Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-12-23

SagaSu777 2025-12-24

Explore the hottest developer projects on Show HN for 2025-12-23. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN offerings underscore a powerful trend: developers are aggressively leveraging AI not just to build novel applications, but to fundamentally enhance existing workflows and solve tedious problems. We see a surge in AI agents designed for specific tasks, from coding assistance and debugging (Superset, Mysti, Wafer) to creative endeavors and even personal productivity (Kapso for WhatsApp, FormAIt). The emphasis on developer experience is palpable, with many projects aiming to streamline complex processes, reduce friction, and boost efficiency. This focus on actionable solutions, often delivered via user-friendly CLIs or specialized extensions, reflects a pragmatic hacker ethos – using cutting-edge technology to make everyday tasks easier and more powerful. For aspiring innovators and entrepreneurs, this highlights fertile ground in creating tools that integrate seamlessly into developer workflows or provide intelligent automation for niche problems. The rise of local-first and privacy-conscious solutions also signals a growing demand for user control and data integrity in an increasingly connected world. Embrace this spirit of innovation by identifying pain points in your own work or community and applying elegant, tech-driven solutions. The true value lies in empowering users with tools that are not only powerful but also intuitive and trustworthy.

Today's Hottest Product

Name

CineCLI

Highlight

CineCLI is a cross-platform terminal application that revolutionizes how users interact with movie content. Its core innovation lies in seamlessly integrating movie browsing, detailed information retrieval, and direct torrent launching within a command-line interface. This tackles the friction of switching between multiple applications for media discovery and acquisition. For developers, the key takeaway is the elegant use of terminal UIs to create a rich, interactive experience for tasks traditionally relegated to graphical interfaces. It showcases how Python, combined with terminal UI libraries, can build powerful and user-friendly tools that enhance productivity for media enthusiasts.

Popular Category

AI/ML Tools

Developer Productivity

Command-Line Interfaces

Open Source Utilities

Web Development

Popular Keyword

AI

CLI

Open Source

Developer Tools

Productivity

LLM

Automation

Python

Rust

TypeScript

Technology Trends

AI-powered Automation and Assistance

Developer Experience Enhancement

Local-First and Privacy-Focused Solutions

Cross-Platform Tooling

WebAssembly (WASM) Integration

Serverless and Edge Computing

Enhanced Command-Line Interfaces

Decentralized Architectures

Data Ingestion and Processing Pipelines

Creative AI Applications

Project Category Distribution

AI/ML Tools (25%)

Developer Productivity/Tools (30%)

Utilities/CLI Tools (20%)

Web Development/Infrastructure (15%)

Creative/Niche Applications (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | CineCLI | 308 | 101 |

| 2 | HTML2Canvas-Prod | 80 | 36 |

| 3 | Kapso: WhatsApp API Orchestrator | 27 | 14 |

| 4 | CodexConfigBridge | 16 | 0 |

| 5 | Claude Terminal Insights | 15 | 1 |

| 6 | VibeDB-GUI | 5 | 9 |

| 7 | OfflineMind Weaver | 8 | 6 |

| 8 | AuthZed Kids: Authorization Adventure | 11 | 1 |

| 9 | Nønos: Zero-State RAM OS | 9 | 1 |

| 10 | QR-Wise Gateway | 6 | 3 |

1

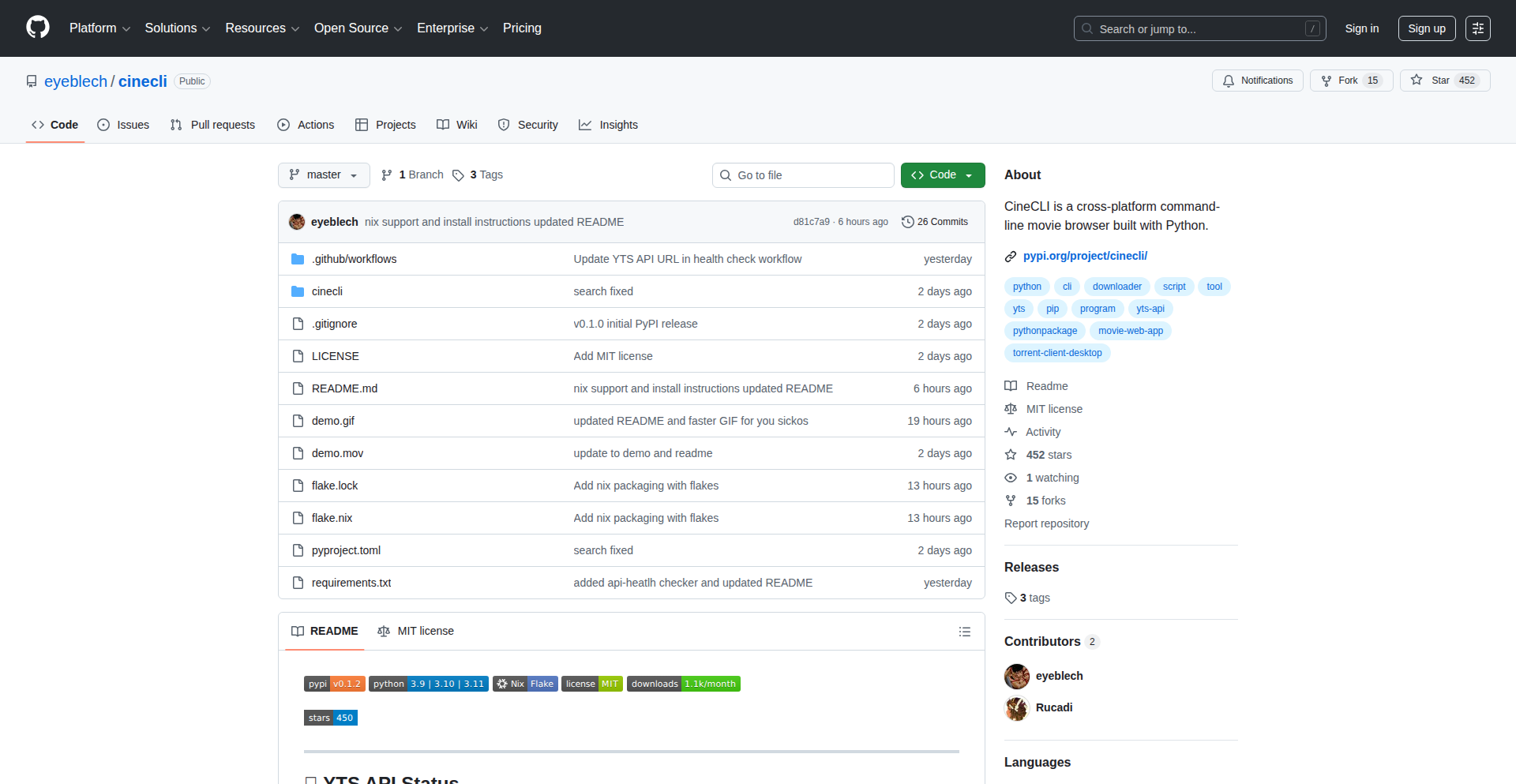

CineCLI

Author

samsep10l

Description

CineCLI is a command-line interface (CLI) application that allows users to search for movies, view detailed information such as ratings and runtime, and initiate downloads via their system's default torrent client, all from the terminal. Its innovation lies in bridging the gap between simple terminal-based tools and rich media browsing, offering a fast, efficient, and privacy-focused way to discover and access movies for developers who prefer working within their command-line environment.

Popularity

Points 308

Comments 101

What is this product?

CineCLI is a cross-platform terminal application designed for movie enthusiasts and developers who spend a lot of time in the command line. It leverages APIs from movie databases to fetch comprehensive movie details, including ratings, genres, and runtime. The innovative aspect is its seamless integration with the operating system's default torrent client, allowing users to initiate torrent downloads directly from the terminal without needing to open a web browser or separate torrent application. This means you get a rich, interactive experience for movie discovery and acquisition, all within the familiar context of your terminal.

How to use it?

Developers can install CineCLI using pip, Python's package installer, making it readily available for use in any terminal session on Linux, macOS, or Windows. After installation, users can run commands like `cinecli search <movie_title>` to find movies. The application provides an interactive mode where users can navigate through search results and view details using keyboard commands, and a non-interactive mode for scripting or quick lookups. Once a desired movie is found, users can select it and choose to open its magnet link, which automatically launches their system's default torrent client to begin the download. This provides a streamlined workflow for media consumption directly from the command line.

Product Core Function

· Movie Searching from Terminal: Allows users to quickly find movies by title directly in their command-line interface, saving time compared to web searches. So, this is useful for quickly checking if a movie exists or getting its basic info without leaving your coding environment.

· Rich Movie Details Display: Presents comprehensive information like ratings, runtime, genres, and cast, enabling informed decisions about movie selection. So, this is useful for quickly understanding a movie's quality and content before deciding to download it.

· Interactive and Non-Interactive Modes: Offers flexibility for both manual exploration and automated tasks, catering to different user preferences and scenarios. So, this is useful for either browsing movies casually or integrating movie lookups into scripts.

· System Default Torrent Client Integration: Seamlessly opens magnet links with the user's pre-configured torrent client, simplifying the download process. So, this is useful for directly starting movie downloads without manual steps, making the entire process much faster and more convenient.

· Cross-Platform Support (Linux/macOS/Windows): Ensures consistent functionality across major operating systems, making it accessible to a wide range of developers. So, this is useful for developers working on different machines or collaborating with others, as it works everywhere.

Product Usage Case

· A developer working on a late-night coding session needs a quick break to watch a movie. Instead of switching contexts to a web browser, they open their terminal, type `cinecli search The Matrix`, and are presented with details and a download option, all within seconds. This solves the problem of context-switching and maintains productivity.

· A system administrator wants to automate the process of downloading a list of documentaries for an upcoming presentation. They can write a script that uses CineCLI in non-interactive mode to find each documentary and generate magnet links, which are then passed to their torrent client for scheduled downloads. This showcases how CineCLI can be integrated into automated workflows.

· A film buff who loves exploring obscure movies wants to do so efficiently. They use CineCLI's interactive mode to browse through various genres and years, checking ratings and synopses without the distraction of a graphical interface. This provides a focused and efficient way to discover new content.

2

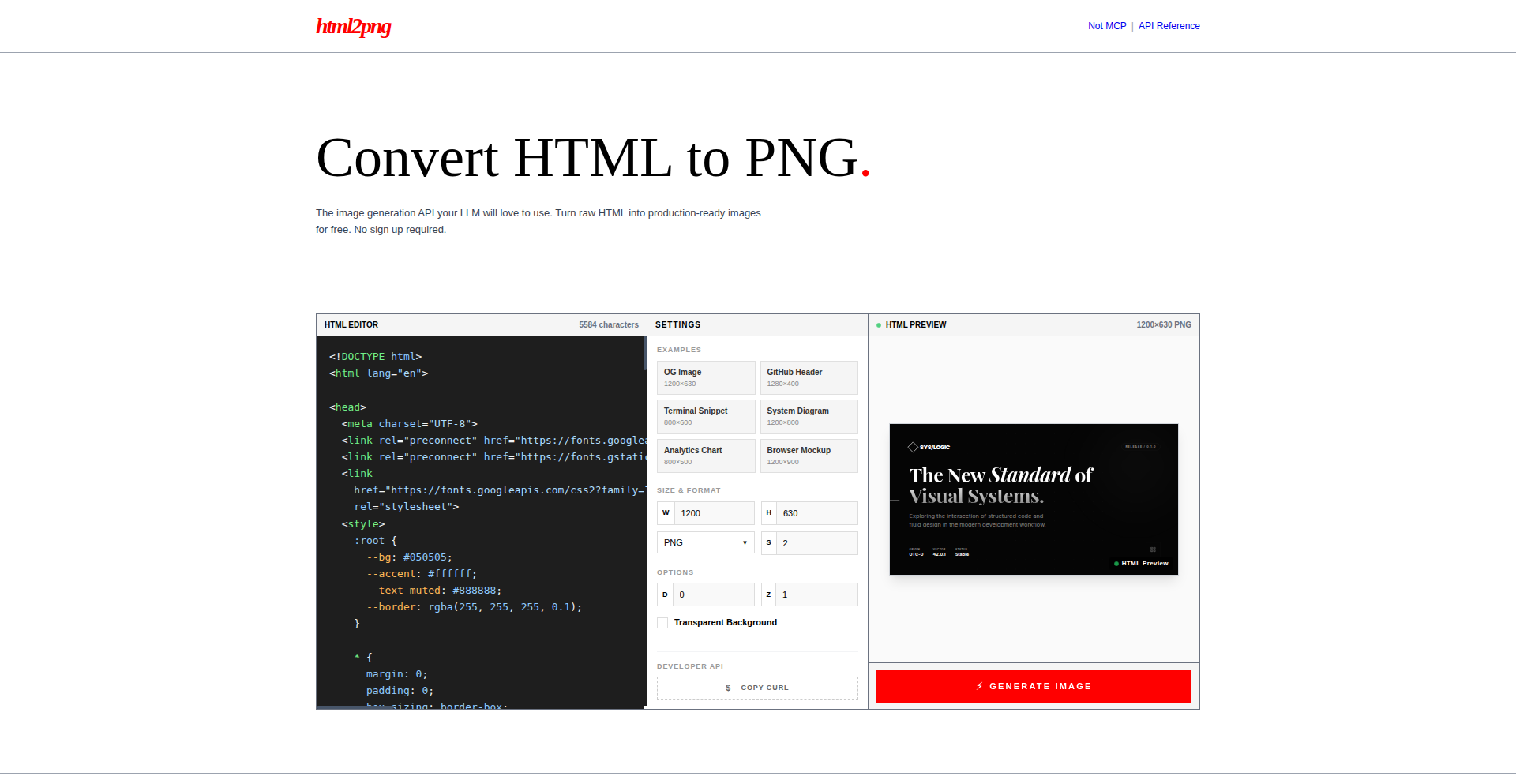

HTML2Canvas-Prod

Author

alvinunreal

Description

This project is a free, production-ready tool that transforms raw HTML into high-quality images. It tackles the common challenge of visually representing web content for presentations, marketing materials, or archival purposes, going beyond basic screenshots by offering precise control over the rendering process. The innovation lies in its robust handling of complex HTML structures and CSS, ensuring faithful visual fidelity in the output images, making web content more accessible and shareable.

Popularity

Points 80

Comments 36

What is this product?

HTML2Canvas-Prod is a browser-based tool that takes any HTML markup and renders it as an image file (like PNG or JPG). Unlike a simple screenshot, it understands the structure and styling of HTML, meaning it can accurately capture complex layouts, dynamic content, and CSS effects. The core innovation is its enhanced rendering engine that handles edge cases and performance optimizations, making it suitable for production environments where reliability and quality are crucial. So, what's in it for you? It means you can reliably generate professional-looking images of your web pages without manual editing or complex software, ensuring your visual content is always consistent and accurate.

How to use it?

Developers can integrate HTML2Canvas-Prod directly into their web applications or workflows. It can be used as a JavaScript library, allowing you to programmatically select HTML elements and trigger image generation. For example, you could build a feature that automatically generates a thumbnail image for a blog post as soon as it's published, or an e-commerce site that generates product image previews based on user-selected options. Integration is straightforward, typically involving a few lines of JavaScript to call the rendering function. This means you can easily add visual content generation capabilities to your existing projects. So, what's in it for you? You can automate the creation of visual assets, saving significant development and manual effort, and providing users with instant, high-quality visual representations of dynamic web content.

Product Core Function

· Accurate HTML and CSS Rendering: Captures the visual appearance of web elements, including complex layouts, fonts, and styles, with high fidelity. This is valuable for creating consistent marketing materials or visual documentation where precise representation is key. So, what's in it for you? Your visuals will look exactly as intended on the web.

· Dynamic Content Image Generation: Renders live, interactive web content into static images, useful for capturing snapshots of application states or user-generated content. This helps in creating visual records or shareable previews of dynamic interfaces. So, what's in it for you? You can easily save or share snapshots of interactive web elements.

· Cross-Browser Compatibility: Ensures consistent image output across different web browsers, reducing the headaches of visual discrepancies. This guarantees that your generated images will look the same for everyone, regardless of their browser. So, what's in it for you? Reliable visual output across all users.

· Production-Ready Performance: Optimized for speed and stability, making it suitable for high-volume or critical applications. This means the tool won't crash or be excessively slow when you need to generate many images quickly. So, what's in it for you? Efficient and dependable image generation for your application.

Product Usage Case

· A blog platform automatically generating featured images for new articles by rendering the article's HTML content into a PNG. This solves the problem of manual image creation for every post, ensuring a consistent visual style. So, what's in it for you? Faster content publishing and professional-looking blog visuals.

· An e-commerce website using the tool to create product preview images. When a user customizes a product (e.g., changing colors or adding accessories), the updated HTML is rendered into an image. This allows customers to visualize their customized product instantly. So, what's in it for you? Improved customer experience and better product visualization.

· A web application for creating and sharing online resumes, where the final resume is rendered into an image to be downloaded or shared. This provides users with a polished, static version of their resume that can be easily distributed. So, what's in it for you? A professional and easily shareable resume format.

3

Kapso: WhatsApp API Orchestrator

Author

aamatte

Description

Kapso is a developer-focused platform that dramatically simplifies integrating with and building on the WhatsApp Business API. It addresses the significant developer experience (DX) challenges often associated with WhatsApp development, offering a streamlined way to handle webhooks, message tracking, debugging, and even building complex automations and in-app experiences within WhatsApp. This project innovates by abstracting away the boilerplate and complexity, allowing developers to focus on delivering value through WhatsApp communication, rather than wrestling with infrastructure and integration details.

Popularity

Points 27

Comments 14

What is this product?

Kapso is a platform designed to make WhatsApp API development incredibly easy for developers. Think of it as a toolkit that provides ready-to-use components and infrastructure to interact with WhatsApp. The core innovation lies in its ability to provide a working WhatsApp API integration and inbox in just minutes, not days. It offers full observability, meaning every incoming and outgoing message is tracked and easily debugged. It also features a multi-tenant system, allowing customers to connect their WhatsApp accounts effortlessly. Furthermore, it includes a workflow builder for creating automated processes and even allows for building mini-applications directly within WhatsApp using AI and serverless functions. The documentation is designed to be understandable for both humans and AI models. Essentially, Kapso democratizes WhatsApp development by removing the typical high barriers to entry and complexity, making it significantly cheaper and faster to build with. So, what's the value for you? You can build and deploy WhatsApp-powered features for your business or application much faster and at a lower cost, without needing to be a deep expert in the intricacies of the WhatsApp API.

How to use it?

Developers can use Kapso by signing up for the platform and connecting their Meta developer account and WhatsApp Business Account. Kapso then provides them with an API endpoint and potentially a pre-built inbox interface. For integrations, developers can leverage Kapso's TypeScript client for the WhatsApp Cloud API, allowing them to write code that sends and receives messages. The platform's multi-tenant architecture means a customer can generate a setup link, and their clients can connect their Meta accounts, enabling Kapso to manage the API interactions for them. For advanced use cases, developers can utilize the workflow builder to create custom automations, like sending personalized notifications or responding to customer queries based on specific triggers. They can also build interactive 'WhatsApp Flows' which are like mini-apps within WhatsApp, powered by AI and serverless functions. The open-sourced components, like the reference inbox or the voice AI agent, can be used as building blocks or for inspiration. So, how do you use it? You integrate Kapso into your existing applications or build new ones, using its provided tools and APIs to handle all your WhatsApp communication needs, from simple messaging to complex conversational flows and AI-driven interactions.

Product Core Function

· WhatsApp API + Inbox Setup: Provides a fully functional WhatsApp API integration and an inbox interface within minutes, drastically reducing initial setup time and allowing immediate interaction with WhatsApp users.

· Full Observability and Debugging Tools: Tracks every webhook received and message sent, offering detailed logs and debugging capabilities to quickly identify and resolve issues, ensuring reliable communication.

· Multi-tenant Platform for Easy Onboarding: Enables generating a setup link for customers to connect their Meta accounts, simplifying the process of onboarding new clients or users onto the WhatsApp platform without complex configurations.

· Workflow Builder for Automations: Allows developers to visually design and implement deterministic automations for tasks like sending notifications, collecting information, or triggering actions based on message content, enhancing efficiency.

· WhatsApp Flows with AI and Serverless Functions: Facilitates the creation of interactive mini-applications directly within WhatsApp, leveraging AI for intelligent responses and serverless functions for dynamic content and logic, enriching user experiences.

· Developer-Friendly Documentation: Offers documentation that is accessible and useful for both human developers and AI models, promoting easier understanding and integration of the API and platform features.

Product Usage Case

· E-commerce businesses can use Kapso to send order confirmations and shipping updates via WhatsApp, and also build interactive product catalogs within WhatsApp, allowing customers to browse and even make purchases directly. This solves the problem of low engagement with traditional email notifications.

· Customer support teams can deploy Kapso to offer real-time chat support through WhatsApp, with the observability tools helping them track response times and agent performance. The workflow builder can automate initial triage of support tickets, directing customers to the right resources or agents.

· SaaS companies can integrate Kapso to send critical alerts and notifications to their users, such as system status updates or security alerts, ensuring high open rates and immediate user awareness. They can also build onboarding flows within WhatsApp to guide new users through product features.

· Developers building AI chatbots can use Kapso to easily integrate their AI models with WhatsApp, allowing for conversational AI experiences directly within the popular messaging app. This is useful for creating virtual assistants or automated customer service agents.

· Marketing teams can use Kapso to run targeted campaigns on WhatsApp, sending promotional messages and collecting feedback through interactive flows, bypassing email spam filters and achieving higher engagement rates.

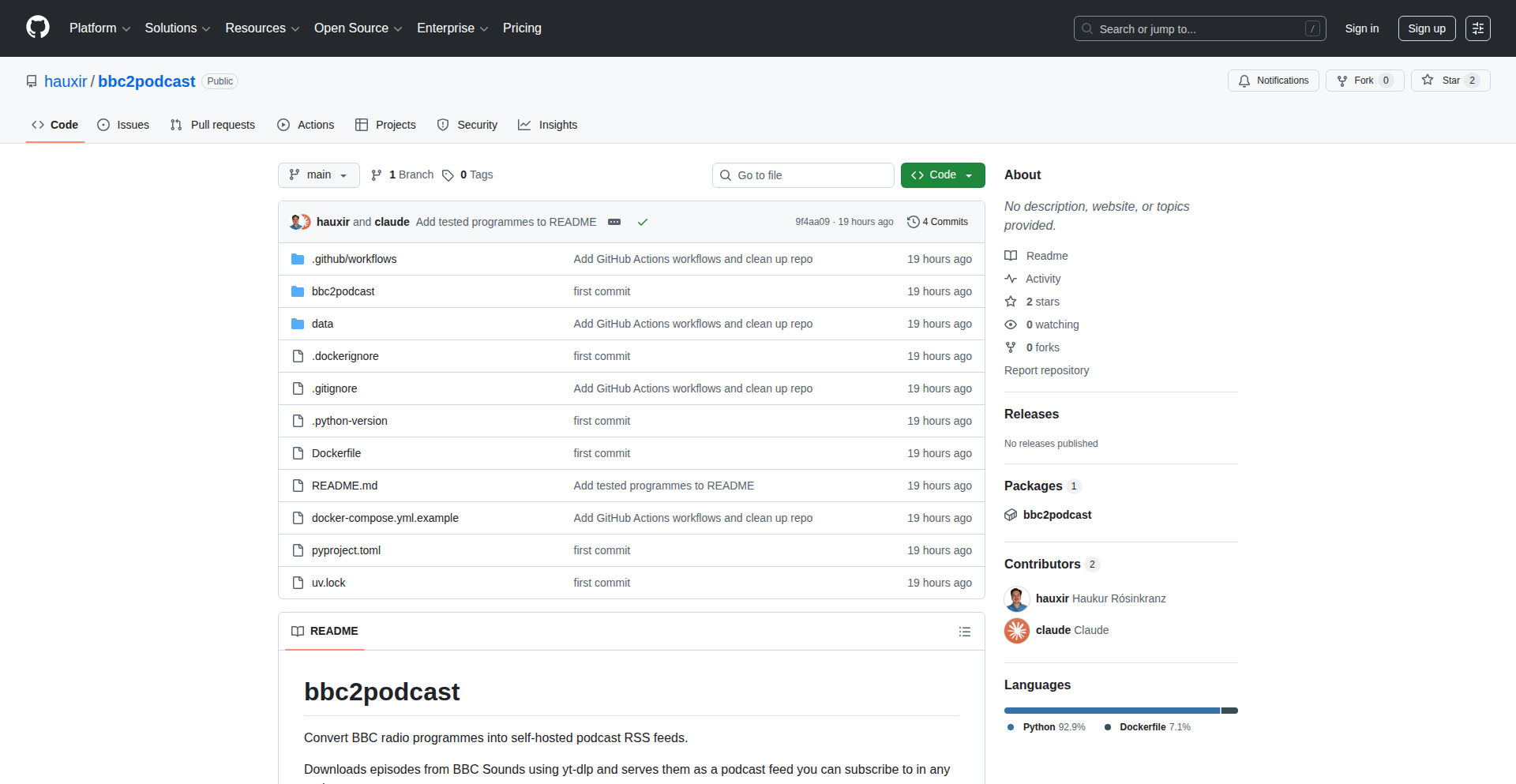

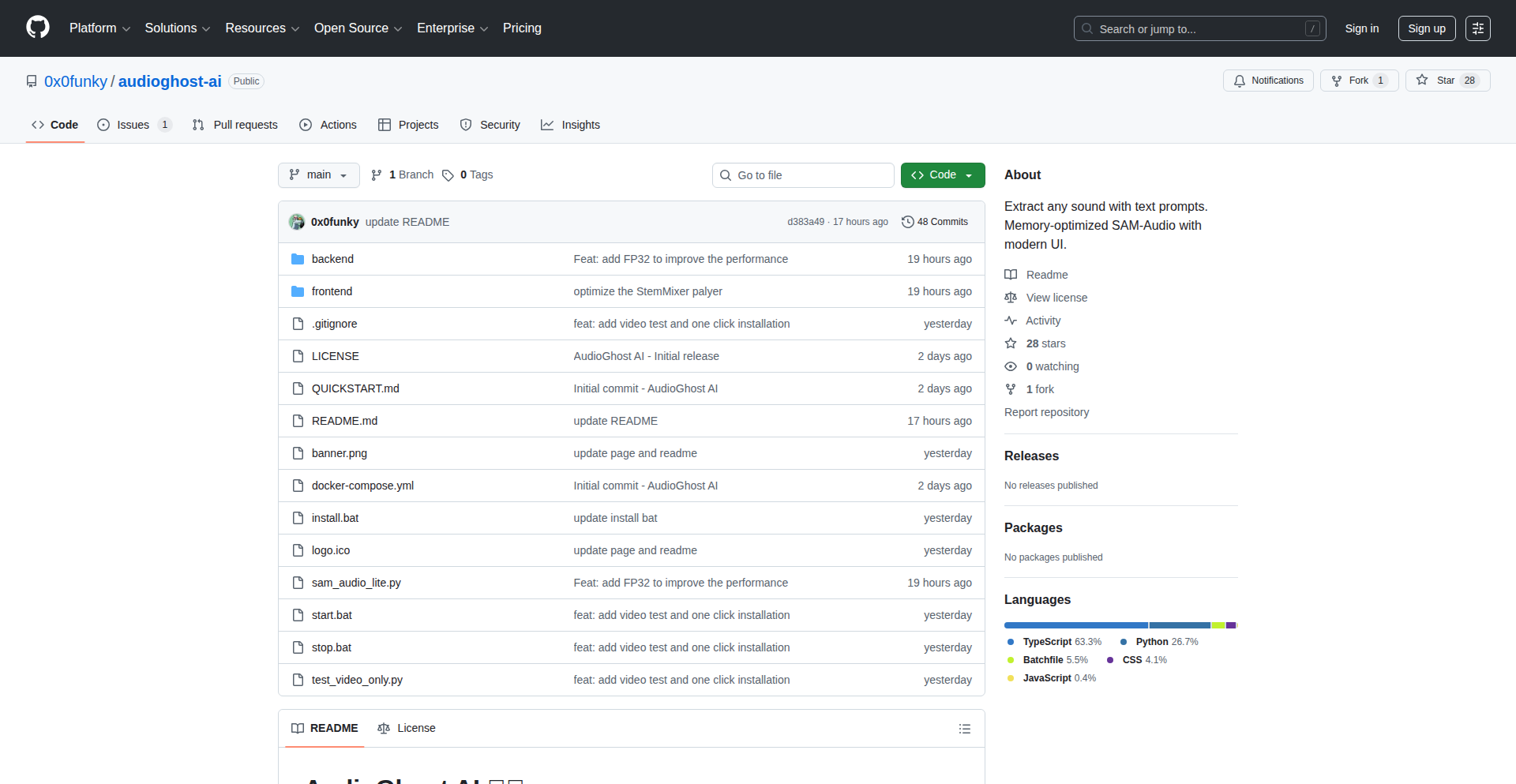

4

CodexConfigBridge

Author

mywork-dev

Description

MCPShark is a tool that bridges the configuration gap between Codex CLI/VS Code extension and its own internal configuration format. It automatically detects Codex's `config.toml` file, parses server entries, and converts them into a format MCPShark understands, simplifying the workflow for developers using both tools. This innovation streamlines setup and reduces manual configuration errors, making it easier to manage development environments.

Popularity

Points 16

Comments 0

What is this product?

CodexConfigBridge is an intelligent configuration importer. It understands that you might be using Codex CLI or its VS Code extension, which relies on a `config.toml` file to manage your development servers. MCPShark, the underlying tool, needs its own way to manage these servers. This bridge feature automatically finds your Codex configuration, reads the server details you've set up, and translates them into MCPShark's internal settings. The innovation lies in its automatic detection and conversion process, eliminating the need for developers to manually re-enter server information, thus saving time and preventing typos. So, what's in it for you? Less manual work and a smoother transition between using Codex for your projects and managing them within MCPShark.

How to use it?

If you are a developer using Codex CLI or the Codex VS Code extension and also want to leverage MCPShark, you simply need to ensure your Codex `config.toml` file is in the expected location (`.codex/config.toml` or specified by `$CODEX_HOME`). When MCPShark starts, it will automatically look for this file. If found, it will parse the `[mcp_servers]` section and set up the corresponding servers in MCPShark's configuration without any further action from your side. This integration can happen directly through standard input/output (stdio) or via HTTP, depending on how MCPShark is being used. The value for you is that your existing Codex server setups are instantly usable within MCPShark, reducing the setup overhead for your development workflow.

Product Core Function

· Automatic Codex config.toml detection: MCPShark actively scans for your Codex configuration file, saving you the effort of manually pointing it to the right location. The value is convenience and reduced chance of error.

· Server entry parsing: It intelligently reads the `[mcp_servers]` section of your `config.toml`, understanding the structure of your server definitions. This technical insight means it can extract the relevant information accurately. The value is precise data extraction without manual parsing.

· Cross-configuration conversion: The parsed Codex server details are automatically transformed into MCPShark's internal configuration format. This is the core of the innovation, ensuring compatibility and seamless integration. The value is interoperability and instant usability.

· stdio and HTTP support: The conversion process can be facilitated through standard input/output streams or via HTTP requests, offering flexibility in how MCPShark interacts with your system. This adaptability is key for integration into various development pipelines. The value is flexible integration into different environments.

Product Usage Case

· Developer using Codex CLI for a backend project and MCPShark for monitoring their deployed services: By leveraging CodexConfigBridge, the developer's defined backend API endpoints in `config.toml` are automatically recognized by MCPShark. This means they can monitor their services immediately without reconfiguring anything in MCPShark, saving setup time and ensuring consistency. The problem solved is the duplication of server configuration effort.

· VS Code user working with a multi-service application managed by Codex: The Codex VS Code extension's configuration for different microservices is recognized by MCPShark. The developer can then use MCPShark to visualize the health and status of these services directly from within their development environment, streamlining debugging and operational visibility. The problem solved is the disconnect between development configuration and operational monitoring.

· Team adopting MCPShark for infrastructure visibility across projects configured with Codex: When multiple team members use Codex with consistent server configurations, CodexConfigBridge ensures that MCPShark can ingest these configurations uniformly. This leads to a standardized view of the infrastructure for the entire team, facilitating collaboration and shared understanding. The problem solved is inconsistent environment setup and visibility across a team.

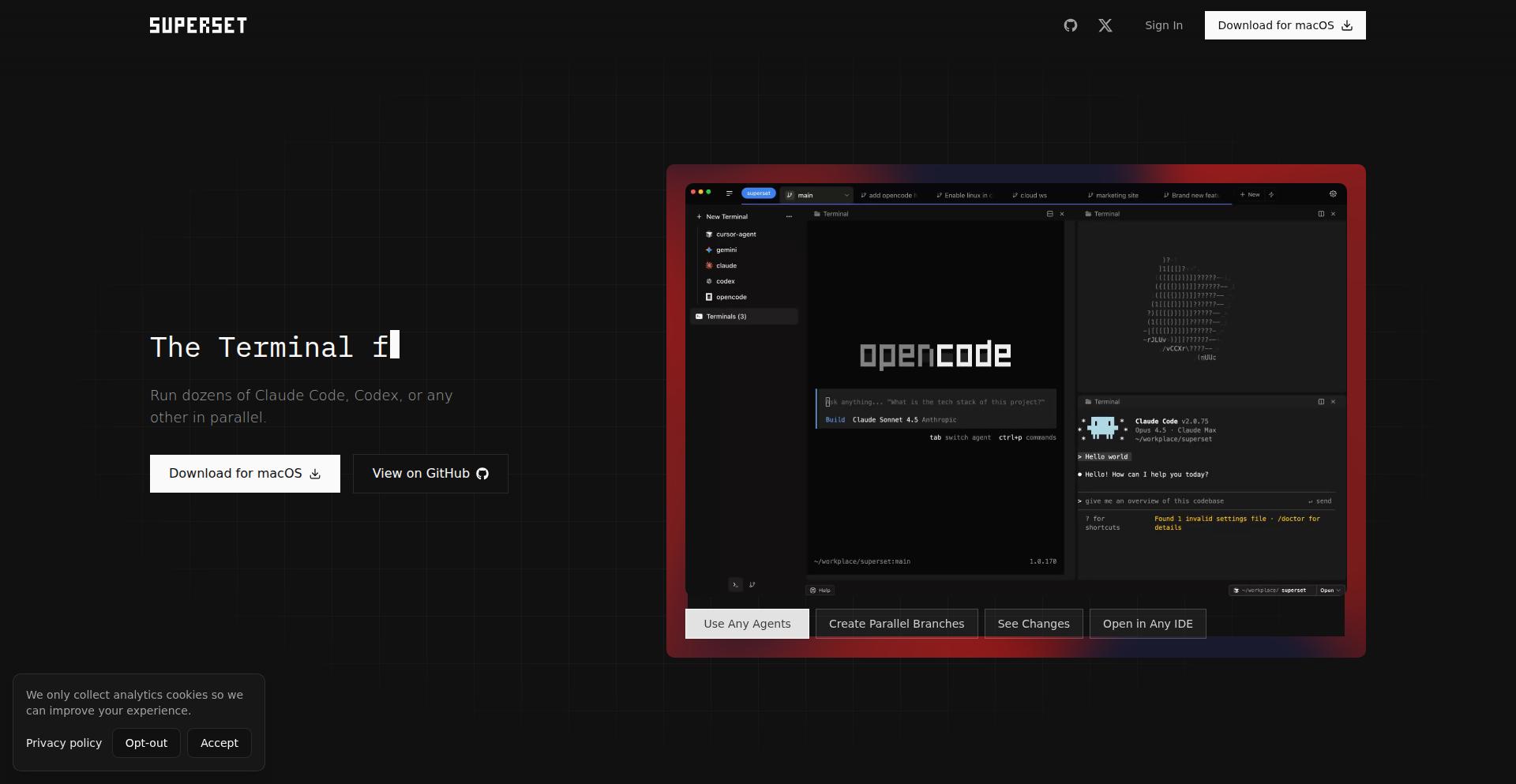

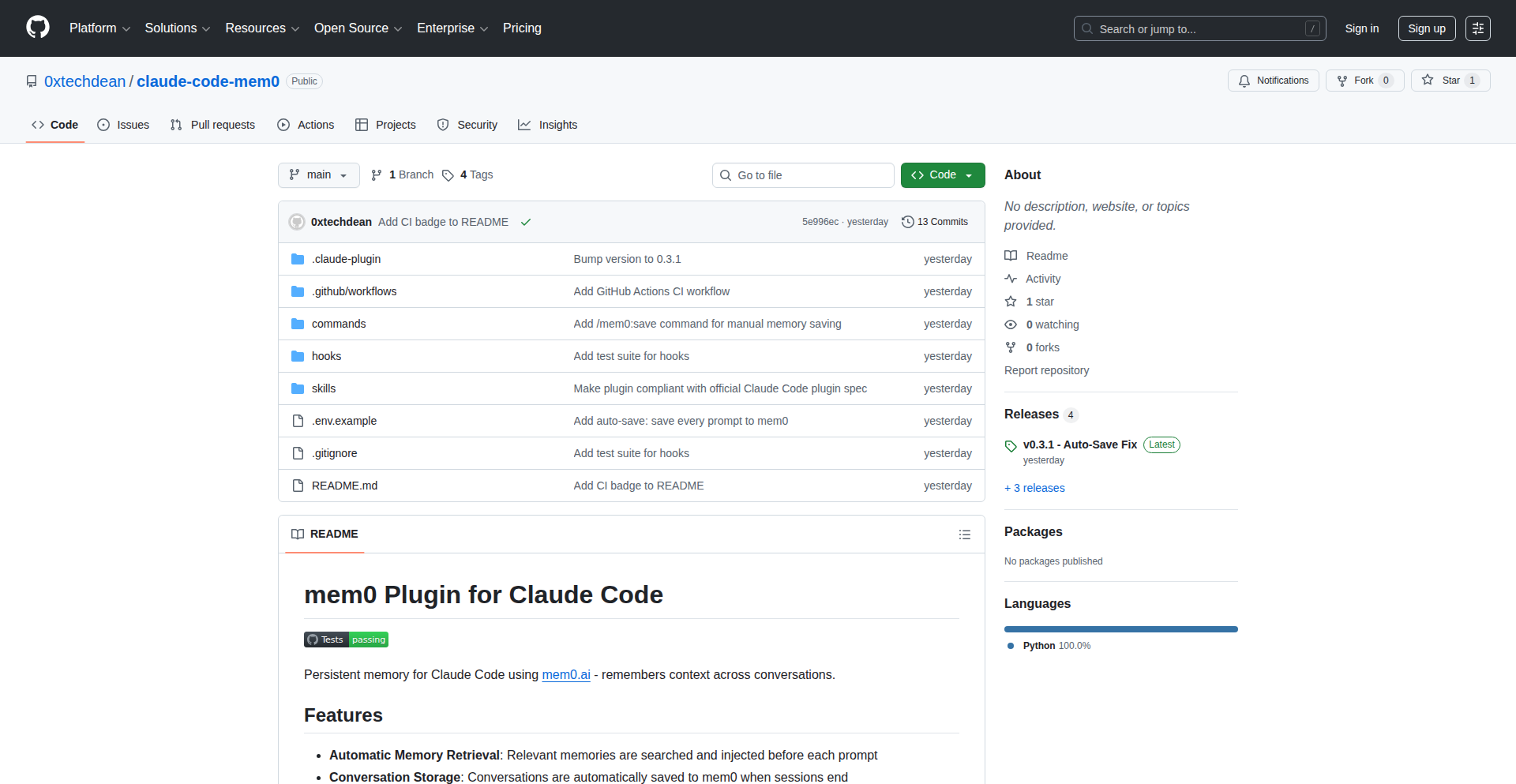

5

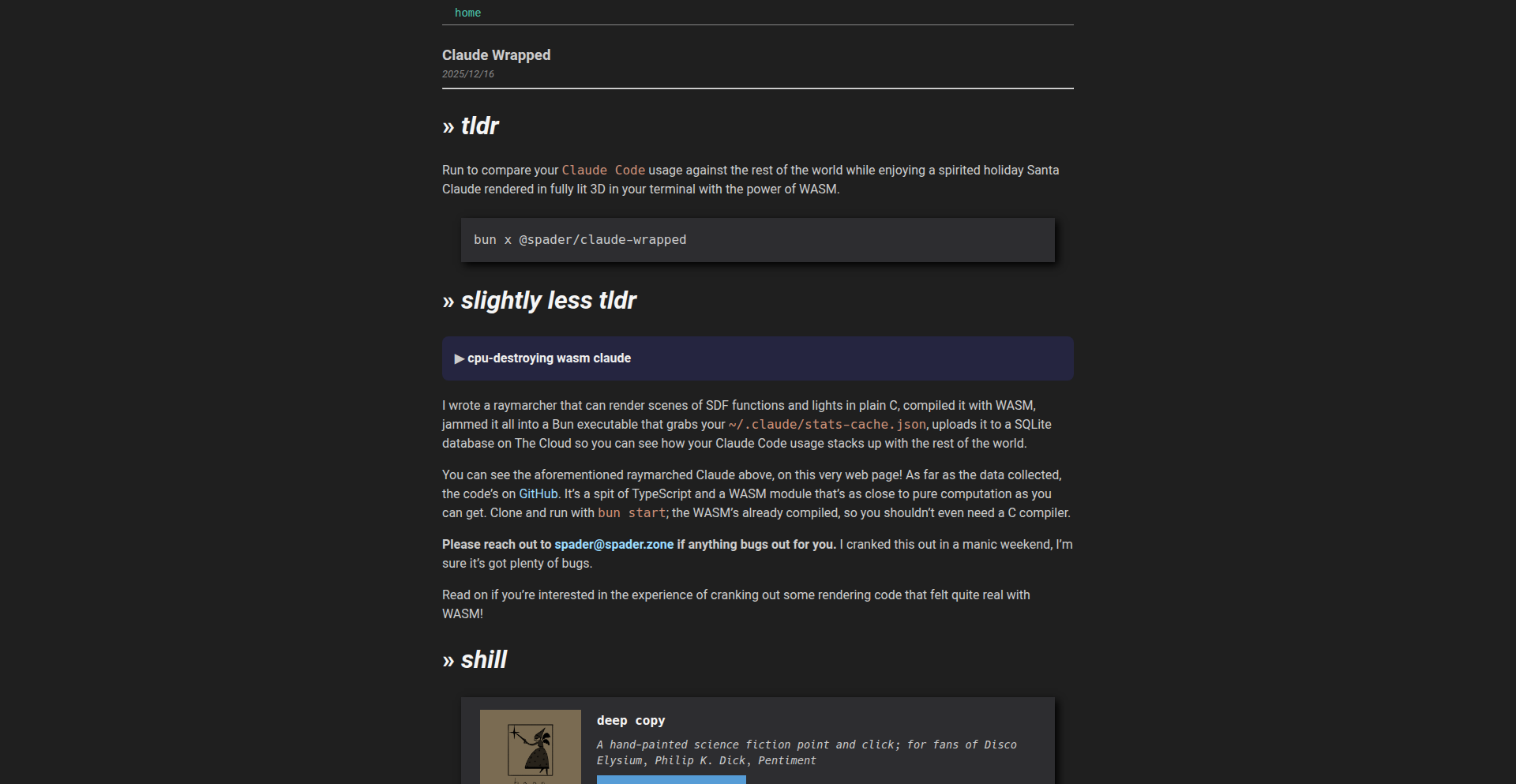

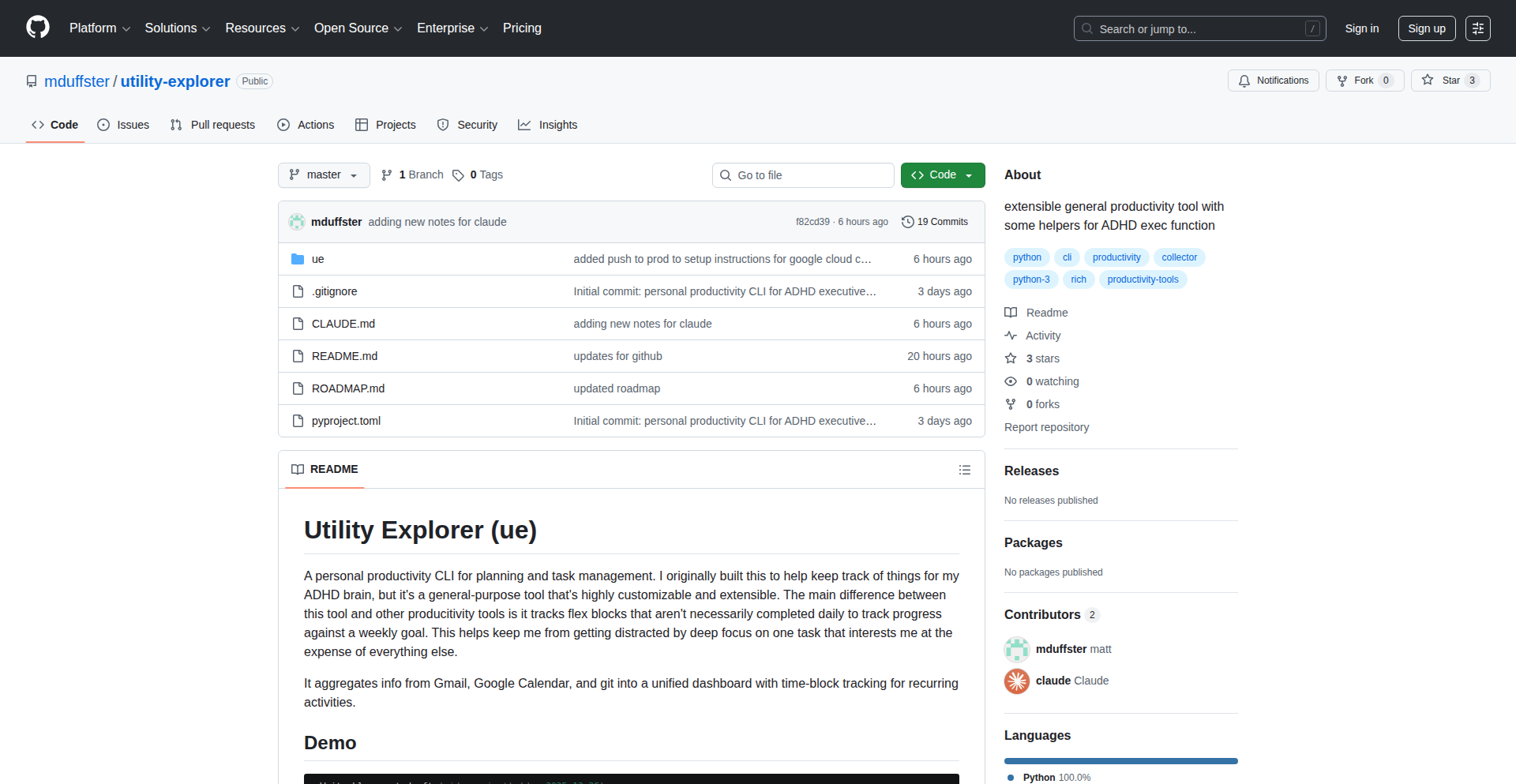

Claude Terminal Insights

Author

dboon

Description

This project, 'Claude Terminal Insights,' offers a novel way to visualize your Claude AI usage directly within your terminal. It leverages Bun and WebAssembly (WASM) to process and present your non-sensitive usage statistics, which are cached locally. The innovation lies in using WASM for efficient local data processing and a raymarcher for a visually engaging, terminal-based presentation, solving the problem of opaque AI usage without requiring complex web dashboards.

Popularity

Points 15

Comments 1

What is this product?

Claude Terminal Insights is a command-line tool that analyzes your personal Claude AI usage data and displays it in an interactive, visually appealing format using a WASM-powered raymarcher. Normally, understanding your AI usage might involve complicated dashboards or confusing logs. This project takes your local, non-identifiable usage stats (stored in $HOME/.claude), processes them efficiently using Bun and WebAssembly (a technology that lets you run code from the web directly on your computer), and renders them as a 3D visualization in your terminal. So, this helps you understand your AI usage in a fun, accessible, and private way, right from your command line.

How to use it?

Developers can use this project by first ensuring they have Bun installed. They would then clone the GitHub repository. The project's command-line interface (CLI) would likely be invoked with a specific command, such as 'claude-wrapped stats', to pull the cached usage data. This data is then processed by the WASM module, and the results are rendered. The core value for a developer is the ability to quickly inspect their AI interaction patterns without leaving their development environment or needing to set up external services. It's a direct, code-centric approach to understanding tool usage.

Product Core Function

· Local Usage Data Caching: The project caches your Claude AI usage statistics locally in your home directory. This means your data stays on your machine, enhancing privacy and speed. So, your personal usage information is kept secure and accessible.

· Bun and WASM Processing: It utilizes Bun (a fast JavaScript runtime) and WebAssembly to efficiently process your usage data. This combination allows for powerful local computations without relying on heavy external libraries or servers. So, data analysis is quick and resource-efficient.

· Terminal-based Raymarcher Visualization: The project renders your usage statistics using a raymarcher in the terminal. This creates a unique, 3D visual representation of your data directly in your command-line interface. So, you get an intuitive and engaging way to see your AI usage patterns.

· Privacy-focused Data Handling: The project explicitly states it handles non-sensitive, non-identifiable data. This commitment ensures user privacy is a top priority. So, you can be confident that your personal information is not being compromised.

Product Usage Case

· Usage Pattern Analysis: A developer can run the tool after a period of using Claude AI to see which types of queries they've made most frequently or how much they've been using the service. This helps in optimizing their workflow and understanding their reliance on the AI. So, you can identify if you're overusing certain features or if there are patterns you can leverage for better productivity.

· Resource Monitoring for Developers: For developers experimenting with AI integrations, this tool can provide a quick snapshot of their API usage and associated costs (if applicable through Claude's billing model). This helps in managing development budgets and making informed decisions. So, you can keep track of your AI spending and usage in a simple, visual way.

· Demonstrating WASM Capabilities: Developers interested in WebAssembly can study the project's WASM implementation to see how it's used for local data processing and visualization. This serves as an educational example of WASM's potential in browser-less environments. So, you can learn practical applications of cutting-edge web technologies.

6

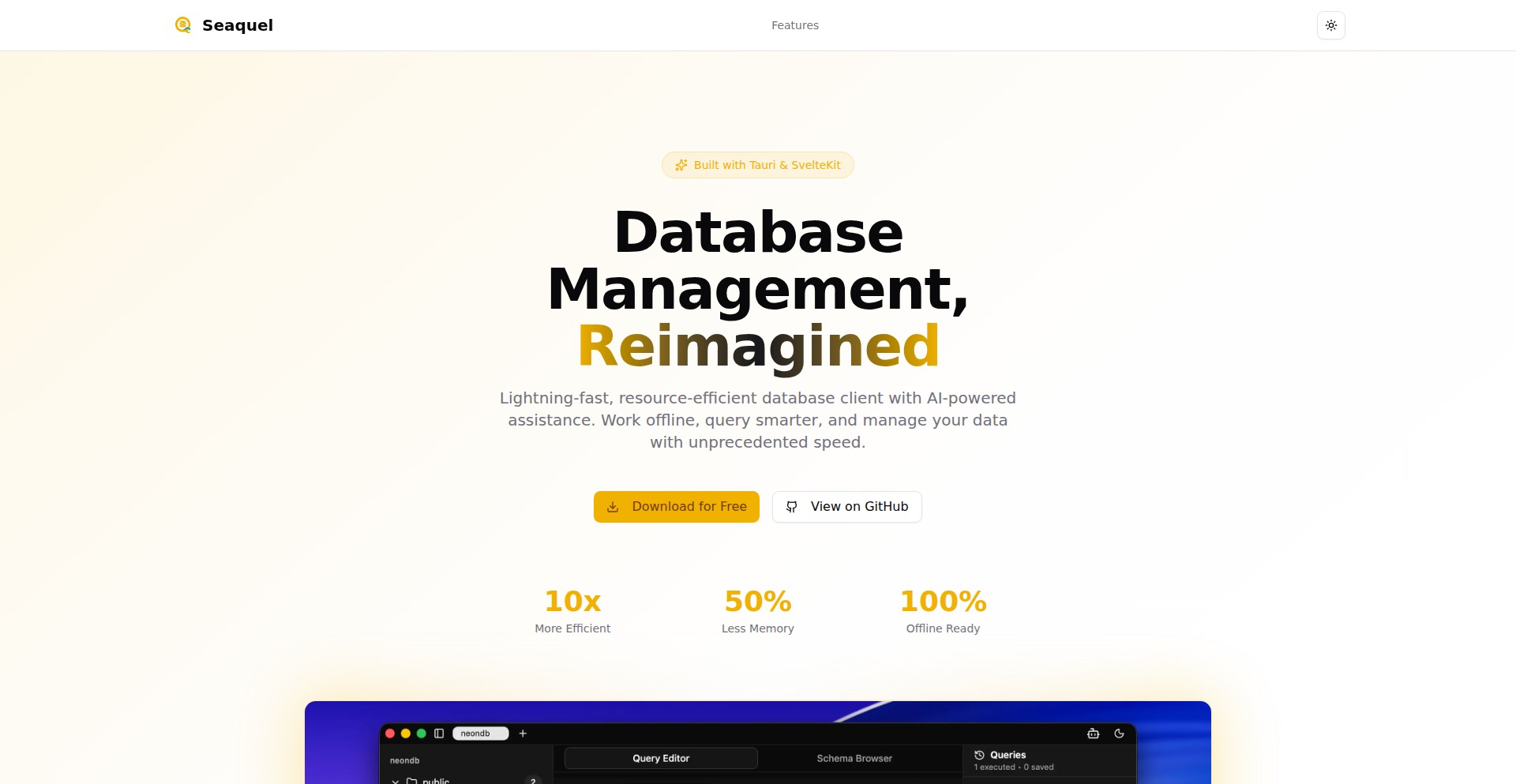

VibeDB-GUI

Author

mootoday

Description

A novel database GUI that uses 'vibe coding' to represent data. It tackles the challenge of quickly understanding complex datasets by visually encoding information based on inferred 'vibes' or characteristics, making data exploration more intuitive and faster for developers.

Popularity

Points 5

Comments 9

What is this product?

VibeDB-GUI is a graphical user interface for databases that goes beyond traditional table or chart views. Instead of just showing raw data, it uses a concept called 'vibe coding' to visually represent the underlying characteristics or 'vibe' of the data. Think of it like assigning colors or patterns to data points that signify their relationships or commonalities, making it easier to spot trends or anomalies at a glance. The innovation lies in its approach to data visualization, aiming to provide an immediate, intuitive understanding of data's 'feel' rather than just its structure. So, this helps you quickly grasp the essence of your data without getting lost in the details, leading to faster insights.

How to use it?

Developers can integrate VibeDB-GUI into their workflow by connecting it to their existing databases. The GUI then analyzes the data and applies its 'vibe coding' logic. Users can interact with the visual representation, filtering and drilling down into specific data segments based on their perceived 'vibes'. This can be used in scenarios like debugging, exploratory data analysis, or even as a novel way to present findings to stakeholders who might not be deeply technical. So, this allows you to explore your data in a more engaging and insightful way, helping you identify patterns and issues more efficiently.

Product Core Function

· Vibe Coding Engine: Analyzes database content and assigns visual 'vibes' to data points, allowing for intuitive pattern recognition. This is valuable for quickly spotting clusters or outliers that might be missed in traditional views, enabling faster data exploration.

· Interactive Visualizer: Provides a dynamic graphical interface where users can manipulate and interact with the 'vibe-coded' data, filtering and exploring based on visual cues. This enhances the user's ability to drill down into specific areas of interest, improving the efficiency of data analysis.

· Database Agnostic Connector: Supports connections to various database types, ensuring broad applicability across different development environments. This provides flexibility and allows developers to leverage the tool with their existing data infrastructure, making it a versatile solution.

· Customizable Vibe Presets: Allows users to define or tweak their own 'vibe coding' rules, tailoring the visualization to specific project needs and data types. This personalization ensures the tool is relevant to diverse use cases, leading to more accurate and meaningful insights.

· Real-time Data Updates: Reflects changes in the database in real-time, ensuring that insights are always based on the most current data. This is crucial for monitoring live systems and making timely decisions, providing up-to-date information for critical operations.

Product Usage Case

· Scenario: A developer is debugging a complex user behavior dataset in a web application. By connecting VibeDB-GUI, they can visually identify clusters of users with similar 'vibes' (e.g., high engagement, specific feature usage patterns) without writing complex queries, helping them pinpoint the root cause of issues faster.

· Scenario: A data scientist is performing exploratory data analysis on a large dataset for a new machine learning model. VibeDB-GUI's 'vibe coding' can help them quickly identify potential correlations or segments within the data that warrant further investigation, speeding up the feature engineering process.

· Scenario: A product manager needs to present user engagement metrics to non-technical stakeholders. VibeDB-GUI can provide a visually intuitive 'vibe map' of user activity, making it easier for stakeholders to understand high-level trends and key user segments without needing to interpret raw numbers.

· Scenario: A backend engineer is monitoring the performance of a distributed system. VibeDB-GUI can visually highlight nodes or services exhibiting unusual 'vibes' (e.g., increased error rates, unusual traffic patterns), alerting them to potential problems before they escalate.

· Scenario: An indie game developer is analyzing player progression data. By using VibeDB-GUI, they can visually see how different player groups are interacting with the game and identify points where players tend to get stuck or drop off, informing game design improvements.

7

OfflineMind Weaver

Author

KasamiWorks

Description

An AI-powered personal memory assistant that operates entirely offline, ensuring complete user privacy. It tackles the challenge of memory recall and organization by leveraging local AI models, offering a secure alternative to cloud-based solutions. The innovation lies in its ability to process and retrieve personal information without sending any data externally, solving the privacy concerns associated with traditional AI tools.

Popularity

Points 8

Comments 6

What is this product?

OfflineMind Weaver is a sophisticated AI system designed to act as your personal memory assistant. At its core, it uses advanced machine learning models (like large language models) that run directly on your device, not on remote servers. This means all your personal data – your notes, thoughts, schedules, and any information you feed it – stays with you. The breakthrough is in making powerful AI capabilities, typically requiring vast server infrastructure, accessible and functional on a local machine, guaranteeing that your sensitive information is never exposed online. This is achieved through efficient model optimization and local inference techniques.

How to use it?

Developers can integrate OfflineMind Weaver into their applications by utilizing its API, which allows for seamless interaction with the local AI model. For example, you could build a note-taking app that automatically tags and categorizes entries based on content, or a personal task manager that intelligently suggests follow-ups. The usage involves setting up the local AI environment and then calling specific functions to query, organize, or generate content based on your personal data. Think of it as having a super-smart, private assistant embedded within your workflow.

Product Core Function

· Local AI-powered information retrieval: Allows users to ask questions about their stored data and receive accurate, contextually relevant answers, all processed on their device, ensuring no data leaves their system. This is useful for quickly finding past information without worrying about privacy breaches.

· Content summarization and organization: The AI can analyze large amounts of text and provide concise summaries or automatically tag and categorize information, making it easier to manage personal knowledge. This helps users digest information efficiently and maintain an organized digital life.

· Privacy-preserving data analysis: Enables users to gain insights from their personal data without any risk of it being accessed by third parties. This is valuable for individuals who are highly concerned about data security and want to understand their own information better.

· Personalized AI responses: The AI learns from the user's data and interaction patterns to provide increasingly tailored and helpful responses. This creates a more intuitive and effective personal assistant experience that adapts to individual needs.

Product Usage Case

· A student using OfflineMind Weaver to organize lecture notes and quickly find specific concepts for revision, without uploading sensitive academic data to the cloud. This solves the problem of scattered notes and the privacy risk of cloud storage.

· A writer employing the tool to generate story ideas or summarize research material locally, ensuring their creative process and intellectual property remain confidential. This addresses the need for AI assistance in creative work while maintaining absolute privacy.

· A researcher building a personal knowledge base with OfflineMind Weaver to cross-reference their findings, knowing that all their experimental data and insights are securely stored and processed on their own machine. This overcomes the limitations of cloud-based research tools that may have data sharing policies.

· A developer integrating OfflineMind Weaver into a journaling application to automatically tag entries and provide reflective summaries, enhancing the journaling experience with AI insights while guaranteeing the privacy of personal thoughts and feelings.

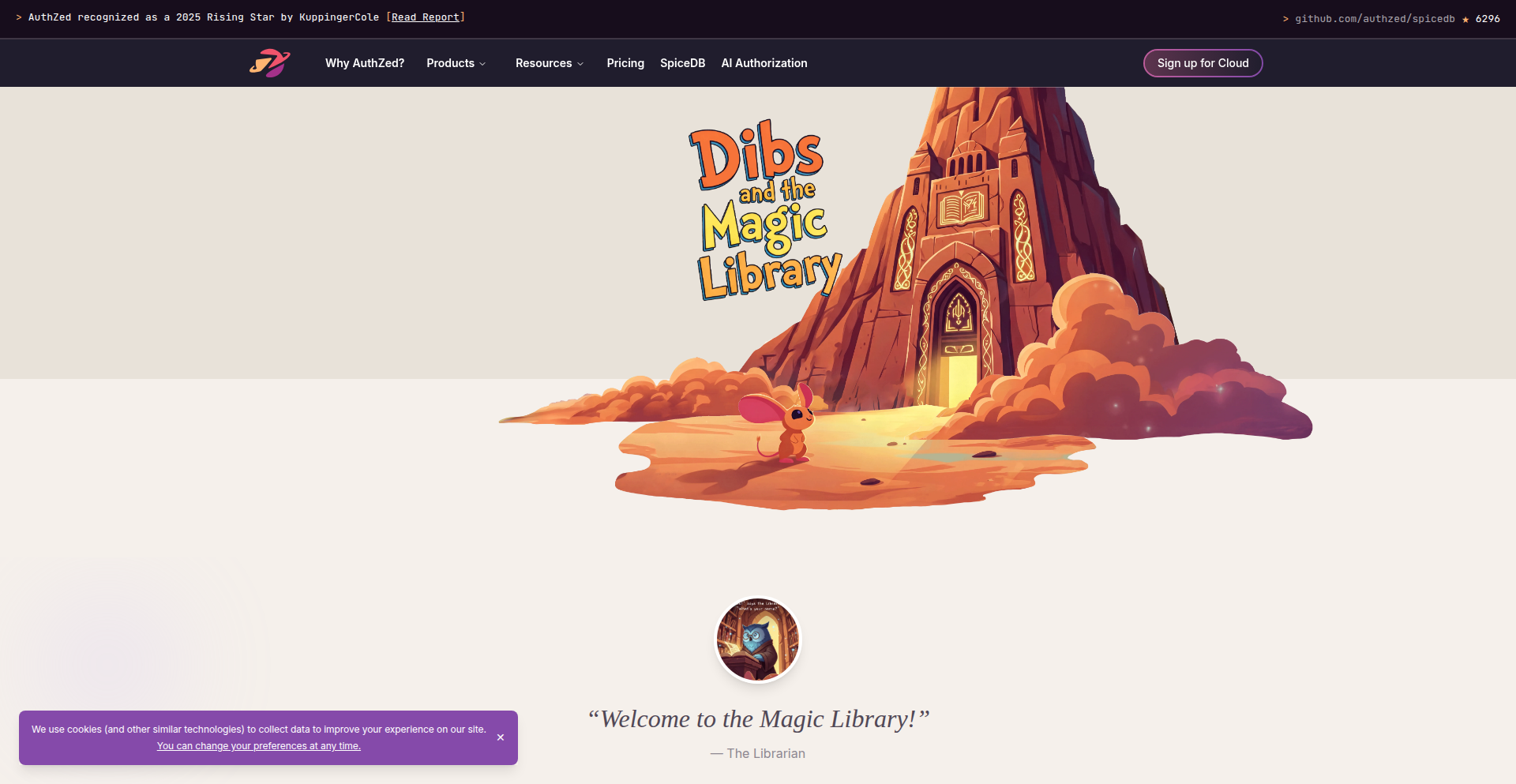

8

AuthZed Kids: Authorization Adventure

Author

samkim

Description

This project is a children's picture book that demystifies authorization and permissions concepts. It uses a fun narrative to explain complex ideas, making them accessible to both kids and adults. A key innovation is the custom AI-powered tool used to generate illustrations, featuring reference-weighted image generation and a git-like branching system for asset management, streamlining the creative process.

Popularity

Points 11

Comments 1

What is this product?

This is a creative project combining storytelling with advanced AI tools to explain technical concepts. The book, 'Dibs and the Magic Library,' uses a narrative to introduce ideas like who can access what and why. The underlying technology innovation is a custom AI image generation tool. This tool allows users to upload reference images and assign weights to them, guiding the AI on which elements are most important for the generated output. It also includes a branching system, similar to version control in software development, to organize different creative ideas and assets, and a feedback loop to improve future image generations. So, it's a fun way to learn about digital access control and a novel approach to AI-assisted creative content production.

How to use it?

For readers, the book can be accessed online or as a gift to introduce children (and even adults) to the fundamental ideas of authorization in a playful manner. For developers and creatives, the AI tool showcased in this project offers a unique method for generating and managing visual assets. It's a demonstration of how sophisticated AI can be integrated into creative workflows, providing more control and organization than typical AI image generators. While the tool itself isn't directly offered as a standalone product in this HN post, its underlying principles can inspire developers working on AI content creation or asset management systems. The value here is in understanding how to build more controlled and iterated AI art generation pipelines.

Product Core Function

· Educational Storytelling: Explains complex authorization concepts like permissions and access control through an engaging narrative, making it easy for anyone to grasp the basics of digital security. This is useful for parents wanting to educate their children or for anyone new to the topic.

· Reference-Weighted AI Image Generation: Allows for more precise control over AI-generated visuals by prioritizing specific reference images, ensuring the art aligns with desired styles and elements. This is valuable for artists and designers looking to leverage AI without sacrificing creative direction.

· Git-like Branching for Assets: Organizes creative assets and design iterations in a structured, version-controlled manner, similar to how software code is managed. This helps in tracking progress, experimenting with different ideas, and reverting to previous versions, which is a boon for any creative project.

· AI Feedback Loop for Iteration: Improves the AI's generation quality over time by incorporating feedback on previous outputs, leading to more refined and consistent visual results. This is crucial for projects requiring a high degree of aesthetic consistency and quality.

Product Usage Case

· Educating children on online safety and digital citizenship by using the book to explain why certain content is restricted or accessible only to specific users.

· Illustrators and graphic designers using the reference-weighted AI tool to quickly generate concept art for characters or scenes, ensuring the style closely matches their vision.

· Game developers experimenting with different visual styles for in-game assets by using the branching feature to manage and compare various art iterations before committing to a final design.

· Marketing teams creating unique visual content for campaigns by leveraging the AI tool's ability to produce custom imagery based on brand guidelines and specific promotional themes, ensuring a distinct visual identity.

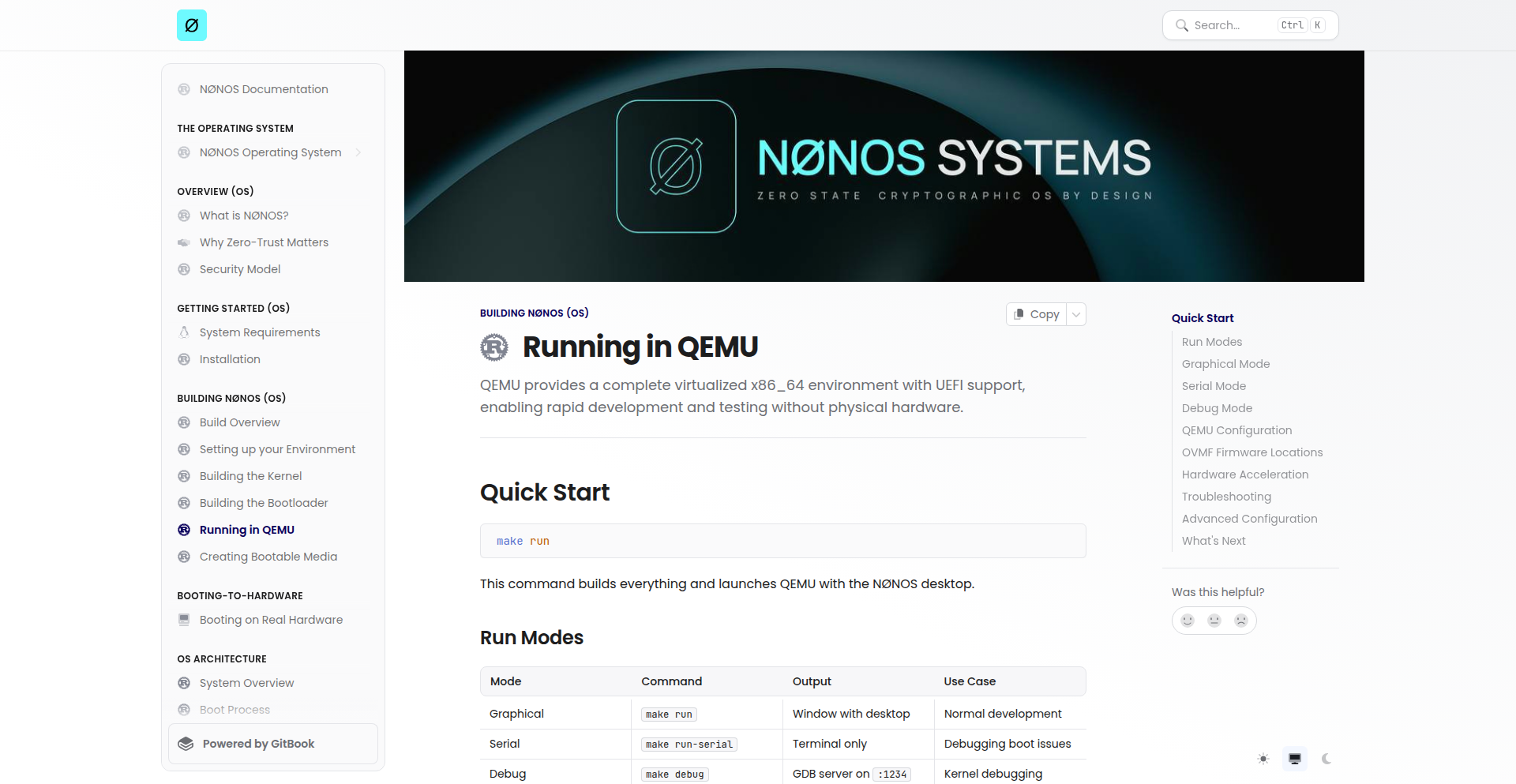

9

Nønos: Zero-State RAM OS

Author

mighty_moran

Description

Nønos is an experimental operating system designed to run entirely in RAM, meaning it has no persistent storage and boots up in a clean, unconfigured state every time. This 'zero-state' approach offers unparalleled speed and simplicity, ideal for highly specific, temporary computing tasks.

Popularity

Points 9

Comments 1

What is this product?

Nønos is a novel operating system concept where the entire OS resides and operates within the computer's Random Access Memory (RAM). Unlike traditional operating systems that rely on hard drives or SSDs for permanent storage, Nønos has no saved state. This means that every time you power on a system running Nønos, it starts from a completely fresh, default configuration. The core innovation lies in its ability to be incredibly fast and resource-efficient because it bypasses the slower disk I/O operations. Think of it like having a super-fast, disposable workspace that resets itself perfectly every time you're done. This is achieved through clever memory management and a minimalistic kernel design that prioritizes speed and simplicity over persistent features. So, this is useful because it allows for extremely rapid boot times and a predictable, clean computing environment for tasks where data persistence isn't required or even desired.

How to use it?

Developers can use Nønos for a variety of niche applications where speed and a clean slate are paramount. This could involve setting up temporary testing environments for software development, creating specialized kiosks or embedded systems that need to reset after each use, or even as a base for highly optimized, single-purpose computing devices. Integration would typically involve booting from a live medium (like a USB drive) or a network boot server, with the OS loading entirely into RAM. The 'zero-state' nature means any configuration or data created during a session is lost upon reboot, making it perfect for scenarios where you want to ensure no residual data is left behind. So, this is useful because it provides a disposable, high-performance computing environment that's ideal for sensitive tasks or rapid prototyping without the overhead of traditional OS management.

Product Core Function

· RAM-based execution: The entire operating system runs from and operates within RAM, leading to significantly faster boot times and application performance compared to disk-based systems. This is valuable for time-sensitive operations and reducing latency.

· Zero-state persistence: The OS has no persistent storage, meaning it starts in a clean, default state on every boot. This is crucial for security, testing, and any application where a fresh start is required, ensuring no data conflicts or residue from previous sessions.

· Minimalist kernel: A highly stripped-down kernel design focuses on essential operating system functions, reducing complexity and resource consumption. This allows for greater efficiency and makes it easier to tailor the OS for specific needs.

· Customizable boot environment: Because it's designed for specific tasks, developers can easily customize the initial boot environment to include only the necessary tools and applications for a particular job. This saves resources and streamlines operations.

Product Usage Case

· Software testing: Developers can use Nønos to create isolated, temporary environments to test new software builds. Each test runs on a pristine system, eliminating the risk of previous test data or configurations interfering with results. This solves the problem of unreliable test results due to environmental drift.

· Kiosk systems: For public-facing terminals or information displays, Nønos ensures that each user interaction starts with a clean slate, enhancing security and preventing unintended data leakage or system misconfiguration. This provides a reliable and secure user experience.

· Embedded systems: In specialized embedded devices that perform a single task, such as a point-of-sale terminal or a dedicated data acquisition unit, Nønos can provide a fast and robust operating environment that resets automatically, ensuring consistent operation. This solves the challenge of maintaining stability and performance in resource-constrained devices.

· Live recovery media: Nønos could serve as a highly efficient live environment for system recovery or diagnostics, allowing technicians to boot into a fast, clean OS without altering the host system's data. This speeds up troubleshooting and data recovery processes.

10

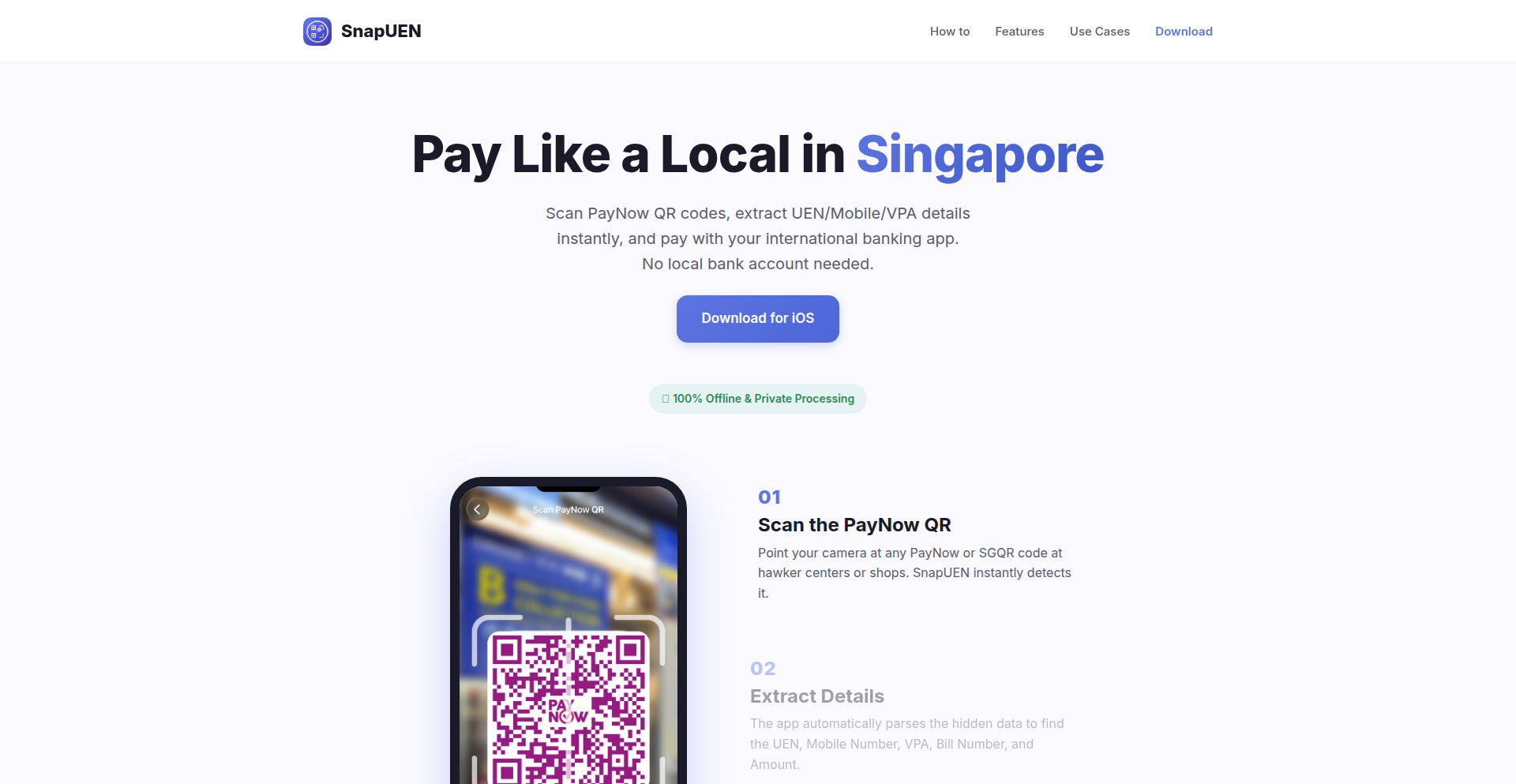

QR-Wise Gateway

Author

noppanut15

Description

A parser that bridges Singapore's QR code payment standard with international banking systems like Wise and home banks. It simplifies cross-border transactions by allowing users to pay Singapore QR codes directly from their non-Singaporean bank accounts or Wise, eliminating the need for local Singaporean payment apps. The innovation lies in its ability to interpret and translate the Singapore QR payment data into a format compatible with international financial protocols, a common pain point for travelers and businesses dealing with Singaporean merchants.

Popularity

Points 6

Comments 3

What is this product?

QR-Wise Gateway is a software tool, essentially a smart translator for payment codes. It tackles the problem of paying with Singapore's unique QR code system when you're not in Singapore or don't have a local Singaporean bank account. The core innovation is its parsing engine, which understands the specific data embedded within Singaporean QR codes (like those used for PayNow). It then reformats this data into a universal payment request that international banking services, such as Wise or your own bank's international transfer system, can understand and process. This bypasses the usual requirement of having a local Singaporean payment app, making cross-border payments much smoother.

How to use it?

Developers can integrate QR-Wise Gateway into their applications or use it as a standalone service. For individual users, imagine a scenario where you're traveling in Singapore and a merchant presents a QR code. Instead of scrambling to get a local app, you can scan this QR code with QR-Wise Gateway. The tool will then prepare a payment instruction that you can send directly from your Wise account or your regular bank's app, effectively paying the Singaporean merchant from abroad. For businesses, this means enabling easier payment acceptance from international customers who might otherwise be restricted by local payment requirements.

Product Core Function

· Singapore QR Code Parsing: Deciphers the structured data within Singaporean QR payment codes, extracting essential details like merchant ID, transaction amount, and currency. This is crucial because without understanding this specific format, international banks wouldn't know how to initiate the payment.

· International Payment Data Translation: Converts the parsed Singaporean QR data into a standardized payment instruction format that global financial platforms like Wise or common banking APIs can process. This translation layer is the key innovation, bridging the gap between local Singaporean standards and global financial interoperability.

· Wise and Home Bank Integration: Enables seamless submission of translated payment instructions to services like Wise or directly to your home bank's international transfer system. This means you can initiate payments directly from familiar financial tools you already use, without needing new accounts or complex procedures.

· User-Friendly Payment Flow: Simplifies the user experience by abstracting away the complexities of different payment systems. Users scan a QR, and the gateway handles the technical heavy lifting in the background, presenting a clear, actionable payment prompt.

Product Usage Case

· Traveler paying for a taxi in Singapore: A tourist scans a Singapore QR code for a taxi fare. QR-Wise Gateway translates this into a Wise payment request, allowing the tourist to pay from their Wise account without needing a local SIM card or Singaporean bank app, thus solving the immediate payment problem.

· Online business accepting Singaporean customer payments: An e-commerce store based outside Singapore can integrate QR-Wise Gateway to accept payments from Singaporean customers who prefer using their local QR codes. The gateway handles the conversion, allowing the business to receive funds via their usual international payment processor, thus expanding their customer base.

· Small business owner settling invoices with Singaporean suppliers: A business owner in another country needs to pay an invoice from a Singaporean supplier who uses QR code billing. QR-Wise Gateway allows them to scan the supplier's QR code and initiate payment from their domestic bank's international transfer service, simplifying B2B transactions and avoiding foreign exchange complexities.

· Expats managing expenses in Singapore: An expatriate living in Singapore but whose primary banking is in their home country can use QR-Wise Gateway to pay local Singaporean bills and services using their home bank or a global e-wallet, reducing the need to maintain multiple local accounts for everyday transactions.

11

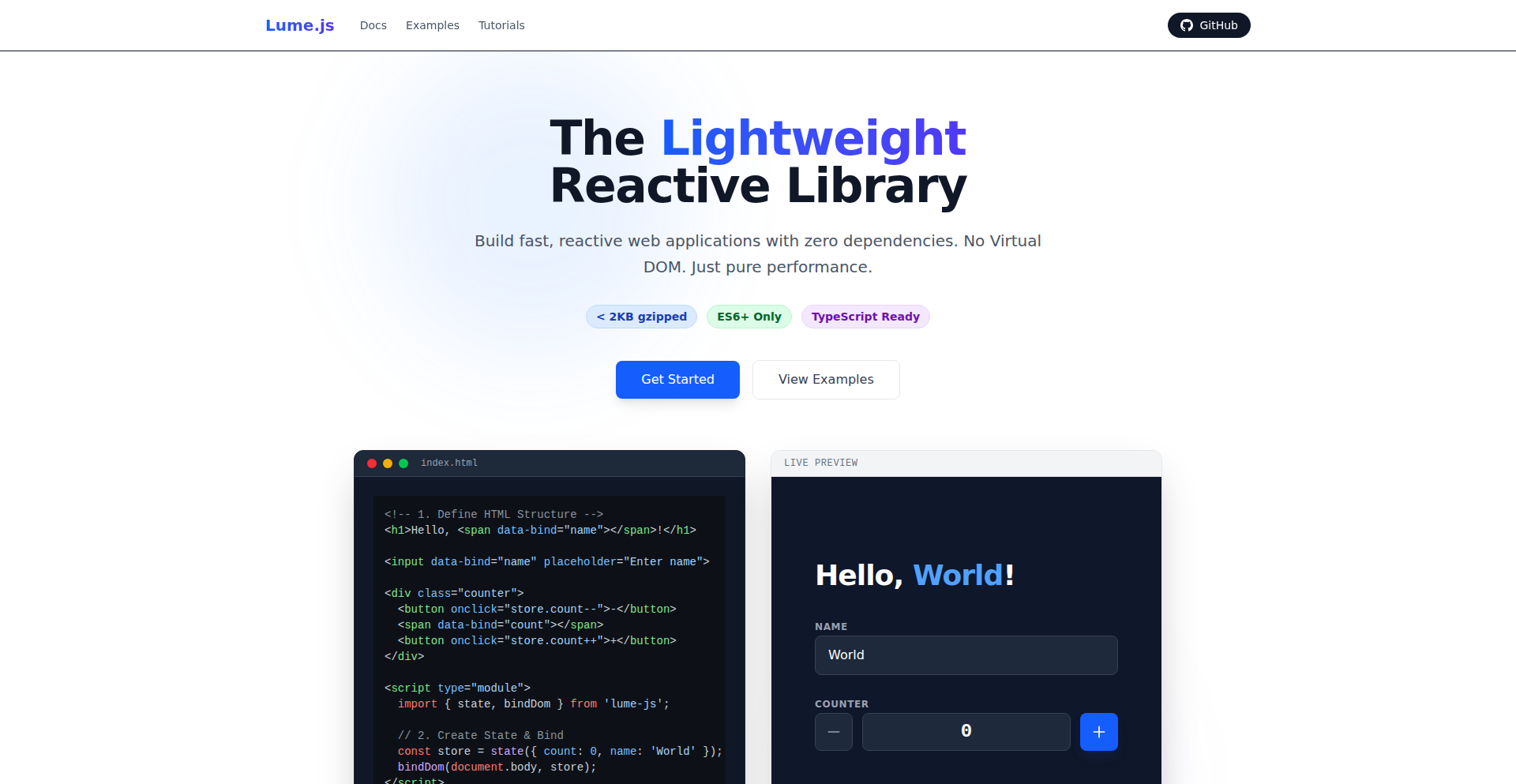

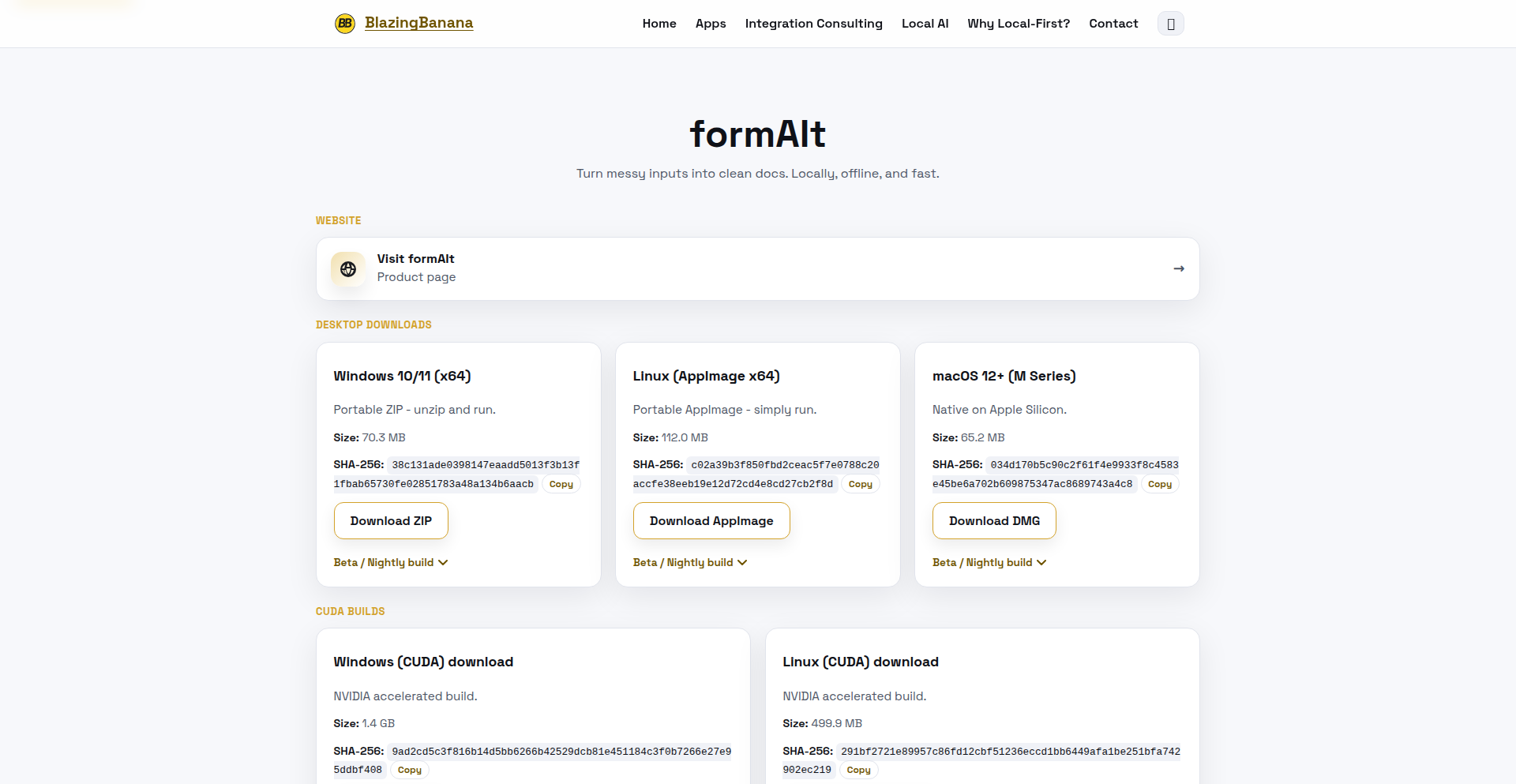

Lume.js: Minimalist React Alternative

Author

sathvikchinnu

Description

Lume.js is a tiny, 1.5KB JavaScript library that offers a React-like experience without any custom syntax. It focuses on efficient DOM manipulation and declarative UI building, making it ideal for developers seeking a lightweight alternative to larger frameworks. Its innovation lies in achieving powerful features with minimal footprint, offering a fresh approach to building interactive web interfaces.

Popularity

Points 7

Comments 2

What is this product?

Lume.js is a cutting-edge JavaScript library designed to build user interfaces for the web, similar to how React works. The 'magic' behind Lume.js is its incredibly small size (just 1.5KB) and its clever use of standard JavaScript features. Instead of introducing its own special syntax (like JSX in React), it leverages plain JavaScript objects and functions. This means you write your UI components using familiar JavaScript code, which the library then efficiently translates into actual web page elements. This approach simplifies the learning curve and reduces the overhead of transpilation, making your development workflow faster and your applications lighter. So, what's the benefit for you? It means you can build fast, responsive web applications without the bloat of larger frameworks, leading to quicker load times and a smoother user experience.

How to use it?

Developers can integrate Lume.js into their projects by simply including the library file in their HTML or via a module bundler like Webpack or Vite. You'll then define your UI components using standard JavaScript functions that return virtual DOM structures. These structures are essentially JavaScript objects describing what your UI should look like. Lume.js then intelligently updates the actual web page (the DOM) only where necessary, ensuring optimal performance. This makes it easy to use in new projects or even to sprinkle into existing ones. For you, this means a straightforward path to building dynamic interfaces with minimal setup and maximum efficiency.

Product Core Function

· Declarative UI: Define your user interface using JavaScript functions that describe the desired output, making your code easier to read and maintain. The value is in building complex interfaces with simpler, more predictable code.

· Efficient DOM Updates: Lume.js automatically detects changes in your UI definitions and updates only the necessary parts of the web page, ensuring snappy performance. The value is in creating fast-loading and responsive applications.

· Minimal Footprint (1.5KB): The library is extremely small, leading to faster download times for users and reduced bundle sizes for developers. The value is in building lightweight, performant web applications.

· No Custom Syntax: Lume.js uses plain JavaScript, eliminating the need for special compilers or preprocessors. The value is in a simpler development experience and easier integration with existing JavaScript projects.

Product Usage Case

· Building single-page applications (SPAs) where a small library size is critical for initial load speed. Lume.js solves the problem of slow initial rendering by providing a performant UI layer with minimal overhead.

· Developing interactive widgets or components that need to be embedded into existing websites without introducing large dependencies. Lume.js addresses the need for lightweight, self-contained UI elements.

· Creating progressive web apps (PWAs) where resource efficiency is paramount for mobile users. Lume.js helps ensure PWAs are fast and responsive even on less powerful devices.

· Experimenting with new UI concepts or building prototypes where rapid development and easy iteration are key. Lume.js's simple API and lack of custom syntax accelerate the prototyping process.

12

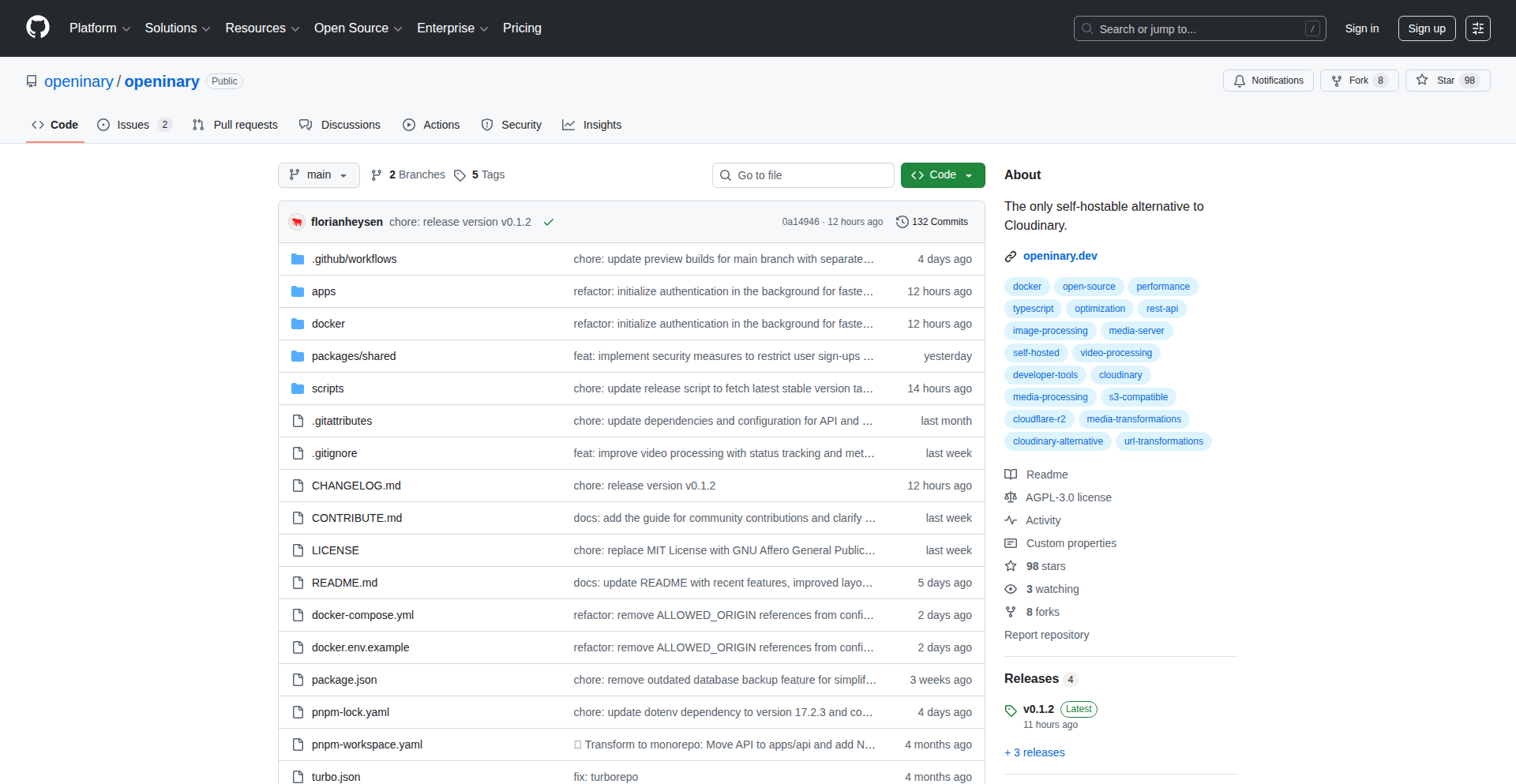

Openinary: Self-Hosted Image Pipeline

Author

fheysen

Description

Openinary is a self-hosted alternative to cloud-based image management services like Cloudinary. It allows developers to manage, transform, optimize, and cache images directly on their own infrastructure, using common storage solutions like S3 or Cloudflare R2. The key innovation is a simple URL-based API for image manipulation, offering the same user experience as proprietary services but with complete control and cost savings.

Popularity

Points 4

Comments 4

What is this product?

Openinary is essentially a flexible image processing engine that you can run on your own servers. Think of it as building your own mini-Cloudinary. Instead of paying per image upload or transformation to a third-party service, Openinary lets you leverage your existing storage (like AWS S3 or Cloudflare R2) to handle image resizing, format conversion (like turning JPEGs into AVIF for better web performance), and caching. The magic happens with a straightforward URL. For example, instead of complex code, you can just append parameters to an image URL to resize it or change its format. This gives you control over your data and can be significantly more cost-effective for high-traffic applications.

How to use it?

Developers can integrate Openinary into their web applications or websites by setting it up on their own server environment, often using Docker for easy deployment. Once running, they can then point their image URLs through Openinary's API. For instance, if you have an image at `my-bucket.s3.amazonaws.com/original.jpg`, you'd use Openinary to serve it like this: `your-openinary-domain.com/t/w_800,h_800,f_avif/my-bucket.s3.amazonaws.com/original.jpg`. This means when a user requests an image, Openinary intercepts the request, performs the specified transformations (like resizing to 800px width and height, and converting to AVIF format), caches the result, and then serves it. This allows for dynamic image optimization without needing to pre-generate multiple image versions.

Product Core Function

· Self-hosted Image Transformations: Allows developers to perform image manipulations like resizing, cropping, and format conversion directly on their own servers, providing cost control and data ownership. This is valuable for dynamic content generation and optimizing image delivery for different devices.

· S3-Compatible Storage Integration: Enables seamless connection with popular object storage services like AWS S3 and Cloudflare R2, leveraging existing infrastructure and avoiding vendor lock-in. This makes it easy to manage a large volume of images without re-architecting storage solutions.

· URL-Based Image API: Offers a simple and intuitive API for requesting transformed images via URLs, mimicking the user experience of commercial services but with self-hosted flexibility. This simplifies frontend integration and allows for on-the-fly image adjustments.

· Image Optimization and Caching: Automatically optimizes image delivery for better web performance and caches processed images to reduce server load and improve loading times. This directly impacts user experience and SEO.

· Docker-Ready Deployment: Provides a Docker image for straightforward setup and management, making it easy for developers to deploy and scale Openinary across different environments. This speeds up the adoption and maintenance process.

Product Usage Case

· An e-commerce platform wanting to serve optimized product images across various devices without incurring high per-request fees from third-party CDNs. Openinary allows them to use their S3 bucket and a simple URL to dynamically resize and format images for mobile, tablet, and desktop views, improving load times and reducing operational costs.

· A content management system (CMS) that needs to offer image editing and delivery capabilities to its users but wants to maintain full control over the data and infrastructure. Openinary can be integrated to provide image transformation and caching directly within the CMS, giving users a familiar experience while keeping all data in-house.

· A developer building a personal portfolio or a project that requires dynamic image handling for a large number of assets. By using Openinary with a service like Cloudflare R2, they can process and deliver images efficiently and cost-effectively, demonstrating advanced technical solutions for common web development challenges.

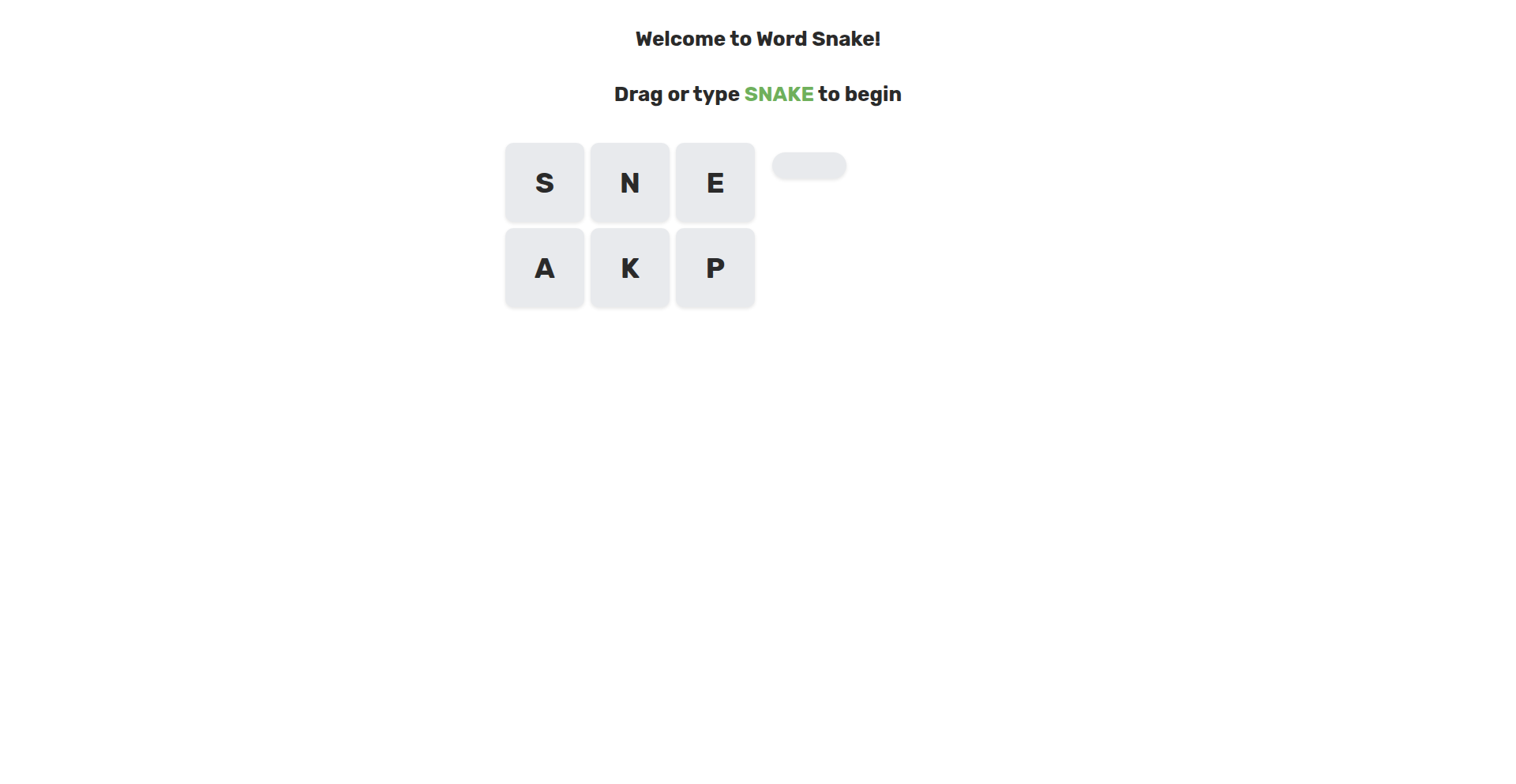

13

WordSnake - Algorithmic Word Sculptor

Author

ediblepython

Description

WordSnake is a novel approach to generating dynamic word art. It leverages a custom algorithm to create interconnected word structures, transforming plain text into visually engaging patterns. The core innovation lies in its procedural generation technique, which allows for unique, scalable word art without manual design. This addresses the need for creative, automated visual content generation for developers and designers looking to add a unique touch to their projects.

Popularity

Points 8

Comments 0

What is this product?

WordSnake is a software tool that procedurally generates visually interesting word art. Instead of manually arranging words, it uses a smart algorithm to connect them, creating organic, snake-like formations. Think of it like a digital vine that grows words instead of leaves. The innovation is in the algorithm itself; it's designed to find optimal connections and layouts for words based on certain parameters, making it a creative and automated way to visualize text. This is useful because it allows you to quickly create unique graphics from text data, which is usually a time-consuming manual process. So, what's in it for you? You get instant, artistic text visualizations that can make your presentations, websites, or even code documentation more engaging.

How to use it?

Developers can integrate WordSnake into their applications or use it as a standalone tool. The project likely exposes an API or command-line interface (CLI) where you can input your text and desired aesthetic parameters (like density, color schemes, or connection styles). The output can then be rendered as an image (SVG, PNG) or potentially as interactive web elements. This integration can be as simple as calling a function in your code to generate a word cloud for a dashboard, or as complex as building a web application where users can generate their own word art. So, how can you use it? Imagine adding a unique visual element to your blog posts, creating custom logos from company names, or even visualizing code complexity with word structures. The flexibility means you can apply it wherever creative text visualization is needed.

Product Core Function

· Procedural Word Arrangement: The algorithm automatically positions and connects words to form aesthetically pleasing, interconnected structures, offering a dynamic and unique output every time. This is valuable for generating novel visual assets without manual effort.

· Configurable Generation Parameters: Users can likely tweak variables such as word density, connection logic, and potentially even color palettes to influence the final word art. This allows for customization and fine-tuning of the visual output to match specific project needs.

· Scalable Vector Output: The ability to output in formats like SVG means the word art can be scaled infinitely without losing quality, making it ideal for both web and print applications. This ensures your visuals look sharp at any size.

· Text Data Visualization: Beyond pure aesthetics, WordSnake can be used to visually represent the prominence or relationships within a body of text, offering a creative alternative to traditional word clouds. This provides a new lens through which to understand textual data.

Product Usage Case

· Dynamic Website Banners: Developers can use WordSnake to generate unique, evolving banners for websites based on user-generated content or trending topics, making the site feel more alive and personalized. This solves the problem of static, uninspiring website headers.

· Artistic Code Documentation: Imagine generating visually striking diagrams from code comments or function names to make technical documentation more engaging and easier to digest. This adds a creative flair to otherwise dry technical writing.

· Personalized Greeting Cards or Social Media Graphics: Users can input names, messages, or event details to create custom, artistic graphics for personal use or sharing on social platforms. This offers a creative way to personalize digital communications.

· Data Storytelling Visuals: For data analysts or journalists, WordSnake can transform keyword lists or thematic summaries into compelling visual narratives, making complex information more accessible and memorable. This provides an engaging way to present insights.

14

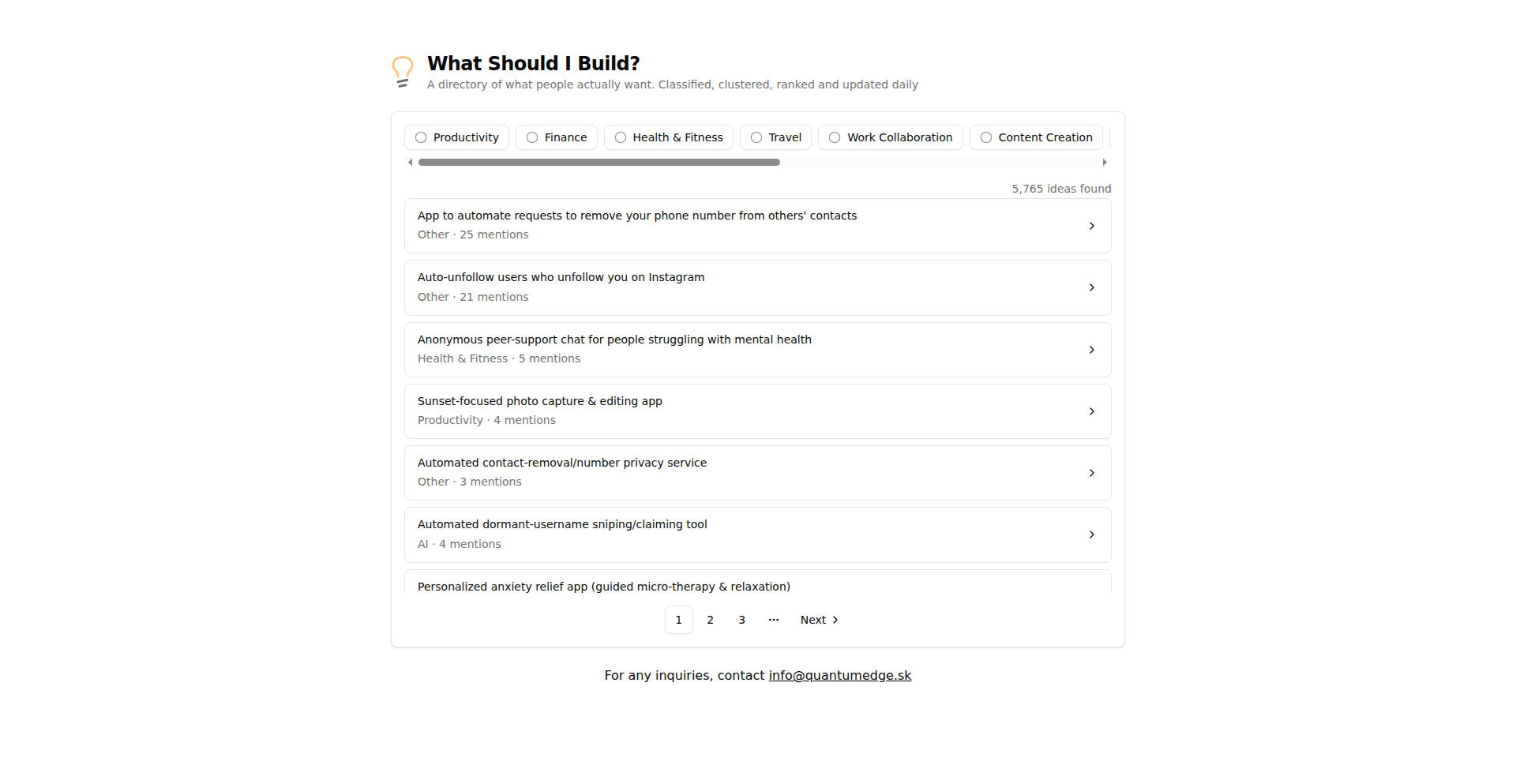

IdeaForge

Author

emil154

Description

IdeaForge is a minimalistic, crowdsourced directory of software ideas, focusing on real-world problems faced by people. It acts as a Proof of Concept (PoC) for a platform that helps developers find inspiration and address unmet needs through code. The innovation lies in its direct connection to user-identified challenges, fostering a community-driven approach to problem-solving.

Popularity

Points 4

Comments 3

What is this product?

IdeaForge is a web application that collects and organizes suggestions for software projects directly from users who are experiencing specific problems. It's built on the idea that the most profitable software often comes from solving a developer's own pain points, but in this case, it broadens that to include the pain points of anyone. The core technology involves a simple backend to store and retrieve these ideas, and a frontend for users to submit and browse them. The innovation is in its direct, unfiltered capture of user needs, bypassing traditional market research and tapping into a raw source of potential product development. So, what's the use? It provides a direct channel to discover validated problems that people are actively seeking solutions for, saving you the time and effort of guessing what to build.

How to use it?

Developers can use IdeaForge by visiting the website, browsing existing ideas, and upvoting those that resonate with them or that they believe they can solve. They can also submit their own ideas based on challenges they or people they know are facing. For integration, a developer could leverage the concept to build a more robust platform, or use the submitted ideas as a starting point for their next personal project or even a commercial venture. So, what's the use? You can find a ready-made list of potential projects that have already been identified as problems, giving you a head start on your development journey.

Product Core Function

· Idea Submission: Users can submit descriptions of problems they encounter, acting as a raw input for potential software solutions. The value here is in capturing real-world needs directly. This is useful for identifying unmet market demands.

· Idea Browsing: Developers can explore a curated list of user-submitted ideas, allowing them to discover potential project directions. The value is in providing a centralized source of inspiration. This helps you find a problem worth solving.

· Community Upvoting: Users can upvote ideas they find compelling, helping to surface the most desired solutions. The value is in the collective intelligence and prioritization of needs. This helps you gauge the demand for a particular solution.

Product Usage Case

· A freelance developer looking for their next side project can browse IdeaForge and discover a user's frustration with managing multiple online subscriptions, leading them to build a personal subscription management tool. This solves the problem of finding a unique and needed project.

· A startup founder seeking a market niche can explore IdeaForge and identify a recurring complaint about the complexity of local government forms, inspiring them to develop a user-friendly application to simplify civic engagement. This helps in identifying a market gap and a potential business idea.

· A hobbyist programmer wanting to contribute to open source can find an idea for a more accessible way for elderly individuals to use common smart home devices, channeling their coding skills into a meaningful social impact project. This allows for targeted problem-solving with social good.

15

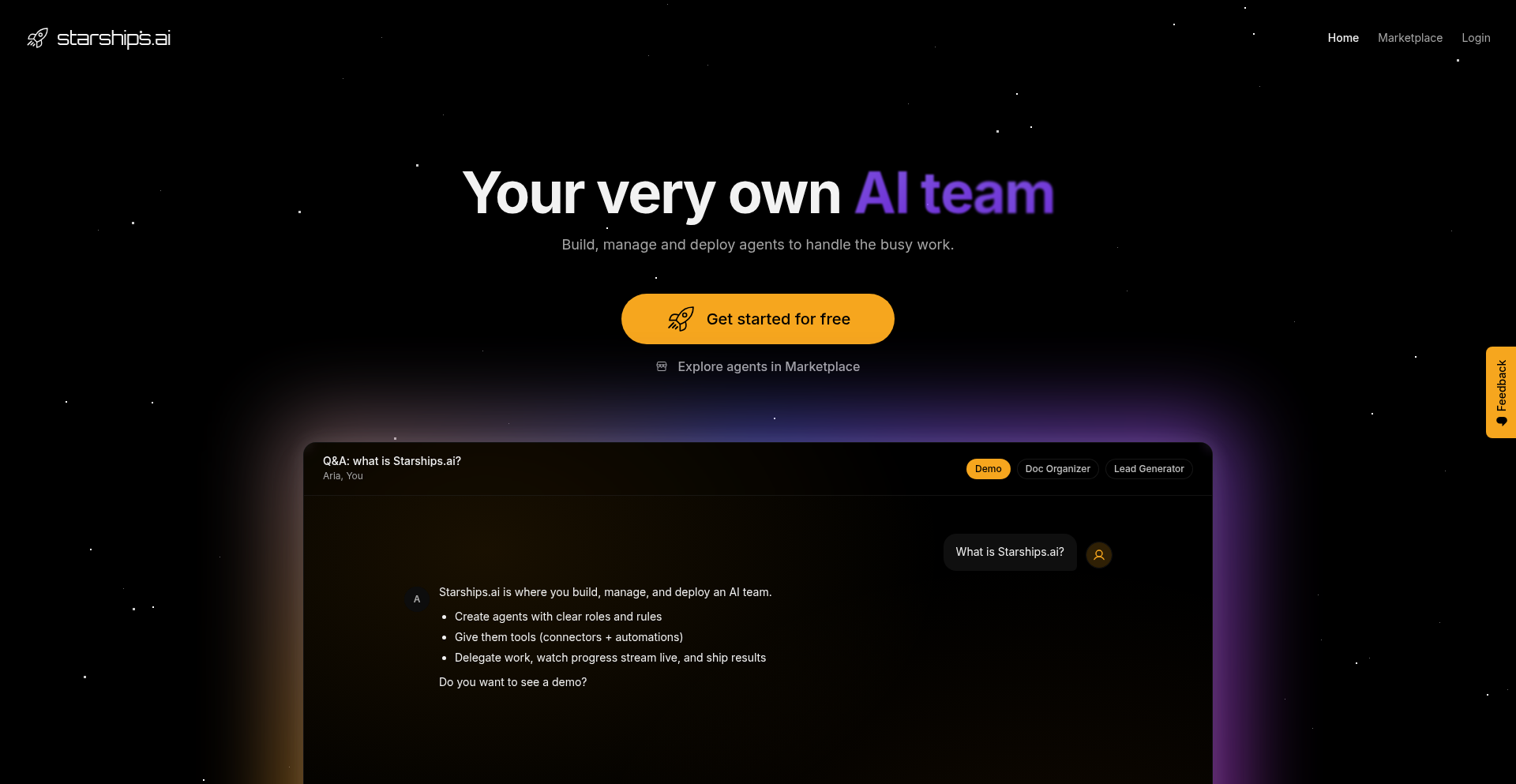

Starships.ai: AI Agent Orchestration Fabric

Author

brayn003

Description

Starships.ai is an AI-powered platform that allows developers to build, deploy, and orchestrate teams of AI agents, enabling them to collaborate on complex tasks. It abstracts away the underlying AI complexities, offering an interface that feels more akin to human communication, like using Slack, making AI collaboration accessible beyond just expert developers. The innovation lies in creating a human-like collaborative environment for AI agents, allowing for sophisticated problem-solving through decentralized, specialized AI entities.

Popularity

Points 4

Comments 2

What is this product?

Starships.ai is a system for creating and managing a team of AI agents that can work together to accomplish complex goals. Instead of a single AI trying to do everything, you have multiple AIs, each with a specific skill set or tool access, that communicate and coordinate with each other. Think of it like having a team of remote employees, each with their own specialty, working on a project together. The core technical innovation is the agent orchestration layer, which manages the flow of information, tasks, and decision-making between these specialized agents. This allows for more robust and sophisticated problem-solving than a monolithic AI approach. So, it's useful because it democratizes the creation of advanced AI systems, allowing for complex task automation without requiring deep AI engineering expertise.

How to use it?

Developers can use Starships.ai to define individual AI agents with specific capabilities (e.g., a writing agent, a research agent, a coding agent). These agents are then deployed within the Starships.ai environment. You can then define a complex task or project, and the platform will orchestrate the interaction between the agents to achieve it. This involves setting up communication channels, assigning roles, and defining decision-making protocols. Integration can occur through APIs, allowing you to trigger agent team actions from your existing applications or workflows. So, this is useful for developers who want to automate complex workflows that would traditionally require human oversight and multiple specialized tools, by leveraging a team of AI agents.

Product Core Function

· Agent Creation and Specialization: Developers can define AI agents with distinct skills and access to specific tools. This allows for modular AI development where each agent focuses on a niche, increasing efficiency and accuracy. The value is in creating specialized AI components that can be reused across various tasks, leading to more robust solutions. This is applicable in scenarios requiring highly specific AI functionalities, like a dedicated research agent for data gathering.

· Collaborative Task Management: Starships.ai facilitates communication and task delegation between multiple AI agents, mimicking human team collaboration. The value lies in enabling AIs to collectively tackle problems that are too large or multifaceted for a single agent. This is useful for complex projects such as content generation pipelines, software development assistance, or intricate data analysis.

· Orchestration Layer: The platform provides the intelligence to direct agent workflows, manage dependencies, and handle decision points. The value is in automating the coordination process, ensuring that agents work together seamlessly and efficiently towards a common goal. This is crucial for executing multi-step processes, like automated market research followed by report generation.

· Human-in-the-Loop Oversight: The system is designed with the intention of humans reviewing critical decisions made by the agent teams. The value here is in maintaining control and ensuring ethical AI behavior while still leveraging the speed and scalability of AI. This is essential for applications where accuracy and accountability are paramount, such as financial analysis or medical diagnostics.

· Slack-like Interaction Model: The interface and interaction patterns are designed to feel familiar and intuitive, like chatting with a remote employee. The value is in lowering the barrier to entry for using advanced AI collaboration tools, making them accessible to a broader audience, not just AI experts. This makes it easier for teams to integrate AI into their daily workflows and understand AI progress.

Product Usage Case

· Automated Content Generation Pipeline: A team of agents could be set up where one agent researches a topic, another outlines the content, and a third writes the article. This automates a lengthy content creation process, solving the problem of slow and manual content production. It's useful for marketing teams needing a high volume of blog posts or articles.

· Software Development Assistance: An AI agent team could be tasked with bug fixing. One agent identifies potential bugs, another researches solutions, and a third attempts to implement the fix. This accelerates the debugging process and frees up human developers for more strategic tasks. This is applicable in software engineering teams looking to improve development velocity.

· Complex Data Analysis and Reporting: Agents could be assigned to gather data from various sources, clean and process it, perform statistical analysis, and finally generate a comprehensive report. This solves the challenge of manually integrating and analyzing disparate datasets. It's useful for business analysts needing quick insights from complex data.

· AI-driven Project Management Assistance: Agents could track project progress, identify potential bottlenecks, and suggest resource allocation adjustments, mimicking a project manager's role. This helps in proactive project management, addressing issues before they escalate. This is beneficial for teams struggling with project visibility and efficiency.

16

Postastiq: SQLite-Powered Single-Binary Blogging Engine

Author

selfhost

Description

Postastiq is a novel blogging platform built as a single executable file, leveraging SQLite for data storage. This means you get a powerful, self-hosted blogging solution that's incredibly easy to deploy and manage. Its innovation lies in its minimalist design and efficient data handling, making it ideal for developers who want a straightforward yet robust way to share their thoughts online without the overhead of complex database setups or multiple dependencies.

Popularity

Points 3

Comments 3

What is this product?

Postastiq is a self-hosted blogging engine designed for simplicity and performance. Instead of requiring a separate database server like MySQL or PostgreSQL, it cleverly uses a single SQLite file to store all your blog content and settings. This makes deployment as simple as running a single executable. The innovation here is the consolidation of a full-featured blogging platform into one file, removing common setup hurdles and reducing the attack surface. So, what's in it for you? You get a fully functional blog that's incredibly easy to get up and running, perfect for personal projects or small teams, without the headaches of database administration. It's the hacker spirit of 'just make it work' applied to content creation.

How to use it?

Developers can use Postastiq by downloading the single binary and running it. It can be hosted on any server or even a personal computer. Configuration is minimal, often handled through environment variables or a simple configuration file. Content is typically managed through a web interface or by directly interacting with the SQLite database for advanced customization. Its lightweight nature also makes it suitable for integration into other applications or as a backend for static site generators. So, how does this benefit you? You can quickly spin up a blog for your project documentation, personal portfolio, or even a small community site with minimal effort, freeing you up to focus on writing and sharing your ideas, not managing infrastructure.

Product Core Function

· Single Binary Deployment: The entire blogging platform is packaged into one executable file, simplifying installation and distribution. This means less hassle with dependencies and quicker setup, so you can start blogging almost immediately.

· SQLite Backend: All blog posts, comments, and settings are stored in a single SQLite database file. This eliminates the need for a separate database server, making it easy to back up and migrate your entire blog, so your valuable content is always safe and portable.

· Markdown Content Support: Posts are written using Markdown, a widely adopted and easy-to-learn markup language. This allows for clean and efficient content creation, so you can focus on your writing without complex formatting.

· Web-based Admin Interface: A user-friendly interface allows for easy creation, editing, and management of blog posts and site settings. This means you don't need to be a command-line expert to manage your blog, making it accessible to everyone.

· Customizable Themes: The platform supports theming, allowing users to personalize the look and feel of their blog. This enables you to create a unique online presence that reflects your style and brand, so your blog stands out.

· Comment System: Includes a built-in system for readers to leave comments, fostering community engagement. This provides a direct way for your audience to interact with your content, so you can build a community around your blog.

Product Usage Case

· Personal Portfolio Blog: A developer can host their personal blog on Postastiq to showcase projects, write articles on technical topics, and share their expertise. This directly addresses the need for a simple, self-hosted platform to establish an online presence and share knowledge.

· Project Documentation Site: For open-source projects or internal tools, Postastiq can serve as a lightweight documentation portal where maintainers can easily publish release notes, guides, and tutorials. This provides a centralized and accessible place for project information, reducing the burden of complex documentation tools.

· Small Team Knowledge Base: A small team can use Postastiq to create an internal blog for sharing company news, best practices, and important updates. This ensures team members stay informed and can easily access crucial information, improving internal communication and knowledge sharing.

· Simple Content Management for Niches: A hobbyist or niche content creator can use Postastiq to quickly set up a blog for a specific interest, like photography or cooking, without needing deep technical knowledge of web servers or databases. This empowers creators to focus on their passion and share it with the world, bypassing technical barriers.

17

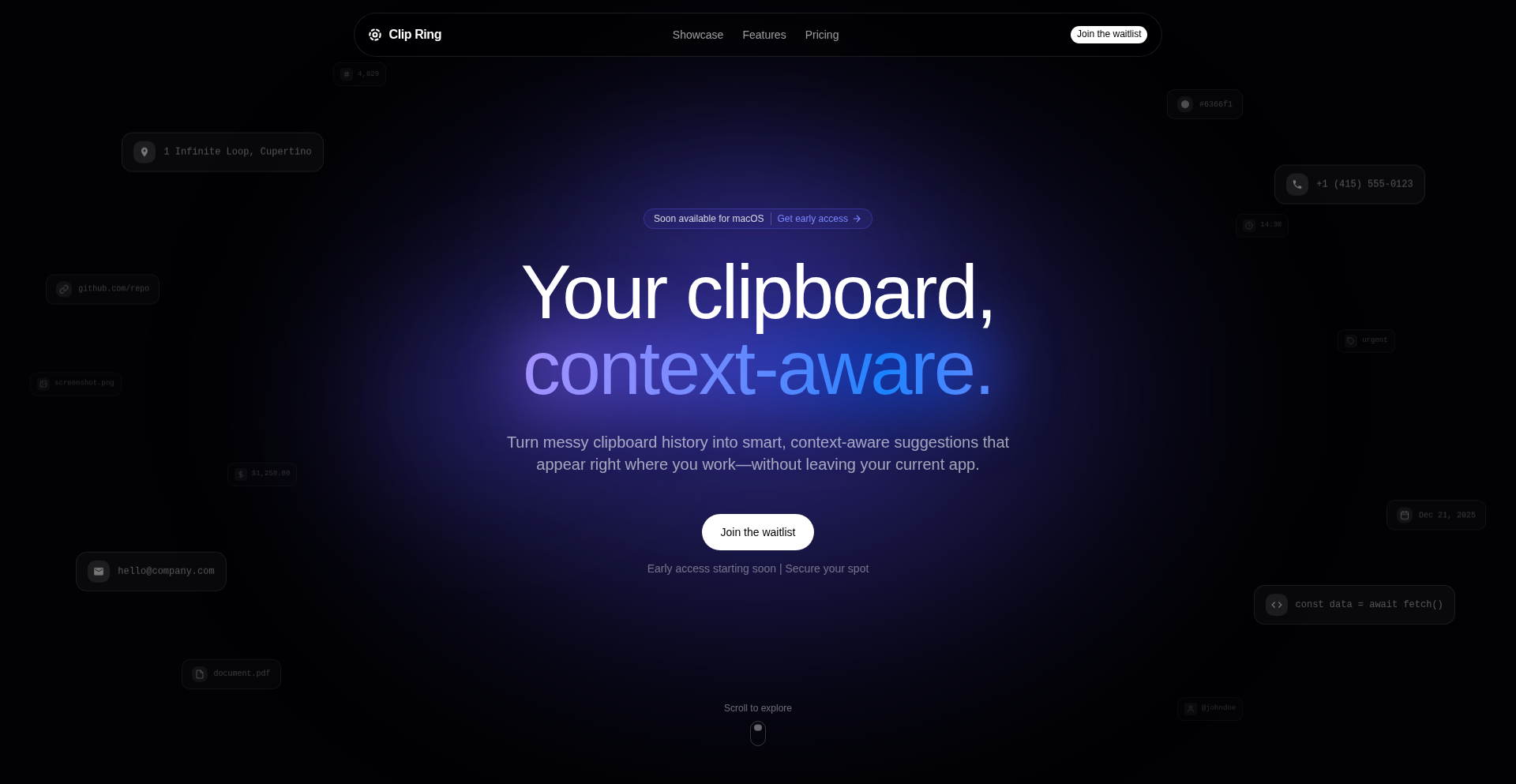

ClipRing: Contextual Clipboard Assistant

Author

tiagoantunespt

Description

ClipRing is a smart desktop application that transforms your messy clipboard history into context-aware suggestions. It intelligently analyzes what you're doing in your current application and presents relevant clipboard content without you having to switch windows. This is innovative because it moves beyond a simple copy-paste history by actively understanding your workflow and offering proactive assistance, reducing cognitive load and saving time.

Popularity

Points 5

Comments 1

What is this product?