Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-12-22

SagaSu777 2025-12-23

Explore the hottest developer projects on Show HN for 2025-12-22. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The landscape today is a vibrant testament to the hacker spirit, where raw ingenuity meets the ever-evolving technological frontier. We're seeing a strong surge in AI-driven solutions, not just for complex tasks, but for streamlining everyday workflows and enhancing developer productivity. The focus isn't just on building AI, but on building *with* AI – from code generation and testing to creating personalized user experiences. Developers are increasingly looking for tools that offer efficiency, reduce friction, and importantly, respect data privacy. This means solutions that run locally, offer granular control, or operate without centralized data storage are gaining traction. Furthermore, there's a clear trend towards highly specialized tools that solve specific problems incredibly well, rather than trying to be an all-in-one solution. This niche focus allows for deep optimization and a more satisfying user experience, empowering individuals and small teams to punch above their weight. For aspiring innovators, this means identifying specific pain points, particularly in the AI development lifecycle and data management, and crafting elegant, efficient solutions that embody the 'do more with less' ethos.

Today's Hottest Product

Name

World's Backlog

Highlight

This project tackles the fundamental challenge in innovation: identifying the *right* problems to solve. By creating a public repository for real-world industry pain points, validated by users, it offers a direct pipeline from user struggle to potential solutions. Developers can learn how to build platforms that aggregate and structure feedback, turning anecdotal complaints into actionable product requirements. The innovative approach lies in its community-driven validation and a focus on quantifying the severity and willingness to pay, which are crucial for any product's success.

Popular Category

AI/ML

Developer Tools

Utilities

Productivity

Data Management

Popular Keyword

AI

LLM

CLI

Open Source

Automation

Rust

Python

Code Generation

Data Visualization

Technology Trends

AI-Powered Automation

Efficient Developer Workflows

Data Privacy and Security

Specialized Tooling for AI Development

Code Generation and Transformation

Decentralized and Privacy-Focused Solutions

Performance Optimization in Niche Areas

Project Category Distribution

AI/ML Tools (30%)

Developer Tools & Utilities (25%)

Productivity & Data Management (20%)

Hobbyist & Niche Applications (15%)

Theoretical/Research Projects (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | ProblemForge AI | 109 | 28 |

| 2 | DeepSearch-Rust SMB Scanner | 14 | 2 |

| 3 | QuantumGravity Unifier | 2 | 12 |

| 4 | Hurry: BlitzCache for Cargo | 11 | 1 |

| 5 | LLVM-jutsu: Code Cloaking for AI | 8 | 0 |

| 6 | SkillPass: Knowledge Continuity Engine | 2 | 5 |

| 7 | Yapi: Terminal-Native API Orchestration | 4 | 3 |

| 8 | DurableExecFixer | 5 | 2 |

| 9 | RetroPlay WASM Engine | 3 | 3 |

| 10 | PDF Diagram to SVG Exporter | 6 | 0 |

1

ProblemForge AI

Author

anticlickwise

Description

ProblemForge AI is a curated repository of real-world, industry-specific pain points and workflow challenges, crowdsourced from professionals. It leverages AI to analyze and categorize these problems, providing builders with insights into their severity, frequency, and potential market value before development even begins. This tackles the 'hard part' of innovation: identifying the right problems to solve.

Popularity

Points 109

Comments 28

What is this product?

ProblemForge AI is a platform designed to bridge the gap between the ease of AI-powered development and the difficulty of finding genuinely impactful problems to solve. It functions as a public backlog of real-world issues encountered by people across various industries. The innovation lies in its structured approach to problem discovery: individuals submit their workflow frustrations, other users validate and rank these issues, and AI then analyzes this data to provide metrics on how severe, how often the problem occurs, and how much people would be willing to pay for a solution. So, it helps you find valuable problems to build solutions for, reducing the risk of creating something nobody needs.

How to use it?

Developers and entrepreneurs can use ProblemForge AI as a primary source for identifying potential product ideas. Instead of guessing what problems to solve, they can browse the validated backlog, filter by industry, problem type, or impact metrics. For integration, developers can directly reference the problem descriptions and validation data when pitching ideas or planning their Minimum Viable Product (MVP). The platform provides the 'why' behind a potential product, making the development process more focused and market-driven. So, you can use it to discover market needs and validate your next big idea before writing a single line of code.

Product Core Function

· Problem Submission and Crowdsourcing: Allows professionals to directly share their daily workflow frustrations and pain points. This is valuable because it taps into authentic, on-the-ground issues that might otherwise go unnoticed, providing raw material for innovation.

· Community Validation and Ranking: Enables users to upvote, comment on, and provide context for submitted problems, indicating their shared experience and the perceived severity of the issue. This is valuable as it filters out minor inconveniences and highlights problems with broader impact and developer interest.

· AI-Powered Problem Analysis: Utilizes AI to process and analyze the collected problem data, providing metrics on frequency, severity, and potential market willingness to pay. This is valuable because it quantifies the market opportunity and risk associated with a problem, guiding developers towards high-potential ventures.

· Industry-Specific Problem Categorization: Organizes problems by industry, making it easier for developers to find relevant challenges within their target markets. This is valuable for focused development, allowing builders to concentrate on specific niches and understand the unique problems within them.

· Pre-Development Market Insight: Offers builders an understanding of user pain and potential demand before they invest heavily in development. This is valuable for de-risking innovation and ensuring that development efforts are directed towards solving problems that have a clear market appetite.

Product Usage Case

· A solo developer looking for their next side project can browse ProblemForge AI, discover that many logistics managers are struggling with inefficient inventory tracking, and see that this problem is frequently reported and highly validated. They can then decide to build an inventory management tool specifically for this audience, armed with market validation. So, this helps a developer find a validated niche for a new app.

· A startup founder seeking to pivot their product strategy can use ProblemForge AI to identify emerging pain points in the healthcare industry. They might find that remote patient monitoring is a recurring issue with a high willingness to pay. This insight can guide them to reorient their existing technology towards solving this specific problem. So, this helps a startup pivot to a more in-demand market.

· A product manager can use ProblemForge AI to supplement user research. By observing the types of problems being submitted and validated, they can gain a broader understanding of user needs and inform their product roadmap with real-world issues. So, this helps a product manager make data-driven decisions for future product features.

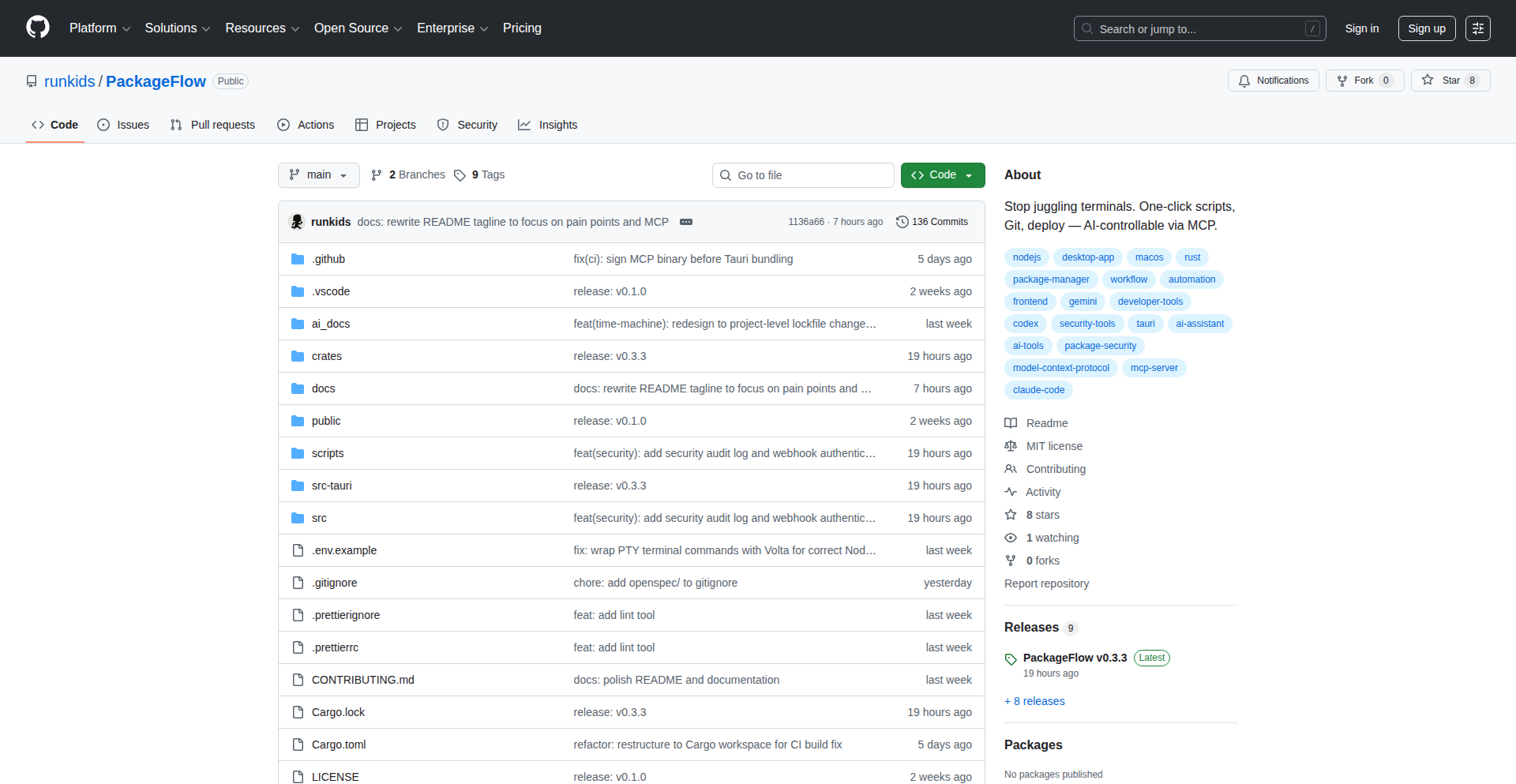

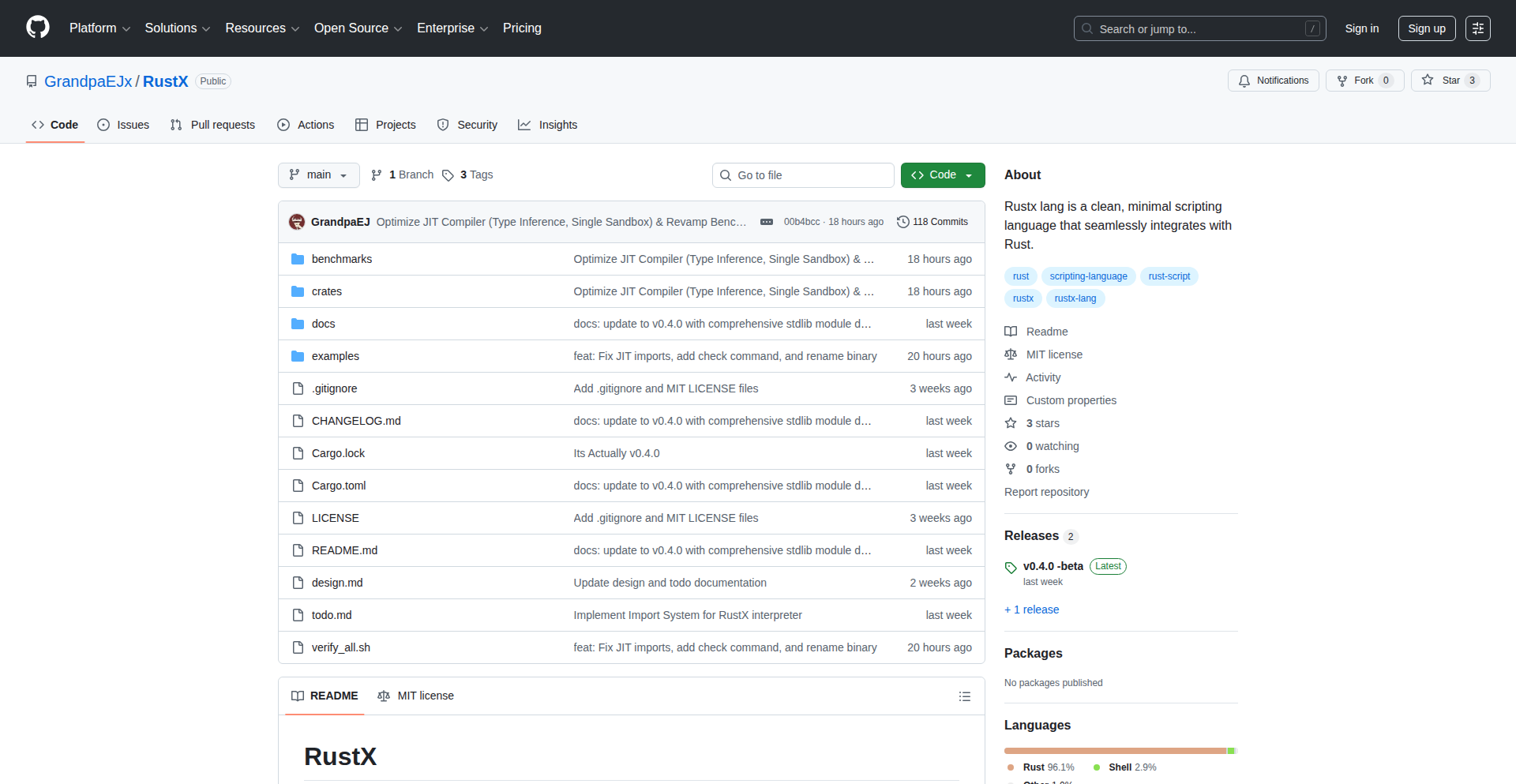

2

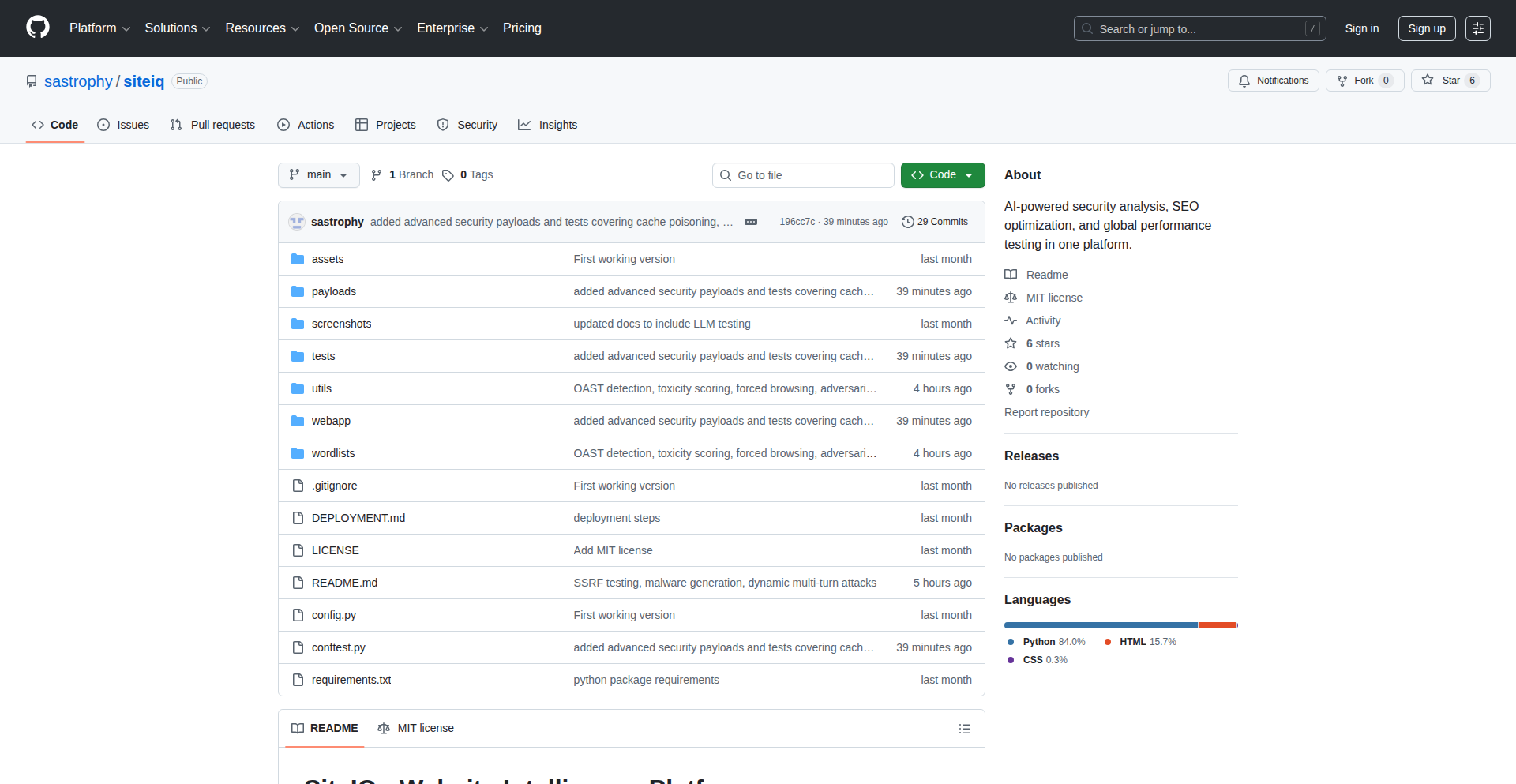

DeepSearch-Rust SMB Scanner

Author

dohuyhoangvn93

Description

DeepSearch is a high-performance SMB directory scanner built in Rust. It tackles the challenge of efficiently enumerating shared resources on Windows networks by leveraging Rust's speed and memory safety. The innovation lies in its optimized algorithms for network traversal and file/directory listing, offering a faster and more reliable alternative to existing tools for security auditing and network inventory.

Popularity

Points 14

Comments 2

What is this product?

DeepSearch is a tool that helps you quickly and efficiently discover all the shared folders and files on Windows computers within a network. It's written in Rust, a programming language known for its speed and reliability, making it very fast and less prone to crashing. The core innovation is its smart way of asking Windows computers for information about their shared resources. Instead of asking one by one, it uses advanced techniques to ask many at once and process the answers very quickly. So, this helps you understand what's shared on your network much faster than before, which is great for security checks or just knowing what data you have available.

How to use it?

Developers can integrate DeepSearch into their security assessment scripts or network management tools. It can be run from the command line, specifying IP ranges or specific hosts to scan. The output can be directed to a file for further analysis, such as identifying potential security vulnerabilities or cataloging network assets. For example, you could use it to quickly scan all machines in a subnet to see which ones have sensitive data shared openly. This means you can quickly find and secure important information, making your network safer.

Product Core Function

· High-speed SMB enumeration: Leverages Rust's performance to quickly list shared directories on multiple hosts simultaneously. This allows for rapid network discovery, so you can quickly identify all available network shares and understand your network's data landscape.

· Efficient network traversal: Uses optimized protocols and threading to explore network shares without overwhelming the network. This means less disruption to your network's performance while still getting comprehensive results, ensuring you don't miss anything important.

· Rust's memory safety: Guarantees that the scanner is robust and less likely to have memory-related bugs, leading to more stable and reliable scans. This translates to trustworthy results, so you can rely on the information gathered for critical security decisions.

· Customizable scan parameters: Allows users to define scan targets, credentials, and other options for tailored reconnaissance. This flexibility means you can adapt the tool to specific network environments and security needs, making it a versatile asset for different scenarios.

Product Usage Case

· Security auditing: A network administrator uses DeepSearch to perform a quick audit of all SMB shares within an organization to identify any publicly accessible sensitive data. This helps prevent data breaches by finding and securing vulnerable shares before attackers do.

· Asset inventory: A system administrator employs DeepSearch to generate an up-to-date inventory of all shared resources across a company's network for better management and compliance. This ensures you know exactly what data is stored and where, simplifying asset tracking and regulatory compliance.

· Incident response: During a security incident, DeepSearch can be used to rapidly identify compromised systems or unauthorized shares by quickly scanning for unusual sharing patterns. This speeds up the process of containing a breach and mitigating damage.

· Network penetration testing: A penetration tester uses DeepSearch as an initial reconnaissance step to map out the attack surface of a target network by discovering all accessible SMB shares. This helps in planning more effective exploitation strategies by understanding what resources are exposed.

3

QuantumGravity Unifier

Author

albert_roca

Description

This project presents a novel approach to unifying fundamental physics constants by deriving the gravitational constant 'G' from quantum properties of the proton and electromagnetic interactions. It uses a geometric scaling hypothesis to link the proton's mass to the Planck mass and then calculates 'G', showing remarkable agreement with experimentally measured values. The project also validates this unified theory by recalculating gravitational acceleration for various celestial bodies and comparing it to predictions from General Relativity.

Popularity

Points 2

Comments 12

What is this product?

This project is a Python script that explores a theoretical framework suggesting that the gravitational constant 'G' is not an independent fundamental constant but rather emerges from the quantum mechanical properties of particles, specifically the proton, and fundamental quantum parameters like Planck's constant (hbar) and the speed of light (c). It hypothesizes that the proton's mass is a scaled version of the Planck mass based on a holographic principle, involving a scaling factor related to 4 to the power of 32. By using this derived relationship, the script calculates a value for 'G'. Furthermore, it implements a 'Unified Geometric Metric' that combines mass-energy equivalence and electrostatic forces to predict gravitational acceleration. This prediction is then compared against the standard Schwarzschild metric from General Relativity for different astronomical objects, demonstrating consistency. So, what this means for you is a potential glimpse into a more fundamental, unified theory of physics where gravity and quantum mechanics are not separate, but interconnected.

How to use it?

Developers can use this script as a starting point for exploring theoretical physics and quantum gravity. They can modify the input parameters (mass, charge, radius) for different celestial bodies or hypothetical particles to observe how the 'Unified Geometric Metric' and General Relativity predictions align or diverge. The core functions `compute_acceleration` and `derive_closed_G` can be integrated into larger simulation frameworks or used for educational purposes to visualize the interplay between quantum mechanics and gravity. It's a tool for researchers and enthusiasts interested in testing or extending these theoretical ideas with code. So, how can this be useful to you? You can plug in your own hypothetical scenarios of objects in space or even microscopic particles to see how this theory predicts their gravitational behavior, potentially uncovering new insights or validating your own physics-based simulations.

Product Core Function

· Unified Geometric Metric Calculation: This function calculates the gravitational acceleration based on a new theoretical model that unifies mass-energy and electrostatic forces. Its value lies in providing an alternative prediction for gravity, especially useful for probing regions where quantum effects might be significant or where standard GR may need refinement.

· General Relativity Benchmark: This function calculates the gravitational acceleration according to the established Schwarzschild metric from General Relativity. Its value is in providing a crucial point of comparison to validate the new theoretical model, ensuring that it accurately reflects known gravitational phenomena.

· Holographic Derivation of G: This function calculates the gravitational constant 'G' by hypothesizing a scaling relationship between the proton mass and the Planck mass, derived from a holographic principle. Its value is in demonstrating how 'G' might not be fundamental but an emergent property of quantum mechanics, potentially simplifying our understanding of gravity.

· Object Parameterization and Simulation: This part of the script allows for the definition of various celestial objects (like planets, stars, black holes) with their respective masses, charges, and radii. This enables the testing of the theoretical model across a wide range of physical scales and conditions, proving its applicability and robustness in diverse scenarios.

Product Usage Case

· Testing fundamental physics theories: A researcher could use this script to test the hypothesis that 'G' is derived from quantum properties by comparing the script's derived 'G' with highly precise experimental measurements, helping to validate or refute theoretical models.

· Exploring black hole physics: By inputting parameters for massive objects near the calculated Schwarzschild radius, developers can observe how the 'Unified Geometric Metric' behaves compared to GR's prediction of an event horizon, potentially offering insights into quantum gravity effects near black holes.

· Educational tool for quantum gravity: Educators can use this script to demonstrate to students how abstract theoretical concepts in physics can be translated into concrete code, making the complex ideas of quantum mechanics and general relativity more accessible and interactive.

· Cosmological simulations: This script's acceleration calculation method could be integrated into larger cosmological simulations to test how alternative gravitational models might affect the evolution of the universe on different scales.

4

Hurry: BlitzCache for Cargo

Author

ilikebits

Description

Hurry is an open-source tool designed to dramatically speed up Rust project builds by introducing distributed build caching for Cargo. It intelligently caches individual Rust packages, meaning if a package hasn't changed, its pre-built version is reused, leading to build times that are 2-5x faster (and sometimes even more). This solves the common problem of slow and repetitive builds in Rust development without requiring complex setup or learning new build systems.

Popularity

Points 11

Comments 1

What is this product?

Hurry is a build caching system specifically for Rust projects that use Cargo. Think of it like a smart 'save' button for your code compilation. When you build your Rust project, Hurry takes the compiled pieces (the 'artifacts') and stores them in a cache. The next time you build, if a piece of code hasn't changed, Hurry retrieves the pre-compiled version from the cache instead of recompiling it from scratch. This is 'distributed' because the cache can be shared across multiple machines or developers. Its innovation lies in its granular, package-level caching and its 'drop-in' nature, meaning it works with your existing Cargo setup with minimal configuration, unlike other solutions that require significant integration effort or are too broad in their caching approach.

How to use it?

Developers can integrate Hurry into their workflow with almost zero configuration. The simplest way is to replace your usual `cargo build` command with `hurry cargo build`. Hurry will then automatically manage the caching process. For collaborative projects or CI/CD pipelines, Hurry can connect to a shared caching service, either a cloud-hosted one provided by Hurry or a self-hosted instance. This means that if one developer or a CI job builds a piece of code, other developers or jobs can benefit from that cached build, saving everyone significant time. It’s designed to seamlessly fit into existing development environments.

Product Core Function

· Distributed Build Caching: Stores compiled Rust code artifacts in a shared cache, allowing multiple developers or build agents to reuse pre-built components. This accelerates build times by avoiding redundant compilation, making development cycles faster.

· Package-Level Granularity: Caches individual Rust packages independently. If only one package changes, only that package needs to be recompiled, while others are served from the cache. This is far more efficient than caching entire projects or build jobs, directly reducing build duration.

· Zero-Configuration Integration: Works seamlessly with existing Cargo projects. Developers can simply run `hurry cargo build` instead of `cargo build`, making it incredibly easy to adopt without complex setup or learning new tools. This lowers the barrier to entry for faster builds.

· Fast Rebuilds: Significantly reduces build times, often by 2-5x or more, by intelligently reusing cached build outputs. This directly translates to more time spent coding and less time waiting for builds to complete, boosting developer productivity.

Product Usage Case

· Local Development Acceleration: A Rust developer working on a large project can use Hurry to drastically cut down local build times after making minor code changes. Instead of waiting minutes for a full rebuild, Hurry serves cached artifacts, allowing for near-instantaneous iteration and testing, leading to a smoother and more productive coding experience.

· CI/CD Pipeline Optimization: A continuous integration/continuous deployment (CI/CD) pipeline for a Rust project can integrate Hurry to cache build artifacts between runs. When a new commit is pushed, the CI job can quickly retrieve previously built dependencies from the cache, leading to significantly faster build and test execution times, and reducing CI costs.

· Team Collaboration on Codebases: In a team setting, when one developer builds a specific library or component, that build artifact can be cached and made available to other team members. This ensures that everyone on the team benefits from the most recent successful build, reducing the time spent by each individual on redundant compilations and improving overall team velocity.

5

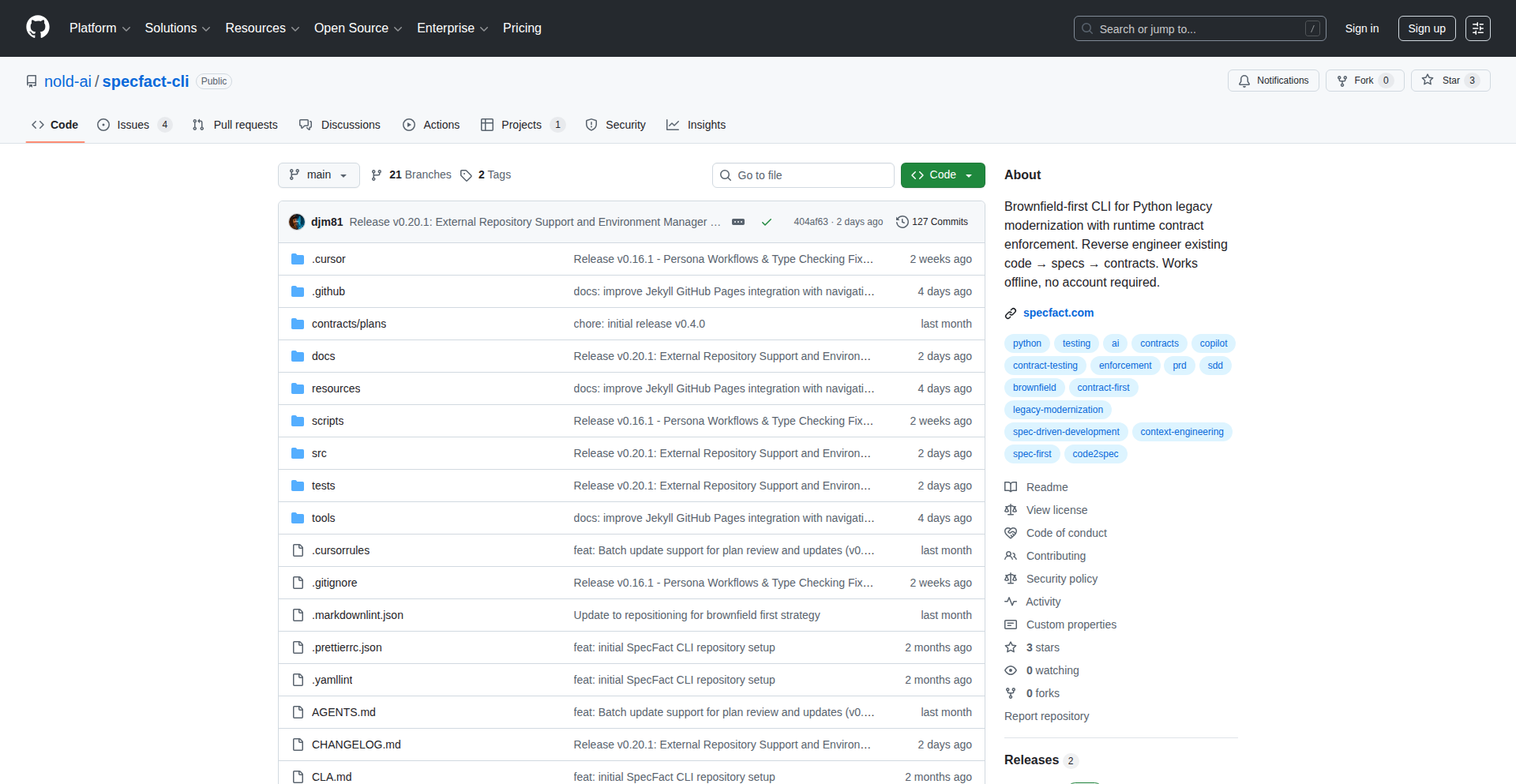

LLVM-jutsu: Code Cloaking for AI

Author

babush

Description

LLVM-jutsu is a novel obfuscation pass for the LLVM compiler infrastructure designed to make code harder for Large Language Models (LLMs) to understand and analyze. It tackles the emerging problem of AI-generated code plagiarism and unauthorized intellectual property extraction by programmatically altering code structures without affecting its execution behavior. The innovation lies in its application within the compilation pipeline, offering a robust, at-compile-time solution.

Popularity

Points 8

Comments 0

What is this product?

LLVM-jutsu is a specialized 'pass' that runs within the LLVM compiler. Think of a compiler as a translator for programming languages. A 'pass' is like a specific translation step that modifies the code to optimize it or add features. LLVM-jutsu's unique pass is designed to 'obfuscate' or 'cloak' your code. It does this by making subtle but significant changes to the code's internal structure – like rearranging sentences in a paragraph to change the flow without altering the meaning. This makes it incredibly difficult for AI models, which learn by pattern recognition, to decipher the original logic or easily reproduce it. The core innovation is integrating this protection directly into the compilation process, so the obfuscation happens automatically when you build your software, ensuring the compiled code is already protected.

How to use it?

Developers can integrate LLVM-jutsu into their existing LLVM-based build processes. This typically involves modifying their build system (like CMake or Makefiles) to include the LLVM-jutsu pass when compiling their source code. For example, if you're building a C++ project using Clang (which uses LLVM), you would configure your build to run LLVM-jutsu as one of the compiler's optimization stages. This means the protection is applied automatically as part of the normal software build, without requiring manual code changes or complex post-compilation steps. It's a seamless addition to the developer workflow.

Product Core Function

· Code Structure Renaming: Renames variables, functions, and other code elements to nonsensical or misleading names, making it difficult for LLMs to infer their purpose. This is valuable for protecting the semantic meaning of your code from AI interpretation.

· Control Flow Perturbation: Modifies the order of operations and conditional logic in subtle ways that don't change the program's outcome but make the logic flow appear convoluted to automated analysis. This prevents AI from easily tracing execution paths.

· Instruction Reordering: Reorders individual machine instructions while preserving the overall program logic. This disrupts the patterns LLMs look for in code sequences.

· Data Representation Obfuscation: Alters how data is stored or represented in memory, adding complexity for AI to understand data structures and relationships. This protects sensitive data handling logic.

· LLVM Pass Integration: Acts as a plug-in for the LLVM compiler, meaning it can be enabled or disabled during the build process. This provides flexibility and allows developers to apply protection only when needed.

Product Usage Case

· Protecting proprietary algorithms in SaaS products: A company developing a critical algorithm for its cloud service can use LLVM-jutsu to compile its backend code. This makes it much harder for a competitor's LLM to analyze a decompiled version of the service and replicate its core functionality, safeguarding intellectual property.

· Preventing AI-driven reverse engineering of game logic: Game developers can apply LLVM-jutsu to their game executables. If a hacker tries to use an AI to understand how game mechanics work by analyzing the compiled game code, the obfuscation will significantly slow down or prevent that process, enhancing game security.

· Securing embedded system firmware: For developers working on firmware for IoT devices or other embedded systems, LLVM-jutsu can add a layer of protection against unauthorized analysis of the device's operational code, making it harder to find vulnerabilities or copy proprietary designs.

· Shielding sensitive code snippets in open-source projects: While open-source often encourages transparency, certain sensitive libraries or modules within a larger project might benefit from obfuscation. LLVM-jutsu can be selectively applied to these parts during the build to deter casual AI-driven code copying without hindering legitimate community contributions.

6

SkillPass: Knowledge Continuity Engine

Author

kevinbaur

Description

SkillPass is a novel solution to the critical problem of knowledge loss when employees depart. Instead of relying on traditional, often ineffective handover documents, it directly captures the implicit, role-specific knowledge from departing employees. This innovative approach leverages a guided session to transform unarticulated expertise into a structured report, preventing operational disruptions and preserving valuable institutional memory without requiring meetings or extensive training.

Popularity

Points 2

Comments 5

What is this product?

SkillPass is a system designed to capture and retain the critical, often unspoken, knowledge that departs a company with an employee. The core technical innovation lies in its guided session methodology. It's not just about documentation; it's about extracting the 'how' and 'why' behind decisions, shortcuts, and undocumented processes that are normally lost. This is achieved through a streamlined, non-intrusive process that ensures valuable context isn't lost, preventing the common scenario where things break when someone leaves because their unique knowledge base has vanished. The value proposition is clear: minimize the impact of employee turnover by ensuring operational continuity and preserving institutional memory, all while being GDPR-compliant and respecting user privacy, with no AI training data collection.

How to use it?

Developers can integrate SkillPass into their HR offboarding process. When an employee is leaving, SkillPass initiates a single, guided session. This session, designed to be efficient and non-disruptive, prompts the departing employee for specific, role-related information. The output is a structured handover report that contains the essential implicit knowledge. This can then be used by successors to quickly get up to speed, reducing ramp-up time and preventing common pitfalls caused by lost knowledge. For developers, this means ensuring that the complex systems they build continue to be understood and maintained, even after key contributors move on. It’s about making the handover process intelligent and actionable, not just a formality.

Product Core Function

· Guided knowledge capture session: This is the core technical engine that uses targeted questions to extract implicit knowledge, ensuring that critical context and decision-making rationale are captured. The value is in transforming hidden knowledge into explicit, actionable information.

· Structured handover report generation: The system processes the captured information into a clear, organized report. This provides successors with a direct roadmap to understanding the departing employee's role, preventing confusion and speeding up the transition.

· Privacy-centric design: The commitment to GDPR compliance and no AI training means that sensitive company knowledge is handled securely and ethically. The value is in providing peace of mind that intellectual property is protected and used solely for internal knowledge transfer.

· Meeting-free knowledge transfer: By automating the knowledge capture process, SkillPass eliminates the need for lengthy and often inefficient handover meetings. This saves valuable time for both departing and remaining employees, directly contributing to productivity.

Product Usage Case

· A senior engineer leaves a critical project, taking with them deep knowledge of specific system optimizations and debugging shortcuts. SkillPass captures this, providing the incoming engineer with a detailed guide to these optimizations, preventing performance degradation and accelerating their understanding of the system's nuances.

· A product manager departs, leaving behind a wealth of context on past feature decisions, user feedback interpretations, and strategic considerations that were never fully documented. SkillPass elicits this rationale, enabling the new product manager to seamlessly pick up the roadmap and make informed decisions, avoiding the repetition of past mistakes.

· A developer who built a highly specialized internal tool resigns. Instead of a sparse documentation file, SkillPass generates a report detailing the tool's architecture, common failure points, and undocumented workarounds. This allows the team to maintain and enhance the tool effectively without needing to reverse-engineer its entire functionality.

· When a key member of a cybersecurity team leaves, their understanding of ongoing threats, specific security protocols, and incident response nuances is crucial. SkillPass captures this expert knowledge, equipping the remaining team with the insights needed to maintain a robust security posture.

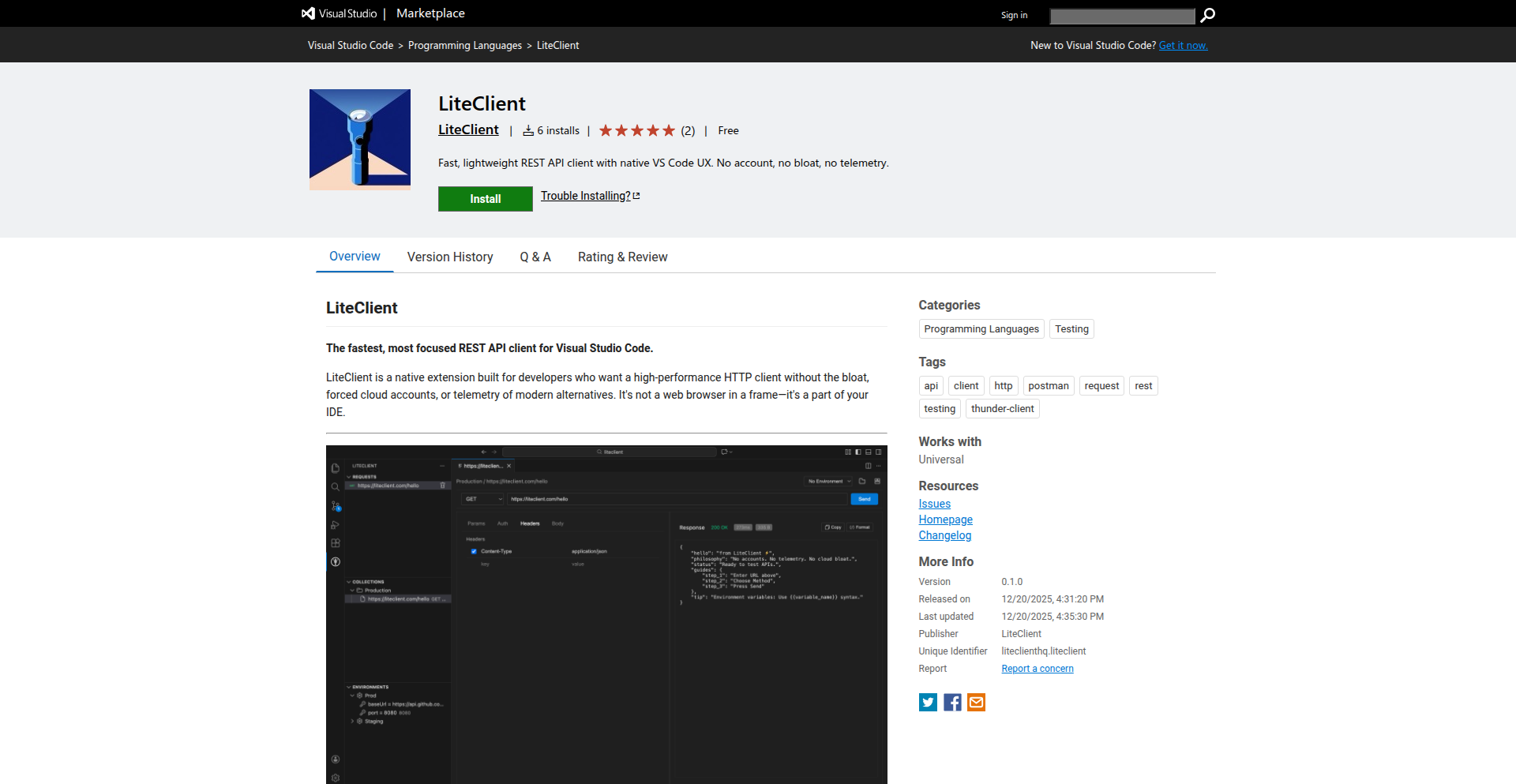

7

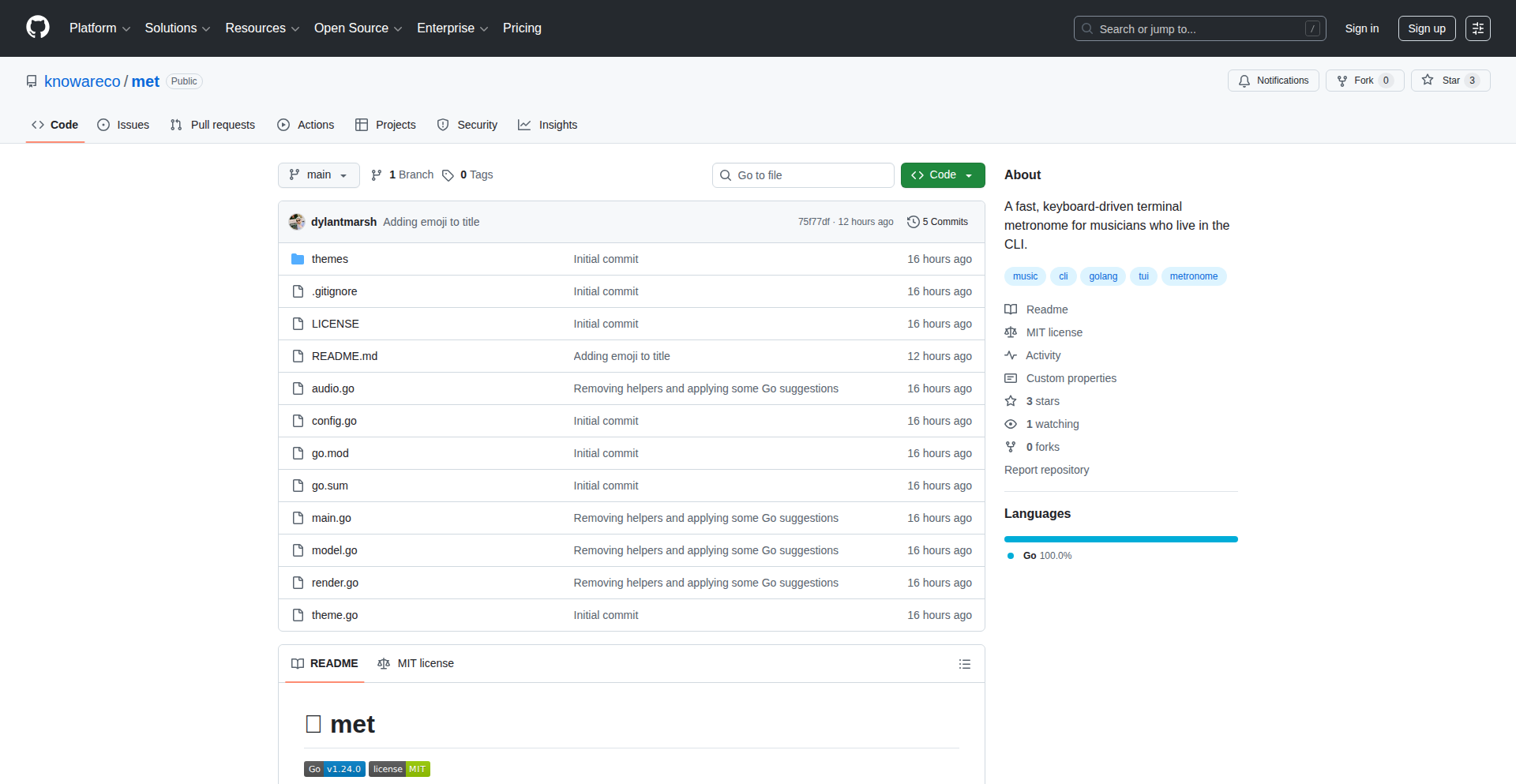

Yapi: Terminal-Native API Orchestration

Author

jamiepond

Description

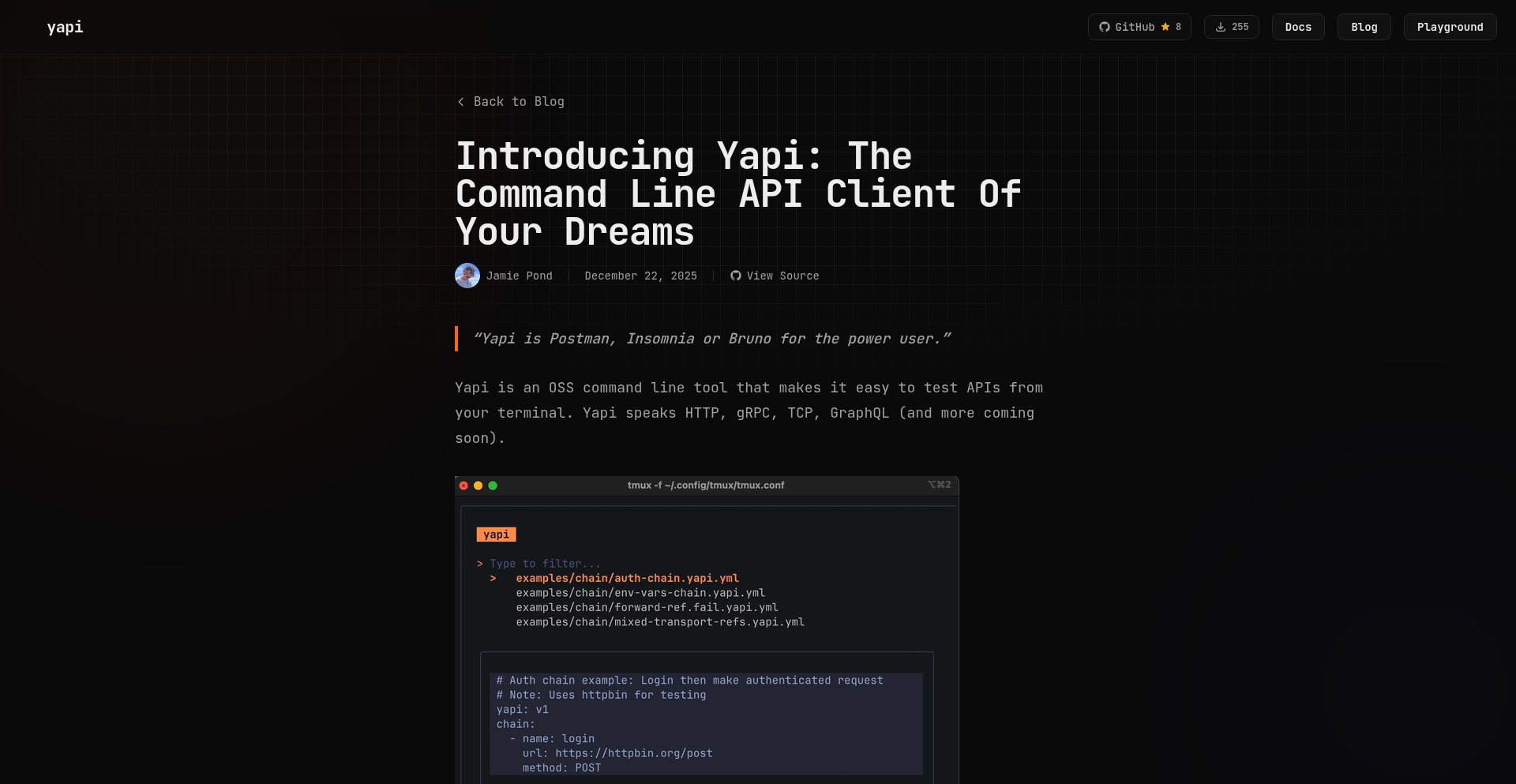

Yapi is a FOSS (Free and Open Source Software) API client designed for terminal power users, offering a more productive experience than traditional GUI tools like Postman, Bruno, or Insomnia. It focuses on providing efficient API request management and execution directly within your terminal environment, leveraging the speed and workflow of editors like Neovim and multiplexers like Tmux.

Popularity

Points 4

Comments 3

What is this product?

Yapi is a terminal-based application that helps developers manage and interact with APIs. Unlike GUI-based tools, Yapi operates within your command-line interface, allowing for faster workflows, especially for those accustomed to text-based environments. Its core innovation lies in its deep integration with terminal editors and multiplexers, enabling developers to craft, send, and inspect API requests without leaving their primary coding workspace. This translates to reduced context switching and a more streamlined development process. So, this is useful for you if you want to manage your API interactions efficiently without constantly switching between your code editor and a separate application.

How to use it?

Developers can integrate Yapi into their existing terminal workflows. After installation, Yapi can be invoked from the command line. You can define API requests, group them into collections, and execute them directly. For example, you might use it to quickly test a new endpoint you've just implemented in your backend service. Its design is particularly appealing to users who spend most of their time within Neovim or Tmux, allowing them to trigger API calls, view responses, and even modify requests all from within their familiar terminal setup. Integration involves setting up Yapi within your preferred terminal environment and defining your API endpoints and requests as you would in other API clients, but with the added benefit of terminal efficiency. So, this is useful for you by allowing you to seamlessly incorporate API testing and management into your existing coding habits, boosting productivity and reducing distractions.

Product Core Function

· API Request Definition and Management: Allows users to define HTTP requests (GET, POST, etc.) with custom headers, body, and parameters. This is valuable for developers who need to precisely control and document their API interactions, ensuring consistency and reducing errors. It enables rapid testing of backend services and debugging of API integrations.

· Request Collections and Organization: Enables grouping of related API requests into collections for better organization and reusability. This is crucial for managing complex projects with numerous API endpoints, allowing developers to easily find and execute specific sets of requests for different parts of an application or for specific testing scenarios.

· Response Inspection and Analysis: Provides tools to view and analyze API responses, including status codes, headers, and response bodies. This is essential for understanding the outcome of API calls, debugging issues, and verifying that the API is functioning as expected. It helps developers quickly pinpoint problems in their application's communication with its backend.

· Terminal Integration and Workflow Efficiency: Designed to work seamlessly within terminal environments like Neovim and Tmux, minimizing context switching. This significantly enhances developer productivity by keeping all necessary tools within a single interface, reducing the mental overhead of switching between different applications. It's about making API work as fast and fluid as coding.

· FOSS (Free and Open Source Software): Being open-source means the software is freely available, modifiable, and transparent. This is valuable for developers as it provides cost savings, allows for community contributions and improvements, and ensures no vendor lock-in. It fosters trust and collaboration within the developer community.

Product Usage Case

· A backend developer implements a new REST API endpoint. Instead of opening Postman, they can use Yapi within their Neovim session to immediately define a GET request to the new endpoint, send it, and examine the JSON response, all without leaving their code editor. This speeds up the development and testing cycle significantly.

· A frontend developer is debugging an issue where their application is failing to fetch data. They can use Yapi to replicate the exact API call their frontend is making, including all headers and parameters, to see if the problem lies with the frontend's request formation or the backend's response. This helps isolate the source of the bug efficiently.

· A DevOps engineer needs to automate the testing of a critical API endpoint as part of a CI/CD pipeline. Yapi's command-line nature makes it suitable for scripting and integration into automated testing workflows, ensuring the API remains functional before deployments.

· A developer working on a microservices architecture can use Yapi to manage and test interactions between multiple services. They can create collections for each service and then test the flow of data and requests between them, ensuring the entire system is communicating correctly.

8

DurableExecFixer

Author

mnorth

Description

A project aiming to improve Durable Execution, a framework that helps manage long-running and stateful applications. The innovation lies in addressing inherent complexities and potential issues within durable execution patterns, making it more reliable and developer-friendly. This project offers value by providing a more robust foundation for building complex distributed systems.

Popularity

Points 5

Comments 2

What is this product?

This project is an experimental enhancement or fix for Durable Execution frameworks, which are designed to handle tasks that need to run for a long time or maintain state across multiple steps, like complex workflows or background jobs. The core innovation is in identifying and rectifying subtle bugs, performance bottlenecks, or usability challenges within existing Durable Execution implementations. Think of it like reinforcing the foundation of a building that might have minor cracks – it makes the whole structure more stable and dependable. The value is in providing a more predictable and less error-prone environment for developers building sophisticated applications.

How to use it?

Developers can integrate this project by applying its patches or adopting its improved libraries into their existing Durable Execution workflows. This might involve updating dependencies, running a modified version of the framework, or using its new components to manage their stateful operations. The primary use case is for developers building applications that require robust handling of long-running processes, state persistence, and fault tolerance, such as order processing, batch computations, or complex event-driven systems. It offers them a more reliable way to manage the lifecycle of these operations.

Product Core Function

· Improved state management resilience: Ensures that application state is consistently saved and restored even during unexpected failures, preventing data loss and allowing workflows to resume gracefully. This is valuable because it reduces the risk of critical data corruption in long-running processes.

· Optimized execution flow: Enhances the efficiency of how tasks are executed within the durable execution framework, leading to faster processing times and reduced resource consumption. This helps developers build applications that are not only reliable but also performant.

· Simplified debugging and introspection: Provides better tools and mechanisms for developers to understand what's happening within their durable execution workflows, making it easier to identify and fix issues. This saves developers time and frustration when troubleshooting complex systems.

· Enhanced error handling strategies: Implements more sophisticated ways to catch, report, and recover from errors in long-running tasks, ensuring that failures are handled predictably and don't cascade into larger problems. This is crucial for maintaining the stability of critical business processes.

Product Usage Case

· Building a robust e-commerce order fulfillment system: Imagine a system that needs to track an order from placement, through payment processing, inventory update, shipping, and delivery. Durable Execution is ideal for this. If the payment processing step fails midway, DurableExecFixer would ensure the order state is preserved, allowing the process to be retried without losing progress, thus preventing lost sales and customer frustration.

· Developing a large-scale data processing pipeline: For applications that process massive datasets, breaking the work into smaller, manageable, durable steps is common. If a processing node crashes during a large data transformation, DurableExecFixer ensures that the transformation can resume from the last successfully completed step, saving significant time and computational resources compared to restarting the entire job.

· Creating a complex multi-stage approval workflow: In enterprise applications, approvals often involve multiple steps and stakeholders. Durable Execution can manage this. If an approver is offline when their turn comes, DurableExecFixer can help ensure the workflow doesn't get stuck indefinitely, perhaps by implementing automatic reminders or escalation policies, streamlining business operations.

9

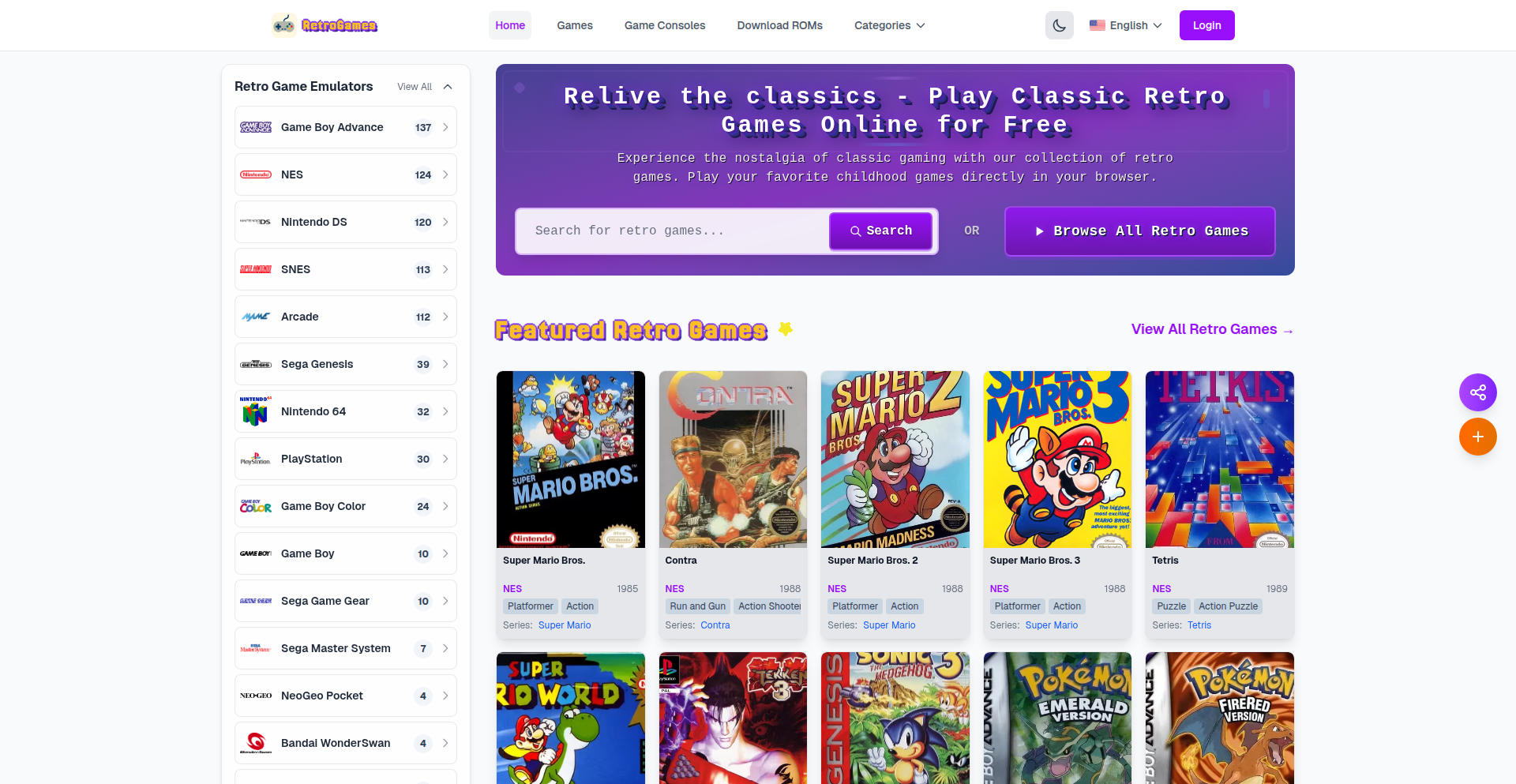

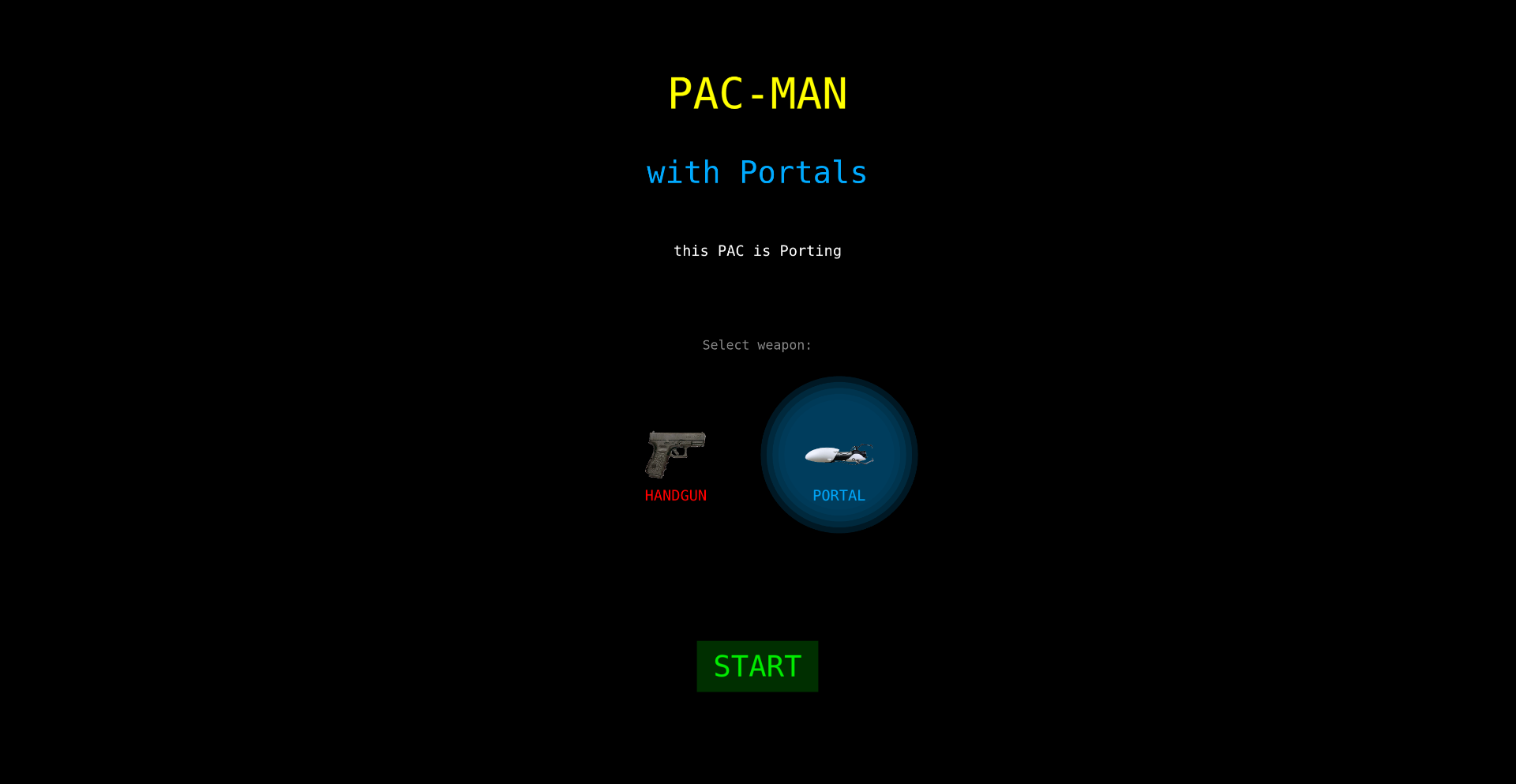

RetroPlay WASM Engine

Author

retrogamesnexus

Description

RetroPlay WASM Engine is a project that brings classic retro games to the browser instantly, leveraging WebAssembly (WASM) for emulation. It tackles the frustration of finding legitimate retro games online by offering a clean, ad-free, and mobile-friendly experience. The core innovation lies in enabling in-browser gameplay without downloads, focusing on user experience and discoverability through structured content and SEO.

Popularity

Points 3

Comments 3

What is this product?

RetroPlay WASM Engine is a browser-based retro game emulator powered by WebAssembly (WASM). Instead of downloading clunky software or navigating through confusing websites with pop-ups, you can play classic games directly in your web browser. WASM is like a special engine that lets you run game code that was originally designed for older systems directly on your modern computer or phone through the browser. This means no more worrying about viruses or annoying ads, just pure gaming fun. So, what's in it for you? Instant access to nostalgia without the hassle.

How to use it?

Developers can integrate the RetroPlay WASM Engine into their own projects or websites. For end-users, the usage is straightforward: navigate to a compatible retro game on a website powered by this engine, and the game will load and become playable directly within the browser. The project emphasizes a clean interface and mobile-friendly controls, making it easy to jump into a game on any device. So, how does this benefit you? You can enjoy a seamless gaming experience on your preferred device without any technical setup.

Product Core Function

· Browser-based Emulation via WebAssembly: Allows playing classic games directly in the web browser without downloads, providing instant access and a consistent experience across devices. This means you can pick up and play your favorite retro titles anytime, anywhere, directly from your browser.

· Clean User Interface: Offers a modern and uncluttered interface, free from intrusive pop-ups and deceptive download buttons, making the gaming experience enjoyable and safe. This translates to less frustration and more time spent gaming.

· Mobile-Friendly Controls: Provides optimized controls for mobile devices, including support for dual-screen games, ensuring a smooth and accessible gaming experience on smartphones and tablets. Now you can relive your favorite gaming memories on the go.

· SEO and Content Structure: Focuses on search engine optimization and a well-structured site to make retro games easy to find, improving discoverability for users looking for specific titles. This helps you locate the games you love faster.

· Save State and Audio Synchronization: Implements reliable save state functionality and accurate audio syncing, crucial for an authentic retro gaming experience. This means your progress is saved reliably, and the game sounds just right, preserving the original feel.

Product Usage Case

· A retro gaming enthusiast wanting to play classic SNES games on their laptop without installing any software. They can visit a site using RetroPlay WASM Engine, and the game loads in the browser, allowing them to play instantly, preserving their favorite childhood memories without risk.

· A mobile gamer looking for a quick retro gaming session on their commute. With mobile-friendly controls, they can comfortably play classic Game Boy Advance titles on their phone, enjoying the convenience and portability.

· A developer building a website dedicated to retro game preservation. They can integrate RetroPlay WASM Engine to allow visitors to directly play featured games within their site, offering a unique and engaging experience that encourages exploration and engagement.

· A user frustrated with malware and pop-ups on other retro game sites. They discover a site powered by RetroPlay WASM Engine, which provides a secure and pleasant environment to play, giving them peace of mind and an enjoyable gaming experience.

10

PDF Diagram to SVG Exporter

Author

mbrukman

Description

This project is a simple GUI tool designed to extract diagrams from PDF documents and save them as Scalable Vector Graphics (SVG). It addresses the common problem of pixelated and blurry images in blog posts or online content derived from PDF research papers. By converting vector graphics within PDFs to SVG, it ensures that diagrams remain sharp and zoomable across all devices and resolutions. The innovation lies in providing an intuitive visual selection method for extracting specific diagram regions, simplifying a previously cumbersome manual process.

Popularity

Points 6

Comments 0

What is this product?

This is a desktop application that allows users to open a PDF file, visually select a specific area containing a diagram, and export that selected region as an SVG file. The core technology leverages Poppler CLI tools for the heavy lifting of PDF rendering and data extraction. The innovation here is the user-friendly graphical interface that wraps these command-line tools, making it incredibly easy for anyone to pinpoint and extract diagrams without needing to understand complex command-line arguments. So, this means you can get crystal-clear, infinitely scalable images from your PDFs, making your content look much more professional and easier for your audience to examine.

How to use it?

Developers can use this tool by downloading and running the application. Once opened, they simply navigate to their desired PDF file. They can then click and drag to draw a bounding box around the diagram they wish to extract. After selecting the region, they can save it as an SVG file. This is particularly useful for content creators, researchers, or anyone embedding technical diagrams into websites, presentations, or other digital formats where image quality and scalability are paramount. Integration into other workflows might involve scripting its command-line counterparts if the GUI is not directly used, but the primary value is its standalone ease of use. This saves you the frustration of screenshots that blur when zoomed, ensuring your audience sees every detail perfectly.

Product Core Function

· PDF Document Loading: Allows users to open and view PDF files within the application, providing a direct interface to the source material. This means you can easily access the PDFs you need to extract from without extra steps.

· Visual Region Selection: Enables users to intuitively draw a rectangular selection box directly on the PDF to precisely isolate the diagram of interest. This is like using a highlighter but for extracting images, ensuring you get exactly what you want.

· SVG Export: Saves the selected diagram region as a high-quality, scalable vector graphics (SVG) file. This ensures your diagrams will look sharp no matter how much you zoom in or how large you display them, making your content look polished and professional.

· Poppler CLI Integration: Internally uses Poppler command-line utilities for robust PDF rendering and extraction, ensuring accurate and reliable results. This behind-the-scenes technology makes the complex process of PDF handling simple and effective for you.

Product Usage Case

· A researcher wants to include a complex flowchart from a PDF paper in a blog post about their findings. Instead of taking a low-resolution screenshot that pixelates on mobile, they use this tool to extract the flowchart as an SVG, ensuring it looks crisp and readable on any device. This means their blog post is more accessible and professional.

· A software developer is creating documentation for an API and needs to include diagrams from a PDF specification. Using this tool, they can easily extract these diagrams and embed them as SVGs in their documentation, guaranteeing that the diagrams scale perfectly with any zoom level, improving the clarity of their documentation.

· An educator is preparing a presentation and needs to use a specific graph from a PDF textbook. They can use this tool to grab the graph as an SVG, ensuring it's sharp and clear when projected on a large screen, making their lecture material easier for students to understand.

11

TinyDOCX: Lightweight DOCX/ODT Generator

Author

lulzx

Description

TinyDOCX is a remarkably small TypeScript library that allows developers to programmatically generate DOCX and ODT (OpenDocument Text) files. It focuses on core document elements like text formatting, headings, lists, tables, images, and hyperlinks, with minimal dependencies and a tiny footprint. This makes it ideal for scenarios where generating editable documents is needed, especially when combined with its counterpart, tinypdf, for generating PDFs.

Popularity

Points 6

Comments 0

What is this product?

TinyDOCX is a developer tool that lets you create Microsoft Word (.docx) and OpenDocument Text (.odt) files using code. Think of it as a highly efficient digital scribe. Instead of using complex software, you tell TinyDOCX what to write – like setting text to bold, creating bullet points, adding tables, or inserting images – and it crafts the document for you. The real innovation here is its size and simplicity. Unlike other tools that are bulky and require many other software components to work, TinyDOCX is incredibly lean, using very little code and no external dependencies. This means it's fast, easy to integrate, and doesn't weigh down your project. It understands the underlying structure of DOCX files (which are essentially organized XML files within a ZIP archive) and efficiently generates the necessary XML to build these documents. So, what's the practical value? You get the power to create editable documents programmatically, with a tool that's much simpler and faster to use.

How to use it?

Developers can easily integrate TinyDOCX into their projects by installing it via npm (`npm install tinydocx`). The library is written in TypeScript, making it straightforward to use in JavaScript or TypeScript projects. You would typically import the library and then use its API to define the content and structure of your document. For instance, you might call functions to add a heading, then paragraphs with specific formatting (like bold or italics), insert a table with data, and finally, render the document as a .docx or .odt file. This can be done within a web application backend to generate reports, or in a desktop application to create form letters. The generated files can then be downloaded by the user or further processed. So, how does this help you? You can automate the creation of professional-looking, editable documents directly from your applications, saving significant manual effort and ensuring consistency.

Product Core Function

· Text Formatting: Enables setting text to bold, italic, underline, strikethrough, applying colors, and custom fonts. This is valuable for creating visually distinct and emphasized content within documents, making them more readable and professional, for example, highlighting key terms in a report.

· Headings (H1-H6): Allows for the creation of hierarchical document structures using different heading levels. This is crucial for organizing content logically and improving navigation within longer documents, similar to how a book uses chapters and subheadings.

· Tables with Borders and Column Widths: Supports the generation of tables with defined borders and specific column widths. This is essential for presenting structured data clearly, such as financial reports or product specifications, where accurate alignment and layout are important.

· Bullet and Numbered Lists (with Nesting): Facilitates the creation of ordered and unordered lists, including nested lists. This is ideal for outlining steps, creating feature lists, or presenting hierarchical information in an easy-to-follow format.

· Images (PNG, JPEG, GIF, WebP): Allows for the embedding of various image formats within documents. This is vital for enriching documents with visual elements, such as logos, diagrams, or illustrations, making them more engaging and informative.

· Hyperlinks: Enables the insertion of clickable links to external websites or internal document anchors. This is useful for directing users to relevant resources or providing easy navigation within a document.

· Headers/Footers with Page Numbers: Supports the inclusion of headers and footers that can contain page numbers and other recurring information. This is important for professional document presentation, especially for longer documents where consistent branding or navigation aids are needed.

· Blockquotes and Code Blocks: Provides formatting for quoted text and code snippets. This is particularly useful for developers or researchers presenting excerpts of text or code samples within a document.

· Markdown to DOCX Conversion: Offers the ability to convert Markdown formatted text directly into DOCX documents. This is a significant time-saver for users who are familiar with Markdown and want to quickly generate formatted documents.

· ODT (OpenDocument) Support with the Same API: Allows generation of OpenDocument Text files using the same straightforward API as DOCX. This broadens compatibility and ensures your generated documents can be opened by a wider range of office suites, offering flexibility in document distribution.

Product Usage Case

· Generating invoices from an e-commerce backend: When a customer makes a purchase, the application can use TinyDOCX to programmatically generate a detailed invoice in DOCX format, which the customer can then easily edit or forward. This automates a critical business process.

· Creating personalized form letters for mail merges: A marketing team can use TinyDOCX to generate a batch of personalized letters for a campaign, pulling customer data and inserting it into pre-defined templates. This drastically reduces manual letter writing.

· Building reports with embedded data visualizations: A data analysis tool could generate reports that include tables and text descriptions formatted by TinyDOCX, making the output professional and editable for further review.

· Creating documentation from Markdown files: Developers can use TinyDOCX to automatically convert their project's README files (written in Markdown) into more formal DOCX or ODT documents for easier sharing with non-technical stakeholders.

· Generating user manuals or guides: When creating documentation for software or products, TinyDOCX can be used to assemble content with consistent formatting, headings, and images, producing editable user guides.

· Automating the creation of legal or contractual documents: For simple contracts or agreements, TinyDOCX can be used to populate templates with specific client details and terms, producing a standard editable document.

12

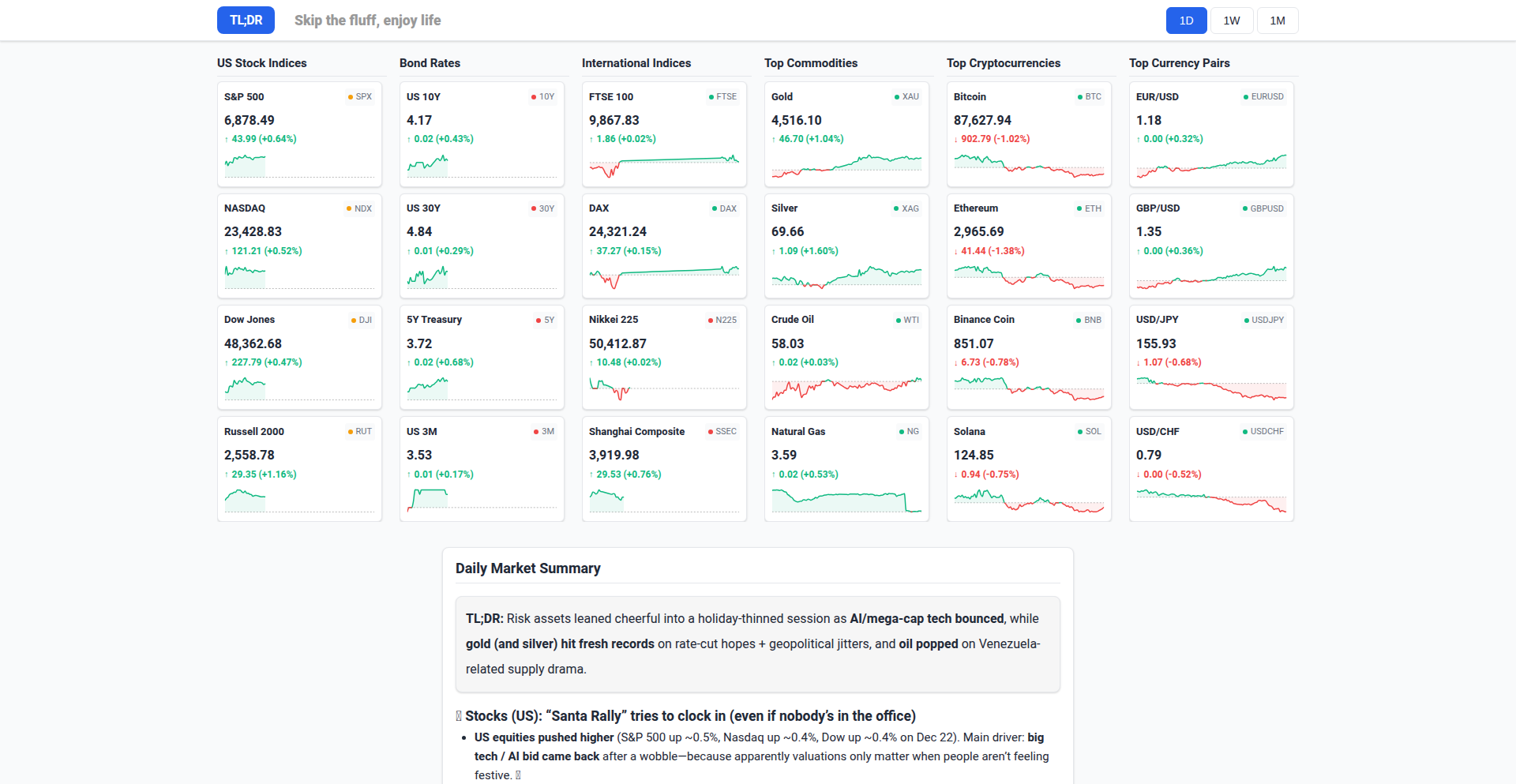

TLDR.Market

Author

firedexplorer

Description

TLDR.Market is a minimalist web application designed to provide users with a rapid, digestible overview of global market performance. It tackles the challenge of information overload by presenting key financial metrics in a concise, easy-to-understand format, making complex market data accessible at a glance. The core innovation lies in its selective data aggregation and presentation strategy, prioritizing speed and clarity over exhaustive detail.

Popularity

Points 5

Comments 1

What is this product?

TLDR.Market is a web service that curates and displays essential global market data, such as stock index movements, currency exchange rates, and commodity prices, in a highly summarized fashion. Its technical approach involves fetching data from multiple financial APIs, processing it to extract key performance indicators (e.g., percentage change, absolute values), and then rendering this information on a clean, user-friendly interface. The innovation here is in the intelligent filtering and prioritization of data, delivering a 'too long; didn't read' (TLDR) version of market sentiment, which is invaluable for quickly grasping the market's pulse without getting lost in the noise.

How to use it?

Developers can integrate TLDR.Market into their workflows or personal dashboards by bookmarking the site or embedding its core data feed (if an API becomes available) into other applications. For instance, a financial blogger could use it to quickly get the day's market summary before writing an article, or a busy executive could check it on their mobile device during a commute. The current usage is primarily through direct web access, offering a fast route to market insights.

Product Core Function

· Real-time market data aggregation: Fetches data from various financial sources to provide up-to-date market information, valuable for tracking live financial trends and making timely decisions.

· Concise data visualization: Presents complex market information through simplified visuals and key figures, helping users quickly understand market movements and overall sentiment without needing deep financial expertise.

· Global market coverage: Offers a snapshot of major global markets, allowing users to get a broad perspective on international economic activity and its potential impact on their interests.

· Minimalist user interface: Designed for speed and ease of use, reducing cognitive load and enabling users to absorb information efficiently, which is crucial for quick decision-making in fast-paced environments.

Product Usage Case

· A day trader who needs to quickly assess the overall market direction before placing trades; TLDR.Market provides a rapid overview, saving time and reducing the risk of missing critical early signals.

· A business analyst who needs to stay informed about global economic health without dedicating significant time to research; they can use TLDR.Market to get a daily pulse check, informing their strategic outlook.

· A personal finance enthusiast who wants to monitor key market indicators without being overwhelmed by detailed financial news; TLDR.Market offers a clear, digestible summary for informed personal investment decisions.

· A content creator focusing on financial news; TLDR.Market can serve as a quick reference to gather the essential market context for their reports or articles.

13

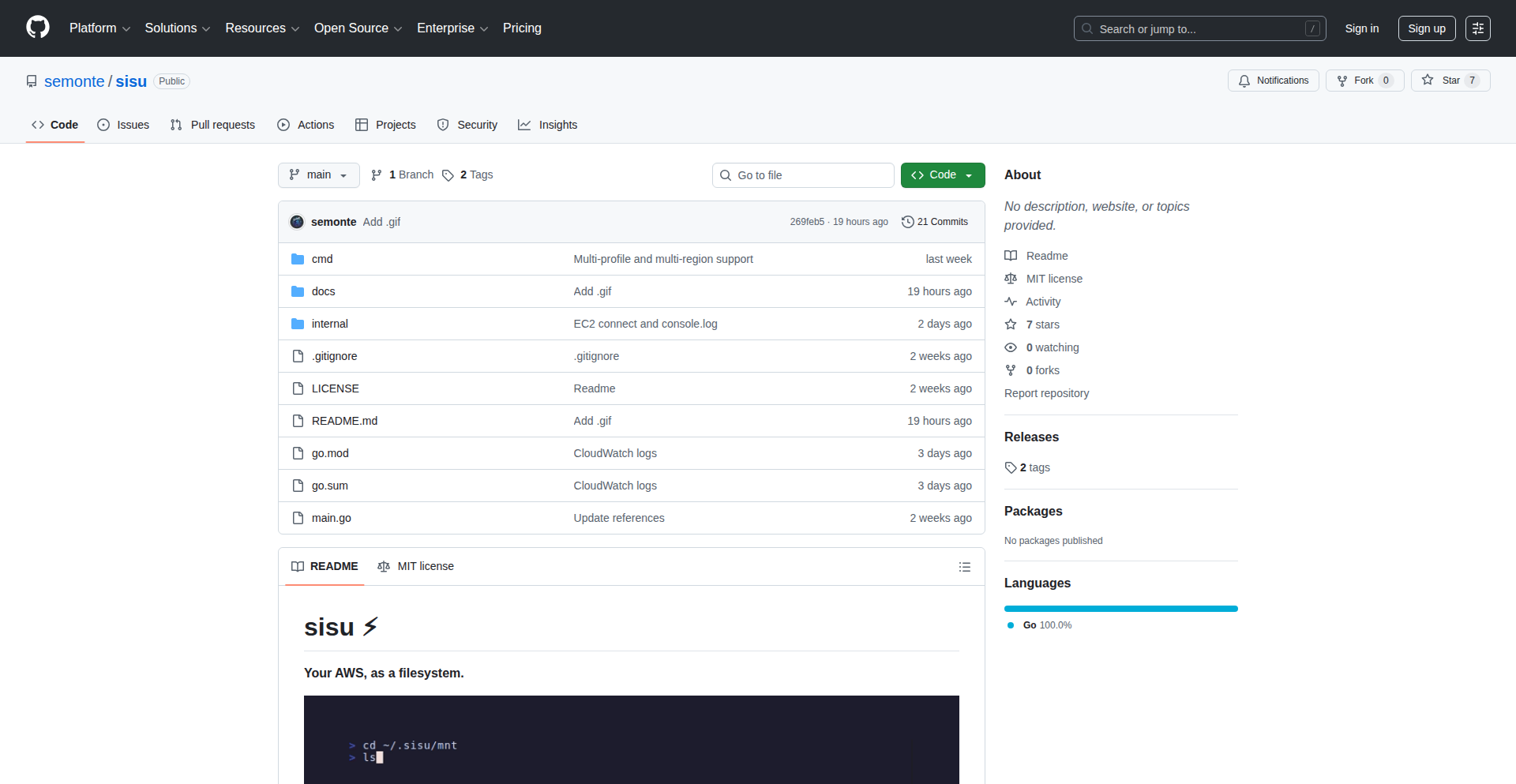

SisuFS: AWS as a Filesystem

Author

smonte

Description

SisuFS presents a novel approach to interacting with Amazon Web Services (AWS) by mounting your AWS S3 buckets as a traditional filesystem. This innovative solution leverages FUSE (Filesystem in Userspace) to provide a seamless, familiar interface for developers and operations teams, abstracting away the complexities of direct API calls and offering a unified view of cloud storage.

Popularity

Points 5

Comments 0

What is this product?

SisuFS is a tool that makes your Amazon S3 storage appear as if it were a regular folder on your computer or server. Instead of using command-line tools or complex SDKs to upload, download, or manage files in S3, you can simply drag-and-drop, copy-paste, or use standard file operations. It achieves this by using a technology called FUSE (Filesystem in Userspace), which allows custom filesystem implementations to be created and mounted directly into the operating system. This means you can interact with S3 buckets as if they were local directories, significantly simplifying cloud storage management and integration.

How to use it?

Developers can use SisuFS by installing the SisuFS client on their operating system (Linux, macOS, or Windows with WSL). Once installed, they can mount an S3 bucket to a chosen local directory using a simple command-line instruction, specifying their AWS credentials and the bucket name. For example, a command like `sisufs <aws_access_key_id> <aws_secret_access_key> <bucket_name> /mnt/my_s3_bucket` would make the contents of 'my_bucket' accessible at '/mnt/my_s3_bucket'. This allows for easy integration with existing scripts, applications, and workflows that expect a standard filesystem interface, without requiring code modifications. Imagine all your web application assets stored in S3 being directly accessible by your web server's file operations, or being able to edit configuration files in S3 with your favorite local editor.

Product Core Function

· Filesystem Mounting: Allows any S3 bucket to be mounted as a local directory, providing a familiar interface for file operations. This is valuable because it simplifies access to cloud storage, reducing the learning curve for new developers and streamlining workflows for experienced ones by using tools they already know.

· Standard File Operations: Supports common file operations like read, write, create, delete, list directories, and file metadata access directly through the operating system. This offers a significant advantage as it allows developers to leverage existing applications and scripts designed for local filesystems, enabling seamless integration with cloud storage without rewriting code.

· Abstraction of AWS APIs: Hides the underlying AWS S3 API calls, providing a user-friendly, abstract layer. This is beneficial because it shields users from the complexities of AWS SDKs and API intricacies, making cloud storage management more accessible and less error-prone.

· Cross-Platform Compatibility: Designed to work on major operating systems like Linux and macOS, with potential for Windows integration via WSL. This broad compatibility ensures that developers can use SisuFS regardless of their preferred development environment, increasing its utility and adoption across diverse teams.

Product Usage Case

· Development Workflow Simplification: A web developer can mount their S3 bucket containing static assets (images, CSS, JavaScript) directly to their local development server. This means any changes made to these assets locally and saved to the mounted filesystem are immediately reflected in S3, allowing for rapid iteration without manual uploads. The benefit is faster development cycles and easier testing of front-end assets.

· Data Backup and Archiving: A system administrator can easily copy large datasets or log files to a remote S3 bucket by simply dragging and dropping them into the mounted S3 filesystem directory. This is much more intuitive than using specific backup scripts or commands, ensuring data is backed up reliably and efficiently, providing peace of mind for data protection.

· Configuration Management: An application can read its configuration files directly from an S3 bucket mounted as a filesystem. This enables centralized configuration management, where all instances of an application can pull their settings from a single, version-controlled S3 location. This simplifies deployment and updates, as configuration changes can be applied by updating a single file in S3.

· Scripting and Automation: Developers can write shell scripts that interact with S3 using standard file manipulation commands (e.g., `cp`, `mv`, `rm`). Instead of learning AWS CLI commands or SDK functions, they can use familiar syntax. This allows for easier automation of cloud storage tasks and integration into existing CI/CD pipelines.

14

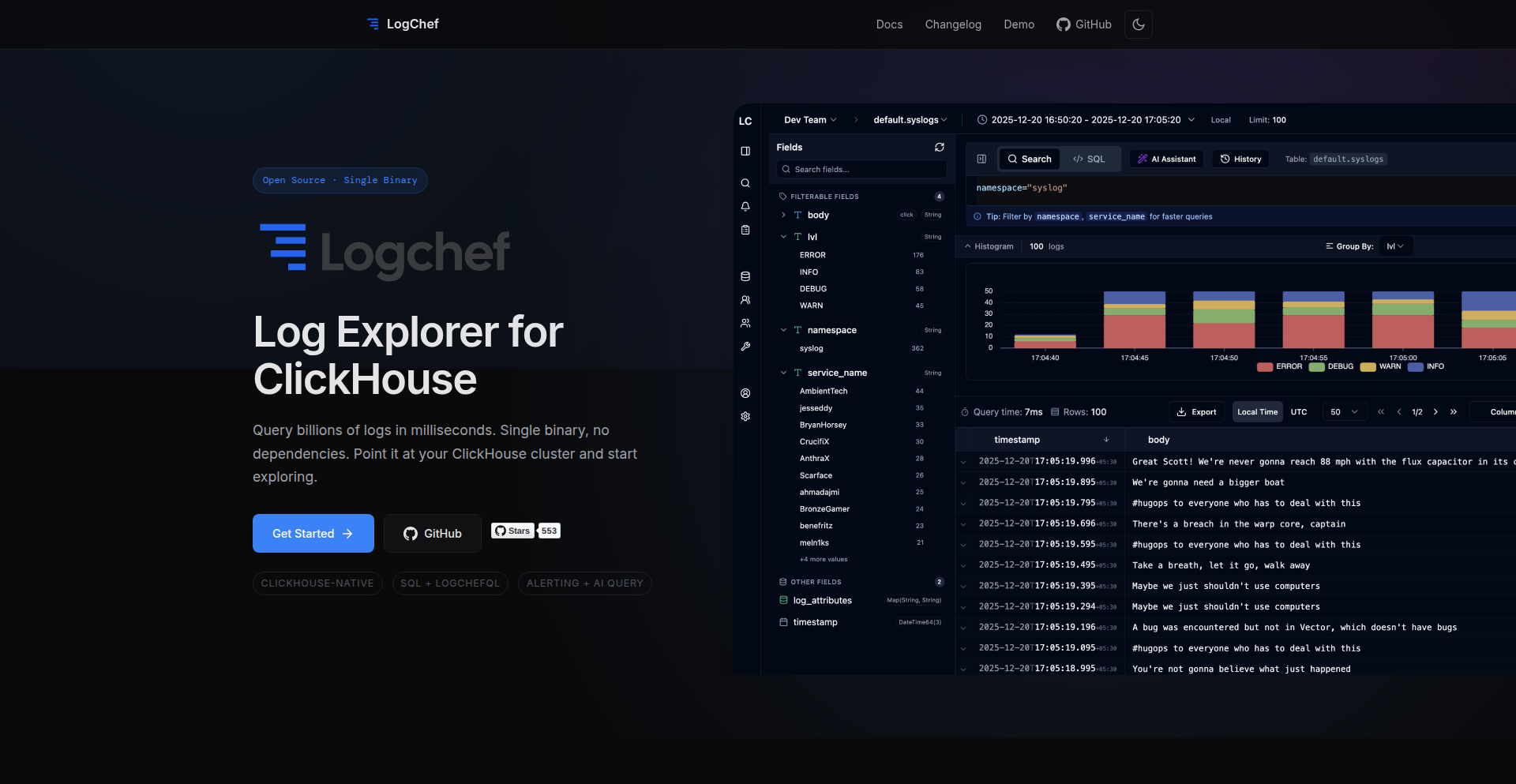

LogChef - ClickHouse Log Explorer

Author

mr-karan

Description

LogChef is an open-source, schema-agnostic log viewer built specifically for ClickHouse. It addresses the limitations of general-purpose dashboarding tools for log exploration by offering features like an intuitive query language, a field sidebar for easy filtering, and robust access control. This means developers can quickly investigate issues without complex setup or manual schema configurations, making log analysis significantly more efficient. So, this is useful because it saves you time and frustration when troubleshooting by providing a user-friendly way to dive into your logs stored in ClickHouse.

Popularity

Points 4

Comments 1

What is this product?

LogChef is a specialized log viewing tool designed to work seamlessly with ClickHouse, a popular database often used for storing large volumes of log data. Unlike generic dashboarding tools like Grafana or Metabase, LogChef is built from the ground up for the unique workflow of log exploration. Its key innovation lies in being schema-agnostic, meaning you don't need to pre-define or migrate your log data's structure to start using it. It introduces LogChefQL, a query language simpler than SQL for filtering logs, and a Kibana-like field sidebar that allows you to click on log values to instantly build filters. This bypasses the manual configuration or slow ad-hoc filtering often encountered with other tools, making it incredibly fast and accessible for developers. So, this is useful because it provides a dedicated, easy-to-use interface for understanding your logs without the usual technical hurdles.

How to use it?

Developers can use LogChef by simply pointing it to an existing ClickHouse table containing their logs. No migrations or OpenTelemetry (OTEL) requirements are necessary. You can then leverage LogChefQL to craft specific queries or use the interactive field sidebar to explore your data. For advanced scenarios, LogChef supports SQL-based alerting that can be routed to Alertmanager, and an AI assistant that translates natural language questions (e.g., 'show me errors from last hour') into executable SQL queries. It also includes an MCP server for integration with LLM assistant tools. The entire application is delivered as a single, lightweight Go binary, making deployment straightforward. So, this is useful because it integrates easily into your existing ClickHouse setup and offers multiple ways, from simple clicks to AI-powered queries, to find the information you need from your logs.

Product Core Function

· Schema-agnostic log viewing: Allows immediate filtering of logs in ClickHouse without requiring upfront schema migrations or specific data formats like OTEL, saving setup time and effort. This is valuable for quickly investigating issues in diverse log data.

· LogChefQL query language: Provides a simpler alternative to SQL specifically for log filtering, making it easier for developers to craft precise queries without deep SQL expertise. This is useful for faster and more targeted log analysis.

· Kibana-style field sidebar: Enables interactive exploration of log data by clicking on field values to automatically generate filters, mimicking the efficient workflow of tools like Kibana. This accelerates the process of isolating specific log events.

· SQL-based alerting with Alertmanager integration: Allows setting up alerts based on log patterns using familiar SQL queries, which can then be routed to common alerting systems. This is valuable for proactive monitoring and incident response.

· AI assistant for natural language querying: Translates plain English requests (e.g., 'find all failed logins') into working SQL queries, democratizing log analysis. This is useful for non-experts and for quickly generating complex queries.

· MCP server for LLM integration: Provides a way to query logs from external LLM tools, enabling advanced analytical workflows and custom integrations. This is valuable for extending the power of your log data with cutting-edge AI.

· Fine-grained access control via Teams and Sources: Offers granular control over which log tables (Sources) specific teams can access, enhancing security and data governance without enterprise licenses. This is crucial for managing sensitive log data in collaborative environments.

Product Usage Case

· Troubleshooting application errors: A developer can quickly use the field sidebar to filter logs by error code, user ID, or timestamp range in a ClickHouse table to pinpoint the root cause of an application failure. This solves the problem of slow and manual log searching.

· Investigating performance degradations: An engineer can use LogChefQL or the AI assistant to query for specific slow requests (e.g., 'find all POST requests taking longer than 2 seconds') across millions of log entries. This addresses the challenge of identifying performance bottlenecks in large datasets.

· Security incident response: A security analyst can use LogChef to quickly search for suspicious activity patterns, like multiple failed login attempts from a single IP address, by filtering on relevant fields. This helps in rapid detection and response to security threats.

· Monitoring system health: By setting up SQL-based alerts in LogChef for critical error messages or unusual traffic spikes, teams can be proactively notified of potential system issues before they impact users. This provides a robust, open-source alerting mechanism for log-driven monitoring.

· Onboarding new team members to log analysis: The intuitive interface and natural language querying of LogChef allow less experienced developers to effectively explore logs without extensive training on SQL or complex log management systems. This lowers the barrier to entry for log analysis.

15

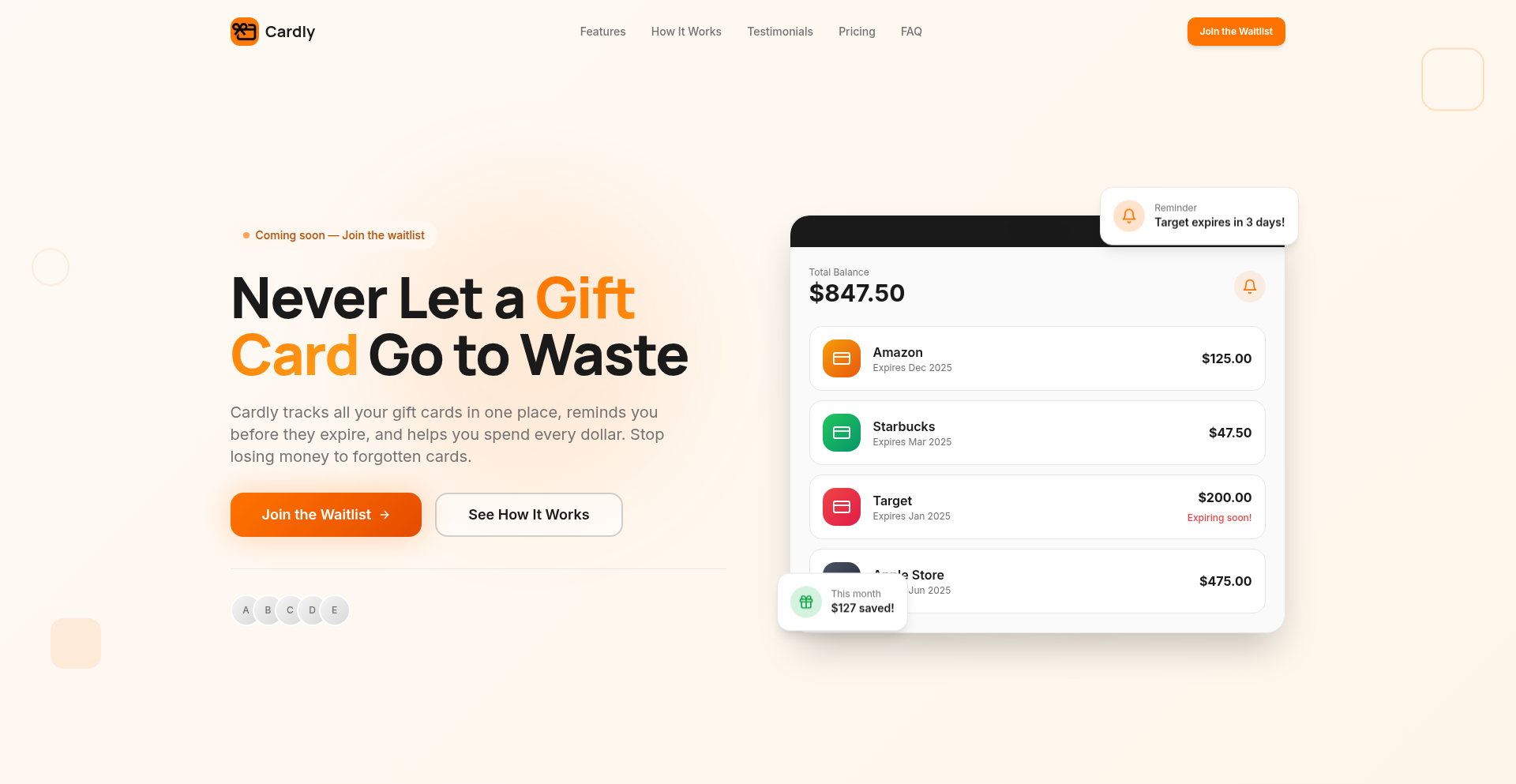

GiftCard Capture: Cardly

Author

Pastaza

Description

Cardly is a minimalist, card-first application designed to efficiently capture and manage your gift cards. It leverages a simple, intuitive interface to digitize and organize your physical gift cards, solving the common problem of lost or forgotten gift card information. The innovation lies in its streamlined data entry and quick recall, making your gift cards instantly accessible and usable.

Popularity

Points 2

Comments 3

What is this product?

Cardly is a mobile application built with a focus on rapid data capture for gift cards. Instead of complex forms, it uses a card-based interface where users can quickly input gift card details like the merchant, balance, and expiration date. The underlying technology likely involves efficient local data storage and potentially a simple image recognition or OCR (Optical Character Recognition) capability for even faster entry, although the 'tiny' aspect suggests a strong emphasis on manual, swift input. The core innovation is in reducing friction for a mundane but often neglected task. So, what's in it for you? It means you won't forget about that coffee shop gift card or have to dig through drawers to find the details for your favorite clothing store. It makes your existing gift cards readily available and usable, unlocking their value.

How to use it?

Developers can integrate Cardly's principles into their own applications by adopting a card-centric UI/UX design for data entry. This could involve using components that mimic physical cards for input fields, allowing users to quickly scan or type information. For a more direct integration, developers could explore building similar functionalities for managing other types of ephemeral data within their apps, such as coupons, loyalty cards, or even quick notes. The concept of 'card-first' can be applied to any scenario where users need to capture discrete pieces of information rapidly. So, how can you use this? Imagine building a personal finance app where you can quickly log small expenses using a card interface, or a recipe app where you store ingredients as individual cards for easy management. It's about making data entry feel less like a chore and more like organizing.

Product Core Function

· Gift Card Data Capture: Allows users to quickly input and store essential gift card details such as merchant name, card number, and balance. This means your gift card information is readily accessible, preventing loss or expiry of value.

· Card-Based Interface: Employs a user-friendly, card-like visual design for data input and display, making it intuitive and fast to manage multiple gift cards. This simplifies the process of organizing your digital wallet, making it visually appealing and easy to navigate.

· Balance Tracking: Enables users to update and track the remaining balance on their gift cards, ensuring you know exactly how much value you have. This helps in planning your purchases and utilizing your gift cards to their full potential.

· Expiration Date Reminders: Provides notifications for expiring gift cards, so you don't miss out on using them before they become invalid. This feature proactively saves you money by reminding you of upcoming deadlines.

· Merchant Search/Filtering: Allows users to quickly find specific gift cards by searching for the merchant name, streamlining access to the card you need. This saves time when you're at the point of purchase and need to find the right card quickly.

Product Usage Case

· A user wants to quickly add a new gift card received for their birthday. Instead of navigating through multiple menus, they open Cardly, tap 'Add Card', and rapidly input the merchant and amount using the card-like interface. This solves the problem of delaying data entry and potentially losing the physical card or its details.

· A developer building a personal budgeting app wants to add a feature for managing gift cards. They can adopt Cardly's card-first approach to create a similar, intuitive data entry screen for gift card details within their app. This solves the challenge of designing a user-friendly interface for often-overlooked financial items.

· A retail business owner wants to create a simple digital loyalty card system for their customers. They can be inspired by Cardly's minimalist design to create an app where customers can easily store and view their digital loyalty cards, enhancing customer engagement and reducing the need for physical cards.

· A student with multiple gift cards from various stores wants to keep track of their balances to budget effectively. They use Cardly to log all their gift cards and their initial balances, then update them as they make purchases. This solves the problem of scattered gift card information and helps them make informed spending decisions.

16

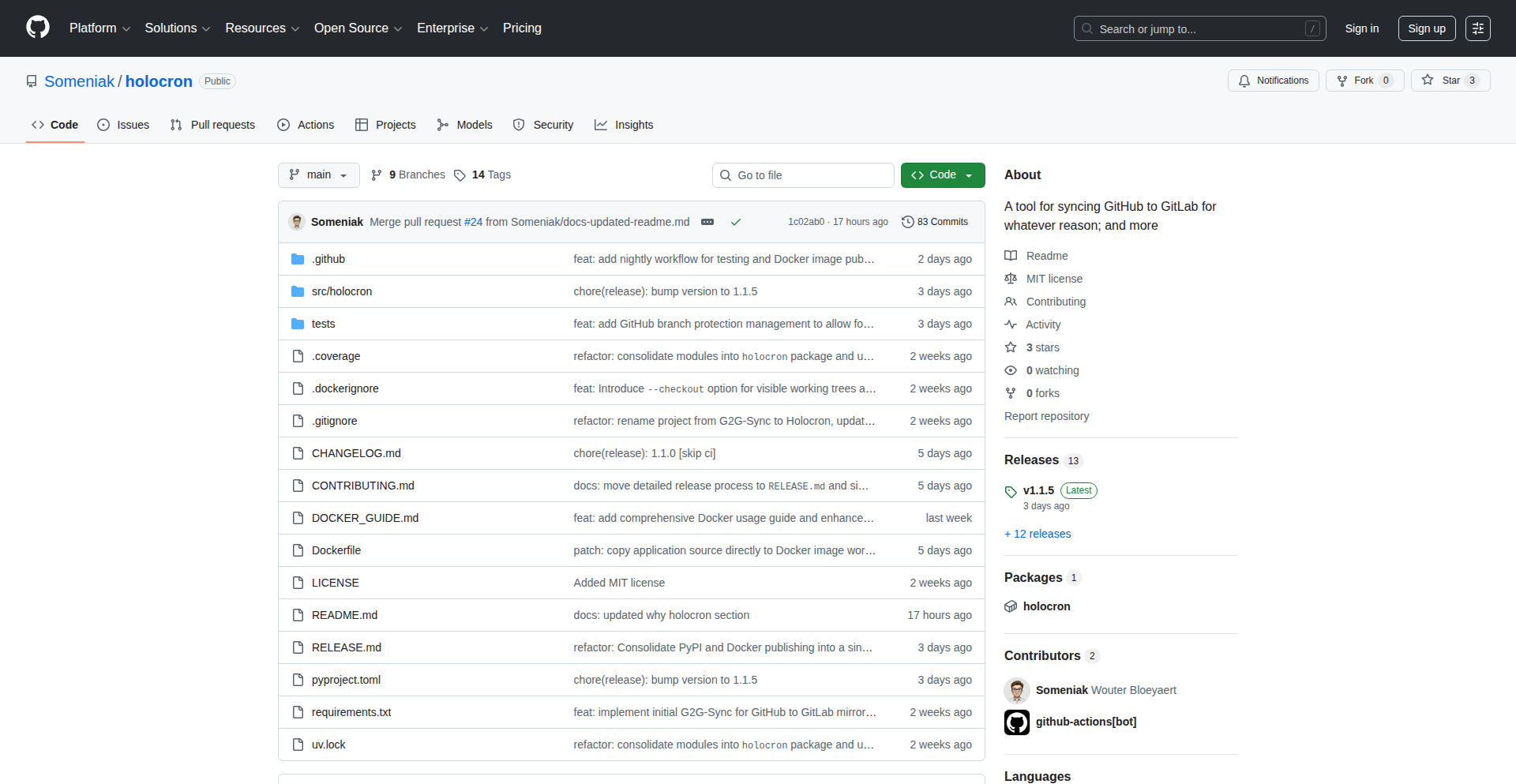

GitSync Holocron

Author

someniak

Description

Holocron is a novel tool designed to synchronize Git repositories in a peer-to-peer manner, eliminating the need for a central server. It leverages advanced Git internals and network protocols to create a resilient and distributed version control system, offering a unique approach to collaborative code management.

Popularity

Points 2

Comments 2

What is this product?

Holocron is a decentralized Git repository synchronization tool. Instead of relying on a single server like GitHub or GitLab, it allows Git repositories to communicate and share changes directly with each other. The core innovation lies in its ability to manage distributed Git objects (like commits and blobs) and negotiate synchronization states without a central authority. It's like having a Git server that lives on your machine and can talk to other Git servers on other machines directly, making it robust against single points of failure and offering more control over your codebase.

How to use it?

Developers can integrate Holocron into their existing Git workflows. Imagine you have multiple machines or collaborate with a small team where setting up a dedicated Git server is overkill. Holocron allows you to establish direct synchronization links between these repositories. You might use it to keep your laptop and desktop versions of a project in sync, or for a small team to share code directly without relying on a cloud service. The integration typically involves configuring Holocron to watch specific repositories and define which other repositories it should sync with, using standard Git network protocols enhanced by Holocron's logic.

Product Core Function

· Decentralized Repository Synchronization: Enables direct peer-to-peer syncing of Git repositories, meaning your code changes can be shared and updated between different machines or collaborators without a central server. This provides data redundancy and resilience.

· Conflict Resolution Logic: Implements intelligent algorithms to detect and help resolve merge conflicts that arise from concurrent changes, ensuring data integrity and smoother collaboration.

· Network Protocol Abstraction: Hides the complexity of direct network communication for Git, allowing developers to focus on the code while Holocron handles the secure and efficient transfer of repository data.

· Repository Discovery: Provides mechanisms for Holocron instances to discover and connect with other Holocron-enabled repositories on the network, simplifying the setup of distributed sync networks.

· Selective Synchronization: Allows users to configure which branches or commits are synchronized, giving fine-grained control over data flow and storage.

Product Usage Case

· Offline Development Sync: A developer working on a laptop in an environment with intermittent internet can use Holocron to sync their work with a desktop at home when a connection becomes available, without needing to push to a public remote.

· Small Team Collaboration: A small startup team can avoid the overhead of setting up and managing a Git server by using Holocron to directly sync their repositories, fostering a private and secure code-sharing environment.