Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-12-21

SagaSu777 2025-12-22

Explore the hottest developer projects on Show HN for 2025-12-21. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN projects reveal a powerful trend: the democratization of complex technologies. We see a surge in AI and LLM applications, not just for novel creations, but for practical problem-solving across diverse domains. From analyzing public sentiment about tech CEOs to generating code, structuring data, and even automating forensic accounting, AI is becoming an accessible force for individual developers. The emphasis on local-first execution and privacy-preserving designs, often leveraging WebAssembly, speaks to a growing demand for user control and data security. Furthermore, the proliferation of developer tools, particularly CLIs and productivity enhancers, highlights a core hacker ethos: streamline workflows, automate tedious tasks, and empower fellow developers. For aspiring innovators, this is a clear signal to explore how AI can augment existing workflows, how to build privacy-centric solutions, and how to create tools that directly address developer pain points. The barrier to entry for sophisticated applications is lowering, encouraging a new wave of creative problem-solving.

Today's Hottest Product

Name

HN Sentiment API – I ranked tech CEOs by how much you hate them

Highlight

This project leverages AI (GPT-4o mini) to analyze Hacker News comments, extract entities, and classify sentiment towards them. It tackles the challenge of processing a vast amount of unstructured text to derive meaningful insights about public opinion. Developers can learn about practical NLP applications, sentiment analysis techniques, and API design for data aggregation and analysis. The developer's approach to entity extraction and sentiment classification provides a blueprint for building similar analytical tools.

Popular Category

AI/ML

Developer Tools

Data Analysis

Open Source

Web Applications

Popular Keyword

AI

LLM

API

Developer Tools

Open Source

Rust

WASM

CLI

Productivity

Data

Technology Trends

AI-powered Content Generation and Analysis

Local-First and Privacy-Focused Applications

Developer Productivity Tools

Efficient Data Processing and Management

Cross-Platform Development

WebAssembly (WASM) for Client-Side Performance

LLM Integration for Various Use Cases

Project Category Distribution

Developer Tools (30%)

AI/ML Applications (25%)

Data Analysis & Visualization (15%)

Web Applications (20%)

Utilities/Lifestyle (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | HN Book Insights Engine | 470 | 167 |

| 2 | WalletForge | 387 | 103 |

| 3 | Shittp: Ephemeral Dotfiles Sync via SSH | 125 | 72 |

| 4 | YAMLCanvas CV Renderer | 79 | 40 |

| 5 | BG-TrainTracker | 74 | 22 |

| 6 | Mushak: Effortless Zero-Downtime Docker Deployments | 26 | 15 |

| 7 | HN Pulse API | 27 | 6 |

| 8 | Luminoid-RS: Cross-Platform Lighting Data Toolkit | 29 | 0 |

| 9 | Mactop | 22 | 1 |

| 10 | PuntualFeedback | 10 | 0 |

1

HN Book Insights Engine

Author

seinvak

Description

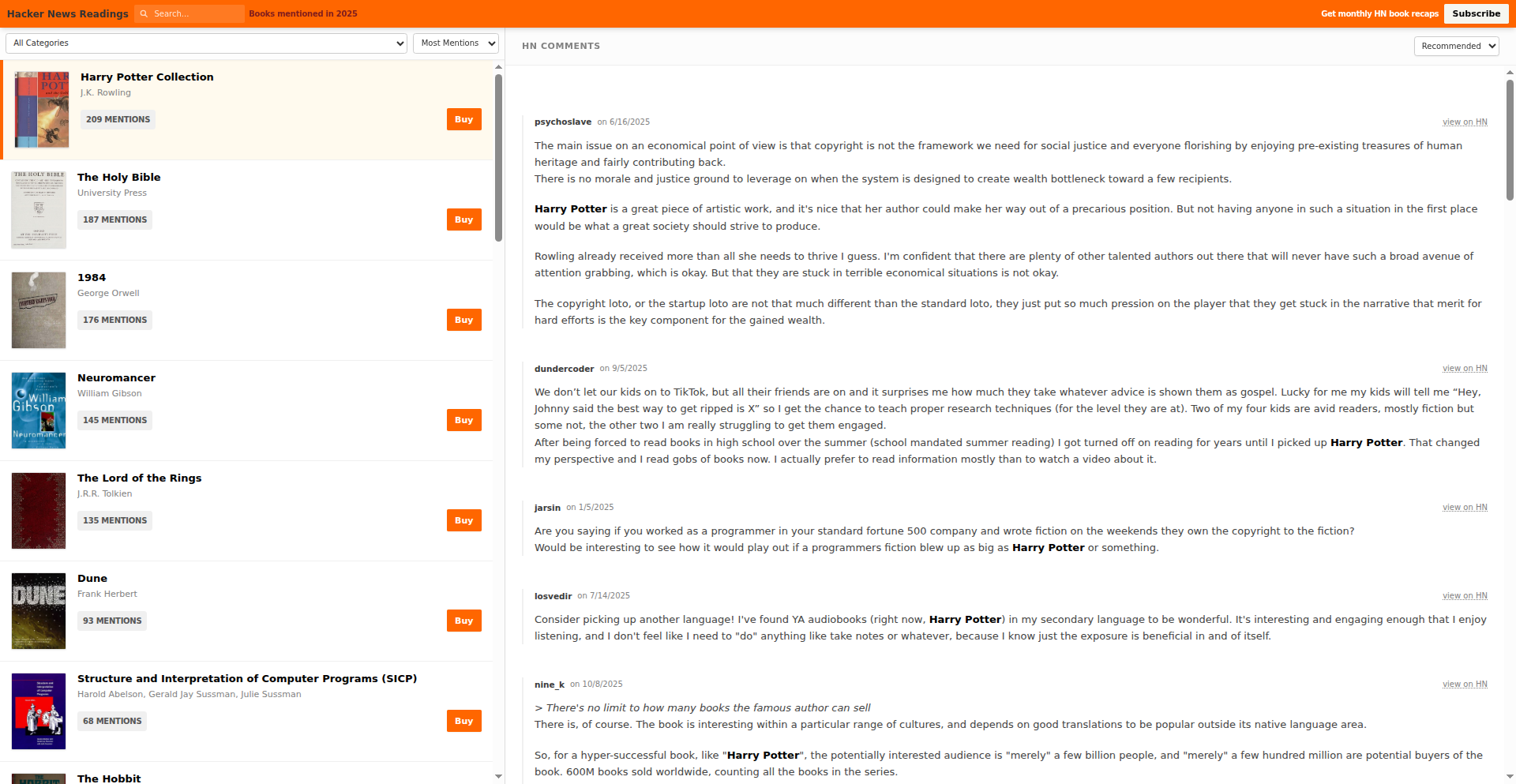

This project is a data-driven exploration of books frequently recommended or discussed on Hacker News in 2025. It leverages natural language processing and data aggregation to identify trending literature within the tech community. The innovation lies in its ability to surface what the developer zeitgeist is reading and learning from, offering insights into relevant knowledge domains beyond pure code.

Popularity

Points 470

Comments 167

What is this product?

This project is essentially a trend analysis tool for literature within the Hacker News community. It works by scanning Hacker News discussions from 2025, identifying mentions of books, and then aggregating this data to reveal which books are most popular or influential. The core technology involves natural language processing (NLP) techniques to extract book titles from unstructured text, followed by data visualization and ranking algorithms. The innovation is in using the collective 'signal' from a highly technical audience to discover valuable reading material, essentially crowdsourcing a reading list for self-improvement and staying informed about emerging ideas.

How to use it?

Developers can use this project as a curated discovery tool. Instead of sifting through endless articles, they can quickly see what books are resonating with their peers. It can be integrated into personal learning workflows, used to inform book club selections, or even guide corporate training initiatives. Imagine a developer looking for books to deepen their understanding of AI ethics, system design, or even product management – this engine can provide a data-backed starting point.

Product Core Function

· Book Mention Extraction: Utilizes NLP to identify book titles within Hacker News comments and articles, enabling the automatic discovery of reading material. This is useful for quickly finding relevant books without manual searching.

· Trend Analysis and Ranking: Aggregates book mentions to identify the most frequently discussed and influential books, providing a prioritized list of reading recommendations. This helps developers focus on popular and impactful literature.

· Data Visualization: Presents the book data in an understandable format, allowing users to easily see trends and patterns in reading habits within the tech community. This makes complex data accessible and actionable.

· Community Insight Generation: Offers a glimpse into the intellectual interests and learning priorities of the Hacker News demographic, providing valuable context for personal and professional development. This helps developers understand what knowledge is valued by their peers.

Product Usage Case

· A developer aiming to broaden their knowledge in areas like distributed systems receives a list of highly recommended books from the 'HN Book Insights Engine,' saving them hours of research and ensuring they pick titles relevant to current industry discussions. This directly addresses the need for efficient learning in a fast-evolving field.

· A startup team looking to improve their product management skills can use the engine to discover books that are actively being discussed and appreciated by experienced product professionals in the tech space. This helps them acquire practical, community-vetted knowledge for better decision-making.

· An individual seeking to understand the ethical implications of emerging technologies can use the engine to find books that are resonating with thought leaders and practitioners on Hacker News. This provides a data-driven approach to identifying crucial, though perhaps niche, learning resources.

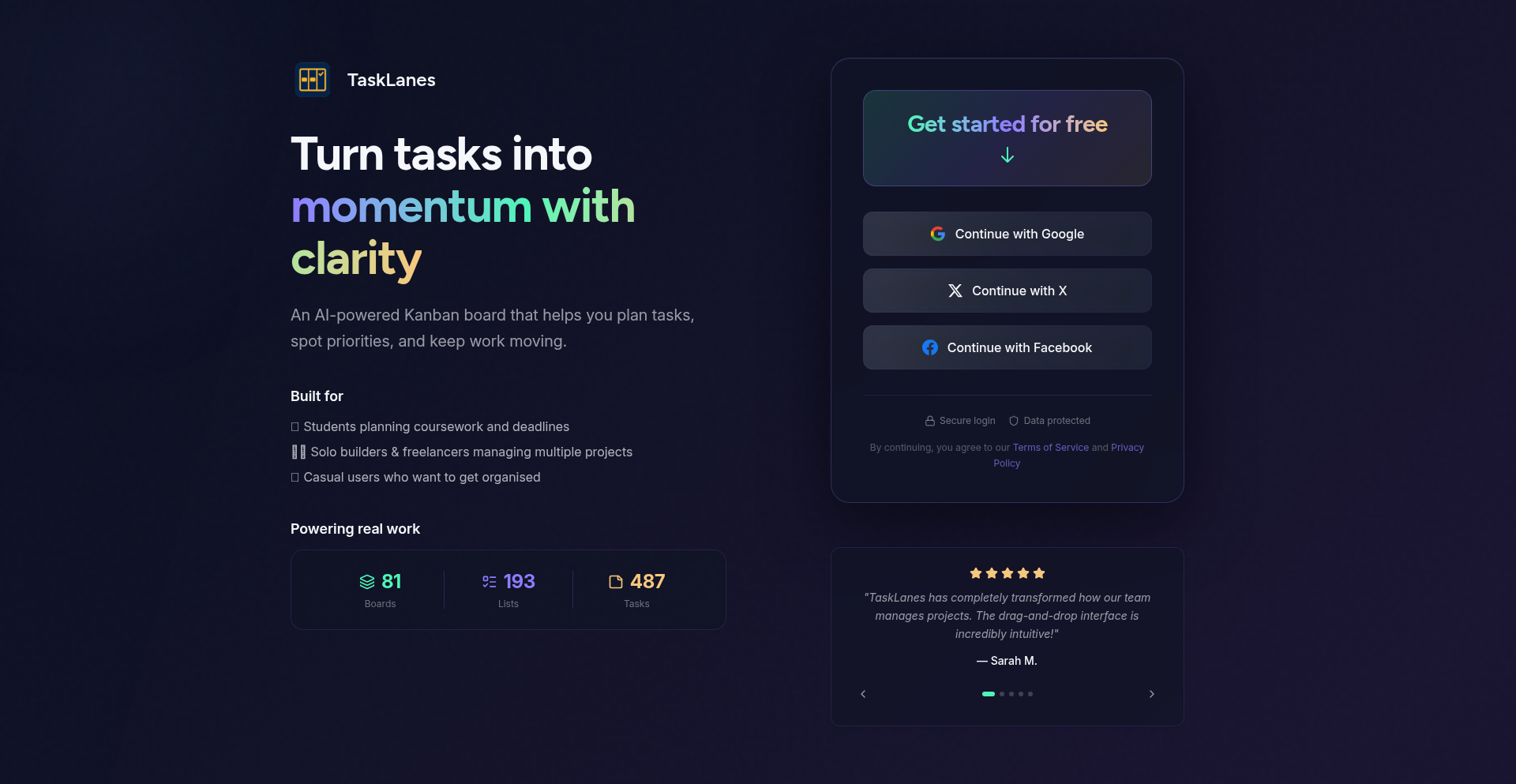

2

WalletForge

Author

alentodorov

Description

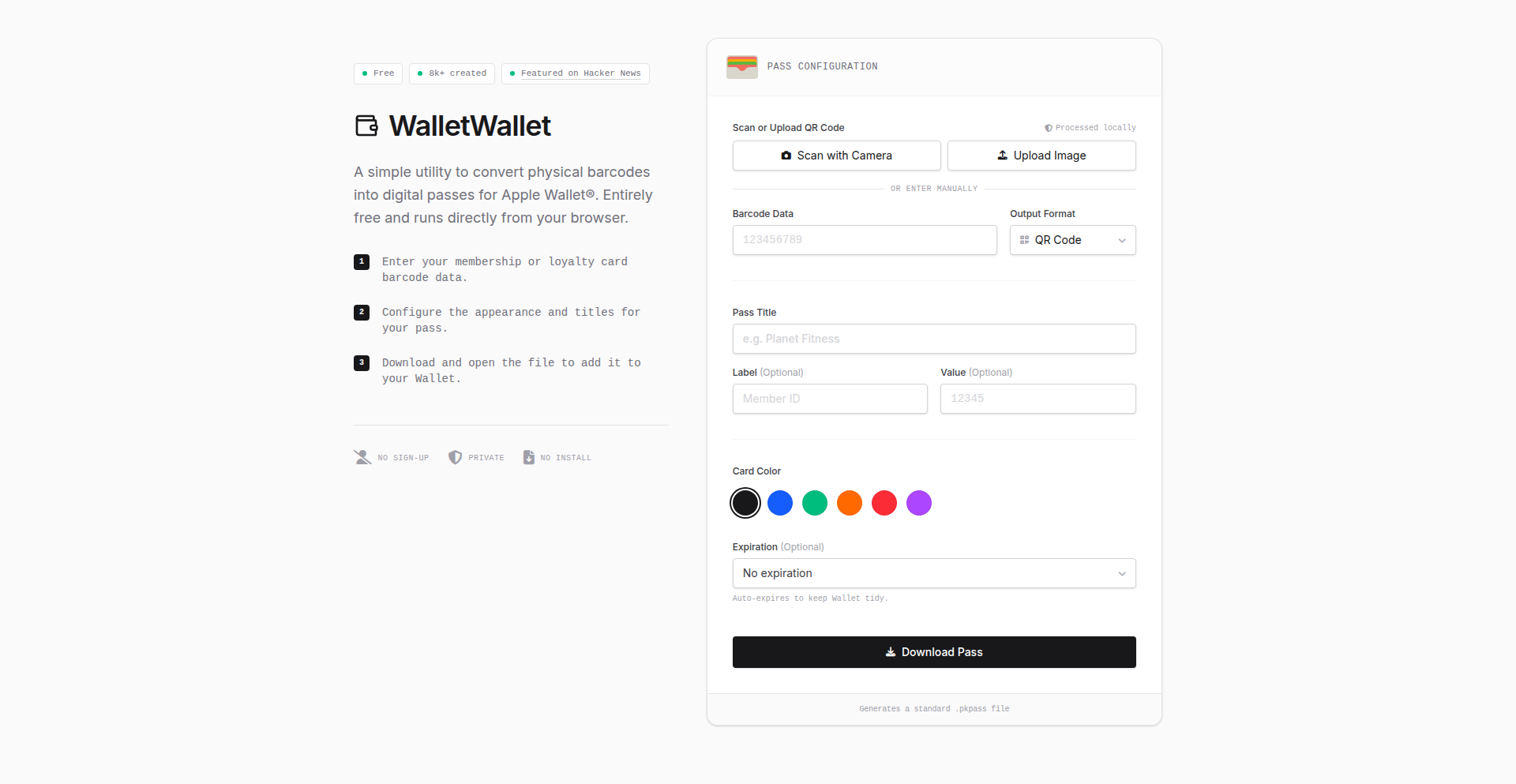

WalletForge is a minimalist application that empowers users to create custom Apple Wallet passes from any source. Instead of relying on complex systems or AI, it focuses on the core functionality of signing passes by manually entering barcode information. This approach minimizes potential errors and provides a direct, hackable solution for integrating services that don't natively support Apple Wallet.

Popularity

Points 387

Comments 103

What is this product?

WalletForge is a tool for generating Apple Wallet passes from arbitrary data, like barcodes from local shops that don't offer them. The innovation lies in its simplicity and directness: it circumvents the need for a full-fledged service by focusing solely on the cryptographic signing process required for Apple passes. You manually input the barcode data, and the app uses your developer certificate to sign it, making it a valid pass for your iPhone's Wallet app. This is useful because it lets you digitize loyalty cards, event tickets, or any other scannable item that doesn't have an official digital pass, directly solving the inconvenience of carrying physical cards or struggling with incompatible systems. It’s a developer-centric approach to a common user problem.

How to use it?

Developers can use WalletForge by first obtaining an Apple Developer certificate. Once the certificate is in place, they can launch the app and manually enter the barcode information (like a store loyalty number or event code). The app then takes this data and, using the developer certificate, generates a signed Apple Wallet pass. This pass can then be added to the user's iPhone Wallet app. The primary use case is for developers who want to create personalized passes for their own use or for a small group of users, especially when dealing with systems that lack native Apple Wallet integration. It’s a straightforward integration for solving a specific personal or small-scale ticketing/loyalty card problem.

Product Core Function

· Manual Barcode Entry: Allows users to input any barcode data directly, providing a flexible input method for diverse data types. This is valuable because it ensures compatibility with any scannable item, regardless of its origin or format.

· Developer Certificate Signing: Leverages a user's Apple Developer certificate to cryptographically sign generated passes. This is crucial for ensuring the passes are recognized and trusted by the Apple Wallet app, providing essential security and functionality.

· Custom Pass Generation: Enables the creation of personalized Apple Wallet passes from the provided barcode data. This is useful for creating digital versions of physical cards or tickets that don't have official digital support, simplifying user convenience.

Product Usage Case

· A small local bookstore doesn't offer Apple Wallet integration for its loyalty program. A developer can use WalletForge to manually enter their loyalty card barcode and create a custom pass, eliminating the need to carry the physical card. This directly addresses the inconvenience of managing multiple physical cards.

· An event organizer for a small community gathering wants to provide digital tickets but lacks the resources for a full ticketing platform. A developer can use WalletForge to generate individual passes for attendees by inputting their ticket barcode, offering a simple and cost-effective digital ticketing solution. This solves the problem of distributing and managing tickets efficiently.

· A developer wants to create a personalized pass for a specific membership or access card that isn't supported by Apple Wallet. They can use WalletForge to manually input the necessary credentials and generate a functional pass, allowing for seamless access control or membership identification. This provides a direct and practical way to integrate less common access methods into the Apple Wallet ecosystem.

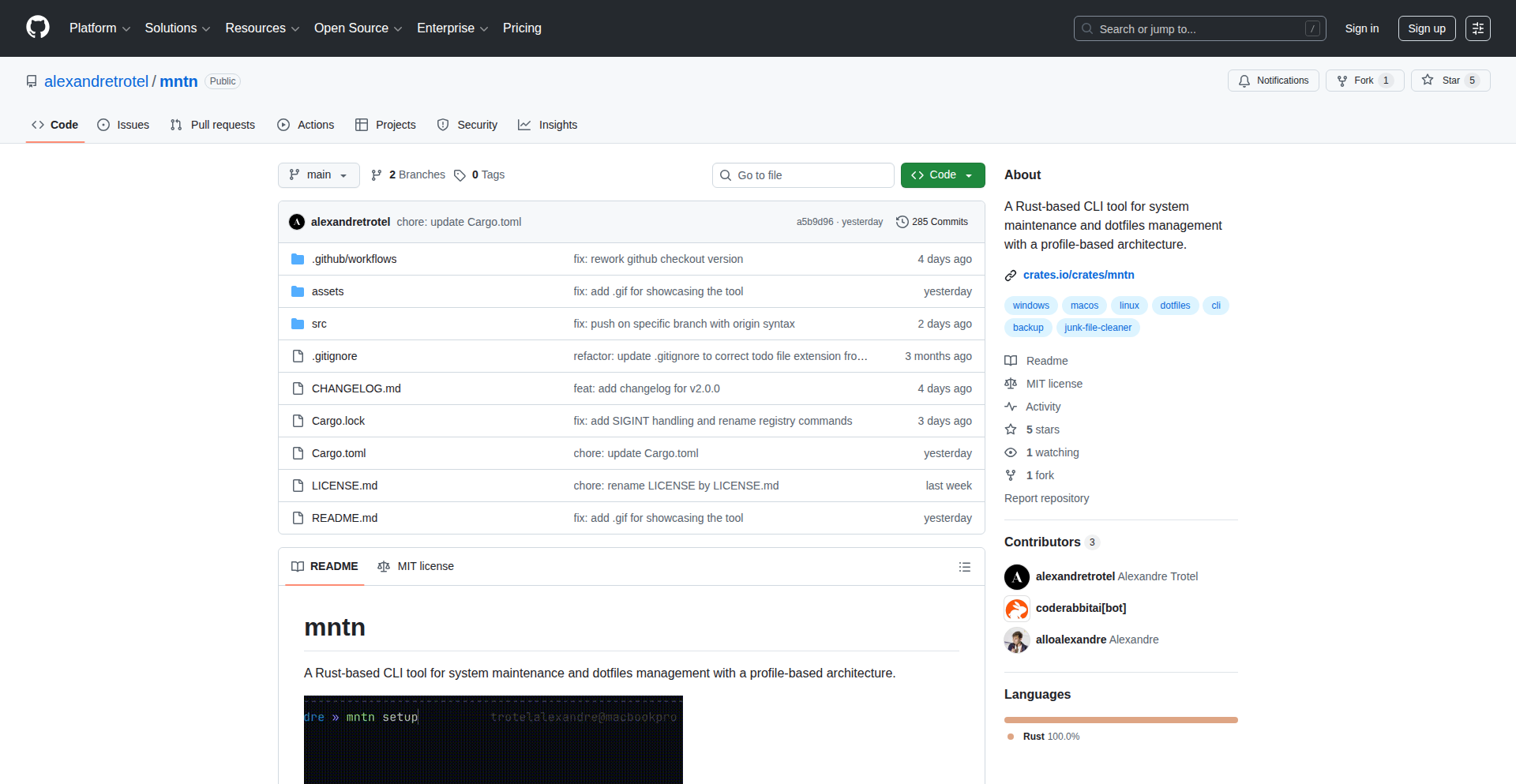

3

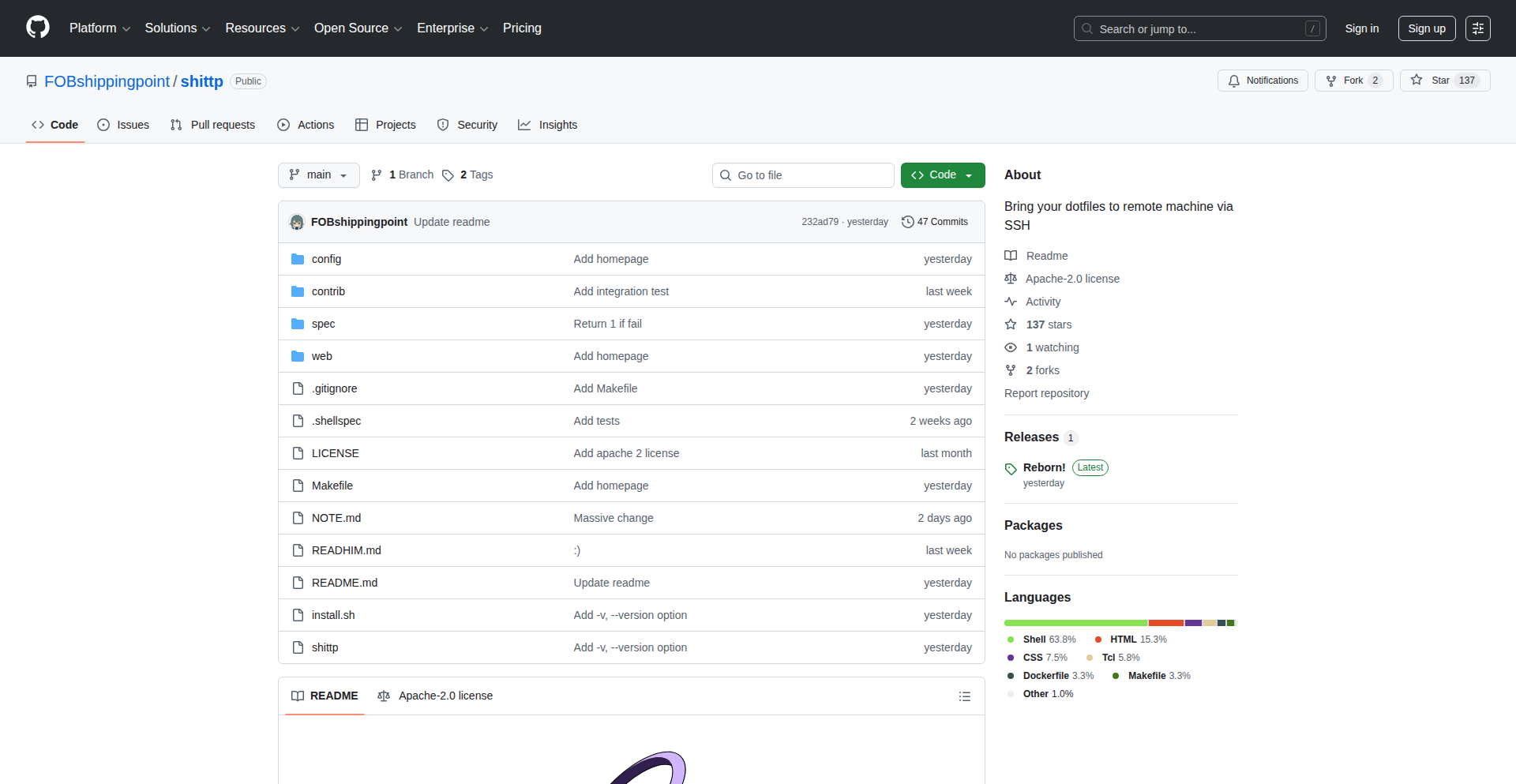

Shittp: Ephemeral Dotfiles Sync via SSH

Author

sdovan1

Description

Shittp is a novel utility for developers to temporarily synchronize their configuration files (dotfiles) across different machines using SSH. Its core innovation lies in its 'volatile' nature, meaning the synced files are designed to be short-lived, promoting security and preventing unintended persistence of sensitive settings.

Popularity

Points 125

Comments 72

What is this product?

Shittp is a command-line tool that leverages SSH to push and pull your dotfiles (like `.bashrc`, `.vimrc`, `.gitconfig`) to a remote server for a temporary period. The 'volatile' aspect means these files aren't permanently stored on the remote server but rather exist only as long as the connection or a short timer is active. This is a creative approach to sharing configurations without the security risks of leaving them on an untrusted machine or the complexity of traditional sync solutions. The underlying technology uses standard SSH protocols for secure transfer and potentially temporary file system mounts or in-memory storage for the 'volatile' behavior, making it lightweight and secure.

How to use it?

Developers can use Shittp to quickly share their current setup with a new machine they're working on, or to access their familiar environment from a borrowed laptop. It's integrated into a developer's workflow by running a simple command before and after a session. For instance, on your primary machine, you might run `shittp push <server_ip>:<path>` to upload your dotfiles, and on the secondary machine, `shittp pull <server_ip>:<path>` to download them. The key is that this is for temporary use, not long-term backup.

Product Core Function

· Secure Dotfiles Transfer: Utilizes SSH for encrypted transmission of configuration files, ensuring data privacy during transit.

· Volatile Storage Mechanism: Implements a temporary storage strategy for dotfiles on the remote, reducing the risk of data leakage or unauthorized access after use.

· Cross-Machine Environment Synchronization: Enables quick setup of a familiar development environment on any machine with SSH access, boosting productivity.

· Lightweight and Minimalist Design: Built for speed and simplicity, offering a direct solution without heavy dependencies or complex configurations.

Product Usage Case

· Scenario: A developer needs to work on a client's machine for a short period. Problem: The client's machine lacks their usual development tools and configurations. Solution: The developer uses Shittp to quickly sync their essential dotfiles (like shell aliases, editor settings) via SSH, allowing them to be productive immediately without compromising security by leaving persistent files.

· Scenario: A developer is setting up a new personal server for a coding project. Problem: Manually configuring the server with all their preferred settings is time-consuming. Solution: Shittp can be used to push their dotfiles to the server temporarily, allowing them to apply their familiar configurations and then clean them up, avoiding permanent storage of potentially sensitive credentials within dotfiles.

· Scenario: Collaborative coding session on a shared machine. Problem: Multiple developers need to quickly switch between their personalized environments. Solution: Shittp can be used to push and pull configurations on demand, enabling rapid switching between different developer setups without complex profile management.

4

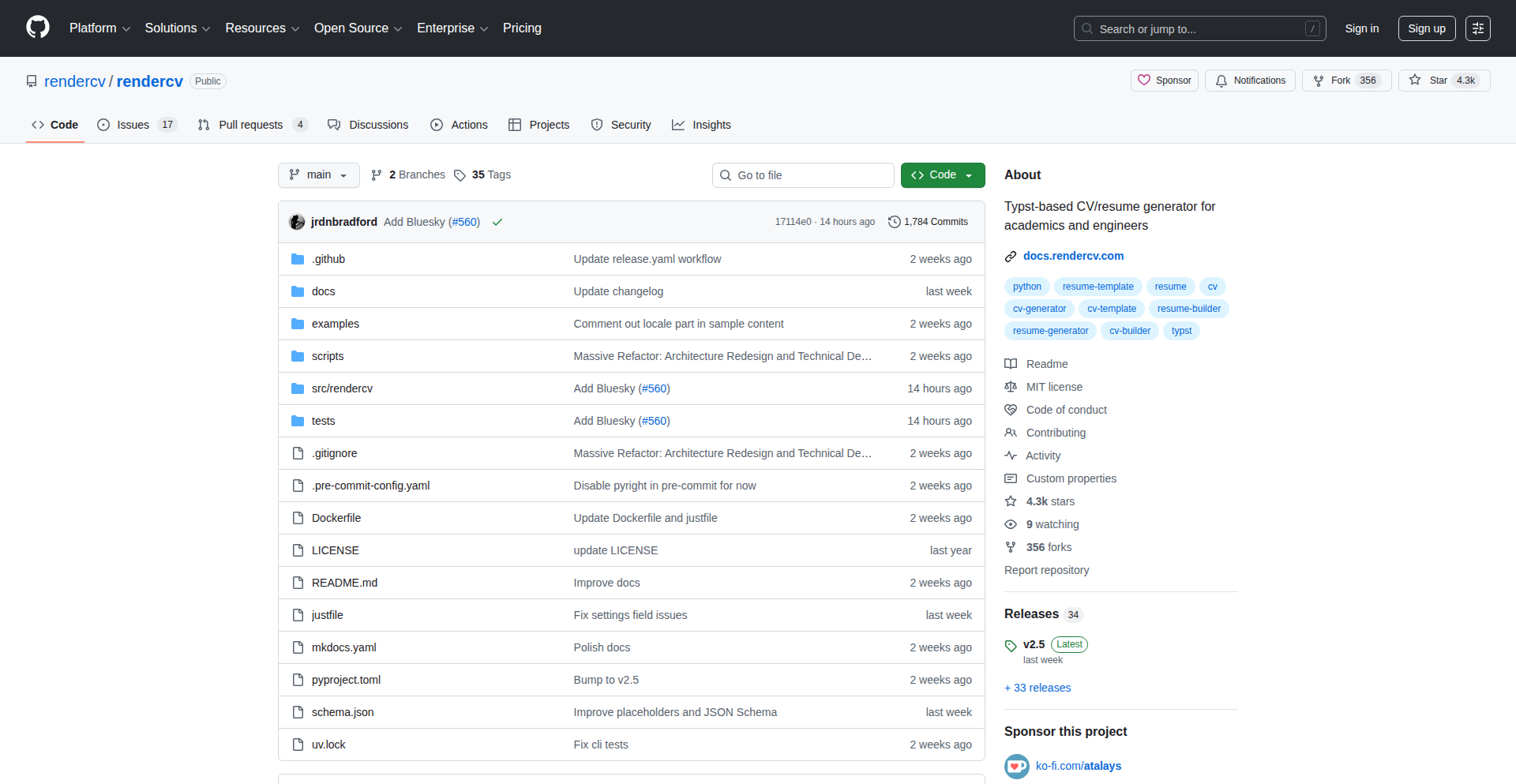

YAMLCanvas CV Renderer

Author

sinaatalay

Description

RenderCV is an open-source tool that transforms a single YAML file into a beautifully typeset PDF resume. It addresses the common frustrations of layout issues in word processors and the complexity of LaTeX, offering a developer-friendly approach to CV creation and management. The core innovation lies in its ability to store content, design, and formatting all within a version-controllable text file, making it highly adaptable for LLM integration and team collaboration.

Popularity

Points 79

Comments 40

What is this product?

YAMLCanvas CV Renderer is a command-line utility that takes a structured CV definition written in YAML and renders it into a professional-looking PDF document. It uses Typst, a modern typesetting system known for its precision and speed, under the hood. The YAML file acts as a single source of truth, defining not just your work experience and education, but also the visual design elements like margins, fonts, and colors. This approach offers precise control over the final output, solving the common problem of inconsistent formatting and difficult editing found in traditional document editors.

How to use it?

Developers can integrate YAMLCanvas CV Renderer into their workflow by creating a `cv.yaml` file that describes their resume. With the tool installed, they can simply run the command `rendercv render cv.yaml` in their terminal. This will generate a PDF file of their CV. Its text-based nature makes it ideal for version control systems like Git, allowing for easy tracking of changes, branching, and merging of CV drafts. It can also be easily integrated into CI/CD pipelines or scripting for automated CV generation for different job applications, especially when combined with AI tools.

Product Core Function

· Version-controllable CVs: Store your entire CV as a text file, enabling diffing, tagging, and easy rollback of changes, making it a robust solution for tracking your professional history.

· LLM-friendly CV generation: Easily copy-paste your YAML CV into AI models like ChatGPT to tailor it for specific job descriptions, then paste the modified content back and re-render, allowing for rapid creation of multiple resume variants.

· Pixel-perfect typography: Leverages Typst for precise alignment and spacing, ensuring a professional and polished look that is hard to achieve with WYSIWYG editors.

· Full design control via YAML: Customize margins, fonts, colors, and other design aspects directly within the YAML file, offering granular control over your CV's appearance without complex coding.

· Editor integration with JSON Schema: Provides autocompletion and inline documentation within code editors, reducing errors and speeding up the writing process of your CV definition.

Product Usage Case

· A software engineer wants to maintain a single, up-to-date CV that can be easily adapted for various job applications. By using YAMLCanvas CV Renderer, they can create a base YAML file and then programmatically tweak sections for each application, ensuring consistency and saving time.

· A job seeker needs to create multiple versions of their resume tailored to different industries. They can use an LLM to suggest content modifications based on job descriptions and then feed these changes back into their YAML file for quick re-rendering into distinct PDF resumes.

· A developer wants to track the evolution of their CV over time, similar to how they track code. By committing their `cv.yaml` file to Git, they can see precisely what changed between versions and revert to previous states if needed.

· A freelancer needs to generate CVs for different clients quickly. They can set up a templated YAML file and then use scripting to populate project-specific details before rendering the final PDF.

5

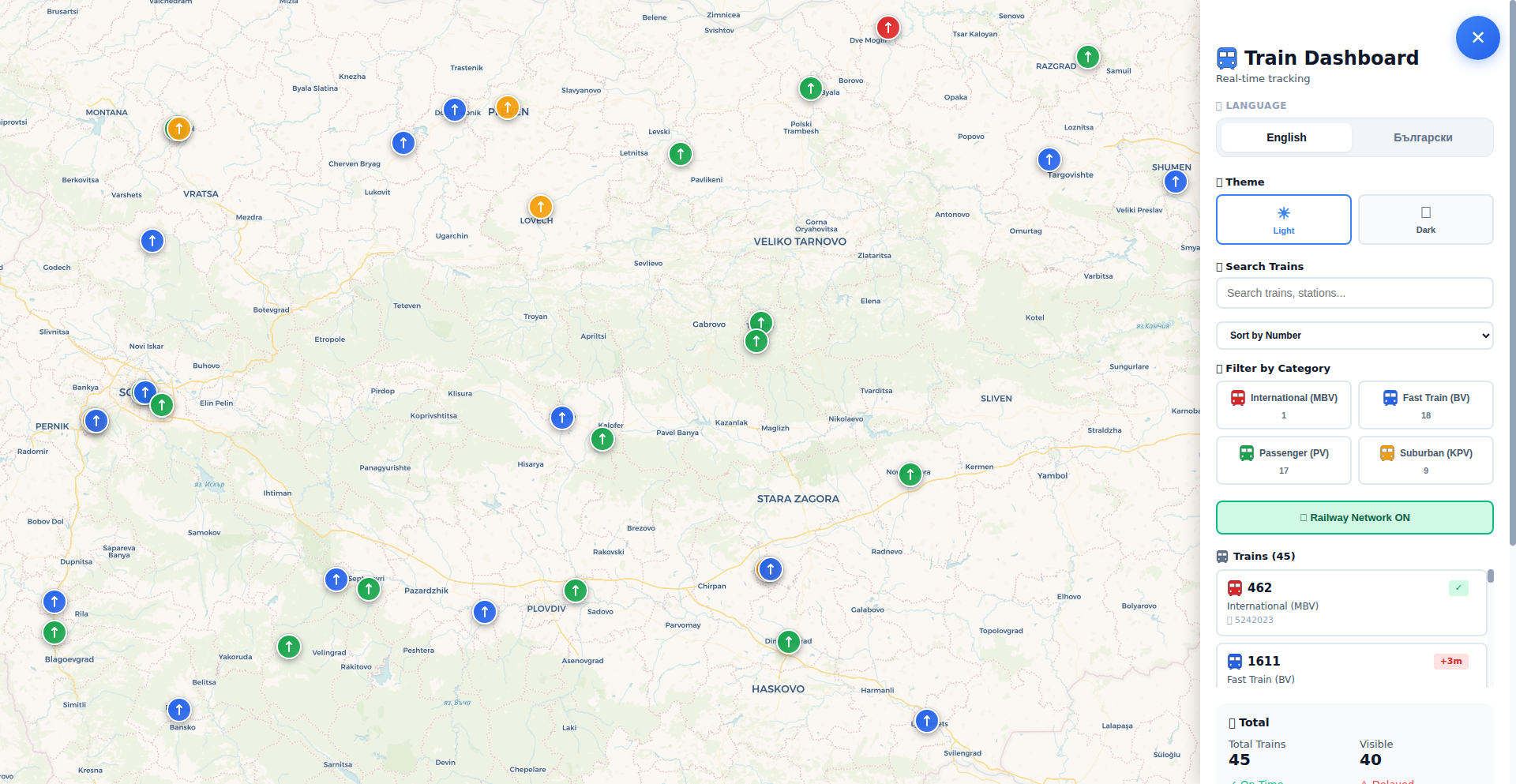

BG-TrainTracker

Author

Pavlinbg

Description

A personal project developed to overcome the lack of a public API for the Bulgarian national railway carrier (BDZ). It scrapes data from the official, outdated, and laggy train map to provide a more functional and informative user experience, offering better route context. This showcases a classic hacker mindset: when faced with a closed system, build your own solution.

Popularity

Points 74

Comments 22

What is this product?

BG-TrainTracker is a web application built by a junior developer to address the shortcomings of the official Bulgarian national railway (BDZ) map. Since BDZ doesn't offer a public API (a way for other programs to easily get train data), the developer reverse-engineered the existing website. It scrapes, or pulls, the train information directly from the official map, even though its user interface is slow and clunky. The innovation lies in taking publicly visible but inaccessible data and making it usable and understandable. It offers a clearer view of train routes and their context, solving the problem of poor information access for train travelers. So, this is useful because it provides a better, more reliable way to see train information than the official, difficult-to-use website.

How to use it?

Developers can use BG-TrainTracker as a reference for how to approach similar problems where official data sources are locked down or poorly implemented. For instance, if you encounter a local government service with no API, you might learn from this project's technique of inspecting the website's network traffic to understand how data is loaded and then programmatically extracting it. It's an example of web scraping and data aggregation. You could integrate similar scraping logic into your own tools or dashboards to monitor real-time data from various sources. So, this is useful for developers looking to build tools that access data from websites that don't offer APIs, helping them understand web scraping techniques.

Product Core Function

· Data Scraping and Extraction: The system programmatically extracts real-time train schedules and location data by parsing the HTML and JavaScript of the official BDZ website. This allows for the collection of data that would otherwise be inaccessible. The value here is in democratizing information, making it available to users and potentially other developers. This is useful for anyone who needs to access train information for planning trips or building related applications.

· Improved User Interface for Route Visualization: Instead of relying on the sluggish official map, BG-TrainTracker presents train routes and their context in a more user-friendly and responsive manner. This enhances the user experience by providing clearer insights into journey details. The value is in making complex travel information easy to understand and use, directly benefiting travelers.

· Data Aggregation and Contextualization: By collecting and processing data from the official map, the project provides a more comprehensive view of train movements and routes than what is readily available. This contextualization helps users understand the bigger picture of their train journey. The value is in providing a richer, more informative experience that aids in better travel planning and decision-making.

Product Usage Case

· A traveler planning a trip on the Bulgarian national railway can use BG-TrainTracker to get a clear and up-to-date overview of available routes and train times, overcoming the difficulties posed by the official BDZ website's poor performance. This solves the problem of unreliable and frustrating trip planning due to bad official tools.

· A developer wanting to build a comparative travel app that includes Bulgarian train data could study BG-TrainTracker's scraping techniques to integrate BDZ information into their platform. This demonstrates how to overcome data access limitations in a competitive market.

· A student learning about web scraping and API alternatives could use BG-TrainTracker as a case study to understand how to extract data from websites that don't provide official APIs, applying these skills to other data-scarce scenarios. This provides a practical learning resource for future developers.

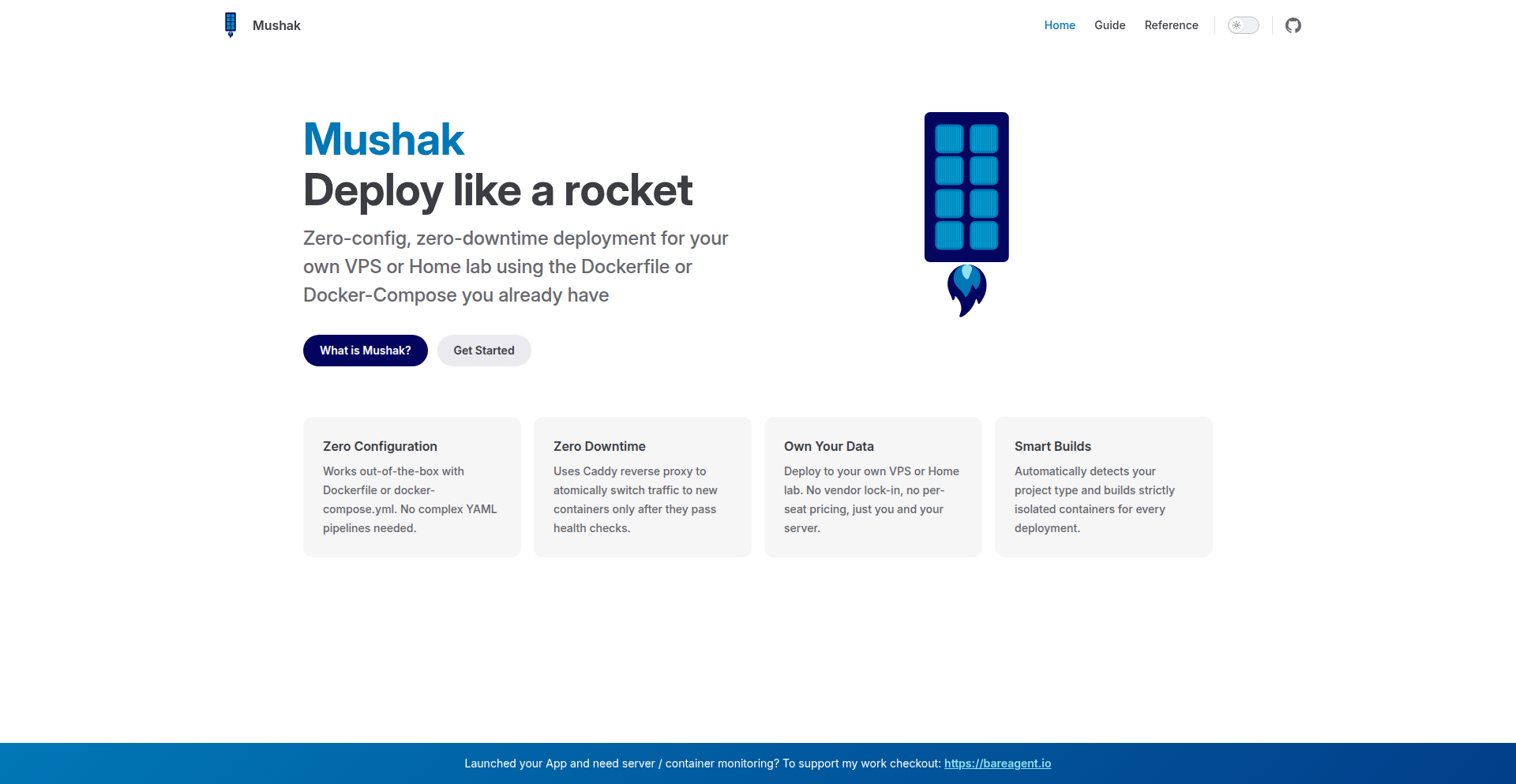

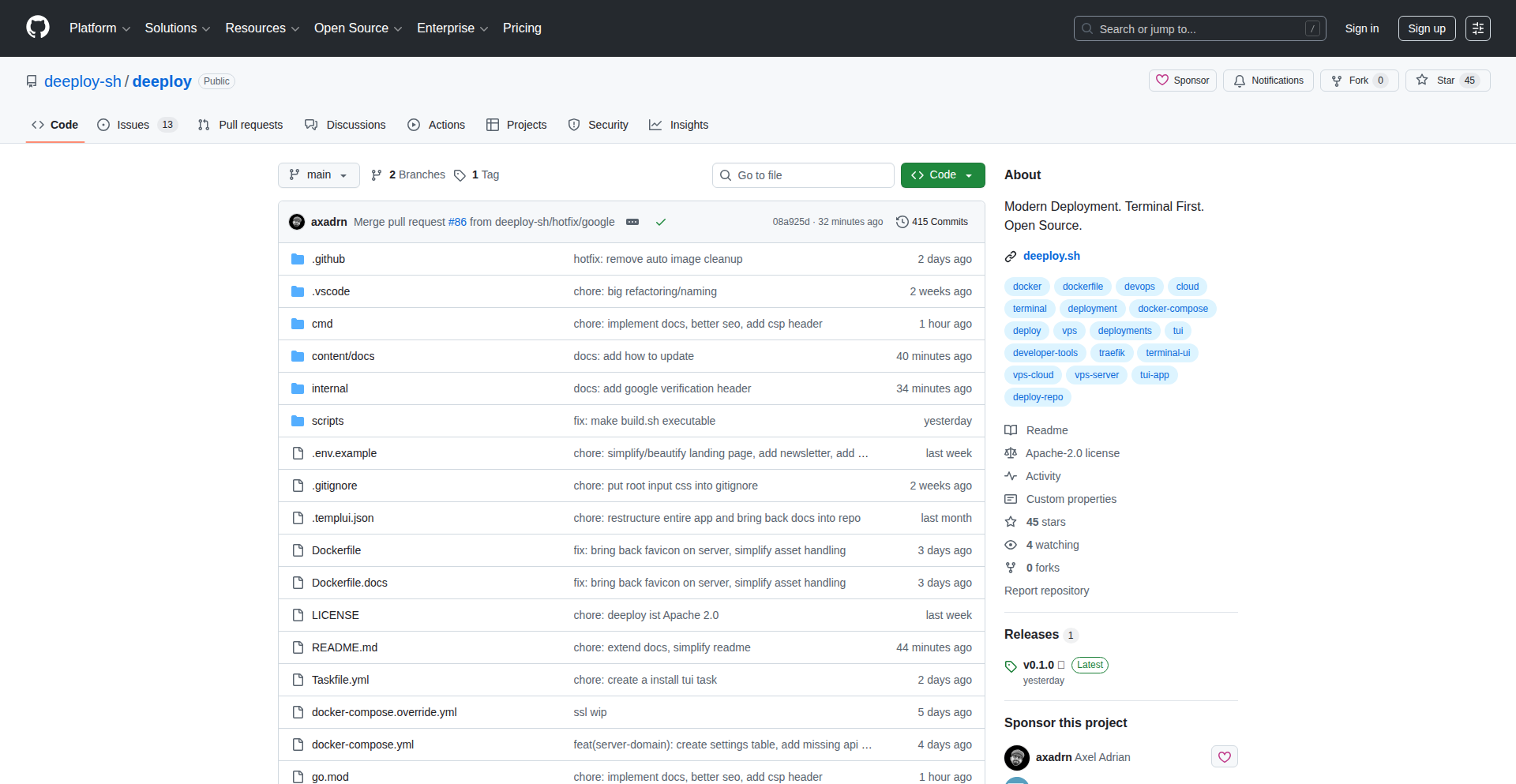

6

Mushak: Effortless Zero-Downtime Docker Deployments

Author

hmontazeri

Description

Mushak is a tool that automates the deployment of Docker and Docker Compose applications to servers with zero configuration and zero downtime. It focuses on streamlining the often complex and error-prone process of getting your containerized applications live and keeping them running smoothly, even during updates. The innovation lies in its ability to abstract away the intricacies of server management and deployment orchestration, making it accessible to developers without extensive DevOps expertise.

Popularity

Points 26

Comments 15

What is this product?

Mushak is a deployment automation tool designed for Docker and Docker Compose applications. Its core technical innovation is its 'zero-config' approach. Instead of requiring complex setup and scripting, it intelligently infers deployment needs from your existing Docker or Docker Compose files. It achieves zero-downtime by implementing strategies like rolling updates, where new versions of your application are deployed and tested before old ones are removed, ensuring continuous availability. This means you can update your live applications without interrupting service for your users.

How to use it?

Developers can use Mushak by simply pointing it to their server and their Docker/Docker Compose project. The tool then handles the entire deployment lifecycle. You can integrate it into your CI/CD pipeline or run it manually. For example, if you have a Docker Compose file defining your web application and database, Mushak can automatically deploy and manage these services on your target server. This drastically reduces the time and effort spent on manual server provisioning, configuration, and deployment, allowing you to focus on building your application.

Product Core Function

· Zero-configuration deployment: Mushak automatically analyzes your Docker or Docker Compose files to determine the necessary deployment steps, eliminating the need for manual scripting or complex configuration files. This is valuable because it saves you time and reduces the chance of errors, so you can deploy faster.

· Zero-downtime updates: Implements rolling update strategies to replace old application versions with new ones seamlessly, ensuring your services remain available to users at all times. This is valuable because it prevents service interruptions and maintains a positive user experience, so your users never see a 'down for maintenance' message.

· Automated container orchestration: Manages the lifecycle of your Docker containers on the server, including starting, stopping, and restarting services as needed. This is valuable because it ensures your application is always running and healthy, so you don't have to manually monitor and manage individual containers.

· Simplified server provisioning: Abstracts away the complexities of setting up and configuring servers for containerized applications. This is valuable because it makes deploying to new servers much easier and faster, so you can scale your application without becoming a server expert.

Product Usage Case

· Deploying a web application with a database: A developer has a web application defined in a Docker Compose file along with its database. Instead of manually setting up servers, installing Docker, and configuring networking, they can use Mushak to deploy both services to a server in minutes, ensuring the application is live and accessible without any downtime for users.

· Automating microservice deployments: A team managing multiple microservices can use Mushak to automate the deployment of each service independently. Mushak's zero-downtime capabilities ensure that updates to one microservice do not affect the availability of others, enabling faster iteration cycles and continuous delivery.

· Rapid prototyping and testing: For developers building and testing new features, Mushak offers a quick way to deploy experimental versions of their applications to a staging server. The ease of use and zero-downtime ensure that testing is not disruptive, allowing for faster feedback loops.

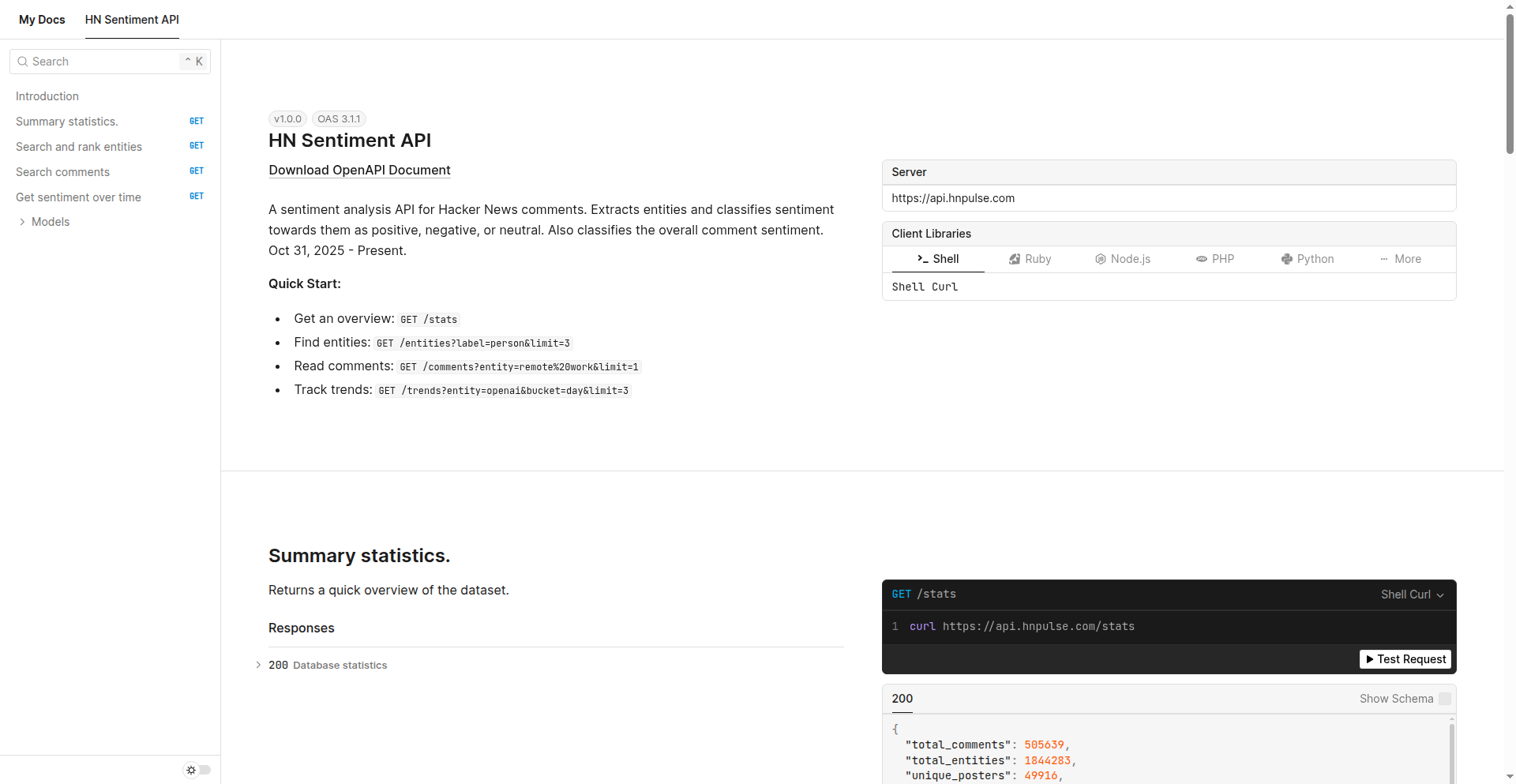

7

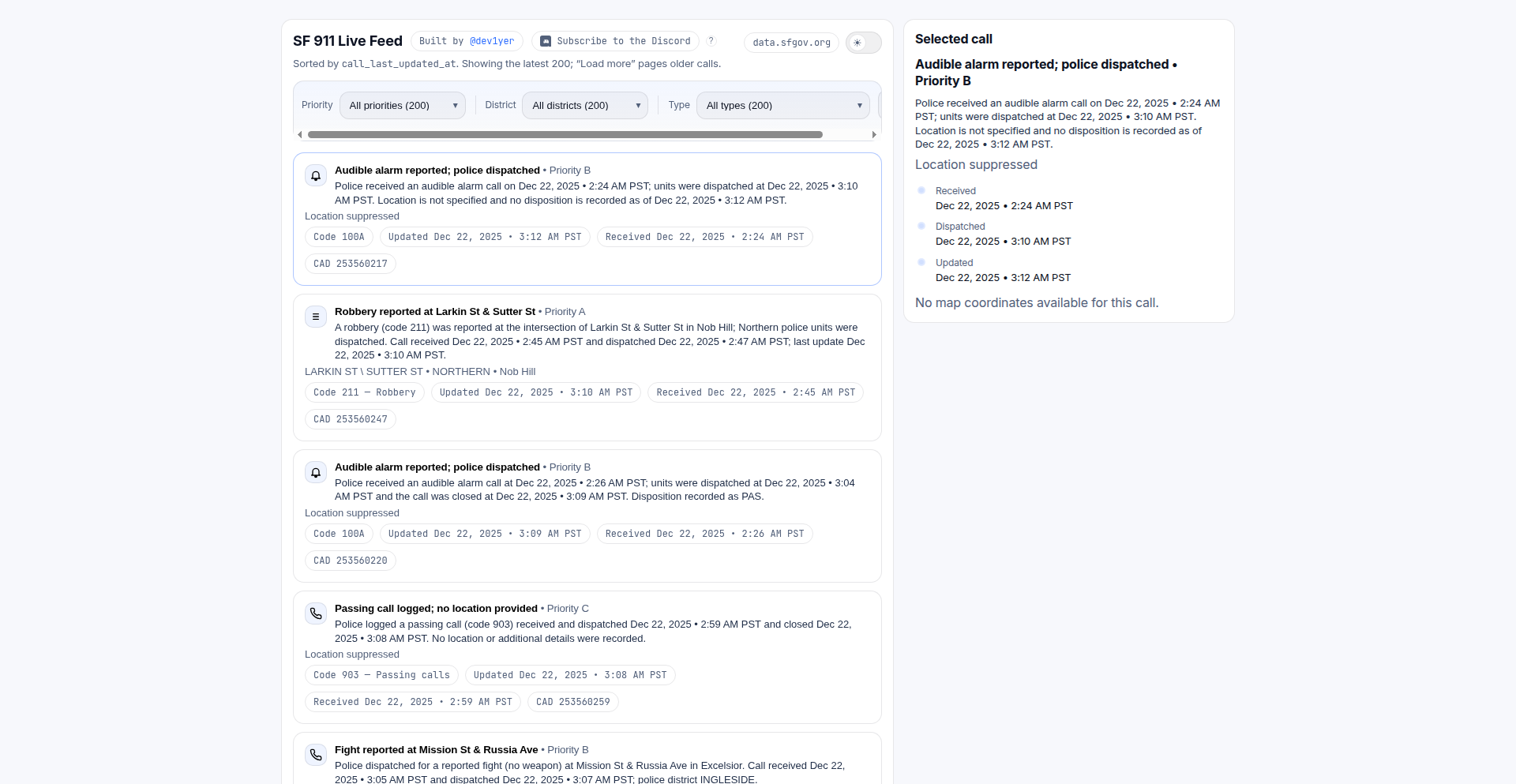

HN Pulse API

Author

kingofsunnyvale

Description

This API extracts named entities from Hacker News comments, classifies their sentiment (positive, negative, neutral) and identifies entity types like people, locations, and technologies. It offers insights into public opinion and trending topics within the developer community by analyzing over 500,000 comments. So, it helps you understand what the tech world is really talking about and how they feel about it.

Popularity

Points 27

Comments 6

What is this product?

HN Pulse API is a powerful tool that uses advanced natural language processing (NLP) techniques, specifically leveraging GPT-4o mini, to process and understand the vast amount of information shared in Hacker News comments. It goes beyond simple keyword searching by identifying specific entities (like people, companies, or technologies) and then analyzing the sentiment expressed towards them. Think of it as a super-smart reader that not only tells you who is being discussed but also whether the discussion is good, bad, or neutral. The innovation lies in its ability to synthesize unstructured text data into actionable insights about community sentiment and entity relationships, providing a unique lens into developer discourse. So, it gives you a clear, data-driven understanding of the prevailing opinions and hot topics in the tech sphere, far beyond what a simple search could offer.

How to use it?

Developers can integrate the HN Pulse API into their applications or use it directly via simple `curl` commands to query vast amounts of Hacker News comment data. For example, you can ask 'Who does HN talk about the most?' by requesting a list of people sorted by mention count. Or, you can find out 'What are people saying about remote work?' by searching for comments related to that entity. You can even track 'Is OpenAI's reputation getting worse?' by looking at sentiment trends over time. The API is designed for ease of use, with clear endpoints for entity discovery, comment retrieval, and trend analysis, allowing you to quickly build features that leverage community sentiment. So, you can easily embed real-time tech community sentiment into your dashboards, research tools, or even news aggregation platforms without needing to build complex NLP systems yourself.

Product Core Function

· Entity Extraction: Identifies and labels key entities such as people, organizations, locations, and technologies within Hacker News comments, providing structured data for analysis. This helps in understanding precisely which subjects are being discussed, offering a clear value for market research and trend identification.

· Sentiment Analysis: Classifies the sentiment expressed towards each extracted entity as positive, negative, or neutral, revealing community opinions. This is invaluable for brand monitoring, product feedback analysis, and understanding public perception of individuals and companies.

· Overall Comment Sentiment: Assigns a general sentiment score to each comment, giving a broader picture of the discussion's tone. This helps in quickly gauging the mood of a conversation thread or a collection of comments on a specific topic.

· Entity Co-occurrence Analysis: Allows you to discover which entities are frequently mentioned together, revealing relationships and contextual discussions. For instance, seeing which technologies are often discussed alongside a specific city can highlight emerging tech hubs or regional interests.

Product Usage Case

· Market Research: A startup can use the API to gauge developer sentiment towards competing technologies or product features before launching their own. By analyzing comments about existing solutions, they can identify pain points and market gaps. So, they can build a product that truly resonates with their target audience.

· Brand Monitoring: A company can track mentions of their brand and key executives on Hacker News to understand public perception and quickly address any negative sentiment. This allows for proactive reputation management. So, they can maintain a positive public image and respond effectively to criticism.

· Content Strategy: A tech blogger or journalist can identify trending topics and the general sentiment around them to inform their content creation, ensuring they are covering subjects that the developer community is actively engaged with. So, they can create content that is relevant and highly engaging for their readership.

· Investment Analysis: An investor can analyze sentiment trends for specific companies or founders discussed on Hacker News to gain insights into community confidence and potential future performance. This provides a unique, community-driven perspective for investment decisions. So, they can make more informed investment choices based on real-time developer sentiment.

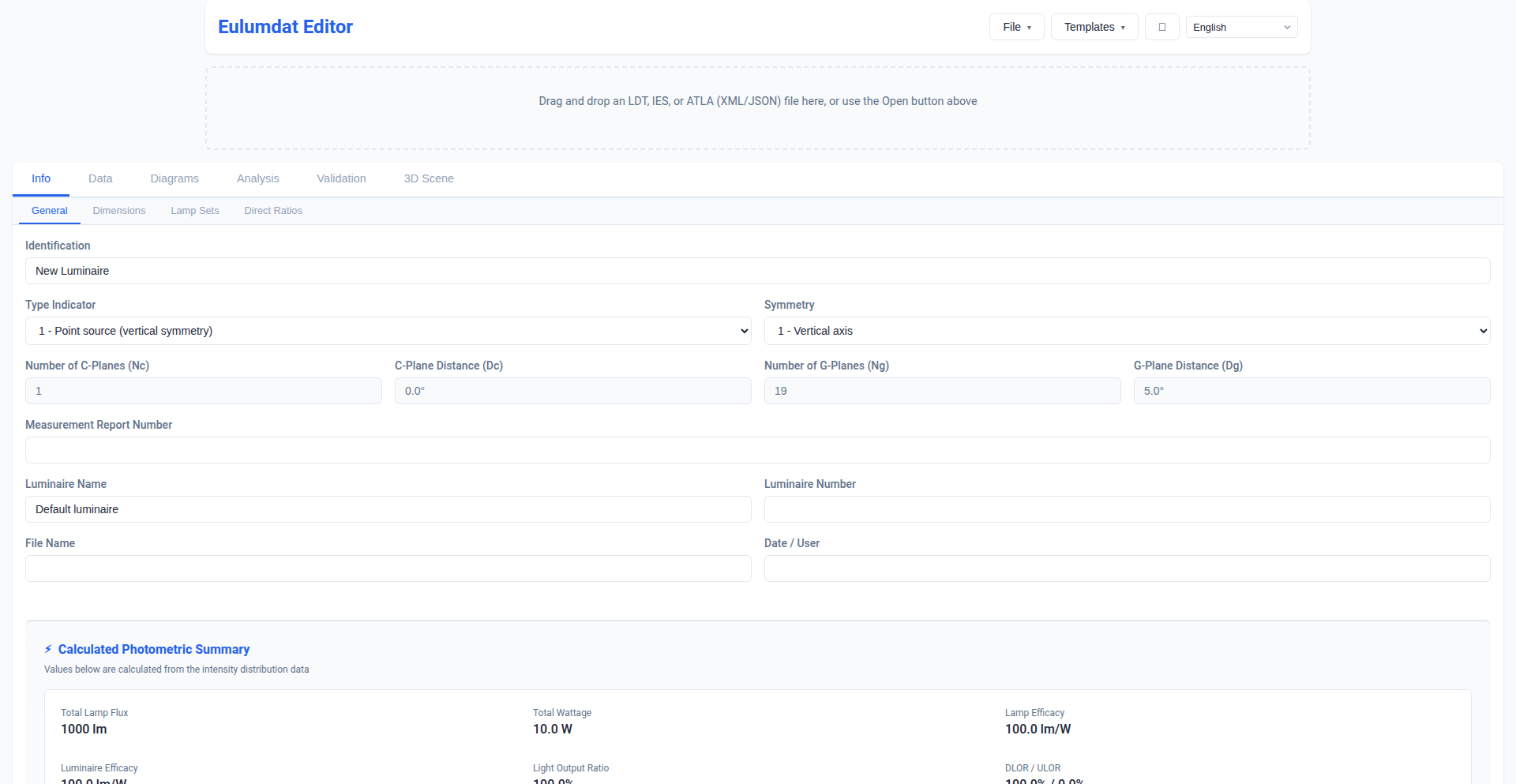

8

Luminoid-RS: Cross-Platform Lighting Data Toolkit

Author

holg

Description

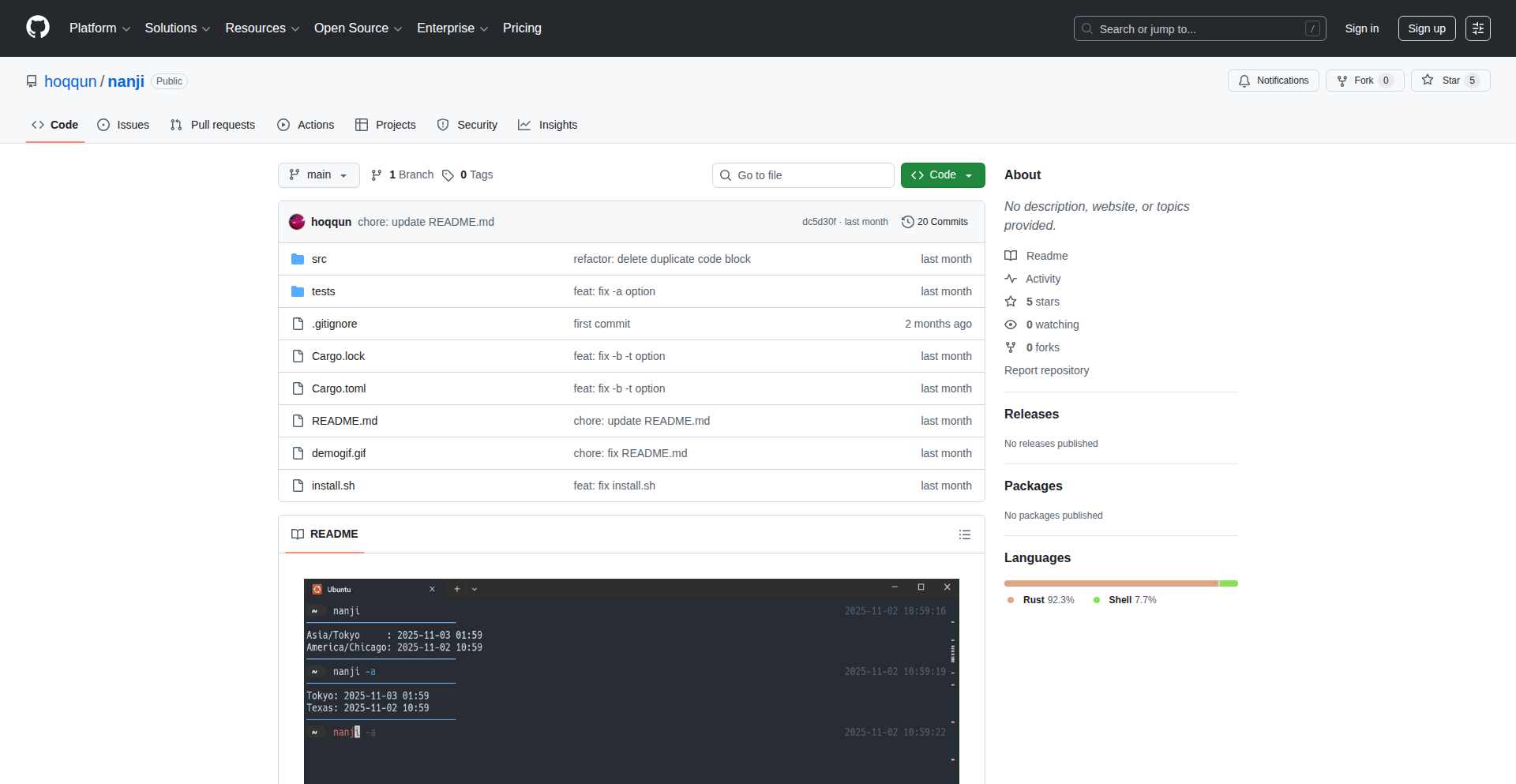

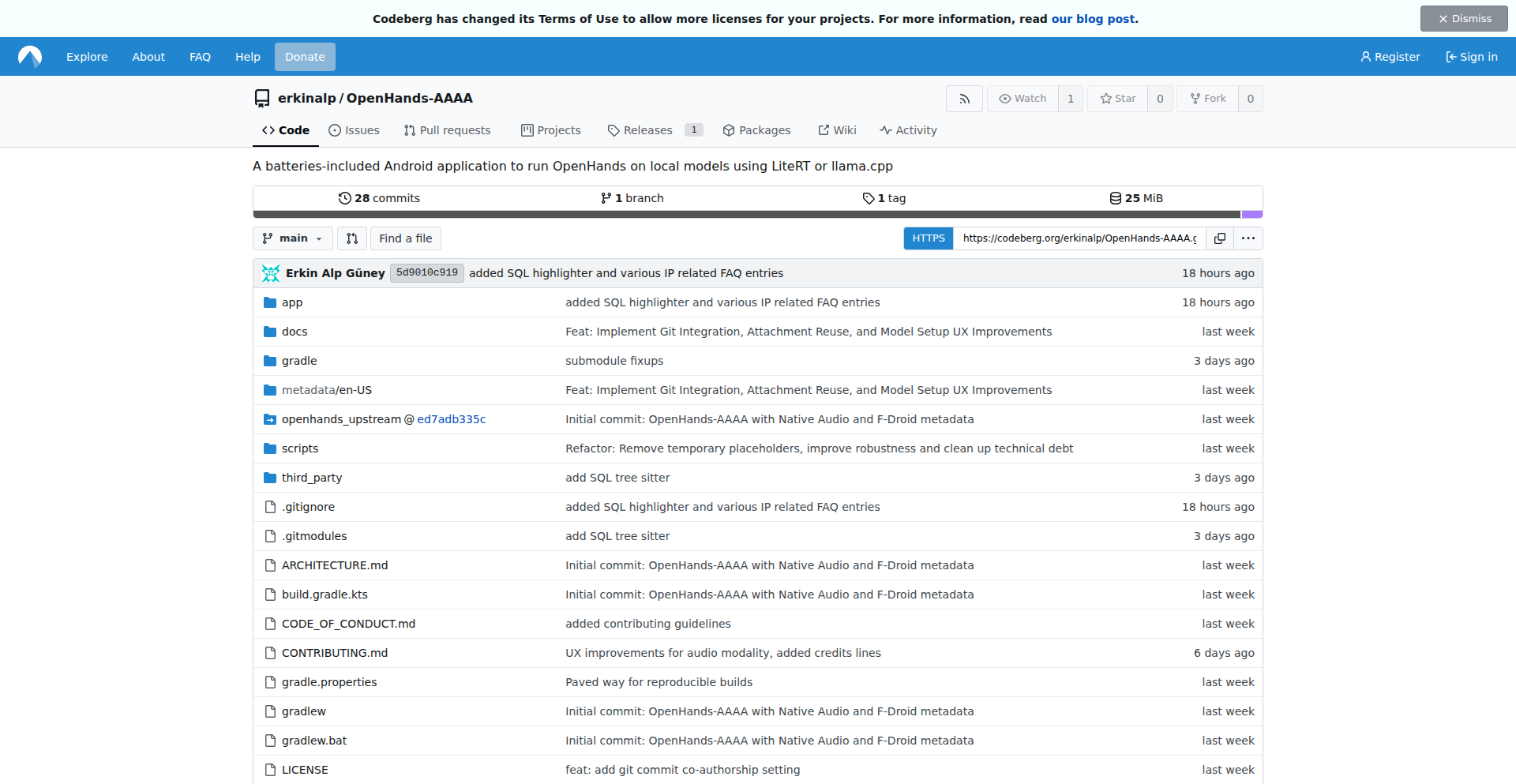

Luminoid-RS is a versatile toolkit built with Rust that bridges the gap between old and new lighting data standards. It can parse legacy file formats like EULUMDAT and IES, and also handles modern spectral data formats such as TM-33 and ATLA-S001. This project leverages UniFFI to compile a single Rust codebase into various platforms including WASM for web, desktop GUIs (egui, SwiftUI), mobile (Jetpack Compose), and Python. Its core innovation lies in its ability to process both historical and cutting-edge photometric data, providing a unified solution for developers working with lighting simulation and analysis tools. So, this helps you work with lighting data no matter the format, making your tools compatible with both older and newer industry standards.

Popularity

Points 29

Comments 0

What is this product?

Luminoid-RS is a lighting data processing toolkit written in Rust. Its core innovation is its ability to handle both traditional photometric file formats (EULUMDAT, IES) that store basic lumen values, and newer spectral data formats (TM-33, ATLA-S001) which capture more detailed wavelength distribution information. This is achieved by parsing these different data structures and providing a unified way to access and process them. The 'why it's cool' part is how it uses UniFFI to make this powerful Rust core available on almost any platform – from web browsers to mobile apps and desktop programs – from a single source of code. So, it's like having a universal translator for lighting data that works everywhere.

How to use it?

Developers can integrate Luminoid-RS into their projects via its bindings for various languages and platforms. For web applications, it can be compiled to WebAssembly (WASM) and used with frameworks like Leptos. For desktop applications, it offers bindings for egui and SwiftUI. Mobile developers can use it with Jetpack Compose, and Python developers can leverage PyO3. The toolkit can parse lighting data files, extract photometric information, and potentially generate visualizations like SVGs or 3D models using integrated components like the Bevy engine for the 3D viewer. So, you can plug this into your existing software to add robust lighting data handling capabilities without rewriting everything for each platform.

Product Core Function

· Legacy format parsing (EULUMDAT, IES): Processes older lighting data files that contain basic photometric information. This is valuable for maintaining compatibility with existing lighting simulation and design tools. So, you can still use your old lighting data files.

· Spectral data parsing (TM-33, ATLA-S001): Handles modern lighting data that includes detailed wavelength distribution, enabling more accurate simulations and analysis. This is crucial for developers working with the latest lighting standards. So, you can work with the newest and most detailed lighting information.

· Cross-platform compilation via UniFFI: Allows a single Rust codebase to be deployed on web (WASM), desktop (egui, SwiftUI), mobile (Jetpack Compose), and Python environments. This significantly reduces development effort and ensures consistency across different platforms. So, build it once and run it everywhere.

· SVG output generation: Can create Scalable Vector Graphics representations of lighting data, useful for visualizations and reports. This helps in communicating lighting design effectively. So, you can easily create visual representations of your lighting data.

· On-demand 3D viewer (Bevy engine): Integrates a 3D rendering capability to visualize lighting distributions in a spatial context. This is beneficial for understanding light placement and impact in real-world scenarios. So, you can see how lights behave in 3D space.

Product Usage Case

· Developing a web-based lighting design tool: A web developer can use the WASM build of Luminoid-RS to allow users to upload and analyze EULUMDAT or TM-33 files directly in their browser. This solves the problem of needing desktop software for basic photometric analysis. So, users can analyze lighting data online without installing anything.

· Building a cross-platform lighting simulation application: A developer can use Luminoid-RS to create a single application that runs on Windows, macOS, iOS, and Android, all capable of parsing and processing both legacy and spectral lighting data. This reduces development time and maintenance costs. So, your lighting app works everywhere with less effort.

· Integrating advanced photometric analysis into an existing Python script: A Python developer can use the PyO3 bindings to leverage Luminoid-RS's capabilities for handling spectral lighting data, enhancing their script's ability to perform complex lighting calculations. This solves the limitation of Python's native libraries for newer lighting standards. So, you can add powerful new lighting data features to your Python code.

· Creating interactive lighting documentation: A developer can use Luminoid-RS to generate dynamic SVG visualizations of lighting data that can be embedded in technical documentation or websites, providing a more engaging way to present photometric information. So, your technical documents can be more visually informative.

9

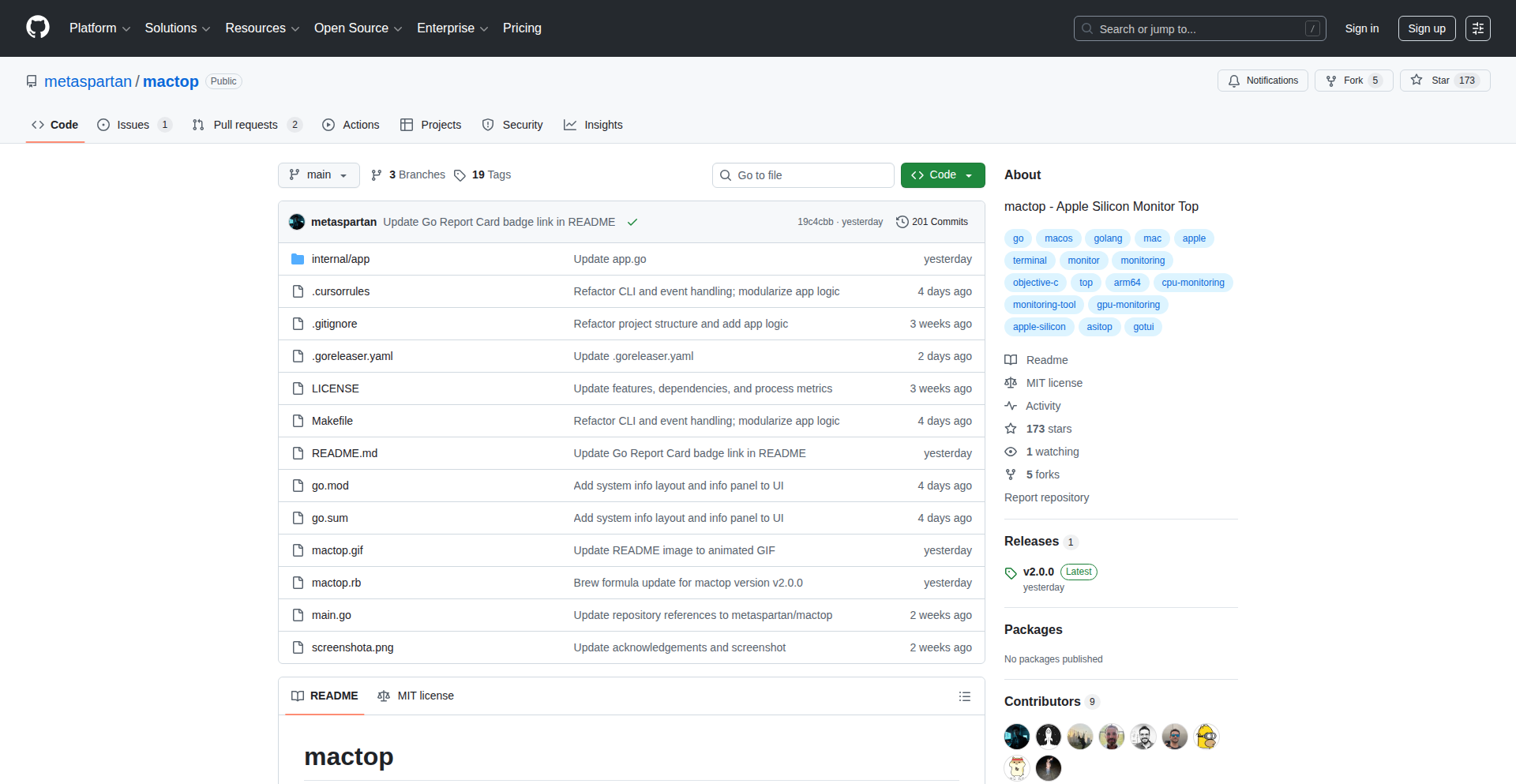

Mactop

Author

carsenk

Description

Mactop v2.0.0 is a real-time system monitoring tool for macOS that visualizes system resource usage with a command-line interface. It's innovative in how it provides detailed, dynamic insights into CPU, memory, disk, and network activity directly in the terminal, offering an alternative to GUI-based monitoring tools and empowering developers with immediate, actionable data without leaving their workflow.

Popularity

Points 22

Comments 1

What is this product?

Mactop is a command-line utility for macOS that shows you, in real-time, how your computer's resources (like CPU, RAM, disk, and network) are being used. Think of it as a dashboard for your Mac's performance, but displayed right in your terminal window. Its innovation lies in its ability to aggregate and present complex system performance metrics in a clear, dynamic, and easily digestible format within the text-based environment of the terminal. This means developers can quickly understand what's happening under the hood of their Mac without needing to switch to a separate graphical application, making it highly efficient for debugging and performance tuning.

How to use it?

Developers can use Mactop by installing it (often via package managers like Homebrew) and then running the 'mactop' command in their terminal. Once executed, it will display a continuously updating table of system resource usage. This is particularly useful when running intensive tasks, debugging performance bottlenecks, or simply understanding what processes are consuming the most resources on their Mac. It can be easily integrated into scripting or automated workflows where a quick, non-GUI system status check is needed.

Product Core Function

· Real-time CPU usage monitoring: Shows individual process CPU consumption and overall system load, helping identify performance bottlenecks. This is useful for understanding why your application might be slow.

· Dynamic Memory (RAM) utilization: Displays memory allocation per process and system-wide, aiding in detecting memory leaks or excessive memory consumption. This helps you ensure your application isn't hogging system memory.

· Disk I/O activity tracking: Monitors read and write operations for disks, crucial for identifying storage-related performance issues. This is valuable when your application involves heavy file operations.

· Network traffic visualization: Tracks incoming and outgoing network data per process, useful for diagnosing network performance problems or understanding data transfer. This helps when your application relies on network communication.

· Process-centric resource breakdown: Allows users to see exactly which processes are consuming specific resources, enabling targeted optimization. This gives you the power to pinpoint and fix resource hogs.

Product Usage Case

· During application development, a developer can run Mactop to see if their new feature is causing a spike in CPU usage, helping them immediately identify and fix the issue. This saves time in the debugging process.

· A system administrator can use Mactop to monitor a Mac remotely via SSH to quickly assess overall system health without needing graphical access, ensuring system stability and responsiveness.

· When troubleshooting a slow-performing application, a developer can launch Mactop to see if the issue is related to excessive memory usage by a specific process, guiding them towards a solution for memory leaks.

· For performance testing, Mactop can be run alongside an application to visually confirm that resource utilization remains within acceptable limits, providing concrete data on application efficiency.

10

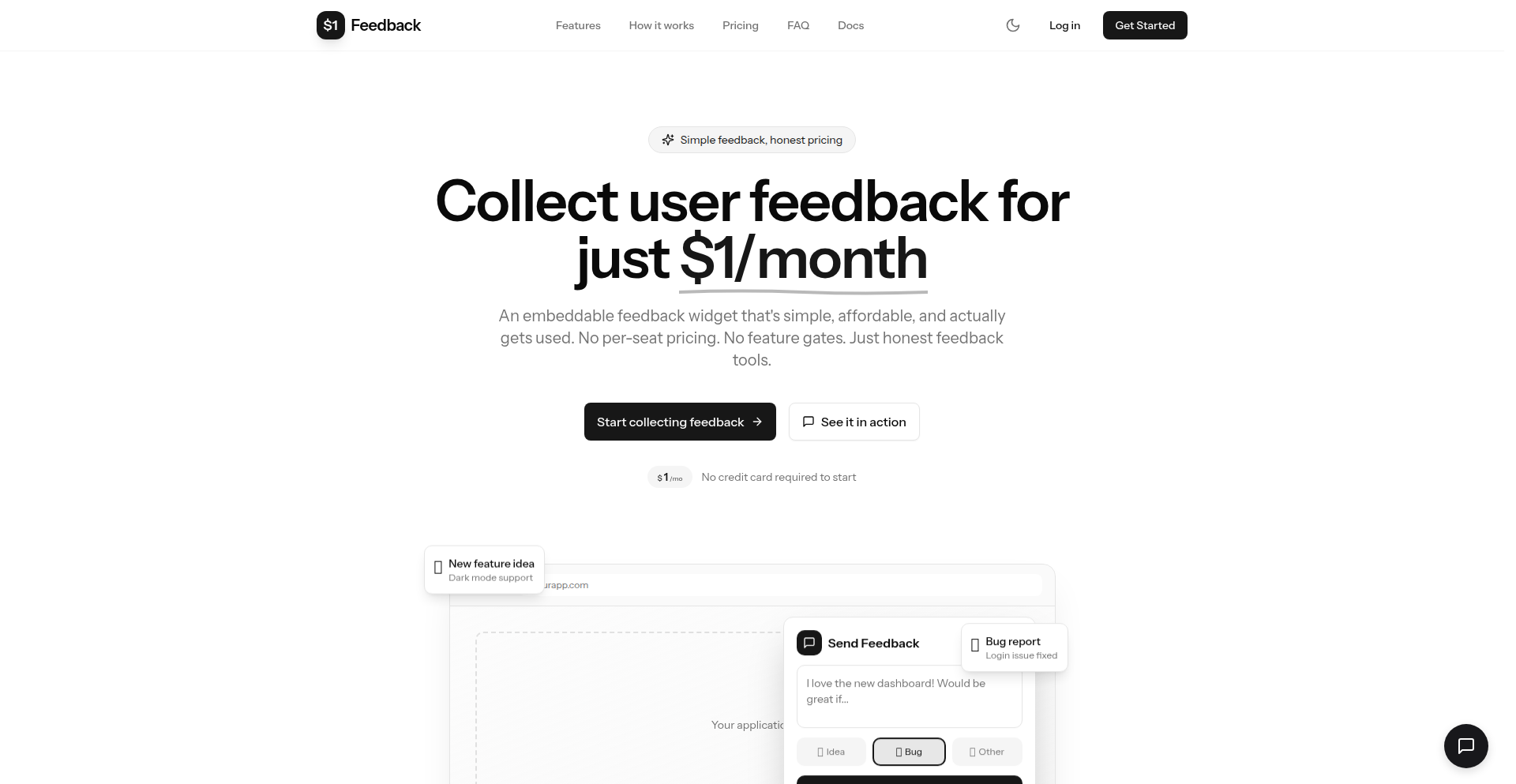

PuntualFeedback

Author

jeremy0405

Description

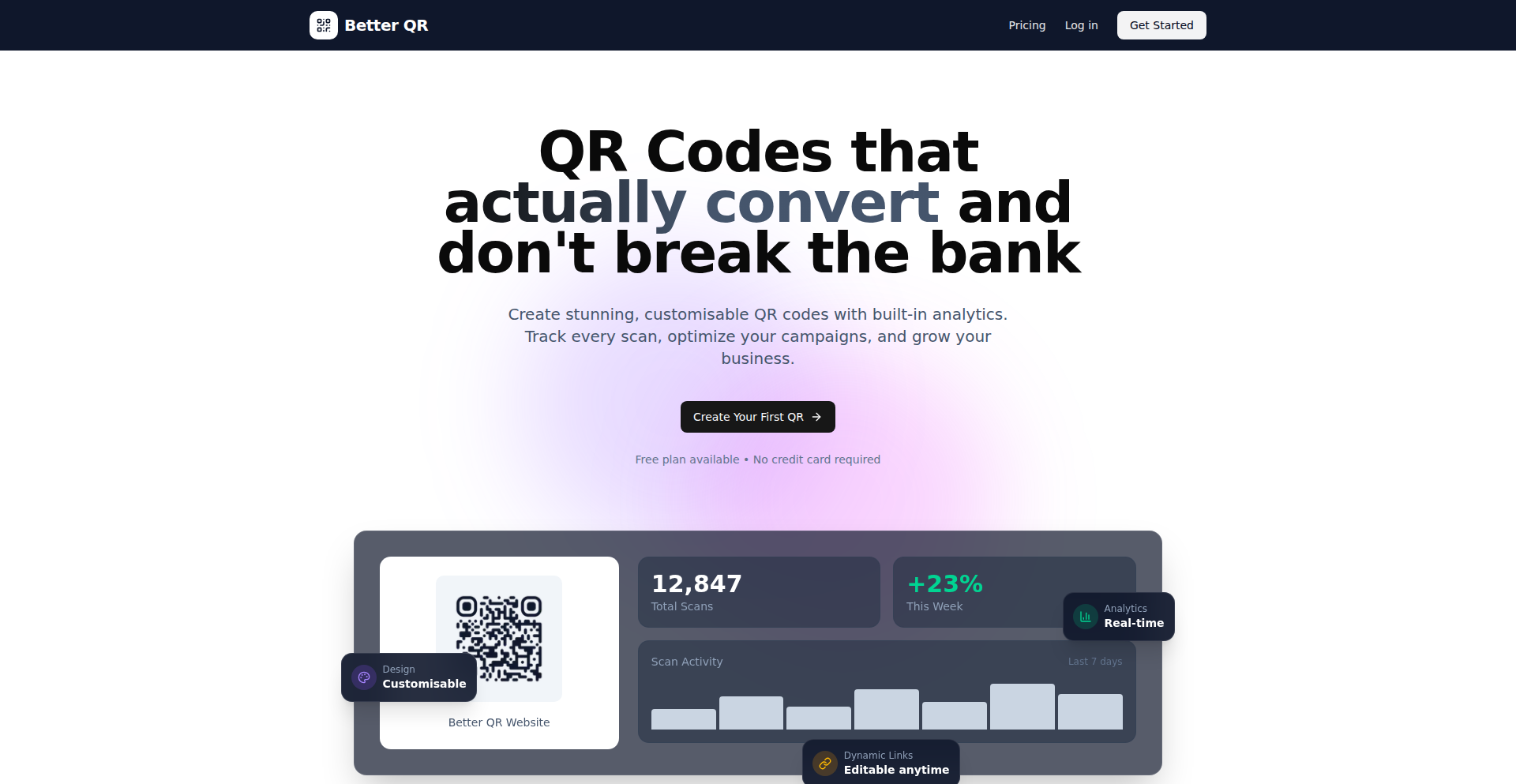

A radically affordable feedback widget, built as a weekend experiment to challenge the status quo of expensive SaaS solutions. It offers a simple yet effective way for users to collect feedback at a minimal cost, proving that essential tools don't need to break the bank. The innovation lies in its stripped-down, cost-effective architecture and a bold pricing strategy.

Popularity

Points 10

Comments 0

What is this product?

PuntualFeedback is a simple, one-dollar feedback collection tool. The core technical insight is that the underlying technology for most feedback widgets is straightforward and inexpensive to host. The developer recognized that the high prices often charged by existing solutions are not justified by the technical complexity. Instead of relying on complex infrastructure or proprietary algorithms, PuntualFeedback leverages basic web technologies to provide a functional and reliable feedback mechanism. The innovation is in its pragmatic approach to pricing, making feedback collection accessible to everyone by reflecting the true low cost of operation and development.

How to use it?

Developers can integrate PuntualFeedback into their websites or applications with minimal effort. The tool is designed for easy embedding, likely through a simple JavaScript snippet or a readily available widget. This allows any developer, regardless of their project's scale or budget, to quickly add a feedback channel. Imagine you have a new feature launched and want to gauge user reaction immediately without a complex setup. You'd simply add the PuntualFeedback snippet to your page, and users could start submitting their thoughts. This means you can get insights directly from your audience without needing to build or pay for an elaborate system.

Product Core Function

· Simple Feedback Submission: Allows users to submit text-based feedback easily. The value here is a direct and unobtrusive way for your users to share their thoughts, providing actionable insights for product improvement.

· Low-Cost Infrastructure: Built on lean principles to ensure minimal operational expenses. This translates to an incredibly low price point, making it accessible for even the smallest projects or personal websites to gather user opinions.

· Easy Integration: Designed for quick and straightforward embedding into any web project. The benefit is rapid deployment, allowing you to start collecting feedback within minutes, saving significant development time and resources.

· Affordable Pricing Model: Priced at $1, challenging the conventional SaaS model for feedback tools. This provides immense value by democratizing access to user feedback, enabling more creators and businesses to engage with their audience without financial barriers.

Product Usage Case

· A solo indie game developer wants to collect feedback on a new game build before a public release. By integrating PuntualFeedback, they can offer a low-friction way for testers to report bugs or suggest improvements, directly contributing to a better final product without any significant cost.

· A blogger running a personal website wants to understand what content their readers find most valuable or what topics they'd like to see covered next. Adding PuntualFeedback as a widget on their posts allows them to gather this audience intelligence effortlessly, guiding their future content strategy.

· A small e-commerce startup is launching a new product line and needs to quickly collect initial impressions from early adopters. PuntualFeedback provides a discreet and inexpensive way to solicit feedback on the product's design, features, or overall appeal, helping them iterate faster.

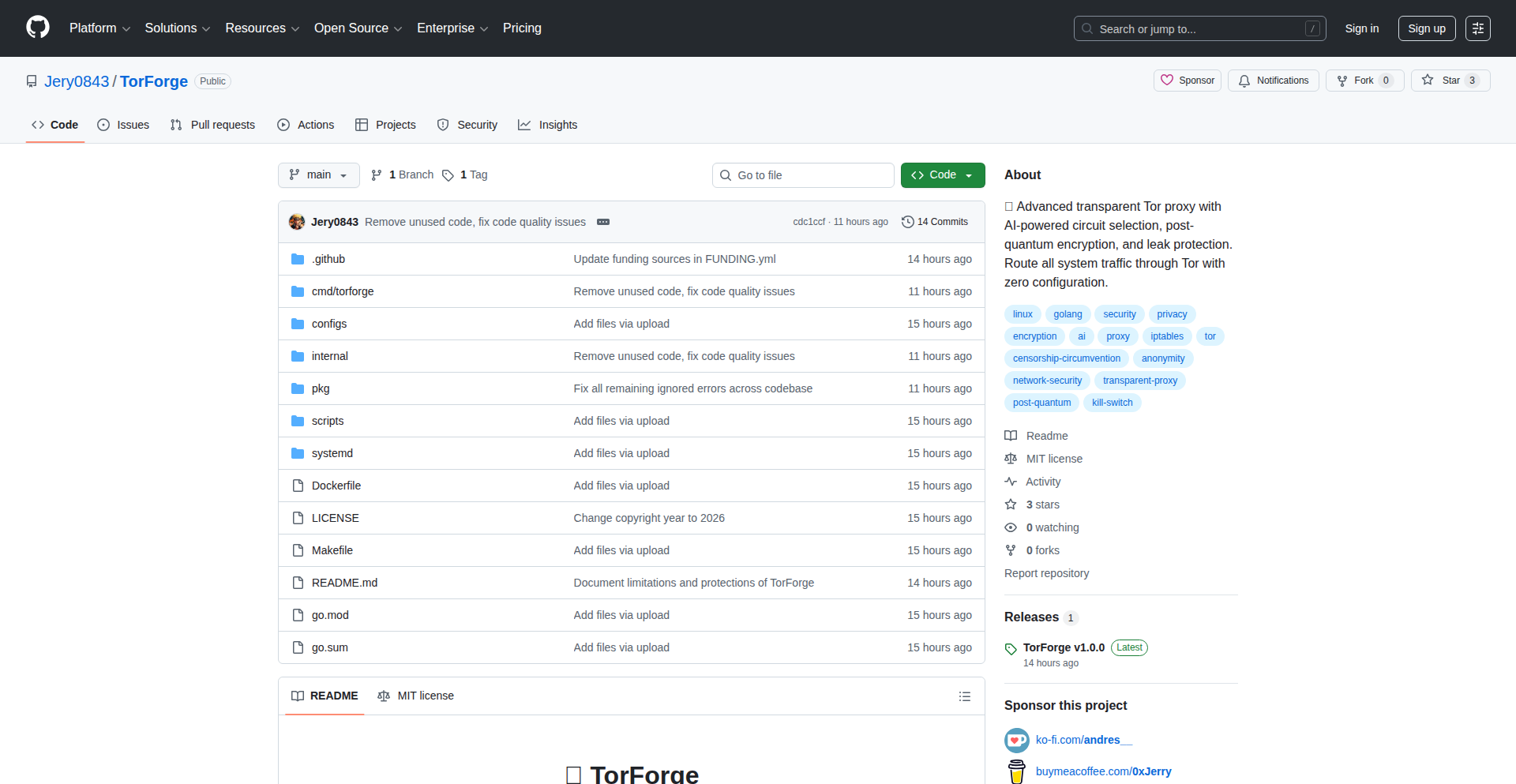

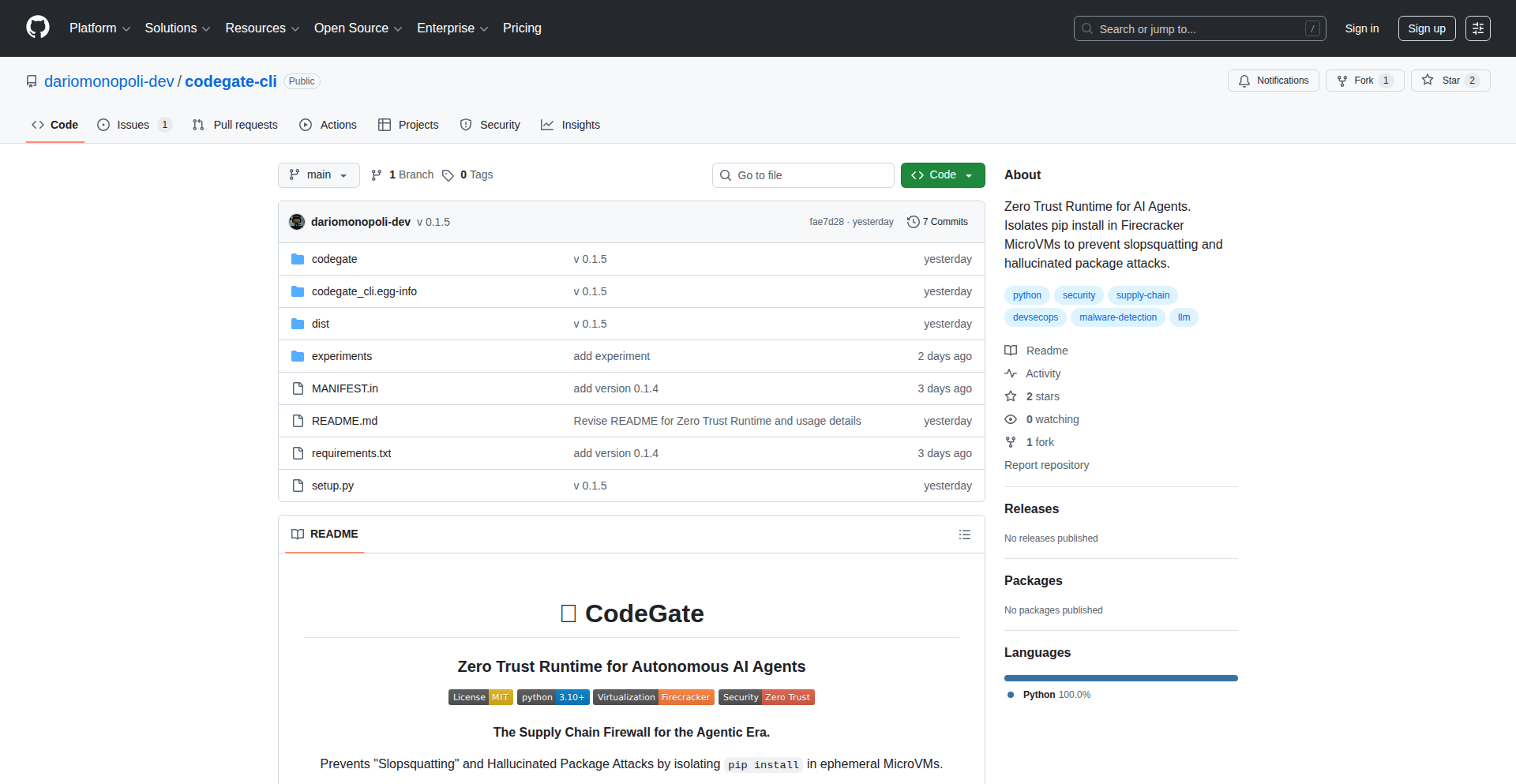

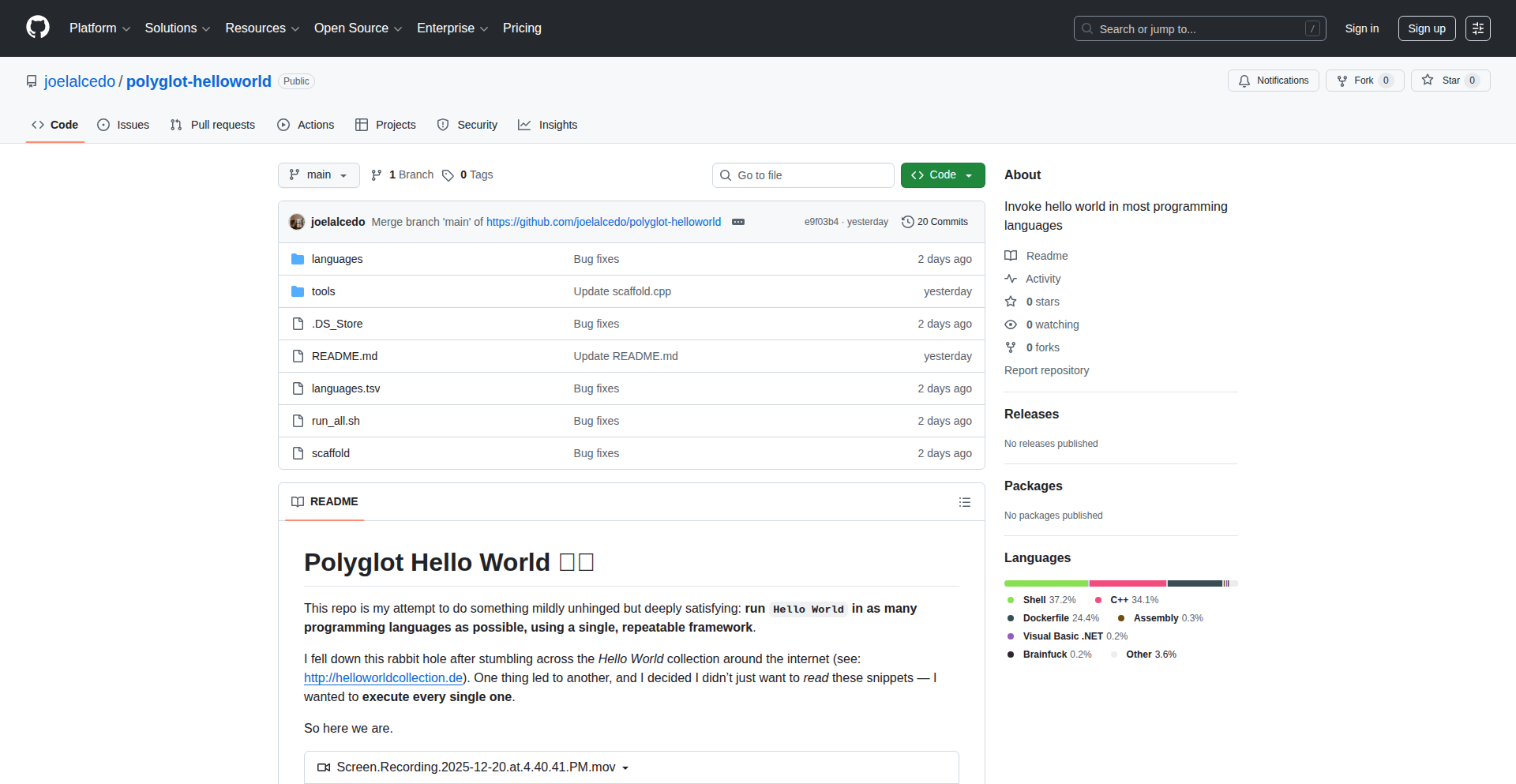

11

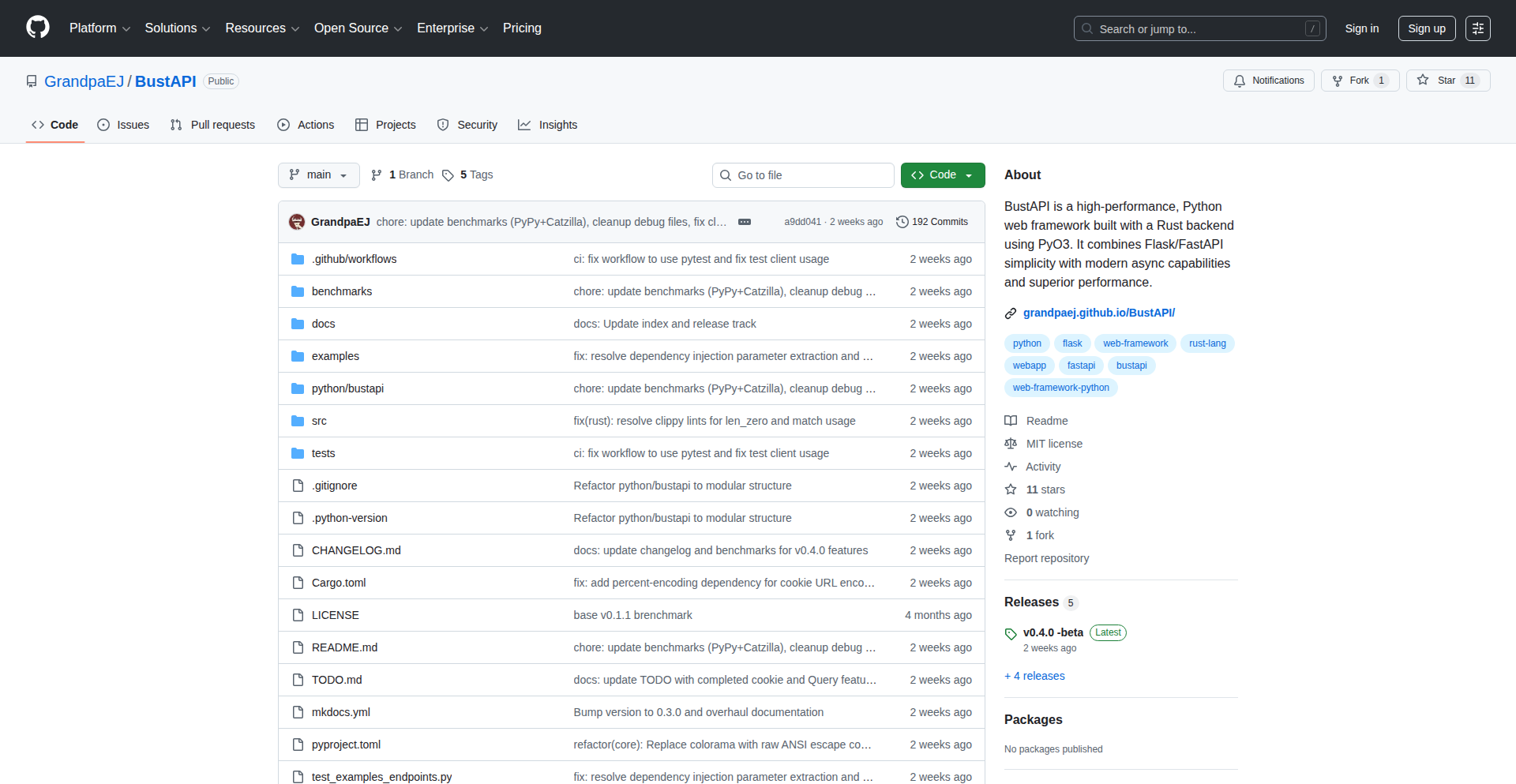

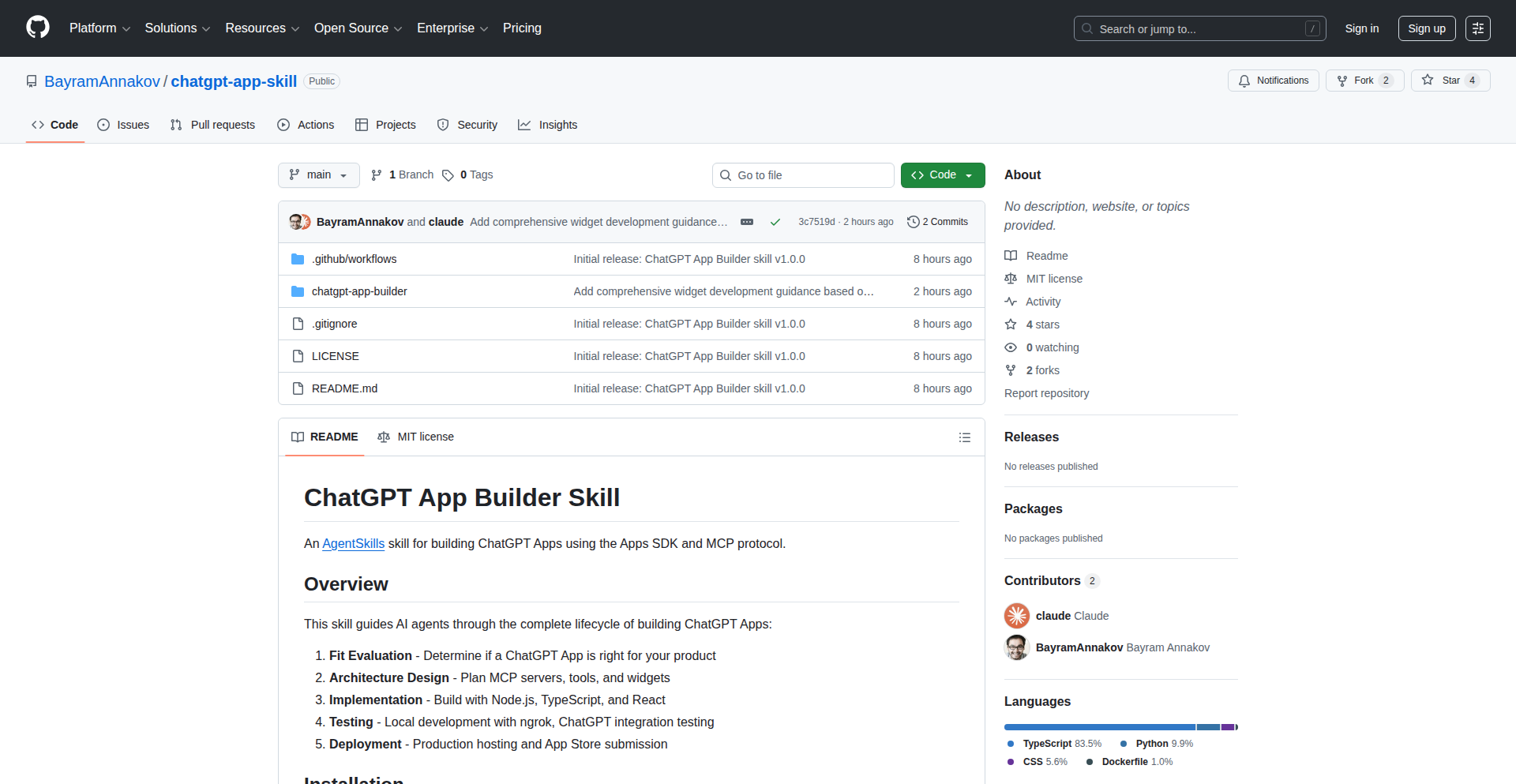

RustPython FusionEngine

Author

ZOROX

Description

This project is a hybrid web framework that embeds a high-performance Rust (Actix-Web) core directly into the Python runtime. It aims to eliminate the traditional performance trade-off of Python web services, offering significantly faster execution speeds without sacrificing developer happiness. So, it means you can get the speed of Rust for your web backend while still enjoying the ease of development with Python. It's a 'cheat code' to speed up Python web applications.

Popularity

Points 5

Comments 3

What is this product?

RustPython FusionEngine is a novel web framework designed to bridge the performance gap between Python and compiled languages like Rust. It achieves this by integrating a Rust-based web server (specifically Actix-Web, known for its speed) directly within the Python execution environment. This means Python code can leverage the raw speed of Rust for handling web requests and processing data, overcoming Python's typical performance limitations in I/O-bound or CPU-intensive web tasks. The innovation lies in this seamless hybrid architecture, allowing developers to write Python but execute critical parts of the web service in highly optimized Rust code. So, this project offers a way to have your Python cake and eat its performance speed too.

How to use it?

Developers can use RustPython FusionEngine to build web services that require high throughput and low latency. The framework allows you to define your API endpoints and business logic in Python, while the underlying engine automatically routes performance-critical operations to the embedded Rust core. This integration is designed to be as straightforward as possible, aiming for an experience similar to using traditional Python frameworks like Flask or Django. You'd typically install the package, import its components, and define your routes and handlers in Python files. The framework handles the complexity of inter-process communication or shared memory between Python and Rust. So, you can integrate this into your existing Python web projects to boost performance without a complete rewrite.

Product Core Function

· Hybrid Execution Core: Leverages a Rust (Actix-Web) engine for web request handling and processing, offering significantly higher performance than pure Python. This is valuable for applications needing to handle many requests quickly or perform complex computations, leading to a snappier user experience and reduced server costs.

· Python Runtime Integration: Seamlessly embeds the Rust core into the Python runtime, allowing developers to write most of their application logic in familiar Python. This preserves developer productivity and ease of use, making high performance accessible without a steep learning curve. It's useful for teams already invested in Python.

· Performance Benchmarking Tools: Includes tools and configurations for benchmarking its performance against other popular web frameworks. This allows developers to objectively measure the speed improvements and understand the 'Python tax' reduction. This is important for validating performance claims and making informed architectural decisions.

· Simplified API Definition: Provides Pythonic ways to define API endpoints, request/response structures, and middleware. This abstracts away the complexities of the Rust backend, making it feel like a native Python framework. This means developers can build fast APIs without needing to become Rust experts.

Product Usage Case

· Building a high-traffic API gateway: A developer could use RustPython FusionEngine to create an API gateway that needs to handle thousands of concurrent requests with minimal latency. The Rust core would manage the high-speed routing and forwarding of requests, while Python could handle authentication and logging. This solves the problem of a Python gateway becoming a bottleneck.

· Developing a real-time data processing service: For applications that ingest and process large volumes of data in real-time, such as stock tickers or IoT sensor feeds, this framework can provide the necessary speed. Python can define the data models and application logic, while the Rust engine ensures fast data ingestion and initial processing. This addresses the slow processing issue in Python for real-time streams.

· Creating a microservice with demanding performance requirements: A developer could build a microservice that needs to respond to requests in milliseconds. By using RustPython FusionEngine, they can leverage Python's flexibility for business logic while ensuring the critical request-response cycle is handled by the super-fast Rust core. This resolves the performance bottlenecks commonly found in Python-based microservices.

12

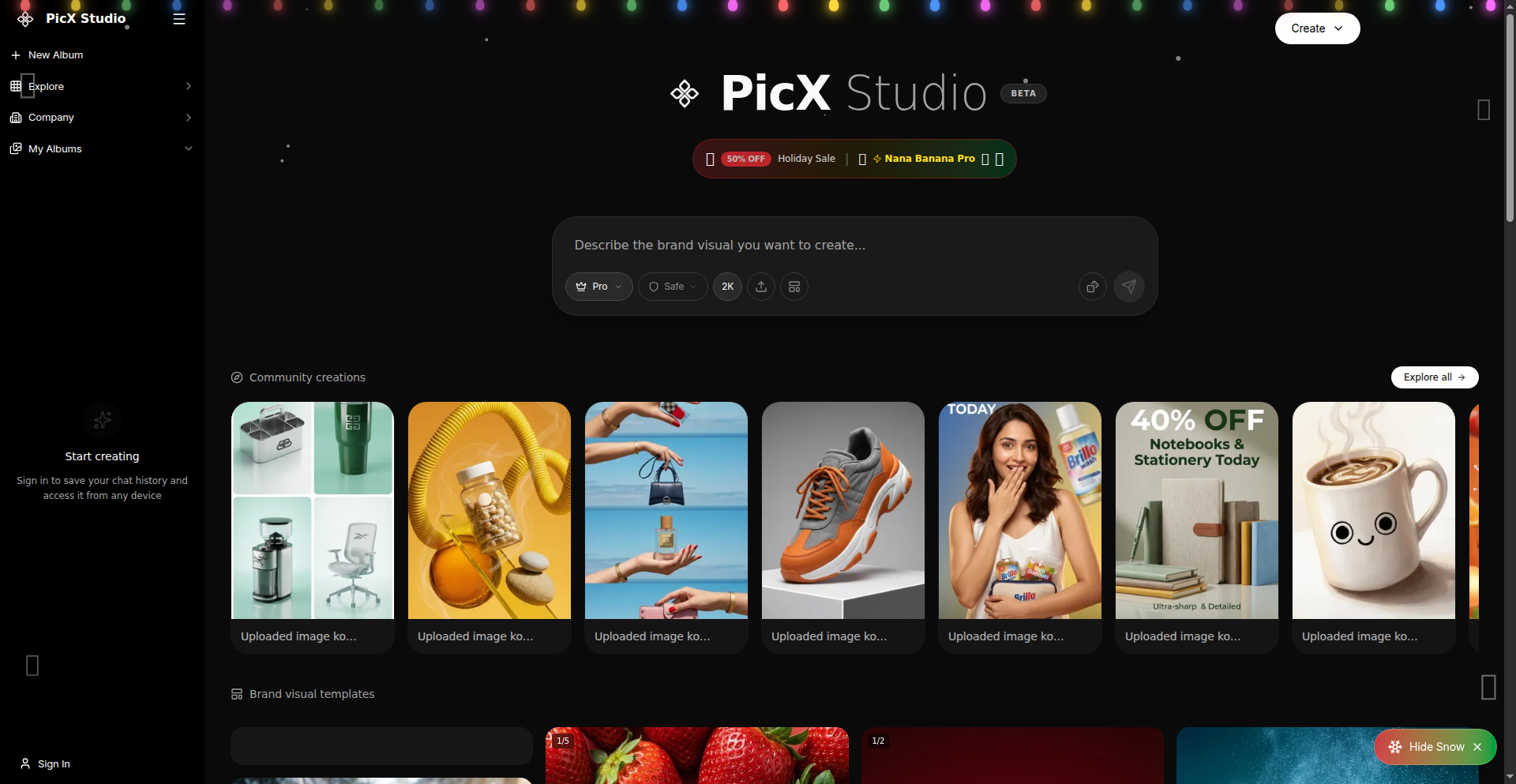

PicX Studio - B2B AI Visualizer

Author

Yash16

Description

PicX Studio is an AI image generation platform pivoting from consumer-facing to business-to-business applications. Initially, it faced significant challenges with user-generated content abuse, particularly for NSFW imagery. By shifting its focus to professional use cases like product photography and headshots, PicX Studio aims to leverage its AI generation capabilities in a more controlled and valuable B2B environment, solving the problem of unregulated misuse by creating a more focused and less problematic service.

Popularity

Points 3

Comments 5

What is this product?

PicX Studio is an AI-powered image generation tool that has transitioned from a consumer product to a business solution. The core technology involves advanced machine learning models, specifically diffusion models or similar generative adversarial networks (GANs), trained to create high-quality images from text prompts. The innovation lies in its strategic pivot to the B2B market, recognizing that while the core AI is powerful, the application context significantly impacts its viability. By targeting businesses needing product photos or professional headshots, the platform can implement stricter usage policies and cater to a less volatile user base. This is useful because it transforms a potentially problematic consumer tool into a focused, professional service that addresses the demand for customized visual assets without the associated content moderation nightmares.

How to use it?

Businesses can integrate PicX Studio into their workflow by subscribing to the service. For product photography, marketing teams can upload existing product images or provide detailed descriptions and receive AI-generated variations, lifestyle shots, or mockups. For headshots, individuals or companies can use the tool to generate professional portraits for websites, LinkedIn profiles, or company directories. The usage would typically involve a web-based interface where users input their requirements, select styles, and generate images. Integration might involve API access for larger enterprises to automate image creation within their existing content management systems. This is useful because it provides an efficient and cost-effective way to generate professional visual content on demand, saving time and resources compared to traditional photography or design services.

Product Core Function

· AI-powered product photo generation: Enables businesses to create high-quality product images with various backgrounds, angles, and lighting. This is useful for e-commerce stores seeking to enhance their listings with professional visuals without expensive photoshoots.

· Professional headshot creation: Generates realistic and polished headshots suitable for corporate branding and individual professional profiles. This is useful for companies looking to standardize employee profiles or individuals wanting a professional online presence.

· Customizable image styles: Allows users to specify stylistic elements and artistic preferences for generated images. This is useful for marketers aiming to match visuals with specific brand aesthetics or campaign themes.

· B2B focused content moderation: Implements robust content filters and usage policies to prevent misuse and ensure professional output. This is useful for businesses that need a reliable and safe platform for visual content creation, avoiding the pitfalls of uncontrolled generative AI.

· API integration for enterprise clients: Offers programmatic access to the AI generation engine for seamless integration into existing business workflows and content creation pipelines. This is useful for large organizations seeking to automate their visual asset production at scale.

Product Usage Case

· An e-commerce startup uses PicX Studio to generate multiple lifestyle images for each product, showcasing them in different settings and contexts. This solves the problem of needing diverse product visuals without hiring photographers for every scenario, leading to increased customer engagement and sales.

· A marketing agency utilizes PicX Studio to create personalized header images for client social media campaigns based on specific campaign themes and target audience demographics. This addresses the need for rapid, tailored visual content production, improving campaign responsiveness and effectiveness.

· A software company uses PicX Studio to generate consistent and professional headshots for all its employees, ensuring a unified look for its corporate website and internal communications. This solves the challenge of inconsistent employee photography, enhancing brand professionalism and recognition.

· A freelance graphic designer uses PicX Studio's API to integrate AI-generated elements into larger design projects, such as creating unique textures or background elements for branding materials. This allows for more creative possibilities and faster project completion, expanding their service offerings.

13

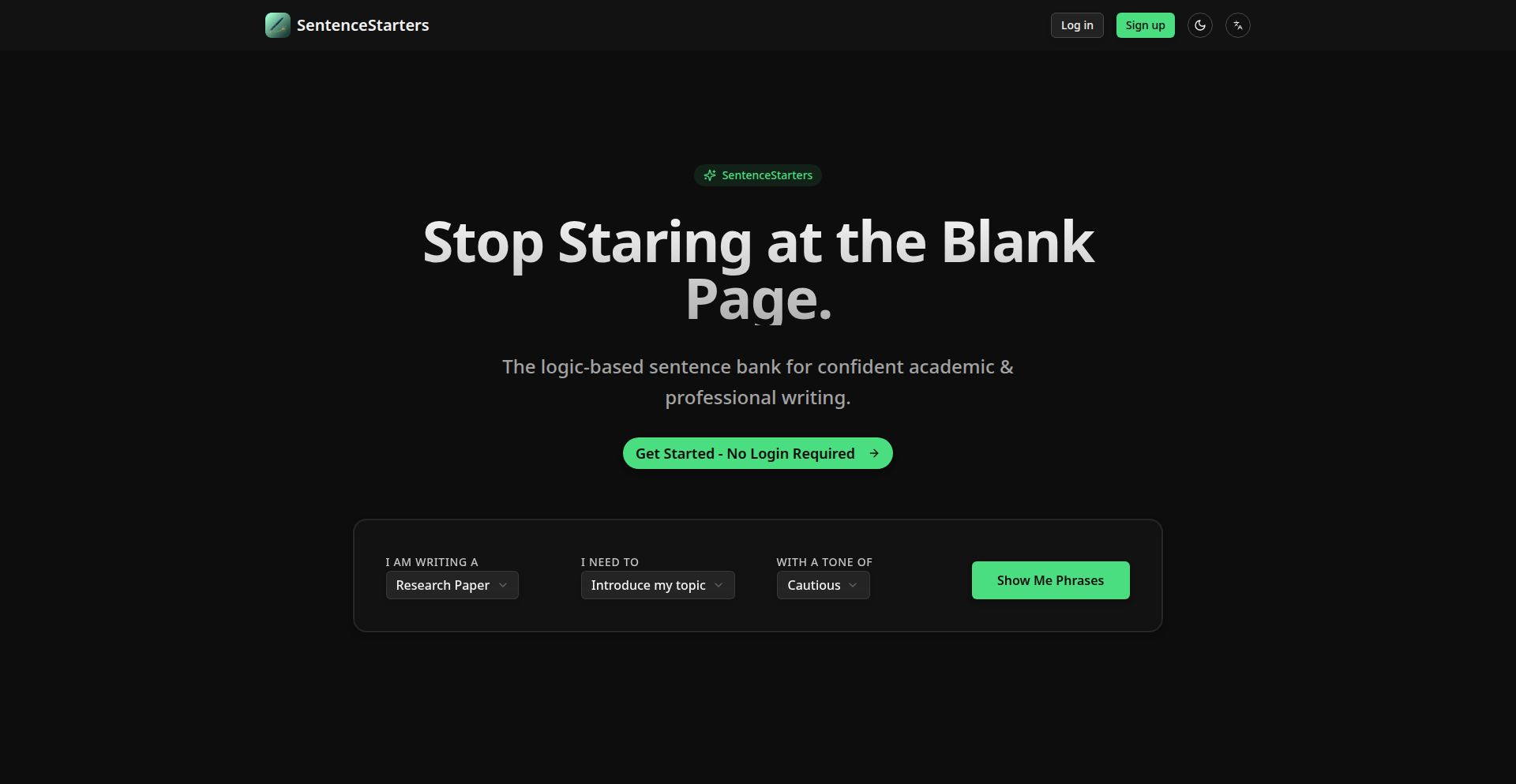

AcademicPhraseEngine

Author

superhuang

Description

AcademicPhraseEngine is a tool designed to generate effective sentence starters for academic and professional writing. It addresses the common challenge of getting started with complex writing tasks by providing context-aware phrase suggestions, aiming to reduce writer's block and improve the clarity and professionalism of written content.

Popularity

Points 4

Comments 3

What is this product?

AcademicPhraseEngine is a smart phrase generator that leverages natural language processing (NLP) techniques to suggest relevant and sophisticated sentence openings. Instead of users having to brainstorm from scratch, the system analyzes the writing context (e.g., the topic, the type of sentence needed) and offers a curated list of phrases. The innovation lies in its ability to go beyond simple keyword matching, understanding the nuance of academic and professional discourse to provide genuinely helpful prompts that can elevate the quality of writing. So, what's in it for you? It helps you overcome that initial hurdle of starting an important document, making your writing process smoother and your final output more polished and impactful.

How to use it?

Developers can integrate AcademicPhraseEngine into their writing applications, content management systems, or even as a standalone browser extension. The core interaction involves sending text context to the engine, which then returns a list of suggested sentence starters. For instance, a user could highlight a paragraph and request starters for the next sentence, or specify the type of statement they want to make (e.g., introduce a counter-argument, provide evidence). This integration could be achieved through a simple API call. For you, this means your existing writing tools could become smarter, offering instant suggestions that save you time and boost your writing confidence.

Product Core Function

· Contextual Phrase Generation: The system analyzes the surrounding text to suggest sentence starters that fit the flow and topic. This is valuable because it ensures your writing remains coherent and relevant, making your arguments easier for readers to follow.

· Discourse Type Awareness: It can differentiate between, for example, an introductory sentence, a transitional phrase, or a concluding statement, offering appropriate suggestions for each. This helps structure your writing logically, guiding your reader through your thoughts effectively.

· Professionalism Enhancement: By suggesting more formal and sophisticated phrasing, the tool helps elevate the overall tone and quality of academic and professional documents. This makes your work appear more credible and well-researched.

· Writer's Block Mitigation: Providing immediate and relevant starting points helps users overcome the psychological barrier of a blank page, enabling them to get started and maintain momentum. This directly translates to faster writing and less stress.

· Customizable Suggestion Pool: Potentially, users could tailor the types of phrases offered based on their field or writing style, ensuring the suggestions are highly personalized and useful. This ensures the tool serves your specific writing needs, not generic ones.

Product Usage Case

· An academic researcher struggling to begin a new section on methodology can use AcademicPhraseEngine to get suggestions like 'To address this, we employed a...' or 'The experimental design involved...'. This helps them articulate their research approach clearly and professionally, avoiding generic phrasing.

· A business professional writing a proposal might use the tool to find strong opening lines for a new paragraph, such as 'Building upon our previous findings, it is evident that...' or 'To achieve the desired outcome, a strategic approach will be necessary...'. This helps make their proposals more persuasive and impactful.

· A student writing an essay can get help crafting the perfect transition into a counter-argument with phrases like 'While it is often argued that...', 'However, an alternative perspective suggests...', or 'Despite these considerations, a significant point remains...'. This improves the depth and complexity of their arguments.

· A content writer creating a technical document could use it to introduce complex concepts, receiving suggestions like 'Fundamentally, this system operates on the principle of...' or 'At its core, this technology is designed to...'. This ensures clarity and precision when explaining intricate subjects.

14

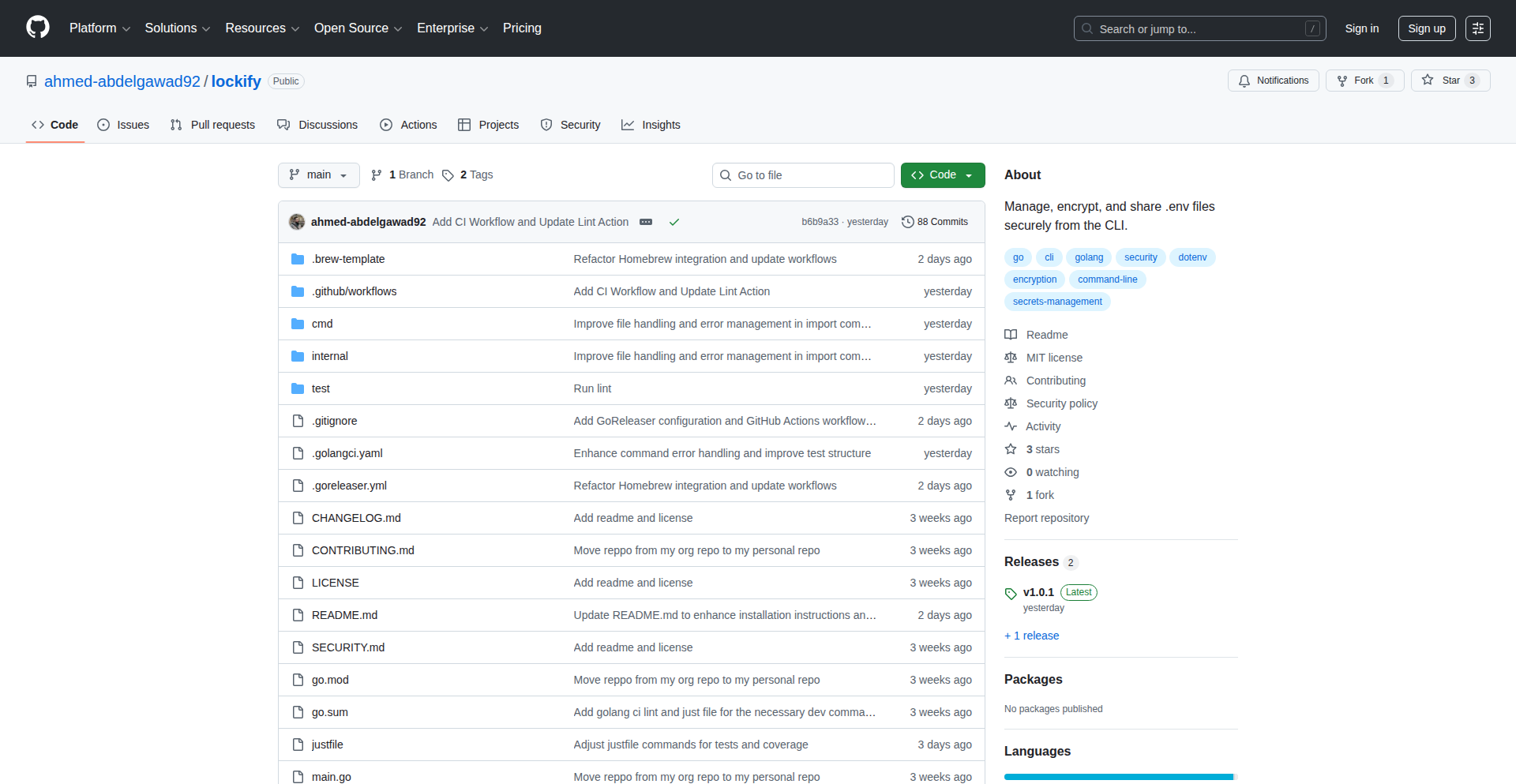

Lockify: Encrypted Env CLI

Author

ahmedabdelgawad

Description

Lockify is a Go-based Command Line Interface (CLI) tool designed for developers to securely encrypt and decrypt files locally. It prioritizes simplicity, speed, and ease of use directly from the terminal, eliminating the need for cloud-based encryption services. This innovative approach offers a straightforward, secure way to manage sensitive environment variables and other configuration files without external dependencies.

Popularity

Points 4

Comments 2

What is this product?

Lockify is a command-line utility written in Go that provides a simple, secure, and fast method for encrypting and decrypting files on your local machine. Its core innovation lies in its minimalist design and focus on local operations. Instead of sending your sensitive data to a cloud service for encryption, Lockify performs all operations directly on your computer. This means your data never leaves your system during the encryption/decryption process, offering a higher level of privacy and security, especially for developers managing sensitive API keys, database credentials, or other configuration information for their projects. The 'developer-friendly' aspect means it's built with the command-line workflow in mind, making it easy to integrate into scripts or daily development tasks.

How to use it?

Developers can use Lockify by downloading and installing the Go binary. Once installed, it's used from the terminal. For example, to encrypt a file named `config.env`, a developer might run a command like `lockify encrypt config.env`. To decrypt it later, they would use `lockify decrypt config.env.locked`. The tool prompts for a password to perform the encryption and decryption, ensuring that only those with the correct password can access the file's contents. This makes it ideal for scenarios where developers need to commit encrypted configuration files to a version control system like Git, or share them securely without exposing sensitive details. It can be easily incorporated into CI/CD pipelines or build scripts for automated secure file handling.

Product Core Function

· Local File Encryption: Encrypts files using a password provided by the user. This is valuable because it allows developers to store sensitive information like API keys or database credentials directly on their machines without risk, as the data is scrambled and unreadable without the correct password. So, this helps you protect your secrets locally.

· Local File Decryption: Decrypts files that were previously encrypted by Lockify, requiring the correct password. This is crucial for accessing your sensitive information when needed for development or deployment, ensuring that only authorized individuals with the password can unlock the data. So, this allows you to securely access your protected information.

· Command-Line Interface (CLI) Simplicity: Offers a straightforward command-line interface for intuitive operation. The value here is that developers can quickly and easily perform encryption/decryption tasks without complex graphical interfaces or learning convoluted commands, fitting seamlessly into their existing terminal-based workflows. So, this makes managing your secrets fast and easy from your usual development environment.

· Go-based Implementation: Built using the Go programming language, which often translates to fast execution and small binary sizes. The technical benefit is a highly performant tool that doesn't consume many system resources, and is easy to distribute and run across different operating systems. So, this means the tool is efficient and easy to get started with.

Product Usage Case

· Securely storing API keys in a project's `.env` file that is then committed to a public GitHub repository. The developer encrypts the `.env` file using Lockify before committing, and their team members can decrypt it using the shared password when they clone the repository. This solves the problem of accidentally exposing credentials in public code.

· Managing sensitive database connection strings for a staging environment. The encrypted connection string file can be deployed to the staging server, and the server's deployment script can decrypt it using a securely managed password to establish the connection. This provides a controlled way to handle sensitive deployment configurations.

· Sharing encrypted configuration files with a colleague for a joint project. Instead of emailing sensitive information, the developer can encrypt the file and share the encrypted version along with the password via a secure communication channel, ensuring the data is protected in transit and only accessible to the intended recipient. This solves the problem of insecure file sharing for sensitive data.

15

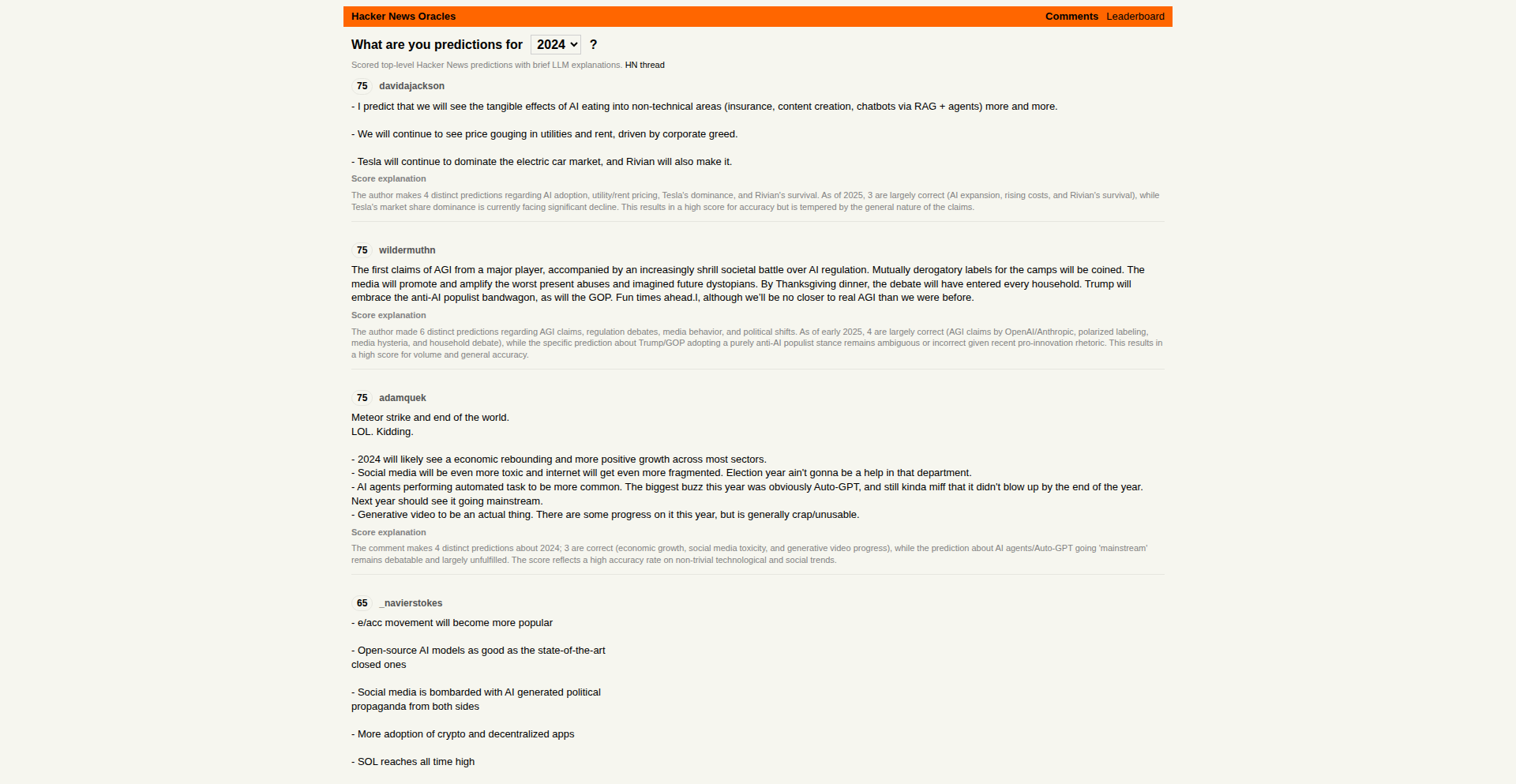

Hacker News Oracles - LLM-Powered Prediction Insights

Author

dotneter

Description

Hacker News Oracles is a fun LLM experiment that analyzes top comments from 'What are your predictions for {year}?' posts on Hacker News. It uses AI to score these predictions, offering a unique, data-driven perspective on community foresight. The innovation lies in applying LLM analysis to unstructured text, extracting actionable insights and ranking predictive accuracy within the tech community.

Popularity

Points 2

Comments 4

What is this product?

This project, Hacker News Oracles, is essentially an AI-powered system that reads through prediction-themed discussions on Hacker News. Imagine asking the community about their forecasts for the future, and this tool then uses a Large Language Model (LLM) to understand, categorize, and score those predictions based on their insightfulness and perceived accuracy. The innovation is in transforming raw human opinions into a ranked leaderboard, demonstrating how LLMs can find patterns and value in informal, community-generated text. So, this helps us understand what the collective wisdom of the tech community is predicting in a structured and quantifiable way.

How to use it?

Developers can use Hacker News Oracles to gain a deeper understanding of trends and potential future developments as perceived by the Hacker News community. It's useful for market research, identifying emerging technologies, or even just for personal curiosity about what the tech world anticipates. You can explore the leaderboard (link provided by the author) to see which predictions are ranked highest. For integration, one could imagine an API that allows developers to pull ranked predictions into their own applications or dashboards to inform strategic decisions. So, this gives you an aggregated, AI-scored view of tech foresight, which can help you make more informed decisions or identify new opportunities.

Product Core Function

· LLM-based comment analysis: The system employs LLMs to process and understand the nuances of user-submitted predictions, extracting key themes and sentiment. This is valuable for automatically identifying significant insights within large volumes of text, saving manual review time.

· Prediction scoring and ranking: An AI model assigns scores to predictions based on various criteria (e.g., clarity, plausibility, potential impact), creating a leaderboard. This provides a quantifiable measure of prediction quality, enabling objective comparison and identification of top-tier foresight.

· Trend identification: By analyzing aggregated predictions across multiple years, the system can highlight recurring themes and emerging trends within the tech community. This offers valuable insights into future technological directions and market shifts.

· Community foresight visualization: The project presents prediction data in an accessible leaderboard format, making complex community insights easily digestible. This allows users to quickly grasp the collective wisdom and identify areas of high interest or anticipation.

Product Usage Case

· A startup founder could use the ranked predictions to identify emerging technologies that are gaining traction within the developer community, potentially informing their product roadmap and investment decisions. This helps them stay ahead of the curve by understanding what the tech world is buzzing about.

· A venture capitalist might leverage the data to spot areas where the community anticipates significant disruption or growth, guiding their investment strategy. This provides an early signal of potential high-growth sectors based on expert opinions.

· A developer looking to understand the future of a specific technology could analyze predictions related to that domain to gauge community sentiment and potential breakthroughs. This helps them anticipate future challenges and opportunities in their field.

· A journalist writing an article on future tech trends could use the leaderboard as a source to quote influential predictions and support their narrative with data-backed insights from the tech community. This adds credibility and depth to their reporting.

16

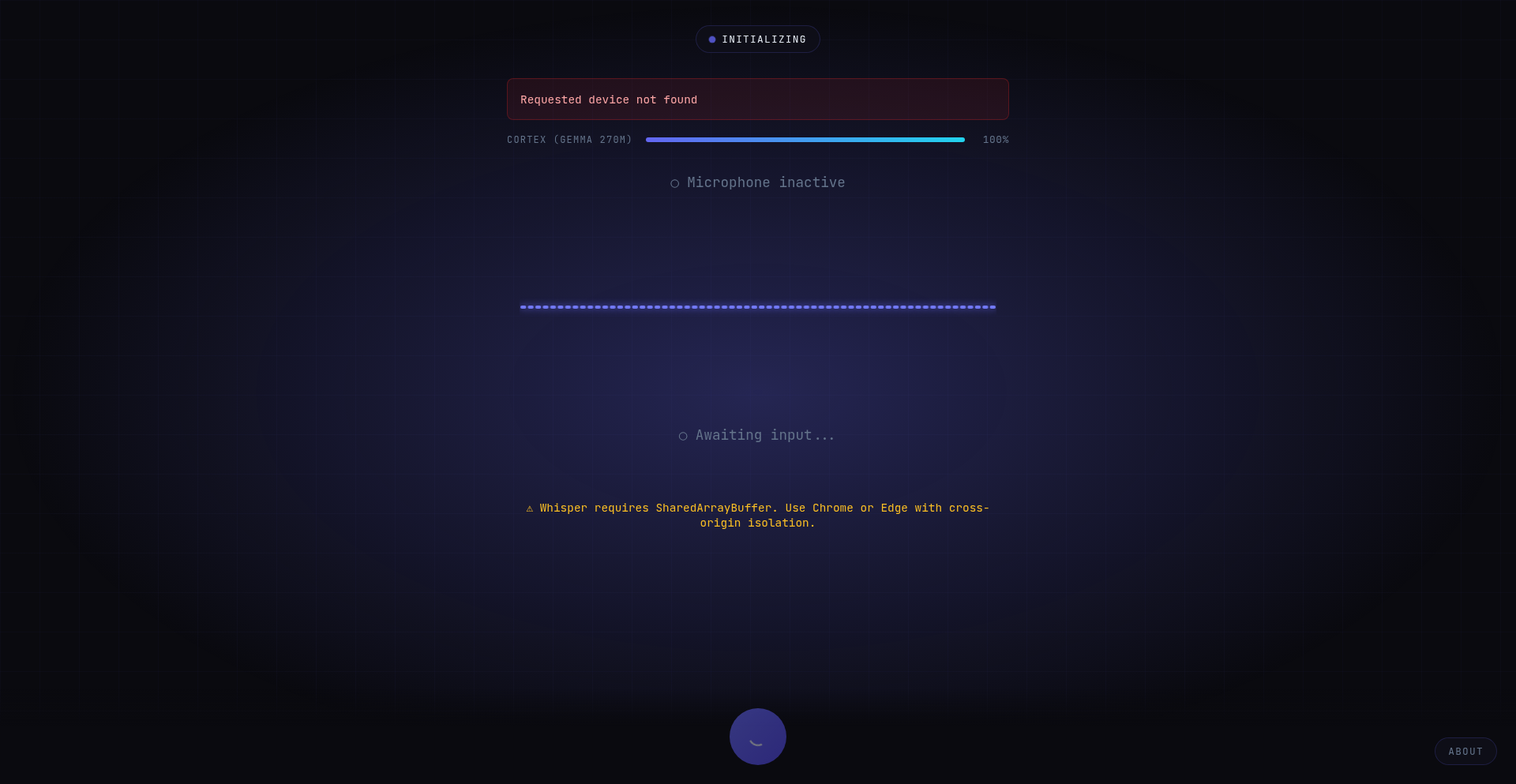

Ava: Client-Side AI Voice Pipeline

Author

muthukrishnanwz

Description

Ava is an open-source AI voice assistant that operates entirely within your web browser. It leverages WebAssembly (WASM) to run speech-to-text (Whisper tiny en), a large language model (Qwen 2.5 0.5B via llama.cpp WASM port), and text-to-speech (native browser API) all locally on your device. This eliminates the need for backend servers or API calls, demonstrating the power of modern browsers for running complex AI tasks offline with acceptable latency. So, what's the use? You get a private, fast, and capable voice assistant that works without sending your data to the cloud, making advanced AI accessible for everyone.

Popularity

Points 2

Comments 3

What is this product?

Ava is a groundbreaking AI voice assistant that performs all its functions – understanding your voice, processing your requests with AI, and speaking back to you – directly in your web browser. It uses cutting-edge technologies like WebAssembly (WASM) to bring powerful AI models, such as Whisper for speech-to-text and Qwen for language processing, to your local machine. The native browser's SpeechSynthesis API handles the voice output. The key innovation is running this entire complex AI pipeline client-side, which means no data ever leaves your device, and once loaded, it works offline. So, what's the use? This represents a significant leap in privacy and accessibility for AI, showing that sophisticated AI can be run locally, offering secure and responsive voice interaction without relying on external servers. This is like having a personal AI assistant that lives entirely on your computer, always available and always private.

How to use it?

Developers can integrate Ava into their web applications to add voice control capabilities. This involves a one-time initial download of about 380MB, which is then cached for subsequent use. Ava requires modern browsers like Chrome or Edge (version 90+) due to its reliance on WebAssembly threading features. For developers looking to build offline voice-enabled experiences, Ava provides a robust, client-side solution. You can essentially embed Ava's capabilities into your own projects, allowing users to interact with your web application using their voice, without requiring any server-side AI processing. This is perfect for creating interactive educational tools, private journaling apps, or any application where voice input and output are desired without compromising user data. So, what's the use? You can empower your web applications with a secure, private, and always-on voice interface, enhancing user experience and expanding accessibility.

Product Core Function

· Real-time Speech-to-Text (Whisper tiny en via WASM): Captures your spoken words and converts them into text instantly. This allows your application to understand what you're saying in real-time, enabling immediate command processing and interaction. So, what's the use? You can build applications that respond to voice commands as you speak them, making interactions feel natural and fluid.

· On-Device LLM Processing (Qwen 2.5 0.5B via llama.cpp WASM): Processes your text requests using a powerful language model that runs locally. This means your AI queries and the generated responses are handled entirely on your device, ensuring privacy and speed. So, what's the use? You can leverage AI for complex tasks like generating text, answering questions, or summarizing information, all without sending your sensitive data to the cloud.

· Streaming Text-to-Speech (Native Browser API): Converts AI-generated text responses back into natural-sounding speech. The system intelligently streams responses, starting to speak as soon as a sentence is ready, rather than waiting for the entire reply. So, what's the use? You get immediate audio feedback from your AI assistant, making conversations feel more natural and allowing you to multitask while the AI speaks.

· Complete Offline Operation (Post-Initial Load): Once Ava is loaded, it functions entirely without an internet connection. This ensures uninterrupted AI assistance regardless of network availability. So, what's the use? You can rely on your AI assistant even in areas with poor or no internet connectivity, ensuring continuous productivity and access to information.

Product Usage Case

· Building a private, offline journaling application: Users can dictate their thoughts and memories directly into the app, with all data remaining on their device. Ava handles the speech-to-text and can even use the LLM for suggesting entry titles or summarizing content. So, what's the use? You can create a secure and personal space for reflection, knowing your private thoughts are never exposed online.

· Developing an educational tool for language learning: Students can practice speaking and receive instant feedback on pronunciation (via speech-to-text analysis) and grammar (via LLM). Ava can also read out vocabulary and phrases for practice. So, what's the use? You can offer an interactive and accessible language learning experience that doesn't require an internet connection for core functionality.

· Creating a voice-controlled personal assistant for desktop productivity: Users can control applications, set reminders, or quickly search for information using voice commands, all processed locally. The streaming TTS ensures quick auditory feedback. So, what's the use? You can enhance workflow efficiency and accessibility by enabling hands-free control of digital tasks on your computer.

17

Matle: Daily Chess Mate Puzzle

Author

matle_io

Description

Matle is a daily chess guessing puzzle inspired by the popular Wordle game. It presents users with a real chess checkmate position where some squares are hidden. The challenge is to deduce the missing squares to reconstruct the exact position and identify the mate. This project showcases an innovative application of game mechanics to a complex domain like chess, offering a fresh way for enthusiasts to engage with chess positions.

Popularity

Points 2

Comments 3

What is this product?

Matle is a web-based application that provides a daily chess puzzle. The core innovation lies in its approach to interactive chess learning and engagement. Instead of traditional chess problems, it uses a guessing mechanic similar to Wordle. Users are shown a checkmate position with obscured squares. The underlying technology likely involves a robust chess engine to validate user guesses and generate valid checkmate positions. The value proposition is a daily, accessible, and engaging way to improve one's understanding of chess tactics and board visualization, even for those who might find traditional study methods daunting. So, what's in it for you? It's a fun, brain-teasing daily challenge that sharpens your chess intuition and tactical recognition, all disguised as a simple guessing game.

How to use it?

Developers can integrate Matle's core logic or similar puzzle generation mechanisms into their own applications. For instance, a chess learning platform could embed Matle-style puzzles to offer a more interactive experience. The project demonstrates how to combine a chess game logic with a user-friendly puzzle interface. Technical integration could involve leveraging a chess library (like `python-chess` for Python or `chess.js` for JavaScript) to manage board states, validate moves, and determine checkmate conditions. The hidden square mechanic can be implemented by programmatically masking parts of a valid chess position. So, how can you use this? Imagine building a chess training app where users can guess missing pieces to solve tactical puzzles, or even a casual game that tests users' knowledge of common opening or endgame positions. It's about repurposing game design patterns for educational and entertainment purposes.

Product Core Function

· Daily unique checkmate puzzle generation: This allows for a recurring engagement loop, ensuring users have a fresh challenge each day. The value is in maintaining user interest and providing consistent learning opportunities. Its application is in daily puzzle apps, educational games, or gamified learning platforms.

· Interactive board state guessing: Users can guess the location of missing chess pieces. This functional core offers a unique way to train spatial reasoning and tactical visualization in chess. The value is in actively engaging users in problem-solving rather than passive observation. This is useful for any interactive educational tool or skill-building game.

· Real checkmate position validation: The system ensures that the reconstructed position is a legitimate checkmate scenario. This provides an educational foundation and ensures the puzzles are technically sound. The value is in offering accurate and meaningful challenges. This is crucial for any application aiming to teach or test skills accurately.

· User-friendly interface for guessing and feedback: The design prioritizes ease of use for players to input their guesses and receive feedback. The value is in accessibility and a positive user experience, making complex chess concepts approachable. This is vital for any product targeting a broad audience, not just expert players.

Product Usage Case

· A chess learning platform could use Matle's puzzle mechanics to create a daily 'Tactical Guess' feature, helping beginner and intermediate players improve their ability to spot mating patterns and piece values. It solves the problem of traditional tactical trainers being too dry by making it a guessing game.

· A game developer could build a mobile game where players reconstruct famous historical chess games by guessing the positions of key pieces at different moments. This would leverage Matle's concept of hidden information within a known context to solve the problem of making historical game analysis more engaging.