Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-12-20

SagaSu777 2025-12-21

Explore the hottest developer projects on Show HN for 2025-12-20. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

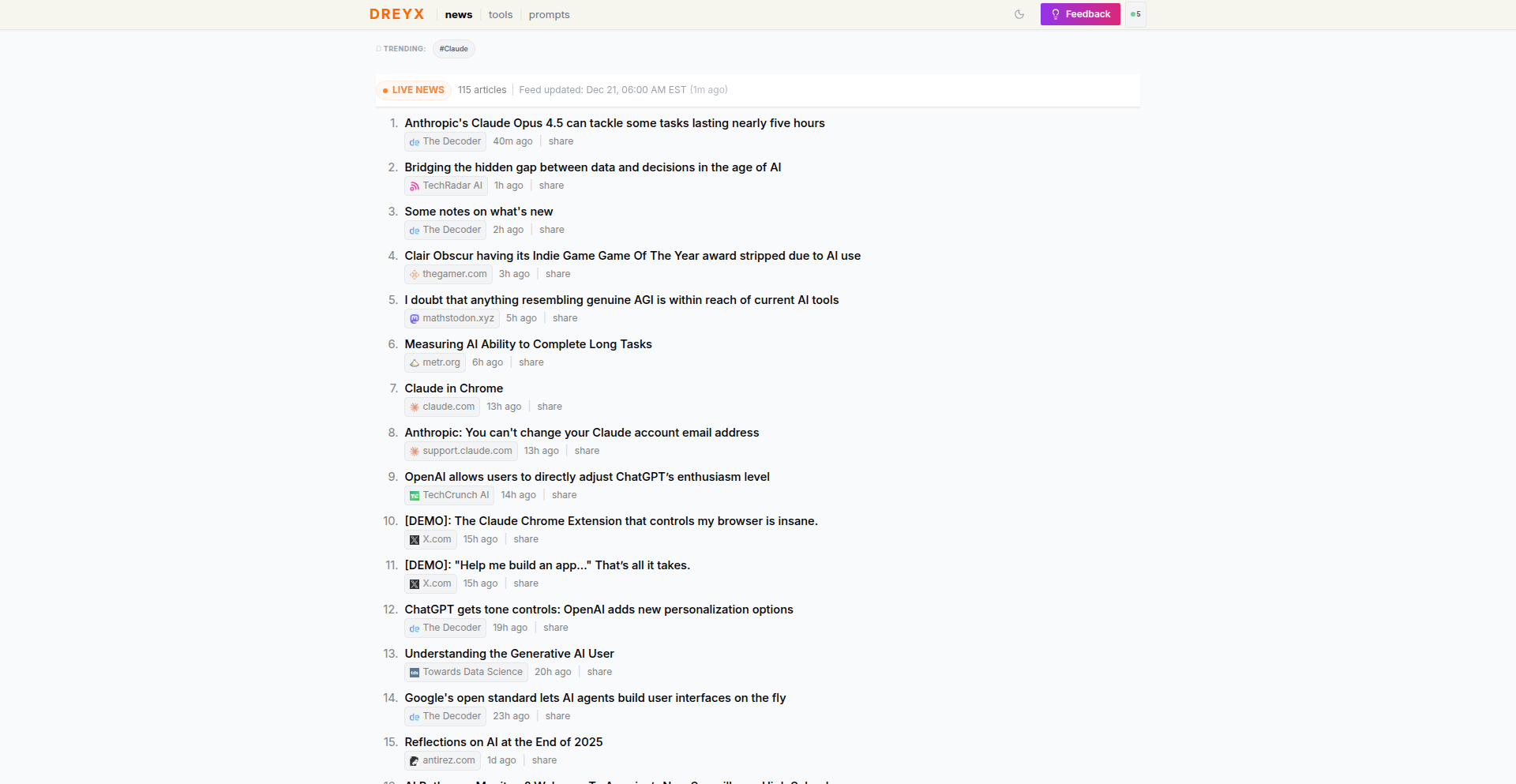

Today's Show HN landscape paints a vibrant picture of innovation driven by a hacker's spirit – finding elegant solutions to complex problems. We're seeing a strong surge in AI and LLM applications, not just as standalone tools, but deeply integrated into developer workflows and everyday productivity. The emphasis on 'local-first' and privacy-preserving applications is a critical counter-trend to cloud-centric models, empowering users with control over their data and reducing reliance on external services. For developers, this means an opportunity to build robust, secure, and user-centric tools. Entrepreneurs should look at niches where these privacy-focused, AI-augmented solutions can disrupt existing markets or create entirely new ones. The focus on developer tooling, from Kubernetes previews to debugging aids, signals a continuous push for efficiency and sophistication in software creation. The emergence of projects in low-level systems programming and niche languages like Zig and Dragonlang shows a hunger for performance and control at the foundational level. This diverse ecosystem thrives on the creative application of technology to solve specific pain points, from organizing sensitive documents to improving the efficiency of AI models themselves. The takeaway for innovators is clear: identify a real problem, leverage cutting-edge tech with a pragmatic approach, and build solutions that empower users and developers alike, always keeping an eye on security and user control.

Today's Hottest Product

Name

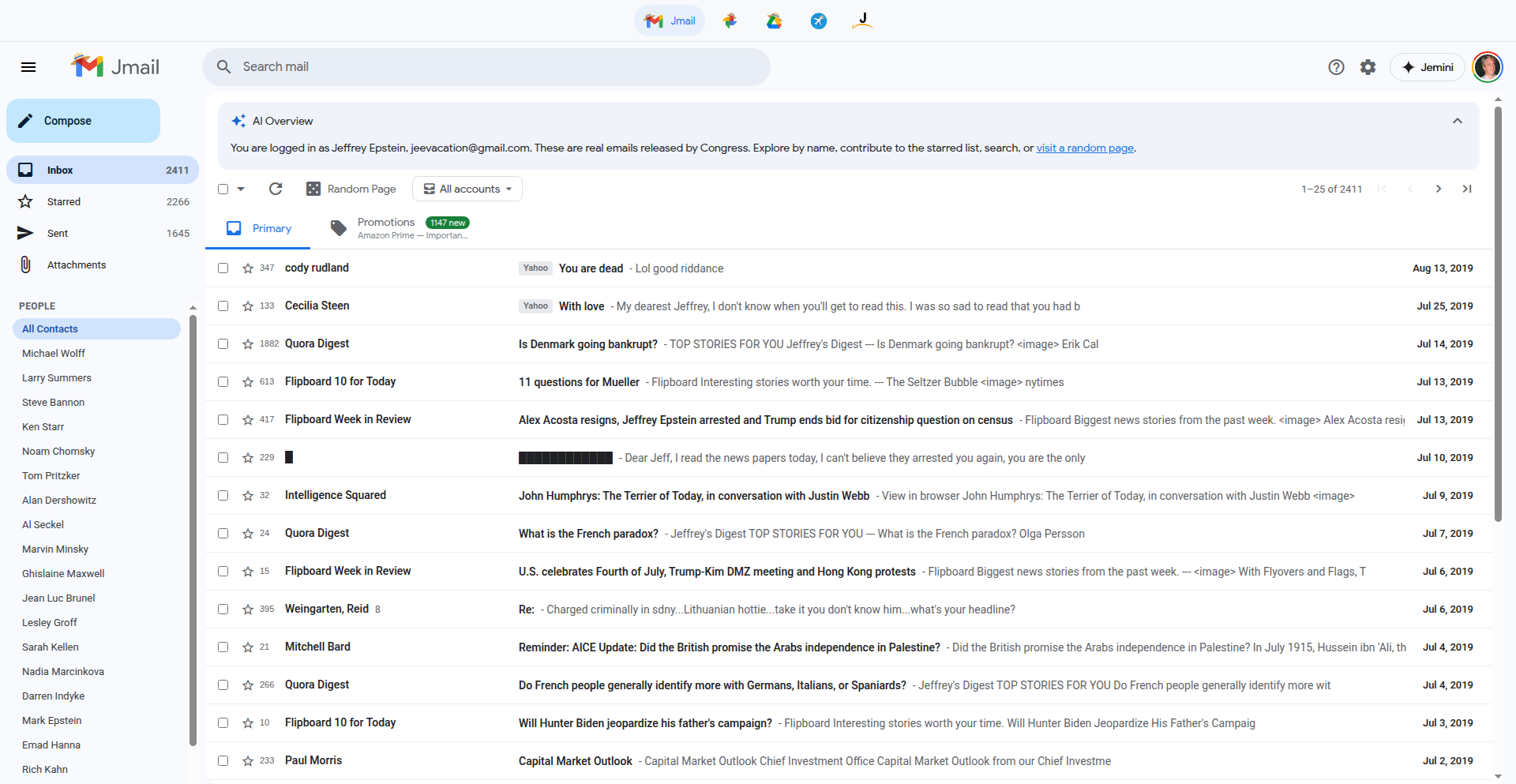

Jmail – Google Suite for Epstein files

Highlight

This project leverages the power of distributed systems and secure file management to create a 'Google Suite' specifically designed for handling sensitive and large datasets, inspired by the public release of the 'Epstein files.' It tackles the technical challenge of organizing and accessing massive amounts of data securely, offering developers insights into building scalable, privacy-focused applications. The core innovation lies in its ability to manage complex data ecosystems efficiently, potentially using technologies like distributed storage and advanced access control mechanisms.

Popular Category

AI/LLM Applications

Developer Tools

Data Management

Security

Popular Keyword

LLM

AI

eBPF

Kubernetes

Python

Zig

macOS

WebAssembly

Technology Trends

AI-driven productivity

Local-first applications

Enhanced developer tooling

Efficient data processing

Advanced system monitoring

Privacy-preserving technologies

Cross-platform development

Low-level systems programming

Project Category Distribution

AI/LLM Applications (25%)

Developer Tools (20%)

Data Management/Storage (10%)

Utilities/Productivity (15%)

Security (5%)

System Programming/Languages (10%)

Creative/Entertainment (5%)

Hardware/Embedded (5%)

Other (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | EpsteinFileSuite | 841 | 163 |

| 2 | HN Persona Weaver | 200 | 116 |

| 3 | ClaudeCode Music Companion | 50 | 12 |

| 4 | ChartPreviewr | 19 | 6 |

| 5 | Fucking Websites CLI | 11 | 4 |

| 6 | Dbzero: Infinite RAM Python Persistence | 7 | 3 |

| 7 | Cerberus: Kernel-Level Network Insight | 7 | 3 |

| 8 | HiFidelity Native AudioEngine | 4 | 4 |

| 9 | DK Atlas 3D Brain Explorer | 6 | 1 |

| 10 | BrowserACH | 5 | 1 |

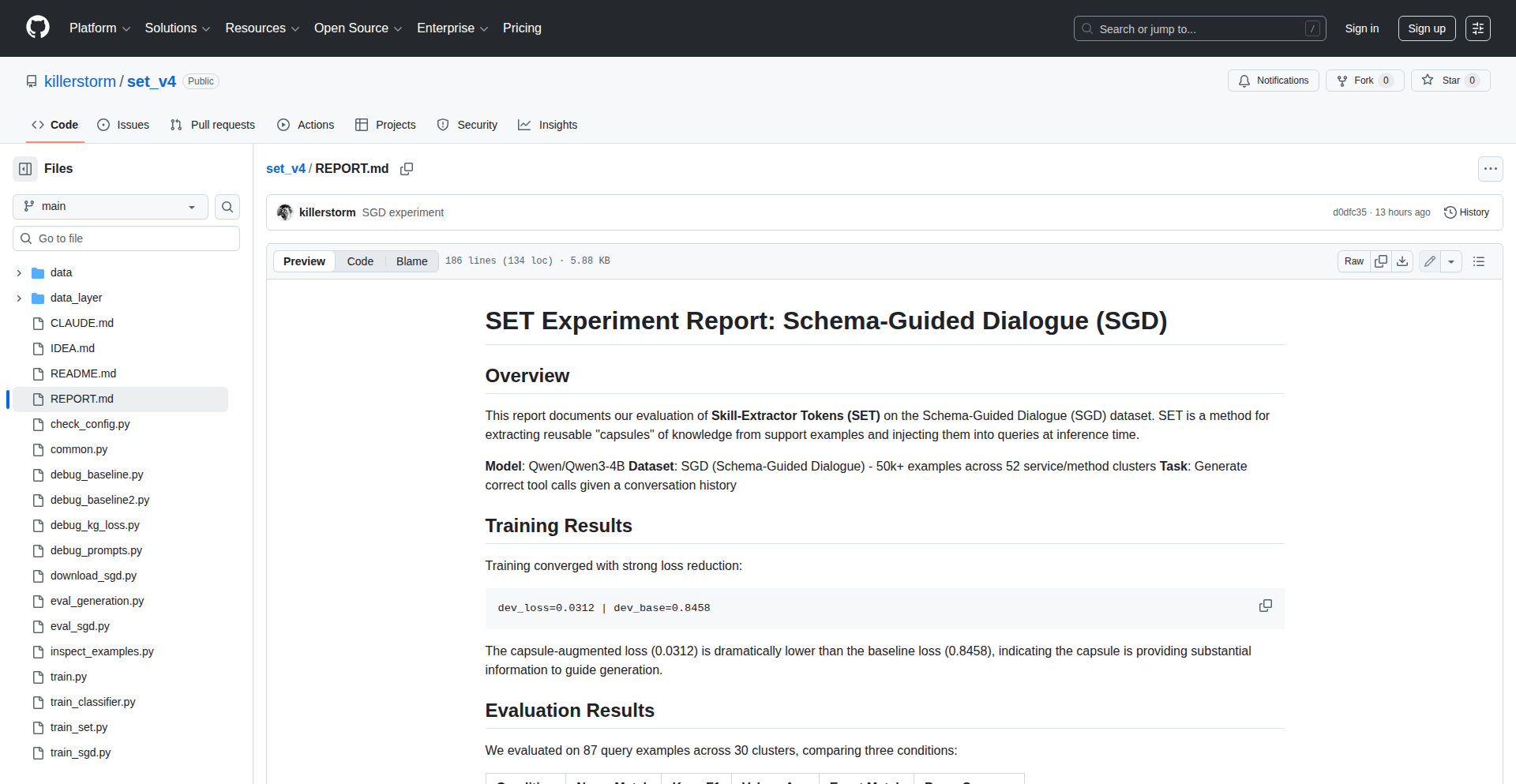

1

EpsteinFileSuite

Author

lukeigel

Description

A collection of tools designed for securely organizing and accessing sensitive documents, inspired by the public release of legal files. It offers a private, encrypted environment for managing personal or sensitive information, providing peace of mind through robust data protection. The innovation lies in creating a secure, user-friendly platform for managing critical data, making advanced encryption accessible to everyone.

Popularity

Points 841

Comments 163

What is this product?

EpsteinFileSuite is a suite of applications that provides a secure, private, and encrypted workspace for managing sensitive documents. Unlike cloud storage that might have broad access policies, this suite focuses on giving you granular control over your data. The core innovation is the application of strong encryption techniques and secure data handling practices to create a personal 'digital vault' accessible only by you. This means even if the data were intercepted, it would be unreadable without your keys. So, this is for anyone who values their privacy and wants to ensure their sensitive information is protected from unauthorized access.

How to use it?

Developers can integrate EpsteinFileSuite into their workflows by leveraging its APIs for secure data storage, retrieval, and management. For example, a developer building a secure note-taking app could use EpsteinFileSuite's backend to ensure all notes are encrypted before being stored. End-users can utilize it as a standalone application for securely storing important personal documents, such as legal papers, financial records, or private journals. The integration is designed to be straightforward, allowing for seamless adoption into existing applications or as a dedicated secure data management solution. This means you can add a layer of top-tier security to your projects or personal files with minimal effort.

Product Core Function

· End-to-end encryption for all stored documents. This ensures that your data is encrypted on your device before it's sent, and only decrypted when you access it, making it unreadable to anyone intercepting it. This provides the highest level of data privacy.

· Secure document organization and retrieval. This feature allows you to categorize and search through your sensitive files efficiently, ensuring you can find what you need quickly within your secure vault. This saves you time and reduces the risk of misplacing important information.

· Private collaboration features. This enables sharing encrypted documents with specific individuals or groups, ensuring that collaboration happens securely without exposing the data to unauthorized parties. This is crucial for teams working with confidential information.

· Audit trails and access logging. This function provides a record of who accessed which documents and when, offering transparency and accountability for data access. This helps in tracking usage and identifying any suspicious activity, giving you peace of mind about who is accessing your data.

Product Usage Case

· A lawyer can use EpsteinFileSuite to securely store and share client case files, ensuring client confidentiality is maintained throughout the process. This solves the problem of secure handling of sensitive legal documents and ensures compliance with privacy regulations.

· A journalist can use the platform to store confidential sources and interview transcripts, protecting the identity of their sources and the integrity of their investigation. This addresses the critical need for anonymity and data security in investigative journalism.

· An individual can use EpsteinFileSuite to securely store personal health records or financial statements, ensuring their private information is protected from identity theft or unauthorized access. This provides a personal digital safe for highly sensitive personal data.

· A development team can integrate EpsteinFileSuite to manage sensitive API keys or proprietary code snippets, ensuring that these critical assets are protected within a secure, access-controlled environment. This solves the challenge of securely managing sensitive development credentials.

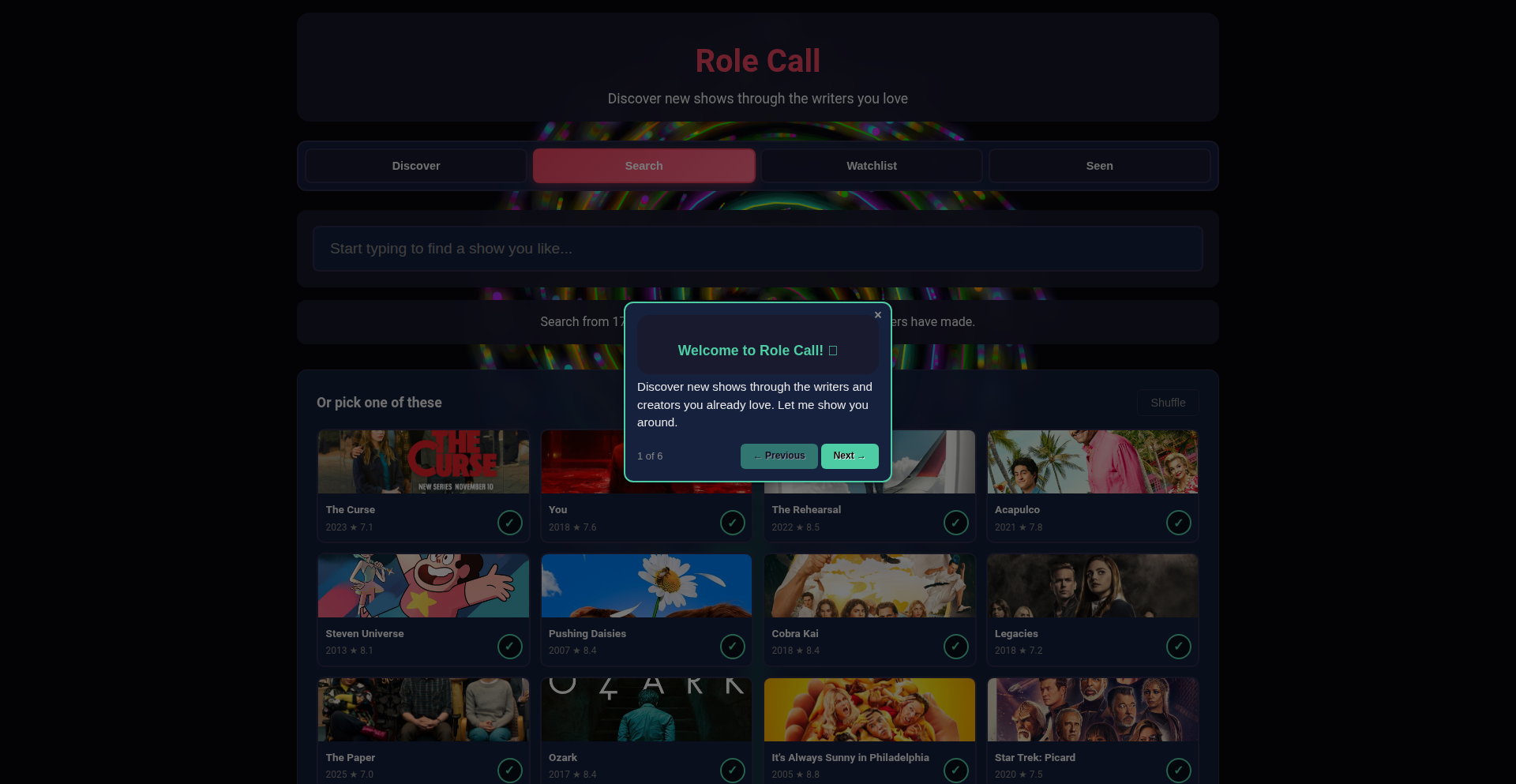

2

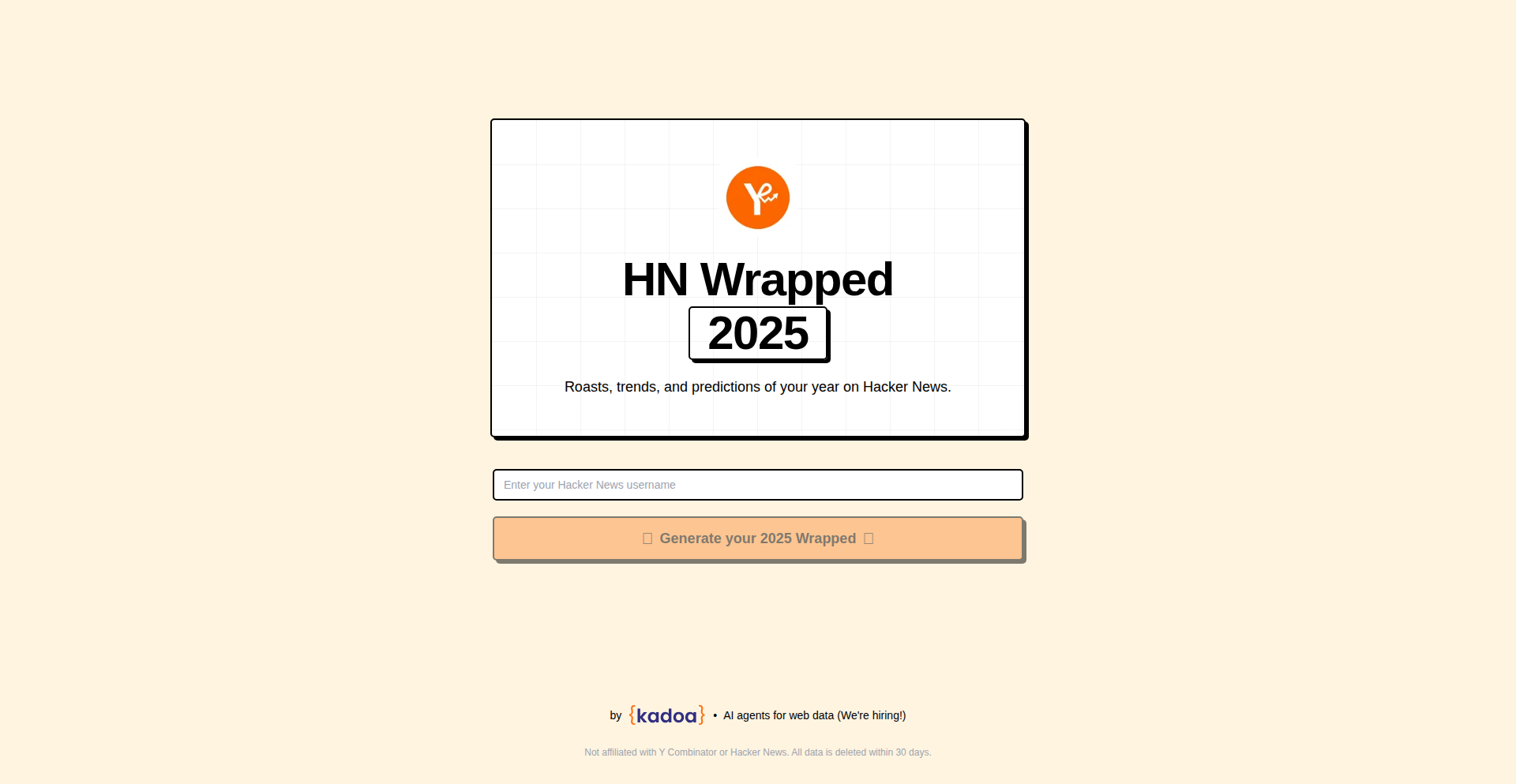

HN Persona Weaver

Author

hubraumhugo

Description

HN Persona Weaver is a creative project leveraging the latest Gemini LLMs to analyze your Hacker News activity and generate a personalized retrospective. It offers generated roasts and statistical insights based on your past contributions, a futuristic vision of your HN front page from 2035, and a unique xkcd-style comic depicting your HN persona. This project showcases the innovative application of AI for entertainment and self-reflection within a tech community.

Popularity

Points 200

Comments 116

What is this product?

HN Persona Weaver is a web application that uses advanced AI models, specifically Gemini 3 Flash and Gemini 3 Pro (image generation), to process your Hacker News username. It's not just a data aggregator; it's a creative engine that interprets your activity to produce engaging and often humorous content. The innovation lies in applying these powerful language and image models to a specific niche community (Hacker News users) to generate personalized, fun, and insightful outputs. It translates raw user data into a narrative and visual representation of their online identity within the HN ecosystem. The 'so what does this mean for me?' is that you get a fun, unique, and personalized way to see yourself through the lens of your Hacker News interactions, offering a lighthearted take on your digital footprint.

How to use it?

Developers can use HN Persona Weaver by simply entering their Hacker News username into the provided web interface. The application then fetches publicly available data associated with that username from Hacker News. This data is fed into the Gemini models, which perform the analysis and generation tasks. For integration purposes, developers could potentially explore the underlying logic or APIs if they were made public, to understand how to use LLMs for similar personalized content generation tasks. The primary use case for an end-user developer is to experience their own 'HN Wrapped' and share it with the community. The 'so what does this mean for me?' is that it's an easy-to-use tool that requires no technical setup on your part; just provide your username and get a personalized AI-generated experience.

Product Core Function

· Generated Roasts and Stats: Leverages LLMs to analyze a user's HN activity, extracting key trends, popular topics, and potentially controversial posts, then crafts witty and insightful 'roasts' or statistical summaries. This provides a unique, AI-driven commentary on one's contributions, offering both entertainment and a novel way to reflect on their engagement. The application is valuable for developers who want a fun, personalized review of their online persona.

· Personalized HN Front Page (2035 Vision): Uses LLMs to project future content trends on Hacker News based on current user activity and general AI predictions, creating a speculative personalized front page from the year 2035. This demonstrates the creative potential of LLMs in foresight and personalization, offering a glimpse into what might be engaging in the future. Its value lies in sparking imagination and showing how AI can extrapolate trends.

· xkcd-style Comic Generation: Employs image generation capabilities of LLMs to create a comic strip that visually represents the user's HN persona, inspired by the distinct style of xkcd. This showcases the power of AI in creative visual storytelling, translating abstract user data into a relatable and humorous visual format. This is valuable for developers looking for novel ways to visualize data or create shareable, entertaining content.

Product Usage Case

· Community Engagement and Virality: A developer uses HN Wrapped to generate their personalized summary and shares it on Hacker News. The engaging and humorous nature of the AI-generated content sparks conversation, leading to increased interaction with their post and wider community sharing. This solves the problem of low engagement by providing inherently shareable and interesting content.

· Personal Reflection and Self-Awareness: A developer inputs their username to see their AI-generated 'roast' and future HN front page. This provides a novel and entertaining way to reflect on their past contributions to the community and consider future interests, fostering a sense of self-awareness in their online presence. This offers a unique form of personalized feedback.

· AI Exploration and Inspiration: A developer interested in the practical applications of LLMs uses this project as an example to understand how generative AI can be applied to niche communities for personalized content creation. They might study its approach to data processing and prompt engineering for inspiration in their own AI projects. This provides a concrete, accessible example of LLM application for learning and inspiration.

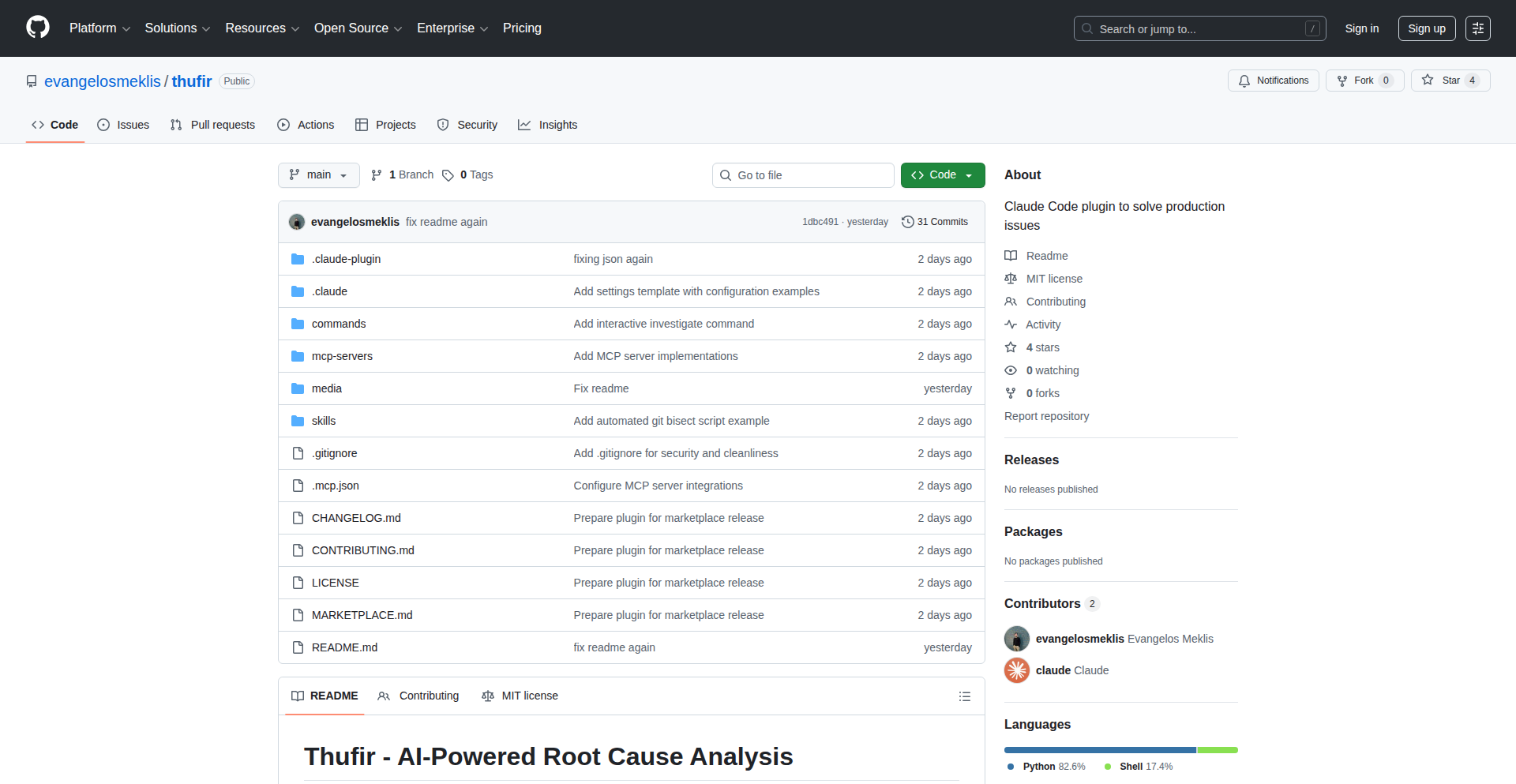

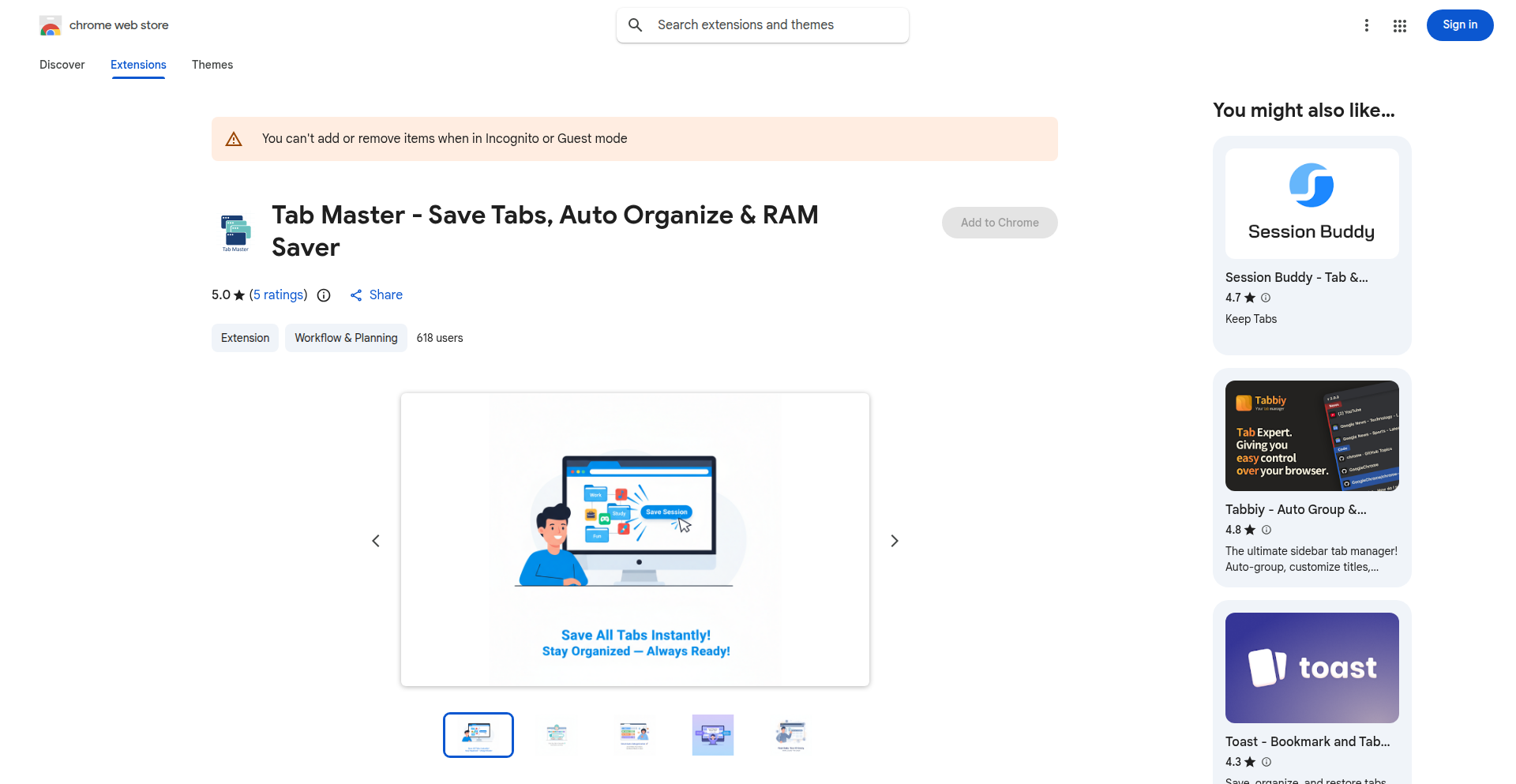

3

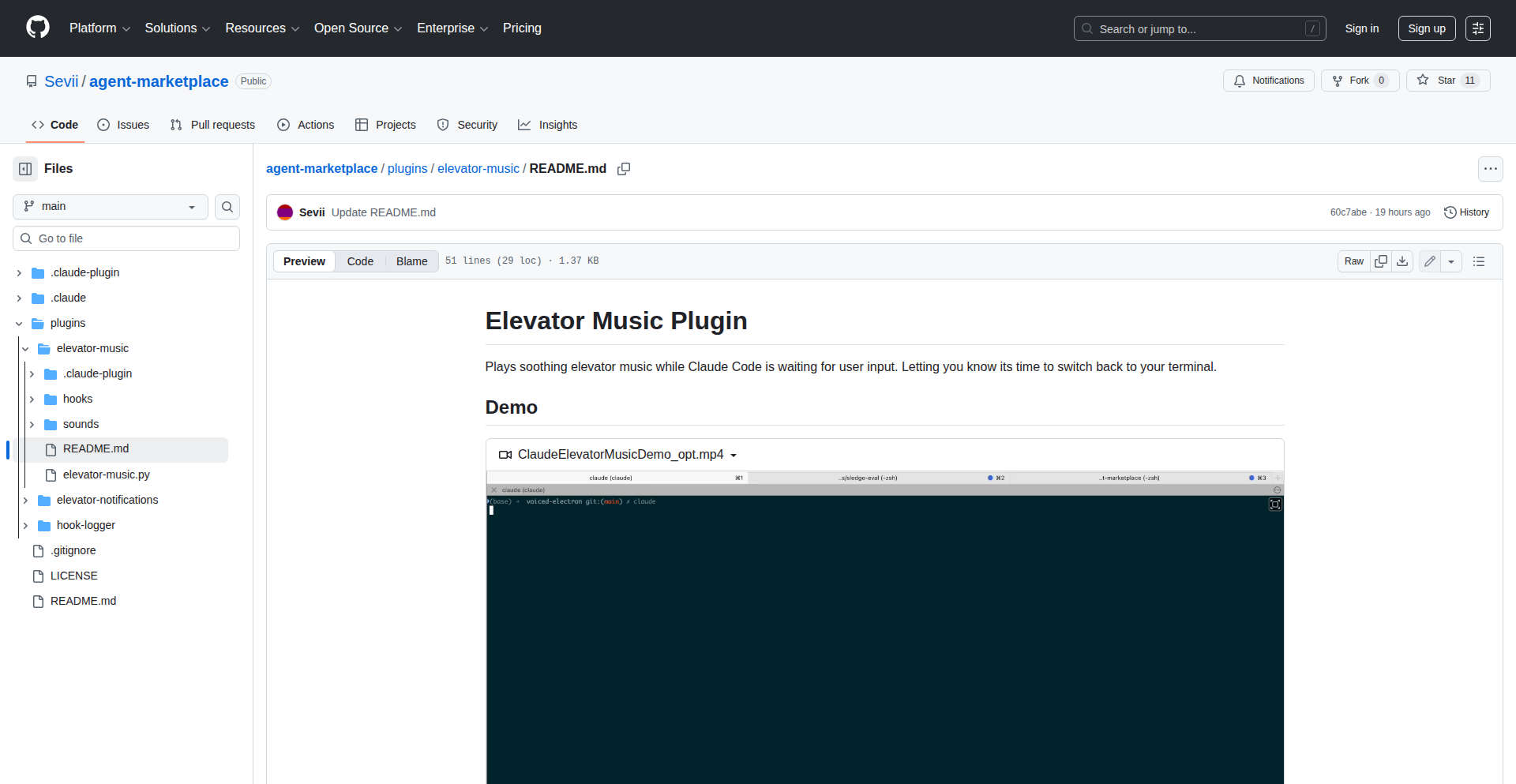

ClaudeCode Music Companion

Author

Sevii

Description

A browser plugin designed to enhance the user experience with Claude Code by playing music during its waiting periods. This addresses the common issue of Claude Code's latency, preventing users from getting distracted or leaving the session idle.

Popularity

Points 50

Comments 12

What is this product?

This is a browser plugin that intelligently detects when Claude Code is idle and waiting for user input. Instead of leaving you staring at a blank screen, it plays music. The innovation lies in how it leverages Claude Code's internal 'hooks' or signals that indicate its waiting state. By tapping into these, it provides a subtle but effective way to keep you engaged and aware of when Claude is ready for your next command, without being intrusive. So, this is useful because it makes waiting for AI responses less tedious and keeps you focused on the interaction.

How to use it?

Developers can easily install this plugin through their browser's extension store (e.g., Chrome Web Store, Firefox Add-ons). Once installed, it runs in the background. When you're interacting with Claude Code and it enters a waiting state, the plugin automatically starts playing a pre-selected or randomly chosen piece of music. You can typically configure the music source or preferences within the plugin's settings. This means you can integrate it seamlessly into your workflow; just install it and it works. So, this is useful because it automates the process of making waiting times more pleasant and productive.

Product Core Function

· Idle State Detection: The plugin uses specific signals from Claude Code, essentially 'listening' for when the AI is ready for input. This allows for precise timing of music playback. Its value is in ensuring music only plays when you're actually waiting, not during active processing, thus maintaining the focus on the AI's response. This is applicable in any scenario where you are actively using Claude Code for extended periods.

· Music Playback Initiation: Upon detecting an idle state, the plugin triggers music playback. This can be from a variety of sources, such as web radio, local files, or curated playlists. The value here is transforming passive waiting time into an engaging experience, preventing user abandonment and improving session continuity. This is useful for anyone who finds long AI waits demotivating.

· Configurable Settings: Users can often customize the type of music played, volume levels, and perhaps even specific 'waiting' playlists. This allows for personalization, making the experience more enjoyable and less distracting based on individual preferences. The value is in adapting the feature to suit diverse user needs and moods, making the interaction more pleasant. This is useful for tailoring the AI experience to individual comfort levels.

Product Usage Case

· Scenario: A developer is using Claude Code to generate complex code snippets or debug intricate problems. The AI can take several minutes to process. How it solves the problem: Instead of the developer staring blankly at the screen, potentially losing focus or getting distracted by other tabs, the plugin plays a calm ambient track. This keeps the developer gently anchored to the task, reducing the perceived wait time and increasing efficiency. So, this is useful because it transforms potentially frustrating long waits into a more relaxed and productive period, preventing task abandonment.

· Scenario: A user is drafting creative content with Claude Code, like writing a story or brainstorming ideas. The AI's response generation can be lengthy. How it solves the problem: The plugin plays upbeat instrumental music, creating a more inspiring atmosphere during the wait. This can stimulate creativity and maintain momentum for the user's writing process. So, this is useful because it enhances the creative workflow by maintaining an engaging and stimulating environment, even during AI processing downtime.

· Scenario: A remote worker is juggling multiple tasks and using Claude Code for assistance. They don't want to miss when Claude is ready but also need to stay productive. How it solves the problem: The plugin can be configured to play a distinct, pleasant sound cue or a short musical interlude when Claude is ready for input. This acts as a subtle notification without requiring constant visual monitoring. So, this is useful because it provides effective, non-intrusive alerts that allow users to multitask efficiently while still being responsive to the AI.

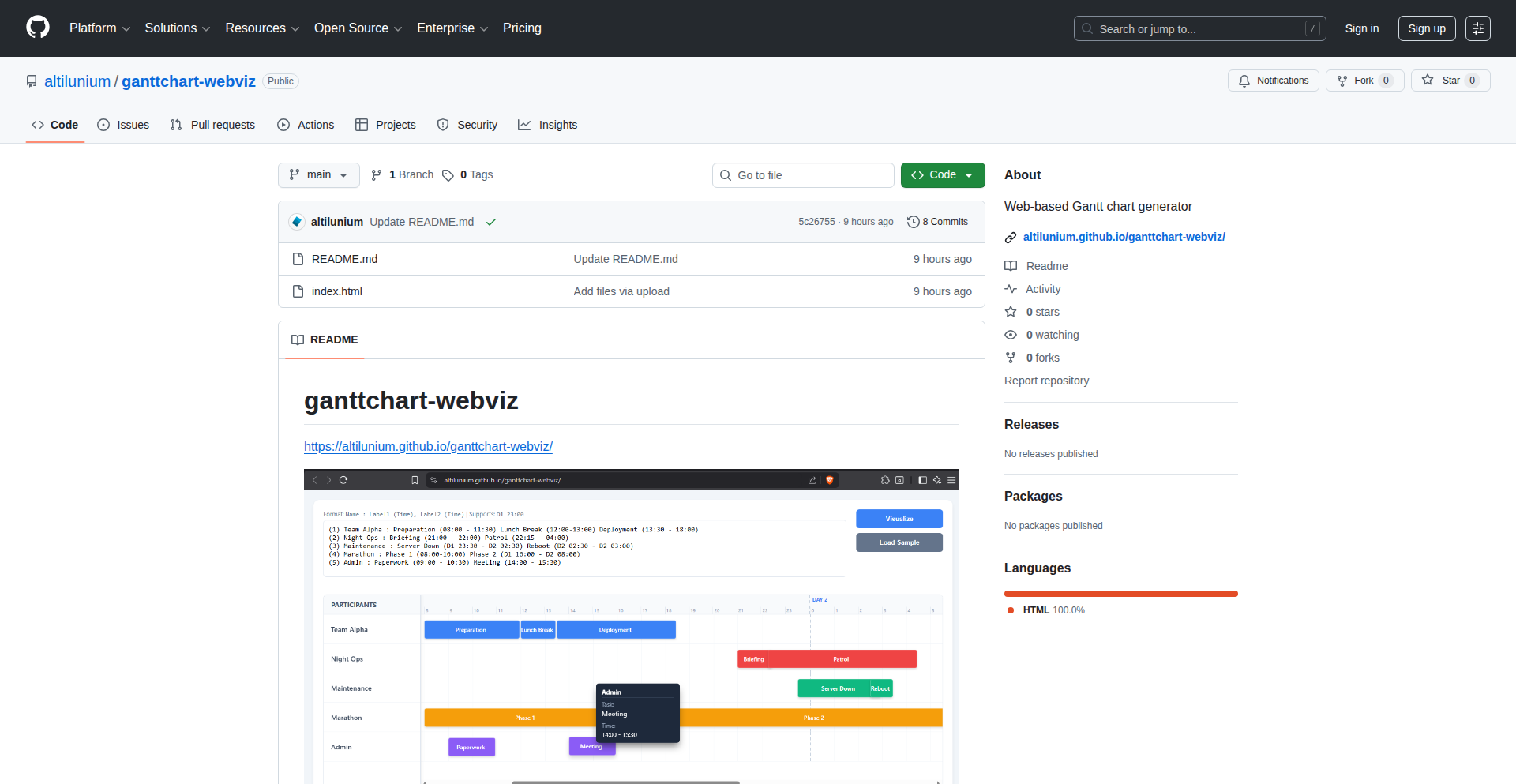

4

ChartPreviewr

Author

chartpreview

Description

ChartPreviewr is a novel solution that automates the creation of temporary, live preview environments for Helm charts directly from your pull requests. It addresses the common bottleneck in Kubernetes development workflows where reviewing and testing changes to Helm charts requires significant manual effort and specialized knowledge. By deploying each PR's Helm chart to a dedicated Kubernetes namespace and providing a unique preview URL, ChartPreviewr allows developers and reviewers to instantly visualize and interact with changes, significantly accelerating the feedback loop and improving code quality. This approach is particularly innovative because it's Helm-native, handling complexities like chart dependencies and layered value files effectively, which are often challenging for generic container preview tools.

Popularity

Points 19

Comments 6

What is this product?

ChartPreviewr is a tool designed to automatically deploy your Helm charts to a live Kubernetes cluster whenever you open a pull request. It then provides a temporary, unique URL where you and your team can access and test the application deployed by that specific chart version. Once the pull request is closed, the environment is automatically cleaned up. The innovation lies in its deep integration with the Helm ecosystem, understanding the intricacies of chart dependencies, custom values files, and layered configurations. Unlike generic preview solutions, ChartPreviewr is built from the ground up to be Helm-native, ensuring accurate and reliable deployments. It works by setting up a dedicated namespace for each preview, isolating it with strict network policies to ensure security. This means you get a realistic, isolated test environment for every proposed change, without any manual setup or teardown. So, what does this mean for you? It means you can see your Helm chart changes working live, in a real Kubernetes environment, without waiting for a dedicated staging server or a busy colleague to manually deploy it. This drastically speeds up development and ensures that issues are caught much earlier.

How to use it?

Developers can integrate ChartPreviewr into their workflow by installing the ChartPreviewr GitHub App (or using GitLab integration). Once installed, ChartPreviewr automatically detects new pull requests targeting Helm chart repositories. It then triggers a deployment of the chart within that pull request to a dedicated Kubernetes cluster. A unique preview URL is generated and commented on the pull request. Reviewers can simply click this link to access a live, running instance of the application as defined by the proposed changes. The environment is automatically provisioned and de-provisioned, eliminating manual overhead. For integration, you'll typically install the provided GitHub App. For CI/CD pipelines, you might configure it to trigger on PR events. The core idea is to make previewing changes as simple as opening a pull request. So, how can you use this? Imagine you've made a change to your application's Helm chart. Instead of just submitting the code and waiting for someone to manually test it, you simply open a PR. ChartPreviewr spins up a live preview, giving you a direct link to test your changes. This is perfect for validating new configurations, verifying dependency updates, or simply seeing your application in action before merging.

Product Core Function

· Automated Helm Chart Deployment to Live Environments: This function deploys your Helm chart to a real Kubernetes cluster for each pull request, providing a tangible, working instance of your application. This is valuable because it allows for immediate verification of changes, reducing the risk of deploying broken code.

· Unique Preview URL Generation: A specific URL is generated for each preview environment, making it easy for developers and reviewers to access the deployed application. This simplifies collaboration and feedback by providing a direct access point, so you can instantly see and test the deployed changes.

· Automatic Environment Cleanup: When a pull request is closed, the corresponding preview environment is automatically removed, preventing resource waste and keeping your Kubernetes cluster clean. This means you don't have to worry about manual cleanup or orphaned resources, saving time and operational overhead.

· Helm-Native Integration: ChartPreviewr understands the nuances of Helm, including dependencies and complex value overrides, ensuring accurate and reliable deployments. This is crucial for Helm users, as it correctly handles the complexities of their chart configurations, offering a more precise preview than generic solutions.

· GitHub/GitLab Integration: Seamless integration with popular code hosting platforms automates the workflow by triggering deployments based on PR events and providing feedback directly within the platform. This means your existing development workflow is enhanced without significant disruption, allowing for immediate feedback within your PR view.

Product Usage Case

· A team is developing a microservice and uses Helm to manage its deployment on Kubernetes. When a developer makes changes to the Helm chart, such as updating resource limits or adding new configurations, they open a pull request. ChartPreviewr automatically deploys this specific version of the chart to a live preview environment. The QA team and other developers can then access this environment via a provided URL to perform integration testing and validation, ensuring that the changes work as expected before merging.

· A developer is working on updating a complex Helm chart that has multiple dependencies on other charts. They need to verify that the updated chart and its dependencies function correctly together. ChartPreviewr provisions an environment that includes all the specified dependencies, allowing the developer to test the entire stack in a realistic setting. This solves the problem of manually setting up and managing intricate dependency trees for testing.

· An open-source project maintains public Helm charts. Contributors often submit pull requests with improvements or bug fixes. ChartPreviewr allows maintainers to instantly spin up a preview of the chart with the proposed changes, enabling them to quickly review the impact and provide feedback to the contributor without the need for manual setup. This accelerates the contribution process for open-source projects.

· A company is migrating an existing application to Kubernetes and is using Helm to define its deployment. They are iterating on the Helm chart to achieve high availability. ChartPreviewr allows them to test different HA configurations in isolated preview environments for each pull request, enabling them to fine-tune the deployment strategy and ensure stability before production deployment. This helps them solve the challenge of testing complex operational requirements in a dynamic development cycle.

5

Fucking Websites CLI

Author

kuberwastaken

Description

A command-line interface (CLI) tool that simplifies the process of creating and managing simple static websites. It leverages pre-defined templates and configuration files to quickly scaffold new projects, eliminating repetitive setup tasks for developers. The core innovation lies in its opinionated structure that promotes best practices and rapid deployment for small-scale web projects.

Popularity

Points 11

Comments 4

What is this product?

This project is a command-line tool designed to streamline the creation of static websites. Instead of manually setting up directory structures, configuration files, and basic HTML, CSS, and JS files, 'Fucking Websites CLI' automates this. It's like having a pre-built blueprint for your website that you can start customizing immediately. The innovation is in its opinionated approach – it guides developers towards a sensible project structure and utilizes templating engines under the hood to generate boilerplate code. This saves time and reduces the cognitive load of starting a new, simple web project.

How to use it?

Developers can use this CLI tool by installing it via a package manager (e.g., npm, pip, depending on implementation). Once installed, they can navigate to their desired directory in the terminal and run commands like `fucking-websites new my-awesome-site` to generate a new project. The tool will then prompt for basic information or use default configurations to create a folder structure with essential files. This allows developers to focus on writing content and styling rather than the initial setup. It's ideal for quickly deploying landing pages, personal blogs, or simple project documentation sites.

Product Core Function

· Project Scaffolding: Automatically generates a standard directory structure and essential files (HTML, CSS, JS, config) for a new static website. This is useful because it immediately provides a working starting point for any web project, saving hours of manual setup.

· Templating Engine Integration: Uses templating to create reusable website components and layouts. This means developers can define common elements once and have them applied across their site, improving consistency and reducing redundant code.

· Configuration File Management: Simplifies the management of project-specific settings through a clear configuration file. This makes it easy to customize build processes or deployment settings without deep diving into complex scripts.

· Command-Line Interface: Provides an intuitive command-line interface for all operations, allowing for quick execution and integration into automated workflows. This is valuable because it enables developers to interact with the tool efficiently directly from their terminal, fitting seamlessly into their existing development environment.

Product Usage Case

· Quickly launching a personal portfolio website: A developer can use 'Fucking Websites CLI' to generate the basic structure of their portfolio in minutes, then immediately start adding their projects and contact information, drastically reducing the time to get online.

· Creating landing pages for marketing campaigns: For a new product launch, a marketing team or developer can rapidly spin up a professional-looking landing page using pre-defined templates, allowing for faster iteration and A/B testing of different designs.

· Setting up documentation sites for open-source projects: Developers can leverage the tool to create a clean, organized structure for project documentation, ensuring that important information is presented clearly and consistently.

· Rapid prototyping of simple web applications: For projects that don't require complex backend logic initially, this tool allows for the fast creation of the frontend structure, enabling developers to visualize and test user interfaces quickly.

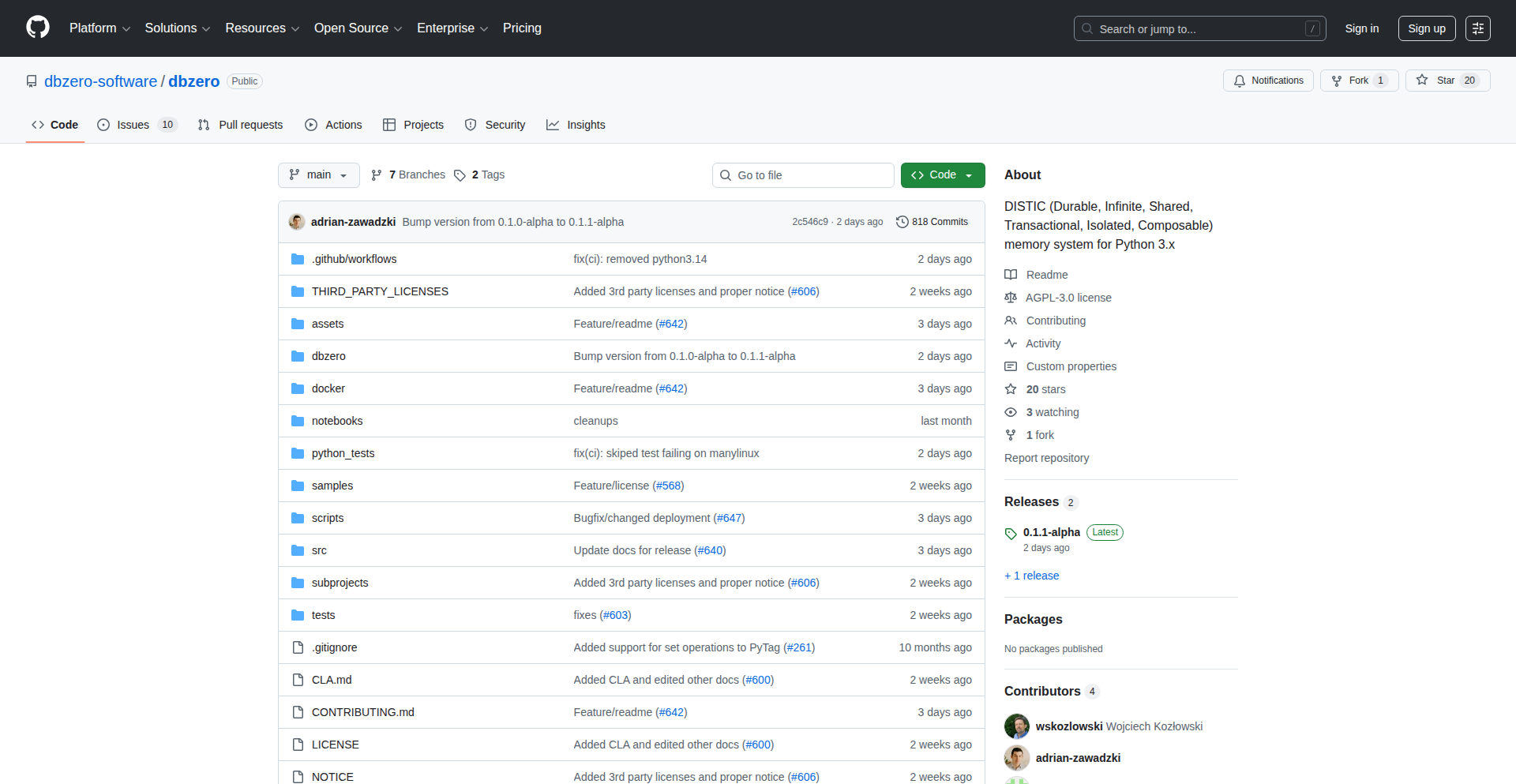

6

Dbzero: Infinite RAM Python Persistence

Author

dbzero

Description

Dbzero is a Python persistence engine that allows you to treat your data as if it were in memory, regardless of its actual size. It achieves this by using a novel approach to data management, minimizing I/O operations and optimizing data access patterns. This breaks through the limitations of traditional in-memory data structures and databases, offering a seamless developer experience for handling large datasets.

Popularity

Points 7

Comments 3

What is this product?

Dbzero is a Python library that fundamentally changes how developers interact with data. Instead of worrying about loading entire datasets into memory, which can be slow and resource-intensive, Dbzero lets you code as if you had unlimited RAM. It does this by intelligently managing data access and storage behind the scenes. Think of it like having a super-smart assistant who fetches only the data you need, exactly when you need it, without you having to explicitly manage that process. This means you can work with massive datasets as easily as you would with small lists or dictionaries. The innovation lies in its optimized data indexing and lazy loading mechanisms, which drastically reduce the overhead typically associated with large-scale data operations.

How to use it?

Developers can integrate Dbzero into their Python projects by installing the library. Once installed, you can define your data structures and then use Dbzero's API to interact with them. For instance, you might load a large dataset from a file or database into a Dbzero object. You can then query, filter, and manipulate this data using familiar Python syntax, such as list comprehensions or attribute access, without ever experiencing the performance penalties of traditional memory management. Dbzero handles the underlying data retrieval and storage efficiently, making it feel like the data is always readily available in RAM. This is particularly useful for data science, machine learning preprocessing, or any application that deals with datasets larger than system memory.

Product Core Function

· Infinite RAM simulation: Allows developers to write code that operates on data as if it were entirely in memory, regardless of actual size, by intelligently managing data fetching and storage. This is useful for building applications that handle massive datasets without running out of system resources.

· Optimized data access: Implements advanced caching and lazy loading strategies to minimize disk I/O and retrieve data only when it's actively being used. This leads to significantly faster data processing and a smoother user experience.

· Seamless Python integration: Provides a Pythonic API that mimics standard Python data structures, making it easy for developers to adopt and use without a steep learning curve. This reduces development time and complexity.

· Reduced memory footprint: By avoiding loading entire datasets into RAM at once, Dbzero significantly lowers the memory requirements of an application. This is crucial for deploying applications on systems with limited memory or for handling extremely large datasets.

· Data persistence: Offers a mechanism to store and retrieve large datasets persistently, ensuring data is not lost when the application closes. This is essential for applications that require long-term data storage and retrieval.

Product Usage Case

· A data scientist analyzing a multi-terabyte CSV file. Instead of struggling with memory errors or complex chunking strategies, they can load the file into Dbzero and perform complex aggregations and transformations using familiar Python code, getting results much faster.

· A machine learning engineer preparing a massive dataset for model training. Dbzero enables them to preprocess features and labels efficiently without requiring a supercomputer, speeding up the entire model development pipeline.

· A web application backend service that needs to serve data from a very large database. Dbzero can be used to cache frequently accessed data or manage access to entire tables without overwhelming the server's memory, improving responsiveness.

· Developing a simulation that requires manipulating a vast number of objects. Dbzero allows the simulation to run smoothly and efficiently, even with millions of objects, by only keeping active objects in memory.

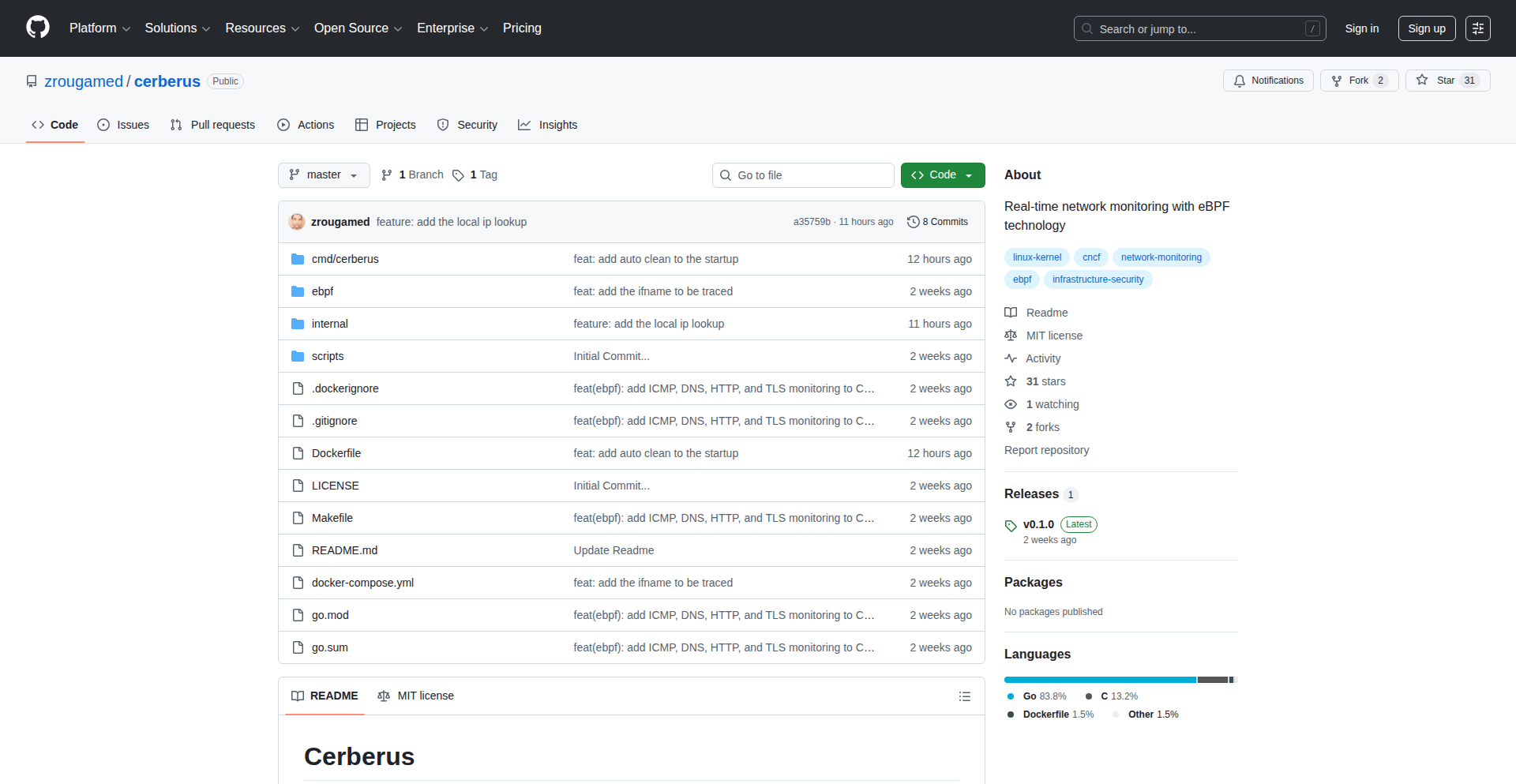

7

Cerberus: Kernel-Level Network Insight

Author

zrouga

Description

Cerberus is a real-time network monitoring tool that leverages eBPF to capture and analyze network packets directly within the Linux kernel. This approach minimizes performance overhead, making it ideal for high-traffic production environments like container network interfaces (CNI). It overcomes the limitations of traditional tools like tcpdump and Wireshark by offering efficient, in-kernel packet inspection.

Popularity

Points 7

Comments 3

What is this product?

Cerberus is a sophisticated network monitoring system that utilizes eBPF (extended Berkeley Packet Filter) technology. Unlike traditional tools that often require copying data between the kernel and user space, leading to performance bottlenecks, eBPF allows us to run custom programs directly inside the Linux kernel. Cerberus's eBPF programs are designed to filter and classify network packets very early in the network stack, specifically at the kernel level. This means it can inspect and understand network traffic with minimal impact on the system's performance, even under heavy load. The innovation lies in its ability to provide detailed network insights without becoming a performance drag on critical infrastructure, a common issue with existing solutions.

How to use it?

Developers can integrate Cerberus into their production environments to gain real-time visibility into network traffic. For containerized applications, it can be deployed to monitor traffic flowing through CNI plugins, identifying performance issues or security threats as they happen. Its integration is typically done by loading the eBPF programs into the kernel, often managed via a daemon or a systemd service. The collected data can then be exported to other monitoring systems like Prometheus for metric analysis or processed further for anomaly detection. This allows for proactive troubleshooting and security monitoring without sacrificing application performance.

Product Core Function

· Kernel-level packet filtering: Enables efficient and low-overhead selection of relevant network packets directly within the kernel, reducing the amount of data that needs to be processed by user-space applications, thus improving performance.

· Real-time traffic classification: Allows for immediate categorization of network traffic based on protocols, ports, or application types, providing immediate insights into network activity and potential issues.

· Minimal performance overhead: Designed to have a near-zero performance impact on production systems, making it suitable for high-demand environments where traditional packet capture tools would be too resource-intensive.

· eBPF program execution: Leverages the power of eBPF to run custom logic within the kernel, enabling advanced packet inspection and analysis capabilities that are not possible with standard kernel networking interfaces.

· Payload inspection up to 32 bytes: Provides a limited but crucial look into the application-layer data of packets, allowing for identification of specific commands or patterns without excessive overhead.

Product Usage Case

· Monitoring traffic within Kubernetes CNI: A platform engineer can use Cerberus to monitor the network traffic between pods in a Kubernetes cluster, identifying bottlenecks or misconfigurations in the CNI, and ensuring smooth communication for microservices.

· Detecting network anomalies in critical infrastructure: A security operations center can deploy Cerberus to continuously monitor network traffic for unusual patterns or deviations from normal behavior, enabling early detection of potential cyberattacks or operational issues in high-availability systems.

· Performance tuning for high-throughput applications: A developer working on a high-performance network service can use Cerberus to pinpoint network latency issues or inefficient packet handling, leading to optimizations that boost overall application throughput.

· Troubleshooting inter-service communication: When services within a distributed system are experiencing communication problems, Cerberus can be used to visualize and analyze the network traffic between them, quickly diagnosing issues related to protocols, ports, or packet loss.

8

HiFidelity Native AudioEngine

Author

rathod0045

Description

HiFidelity is a native macOS offline music player built for audiophiles. It leverages the BASS audio library for professional-grade sound and TagLib for metadata, supporting over 10 audio formats including lossless and high-resolution files. Its core innovation lies in bit-perfect playback with sample rate synchronization and exclusive audio device access, ensuring the purest sound reproduction. It also features seamless gapless playback, a built-in equalizer, intelligent recommendations, and real-time lyrics display, all designed to enhance the listening experience for discerning users. So this is useful for me because it provides an uncompromising audio experience on my Mac, making my music sound its best without any digital manipulation or interruptions.

Popularity

Points 4

Comments 4

What is this product?

HiFidelity is a desktop music player for macOS that focuses on delivering the highest possible audio quality for offline playback. It achieves this by using a powerful audio library called BASS, which allows it to bypass typical operating system audio processing that can degrade sound. The key technical innovation is 'bit-perfect playback,' meaning the audio data is sent to your audio hardware exactly as it is stored in the file, without any alterations. It also includes 'sample rate synchronization' to ensure the audio hardware is playing at the correct speed, and 'obtain exclusive access of audio device' (also known as 'hog mode') which prevents other applications from interfering with the audio output. This means you get the most faithful reproduction of the original recording. So this is useful for me because it guarantees the highest fidelity sound from my music files, letting me hear the music exactly as the artist intended, free from unnecessary digital processing.

How to use it?

Developers can use HiFidelity as a reference for building high-quality audio applications on macOS. The project demonstrates how to integrate professional audio libraries like BASS and metadata readers like TagLib to handle a wide range of audio formats. It showcases techniques for achieving bit-perfect playback, exclusive audio device access, and gapless playback, which are critical for audiophile-grade applications. Furthermore, it provides examples of building a user interface with features like library browsing, playlist management, search capabilities (using FTS5), lyrics synchronization, and smart recommendations. Developers interested in audio processing, media playback, or native macOS application development can learn from its architectural choices and implementation details. So this is useful for me because it gives me a practical example of how to build a sophisticated and high-quality audio player, allowing me to understand and potentially replicate advanced audio handling techniques in my own projects.

Product Core Function

· Bit-perfect playback with sample rate synchronization: Ensures the audio signal is reproduced exactly as stored, providing the highest fidelity. Useful for audiophiles who want the purest sound experience.

· Support for 10+ audio formats including lossless and high-resolution: Allows playback of a wide variety of music files, from common MP3s to professional studio-quality formats. Useful for users with diverse music libraries.

· Gapless playback: Enables seamless transitions between tracks, eliminating any silence or interruption. Ideal for listening to albums that are meant to flow continuously, like live recordings or concept albums.

· Built-in equalizer with customizable presets: Allows users to fine-tune the audio output to their preferences or specific listening environments. Useful for tailoring the sound to personal taste or correcting acoustic issues.

· Smart Recommendations (Auto play): Learns user listening habits to suggest and play music automatically, reducing the need for manual selection. Useful for discovering new music or for passive listening.

· Real-time line-by-line lyrics highlighting: Displays lyrics in sync with the music, enhancing the engagement with songs. Useful for understanding lyrics, singing along, or appreciating the lyrical content.

· Advanced Search with FTS5: Provides fast and efficient searching of the entire music library. Useful for quickly finding specific songs, artists, or albums within large collections.

· Import playlist with m3u or Import Folder as playlist: Simplifies the process of organizing and loading existing playlists or entire folders of music. Useful for users who have pre-existing playlist files or want to quickly create playlists from folders.

Product Usage Case

· A musician wanting to accurately preview their master tracks in lossless formats on their Mac without any added processing that could color the sound. HiFidelity's bit-perfect playback ensures they hear the true representation of their work.

· A DJ who needs to seamlessly transition between tracks during a set or practice session without any audible gaps. HiFidelity's gapless playback is crucial for this use case.

· A student who wants to organize a large collection of downloaded music and easily find any song without manually scrolling through folders. HiFidelity's advanced search functionality significantly speeds up music discovery.

· A casual listener who enjoys discovering new music based on their current listening habits. HiFidelity's smart recommendations can introduce them to artists and genres they might not have found otherwise.

· A lyric enthusiast who wants to sing along to their favorite songs with perfect timing. HiFidelity's real-time lyrics highlighting provides an engaging karaoke-like experience.

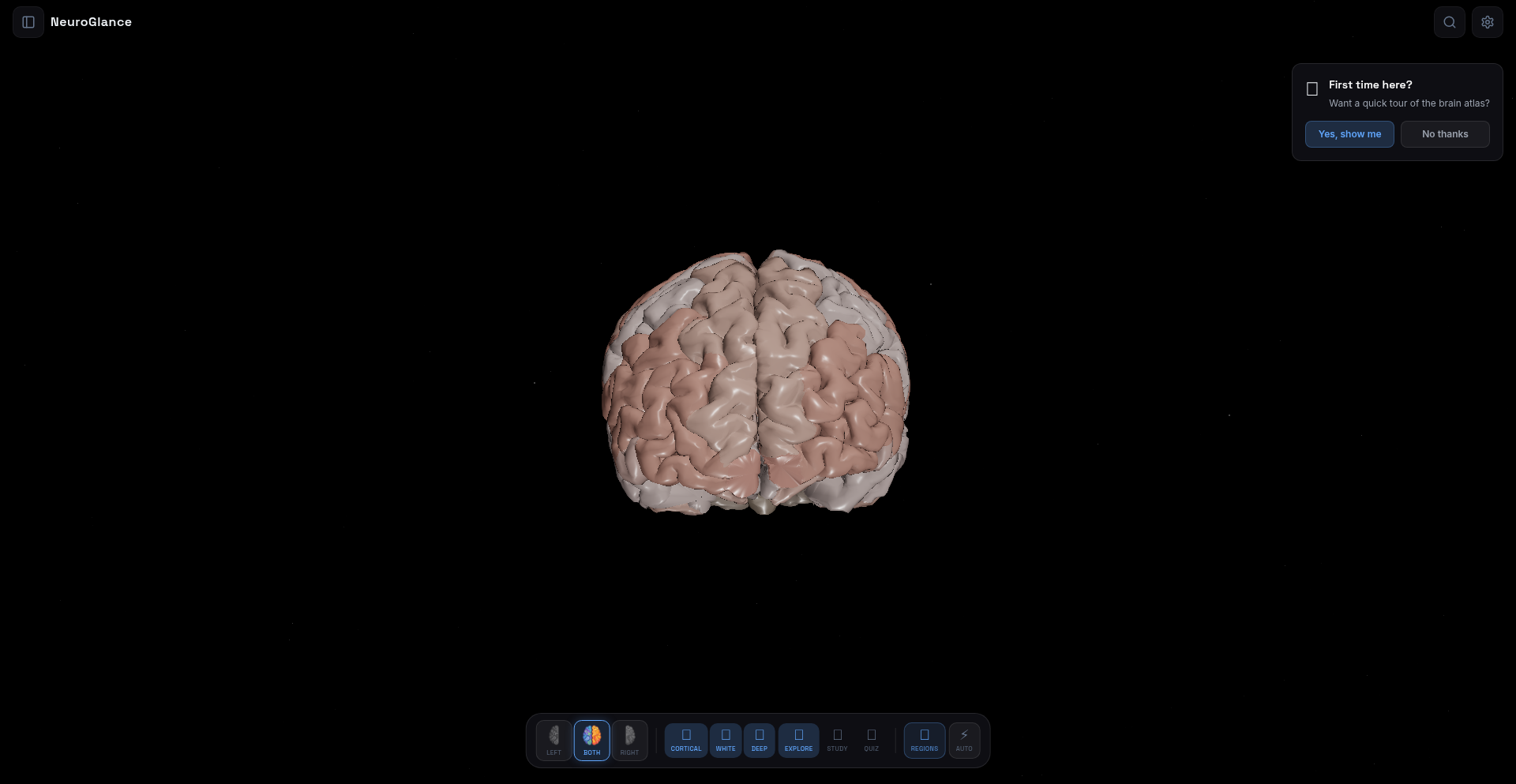

9

DK Atlas 3D Brain Explorer

Author

ronniebasak

Description

A novel interactive 3D brain viewer that visualizes anatomical locations and technical neuroscience terms. This project tackles the complexity of understanding brain anatomy and the relationships between its technical vocabulary by offering an intuitive, visual interface. It's a tool that makes dense neuroscience information accessible and explorable.

Popularity

Points 6

Comments 1

What is this product?

DK Atlas 3D Brain Explorer is an application that allows users to interactively explore a 3D model of the human brain. Instead of just looking at static diagrams or reading text, you can rotate, zoom, and delve into specific regions of the brain. What makes it innovative is its integration of technical neuroscience terms directly with their corresponding anatomical locations. When you highlight a specific part of the 3D brain, it can show you the associated technical term, and vice-versa. This is built using advanced 3D rendering techniques, likely leveraging WebGL or similar technologies for browser-based accessibility, and a carefully curated dataset mapping terminology to brain structures. So, what's the value for you? It dramatically simplifies learning and recalling complex brain anatomy and its associated jargon, making it easier to grasp difficult neuroscience concepts.

How to use it?

Developers can use DK Atlas 3D Brain Explorer in several ways. For educational platforms, it can be integrated as an interactive learning module, allowing students to visually discover and learn about brain structures and their functions. For researchers, it can serve as a reference tool to quickly locate and understand the anatomical context of specific terms in their work. Integration might involve embedding the viewer into a web application using provided JavaScript APIs, allowing for custom highlighting, data overlays, or even linking to external resources. The underlying technology likely allows for programmatic control of the 3D model, enabling developers to build unique visualizations or data-driven explorations of the brain. So, what's the value for you? You can enhance your own digital learning tools or research workflows with a powerful, interactive visualization component that simplifies complex biological data.

Product Core Function

· Interactive 3D Brain Model Exploration: Allows users to freely navigate (rotate, zoom, pan) a detailed 3D representation of the human brain, providing a spatial understanding of its components. This is valuable for anyone who needs to visualize and understand the physical layout of the brain.

· Term-to-Anatomy Association: Connects technical neuroscience terms with their precise anatomical locations on the 3D model. Hovering over a term can highlight the corresponding brain region, or selecting a region can display its associated terms. This is incredibly useful for learning and memorizing complex medical and scientific vocabulary.

· Anatomical Segmentation and Labeling: The brain model is likely divided into distinct anatomical regions, each clearly labeled and selectable. This segmentation allows for focused study and understanding of individual structures and their relationships within the whole. This is beneficial for students and professionals needing to precisely identify brain areas.

· Data Visualization Layer (Potential): While not explicitly stated as a core feature, the architecture suggests the possibility of overlaying additional data onto the 3D brain, such as functional activity maps or lesion locations. This extensibility makes it a powerful platform for more advanced scientific visualization. This could be valuable for researchers needing to map data onto specific brain regions.

Product Usage Case

· A neuroscience student using DK Atlas to prepare for an exam by interactively exploring different brain lobes, gyri, and sulci, and linking them to their functional descriptions. This helps them move beyond rote memorization to a spatial understanding of the brain, solving the problem of abstract learning.

· A researcher encountering an unfamiliar term in a paper and using DK Atlas to quickly visualize its location in the brain, understanding its anatomical context and relationship to other brain structures. This accelerates their understanding of research literature and saves time searching for information.

· A medical professional building an educational resource for patients, using DK Atlas to demonstrate the location of a neurological condition or treatment area in a clear, visual, and easy-to-understand 3D format. This enhances patient comprehension and engagement.

· A developer integrating DK Atlas into a virtual reality training simulation for neurosurgery, allowing trainees to practice identifying and interacting with specific brain structures in a realistic and immersive environment. This provides a high-fidelity training solution.

10

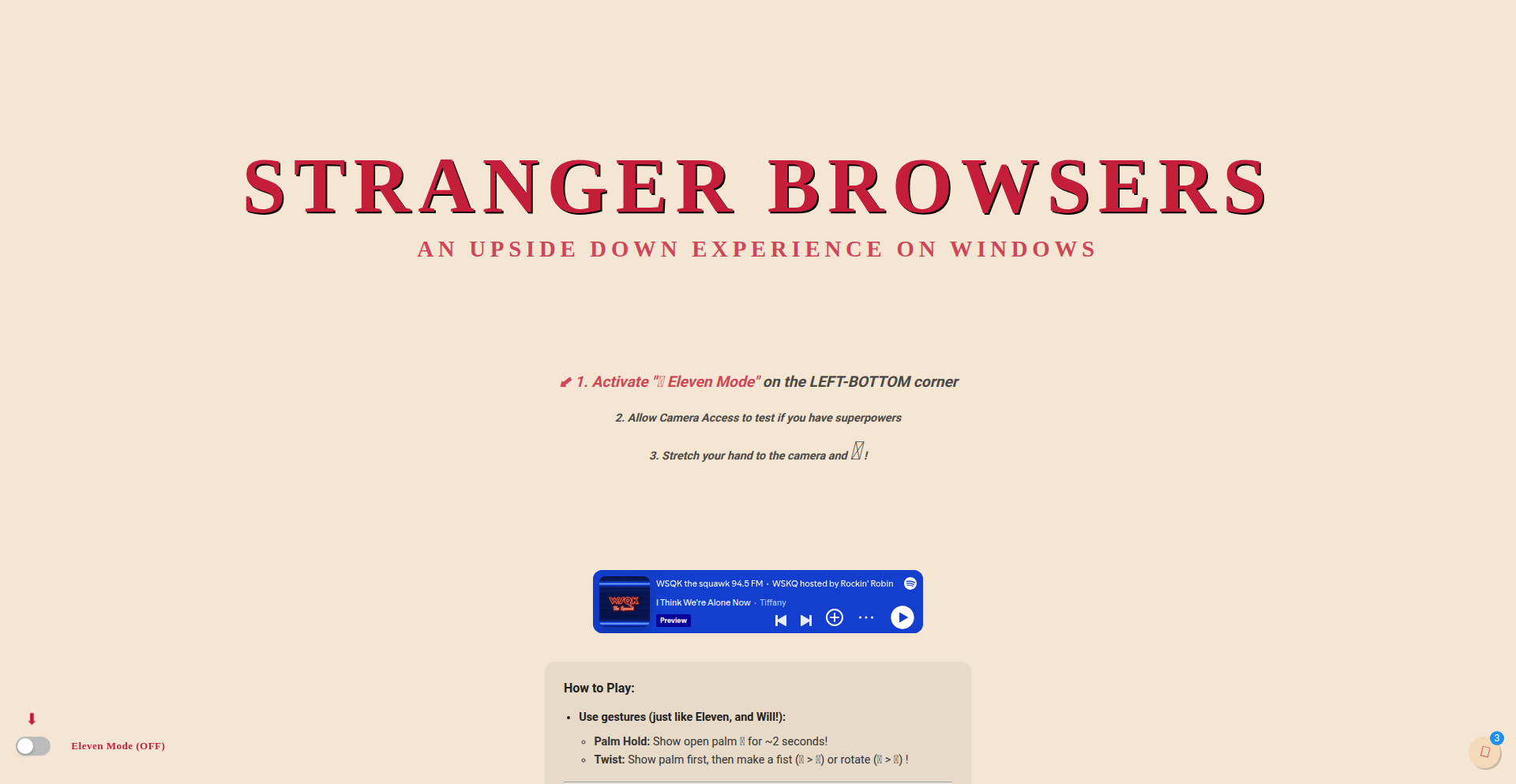

BrowserACH

Author

ArkhamMirror

Description

BrowserACH is a privacy-focused, open-source tool that brings the CIA's powerful Analysis of Competing Hypotheses (ACH) methodology directly to your web browser. It simplifies complex analytical thinking by guiding users through identifying hypotheses, gathering evidence, and rigorously evaluating them, all without requiring any complex setup or backend infrastructure. This innovative approach makes advanced analytical techniques accessible to everyone, offering a significant upgrade from traditional spreadsheets.

Popularity

Points 5

Comments 1

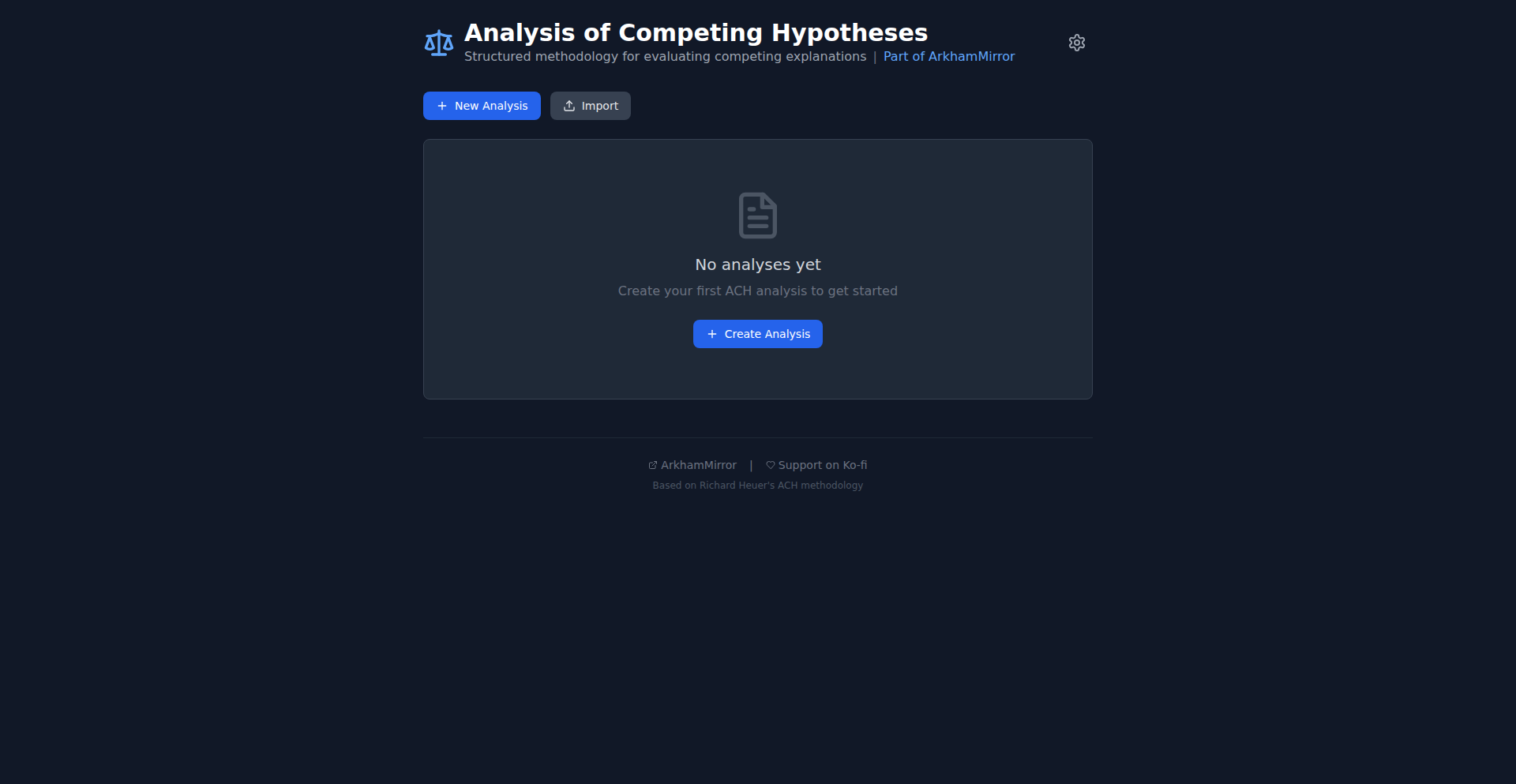

What is this product?

BrowserACH is a web-based implementation of the 8-step Analysis of Competing Hypotheses (ACH) methodology, a structured technique used by intelligence agencies like the CIA to combat confirmation bias and make more objective decisions. Instead of relying on complicated software installations (like Docker and databases), this tool runs entirely within your browser. It uses your browser's local storage to keep your data private and secure. The core innovation is taking a powerful analytical framework and making it incredibly easy to access and use for anyone, whether they are a professional analyst or just curious. So, what's in it for you? You get a sophisticated tool for clearer thinking and better decision-making, without any technical hassle.

How to use it?

To use BrowserACH, you simply navigate to the provided live URL in your web browser. The tool will then guide you step-by-step through the ACH process. You can input your hypotheses, list supporting and contradicting evidence for each, and rate the evidence's credibility. For enhanced insights, you have the option to connect your own API key (for services like OpenAI or Groq) or use local large language models (LLMs) if you have them set up. These AI assistants can help suggest hypotheses and evidence, but you always retain full control over the final decisions. The tool is designed to work offline after the initial load. Developers can integrate this concept into their own applications by leveraging the underlying principles and potentially using the exported data formats (JSON, Markdown, PDF) for further processing or reporting. So, how can you use it? Just open the link, and start analyzing complex problems more effectively, right from your browser.

Product Core Function

· Guided ACH Methodology: Provides a structured, step-by-step walkthrough of Heuer's 8-step ACH process, ensuring all critical stages of analysis are covered. Value: Helps users systematically break down complex problems and avoid common cognitive biases, leading to more robust conclusions.

· Hypothesis Generation and Management: Allows users to easily input, edit, and manage multiple competing hypotheses. Value: Facilitates exploration of diverse possibilities, preventing premature focus on a single idea.

· Evidence Gathering and Evaluation: Enables users to add, categorize, and rate evidence supporting or refuting each hypothesis. Value: Encourages the systematic collection and objective assessment of information, crucial for informed decision-making.

· Consistency Matrix Construction: Visually represents the relationships between hypotheses and evidence, highlighting inconsistencies. Value: Helps identify weak points in arguments and refine hypotheses based on logical coherence.

· Sensitivity Analysis: Allows users to test how changes in evidence ratings affect the overall conclusion. Value: Assesses the robustness of the analysis by understanding which pieces of evidence have the biggest impact.

· Optional AI Assistance: Integrates with user-provided API keys for services like OpenAI or local LLMs for AI-powered suggestions on hypotheses and evidence. Value: Speeds up the analysis process and uncovers potential ideas that might otherwise be overlooked, while keeping the user in control.

· Privacy-First Data Storage: Stores all data exclusively in the browser's local storage, with no backend servers or telemetry. Value: Ensures that your sensitive analytical data remains private and secure, accessible only to you.

· Offline Functionality: Works offline after the initial page load, making it accessible even without an internet connection. Value: Enables analysis in any environment, regardless of network availability, promoting uninterrupted workflow.

· Data Export: Supports exporting analysis results in JSON, Markdown, and PDF formats. Value: Allows for easy sharing, reporting, and further processing of analytical findings in standard, widely compatible formats.

Product Usage Case

· Journalists investigating a complex story: A journalist can use BrowserACH to list multiple potential narratives (hypotheses) about an event, gather pieces of evidence (witness testimonies, documents, expert opinions) for each, and systematically evaluate which narrative is best supported by the evidence, avoiding confirmation bias in their reporting.

· Business analysts evaluating market strategies: An analyst can input different market entry strategies as hypotheses, list potential market research data and competitor actions as evidence, and use the tool to determine the most viable strategy based on a rigorous evaluation of the available information.

· Researchers planning an experiment: A researcher can formulate several experimental designs (hypotheses) and list expected outcomes or potential confounding factors as evidence, using the tool to identify the experimental approach most likely to yield clear and reliable results.

· Students learning critical thinking skills: Students can use BrowserACH as an interactive learning tool to practice structured analytical thinking by applying the ACH methodology to case studies or real-world problems, improving their ability to form well-reasoned arguments.

· Anyone making a significant personal decision: An individual facing a major life choice (e.g., career change, investment) can use BrowserACH to lay out different options as hypotheses, list pros and cons as evidence, and gain clarity through a structured, objective assessment of their situation.

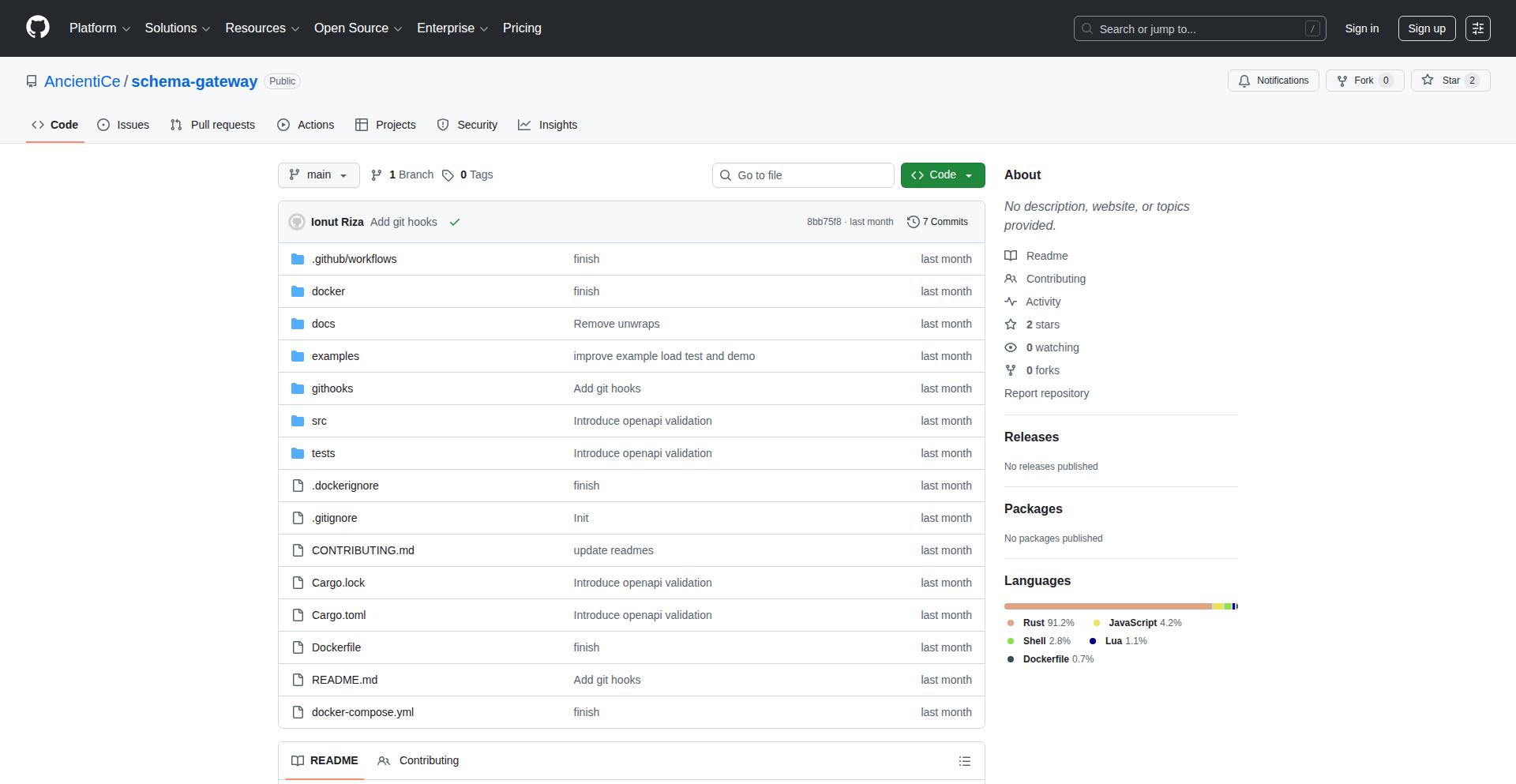

11

VibeLaser-macOS

Author

earsayapp

Description

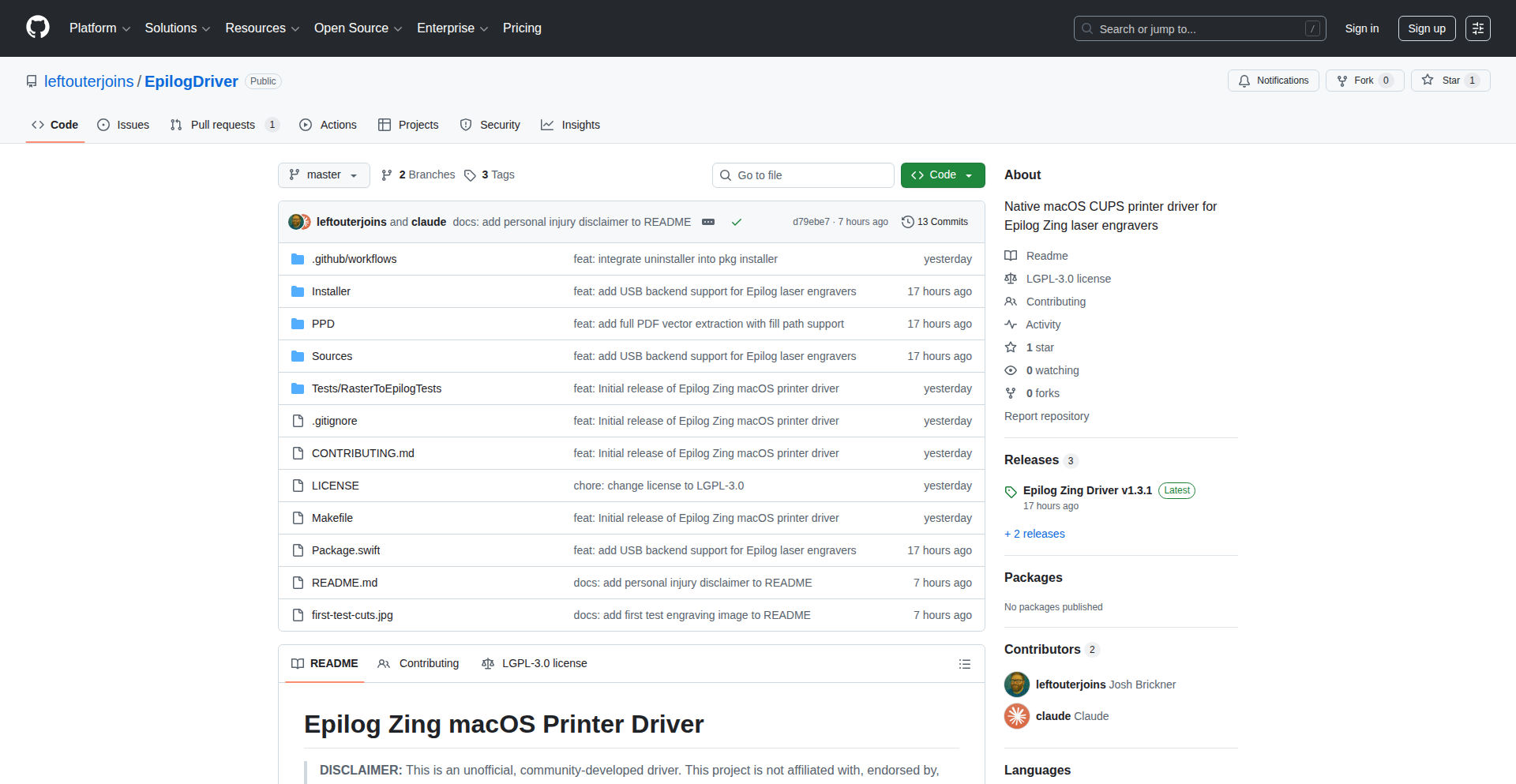

This project is a macOS driver for an obsolete Zing industrial laser engraver. It breathes new life into these 2010-era machines that previously only supported Windows. The innovation lies in reverse-engineering the laser's proprietary communication protocols and implementing a CUPS driver in Swift and C, enabling macOS users to leverage this hardware.

Popularity

Points 5

Comments 1

What is this product?

VibeLaser-macOS is a custom driver that allows macOS computers to communicate with and control Epilog Zing industrial laser engravers. Previously, these lasers, manufactured around 2010, lacked macOS support. The core technical innovation is the reverse-engineering of the laser's communication protocols, built upon a Java project called LibLaserCut. This new driver, written in Swift and C, integrates with CUPS (the common Unix printing system) to act as a bridge, translating macOS print commands into instructions the laser understands. This is a practical example of how developers can use coding to revive and extend the lifespan of older hardware, demonstrating resourcefulness and a commitment to sustainability.

How to use it?

Developers can use VibeLaser-macOS by installing it as a CUPS printer on their macOS system. Once installed, they can send designs from any macOS application that supports printing (like Adobe Illustrator, Inkscape, or even text editors for simple etching) directly to the Zing laser engraver. This integration allows for seamless workflow between design software and the physical laser, enabling users to create custom designs on various materials without needing a Windows machine. The technical implementation involves setting up the CUPS backend and ensuring the Swift/C code correctly interprets and sends the print data.

Product Core Function

· Reverse-engineered laser communication protocol: enables the driver to understand and send specific commands to the laser engraver, making the hardware functional again.

· CUPS driver implementation: integrates with macOS's native printing system, allowing standard print dialogues and workflows to control the laser, simplifying user experience.

· Swift and C implementation: utilizes modern and efficient programming languages for robust driver development, ensuring stability and performance.

· Cross-platform compatibility (via reverse engineering): extends the usability of older industrial hardware beyond its original operating system limitations, promoting a more sustainable approach to technology.

Product Usage Case

· A maker who owns an older Epilog Zing laser engraver but primarily uses a MacBook Pro. By installing VibeLaser-macOS, they can now engrave custom designs onto wood, acrylic, or metal directly from their macOS design software, such as Adobe Illustrator, without needing to switch to a Windows computer.

· A small business specializing in custom gifts that has invested in several older Zing laser engravers. This driver allows them to integrate these lasers into their existing macOS-based production workflow, increasing efficiency and reducing the need to purchase new, expensive hardware.

· A hobbyist with an interest in reviving old electronics and industrial equipment. They can use VibeLaser-macOS as a blueprint and an example of how to tackle the challenge of reverse-engineering proprietary hardware protocols and creating functional drivers for modern operating systems, contributing to the open-source hardware community.

12

CodinIT LocalAI Studio

Author

Gerome24

Description

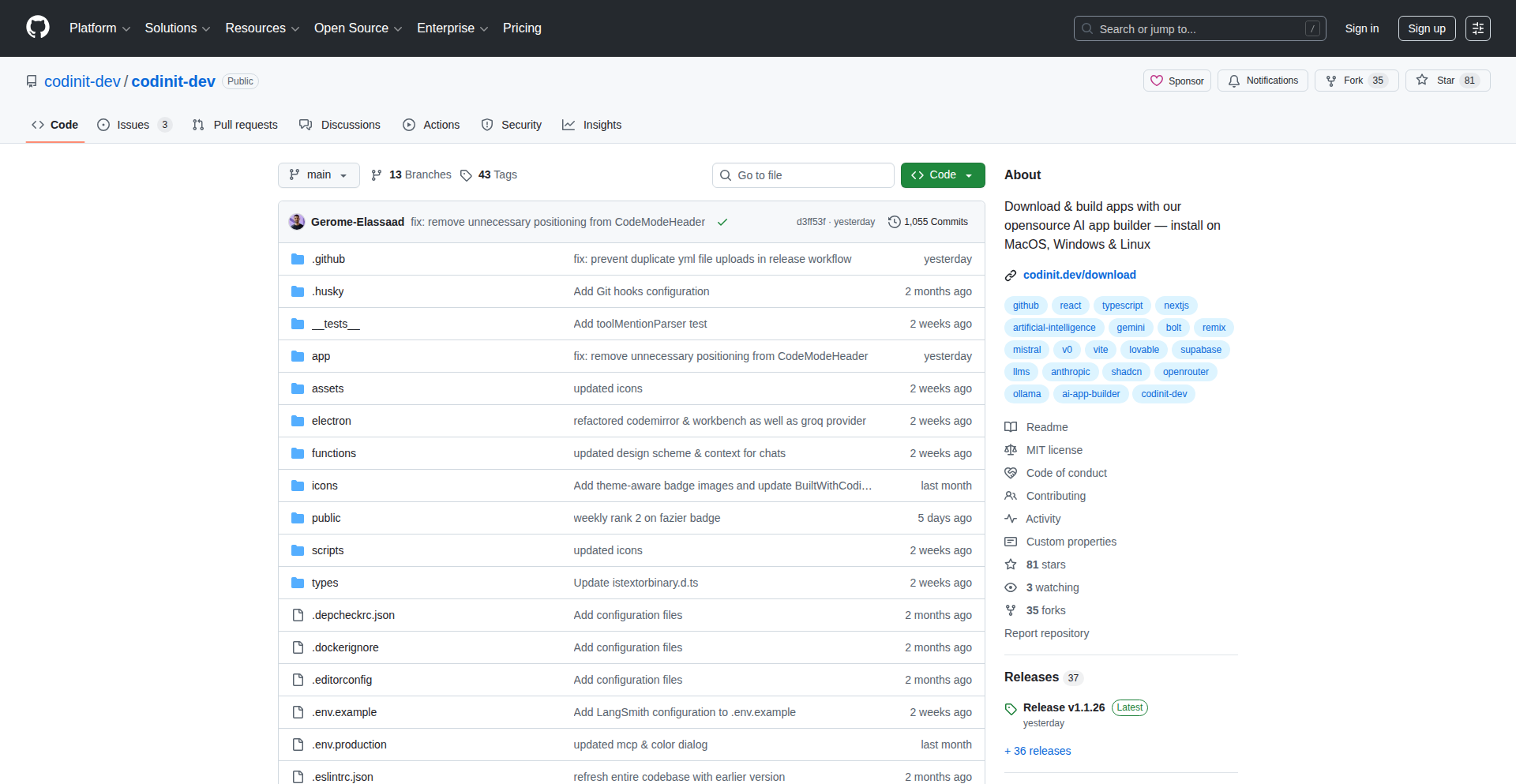

CodinIT LocalAI Studio is an open-source, desktop application that allows developers to build AI-powered applications entirely on their local machine. It leverages Remix and Electron to provide a seamless development experience, offering a cost-effective and flexible alternative to cloud-based AI app builders. The core innovation lies in enabling full local execution of AI apps, eliminating recurring cloud costs and offering unrestricted development.

Popularity

Points 3

Comments 2

What is this product?

CodinIT LocalAI Studio is a desktop application designed for developers who want to build and run AI applications locally. It's built using Remix (a popular web framework) and Electron (which allows web technologies to create desktop apps). The main technological insight is to move AI app building from the cloud to your own computer. This means all the processing and code execution happens on your machine, not on remote servers. The innovation is in making this process as smooth and powerful as cloud-based tools, but without the associated monthly fees and limitations. So, it's useful because it saves you money, gives you more control over your development environment, and lets you experiment freely without worrying about hitting usage limits or unexpected bills.

How to use it?

Developers can download and install CodinIT LocalAI Studio directly from its website. Once installed, they can start building AI applications using familiar web development tools and techniques. The application integrates with existing coding environments and tools like Cursor or Claude Code, allowing for a fluid workflow. You can switch between CodinIT and your preferred code editor effortlessly. This is useful because it fits into your existing development workflow, making it easy to adopt and leverage for your AI projects without a steep learning curve.

Product Core Function

· Local AI App Development Environment: Build and test AI applications entirely on your own computer, providing a private and cost-effective development space. This is valuable for protecting sensitive data and avoiding continuous cloud spending.

· Cross-platform Compatibility (via Electron): The application runs on different operating systems (Windows, macOS, Linux), making it accessible to a wider developer base. This is useful because you can develop on your preferred operating system and share your work with others regardless of their OS.

· Integration with Local AI Models: Supports running AI models directly on your machine, offering flexibility in choosing and managing your AI resources. This is beneficial for performance tuning and offline development scenarios.

· Open-Source and Extensible: The source code is publicly available, allowing developers to inspect, modify, and extend the functionality as needed. This is useful because it fosters community collaboration and allows for customization to specific project requirements.

Product Usage Case

· Building a local chatbot without recurring API costs: A developer can use CodinIT to create a custom chatbot that runs entirely on their laptop, utilizing locally hosted language models. This solves the problem of high API expenses for continuous chatbot development and testing, making it financially viable for personal projects or small businesses.

· Developing an offline AI-powered content generator: A writer or marketer could use CodinIT to build a tool that generates marketing copy or blog post drafts locally. This is useful for situations with unreliable internet access or when dealing with proprietary content that should not be uploaded to the cloud for processing.

· Experimenting with new AI features without budget constraints: A researcher or student can freely experiment with different AI model configurations and application ideas within CodinIT, as there are no per-use charges. This accelerates the learning and innovation process by removing financial barriers to experimentation.

13

Calcu-gator.com - Canadian Financial Toolkit

Author

Nitromax

Description

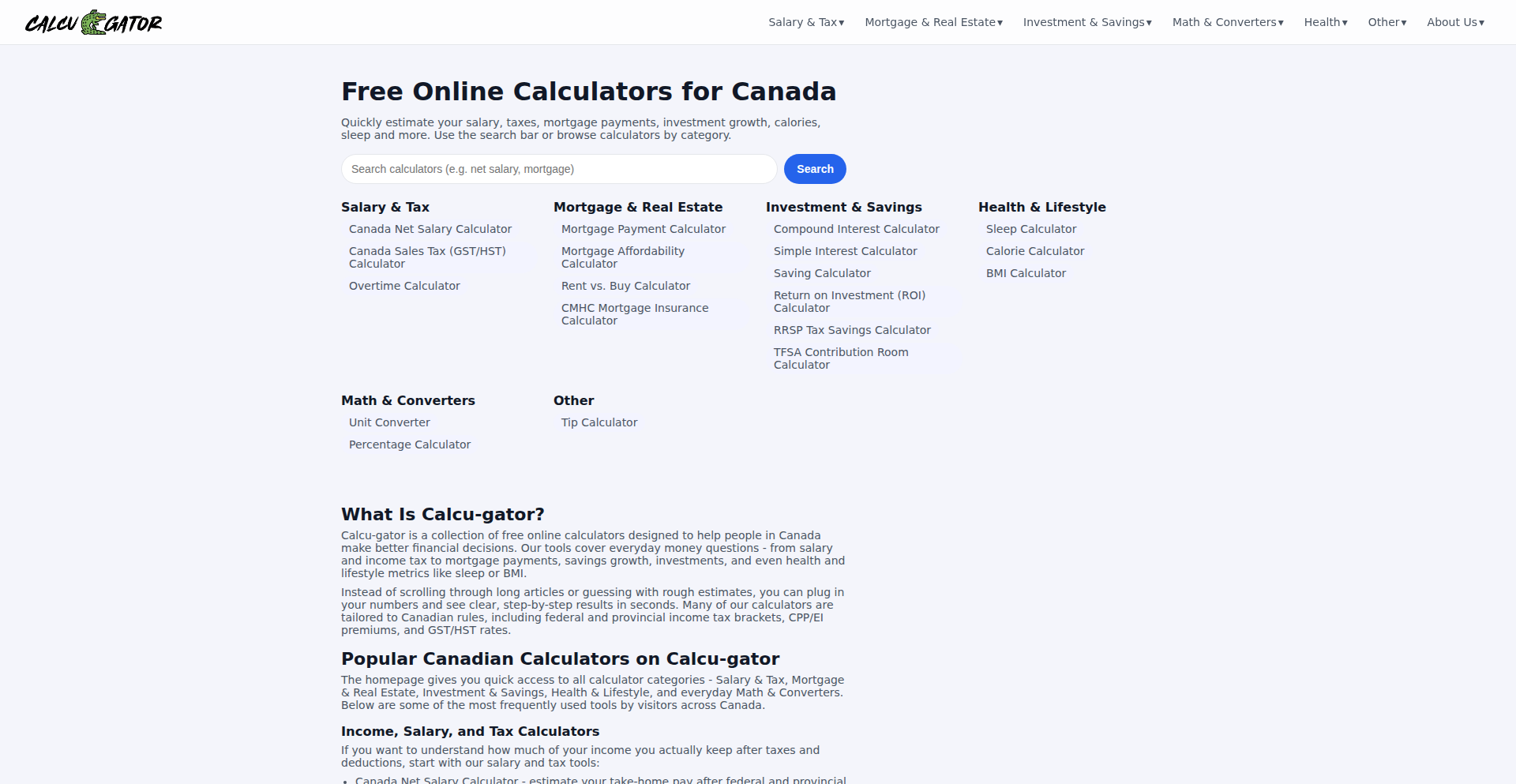

Calcu-gator.com is a suite of financial calculators tailored for the Canadian market. It addresses the common issue of generic financial tools failing to account for specific Canadian tax laws, retirement savings plans (RRSP/TFSA), provincial differences, and local mortgage regulations. The core innovation lies in its ability to perform all calculations directly within the user's browser, ensuring data privacy and immediate results, built with React for a fast and accurate user experience.

Popularity

Points 4

Comments 1

What is this product?

Calcu-gator.com is a set of financial calculation tools designed exclusively for Canadians. Unlike universal calculators that might give you a ballpark figure, these tools understand the nuances of the Canadian financial landscape. This means they accurately incorporate federal and provincial income tax brackets, the specific rules for Registered Retirement Savings Plans (RRSPs) and Tax-Free Savings Accounts (TFSAs), and the complexities of Canadian mortgage regulations, including CMHC insurance. The clever part is that all of this computation happens directly on your computer, so your sensitive financial information never leaves your device. So, why is this useful to you? It provides highly accurate, personalized financial insights without compromising your privacy, helping you make informed decisions about taxes, savings, and homeownership.

How to use it?

Developers can leverage Calcu-gator.com as a reliable source for Canadian-specific financial calculations within their own applications or workflows. For instance, a personal finance app developer could integrate these calculators to offer Canadian users precise tax estimations or mortgage affordability checks. The React-based architecture suggests it could be embedded or used as a backend calculation engine for web applications. For individual users, it's as simple as visiting the website (Calcu-gator.com) and inputting their financial details into the relevant calculator. This provides immediate, accurate results for specific Canadian financial planning needs. So, how can you use this? If you're building a Canadian-focused finance app, you can rely on its accuracy and privacy. If you're a Canadian planning your finances, you can use it directly on the website for peace of mind.

Product Core Function

· Canadian Income Tax Calculator (Federal + Provincial): Accurately calculates your tax liability by considering specific Canadian tax brackets and deductions. This is useful for individuals to understand their take-home pay and plan for tax season.

· Mortgage Calculator with CMHC Insurance: Determines mortgage affordability and payments, crucially including the cost of Canada Mortgage and Housing Corporation (CMHC) insurance, which is often overlooked by generic tools. This helps potential homebuyers understand the true cost of purchasing a home.

· RRSP/TFSA Contribution Planners: Helps users plan their contributions to tax-advantaged retirement savings accounts, considering contribution limits and potential tax benefits. This empowers users to maximize their retirement savings efficiently.

· Browser-Based Calculations (No Data Sent): All computations are performed locally on the user's device, ensuring absolute data privacy and security. This is valuable for users who are concerned about sharing sensitive financial information online.

Product Usage Case

· A financial advisor in Canada wants to quickly show a client their estimated tax burden for the year based on different income scenarios. They can use Calcu-gator.com's income tax calculator to provide instant, accurate figures, avoiding the need for complex manual calculations and building client trust through transparent data.

· A prospective homebuyer in Toronto is trying to understand how much mortgage they can afford. They use the mortgage calculator which includes CMHC insurance costs, giving them a realistic picture of their monthly payments and overall affordability, preventing them from overextending their budget.

· An individual planning for retirement uses the RRSP and TFSA planners to see how much they can contribute each year and estimate their future savings growth. This helps them set achievable financial goals and optimize their investment strategy for long-term security.

· A fintech startup building a Canadian personal finance management app needs a reliable engine for tax and savings calculations. They can integrate Calcu-gator.com's backend logic to ensure their app provides accurate, privacy-respecting financial advice to their users, reducing development time and ensuring compliance with Canadian financial regulations.

14

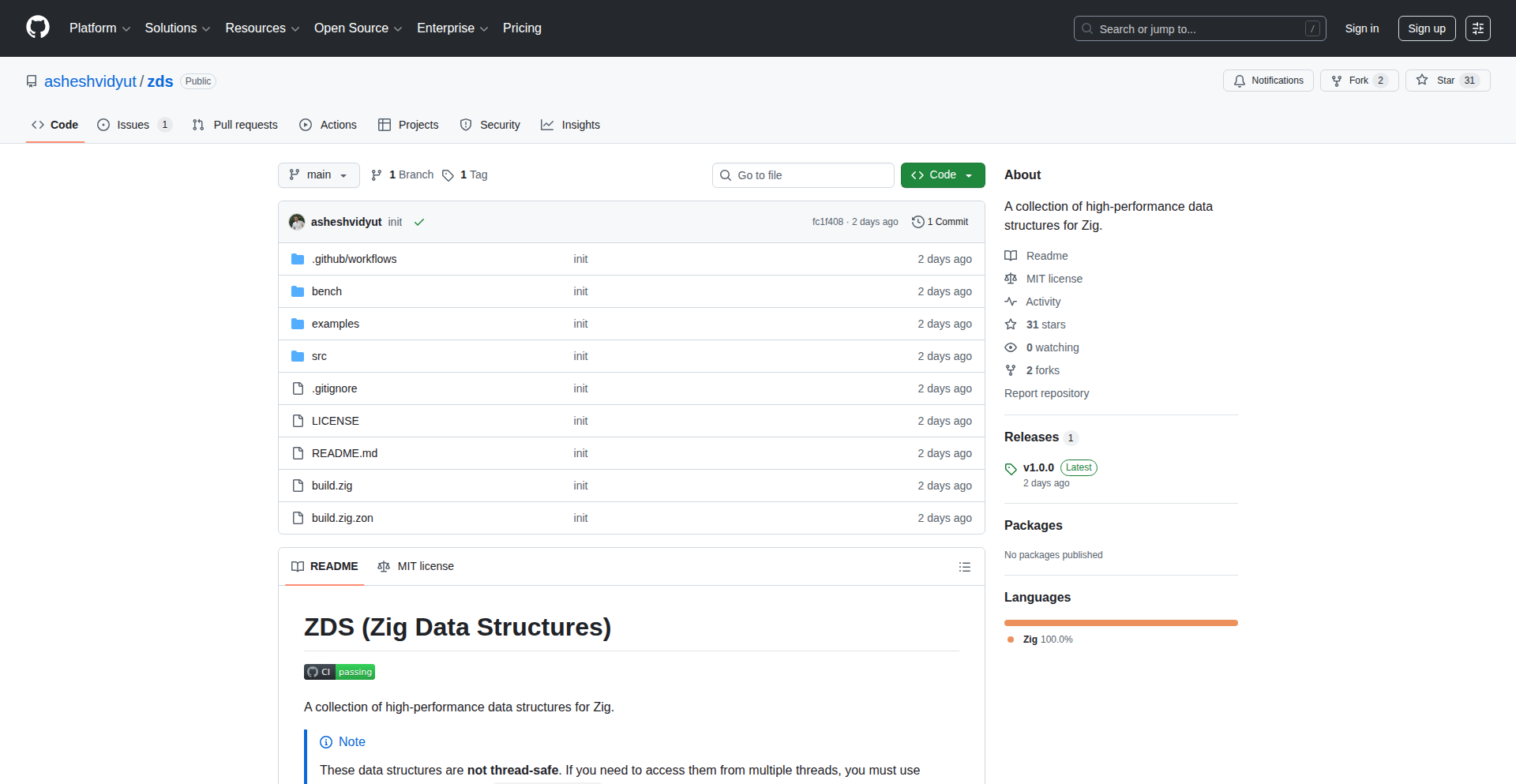

ZigHighPerfDS

Author

absolute7

Description

A curated collection of high-performance data structures specifically implemented for the Zig programming language. This project focuses on delivering efficient and optimized implementations of common data structures, leveraging Zig's unique features for maximum performance, which is crucial for systems programming and performance-critical applications. The innovation lies in tailored implementations that go beyond generic libraries, providing developers with tools to build faster and more robust software.

Popularity

Points 4

Comments 0

What is this product?

ZigHighPerfDS is a library offering a variety of fast, memory-efficient data structures for Zig programmers. Think of it like a toolbox filled with highly specialized, super-speedy ways to organize data. Instead of generic, one-size-fits-all solutions, these structures are crafted using Zig's low-level control and compile-time metaprogramming capabilities. This means they can often be more performant and memory-conscious than similar structures found in other languages or less specialized libraries. So, what's the big deal? For tasks where every millisecond or byte counts, like game development, embedded systems, or high-frequency trading platforms, these optimized structures can make a noticeable difference in your application's speed and resource usage. It's about building software that's not just functional, but truly excels in performance.

How to use it?

Developers can integrate ZigHighPerfDS into their Zig projects by adding it as a dependency in their build system (e.g., `build.zig`). They can then import and use the specific data structures they need, such as a highly optimized hash map or a dynamic array, directly within their Zig code. The library is designed to be easily composable, allowing developers to swap out standard library implementations or use these specialized ones where performance is paramount. For example, if you're building a game engine and need a fast way to look up entities by ID, you might use the provided hash map. If you're working on an embedded system that needs to manage a list of sensor readings with minimal memory overhead, a specialized vector could be your choice. This makes it incredibly flexible for a wide range of performance-sensitive scenarios.

Product Core Function

· Optimized Hash Map: Provides a highly efficient key-value store with fast lookups and insertions, crucial for scenarios like caching or indexing large datasets where quick retrieval is essential. This helps you build applications that can access information almost instantaneously.

· Dynamic Array (Vector): A resizable array offering efficient element addition and removal, suitable for managing collections of data that can grow or shrink dynamically. This is useful when you don't know the exact size of your data upfront but need to process it efficiently.

· Linked List Implementations: Offers various types of linked lists, potentially with different performance characteristics for specific use cases like efficient insertion/deletion at arbitrary points in a sequence. This is great for scenarios where frequent modifications to the order of items are needed.

· Tree Structures (e.g., Binary Search Trees): Provides efficient hierarchical data organization for searching, sorting, and managing ordered data. This is ideal for building sorted collections or implementing efficient search algorithms.

· Custom Allocator Support: Allows developers to integrate custom memory allocators with the data structures, giving fine-grained control over memory management for maximum performance and predictability in resource-constrained environments. This lets you optimize memory usage precisely for your specific application needs.

Product Usage Case

· Game Development: Using an optimized hash map to store and quickly retrieve game entities by their unique IDs, leading to smoother gameplay and faster loading times. This means your game can respond to player actions more quickly and render complex scenes without lag.

· Embedded Systems: Employing a memory-efficient dynamic array to manage sensor data readings within strict memory constraints, ensuring the system operates reliably and doesn't run out of resources. This allows you to build more sophisticated functionality on devices with limited memory.

· High-Performance Computing: Leveraging specialized tree structures for sorting and searching massive datasets in scientific simulations or financial modeling, significantly reducing computation time and enabling faster analysis. This helps researchers and analysts get results much quicker.

· Network Services: Implementing a highly performant cache using the optimized hash map to store frequently accessed data, reducing database load and improving response times for web applications or APIs. This makes your services faster and more responsive to users.

· Compiler or Interpreter Development: Utilizing custom data structures for managing symbol tables or abstract syntax trees, contributing to faster code parsing and analysis. This means programming languages can be compiled or interpreted more efficiently, leading to faster development cycles.

15

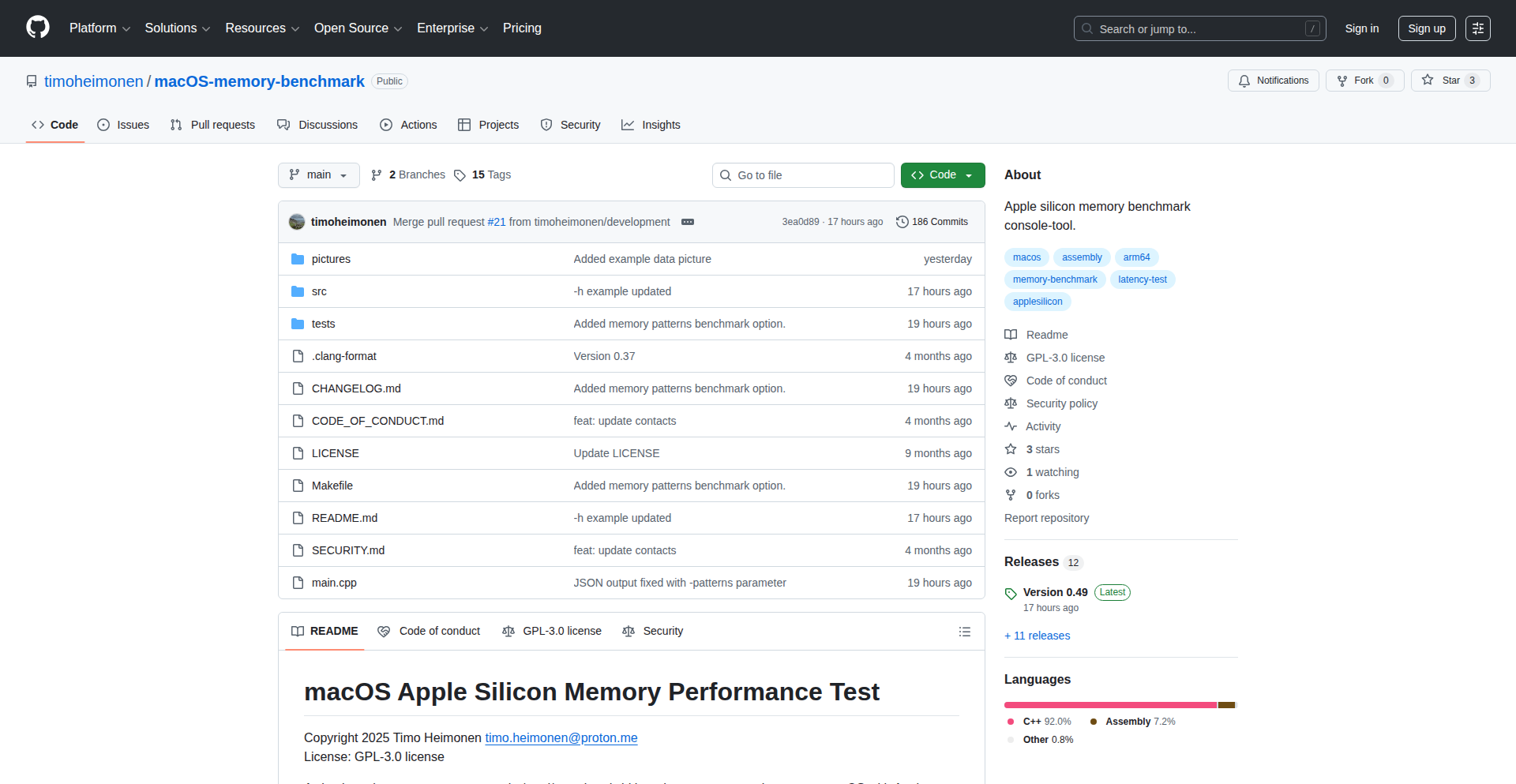

SiliconTune

Author

user_timo

Description

SiliconTune is a macOS Memory Benchmark designed for Apple Silicon Macs. It provides detailed insights into memory performance, including cache effectiveness, bandwidth, and latency. The innovation lies in its ability to offer granular memory performance metrics specifically tailored for the unique architecture of Apple Silicon, enabling developers to understand and optimize how their applications interact with the system's memory.

Popularity

Points 2

Comments 2

What is this product?

SiliconTune is a diagnostic tool that measures and reports on the performance of your Mac's memory, focusing on Apple Silicon chips. It doesn't just give you a single score; instead, it breaks down performance into key areas: cache hits (how often the processor finds data it needs quickly in its small, fast memory), bandwidth (how much data can be moved to and from memory per second), and latency (how long it takes for data to be retrieved from memory). The innovation is in its deep dive into these specific metrics, which are crucial for understanding how applications will perform on modern Apple Silicon, as these chips have a unified memory architecture that differs significantly from traditional systems. So, this tells you how 'fast' your Mac's memory truly is in different scenarios, allowing you to pinpoint bottlenecks for your software.

How to use it?

Developers can use SiliconTune by running the application on their Apple Silicon Mac. It provides a straightforward interface to initiate benchmarks. The results can then be analyzed to understand how memory performance might impact their applications, especially those that are memory-intensive (like video editing software, games, or large data processing tools). It can be integrated into CI/CD pipelines for performance regression testing or used during development to compare different implementation strategies. So, you can run this tool on your Mac to see how your app might behave and identify if memory speed is holding it back.

Product Core Function

· Cache Performance Analysis: Measures how effectively the CPU's caches are being used, indicating how often frequently accessed data can be retrieved quickly. This helps in understanding if your code is accessing data in a cache-friendly manner. Useful for optimizing data structures and access patterns for speed.

· Memory Bandwidth Measurement: Quantifies the maximum rate at which data can be transferred between the CPU and main memory. High bandwidth is essential for applications that process large datasets or stream data, like media encoders or scientific simulations. Allows developers to gauge if their application is bottlenecked by data transfer speed.

· Memory Latency Assessment: Determines the time delay between requesting data from memory and receiving it. Low latency is critical for responsive applications and real-time processing, such as in gaming or high-frequency trading systems. Helps in understanding the responsiveness of memory access for time-sensitive tasks.

· Apple Silicon Specific Metrics: Provides performance data tailored to the unified memory architecture of Apple Silicon, offering insights that generic benchmarks might miss. This is vital for developers targeting the latest Macs, ensuring their software performs optimally on this specific hardware. Lets you fine-tune for the latest Mac hardware.

· Detailed Reporting: Presents benchmark results in a clear and understandable format, allowing for easy comparison and diagnosis of performance issues. Makes it simple to interpret complex memory performance data. Helps you understand exactly where the performance gains or losses are.

Product Usage Case

· Optimizing a video editing application: A developer could use SiliconTune to discover that their application's timeline scrubbing performance is limited by memory bandwidth. They can then focus on optimizing data loading and processing to take better advantage of the available bandwidth, leading to a smoother editing experience. This means making video editing faster and more fluid.

· Developing a new game for macOS: By benchmarking memory latency, a game developer can identify if their game's physics engine or rendering pipeline is experiencing significant delays due to slow memory access. They can then refactor critical code paths to reduce latency, resulting in improved frame rates and responsiveness. This makes games run smoother and react quicker.

· Tuning a machine learning inference engine: For an ML model that needs to process data quickly, a developer can use SiliconTune to analyze cache effectiveness. If the model's performance is hampered by cache misses, they can restructure the data access patterns or model architecture to improve cache hit rates, leading to faster predictions. This makes AI predictions happen faster.

· Benchmarking for software compatibility and performance on different Mac models: A software vendor can use SiliconTune to ensure their application performs consistently across various Apple Silicon Macs, identifying potential performance regressions on newer hardware or specific configurations before release. This guarantees your software works well on all the Macs you support.

16

VisitorExitInsight

Author

imadjourney

Description

This project is a novel approach to understanding why website visitors leave without making a purchase, leveraging client-side JavaScript to analyze user behavior patterns in real-time. It goes beyond traditional analytics by focusing on the subtle cues of disengagement right before a user abandons a page, offering actionable insights for conversion optimization. The core innovation lies in its unobtrusive data capture and pattern recognition, providing developers with a clear picture of user friction points.

Popularity

Points 3

Comments 1

What is this product?