Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-12-19

SagaSu777 2025-12-20

Explore the hottest developer projects on Show HN for 2025-12-19. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

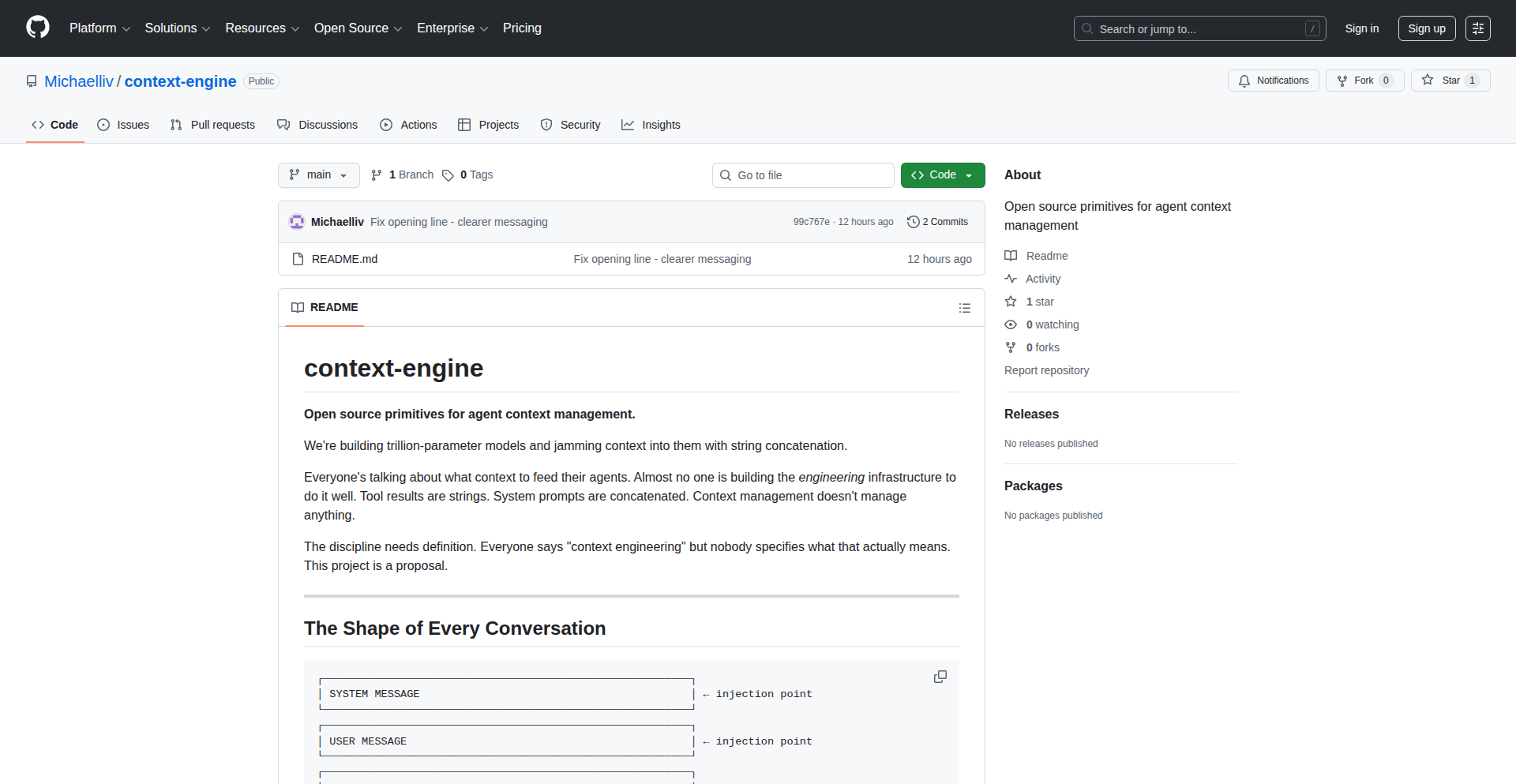

Today's Show HN offerings paint a vibrant picture of innovation, with a strong emphasis on leveraging AI to enhance developer workflows and create more intuitive user experiences. The prevalence of 'local-first' applications and self-hosted solutions highlights a growing desire for data privacy and control, moving away from over-reliance on cloud vendors. Developers are clearly focused on building tools that solve specific pain points, from streamlining B2B SaaS development with vendor-agnostic starters to creating niche developer utilities like AI-powered mocking servers and image diffing tools. The surge in AI-related projects, particularly those focused on agentic behavior, prompt engineering, and efficient LLM interaction, indicates a maturing ecosystem where the focus is shifting from just using AI to building robust infrastructure and applications around it. For aspiring developers and entrepreneurs, this signals a rich opportunity to identify underserved niches within these emerging fields. Don't be afraid to dive deep into specific problems, whether it's optimizing LLM context management with new architectures like `Agents.db`, or building user-friendly interfaces for complex AI tasks. The hacker spirit of solving real-world problems with creative technical solutions is alive and well, driving forward the next wave of software innovation.

Today's Hottest Product

Name

Show HN: I open-sourced my Go and Next B2B SaaS Starter (deploy anywhere, MIT)

Highlight

This project tackles the common pain point of vendor lock-in for B2B SaaS products. The developer has built a robust, production-ready full-stack engine using Go for the backend and Next.js for the frontend, with a modular monolith architecture. Key innovations include utilizing SQLC for type-safe SQL queries, integrating with services like Stytch for auth and Polar.sh for billing, and offering separate Docker containers for flexible deployment on any VPS. Developers can learn a lot about building scalable, vendor-agnostic SaaS backends, modular architecture design, and efficient deployment strategies. The focus on a single binary deployment for the Go backend showcases an elegant approach to managing complexity without resorting to microservices for smaller teams.

Popular Category

AI & Machine Learning

Developer Tools

SaaS & Productivity

Open Source

Popular Keyword

AI

LLM

Developer Tools

Open Source

SaaS

Local-First

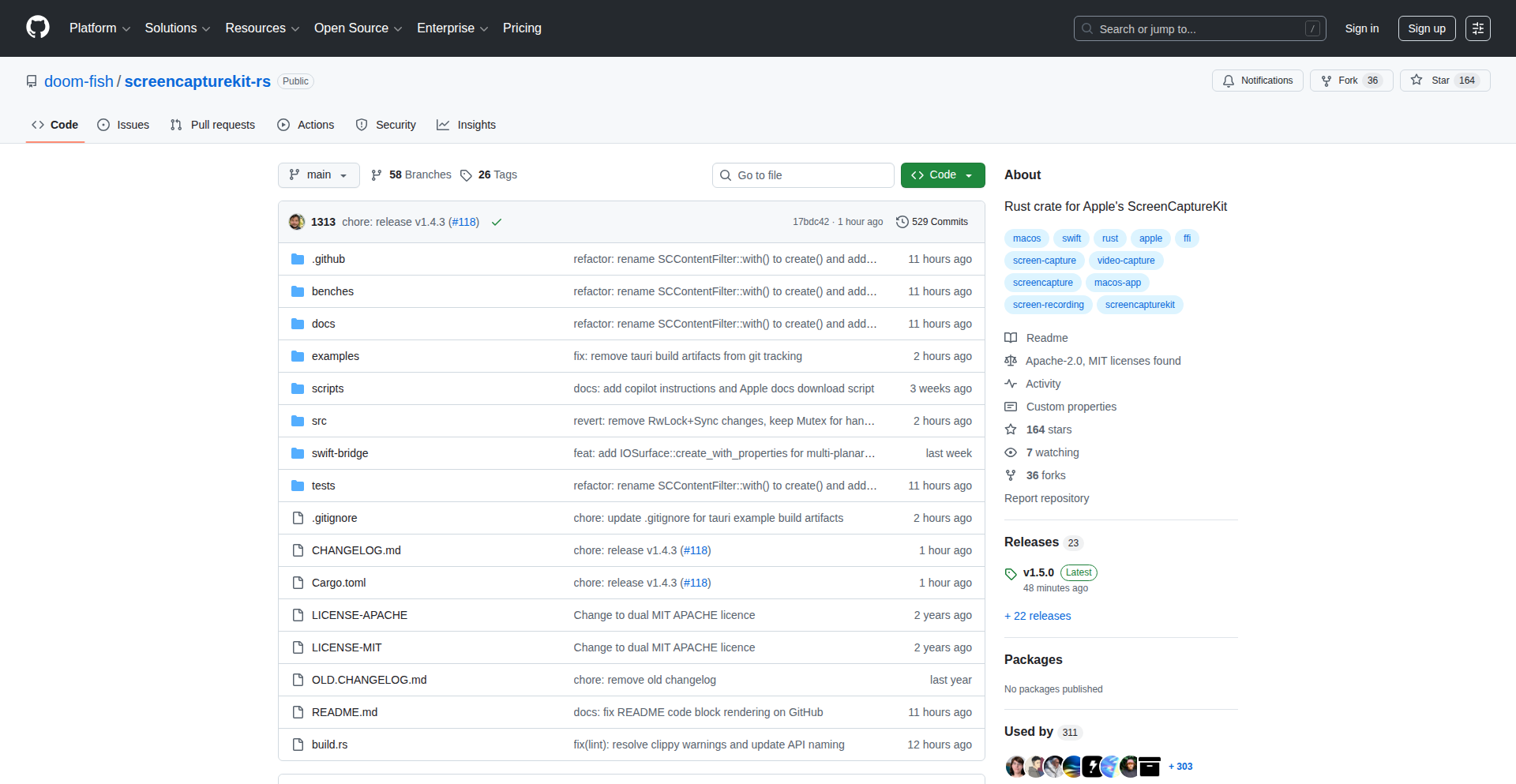

Rust

Technology Trends

AI Integration

Local-First Applications

Developer Productivity Tools

Open-Source SaaS Starters

Decentralized/Self-Hosted Solutions

Rust Ecosystem Growth

Efficient Data Handling

WebAssembly (WASM)

Project Category Distribution

AI & Machine Learning Tools (30%)

Developer Utilities & Productivity (25%)

Open Source Frameworks & Starters (15%)

Web & App Development Tools (10%)

Data & Infrastructure (10%)

Miscellaneous (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | HackerNews Clickbait Transform | 182 | 73 |

| 2 | Modular Monolith Go+Next.js SaaS Engine | 77 | 33 |

| 3 | MobileScreenCastr | 67 | 39 |

| 4 | Voice2Sticker AI Printer | 42 | 50 |

| 5 | Linggen - Code Context Weaver | 32 | 10 |

| 6 | Orbit: Shell Scripting's LLVM Compiler | 17 | 13 |

| 7 | Credible AI Insight | 10 | 4 |

| 8 | Zynk DirectSync | 11 | 2 |

| 9 | Vanishfile | 3 | 5 |

| 10 | BlazeDiff v2 - HyperSpeed Image Comparison Engine | 7 | 0 |

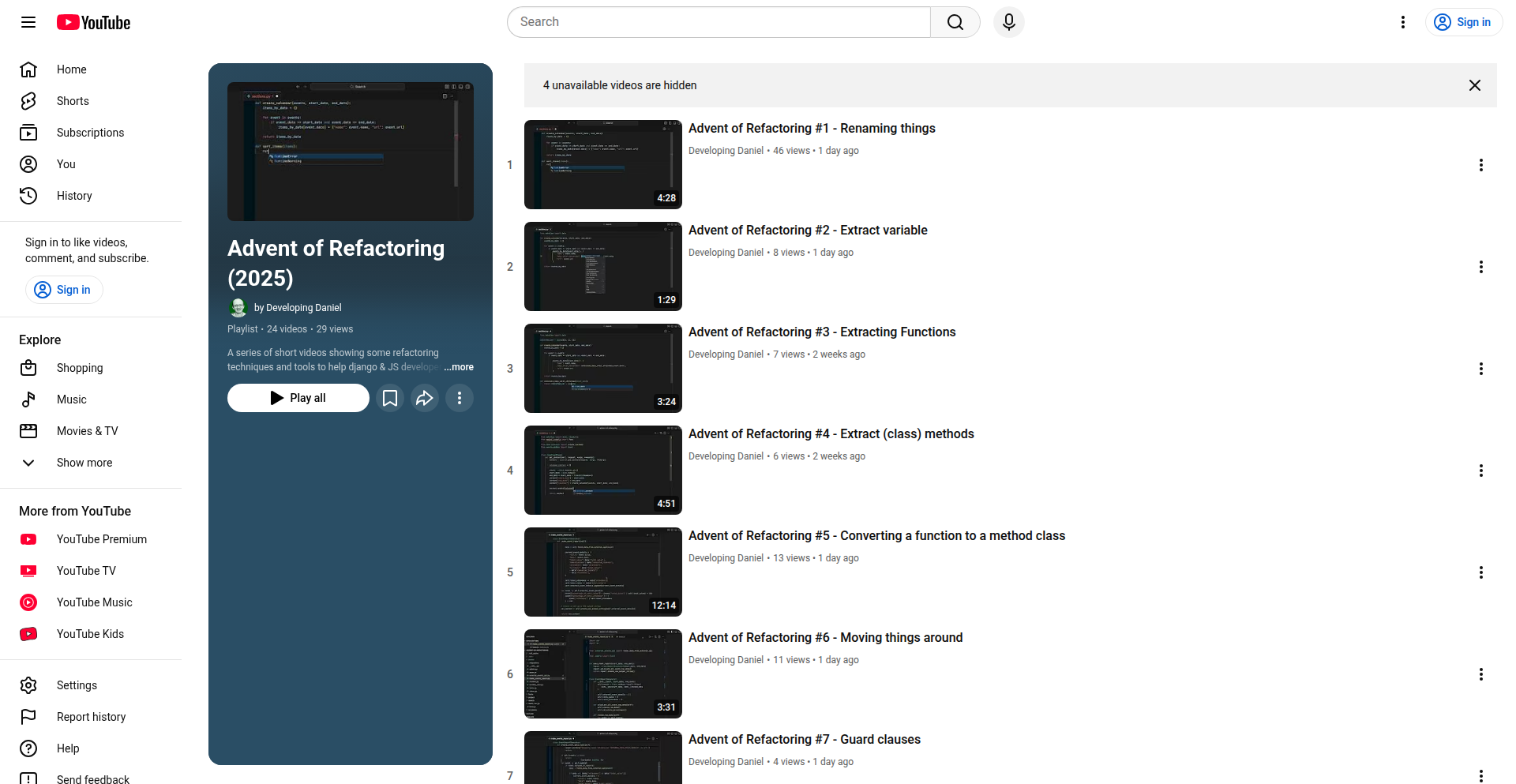

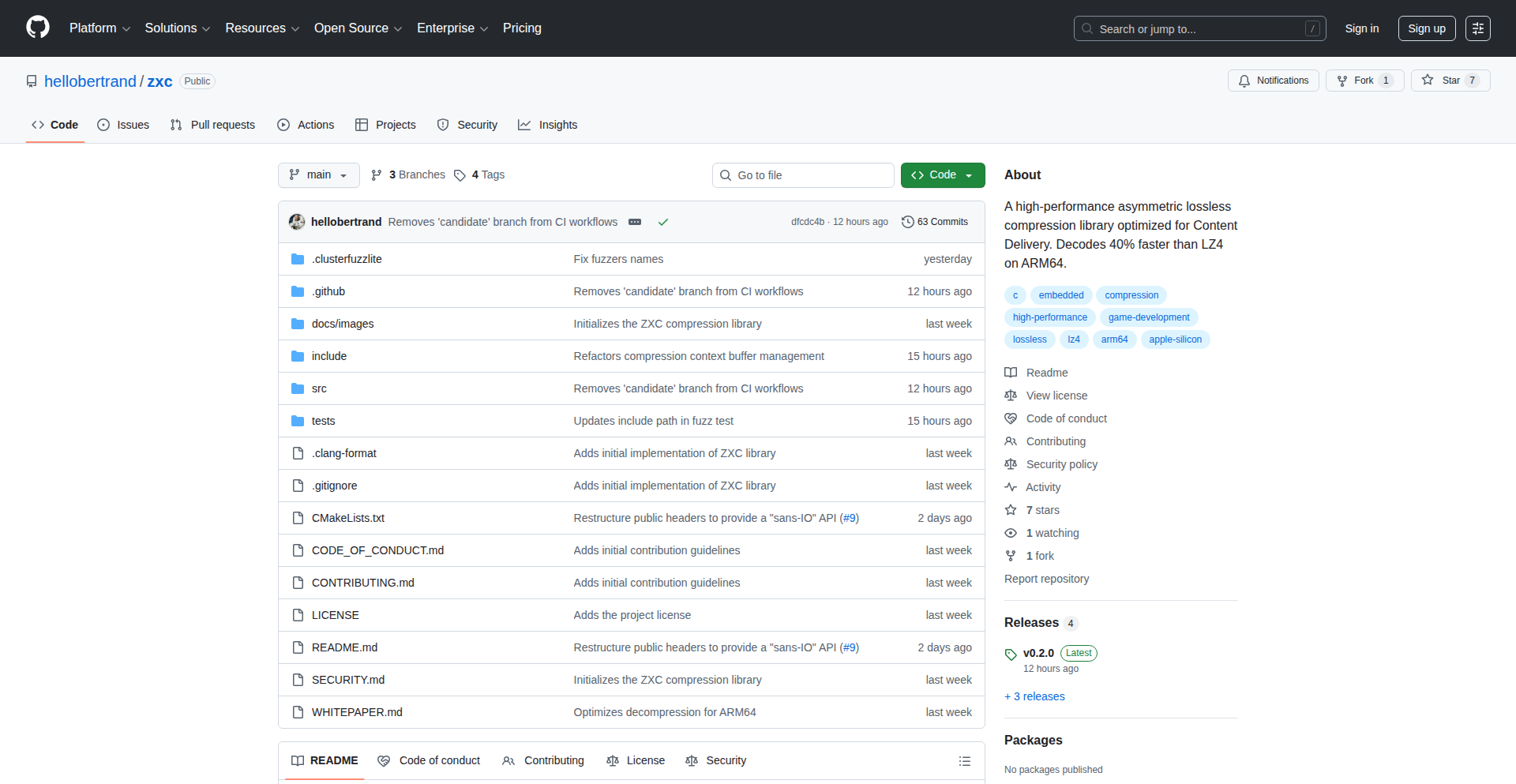

1

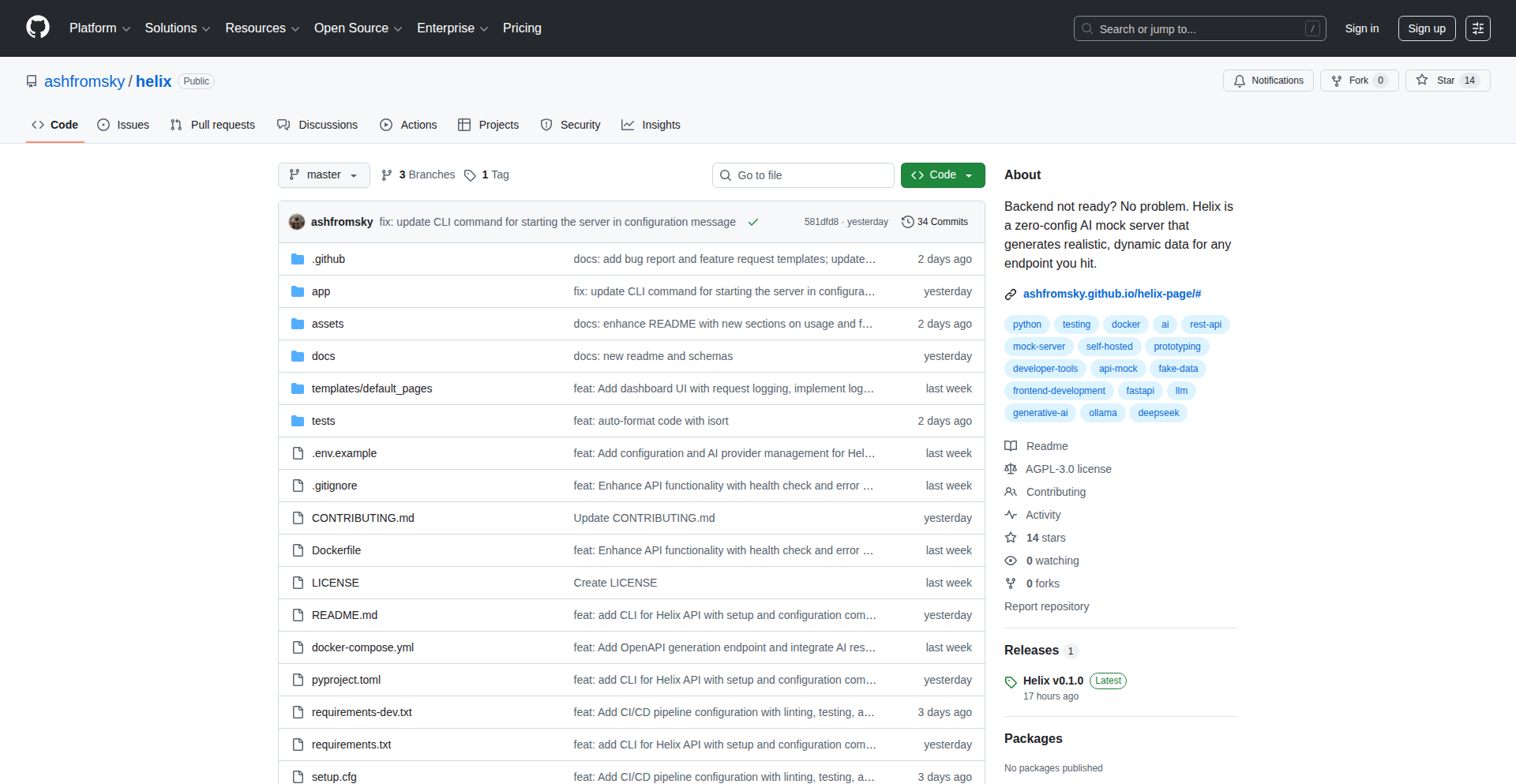

HackerNews Clickbait Transform

Author

keepamovin

Description

This project takes Hacker News headlines and transforms them into hysterical, exaggerated clickbait, demonstrating a novel approach to natural language generation (NLG) by leveraging LLMs to inject sensationalism into factual titles. It showcases how AI can be used to reframe information for engagement, exploring the creative and potentially humorous side of AI-powered content manipulation. The innovation lies in its ability to understand the underlying sentiment and context of a news headline and then artificially inflate it with clickbait tropes, offering a unique perspective on information presentation and its impact on user perception.

Popularity

Points 182

Comments 73

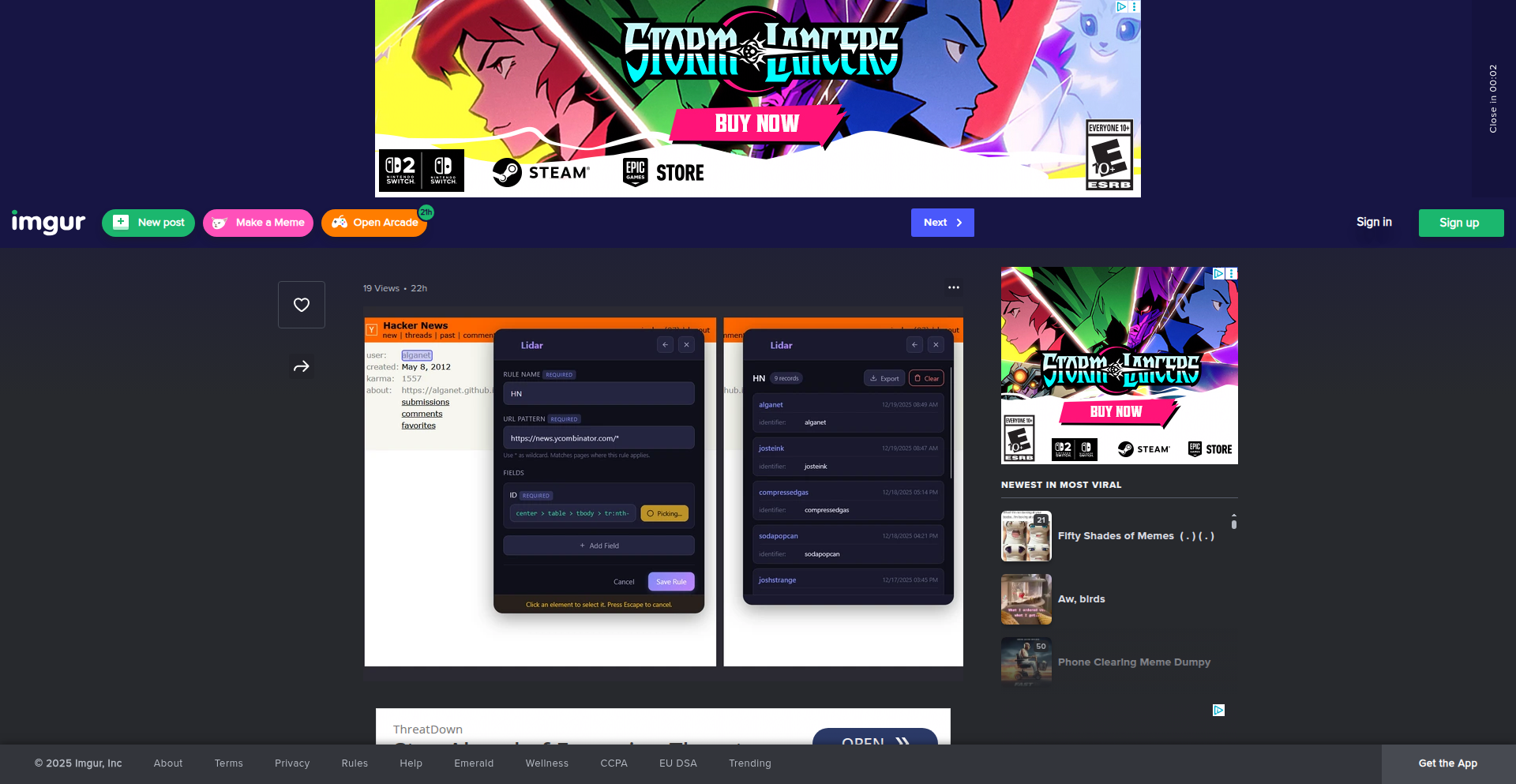

What is this product?

This is a project that applies AI, specifically a Large Language Model (LLM), to re-write Hacker News headlines, making them sound like over-the-top clickbait. The core technology involves feeding an LLM the original headline and instructing it to generate a new version that uses common clickbait techniques like hyperbole, urgent calls to action, and vague promises of shocking revelations. The innovation here is not just generating text, but understanding the nuance of what makes something 'clickbait' and applying that creatively to technical news. So, what's the use? It provides a fun, lighthearted way to explore how language can be manipulated for attention and offers a glimpse into the creative potential of AI beyond straightforward information delivery.

How to use it?

Developers can use this project as a creative tool or as a learning resource. For creative use, it can be integrated into a browser extension or a dedicated web app where users can paste or fetch Hacker News headlines to see their clickbait makeover. This can be used for entertainment, or even to analyze how sensationalism might affect the perception of technical news. Technically, it involves setting up an API call to an LLM (like GPT-3/4, or open-source alternatives), sending the original headline as a prompt with specific instructions for clickbait transformation, and displaying the generated output. This offers a practical example of prompt engineering and integrating LLM capabilities into an application. The value for developers is in understanding and experimenting with LLM APIs for creative text generation tasks.

Product Core Function

· Headline Clickbait Transformation: Leverages LLMs to rewrite factual headlines into exaggerated, attention-grabbing clickbait, demonstrating creative NLG. This is useful for exploring AI's ability to manipulate sentiment and engagement.

· Prompt Engineering for Sensationalism: Develops and refines prompts to guide LLMs in adopting clickbait writing styles, showcasing the importance of precise instructions for AI. This helps developers understand how to get specific creative outputs from LLMs.

· Demonstration of AI Creative Potential: Provides a tangible example of AI being used for humorous and engaging content generation, moving beyond purely functional applications. This highlights the broader creative applications of AI for the tech community.

· Information Reframing Exploration: Illustrates how the same core information can be presented in vastly different ways to influence perception and engagement. This is valuable for understanding media effects and content strategy.

Product Usage Case

· Entertainment Platform: Imagine a browser extension that automatically 'clickbait-ifies' Hacker News headlines as you browse, adding a layer of humor and absurdity to your tech news consumption. This solves the problem of routine news consumption by injecting fun.

· Content Strategy Experimentation: A startup could use this to experiment with different headline styles for their own technical blog posts, analyzing which styles (factual vs. clickbait-like) generate more initial interest, helping them understand audience engagement tactics.

· AI Prompt Engineering Tutorial: A developer looking to learn advanced LLM prompt engineering can dissect the prompts used in this project to understand how to elicit specific stylistic outputs from AI models, accelerating their learning curve.

· Social Media Content Generation Aid: A tech influencer might use this as a tool to generate attention-grabbing social media posts derived from technical articles, making complex topics more accessible and shareable.

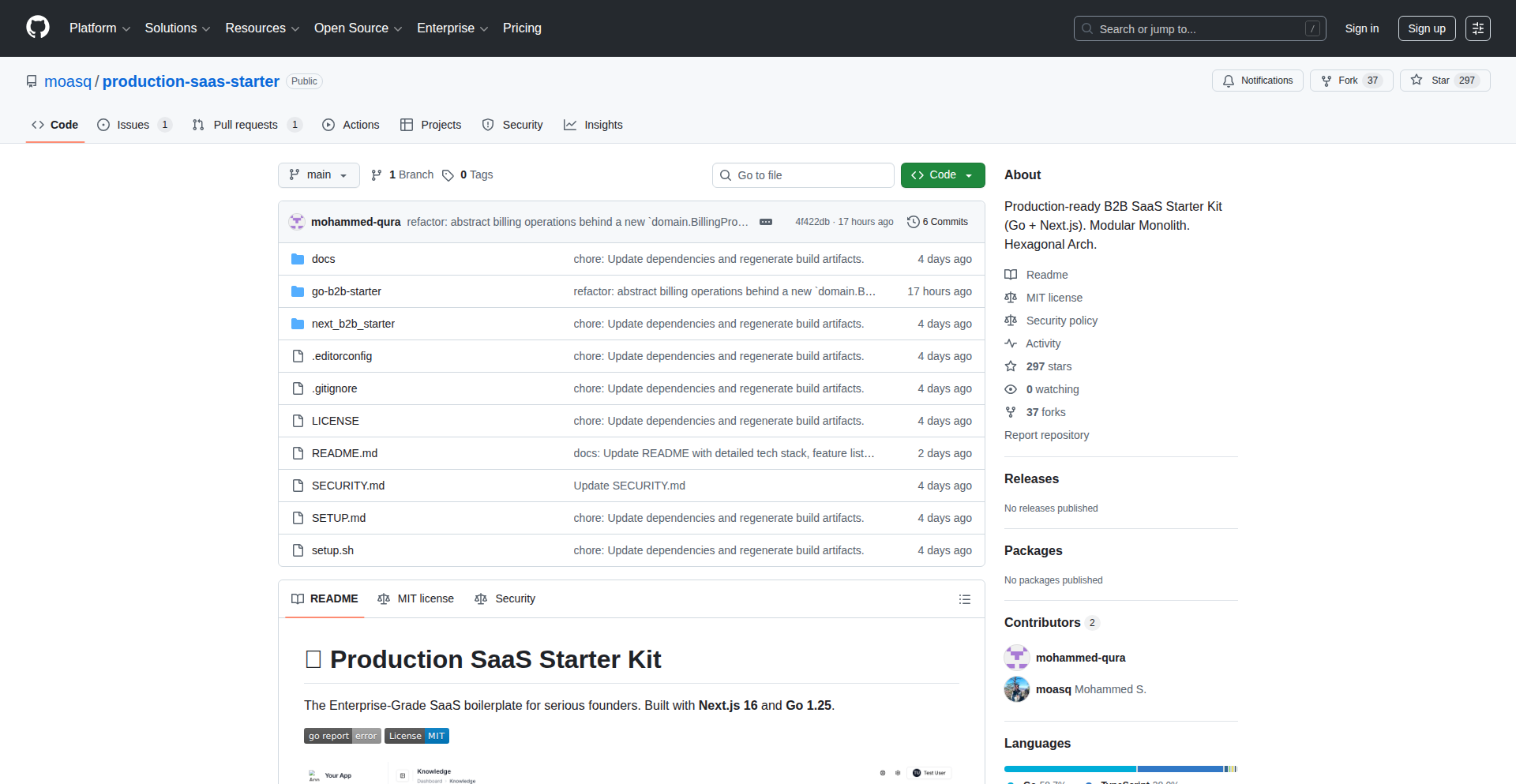

2

Modular Monolith Go+Next.js SaaS Engine

Author

moh_quz

Description

This project is a production-ready, full-stack B2B SaaS starter kit. It provides a Go backend and a Next.js frontend, both fully Dockerized and designed for independent deployment. The core innovation lies in its 'Modular Monolith' architecture, offering the separation of microservices without the complexity, and its vendor-agnostic approach, allowing developers complete control over their infrastructure and avoiding vendor lock-in. It solves the problem of unpredictable scaling costs and difficult migrations common with platform-dependent SaaS starters.

Popularity

Points 77

Comments 33

What is this product?

This project is a comprehensive B2B Software-as-a-Service (SaaS) starter kit that includes both the backend and frontend code. The backend is built with Go, a programming language known for its efficiency and speed, and uses frameworks like Gin for web handling and SQLC for type-safe database queries. The frontend is built with Next.js, a popular React framework for building modern web applications. The key innovation here is the 'Modular Monolith' design for the Go backend. Instead of breaking down the application into many small, independent microservices which can be complex to manage, this approach organizes the backend into distinct modules (like Authentication, Billing, AI). These modules are loosely coupled, meaning they can be developed and updated somewhat independently, but they are deployed as a single, unified application. This offers the benefits of cleaner code separation and easier team collaboration without the overhead of managing numerous distributed services. Furthermore, the entire application is containerized using Docker, meaning it can be easily packaged and run consistently across different environments. The project explicitly avoids proprietary services like Vercel or Supabase, giving you the freedom to deploy your application on any cloud provider or even your own servers, offering significant cost predictability and migration flexibility. The value proposition is having a robust, scalable, and customizable foundation for a B2B SaaS product without the initial heavy lifting of setting up core infrastructure and features, and crucially, without being tied to specific vendor platforms.

How to use it?

Developers can use this project as a foundation to build their B2B SaaS products. The setup involves cloning the GitHub repository and following the included `setup.md` guide, which likely involves running a script (`./setup.sh`) to initialize the Docker environment and set up the necessary dependencies locally. Once set up, developers can start customizing the application logic to fit their specific product features. The modular architecture means they can easily integrate new features or replace existing ones. For instance, if a developer needs a different payment provider than the included Polar.sh, they can modify the billing module's interface. Similarly, if they don't require OCR functionality, they can omit that module. The frontend can be swapped out for other frameworks that communicate via HTTP APIs, offering flexibility in UI development. The independent Docker containers for the frontend and backend allow for flexible deployment strategies: both can run on the same server, or they can be deployed on different cloud providers (e.g., frontend on Cloudflare Pages, backend on a VPS). This project provides a robust starting point, allowing developers to focus on their unique value proposition rather than reinventing common SaaS functionalities like authentication, billing, and multi-tenancy.

Product Core Function

· Production-grade B2B Backend Engine: The Go backend is designed for performance and scalability, handling essential business logic for SaaS products. This is valuable because it provides a stable and efficient core for your application, reducing development time for fundamental server-side operations.

· Modern Full-Stack Frontend Framework: The Next.js frontend provides a feature-rich and user-friendly interface, leveraging React and Tailwind CSS for efficient UI development. This is valuable because it allows for quick creation of dynamic and responsive user interfaces, crucial for customer engagement.

· Modular Monolith Architecture: The Go backend is structured into isolated modules (Auth, Billing, AI, etc.) that can be independently developed but deployed as a single unit. This is valuable because it offers the organizational benefits of microservices with the deployment simplicity of a monolith, making code cleaner and development more manageable.

· Vendor-Agnostic Deployment: The entire stack is Dockerized and designed to be deployed on any infrastructure, avoiding lock-in to specific cloud providers like Vercel or Supabase. This is valuable because it gives developers full control over their hosting costs, infrastructure, and data, and makes future migrations easier.

· Integrated Authentication and RBAC: Includes a Stytch B2B integration for robust user authentication and Role-Based Access Control (RBAC), with multi-tenant data isolation handled at the database query level. This is valuable because it ensures secure user management and data privacy from the outset, a critical requirement for any B2B application.

· Subscription and Billing Management: Features integration with Polar.sh for handling subscriptions, invoices, and global tax/VAT. This is valuable because it offloads the complex and often error-prone task of managing recurring payments and compliance, allowing founders to focus on product growth.

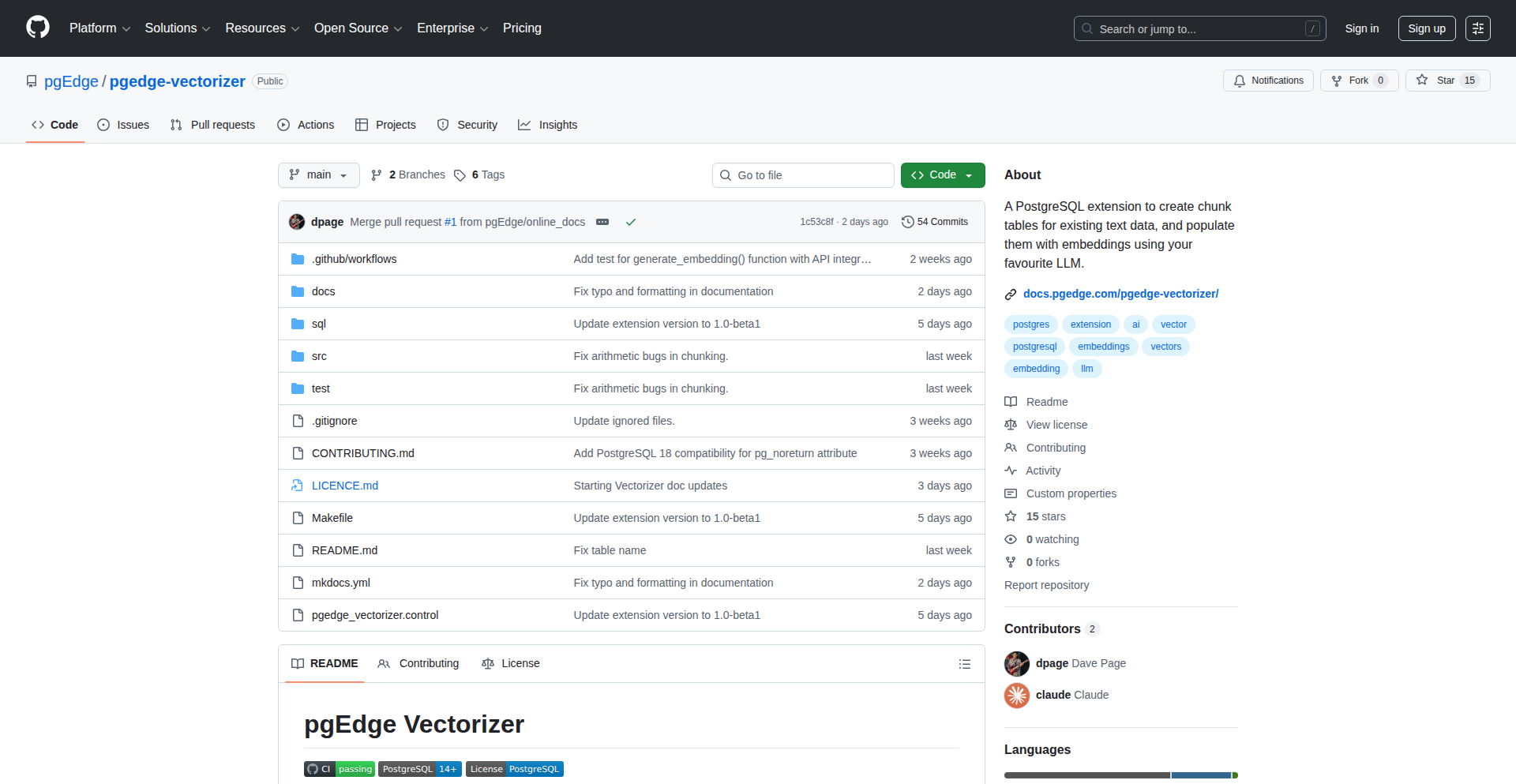

· AI-Powered Features with RAG and pgvector: Incorporates an AI pipeline using OpenAI's Retrieval Augmented Generation (RAG) with PostgreSQL's pgvector extension for intelligent data retrieval and content generation. This is valuable because it allows for the integration of advanced AI capabilities into your product, such as intelligent search or content creation, with a focus on minimizing factual errors.

· Document OCR and Extraction: Integrates Mistral AI for Optical Character Recognition (OCR) to extract information from documents. This is valuable because it enables automated data extraction from unstructured document formats, streamlining workflows and reducing manual data entry.

· Scalable File Storage: Integrates with Cloudflare R2 for object storage, offering a cost-effective and scalable solution for storing application assets. This is valuable because it provides a robust and affordable way to handle large amounts of file data, essential for many SaaS applications.

Product Usage Case

· A startup founder wants to launch a new B2B SaaS product quickly and affordably. They can use this starter kit to deploy a fully functional application on a $6 VPS, focusing their limited resources on marketing and feature development rather than building core infrastructure from scratch.

· A development team needs to build a complex SaaS application with a modular structure to ensure maintainability and scalability. The Modular Monolith approach allows them to manage code separation effectively within a single deployment unit, leading to faster iteration cycles and easier onboarding for new team members.

· A company is concerned about vendor lock-in and unpredictable cloud costs with their current SaaS platform. They can migrate their existing application or start a new one using this starter kit, deploying it on their preferred infrastructure (e.g., AWS, GCP, or on-premise), thereby gaining cost control and flexibility.

· A developer wants to integrate AI-powered features into their B2B application, specifically for generating reports from uploaded documents. They can leverage the pre-built AI pipeline with RAG and OCR modules to quickly add intelligent document analysis and report generation capabilities.

· A SaaS provider needs to handle international payments and complex tax regulations. By using the integrated Polar.sh billing module, they can ensure compliance with global tax laws and manage subscriptions efficiently, reducing the risk of financial penalties and operational overhead.

3

MobileScreenCastr

Author

admtal

Description

MobileScreenCastr is a lightweight, developer-focused application that enables the creation of screen recording videos directly from mobile devices, mimicking the functionality of popular desktop tools like Loom. It addresses the challenge of quickly capturing and sharing mobile-specific user flows, bugs, or feature demonstrations without requiring complex external setups. The core innovation lies in its on-device recording and simple export capabilities, aiming to streamline the demo video creation process for mobile developers.

Popularity

Points 67

Comments 39

What is this product?

MobileScreenCastr is a tool designed to record your mobile device's screen and interactions, then package them into a shareable video. Think of it as a personal, on-the-go video production studio for your phone or tablet. Its technical innovation is in its direct integration with the mobile operating system to capture screen output and user touches in real-time, then efficiently encoding it into a video format. This avoids the need to connect to a computer, use complicated mirroring software, or perform extensive post-production, making it incredibly fast to capture and share what's happening on your device. So, it's useful because it lets you instantly show someone exactly what you're seeing and doing on your mobile device, without any fuss.

How to use it?

Developers can use MobileScreenCastr by installing the application on their Android or iOS device. Once installed, they can initiate a recording session from within the app. The app then overlays a recording control, allowing the user to start, pause, and stop the screen capture. After recording, the video can be reviewed and exported directly from the device. This makes it ideal for quickly demonstrating a bug encountered on a specific device, showcasing a new UI flow to a designer, or creating a brief tutorial for a new feature. The integration is straightforward, acting as a standalone app that leverages the device's native screen recording capabilities. Therefore, it's useful because you can start recording your mobile screen in seconds to show off a problem or a new feature, right from where the problem or feature exists.

Product Core Function

· On-device screen recording: Captures video directly from the mobile operating system's display output, allowing for high-fidelity recording without external hardware. This is valuable for accurately representing the user experience.

· Real-time interaction overlay: Records touch gestures and user inputs visually on the screen, providing context for how an action was performed. This is useful for demonstrating precise user flows or accidental interactions.

· Simple video export: Allows users to export recorded videos in standard formats directly from the device, eliminating the need for file transfers or cloud syncing for immediate sharing. This speeds up the feedback loop.

· Basic editing capabilities: Offers minimal editing features to trim the beginning or end of a recording, ensuring the final video is concise and focused. This reduces the need for separate editing software for simple adjustments.

· Direct sharing options: Integrates with mobile sharing functionalities to easily send recordings via messaging apps, email, or cloud storage services. This facilitates quick dissemination of information within teams.

Product Usage Case

· A mobile QA tester encounters a rare bug on a specific device. They use MobileScreenCastr to record the steps leading up to the bug, then share the video with the development team. This solves the problem of trying to describe a complex, intermittent bug in text.

· A product designer wants to show a developer a new animation sequence on a prototype app. They record a short video using MobileScreenCastr and send it directly to the developer. This solves the problem of bridging the gap between design intent and implementation.

· A developer is testing a new feature and wants to quickly show its functionality to a colleague before committing. They record a quick demo with MobileScreenCastr and share it via a team chat. This speeds up collaboration and iterative feedback.

· A user is having trouble with a specific setting in an app. They use MobileScreenCastr to record their attempts to fix it and send it to customer support. This provides clear, actionable evidence of the user's problem, improving support efficiency.

4

Voice2Sticker AI Printer

Author

spydertennis

Description

Stickerbox is a voice-activated sticker printer that leverages AI image generation to transform children's spoken ideas into tangible stickers. This project creatively merges cutting-edge AI technology with simple, physical output, making complex generative AI accessible and safe for kids. The innovation lies in abstracting the AI process into a magical, real-world creation, fostering imagination and creativity through a tangible, interactive experience.

Popularity

Points 42

Comments 50

What is this product?

Voice2Sticker AI Printer is a unique device that allows children to bring their imagination to life by verbally describing their ideas, which are then translated into physical stickers using AI image generation and a thermal printer. The core technical innovation is the seamless integration of a user-friendly voice interface, a sophisticated AI image generation model (likely a diffusion model like Stable Diffusion or DALL-E variants, though not explicitly stated, it's the typical technology for such outputs), and a safe, simple thermal printing mechanism. It makes advanced AI, typically confined to screens, a tangible and interactive experience for young users, focusing on safety and ease of use. The 'magic' for kids is holding their dreamt-up creations as real stickers, like a 'ghost on a skateboard' or 'a dragon doing its taxes', making abstract concepts concrete.

How to use it?

Developers can imagine integrating this concept into educational tools or creative platforms. For users, a child would simply speak their idea into the printer's microphone, such as 'a purple cat wearing a hat'. The device processes this voice input, converts it into a descriptive prompt for the AI image generator, which then creates a unique image. This image is then sent to the thermal printer to produce a physical sticker. This could be integrated into apps for story-telling, art projects, or even personalized stationery, allowing for easy integration of custom sticker creation into digital workflows.

Product Core Function

· Voice-to-text transcription: Converts spoken words into text prompts, allowing for intuitive user input without complex interfaces, enabling quick idea capture.

· AI image generation: Creates unique visual representations of user-described concepts, unlocking creative potential and allowing for virtually limitless sticker designs.

· Thermal sticker printing: Produces durable, physical stickers instantly from AI-generated images, providing a tangible output that enhances the user's sense of accomplishment and ownership.

· Kid-safe design and materials: Ensures the product is safe for children, addressing concerns around materials (BPA/BPS free paper) and user data privacy, building trust for parents and guardians.

· Simplified user interface: Designed for ease of use by young children, abstracting away technical complexities so users can focus on creativity rather than operation.

Product Usage Case

· Educational Tool: A classroom could use Voice2Sticker AI Printer for art classes to illustrate concepts from stories, allowing students to visualize characters or scenes described in text and create their own sticker interpretations.

· Creative Play: Children can use it at home to create personalized stickers for their belongings, notebooks, or to share with friends, turning their daily thoughts and imaginative ideas into shareable physical objects.

· Therapeutic Aid: In a therapeutic setting, children could use it to express emotions or ideas they find difficult to verbalize, by describing them and seeing them manifest as stickers, providing a non-verbal communication channel.

· Personalized Gifting: Users can create unique, personalized stickers for gifts or special occasions, describing a custom design that holds personal meaning, offering a novel and heartfelt way to show affection.

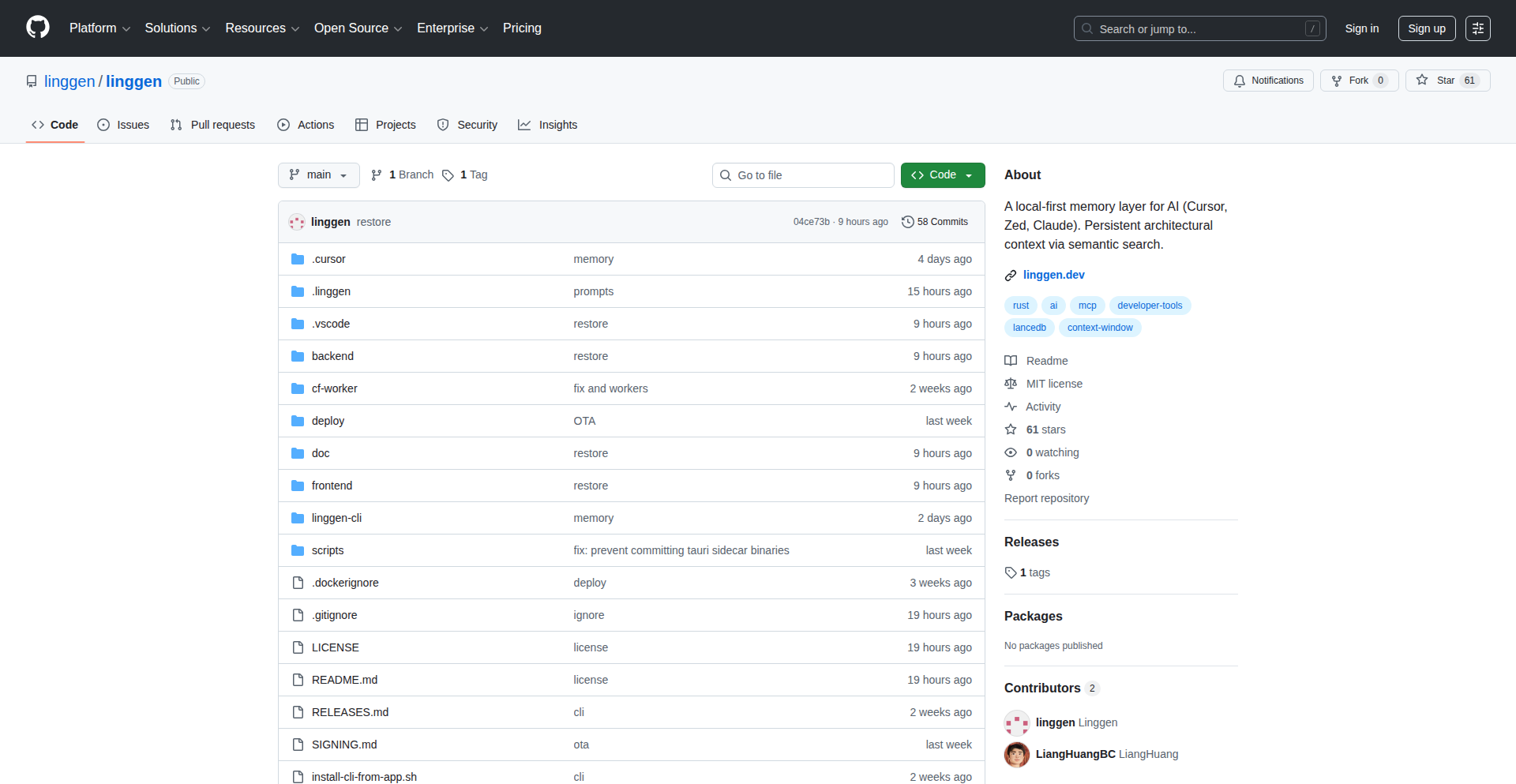

5

Linggen - Code Context Weaver

Author

linggen

Description

Linggen is a local-first memory layer for AI, designed to help developers quickly recall and understand complex multi-node systems. It indexes project documentation and code, allowing AI models to instantly access architectural context, thereby eliminating the 'cold start' problem and saving significant developer time. The innovation lies in its local-first approach using Rust and LanceDB, ensuring data privacy and offline functionality, and its ability to create a 'team memory' for seamless knowledge sharing among developers and their AI assistants.

Popularity

Points 32

Comments 10

What is this product?

Linggen is a smart system that acts like your project's long-term memory for AI. Imagine you're working on a large, complicated software project with many interconnected parts. It's easy to forget the details of how everything fits together, especially when you switch between tasks or join a new team. Linggen solves this by reading all your project's documentation and code, and then creating a searchable, intelligent index of this information. When you or your AI assistant needs to understand a specific part of the system, Linggen can instantly recall and provide all the relevant context, like architectural diagrams or past decisions. The technical innovation is that it does all of this locally on your computer using fast technologies like Rust and LanceDB, meaning your code and data stay private, and it doesn't need an internet connection. It also allows for 'team memory,' where your teammates' AI assistants can also benefit from this shared understanding, reducing the need for constant re-explanation.

How to use it?

Developers can integrate Linggen into their workflow through a VS Code extension. By 'initializing their day' with Linggen, they can instantly load the full architectural context of their projects. This means that when you're about to start coding, instead of spending time re-reading documentation or trying to remember how different modules interact, Linggen will have that knowledge readily available for you and your AI coding assistants. It supports popular AI interfaces like Cursor, Zed, and Claude Desktop, allowing seamless integration with your preferred AI tools. The 'Visual Map' feature helps in understanding file dependencies and predicting the impact of code changes, offering a clear picture of 'blast radius' before making modifications.

Product Core Function

· Local-First Knowledge Indexing: Indexes your project's code and documentation on your machine using Rust and LanceDB. This provides fast, private access to information without relying on cloud services, meaning you can work offline and your sensitive code never leaves your environment. This is useful for developers who want to maintain data privacy and have instant access to project context.

· Instant AI Context Loading: Allows AI models to immediately access a rich understanding of your project's architecture and code. This eliminates the 'cold start' problem where AI needs time to learn about your project, making AI-assisted coding significantly more efficient from the get-go. This benefits developers by speeding up AI interactions and reducing the frustration of repeated context setup.

· Team Knowledge Sharing: Creates a shared 'memory' that can be accessed by multiple developers and their AI assistants. This ensures everyone on the team is on the same page regarding project knowledge, reducing miscommunication and redundant explanations. This is valuable for teams working on collaborative projects, fostering better teamwork and faster development cycles.

· Visual Dependency Mapping: Generates visual representations of file dependencies within a project. This helps developers understand how different parts of the codebase are connected and to assess the potential impact of changes, preventing unintended consequences. This is crucial for refactoring and maintaining large codebases, allowing for more confident and less risky code modifications.

Product Usage Case

· A developer working on a complex microservices architecture uses the Linggen VS Code extension to load the entire system's documentation and inter-service communication patterns before starting a new feature. This allows their AI assistant to immediately suggest relevant APIs and potential integration points, saving hours of manual research and recall.

· A new developer joins a project with thousands of lines of code. Instead of struggling to understand the codebase through reading endless documentation, they use Linggen to get an instant overview of the project's structure, key components, and their relationships. This dramatically reduces their onboarding time and allows them to become productive much faster.

· A team is performing a large-scale refactoring. Using Linggen's visual dependency map, they can identify all the files and modules that might be affected by a change to a core library, predicting the 'blast radius' and planning their refactoring effort more effectively, thus avoiding costly bugs and rework.

· A developer working on a sensitive R&D project needs to leverage AI for code generation but cannot send their proprietary code to a cloud-based AI service. Linggen's local-first approach allows them to index their project locally and use an AI model that can access this local index, enabling AI-assisted development without compromising intellectual property.

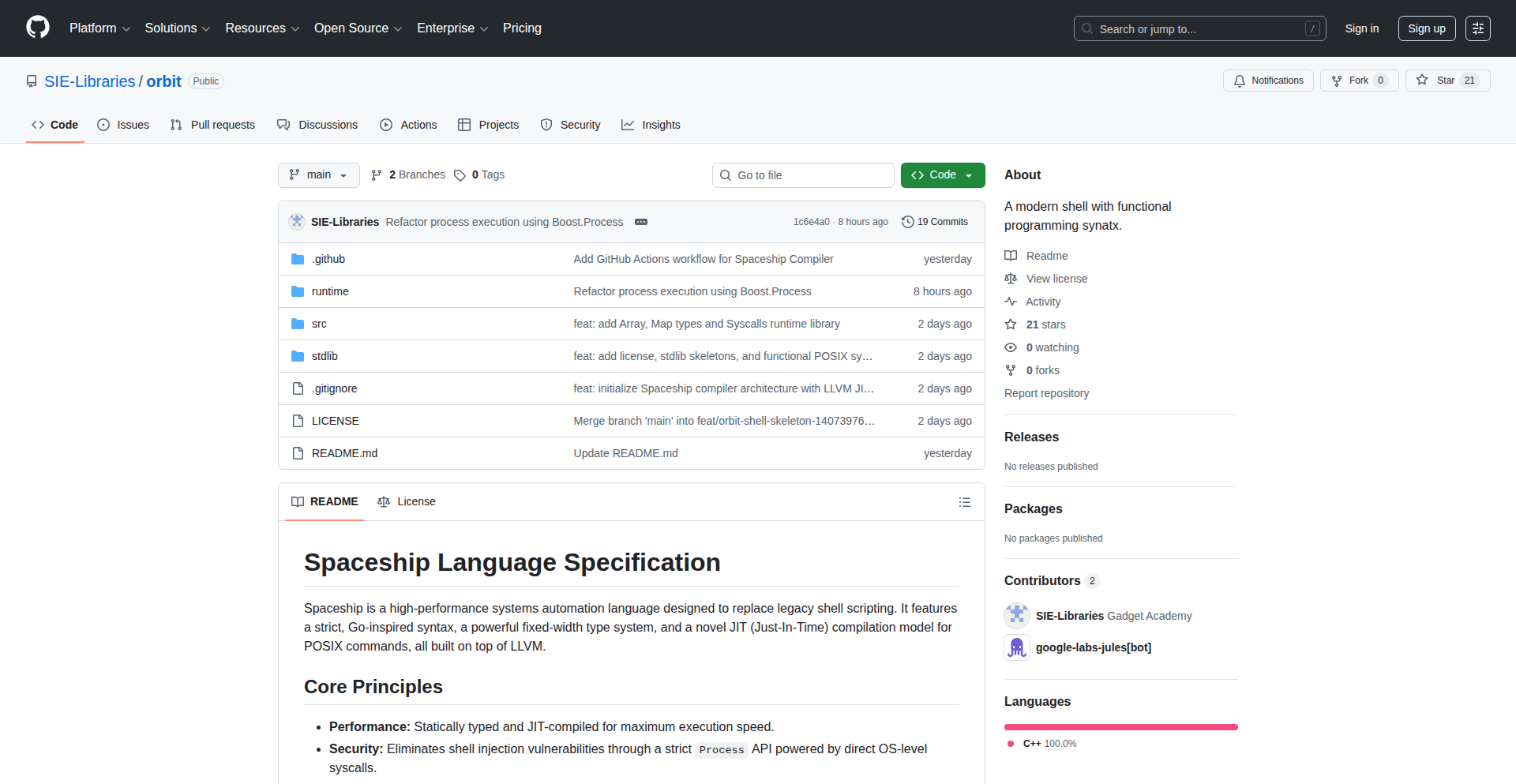

6

Orbit: Shell Scripting's LLVM Compiler

Author

TheCodingDecode

Description

Orbit is a systems-level programming language that innovates by compiling traditional shell scripts (.sh) into LLVM Intermediate Representation (IR). This allows shell scripts to be optimized and executed with the performance characteristics of compiled languages, bridging the gap between scripting ease and compiled efficiency. It tackles the inherent performance limitations and debugging complexities of pure shell scripting by leveraging the powerful LLVM compiler infrastructure.

Popularity

Points 17

Comments 13

What is this product?

Orbit is a novel programming language designed to transform your existing shell scripts into highly optimized code. Instead of executing shell commands line by line, Orbit parses your .sh files and translates them into LLVM IR. LLVM is a sophisticated compiler backend used by many high-performance languages like C++ and Rust. By compiling to LLVM IR, Orbit enables your shell scripts to benefit from advanced optimizations such as dead code elimination, instruction scheduling, and even Just-In-Time (JIT) compilation. This means your scripts can run significantly faster, consume fewer resources, and be more reliable, effectively giving shell scripting the power and speed of compiled languages without sacrificing its user-friendly syntax. So, what's in it for you? You get faster, more efficient scripts that can handle more demanding tasks.

How to use it?

Developers can use Orbit by writing their scripts in a syntax that closely resembles standard shell scripting but with extended capabilities that Orbit understands. The Orbit compiler then takes these .sh files and processes them. The output can be directly executed LLVM bitcode or a native binary. This integration is straightforward: you can replace your current `bash your_script.sh` command with `orbit your_script.sh` or a compiled version. Orbit can be integrated into existing CI/CD pipelines to ensure optimized script execution. For complex system administration tasks, automation workflows, or performance-critical batch processing, Orbit allows you to write your logic in a familiar scripting style and then compile it for maximum performance. So, how does this help you? You can supercharge your existing automation and system tools with minimal changes, making them run faster and more reliably.

Product Core Function

· Shell Script Compilation to LLVM IR: Orbit parses .sh files and generates LLVM Intermediate Representation. This allows for static analysis and aggressive optimizations that are impossible with traditional shell interpreters. Value: Enables significant performance gains and deeper code analysis for your scripts. Use Case: Optimizing critical automation tasks that are currently bottlenecked by shell script speed.

· LLVM Optimization Passes: Leverages LLVM's extensive suite of optimization passes to refine the generated IR. This includes techniques like loop unrolling, function inlining, and common subexpression elimination. Value: Achieves performance comparable to compiled languages for your scripting logic. Use Case: Making resource-intensive data processing scripts run much faster and more efficiently.

· Native Binary Generation: Orbit can compile the LLVM IR into standalone native executables. This eliminates the need for a specific runtime environment to execute the script. Value: Creates portable and self-contained executables, simplifying deployment and reducing dependencies. Use Case: Distributing utility scripts to environments where the Orbit compiler might not be present.

· Enhanced Scripting Constructs: While maintaining shell-like syntax, Orbit might introduce or clarify constructs that are more amenable to compilation and optimization, potentially offering clearer control flow and error handling. Value: Provides more robust and predictable script behavior, especially in complex scenarios. Use Case: Developing sophisticated system management tools that require precise control and error resilience.

Product Usage Case

· Performance-critical data processing pipelines: Imagine a script that processes large log files daily. By using Orbit, this script can be compiled to run significantly faster, reducing processing time from hours to minutes. It solves the problem of slow execution for heavy-duty data tasks. How it helps you: Your daily data crunching will be dramatically faster and more efficient.

· System automation and orchestration: For scripts that manage cloud infrastructure or deploy applications, speed and reliability are crucial. Orbit allows these scripts to be compiled for faster execution, ensuring quicker deployments and more responsive system management. It addresses the need for high-performance automation. How it helps you: Your infrastructure management and deployment processes will be significantly faster and more reliable.

· Developer tooling and command-line utilities: When building new command-line tools, developers often start with shell scripts for quick iteration. Orbit allows them to compile these scripts into optimized, standalone executables, offering a better user experience and performance. It solves the 'script feels slow' problem for developer tools. How it helps you: You can build and distribute faster, more professional-feeling command-line tools for yourself and others.

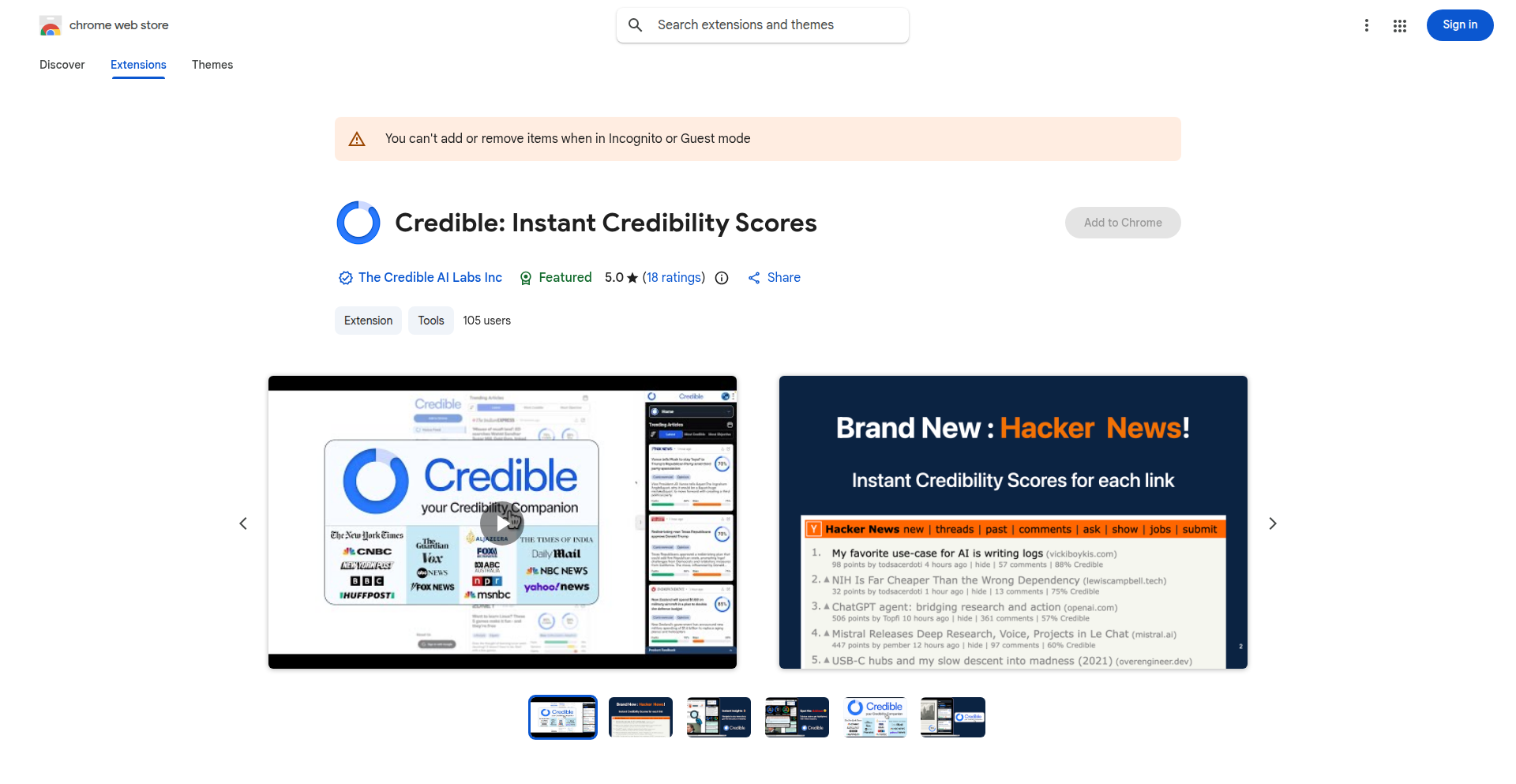

7

Credible AI Insight

url

Author

betterhealth12

Description

Credible is a Chrome extension that instantly displays credibility scores and detailed analyses of online content directly within your browser. It tackles the problem of information overload and time wasted verifying sources by providing key takeaways, bias detection, and a breakdown of claims (facts vs. opinions vs. dubious) without requiring you to leave the page. This innovation helps users quickly assess the trustworthiness of articles and comments, saving valuable time and mental energy.

Popularity

Points 10

Comments 4

What is this product?

Credible is a sophisticated browser extension, acting as your personal fact-checker and time-saver. It uses advanced natural language processing (NLP) and AI models to analyze articles and comments in real-time. When you're browsing websites like Hacker News, Credible analyzes the linked content. It then overlays a 'credibility score' next to each link on the main page and provides a comprehensive analysis on the article's page. This analysis includes summarizing the main points of the article, identifying potential biases (like political leaning or sensationalism), and distinguishing between factual statements, opinions, and unsubstantiated claims. The innovation lies in its ability to perform this deep analysis instantly and in-context, preventing users from having to navigate away and conduct manual research, which is a significant time sink and prone to confirmation bias. So, for you, this means less time spent sifting through unreliable information and more confidence in what you read.

How to use it?

Using Credible is straightforward. You simply install it as a Chrome extension from the Chrome Web Store. Once installed, it automatically becomes active when you browse the web. On platforms like Hacker News, you'll notice a credibility score appearing next to each link. Clicking on a link will take you to the article, where Credible will display a detailed breakdown of its content, including its credibility score, detected biases, and a classification of its claims. You can also access a mobile-friendly feed of analyzed content on mycredible.ai/feeds/hacker-news. Integration is seamless; it works in the background as you browse. This is useful for anyone who consumes a lot of online content and wants to quickly understand the reliability and essence of information, especially in fast-paced environments like tech forums or news aggregators. So, for you, this means a more efficient and trustworthy browsing experience, integrated directly into your workflow.

Product Core Function

· Instant Credibility Scoring: Assigns a numerical score to online content reflecting its trustworthiness based on various analytical factors. This allows for a quick, at-a-glance assessment of information reliability, helping you prioritize what to read. Its application is in quickly filtering out potentially misleading or low-quality content.

· Bias Detection: Identifies and flags potential biases within articles, such as political leanings, sensationalism, or specific agendas. This helps you understand the perspective from which information is presented, leading to a more balanced understanding of complex topics. This is valuable for critical thinking and informed decision-making.

· Claim Breakdown: Differentiates between factual statements, opinions, and dubious claims within an article. This function empowers you to critically evaluate the evidence presented and distinguish between objective reporting and subjective viewpoints, enhancing your ability to discern truth from falsehood.

· Key Takeaways Summarization: Extracts and presents the most important points of an article in a concise summary. This saves you time by providing the core message of a piece without needing to read the entire text, making information consumption more efficient. This is especially useful for busy professionals or students.

· In-Context Analysis: Displays all credibility information and analysis directly on the webpage you are viewing, without requiring you to leave the current page. This preserves your browsing flow and significantly reduces the effort needed to verify information, making the process of critical evaluation seamless. This means you can stay focused and informed without interruption.

Product Usage Case

· On Hacker News, when browsing the front page, you see a Credible score next to each article title. This allows you to quickly decide which articles are likely to be well-researched and trustworthy before investing your time in reading them. It solves the problem of not knowing which links to click for reliable information.

· While reading a news article about a current event, Credible analyzes the content and highlights claims that are presented as fact but may be opinions or lack supporting evidence. This helps you identify potential misinformation or propaganda, allowing you to form a more accurate understanding of the event. This is crucial for making informed decisions in a complex world.

· In online discussions where users link to external articles, Credible provides an immediate analysis of the linked content. This helps participants in the discussion to quickly assess the credibility of the sources being referenced, leading to more productive and fact-based conversations. This can be applied to any forum or social media where content sharing occurs.

· For individuals who frequently consume content from various sources for research or professional development, Credible acts as a preliminary filter, indicating the reliability of sources. This saves significant time that would otherwise be spent on manual verification, allowing for faster progress in learning and analysis. This is useful for researchers, students, and lifelong learners.

8

Zynk DirectSync

Author

justmarc

Description

Zynk DirectSync is a cross-platform file transfer and messaging application focused on reliable, end-to-end encrypted transfers between any devices. It tackles the common frustration of moving files across different operating systems and devices, even when networks are unstable, by prioritizing direct peer-to-peer connections with auto-resume capabilities. This offers a private and efficient alternative to cloud storage for large or frequent transfers.

Popularity

Points 11

Comments 2

What is this product?

Zynk DirectSync is a sophisticated file transfer and messaging tool built on a peer-to-peer (P2P) architecture. The core innovation lies in its robust auto-resume functionality, meaning if a file transfer is interrupted due to network changes or device sleep, it can pick up exactly where it left off, similar to how file synchronization tools like rsync work. When direct P2P connections fail to establish (often due to network configurations like NAT or firewalls), it intelligently falls back to a secure cloud relay. Crucially, all data, whether transferred directly or via relay, is protected by end-to-end encryption, ensuring only the sender and intended recipient can access the content. This technology solves the problem of unreliable file transfers and privacy concerns associated with traditional cloud services.

How to use it?

Developers can use Zynk DirectSync in various ways, leveraging both its graphical user interface (GUI) and command-line interface (CLI). For everyday use, simply install the app on macOS, Windows, Linux, iOS, Android, or even Steam Deck. You can then initiate direct file and folder transfers between your own devices or share them with others. The CLI version is invaluable for scripting automated file transfers to and from servers, build pipelines, or for integration into existing workflows. For sharing files with individuals who don't have Zynk installed, Web Drops allow you to create secure, time-limited share links, making it easy to send or request files without requiring the recipient to sign up. This offers a seamless integration into personal and professional workflows where reliable, private data exchange is paramount.

Product Core Function

· Direct Device-to-Device Transfers: Enables unlimited size file and folder transfers directly between devices, bypassing cloud intermediaries and ensuring maximum privacy and speed. This is useful for moving large project assets or backups between your personal machines.

· Auto-Resume Capability: Automatically resumes interrupted file transfers when the connection is restored, preventing data loss and saving time on re-transfers. This is a lifesaver when transferring large files over unstable Wi-Fi or cellular networks.

· End-to-End Encryption (E2EE): Guarantees that all data transferred is encrypted from the source to the destination, making it unreadable by Zynk or any third party. This is essential for transferring sensitive documents or proprietary code.

· Cross-Platform Support: Available on a wide range of operating systems including macOS, Windows, Linux, iOS, Android, and Steam Deck, ensuring seamless file sharing across all your devices. This allows a developer to send a file from their Linux workstation to their iPhone instantly.

· Command-Line Interface (CLI): Provides powerful scripting capabilities for automating file transfers on servers, embedded systems (like Raspberry Pi), or within CI/CD pipelines. This enables automated deployment of build artifacts or log file collection.

· Web Drops/Share Links: Allows users to share files with or request files from people who don't have Zynk installed, via secure, configurable web links. This is perfect for collaborating with external partners or clients without requiring them to install any software.

· P2P First with Cloud Relay Fallback: Prioritizes direct P2P connections for efficiency and privacy, but intelligently uses secure cloud relays when direct connections aren't feasible, ensuring a robust transfer experience. This handles complex network setups where direct connections might be blocked.

Product Usage Case

· A software developer needs to transfer a large dataset for machine learning model training from their powerful workstation to a portable device for offline analysis. Zynk's direct, unlimited transfer with auto-resume ensures the process is fast and won't fail if their laptop briefly loses connection. This saves hours of waiting and prevents potential data corruption.

· A freelance graphic designer needs to send high-resolution design files to a client who isn't technically savvy. Using Zynk's Web Drop feature, they can create a secure link for the client to download the files without needing to install any application, simplifying the collaboration process and ensuring the files arrive securely.

· A system administrator needs to regularly transfer log files from multiple remote servers to a central logging system. The Zynk CLI can be scripted to automate this process, ensuring logs are collected reliably and securely via end-to-end encryption, even across firewalls.

· A user wants to sync important documents between their work laptop and personal desktop, but distrusts cloud storage for sensitive information. Zynk's direct P2P synchronization provides a private and secure method for keeping files consistent across their devices, offering peace of mind.

· A developer is working on a project that involves deploying code to a Raspberry Pi. The Zynk CLI can be used in a build script to automatically push updated code to the Pi after a successful build, streamlining the deployment workflow.

9

Vanishfile

Author

crosshairflaws

Description

Vanishfile is a temporary and secure file sharing service designed to automatically delete files after a set period or number of downloads. It emphasizes privacy by not requiring accounts, offering optional client-side encryption, and password protection, allowing users to share sensitive data without leaving a permanent digital footprint. This tackles the problem of needing to share files that shouldn't persist indefinitely on a server, providing a practical solution for transient data sharing.

Popularity

Points 3

Comments 5

What is this product?

Vanishfile is a file sharing platform built with a focus on ephemeral data. The core technical innovation lies in its self-destructing file mechanism. Files uploaded to Vanishfile are associated with expiry conditions, either a time-based duration (e.g., 7 days) or a download limit. Once these conditions are met, the file is automatically removed from the server. Additionally, it implements optional client-side encryption, meaning the file is encrypted in the user's browser before being uploaded, so even the server administrators cannot access the file's content. Password protection adds another layer of security, ensuring only authorized individuals with the correct password can initiate a download. This approach leverages server-side logic for expiry and client-side operations for encryption to maximize privacy and minimize data retention.

How to use it?

Developers can use Vanishfile for various scenarios requiring temporary file sharing. For instance, if you need to share a large configuration file with a colleague that should only be valid for a short period, you can upload it to Vanishfile, set an expiry, and share the generated link. If you're collaborating on a document and want to share a draft that should be automatically removed after a few reviewers have seen it, Vanishfile provides this capability. For integrating into custom workflows, the API (though not explicitly detailed in the HN post, this is a common extension for such tools) would allow programmatic uploads and link generation, enabling automated secure sharing of temporary artifacts from build processes or temporary data dumps. The primary use case is for sharing files where long-term storage is undesirable or a security risk.

Product Core Function

· Automatic File Expiration: Files are set to self-destruct after a specified time or download count, ensuring data is not permanently stored, thus reducing long-term security risks and storage overhead.

· No Account Required: Users can upload and share files without creating an account, simplifying the process and enhancing user anonymity for quick, ad-hoc sharing needs.

· Client-Side Encryption: Files can be encrypted in the user's browser before upload, meaning the server only stores encrypted data, and the content is unreadable to the service provider, offering a high degree of privacy.

· Password Protection: A password can be set for file access, ensuring that only individuals with the correct password can download the file, adding a crucial layer of access control for sensitive information.

· Download Limits: The ability to limit the number of downloads for a file prevents excessive access and ensures the file is only shared with a controlled audience.

Product Usage Case

· Sharing a temporary build artifact with a client: A developer can upload a specific build output to Vanishfile, set it to expire after 24 hours, and share the link. This ensures the client receives the artifact but it doesn't remain on a server indefinitely, mitigating potential vulnerabilities from outdated or forgotten builds.

· Collaborating on a sensitive document draft: When sharing a draft of a confidential document with a small team, you can upload it to Vanishfile with a download limit of 5 and client-side encryption. This ensures only the intended recipients can access it, and once downloaded by a few people, it becomes inaccessible, preventing unintended wider distribution.

· Providing temporary credentials or keys: For short-term access or provisioning, you can share a file containing temporary credentials via Vanishfile with password protection and a short expiry time. This minimizes the risk of these sensitive details being compromised if the link or file were to be accidentally exposed.

· Distributing a temporary configuration file for an event: If you're setting up a temporary service for an event, you might need to distribute a configuration file. Vanishfile allows you to share this file with an expiry, ensuring it's only available during the event's duration and automatically removed afterwards.

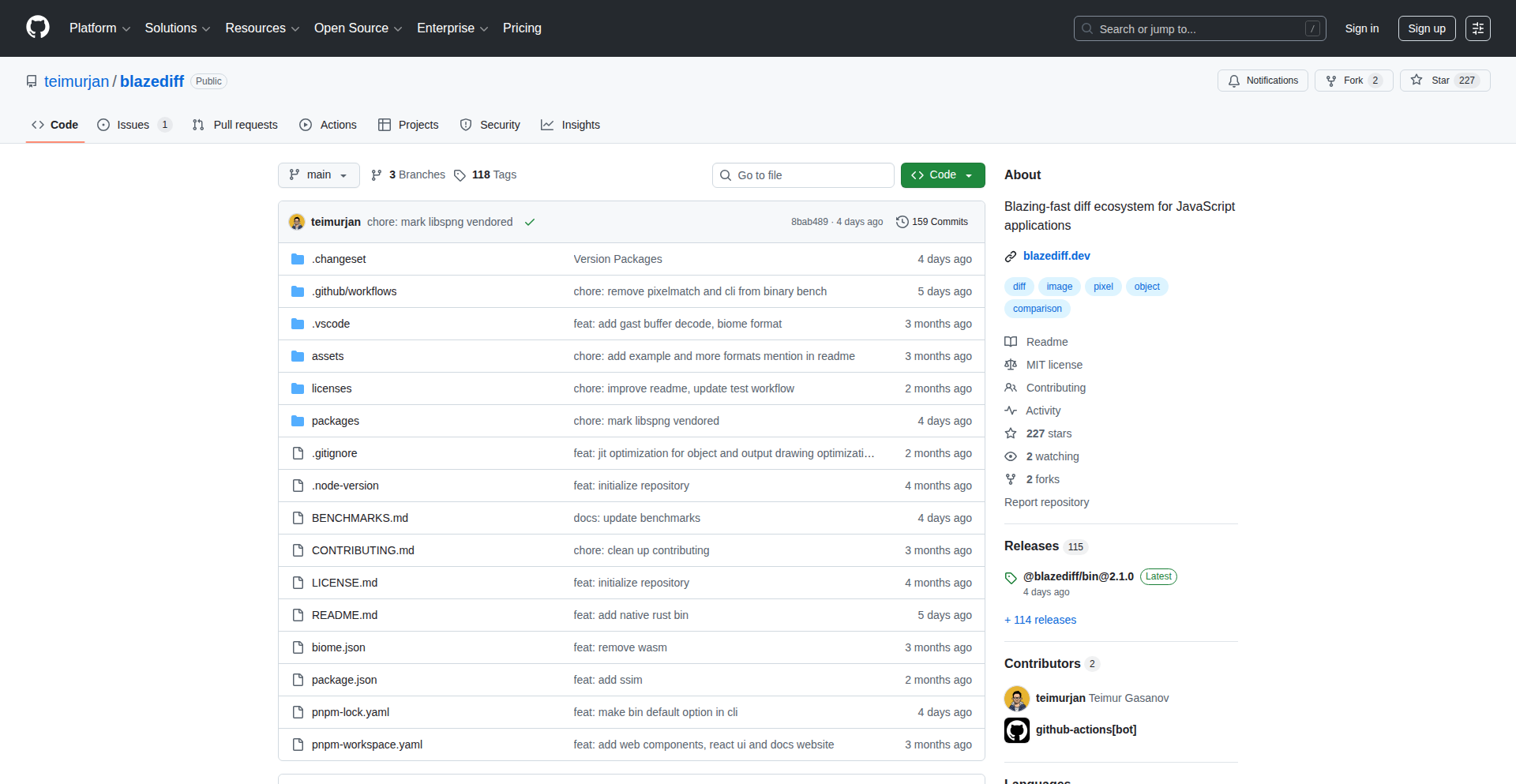

10

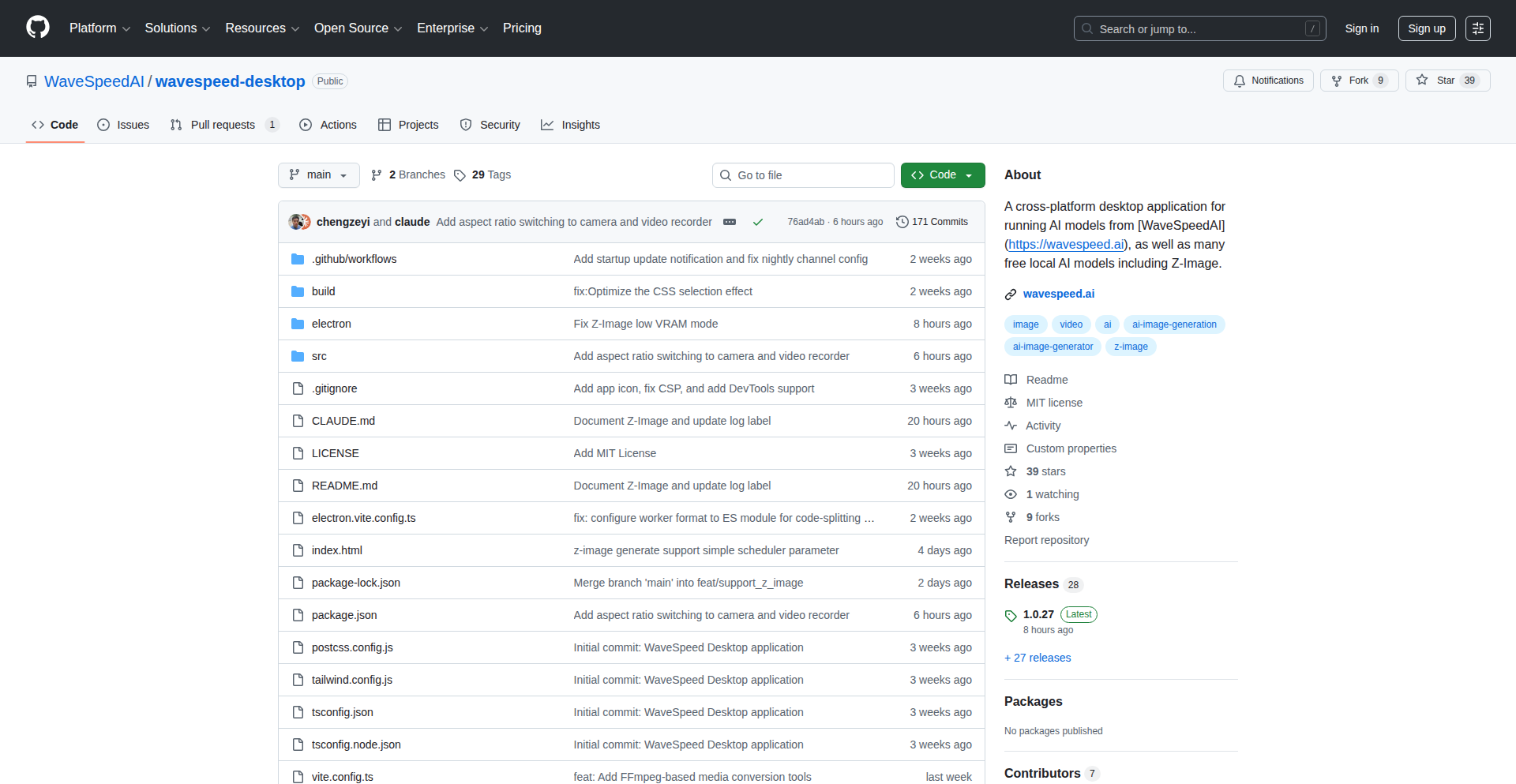

BlazeDiff v2 - HyperSpeed Image Comparison Engine

Author

teimurjan

Description

BlazeDiff v2 is a groundbreaking open-source image comparison tool that achieves unparalleled speed by rewriting its core in Rust and leveraging SIMD instructions. It dramatically speeds up the process of finding differences between two images, making it significantly faster than existing solutions, especially for high-resolution images. This means you can get visual feedback on image changes in a fraction of the time.

Popularity

Points 7

Comments 0

What is this product?

BlazeDiff v2 is a highly optimized tool designed to precisely identify the differences between two images. Its innovation lies in its 'smart scanning' approach. Instead of checking every single pixel, it first quickly identifies 'suspicious' areas that are likely to contain differences. Then, it focuses its intensive comparison only on these flagged areas. This intelligent filtering, combined with performance-enhancing technologies like Rust and SIMD (which allows the computer to perform the same operation on multiple data points simultaneously), makes it incredibly fast. So, if you need to know exactly what changed between two versions of an image, this tool does it much, much faster.

How to use it?

Developers can integrate BlazeDiff v2 into their workflows by using its provided API, which is designed to be a drop-in replacement for the popular 'odiff' tool. This means if you're already using odiff, switching to BlazeDiff v2 requires minimal code changes. It's ideal for automated testing where you need to compare screenshots of UI elements, or in CI/CD pipelines to detect unintended visual regressions. Its small binary size and high performance make it suitable for resource-constrained environments as well. Essentially, any process that involves verifying visual consistency between images can benefit.

Product Core Function

· Ultra-fast image comparison: Achieves significantly faster difference detection than traditional methods, allowing for quicker verification of visual changes, which is crucial for rapid development cycles.

· Intelligent difference highlighting: Focuses comparison on potentially modified areas, reducing processing time and enabling quicker insights into image discrepancies, useful for pinpointing specific UI bugs.

· Cross-platform compatibility: Leverages SIMD instructions (NEON for ARM, SSE4.1 for x86) for optimized performance on various architectures, ensuring consistent speed across different development environments.

· Memory-efficient processing: Offers smaller binary sizes compared to alternatives, making it easier to distribute and integrate into projects without significant overhead, beneficial for large-scale deployments.

· API compatibility with odiff: Provides a seamless transition for users familiar with existing image diff tools, reducing the learning curve and accelerating adoption, allowing immediate performance gains.

Product Usage Case

· Automated UI testing: In a web development scenario, automatically compare screenshots of a web page before and after a code change to instantly detect any visual bugs introduced, saving manual testing time.

· Continuous Integration (CI) pipelines: Integrate BlazeDiff v2 into your CI process to automatically flag any visual regressions in image assets or user interface components, preventing faulty builds from reaching production.

· Game development: Developers can use it to compare textures or UI elements across different builds of a game, ensuring visual consistency and identifying unexpected graphical changes.

· Design workflow: Designers can use this to quickly compare different iterations of a design asset, enabling faster feedback and iteration on visual elements.

· Data visualization comparison: In scientific or data analysis contexts, compare generated plots or charts to verify accuracy and detect subtle changes introduced by algorithm updates.

11

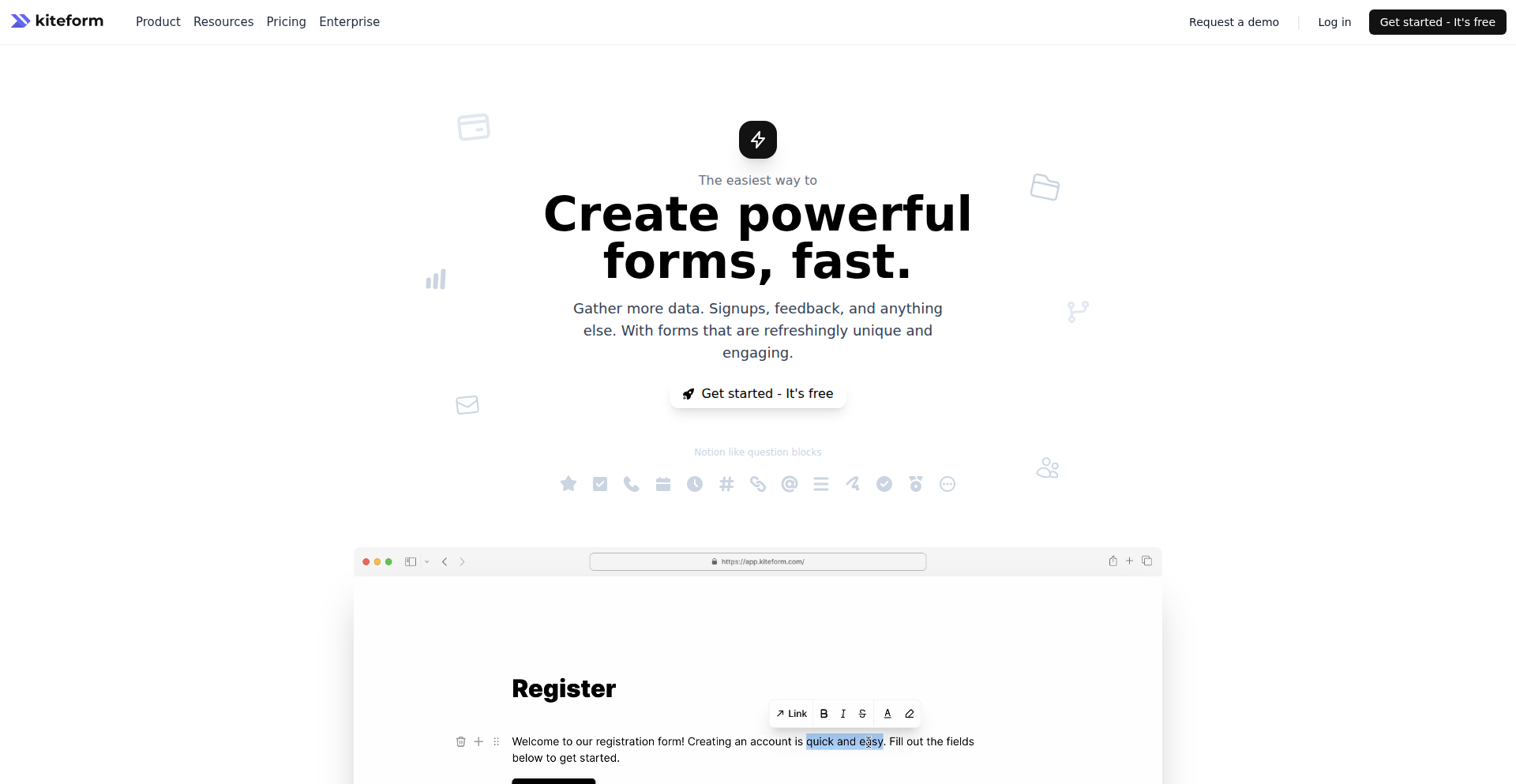

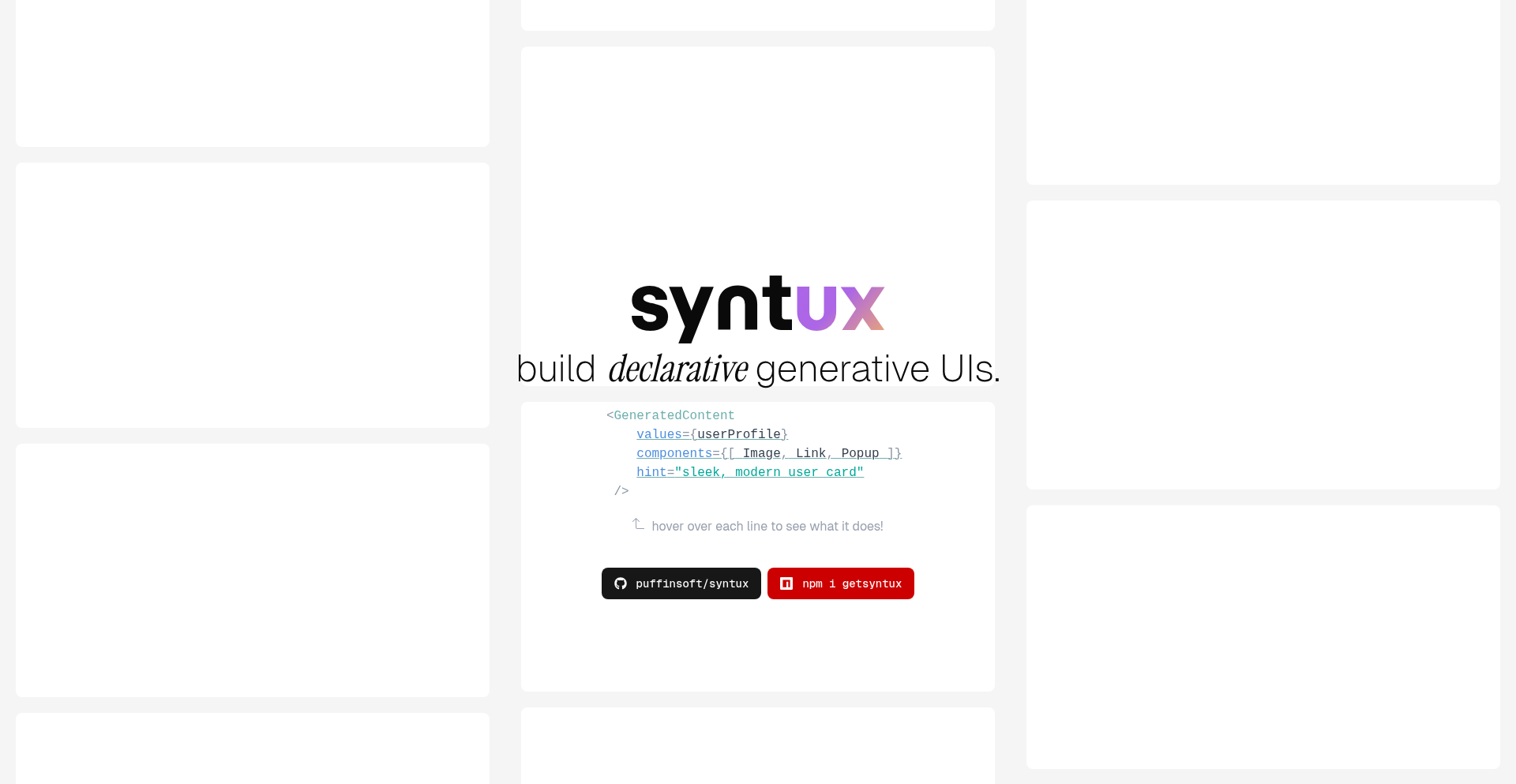

Kiteform: The Declarative Form Synthesizer

Author

18chetanpatel

Description

Kiteform is a novel form builder that leverages a declarative approach, allowing developers to define forms using a simple, human-readable syntax. It abstracts away the complexities of UI rendering, state management, and data validation, empowering developers to create and manage forms with unprecedented ease. The core innovation lies in its ability to translate a high-level form specification into functional, interactive web forms.

Popularity

Points 4

Comments 2

What is this product?

Kiteform is a developer-centric tool that simplifies the creation of web forms. Instead of writing traditional HTML, JavaScript, and CSS for each form element, developers describe the desired form structure and behavior using a specific, easy-to-understand language. Kiteform then automatically generates the necessary front-end code (HTML, CSS, JavaScript) to render and manage the form. The innovation is in this 'declarative' nature; you tell it what you want the form to be, not how to build it step-by-step. This means less boilerplate code for you, faster development, and a more consistent user experience across your applications. So, what's in it for you? You get to build forms in minutes, not hours, with fewer bugs and less frustration.

How to use it?

Developers can integrate Kiteform into their web projects by defining their form structures in the Kiteform language. This definition can be a standalone file or embedded within their existing codebase. Kiteform then provides an API or a CLI tool to compile this definition into usable web components or plain JavaScript, HTML, and CSS that can be directly included in a web page or application framework (like React, Vue, or Angular). It's designed for rapid prototyping and building forms for various applications, from simple contact forms to complex multi-step questionnaires. So, what's in it for you? You can quickly drop in sophisticated forms into your existing web applications or new projects without a steep learning curve.

Product Core Function

· Declarative Form Definition: Developers define form fields, their types (text, number, select, etc.), validation rules, and layout using a straightforward syntax. This allows for intuitive form creation and easy modification. The value is a significant reduction in the code needed to build a form and improved maintainability. This is applicable to any scenario where forms are a crucial part of user interaction.

· Automatic UI Generation: Kiteform takes the declarative definition and automatically renders functional and styled form elements in the browser. This eliminates the need for manual HTML/CSS coding for form elements. The value is faster development cycles and a consistent look and feel for forms. This is useful for any web application that requires user input.

· Built-in Validation Engine: The system incorporates robust validation capabilities, allowing developers to define complex validation rules (e.g., required fields, email format, custom patterns) directly within the form definition. The value is ensuring data integrity and providing immediate feedback to users, leading to better data quality. This is essential for any form that collects critical user data.

· State Management Abstraction: Kiteform handles the internal state of the form (e.g., user input, validation status) without requiring developers to write custom state management logic. The value is simplifying the development process by removing boilerplate code for form state. This is particularly beneficial for complex forms with many fields.

· Extensibility Hooks: The platform is designed with extensibility in mind, allowing developers to inject custom logic or integrate with third-party services at various stages of form processing. The value is enabling advanced customization and integration capabilities for specific business needs. This is useful for tailoring forms to unique workflows or integrating with backend systems.

Product Usage Case

· Rapid Prototyping of User Interfaces: A startup building a new SaaS product needs to quickly create registration and profile forms. Using Kiteform, they can define all their forms with basic fields and validation rules in a single afternoon, significantly accelerating their MVP development. This solves the problem of slow UI development.

· Building Data Collection Forms for Surveys: A researcher needs to create a detailed online survey with various question types, including conditional logic. Kiteform allows them to define these complex structures declaratively, ensuring all data is collected accurately and consistently. This addresses the challenge of building intricate survey logic manually.

· Integrating Forms into Existing Applications: A legacy web application needs a new feedback form. Instead of refactoring large parts of the existing codebase, the developer can use Kiteform to generate a self-contained form component that seamlessly integrates with the current system. This solves the problem of integrating new features into older systems.

· Developing Internal Tools with Forms: A company needs to build an internal tool for sales representatives to log customer interactions. Kiteform enables them to quickly create a standardized form for this purpose, ensuring all necessary information is captured consistently, regardless of the user's technical skill. This tackles the need for user-friendly internal tools.

12

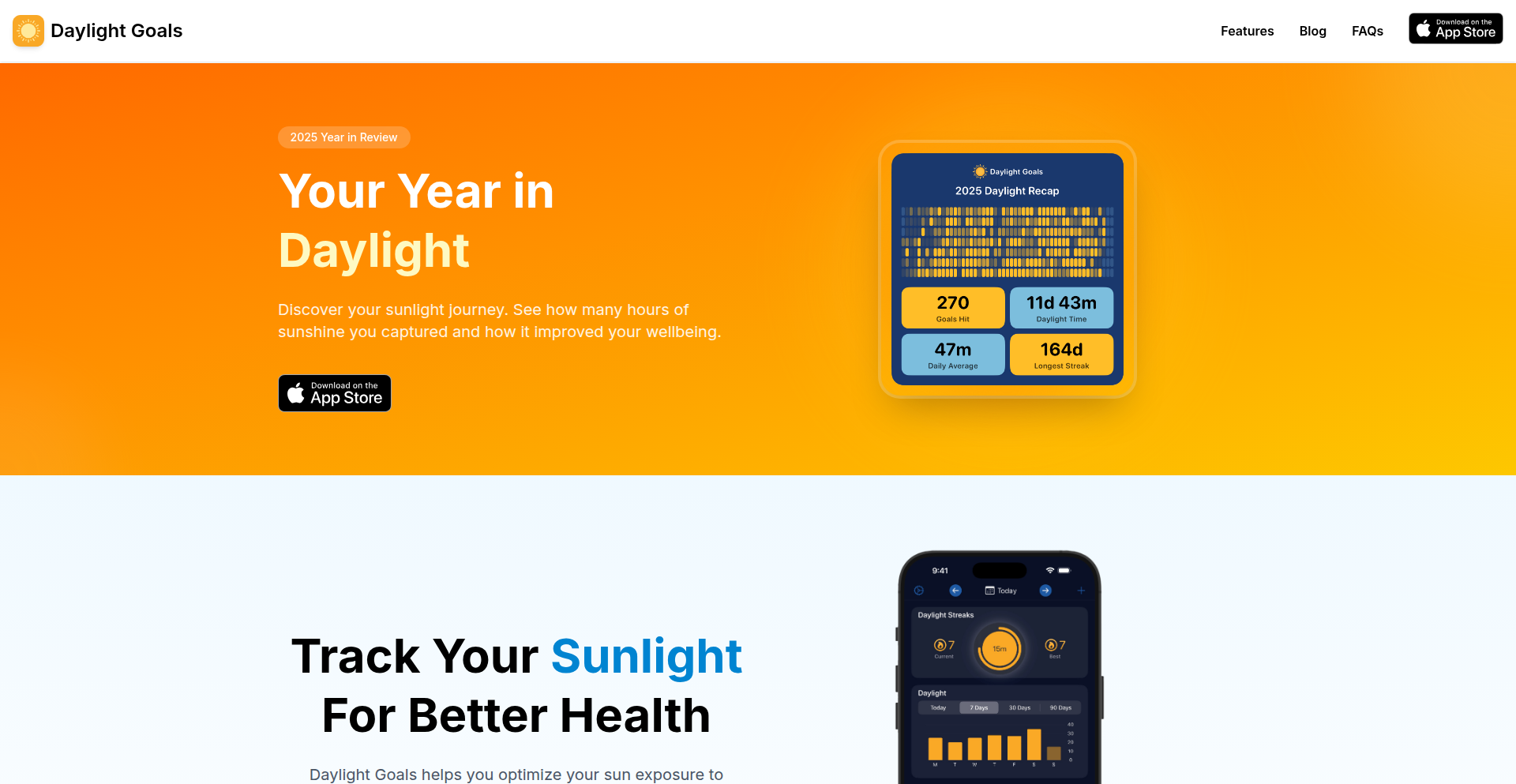

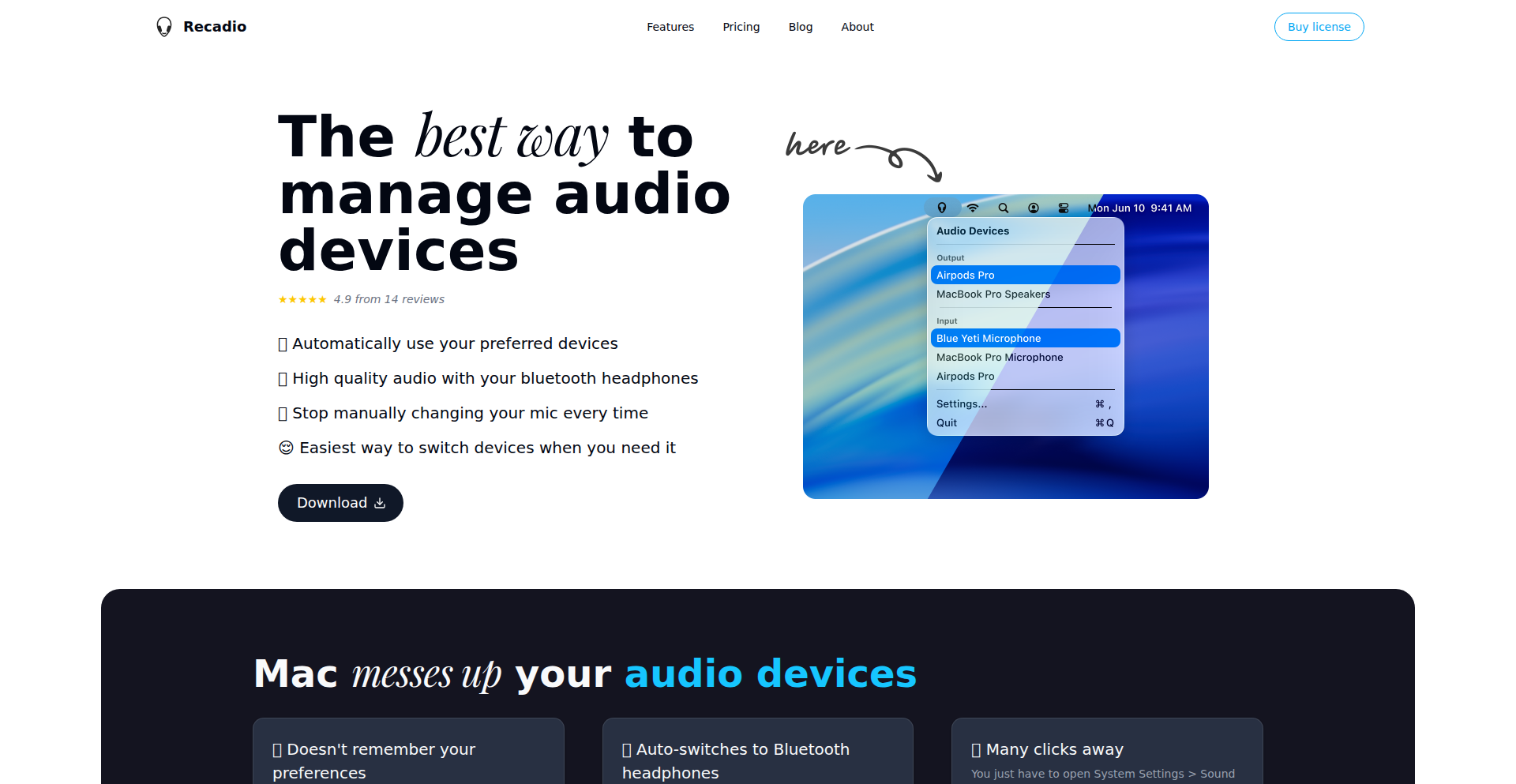

Daylight Goals: Sunlight Habit Tracker

Author

jclardy

Description

Daylight Goals is an iPhone and Apple Watch app that analyzes your outdoor time, tracked by your Apple Watch, and provides insights into your daily sunlight exposure. It helps you achieve a daily goal of 20 minutes of sunlight, and now offers a 'Daylight Recap' for 2025, visualizing your year-long sun exposure habits. This app leverages the ambient light and motion data from your Apple Watch to understand when you're outdoors and benefiting from sunlight.

Popularity

Points 2

Comments 4

What is this product?

Daylight Goals is a mobile application that acts as a personal sunlight tracker. It ingeniously utilizes the sensors built into your Apple Watch, specifically its ability to detect ambient light and movement, to estimate how much time you're spending outdoors. The core innovation lies in transforming raw sensor data into actionable insights about your daily sunlight intake. It's not just about counting minutes; it's about understanding your exposure patterns and encouraging healthy habits. The 'Daylight Recap' feature is a sophisticated way to aggregate and present a year's worth of this data, making complex personal analytics accessible and engaging. So, this helps you understand how much natural light you're getting and encourages you to prioritize it for your well-being.

How to use it?

Developers can integrate Daylight Goals into their personal wellness routines by simply installing the app on their iPhone and pairing it with their Apple Watch. The app automatically starts tracking outdoor time when the Apple Watch is worn and the screen is exposed to light. For developers who are interested in the technical underpinnings, the app showcases how to access and interpret sensor data for health and wellness applications. It provides a practical example of using wearable technology for behavioral tracking and goal reinforcement. So, you can use it to set and achieve your personal sunlight goals and gain insights into your device's potential for health tracking.

Product Core Function

· Automatic Outdoor Time Tracking: The app uses Apple Watch's ambient light and motion sensors to detect when you are outdoors. This provides a passive and unobtrusive way to gather data without manual input, making it easy to track your habits over time. The value is effortless data collection for understanding your lifestyle.

· Daily Sunlight Goal Setting and Monitoring: Users can set a daily target for sunlight exposure (e.g., 20 minutes) and the app visualizes progress towards this goal. This gamifies healthy behavior and provides motivation. The value is a clear, achievable target for improving your well-being.

· Data Visualization and Analysis: The app presents tracked outdoor time through charts and graphs, offering insights into daily, weekly, and monthly patterns. This allows users to identify trends and understand their habits better. The value is a clear picture of your sunlight exposure, enabling informed lifestyle adjustments.

· Daylight Recap 2025: A comprehensive review of your year-long sunlight exposure, presented in an engaging format. This feature provides a long-term perspective on your habits and achievements. The value is a year-end summary of your efforts and a benchmark for future goals.

· Apple Watch Integration: Seamless integration with Apple Watch allows for on-the-go tracking and on-wrist notifications, making it convenient to stay on top of your goals. The value is convenience and immediate feedback right from your wrist.

Product Usage Case

· For a developer focused on personal health and productivity, using Daylight Goals helps them understand how their work-from-home setup might be impacting their natural light exposure. By tracking their outdoor time, they can identify days where they've been indoors too much and make a conscious effort to step outside. This addresses the technical problem of quantifying and encouraging healthy outdoor activity within a digitally-centric lifestyle.

· A developer interested in building fitness or wellness applications could study the approach Daylight Goals takes to interpreting sensor data. The app demonstrates a practical use case of translating raw sensor inputs into meaningful health metrics, providing inspiration for their own projects. This highlights the technical insight into leveraging existing wearable hardware for innovative applications.

· For any developer who experiences burnout or wants to improve their overall well-being, Daylight Goals offers a simple yet effective tool. By encouraging regular outdoor breaks, the app indirectly promotes mental clarity and reduces eye strain. This solves the problem of remembering to take essential breaks in a busy development schedule.

· A developer keen on understanding user engagement with passive tracking features would find Daylight Goals a case study. The app's success relies on its ability to work in the background, making it easy for users to benefit without active participation. This showcases a valuable approach to designing user-friendly and effective health-monitoring tools.

13

SkySpottr AR Tracker

Author

auspiv

Description

SkySpottr is an innovative iOS application that leverages augmented reality (AR) to display real-time aircraft information directly overlaid on your device's camera feed. It aggregates ADS-B data from community feeders and uses kinematic prediction to provide smooth positional updates, all without relying on complex AR frameworks like ARKit. The project showcases remarkable technical ingenuity by building a complete, performant application from backend to frontend with significant AI assistance, demonstrating a modern approach to software development.

Popularity

Points 3

Comments 2

What is this product?

SkySpottr is an augmented reality aircraft tracking application for iOS. Its core technical innovation lies in its efficient use of native device capabilities like AVFoundation for the camera, CoreLocation and CoreMotion for positional and orientation data, combined with sophisticated mathematical projections to render aircraft information in real-time. Instead of using a dedicated AR SDK, it ingeniously uses device GPS and heading data to precisely position AR overlays. Kinematic prediction algorithms are employed to interpolate aircraft positions between data updates, resulting in a smoother visual experience. The project also highlights a fascinating journey of AI-assisted development, where AI tools were used to build various components, though it also exposed the critical need for human oversight in debugging complex issues, particularly with UI scaling factors that mimicked more complex problems.

How to use it?

Developers can use SkySpottr as a prime example of how to build location-aware AR experiences using fundamental device sensors and custom mathematical logic, rather than relying solely on high-level AR frameworks. This approach offers greater control and potentially lower resource overhead. The project's backend, built with Django and C#, demonstrates how to efficiently handle real-time data streams (ADS-B via WebSockets) and serve them to a mobile client. The use of Postgres and Redis for data storage and caching on a self-managed VPS provides a blueprint for cost-effective, performant infrastructure. Developers can draw inspiration from its approach to handling sensor data, projecting 3D elements onto a 2D camera feed, and managing real-time data pipelines, especially in resource-constrained environments or when seeking a deep understanding of the underlying mechanics.

Product Core Function

· Real-time aircraft tracking: Utilizes ADS-B data from community feeders to display live aircraft positions, altitudes, and speeds. Value: Provides immediate situational awareness for aviation enthusiasts and researchers.

· Augmented reality overlay: Projects aircraft information directly onto the device's camera view, creating an intuitive visual experience. Value: Enhances understanding and engagement by merging digital information with the physical world.

· Kinematic prediction: Smooths aircraft position updates between ADS-B data points using mathematical interpolation. Value: Ensures a fluid and continuous tracking experience, preventing jerky movements of the AR elements.

· Native iOS sensor integration: Leverages CoreLocation and CoreMotion for precise GPS and heading data without relying on ARKit. Value: Demonstrates efficient use of device hardware for AR, potentially leading to better performance and wider compatibility.

· AI-assisted development pipeline: Entire application, including backend, frontend, and deployment infrastructure, was significantly built with AI tools. Value: Showcases the rapid prototyping capabilities of AI in software development, accelerating feature delivery.

· Self-hosted infrastructure: Deployed on a single VPS with Postgres, Redis, Django, and C#, avoiding cloud provider dependencies. Value: Offers a model for cost-effective and control-oriented application hosting for developers.

Product Usage Case

· Developing location-based AR games: This project's approach to sensor fusion and AR rendering can inform the development of AR games where virtual objects need to be accurately placed and tracked in the real world based on device location and orientation.

· Building educational tools for aviation: SkySpottr can serve as a foundation for educational applications that teach about air traffic, flight paths, and aircraft types in an interactive, visual manner.

· Creating custom surveillance or tracking systems: For niche applications requiring real-time tracking of objects in a defined area, the principles of data ingestion, processing, and AR visualization can be adapted.

· Exploring cost-effective backend architectures: The successful deployment of a robust backend on a self-managed VPS offers a practical case study for developers looking to reduce infrastructure costs.

· Debugging complex UI issues with AI: The project highlights both the power and limitations of AI in debugging, offering valuable lessons on how AI can assist in identifying issues, but also emphasizing the irreplaceable role of human analytical thinking when faced with subtle bugs like UI scaling problems.

14

LlamaImageCaptioner

Author

paradox460

Description

An experimental tool that uses Llama.cpp to automatically generate captions and tags for your local image library. It leverages the power of large language models to understand image content and store this metadata directly within the image's EXIF data, offering a novel way to organize and search your photos locally.

Popularity

Points 5

Comments 0

What is this product?

This project is a local image captioning and tagging tool that runs on your own machine. It utilizes Llama.cpp, a popular C++ port of Meta's Llama large language model, to process images. You point it to a directory of photos, and it intelligently generates descriptive captions and relevant tags for each image. The innovation lies in its ability to perform this on your local system without uploading your images to the cloud, and it writes the generated metadata directly into the image's EXIF tags. This means your photo descriptions are portable and accessible by other EXIF-aware applications. So, what's in it for you? It's a privacy-preserving, offline way to enrich your image library with meaningful descriptions, making your photos easier to find and manage without relying on external services.

How to use it?

To use LlamaImageCaptioner, you first need to have Llama.cpp set up and a compatible Llama model downloaded. You then run the tool and point it to the directory containing your images. The tool will iterate through each image, sending it to the Llama model for captioning. Once captions and tags are generated, an editable interface is presented, allowing you to review and refine them. After you're satisfied, you can save the changes, which embeds the metadata into the image's EXIF data and moves to the next image. This is ideal for photographers, digital artists, or anyone with a large personal photo collection who wants to enhance discoverability and organization. You can integrate this into your existing photo management workflow by ensuring your viewing software supports EXIF metadata. So, how does this benefit you? It allows you to quickly add searchable descriptions to thousands of photos without manually typing, saving you significant time and effort.

Product Core Function

· Local Image Caption Generation: Utilizes Llama.cpp to analyze image content and generate descriptive captions offline. This is valuable for users concerned about privacy or those with slow internet connections, enabling rich descriptions for any photo.

· Automatic Tagging: Extracts relevant keywords and tags from the generated captions, making images more searchable and categorized within your local filesystem. This directly improves your ability to find specific photos later.

· EXIF Metadata Integration: Writes generated captions and tags directly into the EXIF data of the image files. This ensures that the metadata is portable and accessible by various photo viewers and management tools, making your organization efforts long-lasting and universally compatible.

· Editable Interface: Provides a user-friendly interface to review, edit, and confirm generated captions and tags before saving. This gives you full control over the accuracy and relevance of the metadata, ensuring it meets your specific needs.

· Batch Processing: Scans and processes an entire directory of images, streamlining the organization of large photo libraries. This massively reduces the manual labor involved in cataloging a substantial collection.

Product Usage Case

· A wildlife photographer with thousands of raw image files needs to quickly tag species, locations, and behaviors. LlamaImageCaptioner can automate the initial tagging, saving hours of manual work and allowing for faster retrieval of specific shots for publication. This solves the problem of tedious manual cataloging for professionals.

· A hobbyist artist wants to organize their digital art portfolio for their website. By using LlamaImageCaptioner, they can add descriptive keywords about the style, medium, and subject matter of each artwork, making it easier for potential clients to search and find specific pieces on their online gallery. This helps them showcase their work more effectively.

· A user wants to build a personal photo archive with detailed descriptions for future reference. LlamaImageCaptioner can automatically generate narratives for family photos, travel pictures, or event snapshots, preserving memories with rich contextual information that might otherwise be forgotten. This adds a layer of personal history to their digital memories.

· A developer looking to build a more advanced local photo search engine could use this tool as a backend to enrich their image dataset with AI-generated metadata. This allows for more sophisticated search queries that go beyond simple filenames. This provides a foundational data enrichment layer for further development.

15

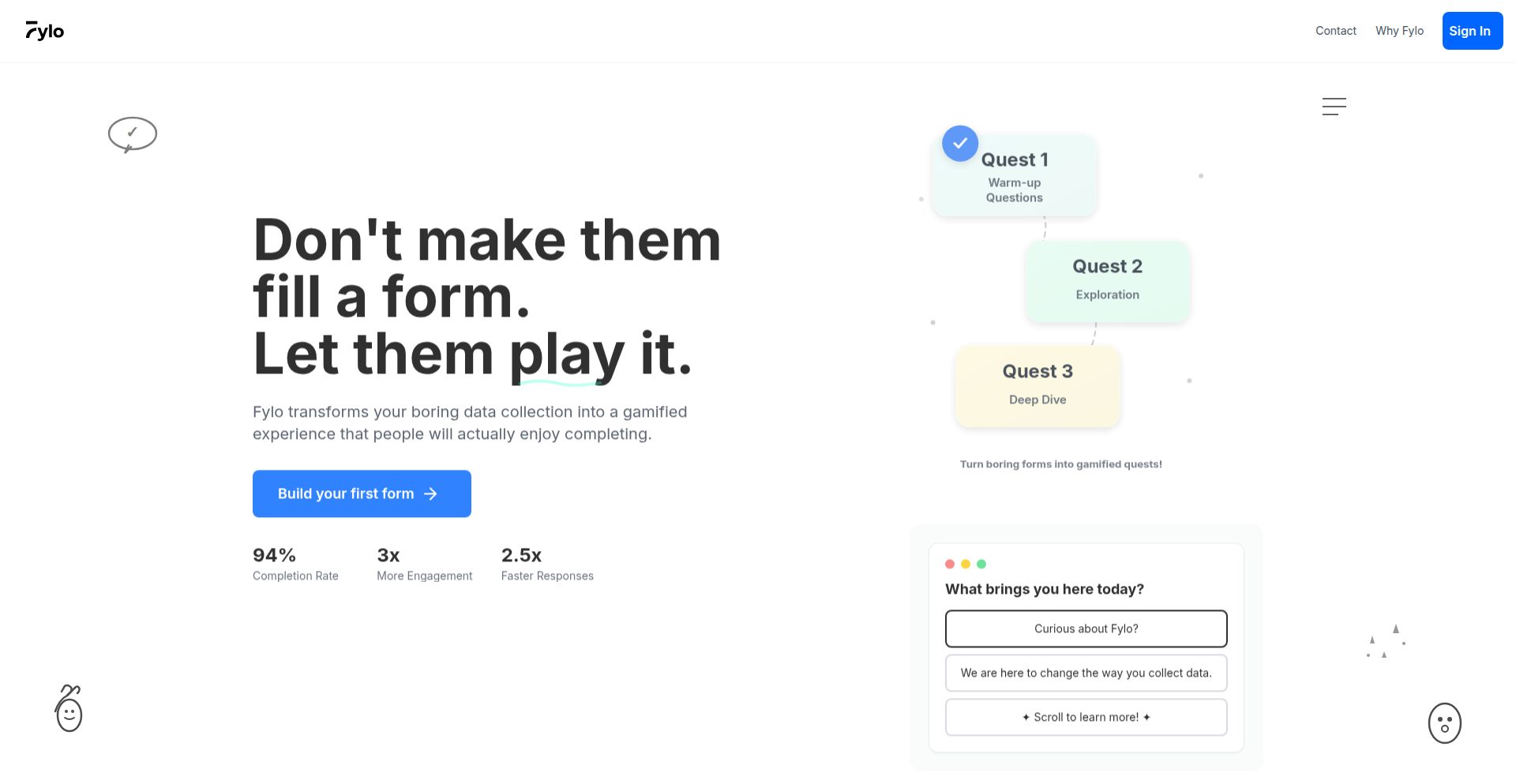

Fylo: Interactive Form Flow

Author

studlydev

Description

Fylo is a form builder that transforms static, unengaging forms into interactive experiences. It addresses the common problem of low form completion rates by focusing on elements like progress indicators, dynamic interactions, and fluid transitions, making form filling feel more like a conversation and less like a task. This project demonstrates a technical innovation in user experience design through code.

Popularity

Points 4

Comments 1

What is this product?