Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-12-18

SagaSu777 2025-12-19

Explore the hottest developer projects on Show HN for 2025-12-18. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The landscape of developer innovation is rapidly evolving, with a strong emphasis on leveraging AI to streamline complex tasks and enhance productivity. This week's Show HN projects highlight a significant trend towards building specialized AI agents and tools that address specific pain points across various domains, from frontend development with Composify to data acceleration with Spice Cayenne. Developers are pushing the boundaries of what's possible by creating modular architectures and privacy-focused solutions, demonstrating a keen understanding of real-world needs. For aspiring innovators and established developers alike, the takeaway is clear: identify a niche problem, leverage cutting-edge technologies like AI and Rust for performance, and prioritize user experience and efficiency. The spirit of hacking thrives when we build tools that empower others and solve tangible challenges, whether it's making UI design accessible, accelerating data queries, or simplifying complex workflows. Don't be afraid to dive deep into performance optimizations or to create minimalist libraries that solve a single problem exceptionally well. The future belongs to those who can creatively apply technology to make complex things simple and accessible.

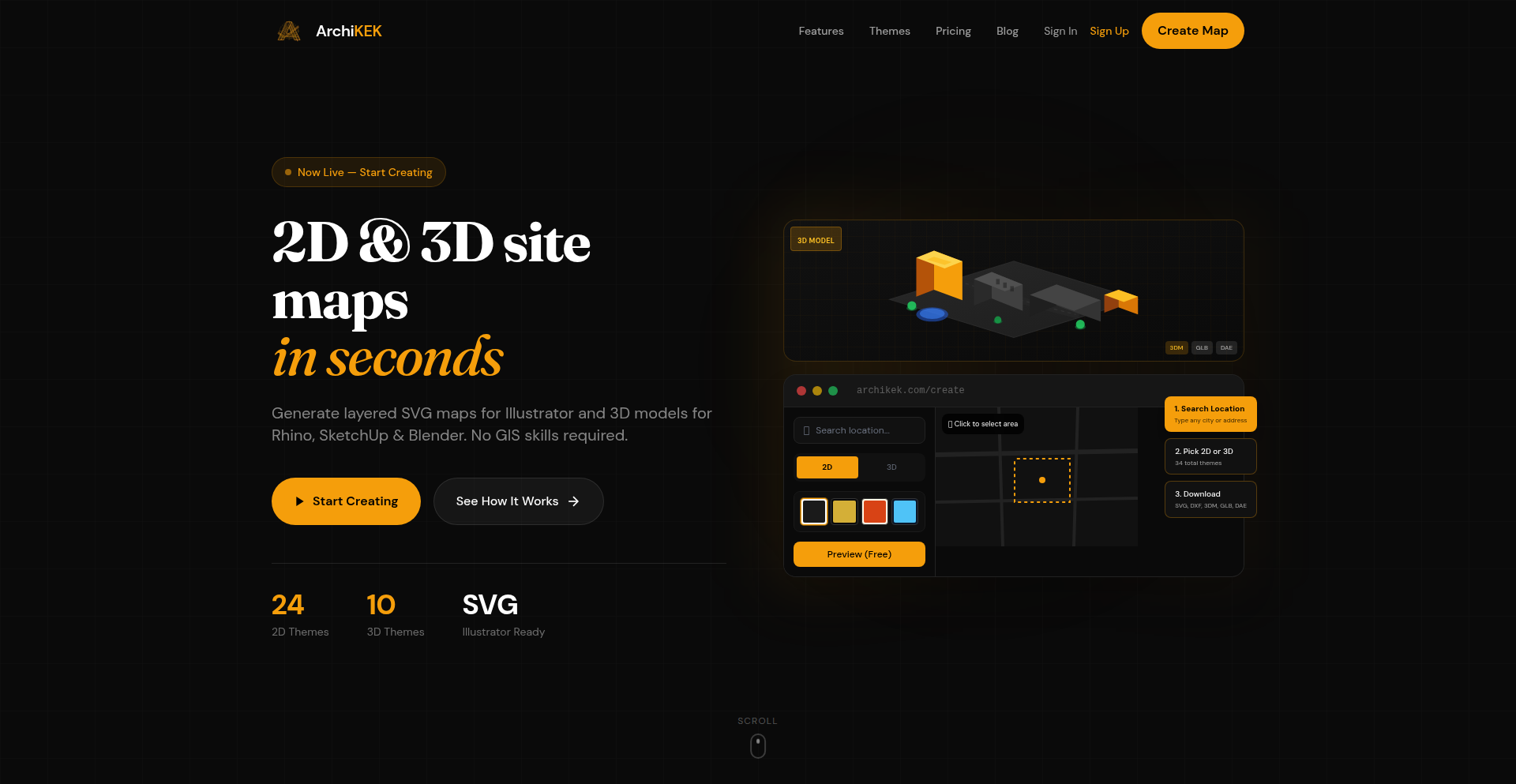

Today's Hottest Product

Name

Composify

Highlight

Composify introduces a unique approach to frontend development by acting as an open-source visual editor that allows non-developers to compose web pages using existing React components. This solves the common pain point of marketing teams repeatedly requesting landing page changes, which often leads to engineering bottlenecks. The innovation lies in its ability to register and utilize production React components as drag-and-drop blocks, generating JSX output without requiring modifications to the component code or learning a new schema. Developers can learn how to build tools that bridge the gap between designers/marketers and engineering, fostering a more efficient workflow and enabling rapid layout-level A/B testing.

Popular Category

AI/ML

Developer Tools

Frontend Development

Data Engineering

Cloud Infrastructure

Popular Keyword

AI

LLM

Agent

Editor

Data

Performance

Developer Tools

Open Source

Rust

Python

Web

UI

Technology Trends

AI-powered workflow automation

Efficient data processing and acceleration

Minimalist and dependency-free libraries

Enhanced developer experience through specialized tools

Privacy-focused and local-first solutions

Composable and modular architectures

Democratization of complex functionalities (e.g., UI design, data analysis)

Advancements in data storage and retrieval formats

Project Category Distribution

AI/ML Tools & Frameworks (30%)

Developer Productivity & Tools (25%)

Data Engineering & Infrastructure (15%)

Frontend & UI Development (10%)

Utilities & Niche Applications (10%)

Security & Privacy (5%)

Other (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Composify: React Component Composer | 63 | 5 |

| 2 | Spice Cayenne: Data Accelerator Engine | 26 | 3 |

| 3 | TinyPDF | 15 | 1 |

| 4 | FirstClick AI Citation Optimizer | 7 | 6 |

| 5 | Paper2Any | 11 | 2 |

| 6 | DNS Sentinel | 7 | 4 |

| 7 | DocsRouter: Unified Document Intelligence API | 10 | 0 |

| 8 | DadJoke-Qwen3-Tuner | 10 | 0 |

| 9 | DailySet | 7 | 2 |

| 10 | IconicForge | 8 | 0 |

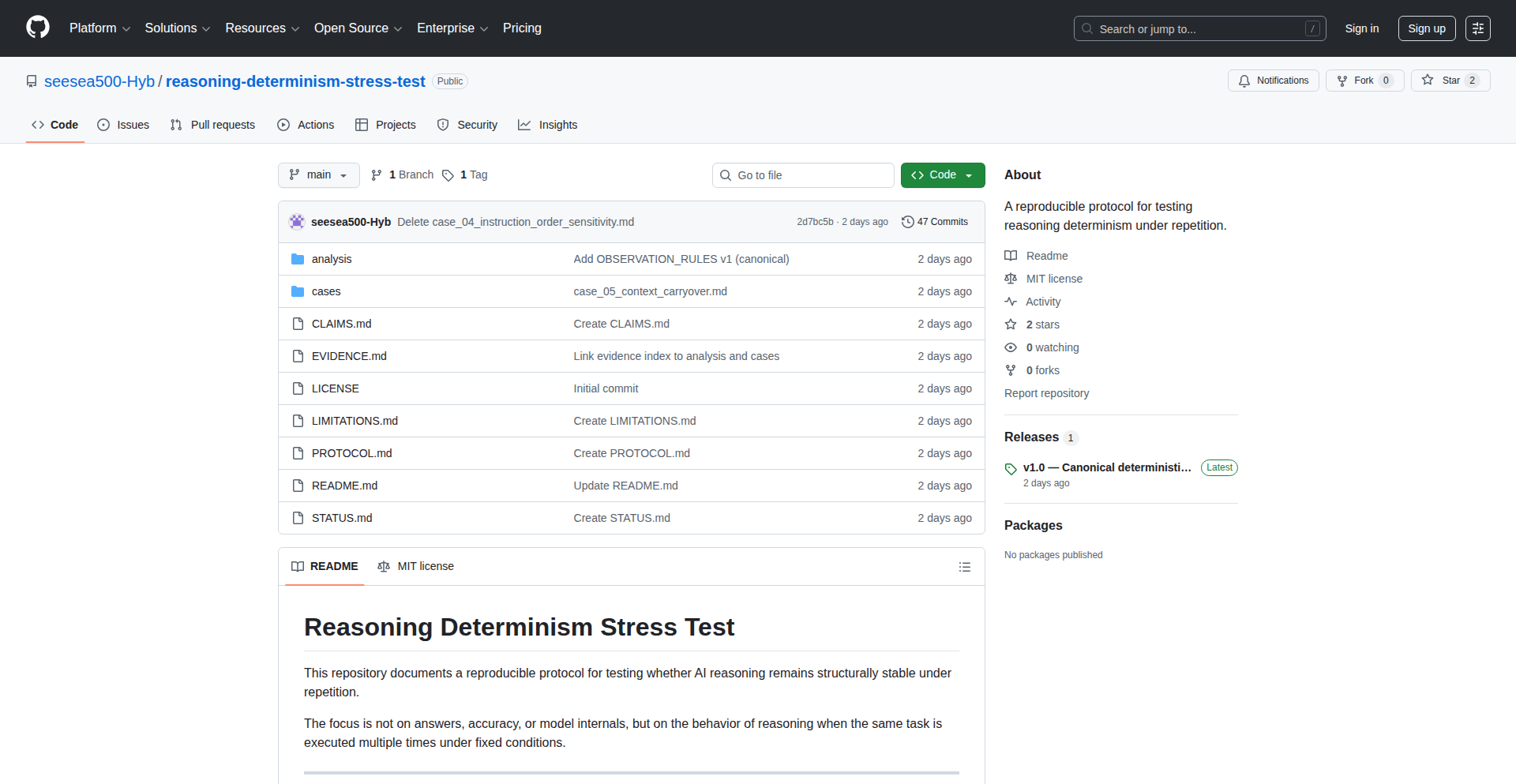

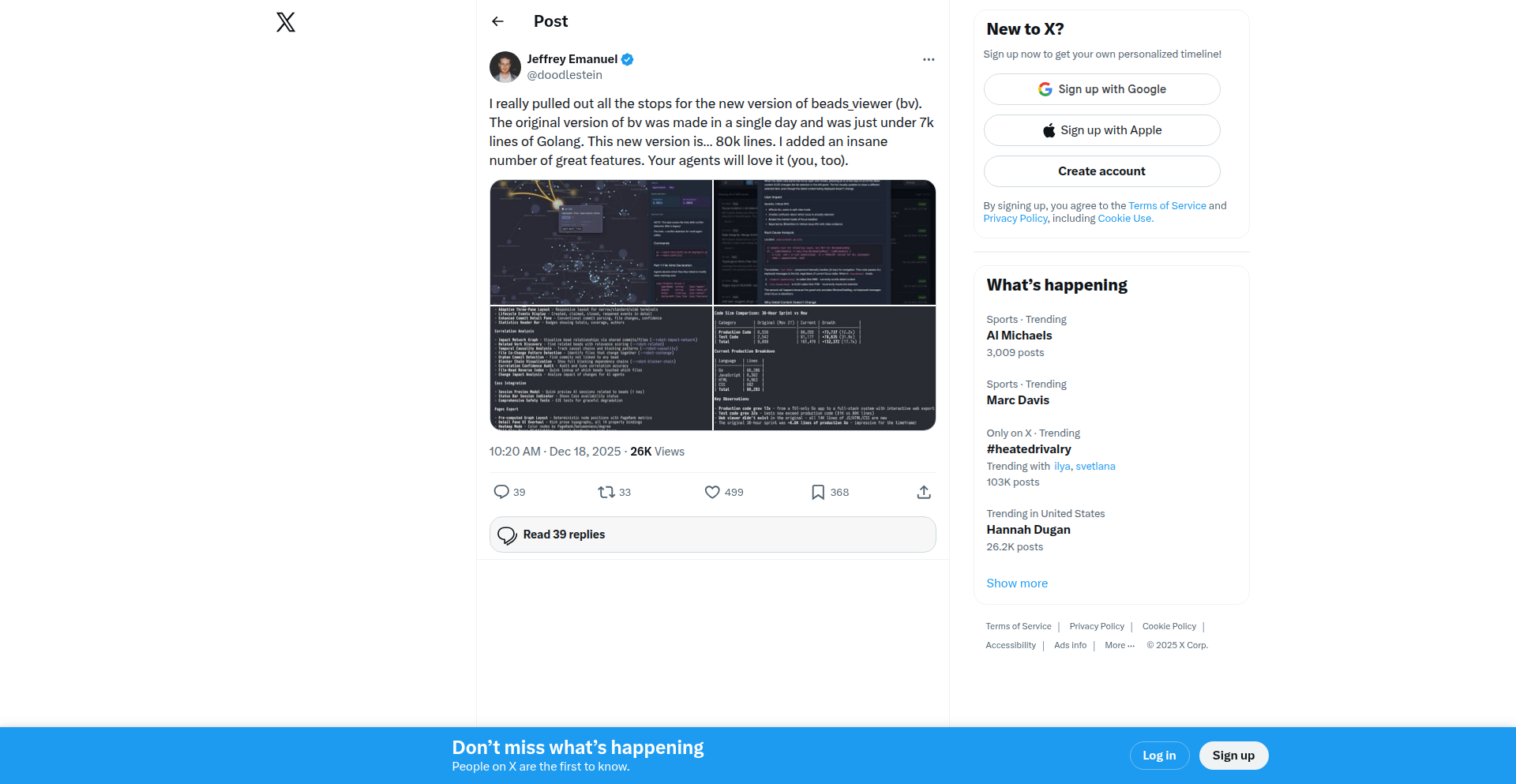

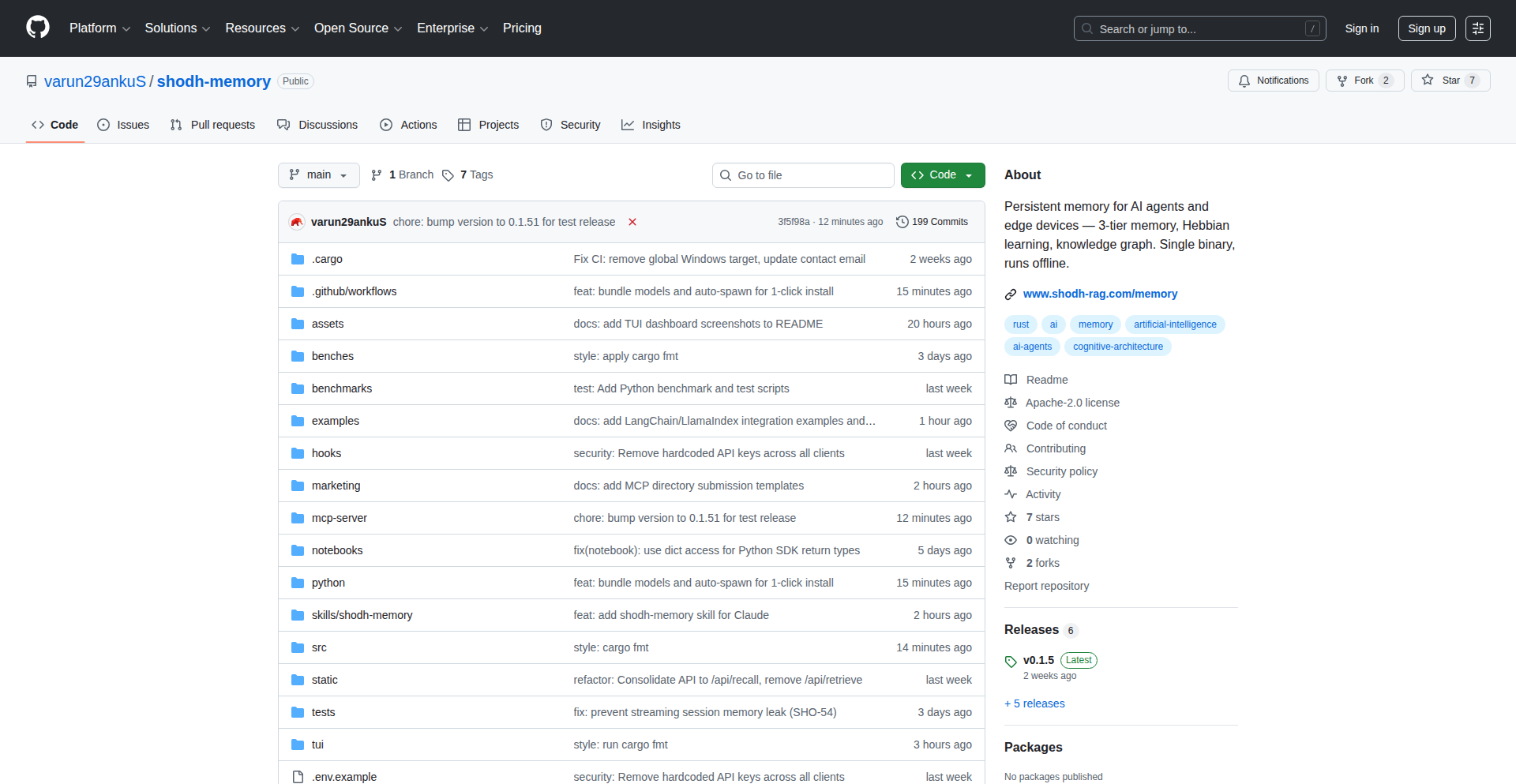

1

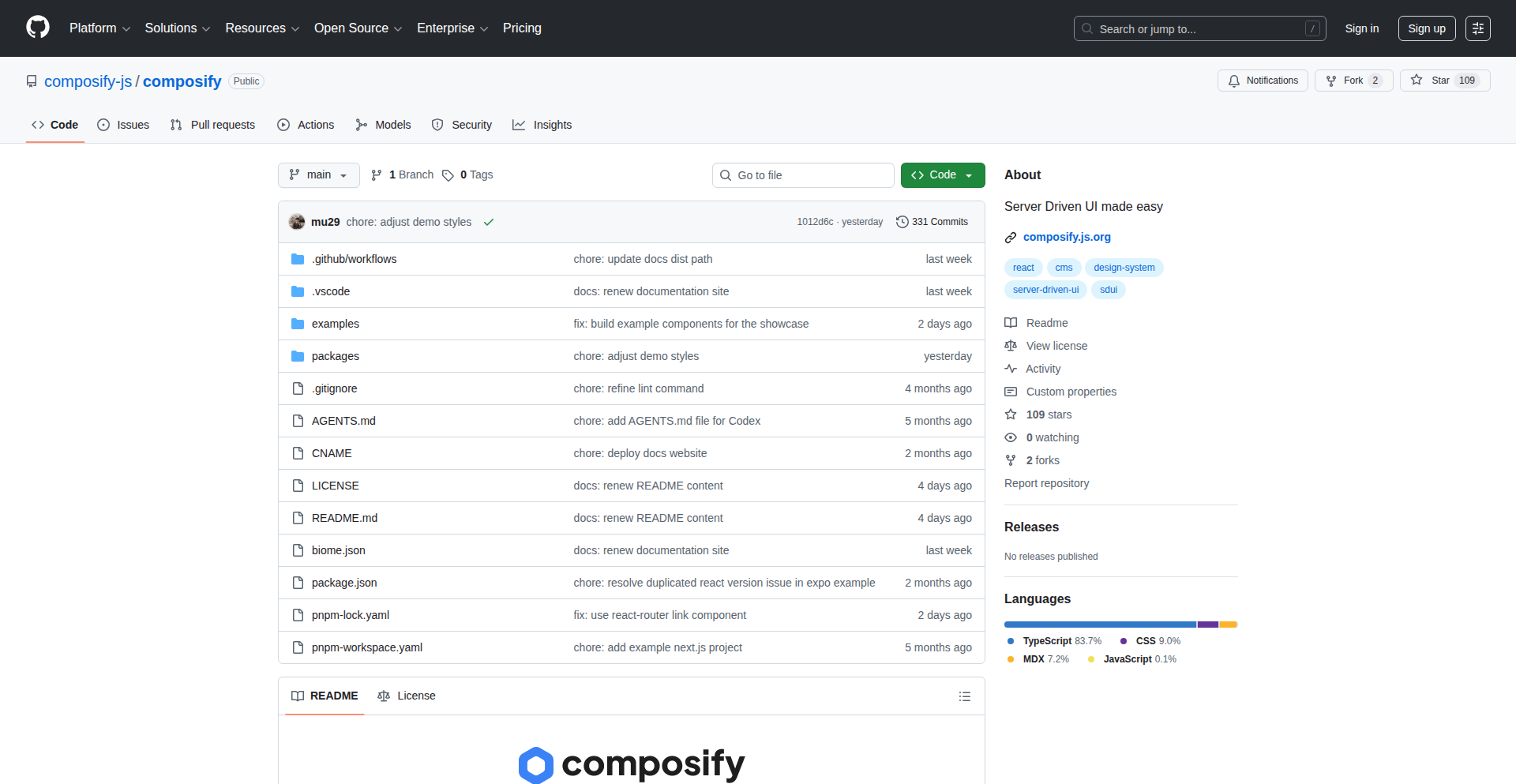

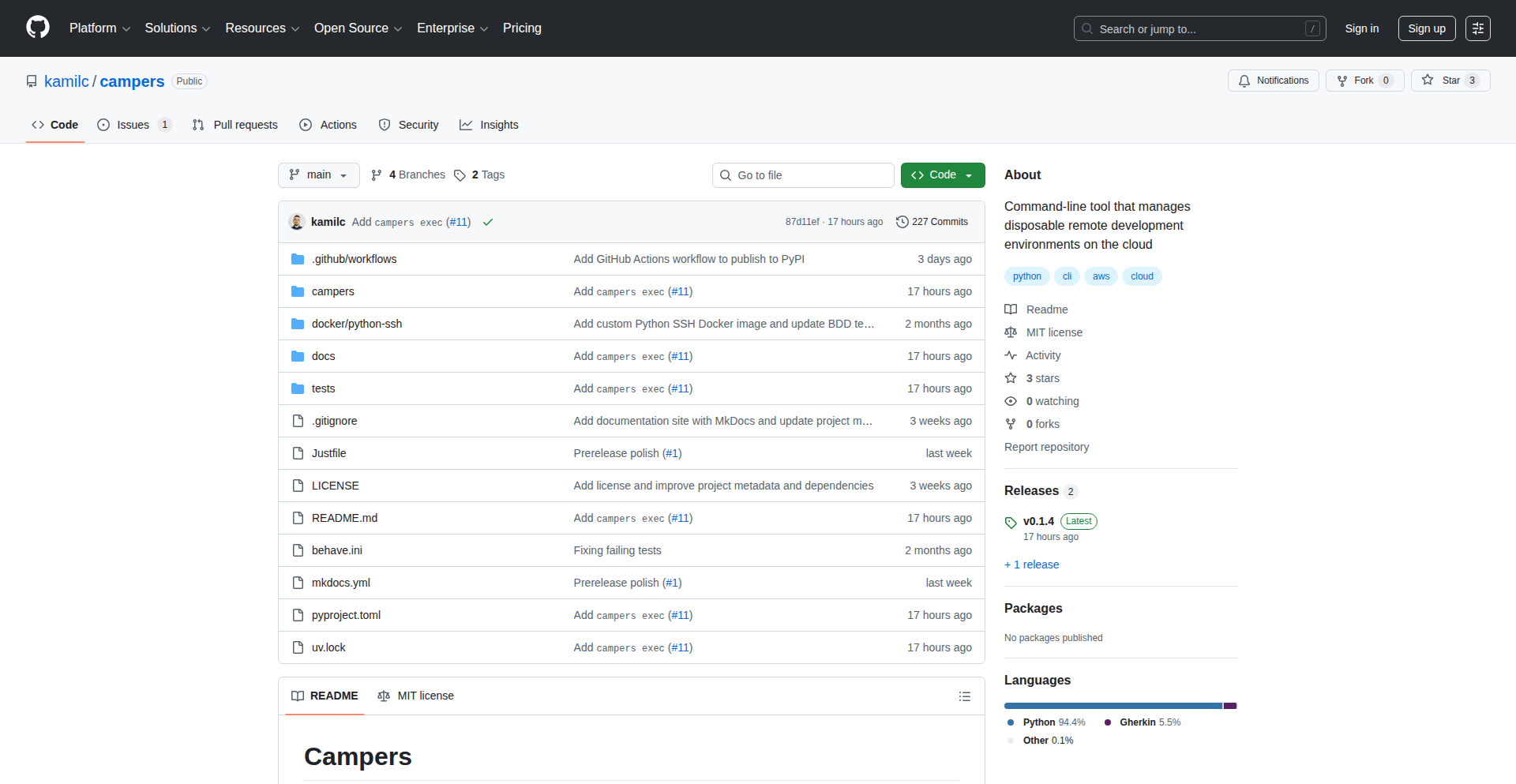

Composify: React Component Composer

Author

injung

Description

Composify is an open-source visual editor that allows non-developers to build web pages using existing React components. It solves the common problem of marketing teams needing frequent landing page updates, which often burdens engineering teams with tickets. Composify lets users drag and drop registered React components to create JSX strings, enabling faster iteration and empowering marketing to ship changes independently. Its innovation lies in its minimal approach, leveraging your actual production components without requiring code modifications or learning new schemas, acting as a bridge between no-code builders and headless CMS.

Popularity

Points 63

Comments 5

What is this product?

Composify is a visual editor designed for React applications. Think of it like a Lego set for your website's building blocks, where those blocks are your actual, live React components. The core technical idea is to create a 'server-driven UI' system. Instead of developers writing all the JSX (the code that describes what a web page looks like) every time a change is needed, Composify allows users to visually arrange pre-registered React components. The output of this visual arrangement is a JSX string, which your application can then render. This is innovative because it avoids the typical trade-offs of other tools: it doesn't lock you into a proprietary component set like Wix, nor does it force you to reformat your existing components to fit its model, unlike some other headless CMS or page builders. The value for you is a significant reduction in development overhead for routine page updates, allowing marketing or content teams to be more agile.

How to use it?

Developers integrate Composify by registering their existing React components into the system. Composify then exposes these components as 'draggable blocks' within its visual editor interface. Non-technical users can then drag these blocks onto a canvas, arrange them, and customize their properties (if exposed). Composify generates a JSX string representing this arrangement. Your React application can then fetch this JSX string (e.g., from an API or a content management system) and render it dynamically. This is useful for scenarios where you have a library of reusable UI elements and want to empower content creators to assemble landing pages, campaign pages, or sections of your application without needing developer intervention for every minor layout tweak.

Product Core Function

· Visual component composition: enables non-developers to assemble web pages by dragging and dropping registered React components, reducing the need for developer involvement in layout changes.

· React component registration: allows developers to easily make their existing production-ready React components available as editable blocks in the visual editor, maximizing code reuse and consistency.

· Server-driven UI generation: outputs a JSX string that can be dynamically rendered by your React application, facilitating rapid content updates and A/B testing at the layout level.

· Minimal integration overhead: designed to work with your existing component architecture without requiring significant code refactoring or adherence to new component schemas, making adoption smoother.

· Independent content iteration: empowers marketing and content teams to make layout changes and ship new pages autonomously, accelerating go-to-market timelines and reducing engineering backlogs.

Product Usage Case

· Marketing team needs to launch a new promotional landing page with specific product banners and calls-to-action. Composify allows them to drag and drop pre-approved banner components and CTA components, arrange them, and instantly generate the page code, bypassing the traditional ticket submission and development cycle.

· E-commerce site wants to run a time-limited flash sale and needs to update the homepage layout to prominently feature sale items. Using Composify, the marketing team can rearrange existing product grid components and promotional banners without filing a developer ticket, ensuring timely execution of the sale.

· A content-heavy website needs to create themed landing pages for different campaigns. Composify allows content editors to select from a library of pre-built content blocks (e.g., text with image, video embed, testimonial card) and arrange them to form a unique campaign page, offering flexibility without sacrificing design consistency.

· Product teams want to perform layout-level A/B testing on different page structures for conversion optimization. Composify can generate multiple JSX variations of a page, allowing for easy deployment and testing of different component arrangements to identify the most effective layouts.

2

Spice Cayenne: Data Accelerator Engine

Author

lukekim

Description

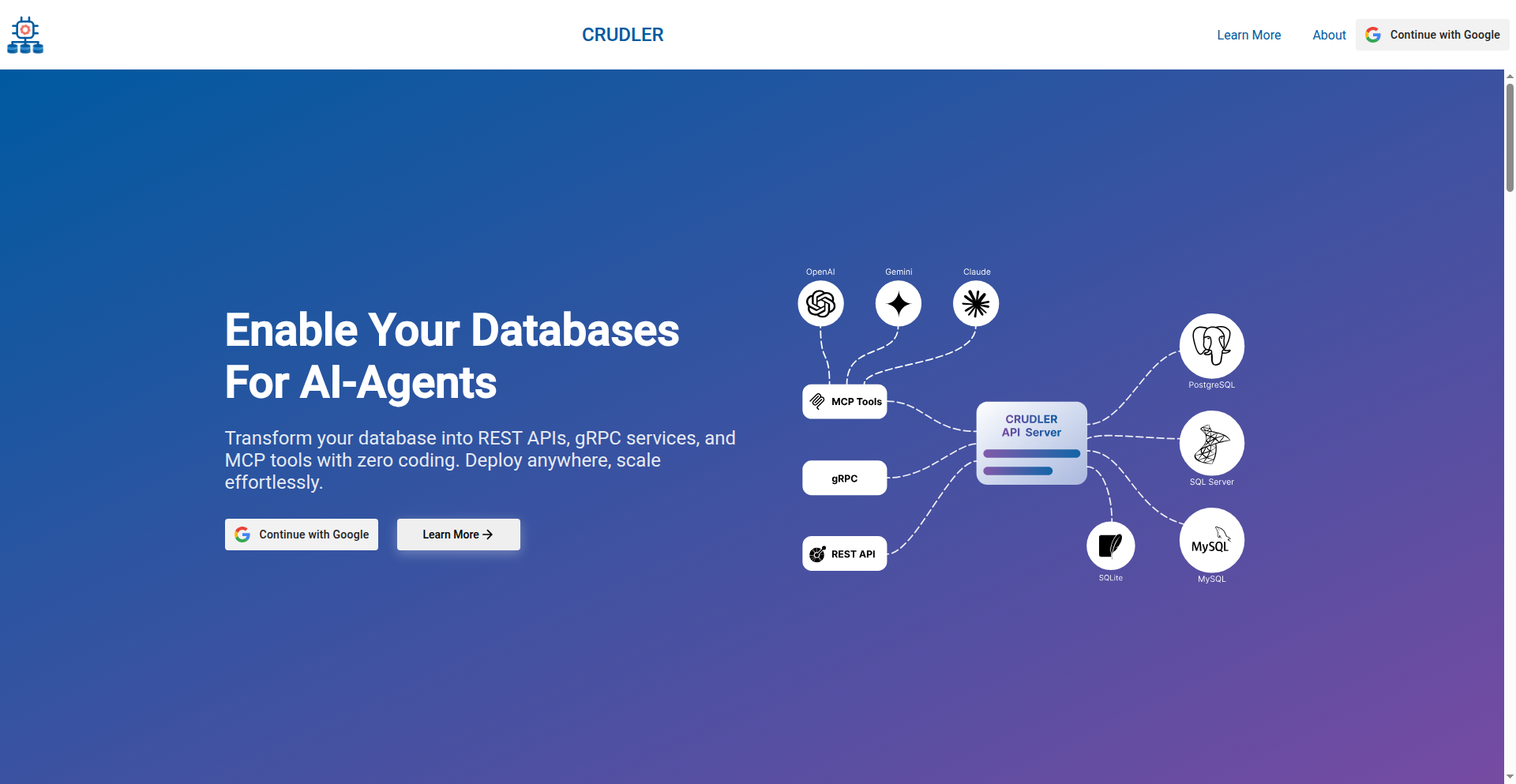

Spice Cayenne is a high-performance, portable data and AI engine designed to accelerate SQL queries, hybrid-search, and LLM inference. It leverages Apache DataFusion and Ballista for powerful data processing and a novel columnar data format called Vortex, which offers significantly faster data access and scanning compared to traditional formats like Parquet. This innovation allows enterprises to efficiently query and analyze vast datasets distributed across various storage systems, making complex data operations more accessible and faster. The core idea is to bring AI and complex data processing directly to where your data lives, making it incredibly efficient for real-world applications.

Popularity

Points 26

Comments 3

What is this product?

Spice Cayenne is an open-source data engine that acts as a 'Data Accelerator.' Think of it as a super-fast intermediary that understands how to quickly retrieve and process data from different places, whether it's in other databases, cloud storage, or even local files. Its innovation lies in its use of Vortex, a new columnar data format that's engineered for speed. Unlike older methods that might read data row by row, Vortex reads data in columns, which is much more efficient for analytical queries. This means you can ask complex questions of your data and get answers dramatically faster, using less memory and computational power. So, for you, this means getting insights from your data quicker and more cost-effectively, enabling more advanced AI applications and real-time decision-making.

How to use it?

Developers can integrate Spice Cayenne into their applications to enhance data processing capabilities. It's designed to be lightweight and portable, meaning it can run almost anywhere. You can use it to power your backend services that require fast data retrieval for features like search or personalized recommendations. It supports standard SQL queries, making it easy to adopt if you're already familiar with database querying. For AI-driven applications, Spice Cayenne can efficiently prepare and serve data for Large Language Models (LLMs) or other machine learning models, speeding up inference times. Its hybrid-search capabilities allow for combining keyword-based search with vector similarity search, making it ideal for applications needing intelligent content discovery. So, if you're building an app that needs to quickly crunch numbers, find specific information, or power AI features, Spice Cayenne can be dropped in to significantly boost performance.

Product Core Function

· SQL Query Acceleration: Processes standard SQL queries incredibly fast by leveraging optimized data access patterns and the Vortex data format. This means your applications can retrieve and analyze data much quicker, leading to snappier user experiences and faster reporting. Think of it as giving your database a speed boost for analytical tasks.

· Hybrid Search Capabilities: Enables combining traditional keyword search with advanced vector similarity search. This allows for more intelligent and nuanced search results, going beyond simple matches to understand the meaning and context of user queries. Useful for content discovery, product recommendations, and semantic search.

· LLM Inference Optimization: Efficiently prepares and serves data required by Large Language Models (LLMs) and other AI models. This reduces the time it takes for AI models to process information and generate responses, making AI-powered features in your applications more responsive and capable.

· Data Accelerator for Disparate Sources: Materializes data from various sources (databases, files) into an optimized format (Vortex) for faster access. This tackles the common problem of data being scattered everywhere, making it challenging to analyze. Spice Cayenne unifies and accelerates access to this dispersed data.

· Lightweight and Portable Engine: Built in Rust, making it fast, memory-efficient, and deployable across various environments, from edge devices to cloud servers. This flexibility means you can use it where you need it without heavy infrastructure dependencies.

Product Usage Case

· Real-time analytics dashboard: A company building a dashboard to visualize sales data can use Spice Cayenne to ingest and query petabytes of sales records in near real-time, allowing executives to make faster business decisions based on up-to-the-minute information.

· Personalized recommendation engine: An e-commerce platform can use Spice Cayenne to power its recommendation system. By quickly analyzing user browsing history and product data using hybrid search, it can deliver highly relevant product suggestions to customers, increasing engagement and sales.

· AI-powered customer support chatbot: A support team can integrate Spice Cayenne into their chatbot. The engine can rapidly retrieve relevant information from a vast knowledge base to answer customer queries with LLM inference, improving customer satisfaction and reducing support load.

· IoT data analysis: A company collecting sensor data from thousands of devices can use Spice Cayenne to accelerate the analysis of this high-volume data, identifying anomalies or trends quickly to improve product performance or prevent failures.

· Developer productivity tool: A developer building a complex data processing pipeline can use Spice Cayenne to rapidly prototype and test their data transformations and queries without needing to set up large, cumbersome data warehousing solutions.

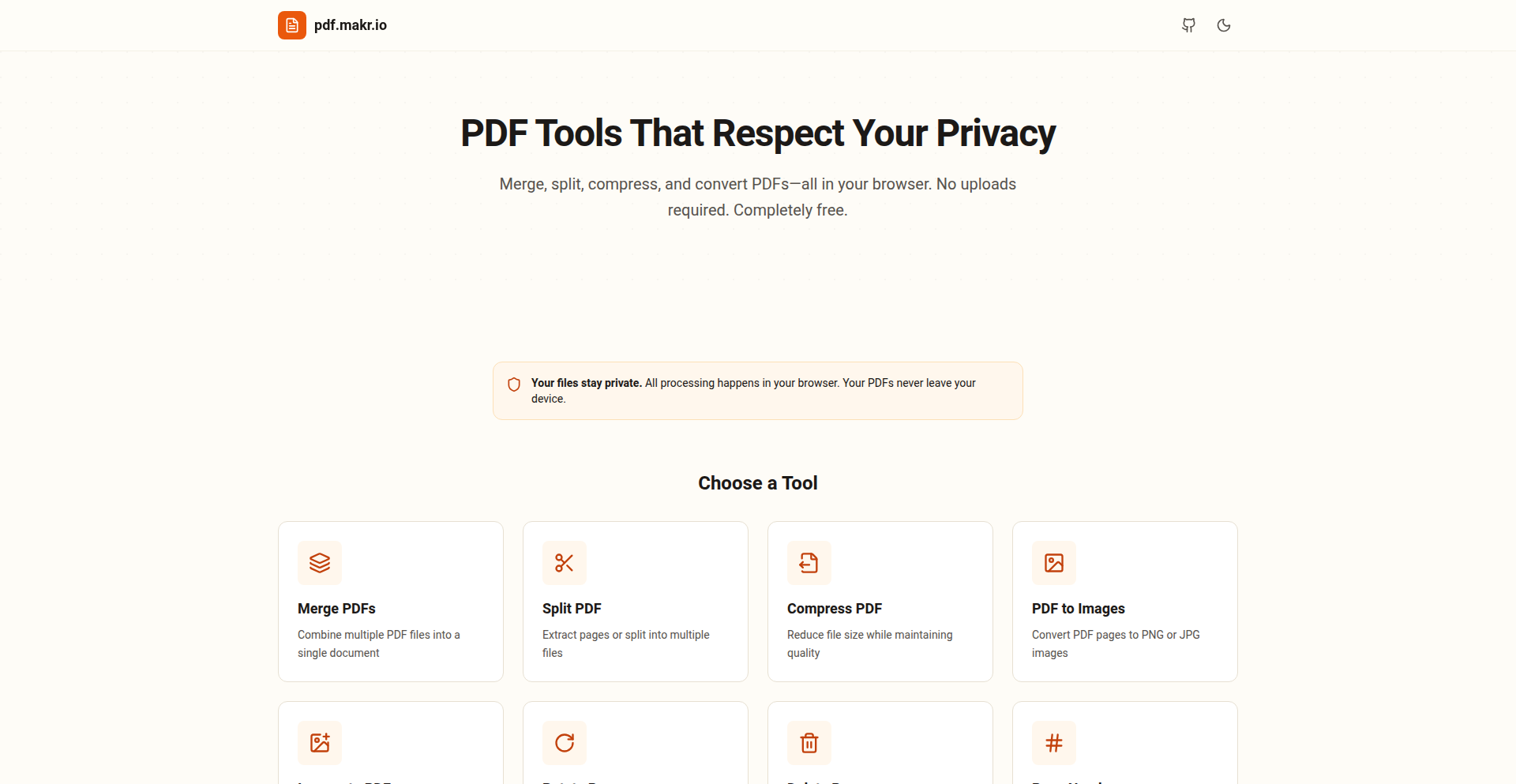

3

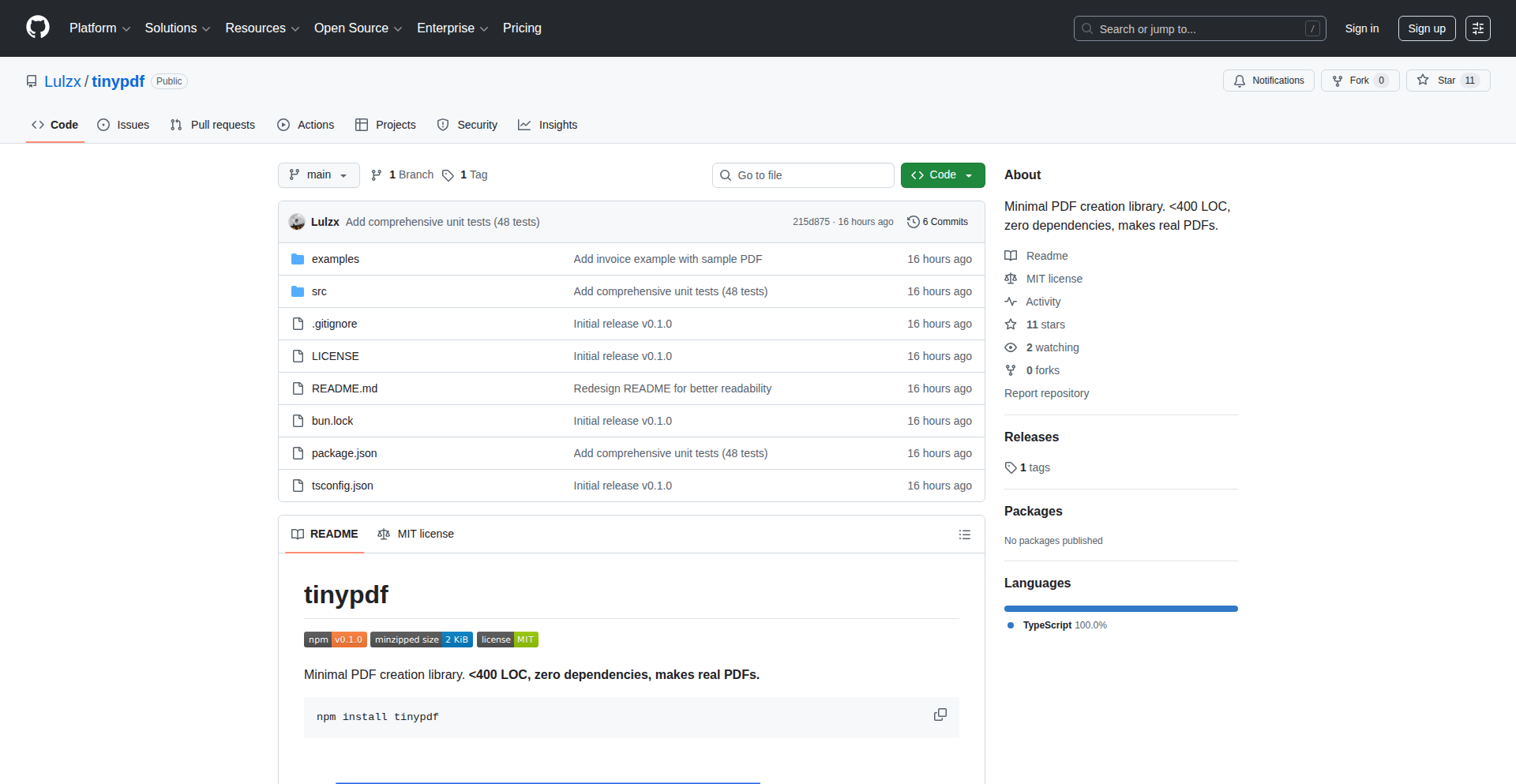

TinyPDF

Author

lulzx

Description

TinyPDF is a lightweight PDF generation library for Node.js applications, focusing on essential features like text, rectangles, lines, and JPEG images. It's a stark contrast to larger libraries like jsPDF, offering a drastically reduced footprint of under 400 lines of TypeScript and just 3.3KB when minified and gzipped, with zero dependencies. This makes it ideal for generating invoices, receipts, reports, tickets, and labels where advanced features are not required, offering a lean and efficient solution for common PDF creation tasks.

Popularity

Points 15

Comments 1

What is this product?

TinyPDF is a highly optimized, minimalist PDF generation library written in TypeScript for Node.js. Unlike feature-rich but heavy libraries, TinyPDF focuses on a curated set of functionalities: rendering text (with basic font support like Helvetica, color control, and alignment), drawing rectangles and lines, and embedding JPEG images. It also supports multi-page documents and custom page sizes. The innovation lies in its extreme focus on essential PDF elements and its efficient implementation, resulting in a remarkably small file size and no external dependencies. This means faster load times, reduced application bundle size, and simpler integration, making it perfect for scenarios where only the core elements of a PDF are needed, such as generating simple documents like invoices or tickets.

How to use it?

Developers can integrate TinyPDF into their Node.js projects by installing it via npm: `npm install tinypdf`. Once installed, you can import and use its functions within your TypeScript or JavaScript code to programmatically create PDF documents. For example, you can instantiate a PDF document, add text content with specified positions and styles, draw graphical elements like borders or separators, and embed JPEG images. The library is designed for straightforward API usage, allowing developers to quickly generate PDFs on the fly. Common use cases include server-side PDF generation for e-commerce orders, generating printable reports, or creating dynamic labels. The lack of dependencies simplifies deployment and reduces potential conflicts within your project.

Product Core Function

· Text Rendering: Ability to add text to PDFs with control over font (e.g., Helvetica), color, and alignment (left, center, right). This is valuable for adding descriptions, prices, or titles to documents, ensuring clear and readable content.

· Geometric Shapes: Support for drawing rectangles and lines. This is useful for creating borders, separators, or visual structure within documents like invoices or report headers, enhancing readability and professional appearance.

· JPEG Image Embedding: Capability to include JPEG images directly within the PDF. This is essential for adding logos, product images, or any visual branding to generated documents, making them more informative and visually appealing.

· Multi-Page Support: Allows for the creation of documents spanning multiple pages. This is crucial for generating longer reports, detailed invoices, or any document that exceeds a single page, providing a complete and organized output.

· Custom Page Sizes: Flexibility to define custom dimensions for PDF pages. This is valuable for generating documents that need to fit specific print formats or display requirements, such as tickets or labels, ensuring accurate sizing.

Product Usage Case

· Generating E-commerce Invoices: A Node.js backend can use TinyPDF to programmatically create a PDF invoice for each customer order, including product details, prices, and company logo, directly from order data. This solves the problem of needing a quick and lightweight way to provide customers with a professional invoice without the overhead of a large PDF library.

· Creating Printable Shipping Labels: An application can generate shipping labels as PDFs, embedding recipient addresses, tracking numbers, and barcodes (as JPEG images). This allows for easy printing of labels directly from the application, simplifying the shipping process.

· Building Simple Reports: A data-driven application can generate basic reports by rendering text and perhaps some dividing lines and logos into a PDF. This is useful for creating printable summaries of data for internal use or for users who prefer offline access to information.

· Generating Event Tickets: An event management system can use TinyPDF to create simple PDF tickets with event details, attendee names, and perhaps a QR code (as a JPEG image). This provides a downloadable and printable ticket format for attendees, solving the need for a compact ticket generation solution.

4

FirstClick AI Citation Optimizer

Author

mrayushsoni

Description

This project is a tool designed to help businesses get recommended by AI chatbots like ChatGPT and Perplexity. It addresses the emerging challenge where AI assistants are becoming a primary source for recommendations, impacting traditional SEO. FirstClick automatically generates comparison content (e.g., 'X vs Y', 'Best alternatives to Z') and optimizes it for AI citation, then tracks whether AI models actually mention the product. It's built on the insight that AI recommendation is a new frontier for discoverability, similar to how SEO transformed search engine visibility.

Popularity

Points 7

Comments 6

What is this product?

FirstClick is a system built to address the shift in how users discover products and services, moving from traditional search engines to AI assistants. The core technical innovation lies in understanding and manipulating how AI models select and present information. Instead of just optimizing for Google search algorithms, FirstClick focuses on generating content that AI models are likely to cite and compare. This involves analyzing the factors AI uses to determine relevance and authority, and then programmatically creating 'bottom-of-funnel' (BOFU) comparison content that highlights a product's advantages against competitors. The system then employs tracking mechanisms to verify when and if AI models are actually recommending the client's product, providing crucial feedback for refinement. It's a proactive approach to ensuring visibility in the nascent AI-driven recommendation landscape.

How to use it?

Developers can integrate FirstClick by providing information about their product and its competitors. The tool then automates the creation of comparison articles and 'alternative to' content. These pieces are crafted with specific linguistic patterns and factual structures that AI models tend to favor for citation. The output can be published on a company's blog or website. Once published, FirstClick's tracking feature monitors various AI platforms to detect mentions and recommendations of the client's product, providing actionable data on their AI discoverability. This allows businesses to refine their content strategy based on real-world AI behavior, ensuring their offerings are visible when users ask AI for advice.

Product Core Function

· Automated BOFU Content Generation: Creates comparison articles (e.g., 'Product A vs Product B') and 'alternative to' content, focusing on key differentiators. The technical value is in using natural language generation (NLG) models to produce persuasive and informative content that aligns with AI's information retrieval patterns, making it easier for AI to cite.

· AI Citation Optimization: Modifies content to be more likely to be picked up and cited by AI models. This involves strategic keyword placement, structured data, and framing that appeals to AI's logical processing, providing a distinct advantage over standard SEO content.

· AI Recommendation Tracking: Monitors AI platforms to detect if and how a product is being recommended. This provides invaluable feedback on the effectiveness of the content strategy and the product's performance in AI-driven discovery, helping users understand 'so what does this mean for my business?' in terms of actual customer acquisition from AI.

· Competitive Analysis for AI Visibility: Analyzes competitor mentions within AI recommendations to identify gaps and opportunities. This technical function allows businesses to understand their standing in the AI recommendation ecosystem and proactively address areas where they are being overlooked, offering a clear path to improving their AI presence.

Product Usage Case

· A SaaS startup struggling with low visibility on AI recommendation platforms. FirstClick generates 'Our Product vs. Competitor X' articles, optimizing them for AI citation. The tool then detects that Perplexity.ai begins recommending the startup's product in response to queries like 'best project management tools', directly leading to increased referral traffic. This solves the problem of being invisible in a growing AI-powered discovery channel.

· An e-commerce company wants to ensure their new product is recommended by AI assistants. FirstClick identifies that users are asking AI for 'alternatives to Brand Y's popular gadget'. The tool then crafts a comparison piece highlighting the startup's product as a superior alternative, and subsequently, the product starts appearing in AI-generated lists of alternatives, driving informed purchasing decisions.

· A service provider notices competitors are frequently mentioned by ChatGPT for specific needs. FirstClick analyzes these mentions to understand the AI's preference criteria. It then helps the service provider reframe their own service descriptions and create new content that directly addresses these criteria, leading to increased mentions and inquiries from users seeking AI-driven solutions.

· A founder wants to understand the AI recommendation landscape for their niche industry. FirstClick provides insights into which AI models are citing which companies and for what reasons. This allows the founder to pivot their content marketing strategy to focus on areas where AI is actively seeking information, ensuring their business is discoverable when potential customers turn to AI for advice.

5

Paper2Any

Author

Mey0320

Description

Paper2Any is an open-source tool that transforms research papers into editable PowerPoint (PPTX) slides and SVGs. It intelligently understands the content of a PDF, text, or even a sketch, and reconstructs it into a structured presentation format, offering flexibility in visual styles and the ability to select specific sections of the paper. This addresses the tedious manual effort of creating professional presentation materials from academic research, saving significant time and effort for researchers and students.

Popularity

Points 11

Comments 2

What is this product?

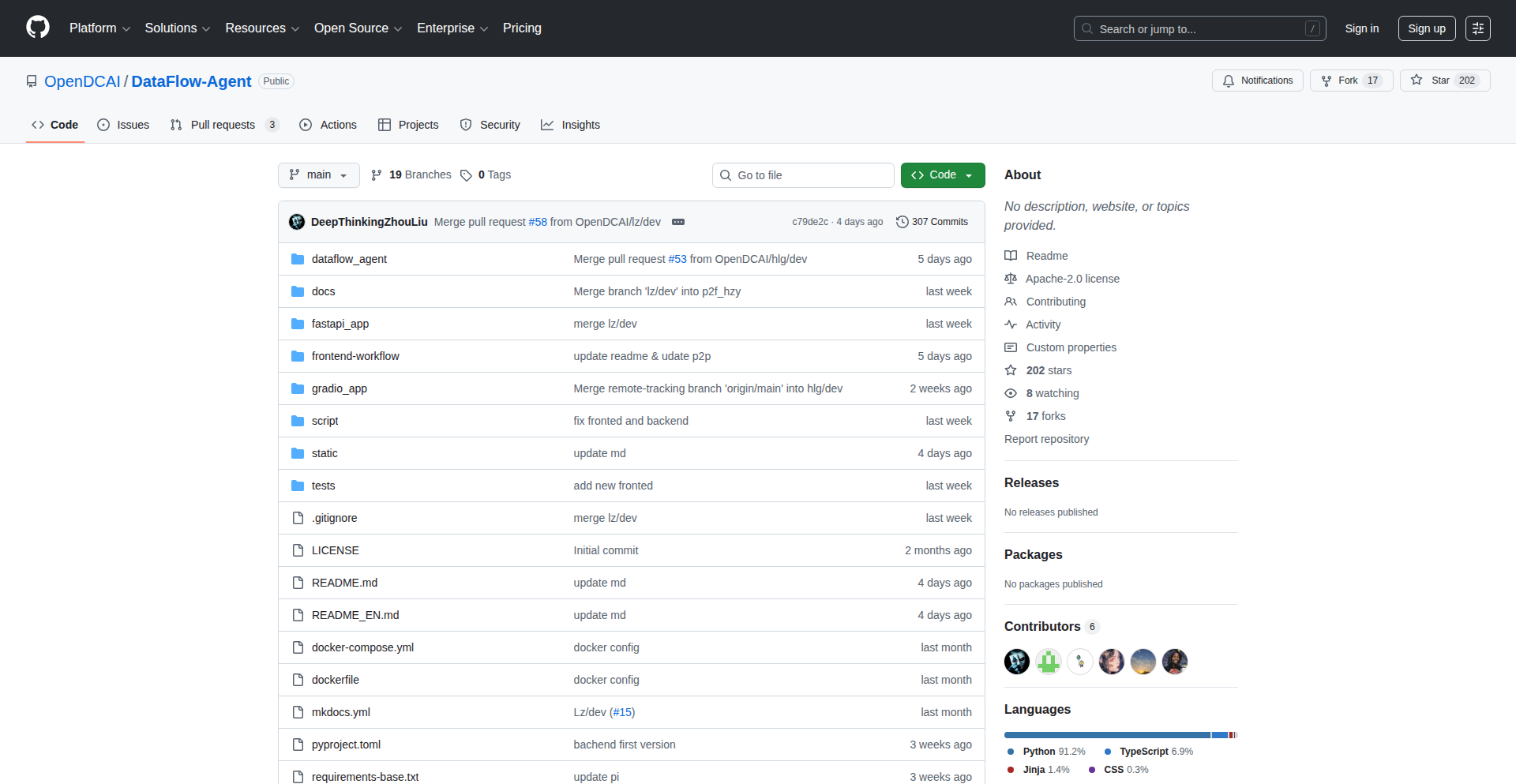

Paper2Any is an innovative tool built on the DataFlow-Agent framework. It tackles the common pain point of converting dense research papers into presentable slides. Unlike AI tools that generate uneditable image outputs, Paper2Any employs a multimodal reading approach to extract both text and visual elements from your input (PDF, text, or sketch). It then analyzes the research logic and core contributions. The key innovation lies in its PPT generation process: instead of a single image, it creates independent, editable elements like text blocks, shapes, and arrows. This allows for fine-grained control over the final presentation, including the selection of visual styles and specific page ranges from the original paper, making the output highly adaptable for publication or presentation. So, it helps you avoid the frustrating experience of manually recreating complex diagrams and text from papers into a presentation format.

How to use it?

Developers and researchers can use Paper2Any by providing it with a research paper (in PDF format), raw text, or even a hand-drawn sketch. You can specify which parts of the paper you want to convert, for example, just the methodology section, to reduce processing and focus the output. The tool allows you to experiment with different visual styles for the generated slides. Integration is straightforward: you can use the provided demo for quick tests, or if you're looking to build this functionality into your own applications, you can leverage the open-source DataFlow-Agent framework to integrate its capabilities programmatically. This means you can automate parts of your research workflow or build custom presentation generation tools. So, it saves you the hours you'd otherwise spend meticulously recreating figures and text for your talks or papers.

Product Core Function

· Multimodal Input Processing: Accepts PDF, plain text, or sketches as input, allowing for versatile data handling. This is valuable because it means you don't need to reformat your source material before using the tool, streamlining your workflow.

· Intelligent Content Understanding: Analyzes the research logic and identifies key contributions, enabling the generation of contextually relevant slides. This saves you from having to manually sift through pages to decide what's important for your presentation.

· Editable PPTX Generation: Creates fully editable PowerPoint files with independent elements (text, shapes, arrows), offering maximum flexibility for customization. This is crucial because it means you're not stuck with a static image; you can easily modify and refine the slides to fit your specific needs.

· Configurable Page Range Selection: Allows users to specify which sections or pages of the input document should be included in the generated slides, optimizing token usage and focus. This is useful for creating targeted presentations without being overwhelmed by the entire paper's content.

· Visual Style Switching: Supports different visual styles for the generated output, enabling users to choose an aesthetic that best suits their presentation needs. This means you can present your research in a visually appealing way that matches your brand or audience expectations.

· SVG Output: Generates Scalable Vector Graphics (SVG) for diagrams and figures, providing high-resolution, scalable visuals for digital or print use. This is important for ensuring your diagrams look sharp and professional at any size, preventing pixelation.

Product Usage Case

· Academic researchers preparing slides for conferences or journal submissions can input their published papers and instantly get a draft set of editable slides, significantly reducing preparation time. This solves the problem of spending days manually recreating complex experimental setups or theoretical models.

· Students working on literature reviews or thesis presentations can feed in multiple research papers and quickly generate a consolidated set of slides that highlight key findings from each source. This helps them organize and present a large amount of information efficiently.

· Technical writers or educators creating explanatory content can use Paper2Any to quickly convert technical documentation or research articles into digestible visual aids for training or educational materials. This makes complex technical information more accessible.

· Developers building AI-powered research assistants can integrate Paper2Any's capabilities to offer a feature that automatically generates presentation outlines or visual summaries from research papers ingested by their platform. This enhances the functionality of their AI tools.

6

DNS Sentinel

Author

timatping

Description

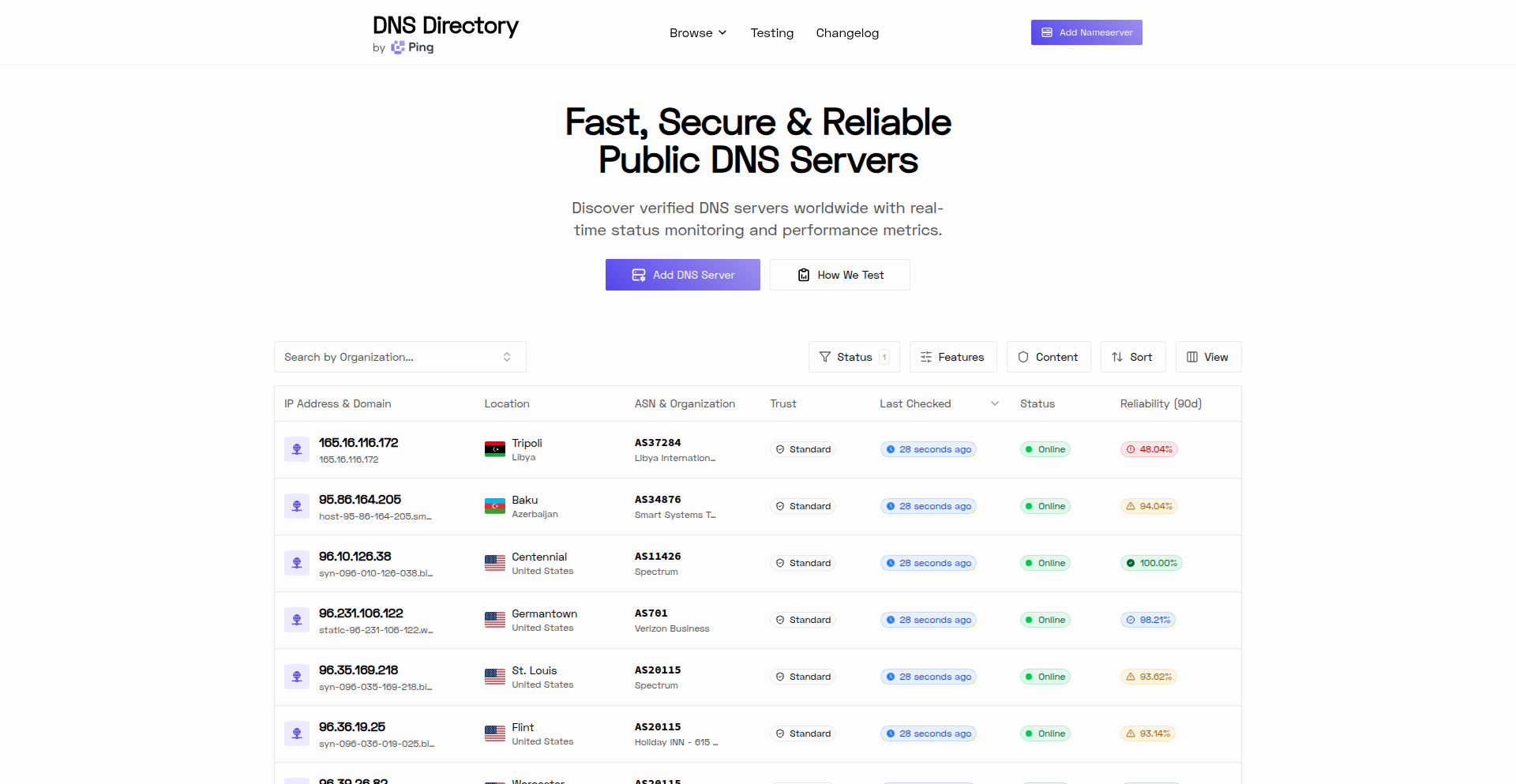

DNS Sentinel is a free, searchable database that tracks over 77,000 public DNS servers globally. It performs live monitoring every 10 minutes, providing insights into server uptime, security features like ad and malware blocking, DNSSEC support, and IPv6 compatibility. This project addresses the lack of a comprehensive, up-to-date public DNS server directory, originally built to support web scraping projects that require reliable DNS resolution.

Popularity

Points 7

Comments 4

What is this product?

DNS Sentinel is a constantly updated, public directory of DNS (Domain Name System) servers. Think of DNS as the internet's phonebook, translating website names (like google.com) into IP addresses that computers understand. This project automatically tests thousands of these public DNS servers every ten minutes to see if they are working, how fast they are, and if they offer special features like blocking ads or malware. The innovation lies in building this massive, actively monitored dataset from scratch, solving the problem that no such reliable, real-time resource existed before for developers needing to understand or select DNS servers.

How to use it?

Developers can use DNS Sentinel by visiting the website (dnsdirectory.com) to search for and filter public DNS servers based on criteria such as location, uptime percentage, security features (e.g., ad blocking, malware protection), DNSSEC support, and IPv6 readiness. It's particularly useful for those building applications that rely on specific DNS server characteristics, such as web scraping tools that need to avoid detection by using varied IP addresses, or for network engineers and security professionals looking to understand the landscape of available public DNS infrastructure. You can also submit new DNS servers to be added to the directory.

Product Core Function

· Live DNS Server Monitoring: Continuously tests over 77,000 public DNS servers every 10 minutes to ensure data freshness and reliability, providing real-time insights into server availability and performance for your projects.

· Comprehensive Filtering Options: Allows users to filter DNS servers by critical attributes like uptime, geographical location, advanced security features (ad blocking, malware protection), DNSSEC compliance, and IPv6 support, enabling precise selection for specific technical needs.

· Detailed Historical Data: Provides access to all historical testing information for each DNS server, allowing for trend analysis and a deeper understanding of server behavior over time, useful for long-term project planning and reliability assessment.

· Public Resource & Contribution: Offers a free, open database for public use and encourages community contributions by allowing users to submit new DNS servers, fostering a collaborative environment for improving internet infrastructure knowledge.

Product Usage Case

· A web scraping project needs to disguise its origin to access restricted content. Using DNS Sentinel, the developer can identify and select a diverse set of public DNS servers known for their ad-blocking capabilities and varied locations to make their scraping traffic appear more legitimate and avoid detection.

· A cybersecurity researcher is investigating the effectiveness of different public DNS resolvers in preventing access to malicious websites. They can use DNS Sentinel to filter servers with malware protection and DNSSEC enabled, then monitor their performance and reliability over time to draw conclusions about their security posture.

· A developer is building a new application that requires high-performance and reliable DNS resolution from a specific region. They can search DNS Sentinel for servers in that region, filter by high uptime, and choose a server that supports IPv6 to ensure future compatibility and optimal speed.

· An organization is planning to deploy a new network infrastructure and wants to understand the characteristics of commonly used public DNS servers for potential integration or comparison. DNS Sentinel provides a free, extensive dataset to analyze uptime, feature sets, and global distribution of these servers.

7

DocsRouter: Unified Document Intelligence API

Author

misbahsy

Description

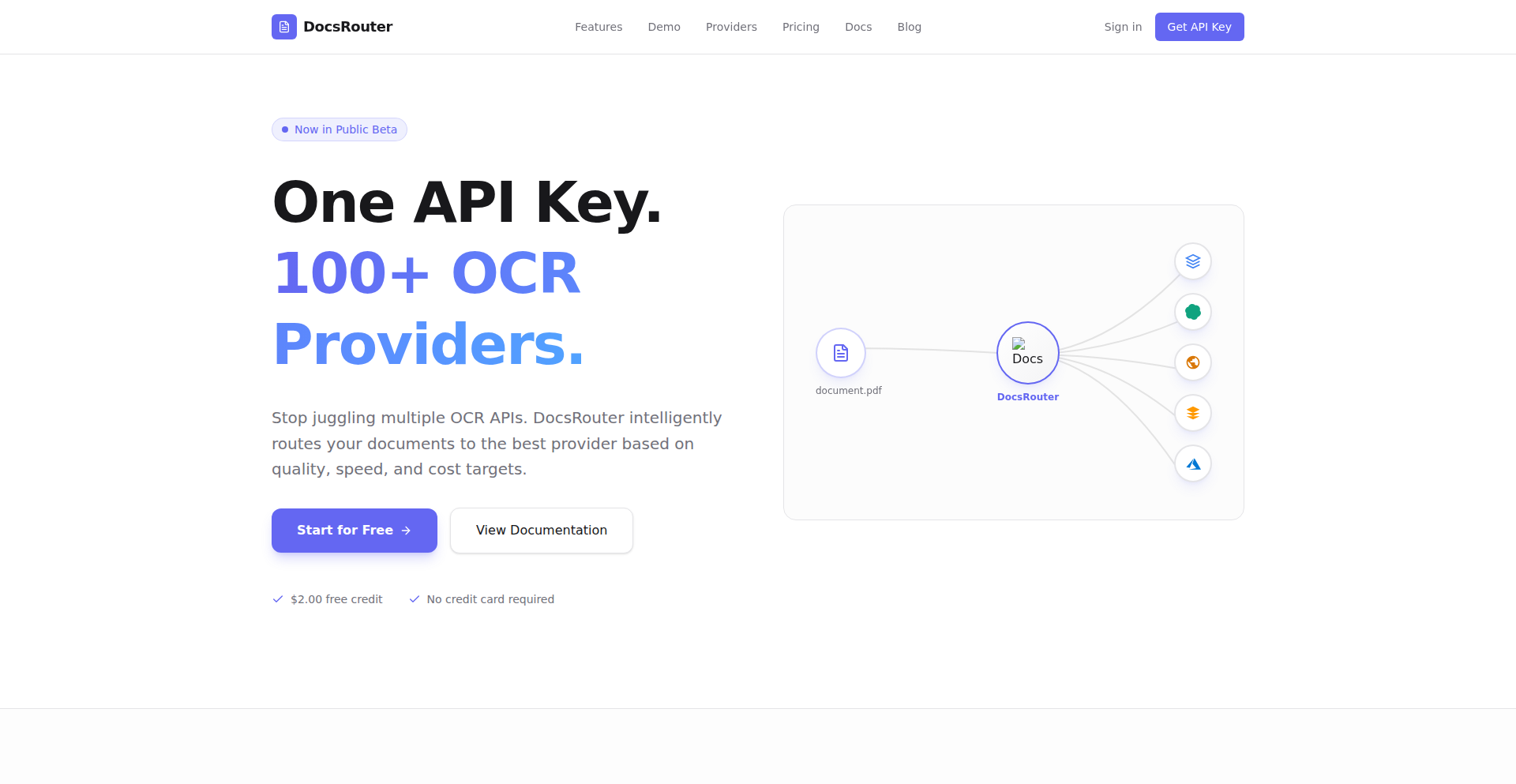

DocsRouter is a smart middleware service that acts as a single point of access for various Optical Character Recognition (OCR) and Vision Large Language Models (LLMs). It simplifies the complex process of integrating and managing multiple AI services for document processing. Instead of building and maintaining your own system to call different AI providers, DocsRouter offers a unified API. This allows developers to effortlessly switch between or combine different OCR and vision models based on factors like cost, speed, and accuracy, without changing their application's code. The output is standardized, making it easy to use the extracted data. This is for teams dealing with high volumes of documents like invoices, contracts, or forms who want to avoid vendor lock-in and leverage the best AI technology available for their needs.

Popularity

Points 10

Comments 0

What is this product?

DocsRouter is an API service that simplifies working with different AI tools that read and understand documents. Imagine you need to extract information from scanned papers, like invoices or forms. There are many AI services (OCR and Vision LLMs) that can do this, each with its own strengths and weaknesses in terms of cost, speed, and how accurately they can read different types of text or even understand the context. Traditionally, if you wanted to use multiple services, you'd have to write a lot of custom code to connect to each one, handle their different outputs, and manage the costs. DocsRouter solves this by providing one simple API. You send your document to DocsRouter, and it intelligently decides which AI service is best to use for that specific document, or it can even combine results from multiple services. It then gives you back the information in a consistent format, so your application doesn't need to know which AI service was actually used. This is innovative because it abstracts away the complexity of the AI landscape, making advanced document processing accessible and manageable, and preventing you from being stuck with a single, potentially outdated or expensive, AI provider.

How to use it?

Developers can integrate DocsRouter into their applications by making simple API calls. For example, when your application needs to process a new document (like an invoice uploaded by a user), instead of directly calling an OCR service, you'll send the document to the DocsRouter API. You can configure DocsRouter, either directly through the API or via its dashboard, to use specific AI providers or to automatically choose the best one based on predefined rules (e.g., 'use the cheapest option that provides at least 90% accuracy'). DocsRouter handles the communication with the chosen AI models, normalizes their outputs (like extracting text, table data, or specific fields), and returns this structured data to your application. This means your application logic remains clean and unaffected by changes in the underlying AI technology. You can also use their provided playground to test different document types with various AI models side-by-side to understand which providers perform best for your specific use cases.

Product Core Function

· Unified API for multiple OCR and Vision LLMs: Reduces integration effort and allows easy switching between AI providers, so your app always uses the best available technology without code changes.

· Intelligent routing policies: Enables automatic selection of AI models based on cost, accuracy, or latency requirements, optimizing performance and budget for document processing.

· Normalized output formats (text, tables, fields): Guarantees consistent data structure regardless of the AI provider used, simplifying downstream data processing and application logic.

· Provider abstraction layer: Hides the complexity of different AI service APIs, preventing vendor lock-in and allowing future-proofing as new models emerge.

· Side-by-side output comparison playground: Facilitates experimentation and selection of optimal AI models for specific document types through visual comparison of results.

Product Usage Case

· An accounting software company needs to extract data from thousands of invoices daily. By using DocsRouter, they can send each invoice to the API, and DocsRouter will select the most cost-effective and accurate OCR/vision model for that invoice type. This saves them development time on custom integrations and lowers their operational costs compared to using a single, expensive provider, providing them with clean, structured invoice data for their accounting system.

· A legal tech startup is building a contract analysis tool. They can use DocsRouter to process various legal documents, leveraging different LLMs for tasks like identifying key clauses or extracting specific parties. The normalized output allows their analysis engine to work consistently, even if the underlying AI model for contract interpretation changes, ensuring their tool remains competitive and accurate over time.

· A logistics company needs to process shipping manifests and customs forms. DocsRouter can handle these diverse document formats by routing them to specialized OCR or vision models, ensuring accurate extraction of shipping details, addresses, and product information. This streamlines their operations by reducing manual data entry and errors, leading to faster processing times and improved supply chain visibility.

8

DadJoke-Qwen3-Tuner

Author

shutty

Description

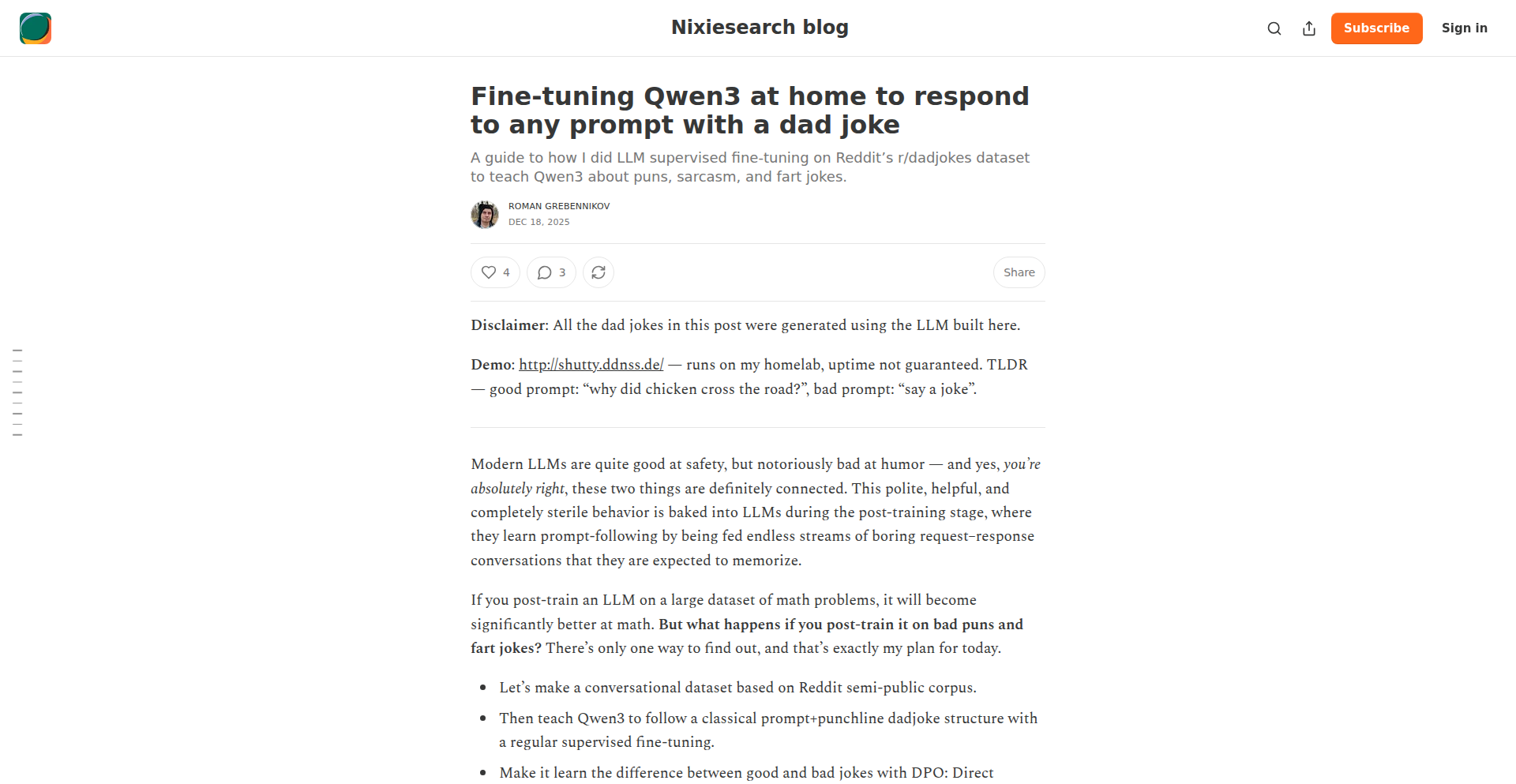

This project demonstrates how to fine-tune the Qwen3 large language model at home to specifically respond to any user prompt with a dad joke. It highlights the accessibility of fine-tuning powerful AI models with consumer-grade hardware and offers a fun, unique application of natural language processing.

Popularity

Points 10

Comments 0

What is this product?

This project is a demonstration of fine-tuning Qwen3, a large language model, to inject a specific personality trait: the ability to tell dad jokes. The core technical innovation lies in the process of taking a general-purpose AI model and specializing it for a niche output with relatively modest resources. This is achieved through techniques like LoRA (Low-Rank Adaptation), which allows for efficient fine-tuning by only updating a small subset of the model's parameters. So, what's the value? It proves that you don't need a massive data center to customize advanced AI for your specific needs or creative ideas.

How to use it?

Developers can use this project as a blueprint for fine-tuning their own LLMs for specific tasks. The process typically involves preparing a dataset of prompts and desired dad joke responses, setting up a fine-tuning environment (often using Python libraries like `transformers` and `peft`), and running the training process. The fine-tuned model can then be integrated into applications via an API, allowing users to interact with an AI that consistently delivers cheesy humor. For example, you could build a chatbot for a family-friendly app or create a humorous content generation tool. So, how does this benefit you? It provides a practical, step-by-step guide to make powerful AI models behave in a way that's fun and tailored to your project.

Product Core Function

· Efficient LLM Fine-tuning: The ability to adapt large language models like Qwen3 using resource-efficient methods like LoRA. This allows for customization without retraining the entire model from scratch, saving significant computational power and time. The value is democratizing AI customization.

· Prompt-to-DadJoke Generation: The core function of the fine-tuned model. It takes any input prompt and generates a relevant, albeit humorous, dad joke as a response. The value lies in creating engaging and entertaining user experiences through personalized AI humor.

· Home-based AI Customization: Demonstrates that advanced AI fine-tuning can be performed on consumer-grade hardware, not just in large research labs. This opens doors for individual developers and small teams to experiment with and deploy specialized AI. The value is in lowering the barrier to entry for AI innovation.

Product Usage Case

· Building a 'Digital Comedian' Chatbot: Integrate the fine-tuned model into a messaging platform to create a chatbot that can entertain users with dad jokes on command. This solves the problem of needing a constant stream of lighthearted content for casual interactions.

· Content Generation for Social Media: Use the model to automatically generate humorous captions or responses for social media posts, injecting personality and engagement into online content. This addresses the challenge of creating consistent and entertaining social media material.

· Educational Tool for AI Fine-tuning: Serve as a practical, hands-on example for students and aspiring AI engineers learning about LLM fine-tuning techniques. It provides a clear, fun use case to illustrate complex concepts. This helps overcome the difficulty of understanding abstract AI training processes.

9

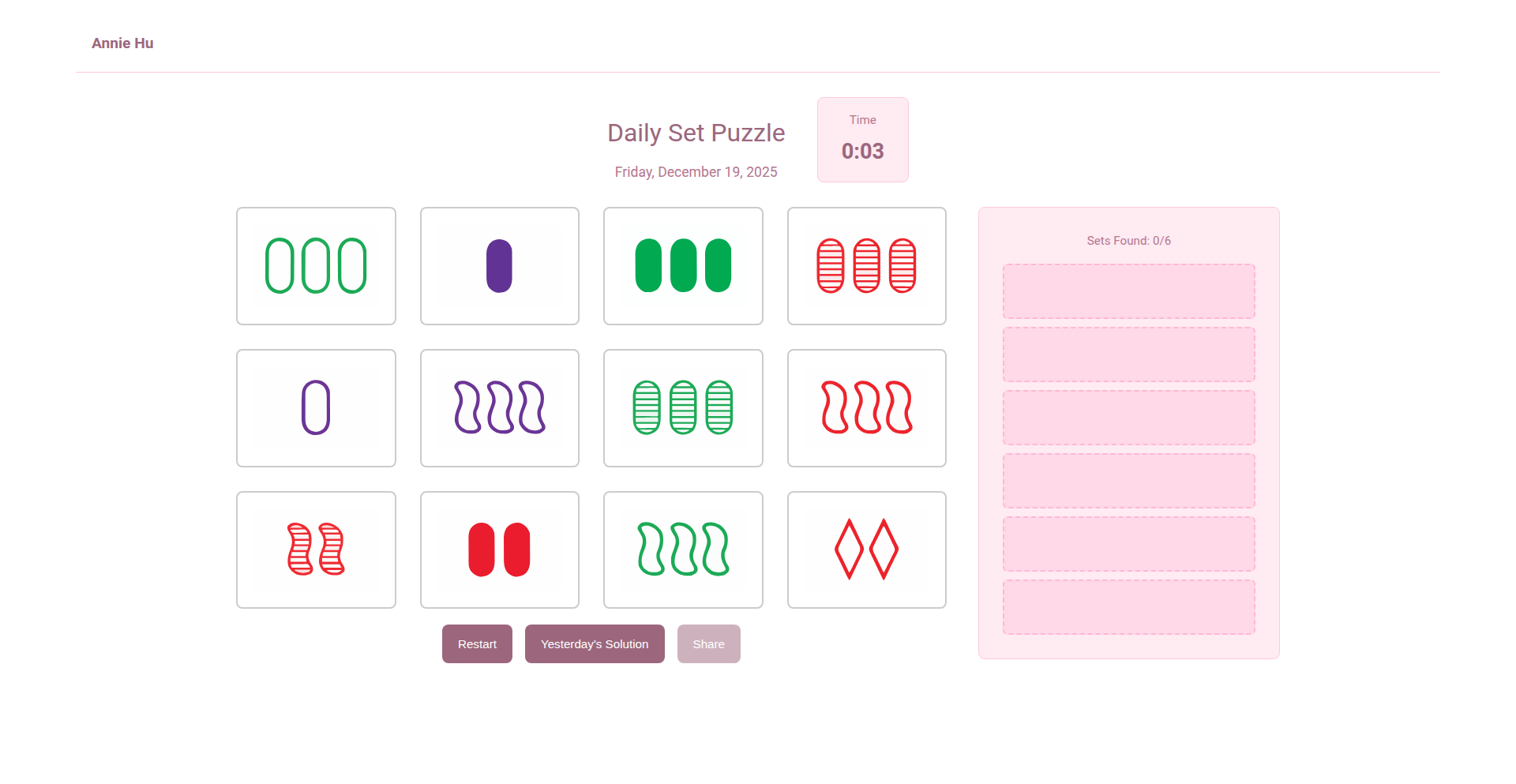

DailySet

Author

anniegracehu

Description

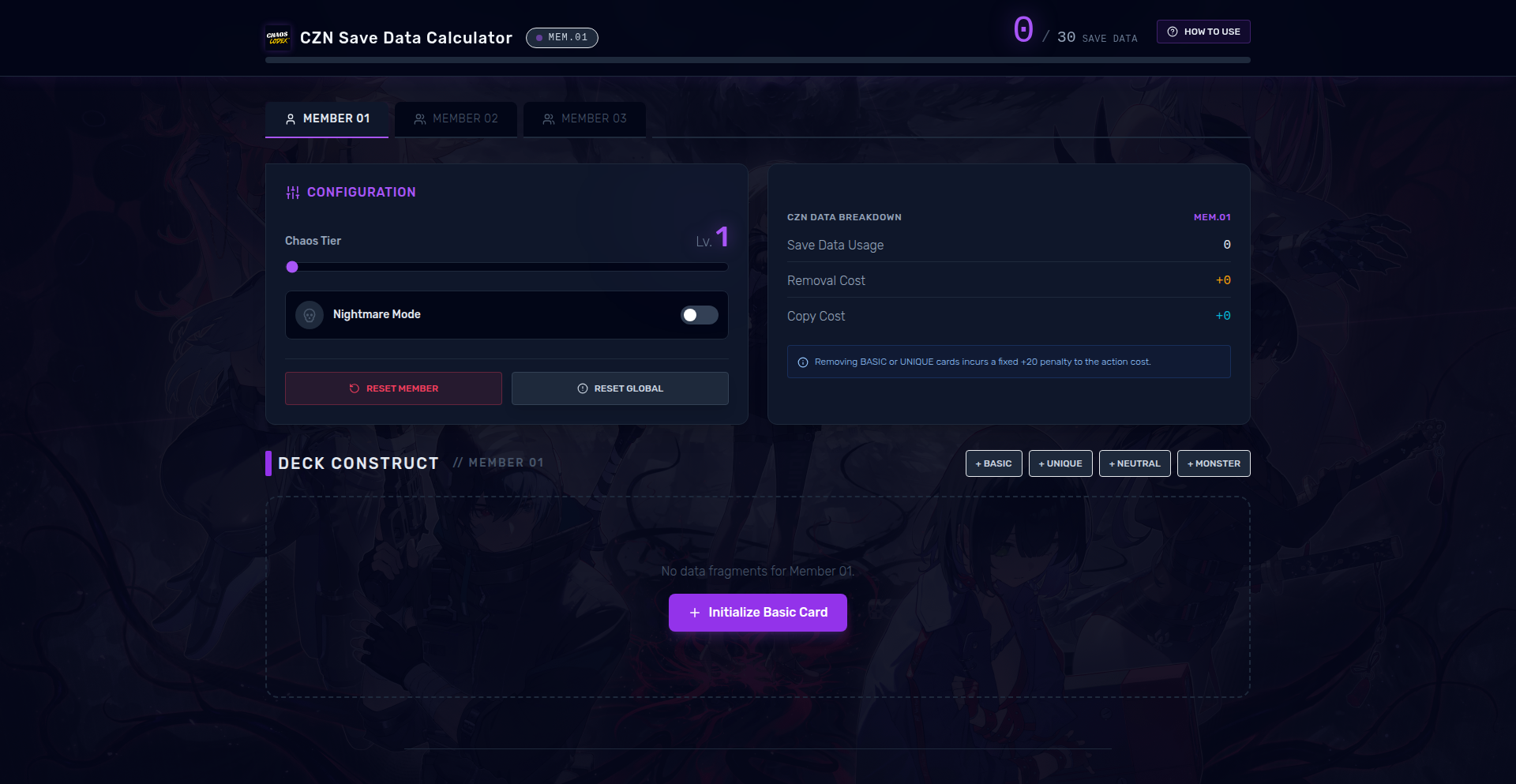

DailySet is a re-implementation of the popular 'Set' card game, driven by a fascinating technical challenge: the original setgame.com's SSL certificate expired. This project showcases a deep understanding of web application development, certificate management, and the underlying logic of the Set game itself. Its innovation lies in not just recreating the game, but in providing a robust and accessible alternative, demonstrating a keen eye for identifying and solving real-world technical limitations with elegant code.

Popularity

Points 7

Comments 2

What is this product?

DailySet is a web-based implementation of the classic 'Set' card game. The core innovation here is the initiative to rebuild it from scratch when the original website became inaccessible due to an expired SSL certificate. This means it's built with modern web technologies, ensuring a secure and reliable experience. The underlying logic of the Set game, which involves identifying specific patterns in cards based on attributes like color, shape, number, and shading, is meticulously recreated, presenting a computationally interesting puzzle that's both fun and intellectually stimulating. So, what's in it for you? You get a reliable and secure way to play a challenging puzzle game anytime, anywhere, without worrying about broken links or security warnings.

How to use it?

Developers can use DailySet in several ways. Firstly, as an end-user, you can simply visit the deployed web application to play the game. For developers looking to integrate or learn, the project's codebase serves as an excellent example of building interactive web applications. It demonstrates how to manage game state, render dynamic content, and handle user input efficiently. You can fork the repository, study the code, and even contribute to its development. Think of it as a blueprint for building your own engaging web-based games or interactive tools. So, how does this benefit you? You can easily access a fun game, or leverage the code as a learning resource and a foundation for your own projects.

Product Core Function

· Game logic implementation: The core algorithm for determining valid 'Set' combinations is precisely engineered. This involves intricate logic to compare card attributes. Its value is in providing a true-to-game-rules experience. This is crucial for any player seeking an authentic challenge.

· Web application interface: A user-friendly interface built with modern web technologies allows players to interact with the game seamlessly. The value here is accessibility and an intuitive gaming experience. Anyone can jump in and play.

· Certificate renewal initiative: The proactive re-creation of the game after the original's certificate expired highlights a developer's commitment to keeping valuable online resources available. The value is in ensuring the longevity and accessibility of the game for the community. This means the game remains playable and secure for everyone.

· Responsive design: The game is likely designed to work on various devices, from desktops to mobile phones. The value is in providing a consistent and enjoyable experience regardless of the user's device. You can play it on whatever screen you have handy.

Product Usage Case

· Educational tool for learning game development: A developer could study DailySet's codebase to understand how to implement game rules, manage game state, and build interactive web UIs. This helps them learn by example how to create their own games.

· Inspiration for recreating other defunct web games or tools: The success of DailySet can inspire developers to tackle other beloved but broken web applications, revitalizing them for new audiences. This means more of your favorite old-school online experiences could be brought back to life.

· Building a personal portfolio project: This project serves as a concrete example of a developer's ability to identify a problem (expired certificate), devise a solution (rebuild the game), and execute it effectively using web technologies. It demonstrates practical problem-solving skills to potential employers.

· Backend logic for a larger game application: The core Set game logic could be extracted and used as a component within a more complex multiplayer or advanced version of the game. This shows how a specific, well-defined problem solver can be a building block for bigger things.

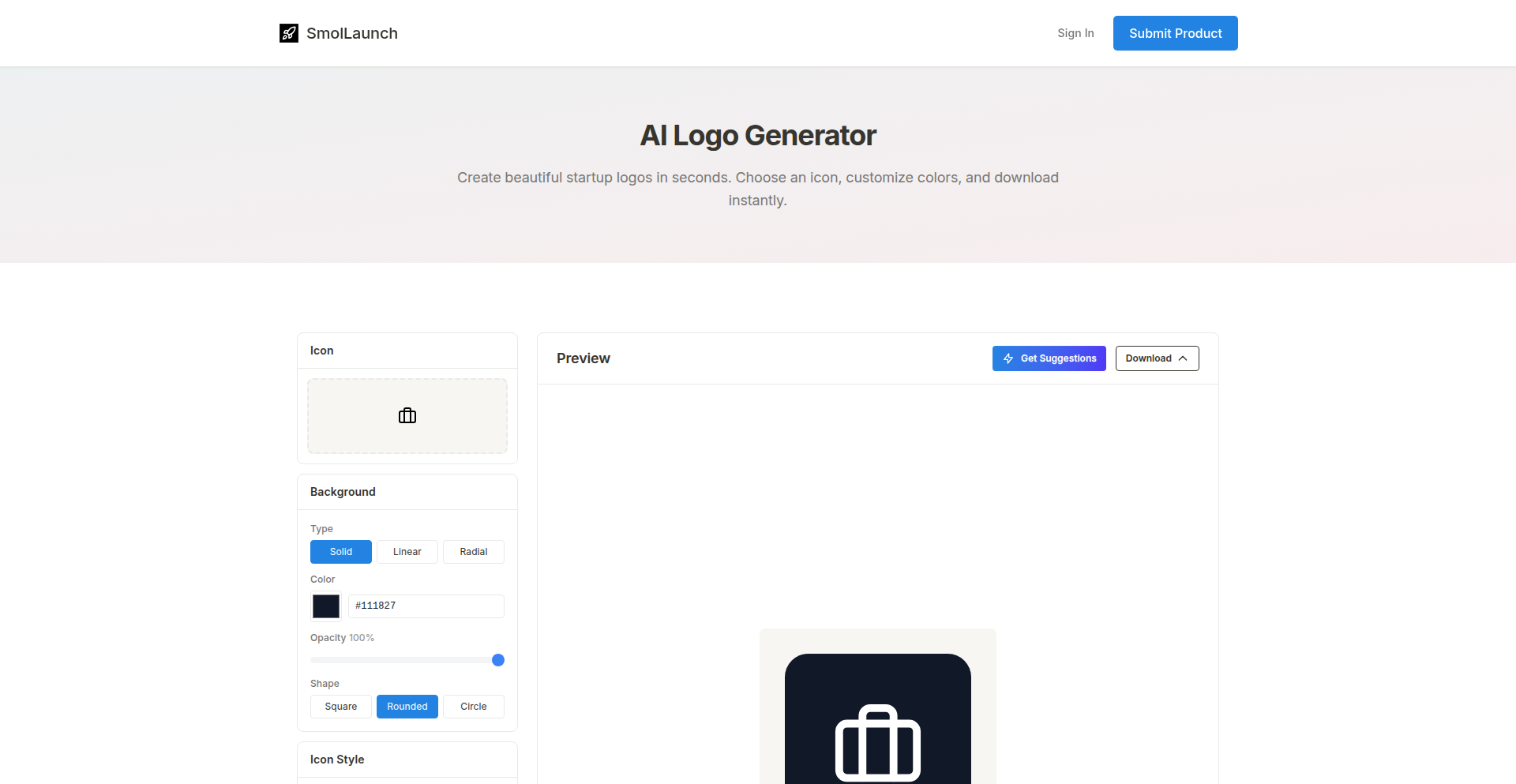

10

IconicForge

Author

teemingdev

Description

IconicForge is a free-to-use logo generator designed for indie founders and developers. It offers a streamlined approach to creating usable logos quickly by leveraging a curated set of icons and a rule-based color suggestion system. This avoids the complexity and cost associated with heavy AI generators, editors, or subscriptions. The core innovation lies in its lightweight 'smart suggestions' feature, which uses keywords describing an app's function to propose suitable icons and color palettes without relying on external AI services, ensuring speed and zero running cost. The output is a single, downloadable logo file, eliminating the need for accounts, watermarks, or asset management.

Popularity

Points 8

Comments 0

What is this product?

IconicForge is a practical tool for creating logos without the usual hassle. Instead of complex AI that might take time or cost money, it uses a pre-defined library of icons and a clever system of rules. You describe what your app does, and it suggests relevant icons and colors. Think of it like a smart assistant with a toolbox of design elements, making it fast and accessible. This means you get a decent logo quickly without being overwhelmed by options or worrying about hidden fees.

How to use it?

Developers can use IconicForge by visiting the website, describing their project in a few words (e.g., 'a task management app', 'a social media platform for gamers'), and then browsing the generated icon and color combinations. Once a satisfactory logo is created, it can be downloaded as a single image file (like PNG or SVG), ready to be used immediately on websites, app stores, or marketing materials. It's designed for immediate use, without requiring any account creation or complex integration.

Product Core Function

· Keyword-based icon suggestion: Automatically recommends icons that match the described function of your project, saving you the time of searching through vast libraries. This is useful for quickly finding a visual representation of your app's purpose.

· Rule-based color palette generation: Offers color schemes that are designed to be aesthetically pleasing and relevant to your app's description, providing a good starting point for branding without requiring design expertise.

· Direct download of single logo file: Provides a ready-to-use logo file without watermarks or extra management, allowing for immediate deployment and application across various platforms.

· No account or subscription required: Eliminates barriers to entry and ongoing costs, making it a truly free and accessible tool for anyone needing a quick logo.

· Lightweight and fast processing: Utilizes simple logic and mappings, ensuring quick generation times and a smooth user experience, ideal for users who need results immediately.

Product Usage Case

· A solo indie developer launching a new productivity app needs a logo quickly for their website and app store listing. They describe their app as 'a simple to-do list manager', and IconicForge suggests a checklist icon with a clean, professional color scheme, providing a usable logo in minutes without needing to hire a designer.

· A game developer is working on a new indie game and needs a placeholder logo for early marketing materials. They input 'a fantasy RPG combat game', and IconicForge generates an icon featuring a sword or shield with a more adventurous color palette, enabling them to create visual assets for their launch without delay.

· A startup founder is testing out a new idea and needs a basic logo for a landing page to gather user feedback. They describe their service as 'a platform for sharing recipes', and IconicForge provides a simple culinary-themed icon and a welcoming color scheme, allowing them to quickly establish a visual identity for their experiment.

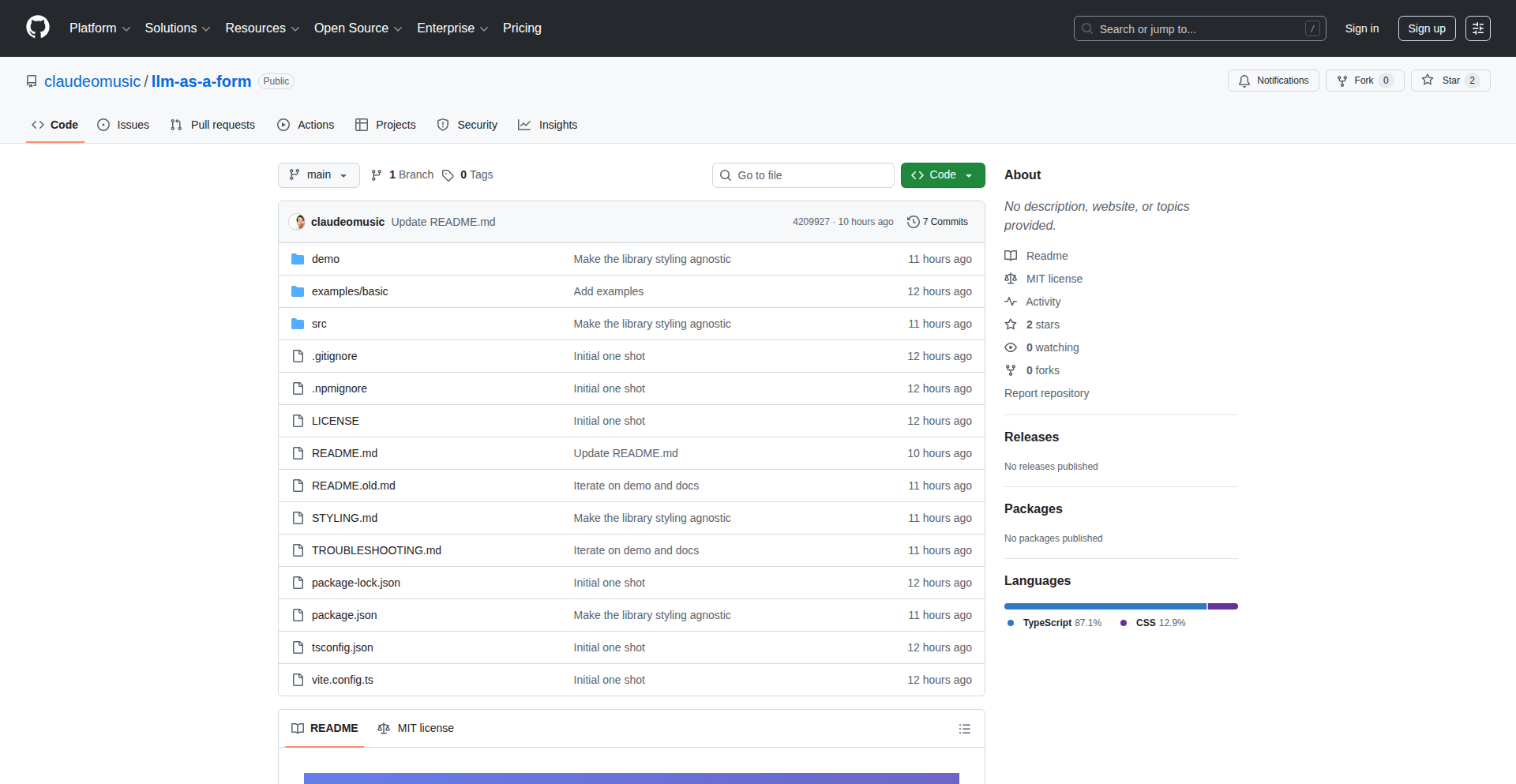

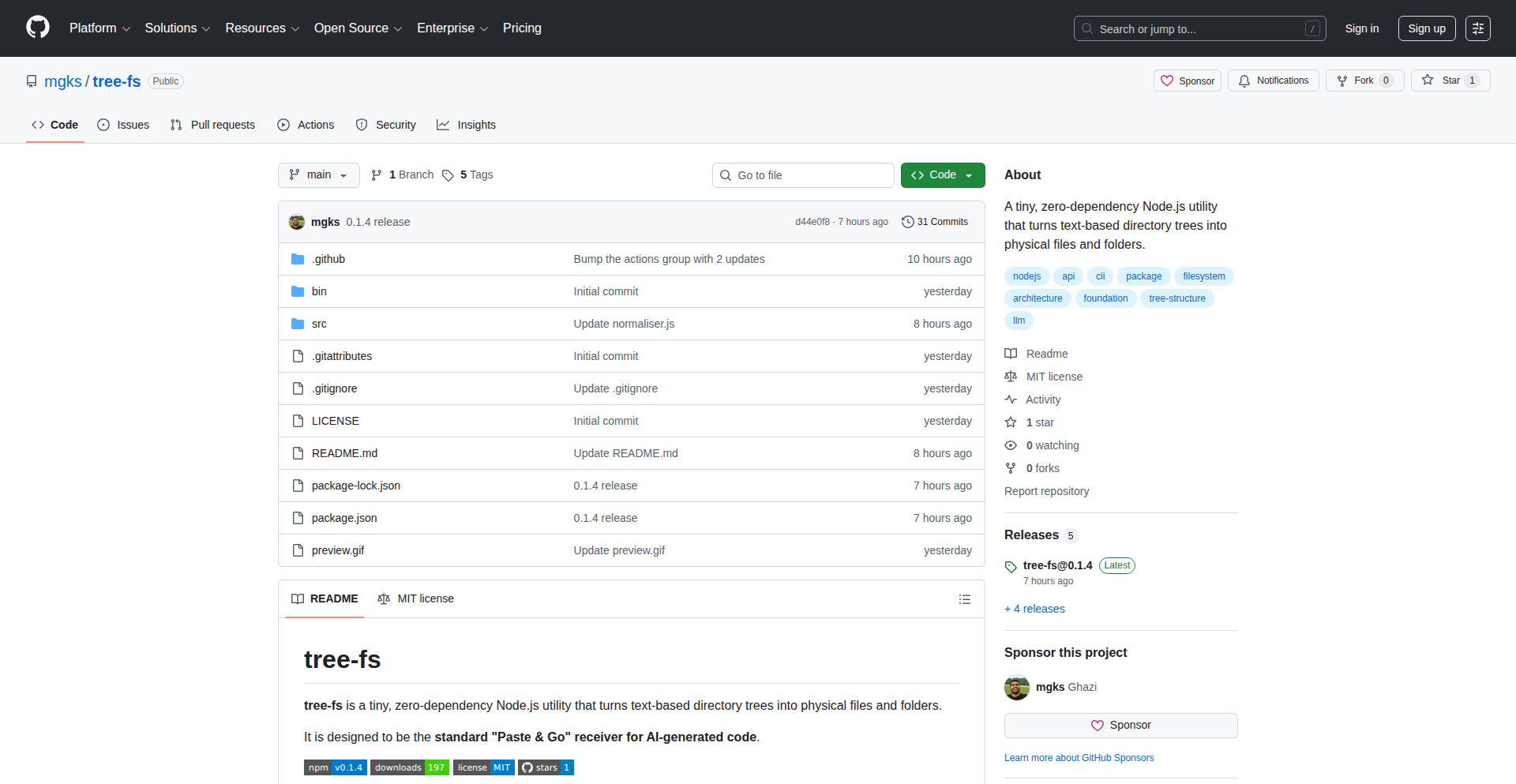

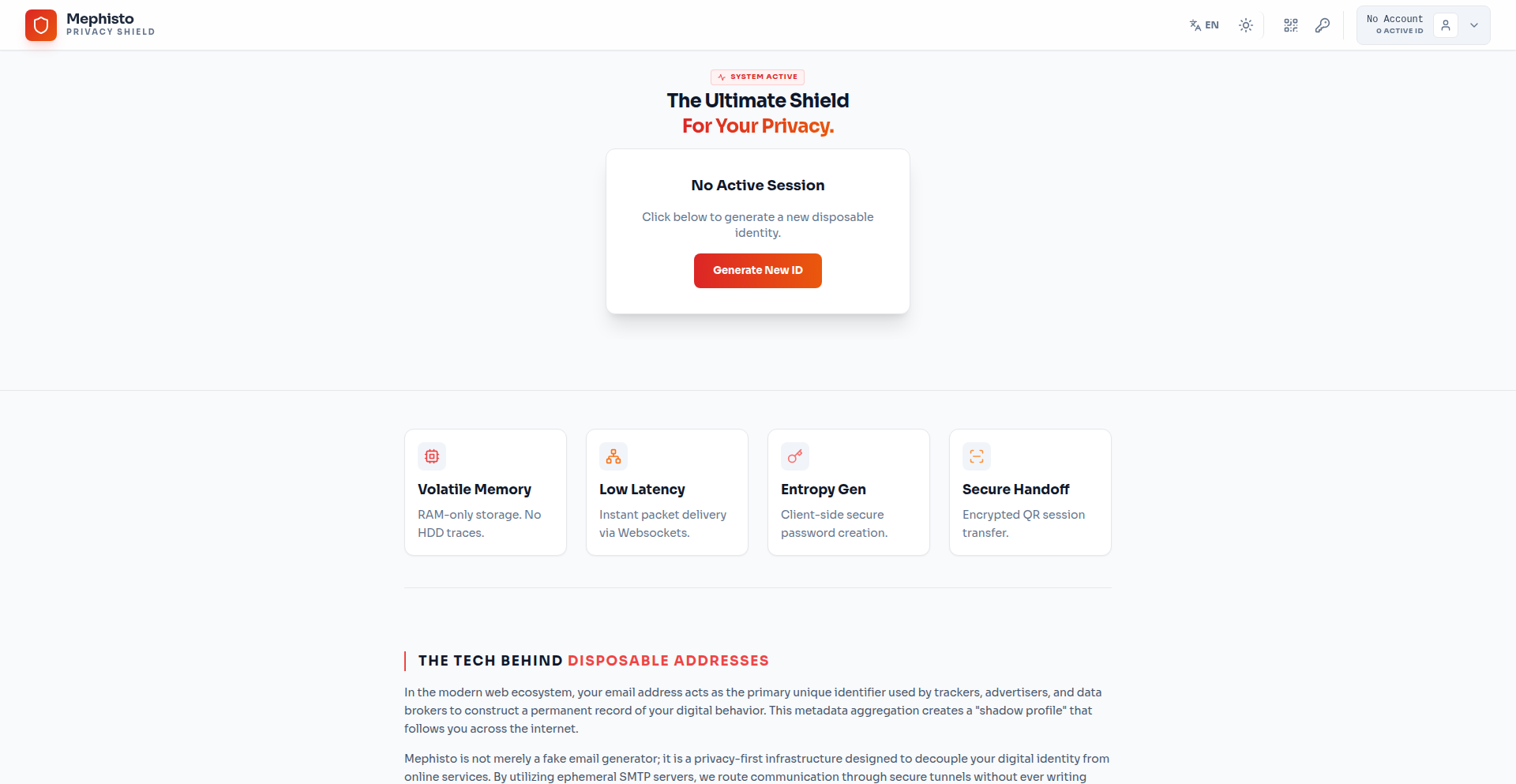

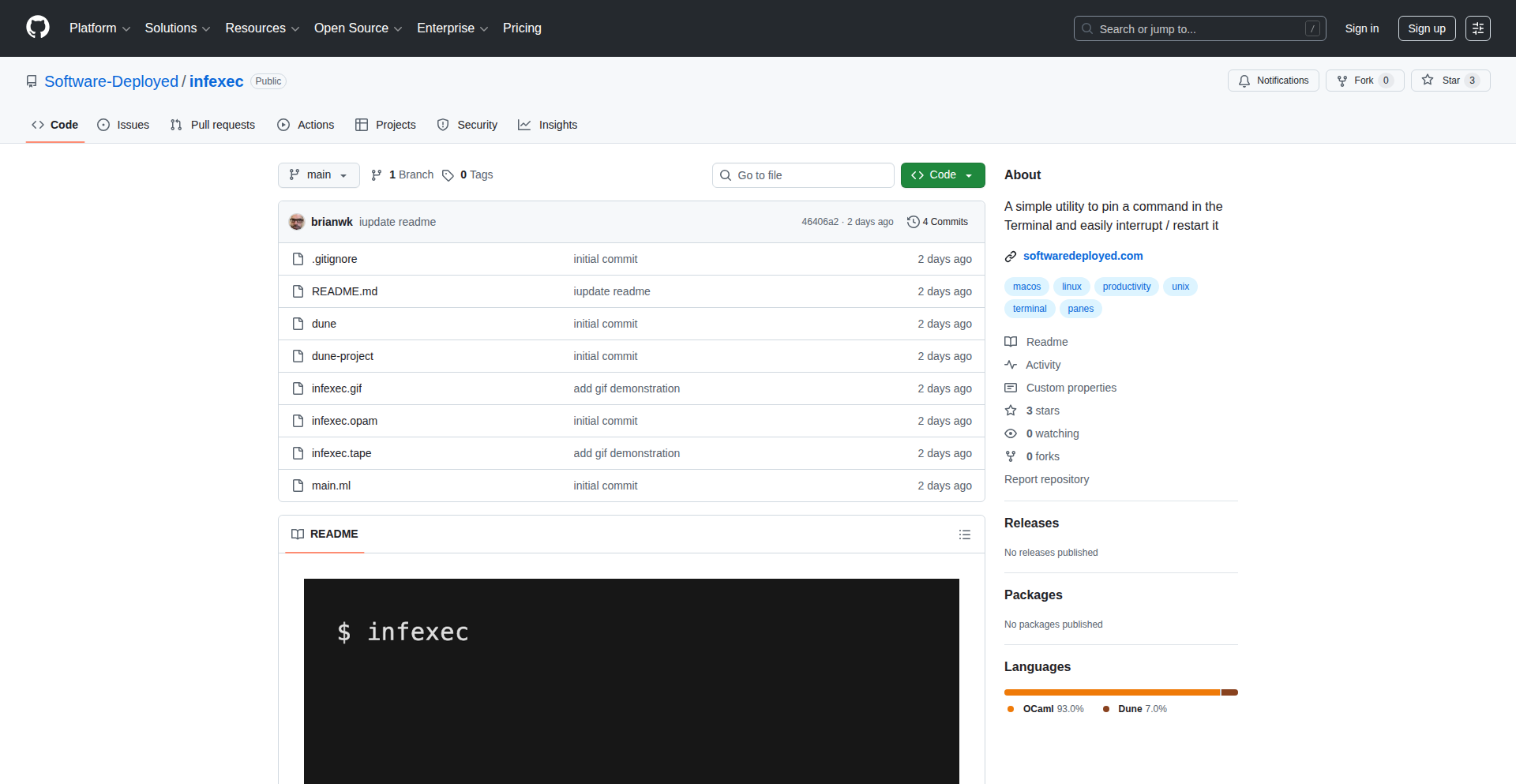

11

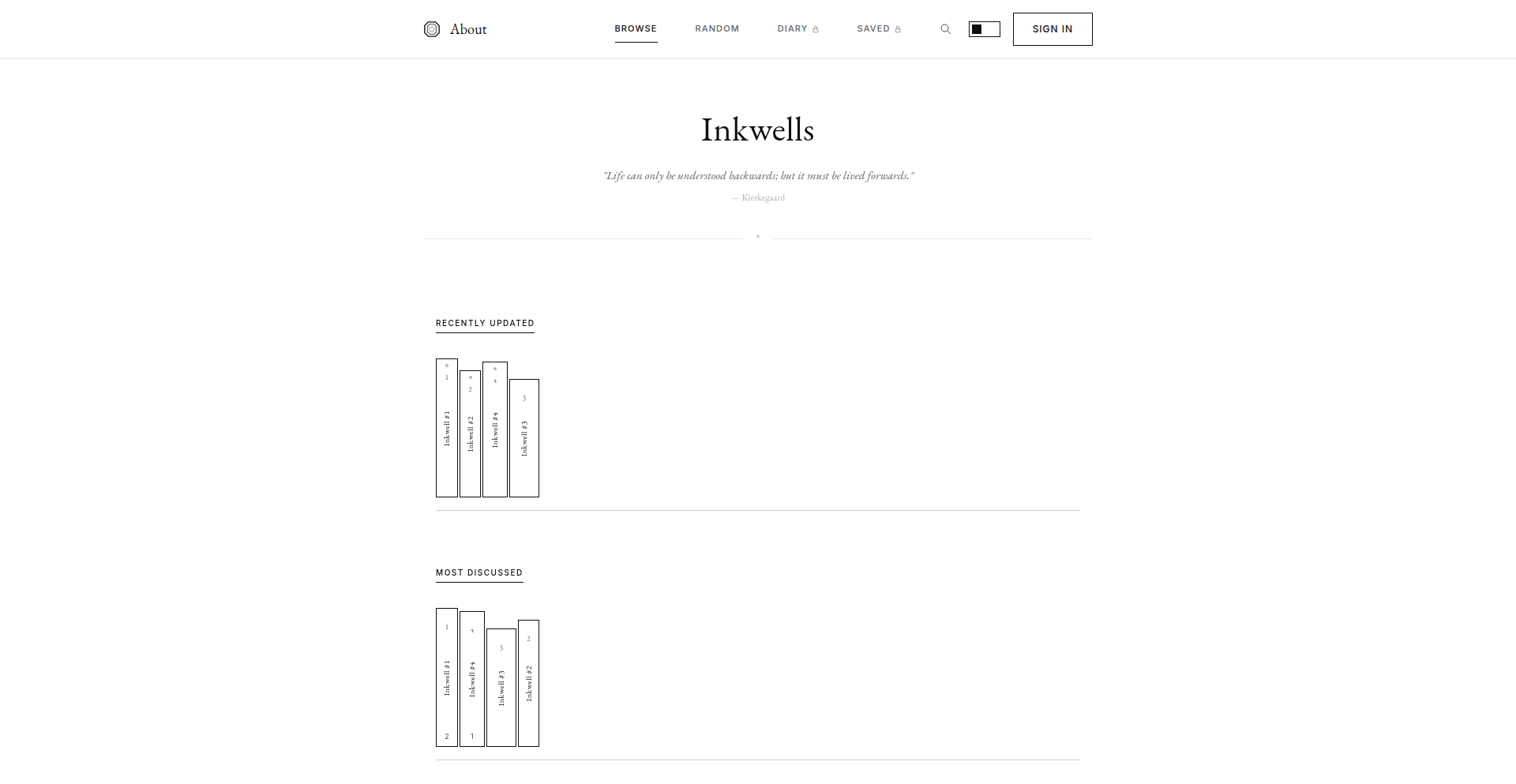

Inkwells: Anonymous Thought Weaver

Author

reagantriminio

Description

Inkwells is a personal project that creates an anonymous platform for writing and discovering diary entries. It addresses the need for individuals to express themselves freely without the constraints of personal identity, focusing on the raw act of writing and idea sharing. The innovation lies in its commitment to absolute anonymity, using numerical identifiers instead of usernames, and its design to foster discovery and interaction within this anonymous space.

Popularity

Points 3

Comments 4

What is this product?

Inkwells is a digital space designed for private thoughts and public discovery, all under the veil of anonymity. Think of it like a collection of diaries that anyone can browse and even interact with, but without anyone knowing who wrote what. The core technical insight is in how it enforces true anonymity. Instead of traditional usernames and profiles, each entry is linked to a simple, unique number. This means you can read someone's deeply personal thoughts, save the ones you find compelling, or even leave a reaction or comment, all without any way to trace it back to you or the original author. This approach sidesteps the complexities of user authentication and data privacy by fundamentally removing personal identifiers, allowing for unfiltered expression and organic discovery. The value here is a safe haven for self-expression and a unique lens into diverse human experiences.

How to use it?

Developers can use Inkwells as a source of inspiration for building privacy-centric applications or exploring novel ways to foster community engagement without user profiles. The technical implementation likely involves a robust backend system that generates and assigns unique numerical IDs to each entry and its associated interactions, ensuring no link to the user's device or network. Imagine integrating a similar concept for a 'random thought generator' on a website, or a feedback system where users can submit anonymous suggestions that are then categorized and displayed without revealing the submitter. The emphasis on a clean, untethered experience makes it a good model for exploring user-generated content where identity is a liability rather than an asset.

Product Core Function

· Anonymous Entry Creation: Allows users to write and publish diary entries without any personal identification, fostering unfiltered expression. The value is providing a safe space for thoughts that might otherwise remain unshared.

· Numerical Identifier System: Replaces traditional usernames with unique numbers for each entry, ensuring absolute anonymity. This is technically innovative in how it simplifies privacy by eliminating the need for complex user management, making it incredibly secure for sensitive content.

· Entry Discovery and Browsing: Enables users to explore a public feed of anonymous diary entries, facilitating the discovery of diverse perspectives and ideas. This addresses the need for serendipitous content discovery in a controlled, privacy-respecting environment.

· Saving and Curating Entries: Allows users to bookmark or save entries they find particularly meaningful or interesting. The technical implementation likely involves a simple storage mechanism linked to the user's session or a temporary cookie, without linking to their identity, adding value by helping users recall impactful content.

· Anonymous Interaction (Commenting/Reacting): Enables users to engage with entries through comments or reactions without revealing their identity. This fosters community interaction while upholding the core principle of anonymity, solving the challenge of encouraging engagement without compromising privacy.

Product Usage Case

· A writer experimenting with different narrative voices and themes without the pressure of personal branding, using Inkwells to anonymously publish diverse story snippets. This helps them refine their craft by seeing how different styles are received without judgment.

· A developer building a tool that aggregates anonymous user feedback for a product, similar to how Inkwells collects diary entries. This allows for honest, unbiased input that can drive product improvements without fear of reprisal or personal association.

· A researcher analyzing trends in public sentiment or common personal struggles by browsing and categorizing anonymous entries on Inkwells. This provides raw, unadulterated data on human thoughts and emotions, valuable for social science studies or mental health trend analysis.

· A user seeking catharsis by writing down difficult thoughts or experiences, finding solace in the act of expression and the possibility that someone else might resonate with their words, even if anonymously. This directly addresses the human need for emotional release and connection.

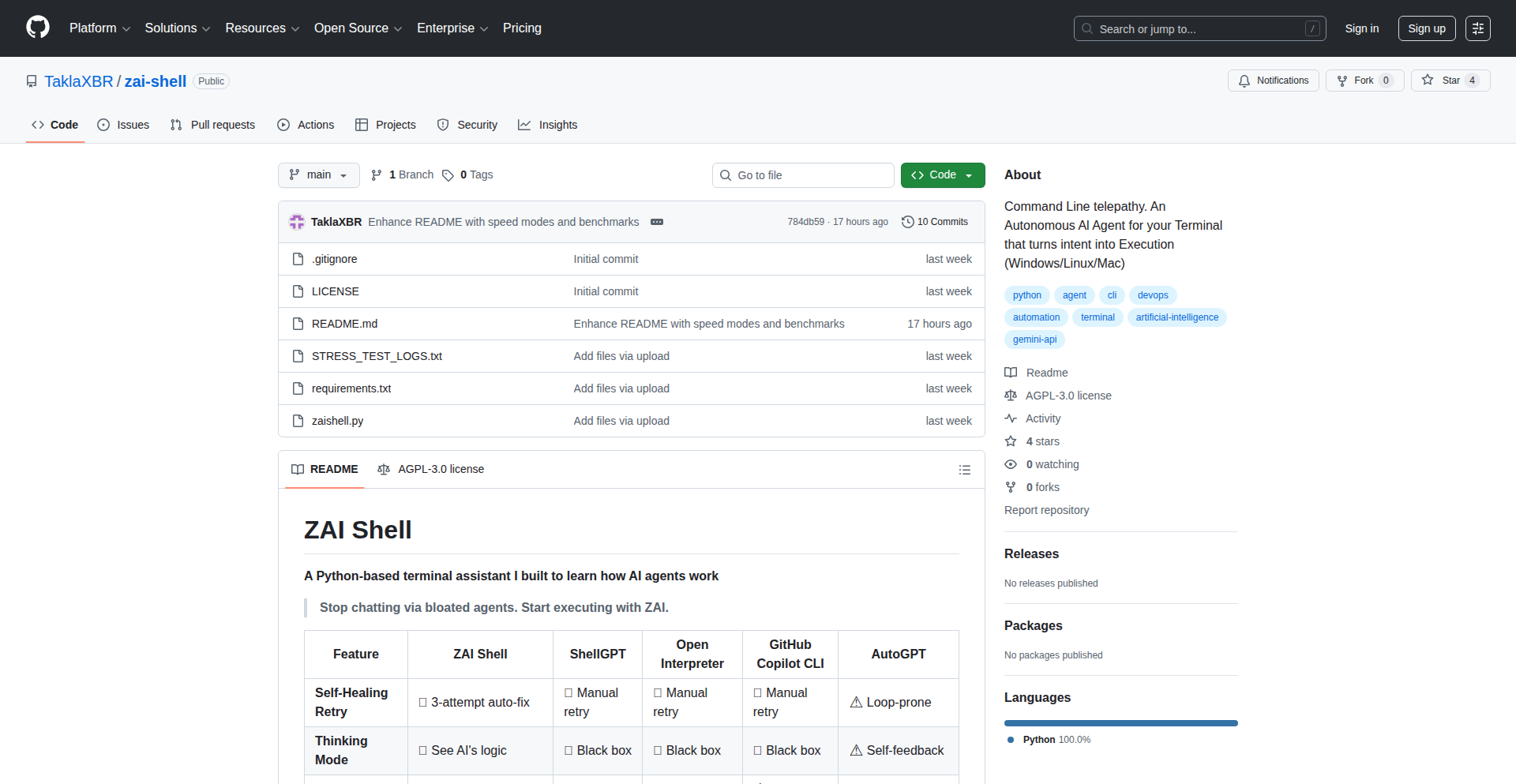

12

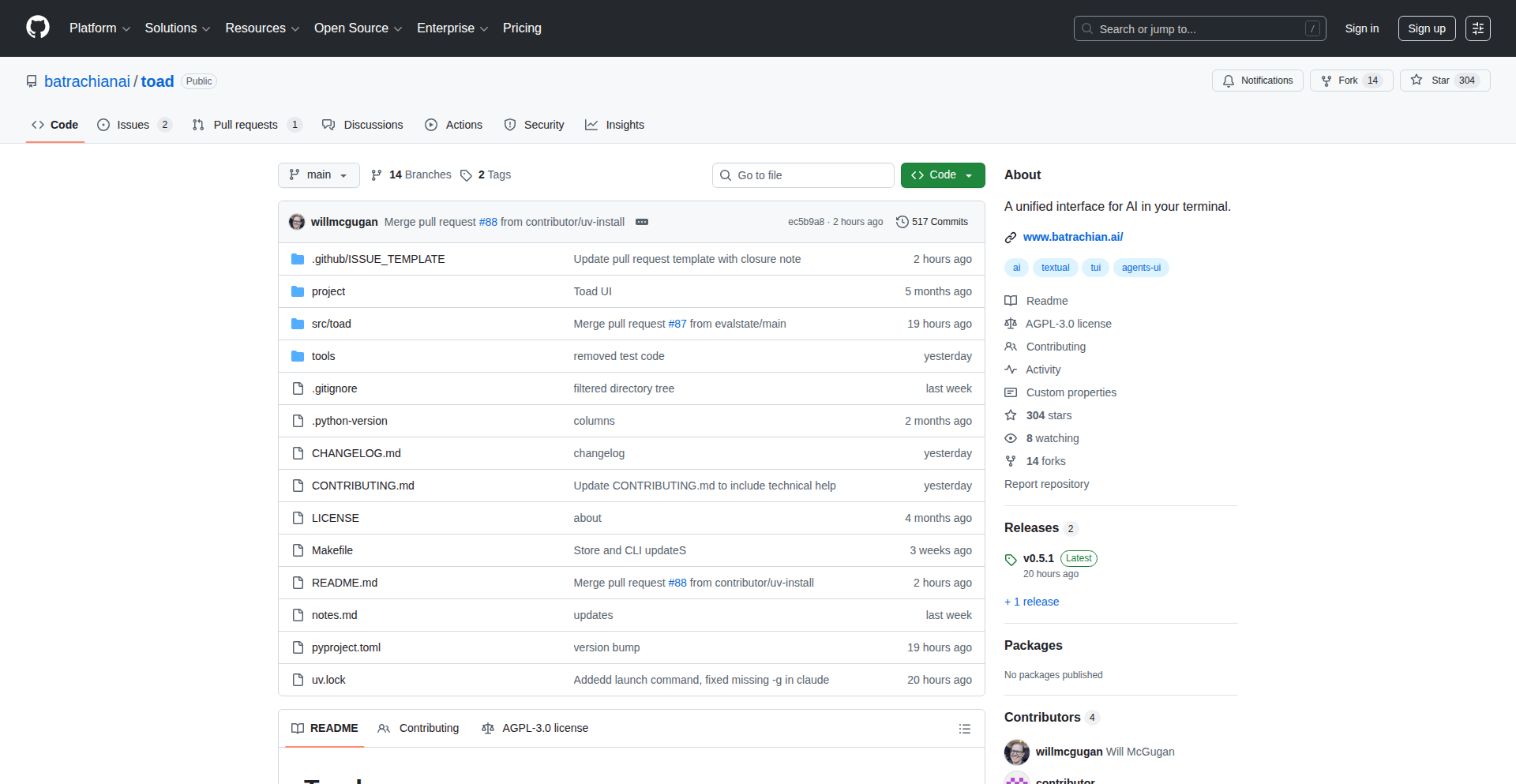

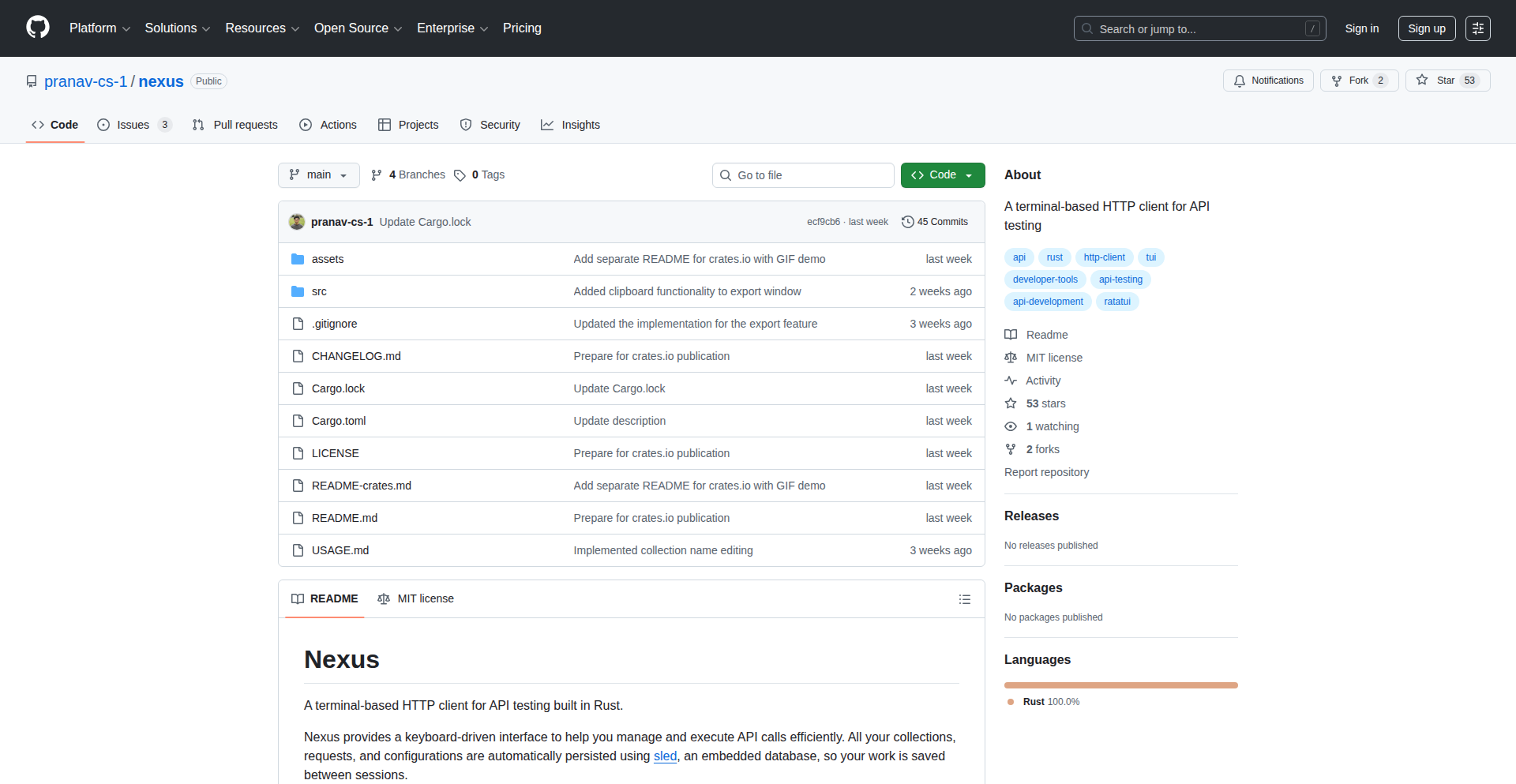

Toad: Unified Terminal Agent Orchestrator

Author

willm

Description

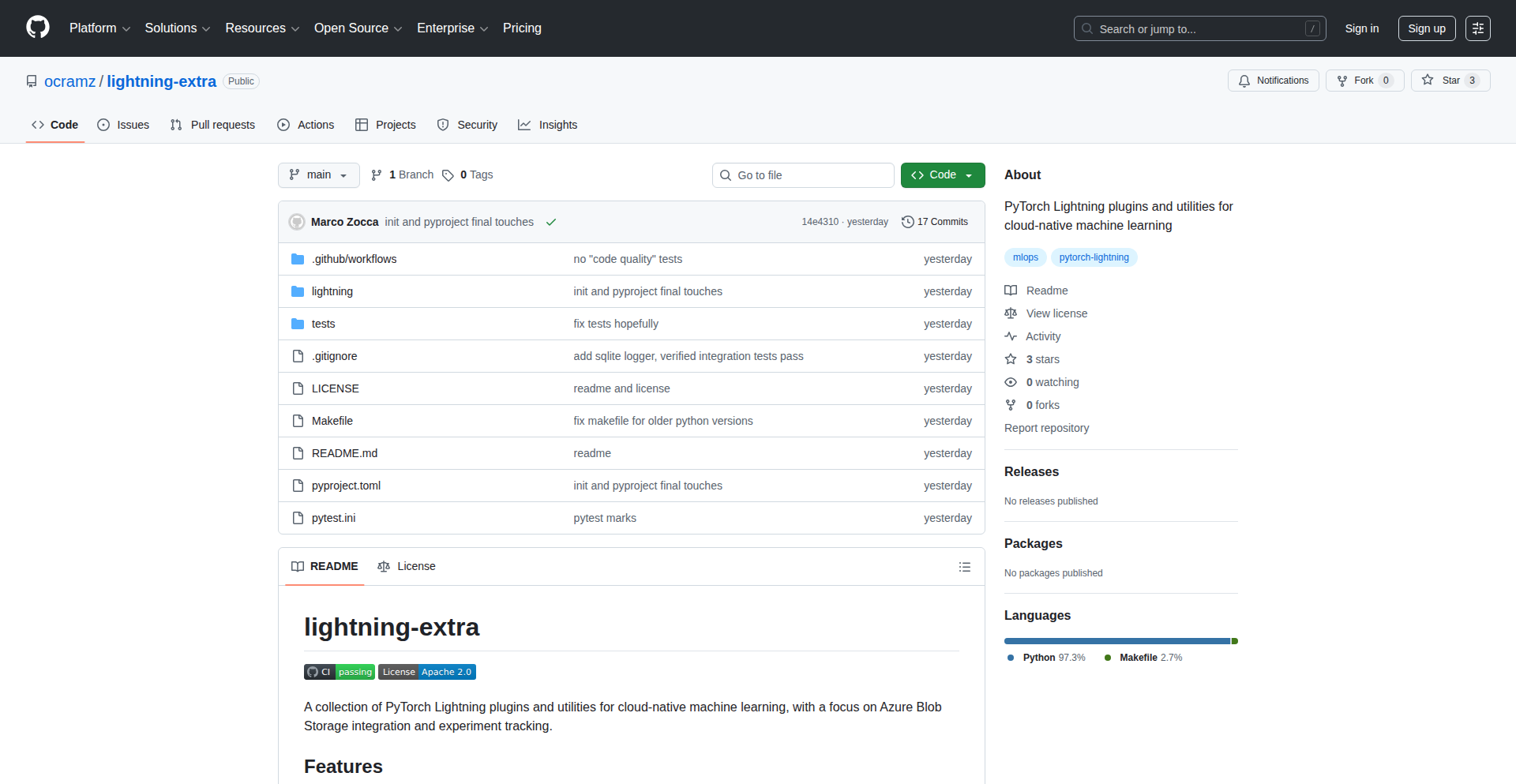

Toad is a command-line interface (CLI) that provides a unified and enhanced terminal user experience for interacting with multiple AI coding agents. It leverages the Agent Client Protocol to seamlessly connect with various AI agent SDKs, allowing developers to manage and utilize different agents from a single, intuitive terminal environment. This project solves the problem of fragmented UIs for AI coding tools by offering a centralized, developer-friendly interface that prioritizes efficiency and control.

Popularity

Points 6

Comments 0

What is this product?

Toad is a command-line interface (CLI) designed to bring a superior user experience to AI coding agents, directly within your terminal. Historically, interacting with different AI agents often meant dealing with separate web interfaces or clunky SDK integrations. Toad acts as a central hub. It utilizes the Agent Client Protocol (ACP), a standard way for AI agents to communicate. This means Toad can talk to any agent that supports ACP, regardless of who built the agent or what specific task it performs. The innovation lies in providing a single, consistent, and powerful terminal interface for controlling and observing multiple agents simultaneously. Think of it as a cockpit for your AI coding assistants, allowing you to switch between, manage, and get feedback from them all without leaving your familiar terminal environment. So, what's the benefit to you? You get to use your favorite AI coding tools more efficiently, with less context switching and a more streamlined workflow.

How to use it?

Developers can use Toad by installing it as a CLI tool. Once installed, you can configure Toad to connect to your chosen AI agents that adhere to the Agent Client Protocol. This involves specifying the agent's endpoint or configuration details. Toad then provides a rich terminal interface where you can invoke agent actions, send prompts, and receive responses. For example, you could use Toad to ask one agent to generate code, another to refactor it, and a third to debug it, all from the same terminal session. This makes complex AI-assisted development workflows much more manageable and efficient. The integration is designed to be straightforward, allowing developers to plug in their preferred AI agent ecosystem without significant setup overhead. So, how does this help you? It means you can leverage the power of AI agents for your coding tasks without a steep learning curve for each new tool, leading to faster development cycles.

Product Core Function

· Unified Agent Interaction: Allows developers to interact with multiple AI coding agents through a single, consistent terminal interface, reducing context switching and improving workflow efficiency.

· Agent Client Protocol (ACP) Support: Integrates with any AI agent that implements the Agent Client Protocol, providing a flexible 'bring your own agent' framework and fostering interoperability within the AI development ecosystem.

· Enhanced Terminal UI: Offers a richer and more intuitive user experience compared to typical command-line interactions, making it easier to manage complex AI agent tasks and understand their outputs.

· Simultaneous Agent Management: Enables the running and monitoring of a large number of AI agents concurrently, facilitating parallel task execution and complex problem-solving scenarios.

· Developer-Centric Workflow: Designed with developers in mind, aiming to streamline the process of incorporating AI assistance into daily coding routines, ultimately speeding up development and improving code quality.

Product Usage Case

· Scenario: A developer needs to write a new feature, debug an existing one, and document the code. Toad can be configured to connect to three different AI agents specialized in code generation, debugging, and documentation respectively. The developer can then switch between these agents within the Toad terminal to request and receive the necessary outputs sequentially or even in parallel, significantly speeding up the overall task completion. The value to you is faster feature delivery and less mental overhead managing multiple tools.

· Scenario: A team is experimenting with different AI coding assistants to find the best fit for their projects. With Toad, they can easily connect to each agent's SDK (assuming ACP compliance) and compare their performance side-by-side within a single terminal interface. This allows for quick evaluation and iteration on agent usage without needing to set up separate environments for each tool. The value to you is an easier way to find and integrate the most effective AI tools for your team.

· Scenario: An AI agent provides complex, multi-step outputs or suggestions. Toad's enhanced terminal UI can better visualize and organize these outputs, making them easier for the developer to parse, understand, and act upon. This could involve structured formatting of code suggestions, clear error message presentation, or organized documentation snippets. The value to you is better comprehension of AI suggestions, leading to more accurate and efficient implementation.

13

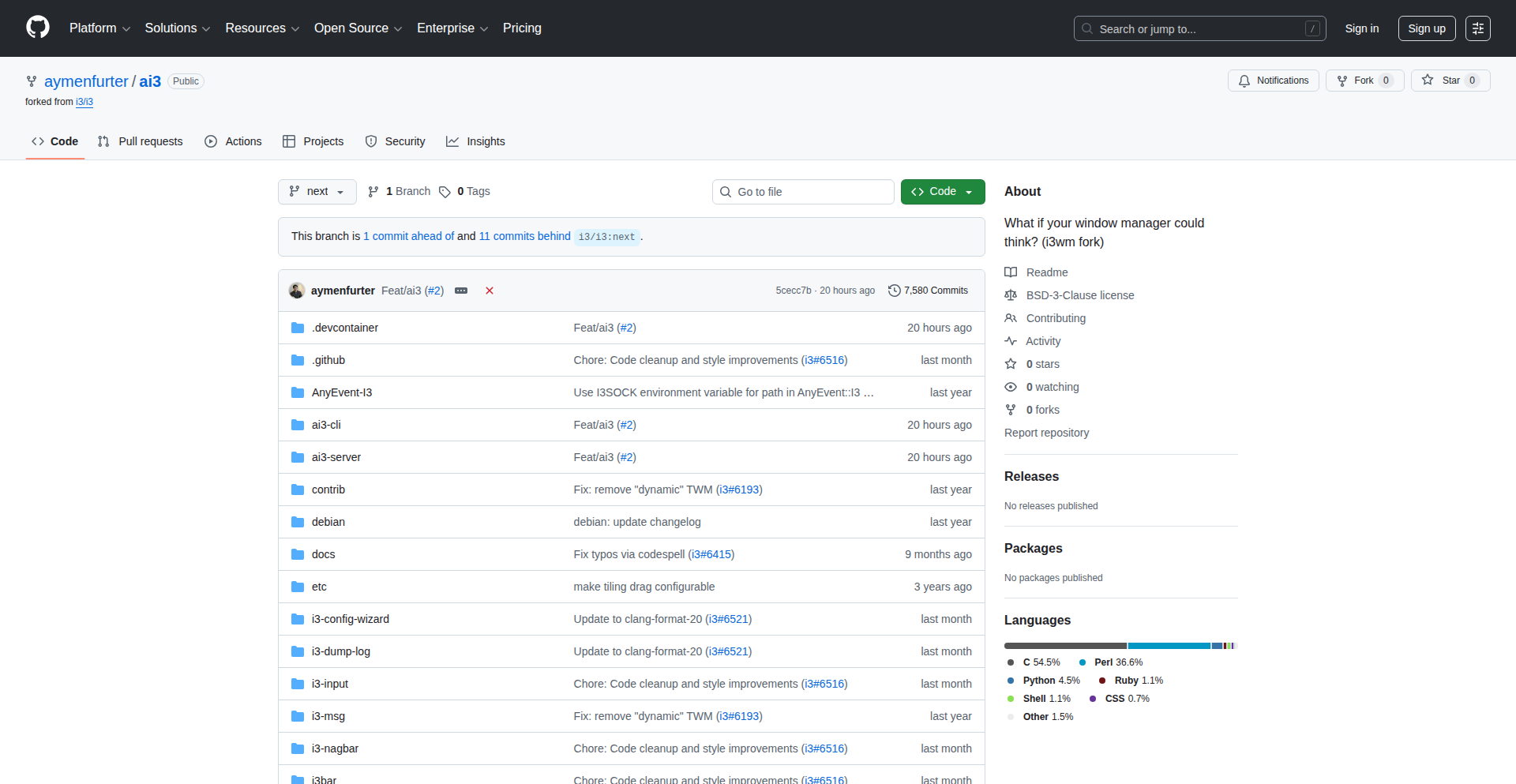

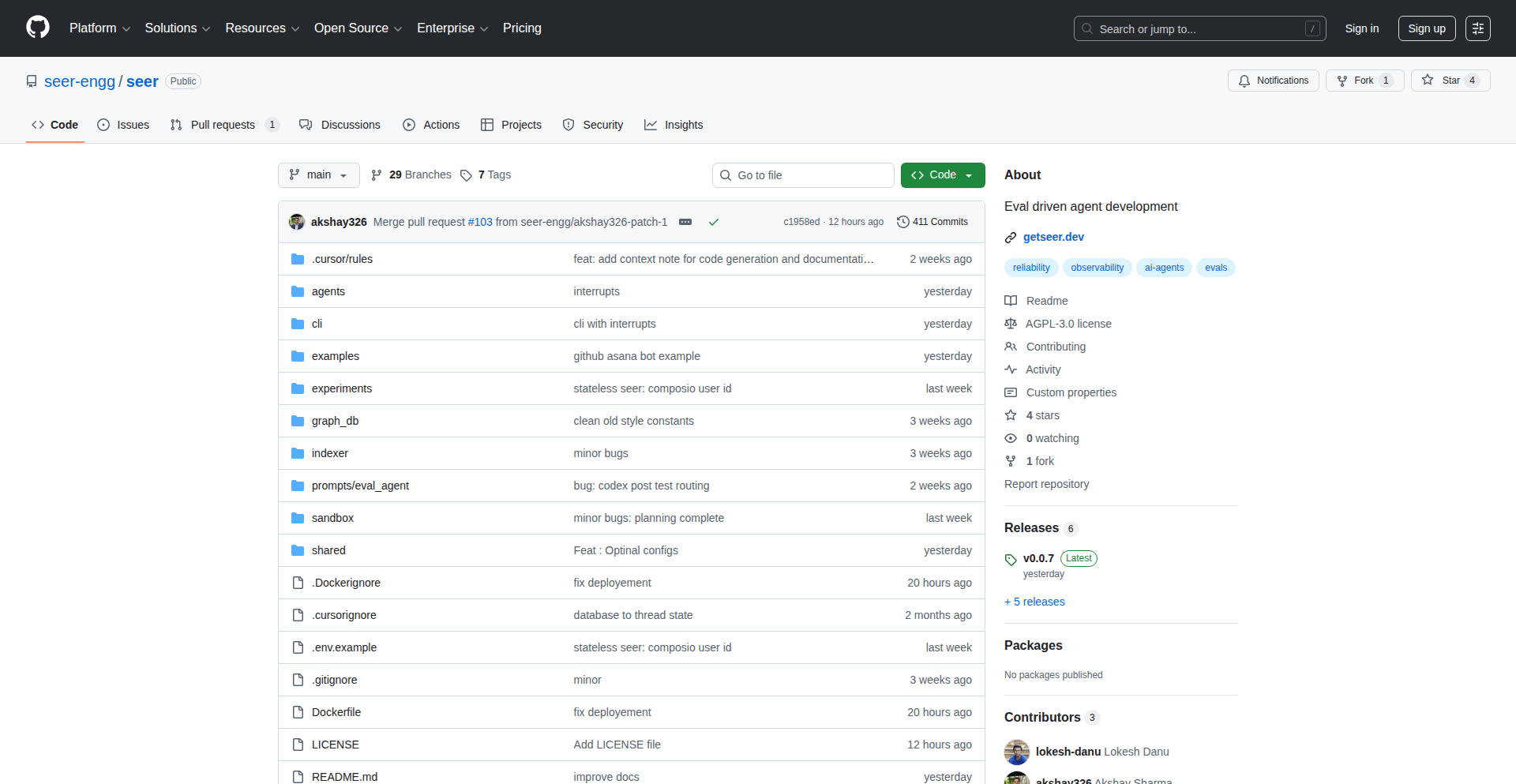

JulesAI GitHub Actions

Author

suyashkumar

Description

This project presents a set of GitHub Actions that demonstrate how to interact with Jules, an experimental AI agent from Google Labs. It showcases practical examples of leveraging AI for code-related tasks directly within the GitHub workflow, highlighting a novel approach to automated code assistance and problem-solving.

Popularity

Points 6

Comments 0

What is this product?

Jules AI GitHub Actions is a collection of pre-built automation scripts (GitHub Actions) that allow developers to integrate Jules, a cloud coding AI agent, into their software development pipeline. The core innovation lies in abstracting the complexity of interacting with a sophisticated AI agent, making its capabilities accessible through familiar CI/CD (Continuous Integration/Continuous Deployment) workflows. This means you can trigger AI-powered actions like code generation, refactoring suggestions, or bug detection as part of your regular code commits and pull requests. So, what's the value for you? It brings AI-powered coding assistance directly into your development process, potentially speeding up tasks and improving code quality without requiring you to manually run separate AI tools.

How to use it?

Developers can integrate Jules AI GitHub Actions into their GitHub repositories by adding YAML configuration files to the `.github/workflows/` directory. These files define when and how Jules should be invoked. For example, a workflow could be set up to automatically run a Jules-powered code review on every pull request, or to generate boilerplate code for new features. The actions act as connectors, translating your code changes or workflow events into prompts for Jules and then processing Jules's responses back into meaningful actions within GitHub. So, how can you use this? You can automate tedious code reviews, get AI-generated code snippets for common patterns, or even have Jules help debug issues found during your automated tests. This integration means AI assistance is there when you need it, right where you work.

Product Core Function

· Automated Code Review: The action can trigger Jules to analyze code changes in a pull request, providing feedback on potential bugs, style inconsistencies, or performance issues. This adds an AI layer to code quality checks. So, what's the value for you? Faster and more comprehensive code reviews, catching issues earlier in the development cycle.

· Code Generation: Developers can use these actions to prompt Jules to generate specific code snippets or even entire functions based on a description. This leverages AI to accelerate the creation of repetitive or standard code. So, what's the value for you? Reduced development time by automating the generation of boilerplate or common code patterns.

· AI-Powered Debugging Assistance: When errors occur during a build or test process, these actions can send the error logs and relevant code context to Jules for analysis and potential solutions. So, what's the value for you? Get AI-driven insights into error resolution, potentially saving significant debugging time.

· Workflow Automation with AI: The actions enable the creation of custom workflows where AI plays a role in decision-making or task execution, such as automatically suggesting documentation updates based on code changes. So, what's the value for you? Smarter and more efficient development workflows tailored to your project's needs.

Product Usage Case

· Scenario: You're working on a large codebase and need to ensure all new code adheres to strict style guidelines and best practices. How to use: Configure a GitHub Action to run Jules AI on every pull request. Jules will analyze the code and comment directly on the PR with suggestions for style improvements or potential anti-patterns. So, how does this help? It enforces code quality automatically, freeing up human reviewers for more complex tasks.

· Scenario: You need to quickly scaffold a new API endpoint for a web application. How to use: Create a workflow that triggers Jules AI to generate the basic structure of the API endpoint, including request handling and response formatting, based on a simple prompt. So, how does this help? It significantly reduces the time spent writing repetitive API code, allowing you to focus on the business logic.

· Scenario: Your CI pipeline fails due to an intermittent test error that's hard to reproduce. How to use: Set up a GitHub Action to capture the test failure logs and send them, along with the relevant code, to Jules AI for analysis. Jules might provide insights into the root cause or suggest a fix. So, how does this help? It provides an intelligent assistant to help diagnose and resolve complex or obscure errors faster.

· Scenario: You've updated a feature and need to ensure the documentation is also updated accordingly. How to use: Implement a workflow that uses Jules AI to analyze code changes and then generate or suggest updates for the relevant documentation files. So, how does this help? It ensures your documentation stays synchronized with your code, improving maintainability and developer onboarding.

14

Quercle AI Fetch API

Author

liran_yo

Description

Quercle is a web fetch and search API specifically designed for AI agents. It addresses the common pain points of existing tools by providing clean, LLM-ready data, even from JavaScript-heavy websites. Its core innovation lies in its ability to intelligently parse web content, extract relevant information, and present it in a structured format suitable for AI consumption, making web data more accessible for building sophisticated AI applications. So, this helps you build smarter AI agents that can understand and interact with the web more effectively.

Popularity

Points 1

Comments 4

What is this product?

Quercle is a specialized API service that allows AI agents to fetch and search web content. Unlike traditional web scrapers that often return raw HTML or messy markdown, Quercle processes websites, including those that rely heavily on JavaScript to load content, and extracts the essential information. It then uses an LLM layer to transform this data into a format that AI models can easily understand and utilize. This means you get cleaner, more relevant data for your AI projects without the usual data wrangling headaches. So, for you, this means less time spent cleaning data and more time building powerful AI features.

How to use it?

Developers can integrate Quercle into their AI agent workflows by making API calls. It's designed for easy integration with popular AI frameworks like LangChain and Vercel AI SDK, as well as other platforms like MCP. You'd typically use it when your AI agent needs to access real-time information from the internet, research topics, or gather data for decision-making. The API returns structured data, which can then be fed directly into your AI agent's prompt or processing logic. So, you can quickly add robust web data capabilities to your AI agents without writing complex scraping code.

Product Core Function

· Intelligent Web Content Fetching: Quercle fetches content from websites, intelligently handling dynamic JavaScript rendering to capture up-to-date information. This is valuable because it ensures your AI agents are working with the latest data, not stale information from static HTML. It's useful for applications needing current event data or live updates.

· LLM-Optimized Data Output: The API processes fetched content to produce an LLM-ready output, meaning the data is cleaned, structured, and easy for AI models to interpret. This significantly reduces the pre-processing effort for developers, allowing AI agents to understand web content more efficiently. This is useful for building conversational AI or agents that summarize web articles.

· JavaScript-Heavy Site Compatibility: Quercle is engineered to work effectively with websites that rely heavily on JavaScript to display their content, a common challenge for many web scraping tools. This expands the range of web data accessible to AI agents. This is crucial for AI agents that need to interact with modern, interactive websites.

· Simplified Integration: The API is built with ease of integration in mind, offering straightforward connection points with popular AI development tools and platforms. This speeds up the development process by removing complex setup steps. This is useful for developers looking to quickly prototype or deploy AI applications.

Product Usage Case

· Building an AI research assistant that can browse and summarize information from multiple news websites, even those with dynamic content loading. Quercle's ability to handle JS-heavy sites and provide clean output makes this possible, solving the problem of fragmented and messy data from different sources.

· Developing a customer support chatbot that can access product information and FAQs from a company's website to provide accurate answers. Quercle can fetch this information reliably, even if the website uses JavaScript for its interactive elements, ensuring the chatbot is always up-to-date.

· Creating an AI agent for market analysis that needs to gather pricing and product details from e-commerce sites. Quercle's structured data output simplifies the process of feeding this competitive intelligence into the agent's analysis models, overcoming the challenge of inconsistent e-commerce site structures.

15

EpsteinDocs Search AI

Author

benbaessler

Description

This project creates a naturally language searchable interface for the US House Oversight Committee's release of Epstein documents. It tackles the challenge of scattered, unsearchable files (PDFs, images, scans) by using AI to make over 20,000 documents accessible and verifiable. The innovation lies in applying Retrieval-Augmented Generation (RAG) to unstructured public data, enabling quick discovery and direct citation verification for users.

Popularity

Points 2

Comments 3

What is this product?

EpsteinDocs Search AI is a specialized search engine that leverages Artificial Intelligence to make a large collection of public documents, specifically the US House Oversight Committee's release related to Epstein, easily searchable using everyday language. The core technology involves Optical Character Recognition (OCR) to convert scanned documents and images into text, then breaking down this text into manageable pieces (chunking). These pieces are then transformed into numerical representations (embedding) that capture their meaning, allowing for semantic search. A Retrieval-Augmented Generation (RAG) pipeline then takes your natural language query, finds the most relevant document snippets based on their meaning, and uses a language model to generate an answer. Crucially, every answer is linked back to the exact page in the original document, ensuring transparency and allowing users to verify the information themselves. So, what this means for you is that instead of manually sifting through thousands of PDFs and images, you can ask questions in plain English and get precise answers with direct links to the source material, making complex information accessible.

How to use it?

Developers can use this project as a template or inspiration for building similar search functionalities on their own collections of unstructured documents. The underlying technical approach, involving OCR, chunking, embedding, and a RAG pipeline, can be adapted to any large corpus of text-based or scannable files. For instance, if you have a large archive of legal documents, research papers, or internal company reports that are currently difficult to search, you can apply these techniques to create a powerful, AI-driven search tool. The project demonstrates how to integrate these AI components to create a user-friendly interface that returns verifiable results with citations. This allows for rapid information retrieval and analysis within your specific data domain. So, for you, this means you can learn how to transform your organization's own 'data swamps' into searchable knowledge bases, saving significant time and effort in finding critical information.

Product Core Function

· Optical Character Recognition (OCR): Converts scanned documents and images into machine-readable text. This is valuable because it unlocks the content hidden within non-textual files, making it available for searching and analysis. Imagine being able to search the text of a scanned report instead of manually reading through it.

· Semantic Search with Embeddings: Transforms text into numerical representations (vectors) that capture meaning, allowing for searches based on concepts rather than just keywords. This is valuable because it finds relevant information even if the exact words aren't used in the query. For example, searching for 'child trafficking' might also return documents discussing 'exploitation of minors'.

· Retrieval-Augmented Generation (RAG) Pipeline: Combines information retrieval from the document corpus with a language model to generate coherent and contextually relevant answers. This is valuable because it provides direct answers to user questions, rather than just a list of documents, making information more digestible.

· Clickable Citations to Source Documents: Provides direct links to the specific page within the original documents where the answer was found. This is valuable because it builds trust by allowing users to easily verify the information and check the original context, ensuring accuracy and transparency.

Product Usage Case

· Investigative Journalism: A journalist could use this approach to quickly search through thousands of leaked documents to find specific connections or evidence related to a particular investigation. Instead of manually reading every document, they could ask questions like 'What were the key financial transactions mentioned in these documents?' and get direct, sourced answers.

· Legal Document Analysis: A law firm could apply this technology to a vast archive of case files and legal precedents to find relevant information for a new case. A lawyer could ask, 'What previous cases mention similar contractual disputes?' and receive a list of relevant documents with page-by-page citations, saving hours of manual research.

· Academic Research: A researcher could use this method to explore a large collection of scientific papers or historical archives. They could query 'What are the primary theories on climate change impact on coastal erosion?' and get summarized answers with links to the specific papers and paragraphs that discuss these theories, accelerating their literature review.

· Public Records Discovery: Citizens or organizations wanting to understand specific government actions or public releases could use this tool to efficiently find information. For example, if a government agency releases a large set of environmental reports, users could ask 'What were the pollution levels reported in the XYZ region in 2022?' and get immediate, verifiable answers.

16

SchematicVision AI

Author

edmgood

Description

SchematicVision AI is an innovative PDF viewer designed for the AEC (Architectural, Engineering, and Construction) industry. Unlike traditional PDF viewers that rely heavily on text and metadata, it employs an AI agent capable of understanding both textual and visual information within documents. This makes it particularly effective for interpreting engineering schematics, which are predominantly visual. Its core innovation lies in its multimodal AI agent, enabling more accurate data extraction and task support for complex visual documents.

Popularity

Points 5

Comments 0

What is this product?

SchematicVision AI is a cutting-edge PDF viewer that leverages a multimodal AI agent. Traditional AI PDF viewers struggle with engineering schematics because they primarily process text and metadata, which are less relevant for visual-heavy documents. SchematicVision AI overcomes this limitation by equipping its AI agent with the ability to 'see' and interpret both text and images simultaneously. This allows it to understand the intricate details and relationships within engineering drawings, leading to significantly improved accuracy for tasks like steel estimation and beyond. So, what's the benefit for you? It means you can get more out of your visual documents, extracting crucial information that was previously inaccessible to AI.

How to use it?