Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-12-17

SagaSu777 2025-12-18

Explore the hottest developer projects on Show HN for 2025-12-17. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN submissions highlight a powerful trend: the democratization of complex technologies and the ingenious application of existing tools to solve new problems. We're seeing a surge in AI-powered developer tools, from code generation and analysis to intelligent agents that can automate tedious tasks. This isn't just about fancy algorithms; it's about practical applications that reduce costs, boost productivity, and enhance user experience. The emphasis on open-source and zero-cost solutions, like GitForms, underscores a growing desire for accessible, transparent, and maintainable technology. Developers and entrepreneurs should focus on identifying pain points within their workflows or industries and explore how AI, or novel combinations of existing tech, can offer elegant, cost-effective, and user-friendly solutions. The 'hacker' spirit is alive and well, pushing boundaries by repurposing and combining technologies in unexpected ways to achieve remarkable outcomes.

Today's Hottest Product

Name

GitForms – Zero-cost contact forms using GitHub Issues as database

Highlight

This project cleverly sidesteps traditional database and backend costs by leveraging GitHub Issues as a persistent store for form submissions. It's a fantastic example of creative resourcefulness, integrating seamlessly with Next.js and offering instant email notifications from GitHub. Developers can learn about building cost-efficient web applications by thinking outside the conventional infrastructure box and utilizing existing developer tools in novel ways. The key innovation lies in abstracting the database functionality to a developer-centric platform, reducing operational overhead to near zero.

Popular Category

AI/ML

Developer Tools

Web Development

Data Management

Utilities

Popular Keyword

AI

LLM

Open Source

API

Python

Rust

Web Scraping

Database

Cloud

Technology Trends

AI-driven development tools

Cost-optimization in cloud infrastructure

Open-source solutions for common problems

Deterministic and reproducible computation

Developer productivity enhancements

Data privacy and security innovations

Unified API interfaces

Serverless and edge computing

Project Category Distribution

AI/ML (20%)

Developer Tools (30%)

Web Development (25%)

Data Management (10%)

Utilities (15%)

Today's Hot Product List

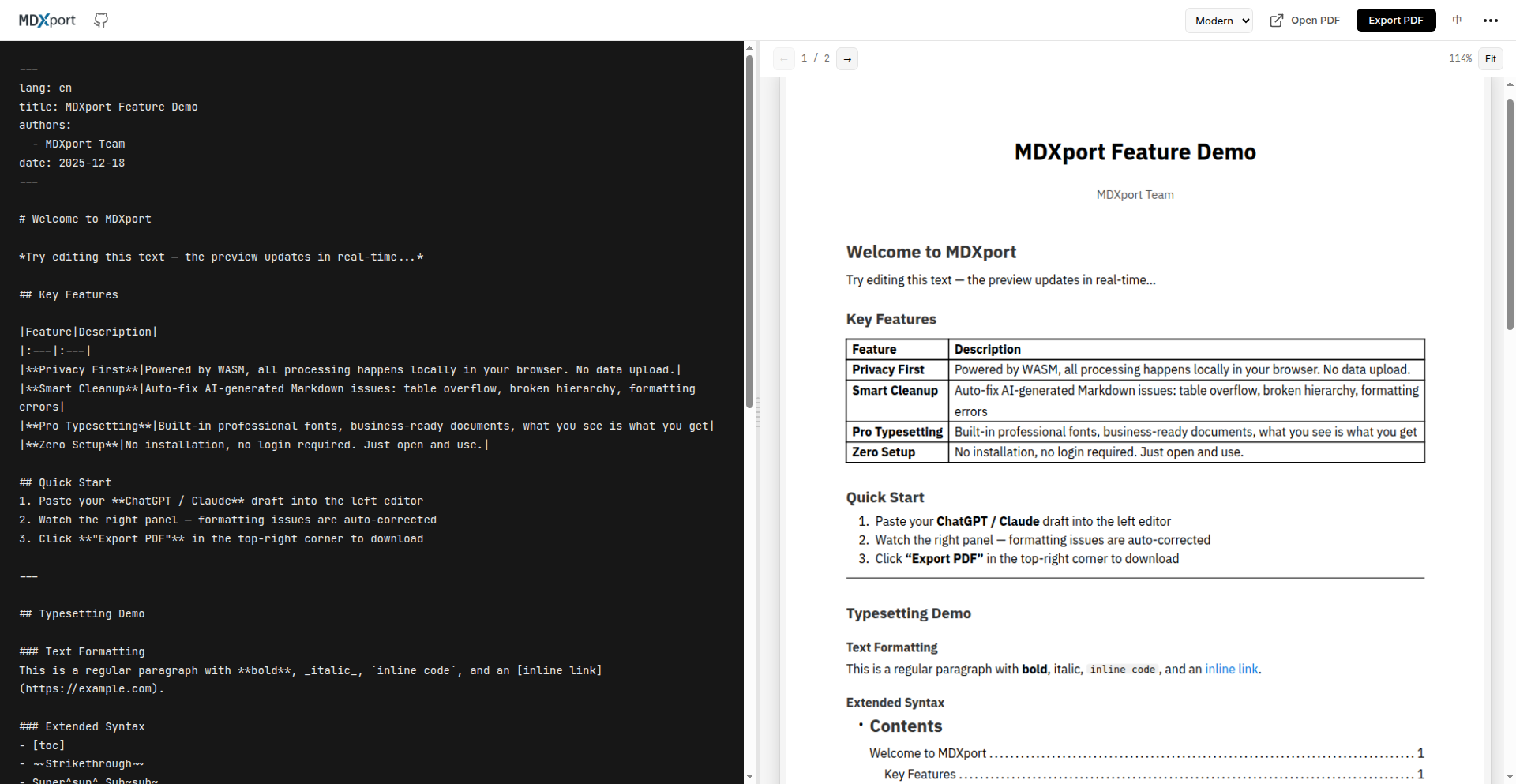

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | RustWaveletMatrixPy | 86 | 7 |

| 2 | GitForms: GitHub Issues Powered Contact Forms | 34 | 23 |

| 3 | Mephisto | 21 | 31 |

| 4 | MCPShark VSCE: In-Editor MCP Traffic Inspector | 16 | 0 |

| 5 | Catsu Embeddings Hub | 7 | 5 |

| 6 | NanoDL: C-based Minimal DL with Naive CUDA/CPU Ops and Autodiff | 10 | 1 |

| 7 | Open-Schematics ML Engine | 11 | 0 |

| 8 | Valmi: Outcome-Driven AI Agent Billing | 4 | 6 |

| 9 | AeroViz 3D | 8 | 2 |

| 10 | Tonbo: Serverless & Edge Embedded DB | 6 | 2 |

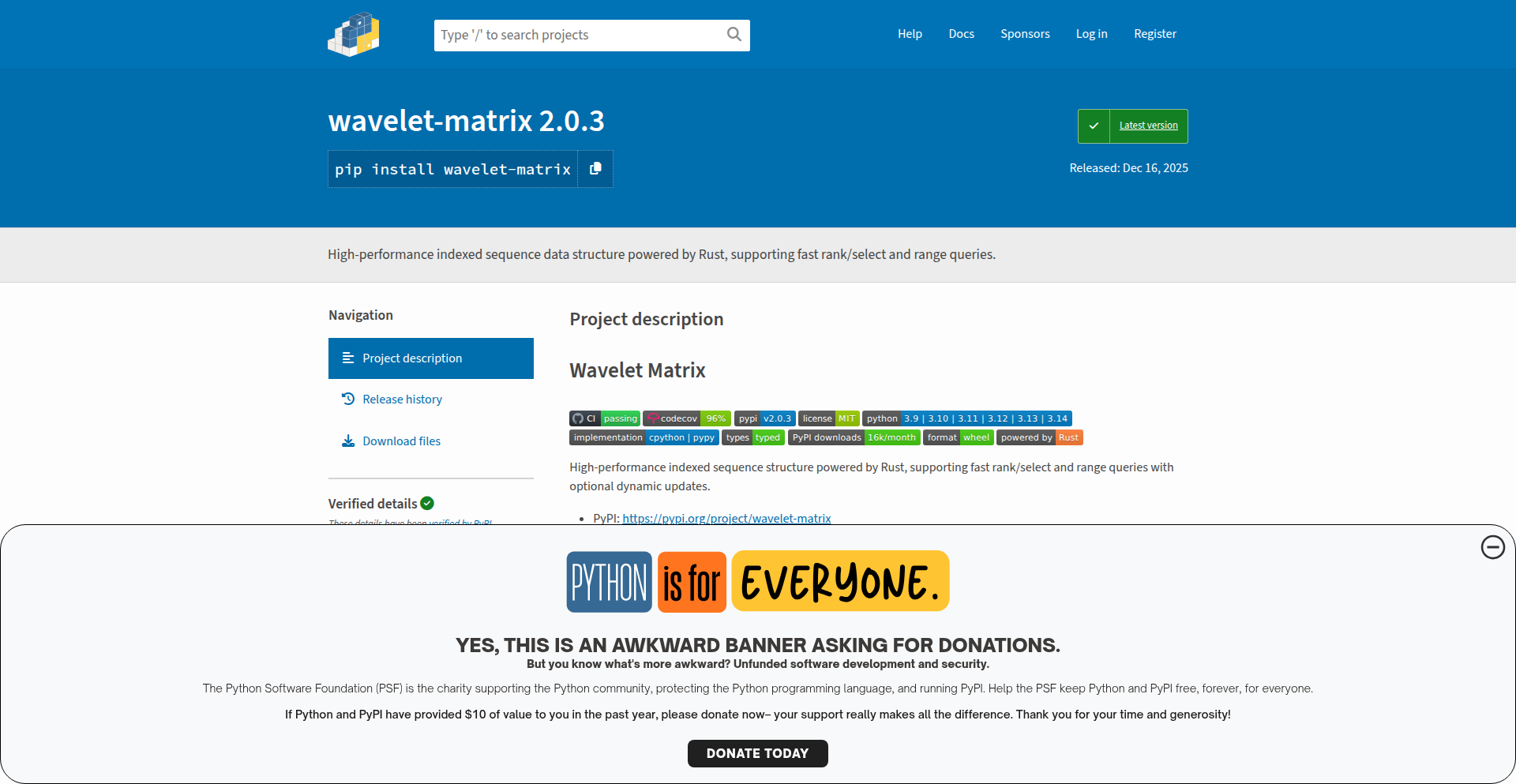

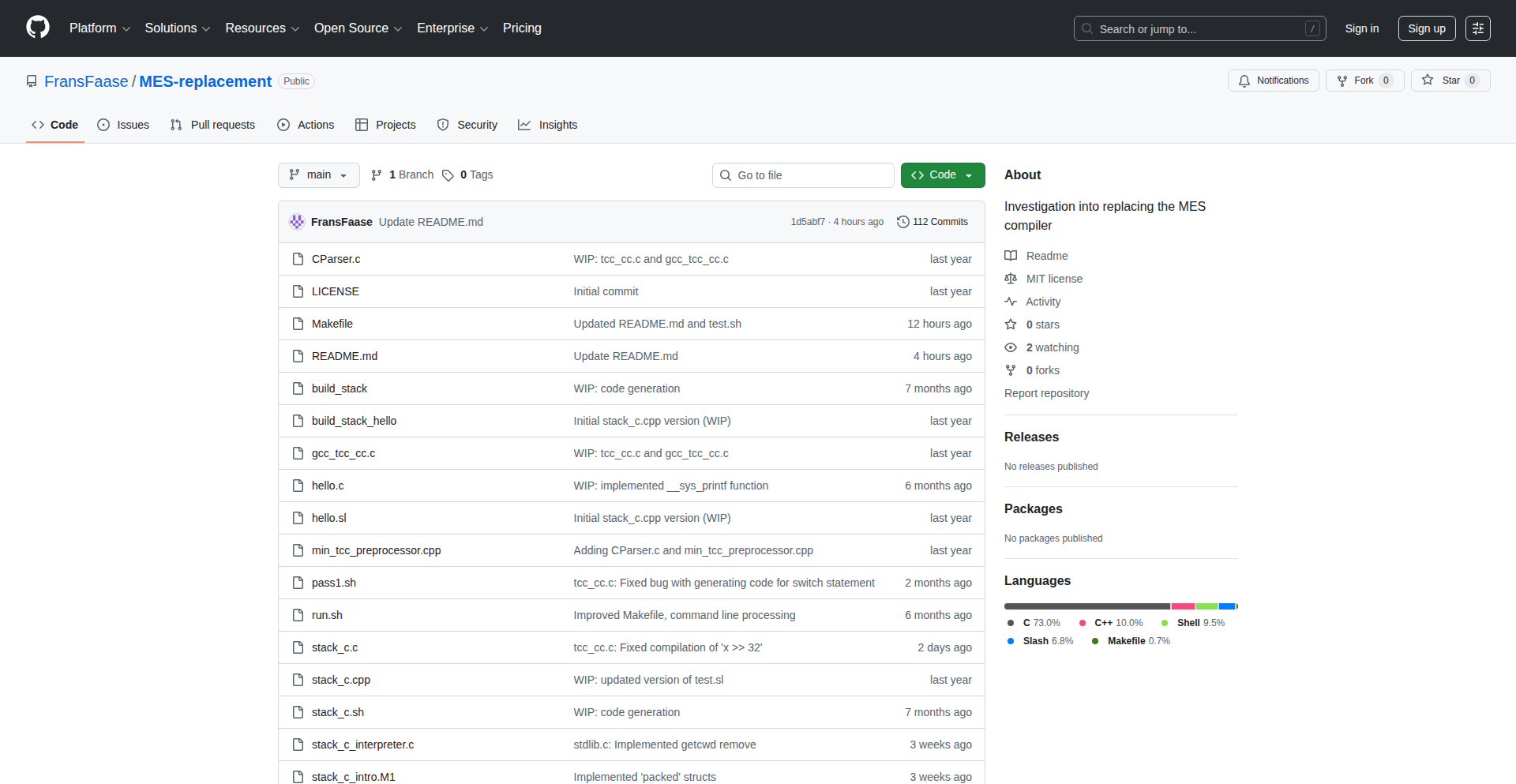

1

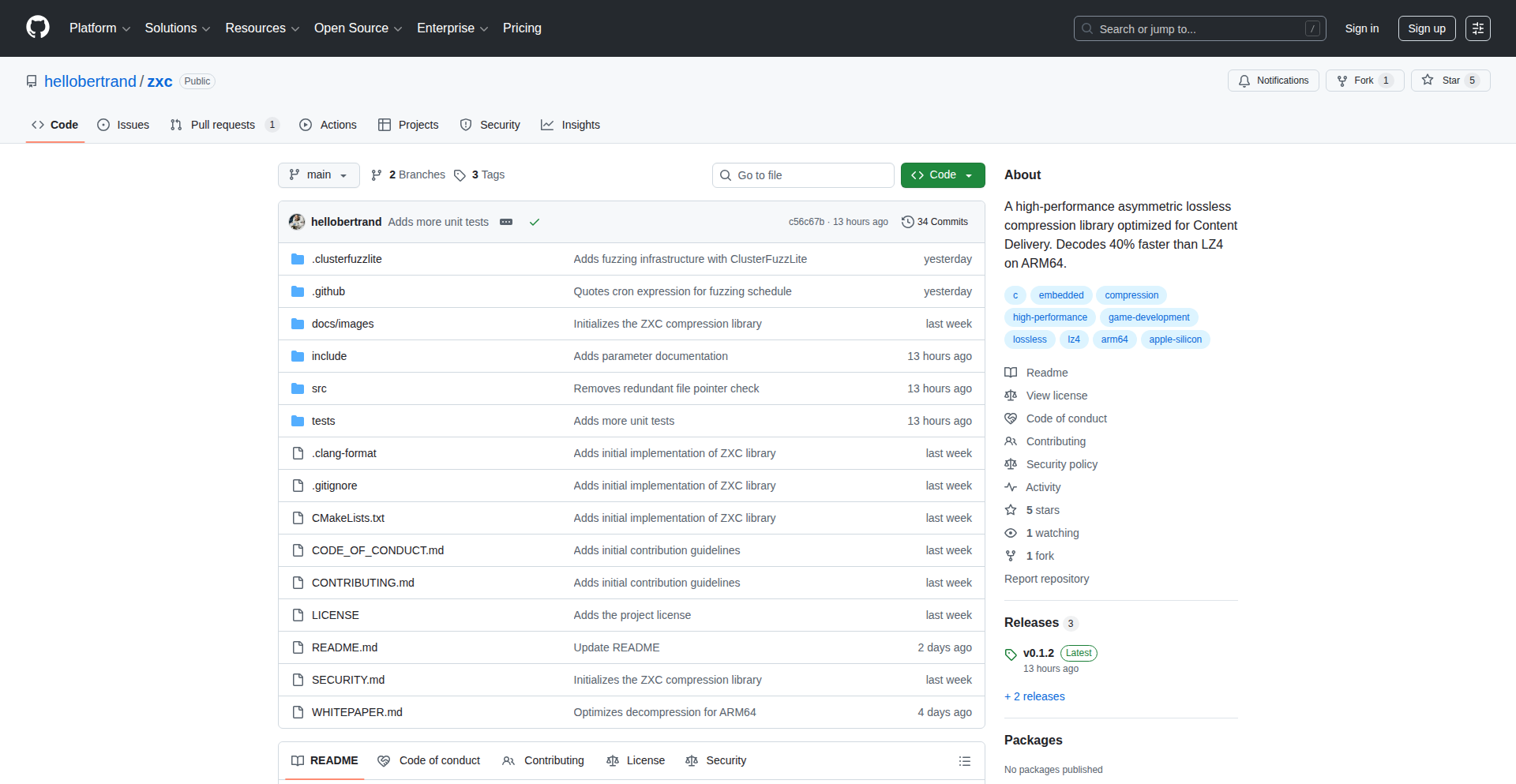

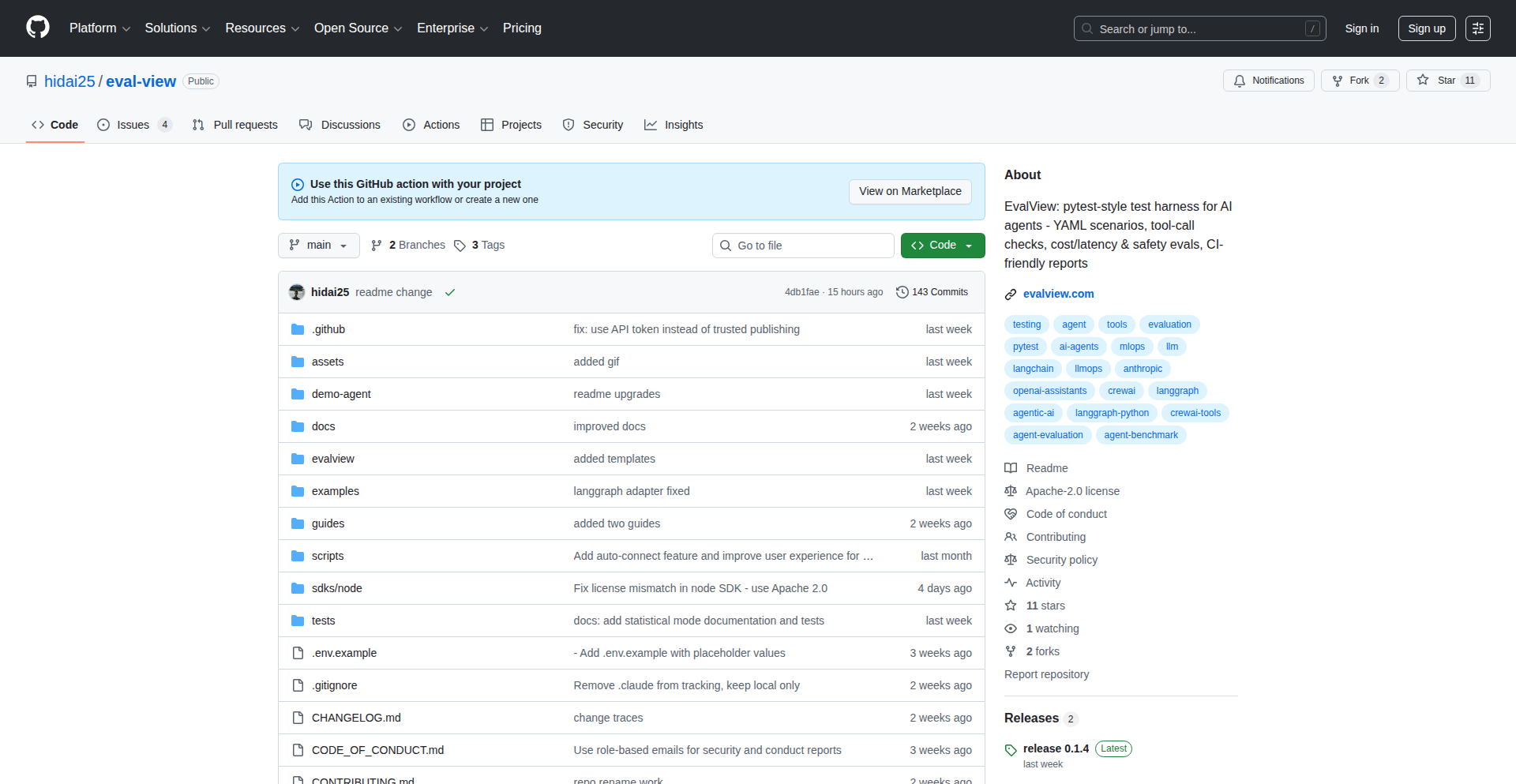

RustWaveletMatrixPy

Author

math-hiyoko

Description

A high-performance Wavelet Matrix library for Python, built using Rust. It addresses the scarcity of robust and efficient Wavelet Matrix implementations in Python, offering fast query capabilities and typed APIs for improved developer experience. This project brings advanced data structure functionality to Python with a focus on speed and reliability.

Popularity

Points 86

Comments 7

What is this product?

RustWaveletMatrixPy is a Python library that provides a Wavelet Matrix data structure, implemented in Rust for maximum performance. A Wavelet Matrix is a sophisticated data structure used for efficiently answering various queries on sequences of symbols, like strings or lists of numbers. Think of it as a highly optimized way to search and analyze large amounts of ordered data. The innovation here is bringing a cutting-edge, performant implementation to Python developers, overcoming the typical performance bottlenecks of pure Python solutions. It's built in Rust because Rust offers low-level control and memory safety, which are crucial for achieving high speed without sacrificing reliability, especially for complex data structures like this. So, for you, this means you can perform complex data analysis and querying tasks on large datasets within Python much, much faster than before.

How to use it?

Developers can integrate RustWaveletMatrixPy into their Python projects by installing it via pip. Once installed, they can instantiate a Wavelet Matrix object with their data (e.g., a list of integers or characters). The library exposes a clean, typed API for performing operations like rank (counting occurrences of an element up to a certain position), select (finding the position of the k-th occurrence of an element), top-k queries (finding the k most frequent elements in a range), quantile queries (finding the element at a specific rank), and range queries. It even supports dynamic updates, allowing the data to be modified after the matrix is built. This means if you're building a data analytics application, a search engine backend, or any system that needs to quickly process and query large sequences, you can easily plug this library in to get lightning-fast results. For example, if you have a large text file and need to quickly find how many times a specific word appears before a certain character position, this library makes that operation incredibly efficient.

Product Core Function

· Fast Rank Queries: Efficiently counts the occurrences of a symbol up to a given index in the sequence. This is useful for tasks like analyzing the frequency distribution of characters in a text within specific segments, helping you understand data patterns quickly.

· Fast Select Queries: Quickly finds the index of the k-th occurrence of a given symbol. This is invaluable for locating specific data points or patterns within large datasets, such as finding the position of the 100th instance of a particular event in a log file.

· Top-K Queries: Determines the k most frequent symbols within a specified range of the sequence. This is extremely powerful for real-time analytics, identifying trending items, or summarizing large data segments by highlighting the most common elements.

· Quantile Queries: Retrieves the element at a specific rank (e.g., the median) within a range of the sequence. This enables efficient statistical analysis and data profiling, allowing you to understand the distribution of your data without sorting the entire dataset.

· Range Queries: Allows for efficient querying of information within a sub-section of the sequence. This is a fundamental capability for many data processing tasks, enabling focused analysis on specific parts of your data.

· Dynamic Updates: Supports adding or modifying elements in the sequence after the Wavelet Matrix has been constructed. This provides flexibility for applications where data is not static, allowing you to maintain an efficient query structure even as your data evolves.

Product Usage Case

· Building a highly responsive search index for large text corpora: Developers can use RustWaveletMatrixPy to create an index that allows for extremely fast text searching, including complex pattern matching and frequency analysis, significantly improving user experience in document retrieval systems.

· Implementing real-time analytics dashboards for streaming data: By leveraging the fast query capabilities, developers can process and analyze high-volume data streams in real-time, providing up-to-the-minute insights for monitoring systems or user behavior tracking.

· Optimizing bioinformatics sequence analysis: In fields like genomics, where vast amounts of sequence data are common, this library can accelerate tasks such as motif finding and variant analysis, making research more efficient.

· Developing efficient data compression and retrieval systems: Wavelet Matrices are fundamental to some compression algorithms. This library can be integrated into systems that require fast data decompression and querying of compressed data, saving storage space and retrieval time.

· Creating efficient algorithms for competitive programming problems involving sequence analysis: For developers participating in coding competitions, this library offers a powerful tool to solve complex problems related to permutations, rank, and select queries with optimal time complexity.

2

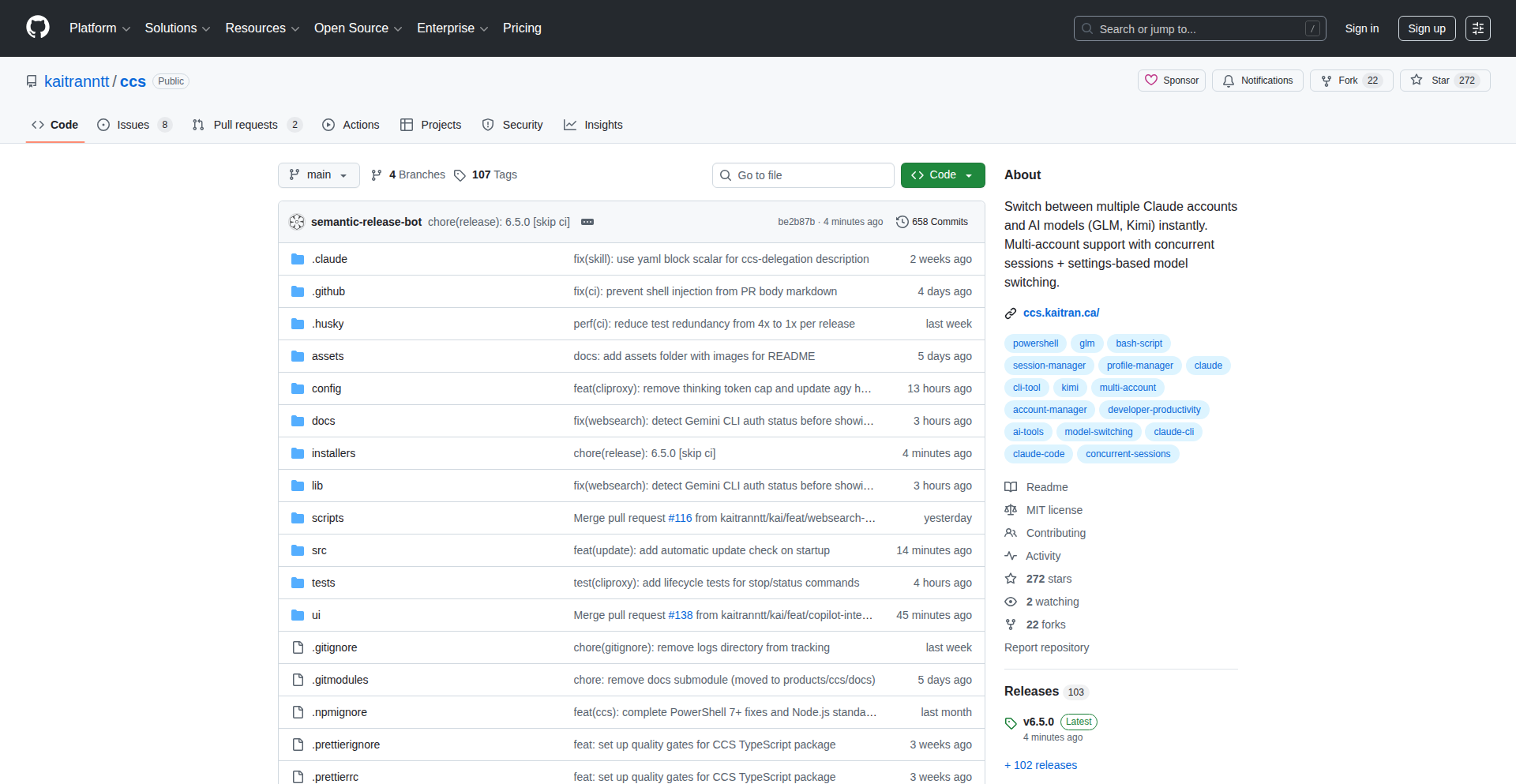

GitForms: GitHub Issues Powered Contact Forms

Author

lgreco

Description

GitForms is an open-source contact form solution that leverages GitHub Issues as its backend. Instead of paying for expensive form services, it stores form submissions directly as issues in your GitHub repository. This eliminates ongoing costs associated with traditional form providers, databases, and backend servers, making it an ideal solution for developers looking for a free and efficient way to collect feedback and inquiries on their landing pages, portfolios, or MVPs.

Popularity

Points 34

Comments 23

What is this product?

GitForms is a novel approach to handling contact form submissions by repurposing GitHub Issues. When a user submits a form on your website, GitForms, built with Next.js 14, Tailwind CSS, and TypeScript, uses the GitHub API to create a new issue in a designated GitHub repository. This means you receive form submissions as actionable GitHub Issues, complete with all the submitted data. The innovation lies in its zero-cost, serverless architecture. It avoids the need for separate databases or backend servers, making deployment incredibly simple and free on platforms like Vercel or Netlify. Configuration for themes, text, and even multi-language support is handled through a simple JSON file, embodying the hacker ethos of using existing tools creatively to solve common problems.

How to use it?

Developers can integrate GitForms into their Next.js 14 applications. After setting up the project, you'll configure a JSON file with your desired settings, such as the GitHub repository where submissions should be stored, API tokens for authentication, and styling options. The form component can then be embedded into your website's pages, like landing pages, portfolios, or MVP project sites. Upon submission, the data is automatically sent to your GitHub repo as a new issue. You'll receive email notifications from GitHub for each new submission, allowing you to track and manage inquiries directly within your development workflow. This integration is particularly useful for projects hosted on Vercel or Netlify, where its serverless nature fits perfectly into free tier offerings.

Product Core Function

· Stores contact form submissions as GitHub Issues: This provides a free, organized, and trackable way to manage user inquiries without additional database costs, making it perfect for personal projects and MVPs.

· Zero ongoing costs: Eliminates monthly fees for form services, databases, and backend servers by utilizing GitHub's free tier and serverless deployment, directly saving developers money.

· Serverless deployment: Easily deployable on platforms like Vercel and Netlify, allowing for quick setup and free hosting for low-volume use cases.

· Configurable via JSON: Offers flexibility in customizing form themes, text, and multi-language support through a simple JSON file, enabling easy adaptation to different project needs.

· Instant email notifications from GitHub: Ensures prompt awareness of new submissions by leveraging GitHub's built-in notification system, streamlining the feedback process.

Product Usage Case

· Building a personal portfolio website: A developer can use GitForms to add a contact section to their portfolio. When potential employers or collaborators submit inquiries through the form, each submission becomes a GitHub issue, allowing the developer to easily track and respond to opportunities without setting up a separate contact management system.

· Launching a Minimum Viable Product (MVP): For a new web application or service, GitForms can be integrated to collect early user feedback and bug reports. Submissions are automatically filed as issues in the project's repository, providing developers with a clear list of user concerns to address as they iterate on the product.

· Creating simple landing pages for side projects: A developer launching a small tool or project can embed a GitForms form on its landing page to gather interest or support requests. This allows for immediate feedback collection without the overhead of managing a dedicated backend or paying for a form service, perfect for hobby projects.

3

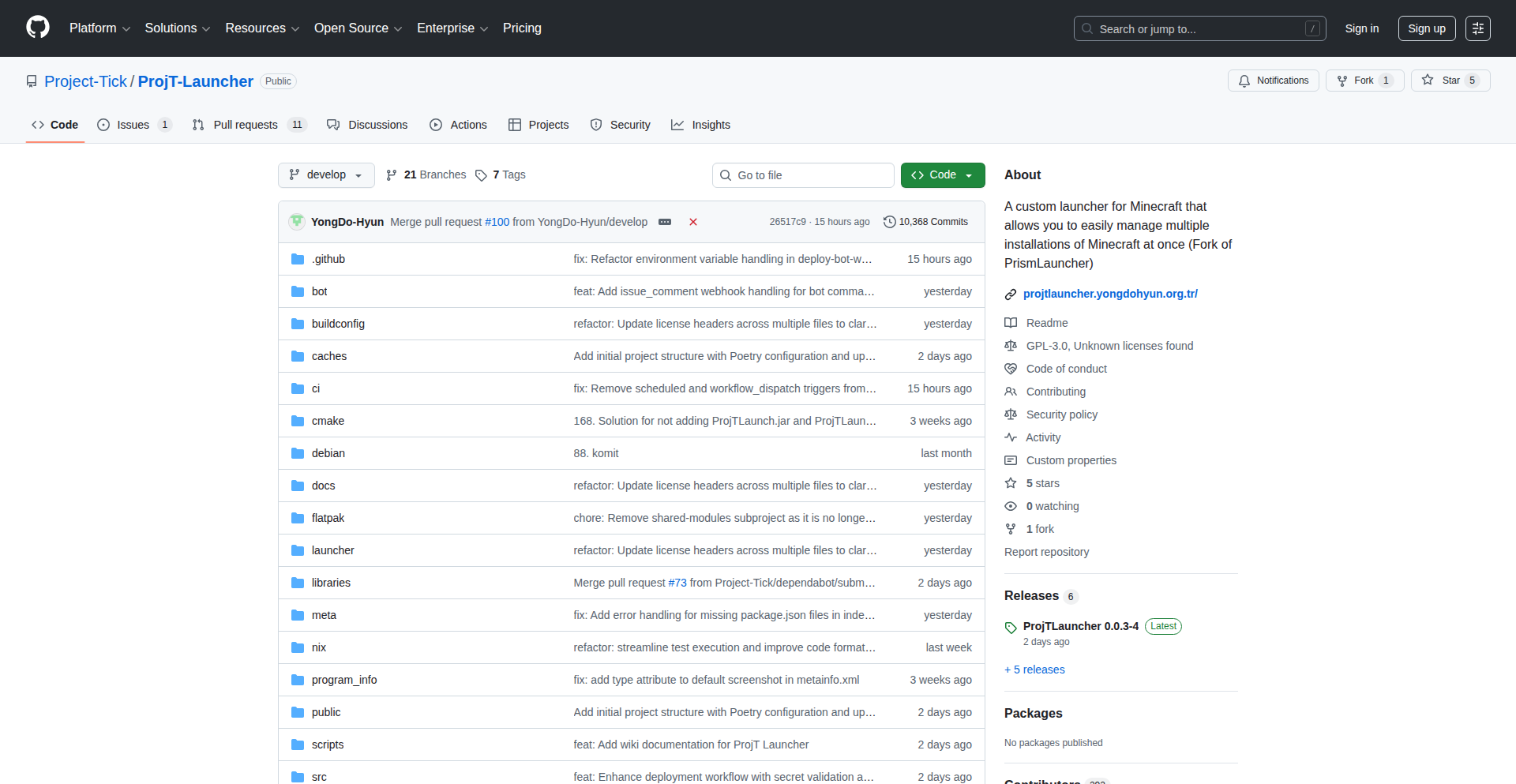

Mephisto

Author

benmxrt

Description

Mephisto is a disposable email service that prioritizes privacy and developer experience. It runs entirely in volatile memory, meaning no data is stored permanently. It features a client-side password generator, offline capabilities via PWA, and uses WebSockets for real-time mail delivery without polling. This addresses the common frustrations with intrusive ads, trackers, and captchas found in many disposable email services, offering a clean, developer-focused utility.

Popularity

Points 21

Comments 31

What is this product?

Mephisto is a disposable email service designed as a developer tool. Its core innovation lies in its 'RAM-only' architecture, where all data is held in volatile memory and is erased once a session ends. This means no persistent storage on servers, enhancing privacy. It also utilizes client-side entropy for its password generator, ensuring your keys are never transmitted to the server. The Progressive Web App (PWA) design allows for offline use and uses WebSockets for instant email reception, eliminating the need for constant checking. This is fundamentally different from typical disposable email services that often compromise user privacy for ad revenue. So, what's in it for you? You get a secure, fast, and ad-free way to use temporary email addresses for sign-ups and testing, without worrying about your data being stored or your experience being interrupted by ads and captchas.

How to use it?

Developers can use Mephisto by visiting the website and immediately creating a temporary email address. The service is designed for quick, on-the-fly use, perfect for signing up for services where you don't want to use your primary email, or for testing registration flows without creating permanent accounts. Its PWA nature means you can install it on your device for quick access and even use it offline for certain functionalities. For seamless transitions, you can use the mobile handoff feature, which uses an encrypted QR code to transfer an active session to your mobile device. This means you can start a session on your desktop and easily continue it on your phone without re-entering any information. So, how does this help you? It provides a streamlined and secure workflow for managing temporary email needs, directly integrated into your development or testing process.

Product Core Function

· Volatile Memory Email Storage: Emails are stored only in active RAM and deleted upon session termination. This provides enhanced privacy by preventing data persistence, useful for sign-ups where you want to protect your primary email. The value here is peace of mind and data security.

· Client-Side Password Generation: The password generation logic runs directly in your browser, meaning your generated keys are never sent to the server. This significantly boosts security for any password-protected services you might be using this email with. The value is keeping your credentials private and secure.

· Progressive Web App (PWA) with WebSockets: Mephisto functions as a PWA, allowing for installation and offline access. It uses WebSockets for real-time email delivery, ensuring you receive incoming messages instantly without needing to repeatedly refresh the page. The value is a fast, responsive, and convenient email experience.

· Encrypted QR Code Mobile Handoff: You can transfer an active email session from one device to another (e.g., desktop to mobile) using an encrypted QR code. This enables a seamless continuation of your workflow across devices. The value is flexibility and uninterrupted workflow management.

· Ad-Free and Tracker-Free Interface: Unlike many disposable email services, Mephisto is free from intrusive advertisements and tracking scripts. This provides a clean, uncluttered, and privacy-respecting user experience. The value is a distraction-free and secure interaction.

Product Usage Case

· Testing sign-up flows for a new web application: A developer can use Mephisto to quickly generate temporary email addresses to test user registration, email verification, and password reset functionalities without creating actual user accounts or spamming their inbox. This accelerates the testing cycle.

· Signing up for online services without revealing personal email: When a user needs to sign up for a forum, a free trial, or a one-time download, they can use a Mephisto email address to avoid receiving spam or marketing emails in their primary inbox. This keeps personal inboxes clean and reduces exposure to unwanted communication.

· Experimenting with third-party services that require email verification: If a developer is testing an API integration or a service that mandates email confirmation, Mephisto provides a convenient way to get a verified email without committing a permanent address. This allows for rapid experimentation.

· Using a temporary email for secure testing of sensitive operations: For scenarios where an email address is required for testing sensitive actions like account recovery simulations, the RAM-only nature of Mephisto ensures no trace of the test email account or its associated data remains after the session. This enhances the security of testing environments.

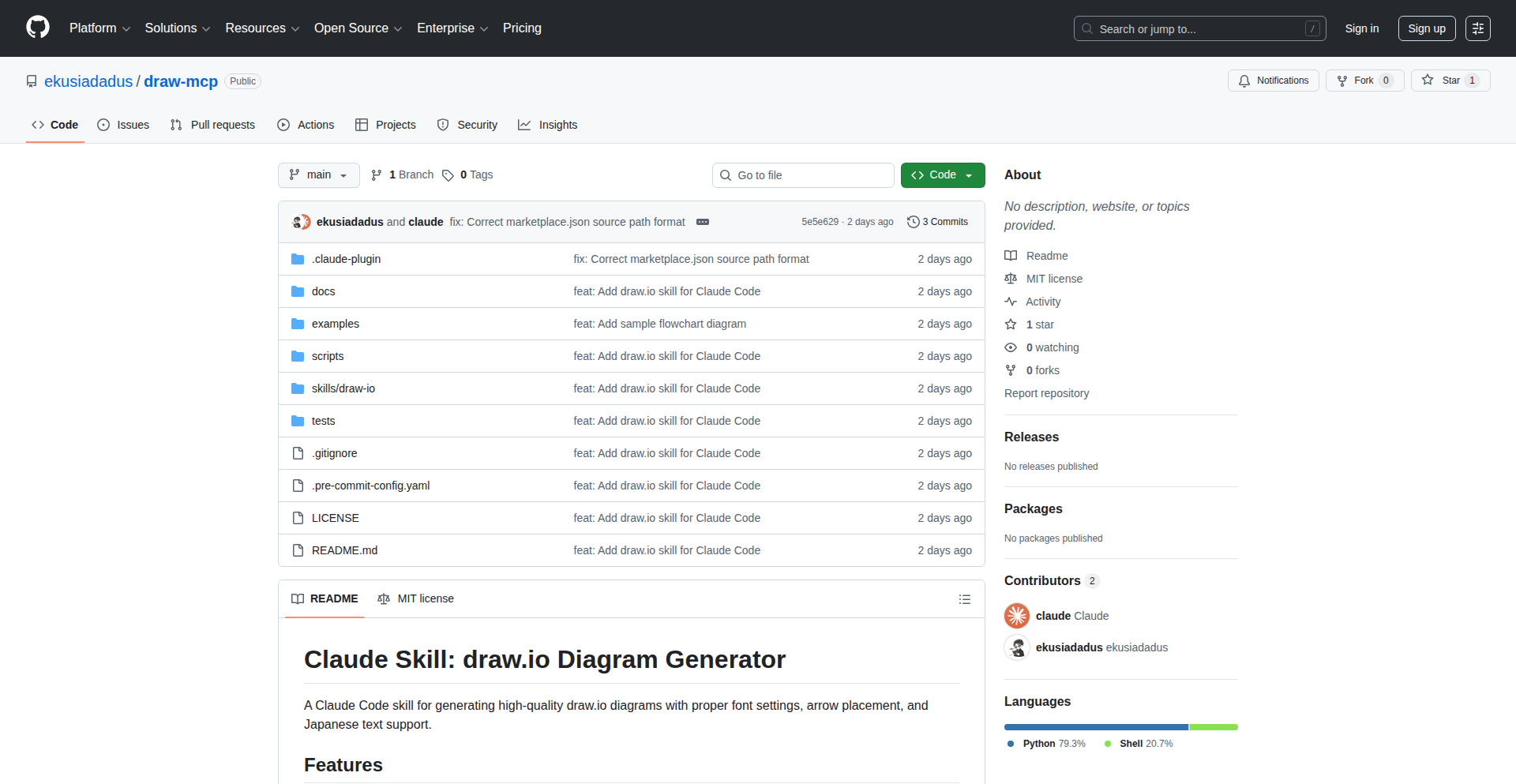

4

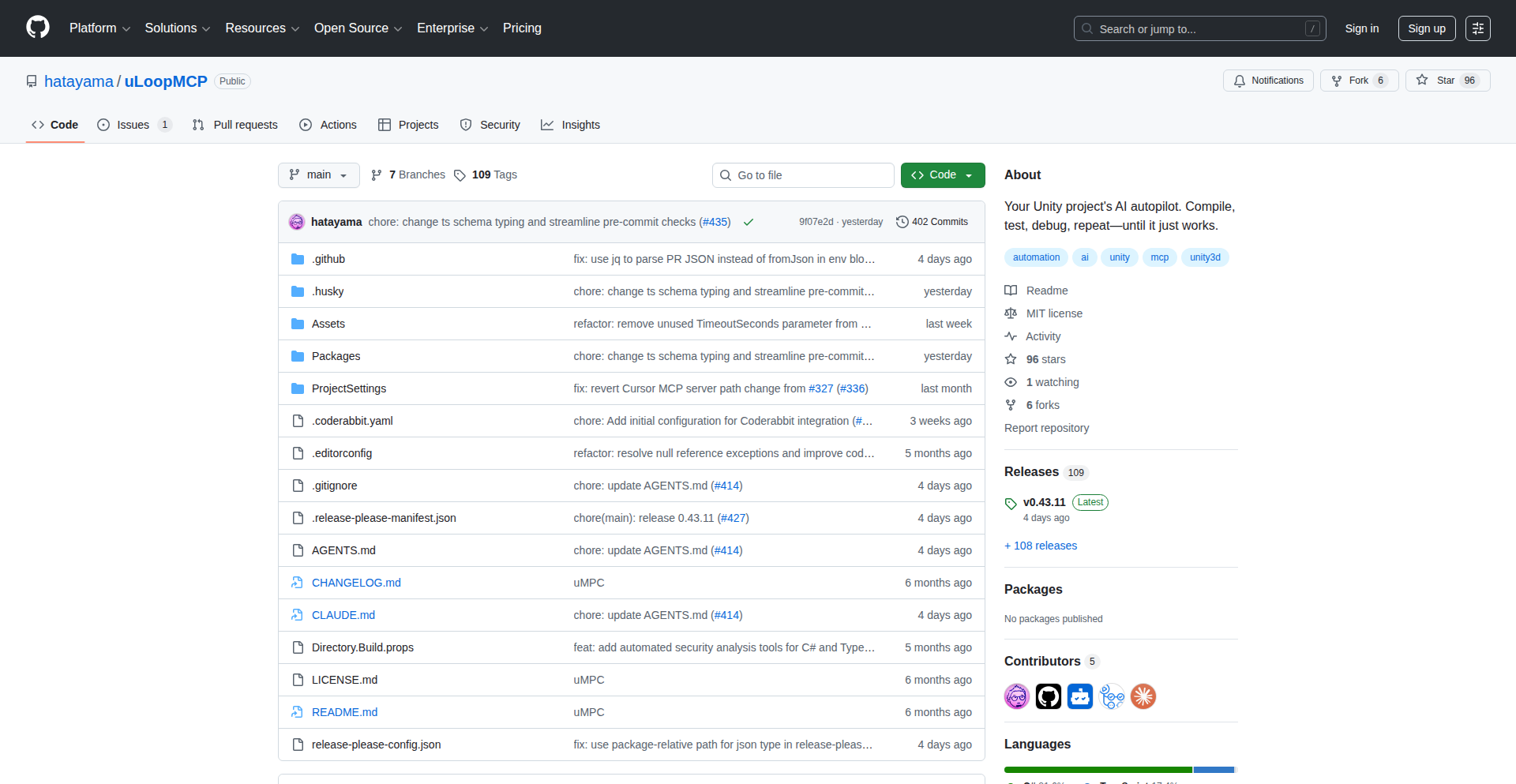

MCPShark VSCE: In-Editor MCP Traffic Inspector

Author

mywork-dev

Description

This project is a VS Code and Cursor editor extension that allows developers to view and debug MCP (Model Context Protocol) traffic directly within their IDE. It eliminates the need to constantly switch between the editor, terminals, and logs, significantly streamlining the debugging process for MCP-based applications. The innovation lies in bringing the network traffic inspection capabilities directly into the developer's primary workspace, making it easier to understand how data is flowing and troubleshoot issues.

Popularity

Points 16

Comments 0

What is this product?

MCPShark Viewer is a VS Code and Cursor extension that acts as an in-editor traffic inspector for the Model Context Protocol (MCP). Instead of manually parsing logs or using separate tools to see the data exchanged between MCP agents or tools, this extension intercepts and displays that traffic directly within your code editor. The core innovation is its integration: it uses the editor's extension API to create a dedicated view for visualizing MCP messages. This allows developers to see exactly what data is being sent and received in real-time, without context switching. For example, if you're building an AI agent that communicates using MCP, you can see the conversation flow right next to your code. This solves the problem of fragmented debugging environments where developers spend a lot of time trying to correlate code with network activity.

How to use it?

Developers can install the MCPShark Viewer extension directly from the VS Code Marketplace or Cursor's extension marketplace. Once installed, when working with applications that use MCP, the extension automatically detects and displays the MCP traffic in a dedicated panel within the editor. Developers can then interact with this panel to view message details, identify patterns, and troubleshoot communication issues. For example, you might open a new tab in your editor that shows a live feed of MCP messages, with options to filter, search, and drill down into individual message payloads. This makes debugging MCP agents significantly more efficient by keeping all relevant information in one place.

Product Core Function

· Real-time MCP traffic visualization: Displays incoming and outgoing MCP messages directly in the editor, allowing developers to see data flow as it happens, making it easy to understand the communication between different parts of an application.

· In-editor debugging panel: Provides a dedicated interface within VS Code/Cursor to inspect MCP messages, reducing the need to switch between multiple tools and improving debugging efficiency.

· Message detail inspection: Allows developers to click on individual messages to see their full payload and metadata, helping to pinpoint the exact data causing issues.

· Contextual relevance: By displaying traffic alongside the code that generates or consumes it, developers can more easily correlate network activity with their application logic.

Product Usage Case

· Debugging an AI agent that uses MCP to communicate with a knowledge base: Developers can see the exact queries sent to the knowledge base and the responses received, all within their editor, allowing them to quickly identify if the agent is formulating requests correctly or if the knowledge base is returning unexpected data.

· Troubleshooting a distributed system where components communicate via MCP: Developers can observe the message exchange between different services, identify bottlenecks or communication errors, and ensure data integrity without leaving their IDE.

· Developing new MCP-based tools and agents: By having a clear view of MCP traffic, developers can iterate faster, test their implementations in real-time, and ensure their protocol adherence.

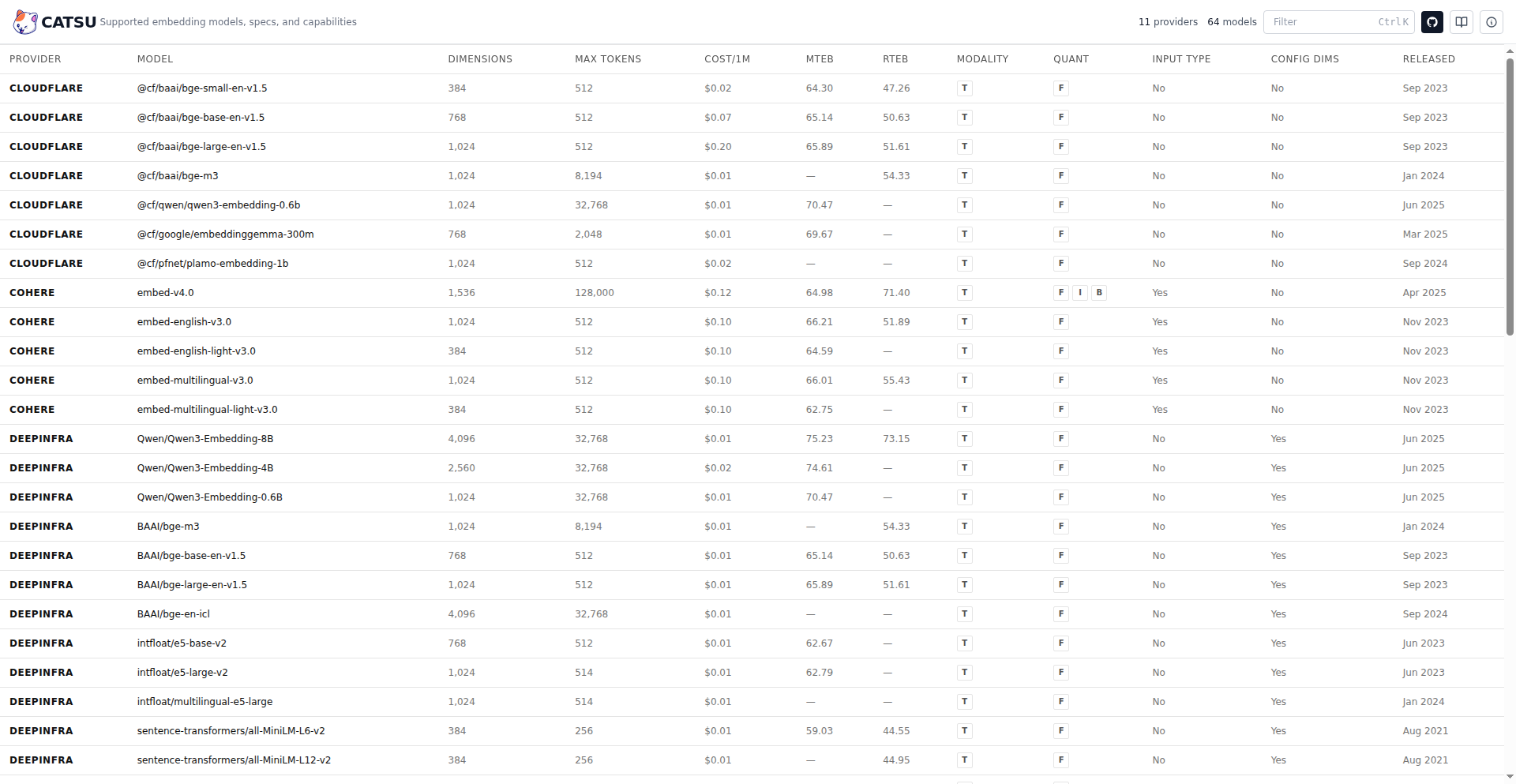

5

Catsu Embeddings Hub

Author

bhavnicksm

Description

Catsu is a Python client designed to simplify interactions with various embedding API providers. It addresses the common pain point of dealing with inconsistent SDKs, hidden limitations, and frequent breaking changes across different services. By offering a single, unified API, Catsu allows developers to easily switch between providers, track costs, and benefit from built-in resilience features like automatic retries. So, this helps you save significant development time and reduces the complexity of integrating AI embeddings into your applications.

Popularity

Points 7

Comments 5

What is this product?

Catsu is essentially a universal translator for embedding APIs. Instead of learning and managing the unique ways each embedding service (like OpenAI, VoyageAI, Cohere, etc.) works, Catsu provides one consistent interface. It abstracts away the underlying complexities, including vendor-specific bugs, undocumented limits, and broken update cycles. Think of it as a layer that standardizes how you send text to get its 'meaning' represented as numbers (embeddings). This standardization makes it incredibly easy to experiment with different embedding models and providers without rewriting your code. So, this means you can quickly find the best and most cost-effective embedding solution for your needs without getting bogged down in technical differences between providers.

How to use it?

Developers can integrate Catsu into their Python projects by installing it via pip. Once installed, they instantiate a Catsu client and can then use its `embed` function to generate embeddings for their text. The client allows specifying the desired embedding model. Catsu handles the communication with the chosen provider, including error handling and retries. It also provides insights into usage costs. This makes it suitable for a wide range of applications, from building search engines and recommendation systems to implementing AI-powered content analysis and natural language understanding features. So, you can easily add powerful AI embedding capabilities to your existing Python applications with just a few lines of code.

Product Core Function

· Unified API for 11+ embedding providers: This allows developers to easily switch between different embedding services (like OpenAI, VoyageAI, Cohere, etc.) without needing to rewrite their integration code. This is valuable because it provides flexibility and avoids vendor lock-in, enabling developers to choose the best provider based on cost, performance, or specific features. It simplifies development and speeds up experimentation.

· Bundled database of 50+ models with pricing, dimensions, and benchmark scores: This provides developers with a centralized resource to compare and select the most suitable embedding models for their use case. Understanding model characteristics and costs upfront helps in making informed decisions, optimizing performance, and managing budgets effectively. This is useful for selecting the most efficient and cost-effective AI components for a project.

· Built-in retry with exponential backoff: This feature automatically retries failed API requests with increasing delays, improving the reliability of embedding generation. This is important because network issues or temporary service outages can disrupt applications. By automatically handling retries, Catsu ensures smoother operation and reduces the likelihood of application failures due to external API instability.

· Automatic cost tracking per request: Catsu monitors and reports the cost associated with each embedding request. This transparency is crucial for developers to manage their cloud spending and optimize their AI usage. Understanding costs allows for better resource allocation and prevents unexpected expenses. This is valuable for controlling project budgets and ensuring financial efficiency.

· Full asynchronous support: Catsu is designed to work seamlessly with Python's asynchronous programming features. This allows developers to handle multiple embedding requests concurrently without blocking their application's main thread, leading to significantly improved performance and responsiveness, especially in high-throughput applications. This is useful for building scalable and efficient applications that can handle many operations at once.

Product Usage Case

· Building a semantic search engine: A developer can use Catsu to generate embeddings for documents and user queries. By comparing the embeddings, the search engine can find documents semantically similar to the query, even if they don't share exact keywords. Catsu's unified API allows easily testing different embedding models to find the one that provides the best search accuracy for their specific data. This solves the problem of needing to integrate and manage multiple, complex search-related AI models.

· Implementing personalized recommendation systems: For an e-commerce platform, Catsu can embed product descriptions and user behavior data. By analyzing these embeddings, the system can recommend products that are similar to those a user has liked or viewed. The ability to quickly switch providers or models with Catsu helps in A/B testing different recommendation algorithms for optimal user engagement. This helps businesses offer more relevant and engaging product suggestions to their customers.

· Developing AI-powered content moderation: A platform dealing with user-generated content can use Catsu to embed text posts and identify potentially harmful or inappropriate content based on its semantic meaning. The automatic cost tracking feature helps manage the expenses associated with processing large volumes of user content. This solves the challenge of efficiently and cost-effectively identifying problematic content at scale.

· Creating intelligent chatbots and virtual assistants: Catsu can embed user queries and pre-defined responses or knowledge base articles. This allows the chatbot to understand the intent behind user questions and retrieve the most relevant information, leading to more natural and helpful conversations. The reliable retry mechanism ensures the chatbot remains functional even if there are temporary issues with the embedding API provider. This enhances the user experience by providing faster and more accurate responses.

6

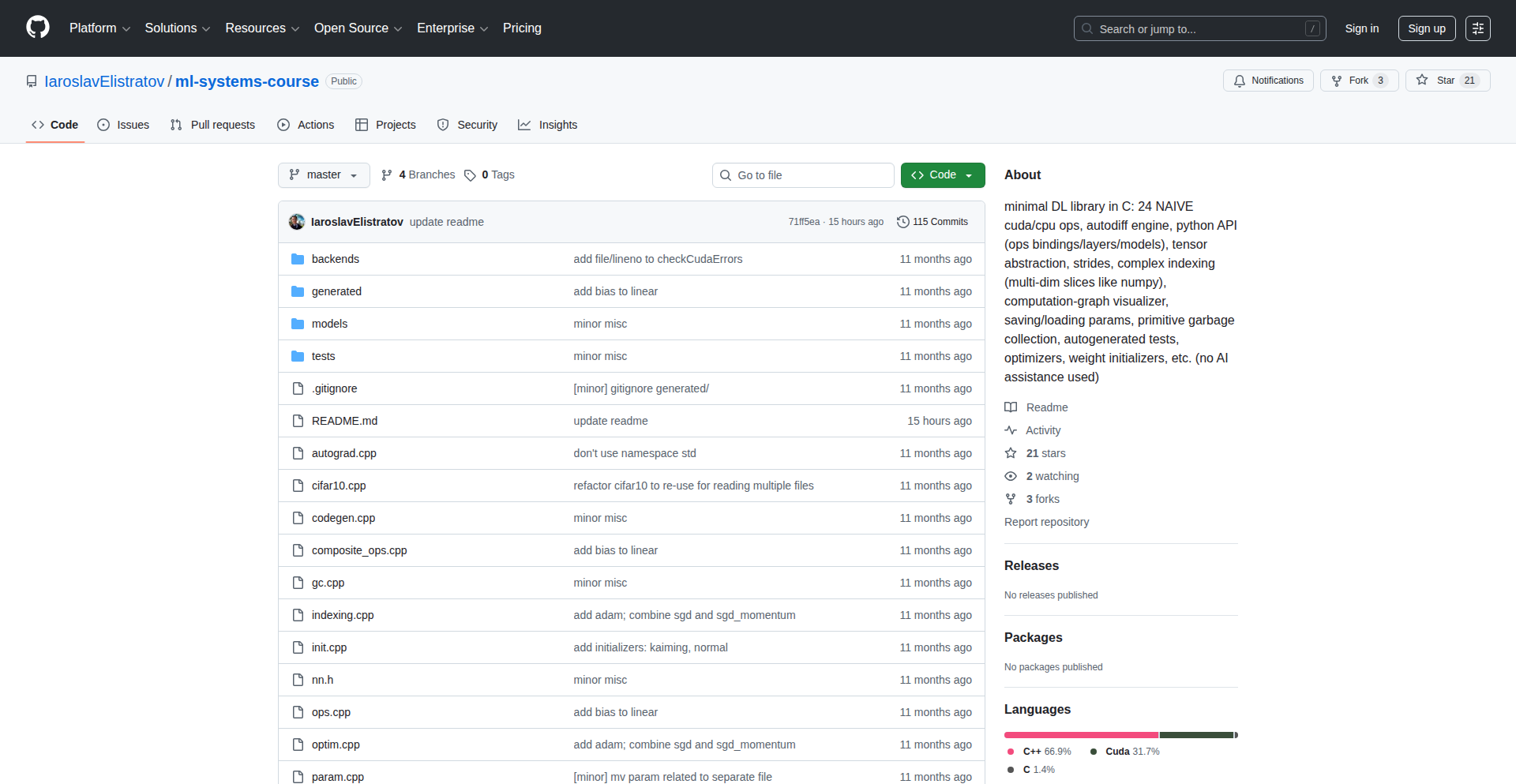

NanoDL: C-based Minimal DL with Naive CUDA/CPU Ops and Autodiff

Author

iaroo

Description

NanoDL is a lightweight deep learning library written in C, featuring 24 fundamental CUDA and CPU operations. It boasts automatic differentiation capabilities and a Python API, making it an experimental yet powerful tool for developers looking to understand the core mechanics of deep learning or build custom low-level models without the overhead of larger frameworks.

Popularity

Points 10

Comments 1

What is this product?

NanoDL is a minimalist deep learning library implemented in C. Its core innovation lies in its "naive" yet foundational set of 24 operations, which can be executed on both CPU and CUDA-enabled GPUs. This allows developers to see and manipulate the very basic building blocks of neural networks. The inclusion of automatic differentiation (autodiff) means that gradients, essential for training models, are computed automatically, simplifying the development process. The Python API acts as a user-friendly interface, abstracting away some of the C-level complexities. So, what's the value for you? It's a transparent window into how deep learning works at a granular level, enabling custom optimizations and a deeper understanding of performance bottlenecks that might be hidden in more complex libraries.

How to use it?

Developers can integrate NanoDL by leveraging its Python API to define and train neural network models. For instance, you could use it to experiment with custom layer implementations or to benchmark the performance of basic operations on specific hardware. The library is suitable for researchers exploring novel neural network architectures, educators demonstrating core ML concepts, or engineers needing fine-grained control over tensor operations for specialized applications. So, how can you use it? You can import it into your Python scripts to build simple neural networks, experiment with different activation functions or loss functions, and even integrate it into existing C/C++ projects for performance-critical components. This gives you flexibility and a deep dive into the underlying computation.

Product Core Function

· 24 Naive CUDA/CPU Operations: This provides fundamental tensor manipulations like matrix multiplication, addition, and activation functions, optimized for both general-purpose CPUs and NVIDIA GPUs. This means you get raw computational power and can choose the best execution environment for speed. The value is in understanding and controlling these fundamental computations for performance tuning and custom model designs.

· Automatic Differentiation (Autodiff): This system automatically calculates the gradients of your model's loss function with respect to its parameters. This is crucial for training neural networks via backpropagation. The value here is significant time savings and reduced error in gradient calculation, allowing you to focus on model architecture rather than manual derivative computation.

· Python API: This offers a high-level, user-friendly interface to the C-based library, making it accessible for most developers. It allows for easy model definition, training, and inference within a familiar Python environment. The value is in bridging the gap between low-level C performance and high-level Python development ease, making powerful computations readily available.

· Minimalist Design: The library focuses on a small set of essential operations, promoting clarity and understandability. This makes it easier to debug, modify, and learn from. The value is in demystifying complex DL frameworks and providing a solid foundation for further learning and experimentation without being overwhelmed by features.

Product Usage Case

· Custom Layer Implementation: A developer could use NanoDL's C backend to create a highly specialized neural network layer not present in standard libraries, optimizing its performance for a specific data type or hardware architecture. This allows for unparalleled performance customization for niche problems.

· Educational Tool for Deep Learning Fundamentals: An instructor could use NanoDL to demonstrate how basic operations like matrix multiplication and gradient descent are implemented and executed on GPUs, providing students with a tangible understanding of deep learning mechanics. This makes abstract concepts concrete and easier to grasp.

· Performance Benchmarking: A researcher could use NanoDL to isolate and benchmark the performance of individual CUDA operations on their hardware, identifying bottlenecks and optimizing their custom deep learning workflows. This helps in understanding hardware capabilities and optimizing computational pipelines for maximum efficiency.

· Building Domain-Specific DL Models: An engineer working on an embedded system with limited resources might use NanoDL to build a lean, custom deep learning model that only includes the essential operations needed for their task, ensuring efficient deployment. This enables the creation of tailored solutions for resource-constrained environments.

7

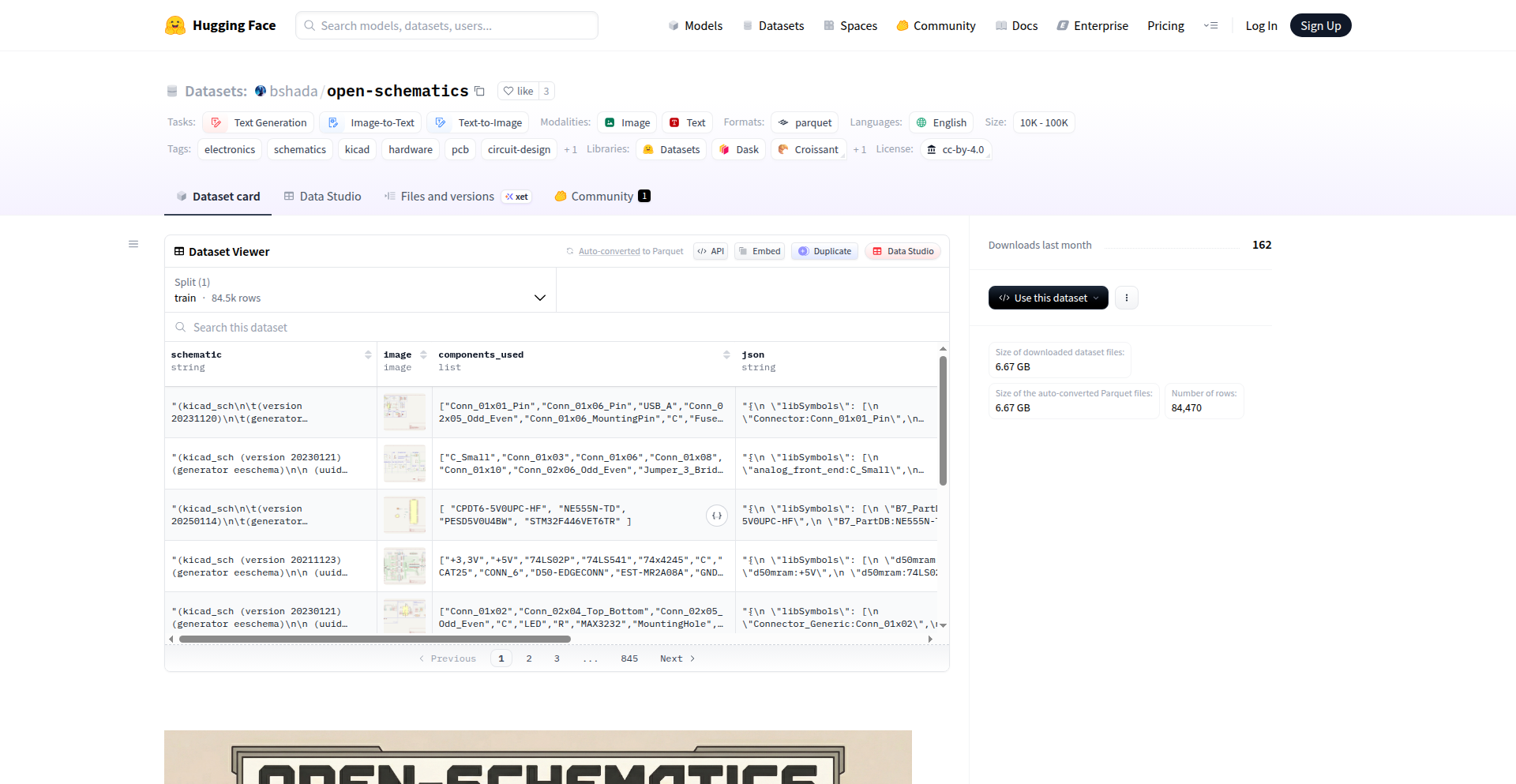

Open-Schematics ML Engine

Author

_bshada

Description

Open-Schematics is a massive public collection of electronic circuit diagrams (schematics) paired with visual representations and organized data. This project leverages machine learning to unlock new possibilities in understanding, searching, and verifying electronic circuits. Think of it as a powerful visual search engine and knowledge base for all things electronic circuits, making complex designs accessible and analyzable.

Popularity

Points 11

Comments 0

What is this product?

Open-Schematics is a comprehensive dataset of electronic schematics, going beyond just the raw diagram files. It includes rendered images of the schematics and structured metadata. The innovation lies in preparing this data for machine learning. By transforming raw circuit designs into a format that AI can understand, we can train models to recognize circuit patterns, understand their functionality, retrieve similar designs quickly, and even validate the correctness of new schematics. This is like teaching a computer to 'read' and 'understand' electronic blueprints.

How to use it?

Developers can integrate Open-Schematics into their projects in several ways. For instance, you could use it to build a smart circuit design assistant that suggests components or existing patterns based on your current design. It can also power a circuit search engine where you describe a desired functionality, and the system returns matching schematics. For educational purposes, it allows for building interactive learning tools that explain circuit behavior. Integration might involve using its API to query the dataset or using its pre-trained models for specific tasks like component identification within a schematic.

Product Core Function

· Circuit Image Rendering: Transforms raw schematic data into clear, visual images, making them easily interpretable by both humans and machine learning models. This allows for visual pattern recognition and analysis that was previously difficult with raw data alone.

· Structured Metadata Generation: Organizes complex circuit information into a searchable and analyzable format. This structured data is crucial for training machine learning models to understand relationships between components and their functions, enabling efficient retrieval and validation.

· Machine Learning Model Training: Provides the foundation for training AI models to understand circuit functionality, identify components, and recognize design patterns. This opens up possibilities for automated circuit analysis, design optimization, and advanced fault detection.

· Circuit Retrieval System: Enables searching for specific electronic circuits based on functional descriptions or visual similarity. This drastically reduces the time engineers spend searching for existing solutions, promoting reuse and faster development.

· Circuit Validation and Verification: Allows for automated checking of schematic correctness and adherence to design rules. This helps catch errors early in the design process, saving time and resources, and improving the reliability of electronic products.

Product Usage Case

· Building an AI-powered circuit design tool that suggests optimal component choices or common sub-circuit implementations based on the user's current design, speeding up the initial design phase.

· Developing a search engine for electronic engineers where they can describe the desired performance of a circuit (e.g., 'low-power amplifier for audio') and get relevant schematics from the dataset as potential starting points.

· Creating an educational platform that uses AI to explain how different parts of a circuit work together and visualize signal flow for students learning electronics.

· Implementing an automated system for quality control in PCB manufacturing that uses schematics to predict potential issues or verify component placement against the intended design.

8

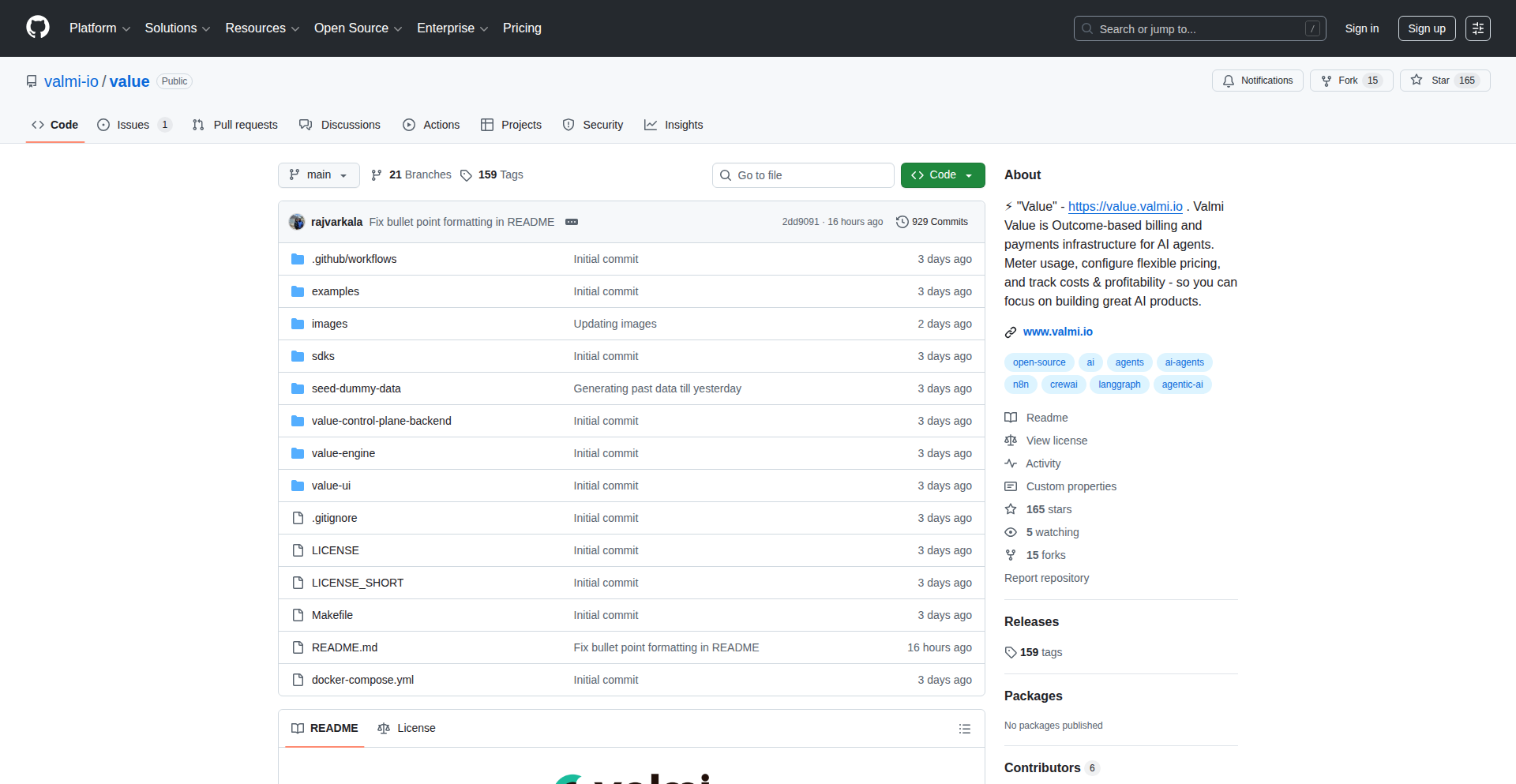

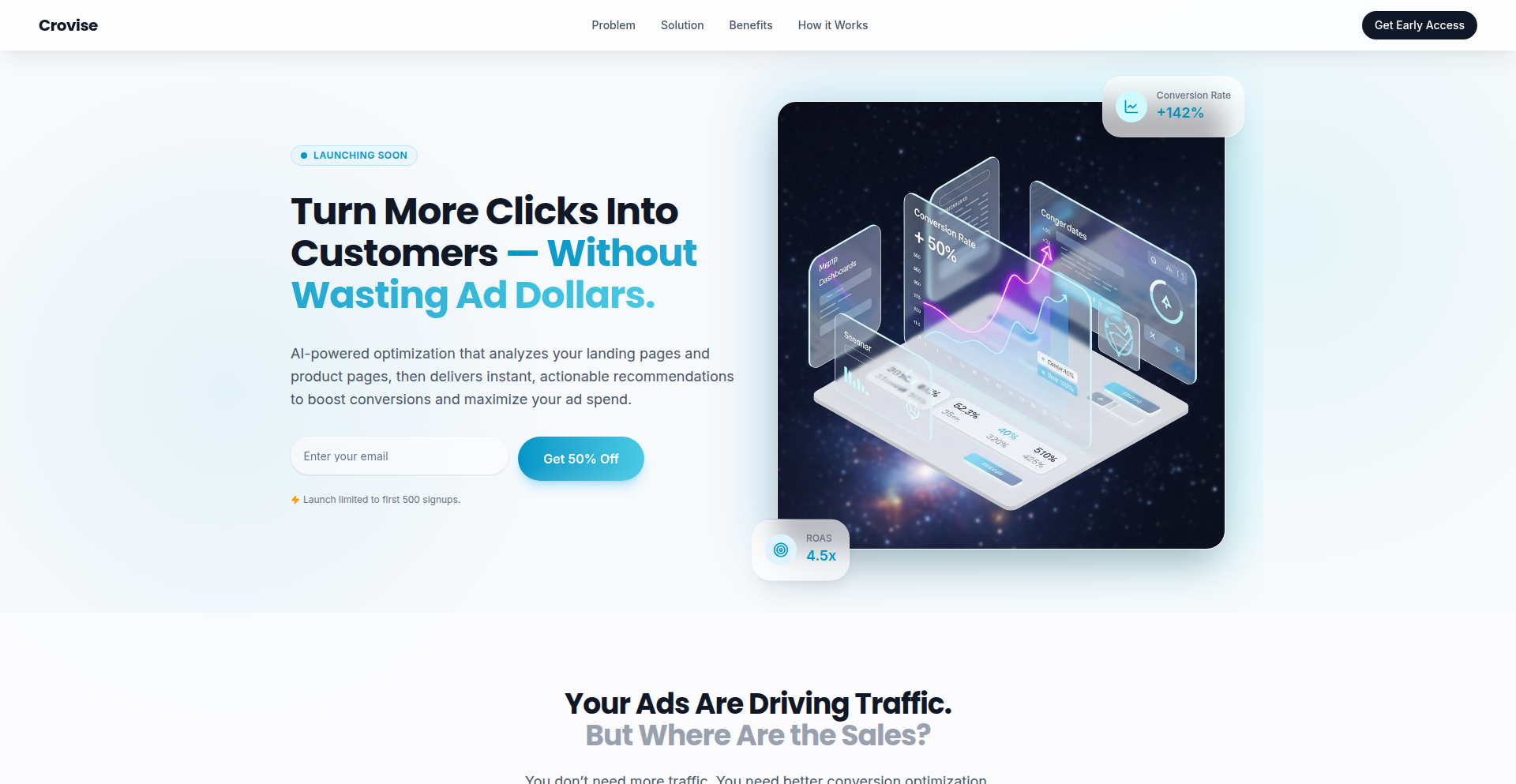

Valmi: Outcome-Driven AI Agent Billing

Author

rajvarkala

Description

Valmi is an open-source billing platform designed for AI agents, shifting the focus from usage-based pricing (like tokens or API calls) to outcome-based pricing. It allows developers to charge customers only when their AI agents successfully complete a task or deliver a meaningful result, solving the problem of unpredictable costs and misaligned value in AI agent development. This approach builds trust by ensuring customers pay for tangible results, not just computational effort.

Popularity

Points 4

Comments 6

What is this product?

Valmi is an open-source billing system that revolutionizes how AI agents are priced and paid for. Instead of traditional billing models that charge for API calls, tokens, or compute time, Valmi enables 'outcome-based billing'. This means customers are charged only when the AI agent achieves a specific, predefined goal, such as resolving a support ticket or generating a successful report. The technical innovation lies in treating 'outcomes' as first-class billable units. It tracks the cost associated with each agent run, allowing for transparent margin calculation per agent or customer. It supports flexible pricing models including pure outcome, usage-based, or a hybrid approach, all while offering open-source SDKs and a self-hostable stack for maximum control and transparency. For developers, this means a fairer and more predictable revenue model, and for customers, it means paying for value delivered, not just the effort expended.

How to use it?

Developers can integrate Valmi into their AI agent applications using its open-source SDKs. When an AI agent completes a task that is defined as a billable outcome, Valmi records this success. The system then calculates the cost incurred for that specific run (including LLM costs, tool usage, and any retries) and applies the pre-defined pricing strategy (outcome-based, usage-based, or hybrid). This allows developers to easily set up billing that aligns with the actual value their AI agents provide. For instance, a customer service AI agent that successfully closes a support ticket would trigger a successful outcome charge, whereas an agent that fails to resolve the issue wouldn't incur a charge for that specific attempt. Valmi can be self-hosted, giving developers complete ownership of their billing infrastructure.

Product Core Function

· Outcome-based billing: Enables charging customers specifically when an AI agent achieves a defined, valuable outcome, making billing directly tied to delivered results and increasing customer trust by ensuring they pay for tangible success.

· Cost tracking per agent run: Automatically monitors and reports the cost of each individual AI agent execution, providing developers with clear insights into their expenses and enabling accurate margin calculations for each service.

· Flexible pricing models: Supports a variety of pricing strategies including pure outcome-based, usage-based (like API calls or tokens), and hybrid models, allowing developers to tailor their billing to best suit their AI agent's functionality and market value.

· Transparent margin analysis: Provides detailed visibility into the profit margins for each AI agent or customer, helping developers optimize their pricing strategies and business operations for better financial performance.

· Open-source SDKs and self-hostable stack: Offers developers the freedom and flexibility to integrate Valmi into their existing systems and host the entire billing infrastructure themselves, ensuring data privacy, customization, and avoiding vendor lock-in.

Product Usage Case

· A customer support AI agent that automatically resolves user queries: Instead of charging for each API call the agent makes, Valmi bills the client only when the AI successfully closes a support ticket, ensuring the client pays for a solved problem, not just an attempted one.

· An AI content generation service that produces marketing copy: Developers can configure Valmi to charge clients per article generated and approved, directly linking the billing to the creation of valuable content rather than the time or number of LLM prompts used.

· An AI-powered market research tool: Valmi can be used to bill clients based on the successful completion of research tasks, such as generating a detailed competitor analysis report, ensuring the client pays for actionable intelligence.

· An internal AI assistant for a company that automates data entry: Billing can be set up to trigger only when the AI successfully processes and inputs a batch of data, demonstrating clear cost savings and return on investment for the business.

9

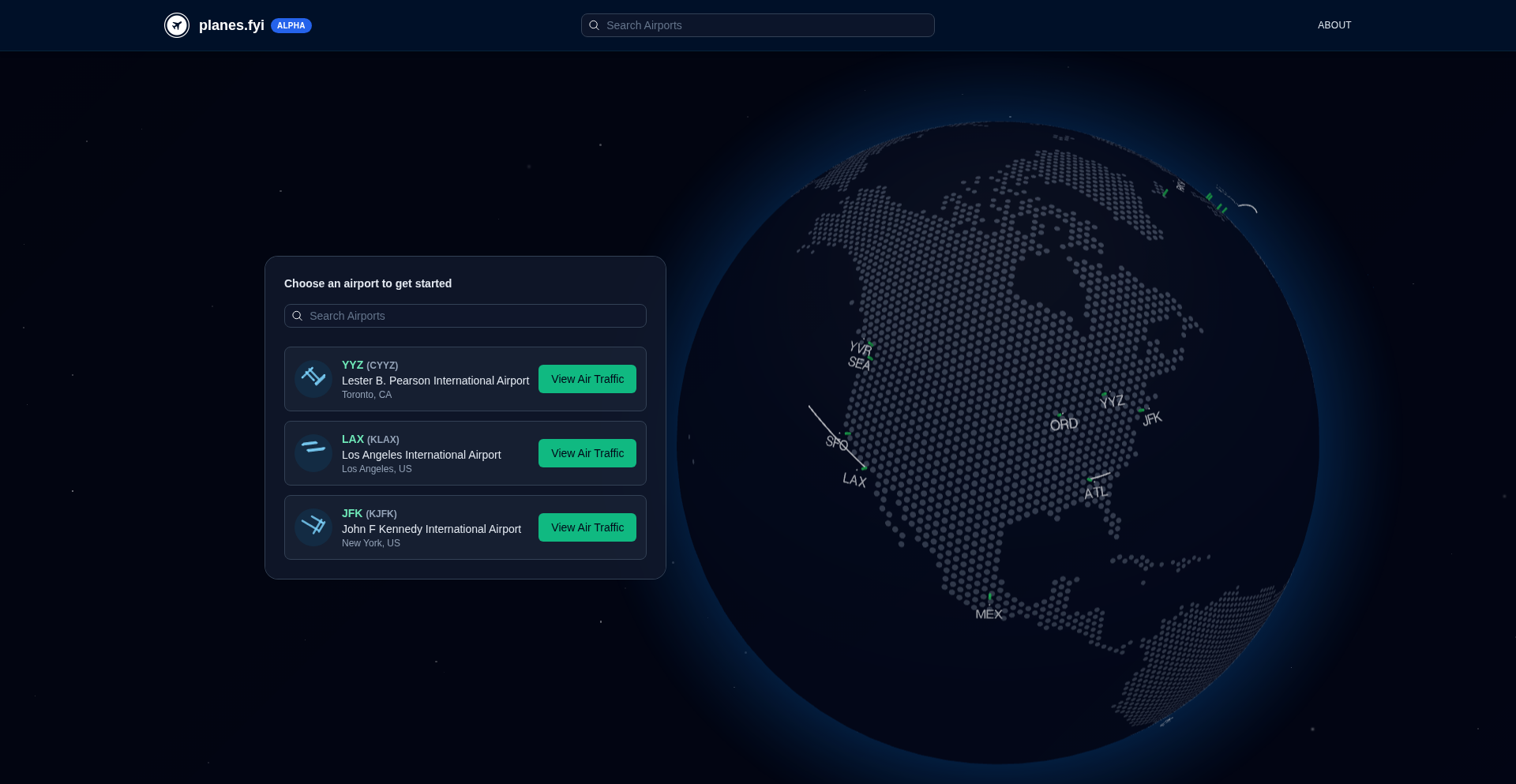

AeroViz 3D

Author

ryry

Description

AeroViz 3D is a novel project that offers a dynamic 3D visualization of aircraft in real-time around specific airports, integrated with live weather and airport operational data like ATIS. It's a creative technical experiment to explore how complex aviation data can be presented in an intuitive, visually engaging way, offering a unique perspective for aviation enthusiasts and developers interested in data visualization.

Popularity

Points 8

Comments 2

What is this product?

AeroViz 3D is a 3D interactive map that visualizes live aircraft movements. It goes beyond just showing planes; it pulls in real-time weather data and essential airport information (like ATIS broadcasts, which are routine aviation weather reports). The innovation lies in its ability to render this complex, multi-layered data into a cohesive 3D environment, allowing users to explore aviation activity from a new, more immersive angle. Think of it as a live, interactive diorama of an airport's airspace.

How to use it?

Developers can explore AeroViz 3D by visiting the website and selecting specific airports to view. For those interested in the underlying technology, the project serves as a powerful example of real-time data integration and 3D rendering. It can inspire developers working on geospatial applications, flight simulation tools, or any project requiring the visualization of dynamic, multi-source data. The open-ended nature of HN projects means there isn't a direct API for integration yet, but the concept itself is a blueprint for building similar visualization platforms.

Product Core Function

· Real-time 3D Aircraft Tracking: Visualizes the live positions of airplanes in a 3D space around selected airports. This is valuable for understanding air traffic patterns and provides a compelling visual for aviation enthusiasts, helping them grasp the scale and movement of aviation in real-time.

· Integrated Weather Visualization: Overlays current weather conditions onto the 3D map. This allows users to see how weather might be impacting flight operations, offering immediate context for observed aircraft movements and providing a practical view for understanding real-world aviation challenges.

· ATIS and Airport Data Overlay: Displays essential airport operational data, such as ATIS broadcasts. This adds a layer of crucial, often overlooked, information directly into the visual environment, making it useful for pilots, aviation students, or anyone needing to understand airport communication and status.

· Unique Data Presentation: Explores novel ways to visualize complex aviation data beyond traditional 2D maps or raw text. The value here is in demonstrating creative problem-solving through visualization, pushing the boundaries of how information can be understood and appreciated.

· Interactive Exploration: Allows users to freely navigate and explore the 3D environment. This interactivity makes the learning and exploration process engaging and intuitive, enabling users to zoom in on specific aircraft or pan across the entire airspace to gain a comprehensive understanding.

Product Usage Case

· Aviation Enthusiast Visualization: A user who loves planes can open the map for a busy airport like JFK and see exactly where planes are in the sky, their flight paths, and the current weather conditions affecting them. This provides a much richer and more engaging experience than simply looking at flight tracking websites.

· Student Pilot Learning Tool: A student pilot can use this visualization to understand how weather fronts impact flight paths around an airport, or to get a feel for the density of traffic during different times of day. It offers a dynamic, practical learning environment that complements theoretical knowledge.

· Developer Inspiration for Geospatial Data: A developer building a real-time city traffic visualization tool could draw inspiration from AeroViz 3D's approach to rendering dynamic objects and overlaying multiple data streams in a 3D space. It demonstrates a creative application of 3D rendering for complex, real-world data.

· Research into Air Traffic Visualization: Researchers interested in human-computer interaction for aviation could study how users interact with this 3D environment to gather insights into more effective air traffic control visualizations.

· Hobbyist Project Showcase: Developers looking to build visually impressive projects with real-time data can see how this project cleverly combines different data sources into a compelling 3D experience, encouraging them to experiment with their own data visualization ideas.

10

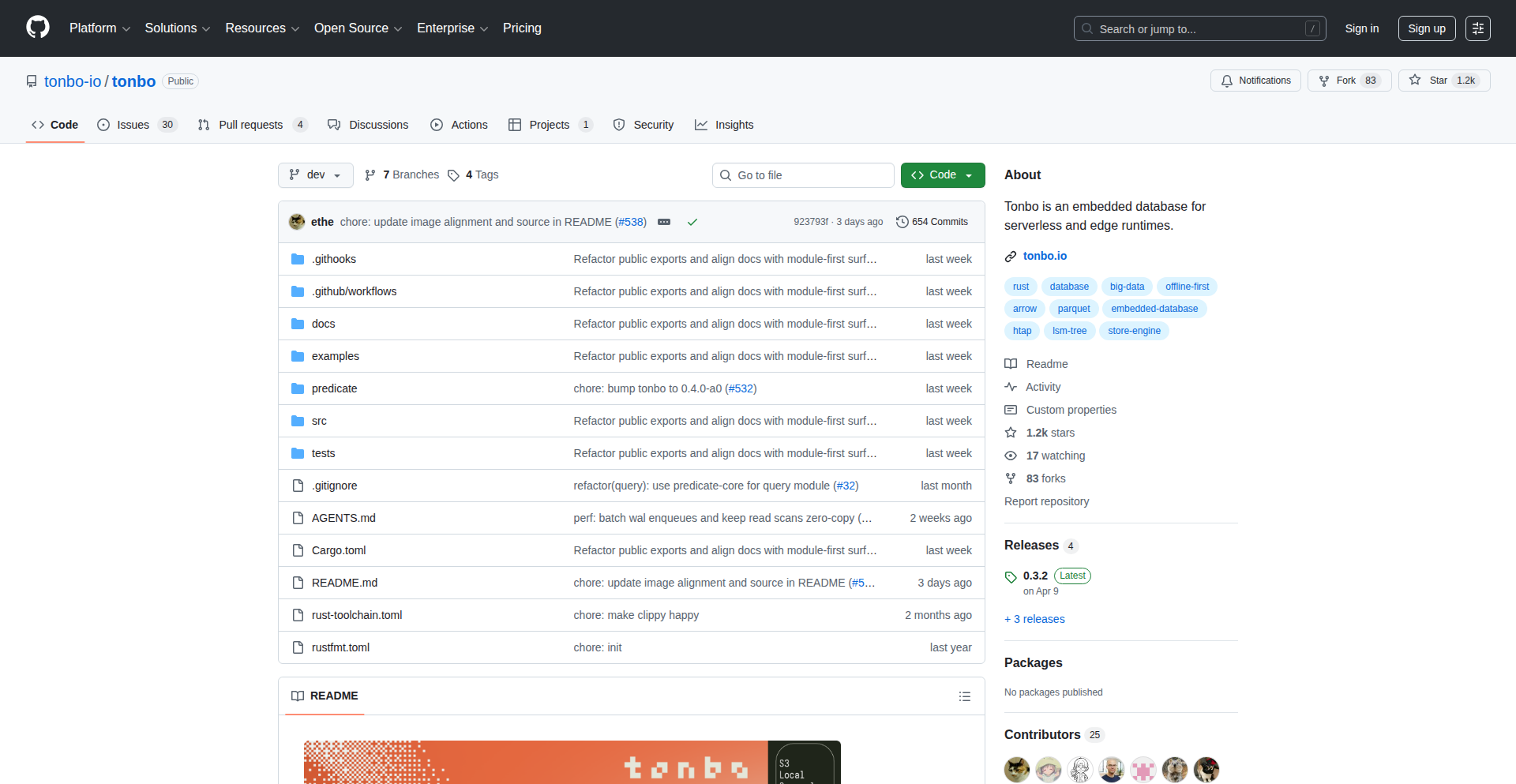

Tonbo: Serverless & Edge Embedded DB

Author

ethegwo

Description

Tonbo is a novel embedded database designed specifically for serverless and edge computing environments. It addresses the challenges of state management and data persistence in ephemeral, distributed, and resource-constrained runtimes by offering a lightweight, high-performance, and offline-first solution. The innovation lies in its ability to operate efficiently within these modern deployment models, providing developers with a seamless way to handle data without relying on traditional, heavy database servers.

Popularity

Points 6

Comments 2

What is this product?

Tonbo is an embedded database. Unlike traditional databases that run as separate servers, Tonbo is designed to be compiled directly into your application code, running within the same process. This makes it incredibly efficient for environments like serverless functions (e.g., AWS Lambda, Cloud Functions) and edge devices, where setting up and managing external database connections can be slow, costly, or impossible. Its core innovation is its optimized architecture for low-latency, high-concurrency access in resource-limited scenarios, combined with a robust offline-first capability. This means your application can function even when connectivity is intermittent, synchronizing data when it's available. So, why is this useful? It allows your applications running in these modern, distributed environments to reliably store and retrieve data locally, dramatically improving performance and responsiveness, and enabling functionality that wasn't practical before.

How to use it?

Developers can integrate Tonbo by including its library into their project. It typically involves initializing the Tonbo database within their serverless function or edge application code. For example, in a Node.js Lambda function, you would import the Tonbo library, configure its storage path (which could be in-memory or persist to a local file system if available), and then use its API to perform CRUD (Create, Read, Update, Delete) operations. Tonbo's embedded nature means there's no separate server to manage or connect to; the database lives with your code. Integration scenarios include mobile applications that need offline data storage, IoT devices collecting sensor data, and serverless backends needing fast, local data access. So, how does this help you? You can build applications that are faster, more resilient to network issues, and simpler to deploy in challenging environments.

Product Core Function

· Lightweight Embedded Database Engine: Provides efficient data storage and retrieval directly within the application runtime, reducing overhead and latency compared to client-server databases. This is useful for applications where every millisecond counts, like real-time data processing on edge devices.

· Offline-First Data Persistence: Allows applications to operate and store data even when network connectivity is unavailable, automatically synchronizing changes when a connection is re-established. This is invaluable for mobile apps or IoT sensors in remote areas, ensuring data isn't lost and functionality is maintained.

· Optimized for Serverless & Edge Runtimes: Specifically engineered to perform well in resource-constrained environments with short execution times and limited local storage. This means your serverless functions can handle data operations effectively without hitting performance bottlenecks. Your serverless backend becomes more capable.

· High Concurrency and Low Latency Operations: Designed to handle multiple read and write requests simultaneously with minimal delay, crucial for responsive user experiences and real-time data streams. This makes your applications feel snappier and more capable of handling user demand.

· Simplified State Management: Eliminates the complexity of managing external database servers and connections, making it easier to develop and deploy applications in distributed systems. This streamlines development and reduces operational headaches.

Product Usage Case

· Building a mobile application that needs to store user preferences and local data for offline access. Tonbo would allow the app to read and write data locally, and then sync it to a cloud backend when online, improving user experience and data reliability.

· Developing an IoT application on an edge device to collect and process sensor readings. Tonbo can store this data locally, perform initial analysis, and then send summarized data to a central server, reducing bandwidth usage and enabling real-time local alerts.

· Creating a serverless API backend for a web application where rapid data access is critical. Tonbo can be embedded within the serverless function to provide near-instantaneous access to frequently used data, reducing API response times and improving scalability.

· Implementing a distributed data synchronization system for multiple edge devices that might lose network connectivity. Tonbo's offline-first capabilities ensure that data is captured and eventually synced across devices, maintaining data integrity.

11

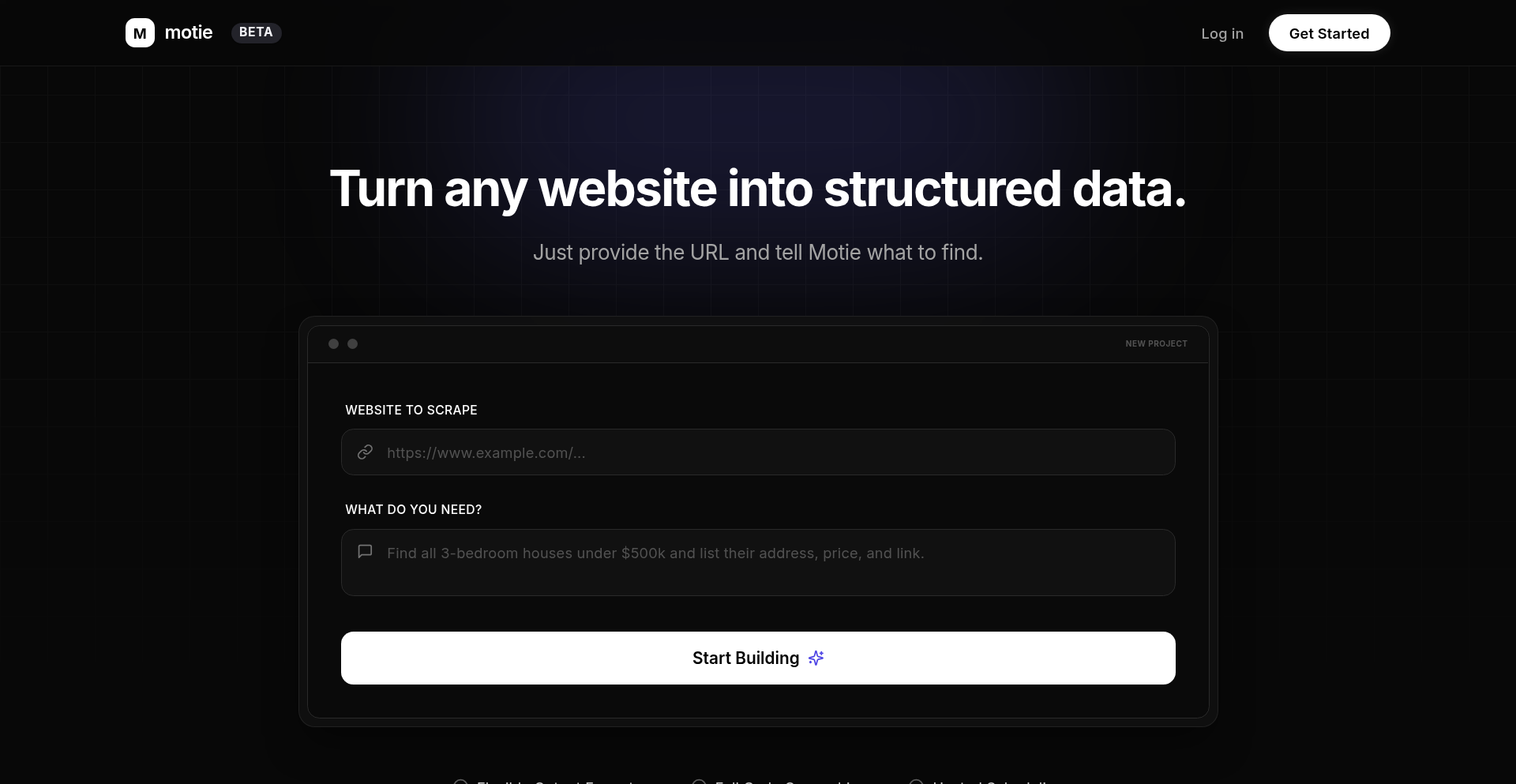

Motie AI Web Scraper

Author

jb_hn

Description

Motie is an AI-powered agent that transforms natural language requests into structured data extracted from the web. It addresses the common challenges of complex web scraping by allowing users to simply describe what data they need and from which URL, generating the necessary scraping code automatically. This makes data extraction accessible to a wider audience and provides technical users with foundational code for further development.

Popularity

Points 4

Comments 3

What is this product?

Motie is an AI agent designed to simplify web scraping. Instead of manually writing complex code with specific selectors (like CSS selectors), you can describe the data you want using plain English. For instance, you can tell Motie to 'extract all product titles and prices from this e-commerce page.' Motie uses advanced AI models to understand your request, process the web page, and then automatically generates the code needed to perform the extraction. This innovation lies in its ability to bridge the gap between human language and machine-executable scraping logic, making data extraction more intuitive and less code-intensive. The core technical insight is leveraging large language models (LLMs) to interpret user intent and translate it into the precise instructions required by web scraping libraries, effectively acting as an 'AI Data Engineer.'

How to use it?

Developers can use Motie in several ways. For rapid data extraction, you can visit the Motie website (app.motie.dev), provide a URL and your natural language prompt (e.g., 'Get the titles and links of the top 10 articles on this news page'). Motie will then process this and give you the extracted data, usually in CSV or JSON format. For more advanced use cases, Motie exports the generated scraping code. This allows technical users to take the code and integrate it into their own projects, customize it further, or use it as a starting point for more sophisticated scraping tasks. Integration can be as simple as copying and pasting the generated code into your development environment, or using Motie's hosted scheduling to run scraping jobs automatically.

Product Core Function

· Natural Language Data Extraction: Users can describe the data they need using text prompts. This fundamentally changes how users interact with web scraping, moving from complex code to simple instructions. The value is significantly reduced learning curve and faster initial data retrieval for anyone.

· Automated Code Generation: Motie generates the actual web scraping code (e.g., in Python using libraries like BeautifulSoup or Scrapy). This provides developers with tangible assets they can use, modify, and build upon, offering full code ownership and flexibility, which is invaluable for custom applications and learning.

· Structured Data Output: The extracted data is provided in easily usable formats like CSV and JSON. This ensures that the retrieved information is immediately ready for analysis, database import, or further processing, eliminating the need for manual data cleaning and formatting.

· Hosted Scheduling and Orchestration: Motie offers a service to schedule and run scraping tasks automatically. This is crucial for tasks requiring regular data updates, ensuring data freshness without constant manual intervention, and streamlining data pipelines for businesses and researchers.

Product Usage Case

· Market Research: A small business owner wants to understand competitor pricing for a specific product category. They can use Motie to extract product names, prices, and review counts from competitor websites by simply describing what they are looking for. This helps them make informed pricing and product decisions without needing to hire a developer or learn complex scraping techniques.

· Content Aggregation: A blogger wants to curate trending news from various tech sites. They can use Motie to extract headlines, article links, and author names from multiple news sources, and then use the generated code to build an automated feed for their blog. This saves them hours of manual copy-pasting and data organization.

· Academic Research: A researcher needs to collect data on public opinion expressed on social media or forums related to a specific topic. They can leverage Motie to extract relevant posts, sentiment indicators, and user engagement metrics from specified URLs. The generated code can then be adapted for more in-depth analysis and hypothesis testing.

· Personal Data Management: An individual wants to track their online order history from various e-commerce platforms. They can use Motie to extract order details, shipping status, and item prices from their account pages on different websites, consolidating this information into a single, manageable dataset.

12

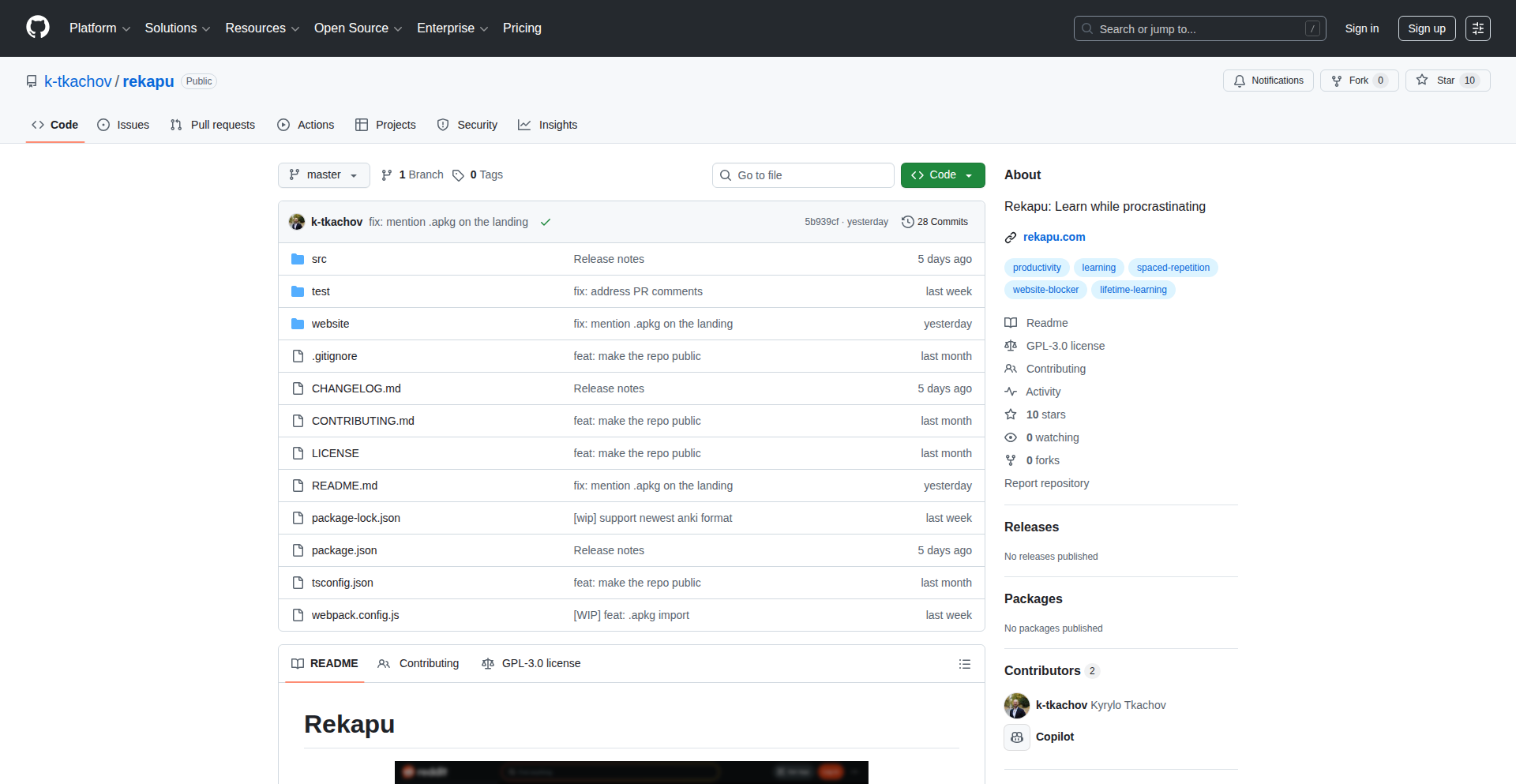

Procrastination Weaver

Author

kee_real

Description

Rekapu is a browser extension designed to help users learn new languages or subjects by integrating learning into their procrastination habits. Instead of a hard block, it presents a single flashcard when you try to access distracting websites. Answering correctly grants you access for a set period. This approach leverages existing behavior to create a low-friction learning experience, making continuous learning effortless and guilt-free.

Popularity

Points 7

Comments 0

What is this product?

Rekapu is a smart browser extension that transforms unproductive browsing into a learning opportunity. The core technical insight is to use existing procrastination patterns as a gateway for learning. When you attempt to visit a website known for distraction, Rekapu doesn't just block it; it presents a single learning flashcard. The innovation lies in its seamless integration: answering the card allows immediate continuation on the original site via an overlay, preserving your scroll position and context. This avoids the jarring experience of traditional blockers and creates a natural, almost subconscious, learning loop, powered by spaced repetition principles for effective memorization.

How to use it?

Developers can integrate Rekapu into their workflow by installing it as a Chrome extension. Users can then import their existing Anki flashcard decks (supporting media and cloze deletions) or create new ones. By configuring which websites trigger a flashcard prompt, developers can turn their usual procrastination destinations (like Hacker News or social media) into spaced repetition learning sessions. The extension stores all data locally using IndexedDB, ensuring privacy and offline functionality. This allows developers to build consistent learning habits without needing to rely on willpower or drastic website blocking.

Product Core Function

· Spaced Repetition Learning: Implements spaced repetition algorithms (using ratings like Again, Hard, Good, Easy) to optimize memorization. This means you'll be shown flashcards at just the right time to reinforce learning, making your study sessions highly efficient and effective.

· Anki Deck Import: Supports importing Anki .apkg decks, including media. This allows users to leverage their existing study materials and rich multimedia content (like audio or images) within Rekapu, making learning more engaging and comprehensive.

· Google TTS Support: Integrates with Google Text-to-Speech (TTS) for pronunciation. This is incredibly valuable for language learners, as it provides native-sounding audio for words and phrases, greatly improving pronunciation and listening comprehension.

· Cloze Deletion Cards: Supports cloze deletion flashcards, where parts of a sentence are hidden. This advanced flashcard format challenges recall and understanding of context, offering a deeper learning experience beyond simple Q&A.

· Activity Streaks & Daily Goals: Tracks learning streaks and allows setting daily learning goals. This gamified approach motivates consistent engagement by visually representing progress and encouraging daily practice, making learning a rewarding habit.

· Local Data Storage (IndexedDB): All learning data is stored locally in the browser's IndexedDB. This ensures your privacy is protected as no personal data is sent to any servers, and it allows the tool to function seamlessly offline.

Product Usage Case

· Scenario: A remote developer working for a US company needs to improve their Polish for daily life in Poland but struggles to find dedicated study time. Rekapu Solution: By setting Polish vocabulary flashcards to appear when they visit their usual news sites, they learn a few words each time they 'procrastinate', turning passive browsing into active language acquisition without disrupting their workflow.

· Scenario: A student preparing for a technical certification exam finds themselves constantly distracted by social media during study sessions. Rekapu Solution: They can import their Anki decks into Rekapu and set the extension to prompt them with exam-related questions whenever they attempt to access social media. Answering correctly grants them uninterrupted access, ensuring their study time is focused and productive.

· Scenario: A game developer wants to learn a new programming language but finds traditional online courses too demanding. Rekapu Solution: They can create cloze deletion flashcards for syntax and concepts of the new language. When they feel the urge to browse gaming forums or other development sites, Rekapu will present a flashcard. Successfully answering unlocks the site, making learning a bite-sized, integrated part of their daily digital routine.

13

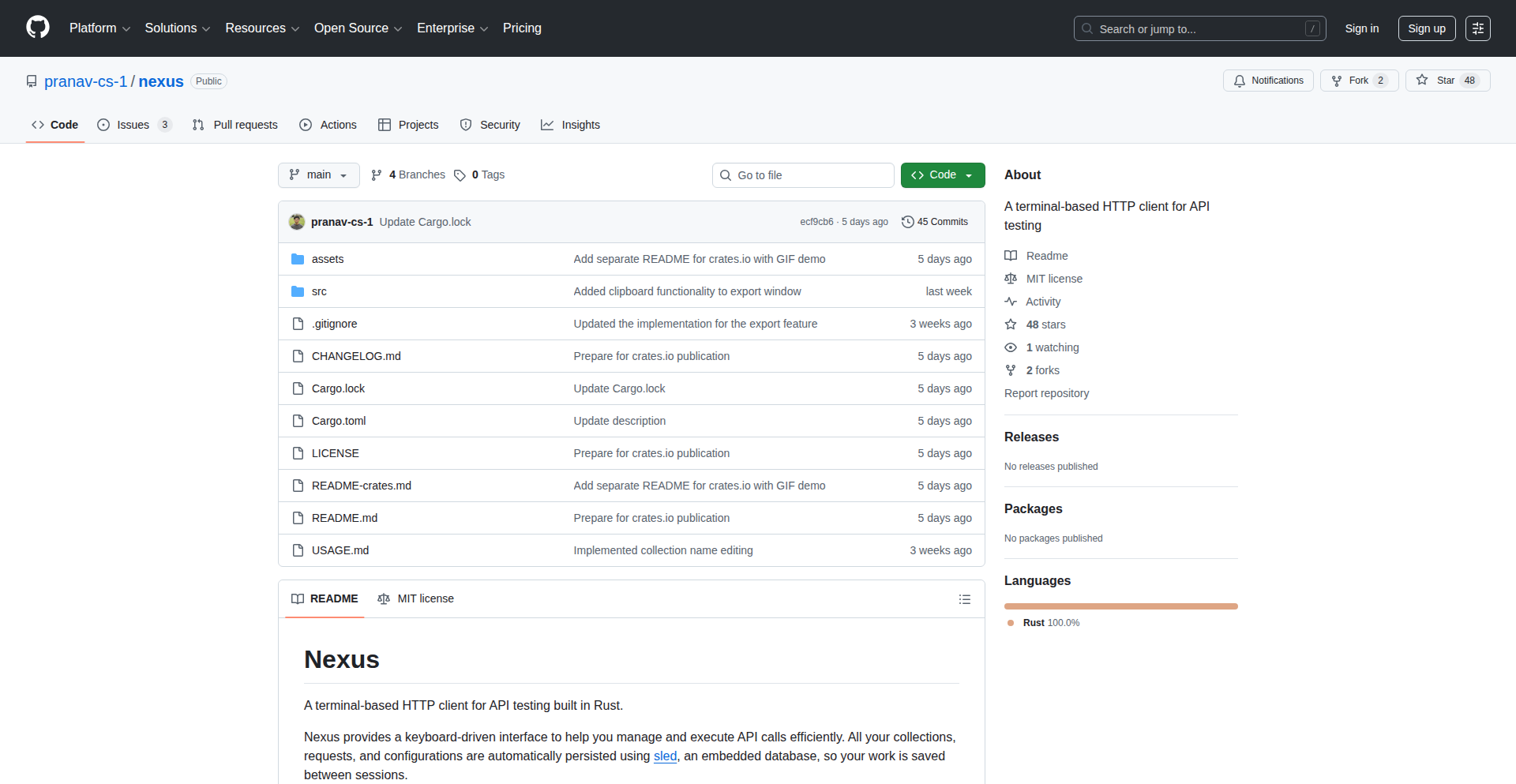

LibreTech Nexus

Author

iris-digital

Description

This project is a curated portal and initiative aimed at consolidating and promoting open-source and privacy-respecting hardware and software alternatives to mainstream BigTech products. It highlights a growing ecosystem of devices like Fairphone, Framework laptops, and privacy-focused operating systems, addressing the fragmentation and tedium of assembling a complete ethical tech stack. The innovation lies in its conceptualization of a unified approach to support and invest in these individual components, creating a stronger collective impact for the libre technology movement.

Popularity

Points 5

Comments 2

What is this product?

LibreTech Nexus is a conceptual initiative and informational hub that gathers and showcases a range of open-source and privacy-respecting hardware and software products. It recognizes that while many excellent individual ethical technology options exist (like privacy-focused phones, customizable laptops, and open operating systems), their fragmentation makes them difficult for consumers to discover, adopt, and collectively support. The innovation is in proposing a unified strategy to increase their visibility, drive sales, and encourage further investment, thereby accelerating the development of a complete ethical technology ecosystem. It acts as a rallying point for individuals and the community to support these products.

How to use it?

Developers and consumers can use LibreTech Nexus as a central resource to discover and learn about available ethical technology products. For developers working on open-source hardware or software, it serves as a platform to gain visibility and attract potential users and contributors. The project encourages discussion and brainstorming on how to better integrate and market these disparate components, fostering collaboration within the open-source community. It can be used to identify gaps in the ethical tech landscape and inspire new projects. The associated website (aol.codeberg.page/eci/status.html) provides a list of current offerings, serving as a starting point for exploration and advocacy.

Product Core Function

· Curated directory of ethical tech products: Provides a centralized list of privacy-respecting hardware and software, helping users discover alternatives to proprietary systems. This addresses the problem of information overload and makes it easier to find trusted options.

· Community engagement and discussion platform: Facilitates conversations among users, developers, and advocates to brainstorm strategies for supporting and growing the ethical tech movement. This fosters collaboration and shared problem-solving.

· Advocacy for investment and adoption: Aims to increase attention, sales, and investment in open-source and privacy-focused products. This is crucial for the long-term sustainability and improvement of these alternatives.

· Educational resource on the ethical computing landscape: Informs users about the importance of digital freedom and privacy, and how existing products contribute to this goal. This empowers users to make informed choices.

Product Usage Case

· A developer looking to build a completely open-source laptop setup can use LibreTech Nexus to find compatible components like a Framework laptop, an open firmware solution, and a privacy-focused Linux distribution. This solves the problem of needing to research each component individually across multiple vendors.

· An individual concerned about data privacy can discover a range of smartphones like Fairphone running a privacy-enhanced OS. This allows them to replace their data-collecting device with a more ethical alternative, ensuring their personal information is better protected.

· A group of enthusiasts wanting to promote open hardware could use LibreTech Nexus to identify complementary products and propose integrated bundles or marketing campaigns. This amplifies their reach and impact by leveraging existing community efforts.

· A programmer seeking to contribute to the open-source ecosystem can identify emerging projects and their specific needs through the discussions and curated lists. This directs their efforts to areas where they can make a significant contribution to privacy and freedom in technology.

14

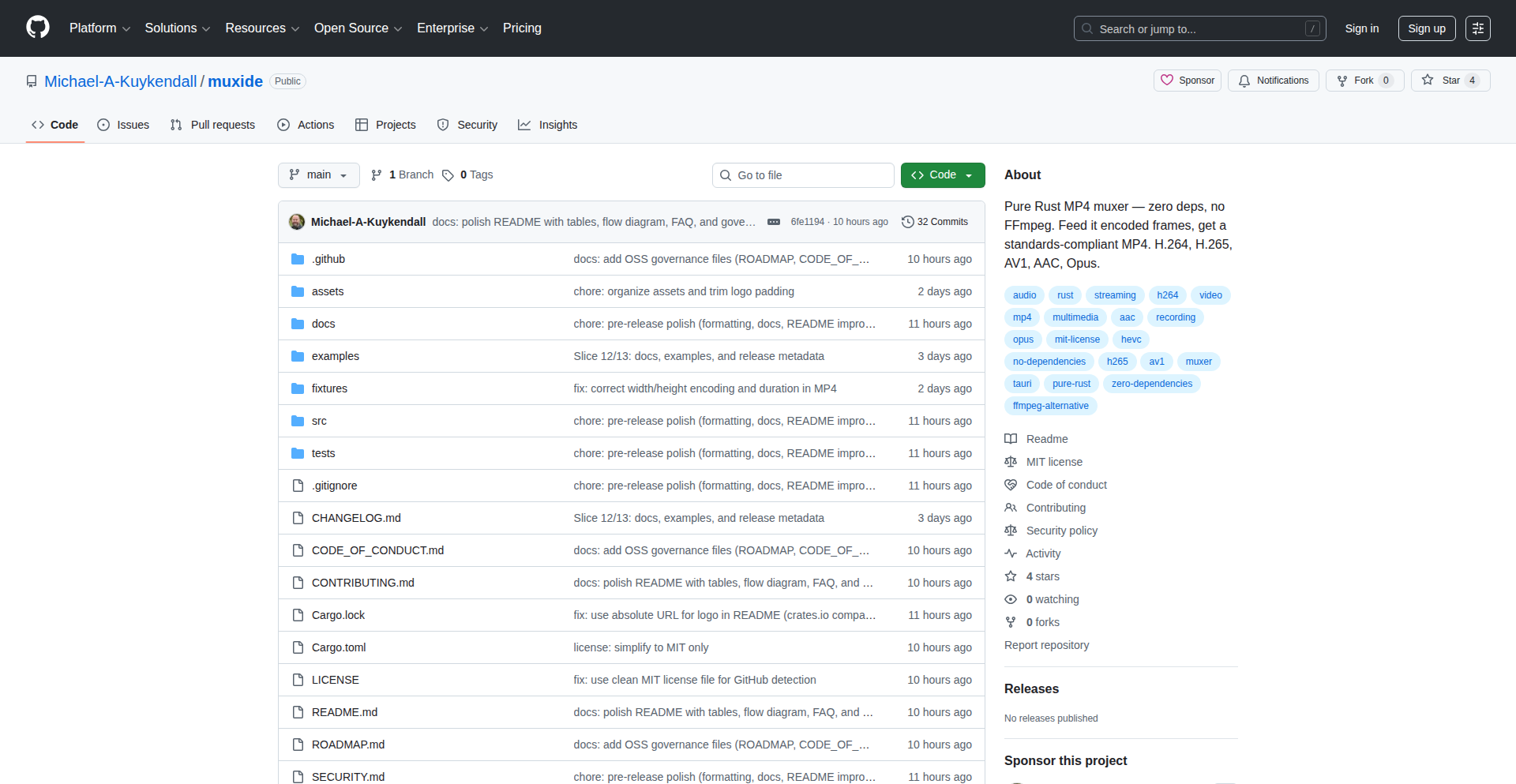

Muxide - Rust Native MP4 Weaver

Author

MKuykendall

Description

Muxide is a pure Rust library designed for creating MP4 files from raw video and audio streams, supporting modern codecs like H.264, H.265, and AV1. Its key innovation lies in its complete independence from external dependencies like FFmpeg, offering a lightweight, performant, and secure solution for developers who need fine-grained control over MP4 generation directly within their Rust applications. This significantly simplifies integration and reduces build complexity.

Popularity

Points 6

Comments 1

What is this product?

Muxide is a software tool, specifically a library written in the Rust programming language, that allows developers to construct MP4 video files from separate video and audio data. The innovation here is that it's built entirely from scratch using Rust's own capabilities, without relying on other complex software like FFmpeg. This means it's faster, more predictable, and less prone to security issues because it's a smaller, self-contained piece of code. Think of it like building a custom Lego set without needing any pre-made special pieces – you get to control every brick. So, this is useful because it provides a secure, efficient, and dependency-free way to create video files programmatically, perfect for applications needing to embed video generation capabilities.

How to use it?

Developers can integrate Muxide into their Rust projects by adding it as a dependency in their Cargo.toml file. They can then use Muxide's API to feed it raw video frames (encoded in H.264, H.265, or AV1) and audio samples. The library handles the intricate process of packaging these streams into a valid MP4 container. This is useful for building custom video processing pipelines, live streaming servers that need to package content, or embedding video creation features into applications without the overhead of external tools. For example, a developer could use Muxide to programmatically create a short video clip from a sequence of images and an audio track directly within their application.

Product Core Function

· Pure Rust MP4 Muxing: Provides the ability to create MP4 files entirely within a Rust environment, eliminating the need for external binary dependencies like FFmpeg. This offers a more stable and predictable build process and reduces the attack surface for security vulnerabilities. Its value is in providing a self-contained, reliable video file creation tool.

· Codec Support (H.264, H.265, AV1): Enables the muxing of modern and efficient video codecs into the MP4 container. This allows for creating high-quality video files with better compression, saving storage space and bandwidth. The value here is in supporting current video standards for broader compatibility and efficiency.

· Zero External Dependencies: Built entirely from scratch in Rust, Muxide avoids the complexities and potential compatibility issues of linking to external libraries. This simplifies integration into existing projects and ensures a cleaner, more manageable codebase. Its value is in making integration seamless and reducing build system headaches.

· Performance and Safety: Rust's inherent memory safety features and performance characteristics are leveraged to create a fast and secure MP4 muxer. This leads to efficient video processing and reduces the risk of common programming errors that can lead to crashes or security flaws. The value is in delivering a robust and performant tool.

Product Usage Case

· Building a custom video transcoding service in Rust: A developer could use Muxide to receive video streams, encode them into H.264/H.265/AV1 if needed, and then use Muxide to package them into MP4 files, all within a single Rust application. This eliminates the need to call out to FFmpeg, simplifying deployment and improving performance. The problem solved is reducing external dependencies and increasing control over the transcoding process.

· Creating dynamic video content for web applications: A backend service written in Rust could use Muxide to generate personalized video clips on-the-fly based on user data or events. This could be for personalized advertisements or dynamic report generation. The value is in enabling real-time, customized video creation without heavy external dependencies.

· Developing embedded systems with video output capabilities: For devices where resource constraints are a concern, a lightweight MP4 muxer like Muxide can be invaluable. It allows for generating video files directly on the device without needing to install or manage larger, more complex multimedia frameworks. This solves the problem of efficient video handling in resource-limited environments.

· Contributing to open-source multimedia tools: Developers can leverage Muxide as a foundational component for building new, innovative multimedia applications or enhancing existing ones, knowing they have a solid, dependency-free Rust-based muxer to work with. This fosters further innovation within the Rust ecosystem and the broader developer community.

15

SOAP-AI Bridge

Author

Ugyen_Tech

Description

This project introduces middleware designed to connect outdated SOAP APIs, common in legacy systems, with modern AI agents. It significantly reduces the development time for such integrations, transforming a typical 6-month effort into just 2 weeks, by achieving approximately 70% token reduction. Its core innovation lies in its ability to work with any legacy system, effectively bridging the gap between old and new technologies.

Popularity

Points 2

Comments 4

What is this product?

This is a middleware solution that acts as an intermediary, allowing modern AI agents to communicate with old, often cumbersome, SOAP APIs. SOAP (Simple Object Access Protocol) is an older messaging protocol for exchanging structured information in web services. Legacy systems often rely heavily on this. AI agents, on the other hand, typically use more modern data formats and communication patterns. The innovation here is the efficient translation and simplification of data and requests between these two disparate systems. It achieves a significant token reduction (around 70%), meaning less data needs to be processed by the AI, leading to faster responses and lower costs. So, what's the benefit for you? It makes it dramatically faster and cheaper to integrate advanced AI capabilities into systems that were built decades ago, unlocking new possibilities without a complete system overhaul.

How to use it?

Developers can integrate this middleware into their existing architecture. It acts as a translator. When an AI agent needs to interact with a legacy system via a SOAP API, the request first goes to the SOAP-AI Bridge. The middleware then intelligently reformats the request into a SOAP-compatible format and sends it to the legacy system. The response from the legacy system is then processed and translated back into a format that the AI agent can easily understand. This is particularly useful in scenarios where you have existing business logic or data locked within legacy systems that you want to leverage with AI for analytics, automation, or new user interfaces. So, what's the benefit for you? You can easily add AI features to your old applications without extensive custom coding, saving considerable development time and resources.

Product Core Function

· SOAP API Translation: Converts modern AI agent requests into the SOAP format required by legacy systems. This allows AI to 'speak' the language of old software. So, what's the benefit for you? Your AI can now interact with your existing backend without you needing to become a SOAP expert.

· Data Transformation and Reduction: Optimizes data exchange by reducing token usage (around 70% reduction). This means less data is sent and processed, leading to faster AI responses and lower operational costs. So, what's the benefit for you? Your AI applications will run faster and be more cost-effective.

· Universal Legacy System Compatibility: Designed to work with any legacy system that uses SOAP APIs, regardless of its age or complexity. This provides a flexible solution for modernizing diverse IT environments. So, what's the benefit for you? You can apply this solution to almost any of your old systems, rather than needing specialized tools for each one.

· AI Agent Integration Layer: Provides a standardized interface for AI agents to interact with legacy systems, simplifying the integration process for AI developers. So, what's the benefit for you? AI developers can focus on building smart applications without getting bogged down in the complexities of legacy system integration.

Product Usage Case

· Integrating a customer service AI chatbot with a 15-year-old CRM system that only exposes its data via SOAP APIs. The middleware translates the chatbot's natural language queries into SOAP requests, fetches customer data from the CRM, and formats it back for the chatbot to provide personalized responses. So, what's the benefit for you? You can offer instant, AI-powered customer support powered by your existing customer data.

· Enabling an AI-driven fraud detection system to access transaction history from an ancient banking core system through its SOAP interface. The middleware efficiently retrieves and formats the necessary historical data for the AI to analyze for suspicious patterns. So, what's the benefit for you? You can enhance your financial security with advanced AI analytics on your historical transaction data.

· Connecting a modern cloud-based analytics platform to an on-premises ERP system using SOAP APIs for data extraction. The middleware handles the complex SOAP communication, making the ERP data readily available for advanced business intelligence and reporting. So, what's the benefit for you? You can gain deeper insights into your business operations by combining data from your legacy systems with modern analytics tools.

16

HN++: Enhanced Hacker News Navigator

Author

7moritz7

Description

HN++ is a browser extension that supercharges the Hacker News experience. It introduces intelligent visual cues like rainbow indentation for comments, native filtering by upvote or comment count, and a 'read later' feature for posts and comments. It also streamlines navigation with a sticky header, infinite scroll, and smarter link handling, while adding practical features like favicons and dark mode, all aimed at making browsing HN more efficient and enjoyable.

Popularity

Points 3

Comments 3

What is this product?

HN++ is a browser extension designed to improve how you interact with Hacker News. It brings a set of highly requested features that go beyond the standard Hacker News interface. At its core, it uses client-side JavaScript to manipulate the existing Hacker News page, adding visual enhancements and functional improvements. The 'rainbow indentation' feature uses the depth of a comment to assign a color stripe, making it easier to follow nested conversations. Native filters allow you to sort and discover content based on popularity metrics like upvotes and comment volume, even identifying 'controversial' discussions where comments outnumber upvotes. The 'read later' functionality saves selected posts and comments directly in your browser's local storage, so you can easily return to them without losing your place. It's built with a focus on developer productivity and a hacker's mindset of improving existing tools.

How to use it?