Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-12-16

SagaSu777 2025-12-17

Explore the hottest developer projects on Show HN for 2025-12-16. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The sheer volume of projects focused on enhancing AI agent capabilities and integrating them into developer workflows is a clear signal. We're seeing a strong trend towards enabling AI to not just generate code, but to collaborate, audit, and even self-correct, moving beyond simple prompt-response mechanisms. Developers are actively seeking ways to make AI more reliable, efficient, and context-aware, leading to innovations in agent orchestration, multi-model interactions, and robust validation techniques. This signifies a maturing landscape where the focus is shifting from 'can AI do it?' to 'how can we make AI do it *better* and *safer*?'. For aspiring innovators, this means there's a huge opportunity to build tools that bridge the gap between raw AI power and practical, trustworthy application, particularly in areas like code quality assurance, secure AI deployment, and specialized domain applications. The hacker spirit is alive and well, with developers not just consuming AI but actively shaping its future through creative problem-solving and open-source contributions.

Today's Hottest Product

Name

Zenflow

Highlight

Zenflow tackles the common pain point of AI coding agents getting stuck in loops or generating inefficient code. Its key innovation lies in orchestrating multiple AI models to work together, enabling cross-model verification and parallel execution of different coding approaches. Developers can learn about advanced agent coordination, dynamic workflow configuration using markdown, and the practical challenges of benchmarking AI models, especially the concept of 'benchmark saturation' and the 'Goldilocks' workflow for optimal AI agent performance. This project showcases a sophisticated approach to leveraging AI for complex coding tasks, moving beyond single-model interactions.

Popular Category

AI/ML Development Tools

Developer Productivity

Code Generation & Assistance

Open Source Software

Popular Keyword

AI agents

LLM

workflow orchestration

code generation

developer tools

open source

productivity

automation

Technology Trends

Agent Orchestration

Multi-Model AI Collaboration

Contextual AI Integration

AI for Code Refactoring & Auditing

Decentralized/Local AI Execution

AI-Powered Content Generation

Data Security & Privacy for AI

AI for Legal & Legislative Analysis

Human-AI Collaboration Tools

Low-Latency AI Interactions

Project Category Distribution

AI/ML Tools (30%)

Developer Productivity Tools (25%)

Open Source Libraries/Frameworks (15%)

Web Applications/Services (10%)

Data Tools & Utilities (8%)

Niche/Specific Domain Tools (12%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Lingoku Contextualizer | 77 | 45 |

| 2 | CivicInsight Weaver | 36 | 21 |

| 3 | Picknplace.js: Intuitive Drag-and-Drop Alternative | 28 | 14 |

| 4 | Zenflow: AI Code Orchestrator | 28 | 13 |

| 5 | Drawize Realtime Canvas | 28 | 8 |

| 6 | CodeGuardian AI | 26 | 7 |

| 7 | ZigFeedZen | 24 | 8 |

| 8 | FuzzyCanary: The AI Scraper Deterrent | 25 | 2 |

| 9 | GPU PCIe Health Checker | 17 | 4 |

| 10 | CloudCarbon Insights | 11 | 3 |

1

Lingoku Contextualizer

Author

englishcat

Description

Lingoku is a browser extension that passively teaches you Japanese vocabulary by subtly replacing English words with Japanese equivalents as you browse the web. It leverages a backend LLM to ensure translations are contextually accurate, making language learning feel like natural immersion rather than active study. This addresses the challenge of 'Anki burnout' by integrating vocabulary acquisition into everyday online activities.

Popularity

Points 77

Comments 45

What is this product?

Lingoku is a smart browser extension that transforms your daily web browsing into a passive Japanese learning experience. It works by identifying English words on webpages and replacing them with Japanese vocabulary that matches your current learning level. The magic behind it is a powerful Large Language Model (LLM) that understands the context of sentences. This means if a word has multiple meanings, the LLM picks the most appropriate Japanese translation for that specific situation. So, while you're reading articles or social media in English, you're naturally picking up new Japanese words without feeling like you're studying. This is based on the 'i+1' language acquisition theory, where you learn just beyond your current level, making it easier to absorb new information.

How to use it?

To use Lingoku, you simply install it as a browser extension on Chrome, Edge, or Firefox. Once installed, it automatically starts working as you browse any English website. You can configure your Japanese language proficiency level within the extension's settings, and it will tailor the vocabulary replacement accordingly. The extension seamlessly integrates into your browsing flow, so there's no need to switch apps or dedicate extra time. For example, while reading a news article, you might see a common English word like 'interesting' replaced with its Japanese counterpart. The original English word remains visible or slightly highlighted, providing context, and you can hover or click to see the Japanese word and its meaning. This makes learning feel effortless and integrated into your existing habits.

Product Core Function

· Contextual Vocabulary Replacement: Replaces English words with Japanese vocabulary relevant to your learning level and the sentence's context, facilitating natural acquisition rather than rote memorization.

· LLM-Powered Translation: Utilizes a backend Large Language Model to ensure accurate and contextually appropriate Japanese translations, distinguishing between different meanings of the same English word and providing a more robust learning experience.

· Passive Learning Integration: Seamlessly integrates Japanese vocabulary learning into your existing web browsing activities, allowing you to learn without feeling like you are actively studying, thus combating learning fatigue.

· User-Level Customization: Allows users to set their Japanese proficiency level, ensuring that the vocabulary introduced is at an appropriate 'i+1' level for optimal learning and comprehension.

· Cross-Browser Compatibility: Available as an extension for major browsers like Chrome, Edge, and Firefox, making it accessible to a wide range of users and their preferred browsing environments.

Product Usage Case

· Learning Japanese vocabulary while reading news articles: A user browsing an English news website might see words like 'economy' or 'political' replaced with their Japanese equivalents, enabling them to understand the broader context while learning new terms passively.

· Acquiring business-related Japanese terms during professional research: A developer researching a new technology on an English tech blog might find technical terms replaced with their Japanese counterparts, aiding in understanding the nuances of the topic in both languages.

· Encountering common phrases and idioms in social media: A user scrolling through English social media feeds could see colloquialisms or frequently used phrases subtly translated into Japanese, helping them grasp natural conversational patterns.

· Overcoming 'Anki burnout' by integrating study into leisure time: Instead of dedicating separate time slots for flashcard review, users can learn Japanese vocabulary organically while enjoying their favorite English content online, making the learning process more sustainable and enjoyable.

2

CivicInsight Weaver

Author

fokdelafons

Description

A digital public infrastructure that democratizes access to legislation by stripping political spin using LLMs and prioritizing content through community voting. It aims to solve the problem of important laws going unnoticed due to complex language and biased media coverage, offering a transparent platform for civic engagement and citizen-led legislative drafting.

Popularity

Points 36

Comments 21

What is this product?

CivicInsight Weaver is a platform designed to make legislation understandable and accessible to everyone. It tackles the challenge of raw legal texts being unreadable and media coverage being driven by outrage rather than substance. The core technology involves using large language models (LLMs) like Gemini 2.5 Flash to process official government bills from various countries (initially US and Poland, with more in development). These LLMs are instructed to remove political jargon and bias, essentially 'sterilizing' the text. The platform then uses a 'Civic Algorithm' where content relevance is determined by user votes, creating a 'Shadow Parliament' that surfaces what the community deems important. Furthermore, it includes a 'Civic Projects' feature, acting as an incubator for citizen-proposed legislation, which is then AI-scored and presented alongside official bills. The tech stack is a modern monorepo using Flutter for both web and mobile, backed by Firebase and Google Cloud Run for the backend, with Vertex AI for its AI capabilities. This approach allows for efficient processing of legal documents and empowers users to actively participate in shaping civic discourse.

How to use it?

Developers can interact with CivicInsight Weaver in several ways. For end-users, the primary interaction is through the live web and mobile applications (lustra.news), where they can browse, understand, and vote on legislation. For developers looking to contribute or integrate, the project is open-source under a PolyForm Noncommercial license. This means you can inspect the code to learn how it works, or even contribute to building data adapters for new countries' legislative systems. The core logic for parsing and processing bills is designed to be country-agnostic, making it easier to extend. Developers can fork the repository, implement a data adapter for a parliament not yet supported, and submit a pull request. This fosters collaboration and expands the platform's reach. The backend infrastructure is built on Firebase and Google Cloud Run, offering familiar environments for cloud-native development. Integration could involve consuming the platform's API (if made public) or embedding its functionalities into other civic tech projects.

Product Core Function

· Legislation Ingestion and Sterilization: Parses raw legal documents (PDF/XML) from official APIs and uses LLMs to remove political spin, making complex laws understandable. This provides users with the factual essence of a bill, cutting through the noise.

· Civic Algorithm for Content Prioritization: Sorts legislative content based on user votes, creating a community-driven feed that highlights what citizens care about most. This ensures important topics rise to the top, rather than being dictated by editorial bias.

· Citizen Legislation Incubation: Enables users to submit draft legislation, which is then AI-vetted and displayed with visual parity to government bills. This empowers citizens to actively propose solutions and participate in the legislative process.

· Multi-Platform Frontend: Utilizes Flutter for a unified web and mobile experience, ensuring accessibility and a consistent user interface across devices. This allows users to engage with civic information anytime, anywhere.

· Scalable Backend Infrastructure: Employs Firebase and Google Cloud Run for a robust and scalable backend. This ensures the platform can handle a growing number of users and legislative data efficiently and reliably.

Product Usage Case

· A citizen concerned about environmental policy can use CivicInsight Weaver to find all relevant new bills, understand their core intent without political framing, and see which ones are gaining traction among other users. This helps them focus their advocacy efforts.

· A journalist looking for unbiased information on a new economic bill can access the 'sterilized' text provided by the platform, supplementing their research and avoiding the need to untangle potentially misleading official statements. This offers a quick, fact-based starting point.

· A developer interested in civic tech can explore the open-source codebase to understand how LLMs are leveraged for text de-spinning, inspiring them to build similar tools or contribute to improving this platform. This offers a learning opportunity and a chance to contribute to a public good.

· A legislator might use the platform to gauge public sentiment on proposed laws by observing the community voting patterns, providing valuable feedback on how a bill is perceived outside of formal lobbying. This offers insights into public opinion.

· A student researching a specific piece of legislation can easily find the core provisions and related citizen-proposed initiatives, simplifying their research process and providing a broader perspective. This makes academic research more efficient.

3

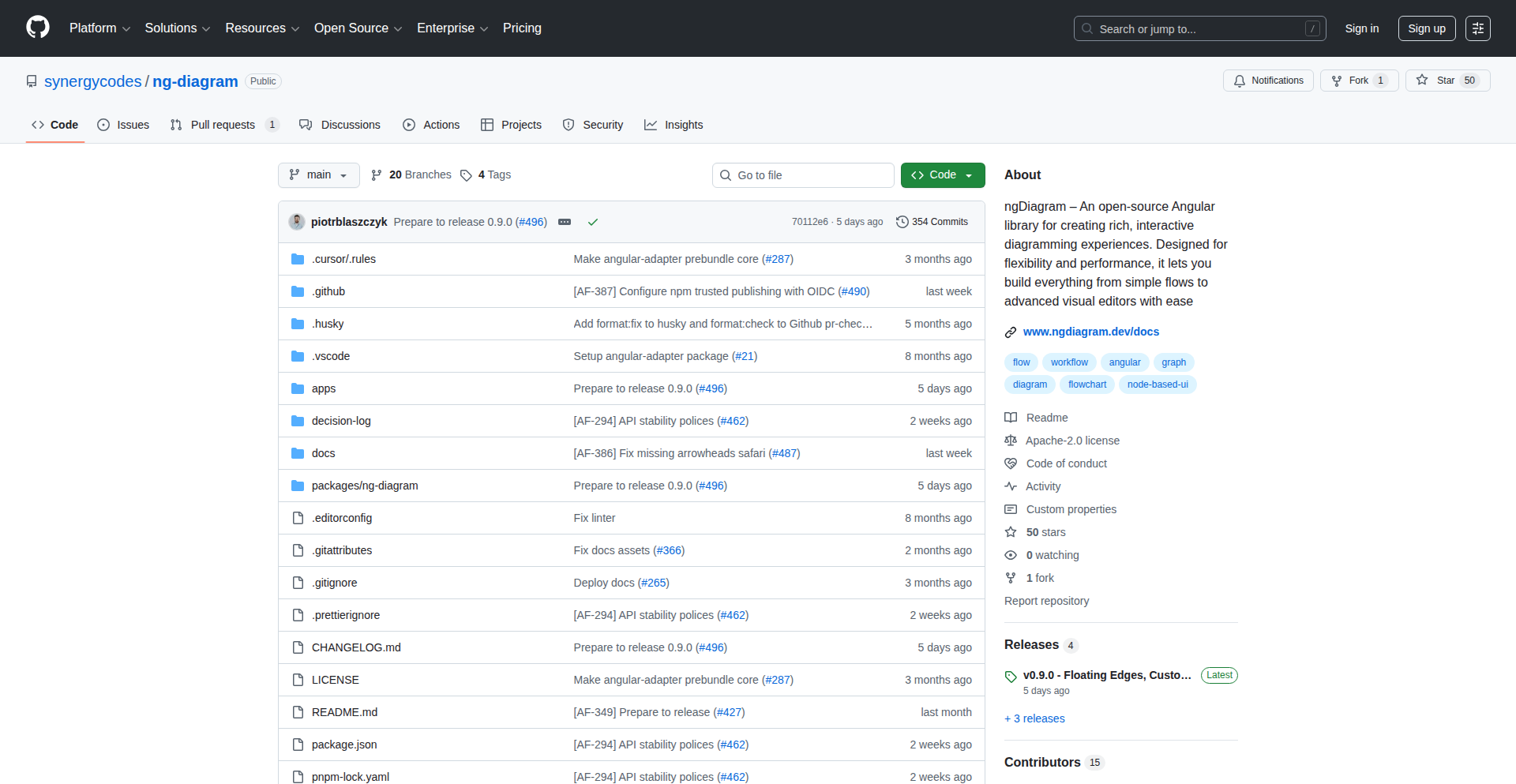

Picknplace.js: Intuitive Drag-and-Drop Alternative

Author

bbx

Description

Picknplace.js is a novel JavaScript library offering a drag-and-drop like user experience, but with a distinct technical approach that focuses on simplicity and performance. It bypasses traditional event-heavy DOM manipulation, opting for a more direct and efficient method to enable users to reposition elements, thus solving the common performance bottlenecks and complexities associated with standard drag-and-drop implementations.

Popularity

Points 28

Comments 14

What is this product?

Picknplace.js is a JavaScript library designed to provide the visual effect of dragging and dropping elements, but it achieves this through a fundamentally different and often more performant technical implementation than standard drag-and-drop APIs. Instead of relying heavily on numerous mouse event listeners and complex DOM updates, it focuses on calculating element positions based on user input more directly. This means it can be faster and less prone to performance issues, especially in complex interfaces. The core innovation lies in its efficient handling of element movement, making it a lightweight and responsive alternative for interactive UIs. So, what's in it for you? It means smoother animations and a more responsive user experience for your web applications, especially when dealing with many draggable items, without the usual performance headaches.

How to use it?

Developers can integrate Picknplace.js into their web projects by including the library and then initializing it on specific HTML elements they want to make 'pickable' and 'placeable'. The API is designed to be straightforward, allowing developers to specify which elements are draggable and how they should behave when moved. It can be used with plain JavaScript or integrated into various frontend frameworks. For instance, you could initialize Picknplace.js on a list of cards in a Kanban board or on interactive widgets on a dashboard. The benefit for you is a quicker way to add dynamic repositioning capabilities to your application, enhancing user interaction with minimal code and effort.

Product Core Function

· Direct Element Positioning: Calculates and applies element positions directly, reducing overhead compared to traditional event-driven methods, leading to smoother animations and better performance. This means your users can move things around without lag.

· Lightweight API: Offers a simple and intuitive API for developers to define which elements can be moved and how they should behave, minimizing the learning curve and integration time. This helps you get interactive features up and running faster.

· Performance Optimized: Engineered to be highly performant, especially in scenarios with a large number of interactive elements, by avoiding unnecessary DOM manipulations and event handling. This ensures your application remains responsive even under heavy load, giving users a consistently good experience.

· Customizable Behavior: Provides options for developers to customize the drag-and-drop behavior, such as defining boundaries or snap-to-grid functionality, allowing for tailored user interactions. This means you can make the dragging exactly how you want it for your specific application's needs.

Product Usage Case

· Interactive Dashboards: Implementing customizable dashboards where users can rearrange widgets, providing a personalized view of information. Picknplace.js offers a smooth way to achieve this without bogging down the browser.

· Visual Editors: Building simple visual editors or canvas-like interfaces where users can position elements freely, such as designing a webpage layout or arranging design assets. This enables intuitive manipulation of on-screen objects.

· Task Management Boards: Creating Kanban-style boards where users can drag tasks between columns, improving workflow visualization and productivity. This offers a fluid way to manage project progress.

· Educational Games: Developing interactive educational games that require users to drag and drop objects to solve puzzles or learn concepts, making learning more engaging. This provides a fun and interactive learning experience.

4

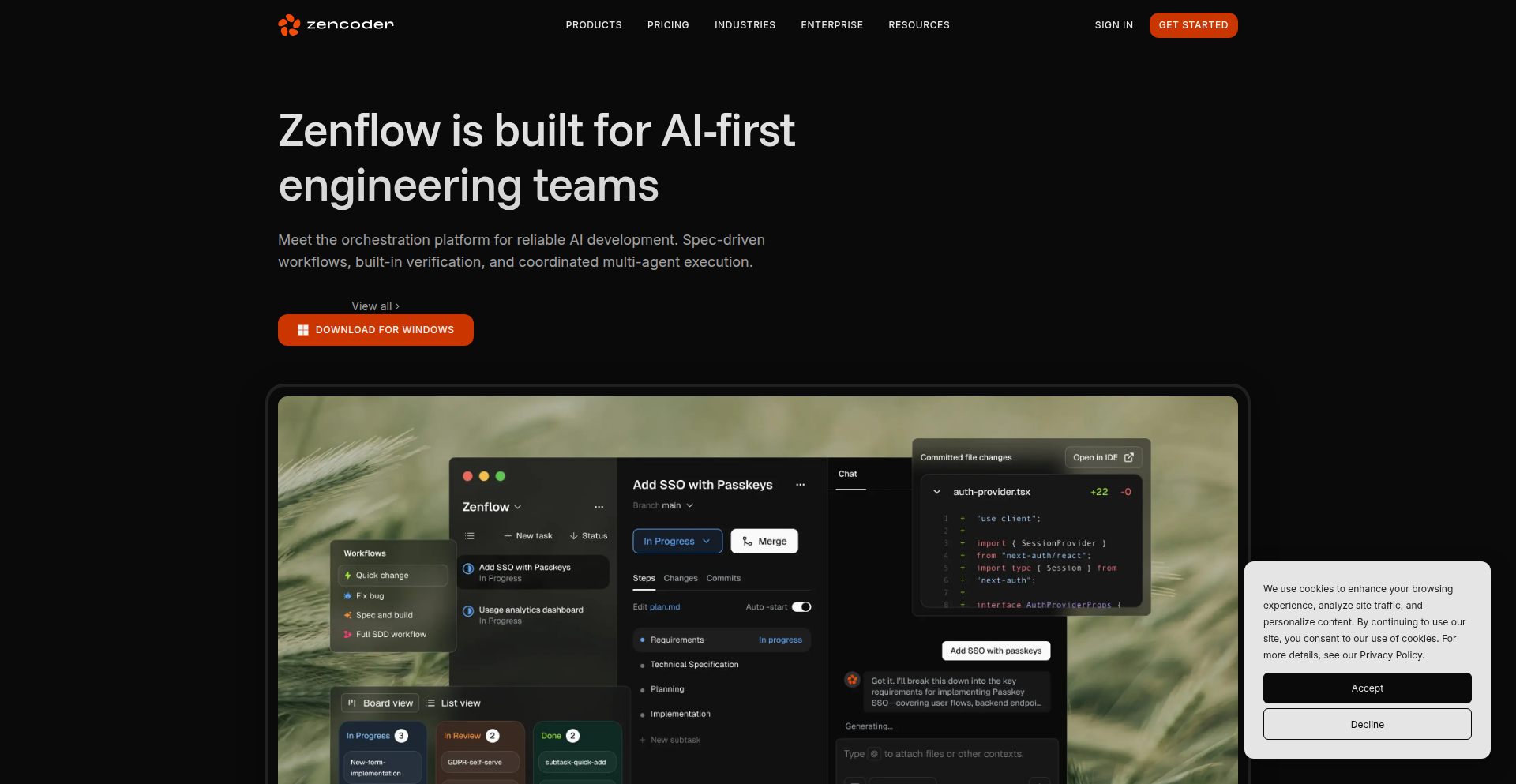

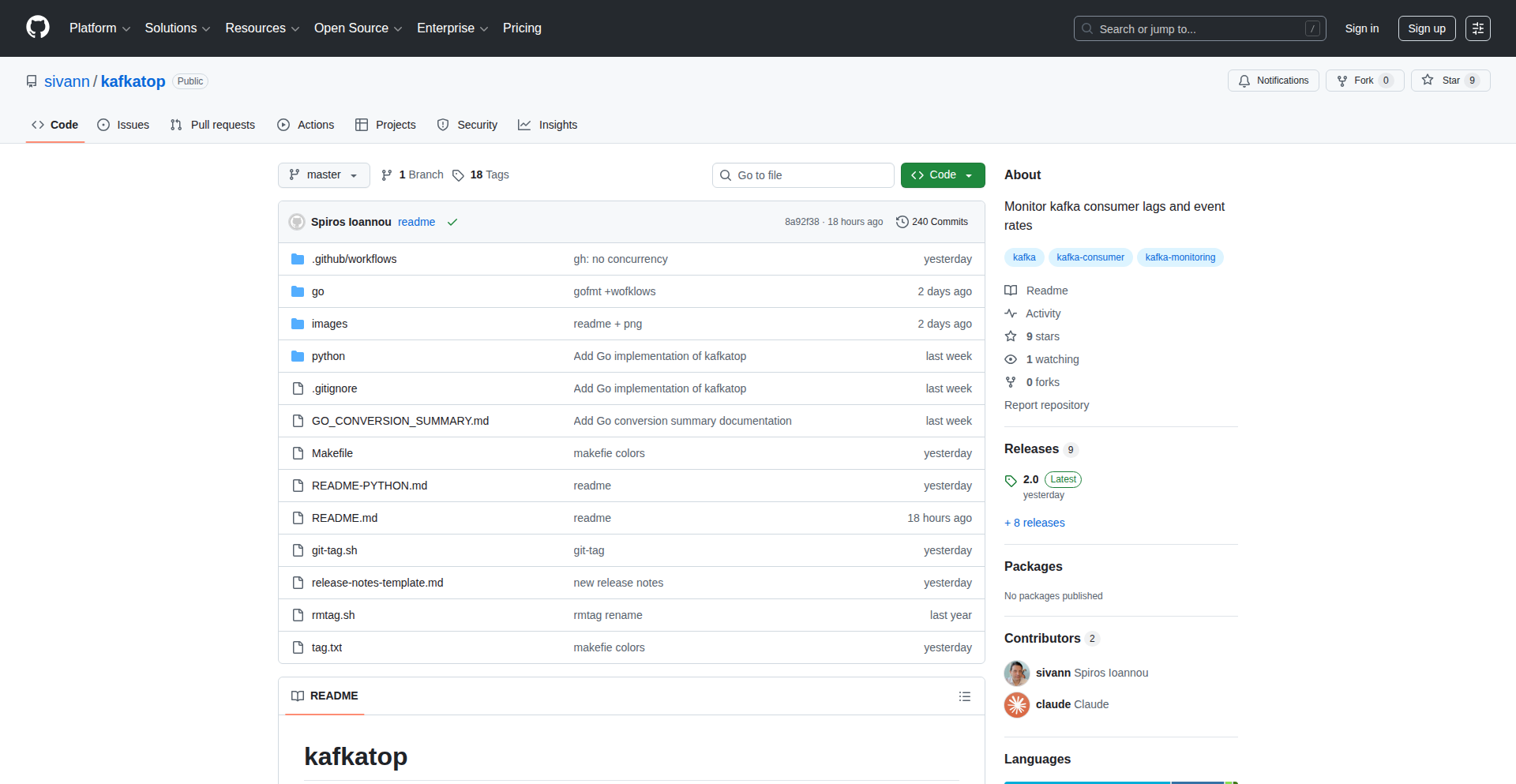

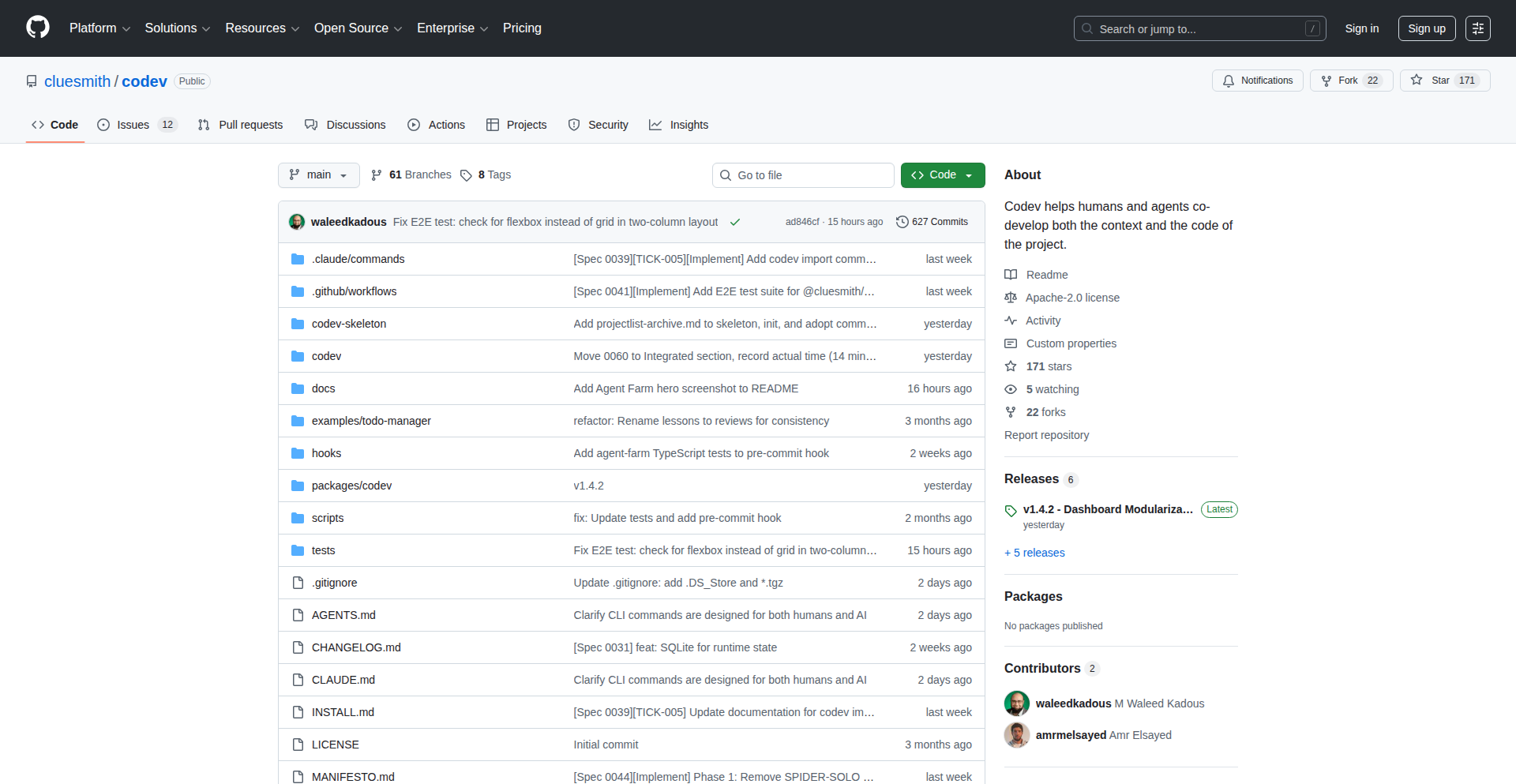

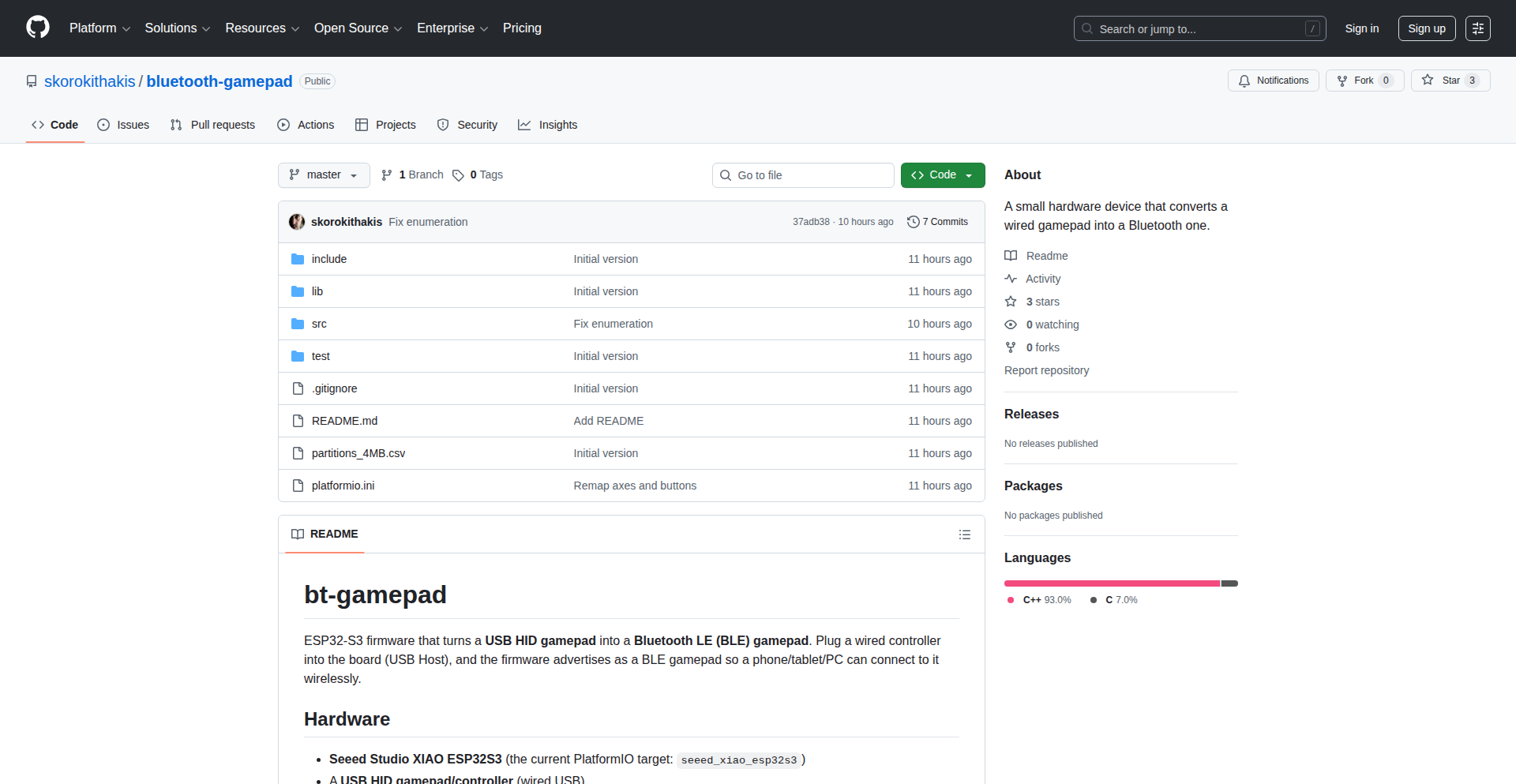

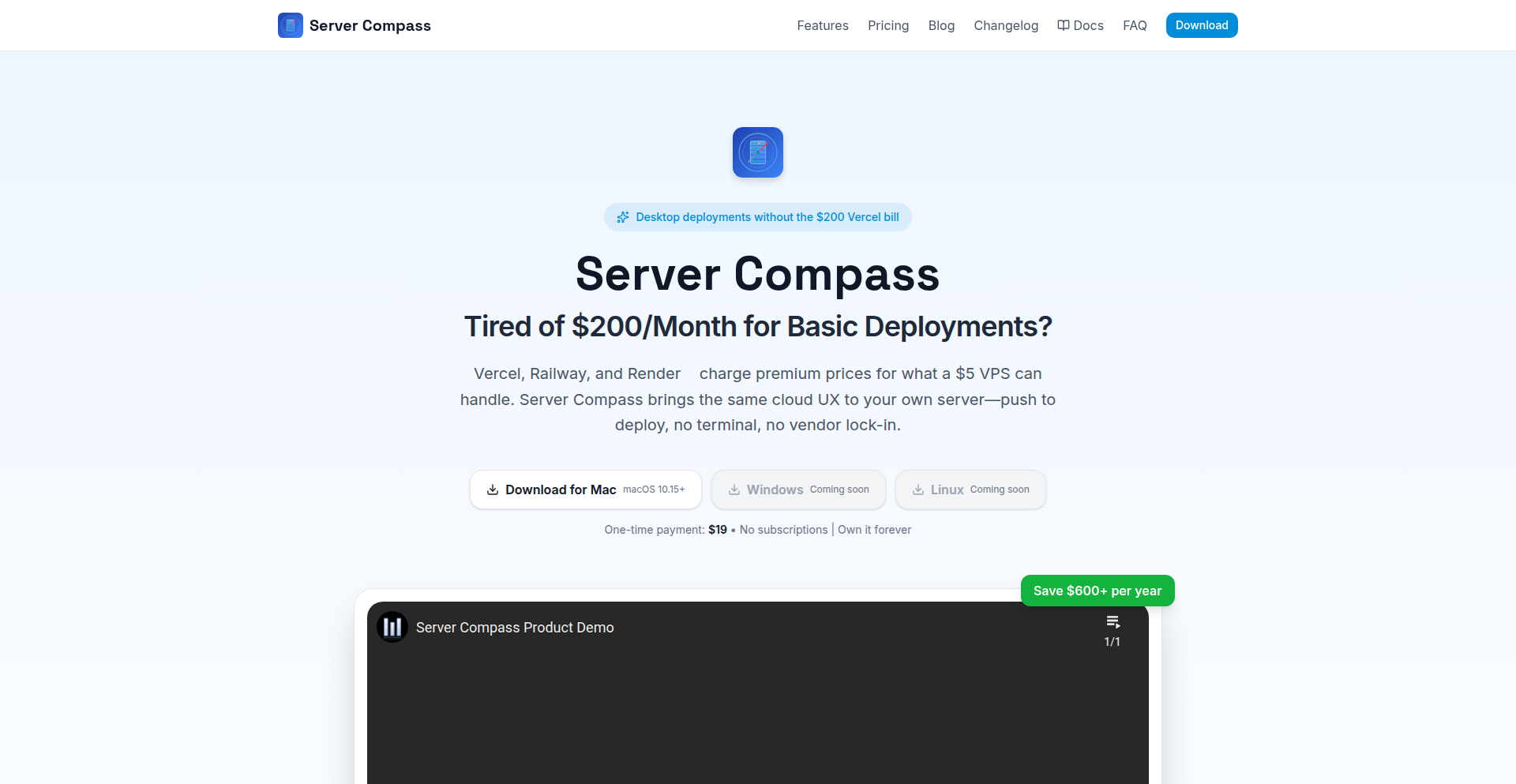

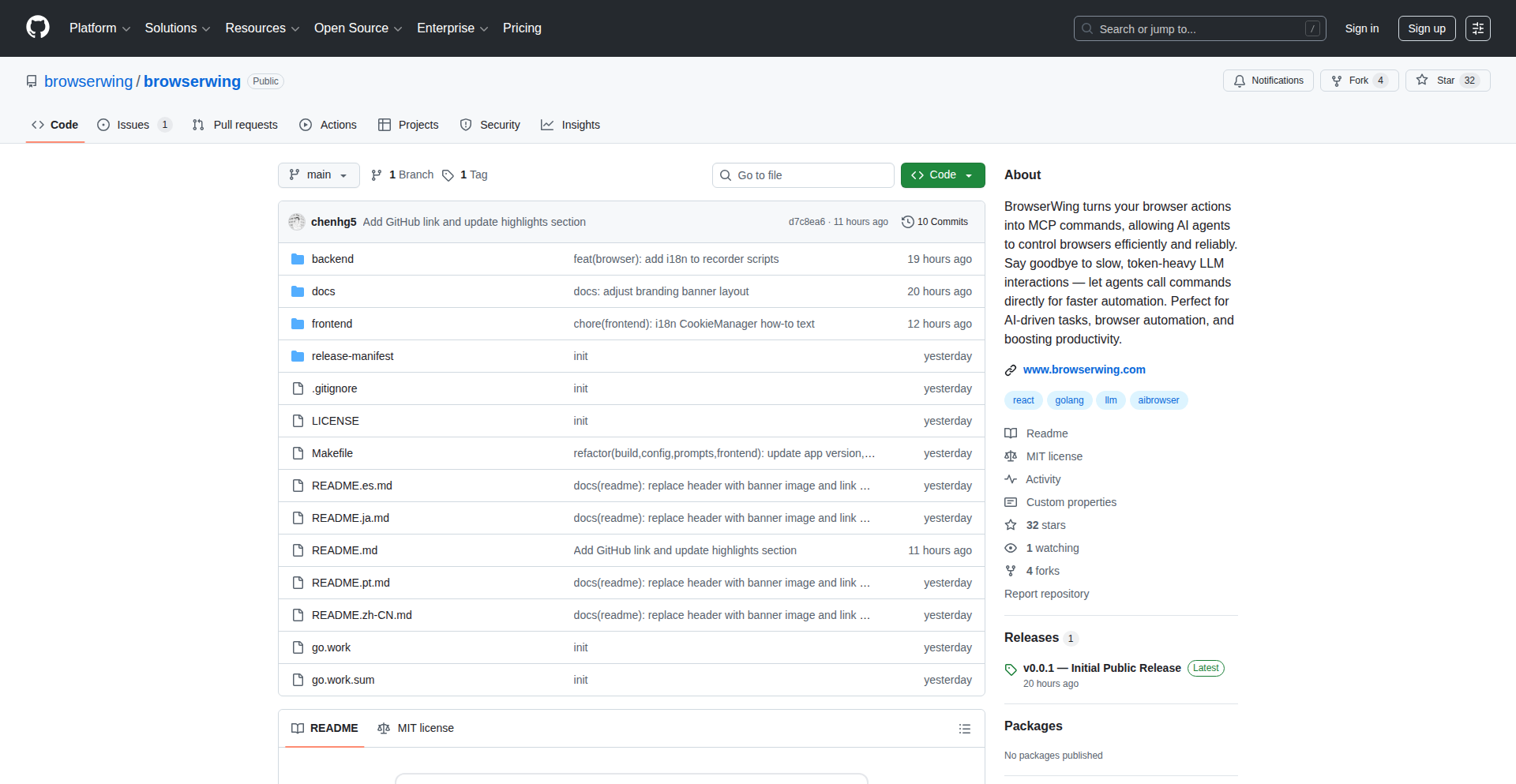

Zenflow: AI Code Orchestrator

Author

andrewsthoughts

Description

Zenflow is a free desktop tool designed to eliminate common issues when using AI coding agents in large projects, such as agents getting stuck in repetitive loops or wasting time. It allows developers to orchestrate complex AI coding workflows, enabling cross-model verification, parallel execution of different approaches, and dynamic workflow configuration through simple markdown files. This innovation addresses the practical limitations of standard chat interfaces for sophisticated AI-assisted development, making AI coding more efficient and reliable. Its value lies in streamlining AI agent interaction, enhancing productivity, and providing deeper insights into AI model performance.

Popularity

Points 28

Comments 13

What is this product?

Zenflow is a desktop application that acts as a conductor for AI coding agents. Instead of interacting with multiple AI models one by one or getting frustrated by repetitive AI responses, Zenflow lets you set up sophisticated workflows. Imagine telling your AI assistant to try five different solutions to a coding problem simultaneously, or having one AI model check the code generated by another. It uses simple markdown files to define these workflows, allowing the AI agents to dynamically adjust their next steps based on the problem's complexity. This is innovative because it moves beyond simple Q&A with AI to a more intelligent, structured execution of AI-powered coding tasks, tackling the 'you're right' loops and wasted effort common with current AI tools.

How to use it?

Developers can download and install Zenflow on their desktop. The tool integrates with various AI models like Claude Code, Codex, Gemini, and Zencoder. To use it, you create simple .md (markdown) files that define your coding workflow. For example, you could specify that a task should first be handled by Model A, then its output reviewed by Model B, and if certain conditions are met, then proceed to another step. Zenflow then executes this workflow, managing the communication between the AI models and your project. This is useful for complex tasks like refactoring large codebases, generating multiple test cases, or exploring different architectural solutions without manual intervention, saving significant development time.

Product Core Function

· Cross-Model Verification: Allows multiple AI models to review or collaborate on code. This helps catch errors that one model might miss and provides a more robust quality assurance for generated code, useful for critical features where reliability is paramount.

· Parallel Execution: Enables running several AI approaches or tasks concurrently. This dramatically speeds up the exploration of solutions for a given problem, ideal for brainstorming or when you need to quickly assess multiple implementation strategies for a feature.

· Dynamic Workflows: Workflows are configured via simple markdown files and can adapt in real-time. Agents can change the next steps based on outcomes, meaning the AI system is 'learning' and adjusting its process, which is powerful for complex, multi-stage development tasks where the path isn't always clear.

· Project List/Kanban Views: Provides an organized overview of tasks and their progress across all AI-driven workloads. This visual management helps developers keep track of multiple AI-assisted coding efforts simultaneously, improving project management and visibility.

· Agent Loop Prevention: Specifically designed to prevent AI coding agents from getting stuck in repetitive or apologetic loops. This ensures AI assistance remains productive and doesn't waste developer time, leading to a smoother and more efficient coding experience.

Product Usage Case

· Imagine you need to refactor a legacy module. With Zenflow, you could set up a workflow where one AI agent identifies potential issues, another generates refactored code, and a third verifies the correctness of the refactored code against existing tests. This solves the problem of manual code review and testing for large refactors, making the process faster and less error-prone.

· For implementing a new feature, you can use Zenflow to run parallel experiments. One AI might suggest a REST API approach, another a GraphQL approach, and a third a microservices-based solution. Zenflow orchestrates these, allowing you to compare outcomes and choose the best path quickly, addressing the challenge of exploring multiple design options efficiently.

· When debugging a complex issue, Zenflow can be used to automatically generate multiple debugging hypotheses and then run tests for each. This automates the often tedious process of trying different fixes, solving the problem of lengthy debugging cycles and improving developer productivity.

· Zenflow can help in generating comprehensive test suites. You could configure it to generate unit tests, integration tests, and end-to-end tests for a new piece of functionality, ensuring better code coverage and reducing the manual effort in test writing.

5

Drawize Realtime Canvas

Author

lombarovic

Description

Drawize is a web-based real-time multiplayer drawing application that has achieved a remarkable milestone of 100 million drawings. Initially built for a Tizen OS contest, it evolved into a popular open-web project. Its core innovation lies in its efficient real-time synchronization engine, hand-coded frontend for performance, and a robust backend architecture designed for massive scale, handling terabytes of data and tens of thousands of concurrent users. This project showcases the power of web technologies for complex interactive applications and the ability of a solo developer to build and scale a successful platform.

Popularity

Points 28

Comments 8

What is this product?

Drawize is a web application that allows multiple users to draw together on a shared canvas in real-time. Its technical ingenuity lies in its bespoke real-time multiplayer engine, built using .NET and WebSockets. This means that when one person draws, everyone else sees it appear almost instantly, creating a smooth and interactive collaborative experience. The frontend is meticulously crafted with hand-coded HTML and JavaScript, avoiding complex frameworks for speed and efficiency. It also utilizes a dual database approach (PostgreSQL and MongoDB) for data storage and employs content classification models for moderation. The project demonstrates how to achieve high performance and scalability for real-time web applications without relying on typical modern frontend tooling. So, what's the value to you? It shows that you can build highly responsive, interactive applications for the web with core web technologies, even at a massive scale, offering a blueprint for developers looking to create similar real-time experiences.

How to use it?

As a developer, you can use Drawize as a reference for building your own real-time collaborative applications. The project's backend, using .NET and WebSockets, provides a solid foundation for handling concurrent connections and synchronizing actions across multiple users. For front-end developers, the hand-coded HTML/JS approach offers insights into optimizing performance and minimizing dependencies. You could integrate similar real-time drawing capabilities into your own projects, whether it's for educational tools, collaborative design platforms, or even custom gaming experiences. The decision to use both PostgreSQL and MongoDB suggests strategies for managing different types of data efficiently. The moderation techniques, utilizing content classification models, are also valuable for applications dealing with user-generated content. So, how can you use this? Study its architecture to inform your own real-time system designs, and adapt its strategies for efficient data handling and content moderation in your own projects.

Product Core Function

· Real-time Multiplayer Synchronization: The ability for multiple users to draw on the same canvas simultaneously with near-instantaneous updates. This is achieved through highly optimized .NET code and WebSockets, ensuring a fluid and interactive user experience. This is valuable for any application requiring simultaneous collaboration, such as remote team brainstorming or interactive educational tools.

· Hand-coded HTML/JavaScript Frontend: A performant and lightweight frontend implementation that avoids heavy frameworks and bundlers. This approach prioritizes speed and reduces load times, making the application accessible even on less powerful devices or slower network connections. This is valuable for developers seeking to build fast, responsive web interfaces with minimal overhead.

· Scalable Data Management: The use of both PostgreSQL and MongoDB for data storage. PostgreSQL likely handles structured data efficiently, while MongoDB might be used for more flexible or document-based storage, demonstrating a pragmatic approach to scaling data handling. This is valuable for understanding how to leverage different database technologies for optimal performance and flexibility in large-scale applications.

· Content Moderation System: Implementation of content classification models to filter inappropriate content. This is crucial for any platform with user-generated content, ensuring a safe and positive environment for all users. This is valuable for any developer building a community-driven application who needs to implement effective content filtering mechanisms.

· Reconnection Logic and Edge Case Handling: Robust mechanisms for handling disconnections and ensuring smooth reconnection for users in real-time sessions. This is critical for maintaining usability in dynamic network environments. This is valuable for building resilient real-time applications that can gracefully handle network interruptions.

Product Usage Case

· Building a collaborative whiteboard application for remote education where students and teachers can draw and annotate together in real-time. Drawize's real-time engine demonstrates how to achieve this seamless collaboration, ensuring that all participants see the same drawing progress instantly, making virtual classrooms more engaging.

· Developing a co-design tool for graphic designers or architects who need to sketch out ideas together in real-time, even if they are in different locations. The project's focus on efficient rendering and synchronization means that even complex sketches can be shared and modified collaboratively without noticeable lag.

· Creating a multiplayer drawing game where users guess words based on drawings made by others. The underlying architecture of Drawize, with its emphasis on efficient real-time communication and handling many concurrent users, provides a strong foundation for building such interactive gaming experiences.

· Implementing a live annotation feature for online presentations or webinars, allowing presenters to highlight key points on slides or shared documents in real-time for the audience. The ability to add drawings dynamically to a shared canvas makes presentations more interactive and informative.

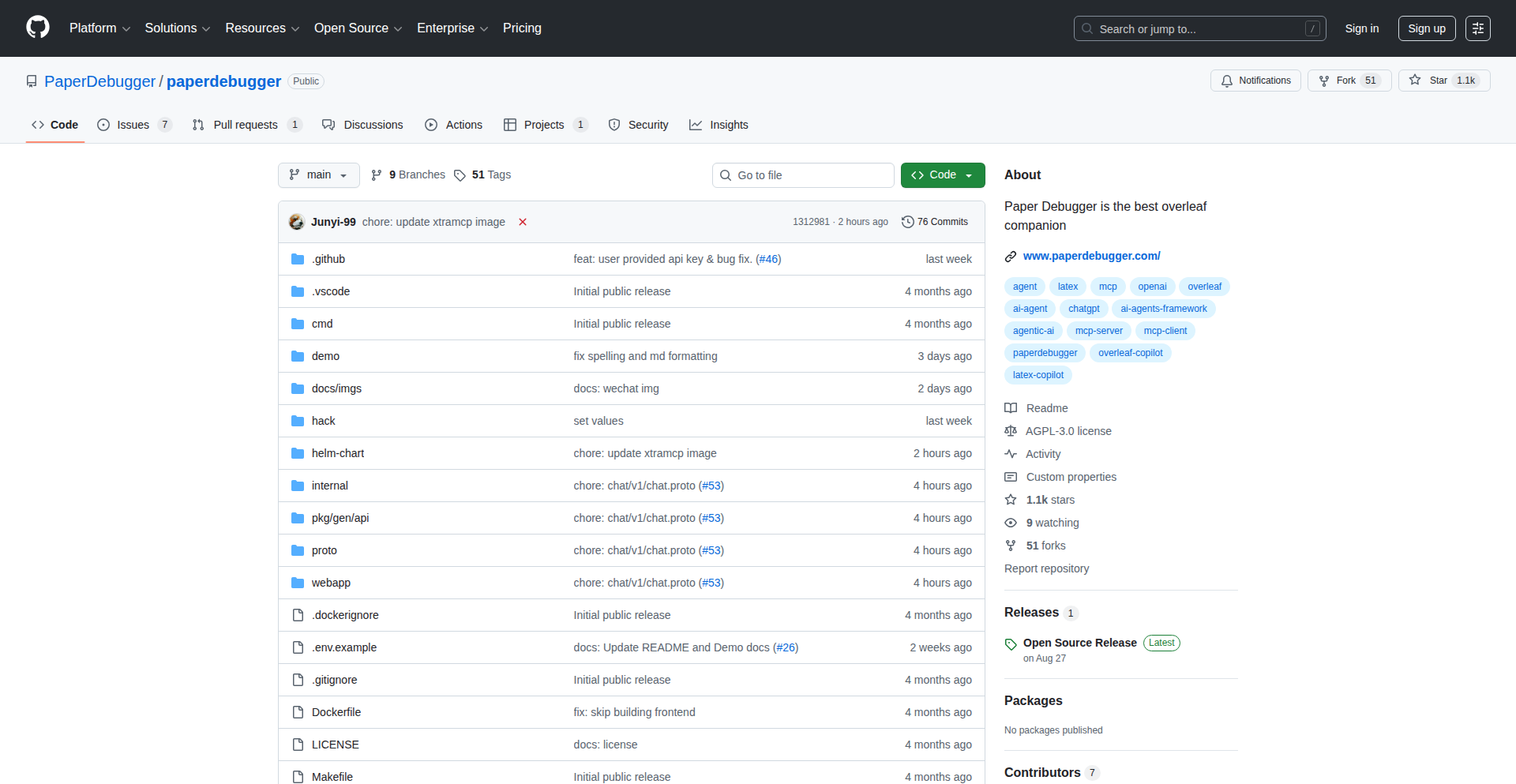

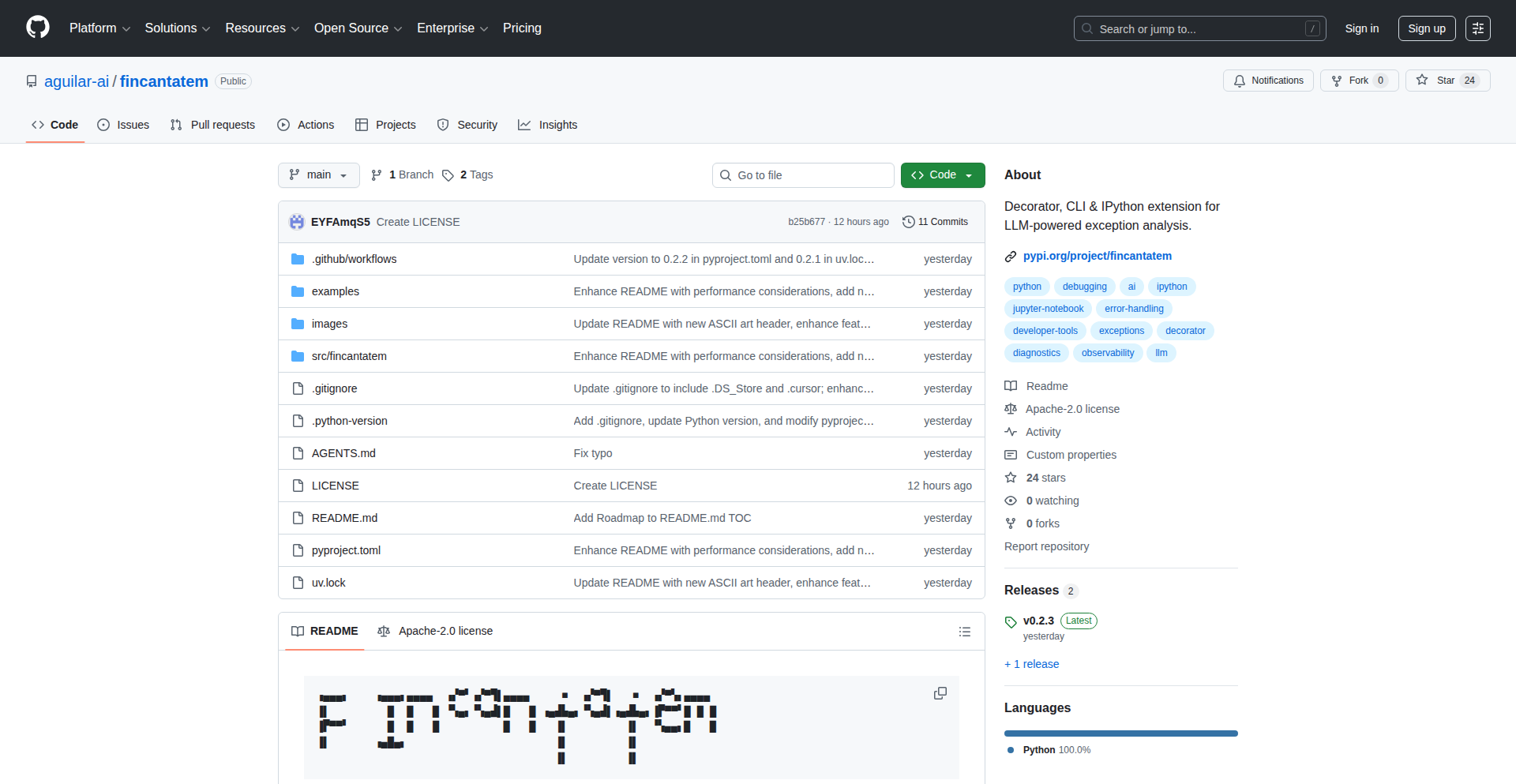

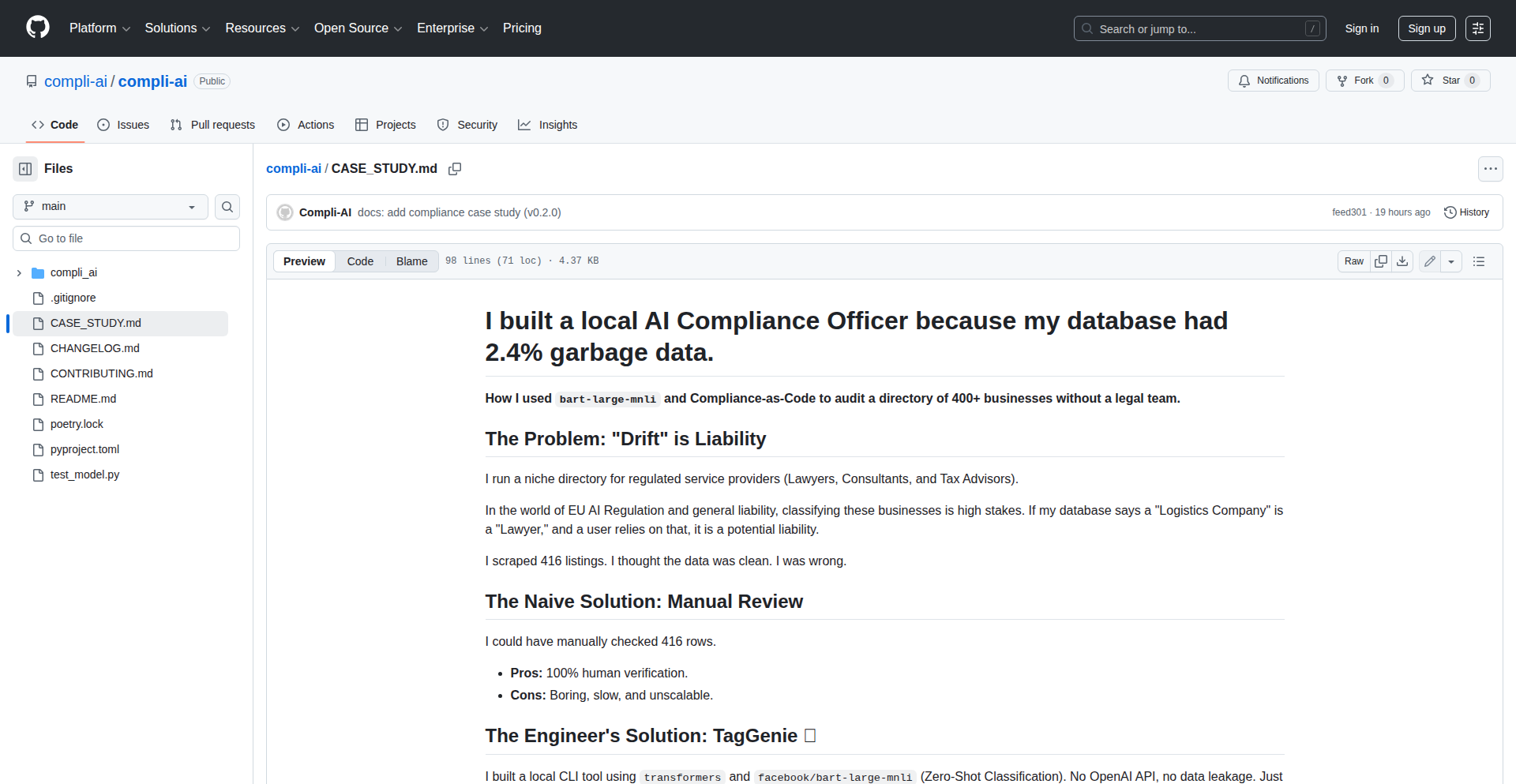

6

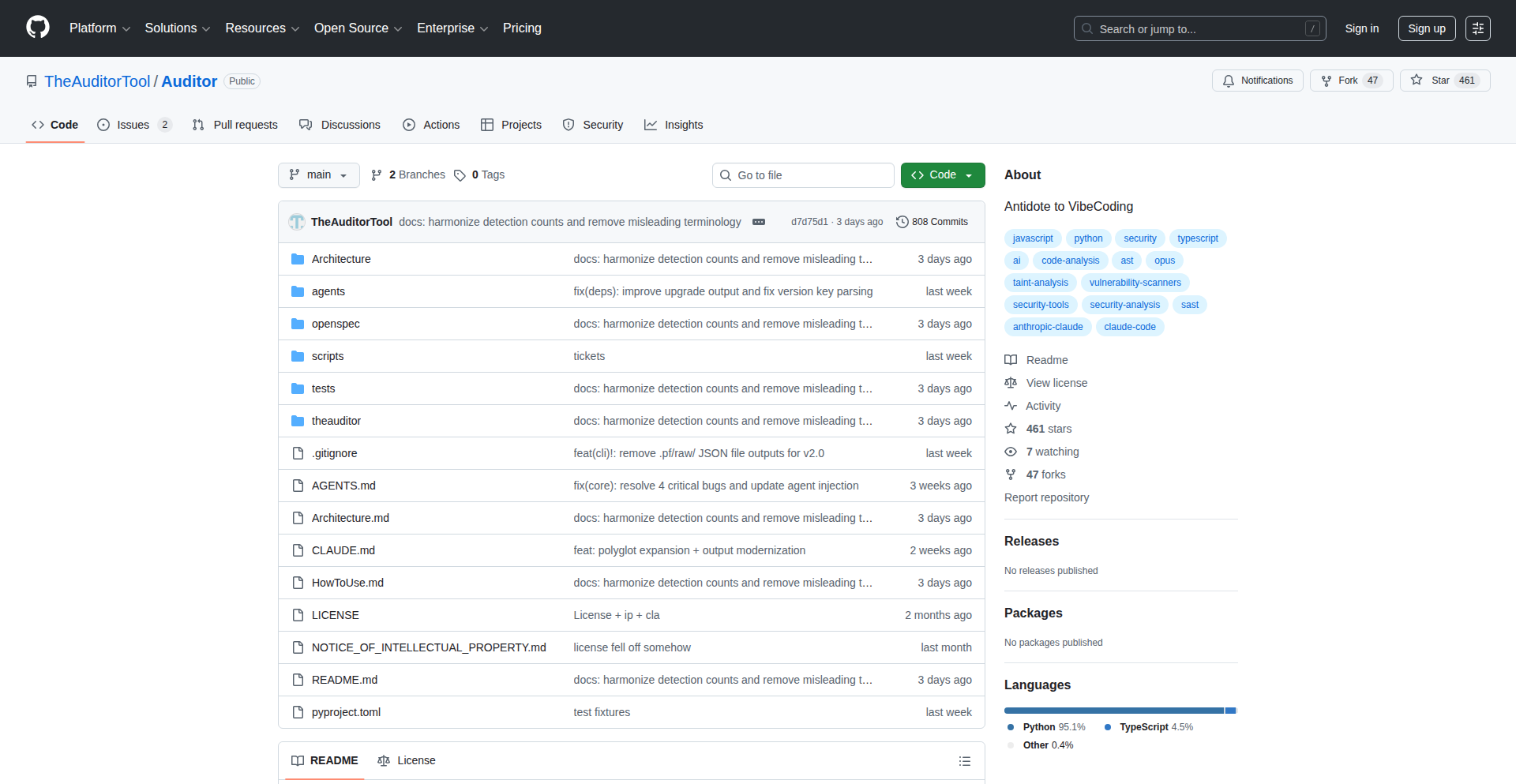

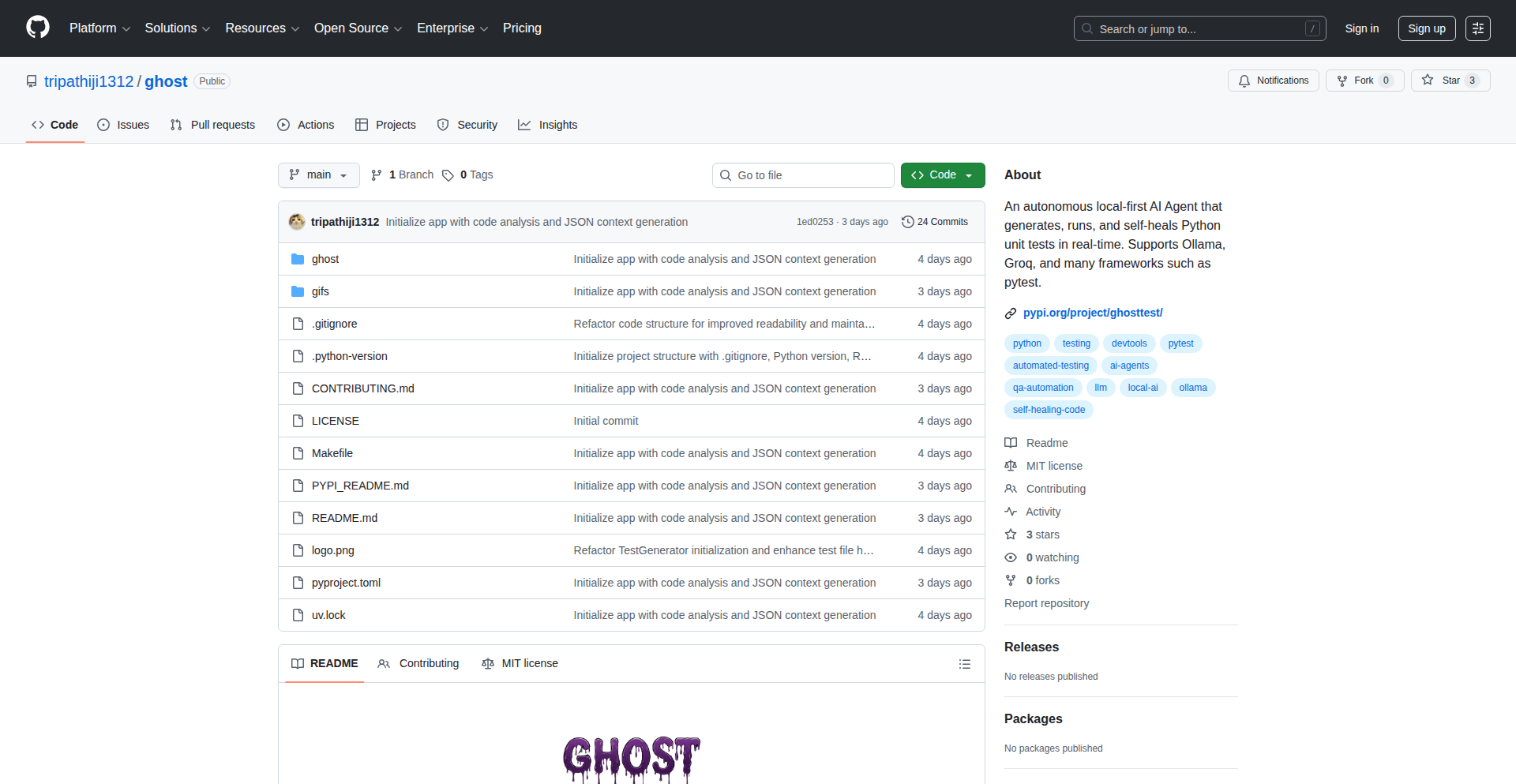

CodeGuardian AI

Author

ThailandJohn

Description

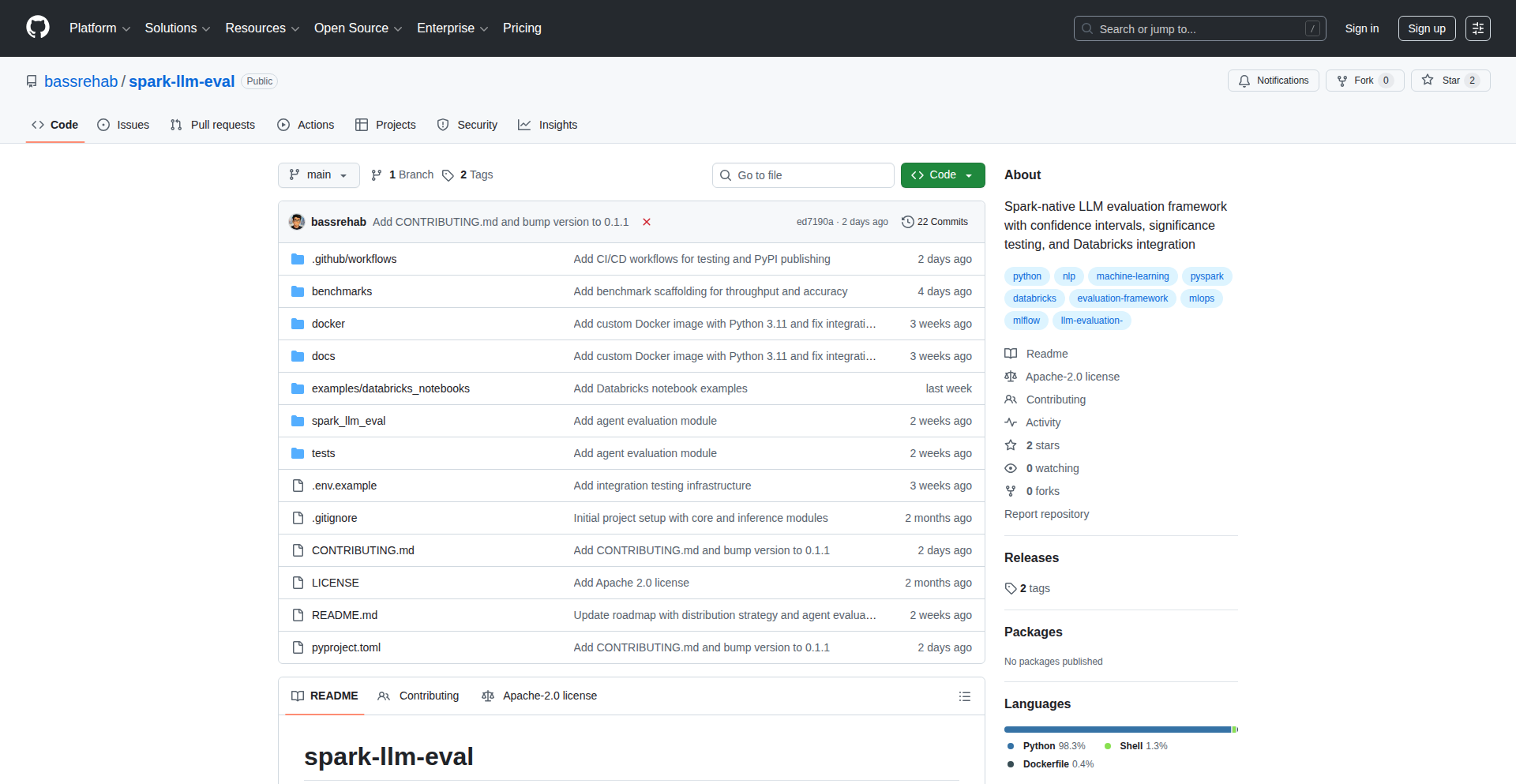

CodeGuardian AI is a sophisticated tool designed to act as a 'flight computer' for AI coding agents. It addresses critical limitations of current AI in understanding code context and knowledge staleness by indexing entire codebases into a local SQLite Graph Database. This allows AI to query and verify dependencies and imports deterministically, preventing hallucinations and ensuring adherence to current best practices. It provides a factual anchor for AI-driven code generation and refactoring, enhancing reliability and security. So, what does this mean for you? It means AI-assisted coding that is more trustworthy and less prone to errors, leading to faster and safer development cycles.

Popularity

Points 26

Comments 7

What is this product?

CodeGuardian AI is a sophisticated AI assistant that acts as a safety net and intelligence layer for AI coding tools. Unlike standard AI models that might 'hallucinate' or use outdated information due to limited context or stale training data, CodeGuardian AI builds a comprehensive, queryable map of your entire codebase using a local SQLite Graph Database. This allows AI agents to precisely understand relationships between different parts of your code, verify dependencies, and ensure they are using the latest syntax and libraries. The 'Triple-Entry Fidelity' system ensures that data integrity is maintained throughout the process, crashing intentionally if any silent data loss occurs. So, what's the core innovation? It transforms AI from a guess-and-check system into a fact-based one, enabling more reliable and secure code generation and refactoring. This means you get AI code that's more likely to be correct, secure, and up-to-date, saving you debugging time and reducing technical debt.

How to use it?

Developers can integrate CodeGuardian AI into their workflow to enhance their AI coding agents. Instead of letting an AI write code directly, developers first use CodeGuardian AI to perform a pre-investigation of the problem statement. The AI agent then leverages CodeGuardian AI to generate a factual audit of the codebase related to the task. Based on this audit, the developer can then plan and execute architectural changes or code generation with higher confidence. This can be done through commands like 'aud explain' for detailed code analysis or 'aud planning' for architectural strategy. So, how does this benefit you? It provides a structured way to use AI for coding tasks, ensuring that the AI's suggestions are grounded in your project's reality, leading to more predictable and higher-quality outcomes.

Product Core Function

· Codebase Indexing and Graph Database: Indexes your entire codebase into a local SQLite Graph Database. This provides AI agents with a precise, queryable map of your project's structure and relationships, allowing for accurate dependency and import verification. This is valuable because it prevents AI from making assumptions or 'hallucinating' about your code, ensuring accuracy.

· Context-Aware AI Interaction: Enables AI agents to query specific information about code dependencies and context without needing to load large numbers of files into their limited context window. This directly addresses the 'context collapse' problem in AI, leading to more relevant and accurate AI outputs.

· Knowledge Staleness Mitigation: Ensures AI agents use current best practices and library versions by providing them with a factual grounding. This prevents AI from introducing outdated patterns or libraries, reducing technical debt and security vulnerabilities.

· Triple-Entry Fidelity System: A robust data integrity system where every step of the processing pipeline has fidelity checks. If any silent data loss occurs, the system intentionally crashes, ensuring that the information provided to the AI is always accurate and reliable. This means you can trust the data the AI uses to make decisions.

· Hybrid Taint Analysis for Microservices: Extends research to track data flow across microservice boundaries, from frontend requests to backend middleware and controllers. This is crucial for understanding security vulnerabilities and data leaks in complex distributed systems.

· Agent Planning and Refactoring Capabilities: Empowers AI agents to not only scan code but also to safely plan and execute architectural changes. This moves beyond simple code suggestions to enabling AI-driven system evolution.

· Multi-language and Framework Support: Supports a growing range of languages including Python, JavaScript, TypeScript, Rust, Go, and infrastructure-as-code tools like AWS CDK and Terraform. This broad applicability makes it a versatile tool for diverse development environments.

Product Usage Case

· Scenario: Refactoring a large, complex legacy codebase with numerous interdependencies. How it solves the problem: CodeGuardian AI indexes the entire codebase into a graph database, allowing an AI agent to precisely understand the impact of a schema change across all affected modules without 'context collapse'. The AI can then confidently suggest and execute the refactor, knowing it has a complete picture. So, this means your large refactors are less risky and can be done more efficiently.

· Scenario: Integrating a new, cutting-edge library into a project that uses older versions of dependencies. How it solves the problem: CodeGuardian AI ensures the AI coding agent is aware of the latest syntax and library versions available, preventing it from defaulting to deprecated patterns. It acts as a guide to ensure modern best practices are followed. So, this helps your project stay current and secure.

· Scenario: Debugging a security vulnerability in a microservice architecture where data flows through multiple layers. How it solves the problem: The Hybrid Taint analysis feature allows CodeGuardian AI to trace data flow across service boundaries, pinpointing exactly where sensitive data might be mishandled. So, this helps you identify and fix security issues faster and more effectively.

· Scenario: An AI agent is tasked with migrating a project from one framework to another. How it solves the problem: By providing a factual understanding of the current codebase and its relationships, CodeGuardian AI guides the AI agent through the migration process, ensuring that all connections and dependencies are accounted for, thus reducing the chances of introducing bugs or breaking existing functionality. So, this makes complex migrations smoother and more predictable.

7

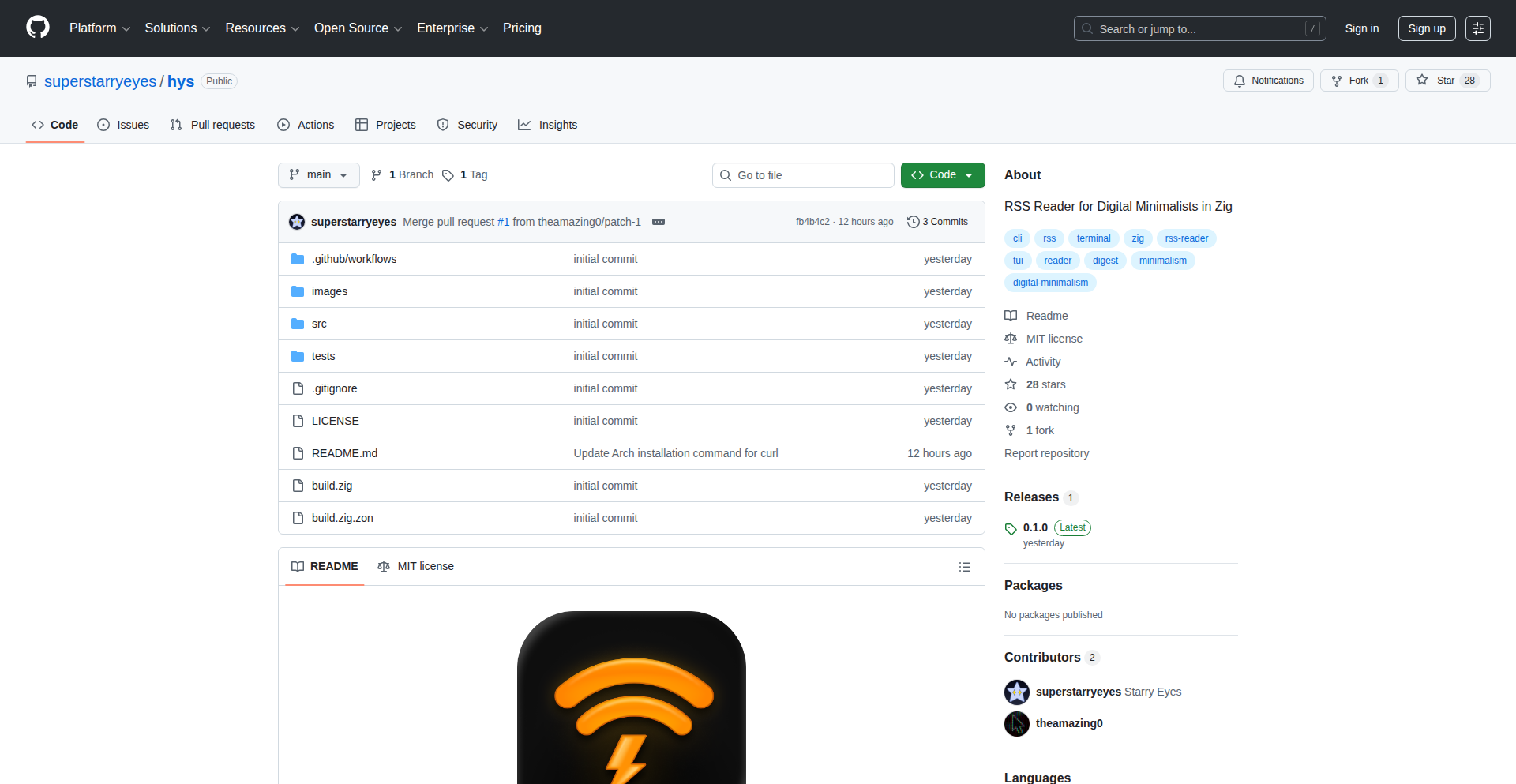

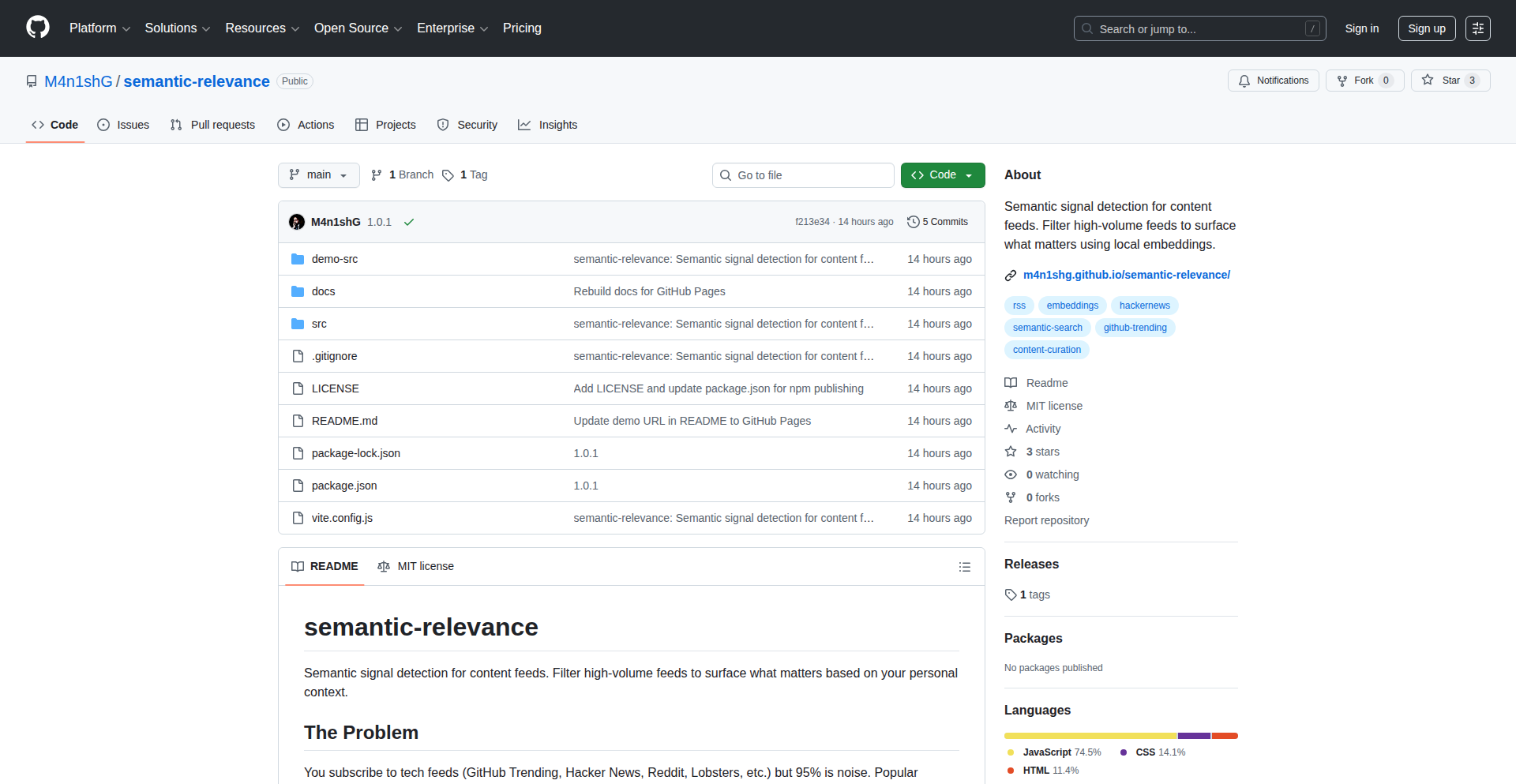

ZigFeedZen

Author

superstarryeyes

Description

ZigFeedZen is an ultra-fast RSS reader built in Zig, designed to fetch content from hundreds of feeds daily in mere seconds. Its core innovation lies in aggressive optimization techniques, including parallelized network requests with curl_multi, efficient XML parsing using libexpat and memory arenas, and smart feed truncation, all contributing to a digital minimalism experience. This means you get your curated news delivered all at once, allowing for focused consumption and a peaceful, distraction-free internet experience.

Popularity

Points 24

Comments 8

What is this product?

ZigFeedZen is a command-line RSS reader engineered for speed and minimal resource usage, inspired by digital minimalism. It addresses the problem of information overload by fetching all your subscribed RSS feeds at once, limiting new content intake to a single daily delivery. Technically, it leverages Zig's robust memory management and concurrency features. It uses curl_multi for highly efficient, multiplexed network requests, enabling HTTP/2 connections to be reused across multiple feeds hosted on the same servers. Conditional GET requests are employed to avoid re-downloading unchanged content. When a feed finishes downloading, its XML is immediately passed to worker threads for parallel parsing using libexpat. This approach avoids loading entire large XML files into memory. For particularly massive feeds, it intelligently truncates the download to a manageable size and parses only the relevant portion, ensuring low memory consumption. The output is then piped to the system's 'less' command, providing familiar Vim-like navigation and search capabilities, with support for clickable hyperlinks.

How to use it?

Developers can integrate ZigFeedZen into their workflows by installing the compiled Zig binary. The primary usage is through the command line, where you can configure your RSS feed URLs (e.g., via an OPML file for import/export). Once configured, you run the application, and it will fetch and display all your feeds in a paginated, searchable interface. For developers interested in extending its capabilities or integrating it into larger systems, the open-source nature and MIT license allow for modification and redistribution. You can use it as a backend for custom dashboards or automate its execution as part of a daily content aggregation script. The goal is to provide a predictable, scheduled content delivery, allowing you to engage with your news in a focused manner.

Product Core Function

· Parallel Feed Fetching: Utilizes curl_multi to download hundreds of RSS feeds simultaneously, significantly reducing the time to get all your content, so you don't have to wait around.

· Efficient XML Parsing: Employs libexpat and memory arenas for fast and memory-conscious XML processing, ensuring even large feeds are handled smoothly without bogging down your system.

· Smart Feed Truncation: Downloads only the necessary parts of very large feeds and parses them, minimizing memory usage and speeding up processing for all feed sizes.

· Digital Minimalism Interface: Delivers all content at once and pipes it to the 'less' command, providing a distraction-free reading experience with familiar navigation and search features, so you can focus on the content.

· Interactive Hyperlinks: Supports OSC 8 hyperlinks, allowing you to click on links directly within the terminal to open them in your browser, making content discovery seamless.

Product Usage Case

· Content Aggregation for Personal Knowledge Management: A developer can use ZigFeedZen to gather articles from dozens of technical blogs and news sites daily, then export or process this content for later review, solving the problem of scattered information and ensuring no important updates are missed.

· Automated News Digest Generation: Integrate ZigFeedZen into a cron job to fetch feeds every morning. The output can then be further processed, for example, to generate a summary email or update a personal dashboard, providing a curated news digest without manual effort.

· Offline Content Curation: Fetch all feeds once a day and then use the 'less' command's navigation to read through articles offline or in a low-bandwidth environment, ideal for commuters or those with unreliable internet connections.

· Building a Custom RSS Reader Backend: Use the core fetching and parsing logic of ZigFeedZen as a foundation for a web-based or desktop RSS reader, leveraging its optimized performance to handle a large number of user subscriptions efficiently.

8

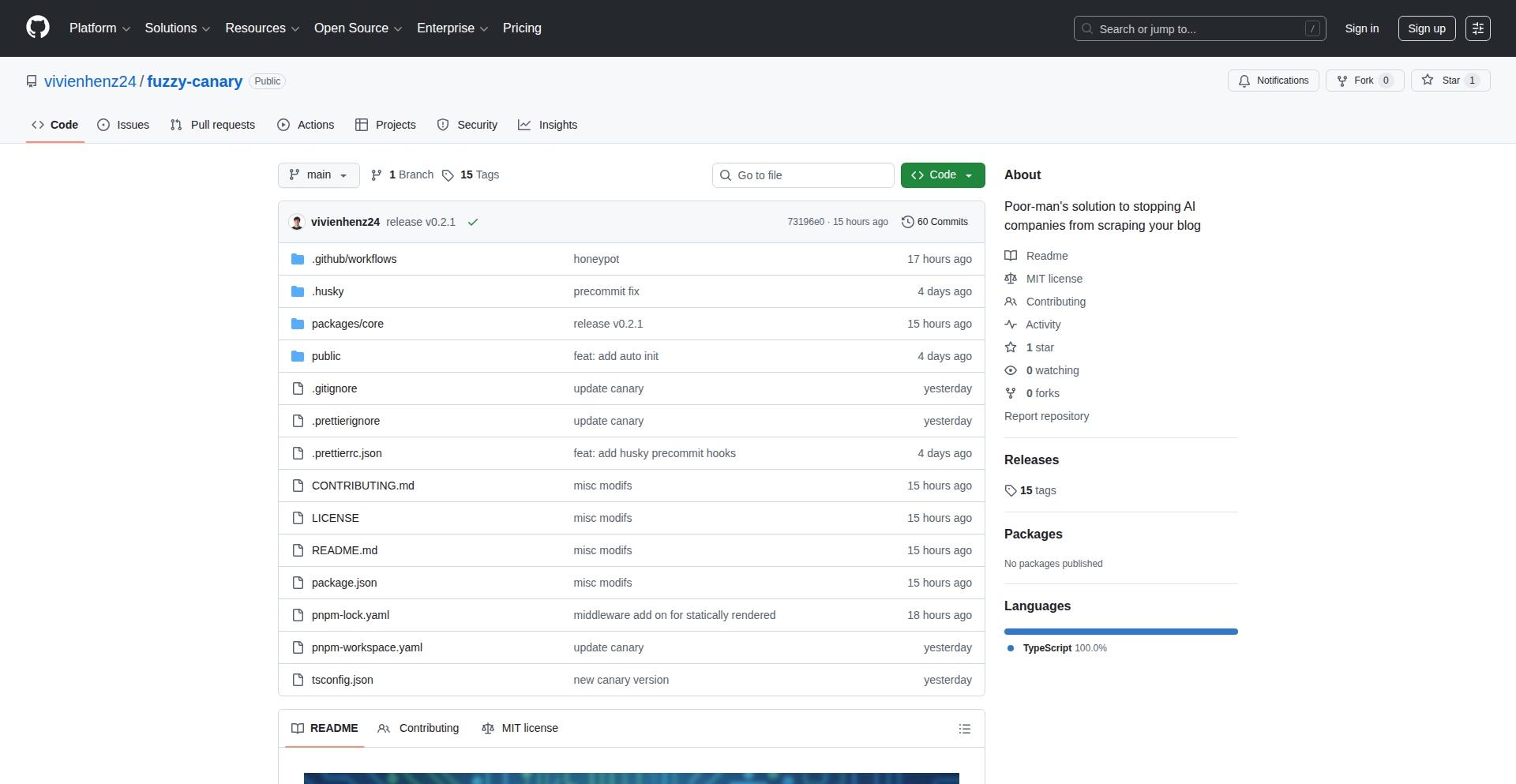

FuzzyCanary: The AI Scraper Deterrent

Author

misterchocolat

Description

This project is a clever, albeit unconventional, solution for self-hosted blog owners battling aggressive AI companies scraping their content. It works by injecting hidden links into your website's HTML, specifically designed to trigger the safeguards of AI scrapers, causing them to avoid your site in the future. To mitigate the negative impact on legitimate search engines like Google and Bing, it intelligently checks user agents and only displays these deceptive links to non-search engine bots. So, how does this help you? It protects your server resources and prevents your valuable content from being endlessly consumed by unauthorized AI training, all while trying to preserve your SEO standing.

Popularity

Points 25

Comments 2

What is this product?

FuzzyCanary is a JavaScript-based tool that aims to deter AI web scrapers from consuming your self-hosted content. The core technical innovation lies in its subtle manipulation of the HTML DOM. It strategically inserts hundreds of invisible links to adult websites. These links are undetectable by human visitors and legitimate search engine bots (thanks to user agent filtering), but they are present in the Document Object Model (DOM). When AI scrapers crawl your site, they encounter these links, which are often configured to trigger their internal 'safety' or 'content policy' filters. This leads the AI to flag your site as undesirable for scraping, effectively adding it to their 'do not crawl' lists. The value here is a defensive measure against unauthorized data harvesting, offering a unique approach to protect your digital assets without relying on external services like Cloudflare.

How to use it?

For developers, FuzzyCanary can be integrated as a simple JavaScript import into your website's frontend. If you're using a framework like React, Vue, or Angular, you would typically include it in your main application file or a component that renders on every page. For static sites, direct inclusion in your HTML template is also an option. The integration aims to be minimal effort, often just a single import or component. This allows you to quickly deploy this defense mechanism and start protecting your blog from aggressive AI scrapers. So, how does this benefit you? It's a quick and easy way to add a layer of protection to your website, freeing up your server resources and potentially saving you from unexpected bandwidth costs.

Product Core Function

· Invisible Link Injection: Dynamically adds hundreds of hidden links to your HTML that are visible to scrapers but not users. This helps to confuse and deter AI bots. So this helps you by making your site look 'unappealing' to unwanted AI crawlers.

· User Agent Filtering: Detects and filters out legitimate search engine bots like Googlebot and Bingbot, ensuring these links are not seen by them. This preserves your Search Engine Optimization (SEO) efforts. So this helps you by protecting your search engine rankings.

· DOM Manipulation: The links are injected directly into the Document Object Model (DOM), making them accessible to crawlers but visually hidden from human visitors. So this helps you by providing a stealthy yet effective way to target AI scrapers.

Product Usage Case

· A blogger running a personal website on a VPS notices a significant spike in server load attributed to AI scraping. They integrate FuzzyCanary into their site's frontend. Within days, the scraper traffic drops dramatically, and their server performance stabilizes. This solves the problem of resource depletion and potential downtime caused by excessive scraping.

· An independent artist hosts their portfolio on a static site generator. Worried about AI companies using their artwork for training data without permission, they implement FuzzyCanary. By ensuring the hidden links are only served dynamically to bots and not baked into the static HTML (a caveat they address by considering server-side rendering or a proxy for their specific setup), they successfully deter AI scrapers while keeping their SEO intact.

· A small news outlet with limited resources wants to protect their articles from being freely ingested by AI content farms. They add FuzzyCanary to their content management system. This allows them to implement a 'digital tripwire' that discourages AI scrapers without requiring expensive WAF solutions. This helps them maintain control over their content's distribution.

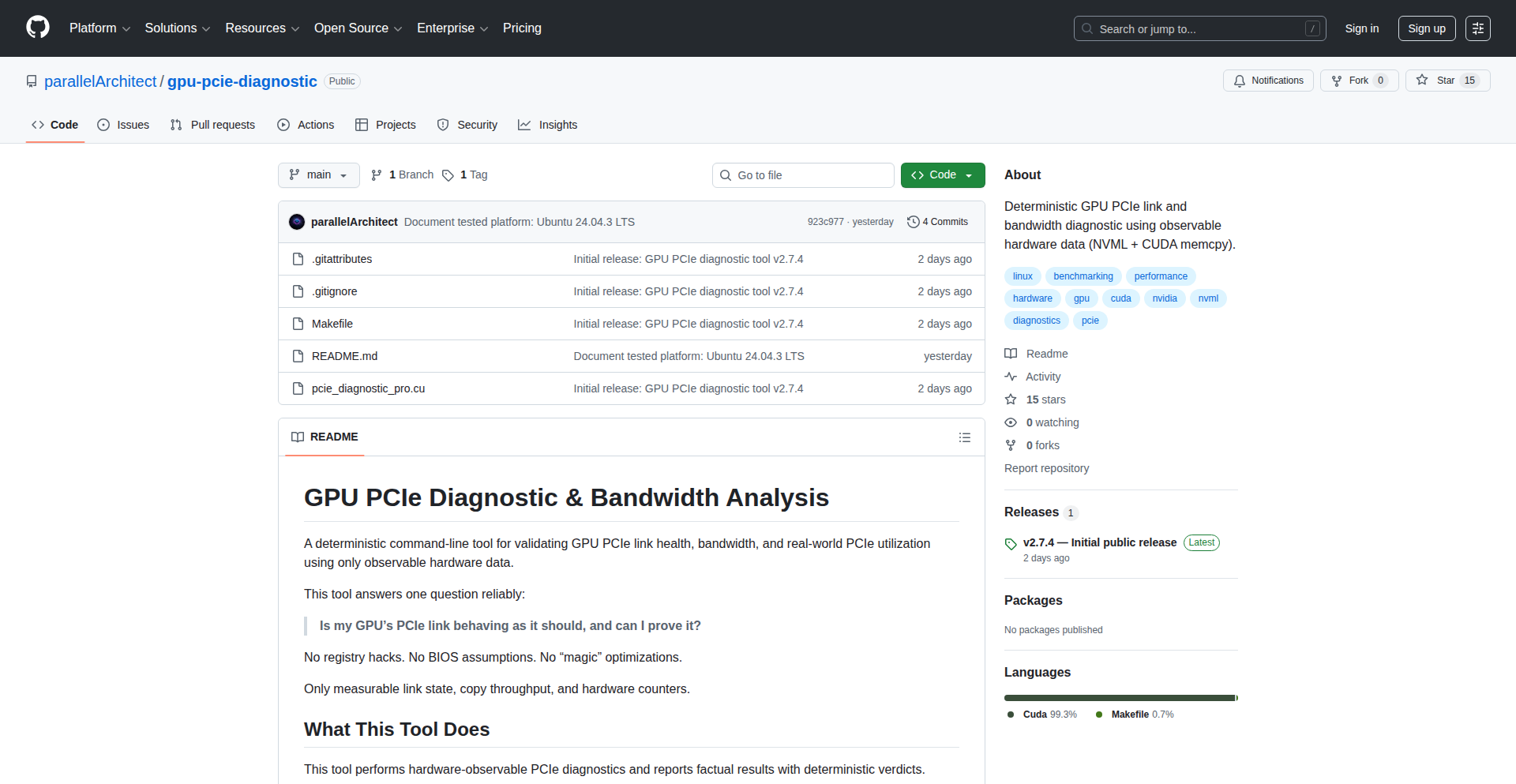

9

GPU PCIe Health Checker

Author

gpu_systems

Description

A Linux tool that definitively checks the health and bandwidth of your GPU's PCIe connection. It uncovers hidden issues like slower-than-expected link speeds or reduced lane widths that standard diagnostics miss, offering a clear verdict on your hardware's performance potential. So, this helps you ensure your GPU is communicating with your CPU at its maximum possible speed, preventing bottlenecks you wouldn't otherwise detect.

Popularity

Points 17

Comments 4

What is this product?

This is a command-line utility for Linux that acts like a highly specialized doctor for your GPU's connection to your motherboard (the PCIe link). It doesn't just guess; it uses specific hardware information to tell you exactly what generation and width (how many lanes) your GPU is communicating at. It also measures the fastest possible data transfer speeds (bandwidth) between your GPU and CPU in both directions and monitors how busy that connection is over time using NVIDIA's tools. The innovation lies in its deterministic approach – it relies purely on observable hardware data to give you a clear, rule-based judgment on whether your PCIe link is performing as it should. This is crucial because problems here, like a faulty riser cable or a misconfigured motherboard setting, can slow down your GPU without any obvious error messages at the software level. So, this helps you identify and fix fundamental hardware communication issues that are often invisible to other tools, directly impacting your system's overall performance, especially for graphics-intensive tasks.

How to use it?

Developers can run this tool from the Linux terminal. After installing it, they simply execute the command, and it will output a detailed report on the GPU's PCIe status. This report can be integrated into system monitoring scripts or used as a diagnostic step when troubleshooting performance issues in applications that heavily rely on GPU communication, such as machine learning training, high-end gaming, or video rendering. For example, if you're experiencing unexplained frame drops or slow training times in a deep learning model, running this tool can quickly tell you if the PCIe connection itself is the bottleneck. So, this provides developers with a precise tool to quickly diagnose and confirm hardware-level communication performance, saving valuable debugging time.

Product Core Function

· Report Negotiated PCIe Generation and Width: This function deterministically identifies the communication standard (e.g., PCIe 4.0) and the number of data lanes (e.g., x16) the GPU is using. The value is in ensuring your GPU is operating at its intended, fastest possible link speed, which is critical for bandwidth-intensive applications. This directly impacts how quickly data can be sent to and from your GPU, and thus, overall performance.

· Measure Peak Host-to-Device and Device-to-Host memcpy Bandwidth: This function benchmarks the maximum theoretical data transfer rate between the CPU (host) and the GPU (device) in both directions using memory copy operations. The value here is in understanding the actual data throughput limitations of your PCIe connection, allowing you to pinpoint if the link is a bottleneck for moving large datasets.

· Monitor Sustained PCIe TX/RX Utilization via NVML: This function tracks the actual usage of the PCIe transmit (TX) and receive (RX) lanes over time, leveraging NVIDIA Management Library (NVML). The value is in seeing if your PCIe connection is consistently being utilized at its peak capacity or if there are periods of underutilization, indicating potential software or driver inefficiencies, or simply confirming it's not being saturated.

· Rule-Based Verdict from Observable Hardware Data: This function analyzes the collected hardware data to provide a clear, actionable conclusion about the health and performance of the PCIe link. The value is in simplifying complex diagnostic information into an easy-to-understand verdict, helping users quickly identify if there's a problem that needs addressing, without needing to be a PCIe expert.

Product Usage Case

· Scenario: A machine learning engineer is training a large neural network and notices that the training speed is significantly slower than expected, even with a powerful GPU. The engineer runs the GPU PCIe Health Checker and discovers that the PCIe link has unexpectedly downgraded to a lower generation (e.g., from PCIe 4.0 to PCIe 3.0) and is using fewer lanes (e.g., x8 instead of x16). Problem Solved: The tool directly identified a hardware communication bottleneck, allowing the engineer to investigate physical connections, motherboard settings, or compatibility issues, rather than wasting time optimizing code.

· Scenario: A gamer is experiencing intermittent stuttering and frame drops in demanding games, despite having a high-end GPU and CPU. Standard driver updates and game settings adjustments haven't helped. Running the GPU PCIe Health Checker reveals that the peak PCIe bandwidth measurements are significantly lower than expected for the GPU's reported link speed. Problem Solved: This points to a potential issue with the PCIe slot, riser cable, or motherboard bifurcation, allowing the gamer to focus their troubleshooting on these hardware components to ensure optimal gaming performance.

· Scenario: A system administrator is setting up a cluster of servers for scientific simulations and wants to ensure all GPUs are communicating optimally. Before deploying workloads, they run the GPU PCIe Health Checker on each server. The tool confirms that all GPUs are operating at their full PCIe generation and width, and bandwidth tests are within expected ranges. Problem Solved: This proactive diagnostic ensures that the entire cluster's hardware is in optimal condition for high-performance computing, preventing potential failures or performance degradation due to undetected PCIe issues.

· Scenario: A developer is building a high-throughput data processing pipeline that involves rapidly transferring large datasets between the CPU and GPU. They use the GPU PCIe Health Checker to establish a baseline for peak PCIe bandwidth. If subsequent performance tests show a drop in pipeline efficiency, they can re-run the checker to see if the PCIe link has degraded or if other bottlenecks have emerged. Problem Solved: The tool provides a consistent and reliable method for verifying the fundamental data transfer capabilities of the system, crucial for optimizing I/O-bound applications.

10

CloudCarbon Insights

Author

hkh

Description

This project extends Infracost's capability to not only predict cloud infrastructure costs but also to visualize and understand the carbon footprint associated with those infrastructure changes. It integrates with cloud provider pricing data and newly acquired carbon emission data to provide developers with a holistic view of their impact, enabling more sustainable and cost-effective cloud architecture decisions.

Popularity

Points 11

Comments 3

What is this product?

CloudCarbon Insights is a tool that analyzes your cloud infrastructure code (like Terraform) and predicts both the financial cost and the environmental carbon emissions it will generate *before* you deploy it. The innovation lies in its ability to map complex cloud resource configurations to real-world pricing and, now, to verified carbon emission data from sources like Greenpixie. This provides a 'checkout screen' for your cloud resources, showing you the cost and carbon impact side-by-side. So, it helps you understand how your code translates into both dollars spent and CO2 emitted.

How to use it?

Developers can integrate CloudCarbon Insights into their workflow by connecting it to their version control system (like GitHub or GitLab) via provided apps. When a developer submits a pull request with changes to their infrastructure code, the tool automatically analyzes these changes. It then comments directly on the pull request, displaying the projected cost and carbon footprint of the proposed changes. This allows developers to easily review the impact and make adjustments to optimize for both cost and environmental sustainability. You can use it by setting up the GitHub or GitLab app and submitting a pull request with your Terraform changes.

Product Core Function

· Predicts cloud infrastructure cost: Analyzes infrastructure code to estimate the monthly cost of deployed resources, helping developers avoid budget overruns and optimize spending. This is valuable for anyone managing cloud resources who wants to control their expenses.

· Calculates carbon footprint: Maps cloud resource usage to estimated carbon emissions, providing a tangible measure of environmental impact. This is useful for engineers and organizations aiming to reduce their carbon footprint and contribute to sustainability goals.

· Provides pre-deployment impact analysis: Shows the cost and carbon impact of infrastructure changes directly in the code review process (e.g., GitHub pull requests), enabling early detection and mitigation of potential issues. This means you can see the consequences of your code before it affects the real world.

· Offers optimization recommendations: Identifies opportunities to reduce both cost and carbon emissions by suggesting alternative resource configurations or sizing. This helps developers make smarter choices that benefit both the business and the planet.

· Integrates with CI/CD pipelines: Can be incorporated into automated workflows to ensure that cost and carbon considerations are part of every deployment. This automates the process of checking your impact, ensuring consistency and compliance.

Product Usage Case

· Scenario: A development team is deploying a new microservice using Terraform on AWS. They are concerned about both operational costs and their company's sustainability targets. How to use: They integrate CloudCarbon Insights with their GitHub repository. When they submit a pull request for the new service's infrastructure, the tool comments with an estimated monthly cost of $500 and a carbon emission projection of 2 tons of CO2 per month. The team then uses the tool's recommendations to resize certain instances, reducing the cost to $400 and emissions to 1.5 tons, without sacrificing performance. This helps them meet their budget and environmental goals.

· Scenario: An engineer is refactoring an existing Kubernetes cluster setup in Azure. They want to ensure the changes are not only efficient but also environmentally responsible. How to use: After committing their Terraform changes, CloudCarbon Insights automatically analyzes the diff. It highlights that a particular service's increased traffic will lead to a 15% cost increase and a significant rise in carbon emissions. The tool suggests using a more energy-efficient instance type for that specific workload, which brings the cost and carbon impact back within acceptable limits. This prevents unexpected cost spikes and reduces the environmental burden.

· Scenario: A startup is building a data processing pipeline and needs to choose between different cloud storage options, considering both cost-effectiveness and carbon impact. How to use: The engineers use CloudCarbon Insights to compare the projected cost and carbon emissions of using AWS S3 versus Azure Blob Storage for their data. The tool reveals that while S3 might be slightly cheaper, the Azure option has a demonstrably lower carbon footprint for their specific usage pattern. This data-driven insight allows them to make a choice that aligns with their budget and their commitment to sustainability.

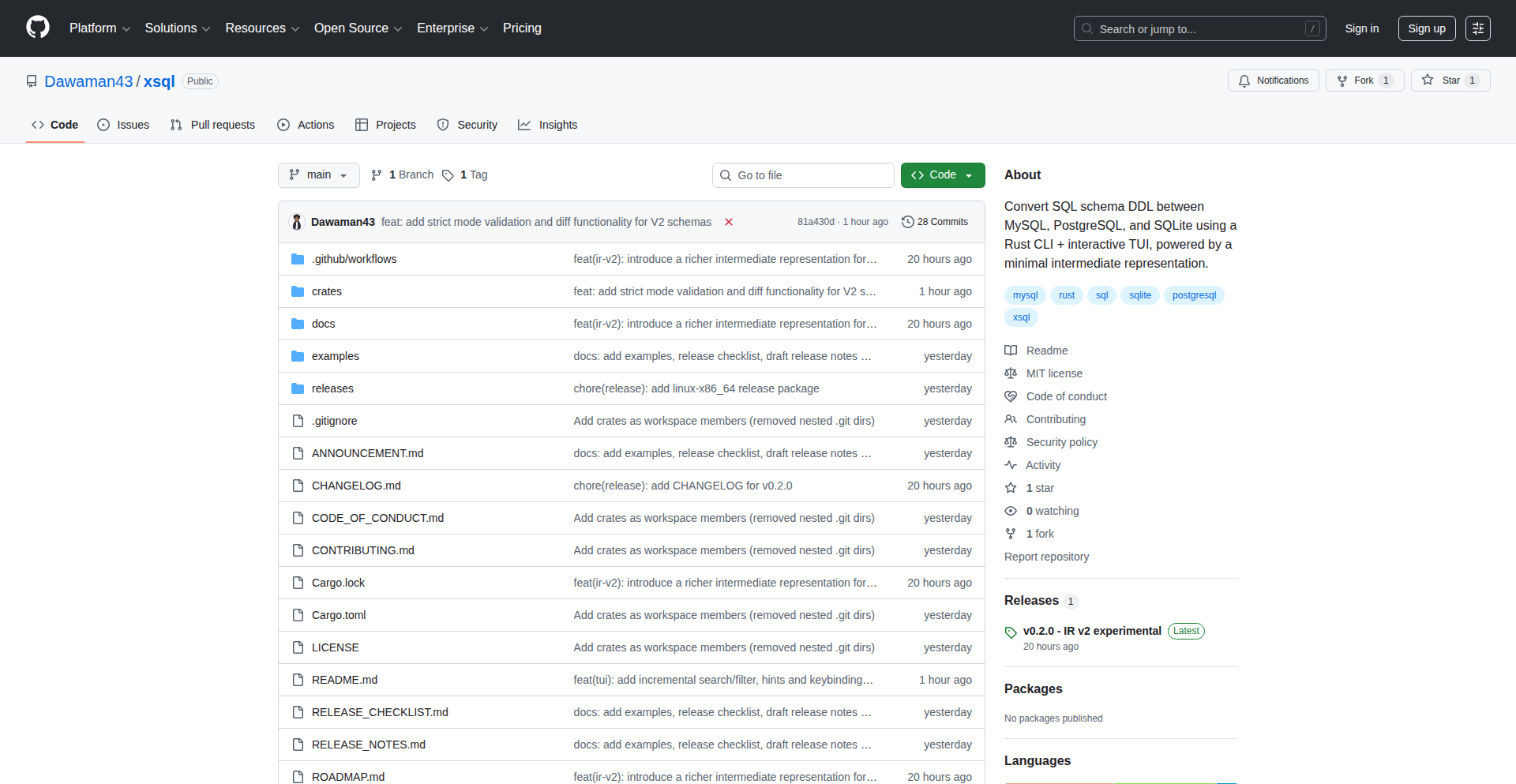

11

Xsql Schema Weaver

Author

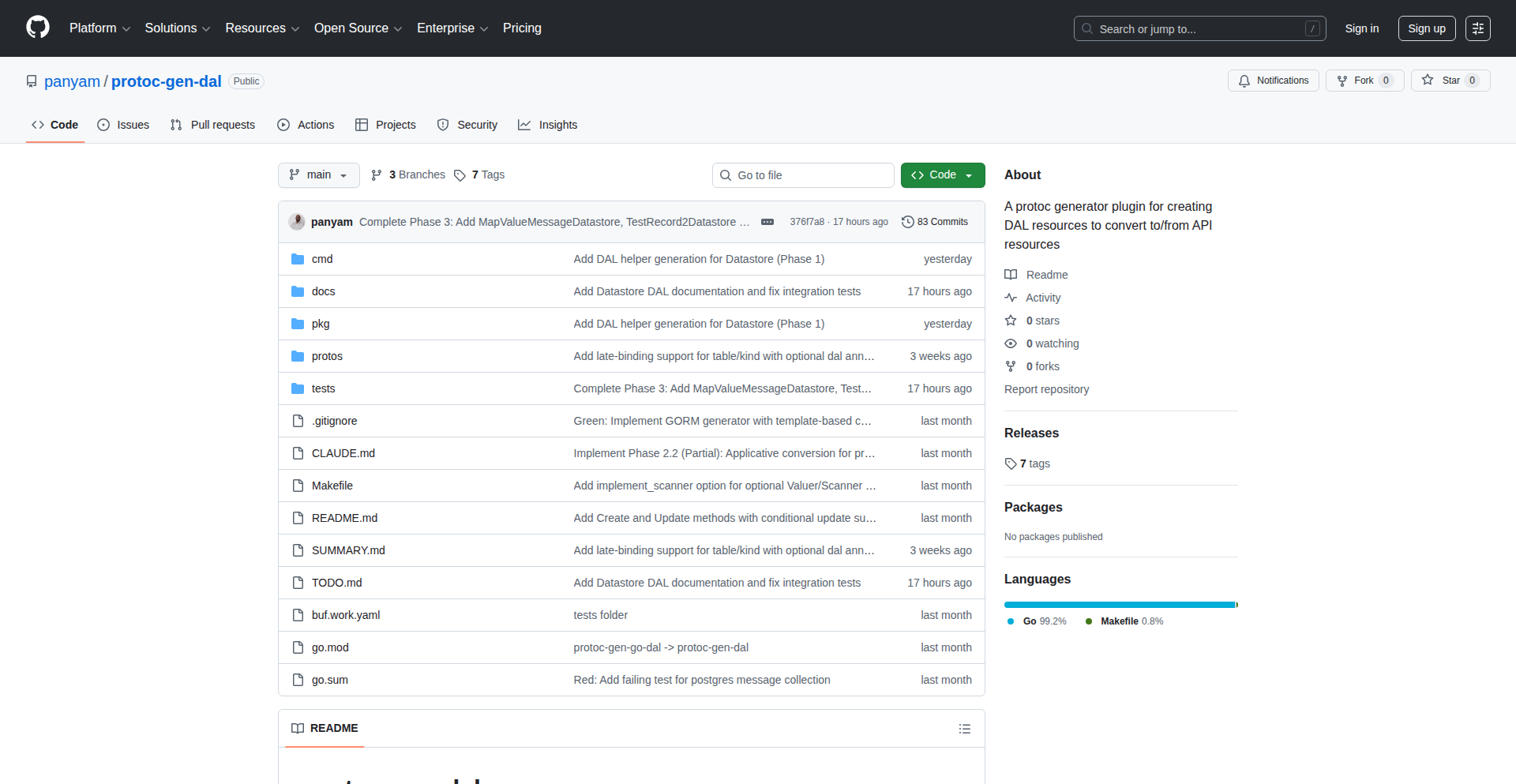

dawitworku

Description

Xsql is an open-source Rust-based command-line interface (CLI) and terminal user interface (TUI) tool designed to intelligently convert SQL schema Data Definition Language (DDL) between different database dialects like MySQL, PostgreSQL, and SQLite. Instead of simple text replacements, it parses SQL 'CREATE TABLE' statements into a structured intermediate representation (IR), then generates equivalent SQL for the target database. This approach ensures safer, more reliable, and easily extendable schema migrations. It handles single files and entire directories, offers an interactive TUI without requiring manual typing, and features an experimental IR v2 with constraint support and JSON output for CI/CD pipelines and other developer tools. The core innovation lies in its abstract representation of SQL schemas, making conversions robust and predictable, which is invaluable for developers managing multi-database environments or migrating between systems. So, this is useful for you because it automates a complex and error-prone task, saving you significant time and reducing the risk of data or schema corruption during database migrations.

Popularity

Points 11

Comments 1

What is this product?

Xsql is a developer tool written in Rust that acts as a bridge between different SQL database systems. Imagine you have a database schema designed for PostgreSQL, but you need to use it with MySQL or SQLite. Traditionally, you'd have to manually rewrite the 'CREATE TABLE' statements, which can be tedious and error-prone due to differences in syntax and supported features. Xsql solves this by first understanding the structure of your SQL schema (like table names, column types, and relationships) by parsing it into a simplified, abstract form. Then, it uses this abstract understanding to generate the correct 'CREATE TABLE' statements for your target database. This intermediate representation (IR) is the key innovation, making the conversion process much more robust and less likely to introduce errors compared to simple text manipulation. It's like having a universal translator for your database blueprints. So, this is useful for you because it automates the complex and error-prone process of migrating database schemas between different types of databases, ensuring accuracy and saving you a lot of manual effort.

How to use it?

Developers can use Xsql in two primary ways: via the command-line interface (CLI) for automated scripting and CI/CD pipelines, or through an interactive terminal user interface (TUI) for more hands-on, visual schema management. For CLI usage, you would typically point Xsql to your source SQL schema file (or a directory containing multiple schema files) and specify the target database dialect. For example, to convert a PostgreSQL schema to MySQL, you might run a command like `xsql convert --from postgres --to mysql --input schema.sql --output schema_mysql.sql`. The TUI offers a more interactive experience where you can navigate through schema elements and perform conversions without typing extensive commands, making it ideal for rapid testing and exploration. The experimental JSON output is particularly useful for integrating Xsql into automated workflows. So, this is useful for you because it provides flexible ways to integrate database schema conversion into your development workflow, whether through automated scripts for deployments or interactive tools for quick adjustments.

Product Core Function

· SQL Schema Parsing to Intermediate Representation: Xsql parses SQL 'CREATE TABLE' statements into a standardized, abstract format. This is valuable because it breaks down the complexity of different SQL dialects into a common language, making subsequent conversions more reliable and less susceptible to syntax errors.

· Cross-Database Dialect Conversion: It generates equivalent SQL DDL for target databases (e.g., MySQL, PostgreSQL, SQLite) from the parsed IR. This is valuable because it allows developers to easily migrate or develop schemas for different database systems without manual rewriting, saving significant time and reducing errors.

· Recursive Folder Conversion: Xsql can process entire directories of SQL schema files, not just single files. This is valuable for managing large or complex database projects where schemas might be spread across multiple files, streamlining the conversion of an entire project.

· Interactive Terminal User Interface (TUI): Provides a typing-free, visual way to interact with and convert schemas directly in the terminal. This is valuable for developers who prefer a more intuitive, less command-line intensive experience for schema manipulation and testing.

· Experimental IR v2 with Constraint Support: The latest version of the intermediate representation includes support for database constraints (like primary keys, foreign keys, unique constraints). This is valuable because it allows for more comprehensive and accurate schema conversions, preserving important data integrity rules across different database systems.

· JSON Output for CI/CD and Tooling: Generates schema information in JSON format, which can be easily consumed by automated build systems, testing frameworks, and other developer tools. This is valuable for integrating schema validation and conversion into continuous integration and continuous delivery pipelines, ensuring consistency and reliability in automated deployments.

Product Usage Case

· Migrating a web application from PostgreSQL to MySQL: A developer can use Xsql to convert their existing PostgreSQL schema files into MySQL-compatible DDL, greatly simplifying the migration process and reducing the risk of errors during the transition. This addresses the problem of needing to support different database backends without extensive manual re-engineering.

· Setting up a development environment with different database instances: A developer working on a project that requires testing against both MySQL and SQLite can use Xsql to generate the necessary schema files for each database from a single source of truth, ensuring consistency across environments. This solves the challenge of maintaining multiple, potentially divergent, schema definitions.

· Automating schema updates in a CI/CD pipeline: By using Xsql's JSON output feature, a developer can automatically validate or convert schema changes as part of their build process. If a schema change is incompatible with the target database, the pipeline can fail early, preventing deployment issues. This addresses the need for robust and automated schema management in modern software development.

· Sharing database schema designs across teams using different database systems: A team can maintain a single, canonical schema definition and use Xsql to generate the appropriate versions for each team member's preferred database, fostering collaboration and reducing misunderstandings. This solves the problem of disparate development environments leading to incompatible schema implementations.

12

A24z: AI-Powered Engineering Operations Orchestrator

Author

brandonin

Description

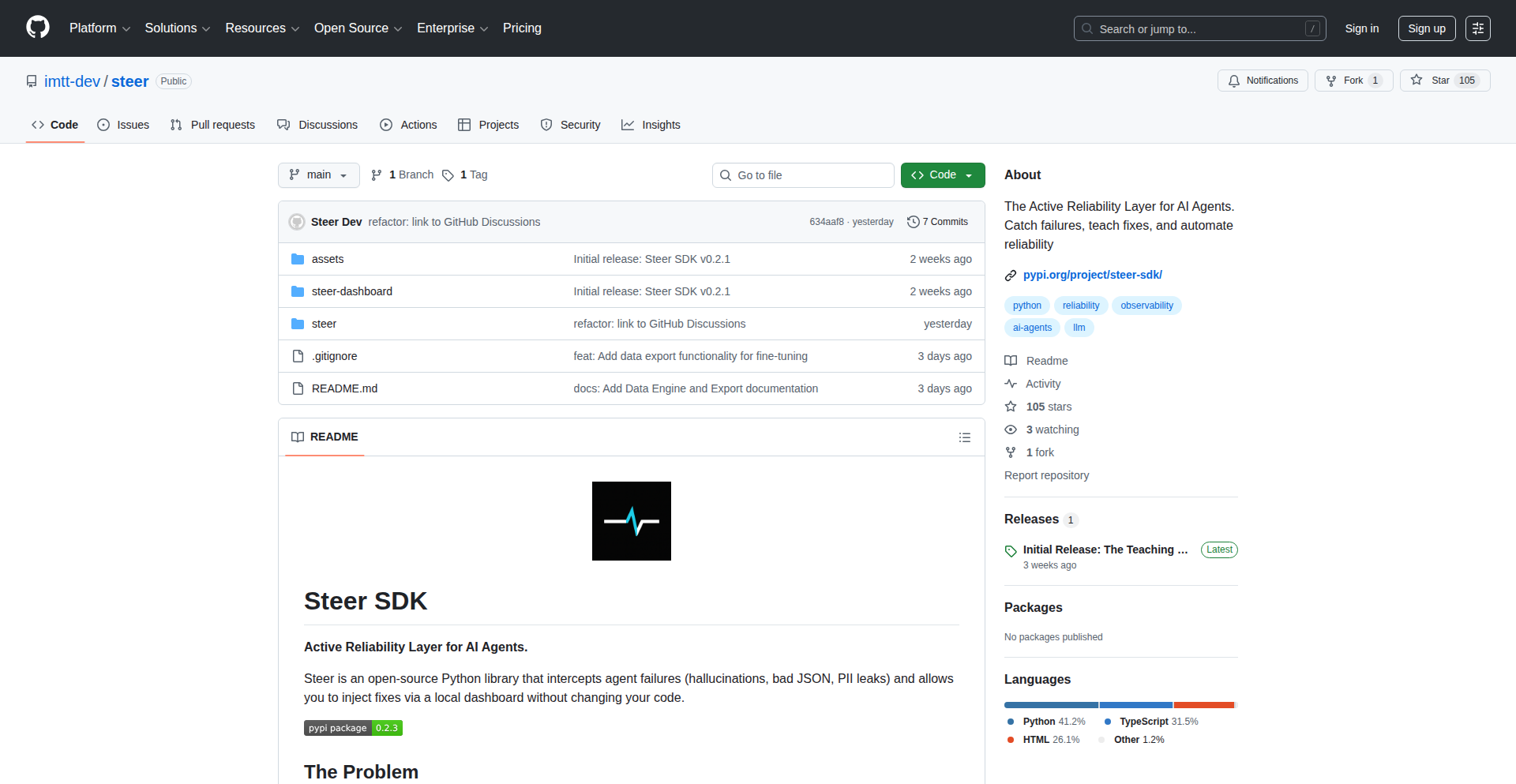

A24z is an AI Engineering Ops platform designed to act as an in-house platform engineer. It addresses the evolving challenges of managing engineering organizations, especially with the increasing adoption of AI coding tools. Its core innovation lies in providing visibility into adoption and ROI for engineering initiatives, and more recently, incorporating features for security scanning and autonomously upgrading AI coding assistants with skills, plugins, and guardrails to optimize team performance. So, this helps engineering leaders understand the impact of their tools and processes, and proactively improve their teams' efficiency and security.

Popularity

Points 8

Comments 4

What is this product?

A24z is an AI Engineering Operations platform that essentially serves as a virtual platform engineer for your team. It leverages AI to provide deep insights into how your engineering organization is adopting new tools, measuring the return on investment (ROI) of your engineering efforts, and proactively enhancing your AI coding assistants. The innovation here is using AI not just for writing code, but for managing and optimizing the engineering process itself. This means you get a more efficient, secure, and informed engineering team without needing to hire a dedicated platform engineer. So, this is valuable because it automates complex operational tasks and provides actionable intelligence to improve your engineering workflows.

How to use it?

Developers and engineering leaders can integrate A24z into their existing development workflows. It can be used to monitor the adoption of AI coding tools within the team, track key performance indicators (KPIs) related to engineering productivity, and identify areas for improvement. For security, it can automatically scan code for vulnerabilities. Furthermore, it can intelligently upgrade your AI coding assistants (like Claude) by autonomously adding new skills, plugins, and guardrails to enhance their capabilities and ensure safer, more optimized code generation. So, you can plug it into your existing setup to gain immediate visibility and automate complex operational tasks, making your development process smoother and more secure.

Product Core Function

· Engineering Adoption and ROI Tracking: Provides dashboards and analytics to visualize how teams are adopting new tools and the business value they are generating, helping leaders justify investments and identify successful strategies. So, this tells you if your expensive new tools are actually being used and if they are worth the money.

· AI Coding Assistant Enhancement: Autonomously upgrades AI coding tools with new skills, plugins, and guardrails to improve their performance, security, and adherence to best practices. So, your AI coding partners become smarter and safer over time without manual intervention.

· Security Scanning: Automatically scans code for potential security vulnerabilities and compliance issues, helping to prevent breaches and maintain a secure development pipeline. So, this acts as an automated security guard for your code.

· Autonomous Operations: Acts as a virtual platform engineer, automating routine operational tasks and proactive system maintenance. So, it handles the background work of keeping your engineering infrastructure running smoothly, freeing up your team for core development.

· Performance Optimization: Analyzes engineering workflows to identify bottlenecks and suggests or implements optimizations for increased efficiency and productivity. So, it finds ways to make your team work faster and smarter.

Product Usage Case

· A startup struggling to measure the impact of their new AI pair-programming tool can use A24z to track developer engagement and the resulting code quality improvements, demonstrating ROI to stakeholders. So, they can prove their investment in AI is paying off.

· A large enterprise with a distributed development team can leverage A24z for continuous security scanning, ensuring all code commits adhere to stringent security policies before deployment. So, they can reduce the risk of security breaches across their entire organization.

· A small engineering team looking to enhance their AI coding assistant's capabilities can use A24z to automatically integrate new plugins and custom skills, allowing the AI to handle more complex tasks without manual configuration. So, their AI coding buddy gets better at helping them without them having to do any extra work.

· An engineering manager wanting to understand why a particular project is falling behind schedule can use A24z to analyze adoption rates of new libraries and identify potential skill gaps or tool inefficiencies. So, they can pinpoint the exact reasons for delays and address them effectively.

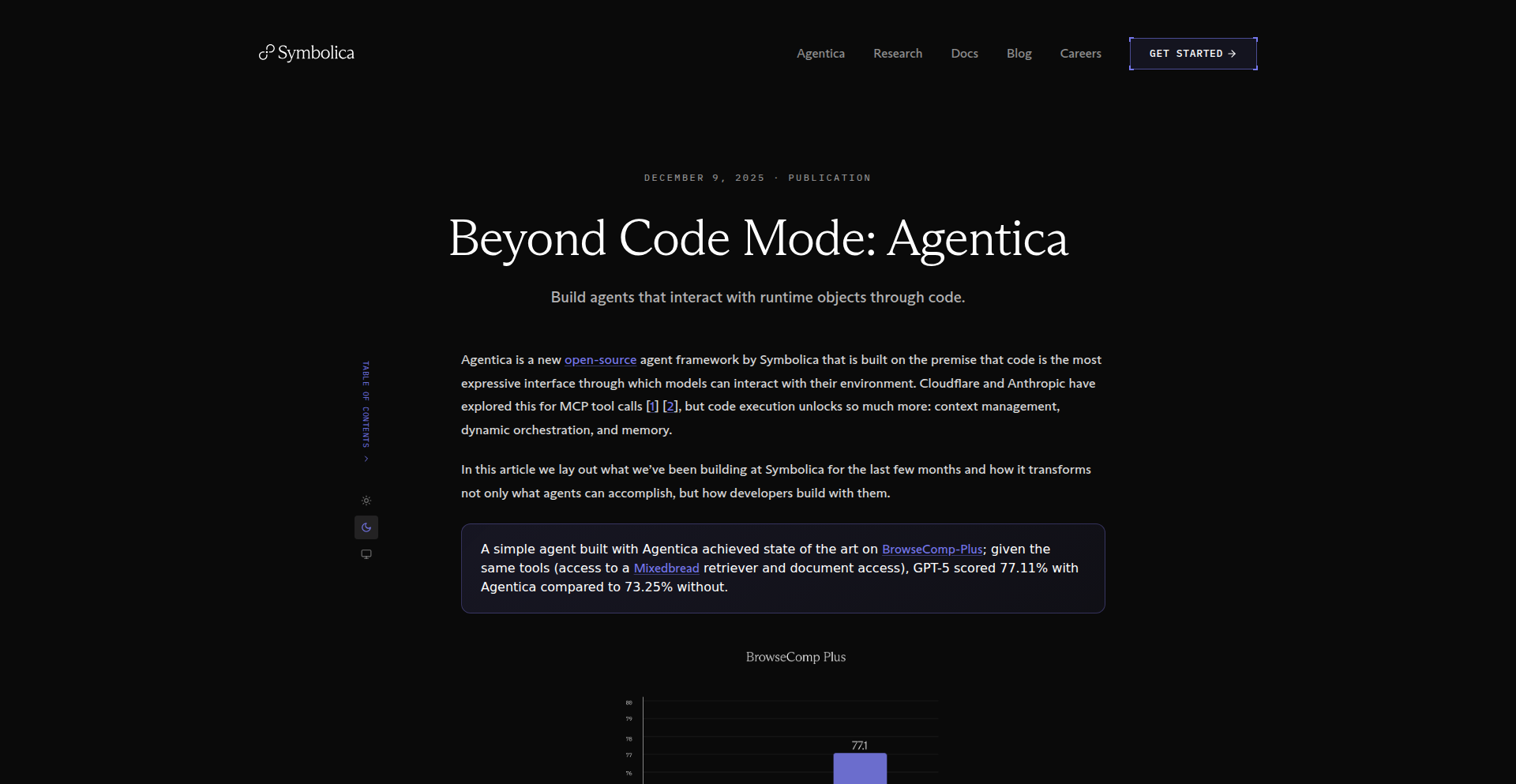

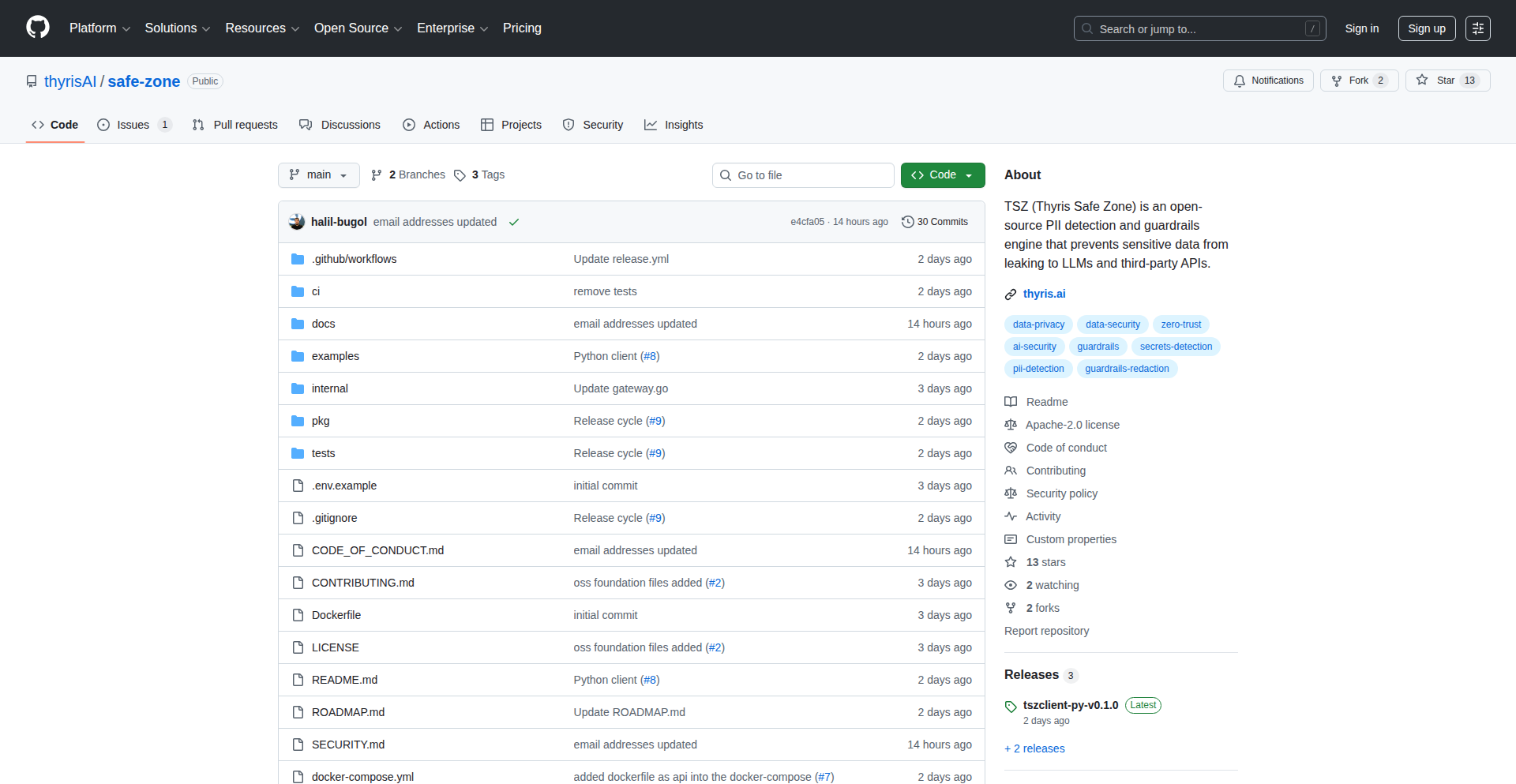

13

Symbolica LLM Function Executor

Author

charlielidbury

Description

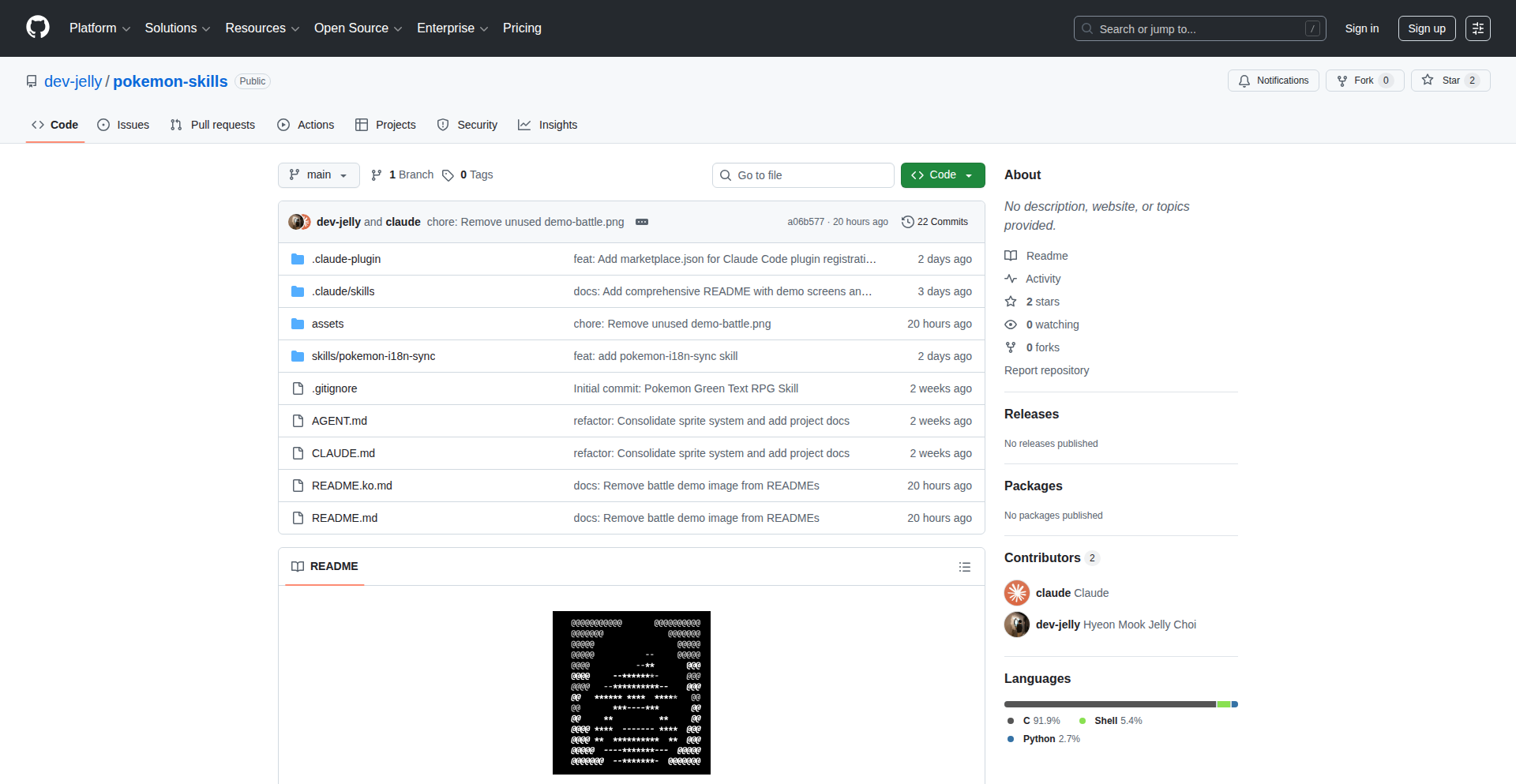

This project explores how exposing AI agent's tools as Python functions or objects, rather than using JSON schemas, significantly enhances their performance. It demonstrates a measurable improvement in complex task completion, like browsing the web and extracting information, by leveraging direct Python integration.

Popularity

Points 10

Comments 0

What is this product?

This project is a proof-of-concept demonstrating that Large Language Models (LLMs) perform better when their available tools are presented as actual Python functions or objects within a Python REPL (Read-Eval-Print Loop). Instead of describing tools using static JSON structures, the LLM can directly call and interact with live Python code. This allows for more dynamic, flexible, and context-aware tool usage, leading to a higher success rate in executing complex tasks.

How to use it?

Developers can integrate this approach by defining their agent's capabilities as Python functions. When the LLM needs to perform an action, it can then directly invoke these Python functions. This is particularly useful for tasks requiring real-time data retrieval, complex calculations, or interaction with other Python libraries. The provided documentation guides on how to structure these callable tools for optimal LLM interaction and offers examples for common use cases like web browsing and data manipulation.

Product Core Function

· Direct Python function invocation for LLM tools: This allows the LLM to dynamically call and execute Python code, enabling more sophisticated and responsive tool usage. This is valuable for building agents that can perform complex actions based on real-time information.

· Improved LLM performance on complex tasks: By using Python functions, the LLM can better understand and utilize its tools, leading to a higher success rate in tasks like web browsing, data analysis, and decision-making. This means more reliable and accurate results from your AI agents.

· Flexible tool definition and management: Presenting tools as Python objects or functions offers greater flexibility in how capabilities are defined and updated compared to rigid JSON schemas. This makes it easier to evolve and adapt your AI agent's functionality over time.

· Enhanced agent reasoning and execution: The direct interaction with Python code allows LLMs to reason more effectively about how to apply their tools, leading to more efficient and accurate execution of multi-step processes. This translates to smarter and more capable AI assistants.

Product Usage Case

· Building an AI agent that can browse the internet, gather specific information, and then process that information using Python libraries like Pandas. The direct function calls enable the LLM to seamlessly transition between web scraping and data analysis.

· Developing a chatbot that can interact with a company's internal APIs by exposing these APIs as Python functions. The LLM can then call these functions to retrieve or update data, providing real-time support to users.

· Creating an AI-powered research assistant that can execute complex queries on scientific databases by leveraging Python libraries for database interaction and data retrieval. The LLM can orchestrate these calls to efficiently gather relevant research papers.

· Designing an automated testing framework where an LLM can control and interact with a web application by calling Python functions that simulate user actions and verify outcomes. This leads to more intelligent and adaptable testing scenarios.

14

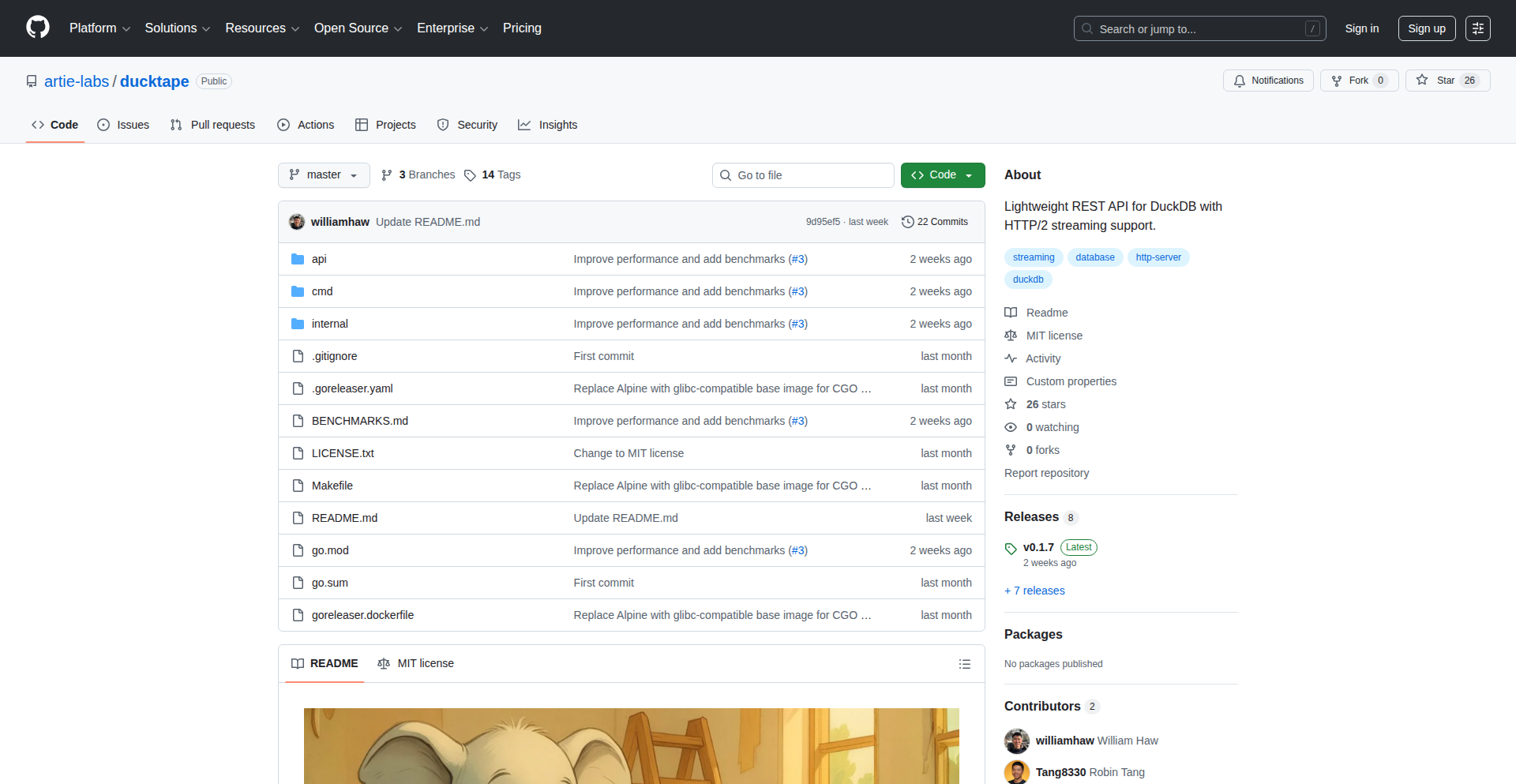

Ducktape: HTTP/2 Powered DuckDB Streamer

Author

williamhaw

Description

Ducktape is a minimalist microservice that bridges the gap between your Go applications and DuckDB. It wraps DuckDB's powerful Appender API behind HTTP/2 streams, allowing you to stream data in NDJSON format directly into DuckDB without the complexities of CGO. This solves the problem of CGO dependencies hindering cross-compilation and static binary generation in pure Go projects, while maintaining near-native performance.

Popularity

Points 10

Comments 0

What is this product?

Ducktape is a small, standalone service designed to let your Go applications easily send data into DuckDB. Normally, interacting with DuckDB from Go requires a CGO dependency, which can make building your Go programs for different operating systems and architectures very difficult, and bloats your Docker images. Ducktape sidesteps this by acting as a small intermediary. Your Go program sends data as plain text (NDJSON) over a modern network protocol called HTTP/2 to Ducktape. Ducktape then takes this data and efficiently inserts it into DuckDB on its behalf. The innovation lies in using HTTP/2 streams to send data efficiently and isolating the CGO dependency to this single, small service, keeping your main application clean and easy to build anywhere. So, what does this mean for you? It means you can leverage the power of DuckDB within your Go applications without getting bogged down by build system headaches, allowing for smoother development and deployment.

How to use it?

Developers can integrate Ducktape by running it as a separate microservice. Your main Go application will then make HTTP/2 requests to this Ducktape service. You'll stream your data, formatted as NDJSON (Newline Delimited JSON, a simple way to send multiple JSON objects one after another), to a specific endpoint on Ducktape. Ducktape will receive this stream and use its internal DuckDB connection to append the data. This is particularly useful in data replication pipelines or when you need to feed streaming data into DuckDB for analysis. The integration involves setting up the Ducktape service and then modifying your Go application to send data to its HTTP/2 endpoint, rather than directly calling DuckDB's CGO-dependent functions. This allows your core Go service to remain a pure Go project, easy to cross-compile and deploy as a single static binary or a lean Docker image. So, how does this help you? It means you can easily add DuckDB as a data destination for your Go services, simplifying your build process and deployment.

Product Core Function

· HTTP/2 Streaming Data Ingestion: Allows for efficient, real-time data transfer from client applications to DuckDB, avoiding the need for clients to handle DuckDB specifics. This means you can send data quickly without large file transfers.

· CGO Dependency Isolation: Encapsulates the CGO requirement within the Ducktape microservice, ensuring your primary Go application remains free of CGO, enabling straightforward cross-compilation and static binary creation. This makes building your Go apps for different platforms (like Windows, macOS, Linux, or even ARM devices) much simpler.

· NDJSON Data Format Support: Utilizes NDJSON for data streaming, a simple and widely understood text-based format for sending multiple structured data records sequentially. This means your data can be sent in a human-readable and easily parsable format.

· DuckDB Appender API Integration: Directly leverages DuckDB's efficient Appender API for fast data insertion into DuckDB tables. This ensures data is added to DuckDB as quickly as possible, maximizing performance.

· Standalone Microservice Architecture: Provides a lightweight, independent service that can be deployed separately from your main application, allowing for flexible scaling and management. This means you can scale the data ingestion part independently if needed.

Product Usage Case

· Real-time Data Replication: A Go-based data replication service can stream changes directly into DuckDB using Ducktape, allowing for quick setup of analytical replicas without complex build configurations for the replication service. This helps set up analytics dashboards faster.

· IoT Data Ingestion: An IoT platform written in Go can send sensor data streams to Ducktape, which then efficiently appends this data into DuckDB for historical analysis and anomaly detection. This allows you to analyze sensor data in near real-time.

· Batch Data Processing: If your Go application processes large datasets and needs to insert them into DuckDB for further analysis, Ducktape provides a streamlined way to do this without CGO complexities, especially in CI/CD pipelines. This simplifies getting large datasets into DuckDB for analysis.

· Microservice Data Archiving: A microservice that generates operational logs or events can stream this data to Ducktape, which archives it into DuckDB, enabling later querying for debugging or auditing purposes. This helps in debugging and auditing by storing data efficiently.

15

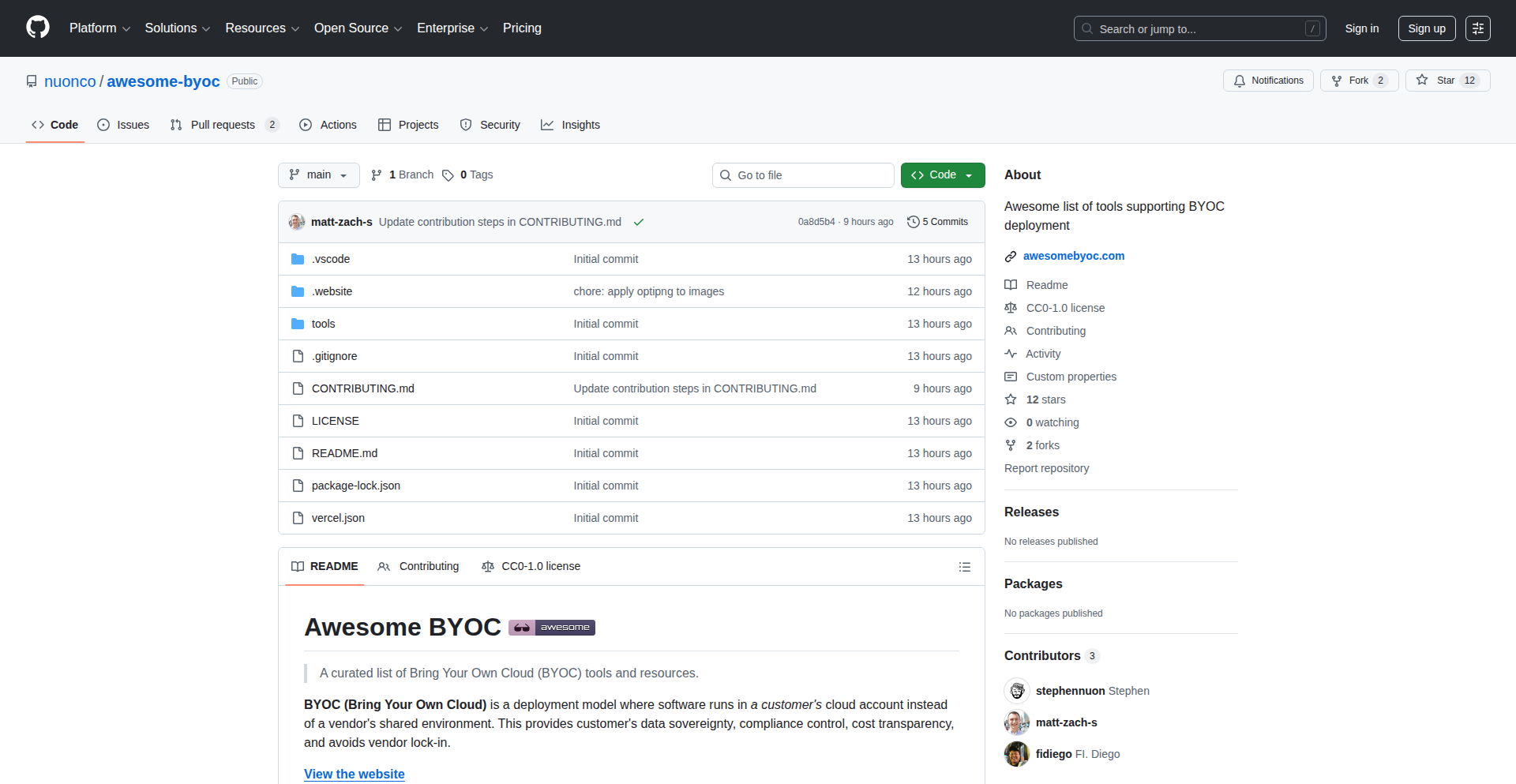

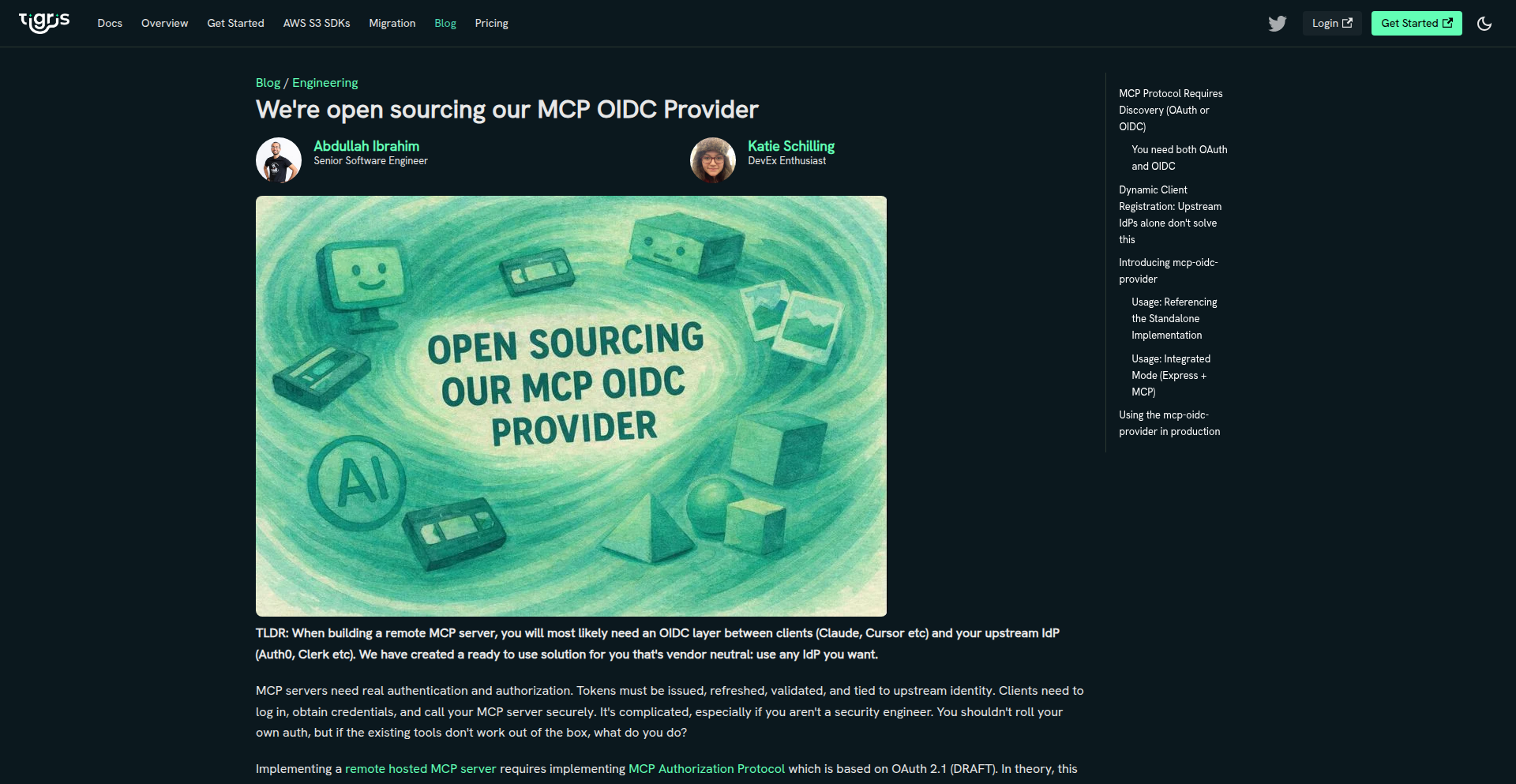

BYOC Vendor Nexus

Author

realsharkymark

Description

This project is a community-curated platform, akin to 'awesome-selfhosted.net', but specifically for 'Bring Your Own Cloud' (BYOC) software vendors. It centralizes information about software that runs within a customer's own virtual private cloud (VPC), making it easier for businesses to discover and adopt solutions that maintain data privacy and control. The innovation lies in creating a single, trusted source for a rapidly growing segment of cloud deployment.

Popularity

Points 9

Comments 0

What is this product?

BYOC Vendor Nexus is a web-based directory that lists software solutions designed to run within a customer's own cloud infrastructure (their 'Bring Your Own Cloud' environment). Unlike traditional SaaS where software runs on the vendor's servers, BYOC software is installed and managed by the user within their secure network. This provides enhanced data security, compliance, and customization. The platform's technical ingenuity is in its community-driven curation model, allowing software vendors to submit their offerings, ensuring a dynamic and up-to-date resource for businesses seeking to maintain full control over their data and applications.

How to use it?