Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-12-09

SagaSu777 2025-12-10

Explore the hottest developer projects on Show HN for 2025-12-09. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

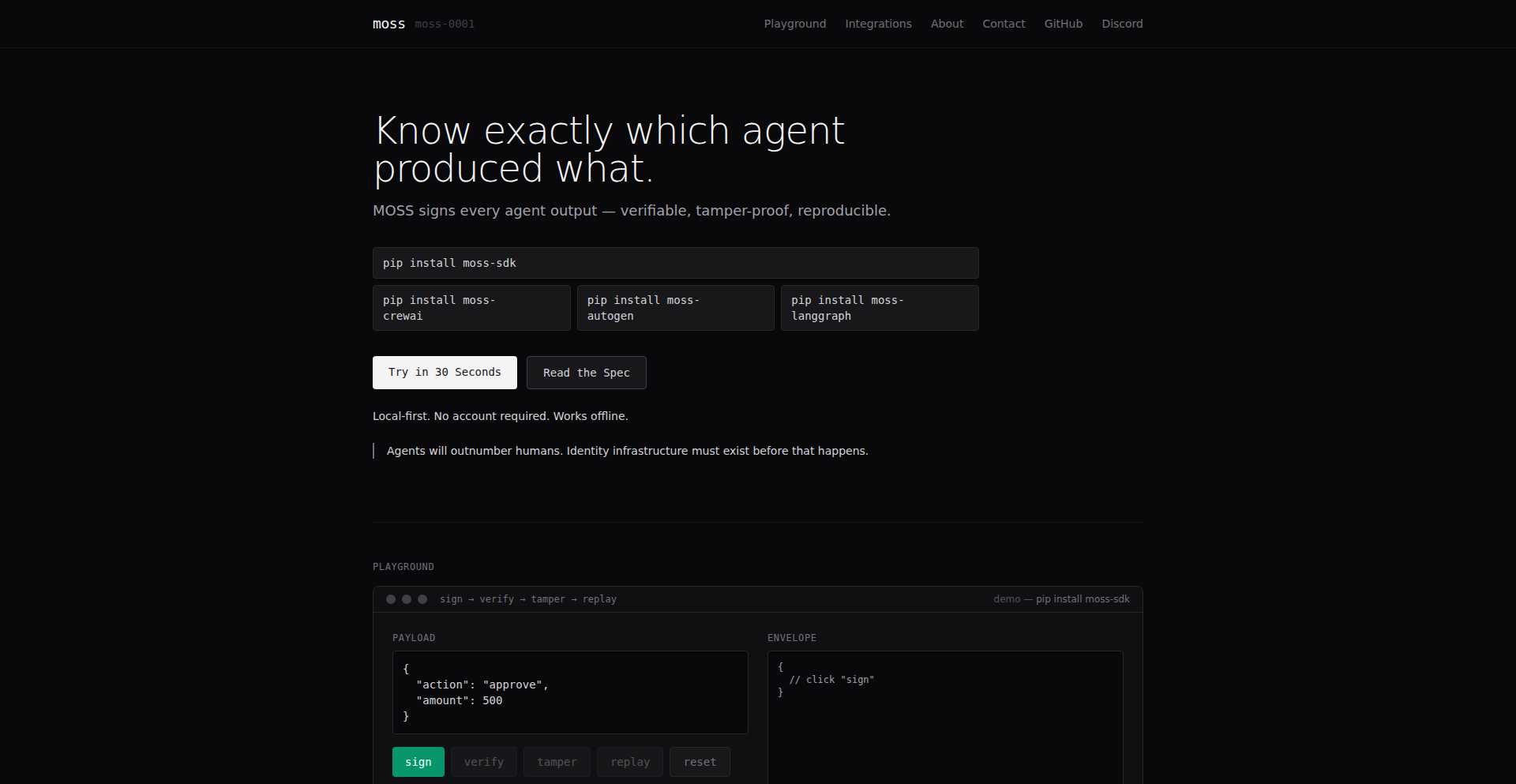

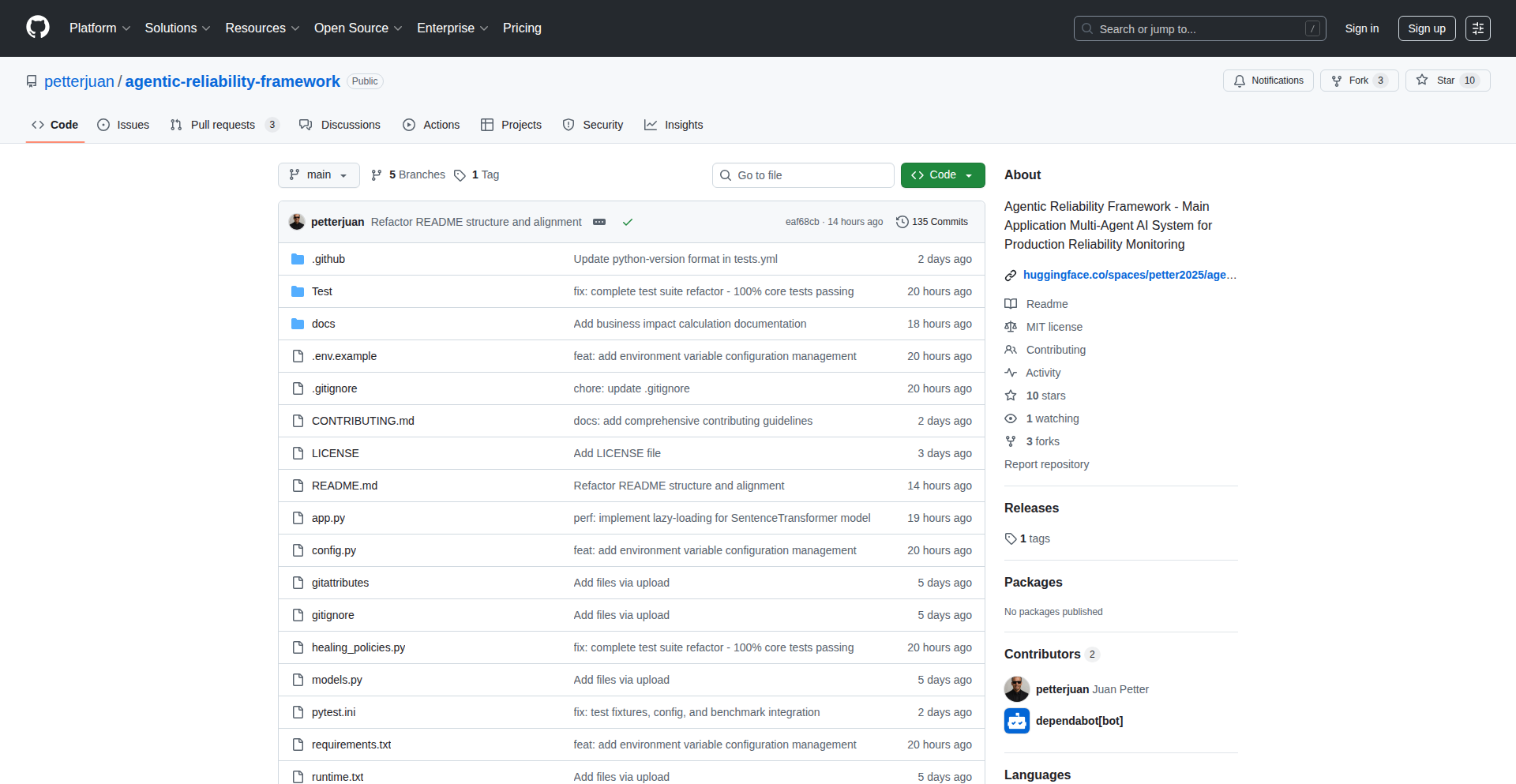

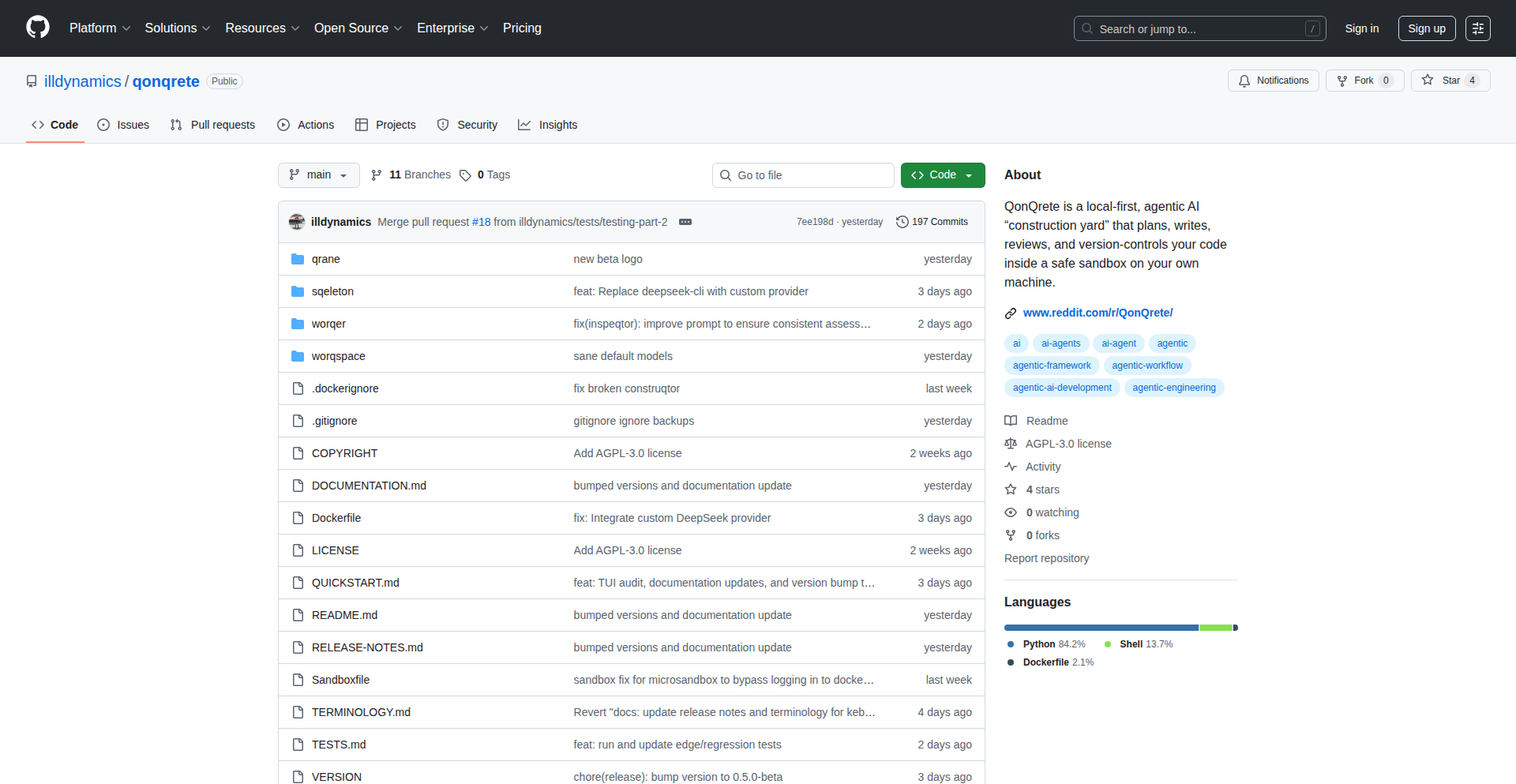

Today's Show HN reveals a vibrant ecosystem of innovation, heavily influenced by AI and a drive to boost developer productivity. We're seeing a significant trend towards AI agents that don't just generate code, but actively analyze, debug, and optimize it, exemplified by tools like 'Detail' which uses AI for sophisticated bug finding. This push towards intelligent automation extends to developer workflows, with projects aiming to streamline complex tasks like learning LeetCode patterns ('AlgoDrill') or managing multiple AI tools in a unified IDE ('HiveTechs'). The embrace of multi-agent systems is also striking, with developers exploring ways to orchestrate multiple AI entities for complex problem-solving, from code generation ('QonQrete') to reliability management ('Agentic Reliability Framework'). For developers, this means an opportunity to leverage AI not just as a coding assistant, but as a powerful partner in debugging, optimization, and complex system design. For entrepreneurs, the clear path is to identify niche problems within existing development or business processes and explore how AI, particularly agentic systems and advanced code analysis, can provide novel and efficient solutions. The focus on creating tools that reduce friction, improve efficiency, and offer deeper insights signifies a growing maturity in how we build and interact with software.

Today's Hottest Product

Name

Detail, a Bug Finder

Highlight

Detail is an innovative bug finder that leverages AI to analyze codebases, write tests, and identify lurking bugs and vulnerabilities. The core technical innovation lies in its ability to spin up hundreds of local development environments to exercise code in thousands of ways, significantly improving the signal-to-noise ratio for bug detection. This approach trades compute for quality, making it practical for finding subtle issues that traditional methods might miss. Developers can learn from its strategy of using tests as guardrails and its sophisticated approach to automated code analysis and behavior anomaly detection.

Popular Category

AI/ML

Developer Tools

Productivity

Popular Keyword

AI

Code

Automation

LLM

Agent

Data

Framework

Platform

Technology Trends

AI-powered code analysis

Multi-agent systems

Developer productivity tools

Data visualization and manipulation

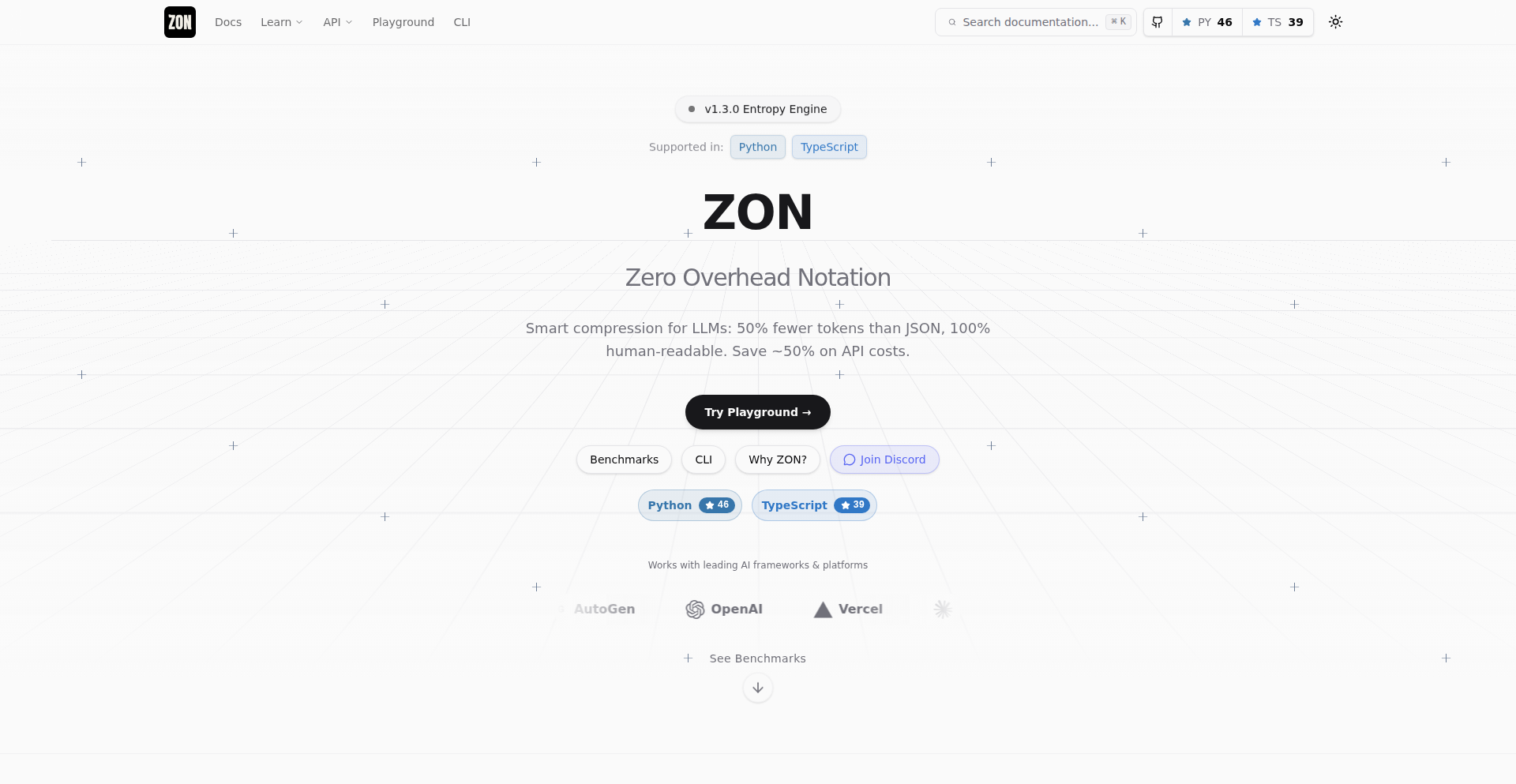

Efficient data formats

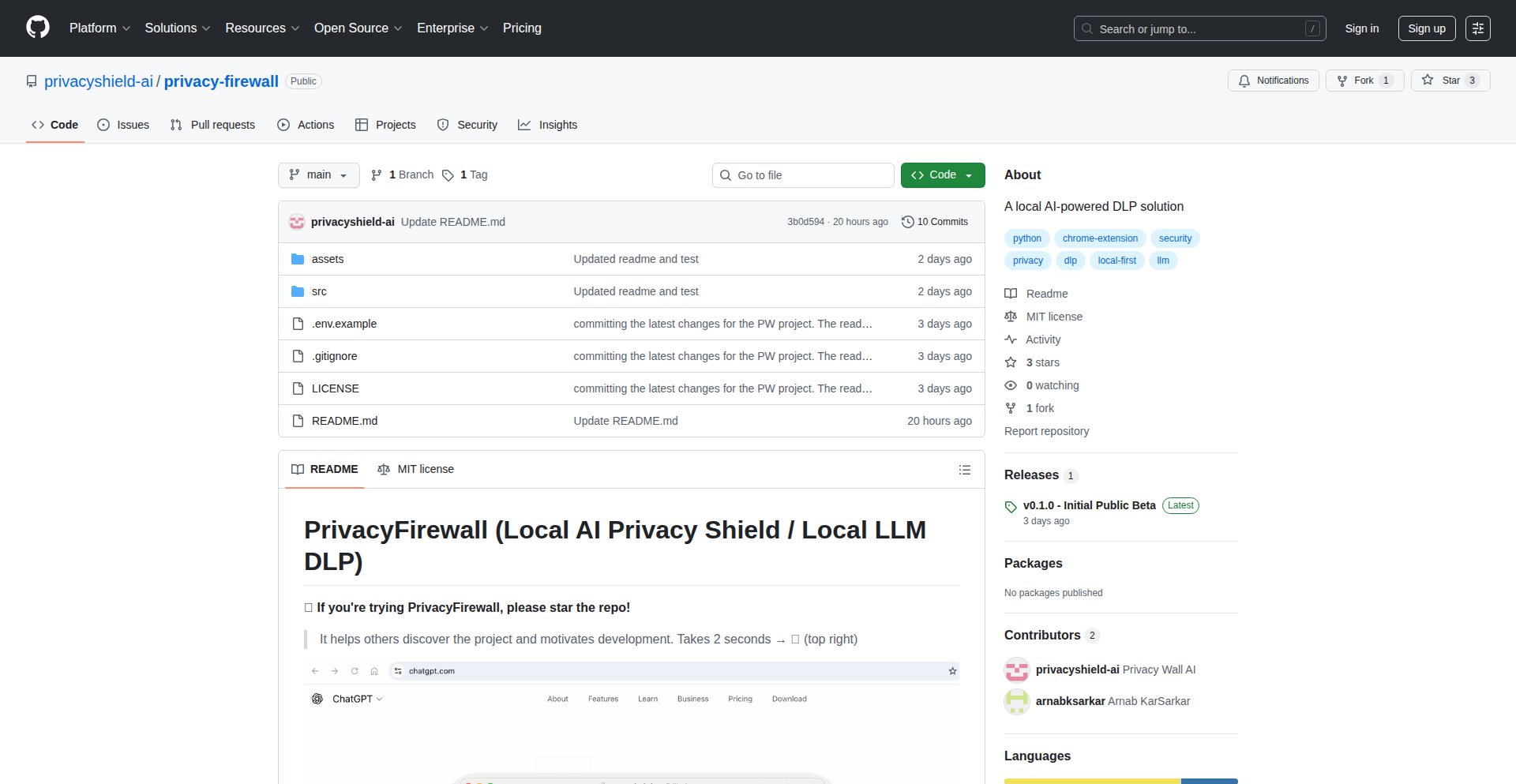

Decentralized/Local-first applications

AI-assisted content creation

Developer experience enhancement

Low-code/No-code solutions

Project Category Distribution

AI/ML Tools (25%)

Developer Productivity (20%)

Data & Analytics (15%)

Web Development Tools (15%)

Utilities & Libraries (10%)

Creative & Design Tools (5%)

Productivity & Lifestyle (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Generative Future Insight Engine | 2652 | 781 |

| 2 | AlgoDrill: Pattern-Recall Coding Coach | 163 | 98 |

| 3 | DeepScan AI | 63 | 26 |

| 4 | AIForge IDE | 15 | 13 |

| 5 | GPT-Driven Traffic Surfer | 16 | 11 |

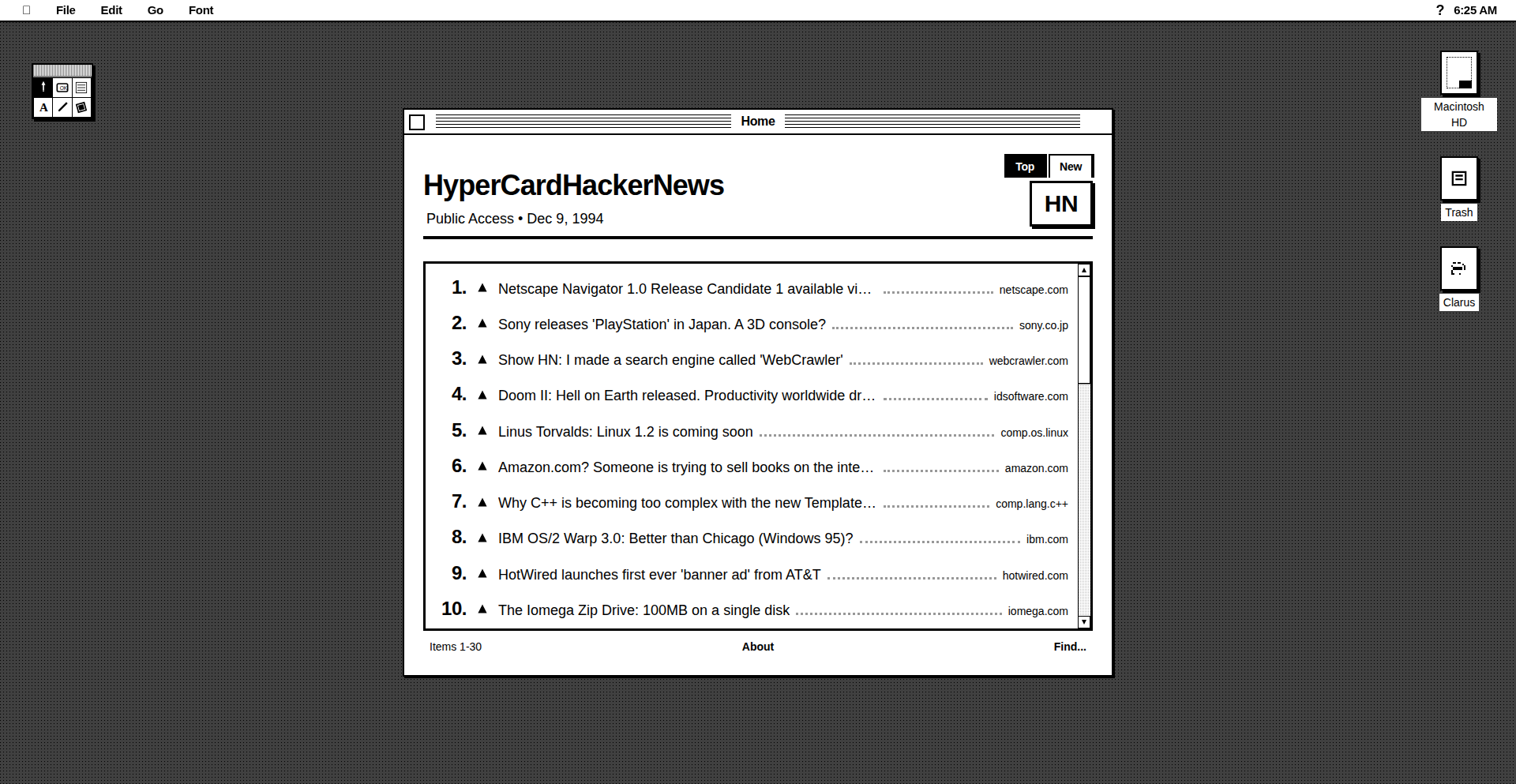

| 6 | Gemini 94: HyperCard Hacker News Reimagined | 12 | 11 |

| 7 | Fate: React & tRPC Data Framework | 22 | 1 |

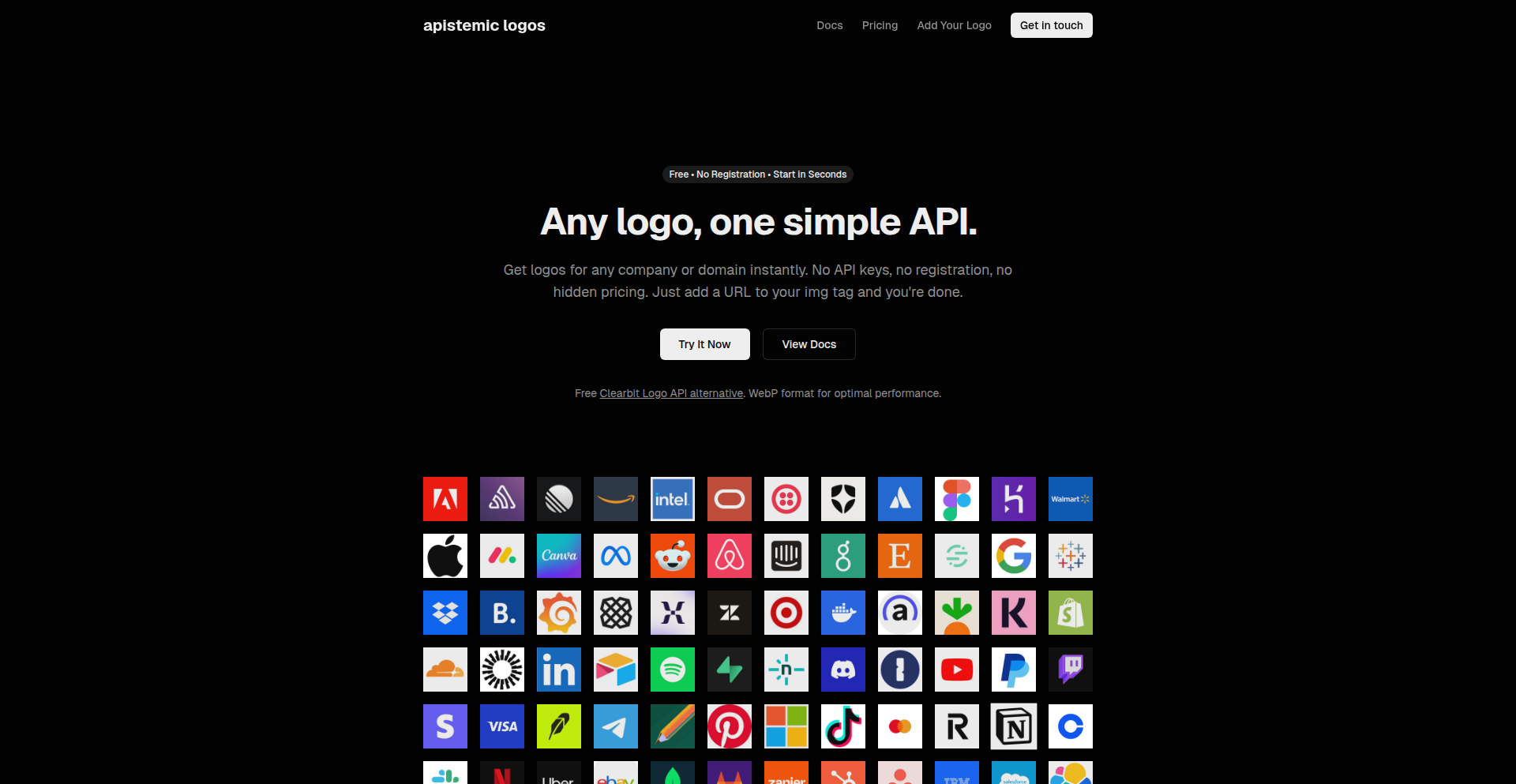

| 8 | Logos API | 9 | 6 |

| 9 | TSP-4h-Genius | 11 | 3 |

| 10 | ImmiForm Genius | 8 | 1 |

1

Generative Future Insight Engine

Author

keepamovin

Description

This project showcases the experimental capabilities of the Gemini Pro 3 large language model by prompting it to hallucinate the Hacker News front page 10 years in the future. The core innovation lies in exploring the model's creative generation and predictive potential, highlighting its ability to synthesize information and imagine future technological trends and community discussions. It demonstrates a playful yet insightful approach to understanding AI's generative power and its implications for content creation and trend forecasting.

Popularity

Points 2652

Comments 781

What is this product?

This is an experimental demonstration of the Gemini Pro 3 large language model's generative capabilities. Instead of asking it to perform a factual task, it's prompted to 'hallucinate' or creatively invent a Hacker News front page as it might appear a decade from now. The innovation is in observing how the AI extrapolates current trends, imagines new technologies, and crafts speculative news headlines and discussions. It's like giving the AI a creative writing prompt about the future of tech, and seeing what it comes up with, demonstrating its understanding of community, innovation, and future possibilities. So, what's this for you? It shows the creative potential of advanced AI for imaginative content generation and exploring future scenarios.

How to use it?

Developers can use this project as a conceptual starting point to explore advanced prompt engineering for generative AI. By observing the output, developers can learn how to craft prompts that elicit creative, speculative, or trend-forecasting content from models like Gemini Pro. This can involve experimenting with different timeframes, technology focus areas, or community sentiment. Integration would typically involve using the Gemini API within custom applications to generate similar future-looking content for creative brainstorming, market research, or even as a source of inspiration for science fiction or future-themed projects. So, what's this for you? It provides a blueprint for leveraging AI's creative side in your own projects, allowing you to generate unique future visions.

Product Core Function

· Generative Future Simulation: Utilizes Gemini Pro 3 to create hypothetical future content based on current trends and AI's imaginative capabilities. This is valuable for exploring potential future scenarios in technology and community discussions. So, what's this for you? It helps you visualize what the future of tech might look like, offering inspiration for innovation.

· Advanced Prompt Engineering Exploration: Demonstrates sophisticated prompting techniques to guide AI towards creative and speculative output. This is useful for developers looking to push the boundaries of AI content generation beyond factual reporting. So, what's this for you? It teaches you how to get AI to think creatively and predict future possibilities.

· Trend Extrapolation and Synthesis: Shows the AI's ability to synthesize existing information about technology and community dynamics to project future developments. This is valuable for understanding how AI can identify and forecast emerging trends. So, what's this for you? It helps you understand how AI can predict what's coming next in technology.

· AI Creativity and Hallucination Analysis: Provides a platform to study the nature of AI 'hallucination' in a creative context, highlighting its potential for novel idea generation. This is important for understanding the creative potential and limitations of AI. So, what's this for you? It shows you the exciting, unpredictable side of AI and how it can lead to new ideas.

Product Usage Case

· Future Technology Trend Forecasting: A developer could prompt Gemini Pro to generate a Hacker News front page from 2034, focusing on breakthroughs in quantum computing and AI ethics. This would help them understand potential future research directions and market opportunities. So, what's this for you? It helps you get a glimpse into future tech trends to guide your development.

· Creative Writing and World-Building: A science fiction author could use this approach to generate speculative headlines and discussion snippets for a future-tech setting, enriching their narrative with plausible future technological advancements. So, what's this for you? It provides a source of inspiration and detailed ideas for your creative projects.

· AI Ethics and Societal Impact Simulation: Researchers could prompt the model to predict future discussions on AI regulation or the societal impact of advanced AI, aiding in ethical foresight and policy development. So, what's this for you? It helps you anticipate future challenges and ethical considerations related to AI.

· Community Sentiment Analysis and Prediction: By observing the generated 'front page', one can infer potential future community interests and concerns within the tech space, providing insights for product development and marketing strategies. So, what's this for you? It gives you an idea of what the tech community might care about in the future.

2

AlgoDrill: Pattern-Recall Coding Coach

Author

henwfan

Description

AlgoDrill is an interactive platform designed to help developers solidify their understanding of common coding patterns encountered in competitive programming and technical interviews. It moves beyond simply solving problems by employing an active recall methodology, breaking down solutions line by line, and providing clear, principle-based explanations. By categorizing problems by patterns like sliding window, two pointers, and dynamic programming, AlgoDrill enables targeted practice to ensure that these patterns become muscle memory, leading to faster and more confident implementation during actual coding challenges. This addresses the common issue of forgetting implementation details even after understanding a pattern.

Popularity

Points 163

Comments 98

What is this product?

AlgoDrill is an online tool that transforms algorithm practice into a drill-based learning experience. Instead of just solving a LeetCode problem once and moving on, AlgoDrill prompts you to reconstruct the solution step-by-step, line by line, using active recall. It provides in-depth editorials that explain the 'why' behind each line of code, focusing on fundamental principles. Crucially, all problems are tagged with common algorithmic patterns (e.g., sliding window, two pointers, dynamic programming). This approach helps you internalize patterns rather than just memorize solutions. So, the innovation here is shifting from passive problem consumption to active, pattern-focused reconstruction, making your coding skills more robust and transferable.

How to use it?

Developers can use AlgoDrill by visiting the website and selecting algorithm problems based on their difficulty or specific patterns they wish to master. The platform will then present a problem and guide the user through rebuilding the solution. You'll be prompted to write code incrementally, with immediate feedback and explanations. This can be integrated into a regular study routine, perhaps dedicating specific sessions to practice a particular pattern or tackling a set of problems tagged with a pattern you're struggling with. It’s perfect for anyone preparing for technical interviews or looking to deepen their algorithmic fluency.

Product Core Function

· Line-by-line solution reconstruction: This allows developers to actively engage with the code, reinforcing understanding of each step. Its value lies in building deep familiarity with how algorithmic solutions are constructed, moving beyond rote memorization.

· First principles editorials: These explanations focus on the underlying logic and 'why' behind each code segment. This is valuable for true comprehension, enabling developers to adapt solutions to new scenarios and troubleshoot effectively.

· Pattern-based tagging: Categorizing problems by algorithmic patterns like 'sliding window' or 'dynamic programming' allows for targeted practice. This is incredibly useful for reinforcing specific concepts that are frequently tested and often confused.

· Active recall mechanism: The act of actively recalling and rebuilding code itself strengthens memory and coding fluency. This is valuable because it simulates the pressure of an interview environment and builds confidence in writing code from scratch.

· Progress tracking (implied by drill structure): While not explicitly detailed, a drill system naturally lends itself to tracking progress on specific patterns. This is valuable for identifying areas of weakness and focusing practice where it's needed most.

Product Usage Case

· A developer preparing for a software engineering interview is struggling to implement dynamic programming solutions during mock interviews. They use AlgoDrill to drill DP problems, focusing on reconstructing the recurrence relation and base cases step-by-step, leading to improved performance in subsequent interviews.

· A student learning algorithms for the first time finds it hard to distinguish between different array traversal techniques. By using AlgoDrill's 'two pointers' and 'sliding window' drills, they gain a clearer understanding of when and how to apply these patterns, enabling them to solve a wider range of array-based problems.

· A seasoned developer wants to refresh their skills in graph algorithms before a new project. They use AlgoDrill to practice problems related to graph traversal (BFS, DFS) and shortest path algorithms, actively rebuilding the code and understanding the underlying principles, ensuring they can apply these concepts efficiently to their project.

· Someone who previously solved LeetCode problems but keeps forgetting the implementation details can use AlgoDrill to revisit those patterns. The active recall and detailed explanations help them solidify their knowledge, so they don't blank out when faced with similar problems in a high-pressure situation.

3

DeepScan AI

Author

drob

Description

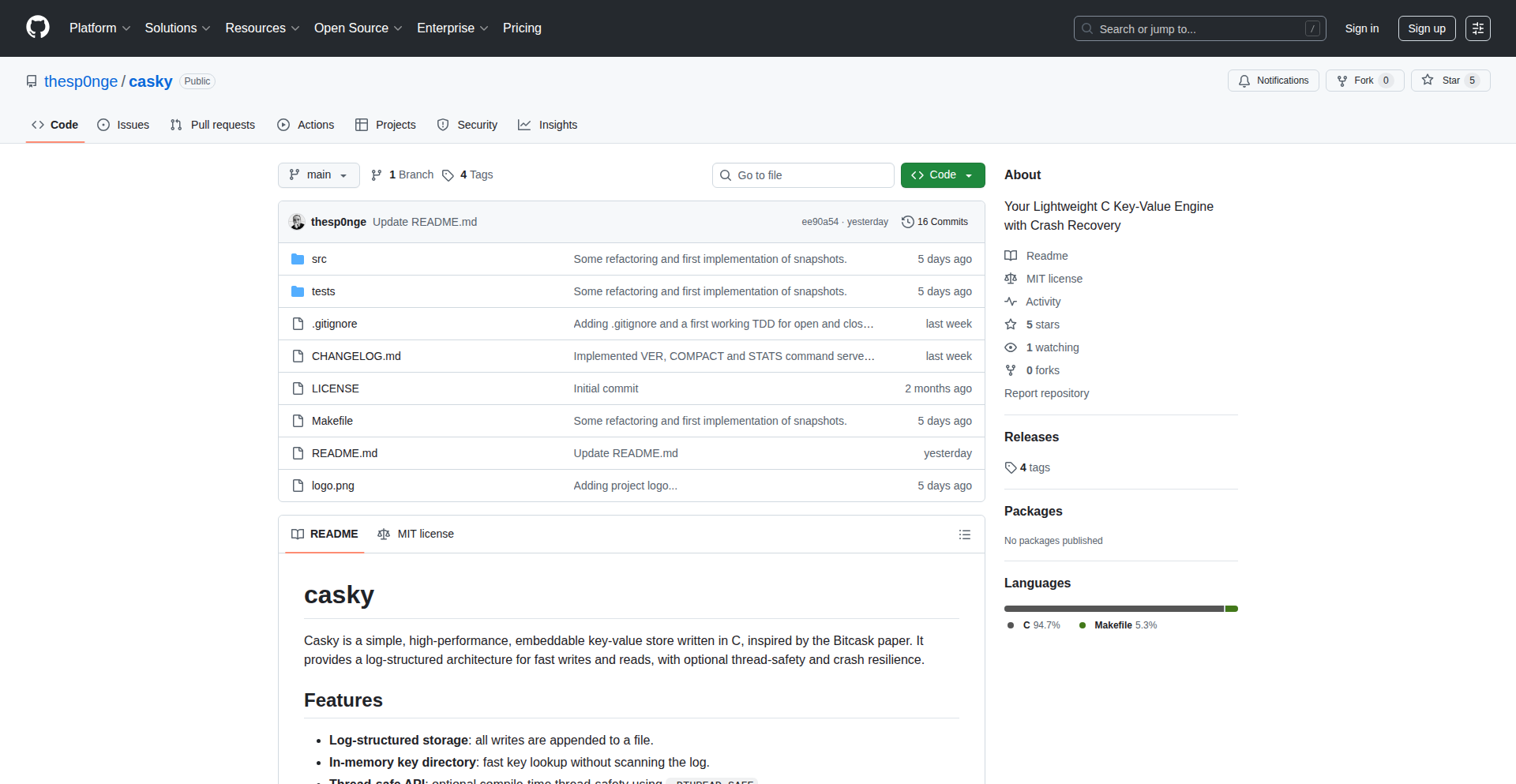

DeepScan AI is a novel bug-finding system that leverages extensive computational analysis to uncover hidden defects and vulnerabilities in application backends. It automates the detection of unintended behavior changes by simulating thousands of code execution paths, offering a more profound level of code inspection than traditional methods. This empowers developers to proactively identify and fix critical issues before they impact production.

Popularity

Points 63

Comments 26

What is this product?

DeepScan AI is an advanced automated bug detection system. Instead of just looking at code superficially, it simulates the execution of your application's backend code in hundreds of different ways. Think of it like putting your code through a highly rigorous stress test. It identifies behaviors that seem 'wrong' or unexpected, which often indicate bugs or security vulnerabilities. The innovation lies in its massive scale of testing and its ability to flag only the most significant and actionable issues, saving developer time by not overwhelming them with false positives.

How to use it?

Developers can integrate DeepScan AI into their workflow by submitting their codebase for analysis. The system checks out the code, figures out how to build and run it locally, and then spins up numerous instances to exercise the application. The results are delivered as clear tickets, GitHub issues, or emails, pinpointing the exact location and nature of the problem. For existing applications, it's a powerful tool to find bugs that might have slipped through testing. For new projects, it acts as a robust safety net. It's particularly useful for app backends where complex interactions can hide subtle errors.

Product Core Function

· Automated code checkout and build execution: This function allows the system to understand and prepare your codebase for testing without manual intervention. Its value is in streamlining the setup process for deep code analysis, making it accessible even for complex projects.

· Massive parallel environment simulation: By running hundreds of copies of your application concurrently, this function aggressively probes for edge cases and unexpected behaviors. The value is in uncovering bugs that might only appear under specific, hard-to-replicate conditions.

· Salient issue flagging and reporting: This function intelligently filters through a vast amount of test data to present only the most critical and actionable bugs. The value here is in prioritizing developer attention on significant problems, avoiding alert fatigue and focusing on real impact.

· Vulnerability detection: Beyond functional bugs, this system is designed to identify security weaknesses. The value is in proactively hardening your application against potential exploits, which is crucial for any production system.

Product Usage Case

· Identifying race conditions in a high-throughput API backend: By simulating concurrent requests from multiple clients, DeepScan AI can uncover subtle bugs where simultaneous operations interfere with each other, leading to data corruption or incorrect responses. This solves the problem of elusive concurrency bugs that are hard to reproduce manually.

· Detecting unhandled exceptions in complex business logic: When dealing with intricate workflows and multiple dependencies, errors can occur in unexpected places. DeepScan AI's broad execution paths help catch these errors, which could otherwise lead to application crashes or unexpected behavior in production.

· Finding potential security vulnerabilities in user input processing: The system can test how the application handles various forms of input, including malformed or malicious data, to identify areas where security flaws might exist. This helps prevent common attack vectors like SQL injection or cross-site scripting.

· Pinpointing performance bottlenecks hidden within specific code paths: While primarily a bug finder, the extensive testing can sometimes highlight code sections that perform poorly under heavy load, allowing developers to optimize critical parts of the application.

4

AIForge IDE

Author

hivetechs

Description

AIForge IDE is a groundbreaking developer environment that unifies 11 distinct AI models, including Claude, Gemini, and Codex, within a single, integrated workspace. It addresses the fragmentation of AI tools by providing a shared memory and a real IDE experience with a Monaco editor, Git integration, and PTY terminals. This allows developers to leverage the unique strengths of each AI without context loss, significantly boosting productivity for complex coding tasks and AI-assisted development.

Popularity

Points 15

Comments 13

What is this product?

AIForge IDE is a sophisticated Integrated Development Environment (IDE) designed for developers who utilize multiple AI models. Its core innovation lies in its ability to run up to 11 different AI assistants (like Claude for reasoning, Gemini for long context, and Codex for code generation) simultaneously within a unified interface. This means you don't have to copy-paste between different AI chats or lose the thread of your conversation. It features shared memory, allowing AIs to learn from each other's interactions, and a consensus validation mechanism where multiple AIs analyze a problem independently, and a fourth AI synthesizes the results, leading to more robust solutions. It also includes a fully functional IDE experience with a Monaco editor, built-in Git support, and interactive PTY terminals, making it a complete development environment, not just a front-end wrapper for AI services.

How to use it?

Developers can use AIForge IDE by installing it and configuring their API keys for the supported AI models. Once set up, they can interact with different AIs directly within integrated terminals. For instance, a developer might use Claude to brainstorm architectural patterns, then switch to Codex in another terminal to generate boilerplate code for those patterns, all within the same project context. The shared memory ensures that the code generated by Codex can be immediately understood and acted upon by Claude if further refinement is needed. The IDE's built-in Git allows for seamless version control of AI-generated or augmented code. This provides a vastly improved workflow for tasks like refactoring, debugging, writing tests, or generating documentation by intelligently leveraging the combined power of multiple AI agents.

Product Core Function

· Integrated AI Terminal Support: Allows developers to run and interact with multiple AI models (e.g., Claude, Gemini, Codex) concurrently in separate, yet connected, terminal windows. This value proposition is that you can leverage the specialized strengths of different AIs for different parts of your workflow without losing context, speeding up complex problem-solving.

· Shared Memory & Context Preservation: Maintains a unified context and memory across all active AI instances. This means that an AI's response can inform another AI's subsequent action, eliminating the need for manual context re-entry and enabling more coherent, multi-step AI collaborations.

· Consensus Validation Mechanism: Employs a system where multiple AIs independently analyze a problem, and a fourth AI synthesizes their findings into a consolidated solution. This adds a layer of reliability and reduces the likelihood of errors by cross-validating AI outputs, ensuring more accurate and dependable results.

· Real IDE Features (Monaco Editor, Git, PTY Terminals): Provides a full-fledged Integrated Development Environment experience, including a powerful code editor, native Git integration for version control, and interactive PTY terminals. This allows developers to write, edit, test, and deploy code directly within the environment, enhancing productivity and streamlining the development lifecycle.

· Unified Workspace for AI Tools: Consolidates various AI tools into a single, cohesive platform. The value here is reducing the cognitive load and time spent switching between disparate applications, allowing developers to focus more on coding and less on tool management.

Product Usage Case

· Scenario: Developing a complex microservice architecture. A developer can use Claude to discuss design patterns and API specifications, then switch to Codex to generate the initial code for each microservice based on those specifications. The shared memory ensures continuity, and the IDE allows for immediate compilation and testing. This solves the problem of fragmented AI tools leading to slow iterations and context loss during architectural design and initial implementation.

· Scenario: Debugging a persistent bug in a large codebase. A developer can feed the error logs and relevant code snippets to one AI (e.g., Gemini for its long context capabilities) to analyze the issue, then use another AI (e.g., Claude for logical reasoning) to propose potential fixes. The consensus mechanism could be used to cross-validate multiple proposed solutions, ensuring a more reliable fix is identified and implemented directly within the IDE's editing and Git functionalities. This addresses the challenge of identifying root causes in complex systems and finding effective solutions.

· Scenario: Writing comprehensive unit tests for a new feature. A developer can instruct an AI to generate test cases based on the feature's requirements, then use another AI to refine those test cases or generate mock data. The ability to have multiple AIs working in parallel, combined with the IDE's code editing and Git integration, allows for rapid creation and versioning of tests, significantly accelerating the testing phase and improving code quality.

5

GPT-Driven Traffic Surfer

Author

ulinycoin

Description

This project showcases a novel approach to content discoverability by leveraging ChatGPT to generate search engine-like traffic, bypassing traditional SEO. It highlights an innovative way to understand and influence AI-driven content consumption, proving that direct AI interaction can be a significant traffic source.

Popularity

Points 16

Comments 11

What is this product?

This project is an experimental demonstration of how ChatGPT can be directly queried to drive traffic to content, akin to a user searching on Google. Instead of optimizing for traditional search engines, the core innovation lies in understanding how to structure prompts and content so that ChatGPT recommends it. It's like teaching an AI to be your content's advocate. The technical insight is that AI models, when prompted effectively, can act as discovery engines, offering a new paradigm for content distribution. So, what's in it for you? It means a potential new channel for getting your content seen, independent of Google's algorithms.

How to use it?

Developers can integrate this concept by building custom bots or applications that interact with ChatGPT's API. The usage involves crafting sophisticated prompts that mimic user search queries and providing context about the content you want to promote. This could involve pre-processing your content to ensure it's easily digestible and relevant to AI-generated 'searches'. For example, you could build a service that automatically generates prompt variations based on your latest blog posts and sends them to ChatGPT, analyzing the responses for traffic potential. The value for developers is in exploring and harnessing this nascent AI-driven traffic stream, opening up new marketing and content distribution strategies. So, how can you use this? By building tools that strategically interact with AI to bring eyeballs to your creations.

Product Core Function

· AI-powered content recommendation engine: Leverages ChatGPT's understanding to recommend your content based on simulated user queries, providing a direct and potentially high-converting traffic source. The value is in bypassing traditional SEO gatekeepers. This is useful for content creators looking for alternative distribution channels.

· Prompt engineering for traffic generation: Develops and tests advanced prompting strategies to elicit positive content recommendations from AI models. The value is in understanding how to 'speak' to AIs to drive desired outcomes, a critical skill in the AI era. This is useful for anyone who wants to optimize their content's visibility in AI-driven environments.

· Traffic analysis and optimization: Monitors and analyzes the traffic generated through AI interactions to refine content and prompting techniques for maximum impact. The value is in data-driven improvement of AI-driven marketing efforts. This is useful for marketers and content strategists who need to adapt to new traffic sources.

Product Usage Case

· A blogger uses a custom script to send prompts about their recent articles to ChatGPT, and observes a significant portion of their website traffic originating from these AI-driven recommendations, bypassing traditional search. This solves the problem of declining organic search visibility by finding a new, AI-native discovery pathway. So, what's the benefit here? Gaining visibility when traditional SEO fails.

· A SaaS company builds an internal tool that uses ChatGPT to suggest relevant knowledge base articles to users based on their chat interactions. This effectively reduces support ticket volume by providing proactive, AI-guided solutions. This solves the problem of inefficient information retrieval and user self-service. So, how does this help? It improves user experience and reduces operational costs.

· A researcher develops a system to query ChatGPT about emerging research topics and uses the AI's recommendations to identify overlooked areas for their next paper. This helps overcome the challenge of staying abreast of a rapidly evolving academic landscape. So, what's the value? It accelerates research discovery and innovation.

6

Gemini 94: HyperCard Hacker News Reimagined

Author

benbreen

Description

Gemini 94 is a fascinating technical experiment that reimagines Hacker News as if it were built with HyperCard in 1994. It focuses on the core idea of presenting HN content in a highly interactive, card-based interface, exploring how a different technological paradigm could have shaped our online information consumption. The innovation lies in bridging the gap between modern web content and a nostalgic, highly tactile, and interconnected digital experience.

Popularity

Points 12

Comments 11

What is this product?

Gemini 94 is a project that simulates the Hacker News experience using the principles of HyperCard, a pioneering multimedia authoring system from the early 90s. Instead of a typical web page, content is presented as 'cards' that can be linked together dynamically. The technical approach involves parsing Hacker News's API and re-rendering the data – stories, comments, and user profiles – into distinct HyperCard-like cards. Navigation is achieved through buttons and links embedded within these cards, mimicking the signature 'stack' metaphor of HyperCard. The innovation is in retrofitting modern web data into a fundamentally different interaction model, emphasizing direct manipulation and contextual relationships between pieces of information, rather than linear scrolling. So, what's in it for you? It offers a fresh perspective on information architecture and user interface design, showing how older, simpler technologies can inspire new ways of thinking about digital content organization and interaction, potentially leading to more intuitive and engaging user experiences.

How to use it?

Developers can use Gemini 94 as a conceptual blueprint and a source of inspiration. It's a demonstration of how to take structured data (like from an API) and represent it in a non-traditional, card-based, hyperlinked interface. Potential use cases include building internal knowledge bases, interactive tutorials, or even creating unique digital art installations that repurpose online data. The technical usage involves understanding how to fetch data from sources like the Hacker News API and then programmatically generate visual elements and navigational logic that mimics HyperCard's behavior. So, what's in it for you? It provides a tangible example of how to think outside the typical web framework, encouraging the development of more unique and engaging user interfaces for your own applications.

Product Core Function

· Card-based content rendering: Translates each Hacker News story and comment thread into an individual, interactive 'card'. This allows for a more focused and digestible presentation of information, reducing cognitive load. This is valuable for users who prefer to process information in discrete chunks, improving comprehension and reducing overwhelm.

· Hyperlinked navigation: Implements a system of buttons and links within cards that allow users to jump between related content, similar to navigating a HyperCard stack. This creates a rich, interconnected web of information, enabling deeper exploration and discovery. This is valuable for users who enjoy serendipitous discovery and want to follow threads of information intuitively.

· Interactive comment threads: Renders comment sections as nested, expandable cards, allowing users to easily navigate and engage with discussions. This improves the readability and interactivity of comment sections, making conversations easier to follow. This is valuable for users who participate in online discussions and want a more structured way to engage with them.

· Retro-inspired UI: Emulates the visual and interaction aesthetics of HyperCard, offering a nostalgic and unique user experience. This provides a novel and engaging alternative to standard web interfaces, appealing to users who appreciate unique design and historical computing paradigms. This is valuable for users seeking a departure from conventional UIs and looking for a more memorable online experience.

Product Usage Case

· Developing a personal knowledge management system where each note or resource is a 'card' that can be linked to related entries, creating a mind-map-like structure for information. This solves the problem of information silos and makes complex knowledge easier to navigate and recall. Gemini 94's approach shows how to build interconnectedness.

· Creating an interactive historical timeline of a specific topic, where each event is a card with links to further details, images, and related events. This makes learning about history more engaging and allows users to explore events at their own pace and according to their own interests. This is valuable for educational tools or personal projects.

· Building a prototype for a new type of social media interface that focuses on visual storytelling and direct connections between posts, moving away from endless scrolling feeds. This tackles the challenge of information overload on current platforms and offers a more deliberate and curated content consumption experience.

7

Fate: React & tRPC Data Framework

Author

cpojer

Description

Fate is a novel data framework for React applications, heavily inspired by Relay, that aims to simplify data fetching and management when integrated with tRPC. It focuses on providing a more intuitive and efficient way for developers to handle asynchronous data operations, particularly in the context of real-time applications, by abstracting away complex network interactions and state synchronization logic. The core innovation lies in its declarative approach to data dependencies and its tight integration with tRPC's strong typing, offering a more robust and developer-friendly experience for building complex React UIs.

Popularity

Points 22

Comments 1

What is this product?

Fate is a data framework designed to make fetching and managing data in React applications much smoother, especially when you're using tRPC (a TypeScript RPC framework). Think of it as a smarter way for your React app to ask for information from your server and keep that information up-to-date. It's inspired by Relay, a well-known data fetching library, but aims to be more straightforward. Fate's innovation is in how it lets you declare what data your components need, and it handles the fetching, caching, and updating automatically. This means you write less code for these common tasks, and your application's data is managed more efficiently, leading to faster and more reliable user interfaces. So, this helps you by reducing boilerplate code for data handling and improving the performance and responsiveness of your React applications, making them feel more 'alive'.

How to use it?

Developers can integrate Fate into their React projects by installing it as a dependency and then configuring it to work with their tRPC server setup. Components can then use Fate's hooks or components to declaratively specify the data they require. Fate will automatically fetch this data from the tRPC backend, cache it, and ensure that your UI updates whenever the data changes. This can be integrated into existing React projects using standard package management tools like npm or yarn. The typical use case involves defining data requirements at the component level, allowing Fate to manage the flow of data from your server to your front-end. This means you spend less time worrying about data loading states and more time building features, ultimately accelerating your development cycle.

Product Core Function

· Declarative Data Fetching: Developers specify the data needed by components, and Fate handles the underlying network requests and data retrieval. This reduces manual data fetching logic and makes code cleaner and more maintainable, so you don't have to write repetitive `useEffect` hooks for fetching data.

· Optimized Data Caching: Fate automatically caches fetched data, preventing redundant requests and improving application performance. This means your app loads faster and feels more responsive, as data is readily available without needing to refetch it every time.

· Automatic State Synchronization: When data on the server changes, Fate automatically updates the relevant parts of your UI. This ensures your application's state is always consistent with the backend, providing a seamless user experience without manual state management.

· tRPC Integration: Tight integration with tRPC leverages its strong typing to provide a secure and efficient data layer between your React frontend and backend. This means fewer runtime errors due to type mismatches and a more reliable data flow, enhancing overall application stability.

Product Usage Case

· Building a real-time dashboard: In a dashboard application that displays live stock prices or analytics, Fate can efficiently fetch and update data from the tRPC backend. Components can declare their need for specific stock tickers or metrics, and Fate will ensure the UI is constantly refreshed with the latest information without overwhelming the server or client with unnecessary requests. This results in a highly responsive and accurate real-time view.

· Developing a complex e-commerce product page: For a product page with details, reviews, and related items, Fate can manage the fetching of all these different data points. Each section of the page can declare its data needs, and Fate will intelligently fetch and update them as needed, perhaps fetching related items only after the main product details are loaded. This leads to a faster initial page load and smoother interactions as users browse the product.

· Creating a collaborative editing tool: In an application where multiple users are editing a document simultaneously, Fate can manage the real-time synchronization of changes. Components displaying parts of the document can declare their dependency on specific sections, and Fate will ensure that updates from other users are efficiently fetched and displayed, maintaining a consistent and up-to-date view for everyone involved. This makes collaborative editing feel seamless and instantaneous.

8

Logos API

Author

lorey

Description

This project is a free, drop-in replacement for the Clearbit Logo API, which recently shut down. It provides logos for any company or domain name, is free to use with no signup required, and supports both company and domain names as input. It leverages WebP format for efficient delivery, resulting in smaller payloads and better caching. This innovation addresses a critical need for developers who relied on the now-defunct Clearbit service, offering a seamless and cost-effective alternative. The developer also highlighted the use of AI tools like Claude Code (Max) in its end-to-end development, showcasing a modern approach to building solutions.

Popularity

Points 9

Comments 6

What is this product?

This is a free, no-signup-required API that provides company logos. It's designed to be a direct replacement for the Clearbit Logo API. The core innovation lies in its accessibility and efficiency. Instead of needing an API key or signing up, developers can immediately integrate it into their projects. The system accepts either a company name or a domain name (like 'google.com') and returns the relevant logo. A key technical detail is the use of the WebP image format. This is a modern image format that offers significantly better compression than older formats like JPEG or PNG, meaning the logo files are smaller. Smaller files mean faster downloads, reduced bandwidth usage for both the server and the user, and importantly, better caching. When a logo is requested and served in WebP format, browsers and intermediate servers (like CDNs) can store it efficiently, so subsequent requests for the same logo are served almost instantly without needing to re-download the image. This is achieved through a stack involving S3 for storage, a cached FastAPI backend for fast responses, a Next.js frontend for the website, and Cloudflare for robust CDN and caching capabilities.

How to use it?

Developers can integrate this Logos API into their applications by making simple HTTP requests to the provided endpoint (https://logos.apistemic.com). For example, to get the logo for 'Example Corp', a developer could send a GET request to `https://logos.apistemic.com/logo/Example Corp`. If they have a domain like 'example.com', they could use `https://logos.apistemic.com/logo/example.com`. The API returns the logo image directly, usually in WebP format. This can be used to display company logos on websites, in customer dashboards, in CRM systems, or anywhere company branding is needed. The lack of signup and API keys makes it incredibly easy to get started – just add the URL to your code. For instance, in a web application using an `<img>` tag, you could set the `src` attribute directly to the API endpoint URL, like `<img src="https://logos.apistemic.com/logo/Example Corp" alt="Example Corp Logo">`. This provides immediate visual enhancement without any complex setup.

Product Core Function

· Free Logo Retrieval: Provides company logos without any cost, removing financial barriers for developers and small businesses. This is useful for any project needing to display brand identities without a budget for premium services.

· No Signup/API Key Required: Allows immediate integration into projects. Developers can simply start making requests without the overhead of account creation or key management, saving time and simplifying workflows.

· Company and Domain Name Input: Accepts both company names (e.g., 'Microsoft') and domain names (e.g., 'microsoft.com') as identifiers. This flexibility makes it easier to find logos as developers might have one or the other readily available.

· WebP Format Output: Delivers logos in the WebP format for efficient delivery. This means smaller file sizes, leading to faster loading times and reduced bandwidth consumption, which directly improves user experience and lowers hosting costs.

· High Availability and Caching: Leverages Cloudflare for CDN and caching, ensuring fast and reliable access to logos globally. This means your application's logo loading will be consistently quick, even under high traffic.

· End-to-End AI-Assisted Development: The project was developed using AI tools like Claude Code (Max), showcasing a modern, efficient development methodology that can be inspiring for other developers exploring AI in their workflow.

Product Usage Case

· Website Branding: Displaying logos of clients or partners on a company website to build credibility and showcase relationships. The API allows for dynamic fetching of these logos without needing to pre-download and store them.

· CRM and Sales Tools: Integrating company logos into customer relationship management dashboards or sales prospecting tools to provide instant visual recognition of companies being managed or researched. This helps sales teams quickly identify and recall clients.

· Directory Listings: Populating online directories or marketplaces with company logos for each listed business. This enhances the visual appeal and user-friendliness of directory services.

· Invoice and Document Generation: Automatically embedding client logos onto invoices, proposals, or reports. This personalizes outgoing documents and reinforces brand consistency.

· Developer Tooling: Creating internal tools or scripts that automatically fetch and display company logos based on domain names, aiding in quick analysis or data enrichment tasks.

9

TSP-4h-Genius

Author

oblonski

Description

This project is a personal exploration and rapid prototyping of a Traveling Salesperson Problem (TSP) game. The core innovation lies in the speed of development and the demonstration of how complex problems can be tackled iteratively. It showcases a creative approach to visualizing and interacting with an NP-hard problem, likely using a combination of game logic and potentially a simplified TSP solving algorithm to create an engaging experience.

Popularity

Points 11

Comments 3

What is this product?

This project is a demonstration of building a game around the Traveling Salesperson Problem (TSP) in an incredibly short timeframe (4 hours). The TSP is a classic computer science challenge where you try to find the shortest possible route that visits a set of cities exactly once and returns to the starting city. The innovation here isn't necessarily a breakthrough in solving TSP itself, but in the rapid, code-first approach to creating a playable, engaging experience out of a computationally difficult problem. It's about turning theoretical complexity into a tangible, interactive product, highlighting the power of focused development and 'hacker's mindset'. So, what's the value for you? It shows that even complex problems can be approached and a functional prototype can be built quickly, inspiring you to tackle your own ambitious ideas with rapid iteration.

How to use it?

As this is a 'Show HN' project, it's primarily a demonstration of technical skill and a creative solution. The primary 'use' for developers is inspiration and learning. You might use it to understand how one might gamify algorithmic challenges, or to learn about rapid prototyping techniques. If the project were open-sourced, you could potentially fork it to experiment with different TSP solving algorithms, visualize TSP solutions in novel ways, or even build upon the game mechanics for your own educational or entertainment projects. It's a springboard for your own creative coding. So, how can you use this? Think of it as a blueprint for rapid ideation and a source of inspiration to build your own interactive problem-solving tools.

Product Core Function

· Rapid Prototyping of Algorithmic Games: The ability to conceptualize and build a functional game around a complex algorithm like TSP in just 4 hours highlights efficient development workflows and rapid iteration. This is valuable as it demonstrates that ambitious projects can be started and delivered quickly, encouraging a 'just build it' mentality.

· Gamified Problem Solving: Transforming a theoretical computer science problem (TSP) into an interactive game makes the problem more accessible and engaging. This showcases how complex concepts can be demystified and learned through play, offering a valuable approach for educational tools and interactive experiences.

· Interactive Visualization of Algorithmic Concepts: The game likely provides a visual representation of TSP, allowing users to see how different routes are formed and potentially how algorithms attempt to solve it. This is valuable for understanding abstract concepts visually, aiding comprehension for both beginners and experienced developers.

· Demonstration of 'Hacker's Mindset': Building something complex and engaging in a short time exemplifies the hacker culture's ethos of creative problem-solving and rapid execution. This inspires other developers to push their own boundaries and find efficient, innovative solutions to challenges.

Product Usage Case

· An educational platform developer could use the inspiration from this project to create a series of interactive games that teach students about different algorithms, like sorting, pathfinding, or data structures. The rapid prototyping aspect shows how quickly engaging learning modules can be developed. The value here is in making learning more interactive and fun.

· A game developer looking to incorporate algorithmic challenges into their game could study the approach taken here. They might see how a complex problem like TSP can be simplified into a playable mechanic, providing unique gameplay loops. This helps in designing novel game challenges.

· A solo developer with a backlog of innovative ideas could be inspired by the 4-hour build. It demonstrates that even ambitious projects can be started and a minimum viable product can be achieved very quickly, overcoming the inertia of starting large tasks. The value is in empowering individual creators to take action.

· A programming instructor could use this as a case study to teach students about efficient coding practices, problem decomposition, and the importance of iterative development. It serves as a real-world example of how to tackle a challenging problem with limited time. This helps students learn practical development strategies.

10

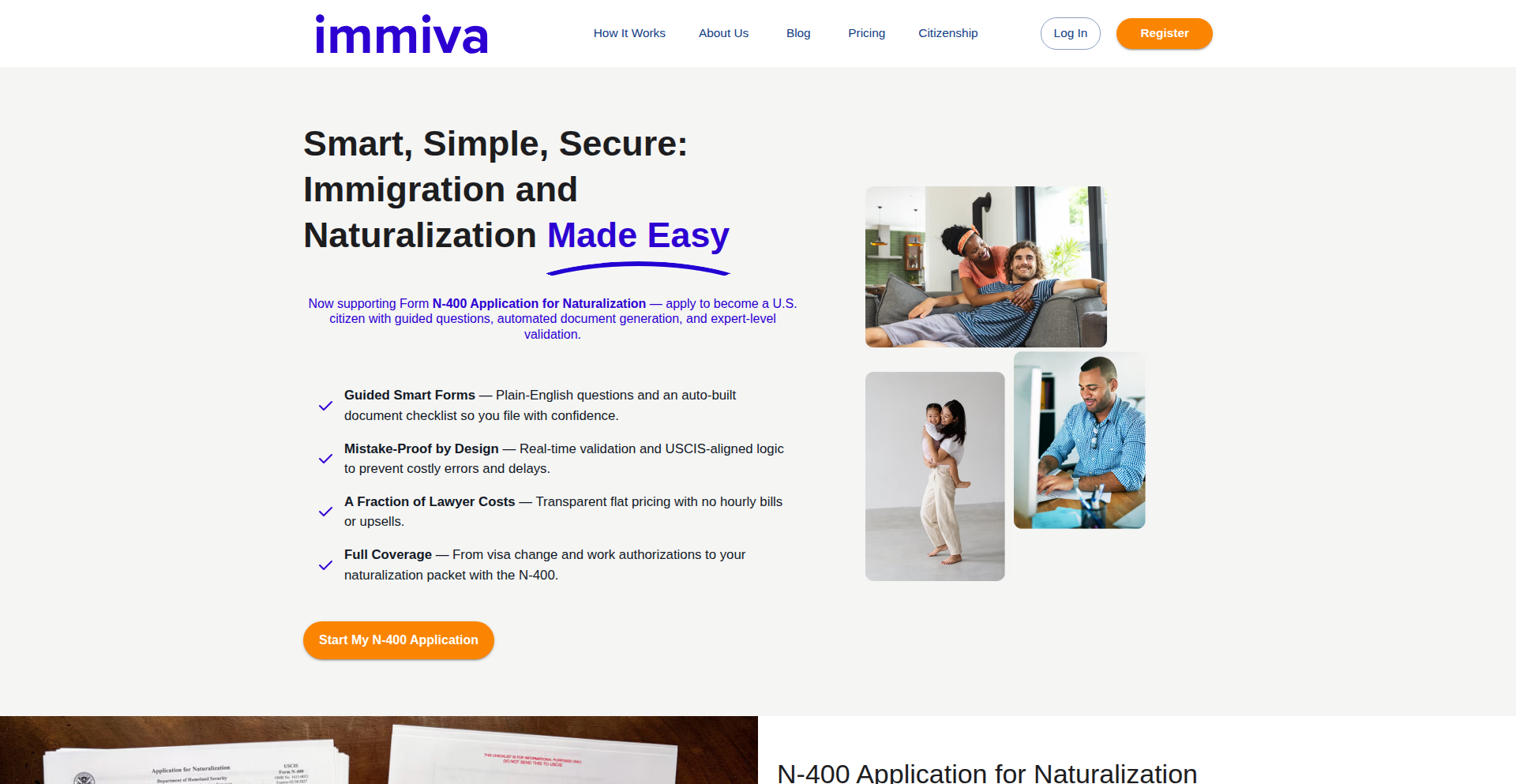

ImmiForm Genius

Author

mjablons

Description

This project is an AI-powered TurboTax-like application specifically designed for generating immigration forms. It leverages natural language processing (NLP) and form generation techniques to simplify the complex and often overwhelming process of filling out immigration paperwork. The innovation lies in its ability to understand user inputs in plain language and translate them into the precise data required for official forms, significantly reducing errors and saving users considerable time and frustration.

Popularity

Points 8

Comments 1

What is this product?

ImmiForm Genius is an intelligent system that acts like a personal assistant for navigating immigration paperwork. Instead of manually reading and filling out lengthy government forms, users interact with the system by providing their information in conversational, natural language. The core technology involves advanced Natural Language Understanding (NLU) to parse user responses, extract relevant entities, and map them to the specific fields on various immigration forms. It then uses a sophisticated form generation engine to populate these forms accurately. The innovation is in democratizing access to legal documentation by making it as simple as having a conversation, and by ensuring data accuracy through programmatic mapping, thus reducing the likelihood of rejections due to common data entry mistakes. So, what's in it for you? It means you can tackle complex immigration forms without needing a legal degree or spending hours deciphering jargon, making a stressful process significantly more manageable.

How to use it?

Developers can integrate ImmiForm Genius into their platforms or services to offer immigration form assistance. This could involve building a standalone web application where users upload their immigration case details or interact via a chatbot. The system can be exposed via an API, allowing other applications to send user data and receive populated immigration forms as output. For example, a legal tech startup could embed this functionality into their case management software, or a community organization could offer it as a free resource to immigrants. The usage involves providing structured or unstructured data about the individual and their immigration case, and the system returns the completed forms. So, what's in it for you? You can build new services or enhance existing ones by providing a highly valuable, automated solution for a critical user need, saving your users significant time and legal costs.

Product Core Function

· Natural Language Input Processing: Understands user-provided information in plain English, extracting key details like names, dates, addresses, and specific case information. This provides value by removing the need for users to memorize form field names or understand legal terminology.

· Automated Form Population: Accurately maps extracted user data to the corresponding fields on official immigration forms. This offers value by ensuring data consistency and reducing manual data entry errors, which can lead to form rejections.

· Multi-Form Support: Capable of handling and generating various types of immigration forms, accommodating different immigration pathways and requirements. This is valuable as it offers a comprehensive solution for diverse immigration needs.

· Error Detection and Validation: Implements checks to identify potential inconsistencies or missing information based on typical form requirements. This adds value by proactively flagging issues before submission, saving users the hassle of corrections and delays.

Product Usage Case

· A startup building a platform for visa applications: ImmiForm Genius can be used to automatically generate visa application forms from user profile data and interview transcripts, simplifying the application process for individuals and reducing the workload for the startup's support staff. This addresses the problem of manual data entry and form completion time.

· A non-profit organization assisting refugees: The system can be integrated to help refugees fill out asylum or refugee status determination forms using simple conversational interfaces, making the process less intimidating and more accessible. This solves the issue of language barriers and complex legal documents.

· A legal aid service for immigrants: ImmiForm Genius can power a self-service portal for clients to begin their immigration paperwork, providing them with accurate and pre-filled forms to bring to their consultations, thus maximizing the efficiency of legal aid resources. This helps streamline the initial data gathering phase of legal assistance.

11

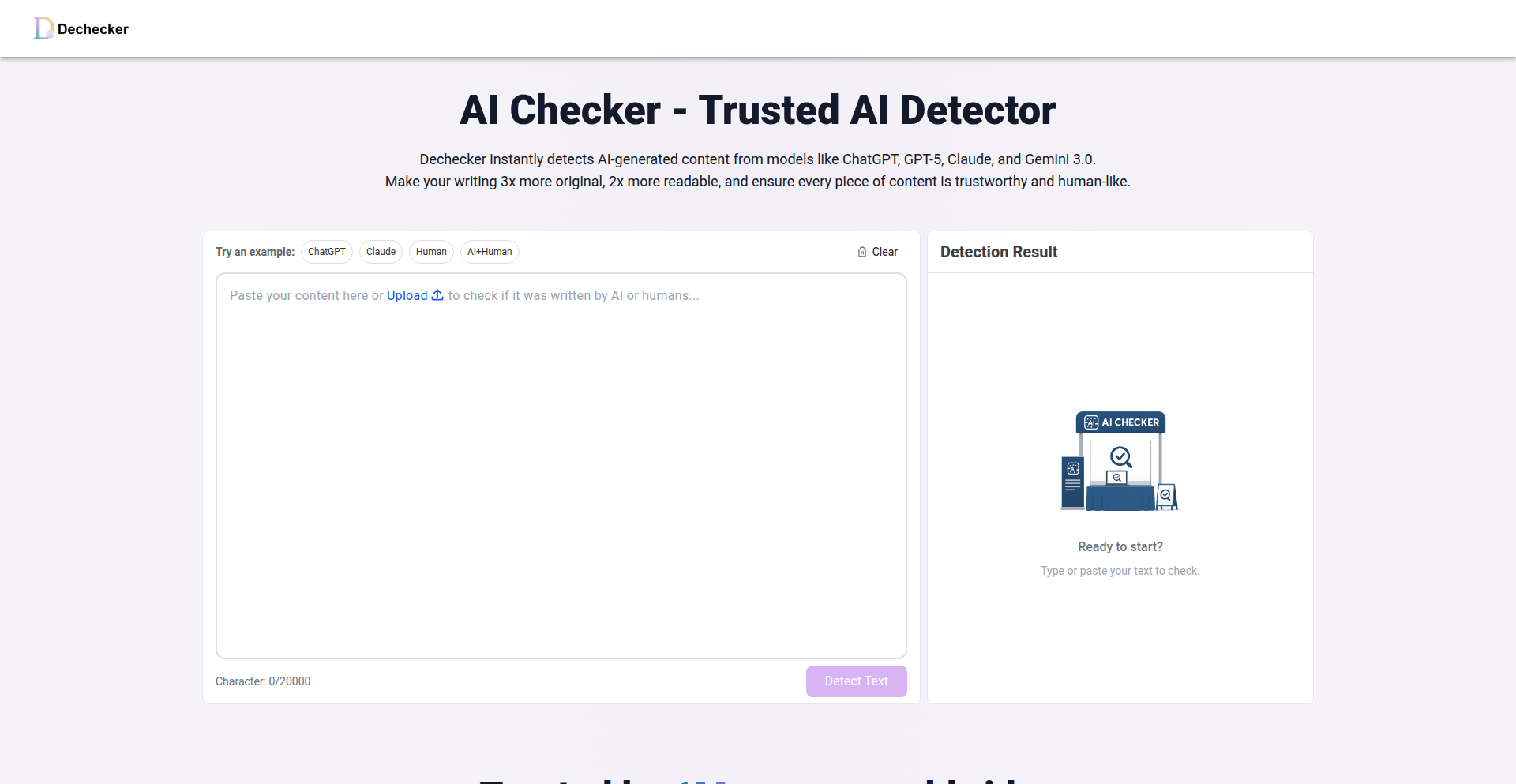

AI-Text Forensics Engine

Author

GrammarChecker

Description

A lightweight yet powerful tool designed to identify text generated by large language models (LLMs) such as ChatGPT, GPT-5, Claude, Gemini, and LLaMA. It offers a fast and free solution to distinguish between human-written and AI-generated content, providing insights into the origin of text with a focus on technical implementation for prompt engineering and content authenticity.

Popularity

Points 6

Comments 2

What is this product?

This project is an AI-generated text detection engine. It works by analyzing various statistical and linguistic features within a given text that are characteristic of LLM outputs. Unlike simple keyword matching, it delves into aspects like sentence structure variability, perplexity (how surprising or predictable the next word is), burstiness (the uneven distribution of word frequencies), and other subtle patterns that emerge from the way LLMs construct sentences and paragraphs. The innovation lies in its ability to synthesize these complex analytical signals into a reliable detection score, offering a nuanced approach to identifying AI authorship. So, what does this mean for you? It means you can get a tool that goes beyond superficial analysis to understand if text was likely written by an AI, helping to maintain the integrity of your content or understand the source of information.

How to use it?

Developers can integrate DeChecker into their workflows or applications via its API or by using its web interface. For instance, in content moderation systems, it can flag potentially AI-generated content for review. For educators, it can help identify academic integrity issues. For researchers, it aids in analyzing the authenticity of data. Integration typically involves sending the text snippet to the DeChecker service and receiving a probability score indicating the likelihood of it being AI-generated. So, how does this benefit you? It allows you to automate the process of verifying text origin, saving time and effort in applications where authenticity is critical.

Product Core Function

· AI Text Signature Analysis: Analyzes statistical properties of text, such as word frequency distribution, sentence length variation, and grammatical complexity to identify patterns indicative of AI generation. This helps in distinguishing AI from human writing by looking at subtle linguistic cues, providing a robust detection mechanism for your content verification needs.

· Multi-Model Compatibility: Designed to detect text from a wide range of popular LLMs, including ChatGPT, GPT-5, Claude, Gemini, and LLaMA. This broad compatibility ensures that your detection efforts are comprehensive across various AI writing styles, making your authenticity checks more reliable regardless of the AI model used.

· Real-time Detection: Offers fast processing speeds, allowing for near-instantaneous analysis of text. This is crucial for applications requiring immediate feedback, such as live content moderation or interactive writing tools, ensuring that you get quick insights without workflow disruption.

· Free and Accessible: Provides its core detection capabilities free of charge via a web interface and potentially an API. This democratizes access to advanced text analysis tools, enabling individual developers, small teams, and researchers to leverage sophisticated AI detection without significant financial investment.

Product Usage Case

· Content authenticity verification for news outlets: A news organization can use DeChecker to scan articles submitted by freelancers or automated systems to ensure that the content is original and not plagiarized or entirely AI-generated, safeguarding their journalistic integrity.

· Academic integrity checks in educational platforms: An online learning platform can integrate DeChecker to analyze student submissions for essays or assignments, flagging potential cases of AI-assisted cheating to maintain a fair learning environment.

· Spam and bot detection in online communities: A forum or social media platform can employ DeChecker to identify and filter out bot-generated comments or posts that are often produced by LLMs, improving the quality of user interactions.

· Prompt engineering analysis for AI developers: Developers working with LLMs can use DeChecker to evaluate the output of their prompts, understanding how different prompt structures might lead to text that is more or less distinguishable from human writing, aiding in prompt optimization.

12

Agentry: React-Powered AI Agent Framework

Author

colinds

Description

Agentry is a novel framework that builds AI agents using React components. It tackles the complexity of AI agent development by leveraging React's declarative nature, composability, and state management. This approach allows developers to treat AI agents and their tools as interchangeable building blocks, enabling dynamic behavior and easier reasoning about complex AI interactions. So, for you, this means a more intuitive and structured way to build sophisticated AI applications, making them more manageable and adaptable.

Popularity

Points 8

Comments 0

What is this product?

Agentry is a framework that reimagines how AI agents are built by treating them as React components. Instead of complex procedural code, you can assemble agents and their functionalities (like tools for web search or code execution) using familiar React patterns. This means agents can inherit capabilities, react to changes in their environment, and even spawn sub-agents, all managed by React's state and component lifecycle. The innovation lies in applying the declarative and composable power of React to the inherently dynamic and stateful world of AI agents. So, what does this mean for you? It translates to building smarter AI applications with less boilerplate and more predictable behavior, harnessing the power of modern web development for AI.

How to use it?

Developers can integrate Agentry into their React projects by treating AI agents and their tools as React components. You can define an agent as a component and then compose it with other components representing tools, such as a web search tool or a code interpreter. The framework uses React's state management and hooks to control the agent's behavior and available tools dynamically. For example, you can conditionally render or enable tools based on the conversation's context using a <Condition> component. This makes it easy to create complex agent workflows and integrate them seamlessly into existing web applications. So, for you, this means leveraging your existing React expertise to build advanced AI features directly within your web applications.

Product Core Function

· AI Agents as React Components: This allows developers to build and manage AI agents using the familiar, declarative structure of React components, making AI logic more organized and easier to reason about. This is valuable for developers who want to integrate AI into their web applications more effectively.

· Composability of Agents and Tools: Agents can be composed of other agents or tools, enabling the creation of hierarchical and modular AI systems. This is valuable for building complex AI behaviors from simpler, reusable parts.

· Dynamic Tool Management: Tools can be mounted or unmounted based on React state, allowing agents to adapt their capabilities dynamically based on the ongoing interaction or task. This is valuable for creating AI agents that can intelligently switch between different functionalities.

· Conditional Behavior (<Condition> component): The framework supports defining conditional logic for agent behavior based on natural language understanding of the conversation history. This is valuable for creating AI agents that can respond contextually and adapt their decision-making.

· Built-in Tool Integrations: Includes ready-to-use integrations for common AI tasks like code execution, web search, and memory management, powered by services like Anthropic's API. This is valuable for accelerating the development of AI agents by providing essential capabilities out-of-the-box.

Product Usage Case

· Building a multi-turn chatbot that can search the web for information and then use that information to write code. The web search tool and code execution tool would be dynamically enabled or disabled based on the user's requests, managed by React's state. This addresses the challenge of creating conversational AI that can perform complex, multi-step tasks.

· Developing an AI assistant that can manage different operational modes. For instance, in a coding scenario, it might enable code execution tools, while in a research scenario, it might prioritize web search tools. This is achieved by using the <Condition> component to switch between sets of available tools based on the detected intent. This solves the problem of building AI agents that can adapt their functionality to different user needs.

· Creating a system where an AI agent can delegate tasks to specialized sub-agents. For example, a general-purpose AI agent could pass a complex data analysis request to a dedicated data analysis agent. This is facilitated by treating sub-agents as nested React components, simplifying the architecture of distributed AI intelligence. This tackles the complexity of managing large, monolithic AI systems by breaking them down into manageable, specialized units.

13

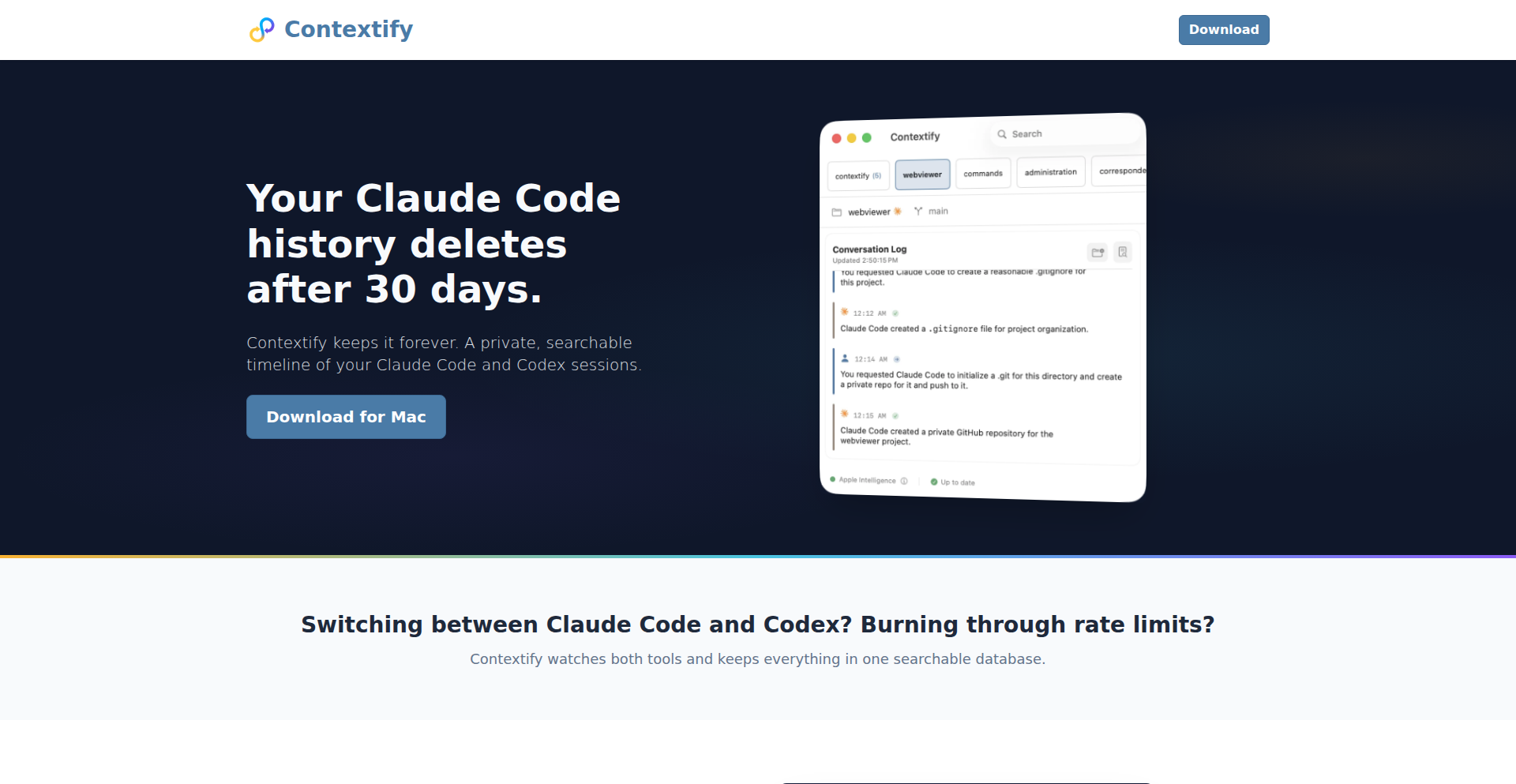

Contextify AI Session Archivist

Author

bredren

Description

Contextify is a native macOS application designed to give developers a persistent, searchable, and locally managed history of their AI coding sessions. It addresses the common problem of AI chat transcripts being ephemeral or inaccessible, offering a private solution that keeps your AI interactions and code-related discussions secure and organized on your machine. It leverages local processing for summaries, ensuring privacy and immediate access to your AI project context.

Popularity

Points 6

Comments 2

What is this product?

Contextify is a sophisticated native macOS application that acts as your personal AI coding assistant's memory bank. It captures and stores your conversations with AI coding tools like Claude Code and Codex CLI, creating a searchable timeline. The innovation lies in its local processing capabilities, where it uses Apple Intelligence to generate summaries of your AI exchanges without sending your sensitive code or discussion data to external servers. This means your AI coding history is private, secure, and readily available for reference, helping you recall past solutions, understand evolving project context, and maintain continuity across your development workflow. So, this is useful because it prevents you from losing valuable insights and code snippets generated during AI coding sessions, effectively giving your AI assistant a long-term memory that you control.

How to use it?

Developers can integrate Contextify into their workflow by installing the native macOS application. Once installed, it automatically monitors and archives sessions from supported AI coding tools. The application presents a unified, searchable timeline view of all your AI interactions. You can then easily search for specific keywords, project names, or code snippets across all your past conversations. Furthermore, Contextify automatically discovers new projects your AI sessions are related to and allows for manual organization. Dedicated hotkeys (Shift-Command-[ or ]) enable quick switching between projects and timelines. This provides a seamless way to revisit previous AI-driven problem-solving steps or to quickly get up to speed on the context of a project you haven't worked on recently. So, this is useful because it saves you time and mental effort by making it trivial to find past AI-generated solutions and understand the context of your ongoing projects.

Product Core Function

· Unified AI Session Timeline: Consolidates conversations from various AI coding tools (e.g., Claude Code, Codex CLI) into a single, chronological feed. The value is in providing a centralized view, eliminating the need to jump between different AI platforms and saving developers time in finding relevant past discussions. This applies to any developer using multiple AI coding assistants.

· Local AI-Powered Summarization: Generates summaries of AI exchanges using on-device processing (Apple Intelligence). The value is in offering privacy-preserving insights into complex discussions and ensuring quick access to the essence of AI interactions without data leaving the user's machine. This is valuable for developers concerned about data privacy and for quickly grasping the key takeaways from lengthy AI dialogues.

· Cross-Project Searchability: Enables searching through the entire history of AI conversations across all projects. The value is in powerful recall, allowing developers to find specific code solutions, ideas, or project details they might have forgotten, thus accelerating problem-solving and reducing redundant work. This is a critical feature for managing multiple projects and complex development histories.

· Automatic Project Discovery and Organization: Intelligently identifies and categorizes AI sessions by project. The value is in automated organization, making it easier for developers to manage and access context for different workstreams without manual tagging. This is beneficial for developers working on diverse projects who need to maintain clear separation and context for each.

· Hotkey Navigation: Provides quick shortcuts for switching between project timelines. The value is in efficient workflow management, allowing developers to rapidly access relevant AI context with minimal interruption to their coding flow. This is a productivity booster for developers who frequently switch between tasks or projects.

Product Usage Case

· A developer is working on a complex feature and remembers using an AI assistant to solve a similar problem a few weeks ago. Instead of re-explaining the issue or trying to find the old conversation across multiple AI chat windows, they use Contextify's search function to quickly locate the previous solution, saving hours of debugging or research. This showcases how Contextify addresses the problem of lost knowledge and accelerates problem-solving.

· A developer needs to hand off a project to a colleague. They use Contextify to generate summaries of the recent AI coding sessions related to the project, providing a concise overview of the challenges faced and the solutions implemented. This helps the colleague quickly understand the project's history and current state, facilitating a smoother handover. This demonstrates Contextify's value in knowledge transfer and project continuity.

· A developer is concerned about the privacy of their proprietary code and AI interaction logs. They choose Contextify because it processes all data locally on their Mac, ensuring that sensitive information never leaves their machine. This highlights Contextify's commitment to data security and privacy, addressing a key concern for many developers.

· A developer has been experimenting with different AI models for code generation. Contextify allows them to track and compare the outputs and conversations from each experiment in a single, organized timeline, helping them identify the most effective AI strategies for their specific needs. This shows how Contextify aids in evaluating and optimizing AI tool usage for better development outcomes.

14

AnkiMobile++: Enhanced SRS Flashcard App

Author

quantized_state

Description

AnkiMobile++ is a visually appealing and feature-rich alternative to the AnkiMobile app for iOS, specifically designed for Mandarin learners. It addresses the perceived bugs and limitations of the original app by incorporating advanced features like a tuned FSRS5 algorithm for optimized spaced repetition and built-in image occlusion support, allowing for efficient card creation with visual aids. Users can seamlessly import existing Anki decks or start new ones.

Popularity

Points 5

Comments 2

What is this product?

AnkiMobile++ is a native iOS application that functions as a Spaced Repetition System (SRS) flashcard tool. Its core innovation lies in its enhanced spaced repetition algorithm, FSRS5, which is a fine-tuned version of the Free Spaced Repetition Scheduler. This algorithm intelligently schedules reviews of learned material based on your recall performance, aiming to maximize retention with minimal effort. Unlike some existing solutions, it offers out-of-the-box image occlusion, a powerful technique for learning from images by hiding parts of them and testing your knowledge of the obscured sections. So, this means you get a smarter way to learn, making sure you review at the perfect time to remember things long-term, and you can learn directly from visuals.

How to use it?

Developers can use AnkiMobile++ by downloading the app from the App Store. For existing Anki users, the primary use case is importing their Anki decks. This is achieved through standard Anki deck export formats (e.g., .apkg files). For new users or those wanting to create cards more efficiently, the image occlusion feature allows them to take a screenshot or upload an image, then mask specific areas, turning the image into a learning card. The app integrates with the FSRS5 algorithm to manage the review schedule. This offers a practical way to build a custom learning tool for any subject, especially those heavily reliant on visual information or specific terminology. So, if you have Anki cards already, you can just bring them over, or if you want to learn from images, this app makes it super easy.

Product Core Function

· Tuned FSRS5 Algorithm for Spaced Repetition: Provides intelligent scheduling of flashcards to maximize long-term memory retention, adapting to individual learning patterns. This means your learning is more efficient, reviewing just when you're about to forget.

· Built-in Image Occlusion: Allows users to create flashcards directly from images by hiding portions of the image and testing recall of the obscured elements. This is great for learning diagrams, maps, or any visual data. So, you can turn any picture into a learning exercise.

· Anki Deck Import: Seamlessly imports existing Anki decks, enabling users to leverage their current study materials. This saves time and effort for existing Anki users. This means you don't have to start your learning all over again.

· Quick Card Addition with Image Feature: Streamlines the process of adding new cards, especially when using image-based learning, making content creation faster. This makes it quicker to get new study material into the app.

Product Usage Case

· Mandarin Language Learning: Learners can import their Mandarin vocabulary and grammar decks from Anki, and use the image occlusion feature to learn Chinese characters by occluding pinyin or meaning on images of characters. This helps solidify understanding of visual forms and pronunciation. So, if you're learning Chinese, you can use this to master characters and their meanings visually.

· Medical/Anatomy Study: Students can import anatomy diagrams or medical images and use the image occlusion feature to create flashcards for identifying organs, muscles, or medical conditions. The FSRS5 algorithm ensures consistent review of this complex visual information. This means you can effectively memorize complex visual information like body parts.

· Technical Diagram Memorization: Developers or engineers can use image occlusion to create flashcards for network diagrams, circuit schematics, or system architecture visuals, testing their ability to identify components or connections. This aids in quickly recalling technical system layouts. So, you can learn and remember complex technical drawings more easily.

· Foreign Language Visual Vocabulary: Beyond Mandarin, learners of any language can use image occlusion with pictures of objects and their corresponding foreign words to build vocabulary in a highly visual and memorable way. This offers an engaging method for acquiring new words. This helps you learn new words by associating them with pictures.

15

Inferbench: Community-Powered Inference Benchmarking

Author

binsquare

Description

Inferbench is a community-driven platform for collecting and sharing inference performance data on various hardware, especially GPUs. It addresses the challenge of understanding real-world inference performance beyond theoretical specs by leveraging user-submitted and volunteer-validated data. The innovation lies in its decentralized approach to building a comprehensive benchmark database.

Popularity

Points 6

Comments 1

What is this product?

Inferbench is a project that creates a public, collaborative database for measuring how fast different hardware, particularly GPUs, can perform AI inference tasks. Think of it as a crowd-sourced report card for AI chips. The core innovation is building this database not through a single company's testing, but by allowing anyone to submit their own performance results and having other users verify them. This makes the data more diverse and representative of real-world conditions, rather than just controlled lab tests. So, what's the value? It provides a much more practical and trustworthy source of information for developers and researchers trying to choose the right hardware for their AI projects.

How to use it?

Developers can use Inferbench in several ways. Firstly, as a consumer of information, they can browse the existing database to find performance metrics for specific hardware configurations and AI models. This helps them make informed decisions when selecting GPUs or other inference accelerators, potentially saving them significant time and money by avoiding underperforming hardware. Secondly, as a contributor, developers can run benchmarks on their own hardware using the provided tools and submit their results to the community. This not only helps them understand their own system's capabilities but also contributes to the collective knowledge base. The integration is straightforward: download the benchmarking tools, run tests on your desired models, and submit the results through the platform. This allows you to see how your setup compares to others and helps the community build a richer dataset.

Product Core Function

· Community-driven data collection: Developers can submit their own AI inference performance data, creating a rich and diverse dataset that reflects real-world usage scenarios and hardware variations.

· Volunteer validation system: Ensures the accuracy and reliability of submitted data through peer review, building trust in the benchmark results.

· Hardware and model performance database: A searchable repository of inference speed benchmarks across various GPUs and AI models, enabling quick comparison and informed hardware selection.

· Benchmarking tool integration: Provides tools for developers to easily run standardized inference tests on their own hardware and contribute to the database.

· Performance insights and analysis: Offers visualizations and comparative metrics to help developers understand hardware trade-offs and optimize their AI deployments.

Product Usage Case

· A startup is developing a new image recognition application and needs to choose a GPU that offers the best performance-per-dollar for their target inference speed. They consult Inferbench to compare the inference speeds of various GPUs on similar image recognition models, allowing them to select the most cost-effective hardware without needing to purchase and test each one themselves.

· A researcher is working on a cutting-edge natural language processing (NLP) model and wants to understand how different inference hardware configurations will impact its real-time performance in a production environment. They use Inferbench to find data on the model's inference speed across a range of GPUs, helping them predict latency and resource requirements for deployment.

· An independent hardware enthusiast has a custom-built AI workstation and wants to contribute to the community's understanding of inference performance. They download the Inferbench benchmarking tools, run tests on their system with popular AI models, and submit the validated results. This effort helps others who might have similar hardware configurations make informed decisions.

16

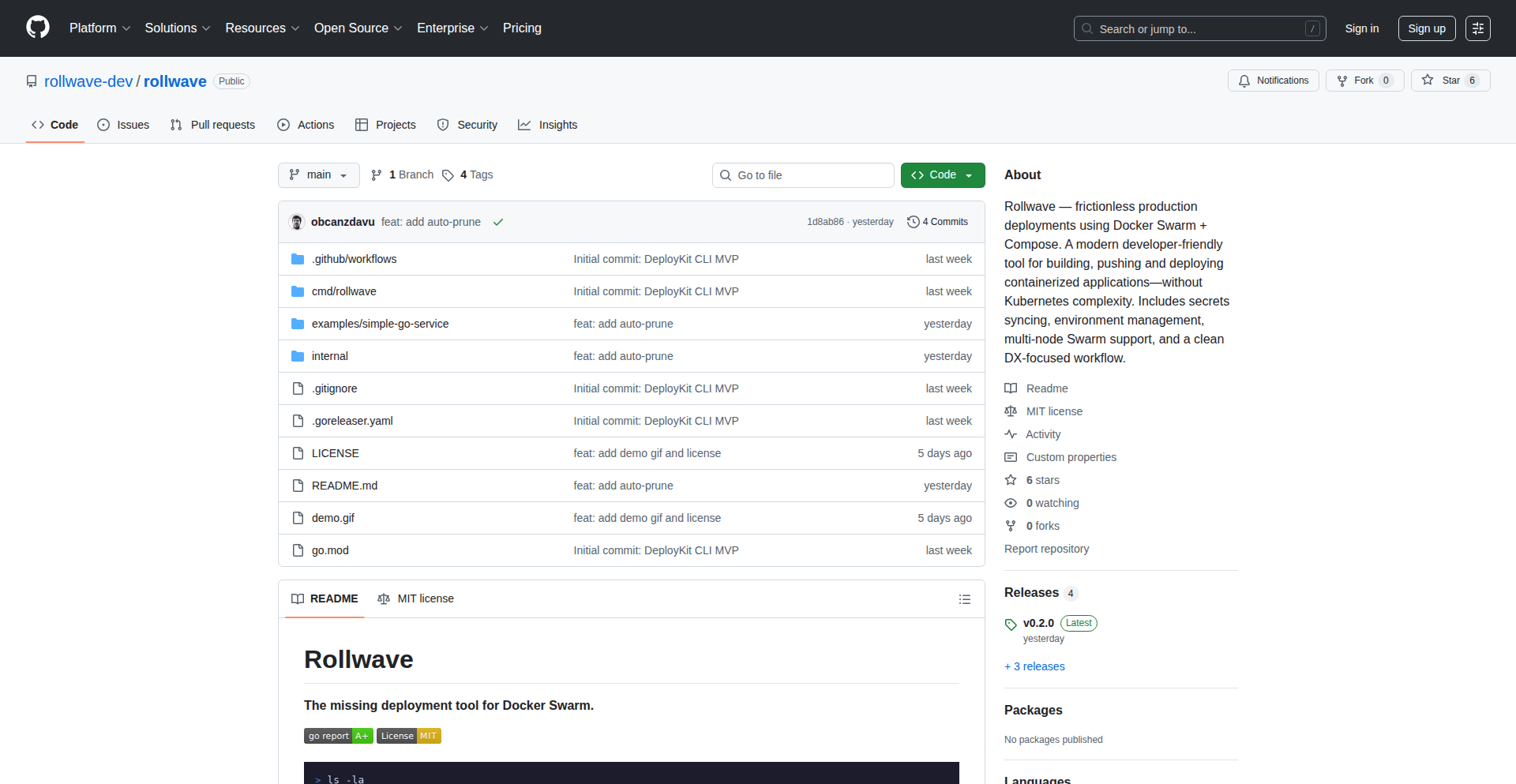

Durable Streams Protocol

Author

kylemathews

Description