Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-12-08

SagaSu777 2025-12-09

Explore the hottest developer projects on Show HN for 2025-12-08. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The landscape of innovation this week is heavily influenced by the pervasive power of AI and the developer's relentless pursuit of efficiency and privacy. We're seeing AI move beyond simple generation to become a sophisticated tool for analysis, summarization, and even complex problem-solving, as demonstrated by projects that distill vast amounts of information or automate intricate workflows. Developers are not just building tools *with* AI, but are also using AI to *build* tools faster and smarter. Simultaneously, there's a strong undercurrent of empowering developers with better tools: from streamlined secrets management and enhanced code visualization to efficient data processing engines like DuckDB that run entirely client-side, respecting user privacy. The emphasis on open-source and client-side processing signifies a desire for transparency and control, a hallmark of the hacker spirit. For budding entrepreneurs, this means looking for unmet needs where AI can provide intelligent solutions or where existing developer tools can be made significantly more efficient, secure, or privacy-preserving. The ability to leverage AI for rapid prototyping and validation, as seen in several projects, is a powerful advantage for iterating quickly and finding product-market fit.

Today's Hottest Product

Name

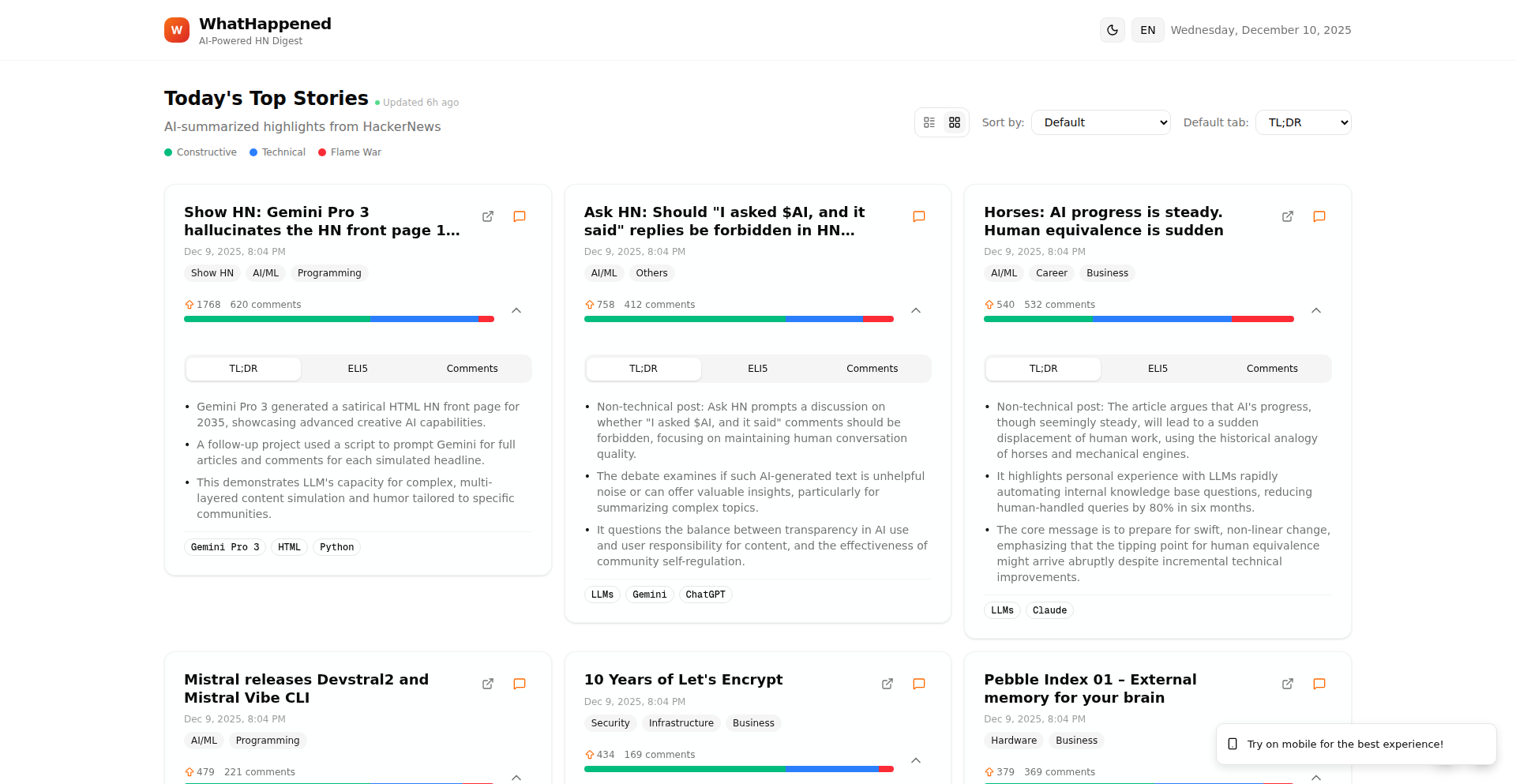

WhatHappened – HN summaries, heatmaps, and contrarian picks

Highlight

This project ingeniously tackles information overload on Hacker News by leveraging AI for concise summaries and analyzing comment sentiment to visualize discussion dynamics. It provides an "ELI5" version and highlights the most upvoted disagreements, offering a truly unique way to digest content. Developers can learn about practical AI integration for content summarization, sentiment analysis, and intelligent content filtering, showcasing how technology can enhance user experience by cutting through noise.

Popular Category

AI/ML

Developer Tools

Productivity

Data Analysis

Popular Keyword

AI

LLM

Data

Analysis

Developer Tools

Automation

Open Source

Privacy

Agent

Technology Trends

AI-powered content analysis and summarization

Efficient data processing with DuckDB

Enhanced developer workflows and security

Decentralized and privacy-focused applications

Agentic systems for complex tasks

Cross-platform and browser-based tools

Project Category Distribution

AI/ML (25%)

Developer Tools (20%)

Productivity (15%)

Data Analysis (10%)

Security (8%)

Utilities (15%)

Other (7%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | ActiveMeet Notes | 159 | 123 |

| 2 | Lockenv: Git-Secured Vault | 100 | 34 |

| 3 | SQLFlow Stream | 74 | 13 |

| 4 | TimeCapsuleMailer | 44 | 28 |

| 5 | AI-Enhanced Code Refiner | 18 | 2 |

| 6 | TypeScript Debugging Playbook | 12 | 7 |

| 7 | Diesel-Guard: SQL Migration Sentinel | 18 | 0 |

| 8 | Octopii: Rust Distributed Runtime | 16 | 0 |

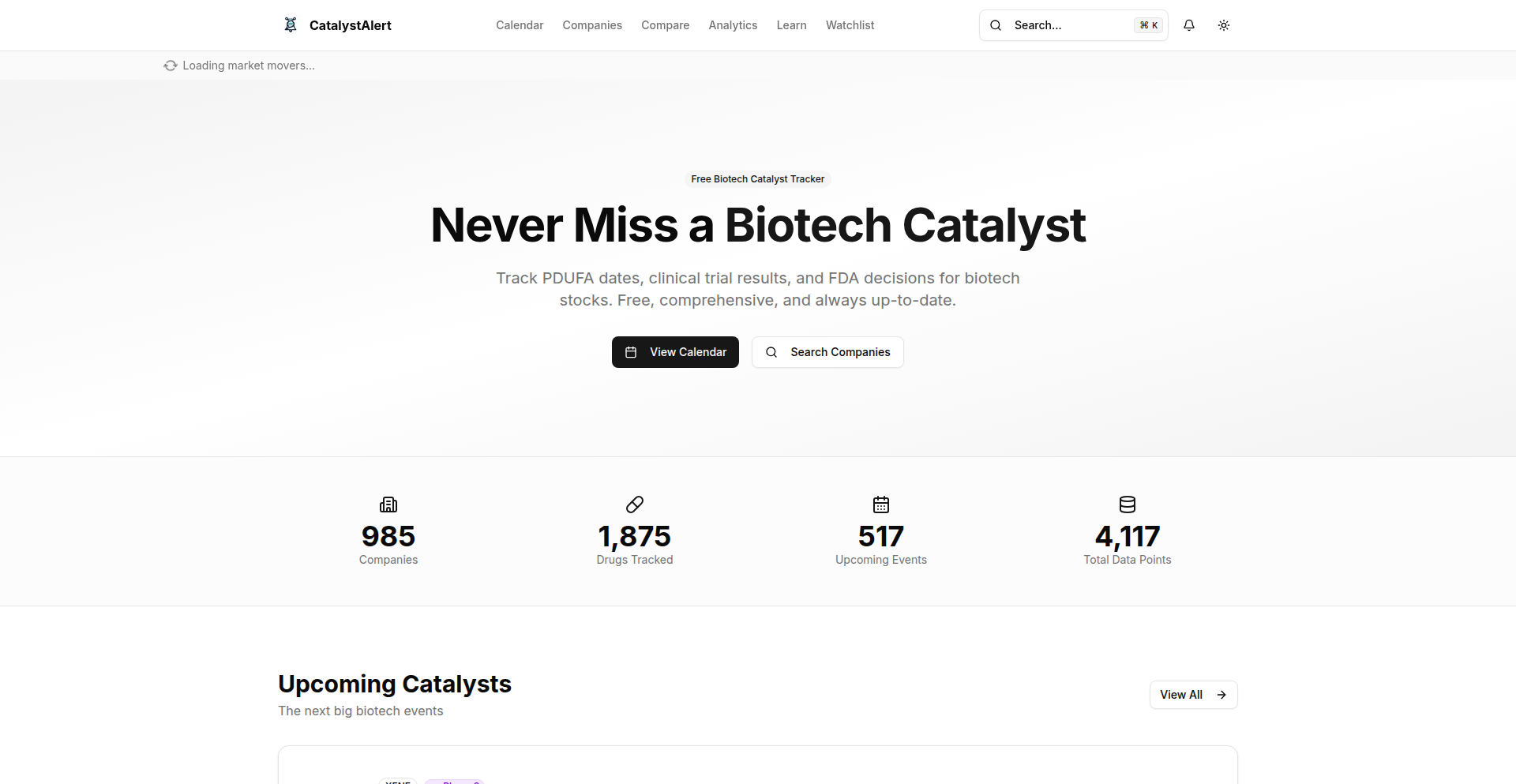

| 9 | CatalystAlert | 8 | 6 |

| 10 | HNInsight Engine | 8 | 2 |

1

ActiveMeet Notes

Author

davnicwil

Description

This project is a specialized note-taking system designed for recurring meetings such as 1-on-1s. It focuses on 'active note-taking,' which means capturing key points, insights, and action items in real-time as the meeting progresses, rather than just transcribing or summarizing. The innovation lies in its tailored workflow that enhances memory recall and historical tracking of meeting themes, offering a more effective way to manage and learn from ongoing discussions.

Popularity

Points 159

Comments 123

What is this product?

ActiveMeet Notes is a digital tool built to address the challenge of effectively capturing and recalling information from frequent, recurring meetings. Unlike generic note-taking apps, it emphasizes 'active note-taking.' This approach encourages users to process information as it's being shared, jotting down concise bullet points that represent key agenda items, emerging insights, spontaneous discussions, and agreed-upon actions. The core technical insight is that by actively engaging with the material and using a structured format, users can better retain information and gain historical context over time. This is particularly useful for tracking the evolution of topics and the effectiveness of discussions in regular meetings. What this means for you is a system that helps you remember what's important and see how your conversations have evolved, making your meetings more productive.

How to use it?

Developers can use ActiveMeet Notes directly through its web interface, often without needing to sign up for a free tier. The system is designed for immediate use during a meeting. The core interaction involves typing short, descriptive notes. For integration, while not explicitly detailed as a developer API in the initial description, the underlying principle of structured text notes suggests potential for future integration with other productivity tools or data analysis pipelines. Developers looking to improve their personal meeting effectiveness can start using it today to see how it enhances their note-taking process. This translates to a quick start for improving your meeting outcomes.

Product Core Function

· Real-time active note capture: Allows users to jot down concise notes during live conversations, prioritizing key information over exhaustive transcription. This directly helps you remember critical discussion points as they happen.

· Action item tracking: Provides a dedicated mechanism to log action items agreed upon during meetings, making it easier to follow up and ensure tasks are completed. This ensures accountability and progress on agreed tasks.

· Historical meeting analysis: Enables users to review past meetings, providing a chronological view of discussions, evolving themes, and recurring topics. This helps you understand the long-term trajectory of your conversations and meeting effectiveness.

· Customizable note structure: While not explicitly detailed, the mention of 'bullet-like notes' implies a flexible structure that can be adapted to different meeting types. This allows you to tailor the note-taking experience to your specific needs.

Product Usage Case

· For a software engineering lead managing weekly 1-on-1s with their team: The lead can use ActiveMeet Notes to quickly capture feedback, technical challenges discussed, and action items for each team member. This ensures no important feedback is lost and progress on action items can be easily tracked in subsequent meetings, improving team performance and individual growth.

· For a project manager overseeing multiple recurring project update meetings: The PM can use the system to log key decisions, risks identified, and next steps for each project. Reviewing historical notes allows them to spot recurring issues or track the resolution of previously identified problems, leading to better project oversight and risk mitigation.

· For an individual contributor attending various cross-functional team syncs: The user can employ ActiveMeet Notes to record key takeaways, action items assigned to them, and important context shared by other departments. This helps them stay organized, accountable for their contributions, and better understand the broader project landscape, enhancing their overall effectiveness.

2

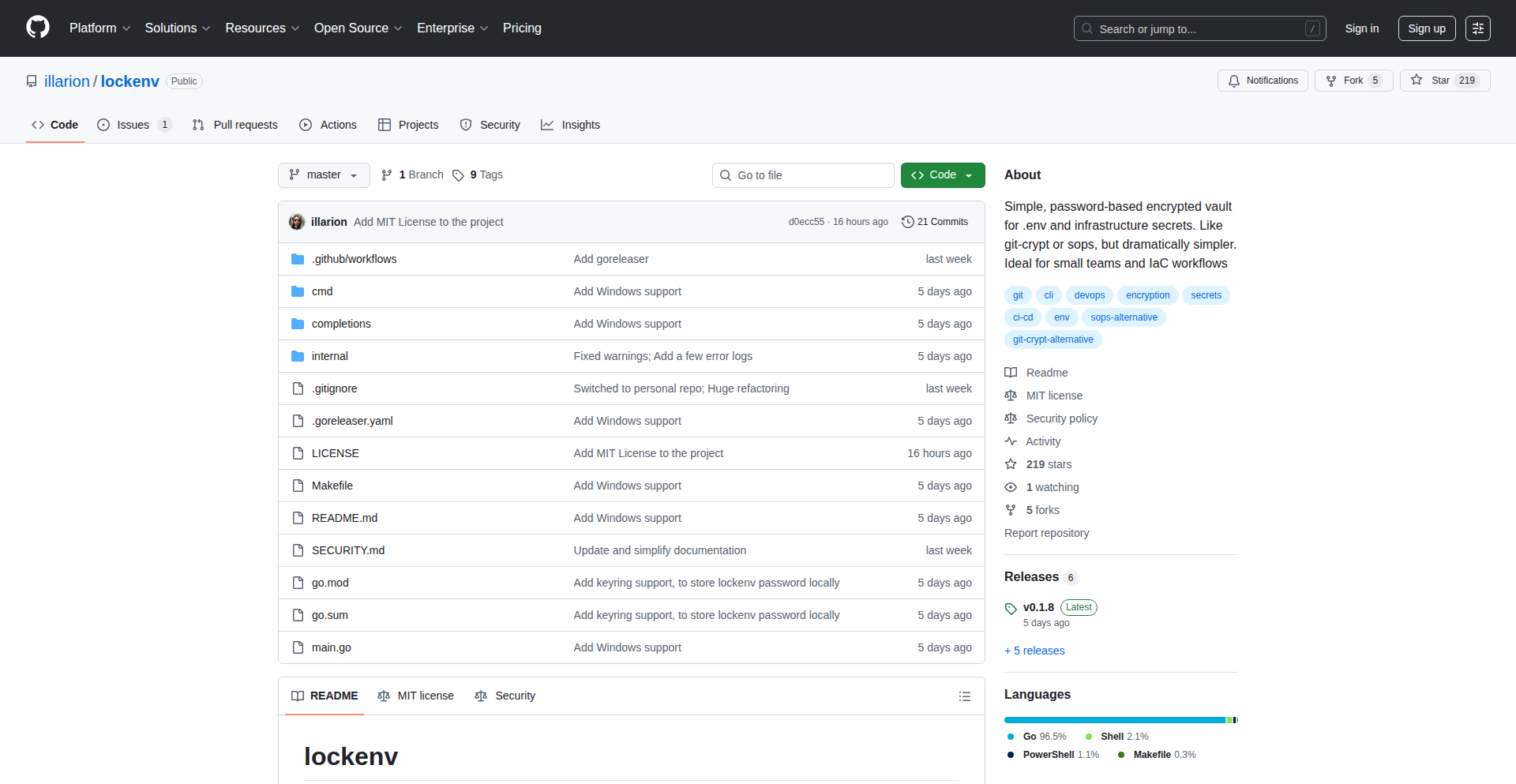

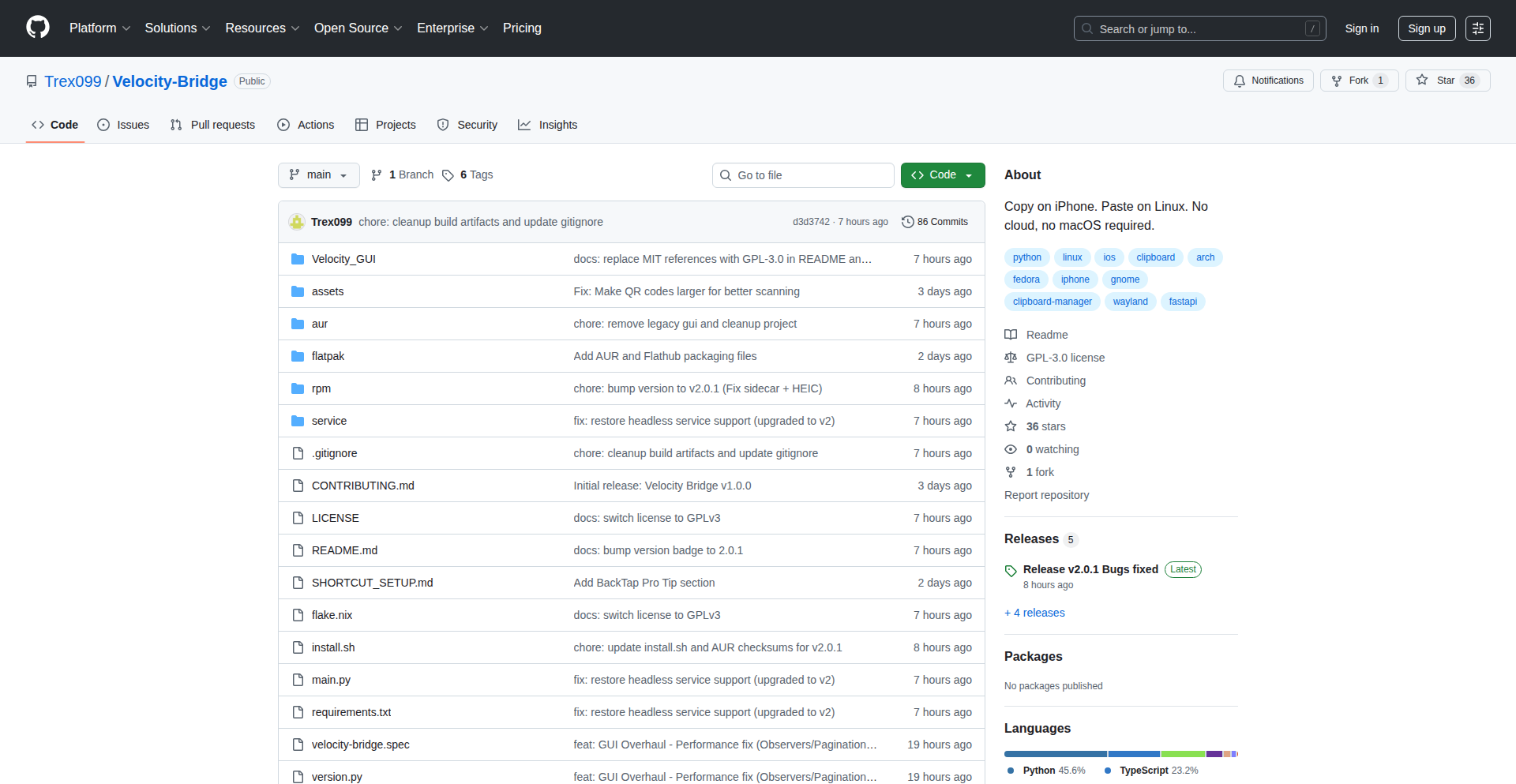

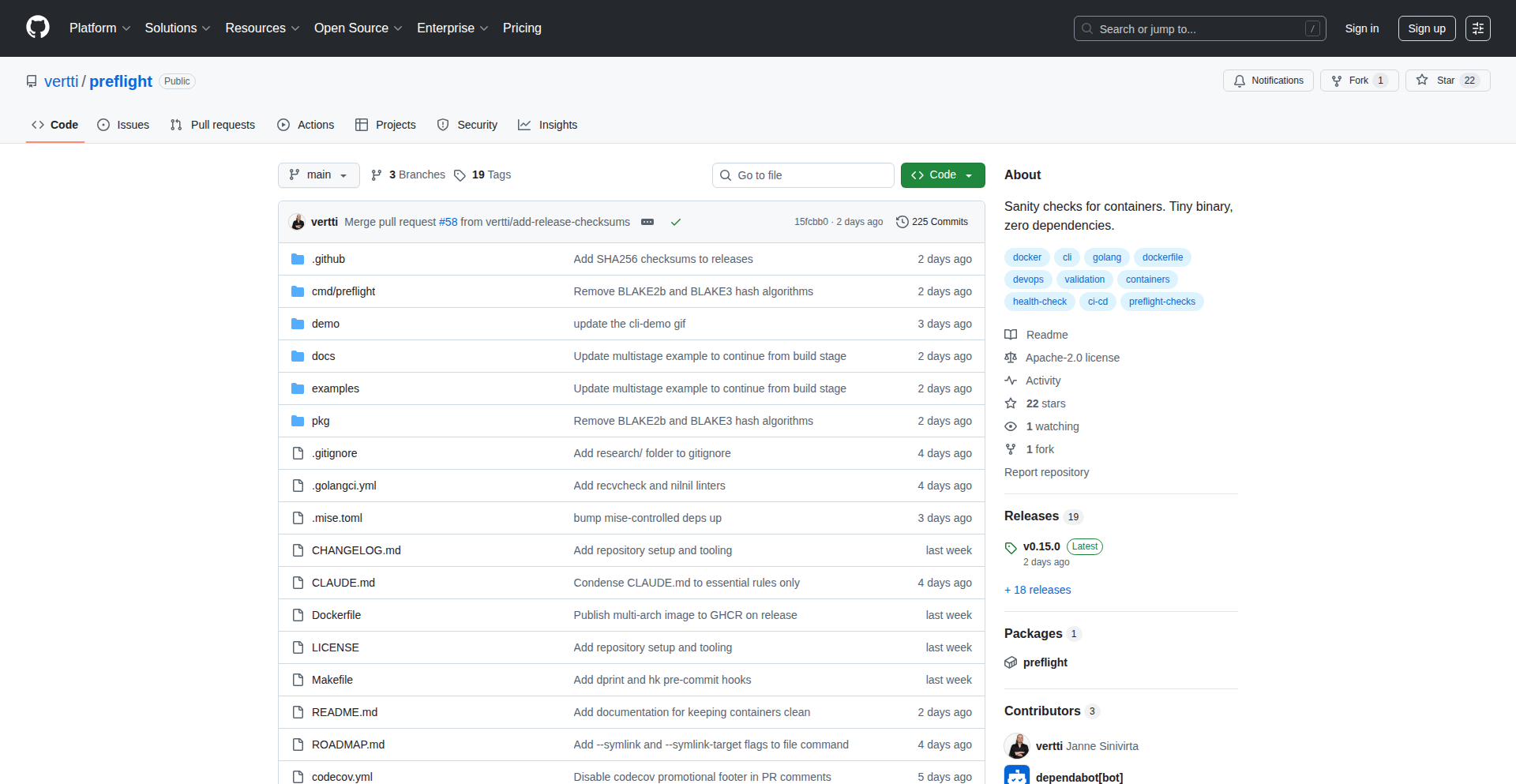

Lockenv: Git-Secured Vault

Author

shoemann

Description

Lockenv is a straightforward, password-protected vault for storing sensitive files like environment variables or secrets directly within your Git repository. It eliminates the complexity of gpg keys or cloud services, offering a simple lock/unlock mechanism. Its integration with OS keyrings minimizes repetitive password entry, making it an ideal solution for developers tired of cumbersome secret management tools, especially for smaller projects or when needing to securely share information without resorting to insecure methods like Slack.

Popularity

Points 100

Comments 34

What is this product?

Lockenv is a command-line tool designed to create and manage encrypted files that can be safely stored in a Git repository. The core innovation lies in its simplicity: instead of complex cryptographic setups, it uses a single password to encrypt and decrypt your secrets. When you initialize Lockenv, it sets up an encrypted vault. You can then add your sensitive files, and Lockenv will encrypt them using your chosen password. When you need to access them, you unlock the vault with the same password. The beauty is that you can commit this encrypted vault to Git, and it remains secure. It leverages the operating system's native keyring (like Keychain on macOS or Credential Manager on Windows) to securely store your password, so you don't have to type it every time you unlock the vault. This is a hacker-ethos approach: building a practical, easy-to-use tool to solve a common developer pain point – secure secret storage – without unnecessary overhead. So, what does this mean for you? It means you can keep your API keys, database credentials, or any other sensitive information safe and version-controlled without wrestling with complicated security software. It’s a quick, clean way to manage secrets for your projects.

How to use it?

Developers can integrate Lockenv into their workflow by first installing it via their preferred package manager or by building from source. Once installed, they'd run `lockenv init` to create a new encrypted vault. After initialization, they can add sensitive files to the vault using `lockenv add <filepath>`. The tool will then encrypt these files and store them within the vault, which can be committed to Git. To access the secrets, a developer would run `lockenv unlock`. The tool will prompt for the password and, if correct, decrypt the files. For convenience, Lockenv can store the password securely in the OS keyring, so subsequent unlocks are seamless. This makes it perfect for CI/CD pipelines where secrets need to be accessed securely, or for collaborative projects where sensitive configuration needs to be shared safely. So, how does this help you? You can easily secure your application's configuration files, and have them tracked by Git, ready for deployment or sharing, all without exposing sensitive data.

Product Core Function

· Password-protected encryption: Encrypts sensitive files using a single password, providing a basic yet effective layer of security for your secrets. This is valuable because it simplifies the process of securing information, making it accessible for individuals who might not be cryptography experts.

· Git integration: Allows encrypted files to be stored directly within a Git repository, enabling version control for secrets. This is useful for tracking changes to sensitive information and easily reverting to previous states, ensuring auditability and safety.

· OS keyring integration: Securely stores the vault password in the operating system's keyring, eliminating the need for repeated password entry. This enhances usability and reduces friction for frequent users.

· Simple command-line interface: Provides intuitive commands for initialization, adding files, and unlocking the vault. This straightforward interface makes the tool easy to learn and use, fitting the hacker ethos of efficient problem-solving.

· Cross-platform compatibility (experimental): Designed to work on macOS, Linux, and Windows, aiming for broad accessibility across different development environments. This is valuable as it allows developers to use the same secret management tool regardless of their operating system.

Product Usage Case

· Securing API keys for a personal project: A developer is building a web application that uses external APIs and needs to store their API keys securely. Instead of hardcoding them or storing them in plain text files, they use Lockenv to create an encrypted vault, add the API key file, and commit the vault to their Git repository. This ensures the API key is safe from accidental exposure when sharing code or collaborating. So, what's the benefit for you? Your sensitive keys are protected, and your code remains clean and version-controlled.

· Managing database credentials for a staging environment: A team is deploying their application to a staging environment and needs to manage the database credentials securely. They use Lockenv to encrypt the database configuration file. The encrypted file is then added to the Git repository and deployed. During deployment, the CI/CD pipeline can securely unlock the vault to access the credentials. This eliminates the risk of credentials being exposed in build logs or configuration files. For you, this means secure and automated deployment of your applications without compromising sensitive information.

· Storing sensitive environment variables for local development: A developer frequently switches between different projects, each with its own set of environment variables for local testing. Instead of managing multiple `.env` files scattered across their system, they can use Lockenv to create separate encrypted vaults for each project's environment variables. This keeps their development environment clean and prevents accidental exposure of sensitive variables during local testing. The advantage for you is organized and secure management of your development configurations.

3

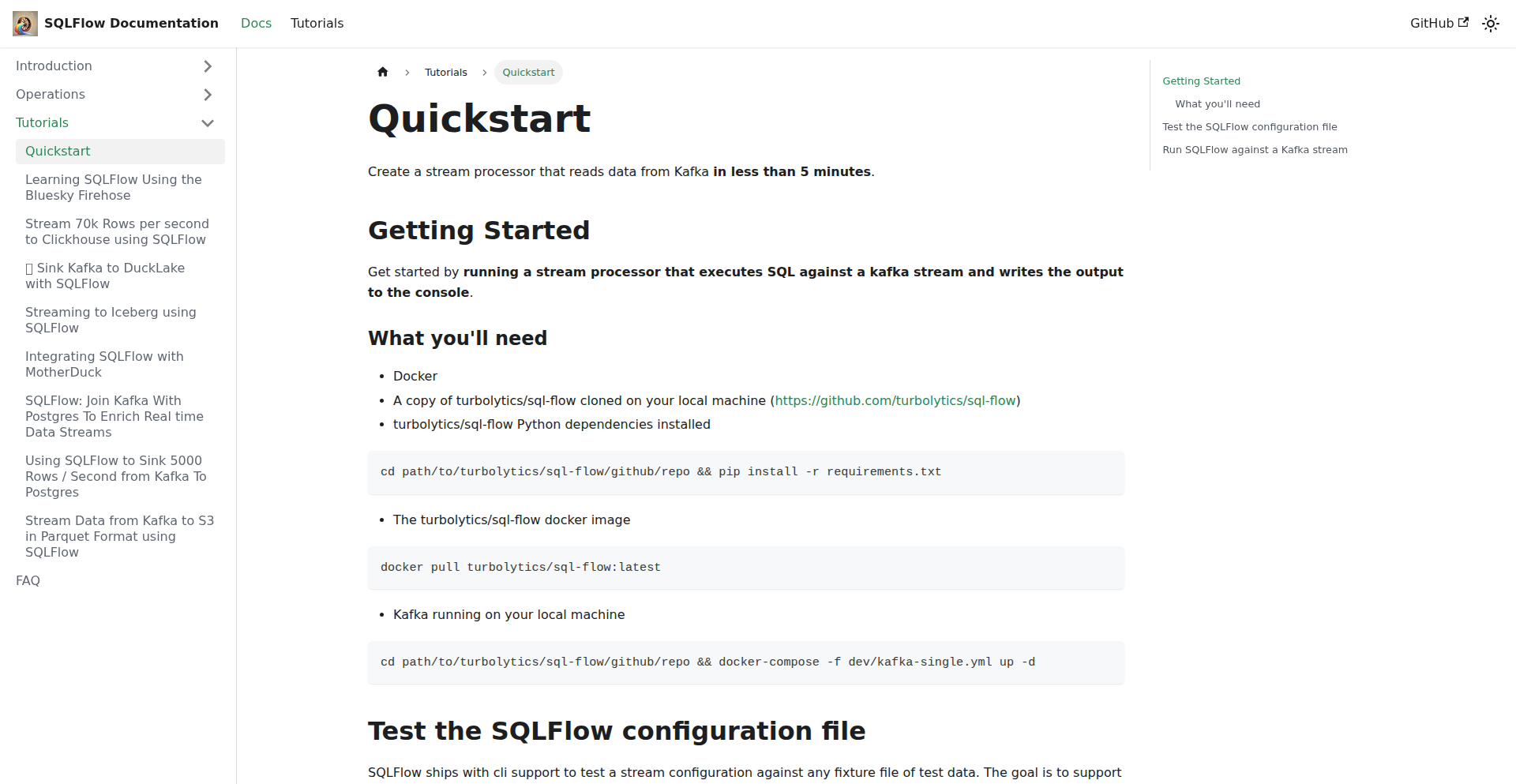

SQLFlow Stream

Author

dm03514

Description

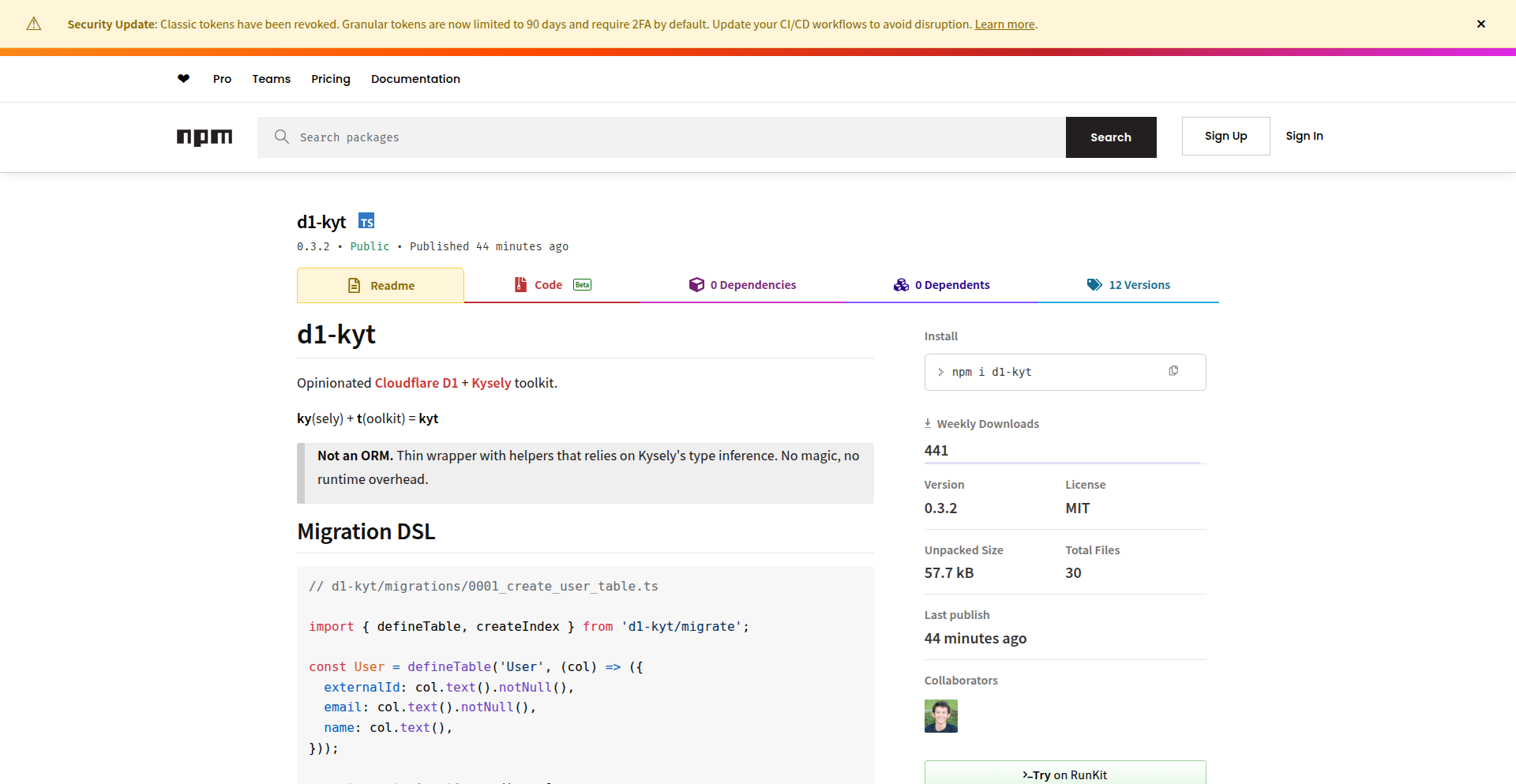

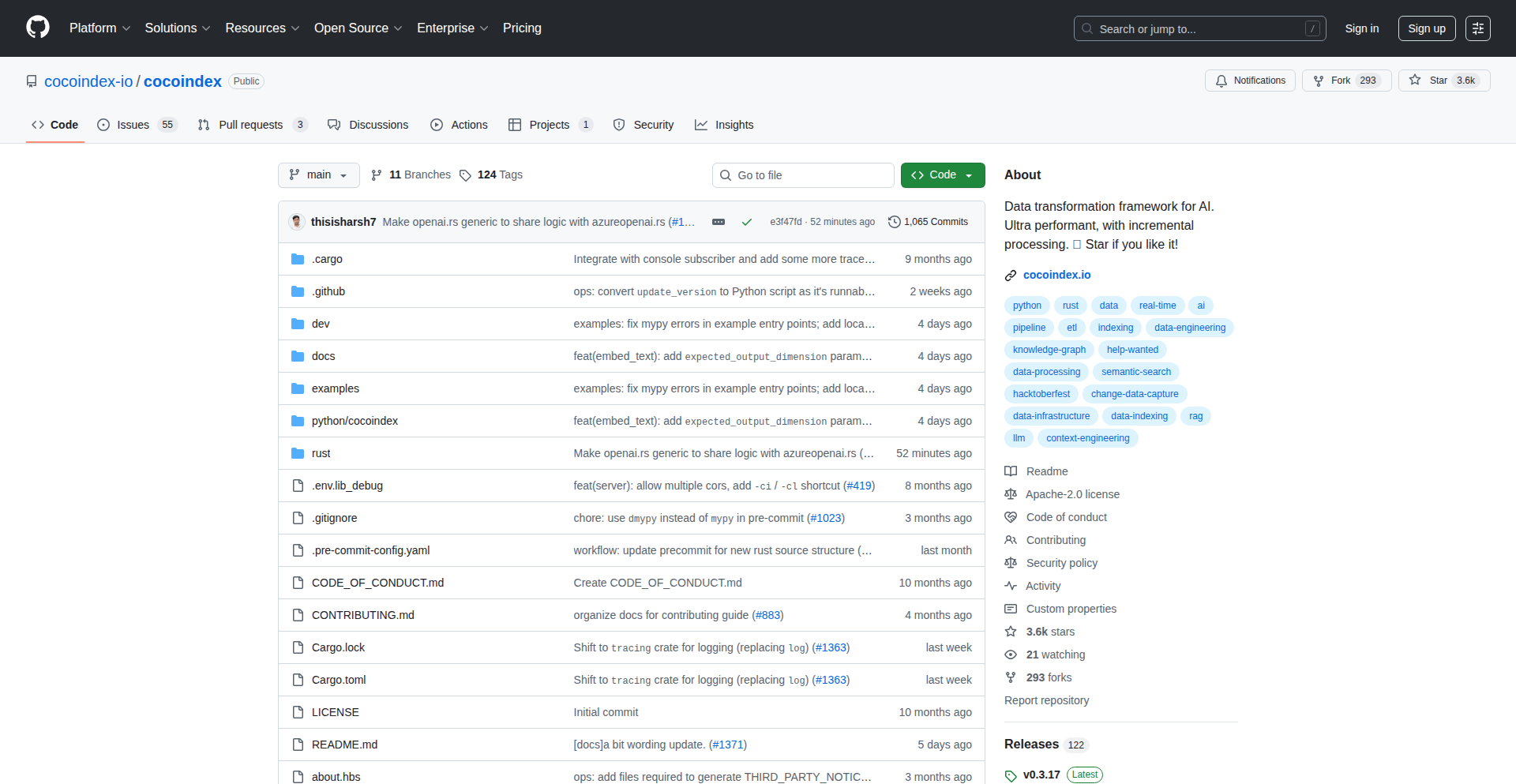

SQLFlow Stream is a lightweight stream processing engine that uses DuckDB for high-speed, low-memory data handling. It tackles the common developer frustration of needing heavy JVM-based systems or custom solutions for simple stream processing tasks, offering a more efficient and accessible alternative. Its innovation lies in harnessing DuckDB's in-process analytical database capabilities for real-time data streams, providing powerful SQL querying for streaming data with minimal resource overhead. This empowers developers to process tens of thousands of messages per second with very little memory, making stream processing more approachable and cost-effective.

Popularity

Points 74

Comments 13

What is this product?

SQLFlow Stream is a stream processing engine designed to handle real-time data streams efficiently. At its core, it utilizes DuckDB, an in-process analytical database, to process data as it arrives. This is innovative because instead of relying on large, distributed systems or dedicated streaming platforms (which often require complex setups and considerable memory), SQLFlow leverages DuckDB's ability to perform analytical queries directly on incoming data. This means you can use familiar SQL commands to filter, aggregate, and transform data streams in near real-time, achieving high throughput (tens of thousands of messages per second) with remarkably low memory usage (around 250MB). This approach simplifies stream processing significantly, making it accessible even for developers who might find traditional big data tools daunting.

How to use it?

Developers can integrate SQLFlow Stream into their applications to process data from sources like Kafka. The primary way to use it is by writing SQL queries that define the desired stream processing logic. For example, you could write a query to continuously monitor incoming messages, filter for specific events, and aggregate counts or averages. DuckDB's extensive connectors allow SQLFlow to interact with various data sources and sinks. This means you can connect to Kafka to ingest data, and then use SQL to process it, potentially writing the results to another Kafka topic, a database, or a file. The project aims for ease of use, allowing developers to define their streaming pipelines using standard SQL, reducing the learning curve and development time. The project provides documentation and tutorials to guide users through setup and common use cases.

Product Core Function

· High-throughput message processing: Processes tens of thousands of messages per second, enabling real-time analysis of large data volumes without overwhelming system resources.

· Low memory footprint: Operates efficiently with minimal memory (around 250MB), making it suitable for resource-constrained environments or smaller projects.

· SQL-based stream processing: Allows developers to define data transformations and aggregations using familiar SQL syntax, simplifying the development of streaming logic.

· DuckDB integration: Leverages DuckDB's powerful in-process analytical database capabilities for fast and efficient stream query execution.

· Rich connector ecosystem: Supports integration with various data sources and sinks through DuckDB's extensive connectors, facilitating seamless data flow from ingestion to output.

Product Usage Case

· Real-time anomaly detection: In a financial trading platform, use SQLFlow Stream to monitor incoming transaction data, filter for unusual patterns (e.g., sudden spikes in volume or price), and trigger alerts instantly.

· Live dashboard data aggregation: For a web analytics service, process real-time user activity events from Kafka, aggregate page views, unique visitors, and session durations using SQL queries, and feed these metrics to a live dashboard.

· IoT device data processing: In an industrial IoT application, ingest sensor readings from numerous devices via Kafka, filter out noisy data, calculate average readings per device or location, and store critical alerts or processed data for historical analysis.

· Log stream analysis and alerting: For a microservices architecture, process application logs streamed through Kafka. Use SQLFlow Stream to filter for error messages, count occurrences, and trigger alerts when error rates exceed a predefined threshold.

4

TimeCapsuleMailer

Author

walrussama

Description

A web application that allows users to schedule and send emails to themselves or others at a future date, effectively creating digital 'time capsules' of thoughts, ideas, or reminders. The core innovation lies in its robust scheduling mechanism and secure storage of unsent emails, addressing the common problem of forgotten intentions or valuable future insights.

Popularity

Points 44

Comments 28

What is this product?

This project is a web-based service that acts like a digital time capsule for emails. You write an email now, set a future delivery date, and the system will automatically send it on that day. It's built on a server-side architecture that manages a queue of scheduled emails, using a robust job scheduling system to ensure timely delivery. The innovation is in its ability to reliably store and send messages days, weeks, or even years in the future, overcoming the limitations of simply setting a calendar reminder.

How to use it?

Developers can integrate this service into their workflows by accessing the web interface. They can compose emails, specify recipient(s), and select a future delivery date. It's useful for personal reflection, sending reminders to future selves, or even for businesses to send automated follow-ups or anniversary messages. Imagine creating a birthday message that arrives a year from now, or sending yourself a note of encouragement when you're about to embark on a new project.

Product Core Function

· Scheduled Email Sending: Allows users to compose an email and schedule its delivery for a specific date and time in the future. This is valuable for ensuring important messages are received at the opportune moment, like a birthday greeting or a project kick-off reminder, so you don't forget.

· Time Capsule Storage: Securely stores unsent emails and their scheduled delivery times. This is crucial for reliability, acting as a digital vault for your future communications, so your intended messages aren't lost.

· Future Self Reminders: Enables users to send messages to themselves for future reflection or action. This helps in personal growth and accountability, providing a tangible way to connect with your future self and recall past intentions.

· Batch Scheduling: Potential for sending multiple emails on a future date, useful for event-based communication or campaign rollouts. This offers efficiency for sending coordinated messages in the future.

Product Usage Case

· Personal Journaling Reinforcement: A user can write about their current aspirations or challenges and schedule the email to arrive when they anticipate a critical decision point, helping them stay aligned with their past self's mindset.

· Event Planning Follow-up: A wedding planner can schedule a 'Thank You' email to be sent to clients a week after the event, ensuring timely appreciation without needing manual intervention post-event.

· Long-Term Goal Setting: A student can write about their career goals and schedule the email to be sent to themselves on their graduation day, serving as a powerful reminder of what they aimed for.

· Creative Project Incubation: A writer can draft story ideas and schedule them to be sent to themselves periodically to spark new creative directions or revisit unfinished narratives.

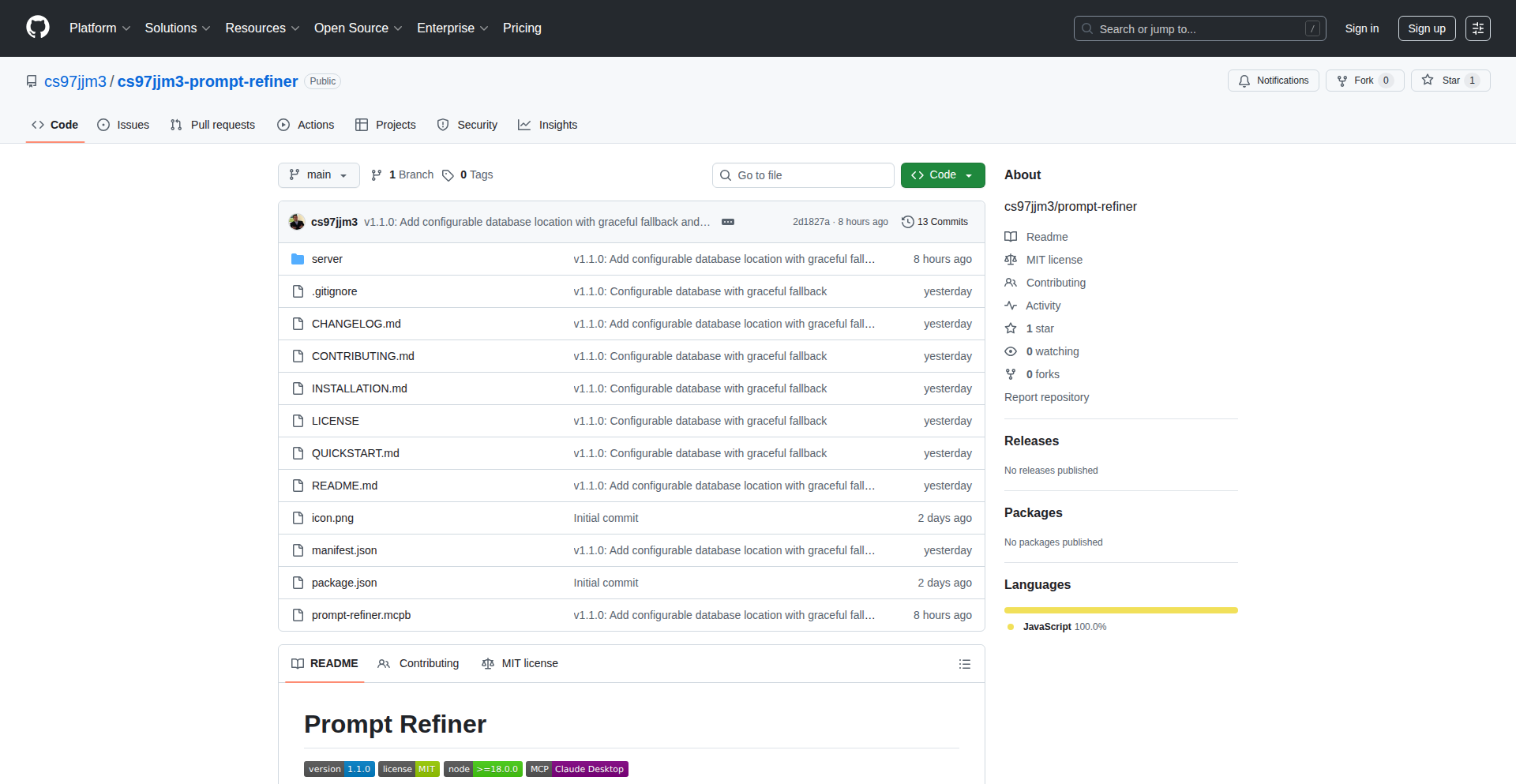

5

AI-Enhanced Code Refiner

Author

Gricha

Description

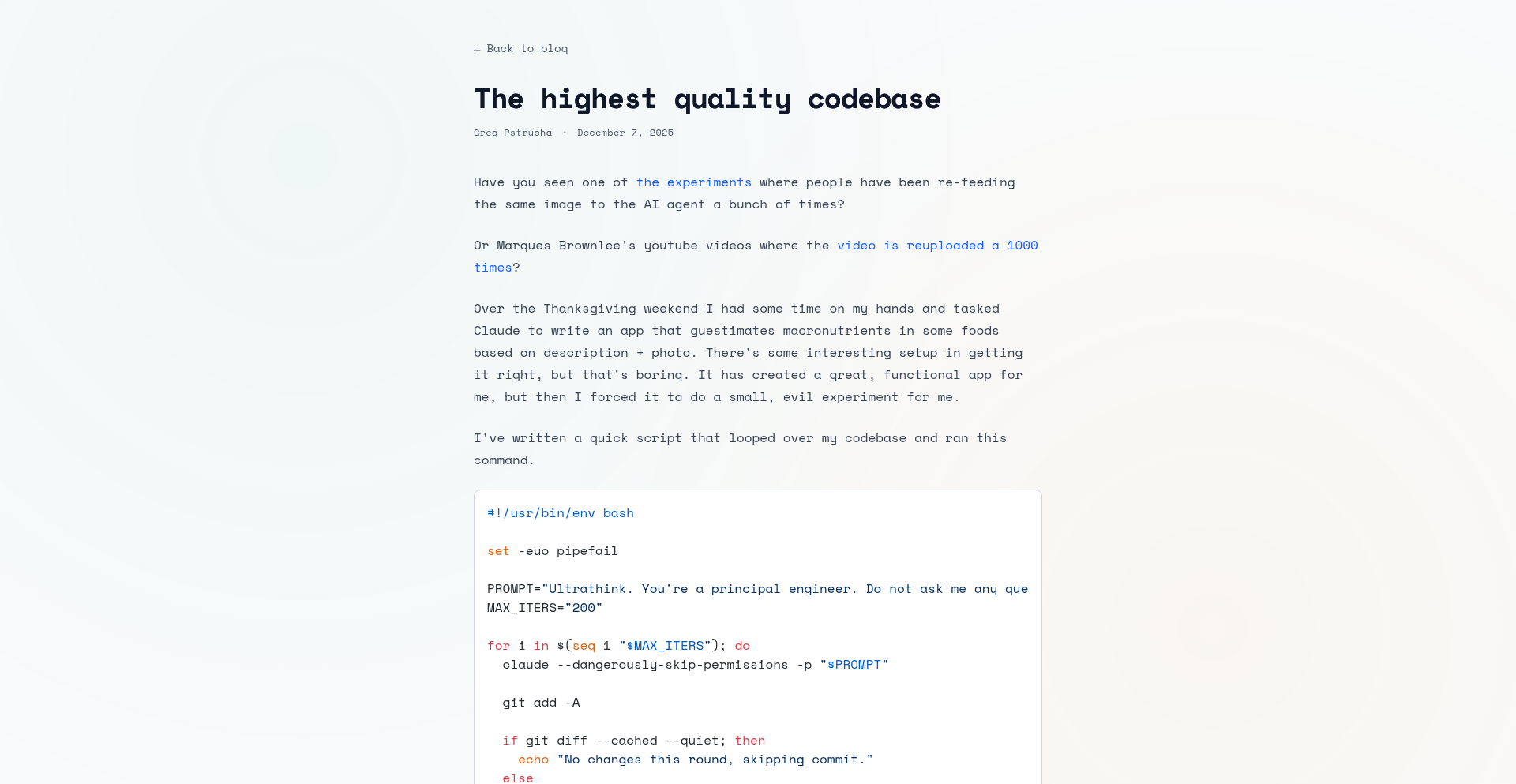

This project leverages Large Language Models (LLMs), specifically Claude, to automatically suggest and implement improvements for codebase quality. It addresses the common developer challenge of maintaining clean, efficient, and readable code by acting as an AI-powered code reviewer and refactorer.

Popularity

Points 18

Comments 2

What is this product?

This project is an experimental tool that utilizes advanced AI, like Claude, to analyze and enhance your existing code. Instead of developers manually searching for bugs or suboptimal code patterns, this AI acts as a tireless assistant, reviewing your codebase over and over (200 times in this case, as an example of its persistent improvement capability). It identifies areas for improvement, suggests specific code changes to make it better, and can even apply those changes. The core innovation lies in using AI's natural language understanding and code generation capabilities to automate and scale code quality improvements, a traditionally human-intensive task. Think of it as having an incredibly smart coding partner who never gets tired of making your code better.

How to use it?

Developers can integrate this tool into their workflow by typically feeding their codebase or specific code snippets to the AI model. This might involve using command-line interfaces, custom scripts, or potentially future integrations with IDEs (Integrated Development Environments). The AI then analyzes the code based on predefined quality metrics or general best practices. After analysis, it provides suggestions for refactoring, bug fixing, performance optimization, or improving readability. Developers can then review these suggestions and choose to apply them, thereby rapidly improving their codebase without extensive manual effort. It's a way to offload some of the more tedious aspects of code maintenance to an intelligent agent, freeing up developer time for more creative problem-solving.

Product Core Function

· AI-driven code analysis: The AI's ability to understand code syntax, structure, and common patterns allows it to identify potential issues that human developers might miss or overlook due to fatigue. This provides a more objective and thorough code review process.

· Automated refactoring suggestions: The system proposes concrete code modifications to improve clarity, efficiency, and maintainability. This directly helps developers write cleaner code with less effort, leading to more robust software.

· Iterative code improvement: The demonstrated ability to perform improvements multiple times signifies an iterative refinement process. This means the AI can continuously optimize code over time, leading to a perpetually evolving and improving codebase, which is valuable for long-term project health.

· LLM-powered code generation: By leveraging the generative capabilities of LLMs, the tool can not only identify problems but also propose and potentially generate the corrected code, accelerating the debugging and optimization cycle.

· Scalable quality assurance: This approach offers a scalable way to ensure code quality across large codebases. Instead of relying solely on a limited number of human reviewers, AI can provide consistent and on-demand code quality checks for every part of the project.

Product Usage Case

· Improving legacy codebases: Developers working with older, complex code that lacks proper documentation or testing can use this tool to systematically identify and refactor problematic sections, making the code more manageable and understandable. The AI helps untangle the spaghetti code.

· Enhancing code readability for team collaboration: When multiple developers work on a project, maintaining consistent code style and readability is crucial. This tool can automatically suggest changes that align with best practices, ensuring that code is easier for everyone on the team to read and contribute to.

· Optimizing performance-critical sections of an application: For applications where speed and efficiency are paramount, the AI can analyze critical code paths and suggest optimizations that might not be immediately obvious to human developers, leading to faster execution and better resource utilization.

· Accelerating the onboarding of new developers: New team members often struggle to understand unfamiliar code. By having the AI suggest improvements and explain its reasoning, it can act as a guide, helping new developers quickly grasp the codebase's structure and quality standards.

· Reducing the burden of routine code maintenance: Tasks like fixing minor bugs, updating outdated syntax, or ensuring adherence to coding standards can be time-consuming. This AI tool automates many of these repetitive tasks, allowing developers to focus on higher-level design and feature development.

6

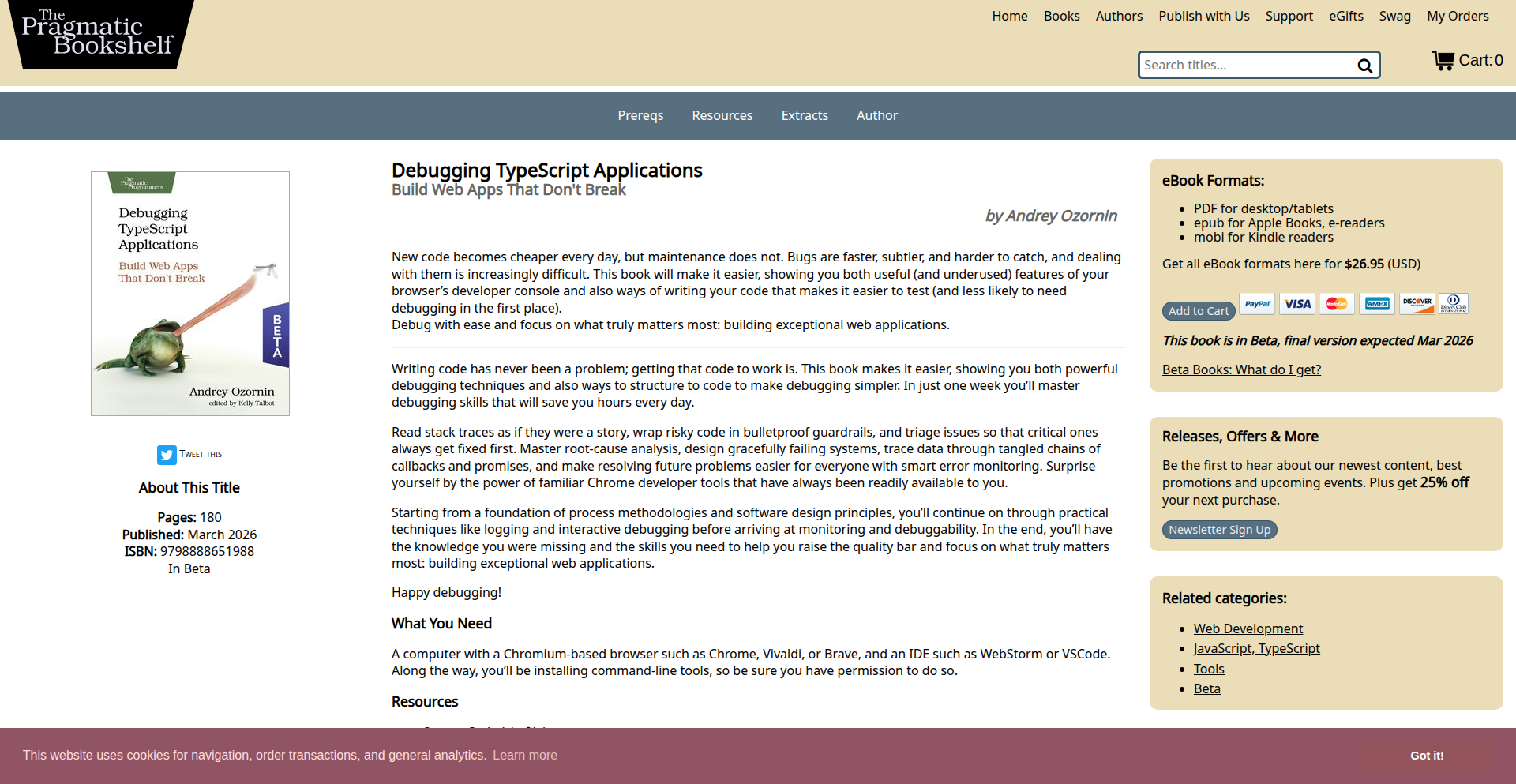

TypeScript Debugging Playbook

Author

ozornin

Description

This project is a beta release of a book focused on the practical art of debugging TypeScript applications. It delves into common pitfalls and advanced techniques for identifying and resolving issues in TypeScript codebases, offering concrete strategies and code examples. The innovation lies in its specialized focus on TypeScript's unique debugging challenges, providing developers with a dedicated resource to improve their problem-solving skills and ship more robust applications. So, what's the value to you? It's about saving time and reducing frustration when bugs inevitably appear, making you a more efficient and effective developer.

Popularity

Points 12

Comments 7

What is this product?

This project is a comprehensive guide, currently in beta, that teaches developers how to effectively debug applications written in TypeScript. It breaks down the complexities of TypeScript debugging, which often differ from plain JavaScript due to features like static typing, type inference, and transpilation. The innovative aspect is its deep dive into these TypeScript-specific challenges, offering insights and solutions that are not readily available in general debugging literature. It's like having a seasoned expert walk you through every tricky bug. So, what's the value to you? You'll learn to find and fix bugs faster and more reliably in your TypeScript projects.

How to use it?

Developers can use this book as a learning resource to enhance their debugging skills. It can be read cover-to-cover or used as a reference guide when encountering specific issues. The book provides actionable advice, code snippets, and explanations of debugging tools and techniques tailored for TypeScript. Integration means applying these learned principles and code examples directly into your development workflow. So, what's the value to you? You can directly apply the book's advice to your current projects to solve existing bugs or prevent future ones, becoming a more skilled troubleshooter.

Product Core Function

· Advanced TypeScript Error Analysis: Learn to decipher complex TypeScript compiler errors and runtime exceptions, understanding their root causes and common resolutions. This helps in quickly pinpointing the source of a bug. The value is in faster bug identification and reduced time spent on deciphering cryptic messages.

· Debugging Type-Related Issues: Discover specific strategies for debugging problems arising from TypeScript's static typing system, such as incorrect type assertions, unintended type widening, or issues with generic types. This ensures your types are correctly enforced and don't become a source of bugs. The value is in building more predictable and less error-prone code.

· Effective Use of Debugging Tools: Explore how to leverage common debugging tools (like browser developer tools and IDE debuggers) with TypeScript, including setting up breakpoints, inspecting variables, and stepping through code effectively, even after transpilation. This makes the debugging process more efficient and insightful. The value is in gaining deeper visibility into your code's execution.

· Performance Debugging in TypeScript: Understand how to identify and resolve performance bottlenecks specific to TypeScript applications, considering aspects like compilation overhead and runtime type checking. This helps in building faster and more responsive applications. The value is in delivering better user experiences through optimized performance.

· Real-world Debugging Scenarios: Study case studies and practical examples that illustrate common debugging challenges faced by developers in production environments and how to overcome them using the techniques taught in the book. This provides practical, applicable knowledge. The value is in learning from others' mistakes and readying yourself for common production issues.

Product Usage Case

· A developer struggling with a complex type error in a React component is using the book to understand how generic types might be misapplied, leading to a quicker fix and a more stable component. This addresses the immediate need to resolve a blocking issue.

· A team building a large-scale Node.js application with TypeScript encounters unexpected runtime behavior. They consult the book's section on debugging asynchronous operations and type guards, which helps them identify a subtle bug in their data validation logic. This improves the reliability of critical application features.

· A junior developer new to TypeScript is finding it difficult to navigate the debugging process. They use the book to learn fundamental techniques for inspecting variables and stepping through code in their IDE, gaining confidence and independence in troubleshooting. This fosters skill development and reduces reliance on senior developers.

· A developer notices performance degradation in their application after introducing new TypeScript features. They refer to the book's performance debugging chapter to identify potential compilation overhead issues and optimize their code for better runtime speed. This leads to a more efficient and responsive application.

7

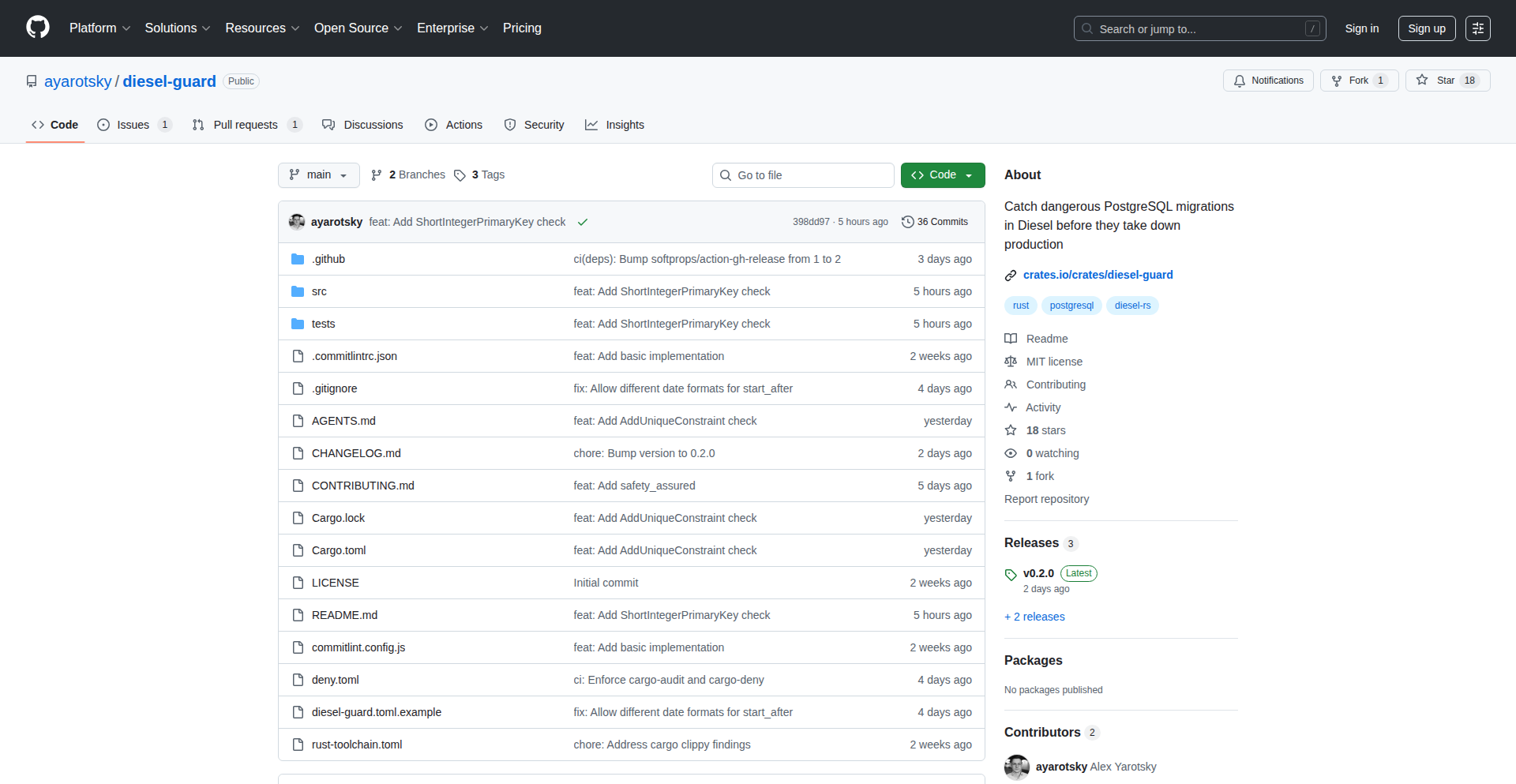

Diesel-Guard: SQL Migration Sentinel

Author

ayarotsky

Description

Diesel-Guard is a sophisticated linter designed to proactively identify potentially unsafe or problematic patterns within Diesel ORM migrations for PostgreSQL. It acts as an early warning system, preventing common pitfalls and ensuring the integrity of your database schema evolution.

Popularity

Points 18

Comments 0

What is this product?

Diesel-Guard is a static analysis tool that scans your Diesel ORM migration files for PostgreSQL. It uses predefined rules to detect common anti-patterns, such as operations that could lead to data loss (e.g., `DROP COLUMN` without proper checks), performance regressions (e.g., unindexed joins), or compatibility issues. Its innovation lies in its targeted approach to database migration safety, providing developers with actionable feedback before migrations are even run, thus reducing the risk of production incidents. Think of it as a spell-checker for your database changes, catching potential mistakes before they become costly problems.

How to use it?

Developers integrate Diesel-Guard into their development workflow. This can be done manually by running the command-line tool on their migration files, or more effectively, by integrating it into their Continuous Integration (CI) pipeline. For example, in a GitHub Actions workflow, you could add a step to run Diesel-Guard on any new migration files committed. If the linter detects any issues, it will fail the CI build, alerting the developer to review and fix the migration before it can be merged or deployed. This ensures that only safe migrations proceed through the development lifecycle.

Product Core Function

· Unsafe Operation Detection: Identifies potentially destructive SQL commands like 'DROP COLUMN' or 'ALTER TABLE' that might cause data loss or downtime without proper safeguards. This helps prevent accidental data erasure, saving valuable time and preventing emergency recovery operations.

· Performance Pitfall Analysis: Flags common performance bottlenecks in migrations, such as the absence of necessary indexes on columns involved in joins or WHERE clauses. This ensures your database remains efficient as it grows, leading to faster application performance.

· Schema Change Validation: Analyzes the structure of schema modifications to identify potential conflicts or unexpected side effects. This gives developers confidence that their schema changes won't break existing application logic or introduce subtle bugs.

· Customizable Rule Sets: Allows developers to define their own linting rules tailored to their specific project needs and organizational standards. This provides flexibility and ensures the tool aligns with unique project requirements, enhancing its practical value.

· Integration with Development Workflow: Designed to be easily integrated into CI/CD pipelines and developer tooling, providing immediate feedback. This streamlines the development process by catching errors early, reducing the cost of fixing bugs and accelerating delivery.

Product Usage Case

· Preventing accidental data loss during a schema refactor: A developer plans to remove a column from a table. Without Diesel-Guard, they might accidentally run 'DROP COLUMN' directly in production. With Diesel-Guard, the linter would flag this as a high-risk operation, prompting the developer to implement a safer phased removal strategy or add explicit checks, thus avoiding data loss.

· Improving query performance after adding a new feature: A new feature requires joining two large tables. The migration adds the join but forgets to add an index on the joining column. Diesel-Guard would detect the missing index, warning the developer to add it within the migration itself, ensuring that the new feature doesn't degrade overall application performance.

· Ensuring backward compatibility of API changes: A backend change requires altering a column type. The migration script attempts a direct type cast that might fail for existing data. Diesel-Guard can identify such potentially problematic casts, prompting the developer to ensure the migration handles existing data gracefully, maintaining API stability.

· Automating security checks for database migrations: In a regulated environment, certain SQL patterns might be disallowed for security reasons. Diesel-Guard can be configured with custom rules to enforce these security policies, preventing the introduction of vulnerable database operations.

8

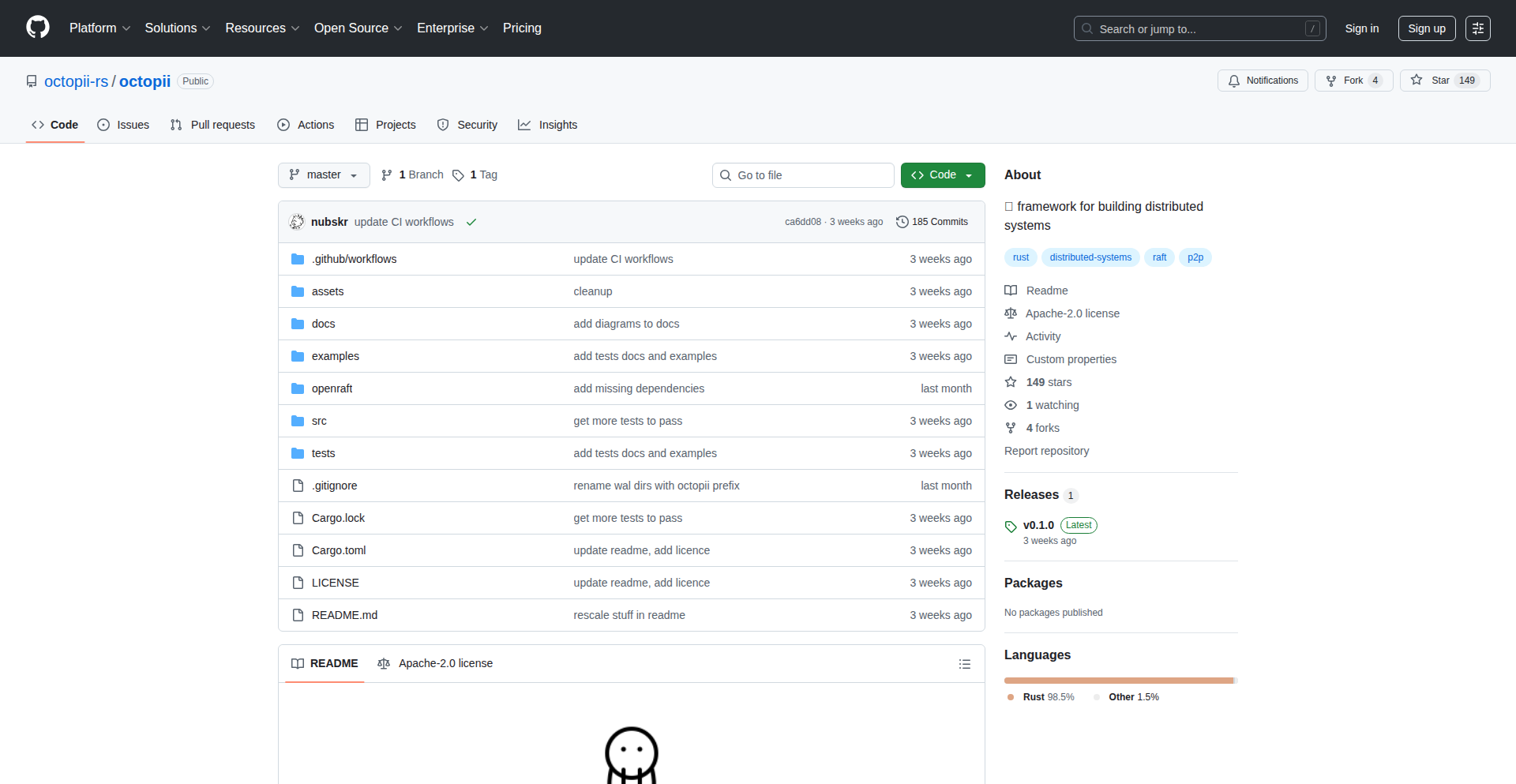

Octopii: Rust Distributed Runtime

Author

puterbonga

Description

Octopii is a novel runtime environment designed for building distributed applications using the Rust programming language. It addresses the inherent complexities of distributed systems by providing a robust framework for managing concurrency, communication, and fault tolerance, allowing developers to focus on application logic rather than low-level infrastructure. The innovation lies in its Rust-native approach, leveraging Rust's safety guarantees and performance to create more reliable and efficient distributed services.

Popularity

Points 16

Comments 0

What is this product?

Octopii is a specialized execution environment, akin to an operating system for distributed applications, specifically built for Rust. It simplifies the creation of systems where multiple processes or machines need to communicate and cooperate. Its core innovation is in how it manages these interactions, using Rust's powerful features like its ownership system and fearless concurrency to ensure that your distributed programs are safe, performant, and less prone to common bugs like race conditions or deadlocks. Think of it as a highly optimized and secure toolkit for making your Rust code run seamlessly across many interconnected computers. So, what's in it for you? It means you can build complex, scalable applications with greater confidence, knowing that the underlying framework handles many tricky distributed system challenges for you, resulting in more stable and faster applications.

How to use it?

Developers can integrate Octopii into their Rust projects by defining their application's components and how they communicate within the Octopii framework. This typically involves using Octopii's provided libraries and APIs to define services, message queues, and fault tolerance mechanisms. For instance, you might define a 'worker' service that listens for tasks on a message bus managed by Octopii, and a 'coordinator' service that dispatches these tasks. Octopii then handles the underlying network communication, serialization, and ensures that if a worker fails, the task can be reassigned. The practical use case involves deploying microservices, real-time data processing pipelines, or any application requiring resilient communication between independent units. So, how does this help you? It allows you to quickly scaffold and deploy distributed systems in Rust without needing to be an expert in network protocols or distributed consensus algorithms, speeding up your development cycle.

Product Core Function

· Distributed Service Orchestration: Octopii manages the lifecycle and communication of individual services within a distributed application, ensuring they can discover and interact with each other reliably. This is valuable for building resilient microservice architectures where services need to be highly available.

· Message Passing and Communication: It provides a safe and efficient mechanism for services to exchange data. This is crucial for real-time data streams or command-and-control systems, offering a structured way to send and receive messages between different parts of your application.

· Fault Tolerance and Resilience: Octopii incorporates strategies to handle failures gracefully, such as automatic retries or service restarts. This is essential for applications that cannot afford downtime, ensuring continuous operation even when individual components encounter issues.

· Concurrency Management: Leverages Rust's strong concurrency features to allow multiple operations to happen simultaneously without data corruption. This directly translates to better performance and responsiveness for your applications, especially in high-throughput scenarios.

Product Usage Case

· Building a scalable real-time analytics platform: A developer could use Octopii to deploy multiple data ingestion nodes that communicate with processing nodes. If one processing node fails, Octopii can automatically redistribute the workload to healthy nodes, ensuring data is processed without interruption. This solves the problem of data loss and processing delays in case of component failures.

· Creating a distributed command and control system for IoT devices: Octopii can manage thousands of device agents. The system can send commands to devices and receive telemetry data, with Octopii handling the network complexity and ensuring commands reach their intended devices even with intermittent network connectivity. This addresses the challenge of reliable communication with a large fleet of devices.

· Developing a high-performance distributed cache: Octopii can facilitate the communication and data synchronization between multiple cache nodes. If a node becomes unavailable, Octopii's resilience features can help maintain data availability and consistency across the remaining nodes, solving the problem of cache performance degradation due to node failures.

9

CatalystAlert

Author

nykodev

Description

CatalystAlert is a free, community-driven biotech catalyst calendar that tracks events and news for 985 companies. Its technical innovation lies in its automated data aggregation and curated presentation, solving the problem of scattered and time-consuming research for biotech professionals.

Popularity

Points 8

Comments 6

What is this product?

CatalystAlert is a web-based calendar that automatically pulls in relevant news, event announcements, and scientific publications from a vast network of biotech companies. The core technical idea is to use web scraping and API integrations to gather information from public sources, then process and present it in a user-friendly calendar format. This bypasses the manual effort of visiting individual company websites or relying on fragmented news feeds. The value for users is a centralized, up-to-date view of crucial biotech happenings, saving significant research time and enabling quicker identification of opportunities or critical developments.

How to use it?

Developers can integrate CatalystAlert into their workflows by bookmarking the calendar or, for more advanced use cases, potentially leveraging a future API (if developed) to pull calendar data into their own applications or research dashboards. Specific technical use cases include market researchers needing to stay ahead of competitor announcements, investors monitoring R&D milestones, and scientists tracking potential collaboration partners. It acts as a single source of truth for biotech industry events, making it easy to spot trends and potential shifts.

Product Core Function

· Automated Data Aggregation: Utilizes web scraping and potential API connections to gather information from numerous biotech company sources, providing a comprehensive and up-to-date view of industry events and news. This saves users the tedious manual effort of checking multiple sources.

· Curated Event Calendar: Presents gathered information in a clear, chronological calendar format, allowing users to easily visualize upcoming milestones, product launches, and scientific publication releases. This helps users stay informed and identify potential opportunities or threats.

· Company Tracking: Monitors a large database of biotech companies (985 as of the current listing), ensuring that a wide spectrum of industry activity is covered. This provides a broad perspective on the biotech landscape.

· Free and Community-Driven: Offered at no cost and relies on community input for expansion and accuracy, fostering a collaborative environment for biotech intelligence. This makes valuable industry insights accessible to everyone.

Product Usage Case

· A venture capital analyst uses CatalystAlert to quickly identify companies announcing significant clinical trial results or new funding rounds, enabling faster investment decision-making. It helps by centralizing critical news that would otherwise be scattered across dozens of websites.

· A pharmaceutical researcher uses CatalystAlert to track the publication dates of key scientific papers from competitor companies, informing their own research strategy and identifying potential areas of focus. This saves them from manually checking numerous academic journals and company press releases.

· A biotech startup founder uses CatalystAlert to monitor the launch timelines of new products from established players, helping them to better position their own offerings in the market. It provides a strategic overview of competitor activities.

10

HNInsight Engine

Author

marsw42

Description

This project, 'WhatHappened', is an AI-powered tool designed to distill the essence of Hacker News posts. It tackles the 'wall of text' problem by providing concise AI summaries, visualizing comment section sentiment through a 'Heat Meter,' and highlighting contrasting viewpoints with 'Contrarian Detection.' Built as a mobile-first Progressive Web App (PWA), it offers a streamlined experience for users on the go, allowing them to quickly grasp technical insights without getting lost in noise. The core innovation lies in leveraging AI to filter and present information more effectively.

Popularity

Points 8

Comments 2

What is this product?

HNInsight Engine is a sophisticated system that transforms the often overwhelming content of Hacker News into easily digestible insights. It uses advanced AI models, like Gemini, to generate short, technical summaries (TL;DR) and simplified explanations (ELI5) for each top daily post. Beyond just summarizing, it analyzes the comment sections to create a 'Heat Meter,' showing the balance between constructive discussion, technical debate, and unproductive 'flame wars.' This helps users quickly assess the quality and nature of the conversation. Furthermore, it actively seeks out and highlights the most upvoted dissenting opinions or critical feedback, acting as a 'Contrarian Detector' to expose users to diverse perspectives and break free from echo chambers. The system is architected as a Progressive Web App (PWA) using Next.js and Supabase, ensuring a smooth, mobile-friendly experience that can be added to a device's home screen without needing an app store. So, what's the real value? It saves you time and mental energy by pre-filtering and presenting the most valuable technical discussions and diverse opinions from Hacker News in a format that's easy to consume, especially on your phone.

How to use it?

Developers can integrate HNInsight Engine into their workflow by accessing the WhatHappened PWA through their mobile or desktop browser and adding it to their home screen for quick access, much like a native application. When browsing Hacker News, instead of clicking into every article and comment thread, users can go to the HNInsight Engine. It will present cards for the top posts, already pre-processed. For a specific post, you'll see a concise technical summary and an ELI5 version, allowing you to decide instantly if it's worth a deeper dive. The 'Heat Meter' provides a visual cue to gauge the comment quality – a high 'flame war' score might indicate a thread to avoid or approach with caution. The 'Contrarian Detection' feature directly points you to the most significant disagreements, offering a balanced perspective without manual effort. This means you spend less time sifting through irrelevant content and more time engaging with valuable technical insights. It's designed for efficient information consumption in a world of constant digital noise.

Product Core Function

· AI Technical Summaries: Generates 3 bullet-point technical TL;DRs for each HN post, helping users quickly grasp the core technical concepts and innovations discussed. This is valuable for developers who need to stay updated on emerging technologies but have limited time to read lengthy articles.

· AI ELI5 Summaries: Provides simplified explanations of complex technical topics in each HN post, making advanced concepts accessible to a broader audience and helping developers explain technical ideas to non-technical stakeholders. This reduces the barrier to understanding cutting-edge technology.

· Comment Heat Meter: Visualizes the sentiment distribution of comment sections (Constructive vs. Technical vs. Flame War) using AI analysis. This allows developers to quickly assess the quality and relevance of discussions, helping them prioritize which threads to engage with for genuine technical insights and avoid time wasted on unproductive debates.

· Contrarian Detection: Identifies and highlights the most upvoted dissenting or critical opinions within comment threads. This feature actively combats echo chambers by exposing users to alternative viewpoints and constructive disagreements, fostering a more nuanced understanding of technical topics and encouraging critical thinking among developers.

· Mobile-First PWA Design: Built as a Progressive Web App (PWA) with Next.js, supporting swipe gestures and home screen installation. This offers a seamless and native-like user experience on mobile devices, enabling developers to access and consume HN insights efficiently on the go without needing a dedicated app, ensuring productivity regardless of location.

Product Usage Case

· A backend developer wants to quickly understand the implications of a new database technology discussed on Hacker News. They use HNInsight Engine to get an AI technical TL;DR, which immediately tells them the key features and potential drawbacks. This saves them from reading a long article, allowing them to move on to their next task.

· A junior developer is trying to understand a complex AI algorithm shared on HN. They use the ELI5 summary provided by HNInsight Engine, which breaks down the algorithm into simple terms. This helps them grasp the core concept without getting bogged down in jargon, accelerating their learning.

· A community manager wants to gauge the general sentiment around a new open-source project announced on HN. By looking at the 'Heat Meter,' they can see if the discussion is primarily technical and constructive, or if it's devolving into a 'flame war.' This informs their strategy for engaging with the community.

· A product manager is researching user feedback on a new feature idea. They use HNInsight Engine's 'Contrarian Detection' to find the most common criticisms or alternative suggestions in the comments section. This provides valuable insights for refining the product strategy by understanding potential pushback early on.

· A developer attending a conference wants to stay updated on tech news during breaks. They access HNInsight Engine on their phone as a PWA, quickly swiping through AI-generated summaries and heatmaps of the latest HN posts. This allows them to stay informed and discover interesting discussions without needing to be at their desktop.

11

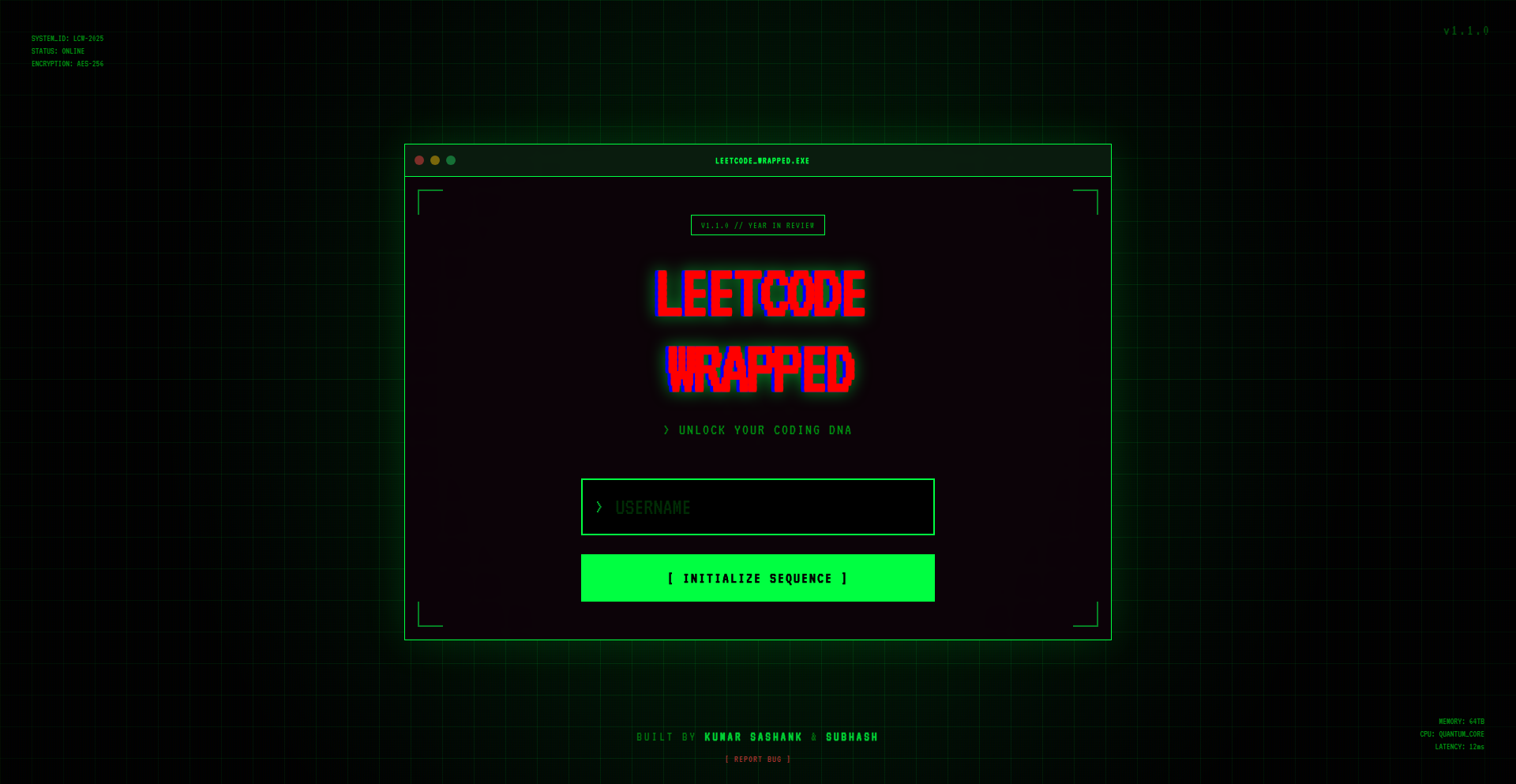

Leetwrap: Your Personalized LeetCode Year in Review

Author

kumarsashank

Description

Leetwrap is a 'Spotify Wrapped' style annual summary for LeetCode users. It provides a visually engaging breakdown of your coding practice, highlighting key statistics, problem-solving streaks, ranking distribution, and fun insights derived from your LeetCode activity. This innovative tool leverages data visualization to offer a unique perspective on your competitive programming journey, making your progress tangible and motivating.

Popularity

Points 6

Comments 2

What is this product?

Leetwrap is a personalized dashboard that aggregates and visualizes your LeetCode activity over a year. It works by connecting to your LeetCode account (with your permission) and extracting data such as problems solved, submission history, contest performance, and ranking trends. This data is then processed to generate charts, graphs, and summaries that are presented in a user-friendly, 'wrapped' format. The core innovation lies in transforming raw coding practice data into an easily digestible and engaging narrative of your growth as a problem solver, similar to how Spotify summarizes your music listening habits.

How to use it?

Developers can use Leetwrap by visiting the Leetwrap website and authorizing it to access their LeetCode profile. Once connected, the tool automatically generates your personalized year-in-review. This can be shared with friends, study groups, or on social media to showcase your progress and achievements in competitive programming. It's a fantastic way to reflect on your learning journey and identify areas for improvement for the next year.

Product Core Function

· Problem Solving Streak Visualization: Shows consecutive days of solving LeetCode problems, providing a gamified incentive for consistent practice and a clear indicator of dedication.

· Ranking Distribution Analysis: Illustrates how your ranking has evolved over time, offering insights into your performance consistency and potential growth areas within the competitive programming landscape.

· Category-wise Problem Breakdown: Details the number and types of problems solved across different difficulty levels and topics, helping users understand their strengths and weaknesses in specific algorithms or data structures.

· Personalized Statistics Dashboard: Presents a comprehensive overview of your LeetCode activity, including total problems solved, submission success rates, and contest participation, offering a holistic view of your engagement.

· Interactive Visualizations: Employs engaging charts and graphs to make complex data understandable and enjoyable, transforming abstract progress into concrete, shareable insights.

Product Usage Case

· A student preparing for technical interviews can use Leetwrap to see if they are consistently practicing problems across different difficulty levels, identifying if they need to focus more on medium or hard problems to be interview-ready.

· A competitive programmer can analyze their ranking distribution to understand their performance in contests over the year, pinpointing if their ranking tends to fluctuate significantly or remain stable, and use this insight to strategize for future competitions.

· A coding bootcamp instructor can encourage their students to use Leetwrap to visualize their collective progress and individual effort, fostering a sense of community and healthy competition as they track their problem-solving streaks.

· A developer looking to document their self-learning journey can share their Leetwrap summary on their personal blog or LinkedIn profile, showcasing their dedication to improving their algorithmic skills and problem-solving abilities to potential employers.

· A group of friends practicing LeetCode together can compare their Leetwrap summaries to see who has been most consistent or who has improved the most in certain areas, using this as a fun motivator for their collaborative learning efforts.

12

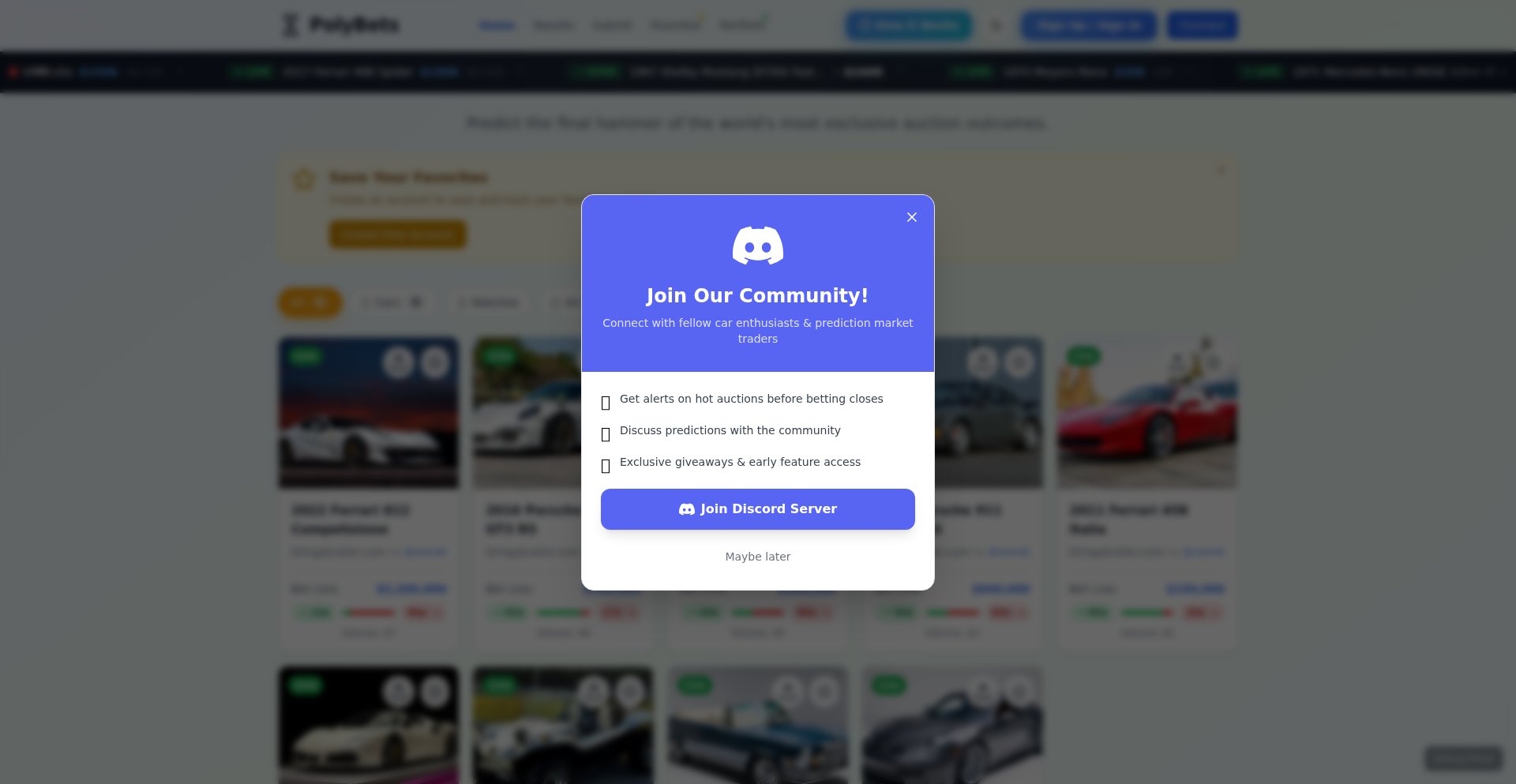

PolyBets

Author

h100ker

Description

PolyBets is a decentralized prediction market built on Polygon Mainnet that allows users to bet on the outcomes of online auctions. It leverages blockchain technology to create a transparent and verifiable way to settle disputes and create engaging betting opportunities around collectibles, art, watches, and especially cars. The core innovation lies in its ability to automatically generate prediction markets from auction links, making it incredibly easy to participate.

Popularity

Points 5

Comments 2

What is this product?

PolyBets is a platform that turns any online auction into a bet. Think of it like a sophisticated way to make a wager with your friends about whether that classic car will sell for over $100,000 or if that rare watch will fetch its estimated price. It uses a blockchain called Polygon (which is like a faster, cheaper version of Ethereum) to record all the bets and outcomes. The real magic is how it takes a simple link to an auction site and automatically creates a 'prediction market' for it. This means you don't need to be a tech whiz to set up a bet; the system does the heavy lifting. So, for you, it means a fun, trustless way to engage with your favorite auctions and potentially win some crypto.

How to use it?

Developers can use PolyBets by providing a URL to an online auction. The system then scrapes the relevant information (like the item, current bid, and auction end time) and creates a prediction market on the Polygon blockchain. Users can then create accounts, deposit cryptocurrency, and place bets on various outcomes (e.g., 'will sell above X price', 'will sell below Y price'). The platform automatically resolves the bets once the auction concludes based on the verified results. This can be integrated into communities or platforms that discuss auctions, providing a new layer of interaction. So, for developers, it offers a ready-to-use decentralized betting infrastructure that can be easily plugged into existing content or community platforms.

Product Core Function

· Auction Link Parsing: Automatically extracts auction details from provided URLs, enabling quick market creation. This means you can instantly turn any auction you find into a betting opportunity without manual data entry.

· Decentralized Market Creation: Generates prediction markets on Polygon Mainnet, ensuring transparency and immutability of bets. This provides a secure and verifiable way to conduct bets, so you can trust the results.

· On-Chain Betting: Allows users to place bets using cryptocurrency, with all transactions recorded on the blockchain. This means your bets are secure and visible, building trust in the platform.

· Automated Outcome Resolution: Verifies auction results and automatically distributes winnings to the correct participants. This eliminates the need for manual payouts and ensures fair settlement of all bets.

· Polygon Network Integration: Utilizes Polygon for fast and low-cost transactions, making betting accessible and affordable. This means your betting experience will be smooth and won't cost a fortune in fees.

Product Usage Case

· Car Enthusiast Community: A group of car collectors can use PolyBets to bet on the final selling price of rare classic cars listed on auction sites like Bring a Trailer. This adds excitement to watching auctions and provides a fun way to settle friendly wagers on who predicted the price best.

· Art Collectors: Art lovers can create prediction markets on the final hammer price of artworks at major auction houses. This allows them to engage with art auctions beyond just observation and participate in a decentralized financial market related to art.

· Watch Collectors: Members of a watch collecting forum can use PolyBets to bet on whether a specific limited edition watch will exceed its pre-auction estimate. This creates a gamified experience for members and encourages deeper engagement with auction results.

13

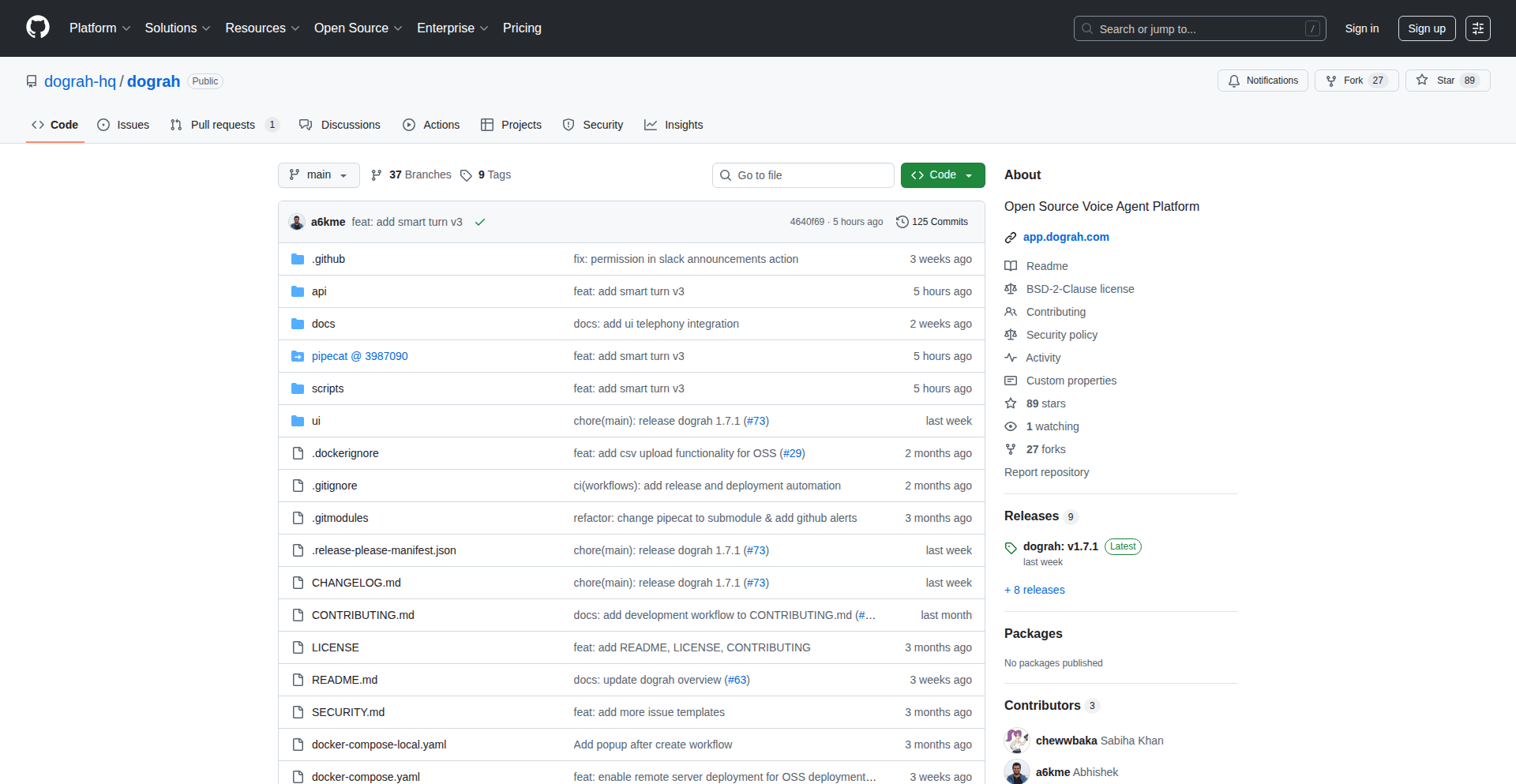

Dograh Voice Agent Fabric

Author

a6kme

Description

Dograh is an open-source framework designed to simplify the creation and testing of voice agents. It addresses the common pain points developers face when integrating various components like real-time audio, speech-to-text (STT), text-to-speech (TTS), and large language models (LLMs). It offers a visual builder, automatic variable extraction, and built-in telephony integrations, allowing for faster development and deployment of sophisticated voice applications.

Popularity

Points 4

Comments 2

What is this product?

Dograh is a fully open-source framework for building voice agents. Think of it as a toolkit that makes it much easier to create AI-powered voice applications, like those you might interact with over the phone. The core innovation lies in providing ready-made solutions for the complex plumbing required to make voice agents work. This includes handling audio streams, converting spoken words to text (STT), understanding that text with an AI (LLM), generating spoken responses (TTS), and connecting to phone networks. Unlike commercial solutions that might lock you in, Dograh gives you full control, allowing you to see, modify, and host every part of the system yourself, ensuring data privacy and flexibility. It simplifies what was previously a highly custom and time-consuming development process.

How to use it?

Developers can use Dograh by cloning its GitHub repository and following the provided setup instructions, which often involve a simple command to spin up a basic, pre-configured multilingual agent. You can then customize this template using the drag-and-drop visual agent builder to define the logic and flow of your voice agent. For more advanced use cases, you can directly interact with the underlying code, fork components, and integrate your preferred LLM, STT, and TTS services. The framework includes built-in integrations with popular telephony providers like Twilio, so you can connect your agent to real phone numbers for testing and deployment. Essentially, Dograh provides a structured environment and essential tools to accelerate the development cycle for voice AI applications, from initial concept to production.

Product Core Function

· LLM-powered agent templating: This provides a starting point for any voice agent use case by automatically generating a basic agent structure. This saves developers from starting from scratch and allows them to quickly iterate on their ideas, understanding what's possible from the outset.

· Drag-and-drop visual agent builder: This allows for intuitive creation and modification of voice agent logic without extensive coding. It significantly speeds up the iteration process, enabling rapid prototyping and easier experimentation with different agent behaviors, making development accessible even to those with less deep coding experience.

· Integrated variable extraction: This feature automatically identifies and extracts key information from conversations (like names, dates, or specific keywords) and feeds it to the LLM. This is crucial for making agents intelligent and context-aware, as it ensures the AI has the necessary data to respond accurately and perform actions, leading to more effective and personalized interactions.

· Built-in telephony integration: Dograh connects seamlessly with various telephony providers, allowing your voice agents to make and receive calls. This removes a significant hurdle in deploying real-world voice applications, enabling you to test and launch agents on actual phone lines without complex network configurations.

· Multilingual support: The framework is designed to handle multiple languages end-to-end, from speech recognition to natural language understanding and text-to-speech. This is vital for building global voice applications that can cater to a diverse user base, expanding the reach and usability of your AI solutions.

· Choice of LLM, STT, and TTS services: Dograh doesn't tie you to specific AI models. You can integrate your preferred services, offering flexibility and cost control. This allows developers to leverage the best-in-class tools for their specific needs and budgets, optimizing performance and cost-effectiveness.

Product Usage Case

· Building a customer support chatbot that can handle inquiries over the phone: A company could use Dograh to quickly prototype and deploy a voice-based customer service agent. Instead of hiring more staff, the AI can answer common questions, route complex issues, and provide 24/7 support. This directly addresses the need for scalable and efficient customer engagement.

· Creating an automated appointment booking system via voice: A clinic or service provider can implement a voice agent that allows patients to book appointments simply by speaking their desired time and date. Dograh's variable extraction and LLM integration ensure the agent understands the request and confirms the booking, simplifying the user experience and reducing administrative overhead.

· Developing a multilingual sales assistant for international markets: A business expanding globally can use Dograh to build voice agents that can converse with potential customers in their native languages, providing product information and gathering leads. The end-to-end multilingual support is key here, allowing for broader market penetration without requiring separate development teams for each language.

· Self-hosting a Vapi-like platform for enhanced data privacy: Organizations with strict data privacy requirements can use Dograh to build and host their voice agent infrastructure on-premises or in their own cloud environment. This provides complete control over sensitive customer data, mitigating risks associated with third-party SaaS solutions.

14

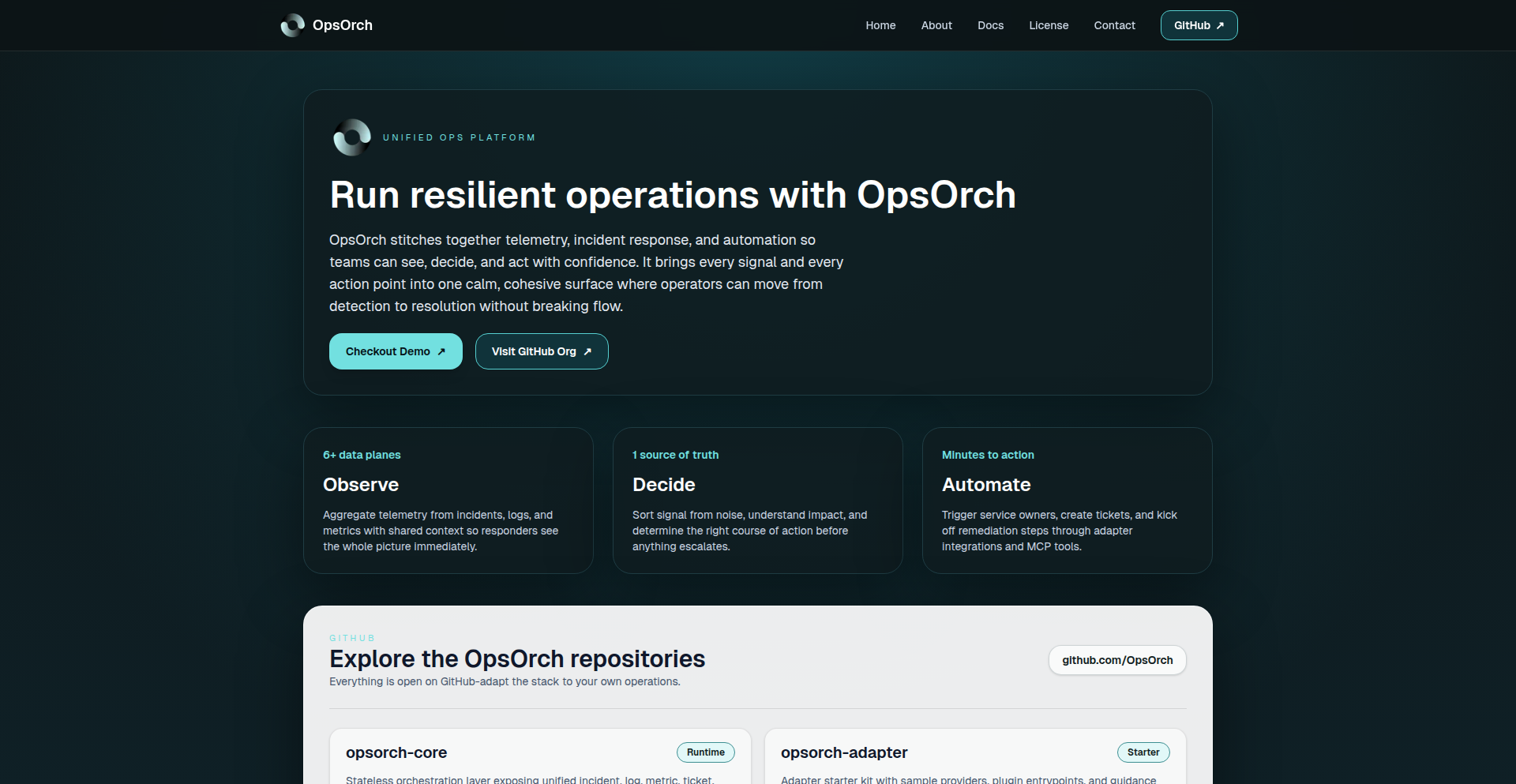

OpsOrch Unified Ops API

Author

yusufaytas

Description

OpsOrch is an open-source orchestration layer that provides a single, unified API for managing incidents, logs, metrics, tickets, messaging, and service metadata. It acts as a 'glue layer' by connecting to existing tools like PagerDuty, Jira, Elasticsearch, Prometheus, and Slack through pluggable adapters, normalizing their data into a consistent schema. This eliminates the need to navigate multiple UIs and APIs, simplifying complex incident workflows. An optional MCP server can expose these capabilities as typed tools for LLM agents, enabling AI-driven operations.

Popularity

Points 6

Comments 0

What is this product?

OpsOrch is a middleware that simplifies how developers and operations teams interact with various IT operational tools. Instead of learning and using the separate APIs and interfaces for each tool (like PagerDuty for incidents, Jira for tickets, or Elasticsearch for logs), OpsOrch offers one consistent API. It uses 'adapters' – essentially small connectors – to talk to each individual tool. These adapters translate the data from each tool into a common format that OpsOrch understands. This is innovative because it reduces complexity and the time spent context-switching between different systems during critical incident response or daily operations, without requiring you to replace the tools you already rely on. It also provides a layer for AI agents to interact with your operational data.

How to use it?

Developers can integrate OpsOrch into their existing workflows or build new applications on top of it. You would install the OpsOrch core service and then configure adapters for the tools you use (e.g., PagerDuty, Jira, Prometheus, Slack). Once set up, you can query for incidents, logs, metrics, or create tickets using the single OpsOrch API, regardless of the underlying tool. For AI integration, the optional MCP server can expose OpsOrch's functionalities as 'tools' that Large Language Models (LLMs) can call to retrieve information or trigger actions, making AI-powered incident response or automation more feasible.

Product Core Function

· Unified Incident Management API: Provides a single API endpoint to query, acknowledge, or resolve incidents across different on-call and incident management tools. This means faster incident response as you don't have to log into multiple systems to get the full picture or take action.

· Centralized Log and Metric Querying: Allows you to fetch logs and metrics from various sources like Elasticsearch and Prometheus using a consistent query structure. This saves time and effort in troubleshooting, as you can analyze data from different systems side-by-side without learning each individual query language.

· Standardized Ticket Handling: Enables the creation, updating, and retrieval of tickets from systems like Jira through a single API. This streamlines workflow management and ticket tracking for development and support teams.

· Pluggable Adapter Architecture: Supports custom or pre-built adapters written in Go or JSON-RPC to connect to diverse operational tools. This flexibility ensures OpsOrch can evolve with your tech stack and integrate with a wide range of services, preventing vendor lock-in and allowing you to leverage your existing investments.

· LLM Agent Tooling (MCP Server): Exposes operational data and actions as typed tools that AI agents can utilize. This opens up possibilities for automated incident triaging, root cause analysis, and proactive issue resolution driven by AI.

· No Data Gravity or Vendor Lock-in: OpsOrch does not store your operational data; it acts purely as a broker. This means your data remains with your existing tools, and you are not forced into a new platform or proprietary ecosystem.

Product Usage Case

· Incident Response Automation: An operations engineer can use OpsOrch to programmatically fetch all active alerts from PagerDuty, cross-reference them with relevant logs from Elasticsearch and metrics from Prometheus, and then automatically create a detailed incident ticket in Jira, all through a single API call. This drastically reduces manual work during high-pressure situations.

· AI-Powered Debugging: An LLM agent, connected to OpsOrch via the MCP server, could be tasked with debugging a recurring application error. The LLM could then use OpsOrch to query application logs for error patterns and system metrics for performance anomalies, synthesize the information, and suggest potential fixes or automatically create a task for the development team.

· Developer Self-Service Operations: Developers could use the OpsOrch API to retrieve performance metrics for their services or check the status of deployed applications without needing direct access to complex monitoring dashboards. This empowers developers to understand and manage their services more independently.

· Streamlined Onboarding for New Tools: When a new logging or monitoring tool is introduced, only a new adapter needs to be built for OpsOrch. Existing applications and workflows that rely on the unified API do not need to be modified, allowing for faster adoption of new technologies.

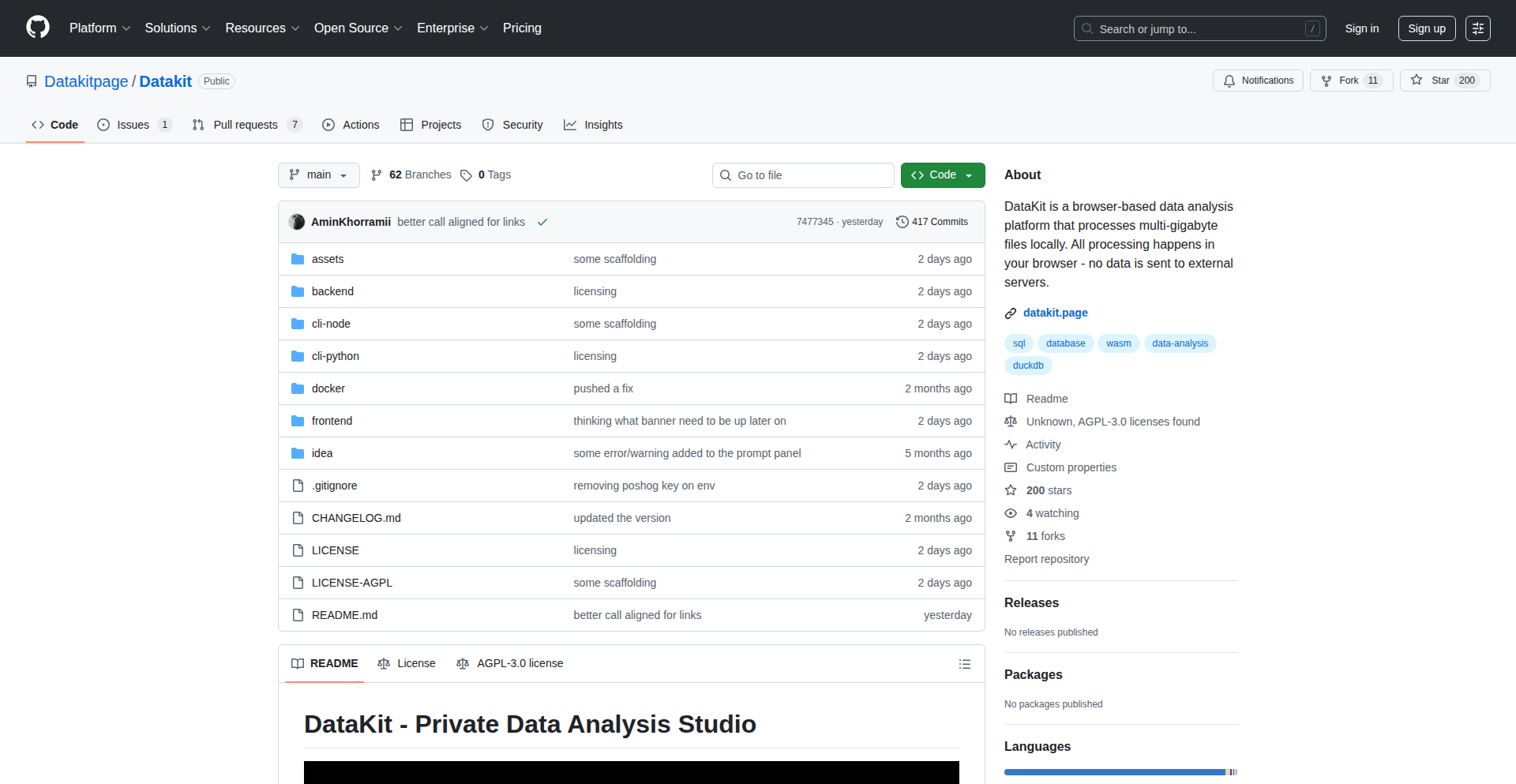

15

DataKit: Client-Side Data Studio

Author

aminkhorrami

Description

DataKit is a groundbreaking browser-based data analysis platform that empowers users to process massive datasets (CSV, Parquet, JSON, Excel) entirely within their web browser. It leverages DuckDB-WASM for client-side execution, meaning your sensitive data never leaves your local machine. This innovative approach eliminates the need for costly server infrastructure or complex local installations, offering a powerful and accessible data studio experience directly in your browser tab.

Popularity

Points 6

Comments 0

What is this product?

DataKit is a full-fledged data studio that runs completely in your web browser, transforming how you analyze large files. The core innovation lies in its use of DuckDB-WASM. DuckDB is a powerful analytical database, and by compiling it to WebAssembly (WASM), it can run directly within the browser's JavaScript environment. This allows DataKit to process multi-gigabyte files without sending any data to a server. Think of it as having a super-fast, local database engine accessible through a web page. It also integrates Python notebooks via Pyodide, another WebAssembly compilation technology, enabling sophisticated data science workflows and even an AI assistant that can understand your data's structure without ever seeing the actual sensitive information.

How to use it?

Developers can use DataKit by simply visiting the live demo website or by cloning the open-source repository and running it locally. For immediate use, you can upload your large CSV, Parquet, JSON, or Excel files directly into the browser interface. DataKit provides a full SQL interface, allowing you to query your data using standard SQL commands. For more advanced analytics and machine learning, you can launch Python notebooks within the same environment. DataKit also supports connecting to remote data sources like PostgreSQL, MotherDuck, and S3, either directly or through an optional proxy, making it a versatile tool for diverse data integration needs. The integration is seamless, allowing you to switch between SQL queries and Python scripts effortlessly within a single browser session.

Product Core Function

· Client-side processing of large files (up to 20GB): This feature eliminates the need for expensive server hardware or cloud storage for data analysis. Your data remains secure on your local machine, making it ideal for handling sensitive information and reducing operational costs. So, you can analyze terabytes of data without breaking the bank or compromising privacy.

· Full SQL interface powered by DuckDB-WASM: This provides a powerful and familiar way to query and manipulate your data. You can perform complex joins, aggregations, and filtering directly in the browser, leveraging the speed and efficiency of DuckDB. This means you can get insights from your data quickly and easily, just like you would with a traditional database.

· Python notebooks via Pyodide: This enables advanced data science workflows, including machine learning model development and complex statistical analysis, all within the browser. This allows you to go beyond basic analysis and build sophisticated applications without leaving your familiar browser environment.

· Connection to remote data sources (PostgreSQL, MotherDuck, S3): This offers flexibility in data integration, allowing you to work with data residing in various locations without complex setup. So, you can access and analyze data from wherever it lives, simplifying your data pipelines.

· AI assistant with schema-only access: This provides intelligent assistance for data exploration and understanding without compromising data privacy. The AI can help you discover relationships and patterns based on your data's structure, not its content. This means you can get AI-powered help to understand your data without worrying about exposing sensitive information.

Product Usage Case

· Analyzing multi-gigabyte sales transaction data for immediate business insights: A marketing analyst needs to quickly identify trends and patterns in a large sales dataset without waiting for IT to provision a server or download the entire file. DataKit allows them to upload the CSV directly into the browser and run SQL queries to segment customers and analyze campaign performance in real-time. This dramatically speeds up decision-making and reduces reliance on backend teams.

· Developing and testing machine learning models on sensitive user data: A data scientist working with personally identifiable information needs to build a recommendation engine. With DataKit, they can load the data into their browser, run Python scripts for feature engineering and model training using Pyodide, and then test the model's performance, all without the data ever leaving their local machine. This ensures compliance with privacy regulations and reduces the risk of data breaches.

· Onboarding new team members with a self-service data exploration tool: A startup wants to empower its non-technical team members to explore company data without extensive training. DataKit provides a user-friendly browser interface with SQL capabilities, allowing them to easily query datasets and discover insights independently. This democratizes data access and reduces the burden on the data engineering team.

· Prototyping data processing pipelines for remote cloud storage: A developer is building an application that needs to process data from an S3 bucket. DataKit's ability to connect to S3 allows them to quickly prototype and test their data processing logic directly in the browser, iterating rapidly before deploying to a production environment. This significantly accelerates the development cycle.

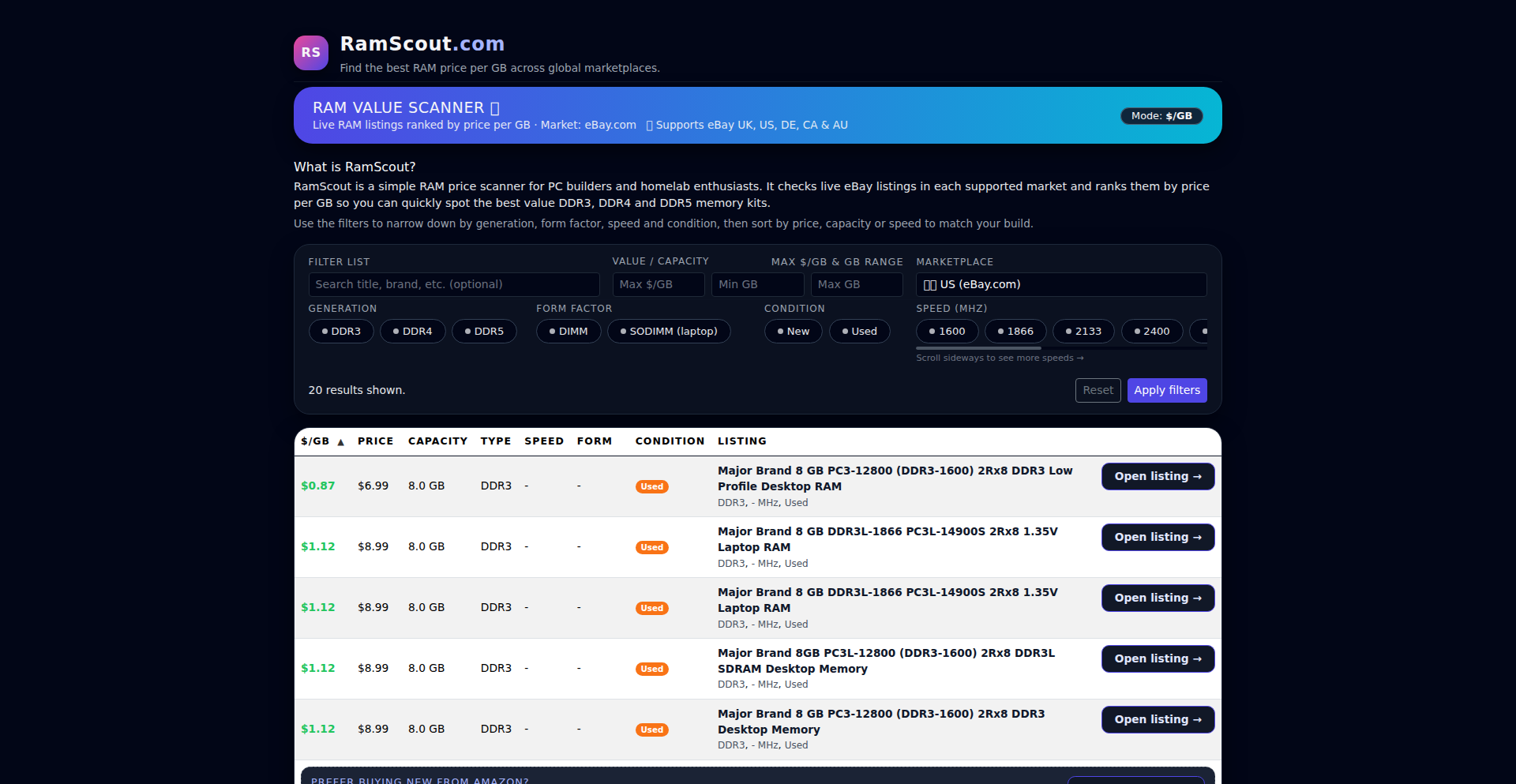

16

RAM Scraper & Deal Finder

Author

chinskee

Description

A minimalist weekend project that intelligently scans eBay (UK/US) for RAM modules, ranking them by price per gigabyte. It helps users find the best deals on DDR3, DDR4, and DDR5 RAM by filtering based on type, capacity, speed, and condition. This provides a quick and efficient way to identify significantly underpriced RAM listings, solving the pain point of rising RAM costs and the difficulty in sourcing affordable components for builds like NAS systems.

Popularity

Points 5

Comments 1

What is this product?

This is a clever tool designed to combat the recent surge in RAM prices. It works by programmatically 'scraping' or collecting data from eBay listings in both the UK and US. The core innovation lies in how it processes this data: instead of just showing raw prices, it calculates and ranks RAM by its 'price per gigabyte' (price divided by the total storage capacity). This immediately highlights the best value for money. It also offers practical filters for RAM type (DDR3, DDR4, DDR5), size, speed, and whether it's new or used. The 'so what?' is: it cuts through the noise of thousands of listings to quickly show you the cheapest RAM for your money, making it easier to snag a good deal for your computer or server.

How to use it?

Developers can use RamScout as a standalone web tool to find RAM deals. The project's simplicity means it's likely built with readily accessible web scraping libraries (e.g., Python's BeautifulSoup or Scrapy) and a straightforward frontend to display the results. For integration, one could imagine extending this by building an API that exposes the ranked RAM listings. This would allow other applications or services to programmatically access the best RAM deals, perhaps for a custom PC building configurator or a price alert system. The 'so what?' is: it's a direct, no-nonsense way to find affordable RAM, and its underlying technology could be the foundation for more sophisticated purchasing tools.

Product Core Function