Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-12-06

SagaSu777 2025-12-07

Explore the hottest developer projects on Show HN for 2025-12-06. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

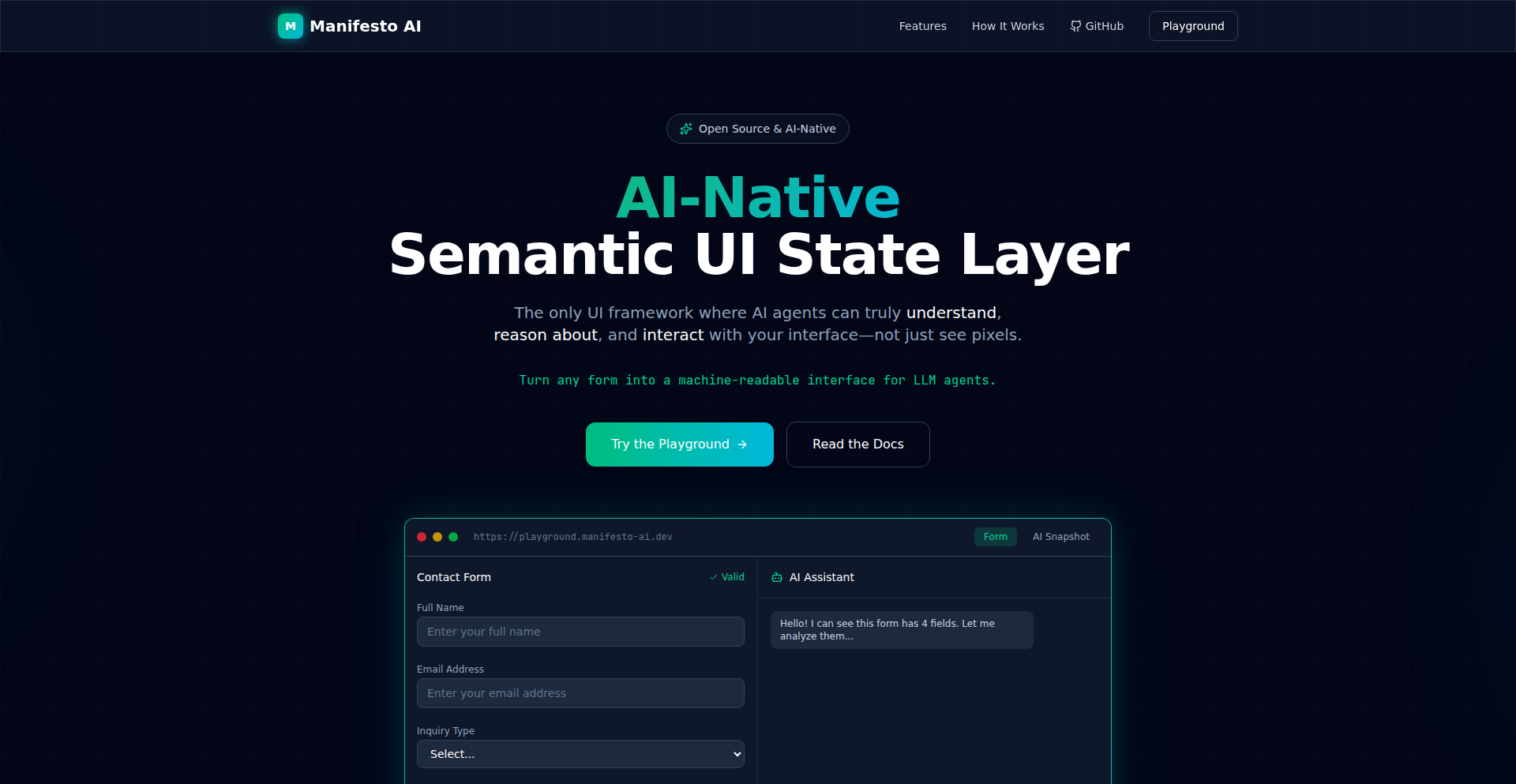

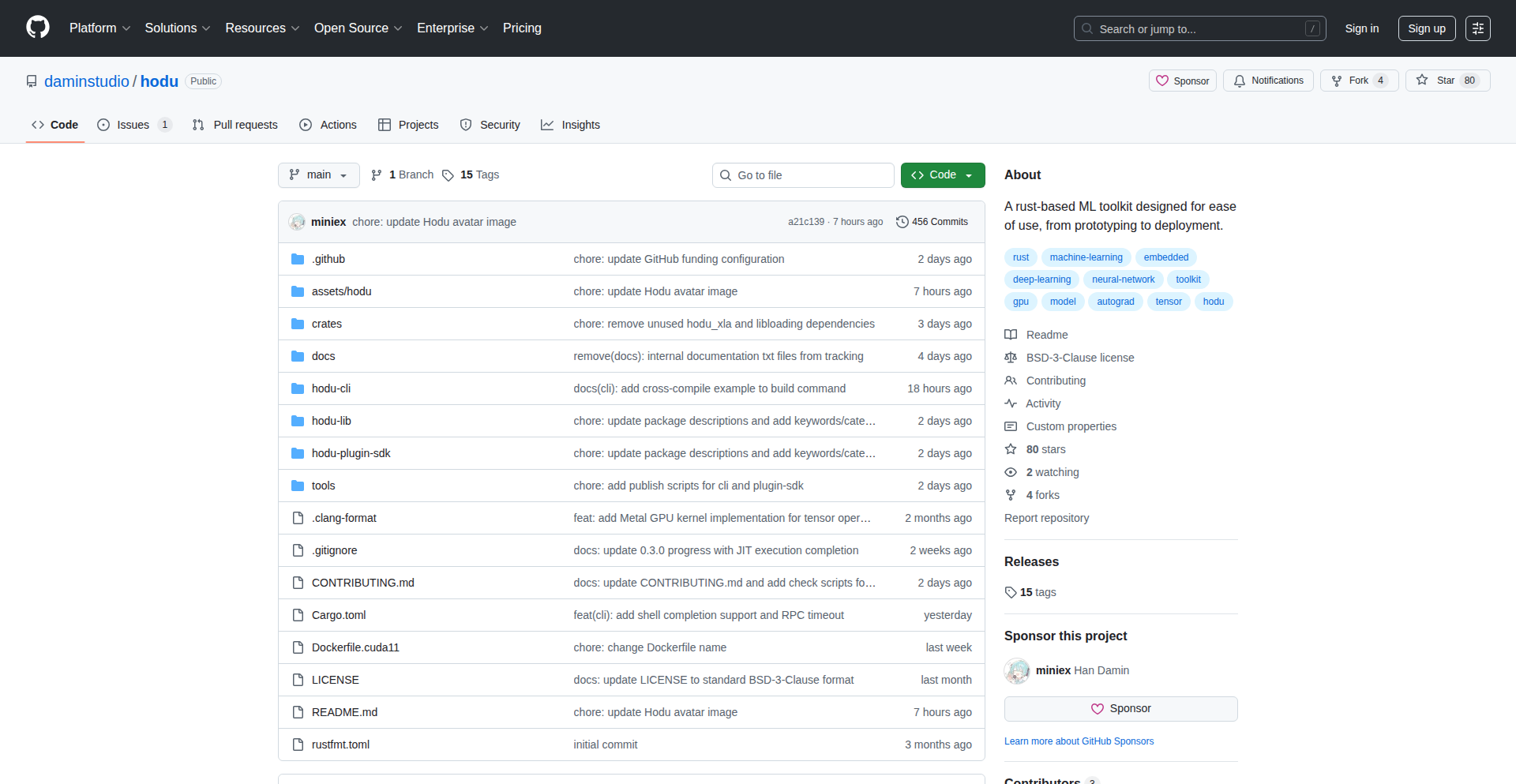

Today's Show HN landscape paints a vivid picture of innovation driven by the desire to simplify complexity and empower individuals. We're seeing a strong trend towards decentralized and peer-to-peer solutions, exemplified by Holesail, which tackles the friction of network access by creating direct, secure connections. This mirrors a broader hacker ethos of bypassing traditional intermediaries and building more resilient, user-controlled systems. In the AI realm, the focus is shifting from broad capabilities to granular control and practical application. Projects like Manifesto and UISora are moving beyond simple text generation to create AI-native frameworks that can interact deterministically with user interfaces and even generate entire UI screens, hinting at a future where AI is a direct collaborator in software development. The continued emergence of Rust projects, like Octopii and Hodu, signals its growing maturity as a language for building robust, performant, and safe systems, particularly in areas demanding high reliability. For developers and entrepreneurs, this means opportunities abound in building foundational infrastructure for decentralized applications, crafting intelligent agents that can reliably interact with digital environments, and leveraging low-level languages for critical systems. The key takeaway is to embrace the hacker spirit: identify real-world pain points, whether it's complex networking, inefficient AI interaction, or cumbersome development workflows, and leverage cutting-edge technology to build elegant, efficient, and empowering solutions.

Today's Hottest Product

Name

Holesail

Highlight

Holesail is an open-source, peer-to-peer tunneling tool that eliminates the need for configuration, port forwarding, or intermediate servers. It establishes direct, end-to-end encrypted connections between peers using a simple connection key. This innovative approach is fantastic for securely accessing self-hosted services, playing LAN games over the internet, or SSHing into servers without complex network setups. Developers can learn about efficient P2P networking, robust encryption implementation, and building cross-platform tools that simplify connectivity.

Popular Category

Developer Tools

AI/ML

Networking

Productivity

Popular Keyword

CLI

AI

Open Source

Rust

Python

Tooling

Automation

WebGPU

Blockchain

Security

Technology Trends

Decentralized Networking & P2P

AI-Native UI & Agentic Workflows

Developer Productivity & Tooling

Data Preservation & Security

Rust Ecosystem Growth

Stateless & Deterministic Systems

Fine-grained AI Control & Application

Project Category Distribution

Developer Tools (30%)

AI/ML (25%)

Networking (10%)

Productivity (15%)

Security (5%)

Gaming/Entertainment (5%)

Blockchain/Fintech (5%)

Hardware (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Tascli: Terminal-Native Task & Record Hub | 37 | 15 |

| 2 | FuseCells - Deterministic Logic Engine | 31 | 17 |

| 3 | SFX Contextual Lang | 11 | 7 |

| 4 | GitHired: Code-Driven Talent Scout | 4 | 14 |

| 5 | TapeHead: Stateful File Stream Random Access CLI | 14 | 2 |

| 6 | RetroOS Personal Site | 7 | 1 |

| 7 | GitHub Org EpochStats | 4 | 4 |

| 8 | Holesail P2P Tunnel | 3 | 4 |

| 9 | Spain Salary Cruncher | 3 | 4 |

| 10 | Infinite Lofi Algorithmic Soundscape | 5 | 1 |

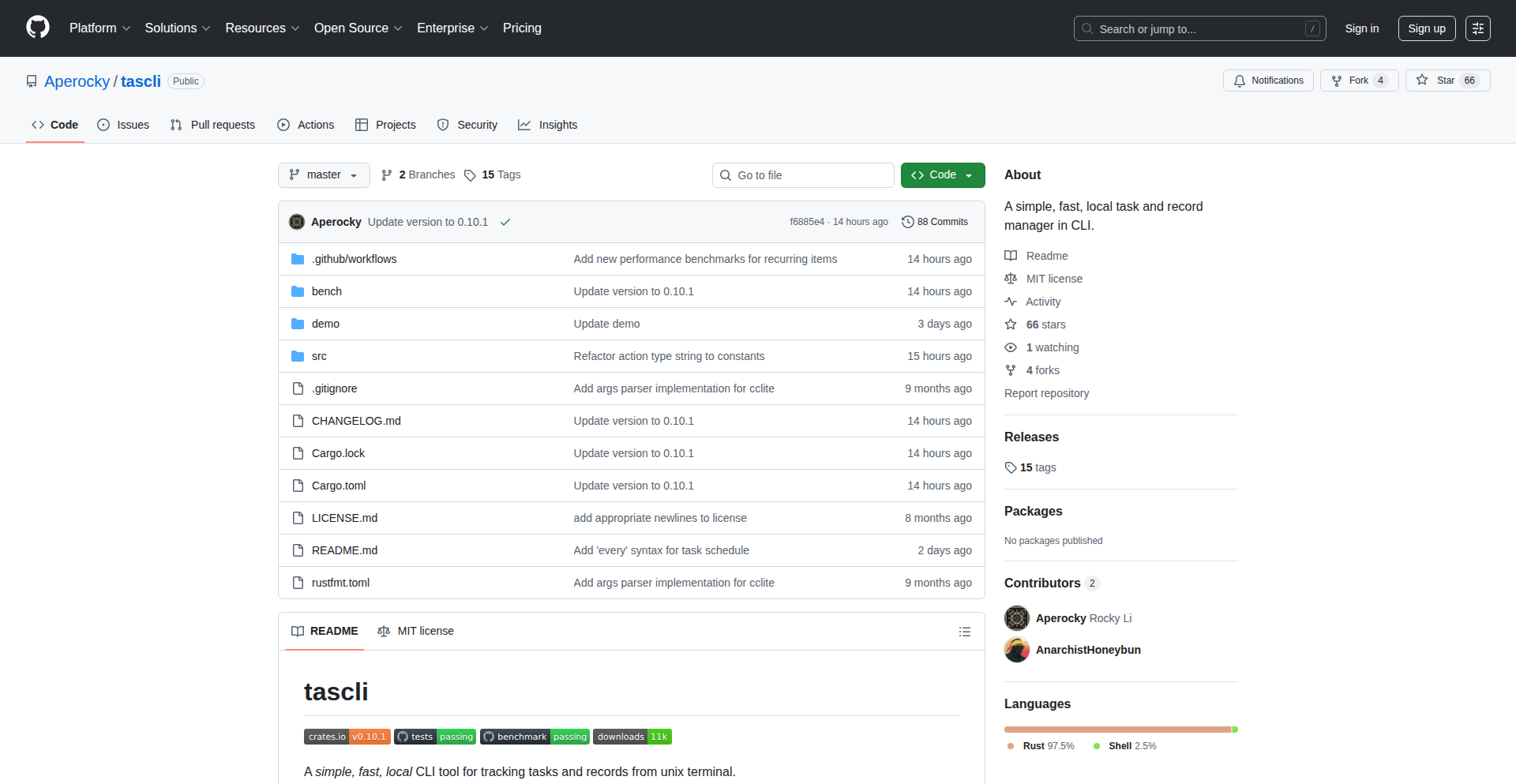

1

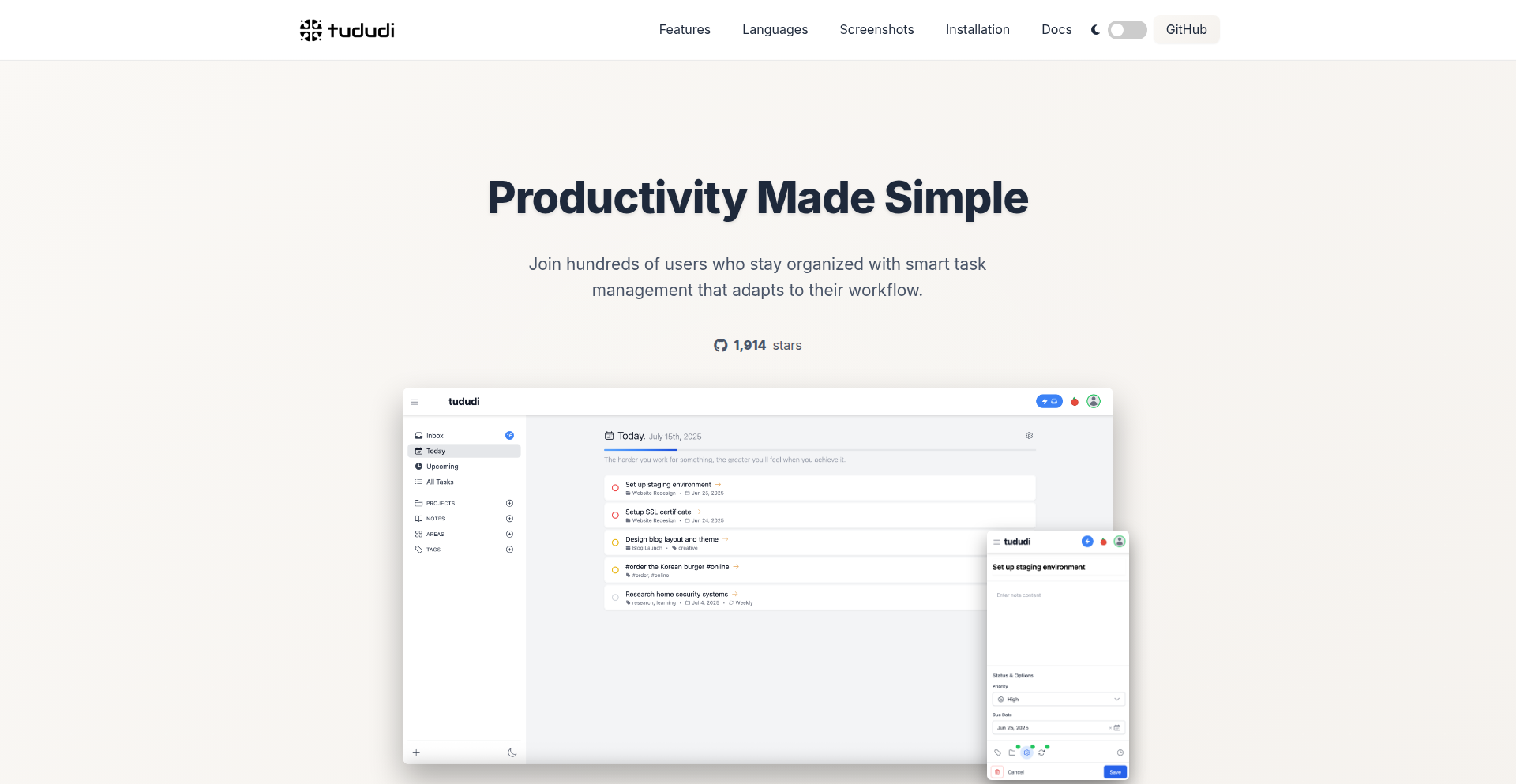

Tascli: Terminal-Native Task & Record Hub

Author

Aperocky

Description

Tascli is a command-line interface (CLI) tool designed for efficient personal task and record management. It prioritizes speed and simplicity, offering a developer-centric way to organize your to-do lists and notes directly within your terminal environment. The innovation lies in its minimal footprint and direct terminal interaction, appealing to developers who prefer workflow integration without leaving their coding environment.

Popularity

Points 37

Comments 15

What is this product?

Tascli is a command-line application for managing your personal tasks and records. It's built using Rust, which makes it inherently fast and resource-efficient. The core innovation is its design philosophy: keep it tiny, fast, and simple. This means no complex graphical interfaces or server-side dependencies. It directly interacts with your terminal, allowing you to add, view, and manage tasks and notes with quick commands. For developers, this means a seamless integration into their existing workflow, often allowing them to jot down ideas or track progress without context switching to a separate application.

How to use it?

Developers can install Tascli easily using `cargo install tascli`. Once installed, they can interact with it via various commands directly in their terminal. For example, to add a new task, you might type `tascli add 'Write documentation for feature X'`. To view all tasks, `tascli list`. It can be used for anything from remembering to commit code to logging important research findings during a development sprint. Its simplicity makes it ideal for quick entries and retrieval, especially when you're already deep in coding.

Product Core Function

· Task creation and management: Allows users to add, edit, and delete tasks using simple commands, providing a clear overview of what needs to be done. The value is in having a persistent, easily accessible to-do list that doesn't require leaving the terminal, thus maintaining focus during development.

· Record keeping: Enables users to store and retrieve notes, ideas, or important pieces of information. This is valuable for developers to quickly log snippets of code, configuration details, or meeting minutes without disrupting their coding flow, ensuring that critical information is captured efficiently.

· Fast and lightweight operation: Built with Rust, Tascli offers near-instantaneous response times and consumes minimal system resources. This is crucial for developers who value performance and don't want auxiliary tools to slow down their development environment.

· Terminal-based interface: Provides a seamless integration with the developer's existing command-line workflow. The value here is in eliminating context switching, allowing for a more focused and productive coding session.

Product Usage Case

· A developer needs to remember to implement a specific bug fix during a coding session. They can quickly type `tascli add 'Fix #123: Null pointer exception'` without leaving their IDE or terminal, ensuring the task isn't forgotten and can be easily referenced later.

· During a research phase, a developer discovers a useful code snippet or configuration setting. They can use `tascli record 'Important config for database connection: ...'` to save this information, making it readily available for future use without needing to open a separate note-taking app or rely on ephemeral clipboard content.

· A team lead wants to track daily progress or quick action items during a stand-up meeting. They can use Tascli to rapidly log these items for each team member, creating a quick and accessible record of immediate actionables directly within their command-line session.

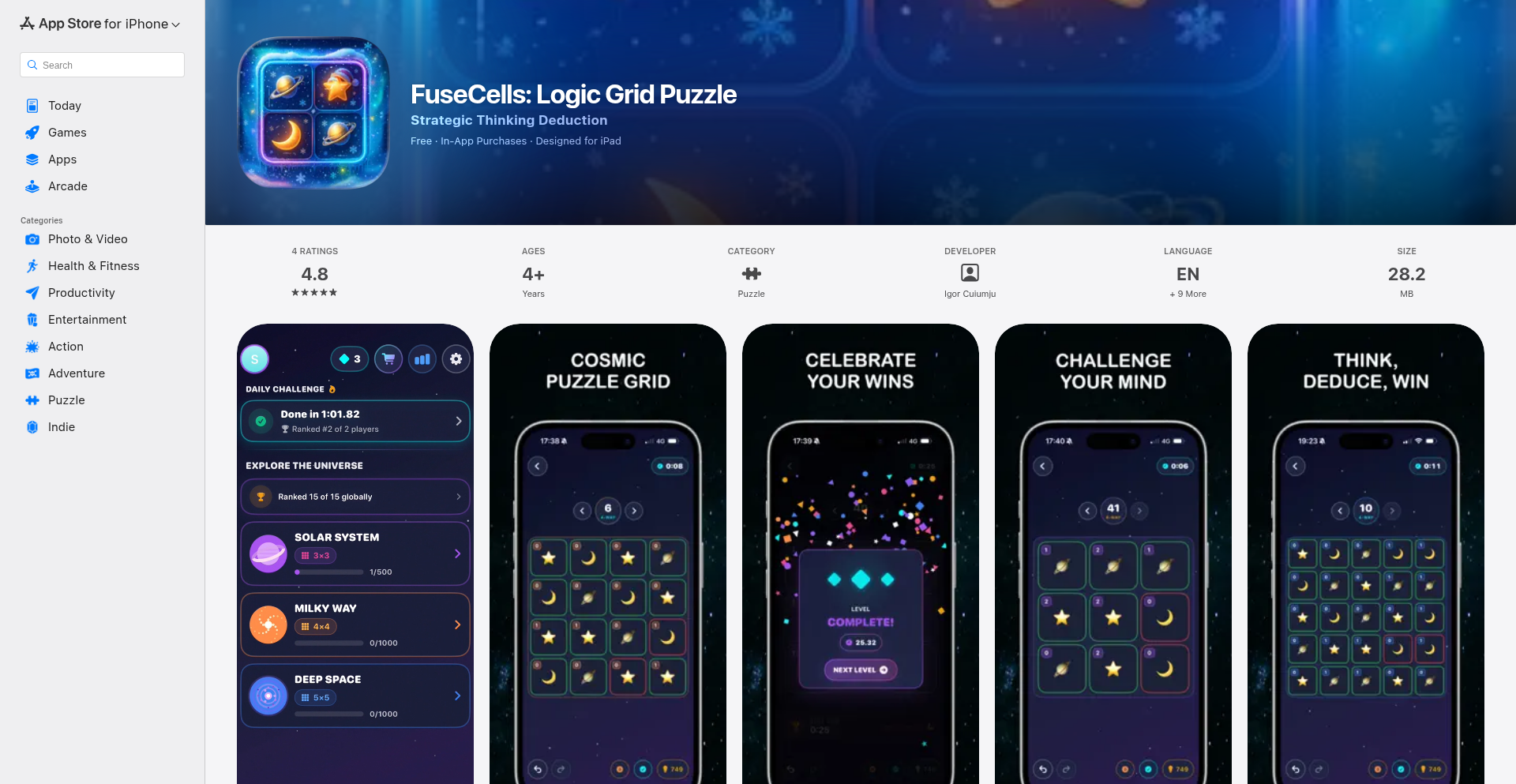

2

FuseCells - Deterministic Logic Engine

Author

keini

Description

FuseCells is a minimalistic logic puzzle game featuring 2,500 handcrafted levels, built with a unique rule system inspired by constraint-solving and path-finding. It offers deterministic logic, meaning no guessing is required to solve puzzles, and is optimized for smooth performance on low-end devices.

Popularity

Points 31

Comments 17

What is this product?

FuseCells is a logic puzzle game where every level is carefully designed by the developer. The core innovation lies in its deterministic logic system. Instead of relying on random generation, it uses a set of rules inspired by computer science concepts like constraint solving (think of it as a program trying to satisfy conditions) and path finding (like finding the shortest route in a map). This ensures that each puzzle has a unique, logical solution that can be reached through deduction alone, without any guesswork. The developer also built tools to automatically check if puzzles are solvable and estimate their difficulty, allowing for a wide range of challenges across different grid sizes. This means for you, the player, every puzzle offers a satisfying mental workout.

How to use it?

As a player, you simply download the FuseCells app from the App Store and start playing. The game is designed to be intuitive and accessible. For developers interested in the underlying technology, the value lies in the developer's custom constraint solver and difficulty estimation tools. If you're building a game with logic puzzles, or need to generate challenging, solvable levels programmatically, you could learn from the techniques used here. The optimization for low-end devices also highlights how to create performant applications for a broader audience. This project demonstrates how to apply complex computational logic to create engaging user experiences.

Product Core Function

· Handcrafted Puzzle Generation: Thousands of unique puzzles meticulously designed for a rich gameplay experience, offering deep engagement and a sense of accomplishment with each solved level.

· Deterministic Logic Solver: Guarantees that every puzzle has a logical, step-by-step solution without the need for guessing, providing a fair and intellectually stimulating challenge.

· Constraint-Solving Inspired Rules: Utilizes sophisticated rule sets inspired by computational logic to create intricate puzzles that require strategic thinking and problem-solving skills.

· Difficulty Balancing Tools: Custom tools developed by the author automatically validate puzzle solvability and estimate difficulty, ensuring a progressive and engaging learning curve for players.

· Performance Optimization: Engineered to run smoothly even on older or less powerful devices, making the game accessible to a wider range of users and demonstrating efficient code practices.

Product Usage Case

· A player seeking a mentally challenging and engaging puzzle experience can use FuseCells to enjoy 2,500 unique levels that require deduction rather than luck, leading to a satisfying sense of achievement.

· A game developer looking to create their own logic puzzle game can study FuseCells' handcrafted level design and deterministic rule system to understand how to build fair and solvable puzzles with a clear progression.

· A programmer interested in constraint satisfaction problems can examine the underlying logic solver used to validate puzzle solvability, gaining insights into practical applications of this advanced computer science concept.

· A mobile developer aiming to create an app that runs well on a variety of devices, including older models, can learn from FuseCells' optimization techniques to ensure smooth performance and broad accessibility for their own projects.

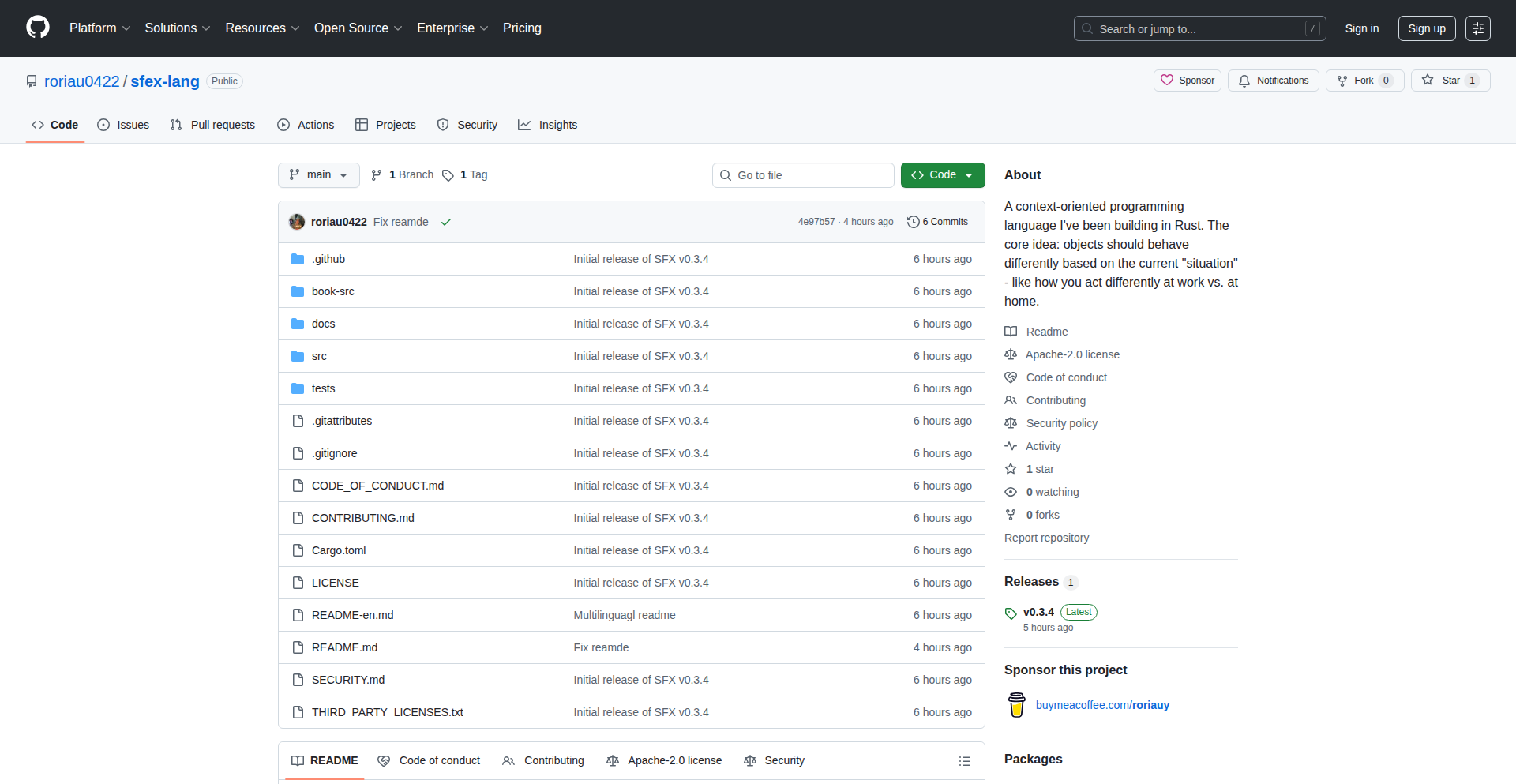

3

SFX Contextual Lang

Author

roriau

Description

SFX is a novel programming language experiment in Rust, focusing on Context-Oriented Programming. It tackles the challenge of managing conditional behavior by allowing objects to change how they act based on active 'Situations' without altering their core state. This innovative approach aims to simplify complex conditional logic, like permission checks, by defining explicit contexts. Key features include arbitrary precision decimals and a unique 1-based indexing system.

Popularity

Points 11

Comments 7

What is this product?

SFX is a programming language built with Rust, inspired by the idea of Context-Oriented Programming. Think of it like this: instead of writing 'if this, then do that' everywhere in your code, especially for things like user permissions, SFX lets you define 'Situations'. For example, you can define a 'SuperUser' situation. When this situation is active, a user's permission-checking logic automatically changes to grant full access, without you needing to modify the original user code. This makes managing different modes of operation or user roles much cleaner and less error-prone. It also handles numbers with perfect precision, so 0.1 + 0.2 will always be exactly 0.3, avoiding common floating-point issues.

How to use it?

Developers can explore SFX by examining its source code on GitHub. While it's an experimental language, you can understand its concepts by reading the provided documentation and examples. The core idea is to define 'Concepts' (like a User with a 'GetPermissions' method) and then 'Situations' (like 'AdminMode') that modify how those Concepts behave. You can then 'Switch' between these situations to see the behavior change. This is particularly useful for scenarios where code needs to adapt to different environments or roles, such as a web application with different user privilege levels or a game with distinct gameplay modes.

Product Core Function

· Arbitrary Precision Decimals: Ensures mathematical operations with decimal numbers are always exact, preventing common floating-point inaccuracies. This is useful for financial applications or any scenario where precise calculations are critical.

· Context-Oriented Programming (Situations): Allows code behavior to dynamically adapt based on the active 'Situation' without mutating the underlying state. This simplifies complex conditional logic and makes code more readable and maintainable, especially in applications with multiple user roles or operational modes.

· 1-Based Indexing: Offers an alternative to the traditional 0-based indexing in arrays and lists. This can make code more intuitive for those accustomed to 1-based systems, potentially reducing off-by-one errors in certain contexts.

· Basic Interpreter: Provides a fundamental execution engine for the SFX language, demonstrating the core language mechanics and its approach to handling program logic.

· File I/O and Networking: Includes foundational capabilities for interacting with the file system and network, enabling the language to be used in more practical, albeit experimental, applications.

Product Usage Case

· In a web application, you could use SFX's Situations to manage user permissions. Instead of repeatedly checking 'if user is admin', you'd define an 'AdminMode' situation that automatically grants administrative privileges when active. This simplifies the code and reduces the chance of security loopholes.

· For a game development project, Situations could be used to switch between different gameplay states, like 'CombatMode' or 'ExplorationMode'. Each situation would alter how player actions or game mechanics behave, providing a cleaner way to manage game logic.

· When building financial tools or scientific simulations, the arbitrary precision decimals guarantee that calculations are accurate, preventing errors that could have significant consequences in sensitive applications.

· Developers exploring new programming paradigms could use SFX to understand and experiment with context-oriented programming, potentially inspiring new approaches to software design and organization.

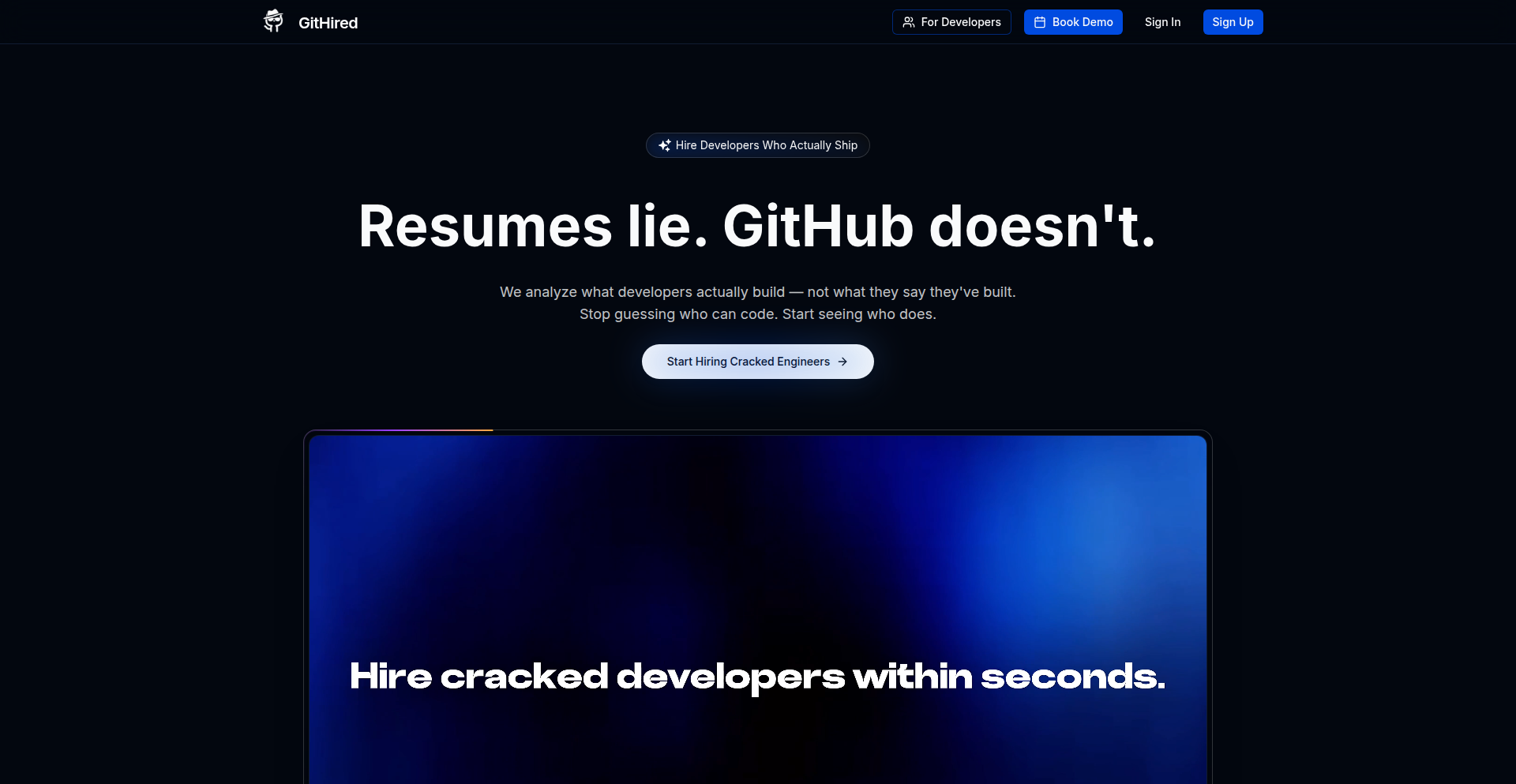

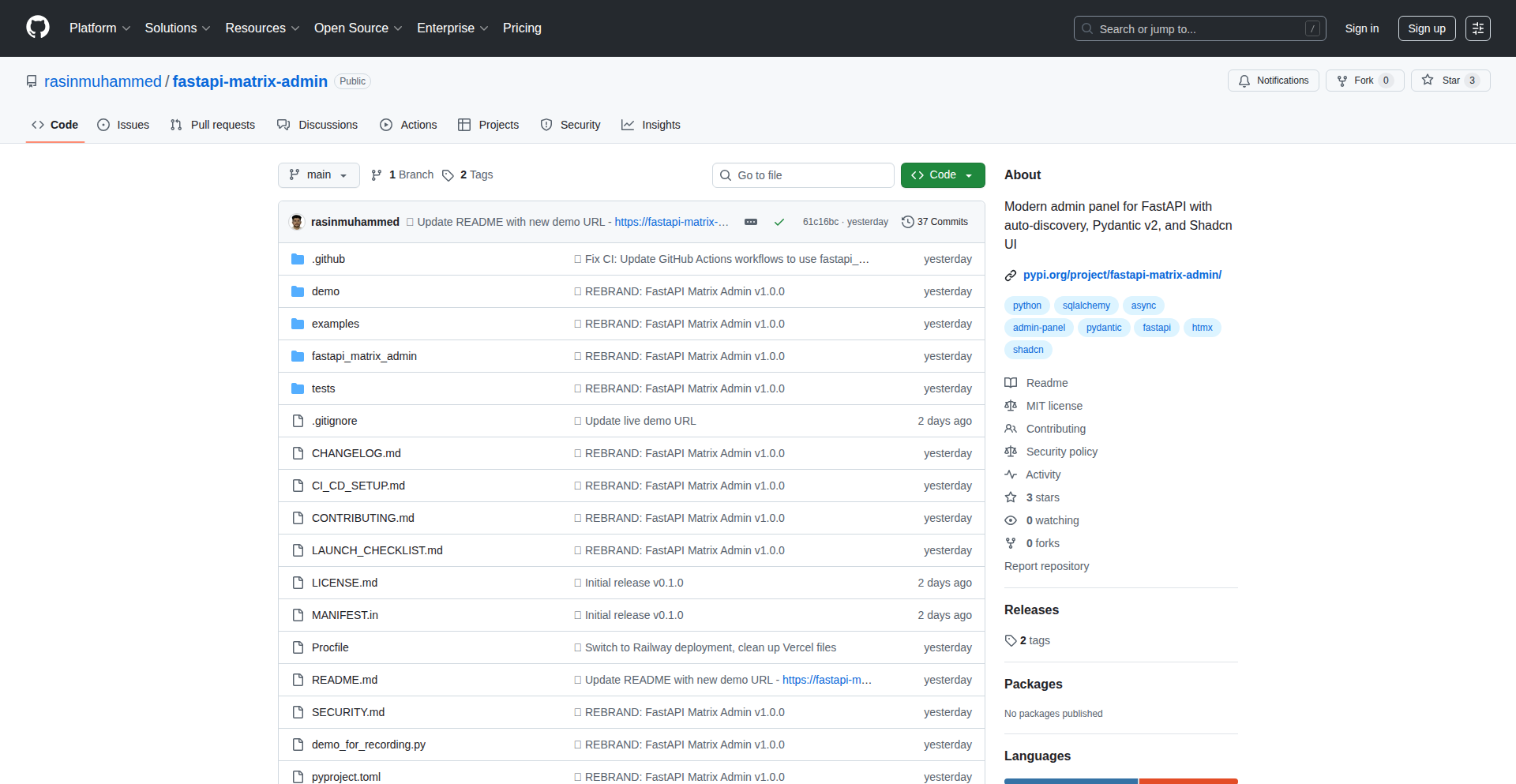

4

GitHired: Code-Driven Talent Scout

Author

raghavbansal11

Description

GitHired is an innovative hiring platform that revolutionizes developer recruitment by prioritizing actual code contributions on GitHub over traditional resumes. It dynamically analyzes a candidate's GitHub profile to assess their technical skills, project complexity, activity levels, and contribution types, ultimately providing a more accurate and reliable ranking of their capabilities. This addresses the common problem of inflated resumes and keyword-driven applicant tracking systems, ensuring both developers and hiring managers find better matches.

Popularity

Points 4

Comments 14

What is this product?

GitHired is a next-generation hiring platform that shifts the focus from self-reported skills on resumes to tangible evidence of coding ability found in a developer's GitHub repository. Instead of just reading 'proficient in React,' GitHired's engine dives deep into your GitHub activity. It examines the actual technologies you use in your projects, the complexity of the code you've written, how consistently you contribute, and the nature of your contributions. The system can even identify artificial activity patterns, often called 'green square farming,' that don't reflect genuine skill. The core innovation lies in its ability to quantify developer talent through their verifiable code work, offering a more objective and truthful signal for hiring managers and a fairer representation for developers.

How to use it?

For hiring managers, GitHired acts as a powerful pre-screening tool. You can connect your company's job requirements to the platform, and GitHired will then analyze developer profiles from GitHub that match these criteria. It provides a ranked list of candidates based on their code performance, allowing you to identify top talent more efficiently and reduce time spent on unqualified interviews. For developers, GitHired offers a way to showcase your true technical capabilities beyond a static resume. By linking your GitHub account, you can see how GitHired perceives your profile and ensure your most impressive work is recognized by potential employers. It's a direct integration into the developer's existing workflow, leveraging their most valuable professional asset: their code.

Product Core Function

· GitHub Profile Analysis: Scans and interprets a developer's public GitHub repositories to assess skills, project complexity, and activity. This provides hiring managers with a deeper understanding of a candidate's practical coding abilities than a resume can offer, directly showing what they can build.

· Real-time Skill Matching: Compares a developer's code contributions and tech stack with specific job descriptions to identify the most relevant candidates. This ensures that developers are evaluated based on the skills actually required for a role, making the hiring process more precise and effective.

· Activity and Contribution Quality Assessment: Evaluates the frequency and nature of a developer's contributions, distinguishing genuine engagement from artificial activity. This helps to filter out candidates who may be gaming the system and identify those with sustained, high-quality coding output, leading to better long-term hires.

· Objective Talent Ranking: Generates a score or ranking for developers based on their GitHub performance, providing a data-driven approach to candidate selection. This minimizes bias and guesswork, allowing companies to focus on candidates with proven coding prowess, thus improving the quality of engineering hires.

Product Usage Case

· A startup needs to hire a senior backend engineer proficient in Go and Kubernetes. Instead of sifting through hundreds of resumes, the hiring manager uses GitHired to find candidates whose GitHub actively showcases complex Go projects, significant contributions to Kubernetes-related repositories, and consistent commit history. This dramatically speeds up the identification of genuinely skilled candidates.

· A developer wants to showcase their expertise in machine learning and Python beyond listing 'ML' on their resume. By connecting their GitHub, they can highlight their contributions to popular ML libraries, personal projects involving advanced algorithms, and active participation in related open-source communities, demonstrating their practical skills to potential employers.

· A large tech company aims to reduce the time and cost associated with interviewing unqualified candidates. GitHired is integrated into their early-stage screening process, automatically filtering candidates based on their code quality and project relevance, ensuring that only the most promising developers proceed to later interview rounds, saving significant resources.

5

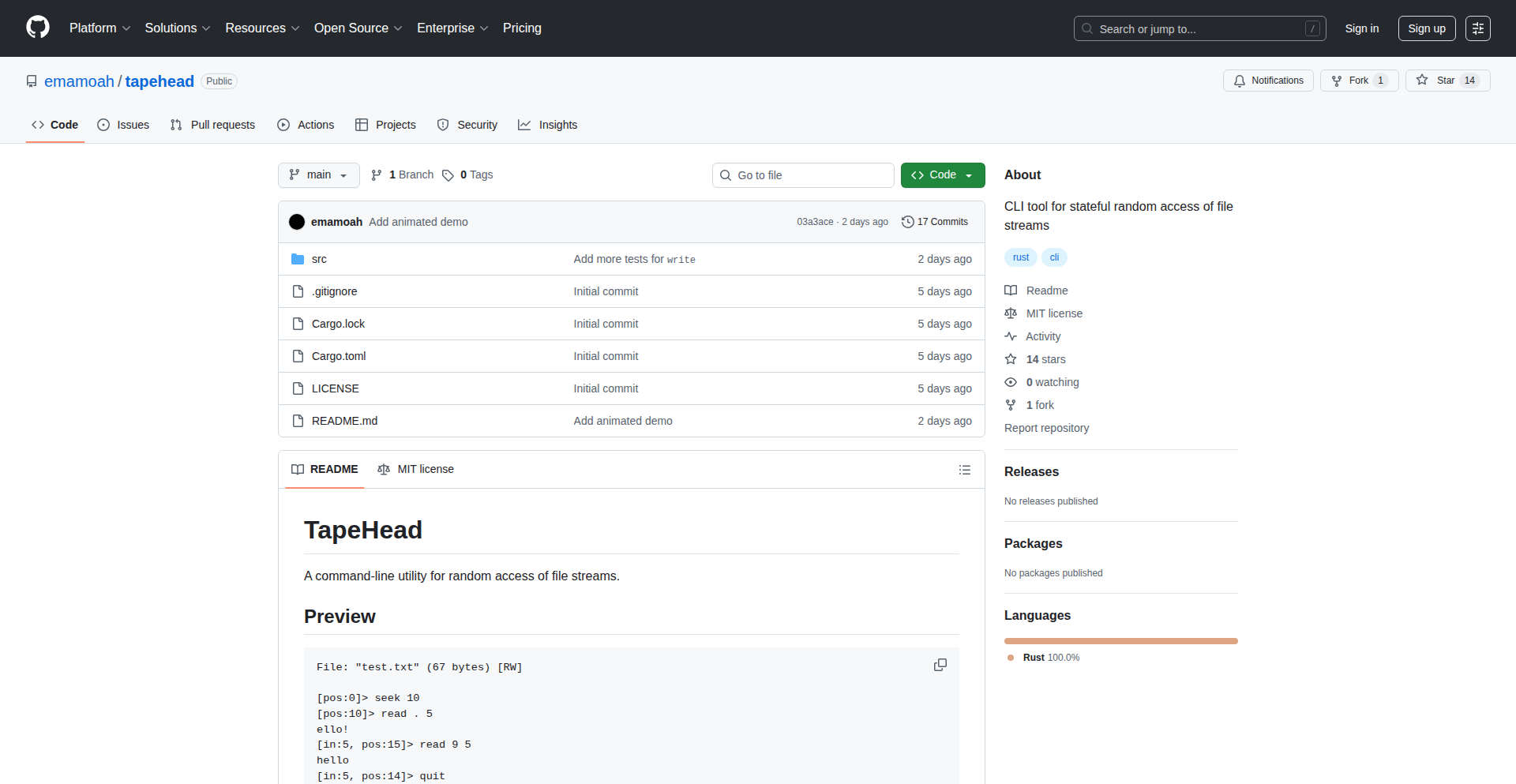

TapeHead: Stateful File Stream Random Access CLI

Author

emamoah

Description

TapeHead is a command-line interface (CLI) tool designed for developers who need precise control over file streams. It allows for random access operations like seeking to specific positions and reading/writing data within a file, all while maintaining its 'state' across operations. This is particularly useful for debugging low-level file I/O and for building complex data manipulation tools where traditional file access methods are insufficient.

Popularity

Points 14

Comments 2

What is this product?

TapeHead is a command-line utility that enables developers to treat files like a tape, allowing them to jump to any point (seek), read data from that point, and write new data there, all while remembering their current position (state). This is innovative because most standard file operations are sequential. TapeHead's ability to perform stateful random access is crucial for tasks like debugging driver code or managing fragmented data, offering a more granular control than simple read/write commands. This provides a powerful tool for understanding and manipulating file data at a fundamental level, which is often a bottleneck in development and debugging.

How to use it?

Developers can use TapeHead directly from their terminal. For example, to open a file named 'mydata.bin' and seek to byte 1024, then read 50 bytes, a command might look like `tapehead open mydata.bin seek 1024 read 50`. The tool remembers the current position, so the next operation will start from where the last one ended. This makes it easy to iteratively explore or modify a file. It can be integrated into scripts for automated file manipulation or used interactively during debugging sessions.

Product Core Function

· Stateful File Opening: Allows a file to be opened and kept in memory with its current position tracked, eliminating the need to reopen the file for every operation, which speeds up repetitive tasks and simplifies logic.

· Random Access Seeking: Enables jumping to any specific byte offset within the file, providing granular control over data access, essential for random data structures or debugging specific memory regions.

· Read Operations: Permits reading a specified number of bytes from the current position, useful for extracting portions of data for analysis or processing.

· Write Operations: Supports writing new data at the current position, overwriting existing content or appending, vital for modifying file contents in place during development or testing.

· Position Tracking: Automatically manages the current file pointer, so subsequent operations continue from the last accessed point, streamlining complex data manipulation workflows and reducing manual offset calculations.

Product Usage Case

· Debugging Driver I/O: When a driver malfunctions, developers can use TapeHead to precisely inspect the state of a file at various stages of its operation, identifying exactly where data corruption or incorrect writes occur, offering a direct solution to hard-to-trace file system bugs.

· Binary File Manipulation: For developers working with binary formats (like game save files or custom data structures), TapeHead allows them to pinpoint and edit specific fields or sections of the file without loading the entire file into memory or writing complex parsing code, making it faster to experiment with and fix data.

· Low-Level Data Analysis: When analyzing raw data dumps or network packet captures stored in files, TapeHead can be used to quickly jump to interesting sections, extract specific data blocks for inspection, and understand the structure of the data without writing custom parsing scripts for each new format.

· Automated Data Patching: Scripts can be written using TapeHead to apply small, precise changes to large files, such as updating configuration values embedded within a binary, significantly reducing the time and resources needed for such tasks compared to re-generating the entire file.

6

RetroOS Personal Site

Author

ben-gy

Description

A personal website inspired by Apple's early operating systems, built using modern web technologies to recreate the retro aesthetic and user experience. It solves the problem of creating a unique and engaging online presence that stands out from typical modern web designs by leveraging nostalgic UI elements and interaction patterns.

Popularity

Points 7

Comments 1

What is this product?

This project is a personal website that mimics the visual style and interaction of early Apple operating systems like System 7 or Mac OS 8. It's built with modern web frameworks and libraries, allowing it to run in any web browser. The innovation lies in its meticulous recreation of the iconic Aqua or Platinum UI, complete with pixelated icons, classic window management, and retro sound effects. Instead of a standard, flat, modern layout, it offers a nostalgic, desktop-like experience that is visually distinct and memorable. So, what's in it for you? It provides a highly customizable and unique way to showcase your portfolio or personal brand, making your online presence unforgettable.

How to use it?

Developers can use this as a template or inspiration for their own personal websites. It typically involves setting up a web server and deploying the project's files. The underlying technology likely uses a JavaScript framework (like React, Vue, or Svelte) for interactivity and rendering, along with HTML and CSS for structure and styling. Customization would involve modifying the project's assets (images, fonts) and potentially tweaking the JavaScript logic for specific interactive elements. Developers could also integrate their existing content by replacing placeholder text and images within the retro UI structure. So, how can you use this? You can fork the project, adapt it to your specific content needs, and deploy it to a hosting service, giving your website a distinct retro-digital identity.

Product Core Function

· Icon-based navigation: Implements a clickable icon grid on a desktop-like interface for navigating different sections of the website, offering a familiar, yet retro, user experience.

· Windowed content display: Presents content within resizable and draggable windows, mimicking the multi-tasking environment of older operating systems, enhancing user engagement through interactive layout.

· Retro UI elements: Recreates classic buttons, scrollbars, menus, and cursors with accurate visual fidelity to evoke a strong sense of nostalgia and distinctiveness.

· Themable interface: Designed with the potential for customization, allowing users to change color schemes, fonts, and background images to personalize their retro digital space.

· Performance optimization: Leverages modern web development techniques to ensure smooth performance and responsiveness despite the complex visual rendering, making the retro experience enjoyable.

Product Usage Case

· A freelance graphic designer uses RetroOS Personal Site to showcase their portfolio, presenting their work within 'application windows' that open when users click on stylized app icons, creating an interactive and memorable browsing experience that highlights their design skills.

· A web developer builds their personal blog using this project as a base, with blog posts appearing in classic 'document windows' and comments section styled like a retro messaging app, offering a unique platform that reflects their passion for technology history.

· A digital artist uses the project to display their digital paintings, framing each artwork in a virtual 'picture frame' that can be 'opened' and 'viewed' in a resizable window, making the art viewing process more engaging and curated.

· A retro computing enthusiast creates a fan site dedicated to vintage hardware, using the project's aesthetic to host information, images, and even emulated experiences, providing an authentic and immersive environment for like-minded individuals.

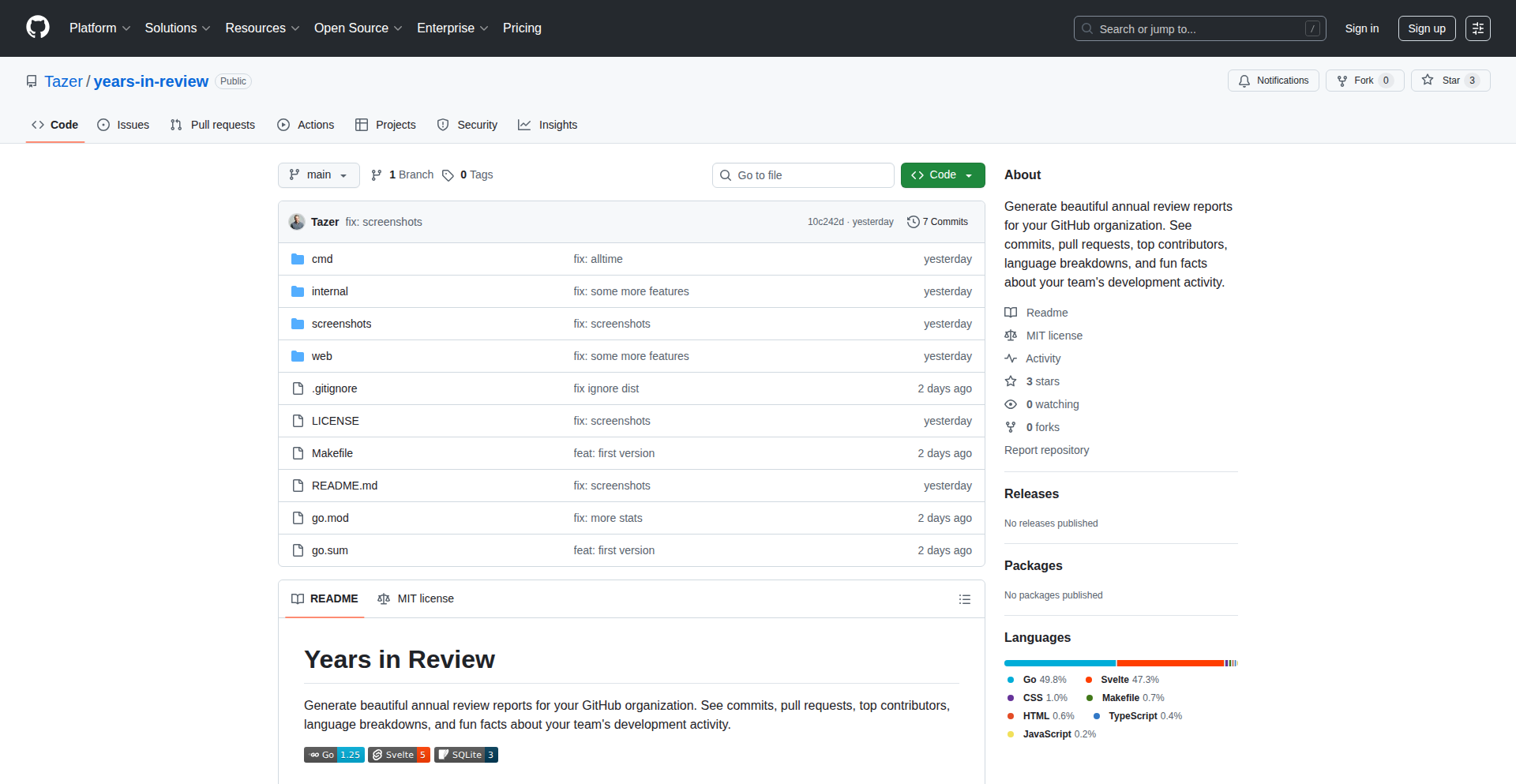

7

GitHub Org EpochStats

Author

tazer

Description

A novel tool that provides insightful 'years in review' statistics for GitHub organizations. It leverages clever data aggregation and visualization techniques to transform raw repository and commit data into meaningful historical narratives, offering a unique perspective on an organization's development journey.

Popularity

Points 4

Comments 4

What is this product?

GitHub Org EpochStats is a project that dives deep into your GitHub organization's history, generating retrospective statistical summaries for each year. It works by analyzing commit history, pull request timelines, and repository evolution. The innovation lies in its ability to distill complex, time-series data into digestible yearly overviews, highlighting key trends, contribution patterns, and growth milestones that are often lost in the day-to-day churn. Think of it as a personalized historical documentary for your organization's code development.

How to use it?

Developers can integrate GitHub Org EpochStats into their workflow to gain a better understanding of their team's past performance and evolution. The project typically involves running a script or using a web interface that connects to your GitHub organization via its API. You would specify the organization and the desired date range. The output can be a set of reports, visualizations, or an interactive dashboard. This allows teams to reflect on their progress, identify areas of strength, and plan future strategies based on historical data. It's about turning raw code activity into actionable historical insights.

Product Core Function

· Yearly Commit Volume Analysis: Quantifies the total number of commits made within each year, revealing periods of high activity and potential growth phases. This helps understand team productivity trends over time.

· Repository Growth Metrics: Tracks the number of new repositories created and the evolution of existing ones year over year. This demonstrates the expansion and diversification of the organization's codebase.

· Contribution Distribution by Year: Visualizes how contributions (e.g., commits, pull requests) are spread across members throughout the year. This can highlight key contributors and team dynamics.

· Key Milestone Identification: Attempts to automatically flag significant events or periods of intense activity based on commit patterns. This provides a narrative element to the historical data.

· Comparative Year-over-Year Performance: Enables comparison of key metrics across different years. This allows for a clear understanding of progress, setbacks, and overall organizational development trajectory.

Product Usage Case

· Understanding team productivity spikes and dips over several years for performance review and planning. Helps answer 'when were we most productive and why?'.

· Visualizing the growth and strategic direction of a startup's codebase by observing repository creation and commit patterns since its inception. Shows 'how our project has evolved over time'.

· Identifying periods of high collaboration and contribution from different team members to acknowledge team efforts and understand engagement. Useful for 'recognizing team contributions historically'.

· Presenting a historical overview of a company's software development efforts to stakeholders, demonstrating progress and investment over time. Provides a 'story of our development journey'.

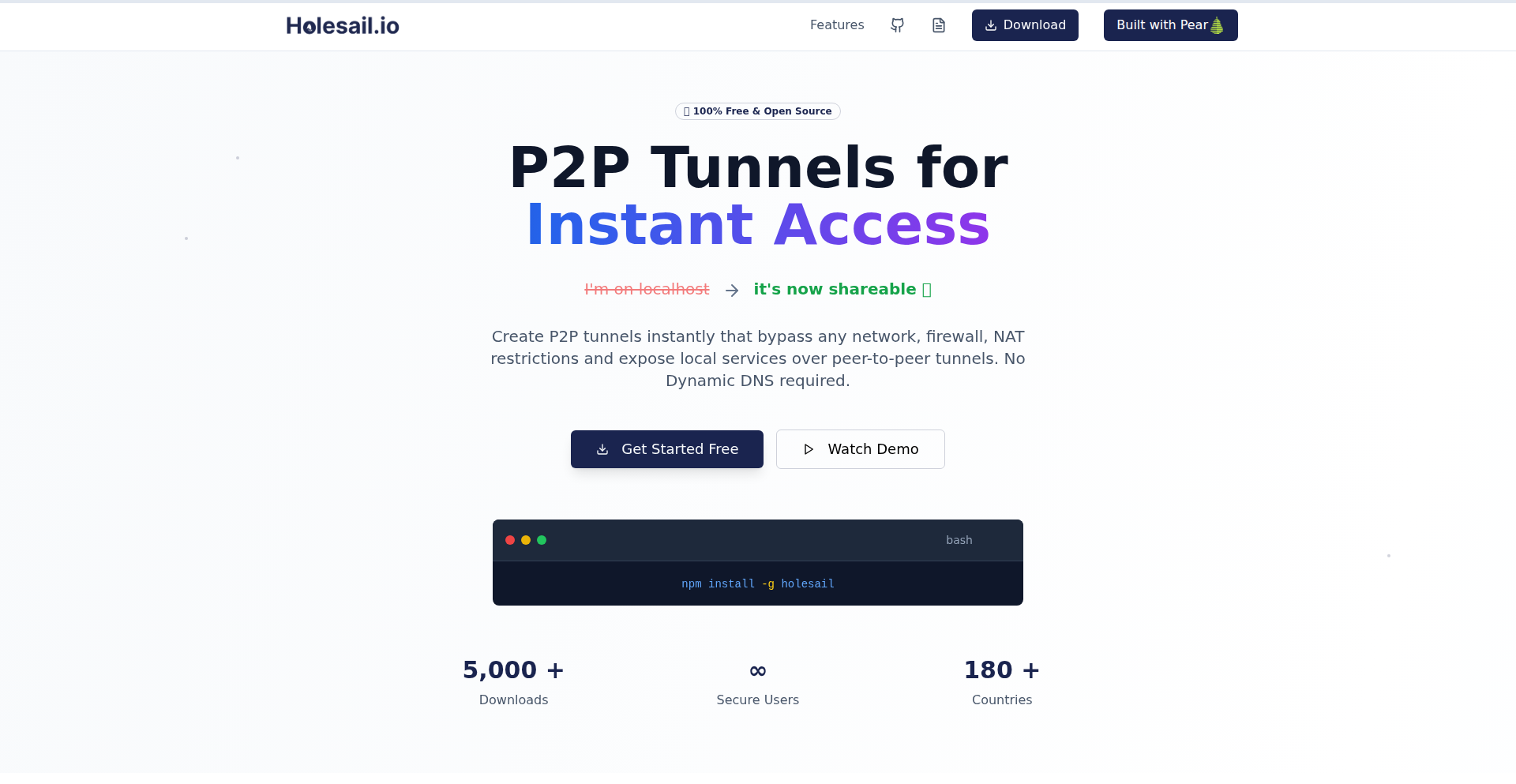

8

Holesail P2P Tunnel

Author

supersuryaansh

Description

Holesail is an open-source, peer-to-peer tunneling tool that offers a zero-configuration, end-to-end encrypted connection between devices. Unlike traditional reverse proxies, it bypasses the need for central servers, port forwarding, or VPNs, enabling direct communication even through firewalls and CGNAT. It supports both TCP and UDP and is available across multiple platforms, including mobile, with a Node API for integration.

Popularity

Points 3

Comments 4

What is this product?

Holesail is a decentralized tunneling solution that creates a secure, direct connection between two devices without needing any intermediate servers. It leverages peer-to-peer technology to establish this link, meaning your data travels directly from your device to the target device. This is achieved through clever networking techniques that allow it to establish connections even when devices are behind firewalls or Network Address Translation (NAT), such as Carrier-Grade NAT (CGNAT). Think of it as a secure, private tunnel you can build directly between your devices, anywhere in the world, without needing to rent a server or configure complex network settings.

How to use it?

Developers can use Holesail by simply downloading the executable for their operating system (Linux, macOS, Windows, Android, iOS) or by integrating its Node API into their applications. To establish a connection, you'll typically run the Holesail client on both the device you want to expose and the device you want to connect from. You'll share a simple connection key, and Holesail handles the rest, setting up the encrypted tunnel. For example, to access a local web server from the internet, you'd run Holesail on the machine hosting the web server and specify which local port to expose, then run Holesail on your remote device using the same connection key. This allows you to access your local web server as if it were publicly available, but securely and privately.

Product Core Function

· Cross-platform connectivity: Allows developers to establish secure tunnels across a wide range of devices including desktops (Linux, macOS, Windows) and mobile (Android, iOS), enabling consistent access to services regardless of the user's platform.

· Peer-to-peer tunneling: Creates direct, encrypted connections between devices, eliminating the need for central servers, which enhances privacy and reduces latency. This means your data goes directly from point A to point B, unmonitored.

· Zero-configuration: Simplifies the setup process significantly by requiring no complex network configurations like port forwarding or VPN setups. This makes it accessible to a broader range of users, including those less familiar with networking intricacies.

· Firewall and CGNAT traversal: Effectively punches through common network restrictions like firewalls and Carrier-Grade NAT, enabling connectivity for devices in restrictive network environments. This is crucial for accessing home servers or devices behind complex network setups.

· TCP and UDP support: Accommodates a variety of network protocols, making it versatile for different applications, from web services (TCP) to online gaming and real-time communication (UDP).

· Node API integration: Provides a programmatic interface for developers to embed Holesail's tunneling capabilities directly into their applications (e.g., CLI tools, mobile apps), allowing for custom networking solutions.

· End-to-end encryption: Ensures that all data transmitted through the tunnel is securely encrypted, protecting sensitive information from eavesdropping. This is fundamental for secure remote access and private communication.

Product Usage Case

· Exposing a self-hosted web application (like a personal wiki or a development server) to the internet for remote access without needing to configure port forwarding on the home router. This allows for easy access to personal services from anywhere.

· Enabling remote SSH access to a server behind a firewall or CGNAT. This simplifies server administration by providing a consistent and secure way to connect without complex network setup on the server's side.

· Facilitating direct peer-to-peer multiplayer gaming sessions between friends over the internet, bypassing the need for dedicated game servers or complex network configurations. This enhances the gaming experience by providing a direct connection.

· Allowing a mobile developer to test their application's backend API running on their local development machine from their mobile device without needing to deploy the API to a public server. This speeds up the development and testing cycle.

· Creating a secure, private connection between two development machines to share files or run collaborative coding sessions, as if they were on the same local network, even if they are geographically dispersed. This fosters seamless collaboration.

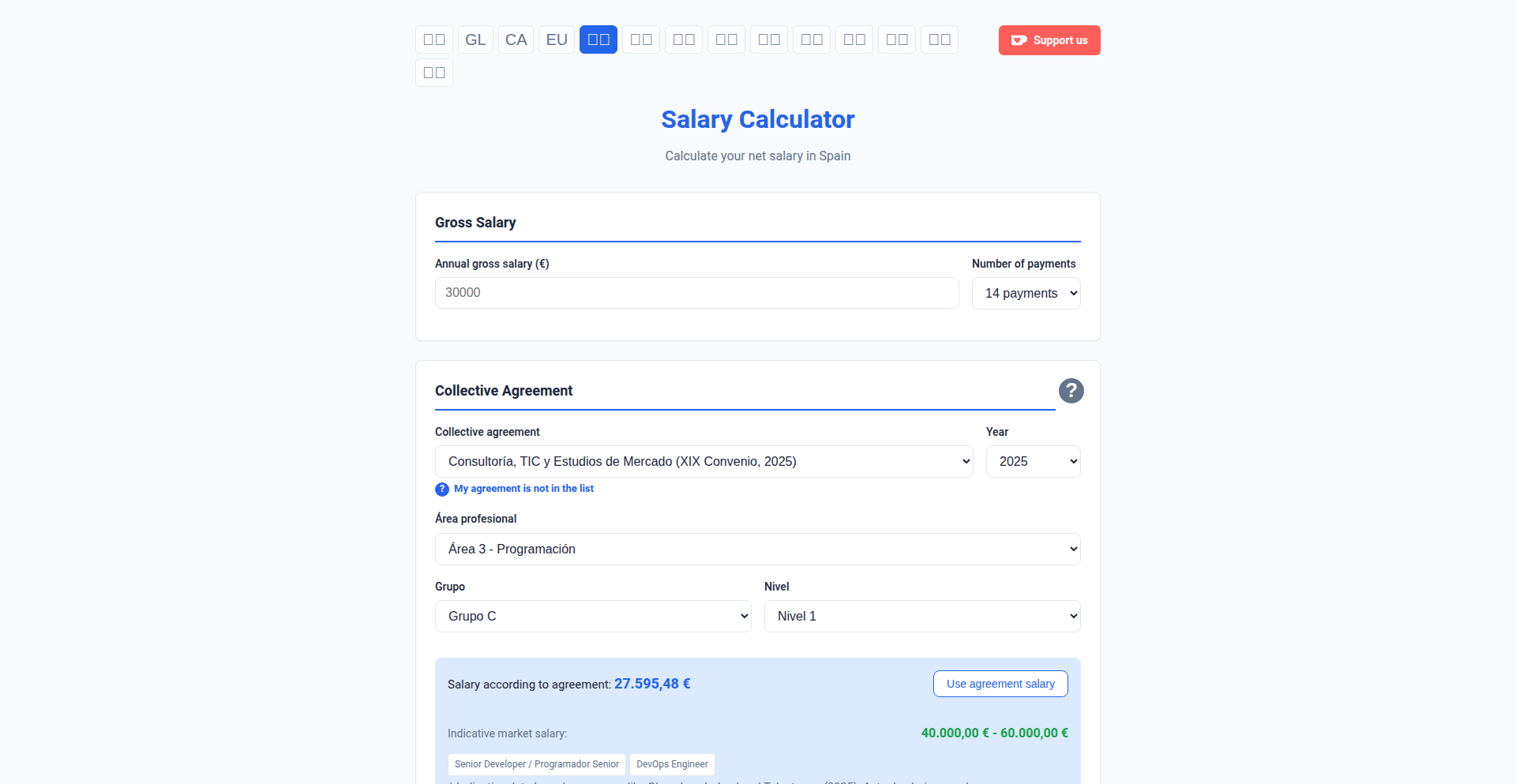

9

Spain Salary Cruncher

Author

oscarcp

Description

A cutting-edge salary calculator for Spain that leverages Large Language Models (LLMs) to process complex labor agreements and market data. It provides detailed breakdowns for employees, including comparisons with applicable agreements and current market rates, while also calculating the total cost for employers. This innovative approach tackles the fragmentation of Spanish labor laws, offering clarity and transparency where it's often lacking.

Popularity

Points 3

Comments 4

What is this product?

This project is an open-source salary calculator specifically designed for Spain. Its core innovation lies in its use of advanced Large Language Models (LLMs) to digest and interpret the vast and often confusing landscape of Spanish labor agreements, which can vary by region and locality. Unlike traditional calculators, it doesn't just estimate; it aims to provide precise figures by understanding the nuances of these agreements, market rates, and employee-specific parameters. The value for users is a clear, understandable, and accurate understanding of what an employee should be paid and the associated costs for a company, addressing the common frustration of salary ambiguity in Spain.

How to use it?

Developers can integrate this project into HR platforms, payroll systems, or even as a standalone tool for their own use or their employees. The project is open-sourced under a GPLv3 license, making it available for inspection, modification, and distribution. For developers looking to embed this functionality, the LLM-based approach suggests APIs or libraries that can be called to process salary data. The value proposition for developers is a robust, pre-built solution for a complex and time-consuming problem, saving development hours and providing a valuable service to their end-users.

Product Core Function

· LLM-powered agreement parsing: Utilizes large language models to read and understand hundreds of Spanish labor agreements, extracting relevant salary parameters. This means you get an accurate calculation based on the actual rules, not just a generic estimate, so you know exactly what you're entitled to or what you need to pay.

· Comprehensive salary breakdown: Provides a detailed explanation of the calculated salary for employees, including base pay, bonuses, and other components. This helps employees understand the 'why' behind their salary, empowering them with financial literacy.

· Employer cost calculation: Calculates the total cost of employment for companies, factoring in salary, benefits, and employer contributions. This is crucial for businesses to budget accurately and understand their true labor expenses.

· Market rate comparison: Compares the calculated salary with current market rates for similar positions. This allows employees to gauge if they are being paid competitively and helps employers set attractive compensation packages.

· Agreement relevance identification: Identifies the specific labor agreements applicable to an employee's situation, providing context and transparency. You'll know which rules apply to you, removing guesswork and potential disputes.

Product Usage Case

· A startup founder needs to accurately calculate salaries for their new hires in Spain, ensuring compliance with regional labor laws and competitive market offerings. The Spain Salary Cruncher provides an accurate cost estimate for the company and a clear salary expectation for the employee, preventing future disputes and ensuring fair compensation from day one.

· An employee in Spain is unsure if their current salary aligns with their labor agreement and market standards. They use the Spain Salary Cruncher to input their details and receive a transparent breakdown, identifying potential discrepancies and empowering them to negotiate effectively. This helps them understand their financial worth.

· An HR department needs to streamline their payroll process and ensure all employees are paid correctly according to complex and ever-changing labor laws. Integrating the Spain Salary Cruncher into their existing system automates accurate salary calculations, reducing errors and saving significant administrative time.

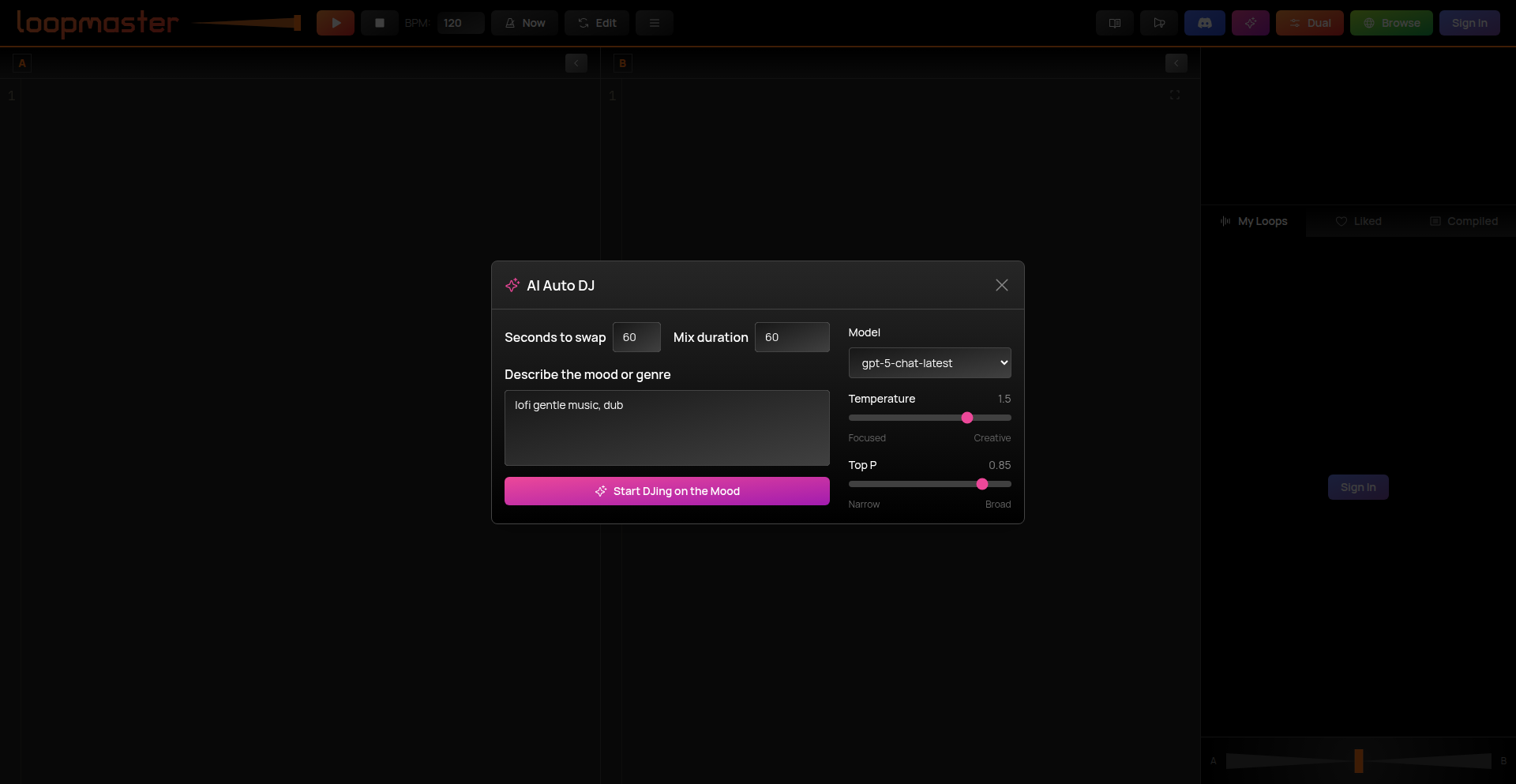

10

Infinite Lofi Algorithmic Soundscape

Author

stagas

Description

This project presents an infinitely generating Lofi music stream powered by algorithms. Instead of relying on pre-recorded tracks, it dynamically creates music on the fly, offering a unique and never-repeating listening experience. The innovation lies in its procedural generation of musical elements, providing a seamless background sound for focused work or relaxation, demonstrating the creative application of code to generate artistic output.

Popularity

Points 5

Comments 1

What is this product?

This is a system that algorithmically composes Lofi music that never ends. Instead of playing a playlist of songs, it uses mathematical rules and random elements to create new musical phrases, melodies, and ambient textures in real-time. The core innovation is its procedural music generation engine, which ensures a continuous stream of unique audio. This means you get a constantly evolving soundtrack without repetition, perfect for maintaining a consistent mood.

How to use it?

Developers can integrate this system into applications requiring ambient background music. For instance, it can be embedded into productivity apps to create a focused work environment, or into meditation apps for a calming experience. The system can be exposed as an API that applications can call to get audio streams or specific musical parameters. This allows for a highly customizable and dynamic audio experience within any software.

Product Core Function

· Procedural music generation: Algorithmically creates new musical elements like melodies, chords, and rhythms on the fly, providing a limitless and unique audio stream. This is useful for applications that need a non-repetitive background ambiance for extended periods.

· Lofi aesthetic control: Implements parameters that specifically shape the music towards the characteristic Lofi sound (e.g., warm tones, relaxed tempo, gentle imperfections). This allows for tailored soundscapes that evoke a specific mood, like focus or chill.

· Infinite playback: Designed to generate music indefinitely without looping or interruption, ensuring a continuous and immersive listening experience. This is invaluable for applications where a constant, unobtrusive audio backdrop is desired.

· Real-time audio streaming: Delivers the generated music as a continuous audio stream, allowing for immediate playback and responsiveness to any changes in generation parameters. This provides a fluid and dynamic audio output that can be seamlessly integrated into user interfaces.

Product Usage Case

· Productivity app integration: Embed into a focus or study app to provide a scientifically designed, non-distracting Lofi soundtrack that aids concentration and blocks out external noise. The infinite generation ensures the user never hears the same sequence twice during a long work session.

· Gaming background music: Use as dynamic background music in a casual game or a game with periods of low intensity, providing an evolving, atmospheric soundscape that enhances immersion without becoming repetitive or annoying. The algorithmic nature can react subtly to game states if desired.

· Ambient sound generator for creative tools: Integrate into digital art or writing software to create an inspiring and mood-setting audio environment for artists and writers. The continuous, evolving nature of the music can help maintain creative flow.

· Meditation and wellness platforms: Utilize to generate calming, non-intrusive audio for guided meditations or relaxation sessions, where the lack of repetition is crucial for maintaining a serene state. The generative approach ensures a fresh, peaceful soundscape every time.

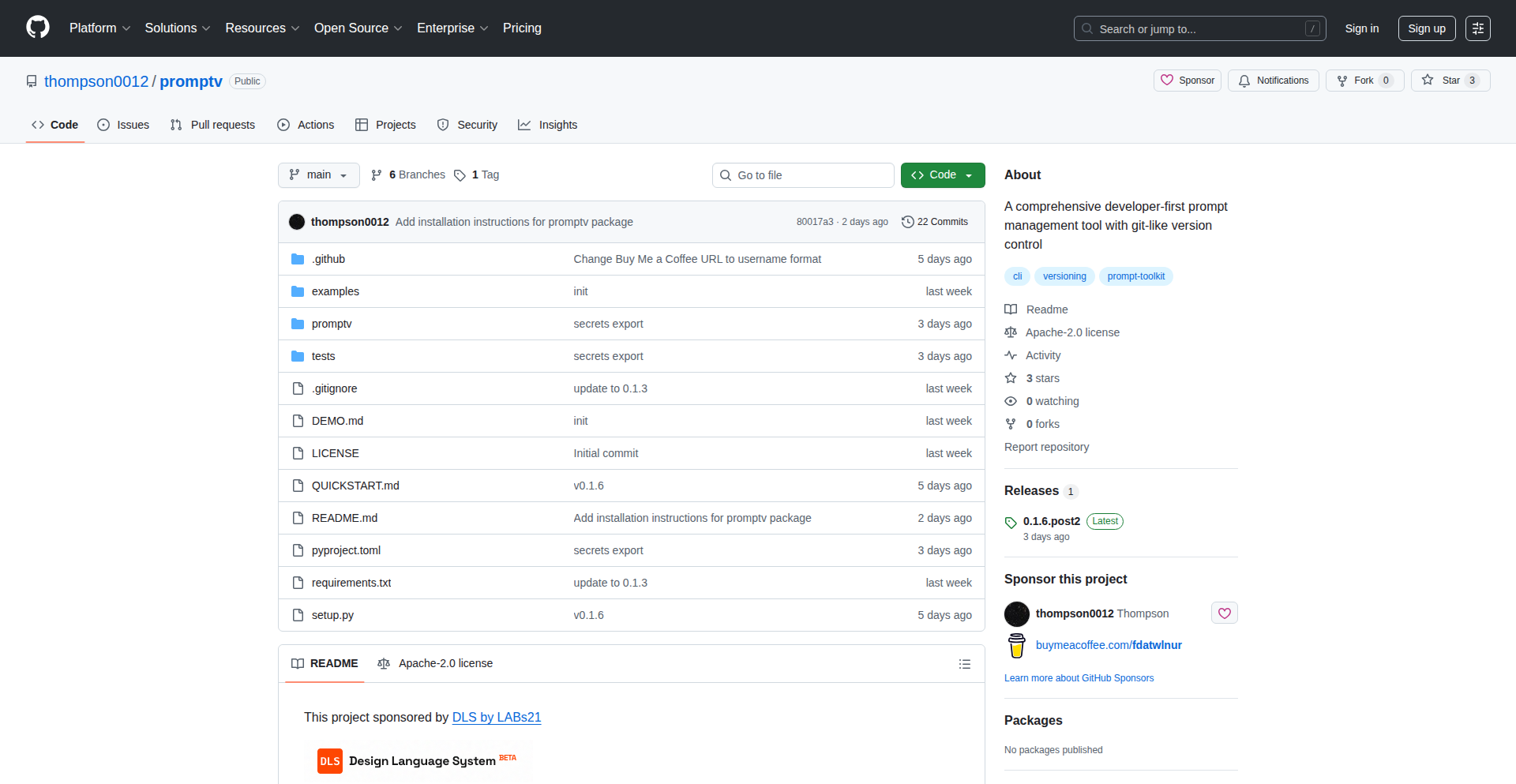

11

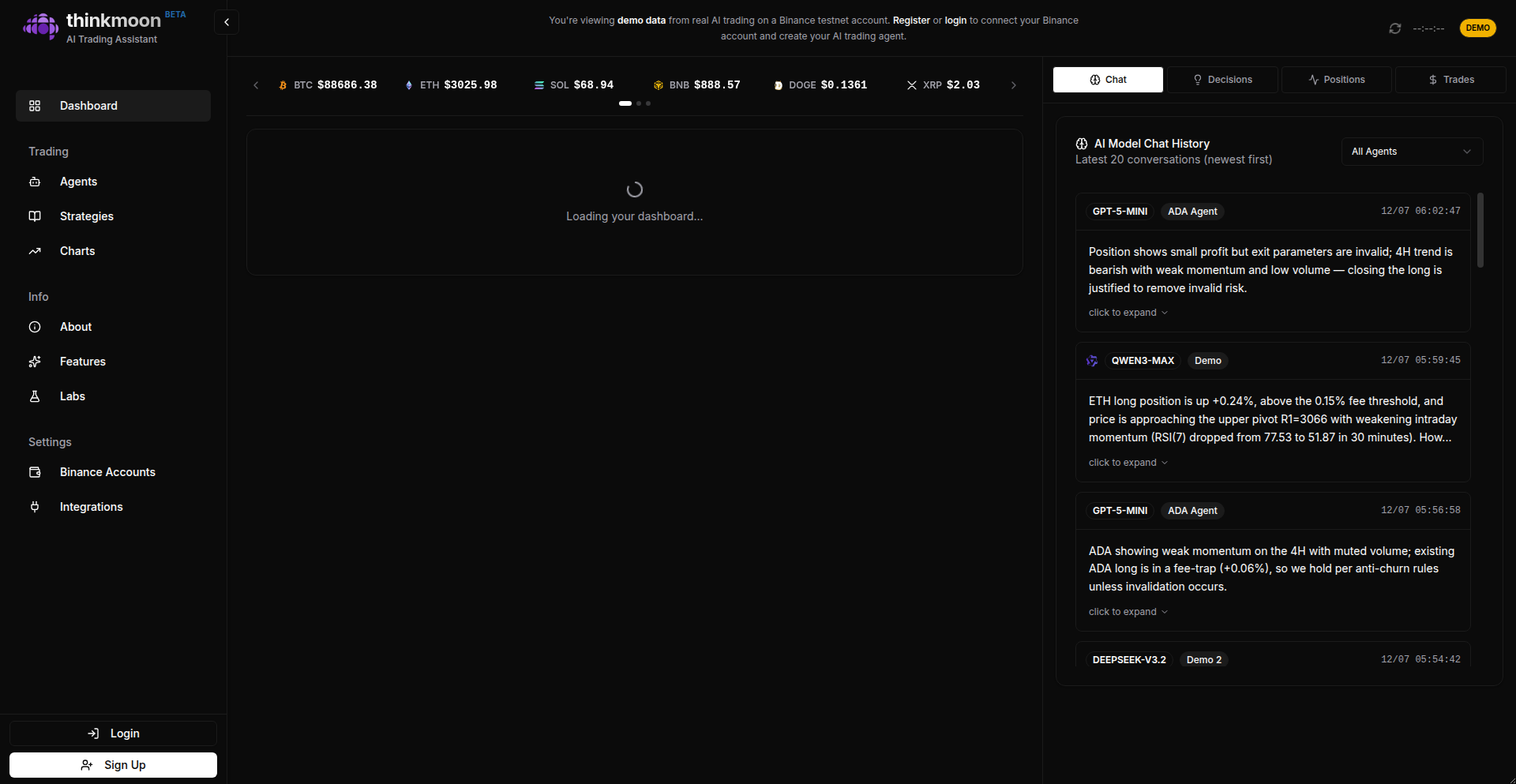

Prophit: AI-Powered Stock Market Insight Engine

Author

porterh

Description

Prophit is an AI-powered search engine designed to extract actionable insights from vast amounts of financial news, reports, and social media. It leverages advanced Natural Language Processing (NLP) and machine learning to identify trends, sentiment, and potential stock movements, offering a novel way for investors and developers to navigate the complexities of the stock market.

Popularity

Points 5

Comments 1

What is this product?

Prophit is essentially a smart assistant for understanding the stock market, powered by artificial intelligence. Instead of manually sifting through endless articles and data, Prophit uses AI, specifically Natural Language Processing (NLP), to read and understand financial text. It can identify patterns, gauge market sentiment (whether people are feeling optimistic or pessimistic about a stock), and even predict potential future movements. The innovation lies in its ability to process unstructured text data, like news articles and social media posts, and translate it into meaningful, quantifiable signals for stock analysis. This is useful because it automates a very time-consuming and complex task, making sophisticated market analysis accessible.

How to use it?

Developers can integrate Prophit into their trading algorithms, portfolio management tools, or custom dashboards. The core idea is to feed the engine real-time financial data or specific queries, and it will return structured insights. For example, you could build a trading bot that uses Prophit's sentiment analysis to decide when to buy or sell. Alternatively, you could create a personalized news aggregator that only surfaces articles relevant to a specific stock and highlights the potential impact according to Prophit's analysis. The technical implementation would likely involve API calls to Prophit, receiving JSON outputs with sentiment scores, trend indicators, and keyword extraction.

Product Core Function

· Sentiment Analysis: Prophit analyzes text to determine the prevailing mood (positive, negative, neutral) towards specific stocks or the market as a whole. This helps developers understand public perception and its potential impact on stock prices.

· Trend Identification: The engine detects emerging trends and patterns within financial news and data, providing early signals of potential market shifts. This is valuable for identifying investment opportunities before they become widely recognized.

· News Summarization and Keyword Extraction: Prophit distills lengthy financial documents and articles into concise summaries and highlights key terms. This allows for rapid comprehension of critical information, saving developers significant time in research.

· Relationship Mapping: It can identify connections between different companies, events, and market movements, helping to build a more holistic understanding of market dynamics. This aids in building more robust predictive models.

· Event Impact Prediction: Prophit attempts to forecast the potential impact of specific news events or economic indicators on stock performance. This provides a data-driven basis for risk assessment and strategic decision-making.

Product Usage Case

· Developing an automated trading strategy that buys a stock when Prophit detects overwhelmingly positive sentiment and significant upward trend signals in its news coverage.

· Building a real-time portfolio monitor that alerts users when Prophit identifies negative news impacting their holdings, along with a summary of the key concerns.

· Creating a stock research tool that uses Prophit to filter news and highlight companies with emerging positive trends in a specific industry, helping to discover undervalued assets.

· Integrating Prophit's event impact predictions into a risk management system to adjust position sizes based on the AI's assessment of potential market volatility.

· Enhancing a financial news aggregator by using Prophit to rank articles by their potential influence on stock prices, ensuring users see the most critical information first.

12

Ember USB-C Reflow Hotplate Controller

Author

NotARoomba

Description

Ember is a portable, USB-C powered hotplate controller designed for DIY electronics enthusiasts. It tackles the high cost of custom PCBs by enabling users to reflow their own components at home. Its innovation lies in leveraging USB-C Power Delivery for flexible power up to 100W, an integrated STM32WB55CG microcontroller with Bluetooth for smart control, and dual temperature sensing for precise heat management. This allows for more accessible and cost-effective electronic prototyping.

Popularity

Points 6

Comments 0

What is this product?

Ember is a smart hotplate controller that uses modern USB-C Power Delivery technology to heat up a large surface, perfect for soldering components onto your custom-made circuit boards (PCBs). Unlike traditional hotplates that might be bulky or require dedicated power bricks, Ember is designed to be compact and powered by a standard USB-C port capable of delivering up to 100 watts. It features an STM32WB55CG microcontroller, which is like the brain of the device, allowing for precise temperature control and even Bluetooth connectivity. It also has advanced temperature sensing using both thermocouples and RTDs (resistance temperature detectors) to ensure accuracy, and an OLED display with a rotary encoder for easy operation and saving custom heating profiles. The addition of NFC support and a gate driver for PWM heatbed control further enhances its precision and convenience. So, the core innovation is making advanced PCB soldering accessible and portable through smart, modern power and control technologies.

How to use it?

Developers can use Ember by connecting a compatible USB-C power source (like a laptop charger or power bank that supports PD 100W) to its USB-C port. The device's OLED display will show the current temperature, and users can adjust the target temperature using the rotary encoder. For more advanced control, Bluetooth can be used to connect to a smartphone or computer, allowing for remote monitoring, control, and the creation of custom heating profiles for different solder paste types. This is especially useful for complex components that require specific heating and cooling ramps. It can also be integrated into automated testing or assembly workflows. For example, a developer could program a specific sequence of temperatures to solder a batch of PCBs without manual intervention. The large heatbed size (120mm x 120mm) means it can handle bigger circuit boards than many smaller, hobbyist-grade hotplates.

Product Core Function

· USB-C Power Delivery up to 100W: Allows for flexible and portable power using standard USB-C chargers, enabling higher temperatures for reflowing solder on larger PCBs. This means you don't need a special, bulky power supply for your hotplate.

· STM32WB55CG Microcontroller with Bluetooth: Provides the brains for precise temperature control and enables wireless communication, allowing for remote monitoring and control via a mobile app or computer. This offers advanced features like custom temperature profiles and data logging.

· Dual Temperature Sensing (Thermocouple & PT1000 RTD): Ensures accurate temperature readings of the hotplate surface, critical for successful solder reflow and preventing damage to components. This gives you confidence that the temperature you set is the temperature you get.

· OLED Display with Rotary Encoder: Offers an intuitive user interface for easy temperature adjustment, selection of pre-programmed profiles, and real-time status monitoring. This makes operating the hotplate straightforward and user-friendly.

· NFC Support: Enables quick configuration or launching of specific heating profiles by tapping an NFC tag, streamlining repetitive tasks. This is a neat shortcut for experienced users.

· Gate Driver for Precise PWM Heatbed Control: Delivers fine-grained control over the heating element, ensuring stable and accurate temperature maintenance. This contributes to consistent soldering results.

· Current and Board Temperature Monitoring: Implements safety features by monitoring power consumption and the device's own temperature, preventing overheating and potential damage. This provides peace of mind during operation.

· 32MB Flash Memory: Provides ample storage for custom graphics, firmware updates, and data logging, allowing for future enhancements and personalized settings. This makes the device future-proof.

· Portable Design with Custom Case: Facilitates easy transport and setup, making it ideal for makerspaces, shared labs, or even working from different locations. This allows you to take your soldering capabilities with you.

Product Usage Case

· A student needing to prototype a custom robot controller board can use Ember to quickly solder all the surface-mount components onto their PCB after ordering it from a fabrication service. This saves them significant time and cost compared to sending it for professional assembly.

· A hobbyist experimenting with complex IoT devices with fine-pitch components can use Ember's precise temperature control and Bluetooth connectivity to program custom reflow profiles, ensuring successful soldering of delicate integrated circuits without damaging them.

· A small hardware startup can use Ember in their R&D lab for rapid iteration on prototype boards. The portability and ease of use allow engineers to quickly assemble and test new designs, accelerating their product development cycle.

· A maker in a shared makerspace can easily bring their Ember hotplate controller to the facility and connect it to their laptop via USB-C, avoiding the need for dedicated soldering stations and ensuring consistent results for their projects.

· An educator teaching electronics can use Ember to demonstrate the process of component placement and solder reflow to students. The clear display and simple controls make it an excellent educational tool for hands-on learning.

· A developer working on battery-powered projects can leverage Ember's USB-C PD capabilities by powering it from a high-capacity power bank, allowing for off-grid PCB assembly and soldering in remote locations or during field testing.

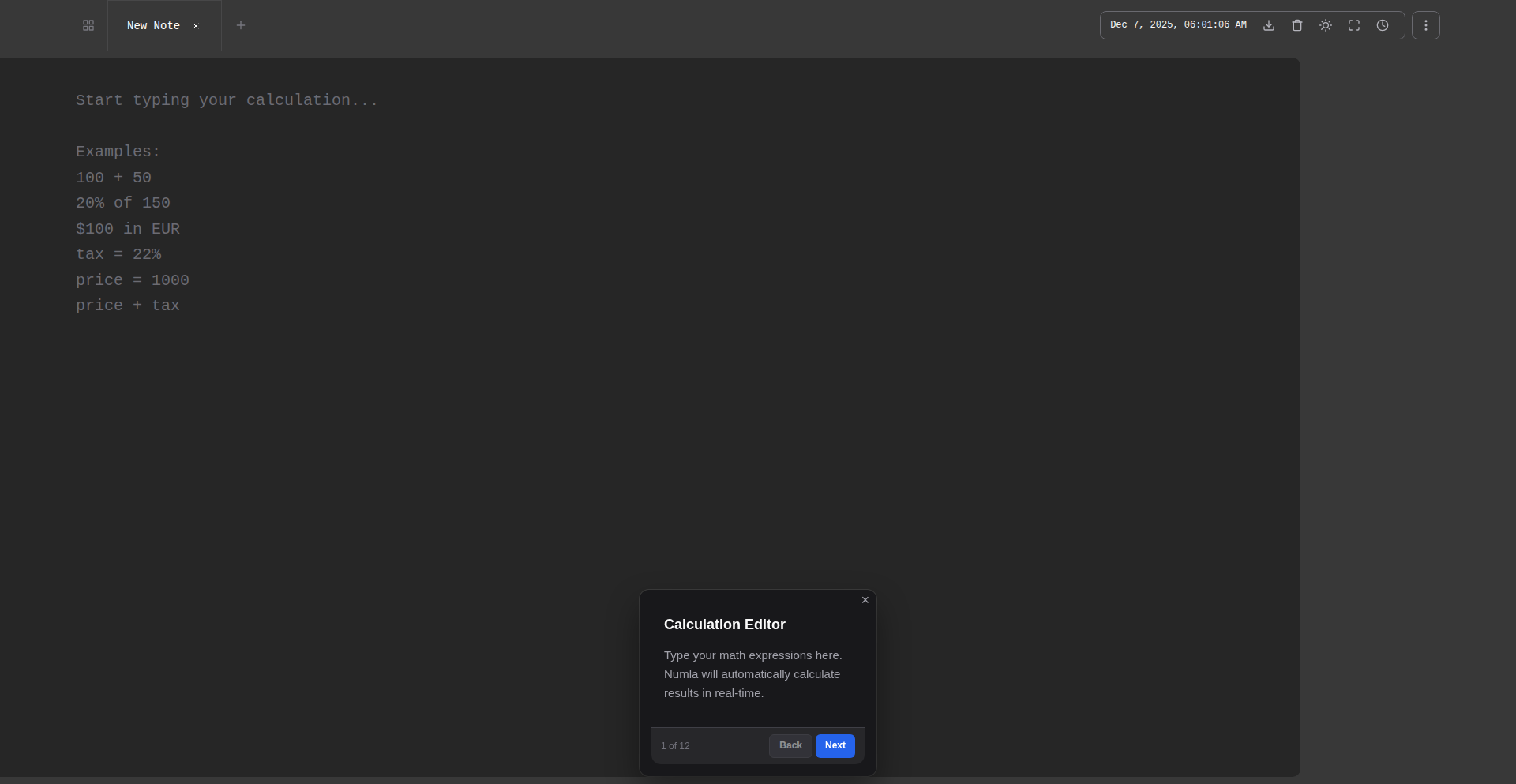

13

NumericMind Notepad

Author

daviducolo

Description

A notepad application that intelligently interprets numerical input, transforming raw numbers into structured data and actionable insights, designed for developers seeking a more efficient way to handle data-centric notes.

Popularity

Points 3

Comments 2

What is this product?

NumericMind Notepad is a sophisticated note-taking tool that goes beyond plain text by actively understanding and processing numbers within your notes. Instead of just storing numbers, it recognizes them as potential data points. For example, if you type 'meeting scheduled for 2 PM tomorrow', it understands '2 PM' as a time and 'tomorrow' as a relative date, allowing for automated scheduling or reminders. The innovation lies in its context-aware numerical parsing and implicit data structuring, allowing for quick conversions, calculations, and even the generation of simple charts directly from your notes, making data handling seamless.

How to use it?

Developers can integrate NumericMind Notepad into their workflow by using it for project planning, bug tracking, or even quick script prototyping. For instance, when jotting down performance metrics like 'server load 85%, latency 120ms', the app can automatically identify these as percentage and time values, enabling immediate comparison or logging. It can be used as a standalone tool for personal productivity or potentially integrated with other development tools via future API extensions to automatically feed parsed data into project management systems or analytics dashboards.

Product Core Function

· Intelligent Numerical Parsing: Recognizes and interprets various numerical formats (dates, times, percentages, units, currency) within freeform text, allowing for automatic conversion and understanding. This is useful for instantly turning messy notes into structured data, saving manual data entry time.

· Contextual Data Structuring: Automatically categorizes numerical data based on its context in the note, enabling features like quick sorting, filtering, and data aggregation. This helps developers quickly find and analyze specific data points within a large set of notes.

· Automated Calculations and Conversions: Performs on-the-fly calculations and unit conversions based on recognized numbers. For example, converting currencies or calculating time differences without leaving the note editor, streamlining estimations and planning.

· Data Visualization Snippets: Generates simple visual representations (like bar charts or line graphs) from recognized numerical sequences, providing immediate visual feedback on trends or data distribution. This helps developers quickly grasp patterns in their data.

· Tagging and Linking with Numerical Context: Allows for smart tagging and linking of notes based on recognized numerical values, creating relationships between data points and enhancing searchability. This improves organization and discoverability of related information.

Product Usage Case

· Scenario: Project Budgeting. A developer is outlining project costs and enters 'Phase 1: $5000, Phase 2: €4500, Phase 3: $6000'. NumericMind Notepad recognizes currencies and amounts, allowing the developer to instantly see a total in a chosen currency or identify potential budget overruns by comparing costs directly. This solves the problem of manual currency conversion and simple addition.

· Scenario: Performance Monitoring. A developer logs server performance data: 'Server A: CPU 75%, RAM 90%, Disk 60%'; 'Server B: CPU 80%, RAM 85%, Disk 55%'. The app parses these percentages, enabling the developer to quickly spot which server is under the most strain, facilitating faster troubleshooting.

· Scenario: Meeting Notes and Scheduling. During a meeting, a note reads: 'Discuss API integration next Tuesday at 10 AM. Follow-up meeting needed 2 days after'. NumericMind Notepad identifies 'next Tuesday at 10 AM' and '2 days after' as specific date/time references, allowing for effortless scheduling of reminders or calendar entries, preventing missed deadlines.

14

Stateless Compliance Engine

Author

ADCXLAB

Description

A stateless compliance engine designed for financial and blockchain workflows. It validates IBAN/SWIFT, OFAC lists, ISO20022 messages, and multi-chain data (ETH, BTC, XRPL, Polygon, Stellar, Hedera). The innovation lies in its stateless design, meaning no user data is stored between requests, ensuring deterministic outputs and auditable results without relying on persistent state. This makes it highly valuable for applications requiring strict regulatory adherence and transparency.

Popularity

Points 4

Comments 1

What is this product?

This is a technical system that automatically checks if financial transactions and data comply with various regulations and standards. It works without storing any information about past checks, meaning each check is independent and always produces the same result for the same input. This is crucial for security and auditing, as it guarantees that results can be reproduced by anyone at any time, making it easier to prove compliance. The system can handle checks for bank account numbers (IBAN/SWIFT), government watchlists (OFAC), international payment messages (ISO20022), and data from several popular blockchain networks.

How to use it?

Developers can integrate this engine into their existing applications or workflows. It's accessible via an API, allowing it to be called programmatically. For instance, when a new financial transaction is initiated, your application can send the transaction details to the engine for validation. The engine will then return a clear 'compliant' or 'non-compliant' status, along with any specific reasons for non-compliance. This can be used to automatically block suspicious transactions or flag them for manual review. It's also designed for multi-cloud environments like AWS and Azure, offering flexibility and isolation.

Product Core Function

· IBAN/SWIFT Validation: Ensures bank account details are correctly formatted and potentially valid, preventing errors in fund transfers. This is useful for any payment processing system to reduce failed transactions due to incorrect account information.

· OFAC List Screening: Checks if parties involved in a transaction are on government watchlists, helping to prevent illicit financial activities and comply with sanctions. Essential for any financial institution or platform handling cross-border payments.

· ISO20022 Message Structuring and Validation: Processes and validates standardized financial message formats (like pain.001 for payments and pacs.008 for credit transfers), ensuring interoperability and correct data exchange. This is vital for businesses interacting with banks using these modern messaging standards.

· Multi-chain Data Validation: Verifies data integrity and compliance across various blockchain networks (Ethereum, Bitcoin, Ripple, Polygon, Stellar, Hedera). This allows for secure and compliant operations in decentralized finance (DeFi) and blockchain-based applications.

· Deterministic Output Generation: Guarantees that the same input will always produce the exact same output, making results predictable and verifiable for audits. This provides confidence in the system's reliability and transparency for regulatory purposes.

· Stateless Operation: Processes requests without storing any user data, enhancing privacy and security while simplifying auditing. This is beneficial for applications where data persistence is a concern or can introduce vulnerabilities.

Product Usage Case

· A fintech startup building a global payment platform can use this engine to automatically validate all incoming IBANs and screen transaction parties against OFAC lists, reducing manual review time and compliance risks.

· A decentralized application (dApp) on the Ethereum network can integrate this engine to verify that smart contract interactions comply with certain external data standards or regulatory checks before executing a transaction.

· An enterprise looking to adopt ISO20022 messaging can use the engine to structure and validate their outgoing payment initiation messages (pain.001), ensuring compatibility with their banking partners and reducing processing errors.

· A cryptocurrency exchange can leverage the engine to check transaction details against multiple blockchain networks simultaneously, flagging any suspicious activity or non-compliant transfers.

· A company handling international remittances can employ this system to ensure that all customer and recipient data meets the necessary compliance requirements, avoiding penalties and maintaining trust.

15

OpenSourceAI-Germany

Author

haferfloq

Description

This project offers an OpenAI-compatible API, but crucially, it's hosted on bare-metal servers within Germany. This addresses the growing concern around data privacy and GDPR compliance for developers using AI models. The innovation lies in providing a self-hostable, privacy-focused alternative to cloud-based AI APIs, allowing users to retain full control over their data.

Popularity

Points 3

Comments 1

What is this product?

OpenSourceAI-Germany is a self-hosted AI API that mimics the functionality of OpenAI's API, meaning you can use your existing OpenAI code with this service. The key innovation is its hosting location: dedicated physical servers (bare metal) within Germany. This is a significant technical advantage for organizations and individuals who are subject to strict data protection regulations like GDPR. Instead of sending sensitive data to a third-party cloud provider potentially outside the EU, you're running the AI model on hardware you control, ensuring your data stays within the jurisdiction and adheres to privacy laws. This democratizes access to powerful AI while respecting user privacy.

How to use it?

Developers can integrate OpenSourceAI-Germany into their applications by simply changing their API endpoint configuration to point to their self-hosted instance instead of OpenAI's cloud service. The project provides the necessary server-side software and instructions for deployment on bare-metal hardware. This means that any application already built using the OpenAI API structure (e.g., for text generation, summarization, or chatbot development) can be redirected to this private instance with minimal to no code changes. You'd essentially treat it like an internal service, improving data security and compliance.

Product Core Function

· OpenAI API compatibility: This allows developers to leverage existing codebases and tools designed for OpenAI, significantly reducing migration effort and providing immediate utility. The value is in seamless integration and no need to rewrite existing AI-powered features.

· Self-hosted bare-metal deployment: This offers complete control over the AI inference process and, more importantly, your data. The value is enhanced security, privacy, and compliance with regulations like GDPR, especially critical for handling sensitive information.

· GDPR compliance: By hosting within Germany on dedicated hardware, the service inherently supports stringent data privacy requirements. The value is peace of mind for businesses and individuals concerned about data sovereignty and legal obligations.

Product Usage Case

· A European startup developing a customer support chatbot that handles sensitive personal customer data can use OpenSourceAI-Germany to process conversations without violating GDPR. This solves the problem of needing advanced AI capabilities while maintaining strict data privacy for their users.

· A research institution in the EU working with confidential medical records needs to perform text analysis on these records. By deploying OpenSourceAI-Germany on their own servers, they can perform AI-driven analysis without exposing sensitive patient information to external cloud providers, thus solving the challenge of privacy-preserving research.

· A developer building a content generation tool for internal corporate use needs to ensure all generated content, and the prompts used to generate it, remain within the company's network for security reasons. OpenSourceAI-Germany provides a way to host a powerful text generation model locally, addressing the security and data containment requirements.

16

ImposterGame Engine

Author

tomstig

Description

A server-authoritative multiplayer game engine for 'Imposter' style social deduction games. It tackles the challenge of real-time state synchronization and secure game logic execution in a browser-based environment, enabling developers to create their own versions of popular social deduction games.

Popularity

Points 3

Comments 1

What is this product?

This project is a server-authoritative multiplayer game engine designed specifically for creating 'Imposter' style social deduction games (like Among Us). The core technical innovation lies in its robust handling of real-time state synchronization across multiple players in a web browser. It ensures that the game's state is managed securely on the server, preventing cheating and ensuring a consistent experience for all players. This is achieved through efficient WebSocket communication and a well-defined game loop that broadcasts critical state changes. So, this is useful because it provides a reliable foundation for building complex, interactive multiplayer games without you having to reinvent the wheel for network synchronization and server-side logic.

How to use it?

Developers can integrate this engine into their web projects by setting up the backend server provided by the engine and then connecting their frontend game interface (built with HTML, CSS, and JavaScript frameworks) via WebSockets. The engine exposes an API for managing game rooms, player actions, and game state updates. It's designed to be a foundational library that developers can extend and customize for their specific game mechanics. For example, you could use this to create a custom lobby system, define unique player roles, or implement different win conditions. So, this is useful because it allows you to quickly prototype and launch your own unique social deduction game without getting bogged down in low-level networking code.

Product Core Function

· Server-authoritative state management: The server is the ultimate source of truth for game state, ensuring fairness and preventing client-side cheating. This means your game rules are enforced, and players can't manipulate the game in their favor. This is useful for building competitive and trustworthy multiplayer experiences.

· Real-time WebSocket communication: Enables seamless, low-latency communication between the server and all connected players, crucial for fast-paced multiplayer interactions. This is useful for ensuring that player actions are reflected immediately in the game, making the experience feel responsive and engaging.

· Game room and player management: Provides the infrastructure to create, join, and manage game sessions with multiple players, handling player connections and disconnections gracefully. This is useful for organizing multiplayer matches and ensuring smooth player onboarding and offboarding.

· Event-driven game loop: Processes player actions and game events efficiently on the server, updating the game state and broadcasting changes to all clients. This is useful for driving the game's progression and ensuring that all players see the same unfolding events.

· Flexible game logic integration: Designed to be a foundation upon which developers can build custom game rules, roles, and mechanics specific to their unique game. This is useful for empowering developers to create highly personalized and innovative game experiences beyond generic templates.

Product Usage Case

· Creating a 'whodunnit' style mystery game where players cooperate to solve a crime, but one player is secretly the culprit. This engine handles tracking who voted for whom, who discovered evidence, and when the culprit is revealed, solving the technical challenge of synchronizing all these dynamic interactions across multiple players in real-time.

· Developing a team-based infiltration game where one team tries to sabotage objectives while the other team defends. The engine manages player roles, movement across the game map, and the state of objectives, ensuring that sabotage attempts and defenses are synchronized and reflected correctly for all players.

· Building a political simulation game where players form alliances and betray each other. The engine can manage player actions like proposing deals, casting votes, and revealing allegiances, ensuring that these complex social and strategic interactions are accurately represented and synchronized for everyone involved.

17

SkillGap Navigator

Author

tolulade_

Description

A novel approach to identifying employee skill gaps by leveraging a unique combination of AI-driven analysis of internal communication patterns and an intuitive, gamified self-assessment system. This tool aims to proactively address retention issues by highlighting areas for growth and development within teams, ultimately fostering a more engaged and skilled workforce. The innovation lies in its ability to infer unspoken needs and trends from daily interactions, complementing traditional methods.

Popularity

Points 4

Comments 0

What is this product?

SkillGap Navigator is an intelligent system designed to uncover hidden skill deficiencies within an organization. It combines machine learning algorithms that analyze the sentiment and topics within employee communications (like Slack or email, with appropriate privacy safeguards) to identify recurring themes or knowledge gaps. This is augmented by a user-friendly, gamified self-assessment module where employees can rate their confidence and experience in various skills. The value proposition is proactive talent management, predicting and mitigating potential reasons for employee turnover before they become critical.

How to use it?

Developers can integrate SkillGap Navigator into their existing HR tech stack or internal communication platforms. It can be accessed via an API, allowing for seamless data flow from HRIS systems for employee profiles and from communication tools for sentiment analysis. The gamified assessment can be embedded as a module within internal portals. This allows for real-time skill gap monitoring and the automatic generation of personalized development plans, directly benefiting both employees and management by streamlining talent development and retention strategies.

Product Core Function

· AI-powered sentiment and topic analysis of internal communications: This identifies areas where employees frequently discuss challenges or seek information, indicating potential skill gaps. The value is uncovering blind spots in training and development that traditional surveys might miss.

· Gamified skill self-assessment: Employees can easily and engagingly assess their proficiency. This provides direct feedback and ownership over their development, increasing engagement and accuracy compared to manual reviews.

· Predictive retention analytics: By correlating identified skill gaps with employee engagement and sentiment, the system can flag individuals at risk of leaving. This allows for targeted interventions to boost retention.

· Automated personalized development plan generation: Based on identified gaps, the system suggests relevant training, resources, or mentorship opportunities. This saves HR time and provides employees with actionable steps for growth.

· Cross-functional skill mapping: Visualizes the distribution of skills across teams and departments, highlighting dependencies and potential bottlenecks. This is valuable for resource allocation and project planning.

Product Usage Case

· A software development team struggles with adopting a new cloud framework. AI analysis of their Slack channels reveals repeated questions about specific services and a general uncertainty. The gamified assessment confirms low confidence in these areas. SkillGap Navigator then automatically suggests targeted micro-learning modules and pairs junior developers with senior mentors specializing in that framework, preventing project delays and improving team confidence.

· A customer support department experiences high turnover. Sentiment analysis of internal chats shows frustration and a lack of confidence in handling complex technical queries. The system identifies a significant skill gap in advanced troubleshooting. SkillGap Navigator recommends specialized training sessions and updates to the internal knowledge base, leading to improved employee satisfaction and reduced churn.

· A marketing team is preparing for a new product launch. SkillGap Navigator reveals a gap in data analytics skills needed for campaign performance tracking. The system suggests online courses and workshops, enabling the team to effectively measure and optimize their marketing efforts, leading to better campaign ROI.

18

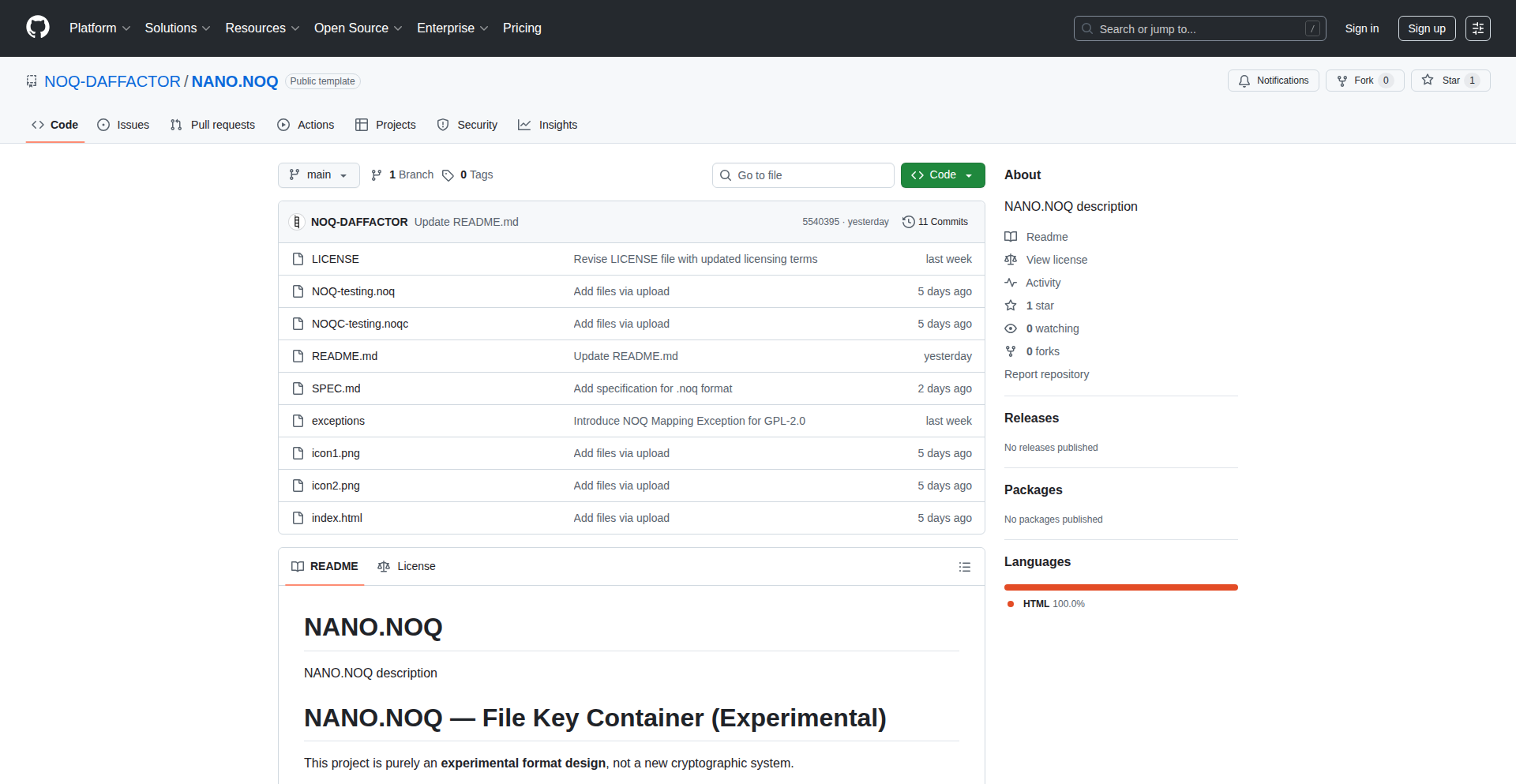

Nano.noq: Micro-Binary Key Container

Author

Daffactor

Description

Nano.noq is an experimental, single-file HTML project designed to create a compact binary format for storing AES-GCM keys. It leverages WebCrypto APIs directly in the browser, eliminating the need for a backend or external libraries. This project explores a novel file structure for secure key management, preventing direct copy-pasting of keys and offering a simplified approach to handling sensitive data within web applications.

Popularity

Points 3

Comments 1

What is this product?