Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-12-05

SagaSu777 2025-12-06

Explore the hottest developer projects on Show HN for 2025-12-05. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

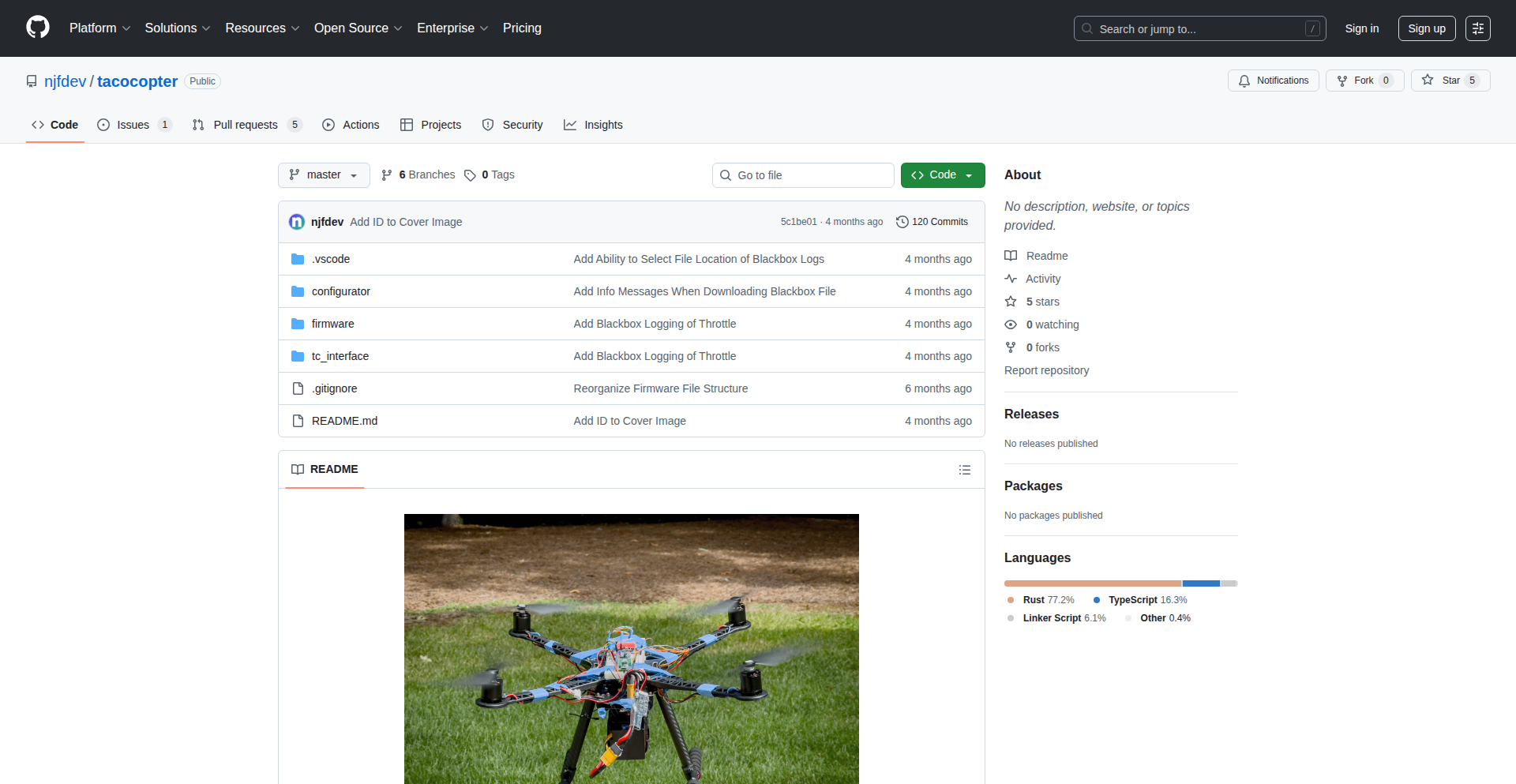

The current wave of innovations on Show HN is a vibrant testament to the hacker spirit, where developers are not just building features but solving intricate problems with elegant, often unconventional, technical solutions. We're witnessing a significant trend towards empowering developers with AI, not just as a general assistant, but as a highly specialized tool to combat issues like code sloppiness (Sloppylint) or to streamline complex workflows (TaskWand). The emphasis on self-hosting and data ownership, as seen with Pbnj, reflects a growing desire for control and privacy in an increasingly cloud-centric world. Furthermore, the pervasive use of Rust highlights a commitment to performance and reliability, especially in areas like embedded systems (Tacocopter) and high-throughput data processing. This era is defined by pragmatic innovation, where the goal is to build tools that are not only technically sound but also directly address user pain points with a focus on efficiency, accessibility, and often, sheer cleverness. For aspiring developers and entrepreneurs, this means identifying those nuanced, often overlooked, problems and leveraging emerging technologies – particularly AI and performant languages – to craft focused, impactful solutions. Don't shy away from building for yourself first; that's often where the most authentic and valuable innovations emerge.

Today's Hottest Product

Name

Pbnj – A minimal, self-hosted pastebin you can deploy in 60 seconds

Highlight

This project showcases exceptional technical ingenuity by offering a self-hosted pastebin solution that prioritizes simplicity and rapid deployment. It tackles the common pain point of over-engineered self-hosted services by focusing on a CLI-first approach and one-click deployment to Cloudflare. Developers can learn valuable lessons in minimalist design, efficient deployment strategies, and the power of command-line interfaces for common utility tasks. The focus on a smooth user experience, even with memorable URLs, is a testament to thoughtful engineering.

Popular Category

Developer Tools

AI & Machine Learning

Productivity

Utilities

Web Development

Popular Keyword

AI

LLM

CLI

Rust

Python

Self-hosted

Automation

Developer Productivity

Code Generation

Data Analysis

Technology Trends

AI-powered development tools are booming, addressing specific pain points like code quality and workflow automation.

Minimalist and self-hostable solutions are gaining traction, emphasizing user control and simplicity.

The Rust ecosystem is expanding rapidly, particularly in systems programming and performance-critical applications.

Cross-platform and accessible tooling, often leveraging WebAssembly or smart CLI design, are on the rise.

RAG (Retrieval-Augmented Generation) is a key technique for enhancing LLM accuracy and relevance in specialized domains.

Project Category Distribution

Developer Tools (25%)

AI & Machine Learning (20%)

Productivity & Utilities (30%)

Web Development (15%)

Data & Analytics (10%)

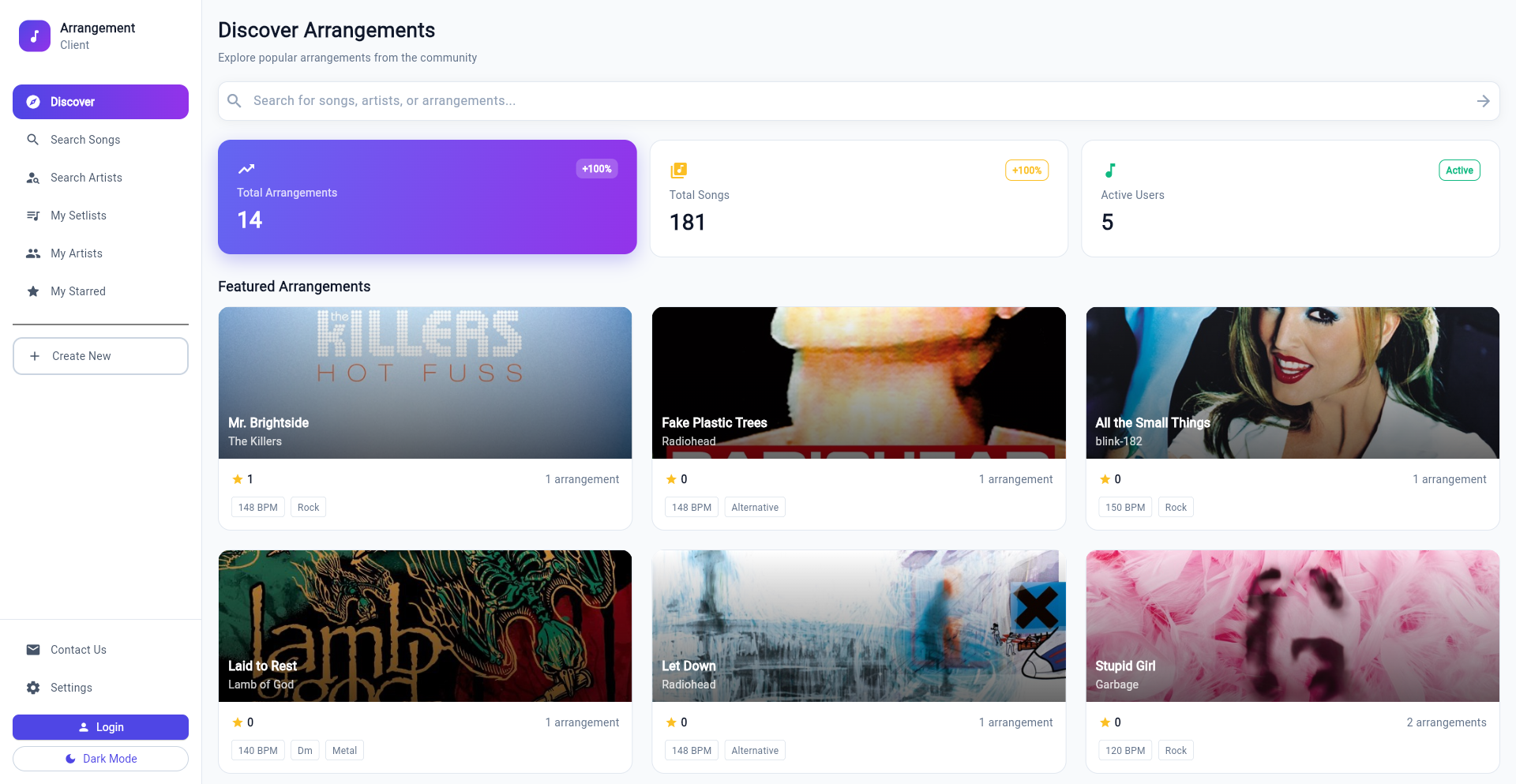

Today's Hot Product List

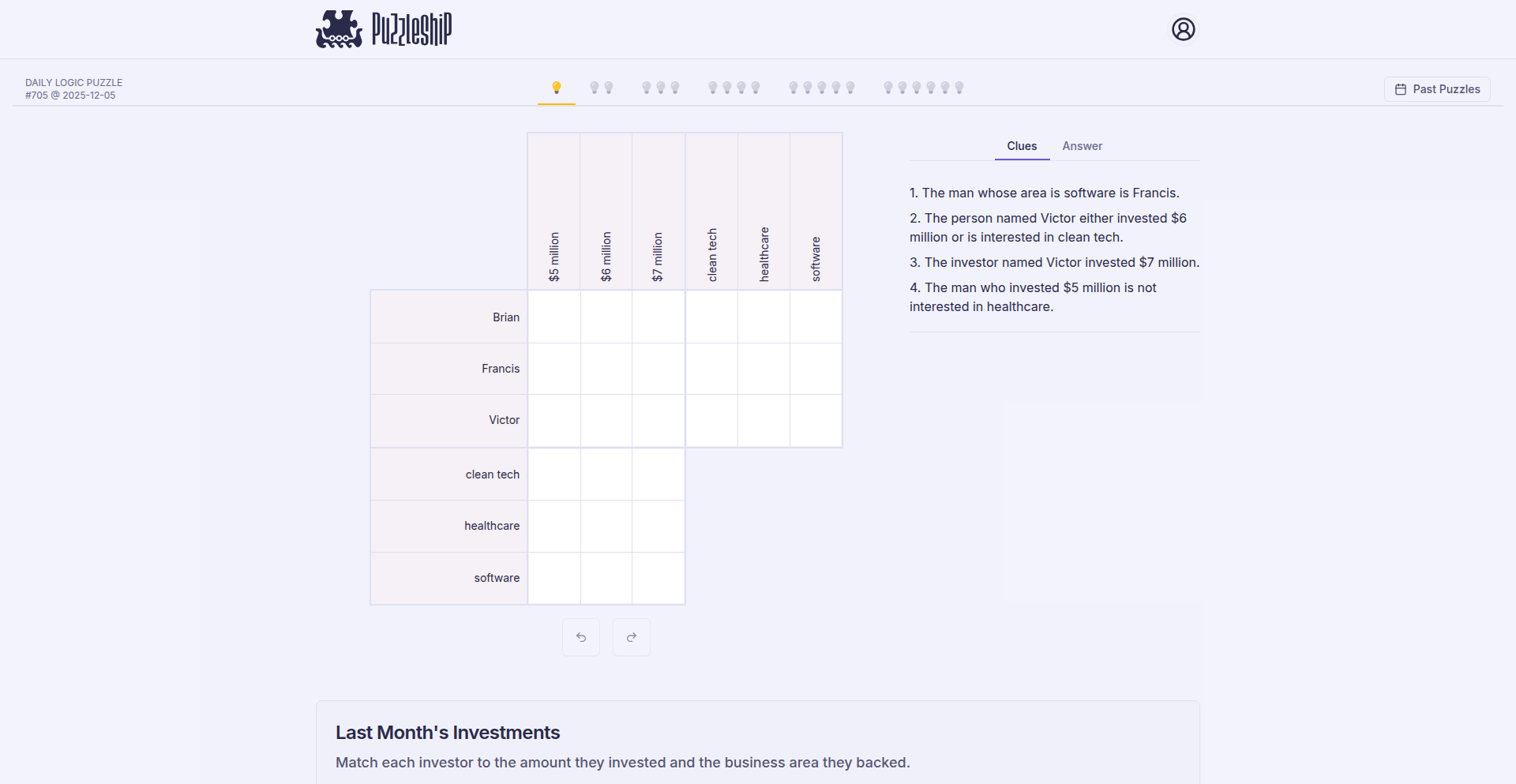

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Pbnj - The Speedy Pastebin Enabler | 58 | 16 |

| 2 | SerpApi MCP Server: Scalable Search Proxy | 22 | 5 |

| 3 | Radioactive Knight Chess Learner | 14 | 3 |

| 4 | AI-Slop Detector | 11 | 3 |

| 5 | CelestialPrint Calendar | 2 | 7 |

| 6 | StableChat-LLM-Deterministic | 8 | 1 |

| 7 | RuleWeaver: Example-Driven Reasoning Engine | 4 | 3 |

| 8 | Hacker Hire Explorer | 4 | 2 |

| 9 | OLake-IcebergTurbo | 5 | 1 |

| 10 | Chronosync Todo | 2 | 4 |

1

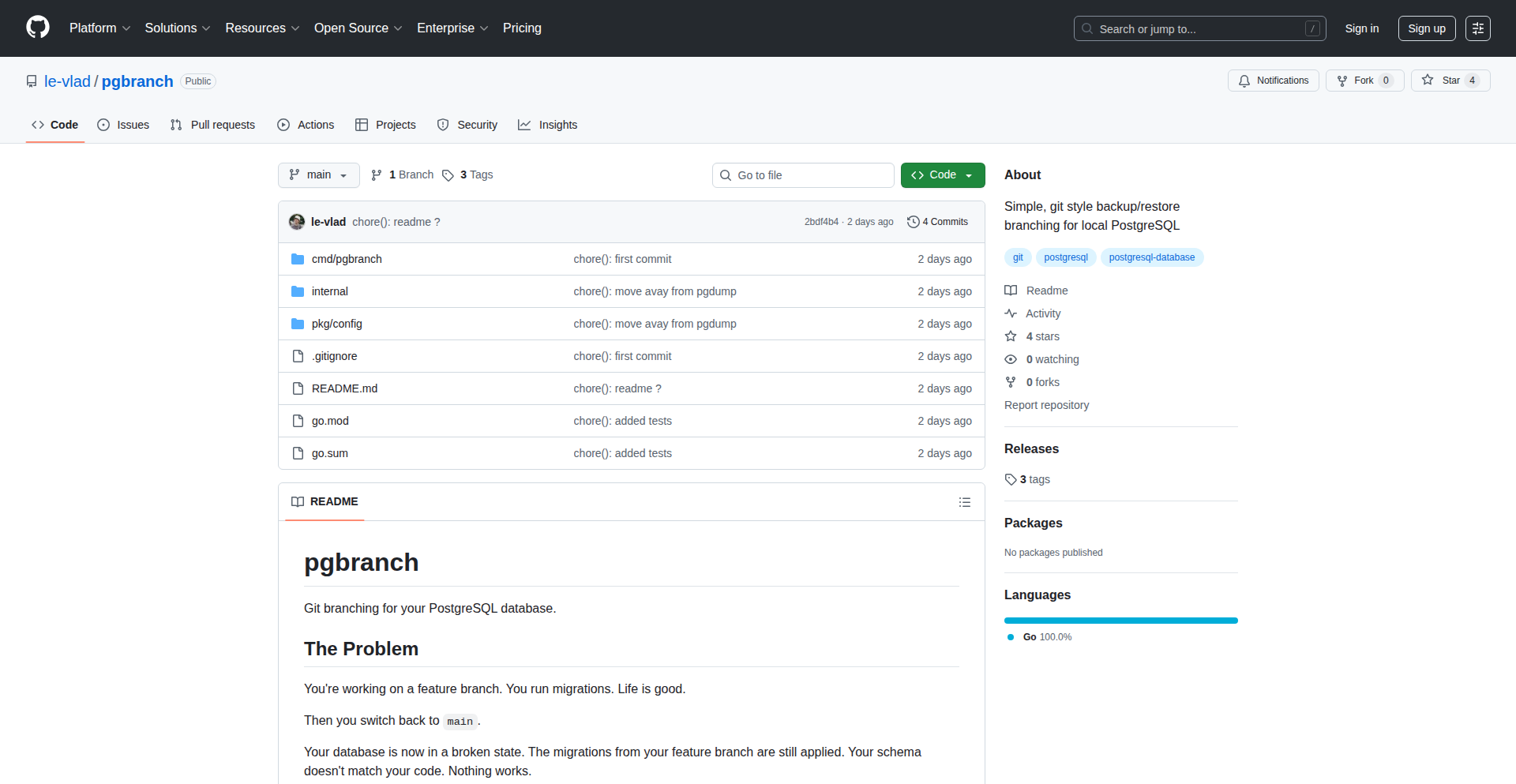

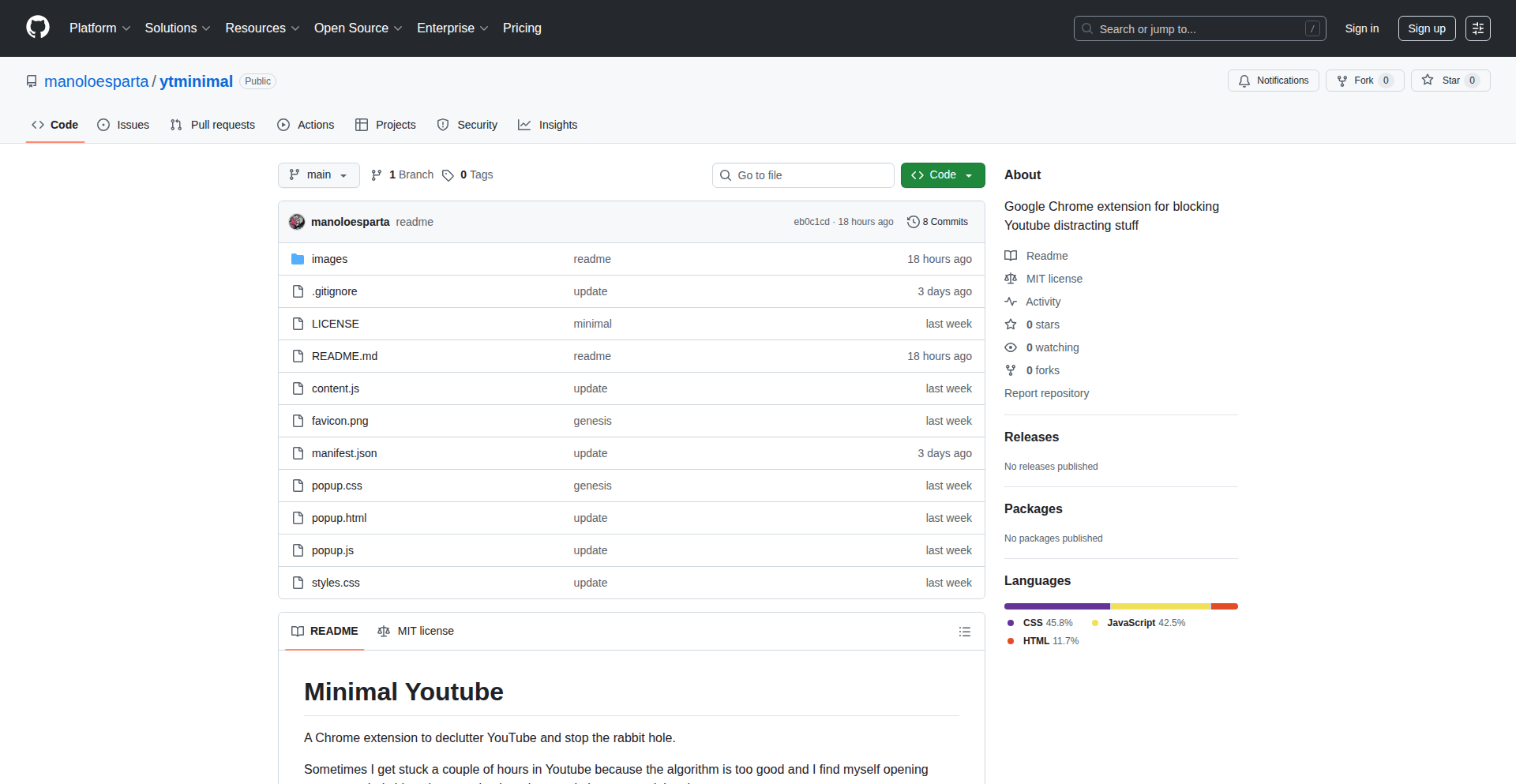

Pbnj - The Speedy Pastebin Enabler

url

Author

bhavnicksm

Description

Pbnj is a minimalist, self-hostable pastebin designed for rapid deployment and ease of use. It tackles the complexity of traditional pastebin solutions by offering a one-click Cloudflare deploy and a CLI-first experience. The innovation lies in its focus on simplicity, allowing developers to get a functional pastebin up and running in under a minute without the overhead of accounts, Git integration, or elaborate admin panels. This project embodies the hacker ethos of using code to solve a specific, annoying problem: the hassle of setting up a personal pastebin.

Popularity

Points 58

Comments 16

What is this product?

Pbnj is a super-lightweight pastebin service that you can host yourself. Think of it as your personal digital notepad that's incredibly fast to set up and use. The core technical idea is to strip away all the unnecessary features often found in complex web applications. Instead of user accounts, complicated login systems, or version control like Git, Pbnj focuses on the essential function: quickly sharing code snippets or text. It uses Cloudflare Workers for deployment, which means it can run close to users globally and leverage their free tier for significant capacity, making it very cost-effective. The innovation is in its radical simplicity and focus on developer workflow through a command-line interface (CLI) and memorable URLs.

How to use it?

Developers can use Pbnj in several ways. The primary method is via the command-line interface (CLI). After installing it with `npm install -g @pbnjs/cli`, you can simply run `pbnj <your_file_name>` (e.g., `pbnj my_script.py`). Pbnj will then upload the content of that file to your self-hosted instance and automatically copy a unique, easy-to-remember URL (like `crunchy-peanut-butter-sandwich`) to your clipboard, ready to be shared. You can also deploy it to your own Cloudflare account with a single click, giving you full control. For those times you're not in the terminal, a simple web UI is available to paste text directly or manage existing pastes.

Product Core Function

· Minimalist Paste Creation: Allows quick uploading of text or code snippets, with syntax highlighting for over 100 programming languages. This is valuable because it ensures your shared code is readable and instantly understandable for others, regardless of the programming language.

· One-Click Cloudflare Deployment: Enables deployment to Cloudflare Workers with a single click, leveraging a robust and scalable infrastructure for free. This is valuable for developers who want to host their own services without managing complex server infrastructure, making it accessible even on a free tier.

· CLI-First Workflow: Provides a command-line tool for uploading files and getting shareable URLs instantly. This is valuable because it integrates seamlessly into a developer's existing workflow, allowing for rapid sharing directly from their terminal without context switching.

· Memorable URLs: Generates human-readable, unique URLs for each paste (e.g., 'happy-blue-whale'). This is valuable as it makes sharing links easier and more professional compared to random alphanumeric strings, improving the user experience for recipients.

· Private Pastes with Secret Keys: Offers the option to create private pastes accessible only with a secret key. This is valuable for developers who need to share sensitive information or work-in-progress code with a limited audience, adding a layer of security.

· Web UI Access: Includes a functional web interface for users who prefer not to use the CLI or need to access the pastebin from a different device. This is valuable as it provides flexibility and accessibility, catering to different user preferences and situations.

Product Usage Case

· Sharing a code snippet with a colleague: A developer needs to show a specific function or error message to a teammate. Instead of copy-pasting into an email or chat, they can use `pbnj my_buggy_function.js` from their terminal, get a memorable URL, and share it instantly. This solves the problem of messy formatting and makes the shared code easy to reference.

· Deploying a temporary configuration file: A developer needs to quickly share a configuration file with a remote server or a CI/CD pipeline for a one-off task. Pbnj allows them to upload the file via CLI, get a URL, and use it in their deployment script, solving the challenge of securely and quickly distributing such files without setting up a dedicated file-sharing service.

· Self-hosting a personal knowledge base: A developer wants a private place to store and retrieve their personal notes, code snippets, and research findings. By deploying Pbnj to their Cloudflare account, they gain a secure, always-accessible personal pastebin with memorable links, solving the problem of scattered notes and providing an organized, self-owned solution.

· Quickly sharing output from a script: A developer runs a long script that generates a significant amount of text output. Instead of scrolling through a terminal or redirecting to a file that needs manual uploading, they can pipe the output to Pbnj via the CLI: `my_script.sh | pbnj`. This instantly provides a shareable link to the entire output, solving the problem of managing and distributing large amounts of script-generated data.

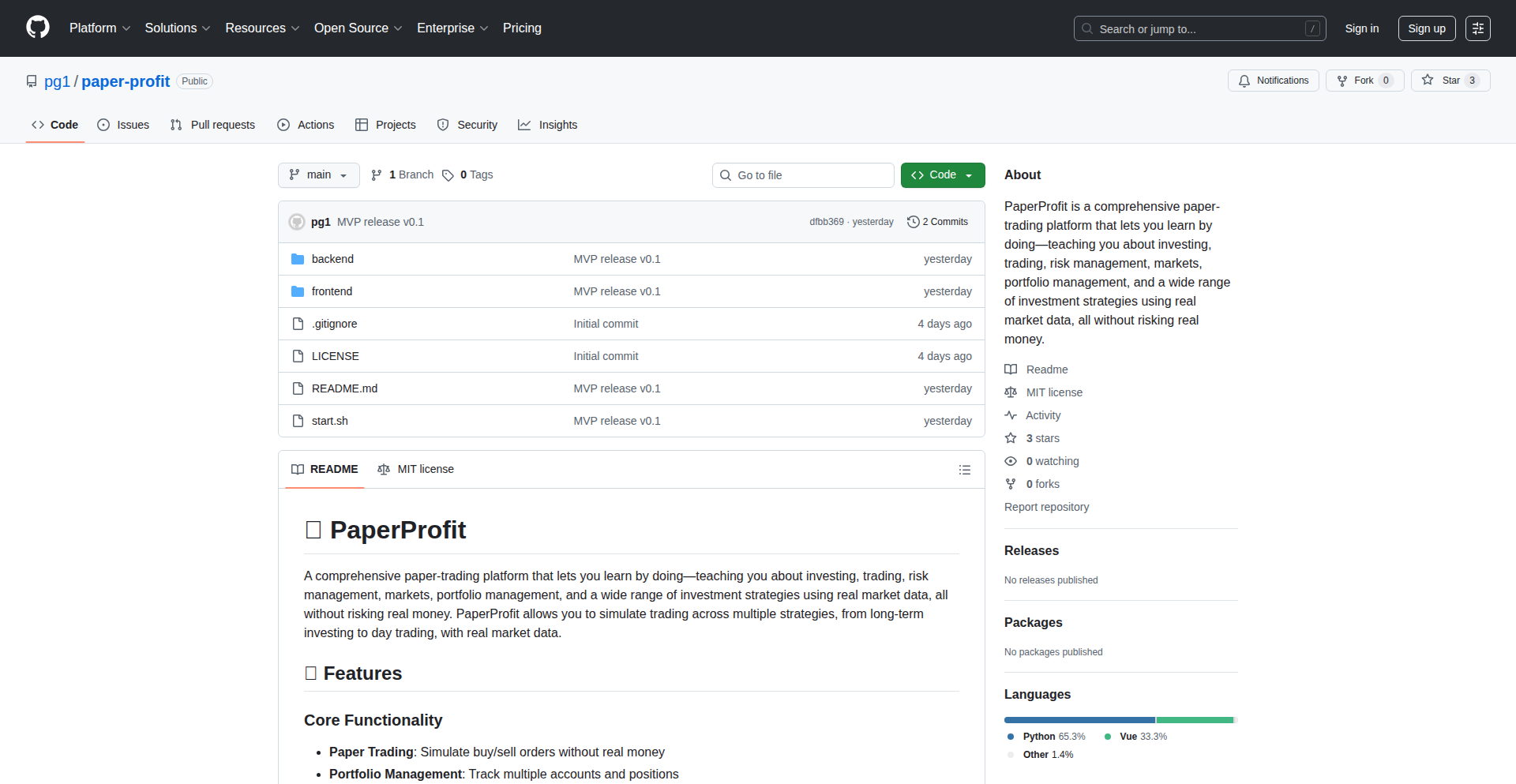

2

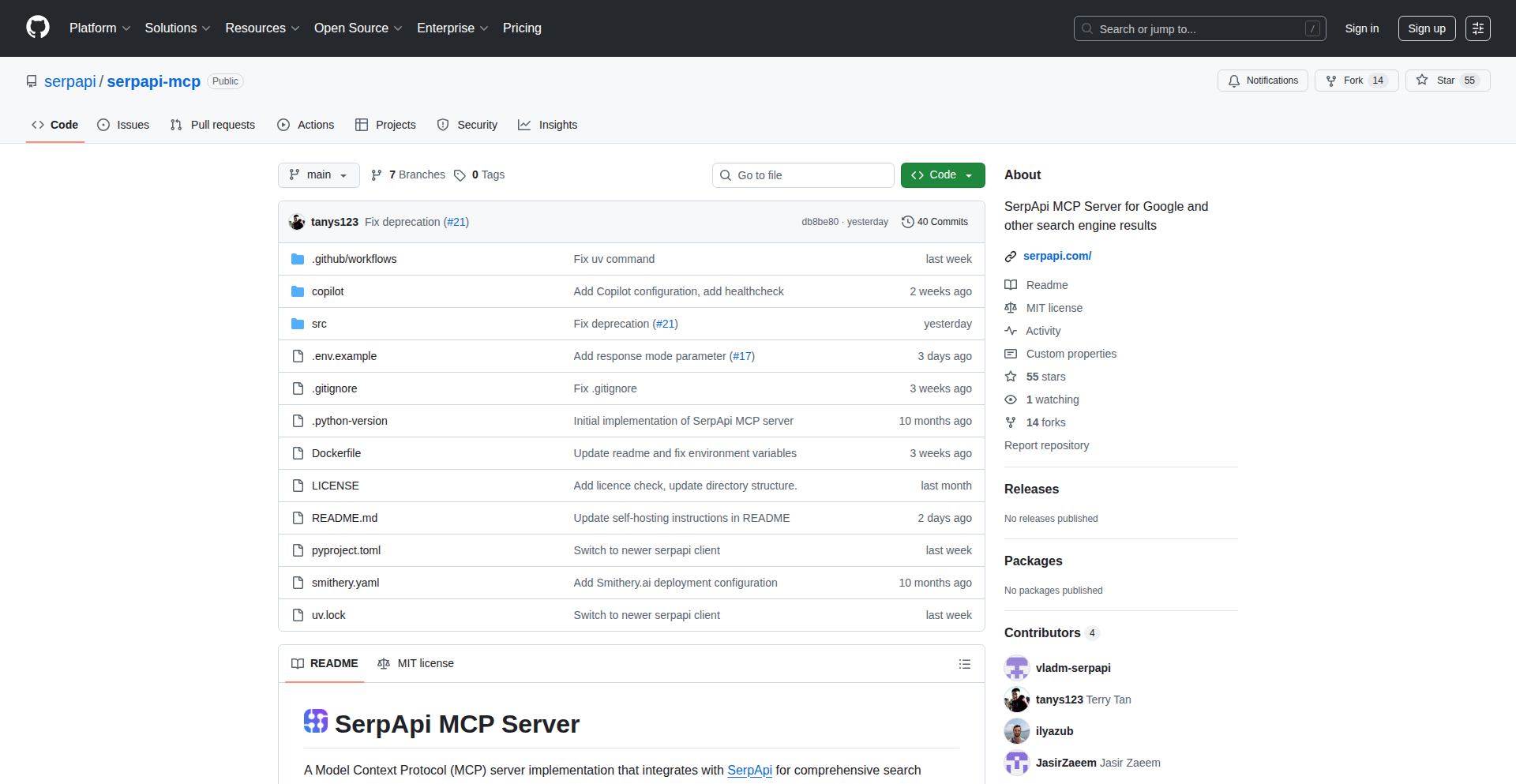

SerpApi MCP Server: Scalable Search Proxy

Author

thefoolofdaath

Description

A self-hosted, high-performance proxy server designed to manage and distribute search engine requests at scale. It addresses the challenge of rate limiting and IP blocking by intelligently routing traffic through a pool of proxies, offering significant cost savings and improved reliability for applications relying on frequent search engine data retrieval.

Popularity

Points 22

Comments 5

What is this product?

This project is a self-hosted, highly configurable proxy server specifically built for managing and distributing search engine requests. Think of it as a traffic controller for your search queries. Instead of sending all your search requests directly to a search engine from a single IP address (which can quickly get you blocked or throttled), this server acts as an intermediary. It uses a pool of various proxy servers (like residential or datacenter IPs) and intelligently assigns requests to them. The innovation lies in its efficient management of these proxy IPs, its ability to handle a large volume of requests without getting detected, and its focus on cost-effectiveness compared to commercial API services. So, this is for you if you need to reliably and affordably fetch data from search engines programmatically, without hitting rate limits or getting your IP banned.

How to use it?

Developers can deploy the SerpApi MCP Server on their own infrastructure (e.g., a cloud server or a dedicated machine). They would then configure it with a list of available proxy servers they have access to (either purchased or self-managed). Applications that need to perform search engine queries would then be configured to send their requests to this MCP server, instead of directly to the search engine. The MCP server handles the complexities of IP rotation, error handling, and load balancing across the proxy pool. This means your application code stays cleaner, and the server takes care of the tricky proxy management. This is useful for any developer building applications that require automated search engine data scraping, market research tools, or price comparison engines. You integrate by simply changing your application's outgoing proxy settings to point to your deployed MCP server.

Product Core Function

· Intelligent Proxy Rotation: Automatically cycles through a pool of proxy IPs to distribute search requests. This prevents individual IPs from being flagged for excessive use, making your scraping more robust and sustainable.

· Load Balancing: Distributes incoming search requests across available proxy servers to optimize performance and prevent overloading. This ensures faster response times and higher throughput for your data retrieval operations.

· Rate Limiting Management: Helps to circumvent search engine rate limits by using multiple IPs, allowing for higher query volumes without immediate detection. This is crucial for applications that need to perform extensive data collection.

· Error Handling and Retries: Implements logic to handle proxy failures and temporary search engine errors, automatically retrying requests with different proxies. This significantly increases the reliability of your data fetching process, reducing data loss.

· Self-Hosted Flexibility: Offers full control over your data and infrastructure, avoiding reliance on third-party services and their potentially restrictive terms or high costs. This gives you cost predictability and ownership of your scraping operations.

Product Usage Case

· Scraping E-commerce Product Data: A developer building a price comparison website can use the MCP Server to fetch product listings and prices from multiple online retailers. By distributing requests across many proxies, they can gather large amounts of data quickly without being blocked by the retailers' anti-scraping measures, resulting in more comprehensive and up-to-date price comparisons for users.

· Automated Market Research: A startup analyzing search trends for a new product can deploy the MCP Server to run large-scale keyword searches on search engines. The server's ability to manage proxy IPs and avoid rate limits allows them to collect extensive data on search volume and related queries, informing their product development and marketing strategies.

· SEO Monitoring Tool: A company providing SEO services can use the MCP Server to periodically check search engine rankings for their clients' keywords. The server's robust proxy management ensures consistent and reliable data collection, enabling them to provide accurate ranking reports and identify areas for SEO improvement.

3

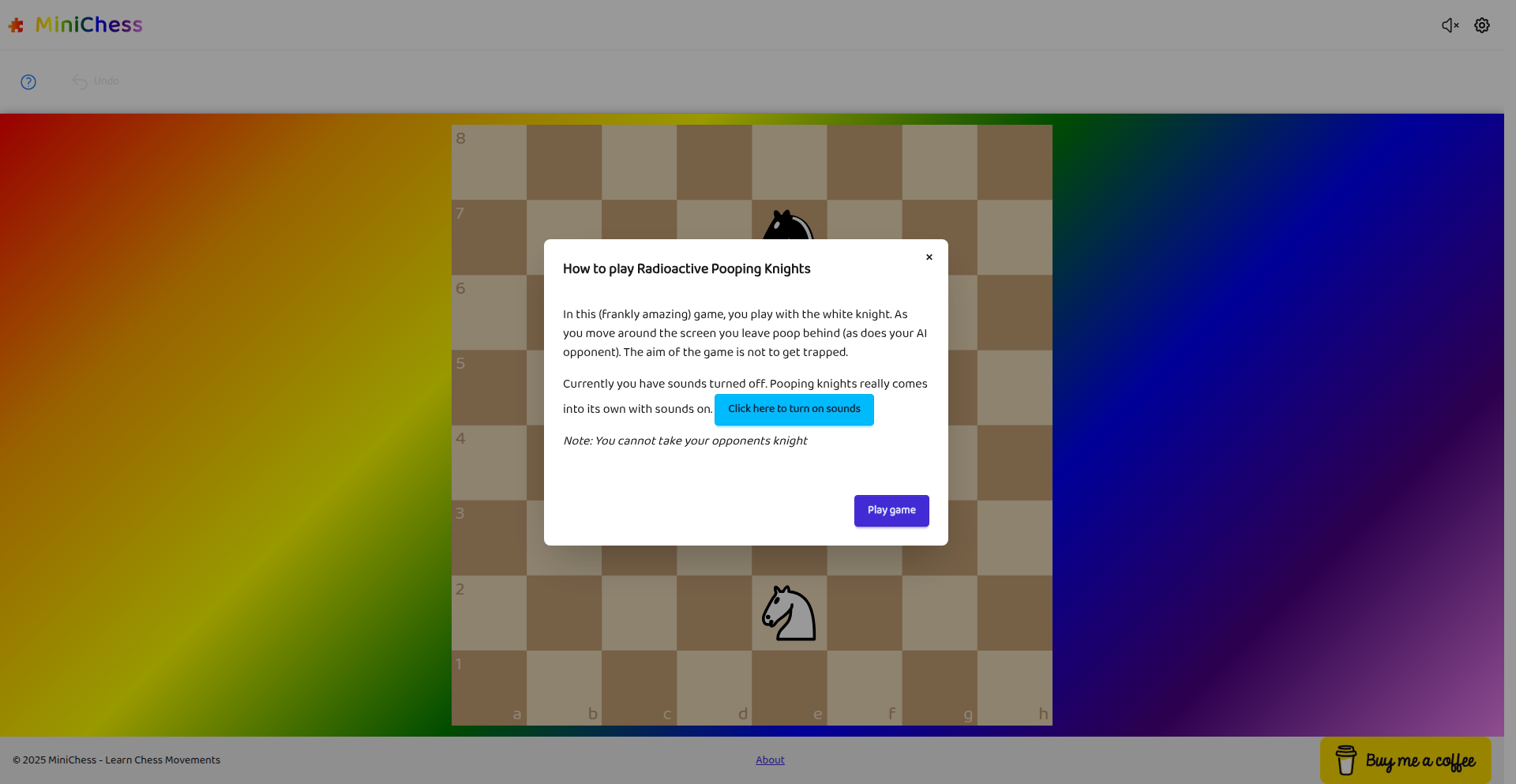

Radioactive Knight Chess Learner

Author

patrickdavey

Description

A fun and quirky chess learning application for children, featuring unique 'pooping knights' that leave a trail on the board. This project innovates by gamifying basic chess piece movement, specifically the knight's L-shaped move, through a playful mechanic. It tackles the challenge of making early chess education engaging and less intimidating for young learners.

Popularity

Points 14

Comments 3

What is this product?

This project is a browser-based game designed to teach children the movement of a knight in chess. The core innovation lies in its visual representation: knights move across a chessboard and leave a 'poop' trail behind them. The objective is to navigate the knight without stepping on these trails, making the learning process intuitive and enjoyable. It leverages a simple yet effective visual metaphor to reinforce the knight's unique movement pattern, which is often a point of confusion for beginners. The sound design is also intended to enhance the playful experience, making it more immersive.

How to use it?

Developers can use this project as a reference for building educational games with novel mechanics. It demonstrates how to implement a grid-based movement system and apply visual feedback (the 'poop' trail) to illustrate specific game rules. For educators or parents, it can be directly used as a tool to introduce or reinforce knight movement in a non-traditional, engaging way. It's built as a web application, meaning it's accessible via a web browser without needing any installations, making it easy to share and play. Integration might involve embedding this game into a larger educational platform or using its logic as a foundation for other chess-related learning tools.

Product Core Function

· Knight Movement Simulation: The system accurately simulates the L-shaped movement of a chess knight across a board, providing immediate visual feedback. This is valuable for understanding the fundamental rule of knight movement in chess.

· Interactive Trail Generation: As the knight moves, it leaves behind a visible 'poop' trail. This mechanic directly visualizes the path taken by the knight and serves as a learning tool to avoid stepping on previously occupied squares in subsequent moves.

· Puzzle-Based Learning: The game presents 'maze-like' puzzles, challenging the player to navigate the knight through specific paths or reach certain goals without hitting the trails. This approach turns learning into a problem-solving activity, enhancing retention.

· Engaging Audio Feedback: The inclusion of sound effects is designed to make the game more immersive and enjoyable for children, contributing to a positive learning experience. This adds an auditory layer to reinforce the visual elements.

· Web-Based Accessibility: The project is built as a web application, making it easily accessible from any device with a web browser. This low barrier to entry allows for widespread use and easy sharing among learners and educators.

Product Usage Case

· Teaching young children chess: A parent can use this game to introduce their 7-year-old daughter to the complex movement of the knight in a fun and accessible way, avoiding the dryness of traditional chess lessons.

· Developing educational web games: A game developer can study the project's approach to gamifying a specific chess rule to inspire the creation of other educational games focusing on strategy or logic.

· Creating interactive learning modules: An educator could integrate the core logic of this knight movement simulation into a broader online chess curriculum, providing a playful interactive element.

· Demonstrating simple game physics and UI: The project showcases how to create a responsive grid-based UI and simple animation for game pieces, a useful example for junior front-end developers learning game development principles.

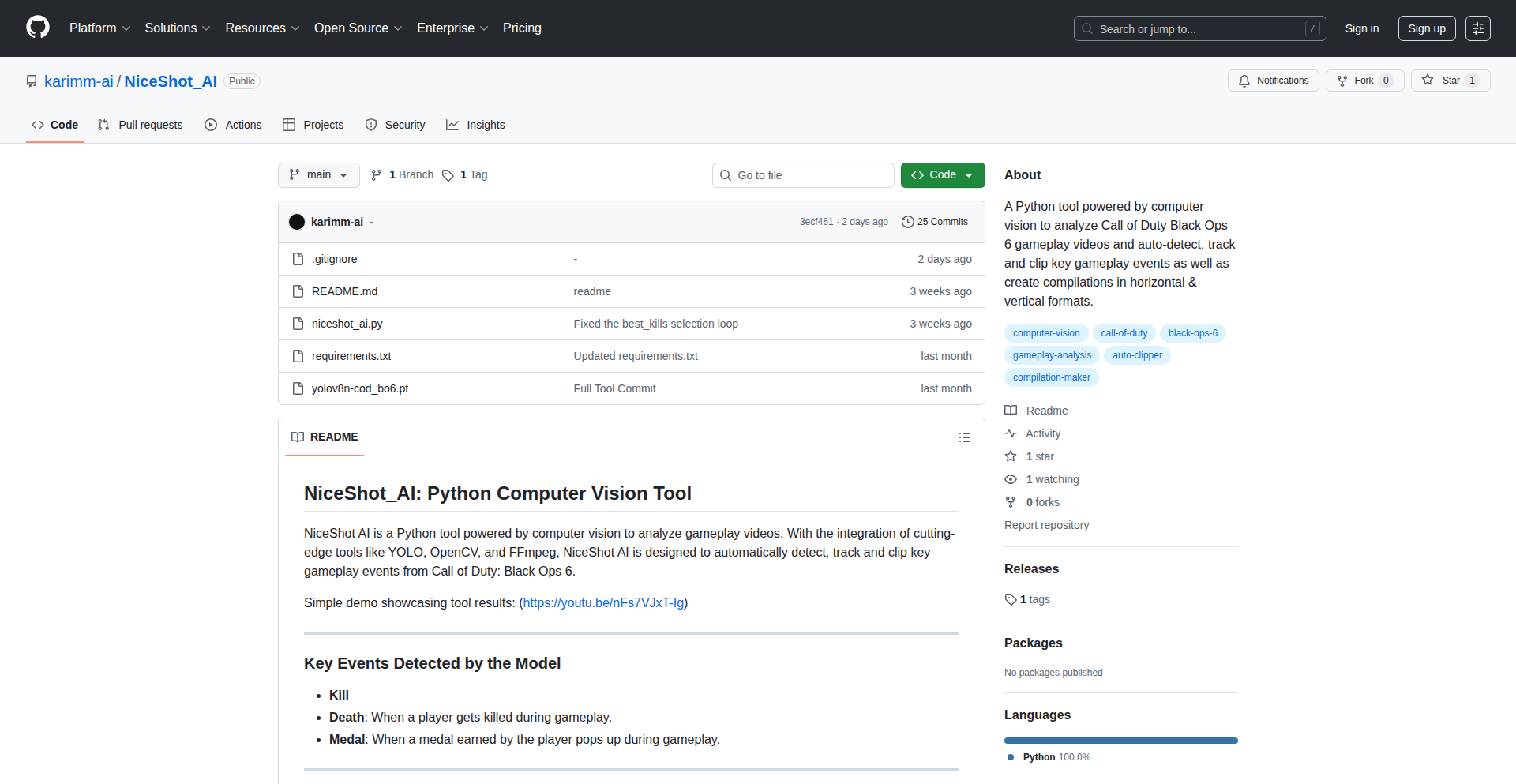

4

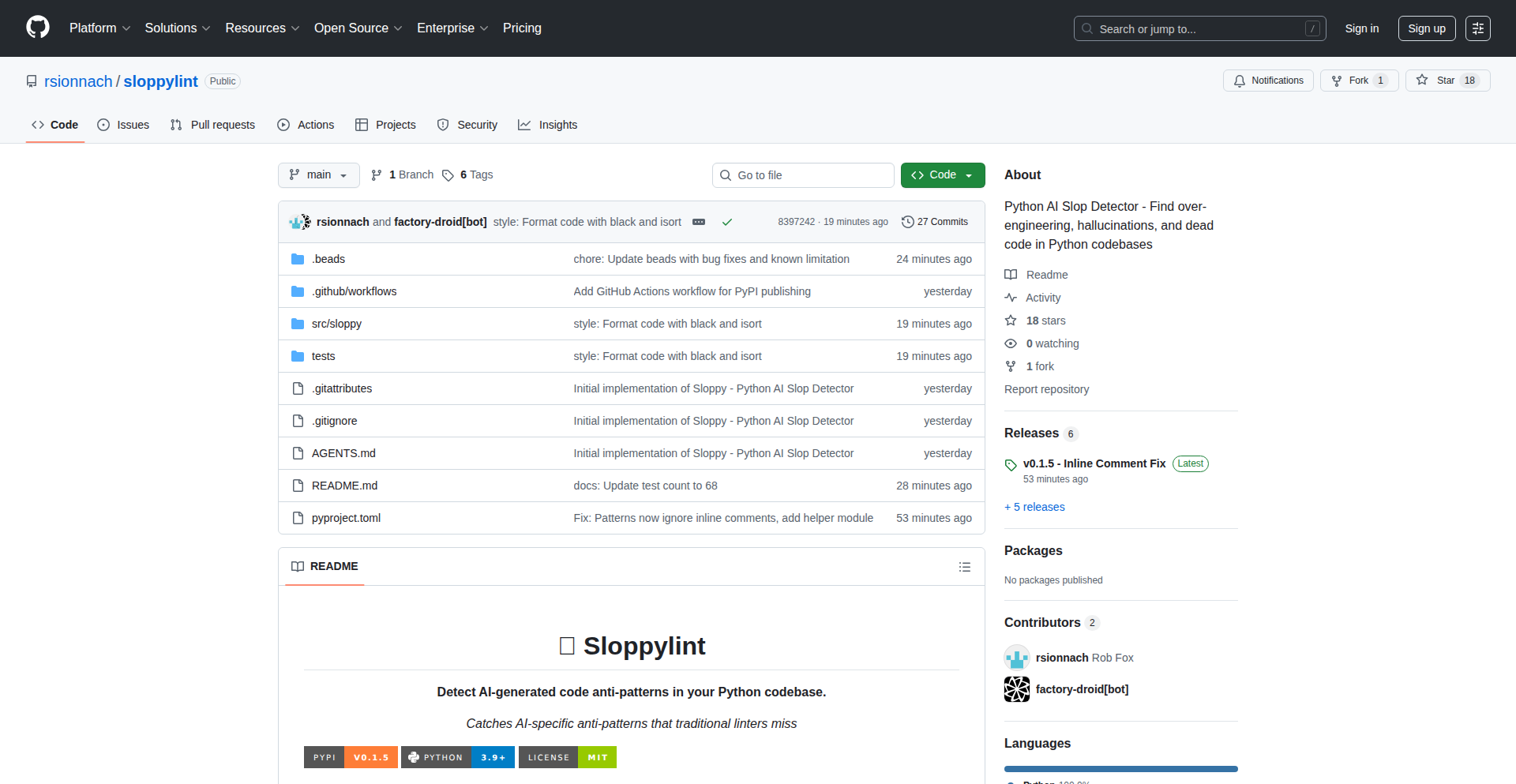

AI-Slop Detector

Author

kyub

Description

This project is a specialized linter designed to catch subtle, yet critical, errors and anti-patterns commonly introduced by AI code generation tools in Python. It goes beyond traditional linters by identifying 'AI slop,' such as hallucinated imports, placeholder functions, cross-language syntax leaks, and problematic default argument usage, ultimately improving the reliability and maintainability of AI-assisted code.

Popularity

Points 11

Comments 3

What is this product?

AI-Slop Detector is a command-line tool that analyzes Python code generated by AI assistants. Unlike standard linters that focus on general code quality and style, this tool is specifically trained to recognize the unique mistakes AI models tend to make. These include importing non-existent libraries (which can happen up to 20% of the time with AI-generated code), leaving behind incomplete 'pass' or 'TODO' statements, mistakenly using syntax from other programming languages (like JavaScript's '.push()' in Python), and employing dangerous mutable default arguments. Essentially, it acts as a post-AI quality check, catching errors that a human developer might overlook but are typical AI slip-ups. The value is in catching these before they cause bugs in production, saving debugging time and ensuring code integrity.

How to use it?

Developers can easily integrate AI-Slop Detector into their workflow. After installing it via pip (e.g., `pip install sloppylint`), they can run it directly from their terminal on their Python codebase. A simple command like `sloppylint .` will analyze the current directory and report any detected AI-specific issues. This can be incorporated into pre-commit hooks or CI/CD pipelines to automatically flag problematic AI-generated code before it's committed or deployed. This means developers can leverage AI for rapid prototyping and code generation with greater confidence, knowing that this tool will help them clean up the inevitable imperfections.

Product Core Function

· Hallucinated Import Detection: Identifies and flags imports for packages that do not exist, preventing runtime errors and wasted development effort on non-functional code. This is crucial for ensuring code can actually run and use its intended dependencies.

· Placeholder Code Identification: Detects common placeholder constructs like `pass`, `...`, or `TODO` comments, which indicate incomplete or unaddressed code sections, prompting developers to finish the implementation and avoid unfinished logic slipping into production.

· Cross-Language Pattern Recognition: Spots syntax and function calls that are common in other programming languages (e.g., JavaScript's array methods) but are incorrect or non-idiomatic in Python, preventing subtle bugs and improving code readability for Python developers.

· Mutable Default Argument and Bare Except Detection: Catches risky coding practices like using mutable objects as default function arguments or using overly broad `except` clauses without specifying exceptions, which can lead to unexpected behavior and security vulnerabilities. This ensures safer and more predictable code execution.

· Dead Code Analysis: Flags unused variables, functions, or imports that contribute to code bloat and confusion, helping to maintain a clean and efficient codebase. This makes the code easier to understand and maintain.

Product Usage Case

· Scenario: A developer uses an AI coding assistant to quickly generate a complex data processing script in Python. The AI generates the script but imports a niche library that doesn't exist in the project's environment. Running AI-Slop Detector before testing reveals this 'hallucinated import,' saving hours of debugging that would have been spent trying to figure out why the import failed. The developer can then correct the import or find an alternative library.

· Scenario: A team is rapidly iterating on a feature and an AI assistant helps fill in boilerplate code. The AI leaves behind several `TODO` comments as placeholders. AI-Slop Detector automatically flags these `TODO`s during the commit process, reminding the developer to revisit and complete these sections, ensuring no unfinished logic is accidentally shipped.

· Scenario: An AI generates a Python function that uses `.push()` to add items to what appears to be a list. A human developer might miss this as Python lists use `.append()`. AI-Slop Detector flags this as a cross-language pattern, preventing a `AttributeError` and ensuring the code behaves as expected for Python.

· Scenario: An AI assists in creating a utility function with a default dictionary argument. Without realizing the implications, the AI uses a mutable default. AI-Slop Detector identifies this as a potentially dangerous practice, prompting the developer to refactor it to use an immutable default or a factory pattern, thus avoiding unexpected state sharing between function calls.

5

CelestialPrint Calendar

Author

elijahparker

Description

A web application for generating custom printed wall calendars featuring precise sunrise and moon phase data for any chosen location. It prioritizes user privacy through a unique URL access model and temporary data storage, built with Node.js and pdfkit.

Popularity

Points 2

Comments 7

What is this product?

CelestialPrint Calendar is a novel web-based tool that empowers users to design and order personalized physical wall calendars. What sets it apart is its integration of detailed astronomical information – specifically, daily sunrise and moon phase data – tailored to the geographic location you specify. The core innovation lies in its privacy-first architecture, which avoids user accounts and logins. Instead, each calendar project is assigned a unique, shareable URL. This approach means your data is ephemeral, automatically deleted shortly after your last interaction or after an order is fulfilled, ensuring no personal information is persistently stored or tracked. This is achieved using Node.js on the backend to process requests and pdfkit to programmatically generate the print-ready PDF files for submission to printing services like lulu.com.

How to use it?

Developers can use CelestialPrint Calendar by simply navigating to its web interface. You select your desired calendar year and the specific geographic location for which you want the astronomical data. The application then calculates and integrates the sunrise and moon phase information for each day of that year. You can preview your calendar's layout and content. Once satisfied, you can initiate the ordering process. For developers interested in the underlying technology, the project demonstrates a minimalist approach to web application development, utilizing Node.js and the pdfkit library. This could serve as an inspiration for building similar data-driven, output-focused applications where privacy and simplicity are paramount. The use of file storage for JSON documents, while basic, highlights how even simple storage mechanisms can be effective for specific, non-complex use cases.

Product Core Function

· Location-specific astronomical data calculation: This function precisely computes and displays daily sunrise times and moon phases based on user-defined geographical coordinates. Its value lies in providing accurate, personalized celestial information for a physical product, enhancing its utility and uniqueness.

· Customizable calendar generation: Users can select the year and layout for their wall calendar. This offers creative control and allows for the creation of truly bespoke calendars, serving personal or gift-giving needs.

· Privacy-focused access and data handling: The system uses unique URLs for each calendar and implements temporary data storage. This eliminates the need for user accounts and protects user privacy by ensuring data is not permanently stored, valuable for users concerned about data collection and tracking.

· PDF generation for print services: The application leverages the pdfkit library to create high-quality, print-ready PDF files. This directly translates the digital design into a tangible product, enabling seamless integration with professional printing workflows.

Product Usage Case

· Creating a personalized anniversary gift calendar: A user could generate a calendar for their anniversary year, highlighting the moon phases on significant dates like their wedding day or birthdays, providing a thoughtful and unique present. The core issue solved is making a generic calendar into a deeply personal and meaningful item.

· Building a calendar for outdoor enthusiasts: Hikers, campers, or astronomers might want a calendar that clearly shows sunrise times for planning activities and moon phases for night observation. This solves the problem of consolidating critical, location-specific outdoor planning data into an easily accessible physical format.

· Developing a minimalist, private journaling tool: While not its primary function, the system's privacy-centric design could inspire developers to create simple, ephemeral journaling applications where users can record daily thoughts without fear of data breaches or persistent storage. This addresses the need for secure and private digital note-taking.

· Prototyping document generation workflows: Developers exploring how to programmatically create documents from data could use this project as a reference. It demonstrates a practical application of Node.js and pdfkit for generating structured output for specific purposes, such as creating reports or certificates.

6

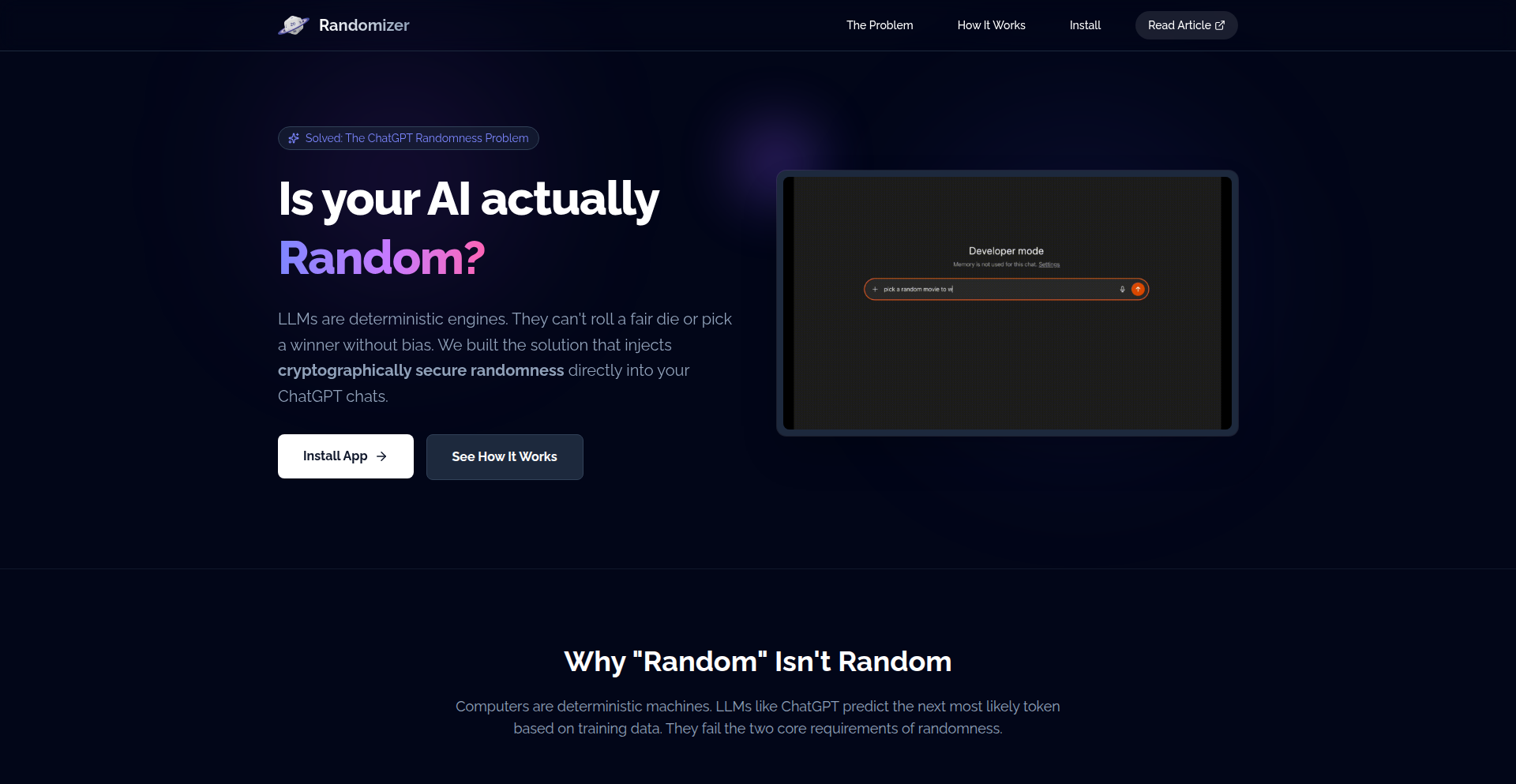

StableChat-LLM-Deterministic

Author

IvanGoncharov

Description

This project introduces a ChatGPT application designed to tackle the inherent randomness problem in Large Language Models (LLMs). It provides a method to achieve more predictable and reproducible outputs from LLMs, a critical aspect often overlooked in current LLM applications, enhancing reliability for developers and users.

Popularity

Points 8

Comments 1

What is this product?

This project is a specialized ChatGPT application that addresses the challenge of LLM randomness. While LLMs are powerful, their outputs can vary even with the same input due to probabilistic sampling. This app implements techniques to control and reduce this variability, essentially making the LLM's responses more deterministic. This is achieved by fine-tuning the sampling process, potentially by employing strategies like greedy decoding, beam search with specific scoring, or temperature setting to near zero, ensuring that for a given prompt and context, the output is consistently the same. This makes LLMs more reliable for tasks where precision and repeatability are paramount.

How to use it?

Developers can integrate StableChat-LLM-Deterministic into their existing workflows or applications where consistent LLM responses are crucial. This could involve building AI-powered content generation tools, automated customer support systems, or testing frameworks for AI models. The integration might involve using the application's API, providing specific parameters to control the deterministic behavior, and processing the predictable outputs within their application logic. For instance, if you're building a system that needs to generate legally compliant text, ensuring the output is always the same for a specific set of inputs prevents unintended variations that could have legal ramifications. It's about gaining control over the AI's creative but sometimes unpredictable nature.

Product Core Function

· Deterministic Output Generation: Provides a way to generate consistent and repeatable LLM responses for identical prompts, which is valuable for debugging, testing, and applications requiring high reliability.

· Controlled LLM Behavior: Offers parameters to fine-tune the LLM's output generation process, allowing developers to balance determinism with output quality, making the AI more predictable and manageable.

· Problem Identification and Solution for LLM Randomness: Directly addresses the often-ignored issue of LLM unpredictability, offering a practical solution for developers who need dependable AI outputs.

· Enhanced LLM Application Robustness: Improves the stability and trustworthiness of applications built on LLMs by reducing the chance of unexpected or variable AI responses, leading to a better user experience and fewer errors.

Product Usage Case

· Automated Report Generation: In a scenario where a system needs to generate daily financial reports based on specific data, using StableChat-LLM-Deterministic ensures that the generated narrative text for the reports is consistent each day for the same underlying data, avoiding variations that could cause confusion.

· Technical Documentation Generation: For creating technical documentation where accuracy and uniformity are critical, this tool guarantees that explanations and code snippets generated by the LLM for specific features will always be the same, simplifying reviews and updates.

· Educational Content Creation: When building an AI tutor that explains complex concepts, consistent explanations are vital for student learning. StableChat-LLM-Deterministic ensures that a concept is explained in the same way every time, reinforcing learning without confusing variations.

· AI-Assisted Code Completion and Refactoring: Developers using AI tools for code assistance can benefit from predictable suggestions and refactoring patterns, making the code more maintainable and reducing the risk of introducing subtle bugs through inconsistent AI suggestions.

7

RuleWeaver: Example-Driven Reasoning Engine

Author

heavymemory

Description

RuleWeaver is a novel reasoning engine that autonomously learns transformation rules from just two examples. Unlike traditional approaches relying on large language models (LLMs), regular expressions (regex), or manually coded logic, it deduces the underlying patterns and applies them to new data. This allows for flexible and intuitive rule creation for tasks like code refactoring, algebraic manipulation, and logical transformations, all while providing a transparent reasoning trace.

Popularity

Points 4

Comments 3

What is this product?

RuleWeaver is a unique engine that learns how to transform data by observing just one pair of 'before' and 'after' examples. Think of it like teaching a child by showing them one example of how to do something and then expecting them to understand the general principle. It doesn't use complex AI models or predefined rules; it figures out the transformation itself. This is groundbreaking because it dramatically lowers the barrier to creating custom automation and problem-solving logic. The 'why' behind its decisions is also visible, showing a step-by-step reasoning trace, making it understandable and debuggable.

How to use it?

Developers can use RuleWeaver by providing pairs of input-output examples to 'teach' it a new rewrite rule. Once a rule is learned, it can be applied to new, unseen inputs. The engine can also combine multiple learned rules to perform more complex operations. For example, you could teach it how to refactor a specific code pattern by showing two code snippets before and after the refactoring. Then, you can feed it other code snippets with the same pattern, and RuleWeaver will automatically apply the learned refactoring. It's designed to be integrated into existing workflows where custom transformations are needed, such as data processing pipelines, code analysis tools, or domain-specific language parsers.

Product Core Function

· Example-Based Rule Learning: Ability to infer complex transformation logic from minimal 'before' and 'after' data pairs. This offers a highly intuitive way to define custom automation without writing explicit code or complex configurations, making it accessible to a wider range of users.

· Rule Composition: Capability to combine multiple learned rules to solve more intricate problems. This enables the creation of sophisticated workflows by breaking down complex tasks into smaller, manageable learned steps, enhancing the engine's versatility.

· Cross-Domain Transfer Learning: Demonstrates that rules learned in one context (e.g., algebra) can be effectively applied to another (e.g., logic or set theory). This highlights the generality of the learning mechanism and its potential for broad applicability across different technical domains.

· Multi-Step Deterministic Rewriting with Trace: Executes transformations step-by-step and provides a detailed, visible trace of its reasoning process. This transparency is invaluable for debugging, understanding the engine's behavior, and ensuring the reliability of its outputs.

· Codemod Generation from Examples: Specifically tailored for software development, allowing developers to teach it how to perform code modifications from examples. This provides a powerful, yet simple, way to automate repetitive code refactoring and maintain code consistency across a project.

Product Usage Case

· Automating Code Refactoring: A developer needs to consistently rename a specific variable across multiple files in a codebase. Instead of manually searching and replacing or writing a complex script, they can show RuleWeaver two versions of a code snippet where the variable has been renamed. RuleWeaver learns this renaming pattern and can then be used to automatically rename the variable in all other relevant files, saving significant time and reducing errors.

· Simplifying Mathematical Expressions: A student or researcher is working with algebraic equations and needs to simplify them. They can provide RuleWeaver with an initial equation and its simplified form. The engine learns the simplification steps and can then be used to automatically simplify other similar equations, making complex calculations more manageable and accelerating problem-solving.

· Transforming Data Formats: In data engineering, there's often a need to convert data from one structure to another. If a specific transformation is frequently required (e.g., rearranging columns, changing data types based on patterns), RuleWeaver can learn this transformation from a sample input and output. This allows for quick creation of custom data processing rules without extensive scripting, especially for non-standard or ad-hoc transformations.

8

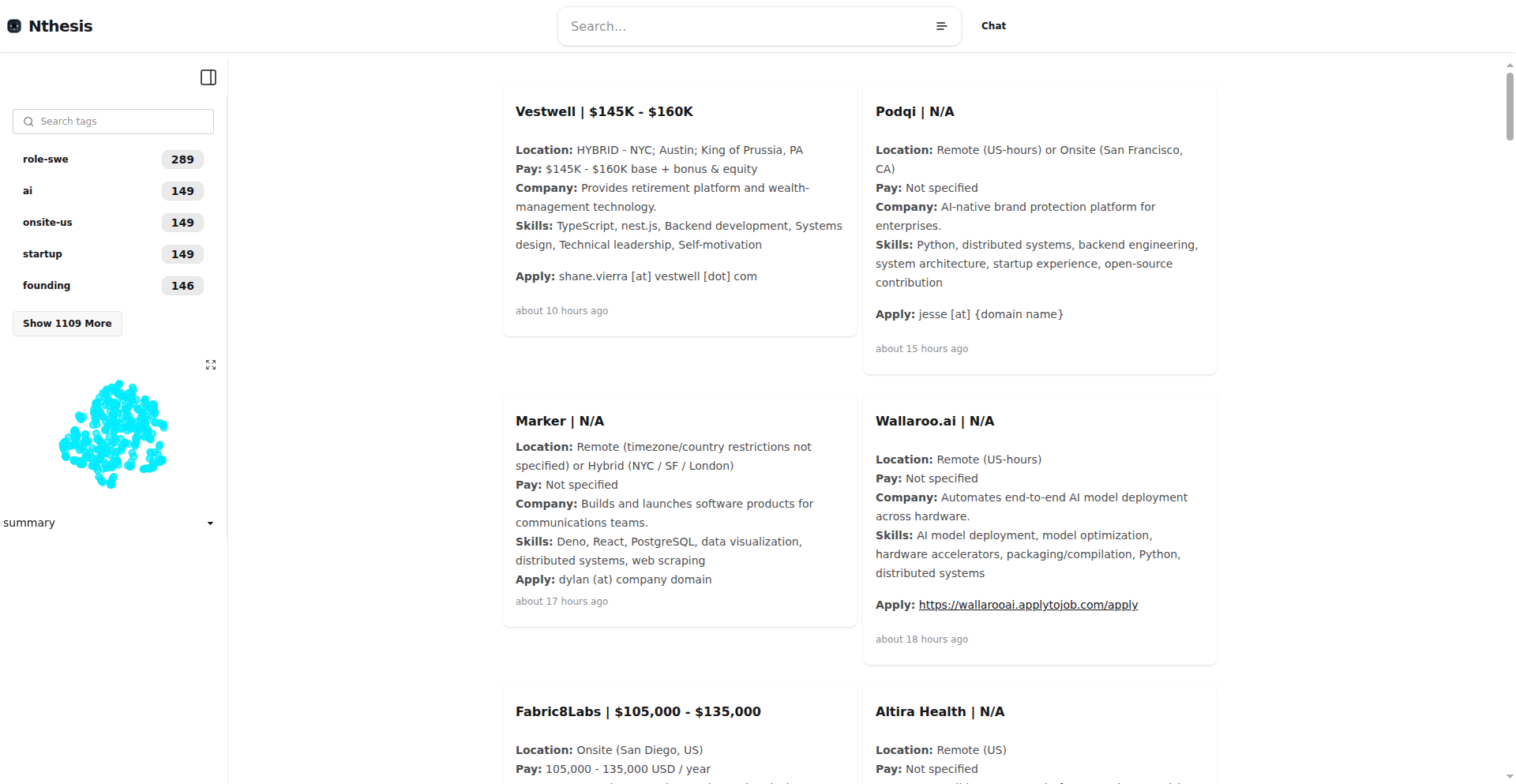

Hacker Hire Explorer

Author

osigurdson

Description

This project is a sophisticated search tool designed to navigate and understand Hacker News's monthly 'Who is Hiring' posts. It goes beyond simple keyword matching by incorporating chat capabilities, semantic search, and a unique semantic map visualization. The core innovation lies in its use of Large Language Models (LLMs) to process and understand the job listings, making it easier for job seekers to find relevant opportunities and for the community to grasp hiring trends. This addresses the challenge of sifting through numerous text-heavy job posts, offering a more intuitive and insightful way to explore the hiring landscape.

Popularity

Points 4

Comments 2

What is this product?

Hacker Hire Explorer is a smart assistant for exploring Hacker News's 'Who is Hiring' job postings. Instead of just basic text search, it uses advanced AI, specifically Large Language Models (LLMs), to truly understand the content of each job ad. It can extract key information, categorize jobs, and even create a visual map (like a semantic map) that shows relationships between different job roles or industries. You can also 'chat' with the job postings to ask specific questions, making it feel like you're having a conversation to find the perfect job. This is innovative because it moves beyond simple keyword matching to a deeper comprehension of the text, offering a more powerful way to find opportunities.

How to use it?

Developers can use Hacker Hire Explorer by visiting the provided URL. You can start with a basic text search to quickly filter jobs. For more nuanced exploration, enable the semantic search feature to find roles based on meaning rather than just exact words. To discover broader trends or ask specific questions about the job market this month, use the chat function. For instance, you could ask 'What are the most in-demand tech skills right now?' or 'Show me jobs related to AI and machine learning.' The tool is designed to be integrated into your job search workflow, providing a more efficient and insightful experience. There's also an API and a command-line interface (CLI) tool available if you want to build similar functionalities or automate your job hunting process.

Product Core Function

· Semantic Job Search: Understands the meaning behind job descriptions to find relevant roles, offering a deeper search experience than traditional keyword matching. This helps you discover jobs you might have missed otherwise.

· Conversational AI Chat: Allows you to 'talk' to the job postings, asking specific questions like 'What are the required qualifications?' or 'What is the company culture like?' This provides quick answers and reduces the need to read through lengthy descriptions.

· Semantic Map Visualization: Presents job roles and industries in a visual format, helping you understand the overall hiring landscape and identify connections between different opportunities. This provides a bird's-eye view of the job market.

· LLM-Powered Data Extraction and Tagging: Uses AI to automatically pull out important details from job posts and categorize them, making the information more organized and easier to digest. This saves you time by pre-processing all the job ads.

· Batch LLM Processing: Efficiently processes a large volume of job postings at once using AI, ensuring comprehensive analysis and quick updates. This means you get the most up-to-date information without delay.

Product Usage Case

· A job seeker looking for remote backend engineering roles can use semantic search to find positions that mention 'distributed systems' or 'cloud-native' even if the exact phrase 'remote backend engineer' isn't in the title. This solves the problem of missing out on great opportunities due to rigid keyword searches.

· A developer interested in the emerging trends in AI hiring can use the chat feature to ask 'What are the common themes in AI job postings this month?' and then ask follow-up questions like 'Are there many roles for prompt engineers?' This helps them quickly identify new career paths and trending technologies.

· A recruiter analyzing the current job market can use the semantic map visualization to see which tech stacks are most frequently mentioned together, identifying potential areas of high demand and skill overlap. This provides valuable insights for strategic hiring decisions.

· A student exploring different career paths in tech can use the tool to ask broad questions like 'What are the differences between a data scientist and a machine learning engineer role?' This helps them make informed decisions about their future education and career.

9

OLake-IcebergTurbo

Author

rohankhameshra

Description

OLake-IcebergTurbo is an open-source tool designed for efficiently ingesting data from databases and Kafka into Apache Iceberg. It features a newly redesigned write pipeline that achieved a remarkable 7x improvement in throughput, offering significant performance gains for data lake operations.

Popularity

Points 5

Comments 1

What is this product?

OLake-IcebergTurbo is a powerful data ingestion engine specifically built to bridge the gap between streaming and transactional data sources (like databases and Kafka) and the modern data lake table format, Apache Iceberg. The core innovation lies in its dramatically re-engineered write pipeline. Instead of processing data sequentially, it employs advanced parallel processing and optimized data buffering techniques. This means it can handle a much larger volume of incoming data in the same amount of time, preventing bottlenecks that often plague large-scale data ingestion. Think of it like upgrading a single-lane road to a multi-lane superhighway for your data; it's simply much faster and can handle more traffic.

How to use it?

Developers can integrate OLake-IcebergTurbo into their data pipelines by configuring it to connect to their source systems (e.g., PostgreSQL, MySQL, Kafka topics) and specifying their target Apache Iceberg tables. The tool provides connectors for common databases and Kafka. Its primary use case is for real-time or batch ingestion scenarios where high throughput and low latency are critical. For instance, imagine you're collecting user activity logs from Kafka and want to store them in an Iceberg table for analytics. You'd configure OLake-IcebergTurbo to read from Kafka and write to your Iceberg table. Its performance improvements mean your analytics queries will have access to fresher data much sooner, and your infrastructure won't be strained by the ingestion process. The redesigned pipeline allows for easier management of data commits and schema evolution, making it a robust solution for evolving data environments.

Product Core Function

· High-throughput data ingestion: Optimized parallel processing and data buffering allow for ingesting data at a significantly faster rate, reducing the time it takes to get data into your data lake. This means fresher data for your analyses.

· Database and Kafka connectors: Built-in support for connecting to various relational databases and Kafka message queues simplifies the setup process for data ingestion from common sources. No need to build custom connectors from scratch.

· Apache Iceberg integration: Seamlessly writes data to Apache Iceberg tables, a modern data lake table format that offers ACID transactions, schema evolution, and time travel capabilities. This ensures data reliability and flexibility.

· Redesigned write pipeline: The core innovation is a fundamentally re-architected system for writing data, focusing on parallelization and efficient resource utilization, leading to substantial performance boosts. This makes your data operations more cost-effective and less resource-intensive.

Product Usage Case

· Real-time analytics on user behavior: Ingesting high-volume clickstream data from Kafka into an Iceberg table using OLake-IcebergTurbo. The 7x throughput improvement ensures that user behavior analytics dashboards are updated almost instantaneously, enabling quicker business decisions. This solves the problem of stale data in analytics.

· Batch ETL for data warehousing: Migrating large datasets from a transactional SQL database to an Iceberg-based data lake for analytical querying. The improved ingestion speed drastically reduces the time required for ETL jobs, freeing up database resources and making historical data available faster for reporting. This tackles the challenge of slow data migration.

· Event-driven data pipelines: Building an event-driven architecture where events published to Kafka are processed and stored in Iceberg for downstream machine learning model training. OLake-IcebergTurbo's efficiency ensures that the ML models are trained on the most current data, improving their accuracy and relevance. This addresses the need for timely data for ML workloads.

10

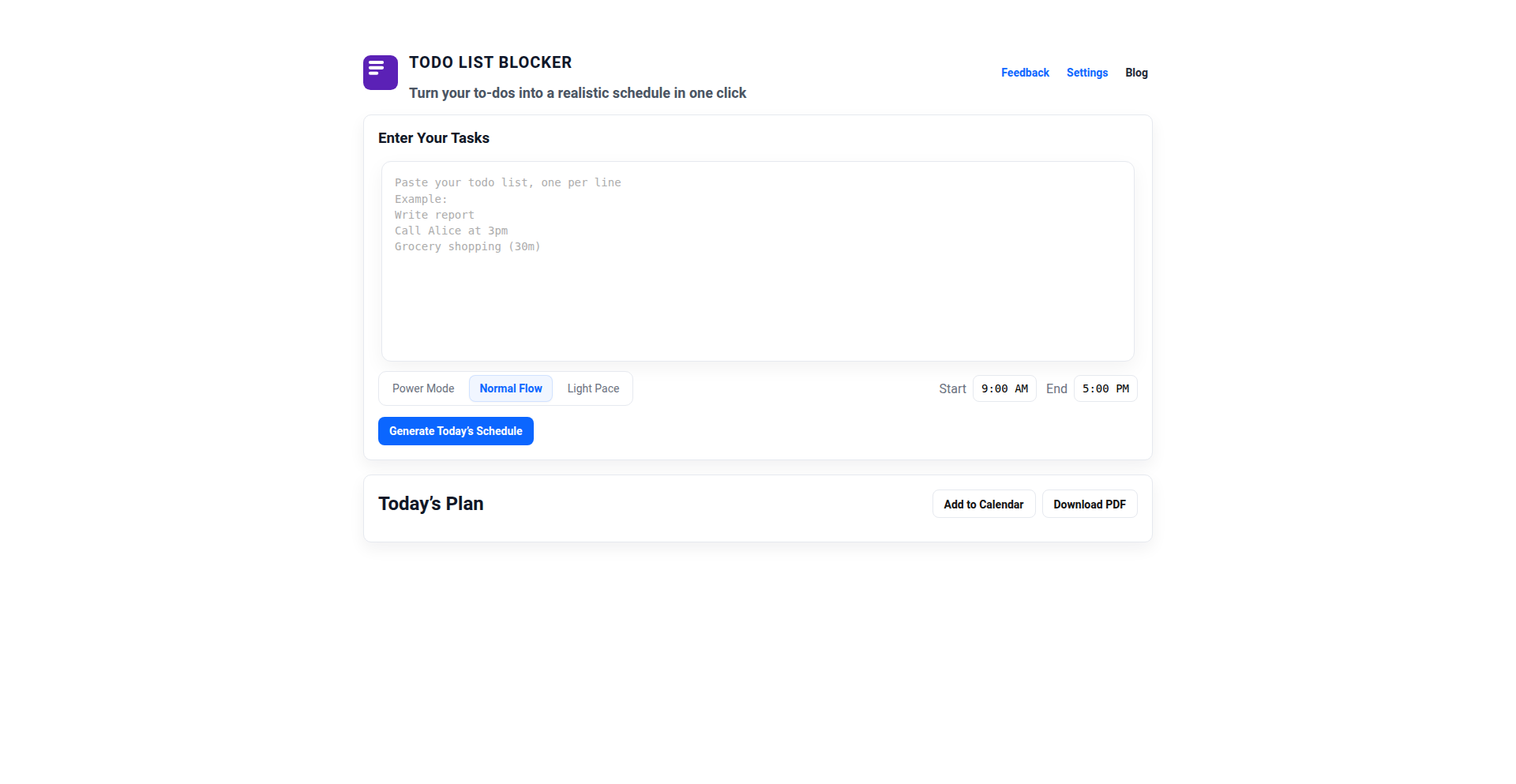

Chronosync Todo

Author

lvfrm

Description

A smart tool that seamlessly transforms your traditional todo list into a time-blocked schedule. It addresses the common problem of overwhelm and underestimation of task duration by intelligently allocating time slots, thereby enhancing productivity and reducing procrastination. The core innovation lies in its dynamic scheduling algorithm that adapts to user input and available time.

Popularity

Points 2

Comments 4

What is this product?

Chronosync Todo is a novel application designed to revolutionize how individuals manage their daily tasks. Instead of just listing what needs to be done, it automatically integrates these tasks into your calendar by estimating their duration and finding optimal time slots. This is achieved through a proprietary algorithm that analyzes task descriptions for keywords indicative of effort and complexity, and then proposes a realistic schedule. The innovation lies in moving beyond a static list to a dynamic, actionable time-based plan, akin to a personal project manager automatically structuring your day. This helps you see exactly when you can accomplish each item, transforming a daunting list into a manageable sequence of actions.

How to use it?

Developers can integrate Chronosync Todo into their workflow in several ways. Primarily, it acts as a standalone application where users can input their todo items. The system then intelligently suggests calendar entries. For deeper integration, developers could potentially leverage an API (if available or future-proofed) to push tasks from their existing project management tools or code repositories directly into Chronosync Todo for time blocking. Imagine syncing your GitHub issues or Jira tickets, and Chronosync Todo helping you schedule dedicated focus time for tackling them. This provides a clear visualization of how development tasks fit into your overall schedule, making it easier to commit to realistic deadlines.

Product Core Function

· Automated Time Blocking: This core function takes your unstructured todo items and intelligently assigns them specific time slots on your calendar. It analyzes task descriptions to estimate effort, offering a more realistic approach to scheduling than manual estimation, thus helping you understand how much can actually be done in a day.

· Dynamic Scheduling Adjustment: If a task runs over or under time, Chronosync Todo can dynamically adjust subsequent time blocks, ensuring your schedule remains fluid and achievable. This prevents the domino effect of a single delayed task derailing your entire day.

· Task Prioritization Visualization: By time-blocking, the tool implicitly prioritizes tasks based on their placement within your schedule, giving you a clear visual hierarchy of what needs attention when. This helps in focusing on the most critical items first.

· Procrastination Mitigation: Seeing your tasks laid out in concrete time blocks makes them less abstract and more actionable, reducing the tendency to put them off. It’s like having a clear roadmap for your day, making it harder to avoid starting.

· Integration with Existing Calendars: It syncs with popular calendar applications, ensuring your time-blocked tasks appear alongside other appointments, providing a unified view of your commitments. This means you don't have to juggle multiple tools to manage your schedule.

Product Usage Case

· Scenario: A freelance developer working on multiple client projects needs to manage deadlines and allocate focus time for coding. Chronosync Todo can take a list of tasks for each project (e.g., 'Implement user authentication', 'Refactor database queries', 'Write unit tests') and automatically schedule dedicated blocks for each within their workday, ensuring no task falls through the cracks and deadlines are met realistically.

· Scenario: A student preparing for exams has a long list of study topics and assignments. Chronosync Todo can break down these large tasks into manageable study sessions, scheduling specific time slots for reviewing each subject, thus making the daunting task of studying feel more organized and less overwhelming.

· Scenario: A team lead needs to allocate time for code reviews, meetings, and actual development work. By inputting these into Chronosync Todo, they can get a clear overview of their capacity, identify potential scheduling conflicts, and ensure sufficient time is dedicated to each responsibility, improving overall team efficiency.

· Scenario: An individual wants to incorporate personal goals like exercise or learning a new skill into their busy schedule. Chronosync Todo can find and block out consistent time slots for these activities, treating them with the same importance as professional tasks, thereby promoting a better work-life balance.

11

SermonSynth AI

Author

tfreebern2

Description

SermonSynth AI is an iOS application that leverages advanced AI to enhance sermon comprehension and retention. It automatically transcribes recorded or uploaded audio, generates concise summaries, creates flashcards for quick review, and formulates tailored reflection questions specifically for Christian content. This addresses the challenge of actively engaging with sermons while also taking effective notes, offering a smarter way for individuals to deepen their biblical literacy and connection to religious teachings.

Popularity

Points 5

Comments 1

What is this product?

SermonSynth AI is an innovative iOS app designed to revolutionize how individuals engage with religious sermons. At its core, it utilizes the powerful Whisper AI model for highly accurate audio transcription. Following transcription, it employs sophisticated AI prompting techniques, powered by OpenAI's API, to analyze the sermon content and generate valuable study aids. These include digestible summaries that capture the essence of the message, flashcards for easy memorization of key points, and thought-provoking reflection questions that encourage deeper personal engagement with Christian principles. The backend is built with Spring Boot and Kotlin, with the iOS frontend developed using SwiftUI, showcasing a modern and efficient technology stack for a seamless user experience.

How to use it?

Developers can integrate SermonSynth AI's capabilities into their own applications by exploring its API, which is powered by Spring Boot and Kotlin. The core AI transcription and summarization features, leveraging Whisper and OpenAI, can be accessed for custom solutions. For end-users, the process is incredibly straightforward: simply record audio during a sermon using the iOS app or upload an existing audio file. The app then handles the entire AI processing pipeline in the background, notifying the user via push notifications once the transcription, summary, and study materials are ready for review. This allows users to focus on the sermon itself, knowing that their notes and learning aids will be automatically generated.

Product Core Function

· AI-powered sermon transcription: Converts spoken sermons into accurate text using Whisper, enabling easy searching and review of sermon content.

· Automated summary generation: Creates concise overviews of sermons, highlighting key themes and messages for quick understanding and recall.

· Flashcard creation: Generates study flashcards from sermon content, aiding in memorization of important verses, concepts, and teachings.

· Personalized reflection questions: Produces tailored questions to stimulate deeper thought and personal application of sermon messages to one's faith journey.

· Push notification system: Informs users promptly when transcriptions and summaries are complete, ensuring timely access to generated study materials.

· Cross-platform backend architecture: Utilizes Spring Boot and Kotlin for a robust and scalable backend, demonstrating efficient modern development practices.

· Native iOS development with SwiftUI: Provides a fluid and intuitive user interface on iOS devices, showcasing modern Apple development trends.

Product Usage Case

· A busy professional who wants to absorb more from weekly church services can use SermonSynth AI to record the sermon, receive a full transcription, and a summary for later review during their commute, ensuring they don't miss key spiritual insights.

· A Bible study group leader can use the generated reflection questions to facilitate deeper discussions among members, making their study sessions more engaging and insightful.

· A student of theology can use the flashcards generated by SermonSynth AI to efficiently memorize important theological concepts and biblical passages discussed in sermons for their academic studies.

· An individual seeking to deepen their personal faith can leverage the AI-generated summaries and reflection questions to engage more actively with the spiritual messages, leading to a more profound and personal connection with their beliefs.

· A developer experimenting with AI transcription and summarization can analyze SermonSynth AI's architecture (Spring Boot, Kotlin, OpenAI API integration) to learn best practices for building similar AI-driven applications.

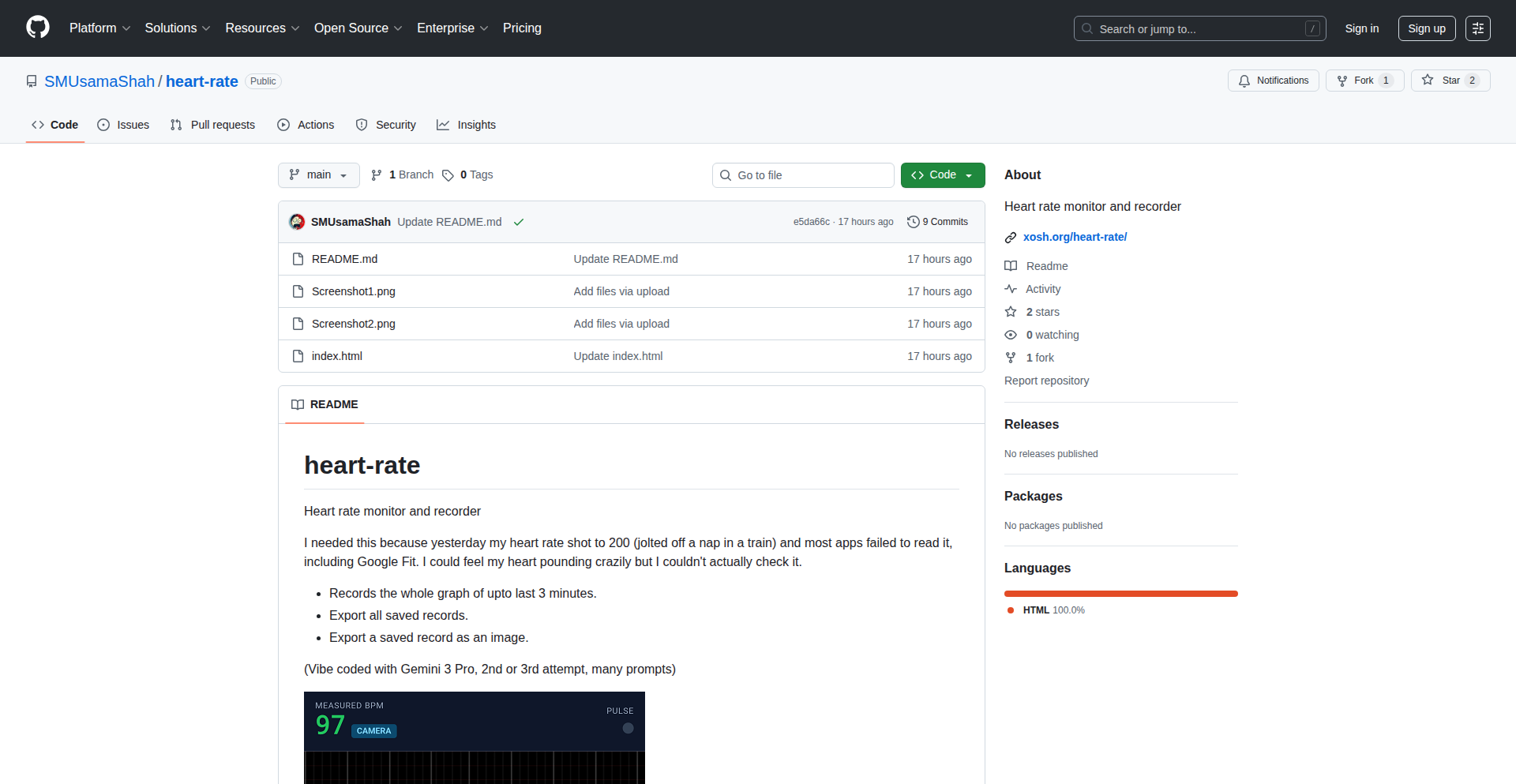

12

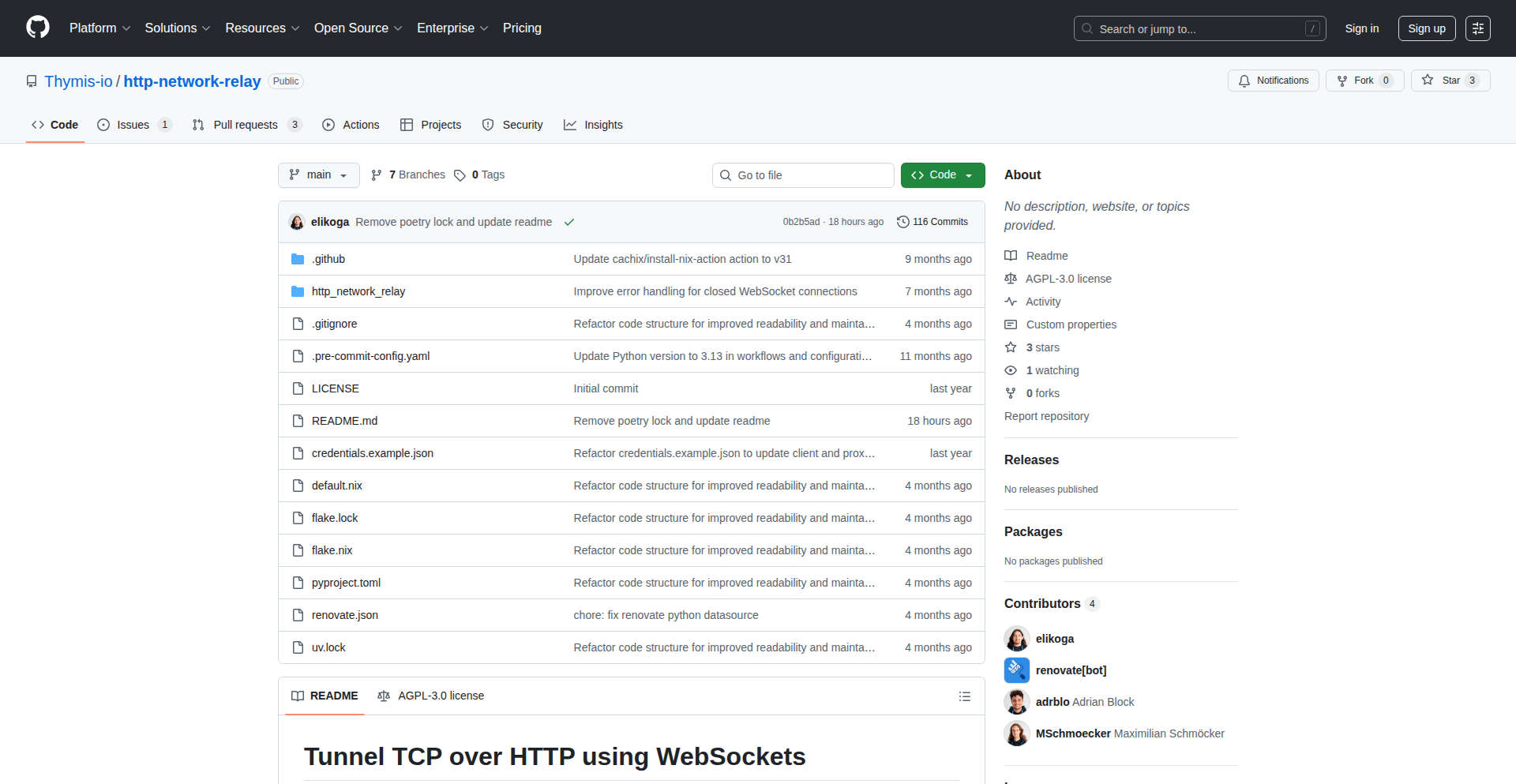

HTTP-Network-Relay

Author

elikoga

Description

This project allows you to establish secure network connections to devices that are behind NAT (Network Address Translation) or firewalls, without needing to configure port forwarding. It cleverly tunnels TCP traffic over HTTP using WebSockets, making it appear as regular web traffic. This is incredibly useful for developers who need to access or control devices in remote or restricted network environments.

Popularity

Points 5

Comments 0

What is this product?

HTTP-Network-Relay is a system that creates a bridge for network communication, enabling devices to talk to each other even when separated by NAT or firewalls. It works by encapsulating TCP connections – the kind of connections your applications use to send and receive data – inside WebSocket connections. WebSockets are a technology that allows for real-time, two-way communication over a single, long-lived HTTP connection, similar to how your web browser communicates with a website. The innovation here is using this standard web technology to bypass network restrictions and establish direct-like communication, which is a clever hack to solve a common networking problem. So, this means you can reach devices that would normally be invisible on the internet.

How to use it?

Developers can integrate HTTP-Network-Relay into their workflows by deploying different components. Typically, you would run an 'edge-agent' on the device behind the NAT/firewall that needs to be accessed. Then, you'd run an 'access-client' on your local machine or a server from which you want to access that device. These two components communicate through a central 'network-relay' service. The project can be used directly with a tool like `uv` by running `uvx --from git+https://github.com/Thymis-io/http-network-relay <command> [args...]`, where `<command>` would be one of `network-relay`, `edge-agent`, or `access-client`. This makes it easy to get started without complex manual setup. This allows you to connect to services running on your home server from a coffee shop, or to manage IoT devices in a factory without IT intervention.

Product Core Function

· TCP Tunneling over WebSockets: This is the core technology that allows data to flow between devices as if they were on the same network, even when separated by NAT or firewalls. It solves the problem of inaccessible devices by leveraging existing web infrastructure. This means you can run services on devices that are otherwise unreachable.

· NAT and Firewall Traversal: The system is designed specifically to overcome the limitations imposed by Network Address Translation and firewalls, which normally block incoming connections. This provides direct access to otherwise hidden devices. So, you can finally access that Raspberry Pi in your basement from anywhere.

· Secure Communication: By using WebSockets, which typically run over TLS/SSL, the communication between devices is encrypted, ensuring data privacy and security. This protects your sensitive data during transmission. This means your remote connections are safe from eavesdropping.

· Decentralized Connectivity: The architecture allows for flexible deployment, enabling connectivity between any two points as long as they can reach the relay server, without requiring a central authority or complex VPN setup. This offers a flexible and resilient way to connect your distributed systems. So, you can connect devices across different networks easily.

Product Usage Case

· Remote Development Server Access: A developer needs to access a development server running on their home network from their office or while traveling. By deploying the edge-agent on the home server and the access-client on their laptop, they can SSH into or access web services on the home server as if it were local, overcoming home router limitations. This solves the problem of needing to manage a dedicated public IP or complicated VPNs for remote access.

· IoT Device Management: A company has deployed IoT devices in remote locations behind corporate firewalls. HTTP-Network-Relay allows their central management system to securely communicate with and control these devices for updates, diagnostics, and data collection without requiring IT teams to open specific ports on their networks. This enables efficient remote management of deployed hardware.

· Peer-to-Peer Application Connectivity: For applications that require direct peer-to-peer communication but where users might be behind NAT, this system can facilitate establishing those connections. For example, a custom chat application or a distributed file-sharing tool could use this relay to connect users who wouldn't otherwise be able to establish direct links. This makes it possible for users to connect directly, improving application performance and reducing server load.

13

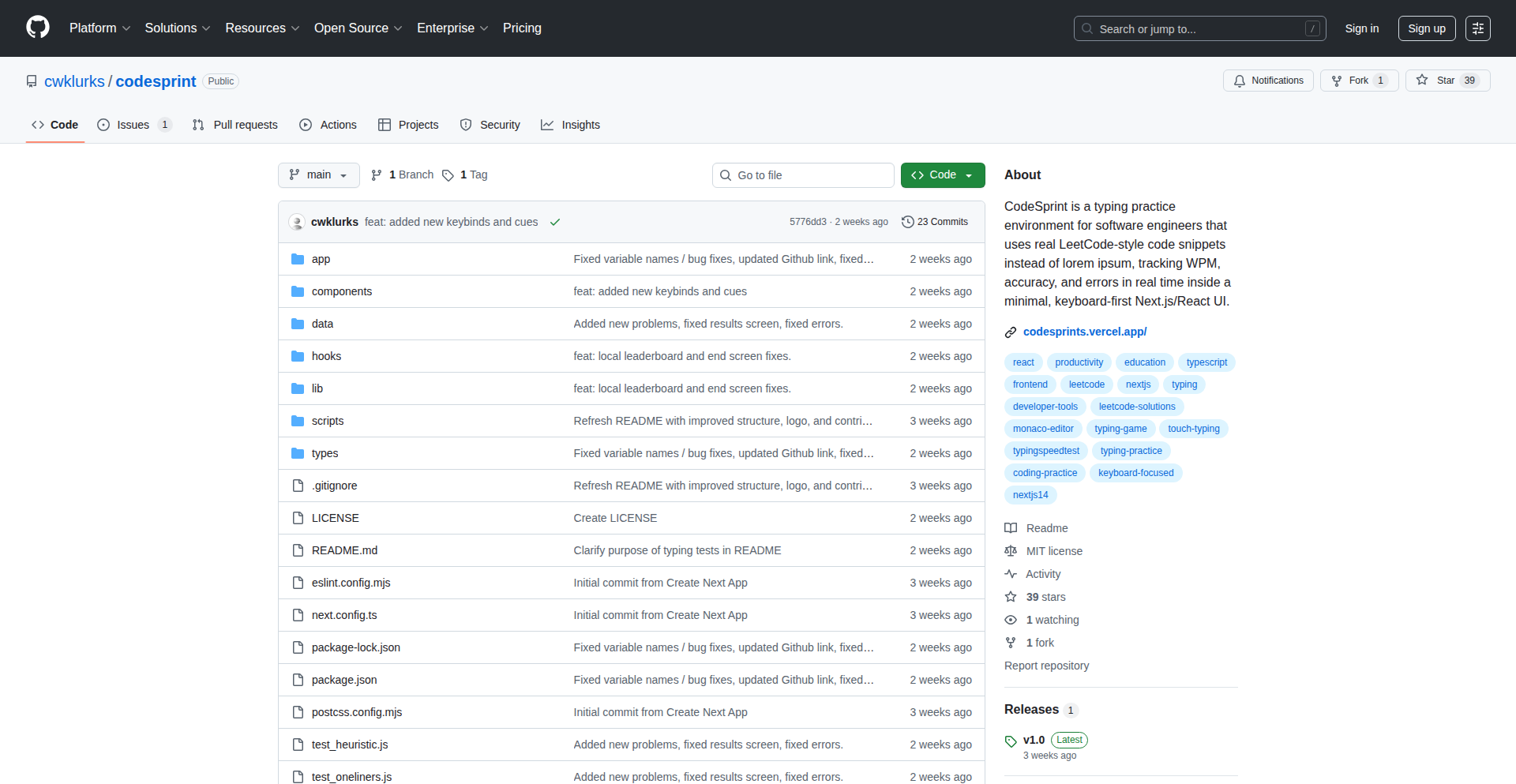

CodeSprint

Author

cwkcwk

Description

CodeSprint is a specialized typing practice tool designed to improve coding speed and accuracy for developers, especially for technical interviews. It uses real LeetCode code snippets to train muscle memory for syntax like brackets, semicolons, and indentation, going beyond standard typing tests.

Popularity

Points 3

Comments 2

What is this product?

CodeSprint is a web-based typing game that focuses on the specific syntax and patterns found in programming code, such as brackets, semicolons, and indentation. Unlike traditional typing tests that use prose, CodeSprint leverages actual LeetCode problem solutions as practice material. This means you're not just typing random words, but reinforcing the muscle memory for the exact characters and structures you'll encounter in coding interviews and daily development. The innovation lies in its tailored scoring mechanism, which accounts for code-specific elements like indentation and symbol density, providing a more relevant measure of coding typing proficiency. It's built using Next.js, TypeScript, Chakra UI, and Framer Motion for a smooth and responsive user experience.

How to use it?

Developers can use CodeSprint directly through their web browser. Simply navigate to the CodeSprint website, choose a programming language (currently supporting Python, Java, C++, and JS), and select a LeetCode problem snippet to practice. The tool will present the code, and you'll type it out. The system tracks your speed and accuracy, providing feedback. This is particularly useful for:

1. Interview Preparation: Practicing typing common code patterns and syntax under pressure.

2. Skill Refinement: Sharpening your ability to type code quickly and without errors.

3. Developer Warm-up: A quick and engaging way to get your fingers and mind ready for coding sessions.

Integration isn't a primary focus, as it's a standalone practice tool. The developer can also potentially use the scoring logic (if open-sourced) to integrate similar metrics into their own tools.

Product Core Function

· Code-specific typing practice: Offers realistic coding scenarios and syntax for developers to practice, improving their typing speed and accuracy with code structures. The value is in building practical muscle memory for coding tasks, making developers faster and more efficient when writing code.

· LeetCode snippet integration: Utilizes actual code snippets from popular LeetCode problems, ensuring practice is relevant to common coding challenges and interview questions. The value is in preparing for real-world technical interviews and common coding patterns.

· Custom scoring engine: Accurately measures typing proficiency by considering code-specific elements like indentation and symbol density, providing a more meaningful metric than generic WPM. The value is in offering a fair and insightful assessment of coding typing skill.

· Multi-language support: Supports popular programming languages like Python, Java, C++, and JavaScript, allowing developers to practice in their preferred language. The value is in providing a versatile tool for a broad range of developers.

· Engaging user interface: Built with modern web technologies like Next.js, TypeScript, Chakra UI, and Framer Motion, offering a smooth, responsive, and visually appealing typing experience. The value is in making practice sessions enjoyable and motivating.

Product Usage Case

· A software engineer preparing for a technical interview uses CodeSprint to practice typing common algorithms like Two Sum or LRU Cache snippets. This helps them reduce errors and improve speed when writing code during the stressful interview environment, directly addressing the problem of translating prose typing speed to coding speed.

· A junior developer wants to become faster at writing boilerplate code (e.g., class definitions, loop structures) in their daily work. They use CodeSprint with snippets of typical code structures to build muscle memory, leading to quicker development cycles and fewer typos in their projects.

· A coding bootcamp student uses CodeSprint to overcome their fear of making syntax errors. By repeatedly typing code in a low-stakes environment, they gain confidence in their ability to accurately produce code, improving their overall learning and debugging process.

· A developer looking to add Rust or Go to their skillset can use CodeSprint (once supported) to familiarize themselves with the syntax and idiomatic patterns of these new languages, accelerating their learning curve and making the transition smoother.

14

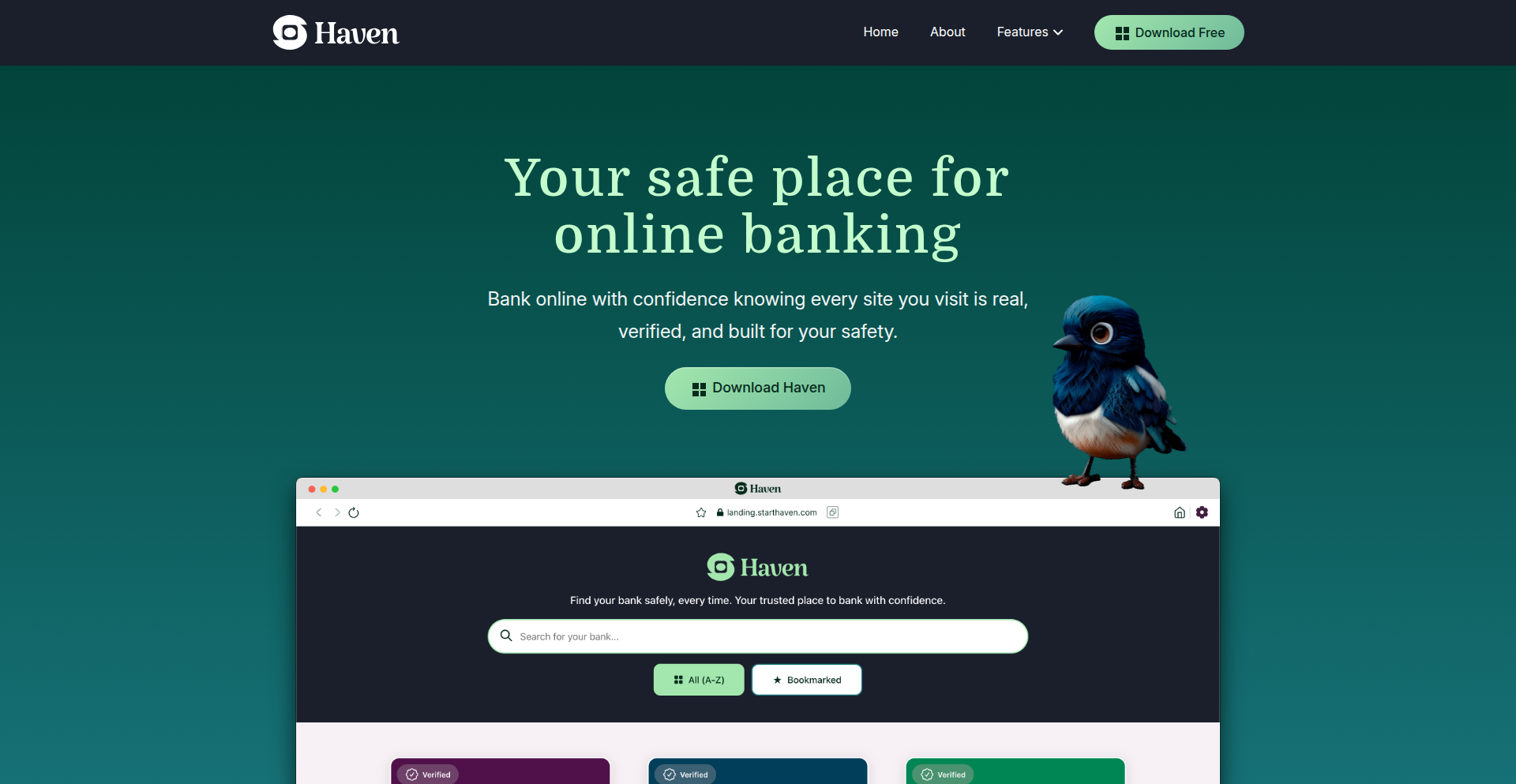

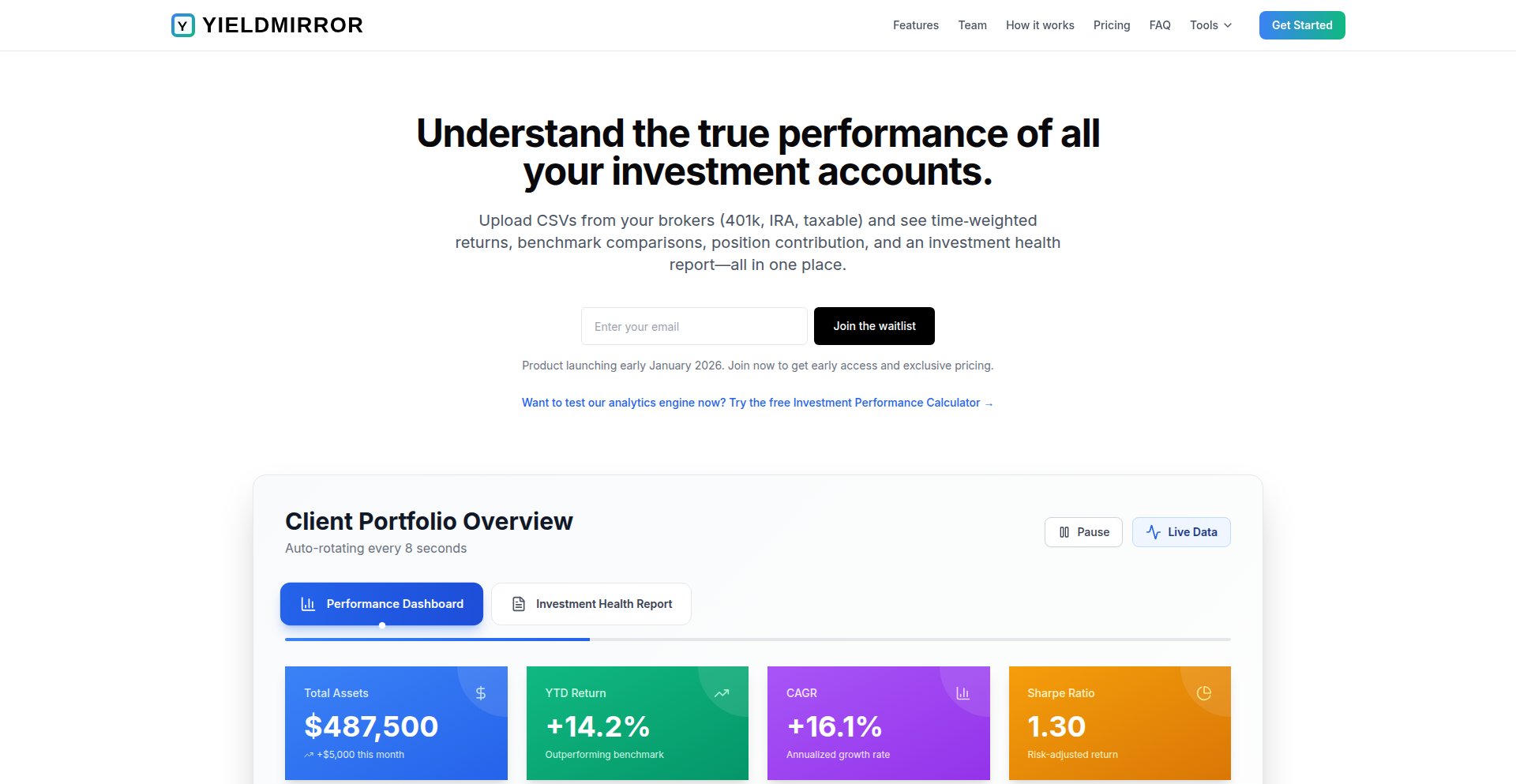

Haven: The Fortified Financial Browser

Author

bms13ca

Description

Haven is a specialized web browser designed for a single, critical purpose: to provide an ultra-secure environment for online banking and financial transactions. It achieves this by drastically limiting its functionality, only allowing connections to verified financial institutions and blocking all other web content, extensions, injected scripts, and third-party code. This approach is a direct response to the growing security risks posed by general-purpose browsers, extensions, and the increasing integration of AI, aiming to protect users from malicious attacks and data theft, inspired by real-life financial losses due to compromised browser security.

Popularity

Points 5

Comments 0

What is this product?

Haven is a highly specialized web browser built from the ground up for enhanced financial security. Unlike regular browsers that are designed for broad internet access, Haven acts as a digital fortress for your banking and investment activities. Its core innovation lies in its radical simplicity and strict control. It does not support browser extensions, scripts from unknown sources, or any form of code injection. This means that the only code running on Haven is what's absolutely necessary for secure communication with verified financial institutions. Think of it like having a dedicated, armored car for your money, rather than using your everyday car which can go anywhere but also carries more risks. This restricted environment significantly reduces the attack surface for malware, phishing attempts, and data scraping that can compromise sensitive financial information.

How to use it?

Developers and everyday users can use Haven by downloading and installing the application from the official website. Once installed, instead of opening a general web browser to access their bank, users would launch Haven. Within Haven, they would navigate to their pre-approved and verified financial institution websites. The browser's strict architecture ensures that only the essential elements for these trusted sites are loaded and executed, preventing any unintended or malicious code from interfering with the session. For developers, Haven can be seen as a blueprint for secure application design, demonstrating how to build robust security by limiting functionality and controlling the execution environment. It offers a secure, predictable space for users to manage their finances without the constant worry of broader internet threats.

Product Core Function

· Restricted Domain Access: Only allows connections to a curated list of verified financial institutions. Value: Prevents users from accidentally visiting malicious phishing sites disguised as legitimate financial portals, directly mitigating risks of credential theft.

· No Extension Support: Prohibits the installation or execution of any browser extensions. Value: Eliminates a major vector for malware and spyware, as malicious extensions are a common way to steal banking information or manipulate web content.

· Script Blocking: Blocks arbitrary JavaScript and third-party scripts from executing. Value: Prevents malicious code from being injected into financial websites, which could otherwise track user activity, steal session data, or alter transaction details.

· No Code Injection or Overlays: Ensures that no external code can modify or overlay the content displayed by financial institutions. Value: Guarantees that what the user sees on their banking page is exactly what the financial institution intended, preventing deceptive overlays that trick users into revealing sensitive information.

· Controlled Environment: Provides a singular, hardened environment solely for financial activities. Value: Offers peace of mind by dedicating a secure space for sensitive transactions, segregating them from the broader, riskier internet.

Product Usage Case

· User A, who has had a grandparent lose money due to a fake Zoom extension, can now use Haven to safely access their online banking without fear of similar extension-based attacks. They simply open Haven, go to their bank's website, and conduct their transactions with confidence, knowing that no rogue code can interfere.

· A sophisticated user who wants to perform sensitive stock trading operations can use Haven to ensure that no background scripts or extensions are monitoring their activity or attempting to manipulate the trading platform. This provides a clean, auditable environment for their financial decisions.

· A small business owner who frequently needs to access multiple financial portals for payroll and accounting can use Haven to isolate these critical tasks. Instead of opening their regular browser, which might have numerous extensions installed for other work, they use Haven for a secure, focused session, protecting their business finances.

· Someone concerned about the privacy implications of AI features being integrated into general browsers can use Haven for their banking. This ensures that their financial data is not being processed or shared with third-party AI models in an uncontrolled manner, maintaining a higher level of privacy for their financial activities.

15

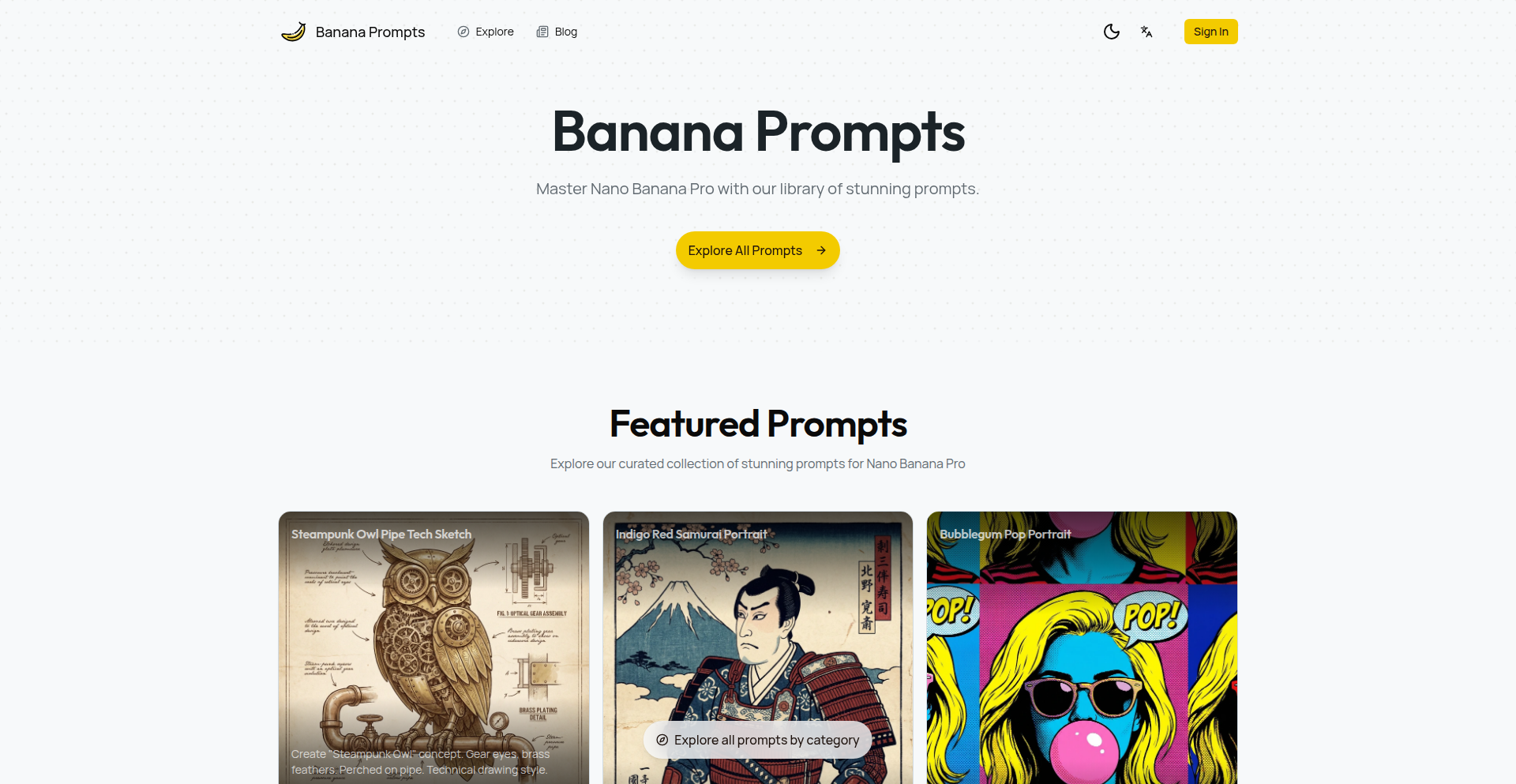

Banana Prompts: Nano Banana Pro Prompt Crafting

Author

zenja

Description

Banana Prompts is a curated collection of expertly designed prompts specifically for the Nano Banana Pro AI model. It's a resource for AI artists and developers to achieve stunning, advanced image generation with photorealistic, cyberpunk, or isometric styles with less effort. The innovation lies in understanding and optimizing prompts for a specific, powerful AI model, offering reliable starting points for creative exploration and reducing the trial-and-error for users. This means you get better, more consistent results faster.

Popularity

Points 4

Comments 0

What is this product?

Banana Prompts is a web-based library of pre-written, highly effective text instructions (prompts) tailored for Nano Banana Pro, an AI that creates advanced images. The core technical insight is that different AI models respond best to specific prompt structures and keywords. Instead of guessing what works, Banana Prompts provides optimized prompts, acting as a shortcut to generate high-quality visuals like realistic landscapes, detailed character portraits, or unique 3D scenes. This saves users time and frustration by providing proven starting points.

How to use it?

Developers and AI artists can use Banana Prompts by visiting the website and browsing through categories or searching for specific styles. Once a suitable prompt is found, it can be copied and pasted directly into the Nano Banana Pro interface. The prompts are designed to be used as-is or with minor adjustments, providing a solid foundation for generating desired images. This integrates seamlessly into existing AI image generation workflows, offering immediate value without complex setup.

Product Core Function

· Curated Prompt Library: Provides a structured collection of tested and optimized prompts for Nano Banana Pro. Value: Reduces the time and expertise needed to craft effective prompts, leading to faster and better image generation.

· Style-Specific Optimization: Prompts are designed to elicit specific visual styles (e.g., photorealism, cyberpunk, claymation) from the AI model. Value: Enables users to reliably achieve desired artistic outcomes, making AI image generation more predictable and controllable for creative projects.

· Reduced Trial-and-Error: Offers reliable starting points, minimizing the need for extensive prompt experimentation. Value: Saves users significant time and computational resources, making the AI art creation process more efficient and accessible.

· Ease of Use: Simple copy-paste functionality for prompts. Value: Allows users of all skill levels to leverage advanced AI capabilities without needing deep technical prompt engineering knowledge.

Product Usage Case

· An independent game developer needs to quickly generate concept art for a cyberpunk city. Instead of spending hours iterating on prompts, they use Banana Prompts to find a highly effective cyberpunk cityscape prompt, receiving a stunning visual in minutes. This helps accelerate their game's pre-production phase.

· A freelance digital artist wants to create a series of photorealistic ocean landscapes for a client. They use Banana Prompts to access prompts optimized for realism and seascape elements, generating high-quality base images that require minimal post-processing. This increases their output and client satisfaction.

· A hobbyist experimenting with AI art generation finds it difficult to achieve specific isometric styles. By using Banana Prompts, they discover optimized prompts that produce claymation-like isometric scenes, unlocking new creative possibilities and boosting their engagement with the technology.

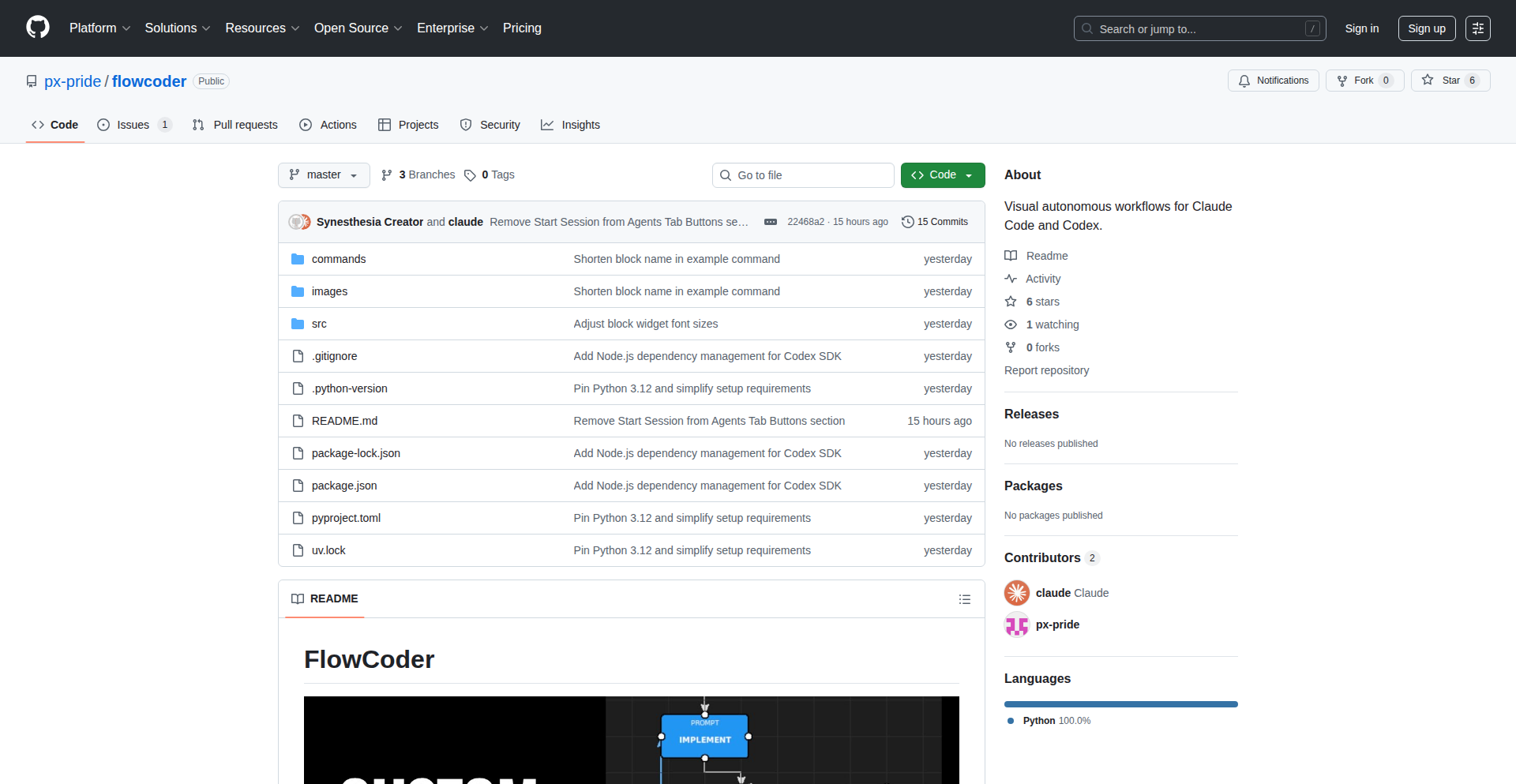

16

TaskWand: AI-Powered n8n Workflow Forge

Author

ronanren

Description

TaskWand is a groundbreaking tool that leverages a specialized Retrieval-Augmented Generation (RAG) system to streamline the creation of complex n8n workflows. By training on a vast dataset of over 2,000 verified n8n workflows, TaskWand dramatically reduces the common AI 'hallucinations' of non-existent nodes or incorrect parameters, ensuring generated workflows are directly importable and functional. It offers a visual preview of the workflow, a prompt refinement feature to convert vague ideas into technical specifications, and an interactive AI copilot for node-specific queries and troubleshooting. This significantly accelerates development and minimizes errors for n8n users.

Popularity

Points 2

Comments 2

What is this product?

TaskWand is an AI-powered assistant designed to generate n8n workflows. Unlike general AI models that might invent workflow steps or parameters that don't exist in n8n, TaskWand uses a technique called Retrieval-Augmented Generation (RAG). Think of it like giving a smart assistant a detailed manual and a library of successful examples for a specific task. TaskWand has 'read' thousands of real, working n8n workflows. When you describe what you want your workflow to do, it first finds the most relevant and correct pieces from its library of examples. Then, it uses a powerful AI model to combine these pieces and generate a new, custom workflow based on your request. This grounded approach ensures the generated workflow is accurate, uses only existing n8n nodes and parameters, and is ready to be imported directly into n8n. So, what makes it innovative? It's the intelligent way it combines AI's creative generation with the hard, verifiable facts from a curated dataset of successful workflows, drastically improving reliability for complex automation tasks.

How to use it?

Developers can use TaskWand by visiting the TaskWand website. The primary interaction involves describing the desired n8n workflow in natural language. For instance, you could type 'Create a workflow that takes new leads from a HubSpot form and adds them to a Google Sheet, then sends a Slack notification.' TaskWand then processes this description. Before generating the final output, you can use its 'Improve' feature to refine your initial idea into a more detailed technical prompt, making it easier for the AI to understand complex requirements. You'll also see a visual preview of the generated workflow in your browser, allowing you to verify the logic before exporting. If you have questions about specific n8n nodes or the logic being generated, you can use the 'Ask' feature, which acts like a Q&A copilot. Once you're satisfied, you can export the workflow as JSON, which can be directly imported into your n8n instance. The tech stack uses modern web technologies like Next.js for the frontend and Supabase for backend services, making it a robust and scalable tool for integration into development workflows.

Product Core Function

· AI-driven workflow generation: Uses a specialized RAG system trained on 2,000+ real n8n workflows to create accurate and importable n8n workflows. This means less manual work and fewer errors when building automations.

· Visual workflow preview: Renders a real-time visualization of the generated n8n workflow in the browser. This allows developers to quickly understand the logic and ensure it matches their requirements before exporting, saving debugging time.

· Prompt refinement ('Improve' button): Transforms vague, high-level task descriptions (e.g., 'sync data') into detailed, technically precise prompts optimized for AI generation. This helps users articulate their needs more effectively, leading to better-quality workflows and reducing misinterpretations.

· Interactive AI copilot ('Ask' feature): Provides a Q&A interface to ask questions about specific n8n nodes, understand workflow logic, or troubleshoot concepts. This acts as a knowledgeable assistant, helping developers learn and solve problems faster without leaving the tool.

· High-quality, import-ready JSON output: Generates workflow definitions that are guaranteed to be compatible with n8n, minimizing the risk of import errors or broken automations. This ensures a smooth transition from generation to deployment.

· Modern web technology stack: Built with Next.js, Tailwind CSS, Supabase, and Qdrant, indicating a focus on performance, developer experience, and scalability. This ensures the tool is responsive and reliable for frequent use.

Product Usage Case