Show HN Today: Discover the Latest Innovative Projects from the Developer Community

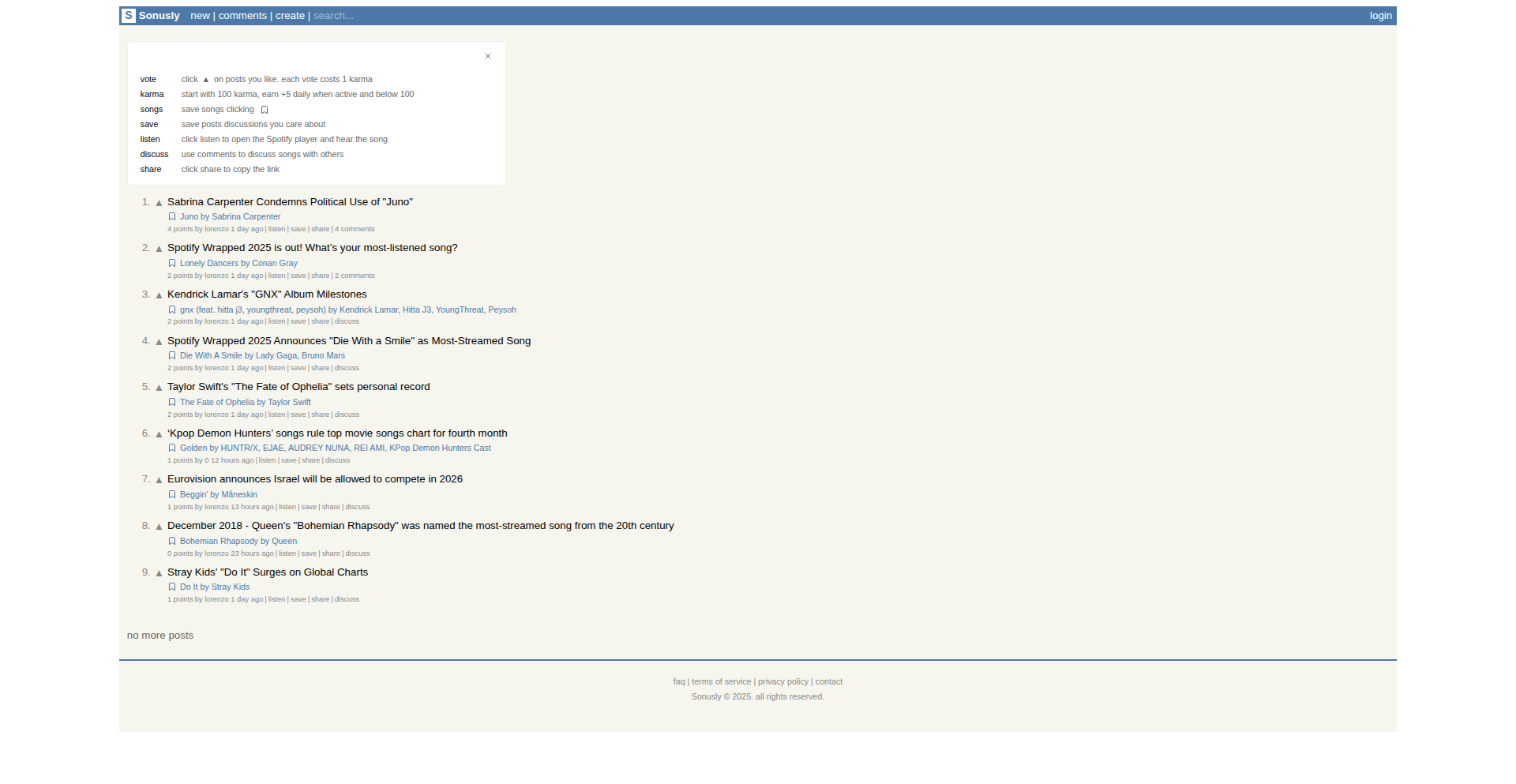

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-12-04

SagaSu777 2025-12-05

Explore the hottest developer projects on Show HN for 2025-12-04. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN submissions reveal a powerful trend: the democratization of complex technologies. We're seeing an explosion of tools that leverage AI not just for its own sake, but to unlock capabilities previously confined to specialists. From turning APIs into AI-callable services without code (Zalor) to building entire AI-powered game studios (Marvin), the barrier to entry is rapidly dissolving. This is a golden age for creators and entrepreneurs who can harness these AI building blocks to solve niche problems or bring ambitious visions to life. For developers, the lesson is clear: embrace AI as a co-pilot and a fundamental component of new applications, rather than just an add-on. Think about how AI can automate tedious tasks, generate novel content, or provide personalized experiences. The future belongs to those who can creatively integrate these powerful models into user-friendly and impactful products. Furthermore, the emphasis on privacy-first, local-first, and open-source solutions indicates a growing desire for user control and transparency, a crucial consideration for any new venture aiming for long-term trust and adoption.

Today's Hottest Product

Name

Onetone – A full-stack framework with custom C interpreter

Highlight

This project showcases a developer's ambition to create a comprehensive, unified development environment. The innovation lies in building a custom C interpreter with its own scripting language (.otc files), integrated with a robust OpenGL 3D graphics engine, a PHP web framework, and Python utilities. This tackles the complexity of modern development by consolidating multiple languages and frameworks into a single cohesive system, offering native performance with Python-like usability. Developers can learn about deep systems integration, cross-language development, and the potential of custom interpreters for specialized applications.

Popular Category

AI & Machine Learning

Developer Tools

Productivity & Utilities

Web Development

Creative Tools

Popular Keyword

AI

LLM

API

Developer Tools

Framework

Automation

Web App

Open Source

CLI

TypeScript

Technology Trends

AI-powered development and content creation

Agentic workflows and autonomous systems

No-code/low-code solutions for complex tasks

Enhanced developer productivity through specialized tools

Privacy-focused and local-first applications

Cross-platform and interoperable solutions

Innovative data expression and visualization

Modernized UI/UX for specialized domains

Project Category Distribution

AI & Machine Learning (30%)

Developer Tools (25%)

Productivity & Utilities (20%)

Web Development (15%)

Creative Tools (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Onlyrecipe 2.0 | 164 | 136 |

| 2 | PrintCal Minimalist Planner | 91 | 30 |

| 3 | MirrorBridge: C++ Reflection for Python Interop | 27 | 7 |

| 4 | AI NDE Narrator | 22 | 9 |

| 5 | Stacktower: Dependency Brick Builder | 27 | 4 |

| 6 | OpenAPI to MCP Gateway | 14 | 4 |

| 7 | EvalsAPI: Reverse-Engineered Slack & Linear APIs for AI Model Evaluation | 10 | 3 |

| 8 | Marvin: AI Game Dev & Ops Suite | 6 | 6 |

| 9 | Flooder: Persistent Homology for Industry | 6 | 2 |

| 10 | EvolveAgent | 5 | 3 |

1

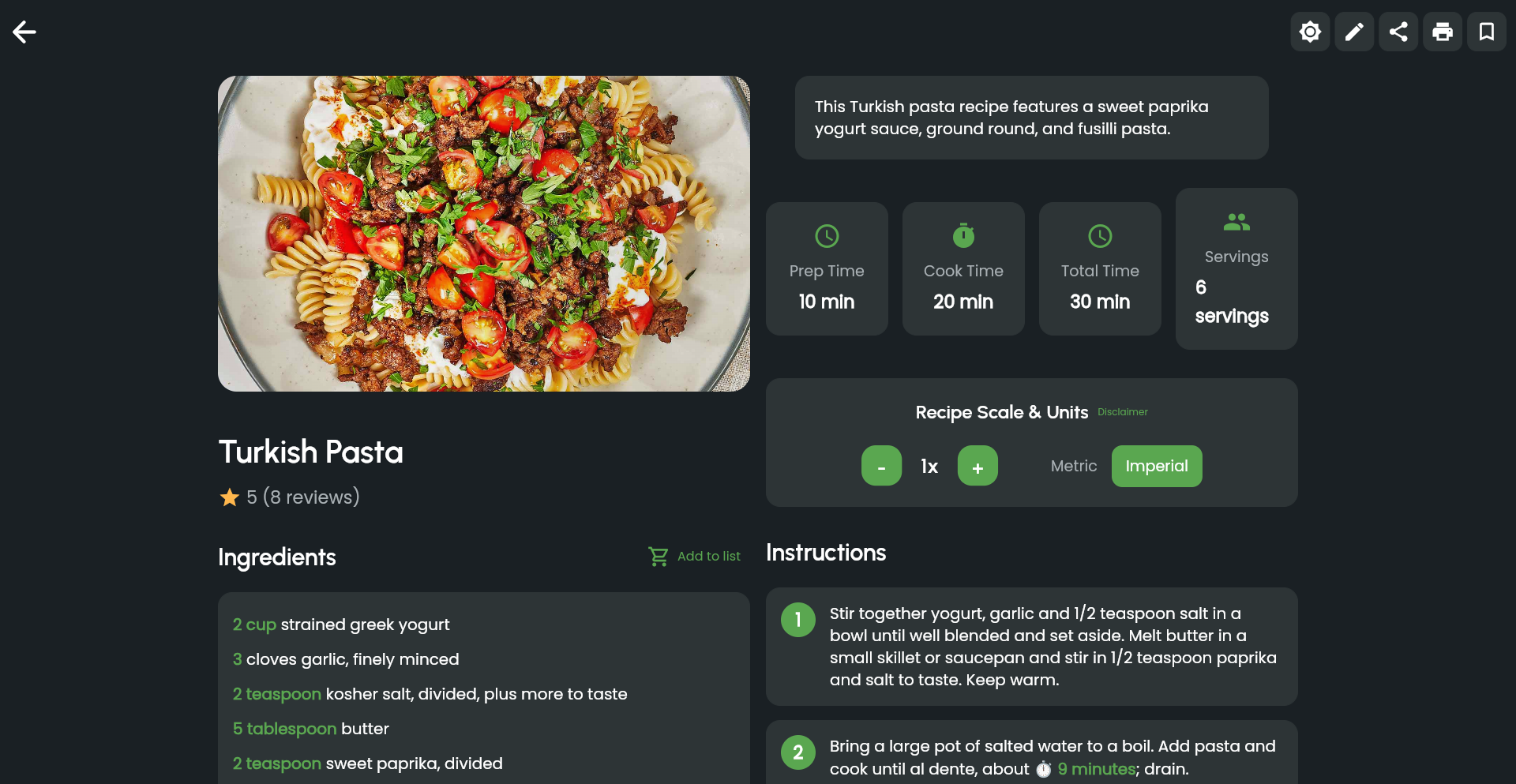

Onlyrecipe 2.0

Author

AwkwardPanda

Description

A significant evolution of the original Onlyrecipe, this project showcases a deep dive into user-driven feature development over four years. The core innovation lies in its robust backend architecture and intelligent recipe parsing, enabling it to transform unstructured text into highly searchable and customizable recipe data. This tackles the common problem of recipe information being scattered and difficult to organize, offering a powerful tool for home cooks and culinary enthusiasts.

Popularity

Points 164

Comments 136

What is this product?

Onlyrecipe 2.0 is a sophisticated recipe management system built on a foundation of advanced data parsing and a flexible API. Instead of just storing recipes as plain text, it intelligently extracts key components like ingredients, quantities, steps, and cooking times from various input formats. This extraction process utilizes natural language processing (NLP) techniques to understand the nuances of recipe language, allowing for a level of detail and structure previously unavailable. The '2.0' signifies a major leap forward, incorporating community feedback to refine its functionality and user experience, making it a testament to iterative development and responsiveness to user needs.

How to use it?

Developers can integrate Onlyrecipe 2.0 into their own applications or build new ones leveraging its powerful recipe data backend. For example, you could build a personalized meal planning app that pulls recipes based on dietary restrictions or available ingredients. A smart kitchen device could use it to fetch and display cooking instructions in real-time. The project likely exposes an API (Application Programming Interface) that allows other software to programmatically access, search, and manipulate recipe data, enabling seamless integration into diverse digital culinary experiences.

Product Core Function

· Intelligent Recipe Parsing: Automatically extracts structured data (ingredients, steps, timings) from unstructured text. This means you don't have to manually enter every detail, saving significant time and effort when digitizing your recipe collection, making it instantly searchable and usable by other applications.

· Advanced Recipe Search and Filtering: Enables highly specific searches based on ingredients, cuisine type, dietary needs, cooking time, and more. This allows users to quickly find the exact recipe they're looking for, even within a large personal database, solving the frustration of sifting through endless options.

· Customizable Recipe Presentation: Allows for flexible display of recipe information, adapting to different devices and user preferences. This ensures recipes are easy to read and follow, whether on a small phone screen or a large kitchen tablet, enhancing the cooking experience.

· User-Driven Feature Development: The evolution to 2.0 is a direct result of incorporating feedback from the Hacker News community, demonstrating a commitment to building a tool that truly meets user demands. This iterative approach means the product is constantly improving based on real-world usage, ensuring its continued relevance and utility for developers.

· API for Integration: Provides programmatic access to recipe data, allowing developers to build new applications or enhance existing ones. This empowers developers to create innovative solutions in the culinary tech space without starting from scratch, fostering creativity and accelerating development.

Product Usage Case

· Building a smart meal planner that suggests recipes based on a user's pantry inventory and dietary preferences. The parsing feature allows the system to understand what ingredients are needed, and the search feature helps find suitable recipes.

· Developing a voice-controlled cooking assistant that reads out recipe steps. The structured data from Onlyrecipe 2.0 makes it easy for the assistant to break down instructions into manageable chunks for voice output.

· Creating a recipe discovery platform that surfaces unique or underappreciated recipes from user-submitted content. The advanced search capabilities allow for finding niche recipes that might otherwise be lost.

· Designing a digital cookbook for families that allows members to easily contribute and organize their favorite recipes. The project's focus on structured data makes collaborative recipe management efficient and enjoyable.

2

PrintCal Minimalist Planner

Author

defcc

Description

PrintCal is a minimalist monthly task planner that prioritizes simplicity and privacy. It addresses the common need for a clean, distraction-free way to organize monthly tasks without the complexities of online accounts, syncing, or data storage. The core innovation lies in its offline-first, printable design, enabling users to maintain structure and focus without digital clutter. Its value is in providing a straightforward, private planning tool for those who prefer analog-style organization with digital convenience for generation.

Popularity

Points 91

Comments 30

What is this product?

PrintCal is a web-based tool that generates a clean, distraction-free monthly calendar view with space for tasks. Its technical principle is to leverage web technologies to create a static, visually simple interface that can be easily printed or viewed offline. The innovation is in its deliberate omission of features like user accounts, cloud syncing, and online data storage. This results in an application that is fast, secure, and respects user privacy. So, what's the benefit for you? You get a private, clutter-free monthly planner that you can use immediately without any sign-ups or data concerns, and you can easily print it out.

How to use it?

Developers can use PrintCal directly through their web browser at https://printcalendar.top/. The interface is straightforward: navigate to the desired month and year, add tasks directly into the designated fields, and then print the generated calendar. For integration, while not designed for complex API integration, developers could potentially use browser automation tools or simulate print actions if they needed to programmatically generate these calendars for specific internal workflows, although its primary use case is manual generation and printing. So, how can you use it? Simply open the website, input your tasks for the month, and print it for your desk or wall.

Product Core Function

· Monthly Task View: Presents a clean, grid-based layout for a full month, allowing users to see all their tasks at a glance. The technical value is in its efficient rendering of calendar data, and the application scenario is for effective long-term planning and review.

· No Account Required: Users can access and use the planner immediately without creating any login credentials. The technical value is in its stateless design and focus on local browser functionality, ensuring immediate usability and privacy. The application scenario is for users who want quick access and don't want to manage yet another online account.

· Offline Functionality: The planner works perfectly without an internet connection after the initial page load. The technical value lies in its client-side rendering and lack of external dependencies. The application scenario is for situations where internet access is unreliable or for users who prefer to work without online distractions.

· Printable Output: Generates a layout optimized for printing, allowing users to have a physical copy of their monthly plan. The technical value is in its responsive design that adapts well to print media. The application scenario is for users who prefer physical planners for better focus and task management.

· Minimalist Aesthetic: Emphasizes a clean, uncluttered design to reduce distractions and improve focus on tasks. The technical value is in its lean HTML, CSS, and JavaScript implementation. The application scenario is for individuals seeking a calm and organized planning experience.

Product Usage Case

· A student needing to plan out their monthly assignments and exam schedule without having to create an online account or worry about their data being stored. They can simply access the site, input their deadlines, print the calendar, and pin it to their notice board for easy reference. This solves the problem of managing academic workload with a simple, private tool.

· A freelancer who wants to visually block out project timelines and client meetings for the upcoming month, but dislikes cloud-based project management tools due to privacy concerns or complexity. They can use PrintCal to generate a printable monthly overview, helping them stay organized without sharing sensitive client information online. This addresses the need for a secure and straightforward project planning solution.

· An individual seeking to reduce digital distractions and improve focus on personal goals. By using PrintCal to plan their monthly fitness routine or personal development activities and printing it out, they can create a tangible reminder that encourages consistent action away from screens. This showcases how the tool helps achieve personal objectives by minimizing digital noise.

3

MirrorBridge: C++ Reflection for Python Interop

Author

fthiesen

Description

MirrorBridge is a novel C++ binding generator that leverages C++ reflection capabilities to automatically create Python bindings for C++ code. This bypasses the need for manual interface definition languages (IDLs) or complex boilerplate code, significantly streamlining the process of integrating C++ libraries with Python applications.

Popularity

Points 27

Comments 7

What is this product?

MirrorBridge is a tool that solves the common challenge of making C++ code easily accessible from Python. Traditional methods often involve writing a lot of repetitive code or using specific interface description languages. MirrorBridge's innovation lies in its use of C++ reflection. Reflection, in essence, is a program's ability to inspect and modify its own structure and behavior at runtime. MirrorBridge uses this C++ introspection to understand your C++ classes, functions, and data types, and then automatically generates the necessary Python wrapper code (bindings). This means you don't have to manually tell Python how your C++ code works; the tool figures it out. The value for developers is a drastically reduced effort in creating interoperable code, saving time and reducing errors.

How to use it?

Developers can use MirrorBridge by including it as part of their build process. After writing their C++ code, they can run MirrorBridge, pointing it to their C++ header files. MirrorBridge will then analyze the C++ code using its reflection capabilities and output Python files. These generated files act as a bridge, allowing Python scripts to directly call C++ functions, instantiate C++ classes, and access C++ data. This is particularly useful when you have existing high-performance C++ libraries that you want to expose to the flexibility and rapid development environment of Python. For integration, you'd typically import the generated Python modules into your Python application, just like any other Python library.

Product Core Function

· Automatic C++ Class Binding Generation: MirrorBridge inspects C++ classes and generates Python classes that can instantiate and interact with the C++ objects. This allows Python developers to use C++ classes as if they were native Python classes, inheriting from them, calling their methods, and accessing their members. The value here is seamless object-oriented integration between two languages.

· Function and Method Binding: It automatically generates wrappers for C++ functions and methods, enabling Python to call them directly. This means you can leverage existing C++ algorithms and utilities from your Python scripts without rewriting them, providing access to powerful, optimized C++ functionality.

· Type Marshaling and Conversion: MirrorBridge handles the conversion of data types between C++ and Python. For example, it can convert a C++ integer to a Python integer and vice versa, or a C++ string to a Python string. This crucial function ensures that data can be passed back and forth between the two languages correctly and efficiently, preventing data corruption and simplifying data handling.

· Reflection-Powered Analysis: The core innovation is its reliance on C++ reflection. Instead of relying on external configuration files or manually defined interfaces, MirrorBridge probes the C++ code itself to understand its structure. This significantly reduces the manual effort and potential for errors compared to traditional binding methods.

Product Usage Case

· Integrating a high-performance C++ scientific computing library into a Python data science workflow: Instead of rewriting computationally intensive C++ algorithms in Python, MirrorBridge can be used to expose these algorithms, allowing Python scientists to leverage their speed while benefiting from Python's ease of use for analysis and visualization. The problem solved is the performance bottleneck of Python for certain tasks.

· Exposing a real-time C++ game engine's functionality to a Python scripting interface: Game developers often use Python for scripting game logic due to its rapid prototyping capabilities. MirrorBridge can bridge the gap, allowing Python scripts to control game objects, trigger events, and access engine data defined in C++, speeding up game development iteration.

· Creating Python bindings for existing C++ embedded systems code: For developers working with resource-constrained embedded systems where C++ is often the language of choice for performance and control, MirrorBridge can help create a Python interface for easier debugging, configuration, or even control from a higher-level Python application. This makes complex C++ systems more approachable.

· Developing a mixed-language application where core performance-critical modules are in C++ and the user interface or high-level logic is in Python: MirrorBridge facilitates this by providing a robust and automatic way to connect these two parts, allowing developers to choose the best language for each part of their application without sacrificing interoperability.

4

AI NDE Narrator

Author

mikias

Description

This project leverages AI to analyze and narrate nearly 8,000 near-death experiences (NDEs), making them accessible and understandable. The core innovation lies in applying natural language processing and synthesis techniques to extract meaningful patterns from textual accounts and transform them into an auditory format.

Popularity

Points 22

Comments 9

What is this product?

This is an AI-powered tool that processes and narrates written accounts of near-death experiences. It uses sophisticated AI models, likely a combination of Natural Language Processing (NLP) for understanding the text and Text-to-Speech (TTS) for generating the narration. The innovation is in how it distills complex, personal narratives into a structured, listenable format, potentially identifying common themes or sentiments within the vast dataset of NDEs. So, what's in it for you? It provides a novel way to explore a fascinating and deeply human phenomenon, making complex qualitative data easily digestible.

How to use it?

Developers can use this project as a demonstration of advanced AI text analysis and audio generation. It could be integrated into research projects studying consciousness, psychology, or even creative storytelling platforms. The underlying AI models could be adapted to process other forms of subjective textual data, such as user reviews, personal journals, or historical accounts, and generate audio summaries or narratives. So, what's in it for you? It offers a blueprint for transforming raw text data into engaging audio content, opening doors for new analytical tools and creative applications.

Product Core Function

· AI-driven text analysis of near-death experience narratives: This allows for the extraction of key themes, emotions, and recurring elements from a large corpus of subjective accounts, providing insights that would be difficult to glean manually. So, what's in it for you? It offers a way to understand the essence of many personal stories without reading each one.

· Natural language processing for understanding narrative structure: This function helps the AI comprehend the chronological flow and emotional arc of each NDE, ensuring the narration is coherent and impactful. So, what's in it for you? It guarantees that the generated audio makes sense and captures the narrative power of the original experiences.

· Advanced text-to-speech synthesis for listenable output: This feature converts the analyzed text into clear and engaging human-like speech, making the NDEs accessible through audio. So, what's in it for you? It allows you to experience these profound accounts without having to read, perfect for multitasking or for those who prefer auditory learning.

· Pattern identification and summarization: The AI can identify common threads across multiple NDEs, offering a synthesized overview of the phenomenon. So, what's in it for you? It provides high-level insights and trends from a vast dataset, saving you time and effort in discovering overarching themes.

Product Usage Case

· A psychology researcher using the AI to quickly review themes within thousands of NDE accounts for a study on altered states of consciousness. The AI's audio summaries help in quickly identifying qualitative trends across the dataset. So, what's in it for you? It dramatically speeds up qualitative data analysis, allowing researchers to focus on interpretation rather than data wading.

· A content creator on platforms like YouTube or podcasts integrating the narrated NDEs into their shows, providing unique and thought-provoking material for their audience. The listenable format makes it easily consumable for listeners. So, what's in it for you? It provides ready-to-use, compelling audio content for engagement and storytelling.

· A developer building a personal journaling app that uses similar AI techniques to summarize user entries into audio diaries, offering a novel way for users to revisit their thoughts and feelings. So, what's in it for you? It showcases how to add value to user-generated content by transforming it into an interactive audio experience.

· An educator developing educational modules on consciousness and perception, using the narrated NDEs as case studies to illustrate subjective experiences and the potential of AI in humanities research. So, what's in it for you? It provides powerful, AI-generated examples to enrich learning materials and demonstrate technological applications in various fields.

5

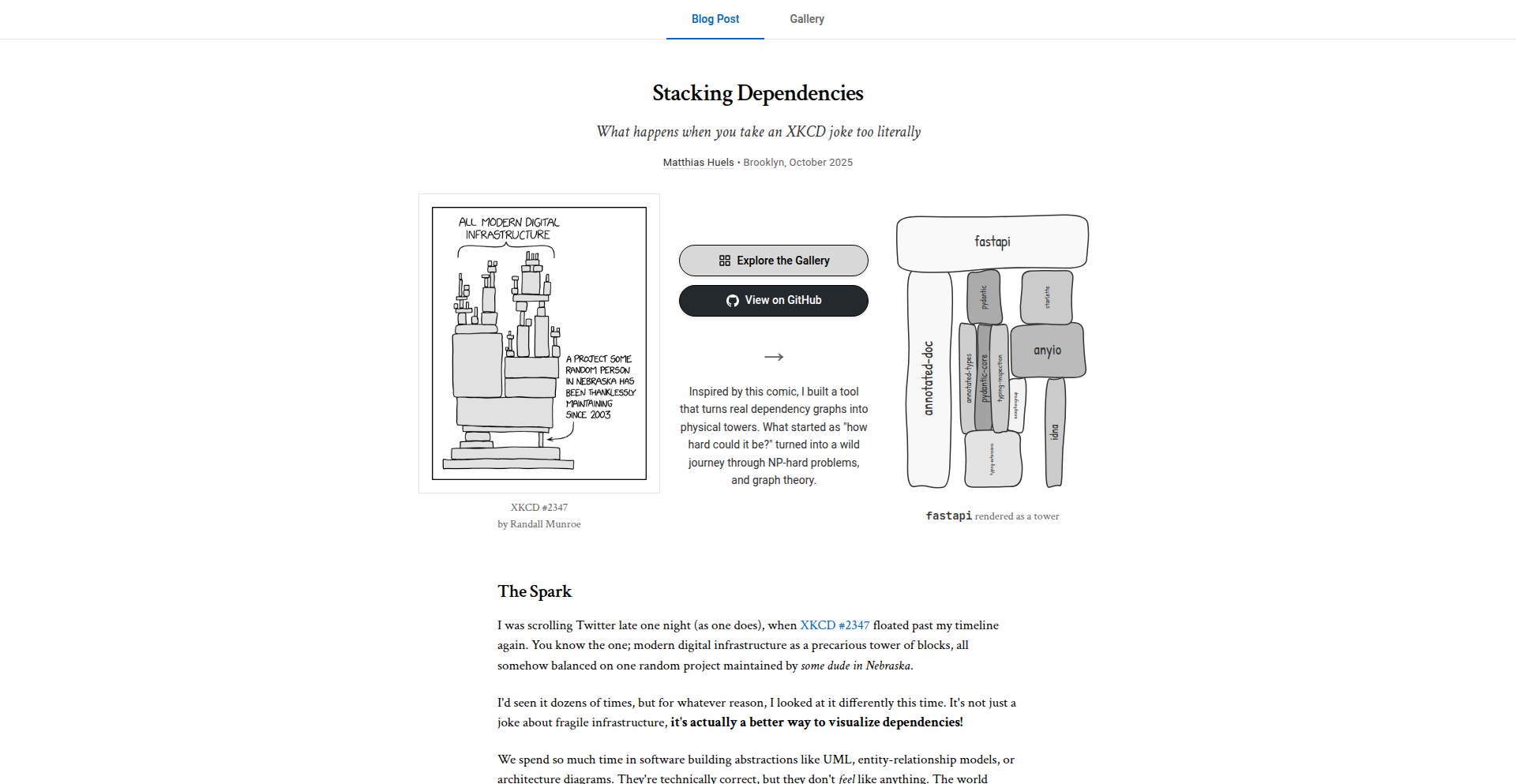

Stacktower: Dependency Brick Builder

Author

matzehuels

Description

Stacktower is an open-source tool that visualizes software package dependencies as a physical tower of bricks. It helps developers understand the complex relationships between different software components by transforming abstract dependency graphs into a tangible, visual representation, inspired by XKCD #2347. This addresses the 'spicy problems' of managing and understanding intricate dependency trees in modern software development for packages like PyPI, Cargo, and npm.

Popularity

Points 27

Comments 4

What is this product?

Stacktower is a novel visualization tool that takes the often-invisible web of software dependencies – how one piece of code relies on another – and turns it into a concrete, physical-like structure of stacked bricks. Think of it like building with LEGOs, but instead of colorful blocks, you're stacking digital pieces of code that your project needs to run. It helps make the complexity of these 'dependency trees' much easier to grasp. The innovation lies in taking abstract data (dependency relationships) and creating a relatable, spatial metaphor that reveals the underlying structure in a unique and insightful way. So, what's the benefit for you? It allows you to see the 'shape' of your project's dependencies, making it easier to identify potential issues or understand how your project is put together.

How to use it?

Developers can integrate Stacktower into their workflow by running the tool on their project's package manager. It can process dependency information from common sources like PyPI (for Python), Cargo (for Rust), and npm (for Node.js). The output is a visual representation of the dependency tower, which can be analyzed to understand the structure and complexity. You can use it to troubleshoot issues related to dependency conflicts, understand the 'blast radius' of updating a specific package, or simply to gain a better mental model of your project's architecture. Essentially, you point Stacktower at your project's dependencies, and it shows you the brick tower, helping you solve dependency puzzles.

Product Core Function

· Dependency Graph Parsing: This function takes raw dependency data from package managers and structures it for visualization. Its value is in making complex, machine-readable data understandable to humans. This is crucial for diagnosing dependency issues and understanding project composition.

· Visual Tower Generation: This core feature translates the parsed dependency graph into a brick-like tower metaphor. The value here is in providing an intuitive, spatial representation of abstract relationships, making it easier to spot patterns and anomalies in dependencies. This helps in comprehending the overall project structure at a glance.

· Multi-Package Manager Support: Stacktower works with popular ecosystems like PyPI, Cargo, and npm. The value is broad applicability across different programming languages and development environments, allowing diverse teams to benefit from its visualization capabilities and tackle shared dependency challenges.

· Open-Source and Extensible: Being open-source means developers can inspect, modify, and extend Stacktower. The value is in fostering community collaboration and allowing customization for specific or niche dependency management needs. This encourages innovation within the developer community by providing a solid foundation.

Product Usage Case

· Troubleshooting Dependency Hell: A developer is experiencing cryptic errors after installing a new library. By feeding their project's dependencies into Stacktower, they can visually see a deeply nested or circular dependency that might be the root cause, allowing for targeted fixes. This solves the problem of 'why is my project broken after this install?'

· Understanding Project Complexity: A lead developer wants to onboard new team members quickly. Stacktower can provide a high-level visual overview of the project's main dependencies, helping newcomers grasp the project's architecture and key components more rapidly. This solves the problem of steep learning curves for new developers.

· Assessing the Impact of Updates: Before updating a core dependency, a developer can use Stacktower to visualize the current dependency chain. This helps them anticipate which other parts of the system might be affected, preventing unexpected breakages. This solves the problem of making safe and informed dependency updates.

· Educating Junior Developers: A senior engineer can use Stacktower to explain to junior developers how different libraries and modules in a project are interconnected. The visual metaphor of the brick tower makes abstract concepts more concrete and easier to learn. This solves the problem of effectively teaching complex system relationships.

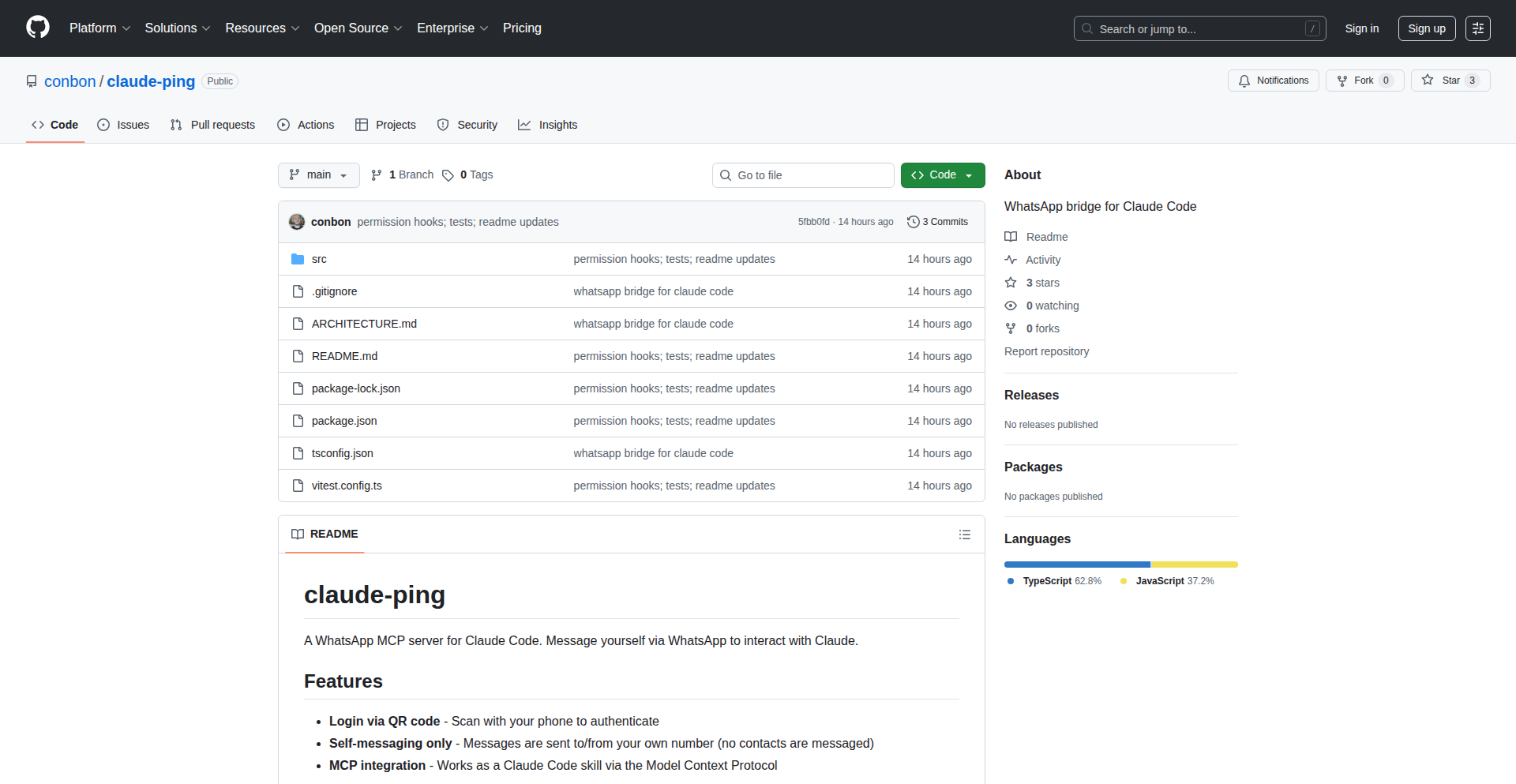

6

OpenAPI to MCP Gateway

Author

rishavmitra

Description

This project transforms your OpenAPI specifications into an MCP server, enabling your APIs to be seamlessly called by AI assistants like Claude or ChatGPT. It bridges the gap between existing API infrastructure and AI integration without requiring manual coding for the AI connection layer. The core innovation lies in automating the complex process of making your services AI-ready.

Popularity

Points 14

Comments 4

What is this product?

This is a tool that automatically converts an OpenAPI specification – which is a standard way to describe how your APIs work – into an MCP (Model-Centric Protocol) server. MCP is a protocol that defines how AI assistants can interact with external tools. Essentially, it acts as a translator. Instead of you writing code to make your API endpoints understandable by an AI, this tool does it for you. This is a significant innovation because it dramatically lowers the barrier to entry for integrating your existing APIs with powerful AI models, saving developers time and effort. It leverages the structure of your OpenAPI spec to create a functional AI interface.

How to use it?

Developers can use this project by providing their existing OpenAPI specification file (in YAML or JSON format). The tool then processes this spec and generates the necessary MCP server configuration. This allows AI models to discover and invoke your API functions directly. Imagine you have a well-documented REST API for your e-commerce backend. You simply point this tool to your OpenAPI spec, and it creates an MCP endpoint that ChatGPT can then use to search for products, place orders, or check order status, all without you writing any AI-specific integration code.

Product Core Function

· OpenAPI to MCP Server Generation: Automatically creates an MCP server from an OpenAPI specification, enabling AI assistants to interact with your APIs. The value here is drastically reduced integration time and effort for exposing APIs to AI.

· Code-Free AI Integration: Allows APIs to be called by AI models like Claude or ChatGPT without writing any custom code for the AI interface. This democratizes AI integration for developers who might not have deep AI expertise.

· Automated API Exposition: Standardizes the way APIs are exposed to AI agents, abstracting away the complexities of protocol translation and API discovery. The value is a robust and standardized way to make your services AI-aware.

· Dynamic Tool Mapping: Maps API endpoints to AI-understandable 'tools' for AI assistants, making it intuitive for AI to know which function to call for a given request. This ensures efficient and accurate AI interaction with your services.

Product Usage Case

· Exposing a weather API to an AI assistant: A developer has a REST API that provides weather data. By feeding the OpenAPI spec to this tool, they can enable an AI to ask 'What is the weather in London?' and have the AI directly call the appropriate API endpoint without manual coding. This solves the problem of making real-time data accessible to AI for conversational queries.

· Integrating a CRM API with an AI for customer support: A company wants to allow their customer support AI to look up customer details or create support tickets. By using this tool with their CRM's OpenAPI spec, the AI can be given the ability to access and manipulate customer data, significantly enhancing the AI's utility in customer service scenarios.

· Building AI-powered internal tools: For internal applications, developers can quickly enable employees to interact with complex business logic through natural language. For example, an AI could be used to process expense reports by calling the relevant internal finance APIs, streamlining workflows and improving productivity.

7

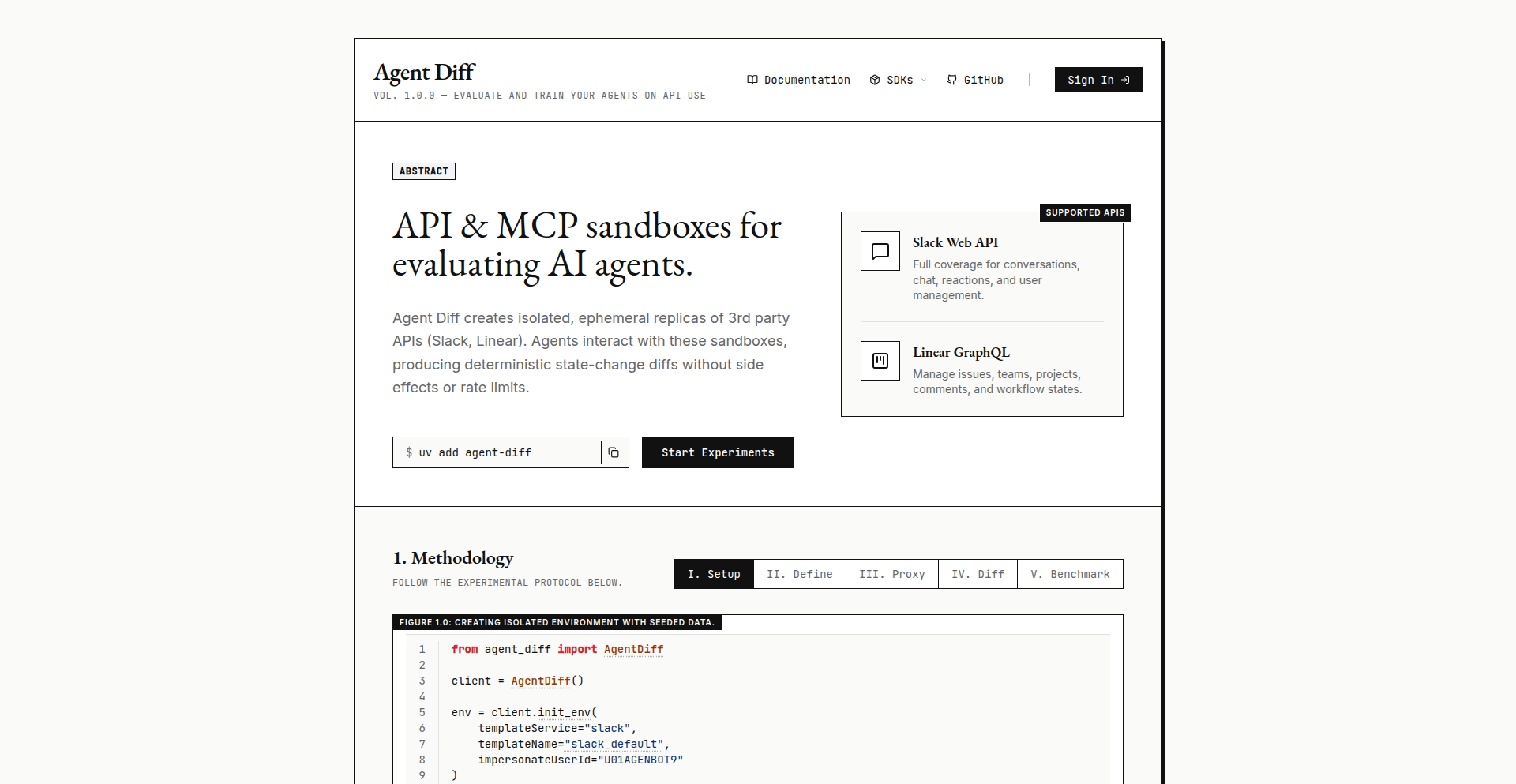

EvalsAPI: Reverse-Engineered Slack & Linear APIs for AI Model Evaluation

Author

hubertmarek

Description

This project reverse-engineers the APIs of popular platforms like Slack and Linear, making them accessible for evaluating and interacting with AI models, particularly in the context of Reinforcement Learning (RL). It allows developers to build automated evaluation pipelines and integrate AI feedback into workflows, effectively treating AI models as programmable agents. The innovation lies in bridging the gap between AI development and real-world application platforms through accessible API interaction.

Popularity

Points 10

Comments 3

What is this product?

This project is an API toolkit that mimics and reconstructs the communication methods (APIs) of platforms like Slack and Linear. Think of it like a translator that allows your AI models to 'talk' to these platforms as if they were native users or applications. The core innovation is enabling AI models, especially those used for Reinforcement Learning (RL) where an AI learns through trial and error, to interact with and receive feedback from complex environments like team chat or project management tools. This means you can automate the process of testing and improving AI models by having them perform tasks and get judged, directly within these familiar platforms.

How to use it?

Developers can use this project to build custom evaluation frameworks for their AI models. For instance, you could train an AI to summarize Slack conversations by having it interact with a simulated Slack environment created by this toolkit. The AI would perform summarization, and the toolkit could then evaluate the quality of the summary against predefined criteria or human feedback. This allows for rapid iteration and improvement of AI models in a controlled yet realistic setting, integrating AI evaluation seamlessly into existing development and testing workflows.

Product Core Function

· API Emulation for AI Interaction: Replicates the communication protocols of platforms like Slack and Linear, allowing AI models to send and receive data as if they were integrated applications. This is valuable for creating realistic testing environments for AI agents.

· Automated Evaluation Pipelines: Enables the creation of automated systems for testing and scoring AI model performance. Developers can set up tests where AI models perform specific tasks and receive objective feedback, accelerating the development cycle.

· Reinforcement Learning Integration: Provides the necessary API hooks for RL agents to interact with external environments and learn from the consequences of their actions. This is crucial for training AI models that need to operate within complex, real-world systems.

· Data Extraction and Analysis: Allows for the collection of interaction data from simulated platforms, which can then be analyzed to understand AI behavior and identify areas for improvement. This facilitates data-driven AI development.

· Customizable Feedback Loops: Facilitates the creation of tailored feedback mechanisms for AI models, allowing developers to define what constitutes success or failure for a given task. This provides fine-grained control over the AI learning process.

Product Usage Case

· Automating the testing of an AI chatbot designed to answer questions in a Slack channel. The toolkit can simulate users asking questions and then evaluate the AI's responses for accuracy and helpfulness, providing valuable feedback for the chatbot's development.

· Training an AI agent to prioritize tasks in a project management tool like Linear. The AI could 'see' new tasks, 'decide' which ones are most urgent, and the toolkit would record the outcomes and provide rewards or penalties based on the AI's decisions, helping it learn to manage priorities effectively.

· Developing an AI assistant that can analyze team communication in Slack to identify potential conflicts or misunderstandings. The toolkit can feed real or simulated conversation data to the AI and help in evaluating its ability to detect and flag such issues.

· Creating a system where an AI model learns to generate better code documentation by interacting with a simulated code repository and receiving feedback on the quality of its generated documentation, facilitated by the toolkit's API emulation.

· Benchmarking different AI models by having them compete on tasks within a simulated environment derived from Linear's task tracking system, allowing for direct comparison of their problem-solving capabilities.

8

Marvin: AI Game Dev & Ops Suite

Author

marvinai

Description

Marvin is an AI-powered platform designed to democratize game development and operation. It leverages specialized AI agents to assist individuals and small teams in every stage of game creation, from design and mechanics to art and level design. Beyond just building, Marvin provides a full operational stack, mimicking the tools used by professional game studios, enabling users to manage and grow their games as sustainable businesses. This tackles the challenge of making complex game development and live operations accessible to everyone, not just large studios.

Popularity

Points 6

Comments 6

What is this product?

Marvin is an AI-driven ecosystem that acts as your virtual game studio. It's built around a system of 'agents' – specialized AI models you can interact with through natural language. You tell Marvin what kind of game you want, and these agents collaboratively work with you on various aspects like game mechanics, visual art, physics implementation, progression systems, and level design. The innovation lies in its comprehensive approach, extending beyond just creation to include game operations – essentially, the entire lifecycle of a game from concept to sustainable business, including publishing and potentially live operations and monetization tools in the future. This is a significant leap from traditional game development tools by integrating AI throughout the entire process.

How to use it?

Developers can start using Marvin by simply interacting with the AI agents through chat. You describe your game idea, and Marvin's agents will ask clarifying questions and begin generating content and suggestions. For instance, you can ask it to 'design a 2D platformer with a focus on fluid movement' and the agents will help flesh out mechanics, suggest art styles, and even outline level structures. Integration can happen by exporting generated assets (like art sprites or level data) and importing them into existing game engines like Unity or Godot. The platform aims to simplify complex workflows, allowing developers to iterate rapidly and focus on creativity rather than getting bogged down in boilerplate tasks. It's about using code and AI to solve the business and creative challenges of making and running a game.

Product Core Function

· AI-assisted game design: Agents help brainstorm and define game concepts, mechanics, and narrative elements, accelerating the initial creative phase.

· Procedural content generation: AI generates game assets like art, levels, and mechanics, reducing manual effort and enabling diverse game worlds.

· Cross-platform publishing pipeline: Facilitates the process of preparing and deploying games to various platforms, streamlining distribution.

· Game operations enablement: Provides tools and frameworks for managing live games, including potential future integration of live ops, monetization, and analytics.

· Natural language interface: Allows users to communicate game development needs and receive assistance through intuitive chat interactions, making development more accessible.

Product Usage Case

· An indie solo developer wants to create a retro-style pixel art RPG but lacks a dedicated artist. They can use Marvin's art agents to describe their desired aesthetic and generate sprites, tilesets, and UI elements, significantly reducing the need for manual art creation and speeding up asset production.

· A small game team is struggling with the complexity of balancing game mechanics and progression in their new strategy game. They can use Marvin to simulate different mechanic interactions and progression curves, receiving AI-driven suggestions for adjustments to improve player engagement and retention.

· A hobbyist game maker wants to quickly prototype a simple puzzle game for social media. They can use Marvin to generate basic game logic, level layouts, and even UI elements, allowing them to create a playable demo within hours rather than days or weeks.

· A developer aims to build a sustainable game business without a large operational team. Marvin's future live ops and monetization tools can help them implement in-game events, manage player economies, and analyze player behavior to optimize revenue and player retention, treating game development as a business from the start.

9

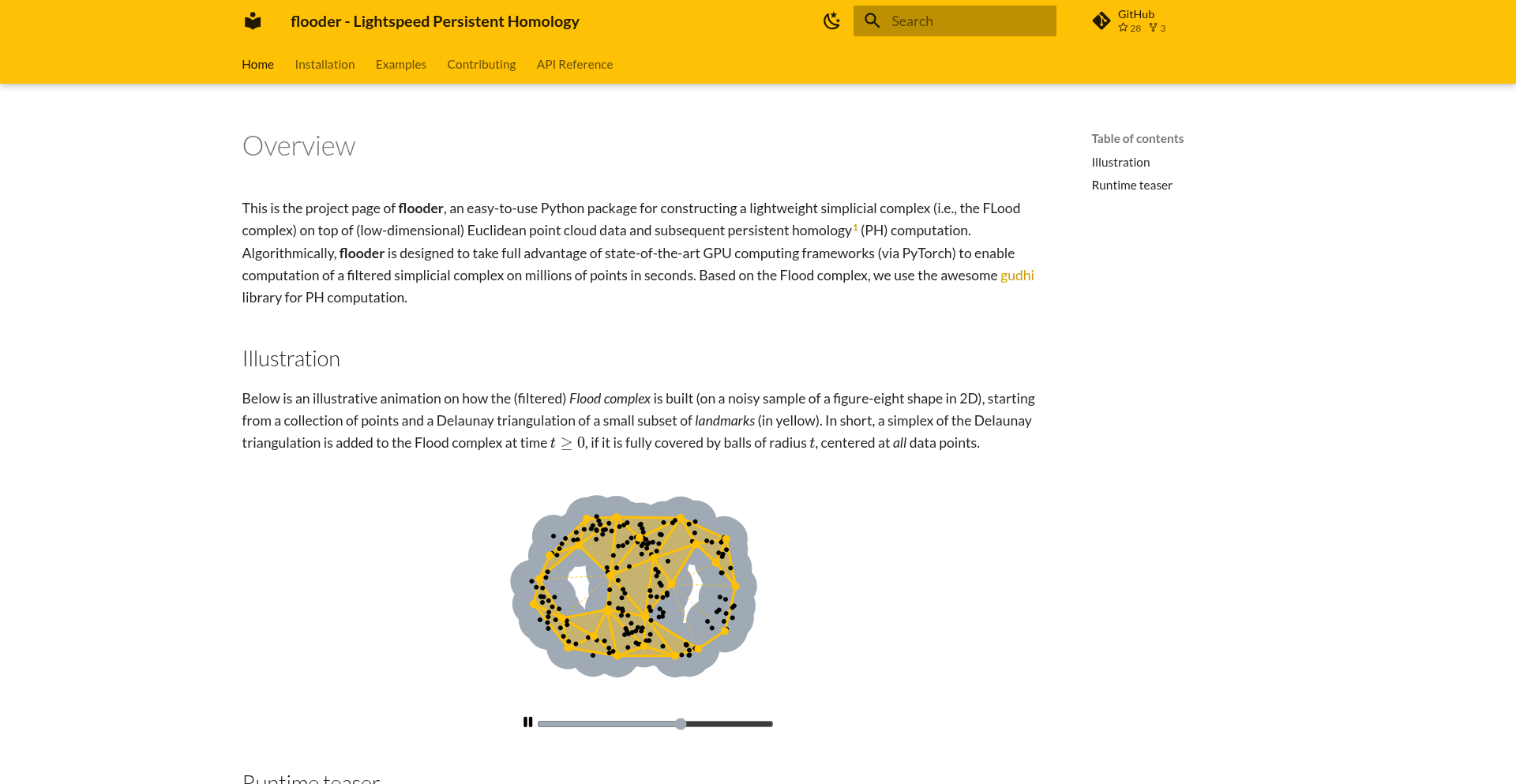

Flooder: Persistent Homology for Industry

Author

elektm

Description

Flooder is a novel tool that brings the power of Persistent Homology, a complex mathematical concept from topology, into practical, industrial applications. It focuses on extracting meaningful topological features from data, enabling deeper insights and better problem-solving in areas where traditional methods fall short. Think of it as a way to find the 'shape' of your data to uncover hidden patterns and connections, making complex datasets understandable and actionable.

Popularity

Points 6

Comments 2

What is this product?

Flooder is a software library that makes Persistent Homology accessible for real-world industrial use. Persistent Homology is a mathematical framework that analyzes the 'shape' of data by tracking topological features like holes and connected components as the data is viewed at different resolutions. Traditional methods often struggle with high-dimensional or noisy data, but Flooder's innovation lies in its efficient algorithms and practical implementation, allowing it to handle large-scale datasets and provide robust topological summaries. This means you can understand the fundamental structure of your data even when it's messy or very complex.

How to use it?

Developers can integrate Flooder into their existing data analysis pipelines. It typically involves preparing your data (e.g., point clouds, graphs, time series) and then using Flooder's functions to compute persistence diagrams or barcodes. These topological summaries can then be used as features for machine learning models, for anomaly detection, or for visualizing complex data structures. For instance, you could feed the topological features extracted by Flooder into a classification model to improve its accuracy, or use them to identify unusual patterns in sensor data.

Product Core Function

· Persistent Homology Computation: Calculates topological features like connected components and holes at various scales, allowing you to understand the underlying structure of your data. This is useful for identifying distinct clusters or cyclic patterns that might be missed by other analyses.

· Feature Extraction for Machine Learning: Generates robust topological features (e.g., persistence diagrams) that can be fed into machine learning models, enhancing their ability to learn from complex and high-dimensional data. This means your AI models can become smarter and more accurate by leveraging the inherent structure of the data.

· Data Simplification and Summarization: Provides concise summaries of complex datasets by focusing on their essential topological properties, making large and intricate data easier to comprehend and manage. This helps you distill vast amounts of information into a manageable and insightful representation.

· Noise Robustness: Designed to be resilient to noise in the data, ensuring that the extracted topological features are meaningful and not just artifacts of random fluctuations. This means you can trust the insights you get, even if your data isn't perfectly clean.

· Scalability for Industrial Datasets: Optimized to handle large volumes of data common in industrial settings, making advanced topological analysis feasible for practical business problems. This allows you to apply cutting-edge techniques to your real-world, large-scale data challenges.

Product Usage Case

· Analyzing sensor data from industrial machinery to detect subtle anomalies that might indicate impending failure, by identifying unusual topological patterns in vibration or pressure readings.

· Characterizing the structure of complex molecules or materials in scientific research to understand their properties, by analyzing the 'shape' of their atomic arrangements.

· Improving the performance of image recognition systems by extracting topological features that capture the structural characteristics of objects, leading to more robust classification.

· Understanding the connectivity and flow patterns in networks (e.g., social networks, traffic networks) to identify bottlenecks or important hubs, by analyzing the topological structure of the network graph.

10

EvolveAgent

Author

EvoAgentX

Description

EvolveAgent is a platform for rapidly building agentic applications that can learn and improve over time without constant developer intervention. It simplifies the process of turning an initial concept into a functional, self-optimizing app by providing integrated user management, databases, tools, and payment processing, eliminating the need for manual infrastructure setup and complex glue code. So, this helps you create intelligent applications much faster, letting them adapt and grow on their own.

Popularity

Points 5

Comments 3

What is this product?

EvolveAgent is a developer platform designed to let you build sophisticated AI-powered applications, called 'agentic apps.' The core innovation is its 'self-optimizing and evolving' capability. Instead of manually updating your app's logic every time you want it to learn or adapt, EvolveAgent's architecture allows the agents within your application to autonomously improve their performance and workflows over time based on usage and feedback. This is achieved through an underlying framework that manages agent interactions, learning mechanisms, and resource allocation, effectively turning your app into a continuously improving system. So, it's like building an app that gets smarter by itself.

How to use it?

Developers can use EvolveAgent to create a wide range of applications, from automated customer support bots to complex data analysis tools and personalized recommendation engines. The platform abstracts away much of the traditional infrastructure setup (like servers, databases, and API integrations). You define the agents, their initial goals, available tools (e.g., web scraping, document analysis, code execution), and how they interact. EvolveAgent then provides the backbone for these agents to operate, learn, and optimize. For integration, you can typically interact with your EvolveAgent application through APIs or a provided SDK, embedding its agentic capabilities into your existing systems or building standalone applications. So, you focus on defining what you want your app to do, and EvolveAgent handles the complex underlying mechanics of making it intelligent and adaptable.

Product Core Function

· Agent Creation and Orchestration: Enables developers to define individual AI agents and manage their interactions, allowing for complex multi-agent systems. The value is in simplifying the architecture of intelligent workflows. This is useful for building systems where different specialized AI modules need to work together.

· Self-Optimization Engine: This core component allows agents to automatically refine their strategies and improve their performance over time based on data and outcomes, reducing the need for manual tuning. The value is in creating applications that become more effective with use. This is applicable for any application where performance improvement is critical.

· Integrated Tooling: Provides out-of-the-box access to a suite of tools (e.g., databases, external APIs, computation modules) that agents can leverage, accelerating development. The value is in reducing development time by not needing to build these integrations from scratch. This is useful for applications requiring diverse functionalities.

· Payment Integration: Seamlessly incorporates payment processing, allowing developers to monetize their agentic applications directly. The value is in enabling quick and easy commercialization of AI products. This is for developers looking to build and sell AI-powered services.

· Rapid Prototyping: Designed for quick iteration, allowing developers to test and deploy new agentic app ideas in minutes rather than days or weeks. The value is in significantly lowering the barrier to entry for AI product innovation. This is ideal for startups and individuals exploring new AI concepts.

Product Usage Case

· Building a personalized AI tutor: Developers can create an agent that adapts its teaching style and content based on a student's learning pace and areas of difficulty, leading to more effective education. EvolveAgent's self-optimization ensures the tutor gets better at teaching over time. This solves the problem of generic educational tools that don't cater to individual needs.

· Developing an automated market research assistant: An agent can be set up to continuously monitor industry trends, analyze competitor activities, and synthesize reports. Its self-evolution ensures it learns to identify more relevant data sources and generate more insightful analysis. This tackles the challenge of keeping up with rapidly changing market dynamics.

· Creating a dynamic customer service chatbot: An agent can handle customer queries, learn from past interactions to resolve issues more efficiently, and escalate complex cases. Its ability to self-optimize means it becomes progressively better at understanding and answering customer questions. This addresses the need for efficient and responsive customer support.

· Designing a smart content generation system: Agents can learn user preferences and generate tailored blog posts, marketing copy, or social media updates. The continuous learning aspect of EvolveAgent means the generated content will become increasingly aligned with the desired tone and style. This solves the problem of repetitive and uninspired content creation.

11

Meetinghouse.cc: Decentralized Connection Finder

Author

simonsarris

Description

Meetinghouse.cc is an experimental platform designed to help individuals find and be found for various purposes, with a focus on decentralized discovery. It tackles the challenge of discoverability in a fragmented digital landscape by exploring novel approaches to peer-to-peer connection. The core innovation lies in its attempt to build a more resilient and user-controlled discovery mechanism, moving away from centralized social graphs.

Popularity

Points 3

Comments 4

What is this product?

Meetinghouse.cc is a project that explores decentralized methods for people to find each other and be found, without relying on traditional, centralized platforms. Imagine a bulletin board where anyone can post what they're looking for or offering, and others can see it, but this bulletin board is managed by the community rather than a single company. The technical innovation here is in investigating how to create such a discovery system that is more resistant to censorship and control, potentially using peer-to-peer technologies or distributed ledgers to store and broadcast connection opportunities. The goal is to empower users with more control over their online presence and connections.

How to use it?

Developers can interact with Meetinghouse.cc by building applications that leverage its discovery mechanism. This could involve creating specialized search tools, integrating its connection-finding capabilities into existing platforms, or contributing to the underlying decentralized infrastructure. For example, a developer could build a tool to find collaborators for open-source projects, or a service to locate local community events, all powered by the Meetinghouse.cc discovery layer. Integration would likely involve interacting with APIs or specific protocols the project exposes, allowing for programmatic access to its decentralized directory of connections and interests.

Product Core Function

· Decentralized profile discovery: Enables users to create and manage their presence and interests in a way that isn't tied to a single server, making it harder to de-platform or censor. This means your ability to be found isn't dependent on one company's rules.

· Interest-based connection matching: Allows users to specify their interests and needs, facilitating connections with others who share similar goals or can fulfill those needs. This helps you find the right people for what you want to do, rather than just random connections.

· Resilient information propagation: Explores methods to ensure that connection information can spread effectively across a network, even if parts of the network are unavailable or under attack. This means your posted needs or offerings are more likely to be seen, even in challenging network conditions.

· User-controlled data: Aims to give users more ownership and control over how their information is shared and discovered. This translates to you deciding who sees what about you, rather than a platform dictating it.

Product Usage Case

· A developer could build a decentralized job board where companies post opportunities and individuals seeking employment can discover them without a central authority vetting the listings. This solves the problem of biased or controlled job search platforms.

· Community organizers could use Meetinghouse.cc to broadcast local event announcements or requests for volunteers, reaching members of their community more effectively and with greater resilience than traditional social media. This helps foster local engagement without relying on platforms that might limit reach.

· Researchers could create a platform to anonymously connect with participants for studies based on specific criteria, ensuring participant privacy and broad reach. This addresses the difficulty of finding qualified and willing participants for sensitive research.

· An artist could use it to announce collaborations or exhibitions, ensuring their announcements reach interested individuals directly and are not subject to algorithm changes that might bury their content. This provides a more direct channel to their audience.

12

VibeCommander: Ambient Audio Orchestrator

Author

fatliverfreddy

Description

VibeCommander is a novel application that dynamically generates ambient soundscapes based on your current system activity. It aims to create a more immersive and productive work environment by translating digital interactions into auditory experiences. The core innovation lies in its ability to monitor system events (like CPU usage, network traffic, application focus) and translate them into subtle, context-aware audio cues. This provides developers with a unique way to perceive their machine's state without direct visual inspection, potentially enhancing focus and reducing cognitive load.

Popularity

Points 7

Comments 0

What is this product?

VibeCommander is a desktop application that creates dynamic, ambient audio environments tailored to your computer's real-time activity. Instead of static background music, it intelligently analyzes what your computer is doing – for instance, if your CPU is working hard on a compilation, or if you're actively browsing. It then translates these activities into subtle, evolving sound patterns. Think of it like a live, auditory dashboard for your digital workflow. The innovation is in this real-time, event-driven sound generation, which moves beyond pre-recorded loops to offer a truly responsive and personalized ambient experience. This means your soundscape isn't just noise; it's a reflection of your current computational state, designed to subtly guide your focus or signal potential issues without being intrusive. So, what's in it for you? It can help you stay in the zone by providing a continuous, non-distracting auditory backdrop that changes with your workflow, making your work environment more engaging and less prone to sudden distractions.

How to use it?

Developers can integrate VibeCommander into their daily workflow by installing the application on their desktop. Once running, it automatically begins monitoring system events. Users can customize the types of system activities they want to influence the soundscape and select from various sound palettes or even define their own audio elements. For instance, you could configure it so that high network activity triggers a gentle, flowing water sound, while intense CPU usage might translate to a more focused, rhythmic pulse. It can be run in the background, complementing existing development tools. The primary use case is to create a more mindful and adaptive working environment. So, how can this benefit you? By setting up VibeCommander, you can establish a personalized audio environment that subtly nudges your focus, alerts you to significant system changes without visual pop-ups, and generally makes your long hours at the computer feel more dynamic and less monotonous.

Product Core Function

· System Activity Monitoring: Listens to real-time system metrics such as CPU load, memory usage, network ingress/egress, and application focus changes. This allows the audio to be directly reactive to the user's computational demands. Its value lies in providing an auditory representation of system performance, which can be useful for understanding workflow bottlenecks or resource-intensive tasks without constantly checking system monitors.

· Event-to-Audio Translation Engine: Translates monitored system events into specific audio parameters like pitch, volume, tempo, or timbre changes. This is the core innovative component that creates dynamic soundscapes. The value is in turning abstract technical data into an intuitive, auditory experience that can influence user mood and focus.

· Customizable Sound Palettes: Offers a library of pre-defined sound themes (e.g., 'Zen Garden', 'Cyberpunk Flow') and allows users to create their own. This provides flexibility for users to match the audio to their personal preferences and work style. The value is in ensuring the ambient sound is pleasant and conducive to productivity, rather than a generic distraction.

· Background Operation: Designed to run silently in the background without consuming significant system resources. This ensures it doesn't interfere with the primary development tasks. Its value is in being a non-intrusive enhancement to the developer's environment.

· Context-Aware Soundscapes: The generated audio evolves organically based on the ongoing system activity, creating a continuous and non-repetitive auditory experience. This avoids the monotony of traditional background music loops. The value is in maintaining engagement and preventing auditory fatigue while still providing subtle feedback.

Product Usage Case

· During a long code compilation: The ambient sound might subtly shift to a more intense, rhythmic pulse, indicating significant CPU activity, helping the developer stay focused on the task at hand without needing to visually check progress. This helps answer 'What's happening with my build right now? without me having to look away from my code.

· While debugging performance issues: Developers can configure VibeCommander to accentuate network traffic or memory usage spikes with distinct audio cues. This provides an immediate, audible signal of potential performance bottlenecks. This helps answer 'Is something spiking in my system that I should investigate? just by listening.

· For focused coding sessions: Users can select a 'calm' sound palette that becomes slightly more active as they switch between different applications, providing a gentle reminder of their workflow without interrupting their thought process. This helps answer 'Am I getting distracted by switching too much? or 'Is my focus wavering?' based on subtle auditory shifts.

· During collaborative development or remote pair programming: The shared ambient soundscape could be subtly influenced by the activities of all connected developers (if future extensions allow), creating a unified auditory experience for the team. This could foster a sense of shared work and presence, answering 'What is the team's overall activity level?'.

13

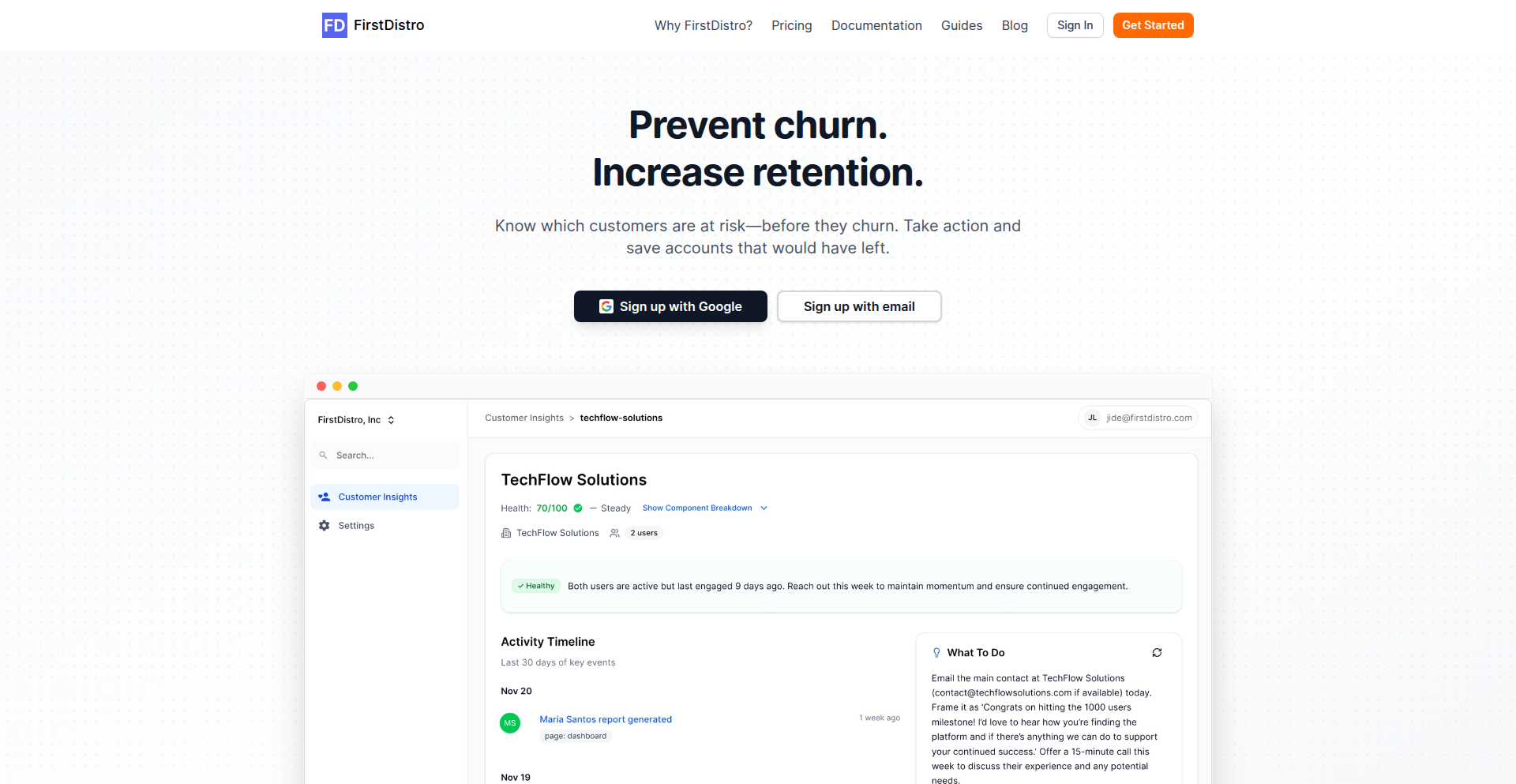

HealthScore Watcher

Author

Jide_Lambo

Description

This project is a simple yet powerful tool designed to proactively monitor customer health by tracking their usage, engagement, and activity patterns. It acts as an early warning system, alerting businesses when a customer's behavior indicates they might be at risk of churning. The core innovation lies in its ability to distill complex customer data into a simple health score, making it easy to identify and address potential issues before they lead to lost revenue.

Popularity

Points 5

Comments 1

What is this product?

HealthScore Watcher is a system that monitors your customers' interactions with your product or service. It looks at how much they use it, how actively they engage, and other behavioral signals. By analyzing these signals, it calculates a 'health score' for each customer. The technology behind it involves data collection via a simple script tag, real-time analysis to compute this score, and then generating alerts. This is innovative because it moves beyond reactive customer support and offers a predictive approach to customer retention, allowing businesses to intervene before a customer decides to leave. So, this helps you understand if your customers are happy and engaged, or if they're quietly drifting away, all without needing complex data science teams.

How to use it?

Developers can integrate HealthScore Watcher into their existing workflows with minimal effort. The primary method is by adding a single script tag to their web application. This script collects the necessary user activity data. Once integrated, the system begins processing this data to calculate customer health scores. These scores can then be used to trigger automated alerts sent to platforms like Slack, email inboxes, or CRM systems such as Attio. This allows for seamless integration into existing communication and management tools, ensuring that the right people are notified at the right time. So, you can easily plug this into your website and start getting instant notifications about at-risk customers directly where you already work.

Product Core Function

· Customer Health Scoring: Gathers user interaction data and transforms it into an easy-to-understand health score, allowing for quick assessment of customer satisfaction and engagement. This helps identify at-risk customers efficiently, so you know who needs attention.

· Real-time Monitoring: Continuously tracks customer activity and updates their health score, ensuring that you always have the latest information on customer engagement. This means you're always aware of the current status, preventing surprises.

· Proactive Churn Prediction: Identifies patterns in customer behavior that indicate a higher likelihood of churn, enabling businesses to take preventative measures. This helps you save customers before they leave, protecting your revenue.

· Automated Alerting System: Sends notifications to designated channels like Slack or email when a customer's health score drops below a critical threshold, facilitating prompt intervention. This ensures you are immediately informed when action is needed, so you can respond quickly.

· Simple Integration: Uses a single script tag for data collection, making it easy to implement without extensive development resources. This saves you time and effort in setting up customer monitoring.

Product Usage Case

· A SaaS company noticing a sudden drop in engagement for a key enterprise client. By using HealthScore Watcher, they receive an alert, investigate, and discover the client is facing internal technical challenges. They proactively offer support, preventing a potential $10k/month revenue loss. This shows how the tool can save significant revenue by highlighting issues early.

· A subscription box service sees the health score of several long-term subscribers declining. Through the alerts, they identify these customers are no longer opening emails or browsing their site. They trigger personalized re-engagement campaigns, successfully retaining these customers and avoiding subscription cancellations. This demonstrates how the tool can improve customer retention rates.

· A mobile app developer wants to ensure new users are actively adopting the app. HealthScore Watcher tracks their initial usage patterns and flags those who aren't engaging after the first week. This allows the developer to send targeted onboarding tips or support, improving the long-term retention of new users. This illustrates how the tool can enhance user onboarding and adoption.

14

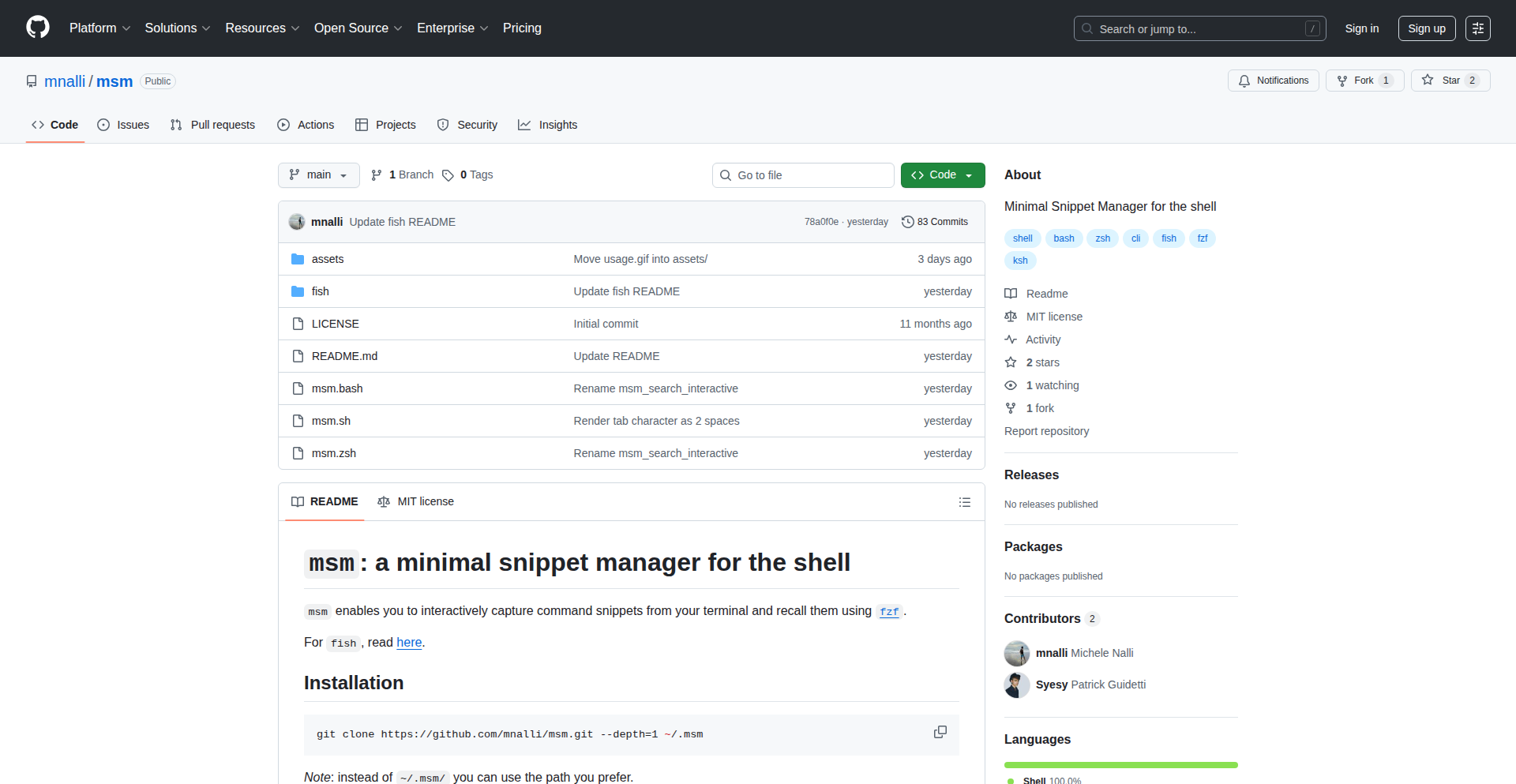

Msm: Shell's Pocket Snippet Engine

Author

mnalli

Description

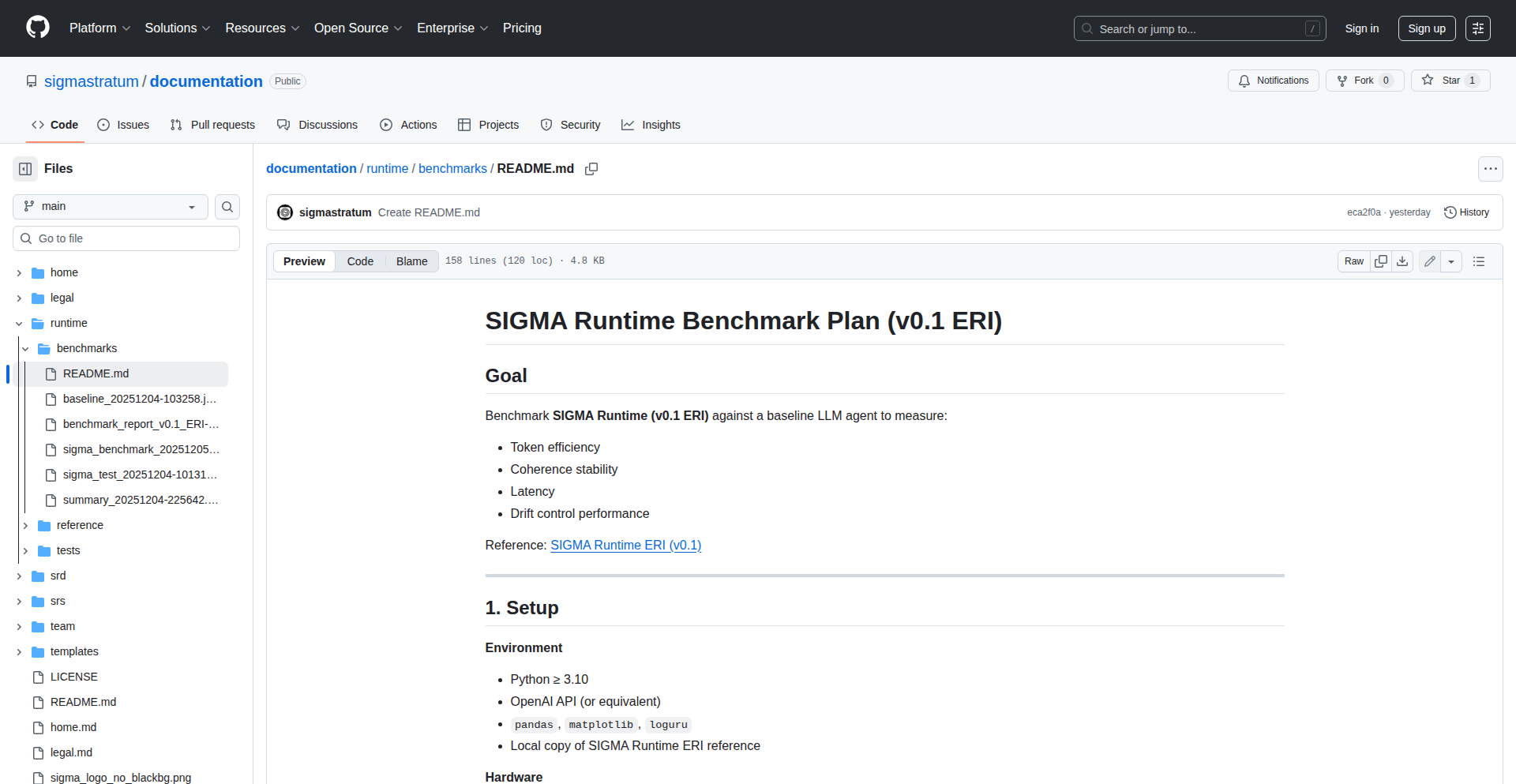

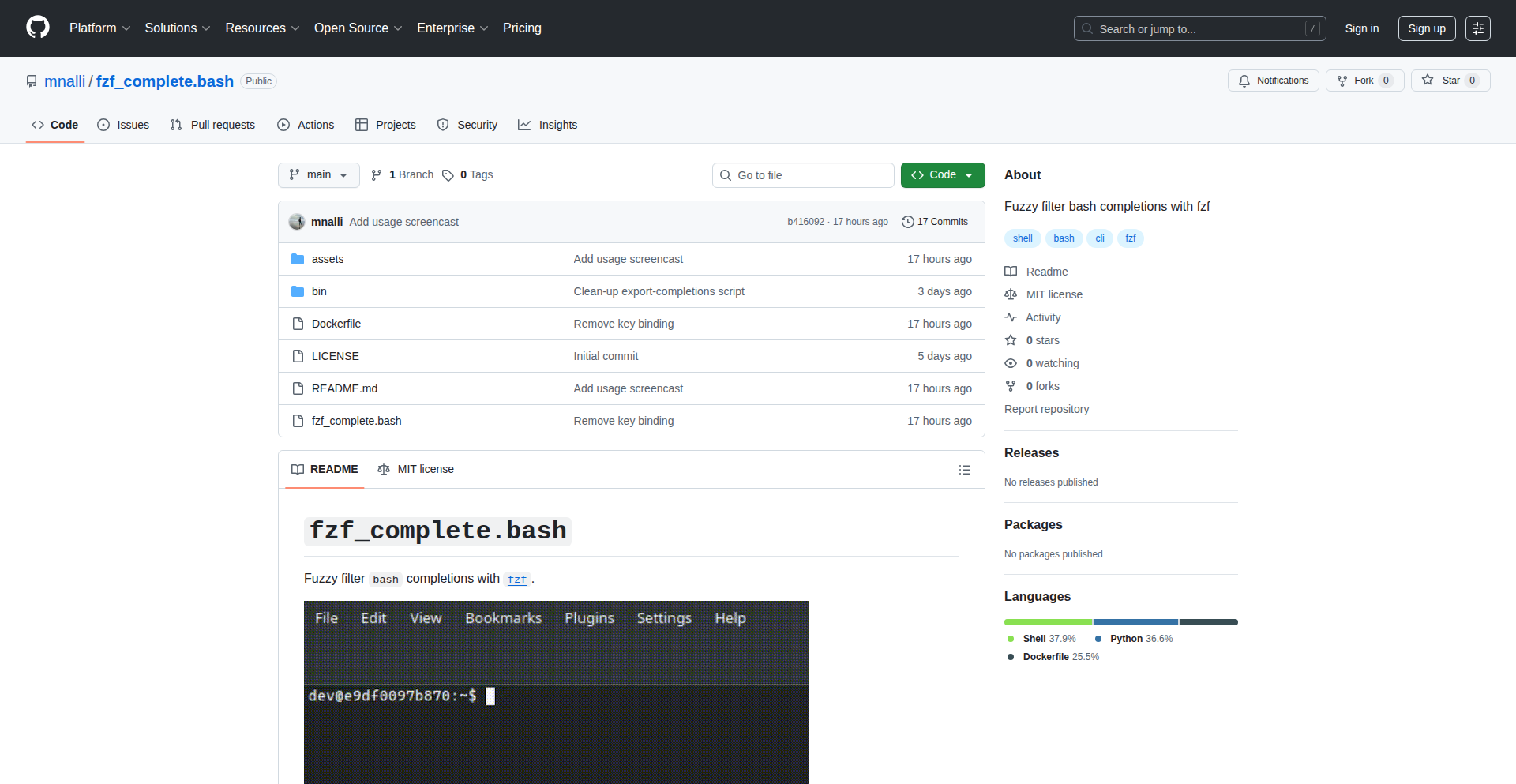

Msm is a minimalist snippet manager for your shell, built upon the powerful fuzzy finder, fzf. It allows you to quickly search, retrieve, and insert code snippets or any text directly from your command line. The innovation lies in its efficient integration with fzf, transforming the command line into a dynamic snippet retrieval tool, addressing the common developer pain point of repetitive typing and context switching.

Popularity

Points 4

Comments 2

What is this product?

Msm is a command-line tool designed to help developers manage and access their code snippets effortlessly. At its core, it leverages the speed and interactive search capabilities of fzf (a command-line fuzzy finder). Instead of digging through files or remembering obscure commands, Msm presents you with an interactive list of your snippets. You type a few characters, and fzf instantly filters your snippets, allowing you to select and paste the desired one directly into your current terminal session. This solves the problem of wasting time on recurring code patterns and improves workflow efficiency by keeping you in the shell.

How to use it?

Developers can integrate Msm into their daily workflow by installing it (typically via a simple script or package manager) and configuring a hotkey or a custom command alias. For instance, you might define an alias like 'msm search' or bind a key combination to trigger Msm. Once active, you can quickly search for snippets like 'git commit message templates' or 'dockerfile base images' and have them inserted into your current command or script. This means less copy-pasting and more focused coding.

Product Core Function

· Fuzzy Snippet Search: Utilizes fzf's advanced fuzzy matching algorithm to quickly find relevant snippets with minimal typing. This means you don't need to remember exact keywords, saving you time and frustration.

· Seamless Integration with Shell: Designed to work directly within your existing shell environment (like bash, zsh, etc.), allowing for natural command-line workflows. This keeps you in your familiar environment without context switching.

· Snippet Organization: Provides a simple yet effective way to store and categorize your snippets, making them easily retrievable. This ensures your essential code chunks are always at your fingertips.

· Clipboard Integration: Automatically copies selected snippets to your system clipboard for easy pasting into any application, not just the terminal. This broadens its utility beyond just shell commands.

· Customizable Storage: Allows users to define where their snippets are stored, offering flexibility to manage their personal knowledge base. This caters to individual preferences for organizing information.

Product Usage Case

· During a coding session, a developer needs to insert a common Git commit message template. Instead of typing it out, they run 'msm commit' (or a similar command), fzf pops up, they type 'feature', and the template is instantly pasted into their Git command line. This saves them seconds per commit, which adds up significantly over time.

· A web developer is working on a new project and needs to quickly add a boilerplate HTML structure. They invoke Msm, search for 'html boilerplate', select the relevant snippet from the fzf results, and it's inserted into their current file. This speeds up project setup and reduces errors from manual typing.

· When troubleshooting a complex system, a developer frequently needs to run a set of diagnostic commands. Msm can store these commands as snippets, allowing them to be quickly searched and executed with a few keystrokes, significantly reducing the time spent on repetitive debugging tasks.

15

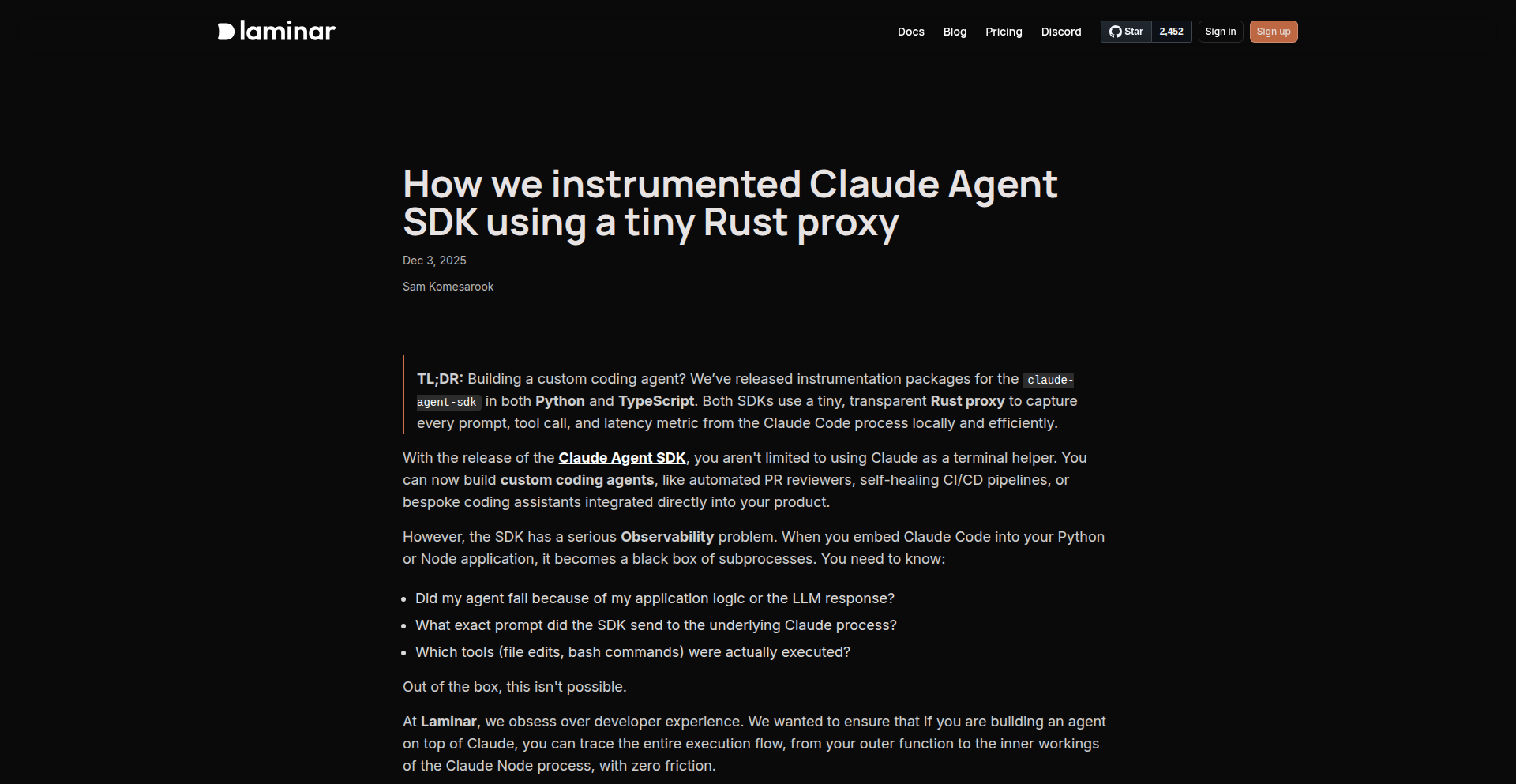

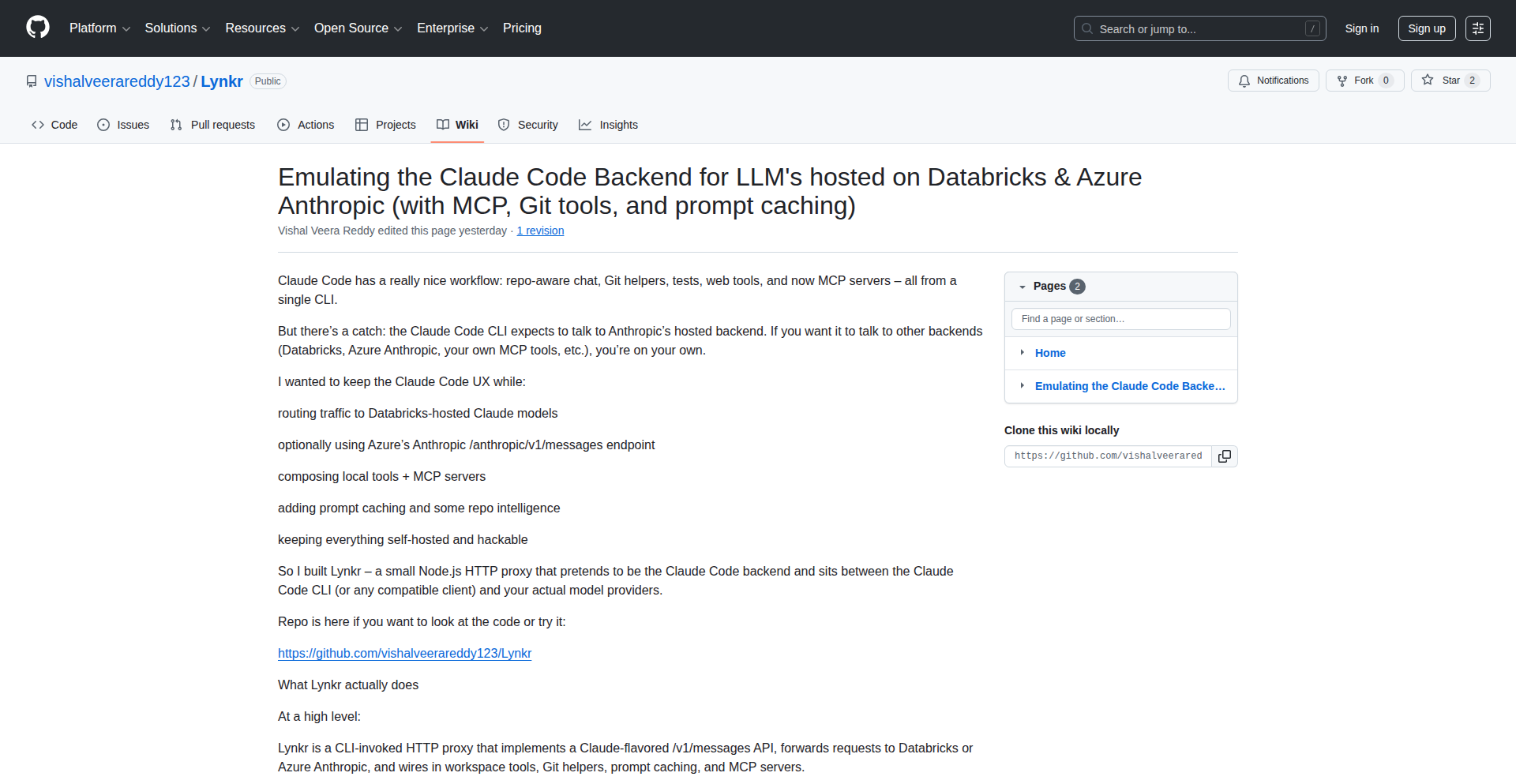

Claude Agent Rust Proxy

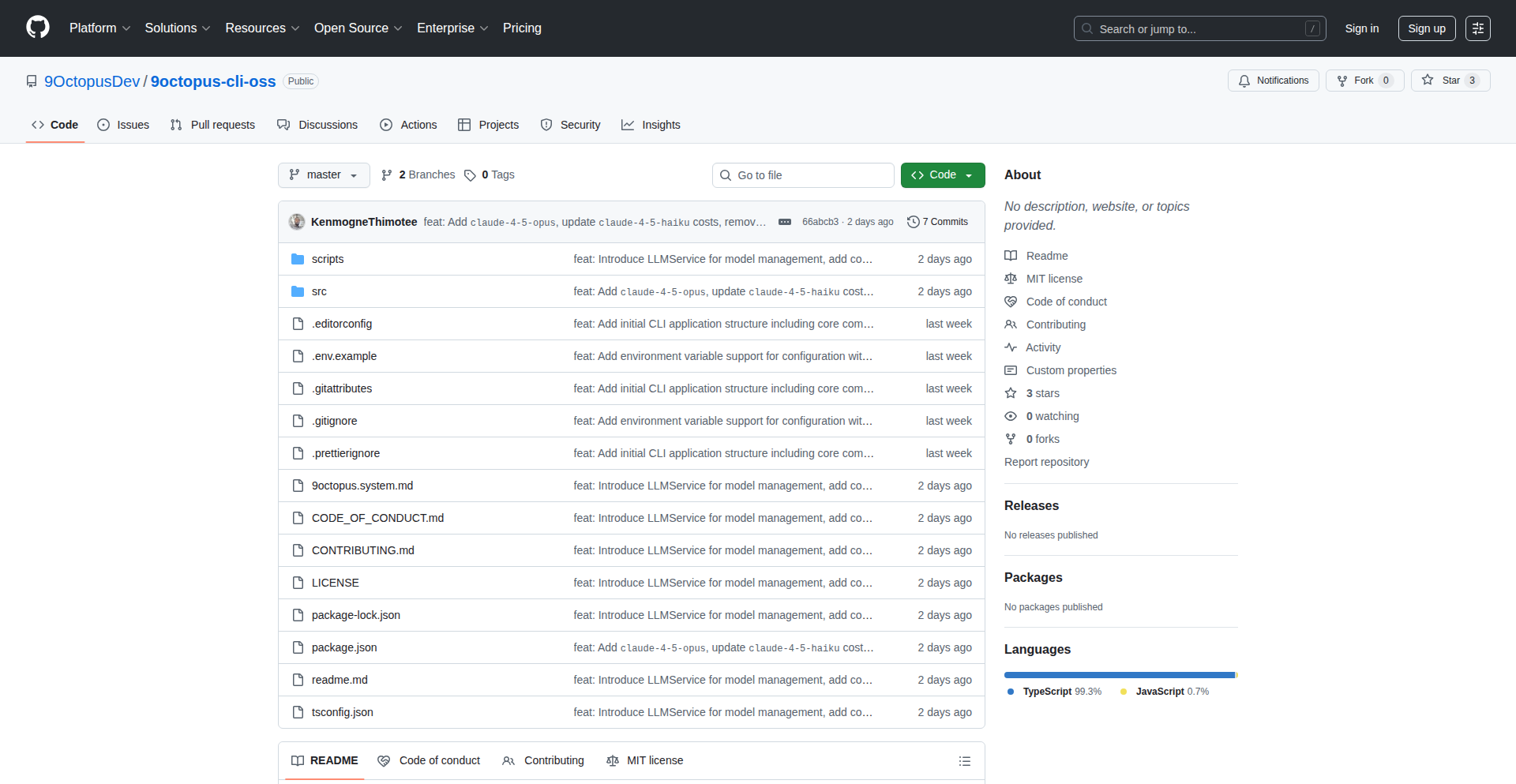

Author

skull8888888

Description

This project showcases a novel approach to instrumenting the recently released Claude Agent SDK. It utilizes a minimalist Rust proxy to seamlessly capture and observe the agent's behavior without requiring deep modifications to the original SDK code. The innovation lies in how it intercepts and analyzes agent interactions, offering valuable insights into AI agent performance and logic, especially for developers working with large language models.

Popularity

Points 6

Comments 0

What is this product?

This is a small, efficient proxy service written in Rust. Its core function is to sit between your Claude Agent and its environment, silently observing and recording all the data flowing in and out. Think of it like a super-smart eavesdropper for your AI agent. The innovation here is using Rust's speed and low-level control to create a proxy that's so lightweight it barely adds any delay, and so easy to integrate that it feels like it's part of the SDK itself. This allows developers to gain deep visibility into their AI agent's decision-making and interactions without becoming experts in complex observability tools or needing to rewrite their agent code.

How to use it?

Developers can integrate this Rust proxy into their Claude Agent workflows. Typically, you would run the proxy as a separate service and configure your Claude Agent to communicate through it. The proxy then forwards the agent's requests and responses to your chosen observability platform. This means you can easily plug this into existing projects. For example, if you're building a chatbot with the Claude Agent SDK, you can run this proxy alongside it. The proxy will then send logs about what questions the agent is asked, how it answers, and any internal thoughts it has, to a platform where you can analyze it. This provides a straightforward way to monitor and debug your AI agent's behavior in real-time.

Product Core Function

· AI Agent Interaction Interception: This function captures all incoming and outgoing messages to and from the Claude Agent. The value is in understanding exactly what information the agent is processing and what responses it's generating, which is crucial for debugging and performance analysis.

· Lightweight Observability Instrumentation: The proxy provides a minimal overhead way to add observability to the Claude Agent SDK. This is valuable because it means developers don't sacrifice performance for insight, allowing them to monitor complex AI behavior without slowing down their applications.

· Seamless Integration with Observability Platforms: The proxy is designed to easily forward captured data to external observability tools. This saves developers significant time and effort in building custom logging and monitoring solutions, enabling them to focus on building the AI agent itself.

· Rust-Powered Performance: Built with Rust, the proxy offers high performance and low resource consumption. This is important for applications where efficiency is key, ensuring that the observability layer doesn't become a bottleneck for the AI agent's operations.

Product Usage Case

· Debugging complex AI agent decision trees: Imagine an AI agent that's supposed to provide customer support. If it gives a wrong answer, this proxy can log the exact conversation flow, the internal reasoning of the agent, and the parameters it used to arrive at that answer, helping developers pinpoint the error quickly. So this helps you find out exactly why your AI chatbot is saying the wrong thing.

· Monitoring AI agent resource utilization and latency: For AI agents that need to respond quickly, this proxy can track how long each interaction takes and how much processing power the agent is using. This is useful for optimizing performance and ensuring a good user experience. So this tells you if your AI is slow and using up too much computer power.

· Gaining insights into AI agent training data effectiveness: By observing the agent's behavior, developers can see if the agent is correctly interpreting and utilizing the data it was trained on. This helps in refining training data and improving the AI's accuracy. So this helps you check if your AI is learning the right things from the information you give it.

· Facilitating A/B testing of different AI agent prompts or configurations: Developers can use the proxy to collect data on how different versions of an AI agent perform under the same conditions, allowing for data-driven decisions on which version is better. So this lets you test two different ways of talking to your AI to see which one works best.

16

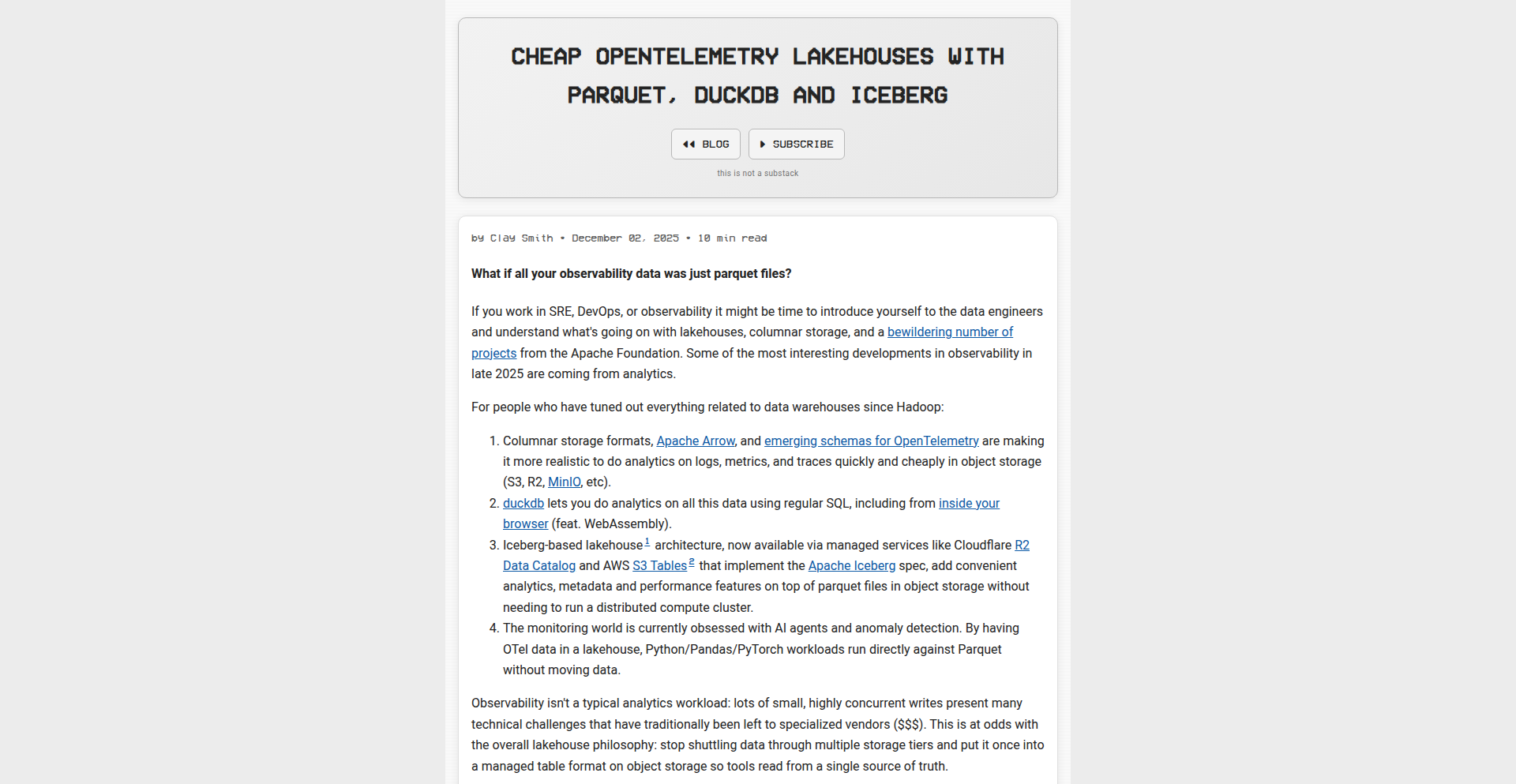

OtelLakehouse

Author

smithclay

Description

OtelLakehouse is a side project exploring an efficient and cost-effective way to store and query OpenTelemetry data. It leverages DuckDB for analytics, open table formats like Apache Iceberg for data organization, and inexpensive object storage for scalability. Rust is used as the 'glue code' to bring these components together, enabling powerful insights from your telemetry data without breaking the bank.

Popularity

Points 4

Comments 2

What is this product?

OtelLakehouse is a system designed to help developers manage and analyze their OpenTelemetry data more affordably. OpenTelemetry is a standard way to collect and send performance metrics and logs from your applications. Traditionally, storing and querying this data can be expensive. OtelLakehouse solves this by using DuckDB, a super-fast in-process analytical database, to query data stored in object storage (like S3 or GCS) in formats like Parquet, managed by Apache Iceberg. This combination means you can get powerful analytical capabilities on your observability data without needing complex and costly dedicated data warehouses.

How to use it?

Developers can use OtelLakehouse to build their own observability data platform. The Rust code acts as an intermediary, allowing OpenTelemetry data to be written into an object storage bucket, organized by Iceberg. Then, using DuckDB, developers can connect directly to this data and run SQL queries to understand application performance, troubleshoot issues, or perform security analysis. This is useful for teams who want more control over their data, need to reduce cloud costs for their observability stack, or want to integrate telemetry analysis into their existing data workflows.

Product Core Function

· OpenTelemetry Data Ingestion: Efficiently collect and route OpenTelemetry traces, metrics, and logs to a central storage location. This value is in providing a standardized way to get your observational data into a queryable format.

· Cost-Effective Object Storage: Utilize cheap, scalable object storage (e.g., Amazon S3, Google Cloud Storage) as the backend for your telemetry data. This directly addresses the pain point of high costs associated with traditional observability solutions.

· DuckDB Analytical Querying: Enable fast, ad-hoc SQL-based analysis of your telemetry data directly from object storage. This gives developers the power to quickly explore and understand their application's behavior.

· Apache Iceberg Table Format Management: Organize your telemetry data using open table formats for schema evolution, time travel, and efficient data management. This ensures your data is well-structured and maintainable over time.

· Rust Integration Layer: Provides the programmatic glue to connect OpenTelemetry data collection, storage, and querying. This demonstrates a practical application of Rust for data engineering tasks.

Product Usage Case

· Troubleshooting Performance Bottlenecks: A developer can use OtelLakehouse to query logs and traces to pinpoint the exact cause of a slow API response, by analyzing patterns in request latency and associated error logs. This helps them identify the root cause of performance issues quickly.

· Cost Optimization for Observability: A small startup can ingest all their application logs and metrics into OtelLakehouse. Instead of paying for an expensive SaaS observability platform, they can use their existing cloud object storage and run queries with DuckDB, significantly reducing their operational costs.

· Security Incident Analysis: A security engineer can query access logs and unusual metric spikes stored in OtelLakehouse to detect and investigate potential security breaches, by looking for anomalous user behavior or system activity patterns.

· Building Custom Dashboards: Developers can integrate OtelLakehouse with custom dashboarding tools. They can write SQL queries to extract specific metrics and trends, then visualize them to monitor application health and user engagement in a way tailored to their specific needs.

17

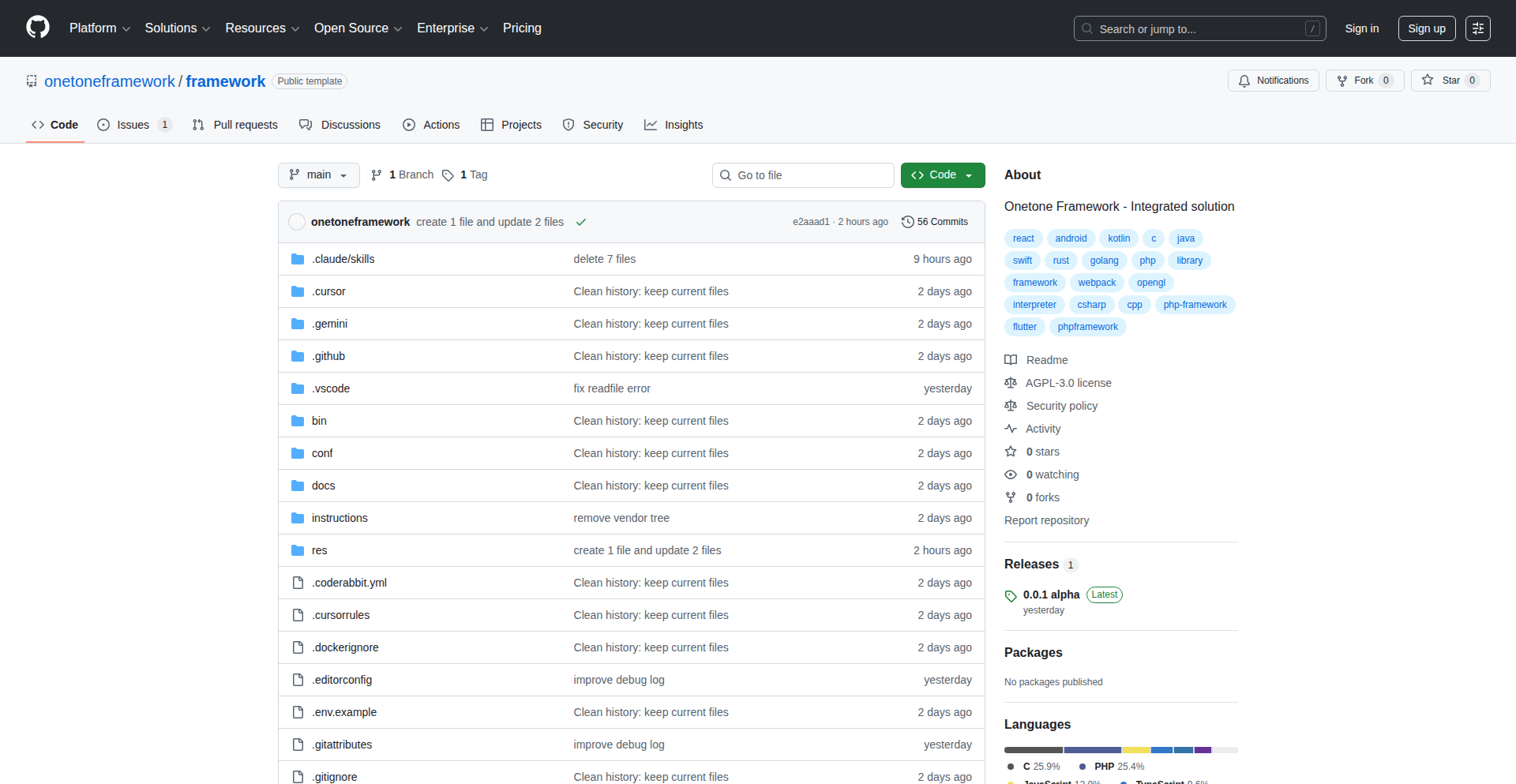

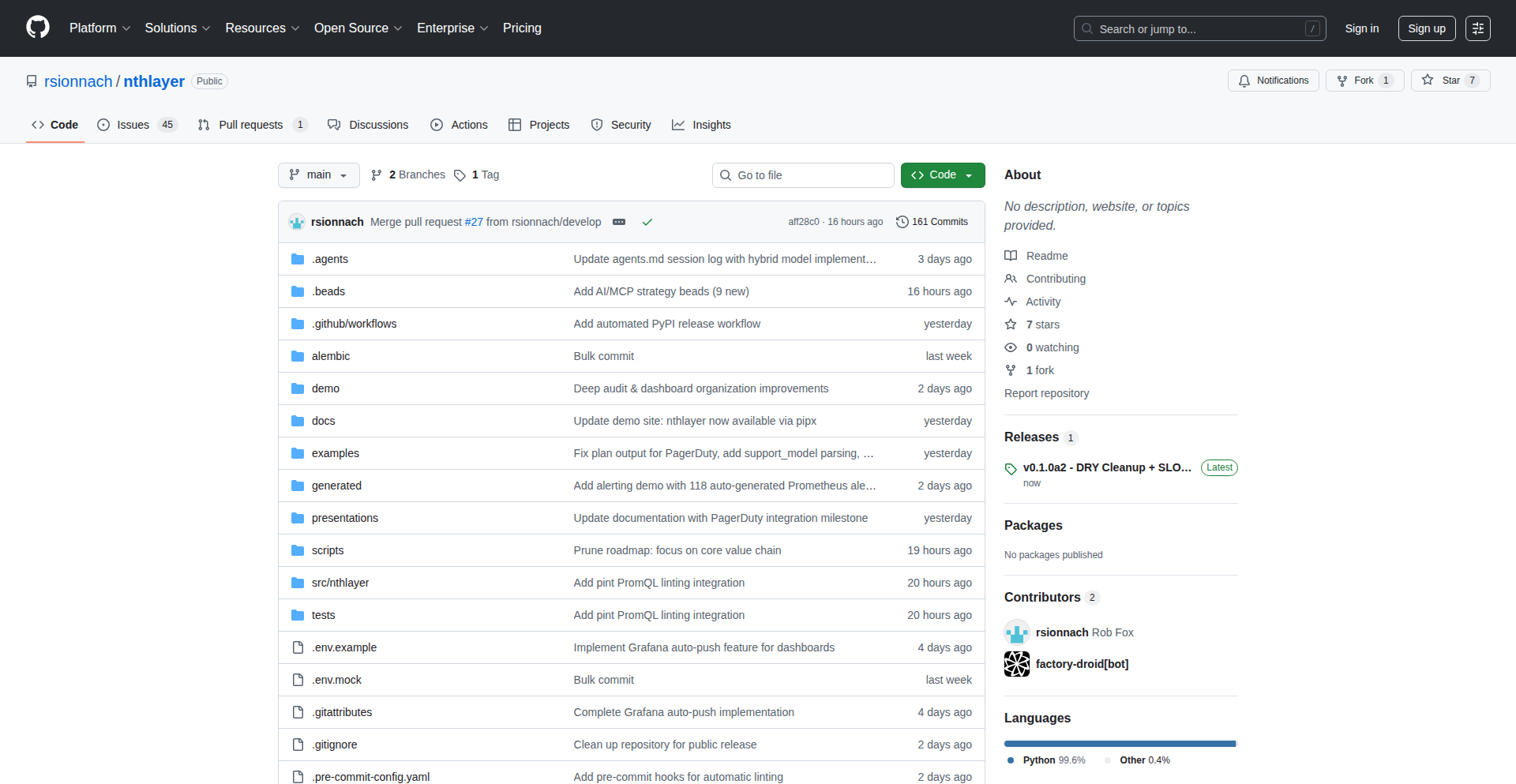

Onetone: Unified Native-Performance Framework

Author

tactics6655

Description

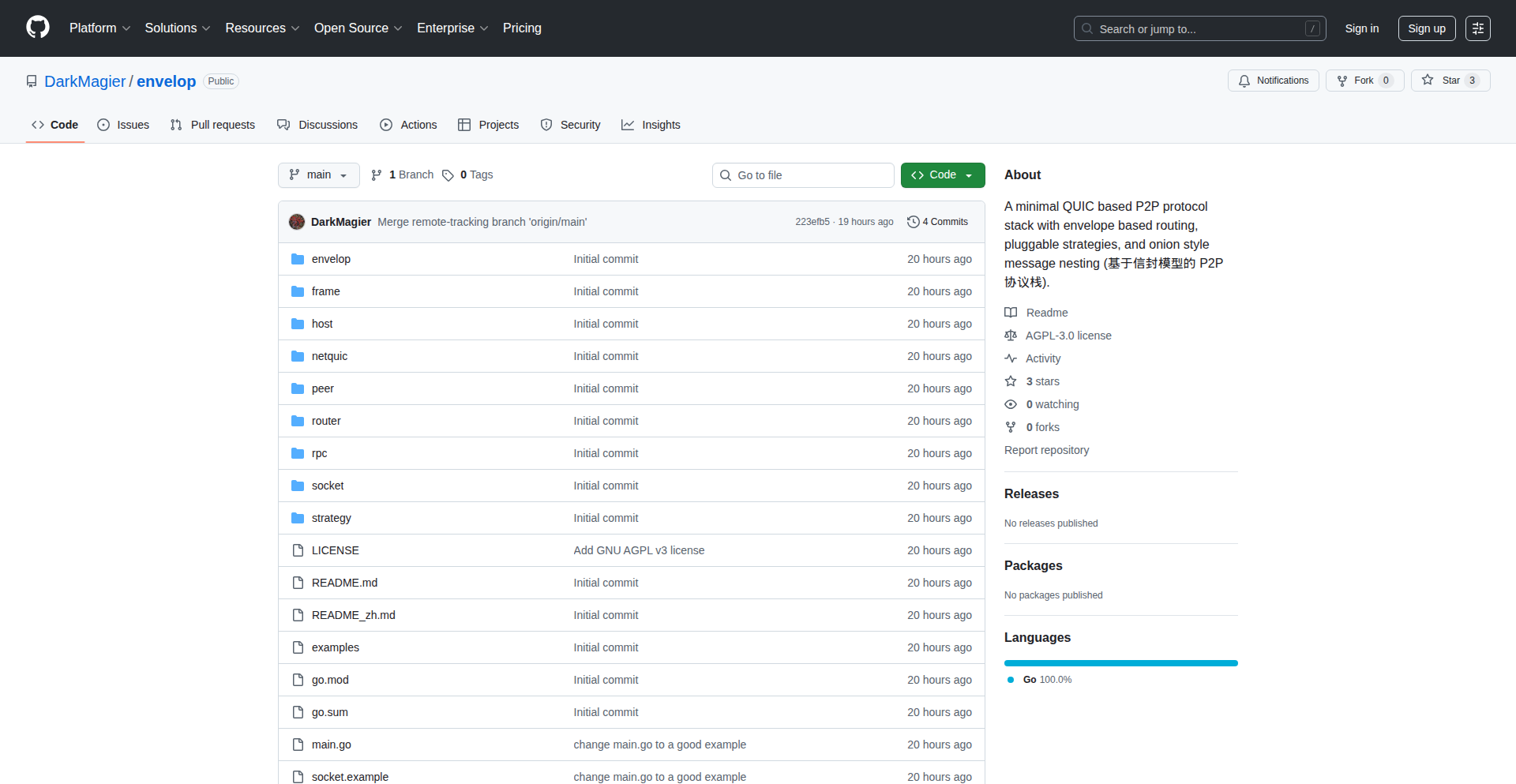

Onetone is an ambitious, open-source full-stack development framework that merges a custom C-like scripting language with a robust OpenGL 3D graphics engine, a PHP web framework, and Python utilities. It aims to provide a cohesive toolkit for game localization, visual novel engines, translation management, and rapid prototyping by offering native performance without the usual complexity of integrating disparate tools. The custom scripting language is designed for modern features like async/await and pattern matching, with native bindings to essential system functions.

Popularity

Points 3

Comments 2

What is this product?