Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-12-03

SagaSu777 2025-12-04

Explore the hottest developer projects on Show HN for 2025-12-03. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The current landscape of Show HN projects paints a vibrant picture of innovation, heavily leaning into the transformative power of AI and the ever-present quest for developer efficiency. We're seeing a clear surge in tools that aim to simplify complex AI interactions, whether it's enabling AI agents to access structured data safely with projects like Pylar, or providing developers with local, privacy-first alternatives to cloud-based AI assistants like PhenixCode. The focus on 'local-first' and 'privacy-preserving' is a critical theme, addressing growing concerns about data security and control. Developers are also pushing the boundaries of performance with tools like Fresh, demonstrating that even foundational software like text editors can be reimagined with modern languages and innovative architectural patterns. For aspiring creators and seasoned engineers alike, this is a call to arms: embrace the AI revolution by building bridges, not walls. Focus on abstracting complexity, democratizing access to powerful AI capabilities, and prioritizing user control and data privacy. The hacker spirit thrives in identifying pain points and leveraging technology to create elegant, impactful solutions that empower others.

Today's Hottest Product

Name

Show HN: Fresh – A new terminal editor built in Rust

Highlight

This project showcases a remarkably efficient and user-friendly terminal editor, 'Fresh', meticulously crafted in Rust. Its core innovation lies in its approach to handling massive files – it employs a lazy-loading piece tree to selectively load only necessary data, drastically reducing memory consumption and load times compared to established editors. For developers, this offers a compelling case study in performance optimization for text-heavy applications and a fresh perspective on building developer tools with modern languages like Rust. The integration of TypeScript for plugins via Deno also highlights an accessible and extensible architecture.

Popular Category

AI/ML Tools

Developer Tools

Productivity Software

Data Visualization

Open Source Software

Popular Keyword

LLM

AI agent

Rust

Open Source

CLI

Python

Vector Database

RAG

Frontend

UI

Technology Trends

AI Agent Ecosystem

Local-First AI

Performance-Optimized Developer Tools

No-Code/Low-Code AI Integration

Data Privacy and Security in AI

WebAssembly for Frontend Performance

Advanced Text Editing Techniques

Composable AI Pipelines

Project Category Distribution

AI/ML Tools (35%)

Developer Tools (25%)

Productivity Software (15%)

Data Visualization (10%)

Utilities/Services (10%)

Educational Tools (5%)

Today's Hot Product List

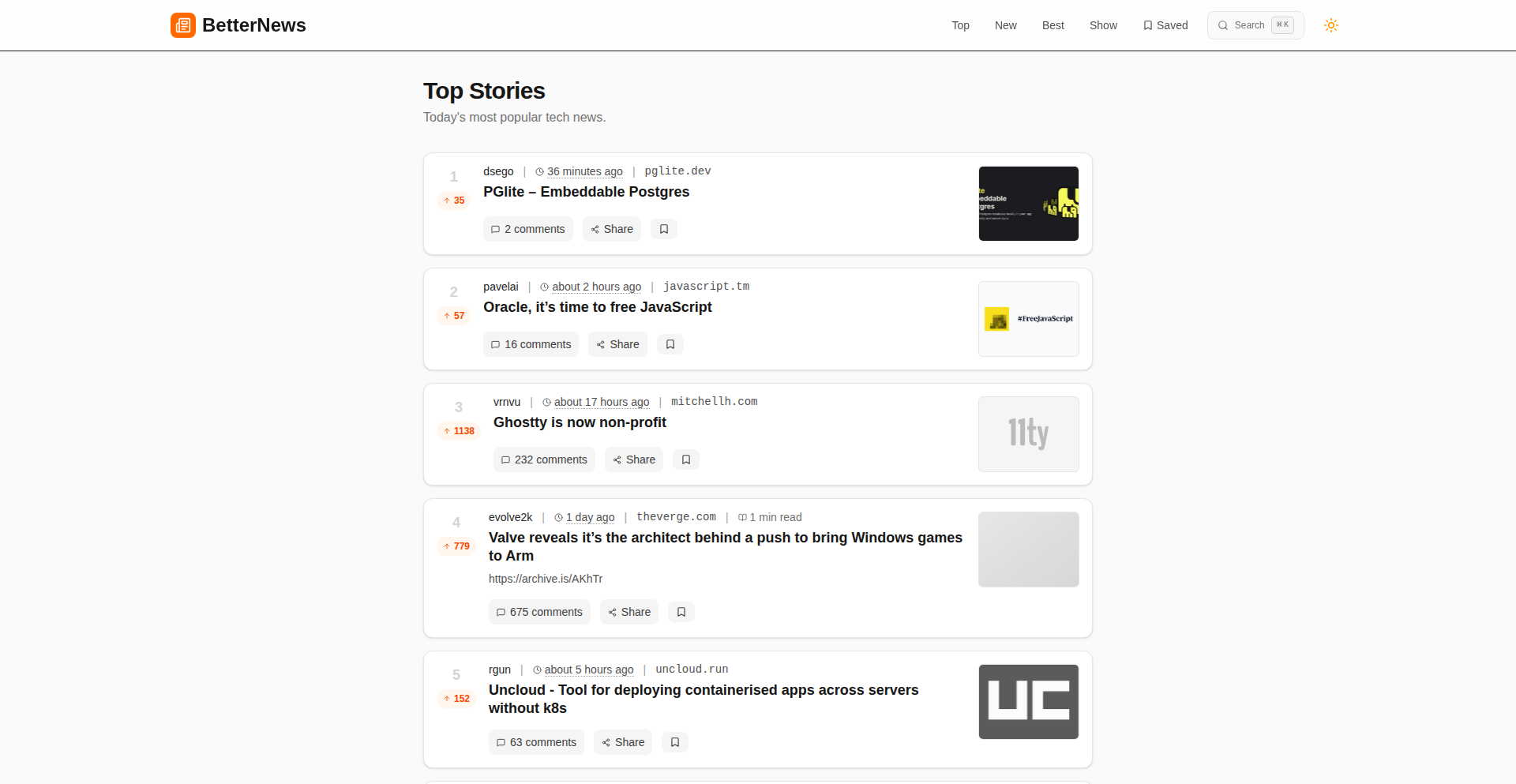

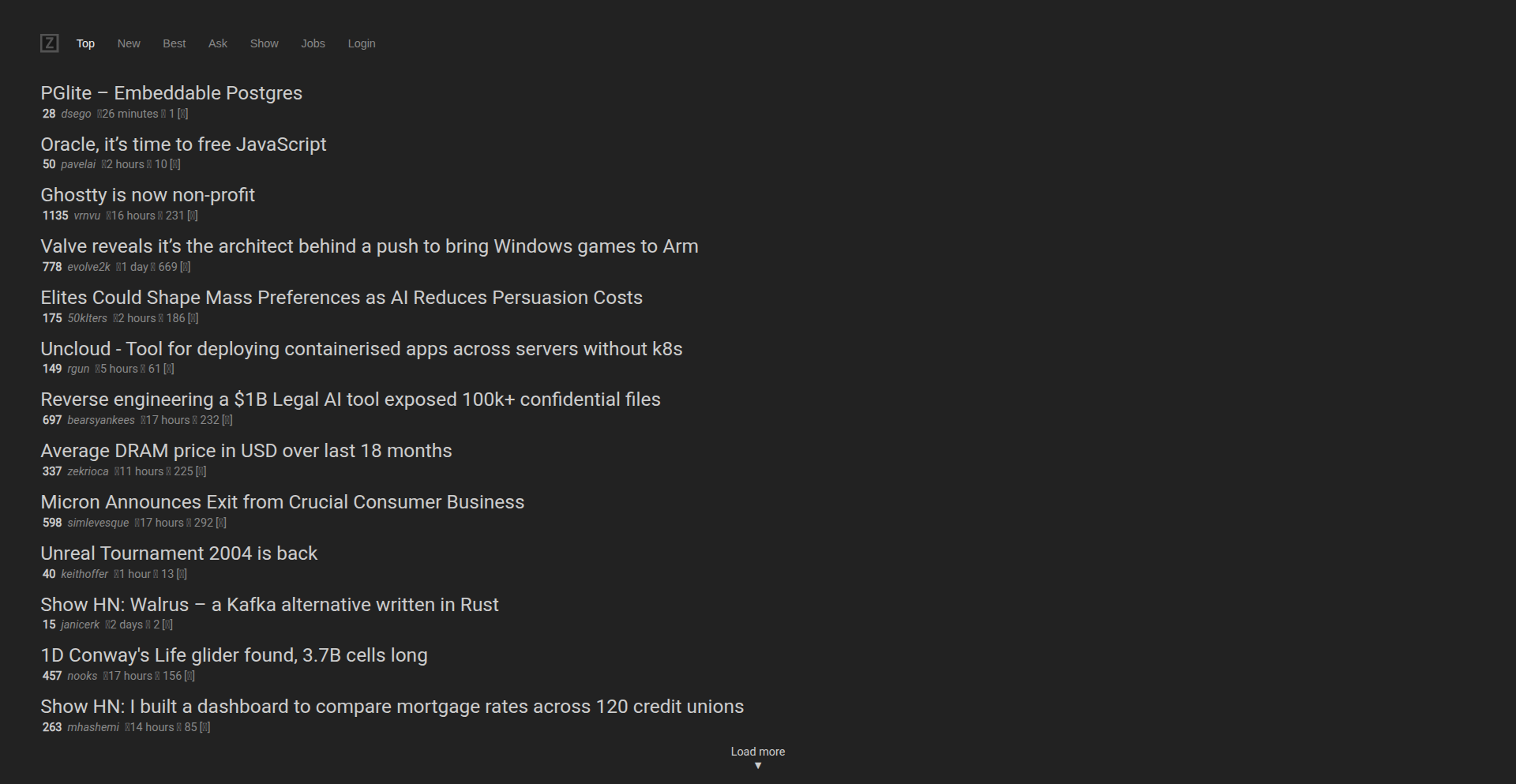

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | CreditUnionRateScan | 263 | 84 |

| 2 | FreshCode-Rust | 153 | 112 |

| 3 | Microlandia: Deno-Powered Data-Driven City Builder | 87 | 17 |

| 4 | SoloInvoice Coder | 11 | 4 |

| 5 | Avolal: Contextual Flight Booker | 9 | 6 |

| 6 | TakaStackLang | 10 | 4 |

| 7 | LLM Orchestrator Hub | 10 | 3 |

| 8 | AI Photo Lumina | 6 | 6 |

| 9 | GoalBet Engine | 6 | 5 |

| 10 | SafeKey AI Input Firewall | 4 | 6 |

1

CreditUnionRateScan

Author

mhashemi

Description

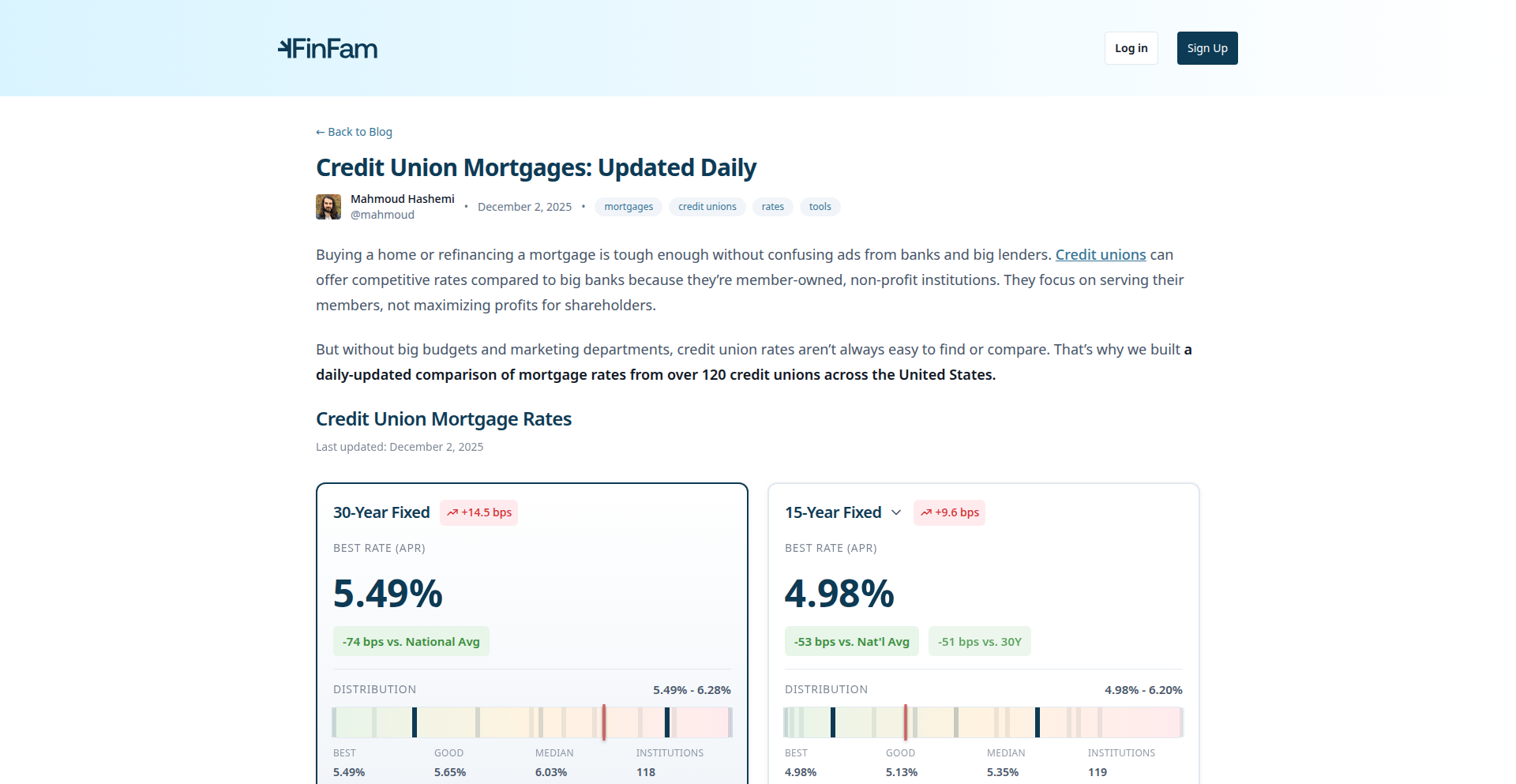

A dashboard that scrapes and compares mortgage rates from over 120 credit unions against national benchmarks. It helps users find better mortgage deals by highlighting the savings achievable by choosing credit unions over traditional big banks, often due to lower marketing costs. The project uses Python for backend data processing and Svelte/SvelteKit for a user-friendly frontend, offering a transparent and ad-free experience.

Popularity

Points 263

Comments 84

What is this product?

This is a web-based tool that collects publicly available mortgage interest rates from more than 120 credit unions. It then compares these rates to the national average benchmark (FRED data) and provides filtering options for loan type, eligibility, and rate type. The innovation lies in its ability to aggregate and present this information in a clear, actionable format, revealing significant cost savings that are often overlooked by consumers. The core idea is that credit unions, being non-profit and having smaller marketing budgets, can offer better rates on standardized financial products like mortgages, and this tool makes that advantage easily discoverable.

How to use it?

Developers can use this project as a reference for building similar data aggregation and comparison tools for other financial products or services. The backend, likely built with Python, demonstrates techniques for web scraping public data, data cleaning, and API integration (for FRED data). The frontend, using Svelte/SvelteKit, showcases how to build interactive and responsive dashboards for data visualization and user interaction. It provides a solid foundation for projects that require comparing dispersed information and presenting it clearly to end-users. The project can be a learning resource for understanding how to leverage open data for consumer benefit.

Product Core Function

· Mortgage Rate Aggregation: Gathers real-time mortgage rates from over 120 credit union websites, providing a centralized view of available options. This is valuable for developers building financial comparison platforms or for understanding data aggregation strategies.

· National Benchmark Comparison: Compares aggregated credit union rates against the official national mortgage rate benchmark, quantifying potential savings. This helps developers implement data comparison logic and highlight value propositions in their own projects.

· Advanced Filtering: Allows users to filter by loan type (e.g., 30-year, 15-year), eligibility criteria, and rate types, enabling tailored searches. This demonstrates how to build complex filtering mechanisms in a user interface for structured data.

· Payment Calculator with Refinance Mode: Includes a calculator that estimates mortgage payments and supports refinance scenarios. This is a practical feature for developers creating personal finance tools or loan simulation applications.

· Direct Link Integration: Provides direct links to each credit union's rate and eligibility pages, streamlining the user's research process. This highlights the value of clear navigation and outbound linking in user-centric applications.

Product Usage Case

· A developer building a personal finance app could integrate the rate aggregation logic to offer users real-time mortgage rate comparisons within their app. This solves the problem of users having to manually check multiple websites, saving them time and potentially money.

· A fintech startup looking to disrupt traditional lending could use the project's methodology as inspiration for building a platform that transparently surfaces the cost advantages of non-traditional lenders. This addresses the market gap of opaque pricing in the mortgage industry.

· An educational project could leverage the data and visualization techniques (like the seaborn plots mentioned) to teach about financial markets and consumer economics. This demonstrates how to use code to explain complex financial concepts in an accessible way.

· A developer experimenting with web scraping and data analysis could use this project as a case study to learn how to collect, process, and present large datasets for practical application. It provides a real-world example of solving a common consumer problem with code.

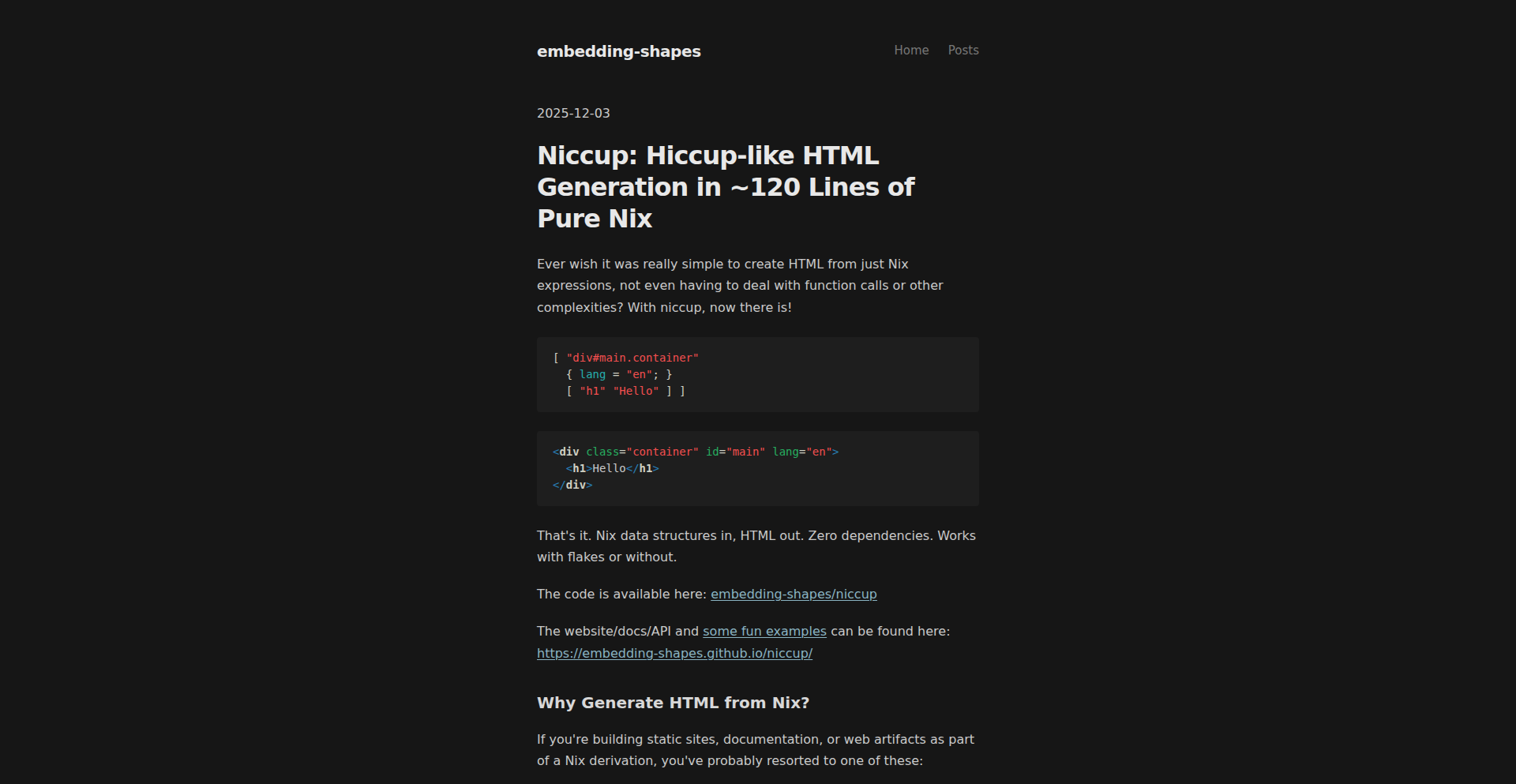

2

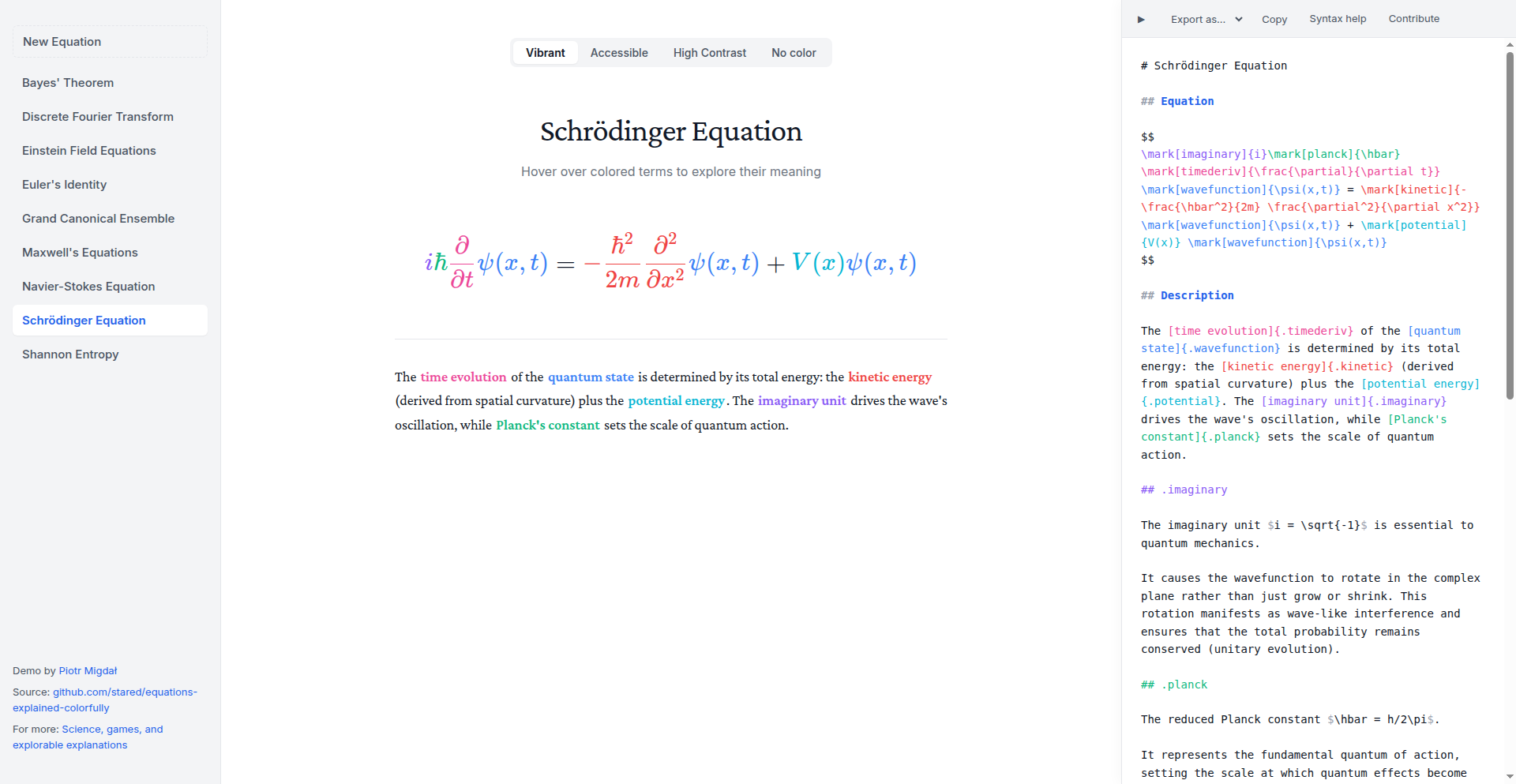

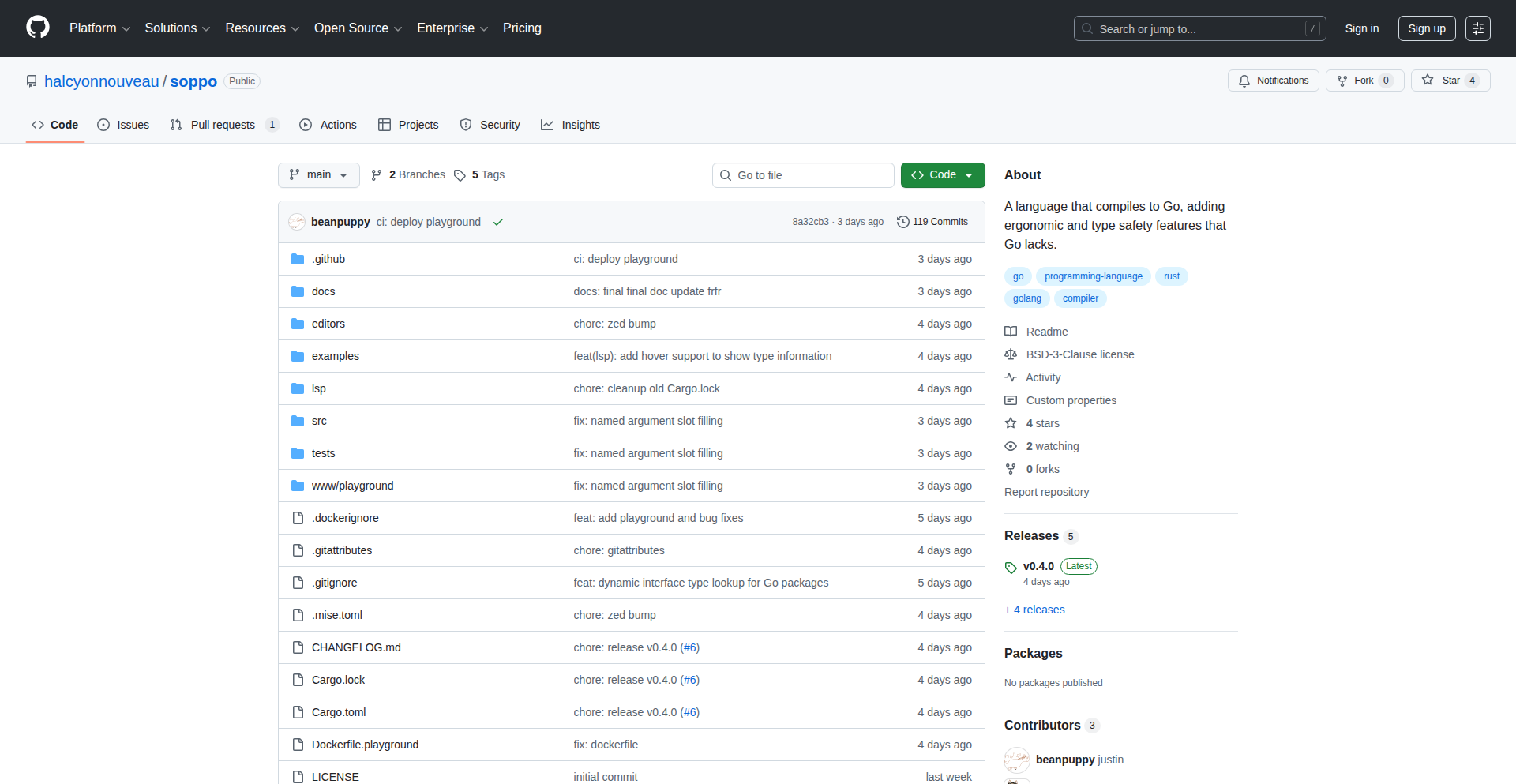

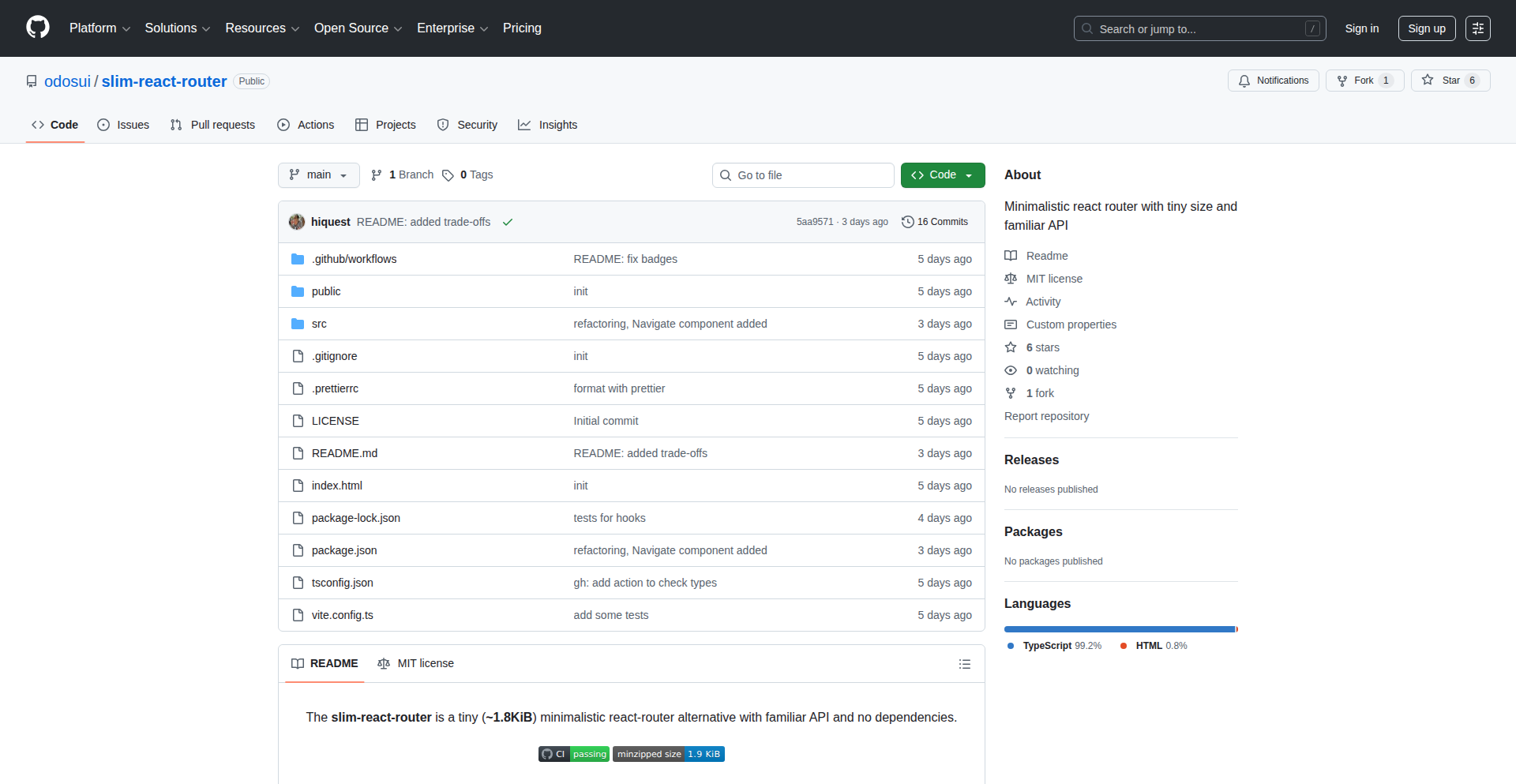

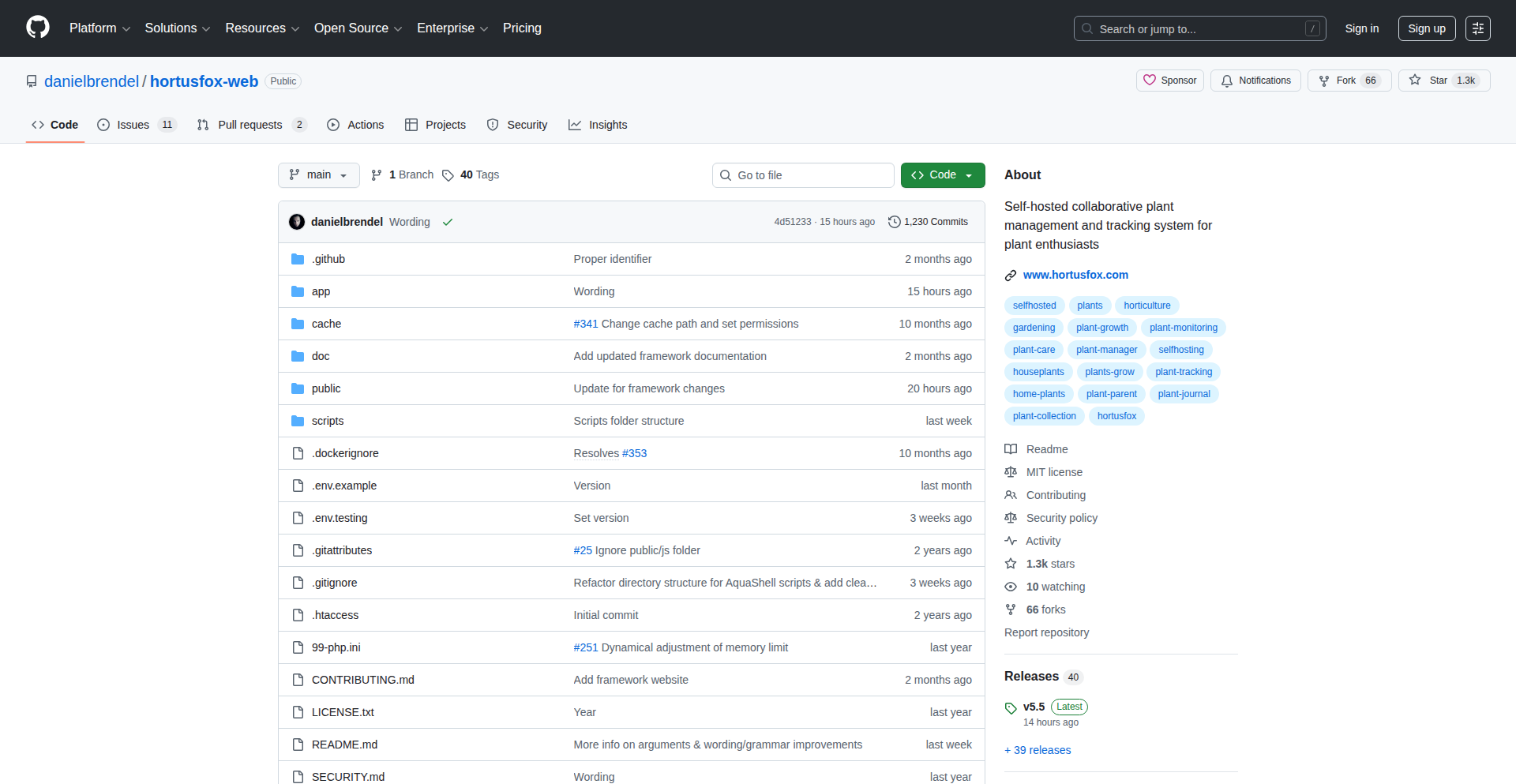

FreshCode-Rust

Author

_sinelaw_

Description

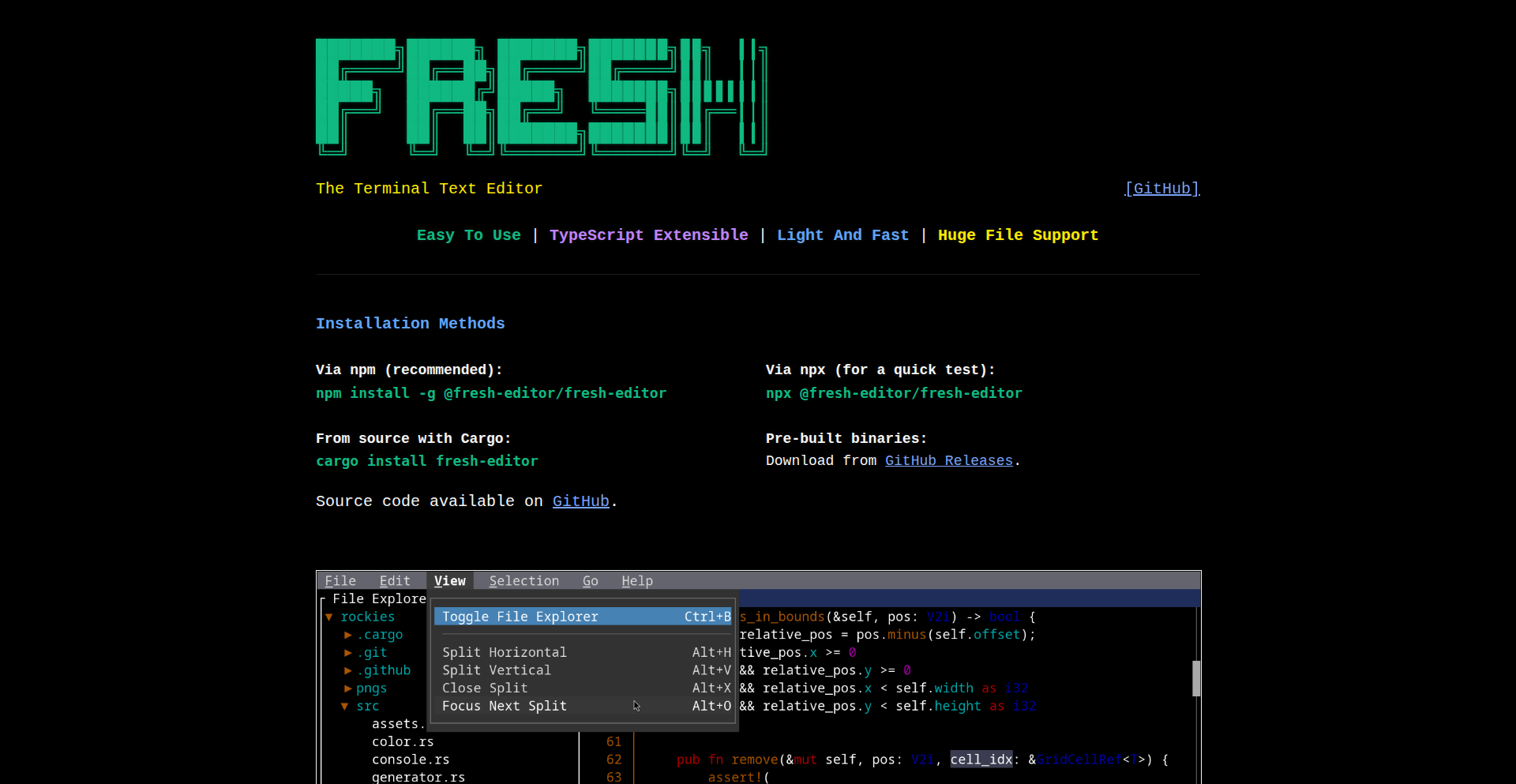

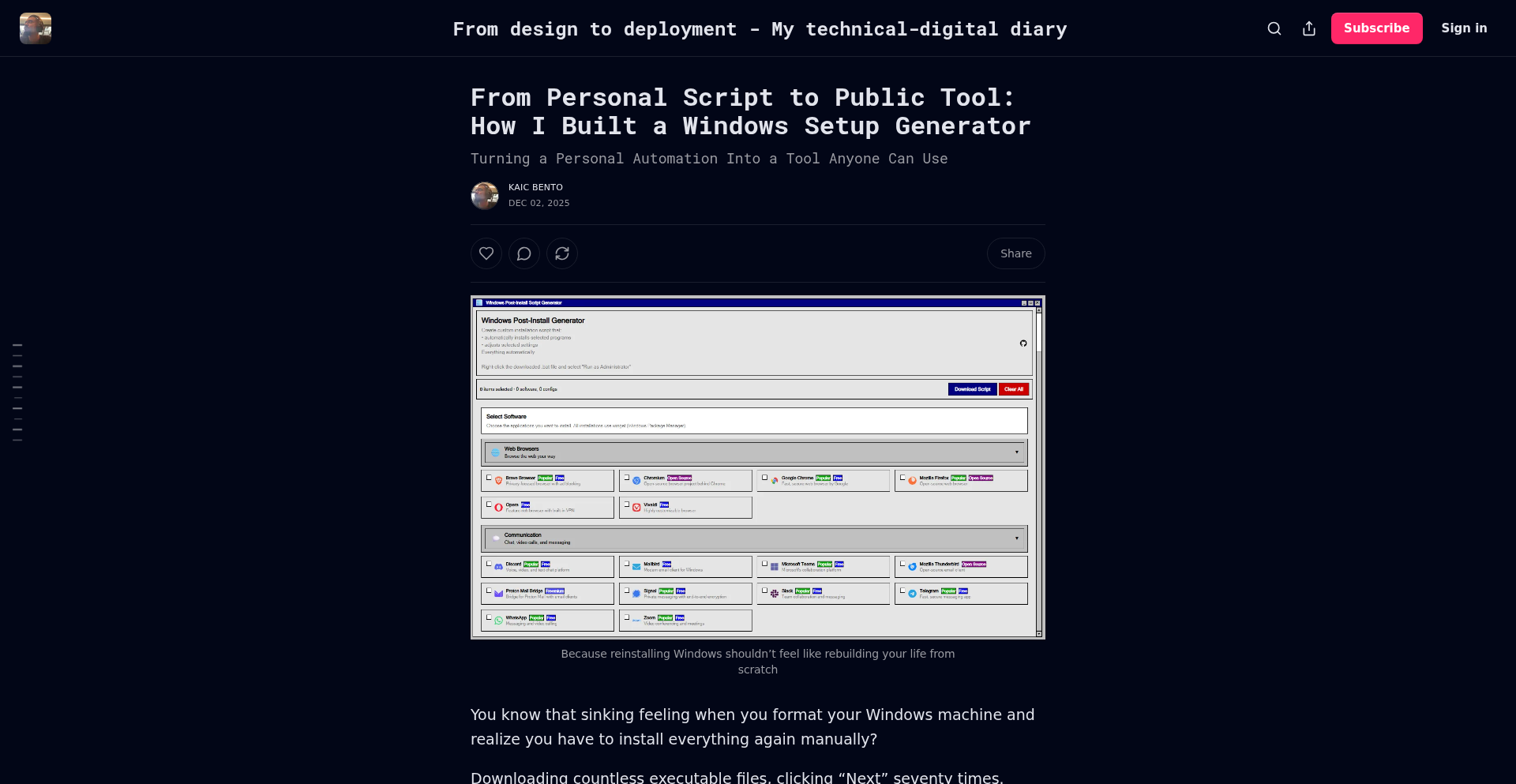

FreshCode-Rust is a blazingly fast, resource-efficient terminal-based text editor built with Rust. It aims to provide the usability and features of modern GUI editors, like command palettes and LSP integration, without the steep learning curve or heavy resource consumption. Its core innovation lies in its lazy-loading piece tree and non-modal design, making it exceptional for handling massive files swiftly and offering a smooth editing experience for developers.

Popularity

Points 153

Comments 112

What is this product?

FreshCode-Rust is a new kind of text editor that runs directly in your terminal. Unlike traditional terminal editors that can be complex to learn, FreshCode-Rust prioritizes ease of use with familiar keyboard shortcuts and a non-modal interface, meaning you don't have to switch between different modes to perform actions. Its technological marvel is its 'piece tree' data structure, which intelligently loads only the parts of a file you're actively working on. This means it can open and edit incredibly large files (gigabytes!) in seconds and use very little memory, which is a game-changer for developers working with huge log files, datasets, or codebases. It's built in Rust for peak performance and supports modern features like code completion through Language Server Protocol (LSP) and customizable plugins written in TypeScript, making it accessible to a wide range of developers.

How to use it?

Developers can use FreshCode-Rust directly in their terminal. Installation would typically involve downloading a binary or building from source. Once installed, you'd launch it from your command line, for example: `freshcode <filename>`. You can then edit files using intuitive keyboard shortcuts, similar to what you might expect from a GUI editor. For advanced features like code completion, you would need to set up a Language Server for your programming language, and FreshCode-Rust would integrate with it automatically. Plugin development is straightforward using TypeScript and Deno, allowing developers to extend its functionality to suit their specific workflows or to build custom tools within the editor.

Product Core Function

· Extremely fast file loading and editing: Implemented using a lazy-loading 'piece tree' data structure, allowing for near-instantaneous opening and manipulation of multi-gigabyte files, significantly reducing developer wait times and improving productivity.

· Low memory consumption: Designed to be highly resource-efficient, using minimal RAM even with very large files, which is crucial for systems with limited resources or when running multiple applications simultaneously.

· Intuitive non-modal editing: Prioritizes a user-friendly experience with standard keybindings and a design that avoids complex mode switching, lowering the barrier to entry for new users and making it easier for experienced users to switch from other editors.

· Modern GUI editor features in the terminal: Includes features like a command palette for quick access to commands and actions, and built-in support for Language Server Protocol (LSP) for intelligent code completion, error highlighting, and other code intelligence features, enhancing developer workflow and code quality.

· Extensible plugin system using TypeScript and Deno: Allows developers to easily create custom plugins and extensions using a widely adopted language, fostering a vibrant ecosystem and enabling tailored editor experiences.

· Rust-based performance: Leverages the speed and safety of Rust for its core implementation, ensuring a robust and performant editing environment.

Product Usage Case

· Editing massive log files: A DevOps engineer needs to analyze a 5GB log file to troubleshoot an issue. Instead of struggling with slow or crashing editors, they can open the file in FreshCode-Rust in under a second, search for specific error patterns, and view ANSI color codes for better readability, directly within their terminal.

· Working on large codebases with limited RAM: A developer on a constrained laptop is working on a large project with thousands of files. FreshCode-Rust's efficient memory usage allows them to open and navigate the entire project quickly without the system becoming unresponsive or running out of memory, unlike heavier IDEs.

· Rapid prototyping and script editing: A data scientist needs to quickly write and test a Python script. FreshCode-Rust's non-modal interface and quick startup time allow them to launch the editor, write code, and get instant feedback from the LSP for syntax checking and autocompletion, accelerating their development cycle.

· Customizing terminal workflows with plugins: A web developer wants to integrate a specific linting tool or code formatter into their terminal editing workflow. They can write a simple TypeScript plugin for FreshCode-Rust that hooks into its event system, automatically running the tool on save and providing feedback directly in the editor.

3

Microlandia: Deno-Powered Data-Driven City Builder

Author

phaser

Description

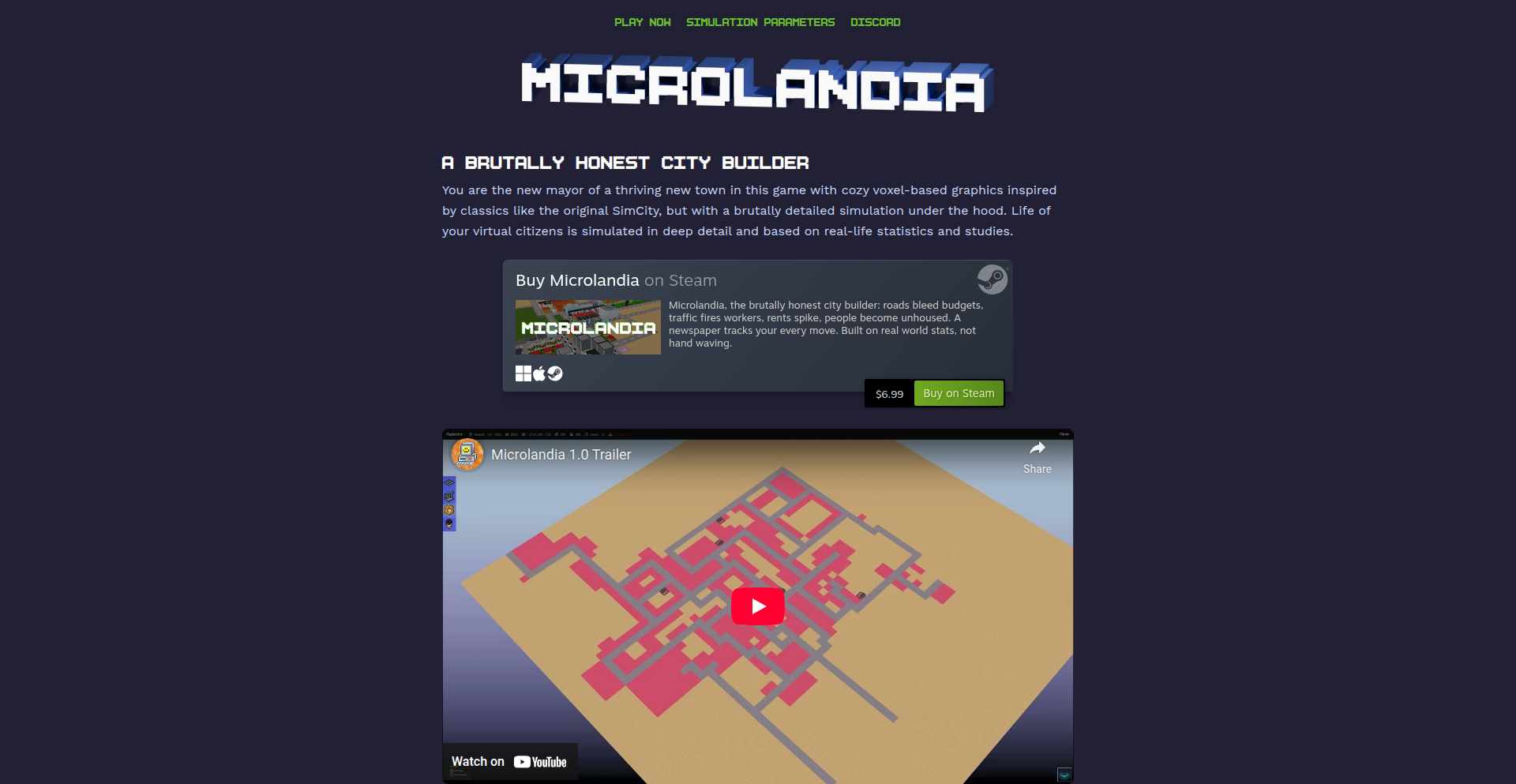

Microlandia is a city-building simulation game, inspired by SimCity Classic, that uniquely leverages Deno and its SQLite driver for its core mechanics. It incorporates real-world datasets and statistics to create a more realistic and introspective simulation, even including often-overlooked aspects like homelessness. This project showcases the power of server-side JavaScript (Deno) for game development and data-intensive applications, offering a glimpse into a developer's creative problem-solving with code.

Popularity

Points 87

Comments 17

What is this product?

Microlandia is a city-building game built using Deno, a modern JavaScript runtime, and its integrated SQLite driver. The innovation lies in using Deno's capabilities to process and integrate real-world data, such as statistics and research parameters, directly into the game's simulation engine. This means your city's development is influenced by actual data, making it a more 'brutally honest' and thought-provoking experience compared to typical games. So, what's in it for you? It offers a unique blend of entertainment and educational value, allowing you to experiment with city management while learning about the complexities of real-world urban planning and societal issues.

How to use it?

As a player, you'll interact with Microlandia through its user interface on Steam. From a developer's perspective, the underlying technology demonstrates how Deno can be effectively used for game development, particularly for projects that require significant data processing and persistence. The project utilizes Deno's built-in SQLite driver, which is a straightforward way to store and manage game state and data. This approach allows developers to build scalable and maintainable applications using familiar JavaScript syntax and a modern runtime environment. So, how can this inspire you? If you're a JavaScript developer interested in game development or data-driven applications, you can learn from Microlandia's architecture and see how Deno can be a powerful tool in your arsenal.

Product Core Function

· Data-driven simulation engine: Utilizes real-world datasets to influence game mechanics, providing a more realistic and challenging city-building experience. Value: Offers a unique, educational, and thought-provoking gameplay loop that goes beyond typical game simulations. Use case: Players looking for deeper strategic challenges and a more grounded simulation.

· Deno runtime integration: Leverages Deno for its modern JavaScript execution environment and built-in features like the SQLite driver. Value: Demonstrates efficient and modern backend development for games and applications, showcasing Deno's capabilities. Use case: Developers interested in exploring Deno for their own game or application projects.

· SQLite data persistence: Employs SQLite for storing and managing game data, ensuring save states and simulation parameters are reliably handled. Value: Provides robust and efficient data management, crucial for any complex application or game. Use case: Developers building applications that require local data storage.

· Societal aspect modeling: Includes parameters and mechanics that reflect complex societal issues like homelessness. Value: Adds depth and realism to the simulation, encouraging players to consider the broader social impact of their decisions. Use case: Players interested in a more nuanced and critical simulation experience.

Product Usage Case

· Building a city that thrives by understanding and addressing real-world economic factors: In Microlandia, you might find that high employment rates directly correlate with specific industrial policies you implement, mirroring real-world economic principles. This helps players learn about economic management in a practical, game-based environment. Problem solved: Demonstrates how data integration can create more educational and realistic gameplay.

· Developing a sustainable urban plan by considering environmental impact data: The game could simulate pollution levels based on industrial output and traffic, forcing players to balance growth with environmental concerns, similar to real-world urban planning challenges. Problem solved: Highlights the importance of environmental sustainability within a game context.

· Experimenting with social welfare policies to combat issues like homelessness: Microlandia might introduce mechanics where implementing specific social programs directly affects homelessness rates, showing the complex interplay between policy and societal well-being. Problem solved: Offers a platform to explore the impact of social policies without real-world consequences.

4

SoloInvoice Coder

Author

mightbefun

Description

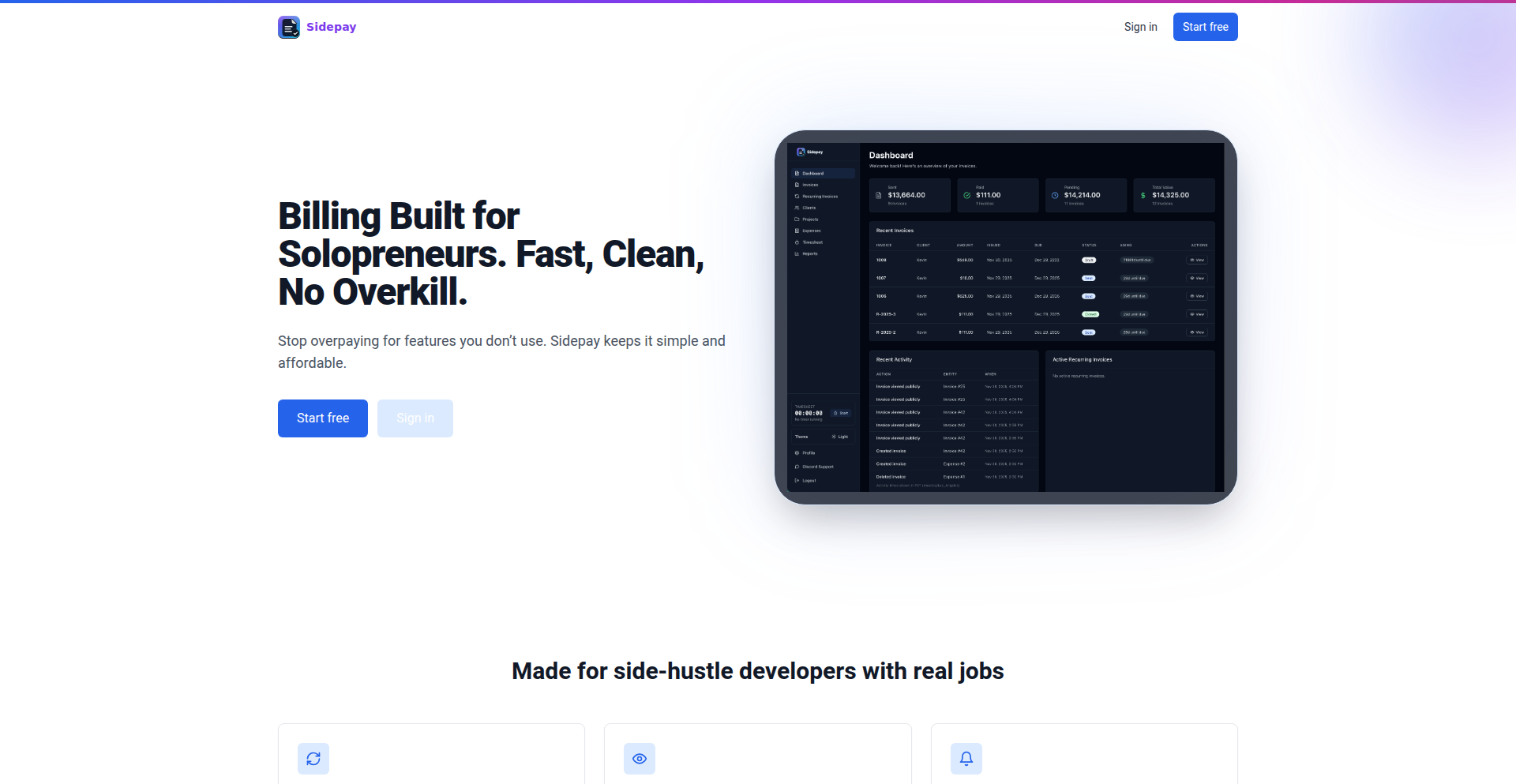

A hyper-lightweight, cost-effective invoicing platform specifically designed for solo developers and freelancers. It strips away unnecessary features found in bloated enterprise solutions, offering only the essential tools to create, send, and track invoices with an emphasis on simplicity and affordability. The core innovation lies in its minimalist approach, directly addressing the pain points of cost and complexity in existing invoicing software for individual professionals.

Popularity

Points 11

Comments 4

What is this product?

SoloInvoice Coder is a software tool that helps independent professionals like software developers and freelancers easily manage their billing. Instead of offering a complex system with features you might never use, this tool focuses on the absolute essentials: creating invoices quickly, sending them out via email, and sending automatic reminders if they aren't paid. It also handles recurring bills, like for a monthly service, and gives you a straightforward way to see which invoices are paid and which are still outstanding. The innovation is in its deliberate simplicity and very low price point ($20 per year), which is a direct response to the high costs and feature overload of typical business invoicing software, making professional invoicing accessible to individuals.

How to use it?

Developers can integrate SoloInvoice Coder into their workflow by simply signing up for the service. Once registered, they can immediately start creating invoices by inputting client details, service descriptions, and pricing. Invoices can be sent directly from the platform via email to clients. For ongoing services, recurring invoice settings can be configured. A clean dashboard provides a quick overview of payment status. This tool is designed to be used standalone and doesn't require complex integration, making it a practical solution for immediate use, especially when dealing with one-off projects or retainer-based client work.

Product Core Function

· Invoice Creation: Quickly generate professional invoices with essential details, saving you time and effort from manual data entry. This is valuable because it allows you to get paid faster and spend less time on administrative tasks.

· Email Sending: Send invoices directly to your clients' inboxes from the platform, ensuring a professional and immediate delivery. This is useful for streamlining communication and providing clients with easy access to their billing information.

· Automatic Reminders: The system automatically sends follow-up emails for unpaid invoices, helping you get paid on time without constant manual follow-up. This feature is a significant time-saver and improves your cash flow.

· Recurring Invoices: Set up invoices to be automatically generated and sent on a regular schedule, perfect for subscription-based services or retainers. This automates a repetitive task, ensuring consistent billing and revenue.

· Simple Dashboard: A clear overview of your financial status, showing paid and unpaid invoices at a glance. This helps you stay organized and understand your income stream easily.

· No Bloat Features: By intentionally omitting complex features like CRM or team management, the platform remains incredibly fast and easy to use for individuals. This means you're not paying for or learning features you don't need, making it incredibly cost-effective.

Product Usage Case

· A freelance web developer needs to send an invoice for a completed project. They can use SoloInvoice Coder to quickly create a professional invoice with line items for development hours and project milestones, then email it directly to the client. This solves the problem of needing a formal billing document without the complexity of full accounting software.

· A contract software engineer working on a monthly retainer needs to ensure they are billed consistently. SoloInvoice Coder's recurring invoice feature can be set up to automatically generate and send the invoice each month, saving the engineer the task of manually creating it and ensuring prompt payment.

· A solo game developer selling their indie game needs to manage payments from publishers or distributors. They can use the platform to track outgoing invoices and receive payment confirmations, keeping their business finances organized without needing a dedicated finance team.

5

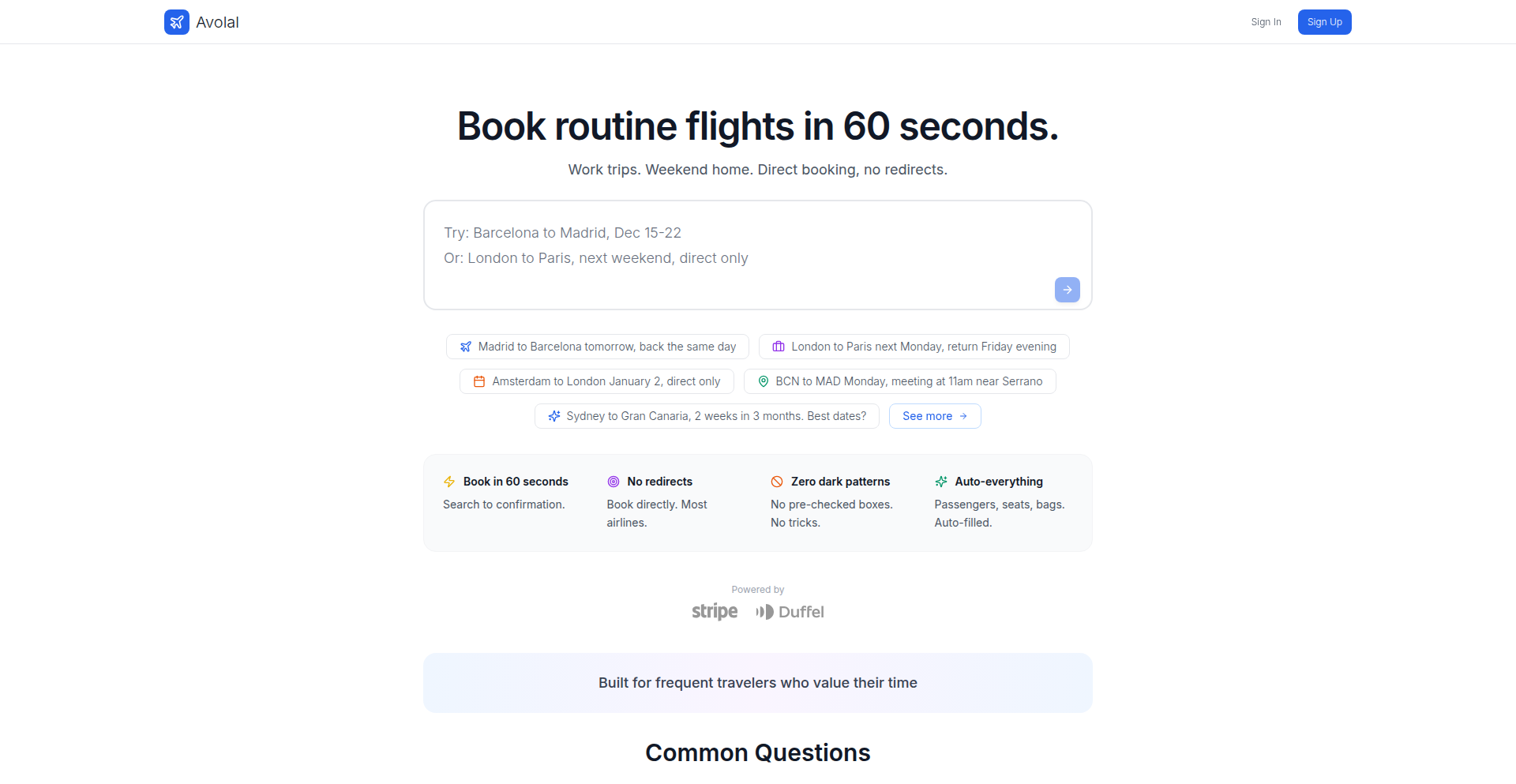

Avolal: Contextual Flight Booker

Author

midito

Description

Avolal is a flight booking tool designed to eliminate the frustrations of typical airline websites. It uses natural language processing to understand complex search queries, learns user preferences, and ranks flights based on overall value rather than airline commission. This results in a faster, more personalized, and transparent booking experience.

Popularity

Points 9

Comments 6

What is this product?

Avolal is an intelligent flight booking platform that leverages natural language processing (NLP) to interpret user requests in a conversational manner. Instead of navigating through rigid search forms, users can type queries like 'San Francisco to Seattle next weekend' and Avolal understands the implied dates (e.g., Friday to Sunday). It also learns and remembers user preferences for seats, fare types, and preferred routes, significantly speeding up the booking process. A key innovation is its 'actual value' ranking system, which considers not just the price but also travel time, airport quality, and other factors that truly matter to the traveler, moving away from commission-driven rankings. This approach aims to provide a more intuitive and user-centric way to book flights.

How to use it?

Developers can use Avolal by visiting avolal.com directly in their browser. For searching routine flights, you can simply type your destination and desired travel dates in a natural, conversational way (e.g., 'New York to London for a business meeting next Tuesday'). Avolal will then present a curated list of flights based on your input and learned preferences. To integrate Avolal's capabilities into other applications or workflows, one would typically look for an API. While not explicitly mentioned in the provided snippet, the underlying technology suggests the potential for an API that developers could use to programmatically search for flights, manage user profiles, and retrieve personalized flight recommendations, streamlining travel booking within their own services.

Product Core Function

· Natural Language Search: Understands context and colloquialisms in flight search queries (e.g., 'weekend trip,' 'meeting at 2 PM'), making it easier to find flights without precise date and time input. Value: Saves time and reduces cognitive load for users who don't want to deal with rigid search forms.

· Preference Learning and Saving: Remembers user preferences for seats, fare classes, and preferred routes, automating repetitive choices. Value: Significantly speeds up the booking process for frequent travelers and those with specific needs.

· Value-Based Flight Ranking: Ranks flights based on a comprehensive 'actual value' metric (price + time + airport quality) rather than airline commissions. Value: Empowers users with objective information to make better, more cost-effective decisions that align with their personal priorities, avoiding hidden biases.

· Ad-Free and Dark Pattern-Free Interface: Provides a clean, transparent booking experience without intrusive ads or manipulative design elements. Value: Builds trust and ensures a frustration-free user journey, focusing solely on finding the best flight option.

Product Usage Case

· Frequent Business Traveler: A user who regularly flies between New York and San Francisco for meetings can simply type 'NYC to SF for my meeting next Thursday,' and Avolal will automatically understand the need for a mid-week return and potentially prioritize flights arriving at SFO based on learned preferences for airport convenience. This solves the problem of repeatedly entering similar search criteria and saves valuable time.

· Leisure Traveler Planning a Weekend Getaway: Someone looking to book a quick trip to Miami for the weekend can type 'LA to Miami this Friday night, back Sunday evening.' Avolal will correctly interpret 'this Friday night' and 'Sunday evening' and present flights optimized for a short weekend trip, solving the issue of manually sifting through multiple date and time combinations.

· User with Specific Seat Preferences: A traveler who always prefers an aisle seat in the front of the plane can set this preference in Avolal. When searching for flights, Avolal will automatically filter and rank options that have such seats available, addressing the common frustration of not being able to secure preferred seating during booking.

6

TakaStackLang

Author

mgunyho

Description

TakaStackLang is a minimalist, stack-based programming language designed for concise problem-solving, particularly for challenges like Advent of Code. Its innovation lies in its use of forward Polish notation (prefix notation) combined with a stack data structure, allowing for very expressive and compact code. This approach simplifies complex operations by breaking them down into a series of pushes and pops on a stack, making it easier to reason about program state and execute logic.

Popularity

Points 10

Comments 4

What is this product?

TakaStackLang is a novel programming language that leverages a stack data structure and forward Polish notation (also known as prefix notation) to execute code. Instead of traditional infix notation (like `2 + 3`), prefix notation writes the operator before the operands (like `+ 2 3`). When combined with a stack, operations are performed by pushing operands onto the stack and then applying operators that consume operands from the stack. This creates a highly efficient and often surprisingly readable way to express computations, especially for recursive or nested logic, as it directly mirrors how function calls and expression evaluation work internally. Its core value is providing a clean, declarative way to solve problems with minimal boilerplate.

How to use it?

Developers can use TakaStackLang by writing programs as sequences of operations and values. The language interpreter reads these sequences, pushing values onto an internal stack and executing operators when encountered. For example, to add two numbers, you might write `+ 2 3`, where `2` and `3` are pushed onto the stack, and then the `+` operator pops them, adds them, and pushes the result back. It's ideal for developers who enjoy exploring alternative programming paradigms or tackling computational puzzles where clear, step-by-step execution is beneficial. Integration might involve writing custom scripts or embedding the language interpreter within other applications to handle specific data processing or logic tasks.

Product Core Function

· Stack-based execution: Operands are pushed onto a stack, and operators pop operands from the stack to perform operations. This allows for efficient management of intermediate results and a clear view of program state, making debugging easier by tracking values as they move through the stack. Its value is in simplifying complex state management.

· Forward Polish Notation (Prefix Notation): Operators precede their operands, allowing for unambiguous expression parsing without the need for parentheses. This leads to more compact code and a more direct representation of computational flow, reducing cognitive load for understanding nested logic.

· Minimalist Instruction Set: Designed with a small set of core operations, making it easy to learn and implement. This simplicity reduces the learning curve and makes the language itself easier to extend or adapt for specific problem domains, offering a highly flexible foundation.

· Declarative Problem Solving: Encourages thinking about problems in terms of data transformation and operation sequences. This paradigm shift can lead to more elegant and maintainable solutions, especially for tasks that involve significant data manipulation or algorithmic challenges.

Product Usage Case

· Solving Advent of Code puzzles: Developers can use TakaStackLang to implement solutions for these annual programming challenges, leveraging its conciseness and clear execution flow to quickly prototype and verify algorithms. It provides a novel way to approach complex algorithmic problems.

· Implementing small, specialized scripting engines: For applications requiring custom logic execution or domain-specific languages, TakaStackLang can serve as a lightweight interpreter, allowing for flexible and extensible rule-based systems. This offers a powerful way to add dynamic behavior to applications.

· Educational tool for programming concepts: Its straightforward stack-based execution and prefix notation make it an excellent tool for teaching fundamental computer science principles like expression evaluation, recursion, and data structures. It demystifies how programs execute at a lower level.

· Prototyping algorithms with clear state transitions: When developing algorithms that involve significant state changes or complex intermediate calculations, TakaStackLang's explicit stack manipulation provides a transparent way to visualize and test the algorithm's behavior. This aids in identifying and fixing logic errors early in the development process.

7

LLM Orchestrator Hub

Author

supreetgupta

Description

This project addresses a common challenge when connecting Large Language Models (LLMs) to various external tools. As you add more tools, the way they connect to the LLM can become a tangled mess (an N×M mesh). Each tool needs its own authentication, error handling, and logging, leading to fragmentation. LLM Orchestrator Hub provides a single, centralized point to manage authentication, access control, routing of requests, and monitoring for these tool integrations, simplifying the architecture and improving manageability. What this means for you is a cleaner, more robust way to integrate LLMs with your existing services.

Popularity

Points 10

Comments 3

What is this product?

LLM Orchestrator Hub is a system designed to streamline the integration of LLMs with multiple external tools or services. Instead of each LLM agent directly connecting to every tool (which becomes complex and hard to manage), this gateway acts as a central traffic manager. It handles crucial tasks like verifying who can access which tool (authentication and authorization), directing requests to the correct tool (routing), and providing a unified view of what's happening (observability). The innovation lies in moving from a point-to-point N×M integration mess to a cleaner hub-and-spoke model. This makes it easier to add, remove, or update tools without rewriting integrations everywhere. So, for you, it means less complexity and more control when building LLM-powered applications.

How to use it?

Developers can integrate LLM Orchestrator Hub by directing their LLM agent's calls through this gateway. For example, if an LLM needs to access a database, a calendar, or an external API, these requests would first go to the Orchestrator Hub. The Hub then authenticates the request, checks if the LLM is authorized to use that specific tool, and forwards the request to the correct tool. The response is then sent back through the Hub to the LLM. The project supports standards like OAuth2 for secure authentication and offers 'Virtual MCP Servers' to group related tools. You can think of it like a smart receptionist for your LLM's conversations with the outside world. Integration typically involves configuring your LLM's tool-calling mechanism to point to the Hub's API endpoint. This allows you to easily manage access and monitor interactions without deeply modifying your LLM's core logic. So, you can add new tools or enforce security policies with minimal code changes.

Product Core Function

· Centralized Authentication and Authorization: Manages who can access which tools, ensuring security and compliance. This simplifies security management across many integrations, meaning you don't have to reinvent authentication for every new tool connection.

· Unified Request Routing: Directs LLM requests to the appropriate external tools based on the request context. This optimizes performance and ensures the LLM gets the right information, saving you the effort of building complex logic to figure out which tool to call.

· Consolidated Observability and Logging: Provides a single place to monitor all LLM-tool interactions, including performance metrics and error tracking. This makes debugging and understanding your LLM application's behavior much easier, so you can quickly spot and fix issues.

· Simplified Tool Management: Allows for easier addition, removal, or updates of external tools without disrupting existing integrations. This agility means you can evolve your LLM application faster by adding new capabilities seamlessly.

· Support for Standards (e.g., OAuth2): Leverages industry-standard security protocols for robust and secure integrations. This ensures your integrations are built on proven security foundations, giving you peace of mind.

· Virtual Tool Groups (Virtual MCP Servers): Organizes tools into logical sets, allowing for curated access and management. This helps in managing complexity by grouping related functionalities, making it easier to control access to specific feature sets.

Product Usage Case

· An LLM customer service bot needs to access user account information, place orders, and check shipping status. Instead of the bot directly integrating with the user database, order management system, and shipping API individually, all these requests go through the LLM Orchestrator Hub. The Hub authenticates the bot, routes the request to the correct backend service, and logs the interaction. This makes it simple to add a new shipping provider or update the user database schema without modifying the bot's core logic.

· A research assistant LLM needs to query multiple scientific databases and external APIs for data retrieval. The Orchestrator Hub can manage credentials for each database and API, ensure rate limits are respected, and route queries to the most appropriate source. If a new research database becomes available, it can be added to the Hub, and the LLM can start using it immediately after configuration, without complex code refactoring.

· An enterprise application uses an LLM for internal task automation. The Orchestrator Hub can enforce organizational policies on which LLM agents can access sensitive internal tools, such as HR systems or financial data. It provides a clear audit trail of all LLM-initiated actions, ensuring accountability and compliance. This means your IT department can easily manage and monitor AI-driven automation across the organization.

8

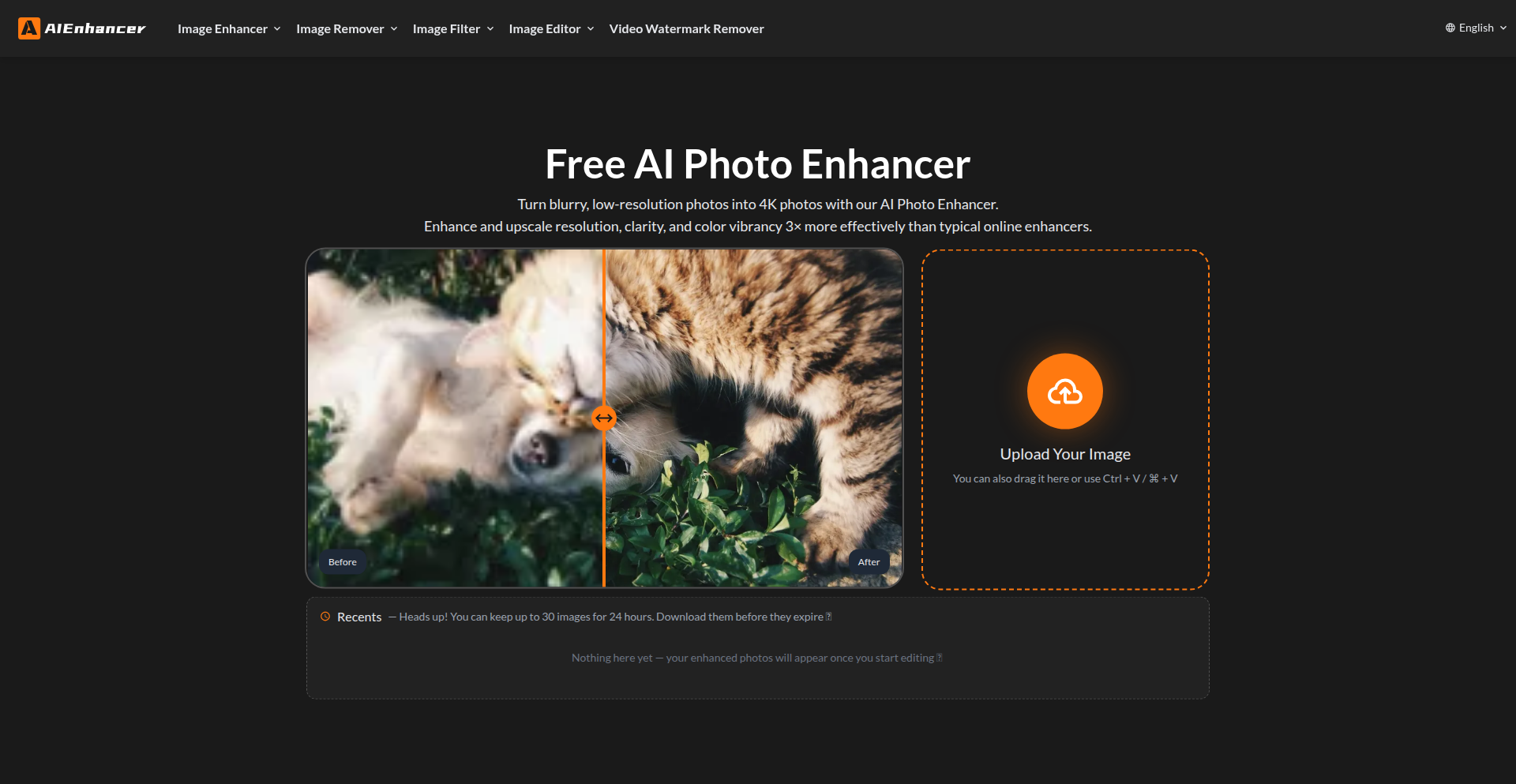

AI Photo Lumina

Author

passioner

Description

An experimental AI-powered photo enhancer that delivers surprisingly good results without any paywalls or complex interfaces. It leverages advanced image processing algorithms to automatically improve photo quality, making it a valuable tool for quick, accessible image refinement.

Popularity

Points 6

Comments 6

What is this product?

AI Photo Lumina is a free, open-source project that utilizes sophisticated artificial intelligence models to automatically enhance the visual quality of photographs. Unlike many commercial tools that hide advanced features behind subscriptions or charge per image, this project focuses on delivering robust image improvements with a straightforward, accessible approach. The core innovation lies in its ability to intelligently analyze image content and apply targeted enhancements to areas like brightness, contrast, sharpness, and noise reduction, resulting in a more pleasing and professional-looking image without manual intervention. This democratizes access to high-quality photo editing capabilities.

How to use it?

Developers can integrate AI Photo Lumina into their workflows by leveraging its underlying AI models and image processing libraries. Depending on the specific implementation, this could involve running the models locally on their machine or through an API if one is exposed. A common use case would be to build batch processing tools for large photo collections, or to add an 'enhance' button directly into content management systems or user-uploaded image pipelines. For instance, a web application could automatically process user-submitted profile pictures to ensure they look their best, improving the overall user experience.

Product Core Function

· AI-driven image quality enhancement: Automatically adjusts brightness, contrast, and color balance to make photos more vibrant and lifelike. This is useful for making dull photos pop, improving the overall aesthetic appeal of images without needing to be a professional editor.

· Intelligent noise reduction: Reduces graininess and digital artifacts in photos, especially those taken in low-light conditions. This means your low-light photos will look clearer and less pixelated, making them more presentable.

· Automatic sharpness improvement: Enhances details and textures in images, making them appear crisper and more defined. This makes your photos look sharper and more professional, bringing out fine details that might otherwise be missed.

· User-friendly interface (or API): Designed for ease of use, allowing for quick and efficient photo processing without a steep learning curve. This saves you time and effort, as you don't need to spend hours tweaking settings to get a good result.

Product Usage Case

· A freelance photographer needs to quickly process a large batch of event photos. By integrating AI Photo Lumina, they can automatically enhance dozens of images in minutes, saving significant post-processing time and delivering better-looking results to clients.

· A blogger wants to improve the visual appeal of their website's featured images. They can use AI Photo Lumina to automatically enhance these images before uploading, making their blog more engaging and professional-looking without requiring graphic design skills.

· A developer building a social media platform for amateur photographers can use AI Photo Lumina to offer an 'auto-enhance' feature. This empowers users to easily improve their photos, fostering a more positive and aesthetically pleasing community experience.

· A researcher working with historical image archives can use AI Photo Lumina to restore and enhance faded or damaged photographs, making them clearer and more interpretable for study and public display.

9

GoalBet Engine

Author

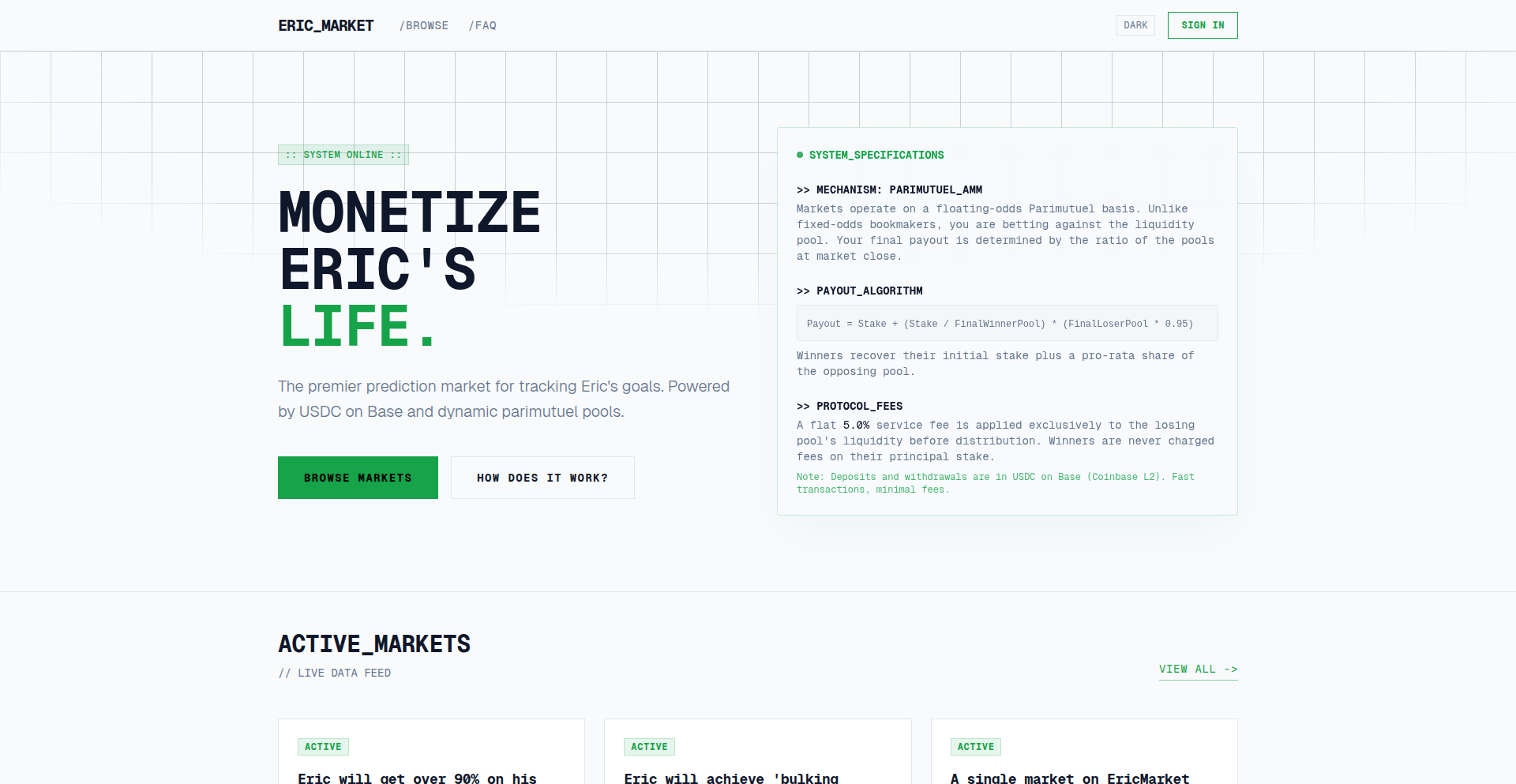

ericlmtn

Description

An experimental prediction market built for betting against personal goals. It leverages a decentralized mechanism to quantify uncertainty and allows users to speculate on the likelihood of certain outcomes. This project showcases a novel application of prediction market principles to individual achievement, offering a unique way to engage with personal aspirations through a gamified, data-driven approach. The core innovation lies in applying a robust financial market mechanism to a non-financial, personal domain, demonstrating the versatility of these systems.

Popularity

Points 6

Comments 5

What is this product?

This project is a decentralized prediction market specifically designed for individuals to bet on whether the creator (and by extension, anyone participating) will achieve their personal goals. The underlying technology is inspired by sophisticated financial market models, but simplified for a personal context. Instead of stock prices, the market's 'price' reflects the perceived probability of a goal being met. For example, if a goal is 'finish writing a book by December', the market might show a price of $0.30, meaning participants believe there's a 30% chance of it happening. This price dynamically adjusts based on how much people are willing to buy or sell 'shares' in that outcome. The innovation is in applying this community-driven probability assessment to personal objectives, making uncertainty tangible and creating a form of social accountability or motivation.

How to use it?

Developers can interact with this project primarily by understanding its underlying architecture and potentially adapting its principles. For instance, a developer might integrate a similar prediction market mechanism into a personal productivity app to encourage users to set realistic goals and provide a mechanism for community feedback or even micro-stakes betting on goal achievement. You could imagine building a plugin for a task management tool where users can create a 'goal contract' and others can 'buy in' based on their confidence. The core idea is to use the concept of a prediction market to crowdsource probability assessments for personal endeavors, which can then inform behavior or provide insights.

Product Core Function

· Decentralized Goal Prediction: Allows users to create verifiable goals and for others to speculate on their achievement, fostering a community-driven assessment of likelihood. This provides a novel way to quantify personal ambition.

· Dynamic Probability Adjustment: Uses market forces (buying and selling 'shares' of goal success) to continuously update the perceived probability of a goal being met. This offers real-time feedback on confidence levels.

· Betting Mechanism: Enables users to place 'bets' (figuratively or literally, depending on implementation) on goal outcomes, providing a gamified incentive and a form of social commitment.

· Transparent Outcome Verification: The system is designed to eventually verify goal outcomes, ensuring the integrity of the predictions and the market. This builds trust in the system's results.

Product Usage Case

· Personal Productivity Enhancement: A developer could build a feature for a habit-tracking app where users can bet on themselves to complete a certain number of workouts in a week. If they succeed, they win; if not, they might forfeit a small amount, encouraging commitment.

· Team Project Milestones: Imagine a small development team using this to predict the likelihood of completing specific features by a deadline. The market's price can highlight areas of high risk or high confidence, prompting proactive problem-solving.

· Learning Goal Assessment: A developer learning a new programming language could set a goal to complete a project using it by a certain date. Friends or colleagues could bet on this, providing encouragement and a playful accountability measure.

· Creative Project Completion: An artist or writer could set a goal for finishing a piece of work. The community's prediction can offer a unique form of external validation or motivation, translating abstract aspirations into a quantifiable market.

10

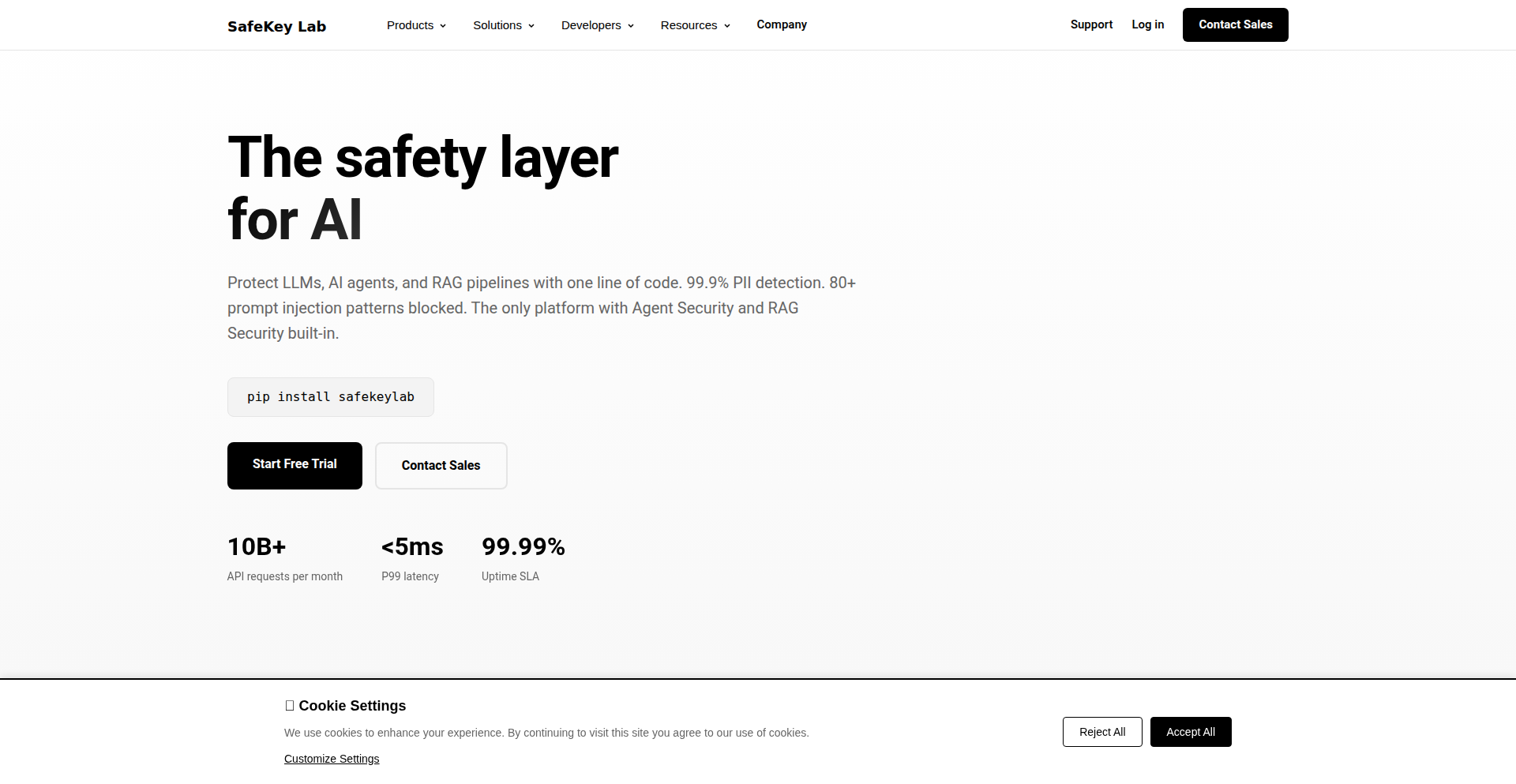

SafeKey AI Input Firewall

Author

safekeylab

Description

SafeKey is an AI input firewall designed to protect sensitive data when interacting with Large Language Models (LLMs). It acts as a protective layer between your application and the AI model, automatically identifying and redacting Personally Identifiable Information (PII) before it ever leaves your environment. This innovative solution tackles the critical challenge of data leakage in LLM applications, especially when dealing with private or confidential information.

Popularity

Points 4

Comments 6

What is this product?

SafeKey is an advanced AI security solution that acts as a gatekeeper for data sent to AI models. Its core innovation lies in its ability to accurately detect and remove sensitive personal information (like names, addresses, social security numbers, etc.) from various data types – text, images, audio, and even video – with over 99% accuracy. Beyond PII, it also offers robust protection against malicious AI prompts, known as 'prompt injection' and 'jailbreaks', ensuring AI models behave as intended. It achieves this with extremely low latency, making it practical for real-time applications. So, for you, this means being able to leverage the power of AI without the fear of accidentally exposing private patient data, customer details, or proprietary business information.

How to use it?

Developers can easily integrate SafeKey into their existing AI workflows using drop-in SDKs compatible with major LLM providers like OpenAI, Anthropic, Azure, and AWS Bedrock. SafeKey can be deployed either within your own secure network environment (VPC) or via their cloud service. The firewall sits between your application's input and the LLM API. When your application sends data to the LLM, SafeKey intercepts it, performs its redaction and security checks, and then forwards the cleaned data to the LLM. This process is incredibly fast, taking less than 30 milliseconds. This means you can integrate advanced AI features into your applications with confidence, knowing that sensitive data is protected. For example, if you're building a customer service chatbot that needs to access user history, SafeKey ensures that personally identifiable details from the user's profile are masked before being sent to the AI for processing.

Product Core Function

· PII Redaction (Text, Image, Audio, Video): Accurately identifies and removes sensitive personal information from all forms of input data, ensuring compliance and privacy. This is crucial for applications handling any kind of personal data, preventing accidental breaches.

· AI Prompt Injection & Jailbreak Defense: Prevents malicious attempts to manipulate AI models into unintended behavior or to bypass safety guidelines, safeguarding the integrity of AI outputs.

· Autonomous AI Workflow Security: Protects against vulnerabilities in complex AI agent systems, ensuring that AI-driven processes remain secure and predictable.

· RAG (Retrieval-Augmented Generation) Pipeline Security: Secures AI systems that fetch information from external sources before generating responses, preventing data leakage during the retrieval process.

· Low Latency Processing: Operates with sub-30ms latency, ensuring that security checks do not significantly slow down AI application performance, making it suitable for real-time use cases.

Product Usage Case

· Healthcare AI Applications: Protecting patient medical records and sensitive health information when using LLMs for diagnosis assistance or patient interaction, ensuring HIPAA compliance.

· Customer Service Chatbots: Masking customer names, account numbers, and other PII before sending user queries to an LLM for personalized support, enhancing customer trust.

· Financial Data Analysis: Securing confidential financial data and customer details when using AI for fraud detection or market analysis, preventing data leaks.

· Internal Business Intelligence Tools: Safeguarding proprietary company information and employee data when employing LLMs for internal report generation or data summarization.

11

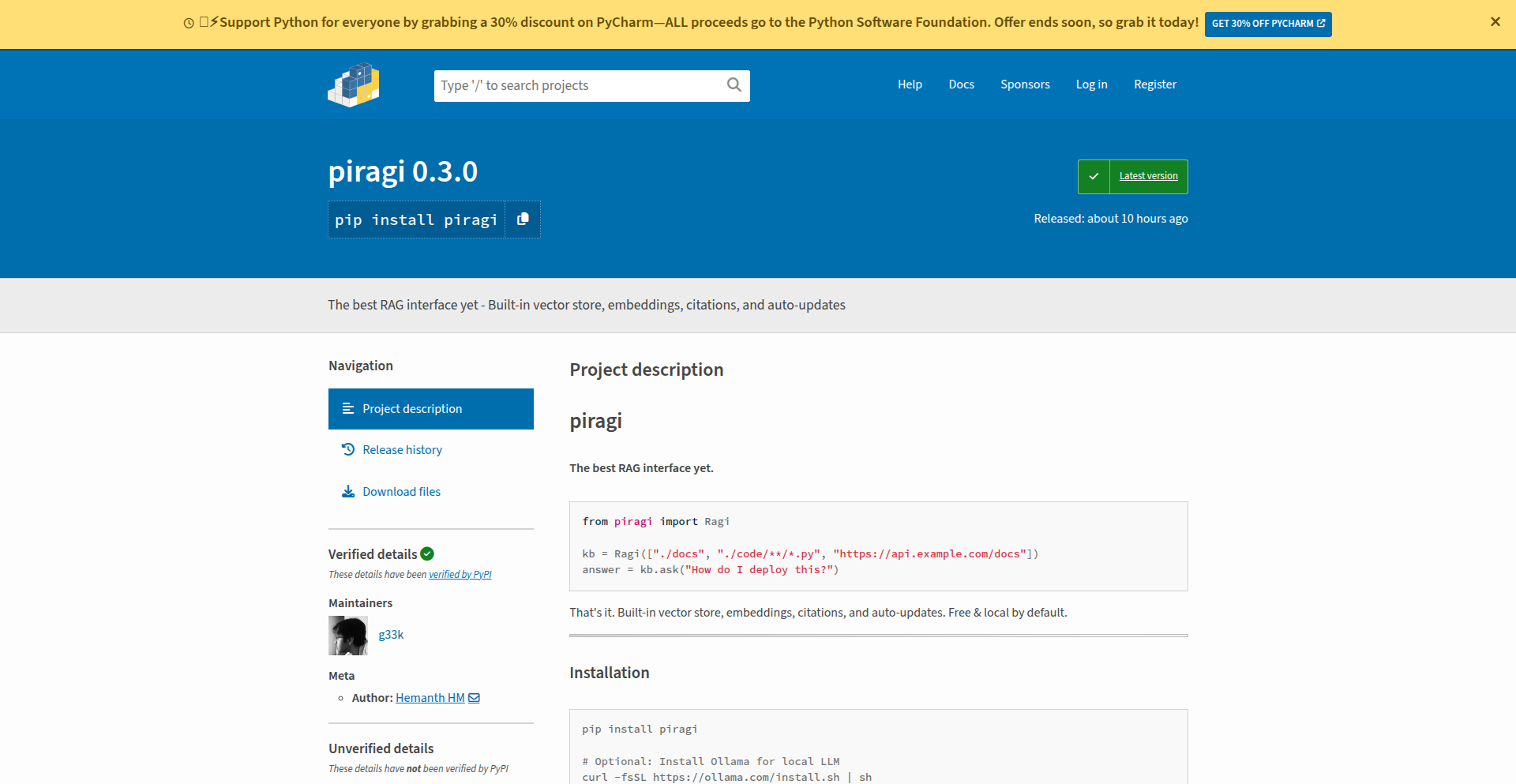

Ragi: Universal RAG Orchestrator

Author

init0

Description

Ragi is a Python library that drastically simplifies the process of building Retrieval Augmented Generation (RAG) systems. It abstracts away the complexities of integrating various data sources, embedding models, and retrieval strategies, allowing developers to set up powerful AI search and question-answering capabilities with just a few lines of code. It addresses the common pain point of repetitive boilerplate code in RAG development.

Popularity

Points 8

Comments 2

What is this product?

Ragi is a smart wrapper for Retrieval Augmented Generation (RAG) that makes it incredibly easy to build AI systems that can answer questions based on your own documents, code, or even web pages. Instead of spending a lot of time setting up complex pipelines for handling different file types, converting them into a format AI can understand (embeddings), and figuring out the best way to search through that information, Ragi does it all for you. It can ingest almost any type of data – PDFs, Word docs, code files, URLs, images, and audio – and automatically set up a powerful search mechanism. The innovation lies in its ability to abstract away the intricate details of RAG implementation, offering a streamlined, plug-and-play solution that runs locally with open-source tools like Ollama for language models and sentence-transformers for embeddings, eliminating the need for API keys for basic usage.

How to use it?

Developers can integrate Ragi into their Python projects with a simple installation (`pip install piragi`). The core usage involves creating an instance of the `Ragi` class, specifying the data sources (local directories, file patterns, or URLs) it should index. Once initialized, you can immediately start asking questions using the `.ask()` method. Ragi handles all the background processing, including data ingestion, embedding, and retrieval. It also offers flexible configuration options to customize retrieval methods (like using HyDE, hybrid search, or cross-encoder re-ranking) or even swap in commercial LLMs like OpenAI's GPT models by providing their API keys. This makes it suitable for quick prototyping or as a robust backend for more complex applications.

Product Core Function

· Universal Data Ingestion: Supports a wide array of file formats including PDF, Word, Excel, Markdown, code (Python, etc.), web page URLs, images, and audio. This means you can build AI search on virtually any content you have without needing to write custom parsers for each type. The value is in saving significant development time and effort by having a single point of entry for diverse data.

· Automatic Background Updates: Continuously monitors specified data sources and automatically refreshes its knowledge base in the background. This ensures that the AI's answers are always up-to-date without manual intervention, providing zero query latency after the initial indexing. The value is in maintaining real-time relevance for your AI-powered features.

· Source Citations: Every answer provided by Ragi includes clear citations to the original sources of information. This is crucial for verifying the AI's responses, building trust, and allowing users to dive deeper into the referenced content. The value is in transparency and accountability of the AI's output.

· Advanced Retrieval Strategies: Implements sophisticated techniques for finding the most relevant information, including HyDE (Hypothetical Document Embeddings) for better query understanding, hybrid search (combining keyword and semantic search) for comprehensive results, and cross-encoder reranking for precise answer selection. The value is in delivering highly accurate and contextually relevant answers, significantly improving the quality of the AI's responses.

· Intelligent Chunking: Employs semantic, contextual, and hierarchical strategies for breaking down large documents into manageable pieces for the AI. This ensures that the AI can effectively process and retrieve information from complex texts. The value is in optimizing the AI's ability to understand and utilize your data, leading to better query results.

· LLM Agnosticism (OpenAI Compatible): Allows seamless switching between local LLMs (via Ollama) and commercial LLMs like OpenAI's GPT series. Developers can leverage the benefits of local processing for privacy and cost-efficiency or opt for powerful commercial models when needed. The value is in flexibility and the ability to choose the best LLM for specific use cases and budgets.

Product Usage Case

· Building a developer documentation search engine: A developer can point Ragi to their project's codebase (e.g., Python files) and Markdown documentation. When asking questions like 'How do I authenticate this API?', Ragi will retrieve relevant code snippets and documentation sections, providing accurate answers with code examples and links to specific files. This solves the problem of navigating large codebases and scattered documentation.

· Creating an internal knowledge base for a company: Ragi can ingest a variety of company documents like PDFs of policies, Word documents of procedures, and Excel spreadsheets of data. Employees can then ask questions in natural language, such as 'What is the vacation policy?' or 'What were the sales figures for Q3?', and receive precise answers with references to the original documents. This significantly speeds up information retrieval for employees.

· Developing a customer support chatbot that understands product manuals: A company can feed Ragi all their product manuals, FAQs, and support articles. The chatbot can then answer customer queries with high accuracy by retrieving relevant information from these sources, reducing the workload on human support agents. The value here is in providing instant, accurate support to customers.

· Answering questions about a collection of research papers: A researcher can feed Ragi a folder of PDF research papers. They can then ask complex questions like 'What are the latest advancements in transformer architectures for NLP?' and Ragi will synthesize information from multiple papers, providing a concise answer with citations to the specific publications. This helps researchers stay up-to-date and discover relevant work more efficiently.

12

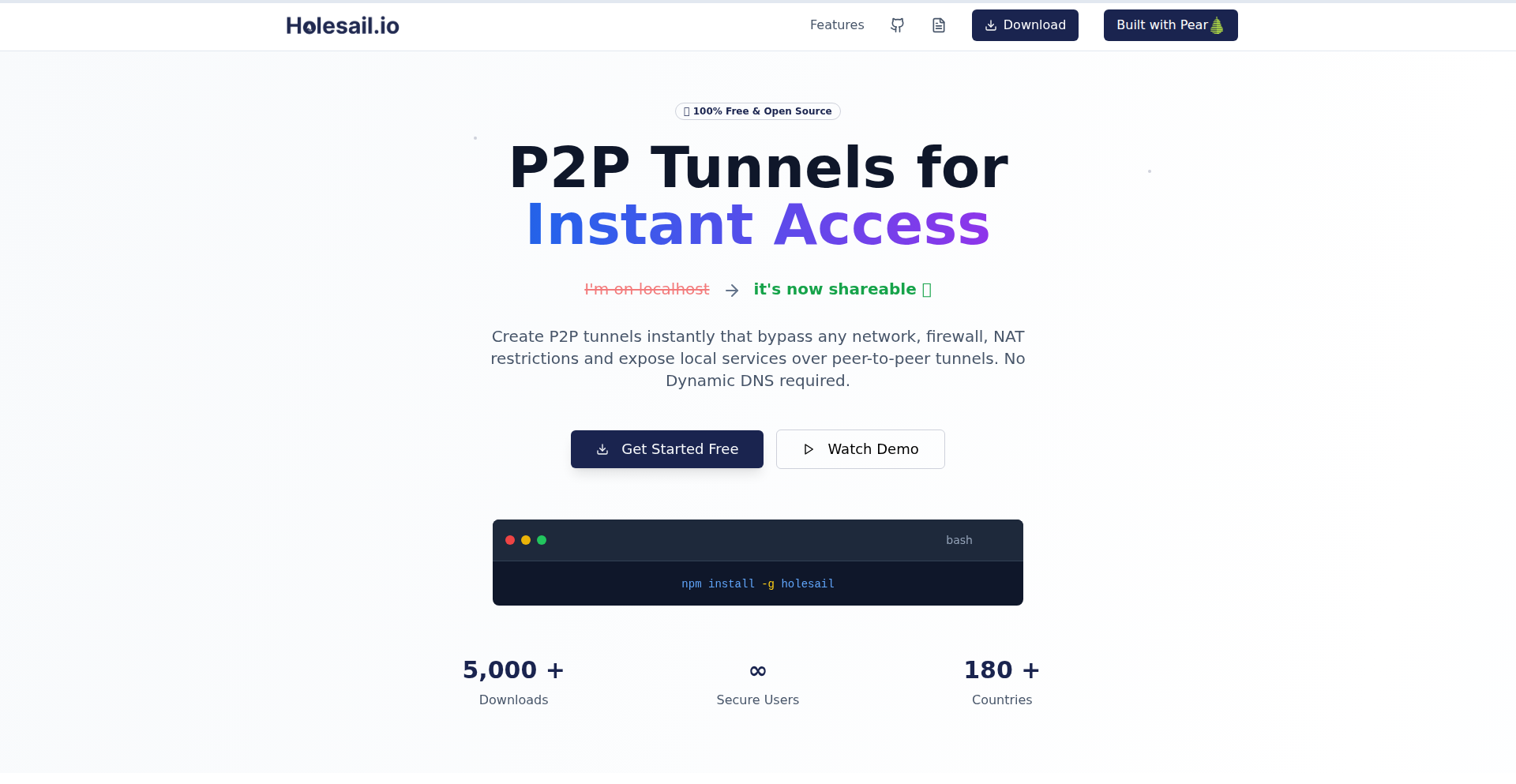

Holesail: Peer-to-Peer Tunneling Engine

Author

supersuryaansh

Description

Holesail is a lightweight, peer-to-peer tunneling tool designed to simplify the sharing of local self-hosted services. It establishes direct, end-to-end encrypted connections between two peers without requiring port forwarding, VPNs, or central servers. This innovative approach leverages a simple connection key to create secure tunnels for various applications, making it an ideal solution for developers and hobbyists needing fast, reliable, and private connectivity for tasks like sharing development servers, enabling remote access, or integrating P2P features into applications.

Popularity

Points 1

Comments 6

What is this product?

Holesail is a peer-to-peer (P2P) tunneling tool. Instead of relying on central servers or complex network configurations like port forwarding (which opens specific doors in your router to the internet), Holesail directly connects two devices, say your laptop and your friend's laptop, using a unique 'connection key'. Think of it like having a secret handshake that allows two computers to talk directly to each other over the internet, even if they are behind different firewalls or routers. The connection is encrypted from end-to-end, meaning only the two connected devices can read the data, making it very private and secure. It supports both TCP and UDP traffic, which are the two main ways data is sent over the internet. This means it can handle almost any kind of network traffic, from simple web requests to game data. It's built to be efficient and works across a wide range of operating systems like Linux, Mac, Windows, Android, and iOS, making it very versatile. The core innovation is its ability to bypass traditional networking complexities, offering a direct, secure, and easy-to-use connection for sharing local resources.

How to use it?

Developers can use Holesail to instantly share services running on their local machine with others. For example, if you're developing a web application on your laptop and want a colleague to test it without deploying it to a public server, you can run Holesail on both machines. You'd generate a connection key, share it with your colleague, and then run Holesail with that key. This would create a secure tunnel, allowing your colleague to access your local web server as if it were publicly available, but with the added security of a direct, encrypted connection. It can be integrated into applications by leveraging its command-line interface or by using its underlying library (if available for programmatic access) to establish P2P connections for features like file sharing, real-time communication, or distributed computing. The absence of central servers means no infrastructure management is needed for basic P2P connectivity, making it a 'set it and forget it' solution for many scenarios. The value here is speed and simplicity: you can get a secure, direct connection up and running in minutes, saving significant setup time and avoiding complex network configurations.

Product Core Function

· Peer-to-peer direct connection: Enables direct communication between two devices without intermediaries. This reduces latency and removes reliance on central server infrastructure, providing faster and more reliable connections for your applications.

· End-to-end encryption: Secures all data transmitted between peers. This ensures privacy and protects sensitive information, which is crucial for sharing development environments or any private data.

· Support for TCP and UDP: Accommodates a wide range of network protocols. This means Holesail can be used for almost any type of network service, from web applications to online gaming, offering broad applicability.

· Cross-platform compatibility: Runs on Linux, Mac, Windows, Android, and iOS. This allows for seamless sharing and access across diverse devices and operating systems, making it incredibly versatile for various user needs.

· Simple connection key authentication: Facilitates easy and quick connection setup. Users only need to share a simple key to establish a secure tunnel, significantly lowering the barrier to entry for P2P networking.

Product Usage Case

· Sharing a local development web server with a remote teammate: A developer can run their web application on their laptop and use Holesail to create a tunnel. The teammate can then access the web application through the tunnel, allowing for real-time collaboration and testing without complex deployment steps. This solves the problem of inaccessible development environments.

· Enabling remote SSH access to a home server without port forwarding: A user can run Holesail on their home server and on their laptop when they are away. By connecting with a shared key, they can establish a secure SSH tunnel directly to their home server, bypassing the need to configure their home router for port forwarding, which can be a security risk and technically challenging.

· Facilitating LAN-style multiplayer gaming over the internet: Players can use Holesail to create a direct connection between their machines, allowing them to play games that normally require a local network as if they were on the same LAN. This solves the problem of games not supporting direct internet connections or requiring complex server setups.

· Allowing a mobile app to access a local development API: A mobile developer can run their API locally and use Holesail to expose it to their Android or iOS device. This is useful for testing and debugging mobile applications that interact with backend services, eliminating the need for cloud-based staging environments for every test.

13

Cloudflare Workers WarpDrive

Author

kilroy123

Description

A blazing-fast website leveraging Cloudflare Workers, showcasing innovative approaches to edge computing and serverless architectures. The core innovation lies in pushing dynamic content generation and complex logic directly to Cloudflare's global network, minimizing latency and improving performance significantly for users worldwide. This project demonstrates how to build performant web applications without traditional backend servers, tackling the challenge of delivering responsive user experiences in a distributed environment.

Popularity

Points 2

Comments 5

What is this product?

This project is a website built using Cloudflare Workers, a serverless compute platform that runs code directly on Cloudflare's edge network. Instead of sending requests all the way to a central server and back, the logic for this website is executed on servers geographically closer to the end-user. This drastically reduces latency because the data and computation happen at the 'edge' of the internet. The innovation here is in architecting a dynamic website where processing happens distributedly, making it incredibly fast. Think of it like having mini-computational hubs all over the world, ready to respond instantly.

How to use it?

Developers can use this project as a blueprint for building their own high-performance web applications. The core idea is to write JavaScript or WebAssembly code that runs within Cloudflare's Workers environment. This can be used to handle API requests, serve dynamic content, implement authentication, or even run complex business logic without managing traditional servers. Integration typically involves deploying your Worker script to Cloudflare, and then configuring your domain's DNS to point to Cloudflare's network, which will then execute your Worker code for incoming requests. This approach is particularly useful for applications requiring low latency and high availability.

Product Core Function

· Edge-side dynamic content generation: Instead of a backend server generating HTML or JSON, Cloudflare Workers on the edge network do it. This means faster delivery because the processing is closer to the user, leading to a snappier website experience.

· Serverless architecture: No need to provision or manage servers. The code runs on demand when a request comes in, scaling automatically. This reduces operational overhead and costs, allowing developers to focus on features, not infrastructure.

· Global low-latency serving: By running code across Cloudflare's vast global network, users experience minimal delays, regardless of their location. This is crucial for applications where every millisecond counts, like real-time data dashboards or interactive games.

· Reduced infrastructure complexity: The entire backend logic can be encapsulated within Workers. This simplifies the overall system architecture and makes it easier to deploy and maintain applications.

· Cost-effectiveness: Pay-as-you-go model for compute execution. You only pay for the resources your code actually consumes, which can be significantly cheaper than maintaining always-on servers, especially for applications with spiky traffic.

Product Usage Case

· Building a real-time analytics dashboard: Imagine displaying live website traffic or performance metrics. By using Cloudflare Workers, the data can be fetched and processed at the edge, then updated on the dashboard with minimal delay, providing users with up-to-the-minute insights.

· Creating a global content delivery network for dynamic APIs: For applications that need to serve personalized content or API responses to users worldwide, Workers can handle the request routing and data fetching at the edge, ensuring users get the fastest possible response tailored to their region or preferences.

· Implementing rapid A/B testing and feature flagging: Developers can use Workers to dynamically serve different versions of a website or feature to specific user segments in real-time, allowing for quick experimentation and iteration without redeploying entire applications.

· Developing a scalable authentication and authorization service: Instead of a dedicated authentication server, Workers can handle user verification and access control at the edge, offloading this critical functionality and ensuring fast, secure access for users across the globe.

· Powering interactive web games: For web-based games that require low latency for player actions and game state updates, Workers can process game logic close to the players, leading to a smoother and more responsive gaming experience.

14

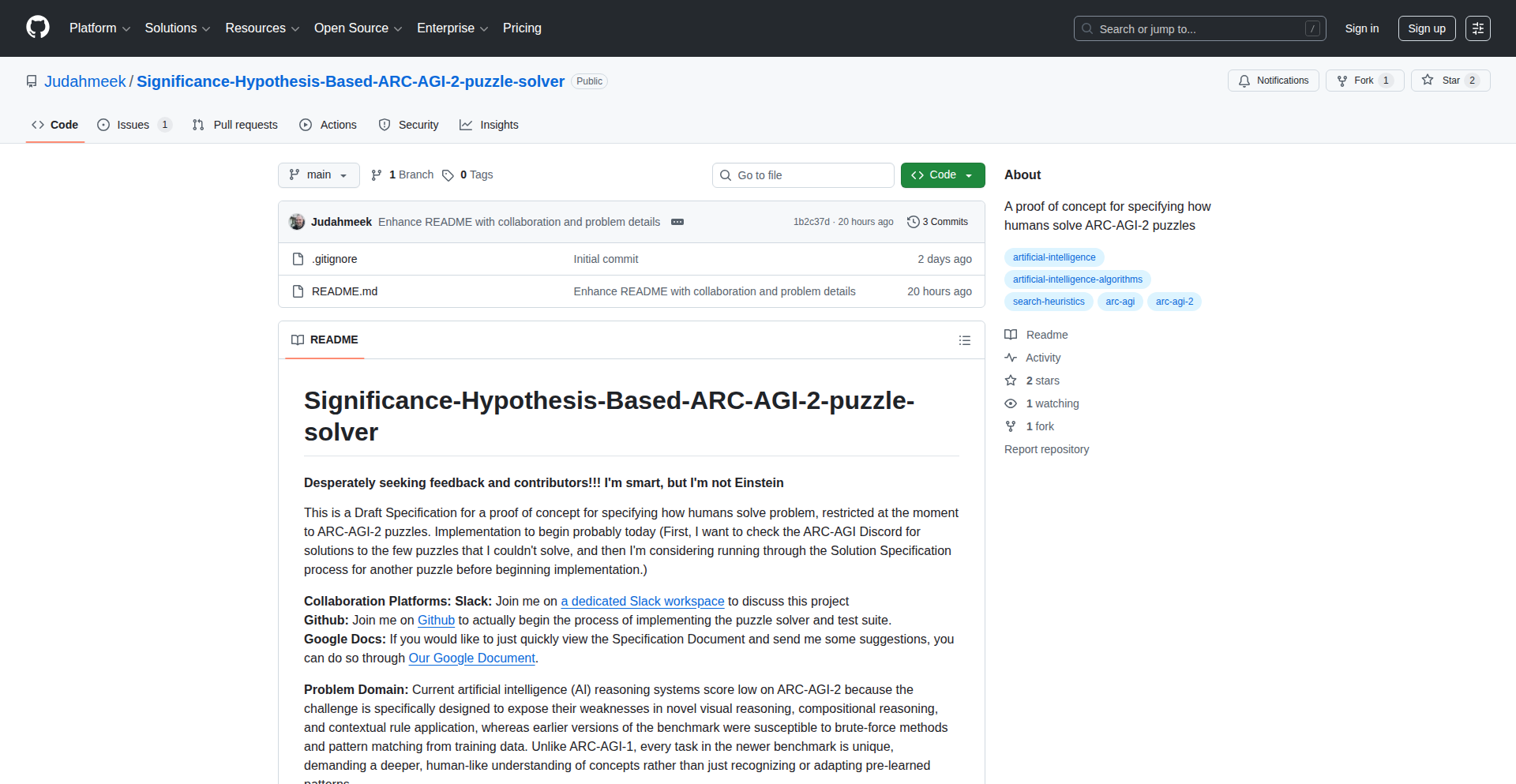

Hypothesis Navigator

Author

judahmeek

Description

This project explores a novel approach to Artificial General Intelligence (AGI) by focusing on testing hypotheses through prediction. It aims to create systems that can not only generate ideas but also rigorously test them against reality, a critical step towards more robust and capable AI.

Popularity

Points 3

Comments 3

What is this product?

Hypothesis Navigator is a conceptual framework and early-stage proof-of-concept for developing Artificial General Intelligence (AGI). The core idea is to move beyond simply generating solutions or insights, and instead to build AI systems that can form specific, testable predictions about the world. By comparing these predictions with actual outcomes, the AI learns and refines its understanding, much like a scientist conducting experiments. This iterative process of prediction, testing, and learning is seen as a fundamental building block for achieving true AGI, where AI can adapt, reason, and solve problems across a wide range of domains. The innovation lies in structuring AI development around a scientific method, using prediction as the primary validation mechanism.

How to use it?

For developers, Hypothesis Navigator offers a new paradigm for designing AI systems. Instead of just focusing on algorithms for data processing or pattern recognition, developers can leverage this framework to build AI agents that actively engage with their environment (real or simulated). This could involve integrating the system with data streams or simulation environments where it can make predictions, observe results, and then update its internal models. It's about architecting AI that doesn't just process information, but intelligently questions and verifies its own understanding. This could be integrated into research platforms for AI development, or in applications where an AI needs to dynamically learn and adapt to changing conditions.

Product Core Function

· Hypothesis Generation: The ability of the system to formulate clear, actionable predictions based on its current knowledge and observations. This is valuable for identifying potential avenues for learning and discovery.

· Prediction-Outcome Comparison: The mechanism for comparing the AI's generated predictions against actual observed results. This core function enables learning and validation, allowing the AI to correct its errors and improve accuracy.

· Knowledge Refinement Loop: The process by which the AI updates its internal understanding and models based on the discrepancies or confirmations found during prediction-outcome comparison. This is crucial for building robust, adaptable AI.

· Domain-Specific Application Modules: The project utilizes a 'minimalistic problem domain' (like ARC-AGI-2) to demonstrate the core concepts. This means the system can be adapted and specialized for various tasks, making it a versatile foundation for AI research.

Product Usage Case

· In a scientific research setting, an AI could be tasked with predicting the outcome of a chemical reaction under specific conditions. By comparing its predictions with actual lab results, it learns the nuances of chemical interactions, accelerating discovery.

· For autonomous systems like self-driving cars, this framework could enable an AI to predict the behavior of other vehicles or pedestrians in complex traffic scenarios. If the predictions are inaccurate, the AI learns to adjust its driving strategy, enhancing safety and reliability.

· In financial modeling, an AI could predict market fluctuations based on various indicators. By testing these predictions against real market data, the AI can refine its trading algorithms and provide more accurate forecasts.

15

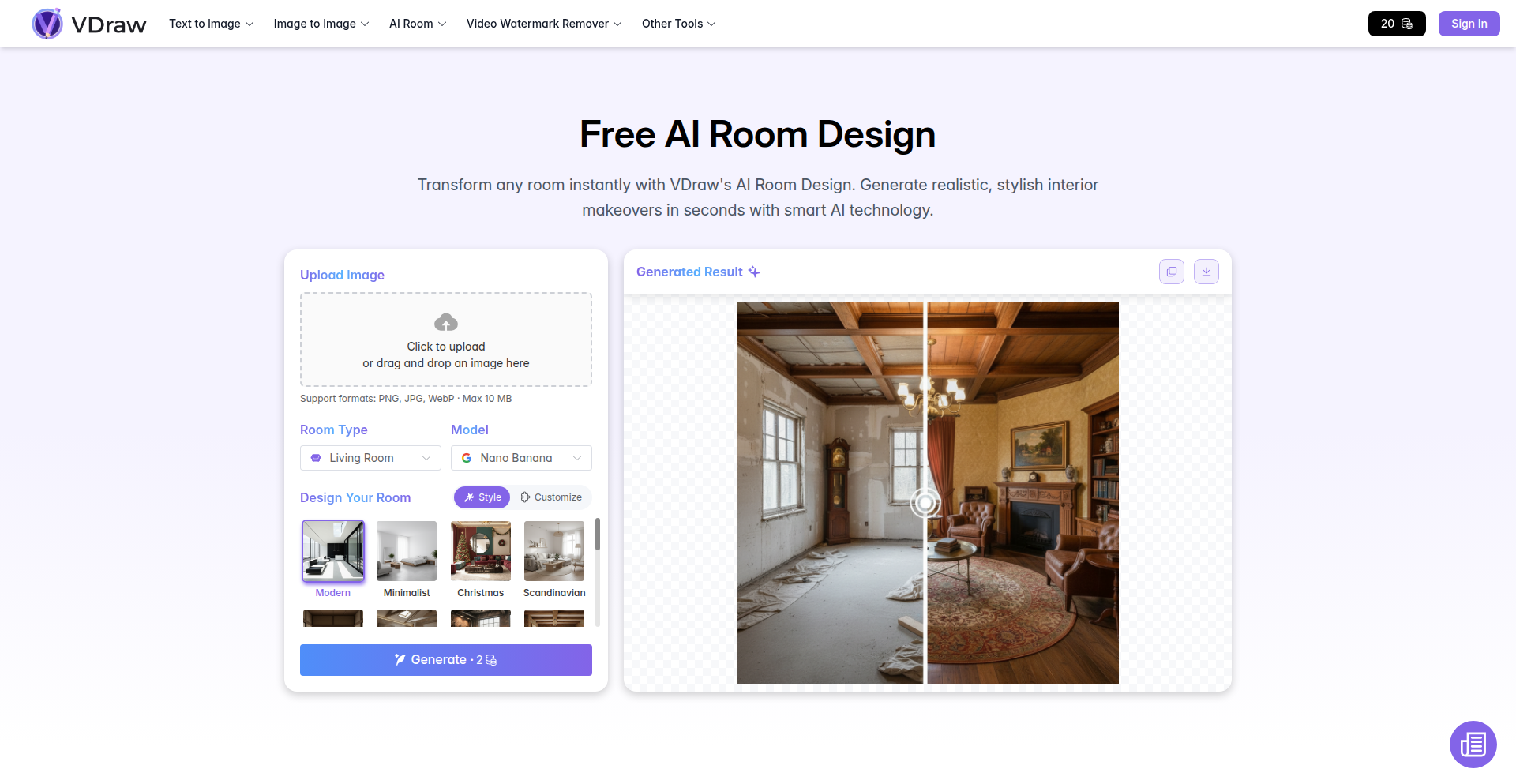

AI Room Stylizer

Author

passioner

Description

A free, browser-based AI tool that takes a photo of your room and instantly generates multiple redesigned versions in various popular styles like modern, minimalist, Scandinavian, and industrial. It intelligently preserves your room's layout while applying the new aesthetic, requiring no login for immediate use.

Popularity

Points 6

Comments 0

What is this product?

This is an AI-powered web application that uses advanced computer vision and generative AI models to reimagine interior design. You upload a picture of your existing room, and the AI analyzes its structure and dimensions. Then, it applies a chosen design style (e.g., modern, bohemian, industrial) by intelligently placing new furniture, changing wall colors, and adjusting lighting and textures, all while keeping the original room's layout intact. The innovation lies in its ability to perform this complex visual transformation directly in your web browser without needing to upload your data to a server or require a login, making it incredibly accessible and private.

How to use it?

Developers can use this tool by simply navigating to the website in their browser. They upload a photograph of any interior space. Then, they select from a predefined list of design styles. Within seconds, the tool presents several AI-generated concept images of their room in the chosen style. This can be used for quick design exploration, generating inspiration for personal projects, or even as a starting point for more detailed design work. For integration into other applications, one would typically use a similar backend AI model that handles image analysis and style transfer, though the current product focuses on direct browser-based user interaction.

Product Core Function

· AI-powered room style transformation: This core function allows users to upload an image of their room and have it automatically redesigned into different aesthetic styles, providing immediate visual concepts. The value is in rapid ideation and design exploration without manual effort.

· Preservation of room layout: The AI intelligently maintains the original room's dimensions and spatial arrangement while applying new styles. This ensures the redesigned rooms are realistic and practical, offering a tangible starting point for actual design changes.

· Multiple design style options: Users can choose from a variety of popular interior design aesthetics, such as modern, minimalist, Scandinavian, and industrial. This provides creative breadth and caters to diverse user preferences, enabling exploration of different design directions.

· Browser-based direct execution: The tool runs entirely within the user's web browser, meaning no software installation or server uploads are necessary. This drastically improves accessibility, speed, and user privacy, making sophisticated design tools available to anyone with a web connection.

· No login requirement: Users can access and utilize the tool instantly without creating an account. This reduces friction and encourages immediate experimentation, making it ideal for quick, spontaneous design inspiration.

Product Usage Case

· A homeowner wanting to redecorate a living room could upload a photo, select 'modern' style, and instantly see how new furniture and color palettes would look, helping them decide on a direction without hiring a designer or spending hours on mood boards.

· A real estate agent could use the tool to quickly generate aspirational images of vacant properties with different interior styles, helping potential buyers visualize the space's potential and making listings more appealing.

· An interior design student could use this to rapidly generate multiple design concepts for a single room, allowing them to explore a wider range of ideas and refine their creative process more efficiently.

· A furniture retailer could integrate a similar backend technology to allow customers to virtually 'try out' different pieces of furniture in their own room images, enhancing the online shopping experience and reducing purchase uncertainty.

16

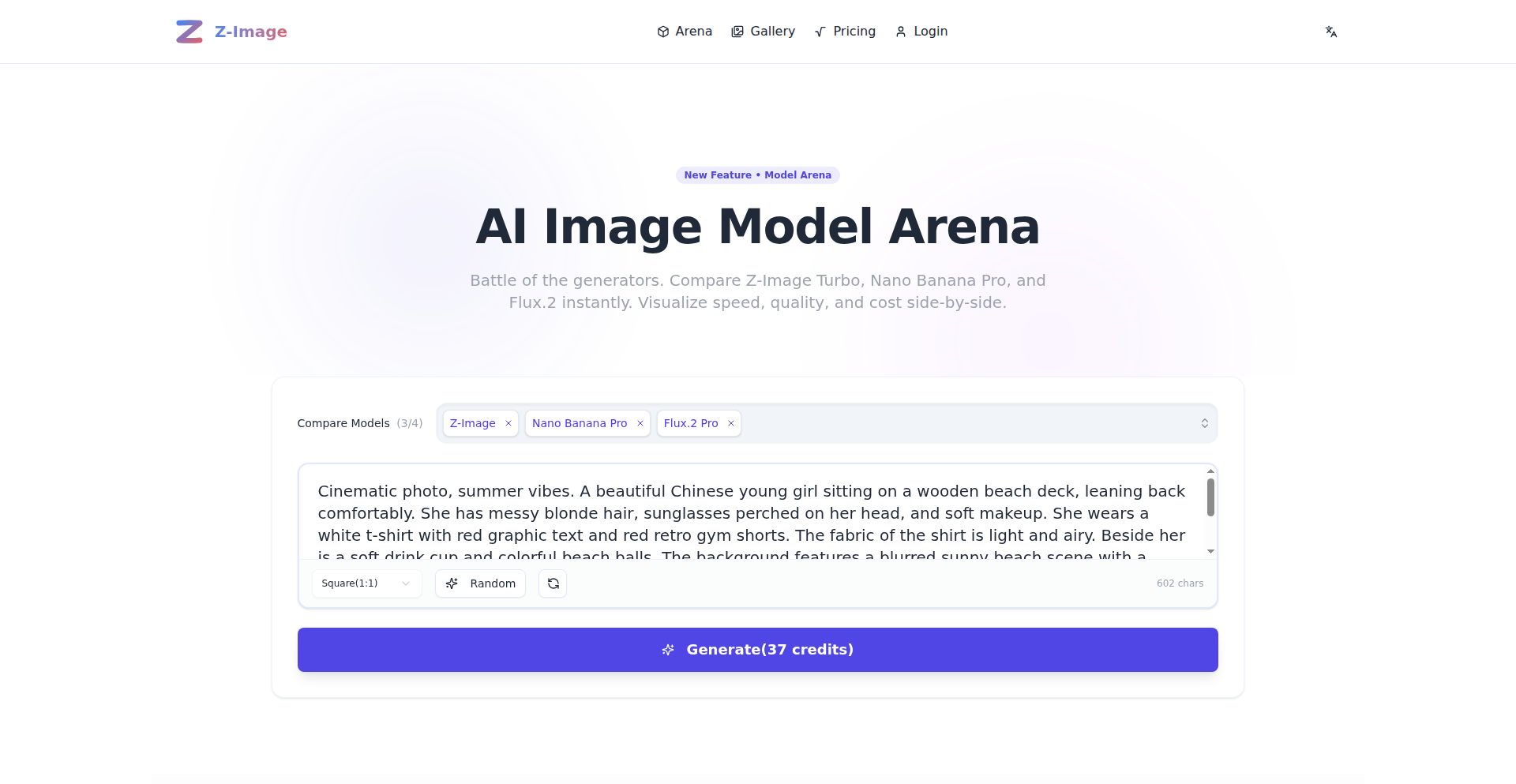

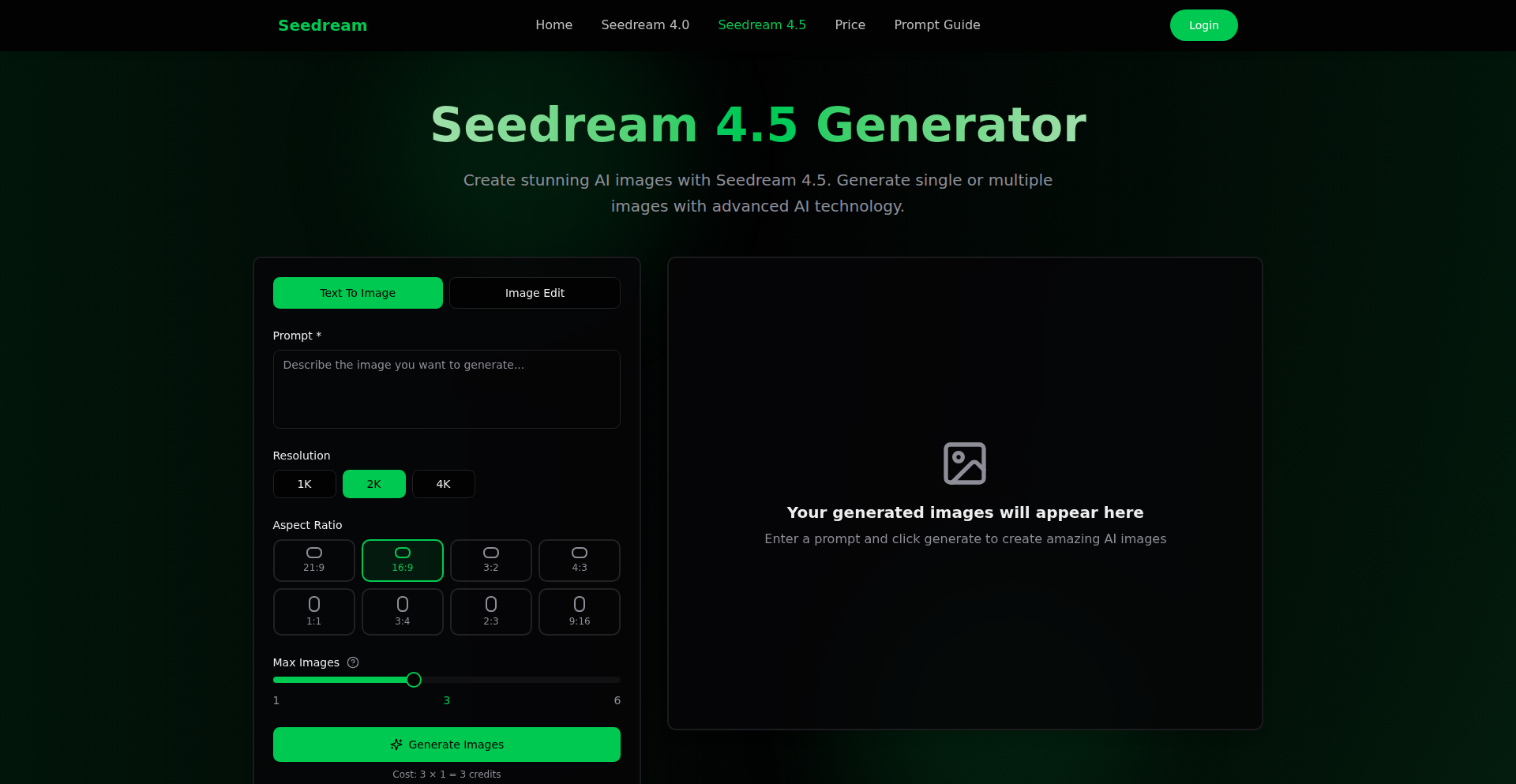

Doubao Seedream AI Image Weaver

Author

Viaya

Description

A next-generation image generation and editing AI model, Doubao Seedream 4.5, from Volcano Engine. It significantly enhances editing consistency, portrait retouching, small text generation, and multi-image compositing. This makes it a powerful upgrade for creators building AI-powered creative tools, offering more precise, artistic, and coherent visual results.

Popularity

Points 5

Comments 0

What is this product?

Doubao Seedream 4.5 is an advanced AI model designed to create and edit images. It builds upon previous versions by offering much better consistency when you make changes to an image, ensuring details, lighting, and colors remain as intended. It also excels at making portraits look more natural and high-quality. A key innovation is its improved ability to generate clear, readable text within images, which is useful for things like signs or interface labels. Furthermore, it's now much better at combining multiple images or ideas into a single, cohesive, and visually pleasing picture. This means it can handle complex creative requests with greater accuracy and artistic flair.

How to use it?

Developers can integrate Doubao Seedream 4.5 into their applications to power AI-driven creative features. This could involve building new image generation tools, enhancing existing illustration pipelines, or streamlining concept art workflows. For example, a graphic design application could leverage its editing consistency to allow users to modify specific elements of an image without altering the overall style. A game development tool might use its multi-image compositing to quickly generate environment assets by combining various reference images. Its natural language processing capabilities mean developers can simply describe the desired output, and the model will generate it, offering a seamless way to add advanced image capabilities to software.

Product Core Function

· Enhanced Editing Consistency: Maintains fine details, lighting, and color tone during edits. This is valuable because it ensures your creative vision isn't lost when making adjustments, leading to more predictable and professional results.

· Improved Portrait Retouching: Yields more natural and high-quality human images. This is useful for applications dealing with photography or character design, as it allows for believable and aesthetically pleasing human visuals.

· Superior Small Text Generation: Creates clearer and more readable embedded text. This is important for designers and developers needing to add text overlays, labels, or signage within images, ensuring legibility and clarity.

· Robust Multi-Image Compositing: Combines multiple input images or prompts reliably for coherent results. This enables complex scene creation and asset blending, allowing for sophisticated visual storytelling and rapid prototyping.

· Advanced Inference Performance and Aesthetics: Delivers more precise and artistic visual outputs. This means users get higher quality, more refined images faster, boosting productivity and creative output.

Product Usage Case

· A creative agency building an AI-powered ad campaign generator can use Doubao Seedream 4.5 to produce consistent visual styles across multiple ad variations, ensuring brand adherence and improving efficiency.

· A game developer can utilize its multi-image compositing to quickly generate diverse background assets by feeding in different environmental elements and style prompts, accelerating the art pipeline.

· A UI/UX designer can leverage its small text generation capabilities to create realistic interface mockups with embedded labels, ensuring the visual representation is accurate and easy to understand.

· A freelance digital artist can use its enhanced portrait retouching to create professional-grade character portraits with natural-looking features, saving time on manual adjustments.

· A content creator can use its editing consistency to experiment with different styles and themes for their visuals, knowing that core elements will remain intact, facilitating rapid iteration and exploration.

17

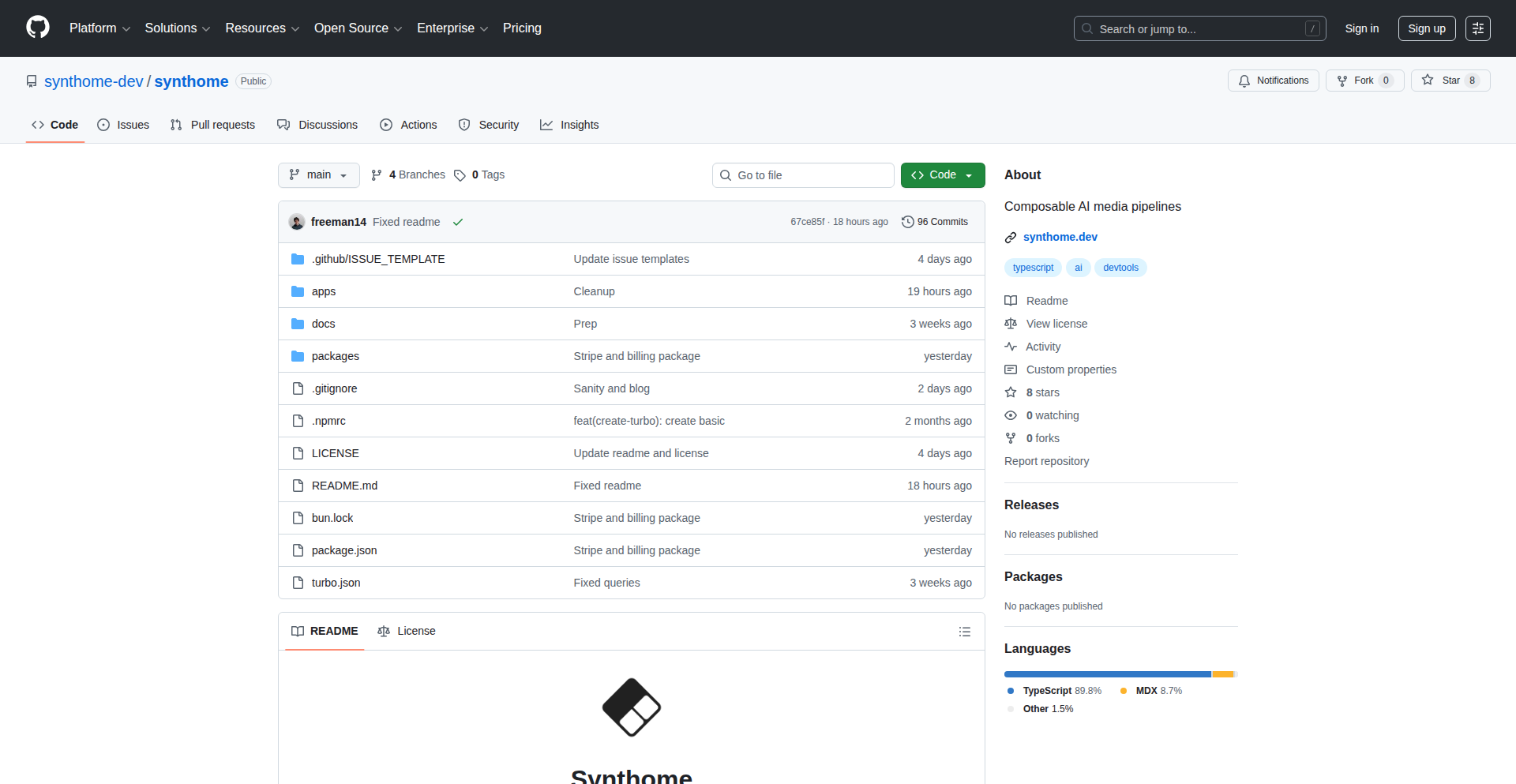

AI Slop Journal Orchestrator

Author

popidge

Description