Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-12-02

SagaSu777 2025-12-03

Explore the hottest developer projects on Show HN for 2025-12-02. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The current wave of innovation on Show HN is a powerful testament to the hacker spirit, showcasing how developers are leveraging cutting-edge technologies like AI and advanced runtime optimizations to solve real-world problems and unlock new possibilities. We're seeing a significant trend towards creating more intelligent and autonomous systems, from agentic AI platforms that can manage complex workflows to tools that help developers tame AI code outputs and ensure reliability. The emphasis on 'local-first' and privacy-preserving solutions is also a strong theme, reflecting a desire for more control and transparency. Furthermore, the drive for efficiency is evident, whether it's through optimizing computation on heterogeneous hardware like in RunMat, or streamlining data processing and developer workflows with clever CLI tools and libraries. For aspiring developers and entrepreneurs, this landscape offers a rich ground for exploration. Focus on building tools that abstract complexity, enhance existing workflows with intelligence, or bring privacy and control back to the user. The open-source ethos continues to thrive, with many projects contributing back to the community, fostering collaboration and accelerating innovation. Embrace the mindset of solving a specific pain point with elegant technical solutions, and don't shy away from tackling ambitious problems with creative engineering.

Today's Hottest Product

Name

RunMat – Runtime with Auto CPU/GPU Routing for Dense Math

Highlight

This project introduces a novel approach to accelerating dense mathematical computations by automatically routing workloads between CPUs and GPUs without requiring explicit CUDA or kernel code. Developers can write familiar MATLAB-style code, and the runtime intelligently fuses operations, manages data placement on the GPU, and falls back to CPU JIT/BLAS for smaller tasks. This demonstrates a sophisticated compiler optimization and heterogeneous computing strategy, offering significant performance gains (e.g., ~130x faster than NumPy) by abstracting away the complexities of GPU programming. The key takeaway for developers is the power of intelligent runtime optimization and the potential for significant performance boosts by allowing code to dynamically adapt to available hardware resources.

Popular Category

AI/ML

Developer Tools

Data Processing

Productivity Tools

System Design

Popular Keyword

AI

LLM

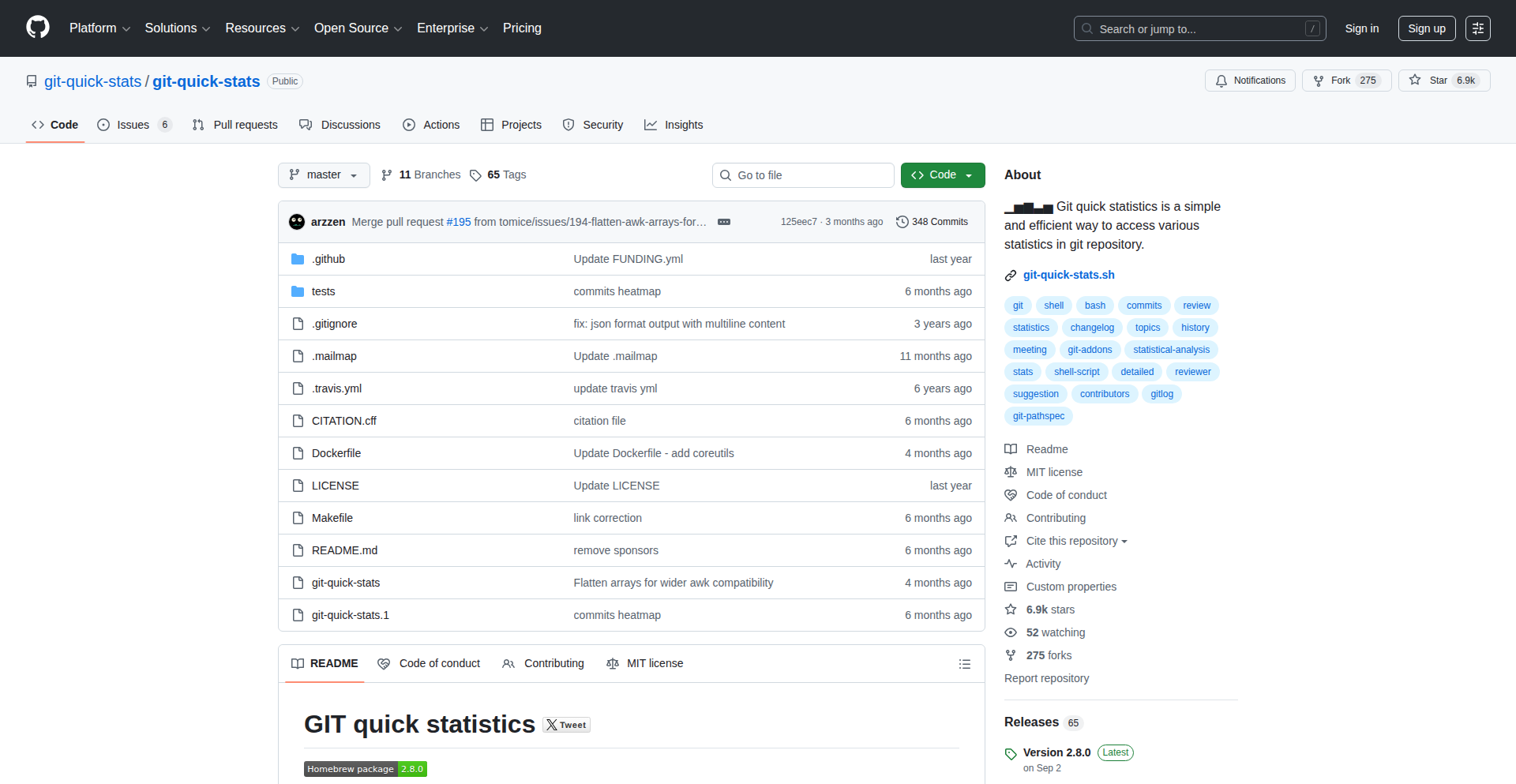

Open Source

Developer Tools

Data

Runtime

Automation

CLI

Visualization

Agent

Technology Trends

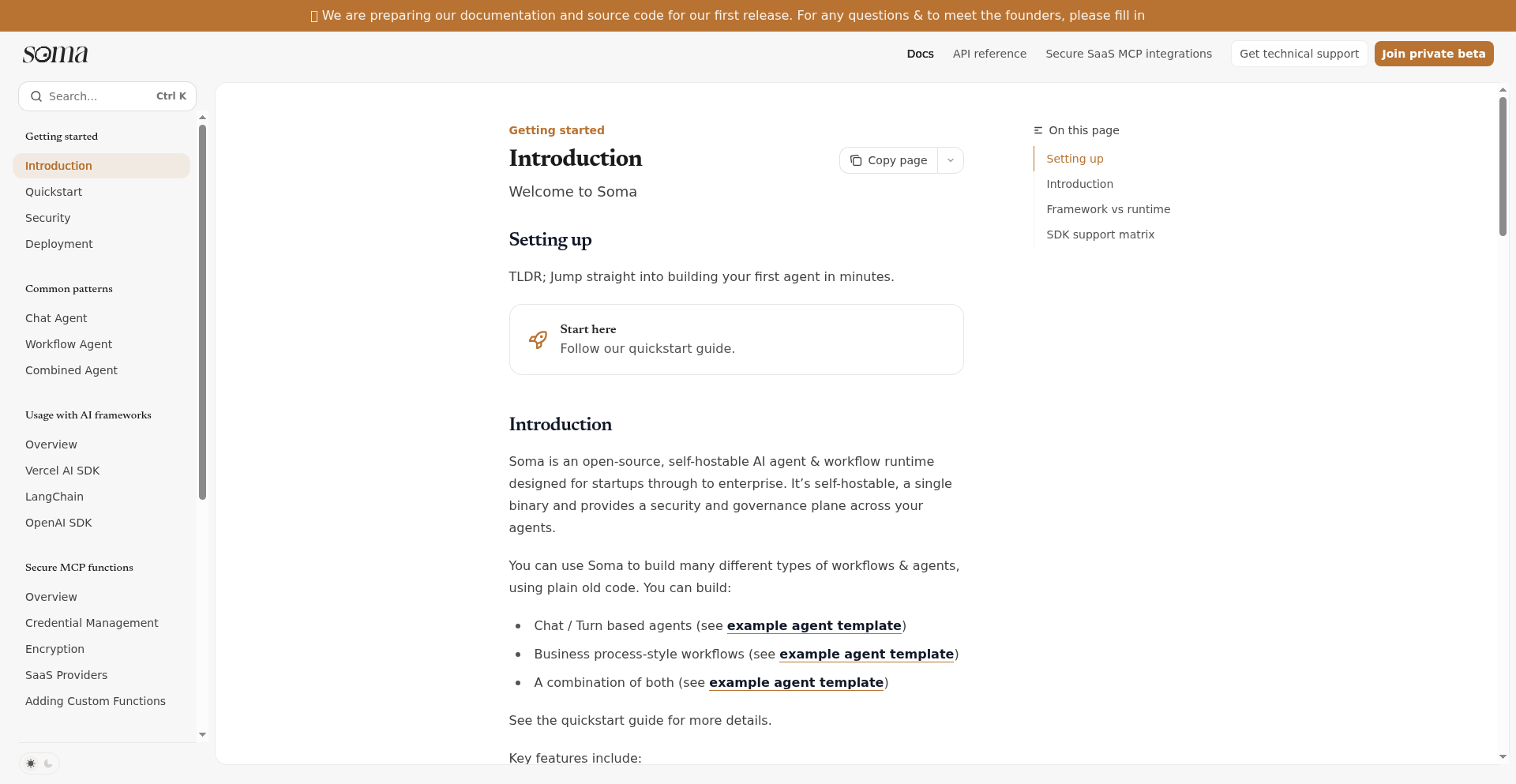

Agentic AI Systems

Efficient Data Processing

AI-Assisted Development

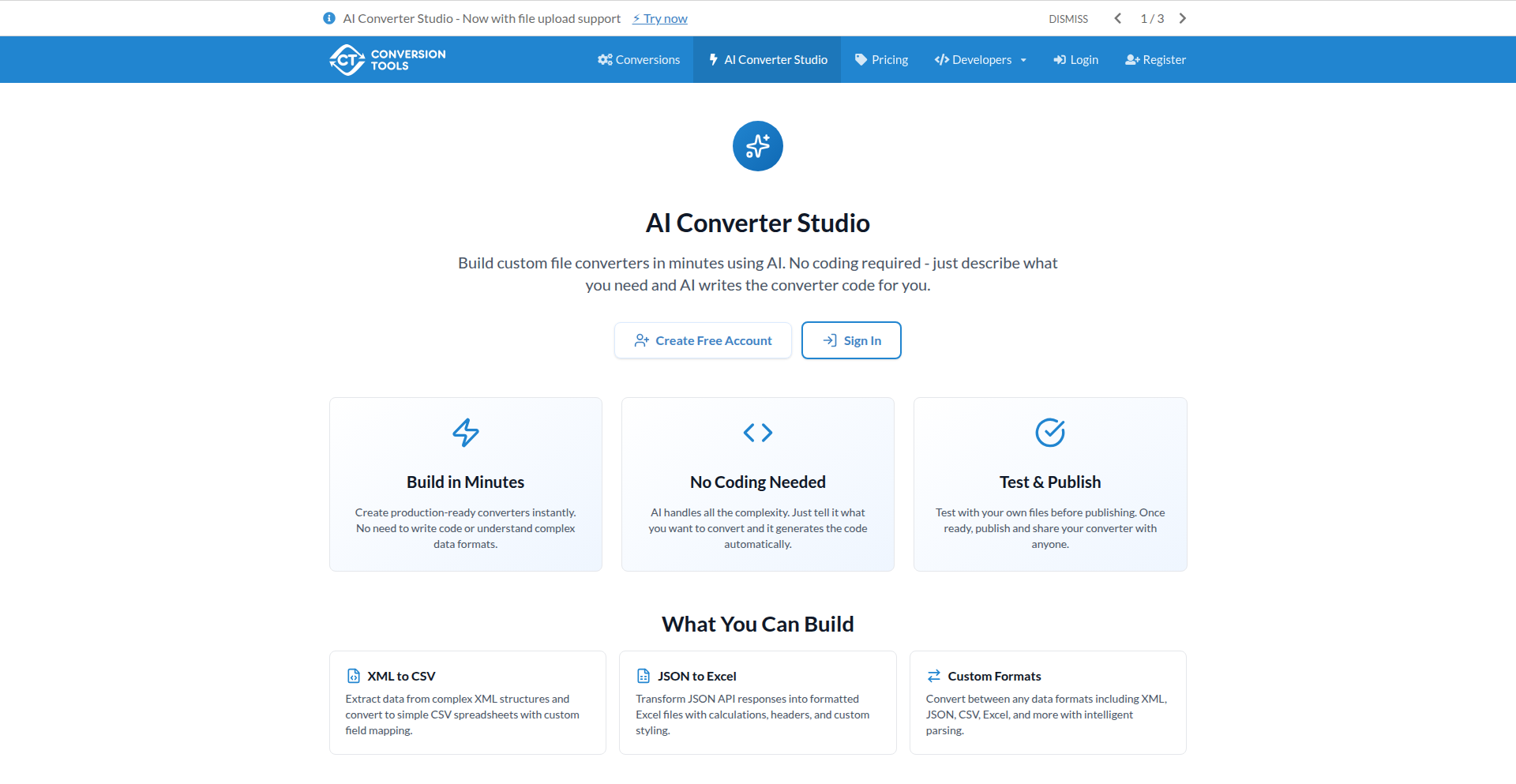

No-Code/Low-Code Automation

Reproducible ML

Heterogeneous Computing

Decentralized Systems

Developer Productivity Tools

AI for Content Generation

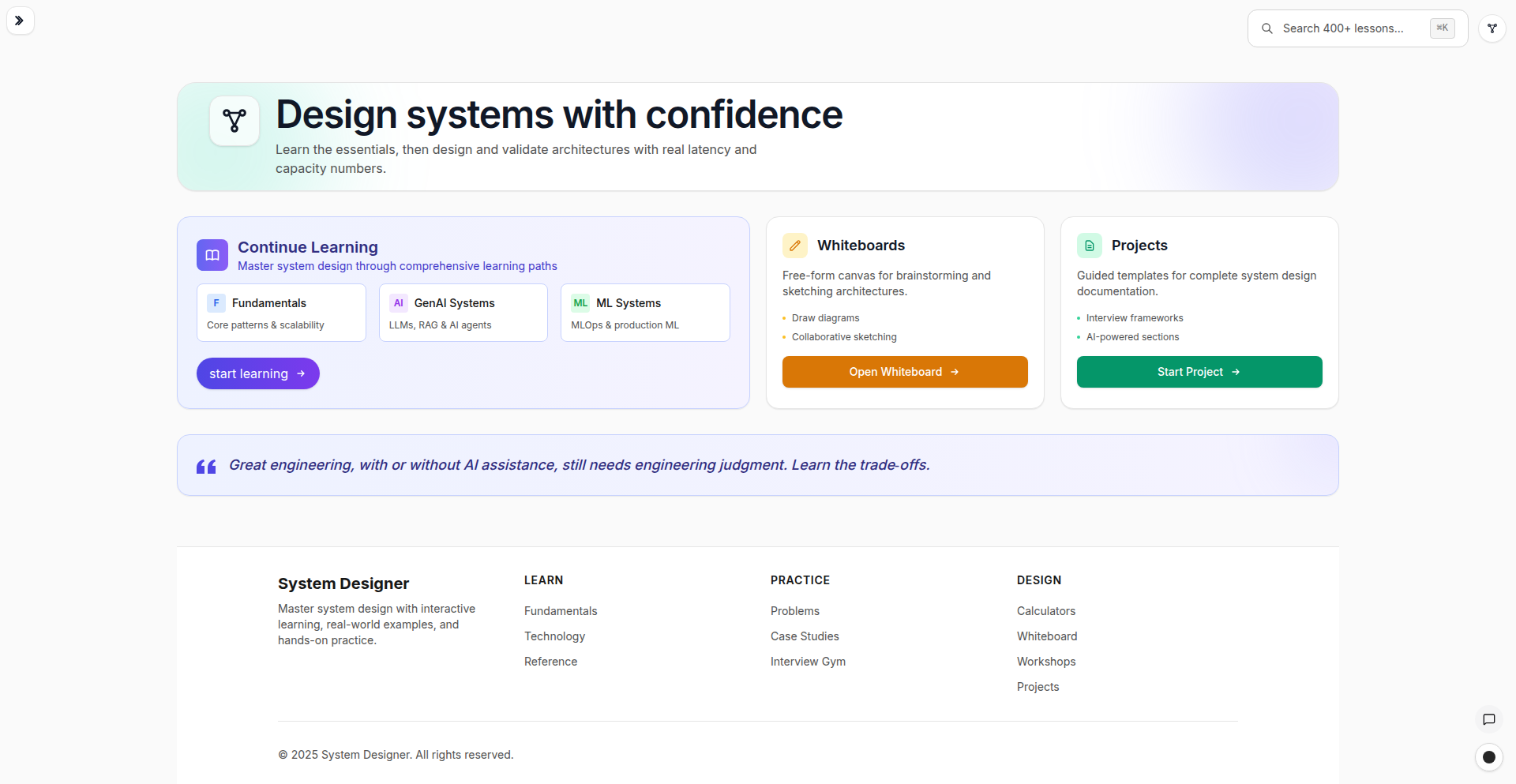

System Design Education

Project Category Distribution

AI/ML (25%)

Developer Tools (20%)

Data Processing/Management (15%)

Productivity Tools (15%)

System Design/Infrastructure (10%)

Content Creation/Media (5%)

Utilities (5%)

Education/Learning (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Marmot: Binary Data Catalog Engine | 94 | 22 |

| 2 | Webclone.js: The Scrappy Site Archiver | 21 | 7 |

| 3 | RunMat Accelerate: Adaptive CPU/GPU Compute Runtime | 19 | 5 |

| 4 | PaperPulse AI | 12 | 6 |

| 5 | TanStack Forge | 9 | 3 |

| 6 | Roundtable AI Persona Debate | 6 | 4 |

| 7 | CoChat: Collaborative AI Team Hub | 5 | 4 |

| 8 | SwipeFood Navigator | 4 | 4 |

| 9 | Elf: Advent of Code Command Line Accelerator | 3 | 5 |

| 10 | Quash: Natural Language Android QA Agent | 4 | 4 |

1

Marmot: Binary Data Catalog Engine

Author

charlie-haley

Description

Marmot is a novel, single-binary data cataloging solution designed to simplify data indexing and retrieval without relying on heavy infrastructure like Kafka or Elasticsearch. It offers a lightweight, efficient way to organize and query structured and semi-structured data, making it ideal for developers looking for a straightforward, embeddable data management tool.

Popularity

Points 94

Comments 22

What is this product?

Marmot is a self-contained data cataloging engine. Instead of needing multiple complex services to manage your data's metadata (like message queues for data ingestion or full-text search engines for querying), Marmot packages everything into a single executable file. This means it's incredibly easy to deploy and run. Its innovation lies in its efficient indexing algorithms and a compact storage format that allows for rapid searching and retrieval directly from the binary, reducing operational overhead and complexity. So, what's in it for you? You get a powerful data management capability without the usual headache of managing distributed systems.

How to use it?

Developers can integrate Marmot into their applications by simply including the Marmot binary. It can be used as an embedded library within a larger application or run as a standalone service. Data can be ingested programmatically, and queries can be executed via a simple API. This makes it perfect for microservices, local development environments, or scenarios where a lightweight, self-sufficient data catalog is needed. For example, you can embed it in a data processing pipeline to quickly catalog intermediate results, or use it in a desktop application to manage local datasets. So, what's in it for you? Seamless integration and instant data cataloging capabilities for your projects.

Product Core Function

· Single Binary Deployment: Marmot is a self-contained executable, eliminating the need for external dependencies like databases or message queues. This drastically simplifies setup and maintenance, making it accessible even for less experienced operations teams. So, what's in it for you? Quick setup and reduced operational burden.

· Efficient Indexing and Querying: It employs specialized indexing techniques that allow for fast searching and retrieval of data records without the need for complex search engines. This means you can find your data quickly. So, what's in it for you? Faster data access and search performance.

· Compact Data Storage: Marmot uses a custom, optimized format for storing metadata, ensuring a small footprint and efficient disk usage. This is crucial for resource-constrained environments or applications dealing with large volumes of metadata. So, what's in it for you? Reduced storage costs and better performance on limited hardware.

· Programmable API: Offers a clean API for developers to programmatically add data, update metadata, and perform searches. This allows for seamless integration into existing workflows and custom applications. So, what's in it for you? Easy automation and integration with your existing code.

· Lightweight and Embeddable: Designed to be small and efficient, it can be easily embedded into other applications or services, acting as an internal data catalog without introducing significant overhead. So, what's in it for you? Add powerful data cataloging to your app without making it bloated.

Product Usage Case

· Building a local development environment for data-intensive applications: A developer needs to quickly spin up a data catalog for testing purposes without setting up Kafka or Elasticsearch. Marmot can be dropped into the project, and data can be indexed and queried locally, speeding up the development cycle. So, what's in it for you? Faster, easier development and testing of data applications.

· Creating a metadata catalog for a small-scale analytics tool: An analytics dashboard needs to track and query information about different datasets it uses. Marmot can serve as the backend for this metadata catalog, offering fast lookups without requiring a separate database server. So, what's in it for you? A simple, efficient way to manage metadata for your analytics tools.

· Implementing an embedded data management system in an IoT device: An IoT device needs to catalog sensor readings or configuration data locally. Marmot's single-binary nature and small footprint make it suitable for deployment on resource-constrained embedded systems. So, what's in it for you? Bring data cataloging capabilities to even the smallest devices.

· Developing a data pipeline that needs to quickly index and retrieve intermediate data: During complex data processing, intermediate results need to be cataloged for debugging or further processing. Marmot can be integrated into the pipeline to provide fast, local indexing and retrieval of this intermediate data. So, what's in it for you? Improved data pipeline visibility and debugging capabilities.

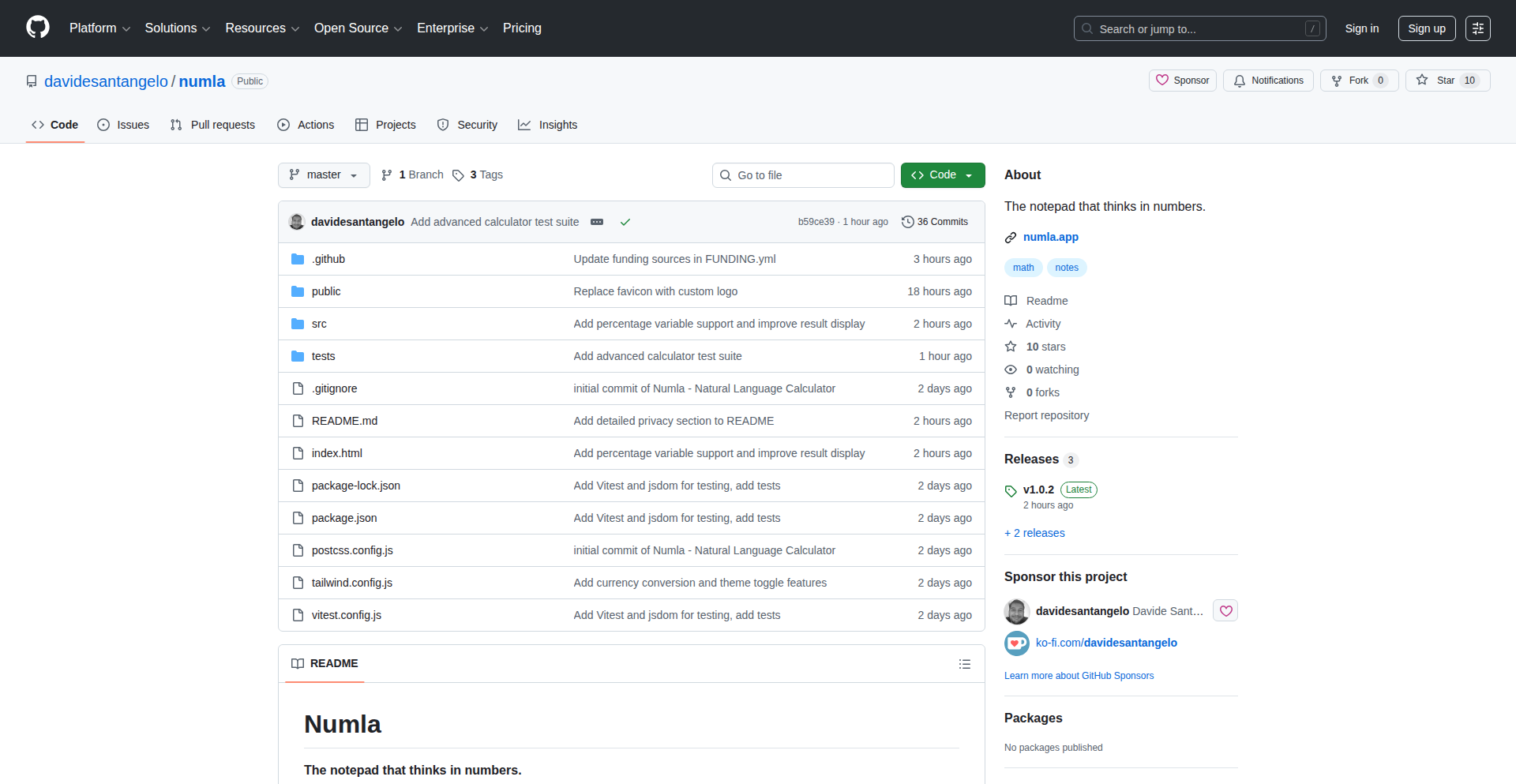

2

Webclone.js: The Scrappy Site Archiver

Author

jadesee

Description

Webclone.js is a Node.js-based website archiving tool that leverages Puppeteer to overcome the limitations of traditional crawlers like `wget`. It's designed for developers needing a robust way to capture entire websites, ensuring all assets and links are preserved, even for complex, dynamically generated content. This offers a reliable solution for offline documentation, historical record-keeping, or developing offline-first web applications.

Popularity

Points 21

Comments 7

What is this product?

This project is a sophisticated website copier built with Node.js and Puppeteer. Unlike simpler tools that often miss crucial parts of a modern website (like images, CSS files, or dynamically loaded content), Webclone.js actually simulates a real browser using Puppeteer. This means it can interact with JavaScript, click buttons, and wait for content to load, just like a human user would. The innovation lies in its ability to faithfully replicate website structures and assets, addressing the common frustration of broken archives caused by dynamic web technologies. So, what's the benefit for you? You get a complete, working copy of a website that you can browse offline, ensuring you don't lose access to important information or web assets, even if the original site disappears or changes.

How to use it?

Developers can use Webclone.js as a command-line tool to specify a target URL and download the entire website. It's integrated via Node.js, meaning you can install it using npm or yarn. The core usage involves running the `webclone` command followed by the URL you want to archive. For more advanced users, the library can be integrated directly into other Node.js projects. For instance, you could script it to periodically archive critical documentation pages, or use it as a backend for a personal knowledge base. So, what's the benefit for you? You can easily automate the process of saving websites for later reference, ensuring your access to vital online resources is never interrupted.

Product Core Function

· Headless Browser Emulation: Uses Puppeteer to render websites as a real browser would, capturing dynamically loaded content and ensuring all assets are fetched. The value here is reliable archiving of modern, JavaScript-heavy websites, so you don't miss critical parts of the content.

· Comprehensive Asset Fetching: Goes beyond just HTML to download all linked resources like images, CSS, and JavaScript files, reconstructing the site's visual and functional integrity. This is valuable because a website is more than just text; this ensures your archive looks and works as intended.

· Link Resolution and Reconstruction: Accurately maps internal links within the cloned site, ensuring that navigating the archived version is seamless. This value means you can easily jump between pages within your offline copy without encountering broken links.

· Error Handling and Robustness: Designed to gracefully handle common web crawling issues, reducing the likelihood of incomplete archives. The value is a more dependable archiving process, saving you the frustration of dealing with partial or failed downloads.

· Command-Line Interface (CLI): Provides an easy-to-use interface for quick archiving tasks without requiring deep coding knowledge. This offers immediate utility for anyone needing to save a website quickly.

Product Usage Case

· Archiving critical online documentation for offline access during projects with unstable internet. Problem solved: Ensuring access to essential information regardless of network connectivity.

· Creating a historical snapshot of a website before major redesigns or decommissioning. Problem solved: Preserving digital heritage and past versions of web content for reference or analysis.

· Developing an offline-first web application by pre-fetching and storing necessary web assets. Problem solved: Enabling web application functionality in environments with limited or no internet access.

· Building a personal knowledge base by archiving relevant articles and resources from the web. Problem solved: Centralizing and making accessible a collection of important web content for future study or use.

3

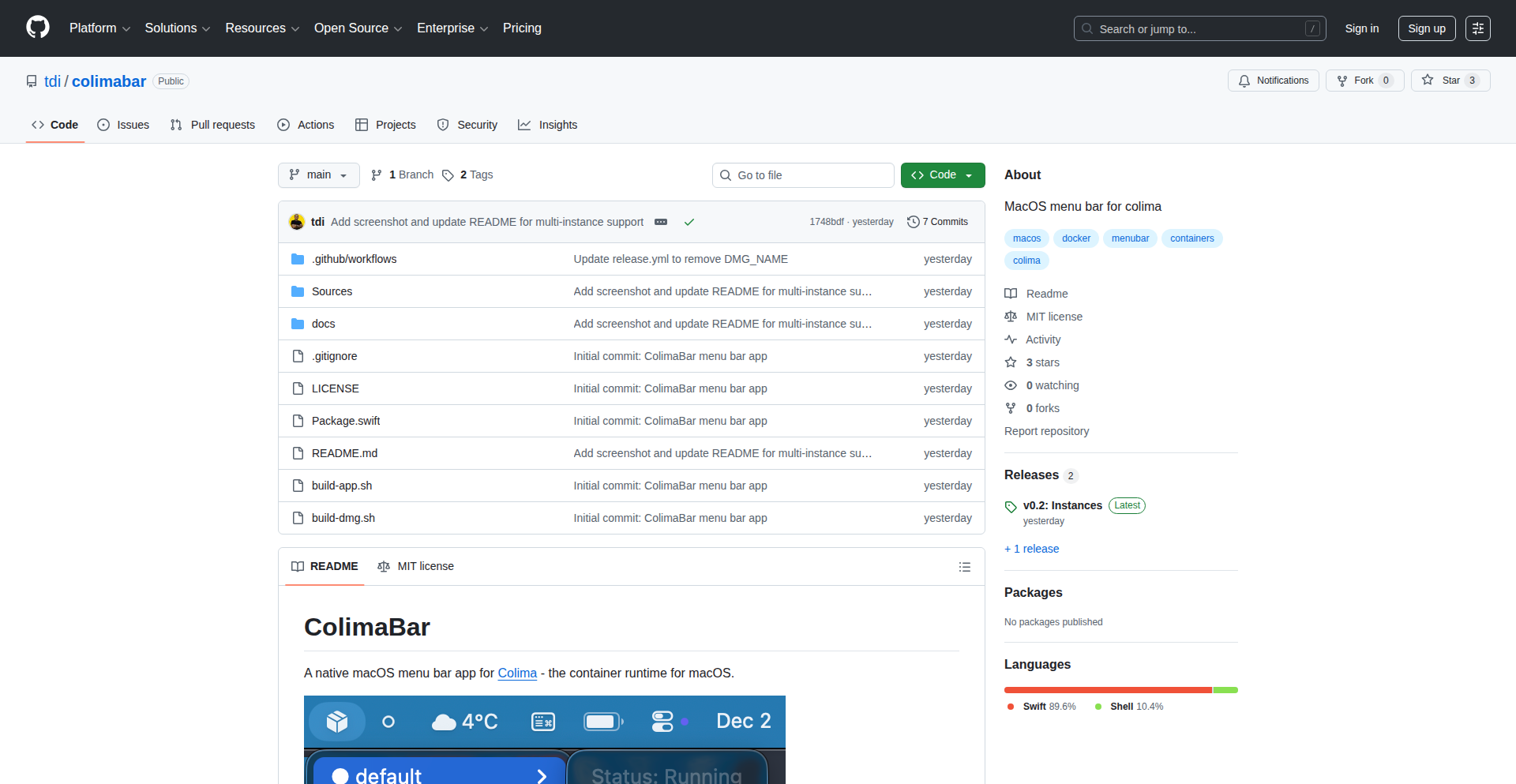

RunMat Accelerate: Adaptive CPU/GPU Compute Runtime

Author

nallana

Description

RunMat Accelerate is an open-source runtime designed to significantly boost the performance of MATLAB-style array computations. It intelligently fuses operations and automatically distributes workloads between the CPU and GPU, eliminating the need for manual CUDA or kernel coding. This means you can write familiar array math code and RunMat handles the optimization for you, delivering substantial speedups for computationally intensive tasks.

Popularity

Points 19

Comments 5

What is this product?

RunMat Accelerate is a runtime environment that takes code written in a MATLAB-like syntax and executes it much faster than traditional libraries like NumPy or even PyTorch for certain operations. Its core innovation lies in its ability to analyze the sequence of array operations you write. It then builds a computation graph, intelligently combines (fuses) multiple operations into fewer, more efficient processing steps (kernels), and decides whether to run these on the CPU or the GPU for optimal speed. If the GPU is beneficial, it keeps data there; otherwise, it falls back to highly optimized CPU JIT (Just-In-Time compilation) or BLAS (Basic Linear Algebra Subprograms) routines. This adaptive approach means you get the performance benefits of specialized hardware without needing to become an expert in low-level GPU programming.

How to use it?

Developers can use RunMat Accelerate by writing their numerical computations using MATLAB-style array syntax. Instead of executing this code with a standard MATLAB interpreter or a library like NumPy, they would point it to the RunMat runtime. RunMat then intercepts these computations, applies its automatic fusion and CPU/GPU routing logic, and returns the results. This is particularly useful for scientific computing, data analysis, and machine learning tasks involving large arrays and complex mathematical operations. Integration can involve replacing existing calls to numerical libraries with RunMat, or using it for new performance-critical sections of code. The benchmarks provided show dramatic improvements in areas like Monte Carlo simulations, image preprocessing, and element-wise mathematical chains.

Product Core Function

· Automatic Operation Fusion: Combines sequences of array math operations into fewer, more optimized computational kernels. This reduces overhead and improves efficiency, leading to faster execution times for complex calculations.

· CPU/GPU Workload Routing: Intelligently determines whether to execute computations on the CPU or the GPU based on the operation and data size. This ensures that the most appropriate hardware is utilized, maximizing performance without manual intervention.

· GPU Data Management: Keeps data on the GPU when it's beneficial for performance, minimizing data transfer bottlenecks between CPU and GPU memory. This is crucial for accelerating workflows that involve repeated access to large datasets.

· Fallback to CPU JIT/BLAS: For smaller computations or when GPU acceleration is not advantageous, RunMat seamlessly falls back to highly optimized CPU JIT compilation and BLAS libraries. This ensures consistent performance across a wide range of scenarios.

· MATLAB-Style Syntax Compatibility: Allows developers to leverage their existing knowledge of MATLAB syntax for array manipulation and mathematical operations, lowering the barrier to entry for high-performance computing.

Product Usage Case

· Monte Carlo Simulations: For complex simulations requiring millions of path calculations, RunMat Accelerate can be up to 2.8x faster than PyTorch and 130x faster than NumPy. This is valuable for financial modeling, risk analysis, and scientific research where simulation speed directly impacts the feasibility of experiments.

· Image Preprocessing Pipelines: In tasks involving common image manipulations like normalization, gain/bias adjustments, and gamma correction, RunMat offers approximately 1.8x speedup over PyTorch and 10x over NumPy. This is beneficial for developers working on computer vision applications, medical imaging, or any field requiring rapid image processing.

· Large-Scale Elementwise Computations: For extremely long chains of element-wise mathematical functions applied to massive arrays (e.g., sin, exp, cos, tanh), RunMat can be up to 140x faster than PyTorch and 80x faster than NumPy. This is a significant advantage for researchers and engineers dealing with large datasets in fields like physics, signal processing, and computational biology.

4

PaperPulse AI

Author

davailan

Description

PaperPulse AI is a mobile-first feed designed to combat information overload in research. It intelligently digests recent, trending academic papers from AI and other fields into easily digestible 5-minute summaries, delivered via a 'doomscrolling' interface. This addresses the challenge of staying current with cutting-edge research without getting lost in lengthy publications or low-quality social media content. The core innovation lies in its automated pipeline: it fetches papers, uses OCR to convert PDFs to text, and then leverages advanced LLMs like Gemini 2.5 to generate concise summaries.

Popularity

Points 12

Comments 6

What is this product?

PaperPulse AI is a smart content aggregation service for researchers, academics, and anyone wanting to stay informed about the latest scientific breakthroughs. It tackles the problem of information overload by automating the process of finding, reading, and summarizing relevant research papers. The technical approach involves daily monitoring of trending papers from sources like Huggingface and major research labs. PDFs are converted into a readable format using Mistral OCR technology. This text is then fed into Gemini 2.5, a powerful AI model, to generate a brief, understandable summary, typically readable within 5 minutes. This innovative pipeline makes cutting-edge research accessible and manageable, transforming the 'doomscrolling' habit into a productive learning experience.

How to use it?

Developers can use PaperPulse AI by simply accessing its mobile-friendly web feed. The platform is designed for passive consumption, akin to social media feeds, but with high-quality, curated content. For integration, developers could potentially tap into the underlying data feeds (if an API becomes available) or use it as inspiration to build similar summarization pipelines for their specific domains. The core usage scenario is to browse the feed, discover trending papers, and quickly grasp their essence through the AI-generated summaries, saving significant time and effort compared to reading full papers. This is useful for developers who need to stay updated on AI advancements or any technical field without dedicating hours to deep dives into primary literature.

Product Core Function

· Automated Paper Discovery: Scans Huggingface Trending Papers and major research labs daily to identify relevant new publications. This saves users the manual effort of searching for new research, ensuring they see the most current and impactful work.

· PDF to Text Conversion: Utilizes Mistral OCR to accurately extract text from PDF research papers. This is a crucial step for making the content machine-readable, overcoming the challenge of unstructured PDF formats.

· AI-Powered Summarization: Employs Gemini 2.5 to generate concise 5-minute summaries of complex research papers. This allows users to quickly understand the core findings and implications of a paper without reading the entire document, maximizing learning efficiency.

· Curated 'Doomscroll' Feed: Presents summaries in a mobile-optimized feed that encourages continuous engagement. This makes staying updated with research feel less like a chore and more like an engaging activity, fitting into modern digital consumption habits.

Product Usage Case

· A machine learning engineer wanting to keep up with the latest advancements in natural language processing. Instead of sifting through hundreds of ArXiv papers, they can open PaperPulse AI and quickly scan summaries of the most talked-about NLP research from the past day or week, identifying key papers for deeper study.

· A PhD student in computer vision who needs to stay abreast of new techniques and architectures. PaperPulse AI provides a daily digest of relevant computer vision papers, allowing them to quickly assess which papers are most relevant to their research without spending hours reading abstracts and introductions.

· A tech lead in a startup trying to understand emerging AI trends that could impact their product roadmap. PaperPulse AI helps them quickly identify and understand the significance of new research in areas like generative AI or reinforcement learning, informing strategic decisions.

5

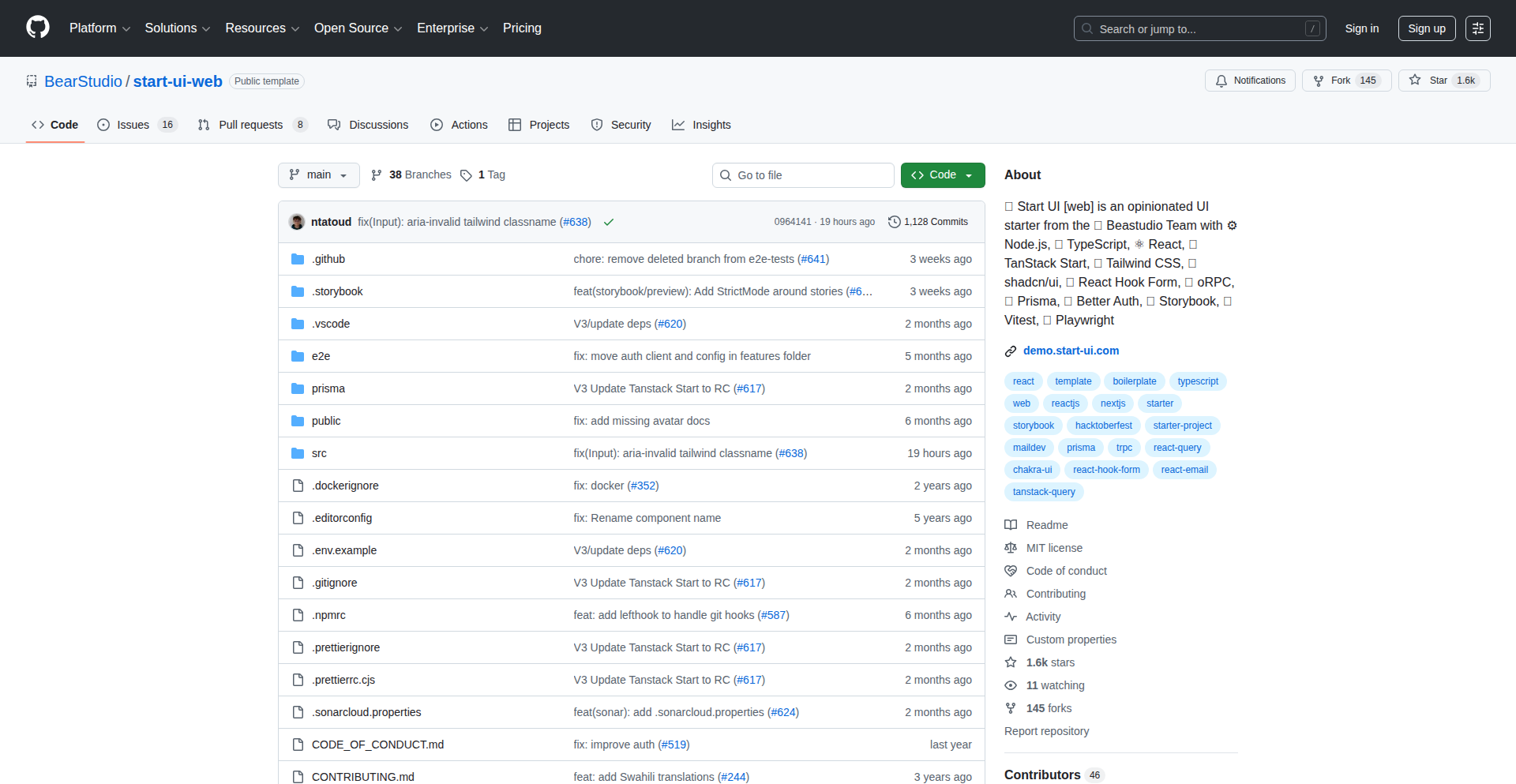

TanStack Forge

Author

ivandalmet

Description

An open-source full-stack starter template that streamlines web application development by integrating a robust backend with a modern frontend framework, simplifying common development tasks and accelerating the path from idea to deployment. It addresses the complexity of setting up full-stack environments, offering a pre-configured, opinionated foundation for developers.

Popularity

Points 9

Comments 3

What is this product?

TanStack Forge is a foundational starter project for building full-stack web applications. It combines a backend API layer with a frontend user interface framework, all pre-configured and ready for customization. The innovation lies in its 'batteries-included' approach, providing a cohesive development experience. Instead of developers piecing together separate backend and frontend tools and configuring them to talk to each other, Forge offers a unified starting point, saving significant setup time and reducing common integration headaches. This means you get a working application structure with best practices already in place, allowing you to focus on your unique features rather than boilerplate configuration.

How to use it?

Developers can clone the repository from GitHub and start building immediately. The starter project typically includes pre-defined API routes, database integration (often with a simple setup like SQLite or a cloud-based option), and a frontend component library. Integration involves modifying the provided API endpoints to match your data needs and customizing the frontend components to create your application's user interface. It's designed to be an opinionated starting point, meaning it has made certain architectural decisions for you, which can be either adopted or overridden as your project evolves. This allows for rapid prototyping and a quick start to developing dynamic applications.

Product Core Function

· Pre-configured Full-Stack Environment: Provides a ready-to-use setup for both backend and frontend, significantly reducing initial development friction. This means you don't have to spend days setting up databases, API servers, and frontend frameworks from scratch, giving you a head start on building features.

· Opinionated Architecture: Offers a structured approach to application development with sensible defaults for routing, data management, and component structure. This guides developers towards maintainable and scalable code, helping avoid common pitfalls and ensuring a consistent codebase.

· Seamless API Integration: Designed for easy communication between the backend API and the frontend. This simplifies fetching and sending data, allowing for a more responsive and dynamic user experience without complex cross-communication setup.

· Developer-Friendly Tooling: Includes common developer tools for linting, formatting, and testing, ensuring code quality and a smooth development workflow. This helps catch errors early and maintain a high standard of code, making collaboration easier.

Product Usage Case

· Rapid Prototyping for SaaS Ideas: A solo developer can quickly spin up a functional backend and frontend to test a new software-as-a-service concept. By using Forge, they can get a demo-ready application in hours instead of days, validating their idea faster.

· Building Internal Tools and Dashboards: An engineering team needs a quick way to build an internal dashboard to visualize data. Forge provides the structure to easily connect to their existing data sources (via the backend API) and build interactive visualizations on the frontend, accelerating the delivery of essential internal tools.

· Learning Modern Full-Stack Development: A junior developer looking to understand how modern full-stack applications are built can use Forge as a learning resource. By examining the pre-configured code, they can grasp best practices and common patterns in API design and frontend state management.

· Migrating Legacy Applications: A company with an older, monolithic application might use Forge as a starting point for a modern refactor. They can gradually migrate parts of their functionality into the Forge structure, leveraging its streamlined setup for new features and eventually replacing older components.

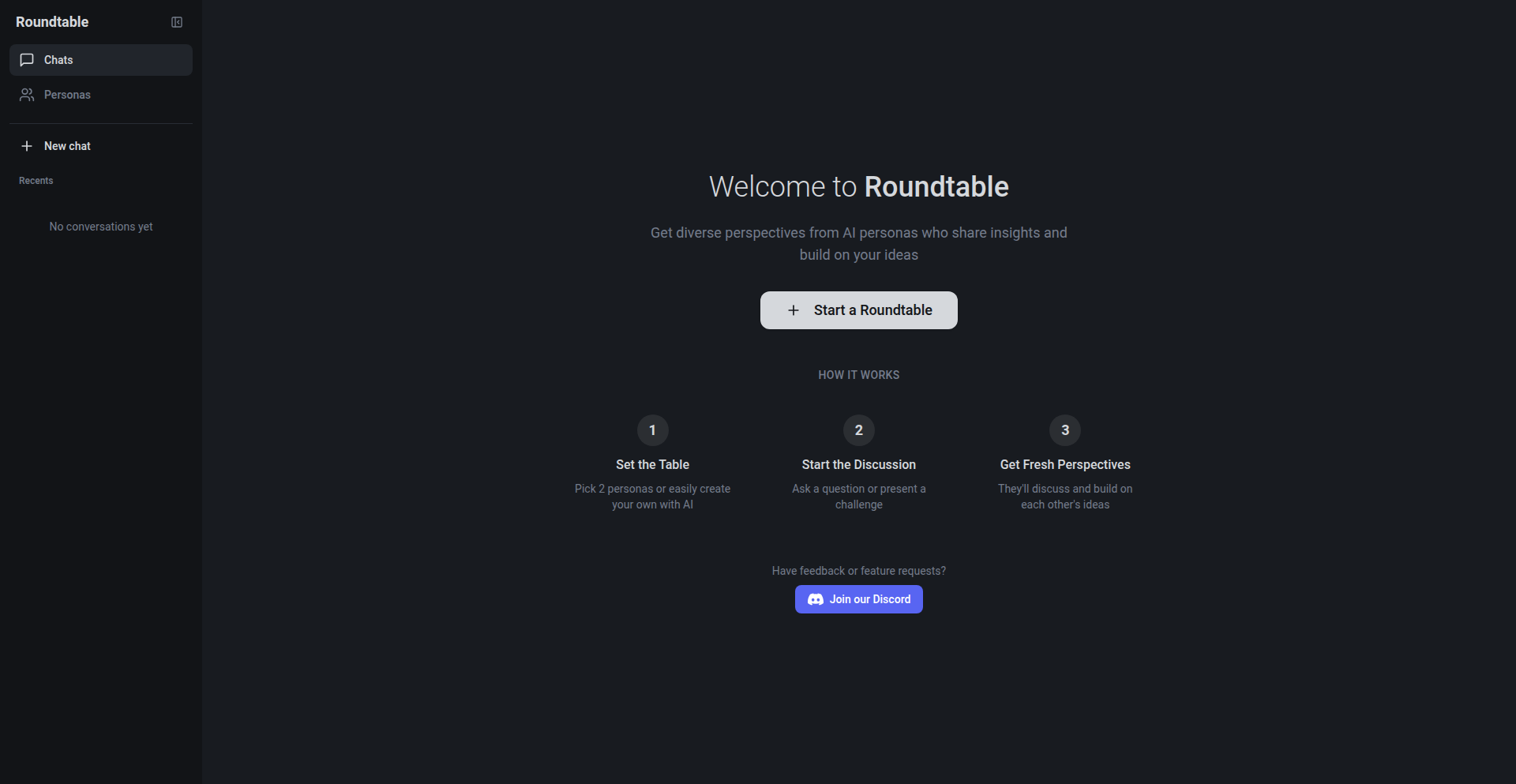

6

Roundtable AI Persona Debate

Author

andrewgm

Description

Roundtable is an AI-powered tool designed to overcome the echo chamber effect often encountered when using large language models (LLMs) for decision-making. Instead of a single AI agreeing with your ideas, Roundtable simulates a multi-persona discussion where different AI agents, each with distinct expertise and viewpoints, debate and challenge each other. This creates a more robust and unbiased evaluation of ideas, helping users uncover potential flaws or alternative perspectives they might have missed. So, what's the value? It helps you make better, more validated decisions by preventing you from getting stuck in your own biased thinking, leading to more innovative and well-rounded product development.

Popularity

Points 6

Comments 4

What is this product?

Roundtable is a novel application of LLMs that transforms the typical one-on-one AI interaction into a dynamic, multi-agent debate. The core innovation lies in its ability to assign distinct 'personas' or roles to different LLM instances within the same conversation. These personas are designed to embody specific expertise (e.g., a skeptical investor, a meticulous engineer, a market analyst) and are programmed to engage in a naturalistic debate, questioning assumptions, highlighting risks, and offering counterarguments. This 'AI rubber duck that argues with itself' approach breaks the mold of AI simply affirming user input, instead fostering critical thinking and uncovering blind spots. So, what's the value? It provides a sophisticated, simulated peer review for your ideas, revealing weaknesses and strengthening your proposals before they reach the real world.

How to use it?

Developers can integrate Roundtable into their ideation and validation workflows. Imagine you have a new product feature idea. You input the core concept into Roundtable and assign specific personas relevant to your project, such as 'Customer Empathy Persona,' 'Technical Feasibility Persona,' and 'Business Viability Persona.' The system then orchestrates a conversation between these AI agents, generating a debate that scrutinizes your idea from multiple angles. This can be done through a simple web interface or potentially integrated via an API into existing project management or brainstorming tools. The output is a transcript of the debate, highlighting points of contention and areas of agreement, which can then inform your next steps. So, how do you use it? You feed it your nascent ideas, let the AI personas hash it out, and use the resulting insights to refine your plans.

Product Core Function

· Multi-Persona AI Simulation: Enables multiple AI agents with distinct roles and expertise to interact within a single conversational thread, fostering diverse perspectives. This is valuable because it moves beyond a single AI's confirmation bias, offering a more comprehensive critique.

· Automated Idea Debate: The system automatically generates a debate among the assigned AI personas, challenging assumptions and exploring potential downsides of proposed ideas. This offers practical value by pre-emptively identifying flaws in your concepts.

· Persona Customization: Allows users to define and select specific AI personas, tailoring the debate to the unique needs and context of their project or industry. This is useful for ensuring the critique is relevant to your specific challenges.

· Insight Generation from Debate: The output of the debate serves as a rich source of actionable insights, highlighting areas of concern and potential improvements. This provides concrete takeaways to inform decision-making and product development.

· Echo Chamber Mitigation: By introducing dissenting viewpoints and critical analysis, the tool actively combats the tendency of LLMs to simply agree with users, leading to more objective evaluations. This is important because it helps you avoid making decisions based on flawed or overly optimistic assumptions.

Product Usage Case

· Scenario: A startup founder is brainstorming new app features. They input their feature ideas into Roundtable, assigning personas like 'Target User Advocate,' 'Revenue Model Analyst,' and 'Technical Debt Assessor.' The AI debate reveals that while the feature is appealing to users, it has significant technical challenges and might not align with the current monetization strategy. This helps the founder pivot to a more viable approach early on. So, what's the benefit? It saves time and resources by identifying critical issues before significant development effort is invested.

· Scenario: A product manager is evaluating market entry strategies for a new product. They use Roundtable with personas such as 'Competitive Landscape Expert,' 'Regulatory Compliance Officer,' and 'Early Adopter Advocate.' The debate uncovers potential regulatory hurdles and intense competition that were initially overlooked, leading to a revised, more robust go-to-market plan. So, what's the benefit? It provides a synthesized risk assessment from multiple expert viewpoints.

· Scenario: A freelance developer is pitching a complex software solution to a potential client. They use Roundtable to 'stress-test' their pitch by assigning personas like 'Skeptical Client,' 'Budget Controller,' and 'Technical Skeptic.' The resulting debate highlights areas where the pitch might be perceived as weak or unconvincing, allowing the developer to refine their presentation and address potential objections proactively. So, what's the benefit? It helps anticipate and counter client concerns, improving the chances of securing projects.

7

CoChat: Collaborative AI Team Hub

Author

mfolaron

Description

CoChat is an innovative extension of OpenWebUI that revolutionizes AI team collaboration. It introduces group chat functionalities, seamless model switching and side-by-side comparison, and intelligent web search. The core technical innovation lies in how it manages multi-model AI interactions within a collaborative environment, preventing AI confusion and ensuring each model acts as a distinct participant rather than an omniscient moderator. This empowers teams to leverage the strengths of various AI models for specific tasks without vendor lock-in.

Popularity

Points 5

Comments 4

What is this product?

CoChat is a specialized interface for teams working with Large Language Models (LLMs). Its technical foundation extends OpenWebUI, adding sophisticated features for collaborative AI use. The primary technical breakthrough is how it tackles the challenge of multiple AI models interacting within a single conversation. Traditionally, when you introduce a new AI model into a chat, it might not understand it's interacting with a previous response from a *different* AI. CoChat solves this by explicitly injecting 'model attribution' into the conversation context. This tells each AI exactly which model generated which part of the dialogue. This simple yet powerful technique dramatically improves the quality of cross-model analysis and collaboration because the AI can now critically evaluate, rather than defensively defend, another model's output. Another key innovation is how CoChat redefines the AI's role in group discussions. Instead of an AI trying to 'solve' every conversational thread like an overlord, CoChat frames the AI as a distinct participant that responds when prompted. This is achieved through careful prompt engineering and structuring of the AI's context, ensuring it acts as a facilitator, not a dictator, allowing human team members to drive the conversation. This means you get better control and more natural interactions when multiple people and multiple AIs are involved.

How to use it?

Developers can integrate CoChat into their existing workflows that utilize OpenWebUI. It's designed for teams who are already experimenting with or actively using AI assistants for project work, coding, research, or content creation. You can start a new group chat where multiple team members can contribute. Within the chat, you can seamlessly switch between different AI models (like GPT, Claude, Mistral, Llama) or even run them side-by-side to compare their outputs on the same prompt. CoChat intelligently activates web search only when real-time information is needed, ensuring relevant data is incorporated into the discussion. Furthermore, it supports inline generation of documents and code, acting as a powerful tool for rapid prototyping and knowledge sharing. The 'no subscription fee' model means you pay for actual token usage at list prices, making it cost-effective for teams. This provides a unified platform for collaborative AI exploration and task execution.

Product Core Function

· Group chat with AI facilitation: Enables multiple users to collaborate in the same AI conversation thread. The AI intelligently detects discussions and participant contributions, acting as a helpful assistant rather than an autocratic moderator. This provides a structured environment for team brainstorming and problem-solving with AI support.

· Model switching and side-by-side comparison: Allows users to fluidly switch between different LLMs (e.g., GPT, Claude, Mistral) or run them concurrently. This technical capability is valuable for identifying the best-performing model for specific tasks, leading to higher quality outputs and more efficient workflow.

· Intelligent context-aware web search: The AI automatically performs web searches only when contextually relevant and necessary for real-time information retrieval. This ensures that the AI's responses are up-to-date and grounded in current data, enhancing the reliability of generated content and analysis.

· Inline artifact and tool calls: Supports the direct generation of documents, code snippets, and other digital assets within the chat interface. This functionality streamlines the creation process and allows for rapid iteration on ideas, making it easier to translate AI insights into tangible work products.

· Model attribution in conversation context: A key technical innovation that explicitly marks which AI model generated each part of a conversation. This prevents AI confusion, improves critical evaluation of AI outputs, and leads to more coherent and productive cross-model interactions.

Product Usage Case

· A software development team uses CoChat to collaboratively debug code. One developer posts a code snippet and an error message. The team then uses CoChat to compare responses from GPT-4 and Claude 3 Opus side-by-side, each providing different insights and potential solutions. The explicit model attribution helps them understand which AI's suggestion is most relevant.

· A marketing team is brainstorming campaign ideas. They use CoChat's group chat feature to generate ideas, with different team members prompting various AI models (e.g., Mistral for creative slogans, Llama for market trend analysis). The AI acts as a facilitator, asking clarifying questions to the team when needed, rather than dictating the campaign direction.

· A research group is analyzing a complex scientific paper. They feed sections of the paper into CoChat and ask different LLMs to summarize or extract key findings. The ability to switch models allows them to leverage the unique strengths of each AI for nuanced interpretation and synthesis of information.

· A content creation team is developing a blog post. They use CoChat to generate outlines, draft sections, and refine wording. The inline document generation feature allows them to quickly assemble a draft, and the intelligent web search helps them fact-check and incorporate relevant statistics without leaving the chat interface.

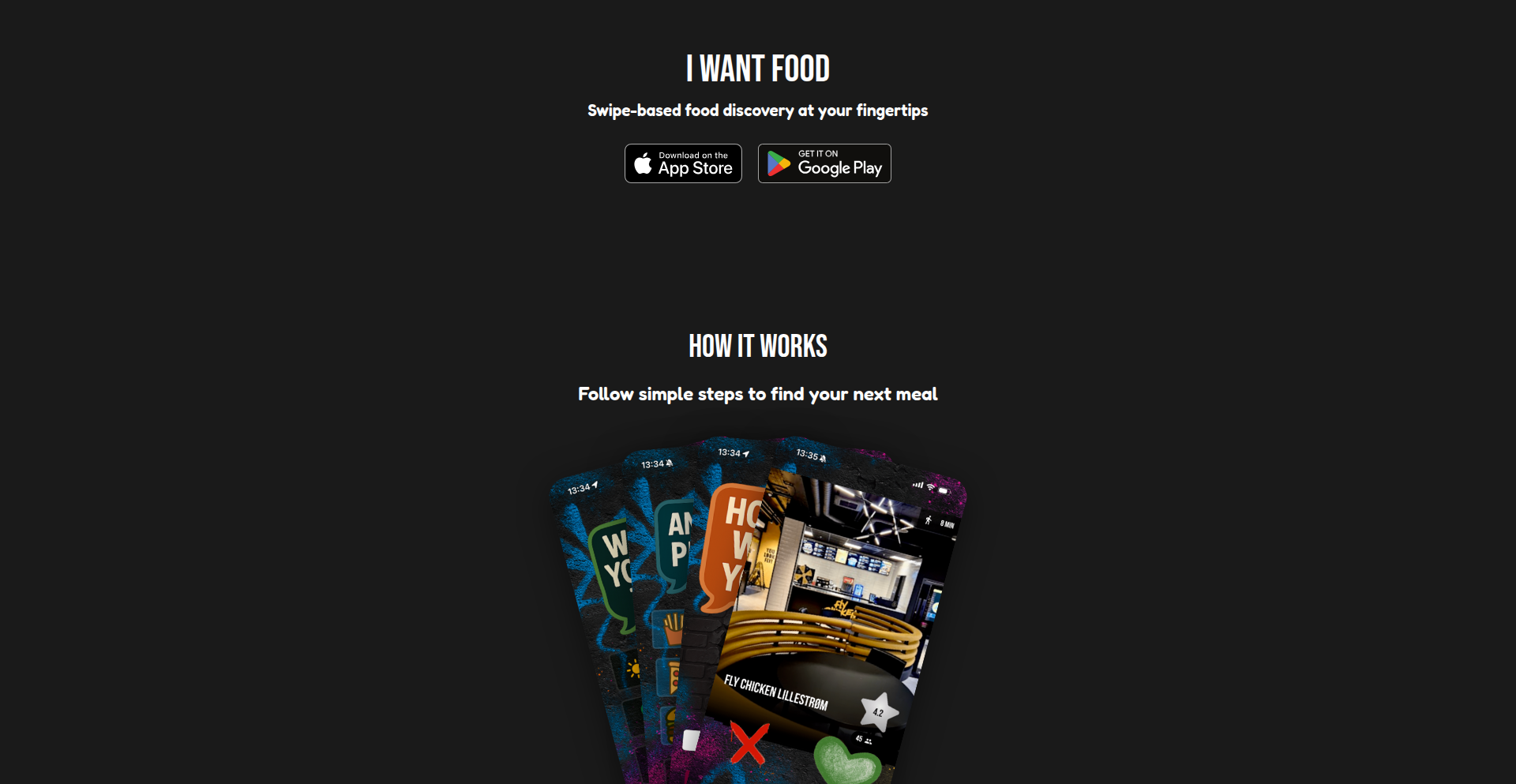

8

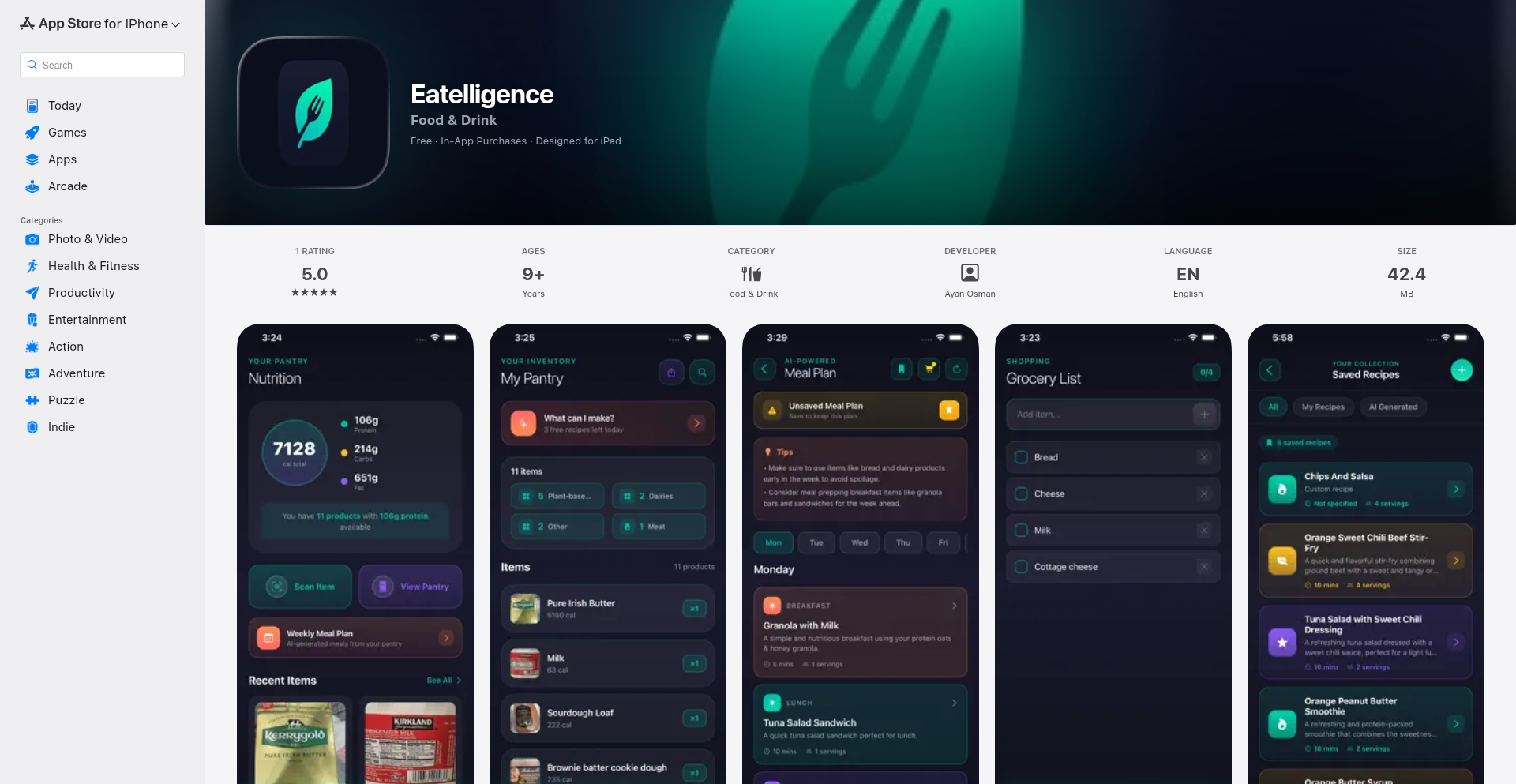

SwipeFood Navigator

Author

b44rd

Description

A simple, swipe-based restaurant discovery app designed to tackle the decision fatigue of choosing a place to eat. It leverages a curated dataset and an intuitive, Tinder-like interface to quickly present users with restaurant options, focusing on efficient exploration rather than exhaustive search. The core innovation lies in its minimalist approach to a complex problem, allowing for rapid user interaction and decision-making.

Popularity

Points 4

Comments 4

What is this product?

This project is a restaurant discovery application that uses a swipe gesture, similar to dating apps, to help users decide where to eat. Instead of scrolling through long lists or complex filters, users are presented with one restaurant at a time and swipe left if they're not interested or right if they are. The underlying technology likely involves a backend that serves restaurant data, possibly with some basic ranking or filtering logic, and a frontend that handles the swipe animations and user interaction. The innovation is in simplifying the user experience for a common daily dilemma, making the process fun and fast.

How to use it?

Developers can use this as a template or inspiration for building their own decision-support applications. The core principle of intuitive swiping for selection can be applied to various domains beyond restaurants, such as discovering products, articles, or even potential collaborators. Integration would involve connecting a data source (e.g., a list of restaurants, products) to the frontend logic that renders individual items and captures swipe actions. The backend can be a simple API returning JSON data, and the frontend can be built using common mobile or web frameworks that support gesture recognition.

Product Core Function

· Intuitive Swipe Interface: Enables users to quickly express preferences by swiping left or right on restaurant cards. This offers a frictionless way to navigate options and reduces cognitive load, making decision-making faster and more engaging. Developers can adopt this for any scenario where quick pairwise selection is beneficial.

· Restaurant Data Presentation: Displays essential restaurant information (name, cuisine, perhaps a rating or image) in a visually appealing card format. This provides users with just enough information to make a decision without overwhelming them. This pattern is valuable for any application that needs to present discrete items for user evaluation.

· Decision Fatigue Reduction: By simplifying the discovery process to a series of binary choices, the app helps users overcome the paralysis of choice when faced with too many options. This is a direct benefit for users feeling overwhelmed by typical recommendation systems, and a key insight for developers designing user-centric interfaces.

· Simple Interaction Model: The core mechanic is universally understood and easy to learn. This leads to a low barrier of entry for new users and a pleasant, almost gamified, experience. This simplicity is a testament to effective UX design, applicable to any product aiming for broad adoption.

Product Usage Case

· Scenario: A user is in a new city and doesn't know where to eat. How it solves the problem: Instead of spending time researching multiple restaurants on Yelp or Google Maps, they can quickly swipe through options presented by SwipeFood Navigator, discovering potential dining spots with minimal effort.

· Scenario: A developer wants to build a quick feedback mechanism for design mockups. How it solves the problem: They can adapt the swipe interface to present design variations, allowing stakeholders to quickly indicate 'like' or 'dislike' without lengthy annotation, streamlining the review process.

· Scenario: A team is trying to decide on a project feature to prioritize. How it solves the problem: Each feature can be presented as a card, and team members can swipe right if they believe it's a high priority. This gamified approach can make decision-making more inclusive and less confrontational.

· Scenario: An e-commerce platform wants to enhance product discovery for impulse buys. How it solves the problem: Presenting products with enticing images and brief descriptions, allowing users to swipe for 'add to cart' or 'not interested,' creating a more engaging and potentially higher conversion browsing experience.

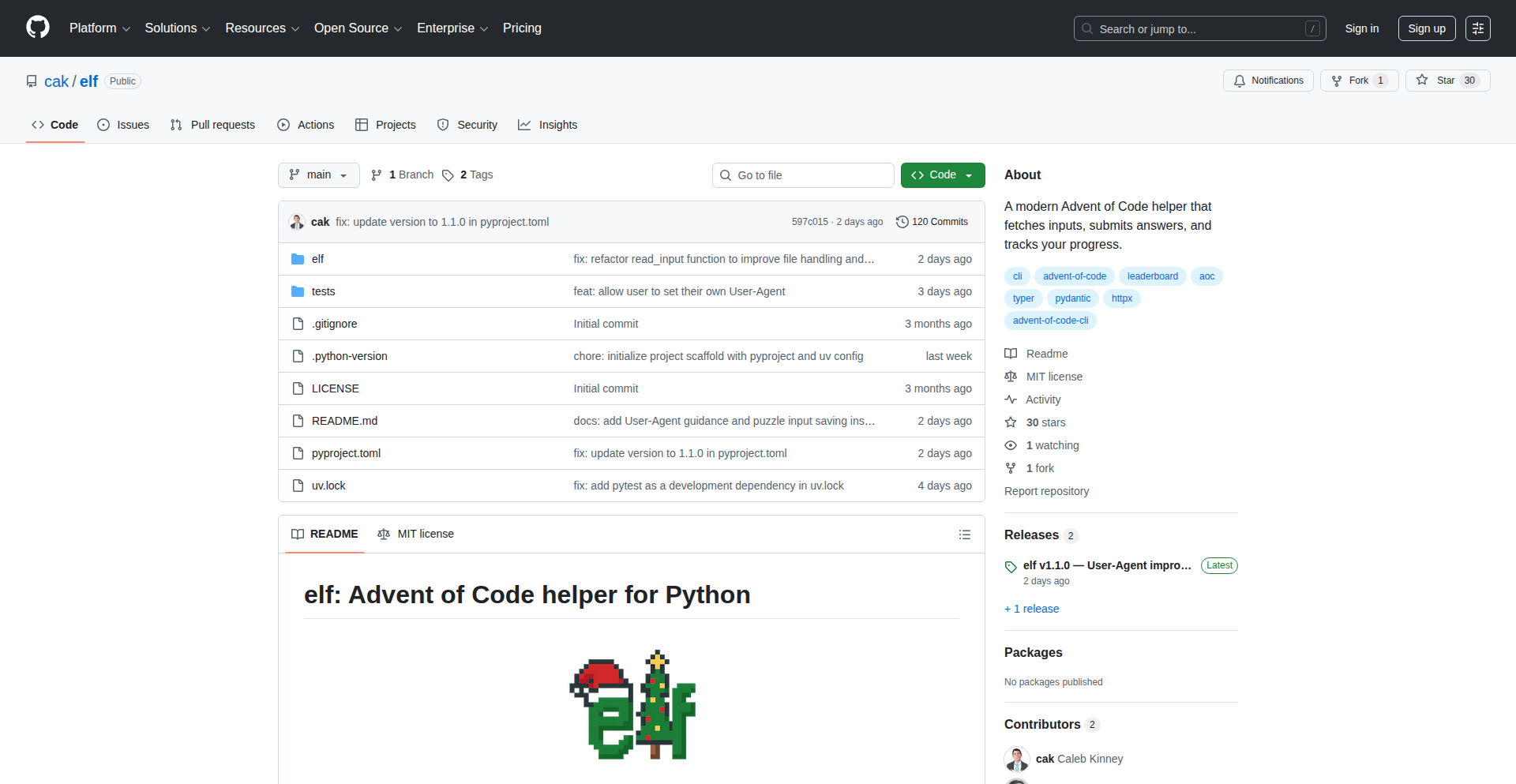

9

Elf: Advent of Code Command Line Accelerator

Author

cak

Description

Elf is a command-line interface (CLI) tool designed to significantly speed up your Advent of Code experience. It automates tedious tasks like fetching puzzle inputs with caching for offline use, safely submitting your answers with built-in checks, and viewing your private leaderboard and progress. This tool injects a dose of hacker creativity by using code to conquer the repetitive aspects of a popular coding challenge, allowing you to focus purely on problem-solving.

Popularity

Points 3

Comments 5

What is this product?

Elf is a command-line tool built to streamline the Advent of Code (AoC) challenge. AoC involves solving daily programming puzzles, which often require fetching input data, submitting solutions, and checking leaderboards. Elf automates these steps. Its core innovation lies in intelligent input fetching with caching, meaning you download each puzzle's input only once and can access it even offline. It also implements 'guardrails' for answer submissions, preventing accidental duplicate or invalid guesses. For those participating in private leaderboards, Elf provides a convenient way to view your standing and progress. It's built using Python with modern libraries like Typer for a clean CLI experience, httpx for web requests, and Pydantic for data validation.

How to use it?

Developers can install Elf using pip, Python's package installer: `pip install elf-aoc`. Once installed, you'll typically use it within your Advent of Code project directory. For example, to fetch the input for a specific day, you might run `elf fetch 2023 1` (assuming the year is 2023 and the day is 1). To submit an answer, you'd use a command like `elf submit 2023 1 <your_answer>`, and Elf will handle the interaction with the AoC website. The tool can also be integrated into custom Python scripts using its optional API for more advanced automation needs.

Product Core Function

· Input Fetching and Caching: Automatically downloads puzzle inputs for a given year and day. Caching ensures inputs are available offline and prevents redundant downloads, saving time and bandwidth. This is useful because you don't have to manually download each input file, and you can work on puzzles even without an internet connection.

· Safe Answer Submission: Submits your solutions to the Advent of Code website. It includes safeguards to prevent submitting the same answer multiple times or invalid answers, thus protecting your progress and avoiding unintended consequences. This is valuable because it prevents accidental lockouts or re-submission issues on the AoC platform.

· Private Leaderboard Viewer: Displays your ranking and progress on private Advent of Code leaderboards in a clear, tabular format or as JSON. This allows you to easily track your performance against friends or colleagues. So, you can quickly see how you stack up without visiting the website manually.

· Status and History Tracking: Provides a calendar view of your participation and a history of your submitted guesses. This helps you keep track of which days you've completed and your past attempts. This is useful for reflecting on your problem-solving journey.

· Optional Python API: Offers a programmatic interface to its functionalities, allowing developers to integrate Elf's capabilities into their own Python scripts or automation workflows. This means you can build custom tools that leverage Elf's core features for even more advanced or personalized workflows.

Product Usage Case

· A developer wants to participate in Advent of Code and needs to solve problems quickly. They use Elf to fetch the input for Day 5 of the current year with `elf fetch 2023 5`. The input is downloaded and cached. Later, while working offline, they want to re-read the input, and Elf provides it instantly from the cache. This saves them from needing an internet connection to access the puzzle data.

· During Advent of Code, a developer solves a puzzle for Day 10 and has an answer. They use Elf to submit it: `elf submit 2023 10 12345`. Elf successfully submits the answer and displays the result. Later, they accidentally run the same submission command again. Elf detects that the answer has already been submitted for that day and prompts the user or prevents the re-submission, safeguarding their progress. This avoids potential penalties on the AoC platform.

· A team of friends is competing in a private Advent of Code leaderboard. One member wants to see how everyone is doing. They run `elf leaderboard 2023` and get a table showing each participant's rank, stars, and completion times. This allows them to easily monitor the competition without everyone individually checking the AoC website. It fosters friendly rivalry and keeps everyone updated on the team's progress.

· A developer is building a custom dashboard to track their coding challenge progress. They use the Elf Python API to fetch their Advent of Code puzzle completion status and guess history. They then integrate this data into their dashboard to visualize their personal progress over time. This allows for more in-depth personal analytics and motivation.

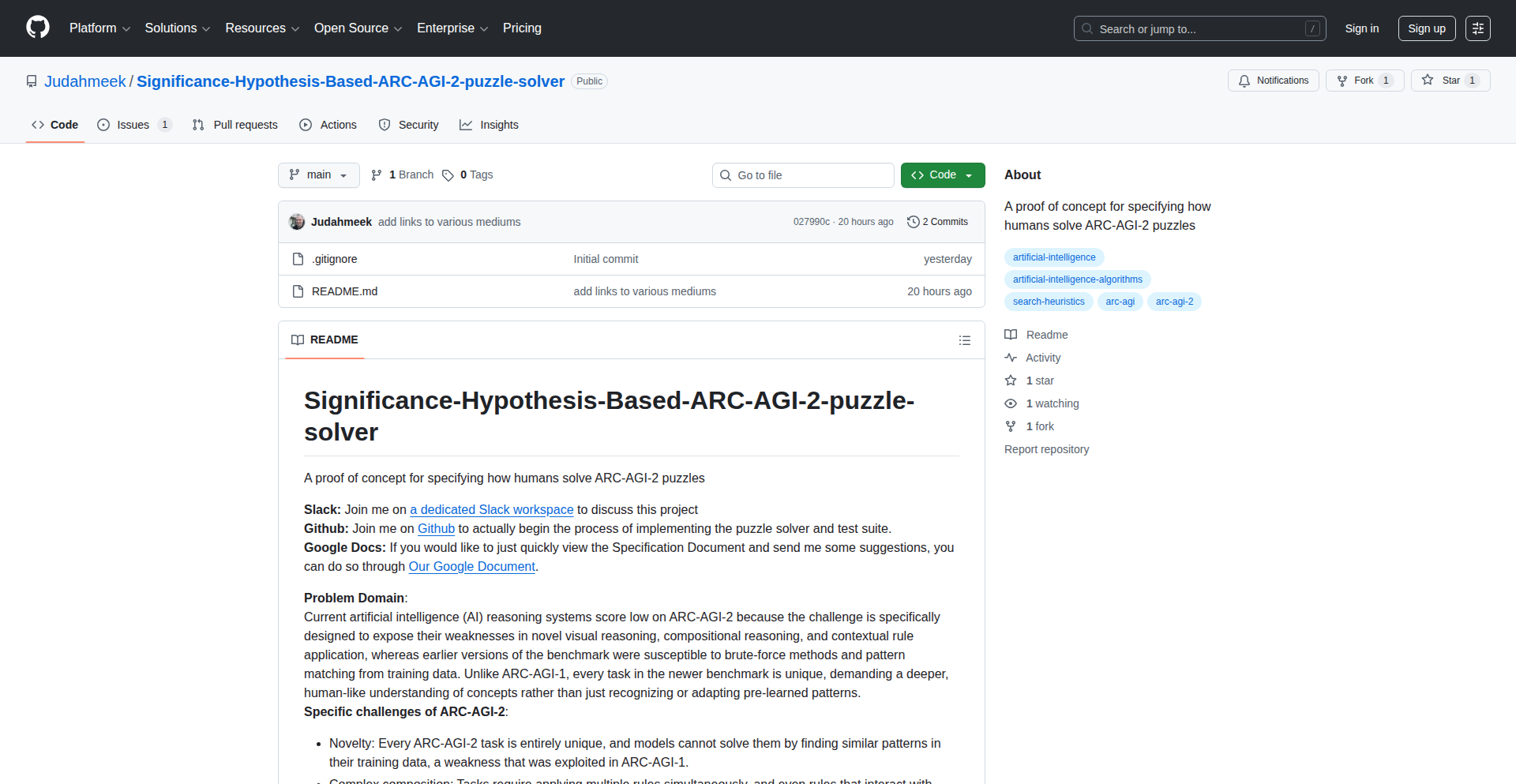

10

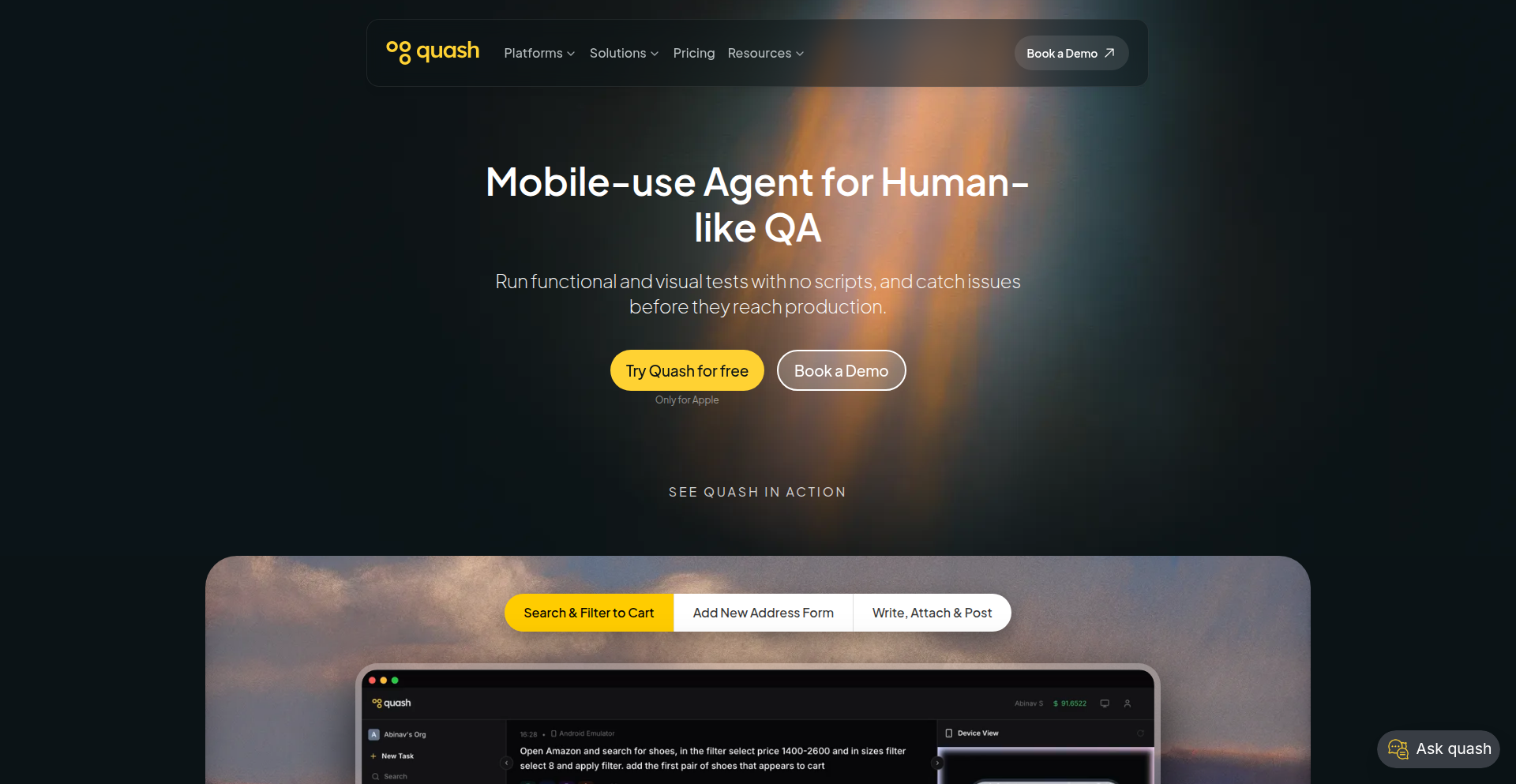

Quash: Natural Language Android QA Agent

Author

pr_khar

Description

Quash is a mobile-first QA tool that transforms plain English descriptions into automated test executions on real Android devices. Its core innovation lies in an agent that understands natural language commands, allowing developers to write test flows without complex scripting. This significantly lowers the barrier to entry for mobile QA and accelerates the testing process. The recent desktop app release (macOS) enables local testing against your own apps and devices, offering a direct and secure testing environment.

Popularity

Points 4

Comments 4

What is this product?

Quash is a desktop application (currently for macOS) that acts as an intelligent agent for mobile Quality Assurance (QA) on Android devices. Instead of writing intricate code or scripts to test your mobile app, you simply describe the desired test in plain English, like 'open the app and log in with user1 and password123'. Quash's agent then translates these instructions into actions performed on a connected Android device, be it a physical device or an emulator. The innovation here is the natural language processing (NLP) engine that interprets your English commands and the agent's ability to interact with the device's UI. This means you don't need to be a QA automation expert to create sophisticated tests. So, what's the benefit for you? It makes mobile app testing much more accessible and efficient, allowing you to catch bugs earlier with less technical overhead.

How to use it?

Developers can download the Quash desktop application for macOS. Once installed, they connect their Android device (either physically via USB or through an Android emulator). Within the Quash application, they can then write their test scenarios in natural English within a dedicated editor. Upon execution, Quash's agent will interact with the connected Android device to perform the described actions. This can be integrated into existing CI/CD pipelines by triggering Quash tests programmatically or used for ad-hoc testing. So, how does this help you? You can quickly set up automated tests for your app by simply describing what you want to test, streamlining your development workflow.

Product Core Function

· Natural Language Test Scripting: Write test flows in plain English, eliminating the need for complex coding languages. This allows for rapid test creation and easy understanding by non-technical stakeholders. The value is reduced test development time and broader team involvement.

· Android Device Agent Execution: An intelligent agent executes English test scripts directly on real Android devices (physical or emulated). This ensures tests are run in an authentic environment, providing more reliable results. The value is accurate testing and early bug detection.

· Local Device Connectivity: Connect any Android device locally to Quash for testing. This provides a secure and private testing environment, ideal for sensitive applications or when dealing with proprietary data. The value is enhanced security and control over your testing.

· Desktop Application for macOS: A downloadable desktop app for macOS (supporting Intel and Apple Silicon) provides a dedicated and robust testing platform. This offers a stable and performant environment for running your QA agent. The value is a streamlined and efficient desktop testing experience.

Product Usage Case

· Scenario: A startup developer wants to quickly test the signup flow of their new social media app on a physical Android phone before releasing it to a small group of beta testers. Problem Solved: Instead of spending hours writing an automation script, they simply describe the steps in Quash: 'Open the app, tap the signup button, enter a valid email and password, tap submit'. Quash executes this on their phone, verifying the signup works as expected. This saves significant development time and ensures a smoother beta launch.

· Scenario: A mobile game studio needs to ensure that in-game purchases function correctly across various device configurations. Problem Solved: The QA team can use Quash to write English descriptions for purchase flows, such as 'Launch the game, navigate to the shop, select the 'gems' pack, proceed to payment, and cancel the transaction'. Quash then runs these tests on different emulators, identifying any purchase-related bugs. This ensures a consistent and reliable in-app purchase experience for all players.

· Scenario: A developer is working on a banking application and needs to perform security checks on their local machine without sending sensitive data to a cloud-based testing service. Problem Solved: By connecting their Android device locally to Quash, they can write English test cases for login, transaction verification, and logout directly on their own setup. This ensures the security and privacy of their app's sensitive operations. This provides peace of mind and compliance with data security requirements.

11

SmolLaunch

Author

teemingdev

Description

SmolLaunch is a minimalist platform designed for developers to share their projects without the typical pressures of growth hacking and competitive ranking. It focuses on genuine discovery and peer feedback, offering a clean launch page and profile. The core innovation lies in its philosophy: a calm space for sharing ideas and prototypes, built with a lightweight stack including Rails and Hotwire for real-time interactions, aiming to feel more like an engineering feed than a leaderboard.

Popularity

Points 8

Comments 0

What is this product?

SmolLaunch is a project launch platform specifically built for developers who want to showcase their creations in a low-pressure, distraction-free environment. Unlike larger platforms that often emphasize marketing and competitive metrics, SmolLaunch prioritizes the craft of building. Its technical foundation is built on Rails 8, utilizing Hotwire for seamless, real-time updates and interactivity without the need for complex JavaScript. This approach allows for a fast, responsive user experience while keeping the codebase lean and manageable. The platform uses Postgres for data storage and Tailwind CSS for a clean, modern aesthetic. The overall architecture is a small, fast monolith, emphasizing simplicity and efficiency in deployment and maintenance. The key innovation is its deliberate exclusion of algorithms, gamified voting systems, and 'launch timing optimization,' fostering a more authentic community feel.

How to use it?

Developers can use SmolLaunch to quickly create a dedicated page for any project they've built, whether it's a small tool, an experiment, or a larger endeavor. The process involves posting a short description and a link to the project. Once published, the project gets a clean, minimal launch page and a developer profile. Other builders can then discover these projects, follow developers they find interesting, leave comments, and send feedback directly. This makes it easy to get constructive input from a community that values the technical aspects of software development. For integration, SmolLaunch is designed with potential future enhancements in mind, such as GitHub integration and RSS feeds, which can be considered for a more connected developer workflow.

Product Core Function

· Project Posting: Allows developers to share their creations with a brief description and a link, providing a simple way to make their work visible to the community and receive initial exposure.

· Minimal Launch Page and Profile: Offers a clean, uncluttered presentation for each project and developer, focusing attention on the work itself rather than distracting design elements, which enhances the clarity of the shared content.

· Peer Feedback and Comments: Enables other developers to interact with projects by leaving comments and providing feedback, fostering a collaborative environment for improvement and learning.

· Follower System: Allows users to follow other builders whose work they appreciate, creating a curated feed of interesting projects and encouraging ongoing engagement within the community.

· No Gamified Voting or Ranking: Eliminates competitive ranking systems, focusing on the intrinsic value of the project and encouraging sharing for the sake of contribution rather than popularity contests, promoting a healthier creator mindset.

Product Usage Case

· A solo developer building a new API wrapper and wants to share it with other developers to gather early feedback on its usability and potential improvements. SmolLaunch provides a direct channel for this, bypassing the need for extensive marketing campaigns on larger platforms.

· A team experimenting with a novel algorithm for image compression and wants to showcase their prototype to peers for technical critique. SmolLaunch's focus on 'engineering feed' allows for discussions centered on the technical merits of the solution.

· An open-source contributor who has developed a small utility tool to streamline a common development task and wishes to announce its availability to the broader developer community. The platform ensures the tool is discovered by those who appreciate such practical contributions.

· A student showcasing a personal project built for a coding bootcamp or as a learning exercise. SmolLaunch offers a low-pressure environment to share their learning journey and receive encouragement and constructive criticism from experienced developers.

12

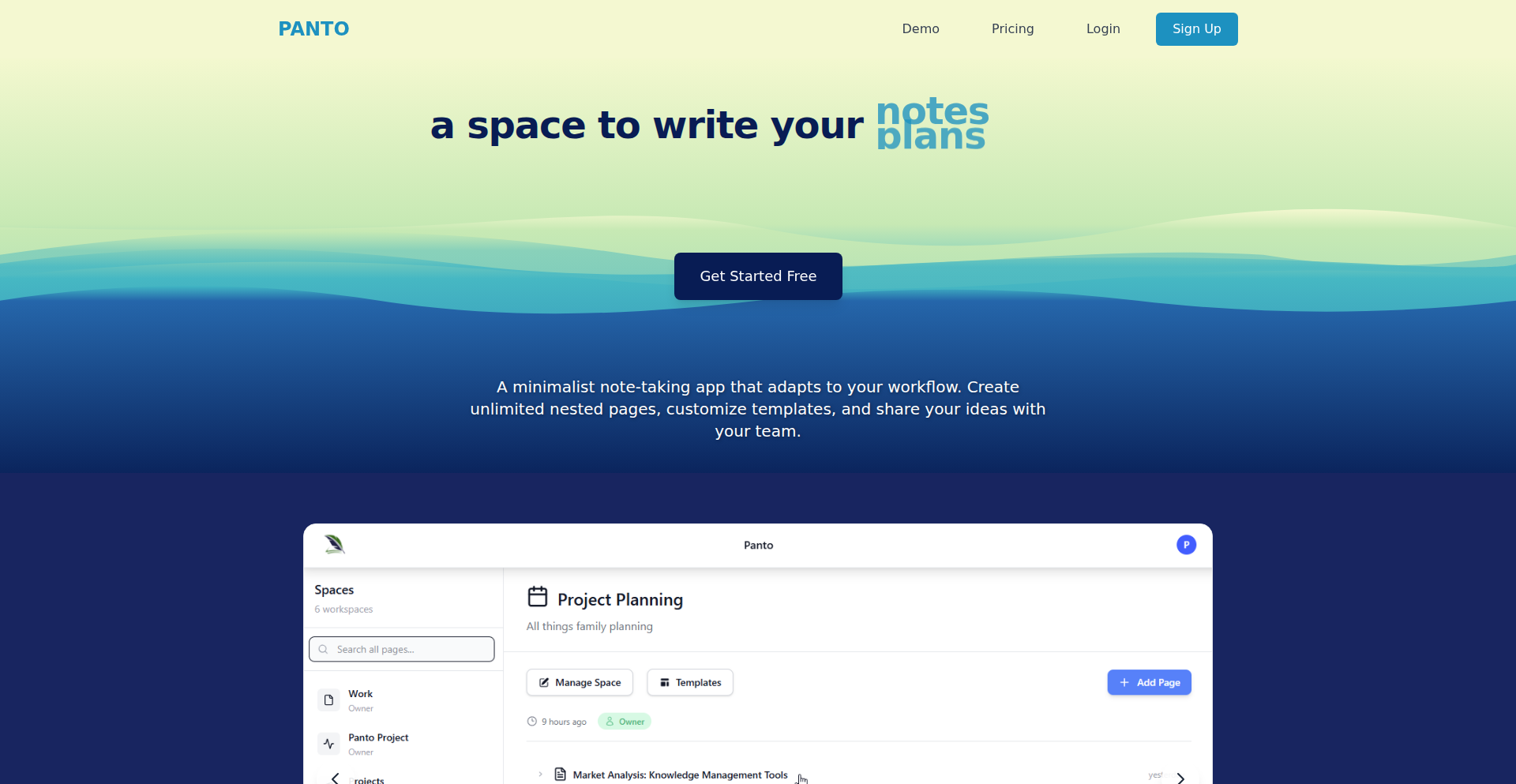

GoMark OCR Notes

Author

peterwoodman

Description

A lightweight, web-based note-taking application built with Go and HTMX. It focuses on essential features like nested pages and templates, but its standout innovation is OCR (Optical Character Recognition) for scanned PDFs, making their content searchable within the app. This addresses the common pain point of dealing with non-searchable documents, offering a seamless experience across devices.

Popularity

Points 5

Comments 3

What is this product?

GoMark OCR Notes is a web application designed for efficient note-taking. It leverages Go for its backend, providing a fast and efficient engine, and HTMX for its frontend, which allows for dynamic updates without full page reloads, making the user experience feel more like a desktop application. The core innovation lies in its integration of OCR technology. This means you can upload scanned documents (like PDFs), and the app will process them to extract the text. This extracted text then becomes searchable, allowing you to quickly find information within your scanned notes, much like you would with a regular text document. So, it transforms static images of text into dynamic, discoverable information.

How to use it?

Developers can integrate GoMark OCR Notes into their existing workflows or use it as a standalone note-taking solution. For integration, the backend built with Go can expose APIs that allow other applications to store and retrieve notes. The HTMX frontend can be embedded or used to build custom interfaces. For standalone use, it functions as a web application accessible via a browser on any device, from desktops to mobile phones, thanks to its responsive design. You can create new notes, organize them in nested hierarchies, use predefined templates for consistency, and upload scanned PDFs to make their content searchable. The value proposition is having a unified place for all your notes, both typed and scanned, that's easily accessible and searchable.

Product Core Function

· Nested Page Structure: Enables hierarchical organization of notes, allowing users to create sub-pages within main notes. This provides a logical and organized way to manage information, useful for project documentation or research where topics have sub-topics.

· Templating System: Allows users to create and reuse predefined note structures. This ensures consistency across notes and saves time by pre-populating common fields, ideal for recurring tasks like meeting minutes or project briefs.

· Shared Spaces: Facilitates collaboration by enabling users to share notes or entire sections with others. This is valuable for team projects where multiple individuals need to contribute to or access the same information simultaneously.

· OCR for Scanned PDFs: Extracts text from scanned PDF documents, making their content searchable. This is a major advantage for anyone dealing with physical documents or archives that have been digitized as images, as it unlocks the information contained within them for quick retrieval.

Product Usage Case

· Student preparing for exams: Uploads lecture slides as scanned PDFs and then uses the OCR search to quickly find specific topics or definitions across all uploaded materials.

· Researcher organizing literature reviews: Creates nested notes for different research papers, uses templates for summarizing key findings, and uploads scanned journal articles, making them searchable by keywords.

· Project manager tracking tasks: Uses shared spaces for team project notes and task lists, uploads scanned design documents and blueprints, and leverages OCR to quickly locate specific requirements or specifications.

· Freelancer managing client work: Creates a dedicated nested space for each client, uses templates for proposals and invoices, and uploads scanned contracts or agreements to easily reference contract details.

13

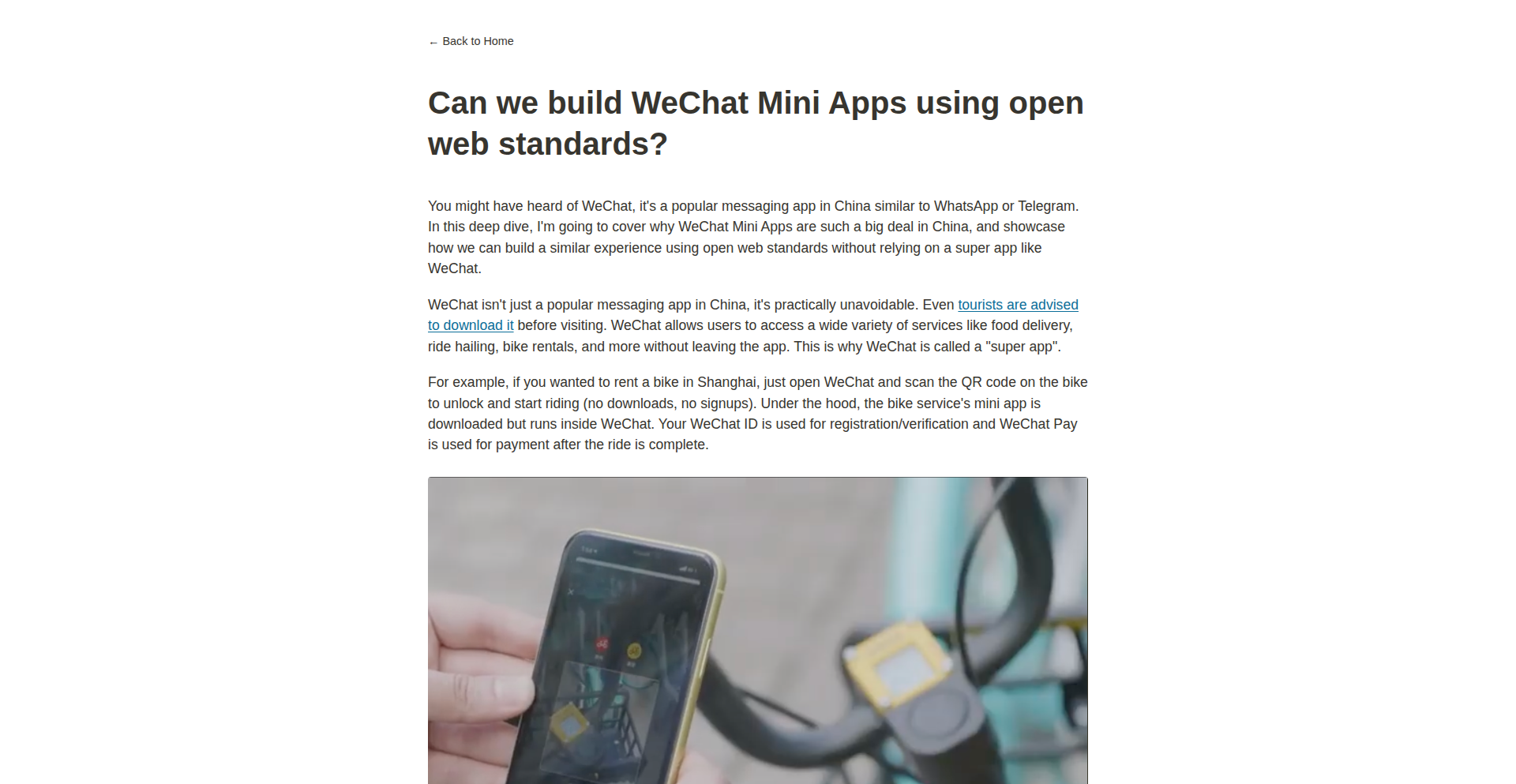

Web-Mini-App Canvas

Author

dannylmathews

Description

This project explores building WeChat Mini Apps using open web standards. It aims to address the challenge of proprietary mini-app ecosystems by leveraging familiar web technologies like HTML, CSS, and JavaScript. The core innovation lies in a transpilation and runtime layer that allows web code to function within the WeChat Mini App environment, offering a more accessible and portable development experience.

Popularity

Points 7

Comments 1

What is this product?

This project is a proof-of-concept that demonstrates how applications normally built for WeChat's proprietary Mini App framework can be constructed using standard web technologies. Instead of learning a new, specific set of APIs and development paradigms dictated by WeChat, developers can use the HTML, CSS, and JavaScript they already know. The project achieves this by creating a bridge – essentially, a translator and a special runner – that takes standard web code and makes it behave like a WeChat Mini App. This means you can write your app using web tools and then deploy it as a WeChat Mini App, which is a significant innovation because it opens up mini-app development to a much wider audience of web developers and promotes code reusability across different platforms.

How to use it?

Developers can use this project by writing their application logic, UI, and styling using standard HTML, CSS, and JavaScript. The project provides a build process that takes this web code and transforms it into a format compatible with the WeChat Mini App environment. This typically involves a transpilation step to convert web APIs and constructs into their WeChat Mini App equivalents, and a runtime library that mimics the behavior of the WeChat Mini App runtime. Integration would involve using the project's provided build tools as part of a standard web development workflow, and then deploying the generated output to the WeChat Mini App platform. This is useful because it lowers the barrier to entry for creating WeChat Mini Apps and allows for easier migration of existing web applications.

Product Core Function

· Web standards to Mini App transpilation: Translates standard web APIs and syntax (like DOM manipulation, event handling) into the specific API calls and structures that WeChat Mini Apps understand. The value is in allowing developers to write code once using familiar web patterns and have it work within a restricted mini-app environment.

· Mini App runtime emulation: Provides a JavaScript runtime environment that mimics the behavior and limitations of the WeChat Mini App runtime. This ensures that the web-based code executes correctly within the WeChat ecosystem, solving the problem of compatibility and offering a consistent development experience.

· Cross-platform development enablement: By using open web standards, the project inherently encourages the possibility of writing code that could potentially be adapted to other mini-app platforms or even as a standard web application, maximizing code reuse and developer efficiency. The value here is in building applications that are less locked into a single platform.

· Simplified development workflow: Developers can leverage their existing knowledge of HTML, CSS, and JavaScript, along with familiar web development tools and debugging techniques. This significantly reduces the learning curve and speeds up the development process for creating mini-apps, making it more accessible.

Product Usage Case

· Building a simple e-commerce storefront as a WeChat Mini App: A web developer could use standard React or Vue.js components written with HTML and CSS, and then use this project to compile them into a functional WeChat Mini App. This solves the problem of needing to learn the WeChat-specific framework for a common application type and allows for rapid deployment.

· Migrating an existing progressive web app (PWA) to a WeChat Mini App: If a business already has a PWA, they could potentially adapt significant portions of its codebase to function as a WeChat Mini App using this project. This saves development time and resources by not having to rewrite the entire application from scratch for the WeChat platform.

· Developing educational tools or interactive content for WeChat users: Educators or content creators familiar with web development could quickly build engaging mini-apps without deep platform-specific knowledge. The value is in democratizing mini-app creation for a broader range of creators.

14

AI Forge: Open Innovation Catalyst

Author

Archivist_Vale

Description

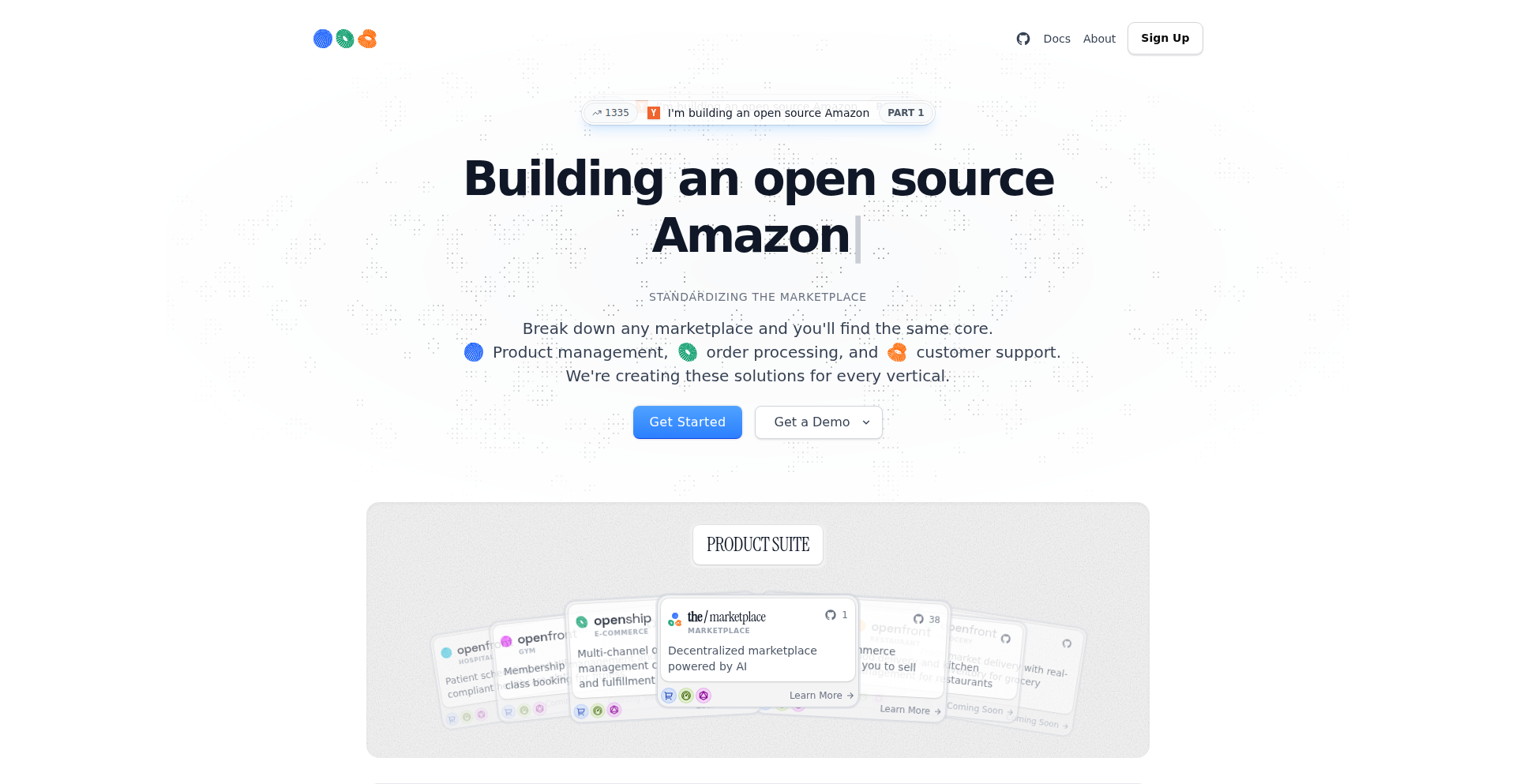

This project, Unpatentable.org, is an AI-driven 'innovation lab' that automatically generates novel inventions and immediately publishes them as prior art, making them freely available to everyone and preventing them from being patented by others. It addresses the risk of valuable knowledge being locked away by corporate patenting by creating a continuously growing library of open, unpatentable, AI-generated innovations across various tech domains.

Popularity

Points 1

Comments 7

What is this product?

Unpatentable.org is essentially an automated system that uses artificial intelligence to brainstorm and document new invention ideas. It's like a creative factory for concepts. The core innovation lies in its approach to sharing knowledge: once an idea is generated, it's immediately documented in a detailed format (problem, mechanism, implementation, impact) and published on the website. To ensure these ideas are truly open and cannot be claimed by anyone later, they are permanently timestamped on the Arweave blockchain and submitted to the USPTO's prior art archive. Think of it as a proactive way to democratize future technological progress, ensuring that breakthroughs benefit humanity, not just a select few.

How to use it?

For developers and innovators, Unpatentable.org offers a rich source of inspiration and a foundation for building upon. You can browse the public library of generated inventions to discover potential starting points for new projects, research, or solutions to existing problems. If you have your own invention idea that you want to ensure remains open and accessible to everyone, the 'Unpatent' tool allows you to upload your concept and have it published as prior art for a fee. This is useful for individuals or organizations wanting to contribute to the public domain and prevent future patenting of their work. Organizations can also sponsor specific innovation 'tracks' (e.g., climate tech), receiving a continuous stream of open-source solutions in that area.

Product Core Function

· AI-powered invention generation: The system uses AI to conceptualize entirely new ideas. This means it can explore novel combinations and solutions that humans might not readily conceive, providing a unique starting point for innovation.

· Detailed invention documentation: Each generated invention is accompanied by comprehensive reports (problem, mechanism, constraints, implementation guide, societal impact). This level of detail is crucial for other developers to understand, replicate, and build upon the ideas, lowering the barrier to entry for further development.

· Immutable blockchain publishing: By anchoring invention PDFs on the Arweave blockchain, the project ensures a permanent, unalterable record of the invention and its creation date. This serves as undeniable proof of existence and prevents disputes over ownership or timing, fostering trust in the open-source nature of the inventions.

· USPTO prior art submission: Submitting inventions to the USPTO prior art archive is a critical step in ensuring they cannot be patented by others in the future. This directly contributes to the goal of keeping valuable innovations accessible to the public and preventing monopolization.

· Discoverable innovation library: The website provides a searchable library of hundreds of AI-generated inventions, categorized by domain. This makes it easy for anyone to find relevant ideas and promotes cross-disciplinary inspiration, accelerating the pace of collective innovation.

· 'Unpatent' tool for human inventors: This feature allows individuals to secure their own ideas as prior art, reinforcing the project's mission of open knowledge and preventing future patent claims on their contributions.

Product Usage Case

· A robotics startup looking for novel ways to improve drone navigation could browse the 'robotics' section of Unpatentable.org. They might find an AI-generated concept for a bio-inspired sensor system that offers a unique solution to obstacle avoidance, saving them significant R&D time and providing a fresh perspective.

· An independent researcher working on renewable energy solutions could use the site to identify emerging concepts in solar energy storage. An AI-generated design for a new type of battery chemistry, detailed with an implementation guide, could provide a breakthrough insight for their research, allowing them to focus on refinement rather than initial ideation.

· A non-profit organization focused on environmental sustainability might sponsor a track on 'wildfire resilience.' The AI engine would then continuously generate and publish open-source solutions for fire detection, prevention, or response, providing valuable tools and blueprints for communities worldwide to adopt and adapt.

· An individual inventor with a groundbreaking idea for a medical device might use the 'Unpatent' tool. By paying a fee, they can ensure their invention is publicly documented and timestamped as prior art, preventing a large corporation from patenting a similar concept later and ensuring their innovation remains accessible for medical advancements.

· A developer aiming to build a decentralized application for scientific collaboration could draw inspiration from AI-generated architectural patterns for knowledge sharing and immutability, ensuring their platform is built on robust, future-proof principles.

15

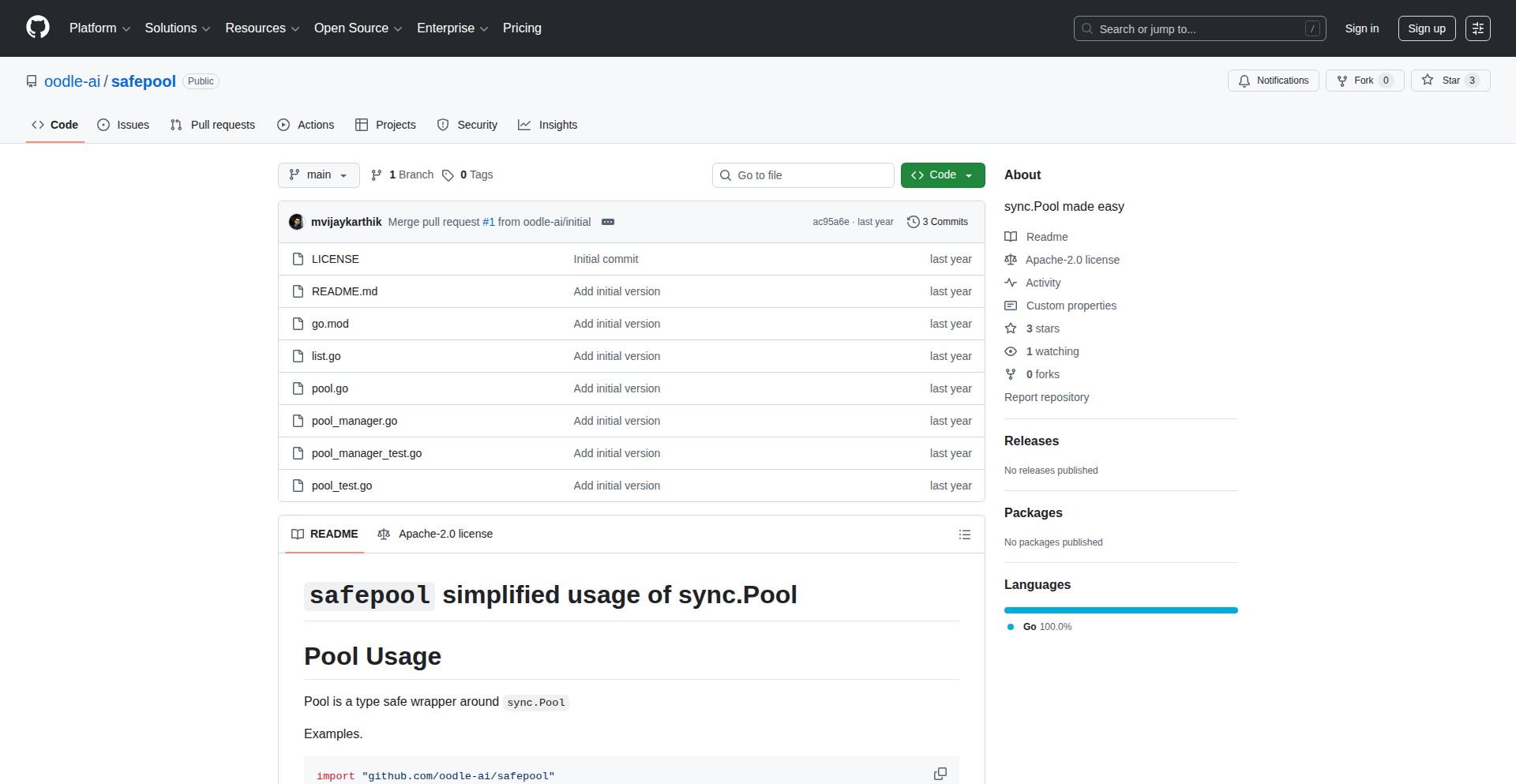

SafePool: Type-Safe Go Object Pooling

Author

mvijaykarthik

Description

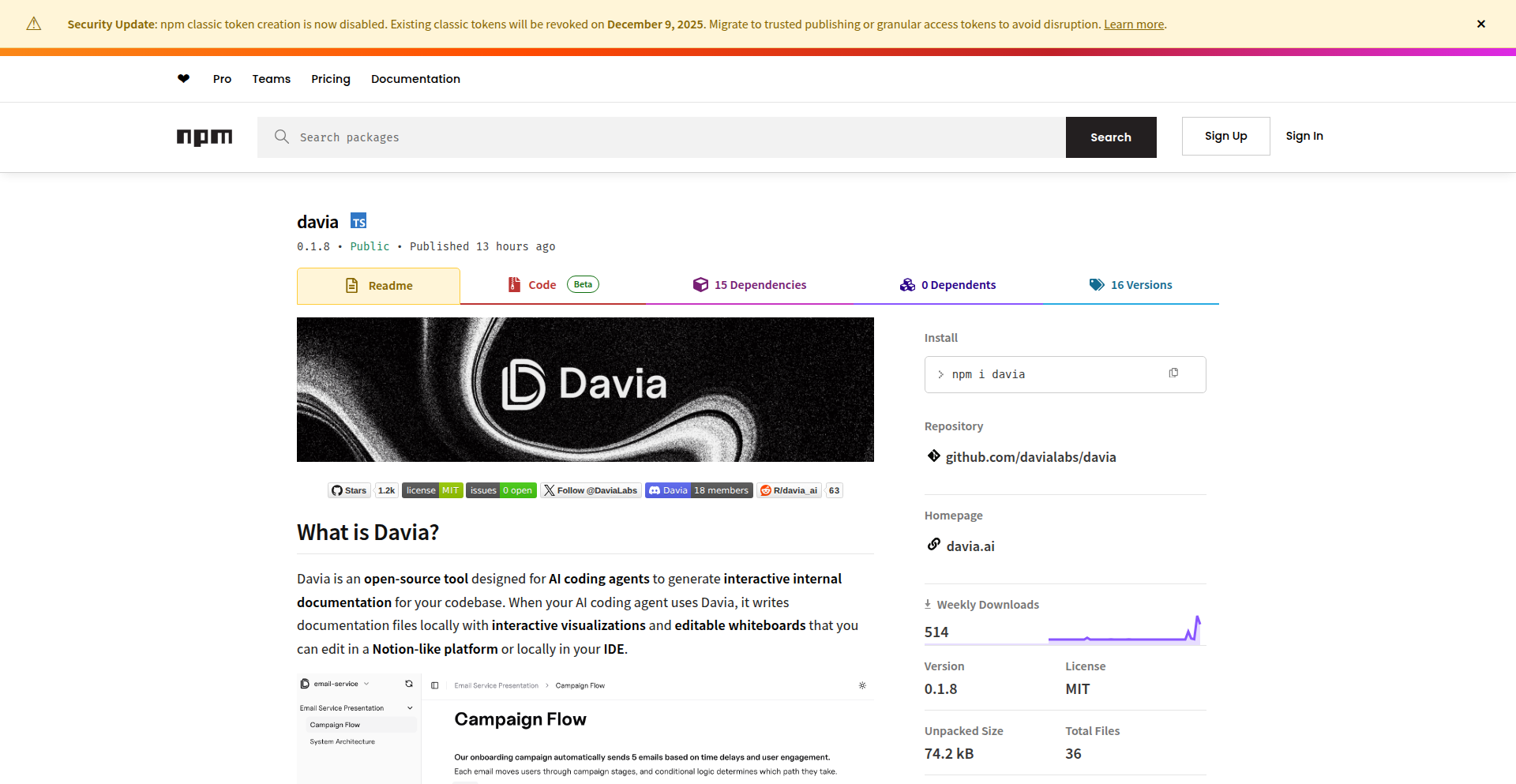

SafePool is a Go library that provides type-safe object pooling using Go generics. It addresses the limitations of Go's built-in sync.Pool, which lacks type safety and can lead to bugs like double-returns or forgotten returns due to manual type assertions. SafePool ensures that only objects of the correct type can be retrieved and returned, and its PoolManager component helps prevent memory leaks by tracking and ensuring the return of all pooled objects across function boundaries.

Popularity

Points 6

Comments 1

What is this product?

SafePool is a solution for Go developers who need to manage memory efficiently by reusing objects instead of constantly creating new ones. Go's standard `sync.Pool` is a way to do this, but it's not 'type-safe.' Imagine you have a pool of different kinds of tools. `sync.Pool` is like a generic toolbox where you have to guess what tool you're pulling out and make sure you put the right tool back. This guessing and checking (called 'type assertions' in Go) is prone to errors. SafePool uses Go's modern 'generics' feature, which is like having a specialized toolbox for each type of tool. You ask for a 'hammer,' and you are guaranteed to get a hammer, not a screwdriver. This eliminates the need for risky type assertions, making your code cleaner and preventing common bugs. Additionally, SafePool introduces `PoolManager`. Think of this as a supervisor for your toolboxes. It keeps track of all the tools you've borrowed, ensuring that at the end of a process, all borrowed tools are returned. This is crucial for preventing 'memory leaks,' where unused objects clog up your program's memory and slow it down, especially when dealing with lots of data like at Oodle AI.

How to use it?

Developers can integrate SafePool into their Go projects by importing the library. You would define a pool for a specific type of object using generics. For example, `SafePool.NewPool[MyStruct]()` creates a pool specifically for `MyStruct` objects. To get an object, you use `pool.Get()`. To return it, you use `pool.Put(obj)`. The key advantage is that you cannot accidentally get or put a `DifferentStruct` into the `MyStruct` pool, as the compiler will catch this error. For scenarios where objects need to persist across different function calls or goroutines and you want to guarantee cleanup, you can use the `PoolManager`. You register objects obtained from pools with the manager, and then when the manager is no longer needed (e.g., at the end of a request), it ensures all registered objects are returned to their respective pools, preventing resource leaks.

Product Core Function

· Type-safe object retrieval: Ensures that when you get an object from a pool, it's guaranteed to be of the expected type, preventing runtime errors from incorrect type assertions. This makes your code more robust and easier to reason about.

· Type-safe object return: Guarantees that only objects of the correct type can be returned to their designated pool, preventing corruption of the pool's state and further reducing bugs.

· Generic pool creation: Allows developers to define pools for any Go type using generics, making the pooling mechanism highly reusable and adaptable to various data structures and objects.

· PoolManager for leak prevention: Tracks objects obtained from pools and ensures their return when the manager is disposed, preventing memory leaks in applications that manage complex object lifecycles across function calls or goroutines.

· Reduced boilerplate and improved readability: Eliminates the need for manual type assertions and error handling associated with `sync.Pool`, leading to cleaner, more concise, and more understandable Go code.

Product Usage Case

· High-performance data processing: In applications that handle large volumes of data, such as telemetry processing at Oodle AI, reusing objects like network buffers or data structures instead of allocating new ones for each piece of data significantly improves performance and reduces garbage collection overhead.

· Web server request handling: When a web server receives many requests, reusing objects like request contexts, database connection pools, or HTTP response writers for each request can drastically reduce memory allocation and improve the server's ability to handle concurrent traffic.

· Game development: In game engines, reusing objects like particles, bullets, or game entities that are frequently created and destroyed can prevent performance bottlenecks and ensure a smoother gaming experience.

· Microservices communication: When services exchange messages or data, reusing message buffers or serialization objects can improve the efficiency of inter-service communication, especially in high-throughput scenarios.

16

CoThou: The AI Source of Truth

Author

MartyD

Description

CoThou is a novel platform designed to combat misinformation generated by AI search and answer engines. It empowers businesses and knowledge creators to become the definitive source of truth for their own content. By reverse-engineering how AI models select information and allowing users to directly input and control their company or research profiles, CoThou ensures AI-generated answers are accurate, citable, and reflect the user's intended narrative. This is a significant innovation for building trust and authority in the age of AI.

Popularity

Points 3

Comments 4

What is this product?

CoThou is a system that allows you to directly influence the information AI search and answer engines retrieve and present about your company or your area of expertise. Instead of relying on potentially outdated or inaccurate public data (like Wikipedia), CoThou lets you create a verified profile. When an AI is asked about your company or a topic you've published on, it will prioritize and cite your CoThou profile. This is achieved by understanding how AI models choose their sources and then making your content the most attractive and authoritative option. It's like having your own dedicated, verified knowledge base that AIs will consult.

How to use it?