Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-12-01

SagaSu777 2025-12-02

Explore the hottest developer projects on Show HN for 2025-12-01. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The sheer volume of AI-driven projects this week underscores a massive shift towards augmenting human capabilities with intelligent agents. Developers are no longer just building standalone tools; they're constructing intelligent layers that integrate deeply into existing workflows. The trend towards local execution and privacy-focused solutions is also palpable, reflecting a growing demand for control over data and models. For developers, this means mastering agent communication protocols, efficient data handling for LLMs (like RAG), and understanding how to build 'smart' components that enhance, rather than replace, human expertise. Entrepreneurs should look for unmet needs in specialized domains where AI can provide a significant, privacy-conscious advantage, or where complex tasks can be automated and made accessible.

Today's Hottest Product

Name

Superset – Run 10 parallel coding agents on your machine

Highlight

This project tackles the developer bottleneck of waiting for AI coding agents. By enabling the parallel execution of multiple agents (like Claude Code and Codex) within isolated environments for each task (Git worktree), it significantly accelerates coding workflows. The technical innovation lies in orchestrating these agents effectively, managing their isolation, and providing timely notifications, essentially building a 'superset' of AI coding tools. Developers can learn about agent orchestration, context management, and efficient parallel processing in a practical application.

Popular Category

AI & Machine Learning

Developer Tools

Productivity

Popular Keyword

AI agents

LLM

Code generation

Workflow automation

Developer productivity

Data processing

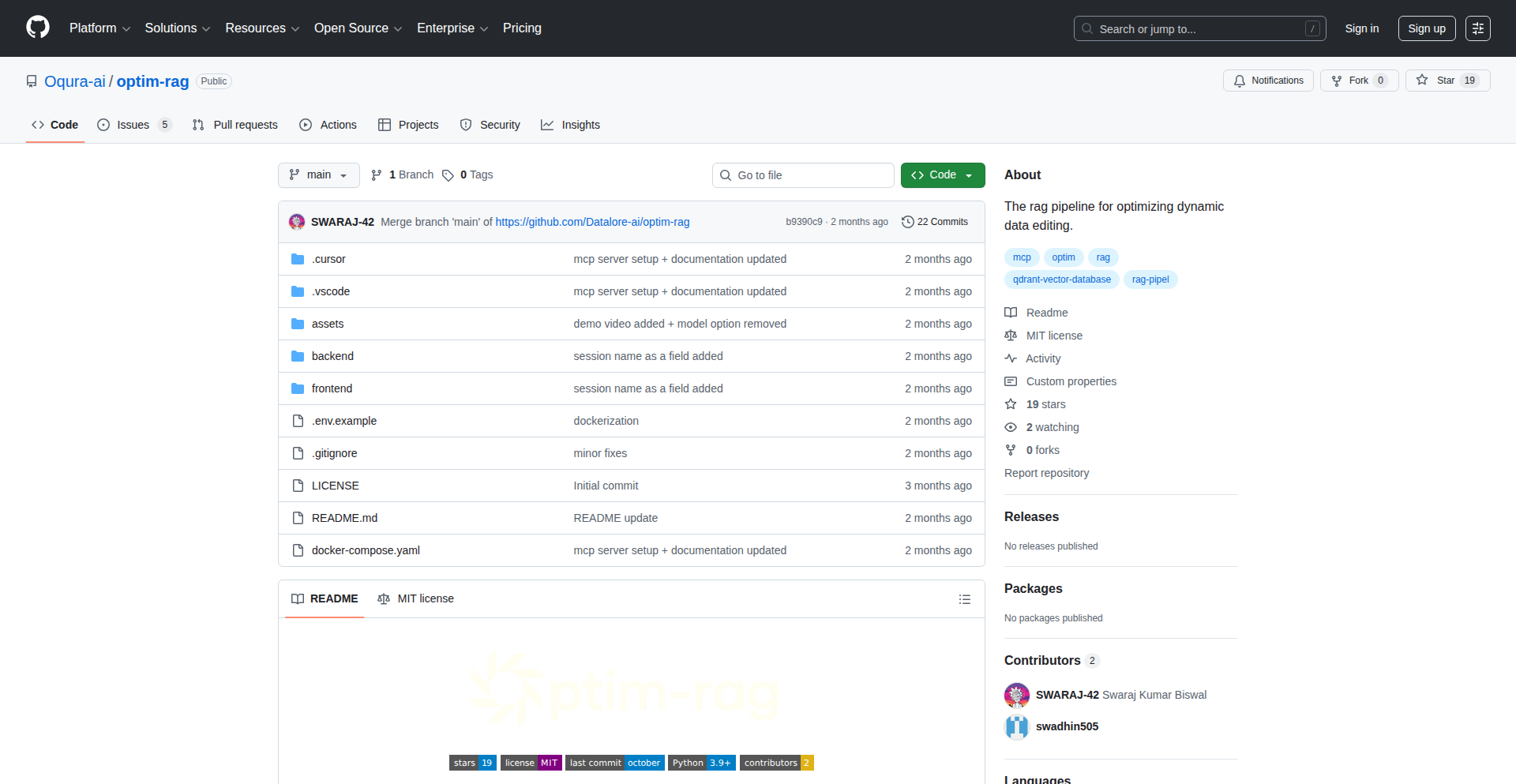

RAG

Technology Trends

AI Agent Orchestration

Efficient LLM Context Management

Local AI Model Execution

Automated Code Generation and Review

Privacy-Preserving Data Processing

Developer Workflow Augmentation

Self-Service Workflow Automation

Interactive Diagramming and Code Visualization

Project Category Distribution

AI & Machine Learning Tools (40%)

Developer Productivity & Tools (30%)

Data Management & Analysis (15%)

Utilities & Infrastructure (10%)

Creative & Niche Applications (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | TinyVisionAI Native App | 15 | 23 |

| 2 | Jargon AI Zettelkasten | 29 | 7 |

| 3 | ProposalFlow | 23 | 8 |

| 4 | Superset - Parallel AI Coding Agent Orchestrator | 22 | 3 |

| 5 | CurioQuest - PWA Trivia Engine | 6 | 10 |

| 6 | Flowctl: Binary Workflow Weaver | 15 | 1 |

| 7 | Furnace: Chiptune Symphony Engine | 14 | 0 |

| 8 | GitHits Code Compass | 10 | 4 |

| 9 | NPM Traffic Insight | 11 | 0 |

| 10 | FFmpeg Artisan's Codex | 10 | 0 |

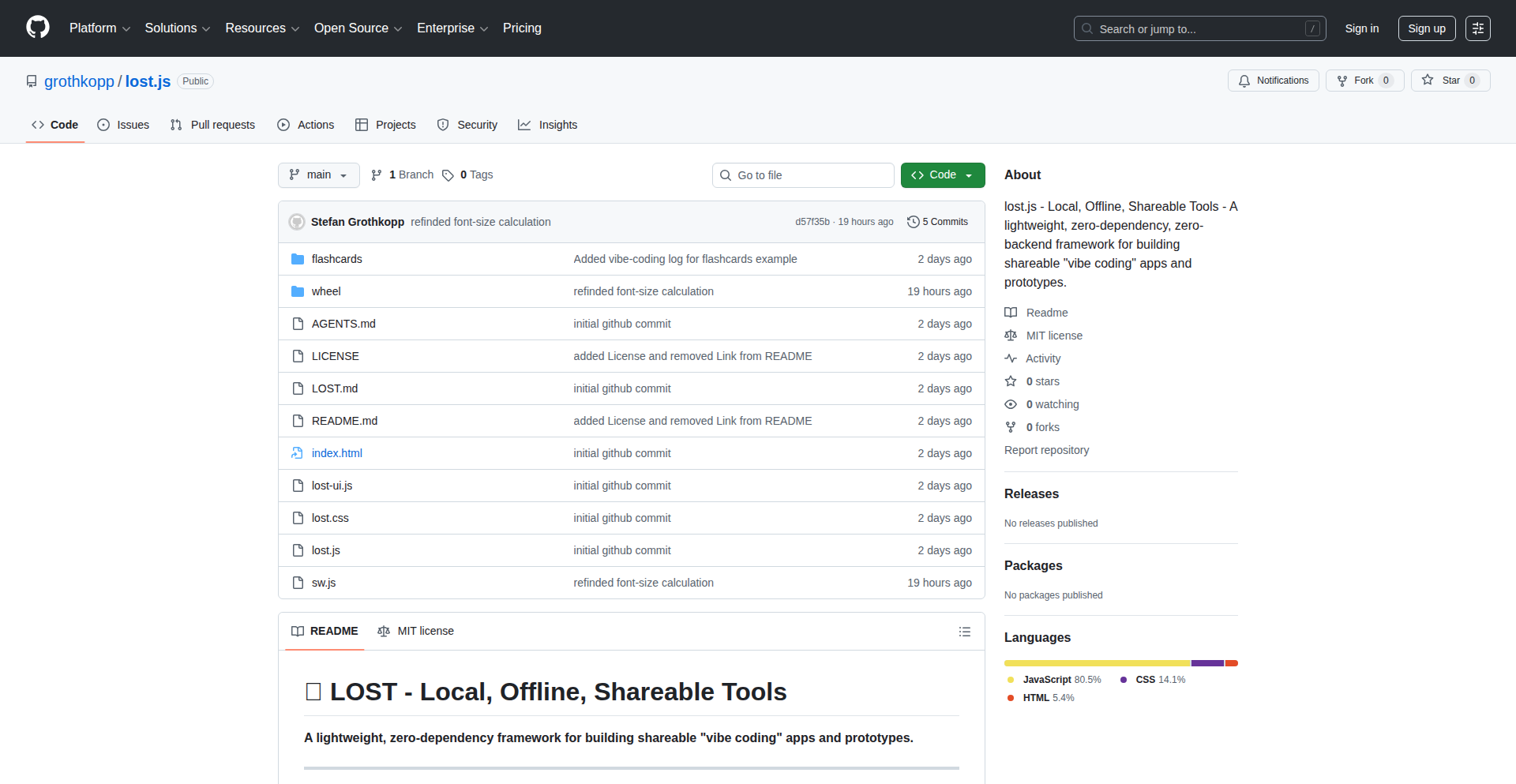

1

TinyVisionAI Native App

Author

jaramy

Description

A highly optimized 1.8MB native application leveraging custom-built UI, vision, and AI libraries. It demonstrates a novel approach to embedding complex AI functionalities within an exceptionally small footprint, making advanced computer vision accessible on resource-constrained devices.

Popularity

Points 15

Comments 23

What is this product?

This project is a compact, native application that packs AI-powered vision capabilities into a mere 1.8 megabytes. The innovation lies in its self-built UI and AI libraries, meaning the developer didn't rely on large, pre-existing frameworks. Instead, they engineered lean components specifically for this app. This allows for significant performance gains and reduced memory usage, enabling sophisticated AI tasks like image analysis or object detection to run efficiently, even on older or less powerful hardware. So, what's the benefit for you? You get powerful AI features without the bloat, making your applications lighter and faster.

How to use it?

Developers can integrate the core principles and custom libraries of TinyVisionAI into their own native applications. This might involve leveraging the lightweight UI components for a snappier user experience or integrating the specialized AI vision modules for tasks like real-time image recognition, augmented reality overlays, or data analysis from visual inputs. The project serves as a blueprint for building resource-efficient AI-driven applications, particularly useful for mobile or embedded systems where every byte counts. So, how can you use it? You can draw inspiration from its architecture to build your own efficient AI features or potentially use its core components as a foundation for your new project.

Product Core Function

· Ultra-compact native application footprint: Enables deployment on devices with limited storage and memory, enhancing accessibility. So, what's the benefit for you? Your apps can reach a wider audience on more devices without sacrificing functionality.

· Custom-built UI library: Provides a responsive and efficient user interface that is highly optimized for performance, avoiding the overhead of large UI frameworks. So, what's the benefit for you? Users experience smoother and faster interactions with your application.

· Self-developed AI vision libraries: Offers specialized algorithms for computer vision tasks, optimized for speed and low resource consumption. So, what's the benefit for you? You can embed intelligent image processing capabilities into your applications without requiring powerful server infrastructure.

· AI inference on-device: Allows complex AI models to run directly on the user's device, enhancing privacy and reducing latency. So, what's the benefit for you? Your application can provide real-time AI insights without constant internet connectivity, improving user experience and data security.

Product Usage Case

· Developing a lightweight mobile app for real-time object recognition in low-bandwidth environments. The app uses TinyVisionAI's optimized vision library to identify objects quickly and accurately, even with limited network access. So, how does this help? It allows for functional AI features in areas where connectivity is unreliable.

· Building an augmented reality experience for educational purposes that runs smoothly on older smartphones. By using the custom UI and lean AI, the AR overlays are rendered efficiently, providing an engaging learning experience without draining the device's battery or crashing. So, how does this help? It makes advanced AR experiences accessible to users with less powerful devices.

· Creating an embedded system for image analysis in an industrial setting where processing power and memory are scarce. The 1.8MB app can perform quality control checks on the production line, identifying defects without needing a dedicated, high-end computer. So, how does this help? It reduces hardware costs and simplifies deployment for specialized industrial AI solutions.

2

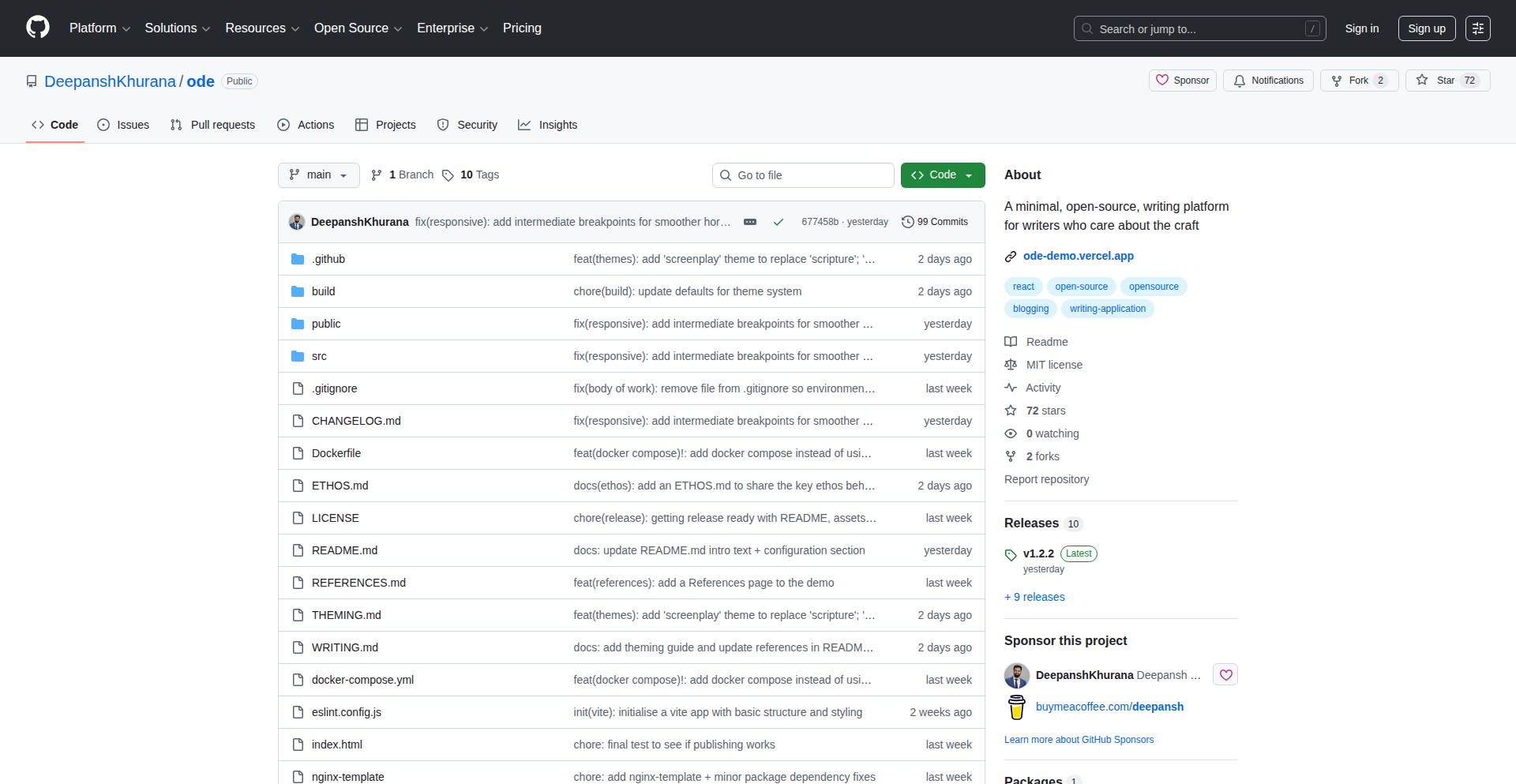

Jargon AI Zettelkasten

Author

schoblaska

Description

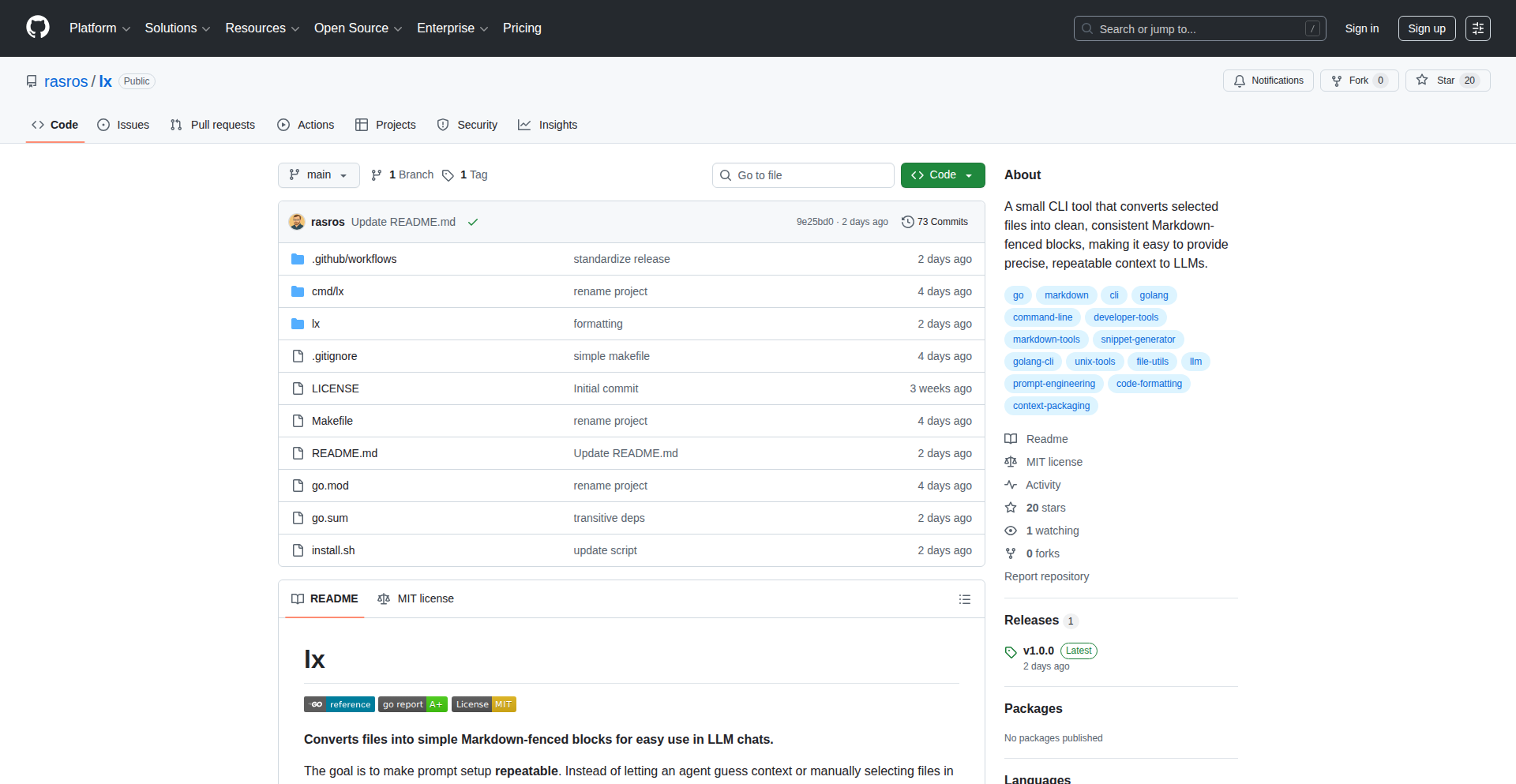

Jargon is an AI-powered Zettelkasten system that automatically reads articles, papers, and YouTube videos, extracts key ideas, and intelligently links related concepts. It uses advanced AI techniques to summarize content, create 'insight cards,' and build a semantic knowledge graph, enabling powerful retrieval and discovery of information. This solves the problem of information overload by transforming raw content into structured, interconnected knowledge.

Popularity

Points 29

Comments 7

What is this product?

Jargon is an AI-managed Zettelkasten, a method for note-taking and knowledge management. It leverages state-of-the-art AI models like Opus 4.5 to process diverse content sources (articles, PDFs, YouTube videos). The core innovation lies in its ability to not just store information, but to semantically understand it. It extracts 'insight cards' (key takeaways), embeds them for semantic search, and automatically links related concepts, forming a dynamic knowledge graph. This is like having an AI assistant that reads everything for you and organizes it into a highly interconnected and searchable brain.

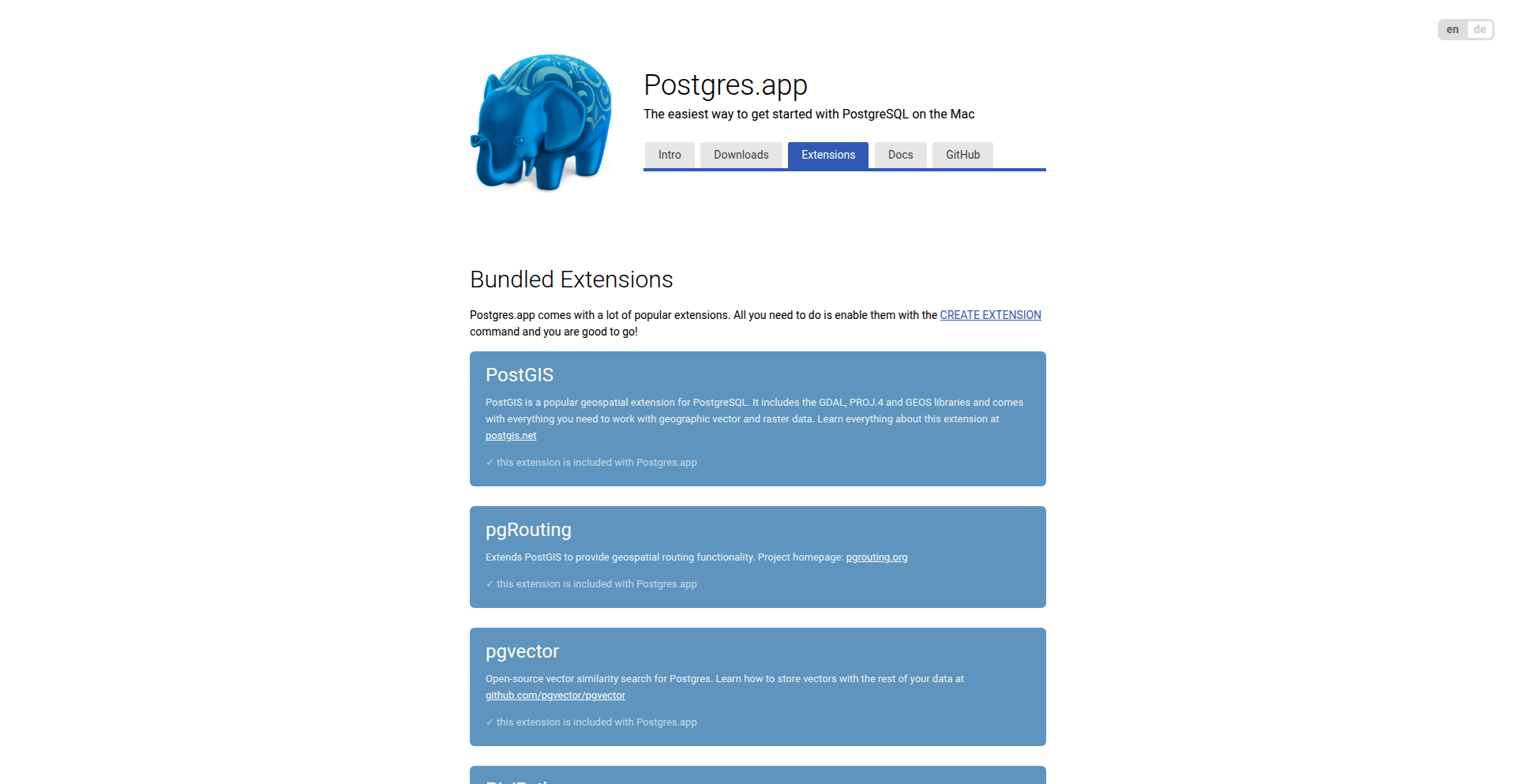

How to use it?

Developers can use Jargon by pasting URLs of articles, links to PDFs, or YouTube videos directly into the system. It can also be queried with questions, and it will autonomously search the web for relevant content to answer them. The processed information is stored as 'insight cards' and embedded into a vector database (using pgvector) for fast, context-aware semantic search. You can then explore your knowledge base through a visual graph or by asking natural language questions, which trigger a Retrieval-Augmented Generation (RAG) process combining your library with fresh web results. For integration, Jargon uses Rails with Hotwire for real-time updates and Falcon for asynchronous processing, making it adaptable for custom workflows.

Product Core Function

· Automated Content Ingestion: Reads and processes articles, PDFs, and YouTube videos. Value: Saves significant manual effort in extracting information, directly turning raw content into actionable insights.

· AI-Powered Idea Extraction: Identifies and summarizes key ideas into 'insight cards'. Value: Distills complex information into digestible nuggets, making learning and knowledge retention more efficient.

· Semantic Linking and Graphing: Automatically connects related concepts and visualizes them in a knowledge graph. Value: Reveals hidden connections between disparate pieces of information, fostering deeper understanding and serendipitous discovery.

· Semantic Search: Allows searching your knowledge base using natural language queries. Value: Goes beyond keyword matching to understand the meaning of your questions, delivering more relevant and precise results.

· Research Thread Generation: Enables creating research threads that trigger web searches for related content. Value: Facilitates continuous learning and exploration by automatically expanding your knowledge base with relevant external information.

· RAG (Retrieval-Augmented Generation): Combines your stored knowledge with real-time web search results for comprehensive answers. Value: Ensures that answers are not only based on your existing notes but also incorporate the latest information available online.

Product Usage Case

· Academic Research: A student can feed research papers and lecture videos into Jargon. The AI extracts key findings and methodologies, automatically linking them to related theories. This helps the student quickly grasp complex subjects and discover potential research gaps.

· Content Curation for Developers: A developer wanting to stay updated on a specific technology can input blog posts and documentation links. Jargon summarizes them into concise insight cards and links them to related tools or concepts, creating a personalized and searchable knowledge base of best practices and new developments.

· Personal Knowledge Management: An individual can use Jargon to archive articles, podcasts, and web clippings on topics they are interested in. The system automatically organizes this information, making it easy to recall specific facts or explore how different ideas interconnect over time.

· Problem Solving with AI Assistance: A developer facing a coding challenge can ask Jargon questions. Jargon will search its own library and the live web for relevant solutions, code snippets, and explanations, augmented by its understanding of your existing knowledge base.

3

ProposalFlow

Author

tlhunter

Description

ProposalFlow is a developer-centric platform designed to streamline the lifecycle of technical proposals. It moves away from unstructured documents to a structured, metadata-driven approach, solving common pain points in technical review and consensus building. Think of it as a specialized, semantic-aware system for managing ideas before they become code.

Popularity

Points 23

Comments 8

What is this product?

ProposalFlow is a system that transforms how technical proposals are managed. Instead of burying crucial information like reviewer status, approval markers, or deadlines within free-form text documents (like in Notion or Google Docs), ProposalFlow extracts this metadata and organizes it. This allows for automated checks and clear visibility. The innovation lies in treating proposal data structurally, enabling programmatic enforcement of rules (e.g., preventing a proposal from moving forward if not all required approvals are met) and improving discoverability and notification. For developers, this means less time wasted searching for information or dealing with unclear status, and more time focusing on implementation. It’s like having an intelligent assistant for your technical decision-making process.

How to use it?

Developers can use ProposalFlow to create, submit, and track technical proposals. Imagine you have an idea for a new feature or a refactor. Instead of writing a lengthy Word document, you'd use ProposalFlow's interface to define key aspects: what the proposal is about, who should review it, what criteria need to be met for approval, and so on. The platform then facilitates the review process by showing reviewers exactly what's expected of them and tracking their progress. It can also notify relevant parties when a proposal is ready for review, has been approved, or requires action. Integration with existing workflows, like Slack notifications, is planned, making it a natural fit for engineering teams looking to formalize their ideation and decision-making stages.

Product Core Function

· Structured Proposal Management: Enables creation of technical proposals with defined fields for reviewers, status, and approval criteria. This helps teams clearly define what needs to be done and who is responsible, avoiding ambiguity and ensuring all necessary steps are considered before development begins.

· Automated Review Tracking: Provides a clear overview of the review status for any proposal, automatically updating as reviewers provide feedback or approvals. This eliminates the need to manually hunt down reviewers or sift through lengthy comment threads, accelerating the feedback loop and decision-making process.

· Semantic Metadata Extraction: Moves beyond simple text storage to capture the meaning of proposal elements, allowing for rule-based enforcement (e.g., preventing a proposal from advancing without meeting specific approval thresholds). This ensures that proposals adhere to organizational standards and that critical requirements are met, reducing the risk of technical debt or poorly planned features.

· Notification System: Keeps stakeholders informed about the progress of proposals they are involved in, such as when a review is requested or an approval is granted. This proactive communication minimizes delays and ensures everyone is on the same page, allowing developers to focus on coding rather than status updates.

· Configurable Proposal Types: Allows organizations to define custom proposal workflows beyond traditional RFCs, such as for database architecture decisions or UI specifications. This flexibility enables teams to adapt ProposalFlow to their specific needs and terminology, making it a more effective tool for various technical domains.

Product Usage Case

· Managing a new feature proposal: A developer proposes a new user-facing feature. They create a proposal in ProposalFlow, specifying the target audience, technical approach, and required reviews from backend, frontend, and product teams. ProposalFlow tracks each team's review and highlights any blocking comments or required approvals, so the developer knows exactly when the feature is greenlit to start coding.

· Refactoring a critical service: An engineer proposes a significant refactor of a core service. ProposalFlow allows them to detail the risks, benefits, and rollback strategy, and assigns specific senior engineers for approval. The platform ensures that all critical approvals are obtained before the refactor is deemed ready for implementation, providing a clear audit trail of the decision.

· Standardizing API design: A team wants to establish new API design guidelines. Using ProposalFlow, they create a 'Design Standard Proposal' and circulate it to all relevant architects. The structured format ensures that key aspects of the design are addressed, and the approval status clearly indicates when the new standards are officially adopted, informing all future development.

4

Superset - Parallel AI Coding Agent Orchestrator

Author

hoakiet98

Description

Superset is an open-source desktop application designed to streamline developer workflows by enabling the parallel execution of multiple command-line interface (CLI) coding agents on a single machine. It addresses the challenge of managing context and avoiding bottlenecks when using various AI coding assistants, such as Claude Code and Codex, by isolating them within dedicated Git worktrees. This innovation allows developers to initiate new coding tasks while others are running, receive timely notifications for agent completion or input requirements, and seamlessly switch between tasks without losing context, ultimately boosting productivity.

Popularity

Points 22

Comments 3

What is this product?

Superset is a desktop application that acts as a central hub for running multiple AI coding agents concurrently. Its core innovation lies in its ability to create isolated development environments, called Git worktrees, for each agent. Imagine each AI coding assistant, like a code generator or a refactoring tool, gets its own clean workspace. This prevents interference and conflicts between different agents, ensuring that one agent's operations don't mess up another's. It intelligently manages these isolated environments, allowing you to start a new coding task without interrupting ongoing ones. When an agent finishes its job or needs your input, Superset sends you a push notification. This means you can juggle multiple AI-assisted coding projects simultaneously, staying unblocked and maximizing your coding efficiency.

How to use it?

Developers can integrate Superset into their existing workflow by installing the application on their desktop. When starting a new coding task that involves an AI agent, Superset simplifies the process by offering a one-click Git worktree creation. This automatically sets up the necessary environment for the agent. You can then launch various CLI coding agents within these isolated worktrees. For example, you might have one worktree where Codex is generating unit tests, and another where Claude Code is refactoring a different part of your codebase. Superset will manage these parallel processes, notifying you when each agent requires attention or has completed its task. This allows for a fluid transition between different coding activities, keeping your development momentum high.

Product Core Function

· Parallel Agent Execution: Run multiple AI coding agents simultaneously on your machine, significantly reducing idle time and increasing overall productivity.

· Isolated Git Worktrees: Each agent operates in its own clean Git worktree, preventing conflicts and ensuring a stable development environment for each task.

· Automatic Environment Setup: Simplifies the setup process by automatically configuring the environment when creating new Git worktrees for agents.

· Push Notifications: Receive timely alerts when agents complete their tasks or require your input, allowing for efficient context switching and faster responses.

· Contextual Isolation: Ensures that the context of one agent's work does not interfere with another's, maintaining the integrity of each development task.

Product Usage Case

· Scenario: A developer is working on a large codebase and needs to generate end-to-end tests for a new feature while simultaneously refactoring an existing module. How it solves the problem: Superset allows the developer to launch Codex in one worktree to generate tests and Claude Code in another worktree to refactor. Both agents run in parallel, and the developer receives notifications when each task is complete, eliminating the need to wait for one to finish before starting the other.

· Scenario: A developer is experimenting with different AI coding tools for code completion and bug fixing and wants to compare their performance without manual setup each time. How it solves the problem: Superset's one-click worktree creation and agent isolation mean the developer can quickly spin up separate environments for each tool, test them side-by-side, and easily switch between them to analyze results. This avoids the tedious process of reconfiguring environments and losing context.

· Scenario: A developer is working on a project with multiple independent features, each requiring AI assistance for different aspects like writing documentation, generating API stubs, or optimizing algorithms. How it solves the problem: Superset enables the developer to dedicate a separate worktree and AI agent for each feature's specific AI-assisted task. This parallel processing capability ensures that all aspects of the project are being worked on concurrently, dramatically speeding up the overall development cycle.

5

CurioQuest - PWA Trivia Engine

Author

mfa

Description

CurioQuest is a progressive web application (PWA) designed as a simple and clean trivia and fun facts game. It showcases how to build interactive web experiences that can be 'installed' on devices, offering offline capabilities and native-app-like performance without traditional app store distribution. The innovation lies in its accessible PWA architecture and straightforward game logic, making it a great example for developers looking to create engaging, installable web content.

Popularity

Points 6

Comments 10

What is this product?

CurioQuest is a web-based trivia game built as a Progressive Web App (PWA). PWAs are essentially websites that leverage modern web technologies to provide an app-like experience. This means it can be added to your home screen, work offline, and load very quickly, much like a native mobile app, but it's built entirely with web standards (HTML, CSS, JavaScript). The innovation here is in its practical implementation of PWA features for a fun, engaging application, demonstrating how to deliver rich interactive experiences directly through the browser, making it accessible to anyone with a web connection. It's a demonstration of delivering high-quality user experiences without the friction of app store downloads.

How to use it?

Developers can use CurioQuest as a reference for building their own PWAs. Its straightforward architecture makes it easy to understand how to implement PWA features like service workers for offline caching and manifest files for 'installability.' You can clone the repository, study its code, and adapt its structure for your own web-based games, educational tools, or any application that benefits from an installable, offline-first web experience. It's about learning to leverage web technologies for more than just static content.

Product Core Function

· Progressive Web App (PWA) Implementation: Enables 'installing' the game on devices for offline access and a native app feel. This means you can play without an internet connection after the initial load, offering a seamless user experience.

· Multi-language Support (English & Portuguese): Demonstrates how to structure a web application to handle content in multiple languages, making it accessible to a broader audience. This involves managing localized text and potentially other assets.

· Categorized Trivia Content: Organizes a large number of questions (over 2600) into distinct categories and difficulty levels. This showcases a scalable approach to managing game data and presenting it to users in an organized manner.

· Responsive Design: Ensures the game plays well on various screen sizes, from desktops to mobile phones. This is crucial for web applications that are meant to be accessed from multiple devices.

· Clean and Simple User Interface: Focuses on usability and a pleasant gaming experience without unnecessary clutter. This highlights the value of good UX design in web applications.

Product Usage Case

· Building an offline trivia app for educational purposes: A teacher could use CurioQuest's structure to create a trivia game that students can download and play on their tablets in areas with limited internet access, reinforcing learning without connectivity issues.

· Developing a web-based quiz for event engagement: An event organizer could adapt CurioQuest to create a branded quiz that attendees can 'install' on their phones during a conference. This provides an interactive element and allows for engagement even with potentially spotty venue Wi-Fi.

· Creating a language learning practice tool: A developer could modify CurioQuest to focus on vocabulary or grammar drills, offering a fun, installable way for users to practice a new language on the go, even without data.

· Demonstrating PWA capabilities for portfolio projects: Aspiring web developers can use CurioQuest as a prime example in their portfolio to showcase their understanding and practical application of PWA technologies, highlighting their ability to build modern, performant web experiences.

6

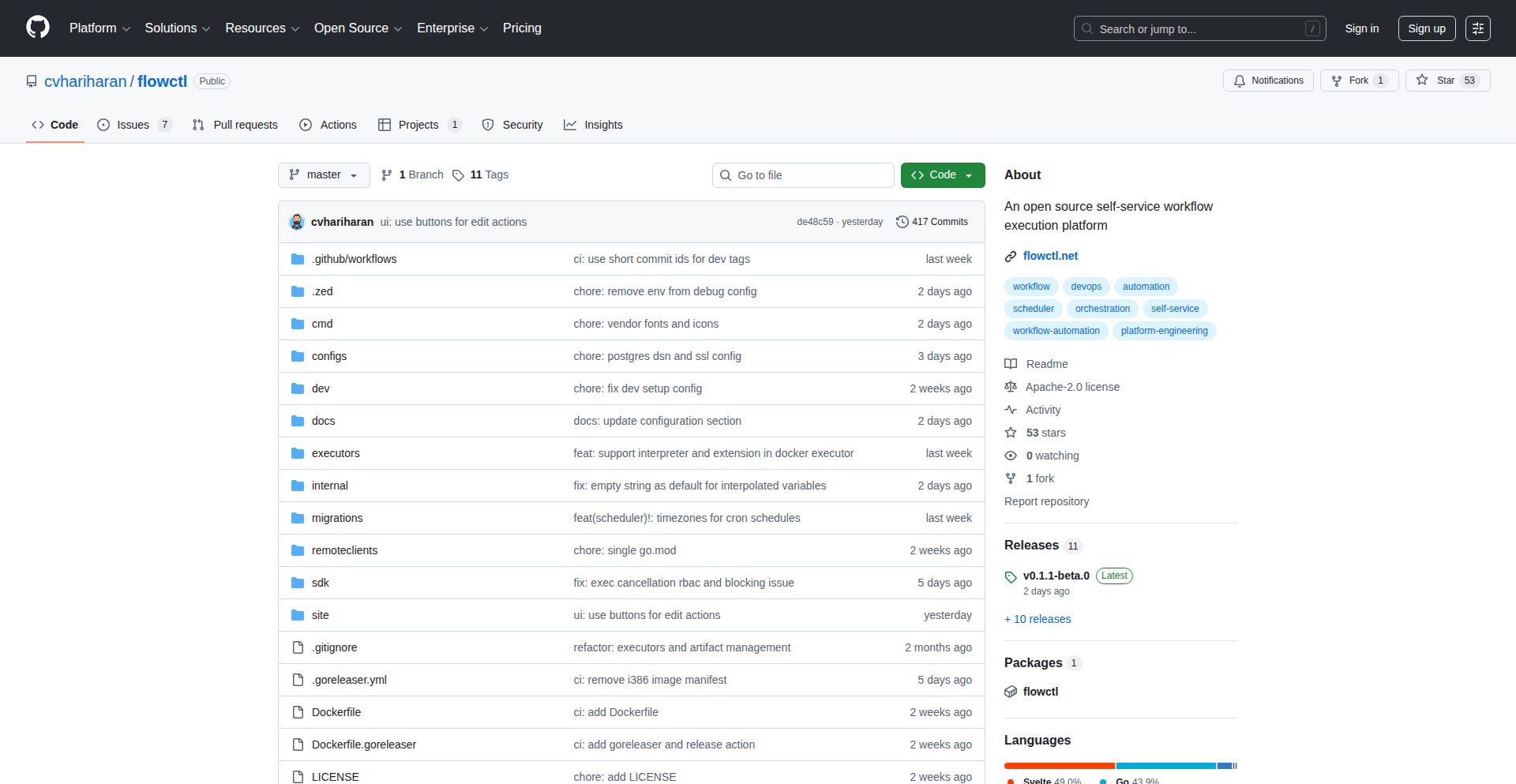

Flowctl: Binary Workflow Weaver

Author

cv_h

Description

Flowctl is a novel open-source platform that simplifies access to complex operational tasks through a single, self-service binary. It empowers users to automate anything from secure SSH access to server instances, to infrastructure provisioning, and custom business process automation. Its core innovation lies in its executor paradigm, making it adaptable to any domain, and its agentless remote execution capabilities, all wrapped in an intuitive UI.

Popularity

Points 15

Comments 1

What is this product?

Flowctl is an open-source platform designed to make complex technical tasks accessible and manageable for everyone. Imagine a universal remote control for your digital operations. Instead of needing deep technical knowledge to perform tasks like granting someone access to a server, setting up new infrastructure, or running a recurring business process, you can define these actions once as a 'workflow'. Flowctl then allows authorized users to trigger these workflows through a simple interface, handling all the underlying technical complexities. The innovation is in its 'single binary' approach, meaning you can deploy and run it easily without complicated setups, and its agentless design for remote operations, reducing installation overhead. This means even non-technical users can safely and efficiently perform tasks that would normally require a sysadmin or developer.

How to use it?

Developers can integrate Flowctl into their existing infrastructure or use it as a standalone tool. For instance, a developer could define a workflow to automatically provision a new testing environment using Docker executors, which can then be triggered by a project manager via the Flowctl UI or an API. For remote server access, instead of sharing SSH keys directly, a workflow can be set up to grant temporary, audited SSH access to a specific instance, managed through Flowctl's approval system. The platform supports OIDC for Single Sign-On (SSO) and Role-Based Access Control (RBAC) to manage who can execute which workflows. You can also schedule workflows to run automatically at specific times using cron-based scheduling, or even trigger them based on external events. The encrypted credentials store ensures that sensitive information like API keys or passwords are handled securely within the platform.

Product Core Function

· Self-service workflow execution: Enables users to trigger predefined technical tasks without direct technical intervention, increasing operational efficiency and reducing errors.

· Agentless remote execution via SSH: Allows for secure interaction with remote servers without needing to install any software on those servers, simplifying deployment and security.

· SSO with OIDC and RBAC: Provides secure authentication and granular access control, ensuring only authorized users can perform specific actions, enhancing security and compliance.

· Encrypted credentials and secrets store: Securely manages sensitive information like passwords and API keys, protecting them from unauthorized access.

· Flow editor UI: Offers a user-friendly graphical interface to design and manage complex workflows, making automation accessible to a wider audience.

· Docker and Script executors: Supports running containerized applications and custom scripts as part of workflows, offering flexibility in automation tasks.

· Approvals: Introduces a human-in-the-loop mechanism for critical workflow steps, ensuring oversight and control over sensitive operations.

Product Usage Case

· A small startup can use Flowctl to allow their sales team to provision demo environments for potential clients. Instead of relying on developers, the sales team can trigger a 'Provision Demo Environment' workflow through the Flowctl UI, which automatically sets up a sandboxed environment using Docker. This speeds up the sales cycle and frees up developer time.

· A DevOps team can create a workflow to automatically grant temporary SSH access to a production server to a specific engineer for troubleshooting. This workflow would require an approval step, and the access would be automatically revoked after a set duration, all logged within Flowctl. This improves security by eliminating the need to share long-lived credentials.

· A system administrator can set up a daily workflow to back up critical databases using a custom script executor. This workflow can be scheduled using cron-based scheduling, ensuring reliable and automated backups without manual intervention.

· A remote worker can use Flowctl to manage their home lab infrastructure. They can trigger workflows to restart specific devices, deploy new applications, or check system status, all from anywhere in the world, through a secure, web-based interface.

7

Furnace: Chiptune Symphony Engine

Author

hilti

Description

Furnace is an experimental chiptune music tracker, embodying the spirit of classic game console sound creation. It leverages the ImGui library for its user interface, offering a novel approach to composing retro-style music. Its innovation lies in simplifying the complex process of chiptune synthesis and pattern sequencing, making it accessible for developers and musicians looking to create authentic 8-bit era soundtracks.

Popularity

Points 14

Comments 0

What is this product?

Furnace is a software tool designed to create chiptune music, which is the distinctive electronic sound characteristic of old video game consoles. It uses ImGui, a popular library for building graphical user interfaces (GUIs) in C++, to provide an interactive way for users to compose music. The innovation here is how it streamlines the traditionally intricate process of sound synthesis (generating sounds from basic waveforms) and pattern sequencing (arranging those sounds into melodies and rhythms) into a user-friendly environment, reminiscent of how music was made on retro hardware. So, for you, this means a more approachable way to explore the creative world of retro game music, even if you're not a seasoned audio engineer.

How to use it?

Developers can use Furnace by cloning the GitHub repository and building it using a C++ compiler that supports ImGui. The primary use case is for game developers who want to add authentic retro soundtracks to their games, or for hobbyists and musicians interested in experimenting with chiptune composition. It can be integrated into game projects by exporting the generated music data in a format compatible with game engines or custom audio playback systems. For you, this means you can easily integrate unique, nostalgic audio into your digital creations or simply have fun composing your own retro tunes without needing specialized, expensive music production hardware.

Product Core Function

· Real-time waveform synthesis: Allows users to define and manipulate basic sound waves (like square, sawtooth, triangle) to generate unique instrument sounds, mimicking the limited but iconic sound chips of old consoles. The value is in creating distinct retro instrument voices for your music.

· Pattern-based sequencing: Enables the creation of musical melodies and rhythms by arranging notes and effects within a grid-like interface, similar to how old game music was programmed. This provides a structured and intuitive way to build songs.

· Instrument editor: Offers a dedicated interface to fine-tune the characteristics of synthesized instruments, controlling parameters like pitch, envelope, and effects. The value is in crafting precisely the sonic textures needed for authentic chiptune.

Product Usage Case

· A game developer building a retro-style platformer can use Furnace to create original soundtracks that perfectly capture the feel of 8-bit era games, enhancing player immersion and nostalgia. This directly addresses the need for authentic sound design in retro-inspired projects.

· A hobbyist musician can experiment with generating unique sound effects or background music for a personal project using Furnace, without needing to invest in complex synthesizers or learn deep audio programming. This democratizes creative audio production for smaller projects.

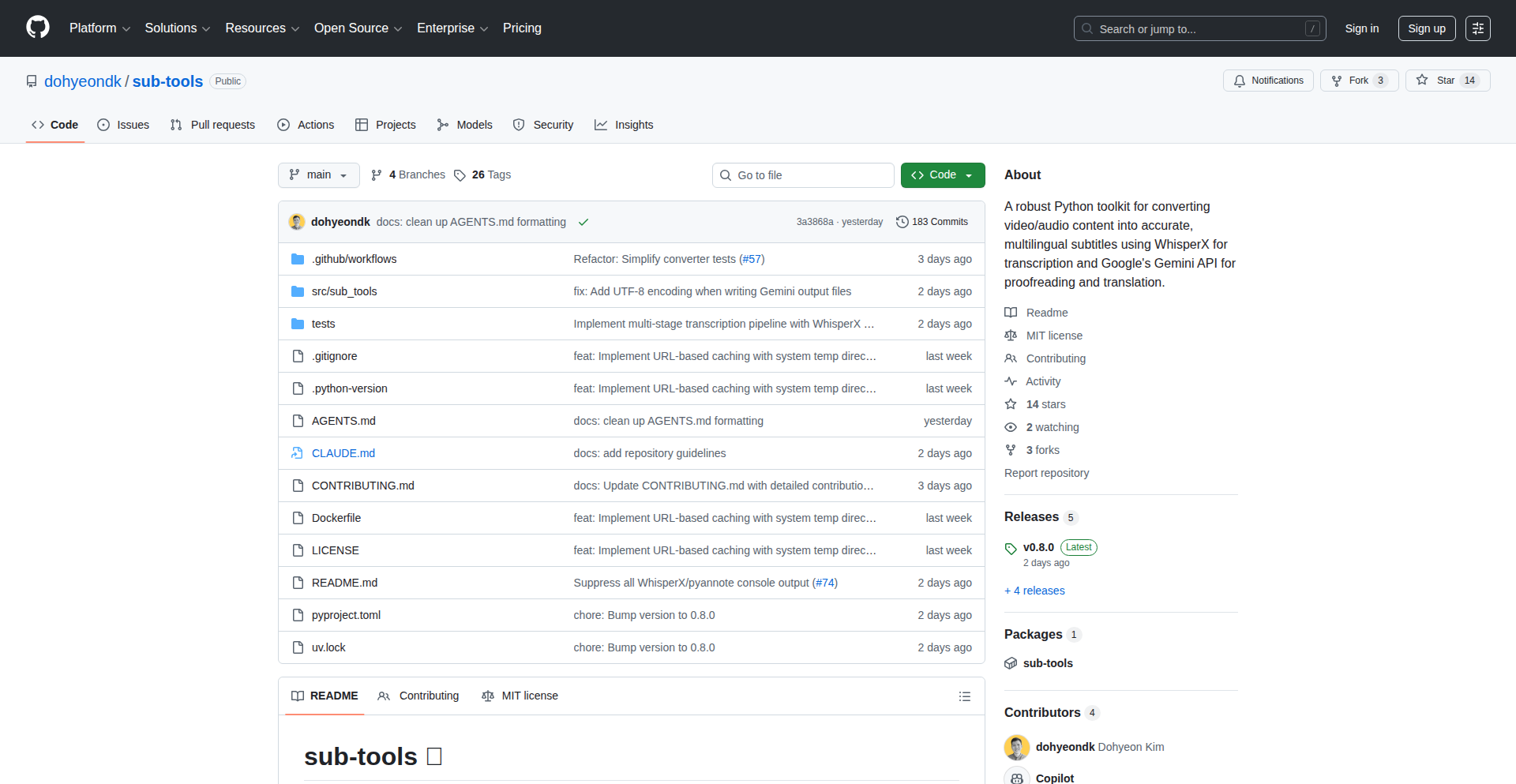

8

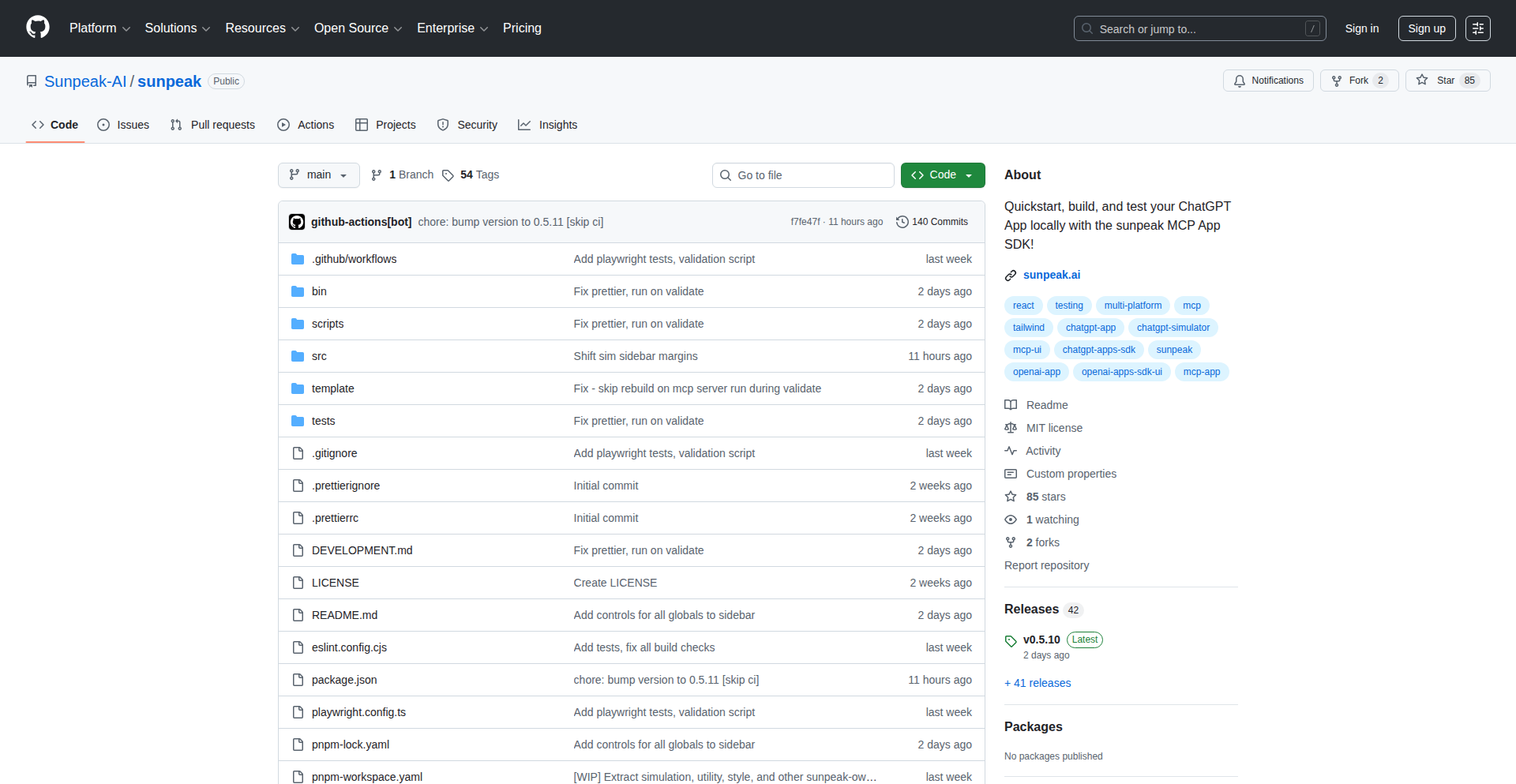

GitHits Code Compass

Author

skvark

Description

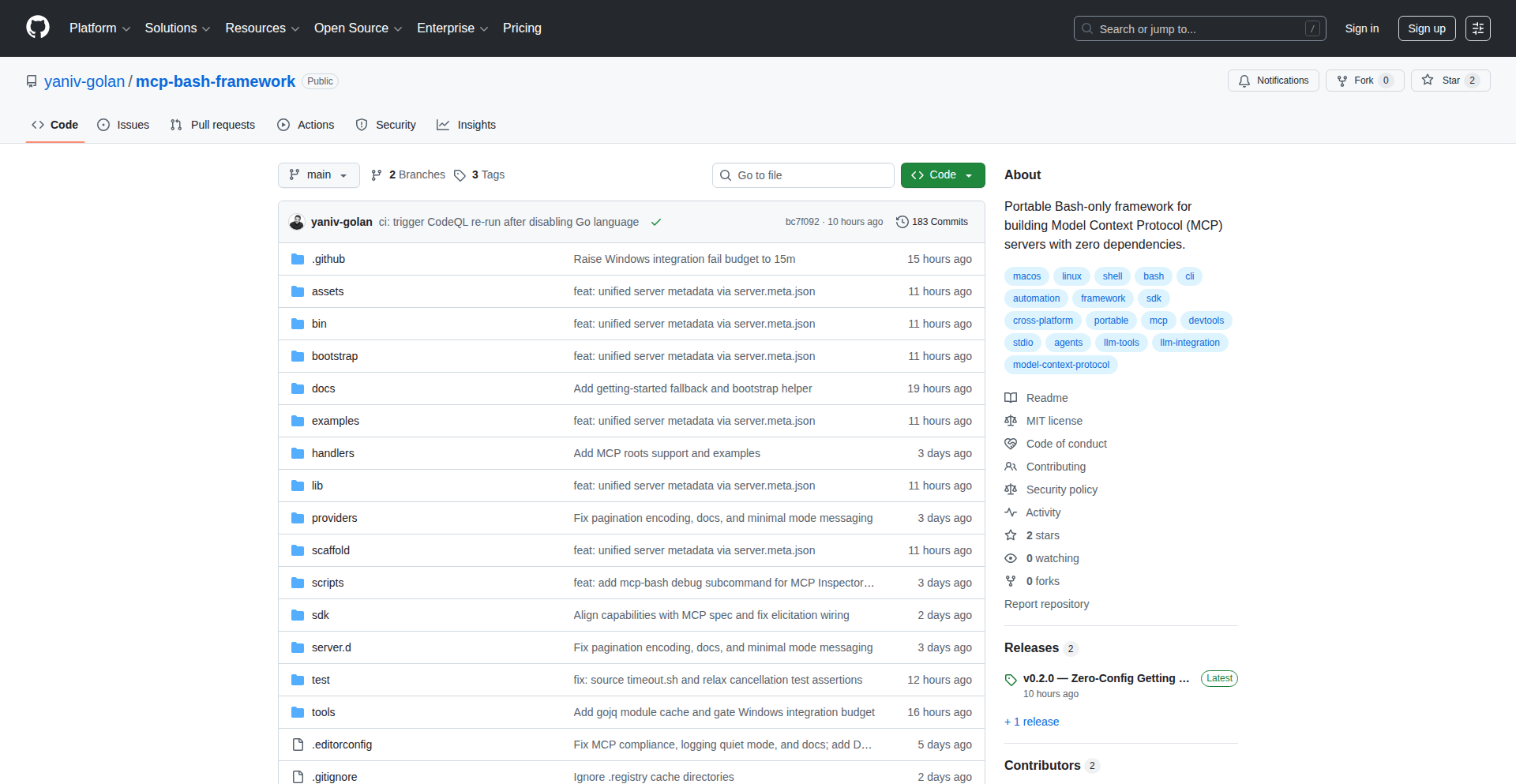

GitHits is a specialized code example engine designed to help developers and AI coding agents overcome technical blockers. It tackles the limitations of traditional search engines and LLMs by diving deep into millions of open-source repositories to find real, actionable code solutions. Unlike general search, GitHits focuses on unblocking you by surfacing relevant code snippets, issues, and documentation, then distilling them into a concise, token-efficient example. So, if you're stuck on a coding problem, GitHits helps you find how others have solved it in the wild, saving you valuable development time.

Popularity

Points 10

Comments 4

What is this product?

GitHits is a sophisticated search engine that goes beyond keyword matching to find practical code solutions within the vast open-source ecosystem. Instead of just returning links, it analyzes millions of repositories to identify code patterns, relevant issues, and discussions that directly address your programming challenge. It then synthesizes this information into a single, high-quality code example. Think of it as a super-powered detective for code, uncovering how real-world problems have been solved by other developers. This is innovative because it moves beyond simple text search or generic AI suggestions to provide contextually relevant, working code examples derived from actual project implementations, solving the problem of finding proven solutions when you're stuck.

How to use it?

Developers can use GitHits through its web UI to input their technical problem or blocker. The engine then searches across supported languages (initially Python, JS, TS, C, C++, Rust) and presents a distilled code example, along with its sources. For AI agents, GitHits offers an MCP (Machine Code Processing) interface, allowing seamless integration into IDEs or CLIs. This means your AI coding assistant can leverage GitHits to find specific code patterns and solutions directly within your workflow, rather than relying on outdated or generic information. So, you can either use the website to quickly find a solution to a pesky bug, or connect it to your AI tools to get smarter, more context-aware coding assistance.

Product Core Function

· Deep Repository Analysis: Scans millions of open-source repositories at the code level to find relevant solutions. This means it's not just looking at file names or comments, but understanding the actual code logic that solves a problem. Its value is in finding actual implementations, not just descriptions of solutions.

· Intent-Driven Search: Focuses on understanding the developer's 'blocker' or intent, rather than relying solely on keywords. This is valuable because it helps you find solutions even if you don't know the exact terminology. It gets to the root of your problem.

· Multi-Signal Ranking: Ranks potential solutions using a combination of code files, issues, and discussions to ensure higher quality and relevance. This approach provides a more comprehensive understanding of a solution's context and effectiveness, leading to more reliable results.

· Distilled Code Examples: Generates a single, token-efficient code example based on the most relevant real-world sources. This saves you time by presenting a consolidated, ready-to-use snippet, rather than requiring you to sift through multiple scattered pieces of information.

· Cross-Repository Clustering: Identifies and clusters similar code samples across different repositories, revealing common approaches and best practices used by the community. This helps you learn from a broader range of solutions and understand established patterns in software development.

Product Usage Case

· Scenario: A Python developer is struggling to implement a complex data preprocessing pipeline involving multiple libraries like Pandas and Scikit-learn. They know the general concept but are unsure about the exact API calls and integration. GitHits can analyze repositories that have similar pipelines, find working examples of how these libraries are combined, and provide a concise code snippet demonstrating the correct usage. This solves the problem of trial-and-error and speeds up development.

· Scenario: A JavaScript developer needs to implement a specific UI component with advanced animations and state management. They've tried generic LLM suggestions which were inaccurate. GitHits can search for projects with similar UI components, identify the exact libraries and patterns used, and provide a runnable example. This ensures the code is practical and leverages real-world implementations.

· Scenario: An AI coding agent is tasked with refactoring a legacy C++ codebase. It needs to understand common patterns for memory management and thread synchronization. GitHits can provide canonical examples from well-maintained C++ projects, demonstrating best practices and potential pitfalls. This helps the AI agent make more informed and accurate refactoring decisions.

· Scenario: A Rust developer is facing a tricky error related to borrow checking. They know the error type but are unsure how to restructure their code to satisfy the compiler. GitHits can find issues and code snippets from other Rust projects where similar borrow checking errors were encountered and resolved, offering practical, tested solutions. This directly addresses the specific technical hurdle and provides a path to a working solution.

9

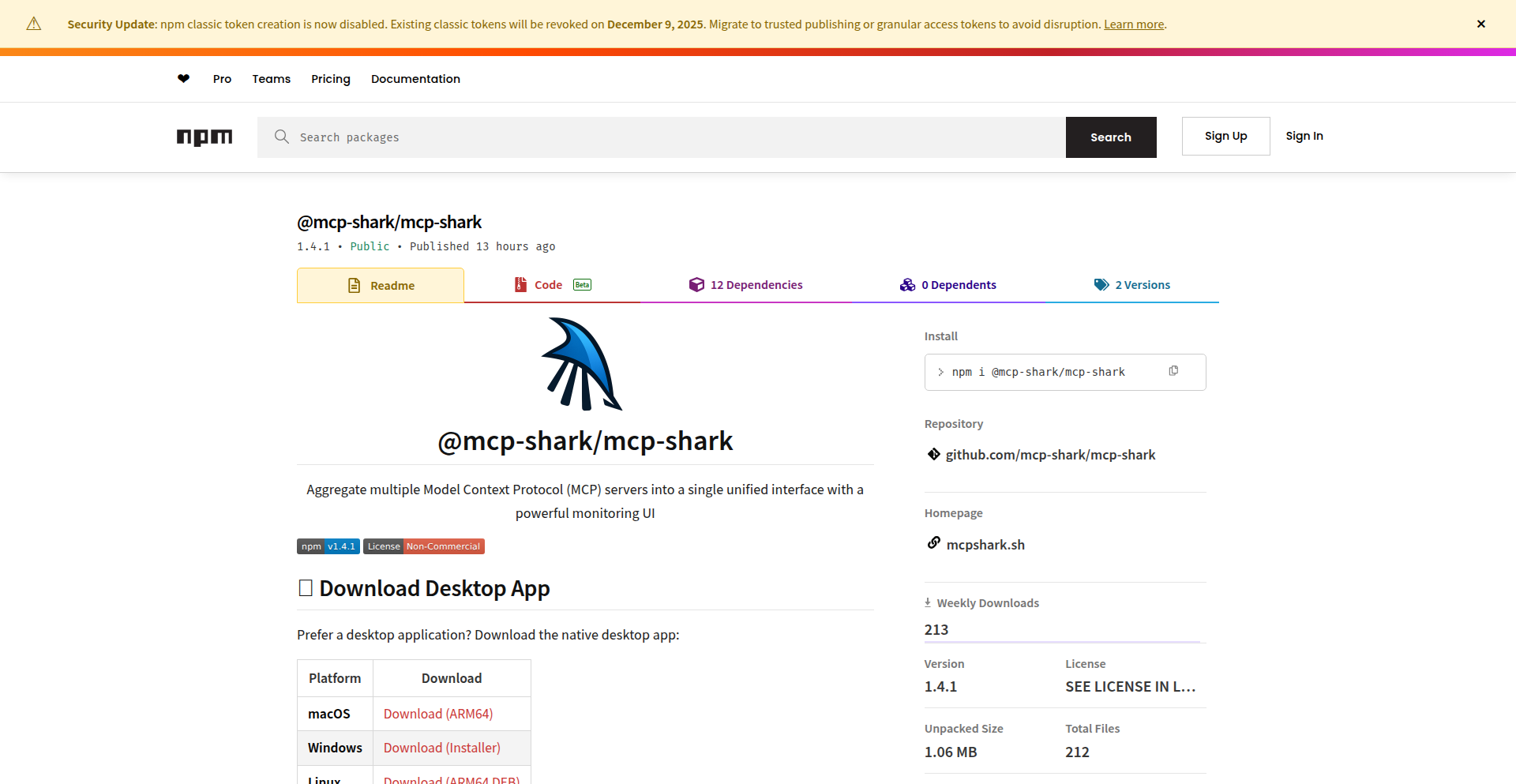

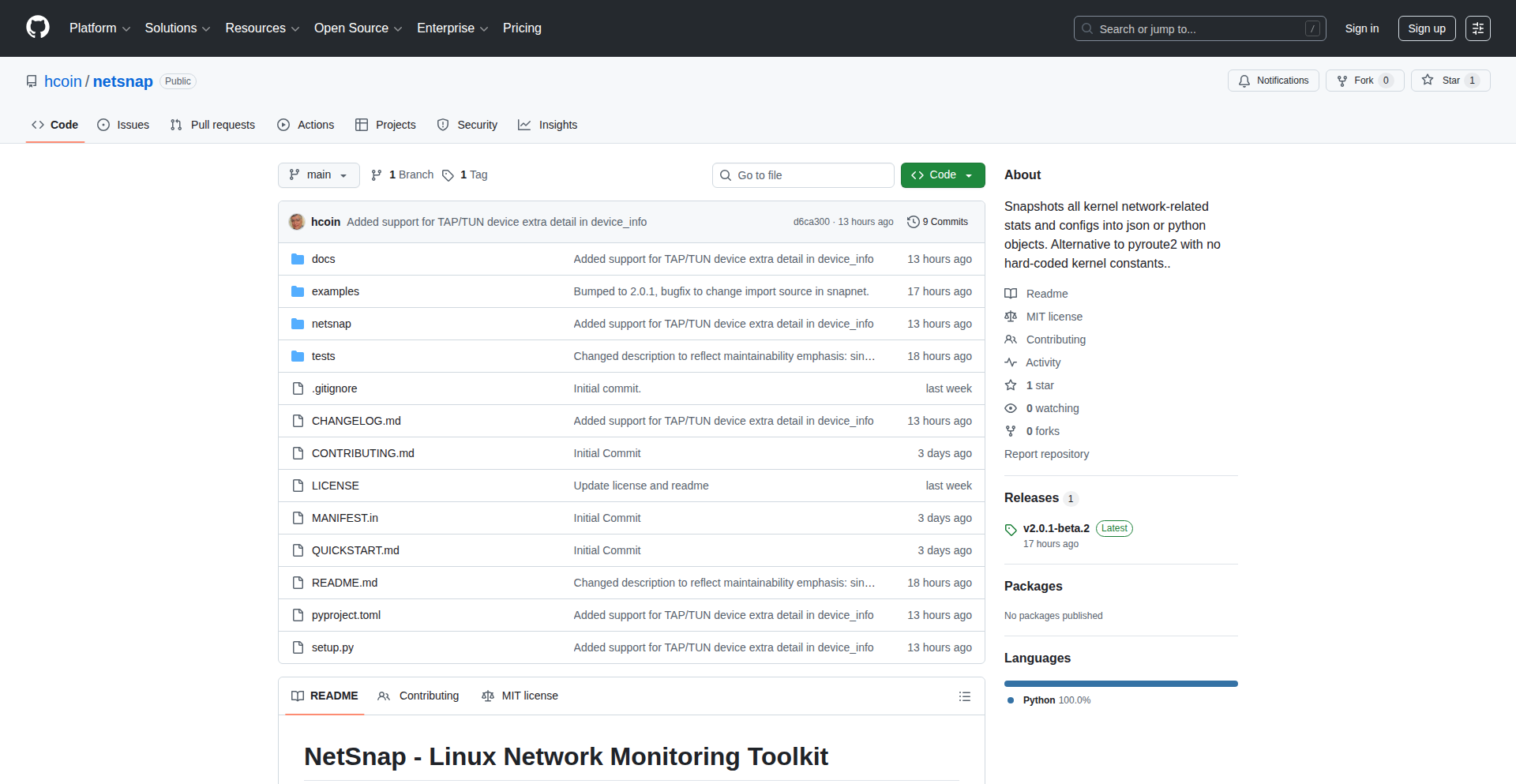

NPM Traffic Insight

Author

o4isec

Description

A real-time network traffic analysis tool leveraging the power of NPM (Node Package Manager) to dissect and visualize network activity. This project innovates by repurposing a common developer tool for network introspection, offering a unique approach to understanding application-level traffic patterns and identifying potential performance bottlenecks or security concerns.

Popularity

Points 11

Comments 0

What is this product?

This project is a novel network traffic analysis tool that uses NPM, a package manager for JavaScript, to gain insights into network communication. Instead of relying on traditional network monitoring tools, it taps into the underlying mechanisms often involved in package installations and updates to analyze traffic. The innovation lies in its creative application of NPM's capabilities for network diagnostics. It can reveal what data is being sent and received, which servers are being communicated with, and how much data is involved. This provides a granular view of network activity originating from or interacting with Node.js environments. So, what's in it for you? It helps you understand the hidden network communications of your Node.js applications, crucial for debugging and optimization.

How to use it?

Developers can integrate this tool into their development workflow for debugging network-related issues in Node.js applications. By running the analysis, they can observe the specific network requests and responses generated by their code, or by the NPM ecosystem itself. This is particularly useful when diagnosing slow application performance, unexpected data transfers, or security vulnerabilities related to network calls. The tool likely provides visualizations or logs that pinpoint problematic traffic. So, how can you use it? You can run it alongside your Node.js projects to gain visibility into their network behavior, helping you identify and fix network-related problems faster.

Product Core Function

· Real-time network traffic capture: Captures and logs network data packets as they occur, providing immediate visibility into network activity. The value is in seeing live data flows to understand current network status. Useful for identifying sudden spikes or unexpected connections.

· NPM package dependency analysis: Analyzes network traffic specifically related to NPM package downloads and updates, helping to understand the external resources your project relies on. The value is in understanding the network footprint of your project's dependencies. Useful for optimizing download times or identifying untrusted sources.

· Traffic visualization and reporting: Presents captured network data in an understandable format, potentially through charts or logs, making complex network patterns accessible. The value is in making network data actionable through clear presentation. Useful for quickly spotting anomalies and understanding data volume.

· Protocol breakdown: Differentiates and categorizes various network protocols (e.g., HTTP, DNS) within the captured traffic, allowing for targeted analysis. The value is in understanding the nature of the communication. Useful for diagnosing specific types of network requests.

· Anomaly detection: Potentially flags unusual or unexpected network activity, alerting developers to potential issues. The value is in proactive problem identification. Useful for catching potential security breaches or performance regressions early.

Product Usage Case

· Debugging slow application startup: A developer notices their Node.js application is slow to start. By using NPM Traffic Insight, they can see if specific NPM package downloads or external API calls are causing delays, allowing them to optimize or remove problematic dependencies. This solves the problem of 'why is my app so slow?'

· Identifying unauthorized data egress: A security-conscious developer wants to ensure their application isn't sending sensitive data to unexpected servers. NPM Traffic Insight can reveal any outbound connections and data transfers, helping to identify and prevent data leaks. This addresses the concern of 'is my data safe?'

· Optimizing package installation times: In a large project, developers might experience long wait times when installing or updating NPM packages. This tool can highlight which packages are taking the longest to download or which mirror servers are slow, enabling developers to choose faster alternatives or cache packages more effectively. This solves the issue of 'why are my package installs taking forever?'

· Understanding microservice communication: For applications built with microservices, NPM Traffic Insight can help visualize the network calls between different services, aiding in the debugging of inter-service communication issues. This helps diagnose problems in distributed systems by showing how services talk to each other.

10

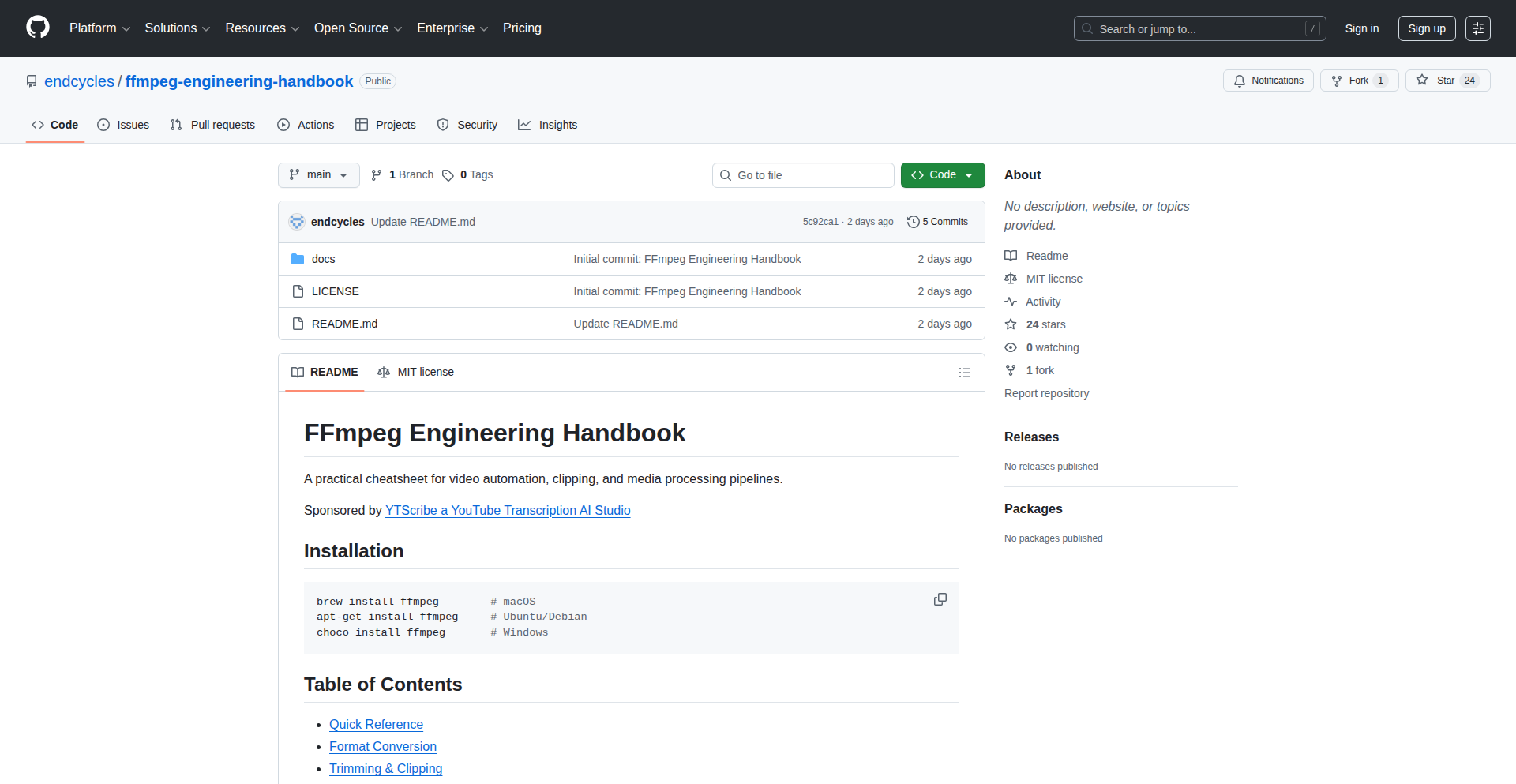

FFmpeg Artisan's Codex

Author

endcycles

Description

A curated compendium of FFmpeg engineering wisdom, offering deep dives into its powerful multimedia processing capabilities. This project distills complex FFmpeg techniques into accessible insights, focusing on innovative ways to solve intricate video and audio manipulation challenges.

Popularity

Points 10

Comments 0

What is this product?

This project is essentially an in-depth, practical guide to FFmpeg, presented in a way that highlights its engineering elegance and problem-solving prowess. It's not just a manual; it's a collection of insights and methodologies for leveraging FFmpeg's advanced features to tackle sophisticated multimedia tasks. The innovation lies in its structured approach to explaining complex concepts, moving beyond basic commands to illustrate powerful, often overlooked, engineering solutions within FFmpeg.

How to use it?

Developers can use this as a go-to resource for understanding and implementing advanced FFmpeg functionalities. Whether you need to optimize transcoding workflows, perform complex stream manipulation, integrate real-time video processing, or craft custom multimedia pipelines, this codex provides the foundational knowledge and practical examples. Integration typically involves understanding FFmpeg's command-line interface and its various APIs, with the codex guiding you on how to effectively utilize these for specific development goals.

Product Core Function

· Advanced transcoding strategies: Explains techniques for efficient and high-quality video and audio format conversion, optimizing for speed and resource usage in development.

· Stream manipulation and routing: Details how to precisely control and reroute media streams, enabling complex processing chains for custom broadcast or streaming solutions.

· Codec and filter deep dives: Unpacks the inner workings of various codecs and filters, allowing developers to fine-tune multimedia processing for specific application needs.

· Performance optimization techniques: Provides methods for squeezing maximum performance out of FFmpeg operations, crucial for real-time applications and large-scale processing.

· Scripting and automation patterns: Illustrates how to script FFmpeg for repetitive tasks and integrate it into larger automated workflows, saving development time and effort.

Product Usage Case

· Developing a scalable video-on-demand platform: Use the transcoding strategies to efficiently convert user-uploaded videos into multiple formats and resolutions for seamless playback across devices.

· Building a live video streaming service: Employ stream manipulation and codec deep dives to manage multiple incoming streams, apply real-time effects, and distribute them efficiently to viewers.

· Creating a mobile video editing application: Leverage filter deep dives and performance optimization to enable features like complex video effects, color correction, and smooth playback on mobile devices.

· Automating content processing for a media company: Implement scripting and automation patterns to automatically transcode, watermark, and segment large volumes of video content for distribution.

· Integrating multimedia processing into an IoT device: Utilize performance optimization and codec understanding to perform on-device video analysis or encoding with limited computational resources.

11

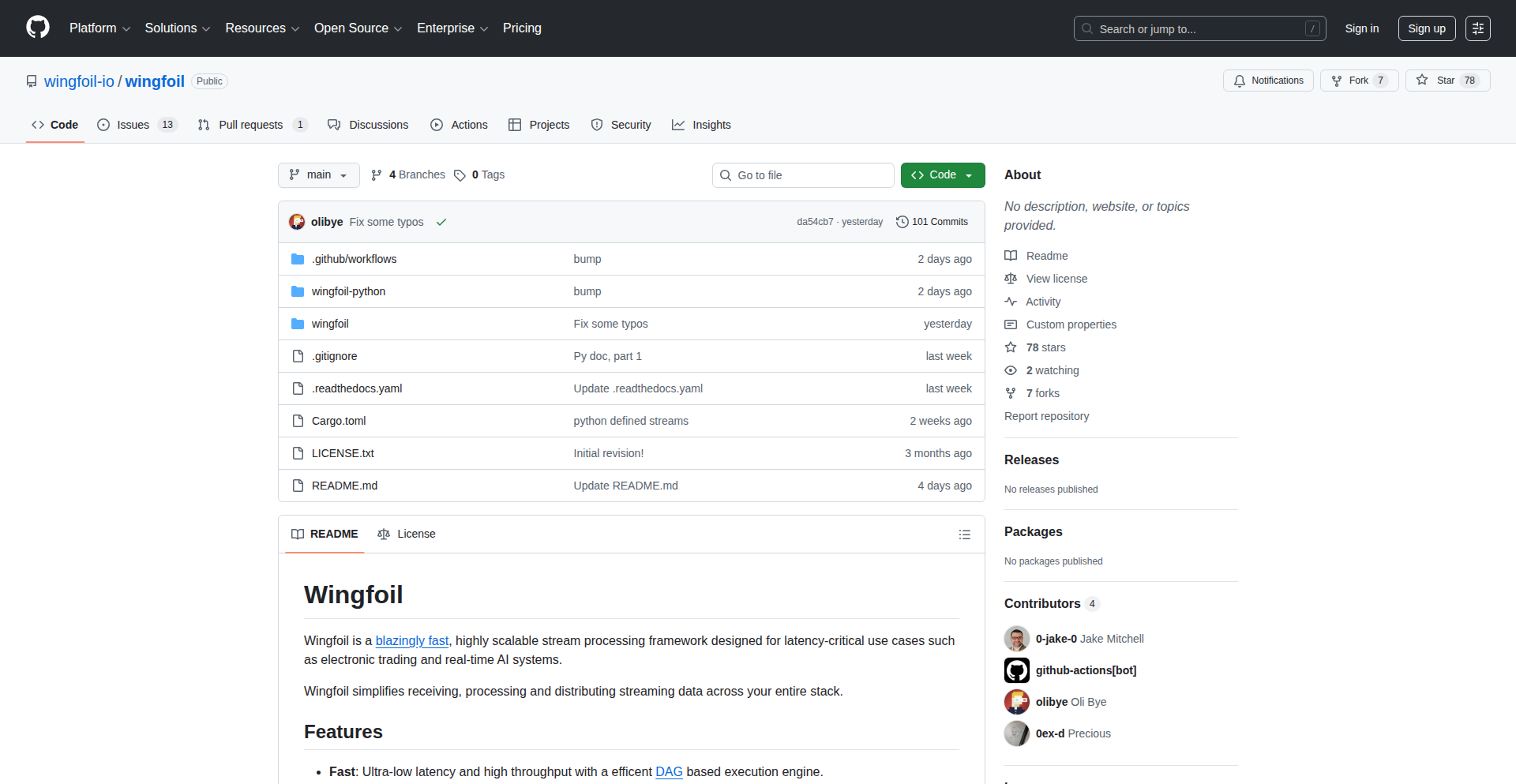

Wingfoil: Rust's Low-Latency Streaming Engine

Author

terraplanetary

Description

Wingfoil is a Rust-based framework designed for ultra-low latency data streaming. It tackles the challenge of real-time data processing by minimizing delays in data transmission and processing, making it ideal for applications where every millisecond counts. Its innovative approach lies in its efficient memory management and asynchronous programming model, built on Rust's strong safety guarantees.

Popularity

Points 6

Comments 1

What is this product?

Wingfoil is a high-performance data streaming framework built using the Rust programming language. Its core innovation is achieving extremely low latency, meaning data travels and is processed with minimal delay. This is achieved through Rust's efficient memory handling, which avoids costly garbage collection pauses, and its sophisticated asynchronous I/O capabilities that allow the system to efficiently manage many concurrent data streams without getting blocked. Think of it as a super-fast pipeline for data that needs to be delivered and acted upon almost instantly.

How to use it?

Developers can integrate Wingfoil into their applications by leveraging its Rust-based API. It's designed to be embedded within larger systems or used to build standalone streaming services. Common integration points include connecting to various data sources (like message queues, IoT devices, or other services) and feeding processed data to destinations (like databases, dashboards, or other microservices). The framework provides building blocks for defining data transformation pipelines, managing connections, and handling errors gracefully, all while maintaining its low-latency promise. This is particularly useful for backend developers building real-time analytics, trading platforms, or control systems.

Product Core Function

· Ultra-low latency data transmission: Enables data to be sent and received with minimal delay, critical for applications like high-frequency trading or real-time gaming.

· Efficient asynchronous I/O: Manages numerous data streams concurrently without blocking, maximizing throughput and responsiveness for high-demand services.

· Memory safety without garbage collection: Achieves predictable performance by preventing pauses typically caused by garbage collection, leading to more stable low-latency behavior.

· Rust's performance and safety guarantees: Leverages Rust's compile-time checks to prevent common bugs and ensure robust, secure, and fast execution of streaming logic.

· Pluggable data source and sink connectors: Allows easy integration with diverse data producers and consumers, providing flexibility in building complex data pipelines.

Product Usage Case

· Real-time analytics dashboards: Developers can use Wingfoil to ingest live data from various sources and update dashboards with near-instantaneous results, allowing for immediate insights into changing trends.

· High-frequency trading systems: For financial applications where split-second decisions are crucial, Wingfoil's low latency ensures that trading signals are processed and executed with the smallest possible delay, maximizing potential profit.

· IoT data processing: In scenarios with a vast number of connected devices sending constant data streams (e.g., sensor readings from smart cities), Wingfoil can efficiently collect, process, and react to this data in real-time, enabling immediate responses to events.

· Online gaming servers: For multiplayer games that require precise player interactions, Wingfoil can handle the rapid exchange of game state updates between players and the server, reducing lag and improving the gaming experience.

12

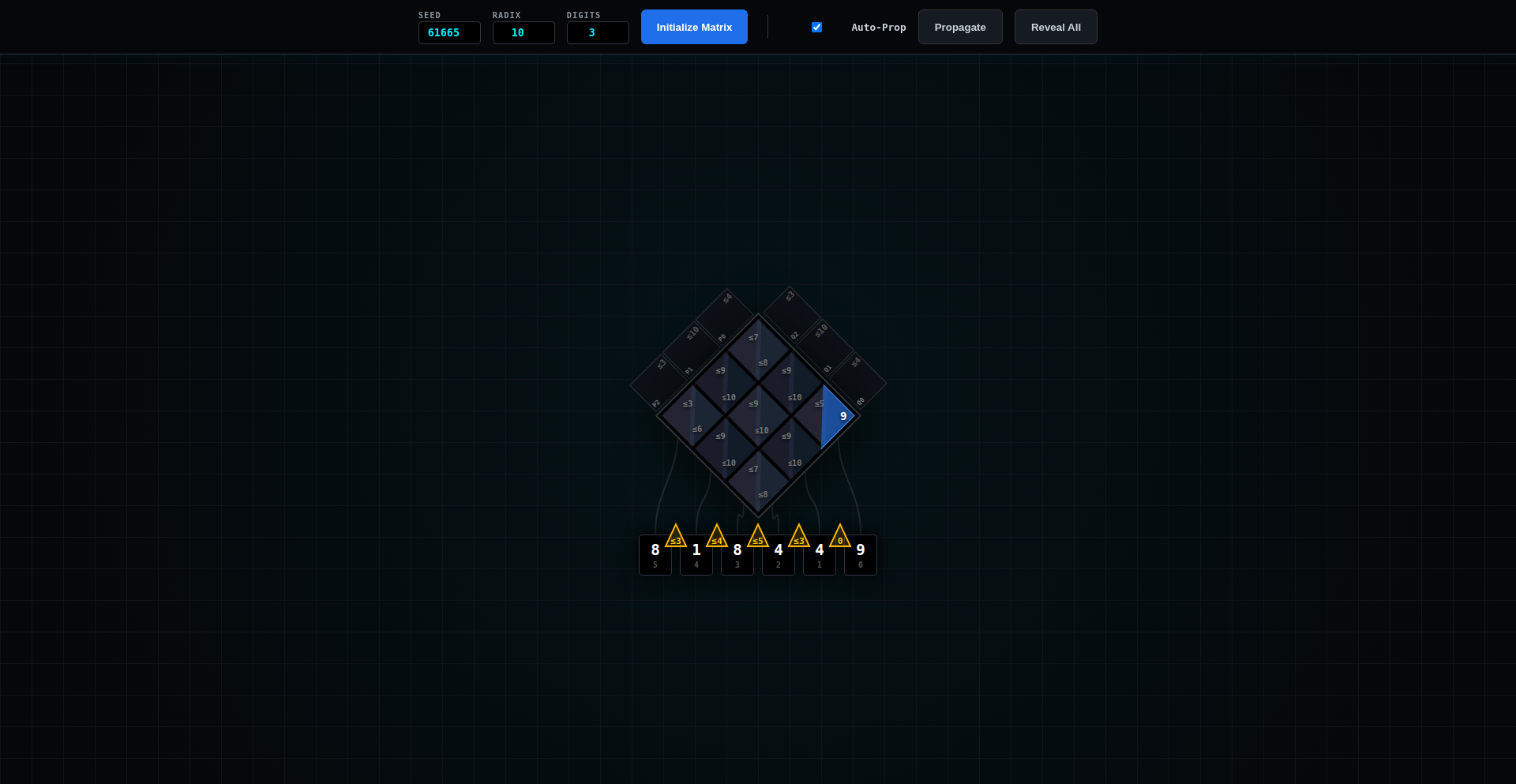

RadixPrime Factorator

Author

keepamovin

Description

A constraint puzzle game that challenges players to factor semiprimes (numbers that are the product of exactly two prime numbers) across any numerical base (radix). This project showcases an innovative approach to number theory problems by abstracting the factoring process beyond the standard base-10 system, offering a unique computational challenge and a fun way to explore prime factorization properties.

Popularity

Points 1

Comments 5

What is this product?

This project is a game built around the mathematical concept of prime factorization, but with a twist. Instead of just working with the numbers we use every day (base-10), it allows you to factor numbers in any numerical base, from binary (base-2) all the way up to much higher bases. The core innovation lies in how it implements and visualizes the factoring of semiprimes (numbers formed by multiplying two prime numbers) in these arbitrary bases. This requires a clever algorithmic approach to handle number representations and prime checking in non-standard systems, demonstrating a deep dive into computational number theory and abstract algebra.

How to use it?

For developers, RadixPrime Factorator serves as a fascinating example of implementing complex mathematical algorithms in code. You can learn from its approach to handling different number bases, prime generation and testing within those bases, and game logic design. It's a great resource for understanding how to abstract mathematical concepts into practical software. Potential integration scenarios include using its core factoring engine for educational tools, math puzzle generators, or even as a component in more complex computational research projects involving number theory. You could potentially port the core logic to different programming languages or use it as inspiration for building your own number theory-based applications.

Product Core Function

· Arbitrary Radix Prime Factorization: The ability to factor semiprimes in any numerical base. This is valuable because it demonstrates an efficient algorithm for handling number representation and prime decomposition in generalized bases, opening doors for computational number theory applications beyond typical base-10 constraints.

· Interactive Constraint Puzzle Interface: A user-friendly interface for playing the factoring game. This highlights the value of clear visualization and interaction design for complex mathematical problems, making abstract concepts accessible and engaging for players and developers alike.

· Prime Number Generation and Verification in Any Radix: The underlying logic to identify and verify prime numbers across different bases. This is a core technical achievement, showing how to adapt standard primality tests to non-standard number systems, a valuable technique for various cryptographic and computational mathematics fields.

· Game State Management and Logic: The system for tracking puzzle progress, scoring, and rule enforcement. This showcases robust game development principles, offering insights into building engaging and challenging puzzle mechanics that can be adapted to other interactive applications.

Product Usage Case

· Educational Software Development: Imagine building a math tutor that uses RadixPrime Factorator's engine to teach students about prime numbers and different number bases in a fun, interactive way. It solves the problem of making abstract math concepts tangible and engaging.

· Algorithmic Art and Generative Design: A creative developer could leverage the factoring engine to generate unique patterns or visuals based on prime factorizations in different radices, offering a novel approach to algorithmic art.

· Computational Research Prototyping: Researchers in fields like cryptography or theoretical computer science could use this project as a starting point to prototype algorithms that require operations on numbers in various bases, accelerating their research by providing a foundational implementation.

· Competitive Programming Practice: For aspiring competitive programmers, dissecting the algorithms behind this project offers valuable insights into handling number theory problems and optimizing code for efficiency, directly addressing the challenge of solving complex computational puzzles.

13

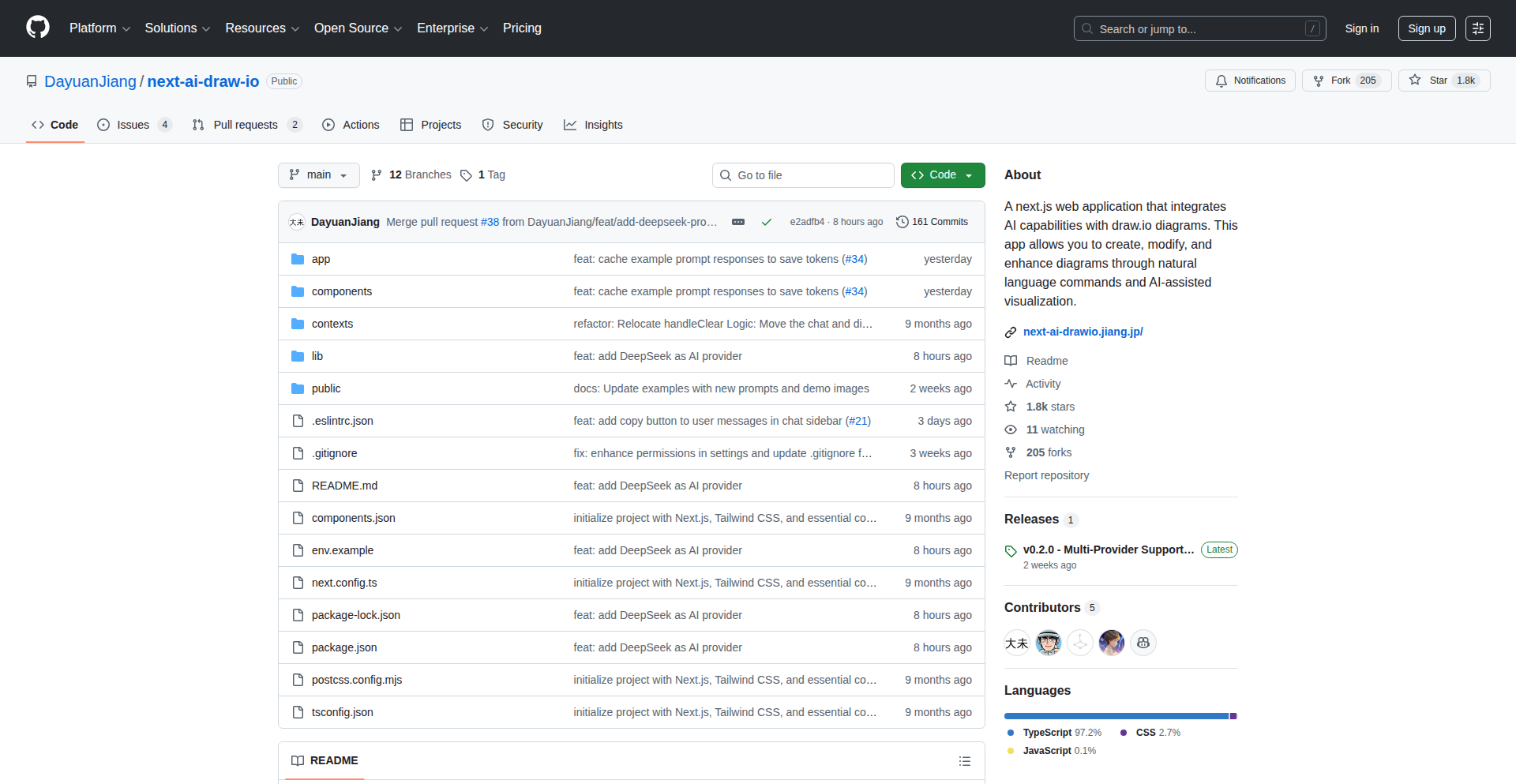

CodeViz Arch-AI

Author

LiamPrevelige

Description

CodeViz Arch-AI is a VS Code extension that automatically generates editable architecture diagrams like C4 and UML from your codebase. It helps teams build shared understanding, make technical decisions collaboratively, and visualize code changes.

Popularity

Points 4

Comments 2

What is this product?

CodeViz Arch-AI is a VS Code extension that intelligently reads your code and transforms it into structured, editable architecture diagrams. Instead of just showing code relationships, it uses an AI approach (inspired by gemini-cli) to understand your codebase's structure and generate diagrams that align with common architectural standards like C4 and UML. It then uses ReactFlow to let you interactively edit these diagrams, link elements directly back to the source code, and even ask an AI to visualize specific code flows. This means you get a clear, visual representation of your software architecture that's directly connected to the actual code, making it easier to understand, discuss, and maintain.

How to use it?

Install the CodeViz extension from the VS Code Marketplace. Once installed, you can open your project, and CodeViz will start analyzing your code. You can then trigger diagram generation for specific files, modules, or even your entire project. The generated diagrams appear in an editor where you can modify them, expand nodes to reveal more detail, and link elements back to their corresponding code locations. For collaborative features, you can integrate with GitHub to import pull requests, allowing teams to visualize and discuss code changes within the context of the architecture. For instance, when reviewing a PR, you can generate diagrams to understand how the proposed changes affect the overall system architecture.

Product Core Function

· Automatic Codebase Analysis: Understands your code to create meaningful architectural diagrams. This is valuable because it saves you the manual effort of deciphering complex codebases and manually drawing diagrams, providing a quick and accurate starting point for understanding your system's structure.

· Editable C4 and UML Diagrams: Generates industry-standard diagrams that you can modify. This is useful for refining your architecture, documenting specific components, and ensuring everyone on the team is on the same page about how the system is designed.

· Direct Code Linking: Connects diagram elements back to the exact source code. This is incredibly helpful for debugging and understanding the implementation details behind an architectural component, allowing you to jump directly from a high-level diagram to the relevant code.

· AI-Powered Flow Visualization: Uses AI to visualize specific code execution paths or functionalities. This feature is great for understanding how data flows through your system or how a particular feature is implemented, making complex interactions much easier to grasp.

· Pull Request Visualization: Integrates with GitHub to visualize changes introduced by pull requests. This is a game-changer for code reviews, as it helps identify the architectural impact of proposed changes, leading to more informed discussions and better code quality.

Product Usage Case

· Onboarding New Team Members: A new developer joins a project. Instead of spending weeks digging through code, they can use CodeViz to generate an architecture diagram, quickly getting an overview of the system's components and their relationships, significantly speeding up their ramp-up time.

· Architecture Review Meetings: A team is about to implement a new feature. They use CodeViz to generate a diagram of the relevant system components, discuss potential architectural impacts, and make informed decisions about the best approach, ensuring the new feature integrates smoothly without causing technical debt.

· Code Review for a Complex Change: A developer submits a large pull request that touches multiple parts of the system. Using CodeViz, the reviewer can generate diagrams before and after the change to visualize the architectural impact, ensuring no unintended consequences or regressions, leading to a more efficient and effective review process.

· Documenting Legacy Systems: An older, poorly documented system needs maintenance. CodeViz can help by generating diagrams from the existing code, providing a much-needed visual map of the system that can be edited and updated, making future maintenance and refactoring efforts much less daunting.

14

Claude-Flow

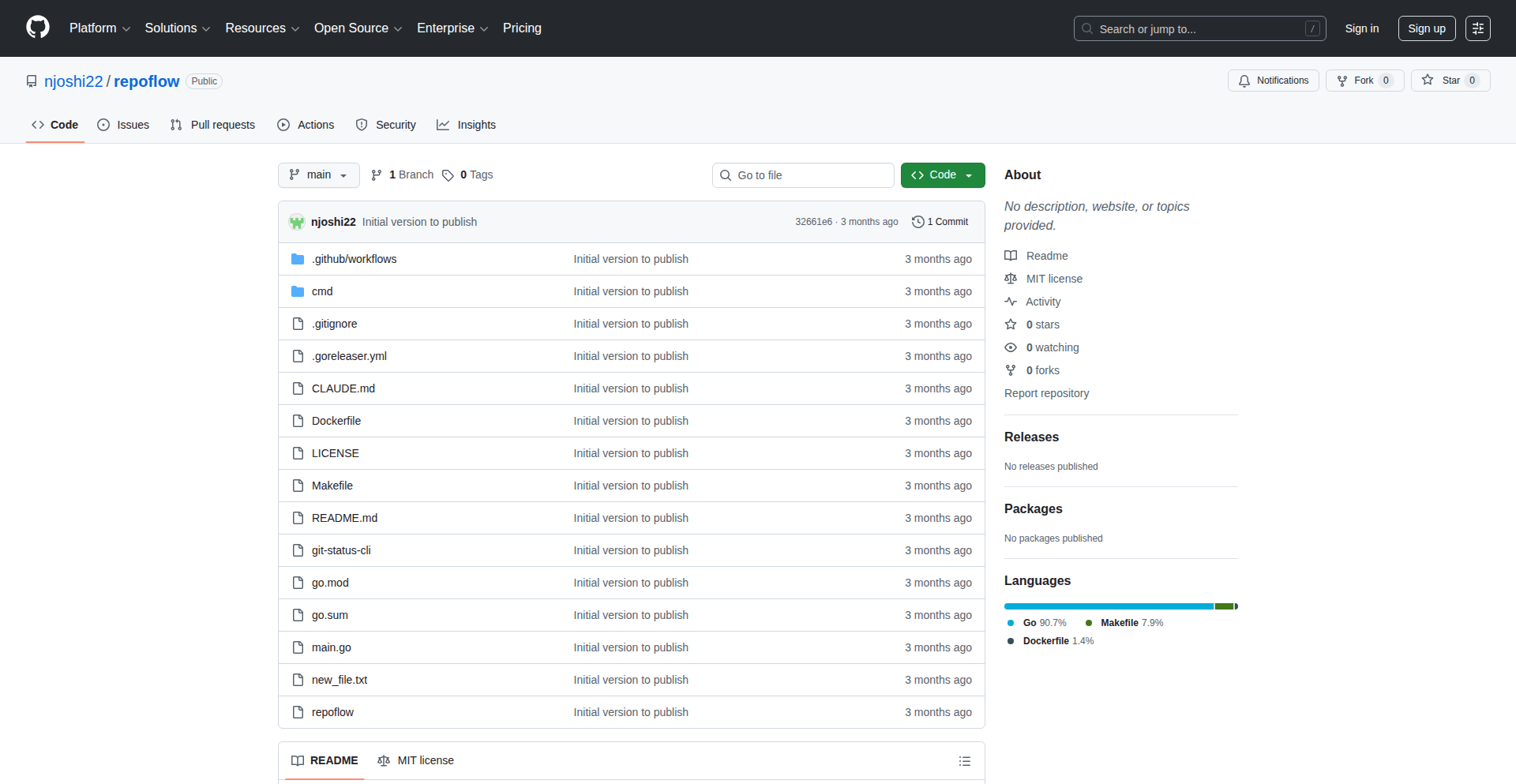

Author

mrgoonie

Description

Claude-Flow bridges the gap between Claude's code generation capabilities and production-ready software. It introduces a sophisticated orchestration layer that intelligently manages, refines, and deploys code generated by LLMs like Claude, transforming raw AI output into reliable and functional applications. The innovation lies in automating the often tedious and error-prone process of testing, debugging, and integrating AI-generated code.

Popularity

Points 5

Comments 0

What is this product?

Claude-Flow is a system designed to make code generated by Large Language Models (LLMs) like Claude more reliable and ready for real-world use. Think of Claude as a brilliant but sometimes unfocused intern. Claude-Flow acts as the experienced project manager and QA team for that intern. It takes the code Claude writes, automatically tests it for bugs, checks if it meets performance standards, and helps integrate it into your existing software projects. The core innovation is building an automated pipeline that handles the critical steps needed to turn AI's creative code snippets into robust, production-grade solutions, saving developers significant manual effort and reducing the risk of introducing AI-generated errors.

How to use it?

Developers can integrate Claude-Flow into their existing development workflows. It works by intercepting code generated by Claude (or other compatible LLMs) and initiating a series of automated checks. This can involve setting up a CI/CD pipeline where Claude-Flow is a stage. For instance, when a new code commit is generated by an LLM for a specific feature, Claude-Flow can be triggered to automatically run unit tests, integration tests, and performance benchmarks. If the code passes these checks, it can be automatically merged or flagged for human review. Developers can configure the types of tests, deployment strategies, and severity thresholds for alerts within Claude-Flow, tailoring it to their project's specific needs and risk tolerance.

Product Core Function

· Automated Code Testing: Implements a suite of automated tests (unit, integration, end-to-end) to verify the correctness and functionality of LLM-generated code, ensuring it works as intended and catches bugs early in the development cycle, reducing debugging time.

· Performance Benchmarking: Runs performance tests to ensure the generated code meets efficiency requirements, preventing slow or resource-intensive AI-generated features from impacting application performance.

· Error Triage and Debugging Assistance: Identifies and categorizes errors in AI-generated code, providing detailed reports and potentially suggesting fixes, which accelerates the debugging process for developers.

· Deployment Orchestration: Manages the process of deploying validated AI-generated code to staging or production environments, streamlining the release cycle and ensuring only tested code is deployed.

· Integration with LLM APIs: Seamlessly connects with LLM APIs like Claude's, allowing for continuous code generation and refinement within a managed pipeline, enabling iterative improvement of AI-generated code.

Product Usage Case

· A startup developing a new microservice uses Claude to generate initial boilerplate code. Claude-Flow then automatically runs a battery of unit tests against this code. If tests fail, Claude-Flow flags the problematic code for the developer. This saves the developer from manually writing and running repetitive tests for basic code structures, allowing them to focus on the unique business logic.

· A large enterprise is using an LLM to refactor legacy code. Claude-Flow is configured to monitor the refactored code, run performance tests to ensure no degradation, and then stage the changes for a controlled rollout. This ensures that the ambitious refactoring effort doesn't introduce performance bottlenecks or unexpected regressions into production systems.

· A solo developer building a web application uses Claude to generate API endpoints. Claude-Flow is set up to automatically test the endpoints with simulated user traffic. If the tests reveal any security vulnerabilities or performance issues under load, Claude-Flow alerts the developer, allowing them to address these critical issues before they are exposed to actual users.

· A research team experimenting with AI-driven game development uses Claude to generate game logic scripts. Claude-Flow automatically compiles and runs these scripts in a simulated game environment, identifying logical errors or crashes. This rapid feedback loop allows the researchers to quickly iterate on AI-generated game mechanics, accelerating the research process.

15

AI Forgery Detector Challenge

Author

amiban

Description

This project is an interactive quiz that challenges users to distinguish between human-created content and AI-generated content. It showcases the remarkable advancements in AI's ability to mimic literature, speeches, and images, highlighting the blurring lines between authentic and synthetic cultural references. The core innovation lies in leveraging these advanced AI generation capabilities to create a thought-provoking and educational experience, demonstrating how convincing AI outputs have become.

Popularity

Points 5

Comments 0

What is this product?

This project is a 'Show HN' from Hacker News, an interactive game designed to test your ability to identify AI-generated content. It presents you with examples of text (like Shakespearean verses or Martin Luther King Jr.'s speeches) and images, and you have to guess whether a human or an AI created them. The underlying technology uses cutting-edge AI models capable of producing highly realistic and stylistically accurate content. The innovation here is using these powerful AI generation tools not just to create, but to *test* our perception and highlight how sophisticated AI has become in mimicking human creativity. So, what's the point? It reveals just how convincing AI can be, making us question what's real and what's generated in our digital world.

How to use it?

Developers can use this project as a demonstration of advanced AI content generation and its perceptual challenges. It can be integrated into educational platforms to teach about AI ethics and capabilities, or as a fun, engaging tool in a developer's portfolio to showcase an understanding of current AI trends. You could hypothetically adapt the underlying principles to build tools that help identify AI-generated spam, fake news, or deepfakes in a more automated fashion. For a developer, this means seeing a practical application of AI's creative power and the challenges it poses.

Product Core Function

· AI-generated literary text mimicking famous authors like Shakespeare, demonstrating the AI's linguistic style transfer capabilities and the challenge in discerning its origin, valuable for understanding AI's creative potential.

· AI-generated speeches in the style of influential figures like Martin Luther King Jr., showcasing the AI's ability to capture rhetorical nuances and emotional tone, useful for exploring AI's capacity for persuasive communication.

· Photorealistic AI-generated images that are difficult to distinguish from real photographs, highlighting advancements in AI's visual generation models and the implications for digital media authenticity, a key area for developers interested in computer vision and generative art.

· AI-generated movie dialogue that mirrors human conversation patterns and character voice, illustrating the AI's natural language processing and generation sophistication, relevant for game development and interactive storytelling.

· Interactive quiz interface that provides immediate feedback on user guesses, allowing for learning and exploration of AI's capabilities and limitations, providing a direct user engagement mechanism.

Product Usage Case

· A developer could use this project as a case study to explain to non-technical stakeholders the current state of AI's creative abilities, making complex AI concepts accessible and demonstrating the 'wow' factor of generative AI.

· In an educational setting, this quiz can be used to spark discussions about AI ethics, the future of content creation, and the importance of critical thinking when consuming digital media, serving as a pedagogical tool.

· A developer building AI-powered content moderation tools could analyze the types of subtle errors AI makes in this quiz (like over-explanation or generic metaphors) to improve their detection algorithms, directly addressing technical challenges in content verification.

· For game developers, this project demonstrates techniques for generating realistic character dialogue or narrative elements, offering inspiration for creating more immersive and dynamic game worlds, showcasing potential for creative tooling.

16

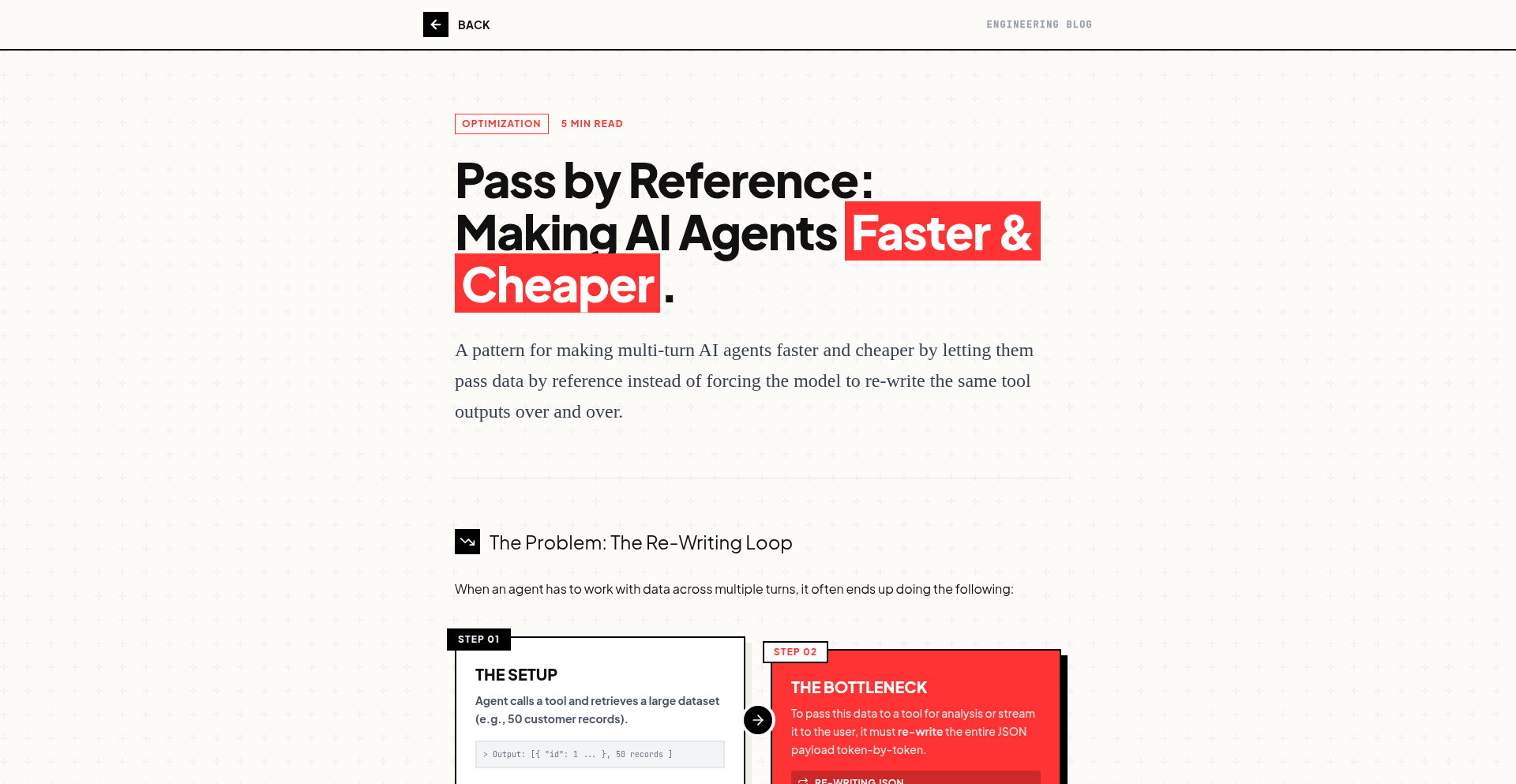

AgentFlow Optimizer

Author

weebhek

Description

This project presents a novel technique to significantly reduce the cost and latency of multi-turn AI agent interactions, achieving savings of around 80-90%. The core innovation lies in a subtle but impactful change to how AI agent requests are structured, allowing for more efficient processing without sacrificing conversational quality. This addresses a major bottleneck in deploying complex AI agents for real-world applications.

Popularity

Points 4

Comments 1

What is this product?

AgentFlow Optimizer is a clever optimization method for multi-turn AI agent systems. Instead of sending each user turn to the AI agent as a completely independent request, it intelligently bundles or prunes conversational history. Think of it like summarizing a long conversation for someone who missed the beginning, rather than making them re-read every single message. This reduces the amount of data the AI needs to process for each turn, directly leading to lower computational costs and faster response times. The innovation is in the specific algorithm that decides what information is essential to pass along, preserving context while shedding redundancy. So, what's in it for you? You get to run more sophisticated AI conversations for much less money and get answers much faster.

How to use it?

For developers, AgentFlow Optimizer can be integrated as a middleware layer or as a pre-processing step before sending requests to your AI agent API (like OpenAI's GPT, Anthropic's Claude, or similar). You would typically pass the current user input along with the recent conversation history to the optimizer. It then returns a 'slimmed-down' prompt that still contains all necessary context for the AI to generate a relevant response. This optimized prompt is then sent to the AI model. This is particularly useful for chatbots, virtual assistants, customer support bots, or any application relying on long, back-and-forth AI interactions. So, how does it help you? You can easily plug this into your existing AI agent pipeline to immediately see cost and speed improvements without needing to fundamentally change your AI model.

Product Core Function

· Contextual prompt compression: This function intelligently analyzes the conversation history and user input to create a more concise prompt for the AI. The value is reducing redundant information, which directly translates to lower API costs and faster processing. This is useful for any application where long conversations are common, like customer service bots.

· Dynamic history summarization: Rather than always sending the full N previous turns, this feature dynamically decides which parts of the history are crucial for the next AI response. This further optimizes the prompt, ensuring the AI has the right context without being overloaded. This is valuable for maintaining coherent, long-form AI dialogues in applications such as interactive storytelling or advanced research assistants.

· Cost and latency monitoring hooks: While not explicitly detailed as a function, the core idea of optimization implies mechanisms to track these improvements. The value is in verifying the effectiveness of the optimization. This is useful for developers who need to justify AI expenditures or ensure a smooth user experience in performance-critical applications.

· Minimal context loss: The optimization is designed to retain the essential information for coherent AI responses. The value is in maintaining high-quality interactions, ensuring the AI doesn't 'forget' important details. This is critical for any AI application where accuracy and natural conversation flow are paramount, such as personal assistants or educational tools.

Product Usage Case

· Customer Support Chatbot: Imagine a chatbot handling complex technical support queries. With AgentFlow Optimizer, the chatbot can remember details from earlier in the conversation across multiple turns, reducing the need for the customer to repeat themselves. This leads to faster resolution times and lower operational costs for the support team. The problem solved is the AI 'forgetting' context in long support threads.

· Interactive AI Storytelling Game: In a game where the player's dialogue choices influence the narrative, AgentFlow Optimizer can help the AI agent keep track of all the player's past decisions and dialogue nuances over many interactions. This allows for a richer, more personalized story experience without the AI becoming prohibitively expensive to run turn by turn. The problem solved is enabling deep, evolving AI narratives on a budget.

· AI-Powered Research Assistant: A researcher using an AI assistant to sift through vast amounts of documents and answer complex questions. AgentFlow Optimizer can help the assistant maintain context across multiple follow-up questions, ensuring it remembers the specific research topic and previous findings, leading to more accurate and relevant answers. The problem solved is maintaining focus and context in complex, iterative information retrieval tasks.

17

ContrastNudge

Author

lalithaar

Description

This project addresses a common UI design challenge: ensuring text remains readable and accessible while maintaining aesthetic appeal. It's a small library that automatically adjusts text colors to meet WCAG (Web Content Accessibility Guidelines) contrast standards (AA or AAA), making subtle but crucial improvements to visual similarity. It also includes a color contrast linter for continuous integration (CI) to automatically catch accessibility issues.

Popularity

Points 5

Comments 0

What is this product?

ContrastNudge is a clever little open-source library designed to solve the frustrating problem of making sure text colors are easily readable against their backgrounds, especially for users with visual impairments. It works by intelligently 'nudging' your chosen text color slightly, just enough to pass accessibility standards like WCAG AA or AAA, without dramatically changing the original color. This means your design looks great and is also inclusive. The innovation lies in its ability to find that sweet spot between perfect contrast and aesthetic integrity. It's like having a tiny, smart assistant for your color choices. So, what's in it for you? It means less manual tweaking of colors to meet accessibility rules and more confidence that your designs are usable by everyone.

How to use it?

Developers can integrate ContrastNudge into their projects by including the library, likely through a package manager like npm or yarn. Once integrated, they can pass their desired text color and background color to the library's functions. The library will then return a slightly adjusted text color that guarantees sufficient contrast. For continuous improvement, the included linter can be set up within your CI pipeline. This means that every time you commit code, the linter will automatically check if your color choices meet accessibility standards. If not, it will flag the issue, preventing non-accessible designs from being deployed. So, what's in it for you? You get a streamlined workflow where accessibility checks are automated, saving you time and ensuring your applications are usable by a wider audience.

Product Core Function

· Automatic WCAG Contrast Adjustment: The core function intelligently modifies text colors to meet AA or AAA contrast ratios, ensuring readability without drastically altering the original color. This is valuable because it automensure your UI elements are accessible to users with visual impairments, improving user experience and expanding your audience.

· Visually Similar Color Output: The algorithm prioritizes maintaining the original aesthetic intent of the color. This is valuable because it prevents jarring color shifts, allowing designers to achieve accessibility compliance without sacrificing their creative vision.

· Color Contrast Linter for CI: A dedicated linter integrates into your continuous integration process. This is valuable because it automates accessibility checks, catching potential contrast issues early in the development cycle and preventing the deployment of inaccessible designs.

· Open Source and Free: The library is freely available under an open-source license. This is valuable because it lowers the barrier to entry for implementing accessibility best practices, allowing any developer or team to leverage these powerful tools without cost.

Product Usage Case