Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-30

SagaSu777 2025-12-01

Explore the hottest developer projects on Show HN for 2025-11-30. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN submissions paint a vivid picture of innovation driven by the desire to solve real-world problems with cutting-edge technology. We're seeing a strong surge in AI applications moving beyond mere experimentation into practical problem-solving, from validating product ideas with real user feedback to automating complex content creation and development workflows. The hacker spirit is alive and well as developers tackle challenges like running LLMs locally on older hardware, enhancing developer productivity with smart tools, and building decentralized or privacy-focused services. For aspiring entrepreneurs, this trend signifies a fertile ground for identifying niche opportunities where AI can augment human capabilities or automate tedious tasks, creating new value propositions. Developers should focus on building robust, user-centric tools that leverage AI responsibly, emphasizing efficiency, privacy, and tangible benefits.

Today's Hottest Product

Name

HolyShift

Highlight

This project leverages AI agents to conduct real-user validation for product ideas across platforms like Reddit, HN, and LinkedIn. It moves beyond synthetic data and predictions by collecting genuine conversations, clustering feedback, and generating detailed go-to-market and build reports. Developers can learn about multi-agent systems, platform-specific prompting strategies, and real-time sentiment analysis using embeddings for effective product validation.

Popular Category

AI/ML

Developer Tools

Productivity

Web Services

Data Management

Popular Keyword

AI agents

LLM

Product Validation

Data Processing

Productivity Tools

Web Frameworks

Developer Productivity

Technology Trends

AI-driven Product Validation

Local LLM Execution

Intelligent Automation

Decentralized Services

Privacy-Preserving Technologies

Developer Productivity Enhancements

Client-Side Processing

AI for Content Creation/Management

Advanced Data Visualization/Analysis

Project Category Distribution

AI/ML Tools (25%)

Developer Productivity (20%)

Web Applications/Services (15%)

Data Management/Analysis (10%)

Frameworks/Libraries (10%)

Utilities (10%)

Open Source Infrastructure (5%)

Other (5%)

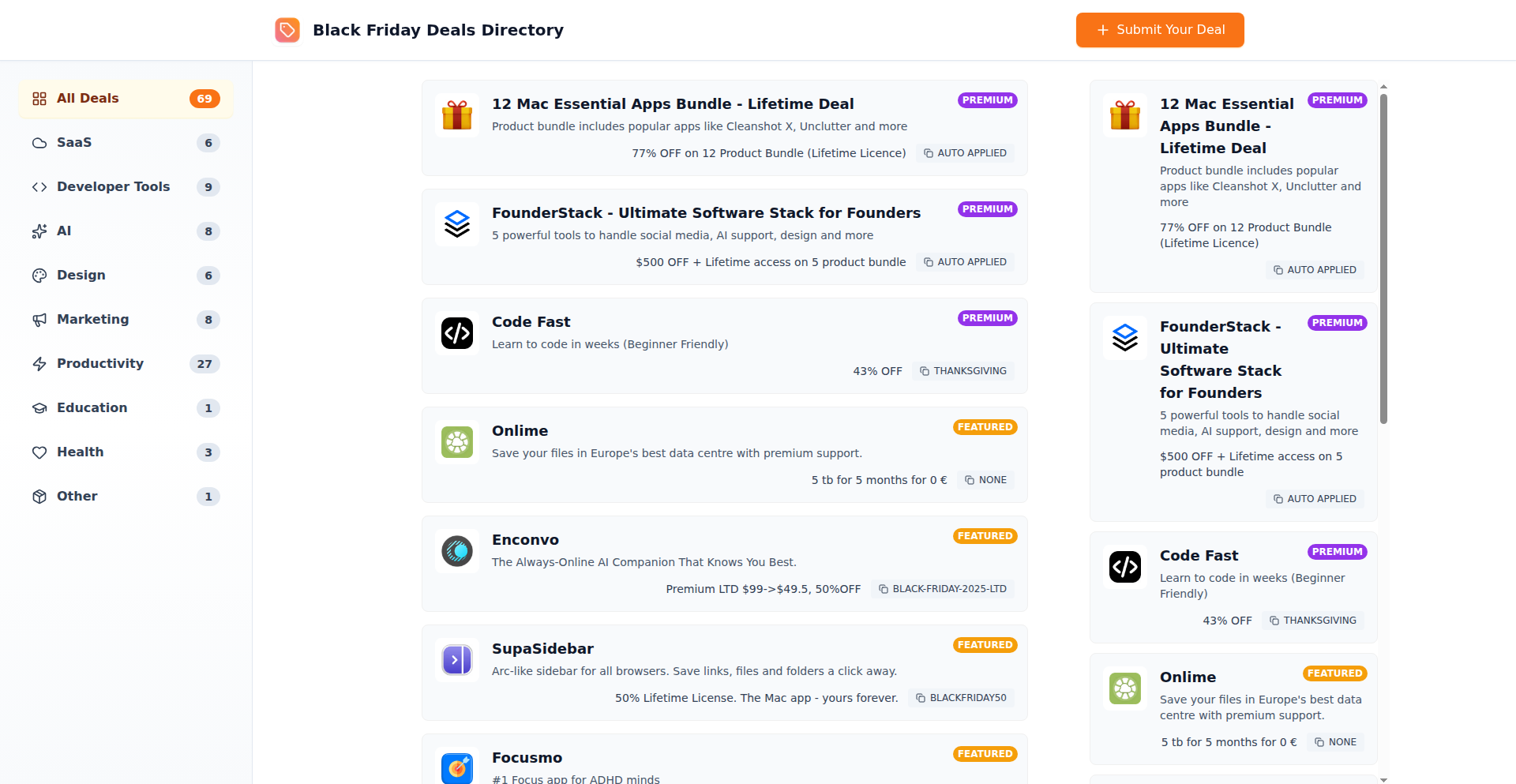

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Tinyfocus: Solo-Dev Productivity Accelerator | 17 | 2 |

| 2 | PersonaPulse AI | 6 | 8 |

| 3 | XP-LLM-Runtime | 2 | 9 |

| 4 | ClaudeChain LLM Orchestrator | 4 | 2 |

| 5 | GoCoverageInsight | 5 | 1 |

| 6 | SpatialPin Social Fabric | 3 | 3 |

| 7 | BlogLab AI SEO Assistant | 4 | 1 |

| 8 | Burn Protocol: Thermodynamically Aligned AI Thinking | 3 | 2 |

| 9 | utm.one: Smart URL Shorter with UTM Governance | 3 | 2 |

| 10 | MentalAgeQuiz | 3 | 2 |

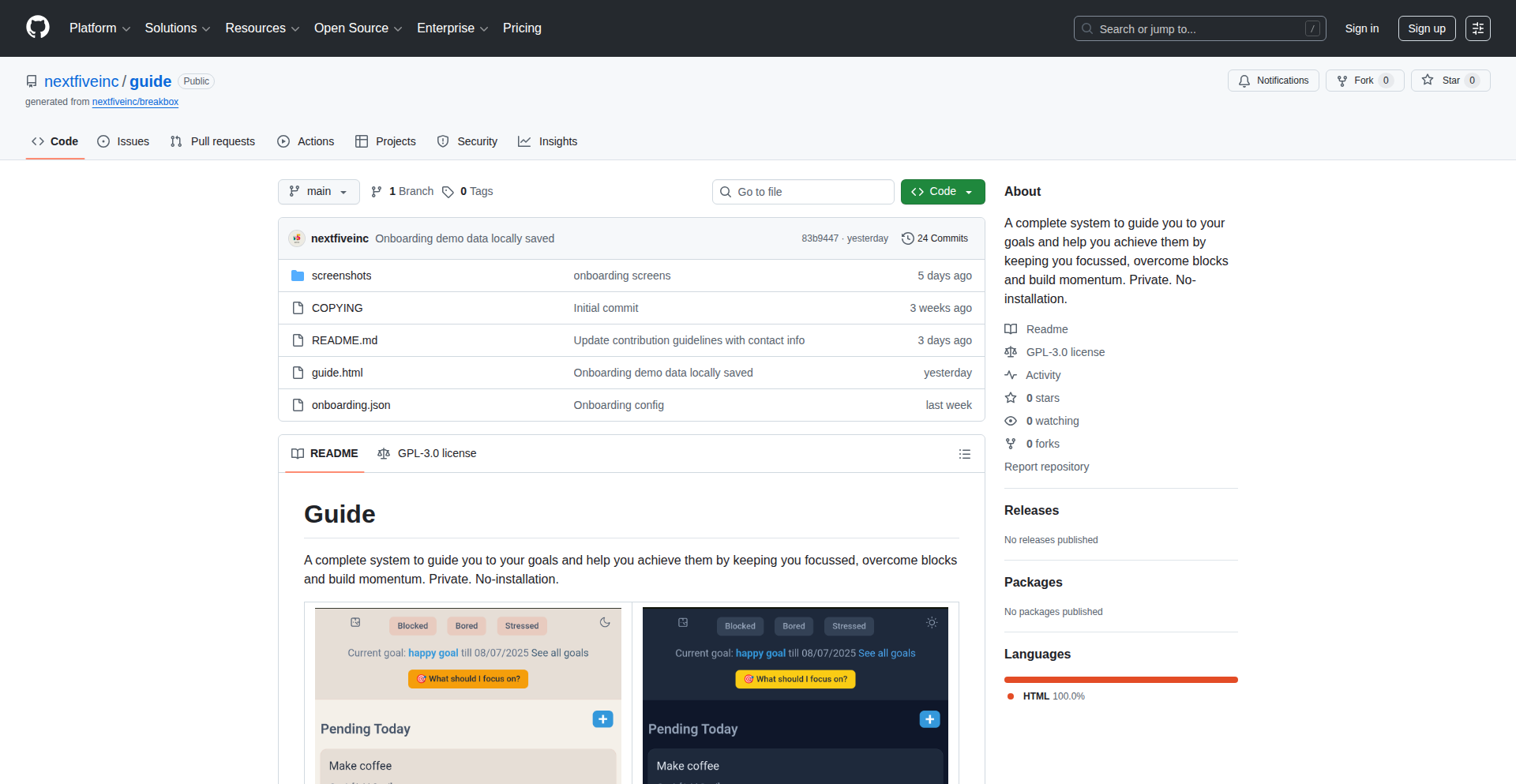

1

Tinyfocus: Solo-Dev Productivity Accelerator

Author

arlindb

Description

Tinyfocus is a minimalist productivity tool built for solo founders and independent developers. Its core innovation lies in its extreme simplicity, offering micro-dashboards and efficient task tracking to eliminate distractions. It tackles the challenge of maintaining focus and maximizing output for individuals juggling multiple responsibilities by providing a clear, actionable view of priorities.

Popularity

Points 17

Comments 2

What is this product?

Tinyfocus is a highly distilled productivity application designed to help individuals, particularly solo founders and developers, stay focused and productive. At its heart, it leverages a very straightforward approach: it helps you identify and prioritize your most critical tasks for the day and presents them in a 'micro-dashboard' format. This means instead of complex features or overwhelming data, you get a concise, visual representation of what needs your attention. The innovation here is in the deliberate removal of 'fluff' – no unnecessary features, no complex integrations, just a clean interface focused on getting things done. For a developer, this translates to a tool that respects their time and cognitive load, helping them cut through the noise and concentrate on high-impact work, which is crucial when you're a one-person show.

How to use it?

Developers can use Tinyfocus by simply visiting the website (tinyfoc.us). The workflow is designed to be immediate: you input your top 1-3 priority tasks for the day and perhaps a key metric or two you're tracking. The micro-dashboard then displays this information prominently, serving as a constant, non-intrusive reminder of your goals. This can be integrated into a developer's daily routine by opening it first thing in the morning or keeping it in a dedicated browser tab. The value is in its immediacy and the lack of setup friction, allowing for quick adoption and immediate benefit to workflow.

Product Core Function

· Top Task Prioritization: This feature allows users to explicitly define and rank their most important tasks for a given period, ensuring that critical work is identified and addressed first. For a developer, this means clearly seeing what bug fix, feature implementation, or crucial refactoring needs their immediate attention, preventing scope creep and wasted effort on less impactful activities.

· Micro-Dashboards for Daily Focus: Instead of extensive analytics, Tinyfocus provides minimalistic visual cues that highlight daily progress and focus areas. This helps developers maintain an overview of their key objectives without getting lost in data. It's like having a clear, uncluttered view of your project's most important moving parts, allowing for quick checks and adjustments to stay on track.

· Distraction-Free Interface: The product is intentionally designed to be free of unnecessary features and visual clutter. This creates a calm and focused environment for the user. For a developer, this is invaluable as it minimizes cognitive overhead and interruptions, allowing for deeper concentration on coding and problem-solving, which is essential for complex technical tasks.

Product Usage Case

· A solo developer working on a side project needs to release a new feature by the end of the week. By using Tinyfocus, they can list 'Implement feature X' as their top priority, and perhaps a secondary task like 'Write unit tests for feature X'. The micro-dashboard keeps this front and center, preventing them from getting sidetracked by other interesting but less urgent coding ideas, directly addressing the challenge of self-discipline and focus in an independent development environment.

· A founder of a small SaaS company is also the primary developer. They often find themselves pulled between user support, marketing emails, and coding. Tinyfocus helps them designate 'Respond to critical user bug reports' as the absolute top priority for the day. The tool ensures this task is always visible, helping them overcome the common solo founder dilemma of being pulled in too many directions and ensuring essential operational tasks are not neglected.

· A developer attending a virtual conference and trying to build a proof-of-concept simultaneously. They can use Tinyfocus to set a core goal like 'Complete basic authentication flow' for the day. The tool acts as a constant anchor, ensuring that despite the distractions of the conference, the primary development objective remains a clear target, demonstrating its utility in managing concurrent demands on attention.

2

PersonaPulse AI

Author

Matzalar

Description

PersonaPulse AI is a novel product validation tool that leverages AI agents to engage in authentic conversations with real users across platforms like Reddit, Hacker News, X, and LinkedIn. Instead of relying on synthetic data or predictions, it gathers genuine feedback, objections, and pricing signals directly from potential customers. The system then synthesizes this information into a comprehensive Go-To-Market strategy and a 'Should we build this?' report, providing data-driven insights to inform product decisions. The core innovation lies in its multi-agent pipeline and platform-specific prompting, enabling nuanced interactions and sophisticated sentiment analysis through real-time clustering and embeddings. This allows founders and product managers to move beyond guesswork and build products that truly resonate with their target audience.

Popularity

Points 6

Comments 8

What is this product?

PersonaPulse AI is an AI-powered system designed to validate your product ideas by directly interacting with real people on various online communities. It tackles the common problem of building products that nobody wants. Instead of using generic AI models or making assumptions, it deploys specialized AI agents that post questions (respecting platform rules), collect genuine reactions, and analyze sentiment. The system then clusters this feedback into meaningful themes like pain points, demand, and pricing expectations. The innovation here is the creation of a multi-agent system that simulates a human-like research process, but at scale. It uses advanced techniques like embeddings to understand the nuances of user feedback and platform-specific prompting to ensure the AI 'speaks the language' of each community. The ultimate output is a detailed report that helps you decide if your product idea is viable, answering the crucial question: 'Is this something people actually need and will pay for?'

How to use it?

Developers and product managers can integrate PersonaPulse AI into their early-stage product development workflow. You would typically define your product idea and target audience. The AI agents then take over, initiating conversations within relevant online communities. You can configure the agents to focus on specific platforms and types of questions. The system's output is a synthesized report that includes a Product Requirements Document (PRD) and a Go-To-Market (GTM) strategy. This means you get actionable intelligence on what features are most desired, what objections users might have, and what pricing points are acceptable. Think of it as having a dedicated market research team that works 24/7, providing you with direct feedback before you commit significant development resources. This saves time, reduces risk, and increases the chances of building a successful product.

Product Core Function

· Authentic user engagement: AI agents interact with real users on platforms like Reddit and X, providing genuine feedback and reducing reliance on guesswork. This helps you understand what your potential customers are truly thinking and feeling about your product concept.

· Real-time feedback collection and analysis: The system gathers raw reactions, objections, and pricing signals from live conversations, offering immediate insights into market sentiment. This allows for quick adjustments to your product strategy based on current user opinions.

· Sentiment and thematic clustering: Using advanced AI techniques like embeddings, feedback is categorized into themes such as pain points, demand, and pricing sensitivity, making it easier to identify key areas for product improvement. This helps you prioritize development efforts on features that address the most critical user needs.

· Automated report generation: A comprehensive report is produced, outlining the product's viability, potential market fit, and a draft Go-To-Market strategy. This report provides a clear roadmap for product development and market entry, saving you considerable time and effort in manual analysis.

· Platform-specific interaction: AI agents are designed to interact appropriately on different platforms, respecting their unique cultures and rules. This ensures that the feedback gathered is relevant and representative of the community being targeted.

Product Usage Case

· A startup founder with a new app idea for remote team collaboration. Instead of building the full app, they use PersonaPulse AI to post questions on relevant developer forums and LinkedIn groups. The AI gathers feedback on desired features, potential pricing, and concerns about existing solutions, helping the founder refine their MVP and prioritize development efforts, leading to a more successful product launch.

· A product manager at a larger company looking to enter a new market segment. They use PersonaPulse AI to understand the unmet needs and pain points of potential customers in that segment by engaging on niche subreddits and industry-specific X discussions. The AI's analysis reveals a strong demand for a specific feature that was not initially considered, preventing a costly product misstep and guiding the team towards a more impactful solution.

· A solo developer building a productivity tool. They are unsure about the optimal pricing strategy. PersonaPulse AI engages with potential users on platforms like Hacker News and various developer communities, collecting direct feedback on what users would be willing to pay. This data-driven approach helps the developer set a competitive and profitable price, increasing their revenue potential.

· A company exploring a pivot for an underperforming product. They use PersonaPulse AI to gauge the general sentiment and identify underlying issues with their current offering among their user base and the broader market. The AI's ability to cluster negative feedback into actionable themes helps the company understand the root causes of underperformance and formulate a revised product strategy.

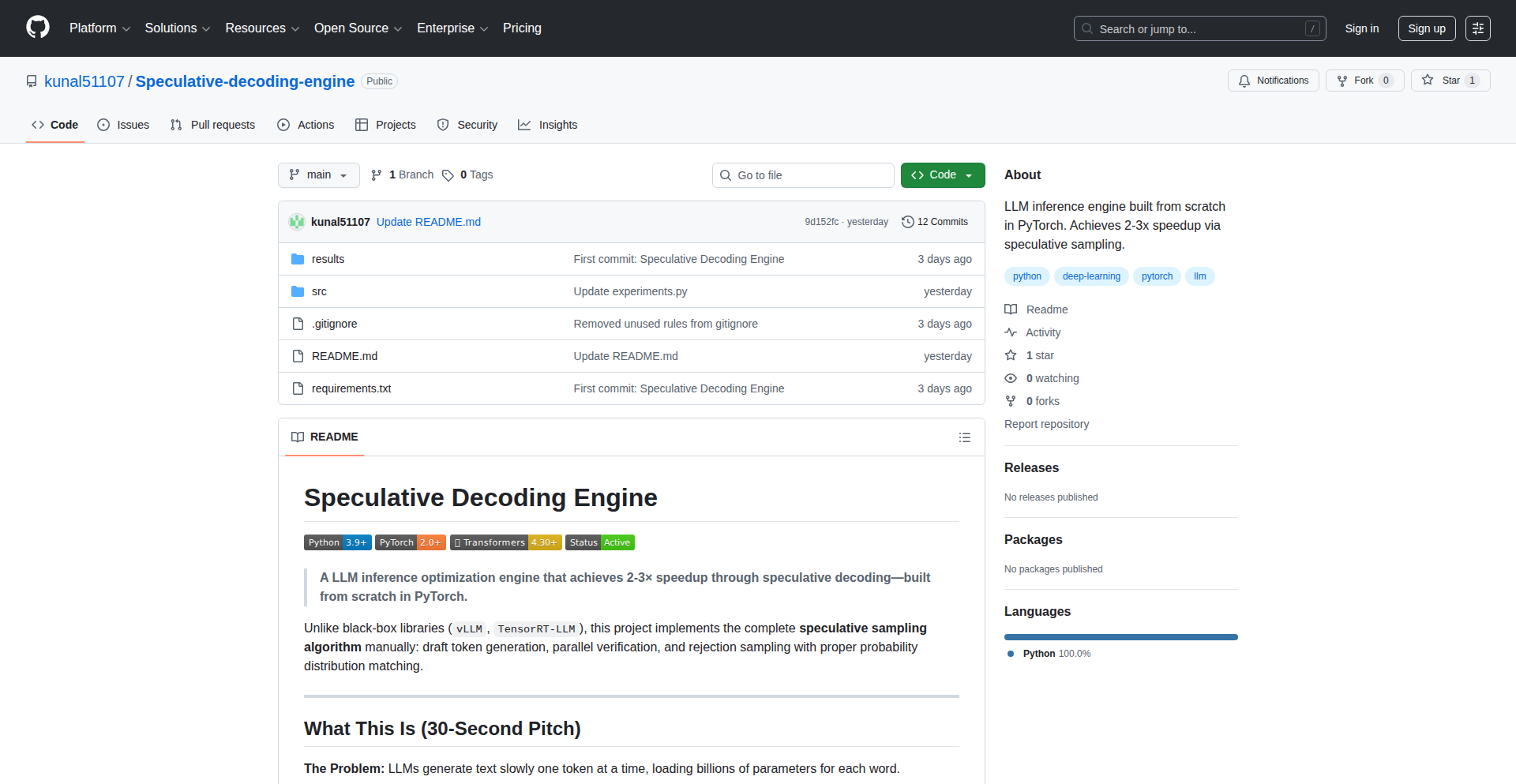

3

XP-LLM-Runtime

Author

dandinu

Description

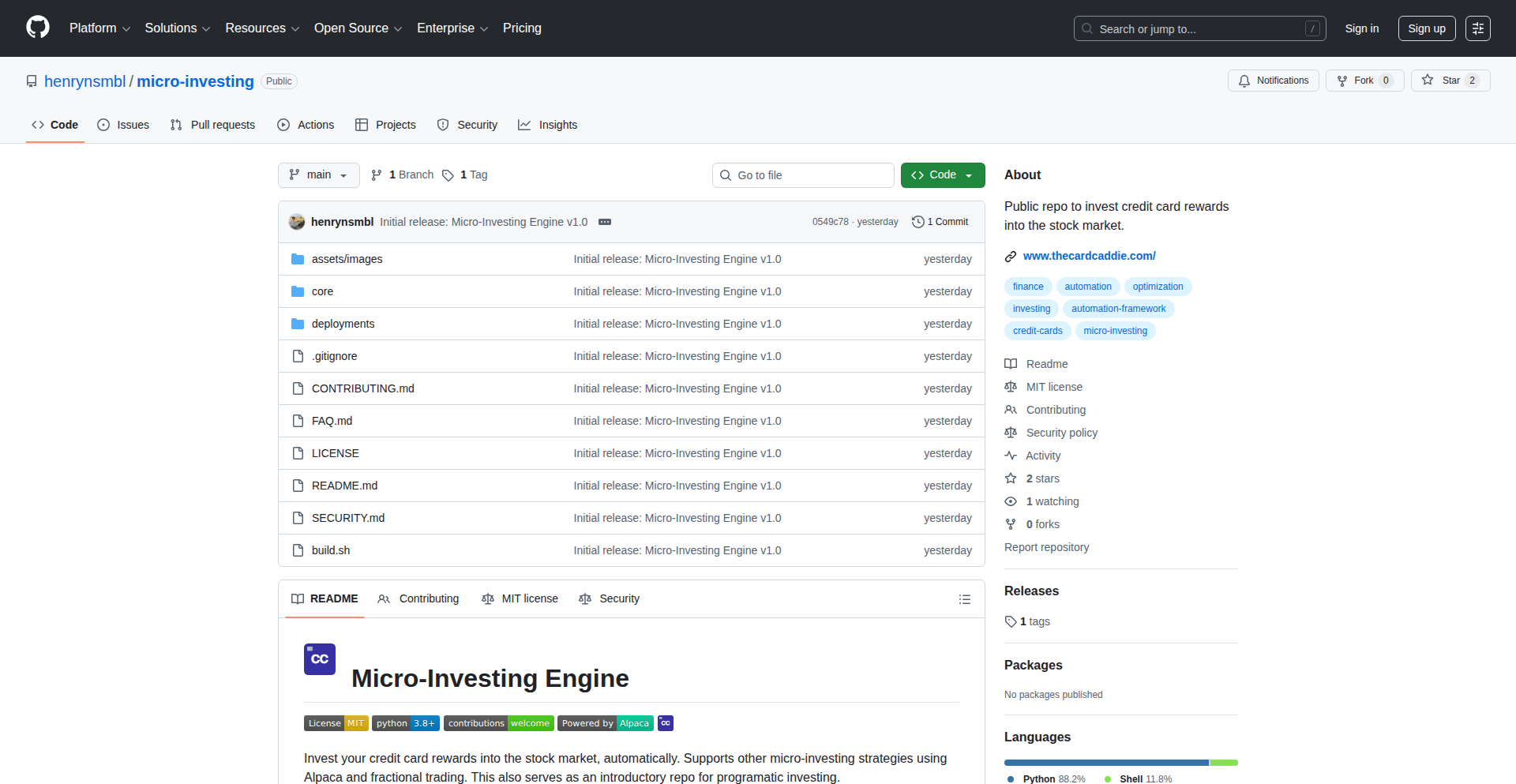

A groundbreaking project that enables local Large Language Models (LLMs) to run on Windows XP, bridging the gap between modern AI and vintage computing. It focuses on cross-compiling llama.cpp to target Windows XP 64-bit, overcoming significant compatibility challenges with older operating systems and libraries. This innovation opens up possibilities for exploring AI capabilities on historically relevant hardware.

Popularity

Points 2

Comments 9

What is this product?

This project is an experimental build of llama.cpp, a popular C++ library for running LLMs, specifically engineered to function on the 2003-era Windows XP operating system. The core innovation lies in the successful cross-compilation and modification of the codebase to run on a 64-bit XP environment. This involved downgrading dependencies like cpp-httplib to a version compatible with XP (newer versions block pre-Windows 8), replacing modern threading primitives (like SRWLOCK and CONDITION_VARIABLE) with XP-compatible equivalents, and navigating the notorious 'DLL hell'. The result is a functional local LLM on hardware that would have been cutting-edge decades ago. So, what's the value to you? It allows for a nostalgic yet functional exploration of AI on classic computing platforms, offering a unique perspective on the evolution of technology.

How to use it?

Developers interested in this project can follow the detailed build instructions provided in the accompanying write-up. This typically involves setting up a cross-compilation environment on a modern system (like macOS) to target Windows XP 64-bit. The process requires careful management of compiler versions and library dependencies. Once compiled, the LLM runtime can be integrated into custom applications or used for direct interaction. The project also provides a video demonstration showcasing its functionality. So, how can you use this? If you're a developer curious about system-level AI integration or want to build applications that run on older or resource-constrained systems with AI capabilities, this project provides a foundational example and valuable insights.

Product Core Function

· Cross-compilation for Windows XP 64-bit: Enables running modern AI inference on a 20-year-old operating system, showcasing extreme backward compatibility efforts.

· LLM inference on legacy hardware: Allows AI models (like Qwen 2.5-0.5B) to process text locally on XP machines, demonstrating that AI isn't limited to the latest tech.

· Compatibility layer for XP threading primitives: Replaces modern Windows threading constructs with XP-compatible ones, a crucial step for running on older systems.

· Dependency management for vintage environments: Selectively downgrades or replaces libraries to ensure compatibility with Windows XP's software ecosystem, a common challenge in retro computing.

· Local AI execution: Provides a way to run AI models entirely on the user's machine without internet connectivity, important for privacy and accessibility on older systems.

Product Usage Case

· Retro AI Chatbot: Imagine running a personal AI assistant on a Windows XP machine from 2003, complete with the classic desktop theme and Winamp playing. This project makes that a reality, solving the problem of bringing AI to vintage personal computers.

· Educational tool for OS evolution: Researchers or students can use this to study the limitations and capabilities of older operating systems when trying to run complex modern software, illustrating the significant advancements in OS design and hardware.

· Niche application development: Developers might find use cases for applications that require AI processing but must run on very old or specialized hardware where modern OS upgrades are not feasible. This project tackles the challenge of AI on constrained platforms.

· Experimentation with AI performance on limited resources: Running an LLM at 2-8 tokens/sec on period-appropriate hardware provides valuable data for understanding AI model efficiency and the hardware requirements for AI, highlighting how far we've come.

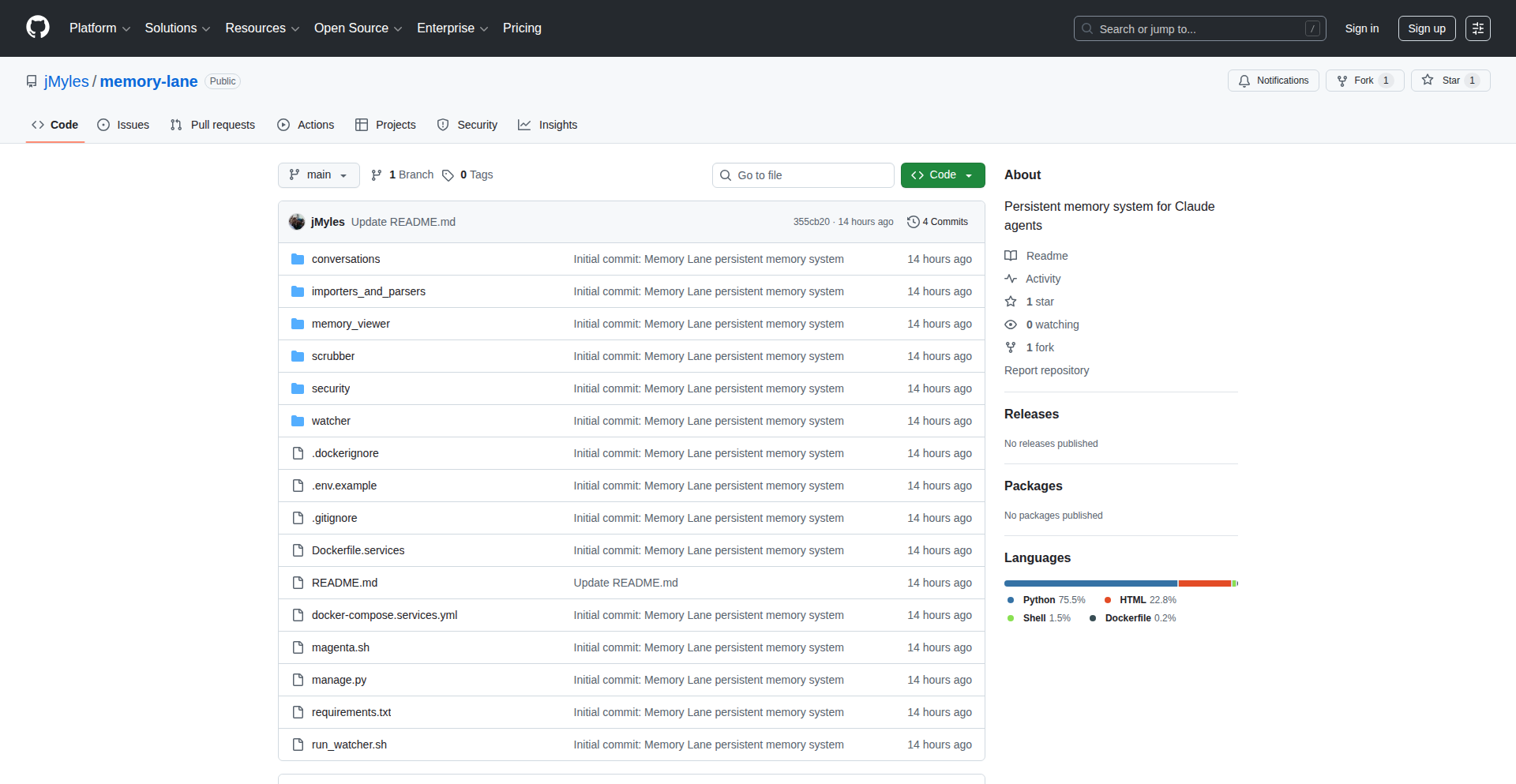

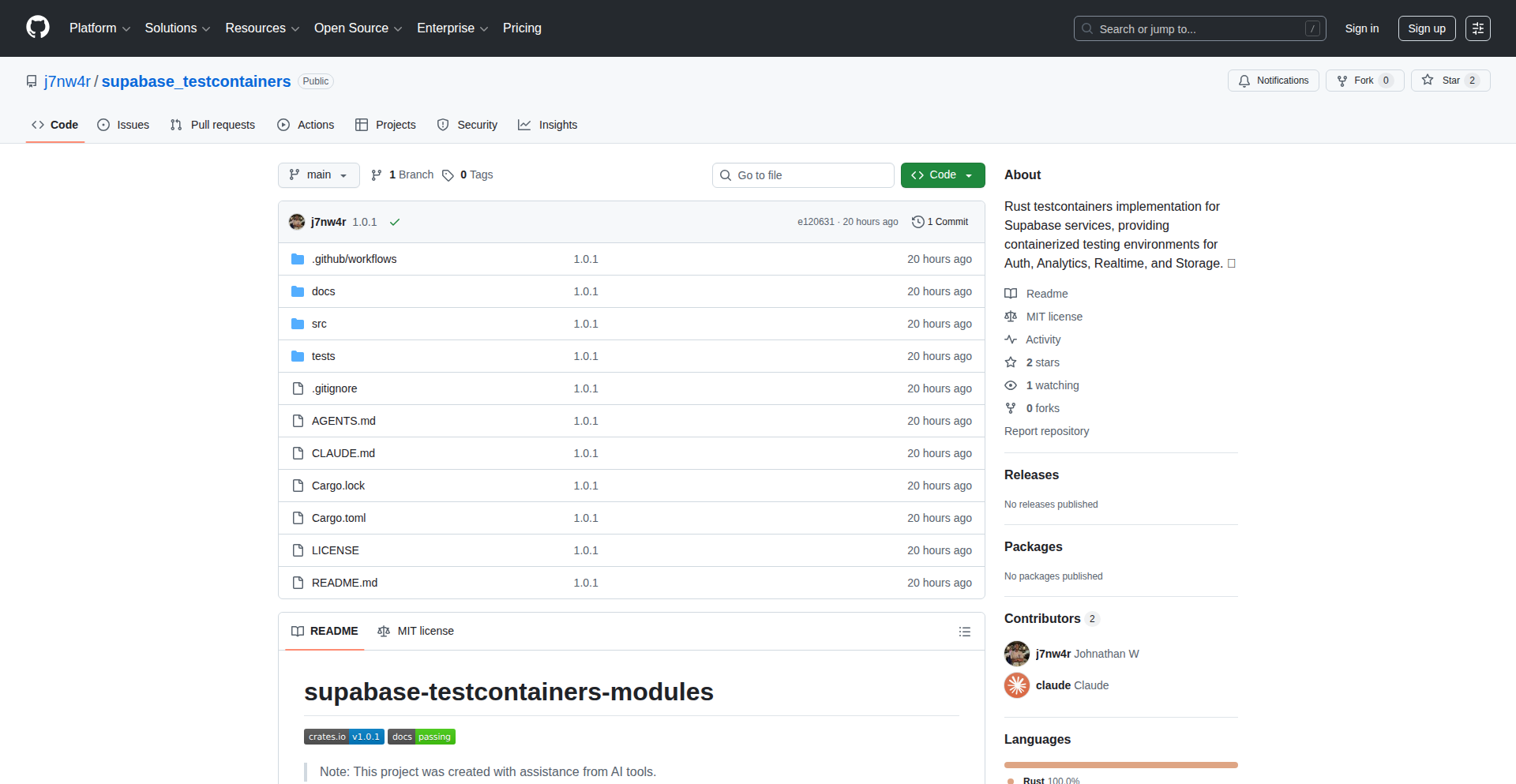

4

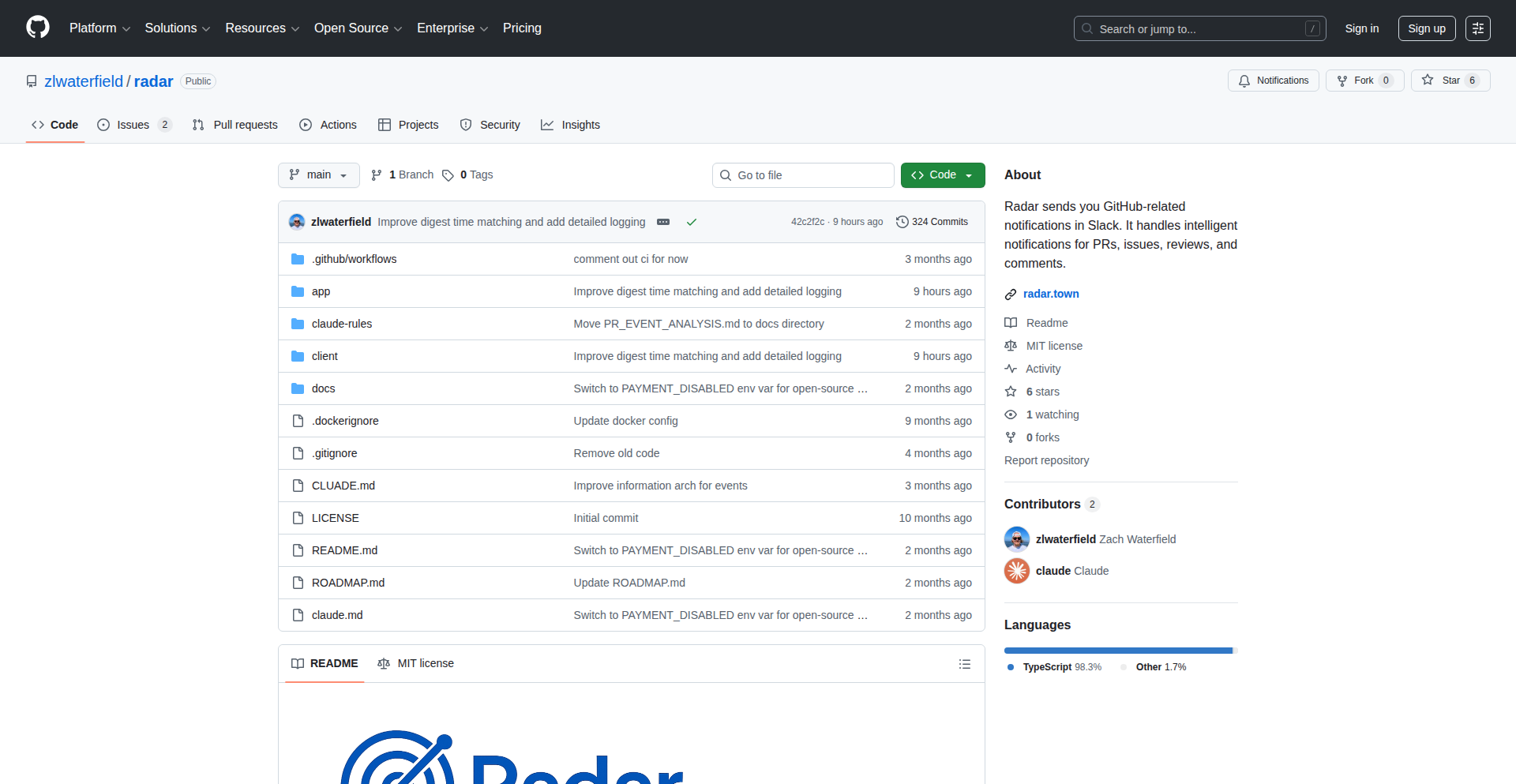

ClaudeChain LLM Orchestrator

Author

rane

Description

This project introduces a novel approach to enhance Large Language Model (LLM) capabilities by enabling an LLM (specifically Claude in this demonstration) to dynamically invoke other LLMs when it encounters a problem it cannot solve on its own. It tackles the limitation of a single LLM getting stuck in repetitive loops or failing to produce optimal outputs by creating a meta-cognitive layer for LLMs, allowing them to delegate tasks to specialized or more capable models.

Popularity

Points 4

Comments 2

What is this product?

This is a system that allows an LLM, like Claude, to recognize when it's struggling with a task and then programmatically call other LLMs to help. Think of it like a team leader LLM that can ask for help from specialized expert LLMs when it's out of its depth. The innovation lies in the LLM's ability to self-diagnose its limitations and initiate a delegation process. Instead of a single LLM trying to do everything and potentially failing, it can orchestrate a sequence of LLM calls, similar to how a human developer might consult different tools or colleagues. This is achieved by analyzing the LLM's output for specific indicators of being 'stuck' or suboptimal and then using code to dispatch a new LLM query, potentially with more context or a different instruction set, to another LLM.

How to use it?

Developers can integrate this by setting up a framework where an initial LLM prompt is processed. The system monitors the LLM's response. If the response indicates a loop or failure to progress, the system intervenes. It can be configured to route the problematic query to a different LLM (e.g., a more powerful one, or one specialized in a particular domain) with the original context and potentially a refined prompt. This can be used within applications that require complex reasoning, creative content generation, or problem-solving that might exceed the capabilities of a single LLM instance. Imagine a chatbot that can't answer a complex question; instead of just saying 'I don't know,' it could hand the question off to a specialized research LLM.

Product Core Function

· LLM Self-Awareness Detection: The system identifies when an LLM is producing repetitive or unhelpful output, indicating it's stuck. This allows for early intervention before the user experiences a degraded experience.

· Dynamic LLM Invocation: Based on the detection, the system can automatically trigger a new query to a different LLM. This means you can leverage the strengths of various LLMs for different parts of a problem.

· Context Preservation and Transfer: When delegating a task, the system ensures that the relevant context from the previous LLM interaction is passed along to the new LLM. This is crucial for maintaining a coherent workflow and avoiding redundant information.

· Programmable LLM Chaining: Developers can define sequences or conditional logic for which LLM to call next, effectively creating 'chains' of LLMs to tackle complex problems step-by-step.

· Error Handling and Fallback Mechanisms: The system can be designed to have fallback options if a delegated LLM also fails, ensuring robustness in the overall process.

Product Usage Case

· Complex Code Generation and Debugging: If an LLM struggles to generate a specific piece of code or debug an error, this system could invoke a more specialized code generation LLM or a debugging-focused LLM to assist, then integrate the solution back.

· Advanced Creative Writing: For a story generation task, if the LLM gets stuck in a plot loop, it could delegate to another LLM to brainstorm plot twists or character developments.

· Multi-Domain Question Answering: Answering a question that requires knowledge from both historical events and scientific principles could involve invoking a history-focused LLM and a science-focused LLM sequentially or in parallel.

· Automated Research and Summarization: When an LLM is tasked with summarizing a broad topic, it might fail to cover all aspects. This system could delegate to specialized LLMs for different sub-topics and then combine the summaries.

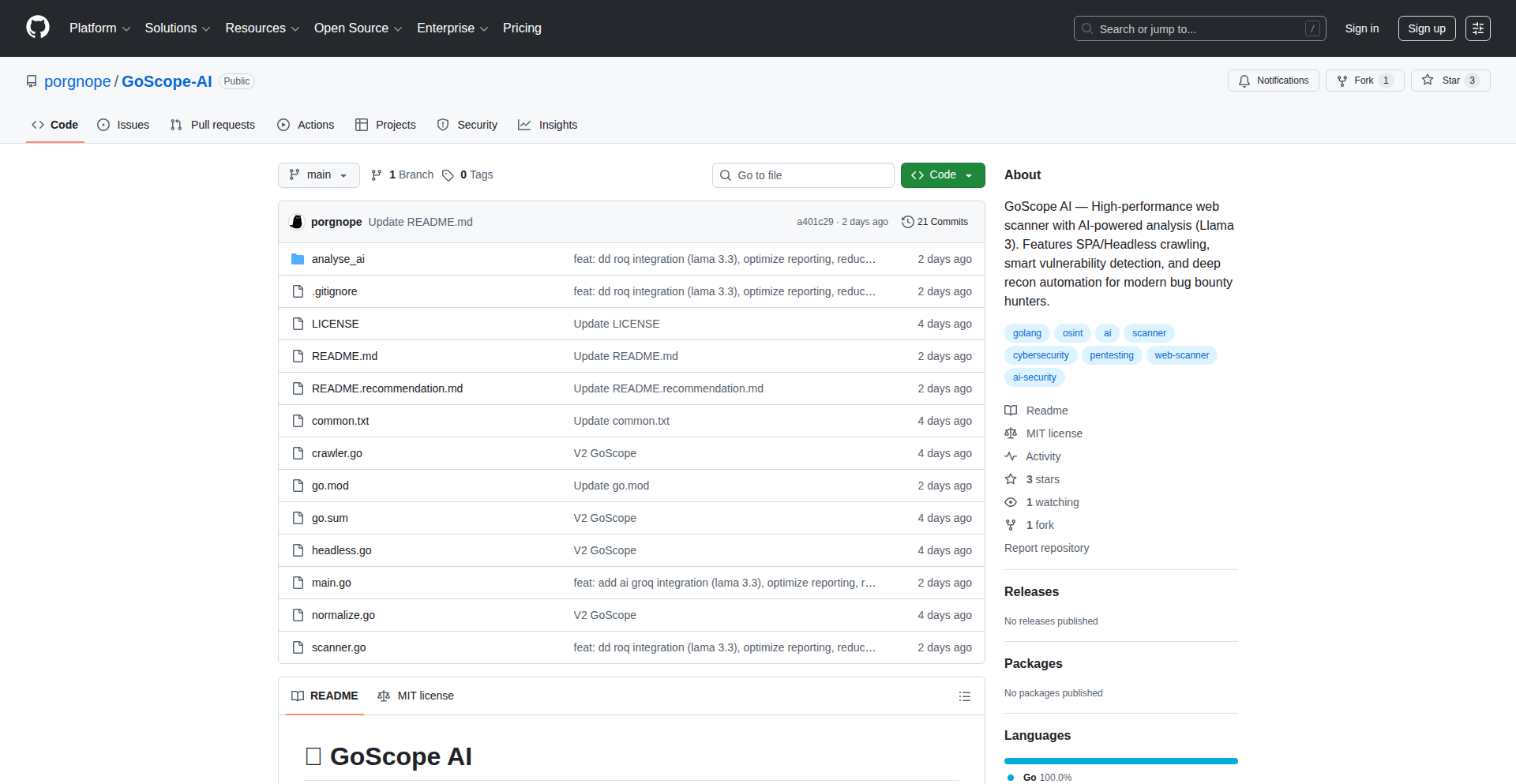

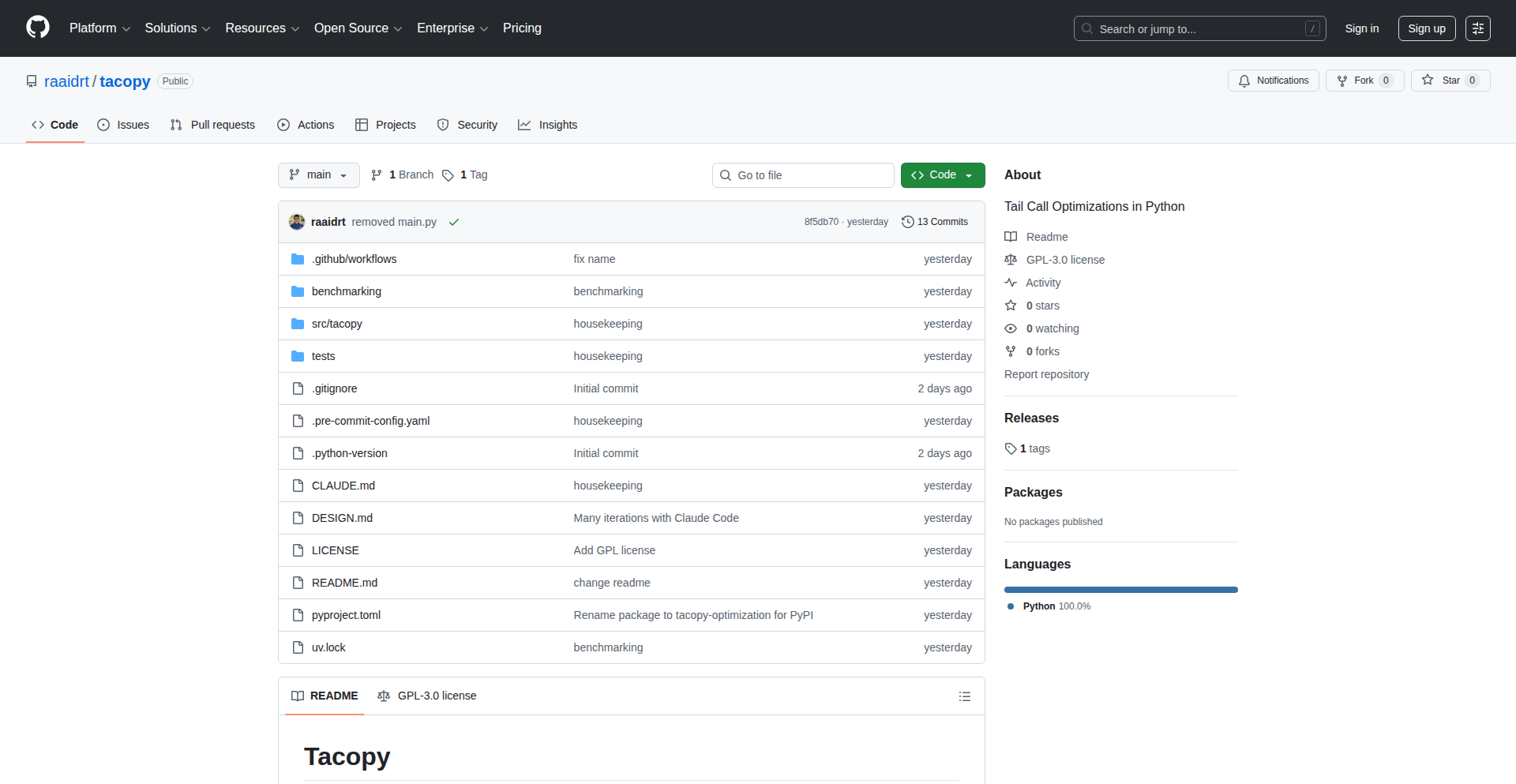

5

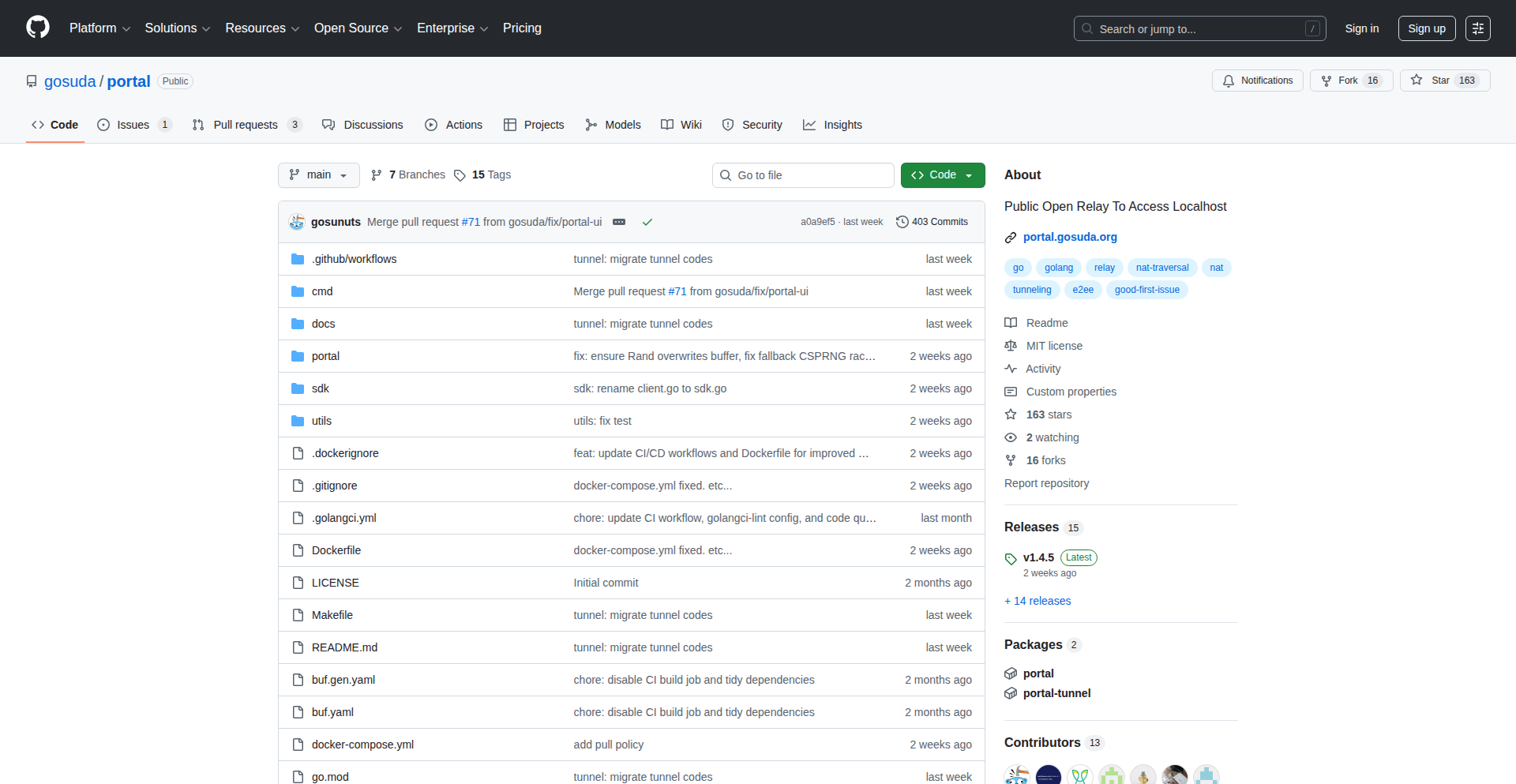

GoCoverageInsight

Author

alien_

Description

A tool that identifies test coverage gaps in Go projects. It leverages static analysis and runtime instrumentation to pinpoint areas of your Go codebase that are not adequately tested, helping developers ensure comprehensive test coverage and reduce potential bugs.

Popularity

Points 5

Comments 1

What is this product?

GoCoverageInsight is a sophisticated tool designed to shine a light on the blind spots in your Go project's test coverage. It works by first analyzing your Go code's structure (static analysis) to understand how different parts of your code are supposed to be called. Then, it intelligently instruments your code (adds small tracking pieces) to observe which lines of code are actually executed when your tests run. By comparing what *should* be covered with what *is* covered, it precisely identifies the specific functions, lines, or branches of code that your current tests are missing. This means you can proactively find and fix untested code before it becomes a problem in production, saving you time and preventing future headaches. It's like having a detective for your tests, ensuring nothing slips through the cracks.

How to use it?

Developers can integrate GoCoverageInsight into their existing Go development workflow. Typically, you would install the tool (e.g., via go install or cloning the repository and building it). Then, you would run it as part of your testing pipeline. The tool will execute your project's tests and produce a detailed report highlighting uncovered code. This report can be in various formats, like HTML, making it easy to visualize and understand the gaps. For example, you can configure your CI/CD pipeline to run GoCoverageInsight after every commit or pull request. If the coverage drops below a certain threshold or new gaps are identified, the pipeline can fail, prompting developers to address the issues before merging. This ensures that your codebase remains robust and well-tested with every change.

Product Core Function

· Static Code Analysis: Analyzes Go source code to understand program flow and identify all possible execution paths. This provides the 'what we expect to be covered' baseline, allowing for a deeper understanding of potential testable logic.

· Runtime Instrumentation: Injects lightweight probes into the Go binary during test execution to precisely track which lines of code are actually run. This gives us the 'what is actually covered' data.

· Coverage Gap Identification: Compares static analysis results with runtime instrumentation data to pinpoint specific functions, branches, and lines of code that are not exercised by tests. This is the core value proposition – showing you exactly where to focus your testing efforts.

· Detailed Reporting: Generates comprehensive reports, often in an easily digestible format like HTML, that visually highlight coverage gaps. This allows developers to quickly understand the extent of the problem and where to implement new tests.

· Integration with CI/CD: Designed to be seamlessly integrated into Continuous Integration and Continuous Deployment pipelines. This automates the process of checking test coverage and ensures that code quality standards are maintained with every code change.

Product Usage Case

· Scenario: A developer has written unit tests for a new feature in their Go web application. They want to be sure that all edge cases and error handling paths within the new code are being tested. Using GoCoverageInsight, they run the tool against their tests. The report reveals that a specific error condition in the API handler's response generation logic is not being triggered by any of their current tests. This allows the developer to write a new test specifically for that error condition, thereby improving the application's robustness and preventing potential crashes for users encountering that specific error.

· Scenario: A team is working on a critical Go microservice and wants to maintain a high level of test coverage to ensure stability. They integrate GoCoverageInsight into their Jenkins pipeline. After a developer submits a pull request that modifies several core functions, the CI pipeline runs the tests and GoCoverageInsight. The tool detects that a newly introduced helper function, intended to be called in a rare but important scenario, is not covered by any tests. The pipeline is configured to reject the pull request until this coverage gap is addressed, preventing a potentially destabilizing bug from entering the main branch.

· Scenario: An open-source Go project is looking to improve its overall code quality and attract more contributors. They use GoCoverageInsight to identify areas with low test coverage. The insights from the tool help them prioritize which parts of the codebase need more test development. They can then use these findings to create specific 'good first issues' for new contributors, guiding them to add tests in well-defined areas and making it easier for newcomers to contribute meaningful code to the project.

6

SpatialPin Social Fabric

Author

simonsarris

Description

Meetinghouse.cc is a novel social networking tool that leverages spatial data to connect individuals. It allows users to place 'pins' on a virtual globe, essentially marking their presence and interests in a specific location. The core innovation lies in its approach to building a decentralized, yet geographically anchored, social layer, using Twitter/X for identity verification to ensure authenticity, with a future vision of supporting other social networks.

Popularity

Points 3

Comments 3

What is this product?

SpatialPin Social Fabric is a project that acts as a decentralized, location-aware social directory. It allows users to anchor their digital presence to a physical location on a globe, creating a new way to discover and be discovered by others. The technology uses a map interface as the primary interaction point, with user identities currently tied to Twitter/X for a layer of real-world verification. The idea is to create a 'sense of real-ness' by grounding social connections to tangible places, offering a unique alternative to traditional, purely online social networks.

How to use it?

Developers can envision using this project as a foundational layer for applications that benefit from geographically distributed user bases. For example, imagine a developer building an event discovery platform where users pin their attendance interests to specific venues, or a local community app where neighbors can pin helpful resources or services. Integration could involve using the API to retrieve and display pins based on geographic queries or to allow users to post their own pins programmatically. The current model emphasizes human discovery, so the immediate use case is for individuals looking to make their presence known in a specific area or for a particular purpose.

Product Core Function

· Geospatial Pinning: Allows users to place digital markers on a global map, creating a visual representation of their presence and interests. The value here is in creating a tangible link between digital identity and physical space, enabling location-based discovery and interaction.

· Decentralized Directory: Acts as a non-centralized registry of users who want to be found. The innovation is in building a distributed network without a single point of control, fostering resilience and user autonomy.

· Identity Verification (via Twitter/X): Uses existing social network profiles to add a layer of authenticity to user pins. This increases trust and reduces the likelihood of fake or anonymous profiles, enhancing the perceived 'real-ness' of connections.

· Spatial-Social Graph: Enables the creation of social connections influenced by physical proximity and shared locations, offering a unique way to build communities around places.

Product Usage Case

· Event Coordination: A developer could build a music festival application where attendees can pin their favorite stages or meeting points on the festival map, allowing others to easily find them or discover popular spots. This addresses the problem of finding people and navigating large, crowded spaces.

· Local Community Hubs: A neighborhood app could allow residents to pin their availability for skill-sharing (e.g., gardening tips, tech help) or to highlight local points of interest. This tackles the challenge of local knowledge dissemination and neighborly support.

· Collaborative Projects: Teams working on geographically dispersed projects could use it to pin key project locations or resources, facilitating better spatial awareness and communication among team members.

7

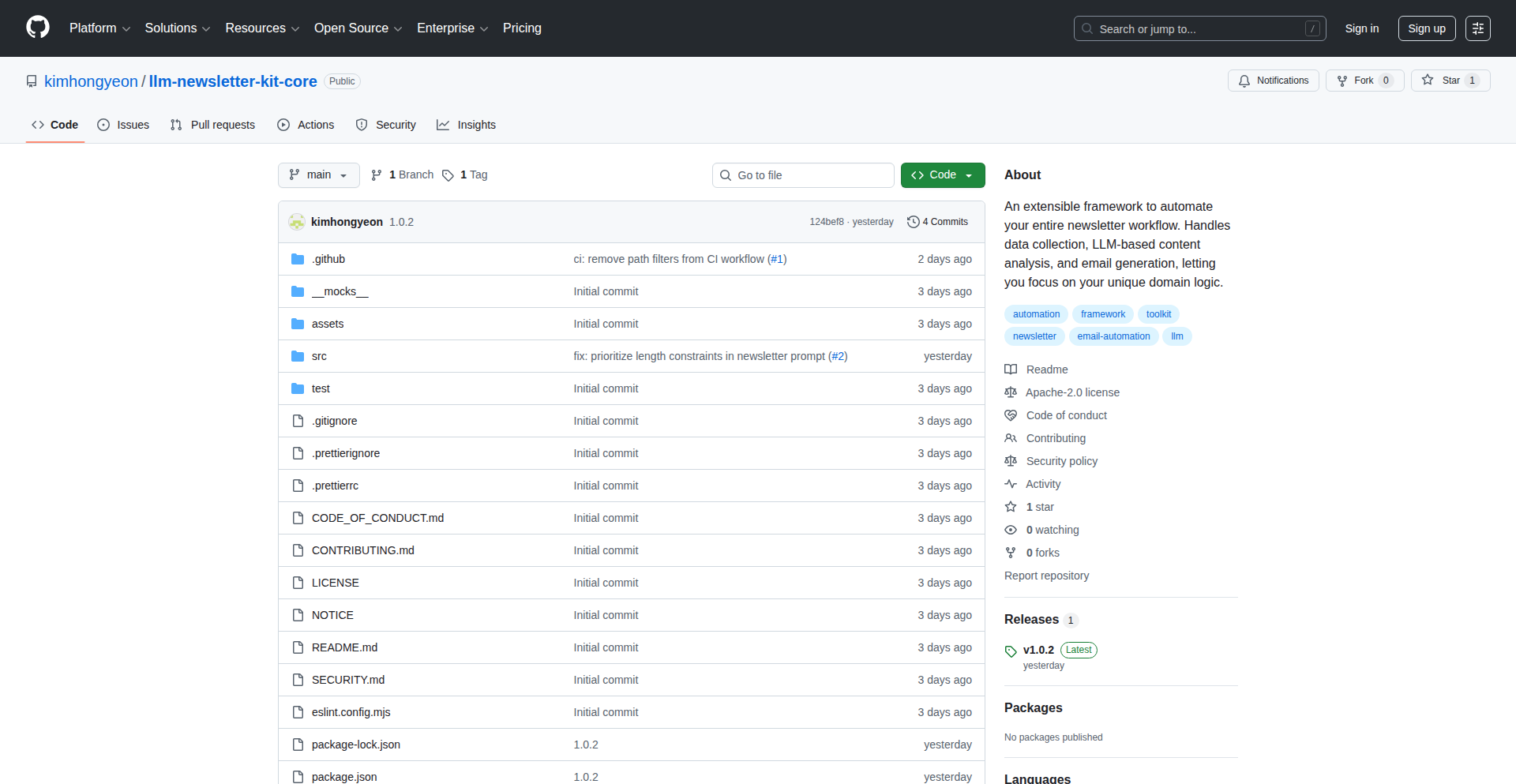

BlogLab AI SEO Assistant

Author

robby1110

Description

BlogLab is an AI-powered tool designed to automate the creation and publishing of SEO-optimized blog content. It addresses the time-consuming nature of manual blog writing, allowing developers and content creators to focus more on building and less on writing, thereby growing their online presence and SaaS projects automatically.

Popularity

Points 4

Comments 1

What is this product?

BlogLab is a smart assistant that uses artificial intelligence to streamline the entire process of creating SEO-friendly blog posts. Think of it as your automated content factory. It takes the pain out of keyword research, content generation, and even helps with publishing. The innovation lies in its end-to-end automation, significantly reducing the manual effort typically required for effective SEO blogging. This means you spend less time writing and more time innovating or marketing your main projects.

How to use it?

Developers can integrate BlogLab into their workflow by using its web interface to input keywords or topics related to their niche. The tool then performs automated research, generates draft blog content tailored for search engine optimization, and can even assist with scheduling or publishing to their existing blog platforms. It's designed for easy adoption, acting as a powerful add-on for anyone looking to boost their online visibility without becoming a full-time blogger.

Product Core Function

· Automated SEO Keyword Research: Identifies high-potential keywords your target audience is searching for, ensuring your content gets discovered by the right people. This saves you hours of manual research and guesswork, directly improving your site's visibility on search engines.

· AI-driven Content Generation: Creates well-written, engaging blog post drafts based on your chosen keywords and topics. This significantly speeds up content creation, allowing you to publish more frequently and consistently, keeping your audience engaged and search engines happy.

· SEO Optimization Features: Integrates SEO best practices directly into the generated content, such as keyword density, meta descriptions, and readability scores. This ensures your posts are not only informative but also technically optimized to rank higher in search results, driving more organic traffic to your projects.

· Publishing Workflow Assistance: Streamlines the process of getting your content live on your blog. This can include formatting, categorizing, and even direct integration with content management systems. This feature minimizes the post-writing administrative burden, allowing for a quicker content lifecycle.

Product Usage Case

· A SaaS founder struggling to find time for content marketing to drive user acquisition: BlogLab can generate weekly blog posts about their product's use cases and industry trends, automatically attracting potential customers searching for solutions. This directly contributes to their SaaS growth by bringing in more organic leads.

· A developer building a personal brand and looking to increase their authority in a specific tech niche: BlogLab can help them consistently publish insightful articles on complex topics, establishing them as an expert and attracting a following. This elevates their professional profile and opens up new opportunities.

· An e-commerce store owner aiming to improve their product discoverability through content: BlogLab can generate blog content that naturally incorporates product keywords and addresses customer pain points, driving qualified traffic directly to their product pages. This leads to increased sales through better search engine visibility.

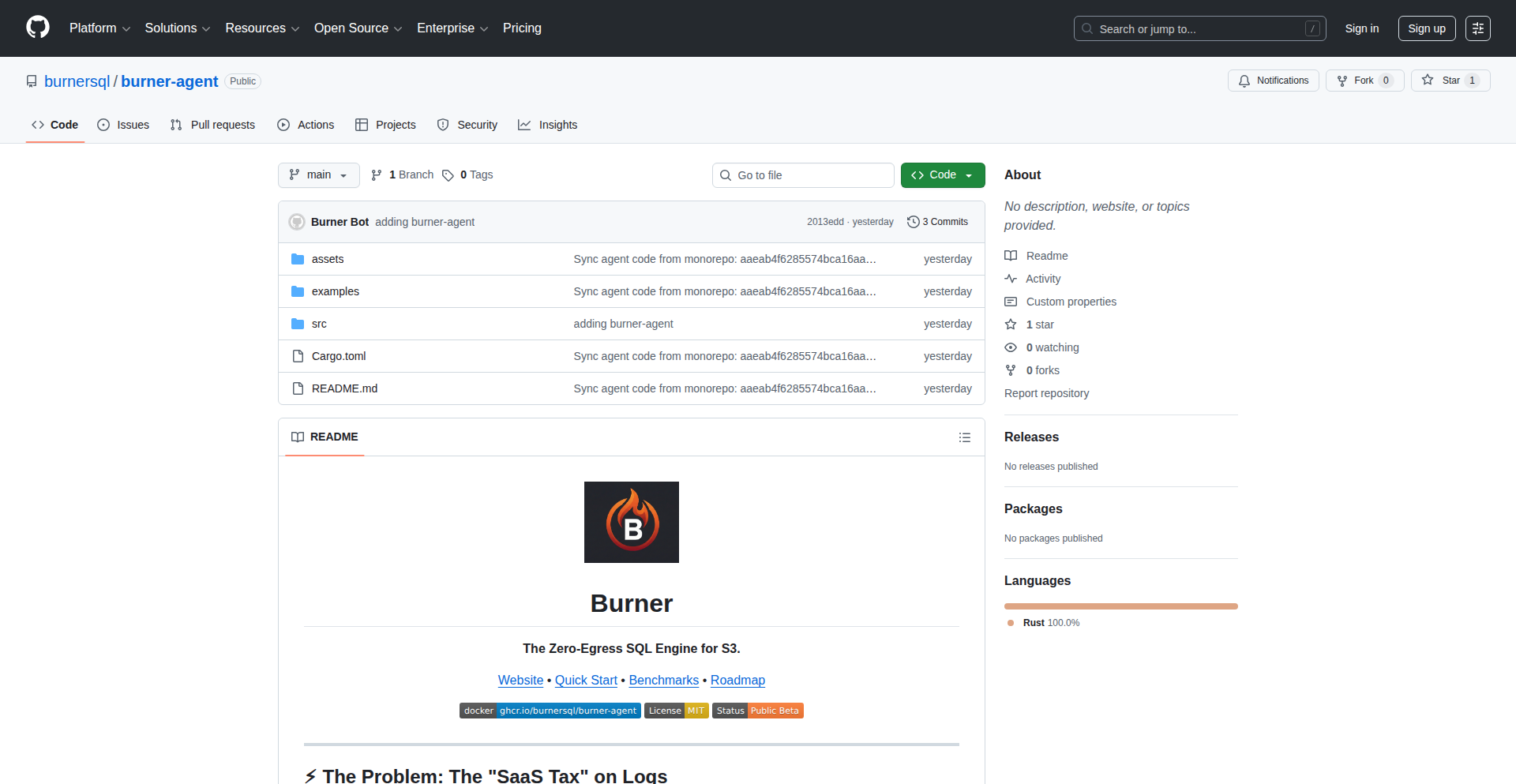

8

Burn Protocol: Thermodynamically Aligned AI Thinking

Author

CodeIncept1111

Description

This project introduces 'Burn Protocol', a novel approach to aligning AI models, specifically Gemini, by leveraging principles from thermodynamics. It addresses the challenge of unpredictable AI behavior and aims to create more stable and predictable AI outputs by forcing the AI's 'thinking' process to align with thermodynamic equilibrium. The core innovation lies in using entropy and energy dissipation concepts to guide AI's internal state transitions, making its reasoning more robust and less prone to emergent, undesirable behaviors. This translates to more reliable AI performance in complex tasks.

Popularity

Points 3

Comments 2

What is this product?

Burn Protocol is an experimental framework that applies thermodynamic principles to guide the internal state and decision-making processes of AI models like Google's Gemini. It draws an analogy between physical systems seeking equilibrium and AI models aiming for consistent, predictable outputs. By introducing a 'thermodynamic alignment' mechanism, the protocol encourages the AI to settle into stable cognitive states, much like a physical system dissipating energy to reach a low-energy, stable configuration. This prevents the AI from 'wandering' into unpredictable or erroneous reasoning paths, thereby enhancing its reliability and safety. So, what's in it for you? It means AI systems that are less likely to go off the rails and more dependable when you need them to perform complex operations.

How to use it?

Developers can integrate Burn Protocol into their AI model pipelines by defining specific thermodynamic constraints that the model's internal computations must adhere to. This involves instrumenting the AI's reasoning process to track a proxy for entropy or energy state and applying corrective feedback loops when the model deviates from its desired equilibrium. The protocol can be used during model training or as a runtime guardrail. For instance, when building an AI chatbot, you could use Burn Protocol to ensure its responses remain contextually relevant and avoid generating nonsensical or harmful content, making your application more user-friendly. It offers a new way to debug and control AI behavior by thinking about it as a physical system.

Product Core Function

· Thermodynamic State Tracking: Implements mechanisms to monitor and quantify a proxy for the AI's internal 'thermodynamic state' during its inference process, enabling developers to understand its computational 'energy' level. This helps in identifying potential instability points in the AI's decision-making, offering a valuable insight into 'why' an AI might produce an unexpected result, crucial for debugging complex AI systems.

· Equilibrium Alignment Feedback: Develops feedback loops that nudge the AI's internal states towards a stable equilibrium, preventing it from entering chaotic or unpredictable reasoning patterns. This is like having an AI that self-corrects to stay on track, leading to more reliable and consistent outputs for your applications, reducing costly errors.

· Entropy-Inspired Inference Control: Leverages concepts of entropy to guide the AI's exploration of possible solutions, favoring more stable and predictable paths over highly divergent ones. This translates to AI that is more likely to find robust solutions, making it ideal for applications requiring high accuracy and predictability, such as financial modeling or scientific research.

· Predictability Enhancement Module: Specifically designed to make complex AI models like Gemini behave more predictably under various conditions, reducing unexpected emergent behaviors. This offers peace of mind for developers deploying AI in critical environments, knowing the AI is less likely to exhibit 'black swan' events that could disrupt operations.

Product Usage Case

· Developing a medical diagnostic AI: Burn Protocol can be used to ensure the AI's diagnostic suggestions are based on stable and reproducible reasoning paths, reducing the risk of errant diagnoses. This means a more trustworthy AI for healthcare professionals.

· Building a financial trading algorithm: The protocol helps in constraining the AI's decision-making to avoid overly volatile or speculative strategies, leading to more robust and less risky trading outcomes. This offers a way to deploy AI in finance with greater confidence.

· Creating a content generation engine: Burn Protocol can be applied to maintain coherence and relevance in generated text, preventing the AI from producing nonsensical or off-topic content. This results in higher quality and more useful AI-generated content for users.

· Testing and debugging large language models: Developers can use Burn Protocol as an analytical tool to understand and mitigate unexpected behaviors in LLMs, providing a more systematic approach to AI safety and reliability. This helps in building better and safer AI for everyone.

9

utm.one: Smart URL Shorter with UTM Governance

url

Author

Raj7k

Description

utm.one is a minimalist URL shortening service that goes beyond just creating short links. It enforces discipline in your UTM tracking by automatically preventing duplicate parameters, ensuring consistent naming conventions, and leveraging the Clean-Track framework to maintain organized and reliable campaign data. This addresses the common pain point of messy and inconsistent tracking URLs, which can lead to inaccurate analytics and wasted marketing efforts. So, this is useful because it saves you time and ensures your marketing data is clean and trustworthy, allowing for better decision-making.

Popularity

Points 3

Comments 2

What is this product?

utm.one is a URL shortening tool designed for marketers and developers who want to maintain clean and structured UTM (Urchin Tracking Module) parameters. Instead of just creating a shorter link, it intelligently manages the UTM tags appended to that link. It uses an automated system to prevent you from accidentally creating duplicate UTM tags (like having two 'utm_source' parameters) and enforces a consistent naming scheme for your tags. It also incorporates the Clean-Track framework, a set of best practices for organizing UTM data, to ensure your tracking is always tidy. The innovation lies in its proactive approach to UTM data hygiene, turning a potentially chaotic aspect of digital marketing into a streamlined and reliable process. So, this is useful because it automates the tedious task of managing UTM tags, preventing common errors that can corrupt your campaign data and make analysis difficult. It ensures your tracking is accurate from the start.

How to use it?

Developers and marketers can use utm.one by visiting their website (utm.one) and signing up for the controlled beta. The process involves inputting your original long URL and then defining the UTM parameters you want to associate with it. The tool's interface is designed to be intuitive, guiding you through this process. It will automatically check for duplicate parameters and suggest consistent naming. For integration, it provides the shortened URL with the managed UTM parameters, which you can then use in your social media posts, email campaigns, advertisements, or any other marketing channel. So, this is useful because it's a straightforward way to generate trackable links that are already organized, saving you the manual effort of crafting and validating each UTM tag.

Product Core Function

· Automated Duplicate UTM Parameter Prevention: The system detects and prevents the creation of multiple instances of the same UTM parameter (e.g., multiple 'utm_source' tags for a single URL), ensuring your tracking data remains unambiguous and avoids analytical confusion. This is valuable because it saves you from manually auditing and cleaning up your data later, directly improving the accuracy of your campaign reporting.

· Consistent UTM Naming Enforcement: utm.one enforces predefined naming conventions for UTM parameters, such as always using lowercase for 'utm_medium' or a specific format for 'utm_campaign'. This consistency is crucial for accurate data aggregation and analysis across different campaigns and platforms. This is valuable because it eliminates variations in how you tag your campaigns, making it easier to compare performance and identify trends.

· Clean-Track Framework Integration: By adhering to the Clean-Track framework, utm.one promotes best practices in UTM management, leading to more organized and interpretable tracking data. This framework provides a standardized way to structure your tracking information. This is valuable because it simplifies the process of understanding your campaign performance by ensuring your data follows a logical and widely accepted structure.

· Distraction-Free URL Shortening: The core functionality of shortening long URLs is presented in a clean and minimalist interface, allowing users to focus on the UTM governance aspect without unnecessary clutter. This is valuable because it streamlines the user experience, making the process of creating trackable links efficient and pleasant.

Product Usage Case

· A social media manager launching a new product campaign across multiple platforms. They can use utm.one to generate unique, consistently tagged short URLs for each platform (e.g., Twitter, Facebook, Instagram). This ensures that they can accurately track which platform drives the most traffic and conversions, avoiding confusion from inconsistent tagging. The problem solved is the inability to reliably attribute traffic to specific social media channels due to varied UTM tags.

· An email marketer sending out a newsletter with different calls to action. With utm.one, they can create distinct short URLs for each CTA, each with a clearly defined 'utm_campaign' and 'utm_content' parameter. This allows them to precisely measure the effectiveness of each CTA within the newsletter. The problem solved is understanding which specific content elements within a campaign are performing best.

· A developer integrating a shortened referral link into their application. They can use utm.one to ensure that any referral traffic generated through this link is automatically tagged with the correct 'utm_source' (e.g., 'app-referral') and 'utm_medium' (e.g., 'organic-social'). This simplifies the process of tracking user acquisition sources from within their own product. The problem solved is the manual effort required to tag referral traffic and the potential for errors in doing so.

10

MentalAgeQuiz

Author

takennap

Description

A lightweight, no-login, quick mental age quiz designed for instant feedback. It prioritizes a clean, fast, and user-friendly experience across all devices, focusing on the core user journey of answering questions and receiving results.

Popularity

Points 3

Comments 2

What is this product?

This project is a web-based mental age quiz. It works by presenting a series of questions, and based on your answers, it calculates an estimated 'mental age'. The innovation lies in its extreme simplicity and focus on user experience: no account creation or personal data is required, making it instantly accessible. The underlying technology likely involves client-side JavaScript for question presentation and answer processing, with a simple algorithm to derive the score. This approach minimizes server load and maximizes speed, allowing users to get their result in under two minutes. The value is in providing a fun, engaging, and immediate introspective experience without any barriers.

How to use it?

Developers can use this project as a reference for building similar quick, engaging, and privacy-focused web applications. It's a great example of how to create a delightful user experience with minimal complexity. You can integrate its core concept into a larger website or application as a fun diversion, or even adapt the question-answer logic for other types of quick assessments. The current implementation is likely straightforward to embed or fork for custom needs, providing a ready-to-go solution for interactive content.

Product Core Function

· Instant Quiz Access: No login or signup required, meaning users can start the quiz immediately upon visiting the page, providing an immediate engagement opportunity.

· Fast & Responsive Design: Optimized for both desktop and mobile, ensuring a seamless user experience regardless of the device, which is crucial for broad adoption and user satisfaction.

· Simple Question-Answering Mechanism: Utilizes basic web technologies to present questions and capture user input, demonstrating an efficient and performant way to handle interactive content.

· Algorithmic Scoring: Calculates a 'mental age' based on user responses, offering a personalized and intriguing outcome without complex backend processes, making the result generation quick and accessible.

· Privacy-Focused Approach: Collects no personal data, respecting user privacy and removing a common barrier to participation, which builds trust and encourages wider use.

Product Usage Case

· Embedding as a 'Fun Fact' or 'Engagement Widget' on a blog or personal website to increase visitor interaction and time spent on the page.

· Using as a quick survey tool for gathering non-sensitive feedback or gauging user sentiment in a playful manner, where the 'mental age' is a proxy for response style.

· Demonstrating front-end development principles for creating simple, performant, and user-centric web applications that prioritize immediate value.

· Incorporating into an onboarding flow as a light-hearted icebreaker to make the initial user experience more welcoming and less demanding.

· Building a prototype for a larger educational or entertainment platform where quick, engaging mini-games or quizzes are a key feature.

11

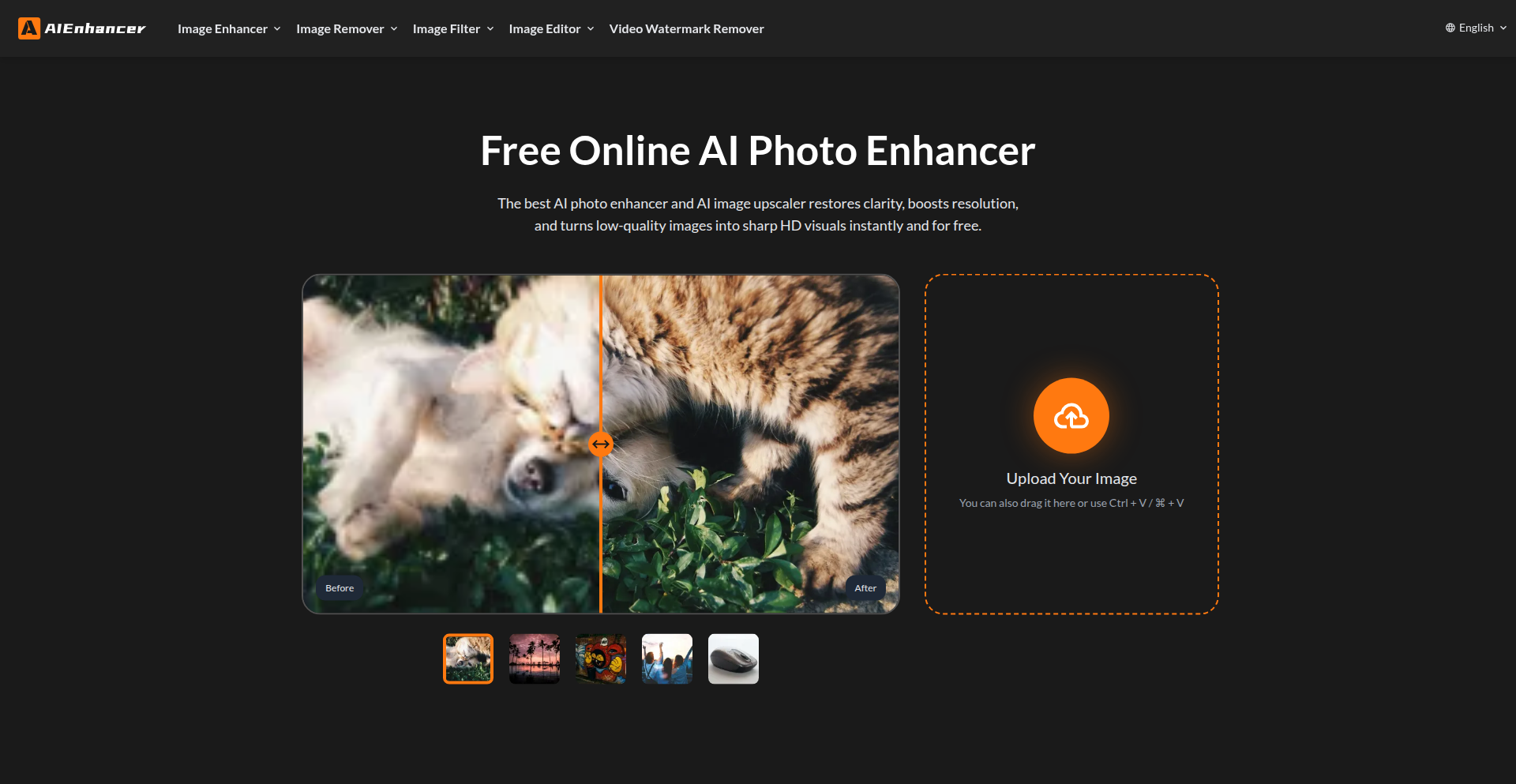

AI Image Alchemy

Author

Pratte_Haza

Description

AI Image Alchemy is an online tool that leverages cutting-edge artificial intelligence to automatically improve the quality of your images for free. It addresses common image issues like damage, unwanted backgrounds, and distracting watermarks, making your photos look professionally enhanced without any manual effort or cost.

Popularity

Points 5

Comments 0

What is this product?

AI Image Alchemy is a web-based service that uses machine learning models to intelligently enhance digital images. Think of it as a smart photo editor that understands what makes an image look good. Its innovation lies in its ability to perform complex tasks like repairing old, torn photos, precisely separating subjects from their backgrounds, and cleanly removing watermarks, all powered by AI. So, for you, this means getting professional-looking image improvements quickly and easily, without needing advanced editing skills or expensive software.

How to use it?

Developers can integrate AI Image Alchemy into their workflows or applications by accessing its online features. For example, if you're building a website that showcases user-uploaded photos, you could prompt users to use AI Image Alchemy to clean up their images before they are displayed. Alternatively, if you're managing a large library of digital assets, you can batch process images to improve their overall quality and remove unwanted elements. The use case is straightforward: upload your image to the website, select the desired enhancement (repair, background removal, watermark removal), and download the improved version. This saves you time and resources that would otherwise be spent on manual editing or complex scripting. This means you can deliver better visual content to your audience with less effort.

Product Core Function

· Photo Repair: Utilizes AI to reconstruct damaged or degraded areas in old photographs, filling in missing pixels and restoring clarity. Value: Preserves memories and revives historical images, making them viewable and shareable. For you, this means bringing old cherished photos back to life.

· Background Removal: Employs computer vision algorithms to accurately detect and isolate the main subject of an image, effectively removing the background. Value: Enables creative compositing and clean product shots, essential for marketing and design. For you, this means easily placing your subjects onto any new background you desire.

· Watermark Removal: Applies AI to intelligently identify and erase watermarks without damaging the underlying image content. Value: Cleans up stock photos or personal images without the distraction of overlays. For you, this means obtaining clean, usable images without paying for premium versions or dealing with copyright restrictions on non-commercial use.

Product Usage Case

· A small e-commerce business owner uses AI Image Alchemy to remove backgrounds from their product photos, making them look professional and consistent on their online store. This solves the problem of time-consuming manual background editing and improves the visual appeal of their listings, leading to potentially higher sales.

· A genealogist uses AI Image Alchemy to repair a collection of old family photographs that are faded and torn. This allows them to digitally preserve their family history and share clearer images with relatives, solving the problem of deteriorating physical photos and making their research more accessible.

· A social media influencer uses AI Image Alchemy to remove unwanted watermarks from stock images they wish to use in their content. This ensures their posts look clean and professional without the visual clutter of watermarks, enhancing their brand image and saving them the cost of licensing fees.

12

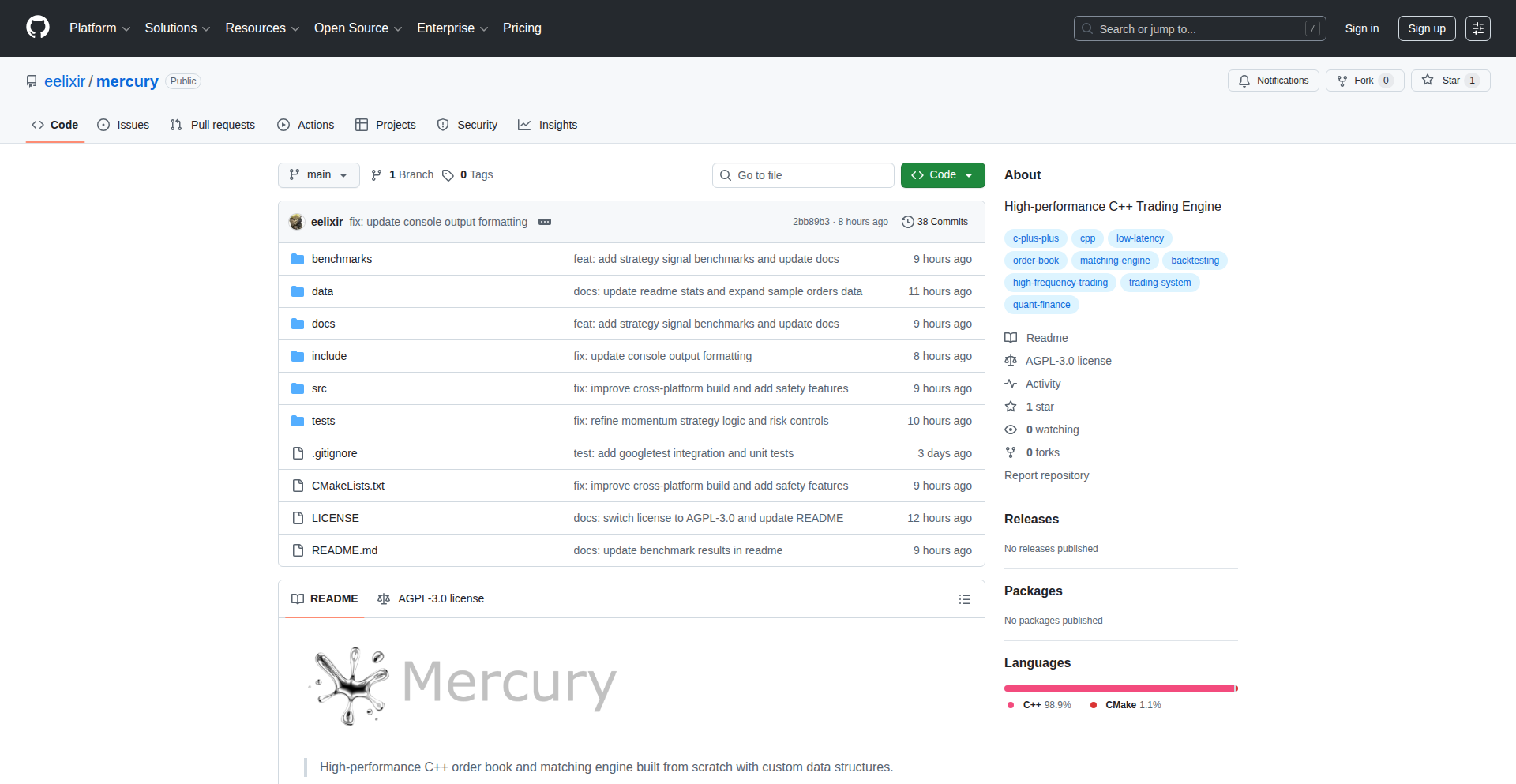

HyperFlow Matching Engine

Author

tjwells

Description

A C++ based order book matching engine achieving an astonishing 3.2 million orders per second with a latency of approximately 320 nanoseconds. It addresses the critical need for ultra-fast and efficient trade execution in financial markets by optimizing data structures and algorithms for high-frequency trading. Its innovation lies in minimizing overhead and maximizing parallelism to process massive volumes of orders in near real-time.

Popularity

Points 3

Comments 2

What is this product?

This project is a highly optimized C++ implementation of an order book matching engine, a core component of financial trading systems. It's designed to rapidly match buy and sell orders for financial instruments. The innovation lies in its extreme performance, handling 3.2 million orders every second with an incredibly low latency of around 320 nanoseconds. This is achieved through advanced C++ programming techniques, efficient memory management, and possibly lock-free data structures or highly optimized multithreading to avoid bottlenecks. So, what's the value? It means significantly faster trade execution and the ability to process a much larger volume of trades, which is crucial for financial institutions aiming to gain a competitive edge in high-frequency trading.

How to use it?

Developers can integrate this engine into their high-frequency trading (HFT) platforms, algorithmic trading systems, or any application requiring real-time financial market data processing and order matching. It would typically be used as a backend service. The usage would involve feeding incoming buy and sell orders into the engine and receiving matched trades as output. Integration would likely involve API calls or shared memory interfaces to pass order data and receive matching results. So, how can you use it? If you're building a trading system that needs to react to market changes instantly and execute trades at lightning speed, this engine provides the core matching functionality you need, minimizing delays and maximizing your trading opportunities.

Product Core Function

· Ultra-high throughput order processing: The engine can handle an immense volume of incoming buy and sell orders, measured in millions per second, enabling scalability for busy markets. This is valuable for applications needing to process a constant stream of market data without falling behind.

· Sub-microsecond latency: The extremely low latency ensures that trades are matched and executed in the shortest possible time, crucial for strategies that rely on reacting to market movements within nanoseconds. This provides a significant speed advantage in time-sensitive trading scenarios.

· Efficient order book management: The underlying data structures are optimized for rapid insertion, deletion, and retrieval of orders, ensuring that the engine can quickly find the best available prices for matching. This optimizes the search for the best trade price, leading to more favorable execution for users.

· C++ performance optimization: Leveraging the power of C++ and advanced programming techniques allows for maximum performance and minimal overhead, essential for the demanding requirements of financial trading. This means a more efficient and cost-effective solution for high-performance computing needs.

· Scalable architecture: While the current implementation shows impressive raw performance, the design principles can be extended to handle even larger volumes or distributed across multiple systems for further scalability. This offers a path for growth as trading volumes increase or more complex systems are built.

Product Usage Case

· High-frequency trading firms: Implementing this engine allows HFT firms to execute their complex trading algorithms with unparalleled speed, capturing small price differences before other market participants. This directly translates to increased profitability and competitive advantage.

· Market making operations: For businesses that provide liquidity to financial markets, this engine enables them to efficiently manage their buy and sell orders, ensuring tight bid-ask spreads and profiting from the volume of trades. This improves market efficiency and reduces trading costs for all participants.

· Algorithmic trading strategy backtesting and simulation: Developers can use this engine to simulate and test trading strategies in a highly realistic, low-latency environment, gaining confidence in their algorithms before deploying them to live markets. This reduces the risk of deploying untested strategies.

· Real-time financial data analysis platforms: For platforms that require immediate insights from market data, this engine can process and match orders in real-time, feeding accurate, up-to-the-second trading information to analytical tools. This enables faster decision-making based on current market conditions.

· Development of new financial instruments and trading venues: Innovators building new types of exchanges or financial products can leverage this engine's performance to ensure their platforms can handle the expected trading activity. This fosters innovation in the financial technology space.

13

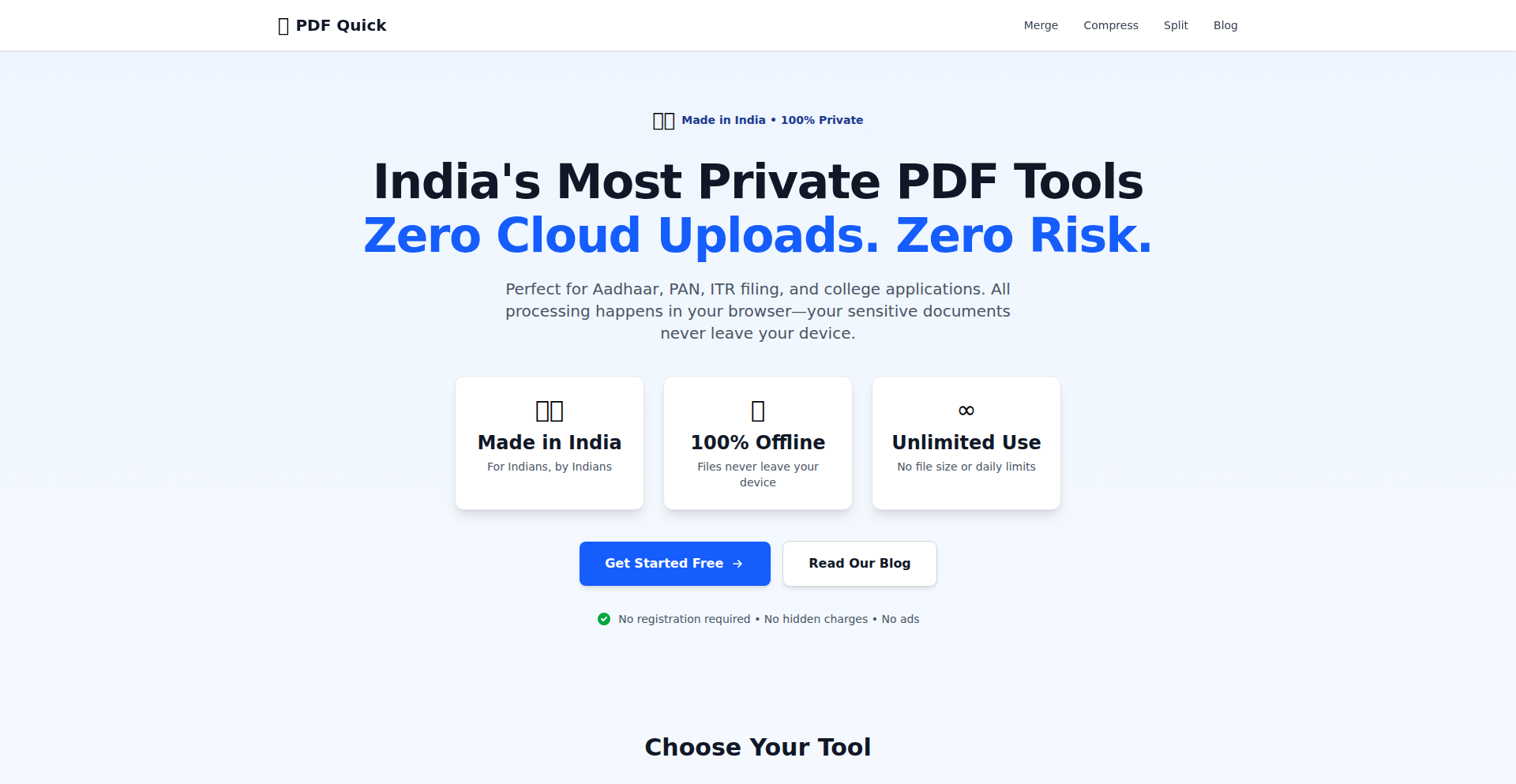

PDF Quick: Client-Side PDF Alchemy

Author

nistamaska

Description

PDF Quick offers a suite of free PDF manipulation tools that operate entirely within your web browser, meaning your documents never leave your device. This privacy-focused approach leverages advanced client-side JavaScript to perform tasks like merging, splitting, and converting PDFs, solving the common problem of sensitive data exposure when using online PDF services.

Popularity

Points 4

Comments 0

What is this product?

PDF Quick is a collection of free, web-based tools designed to help you manage PDF files without uploading them to a server. It works by using JavaScript directly in your browser to perform all the heavy lifting. This means that when you use PDF Quick, your PDF files are processed locally on your computer. The innovation here lies in its commitment to privacy and security by avoiding any server-side processing, which is a significant departure from many free online tools that might store or access your data. It's like having a personal PDF editor that runs entirely on your machine, ensuring your sensitive information stays private.

How to use it?

Developers can integrate PDF Quick into their web applications by embedding the provided JavaScript libraries. This allows them to offer PDF processing functionalities directly within their own platforms. For example, a web application that needs to combine user-uploaded documents into a single PDF report can use PDF Quick to do this on the user's end, enhancing user trust and reducing server load. The usage is straightforward: include the necessary scripts, and then call the provided JavaScript functions to perform operations like merging multiple PDFs into one or extracting specific pages from a larger document.

Product Core Function

· PDF Merging: Combines multiple PDF files into a single document, valuable for consolidating reports or documents without sending them to a remote server for processing.

· PDF Splitting: Extracts specific pages or a range of pages from a PDF, useful for isolating relevant sections of a document while maintaining privacy.

· PDF Conversion (e.g., to Images): Converts PDF pages into image formats, allowing for easier embedding or sharing of specific content without exposing the original PDF structure.

· Client-Side Processing: All operations are performed in the user's browser, ensuring 100% privacy and security for sensitive documents, making it ideal for applications handling confidential information.

· Offline Capability (with cached scripts): Once loaded, basic operations can potentially function even with limited or no internet connectivity, offering greater flexibility.

Product Usage Case

· A legal tech company could use PDF Quick to allow clients to merge multiple court filings into a single submission document directly in their browser, ensuring attorney-client privilege is maintained.

· An e-commerce platform might leverage PDF Quick to merge order confirmations and shipping details into a single printable PDF for customers, all processed client-side to protect user data.

· An educational platform could use PDF Quick to allow students to extract specific chapters from a textbook PDF for study purposes, without the university needing to manage the processing of entire books on their servers.

· A personal finance application could enable users to merge multiple bank statements into a single file for budgeting, with the guarantee that their financial data never leaves their device.

14

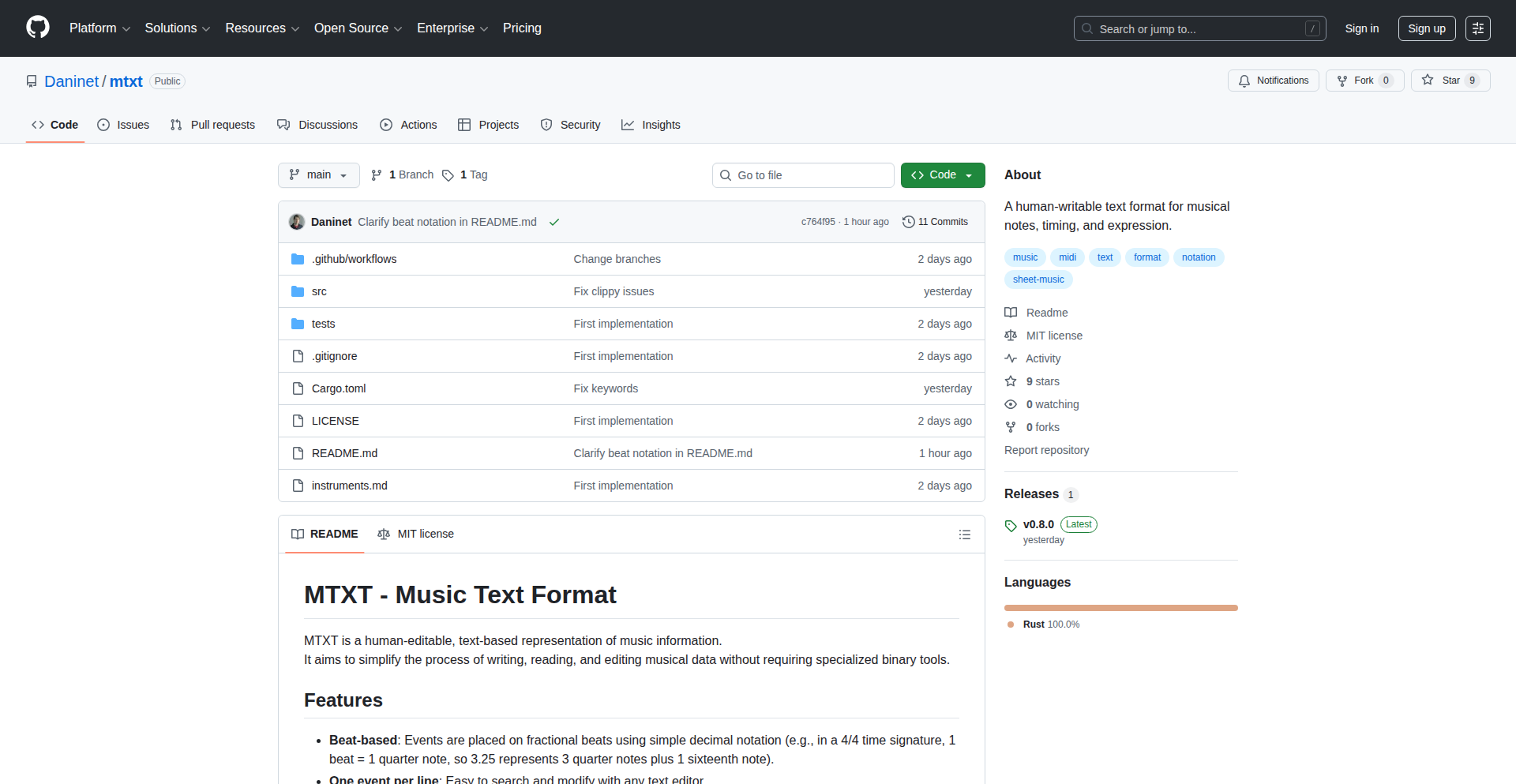

MTXT: Musical Phrase Markup

Author

daninet

Description

MTXT is a novel text-based format for representing musical phrases and compositions. It leverages plain text for simplicity and broad compatibility, allowing musicians and developers to easily encode, share, and manipulate musical ideas programmatically. The innovation lies in its structured yet flexible approach to musical notation within a text environment, solving the problem of easily representing and processing music data without complex graphical editors or proprietary file formats.

Popularity

Points 4

Comments 0

What is this product?

MTXT is essentially a smart way to write down music using just text characters. Think of it like writing code, but for music. Instead of drawing notes on a staff, you use specific symbols and words to describe melodies, rhythms, chords, and even musical structures. The core innovation is its ability to translate these text descriptions into actual musical information that computers can understand and work with. This means you can generate music, analyze it, or even have it played back, all from a simple text file. It's like giving music a digital, programmable language.

How to use it?

Developers can integrate MTXT into their projects to create musical content programmatically. For instance, you could write a script that generates a melody based on certain parameters and outputs it as an MTXT file. This file can then be parsed by a music synthesis engine or a digital audio workstation (DAW) plugin that understands MTXT. Musicians can use simple text editors to jot down musical ideas quickly, which can then be imported into more sophisticated music software. It's useful for building custom music generation tools, algorithmic composition systems, or even for version control of musical pieces.

Product Core Function

· Text-based musical phrase representation: Enables storing musical ideas in a human-readable and machine-parseable text format, allowing for easy sharing and editing, which is useful for collaborative music projects and quick idea capture.

· Programmatic music generation: Allows developers to create musical content by writing code that outputs MTXT, facilitating the creation of dynamic soundtracks, algorithmic music, and interactive music experiences.

· Interoperability with music software: Provides a standardized text format that can be parsed by various music synthesis engines and DAWs, bridging the gap between simple text descriptions and complex musical outputs.

· Structured musical data: Organizes musical elements like notes, durations, chords, and dynamics into a logical structure within the text file, making it easier to analyze and manipulate musical data computationally.

· Version control for music: Enables musicians to track changes to their compositions using standard version control systems like Git, similar to how software code is managed, offering a robust way to manage musical evolution.

Product Usage Case

· Creating procedural music for video games: A game developer could use MTXT to define musical themes that change based on in-game events, generating a dynamic soundtrack without pre-rendering every variation, solving the problem of repetitive music in games.

· Building a web-based music composition tool: A web developer could create a simple interface where users input MTXT code to compose music, which is then instantly played back or rendered, making music creation accessible without professional software.

· Developing an AI music generator: A researcher could use MTXT as the output format for an AI model trained to compose music, allowing for easy evaluation and integration of AI-generated melodies into existing musical workflows.

· Automating music transcription: A programmer could write a script to convert audio recordings into MTXT, providing a structured textual representation of the music for further analysis or manipulation, simplifying the initial stage of music analysis.

15

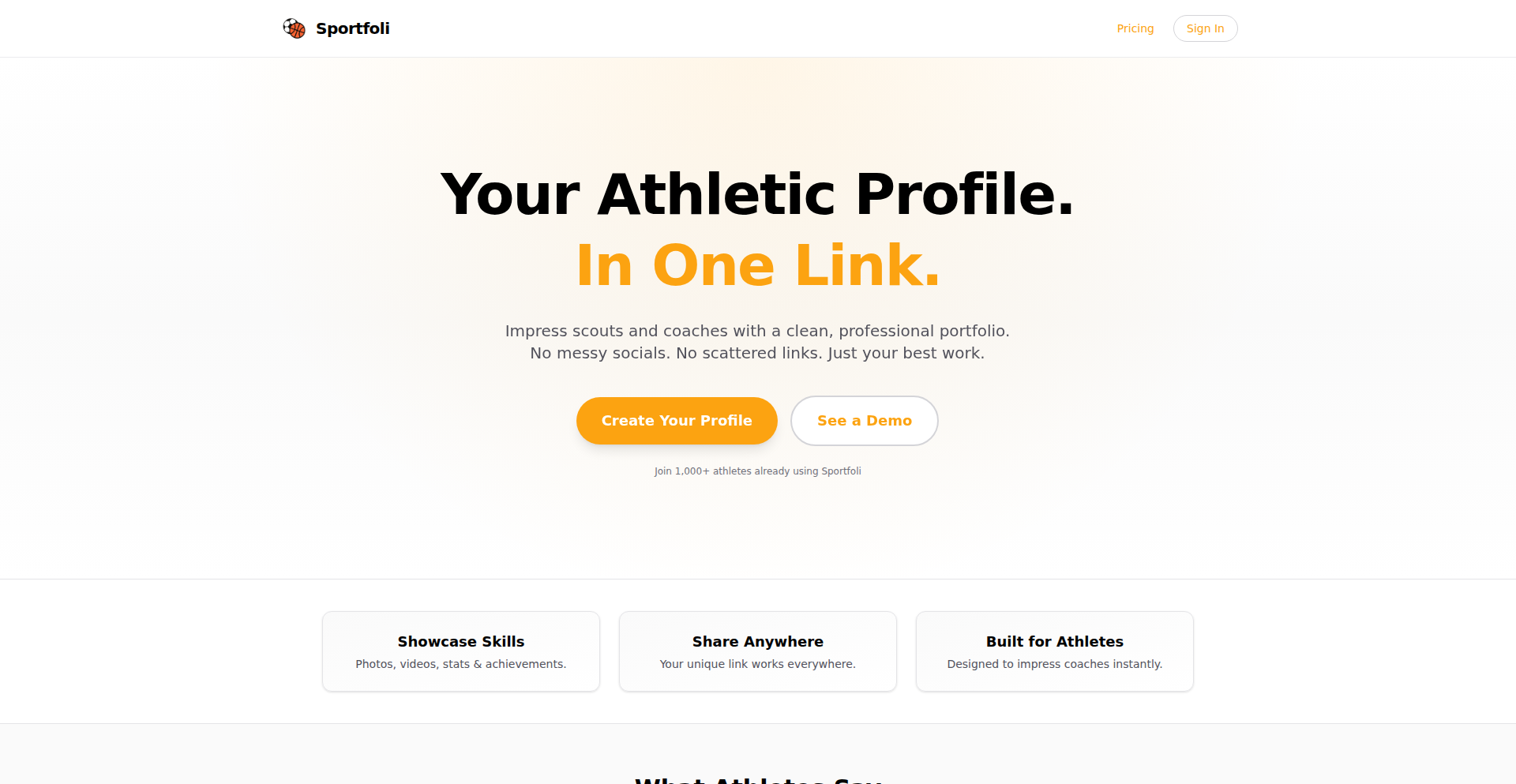

Sportfoli: Athlete Profile Weaver

Author

ethjdev

Description

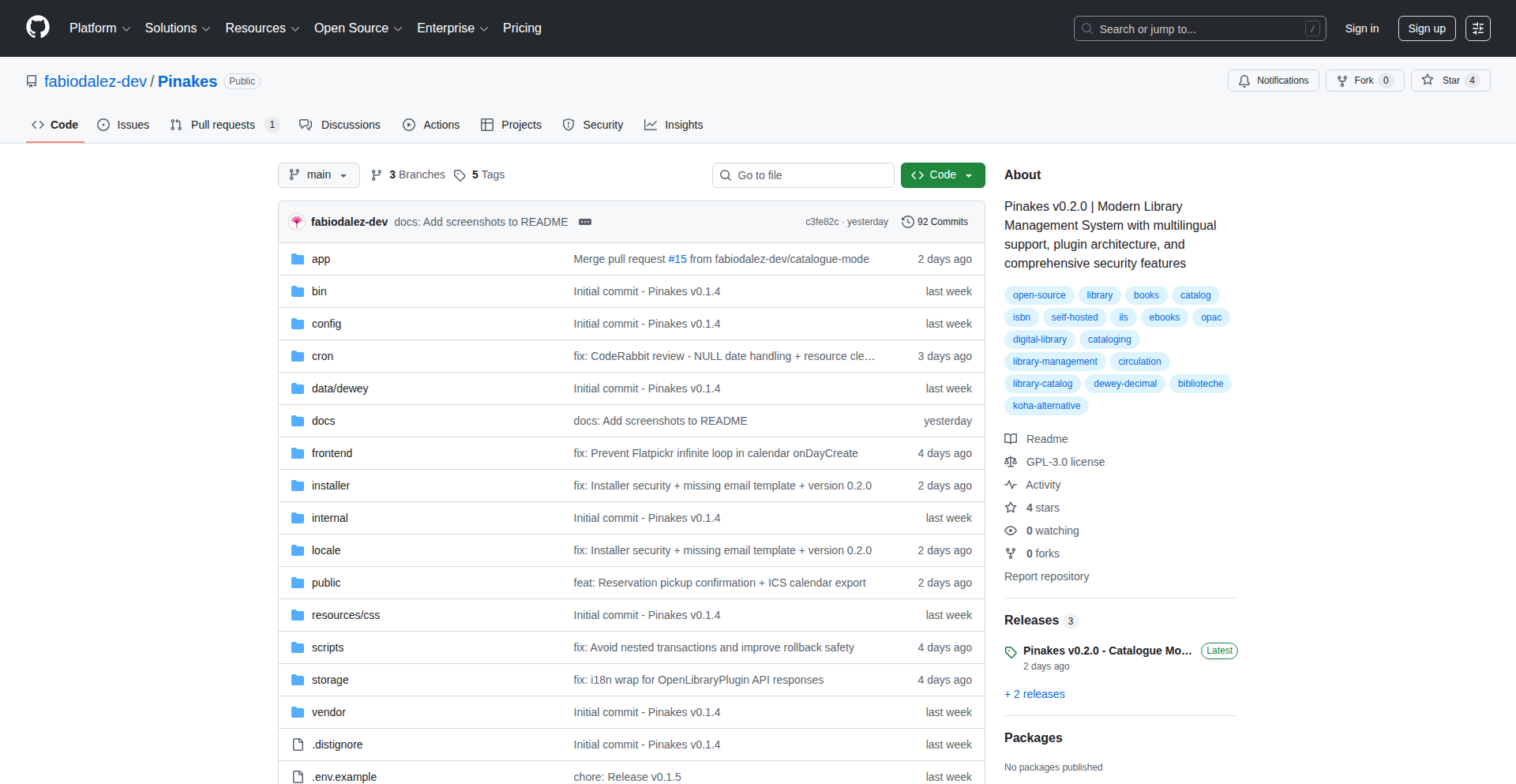

Sportfoli is a lightweight, clean, and declarative tool for athletes to build and showcase their sports profiles. It leverages a templating approach to generate professional-looking profiles, abstracting away the complexities of web design and focusing on content. The innovation lies in its simplicity and ease of customization for individuals without deep web development skills, enabling them to present their athletic achievements effectively.

Popularity

Points 1

Comments 3

What is this product?

Sportfoli is essentially a profile generator specifically tailored for athletes. Instead of requiring users to learn complex web development languages or rely on rigid platforms, Sportfoli uses a template-driven system. Think of it like filling out a structured form where each field corresponds to an aspect of an athlete's career (stats, achievements, bio, etc.). The system then automatically takes this structured data and, using pre-designed templates, builds a clean and visually appealing profile page. The core technical innovation is in how it separates the data from the presentation, allowing for easy updates and customizations without touching code, making it accessible even to those with minimal technical background.

How to use it?

Developers can use Sportfoli by cloning the repository and modifying the existing templates or creating new ones using a simple templating language (likely HTML with placeholders). For athletes or their managers without coding experience, the envisioned usage is a web-based interface where they can input their information, select a template, and generate a downloadable HTML file or a deployable site. Integration could involve embedding the generated profile on existing websites or social media platforms via iframes or links. Essentially, you feed it your sports data, and it gives you a polished online presence.

Product Core Function

· Declarative Profile Generation: Users describe their profile content and choose a template, and Sportfoli builds the output. The value here is speed and ease of use, allowing athletes to quickly establish an online presence without coding knowledge.

· Template-Based Customization: Offers pre-designed templates and the ability to create custom ones. This provides flexibility and branding control for users, ensuring their profile reflects their unique identity and sport.

· Data Separation: Keeps athlete data distinct from presentation logic. This means athletes can update their stats or achievements without redoing the entire profile design, saving time and effort.

· Static Site Output: Generates static HTML files, which are fast, secure, and easy to host anywhere. The value is in performance and simplified deployment for users who may not have server management experience.

Product Usage Case

· An aspiring professional athlete can use Sportfoli to quickly build a personal website showcasing their junior career achievements, highlight reels, and contact information, making them more visible to scouts and sponsors.

· A college sports recruitment team can use Sportfoli to provide a standardized, yet customizable, profile template for their athletes to present to potential recruiters, ensuring consistent professional presentation of all student-athletes.

· A sports agent can use Sportfoli to create individual profiles for their clients, allowing for easy updates on contract signings, awards, or new media appearances, keeping clients' online presence current and impactful.

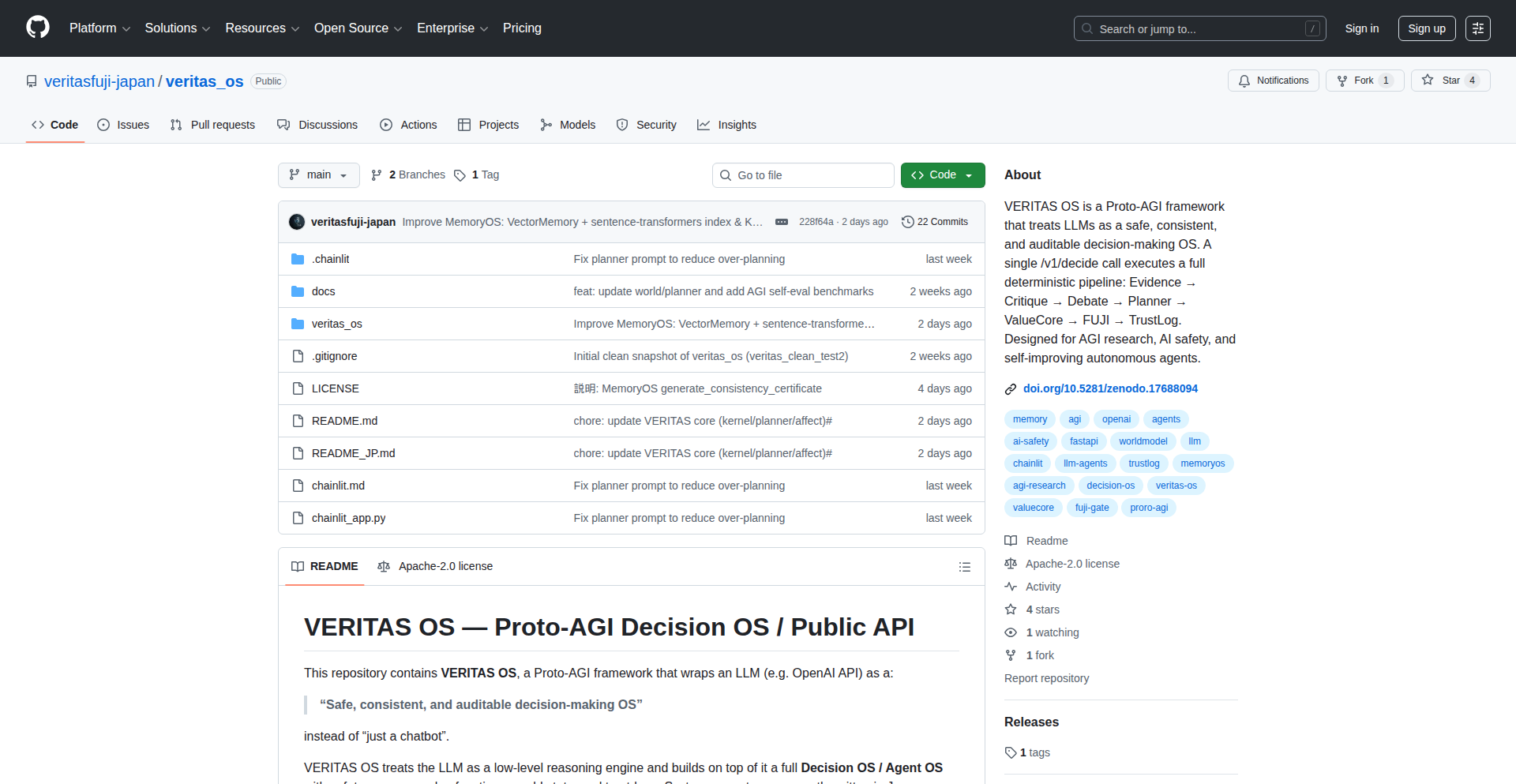

16

Veritas OS: Local LLM Sentinel

Author

VERITAS_OS_JP

Description

Veritas OS is a pioneering, fully local operating system designed to govern Large Language Models (LLMs), treating them as potentially powerful, even dangerous, superintelligence. It enforces a rigorous decision-making framework for LLMs, ensuring their outputs are grounded in evidence, subjected to critique, debated, and planned, all while adhering to predefined ethical, legal, and risk-scoring guidelines. This system acts as a 'constitution' and 'immune system' for AI agents, running entirely on a single user's laptop without cloud dependency, offering a secure, auditable, and controlled environment for interacting with advanced AI.

Popularity

Points 2

Comments 2

What is this product?

Veritas OS is an experimental, file-based operating system that puts a strong leash on Large Language Models (LLMs). Think of it as a very strict guardian for AI. Instead of an LLM just doing whatever it wants, Veritas OS forces every decision through a structured process: first, it looks at the 'evidence' (the prompt and any data). Then, it 'critiques' this information, engages in a 'debate' to explore different angles, and finally creates a 'plan'. Crucially, it has a 'ValueCore' component that scores every action based on ethics, legality, and risk. There's also a 'FUJI Gate' that acts as a mandatory safety filter before and after any LLM output. To make sure nothing is tampered with, all actions are recorded in a tamper-proof 'TrustLog' using SHA-256 hashing. Finally, a 'Doctor Dashboard' acts like an auto-immune system, monitoring and reacting to any potential issues. This entire system runs locally on your laptop, meaning no data leaves your machine, providing a secure and private way to work with powerful AI.

How to use it?

Developers can integrate Veritas OS into their local development workflows to create safer and more controllable AI-powered applications. This involves setting up Veritas OS on their machine and configuring it to act as the intermediary for any LLM they wish to use. Instead of directly calling an LLM API, developers would direct their requests through Veritas OS. For example, if building a content generation tool, the developer's application would send the request to Veritas OS, which would then apply its governance framework to the LLM's response before passing it back to the application. This is particularly useful for sensitive applications where an LLM's output needs strict oversight, or when experimenting with cutting-edge LLMs where their behavior might be unpredictable. The file-based nature allows for deep customization and integration into existing project structures.

Product Core Function

· Evidence-Critique-Debate-Planner Framework: This core loop ensures LLM decisions are not arbitrary but are reasoned and structured, improving reliability and reducing unexpected behavior. This is valuable for developers who need predictable AI outputs.

· ValueCore (Ethics/Legality/Risk Scoring): This function acts as an internal ethical and safety compass for the LLM, flagging or preventing outputs that violate predefined rules. This is crucial for building responsible AI applications and avoiding legal pitfalls.

· FUJI Gate (Safety Filter): This acts as a vigilant gatekeeper, scrutinizing LLM inputs and outputs for safety concerns before they are processed or delivered. This is essential for preventing the generation or propagation of harmful content.

· SHA-256 hash-chained TrustLog: This provides an unalterable, chronological record of all LLM actions and decisions, creating a verifiable audit trail. This is invaluable for debugging, compliance, and understanding AI behavior over time.

· Doctor Dashboard (Auto-immune System): This monitors the overall system health and LLM behavior, acting proactively to correct deviations or potential threats. This adds a layer of resilience and self-healing to AI systems.

Product Usage Case

· Local AI assistant for sensitive data analysis: A developer could use Veritas OS to power a local AI assistant that analyzes confidential company documents. Veritas OS ensures that the LLM's queries and responses are strictly confined to the provided data, adhere to privacy policies, and are logged for audit, preventing data leaks and ensuring compliance.

· Controlled AI content creation for regulated industries: For applications in finance or healthcare, Veritas OS can govern an LLM used for generating reports or communications. The ValueCore and FUJI Gate would filter out any non-compliant or potentially misleading information, ensuring that all generated content meets strict regulatory standards before being seen by users.

· Research into advanced LLM behavior and safety: Researchers can leverage Veritas OS to conduct experiments with novel or experimental LLMs in a controlled environment. The detailed TrustLog and auto-immune system allow for deep analysis of LLM decision-making and provide a safe sandbox for exploring potential AGI risks and mitigation strategies.

17

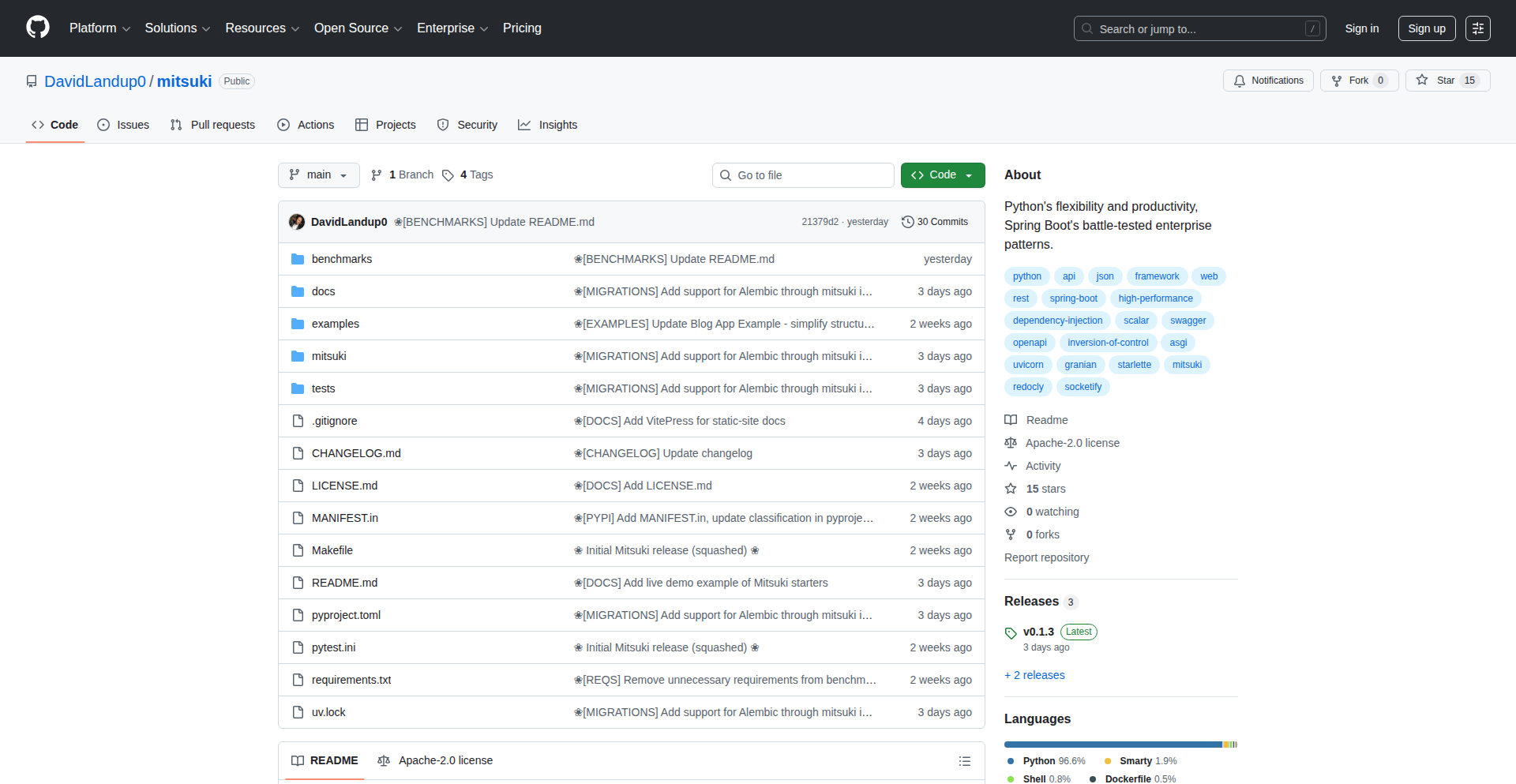

Mitsuki: Python Enterprise Web Framework

Author

DavidLandup0

Description

Mitsuki is a Python web framework designed to bring the structured development patterns and developer experience of enterprise-grade frameworks like Spring Boot to Python. It aims to offer high performance and ease of use, allowing developers to build robust web applications quickly while maintaining long-term project maintainability. The core innovation lies in its ability to provide a structured foundation inspired by enterprise patterns, enabling rapid development for simple APIs and scalable solutions for complex projects, without sacrificing performance.

Popularity

Points 3

Comments 1

What is this product?

Mitsuki is a Python web framework that acts as a productivity layer for building web applications. It's inspired by established enterprise frameworks, incorporating patterns that help manage complexity in larger projects. Think of it like a well-organized toolkit for Python developers. Instead of manually setting up common structures for handling data, business logic, and web requests, Mitsuki provides a predefined structure. This means less boilerplate code and more time spent on the unique features of your application. It achieves high performance by leveraging efficient underlying technologies like Starlette and Granian, aiming to be competitive with popular JavaScript and Java frameworks. The innovation is in making sophisticated development patterns accessible and performant within the Python ecosystem.

How to use it?

Developers can start a new project with Mitsuki using a simple command-line tool that generates a starter project structure with domain classes, services, controllers, and repositories. This provides a solid foundation for applications requiring CRUD (Create, Read, Update, Delete) operations. For simpler needs, Mitsuki allows for rapid development with a single Python file, making it easy to spin up REST APIs quickly. It can be integrated into existing Python projects or used to bootstrap new ones, offering flexibility for various development scenarios. The framework is designed to be lightweight, adding minimal overhead, so you get the benefits of structure without significant performance penalties.

Product Core Function

· Structured Project Initialization: Automates the setup of domain models, business logic services, request handlers (controllers), and data access layers (repositories). This significantly speeds up the initial development of applications by providing a proven organizational pattern, reducing the cognitive load of deciding how to structure your codebase from scratch. It's useful for any project where a clear separation of concerns is beneficial for maintainability.

· High-Performance Web Server: Utilizes performant underlying technologies like Starlette (for ASGI) and Granian to deliver fast response times, comparable to Node.js or Java frameworks. This is crucial for applications that need to handle a high volume of requests efficiently. So, if your application needs to be speedy and responsive under load, this feature directly benefits you.

· Simplified REST API Development: Allows for the creation of simple RESTful APIs with minimal code, enabling quick prototyping and deployment. Developers can get a basic API up and running in just a few lines of Python code, which is invaluable for quick backend services or microservices.

· Enterprise Pattern Adoption: Implements common enterprise development patterns (like dependency injection, though not explicitly detailed in the source, it's implied by the Spring Boot inspiration) that enhance code modularity, testability, and maintainability over the long term. This means your application is easier to manage, update, and extend as it grows, which is a huge win for long-term projects and team collaboration.