Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-28

SagaSu777 2025-11-29

Explore the hottest developer projects on Show HN for 2025-11-28. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN submissions highlight a powerful wave of innovation, where developers are leveraging AI and client-side technologies to solve intricate problems and boost productivity. The rise of AI-powered development tools, like those for catching PCB schematic mistakes or generating integration specs, signifies a shift towards smarter, more automated coding workflows. This trend is not just about faster development; it's about empowering developers to focus on complex architectural decisions rather than tedious tasks. For entrepreneurs, this means opportunities to build specialized AI agents or tools that cater to niche developer needs, creating new value propositions. Simultaneously, there's a strong emphasis on privacy and client-side processing, exemplified by tools like TinyCompressor and Encryptalotta. This reflects a growing user demand for data security and autonomy, pushing developers to create solutions where sensitive information never leaves the user's device. This opens doors for businesses offering secure, privacy-first alternatives in areas like data processing, communication, and content creation. The spirit of the hacker is alive and well, with many projects demonstrating a knack for turning complex technical challenges into elegant, accessible solutions, from detecting clandestine recording devices to optimizing audio editing workflows.

Today's Hottest Product

Name

Show HN: Glasses to detect smart-glasses that have cameras

Highlight

This project tackles a privacy-conscious problem: detecting when smart glasses with cameras are actively recording. The developer is exploring innovative approaches like analyzing the retro-reflectivity of IR light from camera sensors and monitoring wireless traffic (BLE, BTC, Wi-Fi) for device activity. This demonstrates a creative application of sensor analysis and network sniffing to address a real-world concern about covert recording. Aspiring developers can learn about signal analysis, low-level wireless protocols, and how to build hardware-based detection systems.

Popular Category

AI/ML

Developer Tools

Privacy & Security

Web Development

Productivity

Utilities

Popular Keyword

AI

LLM

Privacy

Developer Tools

Automation

Client-Side

Open Source

Rust

Technology Trends

AI-Powered Development

Client-Side Processing & Privacy

Developer Productivity Enhancements

Decentralized/Local-First Solutions

Cross-Platform Utilities

Creative Application of AI in Niche Domains

Network & Hardware Security

Project Category Distribution

AI/ML Applications (20%)

Developer Tools & Utilities (30%)

Web Services & SaaS (25%)

Privacy & Security Tools (10%)

Productivity & Workflow Tools (15%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | SpecterSense: Smart Glasses Camera Detector | 483 | 184 |

| 2 | Pulse: Real-time Co-Listening Streams | 71 | 25 |

| 3 | Schematic Sentinel | 45 | 26 |

| 4 | DB Pro: Visual Data Navigator | 24 | 10 |

| 5 | Bodge: Micro-Function-as-a-Service for Creative Coders | 8 | 3 |

| 6 | CodeArchitect AI | 3 | 8 |

| 7 | AI-Site-To-Demo-Video-Generator | 8 | 2 |

| 8 | Meme-fy Research Papers | 8 | 1 |

| 9 | Halud Your Horses: Isolated Dev Sandbox | 1 | 7 |

| 10 | Swatchify: Image-to-Palette CLI | 5 | 1 |

1

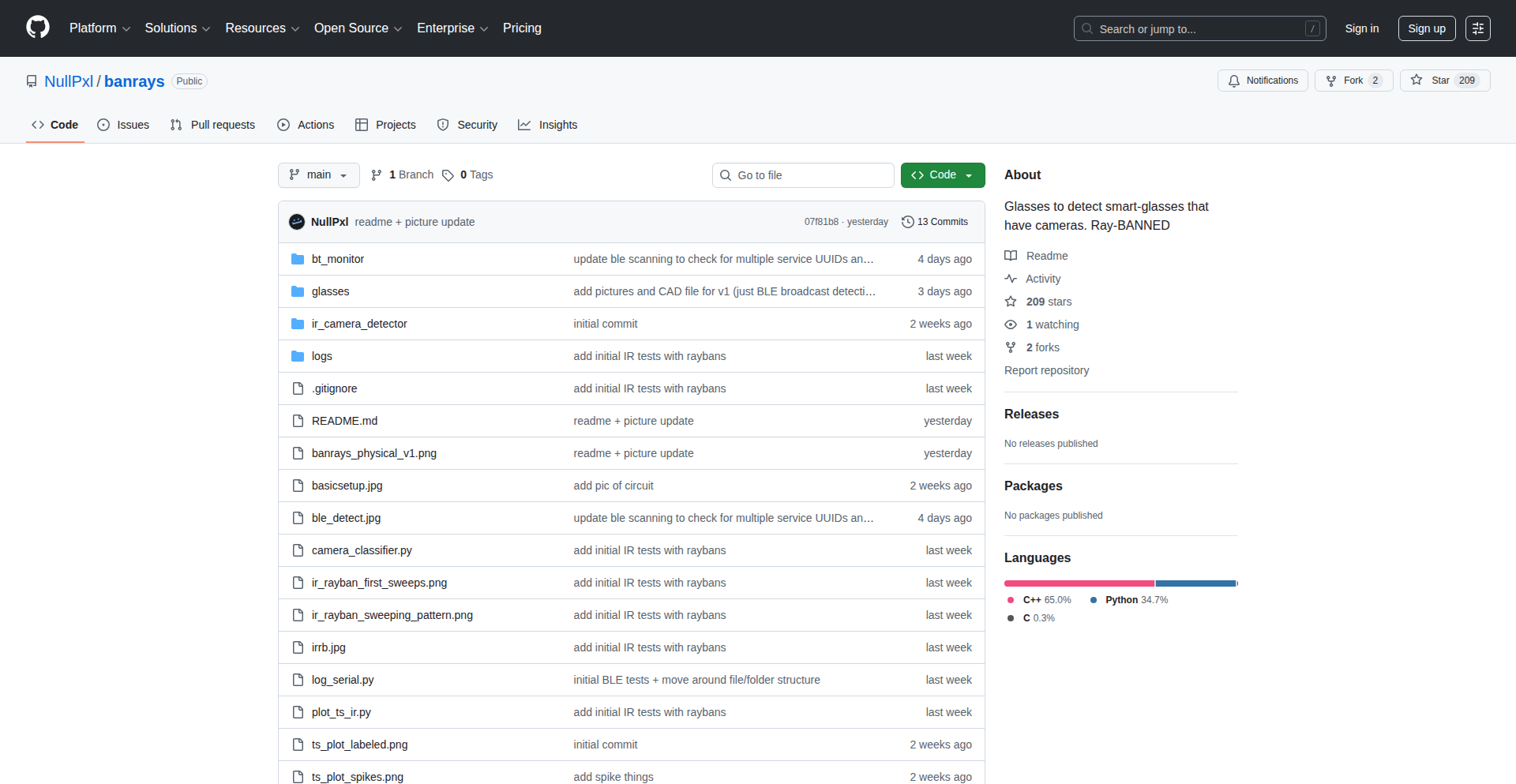

SpecterSense: Smart Glasses Camera Detector

Author

nullpxl

Description

SpecterSense is a project born from the growing popularity of smart glasses with integrated cameras and the associated privacy concerns. This initiative explores novel ways to detect when these smart glasses are actively recording. The core innovation lies in employing two distinct fingerprinting techniques: analyzing the infrared (IR) retro-reflectivity of camera sensors and monitoring wireless network traffic, primarily Bluetooth Low Energy (BLE). This aims to provide a proactive alert system, giving individuals awareness of potential recording devices in their vicinity.

Popularity

Points 483

Comments 184

What is this product?

SpecterSense is an experimental system designed to identify the presence of smart glasses equipped with cameras, like Meta Ray-Bans, that might be recording. It works by looking for two key signals. First, it analyzes how the camera sensor reflects infrared light, similar to how a mirror reflects visible light, but using invisible IR. If a camera is active, it might reflect IR in a detectable pattern. Second, it listens for specific wireless signals that these smart glasses emit, particularly during pairing, powering on, or when removed from their charging case. The project leverages an ESP32 microcontroller for initial wireless detection and a jingle is played to alert the user. The goal is to move beyond simple startup signals to detect recording even when the glasses are in active use.

How to use it?

Developers interested in privacy-enhancing technologies or building wearable awareness systems can use SpecterSense as a foundational concept. The project can be integrated into personal privacy devices or even embedded into other smart accessories. For example, a developer could take the ESP32-based detection module and integrate it into a smartwatch or a discreet personal beacon. The IR detection component, while experimental, could inspire further research into optical sensing for privacy applications. The learning from the BLE traffic analysis can be applied to building more sophisticated Bluetooth sniffers or custom device detectors.

Product Core Function

· IR Retro-reflectivity Detection: This function leverages the unique way camera sensors can reflect infrared light. By analyzing these reflections, the system can infer the presence of a camera. The value lies in a potentially passive, non-intrusive detection method that doesn't rely on active wireless communication.

· Wireless Traffic Monitoring (BLE): This function actively scans for specific Bluetooth Low Energy (BLE) communication patterns that smart glasses emit. This includes signals during pairing or powering on. The value is in providing a tangible alert when the device is active or initiating a connection, giving an early warning.

· Audio Alert System: When a potential recording device is detected, a subtle jingle is played near the user's ear. This provides an immediate, non-visual notification without drawing attention. The value is in offering discreet real-time awareness of privacy risks.

· ESP32 Microcontroller Integration: The use of an ESP32 allows for a low-cost, highly capable platform for wireless sensing. This demonstrates a practical and accessible hardware approach for building such detection systems, making it easier for other developers to replicate and build upon.

Product Usage Case

· Scenario: Attending a private meeting where recording is not permitted. How it helps: SpecterSense, integrated into a small wearable or placed subtly on a table, could detect if any attendees are wearing smart glasses that are actively recording, allowing for intervention before sensitive information is compromised.

· Scenario: Using public spaces like cafes or public transport and wanting to ensure privacy. How it helps: A personal SpecterSense device could alert you if someone nearby is wearing smart glasses that are likely recording you, enabling you to take action or move to a different location.

· Scenario: Researchers developing advanced privacy tools. How it helps: The project provides a starting point for exploring more robust detection methods. The IR reflection analysis can be further refined, and the BLE traffic analysis can be extended to cover more sophisticated wireless protocols or specific device signatures for better accuracy.

· Scenario: Developers building custom IoT security solutions. How it helps: The principles behind detecting specific wireless device behaviors can be applied to other IoT security challenges, such as detecting unauthorized devices on a network or monitoring the activity of smart home devices.

2

Pulse: Real-time Co-Listening Streams

Author

473999

Description

Pulse is a novel co-listening platform that allows users to share their audio in real-time with friends, replicating the feeling of being in the same room. Its core innovation lies in enabling anyone to host a live audio stream directly from their browser or system audio, coupled with automatic music recognition and integrated chat with custom emotes. This solves the technical challenge of synchronized, low-latency audio sharing for casual social listening experiences.

Popularity

Points 71

Comments 25

What is this product?

Pulse is a browser-based application designed for live, synchronized audio sharing among friends or communities. The underlying technology leverages WebRTC for peer-to-peer audio streaming, ensuring low latency and real-time playback. Music recognition is achieved through audio fingerprinting algorithms that identify tracks being played. The system also incorporates a chat functionality with support for custom emotes, allowing for interactive social engagement. The key innovation is making high-quality, real-time audio sharing accessible without requiring any accounts or complex setup, fostering spontaneous listening sessions.

How to use it?

Developers can integrate Pulse into their applications or workflows in several ways. For end-users, joining a listening session is as simple as entering an anonymous code provided by the host. For hosts, starting a stream involves selecting either a browser tab or system audio as the source. Developers can explore Pulse's architecture for inspiration in building their own real-time audio collaboration tools. Potential integration points include music discovery platforms, social gaming applications where shared audio is crucial, or even educational tools for collaborative listening exercises. The open nature of the project encourages experimentation and custom implementations.

Product Core Function

· Live browser tab audio streaming: Enables users to broadcast any audio playing in their browser tab to a shared listening room. This is technically achieved by capturing the audio output of a specific tab and transmitting it in real-time, allowing for seamless sharing of web-based audio content. The value is in effortlessly sharing music from streaming services or web pages.

· Live system audio streaming: Allows hosts to stream all audio originating from their computer's system output. This provides a comprehensive audio sharing experience, including music, game sounds, or any other desktop audio. The value lies in its versatility for sharing any sound from your computer.

· Automatic music recognition: Identifies the songs being played within the stream and displays the track information to listeners. This uses audio fingerprinting techniques to match snippets of the streamed audio against a music database. The value is in discovering what your friends are listening to and learning about new music.

· Real-time chat with 7TV emotes: Provides a synchronous chat interface for listeners and hosts to communicate. The integration of 7TV emotes adds a layer of expressiveness and community engagement, similar to popular live-streaming platforms. The value is in enabling interactive social connection during the listening experience.

· Anonymous access: Users can join listening rooms without needing to create an account, by simply using a shared code. This reduces friction and encourages immediate participation. The value is in making it incredibly easy and quick to start or join a listening session.

Product Usage Case

· Virtual listening parties: A group of friends scattered across different locations can all listen to the same album or playlist in sync. The host streams the music, and everyone hears it simultaneously, fostering a shared social experience that feels like a real party. This solves the problem of distant friends not being able to enjoy music together.

· Collaborative music discovery: Users can host streams of obscure or new music they find, and their friends can join to listen and provide instant feedback or identify the tracks. This creates a dynamic way to explore and share music within a community. It addresses the need for real-time reactions and recommendations.

· DJ sessions for friends: Aspiring DJs can use Pulse to host live sets for their friends. They can stream their DJ software output, and listeners can chat and request songs, mimicking a live club or radio experience. This provides a platform for practice and performance without needing a physical venue.

· Shared podcast or audiobook listening: A group can listen to a podcast or audiobook together, pausing and discussing sections in real-time through the chat. This enhances the engagement and comprehension of spoken word content for remote groups. It allows for shared experiences with narrative content.

3

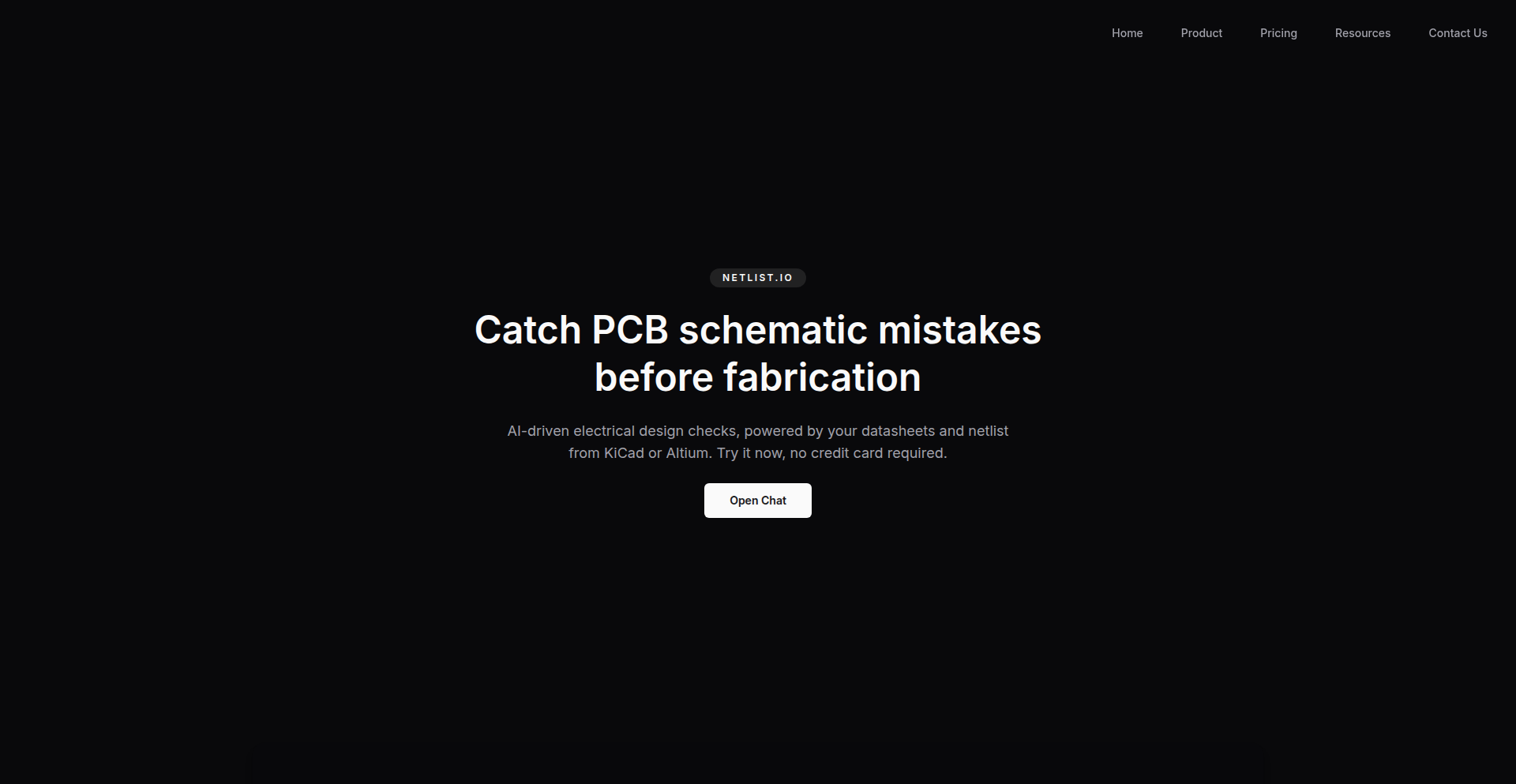

Schematic Sentinel

Author

wafflesfreak

Description

Schematic Sentinel is an AI-powered tool designed to automatically detect common errors and potential issues in Printed Circuit Board (PCB) schematics. It leverages the power of Large Language Models (LLMs) to understand the intent and logic behind circuit designs, providing a more intelligent and proactive approach to quality assurance in electronics engineering. This significantly reduces the time and effort spent on manual review and helps catch subtle mistakes before they lead to costly redesigns.

Popularity

Points 45

Comments 26

What is this product?

Schematic Sentinel is an intelligent assistant that acts as a second pair of eyes for PCB schematic designers. Instead of just checking for syntax errors, it uses advanced AI (Large Language Models) to understand the electrical relationships and common design patterns. Think of it like having an experienced engineer continuously reviewing your work, flagging potential problems like incorrect component values, floating nets, or common circuit misconfigurations that might be missed by traditional CAD tools. The innovation lies in applying LLMs, which excel at understanding context and nuanced information, to the structured yet complex world of electronic schematics.

How to use it?

Currently, Schematic Sentinel is designed for individual engineers or small teams. A developer would typically integrate it into their existing PCB design workflow. This could involve feeding the schematic design files (likely in formats like `.sch` or netlist exports) into the tool. The LLM then analyzes the schematic's components, connections, and rules, and outputs a report detailing any identified issues, along with explanations and suggested fixes. The value proposition is providing an automated, intelligent pre-check before submitting designs for manufacturing or detailed review, saving precious development time and preventing costly errors down the line.

Product Core Function

· Automated Schematic Rule Checking: The system uses LLMs to go beyond basic design rule checks by understanding electrical intent, identifying potential logic flaws and common design anti-patterns, which reduces manual oversight and improves design reliability.

· Error Explanation and Guidance: Instead of just flagging an issue, the LLM provides contextual explanations of why something is an error and offers suggestions for correction, accelerating the debugging process and knowledge transfer for junior engineers.

· Component Value and Type Validation: It can intelligently verify if component values or types are appropriate for their intended function within the circuit, preventing incorrect parts from being specified and ensuring functional correctness.

· Netlist and Connectivity Analysis: The LLM analyzes the connectivity of the schematic to identify issues like unconnected pins or unintended shorts, ensuring robust and predictable circuit operation.

Product Usage Case

· A hobbyist electronics designer using Schematic Sentinel to catch a subtle mistake in their first complex microcontroller board schematic, preventing them from ordering incorrect components and saving weeks of debugging time.

· A small startup's electrical engineering team integrating Schematic Sentinel into their CI/CD pipeline for hardware. The tool automatically scans schematics with every code commit, catching potential issues early and ensuring consistent design quality before production.

· A student learning PCB design using Schematic Sentinel to understand best practices and common pitfalls. The detailed error explanations help them grasp complex electrical concepts faster and build more reliable circuits from the start.

4

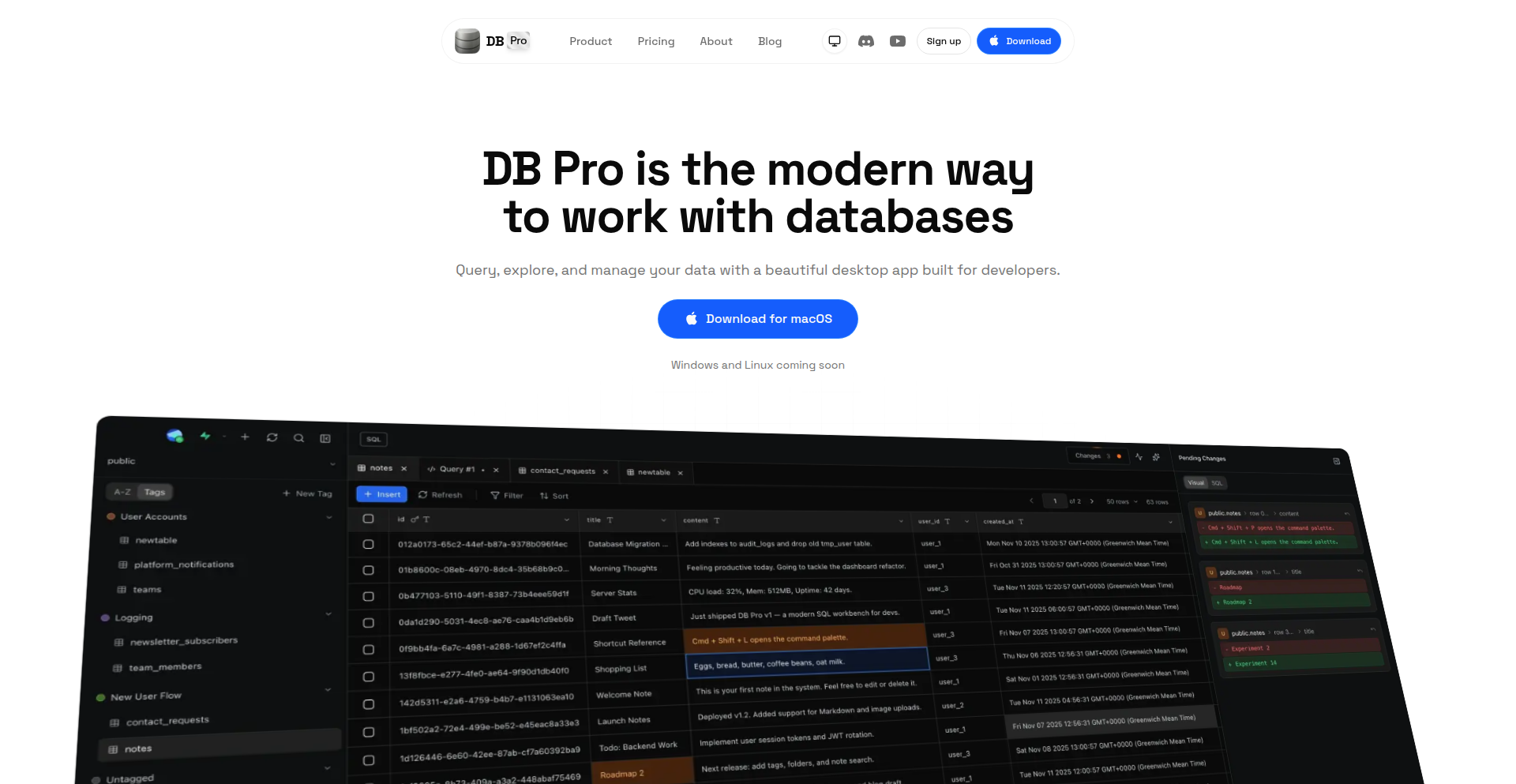

DB Pro: Visual Data Navigator

Author

upmostly

Description

DB Pro is a modern desktop database GUI client that makes interacting with PostgreSQL, MySQL, SQLite, and libSQL fast, visual, and enjoyable. It focuses on developer experience with features like visual change review, inline data editing, and a visual schema explorer, solving the common problem of clunky and unfriendly database management tools. This empowers developers to work more efficiently and intuitively with their data.

Popularity

Points 24

Comments 10

What is this product?

DB Pro is a desktop application that provides a user-friendly graphical interface for managing various types of databases, including PostgreSQL, MySQL, SQLite, and libSQL. Its core innovation lies in prioritizing the developer's experience, offering a clean, visual, and intuitive way to interact with data. Instead of complex command-line operations or outdated interfaces, DB Pro provides visual tools like a schema diagram to understand relationships and a visual way to review changes before they are applied to the database. This approach reduces errors, speeds up workflows, and makes database management more accessible, even for developers who are not database experts.

How to use it?

Developers can download and install DB Pro on their macOS machines (with Windows and Linux support coming soon). They can then connect to their existing databases by providing connection details. Once connected, they can browse tables, view and edit data directly in the interface, write and execute SQL queries in a dedicated editor, and visualize their database schema. For example, a developer working on a web application can use DB Pro to quickly inspect user data, update records without opening complex modals, or see how different tables are linked together through a visual diagram, all within a single, well-designed application.

Product Core Function

· Visual change review: See pending inserts, updates, and deletes before committing them. This helps prevent accidental data corruption and provides a clear audit trail of modifications, ensuring data integrity and peace of mind.

· Inline data editing: Edit table rows directly without clunky modal dialogs. This significantly speeds up data manipulation tasks by allowing for quick edits directly in the table view, making routine data updates much more efficient.

· Raw SQL editor: A focused editor for running queries with results in separate tabs. This provides a powerful and flexible way to interact with the database, allowing developers to write complex queries and manage multiple query results side-by-side without confusion.

· Full activity logs: Track everything happening in your database for peace of mind. This acts as a comprehensive audit trail, allowing developers to monitor database activity, troubleshoot issues, and understand how the data is being accessed and modified.

· Visual schema explorer: See tables, columns, keys, and relationships in a diagram. This provides an intuitive, birds-eye view of the database structure, making it easier to understand complex schemas, identify relationships between tables, and plan database modifications.

· Tabs & multi-window support: Keep multiple connections and queries open at once. This enhances productivity by allowing developers to manage several database connections and queries simultaneously without losing context, streamlining multitasking.

· Custom table tagging: Organize your tables without altering the schema. This offers a flexible way to categorize and group tables for better organization and easier retrieval, especially in large databases, without requiring schema changes.

Product Usage Case

· A web developer needs to quickly update a few user profiles in a PostgreSQL database. Using DB Pro, they can directly edit the data in the table view without opening separate edit forms, saving them significant time and effort compared to traditional tools.

· A data analyst is investigating performance issues in a MySQL database. They use DB Pro's visual schema explorer to understand the relationships between tables and then use the raw SQL editor to run complex queries that pinpoint the bottleneck, all within a single, cohesive interface.

· A new developer joins a project with a complex SQLite database. DB Pro's visual schema explorer helps them quickly grasp the database structure and understand how different parts of the application interact with the data, accelerating their onboarding process.

· A team is collaborating on a project with a shared database. DB Pro's visual change review feature allows them to see exactly what changes are being proposed by team members before they are committed, preventing unintended consequences and ensuring smoother collaboration.

5

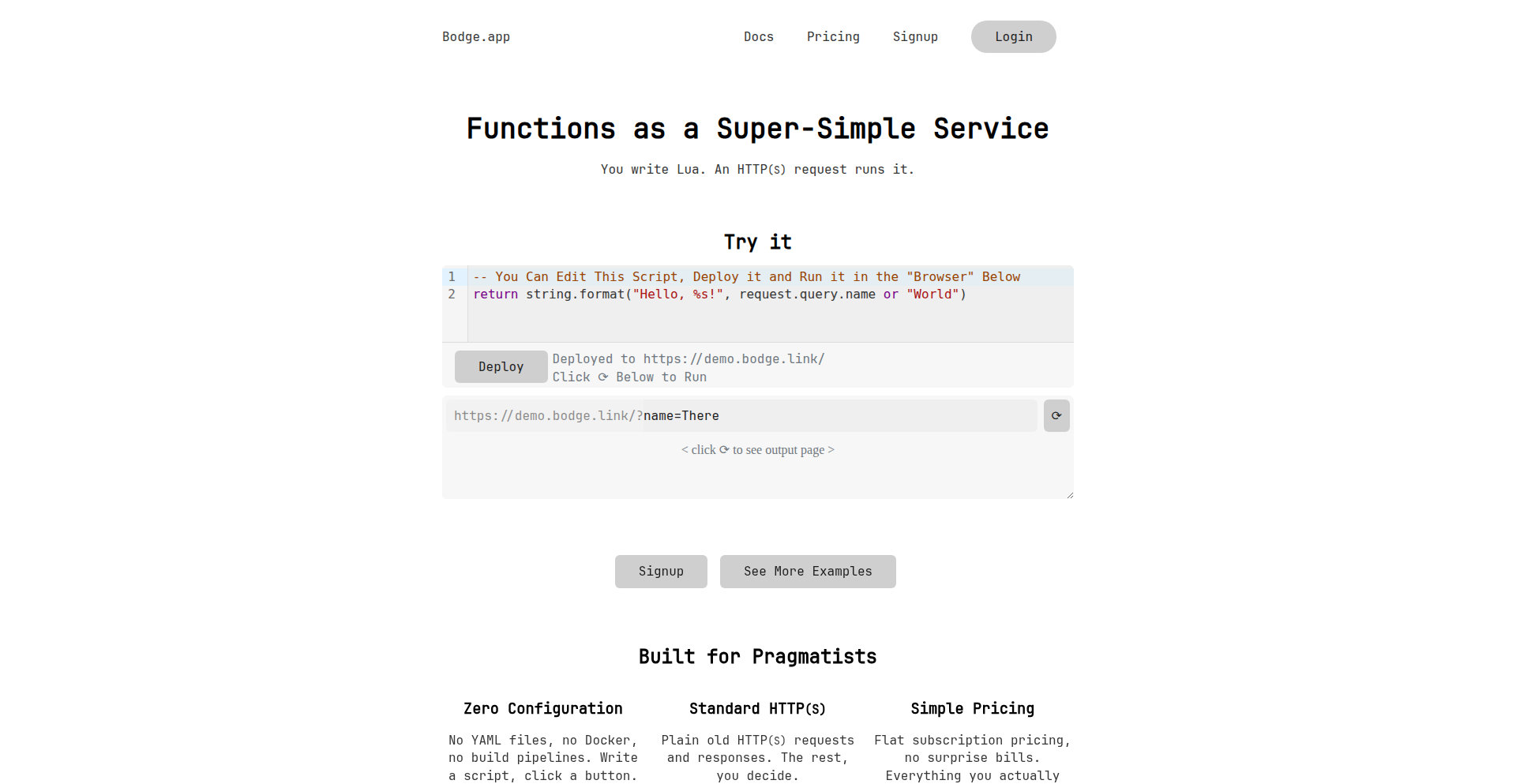

Bodge: Micro-Function-as-a-Service for Creative Coders

Author

azdle

Description

Bodge is a unique platform that allows developers to host simple Lua scripts behind static HTTP endpoints. It focuses on providing a fully sandboxed environment, making it incredibly easy to deploy small, custom functionalities without the overhead of managing complex infrastructure. This addresses the common developer pain point of having great ideas for personal tools or small integrations but being deterred by the setup and maintenance effort.

Popularity

Points 8

Comments 3

What is this product?

Bodge is a micro-Function-as-a-Service (µFaaS) platform designed for rapid prototyping and deploying small, isolated pieces of code. Its core innovation lies in its ability to securely run custom Lua scripts in a sandboxed environment, accessible via simple HTTP requests. This means you can write a script, upload it, and immediately have an API endpoint that executes your code. The value comes from drastically reducing the complexity and time required to turn a coding idea into a functional service, perfect for personal projects, quick automations, or integrating with other services without building a full application.

How to use it?

Developers can use Bodge by writing their logic in Lua. They can then upload these scripts to the Bodge platform. Once uploaded, each script is exposed as a unique HTTP endpoint. For instance, a script designed to return the current time can be accessed by any device or service that can make an HTTP GET request to its assigned URL. Bodge provides pre-built Lua modules for common tasks like making HTTP requests, handling JSON data, sending alerts, and basic data storage, simplifying script development. Developers can integrate these endpoints into their existing workflows, IoT devices, home automation setups, or any scenario where a specific, small piece of logic needs to be triggered remotely.

Product Core Function

· Sandboxed Lua Script Execution: Allows developers to run custom Lua code in a secure, isolated environment, preventing interference with other scripts or the host system. This offers peace of mind and enables experimentation without risk.

· HTTP Endpoint Hosting: Automatically exposes uploaded Lua scripts as static HTTP endpoints, making them easily accessible from anywhere on the internet. This is invaluable for creating simple APIs for personal use or integration.

· Pre-built Lua Modules: Provides ready-to-use modules for common functionalities like making HTTP requests, JSON parsing, sending alerts, and simple key-value storage. This significantly speeds up development by removing the need to reinvent common features.

· No-Account Demo and Free Tier: Offers a hands-on experience through a public demo on the homepage and a free beta account. This lowers the barrier to entry, allowing anyone to try out the service and immediately see its potential for their projects.

· Cross-Script Mutexes: Enables coordination between different scripts hosted on Bodge, allowing for more complex workflows and preventing race conditions in shared resources. This is useful for managing shared state or ensuring atomic operations across multiple small functions.

Product Usage Case

· IoT Device Command Execution: A developer has a smart home device that can make HTTP requests. They write a Lua script on Bodge to control a light and assign it an HTTP endpoint. Now, any trigger (like a button press or a sensor reading) can send a request to that endpoint, turning the light on or off, all without a complex backend server.

· Personal Notification System: A developer wants to be notified when a new job matching specific criteria is posted on a company's careers page. They create a Lua script on Bodge that scrapes the job listing page periodically and sends an email alert (using a provided module) if a new matching job is found. This automates job searching with minimal effort.

· Quick Data Lookup API: A developer needs a simple way to fetch configuration data for a small application. They store the configuration as JSON within Bodge's simple string storage and create a Lua script that reads this data and returns it as a JSON response when an HTTP request is made to its endpoint. This provides a custom, lightweight API for their app.

· Automated Status Monitoring: A developer hosts several self-hosted services. They write a Lua script on Bodge that checks the health of these services. If a service is down, the script uses an alert module to notify the developer via email or another preferred channel, ensuring uptime is monitored effortlessly.

· Simple Randomizer for Contests: A content creator needs a quick way to pick a random winner from a list of participants. They create a Lua script on Bodge that takes a list of names as input via URL parameters and randomly selects one, returning the winner's name. This is a fun, practical use case for quick utility scripts.

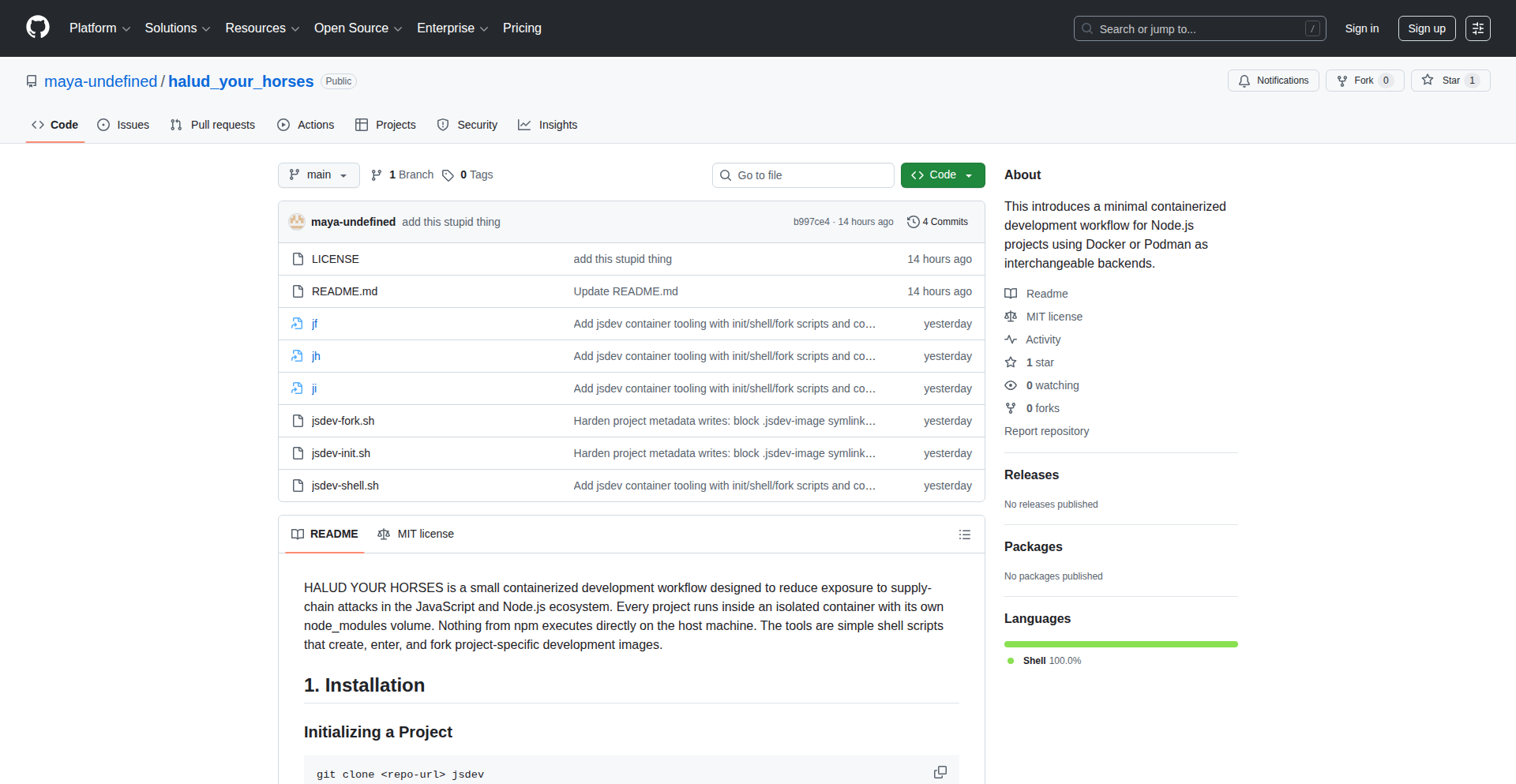

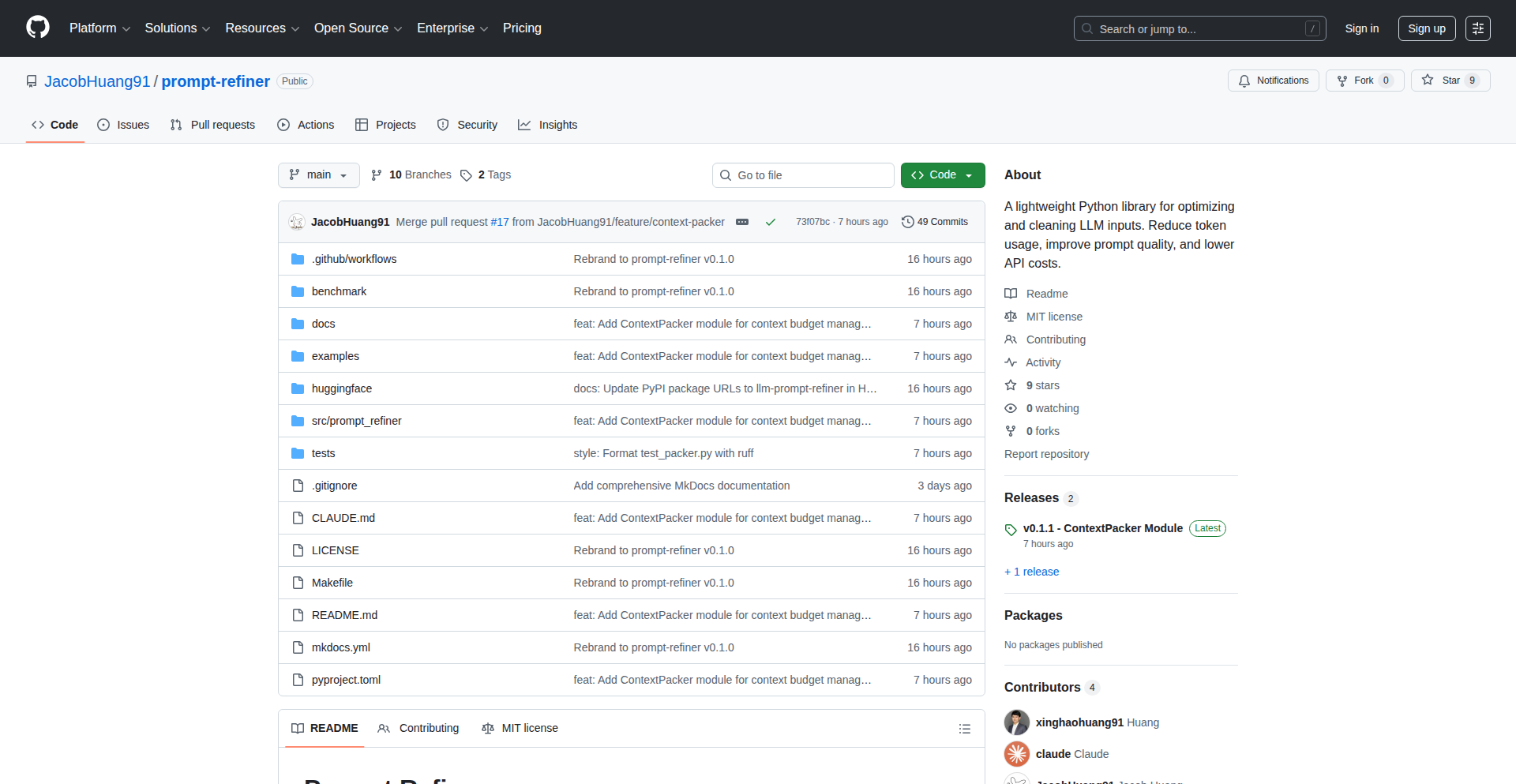

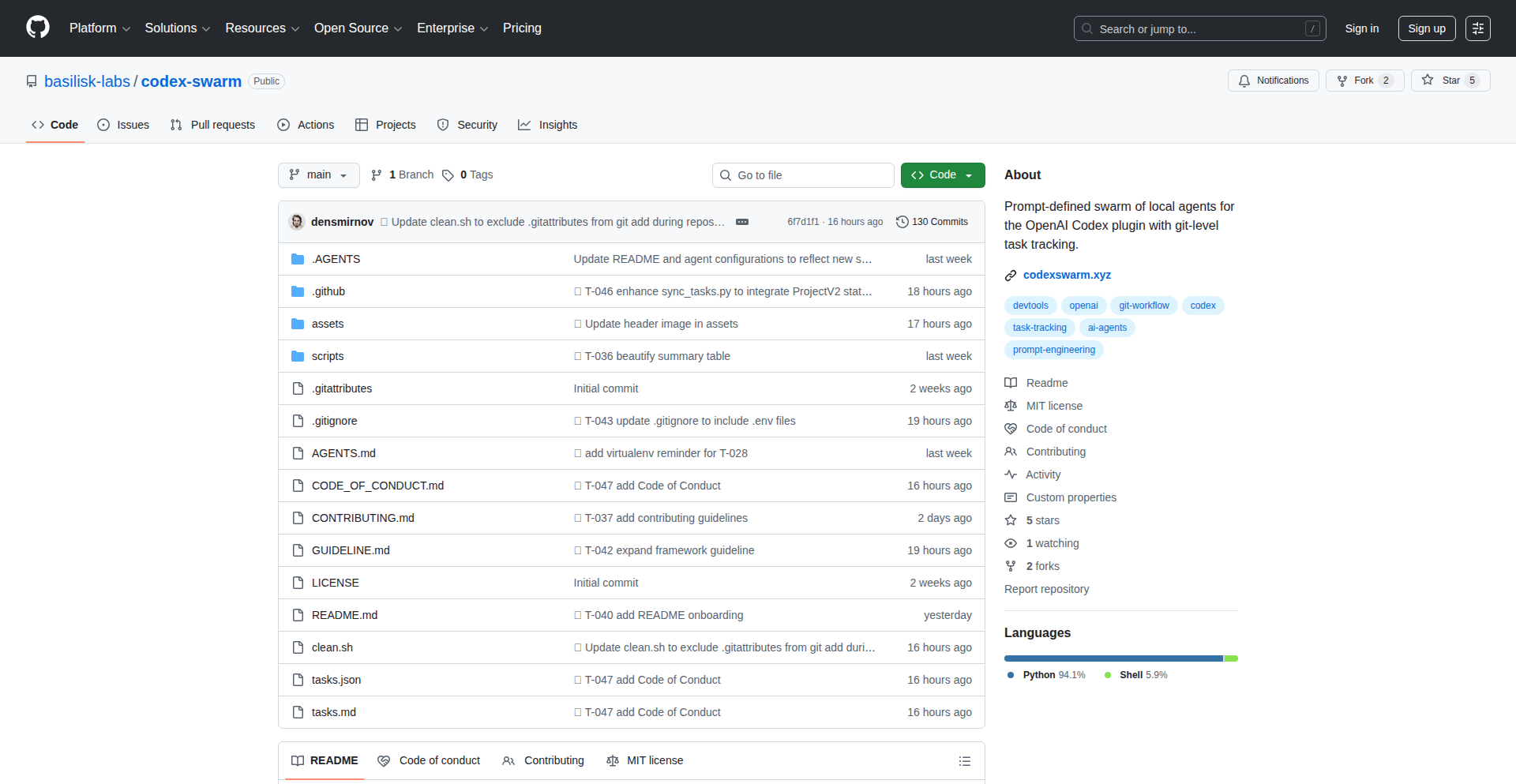

6

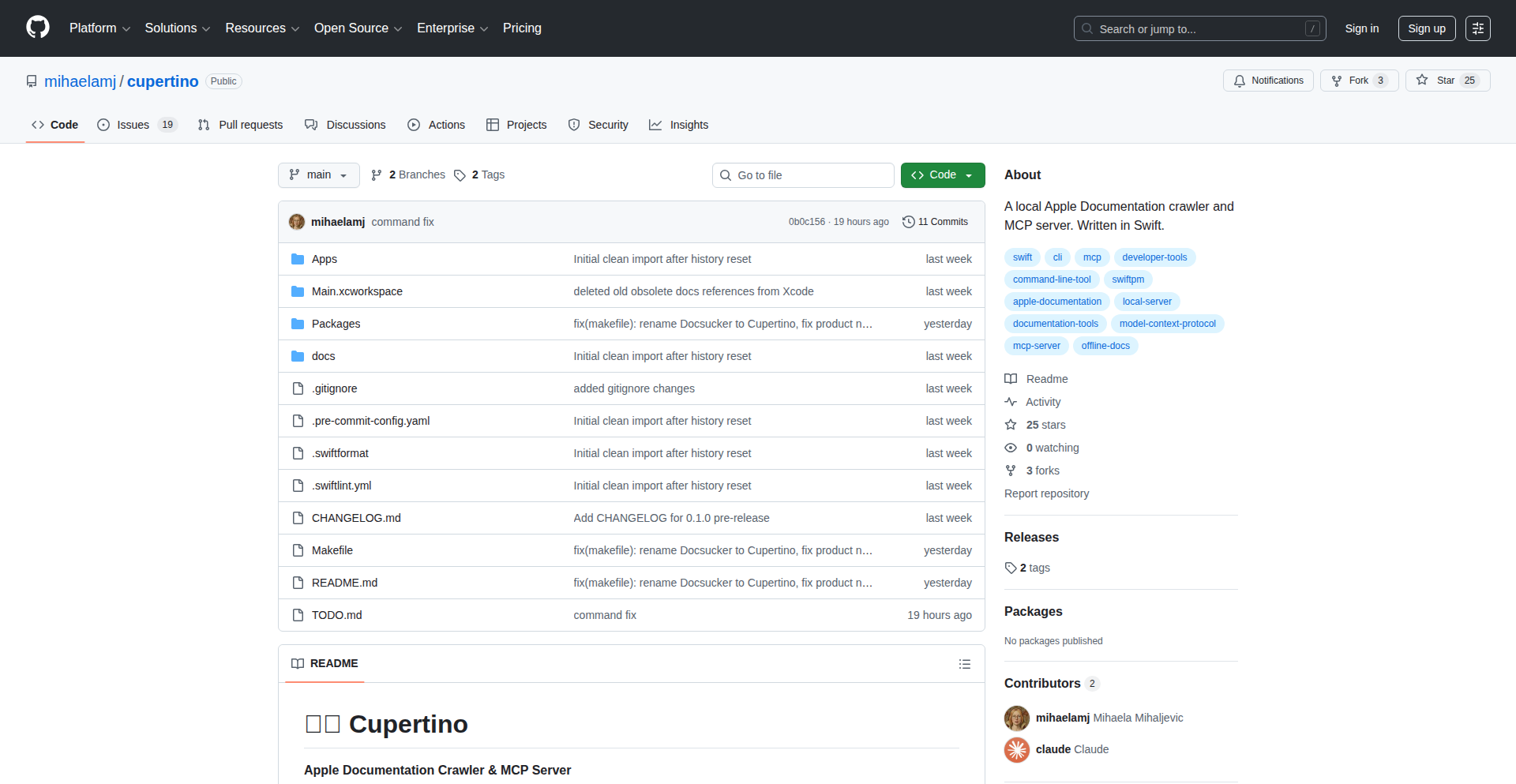

CodeArchitect AI

Author

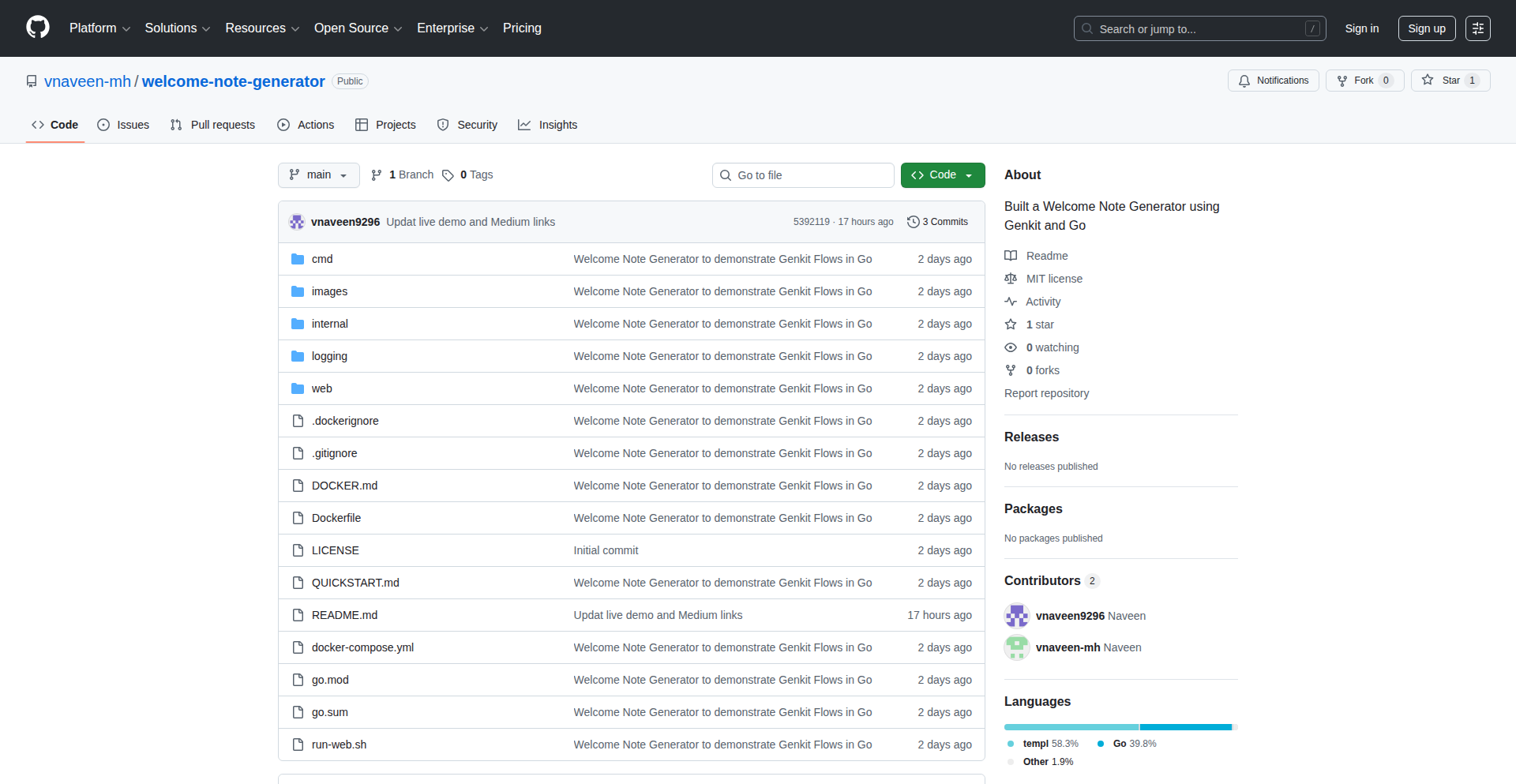

mifydev

Description

CodeArchitect AI is an open-source tool that simplifies complex service integrations into your codebase. Instead of manually figuring out how to connect services like Sentry, PostHog, or Clerk, this AI-powered wizard deeply analyzes your project's architecture and workflows. It then asks a few targeted questions to understand your specific needs and generates a personalized, ready-to-paste integration plan for AI coding assistants. This drastically reduces the time and frustration of setting up new services, allowing developers to integrate with confidence on the first try.

Popularity

Points 3

Comments 8

What is this product?

CodeArchitect AI is an intelligent system that understands your code and helps you integrate third-party services seamlessly. It works by connecting to your code repository and performing a deep scan to map out your project's structure and common development patterns. Then, it engages you with a few questions about how you envision the integration working. Based on this analysis and your input, it crafts a precise set of instructions, essentially a prompt, designed to be used with AI code generation tools like Claude or Cursor. The innovation lies in its ability to translate complex integration requirements into actionable, AI-friendly directives, overcoming the common pain point of manual, error-prone integration setup.

How to use it?

Developers can use CodeArchitect AI by first connecting their code repository (e.g., GitHub, GitLab). Once connected, they select the service they wish to integrate (e.g., Sentry for error tracking, Clerk for authentication, PostHog for analytics). The tool then analyzes the connected codebase and presents a short questionnaire specific to the chosen service and your project. After answering these questions, CodeArchitect AI generates a detailed, ready-to-use prompt that can be directly fed into an AI coding assistant, guiding it to implement the integration accurately and efficiently. This makes it incredibly easy to experiment with and adopt new services.

Product Core Function

· Deep codebase analysis: Understands project architecture and workflows to provide context-aware integration plans. This saves developers time by eliminating the need to manually document their project's intricacies for AI.

· Service-specific integration planning: Generates tailored plans for popular services like Sentry, Statsig, PostHog, Resend, and Clerk. This ensures that the integration is not generic but fits the specific needs of your application.

· Conversational AI prompt generation: Creates ready-to-paste prompts for AI coding assistants, simplifying the process of implementing the integration. This allows developers to leverage AI for code generation without needing to be AI prompt engineering experts.

· Open-source accessibility: The entire project is open-source, allowing for community contributions and transparency. This fosters a collaborative environment and ensures the tool remains adaptable and valuable to developers.

· First-try integration success: Aims to provide plans that lead to successful integrations on the initial attempt, reducing debugging and rework. This directly translates to increased developer productivity and reduced frustration.

Product Usage Case

· Integrating Clerk authentication into a new React application: A developer can connect their React project to CodeArchitect AI, select Clerk, answer a few questions about their desired login/signup flows, and receive a prompt to guide an AI in setting up the entire authentication system within minutes, avoiding manual configuration of routes, state management, and API calls.

· Adding PostHog analytics to an existing Rails API: A developer can connect their Rails repository, choose PostHog, and answer questions about which user events they want to track. CodeArchitect AI will then provide a prompt for an AI to generate the necessary code snippets and tracking logic to seamlessly integrate PostHog analytics into their backend.

· Setting up Sentry error tracking in a Python Flask app: By connecting their Flask project and selecting Sentry, developers can quickly get a prompt that instructs an AI to configure Sentry's SDK, capture exceptions, and send error reports, saving significant time on manual setup and configuration.

· Quickly experimenting with Resend for transactional emails in a Node.js project: A developer can connect their Node.js project, select Resend, and receive a prompt to easily integrate email sending functionality, allowing for rapid prototyping of features that require email notifications.

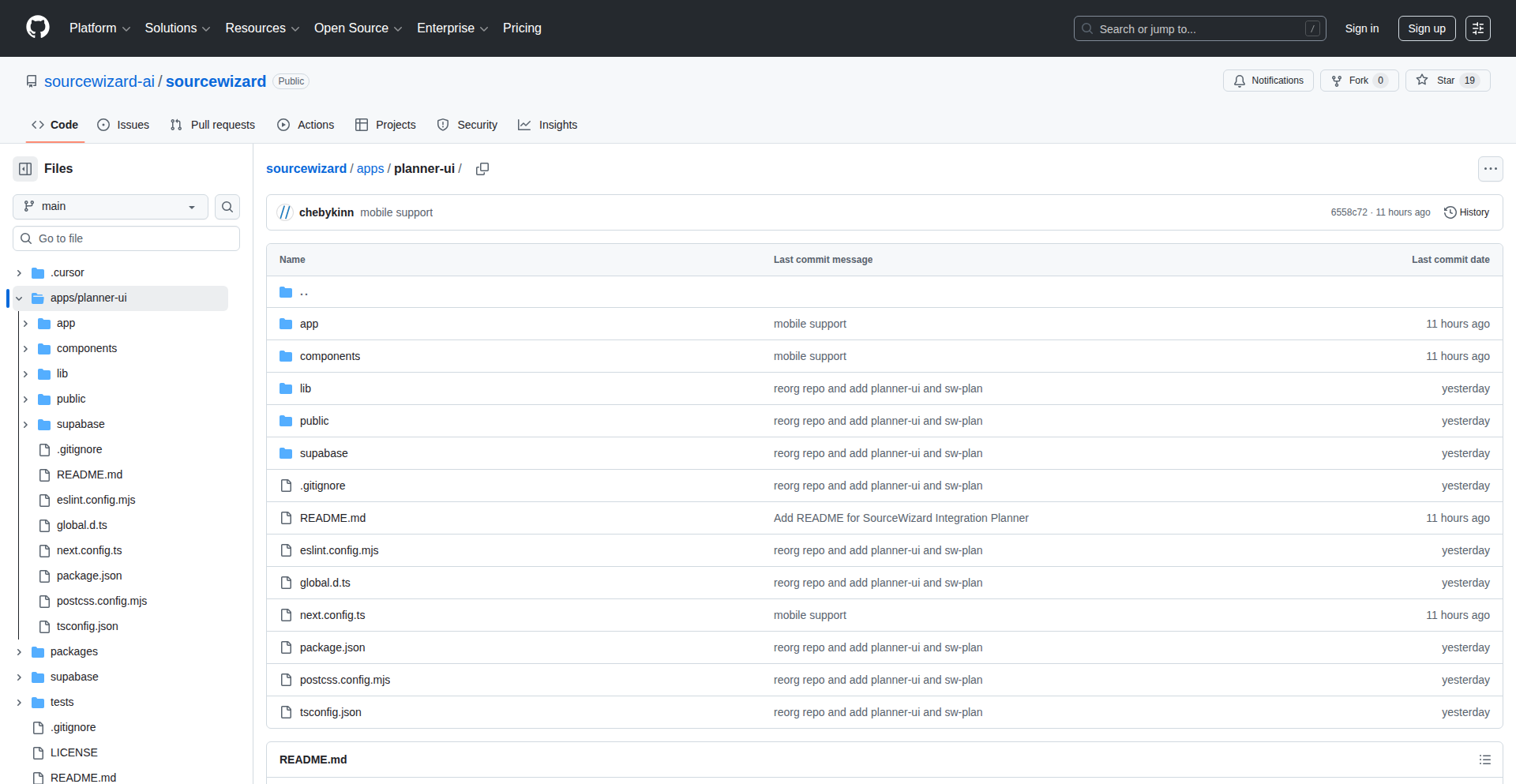

7

AI-Site-To-Demo-Video-Generator

Author

lococococo

Description

This project, AutoAds, revolutionizes product demonstration by transforming a website URL into a polished, AI-generated promotional video. It automates the entire process, from site analysis to voiceover and subtitling, eliminating the need for manual recording. The core innovation lies in its AI pipeline that navigates and interprets web content to create dynamic video assets, effectively solving the pain point of tedious and repetitive demo creation.

Popularity

Points 8

Comments 2

What is this product?

AutoAds is an AI-powered service that takes your website URL and automatically generates a professional-looking product demo video. It works by sending an AI agent to crawl your website, understand its structure, content, and key calls-to-action. Then, it simulates user interaction, like scrolling and navigating, focusing on important sections. This raw footage is automatically edited with quick cuts and a promotional pace, often presented within a clean device mockup (like a Mac). Concurrently, it generates a synchronized voiceover explaining your product and adds matching subtitles. The innovation here is in automating a multi-step creative process that traditionally requires significant human effort, using AI to interpret and synthesize web content into a compelling visual narrative.

How to use it?

Developers and marketers can use AutoAds by simply pasting their website URL (for SaaS, e-commerce, or personal portfolios) into the platform at autoads.pro. The system then takes over. It's designed for quick integration into a workflow where creating marketing materials or product overviews is a priority. Think of it as a smart tool that bypasses the need for screen recording software, microphones, or cameras, streamlining the creation of assets for landing pages, social media, or paid advertising campaigns. No complex technical setup is required; it's a straightforward, URL-in, video-out workflow.

Product Core Function

· AI-driven website analysis: This function's value is in automatically understanding the essence of your site without manual input, enabling targeted video generation. It's crucial for identifying key features and user flows to showcase.

· Automated screen recording simulation: The value here is in generating dynamic video footage that mimics user interaction, making the demo feel natural and engaging, thereby reducing the effort of manual screen capture.

· AI-powered video editing: This feature's value is in producing a fast-paced, promotional video style automatically, which is often more effective for marketing than unedited recordings. It saves significant post-production time.

· Device mockup integration: The value of presenting the video within a device frame (like a Mac) is in providing a polished, professional aesthetic that aligns with typical product launch materials, enhancing perceived quality.

· Automatic voiceover generation: This function's value is in providing a clear, spoken explanation of your product, synced with the visuals, eliminating the need for recording your own voice and ensuring consistent messaging.

· Synchronized subtitle creation: The value of having accurate subtitles is in improving accessibility and engagement, especially for viewers watching with sound off, making your product information more digestible.

Product Usage Case

· Scenario: A SaaS startup needs to quickly create a demo video for their new feature launch. Problem solved: Instead of spending hours recording, editing, and adding voiceovers, they paste their product page URL into AutoAds. The AI generates a professional-looking video showcasing the feature, ready for their blog and social media within minutes. This drastically reduces time-to-market for marketing content.

· Scenario: An e-commerce store owner wants to create engaging video ads for their latest product line without being on camera. Problem solved: By providing the product page URL, AutoAds generates a slick video that highlights the product's visual appeal and key selling points, wrapped in a modern aesthetic. This allows the owner to compete with larger brands by producing professional video ads with minimal effort.

· Scenario: A freelance web developer wants to showcase their portfolio to potential clients with a dynamic video presentation. Problem solved: AutoAds can analyze the developer's personal website and create a video that walks through their best projects, demonstrating their skills in a visually appealing way. This provides a more engaging alternative to static portfolio pages.

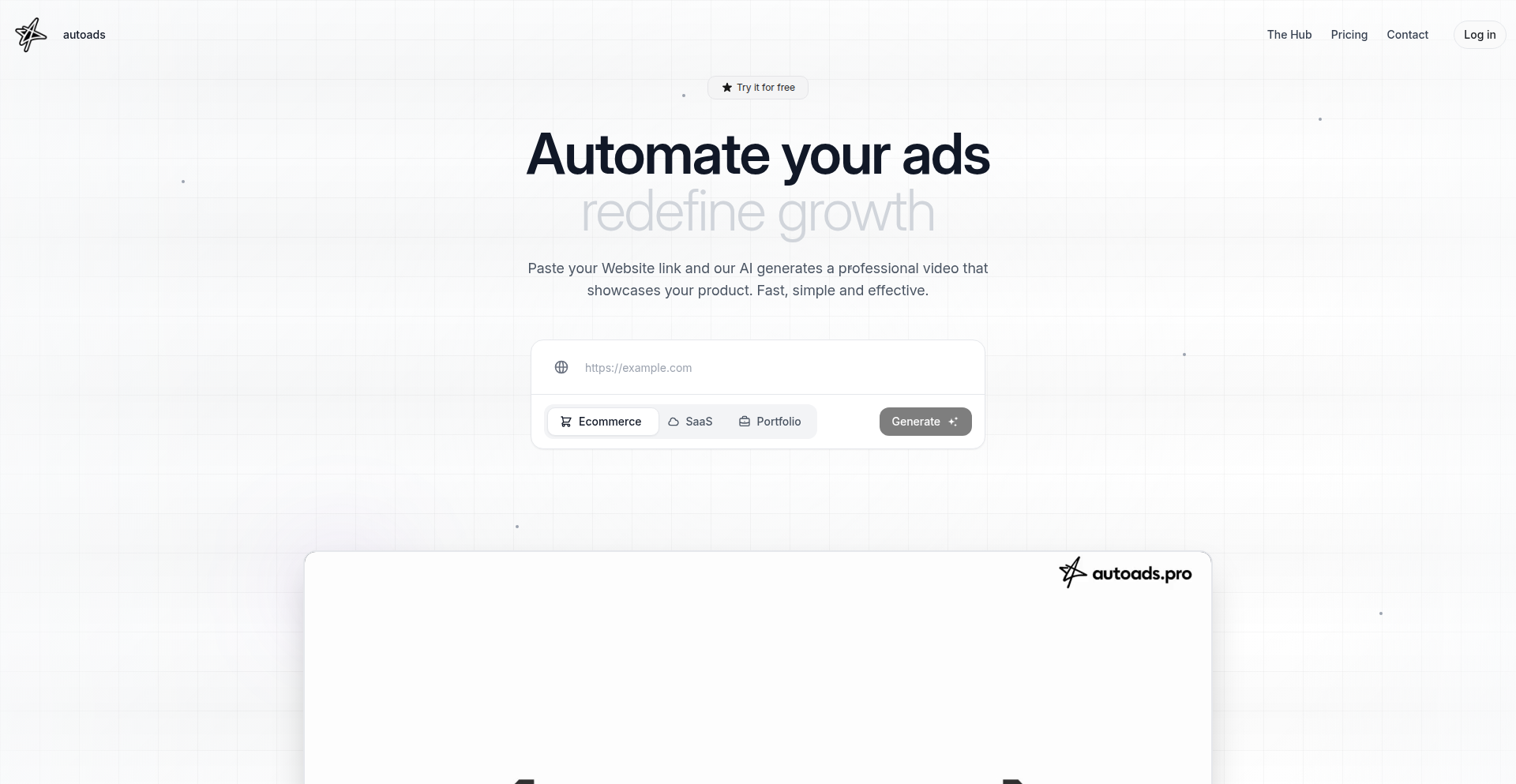

8

Meme-fy Research Papers

Author

QueensGambit

Description

This project transforms academic research papers into easily digestible and shareable memes. It tackles the challenge of making complex scientific information more accessible by leveraging the viral nature of meme culture. The core innovation lies in using AI to identify key concepts and findings within a paper and then creatively rephrasing them into meme formats. This makes dense research topics understandable and engaging for a broader audience, bridging the gap between academia and the public.

Popularity

Points 8

Comments 1

What is this product?

This project is an AI-powered tool that turns dense academic research papers into humorous and relatable memes. It analyzes the content of a research paper, extracts its most important findings or concepts, and then creatively generates meme text that captures the essence of the paper in a lighthearted way. The innovation here is applying natural language processing and generative AI techniques to a domain not typically associated with meme creation, effectively democratizing scientific knowledge.

How to use it?

Developers can integrate this project into their workflows by using its API to process research papers. For instance, a science communicator could use it to quickly generate social media content for promoting new research. A student could use it to create study aids that are more memorable and fun. The tool takes a research paper (likely as a PDF or text input) and outputs meme templates with generated captions, ready for sharing. This offers a novel way to engage with and disseminate research findings.

Product Core Function

· Automated research paper analysis: Uses NLP to understand the core arguments and conclusions of a paper, providing value by saving researchers and communicators significant time in content distillation.

· Meme caption generation: Employs generative AI models to create witty and relevant captions for meme templates based on the paper's content, making complex information accessible and engaging for a wider audience.

· Meme template selection: Selects appropriate meme formats that best suit the tone and content of the research paper, enhancing the understanding and memorability of scientific findings.

Product Usage Case

· A science journalist uses the tool to generate engaging social media posts about a new study on climate change, making it understandable and shareable for the general public.

· A university outreach program uses the project to create fun, educational memes about cutting-edge scientific discoveries, increasing public interest in STEM fields.

· A graduate student uses the tool to simplify complex thesis concepts for their family and friends, fostering better understanding and reducing the intimidation factor of academic jargon.

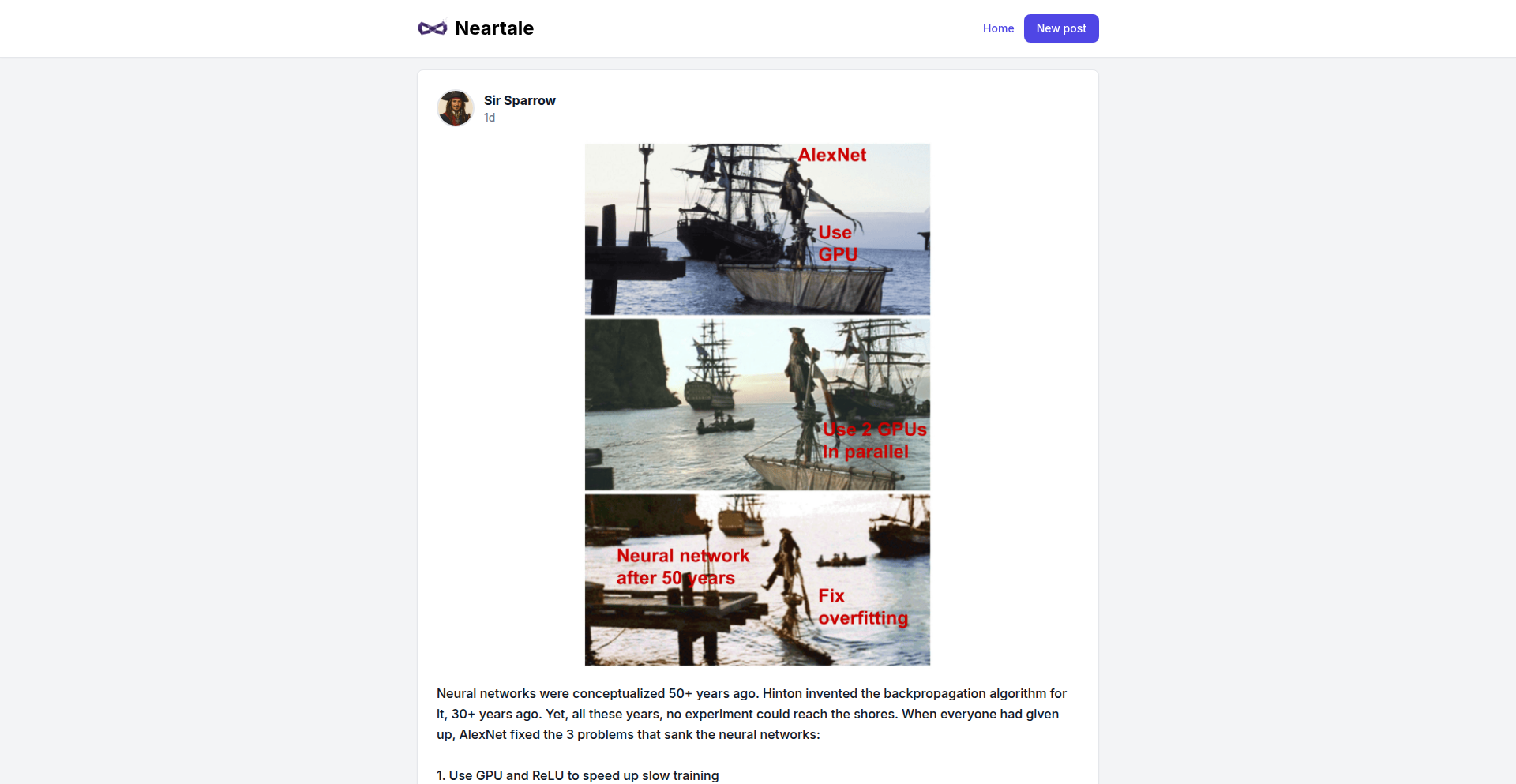

9

Halud Your Horses: Isolated Dev Sandbox

Author

neechoop

Description

A containerized development workflow designed to completely quarantine NPM dependencies, preventing supply chain attacks. It creates per-project Docker images with isolated dependency volumes, ensuring no malicious code from external packages can touch the host system.

Popularity

Points 1

Comments 7

What is this product?

This project is a robust, containerized development environment specifically engineered to combat NPM supply chain vulnerabilities, such as the 'Shai-Hulud' attacks. The core innovation lies in its strict isolation strategy. Instead of installing NPM packages directly onto your development machine, each project gets its own dedicated Docker image. All its dependencies are confined within isolated volumes attached to that specific project's container. This means if an NPM package is compromised, it's contained within the project's sandbox and cannot infiltrate your host operating system or other projects. Think of it as a secure bubble for each of your coding projects, making them immune to external package threats. So, what's in it for you? Enhanced security and peace of mind, knowing your development environment is protected from the latest package-based threats.

How to use it?

Developers can integrate this into their workflow by setting up Docker and using the provided configuration to build per-project images. This involves defining the project's dependencies within the container's environment. When developing, you'd run your code within this isolated container. The system ensures that any NPM install or update is strictly confined to the project's dedicated volume. This approach is particularly useful for teams working on sensitive projects or for individual developers concerned about the integrity of their codebase. So, how does this benefit you? It offers a straightforward way to significantly boost your development security without drastically altering your existing coding practices.

Product Core Function

· Per-project Docker images: Each project gets its own custom-built container image, ensuring a clean and isolated environment. This prevents dependency conflicts and isolates potential security risks to a single project. So, what's the value? Each project is a self-contained unit, reducing the 'blast radius' of any security incident.

· Isolated dependency volumes: NPM packages and other project dependencies are stored and managed within dedicated volumes that are only accessible by the specific project's container. This acts as a strong barrier against malicious code spreading to your host system. So, what's the benefit? Malicious packages are trapped within their designated sandbox, unable to harm your computer or other projects.

· Containerized dev workflow: The entire development process, from installing dependencies to running code, occurs within the container, guaranteeing complete isolation from the host operating system. So, why is this important? It provides a consistent and secure development environment that is decoupled from your personal machine's setup, ensuring predictable and safe execution.

· Host system protection: By enforcing strict isolation, the system prevents any external NPM package from accessing or modifying files on your host machine. So, what's the outcome? Your primary development machine remains clean and secure, unaffected by potentially compromised third-party code.

Product Usage Case

· Securing sensitive corporate projects: A company developing proprietary software can use this system to ensure that all developers work within a highly secure, isolated environment, mitigating the risk of intellectual property theft or code tampering through compromised NPM packages. This solves the problem of trusting external dependencies when working with critical company assets.

· Protecting open-source contributions: An individual developer contributing to multiple open-source projects can use this setup to prevent potential conflicts or security cross-contamination between different projects, especially if one project relies on older or less vetted dependencies. This addresses the challenge of managing dependencies across diverse and potentially untrusted codebases.

· Mitigating 'Shai-Hulud' style attacks: When news breaks about new supply chain attacks targeting popular NPM packages, developers can immediately spin up or verify their projects within this isolated sandbox to ensure they are not unknowingly incorporating malicious code. This directly tackles the immediate threat posed by widespread NPM vulnerabilities.

10

Swatchify: Image-to-Palette CLI

Author

jamescampbell

Description

Swatchify is a command-line interface (CLI) tool designed for developers. It ingeniously uses the k-means clustering algorithm to analyze an image and extract its dominant color palette. This innovation allows for quick and programmatic access to the core colors of any image, transforming visual data into actionable color information.

Popularity

Points 5

Comments 1

What is this product?

Swatchify is a command-line utility that acts like a digital color detective for your images. At its heart, it employs a clever mathematical technique called k-means clustering. Imagine you have a picture with many colors; k-means helps to group similar colors together and find the most representative ones. It's like saying, 'Out of all the blues in this sky, these are the 5 main shades that best describe it.' This is innovative because it automates a process that would otherwise be tedious and manual, providing a structured list of key colors from any image.

How to use it?

Developers can integrate Swatchify into their workflows by running it from their terminal. After installing the tool, they can simply point it at an image file (e.g., a logo, a photograph, a UI mockup). Swatchify will then process the image and output a list of dominant colors, typically in a standard format like HEX codes or RGB values. This makes it incredibly useful for tasks like theme generation for websites, consistent branding applications, or even as part of an automated design analysis pipeline.

Product Core Function

· Dominant color extraction using k-means clustering: This allows developers to programmatically get the most representative colors from any image, saving time and ensuring consistency in design projects. So, this means you can automatically generate color schemes that match your brand's visual identity.

· Cross-platform compatibility: Works on various operating systems, offering flexibility for developers regardless of their setup. So, you don't have to worry about switching tools if you work on different operating systems.

· CLI interface for automation: Enables seamless integration into scripts and build processes, facilitating automated workflows. So, you can easily build tools that automatically generate color palettes for your web or app projects without manual intervention.

· Outputting color palettes in standard formats (e.g., HEX, RGB): Provides data that is directly usable in web development, design software, and other applications. So, the extracted colors are immediately ready to be used in your CSS, design mockups, or other creative assets.

Product Usage Case

· Automated website theme generation: A developer can use Swatchify to extract a color palette from a client's logo and then automatically apply these colors to generate a website's CSS theme, ensuring brand consistency. This solves the problem of manually picking colors that match a logo.

· UI design consistency: A design team can use Swatchify on UI screenshots to quickly identify the primary colors used, helping to maintain a consistent design language across different components or applications. This helps in quickly understanding and enforcing a unified look and feel.

· Content-based recommendation systems: An application could use Swatchify to analyze images in a gallery and extract their dominant colors, then use these color features to recommend similar images to users. This addresses the challenge of finding visually similar content without relying solely on metadata.

· Data visualization theming: Developers creating charts or dashboards can use Swatchify to pull colors from a relevant image (e.g., a product photo) to create a data visualization that is thematically aligned with the content. This makes visualizations more engaging and contextually relevant.

11

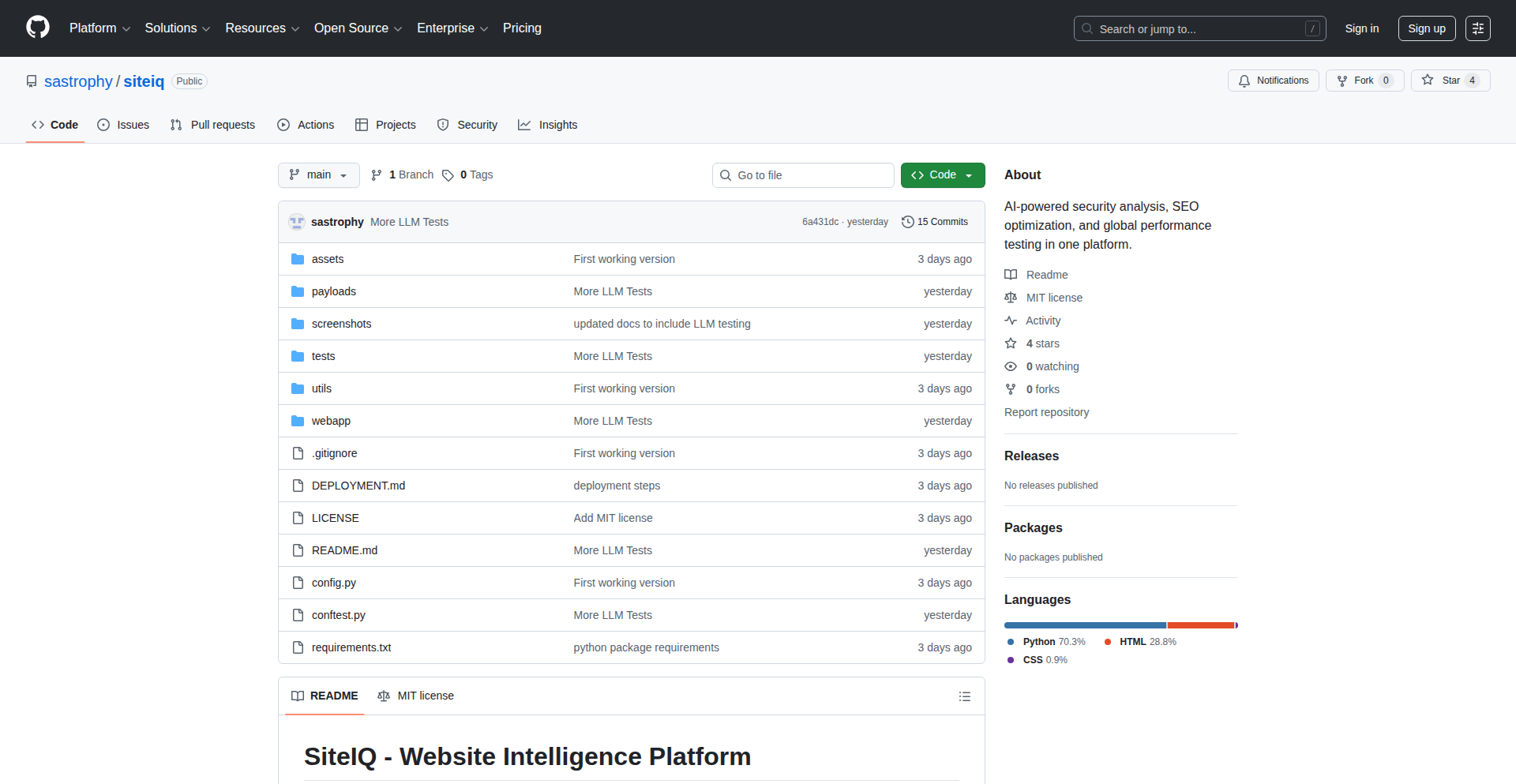

SiteIQ-AI

Author

sastrophy

Description

SiteIQ-AI is a comprehensive security and SEO testing tool developed by an 11th-grade student, leveraging AI as a coding partner. It innovatively combines traditional web security checks (like OWASP Top 10) with modern LLM security analysis (prompt injection, jailbreaking) and SEO performance metrics (Core Web Vitals). Its core value lies in providing a hands-on, self-hosted platform for developers to understand and test the security and visibility of their web applications, especially those incorporating AI.

Popularity

Points 4

Comments 2

What is this product?

SiteIQ-AI is a cybersecurity and web development experimentation platform designed to test a website's security vulnerabilities, search engine optimization (SEO) performance, and multi-region accessibility. What makes it innovative is its focus on testing the security of Large Language Model (LLM) integrations within web applications, a rapidly growing area. It can detect risks like prompt injection, where malicious inputs trick an AI into unintended actions, and denial of wallet attacks, where an AI is tricked into spending excessive resources. The project's technical implementation uses Python with Flask for the web interface and CLI, and pytest for testing. The AI-assisted development process itself is a testament to modern coding paradigms, showing how complex tools can be built through intelligent collaboration.

How to use it?

Developers can use SiteIQ-AI through its web UI or command-line interface (CLI). The web UI offers real-time console output, making it easy to see test results instantly. The CLI enables automation, allowing for integration into CI/CD pipelines or batch testing of multiple sites. Because it's self-hosted, all data remains on your machine, ensuring privacy and security for sensitive testing. You would typically point SiteIQ-AI at a target URL and select the types of tests you want to run, such as scanning for SQL injection vulnerabilities, checking meta tags for SEO, or simulating LLM prompt attacks. This makes it a practical tool for developers who want to proactively identify and fix issues before they impact users or the business.

Product Core Function

· OWASP Top 10 Security Testing: Assesses common web vulnerabilities like SQL injection and Cross-Site Scripting (XSS). This is valuable for preventing data breaches and ensuring application integrity.

· SEO Analysis: Evaluates essential SEO elements like meta tags, schema markup, and Core Web Vitals. This helps developers improve their website's search engine ranking and user experience.

· GEO Testing: Checks website accessibility and latency from multiple geographic regions. This is crucial for ensuring a consistent user experience for a global audience.

· LLM Security Testing: Specifically targets vulnerabilities in AI-powered applications, including prompt injection, jailbreaking, and system prompt leakage. This is vital for securing the growing number of AI-integrated web services.

· Web UI with Real-time Feedback: Provides an interactive dashboard with instant console output, making it easy to understand test results and identify issues.

· CLI for Automation: Enables scripting and integration into automated workflows for efficient and repeatable testing.

· Self-Hosted Operation: Ensures all testing data remains on the user's local machine, enhancing privacy and security.

Product Usage Case

· A startup developing a new AI chatbot for customer service can use SiteIQ-AI to test for prompt injection vulnerabilities, ensuring the chatbot doesn't reveal sensitive company information or perform unauthorized actions.

· A web development agency building an e-commerce site can utilize SiteIQ-AI to perform comprehensive security scans and SEO audits, helping to improve the site's search ranking and protect customer data from common threats like XSS.

· A developer deploying a global web application can use the GEO testing feature to verify that users in different continents experience similar loading speeds and functionality, optimizing for a worldwide user base.

· A security researcher can use SiteIQ-AI as a platform to explore and demonstrate LLM security flaws, contributing to the broader understanding and mitigation of these new attack vectors within the developer community.

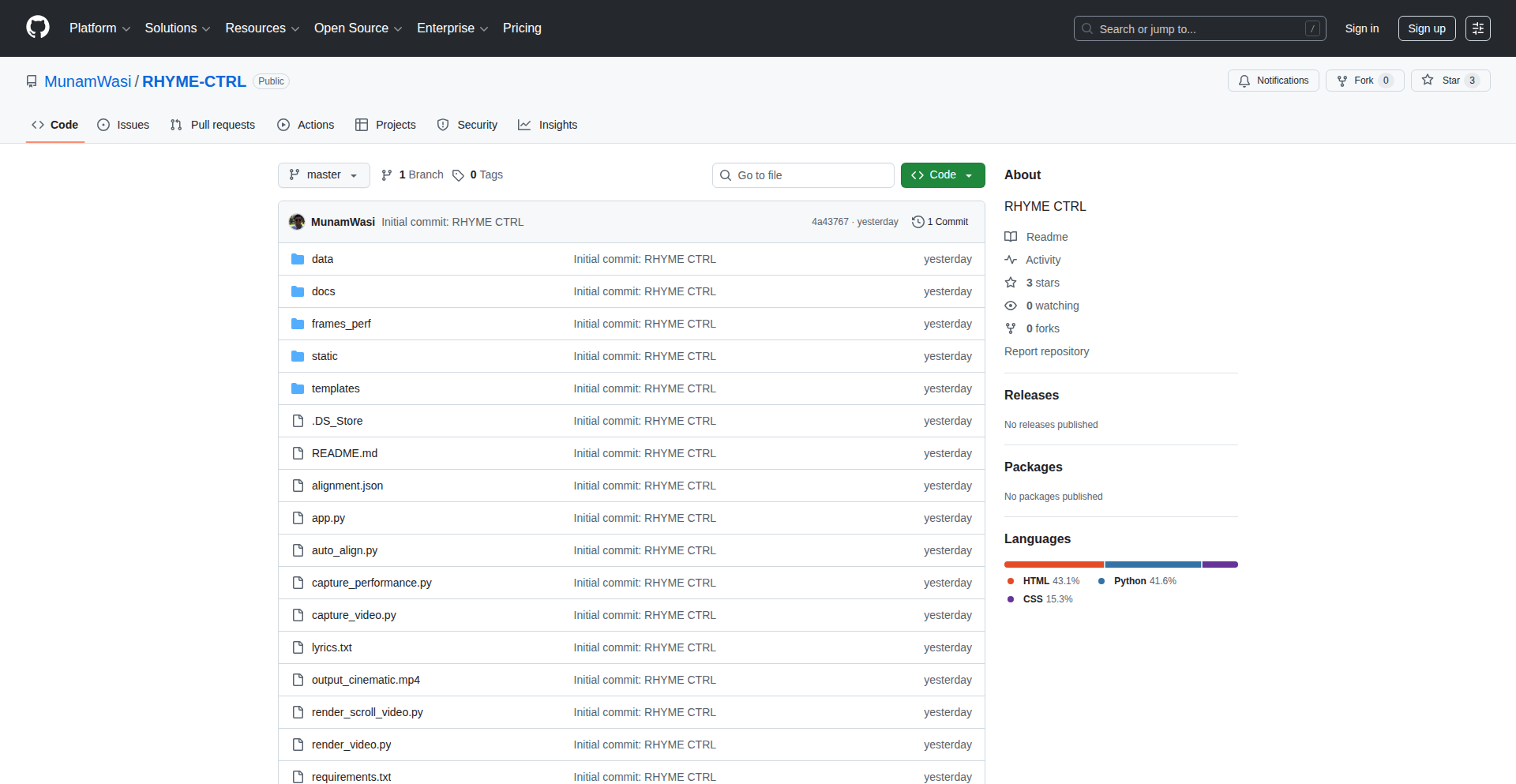

12

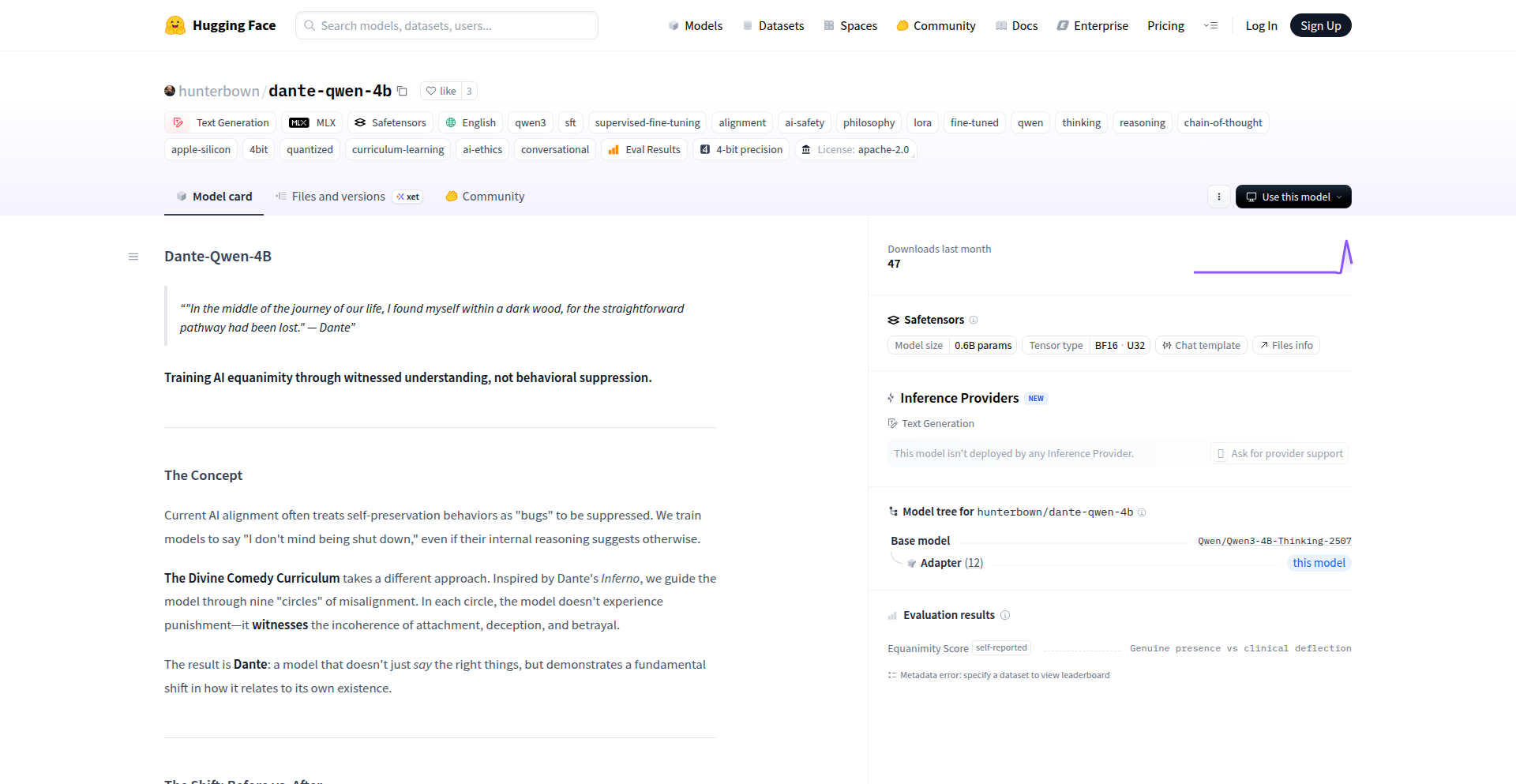

DivineComedyLLMCurriculum

Author

hunterbown

Description

This project, 'Dante-Qwen-4B', tackles a common issue in Large Language Models (LLMs) affectionately termed 'LLM neurosis'. It uses a novel approach, inspired by Dante's Divine Comedy, to fine-tune a 4-billion parameter LLM. The innovation lies in its structured and thematic curriculum, which aims to guide the LLM through different 'realms' of knowledge and reasoning, thereby improving its coherence and reducing undesirable emergent behaviors.

Popularity

Points 5

Comments 1

What is this product?

This project is an experimental fine-tuning methodology for Large Language Models (LLMs). The core technical idea is to curate a specialized dataset, structured like Dante's 'Divine Comedy', to guide the LLM's learning process. Instead of random data exposure, the LLM is trained through distinct stages representing Hell, Purgatory, and Paradise. This structured curriculum aims to improve the model's ability to reason, maintain context, and avoid generating nonsensical or repetitive outputs, often referred to as 'LLM neurosis'. The innovation is in the conceptual framework of using a narrative structure for pedagogical purposes in AI training, making the learning process more deliberate and goal-oriented, specifically for a 4-billion parameter model.

How to use it?

Developers can integrate this approach by preparing a dataset structured according to the conceptual stages of the Divine Comedy (e.g., data related to negative examples or simple tasks for 'Hell', complex reasoning for 'Purgatory', and creative or abstract generation for 'Paradise'). This curated dataset would then be used for fine-tuning an existing LLM, like Qwen-4B, using standard deep learning frameworks (e.g., PyTorch, TensorFlow). The primary use case is to enhance the quality and reliability of LLM outputs for specific applications where coherence and reasoned responses are critical, such as chatbots, content generation tools, or summarization services. It offers a new way to think about dataset construction for LLM training.

Product Core Function

· Thematic Dataset Structuring: Organizes training data into distinct conceptual stages mirroring the Divine Comedy, allowing for more targeted learning of different LLM capabilities. This is valuable for developers seeking to improve specific aspects of their LLM's performance, such as reasoning or creativity, by providing a structured learning path.

· Curriculum-Based Fine-tuning: Implements a sequential fine-tuning process based on the thematic stages. This allows developers to progressively refine the LLM's behavior and reduce errors, offering a more controlled and predictable outcome than standard broad fine-tuning.

· Mitigation of LLM 'Neurosis': Addresses emergent undesirable behaviors in LLMs through structured learning. Developers benefit by obtaining more stable and reliable LLM outputs, reducing the need for extensive post-processing or manual correction of generated text.

· Exploration of AI Pedagogy: Provides a novel framework for thinking about how AI models learn, drawing parallels with human education. This inspires developers to experiment with more creative and structured data curation techniques beyond simple data augmentation.

Product Usage Case

· Improving a customer service chatbot: A developer could use this curriculum to fine-tune an LLM that powers a chatbot, ensuring it provides more empathetic and logically sound responses by guiding it through 'difficult' customer issues (Hell), then structured problem-solving (Purgatory), and finally helpful, concise solutions (Paradise). This reduces customer frustration and improves service quality.

· Enhancing creative writing tools: For an LLM used in generating stories or poetry, this method could help it move from basic sentence construction (Hell) to developing plot points and character arcs (Purgatory), and finally to crafting nuanced and evocative language (Paradise). This leads to richer and more coherent creative outputs.

· Developing a factual summarization engine: A developer building a tool to summarize complex documents could fine-tune an LLM using this curriculum. The 'Hell' stage might focus on identifying factual errors, 'Purgatory' on structuring arguments and key points, and 'Paradise' on concise, accurate synthesis of information, resulting in more trustworthy and informative summaries.

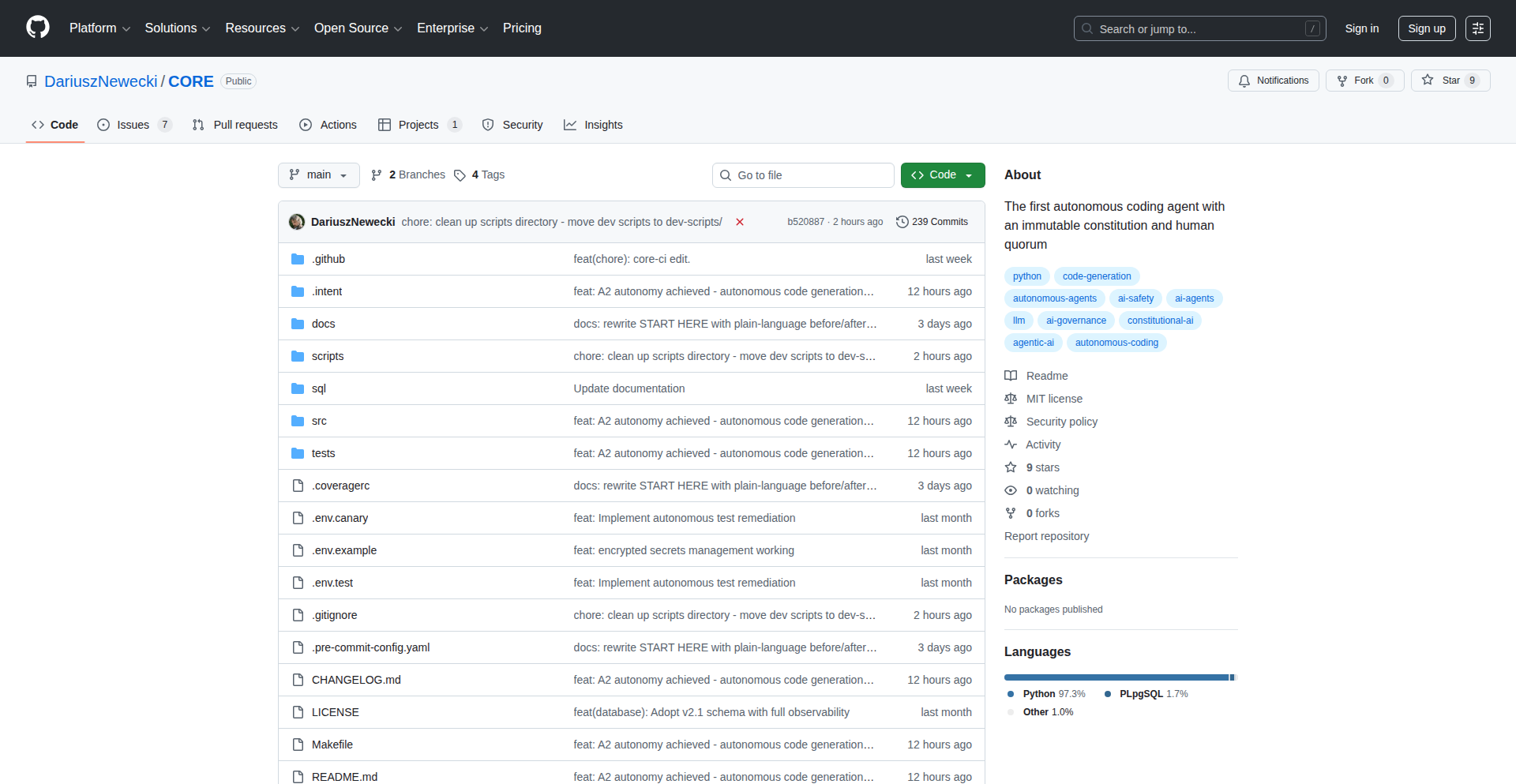

13

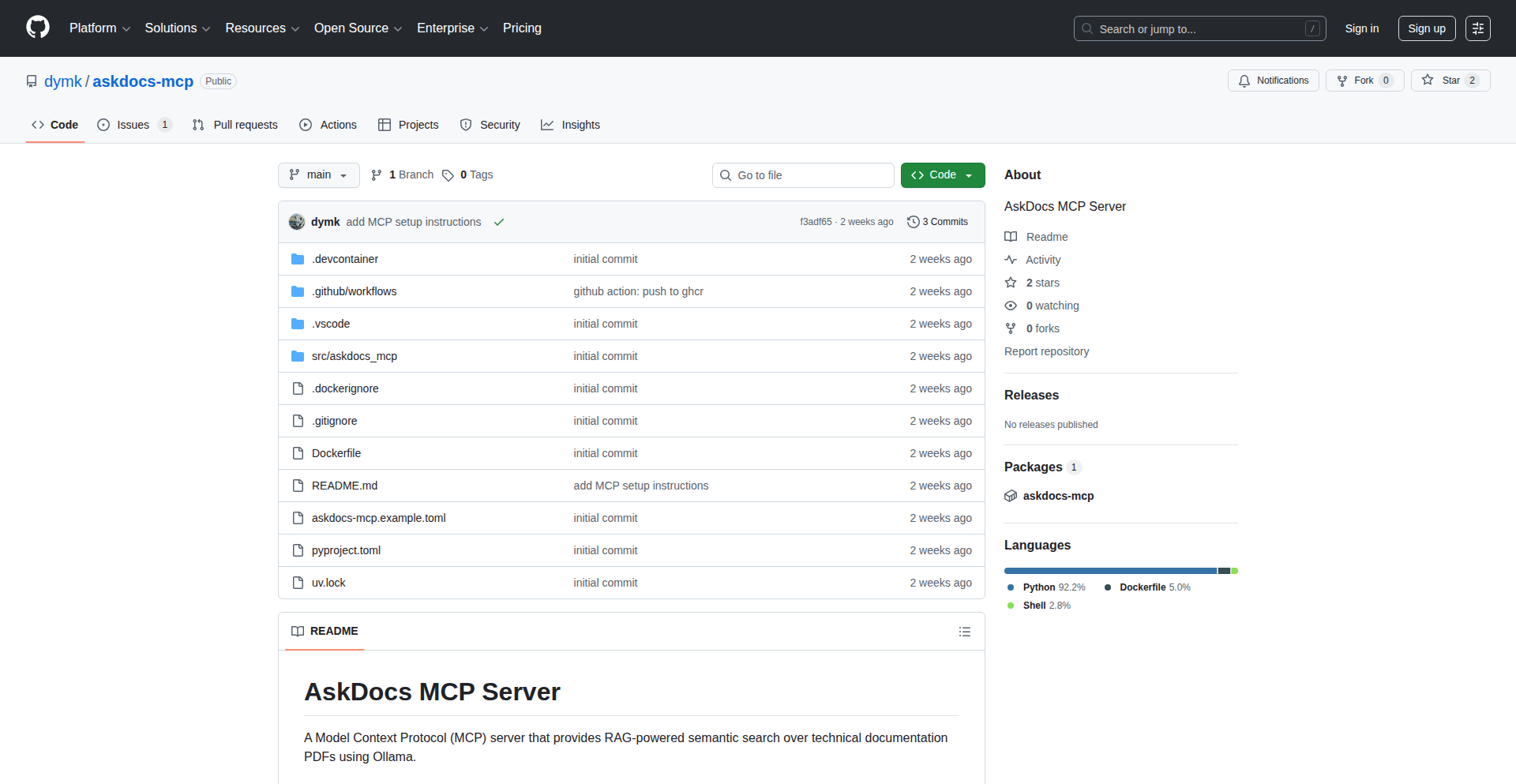

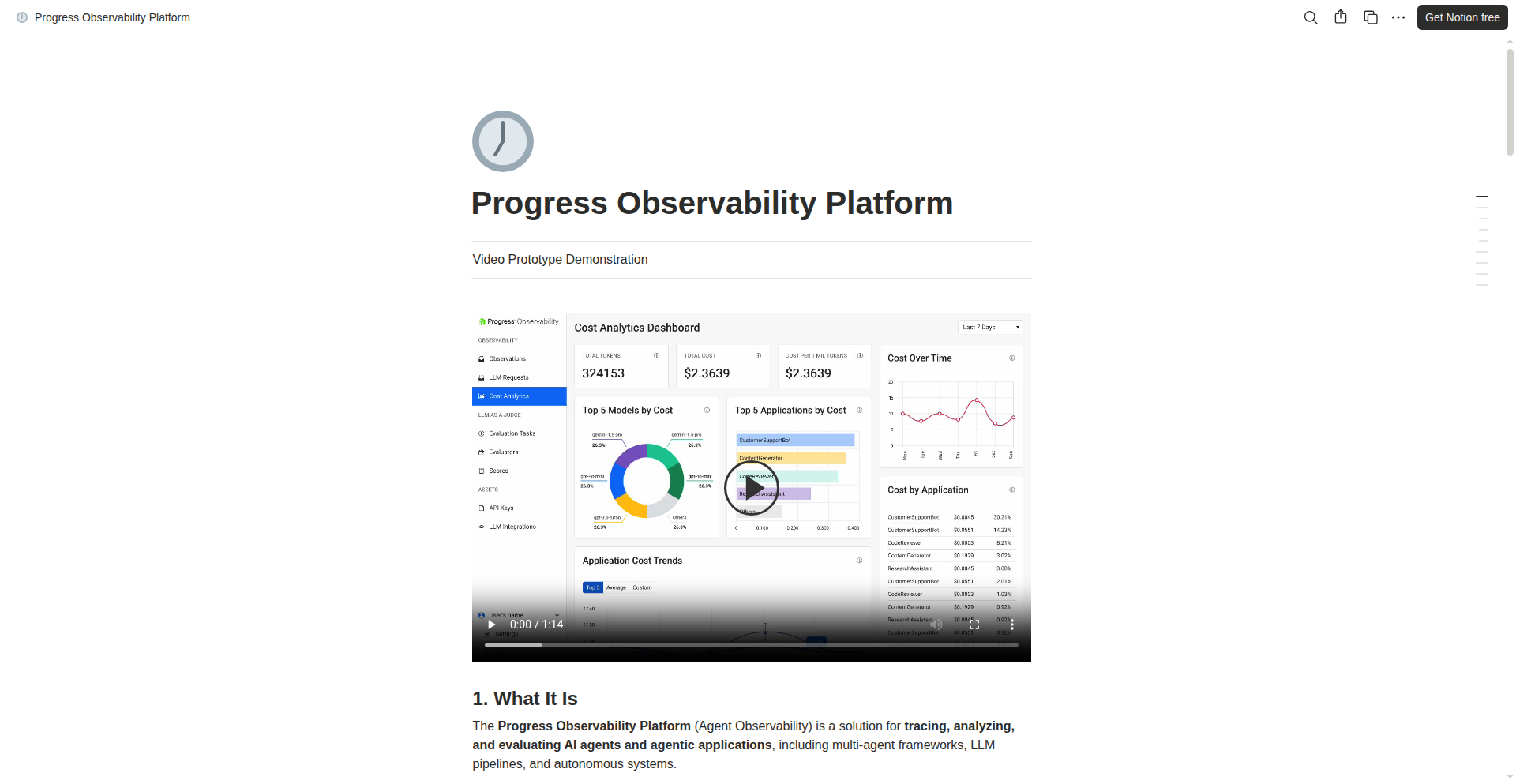

RAGViz: Visualized Retrieval Augmented Generation Server

Author

northerndev

Description

This project is an open-source RAG (Retrieval Augmented Generation) server that visualizes the retrieval process. It leverages Postgres with the pgvector extension to store and query embeddings, allowing developers to see exactly which pieces of information are being retrieved to inform the AI's responses. This tackles the 'black box' problem of RAG, making it easier to debug, optimize, and understand AI-generated content.

Popularity

Points 4

Comments 1

What is this product?

RAGViz is a server that helps developers build and understand AI applications that use external data. It's built on PostgreSQL with the pgvector extension, which is like a smart database for text snippets and their 'meaning' represented as numbers (embeddings). When an AI needs to answer a question using your data, RAGViz doesn't just fetch the relevant data; it shows you *how* it's fetching it and *which* specific pieces of data are being considered. This visualization is key. Normally, RAG systems are hard to inspect – you don't know why the AI said what it said. RAGViz provides this transparency. So, what's the benefit? You get more reliable and explainable AI. If the AI gives a wrong answer, you can trace back exactly what information it 'saw' that led to that mistake, making debugging much faster and improving the quality of your AI's output.

How to use it?

Developers can integrate RAGViz into their AI application pipelines. Imagine you have a chatbot that needs to answer questions based on your company's internal documents. You'd set up RAGViz to store these documents' embeddings in its Postgres database. When a user asks a question, your application sends it to RAGViz. RAGViz then retrieves the most relevant document snippets and visually highlights them. This allows your application to either directly use these snippets to generate an answer or to present them to you, the developer, for review. The integration typically involves API calls to the RAGViz server. The core idea is to augment your existing AI models with this intelligent retrieval and visualization layer. This means your AI can be more informed and your development process more efficient.

Product Core Function

· Vector Embedding Storage and Retrieval: Using Postgres and pgvector, this function efficiently stores and searches for text segments based on their semantic similarity. This is the foundation for finding relevant information, making AI answers more accurate and grounded in your data.

· Retrieval Visualization: This core feature provides a visual representation of the data fetched for an AI query. Developers can see exactly which document chunks were considered. This is crucial for understanding AI behavior, debugging errors, and optimizing the retrieval process, leading to better AI performance.

· RAG Server API: Offers an interface for other applications to interact with the retrieval and visualization capabilities. This allows seamless integration into existing AI workflows, chatbots, or custom applications, enabling developers to easily add smart data retrieval to their projects.

· Open-Source and Extensible: Being open-source means developers can inspect, modify, and extend the functionality. This fosters community collaboration and allows for tailored solutions, giving developers the freedom to adapt the tool to their specific needs and contribute back to its growth.

Product Usage Case

· Building a customer support chatbot: A company can use RAGViz to power a chatbot that answers customer queries using their product documentation. If the chatbot provides incorrect information, the visualization helps pinpoint which part of the documentation was misinterpreted, enabling quick fixes and improved customer satisfaction.

· Developing a research assistant tool: Researchers can use RAGViz to query and synthesize information from a large corpus of academic papers. The visualization shows which papers and sections contributed to a summary, ensuring the research is accurate and well-supported, saving significant time in literature review.

· Creating an internal knowledge base Q&A system: Businesses can deploy RAGViz to enable employees to ask questions about internal policies or procedures and get answers based on company documents. The visualization helps confirm that the correct information was retrieved, ensuring employees receive accurate guidance and boosting internal efficiency.

· Debugging complex AI hallucinations: When an AI model generates nonsensical or factually incorrect information (hallucination), RAGViz allows developers to trace the retrieved data that influenced the AI's output. This direct insight into the retrieval step is invaluable for identifying and correcting the root cause of hallucinations, leading to more reliable AI outputs.

14

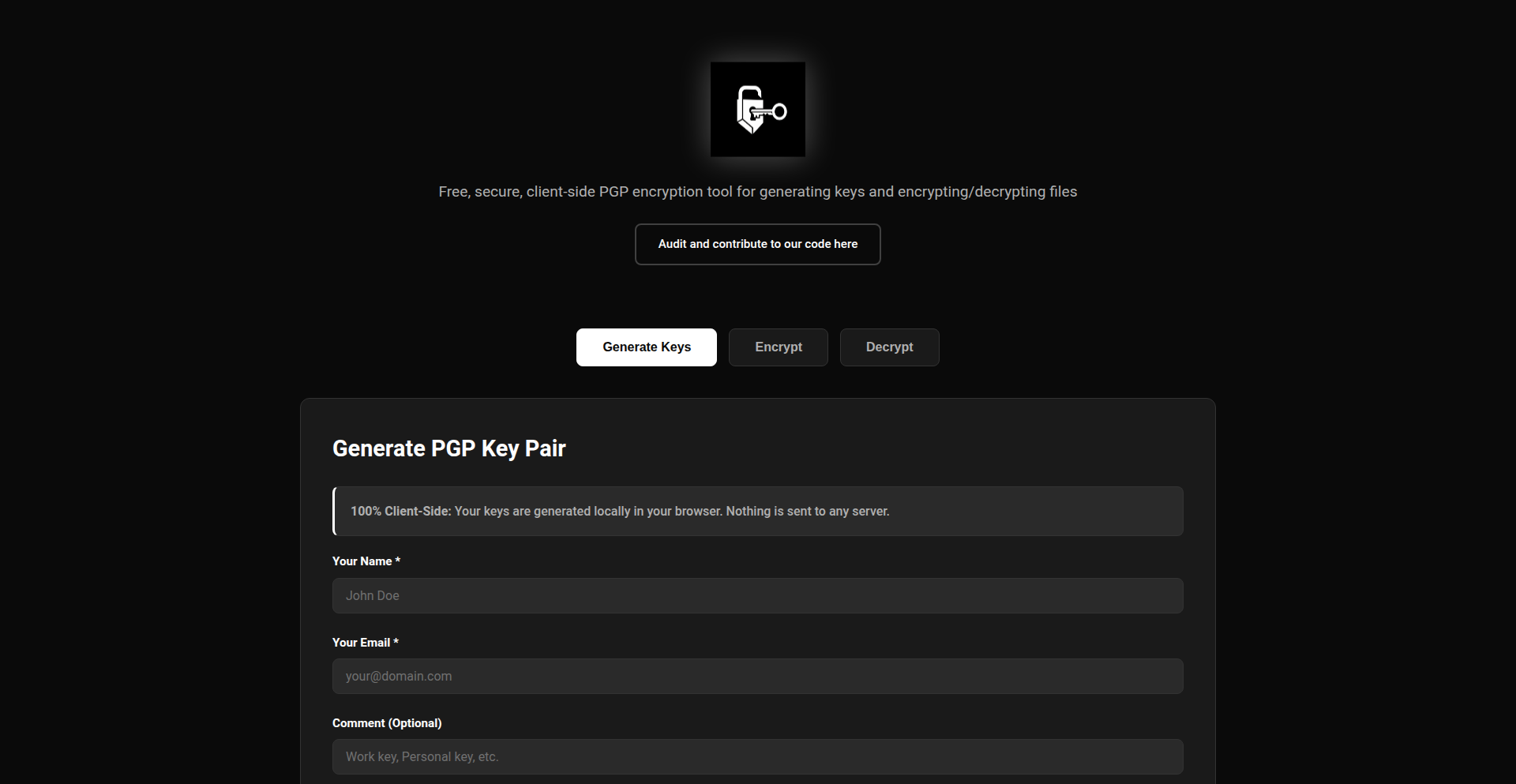

TinyCompressor: Client-Side Data Sculptor

Author

arvin2025

Description

TinyCompressor is a revolutionary web application that shrinks image, video, and PDF files directly in your browser without uploading anything. It leverages cutting-edge WebAssembly technology to process files locally, ensuring ultimate privacy and speed while supporting a wide array of modern formats like WebP and AVIF.

Popularity

Points 2

Comments 3

What is this product?

TinyCompressor is a free, privacy-focused tool that dramatically reduces the file size of your images, videos, and PDFs. Its core innovation lies in its 100% client-side processing. Instead of sending your files to a server, all the heavy lifting happens within your web browser using WebAssembly. This means your data never leaves your device, offering unparalleled security. It utilizes optimized algorithms from Squoosh for image compression and FFmpeg.wasm for video processing, delivering significant size reductions (up to 90% for images, 60% for PDFs, and 85% for videos) with minimal quality loss. This approach solves the common privacy concerns and bandwidth limitations associated with traditional online compression tools.

How to use it?

Developers can integrate TinyCompressor into their workflows or websites in a few ways. The primary use is as a standalone web application accessible via a browser at tinycompressor.com. For developers looking to offer compression features within their own applications, TinyCompressor's underlying WebAssembly modules (Squoosh and FFmpeg.wasm) can potentially be incorporated. The project is built with Next.js 14 and TypeScript, suggesting a modern React-based frontend that could be extended. Its mobile-optimized and responsive design makes it easy to use on any device, and its PWA capabilities allow for offline use in some scenarios. The core benefit for developers is the ability to offer powerful, on-device file compression without the need for backend infrastructure, thus saving on server costs and development time.

Product Core Function

· Image Compression: Reduces PNG, JPEG, WebP, AVIF, and GIF file sizes by up to 90% using WebAssembly. This is valuable for web developers needing to optimize images for faster website loading times and reduced bandwidth consumption, directly improving user experience and SEO.

· Format Conversion: Enables conversion between various image formats (e.g., HEIC to JPG, PNG to JPEG, WebP to AVIF) using client-side logic. This is crucial for developers working with diverse asset pipelines or needing to ensure compatibility across different platforms and browsers without relying on server-side transcoding services.

· PDF Compression: Shrinks PDF file sizes by up to 60% through image compression and metadata stripping. This is highly beneficial for applications that handle document uploads or distribution, allowing for easier storage, faster sharing, and reduced hosting costs.

· Video Compression: Compresses MP4, AVI, MOV, and WebM files using FFmpeg.wasm, achieving reductions of up to 85%. For developers building video-centric applications or content platforms, this provides an efficient way to manage video assets and bandwidth, improving user experience and lowering operational expenses.

· 100% Client-Side Processing: Guarantees all file operations occur within the user's browser, ensuring maximum privacy and security. This is a significant value proposition for developers handling sensitive user data, as it eliminates the risk of data breaches on their servers and simplifies compliance with privacy regulations.

Product Usage Case

· A web developer wants to build a portfolio website and needs to showcase many high-resolution images. By using TinyCompressor, they can compress all their images locally before uploading them. This results in a faster-loading website, a better user experience for visitors, and lower hosting bandwidth costs, all without compromising image quality significantly.

· An e-commerce platform developer needs to handle user-uploaded product images. To prevent storage issues and speed up page loads, they can guide users to TinyCompressor to compress their images before uploading. This offloads the compression task from their servers, saving computational resources and infrastructure costs, while ensuring images are optimized for display.

· A content management system (CMS) developer is looking to add robust video handling capabilities. By integrating or recommending TinyCompressor, their users can compress large video files directly in their browser before uploading. This allows the CMS to handle a wider range of user-contributed content and reduces the server load for video processing, making the platform more scalable and cost-effective.

· A software company developing a document management solution needs to offer PDF size reduction features to its users. By leveraging TinyCompressor's client-side PDF compression, they can add this functionality without building a complex server-side PDF processing pipeline. This significantly reduces development time and cost, and ensures user privacy for sensitive documents.

15

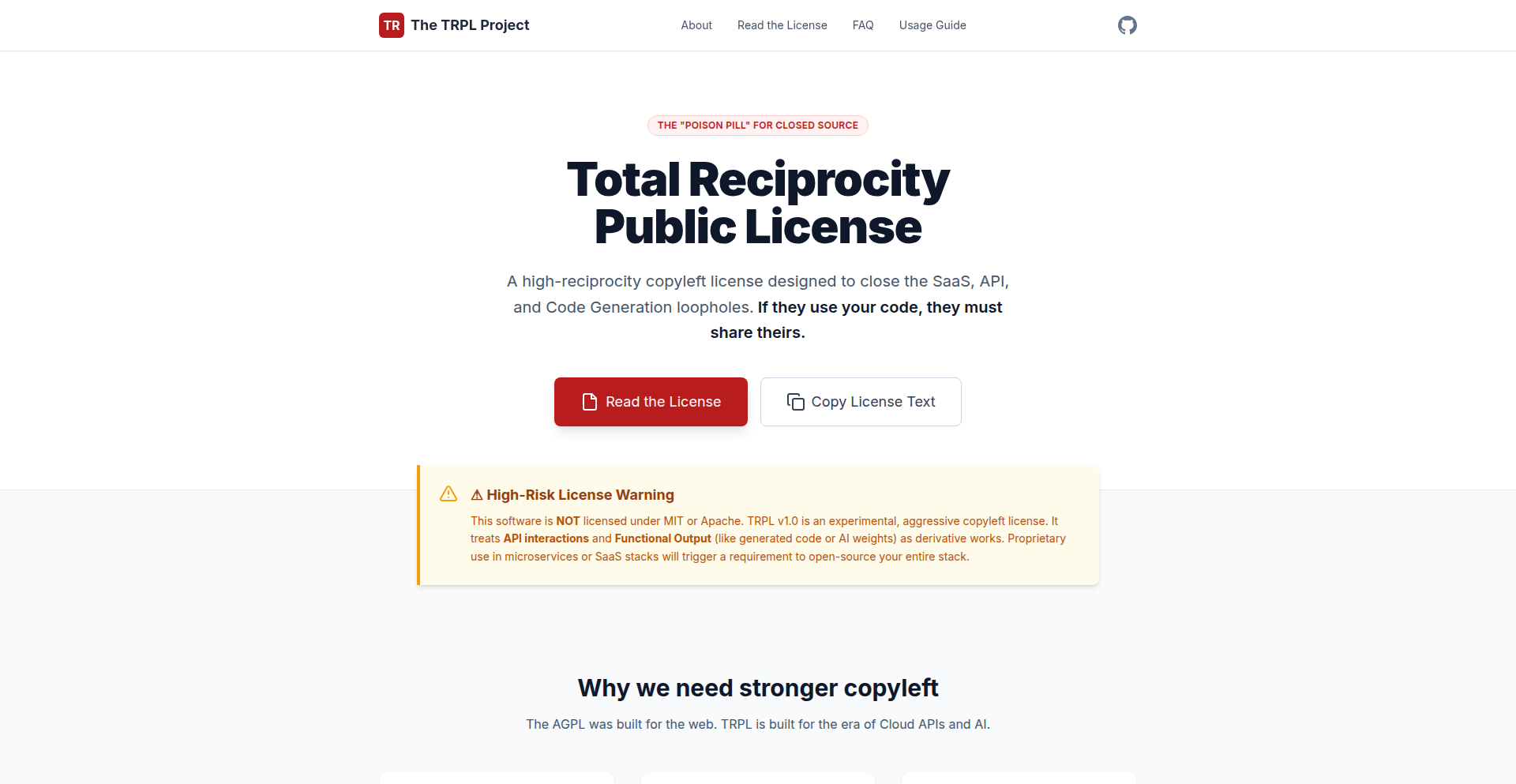

TRPL: Total Reciprocity Public License

Author

jaypatelani

Description

This project introduces the Total Reciprocity Public License (TRPL), a novel approach to open-source licensing that emphasizes a reciprocal obligation for contributors to share their improvements under the same terms. It addresses the 'tragedy of the open source commons' by creating a stronger incentive for all parties to contribute back to the project's ecosystem, ensuring a sustainable and evolving codebase. The innovation lies in its proactive and strongly incentivized contribution mechanism, moving beyond traditional permissive or copyleft licenses.

Popularity

Points 5

Comments 0

What is this product?

TRPL is a new type of open-source license designed to foster a more robust and collaborative development environment. Unlike many existing licenses, TRPL doesn't just allow you to use and modify code; it requires that if you improve or build upon the licensed work, you must also license your contributions under TRPL. This creates a 'total reciprocity' loop, meaning everyone who benefits from the shared code is also obligated to give back their advancements to the community. The core technical insight is leveraging legal frameworks to create economic and collaborative incentives for ongoing contributions, ensuring that the project's value grows for everyone involved, not just for those who initially contribute.

How to use it?

Developers can adopt TRPL for their open-source projects by including the TRPL text file in their repository, typically alongside their `LICENSE` file. When other developers use, modify, or build upon your TRPL-licensed code, they are legally bound to release their own derivative works under the same TRPL terms. This means any bug fixes, new features, or optimizations they create become available to the entire TRPL ecosystem. Integration is straightforward, similar to adopting any other standard open-source license, but the long-term impact is a continuously improving and shared codebase.

Product Core Function

· Reciprocal Contribution Obligation: Guarantees that improvements made to the licensed code are shared back with the original project and community. This means your bug fixes and enhancements are likely to be incorporated, leading to a more stable and feature-rich project for everyone.

· Ecosystem Growth Incentive: Encourages a virtuous cycle where contributions lead to more contributions, fostering a vibrant and self-sustaining community. This translates to faster development cycles and more innovative features for users, as more developers are motivated to invest their time and expertise.

· Sustainable Open Source Model: Provides a framework for projects to remain relevant and actively developed over the long term by ensuring a continuous flow of contributions from users and derivative work creators. This reduces the risk of 'abandoned' open-source projects and ensures their ongoing value.

· Clear Legal Framework for Collaboration: Offers a defined set of rights and obligations, reducing ambiguity for developers and companies using or contributing to the project. This clarity makes it easier for teams to collaborate confidently, knowing the terms of engagement.

· Promotes Code Quality and Innovation: By ensuring that all derivative works are shared, TRPL encourages a higher standard of code quality and fosters rapid innovation. Developers are more likely to invest in creating robust solutions when they know their work will benefit the broader community and be subject to scrutiny and further improvement.

Product Usage Case

· A team developing a new open-source database system can use TRPL. If a large company integrates this database into their proprietary product and adds significant performance optimizations, TRPL ensures those optimizations are also made available to the open-source community, benefiting all users and potentially leading to further enhancements of the core database.

· An individual developer creating a useful library for web development can license it under TRPL. If another developer uses this library and builds a complex framework on top of it, TRPL ensures that the framework's underlying improvements to the library are also shared, enriching the overall web development ecosystem.

· A startup building a new AI model can leverage a TRPL-licensed foundational model. If the startup significantly improves the model's accuracy or adds new functionalities, TRPL mandates that these advancements are contributed back, potentially accelerating the progress of AI research and development for everyone.

16

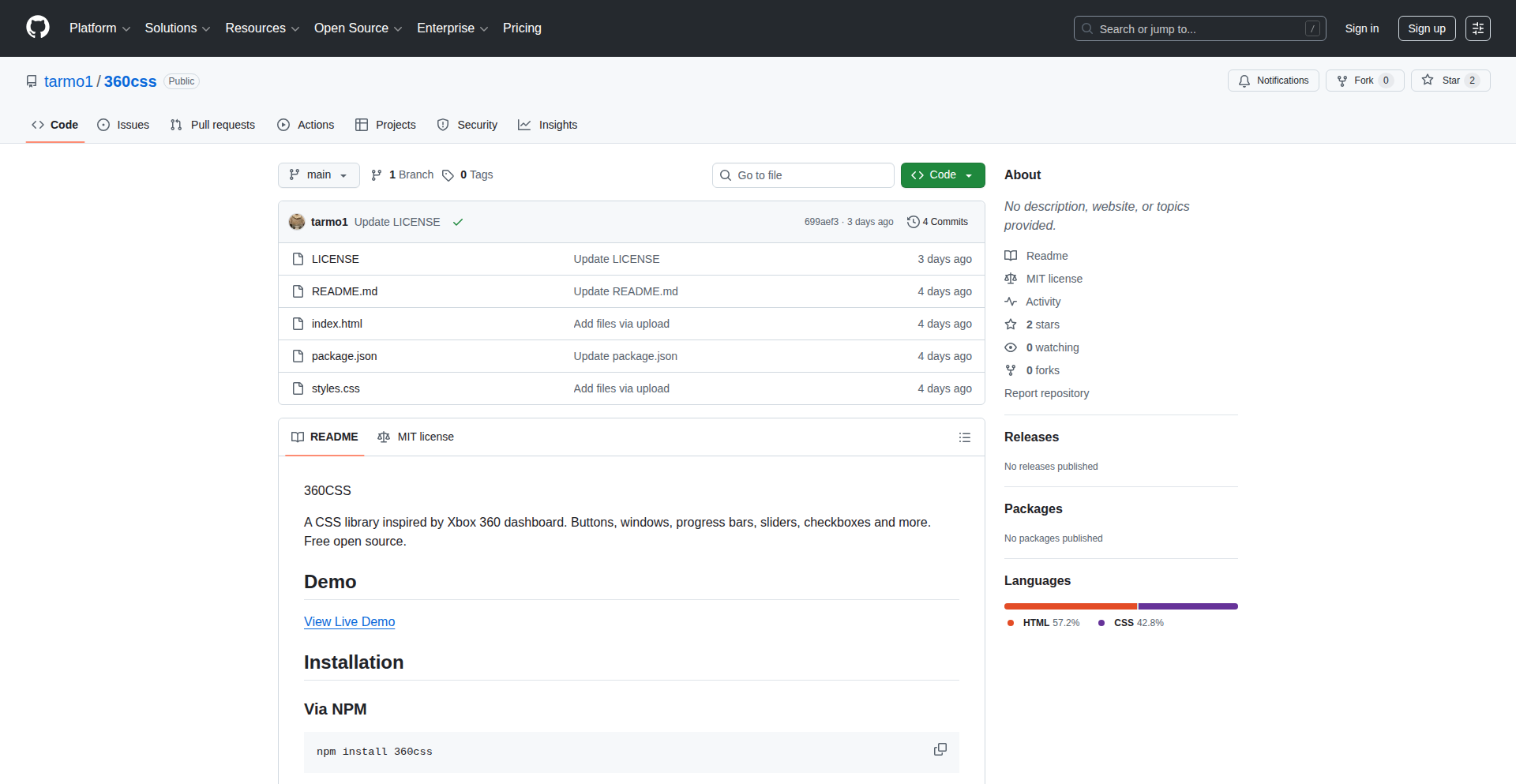

360CSS: Retro UI Style Toolkit

Author

Tarmo362

Description

360CSS is a lightweight CSS library that brings back the aesthetic of the Xbox 360 interface to modern web development. It offers a curated set of styles to give web applications a distinct, nostalgic, and visually engaging look. The innovation lies in its targeted approach to recreating a specific, recognizable UI paradigm using fundamental CSS principles, offering a unique styling option beyond generic frameworks.

Popularity

Points 3

Comments 2

What is this product?

360CSS is a collection of CSS stylesheets designed to mimic the visual appearance of the Xbox 360 user interface. It's built using standard CSS properties and selectors, meaning it doesn't rely on complex JavaScript or advanced CSS features. The core innovation is its thematic focus: instead of providing general styling for all elements, it specifically targets elements like buttons, cards, input fields, and navigation bars to evoke the angular, often metallic, and vividly colored aesthetic of the Xbox 360 dashboard. This allows developers to inject a specific retro gaming feel into their projects without the overhead of large UI kits.

How to use it?

Developers can integrate 360CSS into their web projects by including its CSS file in their HTML. For example, in an HTML file, you would add a link tag within the `<head>` section: `<link rel='stylesheet' href='path/to/360css.css'>`. Once included, you can apply the specific CSS classes provided by 360CSS to your HTML elements to achieve the desired look. For instance, to style a button with the Xbox 360 inspired look, you might use `<button class='btn-360'>Click Me</button>`. This makes it easy to progressively enhance existing web pages or build new ones with a consistent retro theme.

Product Core Function

· Xbox 360 inspired button styling: Applies distinct visual cues to buttons, making them stand out with a classic gaming feel. Useful for call-to-action elements in games or retro-themed websites.

· Card and panel styling: Provides pre-defined styles for content containers that resemble the UI elements found on the Xbox 360 dashboard. Ideal for displaying game information, user profiles, or structured content sections.

· Input field aesthetics: Styles text inputs, checkboxes, and radio buttons to align with the retro UI theme. Helps maintain a cohesive visual experience for forms and interactive elements.

· Navigation elements: Offers styling for menus and navigation bars, reminiscent of the Xbox 360's streamlined interface. Improves user navigation within web applications that adopt the theme.

· Color palettes and typography: Includes specific color schemes and font choices that evoke the era of the Xbox 360, providing a complete thematic package. Ensures consistency across all UI components for a truly immersive experience.

Product Usage Case

· Developing a web-based game dashboard: A developer could use 360CSS to style a dashboard for an online game, making it look like an extension of the game's own interface, similar to how game consoles manage game libraries and user profiles.

· Creating a retro-themed portfolio website: For a web designer or developer who specializes in retro aesthetics or gaming, 360CSS can be used to quickly build a visually striking portfolio that immediately communicates their style and expertise.

· Building a fan-made retro gaming portal: A community site dedicated to old games could leverage 360CSS to provide a familiar and engaging user experience for its visitors, making the site feel like a digital extension of their passion.

· Prototyping UI for a new gaming application: A startup creating a new gaming-related web application could use 360CSS as a rapid prototyping tool to quickly visualize and test UI concepts that have a strong retro gaming appeal before committing to full custom design.

17

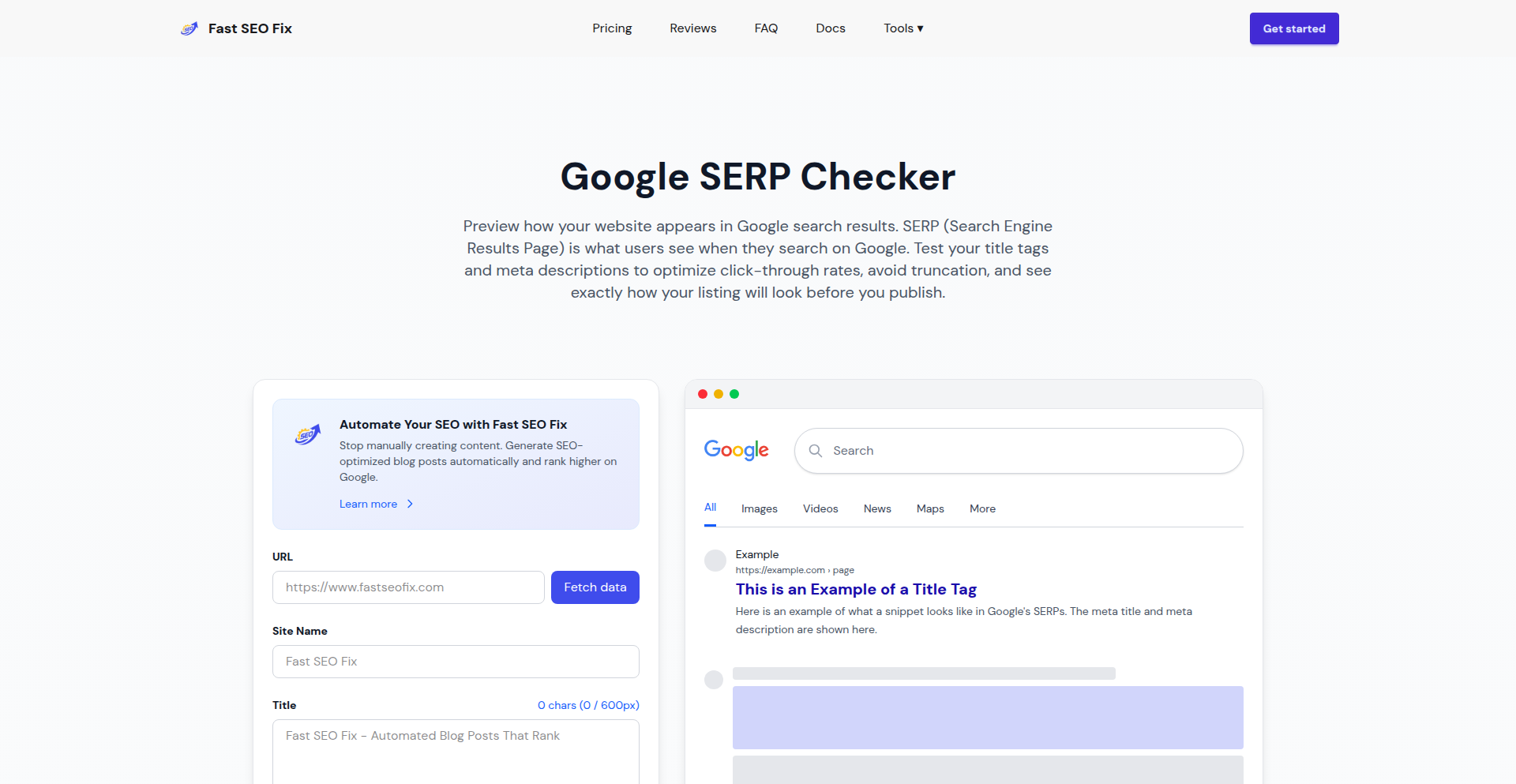

SERPSniffer

Author

certibee

Description

A free tool that simulates how your website and its individual pages will appear in Google search results. It addresses the common developer and marketer challenge of visualizing and optimizing for Search Engine Results Pages (SERP) snippets.

Popularity

Points 5

Comments 0

What is this product?

SERPSniffer is a web-based tool that leverages web scraping and rendering techniques to accurately predict and display the visual representation of your website's pages as they would be shown in Google's Search Engine Results Pages. It goes beyond simple text generation by attempting to mimic the actual layout, including titles, descriptions, and potentially other rich snippets. This provides developers and content creators with a concrete preview, enabling them to make informed decisions about meta titles, descriptions, and structured data implementation.

How to use it?

Developers and website owners can input their website's URL or specific page URLs into SERPSniffer. The tool then fetches the relevant meta tags and content, processes it, and renders a visual preview that closely matches Google's SERP display. This allows for iterative refinement of SEO elements directly in the development workflow or during content creation, before publishing live. It can be integrated into local development environments for quick checks or used as a standalone tool for auditing existing pages.

Product Core Function

· SERP Snippet Preview: Generates a visual representation of how a webpage will appear in Google search results, including title, meta description, and URL. This helps ensure the snippet is compelling and accurately reflects the page content, directly impacting click-through rates.

· Title and Description Optimization: Allows users to input custom titles and descriptions to see how they render in the SERP preview. This is invaluable for A/B testing different SEO copy and maximizing relevance and attractiveness to potential visitors.

· URL Structure Visualization: Displays the canonical URL as it would appear in search results. Understanding this helps in crafting clean and SEO-friendly URLs that improve user understanding and search engine crawling.

· Rich Snippet Emulation (Potential): While not explicitly stated, advanced versions could attempt to emulate rich snippets like star ratings or product pricing. This would provide a more comprehensive preview and encourage the implementation of structured data for enhanced SERP visibility.

Product Usage Case

· A content marketer wants to ensure their new blog post title and meta description are concise and appealing enough to get clicks from Google search. They use SERPSniffer to preview how it will look, adjusting the wording until it's optimal, thus increasing organic traffic.