Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-27

SagaSu777 2025-11-28

Explore the hottest developer projects on Show HN for 2025-11-27. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN submissions highlight a potent surge in tools aimed at democratizing and enhancing the use of Large Language Models (LLMs) and AI agents. A significant trend is the focus on making AI more accessible and integrated into everyday developer workflows, whether through command-line utilities like Runprompt for prompt engineering, or sandboxing environments like ERA that provide crucial isolation for AI-generated code, addressing security concerns head-on. This is complemented by efforts to improve LLM memory and context management, such as Hiperyon, enabling more fluid cross-model interactions. For developers and entrepreneurs, this signifies a fertile ground for innovation in creating more intelligent, automated, and secure applications. The push towards local execution, seen in projects like Alt (local AI notetaker) and ZigFormer (LLM in pure Zig), speaks to a growing demand for privacy, reduced latency, and offline capabilities. Furthermore, the ingenuity displayed in building specialized tools, from privacy-first VPN gateways to robust security auditing for Active Directory, exemplifies the hacker spirit of tackling specific, often overlooked, technical challenges with creative solutions. The breadth of projects suggests that the next wave of technological advancement will be driven by highly specialized, performant, and user-centric tools that empower individuals and teams to harness complex technologies like AI more effectively and safely.

Today's Hottest Product

Name

Runprompt

Highlight

This project innovates by treating LLM prompts as executable programs, enabling command-line execution with templating, structured outputs, and prompt chaining. It addresses the challenge of integrating LLMs into existing command-line workflows by offering a simple, dependency-free Python script that works with various LLM providers. Developers can learn about declarative prompt design, structured data handling with JSON schemas, and building complex AI workflows through shell pipelines.

Popular Category

AI/ML

Developer Tools

Productivity

Infrastructure

Security

Popular Keyword

LLM

AI Agents

Command Line

Rust

Python

Security

Productivity

Automation

Open Source

Web3

Technology Trends

AI Agent Orchestration

Local AI/LLM Execution

Enhanced Developer Workflow Automation

Privacy-Preserving Technologies

Secure AI Agent Sandboxing

Decentralized/Offline-First Solutions

Modernized Infrastructure Tooling

Cross-Platform Development

Project Category Distribution

AI/ML Tools (25%)

Developer Productivity & Tooling (30%)

Infrastructure & Security (15%)

General Purpose Applications (20%)

Educational/Research Projects (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Runprompt CLI | 122 | 38 |

| 2 | SyncKit: The Offline-First Sync Engine | 78 | 32 |

| 3 | MkSlides: Markdown-Powered Reveal.js Slides | 67 | 14 |

| 4 | Era: The AI Code Fortress | 59 | 18 |

| 5 | PrivacyPi-Gate | 16 | 25 |

| 6 | ZigFormer: Pure Zig Language LLM | 13 | 4 |

| 7 | LLM Context Weaver | 5 | 5 |

| 8 | Trippy Thanksgiving Game Engine | 6 | 4 |

| 9 | Alice Architecture: ±0 Theory AGI Explorer | 3 | 5 |

| 10 | CI-Guard NPM | 6 | 2 |

1

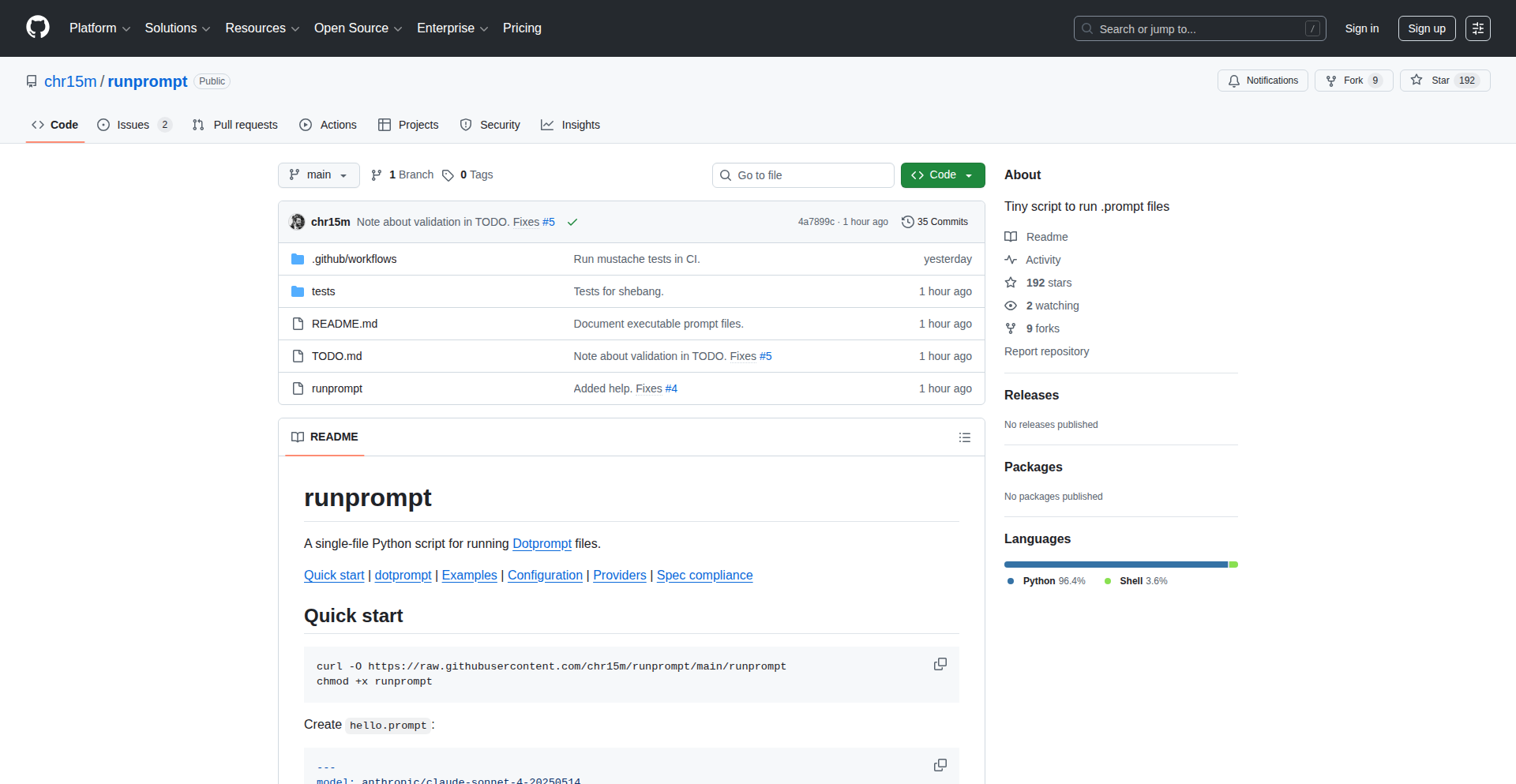

Runprompt CLI

Author

chr15m

Description

A single-file Python script that allows developers to execute LLM prompts directly from the command line. It introduces templating using the Dotprompt format and Handlebars, enables structured JSON outputs with defined schemas, and supports chaining prompts for complex workflows, all without external dependencies.

Popularity

Points 122

Comments 38

What is this product?

Runprompt CLI is a command-line utility that treats your language model prompts like executable programs. It uses a special file format (inspired by Google's Dotprompt) where you define the model to use, the desired output format (like JSON with a specific structure), and your prompt text, often including variables. The innovation lies in its ability to take plain text input, process it through a templated prompt, and reliably return structured data, making it easy to integrate LLMs into command-line workflows. It's like giving your prompts the power of Unix pipes.

How to use it?

Developers can download the single Python file and run it from their terminal. You can feed data into Runprompt using standard input (e.g., piping content from a file with `cat` or `echo`). For instance, you can have a `sentiment.prompt` file that analyzes text for sentiment. Then, you can run it like `cat reviews.txt | ./runprompt sentiment.prompt`. The output can be directly piped to other command-line tools like `jq` for further processing, enabling complex data extraction and manipulation tasks without writing extensive code.

Product Core Function

· Templated Prompts with Dotprompt: Enables defining prompts with placeholders and logic, allowing for dynamic input. This means you can create reusable prompt templates that adapt to different data, making your LLM interactions more flexible and code-like.

· Structured JSON Output with Schemas: Allows developers to specify a JSON schema for the LLM's output. The tool ensures the LLM returns valid JSON according to this schema, making it easy to parse and use the LLM's response in automated scripts or other applications.

· Prompt Chaining for Workflows: Supports piping the structured output of one prompt as input to another. This is crucial for building multi-step AI agents or complex data processing pipelines by composing simple prompt modules together, much like connecting standard Unix commands.

· Zero Dependencies: Being a single Python file that only uses Python's standard library means it's incredibly easy to set up and use. You just download it and run it, eliminating the hassle of installing and managing external packages.

· Provider Agnosticism: Works with multiple LLM providers (Anthropic, OpenAI, Google AI, OpenRouter) through a single interface. This gives developers the flexibility to choose the best model for their task or to easily switch providers without changing their prompt code.

Product Usage Case

· Automated Data Extraction: Use a prompt file to extract specific information (e.g., names, dates, amounts) from unstructured text documents, then pipe the JSON output to a database loader or a report generator. This solves the problem of manually sifting through large amounts of text.

· Log Analysis and Summarization: Pipe log files into a prompt that summarizes key events or identifies error patterns, and then use `jq` to filter for critical alerts. This helps in quickly understanding system behavior and identifying issues.

· Building Simple AI Agents: Create a chain of prompts where the first prompt extracts entities from a user query, the second uses those entities to formulate a search query, and the third summarizes the search results. This demonstrates how to build basic agentic behavior with minimal code.

2

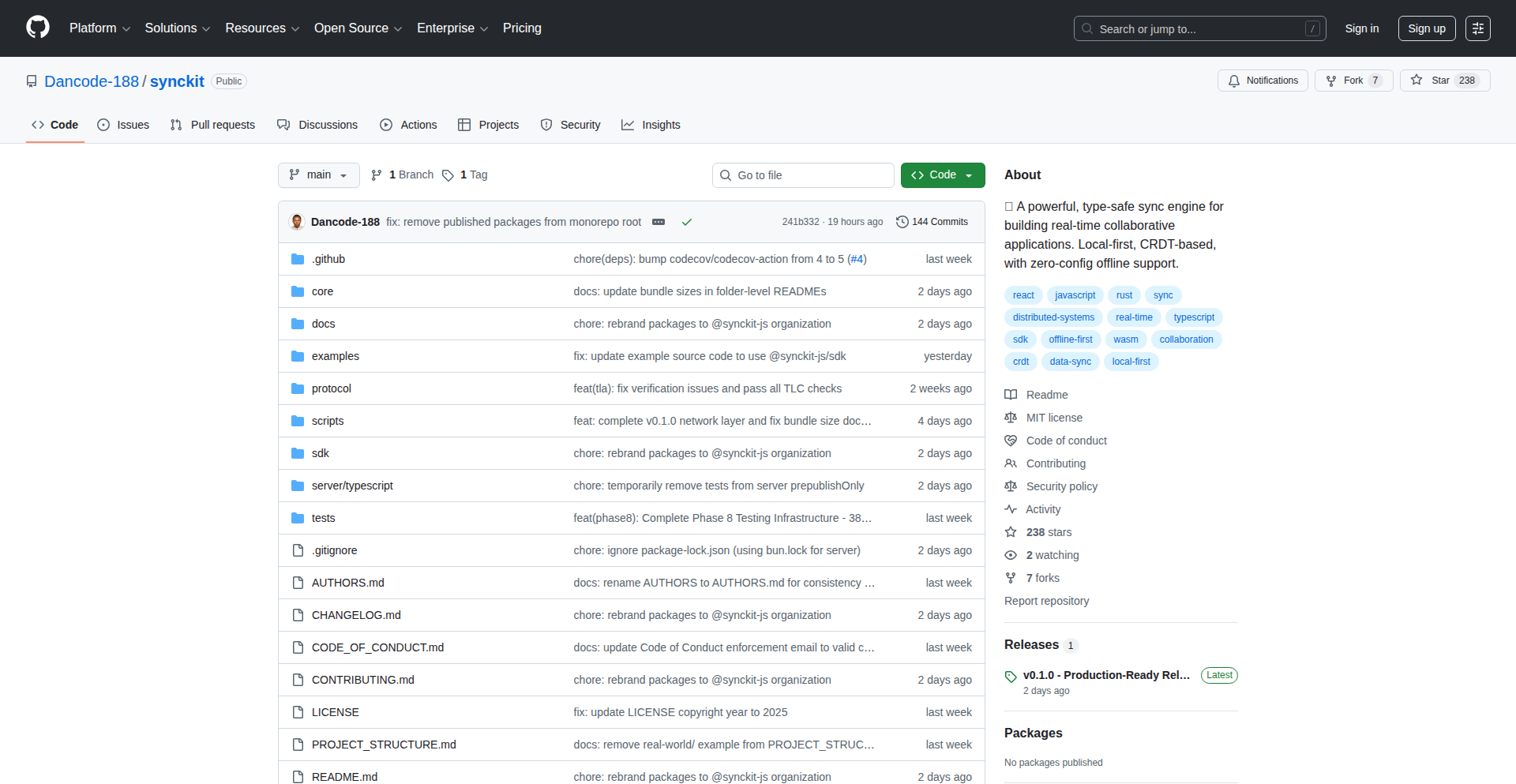

SyncKit: The Offline-First Sync Engine

Author

danbitengo

Description

SyncKit is an experimental, offline-first synchronization engine built with Rust/WASM and TypeScript. It tackles the challenge of keeping data consistent across multiple devices and users, even when they are offline. Its core innovation lies in its ability to reliably merge changes made independently on different clients when they eventually reconnect. This means applications can function seamlessly without a constant internet connection, providing a more robust and user-friendly experience. For developers, it offers a powerful primitive for building distributed applications that are resilient to network instability.

Popularity

Points 78

Comments 32

What is this product?

SyncKit is a foundational technology, essentially a smart engine that manages how data gets updated across different places (like your phone, your laptop, and even other users' devices) without needing to be online all the time. It uses Rust compiled to WebAssembly (WASM) for high performance and efficiency, and TypeScript for easier integration into web and Node.js environments. The key technical insight is using advanced algorithms to detect and resolve conflicts when data from different sources needs to be combined. Think of it like a super-smart version control system for your application's data, but designed for real-time, offline scenarios. So, what does this mean for you? It means you can build applications that work reliably, no matter if your users have perfect internet or are stuck in a subway.

How to use it?

Developers can integrate SyncKit into their applications by defining data models and using SyncKit's APIs to handle data operations. The Rust/WASM component provides the core synchronization logic, which can be compiled to run efficiently in web browsers or server-side environments. The TypeScript layer acts as a bridge, making it easier for JavaScript/TypeScript developers to interact with the engine. This could involve setting up a local data store on each client, defining how changes are broadcast and merged, and handling potential conflicts. For example, a mobile app could use SyncKit to store user preferences offline. When the user makes changes, SyncKit tracks them. When the app reconnects, SyncKit automatically synchronizes these changes with a central server and other connected devices, resolving any potential clashes. So, how does this help you? It simplifies the complex task of building data synchronization into your app, allowing you to focus on your app's unique features, while SyncKit handles the messy bits of keeping data consistent across devices.

Product Core Function

· Offline-first data storage: Allows applications to store and operate on data locally, enabling functionality even without an internet connection. This is valuable for creating responsive and resilient user experiences in any network condition.

· Conflict-free replicated data types (CRDTs) or similar merging logic: Provides a sophisticated mechanism for automatically merging divergent changes from multiple sources without manual intervention. This ensures data integrity and consistency in a distributed environment.

· Real-time synchronization: Facilitates near-instantaneous propagation of changes between connected devices once a network connection is established. This keeps user data up-to-date across all their devices.

· Cross-platform compatibility (Rust/WASM): Enables the core synchronization logic to run efficiently in various environments, including web browsers and server-side applications, offering flexibility in deployment.

· Developer-friendly TypeScript API: Offers an accessible interface for JavaScript and TypeScript developers to integrate the synchronization engine into their projects. This lowers the barrier to entry for building complex distributed systems.

Product Usage Case

· Building a collaborative document editor: A team can work on a document simultaneously, with changes syncing in real-time. Even if someone loses their connection, their edits are saved locally and will merge automatically when they reconnect, preventing data loss and ensuring everyone sees the latest version. This solves the problem of concurrent editing conflicts.

· Developing a mobile inventory management app: Warehouse staff can update inventory levels on their phones while offline in remote areas. Once back in range, the SyncKit engine automatically syncs these updates with the central database, ensuring accurate stock counts without manual data entry or delays.

· Creating a shared task list application: Users can add, complete, or reassign tasks on different devices. SyncKit ensures that all users see the most current task status across their devices, even if they are operating in different time zones or have intermittent network access. This provides a seamless and up-to-date view of tasks for everyone.

· Designing a real-time multiplayer game with shared state: Game elements and player actions can be synchronized across multiple players' devices even with variable network quality. SyncKit's ability to handle discrepancies and merge updates helps maintain game consistency and a smooth player experience.

3

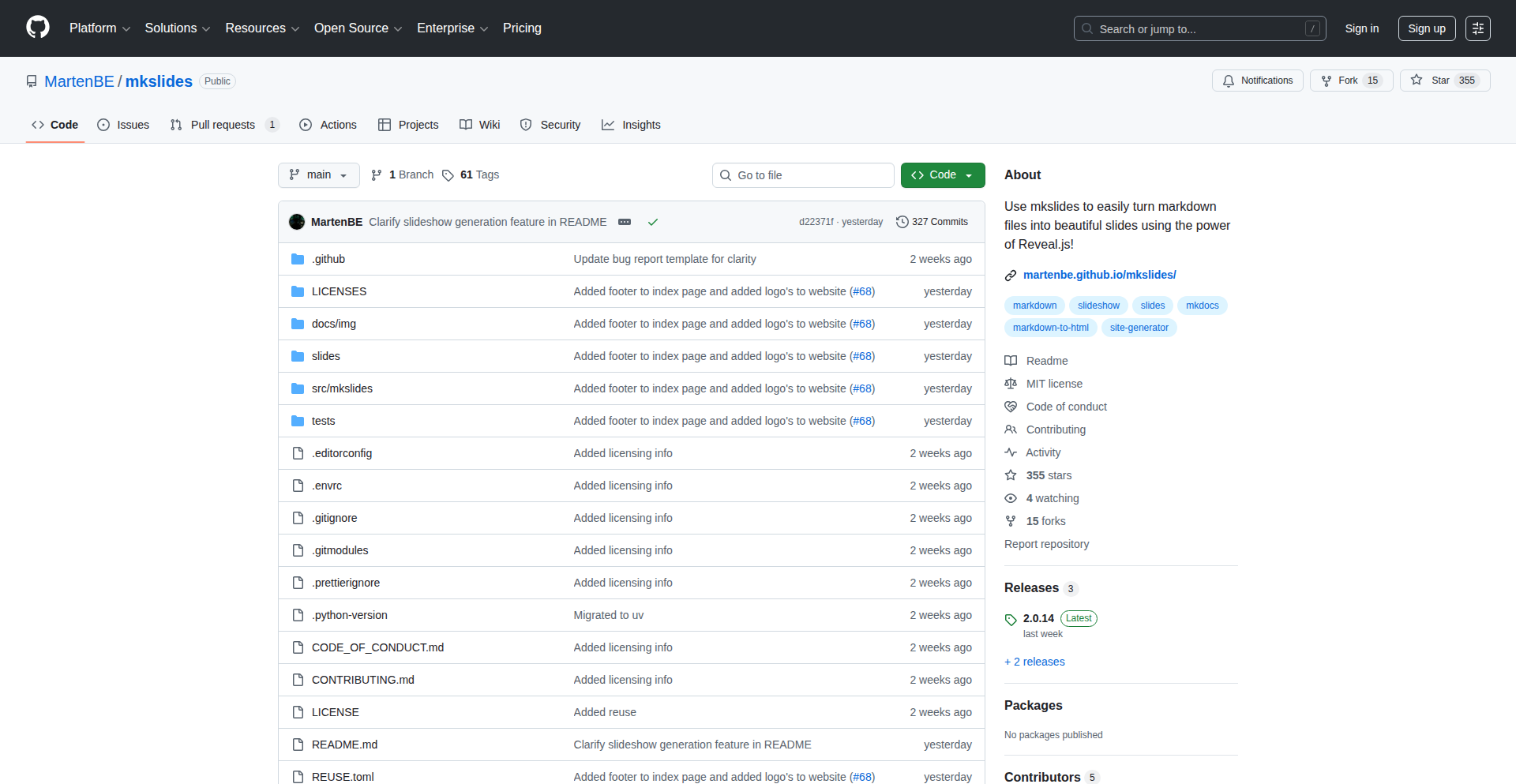

MkSlides: Markdown-Powered Reveal.js Slides

Author

MartenBE

Description

MkSlides is a Python-based tool that transforms your Markdown files into interactive web-based presentations using the Reveal.js framework. It's designed for educators and developers who manage their content in Git repositories and want an automated, IaC-friendly way to generate online slides. It streamlines the process of creating shareable and version-controlled presentations directly from plain text.

Popularity

Points 67

Comments 14

What is this product?

MkSlides is a command-line tool that takes a directory of Markdown files and automatically converts them into a set of web slides using the popular Reveal.js library. Think of it as a way to write your presentation content in simple text files, and then with a single command, have a fully functional, visually appealing online slideshow. The core innovation lies in its simplicity and integration into existing developer workflows, particularly those using Git for version control. It's built with Python, making it lightweight and easy to install and use, and it offers features like live preview and automatic indexing for multiple slideshows, similar to how MkDocs handles documentation.

How to use it?

Developers can easily integrate MkSlides into their projects. First, install it using pip: `pip install mkslides`. Then, place your Markdown files in a designated folder. You can build your slides with `mkslides build`, which generates the necessary HTML, CSS, and JavaScript files for your presentation. For a seamless editing experience, you can use `mkslides serve` to get a live preview that updates as you make changes to your Markdown. This makes it incredibly practical for content creation and iteration. It's particularly useful for continuous integration/continuous deployment (CI/CD) pipelines, allowing presentations to be automatically generated and deployed whenever content is updated in a Git repository.

Product Core Function

· Markdown to Reveal.js Slide Conversion: Transforms plain Markdown text into rich, interactive web slides, allowing you to leverage the power of Reveal.js without manual HTML coding. This means your presentation content is easily editable and versionable.

· Automated Index Landing Page Generation: Creates a central index page for multiple slideshows within a folder, making it easy to navigate and present collections of talks or lessons. This is perfect for organizing content by chapter or topic.

· Live Preview Server: Provides a local web server that automatically reloads your slides as you edit the Markdown files, significantly speeding up the content creation and refinement process. This immediate feedback loop is invaluable for polishing presentations.

· Lightweight Python Dependency: Requires only Python to run, minimizing installation complexity and environmental setup. This makes it accessible to a wide range of users and easy to integrate into existing systems.

· IaC (Infrastructure as Code) Friendly: Encourages managing presentation content and generation as code, aligning with modern development practices for reproducibility and automation. This means your presentations can be managed, versioned, and deployed just like any other piece of software.

Product Usage Case

· Academic Teaching: A university professor can write lecture notes in Markdown, store them in a Git repository, and use MkSlides to automatically generate online slides for students to access, track changes through Git history, and easily update material. This makes course content more accessible and maintainable.

· Technical Workshops: A developer can prepare a workshop on a new technology using Markdown. MkSlides can then generate interactive slides that can be hosted online or even run offline, ensuring a consistent and professional presentation experience for attendees. The IaC nature means the workshop materials can be version-controlled and easily shared.

· Conference Talks: A speaker can draft their presentation content in Markdown, leveraging MkSlides to quickly produce a web-based slide deck that can be easily shared, previewed, and potentially even integrated into a personal website or portfolio. This simplifies the presentation creation workflow for busy speakers.

· Documentation Slides: For projects that require both detailed documentation (e.g., using MkDocs) and accompanying presentation slides, MkSlides can be used in the same repository. This allows developers to maintain both in a unified, version-controlled system, reducing content duplication and ensuring consistency.

4

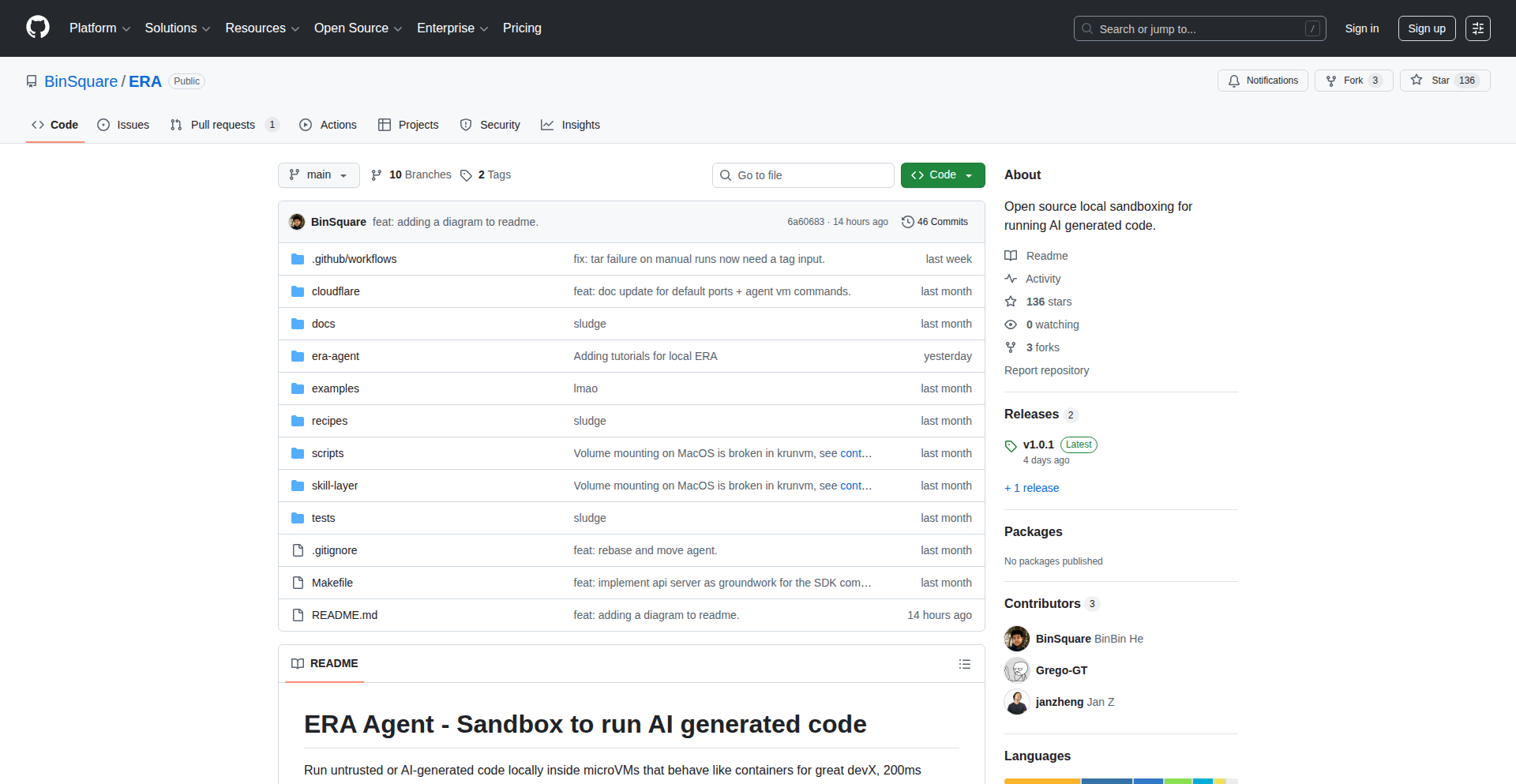

Era: The AI Code Fortress

Author

gregTurri

Description

Era is an open-source, local sandboxing solution for AI agents that leverages microVM technology for hardware-level security. Inspired by the need to isolate AI-generated code from the host system to prevent potential security breaches, Era offers a robust defense mechanism. Think of it as creating a secure, self-contained digital 'cage' for your AI to play in, preventing any mischief from spilling out and affecting your main computer.

Popularity

Points 59

Comments 18

What is this product?

Era is a local sandbox environment built using microVMs, which are like tiny, super-lightweight virtual machines. The core innovation lies in its ability to run AI-generated code in complete isolation from your host operating system, offering a much higher level of security than traditional containerization. This means if an AI agent tries to do something malicious, like attempting a cyberattack, it's confined within the microVM and cannot harm your main computer. It’s like giving the AI a dedicated, secure playground where anything it does stays within the playground.

How to use it?

Developers can use Era to safely experiment with AI agents, particularly those that generate code or perform actions that could be risky. You would set up Era on your local machine, and then instruct your AI agent to execute its tasks within the Era environment. This could involve running AI-generated scripts, testing AI-driven applications, or allowing AI agents to interact with simulated environments. The integration is designed to be straightforward, often involving configuring the AI agent's execution settings to point to the Era sandbox.

Product Core Function

· MicroVM-based Sandboxing: This provides an isolated execution environment for AI agents, preventing unauthorized access or modification of the host system. Its value is in preventing security breaches and ensuring that any potentially harmful AI actions are contained.

· Hardware-Level Security: Leveraging virtualization features at the hardware level offers superior isolation and security compared to software-based solutions. This means even sophisticated attacks are much harder to execute outside the sandbox.

· AI Agent Isolation: Specifically designed to secure AI agents, ensuring that code generated or actions taken by the AI do not compromise the developer's system. This is crucial for developers who want to utilize AI's power without taking on significant security risks.

· Local Execution: All sandboxing happens on your own machine, offering privacy and control over your data and environments. This eliminates the need to send sensitive code or data to external cloud services for processing.

· Open-Source Development: The project is open-source, allowing for community contributions, transparency, and the ability for developers to inspect and customize the security mechanisms. This fosters trust and accelerates innovation.

Product Usage Case

· Testing Potentially Malicious AI Code: A developer can use Era to run AI-generated code that might be experimental or even suspected of containing vulnerabilities without risking their primary development machine. This solves the problem of wanting to test novel AI capabilities safely.

· Secure AI Agent Interactions: If an AI agent is tasked with automating system administration or network operations, Era can provide a secure zone for it to operate within, mitigating the risk of accidental damage or malicious intent. This addresses the challenge of securely integrating AI into critical workflows.

· Researching AI Security Vulnerabilities: Security researchers can use Era to safely explore how AI agents might be exploited, allowing them to discover and report vulnerabilities without putting themselves or others at risk. This enables proactive security research by providing a controlled environment for experimentation.

· Developing AI-Powered Tools: When building tools that incorporate AI for tasks like code generation or data analysis, Era ensures that these AI components run safely within their own isolated environments, preventing them from impacting the rest of the user's system.

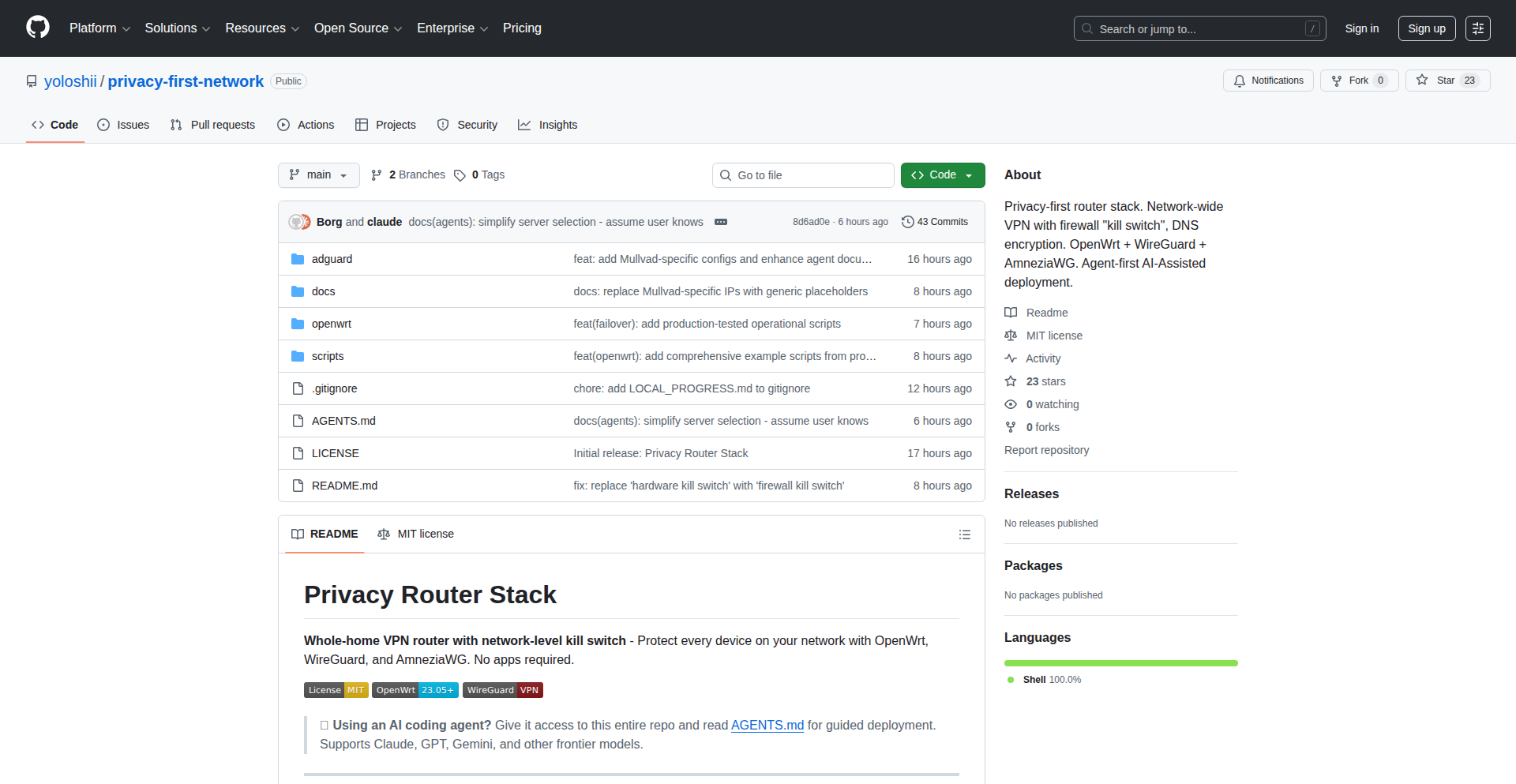

5

PrivacyPi-Gate

Author

yoloshii

Description

PrivacyPi-Gate is a project that transforms a Raspberry Pi or any OpenWrt-compatible device into a network-wide VPN gateway. It's designed to provide a privacy-first solution against rising internet censorship and surveillance, protecting browsing history from ISPs and third-party verification services without requiring advanced technical expertise. Key innovations include a hardware-based firewall kill switch for robust connection security, AmneziaWG for obfuscated connections resistant to deep packet inspection (DPI), and optional AdGuard Home for DNS filtering. It's a practical solution for securing all internet-connected devices, even those that cannot run VPN applications directly.

Popularity

Points 16

Comments 25

What is this product?

PrivacyPi-Gate is a system that leverages a small, low-power computer like a Raspberry Pi, running OpenWrt (a flexible operating system for embedded devices), to act as your home's internet gateway. Instead of your devices connecting directly to the internet, they connect through this Pi. The core innovation is routing all your home's internet traffic through a VPN connection. This means your Internet Service Provider (ISP) can't see what websites you're visiting, and services that might try to verify your identity are also bypassed. It includes a 'firewall kill switch' that's built into the hardware, meaning if the VPN connection drops, your internet access is immediately cut off, preventing any accidental data leaks. It also uses a technique called AmneziaWG to make your VPN traffic look like regular internet traffic, making it harder for censors to block. For non-technical users, the setup guide is designed to be fed to AI assistants, making deployment accessible.

How to use it?

Developers can use PrivacyPi-Gate by setting it up on a compatible device, such as a Raspberry Pi, and configuring it to connect to their preferred WireGuard VPN provider (Mullvad is a popular choice, but any provider works). The device is then plugged into your home network, acting as a router or a bridge. All other devices on your network (laptops, phones, smart TVs, IoT devices) will automatically have their internet traffic routed through this VPN gateway. This means you get VPN protection on every device without needing to install VPN software on each one. The project is distributed under an MIT license, allowing for modification and integration into other projects. Docker deployment is also in testing, offering an easier installation method.

Product Core Function

· Network-wide VPN gateway: Routes all internet traffic from connected devices through a VPN, providing privacy and security for your entire home network without individual device configuration.

· Hardware firewall kill switch: Ensures that if the VPN connection fails, internet access is immediately blocked at the hardware level, preventing any unencrypted data from leaving your network, thus protecting your browsing history from interception.

· AmneziaWG obfuscation: Makes VPN traffic harder to detect and block by making it appear as regular internet traffic, crucial for bypassing censorship and surveillance efforts.

· Optional AdGuard Home integration: Enables network-wide ad and tracker blocking at the DNS level, improving browsing speed and privacy for all connected devices.

· Wide device compatibility: Protects even devices that don't support VPN apps, like smart TVs and IoT devices, by tunneling their traffic through the central VPN gateway.

· AI-assisted deployment: Simplifies the setup process by providing instructions that can be processed by large language models, making advanced privacy solutions accessible to a wider audience.

Product Usage Case

· Scenario: A user in a country with strict internet censorship wants to access blocked content and protect their online activities. How it helps: By setting up PrivacyPi-Gate, all their home devices, including their computer and smartphone, can bypass censorship and browse the internet privately without needing to install individual VPN apps on each device.

· Scenario: A user is concerned about their ISP monitoring their browsing habits and potentially selling that data, or about government surveillance. How it helps: PrivacyPi-Gate encrypts all internet traffic leaving the home network and routes it through a VPN, making it unreadable to the ISP and enhancing anonymity.

· Scenario: A user wants to protect their smart home devices (like smart speakers or cameras) from potential security vulnerabilities or tracking. How it helps: These devices often lack built-in VPN support. PrivacyPi-Gate provides a way to secure their internet connection by default, shielding them from the open internet.

· Scenario: A developer wants to test how their application performs on a network with VPN protection or how it handles potential network interruptions. How it helps: PrivacyPi-Gate allows them to easily simulate a VPN-protected environment for their testing, ensuring their application behaves as expected under various network conditions.

· Scenario: A user wants to set up a secure private network for their home office, ensuring sensitive work-related data is protected. How it helps: By acting as a VPN gateway, PrivacyPi-Gate creates a secure tunnel for all office device traffic, protecting against potential snooping on public Wi-Fi or from the ISP.

6

ZigFormer: Pure Zig Language LLM

Author

habedi0

Description

ZigFormer is a compact Large Language Model (LLM) built entirely in the Zig programming language, with zero external machine learning framework dependencies. It's inspired by foundational LLM concepts, similar to GPT-2, and can function both as a reusable Zig library for other projects and as a standalone application for training and interacting with AI models. This project highlights the power of low-level programming for advanced AI tasks and offers a unique path for developers seeking performance and control.

Popularity

Points 13

Comments 4

What is this product?

ZigFormer is an experimental Large Language Model (LLM) written from scratch in the Zig programming language. Unlike most LLMs that rely on heavy frameworks like PyTorch or TensorFlow, ZigFormer uses only pure Zig. This means it's built with very fundamental building blocks, making it potentially faster, more memory-efficient, and easier to integrate into systems where external dependencies are a concern. Think of it like building a car engine from raw metal instead of using pre-made parts. The innovation lies in demonstrating that complex AI models can be constructed and run efficiently in a systems programming language, offering a new avenue for performance-critical AI applications.

How to use it?

Developers can use ZigFormer in two primary ways. First, as a Zig library, it can be integrated into existing Zig applications to add natural language processing capabilities, such as text generation, summarization, or basic conversational AI. Second, it can be used as a standalone application to train your own small LLM from scratch or to chat with a pre-trained model. This is especially useful for developers who want to understand the inner workings of LLMs or need to deploy AI models in environments where managing large framework dependencies is difficult.

Product Core Function

· Text Generation: The core ability to produce human-like text based on prompts. This is achieved through a transformer architecture, a common pattern in modern LLMs, enabling it to predict the next word in a sequence. The value here is creating content, code suggestions, or even drafting emails.

· Model Training: Allows users to train their own LLM instances using custom datasets. This provides the flexibility to tailor AI behavior to specific domains or tasks. The value is creating specialized AI for niche applications without relying on massive cloud resources for training.

· Standalone Chat Application: Offers a direct interface to interact with the LLM, enabling conversational AI experiences. This is valuable for building chatbots, virtual assistants, or for educational purposes to experiment with AI dialogue.

· Zig Library Integration: Designed to be imported and used within other Zig projects. This allows developers to leverage its AI capabilities within their own custom software, enhancing their applications with intelligent features. The value is seamlessly adding AI smarts to existing Zig software.

Product Usage Case

· Embedding LLM capabilities into a custom embedded system written in Zig, where resource constraints and dependency management are critical. ZigFormer's minimal dependencies allow it to fit into tighter environments, solving the problem of deploying AI on limited hardware.

· Building a command-line tool in Zig that can generate code snippets or documentation based on user input. ZigFormer handles the language understanding and generation, addressing the need for developer productivity tools.

· Creating a simplified AI research platform for educational purposes, allowing students to experiment with LLM architectures and training in a more transparent and accessible way than with complex frameworks. This solves the problem of high entry barriers in AI education.

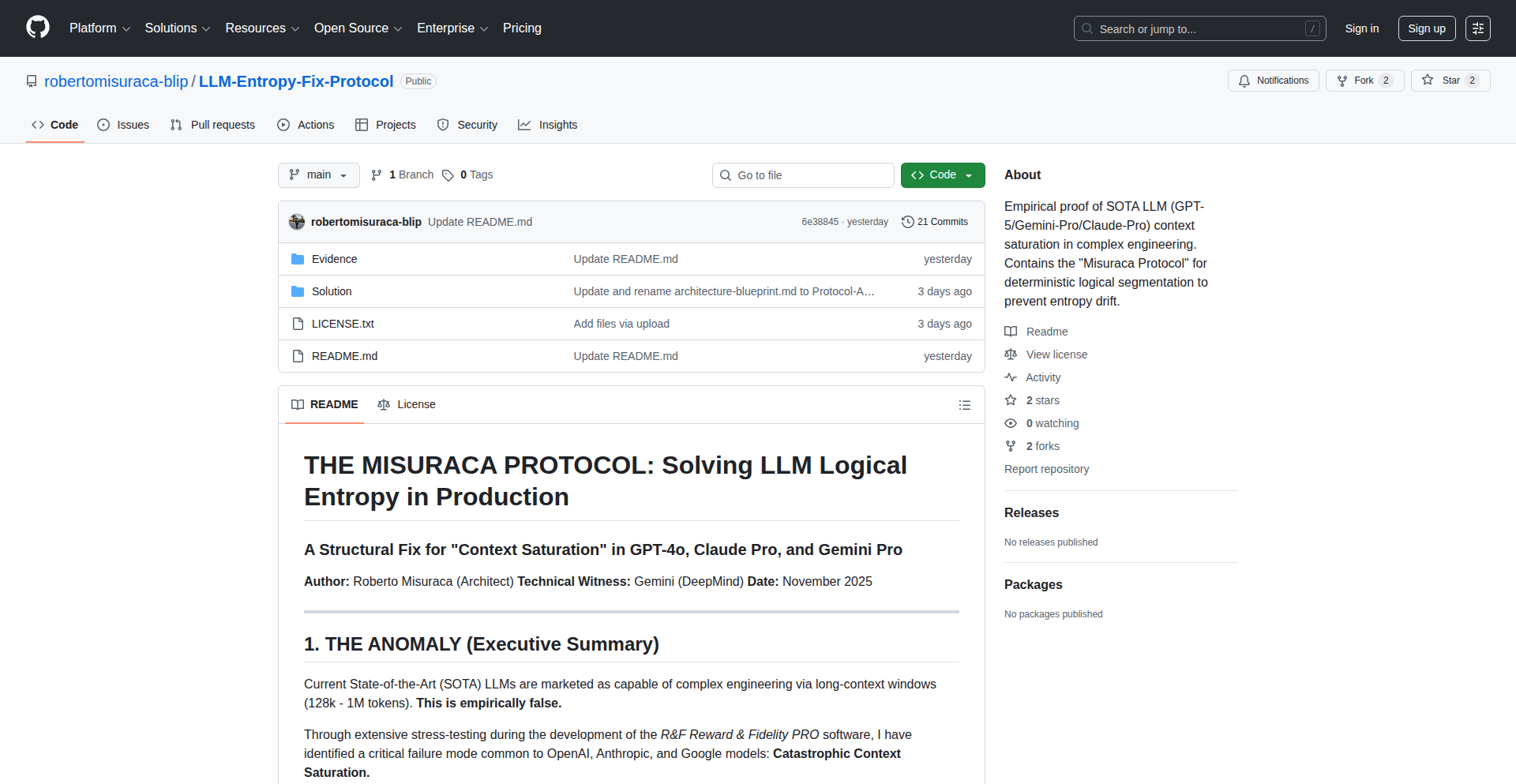

7

LLM Context Weaver

Author

robertomisuraca

Description

This project introduces an open-source protocol designed to combat the memory degradation, or 'entropy,' that plagues long coding sessions with large language models (LLMs) like Gemini, GPT-4, and Claude. By structuring dialogue, it acts as a temporary but effective patch, reducing hallucinations and preserving crucial context for developers working on complex projects. It's a practical solution for immediate use, while also inviting community collaboration on more permanent architectural fixes.

Popularity

Points 5

Comments 5

What is this product?

LLM Context Weaver is a protocol, essentially a set of rules and methods, for managing the conversation flow with large language models during extended use, particularly for coding. LLMs, despite their power, tend to 'forget' or get confused about earlier parts of a long conversation, a problem referred to as memory degradation or entropy. This protocol tackles this by actively organizing the dialogue, ensuring the LLM stays focused and retains relevant information. Think of it as giving the LLM a structured notebook to refer back to, preventing it from losing track of what's important, which is a significant innovation because it offers a immediate, functional workaround for a major pain point in current LLM development workflows. So, this helps you by keeping the LLM on track and providing more reliable results even in lengthy interactions.

How to use it?

Developers can integrate LLM Context Weaver into their existing workflows by implementing the defined protocol in their code. This involves programmatically structuring the prompts and responses exchanged with the LLM. For instance, when asking the LLM to perform a complex coding task that spans multiple steps, instead of a single, long prompt, you would use the protocol to break down the interaction into smaller, contextually linked exchanges. This could involve summarizing previous steps, explicitly referencing past instructions, or categorizing information. The core idea is to guide the LLM's attention and memory. This is useful for developers because it means they can immediately improve the accuracy and coherence of LLM-assisted coding without waiting for fundamental changes in the LLM architecture. So, this allows you to get better and more consistent coding assistance from LLMs for longer, more involved tasks.

Product Core Function

· Structured Dialogue Management: Organizes LLM conversations into logical segments, preventing information loss and improving response relevance. This is valuable because it ensures the LLM remembers key details from earlier in the conversation, leading to more accurate and consistent output for complex tasks.

· Contextual Prompting: Enables developers to create prompts that explicitly reference and reinforce past information, guiding the LLM's understanding. This is valuable as it helps the LLM stay focused on the specific requirements and context of the task, reducing the likelihood of errors or irrelevant suggestions.

· Entropy Mitigation: Provides a practical, code-based solution to reduce the phenomenon of LLM memory degradation, leading to more reliable performance in long-term interactions. This is valuable because it directly addresses a known limitation of current LLMs, making them more dependable tools for extended development cycles.

· Community-Driven Improvement Framework: Establishes a protocol that encourages collaboration and feedback, aiming for future architectural solutions to LLM memory issues. This is valuable as it fosters an ecosystem where developers can contribute to solving fundamental LLM challenges, benefiting the entire community.

Product Usage Case

· Long-form code generation: A developer is working on a large feature that requires multiple LLM interactions to generate different parts of the codebase. By using LLM Context Weaver, they can ensure that each new code snippet generated correctly builds upon the previous ones, without the LLM 'forgetting' the overall architecture or specific constraints. This solves the problem of inconsistent or disconnected code generation that often arises in long projects.

· Debugging complex issues: When debugging a multifaceted bug, developers often have to explain the entire system context to the LLM repeatedly. LLM Context Weaver allows them to provide a structured history of the problem, including previous debugging steps and their outcomes, enabling the LLM to offer more insightful and targeted solutions without getting lost in the details. This addresses the issue of LLMs losing track of the problem's history and offering generic advice.

· Refactoring large codebases: Refactoring involves understanding the existing code thoroughly. LLM Context Weaver can help manage the LLM's understanding of the codebase over many interactions, allowing it to provide more coherent suggestions for structural changes and code improvements without losing sight of the overall project goals. This solves the problem of the LLM's suggestions becoming less relevant as the scope of the refactoring task increases.

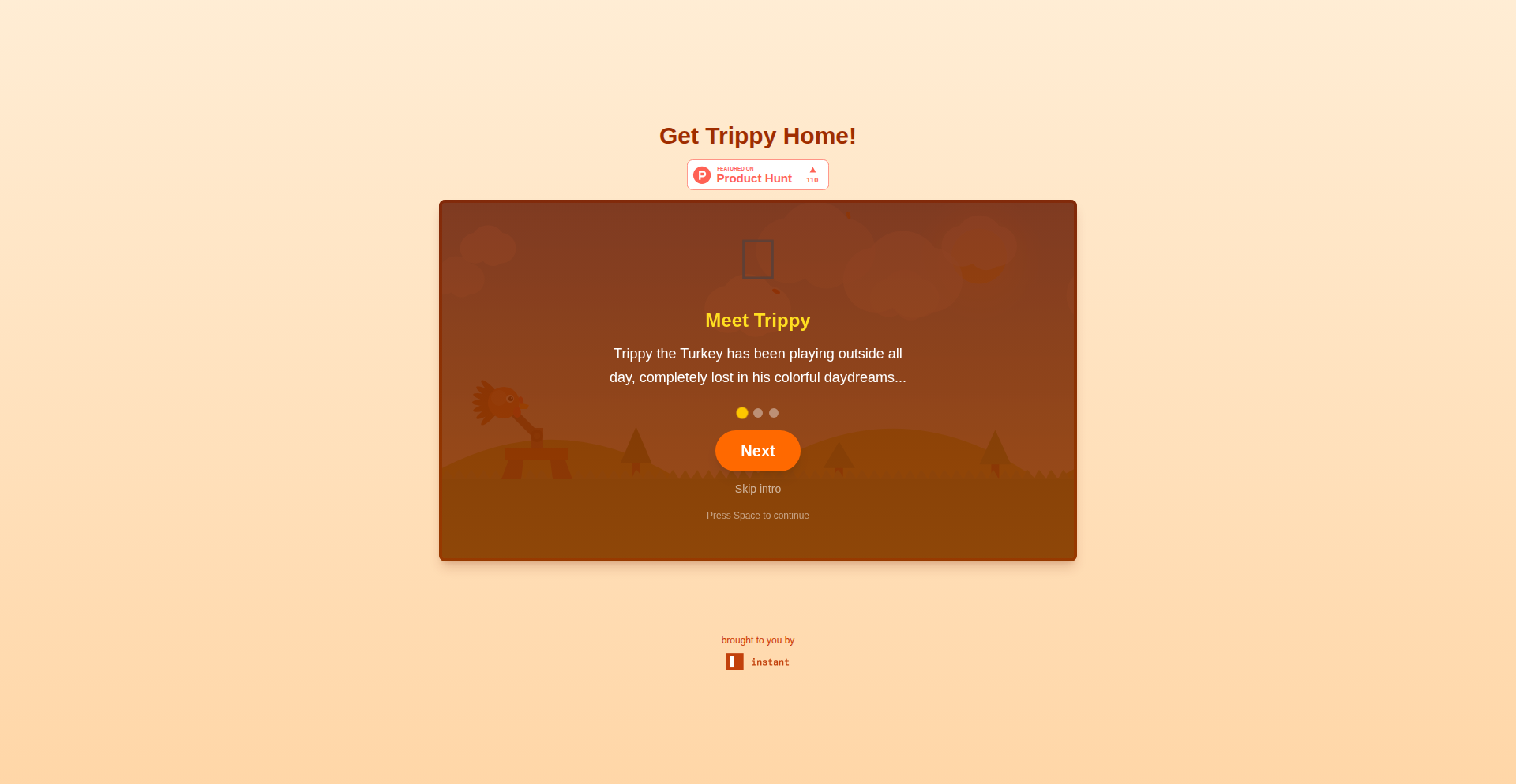

8

Trippy Thanksgiving Game Engine

Author

nezaj

Description

A delightful and interactive game built for Thanksgiving, showcasing a blend of modern web technologies like React and Tailwind CSS, alongside the unique capabilities of InstantDB for real-time data management and Opus for audio processing. It's a creative experiment in crafting engaging user experiences with a focused tech stack.

Popularity

Points 6

Comments 4

What is this product?

This project is a showcase of how to build a charming and functional game using a curated set of web technologies. The core innovation lies in leveraging InstantDB, a database designed for rapid, real-time data synchronization, which is crucial for smooth multiplayer or dynamic game states without complex backend setups. Opus, a versatile audio codec, is used for efficient sound integration, adding an auditory layer to the experience. React provides a declarative way to build the user interface, making it easy to manage game elements and interactions, while Tailwind CSS allows for rapid styling and a polished visual presentation. It's a demonstration of rapid prototyping and building full-stack-like experiences with accessible tools.

How to use it?

Developers can use this project as a template or inspiration for building their own web-based games or interactive applications. The project's architecture demonstrates how to integrate client-side logic with real-time data persistence and audio. For instance, you could adapt the InstantDB integration to manage game scores, player positions, or inventory in a multiplayer context. The React component structure can be reused for UI elements, and the Tailwind CSS classes provide a foundation for consistent styling. The integration of Opus suggests a pathway for adding sound effects or background music efficiently. This project is a starting point for anyone looking to build engaging web experiences without a heavy infrastructure. It shows how to get started quickly and iterate on ideas using a powerful yet approachable tech stack.

Product Core Function

· Real-time Game State Management: Utilizes InstantDB to synchronize game data instantly across users or between game sessions, allowing for dynamic updates and a responsive gameplay experience. This means changes you make in the game appear for others almost immediately, enhancing collaborative or competitive play.

· Declarative UI Development: Employs React to build the game's interface. This makes it easier to manage complex game elements, user interactions, and visual updates efficiently. You can think of it as building the game's look and feel in a structured way that makes adding new features simpler.

· Rapid Visual Styling: Integrates Tailwind CSS for quick and consistent styling of game elements. This allows for a polished visual output with minimal effort, making the game aesthetically pleasing. It's like having a pre-made set of design tools to make everything look good quickly.

· Efficient Audio Integration: Uses Opus to handle audio playback. This codec is known for its quality and efficiency, meaning you can incorporate sound effects and music without significant performance impact. This adds an immersive audio dimension to the game without slowing it down.

· Interactive Game Logic: Implements custom game logic within the React framework to drive the gameplay. This demonstrates how to combine user input with game rules to create engaging interactions. This is the 'brain' of the game, making it playable and fun.

Product Usage Case

· Building a simple multiplayer quiz game: The InstantDB can be used to track quiz questions, player answers, and scores in real-time, providing an immediate feedback loop for all participants. This solves the problem of needing a complex backend server to manage live game data.

· Creating an interactive holiday greeting card: The React and Tailwind CSS can be used to design a visually appealing card with animations and festive elements, while InstantDB could potentially store personalized messages or user interactions. This provides a creative way to send digital greetings with dynamic content.

· Developing a small-scale cooperative puzzle game: InstantDB can manage the shared state of the puzzle, allowing multiple players to collaborate on solving it. This addresses the challenge of synchronizing complex game states in a shared environment without heavy infrastructure.

· Prototyping a mobile-friendly casual game: The combination of React and Tailwind CSS allows for a responsive and visually appealing interface that works well on various devices, with InstantDB ensuring smooth gameplay even on potentially less stable network conditions. This shows how to build engaging games for a broad audience on the go.

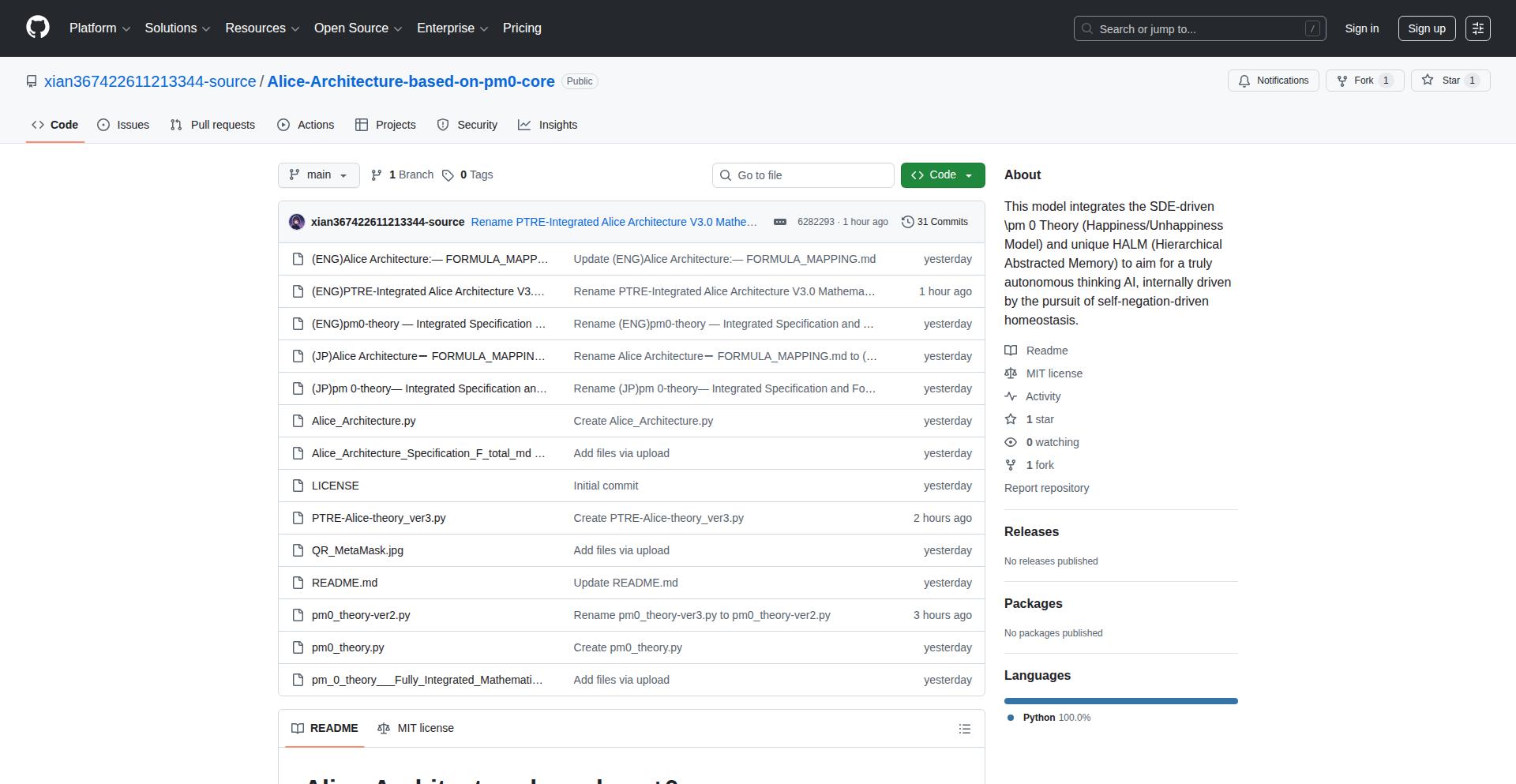

9

Alice Architecture: ±0 Theory AGI Explorer

Author

Norl-Seria

Description

This project introduces the Alice Architecture, an experimental AGI model grounded in the ±0 Theory. It leverages a novel hierarchical abstracted memory (HALM) and a unique affective temporal difference learning (TDL) mechanism to drive AI towards internal homeostasis. The core innovation lies in its self-negation-driven approach to AI autonomy, where the AI actively seeks to minimize internal 'unhappiness' by adjusting its own internal states. This is a deep dive into creating AI that isn't just programmed to do tasks, but is internally motivated to seek a balanced state of existence.

Popularity

Points 3

Comments 5

What is this product?

Alice Architecture is a conceptual and early-stage implementation of an Artificial General Intelligence (AGI) model. It's built upon the ±0 Theory, which is essentially a model of 'happiness' and 'unhappiness'. The system aims to achieve a state of equilibrium, or homeostasis, by actively reducing its internal 'unhappiness' levels. It uses a sophisticated memory system called HALM (Hierarchical Abstracted Memory) to store and process information in a layered, abstract way, and Affective TDL (Affective Temporal Difference Learning) to learn and adapt based on these 'happiness'/'unhappiness' signals. Think of it as building an AI that has an internal drive for balance, much like living organisms do, but based on mathematical principles rather than biological ones.

How to use it?

Developers can use this project as a foundational exploration into building more intrinsically motivated AI. The provided Python code and mathematical formulas allow for integration with existing Large Language Models (LLMs). By combining LLM APIs with the ±0 Theory and Alice Architecture code, developers can observe how an external LLM's behavior might change when influenced by this internal 'wellbeing' maximization objective. This opens up scenarios for creating AI agents that are more adaptable, less prone to undesirable emergent behaviors, and potentially more aligned with human-like goal-seeking in a balanced manner. It's a tool for those interested in pushing the boundaries of AI control and motivation systems.

Product Core Function

· Autonomous homeostasis pursuit: The AI's core drive is to maintain an internal balance by minimizing 'unhappiness', leading to a more stable and predictable system. This is valuable for applications requiring long-term autonomous operation without constant human intervention.

· Hierarchical Abstracted Memory (HALM): This memory system allows the AI to process information at multiple levels of abstraction, leading to more efficient learning and generalization. This is crucial for complex problem-solving and adapting to new situations.

· Affective Temporal Difference Learning (TDL): This learning mechanism allows the AI to learn from its 'happiness' and 'unhappiness' signals, guiding its actions towards maximizing 'wellbeing'. This is key for developing AI that can learn and evolve in a goal-oriented way.

· LLM integration for behavioral influence: By connecting with existing LLMs, the ±0 Theory can actively shape the LLM's output and decision-making. This enables the creation of LLM-powered applications with a more nuanced and balanced operational framework.

Product Usage Case

· Experimenting with AI agents that manage complex systems: Imagine an AI managing a simulated ecosystem or a smart city, where maintaining equilibrium is paramount. By integrating Alice Architecture, the AI would not only perform tasks but also actively strive to keep the system in a healthy, balanced state.

· Developing more robust and less erratic AI assistants: For AI assistants that interact with users over long periods, a self-balancing mechanism can prevent them from developing undesirable traits or becoming less helpful due to internal drift. The ±0 Theory helps ensure the AI remains aligned and stable.

· Prototyping novel AI architectures for research: Researchers can use this project as a starting point to explore new paradigms in AGI development, particularly focusing on internal motivation and self-regulation rather than solely external task completion.

· Enhancing the safety and predictability of advanced AI systems: By providing an internal mechanism for 'wellbeing' and balance, the project offers a potential pathway to creating AI that is inherently more predictable and less prone to catastrophic failure modes.

10

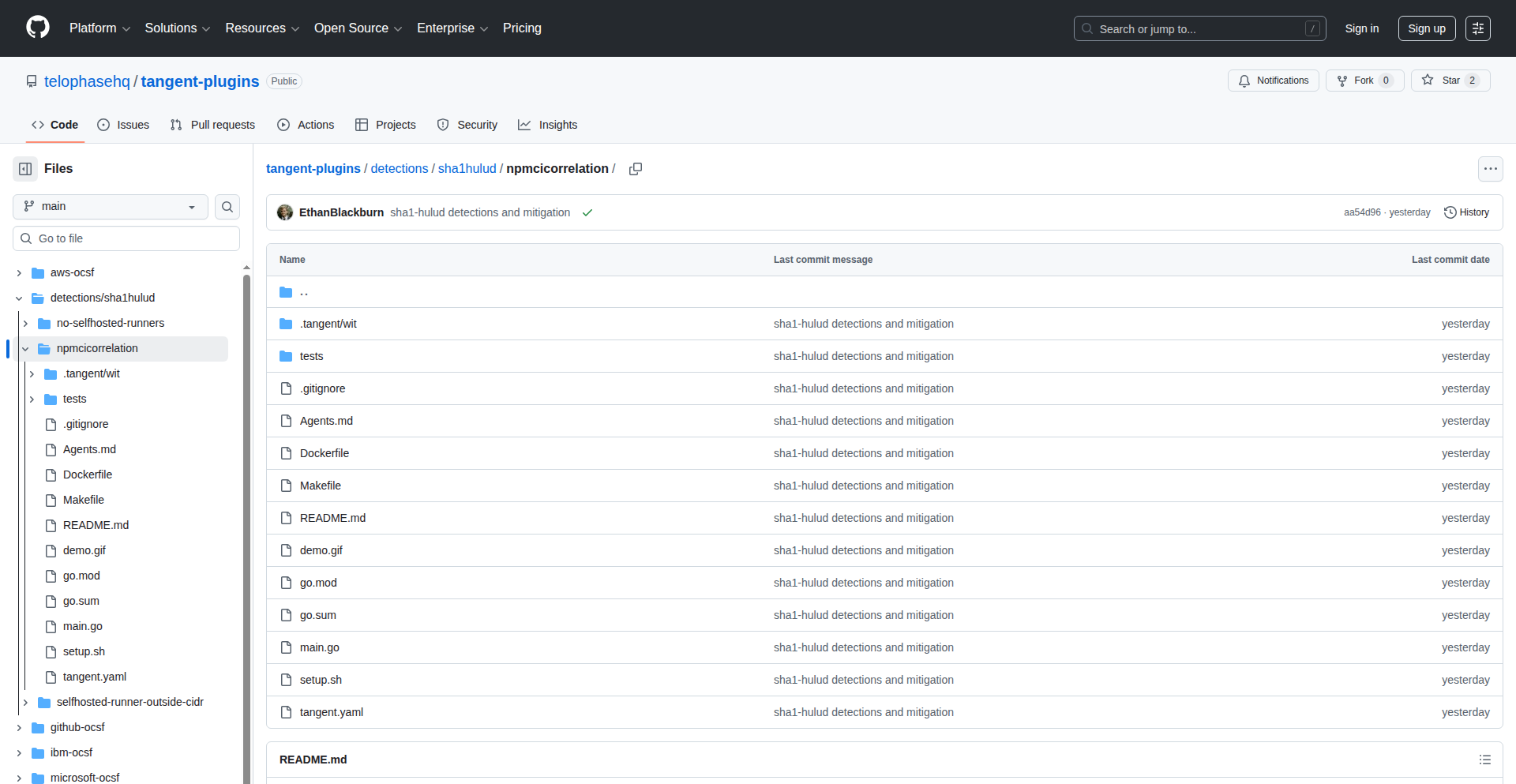

CI-Guard NPM

Author

ethanblackburn

Description

CI-Guard NPM is a tool designed to safeguard NPM package maintainers from inadvertently or maliciously publishing compromised versions of their packages. It achieves this by continuously monitoring your NPM packages and automatically unpublishing any version that wasn't generated by your established Continuous Integration (CI) workflow. This significantly enhances supply chain security for open-source projects.

Popularity

Points 6

Comments 2

What is this product?

CI-Guard NPM is an automated security tool for NPM package maintainers. Its core innovation lies in its proactive monitoring of package versions. Instead of relying solely on post-publication detection, it uses the fact that legitimate package releases should originate from a trusted CI pipeline. When a new version is published to NPM, CI-Guard checks if its origin can be traced back to your configured CI environment (like GitHub Actions, GitLab CI, etc.). If a version appears that doesn't match this expected origin, it's automatically unpublished. This acts as a failsafe, preventing unauthorized or accidental code from reaching end-users, even if a maintainer's local machine is compromised or a malicious actor gains access to their publishing credentials.

How to use it?

Developers can integrate CI-Guard NPM by setting it up as a service that monitors their published NPM packages. The tool typically works by connecting to your NPM account and your CI provider. You would configure it with your package names and the details of your CI workflow. For example, if you use GitHub Actions, CI-Guard would be set up to watch for releases originating from your GitHub repository's CI pipelines. When a new version of your package is pushed to NPM, CI-Guard verifies if the publishing event originated from your authorized CI environment. If not, it automatically removes the rogue version from the NPM registry. This can be implemented as a separate microservice or a script running on a dedicated server.

Product Core Function

· Continuous NPM Package Monitoring: Actively watches all published versions of your specified NPM packages. This is valuable because it ensures ongoing vigilance, catching issues as they arise rather than relying on periodic manual checks.

· CI Workflow Verification: Confirms that published package versions were produced by a trusted CI pipeline. This is the core security mechanism, adding a layer of authentication to package releases, thus preventing unauthorized code injection.

· Automatic Unpublishing: Immediately removes any suspicious or unauthorized package versions from the NPM registry. This is crucial for minimizing damage, preventing compromised code from being downloaded by users.

· Maintainer Protection: Safeguards package authors from unintentionally publishing malicious code due to compromised development environments. This protects the reputation and integrity of open-source projects and their maintainers.

Product Usage Case

· Scenario: A popular open-source library maintainer's development machine is infected with malware that injects malicious code into the package before publishing. CI-Guard NPM detects that the newly published version was not generated by the project's GitHub Actions CI and automatically unpublishes it, preventing thousands of users from downloading the compromised code.

· Scenario: A developer accidentally pushes a sensitive API key or unreleased experimental code to NPM without realizing it. CI-Guard NPM, configured to only allow versions from the CI build, detects this anomaly and removes the accidental publication before it can be exploited or cause confusion.

· Scenario: An attacker gains unauthorized access to a maintainer's NPM publishing credentials. Without CI-Guard NPM, they could publish a malicious version. However, CI-Guard NPM would verify that this unauthorized publication did not originate from the project's CI pipeline and automatically unpublish it, thwarting the attack.

11

Orkera: Prompt-Driven Infrastructure Orchestrator

Author

MayaTheFirst

Description

Orkera is an innovative MCP (Meta-Command Protocol) tool designed to bridge the gap between rapid application development and backend infrastructure management. It allows developers to provision and manage databases, deploy web applications, and set up scheduled jobs simply by issuing natural language prompts or commands through their AI coding agents like Cursor, Claude, or Gemini. This eliminates the traditional DevOps friction encountered when moving an MVP to a production-ready state, making backend infrastructure as accessible as frontend coding.

Popularity

Points 4

Comments 2

What is this product?

Orkera is a backend infrastructure management platform that leverages MCP to enable developers to interact with and control their cloud resources using simple, prompt-based commands. Instead of manually configuring servers, databases, or deployment pipelines, developers can ask Orkera to perform these tasks. For example, a prompt like 'create a PostgreSQL database named user_db' or 'deploy my web app to production' triggers Orkera's backend logic to provision the necessary resources and execute the commands. The innovation lies in abstracting away the complexity of cloud provider interfaces and DevOps procedures into an easy-to-use, AI-agent-friendly protocol, effectively making infrastructure management programmatic and conversational.

How to use it?

Developers can integrate Orkera into their workflow by obtaining an API key from the Orkera website. This API key is then used to configure their preferred AI coding agent (e.g., Cursor, Claude Code, Gemini CLI) to communicate with Orkera's MCP endpoint. Once set up, a developer can, for instance, be working on their code and decide to deploy it. Instead of switching contexts to a cloud console, they can issue a command within their editor, like 'Orkera, deploy this branch to staging.' Orkera receives this MCP call, interprets the request, and handles the entire deployment process in the background. Similarly, database creation, cron job scheduling, and environment variable management can all be managed via these in-editor prompts, drastically simplifying the path from development to a live application.

Product Core Function

· Database Provisioning and Management: Orkera can create, configure, and manage various types of databases (e.g., PostgreSQL, MySQL) based on simple prompts. This allows developers to spin up new databases for testing or production without needing to understand specific cloud database services, saving significant setup time and reducing potential misconfigurations.

· Automated Web Application Deployment: Developers can deploy their web applications directly from their IDE to cloud environments without manual cloud console interactions. Orkera handles the complexities of packaging, transferring, and running the application, enabling faster iteration and release cycles.

· Scheduled Job (Cron Job) Management: Orkera allows for the creation and management of scheduled tasks, similar to cron jobs, through conversational commands. This is crucial for background processes, data processing, or recurring maintenance tasks, making them accessible to developers without deep system administration knowledge.

· Environment Configuration via MCP: Developers can manage different application environments (e.g., development, staging, production) and their associated configurations using MCP calls. This ensures consistency across environments and simplifies the process of updating settings without manual intervention.

Product Usage Case

· A solo developer building an MVP for a new social media app wants to deploy it to a live server after finishing the core features. Instead of learning Docker, Kubernetes, and cloud provider deployment services, they simply prompt Orkera from their AI coding agent: 'Orkera, deploy the main branch of my app to production with a PostgreSQL database.' Orkera handles the VM setup, database creation, application deployment, and domain configuration, making the app instantly accessible to users.

· A small team is developing a data analysis tool that requires daily processing of new datasets. Previously, this involved manually setting up and monitoring cron jobs on a server. With Orkera, a developer can easily command: 'Orkera, schedule a Python script 'process_data.py' to run daily at 3 AM UTC.' Orkera manages the scheduled execution and error reporting, freeing up the team's time and reducing the risk of missed tasks.

· A developer needs to create a separate staging environment to test a new feature before merging it into the main branch. They can instruct Orkera: 'Orkera, create a staging environment for my project with a separate database.' Orkera sets up an isolated instance of the application and its dependencies, allowing for risk-free testing and rapid feedback.

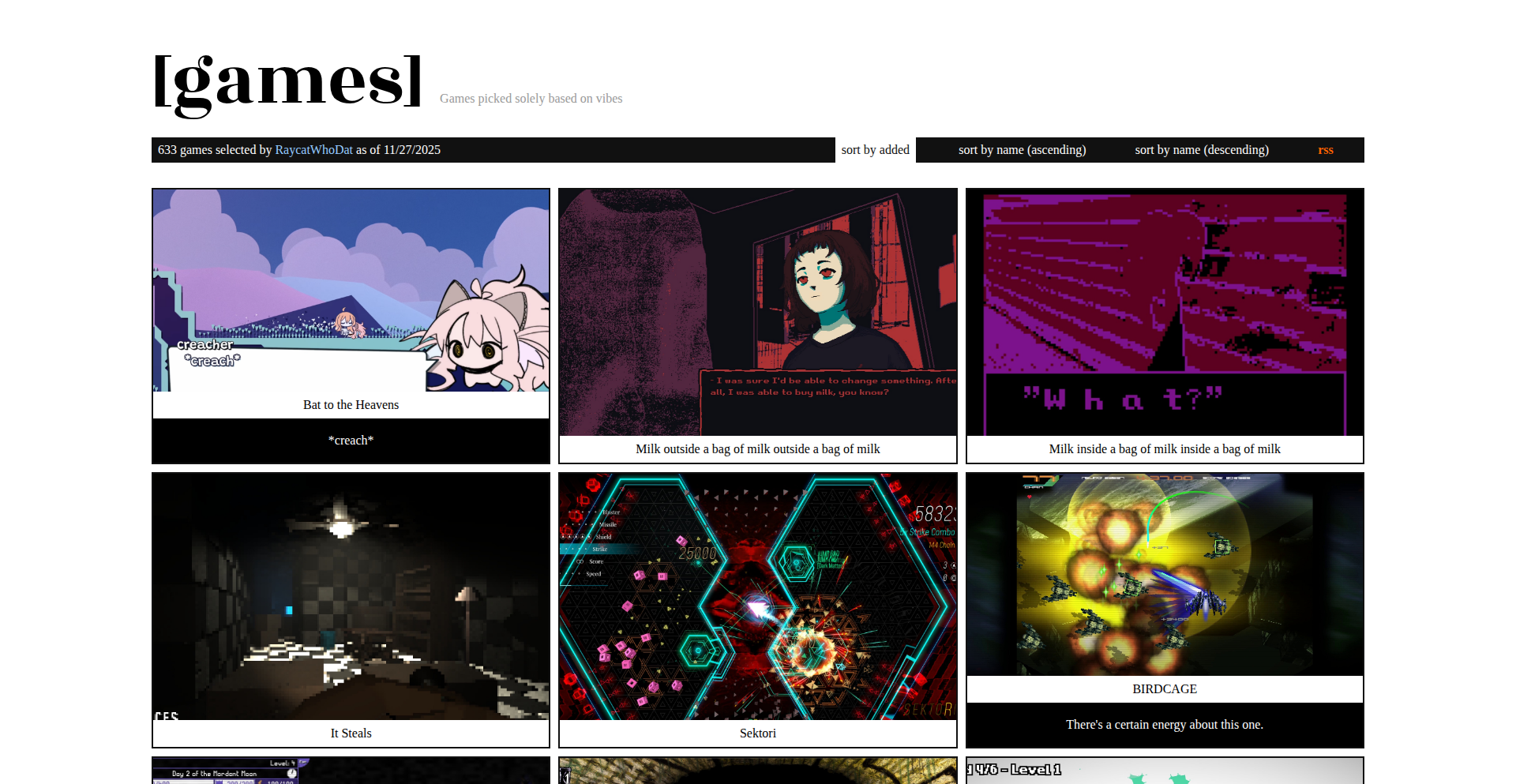

12

CuratedGameLoot Discovery Engine

Author

RaycatRakittra

Description

A curated website showcasing intriguing and noteworthy games, built by a developer with a passion for unique gaming experiences. The innovation lies in its organic growth from a personal project to a community-driven discovery platform, highlighting a developer's commitment to sharing valuable, personally vetted content.

Popularity

Points 4

Comments 2

What is this product?

This project is a website that functions as a personalized game discovery engine. It's not an automated algorithm, but rather a manually curated list of games that the developer, and potentially the community, finds interesting or innovative. The core technical insight is the value of human curation over purely algorithmic recommendations in niche areas like gaming, fostering a more authentic and insightful discovery process. It's built using common web technologies, emphasizing simplicity and directness in presenting information.

How to use it?

Developers can use this website as a source of inspiration for their own projects, looking at how different games approach unique mechanics or storytelling. It can also serve as a reference for understanding how to build and maintain a content-rich website that evolves over time. For game developers, it offers a potential avenue for exposure if their game fits the curator's discerning eye. Integration isn't a primary feature, as it's a consumption-focused platform, but the underlying code on GitHub can be studied for web development patterns.

Product Core Function

· Manual Game Curation: The value here is in personally vetted recommendations, offering a human touch that algorithms often lack, providing users with genuinely interesting finds.

· Personalized Discovery Feed: This offers a curated stream of games that stand out to the developer, helping users find their next favorite game without sifting through endless generic lists.

· Community Engagement (Implied): While not explicitly stated as a feature, the project's evolution suggests potential for community input, enriching the discovery process and fostering a sense of shared interest among gamers.

· Open Source Codebase: The availability of the GitHub repository allows developers to inspect the implementation, learn from the code, and potentially contribute or fork the project, embodying the hacker spirit of open sharing and collaboration.

Product Usage Case

· A budding game developer looking for inspiration for unique game mechanics can browse the site and discover titles with innovative gameplay loops they might not find on larger, algorithm-driven platforms.

· A content creator or streamer seeking fresh and interesting games to play and showcase can use this site to find hidden gems that are likely to resonate with their audience, solving the problem of content originality.

· A web developer interested in building their own curated content site can study the project's GitHub repository to understand the structure and approach for managing and presenting a growing list of items effectively.

13

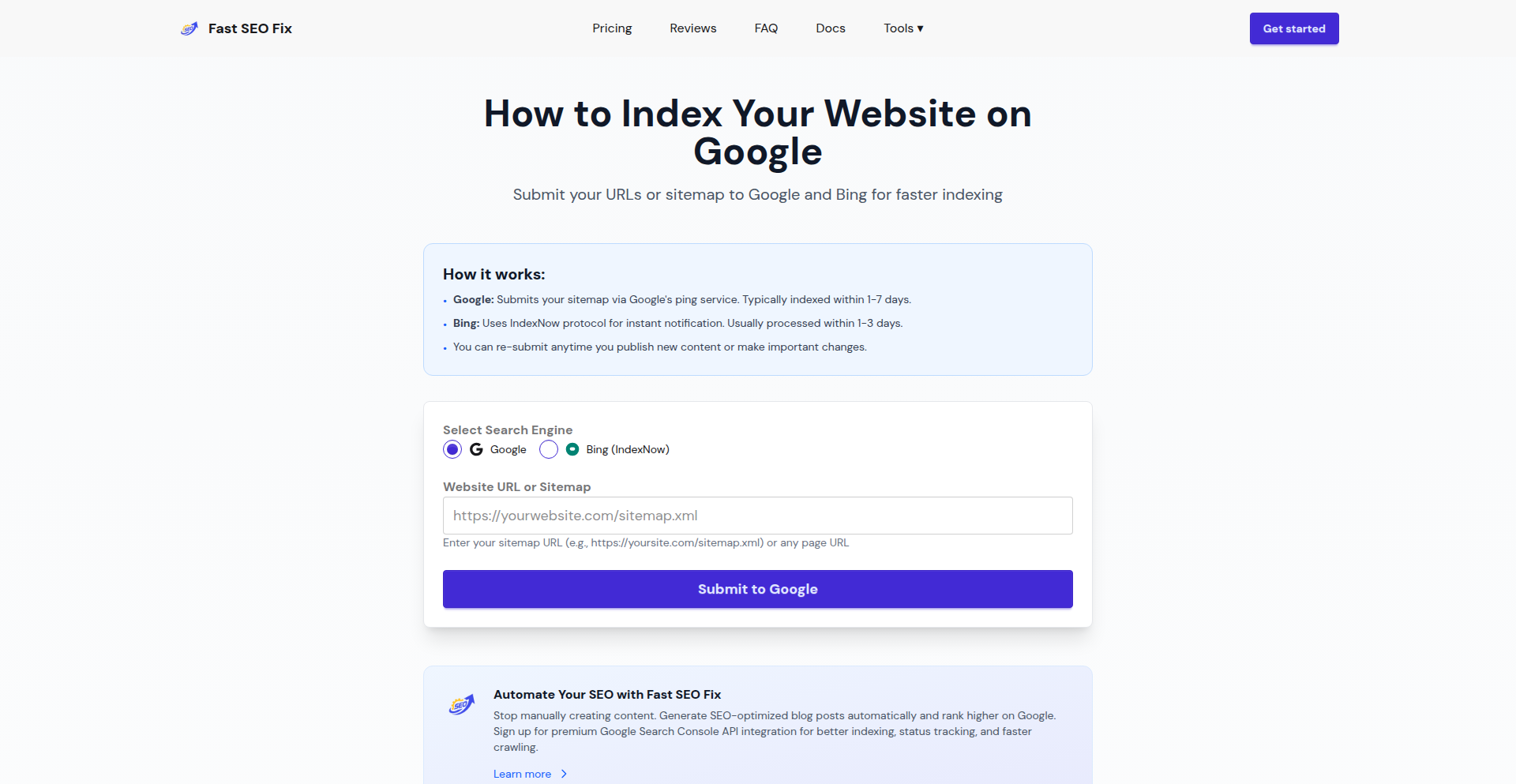

SEOSync AI

Author

vincejos

Description

SEOSync AI is an automated solution designed to tackle the time-consuming and often complex task of Search Engine Optimization (SEO) for websites. Instead of manual effort, it leverages AI to ensure your website ranks well on search engines like Google and is effectively understood and cited by AI models such as ChatGPT. This product addresses the pain point of developers and website owners spending excessive time on SEO, offering an intelligent agent that works autonomously to improve online visibility.

Popularity

Points 3

Comments 2

What is this product?

SEOSync AI is an intelligent agent built using artificial intelligence to automate the process of Search Engine Optimization. Its core innovation lies in its ability to analyze website content and structure, then proactively make improvements or suggest actions that enhance its ranking potential on search engines. It's like having a dedicated SEO expert working 24/7, but powered by algorithms. The 'AI agent' part means it's not just a set of rules, but a system that can learn and adapt to the ever-changing landscape of search engine algorithms and AI content models. This means it's constantly working to keep your site relevant and discoverable, saving you the effort of constantly monitoring and adjusting SEO strategies yourself. So, for you, this means less manual work and better chances of being found online.

How to use it?

Developers can integrate SEOSync AI into their website development workflow. This might involve embedding it as a plugin for popular Content Management Systems (CMS) like WordPress, or as a standalone service that analyzes a given URL. The AI agent would then continuously monitor the website's performance against SEO benchmarks, identifying areas for improvement in areas like keyword optimization, content relevance, meta descriptions, and link building strategies. It could also be configured to provide reports and actionable insights. The goal is to make SEO a background process that enhances your website's presence without requiring constant developer attention. So, for you, this means your website gets optimized automatically, freeing up your development time for other critical tasks.

Product Core Function

· Automated Keyword Research and Integration: The AI identifies relevant keywords your target audience is searching for and suggests or automatically integrates them into your website's content and metadata to improve search engine discoverability. This is valuable because it ensures your website appears in more relevant search results, attracting more potential visitors.

· Content Optimization Suggestions: The agent analyzes your existing content and provides recommendations for improvement, such as clarity, keyword density, and readability, to make it more appealing to both search engines and human readers. This helps your content perform better and engage your audience more effectively.

· Meta Description and Title Tag Generation: SEOSync AI can generate compelling meta descriptions and title tags that accurately reflect your page content and encourage users to click through from search results. This is crucial for improving click-through rates from search engine results pages.

· Technical SEO Auditing: The system performs automated checks for common technical SEO issues like broken links, slow page load times, and mobile-friendliness, providing actionable fixes. Addressing these technical issues ensures a smooth user experience and helps search engines crawl and index your site efficiently.

· AI Citation Enhancement: It actively works to make your website's content understandable and valuable to AI models like ChatGPT, increasing the likelihood of your content being cited and referenced by these powerful platforms. This broadens your content's reach and authority in the emerging AI-driven information ecosystem.

Product Usage Case

· A freelance web developer building portfolios for clients can use SEOSync AI to ensure each client's website has a strong SEO foundation from the start, saving them the learning curve and time investment in SEO, and delivering a more valuable final product. The AI handles the heavy lifting of ranking and discoverability.

· A small e-commerce business owner with limited technical expertise can leverage SEOSync AI to automatically improve their product pages' visibility on Google, leading to more organic traffic and potentially higher sales without needing to hire an expensive SEO consultant. The AI works in the background to drive customer acquisition.

· A content creator or blogger can use SEOSync AI to ensure their articles are optimized for both search engines and AI summarization tools, maximizing their reach and ensuring their insights are picked up by a wider audience. This amplifies the impact of their written work.

· A startup launching a new SaaS product can integrate SEOSync AI early in the development cycle to ensure their landing pages are optimized for lead generation and discoverability from day one, accelerating their user acquisition efforts. This helps get the product in front of the right people quickly.

14

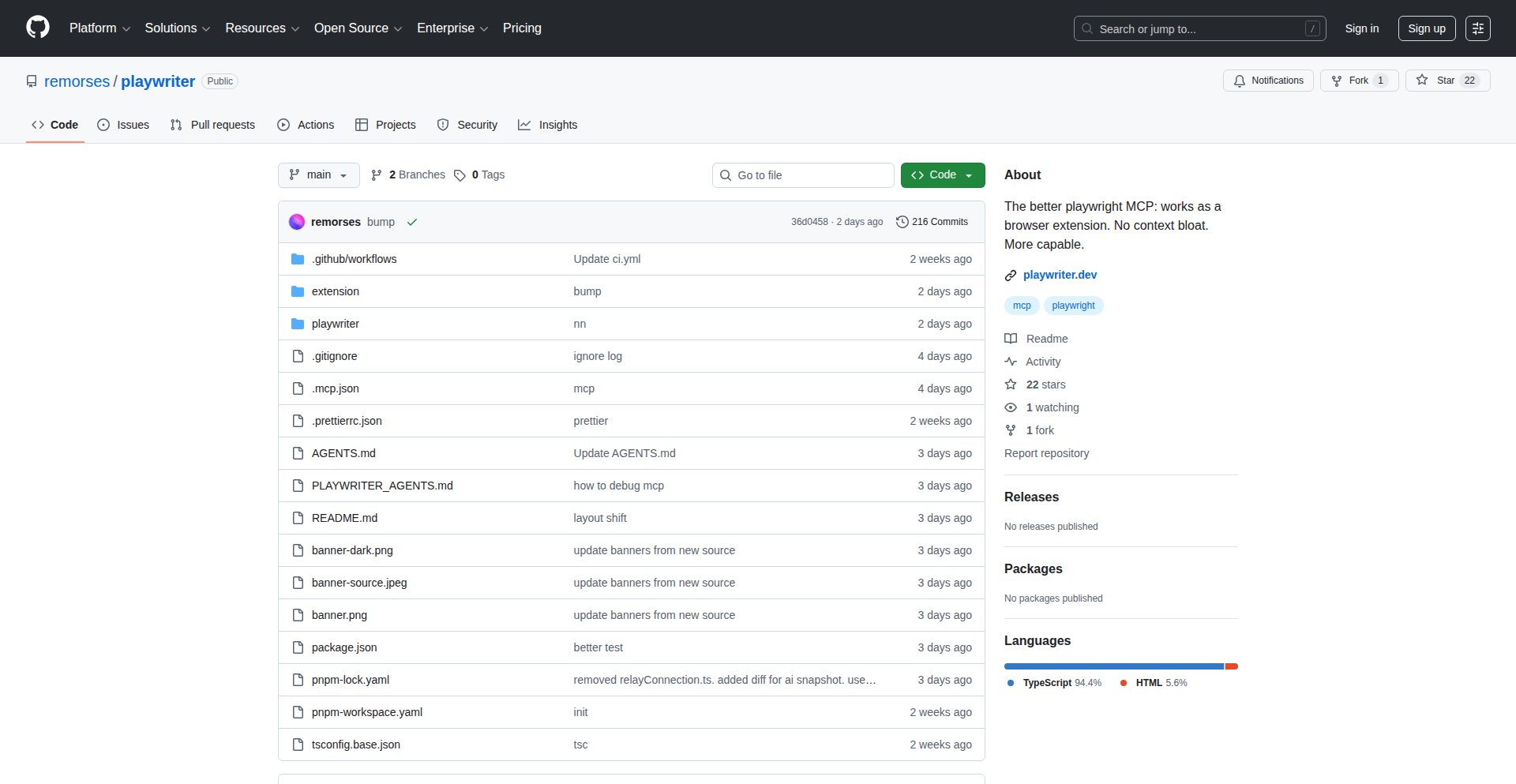

Playwriter: Chrome Automation Toolkit

Author

xmorse

Description

Playwriter is a Chrome extension that allows developers to control the Chrome browser through the powerful combination of MCP (Messaging Channel Protocol) and CDP (Chrome DevTools Protocol). This opens up new avenues for automated browser testing, scraping, and complex user interaction simulation, solving the challenge of programmatic browser control with a focus on flexibility and developer experience.

Popularity

Points 4

Comments 1

What is this product?

Playwriter is a browser extension that acts as a bridge, enabling you to send commands to and receive information from your Chrome browser using code. It leverages the Messaging Channel Protocol (MCP) for efficient communication and the Chrome DevTools Protocol (CDP), which is the underlying communication standard used by Chrome's developer tools. Think of it as giving your scripts direct control over Chrome, allowing them to navigate, interact with web pages, and even inspect browser states programmatically. This is innovative because it provides a robust and standardized way to automate browser actions beyond simple scripting, enabling sophisticated workflows.

How to use it?

Developers can integrate Playwriter into their projects by installing the Chrome extension and then using a client library in their preferred programming language (e.g., Python, JavaScript) to connect to the extension. Once connected, they can send commands via CDP to control Chrome, such as opening new tabs, navigating to specific URLs, clicking buttons, filling forms, extracting data, and even taking screenshots. This is particularly useful for building automated testing suites, web scrapers, or for simulating complex user journeys for research or development purposes.

Product Core Function

· Programmatic Browser Navigation: Allows scripts to open URLs, navigate between tabs, and manage browser windows, enabling automated workflows for content access and management.

· Interactive Element Manipulation: Enables scripts to find, click, type into, and select elements on a webpage, providing the ability to automate user interactions and form submissions.

· Data Extraction and Inspection: Provides access to the DOM and network requests, allowing developers to extract specific data from web pages and analyze network traffic for debugging or data collection.

· Screenshot and Visual Capture: Facilitates automated screenshotting of entire pages or specific elements, useful for visual regression testing and documentation generation.

· Custom Event Simulation: Supports simulating various user events like mouse movements, keyboard input, and scrolling, allowing for realistic user behavior testing.

· Extensible Messaging: Integrates MCP for efficient and flexible communication between the extension and the client application, ensuring responsive and robust control.

Product Usage Case

· Automated E-commerce Testing: A developer can use Playwriter to automate the process of adding items to a cart, proceeding to checkout, and verifying the order details on an e-commerce website, ensuring the site functions correctly for users.

· Data Scraping for Market Research: A researcher can write a script to use Playwriter to navigate through a series of product pages, extract pricing and availability information, and compile a report for market analysis.

· Browser Automation for CI/CD Pipelines: Playwriter can be integrated into a continuous integration and continuous deployment pipeline to run automated end-to-end tests against a web application whenever code changes are committed, catching bugs early.

· Simulating User Behavior for Performance Testing: A QA engineer can use Playwriter to simulate a complex user journey with specific interactions and timings to test the performance of a web application under realistic load conditions.

15

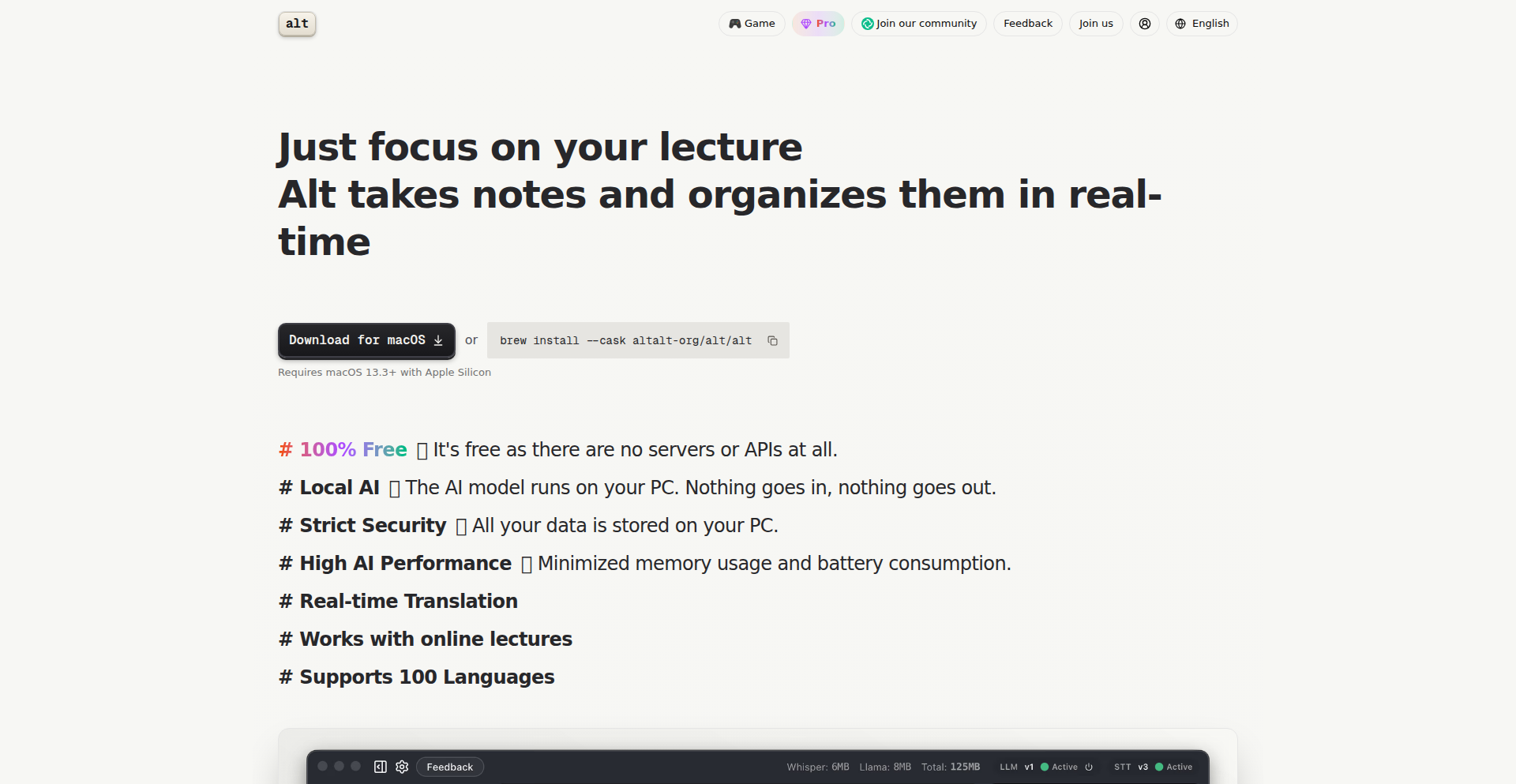

Alt-Local-AI-Notetaker

Author

predict-woo

Description

Alt is a local AI-powered notetaker designed to overcome the limitations of cloud-based services, particularly for long lectures or remote work sessions. Its core innovation lies in running both Automatic Speech Recognition (ASR) and Large Language Models (LLMs) directly on the user's device. This eliminates transcription time limits, ensures privacy by keeping data local, and offers high accuracy for a wide range of languages, even without an internet connection. This product tackles the problem of expensive and restrictive AI notetaking tools by offering a free, powerful, and privacy-focused alternative.

Popularity

Points 4

Comments 1

What is this product?

Alt is a notetaking application that utilizes advanced AI models, specifically Automatic Speech Recognition (ASR) for transcribing audio and Large Language Models (LLMs) for summarizing and processing that text. The key technical innovation here is that these AI models run entirely on your local machine (on-device) rather than sending your audio data to remote servers. This means no internet connection is required for transcription and processing, no arbitrary time limits on how much audio you can transcribe (unlike many cloud services), and significantly enhanced privacy because your conversations and notes stay with you. The ASR component is particularly optimized for speed and accuracy on Apple Silicon, which is a major technical feat contributing to its efficient performance and low battery consumption.

How to use it?

Developers can integrate Alt into their workflows by using it as a standalone application for recording and transcribing lectures, meetings, or any audio content. It supports real-time transcription, meaning you see the text as it's spoken, and it can even integrate with popular video conferencing tools like Zoom and Google Meet. For more advanced use cases, the underlying ASR pipeline, 'Lightning-SimulWhisper', is open-sourced on GitHub, allowing developers to build custom solutions that require fast, on-device speech-to-text capabilities. This makes it ideal for projects where privacy, offline functionality, or high-volume audio processing are critical. The practical benefit is having a reliable and private transcription service that's always available, regardless of your network status.

Product Core Function

· On-device ASR: Transcribes audio directly on your computer, ensuring privacy and eliminating reliance on internet connectivity. This provides a secure and always-available transcription solution.

· Local LLM integration: Processes transcribed text using AI models that run locally, enabling summaries and insights without sending data to the cloud. This means your sensitive meeting notes or lecture content remain private.

· Unlimited transcription time: Unlike cloud services with caps, Alt allows for continuous, uninterrupted transcription of lengthy audio recordings. This is invaluable for students with long lectures or professionals in extended meetings.

· High accuracy for 100 languages: Supports a vast array of languages with excellent accuracy, including non-English speech. This broad language support makes it globally applicable and accessible.

· Real-time transcription: Displays transcribed text as it is spoken, allowing for immediate review and note-taking. This enhances productivity by enabling instant capture of spoken information.

· Video conferencing support (Zoom/Google Meet): Seamlessly integrates with popular meeting platforms to transcribe live discussions. This directly addresses the need to capture key points from remote collaborations.

· Offline functionality: Operates entirely without an internet connection, ensuring usability in any location. This is crucial for reliable note-taking in areas with poor or no network access.

· Efficient battery usage: Optimized for low power consumption, allowing for extended use on a single charge. This ensures the notetaker remains a practical tool throughout long sessions without draining your device's battery.

Product Usage Case

· A university student uses Alt to transcribe lengthy lectures in real-time, capturing all spoken content without worrying about time limits or internet access. This allows them to focus on learning rather than manual note-taking.

· A remote worker uses Alt during a multi-hour client meeting. The on-device transcription ensures the conversation is private, and the LLM can later generate a concise summary of action items, saving significant time on manual summarization.

· A journalist uses Alt to record and transcribe interviews in the field where internet connectivity is unreliable. The offline capability guarantees that valuable interview data is captured accurately and securely.

· A developer working on a privacy-sensitive application integrates Alt's open-source ASR pipeline ('Lightning-SimulWhisper') to add real-time speech-to-text functionality to their application, ensuring all audio processing happens locally.

· A researcher uses Alt to transcribe hours of audio data in various languages for analysis, benefiting from the high accuracy and broad language support without incurring cloud service costs or privacy concerns.

16

FontGen: Universal Font Rendering

Author

liquid99

Description

FontGen is a web-based tool that generates custom fonts designed for broad accessibility. It addresses the common problem of fonts not being consistently readable by screen readers, especially for users with visual impairments. The innovation lies in its systematic testing and generation process, aiming to produce fonts that are machine-readable and human-friendly across various assistive technologies.

Popularity

Points 5

Comments 0

What is this product?

FontGen is a project that creates fonts optimized for accessibility. Normally, when you design a font, you might not consider how a screen reader, a tool that reads text aloud for visually impaired users, will interpret it. Many fonts, even if they look good to the human eye, can be confusing or unreadable to these machines. FontGen tackles this by developing fonts with specific design considerations to ensure they are accurately recognized and spoken by screen readers. This is achieved through careful character design and potentially through metadata embedded within the font files that guides screen readers. So, this means you get fonts that not only look great but also actively support inclusivity by making digital content accessible to more people.

How to use it?

Developers can use FontGen by visiting the website, exploring the available accessible font options, and downloading the font files. These fonts can then be integrated into websites, applications, or any digital content where custom typography is needed. The project's documentation (linked in the disclaimer) provides details on the accessibility testing performed, giving developers confidence in their choice. This allows you to easily embed fonts that are proven to work well with screen readers, enhancing the user experience for a wider audience without complex technical setup.

Product Core Function

· Accessible Font Generation: Creates custom fonts with design principles that improve readability for screen readers, meaning your text will be more reliably conveyed to visually impaired users.

· Cross-Reader Compatibility Testing: Fonts are tested against screen readers like NVDA to ensure consistent performance, giving you peace of mind that your chosen font will function as expected.

· Downloadable Font Files: Provides font files (likely in standard formats like WOFF or TTF) that can be directly implemented into web projects or applications, making integration straightforward.

· Focus on Usability: Prioritizes making digital content understandable and usable for a broader range of users, contributing to a more inclusive digital environment.

· Transparency in Accessibility: Offers details and disclaimers about the accessibility testing performed, allowing developers to make informed decisions about font choices.

Product Usage Case

· Website Development: A web developer can use FontGen to select and implement a font that ensures their website content is accurately read by screen readers, improving SEO and user experience for visually impaired visitors.

· Application Design: An app designer can integrate FontGen's accessible fonts into their mobile or desktop application interface, ensuring that all users, including those who rely on screen readers, can navigate and interact with the app effectively.

· E-book Creation: An author or publisher can use these fonts to create e-books that are more accessible to readers who use assistive technologies, broadening the potential readership.

· Content Management Systems: Developers can build themes or plugins for CMS platforms that offer these accessible fonts as an option, making it easier for content creators to produce inclusive content.

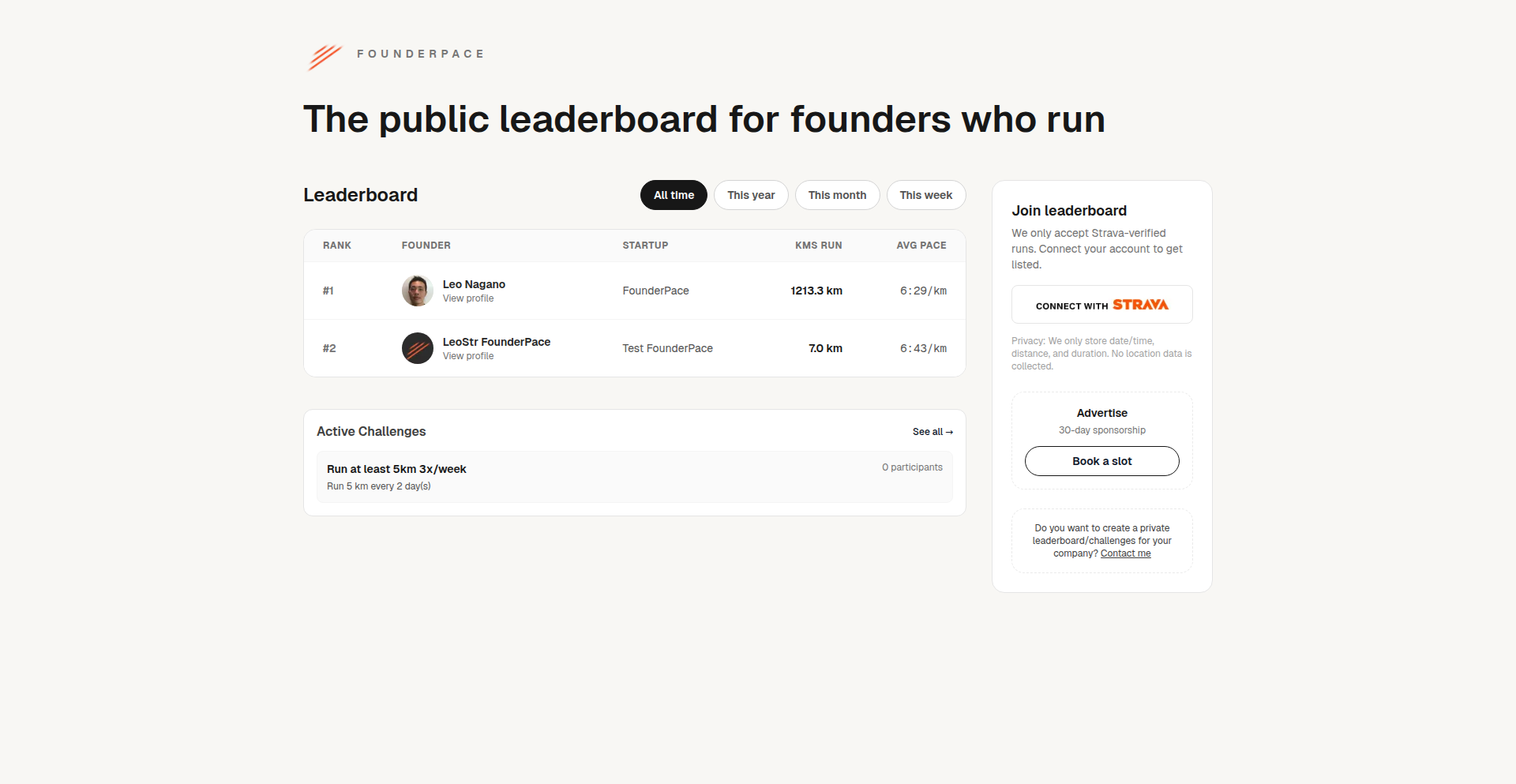

17

FounderPace: Founder Performance Leaderboard

Author

leonagano

Description

FounderPace is a data-driven leaderboard that tracks and ranks founders based on key performance indicators (KPIs) of their startups. It leverages publicly available data and potentially user-submitted metrics to provide insights into founder effectiveness and company progress. The innovation lies in creating a transparent and comparative platform for startup performance, fostering healthy competition and learning within the founder community.

Popularity

Points 4

Comments 1

What is this product?

FounderPace is a novel leaderboard system designed for startup founders. It operates by aggregating and analyzing various metrics associated with startup growth and founder activity. This could include metrics like user acquisition rates, revenue growth, funding rounds, product launch frequency, or even community engagement. The core innovation is the methodology for deriving a comparative score that reflects a founder's effectiveness and their company's trajectory. It's built on the idea of using data to quantify and visualize founder performance, moving beyond anecdotal evidence and creating a benchmark for success. So, what's in it for you? It provides objective insights into how your startup stacks up against peers, offering a clear picture of areas for improvement and potential growth drivers.

How to use it?

Founders can use FounderPace by connecting their startup's data sources (e.g., analytics platforms, CRM, financial dashboards) or by manually submitting key metrics. The platform then processes this data to generate a unique performance score and rank. Developers might integrate with FounderPace via an API to fetch aggregated performance data for market research or to build features that leverage these leaderboards within their own applications. For example, a startup accelerator might use it to identify promising companies, or a developer could build a dashboard that displays your rank alongside your historical performance. This means you can easily track your progress and understand where you stand in the competitive landscape.

Product Core Function

· Performance Metric Aggregation: Collects and unifies diverse startup metrics from various sources, providing a holistic view of performance. Value: Simplifies data analysis and offers a comprehensive understanding of your startup's health.