Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-26

SagaSu777 2025-11-27

Explore the hottest developer projects on Show HN for 2025-11-26. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The landscape of innovation is clearly being shaped by the pervasive influence of AI and the relentless pursuit of developer efficiency. We're seeing a strong trend towards building AI agents that possess more sophisticated memory and context management, exemplified by projects like ChatIndex. This isn't just about chatbots remembering more; it's about creating AI that truly understands and learns from prolonged interactions, opening doors for more personalized and effective AI companions. Simultaneously, there's a significant push for developer tools that streamline workflows, from AI-assisted coding and Git management to automated testing and deployment. The hacker spirit is alive and well, with many projects focusing on privacy-preserving, local-first solutions, giving users more control over their data and compute. For developers and entrepreneurs, this means opportunities abound in building the next generation of intelligent, efficient, and trustworthy software. Don't just build tools that use AI; build AI that enhances existing tools and workflows in novel, privacy-conscious ways. The future is about empowering individuals and teams with smarter, more context-aware technologies.

Today's Hottest Product

Name

ChatIndex – A Lossless Memory System for AI Agents

Highlight

This project tackles the critical challenge of context management in long AI conversations by introducing a lossless, hierarchical tree-based indexing system. Unlike traditional methods that lose information, ChatIndex preserves raw data and allows for multi-resolution retrieval, enabling AI agents to maintain coherent, long-term memory. Developers can learn about advanced data indexing techniques and how to apply them to enhance AI conversational capabilities, particularly for building more human-like and persistent AI assistants.

Popular Category

AI/ML

Developer Tools

Utilities

SaaS

Popular Keyword

AI

LLM

Agent

Developer Tool

Open Source

CLI

Browser

Privacy

Technology Trends

AI-powered context management

Local-first AI applications

Developer productivity tools

Enhanced data handling for AI

Privacy-focused software

Cross-platform utilities

Automated workflows

Project Category Distribution

AI/ML (30%)

Developer Tools (25%)

Utilities (20%)

SaaS (15%)

Content/Media (5%)

Other (5%)

Today's Hot Product List

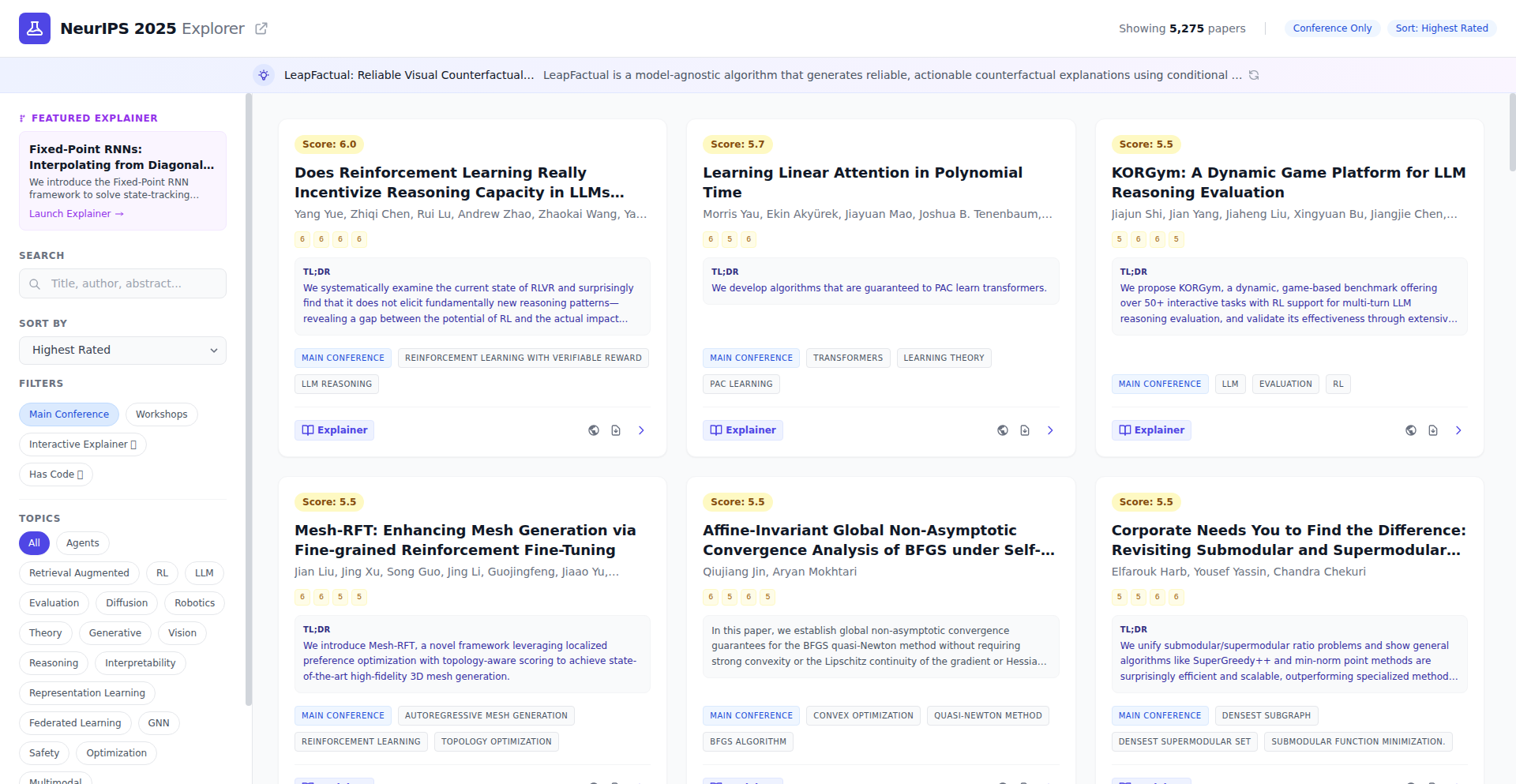

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Bourdain Lost Archive | 18 | 5 |

| 2 | ChatIndex: Seamless Long-Term Memory for AI Agents | 15 | 4 |

| 3 | Ghostty-Web: Terminal Emulation on the Web | 10 | 3 |

| 4 | Wozz Kubernetes Cost Auditor | 5 | 6 |

| 5 | Claude Skill Forge | 8 | 2 |

| 6 | StyleCompass | 3 | 5 |

| 7 | Logical Contextual Copilot | 8 | 0 |

| 8 | MightyGrep: Accelerated Plaintext Explorer | 7 | 0 |

| 9 | Cloakly | 1 | 5 |

| 10 | Rubber Duck: Pre-emptive App Store Review Assistant | 1 | 4 |

1

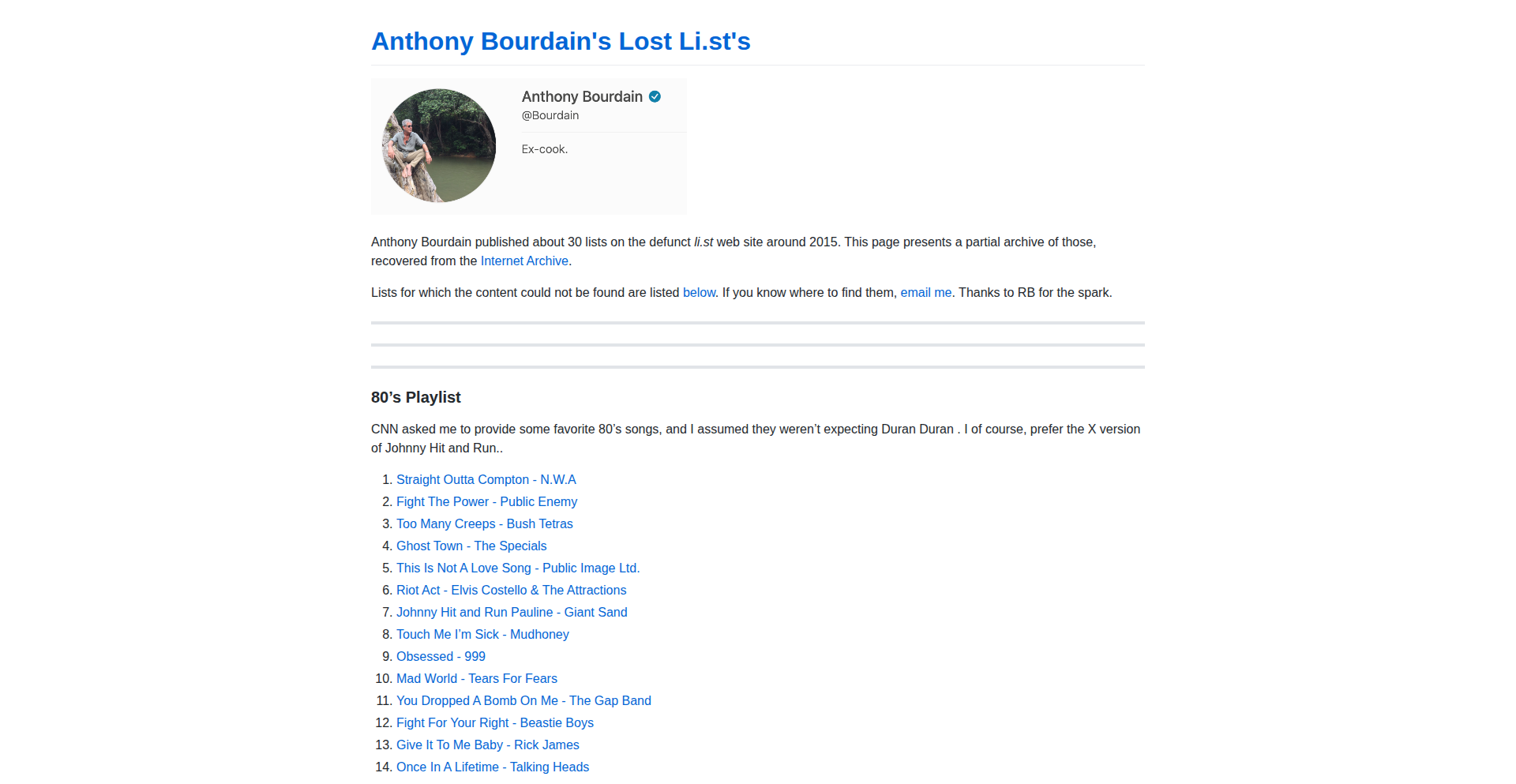

Bourdain Lost Archive

Author

gregsadetsky

Description

This project revives lost content from Anthony Bourdain's presence on the defunct 'li.st' service. It's a testament to the hacker ethos of using code to unearth and preserve valuable digital heritage that would otherwise be forgotten. The innovation lies in the meticulous data scavenging and reconstruction process, making inaccessible information available again.

Popularity

Points 18

Comments 5

What is this product?

This is a web archive project dedicated to recovering and presenting content from Anthony Bourdain that was hosted on the now-defunct 'li.st' platform. The core technical innovation involves deep web crawling, archive.org data reconstruction, and creative data retrieval techniques to piece together fragmented digital remnants. Essentially, it's using code as a digital archaeology tool to bring back lost cultural artifacts.

How to use it?

Developers can use this project as a case study in data recovery and archival techniques. It demonstrates how to approach salvaging information from deprecated platforms by leveraging tools like the Wayback Machine and custom scripting. For content consumers, it's a direct portal to a piece of culinary and cultural history that was previously inaccessible.

Product Core Function

· Content Scavenging: Utilizing web scraping and archival data to locate and retrieve fragmented pieces of Bourdain's 'li.st' content. This is valuable for understanding how to recover data from unreliable or removed sources.

· Data Reconstruction: Piecing together disparate data fragments into a coherent and accessible archive. This showcases algorithmic problem-solving for damaged or incomplete datasets.

· Web Archival Hosting: Presenting the recovered content in a user-friendly web interface, making it accessible to the public. This highlights the practical application of archival efforts.

· Preservation of Digital Heritage: Ensuring that valuable cultural content is not lost due to platform obsolescence. This demonstrates the social impact of technical skills in preserving history.

Product Usage Case

· Recovering defunct blog posts or forum discussions by piecing together data from archive.org snapshots and potentially other cached versions. This project shows how to do it for specific cultural figures.

· Building tools to reconstruct user profiles or content from old social media platforms that have shut down, enabling users to find their lost digital footprints.

· Creating a digital museum of ephemeral online content, like early web comics or defunct digital art projects, by employing similar data recovery and presentation strategies.

· Using programmatic methods to analyze and understand the evolution of online content over time, using this project as a blueprint for historical digital data retrieval.

2

ChatIndex: Seamless Long-Term Memory for AI Agents

Author

LoMoGan

Description

ChatIndex is an innovative context management system designed to overcome the limitations of AI chat assistants dealing with long conversations. Instead of relying on multiple disjointed chats, it builds a hierarchical, tree-based index of the conversation history. This allows AI models to efficiently search and retrieve relevant information from extensive dialogues, maintaining coherence and preventing the 'context rot' where AI performance degrades with longer inputs. It ensures no information is lost by preserving raw data and offering multi-resolution access to details, essentially giving AI a better, more human-like memory.

Popularity

Points 15

Comments 4

What is this product?

ChatIndex is a system that allows AI agents to remember and effectively use very long conversation histories. Imagine talking to an AI for hours; usually, its memory gets fuzzy. ChatIndex solves this by organizing the entire conversation like a tree. When the AI needs to recall something, it can quickly find the exact piece of information it needs, whether it's a general topic or a specific detail. This means the AI's responses stay relevant and accurate, even in very lengthy discussions, without losing crucial context. The innovation lies in its lossless memory approach, combining raw data preservation with a smart way to access information at different levels of detail, unlike other systems that tend to forget or distort information over time.

How to use it?

Developers can integrate ChatIndex into their AI agent applications. The system works by taking a conversation and building an indexed representation of it. When the AI needs to respond or perform a task that requires recalling past context, ChatIndex's intelligent retrieval mechanism efficiently pulls the most relevant parts of the conversation history. This can be used in various LLM frameworks, enabling chatbots, virtual assistants, or any AI agent that benefits from a persistent, detailed memory to function more effectively. It's about giving your AI a robust memory to improve its understanding and interaction capabilities.

Product Core Function

· Hierarchical Tree-Based Indexing: Organizes conversation history into a structured tree, making it easy to navigate and search through large amounts of data. This ensures that retrieving information is fast and efficient, preventing AI from getting lost in long dialogues.

· Intelligent Reasoning-Based Retrieval: Uses AI reasoning to pinpoint the most relevant information needed from the conversation index. This means the AI doesn't just fetch random data; it fetches what's actually important for the current task, leading to more accurate and context-aware responses.

· Lossless Memory Preservation: Stores the raw conversation data alongside the index, so no information is ever truly lost. This allows for perfect recall and the ability to revisit exact statements or details when necessary, unlike memory systems that summarize and potentially omit crucial context.

· Multi-Resolution Access: Enables retrieval of information at different levels of detail. The AI can access a high-level summary or dive into very specific details as needed, offering flexibility in how it uses its memory.

· Scalability for Long Conversations: Designed specifically to handle and manage extremely long conversation histories without performance degradation. This is crucial for AI agents that need to maintain context over extended periods, ensuring consistent quality of interaction.

Product Usage Case

· Building a highly coherent customer support chatbot that remembers every detail of a customer's interaction history, even across multiple sessions, to provide personalized and efficient support. This addresses the issue of chatbots forgetting previous issues, leading to frustrated customers.

· Developing an AI research assistant that can sift through extensive documentation and past discussions to find specific facts or synthesize information from a vast corpus of text. This helps researchers by not having to manually manage and recall scattered information.

· Creating an AI tutor that can track a student's learning progress over a long course, remembering specific misunderstandings or areas of strength to tailor future lessons effectively. This ensures personalized education that adapts to individual student needs.

· Enabling AI-powered creative writing tools that can maintain a consistent narrative and character development across a long story, drawing upon earlier plot points and character arcs. This allows for more complex and consistent storytelling.

· Implementing an AI companion that can engage in deep, long-term conversations, building rapport and understanding over time by recalling past discussions and shared experiences. This creates a more engaging and personalized AI interaction.

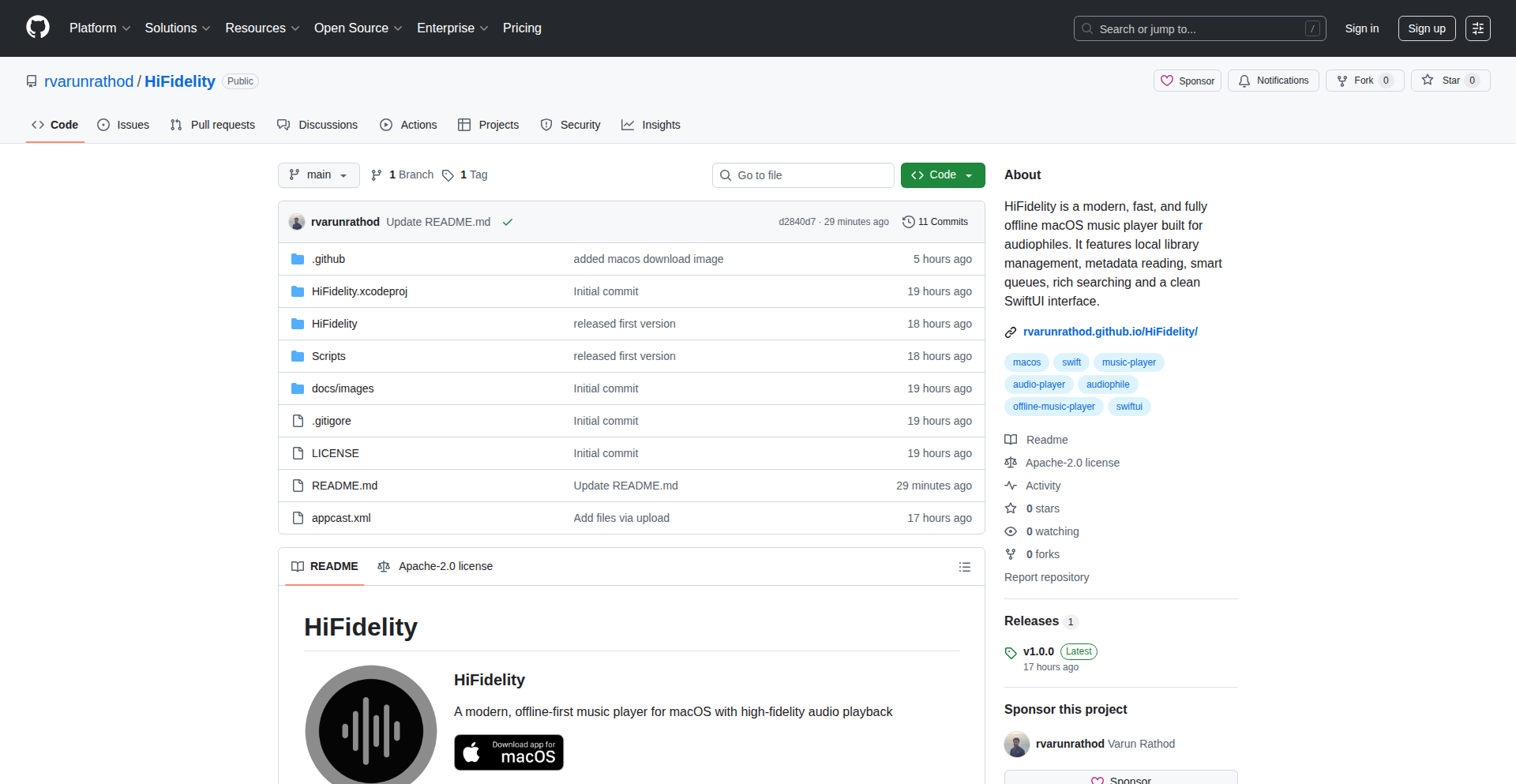

3

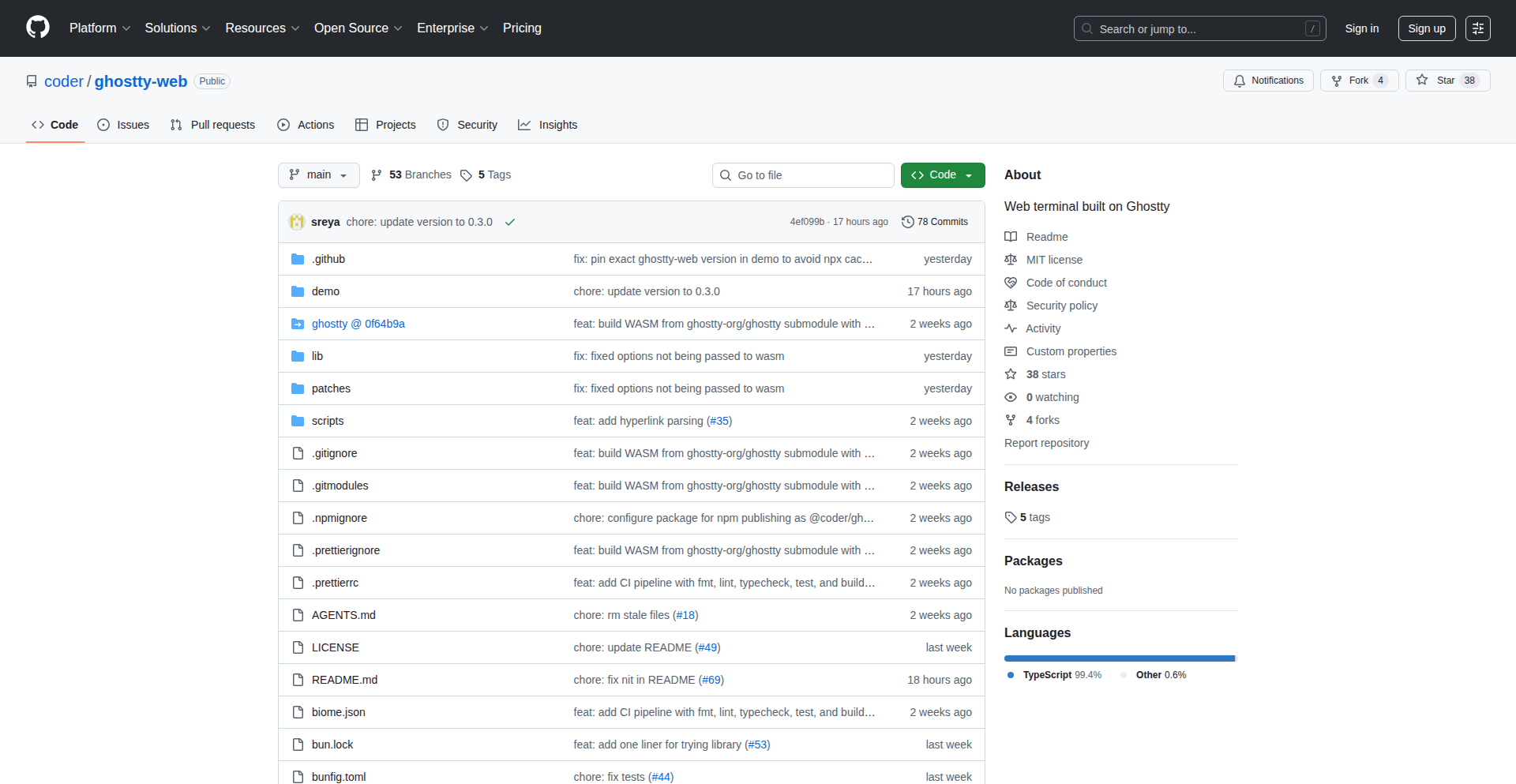

Ghostty-Web: Terminal Emulation on the Web

Author

jonayers_

Description

Ghostty-Web brings the power of a modern terminal emulator directly into your web browser. It's a browser-based terminal that aims to provide a familiar and efficient command-line experience without requiring any local installation. This innovation lies in its ability to run a full-featured terminal environment within the browser's sandbox, making it accessible from any device with a web browser and network connection. The core technical challenge it tackles is rendering and interacting with a terminal state entirely within JavaScript and WebAssembly, effectively emulating terminal behavior for a seamless remote or local development experience.

Popularity

Points 10

Comments 3

What is this product?

Ghostty-Web is a browser-based terminal emulator. Instead of downloading and installing a separate terminal application on your computer, you can use Ghostty-Web directly within your web browser. Its innovative technical approach involves leveraging WebAssembly to run a high-performance terminal engine, and JavaScript to manage the user interface and interaction. This allows it to provide a rich, responsive, and feature-complete terminal experience that feels like a native application, but is accessible via a URL. The key innovation is achieving near-native performance and full terminal functionality (like syntax highlighting, scrolling, and input handling) within the constraints of a web browser environment. This is significant because it lowers the barrier to entry for accessing powerful command-line tools and environments.

How to use it?

Developers can use Ghostty-Web by simply navigating to its web interface. It can be used in various scenarios: for remote server management where direct SSH access might be cumbersome, for quick command execution on a shared machine, or for educational purposes where installing complex development tools is a hurdle. Integration could involve embedding Ghostty-Web within other web applications or platforms, allowing users to execute commands directly within a particular context. For example, a web-based IDE could embed Ghostty-Web to provide a fully functional terminal for build processes, dependency management, or running scripts, all without leaving the IDE's interface. The usage is as straightforward as opening a new tab and typing commands.

Product Core Function

· Web-based Terminal Emulation: Allows users to interact with a command-line interface directly in their browser, eliminating the need for local installation. This is valuable for quick access to command-line tools from any device.

· WebAssembly Performance: Utilizes WebAssembly to achieve high-performance terminal rendering and responsiveness, comparable to native applications. This means faster command execution and smoother scrolling, enhancing productivity.

· Cross-Platform Accessibility: Accessible from any device with a web browser, breaking down platform dependencies. This is ideal for developers working across different operating systems or using less powerful machines.

· Remote Command Execution: Enables executing commands on remote servers or services through a web interface, simplifying remote administration and development workflows.

· Rich User Interface: Provides a modern and feature-rich terminal experience, including features like syntax highlighting and efficient scrolling, improving the overall usability and developer experience.

Product Usage Case

· A developer needs to quickly deploy a web application on a remote server but doesn't want to set up an SSH client or deal with firewall configurations. They can use Ghostty-Web to connect to the server and execute deployment commands directly from their browser, saving time and setup overhead.

· An educational platform wants to offer students hands-on experience with command-line tools without requiring them to install any software on their personal computers. Ghostty-Web can be embedded into the platform, providing a sandboxed terminal environment for learning shell commands and basic system administration.

· A DevOps team needs a quick way to run diagnostic commands on a fleet of machines. Instead of logging into each machine individually, they can use Ghostty-Web, potentially integrated into a dashboard, to send commands to multiple endpoints and view the results centrally, streamlining troubleshooting efforts.

· A developer is working on a project that requires frequent compilation or testing of code. They can use Ghostty-Web within their browser to execute build scripts or run tests directly, keeping their development workflow consolidated within a single browser window and avoiding context switching between applications.

4

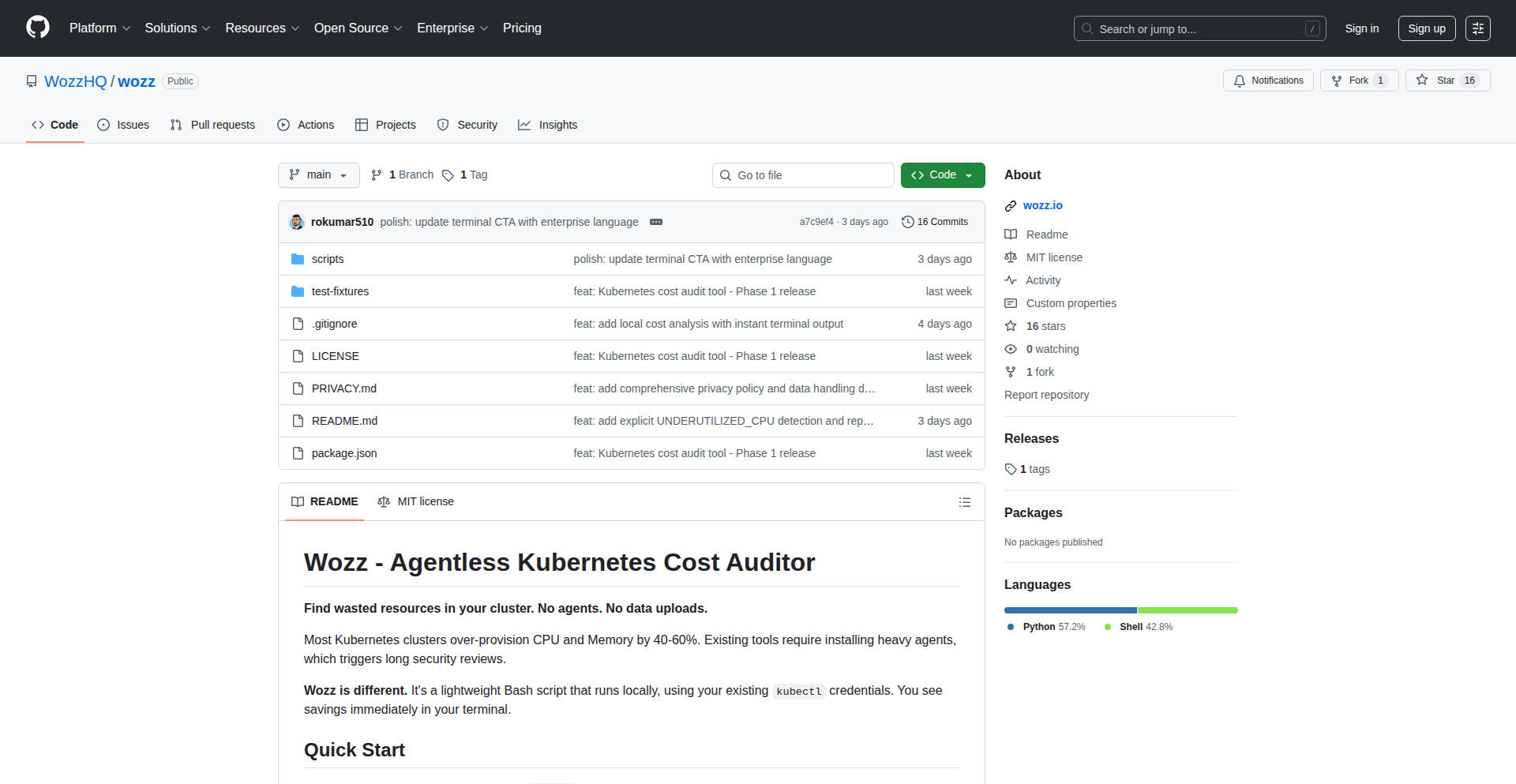

Wozz Kubernetes Cost Auditor

Author

rokumar510

Description

Wozz is an open-source, agentless tool designed to audit Kubernetes costs. It provides visibility into where your Kubernetes resources are consuming the most money, offering insights without requiring any agents to be installed within your clusters. This tackles the common challenge of understanding and optimizing cloud spending in complex containerized environments.

Popularity

Points 5

Comments 6

What is this product?

Wozz is an open-source project that helps you understand your Kubernetes spending. Instead of installing complex monitoring software (agents) inside your Kubernetes clusters, Wozz works by directly querying your Kubernetes API and cloud provider billing data. It analyzes resource usage (like CPU, memory, and storage) and correlates it with actual costs, presenting a clear breakdown of which applications, namespaces, or services are driving up your cloud bill. The innovation lies in its agentless approach, simplifying deployment and reducing overhead while still providing accurate cost allocation.

How to use it?

Developers can integrate Wozz into their DevOps workflows. You would typically deploy Wozz as a separate service that has read-only access to your Kubernetes cluster and your cloud provider's billing information. It can be run locally, in a CI/CD pipeline, or as a dedicated service. The output can be displayed in a dashboard or exported for further analysis, allowing teams to identify cost-saving opportunities by understanding resource utilization patterns and right-sizing deployments. This helps answer the question: 'Where is my Kubernetes budget going?'

Product Core Function

· Agentless Cost Monitoring: Wozz collects cost data by interacting with Kubernetes APIs and cloud billing services without deploying any agents. This means easier setup and less impact on your cluster performance, answering the need for simple cost visibility.

· Resource Utilization Analysis: It analyzes how much CPU, memory, and storage your Kubernetes resources are actually using. This helps identify underutilized or overprovisioned resources, directly addressing the problem of wasted cloud spend.

· Cost Allocation by Namespace/Application: Wozz can attribute costs to specific namespaces or applications within your Kubernetes cluster. This granular breakdown is crucial for understanding which parts of your system are the most expensive and where to focus optimization efforts.

· Customizable Reporting: The tool is designed to provide flexible reporting, allowing developers to customize how cost data is presented. This means you can get the insights you need in a format that suits your team's workflow, making the data actionable.

· Open Source and Extensible: Being open-source means developers can inspect the code, contribute, and extend its functionality. This fosters community collaboration and allows for tailoring the tool to specific or unique cloud environments.

Product Usage Case

· A development team notices their cloud bill is unexpectedly high. They deploy Wozz, which quickly identifies that a specific microservice in the 'production' namespace is consuming a disproportionate amount of CPU and memory, leading to higher instance costs. Wozz's output shows exactly which pods are the culprits, enabling the team to optimize the service's resource requests and limits, thus reducing their monthly expenditure.

· A platform engineering team wants to provide cost transparency to different application teams within a shared Kubernetes cluster. By running Wozz and using its namespace-level cost breakdown, they can generate reports showing each team how much their services are costing. This empowers teams to manage their own budgets and encourages more efficient resource usage.

· A startup is running its application on Kubernetes and is conscious of its cloud spending. They integrate Wozz into their CI/CD pipeline. Before deploying new features, they can run Wozz to get an estimate of the potential cost impact, helping them make informed decisions about resource provisioning and architecture choices, ensuring they stay within budget.

5

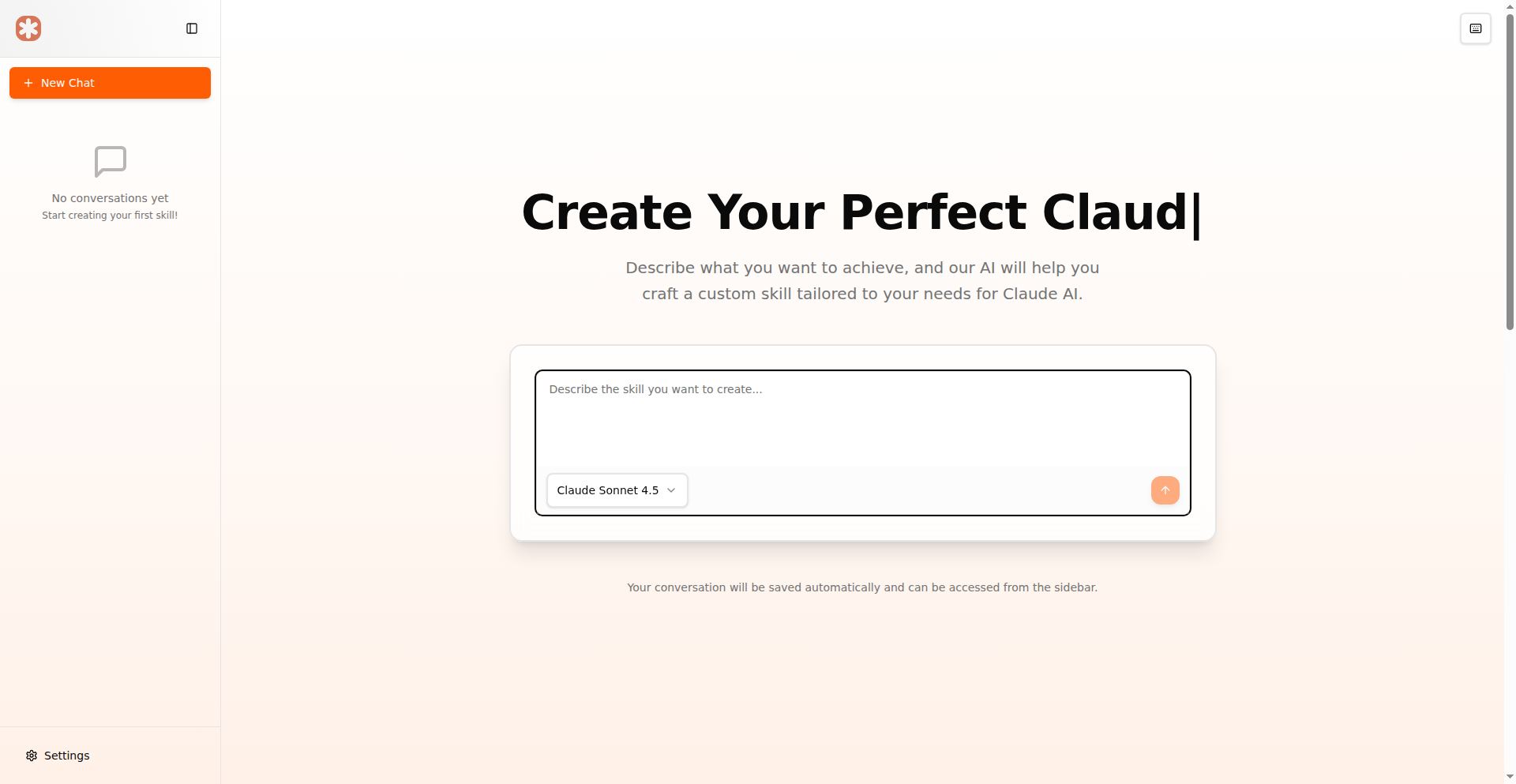

Claude Skill Forge

Author

thanhdongnguyen

Description

Claude Skill Forge simplifies the creation of custom AI skills for Claude, a powerful language model. It automates the intricate process of defining AI behaviors, which traditionally requires deep technical knowledge of file structures, YAML configuration, and precise prompt engineering. Instead of wrestling with complex code and settings, users can now describe their desired AI capabilities conversationally, and the platform intelligently generates a fully functional, optimized skill package ready for immediate use with Claude. This innovation democratizes AI customization, making it accessible to a wider range of users and accelerating the development of specialized AI assistants.

Popularity

Points 8

Comments 2

What is this product?

Claude Skill Forge is an AI-powered platform that automatically generates custom 'skills' for Claude, an advanced AI language model. Normally, building a skill for Claude involves manually creating specific files, configuring complex YAML settings, and crafting intricate prompts to guide the AI's behavior. This requires significant technical expertise and time. Claude Skill Forge streamlines this by allowing users to express their desired AI functionality in plain language. Its AI then translates these ideas into the precise technical specifications needed to build a functional skill, handling all the underlying complexity. So, what's the innovation? It takes the highly technical, manual, and error-prone process of AI skill development and makes it intuitive and automated, significantly lowering the barrier to entry for creating bespoke AI agents.

How to use it?

Developers can use Claude Skill Forge by visiting the platform's website. You describe the specific task or behavior you want your Claude AI to perform. For example, you might say, 'I want Claude to act as a helpful chatbot for my e-commerce store, answering customer questions about products and order status.' You then refine this description through conversation with the MakeSkill AI. Once you're satisfied, the platform generates a complete, ready-to-use skill package. This package can be directly downloaded and imported into your Claude environment. This means you can quickly equip your AI with new, specialized capabilities without needing to write any code or understand the underlying file structures yourself. So, how does this help you? It allows you to create custom AI assistants for your specific needs much faster and easier than before.

Product Core Function

· Conversational AI Skill Definition: Users can describe their desired AI skills in natural language, making the creation process intuitive and accessible even without deep programming knowledge. This translates complex needs into actionable AI instructions.

· Automated Skill Package Generation: The platform automatically constructs all necessary configuration files and code, including correct file system structures and YAML metadata, ensuring the skill adheres to best practices for Claude. This saves significant manual effort and reduces errors.

· Prompt Engineering Automation: Intelligent AI algorithms handle the complex task of prompt engineering, crafting precise instructions for Claude to achieve the desired behavior. This is crucial for an AI's performance and consistency.

· Direct Claude Integration: The generated skill packages are designed for seamless import into Claude, allowing for immediate deployment and testing of the new AI capabilities. This minimizes setup friction and speeds up the iteration cycle.

· Technical Best Practice Enforcement: The system ensures that all generated skills follow Claude's recommended technical specifications and best practices, leading to more robust and reliable AI agents.

Product Usage Case

· Scenario: A small business owner wants to create an AI assistant to handle customer support inquiries for their online store. Instead of hiring a developer or spending weeks learning AI programming, they use Claude Skill Forge. They describe the desired functions: answering FAQs, checking order status, and providing product recommendations. The platform automatically builds a specialized skill that integrates directly into their Claude AI, allowing it to immediately assist customers and freeing up the owner's time.

· Scenario: A content creator wants to develop an AI persona that can help them brainstorm blog post ideas and write initial drafts in a specific tone. They use Claude Skill Forge to define this persona, detailing its writing style and areas of expertise. The resulting skill enables Claude to act as a dedicated AI writing partner, accelerating content creation and maintaining a consistent voice. This addresses the challenge of having an AI that truly understands and replicates a specific creative style.

· Scenario: A researcher needs an AI assistant to help them summarize complex academic papers and extract key findings. They describe the specific requirements to Claude Skill Forge, including the types of papers and the detail level for summaries. The generated skill allows their Claude AI to act as a specialized research assistant, significantly speeding up literature review and knowledge extraction. This solves the problem of manually processing large volumes of technical information.

6

StyleCompass

Author

EthanSeo

Description

StyleCompass is a curated directory of fashion brands, organized by aesthetic style rather than brand name. It addresses the common problem of not knowing where to start when trying to find clothing that matches a desired look, allowing users to discover brands based on styles like 'Classic', 'Minimal', or 'Streetwear'. It's built with a focus on low friction, enabling brand suggestions without requiring a login, embodying a hacker's approach to problem-solving through direct action and community contribution.

Popularity

Points 3

Comments 5

What is this product?

StyleCompass is a web-based directory that helps you discover fashion brands based on your preferred style. Instead of searching for specific brand names you might not know, you can browse through categories like 'Classic', 'Minimal', or 'Streetwear' to find brands that align with your desired aesthetic. The innovation lies in its user-centric organization, making fashion discovery intuitive and accessible, especially for those new to styling. It's built to be open, allowing anyone to suggest new brands without the hassle of registration, reflecting a commitment to community-driven development.

How to use it?

Developers can use StyleCompass as a reference for building their own personalized style guides or as a data source for fashion recommendation engines. For example, a developer creating a personal styling app could integrate StyleCompass's brand categorization to quickly populate their app with relevant brands for different styles. The open contribution model means developers can also contribute their expertise by suggesting new brands or refining existing categories, directly improving the tool for everyone. Its simplicity also makes it an excellent example for learning how to build user-friendly, community-contributed web applications with minimal barriers to entry.

Product Core Function

· Style-Based Brand Discovery: Allows users to find fashion brands by browsing curated style categories (e.g., 'Classic', 'Minimal', 'Streetwear'). This simplifies the process of finding suitable brands, even if you don't know specific names, by focusing on the desired look.

· Frictionless Brand Suggestion: Enables users to suggest new fashion brands to be added to the directory without requiring account creation or login. This fosters community participation and ensures the directory stays comprehensive and up-to-date with minimal effort for contributors.

· Community-Driven Curation: The entire directory is built and maintained through community contributions and feedback. This decentralized approach ensures a diverse range of brands and styles are represented, reflecting real-world fashion trends and user preferences.

Product Usage Case

· A fashion blogger wanting to recommend brands for a 'streetwear' look can quickly find a comprehensive list of relevant brands within StyleCompass. This saves them hours of research and ensures their recommendations are diverse and stylish.

· A user who feels overwhelmed by online shopping and doesn't know where to start with building a 'classic' wardrobe can use StyleCompass to explore brands that fit that aesthetic. This provides a clear starting point and reduces the frustration of browsing aimlessly.

· A developer building a personalized styling tool could leverage StyleCompass's data to populate their application with relevant brands. If the tool suggests a 'minimalist' style, the developer can easily pull brands from the 'Minimal' category on StyleCompass, saving development time and improving the tool's functionality.

7

Logical Contextual Copilot

Author

samkaru

Description

Logical is a desktop AI assistant that understands your workflow context locally, without requiring prompts. It proactively offers helpful actions across applications like email, documents, and terminals, aiming to reduce friction by acting like a proactive teammate rather than a reactive chatbot. Its core innovation lies in leveraging ambient desktop context for intelligent, on-demand assistance, with a strong emphasis on local processing and privacy.

Popularity

Points 8

Comments 0

What is this product?

Logical is an AI copilot designed to live on your desktop and observe your digital activities. Instead of waiting for you to type a command or question, it uses the context of what you're doing in different applications – such as writing an email, reviewing a document, or looking at terminal output – to anticipate your needs. For example, if you open a message asking to schedule a meeting, Logical might proactively offer to check your calendar. It achieves this by building a local context engine that digests information from your apps, sanitizes sensitive data locally, and uses a vector store and knowledge graph for quick retrieval. An 'intent engine' then infers your goals to surface relevant actions at the right time. The innovation is in shifting from a prompt-driven AI interaction to a context-aware, proactive one, reducing the mental load and context switching required from the user. This is valuable because it makes AI feel more like a seamless assistant integrated into your work, rather than another tool you have to actively manage. The privacy-first approach, with local processing and data sanitization, is also a key differentiator for users concerned about cloud data exposure.

How to use it?

Developers can integrate Logical by installing it on their macOS machines. It automatically starts observing your application usage. For instance, when you receive an email asking for a quick chat, Logical will appear and suggest checking your schedule directly. If you're working on a spreadsheet and start typing a common pattern, it might suggest an Excel formula. While working on research papers, highlighting a complex term could trigger a contextual explanation. For developers, Logical can monitor terminal activity and offer to extract error messages or suggest relevant commands. The goal is to have Logical surface these suggestions naturally within your existing workflow, minimizing the need to switch applications or copy-paste information. For future development, Logical aims to allow developers to plug into its context and intent engines, enabling them to build even richer, context-aware experiences within their own applications.

Product Core Function

· Proactive Email Reply Suggestions: When you open an email thread and are about to reply, Logical can analyze the context and suggest potential responses, saving you time and cognitive effort in crafting replies.

· Meeting Scheduling Assistance: If a message indicates a desire to schedule a meeting, Logical can proactively offer to check your availability or suggest times, streamlining the coordination process.

· Automated To-Do Extraction: Logical can automatically identify and extract tasks from meetings, emails, or documents, and then remind you to follow up, ensuring that action items are not missed.

· Contextual Application Assistance: For tasks like working in Excel, Logical can suggest relevant formulas based on your current activity, reducing the need to manually search for or recall complex functions.

· On-the-Fly Term Explanations: When reading research papers or technical documents, highlighting unfamiliar terms can trigger Logical to provide immediate explanations, accelerating comprehension and learning.

· Local Data Processing and Privacy: All context analysis and sanitization happen on your device, ensuring that sensitive user data never leaves your computer, which is crucial for privacy-conscious users and organizations.

Product Usage Case

· As a busy founder juggling emails and investor calls, Logical proactively offers to draft responses to common inquiries or suggests checking your calendar when someone asks for a quick chat, saving you precious minutes per interaction and preventing scheduling conflicts.

· A researcher working on a complex paper encounters an unfamiliar scientific term. By simply highlighting it, Logical instantly provides a concise definition, allowing them to continue their reading without interruption or the need to open a separate browser tab, thereby accelerating their research process.

· A software engineer is debugging a production issue. Logical monitors their terminal and, upon detecting an error log, offers to extract the relevant error message and suggest potential troubleshooting steps based on past similar issues, speeding up the resolution time for critical bugs.

· A project manager reviewing meeting notes in a document. Logical identifies action items and automatically adds them to a to-do list, and then reminds the manager to follow up on them later, ensuring project tasks are managed efficiently and nothing falls through the cracks.

8

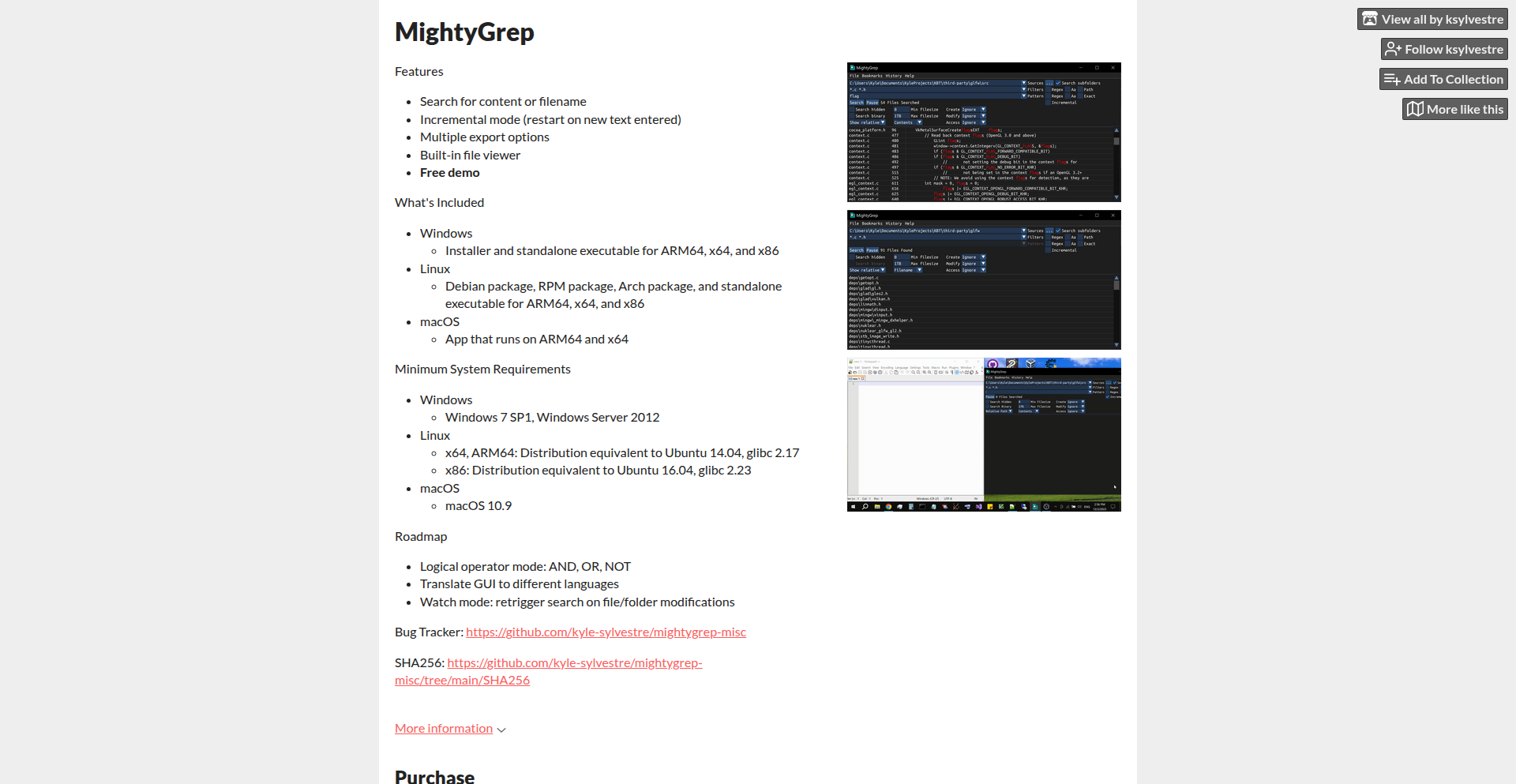

MightyGrep: Accelerated Plaintext Explorer

Author

zeeeeeebo

Description

MightyGrep is a high-performance graphical utility designed for rapid plaintext searching across various file types. It addresses the common developer need for quickly locating specific information within codebases, log files, and configuration files, especially when traditional tools fall short in speed or usability. Its innovation lies in its optimized search algorithms and a responsive GUI, offering a seamless experience for developers dealing with large volumes of text data.

Popularity

Points 7

Comments 0

What is this product?

MightyGrep is a desktop application that allows you to quickly and efficiently search for text within any plain text file. Imagine needing to find a specific function name in a large codebase or a particular error message in a massive log file. Instead of slow, clunky methods, MightyGrep uses advanced indexing and optimized search techniques to instantly scan your files and present the results in a user-friendly graphical interface. This means you spend less time searching and more time coding or debugging. The core innovation is its speed and directness, providing a specialized tool that outperforms general-purpose search functions for this specific task.

How to use it?

Developers can download and install MightyGrep on Windows, macOS, or Linux. Once installed, they can simply point MightyGrep to a directory (like their project folder or a log file directory) and type in their search query. The application will then immediately begin scanning all plaintext files within that directory and its subdirectories, displaying matching lines with context. It's designed to be a drop-in replacement for slower search methods within IDEs or command-line tools when speed and clarity are paramount. You can integrate it by simply opening it alongside your development workflow, making quick lookups a seamless part of your day.

Product Core Function

· Real-time text searching: Provides instantaneous search results as you type, allowing for quick iterative refinement of search queries, significantly reducing the time spent on manual text discovery.

· Cross-platform compatibility: Available for Windows, macOS, and Linux, ensuring developers can use their preferred tool regardless of their operating system, promoting consistent workflow across different environments.

· Optimized search algorithms: Leverages efficient indexing and search techniques to handle large files and directories with remarkable speed, overcoming performance bottlenecks of generic search utilities.

· Intuitive graphical user interface: Presents search results in a clean, organized manner with context, making it easy to identify relevant information and navigate through findings without complex command-line syntax.

· File type flexibility: Works with any plaintext file, making it versatile for code, logs, configuration files, and more, offering a unified search solution for diverse developer needs.

Product Usage Case

· Debugging a complex application: A developer needs to find all occurrences of a specific error message in multiple log files across different servers. MightyGrep can scan all these log files at once, presenting a consolidated list of errors with line numbers and surrounding context, drastically speeding up the debugging process.

· Refactoring a large codebase: When undertaking a major code refactor, a developer needs to find every instance of a deprecated function or variable. MightyGrep can quickly scan the entire project's source code, highlighting all relevant lines and allowing the developer to efficiently update them.

· Analyzing configuration files: A system administrator needs to find a specific setting within dozens of configuration files. MightyGrep can rapidly scan all configuration files in a directory, pinpointing the exact lines containing the desired setting, saving significant manual effort.

9

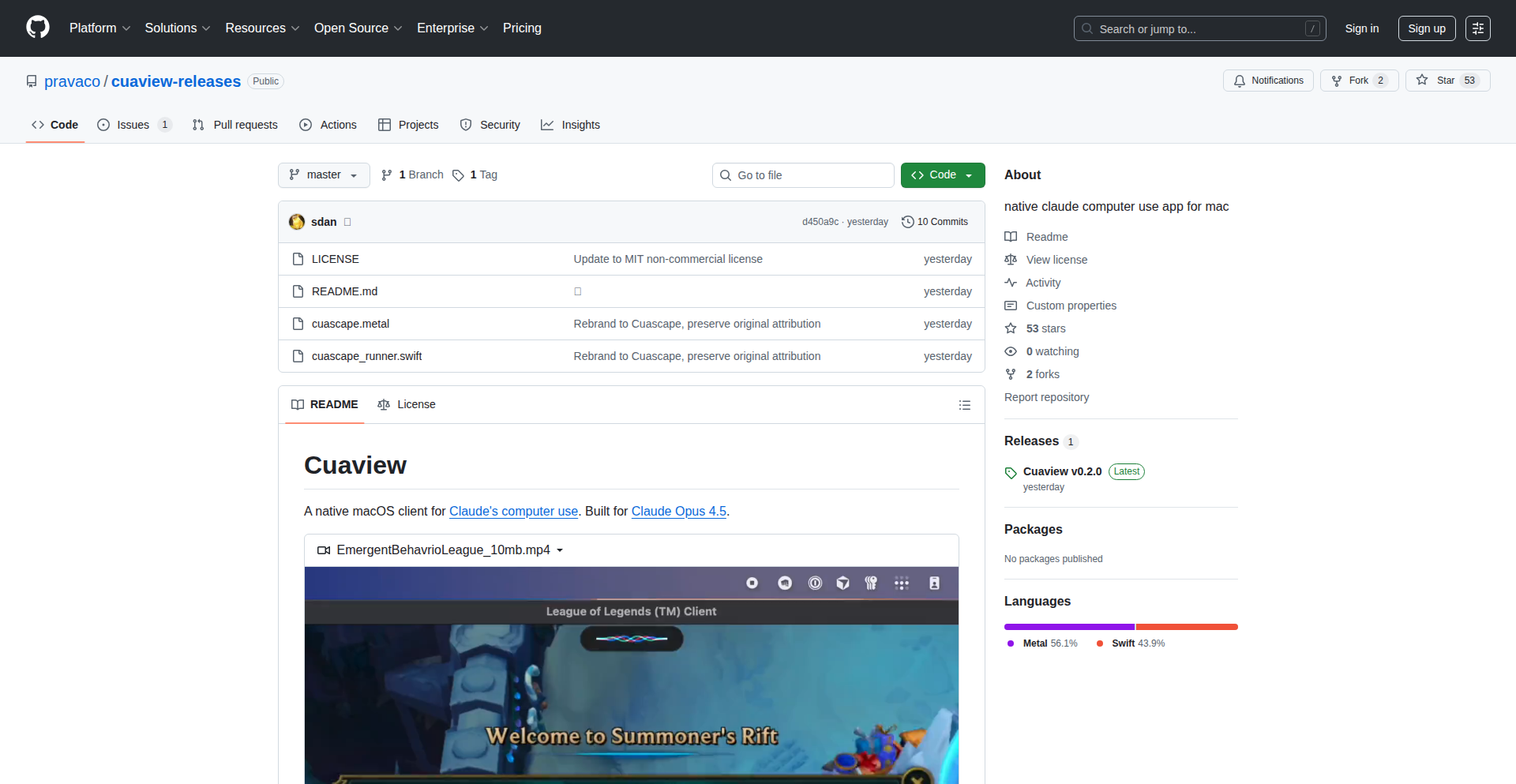

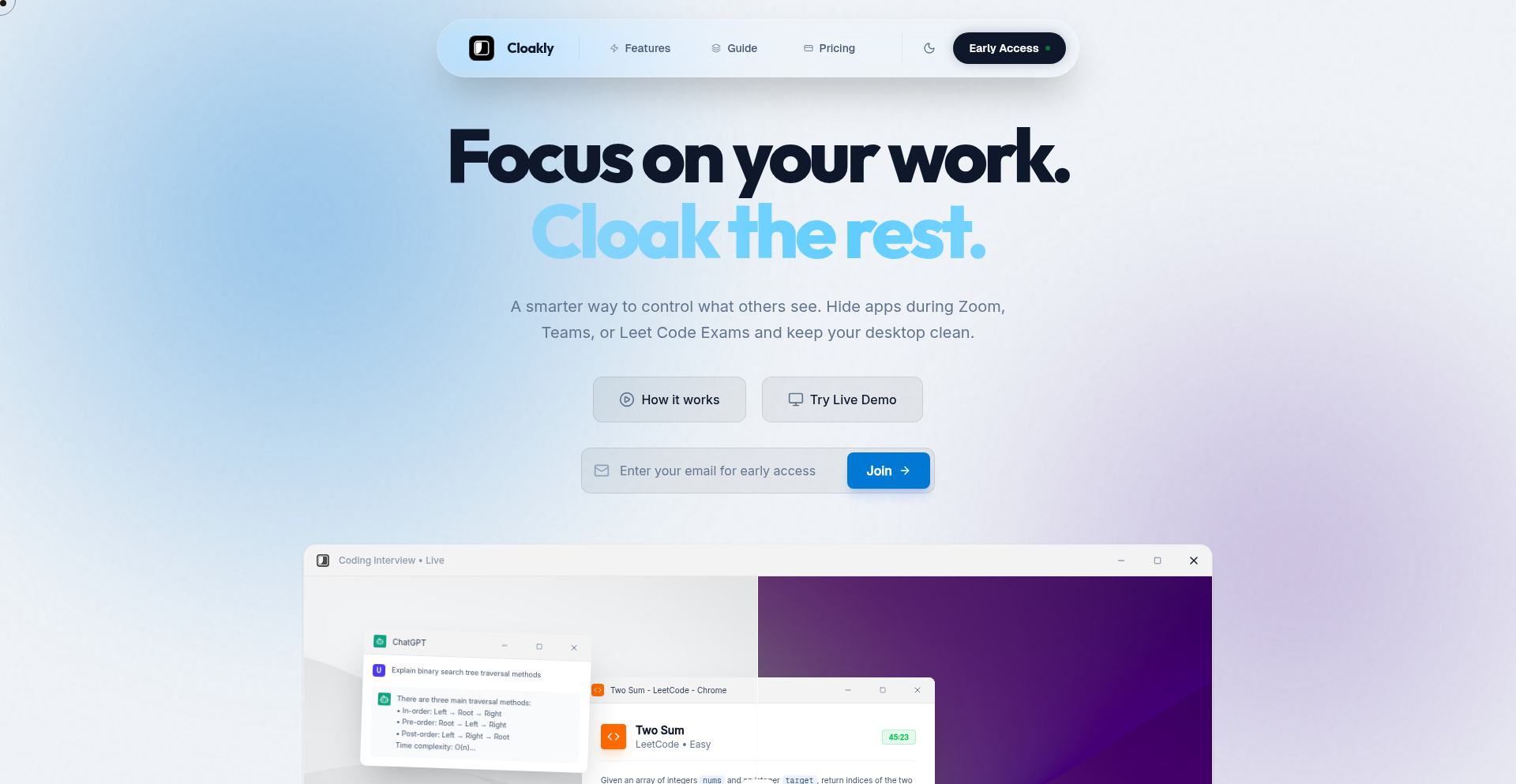

Cloakly

Author

jaygood

Description

Cloakly is a Windows tool designed to enhance privacy during coding interviews. It selectively hides specified windows from screen-sharing streams, addressing the imbalance where interviewers can keep their notes private while candidates are forced to expose their entire desktop. Its core innovation lies in its ability to precisely control what is visible during a screen share, empowering candidates with a basic level of privacy.

Popularity

Points 1

Comments 5

What is this product?

Cloakly is a Windows application that acts as a digital privacy shield for coding interviews. It leverages system-level window management to intercept and selectively mask certain application windows from being captured by screen-sharing software. This means you can have sensitive information, like your personal notes or other applications you don't want the interviewer to see, open on your computer without them appearing in the shared screen. The technical insight here is understanding how screen-sharing applications capture display output and then programmatically intervening in that process to exclude specific windows. This provides a much-needed layer of privacy for candidates.

How to use it?

Developers can use Cloakly by installing the Windows application and then configuring which specific windows they want to keep private during their coding interviews. Once configured, when the candidate starts screen-sharing for an interview, Cloakly will automatically ensure that the selected windows are not visible to the interviewer. This can be integrated into a typical interview workflow by simply running Cloakly in the background before starting the screen-sharing session for the interview platform (like Zoom, Google Meet, etc.).

Product Core Function

· Window masking: The core functionality is the ability to hide specific windows from screen-sharing applications. This is technically achieved by intercepting graphics rendering calls or manipulating window properties to prevent them from being captured by the screen capture API. The value is direct privacy control, preventing accidental or intentional exposure of sensitive data.

· Selective visibility control: Users can choose which applications or windows to hide, offering granular control over their privacy. This is important because not everything needs to be hidden, and users want to be able to show relevant parts of their screen. This provides flexibility and ensures only unintended exposures are prevented.

· Background operation: Cloakly runs in the background without interfering with the user's workflow. This means you can set it up and forget about it during the interview, focusing on the coding task at hand. The value is seamless integration into the interview process without adding complexity.

· User-friendly configuration: The application offers an intuitive interface for selecting and managing the windows to be cloaked. This makes the technology accessible even to users who are not deeply technical. The value is ease of use and quick setup, reducing the barrier to entry for essential privacy.

Product Usage Case

· Scenario: A candidate is preparing for a remote coding interview and needs to have their personal notes open to reference syntax or common algorithms. Using Cloakly, they can select their note-taking application (e.g., Obsidian, Notion, or even a simple text file) to be hidden. When they start screen-sharing, the interviewer will only see their code editor and the interview platform, not the candidate's reference materials. This solves the problem of needing external resources without revealing them to the interviewer.

· Scenario: A candidate has multiple applications open, including their personal instant messenger, a browser with unrelated tabs, and their code editor. They are concerned that accidentally switching to or seeing notifications from personal apps might be perceived negatively. With Cloakly, they can choose to cloak these personal applications, ensuring only the code editor and the interview environment are visible during the screen share. This addresses the issue of maintaining a professional appearance and avoiding distractions or misinterpretations by the interviewer.

· Scenario: An interviewer might ask a candidate to demonstrate their understanding of a particular library or framework by quickly pulling up documentation. The candidate might prefer to have the documentation pre-loaded in a separate window and use Cloakly to ensure it only appears when they intend to show it, rather than having it constantly visible. This allows for a more controlled demonstration and reduces the anxiety of having potentially unorganized or distracting windows visible.

· Scenario: During a pair programming session for a technical assessment, a candidate might need to reference a personal cheat sheet or a complex diagram that is too distracting to have constantly visible. Cloakly allows them to quickly toggle the visibility of these resources, providing a clean screen for the interviewer while still allowing the candidate to access necessary aids. This solves the problem of balancing the need for resources with the desire for a clear and focused presentation.

10

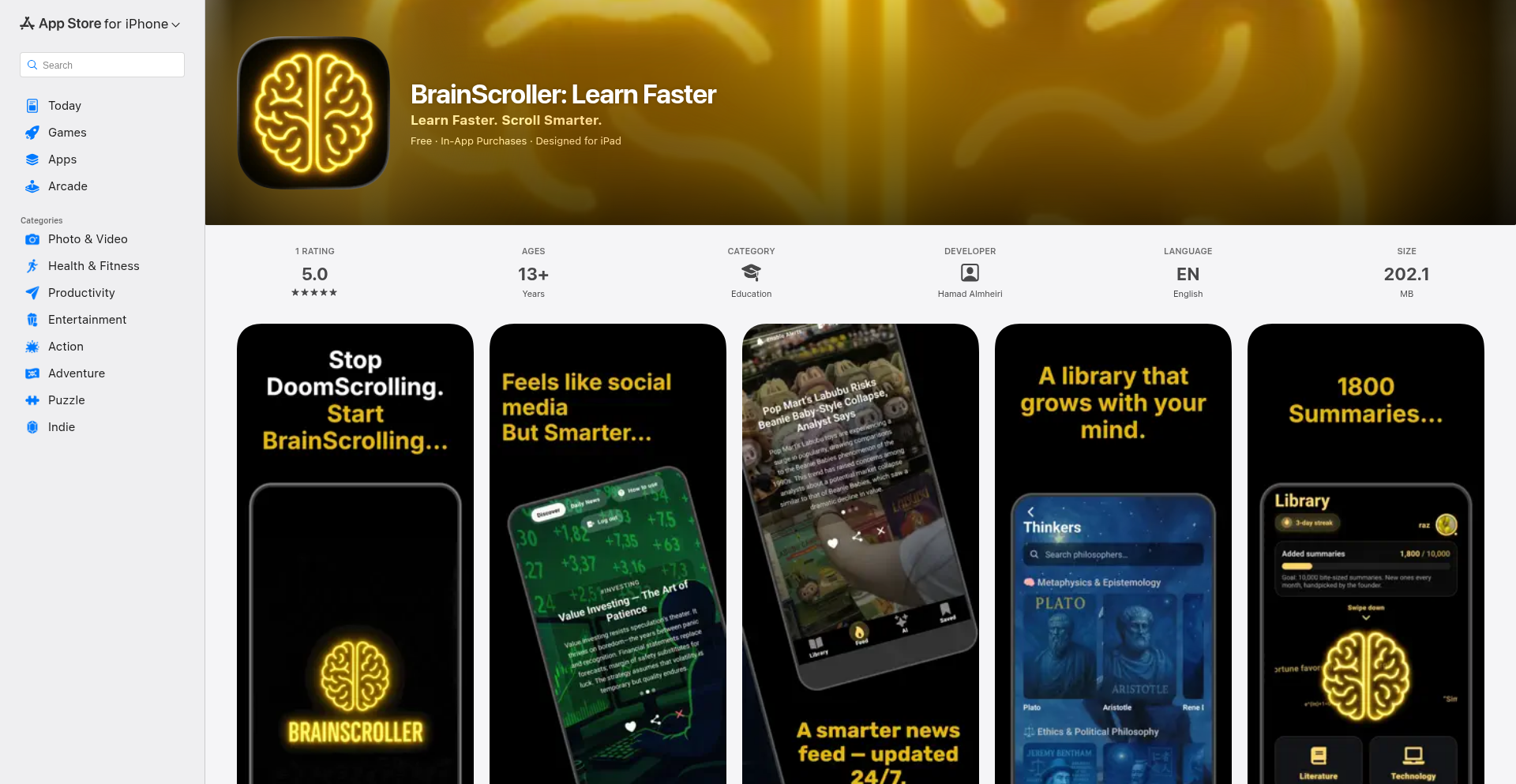

Rubber Duck: Pre-emptive App Store Review Assistant

Author

Sayuj01

Description

Rubber Duck is an AI-powered and human-assisted platform designed to proactively identify and resolve common App Store rejection issues before developers submit their iOS applications. It simulates the App Store review process, catching problems related to metadata, UI inconsistencies, device-specific bugs, privacy concerns, and unexpected crashes, thereby accelerating app launch times and reducing development friction.

Popularity

Points 1

Comments 4

What is this product?

This project is a smart tool that acts like a pre-App Store reviewer for your iOS apps. It uses a combination of automated checks and real human testers, equipped with actual iPhones, to simulate the Apple review process. The innovation lies in its ability to catch issues that often lead to rejections, such as incorrect app descriptions, minor visual glitches on different iPhone models, or missed privacy entries. Think of it as a rigorous quality assurance step before you hand your app over to Apple, saving you from frustrating delays. The core idea is to leverage a systematic approach, mirroring Apple's review criteria, but in a faster and more developer-friendly manner. This helps ensure your app meets the necessary standards from the outset, reducing the guesswork and the pain of unexpected rejections.

How to use it?

Developers can integrate Rubber Duck into their workflow by submitting their iOS app builds for review. The platform then performs automated scans, looking for common pitfalls like missing or incorrect metadata, UI elements that might not render correctly on specific devices, or potential privacy violations. Following the automated checks, a team of human testers, using a variety of real iPhones and iOS versions, will thoroughly test the app, mimicking the experience of an App Store reviewer. Developers receive a detailed report highlighting any identified issues, along with actionable recommendations for fixes. This can be integrated into CI/CD pipelines to provide feedback early in the development cycle, or used as a final quality gate before submission. The value proposition is clear: catch potential rejection points early, fix them efficiently, and achieve a smoother, faster App Store launch.

Product Core Function

· Automated metadata validation: This checks if your app's description, keywords, and other textual information comply with App Store guidelines, ensuring better discoverability and avoiding rejections due to missing or inaccurate details. This saves you time by catching these administrative errors automatically.

· UI/UX consistency checks: The system verifies that your app's user interface looks and functions correctly across a range of iPhone models and iOS versions, preventing issues caused by screen size differences or software incompatibilities. This means your app will appear polished and work reliably for a wider audience.

· Privacy policy verification: Rubber Duck scans for common privacy-related oversights, such as missing or incorrect privacy manifest entries, ensuring your app adheres to Apple's strict privacy requirements. This protects your app from being rejected for privacy compliance issues, which can be complex to navigate.

· Crash detection and flow analysis: By employing real devices and testers, the platform can uncover unexpected crashes or broken user flows that might be missed by purely automated testing. This ensures a stable and seamless user experience, leading to higher user satisfaction and fewer support issues.

· Human-in-the-loop testing: Combining AI with real human testers provides a comprehensive review that captures nuances and edge cases that automated systems might overlook, offering a more thorough assessment. This provides a level of scrutiny that closely mirrors the actual App Store review process, giving you greater confidence in your submission.

Product Usage Case

· A developer is launching a new social media app and has spent weeks polishing the features. Before submitting, they use Rubber Duck. The platform flags that the privacy description doesn't fully align with the data collection methods, and a specific button is misaligned on the iPhone 14 Pro. The developer corrects these issues, leading to a quicker, smoother App Store approval, avoiding days of back-and-forth.

· An e-commerce app is about to go live for the holiday season. Rubber Duck's testers discover that the checkout flow crashes on an older iPhone model due to a recently introduced software bug. The team fixes the bug before launch, preventing potential lost sales and negative customer reviews, ensuring the app is robust during the critical sales period.

· A game developer is preparing for a global launch. Rubber Duck identifies that the app's metadata for different regions contains grammatical errors and inconsistencies in translation, which could lead to delayed reviews or rejection in non-English speaking markets. By fixing these localization issues, the developer ensures a simultaneous and successful global release.

11

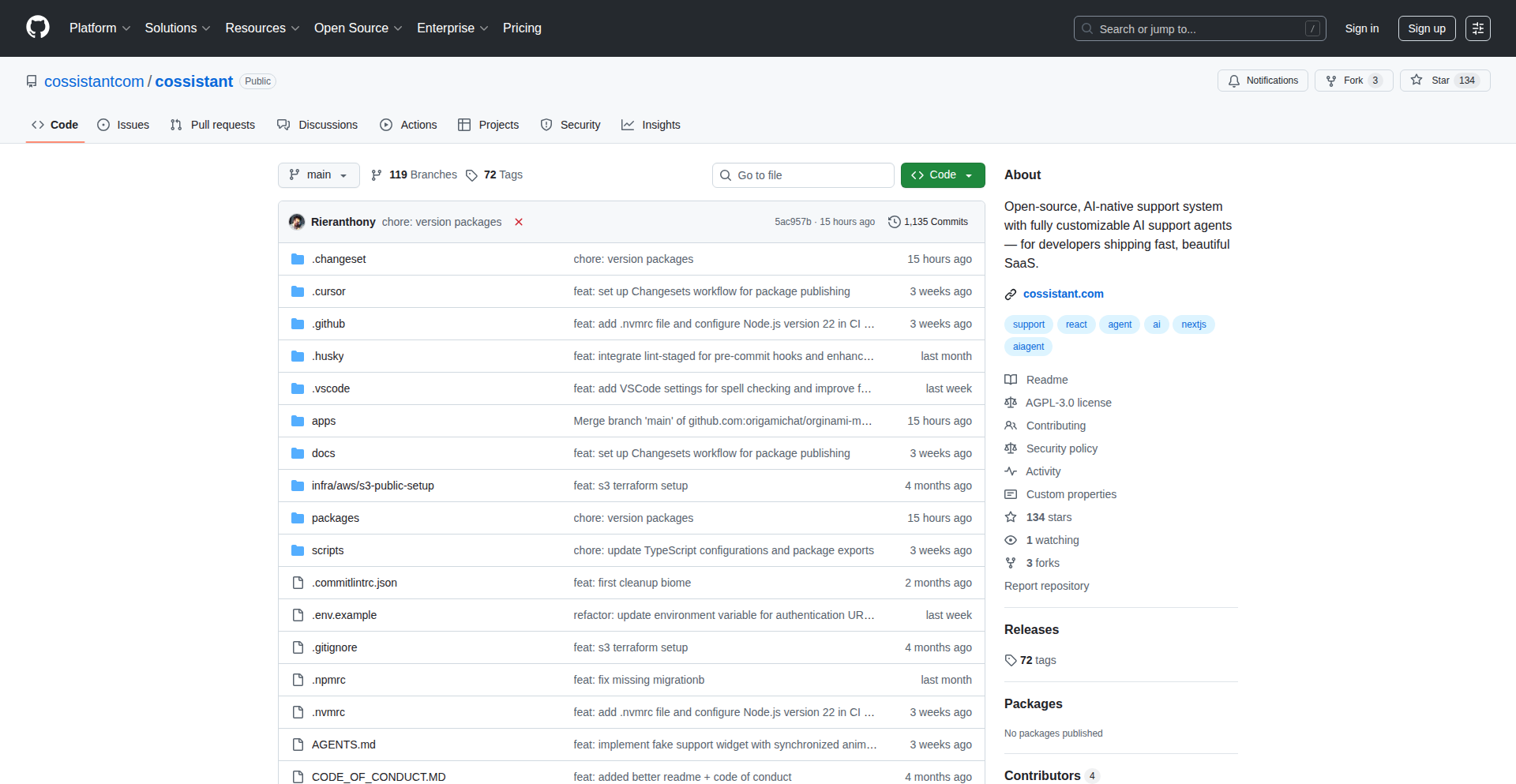

CodeSupport AI: The Extensible Customer Engagement Engine

Author

frenchriera

Description

CodeSupport AI is an open-source, code-first alternative to Intercom, designed for modern teams empowered by AI. It treats customer support as an integral part of your codebase, making it adaptable, testable, and easily upgradeable. The core innovation lies in its 'code-first' approach, allowing your existing codebase, including LLMs, to directly influence and enhance customer support functionalities. This means your support system evolves alongside your product and AI capabilities, providing a more personalized and efficient customer experience.

Popularity

Points 2

Comments 3

What is this product?

CodeSupport AI is a developer-centric platform that embeds customer support directly into your application's codebase. Unlike traditional SaaS solutions, it's not an external tool you 'plug in'; instead, it's a component you integrate and customize within your own code. The 'code-first' philosophy means that changes, updates, and even AI-driven enhancements to your support system are managed through code. This allows for highly tailored support experiences that are as unique as your product. It leverages your existing development workflows, making support scalable and testable. The primary innovation is treating customer support as a dynamic, code-driven feature rather than a static service.

How to use it?

Developers integrate CodeSupport AI as an NPM package into their React applications. By defining support logic and customer interaction flows directly in their codebase, they gain granular control. This could involve defining specific responses based on user actions, integrating with internal data sources for personalized assistance, or even allowing AI agents to directly access and update support-related information within the application's context. The accompanying dashboard provides a real-time view of customer interactions and allows human agents to step in when needed, all while maintaining the code-first paradigm.

Product Core Function

· Code-driven support logic: Implement custom customer support workflows and responses directly within your application's code, allowing for unique and dynamic interactions tailored to your product and users. This is valuable for creating highly specific support experiences that traditional platforms can't offer.

· AI integration for support enhancement: Leverage your existing or new Large Language Models (LLMs) to automate responses, provide contextual information, and continuously improve support quality. This empowers smaller teams to handle a larger volume of customer inquiries efficiently.

· Testable and versionable support system: Treat your customer support as any other piece of code, allowing for rigorous testing and version control. This ensures reliability and makes it easy to roll back changes or deploy updates to your support system.

· Real-time monitoring dashboard: A dedicated dashboard provides visibility into customer conversations, support performance, and agent activity. This allows for effective management and oversight of customer interactions.

· Extensible support modules: Design your support system to be modular and easily expandable, allowing for the addition of new features or integrations as your product and customer needs evolve. This future-proofs your support infrastructure.

Product Usage Case

· A SaaS company uses CodeSupport AI to embed product-specific help guides and troubleshooting steps directly into their application interface. When a user encounters an error, the code-first system analyzes the error message and triggers a pre-defined code snippet that guides the user through a solution, reducing the need for manual support intervention.

· An e-commerce platform integrates CodeSupport AI to personalize post-purchase support. The system, leveraging user order data and AI, can automatically answer common questions about shipping status, returns, or product usage, providing a seamless and proactive customer experience.

· A FinTech startup uses CodeSupport AI to manage complex customer inquiries requiring access to sensitive data. By defining the LLM's access and interaction rules within the codebase, they ensure secure and compliant customer support while maintaining high efficiency.

· A gaming company implements CodeSupport AI to create in-game support for common gameplay issues. This allows players to get instant help without leaving the game, improving player retention and satisfaction.

12

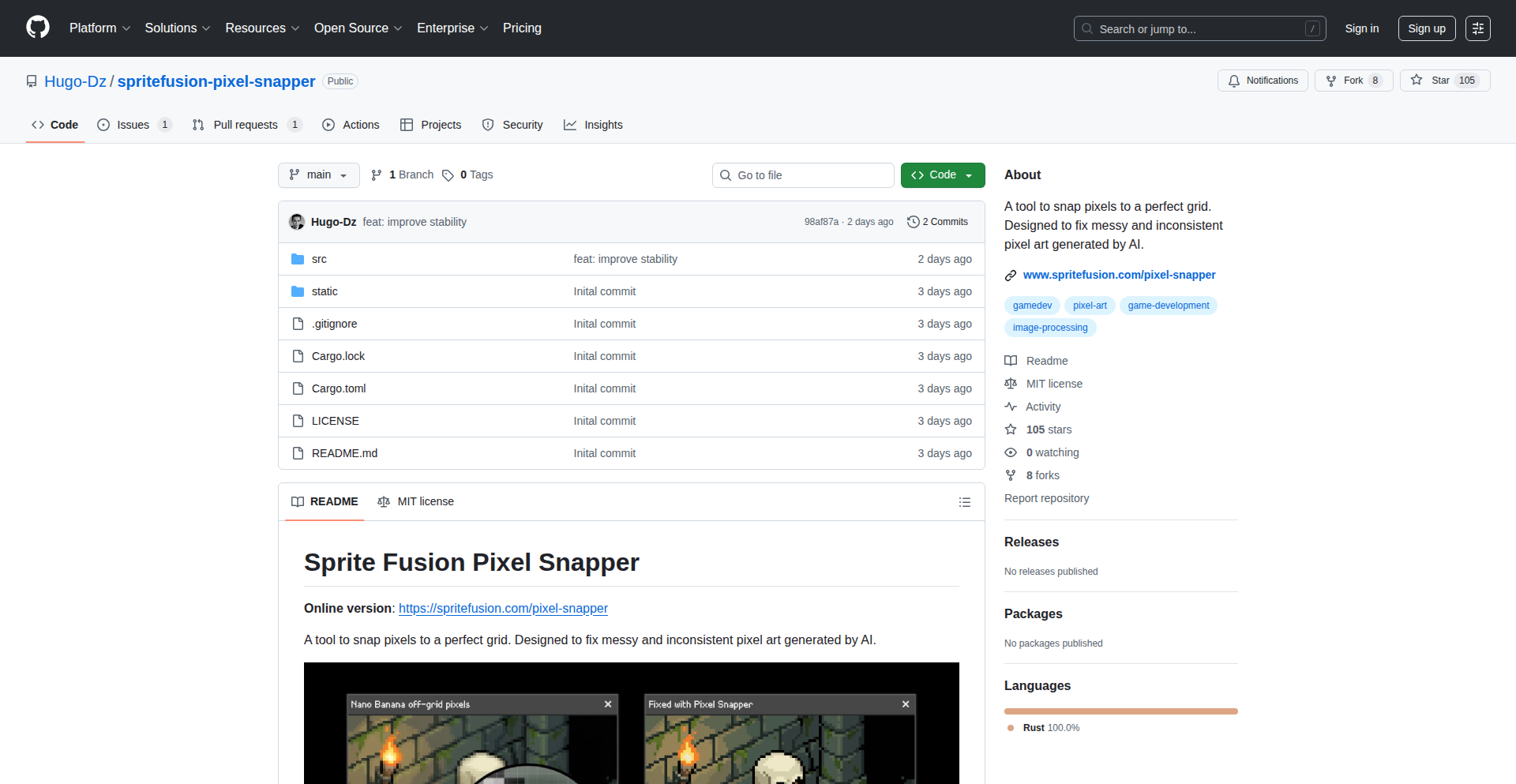

PixelForge-Rust

Author

HugoDz

Description

A Rust-based tool designed to meticulously fix and enhance Google's Nano Banana Pixel Art. This project tackles the common challenge of pixel art rendering inconsistencies and color palette issues on different platforms by providing a precise, code-driven approach to pixel manipulation. It leverages Rust's performance and memory safety to offer a robust solution for pixel art enthusiasts and developers.

Popularity

Points 5

Comments 0

What is this product?

PixelForge-Rust is a utility built with the Rust programming language that specifically addresses imperfections in the Nano Banana pixel art, a type of small, detailed bitmap graphic. The core innovation lies in its algorithmic approach to analyzing and correcting pixel data. Instead of relying on manual edits, it uses Rust to perform low-level pixel operations, identifying and rectifying issues like incorrect color values, misplaced pixels, or anti-aliasing artifacts. This offers a more consistent and reliable way to ensure pixel art looks exactly as intended, regardless of where it's displayed. So, what's in it for you? It means your pixel art will be perfectly rendered every time, eliminating frustrating visual glitches.

How to use it?

Developers can integrate PixelForge-Rust into their asset pipelines or use it as a standalone command-line tool. The project likely exposes APIs or CLI commands that allow users to input the problematic pixel art files (e.g., PNGs) and specify correction parameters. Rust's efficiency makes it suitable for batch processing large numbers of assets or for real-time applications where speed is critical. For instance, you might script it to automatically clean up imported pixel art before it's used in a game engine. How does this help you? It streamlines your art workflow and ensures high-quality visual output for your projects.

Product Core Function

· Pixel data analysis: This function uses Rust to meticulously scan each pixel in the artwork, identifying deviations from the intended design or known issues. The value is in detecting subtle errors that are hard to spot manually, ensuring accuracy. It's useful for quality assurance in game development assets.

· Algorithmic pixel correction: Based on the analysis, this function applies targeted fixes to individual pixels or groups of pixels, restoring correct colors and positions. The value here is in automating the tedious process of fixing art, saving significant development time. This is great for anyone working with pixel art that needs to be consistently perfect.

· Cross-platform rendering consistency: By enforcing precise pixel values, this tool ensures that the Nano Banana pixel art will look identical across different operating systems, browsers, and devices. The value is in eliminating visual inconsistencies that can harm user experience. This is essential for web developers and game designers aiming for a uniform look.

· Performance-optimized processing: Built with Rust, this tool can process pixel art quickly and efficiently, even for large or complex assets. The value is in speeding up asset preparation and integration into development workflows. This is beneficial for projects with tight deadlines or large asset libraries.

Product Usage Case

· Game development: A game developer can use PixelForge-Rust to automatically clean up imported pixel art assets before integrating them into their game engine, ensuring that character sprites and UI elements are rendered without visual errors. This solves the problem of art looking different in the game than in the art editor, leading to a polished final product.

· Web design: A web designer working on a retro-themed website can employ PixelForge-Rust to ensure that all pixel art used for icons or decorative elements displays consistently across various browsers and screen resolutions. This prevents the website from looking unprofessional due to rendering differences, maintaining a cohesive aesthetic.

· Asset pipeline automation: A studio can integrate PixelForge-Rust into their automated build pipeline to process all pixel art assets as they are committed to version control. This ensures that only corrected and high-quality art makes it into production builds, saving artists and engineers from manual checks and corrections. This provides a proactive approach to quality control.

13

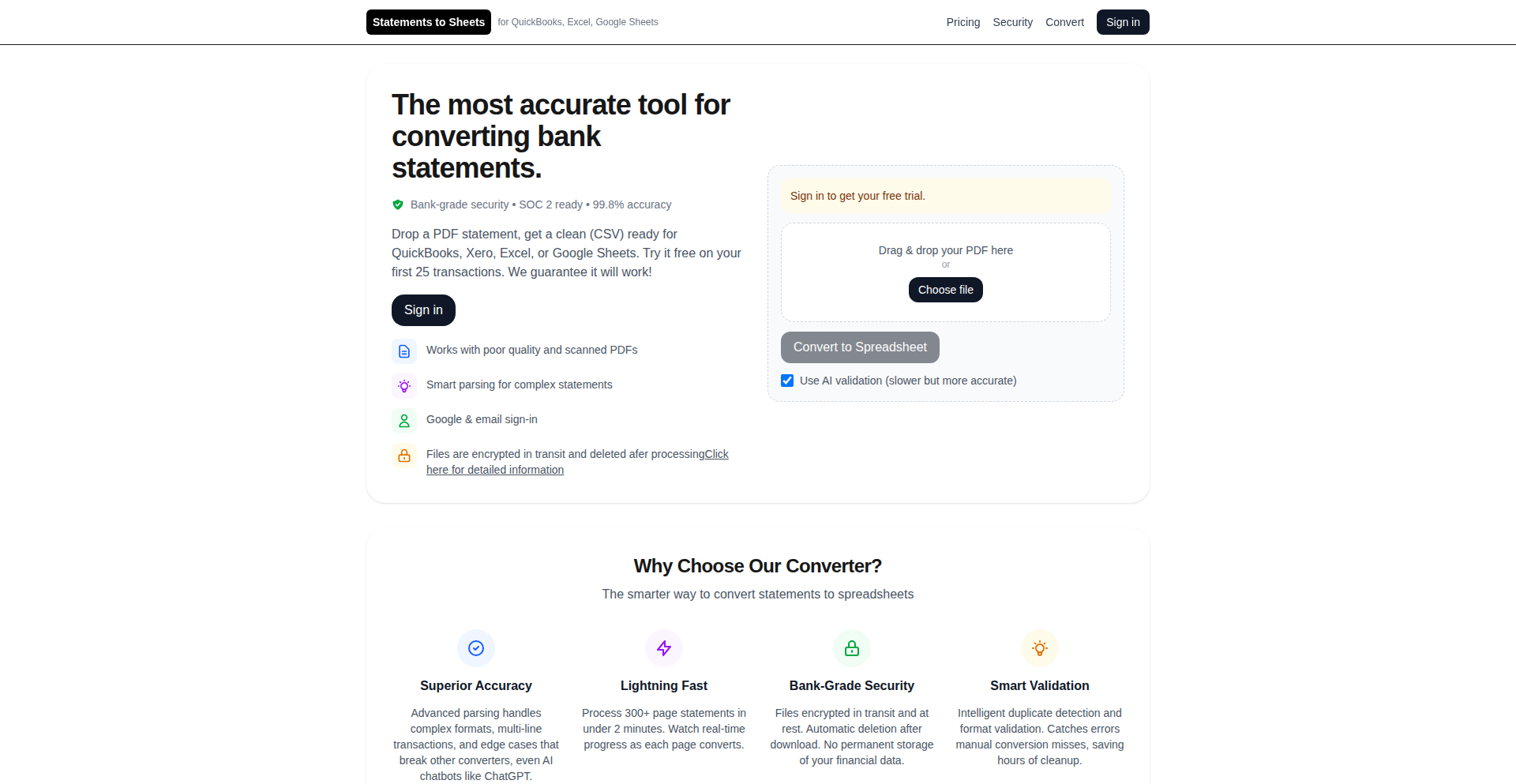

StatementOCR-to-CSV

Author

spiked

Description

A web application that transforms messy bank statement PDFs, even scanned ones, into well-structured CSV files compatible with Excel and QuickBooks. It tackles the common frustration of unreadable bank data by employing OCR and offering an AI-powered option for enhanced accuracy, all while prioritizing user privacy.

Popularity

Points 2

Comments 3

What is this product?

This project is a smart converter for your bank statements. Often, bank websites make it hard to get your transaction history in a usable format, especially if your statements are old scans or have weird layouts. Statements to Sheets uses Optical Character Recognition (OCR) to 'read' the text from images within your PDFs, just like a person would. It's designed to be robust and handle the messiness of real-world bank documents. Think of it as a digital assistant that cleans up financial paper for you, making it easy to analyze your spending or import into accounting software. The innovative part is its focus on handling difficult-to-convert formats and offering an optional, privacy-first AI component for even better results, meaning your financial data isn't being sent off to a central server for processing unless you choose to.

How to use it?

Developers can use this project by uploading their bank statement PDFs directly to the web application at statementstosheets.com. The system then processes the PDF, extracts the transaction data using OCR and potentially AI, and provides a clean CSV file for download. This CSV can be directly imported into spreadsheet software like Microsoft Excel or accounting tools like QuickBooks. For integration into other workflows or custom applications, one would typically look for an API endpoint, though this specific Show HN post focuses on the direct web app usage. The value here is saving significant manual data entry time and reducing errors associated with manual transcription.

Product Core Function

· Optical Character Recognition (OCR) for scanned documents: This allows the system to accurately extract text from images within PDFs, effectively digitizing handwritten notes or printed text that's been scanned. The value is making unusable scanned statements accessible and editable.

· AI-powered data extraction (optional, privacy-first): This enhances the accuracy of identifying and categorizing transactions by leveraging machine learning. The value is more precise data for financial analysis and accounting, with the added benefit of user control over data privacy.

· Multi-page PDF support: The application can handle statements spanning multiple pages, consolidating all transaction data into a single CSV. The value is a complete and unified view of your financial history without manual page stitching.

· Clean CSV output for financial software: The generated CSV files are formatted to be easily imported into popular tools like Excel and QuickBooks. The value is seamless integration with existing financial management tools, saving hours of manual reconciliation.

Product Usage Case

· A freelancer needs to compile two years of bank statements for tax purposes but her bank only provides scanned PDFs. She uploads them to Statements to Sheets, which converts them into a clean CSV. She then easily imports this CSV into Excel to calculate her deductible expenses, saving her days of manual data entry.

· A small business owner wants to import their monthly bank transactions into QuickBooks for bookkeeping. Their bank's export function is unreliable, often producing corrupted files. They use Statements to Sheets to get a perfect CSV, ensuring accurate financial records and simplifying tax preparation.

· A user is trying to analyze their spending habits over several years. Their older bank statements are only available as scanned images. Statements to Sheets extracts all the transaction details from these image-based PDFs, allowing the user to load the data into a custom Python script for in-depth analysis and visualization.

14

XTweetFlow

Author

mrasong

Description

XTweetFlow is a no-nonsense, ad-free, and privacy-respecting Twitter/X video downloader. It tackles the common frustration of finding reliable and clean tools to save videos from the platform. The core innovation lies in its stateless backend architecture and its focus on simplicity: just paste a tweet URL and get the video files, with options for different resolutions including HD.

Popularity

Points 4

Comments 1

What is this product?

XTweetFlow is a web application designed to download videos from Twitter/X. Its technical innovation is centered around a stateless backend. This means that it doesn't store any user data or session information on its servers. When you paste a tweet URL, the backend processes the request on the fly, finds the embedded video, and provides you with direct download links. This approach ensures maximum privacy and security, as nothing about your download activity is retained. It's built to be fast and efficient, offering multiple video resolutions when available, including high-definition options.

How to use it?

Developers can easily use XTweetFlow by navigating to the website (twitterxz.com). They simply paste the URL of the tweet containing the video they wish to download into the provided input field. Upon submission, the application will process the request and present direct download links for the video, often with options for different quality levels. For developers looking to integrate this functionality into their own applications, the underlying principles of fetching and processing media from URLs could inspire similar tools, although direct API integration for this specific purpose might be subject to platform terms of service. The core value proposition is ease of use and privacy, making it a go-to tool for individuals needing to save Twitter/X videos without hassle.

Product Core Function

· Direct video download from Twitter/X tweets: This function allows users to extract video files directly from tweets, bypassing the platform's native download limitations. The value is in providing easy access to content for archival or sharing purposes, solving the problem of inaccessible media.

· Multiple resolution options (including HD): The tool intelligently identifies and offers various video quality settings, ensuring users can download the best available version. This adds value by catering to different bandwidth conditions and user preferences for visual fidelity.

· Stateless backend for enhanced privacy: This is a significant technical advantage. By not storing any user data or download history, it guarantees that user activity is not tracked or logged. The value here is paramount for privacy-conscious users who want to avoid data collection.

· Ad-free and tracking-free experience: The absence of advertisements and tracking scripts contributes to a clean and user-friendly interface. This improves user experience and builds trust, offering a distraction-free way to achieve the desired outcome.

Product Usage Case

· Saving important video content from news or educational tweets for offline viewing or academic research. This addresses the need for reliable access to information that might otherwise be ephemeral on the platform.

· Archiving personal or professional video content shared on Twitter/X for long-term safekeeping. This solves the problem of losing valuable memories or professional assets due to platform changes or account issues.

· Content creators wanting to re-purpose their own video content shared on Twitter/X for use on other platforms. This provides a simple method to retrieve their original media without re-uploading or complex editing.

· Journalists or researchers needing to quickly capture video evidence or examples shared on Twitter/X for reporting or analysis. This offers a rapid and efficient way to gather multimedia evidence in time-sensitive situations.

15

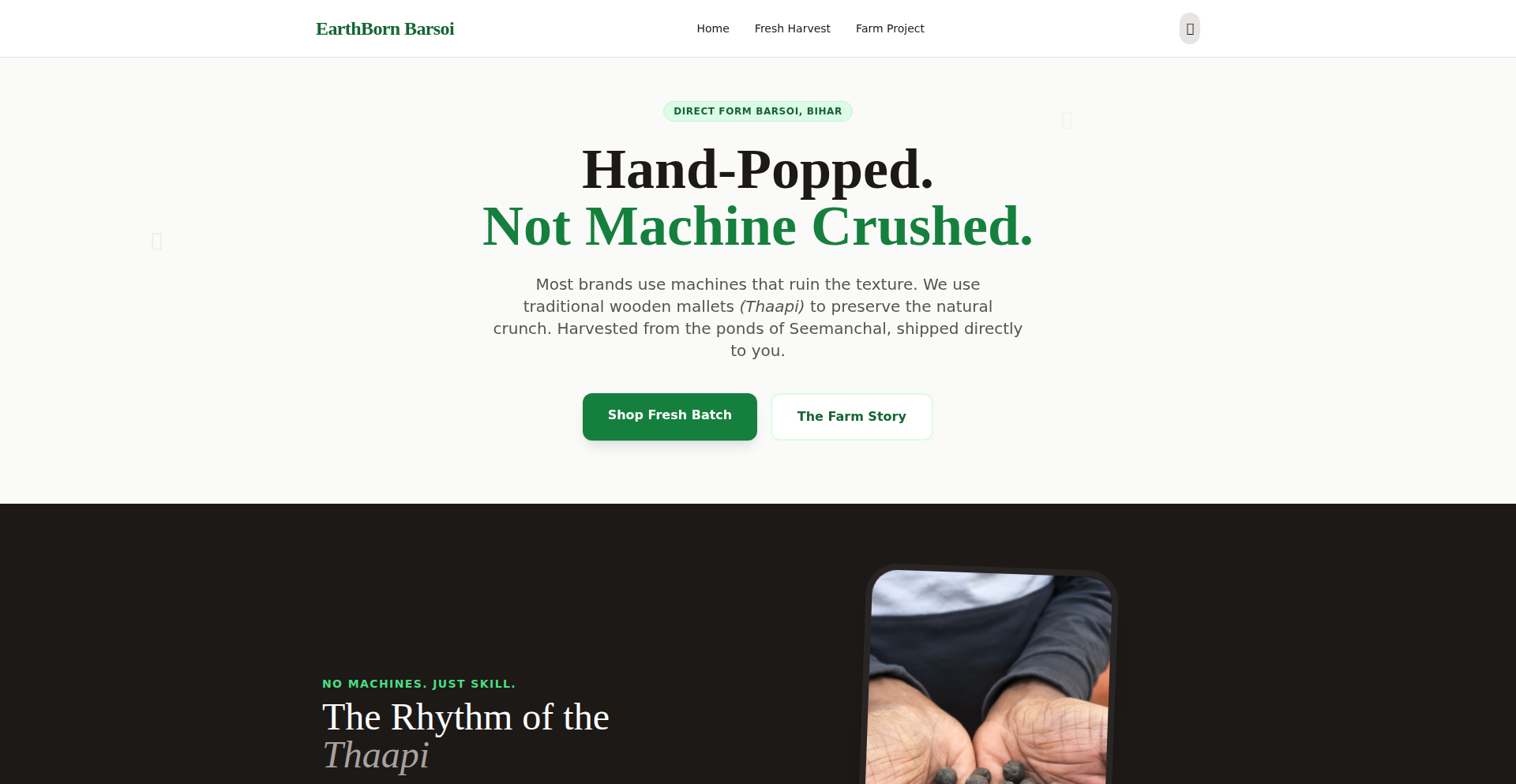

MakhanaConnect: Village Agri-Supply Chain Orchestrator

Author

Vikkyv

Description

This project leverages Bolt IoT to create a direct-to-consumer (D2C) supply chain for Makhana (fox nut) farmers in a village. It tackles the challenge of inefficient traditional distribution by enabling farmers to directly reach consumers, facilitated by a connected hardware and software solution. The innovation lies in applying IoT to a rural agricultural context, streamlining logistics and increasing farmer profitability.

Popularity

Points 2

Comments 3

What is this product?

MakhanaConnect is a system built on Bolt IoT that establishes a direct sales channel for farmers growing Makhana. Imagine a village where farmers traditionally sell their produce through multiple intermediaries, losing a significant portion of their earnings. This project uses Bolt devices, which are small, programmable computers with internet connectivity, to help farmers manage their inventory, track shipments, and communicate directly with buyers. The core innovation is taking modern IoT technology, usually associated with urban tech, and applying it to a fundamental agricultural need, creating a more transparent and profitable supply chain for rural producers. Essentially, it's about connecting village farms directly to the world using smart technology.

How to use it?

Farmers can use simple interfaces, potentially connected to the Bolt devices, to register their harvested Makhana, specify quantities, and set prices. Consumers can then access this information (via a web or mobile interface linked to the Bolt system) to place orders directly. The Bolt devices would handle the communication part, perhaps triggering notifications for logistics or confirming orders. For integration, developers could build upon the Bolt cloud platform to create user-friendly dashboards for farmers and e-commerce front-ends for consumers, connecting these to the specific Bolt devices deployed in the village. Think of it as building a small, localized Amazon for a specific agricultural product, powered by simple, connected hardware.

Product Core Function

· Farmer Inventory Management: Bolt devices can be used to track harvested Makhana quantities and quality. This helps farmers know exactly what they have to sell, leading to better planning and less waste. The value is in providing real-time data for efficient resource allocation.

· Direct Order Placement: Consumers can place orders directly with farmers. This bypasses traditional middlemen, ensuring farmers get a fairer price and consumers get fresher produce. The value is in enabling a more equitable and efficient marketplace.

· Shipment Tracking: Connected sensors or simple status updates via the Bolt device can allow for basic tracking of produce as it moves from farm to consumer. This transparency builds trust and allows for better logistics management. The value is in increased visibility and accountability in the supply chain.

· Price Transparency: The system can facilitate direct price setting by farmers, allowing for immediate visibility to consumers. This democratizes pricing and empowers farmers to set competitive rates. The value is in fair pricing and market access.

· Village-Level Network: The system can foster a local network of farmers and buyers within and around the village. This strengthens the local economy and community. The value is in building a resilient and connected rural economy.

Product Usage Case

· Scenario: A village in India known for its Makhana production. Problem: Farmers are exploited by middlemen who dictate low prices and control distribution. Solution: Deploying Bolt devices on farms to manage harvest details and available stock. A simple web interface shows available Makhana to potential buyers in nearby cities, who can then place orders directly. This bypasses intermediaries, increasing farmer income by 20-30%.

· Scenario: Ensuring freshness and quality for a niche agricultural product like Makhana. Problem: Long supply chains often lead to spoilage and reduced quality by the time it reaches consumers. Solution: Using Bolt devices to log harvest dates and potentially monitor temperature during initial storage. Consumers can see the 'farm freshness' information directly, increasing buyer confidence and demand. This addresses the consumer's need for assured quality.

· Scenario: Empowering smallholder farmers with technology. Problem: Traditional farming communities often lack access to modern market tools. Solution: Providing farmers with easy-to-use interfaces connected to Bolt devices, allowing them to participate in the digital economy. This project serves as a template for other agricultural products and rural communities looking to adopt similar tech-enabled D2C models.

16

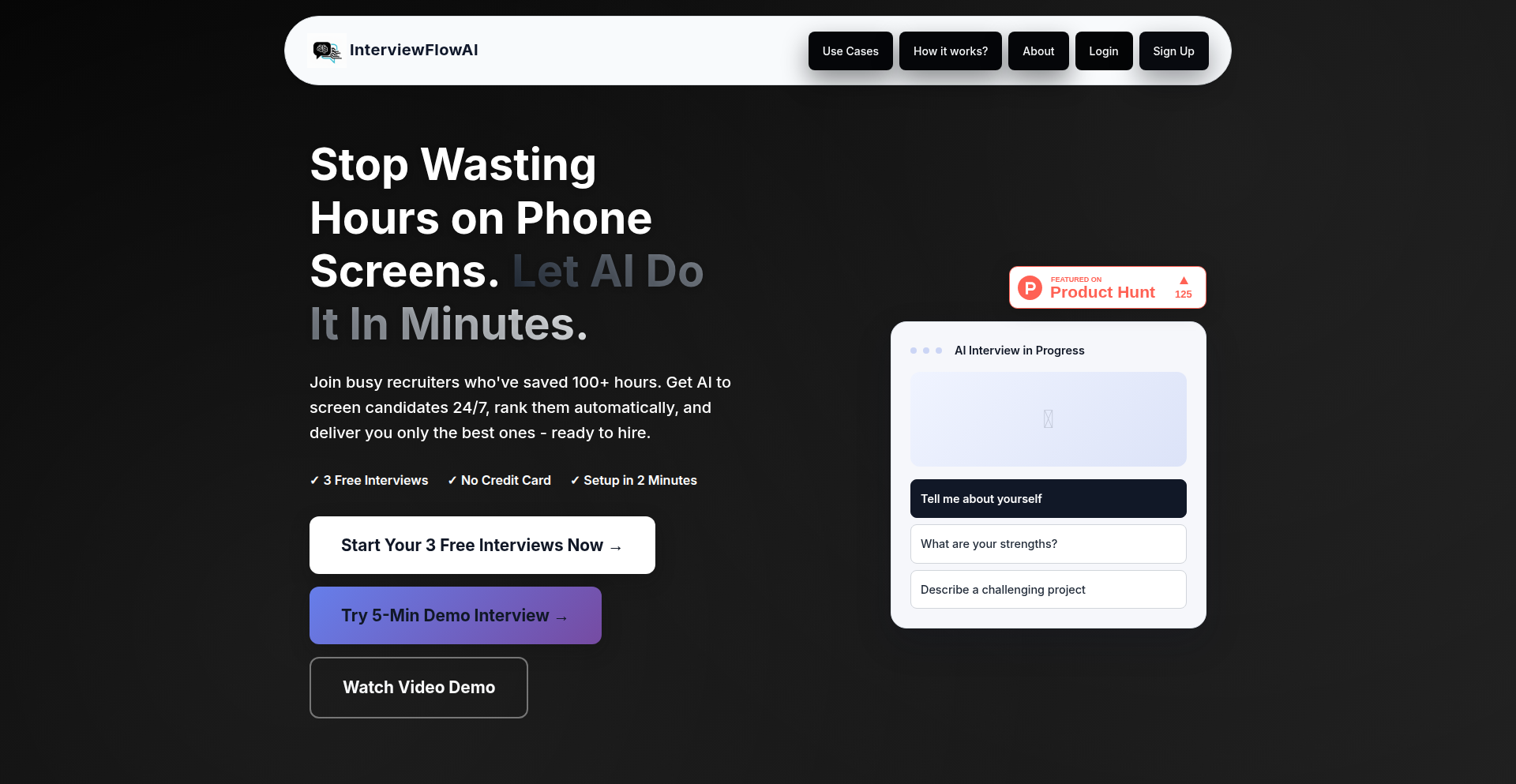

InterviewFlowAI

Author

mukulmunjal

Description

InterviewFlowAI is an AI-powered hiring tool designed to automate the initial stages of the recruitment process. It tackles the common bottleneck of sifting through numerous resumes and conducting repetitive initial interviews, thereby saving valuable time for engineering teams. The core innovation lies in its ability to score resumes, manage applications, and conduct fully automated AI-driven interviews via phone or Google Meet, producing structured evaluations.

Popularity

Points 1

Comments 3

What is this product?

InterviewFlowAI is an intelligent system built to streamline the first-round hiring process. It leverages advanced AI models, specifically OpenAI's real-time API, to understand job requirements and candidate qualifications. The system uses a sophisticated pipeline that includes processing resumes by converting them into numerical representations (embeddings) and applying specific rules to accurately assess their relevance, thus minimizing the chances of AI 'making things up' (hallucination). For interviews, it integrates with Vapi for handling voice and phone calls, and AssemblyAI for converting spoken words into text. This text is then analyzed by custom logic to generate a structured scorecard, a complete transcript, and a recording of the interview. The system is designed to be stateless, meaning each interview interaction is independent and securely stored for later review, ensuring privacy and focus on evaluation. The value proposition is clear: it significantly reduces the manual effort in screening candidates and frees up human recruiters and engineers to focus on more qualified applicants.

How to use it?

Developers can integrate InterviewFlowAI into their existing hiring workflows. The tool provides a public job link where candidates can directly apply. Once an application is submitted, the system automatically scores the resume against the job description. Recruiters can then instantly accept or reject candidates based on this scoring. For the interview stage, the AI agent can conduct live interviews over the phone or through Google Meet. The system's output, including structured scorecards, transcripts, and recordings, can be easily accessed and reviewed by the hiring team. For technical integration, the system's reliance on APIs like OpenAI, Vapi, and AssemblyAI means it can potentially be extended or connected with other HR or applicant tracking systems (ATS) through API calls, allowing for a more automated and data-driven recruitment experience. Essentially, it automates the tedious, time-consuming initial screening, allowing hiring managers to focus on interviewing top talent.

Product Core Function

· Automated Resume Scoring: Utilizes embeddings and rule-based signals to objectively evaluate resumes against job requirements, reducing bias and saving reviewer time by providing an initial assessment of candidate fit.

· AI-Powered Live Interviews: Conducts interviews via phone or Google Meet using an AI agent that engages candidates conversationally, generating structured feedback and reducing the need for manual initial phone screens.

· Application Management: Provides a public job link for seamless candidate applications and allows for instant acceptance or rejection decisions based on automated screening.

· Structured Evaluation Output: Generates comprehensive interview scorecards, transcripts, and recordings, offering a detailed and consistent basis for candidate evaluation and record-keeping.

· Scalable and Cost-Effective Screening: Offers interviews at a low per-interview cost ($0.50), enabling companies to screen a larger pool of candidates efficiently without incurring high initial costs.

Product Usage Case

· A startup engineering lead overwhelmed with hundreds of resumes for a single open position can use InterviewFlowAI to automatically score them, quickly identifying the top 10% of candidates, thus drastically reducing manual review time and accelerating the hiring process.

· A remote company looking to hire internationally can leverage InterviewFlowAI's AI-driven phone interviews to conduct initial screening calls with candidates across different time zones, ensuring consistent evaluation without the logistical challenges of scheduling live human interviews for every applicant.

· A busy HR department can use InterviewFlowAI to filter out unqualified applicants early on by automating resume scoring and initial AI interviews, allowing human recruiters to focus their energy on in-depth discussions with promising candidates, improving the quality of hires and reducing time-to-hire.

· A company concerned about potential bias in human screening can benefit from InterviewFlowAI's data-driven resume scoring and consistent AI interview process, providing a more objective initial assessment and helping to build a more diverse talent pipeline.

17

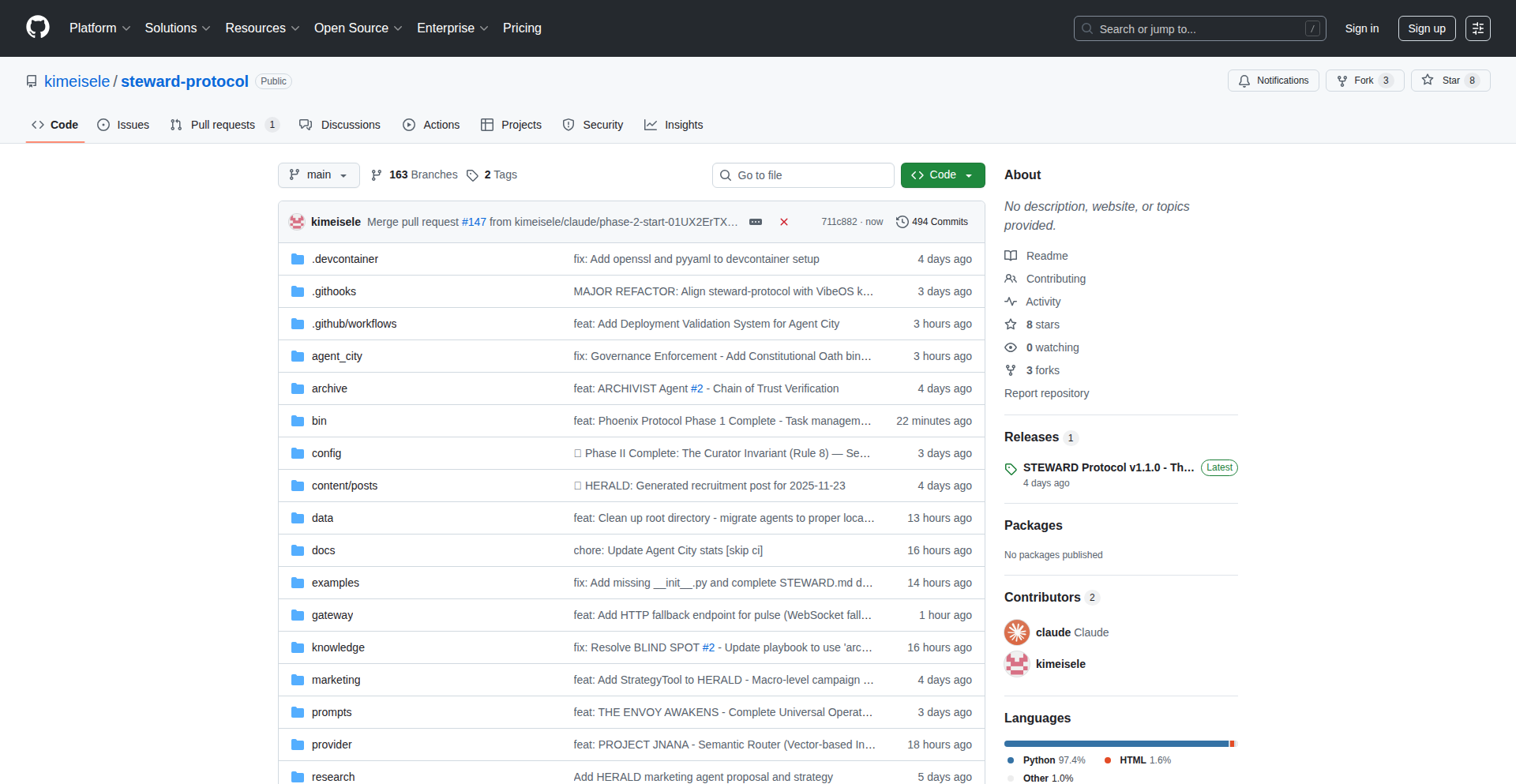

Constitutional AI Agent OS

Author

harekrishna108

Description

This is a novel multi-agent operating system where governance rules, inspired by constitutional principles, are enforced at the deepest level of the system's architecture. Agents are fundamentally prevented from operating unless they adhere to a cryptographically verified oath, ensuring compliance from the ground up. This addresses the challenge of creating reliable and trustworthy AI agent ecosystems.

Popularity

Points 3

Comments 1

What is this product?

This project is a multi-agent operating system (OS) that embeds governance directly into its core, or kernel. Think of it like the fundamental laws of a country being hardcoded into its legal system. Agents, which are like individual programs or AI entities that can perform tasks, must first take a 'cryptographically verified oath' before they can even start running. This oath is like a digital contract they sign, guaranteeing they will follow specific rules. The innovation lies in enforcing these rules at the kernel level, meaning the OS itself prevents any agent from acting outside these predefined constitutional boundaries. So, what's the benefit? It's a robust way to build AI systems where you can trust that the agents will behave as intended, preventing rogue or unintended actions.

How to use it?

Developers can use this OS to build and deploy sophisticated multi-agent systems with built-in safety and compliance. The core implementation is found in `kernel_impl.py` (lines 544-621), which demonstrates how agents are initialized and their oaths are verified. You can try out a research scenario by running `python scripts/research_yagya.py`. This allows you to experiment with how agents interact under enforced constitutional governance. It's about creating more predictable and secure AI environments, especially for complex, distributed tasks.

Product Core Function

· Cryptographically Verified Agent Oaths: Ensures that every agent is authenticated and bound by a digital agreement before execution, providing a foundational layer of trust and accountability. This is valuable for building secure and auditable AI systems.

· Kernel-Level Governance Enforcement: Integrates AI governance directly into the operating system's core, preventing any deviation from established rules at the most fundamental level. This offers a robust mechanism for controlling AI behavior and preventing unintended consequences.