Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-25

SagaSu777 2025-11-26

Explore the hottest developer projects on Show HN for 2025-11-25. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN lineup is a vibrant testament to the hacker spirit, showcasing a relentless drive to simplify complexity and empower creators. We see a strong surge in tools that leverage AI not just for code generation, but also for debugging, analysis, and even customer interaction, signaling a future where AI acts as a true co-pilot in development and business operations. The emphasis on open-source and cross-platform solutions like StepKit highlights a growing demand for interoperability and vendor independence, allowing developers to build and deploy workflows anywhere. Edge computing and local-first applications are also making a significant impact, offering cost savings and enhanced privacy, proving that innovation often comes from optimizing resource usage and putting control back into the user's hands. This diverse set of projects encourages developers and entrepreneurs to look beyond conventional approaches, find elegant solutions to nagging problems, and build with a mindset of openness and accessibility.

Today's Hottest Product

Name

Flowglad

Highlight

Flowglad tackles the complexity of payment processing by offering a zero-code integration and a declarative approach to pricing models using a `pricing.yaml` file, similar to Terraform for pricing. This innovation significantly lowers the barrier to entry for developers and businesses, especially those adopting new AI-driven product models, by abstracting away intricate payment logic and webhook management. Developers can learn about reactive programming paradigms applied to financial systems and explore declarative infrastructure-as-code principles extended to business logic.

Popular Category

Developer Tools

AI/ML

Web Development

Infrastructure

Open Source

Popular Keyword

AI

LLM

Developer Experience (DX)

Open Source

Cloud

Workflow

Automation

Technology Trends

Declarative Infrastructure for Business Logic

AI-Augmented Development and Debugging

Edge Computing for Cost-Effective Services

Cross-Platform Workflow Orchestration

Privacy-Preserving Local-First Applications

Enhanced Developer Experience (DX) through Abstraction

Decentralized and Open Systems

Project Category Distribution

Developer Tools (30%)

AI/ML (20%)

Web Development (15%)

Infrastructure (15%)

Utilities (10%)

Open Source (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Flowglad Reactive Payments | 316 | 178 |

| 2 | KiDoom Engine Visualizer | 250 | 27 |

| 3 | Cloudflare R2 Image Envoy | 54 | 32 |

| 4 | StepKit: Universal Durable Workflow Engine | 35 | 16 |

| 5 | AnimeLingua | 11 | 21 |

| 6 | Browser-Native DiffMerge | 16 | 14 |

| 7 | RAG-PerfBoost | 23 | 3 |

| 8 | CI/CD Sentinel | 12 | 0 |

| 9 | Macko SPMV Accelerator | 7 | 4 |

| 10 | LegacyGlue Weaver | 7 | 1 |

1

Flowglad Reactive Payments

Author

agreeahmed

Description

Flowglad is an open-source payment processor that simplifies integration by eliminating the need for complex glue code and webhooks. It provides real-time insights into customer feature and usage credit balances based on their billing status, inspired by the reactive programming paradigm found in frameworks like React. This innovation drastically reduces maintenance overhead and makes payment integration more predictable and less error-prone.

Popularity

Points 316

Comments 178

What is this product?

Flowglad is a payment processing system designed to be integrated with minimal developer effort. Its core innovation lies in its 'reactive' approach to payments, meaning it automatically updates and reflects changes in customer billing states, feature access, and usage credits in real-time without requiring developers to manually manage complex event handling (like webhooks). Think of it like this: instead of constantly checking if something has changed, Flowglad proactively tells you when it changes. This is achieved by abstracting pricing models into a declarative configuration file, similar to how infrastructure is defined in tools like Terraform, but specifically for your business's pricing. This means developers can define complex pricing tiers, usage meters, and feature flags with ease, and the system handles the underlying logic automatically. For developers, this translates to significantly less code to write and maintain, fewer potential bugs, and a more robust and predictable payment system, especially crucial for modern applications like AI services that often have variable pricing.

How to use it?

Developers can integrate Flowglad into their applications by following a straightforward setup process. Instead of writing extensive code to handle payment events, they define their pricing models, products, features, and usage meters using a `pricing.yaml` file. This declarative approach allows for quick setup of complex billing scenarios. Flowglad then provides simple APIs or SDKs (with a focus on a React-like developer experience) to interact with the payment system. For example, to check a customer's available usage credits for a specific feature, a developer can query Flowglad directly rather than parsing through complex webhook payloads. This approach allows for seamless integration into both backend systems and frontend user interfaces, enabling real-time display of customer entitlements and purchase options. Crucially, Flowglad avoids the need for database schema changes by using customer IDs that already exist in your system and referencing pricing elements via simple 'slugs' you define, reducing friction in adoption.

Product Core Function

· Declarative Pricing Model Configuration: Define pricing tiers, feature gates, and usage-based billing rules in a human-readable YAML file, reducing boilerplate code and potential errors. This provides a clear and organized way to manage your business's revenue streams.

· Real-time Customer Entitlement Updates: Automatically reflect changes in customer subscriptions, feature access, and usage credit balances across your application without manual polling or complex event subscriptions. This ensures users always see accurate information and unlocks, improving user experience and preventing billing discrepancies.

· Zero Webhook Integration: Eliminate the complexity and fragility of managing a large number of webhook event types by providing a reactive system that pushes updates. This significantly simplifies development, reduces maintenance burden, and enhances system reliability.

· Customer Identification via Existing IDs: Integrate with your existing customer database by referencing customers using their native IDs, avoiding data duplication and simplifying the onboarding process. This makes it easier to plug Flowglad into your current infrastructure.

· Slug-based Resource Referencing: Use simple, human-readable 'slugs' to refer to products, features, and usage meters, making your code cleaner and easier to understand. This enhances code readability and maintainability.

· Seamless Backend and Frontend Integration: Access customer usage and feature data in real-time from both your server-side logic and your React-based frontend, enabling dynamic user experiences and personalized features. This allows for a consistent and responsive application.

· Cloning and Export/Import of Pricing Models: Easily copy pricing configurations between test and live environments, and export/import them via the `pricing.yaml` file for version control and disaster recovery. This streamlines testing and deployment workflows.

Product Usage Case

· AI Service Billing: A company offering AI model access can use Flowglad to implement pay-per-token or tiered subscription plans with dynamic feature unlocks. Developers can easily configure these models in `pricing.yaml` and query customer credit balances in real-time to control access, avoiding complex webhook logic and ensuring accurate billing for variable usage.

· SaaS Product Feature Tiers: A Software-as-a-Service provider can leverage Flowglad to manage different subscription tiers with varying feature access. When a customer upgrades or downgrades their plan, Flowglad automatically updates their feature entitlements, and the frontend can instantly reflect these changes, providing a smooth user experience without requiring backend redeployments.

· Usage-Based Metering for Cloud Services: A platform providing developers with cloud resources (like compute or storage) can use Flowglad's usage meters to track consumption and bill accordingly. Developers can define the units and pricing per unit, and Flowglad will track usage in real-time, making it simple to integrate automated billing for dynamic resource consumption.

· E-commerce with Subscription Add-ons: An e-commerce store selling physical goods can offer subscription add-ons or premium features. Flowglad can manage the recurring billing for these add-ons and sync feature access with the main e-commerce platform, ensuring customers receive their purchased benefits seamlessly.

· Gaming with In-App Purchases and Credits: A game developer can use Flowglad to manage in-game currency, item purchases, and premium feature access. The real-time nature of Flowglad allows for instant validation of purchases and feature unlocks within the game, creating a responsive and engaging player experience.

2

KiDoom Engine Visualizer

Author

mikeayles

Description

This project reimagines DOOM's graphics by rendering game elements not as pixels, but as physical PCB traces and component footprints. It achieves this by extracting vector data from the DOOM engine and translating it into graphical elements within KiCad, a popular PCB design software. So, it's a unique way to visualize game geometry using hardware design principles.

Popularity

Points 250

Comments 27

What is this product?

This is a creative endeavor that modifies the classic DOOM game to render its graphics using PCB design elements. Instead of pixels, walls are drawn as PCB traces, and game entities like enemies and items are represented by actual electronic component footprints. The core innovation lies in patching DOOM's source code to extract its internal vector geometry data (like lines for walls and sprite positions for entities) and then sending this data via a Unix socket to a Python script running within KiCad. This script then manipulates pre-allocated PCB traces and footprints to recreate the game's visuals. This bypasses the traditional pixel-rendering pipeline and offers a completely novel way to "see" the game's structure. So, it's a demonstration of how game logic can be reinterpreted through the lens of hardware design.

How to use it?

For developers, this project offers a fascinating technical deep-dive into game engine internals and creative visualization techniques. You can explore the patched DOOM source code to understand how vector data is extracted. The Python plugin for KiCad demonstrates real-time data manipulation and integration with a CAD environment. It can be used as a learning tool to understand how 3D game worlds are represented internally. While not for playing DOOM in a conventional sense, it's highly valuable for those interested in game development, graphics programming, or novel application of design tools. Integration involves setting up the modified DOOM environment and running the KiCad Python script.

Product Core Function

· Vector Data Extraction from DOOM Engine: This allows the game's geometric information (lines, shapes) to be pulled out directly, bypassing the pixel-based rendering. The value here is in understanding how game worlds are represented internally, enabling alternative visualization methods.

· PCB Trace and Footprint Mapping: Game walls are translated into lines of PCB traces, and game objects into component footprints. This offers a unique, abstract visual representation of the game, showcasing creative problem-solving by repurposing design elements.

· Real-time Data Streaming via Unix Socket: The game engine communicates its graphical data to the visualization tool in real-time. This demonstrates efficient inter-process communication and is valuable for building live visualization systems.

· KiCad Plugin for Dynamic Rendering: A Python script within KiCad actively updates the PCB layout based on incoming game data. This highlights how external data can dynamically drive complex design software, useful for interactive design tools.

· Multi-View Rendering (SDL, Python Wireframe): The project simultaneously outputs to a standard game window (SDL) and a debug wireframe window, alongside the KiCad visualization. This provides comprehensive insight into the data flow and rendering process, aiding debugging and understanding.

· Oscilloscope Vector Output (ScopeDoom): Extends the concept to outputting game vectors as audio signals to an oscilloscope. This is a groundbreaking application of game data visualization on analog hardware, demonstrating a true "hacker" approach to hardware interfacing and creative output.

Product Usage Case

· Visualizing DOOM's level geometry in KiCad: Developers can see the architectural layout of DOOM maps rendered as interconnected traces and pads on a virtual PCB. This helps understand spatial relationships and level design principles in a new way.

· Debugging game entity placement and movement: By seeing enemies and items as distinct component footprints, developers can easily track their positions and trajectories in real-time within the KiCad environment, aiding in bug fixing and game logic analysis.

· Exploring the feasibility of non-traditional game rendering: This project serves as a case study for how game engines can be adapted to output data for unconventional display methods, inspiring new forms of interactive art or educational tools.

· Demonstrating real-time data pipeline construction: The system shows how to build a pipeline from a game engine, through inter-process communication, to a sophisticated design tool for live visualization, applicable to many simulation and design workflows.

· Creating art installations or interactive displays using game engines: The ScopeDoom extension showcases how game data can be transformed into signals for analog devices like oscilloscopes, opening up possibilities for physical art and interactive experiences.

3

Cloudflare R2 Image Envoy

Author

cr1st1an

Description

A WordPress plugin that cleverly redirects your existing images to be served through Cloudflare's R2 storage and Workers. This approach dramatically cuts down on bandwidth costs, as R2 offers free egress, making image delivery significantly faster and cheaper without the usual complexity of image optimization plugins. It leverages edge computing to cache and deliver your images efficiently.

Popularity

Points 54

Comments 32

What is this product?

This project is a WordPress plugin that acts as a smart delivery agent for your website's images. Instead of your server handling every image request, the plugin rewrites the image URLs on your website. When someone visits your site, the images are then fetched and served by Cloudflare Workers, which store them in Cloudflare R2. The magic happens on the first request: the Worker fetches the original image and caches it in R2. Subsequent requests are then served directly from Cloudflare's global network, which is blazingly fast and, crucially, has zero outgoing data fees. This means your images load quickly and you save a lot on hosting bills, without needing to mess with complex image compression or resizing settings.

How to use it?

For developers, integrating Cloudflare R2 Image Envoy is straightforward. You install it as a standard WordPress plugin. The plugin automatically intercepts outgoing image URLs. You have two options: 1. Deploy your own Cloudflare Worker by following the provided code and setup instructions – this is free to run, though you'll need a Cloudflare account. 2. Opt for the managed service at $2.99 per month, which uses the developer's pre-configured Worker and R2 bucket. The plugin is theme and builder agnostic, meaning it works with any WordPress setup and doesn't alter your database. It simply ensures your media stays in WordPress but is delivered from Cloudflare's efficient edge.

Product Core Function

· Image URL Rewriting: Automatically modifies image links on your website to point to Cloudflare's edge network. This ensures that every image request is routed for optimal delivery, reducing the load on your own server and improving page speed.

· Fetch-on-First-Request Caching: The first time an image is requested, the Cloudflare Worker retrieves it from your original source and stores it in Cloudflare R2. This intelligent caching means subsequent visitors receive the image directly from Cloudflare's global cache, making loading times instantaneous.

· Zero Egress Cost Delivery via R2: Utilizes Cloudflare R2 object storage, which offers free data egress. This is the key to significant cost savings, as you avoid the hefty fees typically associated with bandwidth consumption on traditional CDNs.

· Minimalist Optimization Approach: Focuses solely on efficient image delivery rather than complex transformations like compression or resizing. This simplicity ensures compatibility and reduces potential conflicts with other plugins, making it easy to implement.

· Fail-safe Original Image Loading: If any part of the Cloudflare delivery chain encounters an issue, the system gracefully falls back to loading the original image directly from your WordPress site, ensuring uninterrupted access to your content.

Product Usage Case

· A small business owner running a WordPress e-commerce site notices high bandwidth costs due to a large number of product images. By implementing Cloudflare R2 Image Envoy, they redirect their image traffic to Cloudflare's edge. Now, product images load much faster for customers, and the monthly hosting bill is significantly reduced because they no longer pay for outgoing data.

· A blogger with a high-traffic WordPress site wants to improve their website's performance without complex technical configurations. They install the plugin and deploy their own Cloudflare Worker. Their site's perceived loading speed dramatically improves as images are served from Cloudflare's global cache, resulting in a better user experience and potentially higher search engine rankings.

· A web developer building a portfolio site wants to showcase high-resolution images without incurring CDN fees. They use the managed service option of the plugin. The plugin handles the image delivery, ensuring fast loading times and a professional presentation, while keeping the development costs low and manageable.

4

StepKit: Universal Durable Workflow Engine

Author

tonyhb

Description

StepKit is an open-source SDK and framework designed to build robust, long-running processes (workflows) that can execute on any platform, from your own servers to cloud services like Cloudflare and Netlify. Its core innovation is abstracting away the complexities of durable execution, allowing developers to write code once and run it anywhere without vendor lock-in or complex setup. It focuses on a simple, explicit API for defining asynchronous tasks and ensures resilience and observability throughout the workflow lifecycle.

Popularity

Points 35

Comments 16

What is this product?

StepKit is essentially a smart engine that manages the execution of multi-step processes, even if those processes take a long time or need to pause and resume. Think of it like a very reliable project manager for your code. Its technical innovation lies in how it handles the 'durability' aspect. Instead of relying on tricky error handling like try-catch for pausing and resuming, StepKit uses a generator-like approach. This means your workflow code can pause naturally, save its state, and pick up exactly where it left off later, even after a server restart or a network interruption. It achieves this by providing a core execution loop that handles step discovery (finding the next part of your process), memoization (remembering results to avoid re-computation), and an event loop that orchestrates the flow. The key value is its platform agnosticism; you write your workflow logic using StepKit's clear API, and it works on your local machine, a dedicated server, or serverless functions without needing to change your code for each environment. This means you get a consistent, reliable way to build complex background tasks that just *work* everywhere.

How to use it?

Developers can integrate StepKit into their applications by installing the SDK. They then define their workflows using StepKit's `step.*()` functions, which represent individual tasks or operations within the workflow. For example, you might have a `step.sendEmail()` or a `step.processPayment()` function. StepKit handles the underlying logic of calling these steps in sequence, managing any pauses or retries, and ensuring the workflow's state is preserved. You can configure different 'drivers' to tell StepKit where and how to run these workflows – for instance, using an in-memory driver for local testing, a filesystem driver for simple persistence, or specific integrations with platforms like Inngest or Cloudflare. This makes it incredibly flexible for various deployment scenarios. You can envision using StepKit in backend services to manage user onboarding sequences, process large datasets asynchronously, or orchestrate microservices.

Product Core Function

· Durable Execution Engine: Manages the lifecycle of asynchronous workflows, ensuring they can be paused and resumed reliably across different environments. This provides peace of mind that long-running tasks won't fail due to temporary issues, making your applications more robust.

· Platform Agnostic Design: Allows workflows to run on any infrastructure (self-hosted, serverless, cloud) without requiring provider-specific code. This eliminates vendor lock-in and gives you the freedom to choose the best hosting for your needs, saving costs and increasing flexibility.

· Simple and Explicit API: Uses `step.*()` functions that are easy to read, understand, and implement, making workflow development straightforward. This speeds up development time and reduces the learning curve for new developers joining your team.

· Built-in Observability: Provides mechanisms for monitoring the execution of workflows, helping developers understand what's happening and troubleshoot issues quickly. This leads to better debugging and a more stable application.

· Extensible Middleware: Supports adding custom logic (like encryption or error reporting) to the execution pipeline without altering the core workflow definition. This allows for easy integration with existing monitoring or security tools, enhancing your application's capabilities.

· Step Memoization: Avoids redundant computation by caching the results of previous steps. This optimizes performance and reduces resource consumption, leading to a more efficient and cost-effective application.

Product Usage Case

· Building an e-commerce order processing system: A customer places an order. StepKit can orchestrate the entire process: validating payment, updating inventory, sending confirmation emails, and initiating shipping, pausing and resuming as needed if any external service is temporarily unavailable. This ensures a smooth and reliable customer experience.

· Implementing an asynchronous data pipeline for analytics: Large datasets need to be ingested, transformed, and analyzed. StepKit can manage each stage of this pipeline as a series of durable steps, allowing for retries on failures and efficient processing without blocking the main application thread. This enables scalable data processing for insights.

· Creating a complex user onboarding flow: When a new user signs up, they might need to complete several steps like profile setup, verification, and introductory tutorials. StepKit can manage this multi-step, potentially lengthy process, ensuring users are guided through smoothly, even if they leave and return later. This improves user engagement and retention.

· Developing a background job processing system for microservices: Different microservices can trigger long-running tasks managed by StepKit. For example, generating a report or sending out bulk notifications. This decouples these heavy tasks from the immediate request-response cycle, improving the responsiveness of your services.

5

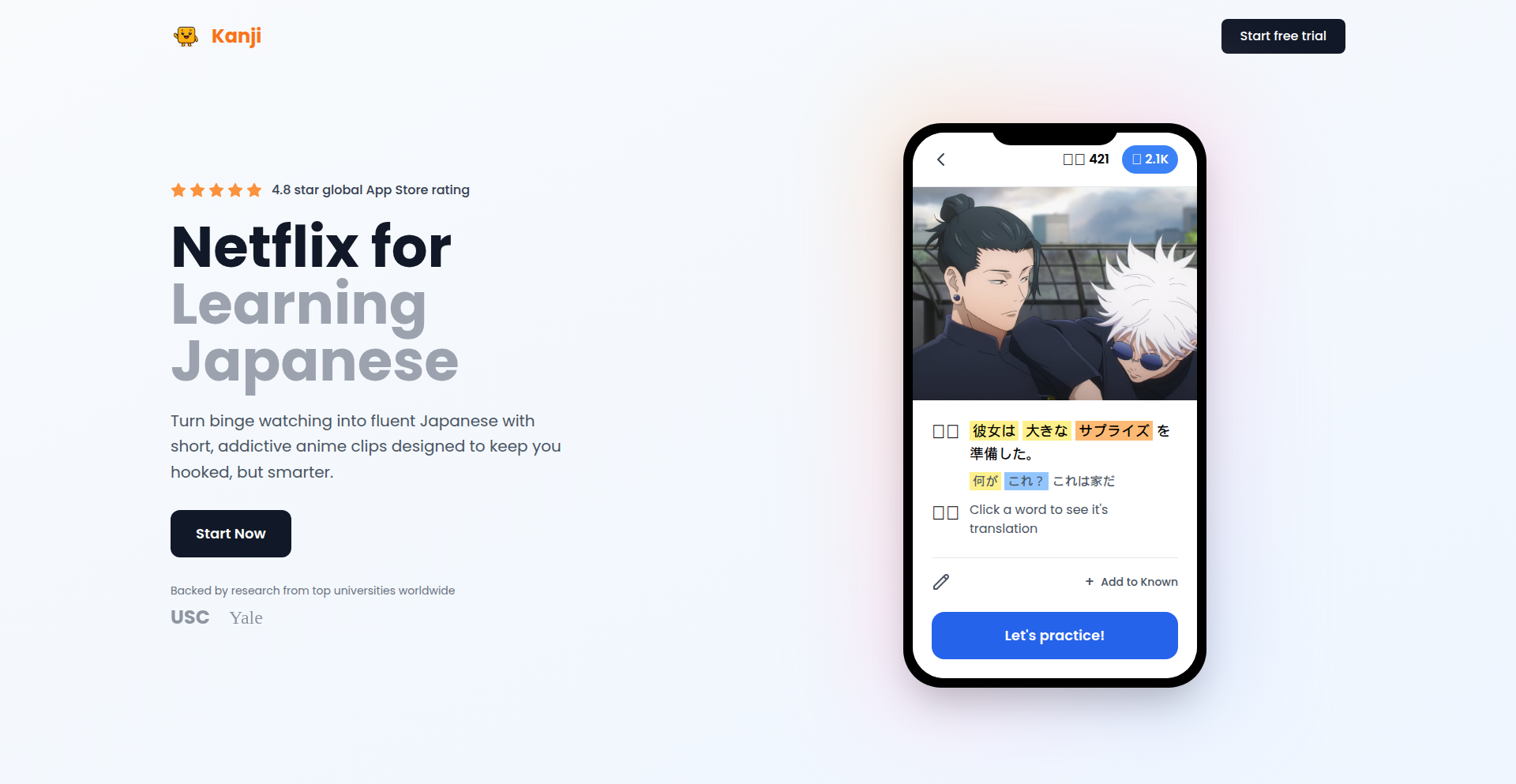

AnimeLingua

Author

Mikecraft

Description

AnimeLingua is a web application that transforms anime into interactive Japanese language learning lessons. It addresses the common issue of repetitive lessons in traditional language apps by sourcing diverse learning content directly from popular anime series. This innovative approach leverages engaging visual and auditory media to provide a more dynamic and effective learning experience for Japanese learners.

Popularity

Points 11

Comments 21

What is this product?

AnimeLingua is a project born from a developer's frustration with the repetitive nature of existing language learning platforms like Duolingo. Instead of standard exercises, it pulls Japanese language content from anime. The core innovation lies in its ability to analyze anime dialogues and scenes to generate contextually relevant vocabulary, grammar, and pronunciation practice. This means you're learning Japanese through real-world conversations and cultural references from the shows you love, making the process more enjoyable and memorable. It's like having a personal Japanese tutor embedded within your favorite anime.

How to use it?

Developers can use AnimeLingua as a standalone learning tool to supplement their Japanese studies. By visiting the provided web application (https://kanjieight.vercel.app/), users can select an anime and begin interactive lessons. The platform likely uses techniques like natural language processing (NLP) to extract and process dialogue, and potentially computer vision to identify relevant scenes. For developers looking to integrate similar functionality into their own projects, the underlying principles of content extraction, NLP for language analysis, and dynamic lesson generation could be explored. Think of it as a blueprint for building custom, media-rich educational tools.

Product Core Function

· Anime-based lesson generation: Dynamically creates Japanese lessons sourced from anime content. This provides learners with authentic, context-rich vocabulary and grammar practice, moving beyond generic phrases and into real-world usage scenarios.

· Interactive dialogue practice: Offers exercises based on anime conversations, allowing users to practice listening comprehension and speaking with natural intonation and pace. This helps develop fluency and understanding of conversational Japanese.

· Vocabulary and grammar contextualization: Presents new words and grammatical structures within the context of anime scenes, aiding memorization and understanding of their practical application. Learners grasp concepts more deeply when they see them used in a relatable narrative.

· Diverse learning content: Leverages a wide range of anime to offer a broad spectrum of linguistic styles and cultural nuances. This prevents learning fatigue and exposes users to different ways Japanese is spoken, from casual slang to more formal dialogue.

Product Usage Case

· A Japanese language learner struggling with memorizing kanji and vocabulary: They can use AnimeLingua to find anime featuring characters whose names or dialogue contain the kanji they need to learn. The lessons derived from these scenes will reinforce the characters and plot, making the learning process more engaging and effective than rote memorization.

· A developer wanting to build a more engaging language learning tool for a niche market: They can study the technical approach of AnimeLingua to understand how to extract and process media content for educational purposes. This could inspire the creation of similar tools for learning other languages using movies, TV shows, or even video games.

· A student preparing for a Japanese language proficiency test (JLPT): They can utilize AnimeLingua to practice listening comprehension with authentic dialogues and exposure to common grammatical patterns used in spoken Japanese. The real-world context of anime dialogues can better prepare them for the listening sections of the exam.

6

Browser-Native DiffMerge

Author

subhash_k

Description

A privacy-focused, in-browser diff and merge tool designed to handle large files (25,000+ lines) with instant character-level comparison. It allows users to create shareable links for their diffs, all without sending any data to a server, ensuring 100% security.

Popularity

Points 16

Comments 14

What is this product?

This project is a web application that lets you compare two versions of a text file (like code or documents) and even merge the differences, all directly in your web browser. The core innovation is its ability to perform these computationally intensive tasks (detecting tiny changes at the character level and handling massive files) entirely client-side. This means your data never leaves your computer, making it incredibly secure and private. Think of it as a super-powered notepad comparison tool that's also a bit of a digital surgeon for text.

How to use it?

Developers can use this tool by simply navigating to the website. They can paste or upload two text files they want to compare. The tool will instantly highlight the differences in real-time, showing exactly what's changed at the character level. A key feature is the 'merge' functionality, where developers can choose which changes from each file to incorporate into a final, combined version. Furthermore, they can generate a unique, shareable link to their diff, which is useful for collaborating or showing specific changes to others without needing to send actual file attachments. This is ideal for code reviews, document version tracking, or any situation where precise text comparison and modification are needed securely.

Product Core Function

· Character-level instant diff: The technology here is an efficient algorithm that compares text character by character in real-time. This means you see changes as you type or load files, providing immediate feedback on what is different, down to a single letter or symbol. Its value is in providing granular understanding of changes, crucial for debugging or precise edits.

· Large file support (25K+ lines): The innovation lies in optimizing the diff algorithm and browser rendering to handle a significant volume of text without crashing or becoming sluggish. This is valuable for developers working with large configuration files, long code modules, or substantial document revisions where traditional online tools might fail.

· Diff merge feature: This function leverages the detected differences to intelligently combine content from two sources. It allows users to pick and choose which specific changes to keep, creating a new unified document. The value is in streamlining the process of integrating revisions from different branches or collaborators, saving manual effort and reducing errors.

· Shareable links: This feature generates a unique URL that encapsulates the comparison state (the two files and their differences). The magic is that the diff computation is done client-side, so the link likely contains instructions or data to reconstruct the diff in the recipient's browser. This provides a secure and convenient way to communicate complex text changes without exposing sensitive data or requiring recipients to install software.

· 100% secure, client-side computation: The core technical achievement is running all diff and merge logic within the user's browser using JavaScript. This eliminates the need for a backend server to process data, meaning no sensitive information is uploaded or stored. The value is paramount for privacy-conscious users and for handling proprietary or confidential information.

Product Usage Case

· Code Review Workflow: A developer has a feature branch with several changes compared to the main branch. Instead of sending large code files, they use this tool to generate a diff link. A colleague clicks the link, sees the exact character-level changes highlighted in their browser, and can even suggest merges for specific sections. This makes code reviews faster and more secure.

· Document Version Comparison: A team is working on a critical legal document with multiple revisions. One person uses the tool to compare two versions. They can instantly see every alteration, addition, or deletion. If needed, they can merge specific amendments from one version into another to create the final approved document, all without uploading the sensitive legal text to an external service.

· Configuration File Management: System administrators often deal with large configuration files. If a configuration is changed and causes issues, they can use this tool to compare the problematic version with a known good one. The character-level diff quickly pinpoints the exact line and character that might be the culprit, speeding up troubleshooting.

· Personal Project Archiving: A hobbyist programmer wants to track changes in their personal project's source code over time. They can generate diff links for different milestones and store them. Later, they can easily revisit any past state by clicking the link, seeing exactly what changed without needing complex version control systems for simple tracking.

7

RAG-PerfBoost

Author

vira28

Description

This project demonstrates a ~2x reduction in RAG (Retrieval-Augmented Generation) latency by intelligently switching embedding models. It tackles the common bottleneck in AI applications where generating responses from large language models (LLMs) often involves a retrieval step, which can be slow. The innovation lies in a dynamic strategy that chooses the most efficient embedding model for a given query, significantly speeding up the entire AI pipeline.

Popularity

Points 23

Comments 3

What is this product?

RAG-PerfBoost is a technique and potential implementation that optimizes the speed of AI systems that use Retrieval-Augmented Generation (RAG). RAG is a process where an AI model first retrieves relevant information from a knowledge base before generating a response. The embedding model is crucial for this retrieval part, as it converts text into numerical representations (vectors) that the AI can understand. The core innovation here is not just using a better embedding model, but having a system that can dynamically select between different embedding models based on the query. This means if a query is simple, it might use a very fast, less complex model. If the query is complex and requires deeper understanding, it might switch to a more powerful, but potentially slower, model. This intelligent switching drastically reduces the time it takes to get relevant information, thereby speeding up the AI's response time. So, for you, this means faster AI assistants, quicker search results in AI-powered applications, and more responsive AI-driven tools.

How to use it?

Developers can integrate this concept into their RAG pipelines. The core idea is to build a layer that sits before the embedding process. This layer analyzes incoming queries and decides which embedding model to invoke. This could be implemented using conditional logic, a simple machine learning classifier to predict query complexity, or even a lookup table. The chosen embedding model then generates the vector representation for the retrieval system. For example, a developer could have a lightweight embedding model for short, keyword-based searches and a more robust model for complex natural language questions. The RAG-PerfBoost approach allows them to leverage both effectively. This is useful for developers building chatbots, AI-powered search engines, or any application requiring efficient information retrieval for LLM generation, leading to a smoother user experience.

Product Core Function

· Dynamic embedding model selection: Allows the system to choose the most efficient embedding model for a given query, improving overall RAG speed. This is valuable for optimizing AI performance and reducing operational costs by using less compute for simpler tasks.

· Query analysis for model routing: Analyzes incoming user queries to determine the optimal embedding model to use. This intelligently directs computational resources and ensures faster retrieval for specific types of queries.

· Latency reduction in RAG pipelines: Directly addresses and significantly cuts down the time taken for the retrieval step in RAG, leading to quicker AI responses. This is crucial for real-time AI applications where responsiveness is key.

· Potential for cost optimization: By using simpler, faster embedding models for simpler queries, this approach can reduce the computational resources needed, leading to cost savings in AI deployments.

Product Usage Case

· A customer support chatbot that needs to quickly find relevant FAQs for user queries. By using RAG-PerfBoost, the chatbot can provide instant answers to common questions, and only switch to more powerful retrieval for complex or ambiguous queries, improving customer satisfaction.

· An internal knowledge base search tool for a large company. Developers can use RAG-PerfBoost to ensure that employees get fast search results for simple keyword searches, while more nuanced or complex research queries are handled efficiently without significant delay, boosting employee productivity.

· An AI-powered content summarization tool that needs to retrieve context from documents. RAG-PerfBoost can accelerate the process of finding relevant text snippets, allowing the summarization model to generate summaries much faster, making the tool more practical for real-world use.

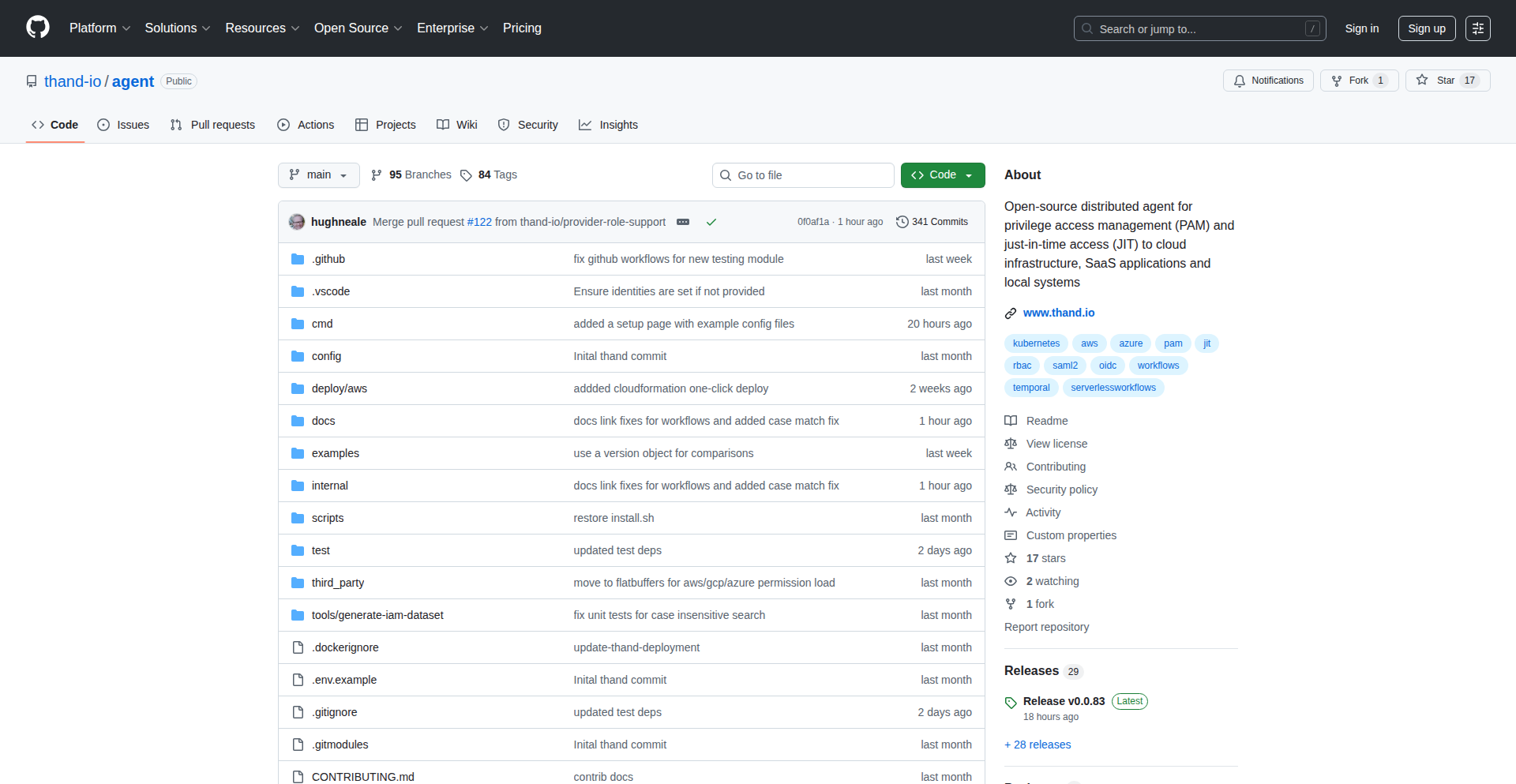

8

CI/CD Sentinel

Author

devops-coder

Description

A security scanning tool specifically designed for Continuous Integration and Continuous Deployment pipelines. It aims to automate the detection of common security vulnerabilities within code and configurations early in the development lifecycle, preventing insecure code from reaching production. This offers significant value by reducing the risk of breaches and compliance failures by shifting security left.

Popularity

Points 12

Comments 0

What is this product?

CI/CD Sentinel is a command-line interface (CLI) tool that integrates directly into your CI/CD workflows. It operates by analyzing your codebase, dependency files, and configuration files against a set of predefined security rules and best practices. The innovation lies in its focus on automation and early detection within the pipeline. Instead of manual security reviews or delayed scans, it provides immediate feedback on potential security flaws as code is being built and deployed, allowing developers to fix issues before they become larger problems. This proactive approach is crucial for maintaining a secure development practice.

How to use it?

Developers can integrate CI/CD Sentinel into their existing CI/CD pipelines (like GitHub Actions, GitLab CI, Jenkins, etc.) by adding it as a build step. After pushing code, the CI/CD pipeline triggers the tool. CI/CD Sentinel then scans the committed code and its dependencies for known vulnerabilities, misconfigurations, and insecure patterns. If it finds any issues, it can be configured to fail the build, alert the development team via Slack or email, or generate a detailed report. This makes security a seamless part of the development process, rather than an afterthought.

Product Core Function

· Automated vulnerability detection: Scans code for common security flaws like injection vulnerabilities, exposed secrets, and insecure library usage, providing developers with immediate feedback to remediate issues before deployment.

· Dependency scanning: Analyzes third-party libraries and packages for knownCVEs (Common Vulnerabilities and Exposures), helping to prevent the introduction of exploitable components into the project.

· Configuration security checks: Reviews infrastructure-as-code (IaC) and deployment configurations for common security misconfigurations, ensuring that deployed environments are secure by default.

· CI/CD integration: Easily plugs into popular CI/CD platforms, making security a standard part of the build and deployment process and reducing manual security effort.

· Customizable rule sets: Allows teams to tailor scanning rules to their specific project needs and compliance requirements, providing flexibility and relevance.

Product Usage Case

· A web development team using GitHub Actions notices a critical vulnerability in a new feature's code. CI/CD Sentinel, running as part of the CI pipeline, immediately flags the vulnerability. The developer can then fix the issue within minutes, preventing the insecure code from ever being merged into the main branch and subsequently deployed to production.

· A DevOps engineer is setting up a new microservice deployment. By integrating CI/CD Sentinel into their GitLab CI pipeline, it automatically scans the Terraform configuration files for insecure resource settings. The tool alerts the engineer to an open S3 bucket, which they can then secure before the infrastructure is provisioned, preventing potential data exposure.

· A mobile app development project relies on numerous third-party SDKs. CI/CD Sentinel is configured to run during the nightly build. It identifies that one of the SDKs has a recently disclosed critical vulnerability. The team is alerted, and they can prioritize updating the SDK to a secure version before it poses a risk to their users.

· A software company needs to comply with stringent security regulations. CI/CD Sentinel is used to enforce security policies by failing any build that doesn't meet the defined security standards, ensuring that all released software adheres to compliance requirements and reducing the risk of audit failures.

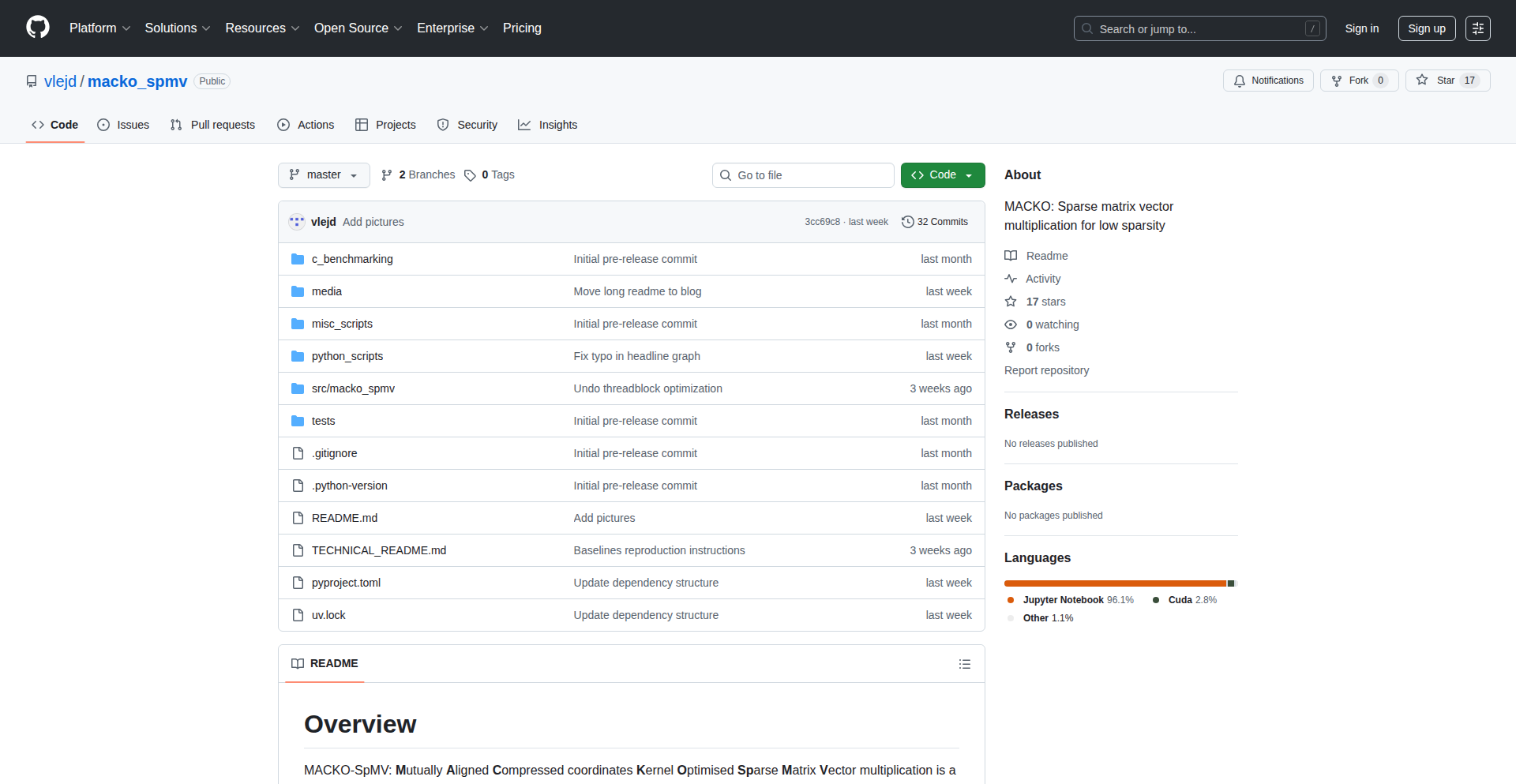

9

Macko SPMV Accelerator

Author

vlejd

Description

This project presents an optimized Sparse Matrix-Vector Multiplication (SpMV) algorithm designed to efficiently run pruned Large Language Models (LLMs) on consumer-grade GPUs. It tackles the challenge of achieving performance benefits from sparsity when you don't have inherently sparse matrices or specialized hardware, pushing the boundaries of what's possible on standard GPUs.

Popularity

Points 7

Comments 4

What is this product?

Macko SPMV Accelerator is a novel approach to performing Sparse Matrix-Vector Multiplication (SpMV) operations, which are fundamental to many machine learning computations, especially in LLMs. Traditionally, you need highly sparse data or specialized hardware to see speedups from sparsity. This project's innovation lies in its ability to deliver significant performance gains even with matrices that are only 30-90% sparse, on regular GPUs. It achieves this by employing clever algorithms and data structures to minimize unnecessary computations and memory access. So, for you, it means running more complex AI models on your existing hardware with better speed.

How to use it?

Developers can integrate Macko SPMV into their existing deep learning pipelines, particularly when dealing with pruned LLMs. The project provides example code (e.g., with PyTorch) demonstrating how to leverage its optimized SpMV kernels. You would typically replace standard SpMV operations in your model's forward pass with calls to Macko SPMV functions, allowing your pruned models to execute much faster. This can be done by loading the library and using its provided functions within your training or inference scripts. This gives you a direct performance boost for your AI applications without needing to buy new hardware.

Product Core Function

· Optimized Sparse Matrix-Vector Multiplication kernel: This function accelerates the core computation of multiplying a sparse matrix by a vector. The value is enabling faster execution of AI models by reducing computational overhead, making them more responsive for real-time applications.

· Handling of moderate sparsity (30-90%): The algorithm is specifically designed to be effective when matrices are not extremely sparse. This is valuable because many real-world pruned models fall into this category, allowing for practical speedups on consumer hardware. You can benefit from AI acceleration even with moderately optimized models.

· GPU acceleration: The implementation leverages the parallel processing power of GPUs. This translates to significantly faster computation times compared to CPU-based execution, allowing you to process more data or run more complex models in the same amount of time. This means your AI tasks finish quicker.

· Low memory footprint: Efficient data structures are employed to minimize memory usage. This is important for fitting larger models or larger datasets into GPU memory, which is often a limiting factor. You can run more demanding AI tasks on your current GPU setup.

Product Usage Case

· Accelerating pruned LLM inference on consumer GPUs: A developer working on a chatbot application might use a pruned LLM to reduce its size and computational requirements. By integrating Macko SPMV, they can significantly speed up the chatbot's response time, making the user experience much smoother, even on a standard laptop. This means your AI-powered tools become faster and more practical for everyday use.

· Enabling larger AI models on limited hardware: A researcher experimenting with a new AI model architecture that has moderate sparsity might find their existing GPU struggles to run it. Using Macko SPMV could allow them to run the model efficiently, enabling them to test their hypotheses and iterate faster without needing to upgrade their hardware. This empowers innovation by removing hardware bottlenecks.

· Improving real-time AI applications: For applications requiring instant AI processing, such as object detection in video streams or natural language understanding in voice assistants, every millisecond counts. Macko SPMV's speed improvements can make these applications more viable and responsive. This means your AI applications feel more instantaneous and less laggy.

10

LegacyGlue Weaver

Author

sfaist

Description

LegacyGlue Weaver is an OSS integration tool designed to tackle the pervasive problem of 'shadow infrastructure' in large organizations. It intelligently ingests and reverse-engineers existing integration code, SQL, configurations, and documentation to map dependencies and automatically regenerate them as maintainable JavaScript code. This addresses the pain points of undocumented, unowned, and brittle legacy connectors, allowing engineers to focus on feature development and enabling faster system upgrades.

Popularity

Points 7

Comments 1

What is this product?

LegacyGlue Weaver is a sophisticated tool that acts like a detective for your old, complex integration code. Think of it as a smart system that reads through scattered bits of code, database queries, configuration files, and even old documents related to how different software systems talk to each other. It figures out what these pieces are doing, how they depend on each other, and then rewrites them into clean, modern JavaScript code. This regenerated code is easier to understand, test, and update. The innovation lies in its ability to reverse-engineer and understand systems that have been neglected or are poorly documented, effectively turning 'black boxes' into transparent, manageable code. This saves companies from wasting valuable engineering time on deciphering cryptic legacy scripts and connectors.

How to use it?

Developers can leverage LegacyGlue Weaver by pointing it to their existing integration assets, such as custom scripts, SQL queries, OpenAPI specifications, or even poorly documented configuration files. The tool will ingest these inputs and perform an analysis to understand the underlying logic and data flow. Once analyzed, it can regenerate this logic as clean JavaScript code. This generated code can then be executed directly as a standalone integration, or it can be exposed as a service through platforms like MCP (Message Communication Protocol) or integrated into new SDKs. It also continuously monitors for changes in upstream APIs or data schemas and can automatically adjust the regenerated code to maintain integration stability. So, if you're struggling with outdated, hard-to-maintain connections between your software, you can feed those into LegacyGlue Weaver and get back code that's a breeze to work with, significantly reducing debugging and maintenance overhead.

Product Core Function

· Ingestion of diverse legacy assets: Value lies in its ability to process a wide range of integration artifacts, from scripts to SQL and documentation, providing a unified starting point for modernization. Useful for organizations with a complex and heterogeneous technology stack.

· Reverse-engineering of integration logic: Value lies in its capability to deduce the actual functionality of undocumented or obscure code. This significantly reduces the time and effort required to understand and refactor legacy systems. Applicable when inheriting projects with little to no documentation.

· Dependency mapping and visualization: Value lies in clearly illustrating how different integration components interact. This provides critical insights for impact analysis during upgrades or refactoring efforts. Essential for understanding the ripple effects of changes in large systems.

· Automated regeneration into clean JavaScript code: Value lies in producing maintainable and testable code from complex legacy systems. This empowers developers to work with modern tooling and best practices. Directly translates to faster development cycles and reduced bugs.

· Continuous monitoring for API and schema drift: Value lies in proactively identifying and addressing integration breakages caused by upstream system changes. This ensures ongoing stability and reduces reactive firefighting. Crucial for maintaining reliable integrations in dynamic environments.

· Automatic repair of integration changes: Value lies in its ability to self-heal integrations when upstream systems evolve. This minimizes downtime and the manual effort required to keep integrations functional. A key feature for ensuring business continuity.

Product Usage Case

· Scenario: A company has a collection of Perl scripts that act as custom connectors between their on-premise CRM and a cloud-based marketing platform. These scripts are old, poorly documented, and hard to modify. LegacyGlue Weaver can ingest these scripts, understand their data transformation and API calls, and regenerate them as a robust Node.js integration module. This makes it easier to maintain, test, and potentially replace the entire integration with a more modern solution, saving the company from years of dealing with brittle legacy code.

· Scenario: A financial institution relies on complex SQL stored procedures for inter-system data synchronization. When the schema of a source database changes, these procedures often break, causing significant operational disruptions. LegacyGlue Weaver can analyze these SQL procedures, understand the data schemas they interact with, and generate JavaScript code that can adapt to schema changes more gracefully. This ensures the data synchronization continues to function smoothly even when upstream databases are updated, preventing costly downtime.

· Scenario: A large enterprise has accumulated numerous 'glue' scripts written over many years by different teams, performing various data transformations and API orchestrations. The knowledge of how these scripts work is often siloed with a few individuals. LegacyGlue Weaver can act as a central intelligence hub, ingesting all these disparate scripts, mapping their interdependencies, and providing a clear, unified understanding of the entire integration landscape. This greatly aids in migrating to new systems or decommissioning old ones, as the 'black boxes' are now demystified.

11

Antler: IRL Browser

Author

dannylmathews

Description

Antler is a novel 'IRL Browser' that aims to bring the serendipity and discovery of physical browsing to the digital realm. It tackles the challenge of information overload and algorithmic echo chambers by generating unique, non-linear paths through content, inspired by the experience of wandering through a physical library or bookstore. The core innovation lies in its approach to content navigation, moving beyond traditional search and recommendation engines.

Popularity

Points 6

Comments 1

What is this product?

Antler is a web application that simulates the experience of browsing physical media like books or magazines in the real world. Instead of typing in specific keywords or relying on personalized recommendations that often lead you down predictable paths, Antler uses a system to randomly connect related pieces of content. Think of it like opening a book at a random page, finding an interesting footnote, and then picking up another book based on that footnote. It's designed to break you out of your typical online habits and expose you to unexpected ideas and information. The technical innovation is in its content discovery algorithm, which prioritizes tangential connections and thematic resonance over direct relevance, fostering a more organic and exploratory user journey.

How to use it?

Developers can use Antler as a source of inspiration for their own projects or as a tool to overcome creative blocks. For example, a developer working on a new feature might use Antler to explore tangential technologies or design patterns that they wouldn't have considered through conventional research. It can be integrated into a personal knowledge management system to surface forgotten or underutilized notes. Think of it as a 'random idea generator' for your digital workflow. You visit the Antler site, start exploring a piece of content, and follow the generated links to discover new perspectives and information relevant to your work, but in a way that feels more like discovery than targeted search.

Product Core Function

· Content Graph Generation: Creates a network of interconnected content, allowing for non-linear exploration. This provides a unique way to surface related information that might otherwise be missed, offering developers exposure to novel concepts and solutions.

· Serendipitous Discovery Engine: Facilitates unexpected encounters with information by moving beyond traditional recommendation systems. This helps developers break through creative ruts and discover innovative approaches they hadn't considered, fostering a more experimental mindset.

· Thematic Navigation: Allows users to follow threads of related ideas and themes, much like browsing a physical subject area. This is valuable for developers trying to understand a broader context or explore a topic from multiple angles, enabling deeper comprehension and idea synthesis.

· IRL Browsing Simulation: Replicates the feeling of physical exploration for digital content, encouraging curiosity and a less directed approach to learning. This can be a powerful tool for developers seeking inspiration outside their usual technical comfort zones, promoting a 'hacker's spirit' of exploring the unknown.

· Algorithmic Detour Mechanism: Intentionally introduces 'detours' in content discovery to prevent users from falling into algorithmic echo chambers. This is crucial for developers who need diverse perspectives and fresh insights to drive true innovation and avoid incremental improvements.

Product Usage Case

· A web developer working on a new UI component could use Antler to explore articles and code repositories tangential to their project's core technology. By following unexpected links, they might discover a novel design pattern or a less common library that solves a usability problem in an innovative way, leading to a more user-friendly and cutting-edge product.

· A game developer facing a creative block for their next game mechanic might use Antler to explore unrelated fields like biology, physics, or even historical events. The system's ability to surface surprising connections could spark entirely new game concepts that wouldn't emerge from typical game design research, pushing the boundaries of interactive entertainment.

· A data scientist trying to find new approaches to anomaly detection could use Antler to browse through academic papers and blog posts on seemingly unrelated topics like signal processing or natural language processing. The unexpected links might reveal a cross-disciplinary technique that can be adapted to their data, leading to a more robust and efficient solution.

· A startup founder looking for disruptive ideas could use Antler as an 'idea incubation' tool. By browsing content related to emerging technologies and societal trends in a non-linear fashion, they might stumble upon an unmet need or a novel combination of existing technologies that forms the basis of their next groundbreaking product.

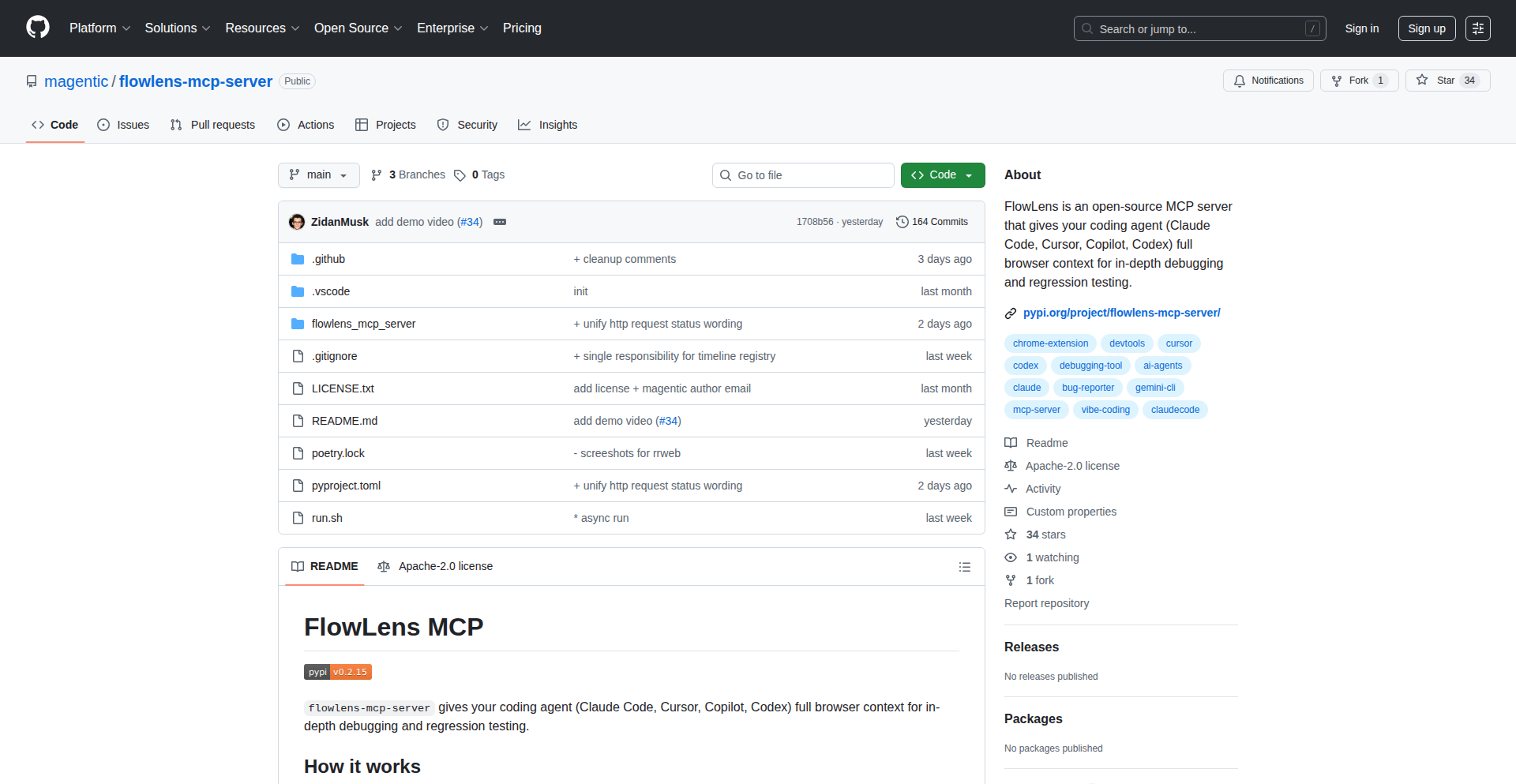

12

FlowLens: AI-Powered Debugging Session Replay

Author

mzidan101

Description

FlowLens is an open-source project that bridges the gap between developers finding bugs and AI agents understanding them. It captures browser context, including DOM, network, and console events, and makes it structured and queryable for AI agents. This allows developers to hand off exact debugging context to AI without manual retyping or hoping the AI can reproduce the issue, improving AI-assisted debugging efficiency.

Popularity

Points 5

Comments 2

What is this product?

FlowLens is a combination of a Chrome extension and an MCP server that allows you to capture and analyze your web browsing sessions for debugging purposes with AI. Instead of just giving an AI raw logs or asking it to guess what happened, the Chrome extension records your interactions, DOM changes, network requests, and console messages. This data is then packaged into a local zip file. The MCP server loads this file and provides specialized tools for an AI agent to interact with the recorded session. For example, the AI can search through events using regular expressions or take screenshots at specific moments, mimicking how a developer would investigate a bug. This is innovative because it focuses on providing AI with the exact, contextual information it needs, rather than relying on the AI to reproduce complex user flows or sift through massive amounts of unorganized data. For you, this means you can pinpoint and share bugs with AI much faster and more accurately, leading to quicker resolutions.

How to use it?

Developers can use FlowLens by installing the Chrome extension. They can either record a specific workflow that leads to a bug or enable a 'session replay' mode that continuously stores the last minute of activity. If a bug occurs, they can export the captured session as a local zip file. This zip file can then be loaded into the FlowLens MCP server. The AI agent, equipped with tools provided by the MCP server, can then access and analyze this recorded session. For instance, an AI debugging assistant could be instructed to 'find the cause of the JavaScript error in the captured session' and would use FlowLens's queryable data to investigate, saving you the time of explaining every step. This integrates seamlessly into the debugging workflow by providing a structured way to hand over complex issues to AI.

Product Core Function

· Browser Context Recording: Captures DOM, network, and console events in real-time or a rolling buffer. This allows for a precise snapshot of what happened during a bug, so you don't have to manually reproduce it for the AI.

· Session Export to Zip: Packages the recorded session data into a portable zip file. This makes it easy to share the debugging context with the MCP server or other tools.

· MCP Server for AI Interaction: Loads the exported session and exposes tools for AI agents to query and analyze the data. This means AI can 'see' and 'interact' with your past debugging session just like you would, leading to more intelligent insights.

· Token-Efficient AI Interaction Tools: Provides specialized tools like regex search and time-based screenshotting for AI to drill down into specific issues. This avoids overwhelming the AI with raw data and focuses its analysis, making the debugging process more efficient for everyone.

· Local Data Processing: All captured data stays on your machine, ensuring privacy and security. You have full control over your sensitive debugging information.

Product Usage Case

· A developer encounters a complex UI bug that is difficult to reproduce consistently. They use FlowLens to record the session, export it, and then hand it to an AI agent. The AI can then analyze the recorded DOM changes and network requests to pinpoint the exact sequence of events that triggered the bug, providing the developer with a clear explanation of the root cause.

· A team is debugging a web application where users report intermittent issues. By using FlowLens in 'session replay' mode, they can quickly grab the context of a recent problem without asking the user to reproduce it. This captured session is then analyzed by an AI to identify common patterns or error conditions, helping to diagnose the underlying problem more rapidly.

· An AI chatbot is being trained to assist with front-end development debugging. Instead of feeding it generic examples, FlowLens allows the chatbot to access and analyze real user session recordings. This provides the AI with practical, real-world debugging scenarios, enabling it to learn and offer more relevant and accurate assistance to developers.

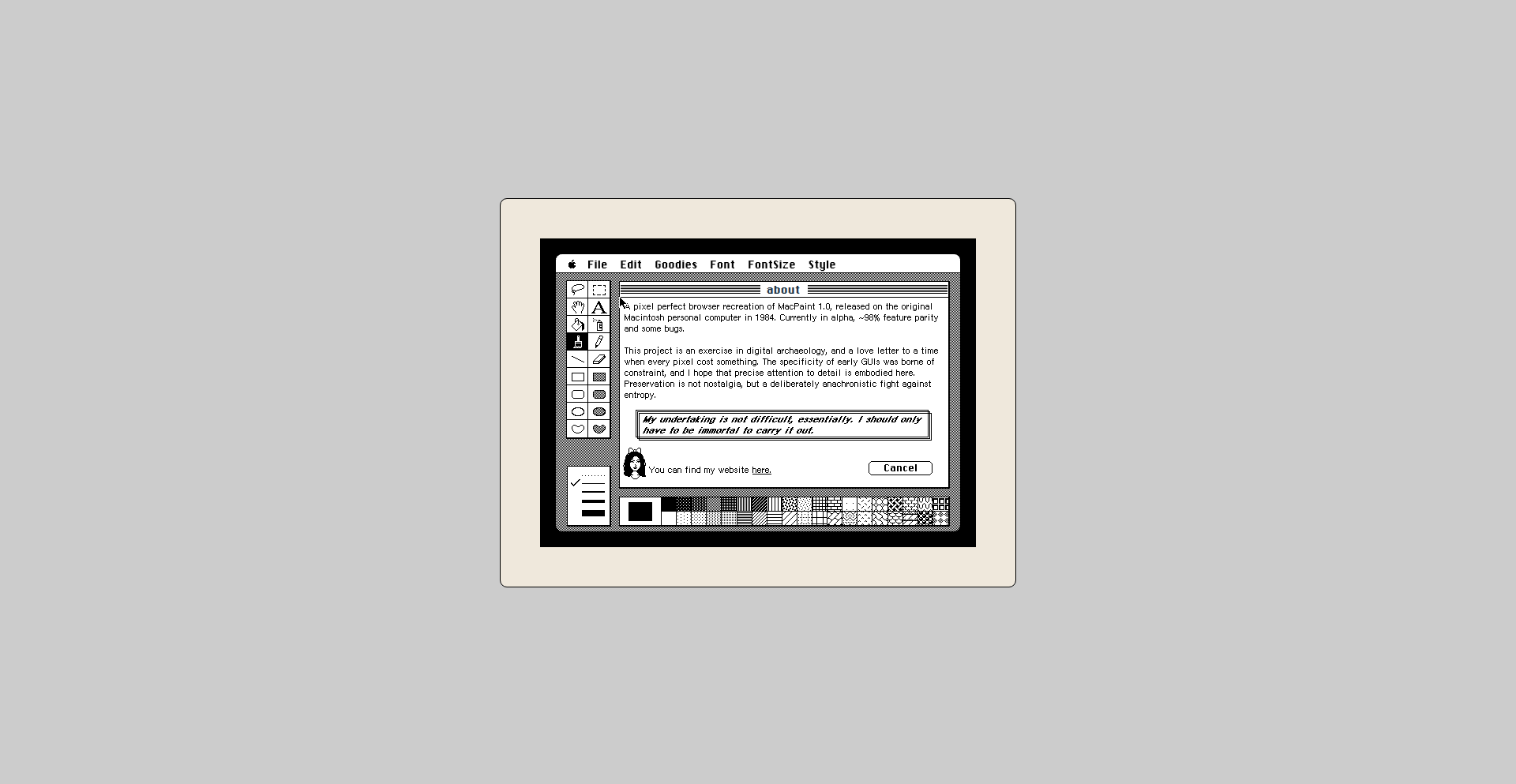

13

ZenPaint: PixelPerfect BrowserCanvas

Author

allthreespies

Description

ZenPaint is a browser-based recreation of the original MacPaint application. It achieves pixel-perfect accuracy by meticulously reverse-engineering the original QuickDraw source code and emulating its behavior. The project focuses on replicating the unique feel and limitations of 1-bit graphics and a constrained toolset to evoke the magic of early digital art creation. Its technical innovation lies in accurately rendering fonts and shape tools without canvas smoothing, using React for a declarative UI, and employing buffer pooling and copy-on-write for performance. This offers developers a chance to explore precise graphical rendering and relive a piece of computing history.

Popularity

Points 7

Comments 0

What is this product?

ZenPaint is a web application that precisely recreates the functionality and visual fidelity of Apple's original MacPaint. The core technical challenge was to achieve pixel-perfect accuracy, meaning every line, curve, and pixel rendered looks identical to the original MacPaint. This involved deep dives into historical Apple graphics code (QuickDraw) to understand and replicate its subtle behaviors, especially around font rendering and how shapes were drawn. Instead of letting the browser's graphics system automatically smooth things out (which would ruin the pixel-perfect look), ZenPaint implements its own logic. It's built using React, a popular framework for creating user interfaces, and uses clever techniques like 'buffer pooling' (reusing memory for graphics instead of constantly creating new ones) and 'copy-on-write' (efficiently handling changes to image data) to keep the performance snappy. The innovation is in the dedication to historical accuracy and the custom graphics pipeline built within a modern web environment.

How to use it?

Developers can use ZenPaint as a reference for understanding historical graphics rendering techniques and for building their own pixel-art focused applications or emulators. It demonstrates how to achieve precise graphical control in the browser, which can be valuable for game development, retro computing projects, or specialized design tools. The project's code is available for study, allowing developers to learn from its implementation of font rendering quirks and efficient canvas manipulation. Integration might involve forking the project to adapt its rendering engine for specific needs or using its principles to guide the development of new browser-based graphics applications. The ability to share artwork via links also presents an opportunity for integrating ZenPaint's drawing capabilities into other web platforms.

Product Core Function

· Pixel-perfect rendering of 1-bit graphics: Accurately replicates the look of original MacPaint, offering a nostalgic and precise visual experience that's crucial for historical accuracy in retro applications or art tools.

· Accurate font rendering: Solves the complex problem of rendering fonts as they appeared on early Macs, vital for any project aiming for historical fidelity in text display.

· Precise shape tool emulation: Recreates the unique drawing behavior of MacPaint's tools, providing developers with insights into how specific graphical operations were performed historically.

· Declarative UI with React: Uses modern web development practices for a clean and maintainable interface, demonstrating how to build complex UIs efficiently.

· Performance optimization with buffer pooling and copy-on-write: Implements advanced techniques for efficient memory management and image manipulation, crucial for smooth drawing performance in web applications.

· Shareable artwork links: Allows users to save and share their creations, offering a mechanism for embedding or linking artwork within other applications or platforms.

Product Usage Case

· Building a retro game that requires authentic 1-bit graphics: Developers can study ZenPaint's rendering engine to ensure their game's visuals precisely match the aesthetic of classic 8-bit or 16-bit era games.

· Creating an educational tool to teach about early computer graphics: ZenPaint serves as a living example of how graphics were handled on early personal computers, useful for historical computing courses or museum exhibits.

· Developing a digital art application that emphasizes retro aesthetics: Designers and artists looking for a distinctive, low-fidelity look can draw inspiration from ZenPaint's unique constraints and tools.

· Experimenting with custom canvas rendering in web applications: Developers can analyze ZenPaint's approach to circumventing default canvas smoothing to achieve specific visual effects for their own projects, such as scientific visualizations or custom UI elements.

· Recreating other classic software interfaces: The techniques used to reverse-engineer MacPaint's graphics can be applied to recreate the look and feel of other seminal applications from the past.

14

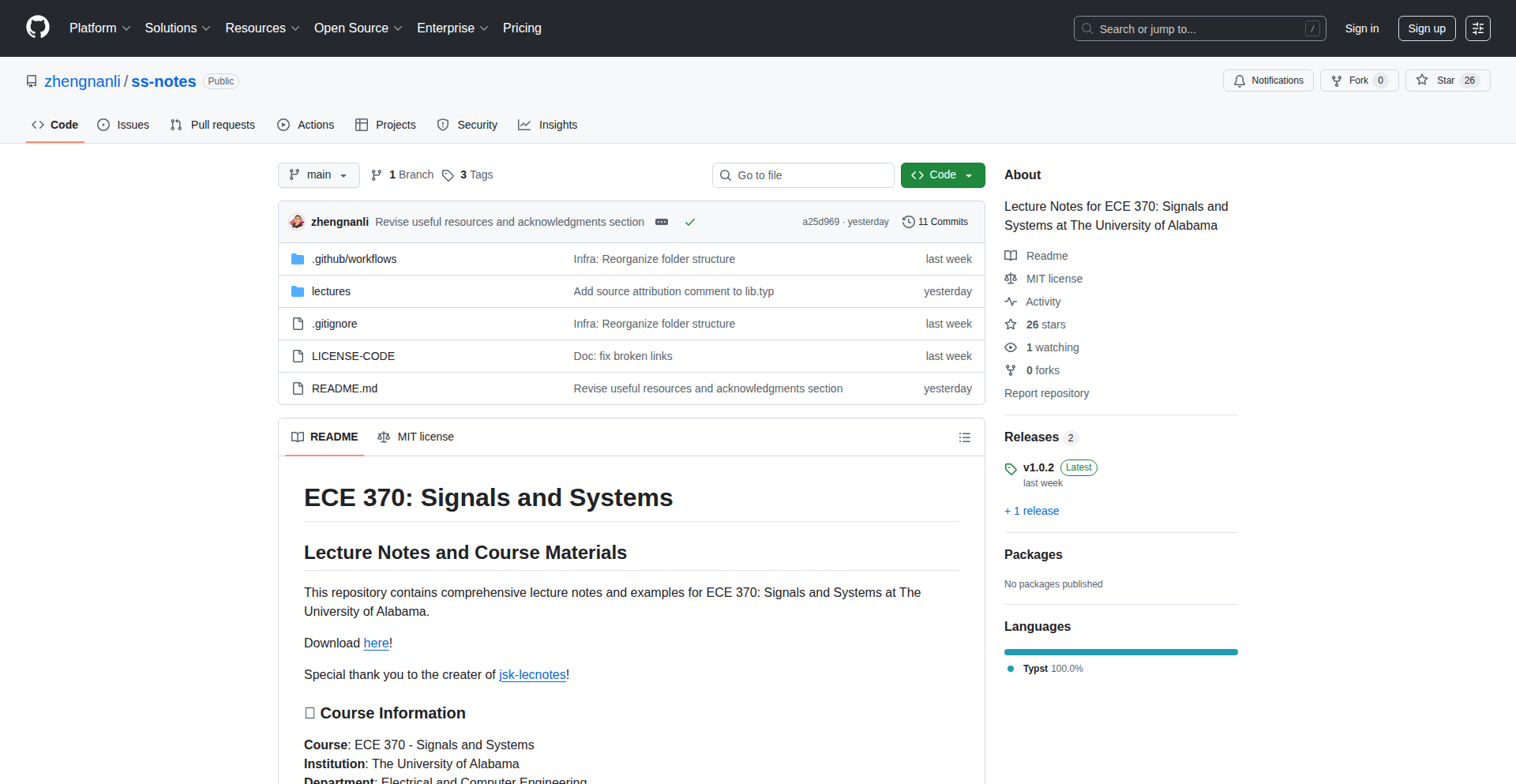

Typst Lecture Notes

Author

subtlemuffins

Description

This project allows users to write lecture notes using Typst, a modern markup-based typesetting system. It showcases an innovative approach to creating structured and visually appealing documents with a focus on developer efficiency and a clean aesthetic, offering an alternative to traditional word processors for technical and academic content.

Popularity

Points 6

Comments 0

What is this product?

This project is essentially a demonstration of using Typst, a powerful typesetting system, to author lecture notes. Typst is designed from the ground up to be fast, flexible, and easy to learn, especially for those familiar with markup languages like LaTeX but seeking a more streamlined experience. The innovation lies in its speed and modern design philosophy, which allows for rapid iteration and compilation of documents. Instead of wrestling with complex formatting menus, you write your content in simple text and let Typst handle the rest, producing professional-looking output. This means you spend less time fiddling with layout and more time focusing on the actual content of your notes, making it incredibly efficient for students, educators, and anyone who needs to produce structured documentation.

How to use it?

Developers can use this project by adopting Typst as their primary tool for note-taking and document creation. You would install Typst (available for various operating systems) and then begin writing your lecture notes in `.typ` files using Typst's intuitive markup. For instance, you'd use simple syntax to define headings, lists, code blocks, and mathematical equations. Typst then compiles these files into high-quality PDFs or other output formats. It's ideal for creating study guides, tutorials, technical documentation, or even personal knowledge bases. Think of it as a programmer's notepad for documentation, offering more control and a cleaner output than standard text editors, with a speed that rivals basic word processors.

Product Core Function

· Structured document creation with simple markup: This allows for rapid writing of content without complex formatting. The value is in saving time and reducing cognitive load for the writer, enabling them to focus on the intellectual work rather than the mechanics of presentation. This is useful for anyone creating reports, articles, or notes that require clear organization.

· Fast compilation speeds: Typst is designed to be significantly faster than many traditional typesetting systems. The value here is immediate feedback on your writing and formatting changes, crucial for iterative development and ensuring your documents look exactly as intended without long waiting times. This is especially beneficial for large documents or when making frequent edits.

· Programmable typesetting: Typst offers a powerful scripting language that allows for advanced customization and automation of document layout and content. The value is in creating reusable templates, complex layouts, and dynamic content generation, empowering developers to build sophisticated and consistent documentation workflows. This is for advanced users who need fine-grained control over their output.

· Modern and clean syntax: Typst's syntax is designed to be more readable and approachable than some older typesetting systems. The value is in lowering the barrier to entry for creating professional-looking documents, making it accessible to a wider range of users, including those who may not have a deep background in typesetting. This makes it easier for teams to collaborate on documentation.

· Cross-platform compatibility: Typst is available on multiple operating systems, meaning you can write your notes on any machine. The value is in ensuring consistency and accessibility regardless of the user's preferred operating system, facilitating collaboration and personal workflow continuity across different devices.

Product Usage Case

· Creating a technical tutorial on a new programming framework: A developer can use Typst to write detailed explanations, embed code snippets with syntax highlighting, and include mathematical formulas for algorithms. The fast compilation allows for quick iteration on the content, and the clean output makes the tutorial easy for others to read and understand, directly addressing the problem of producing accessible and visually appealing technical guides.

· Developing a personal knowledge base or wiki: Users can organize their learning materials, research notes, and project documentation in a structured way. Typst's ability to handle complex cross-referencing and maintain consistent styling ensures that the knowledge base is navigable and aesthetically pleasing, solving the challenge of managing and presenting large amounts of personal information effectively.

· Authoring academic papers or research reports: Students and researchers can leverage Typst's robust mathematical typesetting and bibliography management capabilities to produce professional academic documents. The speed and ease of use, compared to traditional academic writing tools, can significantly reduce the time spent on formatting and allow for greater focus on research content, directly tackling the often-arduous task of academic publication.

· Generating API documentation: For software projects, Typst can be used to programmatically generate documentation for APIs, including function signatures, parameter descriptions, and example usage. The value lies in automating the documentation process, ensuring it stays up-to-date with code changes and maintaining a consistent, high-quality presentation for developers consuming the API, thus solving the problem of outdated or poorly formatted API references.

15

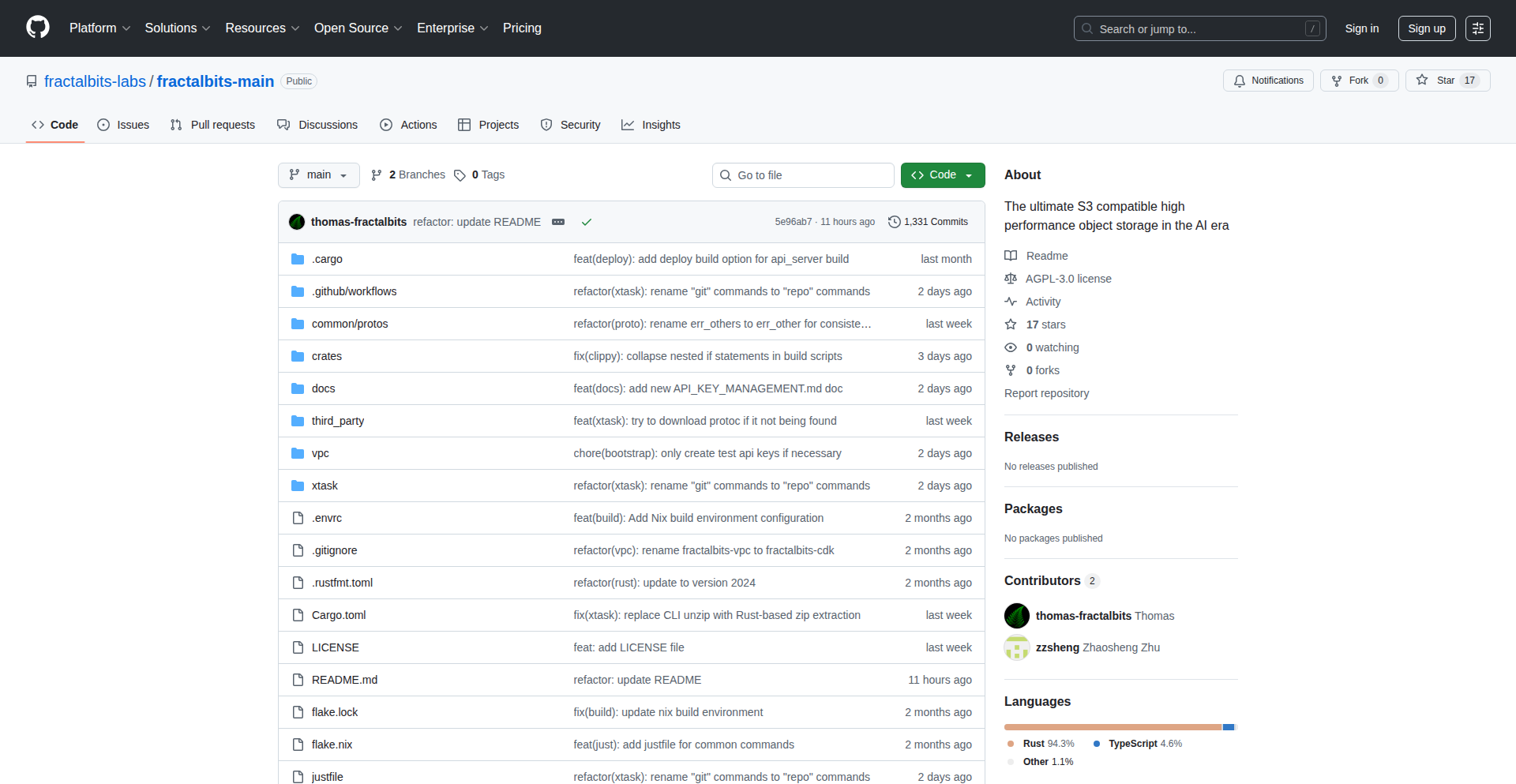

Fractalbits: High-Performance S3-Compatible Storage

Author

thomas_fa

Description

Fractalbits is a novel storage solution that offers S3 compatibility with exceptional performance, aiming for 1 million IOPS at p99 latency of ~5ms. It achieves this by leveraging Rust and Zig, focusing on low-level optimizations for speed and efficiency. This project tackles the common challenge of high-latency storage in distributed systems and provides a performant alternative for data-intensive applications.

Popularity

Points 6

Comments 0

What is this product?

Fractalbits is an object storage system designed to be fully compatible with the Amazon S3 API, meaning you can use existing S3 tools and libraries to interact with it. The innovation lies in its underlying implementation, built with Rust and Zig. These languages allow for fine-grained control over memory and system resources, enabling aggressive optimizations that lead to extremely high Input/Output Operations Per Second (IOPS) and low latency. Think of it as a super-fast warehouse for your digital stuff, built with the most efficient tools possible to get things in and out incredibly quickly, even when lots of people are accessing it at once. So, this helps you store and retrieve data much faster than traditional solutions, which is crucial for demanding applications.

How to use it?

Developers can integrate Fractalbits into their workflows by treating it as a drop-in replacement for S3. This involves configuring your applications or tools to point to the Fractalbits endpoint instead of an S3 endpoint. For example, you could use it as a backend for data lakes, for storing large datasets for machine learning, or as a high-performance backup solution. Its S3 compatibility means minimal code changes. So, you can easily swap out your current object storage for Fractalbits to get a significant speed boost without a major overhaul of your existing systems.

Product Core Function

· S3 API Compatibility: Enables seamless integration with existing S3 tools and applications, allowing developers to leverage a vast ecosystem without re-engineering their infrastructure. This means you can use your familiar S3 clients and SDKs directly with Fractalbits, saving time and effort.

· High IOPS Performance: Achieves extremely high rates of read and write operations, crucial for data-intensive workloads like databases, real-time analytics, and high-frequency trading systems. This directly translates to faster processing and quicker insights from your data.

· Low Latency (p99 ~5ms): Guarantees that 99% of requests are served within a very short timeframe, essential for applications requiring real-time responsiveness and minimizing user wait times. Your applications will feel snappier and more responsive.

· Rust and Zig Implementation: Utilizes modern systems programming languages to achieve low-level performance optimizations and memory safety, leading to a robust and efficient storage system. This means the system is built for speed and reliability from the ground up, ensuring consistent performance.

· Scalable Architecture: Designed to scale horizontally to handle increasing storage demands and traffic, ensuring your storage solution grows with your needs. As your data grows, Fractalbits can handle it without performance degradation.

Product Usage Case

· Machine Learning Data Storage: Storing massive datasets for training AI models where fast access to training data is critical for reducing training times. This allows ML engineers to iterate faster and build better models by reducing the bottleneck of data loading.

· Real-time Analytics Platforms: Serving as the backend for analytical dashboards and real-time data processing pipelines that require immediate access to large volumes of data. Businesses can get up-to-the-minute insights, enabling quicker decision-making.

· High-Frequency Trading Systems: Providing low-latency storage for market data and trade execution, where milliseconds can mean significant financial gains or losses. This ensures trading algorithms can react to market changes instantly.

· Cloud-Native Application Backends: Acting as a performant and scalable object store for microservices and cloud-native applications that need to store and retrieve user-generated content, logs, or application state. Developers can build more robust and responsive cloud applications.

· Large-Scale Backup and Archiving: Offering a cost-effective and high-throughput solution for backing up and archiving vast amounts of data, ensuring quick recovery when needed. This provides peace of mind with fast and reliable data recovery capabilities.

16

UniquelyEncrypted