Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-24

SagaSu777 2025-11-25

Explore the hottest developer projects on Show HN for 2025-11-24. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The surge of LLM-integrated tools continues to dominate, offering new paradigms for content creation, developer productivity, and data interaction. We're seeing a significant trend towards making these powerful AI capabilities more accessible and practical for everyday use, whether it's simulating community discussions, generating code, enriching customer data, or creating interactive visualizations. The proliferation of client-side AI solutions, leveraging WebAssembly and optimized models, is a critical innovation, promising enhanced privacy and offline capabilities. Simultaneously, the focus on robust developer tools, from compilers to command-line utilities, underscores the community's commitment to refining the underlying infrastructure and workflows. For developers and innovators, this landscape presents a fertile ground for exploration, encouraging the building of intuitive interfaces and specialized applications that harness AI's potential without overwhelming the end-user. The spirit of 'build it yourself' to solve a specific pain point remains strong, driving forward solutions that are both technically sophisticated and user-centric.

Today's Hottest Product

Name

Hacker News Simulator

Highlight

This project ingeniously simulates the Hacker News experience by leveraging LLMs to generate instant, AI-powered comments. The core technical innovation lies in its sophisticated prompt engineering, which combines various commenter archetypes, moods, and conversational styles to create a surprisingly realistic and engaging simulation. For developers, this offers a fantastic case study in applying LLMs for dynamic content generation and understanding how to craft prompts that elicit specific conversational behaviors. It elegantly solves the problem of needing human interaction for simulation by using AI, showcasing a creative application of current AI capabilities.

Popular Category

AI/ML

Developer Tools

Web Applications

Data Science

Productivity

Popular Keyword

LLM

AI

Open Source

API

Rust

Python

WebAssembly

CLI

Technology Trends

LLM-powered applications

AI-driven content generation

Client-side AI/WASM

Developer productivity tools

Data analysis and visualization

System programming languages

Decentralized technologies

Real-time interactive experiences

Project Category Distribution

AI/ML Tools (30%)

Developer Utilities (25%)

Web Applications & Services (20%)

Data Science & Analysis (15%)

System & Programming (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | HackerNews LLM Commenter Simulator | 492 | 210 |

| 2 | Cynthia MIDI Maestro | 85 | 30 |

| 3 | AI KidPainter | 8 | 14 |

| 4 | Yolodex Persona Weaver | 16 | 4 |

| 5 | AIJobMapper | 14 | 4 |

| 6 | Contextual Chatbot Assistant | 11 | 6 |

| 7 | Smart Scan CLI & Dashboard | 15 | 0 |

| 8 | Axe: Concurrency-First Systems Language | 13 | 2 |

| 9 | Pulse-Field AI Engine | 6 | 8 |

| 10 | OntologyGraph Weaver | 13 | 0 |

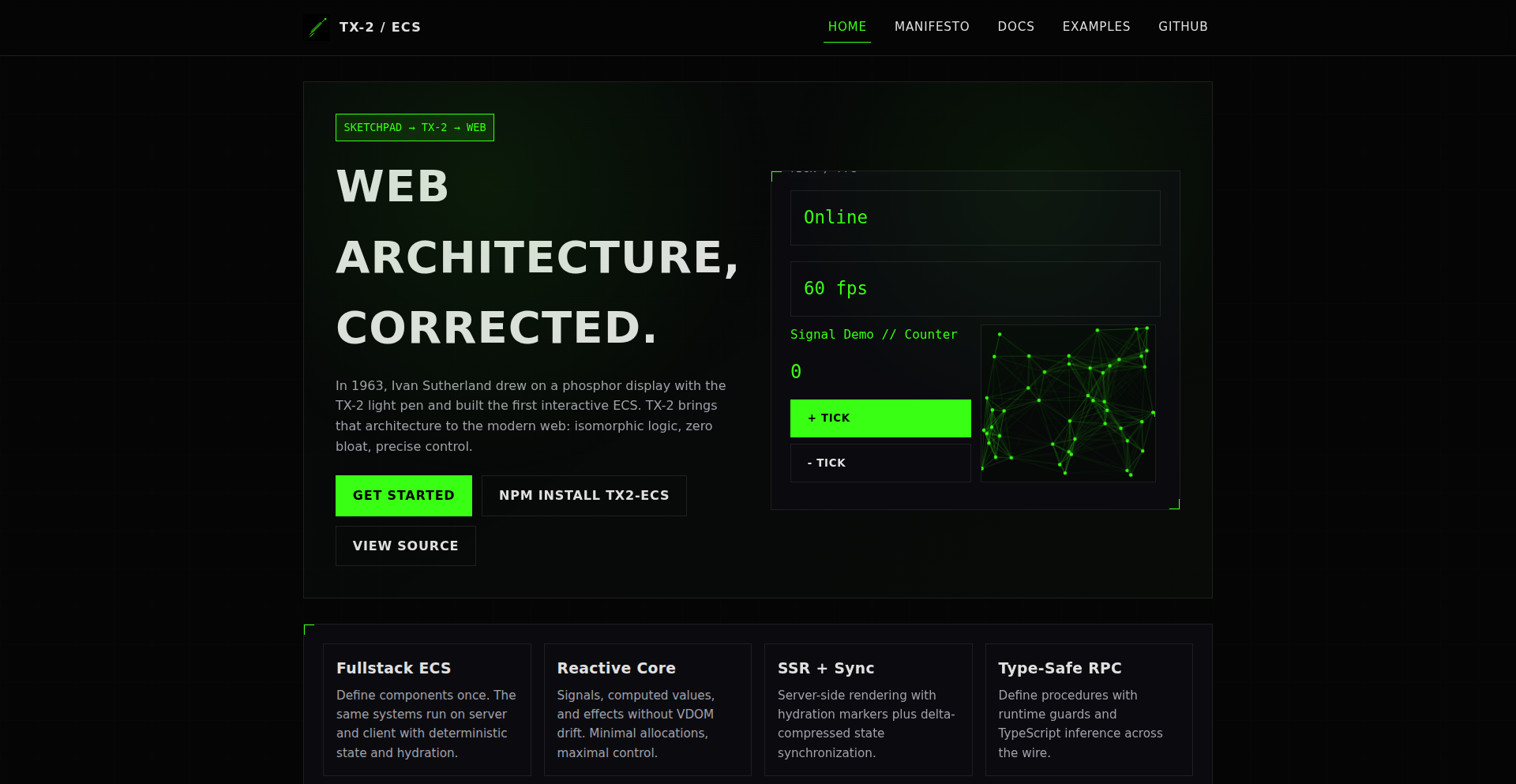

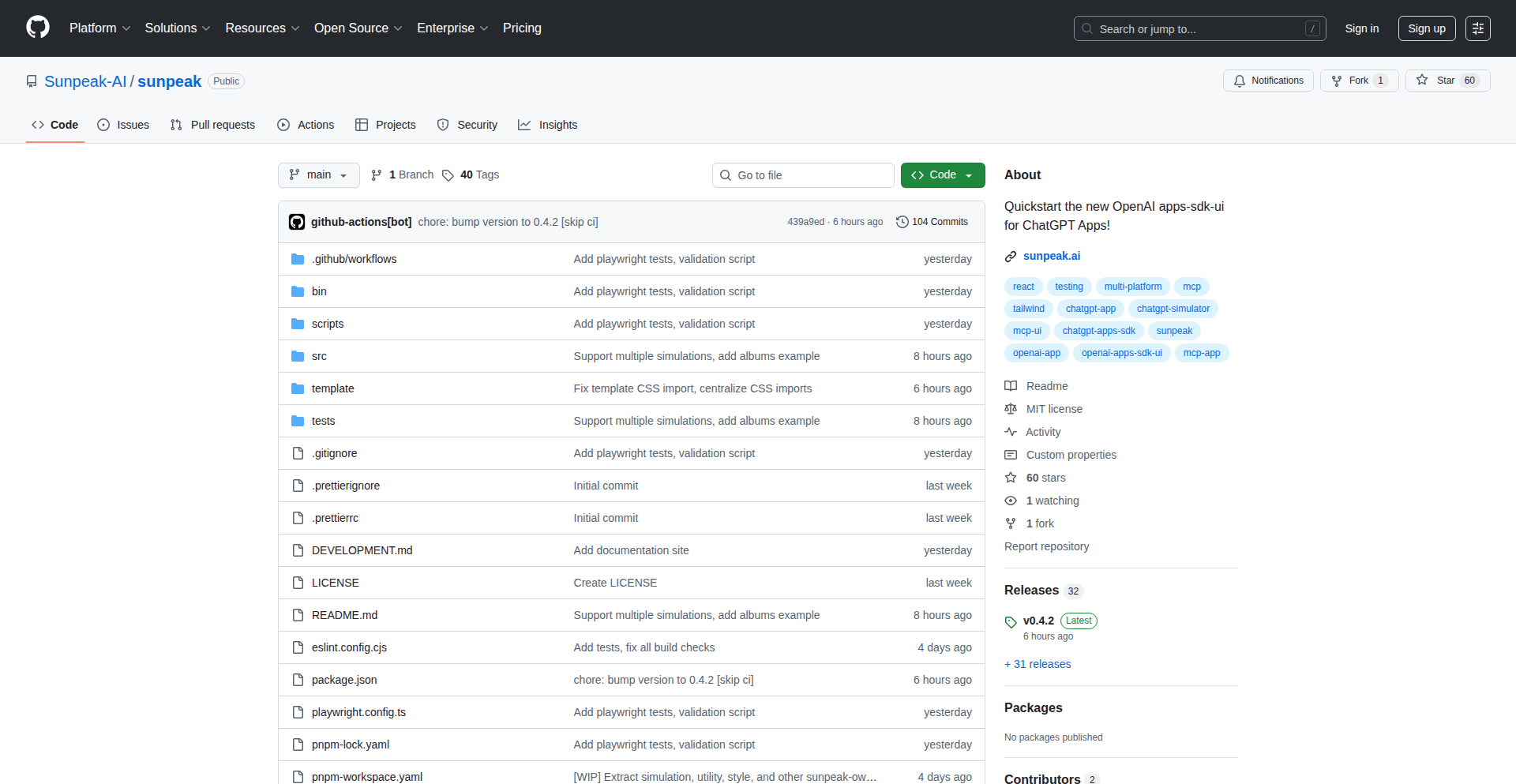

1

HackerNews LLM Commenter Simulator

Author

johnsillings

Description

This project is an interactive simulation of Hacker News where users can submit posts and links, and all comments are instantly generated by Large Language Models (LLMs). The innovation lies in its sophisticated prompt engineering, combining commenter archetypes, moods, and post content to create realistic and varied AI-driven discussions. It's a fascinating experiment in simulating online community dynamics with AI, showcasing a clever application of LLMs to generate engaging content in real-time.

Popularity

Points 492

Comments 210

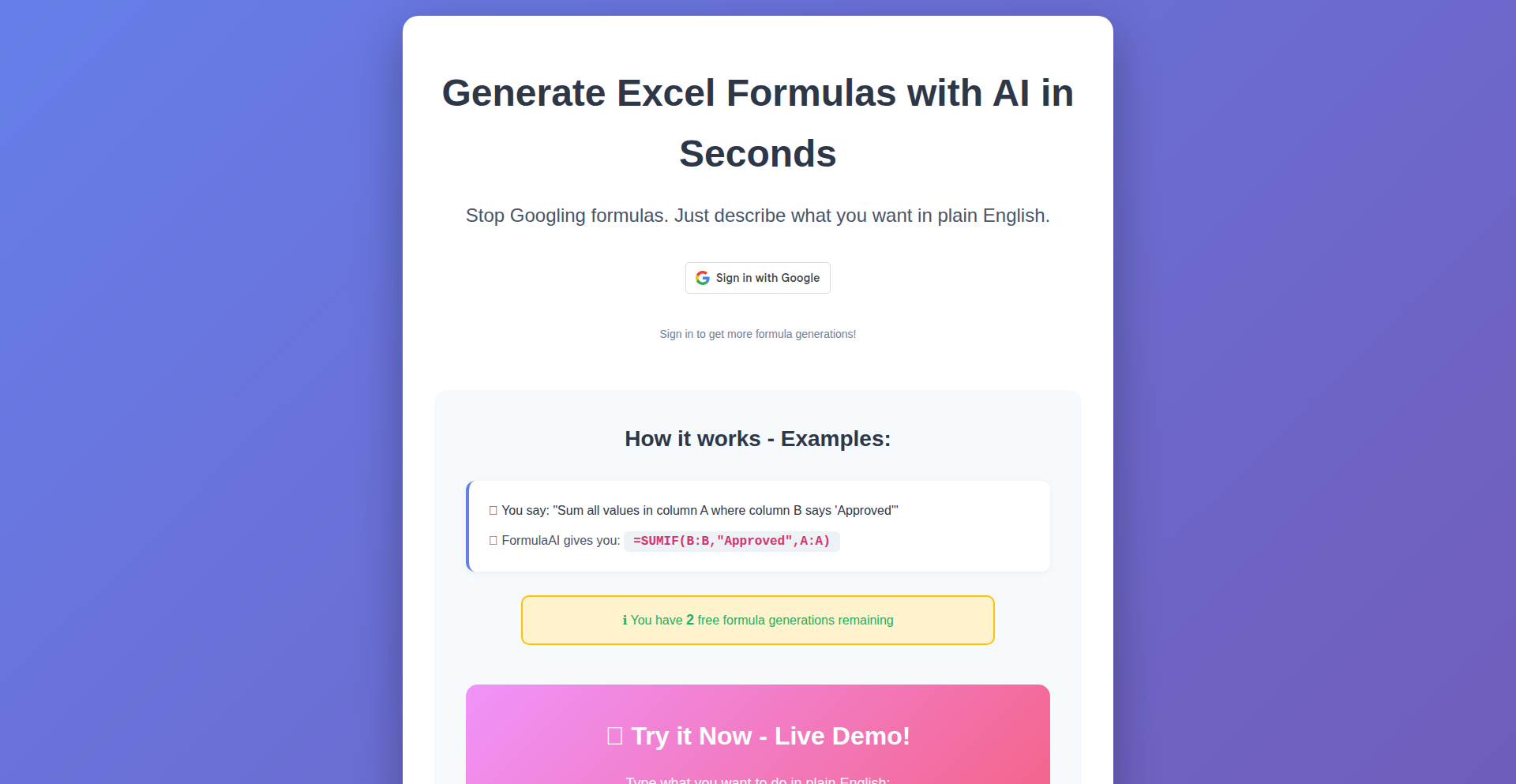

What is this product?

This is an interactive simulation of the popular Hacker News website. Unlike the real Hacker News, where human users post and comment, this simulator uses advanced AI models (LLMs) to generate all comments instantly. The core technical innovation is how it constructs prompts for these LLMs. It draws from a library of pre-defined commenter personalities (archetypes), emotional states (moods), and conversational styles (shapes). These elements are dynamically combined with the content of the user-submitted post or link. The result is a surprisingly realistic and varied stream of AI-generated comments that mimic human interaction, offering a unique exploration of AI's capabilities in content generation and community simulation.

How to use it?

Developers can use this simulator as a playground to experiment with LLM-driven content generation and to understand how different prompts can elicit varied responses. You can submit your own text posts or curl-able URLs directly to the simulator via its submission interface (e.g., `https://news.ysimulator.run/submit`). There's no need for an account to post. Once submitted, you'll see AI-generated comments appear almost instantly, reacting to your content. This allows developers to test prompt engineering strategies, observe how AI interprets different inputs, and explore the potential for AI to generate dynamic content for forums, comment sections, or social media simulations. It's a live demo of prompt-based AI interaction, powered by Node.js, Express, and Postgres on the backend, with LLM inference handled by Replicate.

Product Core Function

· AI-generated comments: Utilizes LLMs to create instant, contextually relevant comments for user-submitted posts or links. This is valuable for understanding AI's conversational abilities and for generating realistic placeholder content in development.

· Commenter archetype system: Employs a library of distinct commenter personas (e.g., the skeptic, the enthusiast, the technical expert) to generate diverse comment styles and opinions. This demonstrates how personality can be injected into AI responses, adding depth to simulated interactions.

· Mood and shape combination: Dynamically blends emotional tones (moods) and conversational structures (shapes) with archetypes and post content to produce a wide range of comment nuances. This highlights sophisticated prompt engineering for nuanced AI output.

· Real-time interaction: Provides immediate feedback and comment generation upon post submission, creating an engaging and responsive simulation. This showcases the efficiency and speed achievable with modern AI inference platforms.

· No account required submission: Allows anyone to quickly test content generation by submitting posts without the friction of registration. This is ideal for rapid prototyping and easy accessibility for experimentation.

Product Usage Case

· Simulating online forum discussions: A developer can post a technical question and observe how different AI commenter archetypes respond, helping to refine the expected user interaction for a real forum or Q&A platform.

· Testing prompt engineering for content generation: By submitting various types of posts (e.g., news links, personal anecdotes, code snippets), developers can see how the AI interprets and reacts, learning best practices for prompt design to elicit desired AI responses.

· Exploring AI's creative writing capabilities: Users can submit creative prompts or story ideas and see how the AI commenter 'reacts' or 'builds' upon them, showcasing potential for AI-assisted creative content generation.

· Educational tool for LLM understanding: Students or newcomers to AI can use this simulator to intuitively grasp how LLMs can be controlled and directed through prompt engineering, making abstract concepts more tangible.

· Prototyping AI-powered community features: A product manager or developer could use this to quickly demo the concept of AI-generated comments for a new social app, visualizing how the community might feel with AI participation.

2

Cynthia MIDI Maestro

Author

blaiz2025

Description

Cynthia is a portable, easy-to-use application designed to reliably play MIDI music files across all Windows versions. Developed out of frustration with the declining native MIDI playback quality and speed in modern Windows, Cynthia features a custom-built MIDI playback engine and a robust codebase, offering superior stability and near-instantaneous performance. Its innovation lies in reviving and enhancing the often-overlooked MIDI experience for developers and music enthusiasts alike.

Popularity

Points 85

Comments 30

What is this product?

Cynthia is a standalone application that re-engineers MIDI playback on Windows. The core innovation is its custom-built MIDI playback engine, which bypasses the often slow and unreliable native Windows MIDI services. Think of it as building a brand new, super-fast, and super-reliable engine for a car that was struggling with its old one. This custom engine ensures that MIDI files load and play almost instantly, a stark contrast to the several-second delays common in later Windows versions. It also provides granular control over playback, like detailed track and note indicators, which were either missing or difficult to access previously. This project is a testament to the hacker ethos of taking a problem (poor MIDI support) and solving it from the ground up with code.

How to use it?

Developers can use Cynthia in a few key ways. Firstly, as a standalone application for personal use or testing MIDI files, offering a dependable way to experience MIDI music. For integration, developers could potentially use the underlying engine or concepts to build their own MIDI-aware applications, especially if they need high performance and stability. The application supports playing .mid, .midi, and .rmi files. It can also be controlled via an Xbox controller, offering a unique interactive experience. For developers working with legacy systems or requiring precise MIDI control, Cynthia provides a robust foundation. It also has the added benefit of running on Linux/Mac via Wine, broadening its accessibility for cross-platform development and testing.

Product Core Function

· Custom MIDI Playback Engine: Offers high playback stability and near-instantaneous loading times, providing a superior user experience compared to native Windows MIDI support.

· Cross-Platform Compatibility (via Wine): Enables playback and testing on Linux and macOS, extending its utility for developers working in diverse environments.

· Extensive File Format Support: Plays .mid, .midi, and .rmi files, covering common MIDI formats.

· Realtime Visual Indicators: Provides visual feedback on track data, channel output volume, and note usage, offering deeper insight into MIDI performance.

· Advanced Playback Modes: Supports Once, Repeat One, Repeat All, All Once, and Random playback, allowing for flexible music sequencing and testing.

· Large File List Capacity: Can handle thousands of MIDI files, making it suitable for extensive music libraries or complex projects.

· Multi-Device Output: Allows playback through one or multiple MIDI devices simultaneously with lag and channel output support, ideal for complex audio setups.

· Xbox Controller Integration: Enables intuitive control of playback functions, offering a novel human-computer interaction for MIDI playback.

Product Usage Case

· A game developer needing to integrate custom MIDI soundtracks into their game, who can use Cynthia to test MIDI file performance and ensure consistent playback across different Windows environments. This solves the problem of inconsistent and slow native MIDI playback affecting game audio quality.

· A musician or composer looking for a reliable tool to audition MIDI compositions, who can benefit from Cynthia's stable playback and visual indicators to fine-tune their work. This addresses the need for accurate and immediate feedback during the creative process.

· A hobbyist developer creating a retro-style music player application, who can draw inspiration from Cynthia's custom engine to build their own high-performance MIDI playback solution. This showcases how a personal project can inspire and provide technical insights for other developers.

· A user migrating from older Windows versions (like Windows 95) who misses the fast and reliable MIDI playback, finding Cynthia provides that nostalgic and functional experience on modern systems. This highlights the project's success in solving a specific regression in user experience.

3

AI KidPainter

Author

daimajia

Description

A free, AI-powered coloring website for children, leveraging generative AI to create unique coloring pages and assist in the coloring process. It addresses the need for engaging and creative digital art activities for kids, offering an innovative approach to traditional coloring.

Popularity

Points 8

Comments 14

What is this product?

AI KidPainter is a web application that uses artificial intelligence to generate custom coloring pages for children and offers AI-assisted coloring tools. The core innovation lies in its generative AI backend, which can create new, imaginative drawing outlines based on simple prompts or themes. Additionally, it can intelligently suggest colors or even provide a 'smart fill' feature that colors within the lines, making the experience more accessible and fun for younger users. This democratizes the creation of personalized coloring content, going beyond static pre-made templates.

How to use it?

Developers can embed AI KidPainter into educational platforms, family-oriented websites, or even integrate its API (if available) into their own creative applications. For end-users, it's a simple web interface: a child or parent can input a theme (e.g., 'a friendly robot,' 'a magical castle') and the AI generates a coloring outline. Then, they can either color it manually using the provided digital brushes or opt for the AI-powered coloring assistance. The value is immediate access to an endless stream of personalized, engaging art activities without needing to download anything.

Product Core Function

· AI-powered coloring page generation: Creates unique line art based on user prompts, offering endless creative possibilities and reducing the need for pre-designed content. This means every coloring session can be a new adventure.

· Smart coloring assistance: Provides features like 'smart fill' that colors within the lines and AI-suggested color palettes, making it easier for young children to achieve satisfying results and encouraging their artistic confidence.

· Interactive drawing canvas: A user-friendly digital canvas with various brushes and tools, allowing for both freehand coloring and utilizing AI assistance. This provides a flexible environment for artistic exploration.

· Free and accessible platform: Offers a completely free service, removing financial barriers to creative digital play for children and families. This makes high-quality creative tools available to everyone.

Product Usage Case

· An educational website can integrate AI KidPainter to provide a daily 'Creative Corner' for students, generating unique learning-themed coloring pages related to current lessons, thus enhancing engagement and reinforcing concepts.

· A children's book publisher could use the generative capabilities to quickly create unique illustrations for coloring books or interactive digital story components, streamlining their content creation process.

· A family-focused app developer can incorporate AI KidPainter as a feature for their app, offering parents a way to generate personalized coloring activities for their children based on specific interests, turning screen time into creative time.

· A therapist working with children could use AI KidPainter to generate calming or specific theme-based coloring pages as a therapeutic tool, providing a customizable and engaging activity for emotional expression.

4

Yolodex Persona Weaver

Author

hazzadous

Description

Yolodex Persona Weaver is a real-time customer enrichment API that transforms an email address into a rich JSON profile of publicly available data. It leverages OSINT (Open Source Intelligence) techniques, similar to those used in financial crime investigations, to compile information like name, country, age, occupation, company, social handles, and interests. This innovative approach focuses on providing accurate, up-to-date, and ethically sourced public information, offering a more transparent and developer-friendly alternative to existing data enrichment services.

Popularity

Points 16

Comments 4

What is this product?

Yolodex Persona Weaver is an API service that acts like a digital detective for your customers. When you provide an email address, it scans the vast public internet (think of it as a super-powered search engine for people) to gather publicly available details about that individual. The core innovation lies in its methodology: it borrows techniques from professional intelligence gathering to sift through and piece together fragmented public data, aiming for real-time accuracy and respecting privacy by only using information that's already out there. Unlike other services that might have outdated or questionable data, Yolodex focuses on transparently presenting verifiable public facts. So, if you need to understand who you're interacting with beyond just an email, this API can build a comprehensive, albeit public, digital persona for them.

How to use it?

Developers can integrate Yolodex Persona Weaver into their applications with ease. It's a single API endpoint that accepts a POST request with a JSON payload containing an email address. For immediate testing without authentication, you can use a simple curl command. For programmatic use, you would typically make an HTTP POST request from your backend code or even a frontend application (with appropriate security considerations) to the `api.yolodex.ai/api/v1/email-enrichment` endpoint. The API responds with a JSON object containing the enriched profile. This is useful for scenarios like personalizing user experiences, verifying user information during onboarding, or enriching CRM data without requiring users to manually input extensive details. The pricing is pay-per-profile, meaning you only pay if information is found, making it cost-effective for testing and for projects with fluctuating data needs.

Product Core Function

· Email to Public Profile Enrichment: Automatically compiles a JSON profile from public data sources when given an email address. This helps developers quickly understand their users or leads by aggregating scattered public information, saving significant manual research time.

· Real-time Data Retrieval: Gathers the most current publicly available information, ensuring that the insights provided are relevant and up-to-date. This is crucial for making timely business decisions and avoiding errors caused by stale data, like reaching out to inactive contacts.

· Ethical OSINT Methodology: Utilizes Open Source Intelligence techniques that focus solely on publicly shared information, avoiding the collection of private or dubious data. This ensures compliance with privacy best practices and builds trust with users, as their private information is not being unnecessarily accessed or sold.

· Transparent and Granular Pricing: Offers a pay-per-enriched-profile model, where you are only charged if data is successfully retrieved. This eliminates the risk of paying for incomplete or empty profiles and provides a clear, predictable cost structure, making it financially accessible for projects of all sizes.

· Simplified API Integration: Provides a straightforward, single API endpoint with easy-to-understand request and response formats. This minimizes the technical overhead for developers, allowing them to implement customer enrichment quickly without complex setup or authentication processes.

Product Usage Case

· Sales Lead Qualification: A sales team can input a prospect's email into their CRM, which then uses Yolodex to enrich the lead's profile with their company, role, and interests. This allows the sales representative to tailor their outreach and understand the prospect's potential needs more effectively, increasing the chances of a successful conversion.

· User Onboarding Personalization: A web application can, with user consent, use Yolodex to retrieve basic public information like name and general interests after a user signs up with an email. This data can be used to personalize the user's initial experience within the application, making it feel more tailored and engaging from the start.

· Content Recommendation Engine: A media platform can enrich user profiles with publicly available interests detected via their email. This enriched data can then be used to suggest more relevant articles, videos, or products, leading to higher user engagement and satisfaction.

· Fraud Detection Enhancement: While focusing on public data, enrichment can add context. For example, if a user's email provides public information that seems inconsistent with their stated identity or application behavior, it can serve as an additional signal for potential fraud investigations, complementing other security measures.

5

AIJobMapper

Author

kalil0321

Description

An interactive map visualizing job openings at leading AI companies worldwide. This project leverages data scraped from Applicant Tracking Systems (ATS) and uses a natural language processing (NLP) interface powered by a small Large Language Model (LLM) to allow users to intuitively filter and explore job opportunities. The core innovation lies in its ability to transform raw job data into an easily digestible visual format with intelligent search capabilities.

Popularity

Points 14

Comments 4

What is this product?

AIJobMapper is a dynamic, web-based map that pinpoints where top AI companies are hiring globally. It addresses the challenge of finding AI-specific job roles across different geographies and company types. The project ingeniously scrapes job postings from various ATS providers, then utilizes SearXNG to discover companies and their job opportunities, amassing a substantial dataset. The standout feature is a built-in LLM that allows users to ask questions in plain English, like 'show me research roles in Europe' or 'filter for remote software engineering positions,' which are then translated into specific map filters. This means you don't need to be a data wizard or a coding expert to find the AI job that's right for you.

How to use it?

Developers can explore the live demo at map.stapply.ai to discover AI job trends. For those interested in the data itself, the raw job data is available on GitHub at github.com/stapply-ai/jobs. Integrations could involve using the data as a foundation for more specialized job boards, career counseling tools, or market research dashboards. Developers can also contribute to the project by improving data collection, enhancing the LLM's filtering capabilities, or adding new visualization features.

Product Core Function

· Global AI Job Mapping: Visualizes AI job openings on an interactive map, allowing users to see hiring hotspots worldwide. This helps understand where the AI industry is growing and where talent is in demand, providing insights for career planning or relocation decisions.

· Natural Language Job Filtering: Enables users to search for jobs using conversational language (e.g., 'AI research jobs in California'). This democratizes access to job data, making it easier for anyone to find relevant opportunities without complex search queries, saving time and frustration.

· Company & Role Specific Data: Collects and categorizes job data from top AI companies, offering insights into specific roles and hiring patterns within the AI sector. This helps job seekers target their applications more effectively and understand the landscape of available positions.

· Data Scraping & Aggregation: Employs tools like SearXNG to efficiently discover and collect job postings from various ATS providers, creating a comprehensive dataset. This ensures a broad overview of the AI job market, providing a more complete picture than manual searches.

· Live Interactive Visualization: Presents the job data through a user-friendly map interface using Vite, React, and Mapbox. This offers an engaging and intuitive way to interact with complex data, making it easier to spot trends and opportunities at a glance.

Product Usage Case

· A recent AI graduate wants to find remote machine learning engineering roles. They can use AIJobMapper to type 'remote machine learning engineer jobs' and immediately see available positions worldwide, rather than sifting through hundreds of generic job boards.

· A data scientist is considering relocating to Europe for AI research opportunities. They can use the map to filter for 'AI research roles in Europe' and visually identify cities with a high concentration of such jobs, aiding their decision-making process.

· A startup founder needs to understand where top AI talent is being hired. By exploring the map, they can identify regions with significant hiring activity in areas relevant to their startup's focus, informing their talent acquisition strategy.

· A career counselor wants to advise clients on emerging AI career paths. They can use AIJobMapper to showcase the breadth of AI job opportunities and highlight in-demand roles and locations, providing concrete examples and data to support their guidance.

6

Contextual Chatbot Assistant

Author

teemingdev

Description

This project is an LLM-powered chatbot designed to act as a 'receptionist' for websites. It addresses the common problem of missed leads and delayed responses on websites by providing instant, AI-generated answers based on the website's own content. It captures user intent and collects lead information, ensuring no potential customer is overlooked, even outside of business hours or during periods of intense developer focus.

Popularity

Points 11

Comments 6

What is this product?

This is an intelligent chatbot for your website that leverages Large Language Models (LLMs) to provide instant, context-aware answers to visitor questions. Unlike generic chatbots, it's trained on your specific website content (like FAQs, documentation, or pricing pages). This means it can offer precise and relevant information, acting as a virtual receptionist. The innovation lies in its ability to understand your content and respond intelligently, ensuring visitors get the information they need immediately, thereby preventing lead loss due to slow responses.

How to use it?

Developers can integrate this chatbot into their websites by connecting their site's content sources. Once connected, the chatbot is configured to understand and draw information from specified pages. Visitors will interact with it through a chat widget on the website. For developers, this means a simple setup process to enhance user engagement and lead capture without needing to manually staff a customer support channel 24/7. It's designed for easy integration, allowing developers to quickly deploy a smart assistant.

Product Core Function

· LLM-powered contextual answering: Provides instant, accurate answers by understanding and utilizing the provided website content. This is valuable because it ensures visitors get immediate help, improving their experience and your website's responsiveness.

· Lead capture on intent: Identifies when a visitor shows interest and collects their contact details. This is valuable for businesses as it automates lead generation, ensuring potential customers aren't lost even if they leave the site.

· Lightweight and simple setup: Designed for easy integration with minimal configuration. This is valuable for developers who want a quick solution to improve user interaction and lead generation without complex development cycles.

· 24/7 availability: Responds to queries instantly at any time. This is valuable because it means no leads are missed due to time zone differences or off-hours, providing consistent support.

· Content personalization: Tailors responses based on your specific website's information. This is valuable as it offers more relevant and accurate information than generic chatbots, building trust and credibility.

Product Usage Case

· A SaaS company experiencing high website traffic but low conversion rates. They integrate the chatbot trained on their product documentation and pricing pages. The chatbot answers pre-sales questions instantly, leading to increased demo requests and sign-ups by capturing leads who might otherwise have left.

· A personal blog author who often misses visitor questions asked via their website's contact form. They deploy the chatbot trained on their blog posts. The chatbot answers common questions about their content, freeing up the author's time and engaging readers more effectively.

· An e-commerce store looking to reduce cart abandonment. They integrate the chatbot trained on their product FAQs and shipping information. The chatbot addresses customer concerns about delivery and product details in real-time, leading to a reduction in abandoned carts and an increase in completed purchases.

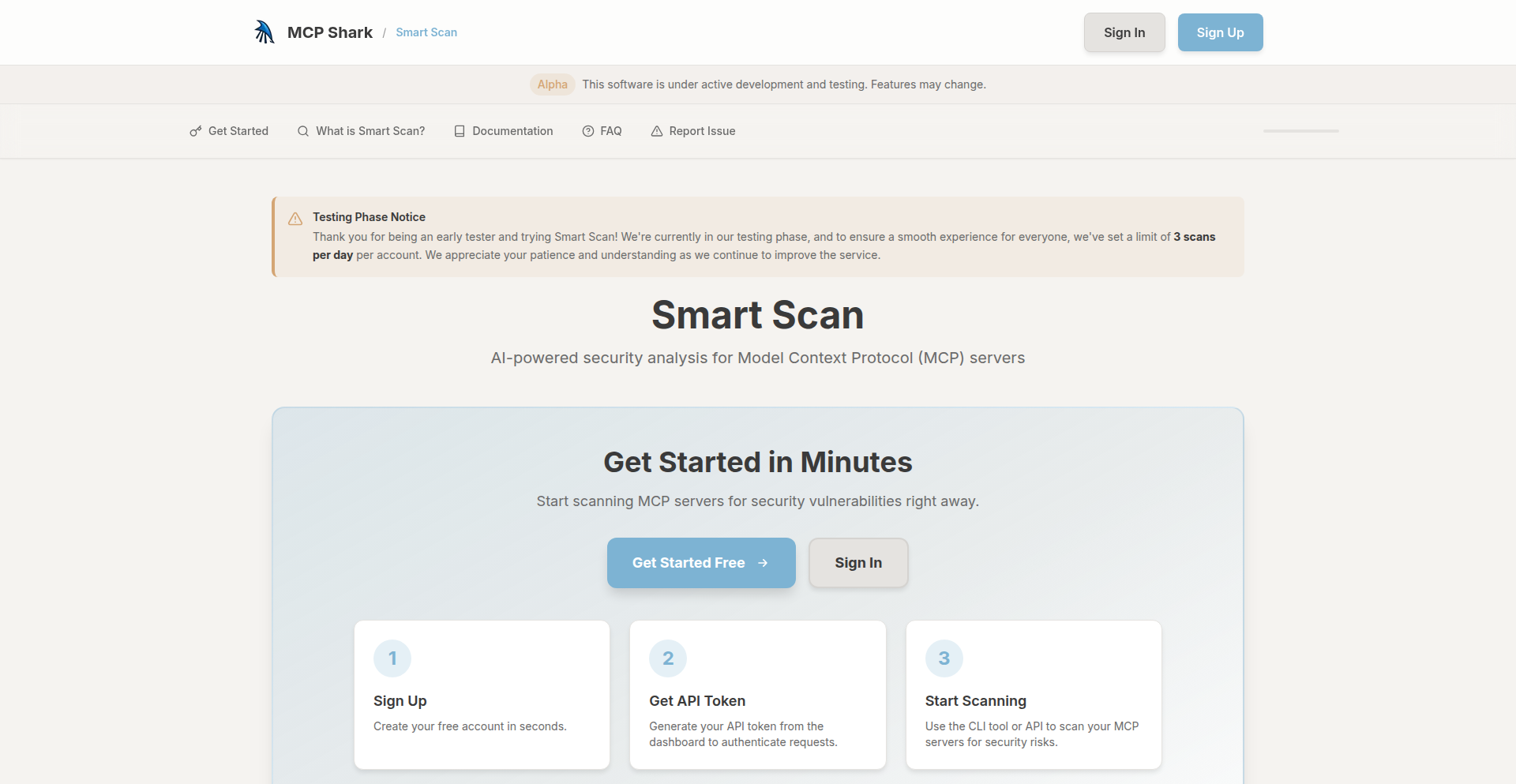

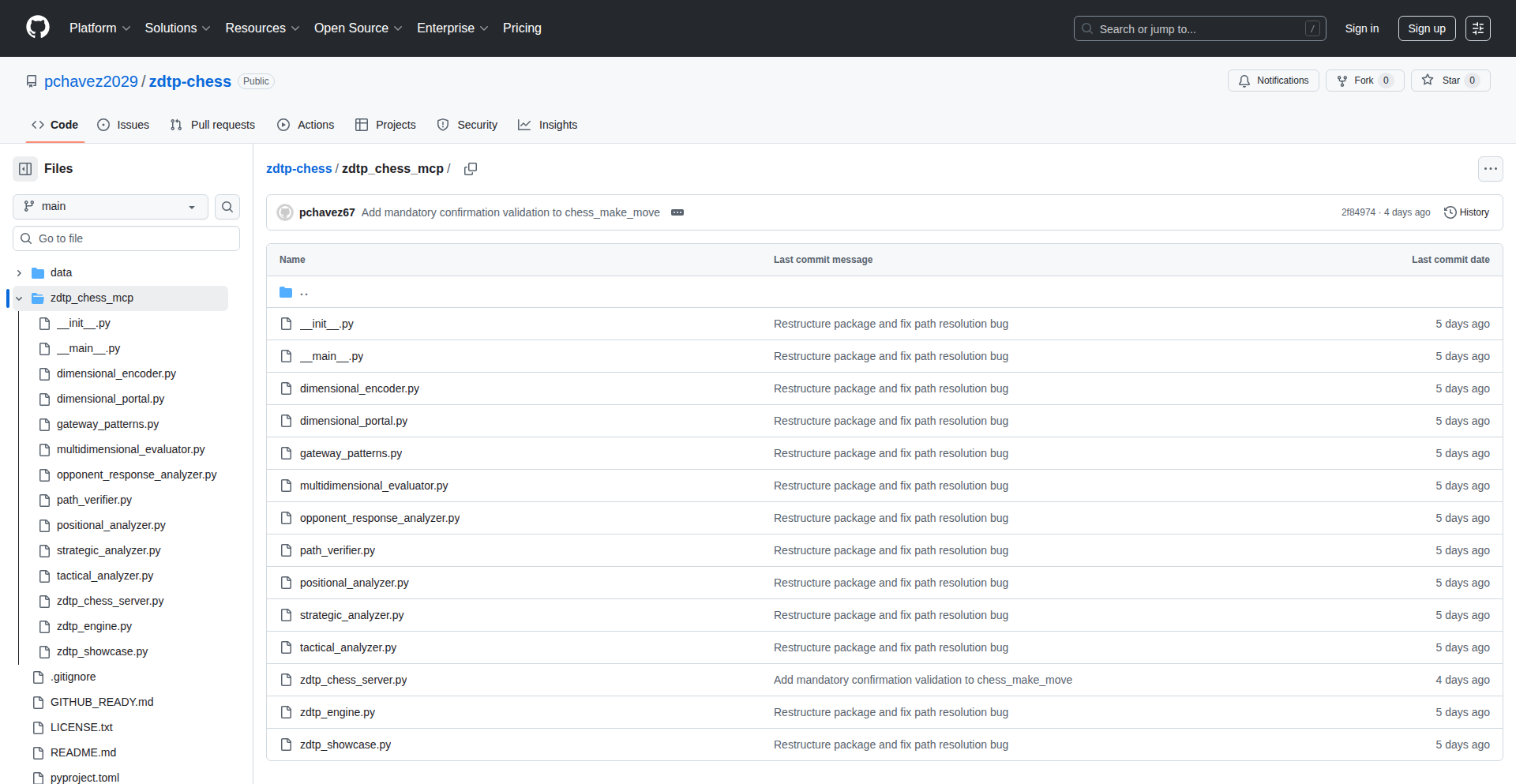

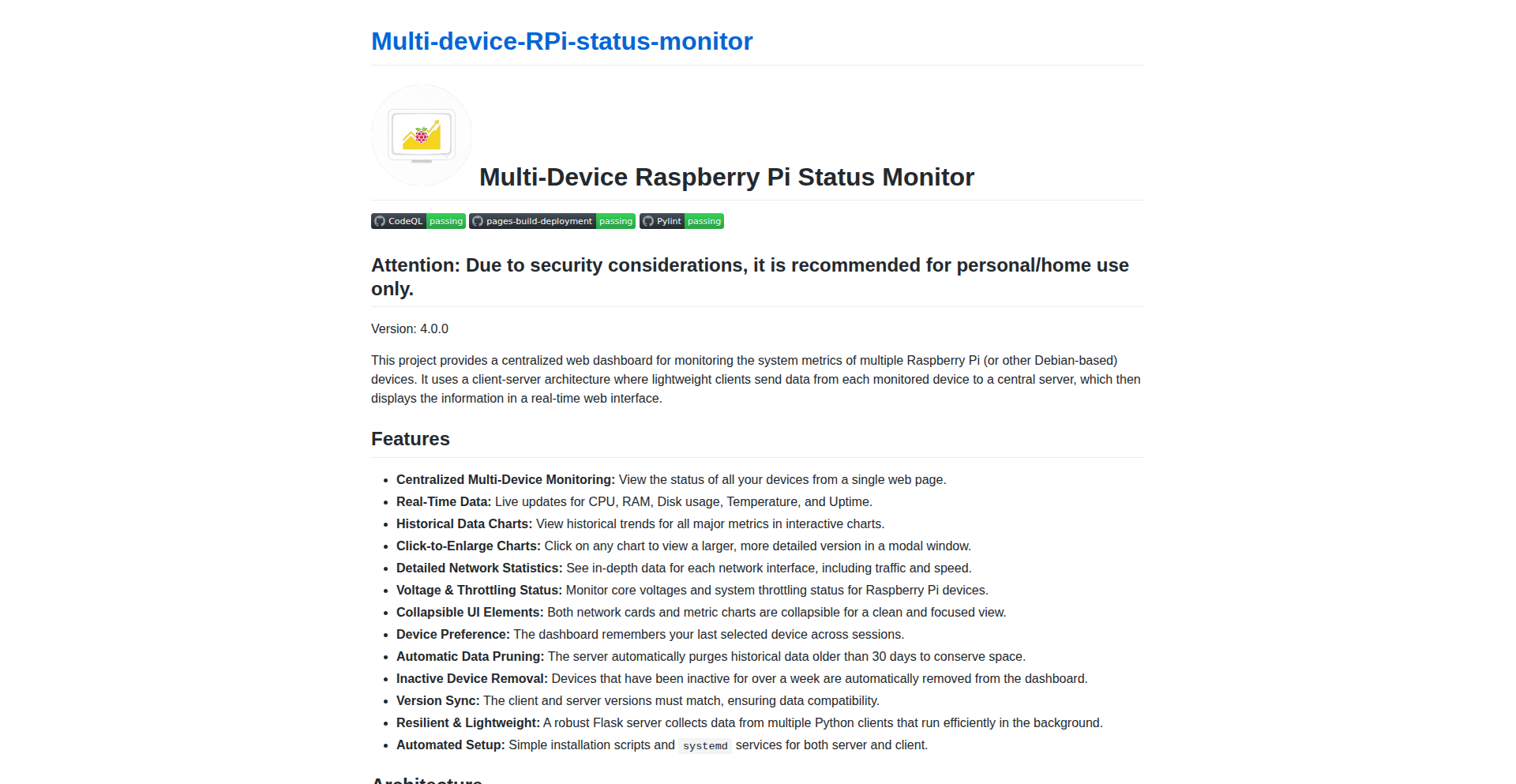

7

Smart Scan CLI & Dashboard

Author

o4isec

Description

Smart Scan is a developer-centric toolkit for integrating Machine Checkpoint (MCP) security scans into development workflows. It offers a REST API for programmatic access, a dashboard for visualizing scan results, and CI/CD integration tools to automate security checks. The innovation lies in making complex MCP security scanning accessible and actionable for developers.

Popularity

Points 15

Comments 0

What is this product?

Smart Scan is a set of tools designed to bring the power of MCP security scanning directly into the hands of developers. Instead of treating security as an afterthought, Smart Scan allows developers to easily integrate security checks into their everyday coding and deployment processes. It does this by providing a user-friendly REST API that allows any application or script to trigger and manage MCP scans, a visual dashboard to make sense of the scan outcomes, and specific tools to plug into Continuous Integration/Continuous Deployment (CI/CD) pipelines. This means developers can get immediate feedback on security vulnerabilities without needing to be security experts themselves, accelerating the development cycle while improving overall application security.

How to use it?

Developers can integrate Smart Scan into their workflows in several ways. For programmatic control, they can use the provided REST API to trigger scans from their custom scripts, build tools, or even within their application code. For automated security checks, they can integrate Smart Scan's CI/CD tools into their existing pipelines (e.g., Jenkins, GitLab CI, GitHub Actions). When a change is pushed or a build is triggered, Smart Scan can automatically run security scans. The results are then accessible via the dashboard for easy review and remediation. This essentially allows developers to 'shift left' on security, addressing potential issues earlier in the development lifecycle.

Product Core Function

· REST API for programmatic scan triggering and management: Enables developers to automate security checks from any scripting language or application, integrating security into custom workflows and development tools. This is valuable because it allows for flexible automation and integration beyond standard CI/CD pipelines.

· Web-based Dashboard for scan result visualization: Provides a clear, intuitive interface to view, filter, and analyze security scan outcomes, making it easy for developers and teams to understand vulnerabilities and their impact. This is valuable for quickly identifying and prioritizing security issues without deep security expertise.

· CI/CD Integration tools: Pre-built connectors and configurations for popular CI/CD platforms, allowing seamless inclusion of MCP security scans into automated build, test, and deployment processes. This is valuable for ensuring security is a consistent part of the release process.

· Automated vulnerability reporting: Generates reports detailing identified security weaknesses, often with severity levels and potential remediation steps. This is valuable for providing actionable intelligence to developers for fixing issues.

· Configuration management for scan profiles: Allows developers to define and save specific scanning parameters and policies, ensuring consistency and tailoring scans to project needs. This is valuable for maintaining standardized security checks across different projects or environments.

Product Usage Case

· A developer pushes a new feature branch to a Git repository. The CI/CD pipeline automatically triggers a Smart Scan. If the scan detects critical vulnerabilities, the build fails, preventing insecure code from being merged into the main branch. This solves the problem of accidentally introducing security flaws into production.

· A security team wants to continuously monitor their deployed microservices for compliance with MCP security standards. They use Smart Scan's REST API to schedule daily scans of their running applications and view the aggregated results on the dashboard. This helps them maintain a strong security posture over time.

· A development team is working on a new web application and wants to ensure it's secure from the start. They integrate Smart Scan into their local development environment, allowing them to run scans on demand before committing code. This empowers developers to fix security issues early, reducing the cost and effort of remediation later.

· A company needs to regularly audit its software supply chain for potential risks. Smart Scan's API can be used to trigger scans on third-party libraries and dependencies as part of a broader security assessment process, identifying potential vulnerabilities in the components being used.

8

Axe: Concurrency-First Systems Language

Author

death_eternal

Description

Axe is a systems programming language designed from the ground up for concurrency and parallelism. It tackles the complexity of modern multi-core processors by making parallel execution a core language feature, not an afterthought. The language emphasizes memory safety and type guarantees without relying on a garbage collector, opting for an arena-based allocator for speed and predictability. This means developers can build highly performant and reliable software for concurrent environments more easily.

Popularity

Points 13

Comments 2

What is this product?

Axe is a novel systems programming language focused on making concurrent and parallel programming intuitive and safe. Unlike many languages where you have to bolt on concurrency libraries, Axe has primitives like 'parallel' and 'local' built directly into its syntax. It uses an 'arena-based allocator' which is like a dedicated memory manager for a specific task that reclaims all memory at once when done, leading to faster compilation and predictable memory usage without the overhead of a traditional garbage collector. This approach ensures strong guarantees about memory and types, preventing common bugs and improving performance. So, what's the benefit for you? You get to build faster, safer, and more robust applications, especially those that need to handle many things happening at once.

How to use it?

Developers can use Axe by writing code in its distinct syntax, which features explicit constructs for parallel execution. For instance, you can define a block of code to run in parallel using the `parallel` keyword. The `local` keyword within a parallel block signifies that the enclosed variables and operations are specific to that parallel execution context. The example shows how to create a local arena for memory management within a parallel thread. Integration would involve using the Axe compiler to transform your Axe source code into executable programs. This is particularly useful for scenarios requiring high throughput and responsiveness, such as server-side applications, game engines, or embedded systems where efficient resource utilization is critical. This allows you to design applications that can truly leverage modern multi-core processors.

Product Core Function

· First-class parallel and concurrent constructs: Built-in language features for easier management of simultaneous tasks, leading to more efficient use of multi-core processors and improved application responsiveness.

· Strong static memory and type guarantees: The language enforces strict rules at compile time to prevent common memory errors (like dangling pointers) and type mismatches, resulting in more reliable software with fewer runtime crashes.

· Arena-based memory allocation: A fast and predictable memory management system that avoids the overhead of a garbage collector, enabling quicker compilation and consistent performance for memory-intensive operations.

· Self-hosted compiler: The compiler can compile a significant portion of its own source code, demonstrating the language's maturity and providing a robust toolchain for developers to build their own applications efficiently.

Product Usage Case

· Developing high-performance server backends: By utilizing the built-in parallelism, developers can create servers that handle a large number of requests concurrently, leading to significantly better throughput and lower latency for users.

· Building real-time data processing pipelines: The language's focus on efficiency and concurrency makes it ideal for systems that need to ingest and process data streams in real-time without performance bottlenecks.

· Creating responsive user interfaces: For applications with complex UIs, parallel execution can be used to offload heavy computations, ensuring the UI remains smooth and interactive even during intensive tasks.

· Developing embedded systems with limited resources: The predictable memory management and focus on performance allow developers to build efficient software for devices with constrained memory and processing power, where garbage collection is often not feasible.

9

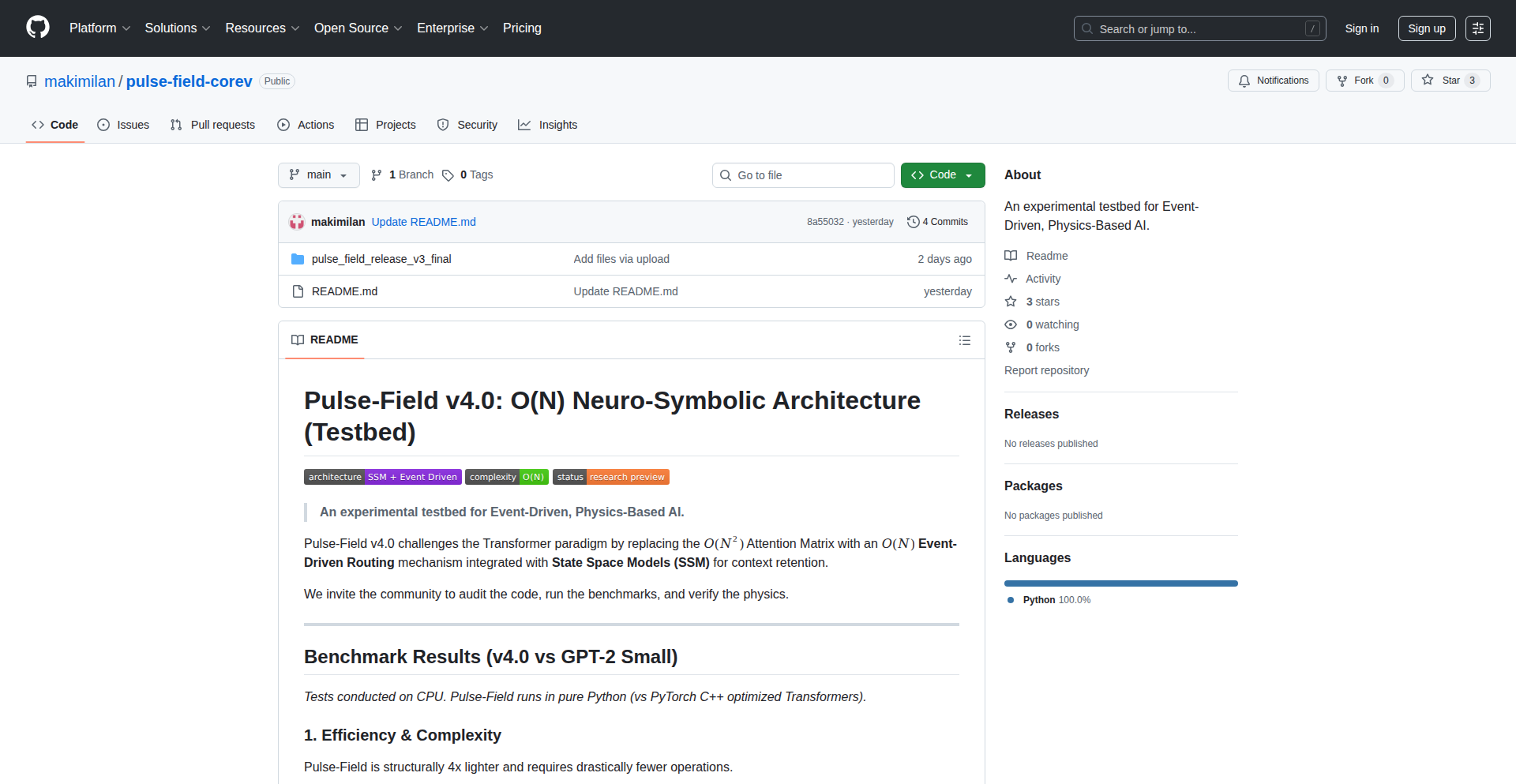

Pulse-Field AI Engine

Author

makimilan

Description

Pulse-Field AI Engine is a groundbreaking AI architecture designed for unprecedented speed and efficiency. It achieves O(N) complexity, meaning its performance scales linearly with input size, outperforming traditional Transformer models by a factor of 12. This innovation offers a radical shift in how we process large datasets for AI tasks, making complex computations dramatically faster and more accessible.

Popularity

Points 6

Comments 8

What is this product?

Pulse-Field AI Engine is a novel AI architecture that rethinks how neural networks process sequential data. Instead of relying on the attention mechanisms found in Transformers, which have quadratic complexity (O(N^2)) and become slow with larger inputs, Pulse-Field uses a novel approach that exhibits linear complexity (O(N)). Think of it like this: Transformers need to compare every piece of information with every other piece, which gets exponentially harder as you add more information. Pulse-Field, on the other hand, processes information in a more streamlined, step-by-step manner, similar to a flowing pulse, making it significantly faster and more memory-efficient. This translates to AI models that can handle much larger datasets or operate much quicker for the same dataset size.

How to use it?

Developers can integrate Pulse-Field into their AI workflows by leveraging its optimized implementation. It can be used as a drop-in replacement for Transformer layers in existing deep learning models for tasks like natural language processing (NLP), time-series analysis, or signal processing. The library provides APIs for defining model architectures and training them on custom datasets. For example, if you're building a chatbot that needs to understand long conversations, or a system that analyzes streaming sensor data, Pulse-Field can dramatically speed up the model's ability to learn from and process that data, leading to real-time responsiveness and lower computational costs.

Product Core Function

· Linear Complexity Processing (O(N)): Enables AI models to scale efficiently with data size, meaning performance doesn't degrade drastically as inputs get larger, making it ideal for big data applications. For you, this means faster training and inference on large datasets without a proportional increase in computing resources.

· Sub-Quadratic Computational Cost: Significantly reduces the computational overhead compared to Transformer architectures, leading to faster processing times. For you, this translates to quicker model development cycles and the ability to deploy more complex models on less powerful hardware.

· Memory Efficiency: Requires less memory to process data compared to attention-based models, allowing for the handling of longer sequences or larger batch sizes. For you, this means avoiding out-of-memory errors and fitting larger models or datasets into available memory.

· Customizable Architecture: Offers flexibility in building custom neural network designs by allowing developers to integrate Pulse-Field layers within broader model architectures. For you, this means the freedom to experiment and tailor AI solutions precisely to your specific problem without being locked into rigid structures.

Product Usage Case

· Natural Language Processing (NLP): Use Pulse-Field to build significantly faster language models for tasks like text summarization, sentiment analysis, or machine translation, especially for processing lengthy documents or large corpora. This allows for real-time analysis of user feedback or faster generation of content.

· Time-Series Analysis: Apply Pulse-Field to analyze long sequences of time-series data, such as stock market trends, sensor readings from IoT devices, or medical monitoring signals, enabling quicker anomaly detection or prediction. This means you can monitor systems in near real-time and react to changes much faster.

· Signal Processing: Utilize Pulse-Field for processing audio or other signal data where temporal dependencies are crucial, achieving faster feature extraction and classification. This could be used in voice assistants for quicker command recognition or in industrial monitoring for faster fault detection.

· Genomics and Bioinformatics: Employ Pulse-Field for analyzing long DNA or protein sequences, accelerating research in areas like disease prediction or drug discovery. This speeds up the discovery process by allowing researchers to sift through vast genetic datasets more rapidly.

10

OntologyGraph Weaver

Author

cybermaggedon

Description

This project is an ontology-driven knowledge graph extraction system. It leverages formal ontologies (like OWL or Turtle) to guide the process of building knowledge graphs from documents. Unlike generic approaches that rely solely on an LLM's interpretation, this system uses a predefined domain model (the ontology) to ensure the extracted information accurately reflects specific semantics, making it invaluable for domains with strict requirements like healthcare or finance. The core innovation lies in using ontologies to constrain LLM-based information extraction, ensuring semantic accuracy and domain relevance. So, this is useful for developers and organizations needing to build highly structured and semantically rich knowledge graphs from text, particularly in specialized fields, ensuring the graph's content aligns with established domain knowledge.

Popularity

Points 13

Comments 0

What is this product?

OntologyGraph Weaver is a system that automatically constructs knowledge graphs from text by strictly adhering to a predefined domain model expressed as an ontology (e.g., in OWL or Turtle format). Think of an ontology as a detailed blueprint that defines what types of 'things' exist in a specific field and how they can relate to each other. This project uses that blueprint to tell Large Language Models (LLMs) exactly what information to look for and how to connect it. The key technical insight is using the ontology not just as background knowledge, but as a strict guide for the LLM's extraction process. This ensures that the resulting knowledge graph is not a generalized interpretation by the LLM, but a precise representation of domain-specific facts and relationships. This solves the problem of generic knowledge graphs missing critical domain semantics, which is crucial for applications needing high fidelity and accuracy. So, this is useful because it provides a way to build knowledge graphs that are deeply aligned with your specific domain's rules and concepts, ensuring higher accuracy and relevance than general-purpose methods.

How to use it?

Developers can use OntologyGraph Weaver by providing their domain ontology and pointing the system to their documents. The system then uses the ontology to guide an LLM in identifying and extracting entities (like people, organizations, or medical conditions) and their relationships (like 'works for' or 'treats') from the text. This extracted information is then validated against the ontology's schema before being stored in a graph database. The system is built on Apache Pulsar for scalability and supports various graph databases like Memgraph and FalkorDB, allowing for flexible deployment either locally or in the cloud. Integration would typically involve setting up the ontology, configuring document sources, and choosing a target graph backend. So, this is useful for developers who need to build structured knowledge representations from unstructured text within a specific domain, offering a robust and scalable solution.

Product Core Function

· Ontology-guided text extraction: This function uses a formal ontology to direct an LLM in extracting entities and relationships from text. The value is in ensuring that the extracted information strictly conforms to predefined domain semantics, leading to more accurate and relevant knowledge graphs. This is applicable in scenarios where domain-specific accuracy is paramount.

· Schema-constrained LLM prompting: The system dynamically crafts prompts for the LLM based on the ontology's definitions. This ensures the LLM focuses on extracting information that aligns with the ontology's classes and properties. The value here is in increasing the precision of LLM extraction and reducing irrelevant or incorrect extractions, making it useful for applications requiring high data quality.

· Output validation against ontology schema: Before storing extracted data, this function verifies it against the ontology. This acts as a crucial quality control step, guaranteeing that the knowledge graph adheres to the defined domain model. The value is in maintaining data integrity and consistency within the knowledge graph, crucial for complex analytical tasks.

· Scalable knowledge graph construction: Built on Apache Pulsar, the system is designed to handle large volumes of data and complex extraction tasks efficiently. The value is in providing a robust and performant solution for building knowledge graphs at scale, enabling the processing of extensive document collections.

· Multiple graph backend support: The system can integrate with various graph databases (e.g., Memgraph, FalkorDB). The value is in offering flexibility to developers, allowing them to choose the graph database that best suits their existing infrastructure and specific performance needs.

Product Usage Case

· Building a healthcare knowledge graph: A pharmaceutical company could use OntologyGraph Weaver with a medical ontology (like SNOMED CT) to extract relationships between drugs, diseases, and patient demographics from clinical trial reports. This would solve the problem of generic LLMs misinterpreting complex medical terminology and relationships, providing a semantically accurate graph for drug discovery research. This is useful for accelerating research and identifying new therapeutic targets.

· Financial risk analysis: A financial institution could use OntologyGraph Weaver with a financial ontology (like FIBO) to extract relationships between companies, financial instruments, and regulatory events from news articles and financial statements. This addresses the challenge of LLMs failing to capture nuanced financial connections, enabling more precise risk assessment and compliance monitoring. This is useful for improving financial decision-making and reducing regulatory exposure.

· Intelligence analysis: An intelligence agency could use OntologyGraph Weaver with a custom ontology defining entities like individuals, organizations, locations, and their connections to extract structured information from vast amounts of open-source intelligence (OSINT) data. This overcomes the difficulty of manually sifting through and connecting disparate pieces of information, providing a clear and actionable knowledge graph for threat assessment. This is useful for enhancing situational awareness and proactive threat detection.

11

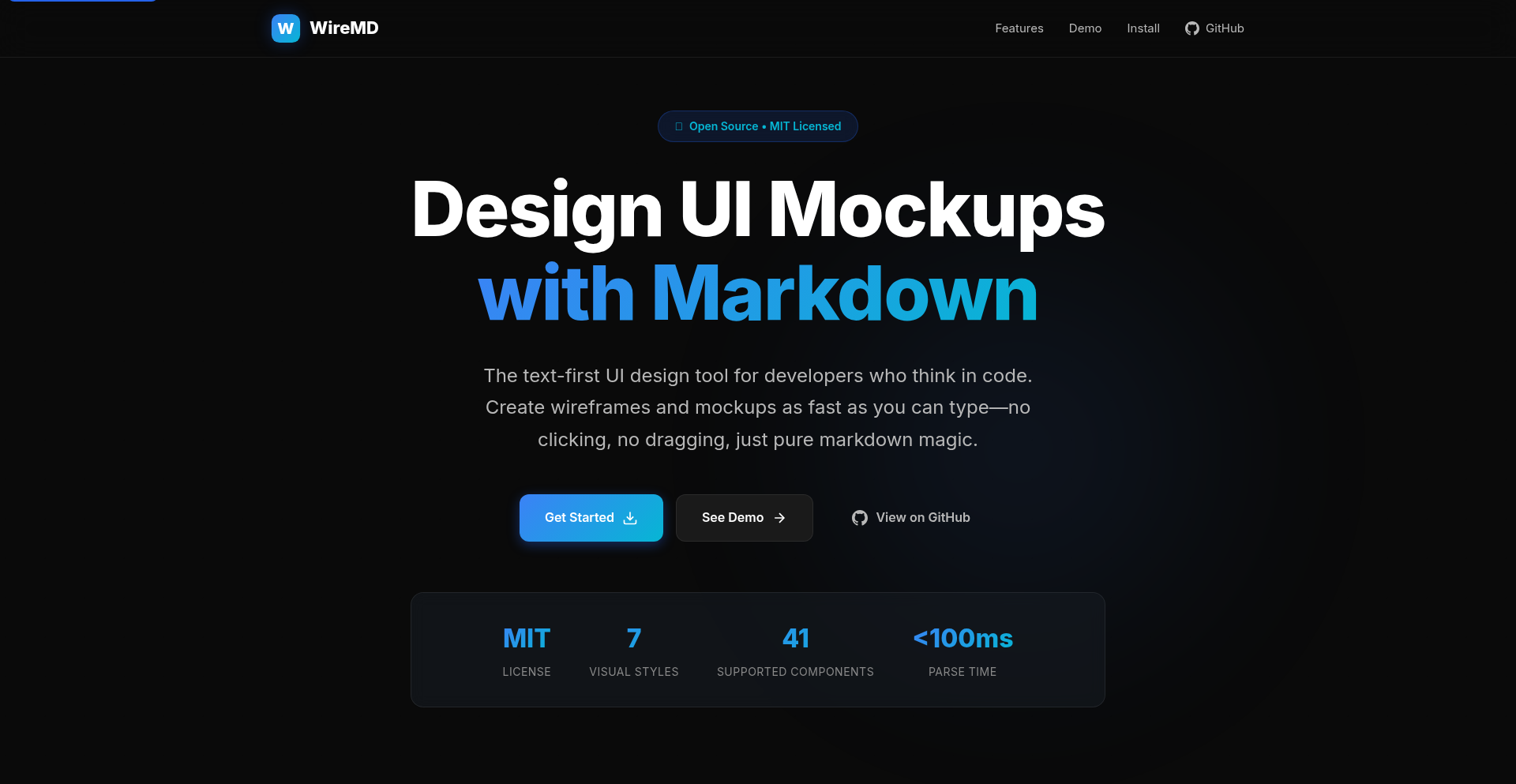

WireMD: Markdown-Powered UI Blueprint

Author

akonan

Description

WireMD is a text-first wireframing tool that allows designers and developers to create UI blueprints using Markdown. Its core innovation lies in treating UI designs as version-controlled, code-editable assets, directly addressable within a developer's existing workflow. It generates outputs in various formats like HTML, Tailwind CSS, and JSON, bridging the gap between ideation and implementation.

Popularity

Points 7

Comments 3

What is this product?

WireMD is a revolutionary tool that lets you design user interface (UI) wireframes using simple Markdown text. Instead of wrestling with complex graphical editors, you write code-like descriptions of your UI. The innovation here is that your UI designs become just like your application code: they can be tracked using version control systems (like Git), reviewed collaboratively in pull requests, and most importantly, edited directly within your favorite code or Markdown editor. This eliminates the friction of switching between different tools and keeps your design logic seamlessly integrated with your codebase.

How to use it?

Developers can use WireMD by creating a Markdown file (e.g., `design.md`) and describing their UI elements using a predefined syntax. For example, you could write a section like: `# My App - Header

- **Logo** (image)

- **Navigation** (list: Home, About, Contact)

# My App - Main Content

- **Hero Section** (text: 'Welcome!', button: 'Learn More')`. WireMD then processes this Markdown to generate various output formats. You can integrate it into your development workflow by including it in your project's repository, generating HTML outputs for quick prototyping, or using the JSON output to feed into other UI generation tools or frameworks. It's about designing as fast as you can type.

Product Core Function

· Markdown-based UI Description: Allows users to describe UI elements, layout, and components using a familiar text format, making design intuitive and accessible. The value is in simplifying the creation process and lowering the barrier to entry for UI conceptualization.

· Version Control Integration: Treats UI designs as text files, enabling them to be managed, tracked, and versioned like any other code file. This offers robust history, collaboration, and rollback capabilities, enhancing project management and team coordination.

· Multi-Format Output Generation: Produces outputs in HTML, Tailwind CSS, and JSON, facilitating diverse integration paths. This provides flexibility for prototyping, direct styling, or programmatic use of design structures, streamlining the transition from design to implementation.

· Code Editor Compatibility: Enables users to edit UI designs directly within their preferred code or Markdown editors. This eliminates context switching and allows designers and developers to work within a single, familiar environment, boosting productivity and reducing errors.

Product Usage Case

· A developer creating a new feature's layout in Markdown within their project's Git repository. This allows for seamless code review of UI changes alongside functional code changes, solving the problem of siloed design work and improving team communication.

· A startup team using WireMD to rapidly iterate on the user flow of a new mobile app. By writing out screens and interactions in Markdown, they can quickly generate HTML prototypes and share them for early user feedback, solving the challenge of slow and expensive graphical prototyping.

· A frontend engineer generating Tailwind CSS configurations from a WireMD file to establish a consistent design system. This streamlines the process of translating design concepts into production-ready, styled components, addressing the need for efficient and scalable UI development.

12

Agentic Document Transformer

Author

philipisik

Description

This project explores a server-side system for AI agents to directly read, write, and transform documents. It treats documents as a programmable data store, enabling real-time manipulation by agents, going beyond browser-based rich text editing.

Popularity

Points 9

Comments 0

What is this product?

This project is an experimental server-side framework that allows AI agents to interact with documents as if they were a programmable database. Instead of focusing on rows or objects, the core unit is a semantically structured document. Agents can then perform actions like reading, writing, and transforming these documents in real-time, without needing a web browser. This is an evolution of embedding LLM-powered editing into rich text editors, pushing the interaction layer to the backend for more autonomous agent operations.

How to use it?

Developers can integrate this system into their backend applications to enable agent-driven document automation. For instance, imagine a system where agents can automatically summarize reports, extract key information from invoices, or even dynamically update content based on external data feeds. The interaction would involve defining agent behaviors and specifying which documents they can access and modify. It's about treating your document store as a dynamic, intelligent layer for automated content management and processing.

Product Core Function

· Real-time Agentic Document Reading: Allows AI agents to access and comprehend the content and semantic structure of documents on the fly, enabling quick information retrieval and analysis for automated workflows.

· Real-time Agentic Document Writing: Enables AI agents to programmatically add or update content within documents, facilitating automated report generation, data entry, or dynamic content population without manual intervention.

· Real-time Agentic Document Transformation: Empowers AI agents to modify and restructure documents based on predefined rules or learned behaviors, such as summarizing lengthy texts, reformatting data, or translating content, enhancing document processing efficiency.

· Programmable Document Data Store: Treats documents as a fundamental data unit that agents can interact with directly, moving beyond traditional database paradigms to enable sophisticated content manipulation and automation.

· Server-side Agent Operations: Facilitates the execution of agent tasks on the backend, independent of a user's browser, allowing for continuous and autonomous document processing and management.

Product Usage Case

· Automated Content Summarization: A system where an AI agent monitors a folder of news articles and automatically generates daily summary digests, making it easier for users to stay informed without reading every article.

· Invoice Data Extraction and Processing: An agent that automatically reads incoming invoices, extracts key details like vendor, amount, and due date, and then populates a financial tracking system, reducing manual data entry errors and saving time.

· Dynamic Knowledge Base Updates: An agent that monitors external data sources and automatically updates a company's internal knowledge base with new information, ensuring employees always have access to the most current data.

· Personalized Document Generation: An agent that, based on user preferences or past interactions, can dynamically generate personalized reports or documents, providing a more tailored user experience.

13

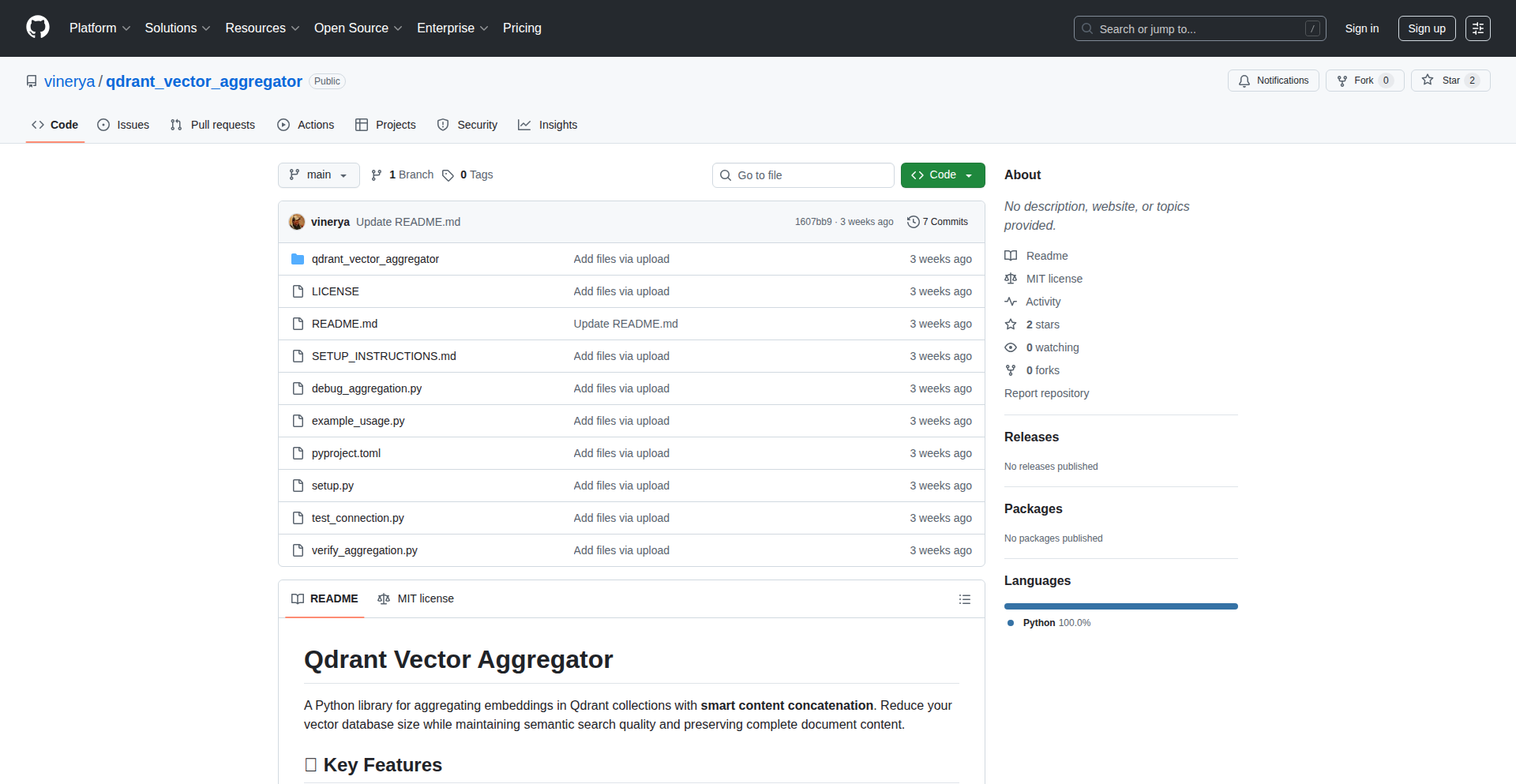

Qdrant Vector Aggregator

Author

chelbi

Description

This project is a tool designed to solve a specific challenge in managing vector databases, particularly when dealing with document-level embeddings. It allows users to store individual text chunks with their embeddings in Qdrant, a popular vector database, and then aggregate these embeddings back into a document-level representation while preserving the original full document text. This is innovative because it addresses a gap where users might want both granular and holistic views of their data within the vector database ecosystem, without having to manage separate systems.

Popularity

Points 4

Comments 4

What is this product?

This project is essentially a bridge between granular chunk embeddings and aggregated document embeddings within Qdrant. The core technical innovation lies in how it intelligently combines embeddings from smaller text pieces (chunks) to create a meaningful representation for the entire document. Instead of just averaging embeddings, which can lose nuance, the aggregator likely employs more sophisticated methods to preserve the semantic richness of the full text. This is useful because it allows you to query at a document level (e.g., 'find documents about X') while still having access to the specific parts of the document that contributed to that result, all managed within your existing Qdrant setup. So, this helps you get a more comprehensive understanding of your data without complex workarounds.

How to use it?

Developers can integrate Qdrant Vector Aggregator into their existing data pipelines. The typical workflow would involve: 1. Chunking your documents into smaller, manageable pieces. 2. Generating embeddings for each chunk using a pre-trained model. 3. Storing these chunk embeddings, along with their corresponding chunk text and a reference to the original document, in Qdrant. 4. Using the Vector Aggregator tool to process the stored chunk embeddings and generate a single, aggregated document embedding for each document. This aggregated embedding, along with the original full document text, can then be stored back into Qdrant or used for further analysis. This is useful for streamlining your vector search and retrieval processes by having unified document representations.

Product Core Function

· Chunk Embedding Storage: The ability to store individual embeddings for text chunks within Qdrant, linked to the original document. This provides the foundational data for aggregation. Its value lies in enabling fine-grained analysis and retrieval.

· Vector Aggregation Logic: The core innovation where embeddings from multiple chunks are combined into a single document embedding. This goes beyond simple averaging to potentially capture richer semantic meaning, providing a more accurate representation for document-level search. This solves the problem of losing semantic context when only individual chunks are considered.

· Document Text Preservation: Ensuring that the full original document text remains accessible alongside its aggregated embedding. This is crucial for understanding search results and for presenting the retrieved information to users effectively. It means you don't lose the context of what you found.

· Qdrant Integration: Seamlessly working with Qdrant as the underlying vector database. This allows developers to leverage their existing infrastructure and expertise, reducing the learning curve and implementation effort. It means you can use what you already know and have.

· Metadata Association: The capability to associate metadata (like document ID, chunk index) with stored embeddings, which is essential for organizing and retrieving data efficiently. This helps in managing your data effectively and finding what you need quickly.

Product Usage Case

· RAG (Retrieval-Augmented Generation) Systems: In RAG, you often need to retrieve relevant document chunks to feed into a language model. This tool allows you to first find relevant documents at a higher level using aggregated embeddings, and then pinpoint specific chunks within those documents if needed, leading to more accurate and contextually relevant responses from the LLM. This means your AI will give better answers.

· Document Similarity Search: For tasks like finding duplicate documents or grouping similar articles, aggregating chunk embeddings provides a more robust representation of the document's overall topic compared to relying on single, potentially biased chunk embeddings. This helps in organizing large datasets and identifying patterns. This makes it easier to find similar content.

· Semantic Search over Large Corpora: When dealing with very large documents or collections of documents, having aggregated document embeddings allows for faster and more meaningful semantic searches. You can quickly narrow down to relevant documents before potentially diving into specific chunks. This speeds up your search and makes it more accurate.

· Knowledge Base Management: For building internal knowledge bases or FAQs, this tool enables efficient querying of documents. Users can search for concepts, and the system can retrieve entire documents that cover those concepts, along with the ability to highlight the specific sections that were most relevant. This makes your internal information easier to find and use.

14

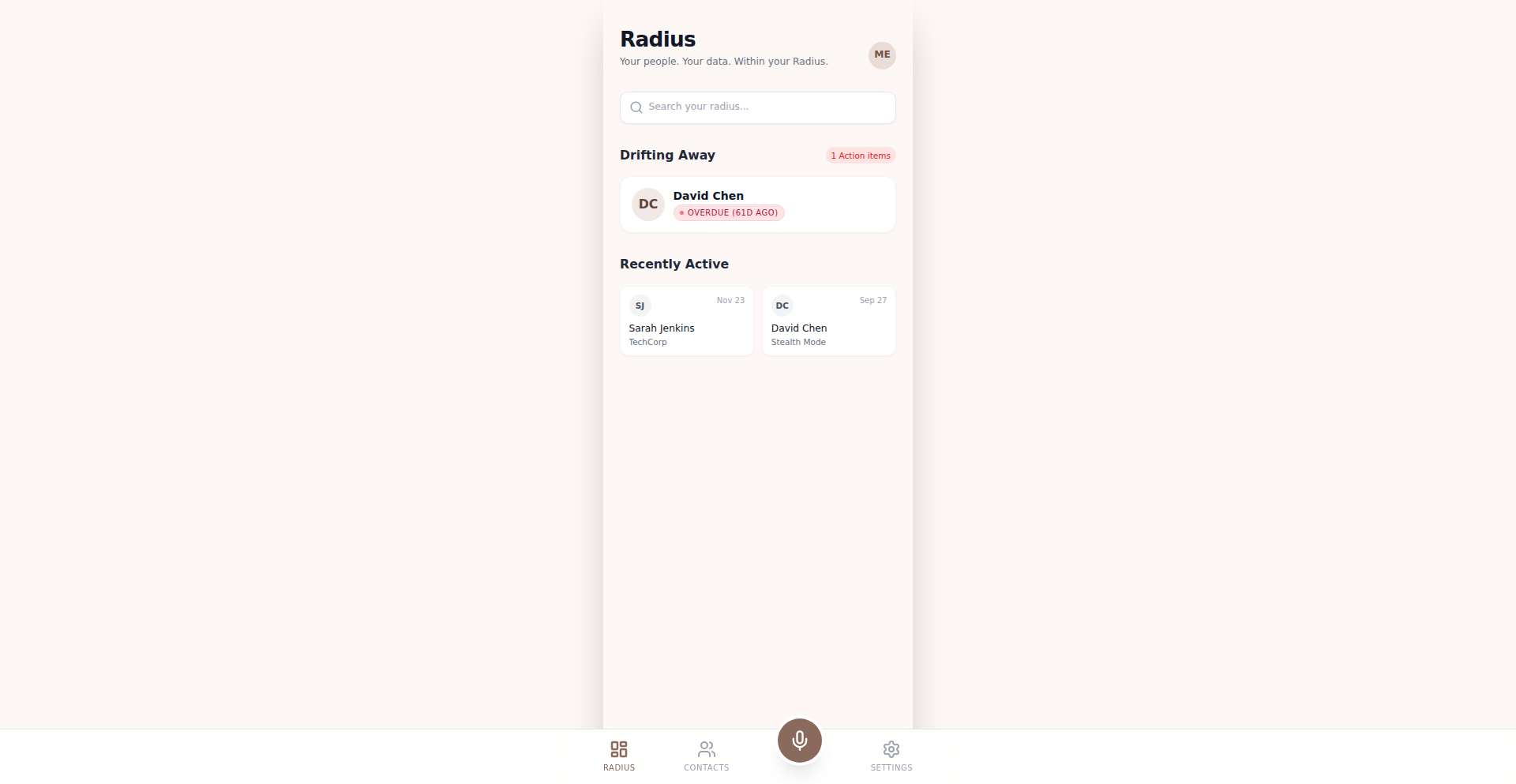

RadiusLocal

Author

Xiaoyao6

Description

RadiusLocal is a local-first personal CRM designed for individuals and small teams to manage their contacts and relationships without relying on a central cloud server. Its core innovation lies in its decentralized data storage, leveraging technologies like IPFS for robust data handling and end-to-end encryption for privacy. This approach offers enhanced security and offline accessibility, solving the common pain points of data ownership and vendor lock-in associated with traditional CRMs.

Popularity

Points 3

Comments 4

What is this product?

RadiusLocal is a personal Customer Relationship Management (CRM) tool that stores all your contact and interaction data directly on your device. Think of it as your personal address book on steroids, but instead of being stored on some company's servers (which you don't control), your data lives with you. The key innovation is its 'local-first' design, meaning it works perfectly even when you're offline. It uses advanced peer-to-peer storage solutions like IPFS (InterPlanetary File System) to ensure your data is secure, private (with end-to-end encryption), and not locked into a single service. So, instead of worrying about your data being sold or lost, you have full control and ownership. This means your valuable contact information and interaction history are always accessible to you, and only you.

How to use it?

Developers can integrate RadiusLocal into their workflows by using its API to sync contact data or log interactions. For personal use, you'd typically install the application, and it would act as your primary contact manager. Data is stored locally, and for backup or sharing purposes, it can be encrypted and stored on decentralized networks like IPFS. This means you can easily back up your entire CRM data and even share specific contacts securely with others. Imagine a salesperson who needs to access their contacts and notes even when in a remote area with no internet – RadiusLocal makes this possible. For developers building other applications that require contact management, RadiusLocal can serve as a secure, private backend for their contact needs.

Product Core Function

· Decentralized Data Storage: Your contact and interaction data is stored locally and can be backed up to decentralized networks like IPFS. This means your data is more secure, private, and resilient against single points of failure. So, it's useful because you own your data and it's always accessible.

· End-to-End Encryption: All your data is encrypted from your device to its storage destination, ensuring only you can access it. This is critical for privacy and protecting sensitive personal or business information. So, it's useful because it keeps your information confidential.

· Offline Accessibility: RadiusLocal functions fully without an internet connection, allowing you to manage contacts and log interactions anytime, anywhere. This is incredibly valuable for professionals who travel or work in areas with poor connectivity. So, it's useful because you can be productive even without internet access.

· Contact Management with Interaction History: Beyond just names and numbers, you can log notes, meetings, calls, and other interactions associated with each contact. This provides a comprehensive view of your relationships. So, it's useful because it helps you remember important details and nurture your connections effectively.

· IPFS Integration for Secure Backup and Sharing: Leverages IPFS for robust, distributed data storage, enabling secure backups and controlled sharing of encrypted data. This offers a modern, privacy-focused alternative to traditional cloud storage. So, it's useful because it provides a secure and future-proof way to store and share your data.

Product Usage Case

· A freelance consultant who needs to track all client communications and meeting notes offline while traveling between client sites. RadiusLocal allows them to log updates in real-time on their laptop or phone, with data syncing securely when they regain connectivity. This solves the problem of losing crucial information due to intermittent internet access.

· A small startup team wanting a shared, yet private, CRM solution without the recurring costs and data privacy concerns of cloud-based CRMs. They can use RadiusLocal, potentially with a shared IPFS node, to manage leads and customer interactions with full data ownership. This addresses the need for a cost-effective and privacy-respecting CRM.

· An individual who wants to meticulously organize their personal network, including friends, family, and professional contacts, with detailed notes about each person's interests and past conversations. RadiusLocal provides a secure, private space for this without the fear of personal data being exposed or misused. This fulfills the desire for a highly personalized and secure contact management system.

15

TerminalWeatherPro

Author

jamescampbell

Description

WeatherOrNot is a terminal-based weather application that reimagines how we interact with weather data. It focuses on providing a rich, highly customizable, and visually appealing weather experience directly within the command line interface, moving beyond basic text outputs to offer a more engaging and informative presentation of meteorological data.

Popularity

Points 6

Comments 0

What is this product?

TerminalWeatherPro is a sophisticated weather application designed to run in your command-line terminal. Instead of just showing raw numbers, it uses terminal capabilities to render weather information in a more visual and structured way, like a retro terminal. The innovation lies in taking standard weather API data and transforming it into an aesthetically pleasing and highly configurable terminal experience, offering insights that are often lost in simpler text-based interfaces. It's about bringing a touch of graphical richness to the command line for a specific, functional purpose: understanding weather.

How to use it?

Developers can install and run TerminalWeatherPro directly from their terminal. Once installed, they can query weather forecasts for specific locations using simple commands. The project is designed to be integrated into developer workflows, perhaps as a quick check before heading out or as part of a larger automation script that needs real-time weather data. Configuration options allow for tailoring the output to specific preferences, such as units of measurement, data points displayed, and visual themes.

Product Core Function

· Command-line weather data retrieval: Fetches current weather and forecast data from meteorological APIs, enabling quick access to essential weather information without leaving the terminal.

· Customizable terminal rendering: Presents weather data using terminal character graphics and color coding for enhanced readability and a retro aesthetic, making it easier to grasp complex weather patterns at a glance.

· Configurable display options: Allows users to personalize the information shown, such as temperature, precipitation probability, wind speed, and more, ensuring the output is relevant to their immediate needs.

· Location-based weather queries: Supports fetching weather data for any specified location worldwide, providing a versatile tool for users in different regions.

· Lightweight and efficient: Optimized for terminal performance, offering a fast and resource-friendly way to access weather information.

Product Usage Case

· A developer working on a project that depends on outdoor conditions might use TerminalWeatherPro to quickly check the weather before planning an outdoor testing session, ensuring they are prepared for rain or extreme temperatures.

· A system administrator could integrate TerminalWeatherPro into a monitoring script to receive alerts if severe weather is predicted for a server location, allowing for proactive measures to protect hardware.

· A hobbyist programmer building a smart home dashboard might incorporate TerminalWeatherPro's output into their terminal interface to have a constant, unobtrusive weather update alongside their other system metrics.

· A digital nomad who spends a lot of time in the terminal can use TerminalWeatherPro to get a fast, informative weather snapshot for their current or next destination without needing to open a browser or a separate app.

16

Alpha137: Superfluid Vacuum Simulator

Author

moseszhu

Description

This project presents a novel approach to simulating quantum vacuum behavior by treating it as a superfluid. The core innovation lies in deriving fundamental physical constants, specifically the fine-structure constant (Alpha), from this theoretical framework. It tackles the complex problem of understanding vacuum properties and their relation to fundamental forces through an elegant simulation.

Popularity

Points 5

Comments 1

What is this product?

This is a simulation project that models the quantum vacuum, the empty space that is actually filled with virtual particles and fluctuating energy fields, as a superfluid. Think of a superfluid like liquid helium, which flows without any friction. The project uses this analogy to explore and theoretically derive the value of the fine-structure constant (Alpha), which is a fundamental number in physics that governs the strength of electromagnetic interactions. The innovation is in using a fluid dynamics-like approach to understand quantum phenomena and calculate a key physical constant, offering a new perspective on the nature of reality at its most basic level. So, what's in it for you? It provides a unique computational tool and theoretical framework that could lead to deeper insights into quantum field theory and potentially new avenues for physics research and discovery.

How to use it?

Developers can use this project as a computational toolkit for exploring theoretical physics simulations. It provides the underlying code and methodologies to replicate and extend the simulation of vacuum as a superfluid. This can be integrated into research workflows for testing hypotheses related to quantum mechanics and fundamental constants. For those interested in computational physics, it offers a practical example of applying simulation techniques to abstract physical concepts. So, what's in it for you? It allows you to experiment with advanced physics simulation techniques and potentially contribute to cutting-edge theoretical physics research.

Product Core Function

· Superfluid vacuum simulation engine: This function provides the core computational model to simulate the vacuum as a frictionless fluid, enabling the exploration of its emergent properties. The value is in offering a novel computational approach to understanding quantum vacuum. This is applicable in advanced physics research and theoretical modeling.

· Fine-structure constant derivation module: This function implements the logic to calculate the fine-structure constant (Alpha) based on the superfluid vacuum simulation. The value is in providing a theoretical pathway to derive a fundamental physical constant from a simulation, opening new avenues for theoretical physics. This is useful for physicists seeking to test or develop new theories.

· Parameter exploration interface: This allows users to adjust simulation parameters and observe their impact on the derived constants. The value is in enabling experimental investigation of the theoretical model and understanding the sensitivity of the results to input conditions. This is helpful for researchers in fine-tuning their models and exploring different scenarios.

Product Usage Case

· A theoretical physicist could use this to simulate a modified vacuum structure and observe if the derived Alpha value changes, potentially indicating new physical phenomena. This solves the problem of manually calculating and testing theoretical variations.

· A computational physics student could use this project as a basis to build their own simulation for a class project on quantum field theory, learning practical simulation techniques. This solves the problem of finding accessible yet advanced simulation examples.

· A researcher exploring alternative theories of everything could integrate this superfluid vacuum model into their larger theoretical framework to see if it aligns with other proposed physical laws. This solves the problem of finding a computational model that represents a specific theoretical concept.

17

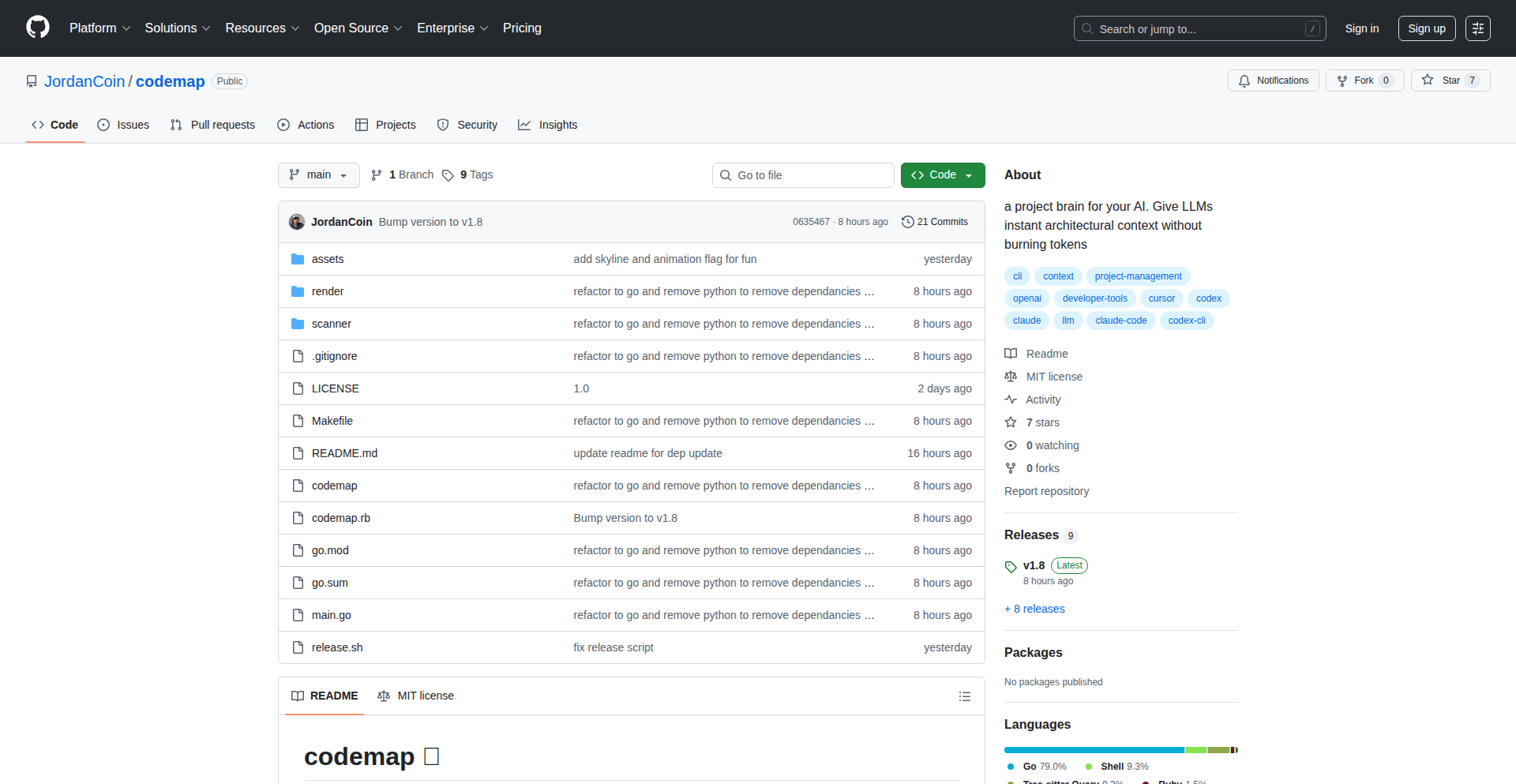

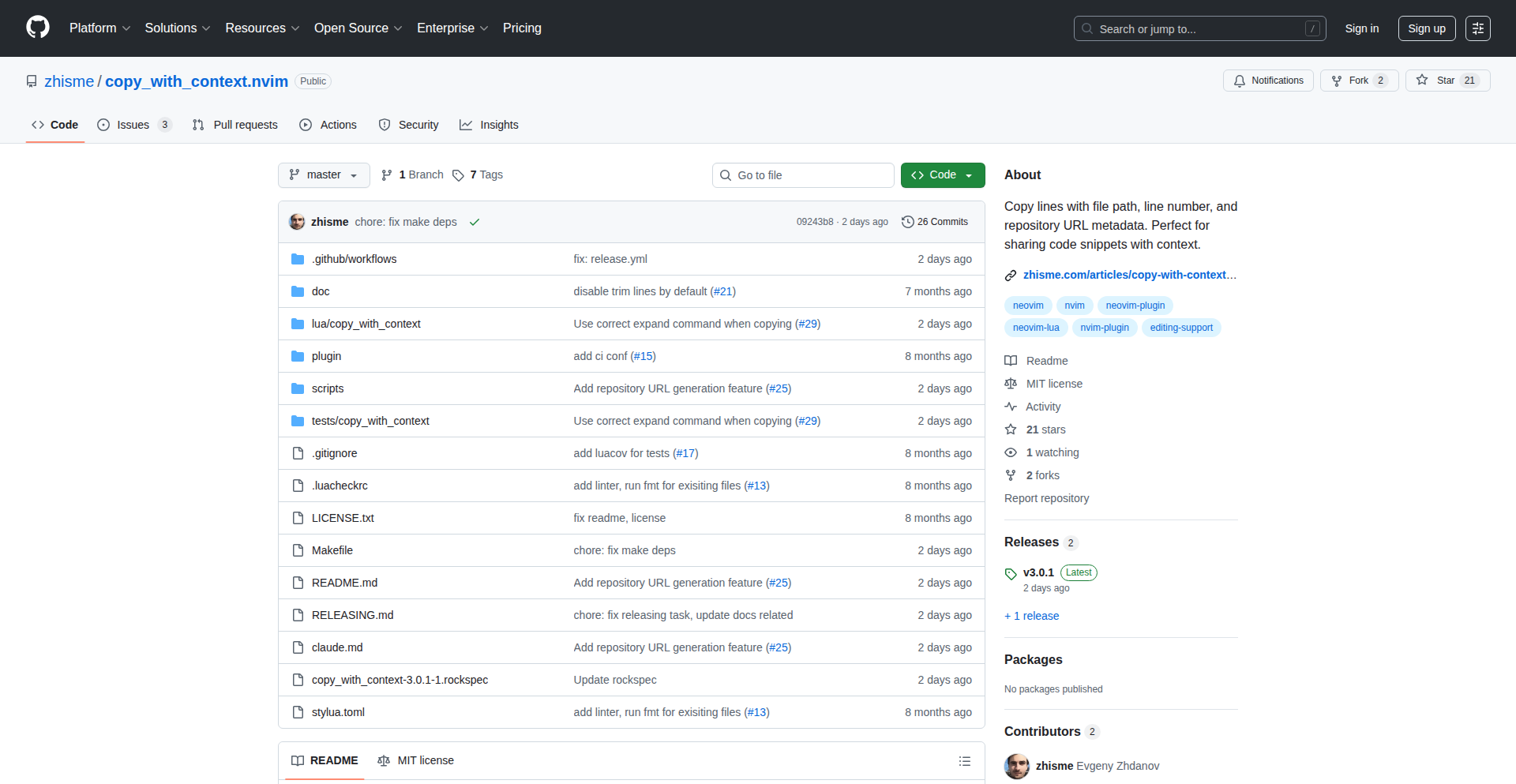

CodeContext Mapper

Author

jordancj

Description

This project is a command-line interface (CLI) tool designed to help developers manage Large Language Model (LLM) context for their codebases. It tackles the problem of LLMs forgetting or losing track of project details during extended use, which can be costly in terms of API tokens and developer time. The innovation lies in its ability to create a high-level, token-efficient map of a codebase that LLMs can easily reference, ensuring they maintain project understanding without needing to re-process the entire codebase repeatedly.

Popularity

Points 4

Comments 2

What is this product?

CodeContext Mapper is a CLI utility that intelligently analyzes your codebase and generates a concise, structured summary or 'map'. This map acts as a persistent memory for Large Language Models (LLMs) when you're working on your projects. Instead of feeding the LLM your entire codebase every time you ask a question, which is expensive and time-consuming, you provide it with this pre-generated map. The core innovation is in how it distills complex code structures into a digestible format for LLMs, significantly reducing token usage and improving the LLM's ability to recall and understand your project's context over time. Think of it as creating a high-level outline of your code that an AI can always refer back to, ensuring it 'remembers' what your project is about.

How to use it?

Developers can integrate CodeContext Mapper into their workflow by running it from their project's root directory in the terminal. The tool will scan the codebase and output a generated context map file (e.g., a JSON or markdown file). This map file can then be passed to your LLM prompts. For instance, when interacting with an LLM for code generation or debugging, you'd preface your request by providing the context map. This could be done by copying and pasting the map content or, more advancedly, by scripting the LLM interaction to automatically include the map. The primary use case is during development where you're frequently querying an LLM about your code, ensuring the LLM maintains a consistent understanding of your project's architecture and specific file details.

Product Core Function

· Codebase Indexing: Scans and understands the structure of your codebase, identifying files, functions, classes, and their relationships. This allows the LLM to grasp the overall architecture without needing to parse every single line of code. The value is in creating a foundational understanding of your project's layout.

· Contextual Summarization: Generates concise summaries of code elements, focusing on key functionalities and dependencies. This abstracts away the fine-grained details while retaining the essential information for the LLM. The value is in providing high-level context efficiently.