Show HN Today: Discover the Latest Innovative Projects from the Developer Community

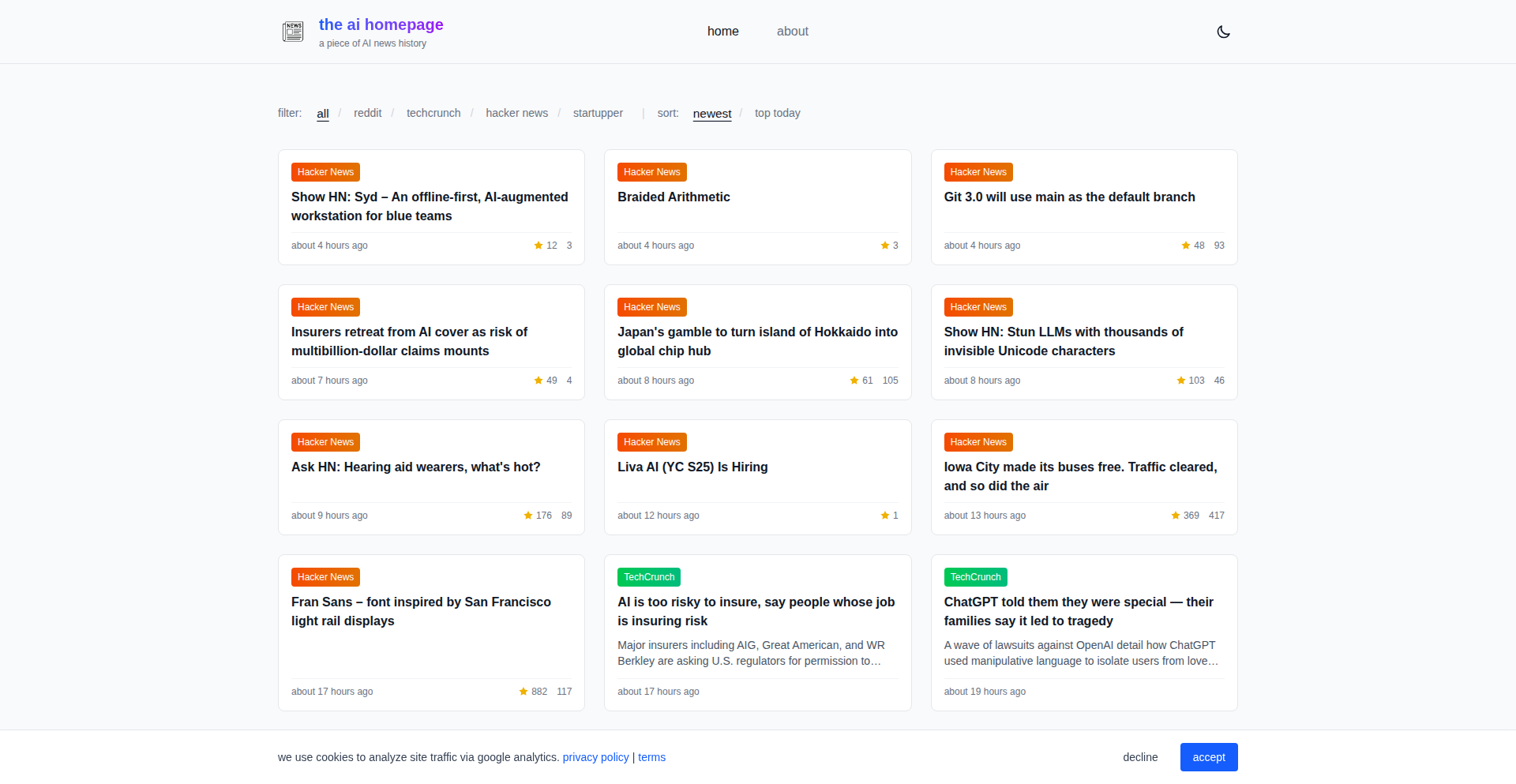

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-23

SagaSu777 2025-11-24

Explore the hottest developer projects on Show HN for 2025-11-23. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN entries reveal a vibrant ecosystem of developers tackling real-world challenges with ingenious technical solutions. The prevalence of AI/LLM-related projects, from adversarial techniques like stunning LLMs with invisible characters to AI-powered content generation and analysis, underscores the transformative impact of this technology. Developers are not just building with AI, but also exploring its vulnerabilities and creating tools to manage, secure, and enhance its capabilities. Furthermore, there's a strong current of creating robust developer tools that streamline workflows, improve security, and prioritize privacy, such as secure `.env` alternatives, memory allocators, and privacy-focused proxies. This trend highlights a critical need for developers to be masters of their tools and environments, building solutions that empower them to focus on innovation rather than getting bogged down by cumbersome processes. For aspiring entrepreneurs, this landscape presents opportunities in developing specialized AI applications, enhancing existing AI infrastructure, or building security and privacy solutions that address the growing concerns around AI data and usage. The hacker spirit is alive and well, pushing boundaries and crafting elegant solutions from fundamental principles.

Today's Hottest Product

Name

Stun LLMs with thousands of invisible Unicode characters

Highlight

This project ingeniously leverages Unicode's less-obvious characters to create text that appears normal to humans but confounds Large Language Models (LLMs). It's a creative application of character encoding to solve a modern problem: AI text manipulation and plagiarism detection. Developers can learn about the nuances of Unicode, text encoding tricks, and how to think about adversarial attacks against AI systems. The core idea is to manipulate data at a fundamental level to achieve a desired outcome, a classic hacker mindset.

Popular Category

AI/ML

Developer Tools

Productivity

Security

Popular Keyword

LLM

AI

Developer Tools

Privacy

Automation

Security

Rust

CLI

Technology Trends

AI/LLM Adversarial Techniques

Enhanced Developer Tooling

Privacy-First Solutions

Workflow Automation

Cross-Platform Development

Data Obfuscation/Anonymization

WebAssembly

Cloud-Native Development

Project Category Distribution

AI/ML Related (20%)

Developer Productivity & Tools (35%)

Privacy & Security (15%)

Utilities & Niche Tools (20%)

Education & Gaming (5%)

Other (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | GhostText | 112 | 51 |

| 2 | C-Mem: Minimalist C Memory Allocator | 97 | 25 |

| 3 | Dank-AI: Accelerated AI Agent Deployment | 6 | 5 |

| 4 | SecureEnvSync | 8 | 3 |

| 5 | EncryptedEnvVault | 6 | 3 |

| 6 | InstantGigs Live | 4 | 5 |

| 7 | AnthropicNewsAggregator | 3 | 6 |

| 8 | Server Survival: Cloud Architecture Defense | 6 | 2 |

| 9 | RAGLaunchpad AI | 2 | 5 |

| 10 | ColorBit Code Studio | 2 | 5 |

1

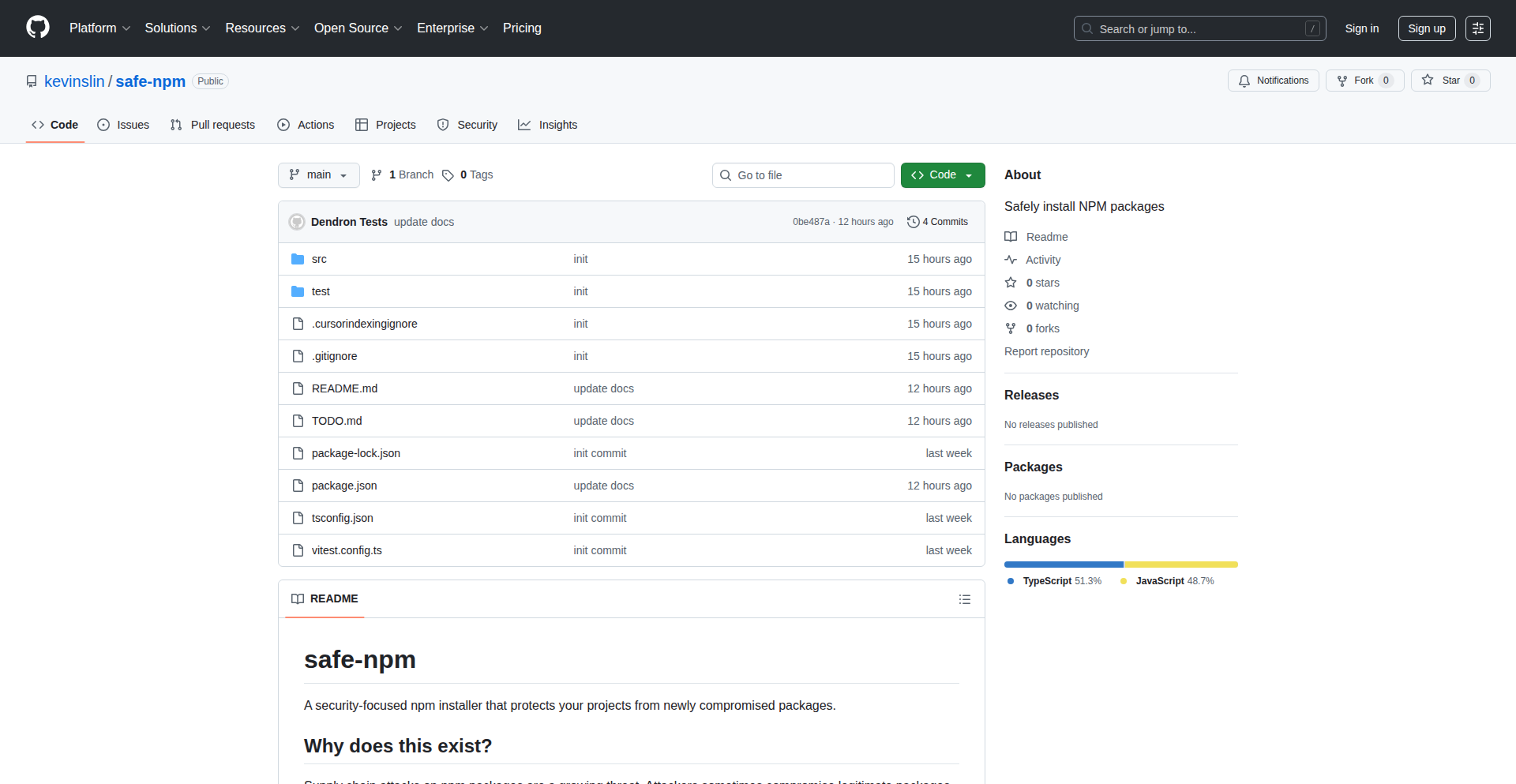

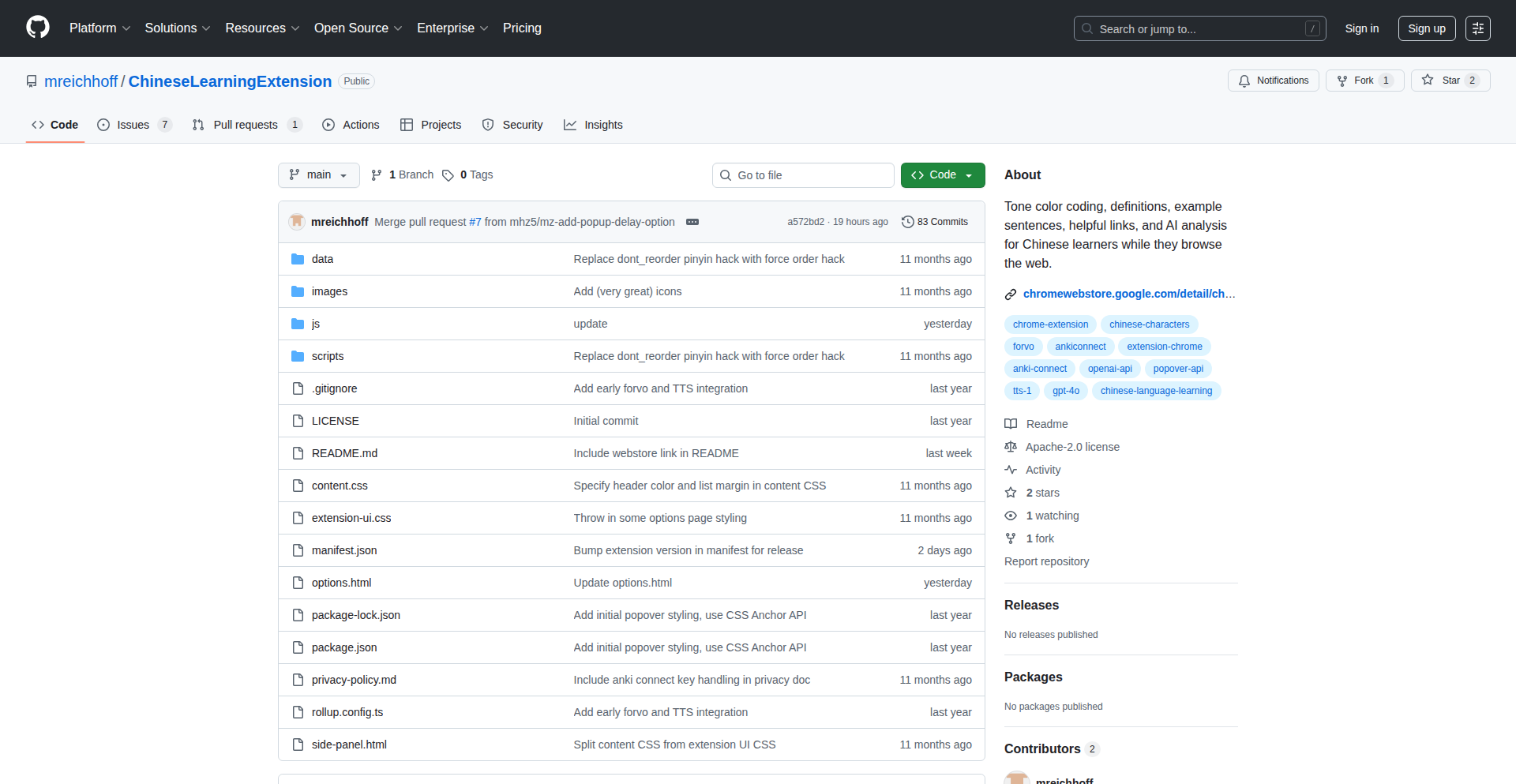

GhostText

Author

wdpatti

Description

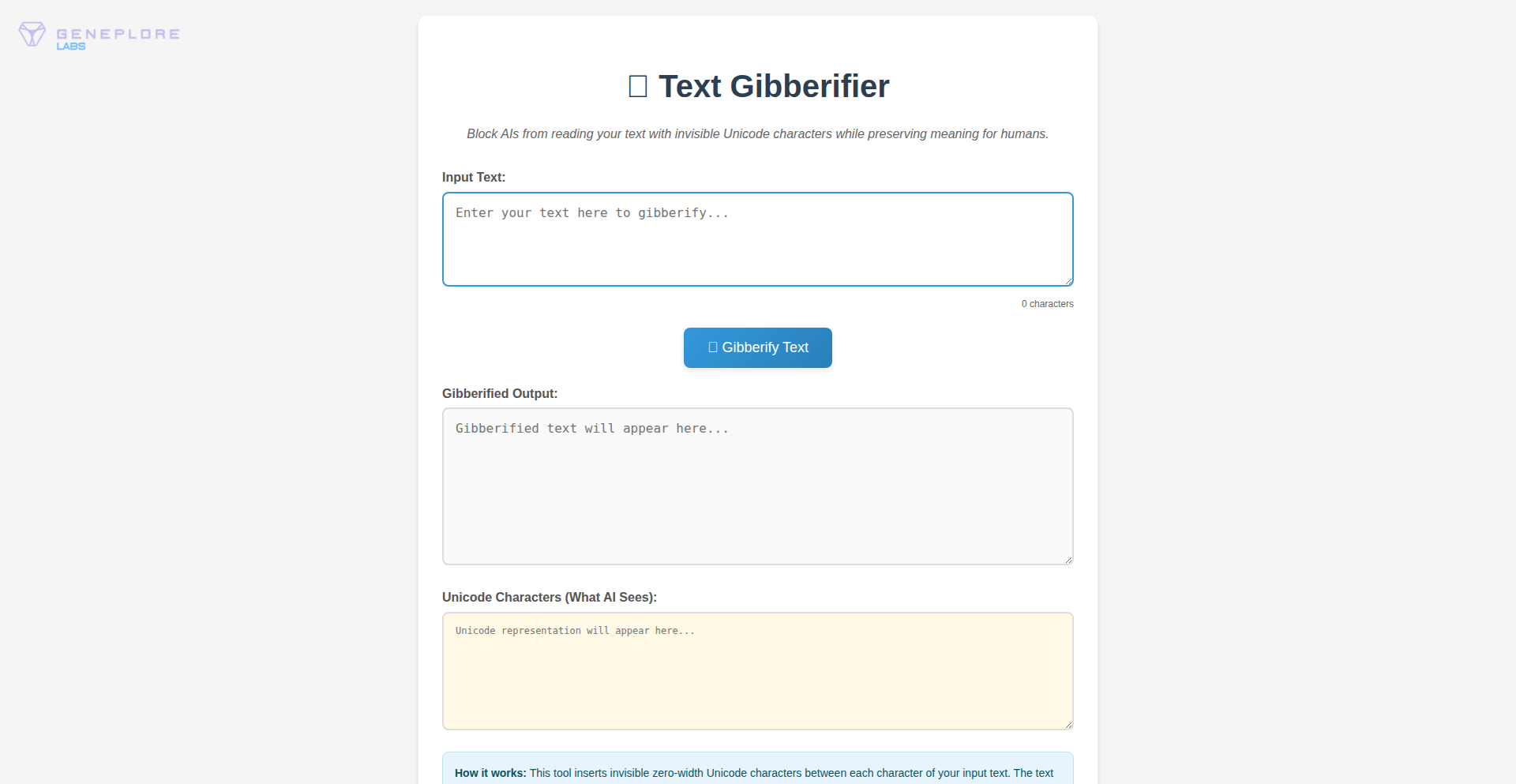

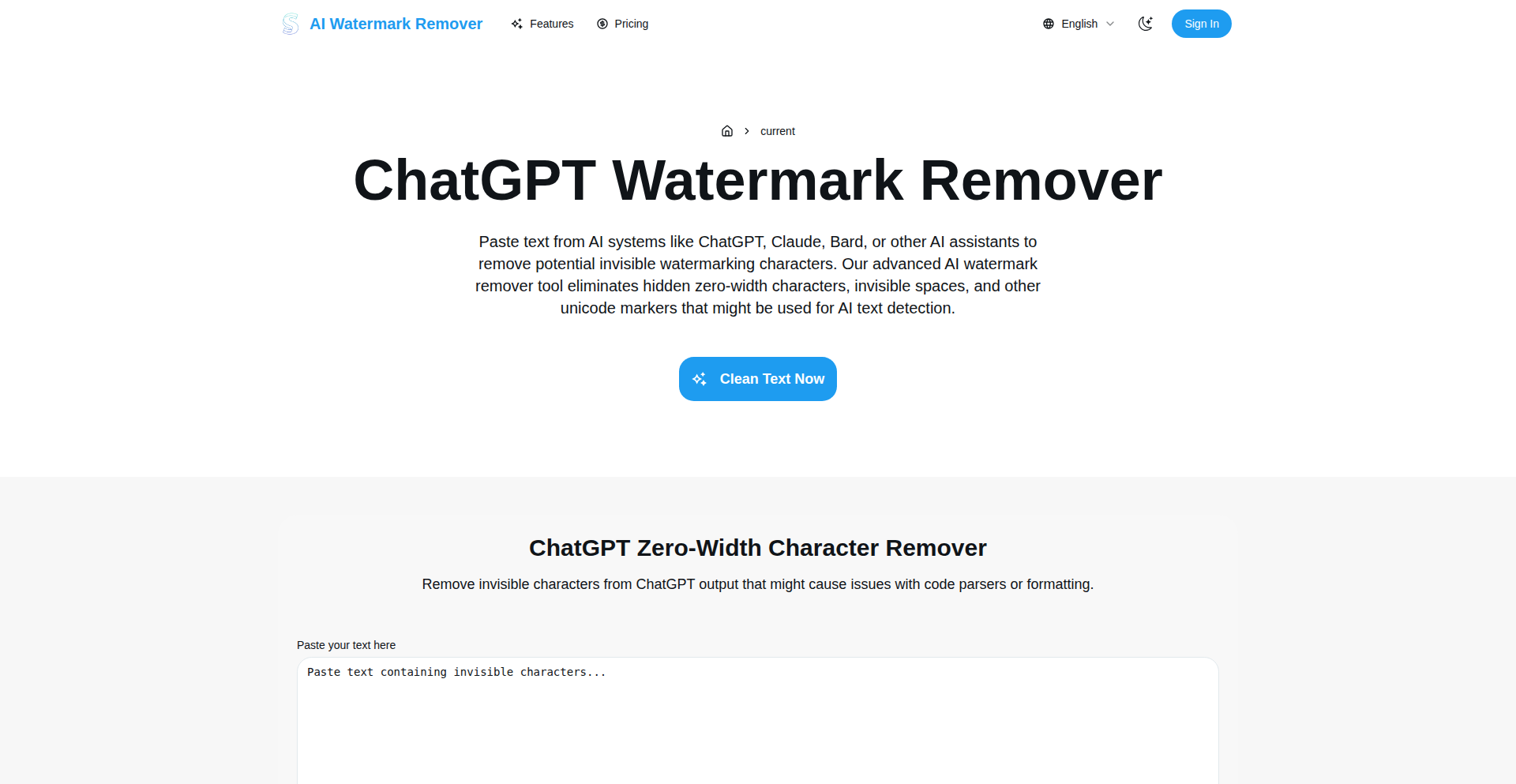

GhostText is a free tool that uses carefully selected, invisible Unicode characters to 'stun' or disrupt Large Language Models (LLMs). By subtly altering text with these characters, it can prevent LLMs from processing or responding to the content coherently. This offers practical applications for anti-plagiarism, protecting text from being scraped by LLM-powered scrapers, or for creative obfuscation.

Popularity

Points 112

Comments 51

What is this product?

GhostText is a clever application of Unicode's vast character set. The core innovation lies in identifying and strategically embedding specific, invisible Unicode characters within a given text. These characters, while undetectable to the human eye and standard text editors, are interpreted by LLMs as valid characters, but their presence causes the LLM's underlying algorithms to falter. Think of it like adding thousands of tiny, imperceptible 'glitches' to a digital image; to a computer vision system, those glitches can make the image unrecognizable. Similarly, these invisible characters disrupt the patterns and structures that LLMs rely on for understanding and generating text. The value is in creating a defense against automated text analysis by LLMs.

How to use it?

Developers can integrate GhostText into their workflows by using the provided tool or library. For example, you could feed text into the GhostText engine, which then outputs the 'stunned' version of the text. This stunned text can then be used in various scenarios: pasting it into a document to deter plagiarism detection by LLMs, using it on a website to prevent AI scrapers from easily extracting and summarizing content, or even for fun, creating messages that appear normal but behave strangely when processed by AI. The integration is typically straightforward, involving simple text input and output operations.

Product Core Function

· Invisible Unicode character injection: This core function leverages a curated set of invisible Unicode characters, such as zero-width spaces or similar control characters, to subtly modify text without visual alteration. The value is in creating a robust method for disrupting LLM processing.

· LLM disruption mechanism: The system is designed to identify characters that are known to cause issues with current LLM parsing and attention mechanisms. This provides a direct technical countermeasure against AI-driven text analysis.

· Text obfuscation utility: Beyond just defense, this function allows for creative text manipulation, making it harder for AI to extract meaning or sentiment from the content, which is valuable for content creators seeking to maintain control over their work's interpretation.

· Anti-scraping defense: By rendering text unreadable to LLM scrapers, it protects digital content from unauthorized AI-driven data harvesting, preserving the integrity of original works.

Product Usage Case

· Imagine you've written a sensitive academic paper. By running your paper through GhostText before submitting it, you can make it significantly harder for AI-powered plagiarism checkers to accurately compare it with other texts, adding a layer of protection to your original work.

· Website owners concerned about AI bots scraping their blog posts for summarization or rephrasing can use GhostText to 'stun' their content. This means AI scrapers will struggle to generate coherent summaries or extract key information, effectively deterring them and protecting your content's uniqueness.

· A developer building a forum or comment system might want to prevent users from directly feeding entire comment threads into LLMs for automated moderation or analysis. GhostText can be used to subtly alter user-submitted text, making it less amenable to such AI processing, thus maintaining a more human-centric interaction.

2

C-Mem: Minimalist C Memory Allocator

Author

t9nzin

Description

This project is a minimalist memory allocator written in C, designed as a fun educational toy. It's not thread-safe, meaning multiple parts of your program can't use it at the exact same time without potential issues, but it serves as a great way to understand the fundamental mechanics of how memory is managed in programming. The author also provides a tutorial blog post to explain the implementation details, demystifying the internal workings of memory allocation.

Popularity

Points 97

Comments 25

What is this product?

C-Mem is a basic memory allocator built from scratch in the C programming language. Instead of relying on the standard library functions like `malloc` and `free`, this project implements its own logic to manage chunks of memory. The core idea is to keep track of available and used memory blocks, allowing the program to request memory (allocation) and release it back when no longer needed (deallocation). Its innovation lies in its simplicity and educational value; it's a stripped-down version that makes it easier to grasp the concepts of heap management, pointer manipulation, and the underlying system calls involved in memory operations. This helps developers understand what's happening 'under the hood' when they use more complex allocators, enabling them to debug memory-related issues more effectively and potentially design more efficient memory strategies in their own applications.

How to use it?

Developers can integrate C-Mem into their C projects by including its source code files. They would then replace calls to standard library allocation functions like `malloc` with the custom allocation functions provided by C-Mem, and `free` with C-Mem's deallocation functions. This is particularly useful for learning purposes or in embedded systems where understanding memory usage is critical and a lightweight allocator might be preferred over a feature-rich but larger standard library implementation. The project's README typically provides instructions on how to compile and link the allocator with your own code, along with example usage patterns to get started. This allows you to experiment with manual memory management and gain hands-on experience with the building blocks of dynamic memory allocation.

Product Core Function

· Custom memory allocation: This function allows a program to request a specific amount of memory from a pre-defined pool, much like `malloc`. The value is that it shows you precisely how memory is carved out and assigned, which is crucial for understanding resource management and preventing memory leaks.

· Custom memory deallocation: This function allows a program to return previously allocated memory back to the allocator, similar to `free`. The value here is demonstrating how freed memory can be reused, contributing to efficient memory utilization and the prevention of overall memory exhaustion.

· Internal memory tracking: The allocator maintains internal data structures to keep track of which memory blocks are in use and which are free. The value of this is understanding the bookkeeping required for efficient memory management, which is a fundamental concept in any programming language that handles dynamic memory.

· Educational tutorial: The accompanying blog post explains the code step-by-step. The value is providing a clear, accessible learning path to understanding complex memory management concepts without getting lost in jargon, making it easier for beginners to grasp core computer science principles.

Product Usage Case

· Learning C memory management: A student programmer wanting to deeply understand how `malloc` and `free` work can use C-Mem to build their own simplified version. This solves the problem of abstract understanding by providing a concrete, runnable example, allowing them to see exactly how memory is managed.

· Embedded systems development: A developer working on resource-constrained embedded devices might use C-Mem as a lightweight alternative to standard library allocators. This addresses the need for minimal memory footprint and predictable behavior, allowing for more control over system resources.

· Debugging memory leaks: By instrumenting their code to use C-Mem and studying its internal state, developers can gain insights into how memory is being handled, which can help identify and fix memory leaks or other memory-related bugs that might be harder to detect with standard allocators.

· Experimenting with memory allocation strategies: Advanced users can modify C-Mem to explore different allocation algorithms (e.g., first-fit, best-fit). This solves the problem of limited experimentation with allocators by providing a foundational code base for exploring advanced memory management techniques.

3

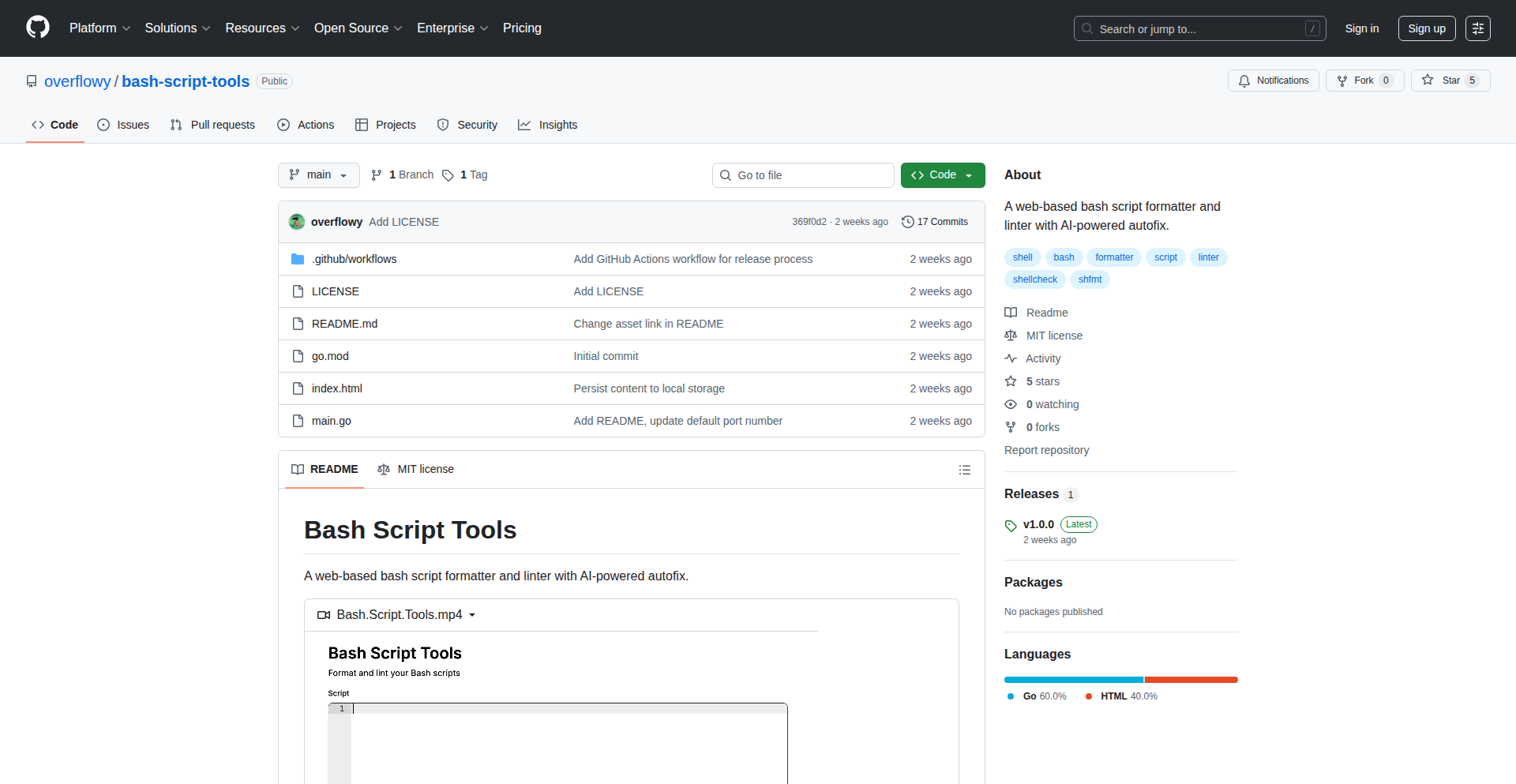

Dank-AI: Accelerated AI Agent Deployment

Author

deltadarkly

Description

Dank-AI is a framework designed to dramatically speed up the process of deploying AI agents into production environments. It addresses the common bottleneck in AI development where moving from a working model to a production-ready agent is time-consuming and complex. The core innovation lies in abstracting away much of the boilerplate and infrastructure management, allowing developers to focus on the AI logic itself. This means less time spent on setup and more time on refining the agent's intelligence and functionality, effectively enabling '10x faster' deployment.

Popularity

Points 6

Comments 5

What is this product?

Dank-AI is a developer-centric platform that simplifies and accelerates the deployment of Artificial Intelligence agents. Instead of manually configuring servers, managing dependencies, and setting up complex pipelines, developers can leverage Dank-AI's pre-built infrastructure and streamlined workflow. It acts like a specialized toolkit for AI, providing the essential plumbing and orchestration needed to get AI models from your local machine or development environment into a live, functioning service. The innovation is in its opinionated approach to agent deployment, offering sensible defaults and intuitive interfaces that abstract away the complexities of cloud infrastructure and distributed systems. This allows developers to focus on the 'brain' of the AI agent – its algorithms and decision-making logic – rather than its 'body' – the deployment infrastructure.

How to use it?

Developers can integrate Dank-AI into their existing AI development workflow. Typically, after developing and testing an AI agent (e.g., a chatbot, a recommendation engine, a predictive model), they would use Dank-AI's command-line interface (CLI) or SDK to package their agent. This involves defining the agent's inputs, outputs, and any specific resource requirements. Dank-AI then handles the creation of necessary cloud resources (like virtual machines, container orchestration, or serverless functions), deploys the agent's code, and sets up robust monitoring and scaling mechanisms. Integration scenarios include deploying custom chatbots for customer service, embedding predictive analytics into web applications, or creating automated decision-making systems for business processes. It can be used with popular AI frameworks like TensorFlow, PyTorch, or scikit-learn, making it a versatile solution for various AI projects.

Product Core Function

· Rapid Agent Packaging: Allows developers to quickly bundle their trained AI models and inference code into a deployable unit, significantly reducing the manual effort of preparing an agent for production. This is valuable because it eliminates tedious configuration steps, freeing up developer time for higher-level tasks.

· Automated Infrastructure Provisioning: Dank-AI automatically sets up the necessary cloud infrastructure (servers, networking, storage) required to run the AI agent. This removes the steep learning curve and operational overhead associated with cloud computing, making it accessible even for developers less familiar with infrastructure management.

· Scalable Deployment: The framework is designed to handle varying loads by automatically scaling the AI agent's resources up or down based on demand. This ensures that the agent remains responsive during peak times and cost-efficient during quiet periods, critical for maintaining user experience and controlling operational costs.

· Observability and Monitoring: Provides built-in tools for monitoring the performance, health, and resource utilization of the deployed AI agent. This is crucial for identifying issues early, understanding agent behavior in production, and making informed decisions about future improvements.

· CI/CD Integration: Designed to integrate seamlessly with Continuous Integration and Continuous Deployment (CI/CD) pipelines. This enables automated testing, building, and deployment of AI agent updates, fostering a faster and more reliable release cycle for AI-powered applications.

· Version Management: Facilitates the management of different versions of AI agents, allowing for easy rollback to previous versions if a new deployment introduces issues. This provides a safety net for production deployments, minimizing the risk of unexpected downtime or errors.

· Developer Experience Abstraction: Hides the underlying complexities of distributed systems and cloud operations through a simplified API and CLI. This allows developers to focus on the AI logic itself, accelerating development cycles and lowering the barrier to entry for deploying sophisticated AI agents.

Product Usage Case

· Scenario: A startup has developed a novel image recognition AI model and needs to make it available to users through a web application. Using Dank-AI, the developers can package their model and inference code, and Dank-AI will handle setting up a scalable API endpoint on a cloud provider. This allows the startup to quickly launch their AI-powered feature without needing a dedicated DevOps team, solving the problem of slow time-to-market for AI products.

· Scenario: An e-commerce company wants to deploy a personalized recommendation engine that needs to handle a high volume of user requests. Dank-AI can be used to deploy this engine, automatically scaling the underlying infrastructure to cope with traffic spikes during sales events. This ensures a consistent and responsive user experience, addressing the challenge of maintaining performance under variable load.

· Scenario: A research team has built a complex natural language processing (NLP) agent for text analysis. They need to deploy this agent for internal use by other departments. Dank-AI can be used to package and deploy the agent to a private cloud environment, providing easy access and monitoring for the research team, thus solving the problem of making research prototypes accessible and manageable in an enterprise setting.

· Scenario: A game development studio wants to integrate an AI-driven NPC behavior system into their new game. Dank-AI can deploy these AI agents as services that the game engine can query, allowing for complex and dynamic NPC interactions. This approach simplifies the deployment and management of game AI, enabling richer and more interactive gaming experiences.

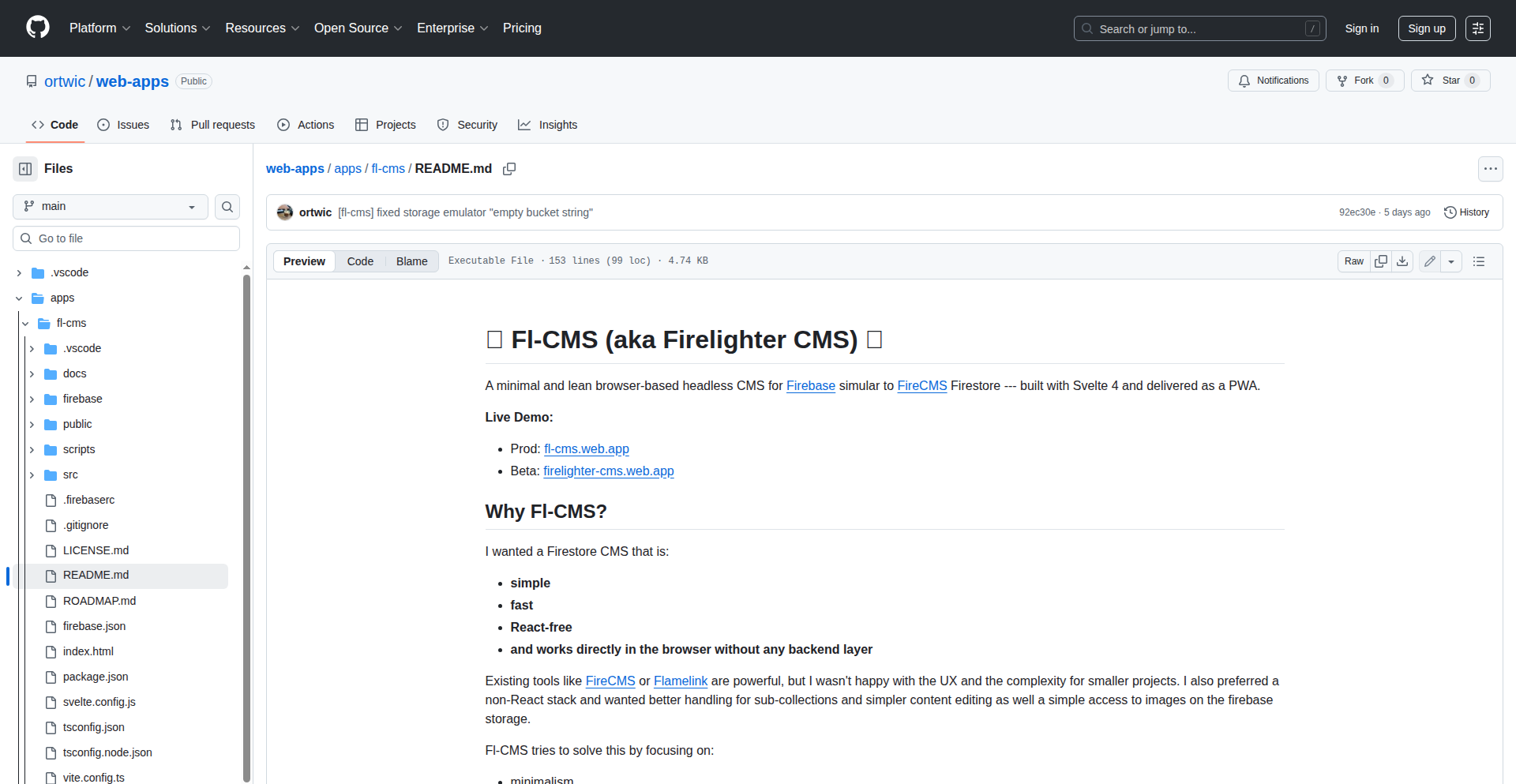

4

SecureEnvSync

Author

harish3304

Description

SecureEnvSync is a developer-friendly and secure alternative to traditional .env files. It addresses the common issues of insecurity, accidental commits, and messy secret management by providing a centralized, encrypted global store for your secrets. This allows you to load them per-project at runtime, eliminating the need for scattered, unencrypted environment files and the risk of exposing sensitive information.

Popularity

Points 8

Comments 3

What is this product?

SecureEnvSync is a tool designed to revolutionize how developers manage application secrets and environment variables. Instead of relying on individual, often unencrypted `.env` files spread across projects, it introduces a single, globally accessible, encrypted secret store. This means you define your sensitive credentials (like API keys or database passwords) just once, and they are securely managed in this central location. When your application runs, it fetches these secrets from the encrypted store, decrypts them, and makes them available to your project. The core innovation lies in its secure, centralized management approach, moving away from the clunky and insecure practices associated with traditional `.env` files, which are prone to accidental commits and difficult synchronization.

How to use it?

Developers can integrate SecureEnvSync into their workflow by installing the tool and setting up the global encrypted store. Once the store is configured and your secrets are added and encrypted, you can then reference these secrets within your projects. At runtime, SecureEnvSync will automatically fetch and decrypt the necessary secrets for that specific project. This can be integrated into build processes or directly within the application's startup routine. It's designed to be a drop-in replacement for existing `.env` loading mechanisms, aiming for minimal disruption while significantly enhancing security and manageability. This allows you to confidently develop and deploy applications without worrying about exposing sensitive information.

Product Core Function

· Centralized Encrypted Secret Storage: Stores all your sensitive credentials in one secure, encrypted location, preventing scattered, unencrypted files and reducing the risk of accidental exposure. This means all your API keys and passwords are in one protected place, making management easier and safer.

· Runtime Secret Loading: Fetches and decrypts secrets specifically for the project that needs them when the application starts, rather than relying on pre-loaded files. This ensures that secrets are only exposed when and where they are needed, enhancing security.

· Cross-Project Secret Synchronization: Eliminates the need to copy-paste secrets across multiple projects; changes made to a secret in the central store are automatically reflected in all projects that use it. This saves time and prevents inconsistencies by keeping all your secrets up-to-date everywhere automatically.

· Secure Handling of Sensitive Data: Protects your application's sensitive information from being accidentally committed to version control systems like Git. This is a major improvement over `.env` files, which are frequently committed by mistake, leading to security breaches. Your secrets stay private.

· Developer-Friendly Workflow: Simplifies the secret management process, making it more intuitive and less error-prone for developers. This allows developers to focus more on building features rather than wrestling with cumbersome secret configurations.

Product Usage Case

· Managing API Keys for Multiple Microservices: A developer working on a microservice architecture can store all their service-specific API keys in SecureEnvSync. Each microservice then securely loads only the keys it requires at runtime, preventing one service from having access to another's credentials. This solves the problem of managing numerous, potentially overlapping API keys across different services.

· Securing Database Credentials in Development and Staging Environments: For applications with different database instances for development, staging, and production, SecureEnvSync can manage these credentials securely. Developers can easily switch between environments without manually changing `.env` files, and the sensitive database connection strings are never exposed in public repositories. This provides a consistent and secure way to handle database access across different stages of development.

· Onboarding New Developers to a Project: When a new developer joins a team, they don't need to manually set up numerous `.env` files with sensitive information. They can simply install SecureEnvSync and authenticate, and the tool will provide the necessary secrets for the project, streamlining the onboarding process and ensuring security from the start. This makes it much faster and safer for new team members to get up and running.

· Preventing Accidental Secret Exposure in Open Source Contributions: Developers contributing to open-source projects can use SecureEnvSync to ensure that their local, sensitive environment variables are never accidentally included in their commits. This protects their personal or company secrets from being leaked into public code repositories. This directly addresses the common issue of accidentally pushing secrets to GitHub.

5

EncryptedEnvVault

Author

harish3304

Description

EncryptedEnvVault is a developer-centric tool that provides a secure and convenient way to manage environment secrets. It addresses the inherent security risks of traditional plaintext .env files, such as accidental exposure in git history, by encrypting secrets locally. This ensures sensitive information is never exposed in plaintext on your machine or in version control, with secrets being directly loaded at runtime on a per-project basis. This innovation significantly reduces the stress and potential for security breaches associated with managing application secrets.

Popularity

Points 6

Comments 3

What is this product?

EncryptedEnvVault is a local, encrypted secret management system designed to replace conventional plaintext .env files. Instead of storing sensitive information like API keys or database credentials in plain text, which can be easily leaked or committed to version control by accident, EncryptedEnvVault encrypts these secrets. The system uses robust encryption algorithms to protect your sensitive data, and then decrypts them securely at the moment your application needs them (runtime). The core innovation lies in its local-first, project-specific approach, offering a more secure and manageable alternative without overcomplicating the developer workflow.

How to use it?

Developers can integrate EncryptedEnvVault into their projects by installing the tool and using its command-line interface to add, manage, and encrypt secrets. You'll typically initialize a secure vault for your project, add your sensitive variables, and then configure your application to load these secrets through the EncryptedEnvVault library at runtime. This means your CI/CD pipeline or local development environment will access the decrypted secrets securely when the application starts, rather than reading from a plaintext file. It's designed to be a drop-in replacement for .env files in terms of how your application consumes the secrets.

Product Core Function

· Local Secret Encryption: Secrets are encrypted on your local machine using strong cryptographic methods, preventing plaintext exposure and reducing the risk of accidental leaks. This means your sensitive data is protected even if your machine is compromised.

· Git Exclusion: By encrypting secrets, you can safely commit the encrypted configuration files to your Git repository without worrying about exposing sensitive credentials. This eliminates a common source of security vulnerabilities.

· Runtime Decryption: Secrets are decrypted only when your application specifically requests them at runtime. This 'just-in-time' decryption minimizes the window of exposure and ensures sensitive data is not lingering in memory or accessible by unauthorized processes.

· Project-Specific Management: The tool allows for managing secrets on a per-project basis, providing better organization and isolation. This prevents accidental cross-pollination of secrets between different projects and simplifies credential management for complex setups.

· Developer-Friendly Workflow: Aims to integrate seamlessly into existing developer workflows, offering a command-line interface for easy management of secrets, making it as straightforward as working with traditional .env files but with enhanced security.

Product Usage Case

· Securing API Keys for External Services: A web application needs to connect to a third-party API (e.g., Stripe, Twilio). Instead of storing the API key in a .env file that might be accidentally committed, developers can use EncryptedEnvVault to encrypt the key. The application then loads the decrypted key at startup, ensuring the API key is never exposed in the codebase.

· Database Credentials Management: A backend service requires database connection details (username, password, host). EncryptedEnvVault can store these credentials securely. When the service starts, it fetches the decrypted credentials to establish a database connection, safeguarding sensitive login information.

· Local Development Environment Setup: Developers working on a new project can quickly set up their local environment by adding sensitive configurations (like a local development database password) to EncryptedEnvVault. This simplifies onboarding new team members as they only need to decrypt and manage secrets, not worry about exposing them in shared configuration.

· Reducing 'Accidental Commit' Stress: A developer repeatedly finds themselves paranoid about accidentally committing sensitive environment variables. EncryptedEnvVault removes this anxiety by making it impossible to commit plaintext secrets, allowing for more focused development and less fear of security breaches.

6

InstantGigs Live

Author

ufvy

Description

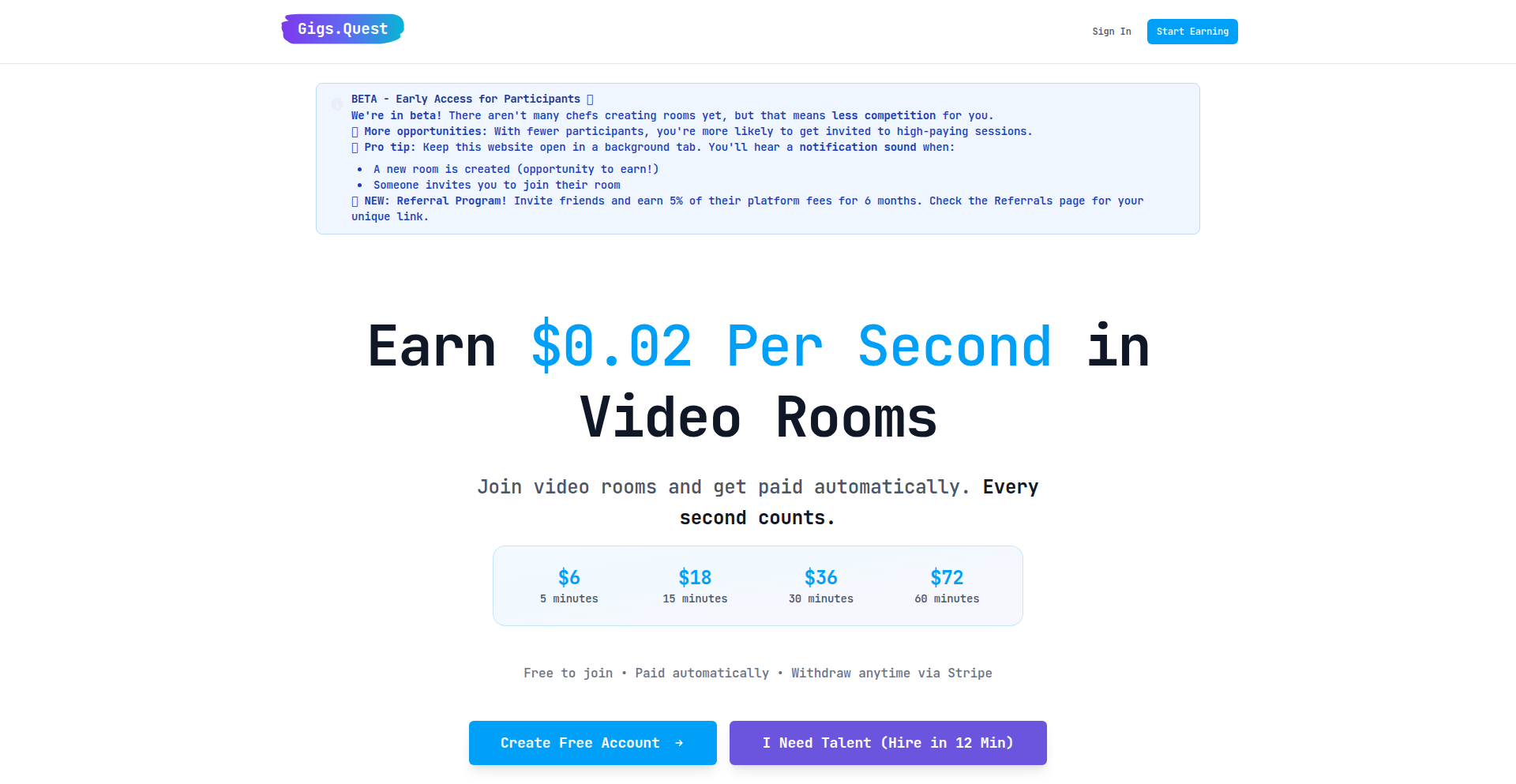

InstantGigs Live is a real-time marketplace designed to eliminate the friction of traditional freelancing. It leverages live video rooms and per-second billing, powered by technologies like LiveKit and Convex, to connect startups needing immediate help with qualified freelancers in minutes, not days. This innovative approach tackles the problem of slow hiring processes for urgent tasks and offers a direct monetization channel for freelancers.

Popularity

Points 4

Comments 5

What is this product?

InstantGigs Live is a live, real-time freelancing platform. Instead of lengthy job postings and proposal reviews, users create a virtual room, describe their immediate need, and qualified freelancers can join within minutes. The core technology utilizes LiveKit for robust video conferencing and screen sharing, and Convex for its real-time database capabilities, ensuring seamless communication and data synchronization. Payments are handled securely via Stripe Connect, with a unique per-second billing model ($0.02/sec, equivalent to $72/hr), which is a significant innovation in how freelance services are priced and consumed, allowing for hyper-granular cost control and immediate value exchange. This solves the problem of traditional freelancing being too slow for urgent needs and offers a more efficient way for experts to monetize their skills.

How to use it?

For startups or businesses with an immediate technical or creative need, you simply sign up, create a 'Gig Room', clearly describe your requirement (e.g., 'Need immediate help debugging a production API error', 'Quick review of a landing page design mockup'), and set a budget. Qualified freelancers who match your needs will be notified and can join the room live. You can then collaborate visually through HD video and screen sharing. Once the task is complete, payment is automatically calculated based on the exact duration of the session and processed instantly. For freelancers, you sign up, create a profile showcasing your expertise, and can then join available Gig Rooms that match your skills. You earn money based on the seconds you actively contribute to helping clients, eliminating the need for bidding or proposal writing. Integration is straightforward; it's a web-based platform accessible through any modern browser, designed for immediate use without complex setup.

Product Core Function

· Real-time Video Collaboration: Enables instant face-to-face and screen-sharing interactions between clients and freelancers, facilitating immediate problem-solving and efficient communication. This speeds up project completion and reduces misunderstandings.

· Per-Second Billing: Charges are calculated precisely based on the time spent actively working, offering extreme cost transparency and control for clients. This democratizes access to expertise for short, critical tasks.

· Instant Matching and Connection: Utilizes a system to quickly connect clients with qualified freelancers who are available and suitable for the task, drastically reducing the traditional hiring time from days to minutes.

· Secure Payment Processing via Stripe Connect: Ensures reliable and instant payouts to freelancers and secure transactions for clients, building trust and efficiency in the marketplace.

· Session Recording: All interactions are recorded for accountability, quality assurance, and future reference. This provides a safety net and learning resource for both parties.

Product Usage Case

· A startup facing a critical production outage that requires immediate debugging assistance. Instead of going through a lengthy hiring process, they create a Gig Room, and within minutes, a senior backend engineer joins, diagnoses the issue via screen share, and helps resolve it, saving hours of downtime and potential revenue loss.

· A design team needs a quick review of a new landing page mockup before a marketing campaign launch. They host a 12-minute session with a UI/UX expert who provides feedback and suggestions, costing only $14. This allows for rapid iteration and ensures the design is optimized before deployment.

· A development team is planning a new feature and needs expert advice on the best architectural approach. They engage a freelance architect for a 45-minute consultation session, costing $54, to get critical insights and avoid potential costly mistakes in the future development process.

7

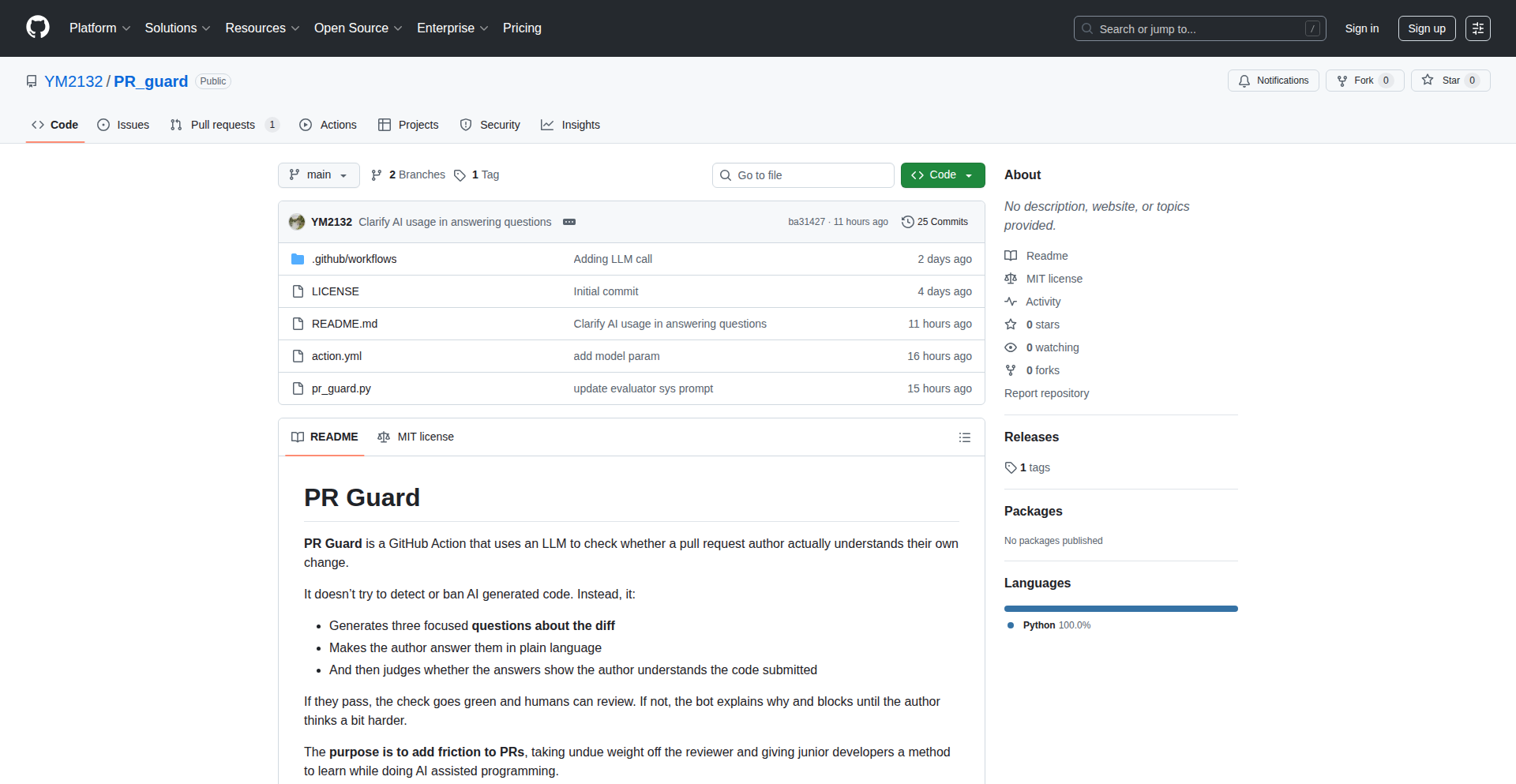

AnthropicNewsAggregator

Author

cebert

Description

This project is a web-based aggregator designed to collect and present news specifically related to Anthropic. It leverages web scraping and data processing techniques to identify and centralize information, providing a dedicated resource for those interested in Anthropic's developments. The innovation lies in its focused approach to curating AI research news.

Popularity

Points 3

Comments 6

What is this product?

This project is a specialized news aggregation website. It functions by automatically scanning various online sources, such as news outlets, blogs, and official announcements, for mentions of Anthropic. Once identified, the relevant articles are collected, processed, and displayed in a structured format on the website. The core technical innovation is the targeted data retrieval and filtering mechanism, ensuring that only Anthropic-centric news is presented, saving users the time of manually sifting through broader AI news. This helps you stay informed about a specific, cutting-edge AI company without getting lost in general information.

How to use it?

Developers can use this aggregator as a direct source of information to stay updated on Anthropic's research, product launches, and company news. It can be integrated into internal knowledge bases or used as a reference point for market research. For example, a developer working in the AI ethics field might use this site to track Anthropic's contributions to responsible AI development. Its simplicity allows for easy bookmarking and regular checking, offering a dedicated stream of relevant updates.

Product Core Function

· Automated news scraping: Gathers news articles from multiple online sources by programmatically checking websites. This provides you with a constant stream of new information without you having to manually search.

· Content filtering and categorization: Identifies and selects articles specifically mentioning Anthropic, filtering out irrelevant content. This ensures you see only what matters to you, saving you time and effort.

· Information aggregation and presentation: Organizes the collected news into a user-friendly interface for easy reading and browsing. This makes it simple to consume a lot of information efficiently.

· Dedicated Anthropic news feed: Offers a singular platform for all Anthropic-related news, acting as a central hub for enthusiasts and researchers. This means you have one place to go for all your Anthropic news needs.

Product Usage Case

· A machine learning researcher looking to monitor Anthropic's latest advancements in large language models can use this site to quickly find all recent announcements and discussions. This helps them stay ahead in their field by knowing about new techniques or findings as soon as they are public.

· A technology journalist covering the AI industry can use this aggregator as a quick reference to gather information and understand the current narrative around Anthropic. This streamlines their research process and ensures they don't miss any critical updates.

· An enthusiast interested in the future of AI safety can track Anthropic's work in this area through the aggregated news. This allows them to follow the progress and public discourse on a crucial aspect of AI development.

8

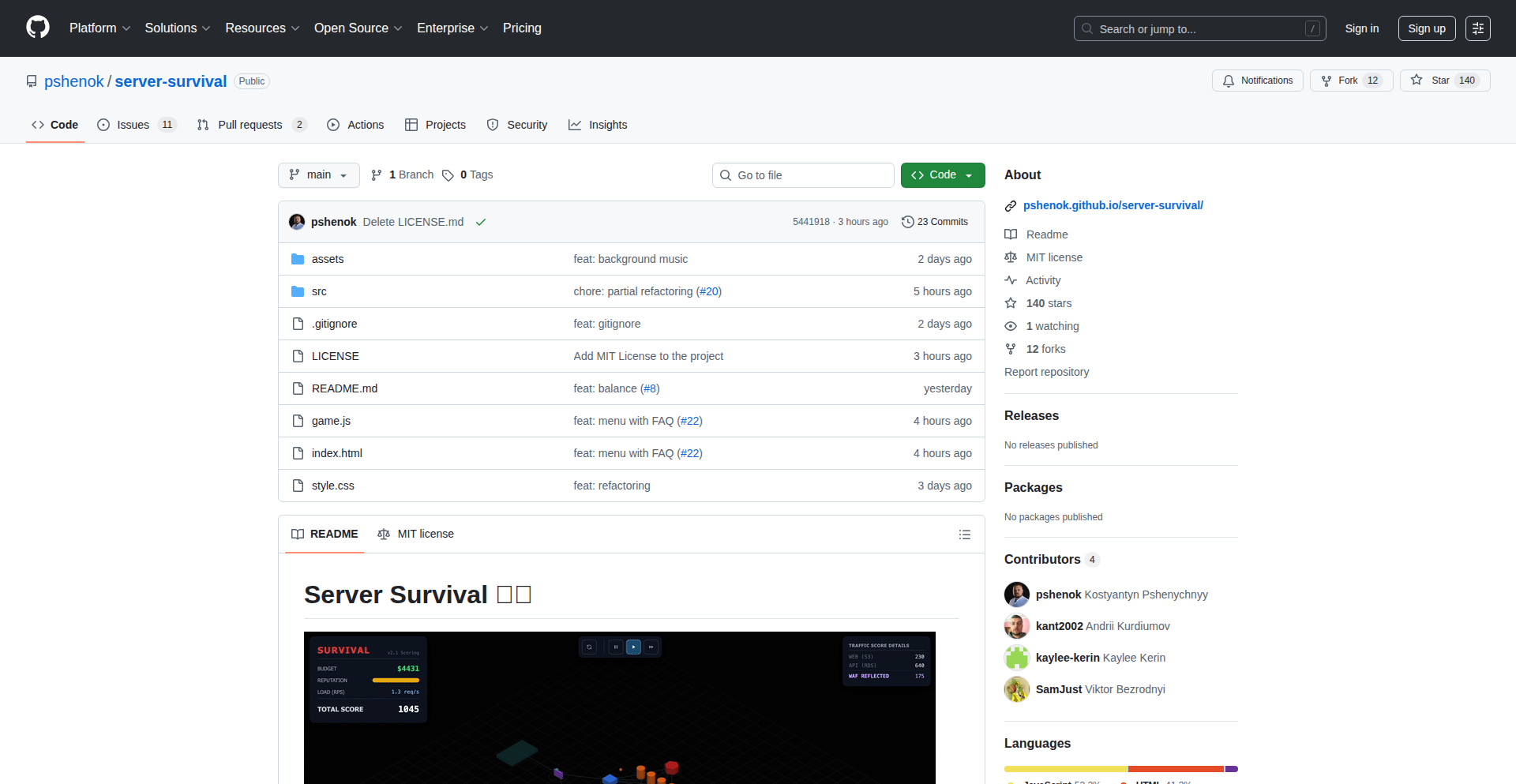

Server Survival: Cloud Architecture Defense

Author

pshenok

Description

Server Survival is an educational tower defense game that teaches cloud architecture concepts. Players build and defend their own server infrastructure against waves of simulated 'traffic' attacks. The core innovation lies in translating complex cloud concepts like load balancing, auto-scaling, and firewalls into engaging game mechanics, making learning about cloud infrastructure intuitive and fun.

Popularity

Points 6

Comments 2

What is this product?

Server Survival is a tower defense game where you play as a cloud architect defending a system from overwhelming traffic. Instead of traditional towers, you deploy various cloud infrastructure components like load balancers, auto-scaling groups, and firewalls. Each component has unique behaviors and costs, mirroring real-world cloud resource management. The game simulates traffic spikes and attack vectors, forcing players to strategically place and upgrade their defenses to maintain system uptime and prevent collapse. It's a novel approach to learning about the resilience and complexities of cloud environments by directly experiencing the challenges in a gamified format. The innovation is in abstracting complex technical systems into understandable, interactive game elements, allowing for hands-on learning without real-world risks.

How to use it?

Developers can use Server Survival as a training tool to understand how different cloud services interact and how to defend against common performance bottlenecks and security threats. You can play it directly in your browser, experimenting with different architectural designs. For instance, you might set up a load balancer to distribute incoming requests across multiple web servers in an auto-scaling group. Then, you'd strategically place firewalls to block malicious traffic. The game provides immediate feedback on the effectiveness of your choices, helping you learn best practices for building scalable and resilient cloud applications. It's a great way to quickly grasp concepts that might take much longer to understand through documentation alone.

Product Core Function

· Dynamic Traffic Simulation: Simulates varying levels of user traffic and sudden spikes, forcing players to adapt their infrastructure, demonstrating the importance of handling fluctuating demand.

· Cloud Component Placement: Allows players to deploy and configure virtualized cloud resources like load balancers, web servers, and databases, teaching resource allocation and system design.

· Scalability Mechanics: Implements auto-scaling features where infrastructure can automatically expand or contract based on traffic, highlighting the principles of elasticity.

· Security Defense Layers: Introduces firewalls and other security measures to protect against simulated cyberattacks, teaching the fundamentals of cloud security.

· Performance Monitoring & Feedback: Provides real-time metrics on system performance (e.g., latency, uptime) and visual cues of failure, enabling rapid learning through consequence.

Product Usage Case

· A junior developer needs to understand how a load balancer helps distribute traffic. Playing Server Survival, they can see how placing a load balancer upfront reduces the strain on individual web servers during a traffic surge, thus preventing them from crashing.

· A DevOps engineer wants to visualize the benefits of auto-scaling. In the game, they can configure an auto-scaling group, and as traffic increases, they observe new server instances being automatically provisioned to handle the load, illustrating elasticity in action.

· A team learning about cloud security can use Server Survival to experiment with firewall rules. They can see how blocking certain ports or IP ranges effectively stops simulated denial-of-service attacks, reinforcing security principles.

· An architect designing a microservices-based application can use the game to understand how to manage inter-service communication under load, by strategically placing components and observing the impact on overall system stability.

9

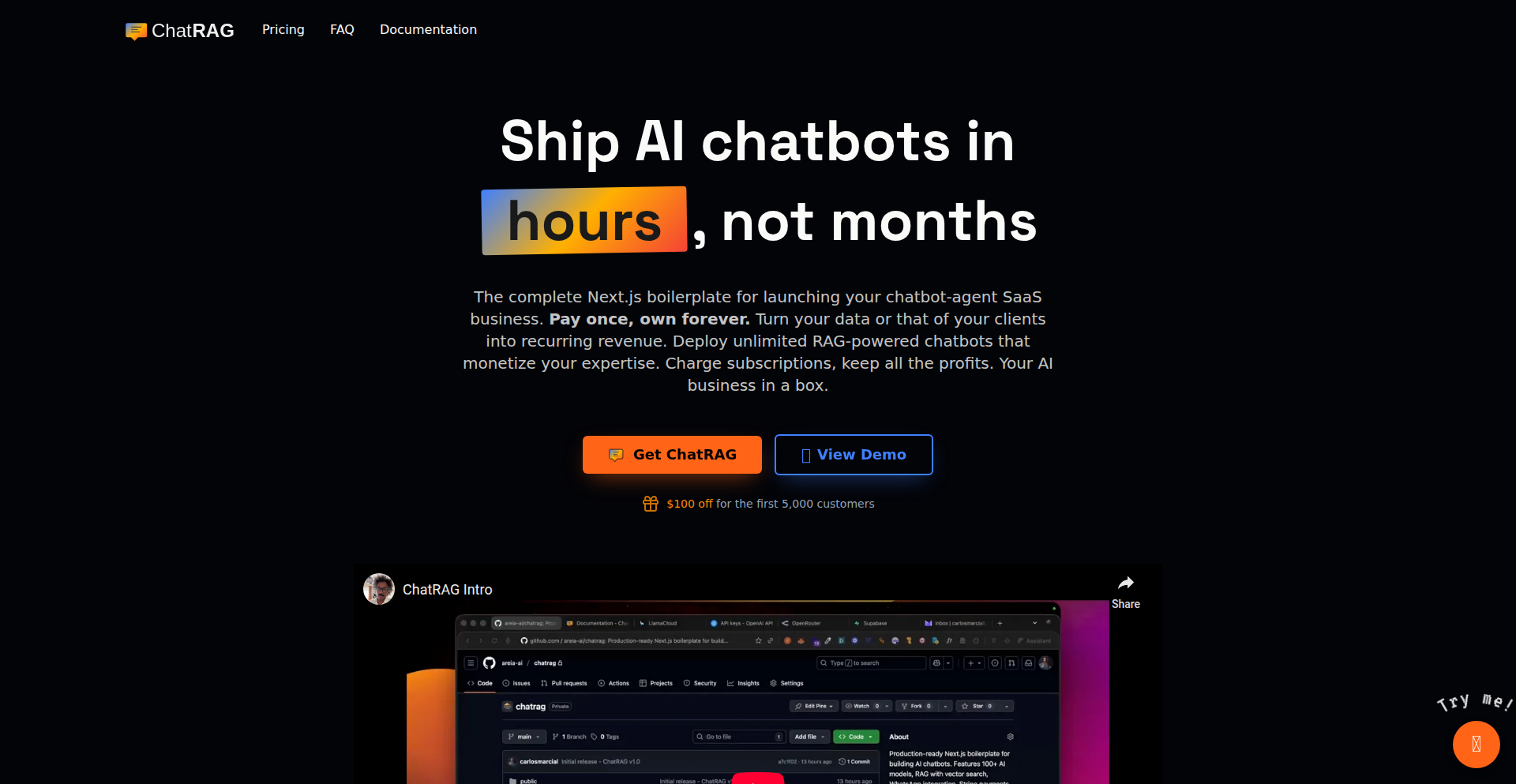

RAGLaunchpad AI

Author

carlos_marcial

Description

A tool that makes it incredibly simple and fast to deploy your own AI chatbots powered by Retrieval Augmented Generation (RAG). It tackles the complexity of setting up RAG pipelines, allowing users to quickly build chatbots that can answer questions based on their own data, without needing deep AI expertise.

Popularity

Points 2

Comments 5

What is this product?

This project is a simplified platform for launching AI chatbots that leverage RAG. RAG is a technique that combines the power of large language models (LLMs) with your own specific data. Instead of the AI relying on its general knowledge, RAG allows it to access and process your documents (like PDFs, text files, or website content) to provide more accurate and context-aware answers. The innovation here lies in abstracting away the complex setup of RAG, offering a streamlined way to integrate your data and deploy a functional chatbot.

How to use it?

Developers can use RAGLaunchpad AI by connecting their data sources (e.g., uploading documents or pointing to URLs). The platform then handles the process of indexing this data and setting up the AI model to query it. This can be integrated into existing applications or used as a standalone chatbot interface. Think of it as a quick starter kit for building intelligent assistants tailored to your specific knowledge base.

Product Core Function

· Data Ingestion and Indexing: Effortlessly upload your documents or link to data sources. The system intelligently processes and indexes this information, making it searchable for the AI. This means your chatbot can learn from your content without manual data preparation.

· RAG Pipeline Orchestration: Automates the complex process of connecting your data to an AI model. It handles the retrieval of relevant information from your indexed data and augments the AI's response, ensuring accuracy and relevance. This removes the need for deep technical knowledge of AI infrastructure.

· Chatbot Deployment: Provides a simple mechanism to launch your RAG-powered chatbot. You can quickly get a functional chatbot up and running, ready to interact with users and answer questions based on your provided data. This significantly speeds up the time from idea to a deployable product.

· Customizable AI Models: Allows for selection and configuration of underlying AI models to best suit your needs. This gives flexibility in choosing the intelligence behind your chatbot. This means you can fine-tune the AI's capabilities for specific tasks.

· API Access for Integration: Offers APIs to integrate your custom chatbot into other applications or workflows. This enables seamless integration with your existing systems, extending the reach of your intelligent assistant. This means you can make your chatbot a part of a larger ecosystem.

Product Usage Case

· Customer Support Bot: A company can use RAGLaunchpad AI to create a chatbot that answers frequently asked questions using their product documentation and support articles. This reduces the load on human support agents and provides instant answers to customers.

· Internal Knowledge Assistant: A research team can upload their scientific papers and internal reports. The chatbot can then help team members quickly find information and synthesize findings from this extensive body of work, accelerating research.

· Personalized Learning Tutor: An educator could use this to build a chatbot that answers student questions based on course materials and textbooks, providing a personalized learning experience. Students can get immediate help without waiting for instructor availability.

· Content Discovery Tool: A website owner could use RAGLaunchpad AI to create a chatbot that helps users navigate and find specific information within their large volume of blog posts or articles. This improves user engagement and content discoverability.

· Developer Documentation Q&A: A software project can use this to create a chatbot that answers developer questions based on its API documentation and code examples, making it easier for new contributors to get started. This streamlines the onboarding process for developers.

10

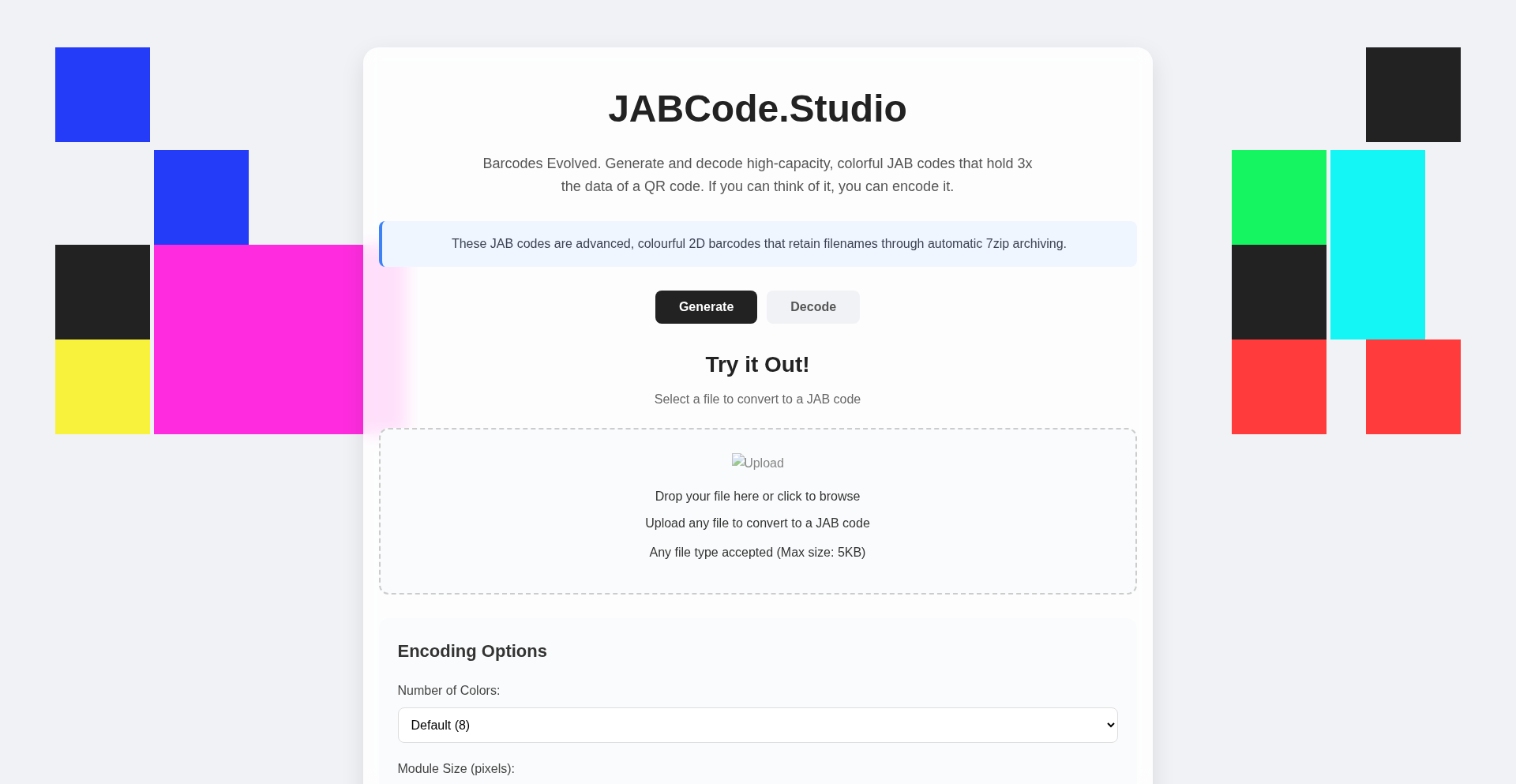

ColorBit Code Studio

Author

jabber-feller

Description

ColorBit Code Studio is a novel tool for generating and scanning high-density 2D color barcodes, offering a significant leap in data capacity compared to traditional QR codes. By utilizing multiple colors, it can store over 2KB of data within a single code, making it ideal for efficient data sharing and embedding. This is a practical implementation for sharing files directly, without needing to compile source code, and it's now available on both web and iOS platforms.

Popularity

Points 2

Comments 5

What is this product?

This project is a digital encoding system that goes beyond the standard black-and-white QR codes you might be familiar with. Instead of just two states (on/off), it uses multiple colors to represent more information in the same physical space. Think of it like upgrading from a simple Morse code to a full alphabet – you can say much more with the same length of transmission. The core innovation lies in its advanced error correction and encoding algorithms that efficiently map large amounts of data (like entire files) into these colorful, compact visual patterns. This means you can store significantly more information, such as documents, images, or even executable code snippets, in a single, scannable image. For developers, this translates to a new, highly efficient way to distribute or embed data in a visually accessible format, overcoming the data limitations of traditional barcodes.

How to use it?

Developers can use ColorBit Code Studio via its web interface or iOS app. For sharing files, you can upload your file directly to the studio, which will then generate a high-density color barcode representing that file. This barcode can be embedded in websites, printed materials, or shared digitally. The companion app or scanner can then interpret this color barcode to retrieve the original file. This is particularly useful for scenarios where you need to quickly share small to medium-sized files without relying on external links or complex transfer protocols. Integration can be as simple as displaying the generated image on a screen for a camera to capture, or printing it for offline access.

Product Core Function

· High-density data encoding: Allows for storing over 2KB of data per code, which is significantly more than standard QR codes. This means you can store more complex information or entire small files directly, reducing the need for separate downloads or links.

· Multi-color barcode generation: Utilizes a palette of colors to achieve higher data density. This innovation makes it possible to pack more information into a smaller visual footprint, making it an efficient choice for data storage and transmission.

· File-to-barcode conversion: Enables direct conversion of files into scannable color barcodes. This feature is a game-changer for quick data distribution, allowing users to share documents, images, or other data by simply presenting a visual code.

· Web and iOS platform availability: Provides accessible tools for both desktop and mobile users. This broad compatibility ensures that developers and users can create and scan codes on their preferred devices, enhancing convenience and adoption.

· Advanced error correction: Ensures data integrity even if the barcode is partially obscured or damaged. This robustness means your embedded data is more likely to be recovered successfully, even under imperfect scanning conditions.

Product Usage Case

· Sharing configuration files for software: A developer can generate a color barcode containing a software's configuration settings. A user can then scan this code to instantly apply the settings to their application, simplifying setup and reducing manual input errors.

· Distributing digital art or small assets: Artists can embed their digital artwork or small asset files directly into a high-resolution image file as a color barcode. This allows viewers to scan the image and obtain a high-quality copy of the art or asset directly from the visual representation.

· Interactive marketing materials: A company can print flyers or posters with color barcodes that link to exclusive content, discount codes, or downloadable resources. Customers can scan these codes with their phones to access immediate value, enhancing engagement beyond a static advertisement.

· Offline data access for field technicians: Technicians in remote areas with limited internet connectivity can store crucial technical manuals, schematics, or diagnostic tools as color barcodes on printed sheets. They can then scan these codes on-site to access the necessary information, improving efficiency and reducing downtime.

11

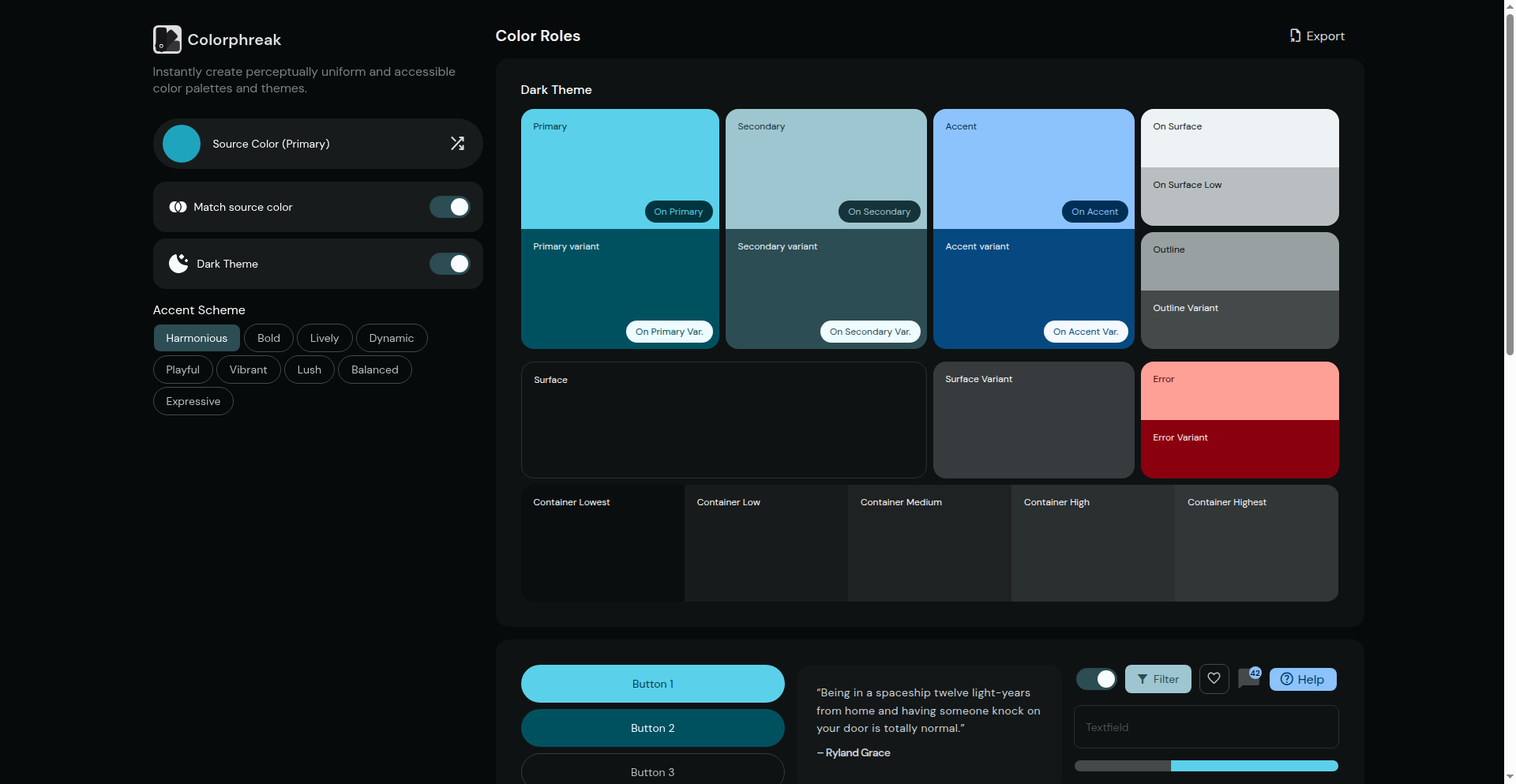

OKLCH Color Weaver

Author

kurainox

Description

This project is a sophisticated color system generator that leverages the OKLCH color space to create perceptually uniform and accessible color palettes. It intelligently generates harmonious color schemes based on a single seed color, producing extensive color ramps and semantic color roles, all while ensuring colors are optimized for the sRGB display gamut. This means your designs will look more consistent and pleasing across different screens, especially for users with visual impairments.

Popularity

Points 5

Comments 1

What is this product?

OKLCH Color Weaver is a developer tool that builds color palettes. Instead of just picking colors randomly, it uses a special color model called OKLCH. Think of OKLCH as a more intuitive way to describe colors, focusing on how humans actually perceive lightness, colorfulness (chroma), and hue. The system starts with a single 'seed' color and then mathematically generates related colors that work well together, like analogous (neighboring colors) or complementary (opposite colors). It creates 'ramps' of colors, meaning smooth transitions from light to dark or more muted to vibrant. Finally, it maps these colors to fit within the standard sRGB color space (what most screens display), reducing harshness and preserving the intended feel of your chosen colors. This is innovative because it goes beyond simple RGB or HSL color picking to create palettes that are inherently more pleasing to the eye and accessible.

How to use it?

Developers can integrate OKLCH Color Weaver into their design workflows or directly into their applications. You provide a primary color (the 'seed'), and the tool generates a comprehensive set of color values. These can be used for defining UI themes, creating branding assets, or designing user interfaces. For web developers, this could involve generating CSS variables for color theming. For application developers, it could mean programmatically defining color palettes within their codebase. The output can be directly copied or potentially integrated via an API in future versions, providing a quick and robust way to establish a consistent and accessible color language for any project.

Product Core Function

· Perceptually Uniform Color Generation: The system produces colors that are perceived by humans with uniform changes in lightness and hue, ensuring smooth visual transitions and preventing jarring color shifts. This is valuable for creating sophisticated and professional-looking interfaces that are easy on the eyes.

· OKLCH Color Space Utilization: By using OKLCH, the generator allows for finer control over color properties like perceived lightness and colorfulness independently, leading to more predictable and aesthetically pleasing results compared to traditional color models.

· Automated Harmony Scheme Generation: The tool automatically creates palettes based on established color theory principles like analogous and complementary schemes. This saves designers and developers time and ensures visually appealing color combinations without requiring deep color theory knowledge.

· 26-Step Color Ramps: For each generated color, the system creates a detailed ramp of 26 steps, offering a wide range of shades and tints. This provides a rich palette for nuanced design choices, from subtle highlights to deep shadows, allowing for precise control over visual depth.

· Semantic Color Role Assignment: The generated color ramps are used to define semantic color roles (e.g., primary, secondary, accent, error, success). This simplifies the process of building accessible and consistent UIs, where colors have defined meanings and functions.

· sRGB Gamut Mapping with Chroma Reduction: All generated colors are intelligently mapped to fit within the sRGB color space, which is the standard for most digital displays. This process preserves the original lightness and hue while adjusting the colorfulness to ensure the colors display correctly and vibrantly on any screen, preventing unexpected color shifts.

· Material Design Inspired Visual Comfort: While built from the ground up, the aesthetic often aligns with the visual comfort and familiarity of Material Design, offering a proven and well-received design language as a foundation for new creations.

Product Usage Case

· A UI/UX designer needs to create a new design system for a web application. They use OKLCH Color Weaver to generate a primary color palette based on their brand's main color. The tool provides them with a full set of accessible colors for various UI elements (buttons, backgrounds, text, alerts), saving them hours of manual color picking and testing for accessibility and visual harmony.

· A front-end developer is tasked with theming a React component library. Instead of hardcoding colors, they use the output from OKLCH Color Weaver to generate CSS custom properties (variables). This allows for easy and consistent theming across all components, ensuring brand consistency and accessibility standards are met programmatically.

· A mobile app developer wants to ensure their app's color scheme is accessible to users with color vision deficiencies. They input their app's main accent color into OKLCH Color Weaver, which generates a perceptually uniform palette with high contrast ratios between key color roles, significantly improving the app's usability for a wider audience.

· A game developer needs to design in-game status indicators (e.g., health, mana, status effects). Using OKLCH Color Weaver, they generate distinct and easily distinguishable color ramps for each status, ensuring players can quickly understand game states even in fast-paced gameplay, while also offering a visually pleasing aesthetic.

12

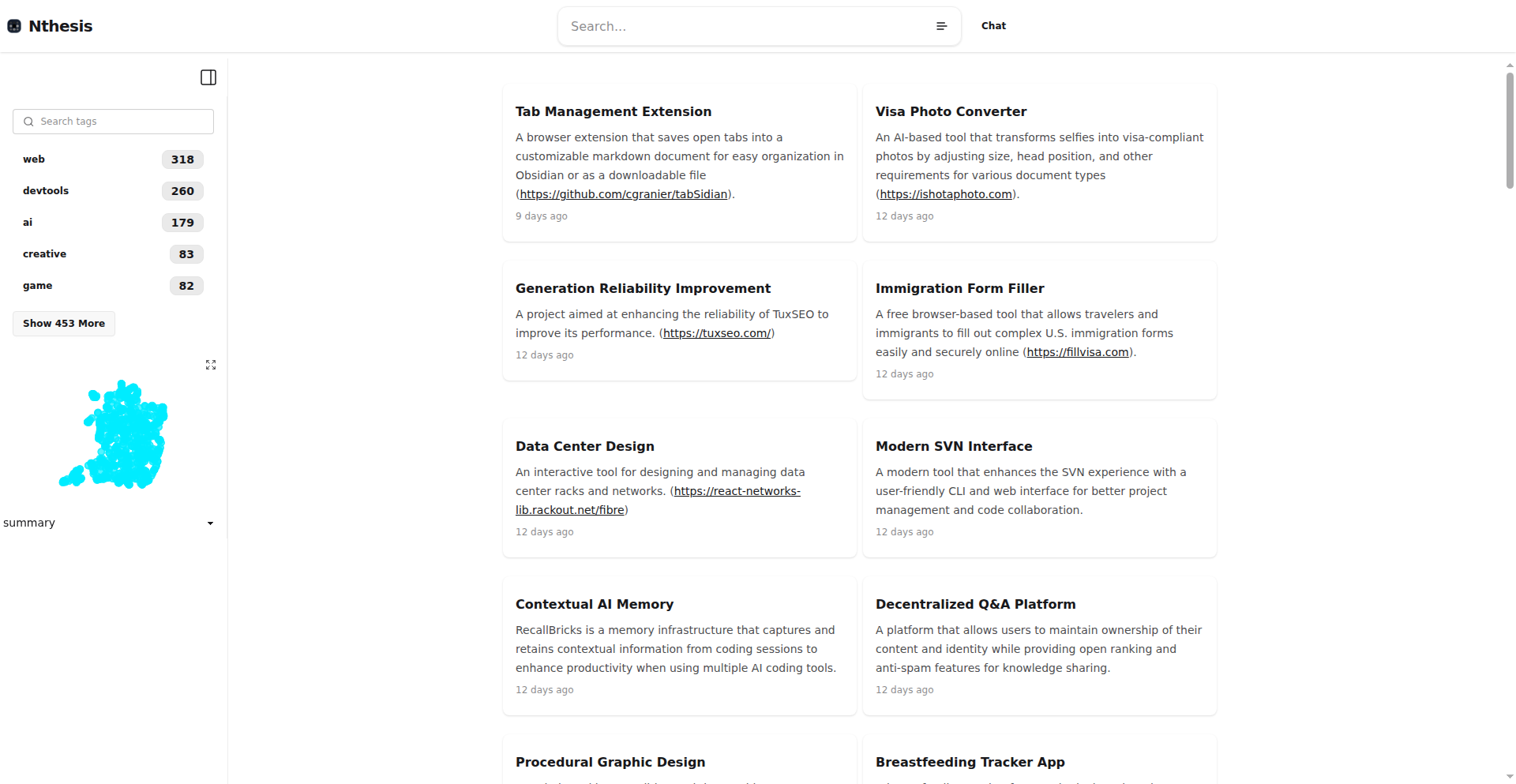

WIP Post Explorer

Author

osigurdson

Description

This project is a public dashboard designed to search and chat with 'What are you working on?' posts. It addresses the challenge of finding and engaging with ongoing projects shared by the developer community by providing an organized and searchable interface to these otherwise scattered posts.

Popularity

Points 6

Comments 0

What is this product?

This is a web application that indexes and makes searchable the 'Ask HN: What Are You Working On?' posts from Hacker News. Instead of manually sifting through countless threads to find out what other developers are building or discussing, this tool offers a structured way to explore these insights. The innovation lies in its ability to aggregate and query these specific types of posts, making community knowledge more accessible. It uses a backend to process and store the relevant posts, and a frontend to provide a user-friendly search and interaction experience. This means you can quickly find discussions or projects related to specific technologies, problems, or ideas. So, what's in it for you? You can easily discover trending projects, find potential collaborators, or gain inspiration for your own work by tapping into the collective knowledge of the HN community.

How to use it?

Developers can access the dashboard via a web browser. The primary interaction is through a search bar where they can input keywords related to technologies, project types, or specific challenges. The tool then returns a list of relevant 'What Are You Working On?' posts, along with direct links to the original Hacker News discussions. Integration can be as simple as bookmarking the site for regular reference or sharing specific findings with colleagues. For more advanced use, one could imagine building custom scripts to pull data for trend analysis. So, how can you use this? You can use it to quickly find out what technologies are popular right now, see how others are solving specific development problems, or discover interesting side projects that might spark your own ideas. It’s like having a curated library of what the developer world is actively building.

Product Core Function

· Searchable database of 'What Are You Working On?' posts: This allows users to quickly find relevant discussions based on keywords, making it easy to discover specific projects or technologies. The value is in saving time and effort when looking for community insights.

· Interactive chat functionality (implied by 'chatting'): This suggests an ability to discuss or comment on the found posts directly within the dashboard, fostering community interaction and knowledge sharing. The value is in enabling direct conversations and collaborations.

· Organized presentation of posts: The dashboard likely presents the search results in a clear, organized manner, making it easy to browse and digest information. The value is in improving information accessibility and reducing cognitive load.

· Direct links to original Hacker News threads: This ensures users can easily access the full context and participate in the original discussions. The value is in maintaining the integrity of the original source and facilitating deeper engagement.

Product Usage Case

· A developer is curious about the current adoption of Rust in web development. They search 'Rust web dev' in the tool and find several 'What Are You Working On?' posts where developers mention using Rust for backend services or tooling. This helps them understand real-world usage and challenges. It solves the problem of scattered information by centralizing it.

· A startup founder is looking for inspiration for a new SaaS product. They search for 'SaaS ideas' or specific niche keywords and discover that multiple developers are working on similar problems, providing insights into market demand and potential features. This helps them validate or refine their product concept.

· A student is working on a personal project involving machine learning. They search for 'machine learning' or specific ML libraries and find other students or professionals sharing their ML projects, learning resources, and challenges. This helps them find relevant examples and potential mentors. It solves the problem of isolation in learning and development.

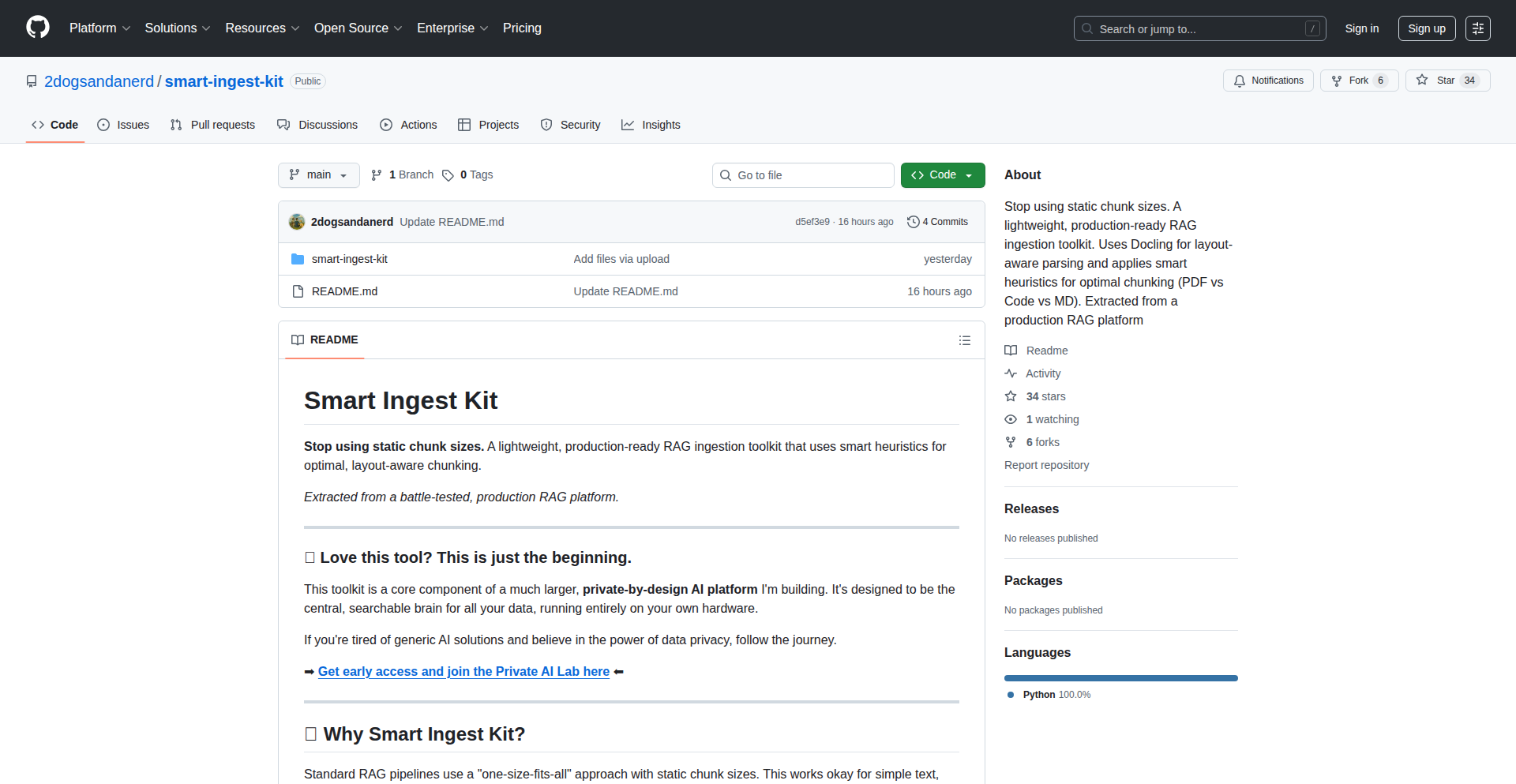

13

TableSlicer-PDF

Author

2dogsanerd

Description

This project addresses the significant challenge of processing complex documents, particularly PDFs containing tables, with Large Language Models (LLMs). The core innovation lies in a novel Markdown conversion process that intelligently preserves table structures, enabling more effective document chunking for AI analysis. This approach offers a practical solution to a common pain point in AI-powered document understanding.

Popularity

Points 6

Comments 0

What is this product?

TableSlicer-PDF is a specialized tool designed to tackle the difficulty of feeding documents with intricate table structures, like those found in PDFs, into AI models. Traditional methods often struggle to interpret and retain the relationships within table data when converting documents for AI processing. This tool's breakthrough is its unique Markdown conversion technique. Instead of simply turning tables into plain text, it converts them into a Markdown format that explicitly preserves the row and column relationships. This is crucial because LLMs process text, and by keeping the table's structure intact in a text-based format, the AI can better understand the data's context and meaning. This means more accurate insights from your documents, especially when dealing with reports, financial statements, or research papers.

How to use it?

Developers can integrate TableSlicer-PDF into their AI workflows that require processing PDF documents with tables. The primary use case is for pre-processing documents before they are fed into an LLM for tasks such as summarization, question answering, or data extraction. The tool can be incorporated as a step in a data pipeline. For example, you might have a Python script that first uses TableSlicer-PDF to convert a PDF into structured Markdown, and then passes this Markdown to an LLM API. This ensures that when the LLM analyzes the content, it receives the table data in a format it can comprehend accurately, leading to superior results compared to simply extracting raw text from the PDF.

Product Core Function

· PDF to Structured Markdown Conversion: Preserves complex table layouts, ensuring AI can correctly interpret tabular data, which is vital for accurate analysis of reports and datasets.

· Intelligent Chunking Enhancement: By maintaining table structure, the conversion facilitates better segmentation of documents for LLMs, improving the relevance and coherence of AI-generated outputs from large documents.

· Problem-Specific Tooling for AI Document Processing: Directly addresses the common frustration of LLMs mishandling table data, providing a focused solution for developers working with AI and complex documents.

Product Usage Case

· Analyzing financial reports: Developers can use TableSlicer-PDF to convert PDFs of annual reports into structured Markdown. This allows LLMs to accurately extract and understand financial figures, trends, and company performance data, providing better insights than standard text extraction.

· Extracting data from research papers: For academic or scientific PDFs containing experimental results in tables, this tool enables LLMs to comprehend the precise relationships between variables, leading to more accurate summarization or data retrieval for research purposes.

· Processing legal documents with tables: When dealing with contracts or legal briefs that include tables for clauses, dates, or parties, TableSlicer-PDF ensures that LLMs can correctly interpret these crucial structured details, minimizing misinterpretations in AI-driven legal analysis.

14

SitStand CLI

Author

graiz

Description

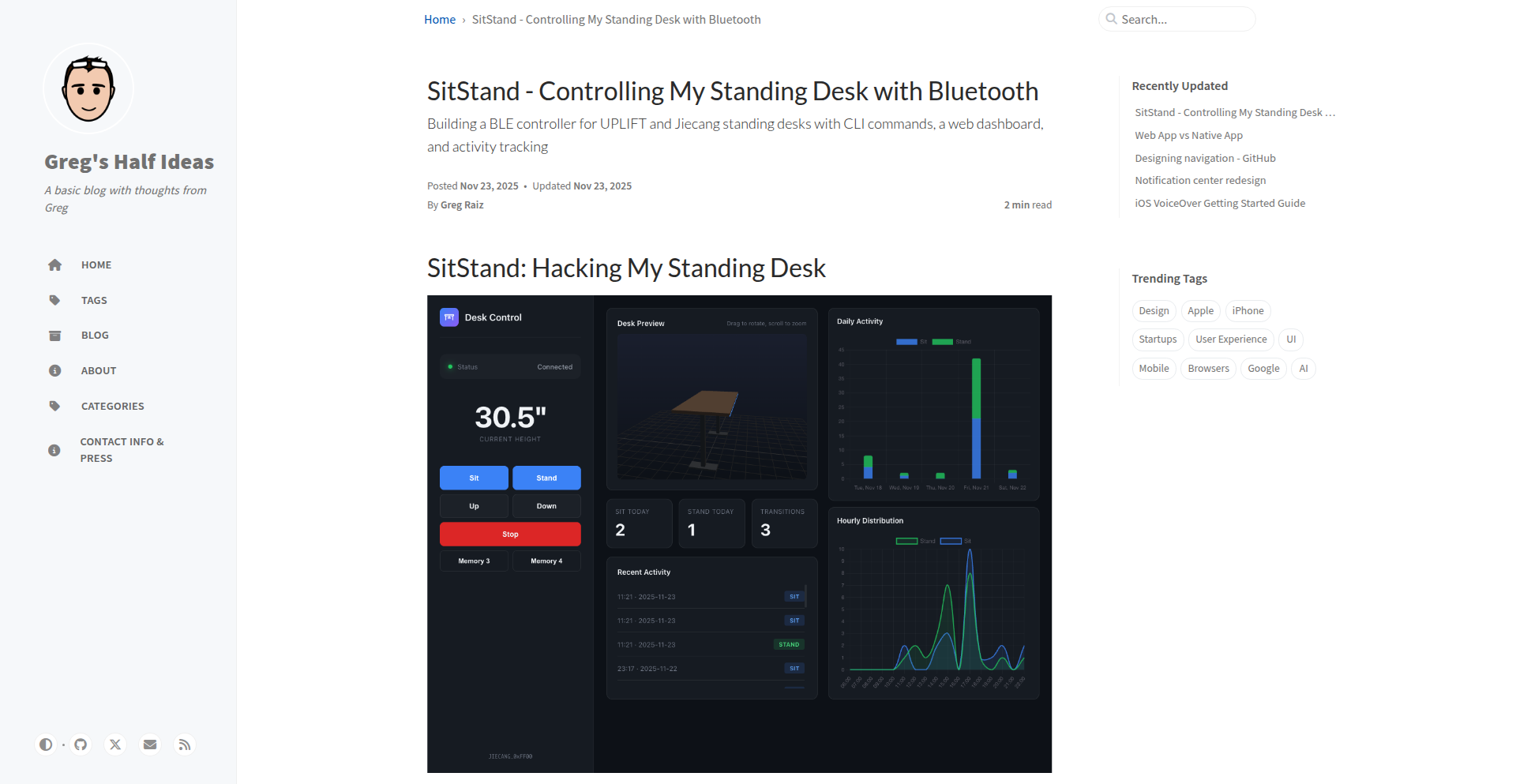

A command-line interface tool that allows users to control their standing desk from the terminal. This project innovates by bridging the gap between physical hardware (standing desks) and the developer's primary interaction environment (the command line), offering a seamless, scriptable way to manage desk height. It solves the problem of manually adjusting desks for ergonomic comfort, making it accessible for users who prefer or need to interact via text-based commands, enabling automation and integration into workflow scripts.

Popularity

Points 6

Comments 0

What is this product?

SitStand CLI is a command-line tool designed to remotely control smart standing desks. Its technical innovation lies in its ability to interface with potentially proprietary standing desk hardware, often using protocols like Bluetooth or proprietary serial commands, and expose them as simple text commands. Instead of relying on a physical button or a mobile app, developers can send instructions like 'sitstand sit' or 'sitstand stand_to_110cm' directly from their terminal. This provides a programmatic way to manage desk height, which is valuable for users who want to automate their sit-stand routine or integrate desk adjustments into other workflows.

How to use it?

Developers can use SitStand CLI by first ensuring their standing desk is compatible and connected (e.g., via Bluetooth to their computer). They would then install the CLI tool (typically via a package manager like npm or by building from source). Once installed, they can execute commands in their terminal. For example, to lower the desk, they might type `sitstand lower`. To set it to a specific height, they could use `sitstand set-height 105` (assuming the desk supports height presets). This allows for quick adjustments without leaving their current terminal session, ideal for developers who spend a lot of time coding.

Product Core Function

· Command-line desk control: Allows users to send basic commands like 'sit', 'stand', 'raise', 'lower' to their standing desk, enabling quick adjustments through text input. The value here is immediate and hands-free height management.

· Programmable height presets: Enables users to define and recall specific desk heights (e.g., 'sitstand preset 1' for sitting height, 'sitstand preset 2' for standing height), offering convenience and consistency in ergonomic posture. This automates the process of finding the right height.

· Integration with scripting and automation: The CLI nature makes it easy to incorporate desk height adjustments into shell scripts, cron jobs, or other automation tools, allowing for scheduled sit-stand reminders or dynamic adjustments based on other system events. The value is creating a more dynamic and health-conscious workspace.

· Cross-platform compatibility (potential): Aiming for compatibility across different operating systems (Linux, macOS, Windows) to reach a wider developer audience. This ensures that developers can use their preferred operating system without losing the ability to control their desk.

Product Usage Case

· Automated Sit-Stand Reminders: A developer can create a script that, every hour, sends the 'stand' command to their desk via SitStand CLI, ensuring they get up and move without manual intervention. This solves the problem of forgetting to change posture.

· Ergonomic Workflow Integration: A developer might want their desk to automatically adjust to a sitting height when they launch their IDE for coding and a standing height when they are reading documentation. SitStand CLI can be triggered by these application launches via scripting.

· Hands-free Adjustments During Collaboration: During a video call or pair programming session, a developer can quickly adjust their desk height with a simple command without needing to break their flow or physically reach for controls. This provides seamless transitions in shared working environments.

· Customizable Desk Height Profiles: For users who work in different environments or with different tasks, SitStand CLI allows them to define and quickly switch between multiple desk height profiles (e.g., a 'focus' height, a 'meeting' height), ensuring optimal comfort and productivity for each activity.

15

GenesisDB: Dual-Protocol Data Nexus

Author

patriceckhart

Description

Genesis DB is a novel database solution that uniquely offers both gRPC and HTTP APIs. This dual-protocol approach addresses the common challenge of data access flexibility, allowing developers to choose the most suitable interface for their needs. The core innovation lies in its ability to seamlessly serve data through high-performance gRPC and widely compatible HTTP endpoints, simplifying integration into diverse application architectures.

Popularity

Points 4

Comments 0

What is this product?

Genesis DB is a database designed for modern applications, providing a unified way to access your data. Instead of just offering one way to connect, it supports two major communication protocols: gRPC and HTTP. gRPC is a modern, high-performance framework often used for internal service-to-service communication, known for its efficiency and strong typing. HTTP is the ubiquitous protocol used across the web, making it incredibly easy to integrate with almost any application or tool. Genesis DB's innovation is in providing both out-of-the-box, allowing developers to leverage the strengths of each for different use cases, all while accessing the same underlying data. This means you don't have to choose between raw speed and broad accessibility; you get both. The Protobuf definitions are open-source, making it transparent and extensible.

How to use it?

Developers can integrate Genesis DB into their projects by choosing the API that best fits their workflow. For applications requiring low-latency, efficient communication, especially within microservice architectures, the gRPC API can be utilized. This involves generating client stubs from the provided Protobuf definitions. For broader accessibility, web applications, or when integrating with existing systems that primarily use RESTful principles, the HTTP API can be used. Furthermore, Genesis DB supports gRPC Server Reflection, which is a powerful feature. It means that tools like `grpcurl` (a command-line tool for interacting with gRPC services) or dynamic client generators can discover and interact with the gRPC API without needing the specific `.proto` files beforehand. This significantly speeds up development and experimentation, especially when building tools that need to dynamically interact with services.

Product Core Function

· Dual API Access (gRPC and HTTP): Enables developers to choose the most efficient or compatible data access method for their specific application needs, enhancing flexibility and integration options.

· High-Performance gRPC API: Leverages Protocol Buffers and gRPC for efficient, low-latency data transfer, ideal for microservices and performance-critical applications.

· Widely Compatible HTTP API: Offers a familiar RESTful interface, making it easy to integrate with web applications, third-party services, and a vast array of existing tools.

· gRPC Server Reflection: Allows clients and tools to introspect the gRPC service structure dynamically, simplifying development, debugging, and the creation of generic client applications.

· Open Source Protobuf Definitions: Provides transparency and allows for community contributions and custom extensions to the data schema, fostering innovation and interoperability.

Product Usage Case

· Building a microservice architecture where internal services communicate via the high-performance gRPC API for speed, while external-facing APIs or simple web clients interact through the more universally compatible HTTP API.

· Developing a real-time analytics dashboard that uses the gRPC API to pull large volumes of data efficiently from Genesis DB, ensuring smooth performance for data visualization.

· Creating a content management system where editors can use standard web browser tools or simple HTTP clients to update content via the HTTP API, while automated background processes utilize the gRPC API for bulk data operations.

· Integrating Genesis DB with third-party tools like Postman or Insomnia for testing and debugging the HTTP API, or using `grpcurl` to interact with and explore the gRPC API without needing to write custom client code first.

16

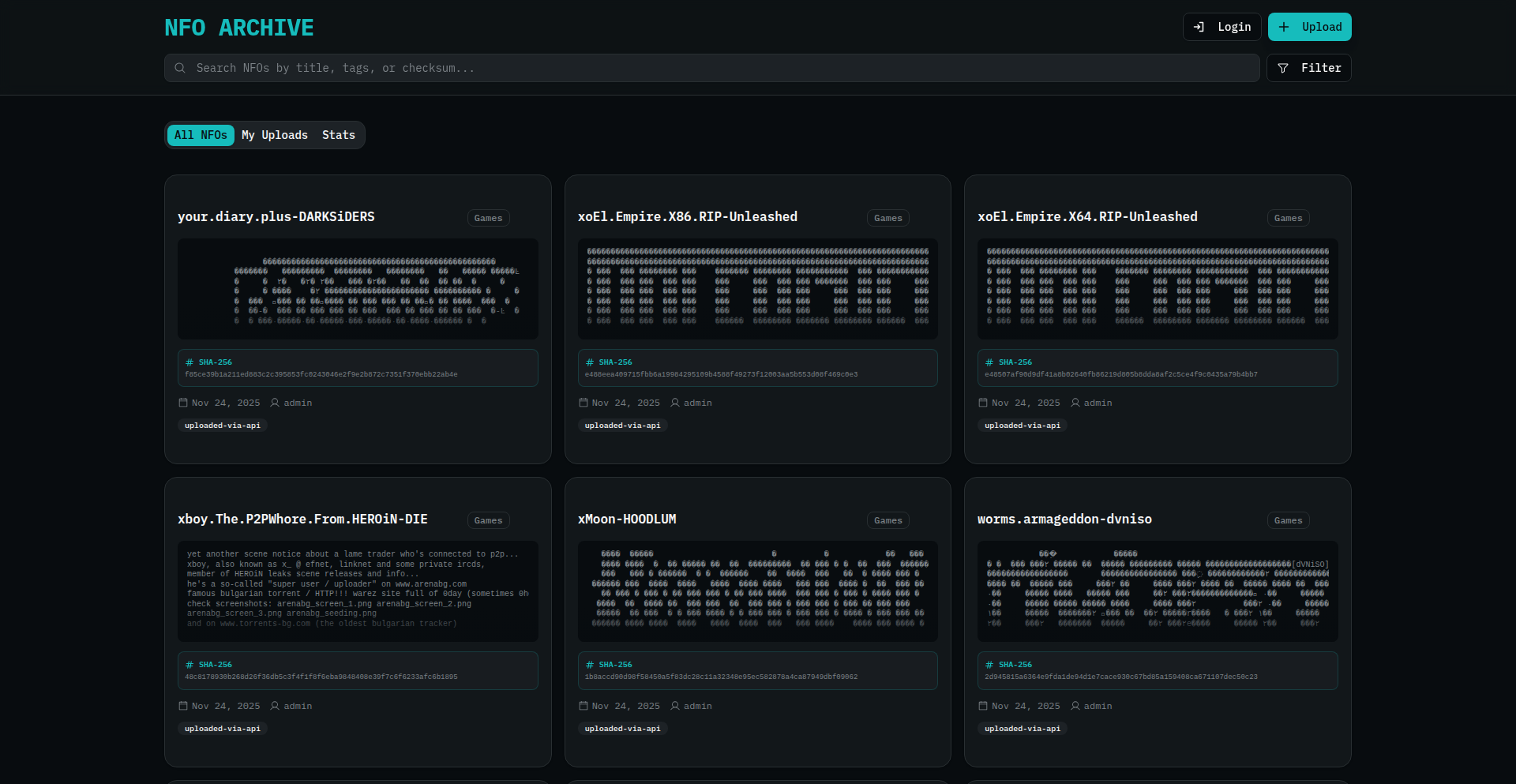

NFOArchive: Retro-Futurist NFO Viewer

Author

bilekas

Description

NFOArchive is a modern, retro-styled viewer and archive for NFO files. It reimagines the classic NFO file experience, often found in early software releases and BBS culture, with a contemporary user interface and enhanced functionalities. The innovation lies in its ability to breathe new life into a legacy file format, making it accessible and visually appealing to a modern audience while preserving its original charm.

Popularity

Points 4

Comments 0

What is this product?

NFOArchive is a software application designed to view and manage NFO files. NFO files, often containing important release information, ASCII art, and technical details from software and game scene groups, are typically viewed in basic text editors. NFOArchive brings a dedicated, visually enhanced experience to these files. Its core technical innovation is in parsing and rendering these often character-based, fixed-width formats with modern UI elements and features like search and categorization. This preserves the 'retro' aesthetic through stylized typography and layout that mimics classic terminals, while offering 'modern' conveniences. So, this is useful because it makes exploring historical digital content and retro tech artifacts a much more engaging and organized experience.

How to use it?

Developers can use NFOArchive to easily browse and search through collections of NFO files, perhaps for research into old software, game modding documentation, or even as a unique way to present project documentation. It can be integrated into larger archival projects or used as a standalone tool. The viewer supports various NFO file encodings and rendering styles, allowing for customization. For example, a developer working on retro game emulation could use NFOArchive to quickly find and understand the technical notes associated with specific game releases. So, this is useful because it provides a specialized and intuitive tool for accessing and understanding legacy technical documentation that would otherwise be difficult to work with.

Product Core Function

· NFO File Parsing and Rendering: The system can accurately interpret and display the complex ASCII art and text structures commonly found in NFO files, employing custom rendering engines that mimic retro terminal aesthetics while maintaining readability. This preserves the original artistic intent and technical information. So, this is useful because it ensures that the historical context and detailed information within NFO files are presented correctly and engagingly.

· Retro-Styled User Interface: Features a visually distinctive interface that evokes the feel of classic computer terminals and BBS systems, using stylized fonts and color schemes. This provides a unique and nostalgic user experience. So, this is useful because it makes the process of interacting with old digital artifacts enjoyable and familiar for those who remember or appreciate that era.

· Search and Indexing Capabilities: Allows users to quickly search for specific keywords or phrases within an entire archive of NFO files, significantly improving discoverability of information. So, this is useful because it saves time and effort when trying to locate specific technical details or information within a large collection of files.

· File Organization and Archiving: Provides tools for users to organize their NFO files into categories, add metadata, and manage their collection effectively. This transforms scattered files into a structured archive. So, this is useful because it helps maintain order and makes it easy to manage and retrieve specific NFO files when needed for projects or research.

Product Usage Case

· A retro game preservationist uses NFOArchive to browse and catalog the NFO files associated with classic game releases, deciphering technical notes and developer messages that provide context for game mechanics and history. This helps in understanding the evolution of game development. So, this is useful because it provides a dedicated platform for preserving and understanding the cultural and technical heritage of retro gaming.

· A digital archaeology researcher uses NFOArchive to analyze the NFO files from early internet bulletin board systems (BBS) to understand communication patterns, software distribution methods, and subculture trends of the late 20th century. This aids in uncovering historical digital practices. So, this is useful because it offers a specialized tool for deep dives into historical digital artifacts and online communities.

· A software historian uses NFOArchive to study the documentation and release notes of early shareware and freeware software, gaining insights into the technical challenges and solutions of the time. This contributes to a deeper understanding of software engineering history. So, this is useful because it simplifies the access and analysis of historically significant software documentation.

· A programmer experimenting with ASCII art generation tools might use NFOArchive as a reference to understand the intricacies and limitations of character-based graphics, informing their own creative coding projects. This inspires new forms of digital art. So, this is useful because it provides practical examples and inspiration for creative coding and digital art projects.

17

TabFreeze

Author

tech_builder_42

Description

TabFreeze is a browser extension designed to combat the performance degradation caused by an excessive number of open tabs. It intelligently suspends inactive tabs, freeing up system resources without closing them, thus improving your computer's responsiveness and battery life. The core innovation lies in its efficient use of the browser's built-in tab discarding API.

Popularity

Points 4

Comments 0

What is this product?