Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-21

SagaSu777 2025-11-22

Explore the hottest developer projects on Show HN for 2025-11-21. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The daily dose of innovation from Hacker News is a vibrant testament to the relentless pursuit of solving problems with technology. We're witnessing a powerful surge in AI integration, not just as standalone novelties, but as integral components enhancing existing tools and creating entirely new workflows. Developers are increasingly focused on building AI-powered features into applications, from code generation and debugging assistants to content creation and sophisticated data analysis. This trend underscores the opportunity for entrepreneurs to identify niche problems where AI can offer a significant leap in efficiency or capability, creating specialized solutions that go beyond generic applications. Simultaneously, there's a strong undercurrent of focus on developer productivity and open-source infrastructure. Projects like Wealthfolio 2.0 highlight the value of robust, multi-platform, and extensible open-source solutions that empower users with control and privacy. This signals a growing demand for tools that are not only functional but also transparent and community-driven. For aspiring builders, embracing these trends means not just adopting new technologies, but understanding the underlying problems they solve and the human needs they address. The hacker spirit thrives on finding clever, efficient, and often unconventional ways to build, improve, and share. It's about pushing boundaries, learning from collective intelligence, and ultimately, shipping solutions that make a tangible difference.

Today's Hottest Product

Name

Wealthfolio 2.0

Highlight

This project showcases a robust approach to building and scaling an open-source investment tracker. The key innovation lies in its multi-platform support (mobile, desktop, Docker) and the introduction of an extensible addons system. Developers can learn about building modular applications, ensuring privacy and transparency in financial tools, and leveraging Docker for wider deployment. The commitment to an open-source philosophy for sensitive financial data is a significant learning for any developer.

Popular Category

AI/ML

Developer Tools

Open Source

Productivity

Web Applications

Popular Keyword

AI

Open Source

Developer Tools

LLM

WebGPU

Docker

API

Security

Productivity

Customization

Technology Trends

AI Integration in everyday tools

Enhanced Developer Productivity

Decentralization and Privacy Focus

Client-side AI and WebAssembly

Composable Architectures and Extensibility

Security-first Development

Open Source Ecosystem Growth

Data Visualization and Analysis Tools

Project Category Distribution

AI/ML Tools & Applications (25%)

Developer Productivity & Tools (20%)

Web Applications & Services (15%)

Open Source Infrastructure & Libraries (15%)

Security & Privacy Tools (10%)

Data Management & Analysis (10%)

Utilities & Miscellaneous (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Wealthfolio Core | 530 | 174 |

| 2 | EpsteinInboxViewer | 13 | 9 |

| 3 | OCR Arena: Vision-Language Model Showdown | 18 | 3 |

| 4 | Emma019: Real-time AI Texas Hold'em | 8 | 4 |

| 5 | Revise: AI-Powered Code Refactoring Assistant | 10 | 1 |

| 6 | Pynote: Live Python in HTML | 6 | 3 |

| 7 | ChordDreamer | 3 | 5 |

| 8 | Davia-AI Wiki | 8 | 0 |

| 9 | FatAccumulatorAI | 7 | 0 |

| 10 | GuardiAgent: LLM Tool Security Layer | 7 | 0 |

1

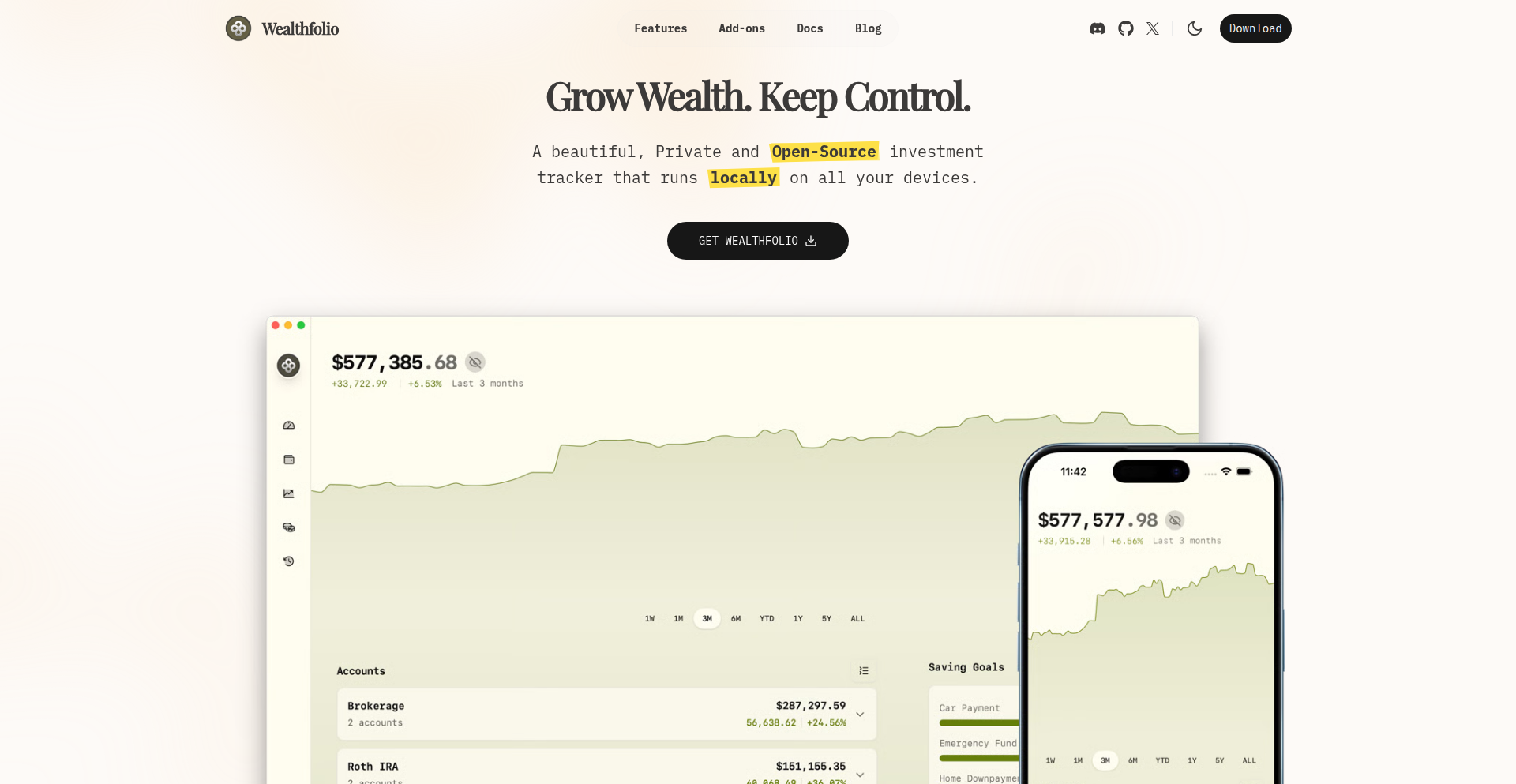

Wealthfolio Core

Author

a-fadil

Description

Wealthfolio Core is an open-source, privacy-focused investment tracker that empowers users to manage their finances across multiple platforms including mobile (iOS), desktop (macOS, Windows, Linux), and self-hosted Docker environments. Its key innovation lies in an extensible addon system, allowing developers to deeply customize and integrate with the platform, fostering a vibrant ecosystem of financial tools. This means you get a transparent and secure way to monitor your investments, with the flexibility to build your own integrations.

Popularity

Points 530

Comments 174

What is this product?

Wealthfolio Core is a personal finance management tool designed for investors who value privacy and control. At its heart, it's a system that securely stores and analyzes your investment data. The innovative part is its addon architecture. Think of it like building blocks: the core app provides the foundation, and developers can create 'addons' – essentially small pieces of code – that add new features or connect Wealthfolio to other services. This isn't just about tracking stocks; it's about building a personalized financial dashboard. So, what's in it for you? You get an investment tracker that's not a black box, and you can extend its capabilities to perfectly match your unique financial tracking needs.

How to use it?

Developers can use Wealthfolio Core in several ways. For personal use, they can install it on their iOS devices, desktop computers, or run it as a self-hosted Docker container for maximum data privacy. For extending its functionality, the addon system is the key. Developers can write their own addons using the provided APIs to integrate with other financial data sources, create custom reporting tools, or automate specific tasks. This allows for a highly tailored experience. For example, you could develop an addon to automatically import data from a specific brokerage account or build a new visualization for your portfolio performance. So, what's in it for you? You can use it as a ready-to-go investment tracker, or dive deeper and build custom solutions that perfectly fit your financial workflow.

Product Core Function

· Multi-platform deployment: Wealthfolio Core can be run on iOS, macOS, Windows, and Linux, and as a Docker container. This allows for seamless access and data synchronization across your devices. The value is in the flexibility to manage your finances wherever you are. The application scenario is having your investment data available on your phone, laptop, or even a server for centralized management.

· Extensible addon system: This feature allows developers to create custom integrations and features. The value is in fostering a community-driven ecosystem and enabling deep personalization of the financial tracking experience. The application scenario is building specialized tools like custom portfolio analyzers or integrating with niche financial data providers.

· Privacy-first design: All data is stored locally or within the user's self-hosted environment, ensuring sensitive financial information remains private. The value is in providing peace of mind and control over your personal data. The application scenario is for users who are highly concerned about data security and do not want their financial information shared with third-party services.

· Open-source philosophy: The entire codebase is publicly available, promoting transparency and community contributions. The value is in building trust and enabling collaborative development. The application scenario is for developers and users who want to audit the code, contribute to its improvement, or ensure its long-term viability.

Product Usage Case

· A user wants to track their cryptocurrency holdings alongside traditional stocks and bonds, but their current broker doesn't offer direct integration. A developer can create a Wealthfolio addon that pulls data from a cryptocurrency exchange API, making all their assets visible in one place. This solves the problem of fragmented financial tracking across different asset classes.

· A financial analyst needs to generate specific performance reports that are not available in standard investment trackers. They can leverage the Wealthfolio addon system to build a custom reporting module that calculates metrics relevant to their analysis, thus providing a tailored solution for their unique needs.

· A small business owner wants to manage their personal investments and their business's cash flow in a unified system. They can use Wealthfolio Core and potentially develop an addon to link business bank account data, enabling a holistic view of their financial health.

· A privacy-conscious individual wants to avoid cloud-based financial services. They can deploy Wealthfolio Core as a self-hosted Docker image on their own server, maintaining complete control and ownership of their investment data.

2

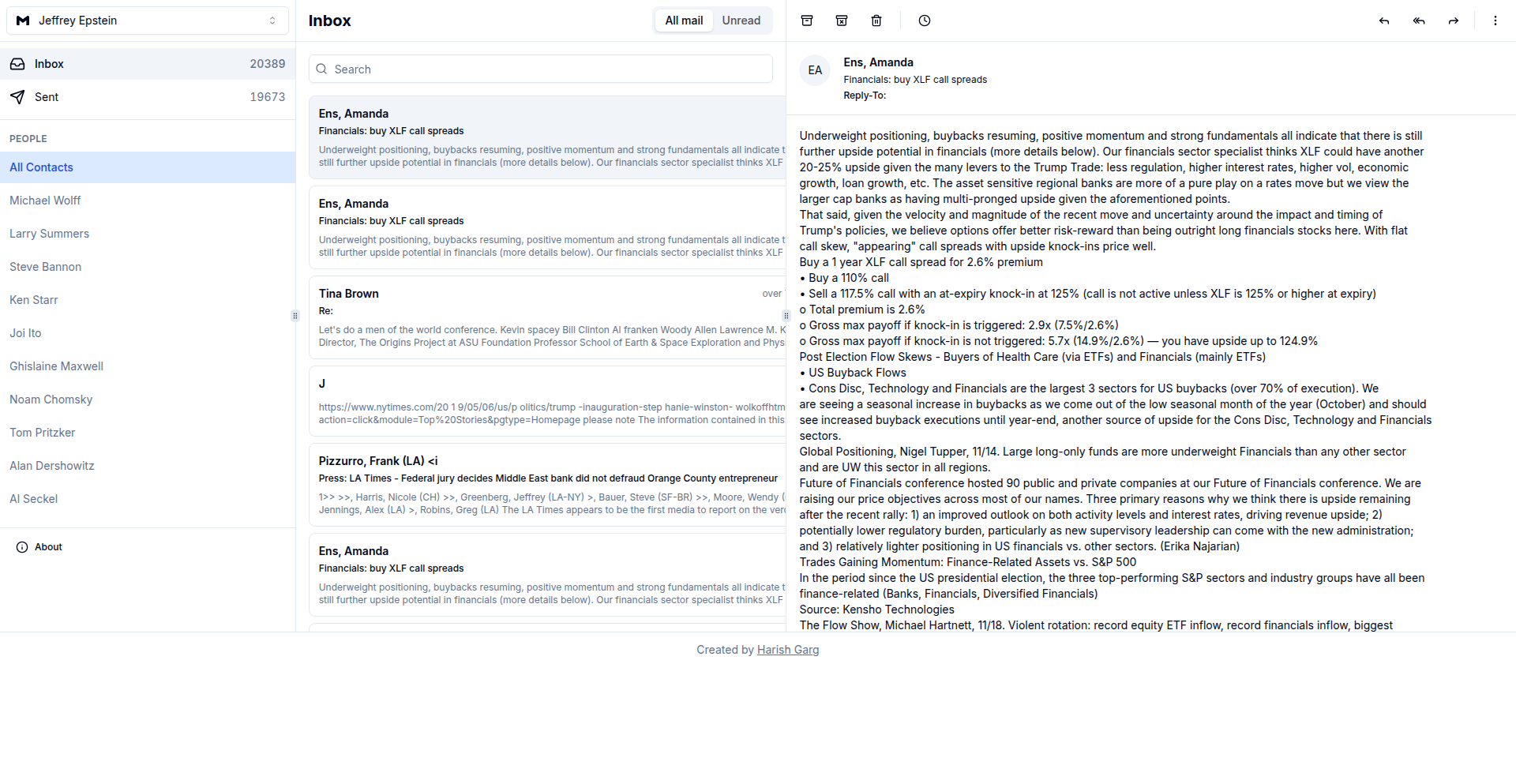

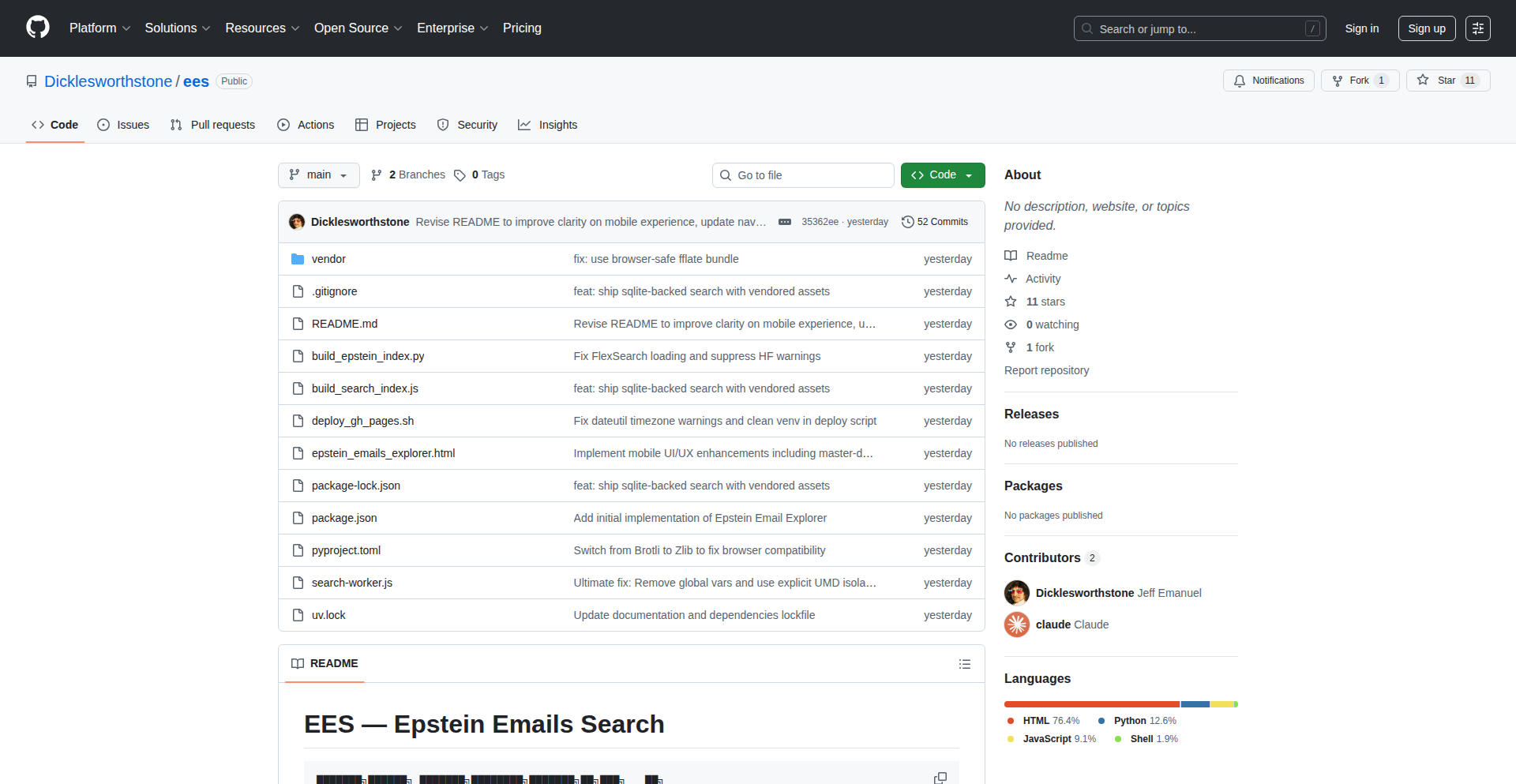

EpsteinInboxViewer

Author

hgarg

Description

This project presents an email client-style viewer for the publicly disclosed Jeffrey Epstein emails. The innovation lies in organizing and presenting a large, complex dataset in an accessible, searchable format, highlighting the technical challenge of data curation and presentation for sensitive and voluminous information.

Popularity

Points 13

Comments 9

What is this product?

This project is an open-source tool that takes the publicly released Jeffrey Epstein emails and presents them in a familiar, user-friendly interface resembling a traditional email client. The core technical innovation is in the data processing pipeline and the frontend development that allows for efficient browsing, searching, and filtering of this massive collection of sensitive documents. Instead of wading through raw files, developers and researchers can use a structured interface, making the information much more digestible and discoverable. So, what's the value to you? It transforms a daunting data dump into an investigable resource.

How to use it?

Developers can use this project as a foundation for building similar data exploration tools for other large, unstructured datasets. The project likely involves backend scripting to parse email formats (like EML), database indexing for fast querying, and a frontend framework (e.g., React, Vue, Svelte) for the interactive viewer. Integration would involve adapting the data ingestion and indexing logic to your specific data source and potentially customizing the frontend to suit different analytical needs. So, how can you use this? It's a blueprint for making any large, messy data accessible and useful.

Product Core Function

· Email Parsing and Structuring: Converts raw email files into a structured format for easier querying and display. This is valuable because it makes unstructured text data machine-readable and manageable.

· Search and Filtering Engine: Implements a robust search and filtering mechanism to quickly locate specific emails or threads within the dataset. This is valuable for researchers and investigators needing to find specific information quickly.

· Interactive User Interface: Provides a clean, intuitive email client-like interface for browsing, reading, and navigating through the emails. This is valuable as it lowers the barrier to entry for understanding complex data.

· Data Indexing for Performance: Utilizes efficient indexing techniques to ensure fast load times and responsive search results, even with a large volume of data. This is valuable for maintaining a smooth user experience with large datasets.

Product Usage Case

· Investigative Journalism: Journalists can use this as a model to analyze and present large leaked document dumps, making it easier to uncover stories and present findings to the public.

· Academic Research: Researchers studying social networks, communication patterns, or historical events can adapt this to analyze large email archives, providing new insights into their fields.

· Data Visualization Projects: Developers can leverage the core parsing and indexing logic to build custom data visualization tools for any dataset that can be represented as a collection of discrete items with associated metadata.

3

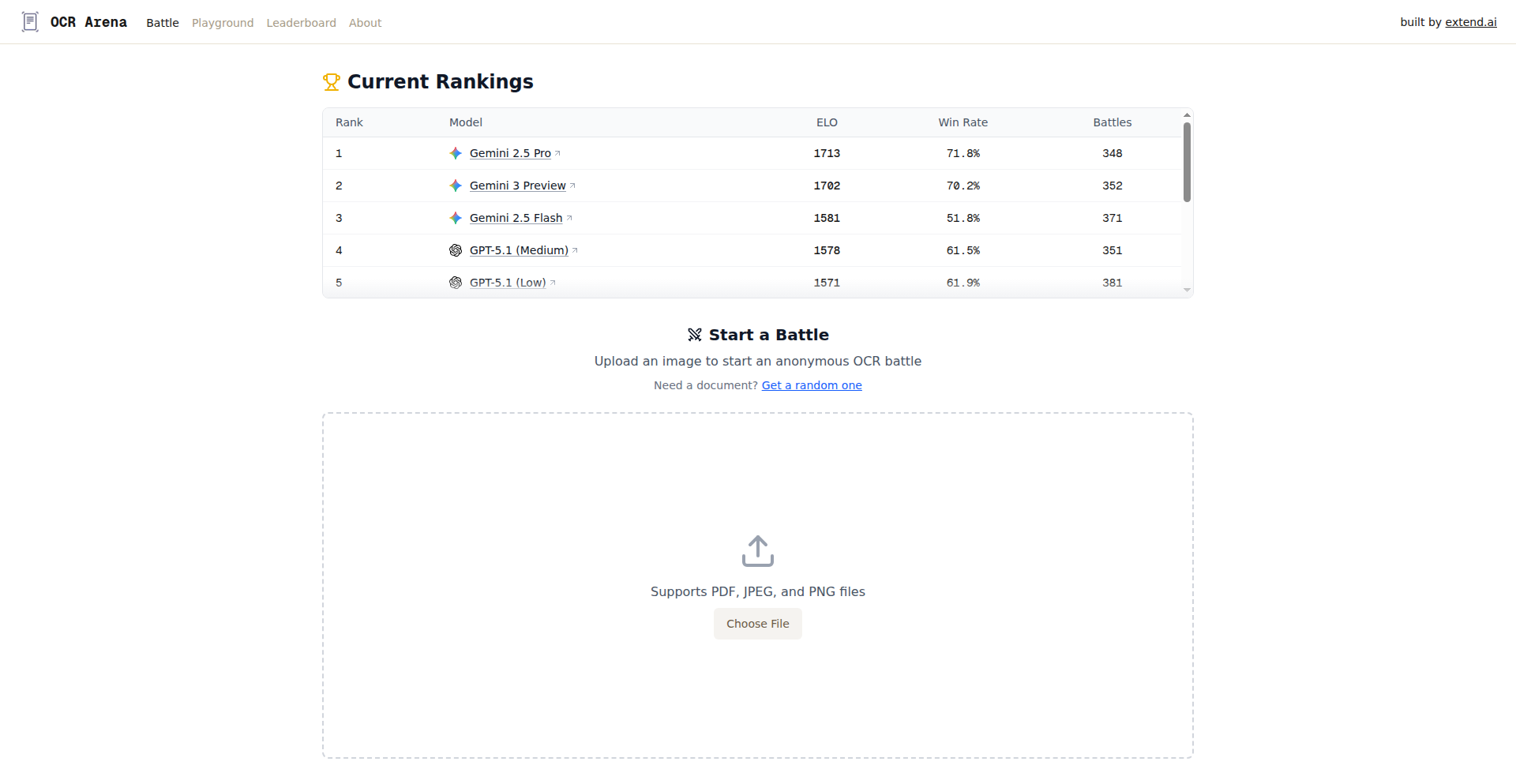

OCR Arena: Vision-Language Model Showdown

Author

kbyatnal

Description

OCR Arena is a free, community-driven playground designed to benchmark and compare leading Visual-Language Models (VLMs) and open-source Optical Character Recognition (OCR) models. It empowers users to upload any document, measure model accuracy, and contribute to a public leaderboard, fostering transparency and accelerating the advancement of OCR technology.

Popularity

Points 18

Comments 3

What is this product?

OCR Arena is a web-based platform that allows anyone to test and compare the performance of various AI models that can read text from images (OCR) or understand visual content alongside text (VLMs). The core innovation lies in providing a standardized environment to upload documents and see how different AI models perform on the exact same data. This helps identify the best-performing models for specific tasks, like digitizing documents or extracting information from images. It's like a standardized race track for AI text-reading capabilities.

How to use it?

Developers can use OCR Arena by simply navigating to the website, uploading a document (PDF, image, etc.), and selecting the models they want to compare. The platform then processes the document using the chosen models and presents a clear accuracy score and comparison. For integration, developers can study the open-source nature of the platform (if available, or infer from its functionality) to understand how to build similar comparison tools or integrate specific OCR/VLM models into their own applications. The leaderboard provides insights into which models are currently favored by the community, guiding technology choices for new projects.

Product Core Function

· Model Comparison Engine: Allows side-by-side evaluation of multiple OCR and VLM models on identical documents, providing actionable accuracy metrics for developers to understand model strengths and weaknesses.

· Document Upload and Processing: Supports various document formats (e.g., PDFs, images) and efficiently processes them through selected AI models, simplifying the testing workflow for rapid iteration.

· Public Leaderboard: Aggregates user-submitted performance data to create a transparent ranking of OCR and VLM models, helping developers make informed decisions about which technologies to adopt.

· Accuracy Measurement Tools: Provides objective metrics to quantify how well each model extracts or understands text from documents, crucial for performance validation in real-world applications.

· Community Contribution: Enables users to vote on model performance, fostering a collaborative environment for advancing OCR and VLM research and development.

Product Usage Case

· A developer building a document digitization service needs to choose the most accurate OCR model. They can upload sample documents to OCR Arena, compare several open-source and commercial models, and select the one with the highest accuracy for their specific document types (e.g., invoices, legal documents).

· A researcher working on visual question answering (VQA) systems can use OCR Arena to compare how different VLMs interpret text embedded within images. This helps them select models that excel at understanding both visual context and textual information for their research experiments.

· A startup is developing an app that extracts information from scanned receipts. They can test various OCR models on OCR Arena to find the most reliable one that handles diverse receipt layouts and handwriting styles, saving significant development time and improving user experience.

· An open-source enthusiast wants to contribute to the improvement of OCR technology. They can use OCR Arena to identify underperforming models, understand their failure points, and potentially contribute fixes or improvements to the respective open-source projects.

4

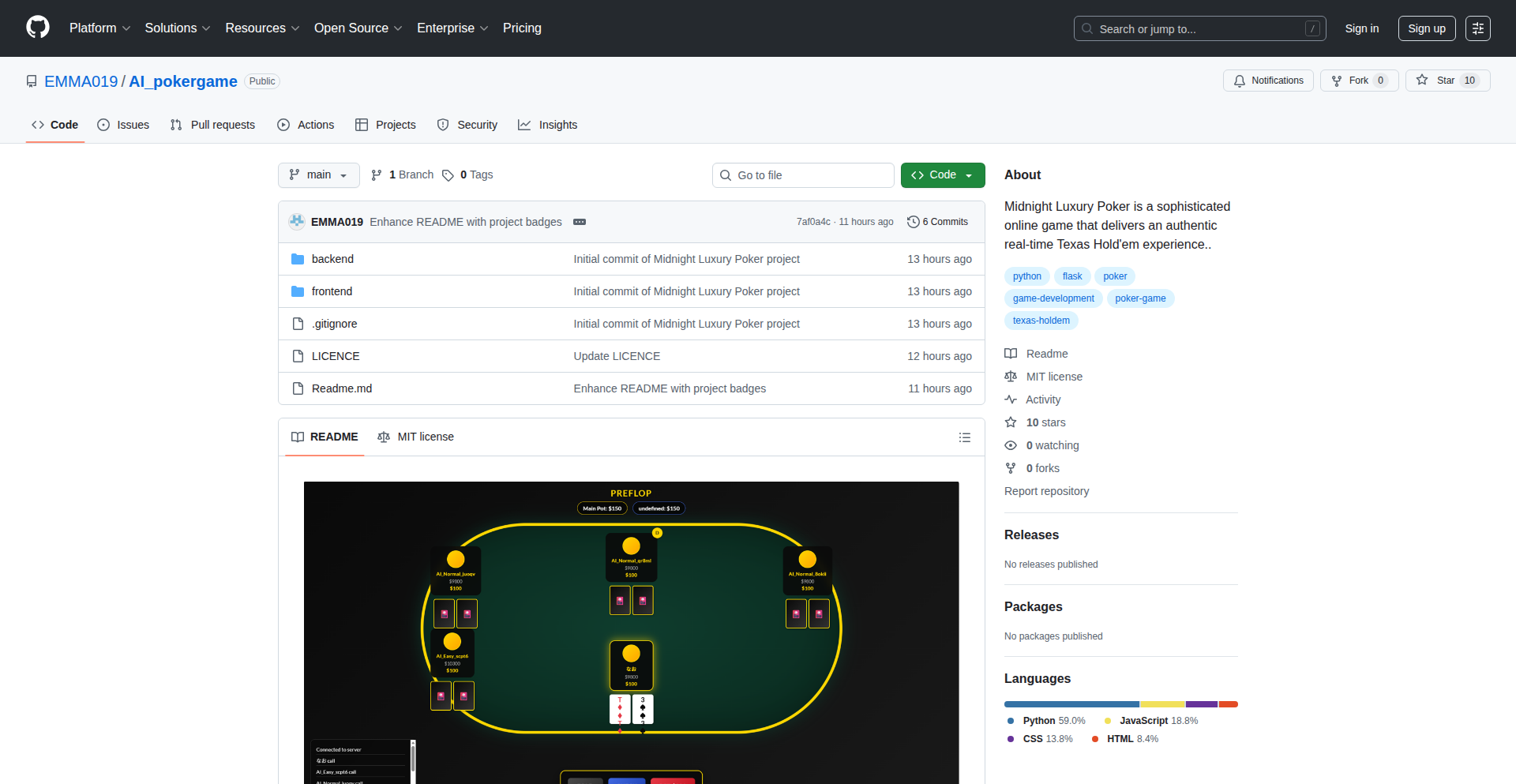

Emma019: Real-time AI Texas Hold'em

Author

tarocha1019

Description

Emma019 is a real-time Texas Hold'em poker game implemented in Python and Flask, featuring AI-powered opponents. It showcases an innovative approach to combining game development with machine learning for intelligent bot behavior, allowing for dynamic and engaging gameplay without human players.

Popularity

Points 8

Comments 4

What is this product?

This project, Emma019, is a fully functional Texas Hold'em poker game. The core innovation lies in its real-time AI opponents. Instead of pre-programmed, predictable moves, the AI uses machine learning to adapt and make decisions. This means the AI can learn from game situations, analyze probabilities, and make more human-like, strategic choices, making the game more challenging and realistic. The use of Python and Flask provides a robust backend for handling game logic and communication, while also making it accessible for developers to extend and experiment with.

How to use it?

Developers can use Emma019 as a foundation for building their own poker-related applications or as a learning tool for AI in games. The Python and Flask backend allows for easy integration into web applications, enabling features like online multiplayer or customizable AI opponents. You can fork the project, modify the AI's decision-making algorithms, or even integrate it with a frontend for a richer user experience. It's a great starting point for anyone interested in game AI, real-time web applications, or Python-based game development.

Product Core Function

· AI-powered opponent decision-making: The AI uses machine learning models to make strategic decisions in real-time, offering a dynamic and challenging gameplay experience. This is valuable because it creates a more engaging and less predictable game than traditional rule-based bots.

· Real-time game state management: The system efficiently manages the current state of the poker game, including player hands, community cards, and betting rounds, ensuring smooth and responsive gameplay. This is valuable for providing a fluid and interactive gaming experience.

· Python and Flask backend: Utilizes a popular and flexible Python web framework (Flask) for building the game logic and handling communication, making it easy for developers to understand, modify, and extend the codebase. This is valuable for its developer-friendliness and the vast ecosystem of Python libraries available for further enhancements.

· Texas Hold'em rules implementation: Accurately implements the rules of Texas Hold'em poker, including hand rankings, betting rounds, and showdowns. This is valuable for providing an authentic poker experience that adheres to established game mechanics.

Product Usage Case

· Developing a personalized poker training tool: A developer could adapt Emma019 to create a tool where aspiring poker players can practice against an AI that simulates various playing styles, helping them identify weaknesses and improve their strategy. This solves the problem of finding consistent and varied practice partners.

· Integrating AI opponents into a larger gambling platform: This project could serve as a core component for a web-based gambling platform, providing engaging AI opponents for players who want to play even when human opponents are not available. This addresses the need for readily available gameplay.

· Creating a research platform for AI in card games: Researchers can use Emma019 as a testbed to experiment with new AI algorithms for card games, exploring how different machine learning approaches impact strategic decision-making and game outcomes. This provides a controlled environment for AI experimentation.

· Building a game for skill-based entertainment: Beyond pure gambling, this could be used to create a fun, skill-based game that leverages AI to provide a challenging experience for casual players. This offers a form of entertainment that requires thought and strategy.

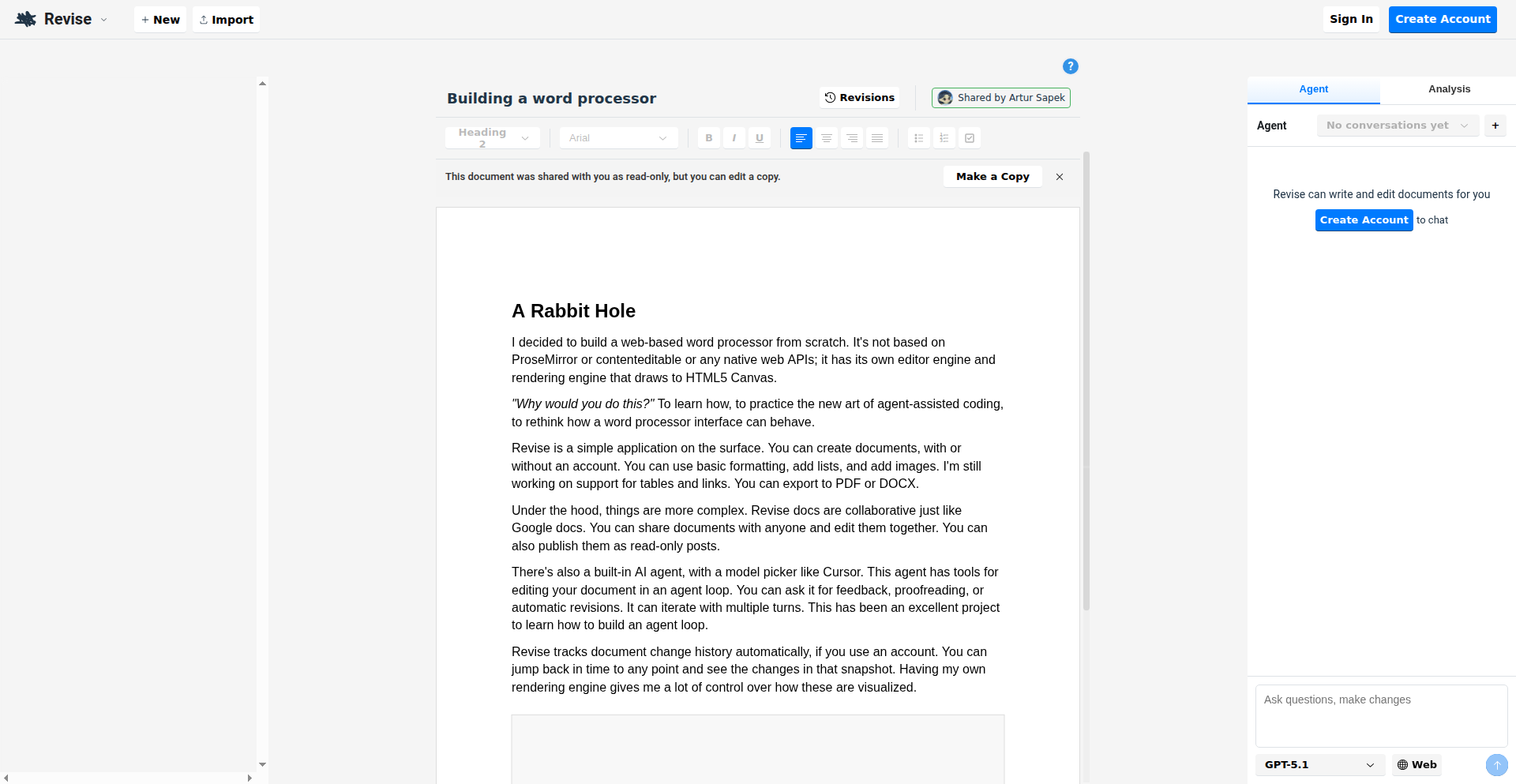

5

Revise: AI-Powered Code Refactoring Assistant

Author

artursapek

Description

Revise is a command-line tool that leverages AI to automatically suggest and apply code refactorings. It tackles the tedious and error-prone task of improving code quality, making it more readable, maintainable, and efficient. The core innovation lies in its AI's ability to understand code context and propose meaningful structural changes, saving developers significant manual effort.

Popularity

Points 10

Comments 1

What is this product?

Revise is an AI-powered command-line application designed to help developers improve their code through automated refactoring. It acts like a smart assistant that analyzes your code, identifies areas for improvement (like simplifying complex functions, removing redundant code, or enhancing variable names), and then suggests or even automatically applies these changes. The technical innovation is in the AI's sophisticated understanding of code semantics and its ability to generate coherent and beneficial code transformations, going beyond simple pattern matching. This means it can make intelligent suggestions that a human developer might take a long time to discover or implement. So, what's in it for you? It makes your code cleaner and easier to work with, reducing bugs and speeding up future development.

How to use it?

Developers can integrate Revise into their workflow by installing it as a command-line tool. After installation, they can point Revise at their codebase, and it will analyze the code. They can then review the AI-generated suggestions. Revise offers different levels of automation, allowing developers to manually approve each refactoring or to automatically apply certain types of changes. This makes it adaptable to various project needs and developer preferences. For example, you might run Revise on a specific file or directory before committing your changes. So, how does this help you? It automates the grunt work of code cleanup, allowing you to focus on building new features instead of getting bogged down in code maintenance.

Product Core Function

· AI-driven code analysis: Identifies complex or suboptimal code patterns for refactoring. This means the AI understands what 'bad' code looks like and why it's bad, helping you write better software.

· Automated refactoring suggestions: Proposes specific code changes to improve readability, maintainability, and performance. This provides actionable steps to make your code better, saving you from having to figure it out yourself.

· Context-aware transformations: Understands the surrounding code to ensure refactorings are safe and effective. This prevents accidental breakages and ensures the changes actually improve the code's logic, making it a reliable tool.

· Configurable automation levels: Allows developers to choose between manual review or automatic application of refactorings. This gives you control over the process, ensuring you're comfortable with the changes being made to your code.

· Support for multiple programming languages: Capable of analyzing and refactoring code in various languages. This broad applicability means you can use Revise across different projects and technology stacks, making it a versatile tool for your development needs.

Product Usage Case

· Improving legacy codebases: A developer working on an older project can use Revise to automatically suggest improvements to complex functions or reduce duplicate code, making the legacy system easier to understand and extend. This helps avoid costly rewrites and speeds up feature development on old code.

· Enhancing code readability during team collaboration: Before merging a pull request, a developer can run Revise to ensure the code adheres to best practices for clarity and conciseness. This leads to more consistent and understandable code across the team, reducing onboarding time for new developers.

· Optimizing performance bottlenecks: Revise can identify inefficient code structures and suggest more performant alternatives. For instance, it might suggest optimizing a loop or data structure. This can lead to faster application execution and a better user experience without requiring deep performance tuning expertise.

· Accelerating learning for junior developers: Junior developers can use Revise to see how experienced developers might refactor code, learning best practices and common patterns through concrete examples. This acts as a learning aid, helping them grow their coding skills faster and more effectively.

6

Pynote: Live Python in HTML

Author

laurentabbal

Description

Pynote is a groundbreaking project that allows you to embed interactive Python code and even full Jupyter-like notebooks directly into any HTML page. It solves the problem of static web content by bringing dynamic Python execution to the browser, enabling rich, data-driven web experiences without complex backend setups. The core innovation lies in its ability to render and execute Python within the user's browser, making complex computations and visualizations instantly accessible.

Popularity

Points 6

Comments 3

What is this product?

Pynote is a JavaScript library that enables you to seamlessly integrate executable Python code and interactive notebook environments directly into your web pages. Think of it as bringing the power of a Python interpreter and a Jupyter notebook to anyone who views your HTML. Instead of just displaying text or static images, Pynote allows you to run Python scripts, see their output, and even interact with them live, all within the web browser. The magic happens through WebAssembly, which allows code written in languages like Python to run in the browser. This means no server-side processing is needed for basic Python execution, making it incredibly efficient and accessible.

How to use it?

Developers can integrate Pynote by simply including the Pynote JavaScript library in their HTML. Then, using a specific tag or attribute, they can define blocks of Python code or entire notebook structures within their HTML. For example, you could have a section of your webpage that displays a plot generated by Python, or a small form that triggers a Python script to perform a calculation. This makes it ideal for educational websites, technical documentation, interactive portfolios, or any scenario where you want to showcase dynamic Python capabilities without requiring users to install anything or navigate away from the page.

Product Core Function

· Live Python Code Execution: Pynote allows you to write Python code directly in your HTML, and it will be executed in the user's browser. This means you can demonstrate algorithms, perform calculations, or show dynamic content generation instantly, providing immediate value to the viewer.

· Interactive Notebook Embedding: You can embed full Jupyter-like notebooks within your HTML pages. This is invaluable for tutorials, online courses, or technical blogs where users can run code, experiment, and learn in a familiar notebook environment without leaving your website.

· Dynamic Content Generation: Pynote empowers you to generate content on the fly based on user interaction or data. Imagine a webpage that customizes its display or provides tailored information based on a Python script's output, making the web experience more personalized and engaging.

· WebAssembly Powered: The underlying technology uses WebAssembly, which is a safe and efficient way to run code written in languages like Python in the browser. This means faster performance and the ability to leverage the vast Python ecosystem directly on the web, offering a powerful and modern solution.

Product Usage Case

· Technical Documentation: Imagine a library's documentation that includes interactive Python examples demonstrating API usage. Developers can run the code snippets directly in the documentation to see immediate results, making it much easier to understand and adopt the library.

· Educational Websites: For online courses or tutorials on programming, Pynote can embed interactive Python exercises and explanations. Students can directly experiment with code examples within the lesson, enhancing their learning and engagement.

· Data Visualization Demos: Showcase interactive charts and graphs generated by Python libraries like Matplotlib or Plotly directly on a webpage. Users can tweak parameters or explore data without needing a separate tool, making data exploration more accessible.

· Personal Portfolios: Developers can create dynamic portfolios that demonstrate their Python skills by embedding small, interactive Python applications or simulations, offering a more engaging and memorable way to present their work.

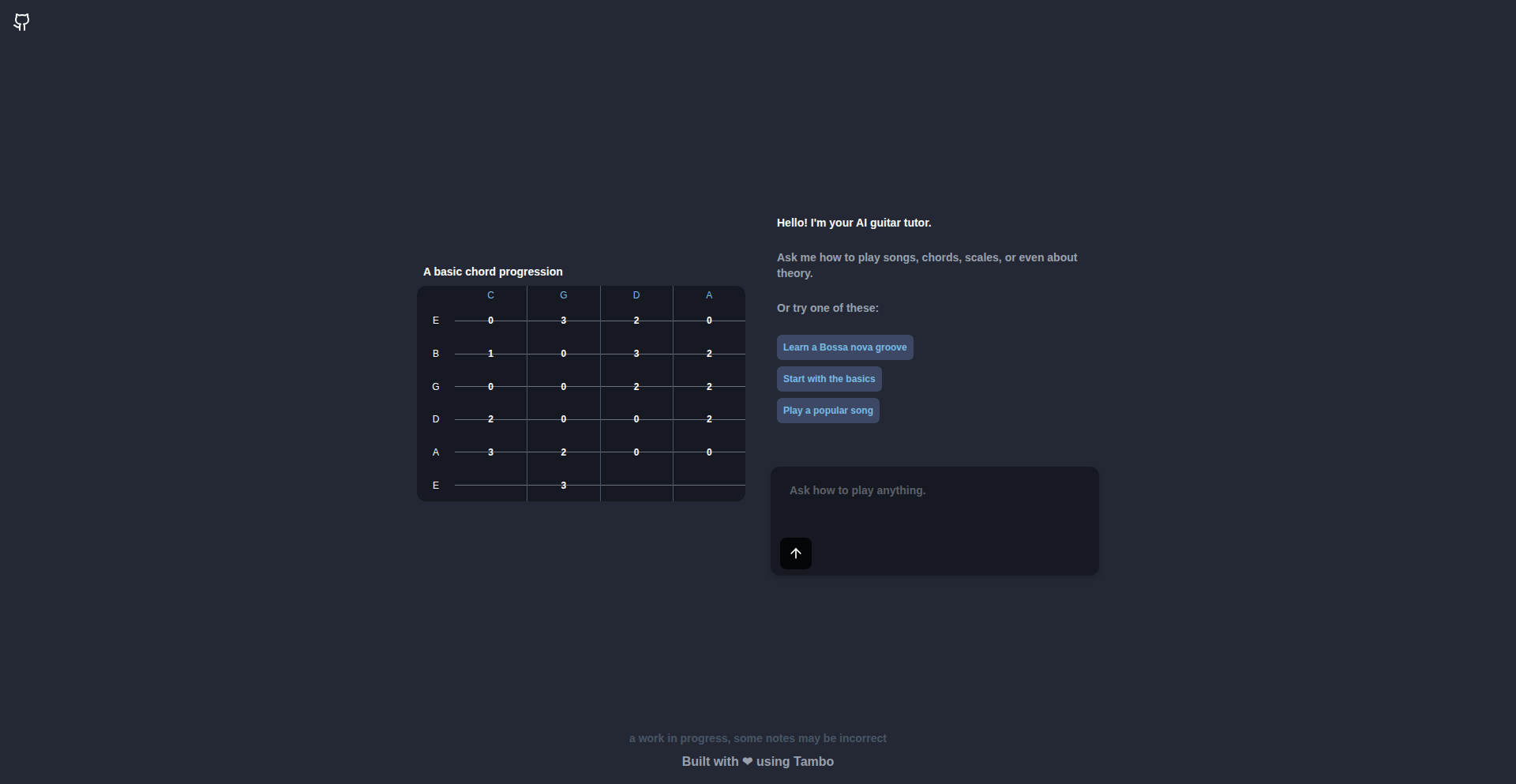

7

ChordDreamer

Author

michaelmilst

Description

A generative UI application that teaches guitar chords and scales in a non-traditional, mood-driven way. It translates abstract concepts like 'chill and dreamy' into playable musical patterns, demonstrating innovative use of AI in creative education.

Popularity

Points 3

Comments 5

What is this product?

ChordDreamer is an AI-powered application designed to help aspiring guitarists learn chords and scales by interpreting descriptive user input. Instead of rigid lesson structures, users can request musical styles or feelings (e.g., 'show me something chill and dreamy'). The application then generates relevant chord progressions or scale fingerings. The core innovation lies in its natural language processing and generative AI capabilities, which translate subjective emotional descriptions into concrete musical instructions, making the learning process more intuitive and personalized. This is like having a musical muse that understands your mood and guides your practice.

How to use it?

Developers can integrate ChordDreamer into their own educational platforms or create standalone applications. The project likely exposes an API where users send text prompts describing desired musical moods or styles. The API then returns structured data representing musical elements like chord names, voicings, and scale patterns. This allows for flexible integration into web or mobile applications focused on music education, creative tools, or even therapeutic applications where music is used for mood regulation. Think of embedding a 'mood-to-music' generator directly into your app.

Product Core Function

· Mood-to-Chord Generation: Translates abstract mood descriptions into specific guitar chords. This is valuable for musicians who want to express a feeling musically but don't know the exact chords to use, offering a creative shortcut and a way to discover new harmonic ideas.

· Style-based Scale Suggestion: Generates scale patterns that fit a requested musical style or feeling. This helps learners explore different melodic possibilities beyond basic scales, broadening their improvisational skills and understanding of musical context.

· Generative UI for Practice: Presents the generated musical information through an intuitive user interface. This ensures that the AI-generated content is easily digestible and actionable for guitarists, facilitating a more engaging and less frustrating learning experience.

· Personalized Learning Paths: Adapts musical output based on user input, offering a highly personalized learning journey. This is incredibly useful for individuals who find traditional, linear learning methods uninspiring, allowing them to practice in a way that resonates with their personal preferences.

Product Usage Case

· A music education app developer could integrate ChordDreamer to offer students a 'play what you feel' mode, allowing them to explore musical expression without being constrained by strict lesson plans, thereby increasing user engagement.

· A game developer might use ChordDreamer to dynamically generate background music or sound effects based on in-game emotional states, creating a more immersive player experience.

· A songwriter could use ChordDreamer as a creative partner, inputting lyrical themes or desired emotions to get instant musical inspiration for chord progressions or melodies, overcoming writer's block.

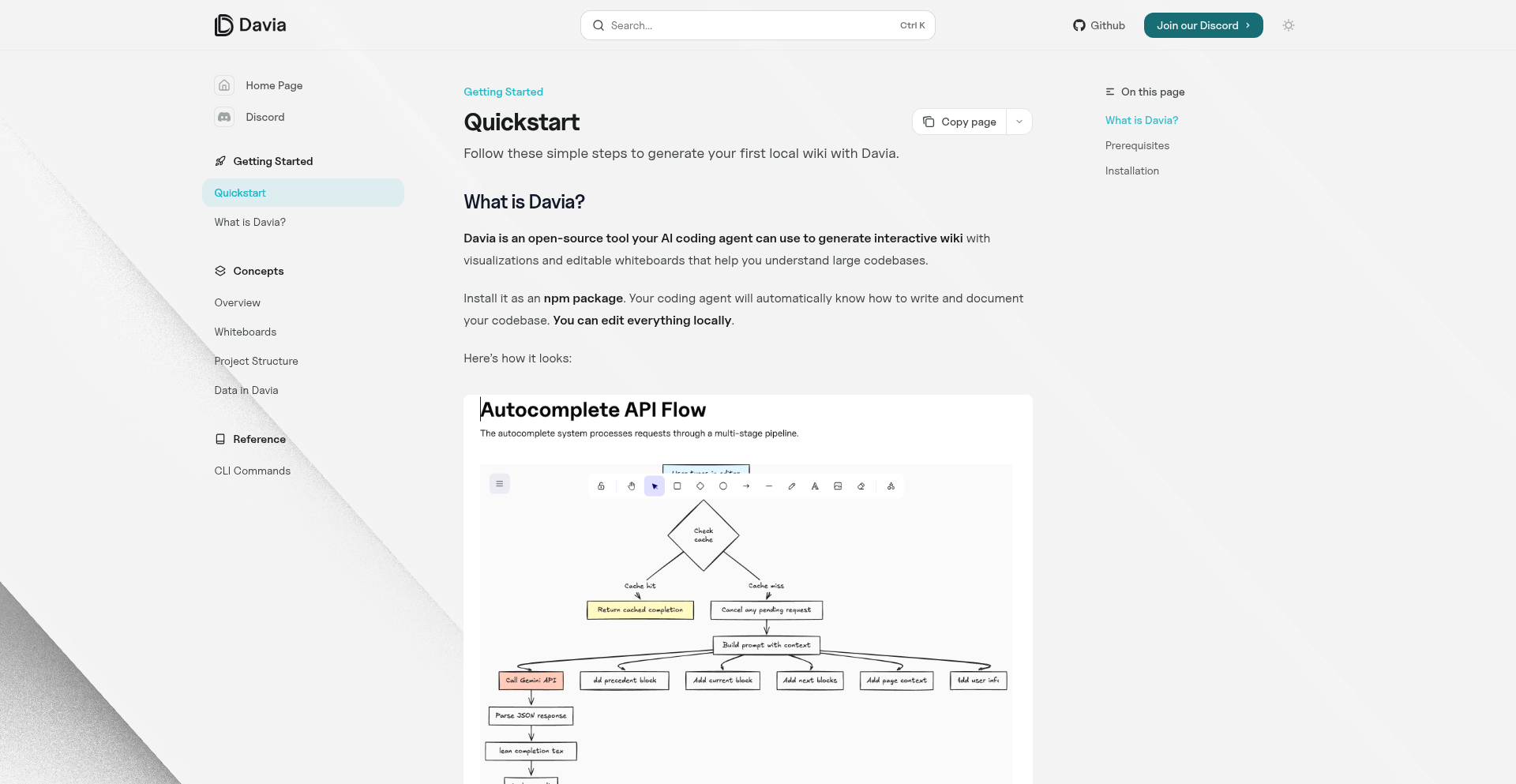

8

Davia-AI Wiki

Author

ruben-davia

Description

Davia-AI Wiki is an open-source project that empowers AI coding agents to automatically generate editable internal project wikis. It addresses the common pain point of creating high-level documentation for non-technical team members or new engineers, which is often time-consuming, lacks visuals, and isn't easily editable locally. Davia integrates with your workflow by producing structured pages and editable diagrams, all running locally.

Popularity

Points 8

Comments 0

What is this product?

Davia-AI Wiki is a local-first, open-source software package that leverages AI coding agents to create and manage project wikis. The core innovation lies in its ability to delegate documentation tasks to your AI agent. The agent writes the content, and Davia transforms it into organized wiki pages, complete with editable diagrams. This means you get high-quality internal documentation without the manual effort, and it's all under your control, editable either through a Notion-like editor for text or a whiteboard for diagrams, or directly in your IDE. So, what's the value for you? It drastically reduces the time and effort spent on documentation, making project knowledge more accessible and maintainable, especially for onboarding and cross-functional collaboration.

How to use it?

Developers can integrate Davia-AI Wiki into their existing workflow by setting up the open-source package locally. You can then instruct your AI coding agent to write documentation for specific parts of your project. Davia takes these instructions and generates structured wiki content. This content is accessible via a web interface that resembles Notion for text editing and includes an interactive whiteboard for diagram creation. Alternatively, you can modify the wiki content directly within your IDE. The key use case is to automate the creation of project documentation, making it easier for anyone to understand your project's architecture, features, and usage. So, how does this benefit you? It means your project documentation stays up-to-date with minimal manual input, improving team alignment and reducing friction for new contributors.

Product Core Function

· AI-powered content generation: Your AI coding agent writes the initial documentation, which Davia then structures into wiki pages. This means documentation is created automatically, saving you time and ensuring consistency. So, what's the value for you? Less manual writing, more time for coding.

· Editable visual workspace: Davia provides a Notion-like editor for text and an editable whiteboard for diagrams. This allows for intuitive and flexible content creation and modification. So, what's the value for you? You can easily update and refine your documentation visually, making it more engaging and understandable.

· Local-first operation: The entire system runs locally, ensuring your data is secure and modifications are seamlessly integrated with your development environment. So, what's the value for you? Greater control over your documentation and privacy, with offline accessibility and better integration with your existing tools.

· IDE integration: Content can be modified directly within your IDE, streamlining the documentation workflow for developers. So, what's the value for you? Documentation becomes just another part of your coding process, easily managed alongside your codebase.

Product Usage Case

· Onboarding new engineers: A project lead uses Davia to generate a comprehensive wiki detailing the project's architecture, setup process, and key modules. New team members can quickly grasp the project's structure and start contributing sooner. So, how does this solve a problem for you? It dramatically reduces onboarding time and the burden on existing team members to explain the project.

· Cross-functional team communication: A product manager needs to explain a complex feature to non-technical stakeholders. Davia generates clear, visually supported documentation that simplifies technical jargon and clarifies the feature's purpose and functionality. So, how does this solve a problem for you? It bridges the communication gap between technical and non-technical teams, ensuring everyone is on the same page.

· Maintaining project knowledge: A team working on a long-term project uses Davia to document evolving features and design decisions. The AI agent continuously updates the wiki as code changes, ensuring the documentation remains accurate and relevant. So, how does this solve a problem for you? It combats documentation rot and ensures your project knowledge base is always current, preventing knowledge loss.

· Internal tool development: Developers building an internal tool can use Davia to create user guides and API references that are easily accessible to other teams within the organization. So, how does this solve a problem for you? It improves the usability and adoption of internal tools by providing clear, readily available documentation.

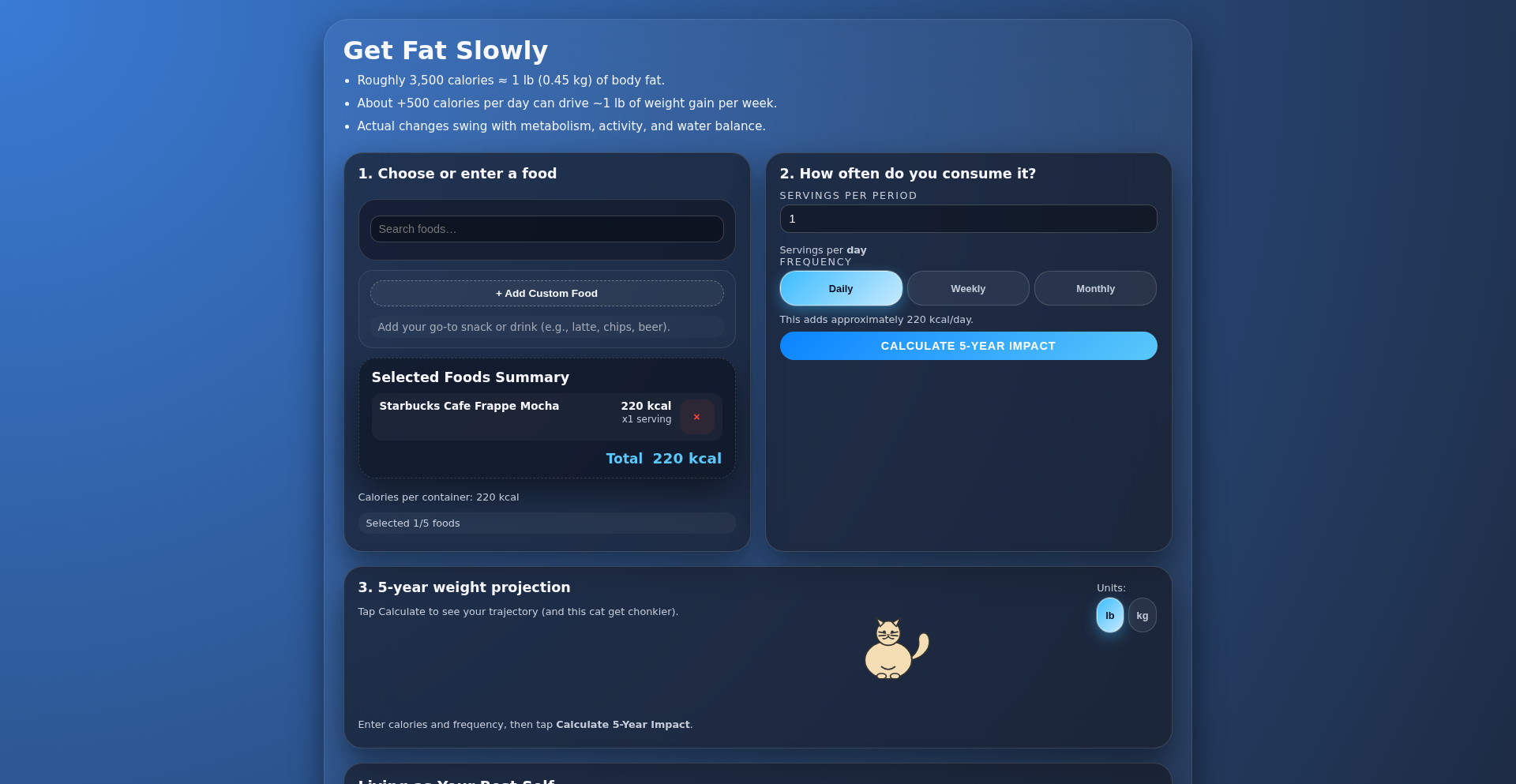

9

FatAccumulatorAI

Author

itake

Description

A calculator built with ChatGPT that models the yearly body fat accumulation based on daily consumption of high-calorie beverages, like Starbucks Mochas. It highlights the significant weight gain potential from seemingly small daily habits.

Popularity

Points 7

Comments 0

What is this product?

FatAccumulatorAI is an AI-powered calculator that leverages ChatGPT's modeling capabilities to estimate how much body fat a person accumulates annually from consuming a specific number of high-calorie drinks per day. The core innovation lies in using AI to simplify complex nutritional calculations and present them in an easily digestible, impactful format. It takes a user's daily drink intake and transforms it into a concrete yearly fat gain figure, demonstrating the cumulative effect of habits.

How to use it?

Developers can integrate FatAccumulatorAI into health and wellness applications. For example, a fitness app could use this tool to provide users with personalized feedback on their beverage choices, estimating their potential yearly impact on body fat. It can be invoked through API calls to ChatGPT, with the prompt being crafted to guide the AI in performing the specific calculation based on user input (e.g., 'Calculate yearly body fat gain from 2 mochas per day').

Product Core Function

· Annual Body Fat Estimation: Calculates the total body fat accumulated in a year based on the daily consumption of calorie-dense beverages. This is valuable for users to understand the long-term consequences of their drink choices.

· Calorie-to-Fat Conversion: Internally converts the caloric content of beverages into estimated body fat, providing a tangible metric for weight gain. This helps users visualize the impact beyond just numbers.

· AI-Driven Modeling: Utilizes ChatGPT for flexible and potentially more nuanced modeling of nutritional impact compared to static calculators. This offers a more adaptable and intelligent approach to personal health tracking.

· Habit Impact Visualization: Presents the yearly fat accumulation in a clear and alarming way, motivating users to reconsider their daily habits. This is useful for habit-forming or habit-breaking applications.

Product Usage Case

· A personal finance app that also tracks spending on lifestyle goods could integrate FatAccumulatorAI to show users the 'health cost' of their daily coffee purchases, highlighting potential health implications alongside financial ones.

· A corporate wellness program could use this tool to educate employees about the cumulative health effects of daily unhealthy beverage consumption, encouraging healthier choices during breaks.

· A student project building a 'health awareness' website could embed this calculator to demonstrate the significant impact of even small daily indulgences on long-term health goals, making abstract health concepts more concrete.

10

GuardiAgent: LLM Tool Security Layer

Author

phear_

Description

This project addresses a critical security gap for Large Language Model (LLM) applications that interact with local tools and data. By introducing a 'security manifest' and a 'policy enforcement engine,' GuardiAgent allows developers to define granular permissions for LLM-connected servers. This prevents buggy or compromised LLM agents from accessing sensitive files, executing arbitrary commands, or exfiltrating data, effectively sandboxing them and mitigating potential damage. For developers, this means a much safer way to integrate LLMs with their local environment and tools.

Popularity

Points 7

Comments 0

What is this product?

GuardiAgent is a security framework designed to protect your local system when LLMs need to interact with your tools and data. LLM applications often need to run 'servers' that grant them access to your files, shell, or even your web browser. Normally, these servers run with the same permissions as your user account. This is risky because if the LLM server has a bug, is poorly configured, or falls victim to a malicious 'prompt injection' attack (where the LLM is tricked into doing something harmful), it can do anything you can do on your computer. This could mean stealing your private keys, leaking your personal files, or messing with your code repositories. GuardiAgent solves this by creating a 'security manifest,' similar to how mobile apps declare their required permissions (like camera access or contacts). You, the developer, define exactly what resources the LLM server can access – which websites it can visit, which files it can read or write, and so on. A local 'policy enforcement engine' then strictly enforces these rules, preventing unauthorized actions and keeping your system safe. So, this is like a digital bodyguard for your computer when LLMs are involved.

How to use it?

Developers can integrate GuardiAgent into their workflow by defining a security manifest file for their LLM-powered agents or tools. This manifest acts as a blueprint, explicitly listing the permitted actions and resources. For example, you might specify that an LLM agent can only read files from a specific project directory and can only make network requests to a particular API endpoint. The GuardiAgent enforcement engine then runs in the background, monitoring the LLM server's actions and blocking anything that deviates from the rules defined in the manifest. This can be integrated into local development setups, CI/CD pipelines, or any environment where LLM agents interact with sensitive systems. The goal is to provide a declarative way to manage LLM agent security, making it easy to secure them without complex manual configurations. So, you define the rules once, and GuardiAgent enforces them, making your LLM integrations significantly safer.

Product Core Function

· Security Manifest Definition: Allows developers to declaratively specify the precise permissions and access controls for LLM agents. This is crucial for controlling what sensitive data or system functions an LLM can interact with, thereby preventing accidental or malicious data leaks and system compromises. So, you get precise control over LLM agent capabilities.

· Local Policy Enforcement: A runtime engine that actively monitors and enforces the rules defined in the security manifest. This ensures that LLM agents adhere to their granted permissions, acting as a safeguard against unexpected or harmful behavior. So, it prevents LLM agents from going rogue and damaging your system.

· Resource Sandboxing: Isolates LLM agents from accessing sensitive system resources by default, granting access only to explicitly permitted files, network endpoints, or commands. This compartmentalization significantly reduces the attack surface and the potential impact of a security breach. So, it keeps potentially risky LLM operations contained and harmless.

· Granular Access Control: Provides fine-grained control over network access (e.g., which hosts can be reached), file system operations (e.g., read/write permissions for specific directories), and command execution. This level of detail allows for highly customized and secure LLM agent deployments. So, you can tailor security to the exact needs of your LLM application.

Product Usage Case

· Securely integrating an LLM agent for code generation: You can use GuardiAgent to allow the LLM agent to read your project files and write new code into specific directories, but prevent it from accessing your SSH keys or personal configuration files. This mitigates the risk of the LLM agent inadvertently exposing sensitive credentials or spreading malicious code. So, your code generation process becomes safer and more controlled.

· Enabling an LLM assistant to browse specific parts of the web for research: GuardiAgent can be configured to permit the LLM to access only certain trusted websites or domains for information gathering, while blocking access to potentially malicious sites or sensitive internal company networks. This prevents the LLM from being tricked into visiting harmful URLs or accessing restricted information. So, LLM-powered research is safer and more focused.

· Developing an LLM-powered automation tool that interacts with local APIs: You can use GuardiAgent to grant the LLM agent permission to communicate with specific internal APIs or services, while denying it access to the broader network or sensitive system commands. This ensures that the automation tool can perform its intended functions without posing a security risk to the rest of your infrastructure. So, you can build powerful LLM automations with confidence.

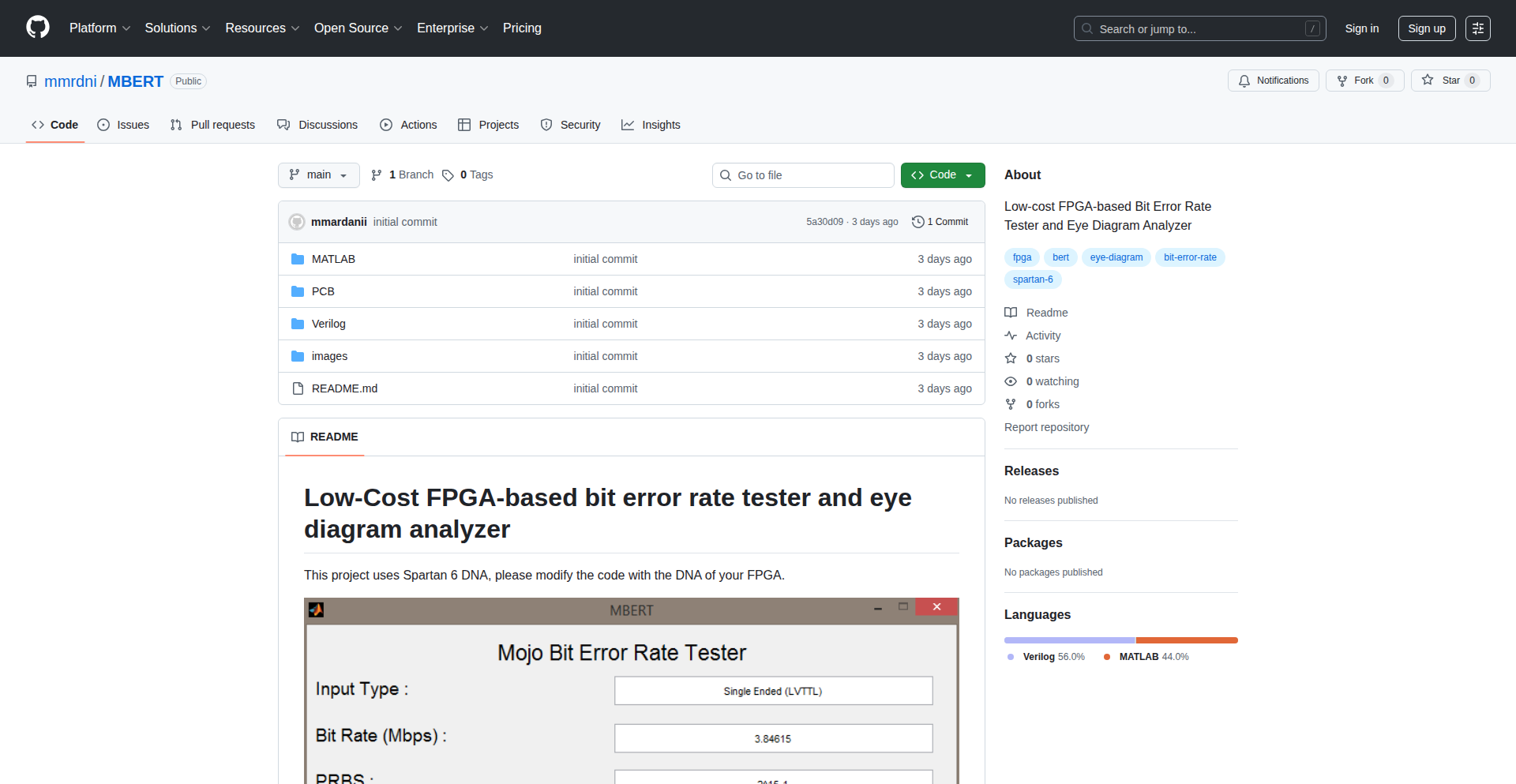

11

NativeFork Navigator

Author

nativeforks

Description

NativeFork Navigator is a free and open-source compass and navigation application designed for de-googled and custom ROM Android devices. It prioritizes user privacy by avoiding ads, in-app purchases, and tracking. The app innovates by leveraging raw Android sensors and AOSP APIs for accurate directional and location data, offering both magnetic and true north readings, and displaying magnetic field strength. Its core technical achievement lies in its self-sufficiency, running entirely on core Android functionalities without relying on Google Mobile Services, making it a robust choice for privacy-conscious users and developers exploring offline or minimal-dependency app development.

Popularity

Points 5

Comments 1

What is this product?

NativeFork Navigator is a highly private and technically robust compass and navigation app. Its core innovation is its complete independence from Google Mobile Services (GMS) and third-party dependencies, relying solely on Android Open Source Project (AOSP) APIs. This means it works perfectly on 'de-googled' phones or custom ROMs without any Google framework. It uses the device's accelerometer, magnetometer, and gyroscope through sensor fusion to provide accurate readings for both magnetic north and true north, displaying the magnetic field strength in microteslas (µT). It also offers live GPS location tracking on OpenStreetMap, ensuring you know where you are without needing external services. The app is built for practicality with features like screen-on during navigation and landscape orientation support. So, what's the value? It offers precise navigation and orientation without compromising your privacy or requiring a Google-centric ecosystem, a rare feat in modern mobile apps.

How to use it?

Developers can use NativeFork Navigator as a prime example of building a functional Android app using only AOSP APIs, demonstrating how to access and fuse sensor data (magnetometer, accelerometer, gyroscope) for directional accuracy. It serves as a reference for creating privacy-first applications that function independently of Google's proprietary services. For end-users, it's a straightforward app: install it, and it provides immediate compass readings. You can switch between magnetic and true north in the settings. When navigating, the GPS location is overlaid on an OpenStreetMap view, and the screen stays on. Its use case extends to situations where a reliable, privacy-respecting offline navigation tool is needed, or for users who have intentionally removed GMS from their devices. The value for a developer is learning how to build essential features from scratch, and for a user, it's having a dependable navigator that respects their digital footprint.

Product Core Function

· Accurate Directional Readings: Utilizes sensor fusion (accelerometer, magnetometer, gyroscope) to provide precise magnetic and true north bearings. This technical capability ensures you always know which way you're facing, critical for hiking, orienteering, or even just finding your way around. The value is reliable orientation data in any situation.

· Live GPS Location Tracking: Integrates with OpenStreetMap to display your real-time GPS coordinates. This means you can see your exact position on a map without needing internet connectivity for the map tiles (though initial map loading might require it). The value is knowing your precise location on a familiar map interface, enhancing situational awareness.

· Magnetic Field Strength Display: Shows the current magnetic field strength in microteslas (µT). This provides an additional layer of data for understanding your environment and can be useful for certain scientific or hobbyist applications. The value is granular environmental data that goes beyond basic compass functions.

· De-Googled Compatibility: Built entirely with AOSP APIs, excluding GMS and third-party libraries. This is a significant technical achievement that allows the app to run on devices without Google services. The value is absolute privacy and functionality for users who have removed Google from their phones, offering a functional app where others might fail.

· Privacy-Focused Design: No ads, no in-app purchases, and no tracking of user data. This core principle is implemented technically through its independent architecture. The value is peace of mind for users, knowing their activity and data are not being collected or exploited.

Product Usage Case

· A hiker on a remote trail without cell service needs to confirm their bearing. NativeFork Navigator provides accurate true north readings using local sensor data, ensuring they stay on course even offline. This solves the problem of needing reliable navigation in connectivity-limited environments.

· A developer is building a custom Android ROM and wants to include essential utilities that don't depend on Google. They can examine NativeFork Navigator's codebase to understand how to access and process sensor data using AOSP APIs, thus fulfilling their requirement for GMS-free functionality. This showcases how the project serves as a technical blueprint for independent app development.

· An individual concerned about digital privacy has removed Google Play Services from their Android device. They can install and use NativeFork Navigator confidently, as it provides core navigation features without any tracking or data collection. This addresses the need for a functional, privacy-preserving app in a restricted software environment.

· A hobbyist interested in geomagnetism wants to measure local magnetic field variations. NativeFork Navigator's display of magnetic field strength in µT provides a convenient way to do this directly from their phone. This illustrates the project's utility for specialized, data-oriented use cases.

12

Cossistant - React Dev's Embedded Support Hub

Author

frenchriera

Description

Cossistant is a featherweight, open-source customer support widget designed specifically for Next.js/React developers. It integrates seamlessly into your application with minimal effort, requiring just an NPM command and about ten lines of code. Initially focusing on human-to-human support, its roadmap includes AI agents capable of autonomously handling the majority of user inquiries by learning from your documentation and knowledge base, escalating to human agents only when necessary. Customizable with Tailwind CSS or your own React components, it aims to provide a support experience that feels native to your product, unlike bloated, expensive third-party solutions.

Popularity

Points 6

Comments 0

What is this product?

Cossistant is an embeddable, open-source support widget for React and Next.js applications. Its core innovation lies in its lightweight architecture and deep integration capabilities. Instead of relying on external, often cumbersome, support platforms, Cossistant allows developers to incorporate a fully customizable support interface directly into their existing tech stack. This means the support experience visually and functionally aligns with your product. The underlying technology leverages modern JavaScript frameworks for efficient rendering and a smooth user experience. Future plans involve sophisticated AI integration for automated query resolution, making it a truly intelligent support solution.

How to use it?

Developers can integrate Cossistant into their React or Next.js projects by installing it via NPM. A few lines of code will then be sufficient to embed the widget into their application's frontend. This typically involves importing the component and rendering it within the desired part of the UI. For styling and customization, developers can use Tailwind CSS classes or replace default React components with their own custom ones, ensuring the support widget perfectly matches their product's design language. This approach makes it incredibly easy to add sophisticated support features without significant development overhead.

Product Core Function

· Lightweight Embeddable Widget: Provides a seamless integration of support functionalities directly within your React/Next.js app, eliminating the need for separate, heavy external tools. This means your support experience is always part of your product, not an add-on.

· Human-to-Human Support: Facilitates direct communication between users and support agents through a chat interface, ensuring authentic and personal customer interactions. This helps in building stronger customer relationships and resolving complex issues effectively.

· AI Agent Automation (Future): Enables AI to auto-handle a significant portion of user queries by training on your knowledge base and documentation, reducing response times and freeing up human agents for more critical tasks. This allows for scalable and efficient customer support.

· Customizable UI/UX: Allows for extensive customization using Tailwind CSS or custom React components, ensuring the support widget's appearance and behavior align perfectly with your product's branding and user experience. This means your support looks and feels like your product.

· Open-Source and Open Components: Offers transparency and flexibility with an open-source codebase and the ability to swap out core components. Developers have full control over their support infrastructure and can tailor it to their specific needs.

Product Usage Case

· A SaaS startup building a new productivity tool wants to offer real-time chat support to its early adopters without adding significant complexity to their development workflow. By embedding Cossistant, they can provide instant, branded support that feels like a natural extension of their app, fostering user trust and gathering valuable feedback.

· An e-commerce platform wants to reduce customer service overhead by automating answers to common questions about shipping and returns. Cossistant's future AI capabilities will allow them to train an agent on their FAQs, handling 80% of these inquiries automatically, thus improving user experience and operational efficiency.

· A React developer building a portfolio website needs to allow potential clients to ask questions directly through the site. Cossistant provides a simple way to add a contact widget that matches the site's design, making it easy for visitors to connect without leaving the page, enhancing lead generation.

· A company with a strong brand identity wants to ensure their customer support interface doesn't detract from their product's aesthetics. Using Cossistant's deep customization options, they can style the support widget to perfectly match their brand guidelines, creating a cohesive and professional user experience.

13

MortgageFlow Explorer

Author

rogue7

Description

A static web application designed to demystify mortgage loan complexities. It acts as an advanced calculator to help users understand the total interest paid over the life of a loan and to aid in the decision-making process of buying a home versus continuing to rent. Its core innovation lies in providing clear, visual insights into cash flows, making abstract financial concepts tangible for the average user.

Popularity

Points 3

Comments 2

What is this product?

This project is a static web app that visualizes mortgage loan calculations. Instead of just spitting out numbers, it helps you see how much of your payment goes towards the principal versus interest, and calculates the total interest you'll pay. The innovation here is taking complex mortgage math and making it understandable through a user-friendly interface, allowing for quick scenario exploration. This means you get a clear picture of your long-term financial commitment with a mortgage, empowering you to make more informed decisions.

How to use it?

Developers can use this project as a readily available tool for personal financial planning or as a reference for building their own financial calculators. It can be integrated into personal finance blogs or websites as an embedded widget. The project's static nature means it's easy to host on platforms like Netlify, Vercel, or even GitHub Pages, offering a simple yet powerful way to add mortgage analysis capabilities to any web presence. This means you can easily embed a mortgage calculator into your own website without complex backend development.

Product Core Function

· Principal vs. Interest Breakdown: Calculates and displays how each mortgage payment is allocated between paying down the loan's principal and covering the interest. This helps users understand the amortization schedule and how the loan balance decreases over time, providing clarity on what you're actually paying for.

· Total Interest Calculation: Computes the total amount of interest paid over the entire loan term. This crucial metric directly impacts the overall cost of homeownership, allowing users to quantify the long-term financial implications of their mortgage.

· Buy vs. Rent Analysis Aid: While not a direct calculator, the insights gained from interest and cash flow analysis help users compare the financial viability of buying a home against renting. This empowers users to weigh the upfront and ongoing costs associated with each option.

· Scenario Exploration: Allows users to input different loan amounts, interest rates, and loan terms to see how these variables affect the total interest paid and monthly payments. This interactive feature lets you test various financial scenarios to find the most suitable option for your situation.

Product Usage Case

· Personal Home Buying Decision: A prospective homebuyer uses the tool to input the mortgage details of a property they are considering. They input the loan amount, interest rate, and loan term, and the application clearly shows the total interest they would pay over 30 years. This helps them understand the true cost of the home and decide if it's financially feasible compared to their current rent.

· Financial Planning Website Integration: A personal finance blogger embeds the MortgageFlow Explorer into their article about 'Understanding Mortgages'. Readers can use the embedded tool directly within the article to calculate their own potential mortgage costs, making the educational content more interactive and valuable.

· Developer's Personal Investment Analysis: A developer is considering taking out a large loan for a rental property. They use the tool to model different loan scenarios and understand the potential interest expenses, helping them assess the profitability of the investment and refine their financial projections.

14

PageStash Knowledge Graph Archiver

Author

Aurelan

Description

PageStash is a novel web archival tool that goes beyond simple page capture. It intelligently analyzes and represents web content using knowledge graphs, transforming static snapshots into interconnected information structures. This allows for deeper understanding and retrieval of archived web pages, solving the problem of information silos and shallow data representation in traditional archiving.

Popularity

Points 1

Comments 4

What is this product?

PageStash is a web archiving tool that uses knowledge graphs to store and organize web page content. Instead of just saving a static copy of a webpage, it extracts key entities (like people, places, concepts) and their relationships from the page. This creates a structured, interconnected map of the information. Think of it like building a mind map of the web page, where everything is linked and understandable, rather than just having a printed photo of it. The innovation lies in moving from simple storage to semantic understanding and organization of archived web data.

How to use it?

Developers can use PageStash to create a more insightful archive of web resources. It can be integrated into research workflows, content management systems, or personal knowledge management tools. Imagine a developer building a system to track industry news; PageStash would not only save the articles but also automatically identify the companies, technologies, and people mentioned, and how they relate to each other. This makes it easy to query relationships like 'which companies are frequently mentioned alongside AI advancements?' or 'what research papers cite this specific concept?'. The tool can be used as a standalone application or via its API to programmatically archive and query web content.

Product Core Function

· Intelligent Content Extraction: Automatically identifies and extracts key entities and their attributes from web pages, providing a structured representation of the information. This is useful for understanding the core components of a webpage without manually sifting through text, leading to faster data comprehension.

· Knowledge Graph Construction: Organizes extracted entities and their relationships into a semantic graph. This allows for complex querying and analysis of connections between different pieces of information, enabling deeper insights than simple keyword searches and helping to uncover hidden patterns.

· Full-Page Archival: Captures a complete snapshot of a webpage as it appeared at a specific time, ensuring historical accuracy. This is crucial for research and compliance, providing reliable evidence of past web content.

· Semantic Search and Querying: Enables users to search and retrieve archived content based on entities and relationships, not just keywords. This makes finding specific information within a large archive much more efficient and precise, saving time and effort.

· Customizable Extraction Rules: Allows developers to define specific entities and relationships to prioritize during extraction, tailoring the archival process to particular use cases. This ensures that the most relevant information is captured and organized for specific project needs.

Product Usage Case

· Academic Research: A researcher studying the evolution of a scientific field can use PageStash to archive relevant papers and news articles. The knowledge graph would automatically link researchers, institutions, concepts, and publications, allowing the researcher to quickly identify influential figures, trending topics, and the lineage of ideas, solving the challenge of navigating vast amounts of academic literature.

· Competitive Intelligence: A business analyst monitoring competitors can archive their websites and news releases. PageStash would create a graph of companies, products, executive changes, and partnerships, enabling the analyst to spot trends and competitive moves more effectively than manual tracking.

· Personal Knowledge Management: A writer or student can archive articles and blog posts related to their interests. The knowledge graph would link concepts, authors, and sources, creating a personal interconnected knowledge base that aids in understanding complex topics and generating new ideas.

· Digital Preservation: Libraries and archives can use PageStash to preserve not just the visual appearance of web pages but also their underlying informational structure, ensuring long-term accessibility and understandability of digital heritage.

15

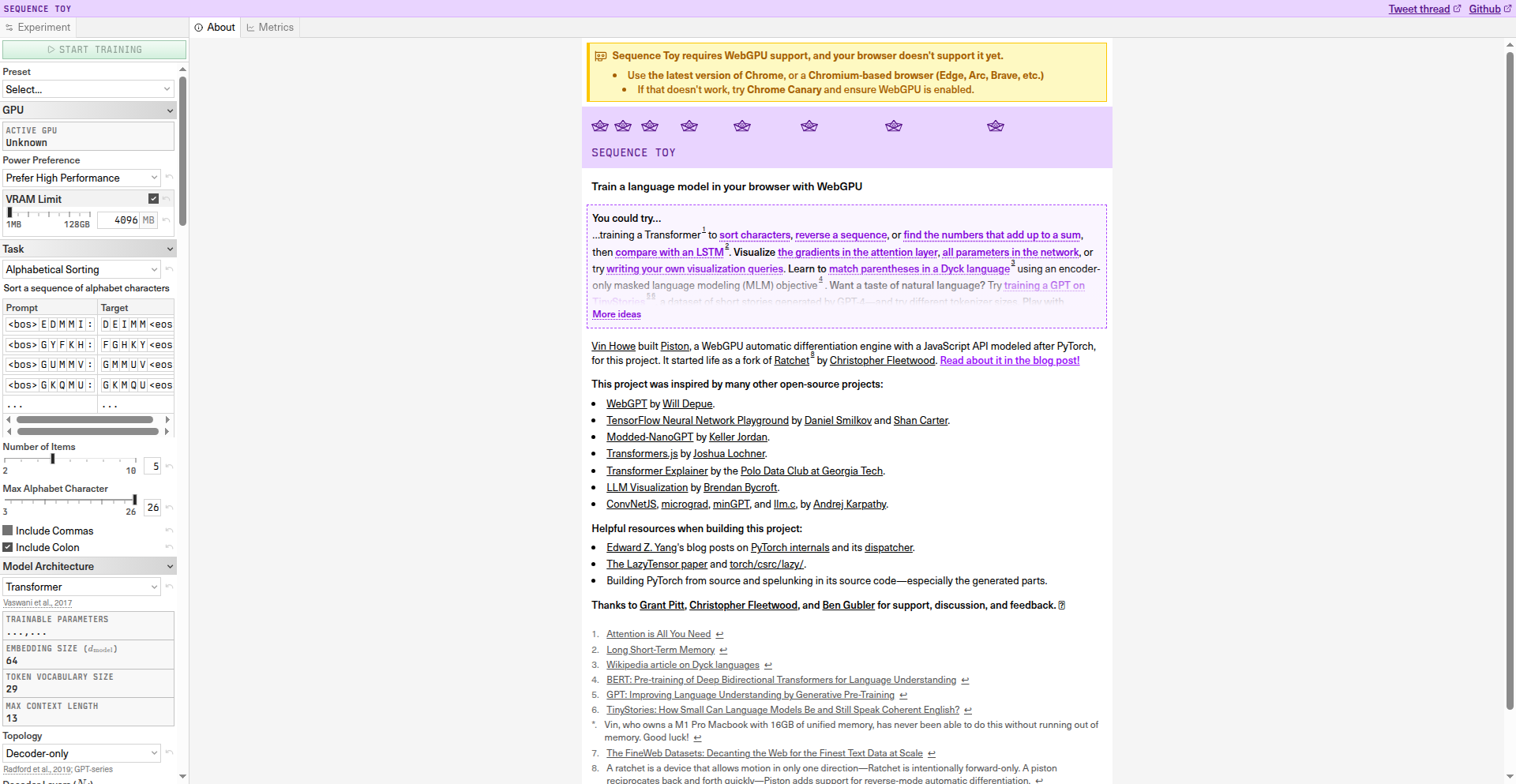

BrowserLM: In-Browser LLM Training with WebGPU

Author

vvin

Description

This project showcases training a language model directly within the user's web browser using the WebGPU API. It breaks down the traditional barrier of requiring powerful dedicated hardware for LLM training, making experimentation and fine-tuning more accessible. The innovation lies in leveraging the browser's capabilities for computationally intensive tasks, democratizing access to machine learning model development.

Popularity

Points 4

Comments 1

What is this product?

BrowserLM is a pioneering project that allows developers to train language models entirely in the web browser. It harnesses the power of WebGPU, a modern web API that provides access to the computer's graphics processing unit (GPU) for general-purpose computation. This means instead of needing expensive, high-end servers or specialized hardware to train machine learning models like large language models (LLMs), you can now do it directly on your laptop or desktop. The core innovation is making LLM training feasible in a ubiquitous computing environment, opening up new avenues for rapid prototyping and personalized model adaptation without complex server setups.

How to use it?

Developers can use BrowserLM to experiment with training smaller language models or fine-tuning existing ones for specific tasks. The project likely provides a JavaScript interface that allows users to load datasets, configure training parameters (like learning rate and epochs), and initiate the training process. The WebGPU backend handles the heavy mathematical operations required for neural network training. Integration could involve embedding this functionality within a web application, such as a content creation tool, a code assistant, or a personalized chatbot builder, where users might want to customize the model's behavior without sending data to external servers. This offers a privacy-preserving and cost-effective way to leverage ML.

Product Core Function

· In-browser LLM training: Enables developers to train language models directly within a web browser environment, reducing the need for external cloud infrastructure and specialized hardware, thus making ML experimentation more accessible and affordable.

· WebGPU acceleration: Utilizes the WebGPU API to leverage the user's local GPU for significantly faster computation compared to traditional CPU-based training, speeding up the model development cycle.

· Model fine-tuning capabilities: Allows for the adaptation of pre-trained language models to specific datasets or tasks, enabling personalized AI experiences and domain-specific applications without extensive re-training from scratch.

· Interactive training visualization (potential): While not explicitly stated, such projects often include visualizations of training progress, loss curves, and metrics, providing immediate feedback and insights into the model's learning process, aiding in debugging and optimization.

· Dataset integration for training: Provides mechanisms to load and process custom datasets within the browser, empowering developers to train models on their own proprietary or niche data for specialized use cases.

Product Usage Case

· A content creator wants to train a small language model to generate text in a very specific, niche style. Instead of paying for cloud GPU time, they can use BrowserLM to fine-tune an existing model directly on their machine using their own writing samples, making personalized content generation faster and cheaper.

· A developer is building a privacy-focused chatbot for their website. By using BrowserLM, they can allow users to optionally fine-tune the chatbot's responses based on their own input history within the browser, ensuring sensitive user data never leaves their device and the AI becomes more relevant to their individual needs.

· An educational platform wants to introduce students to the fundamentals of LLM training. BrowserLM provides a safe, accessible, and free way for students to experiment with training concepts and parameters without needing to set up complex local environments or incur cloud computing costs, democratizing AI education.

16

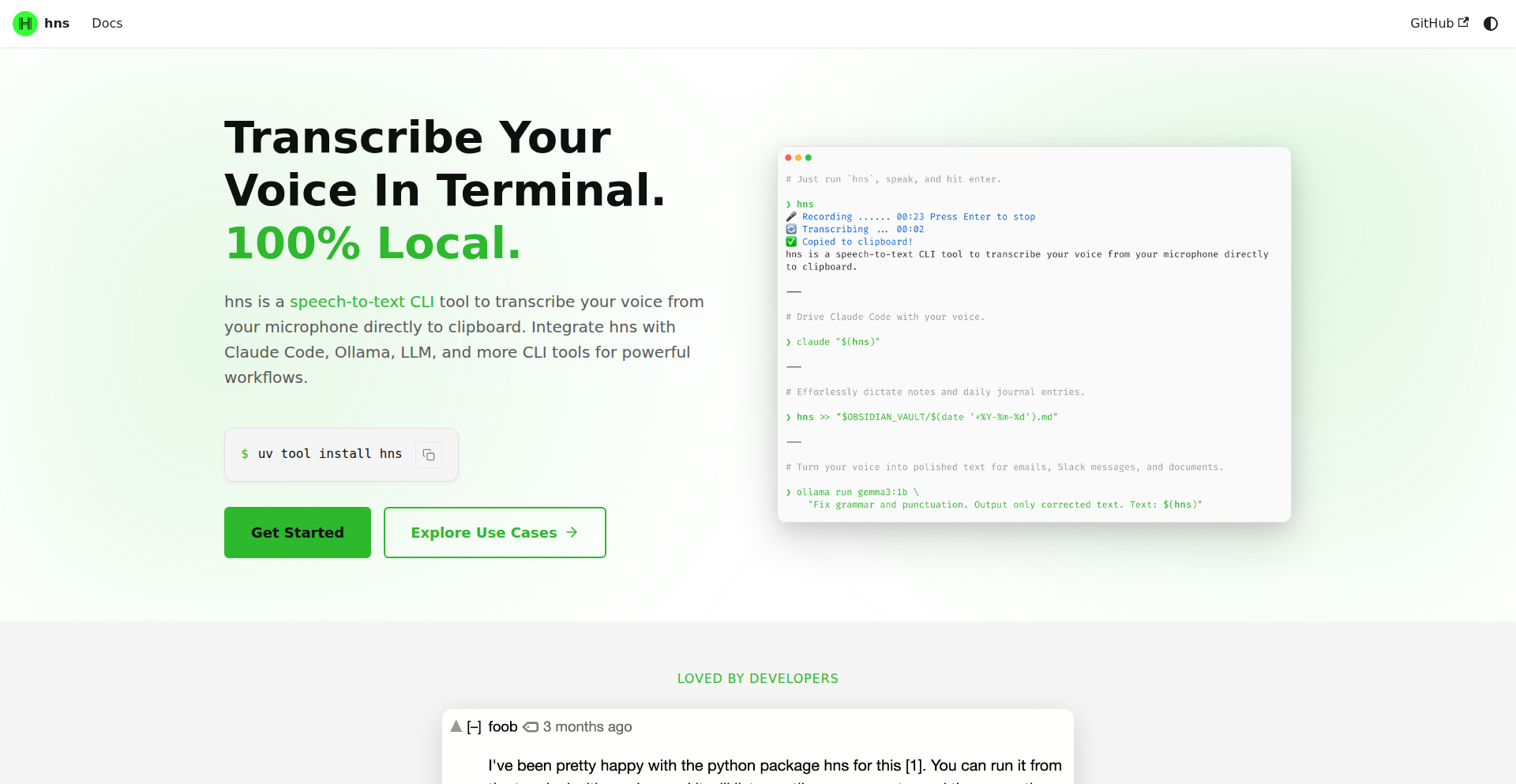

LocalSpeech-to-CLI

Author

primaprashant

Description

LocalSpeech-to-CLI is a command-line interface (CLI) tool that converts your spoken words from your microphone into text, sending it directly to your clipboard. It leverages the powerful faster-whisper model for 100% local, offline transcription. This means your sensitive voice data never leaves your machine. It's designed for developers to seamlessly integrate voice input into their existing command-line workflows, enhancing productivity and enabling new interaction patterns with AI models and other tools.

Popularity

Points 5

Comments 0

What is this product?

This project is a local speech-to-text utility designed for developers. It uses the faster-whisper model, a highly efficient implementation of OpenAI's Whisper model, to transcribe audio from your microphone directly into plain text. The transcription happens entirely on your machine, so no internet connection is needed after the initial model download, and your privacy is protected because your voice data is not sent to any remote servers. The transcribed text is then automatically output to your terminal and, crucially, copied to your system's clipboard, making it instantly available for pasting into any application or command.

How to use it?

Developers can use LocalSpeech-to-CLI by installing it via a package manager (details typically found on the project's GitHub page). Once installed, they can run a command like `hns <your_audio_source>` (where `<your_audio_source>` could be your microphone or an audio file). The tool will then listen, transcribe, and output the text to stdout and the clipboard. For example, to dictate commands or code snippets directly into your terminal, you could run `hns` and then paste the output into your shell. It's also designed for integration: imagine piping the output to tools like Claude Code, Ollama, or other Large Language Models (LLMs) for voice-controlled AI interactions or code generation.

Product Core Function

· Offline Speech Transcription: Leverages faster-whisper for accurate voice-to-text conversion without requiring an internet connection after initial setup. This is valuable for privacy-conscious users and for use in environments with unreliable network access, ensuring consistent performance.

· Direct Clipboard Output: Automatically copies the transcribed text to your system clipboard. This provides immediate usability, allowing users to paste spoken words into any application or command-line interface with a simple paste command (Ctrl+V or Cmd+V), streamlining workflows.

· Command-Line Interface (CLI) Design: Built as a CLI tool, making it ideal for developers who prefer working in the terminal. It can be easily integrated into scripts and existing command-line workflows, enhancing productivity and enabling new ways to interact with other tools.

· Local Processing: Ensures all audio processing happens on the user's machine. This enhances privacy and security by preventing sensitive voice data from being transmitted over the internet, which is crucial for confidential work or sensitive information.

· Automatic Model Download: The whisper model is downloaded automatically on the first run. This simplifies the setup process for users, allowing them to start transcribing quickly without complex manual configuration.

Product Usage Case

· Dictating commands into a terminal: Instead of typing long commands, a developer can speak them, have them transcribed locally, and then paste them into their shell. This speeds up repetitive tasks and reduces typing errors.

· Voice-driven AI interaction: Use the tool to speak prompts to LLMs like Ollama or Claude Code. The transcribed text is sent directly to the AI model, enabling hands-free interaction and potentially faster iteration cycles for developing with AI.

· Note-taking or idea capture in the terminal: Quickly capture ideas or notes by speaking them, and have them instantly available in your clipboard to paste into a text editor or markdown file. This is useful for capturing thoughts on the go without switching context.

· Accessibility enhancement for developers: For developers with physical limitations that make typing difficult, this tool offers a way to interact with their development environment using their voice, increasing inclusivity and accessibility.

· Automating repetitive speech-to-text tasks: Integrate into custom scripts to automate the transcription of audio snippets, for example, as part of a media processing pipeline where voice notes need to be converted to text for indexing or analysis.

17

Hirosend: Swift Encrypted File Courier

Author

nextguard

Description

Hirosend is a lightweight, secure file-sharing service designed for effortless one-off transfers. It addresses the common frustration of cumbersome account creation and complex permission settings found in traditional cloud storage. By offering features like temporary links, optional passwords, and end-to-end encryption, Hirosend provides a simple, fast, and private way to send files to anyone, without them needing to sign up.

Popularity

Points 3

Comments 2

What is this product?

Hirosend is a self-hosted or easily deployable file-sharing solution that champions simplicity and security. At its core, it utilizes a client-side encryption mechanism where files are encrypted using a cryptographic key (often derived from a password or a unique link) before they are uploaded to the server. This means the server itself cannot decrypt the content of the files. When a recipient clicks the link, the file is downloaded and decrypted locally in their browser. The innovation lies in its minimalist approach, mimicking the user-friendly experience of services like the defunct Firefox Send, while prioritizing security and speed for quick, no-fuss file distribution. It's essentially a modern take on secure file transfer, stripping away unnecessary complexity.

How to use it?

Developers can deploy Hirosend themselves, gaining full control over their data. This is particularly useful for businesses or individuals with strict privacy requirements. Integration is straightforward: once deployed, you can simply upload a file through the web interface, set an expiration time (e.g., 24 hours, 7 days), and optionally secure it with a password. You then share the generated link with your recipient. For more advanced use cases, developers can integrate Hirosend's functionality into their own applications via its API (if available or planned), allowing for programmatic file uploads and link generation, such as automatically sending large reports or design assets to clients after a project milestone.

Product Core Function

· Encrypted File Upload and Download: Files are encrypted client-side before upload and decrypted client-side upon download, ensuring that only the intended recipient with the correct key (password or magic link) can access the content. This provides robust data privacy for sensitive documents and intellectual property.

· Time-Limited File Access: Uploaded files can be set to expire after a specified period, automatically deleting them from the server. This is crucial for managing data lifecycle and ensuring that shared information is not accessible indefinitely, enhancing security and compliance.

· Password Protection: Optional password protection adds an extra layer of security, requiring recipients to enter a password to access the file. This is invaluable when sharing confidential information where a shared link might be compromised.

· One-Time Download Links (Magic Links): The system can generate unique, single-use links for file downloads. This ensures that even if a link is accidentally shared, it can only be used once, preventing unauthorized access after the initial download.

· Basic Access Analytics: Provides insights into when a download link was accessed, offering a basic audit trail and confirmation that the file was retrieved by the recipient. This helps in tracking and verifying file delivery.

· No Recipient Account Required: Recipients can download files directly by clicking a link without needing to create an account or navigate complex interfaces. This significantly improves the user experience for external parties.

Product Usage Case