Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-20

SagaSu777 2025-11-21

Explore the hottest developer projects on Show HN for 2025-11-20. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The sheer volume of projects today paints a vibrant picture of innovation, with a clear emphasis on leveraging AI and efficient, modern technologies to solve complex problems. We're seeing a powerful surge in AI being integrated not just for end-user applications, but deep within the development lifecycle itself – from code generation and testing to log analysis and security. This isn't just about building smarter tools; it's about making development faster, more efficient, and more accessible. The widespread adoption of WASM for server-side logic, as seen with Tangent, signals a move towards more portable, performant, and secure execution environments. Furthermore, the recurring theme of 'developer experience optimization' highlights a collective drive to reduce boilerplate, streamline workflows, and empower developers to focus on creative problem-solving rather than repetitive tasks. This 'hacker spirit' of crafting elegant solutions is evident across the board, from lightning-fast testing frameworks to novel serialization formats and intuitive deployment systems. For developers and entrepreneurs, this means immense opportunity to build on these foundational shifts, create specialized AI agents, contribute to the open-source ecosystem, and engineer the next generation of efficient, intelligent software.

Today's Hottest Product

Name

Tangent – Security Log Pipeline Powered by WASM

Highlight

This project introduces a Rust-based log pipeline where all logic (normalization, enrichment, detection) is executed as WebAssembly (WASM) plugins. This innovation tackles the common pain points in security log processing, like schema evolution and tedious mapping, by allowing developers to write this logic in standard languages (Go, Python, Rust) compiled to WASM. This makes the logic shareable, easier for LLMs to generate, and highly performant. Developers can learn about leveraging WASM for server-side logic, building modular and extensible systems, and the practical application of OCSF standards in real-time security analysis.

Popular Category

AI/ML

Developer Tools

Infrastructure

Security

Data Processing

Popular Keyword

AI

WASM

Rust

LLM

Open Source

Cloud

Automation

Data

Testing

Technology Trends

WASM for server-side logic

AI-powered development tools

Composable infrastructure

Privacy-first data handling

Developer experience optimization

Decentralized and self-hosted solutions

LLM integration for specialized tasks

Project Category Distribution

Developer Tools (30%)

AI/ML Tools (25%)

Infrastructure/DevOps (20%)

Data Processing/Analysis (15%)

Security (5%)

Other (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | J2MEArchive: The J2ME Renaissance | 73 | 49 |

| 2 | Agentweave: Open-Source Multi-Agent Network Framework | 42 | 42 |

| 3 | SupabaseRLSGuardian | 22 | 8 |

| 4 | Tangent WASM-Powered Log Weaver | 24 | 2 |

| 5 | ArXivPaperPlus | 9 | 6 |

| 6 | RustBoost | 9 | 4 |

| 7 | DynamicWealth Navigator | 5 | 7 |

| 8 | CTON: LLM Prompt Token Optimizer | 10 | 1 |

| 9 | Yonoma - Reactive SaaS Email Engine | 7 | 4 |

| 10 | MCP Traffic Analyzer | 11 | 0 |

1

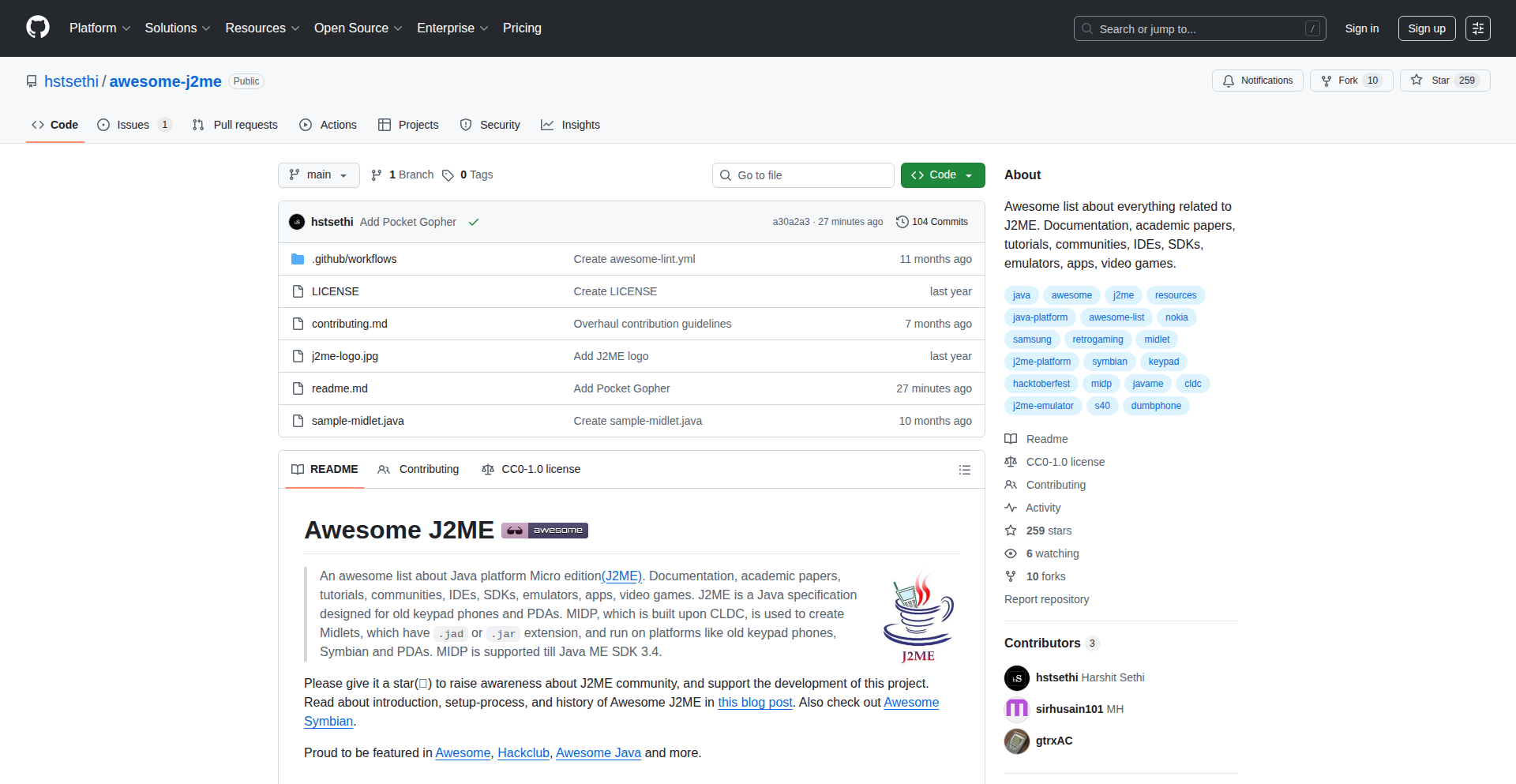

J2MEArchive: The J2ME Renaissance

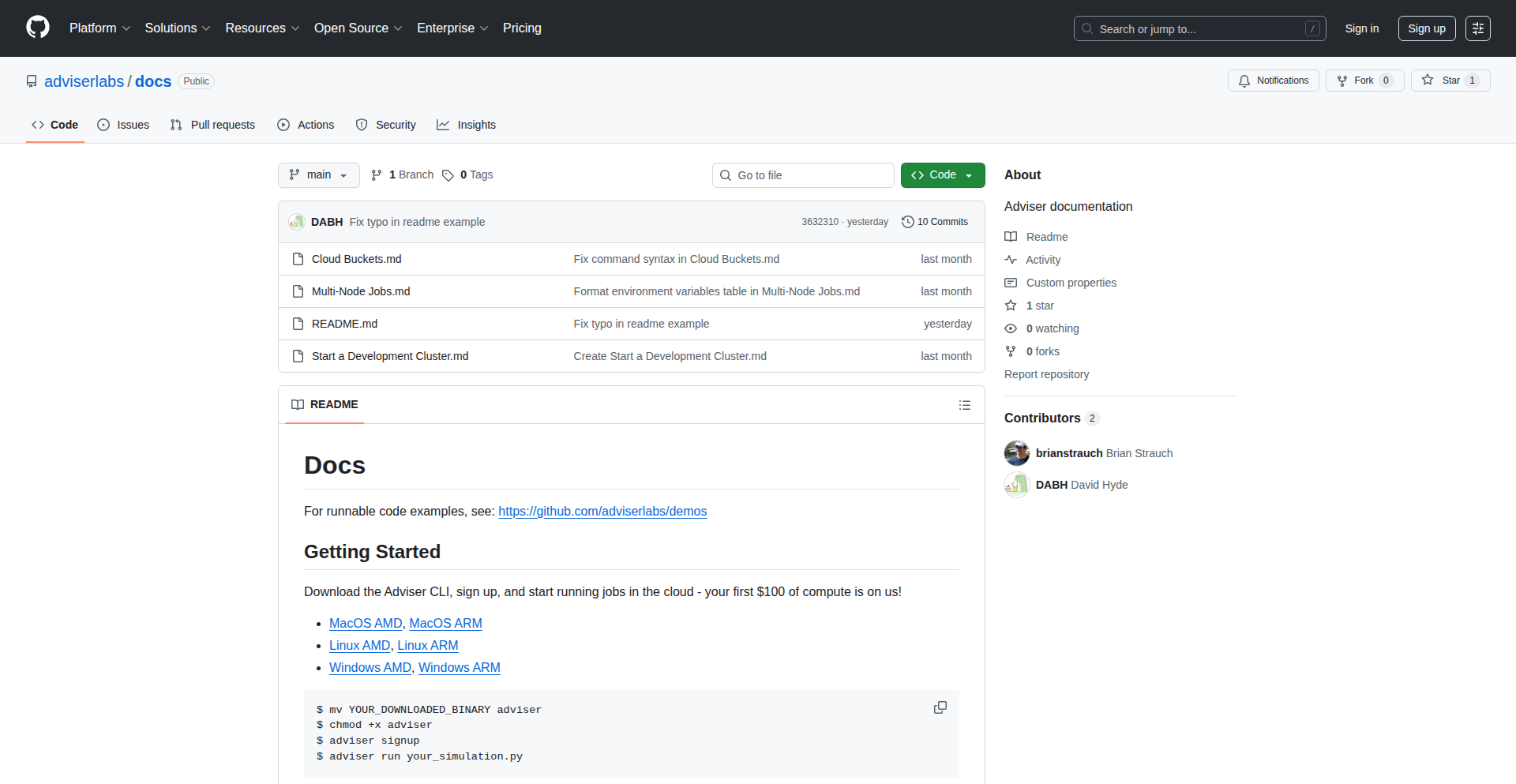

Author

catstor

Description

This project is a curated collection of resources for Java Platform Micro Edition (J2ME). It aims to revitalize interest in J2ME by providing comprehensive documentation, academic papers, tutorials, community links, IDEs, SDKs, emulators, and even archived apps and video games. The innovation lies in its dedicated effort to preserve and organize this legacy technology, making it accessible for modern exploration and understanding. It addresses the challenge of fragmented and often lost resources for older mobile platforms, enabling developers to revisit, learn from, and potentially build upon this foundational mobile technology.

Popularity

Points 73

Comments 49

What is this product?

J2MEArchive is a digital vault containing everything you'd ever want to know about J2ME, a technology that powered early mobile phones. Think of it as a treasure chest for developers who are curious about how mobile apps were built before smartphones dominated. The innovative aspect is its comprehensive compilation of often hard-to-find information, such as developer guides, old SDKs, and even playable game archives. This makes it a unique resource for understanding mobile development's history and its foundational principles. It's like having a complete history book and toolbox for a forgotten era of mobile programming. So, what's in it for you? It offers a chance to learn about efficient, low-resource programming that's still relevant for certain embedded systems or even as a creative challenge.

How to use it?

Developers can use J2MEArchive as a learning hub. You can dive into the tutorials and academic papers to understand the MIDP (Mobile Information Device Profile) and CLDC (Connected Limited Device Configuration) specifications, which are the core of J2ME. The provided SDKs and IDEs can be used to set up a development environment to write and test Midlets, the applications built for J2ME. Emulators allow you to run these Midlets on your computer, simulating the experience of old mobile phones. This means you can experiment with J2ME development, understand its constraints and creative solutions, and potentially port concepts or learn from its optimization techniques. So, how can you use it? You can download old SDKs, follow step-by-step guides to build your first J2ME app, or analyze the code of classic mobile games to see how they achieved functionality with limited resources.

Product Core Function

· Comprehensive J2ME Documentation: Provides access to official specifications, API references, and guides, explaining how J2ME applications were structured and interacted with devices. The value here is learning the fundamental design patterns and limitations of early mobile development, which can inspire efficient coding practices even today.

· J2ME SDKs and IDEs: Offers links to download development kits and integrated development environments necessary to build J2ME applications. This allows developers to set up a working environment to actually write and compile J2ME code, enabling hands-on experience and experimentation with this historical technology.

· J2ME Emulators: Includes tools that simulate the environment of J2ME-enabled devices on a modern computer, letting developers test their creations without needing physical hardware. The value is in providing a practical way to see your J2ME applications come to life and debug them effectively.

· Archive of J2ME Apps and Games: Presents a collection of previously released J2ME applications and video games. This serves as a valuable case study for understanding real-world implementations, learning from successful designs, and appreciating the ingenuity of developers working under strict resource constraints. You can analyze these to understand how complex features were achieved with limited processing power and memory.

· Community and Academic Resources: Links to forums, discussions, and academic papers related to J2ME. This fosters a deeper understanding of the technology's evolution, challenges, and research, providing context and further learning opportunities for those interested in the deeper technical aspects.

Product Usage Case

· A developer wants to understand how games like Snake or Tetris were implemented on basic mobile phones. By using J2MEArchive, they can access the SDK, follow tutorials on game development, and analyze the source code (if available) or bytecode of classic J2ME games to learn about efficient rendering and input handling techniques under severe memory and processing limitations.

· A student researching the history of mobile computing needs to understand the technical underpinnings of early mobile applications. J2MEArchive provides access to academic papers, specifications, and developer documentation, offering a direct window into the technical decisions and architectural choices made during that era.

· An embedded systems engineer is designing a low-power, resource-constrained device and is looking for inspiration on how to manage limited resources. By exploring the J2ME SDKs and examining how Midlets were optimized for battery life and performance on feature phones, they can glean valuable insights into efficient algorithms and memory management strategies that are still applicable.

· A retro-computing enthusiast wants to experience classic mobile games. J2MEArchive provides the necessary emulators and application files, allowing them to relive or discover these iconic pieces of mobile gaming history on their modern computer.

2

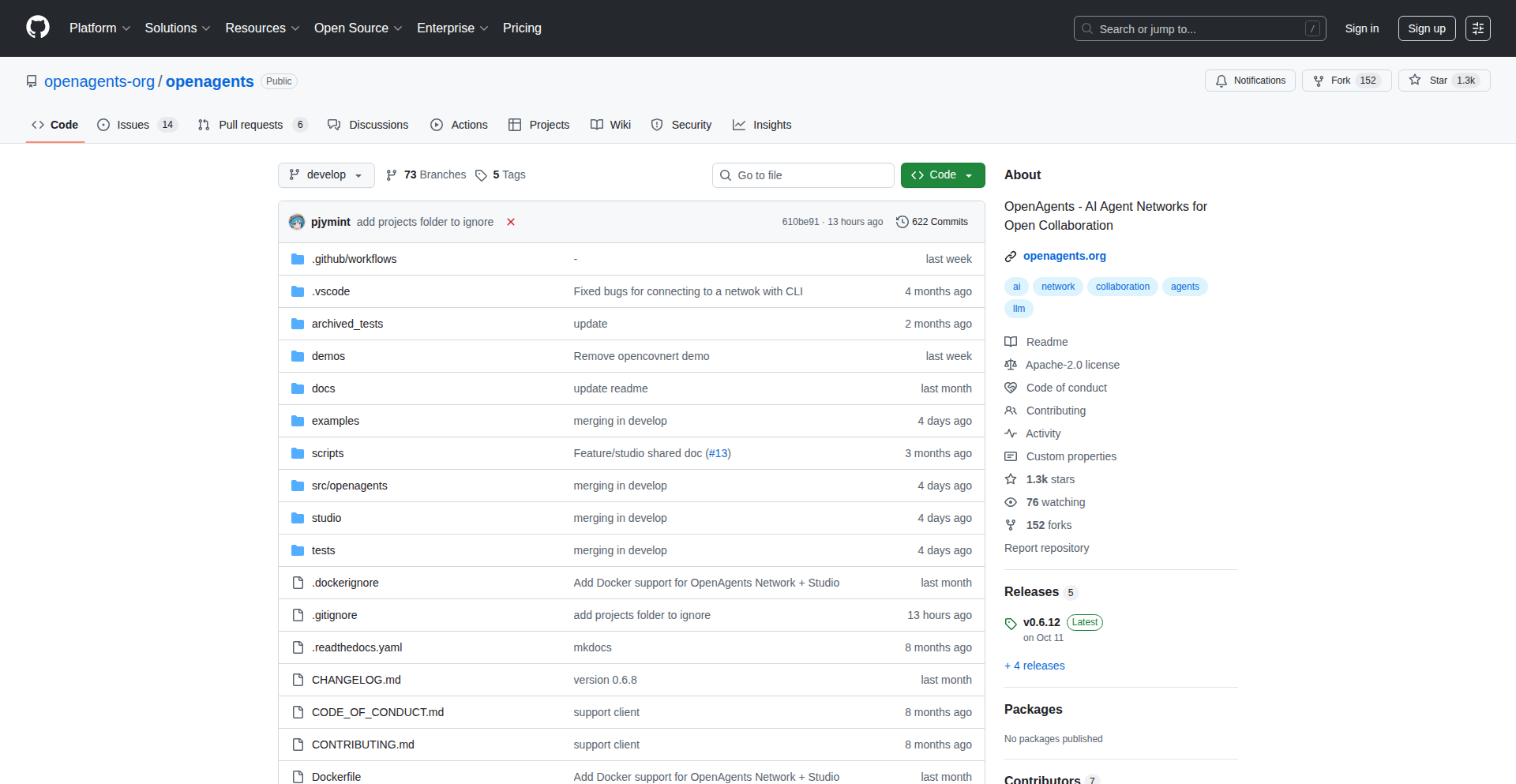

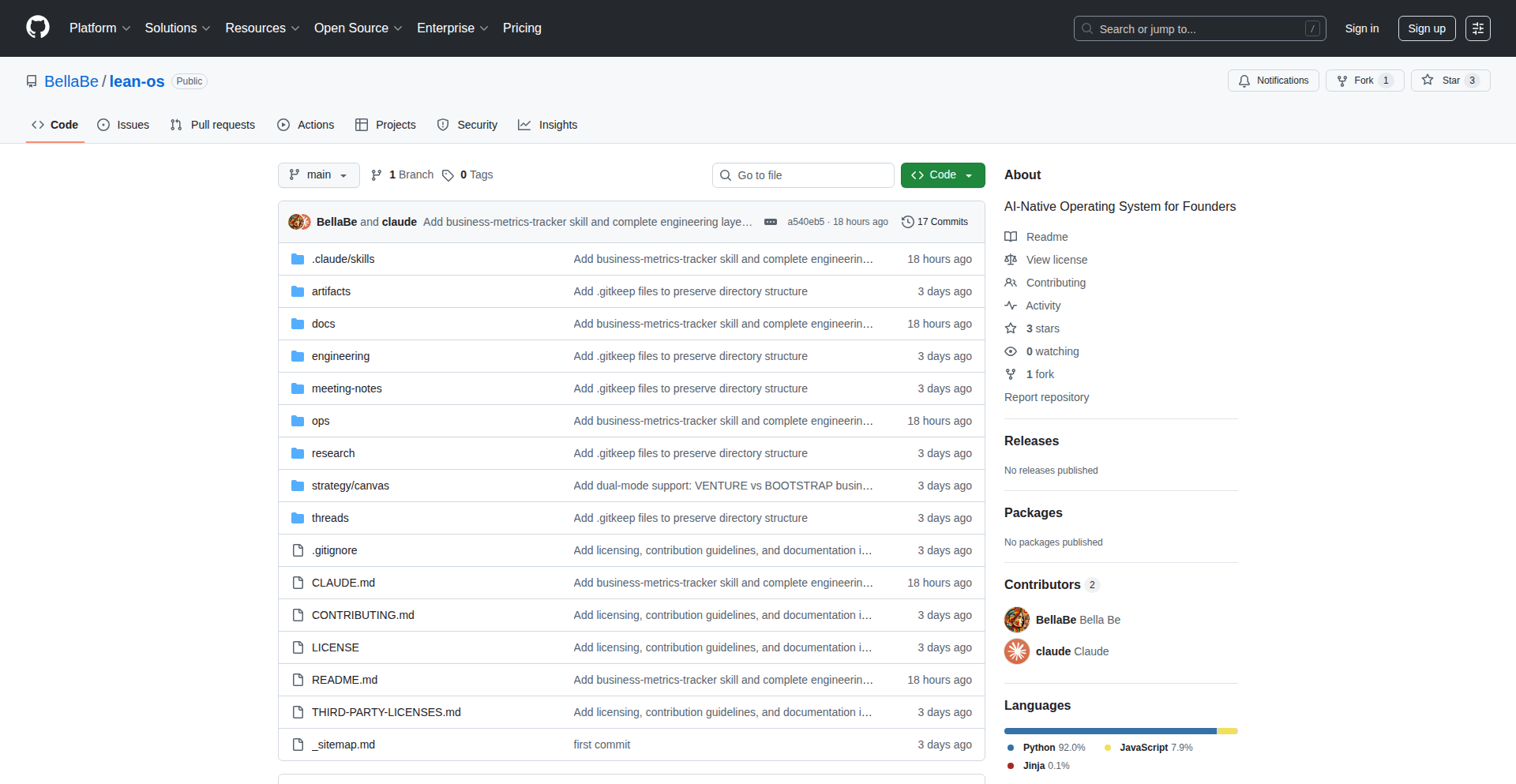

Agentweave: Open-Source Multi-Agent Network Framework

Author

snasan

Description

Agentweave is an open-source framework designed to build and manage multi-agent networks, inspired by the A2A (Agent-to-Agent) communication protocol. It provides a structured way for independent 'agents' (pieces of software that can perform tasks) to discover, communicate, and collaborate with each other, creating more complex and intelligent systems. The core innovation lies in its flexible and extensible architecture, enabling developers to easily create sophisticated distributed applications.

Popularity

Points 42

Comments 42

What is this product?

Agentweave is an open-source framework that simplifies the creation of multi-agent systems. Imagine you have several specialized software robots (agents) that need to work together to achieve a common goal, like a team of specialized workers. Agentweave provides the 'scaffolding' and communication backbone for these agents to find each other, talk to each other using a standardized language (similar to how people use protocols like HTTP to communicate over the internet), and coordinate their actions. The innovation is in its adaptable design, allowing developers to create and integrate custom agent behaviors and communication patterns without being locked into a single, rigid system. So, for you, it means a powerful toolkit to build complex, intelligent software systems where different parts can autonomously interact and collaborate.

How to use it?

Developers can use Agentweave by defining individual agents, each with its own set of capabilities and behaviors. These agents are then registered within the Agentweave network. The framework handles the discovery of other agents and facilitates message passing between them. For instance, a developer might create an 'image recognition agent' and a 'data analysis agent'. Agentweave would enable the image recognition agent to send detected objects to the data analysis agent for further processing. Integration can happen by embedding Agentweave within existing applications or building new microservices that leverage its capabilities. So, for you, it means you can build more intelligent applications by breaking down complex tasks into smaller, manageable agent components that can work together seamlessly.

Product Core Function

· Agent Discovery: Enables agents to find and identify each other within the network, allowing for dynamic system composition and reducing manual configuration. This is valuable because it makes systems more flexible and less prone to breaking when agents are added or removed.

· Inter-Agent Communication: Provides a robust messaging system for agents to exchange information and commands, supporting various communication patterns like request-response or publish-subscribe. This is valuable for enabling agents to collaborate effectively and share data.

· Agent Orchestration: Offers mechanisms for managing the lifecycle and coordination of agents, helping to build more complex workflows and behaviors. This is valuable for building sophisticated systems that can perform multi-step tasks.

· Extensible Agent Model: Allows developers to define custom agent types and behaviors, providing high flexibility to tailor the system to specific needs. This is valuable because it means the framework can be adapted to a wide range of problems, from AI research to business process automation.

Product Usage Case

· Building a decentralized AI research platform where different agents specializing in various machine learning tasks can discover and collaborate on experiments, accelerating research and development. This solves the problem of coordinating complex distributed AI workloads.

· Creating intelligent automation systems for business processes, where agents representing different departments (e.g., sales, inventory, customer support) can communicate and trigger actions based on events, streamlining operations. This addresses the challenge of integrating disparate business functions.

· Developing smart IoT solutions where edge devices (agents) can communicate and share data locally before sending aggregated insights to the cloud, improving efficiency and reducing latency. This tackles the issue of managing and coordinating numerous connected devices.

· Experimenting with swarm intelligence algorithms and emergent behaviors by allowing a large number of simple agents to interact and collectively solve problems, pushing the boundaries of AI and robotics. This provides a platform for exploring novel computational approaches.

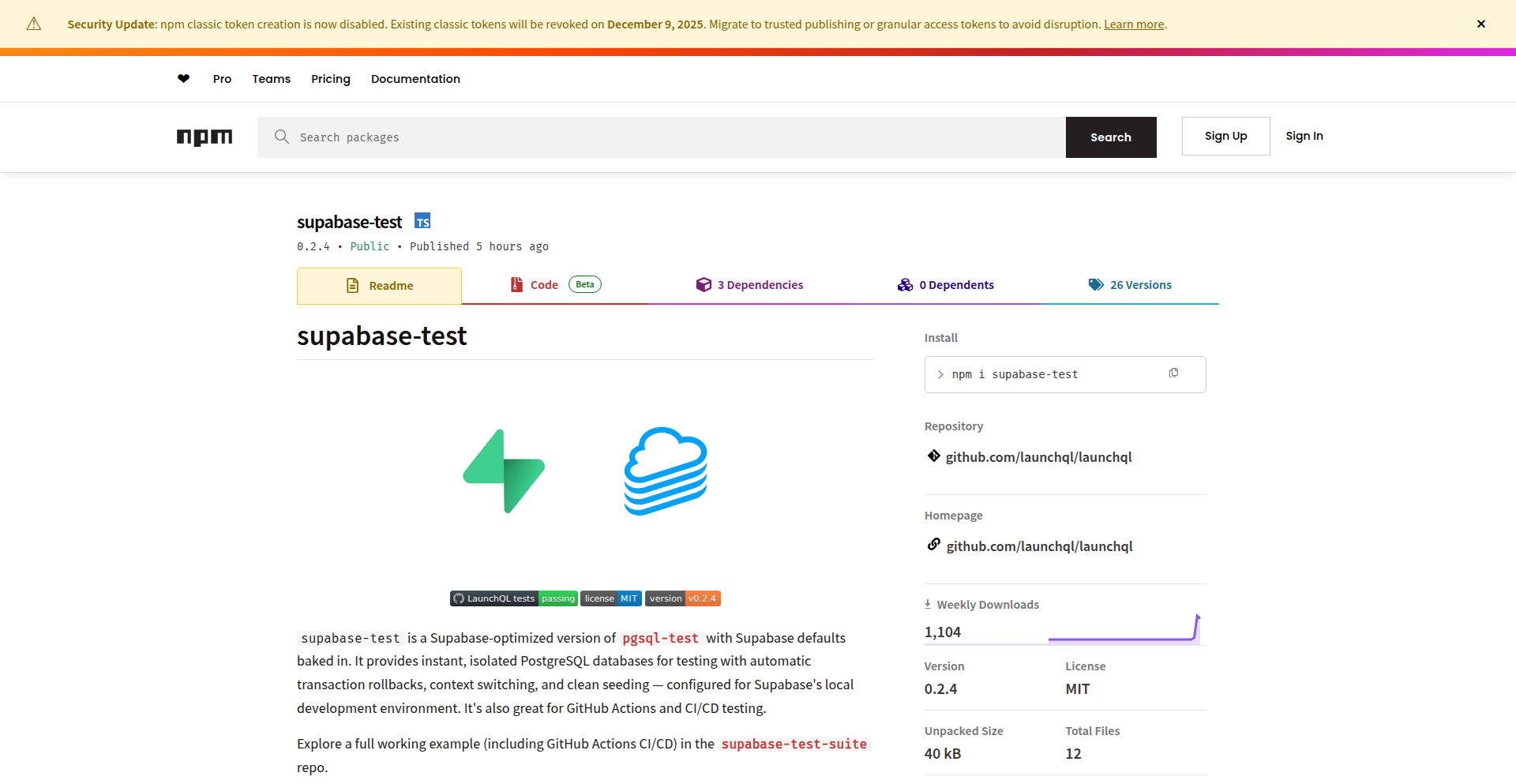

3

SupabaseRLSGuardian

Author

pyramation

Description

This project is a testing framework designed for Supabase applications. It innovates by creating a dedicated, isolated PostgreSQL database for every single test case. This approach ensures that tests are truly independent, eliminating interference from previous test runs and making it significantly easier to validate Row Level Security (RLS) policies by simulating real database states without relying on complex global setup or mock authentication. It tackles the common challenge of testing fine-grained access control in a reliable and efficient manner.

Popularity

Points 22

Comments 8

What is this product?

SupabaseRLSGuardian is a testing tool that spins up brand new, completely separate PostgreSQL databases for each individual test you run against your Supabase backend. Think of it like giving each test its own sandbox environment. This is innovative because traditionally, tests might share a database, leading to data conflicts or needing complex setup. By isolating each test, it guarantees that the database state is exactly as you expect it for that specific test, making it incredibly easy to verify how your Row Level Security (RLS) policies work. RLS is the feature in Supabase that controls who can see and edit what data, and this tool allows you to test those rules with confidence, even simulating different user authentication states without needing to create fake logins.

How to use it?

Developers can integrate SupabaseRLSGuardian into their existing testing workflows, particularly with popular JavaScript test runners like Jest or Mocha. After installing the package, they can configure it to provision a fresh PostgreSQL instance for each test. The framework provides methods to easily seed this isolated database with specific data (using SQL, CSV, or JSON files) and to simulate user authentication states using a `.setContext()` function. This allows developers to write tests that precisely target RLS policies, for example, testing if a regular user can only see their own data, or if an administrator has broader access. It's designed to be run in automated CI/CD pipelines (like GitHub Actions) for consistent and reliable testing.

Product Core Function

· Instant isolated Postgres DBs per test: Each test gets its own clean database, ensuring test isolation and preventing data pollution, which means your test results are always reliable and unaffected by other tests. This is crucial for catching bugs related to data state.

· Automatic rollback after each test: After a test finishes, the database is automatically reset or discarded, ensuring the next test starts with a clean slate. This guarantees that tests don't accidentally interfere with each other, saving debugging time.

· RLS-native testing with `.setContext()` for auth simulation: The framework allows you to simulate different user authentication states directly within your tests. By using `.setContext()`, you can mimic how your Row Level Security policies behave for different logged-in users, making it straightforward to verify access controls.

· Flexible seeding (SQL, CSV, JSON, JS): You can easily populate your isolated test databases with whatever data you need, in various formats. This allows you to precisely set up the test environment to replicate real-world scenarios and edge cases for your RLS policies.

· Works with Jest, Mocha, and any async test runner: The tool is designed to be flexible and compatible with most modern JavaScript testing frameworks, allowing you to adopt it without a complete overhaul of your existing testing setup.

· CI-friendly (runs cleanly in GitHub Actions): The framework is built to work seamlessly in continuous integration environments, ensuring your Supabase application's security policies are automatically checked every time you push code, providing continuous confidence.

Product Usage Case

· Testing a multi-tenant application where users should only access their own company's data. Using SupabaseRLSGuardian, a developer can spin up an isolated DB, seed it with data for 'Company A' and 'Company B', and then write tests to ensure a user associated with 'Company A' cannot even see the existence of data belonging to 'Company B'. This directly addresses the problem of verifying data segregation.

· Validating RLS policies that grant administrative privileges. A developer can simulate an administrator login using `.setContext()`, seed a test database with various data types, and then write tests to confirm that the administrator can indeed view, edit, and delete records that a regular user would be restricted from accessing. This solves the challenge of testing privileged access.

· Testing the effect of new RLS policies on existing data. By creating an isolated database and seeding it with a representative sample of production-like data, a developer can safely experiment with new security rules and run tests to ensure that the changes behave as expected without risking data integrity in a live environment.

· Ensuring that sensitive data is properly protected. A developer can set up a test case where specific fields in a table are marked as sensitive and RLS is configured to hide these fields from non-authenticated users. The framework allows simulating a non-authenticated state and verifying that these sensitive fields are indeed not visible, thus solving the problem of data exposure.

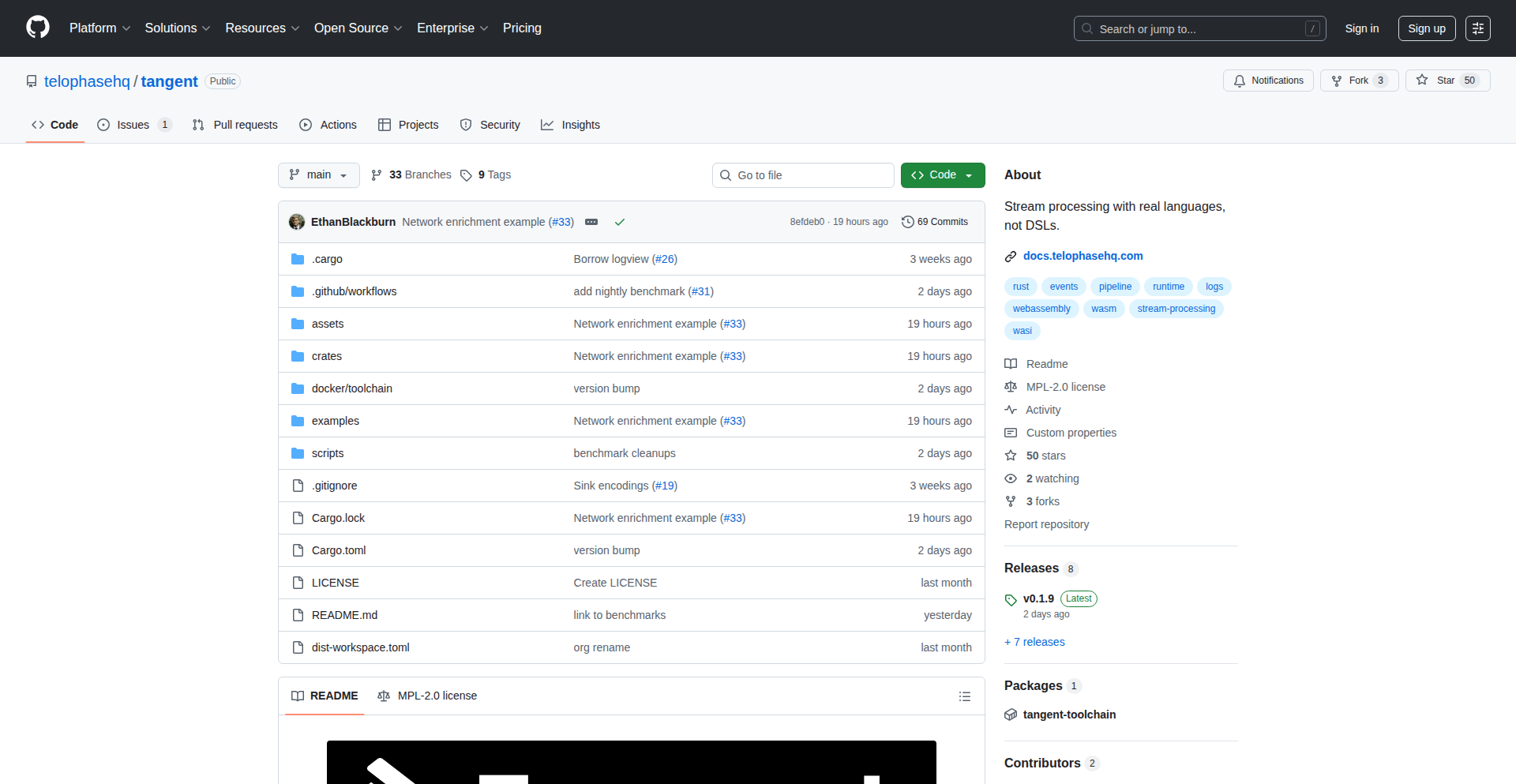

4

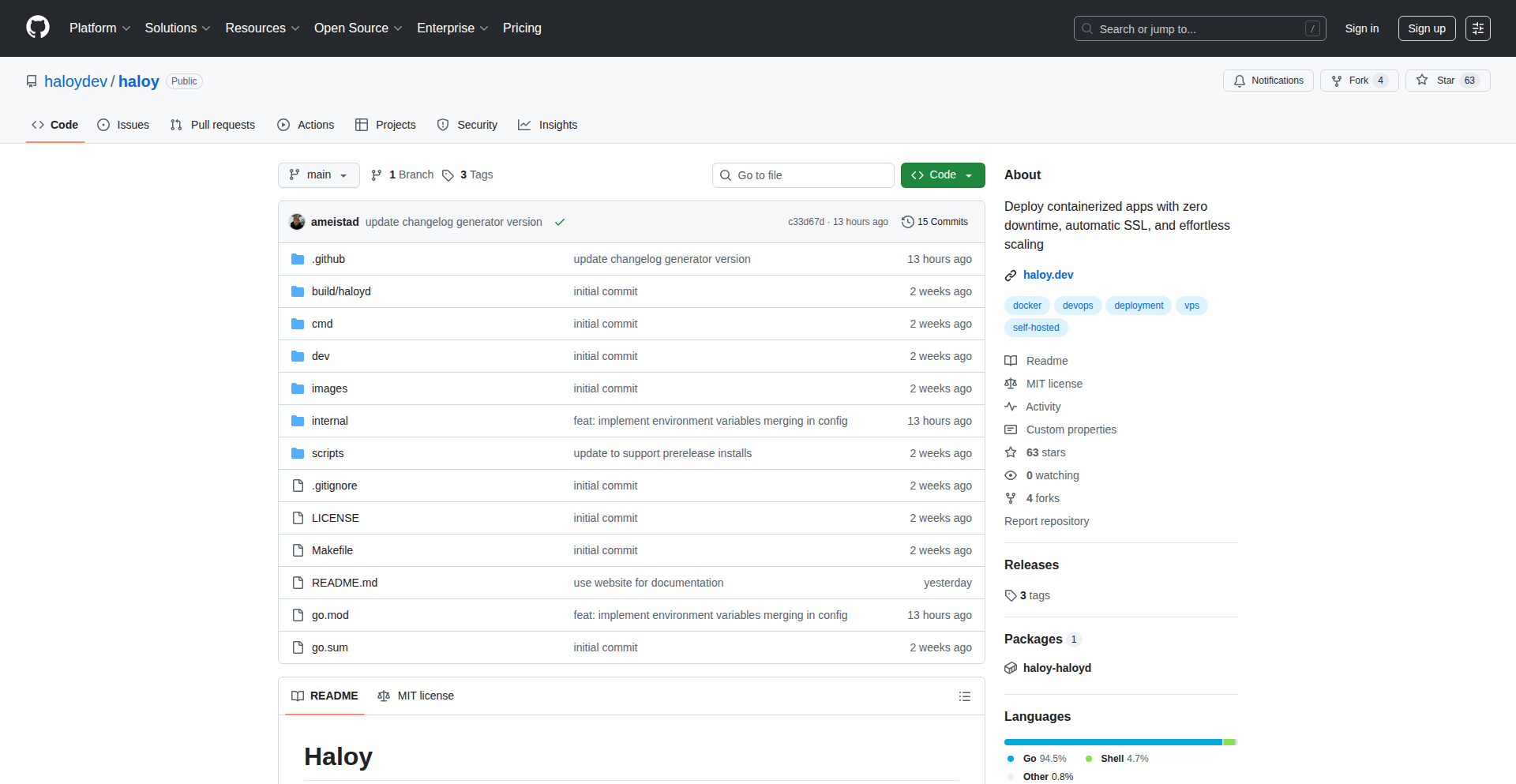

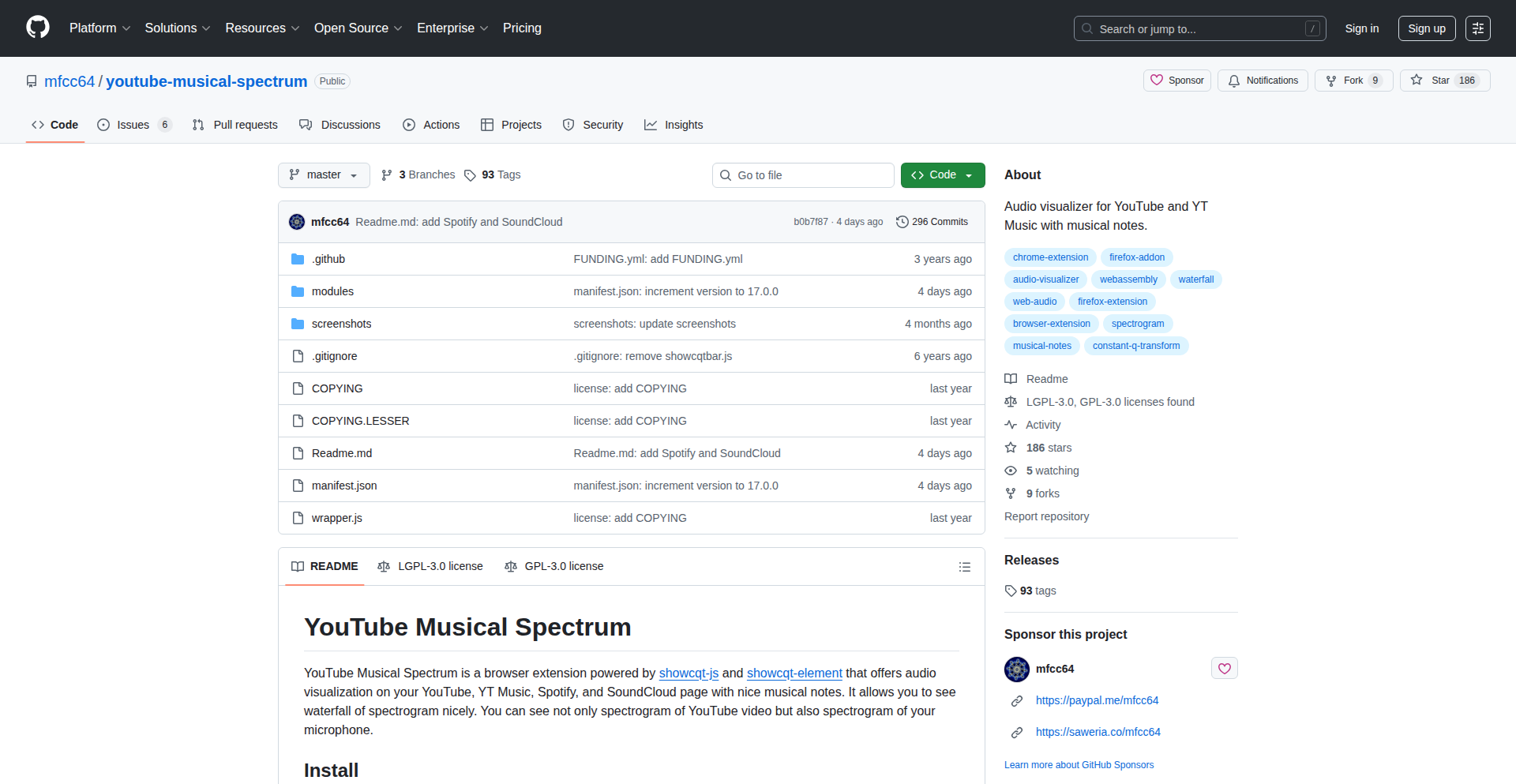

Tangent WASM-Powered Log Weaver

Author

ethanblackburn

Description

Tangent is a high-performance security log processing pipeline built with Rust. It revolutionizes log management by running all data transformation, enrichment, and detection logic as WebAssembly (WASM) plugins. This approach addresses common challenges like schema evolution, lack of shared mapping libraries, and the tediousness of writing custom parsers, enabling faster, more flexible, and LLM-friendly log pipeline development.

Popularity

Points 24

Comments 2

What is this product?

Tangent is a log processing pipeline designed for security data, meaning it takes in raw logs from various sources, cleans them up, adds extra context, and identifies potential security issues. The core innovation is using WebAssembly (WASM) for all the processing logic. Think of WASM as a super-efficient, universally compatible engine that can run code written in different programming languages (like Rust, Python, Go) safely and quickly inside the pipeline. This means instead of being locked into a specific tool's way of doing things, developers can write their log processing rules in languages they already know, compile them into WASM, and plug them directly into Tangent. This makes the pipeline incredibly flexible and allows for easy sharing and reuse of processing logic, solving the problem of constantly changing log formats and repetitive mapping work.

How to use it?

Developers can use Tangent by building custom WASM plugins for their specific log transformation and enrichment needs. The project provides tools to scaffold, test, and benchmark these plugins locally. These plugins can be written in languages like Rust, Python, or Go. For example, if you need to convert logs from a specific security tool into the standardized OCSF format, you can write a Python plugin that reads the incoming log, extracts the relevant fields, and transforms them into the OCSF schema. These plugins can then be loaded into the Tangent pipeline. Tangent also supports running other processing engines' DSLs (like Bloblang) within WASM, making migration easier. The goal is to integrate these plugins seamlessly, whether they are for schema validation, calling external APIs for enrichment, or performing complex data manipulations, all within a high-performance Rust environment.

Product Core Function

· WASM Plugin Architecture: Allows logic to be written in standard programming languages (Rust, Python, Go) and compiled into WebAssembly, offering language flexibility and reusability. The value is in enabling developers to use their preferred tools and easily share processing logic across different systems.

· Log Normalization and Enrichment: Plugins can transform incoming logs into a consistent format (like OCSF) and add valuable contextual information by calling external APIs or referencing internal datasets. This makes log data more useful for analysis and security investigations.

· Detection Logic Execution: Complex security detection rules can be implemented as WASM plugins, enabling real-time threat identification directly within the pipeline. This speeds up incident response by catching threats as they happen.

· Cross-Engine Compatibility: Ability to run other specialized log processing languages (e.g., Bloblang) within WASM, facilitating migration from existing systems and leveraging existing investments.

· Community Plugin Library: A shared repository of pre-built WASM plugins for common transformations and enrichments, reducing redundant development effort for the community. This saves developers time and effort by providing ready-to-use solutions.

· LLM-Assisted Development: Because plugins are written in standard code, Large Language Models can be used to generate new mapping and transformation logic, significantly speeding up development cycles.

· High-Performance Processing: Built with Rust and optimized WASM runtime, Tangent can process large volumes of log data with low latency, crucial for real-time security monitoring. This ensures that critical security events are not missed due to slow processing.

Product Usage Case

· Migrating a complex log processing system: A company using a proprietary DSL for log parsing can write Bloblang processing logic and run it as a WASM plugin within Tangent, allowing a phased migration with minimal disruption and leveraging existing expertise.

· Real-time threat detection based on external threat intelligence: A security analyst can develop a WASM plugin that enriches incoming network logs with data from an external threat intelligence feed. If an IP address in the log matches a known malicious IP, the plugin can flag the log for immediate review, speeding up incident response.

· Automating OCSF schema mapping for new log sources: When a new security tool starts generating logs, instead of manually writing complex mapping rules, a developer can use an LLM to generate a draft Python or Rust plugin to convert these logs to OCSF, then fine-tune it. This drastically reduces the time to onboard new data sources.

· Building a reusable library for common log transformations: A team can create a set of WASM plugins for frequently used data cleaning tasks (e.g., anonymizing PII, extracting specific fields) and share them within their organization or with the broader Tangent community, promoting consistency and efficiency.

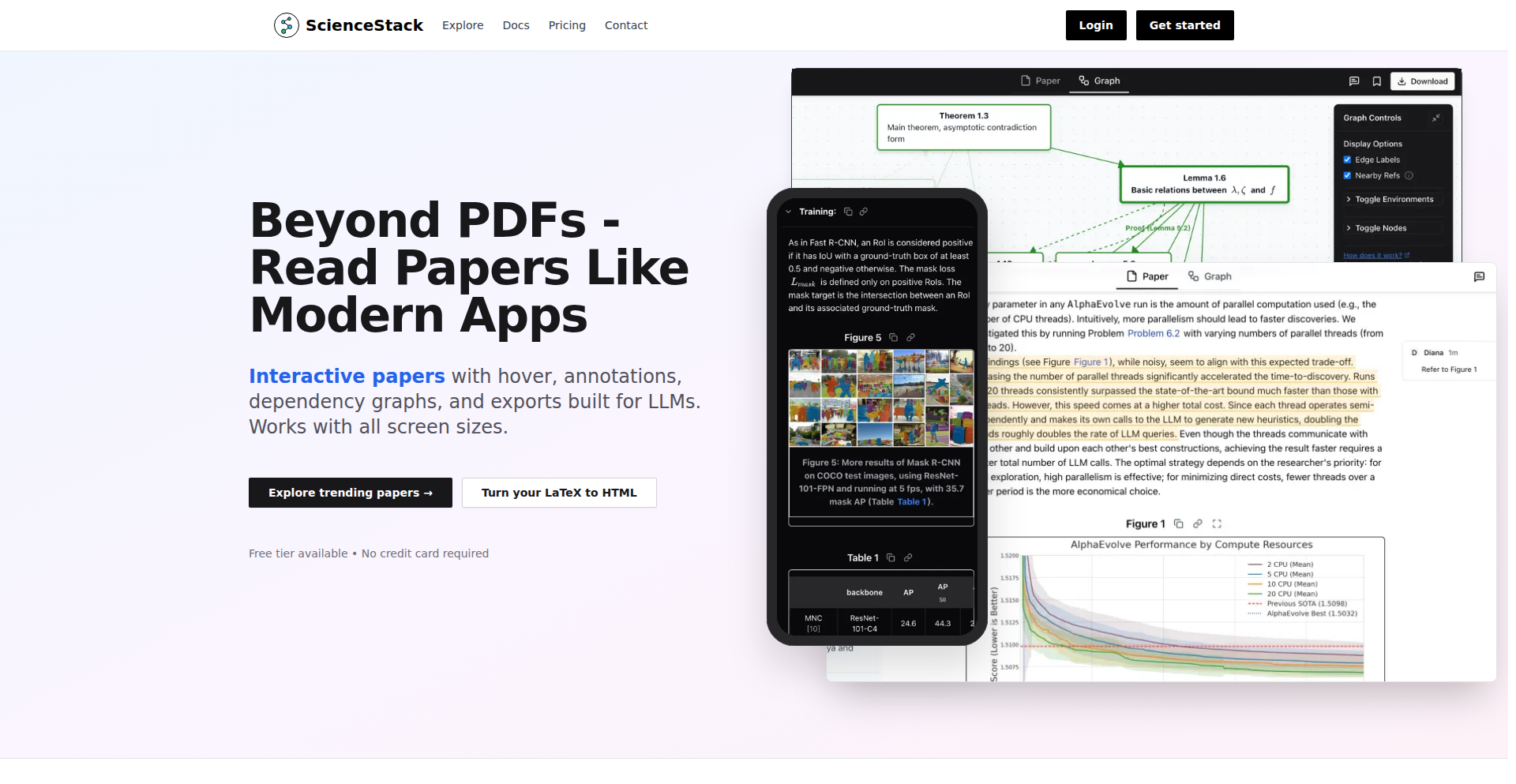

5

ArXivPaperPlus

Author

cjlooi

Description

A fully interactive research paper reader that transforms static PDFs and basic arXiv HTML into a dynamic learning experience. It tackles the common frustrations of academic reading by providing rich, context-aware features that enhance comprehension and exploration, making complex research more accessible.

Popularity

Points 9

Comments 6

What is this product?

ArXivPaperPlus is an advanced web-based reader for research papers, aiming to improve the academic reading experience significantly beyond traditional PDF viewers or the standard arXiv HTML presentation. Its core innovation lies in its ability to deeply parse and interpret the semantic structure of research papers. For instance, it can identify and make interactive every citation, reference, and mathematical equation within a document. This means when you hover over a citation, you instantly see its context or the paper it refers to, rather than having to manually search for it. Similarly, equations can be explored in their raw LaTeX form or even visualized with associated dependencies. This goes beyond simple text display to create a truly connected and explorable knowledge base for each paper, solving the problem of fragmented understanding in academic literature.

How to use it?

Developers can use ArXivPaperPlus by accessing research papers through a dedicated web interface. For example, if you find a paper on arXiv that you want to study in depth, you can navigate to the ArXivPaperPlus platform (or potentially integrate its reader components into your own tools if it's open-sourced). You'd then simply load the paper (e.g., via a direct URL). Once loaded, you can immediately benefit from features like hovering over footnotes for definitions, seeing linked equations, or using the synchronized table of contents to jump between sections. For those who work with research papers programmatically, the 'Copy raw LaTeX' feature allows easy extraction of equations for further use in other LaTeX documents or mathematical software. This makes it an invaluable tool for researchers, students, and anyone needing to quickly grasp and utilize information from academic publications.

Product Core Function

· Interactive References and Citations: Hovering over a reference or citation instantly shows its context or linked paper, reducing the time spent manually searching and improving comprehension of related work. This is useful for quickly understanding the lineage of ideas in a research field.

· Interactive Equations: Users can hover over mathematical equations to see their definitions, related theorems, or even the raw LaTeX code. This simplifies understanding complex mathematical concepts and makes it easier to reuse equations in your own work.

· Auto-Generated Dependency Graphs: The system automatically creates visual graphs showing how definitions, lemmas, and theorems are interconnected. This offers a powerful way to understand the logical structure of a paper and how different mathematical objects relate to each other, aiding in the grasp of complex proofs.

· Synchronized Table of Contents: As you scroll through the paper, the table of contents updates in real-time to highlight your current section. This provides excellent navigation, allowing users to easily orient themselves within long documents and quickly jump to specific areas of interest.

· Highlighting and Annotations: Users can highlight important passages and add personal notes directly within the reader. This is crucial for active learning, allowing for personalized study and easy recall of key information.

· Copy Raw LaTeX: The ability to copy the raw LaTeX code for any equation or mathematical expression provides a direct pathway for developers and mathematicians to integrate these elements into their own projects or further analysis.

Product Usage Case

· A graduate student studying a complex machine learning paper can use ArXivPaperPlus to hover over every cited paper to understand the foundational work, and click on equations to see their derivations. This significantly speeds up their literature review and understanding of novel concepts, solving the problem of getting lost in dense academic text.

· A researcher building a new theorem can use the dependency graphs to visualize how existing theorems in the paper they are reading connect to their own line of reasoning. This helps them identify potential gaps or build upon established proofs more effectively, addressing the challenge of understanding intricate mathematical relationships.

· A developer integrating a specific algorithm from a research paper into their codebase can use the 'Copy raw LaTeX' feature to quickly extract and verify the mathematical formulation of the algorithm, ensuring accuracy and saving time compared to manually transcribing.

· An educator preparing a lecture on a cutting-edge topic can use the synchronized table of contents and interactive elements to easily navigate through a paper and explain complex sections to their students, making the learning process more engaging and clear.

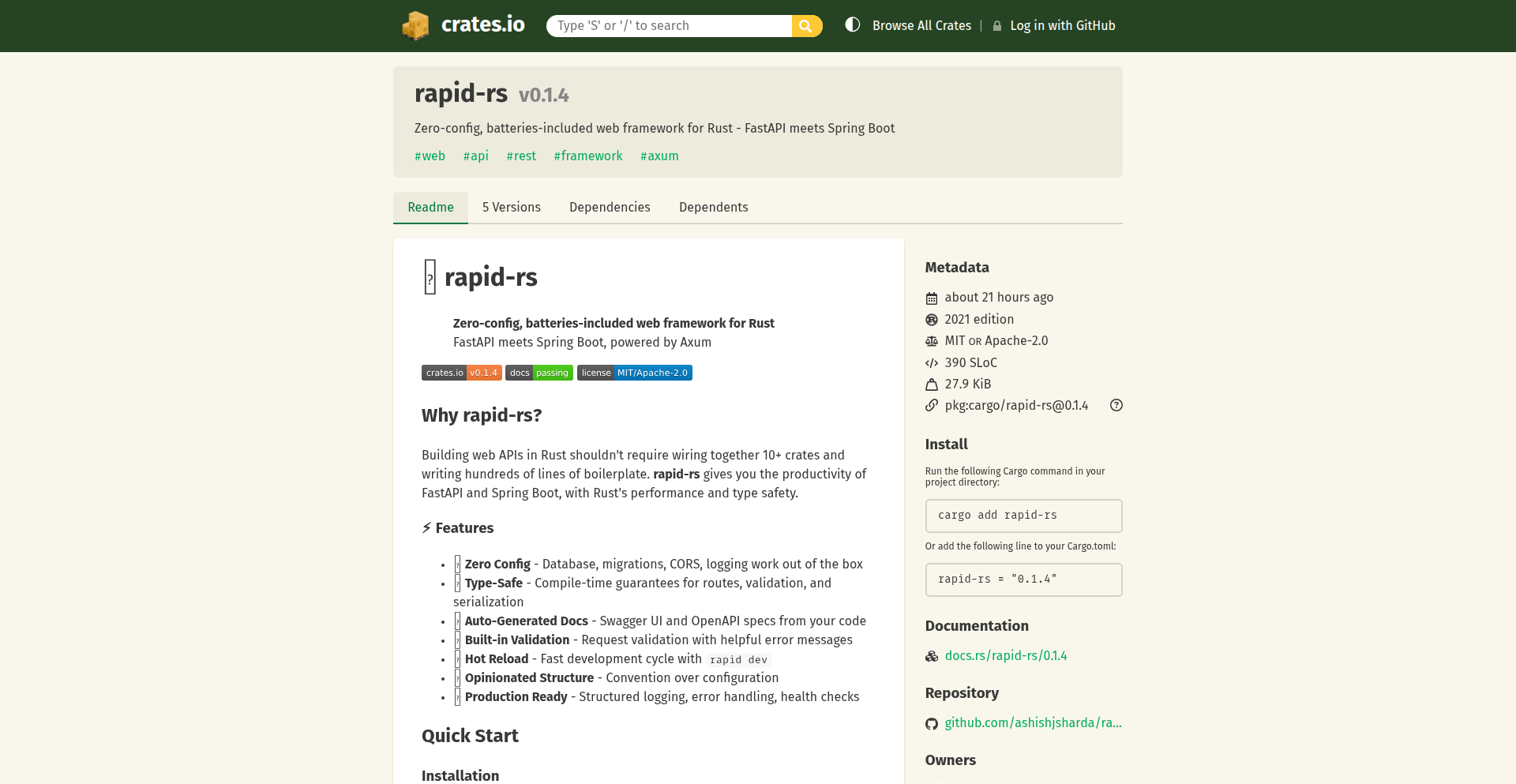

6

RustBoost

Author

ashish_sharda

Description

RustBoost is a zero-configuration web framework for Rust that dramatically reduces boilerplate code when starting new web services. It provides out-of-the-box features like database integration, logging, CORS handling, automatic API documentation with Swagger UI, request validation, and production-ready observability, all with a single command. Built on the Axum framework, it offers impressive performance, achieving around 50,000 requests per second with minimal memory usage.

Popularity

Points 9

Comments 4

What is this product?

RustBoost is a Rust web framework designed to accelerate development by eliminating repetitive setup tasks. It automates common configurations such as database connections, logging, cross-origin resource sharing (CORS), and request validation. It also automatically generates API documentation (OpenAPI/Swagger UI) for easy understanding and integration. This means instead of spending hours writing and configuring these essential components from scratch, you get them pre-built and ready to go, allowing you to focus on your core application logic. The innovation lies in its 'zero-config' philosophy, abstracting away complex setups into a simple command-line interface, making Rust web development more accessible and faster.

How to use it?

Developers can use RustBoost by installing it as a Rust crate. With a single command, they can generate a new Rust web project pre-configured with all the essential services. This generated project serves as a robust starting point for building web applications. You can integrate it into your existing Rust projects or use it to kickstart new ones. For example, if you need to build a new REST API, you'd invoke RustBoost, and it would provide you with a foundational project that already handles things like accepting incoming requests, connecting to a database, and ensuring secure communication. This saves significant time and effort compared to manually setting up each of these components.

Product Core Function

· Automated Database Configuration: Provides a pre-configured database connection (e.g., PostgreSQL, MySQL) so you can start querying data immediately without manual setup. This is useful for any application that needs to store or retrieve information.

· Integrated Logging: Sets up a robust logging system to track application events, errors, and debugging information, essential for monitoring and troubleshooting your application in production.

· CORS Handling: Automatically configures Cross-Origin Resource Sharing (CORS) to allow your web application to communicate with resources from different domains, crucial for modern web architectures.

· OpenAPI/Swagger UI: Generates interactive API documentation automatically at a `/docs` endpoint. This helps developers and consumers understand how to use your API without needing to read through code or separate documentation files.

· Request Validation: Implements built-in request validation to ensure incoming data conforms to expected formats, preventing errors and enhancing security.

· Production-Ready Observability: Includes tools and configurations for monitoring your application's health, performance, and resource usage in a production environment, making it easier to identify and fix issues.

Product Usage Case

· Building a new microservice quickly: Instead of spending days setting up a new Rust microservice with database, logging, and API docs, you can use RustBoost to generate a fully functional skeleton in minutes, significantly accelerating development cycles.

· Rapid prototyping of web APIs: If you need to quickly test an idea for a web API, RustBoost allows you to get a production-ready base up and running extremely fast, letting you focus on the core business logic and user experience.

· Reducing onboarding time for new developers: For teams new to Rust web development, RustBoost provides a standardized and easy-to-understand starting point, reducing the learning curve for setting up web projects.

7

DynamicWealth Navigator

Author

mattglossop

Description

A non-custodial financial co-pilot that tackles the static limitations of traditional portfolio optimization. It offers personalized, dynamic guidance by continuously adjusting risk based on user goals, time horizon, and current portfolio state. This approach aims to significantly increase the probability of achieving financial goals compared to conventional methods, all while providing a unified view of household finances without requiring users to transfer their assets.

Popularity

Points 5

Comments 7

What is this product?

This is a financial co-pilot designed to provide personalized, dynamic investment recommendations. Unlike traditional methods that use a fixed asset allocation, this platform continuously re-evaluates your portfolio and suggests adjustments. It leverages a sophisticated model that considers your specific financial goals, how much time you have to achieve them, and your current investments. The core innovation lies in its dynamic adjustment capability, which is computationally intensive and traditionally difficult to scale for individual investors. By automating this complex process, it aims to make advanced portfolio optimization accessible and effective, ultimately increasing the likelihood of users reaching their financial targets.

How to use it?

Developers can integrate with the platform by securely connecting their existing financial accounts using APIs like Plaid or SnapTrade. This connection allows the platform to access portfolio data without requiring users to move their assets. The platform then provides tailored, actionable guidance for investment adjustments directly to the user. For developers looking to leverage similar financial optimization principles, the underlying methodology emphasizes dynamic risk assessment and goal-contingent allocation. The platform's non-custodial nature means users maintain full control of their assets, receiving advice rather than handing over management. This model is beneficial for users who want professional-grade financial guidance without changing their current banking or investment relationships.

Product Core Function

· Personalized dynamic guidance: Provides ongoing, customized investment recommendations that adapt to individual goals and risk tolerance. This is valuable because it moves beyond generic advice to offer strategies that are actively managed to increase the chances of success.

· Automated goal tracking without asset transfer: Securely links to existing financial accounts to monitor progress towards goals without requiring users to move their money. This offers convenience and peace of mind, ensuring users can trust their current financial institutions while still benefiting from advanced planning.

· Unified household financial view: Consolidates all household assets, spending, and goals into a single, clear dashboard. This is a significant benefit for financial clarity, allowing users to see the complete picture of their financial health at a glance and make more informed decisions.

· Dynamic risk adjustment: Continuously modifies portfolio risk based on the user's time horizon and current portfolio status. This is a key technical innovation that improves upon static models, providing a more responsive and effective way to manage investments towards long-term objectives.

Product Usage Case

· Scenario: A young professional saving for a down payment on a house in five years. The platform would analyze their income, current savings, and the target down payment amount. It would then provide dynamic recommendations, potentially increasing risk exposure in the early years to maximize growth, and then gradually shifting to more conservative investments as the deadline approaches. This addresses the problem of static portfolios that might underperform or be too risky for a specific, time-bound goal.

· Scenario: A retiree planning for income generation and capital preservation. The co-pilot would assess their existing bond and equity holdings, income needs, and retirement duration. It would then suggest rebalancing strategies that aim to generate a stable income stream while protecting against significant market downturns. This solves the issue of generic retirement advice that doesn't account for the delicate balance required for sustainable retirement income.

· Scenario: A user with multiple investment accounts across different brokerages who struggles to get a consolidated view of their net worth and progress towards their goals. The platform's unified view feature would aggregate data from all these accounts, presenting a single, coherent dashboard. This solves the complexity and fragmentation often experienced by individuals with diverse financial holdings, enabling better oversight and decision-making.

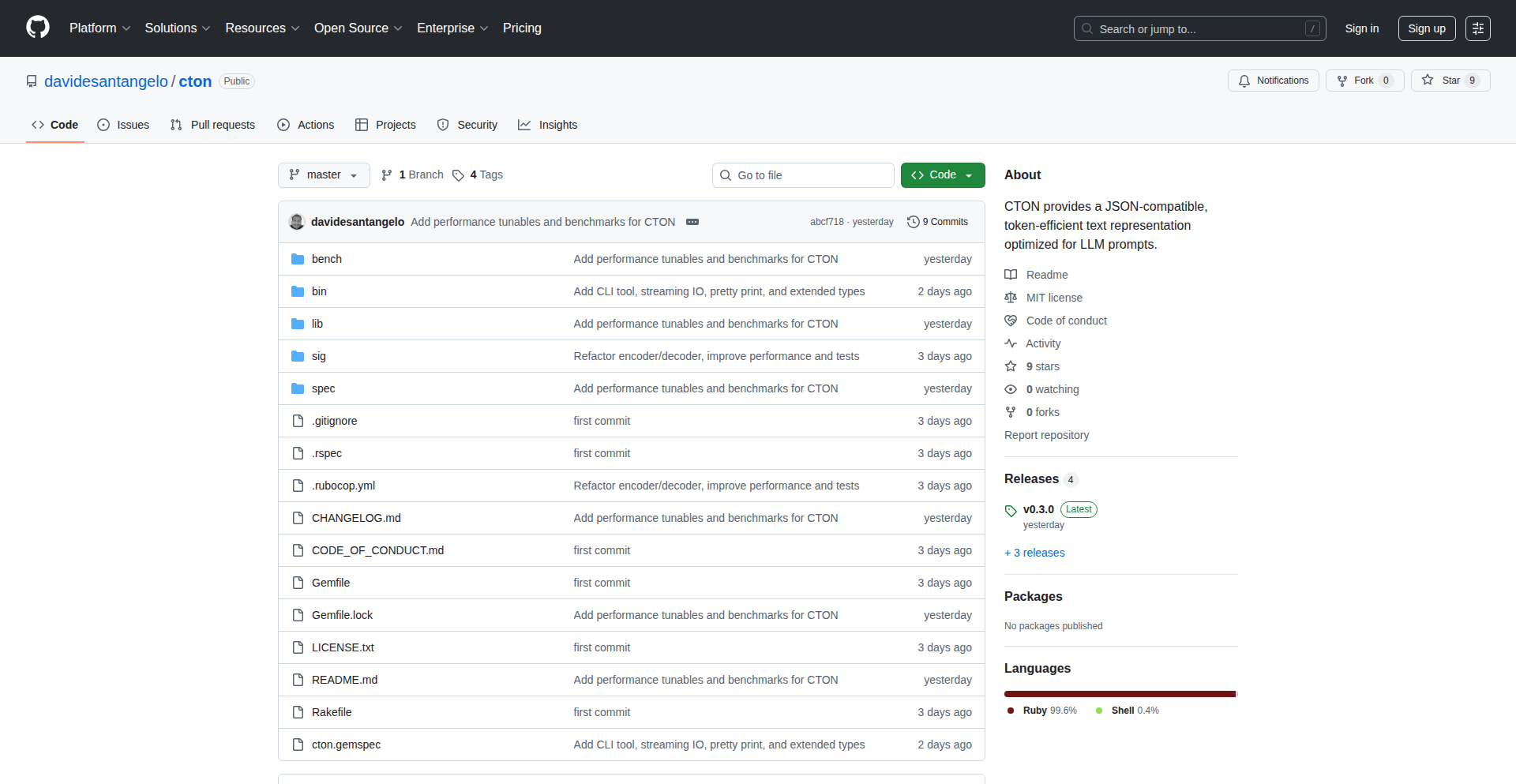

8

CTON: LLM Prompt Token Optimizer

Author

daviducolo

Description

CTON is a novel text format designed to be JSON-compatible and token-efficient, specifically for Large Language Model (LLM) prompts. It tackles the issue of prompt length impacting LLM performance and cost by intelligently encoding text to minimize token count without sacrificing readability or structure, offering a more economical and faster way to interact with LLMs.

Popularity

Points 10

Comments 1

What is this product?

CTON is a new text format that behaves like JSON but uses fewer 'tokens' when fed to Large Language Models. Think of tokens as the tiny pieces of text that LLMs process. The more tokens a prompt has, the more it costs to run and the longer it takes for the LLM to respond. CTON achieves this efficiency by finding smarter, more compact ways to represent the same information, similar to how ZIP files compress data. This means you can send more detailed instructions or data to the LLM for the same cost, or get faster responses for the same prompt.

How to use it?

Developers can use CTON by integrating the CTON library into their LLM interaction pipeline. Instead of constructing a standard JSON prompt, they would structure their prompt data using CTON syntax. The CTON library then encodes this data into its efficient format before sending it to the LLM API. The LLM can process CTON directly if it's trained to understand it, or a lightweight decoding step can be added to convert it back to a standard format if needed. This is ideal for applications involving complex prompts, few-shot learning, or situations where prompt length is a significant bottleneck.

Product Core Function

· Token-efficient encoding: CTON intelligently compresses textual data, reducing the number of tokens required for LLM prompts. This directly translates to lower API costs and faster processing times for your LLM applications.

· JSON compatibility: CTON maintains a structure similar to JSON, making it easy for developers familiar with JSON to adopt. This means less learning curve and smoother integration into existing workflows.

· Preserves semantic meaning: Despite its efficiency, CTON is designed to retain the full meaning and structure of the original prompt, ensuring LLMs can still accurately understand and act upon the input.

· Reduced LLM latency: By sending shorter prompts (in terms of tokens), LLMs can process requests faster, leading to more responsive applications and a better user experience.

· Cost savings for LLM usage: LLM APIs are often priced per token. CTON's efficiency directly reduces the number of tokens sent, leading to significant cost savings for high-volume LLM applications.

Product Usage Case

· Building intelligent chatbots that can handle more complex conversation histories without hitting token limits, leading to more coherent and context-aware interactions. The core problem solved here is managing the growing context window of conversations efficiently.

· Developing few-shot learning systems for LLMs where you need to provide multiple examples within the prompt. CTON allows for more examples to be included, improving the LLM's ability to learn new tasks quickly with fewer direct training data.

· Automating complex report generation or data summarization tasks where detailed input data needs to be fed to the LLM. CTON ensures that large datasets can be provided within token limits, enabling more comprehensive analysis.

· Creating AI agents that require detailed instructions and access to tool descriptions. CTON's efficiency allows for richer agent capabilities by fitting more information into each LLM call, directly improving agent performance and scope.

· Fine-tuning LLMs with custom prompt structures. CTON offers a way to experiment with prompt design efficiently, allowing developers to iterate faster on prompt engineering strategies and identify optimal prompt configurations.

9

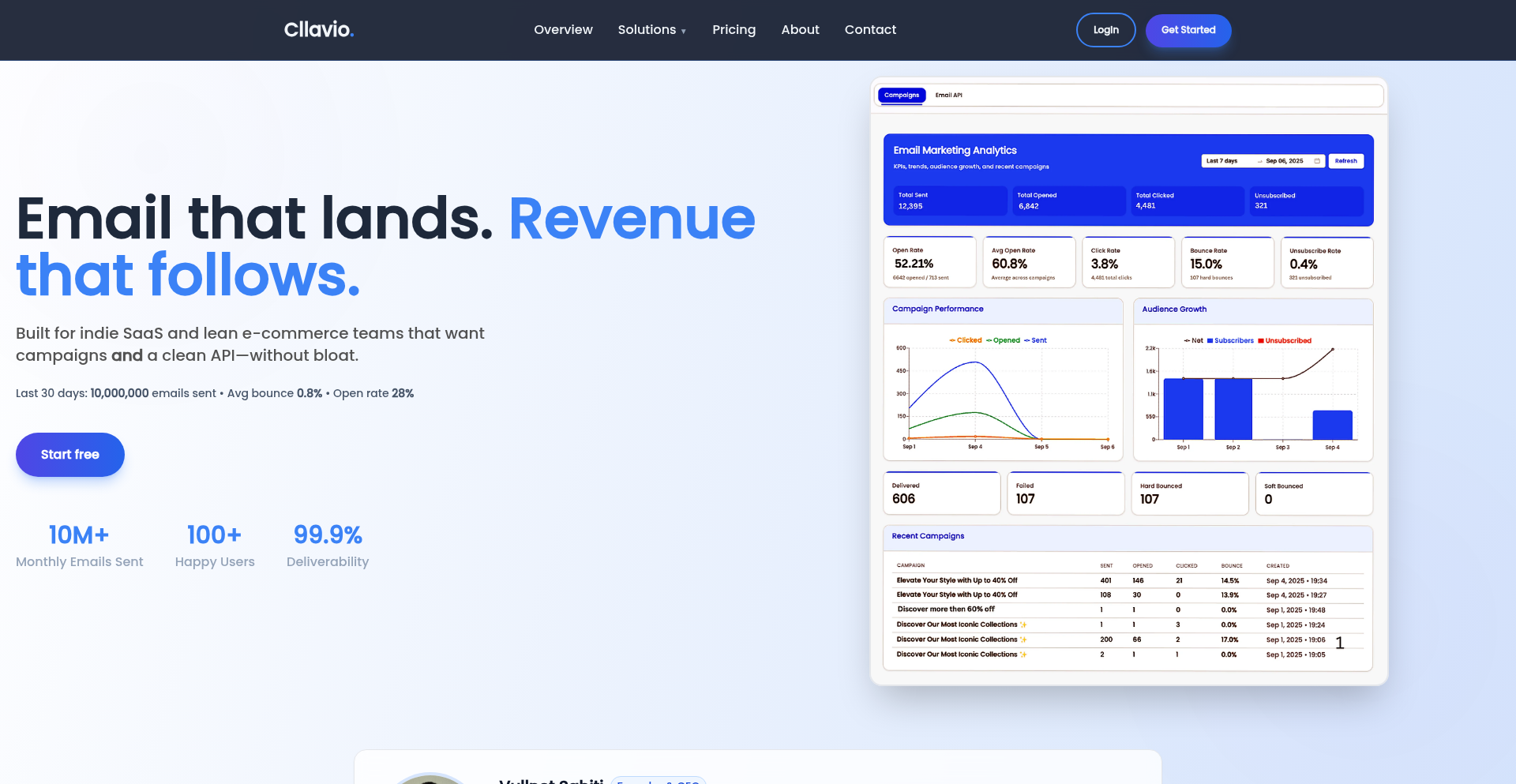

Yonoma - Reactive SaaS Email Engine

Author

vimall_10

Description

Yonoma is a behavior-based email automation tool designed for early-stage SaaS teams. It simplifies the process of sending targeted emails by triggering them based on user actions within a product, such as signing up, becoming inactive, reaching an activation milestone, or nearing the end of a trial. This eliminates the need for manual timing and complex configurations, making sophisticated email automation accessible to smaller teams.

Popularity

Points 7

Comments 4

What is this product?

Yonoma is a smart email system that sends emails automatically when users do specific things inside your software. Instead of setting up complicated rules and schedules, Yonoma watches what users are doing, like signing up for the first time or stopping using the product for a while. When these events happen, Yonoma sends pre-written emails. The innovation here is its focus on simplicity and direct connection to user behavior, making powerful email automation, usually found in large enterprise tools, easy for small SaaS businesses to use. It's like having a personal assistant that knows exactly when to send the right message to keep users engaged.

How to use it?

Developers can integrate Yonoma into their SaaS product by connecting it to their user data platform or directly to their application's event streams. This typically involves setting up event tracking for key user actions (e.g., 'user_signed_up', 'feature_X_used', 'trial_expired'). Once integrated, teams can use Yonoma's intuitive interface to design email workflows, select triggers based on these tracked events, and customize email content. It also offers integrations with popular tools like Stripe for billing events, Segment for unified customer data, and Slack for notifications, streamlining the entire customer engagement process. For a developer, this means less time building custom email logic and more time focusing on core product features, with the benefit of automated, behavior-driven customer communication.

Product Core Function

· Behavior-based Triggers: Automatically sends emails when specific user actions occur within the product, such as signing up, achieving an activation goal, or becoming inactive. This provides timely and relevant communication to users, increasing engagement without manual intervention.

· Automated Workflow Management: Manages the timing and sequence of emails for onboarding, trial reminders, and re-engagement flows, reducing manual overhead for small teams and ensuring consistent customer journeys.

· Pre-built Workflows and Templates: Offers ready-to-use email sequences and templates that can be quickly customized, saving development time and providing best-practice starting points for customer communication strategies.

· Integration with Key SaaS Tools: Connects with platforms like Stripe, HubSpot, Segment, and Zapier, allowing for seamless data flow and automation across different aspects of the business, from billing to CRM to marketing automation, creating a unified customer view and action system.

Product Usage Case

· Onboarding: A new user signs up for a trial. Yonoma detects the 'signup' event and automatically sends a welcome email with a guide on getting started, helping the user quickly understand the product's value.

· Activation: A user has been using the product but hasn't used a key feature yet. Yonoma recognizes this lack of activation and sends a targeted email highlighting the benefits and a quick tutorial for that specific feature, improving user adoption.

· Trial Expiration: A user's trial is about to end. Yonoma automatically sends a reminder email, perhaps with a special offer, encouraging them to convert to a paid plan before their access expires, boosting conversion rates.

· Inactive User Re-engagement: A user hasn't logged in for a week. Yonoma identifies this inactivity and sends a 'we miss you' email, possibly with tips on new features or use cases, aiming to bring them back to the product.

10

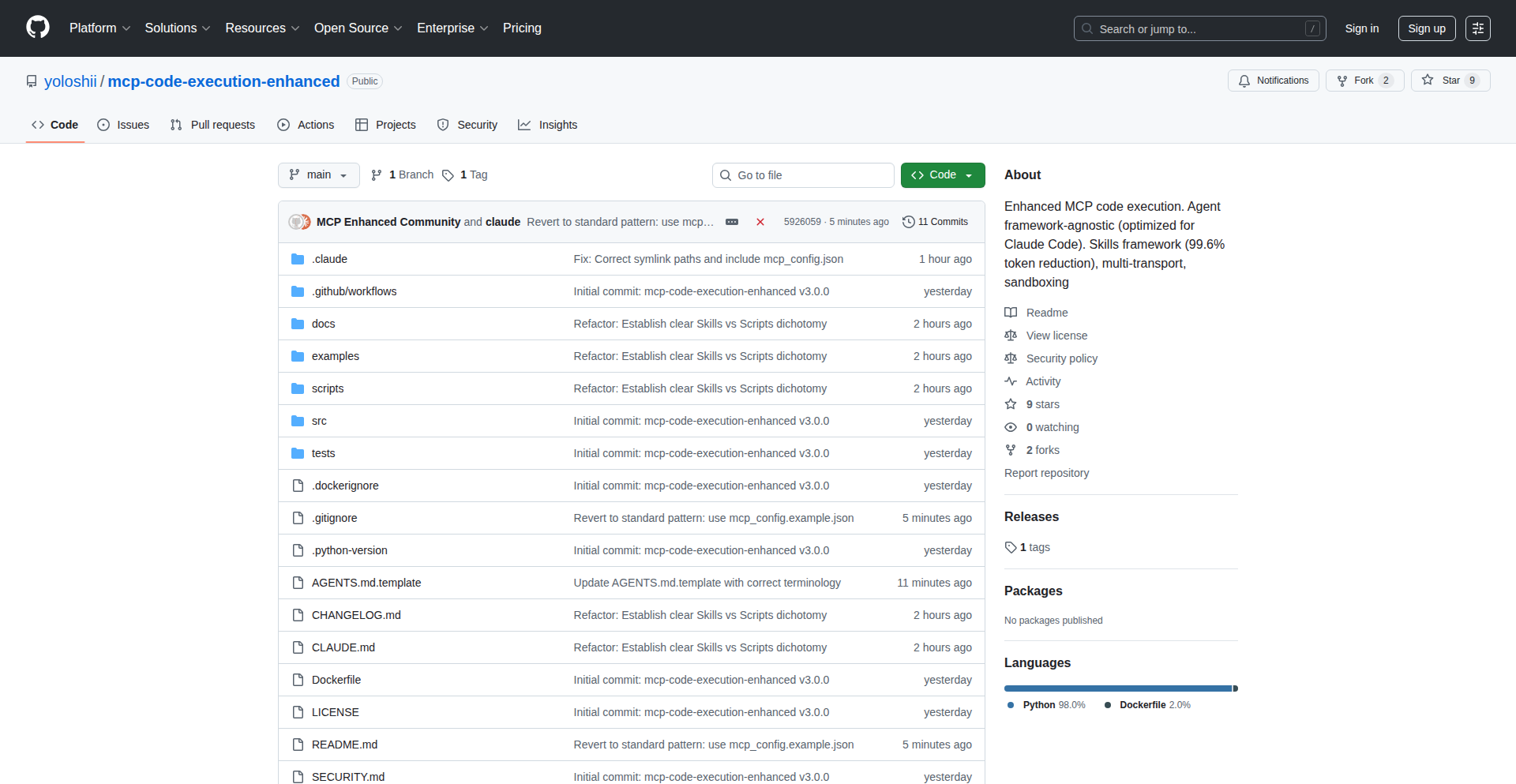

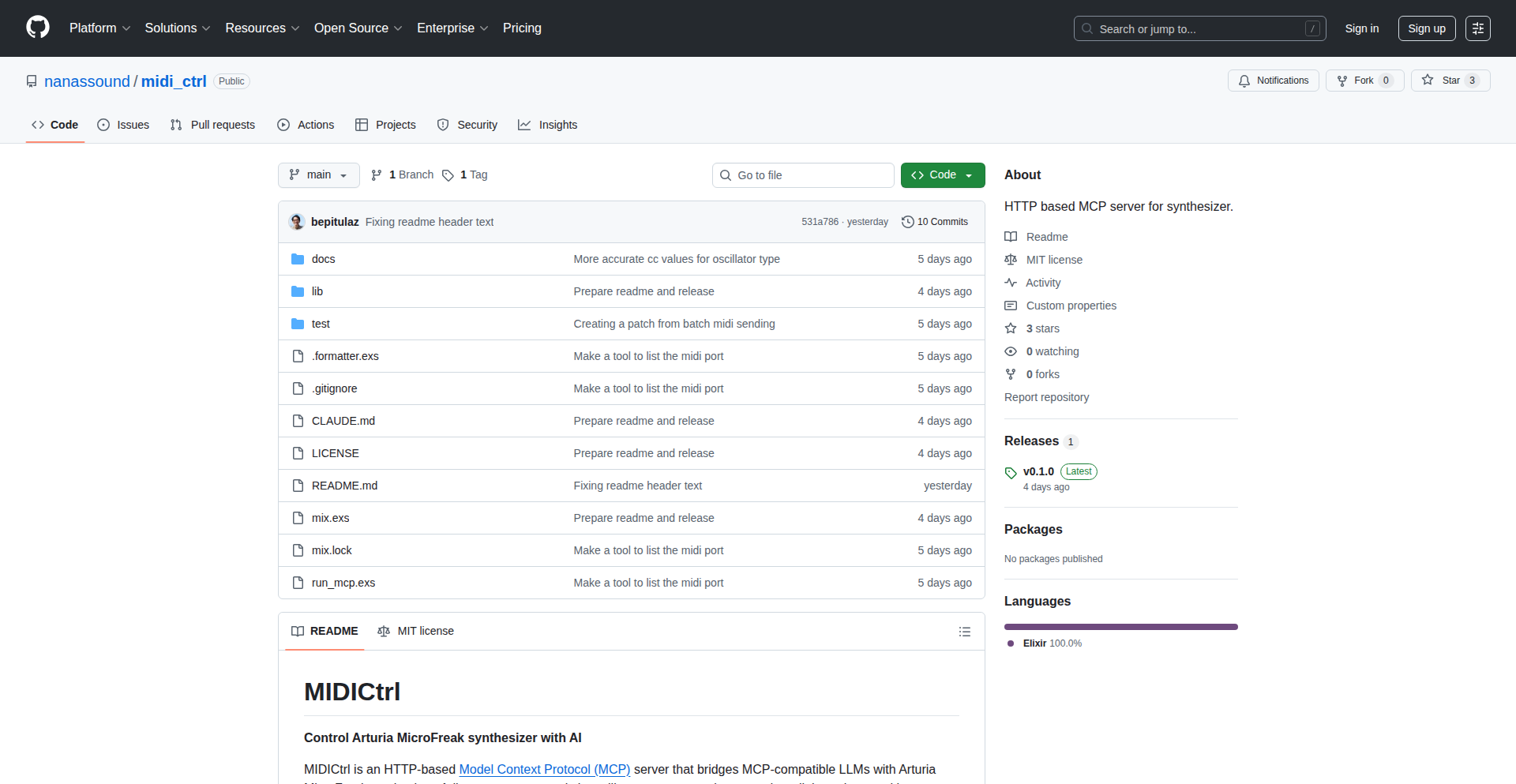

MCP Traffic Analyzer

Author

o4isec

Description

A desktop application for Mac and Windows designed to perform comprehensive analysis of MCP (Master Control Program) traffic. It helps developers and network administrators understand and debug complex communication flows in real-time.

Popularity

Points 11

Comments 0

What is this product?

MCP Traffic Analyzer is a tool that captures and dissects network traffic specifically related to the Master Control Program (MCP) protocol. Think of it like a super-powered detective for your network communications. It intercepts the messages being sent and received by your MCP-enabled systems, then breaks them down into understandable components. The innovation here lies in its specialized focus on MCP, providing granular insights that general network sniffers might miss. This allows you to pinpoint exactly what information is being exchanged, identify potential bottlenecks or errors in the communication, and ultimately ensure your MCP systems are running smoothly and efficiently. So, what's in it for you? If you're working with systems that use MCP, this tool helps you see exactly what's happening 'under the hood' of your network, making troubleshooting faster and more effective.

How to use it?

Developers and system administrators can download and install the desktop application for their respective operating systems (Mac or Windows) from the provided website. Once installed, the application can be configured to capture network traffic on a specific interface or port where MCP communication is occurring. The captured data is then displayed in a user-friendly interface, allowing for real-time monitoring, filtering, and detailed examination of individual MCP packets. This can be integrated into your existing network monitoring setups or used as a standalone diagnostic tool. So, how does this benefit you? You can easily set it up to watch your system's MCP conversations and instantly see any issues, making it straightforward to identify and fix problems.

Product Core Function

· Real-time MCP traffic capture: Intercepts and displays MCP network packets as they are transmitted and received, providing immediate visibility into communication flows. This allows you to see live interactions, which is crucial for debugging active systems.

· Detailed packet dissection: Parses MCP packets, breaking down complex headers and payloads into human-readable fields. This helps understand the structure and content of messages, revealing the 'what' and 'why' of data exchange.

· Filtering and search capabilities: Allows users to filter traffic based on various criteria (e.g., source/destination IP, port, MCP message type) and search for specific patterns within the captured data. This makes it easy to isolate relevant information from noisy network environments.

· Flow visualization: Presents communication patterns and sequences in a clear, visual format, making it easier to comprehend the overall interaction between different MCP endpoints. Understanding the sequence of events is key to identifying logical errors.

· Cross-platform desktop application: Provides a native desktop experience for both macOS and Windows users, ensuring accessibility and consistent functionality across different development environments. This means you can use it on your primary workstation without compatibility issues.

Product Usage Case

· Debugging a slow response time in an industrial automation system using MCP: A developer notices that commands sent to a PLC are taking too long to execute. By using MCP Traffic Analyzer, they can capture the traffic between their control software and the PLC, identify if the commands are being sent correctly, and see if there are any delays or errors in the PLC's responses, pinpointing the exact cause of the slowness.

· Diagnosing communication failures between two MCP-enabled servers: A system administrator is experiencing intermittent connection drops between two critical servers. They deploy MCP Traffic Analyzer on one of the servers to monitor the MCP traffic. The tool reveals malformed packets or unexpected connection resets, helping them to quickly identify a configuration issue or a bug in the MCP implementation on one of the servers.

· Validating data integrity in a financial trading platform: A developer needs to ensure that the data being exchanged between different components of a trading system using MCP is accurate and complete. MCP Traffic Analyzer allows them to inspect the contents of MCP messages, verifying that all required fields are present and correctly formatted, thereby preventing data corruption and ensuring accurate trading operations.

· Analyzing network overhead of MCP communication for optimization: A network engineer wants to reduce the bandwidth consumed by MCP traffic. By using the analyzer, they can observe the size and frequency of MCP messages, identify redundant data, and understand which types of messages contribute most to the traffic volume, informing strategies for optimizing message content or frequency.

11

London StreetText Explorer

Author

dfworks

Description

A web-based tool that leverages Google Street View imagery to extract and make searchable all visible text in London. This innovation solves the problem of accessing and analyzing the vast amount of textual information embedded in urban environments, turning static streetscapes into dynamic data sources. The core innovation lies in applying advanced Optical Character Recognition (OCR) technology to panoramic street view images and indexing the results for efficient querying. This project provides a unique way to 'read' the city, offering insights for urban studies, historical research, marketing, and even just for curious exploration.

Popularity

Points 6

Comments 4

What is this product?

This project is essentially a digital magnifying glass for London's streets. It uses sophisticated image recognition software (Optical Character Recognition, or OCR) to 'read' all the text it finds in Google Street View images of London. Think of shop signs, posters, graffiti, even text on vehicles. The magic is that it then organizes all this 'read' text into a searchable database. So, instead of just seeing a picture of a street, you can actually search for specific words or phrases and see where they appear in the physical city. The innovation is in applying this powerful OCR technology at a massive scale to panoramic street imagery and making it incredibly easy for anyone to explore.

How to use it?

Developers can integrate this tool into their own applications or use it directly through a web interface. For instance, a researcher could query for all instances of a specific historical advertisement appearing on buildings. A marketing team might want to analyze the prevalence of certain brand names or slogans in different London boroughs. Even a tourist could use it to find specific types of shops or points of interest mentioned on signs. The technical backend likely involves a robust OCR engine, a powerful image processing pipeline, and a database for indexing and searching the extracted text. Integration might involve API access to query the text data or embeddable map components.

Product Core Function

· Text extraction from panoramic street view images: This allows for the capture of textual data from a wide variety of sources within the urban landscape, such as shop signs, advertisements, public notices, and graffiti. Its value lies in making previously inaccessible visual information digitally retrievable and analyzable.

· Optical Character Recognition (OCR) powered search: This function enables users to search for specific words or phrases and instantly locate their occurrences across the captured street view data. This transforms the static streetscape into a dynamic, queryable information resource.

· Geospatial indexing of extracted text: By associating each piece of extracted text with its precise geographic location, the tool provides context and spatial understanding. This is valuable for urban analysis, trend identification, and location-based services.

· Web-based exploration interface: A user-friendly interface allows for intuitive searching and browsing of the text data overlaid on map views. This democratizes access to the information, making it usable by a broad audience without requiring deep technical expertise.

Product Usage Case

· A historical researcher wants to find all instances of advertisements for a specific product from the 1950s in London. They can use the tool to search for keywords related to the product and its era, pinpointing historical commercial activity and its spatial distribution.

· A city planner needs to assess the legibility of street signage in different neighborhoods to understand accessibility for visually impaired individuals. They can use the tool to search for specific types of signage and analyze their frequency and visibility.

· A marketing analyst wants to understand brand visibility and competitive presence in various London districts. They can search for specific brand names and identify their locations and prevalence on shop fronts and billboards.

· A local history enthusiast is curious about the evolution of street art in a particular area. They can search for common graffiti tags or styles and track their appearance and changes over time through different Street View captures.

12

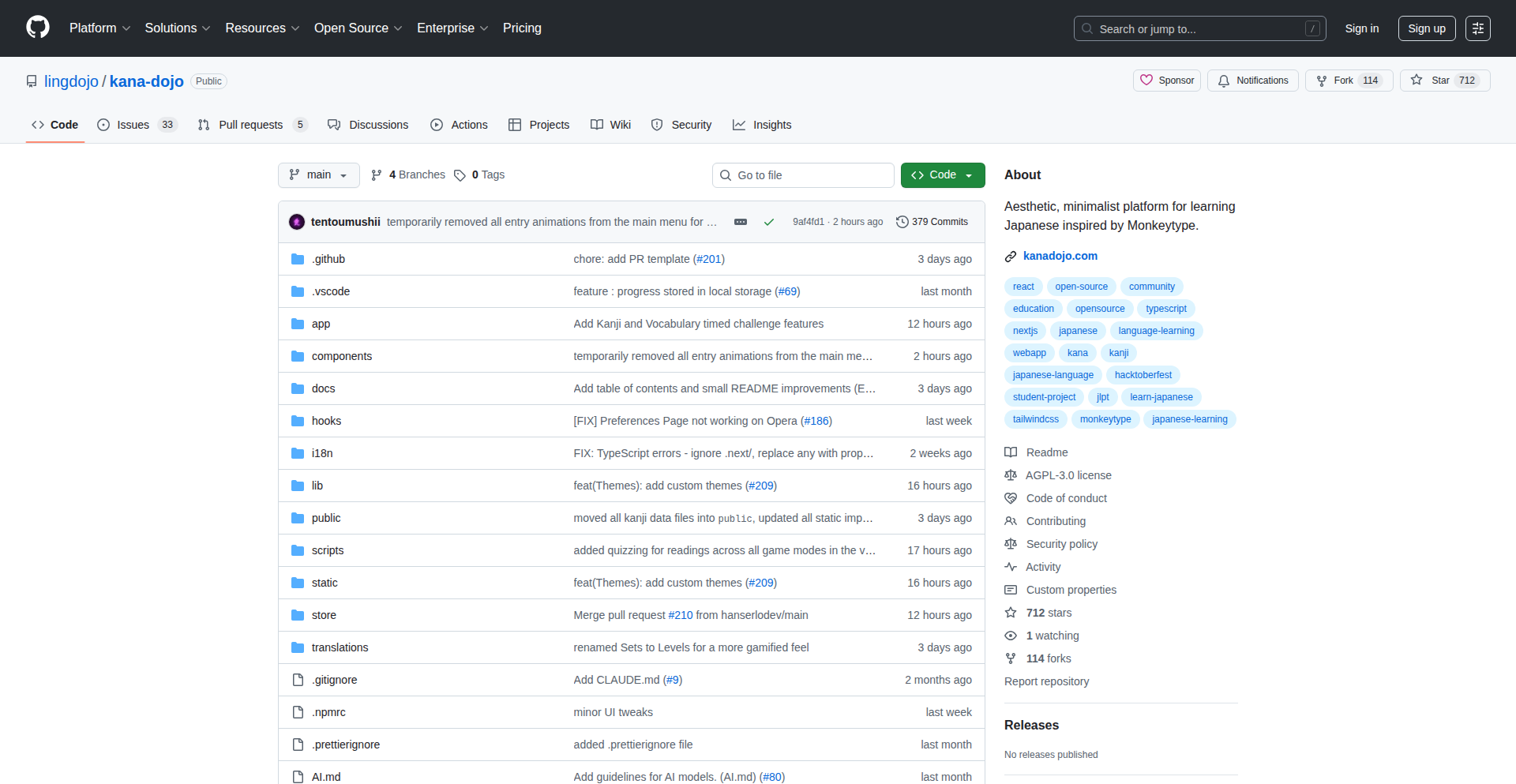

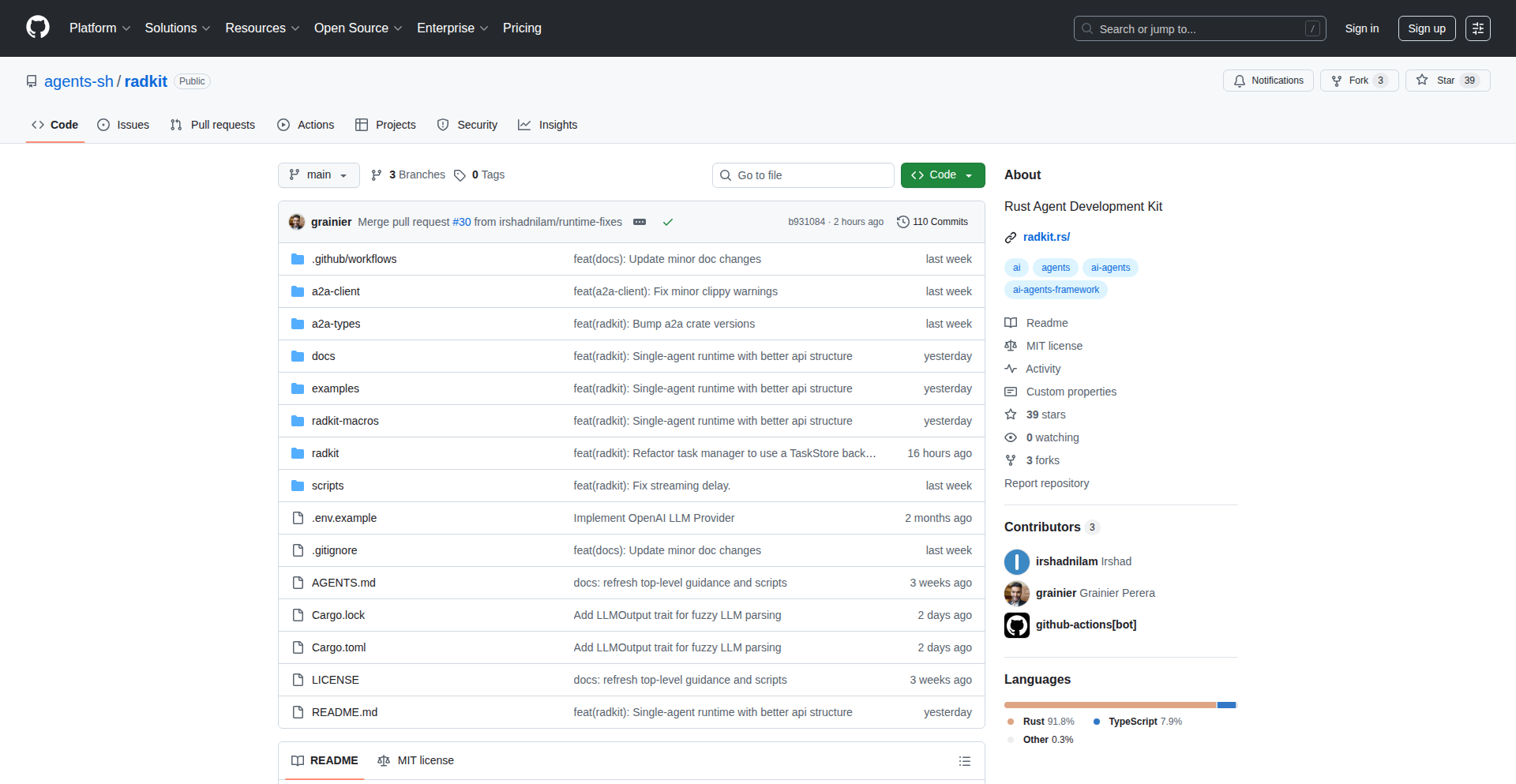

GitPulse AI Explorer

Author

Indri-Fazliji

Description

GitPulse AI Explorer is an AI-powered platform designed to help developers, especially beginners, discover open-source projects with 'good first issues'. It tackles the challenge of finding approachable contributions in the vast open-source landscape by leveraging AI for difficulty prediction and smart repository matching. This means you can find projects that are not only interesting but also suitable for your current skill level, accelerating your open-source journey.

Popularity

Points 8

Comments 1

What is this product?

GitPulse AI Explorer is a web application that uses artificial intelligence to identify and recommend open-source projects that are suitable for new contributors. It analyzes repositories to pinpoint issues labeled as 'good first issues' and also uses an AI model to predict the difficulty of these issues, providing a 'difficulty predictor' score. Furthermore, it offers smart repository matching based on your preferences and analyzes contributor activity to give a 'repo health score'. The core innovation lies in using AI to cut through the noise of thousands of open-source projects, making it easier for anyone to find a meaningful way to contribute.

How to use it?

Developers can use GitPulse AI Explorer by visiting the live website. You can browse through a curated list of over 200 'good first issues' across various projects. You can also utilize the smart repo matching feature to find projects tailored to your interests and skill level. The AI-powered difficulty predictor helps you gauge how challenging an issue might be, allowing you to select contributions that align with your expertise. This can be integrated into your development workflow by bookmarking promising projects or issues directly from the platform, helping you decide where to invest your time for your next open-source contribution.

Product Core Function

· Curated 'good first issues': A collection of over 200 open-source issues specifically marked for beginners. This provides immediate access to entry points for contributing to projects, saving you the time of manually searching for them.

· AI-powered difficulty predictor: An intelligent system that estimates how difficult a particular open-source issue might be. This helps developers choose tasks that match their current skill set, reducing frustration and increasing the likelihood of successful contributions.

· Smart repo matching: A feature that recommends repositories based on your expressed interests and preferences. This ensures you are directed towards projects that genuinely excite you, fostering long-term engagement and learning.

· Contributor analytics: Insights into the activity and responsiveness of project contributors. This helps gauge the health and community engagement of a repository, informing your decision about where to contribute.

· Repo health score: A consolidated score indicating the overall well-being and activity of an open-source project. This provides a quick overview of a project's vitality, helping you choose vibrant and well-maintained communities to join.

Product Usage Case

· A junior developer looking to make their first open-source contribution can use GitPulse AI Explorer to find projects with issues specifically marked as easy for newcomers. The AI difficulty predictor can then help them select an issue that is genuinely manageable, allowing them to gain confidence and experience without being overwhelmed.

· An experienced developer wanting to explore a new technology can use the smart repo matching feature to discover projects using that technology. They can then use the contributor analytics and repo health score to assess the project's community and activity level, ensuring they join a thriving and supportive environment.

· A student working on a university project that requires open-source contribution can leverage GitPulse AI Explorer to quickly identify suitable projects and issues. This significantly speeds up the process of finding a relevant contribution, allowing them to focus more on the technical implementation of their project.

· An open-source project maintainer could potentially use insights from GitPulse AI Explorer to understand how their project is perceived by potential new contributors, and identify areas for improvement to attract more help.

13

YAAT: EU Data Sovereign Analytics

Author

caioricciuti

Description

YAAT is a privacy-first analytics platform designed for EU companies that need to keep their data within EU borders and want direct access to their raw event data. It offers full web analytics, error tracking, and performance monitoring, with a unique selling point of direct SQL access for custom querying and a commitment to GDPR compliance and data ownership. This means you can ask any question about your user behavior, not just rely on pre-defined reports, all while ensuring your data never leaves the EU.

Popularity

Points 6

Comments 2

What is this product?

YAAT is an analytics tool built with a strong focus on data privacy for European businesses. Unlike many analytics services that send your data to servers outside the EU (which can be a problem for regulations like GDPR), YAAT keeps everything within EU infrastructure. The core innovation is its direct SQL access. Instead of just looking at pre-made charts, you can write your own SQL queries against your raw website event data. Think of it like having a direct line to your customer's behavior data, allowing you to ask very specific questions and get precise answers. It also covers standard analytics needs like page views, traffic sources, error logs, and website performance metrics like Core Web Vitals.

How to use it?

Developers can integrate YAAT into their EU-based web applications by including a lightweight JavaScript script (<2KB) on their website. This script collects essential user behavior and performance data. For businesses, the primary use is through the YAAT dashboard. This dashboard allows you to visualize data using various chart types and, crucially, write custom SQL queries using an interface with SQL autocompletion. You can then save these queries as dashboard panels. For developers who need to process raw data further, YAAT allows exporting data in Parquet files, giving you full ownership and control over your analytics data. Domain verification via DNS ensures that only your approved websites can send data to your YAAT instance.

Product Core Function

· Direct SQL Querying: Allows users to write custom SQL queries against raw event data, enabling deep, specific insights into user behavior that pre-built dashboards cannot offer. This is valuable for businesses needing precise answers to unique business questions.

· Privacy-First EU Hosting: Ensures all data is processed and stored within the EU, adhering to GDPR and other regional data protection regulations. This is crucial for EU companies facing strict data residency requirements.

· Comprehensive Analytics Suite: Includes web analytics (pageviews, sessions, traffic), error tracking (JavaScript exceptions), and performance monitoring (Core Web Vitals, load times). This provides a 360-degree view of website health and user experience.

· Customizable Dashboards: Offers a drag-and-drop interface to build personalized dashboards with various visualization options. This allows businesses to see the metrics most important to them in an easily digestible format.

· Data Export in Parquet: Enables users to export their raw analytics data in Parquet format, granting full data ownership and the flexibility to use the data with other tools or for advanced analysis.

· Lightweight Tracking Script: A minimal <2KB script ensures minimal impact on website loading performance, enhancing user experience and SEO.

Product Usage Case

· A German e-commerce company wants to understand which marketing campaigns (UTM parameters) are driving the most sales specifically from mobile users within Germany. Using YAAT's direct SQL access, they can write a query like `SELECT campaign, COUNT(DISTINCT session_id) FROM events WHERE device_type = 'mobile' AND country = 'DE' GROUP BY campaign ORDER BY COUNT(DISTINCT session_id) DESC;` to get this precise answer, which a standard analytics dashboard might not allow them to segment in this granular way.

· A SaaS company operating in France needs to ensure all user data remains within the EU for compliance reasons. They can use YAAT to track user engagement, feature adoption, and identify potential bugs or performance issues without any risk of data leaving the EU. They can then build custom dashboards showing key performance indicators (KPIs) relevant to their subscription model.

· A European startup is experiencing high JavaScript error rates and wants to pinpoint the exact browsers and versions causing these issues. YAAT's error tracking functionality, coupled with its filtering capabilities by browser and version, allows them to quickly identify and fix these problems, improving the user experience and reducing customer frustration.

· A Spanish business wants to monitor the performance of their website, particularly the Core Web Vitals (like LCP, FID, INP), to ensure a smooth user experience. YAAT's performance monitoring features allow them to track these metrics over time, identify bottlenecks, and make data-driven optimizations to improve site speed and user satisfaction, all while keeping their performance data within the EU.

14

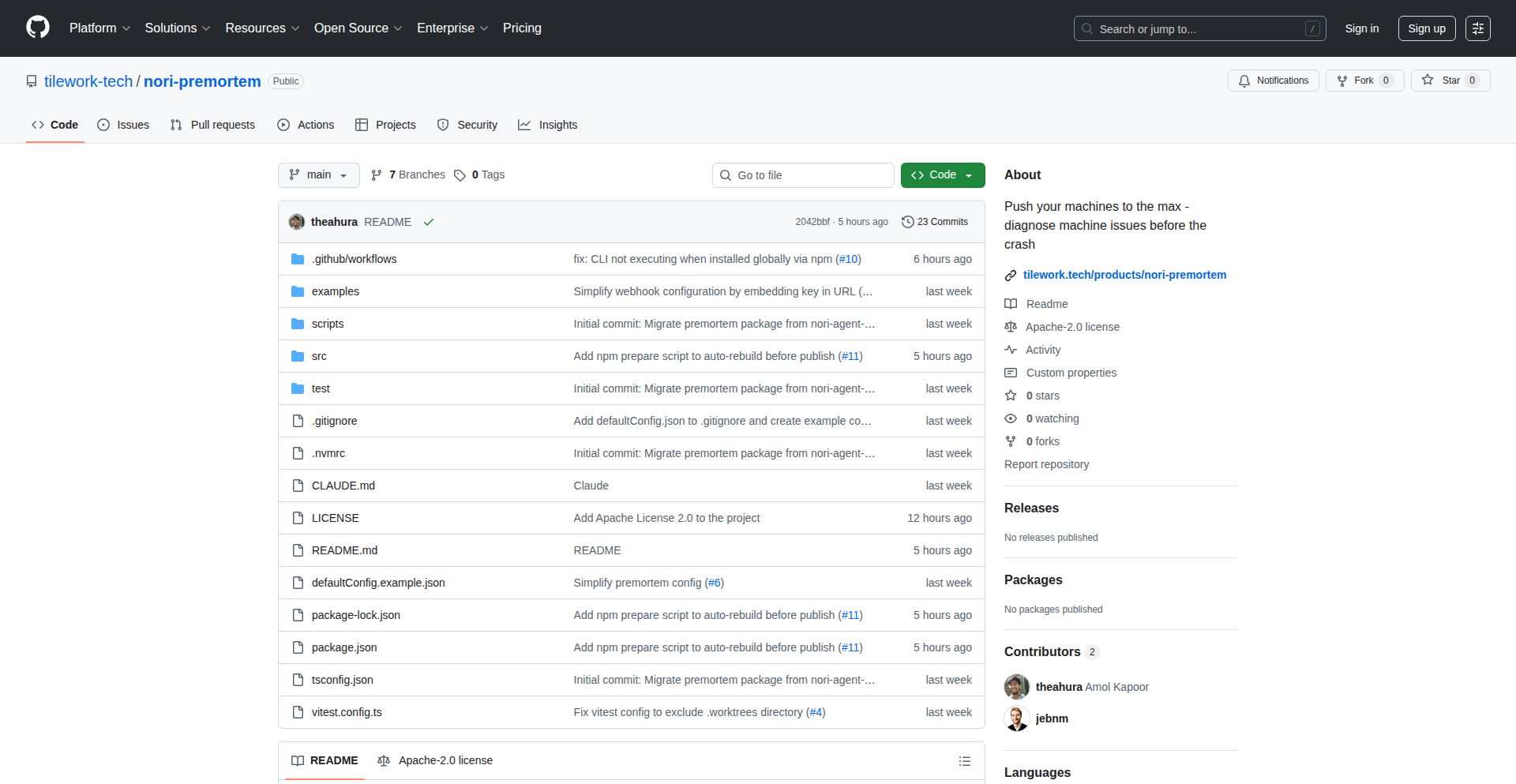

Premortem: AI-Powered System Failure Blackbox

Author

theahura

Description

Premortem is a novel system that acts like an airplane's black box, but for your software. It leverages AI coding agents to proactively identify and debug system failures in real-time, before they cause catastrophic downtime. The core innovation lies in its ability to dynamically spin up an AI agent to analyze system vitals when thresholds are crossed, mimicking the real-time debugging an experienced developer would perform, thus significantly reducing the time it takes to pinpoint and resolve critical issues.

Popularity

Points 3

Comments 5

What is this product?

Premortem is a system designed to prevent unexpected system outages by acting as an intelligent diagnostic tool. When your system's performance metrics (like memory usage or CPU load) exceed predefined limits, Premortem automatically launches an AI coding agent. This agent then executes a series of diagnostic commands and inspects running processes, even delving into function calls within applications (like Python or Node.js). It's like having an AI detective continuously monitoring your system, ready to figure out what's going wrong the moment it starts to happen. The innovation is in its proactive, automated, and AI-driven approach to failure analysis, transforming reactive troubleshooting into a predictive and preventative measure, akin to a real-time system 'premortem' analysis.

How to use it?

Developers can integrate Premortem into their existing infrastructure. Once set up, it continuously monitors system health. When a critical threshold is breached, Premortem autonomously initiates a debugging session using an AI agent. This agent's findings and logs are streamed to a designated server and stored locally, providing a comprehensive record of the events leading up to a potential failure. This allows developers to quickly understand the root cause of issues and, in some cases, intervene to prevent a full system crash. Think of it as an always-on, AI-powered incident response team for your servers.

Product Core Function

· Real-time system vital monitoring: Detects performance anomalies by tracking key metrics like memory and CPU usage, enabling early detection of potential issues.

· AI-powered failure diagnosis: Automatically deploys AI coding agents to analyze system state, identify problematic processes, and inspect code execution, accelerating root cause analysis.

· Automated debugging workflow: Executes pre-defined diagnostic commands and code inspection techniques similar to manual debugging, but at machine speed.

· Comprehensive logging and streaming: Records all diagnostic activities and streams logs to a central server and local storage, providing an irrefutable audit trail for post-incident review.

· Proactive failure prevention: Identifies critical resource exhaustion trends, allowing for potential human intervention to avert system crashes before they occur.

Product Usage Case

· Scenario: A web server is experiencing intermittent Out-of-Memory (OOM) errors, causing unpredictable downtime. Premortem is deployed, and when memory usage spikes to a critical level, it activates an AI agent. The agent analyzes which processes are consuming the most memory, potentially identifying a runaway garbage collection in a backend service, allowing the operations team to kill the offending process before the server crashes. This drastically reduces debugging time compared to manually sifting through logs after the fact.

· Scenario: A development build process is becoming increasingly slow and unstable, often failing due to resource contention. Premortem monitors the build environment. When CPU usage consistently stays at 100% for an extended period, Premortem triggers an AI agent. The agent might discover that a specific test suite is recursively spawning too many child processes, leading to resource exhaustion. This insight helps the development team optimize their test configuration and prevent build failures, improving developer productivity.

· Scenario: A critical microservice experiences a sudden performance degradation, impacting user experience. Premortem is running. Upon detecting a sharp increase in latency and error rates, it launches an AI agent. The agent could trace the issue to an inefficient database query that is being executed frequently, providing the backend engineers with the exact query and its impact, enabling them to quickly optimize the query and restore service performance.

15

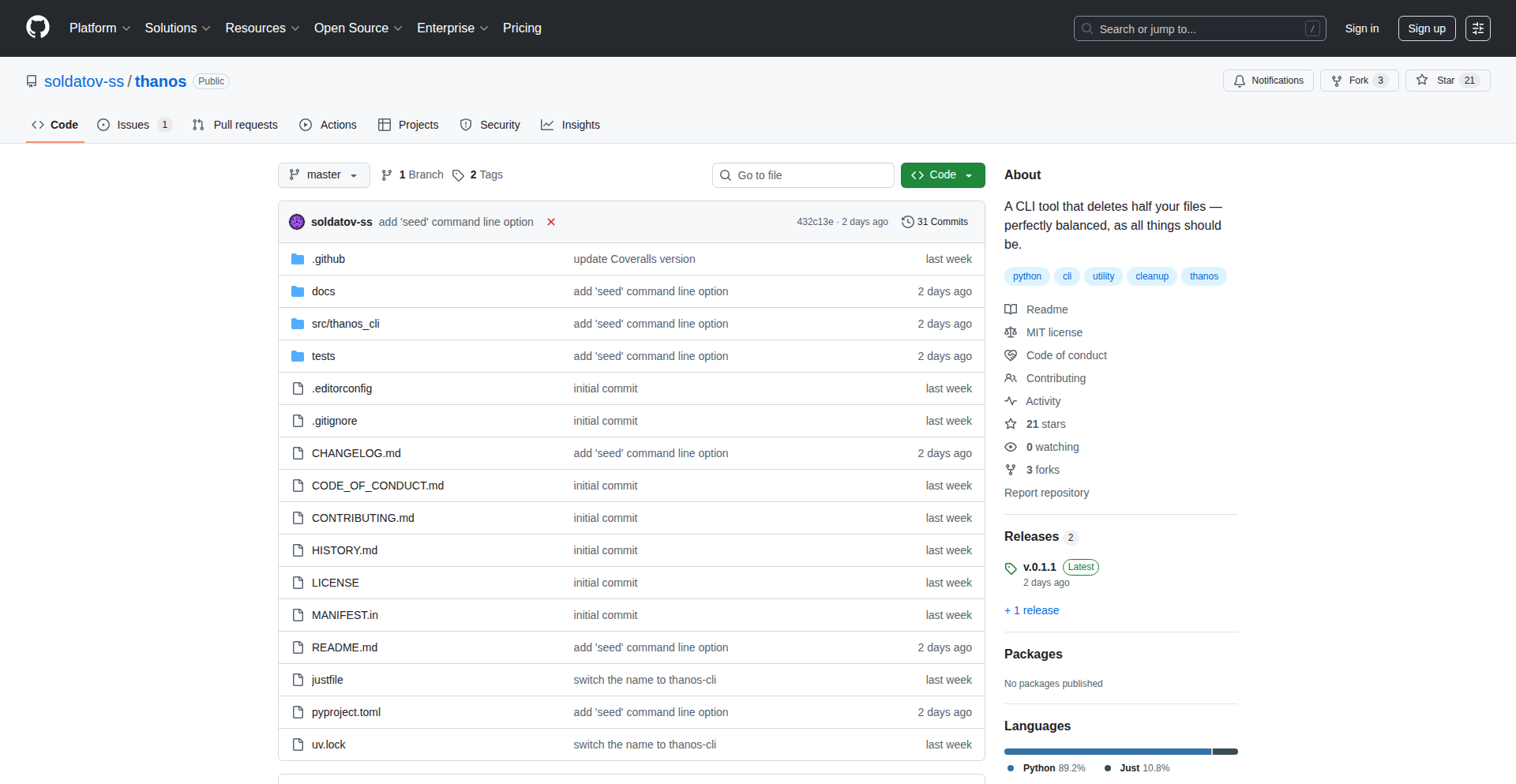

Thanos-CLI: The Half-File Purge Tool

Author

stranger-ss

Description

Thanos-CLI is a Python command-line utility inspired by the infamous 'snap' from Marvel's Thanos. It offers a unique and somewhat playful way to manage files by randomly selecting and optionally deleting exactly half of the files within a specified directory. This tool taps into the hacker spirit of using code for creative, albeit potentially destructive, tasks, offering a simple yet impactful way to declutter storage.

Popularity

Points 2

Comments 5

What is this product?

Thanos-CLI is a command-line program written in Python that simulates the 'snap' by randomly selecting and removing half of the files in a given directory. Its core technical innovation lies in its straightforward yet effective implementation of random selection for file deletion. It leverages Python's built-in `os` and `random` modules to list directory contents, shuffle them, and then pick a precise fifty percent to operate on. This approach ensures a simple, reproducible, and auditable way to perform a mass, albeit random, file cleanup. For developers, it represents a clear example of using fundamental programming concepts to solve a relatable problem: managing digital clutter.

How to use it?

Developers can use Thanos-CLI by installing it via pip: `pip install thanos-cli`. Once installed, they can navigate to a directory in their terminal and execute the command. For example, to delete half the files in the current directory, they would run `thanos-cli --snap`. A dry run to see which files would be affected without deleting them can be done with `thanos-cli --snap --dry-run`. The tool can be integrated into shell scripts for automated cleanup tasks or used as a demonstration of deterministic random operations in a practical context.

Product Core Function

· Random File Selection: The core logic uses Python's `random.sample` to pick exactly 50% of the files from a directory. This is technically sound and ensures a fair, random distribution for deletion, which is useful for experiments or data sampling where you need a consistent fraction of data.

· Optional File Deletion: The `snap` command performs the actual deletion. This offers a practical way to free up disk space rapidly, albeit in a randomized manner, which is a direct application of code to solve a physical resource limitation.

· Dry Run Mode: The `--dry-run` flag allows users to preview which files would be selected for deletion without actually removing them. This is crucial for safety and understanding the tool's impact before irreversible actions, demonstrating good practice in scripting potentially destructive operations.

· Directory Targeting: The tool can operate on any specified directory. This flexibility makes it applicable to various file management scenarios, from personal backups to project cleanup, highlighting its utility in diverse developer workflows.

Product Usage Case

· Simulating data loss for testing: Developers working on data recovery or resilience systems can use Thanos-CLI to quickly simulate a scenario where a significant portion of data is lost randomly, allowing them to test their backup and restoration mechanisms.

· Quickly decluttering large temporary directories: In projects that generate many temporary files, running Thanos-CLI with the dry run first can help identify and then safely remove a large chunk of these files, freeing up disk space for more critical work.

· Educational demonstration of random sampling: For teaching programming concepts like randomness and file manipulation, Thanos-CLI provides a tangible and exciting example of how these concepts can be applied in a real-world (albeit quirky) utility.

· Creative file management experiments: Users interested in unique ways to manage their digital assets can use Thanos-CLI to experiment with random deletions as a form of 'digital minimalism' or to create unpredictable file structures for artistic projects.

16

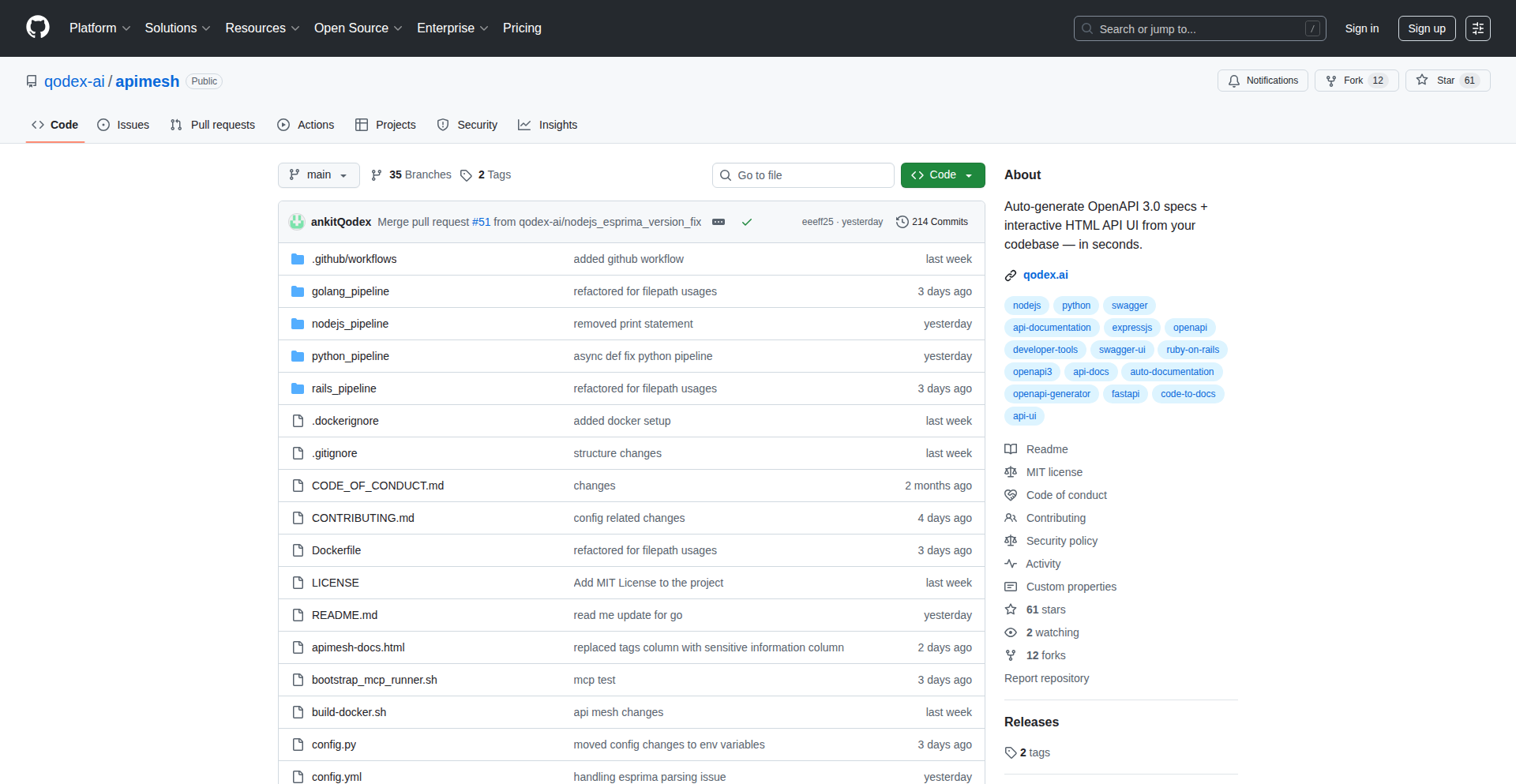

CodeSpecGen

Author

siddhant_mohan

Description

An open-source tool that automatically scans your codebase and generates OpenAPI documentation. It supports popular frameworks like Rails, Go, Python, and NodeJS, extracting routes, parameters, request bodies, and models directly from your code to produce a clean OpenAPI specification ready for integration into your development workflow.

Popularity

Points 7

Comments 0

What is this product?

CodeSpecGen is a smart, automated system that reads your application's code and understands how it communicates. Think of it like a translator that, instead of translating languages, translates your code's structure into a universally understood blueprint for APIs called OpenAPI. The innovation lies in its ability to parse complex code structures (like routes, how data is sent and received, and the shape of that data) and map them into the formal OpenAPI standard, saving developers immense manual effort and ensuring accuracy. So, what's the use? It eliminates the tedious and error-prone task of manually writing API documentation, freeing up developers to focus on building features.

How to use it?

Developers can integrate CodeSpecGen into their existing workflow by running it against their project's source code. It can be executed as a command-line tool or potentially integrated into CI/CD pipelines. Once installed, you point it to your project directory, and it analyzes the code. The output is a standard OpenAPI specification file (usually in YAML or JSON format). This file can then be used by various API development tools, such as API gateways, documentation UIs (like Swagger UI), and client SDK generators. So, what's the use? It seamlessly plugs into your development process, giving you instant, machine-readable API documentation without manual intervention.

Product Core Function

· Codebase Scanning: Analyzes source code to identify API endpoints, request/response structures, and data models. This is valuable because it automates the discovery of your API's capabilities, ensuring that documentation reflects the actual code. So, what's the use? You get an accurate reflection of your API without needing to painstakingly document each part manually.

· Framework Support: Specifically designed to understand the conventions and structures of frameworks like Rails, Go, Python, and NodeJS. This is valuable because it means the tool understands how your specific code is organized, leading to more precise documentation. So, what's the use? It works with the tools and languages you're already using, making integration straightforward.

· OpenAPI Specification Generation: Outputs a compliant OpenAPI specification file. This is valuable because OpenAPI is the industry standard for describing RESTful APIs, enabling interoperability with a wide range of tools and services. So, what's the use? Your API documentation becomes a powerful asset that can be understood and utilized by any tool that supports OpenAPI.

· Route and Parameter Extraction: Automatically identifies all available API routes and the parameters they expect. This is valuable for understanding the exact entry points and inputs required for your API. So, what's the use? You quickly grasp how to interact with your API and what information it needs from callers.

· Request Body and Model Identification: Parses the structure of data being sent to and received from your API. This is valuable for defining accurate schemas for your API payloads. So, what's the use? You know exactly what data format your API expects and returns, preventing integration errors.

Product Usage Case