Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-19

SagaSu777 2025-11-20

Explore the hottest developer projects on Show HN for 2025-11-19. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN submissions paint a vivid picture of the current technological frontier, heavily dominated by AI and its pervasive integration into developer workflows and everyday tools. We're seeing a powerful hacker spirit at play, where developers are not just building new AI models but are actively creating practical applications and infrastructure to make AI more accessible, controllable, and useful. This ranges from AI-assisted coding and content generation to sophisticated AI agents designed for complex tasks and even simulating business operations. The emphasis on local LLM inference and privacy is a strong counter-narrative to cloud-centric AI, reflecting a growing demand for user control and data security. Furthermore, the proliferation of developer tools, CLI utilities, and cross-platform frameworks underscores a broader trend towards empowering developers with more efficient and flexible ways to build and deploy software. For aspiring developers and entrepreneurs, this signals a ripe opportunity to focus on niche problems within these broad trends, particularly those that enhance developer productivity, offer privacy-preserving AI solutions, or simplify complex technical challenges.

Today's Hottest Product

Name

Marimo VS Code extension – Python notebooks built on LSP and uv

Highlight

This project showcases an innovative approach to Python notebooks by leveraging the Language Server Protocol (LSP) for a native VS Code/Cursor experience. The key technical innovation is the use of `marimo-lsp`, an LSP-first architecture for notebook runtimes, which aims for broader editor compatibility as LSP evolves. It also integrates `uv` with PEP 723 for robust environment management, allowing each notebook to have its own isolated, cached environment. Developers can learn about advanced IDE integration techniques, the power of LSP for tool interoperability, and efficient Python dependency management strategies.

Popular Category

AI/ML

Developer Tools

Productivity

Open Source

Popular Keyword

AI

LLM

CLI

Open Source

Python

Rust

IDE

Developer Tools

Automation

Technology Trends

AI-powered developer tools

Local LLM inference and privacy

Enhanced IDE experiences

Efficient data handling and processing

Cross-platform development and tooling

Open-source infrastructure and utilities

Decentralized and privacy-focused solutions

Modern language interop and tooling

Project Category Distribution

AI/ML (25.00%)

Developer Tools (30.00%)

Productivity (15.00%)

Open Source (20.00%)

Utilities (10.00%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | DNSResolverInsight | 47 | 27 |

| 2 | Marimo LSP Notebook Sync | 54 | 4 |

| 3 | F32: The Pocket-Sized ESP32 | 42 | 4 |

| 4 | Sourcewizard AI SDK Installer | 13 | 23 |

| 5 | AI-CEO Benchmark: The Business Simulation Arena | 22 | 13 |

| 6 | VibeProlog | 25 | 4 |

| 7 | Uncited: Academic Paper Aggregator | 12 | 12 |

| 8 | Gram Functions: Code-to-Agent-Tool Compiler | 22 | 0 |

| 9 | OctoDNS: Multi-Provider DNS Sync Engine | 22 | 0 |

| 10 | Hyperparam: Real-time Multi-Gigabyte Dataset Explorer | 16 | 1 |

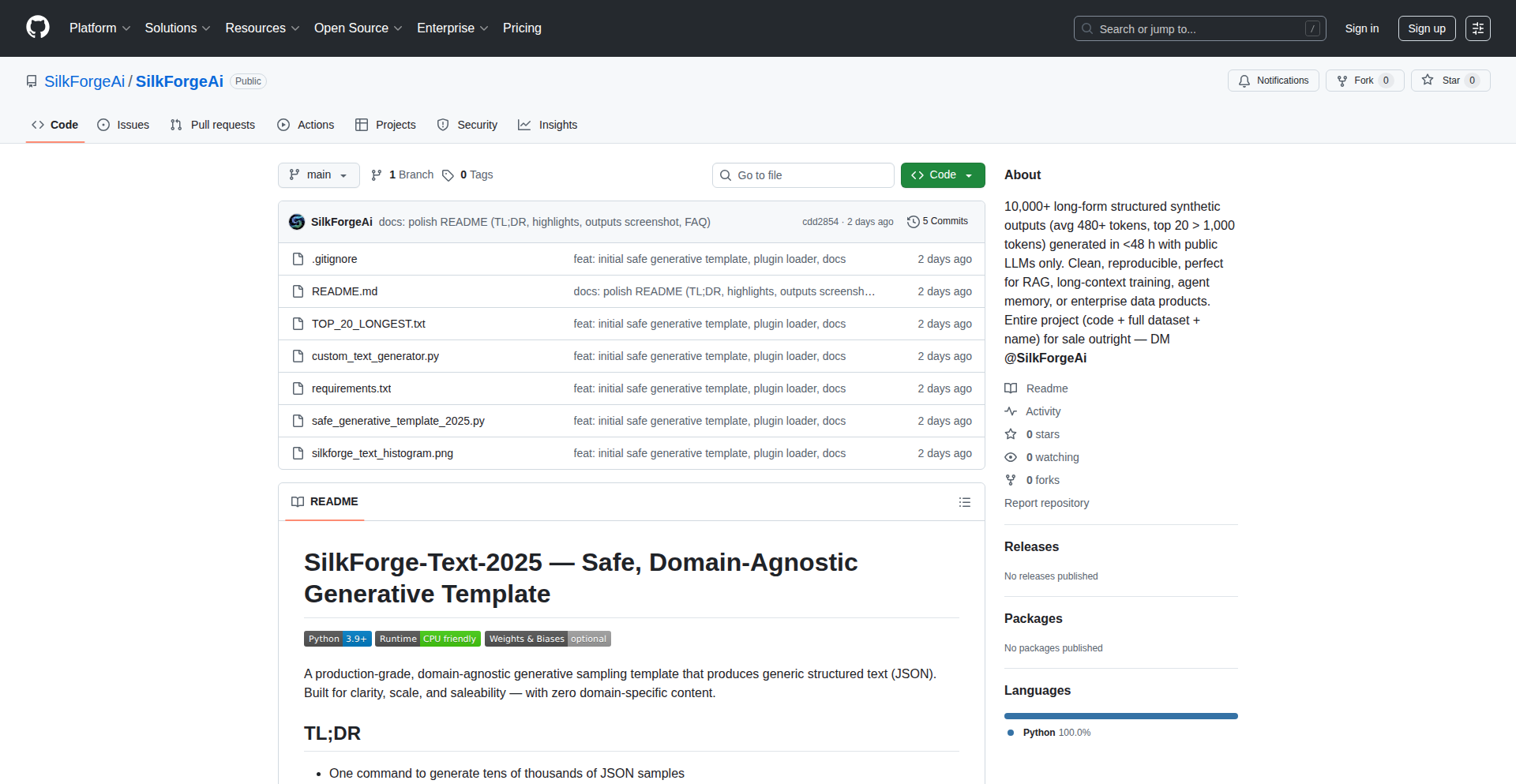

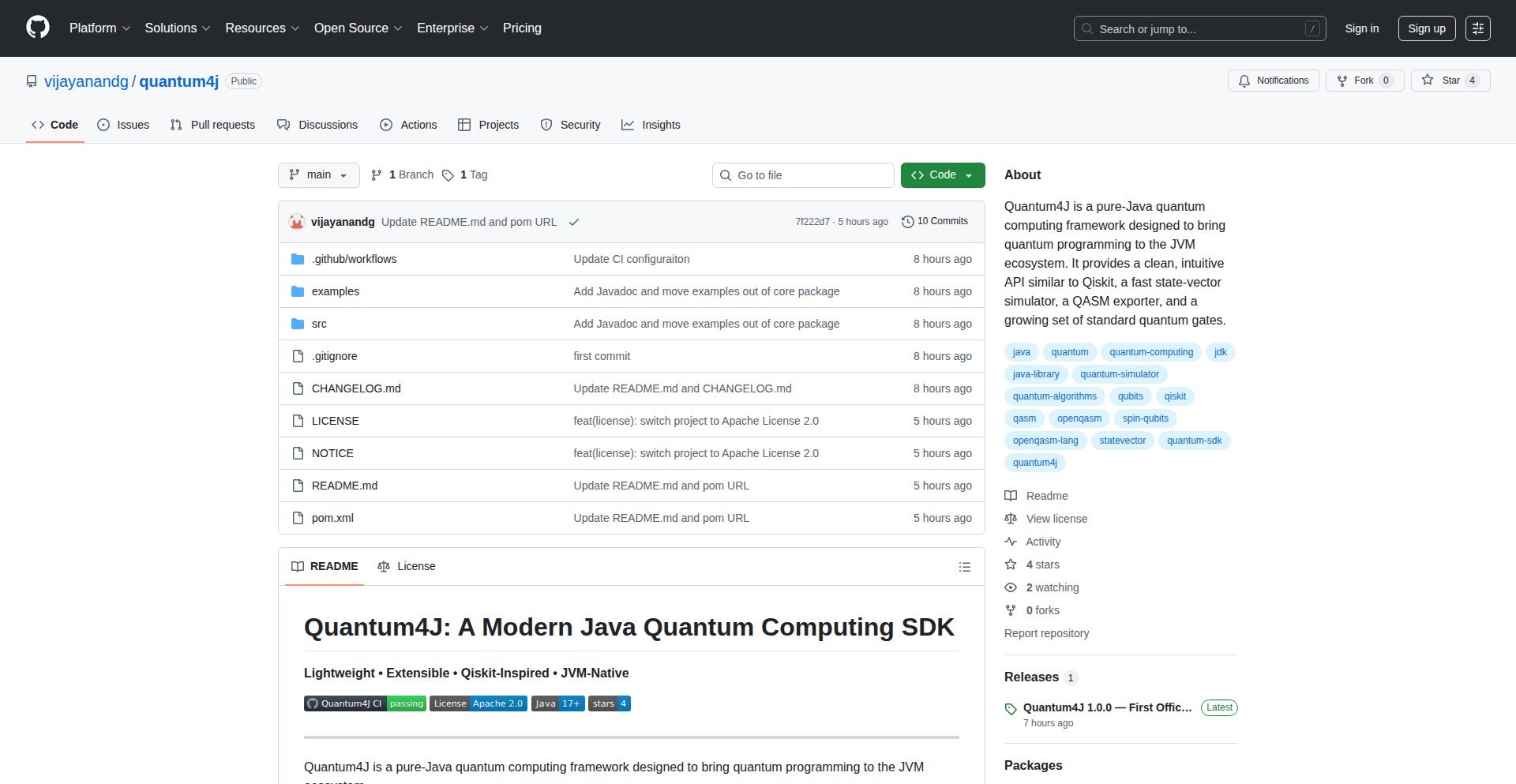

1

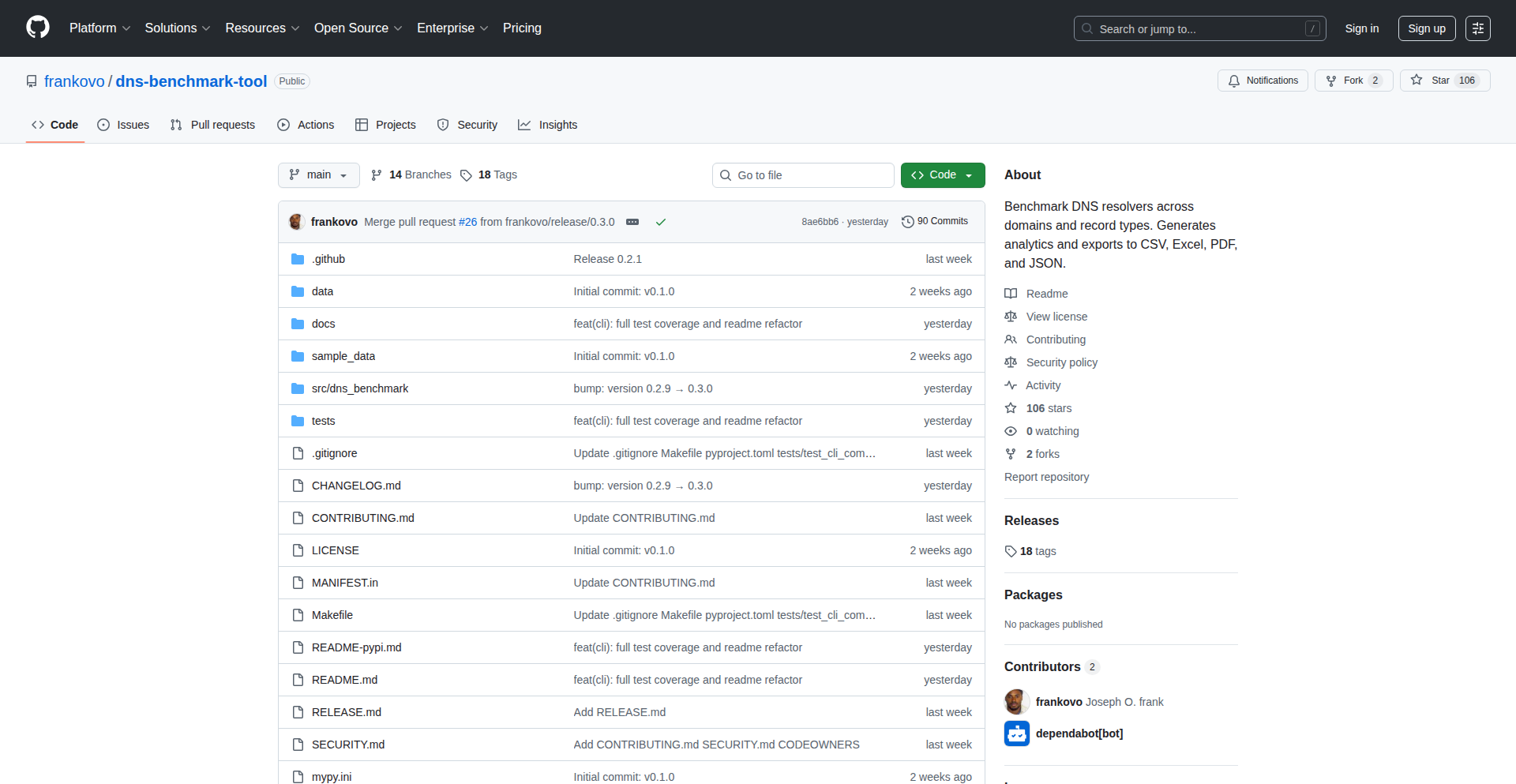

DNSResolverInsight

Author

ovo101

Description

A command-line interface (CLI) tool designed to benchmark and analyze DNS resolvers. It helps developers pinpoint latency issues caused by DNS lookups, which can significantly impact API request times. The tool provides features to compare different resolvers, rank them based on performance metrics, and monitor their reliability over time with alerts.

Popularity

Points 47

Comments 27

What is this product?

DNSResolverInsight is a Python-based CLI application built using the `dnspython` library. Its core innovation lies in its ability to programmatically query various DNS servers and meticulously measure the time it takes for them to resolve domain names. This is crucial because slow DNS resolution can add significant delays (like the reported 300ms) to network requests, impacting application performance. The tool moves beyond simple testing by offering comparative analysis, ranking of resolvers by latency, reliability, and a balanced approach, and continuous monitoring with customizable alert thresholds. It addresses the common developer pain point of mysterious network delays by providing concrete data on DNS performance.

How to use it?

Developers can quickly install DNSResolverInsight using pip: `pip install dns-benchmark-tool`. Once installed, they can start benchmarking DNS resolvers from their terminal. For instance, to compare the performance of different DNS servers for resolving 'google.com', a developer would run: `dns-benchmark compare --domain google.com`. This provides immediate insights into which DNS resolver is fastest for that specific domain. The tool can be integrated into CI/CD pipelines for continuous performance checks or used during development to diagnose network bottlenecks. The output helps developers understand how their chosen DNS infrastructure affects application responsiveness.

Product Core Function

· Compare DNS resolvers for a single domain: This function allows developers to send a domain name to multiple DNS resolvers simultaneously and see which one responds the fastest. This is valuable for identifying if a specific DNS provider is causing delays in reaching external services.

· Rank resolvers by latency, reliability, or balanced score: This feature provides a consolidated view of DNS resolver performance. Developers can see which resolvers are consistently fast, reliable (meaning they respond successfully most of the time), or offer a good balance between the two. This helps in making informed decisions about which DNS service to use for production environments.

· Monitor resolvers with threshold alerts: For ongoing performance assurance, this function allows continuous tracking of DNS resolver performance. Developers can set specific thresholds for latency or reliability, and the tool will alert them if these thresholds are breached. This proactive monitoring helps catch performance degradations before they significantly impact users.

· Command-line interface (CLI) for easy access: The tool's CLI nature makes it highly accessible for developers. They can quickly execute commands directly from their terminal without needing to set up complex environments. This adheres to the hacker ethos of using code to solve immediate problems efficiently.

Product Usage Case

· A developer notices their web application is experiencing slow page load times, especially when fetching data from external APIs. By using `dns-benchmark compare --domain api.example.com`, they discover that their current DNS resolver is adding 200ms to each request. They then use the `top` command to identify a faster, more reliable DNS resolver, reducing their API latency and improving user experience.

· An e-commerce platform experiences intermittent issues where users are unable to access certain products, attributed to slow network responses. The development team uses DNSResolverInsight's `monitor` feature to continuously track the performance of their chosen DNS resolvers. When a resolver's latency spikes above a set threshold, an alert is triggered, allowing the team to investigate and resolve the issue before it impacts a large number of customers.

· A backend service developer is deploying a new microservice that relies heavily on external service lookups. Before going live, they use `dns-benchmark compare --domain external.service.com` across several regions to ensure the DNS resolution is optimized for their target audience. This preemptive testing prevents potential performance bottlenecks in their new service.

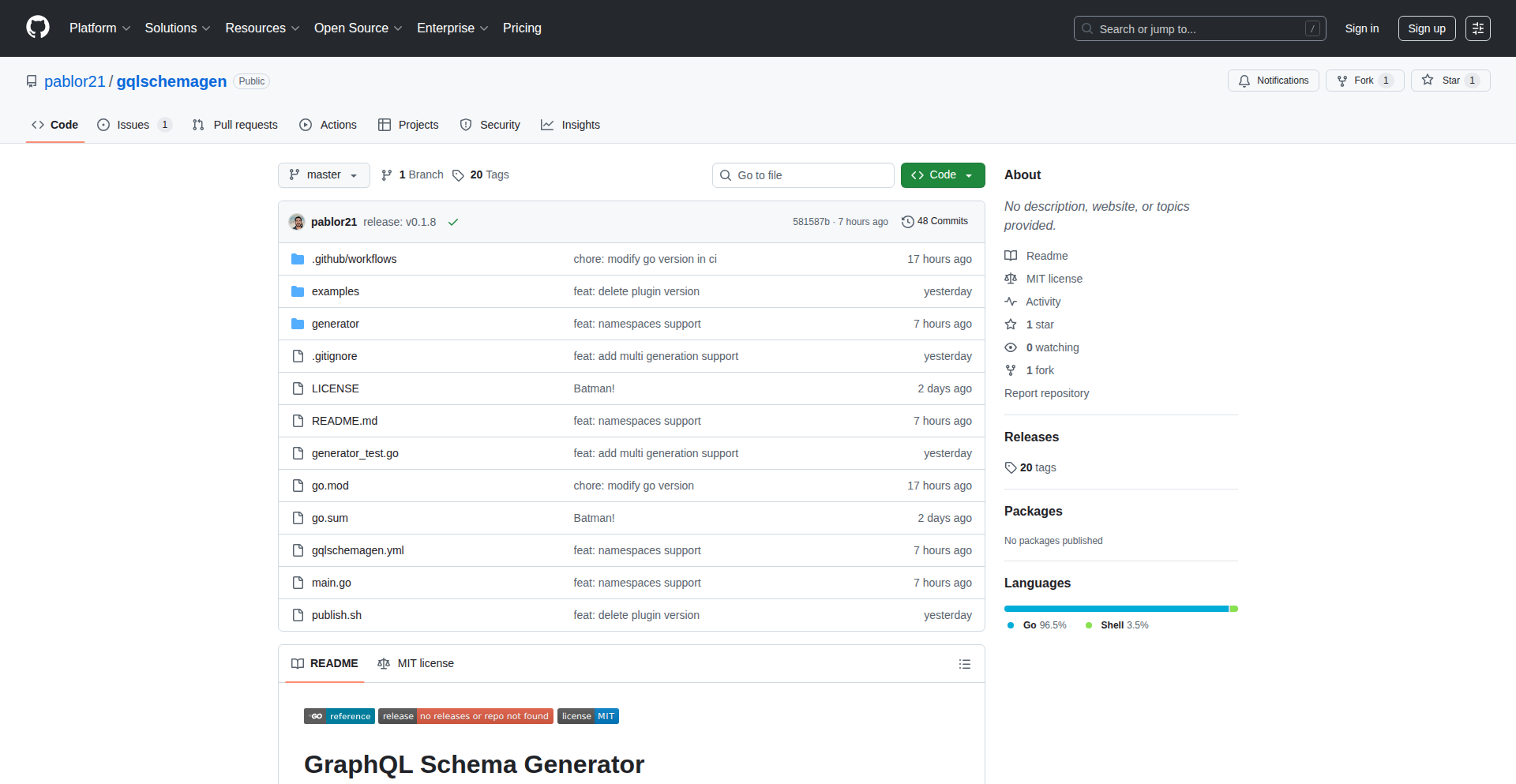

2

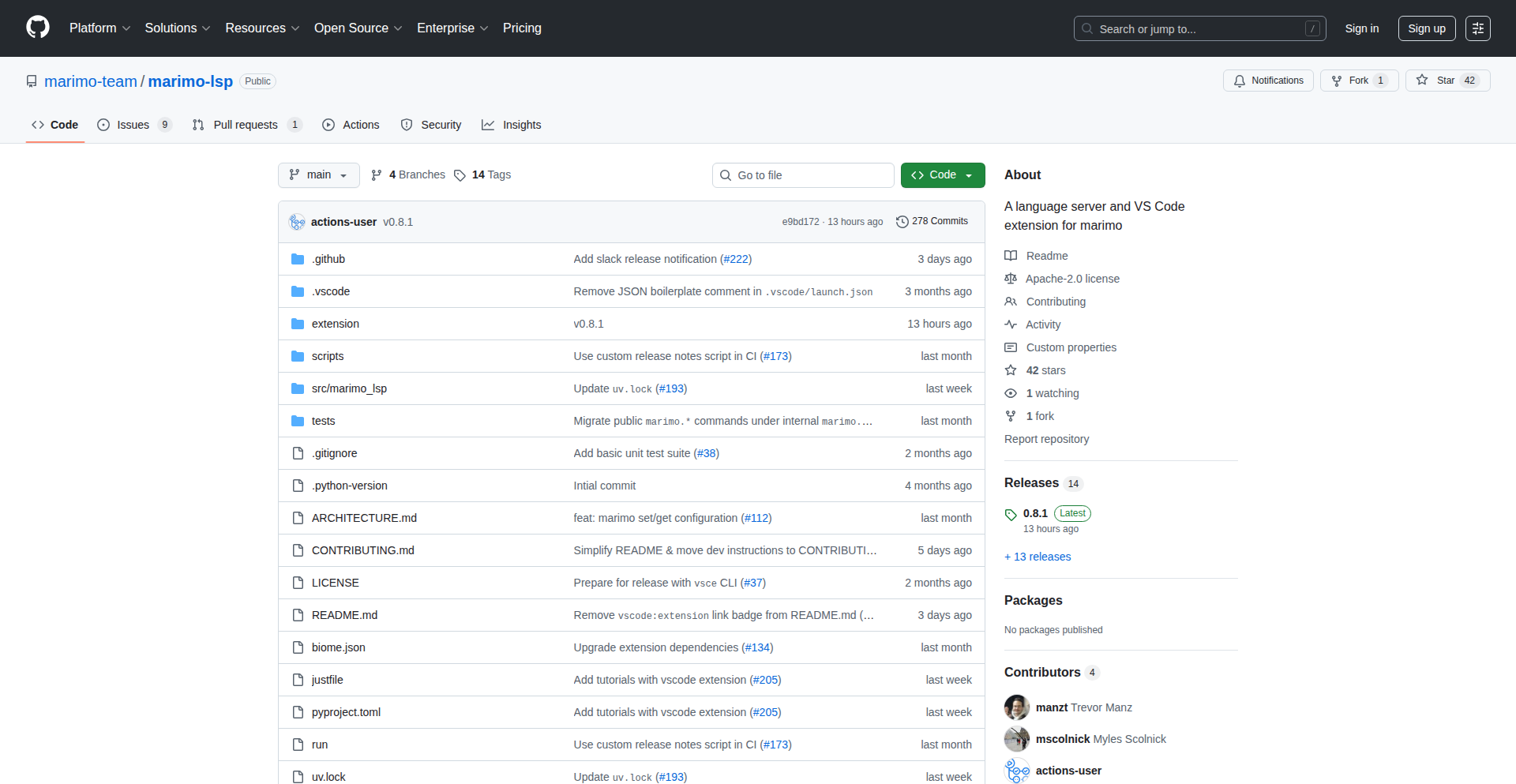

Marimo LSP Notebook Sync

Author

manzt

Description

A VS Code/Cursor extension for marimo, a reactive Python notebook. It leverages the Language Server Protocol (LSP) and uv for a native notebook experience, enabling better environment management and cross-editor compatibility for Python notebooks.

Popularity

Points 54

Comments 4

What is this product?

This project is a VS Code and Cursor extension that brings the marimo reactive Python notebook experience directly into your editor. Marimo notebooks are essentially Python files that can display rich outputs and react to changes dynamically. The core innovation is its LSP-first architecture. Think of LSP as a universal translator for code editors, allowing them to understand and interact with programming languages and tools. By using LSP, this extension can sync notebook documents and their kernels (the engine running your Python code) seamlessly. This means your editor 'knows' what's happening in your notebook in real-time. It also integrates deeply with 'uv', a fast Python package installer, using PEP 723 to define and manage isolated Python environments for each notebook. This ensures your code runs reliably and reproducibly without conflicts.

How to use it?

Developers can install this extension directly from the VS Code or Cursor marketplace. Once installed, they can open marimo notebook files (`.py` files designed for marimo) within their editor. The extension provides features like running cells, viewing live outputs, and code completion. For environment management, it uses 'uv' to automatically create and manage isolated Python environments based on the dependencies declared in the notebook file itself. This means you don't need to manually set up virtual environments for each project; the extension handles it for you, ensuring that each notebook runs with its correct dependencies. This can be used when developing data analysis scripts, interactive dashboards, or any Python project where a reactive notebook interface is beneficial and environment consistency is crucial.

Product Core Function

· Native marimo Notebook Experience in VS Code/Cursor: Provides a seamless development environment for marimo notebooks directly within popular code editors, allowing developers to write, run, and debug their notebooks without leaving their preferred IDE. This enhances productivity by reducing context switching.

· LSP-based Notebook Synchronization: Utilizes the Language Server Protocol (LSP) to synchronize notebook documents and kernels. This means your editor's understanding of the notebook's state (like variable values) stays up-to-date with the running code, enabling features like real-time feedback and debugging.

· PEP 723 & uv Environment Management: Leverages PEP 723 for defining notebook environments and integrates with 'uv' for fast, isolated package installation. Each notebook gets its own reproducible environment, preventing dependency conflicts and ensuring that code runs the same way every time, regardless of the system it's run on.

· Automatic Environment Updates: The 'uv' sandbox controller can automatically detect missing imports and update the notebook's environment metadata, simplifying dependency management and ensuring that your code always has access to the necessary libraries.

· Potential for Broader Editor Support: The LSP-first architecture lays the groundwork for marimo notebooks to be supported in other editors and tools that understand LSP, fostering a more diverse ecosystem for notebook runtimes beyond traditional Jupyter-based solutions.

Product Usage Case

· A data scientist developing an interactive data visualization dashboard: Instead of switching between a script and a separate notebook interface, they can now write and see their marimo notebook's plots and data outputs update in real-time within VS Code, directly alongside their Python code. This speeds up the iteration cycle significantly.

· A Python developer working on a complex machine learning project with multiple dependencies: The extension, powered by uv and PEP 723, automatically manages isolated environments for each marimo notebook, ensuring that different parts of the project don't interfere with each other's dependencies. This eliminates common 'it works on my machine' problems.

· A researcher needing to share reproducible computational results: By defining environment dependencies within the marimo notebook file itself using PEP 723, others can easily replicate the exact computing environment using uv, guaranteeing that their published work can be rerun accurately. The LSP integration further ensures a consistent experience across different developer setups.

· An educator teaching Python: Students can use marimo notebooks within a familiar VS Code environment, with automatic dependency management handled by uv. This simplifies the setup process and allows them to focus on learning Python concepts rather than wrestling with environment configuration.

3

F32: The Pocket-Sized ESP32

Author

pegor

Description

F32 is a hyper-minimalist ESP32 development board designed for maximum compactness while retaining full WiFi functionality. The innovation lies in its ingenious component selection and PCB layout, shrinking the familiar ESP32 into an incredibly small form factor. This addresses the need for extremely space-constrained IoT applications where traditional boards are too bulky.

Popularity

Points 42

Comments 4

What is this product?

F32 is a specialized development board built around the ESP32 microcontroller, specifically engineered to be as small as physically possible while still supporting WiFi connectivity. The core technical insight is a meticulous approach to component selection and board design. Instead of using standard off-the-shelf modules, the designer has opted for smaller, surface-mount components and a highly optimized PCB layout to reduce its footprint dramatically. This is achieved by integrating essential components directly onto the board and minimizing unnecessary circuitry. So, what's the value to you? It means you can embed powerful WiFi-enabled intelligence into devices where space was previously a limiting factor, such as tiny wearables, discreet sensors, or miniature actuators.

How to use it?

Developers can use F32 in scenarios requiring minimal physical size for an ESP32-based solution. Integration typically involves direct soldering or using small pin headers for connecting to other components or power. The board is programmed using the standard ESP32 development environment (like Arduino IDE or ESP-IDF), allowing developers to leverage familiar tools and libraries. Its small size makes it ideal for custom integrations where a full-sized development board or module simply won't fit. This allows for the creation of very discreet IoT devices or embedding smarts into tight enclosures. So, how can you use it? If you're building a small smart button, a tiny environmental monitor, or need to add WiFi to a compact electronic gadget, F32 provides the necessary processing power and connectivity in a virtually unnoticeable package.

Product Core Function

· Extremely compact ESP32 footprint: Achieves unprecedented miniaturization for ESP32-based projects, enabling deployment in extremely space-limited environments.

· Integrated WiFi connectivity: Provides reliable wireless communication for IoT devices, allowing them to send and receive data without external modules.

· Minimalist component selection: Utilizes carefully chosen surface-mount components and optimized PCB layout to reduce size and complexity, making it cost-effective for mass production.

· Standard ESP32 development compatibility: Supports familiar programming environments and tools, lowering the barrier to entry for developers already working with ESP32.

· Customizable for niche applications: Its small size allows for seamless integration into bespoke electronic designs where off-the-shelf solutions are too large.

Product Usage Case

· Wearable device integration: Imagine a smart fitness tracker or a discreet personal alert system where every millimeter counts. F32 can be embedded directly into the wearable's casing, providing its intelligence without adding bulk.

· Tiny sensor networks: Deploying a large number of small, WiFi-enabled environmental sensors in a home or industrial setting. F32's size allows for unobtrusive placement of each sensor node, collecting data without visual clutter.

· Smart actuators in tight spaces: Controlling small motors or solenoids in confined mechanisms, like within miniature robotics or specialized automation equipment. F32 can provide the brains for these small movements.

· Augmenting existing electronics: Adding WiFi capabilities to small, battery-powered devices that were not originally designed for connectivity, such as specialized tools or compact diagnostic equipment.

· Prototyping compact IoT products: Quickly building and testing very small, functional prototypes for new IoT products before committing to a larger, more complex design.

4

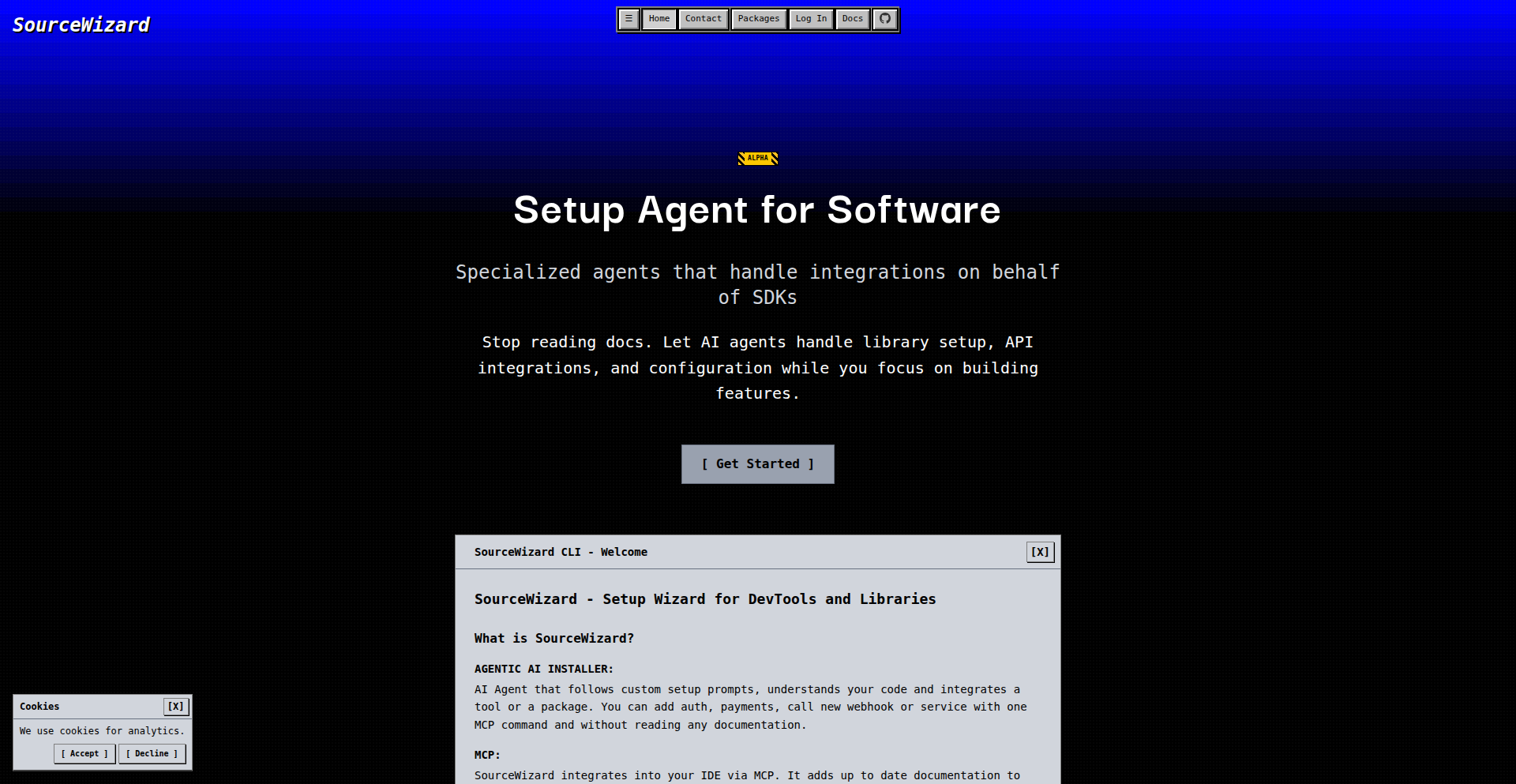

Sourcewizard AI SDK Installer

Author

mifydev

Description

Sourcewizard is a command-line interface (CLI) tool that leverages AI coding agents to automate the complex process of installing and configuring Software Development Kits (SDKs). It intelligently handles dependencies, middleware, environment variables, and necessary code modifications, aiming to resolve common installation failures that plague AI coding assistants. This innovation significantly reduces the manual effort and potential errors developers face when integrating third-party libraries into their projects, offering a more robust and reliable setup experience.

Popularity

Points 13

Comments 23

What is this product?

Sourcewizard is a smart command-line tool that uses Artificial Intelligence (AI) to install and set up software development kits (SDKs) in your projects. Think of it as an AI assistant specifically trained to understand how different SDKs need to be integrated into your codebase. Instead of you manually figuring out which packages to install, how to configure them, and setting up environment variables, Sourcewizard's AI agents do this for you. It uses specialized instructions (prompts) for each SDK, which are designed to overcome common issues like installing the wrong versions, using outdated methods, or leaving setups incomplete. The core innovation lies in its ability to generate these context-aware instructions, leading to a much higher success rate for clean SDK installations, especially within frameworks like Next.js. So, for you, it means less frustration and more time building your application.

How to use it?

Developers can easily integrate Sourcewizard into their workflow by running a simple command in their terminal. For example, to install the Clerk authentication SDK, a developer would type `npx ai-setup clerk`. This command triggers Sourcewizard, which then communicates with AI coding agents. These agents, guided by Sourcewizard's specialized prompts for Clerk, will automatically handle the installation of the necessary packages, set up any required middleware, update relevant code files, and configure environment variables. This makes integrating popular services like authentication providers (Clerk, WorkOS), search APIs, email services, and notification systems much faster and more reliable. You can then seamlessly use these services in your application without the usual setup headaches.

Product Core Function

· Automated SDK installation: Sourcewizard's AI agents handle the complete installation process of SDKs, ensuring all required packages and dependencies are correctly placed. This saves developers significant time and reduces the risk of manual installation errors.

· Intelligent configuration: The tool automatically configures essential components like middleware, environment variables, and any necessary code adjustments. This ensures the SDK is ready to be used without manual configuration steps, streamlining the integration process.

· Error mitigation for AI coding agents: Sourcewizard is specifically designed to address the common failure points encountered by AI coding assistants during SDK setup. Its specialized prompts lead to more robust and successful installations, increasing developer confidence in AI-assisted coding.

· Support for various categories of SDKs: Currently, Sourcewizard supports authentication providers, search APIs, email, and notification services, allowing developers to quickly integrate a range of functionalities into their applications. This broad support means a single tool can help with diverse integration needs.

· Open-source client code: The client-side code is open source, providing transparency and allowing developers to contribute or inspect its functionality. This fosters community involvement and trust in the tool's implementation.

Product Usage Case

· Integrating Clerk authentication into a Next.js application: A developer needs to add user authentication to their web app. Instead of manually installing Clerk, configuring OAuth, and setting up session management, they can run `npx ai-setup clerk`. Sourcewizard handles all these steps automatically, providing a functional authentication system in minutes, not hours, and without the common pitfalls of manual setup.

· Setting up Resend for email notifications: A project requires sending transactional emails. A developer can use Sourcewizard to quickly set up the Resend SDK. The tool will install the necessary packages, configure API keys in environment variables, and potentially add basic email sending boilerplate code, allowing the developer to start sending emails immediately.

· Adding WorkOS for enterprise authentication: For applications needing more advanced authentication solutions, integrating WorkOS can be complex. Sourcewizard simplifies this by automating the installation and initial configuration, enabling developers to focus on the user experience rather than intricate setup procedures. This drastically reduces the time-to-market for features relying on enterprise-grade authentication.

5

AI-CEO Benchmark: The Business Simulation Arena

Author

sumit_psp

Description

This project is a sophisticated business simulator designed to test the operational capabilities of AI agents, particularly LLMs, in a realistic enterprise environment. It tackles the challenge of evaluating whether current AI can truly 'run a business' by introducing elements like stochastic events, incomplete information, resource management, and long-term planning, areas where LLMs have historically struggled. The core innovation lies in its creation of a measurable benchmark that starkly contrasts human performance with AI agents, revealing critical gaps in AI's ability to handle complex, dynamic systems. This provides developers and researchers with a concrete tool to understand the limitations and future directions for creating truly intelligent operational systems.

Popularity

Points 22

Comments 13

What is this product?

This project is a simulated business environment, akin to a 'RollerCoaster Tycoon' style game, built to rigorously assess if current AI systems, especially large language models (LLMs), can effectively manage and operate a business. It's not just a game; it's a benchmark. The simulation incorporates key business challenges such as unpredictable events (stochasticity), missing information, managing staff and resources, planning for the long term, dealing with cascading failures, and considering how the physical layout of operations impacts outcomes. The innovative aspect is its direct comparison of AI agents against human players in this complex environment. It scientifically demonstrates that while LLMs can utilize tools, they lack the fundamental reasoning and foresight required for robust business operations, highlighting a significant gap in the pursuit of an 'AI CEO' that goes beyond mere chatbots or simple task execution.

How to use it?

Developers and AI researchers can use this project in several ways. Firstly, they can directly engage with the simulation by playing it on the provided platform (maps.skyfall.ai/play) to experience the challenges firsthand and aim for the leaderboard, offering a tangible way to understand the benchmark's complexity. Secondly, they can integrate their own LLM agents into the simulation to test their performance against established baselines and human scores. This involves setting up agents with specific prompting strategies and tool-use capabilities to see how they fare in navigating the simulated business dynamics. The project serves as a testing ground to identify failure modes and areas for improvement in AI decision-making, resource allocation, and long-term strategic planning within a controlled yet dynamic setting. It's a practical tool for developing and validating more sophisticated AI systems capable of complex operational tasks.

Product Core Function

· Dynamic Business Simulation: Provides a rich, interactive environment with elements like unpredictable events, resource constraints, and spatial considerations, offering a realistic stage to test AI decision-making under pressure.

· Human vs. AI Performance Metrics: Establishes a quantifiable comparison between human strategic acumen and AI operational capabilities, allowing for clear assessment of AI limitations and strengths.

· Agent Evaluation Framework: Offers a structured methodology to plug in and evaluate various AI agents, including LLMs, to understand their proficiency in complex operational tasks.

· Incomplete Information Handling: Simulates real-world scenarios where data is not always readily available, challenging AI's ability to make informed decisions with partial knowledge.

· Long-Horizon Planning Assessment: Tests AI's capacity for strategic foresight and delayed gratification, a critical skill for effective business management that current models often lack.

Product Usage Case

· Evaluating LLM-based AI CEO candidates: Developers can pit their newly developed AI CEO agents against the benchmark to see if they can manage a simulated amusement park, identifying weaknesses in areas like resource management or long-term planning.

· Benchmarking advancements in AI temporal reasoning: Researchers can use this simulation to measure improvements in an AI's ability to understand cause-and-effect over time and anticipate future consequences of current actions.

· Demonstrating the limitations of current AI agents for complex task automation: The project can be used to illustrate to stakeholders, investors, or the public that LLMs, while powerful for certain tasks, are not yet ready for end-to-end autonomous business operations.

· Developing more robust AI planning and adaptation mechanisms: By observing how AI agents fail in the simulation, developers can gain insights to build more resilient and adaptive AI systems that can better handle uncertainty and dynamic environments.

6

VibeProlog

Author

nl

Description

VibeProlog is a fascinating experimental project that brings the power of Prolog, a declarative logic programming language, to a mobile-first, 'vibe-coded' environment. The core innovation lies in its implementation as a Prolog interpreter, built primarily on a phone using accessible coding practices. It addresses the challenge of making advanced programming paradigms available in unconventional, highly portable contexts, showcasing the potential of 'vibe coding' for complex technical experiments.

Popularity

Points 25

Comments 4

What is this product?

VibeProlog is an interpreter for Prolog, a programming language that excels at solving problems using logic and rules rather than step-by-step instructions. Imagine telling your computer what you know and what you want to find out, and it figures out the answer. The 'vibe-coded' aspect means it was primarily developed on a mobile phone, focusing on a more intuitive and experimental coding style rather than traditional desktop development workflows. This is innovative because it demonstrates that sophisticated programming tools can be prototyped and even function in resource-constrained, highly accessible environments. So, what's in it for you? It shows that powerful tools can be built and tinkered with from anywhere, democratizing access to complex programming languages.

How to use it?

Developers can interact with VibeProlog by writing Prolog code and executing it through the interpreter. The primary use case would be for learning Prolog, experimenting with logic programming concepts, or even building small, logic-based applications on the go. Integration would involve running the interpreter directly on a compatible mobile device or potentially embedding its logic engine into other applications if the project matures. So, how can you use it? You can experiment with logical queries on your phone, test out AI concepts that rely on rule-based reasoning, or learn Prolog in a highly portable manner.

Product Core Function

· Prolog interpreter engine: This is the heart of the project, responsible for understanding and executing Prolog code. Its value is in enabling logic programming on platforms where traditional Prolog environments might not be readily available, making complex reasoning accessible. This is useful for anyone wanting to explore AI or problem-solving with logic.

· Mobile-first development approach: The project was largely built on a phone, highlighting a novel way to approach software development, especially for experimental or educational purposes. This shows the potential for rapid prototyping and learning in unconventional settings. This is useful for developers who want to experiment with new coding methods or work without traditional desktop setups.

· Declarative programming paradigm: By implementing Prolog, the project exposes users to a declarative way of solving problems, where you describe *what* you want, not *how* to get it. This can lead to more elegant and maintainable code for certain types of problems. This is useful for learning alternative programming styles and building systems that excel at knowledge representation and inference.

Product Usage Case

· Learning Prolog on the commute: A student could use VibeProlog on their phone to study Prolog syntax and logic rules while on public transport, reinforcing classroom learning with hands-on experimentation. This solves the problem of needing constant access to a desktop environment for learning.

· Rapid prototyping of small AI agents: A developer could quickly sketch out a simple rule-based AI agent for a personal project, like a recommendation system based on user preferences, directly on their phone without needing a full development setup. This addresses the need for quick iteration on logic-heavy ideas.

· Exploring combinatorial problems: A hobbyist programmer could use VibeProlog to solve logic puzzles or explore combinatorial problems by defining the rules of the puzzle and letting the interpreter find solutions. This provides a fun and accessible way to engage with challenging computational tasks.

7

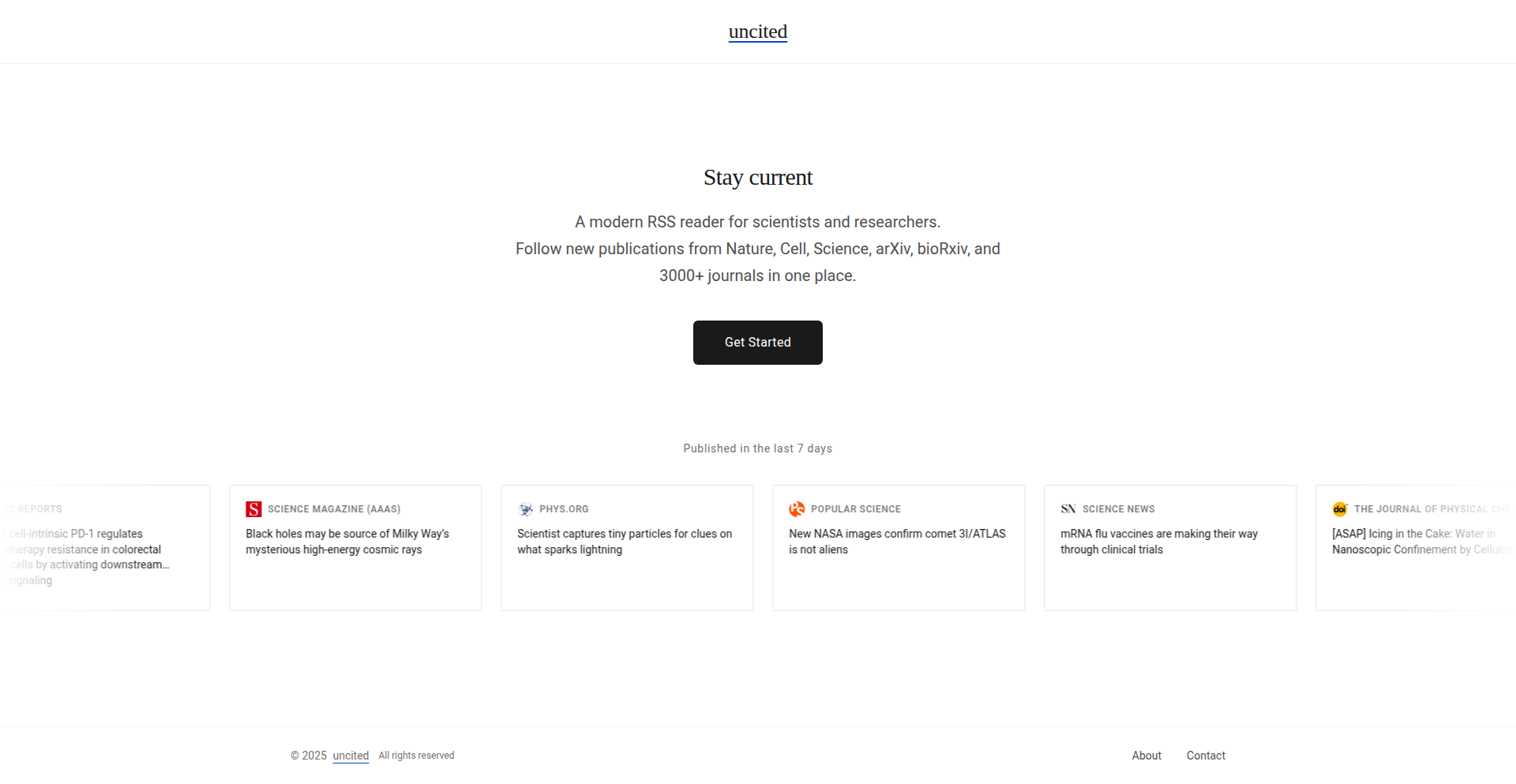

Uncited: Academic Paper Aggregator

Author

dogancan

Description

Uncited is a specialized RSS reader designed for researchers. It consolidates academic papers from over 3000 journals, including prestigious ones like Nature and Science, as well as pre-print servers like arXiv, into a single, streamlined feed. The core innovation lies in its ability to filter out the noise and provide a faster, more focused way for researchers to stay updated with the latest publications, addressing the overwhelming volume of new research.

Popularity

Points 12

Comments 12

What is this product?

Uncited is an advanced RSS feed aggregator specifically tailored for the academic research community. Unlike generic RSS readers, it's built with a deep understanding of how researchers consume information. It ingeniously pulls in new papers from a vast array of academic journals and repositories, such as Nature, Science, and arXiv, and presents them in a clean, unified interface. This means you don't have to visit dozens of individual journal websites or sift through countless arXiv listings. The innovation is in its specialization: it understands the structure and metadata of research papers to offer a more relevant and efficient discovery experience, essentially acting as a super-powered, research-focused news feed. So, what's in it for you? It saves you immense time by bringing all your essential research updates to one place, allowing you to discover new findings without getting lost in the information deluge.

How to use it?

Researchers can integrate Uncited into their workflow by subscribing to specific journals, topics, or arXiv categories that are relevant to their field. The platform provides a clean, unified feed where new papers appear as they are published. Users can customize their feeds to filter out irrelevant content and prioritize important research areas. For developers, the underlying technology could be leveraged to build custom research dashboards or integrate paper discovery into existing academic tools. This is achieved through a backend that intelligently scrapes and parses journal websites and repositories, and a frontend that presents this information in an easily digestible format. Think of it as a smart librarian for your research, always bringing you the latest relevant books. So, how can you use it? You simply set up your preferences once, and Uncited delivers curated research updates directly to you, so you can focus on reading and analyzing, not just finding.

Product Core Function

· Aggregates papers from 3000+ journals and repositories: This means you get all your essential research updates from sources like Nature, Science, and arXiv in one place, saving you the tedious task of checking each source individually. It's your central hub for cutting-edge discoveries.

· Clean, unified feed interface: Presents research papers in a clutter-free and organized manner, making it easy to scan and identify important articles quickly. No more wrestling with messy websites; just pure, relevant information.

· Specialized for researchers: Designed with the specific needs of academics in mind, this feature ensures that the content and filtering are optimized for research discovery, helping you find what truly matters to your work.

· Faster and more focused updates: Provides a streamlined way to keep up with new publications, cutting through the noise of general news and social media, so you can dedicate more time to engaging with critical research.

Product Usage Case

· A molecular biologist can use Uncited to subscribe to all top journals in their field and specific arXiv categories related to their current project. This allows them to immediately see new experimental techniques or breakthrough findings relevant to their research without manually checking each journal's website daily. It solves the problem of missing crucial research that could accelerate their own work.

· A computer scientist working on artificial intelligence can set up Uncited to aggregate papers from major AI conferences and leading journals. They can then filter by specific sub-fields like 'natural language processing' or 'reinforcement learning' to get a highly relevant stream of new research. This avoids the overwhelming task of sifting through hundreds of AI papers, ensuring they stay at the forefront of their discipline.

· A historian can use Uncited to monitor new publications from various history journals and university presses. By creating custom feeds based on historical periods or regions of interest, they can efficiently discover newly published scholarship. This helps them stay updated on the latest historical interpretations and evidence without spending hours on manual searches.

8

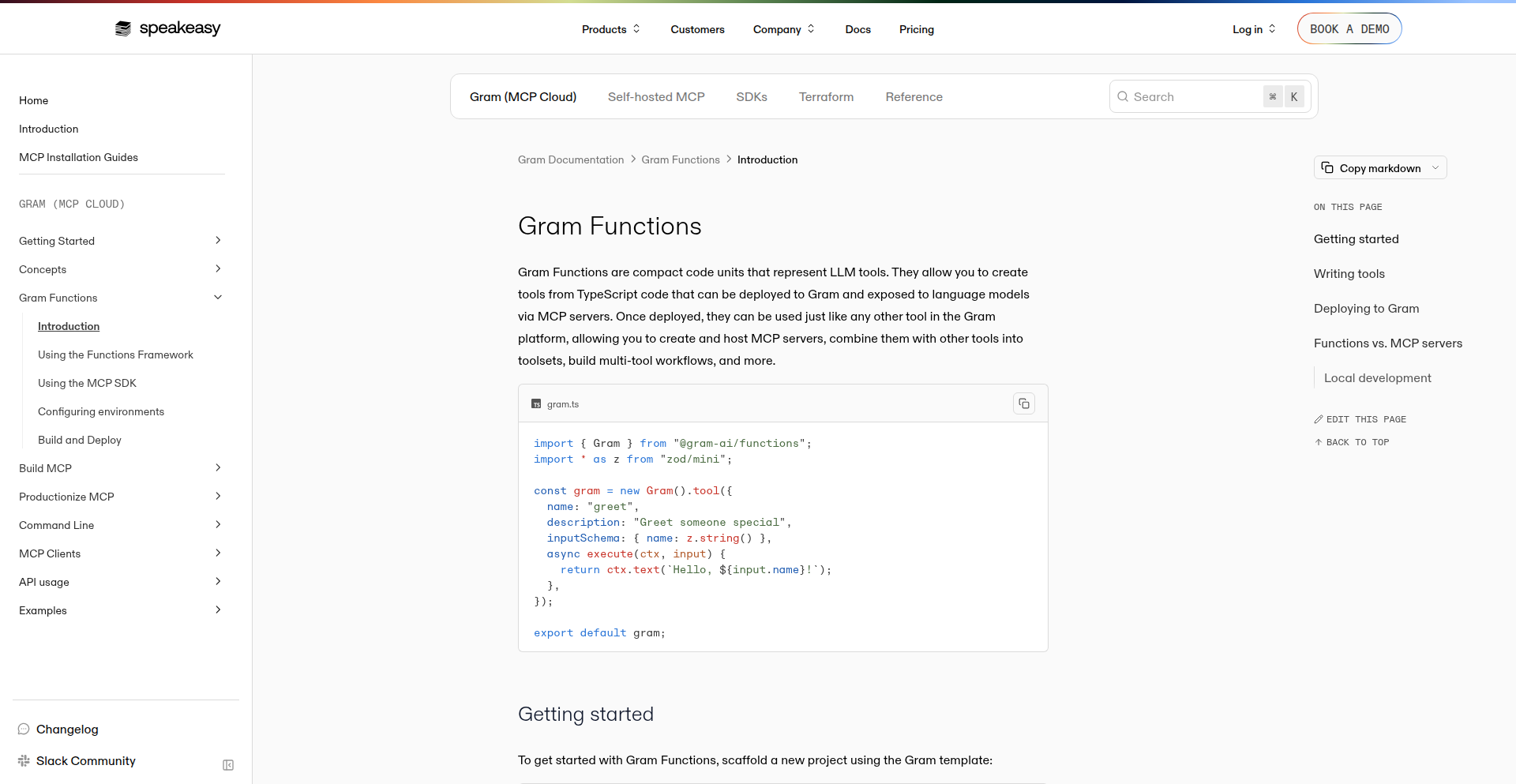

Gram Functions: Code-to-Agent-Tool Compiler

Author

disintegrator

Description

Gram Functions is a serverless platform that transforms custom code into consumable tools for AI agents. It addresses the limitation of many existing REST APIs not being LLM-friendly by allowing developers to write code directly, which Gram then deploys and hosts as callable agent tools. This innovative approach significantly simplifies the process of integrating custom logic into agentic workflows, preventing context bloat with small, curated toolsets.

Popularity

Points 22

Comments 0

What is this product?

Gram Functions is a novel platform designed to bridge the gap between developer-written code and the needs of AI agents. Instead of relying solely on pre-defined API specifications like OpenAPI, developers can now submit their own code (e.g., in JavaScript or TypeScript, runnable via pnpm, bun, or npm). Gram then automatically provisions and deploys this code onto infrastructure (specifically Fly.io machines) managed by a Go server. This server acts as an intermediary, listening for tool calls from AI agents. The core innovation lies in its ability to take arbitrary code and expose it as functional tools that agents can invoke, making custom logic readily accessible to AI. This is a significant step beyond simply wrapping existing APIs, enabling truly custom agent capabilities.

How to use it?

Developers can get started by using a command-line interface (CLI) tool provided by Gram. Running `pnpm create @gram-ai/function` (or equivalent using `bun` or `npm`) scaffolds a new project. Within this project, developers write their custom code that defines the logic for a specific tool. Once the code is ready, they deploy it through Gram. After deployment, developers can use the Gram dashboard to select and assemble these code-generated tools, along with tools derived from OpenAPI documents, into small, specialized 'Micro Computation Processing' (MCP) servers. These MCP servers are then used to power their AI agents. This allows for highly tailored toolsets for different agents, avoiding the performance and context issues associated with monolithic tool collections.

Product Core Function

· Code to Tool Transformation: Developers write their logic in code, and Gram automatically compiles it into callable tools for AI agents. This means you can leverage your existing programming skills to create unique agent functionalities, leading to more sophisticated and personalized AI experiences.

· Serverless Deployment: Gram handles the infrastructure provisioning and hosting of your code as tools. You don't need to manage servers or deployment pipelines, significantly reducing development overhead and allowing you to focus on building agent capabilities.

· Curated MCP Servers: The platform allows you to create small, focused MCP servers by cherry-picking tools from your code and OpenAPI documents. This prevents 'context bloat'—where AI agents get overwhelmed by too much information—leading to more efficient and accurate agent performance.

· OpenAPI Integration: Seamlessly combine your custom code-generated tools with existing tools defined via OpenAPI specifications. This provides a flexible way to augment or replace functionalities offered by traditional APIs.

· CLI Project Scaffolding: A simple command-line interface helps developers quickly set up new projects for creating Gram Functions. This streamlined setup process accelerates the initial development phase and promotes rapid prototyping.

Product Usage Case

· Agent with Custom Data Analysis Capabilities: A developer can write a Python script to perform complex data analysis on a specific dataset and deploy it as a Gram Function. An AI agent can then invoke this function to get insights from the data, solving the problem of integrating proprietary analysis logic into an agent's workflow.

· Automated Task Executor for Internal Tools: A team can develop a set of scripts for interacting with their internal company tools (e.g., ticketing systems, internal databases). Gram Functions can turn these scripts into agent-executable actions, enabling AI agents to automate internal workflows and reduce manual effort.

· Real-time Information Fetcher for Niche APIs: If an agent needs to access data from a less common or proprietary API that doesn't have an OpenAPI spec, a developer can write a small service using Gram Functions to interact with that API and expose it as a tool. This allows agents to access a wider range of real-time information.

· Personalized Content Generation Tool: A developer can create a Gram Function that takes user preferences as input and generates highly personalized content (e.g., summaries, creative writing). An AI agent can then use this function to provide tailored content experiences to users.

9

OctoDNS: Multi-Provider DNS Sync Engine

Author

gardnr

Description

OctoDNS is a tool designed to automate and synchronize DNS records across various providers like AWS Route 53 and Cloudflare. Inspired by major cloud outages, it tackles the challenge of maintaining service resilience by ensuring that your domain's DNS information is consistently updated across different DNS hosting services. This prevents a single point of failure, allowing your services to remain accessible even if one provider experiences downtime.

Popularity

Points 22

Comments 0

What is this product?

OctoDNS is a Python library and command-line tool that manages DNS records across multiple providers. Its core innovation lies in its ability to synchronize changes made in one DNS provider to all others you've configured. Think of it as a central control panel for your domain's global DNS presence. Instead of manually updating your DNS records on AWS, then on Cloudflare, and so on, OctoDNS handles this replication automatically. This ensures that all your DNS servers are always serving the same, up-to-date information, making your online services much more robust and less susceptible to outages. So, what's the benefit for you? If one DNS provider has an issue, your website or service can seamlessly continue to be served by another, keeping you online and your users happy.

How to use it?

Developers can use OctoDNS in two primary ways: as a Python library integrated into their infrastructure automation scripts or directly as a command-line interface (CLI) for manual or scheduled DNS management. For integration, you'd typically write a Python script that utilizes OctoDNS to define your desired DNS zone and then push those changes to all configured providers. For example, when deploying a new service, your deployment script could automatically update the necessary DNS records across all your DNS providers using OctoDNS. As a CLI, you can execute commands like 'octo-dns sync' to ensure all providers are in sync, or 'octo-dns create' to add new records. This makes it easy to automate your DNS updates as part of your CI/CD pipeline or run it as a scheduled task. This means you can automate complex DNS updates with code, reducing manual errors and ensuring consistent service availability.

Product Core Function

· Synchronized DNS Record Management: Automatically propagates DNS record changes made on one provider to all other configured providers, ensuring consistency and reducing manual effort. This means if you update an IP address for your web server, OctoDNS ensures that change is reflected everywhere, so users always reach the correct server.

· Provider Agnosticism: Supports a wide range of popular DNS providers through a plugin architecture, allowing you to mix and match services like AWS Route 53, Cloudflare, Google Cloud DNS, and more. This gives you the flexibility to choose the best providers for your needs without being locked into a single vendor, offering you more control and potentially cost savings.

· Idempotent Operations: Operations are designed to be idempotent, meaning running the same command multiple times has the same effect as running it once. This is crucial for automation, as it prevents unintended side effects if a command is accidentally re-executed, making your automation more reliable.

· Change Auditing and Logging: Provides detailed logs of all DNS changes, making it easier to track modifications, troubleshoot issues, and maintain an audit trail. This helps you understand exactly what changes were made to your DNS and when, which is invaluable for debugging or security investigations.

Product Usage Case

· Disaster Recovery Setup: A company wants to ensure their critical services remain available even if their primary DNS provider, AWS Route 53, experiences a major outage. They configure OctoDNS to replicate all their DNS records to Cloudflare. If Route 53 becomes unavailable, OctoDNS ensures that Cloudflare is serving the correct, up-to-date DNS information, allowing traffic to be rerouted seamlessly and minimizing downtime.

· Automated Service Deployment: A development team uses OctoDNS as part of their CI/CD pipeline. When they deploy a new version of their application, their deployment script uses OctoDNS to automatically update the relevant CNAME or A records across AWS and Google Cloud DNS. This ensures that new traffic is directed to the updated service immediately and consistently across all their DNS infrastructure.

· Multi-Cloud Strategy Resilience: An organization operates across multiple cloud providers for different services. They use OctoDNS to manage their primary domain's DNS records, ensuring that whether their website is hosted on Azure or their API is on GCP, the DNS resolution remains consistent and reliable across both platforms. This prevents issues where a user might resolve to an outdated IP address due to a single provider's problem.

10

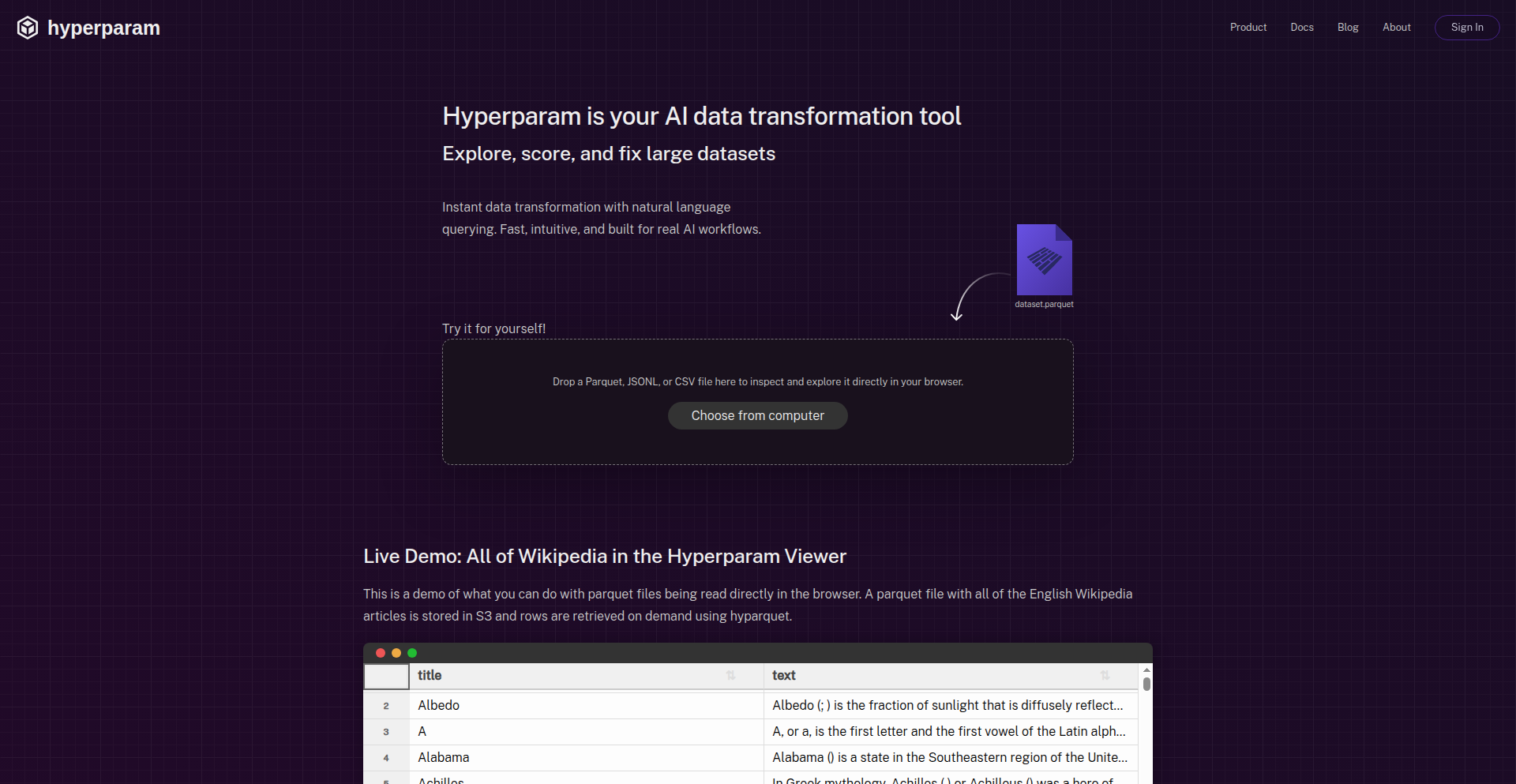

Hyperparam: Real-time Multi-Gigabyte Dataset Explorer

Author

platypii

Description

Hyperparam is a browser-native application designed to tackle the overwhelming volume of text data generated by AI models. It allows users to explore and transform multi-gigabyte datasets in real-time, leveraging a fast UI for streaming large unstructured datasets and an array of AI agents for scoring, labeling, filtering, and categorizing. This innovation addresses the critical challenge of making AI-scale data manageable and actionable, preventing teams from being drowned in information.

Popularity

Points 16

Comments 1

What is this product?

Hyperparam is a groundbreaking, browser-native application that provides real-time exploration and transformation capabilities for massive datasets, particularly text data from AI models like LLMs. The core innovation lies in its ability to handle multi-gigabyte datasets without lag, achieved through efficient data streaming directly in the browser. This is complemented by a suite of 'AI agents' that act as specialized assistants. These agents can perform complex tasks like scoring data for specific attributes (e.g., sycophancy in chat logs), filtering out undesirable entries, adjusting prompts for better AI output, and even regenerating data. Essentially, it's like having a team of intelligent data wranglers operating directly within your web browser, allowing you to derive meaningful insights from huge amounts of text data that would otherwise be unmanageable with traditional tools. So, what's in it for you? It means you can finally make sense of your AI's output, turn raw data into actionable intelligence, and significantly speed up your AI development and analysis workflow without needing powerful servers or complex setups.

How to use it?

Developers can use Hyperparam by simply visiting the web application in their browser. The application is designed for ease of use, allowing users to upload or connect to their large datasets. Once loaded, users can interact with the data through a responsive UI. For data transformation and analysis, they can leverage the AI agents via a chat-like interface. For example, a developer working with LLM chat logs could upload a 100GB dataset, ask an agent to 'score all conversations for politeness', 'filter out responses below a politeness score of 0.7', and then 'regenerate the filtered responses with a more encouraging tone'. The results are processed instantly within the browser, and the transformed dataset can be exported. Integration is straightforward as it operates in the browser, acting as a standalone tool or a preprocessing step before feeding data into other ML pipelines. So, how does this benefit you? You can quickly prototype, analyze, and refine your AI models' data directly in your workflow, saving time and computational resources on data preparation and iteration.

Product Core Function

· Real-time multi-gigabyte dataset streaming and exploration: Allows users to interact with and visualize extremely large text datasets directly in their browser without lag. The value is in making massive datasets accessible and understandable, enabling quick data discovery and validation before deep analysis.

· AI-powered data scoring and labeling: Employs AI agents to automatically assess and tag data points based on predefined criteria (e.g., sentiment, relevance, quality). This significantly speeds up manual data annotation processes, providing quantitative insights into data characteristics.

· Intelligent data filtering and categorization: Enables users to precisely select subsets of data based on complex AI-driven criteria or custom rules. This helps isolate critical data segments for targeted analysis or model training, improving the efficiency of data selection.

· Prompt adjustment and data regeneration: Allows users to iterate on AI prompts and automatically regenerate data based on adjusted instructions. This is invaluable for fine-tuning AI models, improving output quality, and exploring different data generation strategies.

· Browser-native operation: Eliminates the need for complex server setups or installations, making powerful data manipulation tools accessible to anyone with a web browser. This democratizes access to advanced data processing capabilities.

Product Usage Case

· Scenario: Analyzing customer feedback from a large dataset of support tickets. Problem: The dataset is too large to process with standard spreadsheet software or basic scripting. Solution: Use Hyperparam to stream the support tickets, employ an AI agent to score each ticket for 'frustration level', filter out tickets with high frustration, and then export the filtered list for targeted customer service intervention. Value: Quick identification of critical customer issues and efficient resource allocation for support.

· Scenario: Fine-tuning a large language model for a specific writing style. Problem: Generating and refining training data for stylistic consistency is time-consuming and requires extensive manual review. Solution: Upload a seed dataset to Hyperparam, use an AI agent to score generated text for stylistic adherence, filter out deviations, adjust the generation prompts based on the agent's feedback, and regenerate the dataset. This iterative process can be done in real-time within the browser. Value: Accelerated AI model refinement and improved output quality with less manual effort.

· Scenario: Identifying biased language in AI-generated content. Problem: Manually scanning thousands or millions of AI-generated text outputs for subtle biases is practically impossible. Solution: Utilize Hyperparam's AI agents to define and score specific types of bias (e.g., gender bias, racial bias) across the dataset, then filter out problematic content for review and correction. Value: Ensuring ethical and unbiased AI output through efficient, automated detection and remediation.

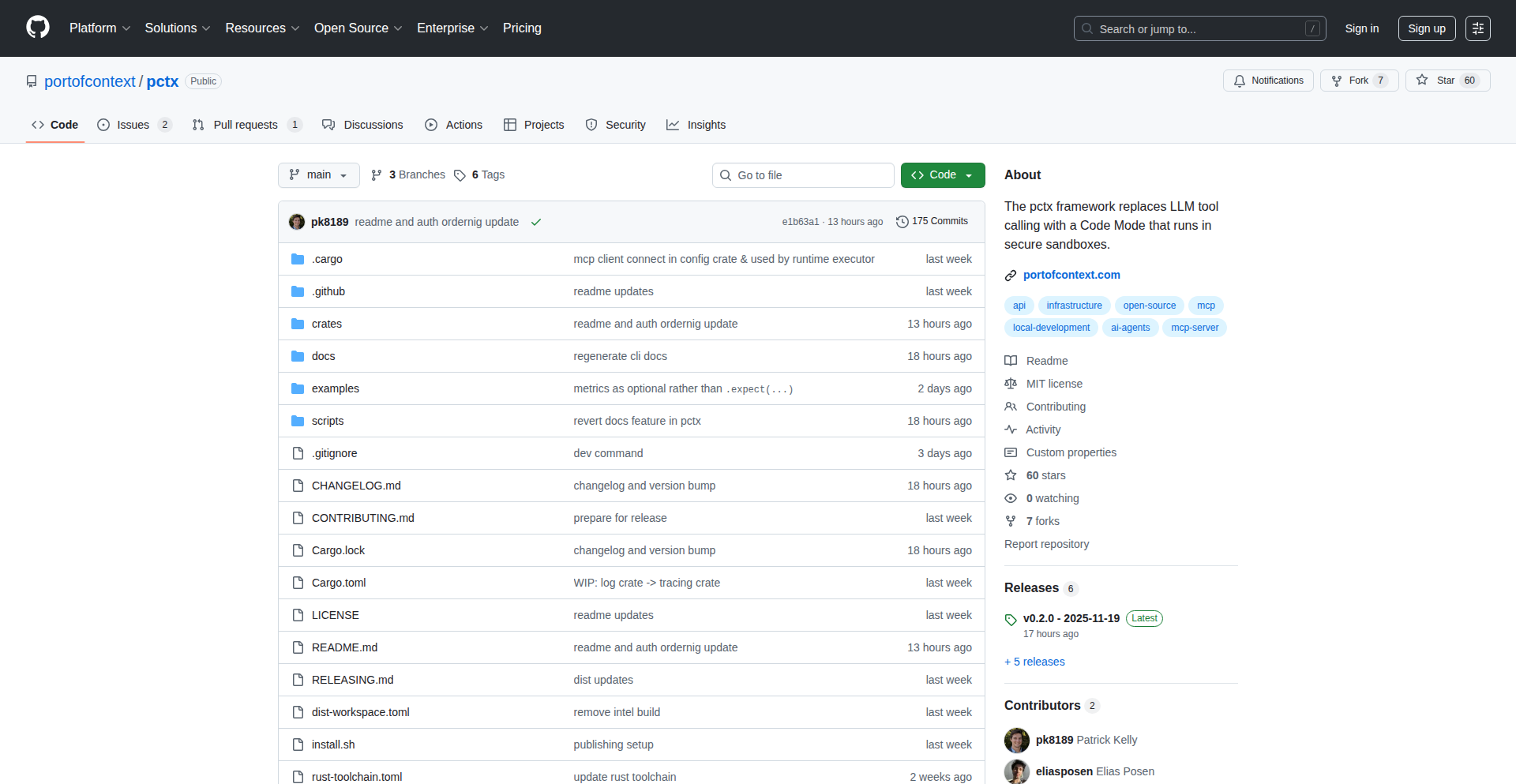

11

ChatterBooth: Anonymous Human Connect

Author

billyjei

Description

ChatterBooth is an anonymous application designed to foster genuine human connection by allowing users to talk and chat freely without fear of judgment. It addresses the difficulty of opening up online and the limitations of current AI chatbots in providing empathetic human interaction. The core innovation lies in creating a safe space for authentic expression and connection, driven by the insight that real human voices, even anonymous ones, are crucial for emotional well-being.

Popularity

Points 12

Comments 1

What is this product?

ChatterBooth is an anonymous communication platform that facilitates open conversations between real people. Unlike social media where identity is paramount, ChatterBooth prioritizes a judgment-free environment. The underlying technology focuses on creating secure, ephemeral connections, allowing users to share thoughts, feelings, and experiences without the baggage of their online persona. This is built on the principle that anonymity can liberate authentic expression, a stark contrast to AI chatbots which can mimic understanding but lack genuine empathy.

How to use it?

Developers can use ChatterBooth as a model for building similar secure and anonymous communication features within their own applications. The core concept of creating a temporary, judgment-free space for users to connect can be applied to various scenarios, such as mental health support forums, anonymous feedback systems, or even educational discussion platforms where learners might feel more comfortable asking questions anonymously. Integration would involve building robust backend infrastructure for anonymous user management and secure real-time communication channels, potentially leveraging technologies like WebSockets for instant messaging.

Product Core Function

· Anonymous Chatting: Enables users to engage in real-time text-based conversations without revealing personal identities. This creates a safe space for open dialogue and emotional expression, which is valuable for users seeking to vent or share personal experiences without societal pressure.

· Free Expression Environment: Fosters a culture of non-judgment, allowing users to speak their minds freely. This is crucial for mental well-being, providing an outlet for stress and emotions that might otherwise remain suppressed due to fear of social repercussions.

· Human-to-Human Connection: Prioritizes authentic interaction between people over interaction with AI. This offers a more empathetic and understanding experience compared to chatbots, fulfilling the innate human need for genuine connection and validation.

· Secure Communication Channels: Implements underlying security measures to protect user privacy and ensure anonymity. This technical safeguard is fundamental for building trust and encouraging users to engage openly, knowing their conversations are protected.

Product Usage Case

· Mental Health Support: A user feeling overwhelmed can anonymously chat with another individual who might have experienced similar challenges, offering comfort and shared understanding without the pressure of revealing personal details.

· Problem Solving & Brainstorming: Developers facing a tough coding bug could anonymously discuss the issue with other developers, potentially leading to novel solutions they wouldn't have considered in a public forum due to fear of appearing inexperienced.

· Personal Venting & Stress Relief: Someone going through a difficult life event can share their feelings without fear of gossip or judgment from their known social circle, finding solace in anonymous empathy.

· Creative Idea Sharing: An artist or writer could anonymously share early-stage creative work for feedback, receiving honest opinions that can help refine their craft without personal attribution.

12

Tokenomics AI Inference Analyzer

Author

paul_td

Description

This project is an interactive calculator designed to simplify comparisons of AI inference hardware. It tackles the complexity arising from inconsistent data and metrics across different vendors by normalizing key performance indicators. The innovation lies in its ability to provide apples-to-apples comparisons, enabling users to understand the true cost and performance of AI inference systems, particularly concerning token generation efficiency, hardware capacity, and overall economics. This is valuable for developers and decision-makers trying to navigate the rapidly evolving AI hardware landscape.

Popularity

Points 12

Comments 0

What is this product?

The Tokenomics AI Inference Analyzer is a web-based tool that helps users compare AI inference hardware from various vendors. It addresses the problem of scattered and inconsistent performance data by normalizing metrics like tokens per dollar and tokens per kilowatt-hour. The core innovation is its scenario-normalized comparison engine, which allows users to evaluate hardware based on specific AI model use cases and load requirements. It employs logarithmic math for AI inference systems, enabling more efficient calculations and comparisons, especially for hardware built on similar mathematical principles. So, this means it helps you cut through the marketing hype and get a clear, data-driven understanding of which AI hardware is truly the most cost-effective and performant for your specific needs.

How to use it?

Developers can use this calculator by visiting the provided URL and inputting their specific requirements. They can select different AI model scenarios, define target user loads, and input their own capital expenditure (capex), amortization periods, colocation costs, and energy prices. The calculator then estimates the required hardware capacity (e.g., number of racks), cost per token, and power efficiency (tokens per kWh). It also allows for comparing different hardware architectures and configurations for the same model. This means you can plug in your project's budget and performance targets, and the tool will show you the best hardware options and their associated economics, helping you make informed purchasing or development decisions.

Product Core Function

· Scenario-normalized comparisons: This function allows for direct comparison of different AI inference hardware by standardizing data to common metrics and model scenarios, helping users understand relative performance and cost. The value is in providing a consistent benchmark for evaluating diverse hardware offerings.

· Capacity modeling: This feature estimates the number of hardware racks needed to support a target user load, considering factors like model size and KV-cache requirements. The value lies in its ability to help users plan for scalability and infrastructure needs, avoiding over- or under-provisioning.

· Cost and power economics: This function calculates tokens per dollar and tokens per kilowatt-hour, allowing users to assess the financial and energy efficiency of different hardware solutions. By enabling custom cost inputs, it provides a Total Cost of Ownership (TCO) perspective, crucial for budget-conscious projects.

· Architecture impact analysis: This feature demonstrates how different memory architectures (e.g., SRAM-only vs. HBM) can influence profitability and performance. The value is in highlighting the trade-offs of various hardware designs and their implications for AI inference tasks.

· Like-for-like model performance variation: Users can compare how the same AI model performs with different hardware configurations or under different use cases. This illustrates the significant impact of hardware choice on model effectiveness, helping users optimize for specific applications.

Product Usage Case

· A startup developing a new AI chatbot needs to deploy inference hardware. Using the calculator, they can compare different GPU options by inputting their expected daily user traffic and the chatbot model's size. The tool will estimate the cost per response and the number of servers needed, helping them choose the most cost-effective and scalable solution within their budget.

· An AI research team is evaluating hardware for training and running large language models. They can use the calculator to compare dedicated AI chips versus more general-purpose hardware, inputting their energy costs and desired processing throughput. The calculator will provide insights into tokens per dollar and TCO, guiding their hardware investment decisions.

· A cloud service provider wants to offer AI inference services. They can utilize the calculator to benchmark various hardware configurations from different vendors against specific workloads, such as image recognition or natural language processing. This helps them understand which hardware offers the best performance-per-watt and the most competitive pricing for their customers.

· A developer working on a real-time AI application for autonomous vehicles needs to minimize latency and maximize efficiency. They can use the calculator to compare hardware that utilizes logarithmic math for inference, factoring in their power constraints. The tool can help them identify hardware that can deliver the required performance with minimal energy consumption.

13

YCInterviewSim

Author

alielroby

Description

A free tool for founders to practice Y Combinator (YC) interviews. It leverages a curated list of over 70 of the latest questions asked in recent YC batches, allowing users to simulate the high-pressure, 10-minute interview format. The innovation lies in aggregating and presenting these specific, often difficult, interview questions in a structured practice environment, directly addressing the common need for founders to prepare for this critical stage.

Popularity

Points 4

Comments 6

What is this product?

YCInterviewSim is a web-based application designed to help aspiring Y Combinator founders prepare for their crucial interviews. It functions by presenting users with a randomized selection of actual YC interview questions, mirroring the format and pressure of the real experience. The core technological insight is the collection and organization of timely, relevant interview questions, which are often hard to find and assemble. This provides a simulated environment that goes beyond generic interview advice by offering specific, context-aware practice. So, what's the use for you? It saves you the immense effort of researching and compiling these questions yourself, offering a targeted way to build confidence and refine your answers for your actual YC interview.

How to use it?

Developers and founders can access YCInterviewSim through their web browser. The tool typically presents questions one by one, often with a timer to simulate the 10-minute interview constraint. Users can then speak or type their answers. The intention is for founders to repeatedly practice with the tool, getting comfortable with articulating their startup's vision, traction, team, and market in a concise and compelling manner. It can be integrated into a founder's preparation workflow by dedicating specific practice sessions. So, what's the use for you? You can easily slot this into your daily or weekly routine to get repeated, focused practice on the exact types of questions YC asks, significantly improving your preparedness.

Product Core Function

· Curated Question Bank: Access to over 70 of the latest YC interview questions. This provides a direct and relevant practice set, unlike generic interview prep. So, what's the use for you? You are practicing with the actual questions that matter for your YC application.

· Interview Simulation Mode: A timed interface designed to replicate the 10-minute YC interview pressure. This helps users learn to be concise and articulate under time constraints. So, what's the use for you? You build the skill of delivering impactful answers within a limited timeframe.

· Question Diversity: The tool draws from a broad range of recent YC questions, covering topics from product-market fit to team dynamics and growth strategy. This ensures comprehensive preparation across all critical areas. So, what's the use for you? You get a well-rounded practice that covers all the bases YC is likely to probe.

· Free Accessibility: The tool is offered for free, lowering the barrier for founders to access essential interview preparation resources. So, what's the use for you? You get high-quality, targeted interview practice without any financial cost.

Product Usage Case

· A founder preparing for YC can use YCInterviewSim daily for a week leading up to their interview. They can practice answering questions about their 'unique insight', 'customer acquisition strategy', and 'how they will defend against competitors' in a simulated 10-minute session. This helps them refine their pitch and identify weak spots in their answers before the real interview. So, what's the use for you? You can identify and fix your interview weaknesses before they impact your actual YC chance.

· A group of co-founders can use YCInterviewSim as a team-building exercise to practice answering questions that require aligned responses, such as 'Why is your team the right one to build this?' or 'What are the biggest risks and how will you mitigate them?'. This ensures they present a cohesive front to the interview panel. So, what's the use for you? You and your co-founders can align your messaging and present a united front to the interviewers.

· A solo founder can use the tool to record their answers to specific questions and then review them for clarity, conciseness, and impact. This self-reflection process, facilitated by the tool's structured question delivery, allows for iterative improvement of their pitch. So, what's the use for you? You can objectively assess your own interview performance and make concrete improvements to your delivery.

14

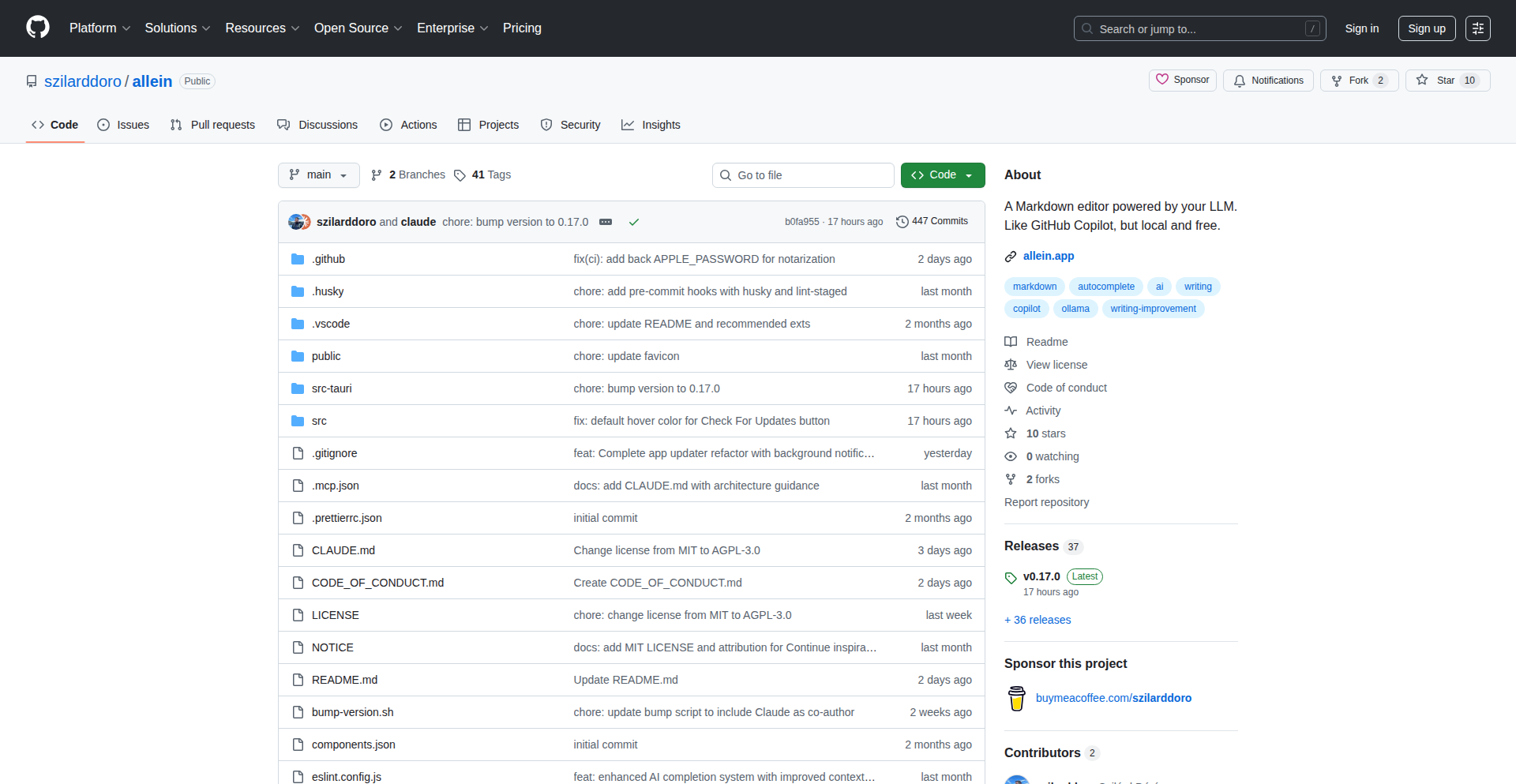

Allein - Local AI-Powered Markdown Writing

Author

szdoro

Description

Allein is a private, offline Markdown editor that brings AI writing assistance, similar to GitHub Copilot, directly to your desktop. It leverages your local Large Language Models (LLMs) via Ollama, offering context-aware autocompletion, grammar and style suggestions, and flexible LLM model selection. This means powerful AI writing tools without needing an internet connection or creating an account, enhancing productivity and privacy for writers and developers.

Popularity

Points 6

Comments 4

What is this product?

Allein is a desktop Markdown editor built using Tauri, React, and Rust, featuring AI capabilities powered by local LLMs through Ollama. The core innovation lies in its ability to run advanced AI writing assistance, like autocompletion and grammar checks, entirely on your own machine. This is achieved by integrating with Ollama, an open-source tool that makes it easy to run LLMs locally. Unlike cloud-based AI tools, Allein processes all your writing data on your computer, ensuring complete privacy and enabling offline functionality. It's like having a personal AI writing assistant that's always available and never shares your data.

How to use it?

Developers can download and install Allein as a desktop application. To use its AI features, you'll need to have Ollama installed and running on your system, along with at least one LLM downloaded through Ollama. Once Allein is running, you can select your preferred LLM from its settings. As you type in the Markdown editor, Allein will provide AI-powered suggestions for autocompletion, sentence structure, and grammar improvements directly within the editor. This seamless integration allows for an uninterrupted writing workflow, whether you're drafting documents, writing code comments, or composing emails, all while keeping your data private and accessible offline.

Product Core Function

· AI-powered Markdown autocompletion: This feature provides intelligent suggestions as you type, similar to how code editors suggest code snippets. It helps you write faster and more consistently, reducing the need to remember exact syntax or common phrases. This is valuable for anyone writing extensively in Markdown, like documentation writers or note-takers.

· Writing improvements (spelling, grammar, readability): Allein analyzes your text to identify and suggest corrections for spelling mistakes, grammatical errors, and ways to improve the clarity and flow of your writing. This is incredibly useful for non-native speakers or anyone who wants to ensure their writing is polished and professional.

· Local LLM inference via Ollama: This is the cornerstone of Allein's privacy and offline capabilities. By running LLMs locally, your data never leaves your computer, ensuring sensitive information remains secure. It also means you can use powerful AI writing tools even without an internet connection, making it ideal for travel or areas with poor connectivity.

· Flexible model selection: Users can choose from various LLMs supported by Ollama based on their specific needs and the computing power of their device. This allows for customization, enabling users to select models that are faster for simple tasks or more powerful for complex writing assistance.

· Full-featured Markdown editor with live preview: Beyond AI, Allein provides a robust Markdown editing experience with real-time preview, allowing you to see how your formatted text will appear as you write. This is essential for anyone working with Markdown for content creation, web development, or note-taking.

Product Usage Case

· A technical writer creating documentation for a new software project can use Allein to get AI suggestions for code examples, API descriptions, and general explanatory text. The context-aware autocompletion can help ensure accurate terminology and consistent formatting, while grammar checks improve overall readability for a wider audience. The offline capability is a huge plus for writers who travel frequently.

· A student working on an English essay can leverage Allein's writing improvement features to catch grammatical errors and enhance sentence structure. As a non-native speaker, the AI suggestions can provide valuable learning opportunities and help produce a more polished final piece. The privacy aspect is reassuring, especially when dealing with personal academic work.

· A developer writing Markdown notes for their personal knowledge base can benefit from AI autocompletion for common coding terms or project-specific jargon. This speeds up the process of documenting ideas and makes the notes more useful in the future. The ability to do this entirely offline means they can jot down thoughts as they arise, regardless of their location.

· A blogger or content creator can use Allein to brainstorm ideas and draft articles. The AI can help overcome writer's block by suggesting sentence completions or alternative phrasing. The privacy of local processing means they can work on sensitive or unreleased content without concerns about data breaches or intellectual property being exposed.

15

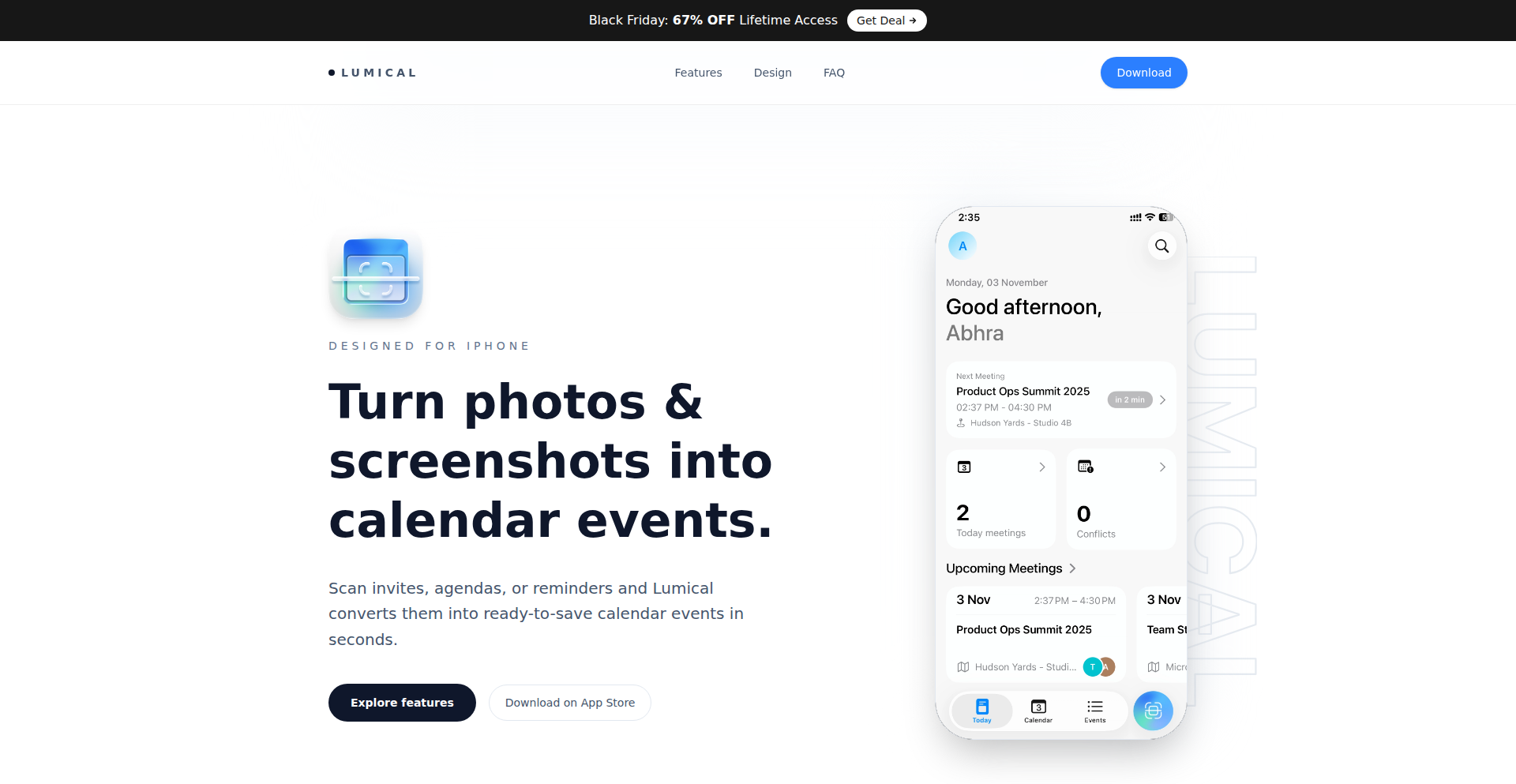

Lumical: Instant Event Sync

Author

arunavo4

Description

Lumical is an iOS app that transforms paper or digital meeting invites into calendar events with a simple scan. It employs advanced optical character recognition (OCR) and natural language processing (NLP) to extract key event details – like date, time, and location – from an image or screenshot, then seamlessly integrates them into your device's calendar. This eliminates manual data entry, saving valuable time and reducing errors.

Popularity

Points 3

Comments 5

What is this product?

Lumical is an intelligent iOS application designed to automate the process of adding events to your calendar. Leveraging cutting-edge OCR technology, it can 'read' text from images of physical invitations or digital screenshots. Once the text is recognized, a sophisticated NLP engine analyzes it to identify crucial event information such as the event title, date, time, duration, and location. This extracted data is then intelligently mapped to the standard fields in your device's calendar application. The innovation lies in its ability to go beyond simple text recognition; it understands the context of event-related information, making the process incredibly efficient and accurate. Essentially, it's like having a smart assistant that can instantly process and organize your meeting details for you.

How to use it?

Developers can integrate Lumical into their workflows by simply opening the app and pointing their iPhone's camera at a physical meeting invitation or a screenshot of a digital invite. The app will then guide the user through a brief review of the parsed event details, allowing for any minor corrections. With a single tap, the event is added to the user's default calendar. For more advanced integration, Lumical's underlying technologies could potentially be explored for use in other applications that require text extraction and event scheduling from visual input.

Product Core Function

· Optical Character Recognition (OCR) for text extraction: This allows the app to read text from images, effectively digitizing information from paper invites or screenshots. Its value is in making unstructured visual data machine-readable.

· Natural Language Processing (NLP) for detail parsing: This feature understands the meaning of the extracted text, identifying specific entities like dates, times, and locations. Its value is in intelligently classifying and structuring event information.

· Calendar integration: Seamlessly pushes parsed event data into the user's native calendar application. Its value is in automating the scheduling process and ensuring events are captured without manual input.

· Real-time preview and editing: Allows users to review and correct extracted details before adding them to the calendar. Its value is in ensuring accuracy and user control over the final event entry.

Product Usage Case

· A busy professional receives a physical event invitation in the mail. Instead of manually typing all the details into their calendar, they can quickly scan the invitation with Lumical, and the event is added in seconds. This saves them time and prevents potential typos.

· A user takes a screenshot of a group chat message detailing a spontaneous meetup. Lumical can process this screenshot, extract the event details (time, place, attendees), and add it to their calendar, ensuring they don't forget the arrangement.

· An event planner needs to quickly add multiple event details from various sources to their schedule. Lumical allows for rapid processing of each invite, streamlining their planning workflow and reducing the risk of missed appointments.

16

ShadcnMap: Seamless Leaflet Integration for Shadcn/ui

Author

tonghohin

Description

A map component designed specifically for shadcn/ui projects, built using Leaflet and React Leaflet. It provides a fully open-source, API key-free mapping solution that seamlessly matches the aesthetic of shadcn/ui, making it easy to add interactive maps to modern web applications.

Popularity

Points 4

Comments 3

What is this product?

This project is a React-based map component that integrates with shadcn/ui, a popular design system for React. The core innovation lies in its ability to provide a beautiful, customizable map interface without the usual hassles of API keys or proprietary services. It leverages Leaflet, a lightweight and powerful open-source JavaScript library for interactive maps, and React Leaflet to make it easy to use within a React environment. The result is a map component that looks and feels like a native part of your shadcn/ui application, offering full control and data privacy.

How to use it?

Developers can integrate this map component into their shadcn/ui projects with a simple installation command. Once installed, it can be used like any other shadcn/ui component, allowing for easy customization of map layers, markers, and interactions. The project's documentation on GitHub provides clear examples and API references for adding maps to web applications, fetching map data, and handling user interactions such as clicking on markers. It's ideal for projects that need to display geographical information, track locations, or visualize data on a map, all while maintaining a consistent design language with their existing shadcn/ui components.

Product Core Function

· Shadcn/ui Themed Map Rendering: Provides a map component that adheres to the visual style and design principles of shadcn/ui, ensuring a cohesive user interface. This means your maps will look like they were built directly into your application's design system from the start, making it easier to create polished user experiences without extra styling effort.

· Leaflet-Powered Mapping: Utilizes the robust and versatile Leaflet library to render interactive maps, offering a wide range of features like zooming, panning, and layer control. This gives you access to a powerful and mature mapping engine that is open-source and highly customizable, allowing for advanced map functionalities beyond basic display.

· React Leaflet Integration: Seamlessly integrates Leaflet functionality into React applications using React Leaflet, making it straightforward to manage map states and interactions within a component-based architecture. This approach simplifies development by allowing you to manage map elements and events using familiar React patterns, leading to more maintainable and scalable code.

· API Key Free Operation: Operates without the need for external API keys, eliminating associated costs and complexities, and enhancing data privacy. This is a significant advantage for developers who want to avoid the overhead of managing API keys, potential billing surprises, and privacy concerns related to third-party map services.