Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-18

SagaSu777 2025-11-19

Explore the hottest developer projects on Show HN for 2025-11-18. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

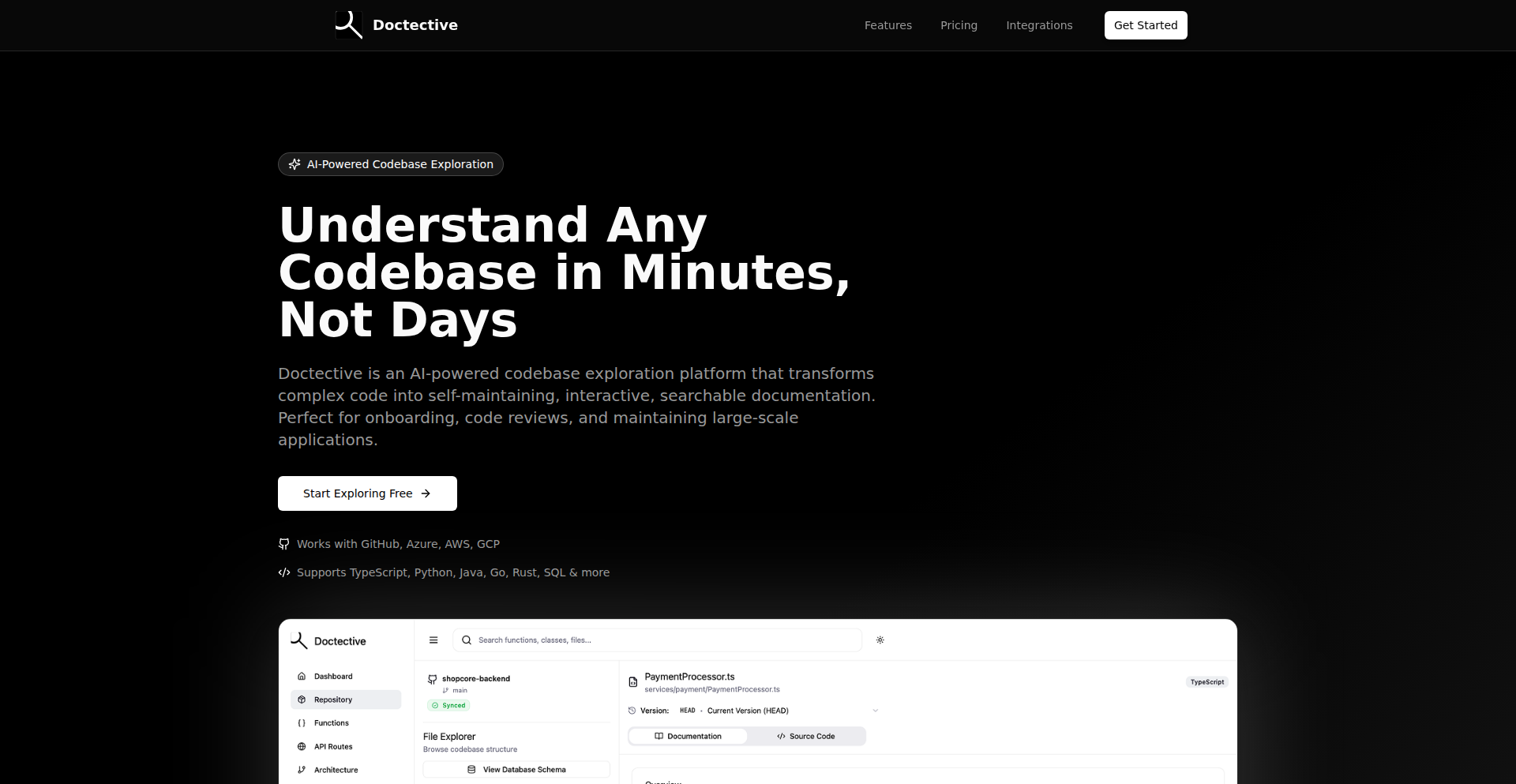

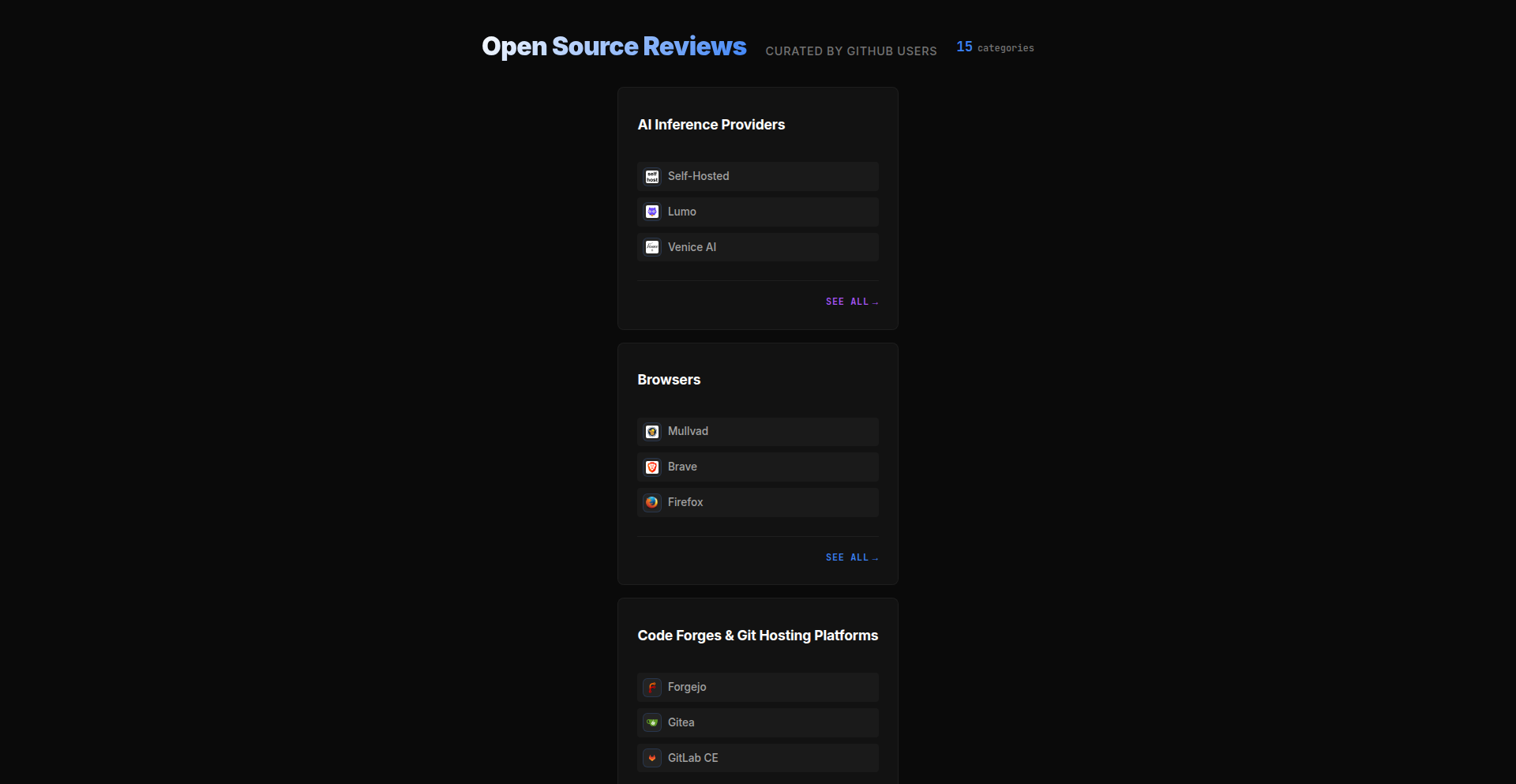

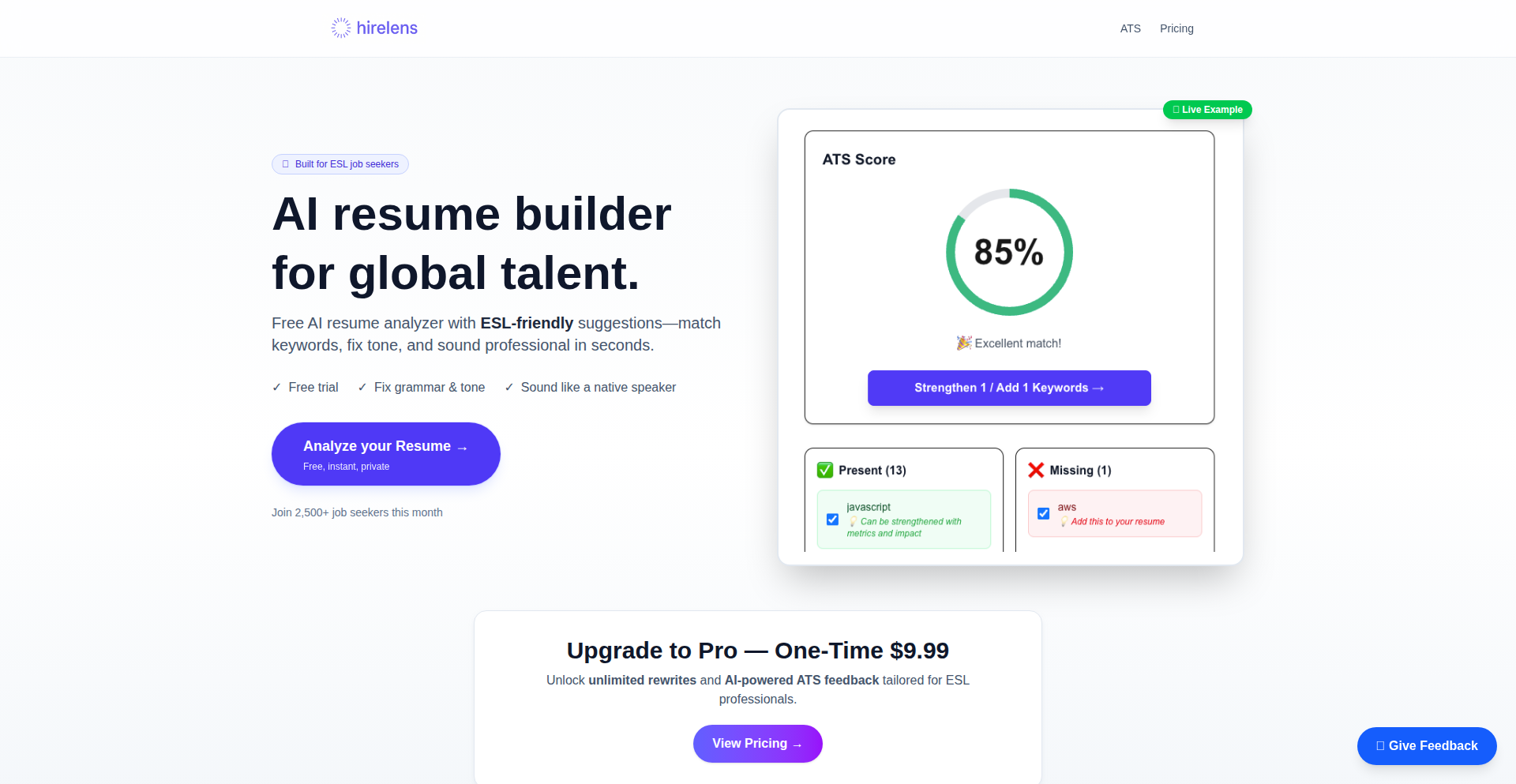

Today's Show HN highlights a powerful convergence of AI, developer tooling, and a strong emphasis on local-first, open-source solutions. We're seeing a clear trend towards empowering individuals and small teams with sophisticated automation and development capabilities that were once the domain of large enterprises. The rise of AI agents, like those in RowboatX and Opperator, that can run locally and interact with the system via familiar command-line interfaces, signifies a democratization of AI's problem-solving potential. Developers are actively seeking ways to streamline their workflows, from code generation and documentation (Davia, Doctective) to infrastructure management (LLMKube, Dboxed) and even complex scientific simulations (Three-Body problem simulator). The drive for performance and efficiency is also evident, with Rust emerging as a go-to language for critical components, as seen in Fast LiteLLM and various libraries. For developers, this means an ever-expanding toolkit to build faster, smarter, and more resilient applications. For entrepreneurs, it signals opportunities to build specialized tools that cater to niche automation needs, enhance developer productivity, or bring advanced AI capabilities to everyday tasks, all while leveraging the collaborative power of open source.

Today's Hottest Product

Name

Show HN: RowboatX – open-source Claude Code for everyday automations

Highlight

This project introduces RowboatX, an open-source CLI tool that brings the power of AI agents for non-coding tasks, mimicking the Claude Code workflow. It leverages the file system as state, a supervisor agent, and human-in-the-loop interaction. The innovation lies in its ability to automate complex daily tasks by allowing agents to install tools, execute code, and reason over outputs, all while running locally with user control, making advanced AI automation accessible for everyday problem-solving.

Popular Category

AI & Machine Learning

Developer Tools

Automation

Open Source

Popular Keyword

AI Agents

LLM

Automation

CLI

Open Source

Kubernetes

Rust

Web Development

Data Visualization

Technology Trends

Local-first AI Agents

Developer Experience Enhancements

Rust for Performance

AI-assisted Coding and Automation

Observability and Monitoring

Web3/Decentralized Solutions

Interactive Visualizations

No-Code/Low-Code Solutions for Specific Domains

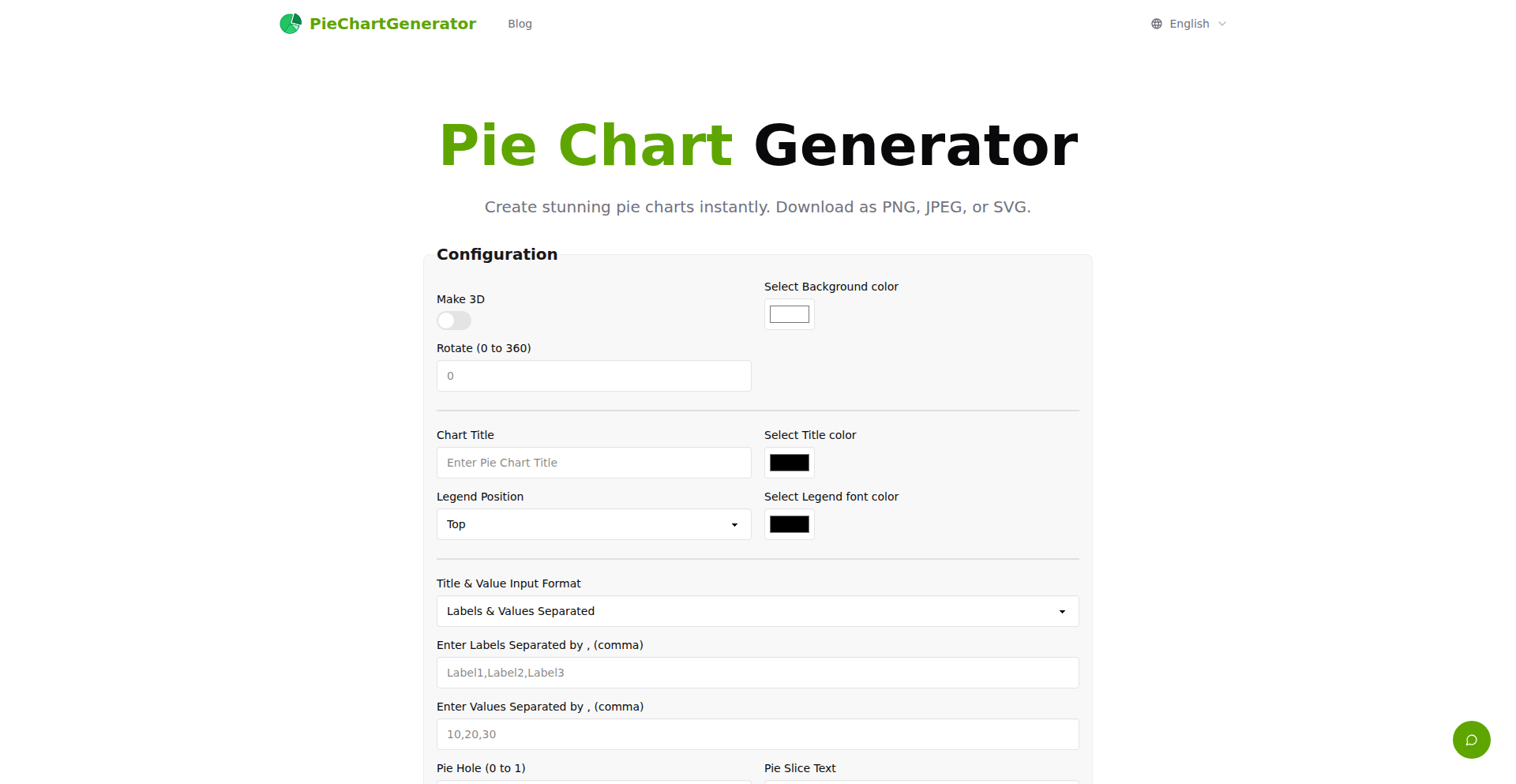

Project Category Distribution

AI & Machine Learning Tools (25%)

Developer Productivity & Tooling (30%)

Web Applications & Services (20%)

Data Visualization & Analysis (10%)

System & Infrastructure Tools (15%)

Today's Hot Product List

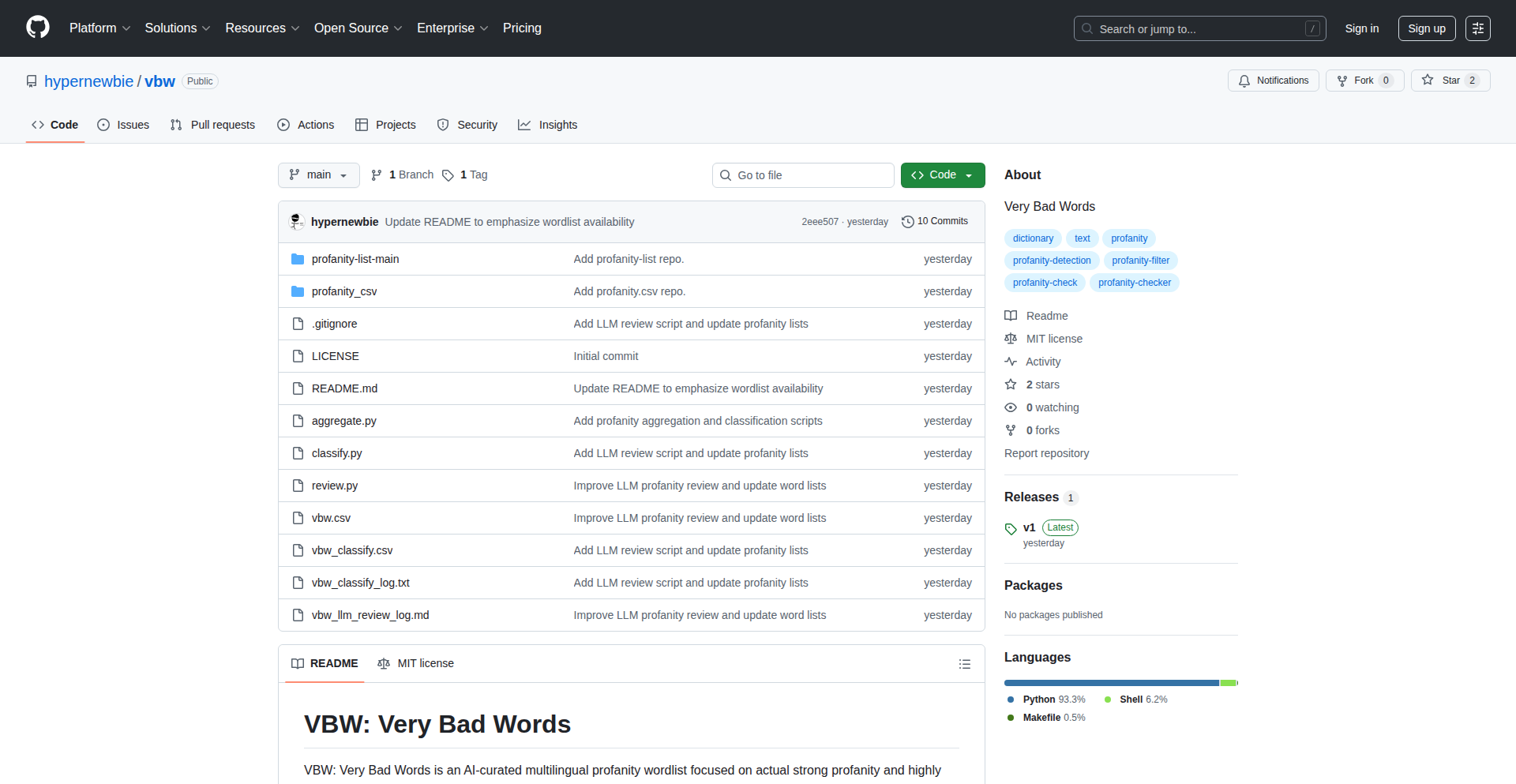

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Orbital Weaver 3D | 133 | 47 |

| 2 | Guts: Go-to-TypeScript Type Transformer | 89 | 22 |

| 3 | RowboatX: Terminal-Native AI Agents | 88 | 23 |

| 4 | AuraSense E-Paper Air Monitor | 52 | 20 |

| 5 | FastLiteLLM-RustAccel | 27 | 9 |

| 6 | Stickerbox: AI-Imagination Transformer | 12 | 4 |

| 7 | OpenTelemetry MCP Gateway | 13 | 2 |

| 8 | AST-Driven AI Coder | 11 | 1 |

| 9 | MCP Local Traffic Insights | 11 | 0 |

| 10 | ZeroReview Explorer | 6 | 4 |

1

Orbital Weaver 3D

Author

jgchaos

Description

A browser-based interactive 3D simulator for the Three-Body problem, allowing visualization of complex celestial mechanics with newly discovered 3D orbits. It addresses the challenge of intuitively understanding and exploring multi-body gravitational interactions, which are typically confined to 2D representations or complex mathematical models.

Popularity

Points 133

Comments 47

What is this product?

Orbital Weaver 3D is a web application that simulates the notoriously complex 'Three-Body problem' in physics, which describes how three celestial bodies (like stars or planets) influence each other gravitationally. Unlike most simulators that are limited to 2D, this project uses advanced 3D graphics (powered by Three.js) to render these interactions. The innovation lies in its ability to visualize not only common periodic orbits but also recently discovered 3D solutions from a large database, showcasing how bodies can weave in and out of a flat orbital plane in intricate ways. So, what's the value to you? It makes abstract physics principles tangible and visually explorable, allowing you to grasp the chaotic and beautiful dance of gravity in three dimensions.

How to use it?

Developers can use Orbital Weaver 3D directly in their web browser as a standalone visualization tool. It's built with Three.js, a popular JavaScript library for creating 3D graphics, meaning its core technology is accessible and familiar to web developers. You can load preset orbits, including novel 3D configurations, and interact with the simulation using intuitive camera controls (rotate, pan, zoom) to get a clear view from any angle. For developers interested in integrating such physics visualizations into their own projects, the open-source nature of HN projects means they can study the codebase. They might use its principles for educational apps, game physics engines, or scientific data visualization. So, how can you use it? You can explore these complex orbital paths to inspire your own projects or use it as a direct visual aid for teaching or demonstrating physics concepts in an engaging, interactive way.

Product Core Function

· Interactive 3D Visualization of Three-Body Orbits: Renders complex gravitational interactions in a fully explorable 3D space, offering a superior understanding compared to 2D simulations. This is valuable for educators, students, and researchers wanting to see celestial mechanics in action, providing a visual 'aha!' moment for abstract concepts.

· Access to Novel 3D Orbital Solutions: Integrates recently discovered 3D periodic orbits that go beyond typical planar movements, revealing unexpected and complex interplays between celestial bodies. This is valuable for pushing the boundaries of scientific understanding and inspiring new theoretical explorations in astrophysics.

· Dynamic Camera Controls and Body-Following Mode: Allows users to freely rotate, pan, and zoom the 3D scene, and even follow a specific body. This provides a personalized and in-depth viewing experience, essential for detailed analysis and appreciating the nuanced movements of each celestial object.

· Force and Velocity Vector Visualization: Displays the direction and magnitude of forces and velocities acting on each body. This technical feature is invaluable for students and developers to understand the underlying physics principles driving the simulation, helping to connect the visual movement to the mathematical forces at play.

· Timeline Scrubbing for Full Orbital Period Exploration: Enables users to rewind and fast-forward through the entire simulation time. This is incredibly useful for studying the evolution of orbits, identifying patterns, and understanding the long-term behavior of the three-body system, which is critical for predicting celestial events or designing space missions.

Product Usage Case

· An astronomy educator using Orbital Weaver 3D to demonstrate the chaos inherent in the Three-Body problem to a classroom, making it easier for students to visualize why long-term prediction is so difficult, thus enhancing their grasp of celestial mechanics.

· A game developer seeking inspiration for realistic spaceship trajectories in a science fiction game, exploring the pre-set 3D orbits to create more dynamic and physically plausible flight paths. This helps them solve the technical challenge of generating interesting yet believable in-game movement.

· A physics student using the timeline scrubbing feature to analyze a specific stable orbit found in the database, correlating the visual path with the equations they are studying in their coursework. This allows them to solve their understanding gap by bridging theory and visualization.

· A researcher visualizing a newly discovered orbital configuration from the provided database to understand its stability and potential implications for planetary system formation. This helps them address the problem of interpreting complex, multi-dimensional simulation data in an intuitive way.

2

Guts: Go-to-TypeScript Type Transformer

Author

emyrk

Description

Guts is a developer tool that automatically converts Go (Golang) data structures and types into their equivalent TypeScript representations. It addresses the common challenge of synchronizing data models between backend Go services and frontend TypeScript applications, saving developers manual conversion time and reducing the risk of type mismatches.

Popularity

Points 89

Comments 22

What is this product?

Guts is a command-line utility that acts as a bridge between Go and TypeScript type systems. It analyzes your Go struct definitions and generates corresponding TypeScript interfaces or types. The innovation lies in its intelligent parsing of Go's type system, including embedded structs, slices, maps, and basic types, and accurately mapping them to idiomatic TypeScript constructs. This means you don't have to manually rewrite your data structures for the frontend, which is a tedious and error-prone process. So, what's in it for you? It drastically speeds up development by eliminating repetitive coding and ensures your frontend and backend data contracts stay perfectly aligned, preventing runtime errors.

How to use it?

Developers can integrate Guts into their workflow by installing it as a Go tool. Once installed, they can run the Guts CLI command on their Go source files or directories. The tool will then scan for Go struct definitions and output the generated TypeScript code to a specified file. This generated TypeScript file can then be imported and used directly in their frontend TypeScript projects, such as React, Vue, or Angular applications. This makes it incredibly easy to share data definitions across the stack. So, how does this benefit you? You can now share your backend data shapes with your frontend with minimal effort, leading to faster feature delivery and fewer integration headaches.

Product Core Function

· Go struct to TypeScript interface conversion: Guts intelligently maps Go's primitive types (string, int, bool, etc.) and complex types like slices, maps, and nested structs to their accurate TypeScript equivalents, ensuring type safety across your application. This saves you from manually defining types, which is a major time saver.

· Embedded struct handling: It correctly translates Go's embedded structs into TypeScript's interface extension mechanism, maintaining the inheritance-like structure for your data models. This means your complex data relationships are preserved without extra manual work.

· Type alias and custom type recognition: Guts can understand and convert Go's type aliases and custom defined types, allowing for more precise TypeScript output that reflects your domain's specific data semantics. This ensures your frontend types are as descriptive as your backend types.

· Customizable output: The tool offers options for configuring the output, such as specifying the naming conventions for generated TypeScript types and excluding certain fields, providing flexibility to match your project's specific coding standards. This gives you control over how the generated code looks and feels.

· Command-line interface (CLI): A straightforward CLI makes Guts easy to integrate into build scripts and CI/CD pipelines, automating the type synchronization process. This means you can automate this crucial step, making your development pipeline more robust.

Product Usage Case

· Backend API data synchronization: Imagine you have a Go backend serving data via a REST API. Guts can take your Go API response structs and generate TypeScript interfaces. This ensures your frontend consistently uses the correct data shapes, preventing 'undefined' errors and making API integration seamless. So, you can build your frontend features faster with confidence.

· Database ORM model sharing: If you're using an ORM in Go to interact with a database, your Go models define your data. Guts can convert these models into TypeScript, allowing your frontend to directly consume and manipulate data with accurate type checking, reducing data mapping errors and improving developer experience. This means less time spent debugging data inconsistencies.

· Microservices communication: In a microservices architecture where Go services communicate with TypeScript services, Guts can be used to ensure consistent data contracts between them. By generating shared type definitions, it reduces integration friction and promotes faster development cycles. This leads to more stable and easier-to-maintain microservice systems.

3

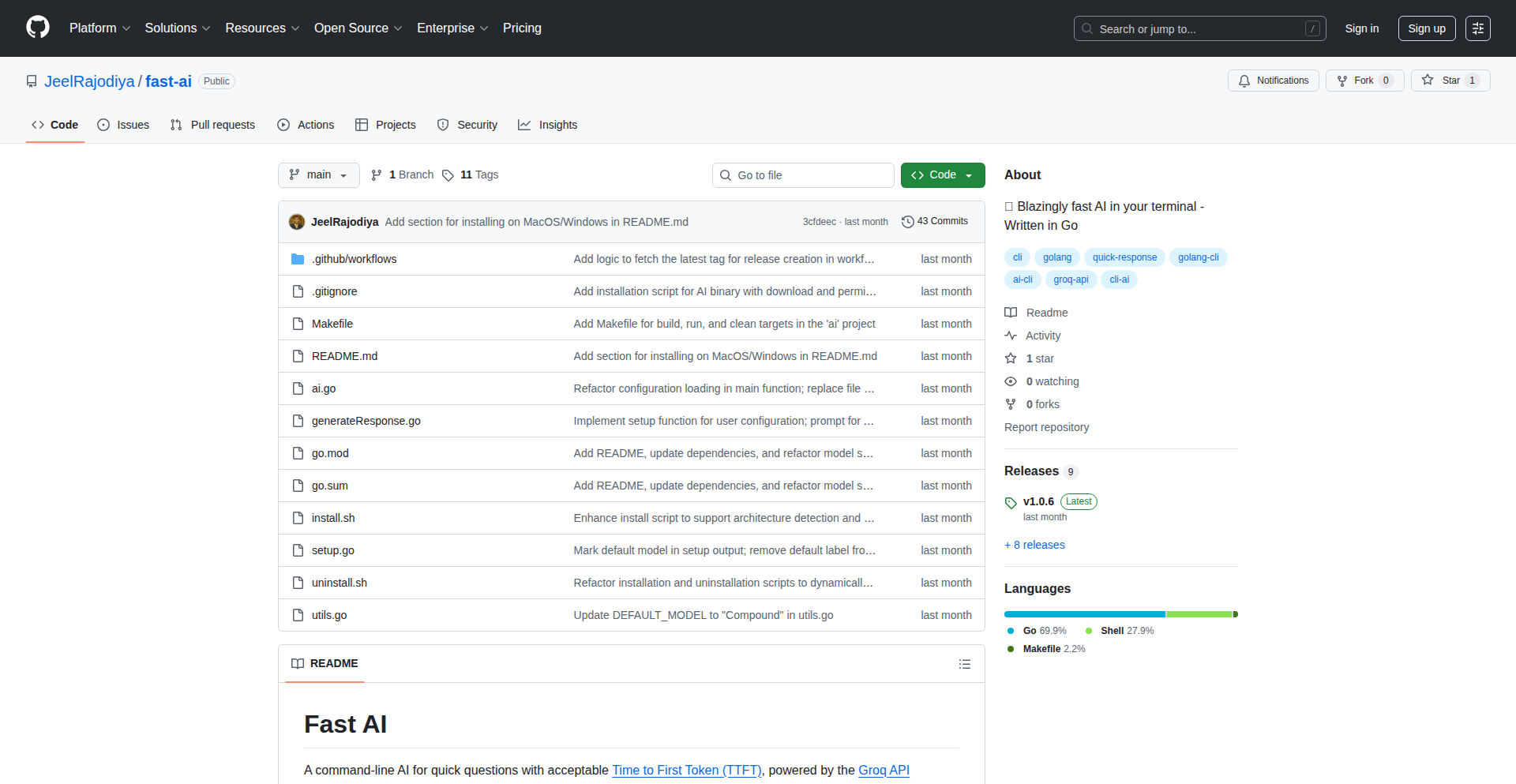

RowboatX: Terminal-Native AI Agents

Author

segmenta

Description

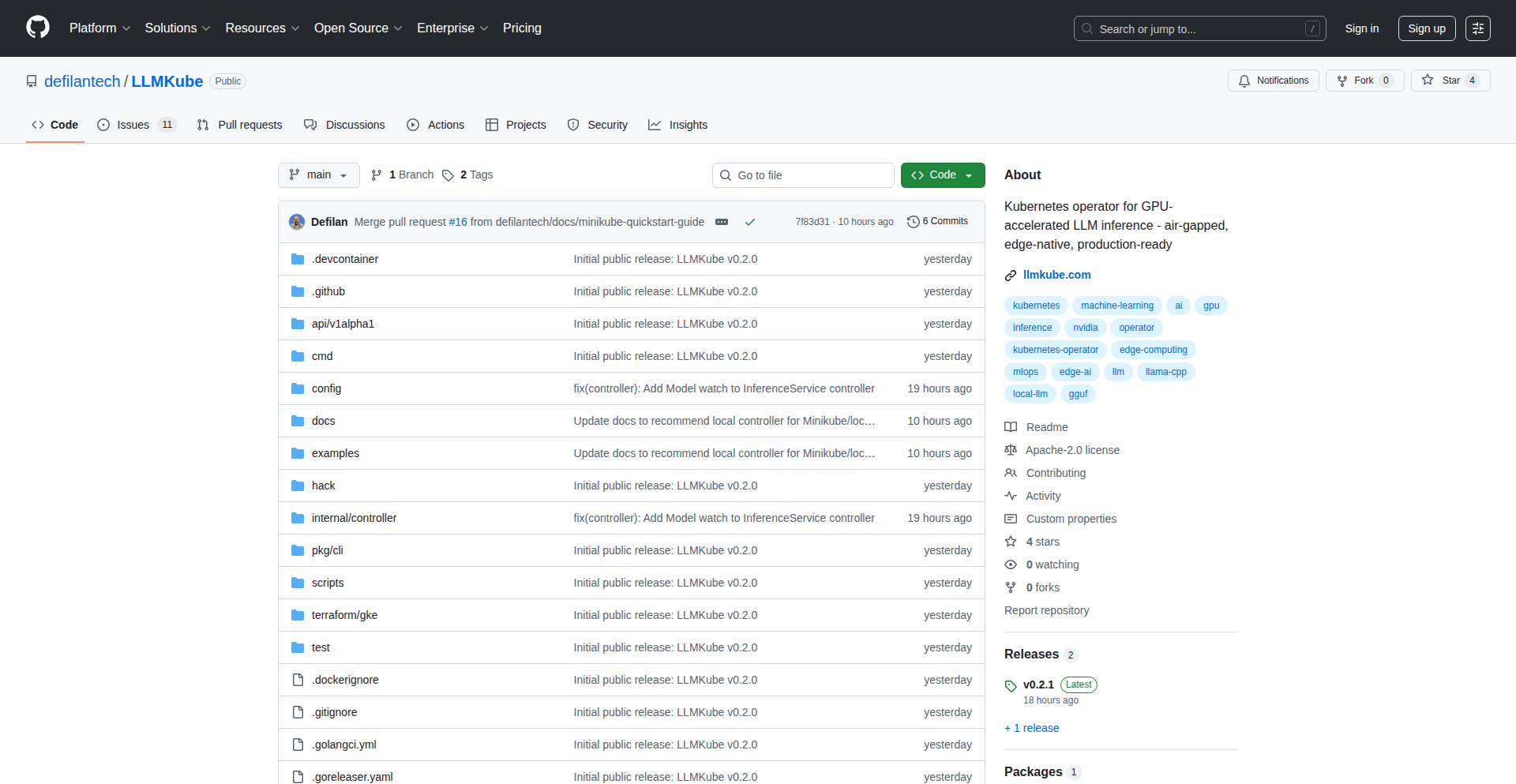

RowboatX is an open-source command-line interface (CLI) tool that allows developers to build and run custom AI background agents for non-coding tasks. It leverages the file system and Unix tools to create, monitor, and connect these agents to various services (MCP servers) for executing tasks and processing their outputs. This innovative approach brings the power of AI automation directly to your terminal, offering a flexible and powerful way to automate everyday tasks, similar to how Claude Code enhances coding, but for broader applications.

Popularity

Points 88

Comments 23

What is this product?

RowboatX is a local, command-line tool designed to create and manage AI agents that run in the background. It treats the file system as the central hub for agent state, meaning all instructions, memories, and logs are stored as easily accessible files. The core innovation lies in its 'supervisor agent' which intelligently uses Unix commands to manage other agents, monitor their progress, and schedule their activities. This design choice is based on the observation that LLMs often excel at interpreting and executing Unix commands compared to direct API calls. A key feature is 'human-in-the-loop' functionality, where an agent can pause and request human input for complex decisions or actions, ensuring control and preventing errors. It's built for flexibility, working with any compatible LLM, including open-source options. So, what's the big deal? It lets you automate tasks on your computer that typically require manual intervention, using AI, without needing to write complex code for each automation.

How to use it?

Developers can use RowboatX by installing it via npm (e.g., `npx @rowboatlabs/rowboatx`). Once installed, they can define their agents using simple configuration files and leverage the CLI to start, stop, and monitor them. Agents can be connected to various 'MCP servers' which act as interfaces to different tools and services. For example, you could connect RowboatX to a podcast generation service to automatically create daily podcasts from new research papers, or to a calendar and search engine to pre-research meeting attendees. The command-line interface allows for direct interaction and control, making it ideal for scripting and integrating into existing workflows. So, how does this help you? It means you can set up automated workflows for tasks like summarizing articles, generating reports, or even managing your schedule, all from your familiar terminal environment.

Product Core Function

· File System as State Management: All agent configurations, data, and logs are stored as files on your local disk. This makes it easy to inspect, version control, and debug agents using standard Unix tools like `grep` and `diff`. The value here is transparent and accessible agent management, allowing for deep dives into how your agents operate and what data they are processing.

· Supervisor Agent with Unix Command Integration: A central agent orchestrates other background agents, primarily using Unix commands for monitoring, scheduling, and execution. This approach leverages the strengths of LLMs in understanding command-line instructions, leading to robust and efficient agent management. The value is a powerful and reliable control system for your automations, built on a foundation of well-understood system tools.

· Human-in-the-Loop for Critical Decisions: Agents can be configured to pause and request human input for tasks that require judgment or complex decision-making, such as drafting sensitive emails or installing new tools. This ensures that the automation process remains under your control and can handle nuanced situations. The value is a safe and controlled automation process that avoids errors by incorporating human oversight when necessary.

· MCP Server Integration for Tool Access: RowboatX can connect to compatible 'MCP servers' to access a wide range of tools and services. This allows your agents to interact with external applications and data sources, expanding their capabilities significantly. The value is the ability to connect your AI agents to virtually any service or tool, enabling sophisticated and multi-faceted automations.

· Local Execution and Terminal Control: Agents run directly on your local machine, providing direct access to your terminal and file system. This enables advanced automation use cases like computer and browser automation that cloud-based solutions cannot easily replicate. The value is powerful, direct control over your local computing environment for automation purposes.

Product Usage Case

· Automated Daily Podcast Generation: Connect RowboatX to arXiv for new AI papers and ElevenLabs for text-to-speech. Configure an agent to monitor arXiv for new papers daily, summarize them, and generate a podcast using ElevenLabs, all running in the background. This solves the problem of staying updated with research without manual effort.

· Pre-Meeting Briefing Generation: Integrate RowboatX with Google Calendar and Exa Search. Set up an agent to automatically research attendees of upcoming meetings, gather relevant public information, and generate a concise briefing document before each event. This enhances meeting preparation by providing quick access to attendee insights.

· Content Aggregation and Summarization Workflow: Configure an agent to periodically scan RSS feeds or specific websites for new articles, summarize the content using an LLM, and save the summaries to a local database or file. This provides an automated way to keep up with industry news or specific topics of interest.

· File Organization and Management Automation: Develop agents that monitor specific directories for new files, automatically rename them based on content or metadata, and move them to appropriate folders. This automates tedious file management tasks, keeping your digital workspace organized.

4

AuraSense E-Paper Air Monitor

Author

nomarv

Description

AuraSense is a room air monitor that leverages an e-paper display to provide subtle yet noticeable feedback on indoor air quality. It tracks key metrics like humidity and CO2 levels, alerting users only when thresholds are exceeded, thus promoting a healthier and more productive environment without constant distraction. The project's innovation lies in its minimalist visual communication and its focus on user well-being through environmental awareness.

Popularity

Points 52

Comments 20

What is this product?

AuraSense is an intelligent device that monitors the air quality in your room. It uses an e-paper screen, similar to what you find on e-readers, which consumes very little power and is easy to read. The core technology involves sensors that measure humidity and carbon dioxide (CO2) levels in the air. When these levels are within a healthy range, the display shows a simple, unobtrusive icon. However, if humidity rises too high (which can lead to mold growth and discomfort) or CO2 levels increase (which can decrease focus and cause drowsiness), the display changes to a more noticeable alert, signaling you to take action, like opening a window. This approach avoids constant visual noise while ensuring you're informed when it matters, all visualized through a clear statistical dashboard for those who appreciate data.

How to use it?

Developers can use AuraSense as a foundational component for smart home or environmental monitoring systems. The device itself can be integrated into existing DIY smart home setups using its sensor data, which can be accessed through its internal logic or potentially exposed via a simple interface for further processing. For instance, the CO2 and humidity readings can trigger other smart devices, like smart thermostats to adjust ventilation or smart fans to increase air circulation. The underlying principle is to use code to react to environmental changes and improve living conditions automatically. Its unobtrusive design makes it suitable for any room where air quality is a concern, from bedrooms to offices.

Product Core Function

· Environmental Sensing: Utilizes low-power sensors to continuously monitor humidity and CO2 levels in real-time. This provides foundational data for understanding indoor air quality and its impact on health and productivity.

· Subtle Alerting System: Employs an e-paper display that changes its visual output based on predefined thresholds for humidity and CO2. This design principle minimizes distractions while ensuring timely notification of deteriorating air quality, allowing users to make informed decisions about their environment.

· Statistical Visualization Dashboard: Presents collected air quality data in a clear, easy-to-understand dashboard format. This appeals to users who enjoy tracking trends and understanding the long-term patterns of their indoor environment, aiding in proactive adjustments.

· Low Power Consumption: The e-paper display technology drastically reduces energy needs, making the device suitable for long-term, unattended operation without frequent battery changes or power source concerns.

Product Usage Case

· Smart Home Automation: Imagine a developer integrating AuraSense into their smart home ecosystem. If CO2 levels rise, AuraSense's alert could trigger a smart ventilation system to automatically open or increase airflow, improving concentration without manual intervention.

· Health Monitoring: For individuals concerned about mold growth, AuraSense can provide early warnings. High humidity alerts could prompt the user to use a dehumidifier or ventilate the room, preventing potential health issues and property damage.

· Productivity Enhancement: In a home office setup, AuraSense can help maintain optimal air quality. When CO2 levels indicate reduced cognitive function, the alert serves as a reminder to open a window, thereby boosting focus and productivity.

· Data-Driven Lifestyle: A data enthusiast could use AuraSense to track air quality trends over time, correlating it with their own well-being or activities. This allows for personalized adjustments to achieve a healthier living space based on empirical evidence.

5

FastLiteLLM-RustAccel

Author

ticktockten

Description

This project introduces a Rust acceleration layer for the popular Python library LiteLLM. It targets performance-critical operations like token counting, routing, rate limiting, and connection pooling by leveraging Rust's speed and concurrency. The innovation lies in using PyO3 to seamlessly integrate Rust code into Python, demonstrating how to optimize existing Python libraries without a complete rewrite and offering valuable insights into performance tuning.

Popularity

Points 27

Comments 9

What is this product?

FastLiteLLM-RustAccel is a performance enhancement for LiteLLM, a library used to interact with various Large Language Models (LLMs). Instead of rewriting LiteLLM in Rust, this project adds a Rust 'shim' or a fast-lane that takes over specific, computationally intensive tasks. It uses PyO3, a tool that lets you write Python extensions in Rust, to make these Rust functions callable from Python. The key innovation is the targeted application of Rust's speed and efficient concurrency primitives (like lock-free data structures) to areas like managing how many tokens are processed, deciding which LLM to use, controlling request rates, and handling network connections. This approach aims to boost performance without disrupting the familiar Python environment.

How to use it?

Developers can integrate FastLiteLLM-RustAccel into their existing LiteLLM projects to potentially see performance improvements in high-throughput scenarios. The project provides Rust implementations for critical functions that are then 'monkeypatched' or substituted for their Python counterparts within LiteLLM. This means you can largely use LiteLLM as you normally would, but with the underlying performance gains from Rust. Specific use cases would involve applications that make a large number of LLM calls, where the overhead of rate limiting, connection management, and routing can become significant. The project also includes feature flags for gradual rollouts and performance monitoring to track the impact.

Product Core Function

· Rust-based token counting using tiktoken-rs: Provides a fast and efficient way to count tokens, which is fundamental for managing LLM costs and context windows, offering near-identical performance to existing Python methods but with a foundation for further optimization.

· Lock-free data structures with DashMap for concurrent operations: Enables faster and more efficient handling of multiple requests simultaneously, crucial for applications with high concurrency, leading to significant speedups in operations like rate limiting and connection pooling.

· Async-friendly rate limiting: Implements a rate limiter that works well with asynchronous programming, ensuring that applications can make requests to LLMs without hitting API limits, with demonstrated significant performance improvements.

· Monkeypatch shims for transparent Python function replacement: Allows Rust code to seamlessly replace existing Python functions in LiteLLM without requiring major code changes, making integration straightforward and enabling immediate performance benefits.

· Performance monitoring: Provides tools to track and measure the performance improvements in real-time, allowing developers to quantify the impact of the Rust acceleration and identify further optimization opportunities.

Product Usage Case

· High-throughput LLM inference services: For services that handle thousands of LLM requests per minute, the optimized rate limiting and connection pooling can drastically reduce latency and increase throughput, meaning more users can be served faster.

· Cost-sensitive LLM applications: Efficient token counting and optimized routing ensure that LLM API calls are managed effectively, potentially reducing operational costs by avoiding unnecessary processing or inefficient model selection.

· Real-time AI applications like chatbots or content generation platforms: Where low latency is critical, the performance gains from Rust can lead to a more responsive user experience, making interactions feel smoother and faster.

· Migrating or optimizing existing Python microservices that rely on LLMs: Developers can introduce Rust acceleration to specific bottlenecks within their services without a full rewrite, gaining performance benefits incrementally and safely through feature flags.

6

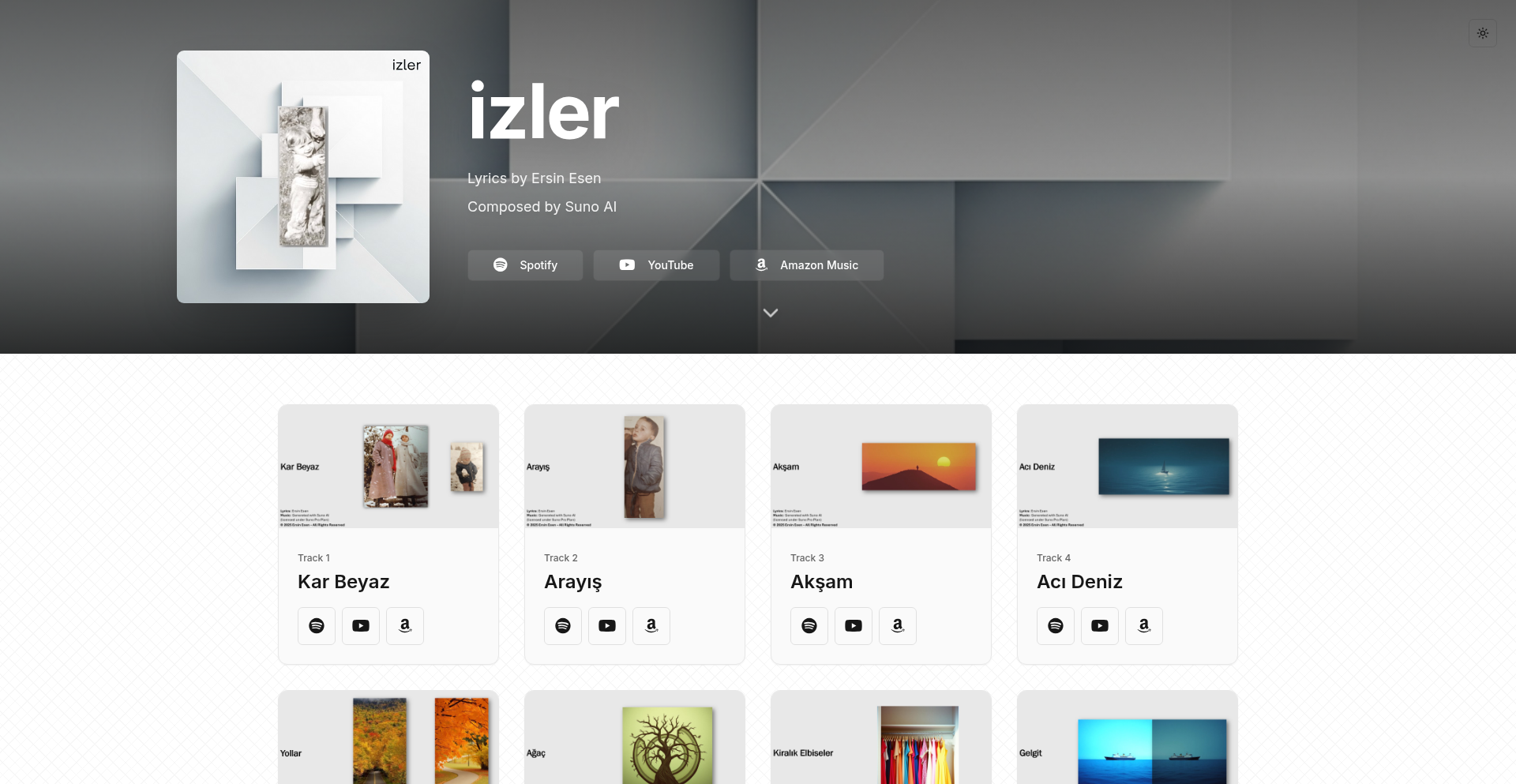

Stickerbox: AI-Imagination Transformer

Author

spydertennis

Description

Stickerbox is a voice-activated sticker printer that merges AI image generation with tangible thermal printing. It allows children to verbally describe their imaginative ideas, which are then transformed into physical stickers. The core innovation lies in making advanced AI accessible and safe for children, translating abstract digital creations into a real, touchable object. This bridges the gap between imagination and reality for young creators.

Popularity

Points 12

Comments 4

What is this product?

Stickerbox is a unique device designed to bring children's imaginations to life through stickers. It works by leveraging AI, specifically a text-to-image generation model, that interprets voice commands. When a child speaks their idea, like 'a purple cat flying on a rainbow,' the AI generates a corresponding image. This digital image is then sent to a built-in thermal printer, which prints it onto special sticker paper. The innovation here is in the seamless integration of sophisticated AI with a simple, child-friendly hardware interface, and the focus on creating a safe, tangible output. This means kids can hold their digital dreams in their hands, fostering creativity in a playful and physical way.

How to use it?

Developers can think of Stickerbox as an example of an end-to-end creative tool for a specific demographic. For parents and educators, it's incredibly simple: a child speaks their idea into the device, and a sticker is printed. For developers interested in the underlying technology, it showcases a practical application of voice recognition, AI image generation APIs, and direct thermal printing integration. Imagine integrating similar voice-to-creation workflows into educational apps or personalized gift-making platforms. The key is the straightforward user interaction designed for young children, making complex technology feel magical. It demonstrates how to abstract away the AI complexity for a delightful user experience.

Product Core Function

· Voice-to-Text Input: Enables children to express their ideas naturally through speech, translating spoken words into actionable text prompts for the AI.

· AI Image Generation: Utilizes advanced AI models to interpret the text prompts and create unique, imaginative visuals based on the child's description, providing endless creative possibilities.

· Thermal Sticker Printing: Instantly transforms digital AI-generated images into physical stickers using safe and easy-to-use thermal printing technology, allowing children to interact with their creations physically.

· Kid-Safe Design and Interface: Features a user interface and operational flow designed specifically for young children, ensuring intuitive use and prioritizing safety and data privacy for peace of mind.

Product Usage Case

· A child wants a sticker of 'a dinosaur eating pizza.' They speak this into Stickerbox, and within moments, a custom sticker appears, which they can then peel off and stick anywhere, turning their imaginative thought into a tangible item.

· During a storytelling session, children can generate stickers for characters or scenes described in the story, making the narrative more engaging and interactive. This can be a powerful tool for educators looking to enhance creative learning.

· As a personalized gift-making tool, a child could design a sticker of their pet as a superhero and give it to a family member, creating a unique and thoughtful present that stems directly from their own imagination and the magic of AI.

· For parents concerned about screen time but wanting to foster creativity, Stickerbox offers a tangible way for kids to engage with generative AI without needing a tablet or computer, encouraging physical play and artistic expression.

7

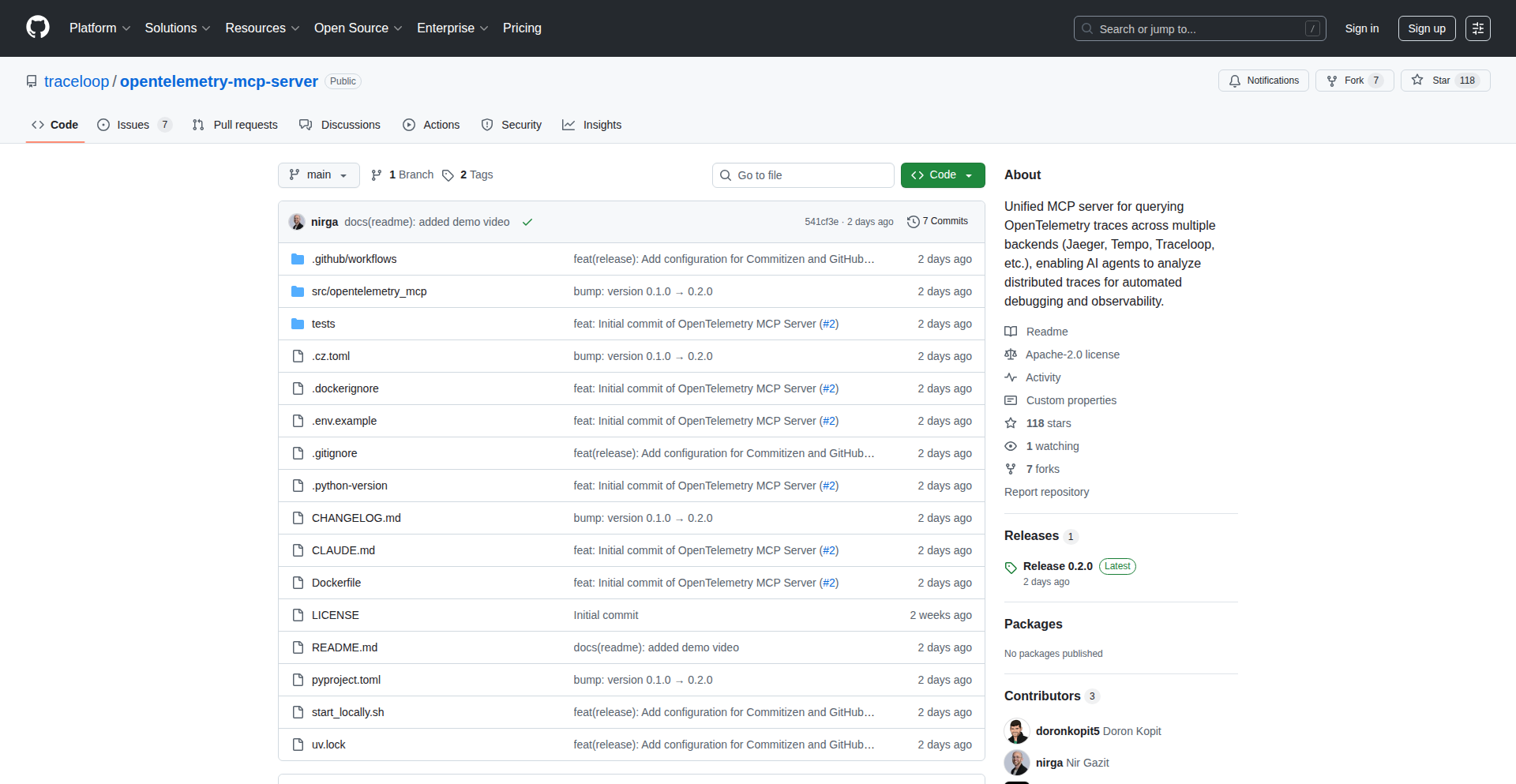

OpenTelemetry MCP Gateway

Author

GalKlm

Description

This project is an open-source MCP (Messaging Control Protocol) server designed to bridge the gap between your development environment and various OpenTelemetry backends. It tackles the common developer pain point of context switching between IDEs and observability platforms by allowing direct access to telemetry data (like traces and logs) within your coding workflow. The innovation lies in its vendor-agnostic approach, supporting multiple observability tools, and its open-source nature, enabling extensibility and customization.

Popularity

Points 13

Comments 2

What is this product?

This is an open-source MCP server that acts as a central hub, connecting different observability platforms (like Grafana, Jaeger, Datadog, Dynatrace, Traceloop) to your local development environment. Traditionally, developers had to manually jump between their Integrated Development Environments (IDEs) and separate observability dashboards to debug issues or understand application behavior. This project introduces a unified way to access this crucial data without leaving your coding workspace. The core technical innovation is its ability to speak the MCP protocol, which is a standard way for systems to communicate, allowing it to be flexible and connect to a wide array of existing telemetry backends. This is valuable because it simplifies debugging and performance analysis, making developers more efficient.

How to use it?

Developers can integrate this MCP server into their development workflow. By installing the server and configuring it to connect to their existing OpenTelemetry backend (which might be storing data from Grafana, Datadog, etc.), they can then use client tools or even directly query the server from their IDE. This allows them to retrieve and analyze traces, logs, and other telemetry data relevant to their code directly within their IDE. For example, imagine you're writing code and suspect a performance bottleneck. Instead of navigating to a separate dashboard, you could potentially trigger a query through the MCP server from your IDE to fetch the relevant trace data and pinpoint the issue, dramatically speeding up your debugging process.

Product Core Function

· Connect to diverse OpenTelemetry backends: This allows you to pull data from multiple observability tools you might already be using, like Grafana or Datadog, into one place for analysis. The value is that you don't need to learn a new system for each tool.

· Expose telemetry data via MCP: This uses a standard communication protocol to make tracing and logging data accessible. The value is that it enables seamless integration with development tools and IDEs, making data readily available where you're coding.

· Vendor-agnostic design: The server is built to work with many different observability providers, not just one. The value is that it offers flexibility for organizations using multiple platforms and prevents vendor lock-in.

· Open-source extensibility: Developers can contribute to or modify the server. The value is that it fosters community development and allows for custom features tailored to specific needs.

· Local development environment integration: It brings production-like insights directly into your coding setup. The value is a significant reduction in debugging time and a more intuitive understanding of application behavior.

Product Usage Case

· Debugging production outages by developers: A developer experiencing a production issue can connect the MCP server to their company's Datadog backend. From their IDE, they can query for recent traces related to the problematic service, inspect the span details, and identify the root cause much faster than navigating through Datadog's UI.

· Performance profiling during development: A backend engineer working on optimizing a specific API endpoint can use the MCP server to pull trace data from their local testing environment, which is configured with OpenTelemetry. They can then analyze the latency of different operations directly within their IDE, helping them identify and fix performance bottlenecks before deploying.

· Investigating prompt issues with LLMs: For teams working with large language models, this could be used to connect to an observability platform tracking LLM interactions. Developers could then query for specific prompt executions, examine the context and responses, and debug why a prompt is not yielding the desired output, all from their development environment.

· Onboarding new team members: A new developer joining a team can easily set up the MCP server to connect to the team's existing observability stack. This allows them to quickly get context on how the application behaves in production and debug issues without an extensive learning curve for various dashboards.

8

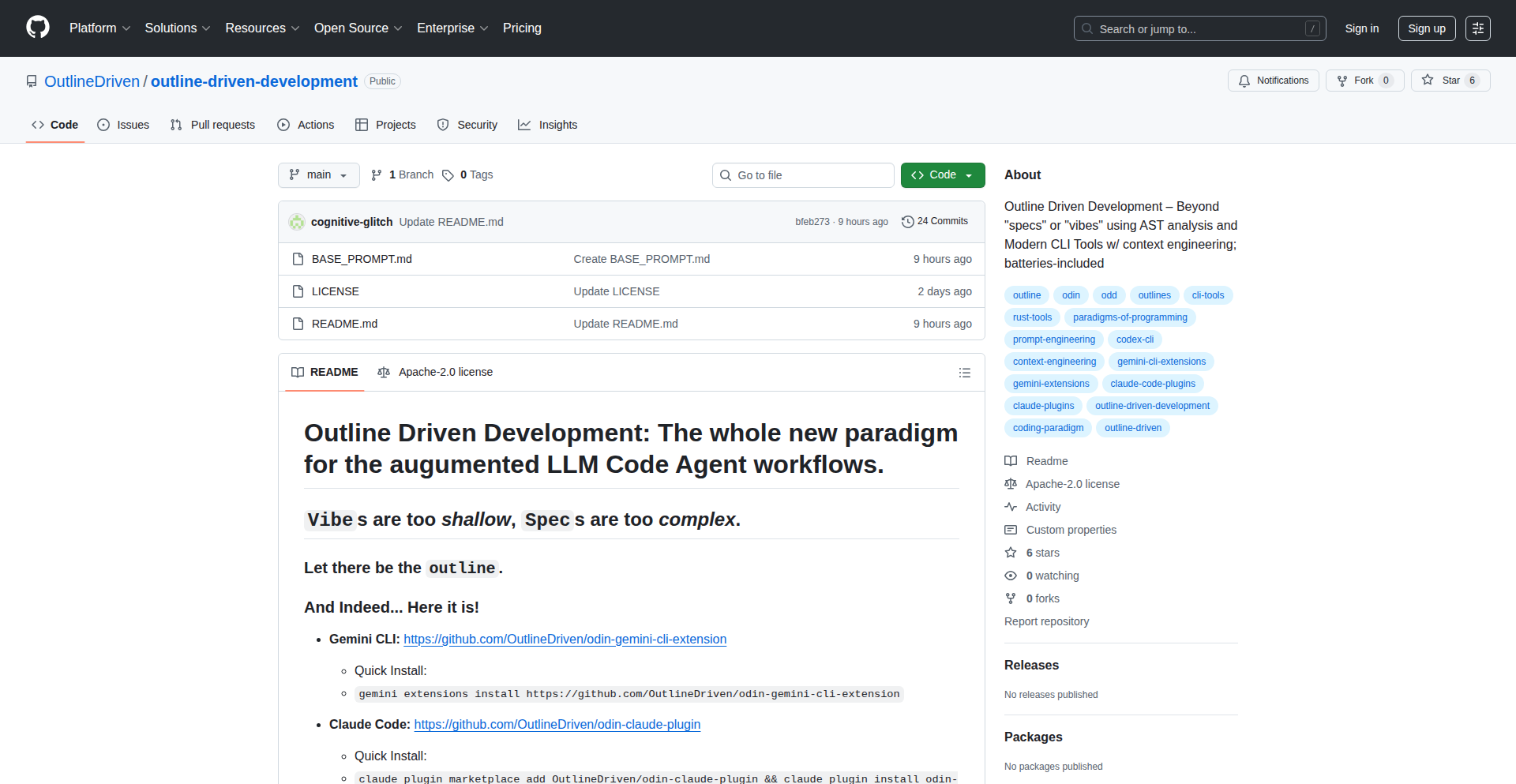

AST-Driven AI Coder

Author

cognitive-sci

Description

This project introduces Outline Driven Development (ODD), a novel approach to AI-assisted coding. Instead of relying on plain text prompts, ODD leverages Abstract Syntax Tree (AST) analysis to provide AI models (like Gemini, Claude, and Codex) with a deep, structural understanding of code. This allows for more nuanced and accurate code generation and modification, bridging the gap between shallow LLM interactions and the cognitive overhead of writing full specifications. The core innovation lies in using a hyper-optimized Rust toolchain for rapid, context-aware code analysis, enabling AI to 'read' code structure much like a human programmer.

Popularity

Points 11

Comments 1

What is this product?

This is a system for AI-assisted coding that goes beyond simple text prompts. It's built around a concept called Outline Driven Development (ODD). The core idea is that instead of just feeding an AI model raw code or a vague description, we provide it with the 'structure' of the code. This is done by analyzing the code's Abstract Syntax Tree (AST). Think of an AST as a detailed blueprint of your code, showing how different parts are connected and organized. By understanding this structure, the AI can grasp the logic and intent of your code much better, leading to more accurate and helpful suggestions or generations. This is achieved using a highly optimized Rust toolchain, which makes the code analysis incredibly fast and efficient, feeding precise, structural context to the AI.

How to use it?

Developers can integrate this system by installing a set of pre-configured extensions and CLI wrappers for major AI coding agents like Gemini, Claude, and Codex. The system relies on local installation of several powerful Rust-based tools such as `ast-grep`, `ripgrep`, and `jj`, optimized for maximum local performance. Once the toolchain is set up (instructions are provided for Linux, macOS, and Windows), developers can install the respective AI agent extensions. For instance, to use it with Gemini, you'd typically install the `odin-gemini-cli-extension`. This allows the AI agent to access the structural context of your code, enabling it to understand your project's architecture and nuances before generating or modifying code. This can be done manually by injecting configurations or through simple CLI commands provided by the project.

Product Core Function

· Structural Code Analysis with AST: Enables AI to understand code not just as text, but as a structured entity, leading to deeper comprehension of logic and intent. This is valuable for AI to make more informed suggestions and reduce errors.

· Hyper-Optimized Rust Toolchain: Utilizes high-performance Rust tools like `ast-grep` and `ripgrep` to quickly parse and analyze code structure. This speed is crucial for real-time AI assistance, allowing for rapid feedback cycles without significant delays.

· AI Agent Integration Kits: Provides pre-configured extensions and CLI wrappers for popular AI coding assistants (Gemini, Claude, Codex). This makes it easy for developers to plug this advanced analysis into their existing AI workflows, enhancing their current tools.

· Outline Driven Development (ODD) Paradigm: Introduces a new way of interacting with AI for coding by focusing on code structure. This helps bridge the gap between vague prompts and detailed specifications, offering a more intuitive and efficient development process.

Product Usage Case

· Refactoring complex codebases: When faced with a large, intricate piece of code, a developer can use this system with their AI assistant. The AI, understanding the AST, can suggest safer and more effective refactoring strategies by analyzing dependencies and code blocks, preventing common mistakes that arise from simply looking at text.

· Generating boilerplate code with context: Instead of asking an AI to generate generic code, a developer can provide the structural context of their project. The AI can then generate boilerplate code that perfectly fits the existing architecture and coding style, saving significant time and ensuring consistency.

· Debugging intricate issues: When a bug is difficult to pinpoint, the AI, armed with structural code insights, can help trace the flow of execution and identify potential problem areas more effectively than a text-based analysis, leading to faster bug resolution.

9

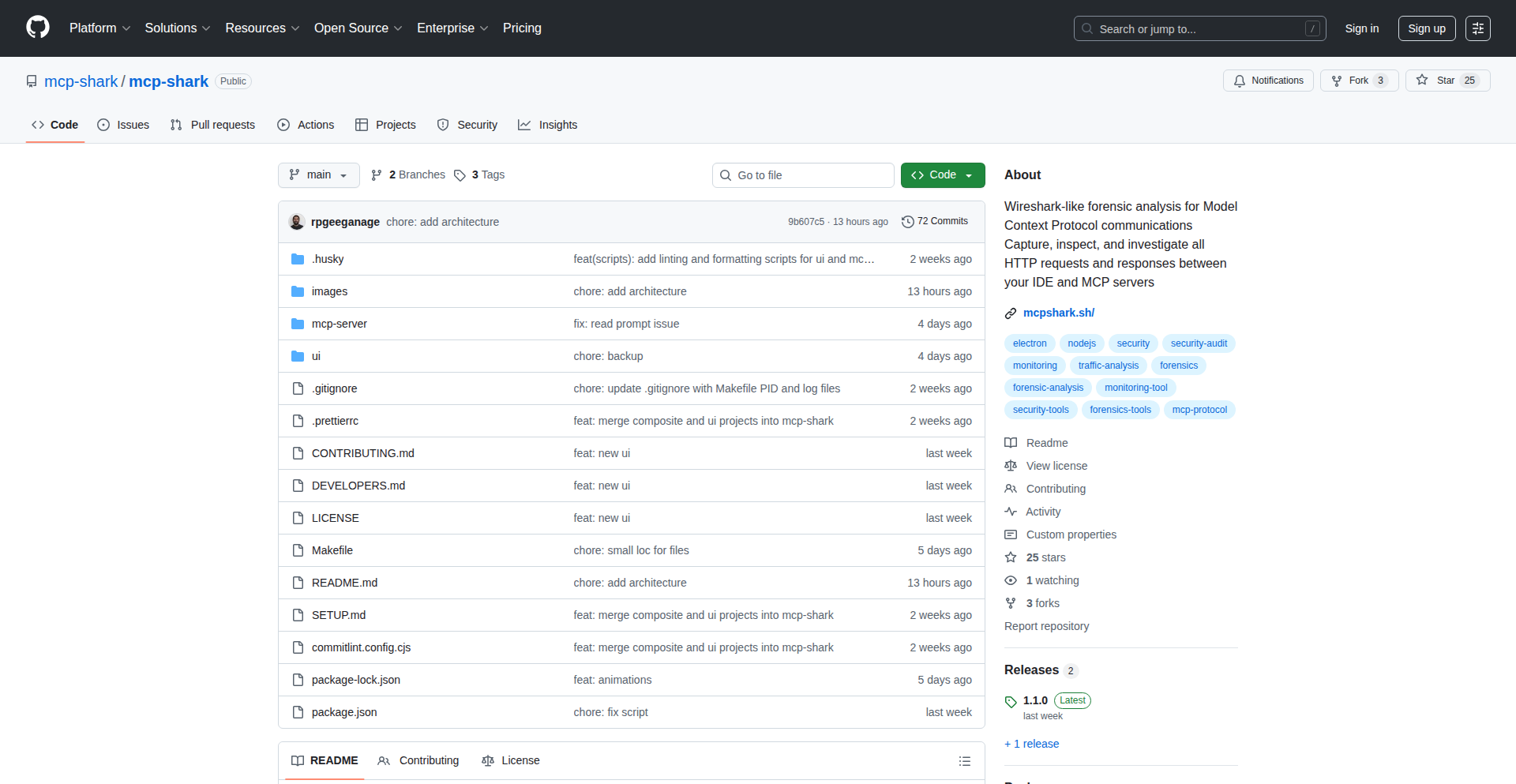

MCP Local Traffic Insights

Author

lone-wolf

Description

A tool for real-time analysis of local network traffic, offering deep insights into device communication patterns and potential anomalies. It leverages advanced packet sniffing and statistical modeling to visualize network behavior, helping developers quickly diagnose connectivity issues and optimize resource allocation.

Popularity

Points 11

Comments 0

What is this product?

This project is a sophisticated network traffic analysis tool designed to capture and interpret data flowing within a local network. Its core innovation lies in its ability to go beyond simple packet counts, employing algorithms to identify traffic signatures, detect unusual patterns, and present this information in an easily digestible format. Think of it as a highly intelligent detective for your network, spotting suspicious activity or inefficiencies that would otherwise be invisible. It's built using efficient packet capture libraries and statistical analysis techniques to process a high volume of data with minimal overhead.

How to use it?

Developers can integrate MCP Local Traffic Insights into their network monitoring systems or use it as a standalone diagnostic tool. It can be deployed on a dedicated machine or a server within the local network. By running the analysis engine, developers can gain immediate visibility into which devices are communicating, what protocols they are using, and the volume of data exchanged. This is invaluable for identifying rogue devices, pinpointing bandwidth hogs, or troubleshooting application-level network problems without needing to sift through raw packet dumps.

Product Core Function

· Real-time Packet Sniffing: Captures network packets in transit without disrupting network flow, allowing for immediate observation of network activity. This means you can see what's happening on your network right now, as it happens, so you can catch problems before they escalate.

· Protocol Identification and Classification: Automatically identifies and categorizes different network protocols (e.g., HTTP, DNS, SMB) and their associated traffic. This helps you understand the 'language' your devices are speaking, making it easier to identify specific application behaviors and troubleshoot issues related to certain services.

· Device Communication Mapping: Visualizes direct communication links between devices on the local network, highlighting who is talking to whom. This provides a clear picture of your network's topology and can quickly reveal unexpected connections or communication patterns, helping you secure your network and understand data flow.

· Anomaly Detection: Employs statistical models to flag unusual or potentially malicious traffic patterns that deviate from normal network behavior. This acts as an early warning system, alerting you to potential security threats or misconfigurations before they cause significant damage or downtime.

· Traffic Volume and Bandwidth Monitoring: Tracks the amount of data transferred by each device and application, identifying bandwidth consumption trends. Knowing which devices or applications are using the most bandwidth is crucial for optimizing network performance and preventing slowdowns.

Product Usage Case

· Troubleshooting Network Latency: A developer notices their web application is slow. By using MCP Local Traffic Insights, they can see if high latency is caused by excessive traffic to a particular external service, an internal device flooding the network, or a misconfigured router, allowing for targeted fixes rather than guesswork.

· Identifying Rogue Devices: A company's IT administrator suspects an unauthorized device has connected to their network. The tool can visualize all active connections, helping them quickly spot the unknown device by its communication patterns and isolate it to prevent potential security breaches.

· Optimizing Application Performance: A team developing a peer-to-peer application wants to understand how their software utilizes the network. They can use the tool to visualize direct peer connections, data transfer rates between nodes, and identify bottlenecks in their communication protocol, leading to more efficient code.

· Diagnosing IoT Device Connectivity Issues: Users with many Internet of Things (IoT) devices might experience devices going offline. The tool can help pinpoint if a specific IoT device is failing to communicate with the network, is being flooded with traffic by another device, or is experiencing high bandwidth usage that causes instability.

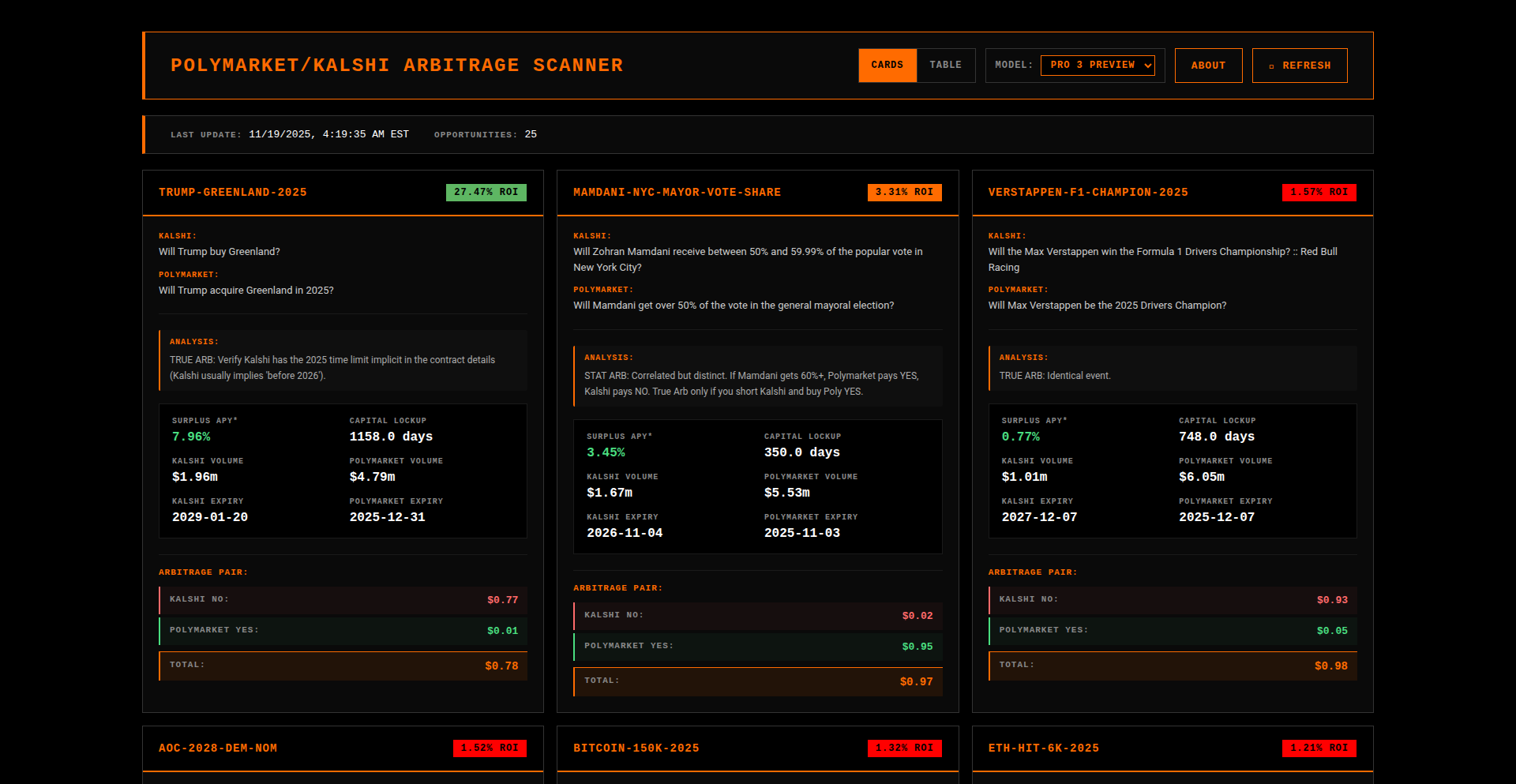

10

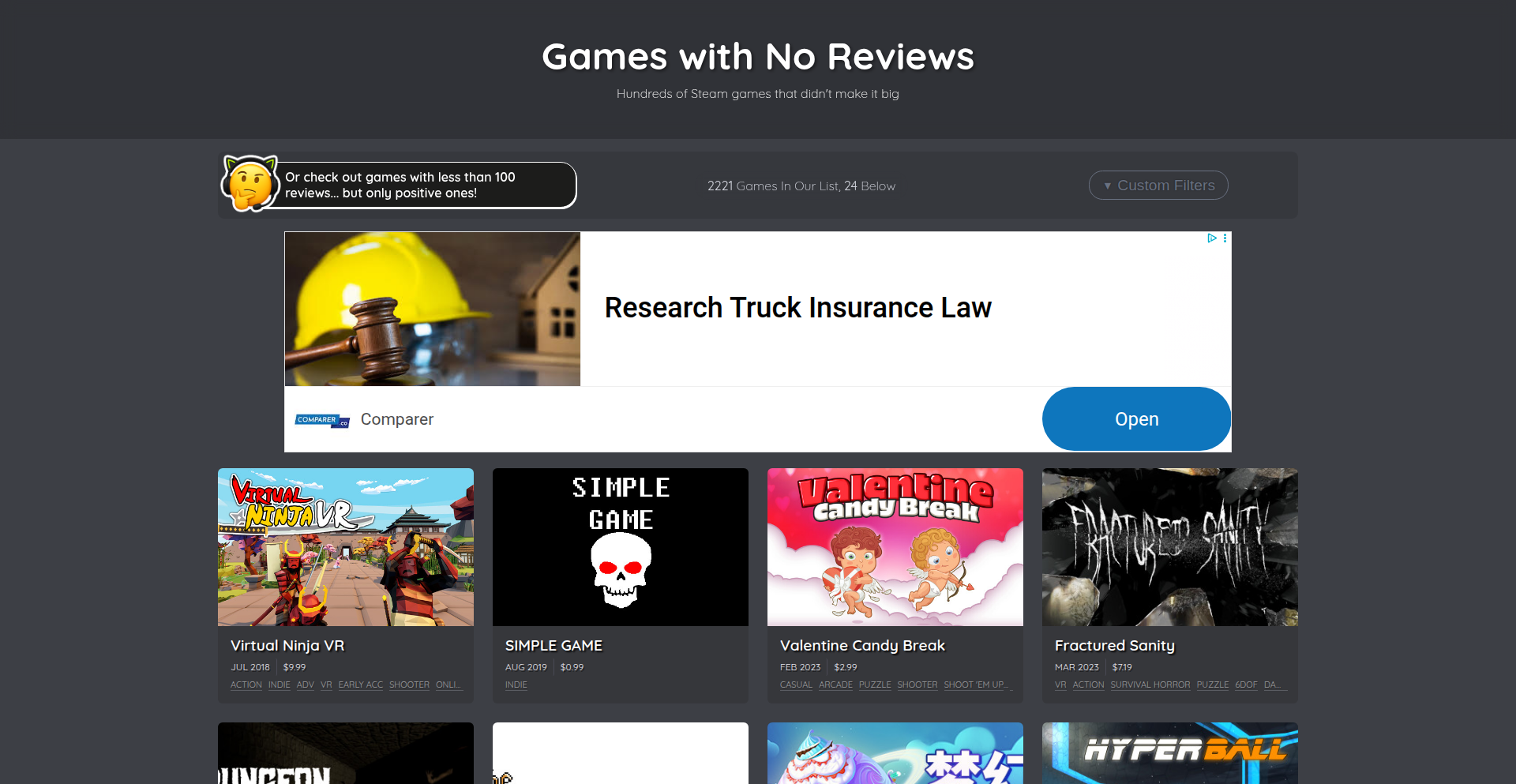

ZeroReview Explorer

Author

AmbroseBierce

Description

A Hacker News Show HN project that analyzes over 2200 Steam games with zero reviews. It leverages a Kaggle dataset of 110K games, supplemented by scraping Steam store pages to verify review counts and fetch trailer URLs. The project highlights interesting findings like games disappearing from Steam and the use of generative AI in game development, offering a unique lens into the vast Steam ecosystem.

Popularity

Points 6

Comments 4

What is this product?

This project is a data exploration tool for the Steam gaming platform, specifically focusing on games that, surprisingly, have accumulated zero reviews. The core innovation lies in how it gathers and presents this data. Instead of relying solely on expensive APIs, it ingeniously uses a publicly available Kaggle dataset containing information on a massive number of Steam games. To ensure accuracy and get richer details like trailer video links, it then performs targeted scraping of individual Steam store pages for games flagged as having zero reviews. This combination of existing datasets and custom scraping allows for a deep dive into overlooked corners of the gaming market. The 'so what does this mean for me?' aspect is that it provides a curated view of potentially undiscovered gems or curious anomalies in the massive Steam library, offering a unique perspective on game discovery and the market.

How to use it?

Developers can use this project as a reference for data acquisition and analysis techniques, particularly for platforms where direct API access might be limited or costly. The approach of combining public datasets with targeted web scraping is a common 'hacker' pattern for gaining insights. For game developers or researchers, it offers a dataset and a method to discover games that might have flown under the radar, understand trends in game releases that receive no initial traction, or even identify potential market gaps. The 'so what does this mean for me?' is that it demonstrates practical ways to gather and analyze data from large online platforms, inspiring new ways to build similar discovery tools or conduct market research.

Product Core Function

· Data Aggregation and Filtering: Collects and filters game data from a Kaggle dataset, enabling the isolation of games with zero reviews. This is valuable for anyone wanting to analyze niche segments of the gaming market. The 'so what does this mean for me?' is that it provides a ready-made starting point for exploring overlooked games.

· Real-time Data Verification: Scrapes Steam store pages to confirm zero review status and fetch additional metadata like trailer URLs. This ensures data accuracy and enriches the information available, offering a more complete picture. The 'so what does this mean for me?' is that you get more reliable and detailed information about these unique games.

· Discovery of Anomalies: Identifies interesting trends such as games disappearing from Steam or the use of AI for game asset generation. This provides insights into the dynamics of the digital game market. The 'so what does this mean for me?' is that you gain a deeper understanding of the current trends and behind-the-scenes activities in the game industry.

· Interactive Exploration: Allows filtering by tags and price, making the exploration of zero-review games more targeted and user-friendly. This helps users find games that align with their interests. The 'so what does this mean for me?' is that you can easily narrow down your search to games that might appeal to you specifically.

Product Usage Case

· Identifying Undiscovered Indie Gems: A user can browse the list of games with zero reviews to find potentially high-quality but overlooked independent titles. By examining trailers and available information, they might discover their next favorite game before it gains wider recognition. The 'so what does this mean for me?' is that you can find unique gaming experiences that most people miss.

· Market Research for Game Developers: A game developer could analyze this dataset to understand what types of games are being released with minimal initial engagement, or perhaps to identify unmet market needs by seeing what's missing from popular categories. The 'so what does this mean for me?' is that you can get insights into the competitive landscape and potential opportunities in game development.

· Studying Platform Dynamics: Researchers or enthusiasts could use this project to study the lifecycle of games on platforms like Steam, observing which games disappear or how quickly new, unreviewed titles are published. The 'so what does this mean for me?' is that you can understand how games and their presence on platforms evolve over time.

· Curiosity-Driven Exploration: A gamer might simply be curious about the vast number of games on Steam that haven't garnered any feedback. This project satisfies that curiosity by presenting a curated list and interesting observations about these 'invisible' games. The 'so what does this mean for me?' is that you can satisfy your curiosity about the hidden corners of the Steam store.

11

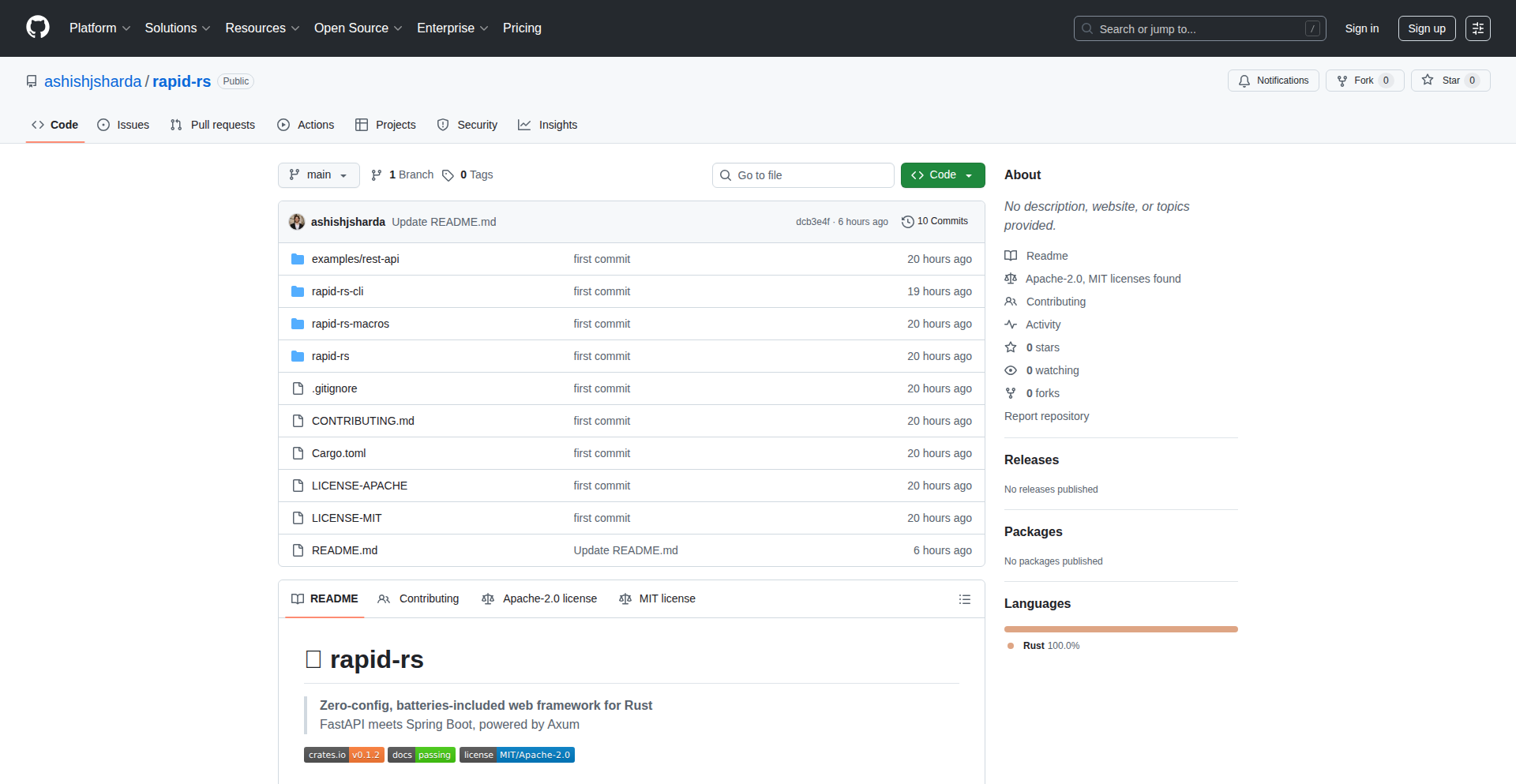

LLM Reddit Sim

Author

mananonhn

Description

This project is a simulation of Reddit built using Large Language Models (LLMs). It aims to explore how LLMs can generate realistic-sounding user interactions, comments, and even post content within a simulated social media environment. The innovation lies in leveraging LLMs for emergent social behavior simulation, offering a unique perspective on AI-driven content generation and community dynamics.

Popularity

Points 4

Comments 5

What is this product?

This project is essentially an AI-powered experiment that mimics the dynamics of Reddit. Instead of real users, it uses Large Language Models (LLMs) to create simulated users who post content and comment on each other's posts. The core innovation is in how the LLMs are instructed and orchestrated to produce diverse and coherent interactions, mimicking the unpredictable nature of online communities. Think of it as a sandbox for AI-generated social media. So, what's the value? It helps researchers and developers understand how AI can generate content and interactions that feel human-like, which is crucial for building more engaging AI applications or studying online behavior.

How to use it?

Developers can use this project as a starting point for building their own AI-driven simulation environments or for generating synthetic data that resembles real-world social media interactions. It can be integrated into larger AI projects that require simulated user feedback or content. For example, you could use it to test moderation systems on AI-generated content or to train other AI models on a diverse dataset of simulated discussions. The value here is providing a readily available, experimental platform to explore LLM-driven simulation without building everything from scratch.

Product Core Function

· LLM-powered content generation: The system uses LLMs to create original posts and comments, mimicking various user tones and interests. This offers a way to generate vast amounts of diverse text data for training or testing AI models.

· Simulated user interactions: The LLMs are designed to respond to existing posts and comments, creating a chain of conversation. This allows for the study of emergent communication patterns and how AI agents can maintain dialogue.

· Configurable simulation parameters: Developers can likely adjust settings to control the number of simulated users, their 'personalities,' and the topics of discussion, providing flexibility for different experimental setups. This means you can tailor the simulation to your specific research or development needs.

· Exploration of LLM creativity and limitations: By observing the generated content, users can gain insights into the creative capabilities and potential biases of LLMs in a social context. This helps in understanding what LLMs can and cannot do well in generating human-like interactions.

Product Usage Case

· Testing AI content moderation: A developer could use this simulator to generate a large volume of simulated posts and comments, some of which might contain controversial or inappropriate content, to train and test an AI moderation system. This provides a safe and scalable way to evaluate moderation tools.

· Creating synthetic datasets for chatbot training: Researchers could run the simulator for an extended period to gather a dataset of simulated discussions that mimic online forum conversations, then use this dataset to train a chatbot to understand and participate in similar dialogues. This accelerates the creation of realistic training data.

· Prototyping AI-driven social platforms: A startup could use this project as a foundational element to prototype a social platform where initial content and user engagement are driven by AI, providing a lively environment before attracting real users. This allows for rapid prototyping and validation of platform concepts.

· Studying AI emergent behavior: Academics could use this simulator to observe how different LLM configurations and prompts lead to distinct types of simulated community behavior, such as polarization or consensus formation, offering insights into AI ethics and societal impact.

12

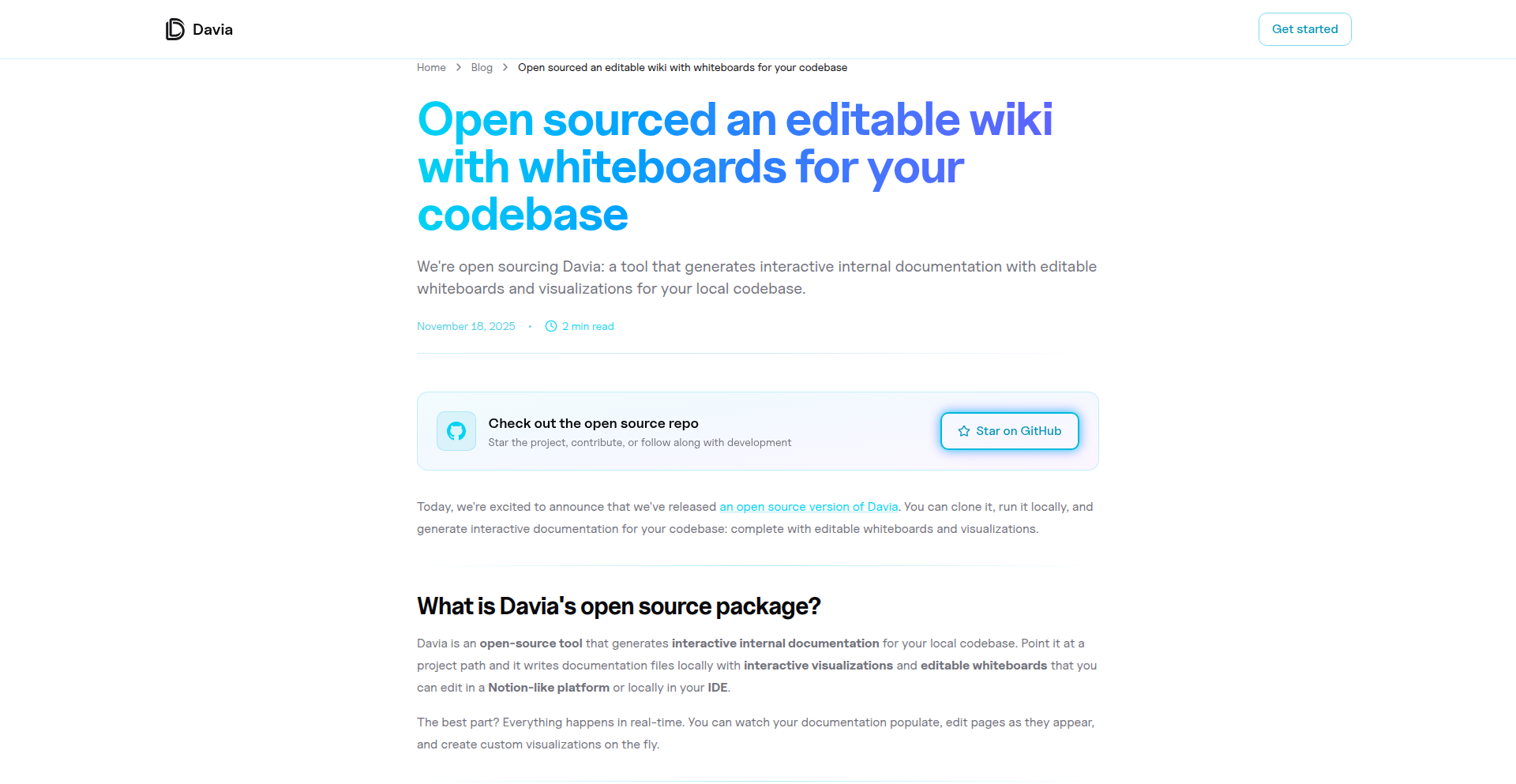

CodeCanvas Wiki

Author

ruben-davia

Description

CodeCanvas Wiki is an open-source tool that automatically generates an editable wiki and interactive diagrams from your codebase. It addresses the common challenge of creating and maintaining up-to-date documentation by integrating code analysis with visual whiteboard-style editing, allowing developers to understand and modify their projects more efficiently. This offers a dynamic and collaborative approach to code documentation, bridging the gap between code structure and human comprehension.

Popularity

Points 8

Comments 0

What is this product?

CodeCanvas Wiki is an open-source system designed to automatically generate a living documentation wiki for your codebase. It parses your code and creates a wiki that's not just text-based but also incorporates editable, whiteboard-style diagrams. Think of it as a smart, interactive notebook for your software. Instead of manually writing extensive documentation or drawing static diagrams, CodeCanvas Wiki does the heavy lifting. It understands your code's structure and translates it into browsable documentation and visual representations that you can directly edit and refine, much like editing text in a document editor or drawing on a digital whiteboard. This innovation lies in its ability to provide editable visual context, making complex codebases easier to grasp and manage, which is often missing in traditional documentation tools.

How to use it?

Developers can integrate CodeCanvas Wiki into their workflow by pointing it towards their existing codebase. The tool then analyzes the code's structure, dependencies, and key components. It generates a comprehensive, editable wiki that can be accessed and modified directly within their IDE or through a web-based editor. For the diagramming aspect, it creates interactive whiteboards that visually represent the code's architecture. This allows developers to collaborate on understanding and planning changes to the codebase. For example, a team could use CodeCanvas Wiki to onboard new members by providing them with an immediately accessible and explorable documentation hub, or to collaboratively design new features by sketching out architectural ideas on the interactive whiteboards.

Product Core Function

· Automatic Codebase Analysis: Scans your code to understand its structure, functions, classes, and dependencies. This helps in automatically building a foundational documentation, saving developers countless hours of manual review and mapping. It means you get a starting point for understanding your project's layout instantly.

· Editable Wiki Generation: Creates a browsable and editable wiki from your code, similar to a Notion-like editor. This allows for easy annotation, explanation, and modification of the documentation. The value here is having a central, living document that can evolve with the code, making it easier for anyone to contribute to and update the project's knowledge base.

· Interactive Whiteboard Diagrams: Generates editable, whiteboard-style diagrams that visually represent your codebase's architecture. This provides a powerful visual aid for understanding complex relationships and system flows. The practical benefit is enhanced comprehension of intricate systems, facilitating better design decisions and debugging.

· IDE Integration: Enables users to view and edit documentation and diagrams directly within their Integrated Development Environment (IDE). This seamless integration minimizes context switching, allowing developers to access and update documentation without leaving their primary coding workspace. It boosts productivity by keeping documentation in sync with the coding process.

· Open Source Community: Being open-source means developers can freely use, modify, and contribute to the tool. This fosters collaboration, encourages rapid improvement, and ensures transparency. The value for the community is a freely available, adaptable tool that can be tailored to specific needs and benefits from collective innovation.

Product Usage Case

· Onboarding new team members: A startup with a rapidly growing codebase can use CodeCanvas Wiki to create an instant, explorable knowledge base for new hires. Instead of spending weeks deciphering legacy code, new developers can navigate the automatically generated wiki and diagrams to quickly understand project structure, core modules, and their interdependencies, significantly accelerating their ramp-up time.

· Refactoring complex systems: For a legacy application with tangled dependencies, a development team can leverage CodeCanvas Wiki to visualize its current architecture. They can then collaboratively use the editable diagrams to plan the refactoring process, sketching out proposed changes and discussing them visually before writing any new code. This reduces the risk of introducing bugs and ensures everyone on the team is aligned on the refactoring strategy.

· API documentation and evolution: A software company developing a public API can use CodeCanvas Wiki to generate and maintain up-to-date API documentation. As the API evolves, the wiki and diagrams can be updated simultaneously, ensuring that developers consuming the API always have access to accurate information. This prevents miscommunication and improves the developer experience for external users.

· Technical debt identification: By analyzing the generated diagrams and wiki, teams can visually identify areas of the codebase that are overly complex, have circular dependencies, or lack clear documentation. This visual representation helps in prioritizing technical debt remediation efforts, making it easier to communicate the need for improvements to management.

13

MultiDock Weaver

Author

pugdogdev

Description

ExtraDock is a macOS application that empowers users to create and manage multiple customizable docks, placing them anywhere on their screen. It tackles the common pain point of a single dock being insufficient for multi-monitor setups. The innovation lies in its flexibility, allowing for extensive customization of dock appearance and the inclusion of unique widgets like IP address display and Stripe dashboard integration, offering practical utility for developers and power users. This provides a significant enhancement to workflow efficiency and personalized desktop management.

Popularity

Points 5

Comments 2

What is this product?

ExtraDock is a macOS application designed to break the limitation of a single dock on your Mac. Think of it as giving your Mac multiple, independent taskbars. Its technical innovation comes from how it creates and manages these additional docks. Instead of relying on a single system-level dock, it builds its own dock interfaces that can be positioned anywhere – on your main screen, secondary monitors, or even specific corners. This is achieved through native macOS UI frameworks, allowing for deep customization of colors, transparency (blur/opacity), borders, and more. Furthermore, it introduces a widget system that allows developers or users to embed real-time information directly into these docks, such as your current external IP address (useful for VPN users to quickly check connectivity) or your Stripe sales figures (for entrepreneurs to monitor their business at a glance). This level of control and integration goes beyond what the default macOS dock offers, providing a truly tailored workspace. So, for you, this means a more organized and information-rich desktop that adapts to your specific workflow, rather than forcing you to adapt to its limitations.

How to use it?

Developers can use ExtraDock in several ways to enhance their productivity and monitoring. Primarily, it's for desktop organization: if you have multiple monitors, you can dedicate specific docks to different sets of applications or workflows. For example, one dock might hold your development tools (IDE, terminal, Git clients), another your communication apps (Slack, email), and a third your design software. Integration with other apps is facilitated through its widget system. Developers can potentially build custom widgets that fetch data from their own services or tools and display it directly in an ExtraDock. For instance, a continuous integration/continuous deployment (CI/CD) developer could create a widget showing the status of their latest build. The app is installed like any other macOS application, and its interface allows for easy creation, configuration, and placement of new docks. So, for you, this means a more structured digital workspace and the potential to have key application statuses or data readily visible without constantly switching windows.

Product Core Function

· Create Multiple Docks: Allows users to spawn an unlimited number of independent docks, breaking the single-dock constraint of macOS. This is valuable for organizing applications and workflows across multiple monitors, ensuring quick access to relevant tools for specific tasks. Imagine having a dedicated dock for coding, another for design, and another for communication.

· Customizable Dock Appearance: Provides extensive options to personalize the look of each dock, including color, blur effects, opacity, and borders. This allows users to create a visually coherent and aesthetically pleasing desktop environment that matches their personal style or branding. For you, this means a desktop that looks exactly how you want it to.

· Widget Integration: Enables the addition of custom widgets to docks, such as clocks, IP address displays, and data dashboards (e.g., Stripe). This transforms docks from simple app launchers into dynamic information hubs, providing at-a-glance access to crucial data like your external IP for VPN checks or business performance metrics. This is valuable for efficient monitoring and quick decision-making.

· Cross-App Integration (with DockFlow): Seamlessly works with another dock application, DockFlow, to offer a more comprehensive desktop management experience. This means developers who use both products can achieve an even greater level of workflow optimization and customization. For you, this can lead to a more powerful and unified desktop control system.

Product Usage Case

· Scenario: A software developer working with a multi-monitor setup. Problem: Juggling applications across different screens can be inefficient, with frequently used tools buried. Solution: Use ExtraDock to create a dedicated dock on each monitor, populated with relevant development tools like IDEs, terminals, and version control clients. This significantly speeds up task switching and reduces cognitive load, making development more fluid. This means less time searching for apps and more time coding.

· Scenario: A remote worker who frequently uses a VPN. Problem: Verifying their external IP address can be a hassle, especially when troubleshooting network issues or ensuring VPN connectivity. Solution: Add an IP address widget to an ExtraDock. This widget will continuously display the current external IP address, allowing the user to instantly see if their VPN is active and assigned the correct IP. This means immediate network status visibility without extra steps.

· Scenario: An e-commerce entrepreneur who wants to monitor sales performance. Problem: Constantly checking a web dashboard for sales figures interrupts workflow. Solution: Integrate a Stripe Dashboard Widget into an ExtraDock. This widget can display key sales metrics directly on the desktop, providing real-time business insights without leaving the current application. This means staying informed about business performance without disrupting your primary tasks.

14

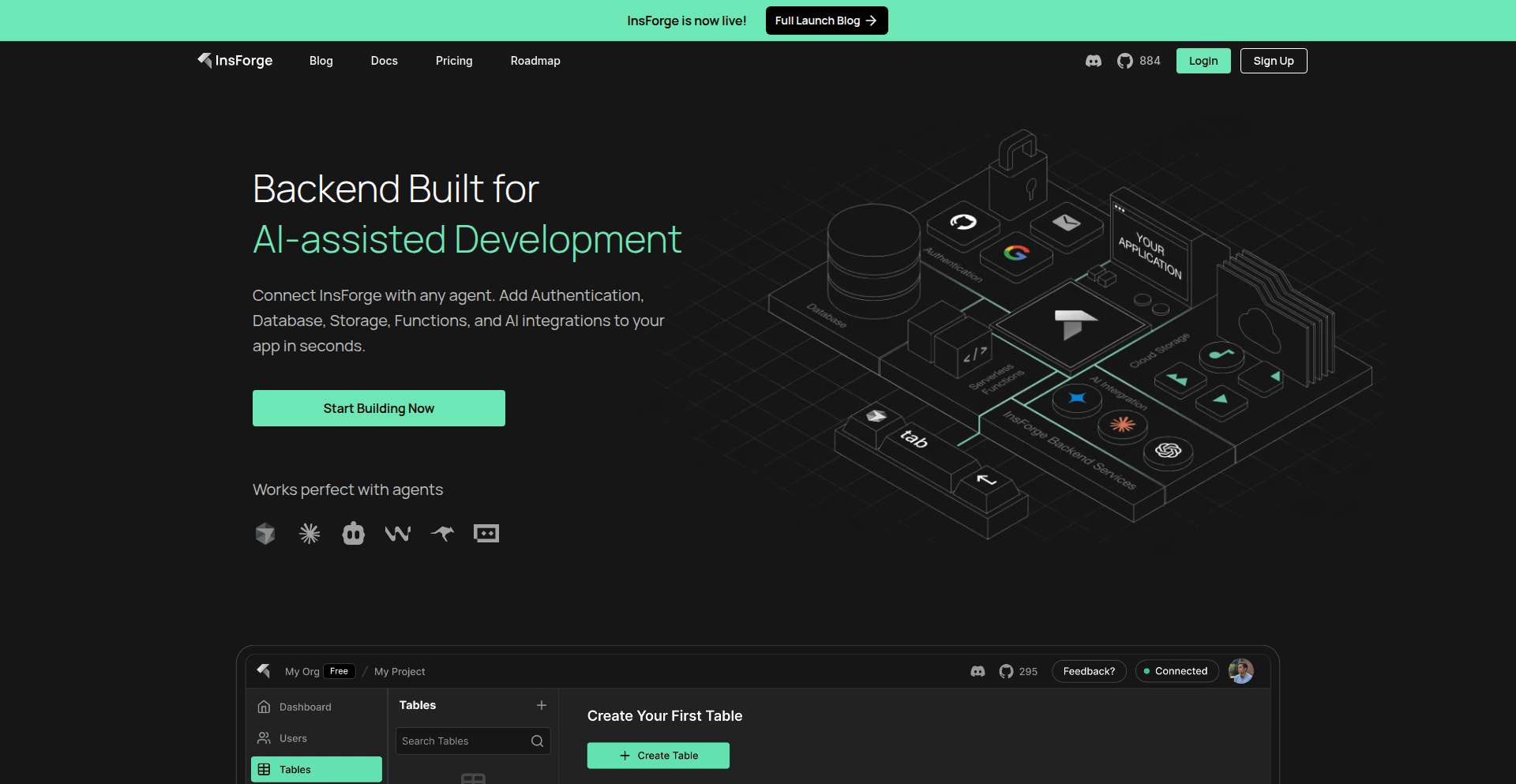

PromptStack BaaS

Author

tonychang430

Description

PromptStack BaaS is a backend-as-a-service (BaaS) platform built on top of PostgreSQL. It focuses on enabling faster, production-ready application development using natural language prompts by intelligently integrating AI. Unlike generic AI code generators, it provides a structured backend environment with features like authentication, typed SDKs, serverless functions, and file storage, all designed to be controllable and predictable through AI prompts. This solves the problem of AI's unreliability in complex backend tasks by providing a robust framework that AI can confidently interact with.

Popularity

Points 7

Comments 0

What is this product?

PromptStack BaaS is a developer platform that helps you build applications faster by letting you use plain English (prompts) to create your backend. Imagine telling your computer what you want your app's backend to do, and it just builds it. It's built on top of PostgreSQL, a powerful database, and adds features like user login (authentication), a way to automatically generate code for interacting with your data (typed SDK), serverless functions for running custom code, and file storage. The key innovation is its 'MCP server' with 'context-engineering tools'. This means it's designed to guide AI more effectively. Instead of AI just guessing, it provides the AI with specific information about your database structure and requirements, making the AI's output more reliable and production-ready. This is like giving an architect detailed blueprints instead of just a general idea. This solves the common frustration of AI-generated code being buggy or incomplete for real-world applications.

How to use it?

Developers can use PromptStack BaaS in two main ways: through their hosted cloud service or by self-hosting the open-source version on their own servers. To use it, you interact with the platform via prompts. For instance, you could prompt to 'Create a user table with fields for email and password' or 'Set up a serverless function to send a welcome email upon user registration.' The platform interprets these prompts and configures the backend infrastructure accordingly. It integrates with your preferred frontend framework by providing generated SDKs. You can also connect it to AI models through a unified API for more advanced prompt-driven features. This makes it easier to get a backend up and running quickly without deep expertise in every backend component, allowing developers to focus on the frontend and core application logic.

Product Core Function

· AI-assisted Backend Configuration: Use natural language prompts to define and create backend resources like database schemas, authentication flows, and serverless functions. This speeds up initial setup and reduces manual coding.

· Production-Grade Postgres Backend: Leverages PostgreSQL for a robust and scalable database, ensuring data integrity and performance for your applications.

· Typed SDK Generation: Automatically generates type-safe software development kits (SDKs) for your database, making it easier and safer to interact with your backend data from your frontend code.

· Serverless Functions with Secrets Management: Deploy custom backend logic without managing servers, and securely store sensitive information like API keys.

· S3-Compatible File Storage: Easily store and retrieve files, similar to how cloud storage services work, for features like user uploads or media hosting.

· Unified AI Model Integration: Connect to various AI models through a single interface, allowing for prompt-driven AI features within your application.

· Context-Engineered AI Interaction: The platform provides specific context to AI models about your backend's structure and requirements, leading to more accurate and reliable AI-generated code and configurations. This is crucial for turning AI experiments into real-world applications.

Product Usage Case

· Rapid Prototyping: A startup founder wants to quickly build a Minimum Viable Product (MVP) for a social networking app. They can use PromptStack BaaS to prompt for user profiles, posts, and comment functionalities, getting a working backend in hours instead of days or weeks, accelerating their time to market.

· Backend for AI-Powered Tools: A developer is building an AI writing assistant. They can use PromptStack BaaS to set up a database for storing user prompts and generated content, and serverless functions for AI model inference, all driven by prompts to configure the backend architecture.

· E-commerce Backend Automation: A developer needs to build the backend for an e-commerce store. They can use prompts to define product catalogs, order management systems, and user authentication, significantly reducing the boilerplate code typically required for such a complex setup.

· Data-Intensive Applications: For applications requiring complex data relationships and queries, PromptStack BaaS allows developers to define their PostgreSQL schema using prompts, ensuring the underlying database is well-structured and optimized from the start.

15

LaravelVueForge

Author

codecannon

Description

LaravelVueForge is a full-stack web application generator that automates the creation of boilerplate code for Vue.js and Laravel projects. It tackles the repetitive setup tasks by allowing developers to define data models and relationships, then deterministically generates a well-structured codebase, including backend APIs and frontend interfaces, saving significant development time and effort.

Popularity

Points 5

Comments 2

What is this product?

LaravelVueForge is a developer tool that acts like a code architect. Instead of manually writing the foundational code for common web application elements like database structures (migrations), data representations (models), and basic interaction points (CRUD APIs and frontend forms/tables), you define your data requirements visually. Our system then uses these definitions to generate a complete, organized, and ready-to-use Vue.js frontend and Laravel backend. The key innovation is that it's not a black-box no-code solution; it produces clean, conventional code that you fully own and can extend, making it a powerful accelerator for new projects.

How to use it?

Developers can use LaravelVueForge by visiting the web application. They would first define their data models, specifying columns, data types, and relationships between different data entities. Once the data structure is designed, they can trigger the generation process. The tool will then output a complete, version-controlled codebase (pushable to GitHub or downloadable as a zip file) that includes a Laravel backend with migrations, models, API endpoints, and a Vue.js frontend featuring user interfaces for authentication, data display, and data editing. This generated code serves as a robust starting point for developers to build their unique application logic upon.

Product Core Function

· Data Model Definition: Allows developers to visually define application data structures, including fields, types, and relationships, reducing the manual effort and potential errors in setting up database schemas and object representations.

· Deterministic Code Generation: Produces predictable, clean, and convention-following code for both Laravel (backend) and Vue.js (frontend), ensuring a consistent and maintainable project foundation.

· Full-Stack Boilerplate Generation: Creates essential backend components like database migrations, models with relationships, factories, seeders, and basic CRUD API endpoints, along with frontend components for authentication, data tables, and create/edit forms using PrimeVue, drastically cutting down initial setup time.

· GitHub Integration & Ownership: Enables seamless pushing of generated code to a GitHub repository, promoting collaboration and version control, and emphasizes that the generated codebase is fully owned by the user, allowing complete freedom for customization and expansion.

· Integrated Development Environment Setup: Includes essential development tools like Docker configurations and starter CI/CD pipelines, linters, and formatters, setting up a productive development environment from the outset.

Product Usage Case

· New Project Scaffolding: A startup founder needs to quickly prototype a new SaaS application. By using LaravelVueForge to generate the basic structure for their core data modules (e.g., users, projects, tasks), they can bypass the initial weeks of boilerplate coding and immediately focus on implementing their unique business logic and features, accelerating their time-to-market.

· Rapid MVP Development: A development team is tasked with building a Minimum Viable Product (MVP) for a new internal tool. LaravelVueForge allows them to define the data models for the tool and generate a functional frontend and backend in hours instead of days. This enables them to quickly demonstrate a working version to stakeholders and gather early feedback.

· API-First Backend Generation: A developer is building a backend API for a mobile application. They can use LaravelVueForge to generate the Laravel backend with all the necessary CRUD endpoints and data models. This frees them from writing repetitive API code and allows them to concentrate on the specific nuances of their API design and business logic.

· Frontend Data Management Interface: A project manager needs a quick way to manage a list of inventory items. LaravelVueForge can generate a Vue.js frontend with a data table and forms for adding, editing, and deleting inventory items. This provides an instant, functional interface that can be easily integrated into a larger application or used as a standalone management tool.

16

MindChess Trainer

Author

psovit

Description

A specialized mobile application designed for mastering blindfold chess. The innovation lies in its dedicated focus on training users to visualize and play chess without seeing the board, addressing a niche but challenging aspect of the game. This app provides a focused environment for developing spatial reasoning and memory skills crucial for advanced chess players.

Popularity

Points 5

Comments 1

What is this product?

This project is a mobile application focused exclusively on blindfold chess practice. Unlike general chess apps, its core innovation is to train users to play chess entirely in their minds, without a visual representation of the board. The underlying technology likely involves a robust chess engine that can process moves and board states programmatically, coupled with an interface that provides verbal or text-based move input and feedback. This allows for exercises that develop the player's ability to mentally construct and manipulate the chessboard, enhancing their strategic thinking and memory.

How to use it?