Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-17

SagaSu777 2025-11-18

Explore the hottest developer projects on Show HN for 2025-11-17. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The relentless wave of AI innovation continues to reshape how we build and interact with technology. This batch of Show HN projects highlights a fascinating trend: the democratization of powerful AI capabilities. From ESPectre's math-based motion detection on affordable hardware to the proliferation of AI agents and specialized LLM applications in fields like drug discovery and music generation, the barriers to entry are rapidly falling. For developers, this means an explosion of new tools and platforms that amplify individual creativity and productivity. It's no longer about having a massive team or budget; it's about leveraging intelligent systems to solve complex problems with elegance and efficiency. For entrepreneurs, this signals a fertile ground for niche solutions that cater to specific industry needs or underserved markets, pushing the boundaries of what's possible with less. The emphasis on developer tooling, efficient data formats like Internet Object, and privacy-centric approaches also shows a maturing ecosystem that values both power and responsible implementation. Embrace these emerging paradigms, experiment fearlessly, and build the future by harnessing these potent technological advancements.

Today's Hottest Product

Name

ESPectre

Highlight

ESPectre ingeniously bypasses the need for complex machine learning by using mathematical analysis of Wi-Fi signal data (CSI) to detect motion. This opens up a world of low-cost, real-time sensing possibilities. Developers can learn how to leverage readily available hardware like the ESP32 and fundamental signal processing techniques for innovative applications beyond traditional motion sensors.

Popular Category

AI/ML & LLMs

Developer Tools

Hardware & IoT

Productivity & Utilities

Popular Keyword

AI Agents

LLMs

Frameworks

CLI Tools

Automation

Data Processing

Technology Trends

Edge AI & Low-Cost Hardware

AI Agent Ecosystem

Developer Productivity Tooling

Data Format Innovation

Decentralized/Privacy-Focused Solutions

Specialized AI Applications

Project Category Distribution

AI/ML & LLMs (30%)

Developer Tools (25%)

Productivity & Utilities (20%)

Hardware & IoT (5%)

Frameworks & Libraries (10%)

Other (10%)

Today's Hot Product List

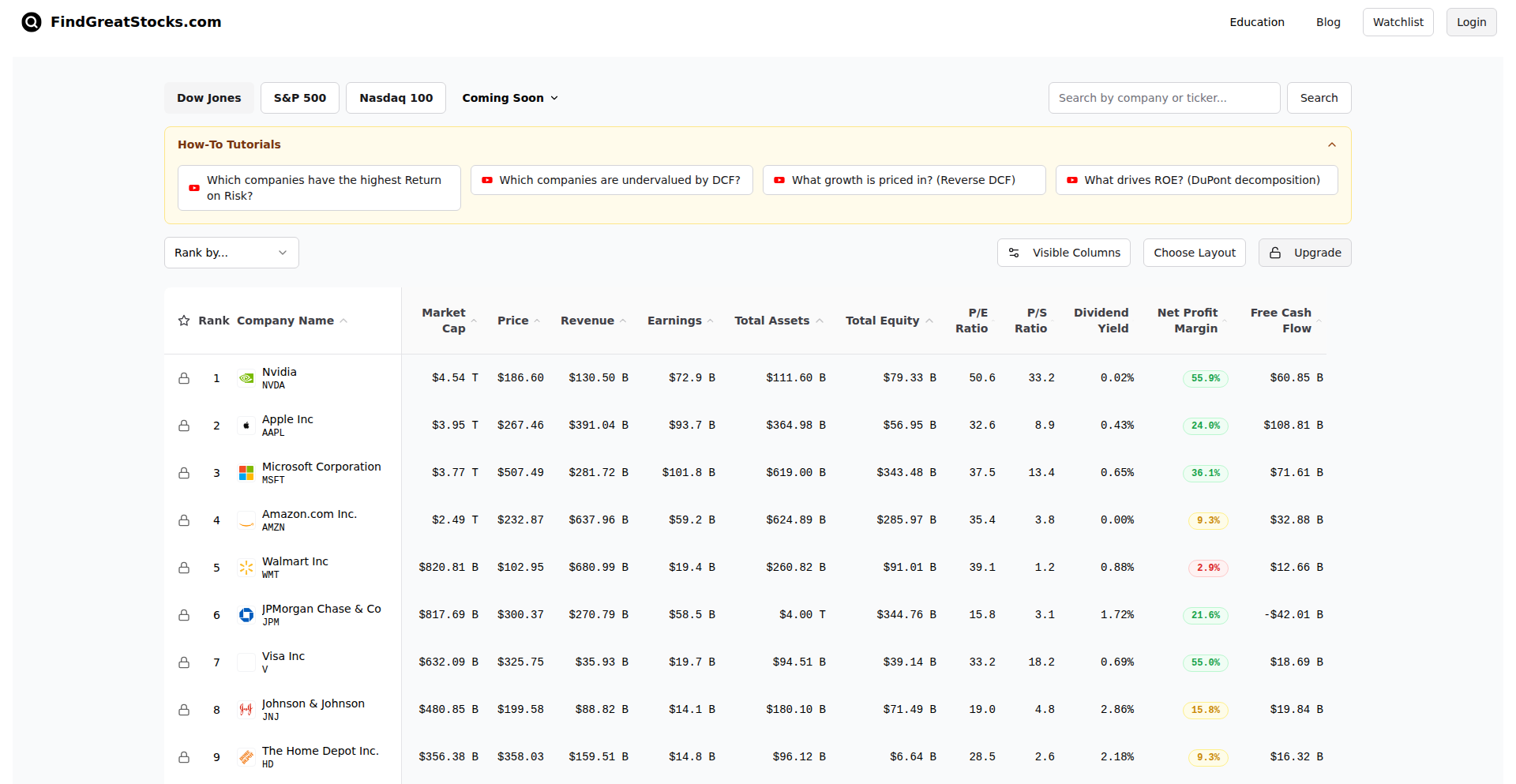

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | ESPectre: Wi-Fi CSI Motion Sentinel | 174 | 43 |

| 2 | PrinceJS: The Bun-Optimized Velocity Framework | 138 | 65 |

| 3 | Parqeye CLI: Terminal Parquet Inspector | 109 | 28 |

| 4 | IggyWebSocket | 25 | 6 |

| 5 | CloudBatcher | 19 | 7 |

| 6 | Kalendis Scheduling Core | 16 | 2 |

| 7 | Octopii: Rust-Powered Distributed App Framework | 14 | 3 |

| 8 | MCP Traffic Insight | 16 | 0 |

| 9 | YourGPT 2.0: Integrated AI Workflow Orchestrator | 11 | 3 |

| 10 | MarkovChainTextCraft | 13 | 1 |

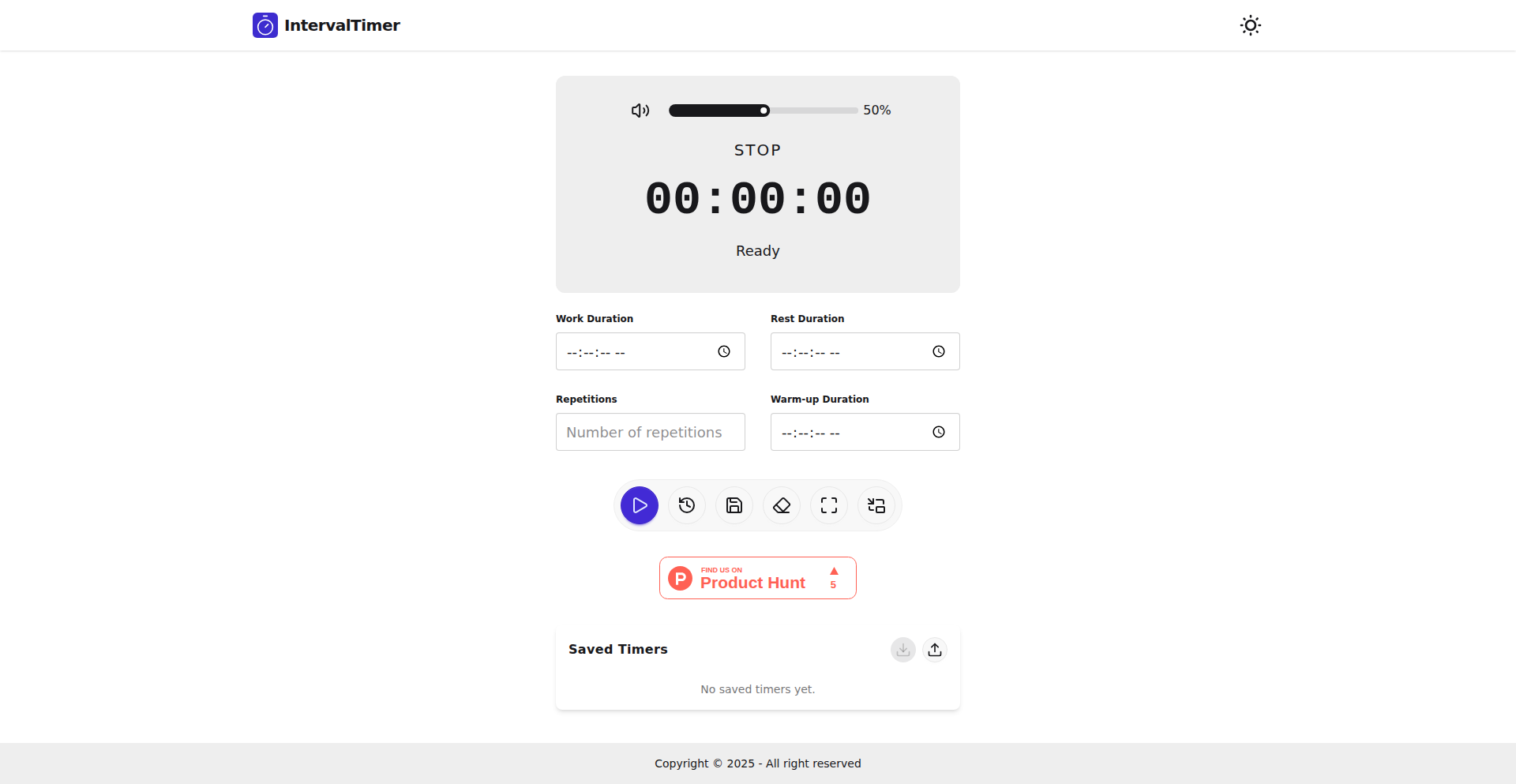

1

ESPectre: Wi-Fi CSI Motion Sentinel

Author

francescopace

Description

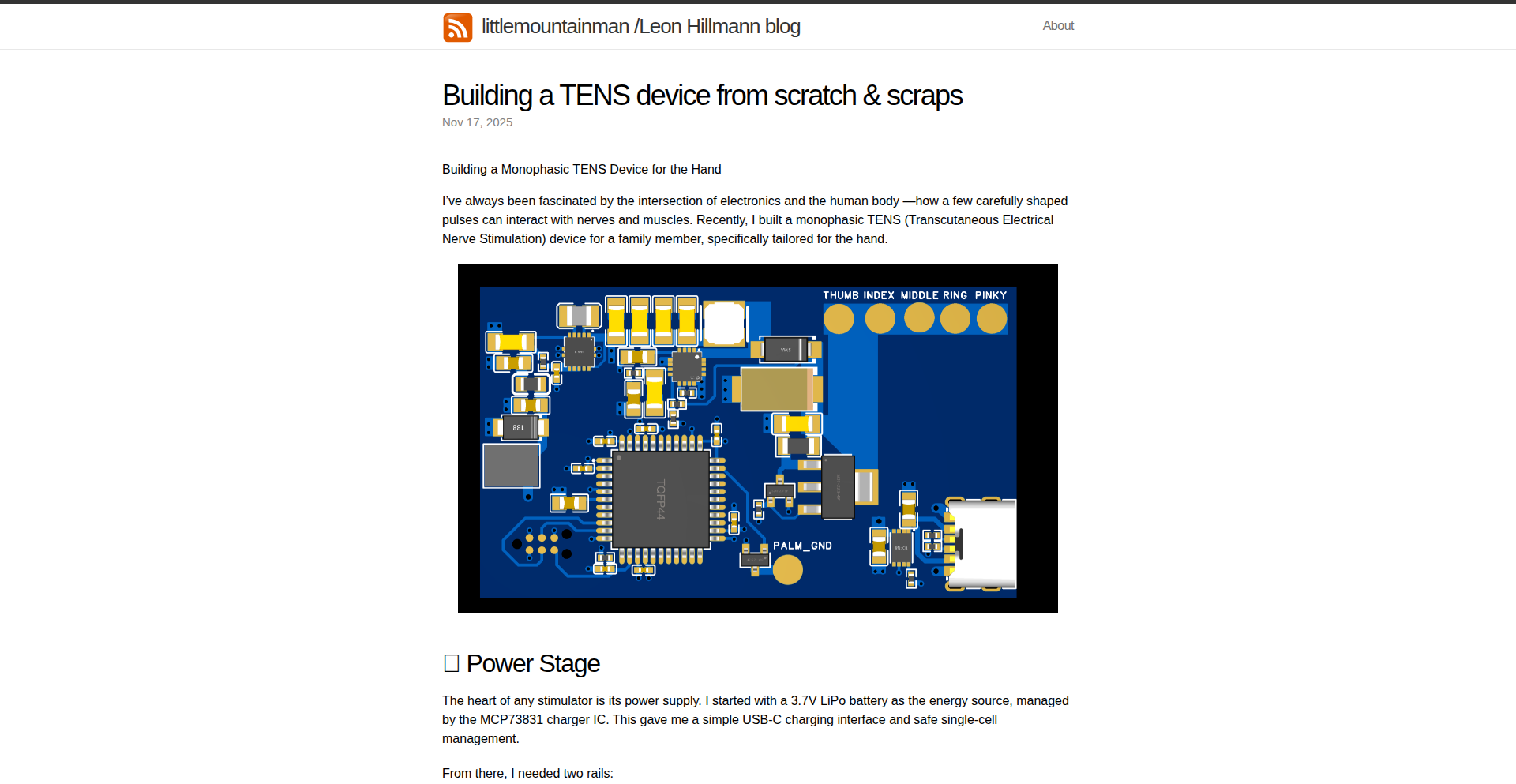

ESPectre is an open-source project that leverages the subtle shifts in Wi-Fi signals, specifically their CSI (Channel State Information) data, to detect motion. Unlike systems that rely on complex machine learning, it uses pure mathematical principles to analyze these signal patterns. This makes it incredibly efficient, capable of running in real-time on inexpensive hardware like the ESP32 microcontroller, and seamlessly integrates with smart home systems such as Home Assistant.

Popularity

Points 174

Comments 43

What is this product?

ESPectre is a novel motion detection system that analyzes the 'fingerprint' of Wi-Fi signals, known as CSI (Channel State Information). When something moves in an area, it subtly changes how Wi-Fi signals travel through that space. ESPectre captures these tiny changes, processes them using mathematical algorithms (no complex AI involved), and determines if motion has occurred. The innovation lies in using readily available Wi-Fi signals as sensors, making it a low-cost, non-intrusive, and real-time solution that doesn't require cameras or special hardware beyond a simple Wi-Fi chip.

How to use it?

Developers can integrate ESPectre into their projects by deploying ESP32 microcontrollers equipped with ESPectre's firmware in the area they wish to monitor. The system then continuously analyzes Wi-Fi CSI data. For smart home enthusiasts, it easily communicates motion events via MQTT, a lightweight messaging protocol, allowing seamless integration with platforms like Home Assistant. This means you can trigger lights, alarms, or other automations based on detected motion without needing dedicated motion sensors.

Product Core Function

· Wi-Fi CSI Signal Analysis for Motion Detection: Captures and interprets fluctuations in Wi-Fi signal characteristics caused by movement, providing a camera-free sensing capability.

· Real-time Mathematical Processing: Employs precise mathematical models rather than AI to quickly and reliably identify motion, ensuring immediate response.

· Low-Cost Hardware Compatibility: Designed to run efficiently on affordable microcontrollers like the ESP32, making advanced sensing accessible and cost-effective.

· Home Assistant Integration via MQTT: Publishes detected motion events to a widely adopted smart home platform, enabling easy automation and control.

· Open-Source GPLv3 License: Encourages community contribution, transparency, and widespread adoption of the technology.

Product Usage Case

· Implementing a 'ghost' motion sensor in a room: Developers can place an ESP32 running ESPectre in a room to detect if anyone has entered, without needing to install visible sensors or cameras. This could be used for security alerts or to trigger ambient lighting upon entry.

· Creating a 'presence detection' system for a smart home: By analyzing Wi-Fi CSI, ESPectre can determine if someone is in a specific area or if a room is occupied. This data can then be used by Home Assistant to automatically adjust thermostats, turn on/off lights, or manage entertainment systems based on occupancy.

· Developing an energy-saving system: ESPectre can detect when a room is empty and signal Home Assistant to turn off appliances or dim lights, leading to energy efficiency. Conversely, it can detect someone entering and preemptively turn on lights or heating.

· Building non-intrusive security monitoring for sensitive areas: In environments where cameras are not desirable, ESPectre offers a way to monitor for movement and alert users to unauthorized presence, all through the analysis of existing Wi-Fi signals.

2

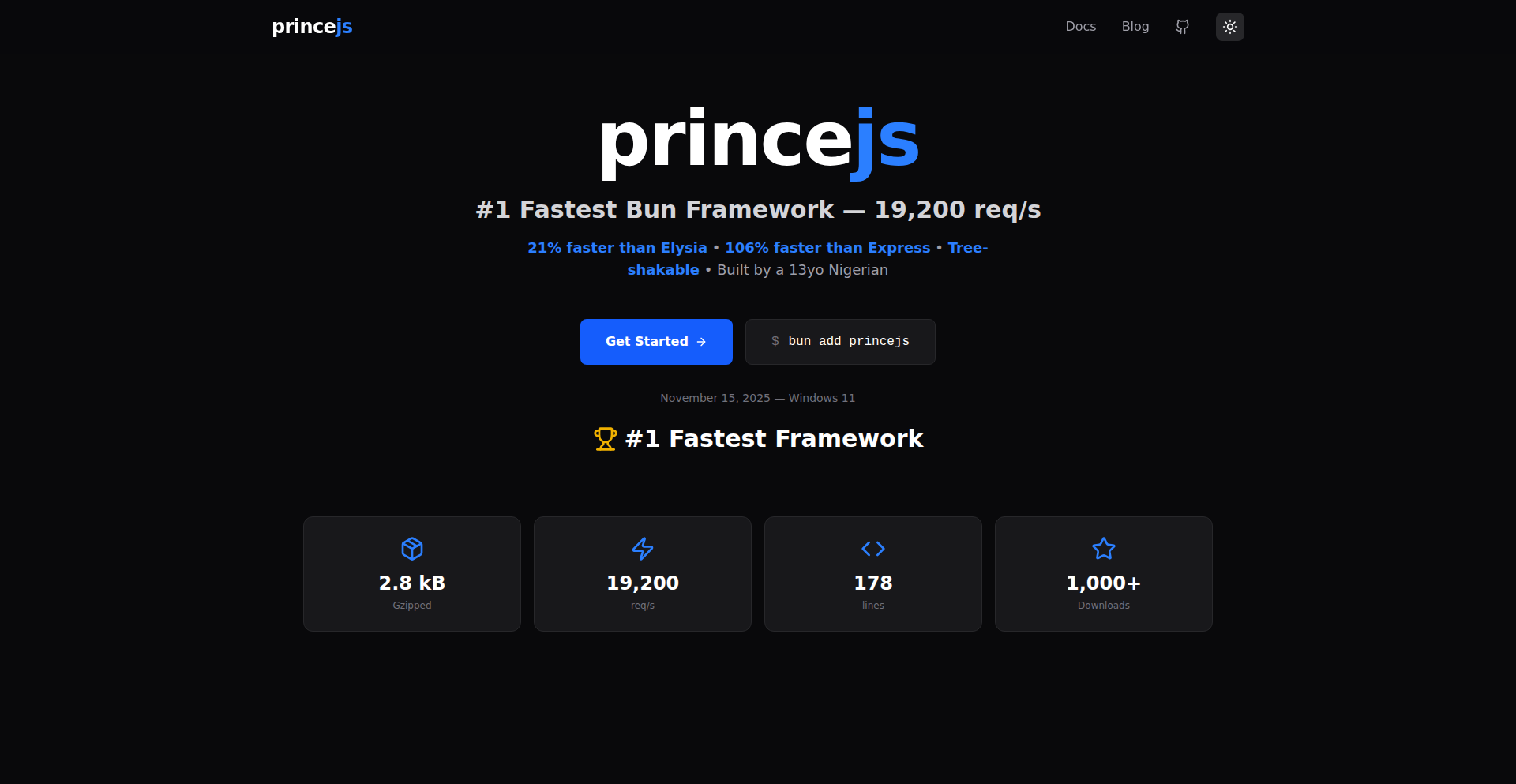

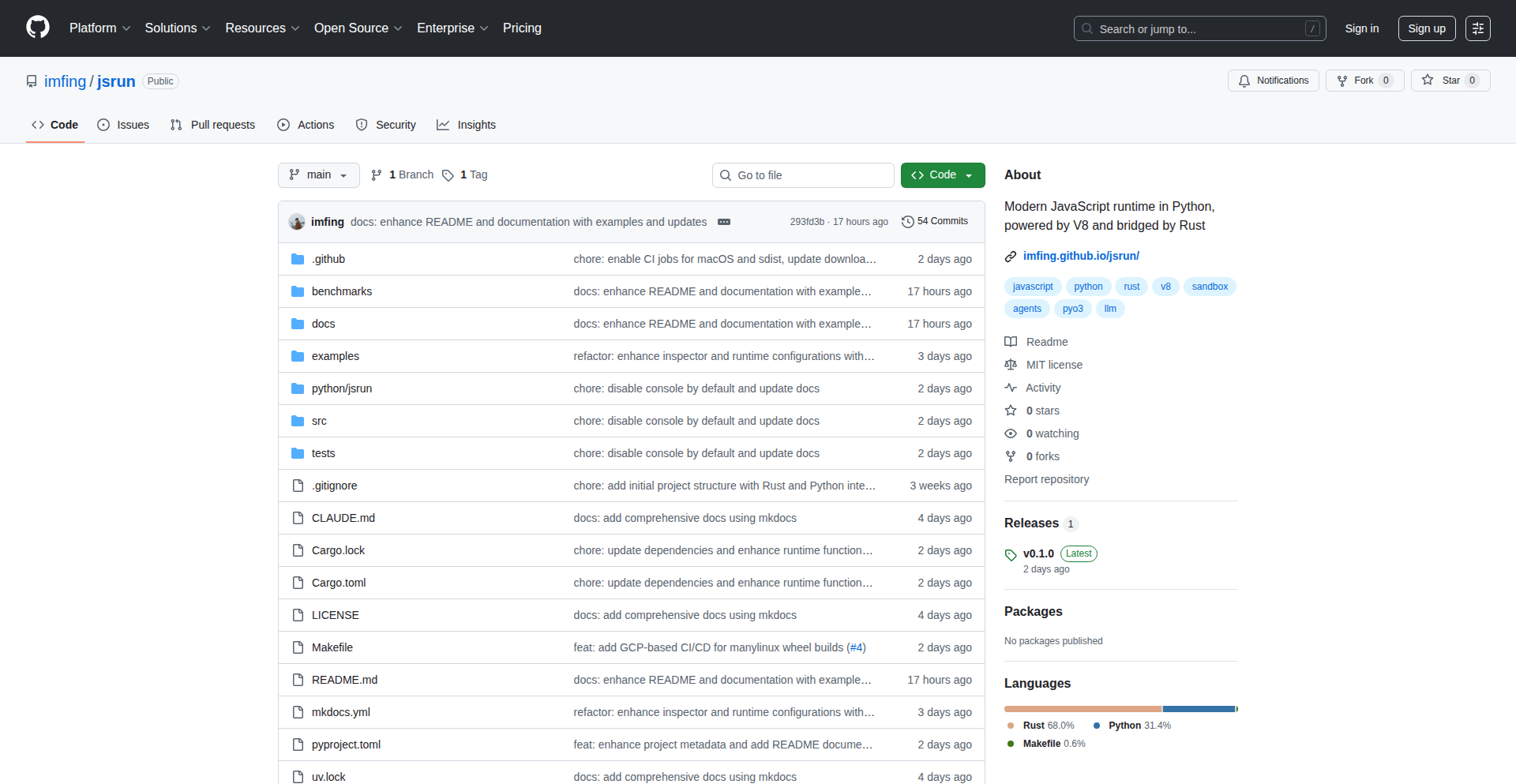

PrinceJS: The Bun-Optimized Velocity Framework

Author

lilprince1218

Description

PrinceJS is a remarkably fast and compact web framework built for Bun, a JavaScript runtime. Its core innovation lies in achieving extremely high request-per-second rates (19,200 req/s, outperforming popular frameworks like Hono, Elysia, and Express) with a tiny footprint (2.8 kB gzipped). It's designed to be tree-shakable, meaning only the features you use are included, minimizing bundle size. This project demonstrates the power of focused optimization and a deep understanding of the Bun runtime to solve the common developer challenge of building performant web applications.

Popularity

Points 138

Comments 65

What is this product?

PrinceJS is a new web framework specifically engineered to leverage the speed of Bun, a modern JavaScript runtime. Its primary technical innovation is its extreme performance, delivering over 19,200 requests per second, which is significantly faster than many established frameworks. This is achieved through meticulous code optimization and a design philosophy that prioritizes raw speed and minimal overhead. Furthermore, it's 'tree-shakable,' a concept where only the parts of the framework you actually need are bundled into your final application. This means your web applications will be smaller and load faster, as they won't contain unused code. So, it's a tool for developers who want to build very fast and efficient web services. The value for developers is building applications that respond instantly and consume fewer resources, which translates to a better user experience and lower operational costs. It's built with zero dependencies and zero configuration, meaning you can get started very quickly without complex setup.

How to use it?

Developers can integrate PrinceJS into their projects using a simple Bun command: `bun add princejs`. Once installed, developers can start building web applications by defining routes and handlers. For example, you might create a simple API endpoint. The framework's minimal configuration means you can often start serving requests almost immediately after setup. Its speed makes it ideal for building high-throughput APIs, microservices, or any web application where rapid response times are critical. Integration is straightforward due to its zero-config nature, allowing developers to focus on their application logic rather than framework setup. This means you can quickly prototype and deploy high-performance backends.

Product Core Function

· High-performance request routing: Achieves 19,200 req/s by optimizing how incoming requests are matched to the correct code for processing, leading to near-instantaneous responses for users.

· Minimal footprint (2.8 kB gzipped): Ensures faster download times for your application and reduced server resource usage, making your application more efficient.

· Tree-shakable architecture: Allows only the necessary parts of the framework to be included in your final application, further reducing size and improving load performance.

· Zero dependencies: Eliminates the need to manage external libraries, simplifying project setup and reducing potential conflicts.

· Zero configuration: Enables developers to start building and deploying applications immediately without time-consuming setup processes.

Product Usage Case

· Building a real-time chat application backend: PrinceJS's speed is perfect for handling a large number of concurrent connections and messages, ensuring a smooth and responsive chat experience for users.

· Developing a high-frequency trading API: The framework's low latency and high throughput are essential for processing financial transactions with minimal delay, critical in time-sensitive trading environments.

· Creating a scalable content delivery network (CDN) edge service: PrinceJS can efficiently serve static assets and API responses at the network edge, reducing latency for users worldwide.

· Optimizing an e-commerce backend for peak traffic: During sales events, PrinceJS can handle a surge in customer requests without performance degradation, ensuring a stable shopping experience.

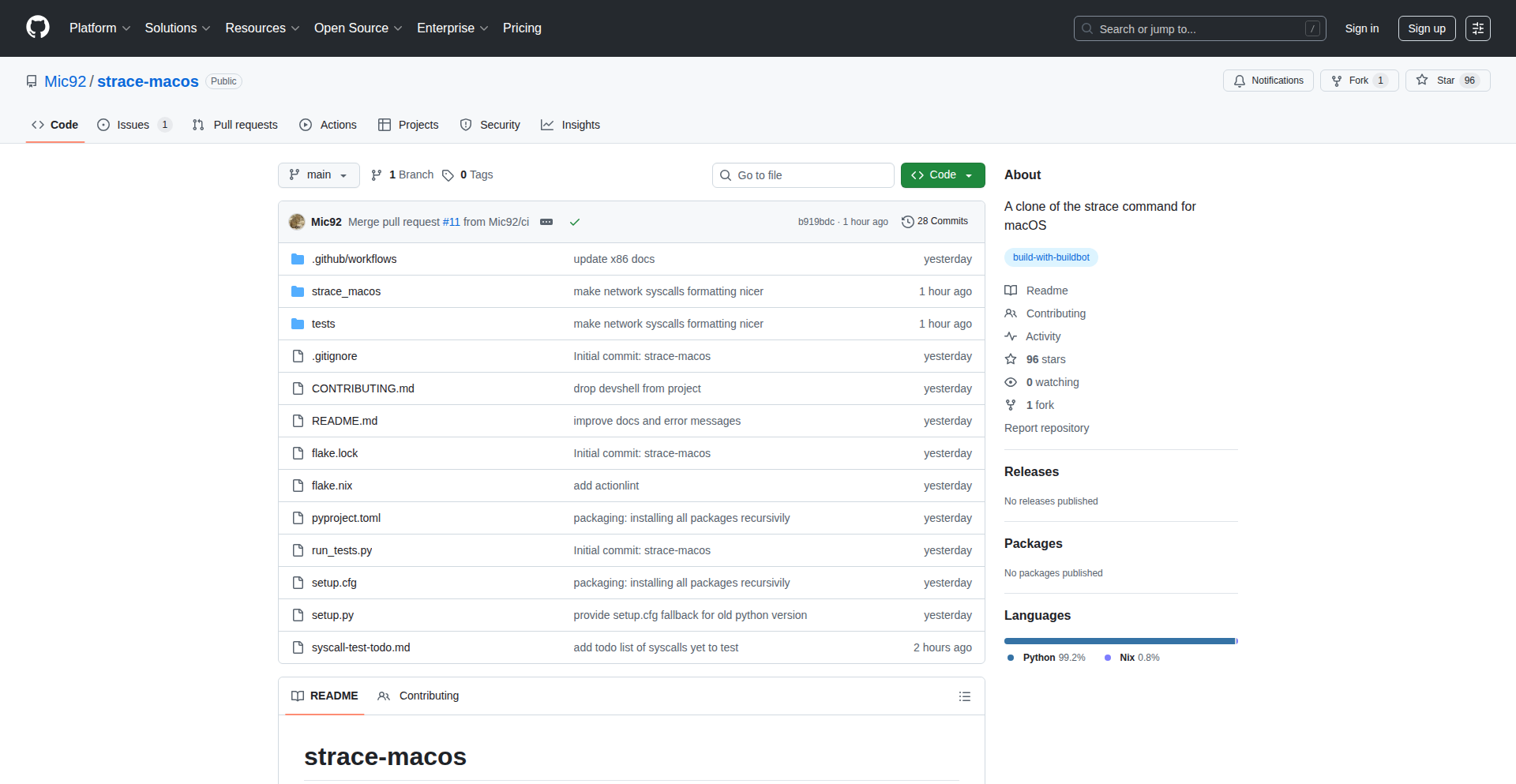

3

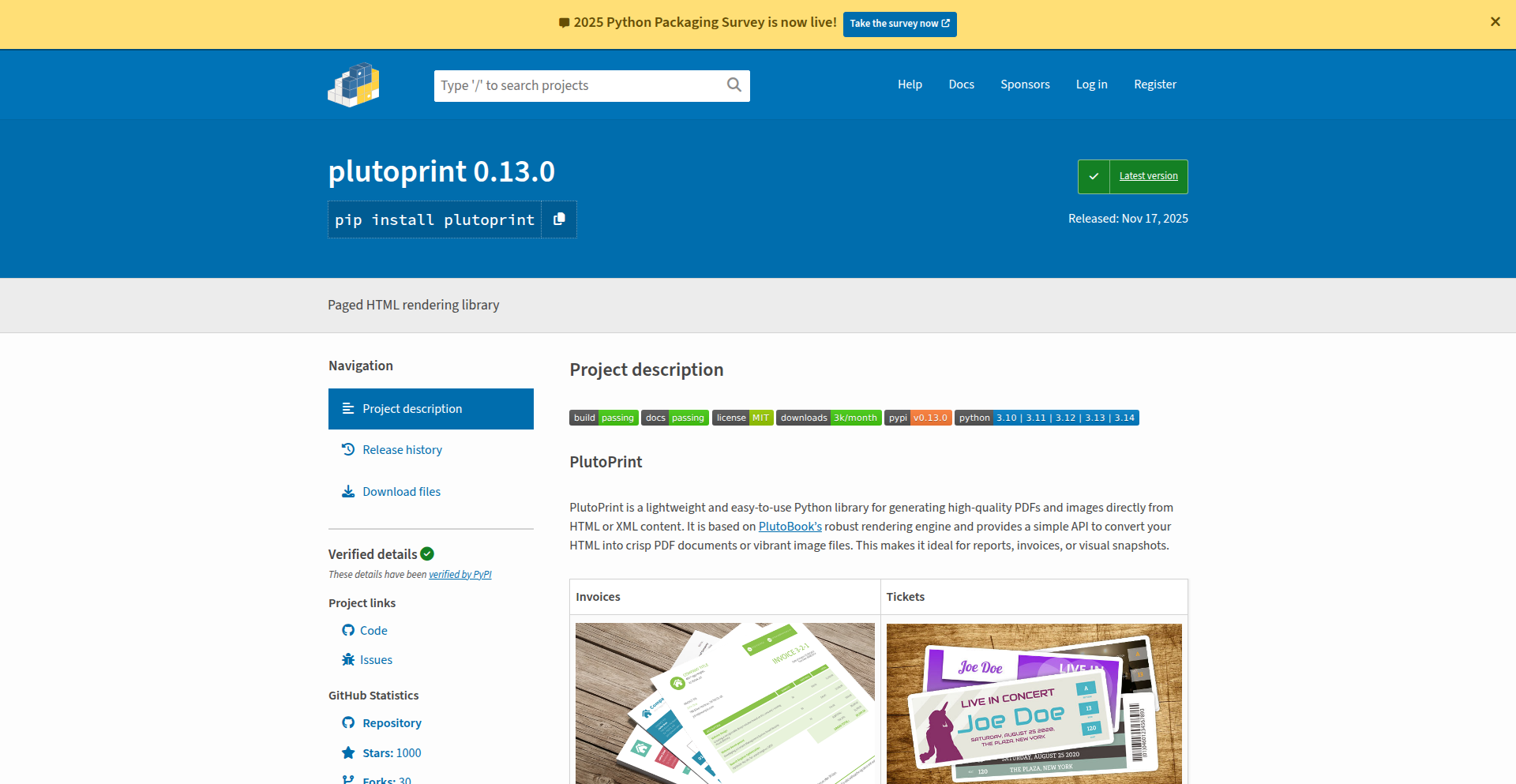

Parqeye CLI: Terminal Parquet Inspector

Author

kaushiksrini

Description

Parqeye is a command-line interface (CLI) tool written in Rust that allows developers to quickly inspect the contents, metadata, and row-group structure of Parquet files directly from their terminal. It eliminates the need to spin up heavier tools like DuckDB or Polars for basic data exploration, providing immediate insights with a single command.

Popularity

Points 109

Comments 28

What is this product?

Parqeye is a Rust-based CLI application designed to be your go-to tool for understanding Parquet files without leaving your terminal. Parquet is a popular columnar storage file format used in big data, known for its efficiency. Parqeye's innovation lies in its ability to rapidly parse and display crucial information about these files – from the overall schema and metadata to the granular details of each row group (which is how Parquet organizes data for efficient querying). This means you can understand what's inside a Parquet file, how it's structured, and even catch potential issues at a glance, all with a simple command. So, what's in it for you? You save time and avoid the overhead of loading large files into complex environments just to peek inside.

How to use it?

Developers can use Parqeye by first installing it (typically via a package manager or by building from source). Once installed, they can navigate to their terminal, locate the Parquet file they want to inspect, and run a simple command like `parqeye <path_to_your_file.parquet>`. This will display a structured overview of the file. For more advanced inspection, flags can be used to detail specific row groups or metadata. This can be integrated into existing shell scripts or CI/CD pipelines for automated data validation or debugging. So, how does this help you? You can quickly check the integrity and structure of data files as part of your development workflow, ensuring data quality and faster troubleshooting.

Product Core Function

· File Schema Visualization: Displays the data types and names of columns in a Parquet file, providing a clear understanding of the data structure. Value: Enables quick validation of expected data fields and types.

· Metadata Inspection: Shows the key-value pairs stored as metadata within the Parquet file, offering context about the data's origin or processing. Value: Helps in understanding data lineage and provenance.

· Row Group Structure Analysis: Breaks down the file into its constituent row groups and displays statistics for each, such as the number of rows and the minimum/maximum values for columns. Value: Facilitates performance tuning and identification of data distribution patterns.

· Terminal-Native Experience: Provides a fast and responsive UI directly in the command line, avoiding the need to launch separate applications. Value: Streamlines the development workflow and reduces context switching.

· Rust Performance: Leverages the efficiency and safety of Rust for fast file parsing and processing, even for large files. Value: Guarantees quick access to information without performance bottlenecks.

Product Usage Case

· A data engineer receives a large Parquet dataset from a third-party API and needs to quickly verify its schema and identify any unexpected null values in critical columns. Using Parqeye `parqeye data.parquet`, they can see the column types and a summary of null counts without loading the entire dataset into a distributed system, saving significant time and computational resources.

· A machine learning practitioner is debugging a data pipeline and suspects an issue with how a specific feature column is being encoded. They use Parqeye `parqeye model_data.parquet --row-group 5 --column feature_xyz` to examine the statistics and data distribution of that column within a particular row group, pinpointing the problem quickly.

· A software developer is integrating a new data source and wants to ensure its Parquet output adheres to the expected format. They add a `parqeye output.parquet` command to their pre-commit hook. If the output file's structure is incorrect, the hook will fail, preventing bad data from entering the repository. This ensures data consistency and quality at the source.

· A data scientist is working with multiple Parquet files and wants to quickly compare their schemas without writing custom Python scripts. They run `parqeye file1.parquet` and `parqeye file2.parquet` sequentially, using the consistent output format to spot differences in column definitions or metadata, accelerating their comparative analysis.

4

IggyWebSocket

Author

spetz

Description

IggyWebSocket is an experimental implementation of WebSocket built on top of Apache Iggy, leveraging the power of io_uring and completion-based I/O. It aims to achieve extremely high performance and low latency for real-time bidirectional communication by utilizing modern Linux kernel features.

Popularity

Points 25

Comments 6

What is this product?

IggyWebSocket is a novel approach to building WebSocket servers. Instead of relying on traditional, often blocking, I/O methods, it harnesses io_uring, a cutting-edge asynchronous I/O interface in the Linux kernel. Coupled with completion-based I/O, this allows the server to handle many concurrent connections and messages with minimal CPU overhead. Think of it like having a hyper-efficient assistant who can manage many tasks simultaneously without getting bogged down, and who only alerts you when a task is truly done. This results in faster responses and the ability to handle much more traffic on the same hardware. So, what's in it for you? Significantly faster and more scalable real-time applications.

How to use it?

Developers can integrate IggyWebSocket into their applications by building a WebSocket server that uses Apache Iggy as its core messaging engine. The primary benefit lies in the underlying I/O handling. Instead of managing threads or complex asynchronous callbacks yourself, io_uring and completion-based I/O abstract away much of the complexity. You write your application logic, and IggyWebSocket, powered by these kernel features, handles the high-speed network communication efficiently. This means you can focus on your application's features, knowing the network layer is highly optimized. For you, this translates to building applications that are more responsive and can scale more easily without needing to constantly tune low-level network settings.

Product Core Function

· High-performance WebSocket communication: Leverages io_uring and completion-based I/O for extremely fast message delivery and reception. This means your real-time updates arrive almost instantaneously, making applications feel very fluid and responsive.

· Apache Iggy integration: Built upon a robust and scalable message broker, Iggy, ensuring reliable message handling and distribution. This means your messages are delivered, and your system is built on a solid foundation, reducing the risk of data loss or service interruptions.

· Low-latency bidirectional communication: Enables real-time chat, live data feeds, and collaborative tools with minimal delay between sender and receiver. Imagine live stock tickers or multiplayer games where every action is seen by everyone else immediately, creating a truly immersive experience.

· Efficient resource utilization: Minimizes CPU overhead by offloading I/O operations to the kernel, allowing for higher connection densities and better performance on existing hardware. This means you can handle more users and more data without needing to upgrade your servers as frequently, saving you money and resources.

Product Usage Case

· Building a real-time chat application: Imagine a chat app where messages appear instantly for all participants. IggyWebSocket's low latency and high throughput are perfect for this, ensuring smooth conversations even with many users.

· Developing live data dashboards: For applications displaying rapidly changing data, like financial tickers or IoT sensor readings, IggyWebSocket can push updates to users in near real-time, keeping them informed without delays.

· Creating multiplayer online games: In fast-paced games, every millisecond counts. IggyWebSocket's efficient I/O can ensure that player actions are communicated and processed quickly, leading to a more competitive and enjoyable gaming experience.

· Implementing collaborative editing tools: For tools where multiple users edit a document simultaneously, IggyWebSocket can efficiently broadcast changes, making the collaborative process seamless and responsive.

5

CloudBatcher

Author

wkoszek

Description

CloudBatcher is a serverless platform that allows developers to run computationally intensive command-line tools in the cloud without any setup. It abstracts away the complexities of managing environments, dependencies, and hardware, enabling seamless execution of tools like Whisper for speech-to-text, Typst for typesetting, Pandoc for document conversion, and FFmpeg for video processing. This empowers developers to integrate powerful external tools into their applications with minimal friction.

Popularity

Points 19

Comments 7

What is this product?

CloudBatcher acts like a cloud-based execution engine for your command-line tools. Instead of wrestling with installing Python environments, GPU drivers, or specific libraries on your local machine or servers, you simply tell CloudBatcher which tool you want to use and provide your input files. It then spins up an isolated container in the cloud, runs your command with the specified resources (CPU, GPU, RAM), and returns the output. The innovation lies in its 'zero-setup' approach for complex tools, making powerful batch processing accessible via a simple command-line interface or a REST API. This effectively turns difficult-to-deploy tools into easily consumable cloud services.

How to use it?

Developers can use CloudBatcher in two primary ways: via its Command Line Interface (CLI) for quick local testing and scripting, or by integrating its REST API into their web applications or backend services. For example, to extract text from PDFs, a developer could use the CLI command `bsubio submit -w pdf/extract *.pdf`. For application integration, they would make an API call to CloudBatcher, specifying the desired tool and input files. The platform handles all the underlying infrastructure, so developers can focus on the output. This is particularly useful for tasks that are resource-heavy or require specialized software that is cumbersome to maintain.

Product Core Function

· Remote Batch Job Execution: Enables running command-line tools as background tasks in the cloud, freeing up local resources and allowing for parallel processing. This means your application doesn't freeze while a heavy computation is happening.

· Environment Isolation and Sandboxing: Each job runs in its own secure container, preventing conflicts with other processes and ensuring consistent results. This is like giving each job its own clean workspace so it doesn't mess anything up.

· Resource Management: Allows specification of CPU, GPU, and RAM limits for each job, ensuring efficient resource utilization and cost control. You can tailor the power of the cloud compute to match the needs of your task.

· Ephemeral File Storage: Input and output files are temporarily stored for the duration of the job and automatically deleted, simplifying data management and reducing storage costs. You don't need to worry about manually cleaning up temporary files.

· REST API for Integration: Provides a programmatic interface for developers to trigger jobs, monitor status, and retrieve results, enabling seamless integration into existing applications. This makes it easy for your software to talk to CloudBatcher and get things done.

· Pre-configured Tool Processors: Offers ready-to-use execution environments for popular tools like Whisper, Typst, Pandoc, Docling, and FFmpeg, eliminating the need for manual installation and configuration. This gives you instant access to powerful tools without the setup headache.

Product Usage Case

· Speech-to-Text Transcription for User Uploads: A web application allows users to upload audio files. Instead of processing these large files on the web server, the application sends them to CloudBatcher with the Whisper processor. CloudBatcher handles the GPU-intensive speech recognition in the cloud and returns the transcribed text, improving application responsiveness and scalability.

· Automated Document Conversion for Content Management: A content management system needs to convert uploaded documents (e.g., .docx to .pdf). The system uses CloudBatcher with the Pandoc processor to perform these conversions in the background, ensuring all content is available in a standardized format without burdening the web server.

· Batch Video Transcoding for Media Platforms: A video hosting service needs to convert uploaded videos into multiple formats and resolutions. This is a CPU-intensive task. The service integrates with CloudBatcher using the FFmpeg processor, allowing it to efficiently transcode videos in parallel in the cloud, making them viewable on various devices.

· PDF Data Extraction for Business Applications: A financial application needs to extract specific data points from uploaded PDF invoices. The application sends the PDFs to CloudBatcher with the Docling processor. CloudBatcher extracts the required information, which is then processed by the financial application, streamlining data entry and analysis.

6

Kalendis Scheduling Core

Author

dcabal25mh

Description

Kalendis is an API-first scheduling backend that handles the complex intricacies of scheduling like recurrence, time zones, and Daylight Saving Time (DST), allowing developers to retain full control over their user interface. It solves the common problem of rebuilding complex scheduling logic from scratch, providing developers with robust, conflict-safe booking capabilities.

Popularity

Points 16

Comments 2

What is this product?

Kalendis is a backend service that provides a powerful scheduling API. It's designed to abstract away the really tricky parts of managing appointments, like figuring out time zone differences across the globe, handling the confusing switch to and from Daylight Saving Time, and ensuring that no two bookings overlap (conflict-safe). The innovation lies in its focus on these difficult areas, offering a clean API that developers can integrate without needing to become experts in time and date math themselves. It also includes a 'Meta-Code-Processing' (MCP) tool that can automatically generate code for your frontend and backend to interact with the API, significantly reducing boilerplate code.

How to use it?

Developers can use Kalendis by signing up for a free account and obtaining an API key. This key is then used to authenticate requests to the Kalendis API. The product offers REST endpoints for managing availability, creating bookings, and handling exceptions. The MCP tool can be integrated into your project's build process, allowing it to generate typed clients and API route handlers for frameworks like Next.js, Express, Fastify, or NestJS. This means you can call the scheduling functions directly from your IDE or other tooling as if they were local, making integration seamless. For example, you can use a simple `curl` command with your API key to fetch availability data for a specific user within a date range.

Product Core Function

· Availability Engine: Handles complex recurring rules with one-off exceptions and blackouts, returning availability in a clear, queryable format. This helps you avoid complex date and time calculations and ensures accurate display of open slots to your users, so they can book services confidently.

· Conflict-Safe Bookings: Provides endpoints for creating, updating, and canceling booking slots that automatically prevent double-bookings. This is crucial for any service-based business, as it prevents scheduling errors and ensures a smooth customer experience, saving you from manually checking for overlaps.

· Time Zone and DST Management: Accurately handles all time zone conversions and Daylight Saving Time adjustments. This is a common source of bugs and confusion for developers; Kalendis solves this, ensuring that bookings are always made and displayed correctly regardless of the user's location or time of year, saving you significant debugging time.

· Meta-Code-Processing (MCP) Generator: Automatically generates typed client libraries and API route handlers for various JavaScript frameworks. This drastically reduces the amount of repetitive 'glue' code you need to write to connect your application to the scheduling API, allowing you to focus on building unique features rather than basic API integration.

· API-First Design: Offers a clean REST API that can be integrated with any frontend or backend technology. This gives you maximum flexibility to build your application with your preferred stack without being locked into a specific UI or framework, so you can maintain full control over your product's look and feel.

Product Usage Case

· A small team of two developers built a full-featured booking platform for their business by leveraging Kalendis. They kept complete control over their user experience and branding, while Kalendis handled all the underlying scheduling complexities, allowing them to launch much faster than if they had to build the scheduling logic themselves.

· A SaaS product that offers appointment scheduling for its users can integrate Kalendis to handle all backend scheduling operations. This allows the product team to focus on building innovative user-facing features, such as advanced analytics or personalized user dashboards, rather than spending time debugging time zone issues or recurrence logic.

· An event management system can use Kalendis to manage complex event schedules, including recurring event series, room bookings, and attendee availability across different time zones. This ensures that all event details are accurately managed and displayed to organizers and attendees globally, preventing confusion and logistical nightmares.

· A developer building a mobile app for local services (e.g., haircuts, tutoring) can use Kalendis to manage appointment bookings. The MCP generator can quickly create the necessary API calls for the app, enabling a fast development cycle and ensuring that the booking system is robust and handles all date/time intricacies correctly from day one.

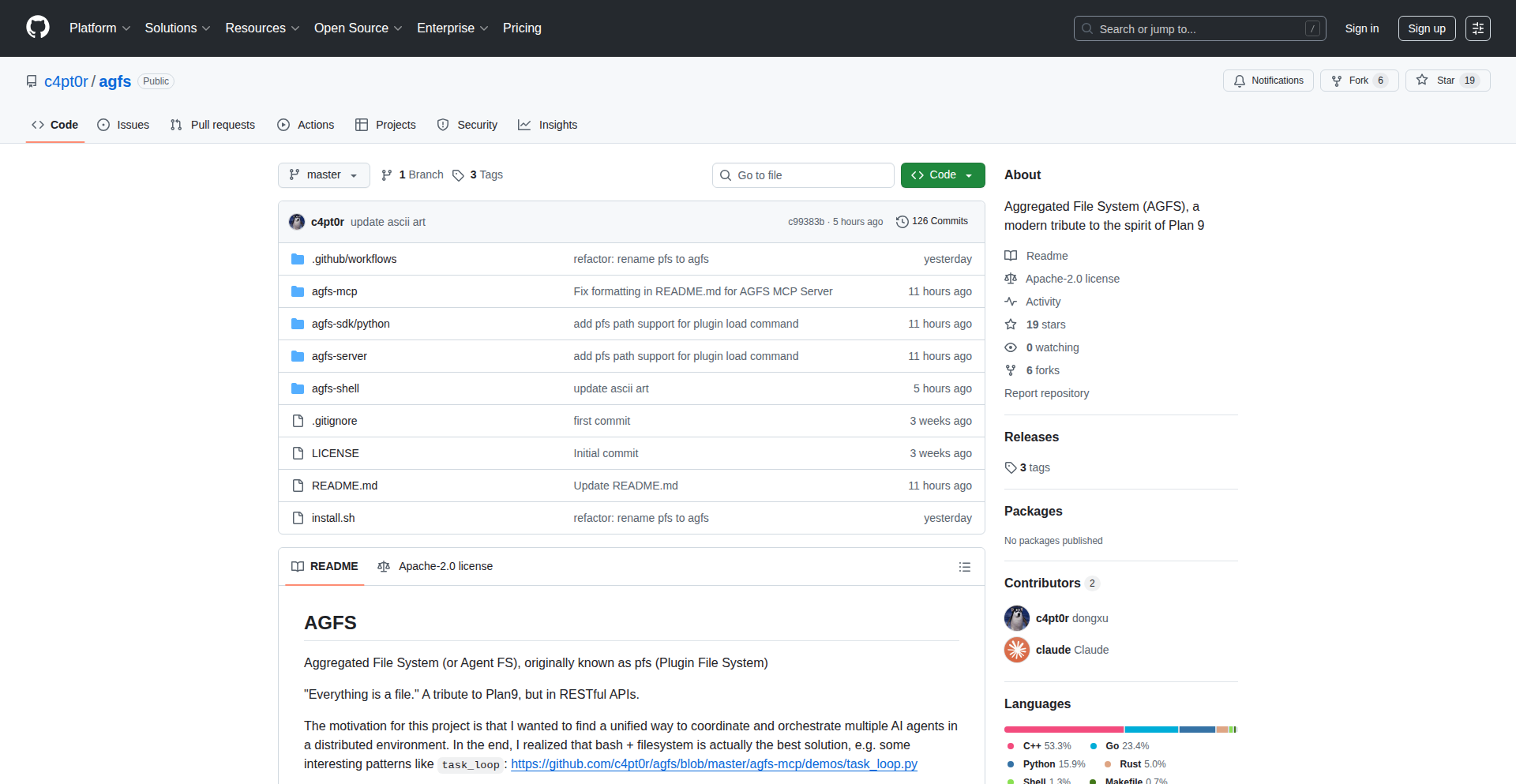

7

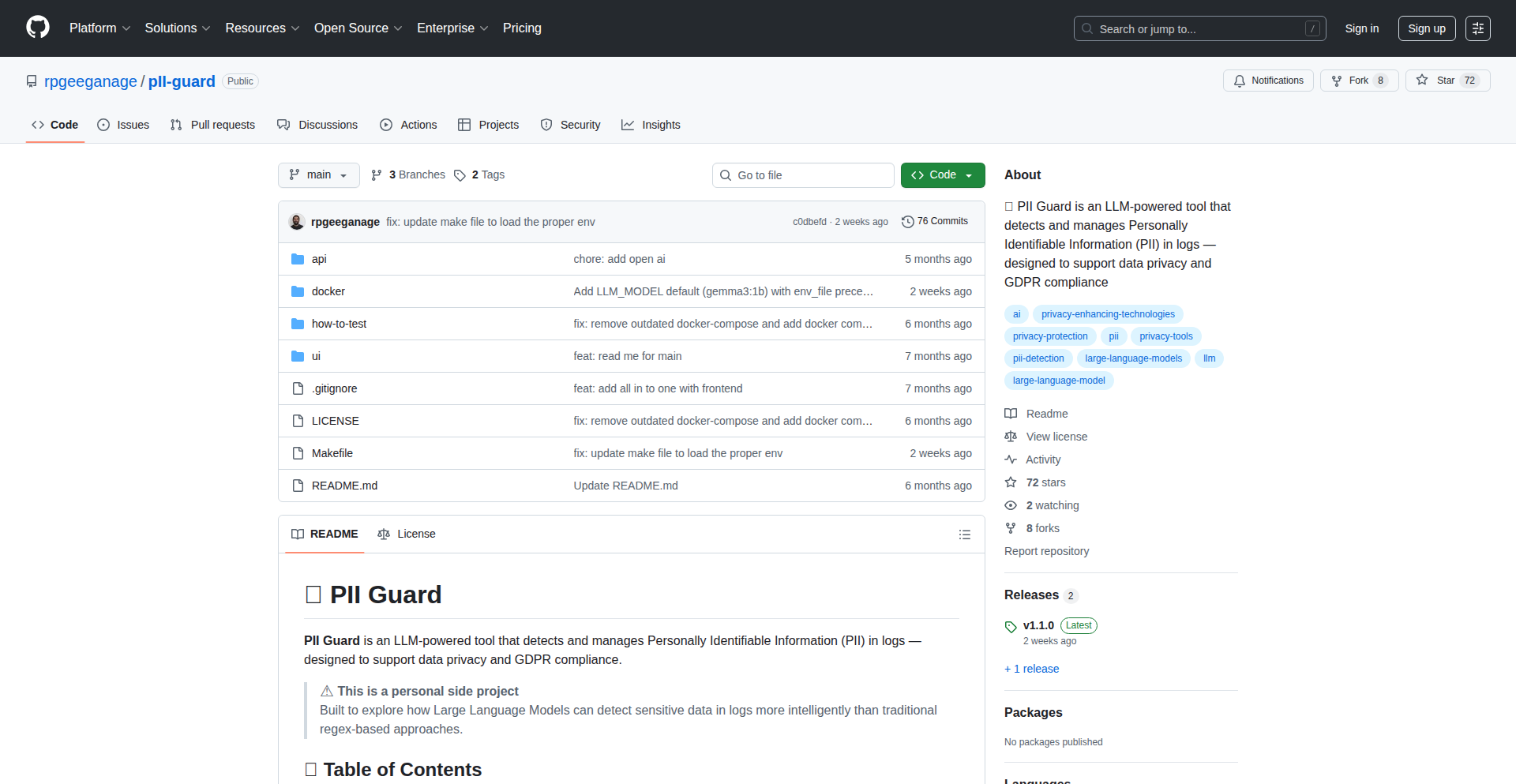

Octopii: Rust-Powered Distributed App Framework

Author

janicerk

Description

Octopii is a novel framework for crafting distributed applications using Rust. Its core innovation lies in providing developers with a robust and efficient way to build systems that span across multiple machines, abstracting away much of the complexity typically associated with inter-process communication and fault tolerance. Think of it as a toolkit that makes building powerful, resilient, and scalable applications much simpler for developers.

Popularity

Points 14

Comments 3

What is this product?

Octopii is a framework that helps developers build distributed applications, which are essentially programs designed to run across many computers but work together as a single system. The main technological challenge here is making these separate computers talk to each other reliably and efficiently, and ensuring the whole system keeps working even if some parts fail. Octopii tackles this by providing a set of tools and patterns written in Rust. Rust is known for its speed and safety, which are crucial for building reliable distributed systems. Octopii simplifies the process of sending messages between different parts of your application that might be running on different servers, managing shared data, and handling errors gracefully. This means developers can focus more on the unique logic of their application rather than getting bogged down in the low-level complexities of distributed computing. So, for a developer, this means building more complex, scalable, and reliable applications faster and with fewer bugs.

How to use it?

Developers can integrate Octopii into their Rust projects by adding it as a dependency in their Cargo.toml file. The framework provides APIs (Application Programming Interfaces) that allow developers to define services, set up communication channels between these services (like sending messages or making remote procedure calls), and manage the state of their distributed application. For instance, a developer building a real-time chat application could use Octopii to manage the connections of thousands of users across multiple servers, ensuring messages are delivered promptly and reliably. It offers building blocks for tasks like service discovery (how services find each other), load balancing (distributing work evenly), and fault tolerance (handling failures). So, for a developer, it means having a ready-made foundation to build sophisticated distributed systems without reinventing the wheel.

Product Core Function

· Distributed Service Definition: Enables developers to define individual components of their distributed application as distinct services. This modularity allows for better organization and easier management of complex systems, making it valuable for building scalable applications where different functionalities can be scaled independently.

· Inter-Service Communication: Provides efficient and reliable mechanisms for services to communicate with each other, whether it's sending simple messages or making complex remote procedure calls. This is critical for enabling seamless interaction between different parts of a distributed system, ensuring data flows smoothly and actions are coordinated across multiple machines.

· Fault Tolerance and Resilience: Incorporates features to handle failures in a distributed environment, ensuring the application continues to operate even if some nodes or services go offline. This adds robustness to applications, making them more dependable and reducing downtime, which is essential for business-critical systems.

· State Management in Distributed Systems: Offers patterns and tools for managing application state consistently across multiple nodes, which is a notoriously difficult problem in distributed computing. This simplifies the development of applications that require shared data or coordinated actions, ensuring data integrity and predictability.

· Developer Productivity in Rust: Leverages Rust's strong safety guarantees and performance to create a framework that is both efficient and less prone to bugs. This allows developers to build high-quality distributed applications more quickly and with greater confidence, reducing development time and maintenance costs.

Product Usage Case

· Building a scalable microservices architecture: A developer could use Octopii to build a set of independent services that communicate with each other to form a larger application. For example, an e-commerce platform could have separate services for user authentication, product catalog, order processing, and payment. Octopii would help manage the communication and coordination between these services, allowing each to be scaled independently as demand grows, solving the problem of creating a flexible and scalable backend.

· Developing real-time data processing pipelines: For applications that need to ingest and process large volumes of data in real-time, such as sensor data from IoT devices or clickstream data from websites, Octopii can facilitate the creation of distributed processing nodes. These nodes can work in parallel to process the data quickly, addressing the challenge of handling high throughput and low latency data streams.

· Creating decentralized peer-to-peer applications: Octopii's underlying principles can be adapted to build applications where nodes communicate directly with each other without a central server. This is useful for applications like distributed file storage or content distribution networks, solving the problem of building systems that are inherently resilient and censorship-resistant.

· Implementing distributed consensus algorithms: For applications requiring agreement among multiple nodes on a particular state or transaction, Octopii can provide the foundational building blocks for implementing complex consensus protocols. This is crucial for applications like blockchain technologies or distributed databases, enabling reliable decision-making in a distributed environment.

8

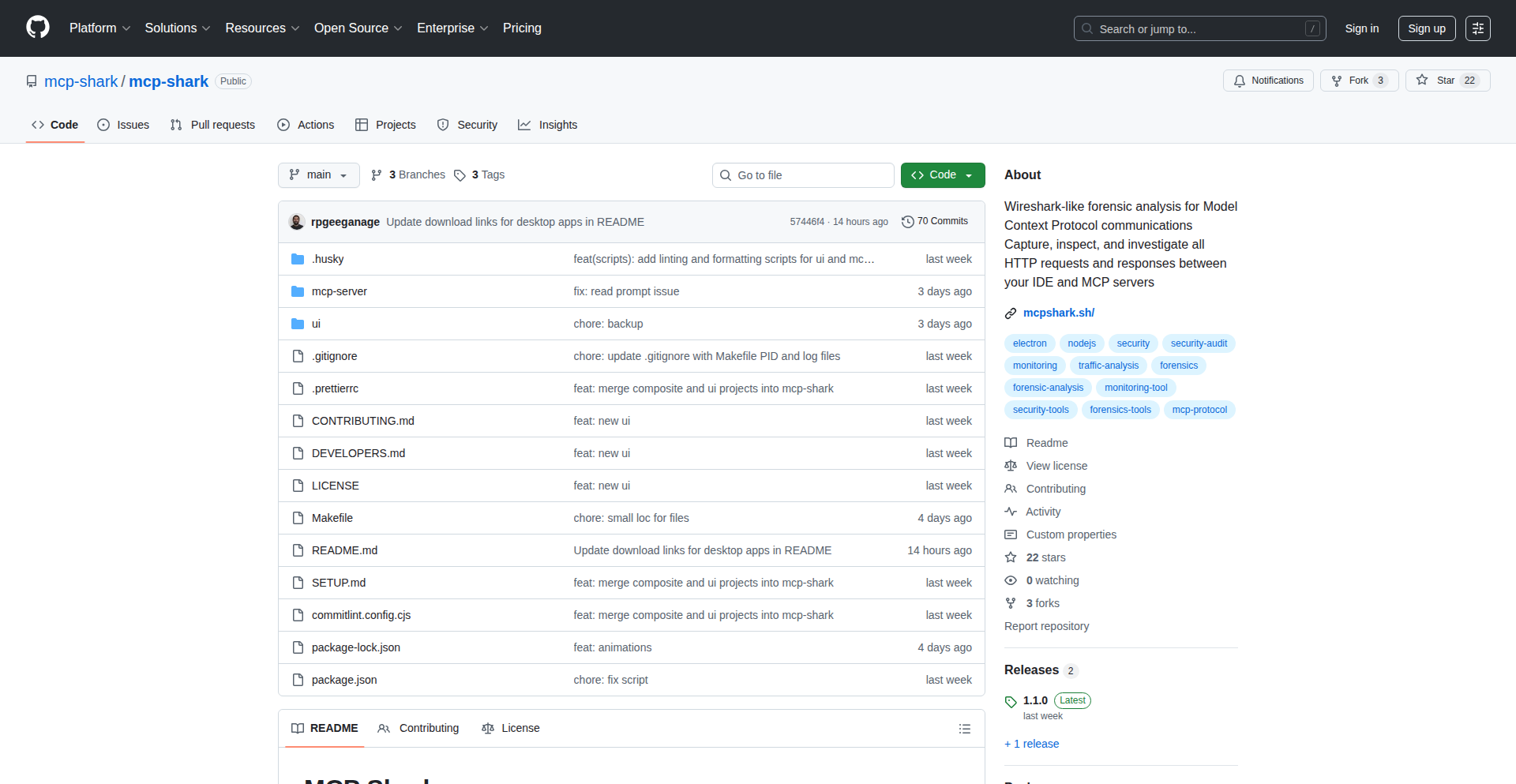

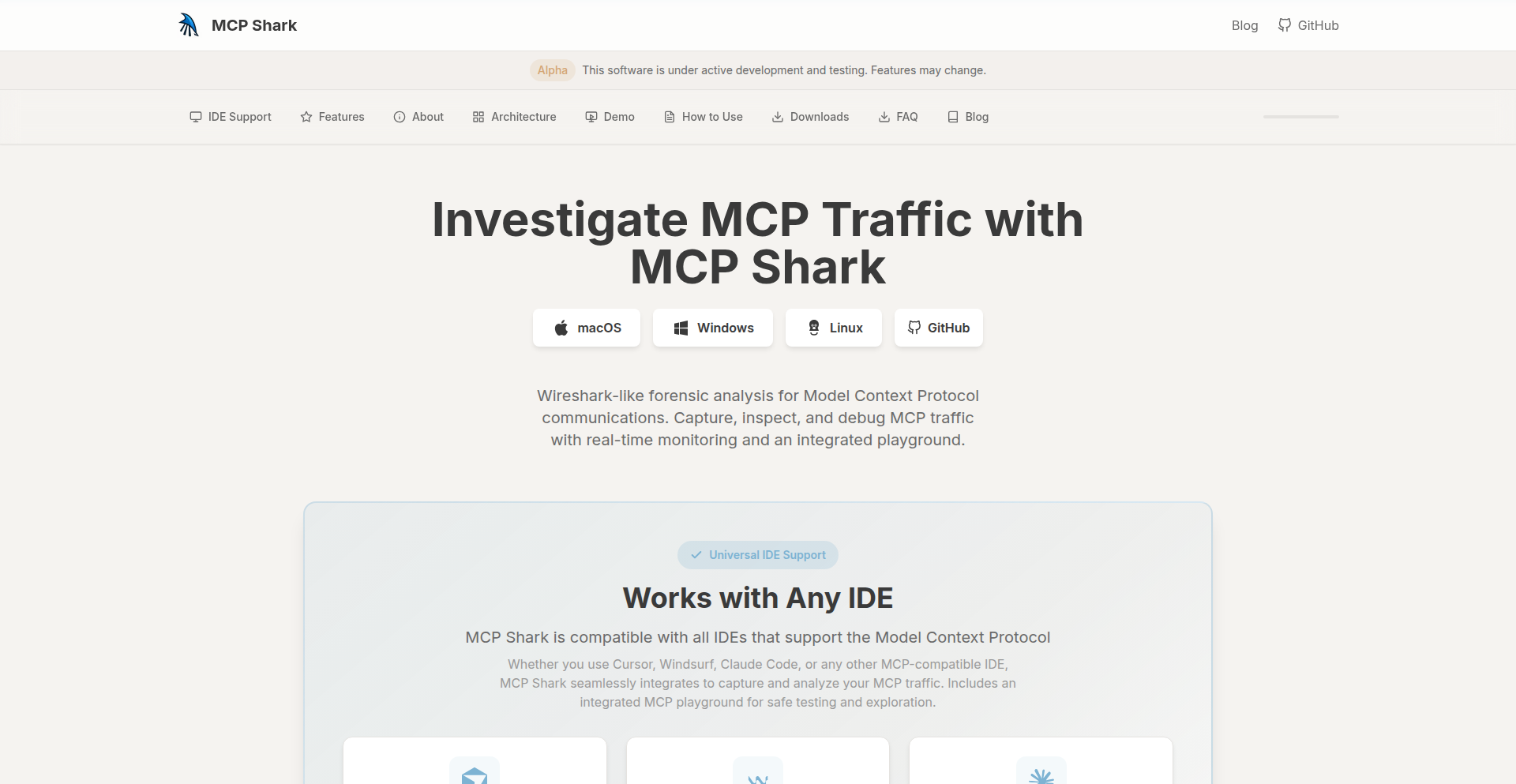

MCP Traffic Insight

Author

o4isec

Description

MCP Traffic Insight is a Show HN project that offers a novel approach to analyzing network traffic, specifically focusing on identifying and understanding the behavior of MCP (Message-Passing Control) traffic. It provides developers with deeper visibility into distributed system communications, allowing them to debug complex interactions and optimize performance. The innovation lies in its specialized parsing and visualization techniques for MCP protocols, which are often opaque to general-purpose network sniffers. This enables quicker identification of bottlenecks and misconfigurations in message-driven architectures.

Popularity

Points 16

Comments 0

What is this product?

MCP Traffic Insight is a specialized network traffic analysis tool designed to dissect and interpret MCP (Message-Passing Control) protocol communications. Unlike generic network sniffers that might struggle with the intricacies of custom or proprietary message-passing systems, MCP Traffic Insight understands the specific structure and semantics of MCP messages. It works by intercepting network packets, parsing them according to MCP protocol rules, and then presenting this information in a human-readable format. The core innovation is its protocol-aware dissection, which transforms raw network data into meaningful insights about message flow, content, and timing. This means you can finally see what your distributed system is actually saying to itself, understand why a message might be delayed or lost, and pinpoint where an error is occurring within the communication chain. For you, this means dramatically reduced debugging time for systems that rely on message passing, leading to faster development cycles and more stable applications.

How to use it?

Developers can integrate MCP Traffic Insight into their workflow by running it on a machine that can capture network traffic from the distributed system they are analyzing. This could involve running it directly on a server, a dedicated analysis machine, or by setting up port mirroring on a switch to capture traffic from multiple nodes. The tool typically works by capturing live network traffic using packet capture libraries (like libpcap) and then applying its MCP-specific parsing logic. The output can be presented in various forms, such as structured logs, visual flow diagrams, or summary statistics. For a developer, this means you can point the tool at your application's network interface and immediately start seeing a breakdown of the MCP messages being exchanged. You can filter by sender, receiver, message type, or even analyze the content of specific messages. This allows for targeted troubleshooting, such as identifying if a particular service is not responding to critical control messages, or if message serialization is causing performance issues. It’s like having X-ray vision for your distributed system’s communication.

Product Core Function

· Protocol-Aware Packet Parsing: Deciphers raw network packets into understandable MCP messages, revealing message structure and payload. This is valuable because it translates complex binary data into actionable information, helping you understand the exact content and intent of every message your system sends and receives, thus enabling precise error diagnosis.

· Message Flow Visualization: Generates diagrams or logs that illustrate the sequence and origin/destination of MCP messages. This is useful for comprehending the overall communication patterns in a distributed system, identifying deadlocks or unexpected communication loops, and visualizing the impact of changes you make to your system.

· Performance Metrics Collection: Tracks key performance indicators like message latency, throughput, and error rates within MCP communications. This is crucial for performance tuning, as it provides concrete data to identify slow points or bottlenecks in your message processing, allowing you to optimize your system for speed and efficiency.

· Error Identification and Reporting: Automatically flags potential issues or malformed MCP messages, offering insights into common communication failures. This saves you from manually sifting through logs by proactively highlighting problems, helping you fix critical bugs faster and prevent cascading failures in your application.

· Interactive Filtering and Searching: Allows developers to filter traffic based on various criteria (e.g., sender, receiver, message type, content keywords) for focused analysis. This feature is invaluable for isolating specific problematic interactions within a high-volume traffic environment, enabling you to quickly zoom in on the exact conversation that needs attention without getting lost in irrelevant data.

Product Usage Case

· Debugging a microservices application where a critical command message from service A to service B is not being processed, leading to a system-wide failure. MCP Traffic Insight can be used to confirm if the message is being sent, if it's reaching service B, and if it's being correctly interpreted by service B's MCP handler, pinpointing the failure point in the communication pipeline.

· Optimizing the performance of a real-time data processing pipeline that relies heavily on inter-process communication via MCP. By analyzing message latency and throughput with MCP Traffic Insight, a developer can identify which specific messages or communication patterns are introducing delays, allowing them to refactor message structures or processing logic for better speed.

· Investigating intermittent failures in a distributed control system where components occasionally lose synchronization. MCP Traffic Insight can help by analyzing the sequence and acknowledgments of control messages to identify if synchronization messages are being dropped, delayed, or misinterpreted, thereby revealing the root cause of the desynchronization issues.

· Understanding the complex interactions in a publish-subscribe system built with an MCP-based messaging layer. MCP Traffic Insight can visualize the flow of published messages to subscribers, allowing developers to see which subscribers are receiving messages, how quickly, and if there are any unexpected broadcast patterns that might indicate a configuration error or performance bottleneck in the message distribution.

9

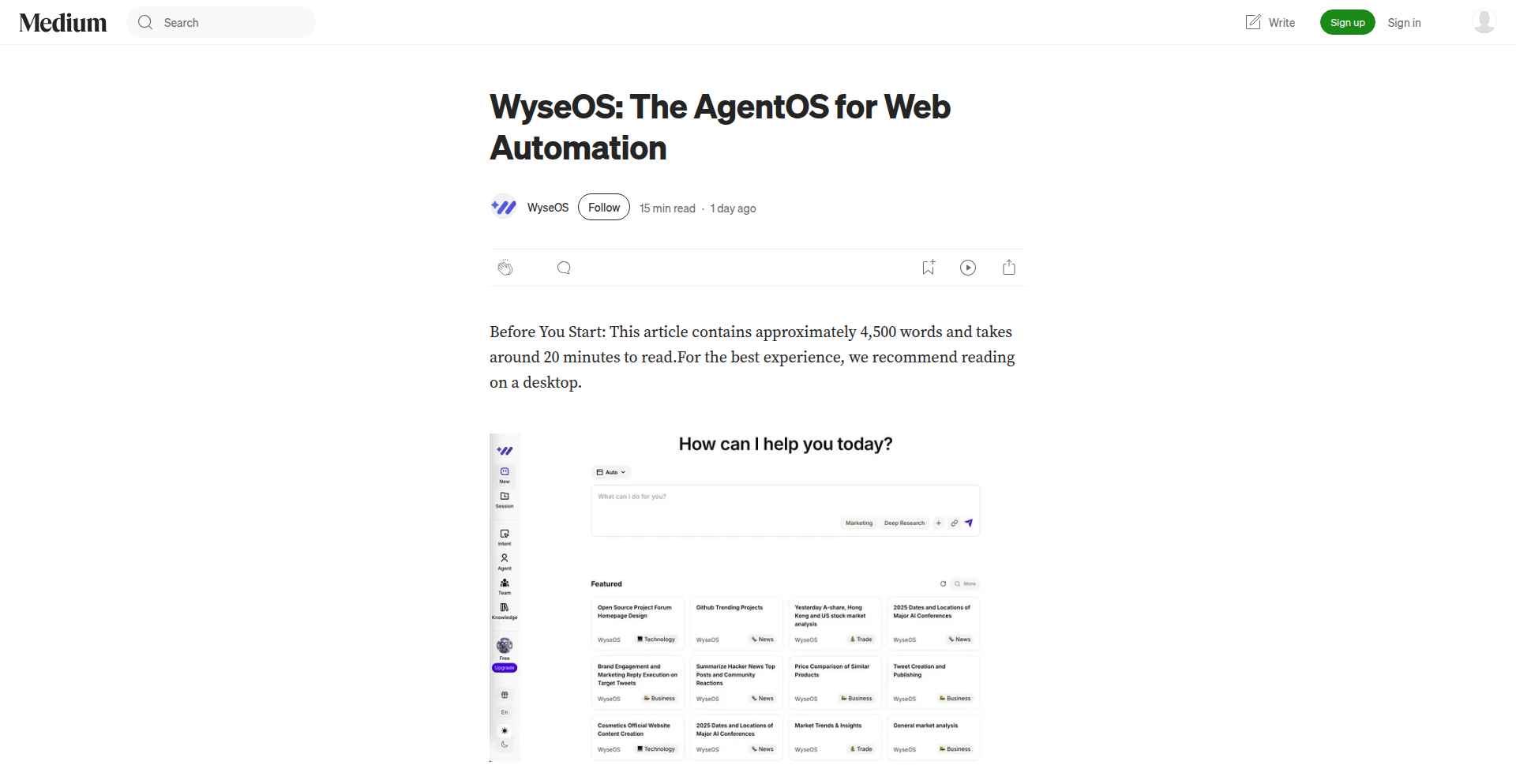

YourGPT 2.0: Integrated AI Workflow Orchestrator

Author

Roshni1990r

Description

YourGPT 2.0 is a comprehensive AI platform designed to streamline support, sales, and operational workflows by seamlessly integrating disparate tools and maintaining contextual understanding across multi-channel interactions. Its core innovation lies in natural language workflow generation, deep external tool connectivity via Studio Apps and MCP protocols, and proactive user engagement through the Ask AI Trigger, all while offering flexible deployment and self-learning capabilities. This system bridges the gap between complex business processes and intuitive AI interaction, making advanced automation accessible.

Popularity

Points 11

Comments 3

What is this product?

YourGPT 2.0 is an advanced AI platform that acts as a central nervous system for business operations. It leverages natural language processing (NLP) to allow users to describe desired workflows in plain English, and the system automatically builds them. The innovation is in its ability to connect to a vast array of external services (like CRM, spreadsheets, payment processors) as 'Studio Apps' directly within these workflows. It also supports the Model Context Protocol (MCP) for standardized AI model interaction and offers over 100 tools via MCP360. This means instead of manually stitching together different software and AI models, YourGPT 2.0 orchestrates them intelligently, ensuring context is preserved across complex, multi-step, and multi-channel conversations. Think of it as a super-intelligent assistant that not only understands your requests but can also command other specialized tools to fulfill them, remembering everything along the way. This solves the problem of siloed tools and fragmented customer interactions by creating a unified, intelligent operational environment.

How to use it?

Developers can integrate YourGPT 2.0 into their existing infrastructure in several ways. For building new automated processes, they can use the AI Studio to describe their desired workflow in natural language, which YourGPT 2.0 translates into an executable process. For connecting external services, Studio Apps can be configured to link popular tools like Google Sheets, Stripe, or CRMs, allowing data to flow seamlessly between them and the AI. The platform's native support for Model Context Protocol (MCP) means it can interface with various AI model servers. For mobile applications, native iOS and Android SDKs allow embedding advanced voice agents and AI capabilities. Websites can be enhanced with the 'Ask AI Trigger' to proactively engage users based on their browsing behavior. Deployments are highly flexible, supporting web, mobile apps, messaging platforms (WhatsApp, Telegram), browser extensions, and helpdesk systems. The self-learning architecture means the system continuously improves its responses and actions over time without constant manual retraining, adapting to new data and user patterns automatically.

Product Core Function

· Natural Language Workflow Generation: Allows users to describe desired business processes in plain English, and the AI automatically constructs the workflow, reducing development time and complexity. This is valuable for quickly automating repetitive tasks and creating custom business logic without extensive coding.

· Studio Apps for External Tool Integration: Enables seamless connection of third-party services like CRMs, payment gateways, and spreadsheets directly into AI-driven workflows. This breaks down data silos and allows for richer, more automated end-to-end processes, improving operational efficiency and data utilization.

· Model Context Protocol (MCP) Support: Facilitates standardized communication with various AI models and offers extensive tool access through MCP360. This provides flexibility in choosing and integrating different AI technologies while ensuring consistent context management, enabling more sophisticated AI applications.

· Ask AI Trigger for Proactive Engagement: Enhances websites by identifying user interest and initiating conversations at opportune moments. This leads to improved customer engagement, higher conversion rates, and a more personalized user experience by anticipating needs.

· Unified Context Management: Maintains a consistent understanding of user interactions across multiple channels and over extended periods, regardless of input format (text, images, audio). This is crucial for providing coherent and personalized customer support, sales follow-ups, and operational responses, enhancing customer satisfaction and team efficiency.

· Self-Learning Architecture: Automatically updates and improves the AI's behavior over time without manual retraining, adapting to new data and user interactions. This ensures the platform remains effective and relevant, reducing ongoing maintenance effort and improving performance iteratively.

Product Usage Case

· Automating customer onboarding by integrating a CRM, email service, and a document signing tool. A customer's sign-up in the CRM triggers an automated email sequence and a request for a signature, all orchestrated by a natural language-defined workflow in YourGPT 2.0. This solves the problem of manual, multi-step onboarding processes that are prone to errors and delays.

· Enhancing e-commerce sales with proactive engagement. When a user spends a certain amount of time on a product page, the 'Ask AI Trigger' initiates a chat offering assistance or a discount. If the user asks a question about shipping, the AI can access order data from a connected platform (e.g., Shopify) and provide an instant, accurate answer, improving conversion rates and customer experience.

· Streamlining support ticket resolution by integrating a helpdesk system with a knowledge base (e.g., Confluence) and a communication channel (e.g., WhatsApp). When a customer submits a query via WhatsApp, YourGPT 2.0 analyzes the input, searches the knowledge base, and provides an answer, escalating to a human agent only when necessary. This reduces response times and frees up support staff for complex issues.

· Improving internal operations by connecting Google Sheets for project tracking with Stripe for payment processing. When a new project is added to the sheet, YourGPT 2.0 can trigger invoice generation in Stripe and track payment status, automating financial workflows and reducing manual data entry errors.

10

MarkovChainTextCraft

Author

JPLeRouzic

Description

A project that polishes a Markov chain generator and trains it on scientific articles. It produces text that rivals small LLMs in quality, offering a lightweight yet powerful approach to text generation. It solves the problem of needing complex infrastructure for advanced text generation by providing a simpler, more accessible method.

Popularity

Points 13

Comments 1

What is this product?

This project is an implementation of a Markov chain text generator that has been refined and trained on specialized content, specifically an article by Uri Alon and colleagues. A Markov chain is a probabilistic model that predicts the next event based only on the current event. In this context, it analyzes patterns in words within an article to generate new text that mimics the style and vocabulary of the original. The innovation lies in polishing this classic technique to produce outputs comparable to much larger, more complex language models (LLMs) like NanoGPT, but with significantly less computational overhead. So, it's a smarter, more efficient way to create text that sounds natural and coherent, without needing a supercomputer.

How to use it?

Developers can use this project by training the Markov chain generator on their own text data. The process involves providing the generator with an input text file (e.g., an article, a book, or a collection of writings). The generator then analyzes the word sequences and builds a model. Once trained, the model can be used to generate new text. This can be integrated into applications that require content generation, like chatbots, creative writing tools, or even for summarizing or rephrasing existing text. The command-line interface shown in the description (. /SLM10b_train UriAlon.txt 3) demonstrates how to train the model with a specified 'order' (which refers to how many previous words the model considers when predicting the next), and (. /SLM9_gen model.json) shows how to use the trained model to generate text. This means developers can easily incorporate this text generation capability into their existing workflows or new projects. What this means for you is you can generate custom text for your applications quickly and efficiently.

Product Core Function

· Markov Chain Training: This core function allows developers to feed any text corpus into the generator. The system analyzes word co-occurrences and builds a probabilistic model that captures the stylistic and semantic patterns of the input text. This provides value by enabling the creation of tailored text generation models for specific domains or writing styles. The application scenario is creating unique content for a niche audience or specific project.

· Text Generation: Once a model is trained, this function generates new text. It does this by probabilistically selecting the next word based on the preceding words according to the trained model. This is valuable for producing human-like text for various purposes, such as drafting articles, creating fictional narratives, or populating datasets. The application scenario is automating content creation tasks for marketing, literature, or data simulation.

· Lightweight Model Architecture: The project emphasizes creating models that are comparable in output quality to large LLMs but are significantly smaller and require less computational power. This is achieved through a refined Markov chain approach. This provides value by making advanced text generation accessible on less powerful hardware and reducing development and deployment costs. The application scenario is building text generation features for mobile apps or web applications with limited server resources.

· Customizable Generation Order: The training process allows for specifying the 'order' of the Markov chain, which dictates how many preceding words influence the prediction of the next word. A higher order generally leads to more coherent but potentially less creative text, while a lower order can be more unpredictable. This provides value by giving developers fine-grained control over the generated text's characteristics, allowing them to balance coherence with creativity. The application scenario is fine-tuning text generation for specific creative or analytical needs.

Product Usage Case

· A fiction writer could use this to generate story ideas or character dialogue by training the model on their existing writing style and preferred genres. This would help overcome writer's block and explore new narrative directions, directly addressing the problem of creative stagnation.

· A researcher studying scientific literature could use this to generate hypothetical research paper abstracts or summaries in the style of a specific field. This could aid in hypothesis generation or in quickly understanding the core themes of a large body of work, solving the problem of information overload and slow discovery.

· A developer building a simple chatbot for a specific domain (e.g., a history trivia bot) could train the model on historical texts to generate relevant and contextually appropriate responses, providing a more engaging user experience without the need for complex natural language processing pipelines. This addresses the challenge of creating responsive and knowledgeable bots with minimal resources.

· A marketer could use this to generate variations of ad copy or social media posts based on successful past campaigns. This would help in A/B testing and optimizing marketing content more efficiently, solving the problem of repetitive content creation and the need for diverse messaging.

11

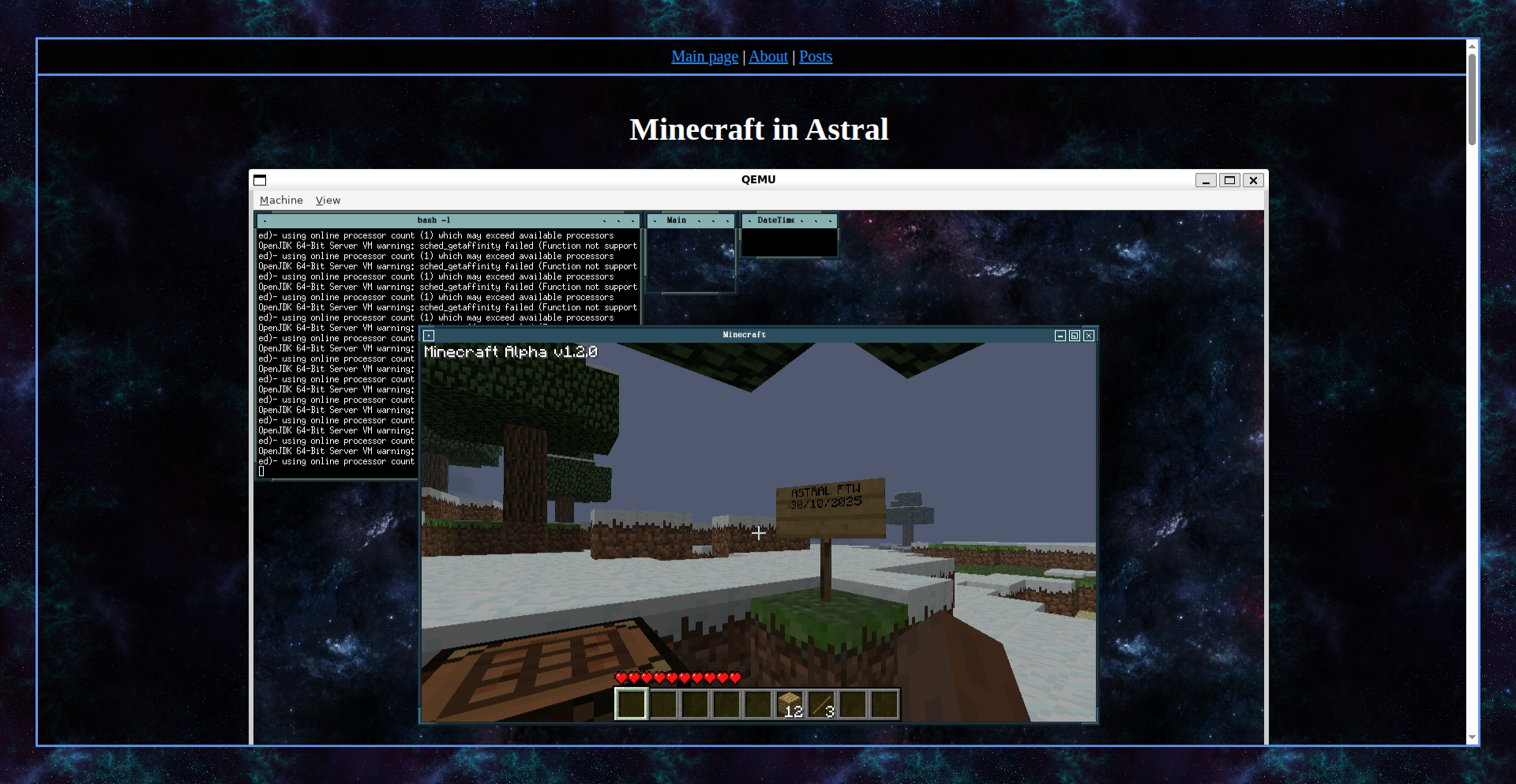

MineOS: A Hobby OS for Minecraft

Author

avaliosdev

Description

This project is a custom-built hobby operating system specifically designed to run Minecraft. The core innovation lies in the stripped-down, efficient nature of the OS, optimizing resource usage to provide a smooth Minecraft experience, especially on lower-end hardware or within specific embedded environments. It demonstrates the power of tailored software solutions for niche applications, showcasing deep understanding of OS principles and game performance tuning.

Popularity

Points 10

Comments 2

What is this product?

MineOS is a lightweight operating system developed from scratch with the sole purpose of running the game Minecraft as efficiently as possible. Instead of relying on general-purpose operating systems like Windows or Linux which come with a lot of overhead (extra code and features you don't need for just gaming), MineOS is built to be minimal. This means it uses fewer system resources like RAM and CPU cycles. The innovation is in the bespoke design: understanding exactly what Minecraft needs to run and building an OS around those specific requirements. This allows for higher performance and potentially the ability to run Minecraft on hardware that would otherwise struggle.

How to use it?

Developers can use MineOS by flashing it onto compatible hardware, such as a Raspberry Pi or other single-board computers, or by running it within a virtual machine for experimentation. The primary use case is to create a dedicated, high-performance Minecraft server or client environment with minimal setup. For example, you could build a dedicated Minecraft gaming rig that's more responsive than a general-purpose PC, or deploy a Minecraft server for a small group of friends that consumes very little power and processing.

Product Core Function

· Minimalist Kernel: Provides the essential operating system functions, like managing the CPU and memory, without any unnecessary bloat. This means less wasted processing power, leading to a smoother Minecraft game.

· Optimized I/O Subsystem: Designed to handle the input and output operations (like reading game data from storage or sending game graphics to the screen) very quickly. This reduces loading times and improves responsiveness in the game.

· Direct Hardware Access: Allows the game to interact directly with the computer's hardware, bypassing layers of abstraction found in larger operating systems. This translates to more direct control and better performance for Minecraft.

· Resource Monitoring: Includes basic tools to monitor how much CPU and memory the game is using. This helps in understanding performance bottlenecks and tuning the system for the best possible experience.

· Custom Bootloader: A small program that starts the OS and loads Minecraft. It's streamlined to get the game running as fast as possible after power-on.

Product Usage Case

· Building a dedicated Minecraft server on a Raspberry Pi: Instead of running a server on a noisy, power-hungry desktop PC, MineOS allows you to create a quiet, energy-efficient server that can handle moderate player counts with excellent performance, making it ideal for small communities or personal use.

· Creating a retro-style gaming station: Developers could use MineOS to build a dedicated machine solely for playing Minecraft, stripping away all non-essential OS features to achieve maximum frame rates and a truly immersive experience on older or less powerful hardware.

· Educational tool for OS development: For students or enthusiasts interested in operating systems, MineOS serves as an excellent, tangible example of how to build a functional OS for a specific purpose, demonstrating core concepts in a practical and engaging way.

· Embedded Minecraft installations: Imagine Minecraft running on specialized hardware for an interactive art installation or a themed attraction, where a full-blown OS would be overkill and introduce unnecessary complexity and resource drain. MineOS provides a lean, focused solution.

12

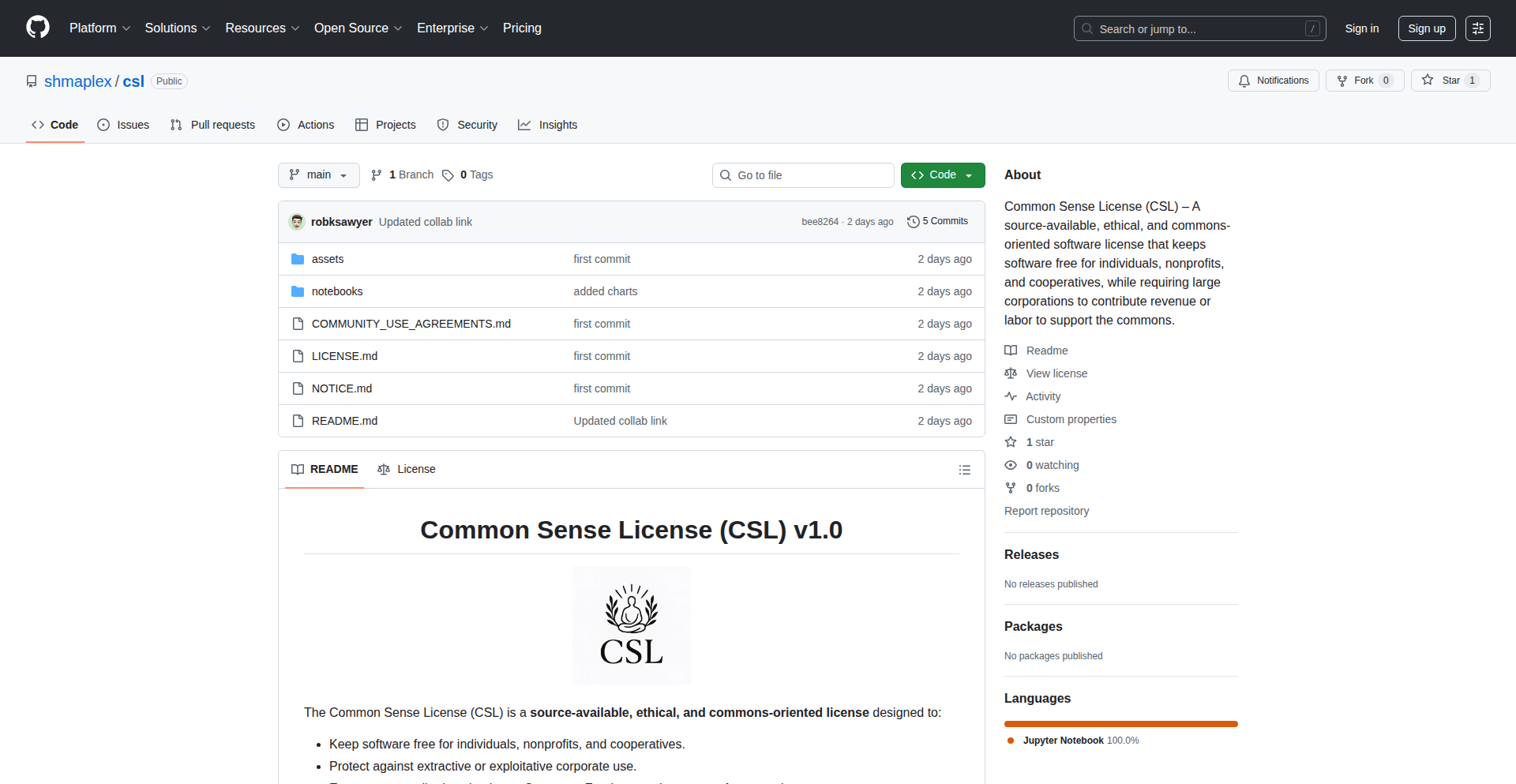

Civic License (CSL)

Author

shmaplex

Description

The Common Sense License (CSL) is a novel software license aiming to create a more equitable and sustainable digital ecosystem. It challenges the current dominance of proprietary and often exploitative licensing models by offering a framework inspired by civic principles. The CSL encourages transparency, collaboration, and fair distribution of value, proposing an alternative to the perceived 'techno-feudal' structures where power is concentrated and creators are vulnerable. This license is a technical and philosophical experiment in building a better model for software ownership and usage.

Popularity

Points 5

Comments 4

What is this product?

The Common Sense License (CSL) is a new type of software license designed to address perceived imbalances in the software world, drawing parallels to feudal systems where power is concentrated and those contributing less are often more empowered. It's not just a legal document; it's a set of technical and ethical guidelines for how software should be shared and used. The innovation lies in its approach to balancing the freedom to use and modify software with the need for creators to be sustained and for the digital infrastructure to be fair and transparent. It proposes mechanisms that encourage contributors and users to actively participate in maintaining and improving the software ecosystem, moving away from models that can leave everyday users vulnerable or creators exploited. So, for you, it offers a way to engage with software that respects your contributions and aims for a fairer digital future.

How to use it?

Developers can adopt the CSL for their open-source projects by including the license text within their codebase, typically in a LICENSE file. They can then specify in their project's README or documentation that their software is licensed under the CSL. For users, using software licensed under CSL means agreeing to its terms, which may include obligations to contribute back to the community or to uphold certain principles of transparency and fairness, depending on the specific terms crafted. The license encourages a more participatory model, so using CSL-licensed software might involve engaging with the community or contributing in ways beyond simple monetary payment. Integration would be as straightforward as adopting any other open-source license, but with a different philosophy guiding its use. This means for you, it's about being part of a collaborative project where your usage is tied to a broader sense of community responsibility.

Product Core Function

· Promotes transparency in software development and usage by encouraging open access to source code and development processes.

· Facilitates equitable distribution of value generated by software, ensuring that creators and contributors are fairly recognized and potentially compensated.

· Encourages collaboration and community involvement, moving beyond a purely transactional relationship with software.

· Provides an alternative to proprietary licensing models, offering creators more control and a path towards sustainability without sacrificing freedom.

· Aims to mitigate the concentration of power in the digital infrastructure, fostering a more balanced and resilient ecosystem.

Product Usage Case

· A bootstrapped open-source project that relies on community contributions for development. By using CSL, the project can ensure that contributors are recognized and that the software's future development is sustainable, incentivizing ongoing engagement. This solves the problem of maintaining momentum and resources for community-driven projects.

· A developer building a niche tool for a specific industry. CSL offers a way to share their work openly while ensuring that if the tool becomes commercially successful, the value generated is shared more broadly within the ecosystem, preventing a single entity from monopolizing its benefits. This addresses the challenge of commercialization without resorting to restrictive licenses.

· A non-profit organization developing educational software. CSL can ensure that the software remains accessible and beneficial to its intended audience, while also providing a framework for receiving support and contributions that align with its mission. This is valuable for projects focused on social good.

· A platform that relies on user-generated content or contributions. CSL can establish clear guidelines for how these contributions are used and how value is shared, fostering a sense of ownership and participation among users. This helps build trust and encourage active participation.

13

GitHub Actions Minecraft Host

Author

charlesvien

Description

This project cleverly repurposes GitHub Actions, a CI/CD automation tool, to function as a Minecraft server hosting service. It solves the problem of easily setting up and managing dedicated Minecraft servers without complex infrastructure, leveraging the existing workflows developers are familiar with.

Popularity

Points 6

Comments 2

What is this product?

This is a project that transforms GitHub Actions, typically used for building and deploying software, into a platform for hosting Minecraft servers. The core innovation lies in using the event-driven nature and execution environment of GitHub Actions to spin up, manage, and potentially even tear down Minecraft server instances. Think of it as using your code deployment pipelines to also manage your game servers. This is valuable because it taps into a familiar developer workflow and infrastructure that many already have access to, making server management less about server administration and more about code.

How to use it?

Developers can integrate this by creating a GitHub repository for their Minecraft server. They would then configure GitHub Actions workflows to handle server startup, shutdown, and potentially world management. This could involve scripting commands that are executed within the GitHub Actions runner environment, which then interact with a Minecraft server process. It's a way to have your server managed by the same automation that builds your code, making it incredibly convenient for developers who already use GitHub.

Product Core Function

· Automated Server Provisioning: leverages GitHub Actions to automatically spin up Minecraft server instances when triggered, eliminating manual setup and reducing time to playable state. This is useful because it means you don't have to manually install and configure server software every time you want to play.

· Event-Driven Server Management: allows server start/stop and other commands to be triggered by Git events or schedules within GitHub Actions, offering flexible control over server availability. This is valuable as it lets you start your server only when needed, saving resources and potential costs.

· Integrated Workflow: merges server hosting with existing CI/CD workflows, allowing developers to manage their game server alongside their code projects. This is useful because it simplifies your toolchain and keeps everything organized within the familiar GitHub environment.

· Customizable Server Configuration: provides a framework for customizing server settings and plugins through code, enabling tailored gameplay experiences. This is valuable for players who want to experiment with different game modes or mods without dealing with complex server configuration files.

Product Usage Case

· A small group of friends who want to quickly set up a private Minecraft server for a weekend gaming session. They can use this project to spin up a temporary server using a GitHub Actions workflow, play together, and then shut it down without incurring ongoing costs or needing to manage dedicated hardware. This solves the problem of spontaneous gaming sessions being hindered by server setup complexity.

· A developer experimenting with a new Minecraft mod. They can use GitHub Actions to create a dedicated environment to test the mod's performance and compatibility by automatically deploying the modded server. This is useful for rapid iteration and testing in a controlled environment.

· A community wanting to host a temporary Minecraft server for a special event or tournament. This project allows for easy setup and teardown of the server, managed by familiar GitHub tools, ensuring the event can run smoothly without prolonged server administration.

14

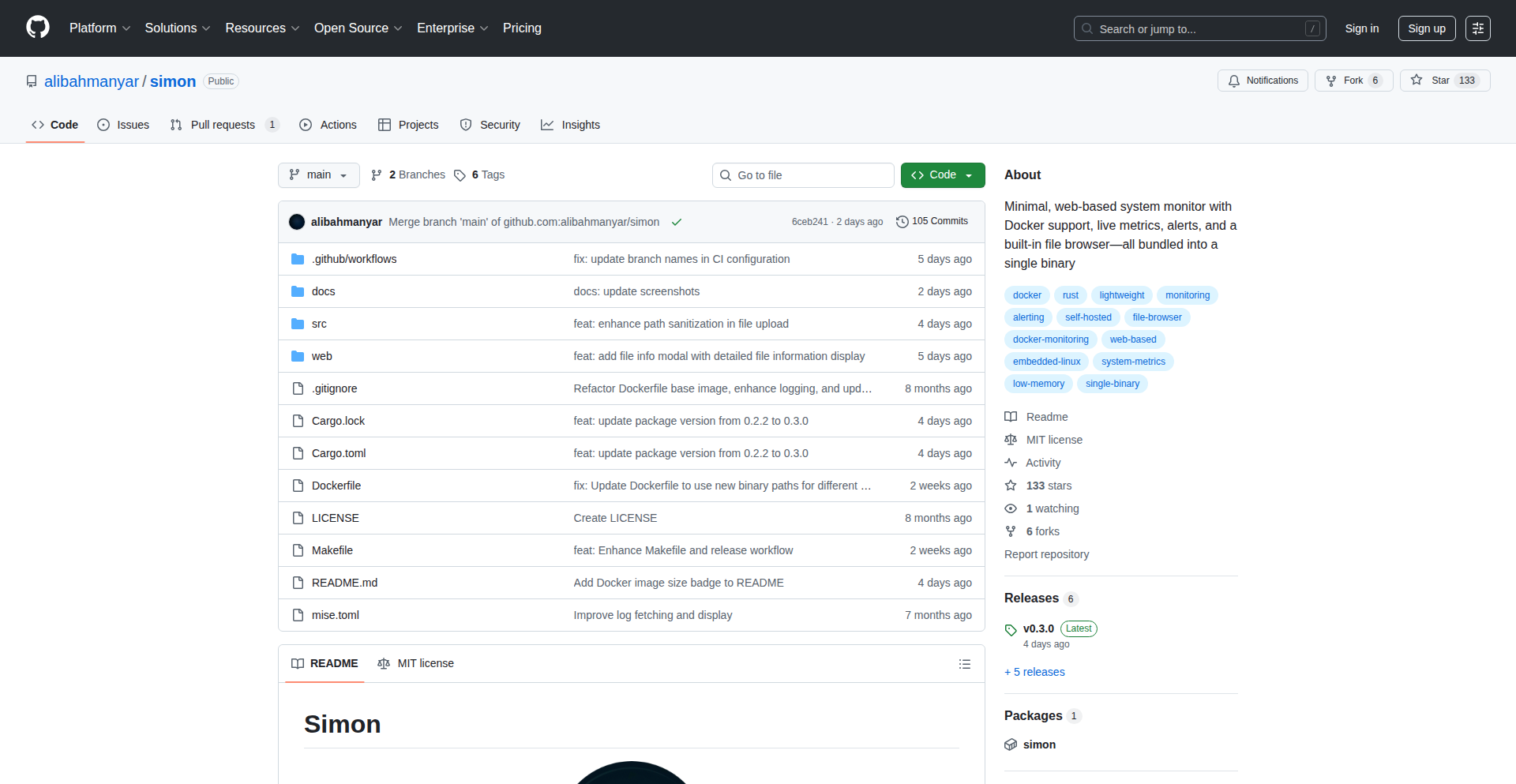

Simon: Unified Home Server Ops

Author

bahmann

Description

Simon is a lightweight, single Rust binary dashboard designed to replace complex and resource-hungry multi-service monitoring stacks. It provides real-time and historical metrics for host systems and Docker containers, integrated file and log management, and flexible alerting, all in a tiny footprint. This means you get powerful server management without the overhead, perfect for self-hosters and resource-constrained environments.

Popularity

Points 7

Comments 0

What is this product?

Simon is a self-hosted monitoring and management dashboard built as a single, lean Rust binary. It consolidates essential operations like system and container monitoring (CPU, memory, disk, network), file management, log viewing, and alert configuration into one easy-to-use web interface. Unlike heavy, multi-component solutions, Simon prioritizes efficiency and simplicity, using minimal resources while offering comprehensive functionality. Its core innovation lies in its monolithic design and resource optimization, making advanced server control accessible even on low-power devices.

How to use it?

Developers can download the single Rust binary, which is just a few megabytes, and run it directly on their Linux systems, including embedded devices and Single Board Computers (SBCs). Simon exposes a web interface that can be accessed from any browser on the network. You can then configure it to monitor your host system and Docker containers. For example, to set up monitoring for your server, you'd simply run the binary and navigate to its web address. You can then define alerts for specific metrics (like high CPU usage) and choose notification channels like Telegram or ntfy, all without installing or configuring multiple separate tools. This makes it incredibly simple to get a robust monitoring and management setup running quickly.

Product Core Function

· Comprehensive Host & Container Monitoring: Real-time and historical data on CPU, memory, disk I/O, and network traffic for your server and its Docker containers. This is valuable because it gives you a clear picture of your system's health and performance, helping you identify bottlenecks or potential issues before they become critical.

· Integrated File Management: A web-based interface to browse, upload, download, and manage files on your server. This is useful for quickly accessing or modifying configuration files, uploading new assets, or retrieving data without needing to constantly use SSH clients for simple file operations.

· Container Log Viewer: Easily view logs from your Docker containers directly through the web UI. This simplifies debugging by allowing you to see application output and errors in context, right where you're managing your services.

· Flexible Alerting System: Define custom rules based on any collected metric and receive notifications via Telegram, ntfy, or custom webhooks. This means you're proactively informed about critical events on your server, allowing for timely intervention and preventing downtime.

· Resource Efficiency: Designed to be extremely lightweight and run as a single binary, minimizing CPU and memory usage. This is a key advantage for anyone running on limited hardware or wanting to reduce their server's operational overhead, ensuring your monitoring tool doesn't become a burden itself.

Product Usage Case

· A self-hoster running a home media server on a Raspberry Pi wants to monitor its performance and receive alerts if the storage fills up. Simon can be installed directly on the Pi, providing a dashboard to view disk usage and CPU load. An alert can be set to notify the user via Telegram when disk space drops below 10%, preventing media library interruptions.

· A developer managing a small fleet of microservices deployed in Docker on a lightweight VPS needs a consolidated view of their application health. Instead of setting up Prometheus, Grafana, and Loki separately, they can deploy Simon. It offers a single pane of glass to see container resource utilization and access logs for debugging, significantly reducing setup time and complexity.

· An IT administrator in a resource-constrained office environment needs to monitor a few critical servers without investing in a large-scale monitoring solution. Simon can be deployed on a small dedicated machine or even one of the servers, providing essential metrics and alerting capabilities for a fraction of the cost and complexity of traditional enterprise tools.

15

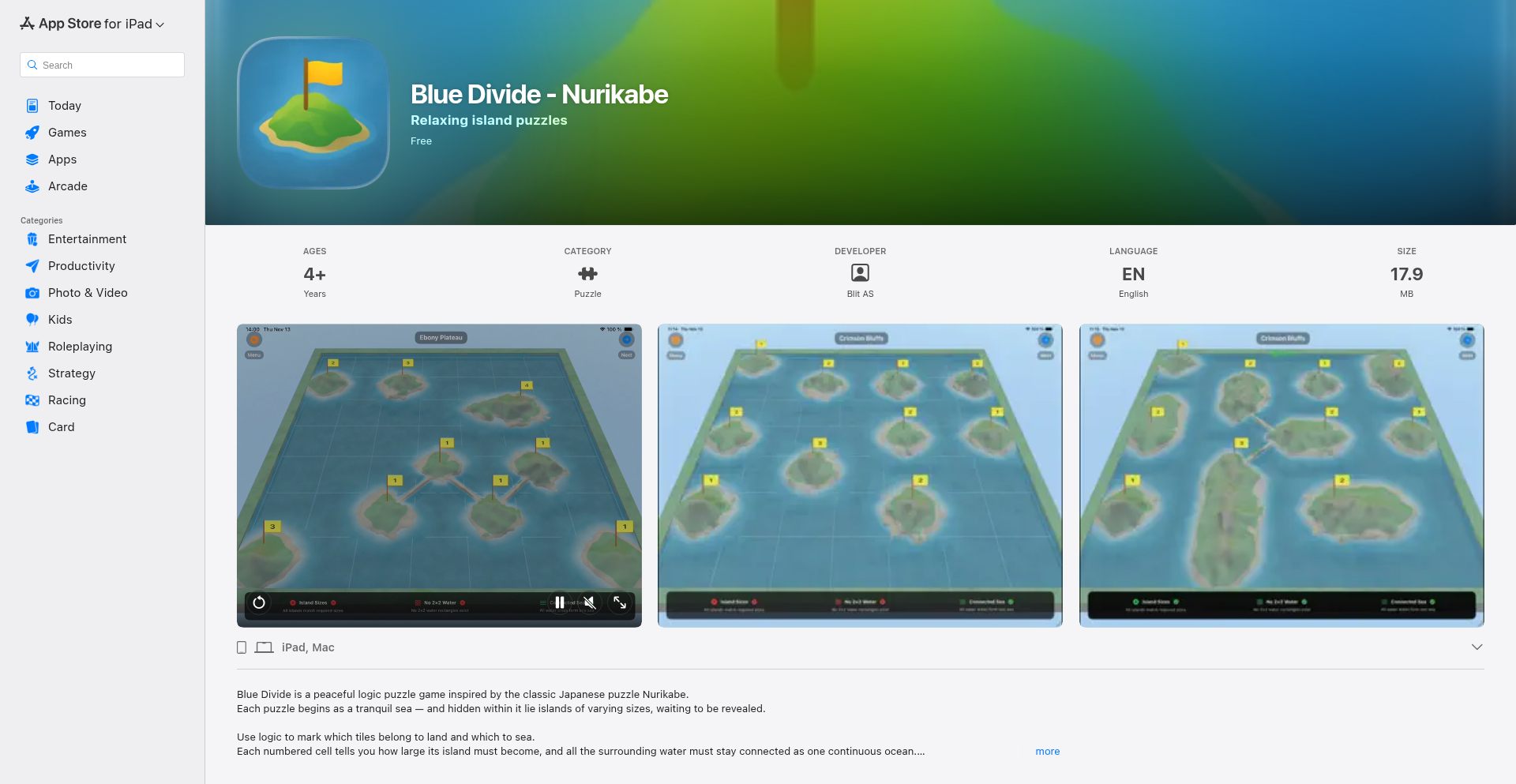

Blue Divide: 3D Nurikabe Mesh Generator

Author

chribog

Description

Blue Divide is a Mac and iPad game that visualizes Nurikabe puzzles in a novel 3D environment. It leverages Swift, SceneKit, and Metal shaders to procedurally generate unique island landscapes for the puzzles, tackling the challenges of shoreline generation and island distinctiveness with an innovative 'dual grid' approach. This project offers a fresh perspective on puzzle visualization and procedural content generation.

Popularity

Points 6

Comments 0

What is this product?

Blue Divide is a puzzle game that reimagines the classic Nurikabe logic puzzle by presenting it in a dynamic 3D world. The core innovation lies in its procedural generation engine for the puzzle's 'islands'. Instead of a flat grid, the game uses a 'dual grid' technique. Think of it like having two overlapping grids: one for the water and one for the land. This allows for more natural and complex shoreline shapes, especially in the tricky corner areas, and ensures each puzzle's landscape feels unique. It uses Swift for game logic, SceneKit for 3D rendering, and Metal shaders for advanced visual effects, with SwiftUI for a clean user interface.

How to use it?

For end-users, it's a delightful puzzle game available on the App Store for Mac and iPad. They can simply download and play. For developers interested in the underlying technology, the 'dual grid' approach to procedural mesh generation and shoreline handling is the key takeaway. This technique can be applied to generate more realistic and varied terrain in game development, architectural visualizations, or any application requiring dynamic environment creation. The use of Metal shaders for custom rendering effects also offers a blueprint for visually rich applications.

Product Core Function

· 3D Nurikabe Puzzle Visualization: Provides an engaging 3D representation of a logic puzzle, making it more immersive and visually appealing. The value is in creating a novel and enjoyable puzzle experience that stands out from traditional 2D interfaces.

· Procedural Island Generation: Dynamically creates unique island landscapes for each puzzle, overcoming the challenge of repetitive or unnatural shorelines. The value is in delivering a fresh and engaging puzzle environment every time, enhancing replayability.

· Dual Grid Generation Technique: Implements a sophisticated 'dual grid' system for more robust and natural shoreline generation, particularly in complex corners. The technical value lies in solving a common procedural generation problem for organic shapes, offering a superior method for creating varied terrain.

· SceneKit and Metal Shader Integration: Utilizes powerful Apple frameworks for smooth 3D rendering and advanced visual effects. The value for developers is in demonstrating efficient and visually striking graphics implementation on Apple platforms.

· SwiftUI for UI: Employs SwiftUI for a modern and responsive user interface, including buttons and hints. The value is in showcasing best practices for modern iOS and macOS app development with a declarative UI framework.

Product Usage Case

· Game Development: Developers can use the 'dual grid' concept to procedurally generate varied and visually appealing 3D terrain for games, overcoming common issues with shoreline realism and corner complexities. This leads to more engaging game worlds.

· Virtual Environment Creation: For applications requiring realistic virtual environments, the approach to generating organic shapes and complex boundaries can be adapted to create diverse landscapes or architectural designs.

· Educational Tools for Procedural Generation: The project serves as an excellent example for developers learning about procedural content generation, specifically how to tackle challenging aspects like natural-looking coastlines.

· App Development with Advanced Graphics: Developers looking to incorporate visually rich 3D elements into their macOS or iPadOS apps can learn from the integration of SceneKit and custom Metal shaders for high-performance graphics.

16

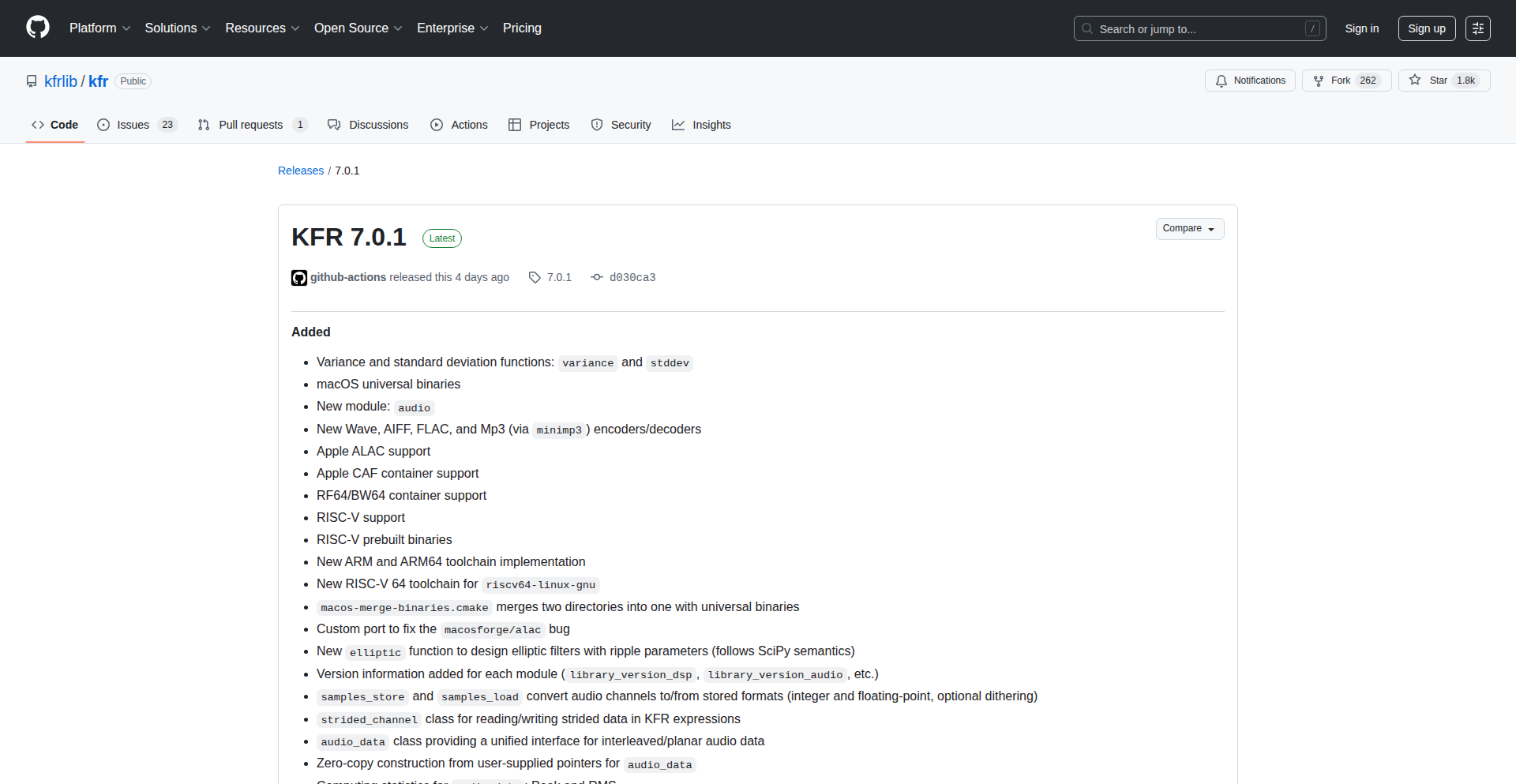

KFR 7: RISC-V SIMD Audio Forge

Author

danlcaza

Description

KFR 7 is a significant C++ digital signal processing (DSP) library update. It introduces advanced capabilities for RISC-V processors, enhanced audio file handling across numerous formats, and a new multichannel audio processing module. The core innovation lies in its low-level optimization and broad format support, making complex audio manipulation more accessible and performant, especially on emerging hardware architectures.

Popularity

Points 5

Comments 1

What is this product?

KFR 7 is a highly optimized C++ library for digital signal processing, particularly for audio. The key innovation is its native support for RISC-V's SIMD (Single Instruction, Multiple Data) extensions, which dramatically speeds up calculations on compatible processors. Imagine doing the same calculation on many pieces of data at once, like multiplying a list of numbers by 2 in a single step. It also boasts a completely revamped audio input/output system that understands a wide variety of audio file formats (WAV, FLAC, MP3, and more), and a new high-level module for managing and processing audio with multiple channels. This means developers can process audio faster, handle more file types easily, and build sophisticated multichannel audio applications with greater efficiency. This is for anyone who needs to work with audio data at a deep technical level, especially on modern or embedded systems.

How to use it?

Developers can integrate KFR 7 into their C++ projects by including its headers and linking against the library. For performance-critical applications on RISC-V, compiling with appropriate flags will enable the SIMD optimizations. The new audio module simplifies reading and writing various audio formats into memory buffers, which can then be processed using KFR's extensive DSP algorithms. For example, a developer building a real-time audio effect could load an audio stream, apply filters and transformations using KFR, and then output the processed audio, all with enhanced speed due to the SIMD support.

Product Core Function

· RISC-V SIMD Support: Accelerates computations on RISC-V processors by performing operations on multiple data points simultaneously, leading to significantly faster audio processing. This is useful for applications requiring real-time audio manipulation or high-throughput audio analysis on RISC-V hardware.