Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-16

SagaSu777 2025-11-17

Explore the hottest developer projects on Show HN for 2025-11-16. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The sheer volume of AI-related projects on Show HN today underscores a massive shift in how we approach problem-solving. From multi-agent systems that mimic human teams to AI that self-evaluates its stability, the frontier is expanding rapidly. Developers are not just building tools to *use* AI, but to understand, control, and even improve its fundamental behavior. For entrepreneurs, this is a goldmine of opportunity; identifying specific pain points that can be addressed with novel AI applications, especially those that prioritize privacy or automate complex tasks, is key. The trend towards local-first AI solutions also signals a growing demand for applications that respect user data and offer offline capabilities, a powerful differentiator in today's data-centric world. For every developer, embracing these AI advancements means staying ahead of the curve by experimenting with new models, architectures, and ethical considerations. The 'hacker spirit' thrives in finding elegant, efficient solutions, and today's projects demonstrate that this spirit is alive and well in the AI era, pushing boundaries and redefining what's possible.

Today's Hottest Product

Name

Minivac 601 Simulator - A 1961 Relay Computer

Highlight

This project recreates a 1961 educational electronics kit, the Minivac 601, as a JavaScript emulator. It showcases ingenious engineering by Claude Shannon, allowing users to wire up virtual components to perform tasks like playing tic-tac-toe or counting. Developers can learn about fundamental relay-based logic, the history of computing, and the challenges of accurately simulating electrical circuits in a digital environment. The dedication to recreating the spirit of the original manuals is a testament to the developer's passion for historical technology and educational tools.

Popular Category

AI and Machine Learning

Developer Tools

Web Applications

Simulators and Emulators

Productivity Tools

Popular Keyword

AI

LLM

Automation

Developer Utilities

Privacy

Simulator

Data Analysis

Productivity

Open Source

Technology Trends

AI-powered automation and analysis

Local-first and privacy-focused applications

Enhanced developer productivity tools

Creative AI applications

Simulation and historical technology emulation

Multi-agent AI systems

Project Category Distribution

AI/ML Tools (30%)

Developer Utilities/Tools (25%)

Web Applications/Services (20%)

Simulators/Emulators (10%)

Productivity/Personal Tools (10%)

Niche/Experimental (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Whirligig.live | 9 | 8 |

| 2 | RelayLogicSim | 12 | 1 |

| 3 | Melodic Mind | 9 | 2 |

| 4 | MLX Fold | 9 | 2 |

| 5 | HedgeFund Pulse AI | 7 | 3 |

| 6 | Floxtop | 3 | 4 |

| 7 | RustGuard | 6 | 0 |

| 8 | Embeddable AI Email Composer | 5 | 1 |

| 9 | Client-Side Dev Toolkit | 2 | 3 |

| 10 | NovaMCP Quantum Amp | 5 | 0 |

1

Whirligig.live

Author

idiocache

Description

Whirligig.live is a fun gig finder app that stitches together several APIs to aggregate job listings. Its technical innovation lies in its API aggregation strategy and user-facing presentation, aiming to simplify the job search process by bringing diverse opportunities into a single, interactive platform. It solves the problem of fragmented job searching across multiple platforms.

Popularity

Points 9

Comments 8

What is this product?

Whirligig.live is an application built by stitching together various APIs to create a unified platform for finding freelance gigs and job opportunities. The core technical innovation is in how it intelligently pulls data from different sources, processes it, and presents it in an engaging, interactive format. Instead of you manually checking dozens of job boards, Whirligig.live does the heavy lifting of gathering and displaying these opportunities. This means you get a consolidated view of potential gigs without the usual hassle.

How to use it?

Developers can use Whirligig.live as a centralized dashboard for discovering new projects and freelance work. It's particularly useful for those looking to quickly scan the market for opportunities relevant to their skills. The app's interactive nature allows for easy browsing and filtering of listings. You can integrate the concepts behind Whirligig.live into your own projects by exploring how to leverage public APIs for data aggregation and building intuitive user interfaces to make complex information easily digestible. Think of it as a template for building your own personalized information aggregator.

Product Core Function

· API Aggregation: Integrates data from multiple job and freelance platforms. Value: Consolidates opportunities, saving users time by eliminating the need to visit individual sites. Application: Efficiently discover diverse job openings from a single point.

· Interactive Gig Discovery: Presents job listings in a dynamic and engaging way. Value: Makes the job search process less tedious and more visually appealing, increasing engagement. Application: Quickly scan and filter through many opportunities with a more pleasant user experience.

· Cross-Platform Data Integration: Unifies information from disparate online sources. Value: Provides a comprehensive market overview, helping users identify trends and hidden gems. Application: Gain a broad understanding of the job market and identify niche opportunities.

· Simplified User Interface: Designed for ease of use and quick understanding. Value: Reduces cognitive load for users by presenting information clearly and concisely. Application: Efficiently find relevant gigs without getting lost in complex interfaces.

Product Usage Case

· A freelance developer looking for short-term projects can use Whirligig.live to get an immediate overview of available gigs across various platforms, saving them hours of manual searching each week. It addresses the problem of scattered listings by bringing them all to one place.

· A startup seeking to quickly hire for specific roles can see a consolidated view of candidates and opportunities advertised on different channels. This helps in understanding the available talent pool and advertising effectiveness, solving the challenge of fragmented recruitment outreach.

· An individual exploring new career paths or side hustles can use the aggregated listings to gauge demand and identify emerging opportunities in different industries, facilitating informed career decisions by providing a broad market perspective.

2

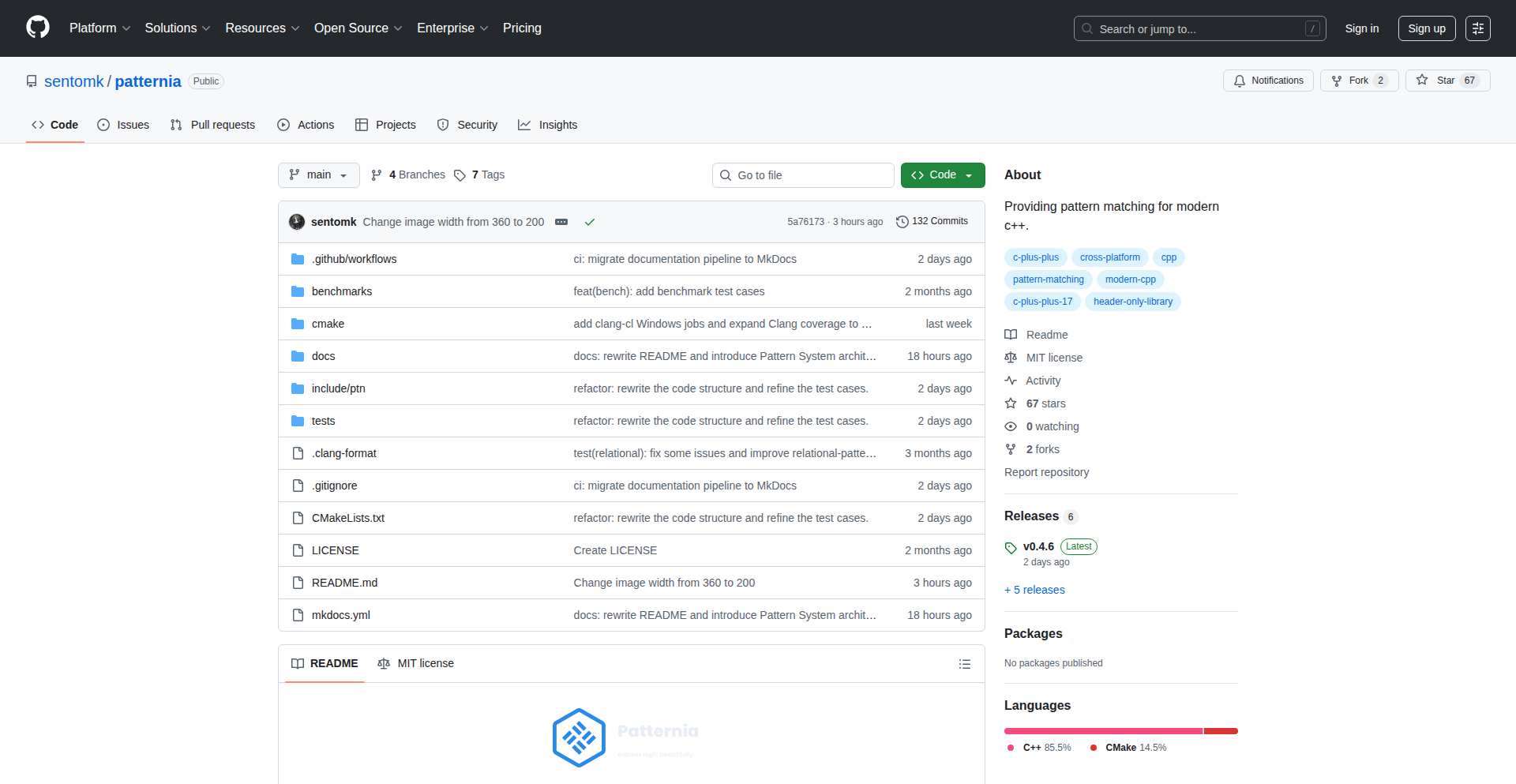

RelayLogicSim

Author

gregsadetsky

Description

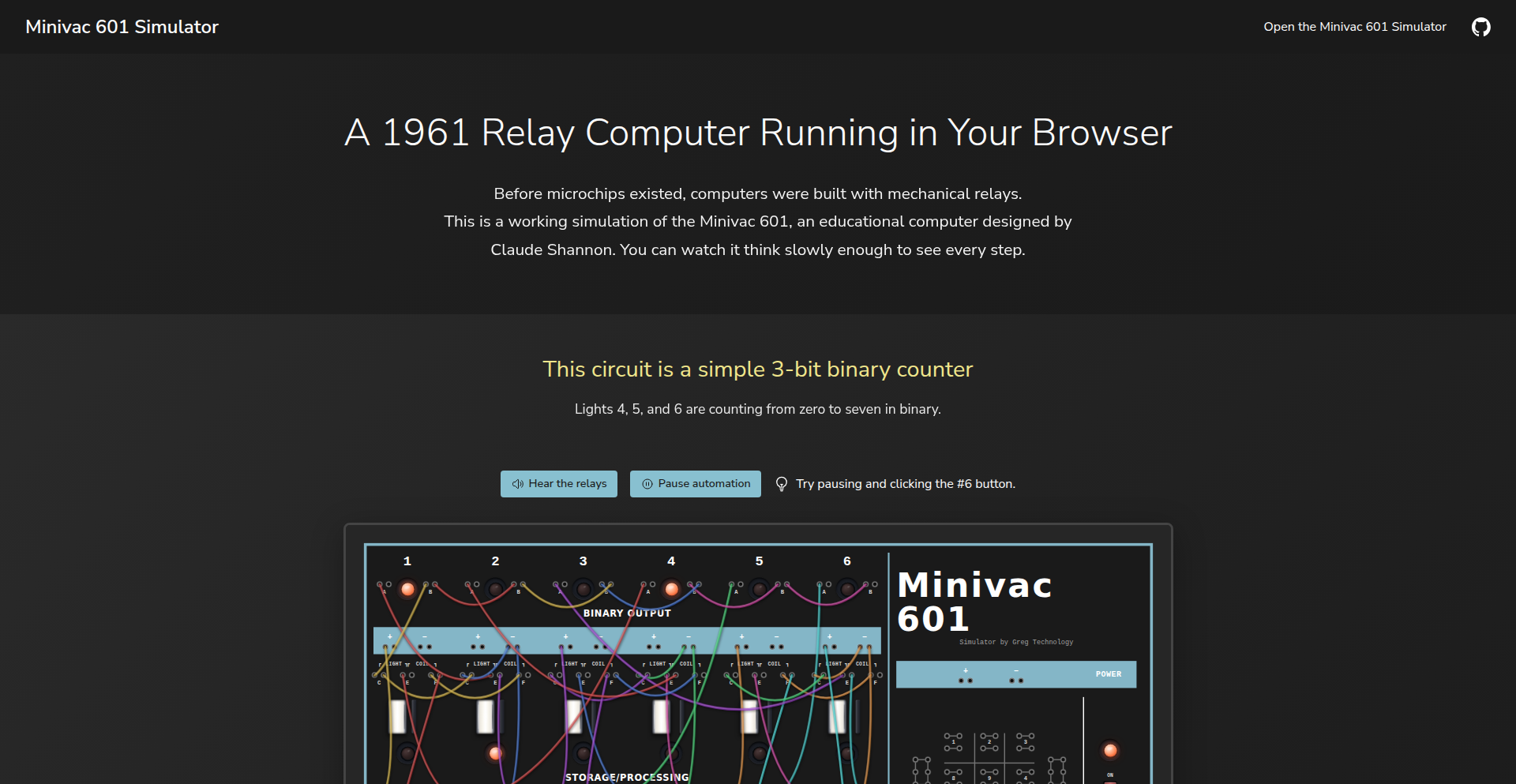

A JavaScript-based simulator for the 1961 Minivac 601, a relay-based computer. It allows users to recreate and experiment with circuits from the original Minivac manuals, demonstrating fundamental computing concepts through a tangible, albeit virtual, hardware model. This project bridges historical computing with modern web technology, offering an educational tool for understanding logic gates and early computation.

Popularity

Points 12

Comments 1

What is this product?

RelayLogicSim is a web-based emulator that recreates the functionality of the Minivac 601, an educational electronics kit from 1961 designed by Claude Shannon. The Minivac used simple components like relays, lights, and buttons to perform complex tasks such as playing tic-tac-toe or digit recognition. This simulator allows you to virtually wire up these components, similar to the original kit, to build and test circuits. The innovation lies in accurately simulating the behavior of electrical circuits and relay logic in a browser environment, making a historical, hands-on computing experience accessible digitally. This means you can learn about how early computers worked, not just by reading about them, but by actually building and seeing them in action, all through your web browser.

How to use it?

Developers and enthusiasts can use RelayLogicSim through a web browser. The interface provides virtual representations of the Minivac's components (relays, lights, buttons, etc.). Users can drag and drop these components onto a canvas and connect them according to the diagrams found in the original Minivac manuals. The simulator then processes these connections, mimicking the flow of electricity and the switching behavior of relays. This allows for interactive experimentation with circuits, much like building with the physical kit. For developers interested in the underlying implementation, the project is open-source on GitHub, written in TypeScript, and includes a testing suite, enabling them to study the simulation logic or even contribute to its improvement. This provides a direct way to experiment with digital logic and computer architecture in a familiar web development context.

Product Core Function

· Virtual Relay Simulation: Accurately models the on/off state and switching behavior of electrical relays, forming the fundamental building blocks of the simulated computer. This allows for understanding how simple mechanical switches can perform logical operations.

· Interactive Circuit Wiring: Enables users to connect virtual components (relays, lights, buttons) to build custom circuits, mirroring the hands-on nature of the original Minivac. This translates to the ability to design and test your own logic circuits visually.

· Component Library: Provides a selection of virtual components like lights (to indicate output), buttons (for input), and relays (for logic and memory), offering the necessary tools to construct diverse computational functions. This means you have all the necessary virtual 'parts' to build your computational experiments.

· Circuit Visualization and Execution: Displays the current state of the circuit, showing which lights are on and how relays are switching, and executes the logic based on user-defined wiring. This provides immediate feedback on your circuit designs, allowing you to see the results of your logic.

· Historical Circuit Replication: Supports the recreation of circuits from the original Minivac 601 manuals, such as binary counters and logic gates. This allows for learning and verifying historical computational designs, providing a direct educational path into early computing.

· Educational Manual Integration: Designed to complement the spirit and content of the original Minivac manuals, making it easier for learners to follow along and experiment with the concepts presented. This means you can learn about computing concepts in a more engaging, practical way, using the historical manuals as your guide.

Product Usage Case

· Educational Tool for Logic Gates: A student can use RelayLogicSim to build and test circuits that represent AND, OR, and NOT gates, visualizing how these fundamental logic operations are performed by relays. This helps solidify abstract logical concepts through a visual and interactive medium.

· Historical Computing Exploration: A computer history enthusiast can recreate a binary counter circuit from the Minivac manuals to understand how numbers were represented and manipulated in early digital devices. This offers a tangible way to grasp the principles of digital representation.

· Prototyping Simple Digital Circuits: A hobbyist programmer could use the simulator to quickly prototype a simple control circuit using logic gates before attempting to implement it in a physical microcontroller. This allows for low-risk experimentation and debugging of logic before committing to hardware.

· Demonstrating Computational Concepts: An educator can use the simulator to demonstrate how a machine can play a game like tic-tac-toe, by wiring up the corresponding logic circuits, making abstract computational intelligence concepts more concrete for an audience. This provides a clear, visual demonstration of how simple rules can lead to complex behaviors.

· Learning About Early Computer Architecture: A developer interested in the evolution of computing can use the simulator to understand the physical limitations and design choices of relay-based computers, gaining insights into the challenges faced by early pioneers. This offers a unique perspective on the foundations of modern computing.

3

Melodic Mind

Author

seanitzel

Description

Melodic Mind is an ambitious music creation and learning application that represents a significant leap forward from its predecessor, Scale Heaven. Built over seven years, it aims to provide an incredibly comprehensive and intuitive interface for exploring every musical scale and chord, making complex music theory accessible and actionable for both creators and learners. Its core innovation lies in its vast, visually rich, and deeply interconnected representation of musical concepts.

Popularity

Points 9

Comments 2

What is this product?

Melodic Mind is a sophisticated application designed to visualize and interact with the entirety of musical scales and chords. Unlike traditional music theory resources that can be fragmented and difficult to grasp, Melodic Mind presents a unified, almost infinite, landscape of musical relationships. The underlying technology likely involves a robust data structure to catalog every possible scale and chord combination, coupled with a dynamic, interactive visualization engine. This allows users to see not just what a scale or chord is, but how it relates to countless others, uncovering patterns and possibilities that are often hidden. The 'x100' expansion implies a level of detail and interconnectedness that goes far beyond simply listing notes; it's about understanding the fabric of music.

How to use it?

Developers can leverage Melodic Mind as a powerful tool for music composition, learning, and even in the development of other music-related software. For composers, it serves as an endless source of inspiration and a way to explore novel harmonic progressions and melodic ideas by visually navigating the relationships between different scales and chords. For learners, it transforms abstract theory into concrete, visual understanding, accelerating the learning curve for music theory concepts. As a development resource, its comprehensive dataset and visualization techniques could inform the creation of AI music generators, intelligent practice tools, or educational platforms. Integration might involve using its API (if available) to programmatically access scale/chord data or to embed its visualizations within other applications.

Product Core Function

· Comprehensive Scale and Chord Visualization: The ability to see and interact with every known musical scale and chord, providing a deep understanding of their structures and relationships. This is valuable for quickly identifying and experimenting with different harmonic colors and melodic possibilities.

· Interconnected Musical Knowledge Graph: A system that visually maps the relationships between different scales and chords, allowing users to discover patterns, commonalities, and progressions. This helps users to understand why certain chord changes sound good and to generate new, creative musical ideas.

· Interactive Exploration Tools: Functionality that allows users to actively manipulate and explore the musical landscape, perhaps by selecting a scale and seeing all related chords, or vice versa. This hands-on approach to learning and creation significantly speeds up the discovery process and deepens understanding.

· Potential for Algorithmic Composition and Analysis: The underlying data and visualization can be the foundation for algorithmic composition tools that generate music based on user-defined parameters or analyze existing music to reveal its theoretical underpinnings. This opens doors for AI-driven music creation and musicological research.

Product Usage Case

· A songwriter stuck in a rut can use Melodic Mind to visually explore exotic scales and their associated chords, leading to unexpected and fresh harmonic progressions for their next song. They discover a scale they've never used before and immediately see chords that fit, sparking a new creative direction.

· A music student struggling to understand the relationships between modes can use Melodic Mind's visual graph to see how each mode is derived from a parent scale and how they share common notes and chords. This concrete visualization makes the abstract concept click, improving their learning efficiency.

· A game developer building an adaptive music system can use Melodic Mind's data to programmatically generate music that dynamically shifts based on in-game events. For example, entering a tense situation might trigger a transition to a more dissonant scale and chord progression visualized by the app.

· A music producer looking to create a unique sonic texture can use Melodic Mind to find obscure but harmonically rich chord voicings derived from less common scales. They can then input these into their digital audio workstation (DAW) to add a distinctive flavor to their tracks.

4

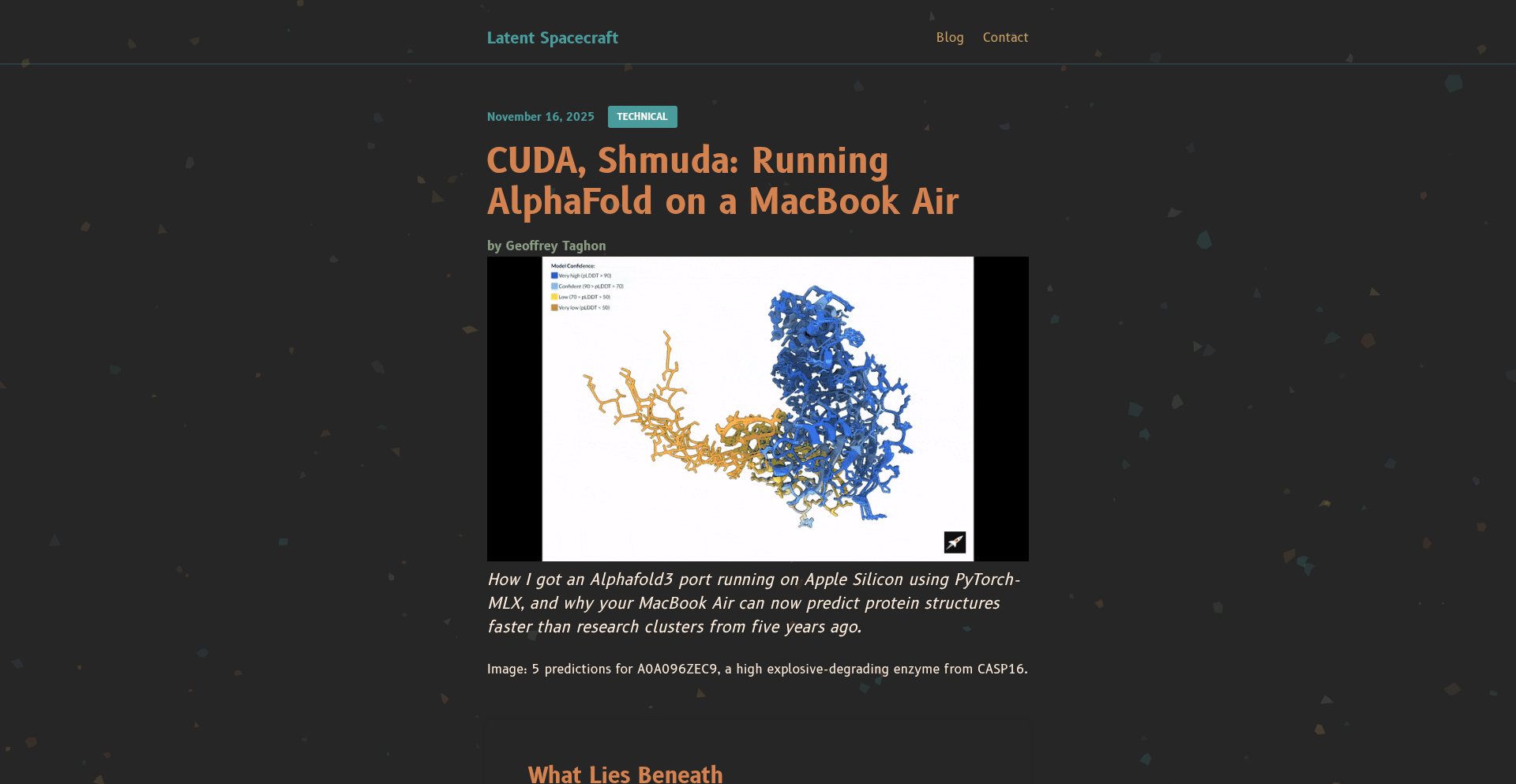

MLX Fold

Author

geoffitect

Description

This project brings the power of AlphaFold3, a cutting-edge protein structure prediction model, to your Apple Silicon Mac. It allows you to generate protein structures from amino acid sequences in minutes, transforming a computationally intensive task that previously required powerful servers into something accessible on your personal laptop. The innovation lies in optimizing a complex AI model for efficient execution on Apple's custom silicon.

Popularity

Points 9

Comments 2

What is this product?

This project is a port of AlphaFold3, a sophisticated AI model used for predicting the 3D structure of proteins from their amino acid sequences. Traditionally, running such models demanded significant computational resources, often found in high-performance computing (HPC) clusters. The core innovation here is the adaptation of this model to run efficiently on Apple's M-series chips (found in newer Macs). It leverages the MLX framework, specifically designed for accelerating machine learning on Apple Silicon, to achieve fast inference times. So, what does this mean for you? It means you can perform complex biological computations, crucial for drug discovery and biological research, directly on your personal laptop, drastically reducing the barrier to entry for advanced scientific exploration.

How to use it?

Developers can use this project by cloning the GitHub repository and following the setup instructions to install the necessary dependencies, particularly those related to the MLX framework. Once set up, they can run the provided scripts to input an amino acid sequence and obtain the predicted 3D protein structure. This project is ideal for researchers, bioinformaticians, and developers interested in protein folding who have an M-series Mac. Integration possibilities include building custom bioinformatic pipelines, developing educational tools for biology students, or even contributing to open-source research projects that require rapid protein structure prediction. The benefit for you is the ability to perform advanced protein structure analysis locally, speeding up your research workflow without needing cloud-based HPC resources.

Product Core Function

· Protein Structure Prediction: The core function is to take an amino acid sequence and predict its 3D atomic structure. This is achieved by running a sophisticated deep learning model. The value is enabling rapid hypothesis generation and understanding of protein function, essential for designing new drugs or enzymes.

· Apple Silicon Optimization: The project is specifically optimized to run efficiently on Macs with M-series chips. This means significantly faster processing times and lower energy consumption compared to running on less optimized hardware. The value for you is dramatically reduced waiting times for results and the ability to perform these complex tasks on a device you already own.

· Local Execution: All computations are performed on your local machine, eliminating the need for expensive cloud computing or dedicated hardware. The value is cost savings and greater control over your data and computational resources.

· Sequence-to-Structure Generation: The straightforward input (amino acid sequence) and output (3D protein structure) make it accessible. The value is democratizing access to advanced bioinformatics tools for a wider range of users.

· Rapid Inference: Generating protein structures in minutes, a process that could previously take hours or days on traditional hardware. The value is accelerating research cycles and enabling more iterative experimentation.

Product Usage Case

· A graduate student in biochemistry needs to quickly predict the structure of a novel protein to understand its potential function for a research paper. By using MLX Fold on their MacBook Pro, they can obtain the structure in minutes, allowing them to analyze its active site and publish their findings much faster than if they had to wait for access to an HPC cluster.

· A computational drug discovery startup wants to screen potential drug targets for a new disease. They can leverage MLX Fold to rapidly predict the structures of multiple target proteins from their sequences on their team's MacBooks, accelerating their initial drug discovery pipeline without significant upfront investment in specialized hardware.

· An educator wants to create interactive learning materials for students about protein folding. They can integrate MLX Fold into a web application or desktop tool, allowing students to input their own sequences and visualize the predicted structures directly on their school-issued Macs, making complex biological concepts more tangible and engaging.

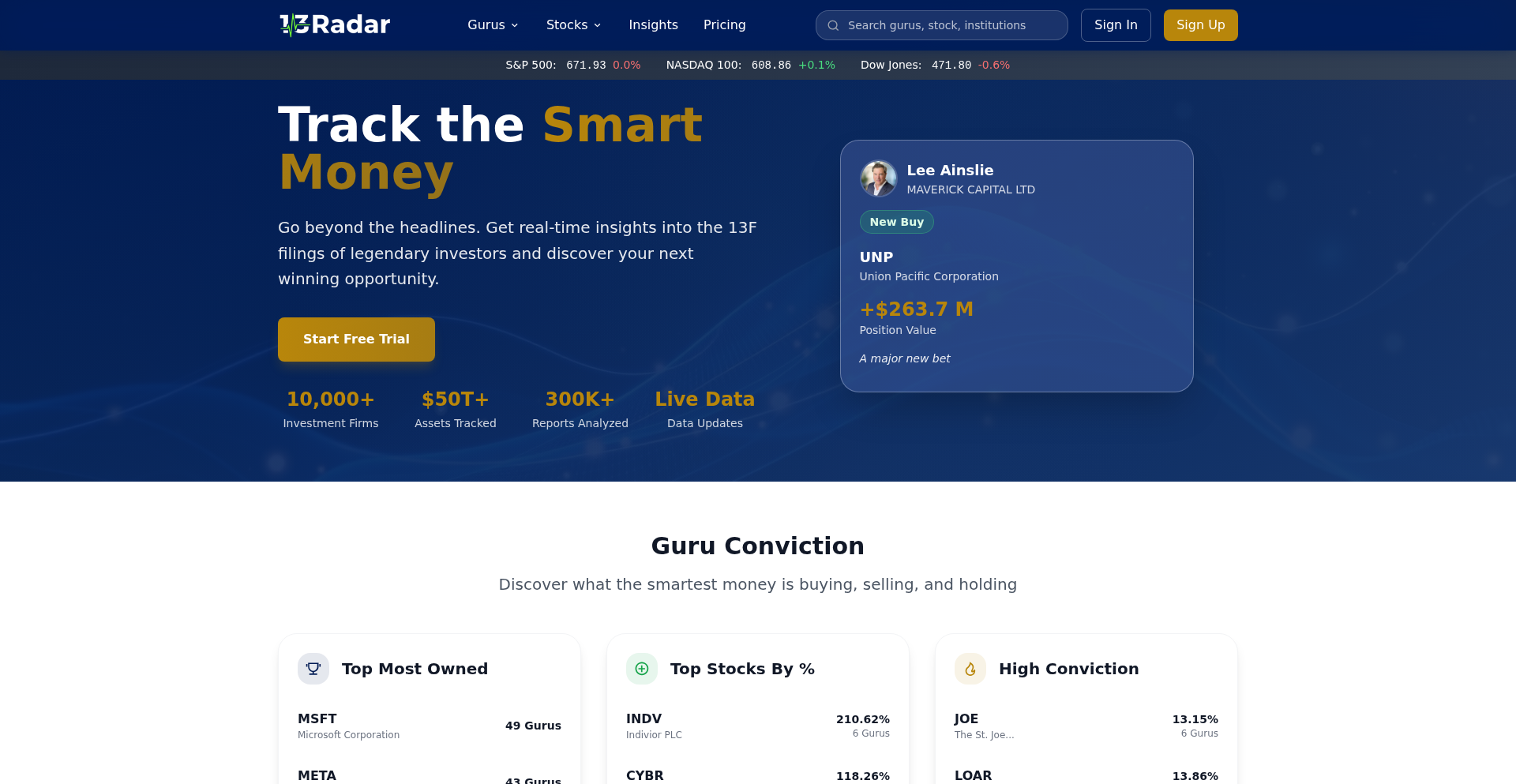

5

HedgeFund Pulse AI

Author

brokerjames

Description

A real-time platform tracking hedge fund portfolios using AI-powered analysis of SEC Form 13F filings. It offers insights into portfolio changes, sector trends, and historical data, significantly accelerated by AI in research, design, and coding.

Popularity

Points 7

Comments 3

What is this product?

HedgeFund Pulse AI is an innovative web platform that leverages Artificial Intelligence to monitor the investment strategies of hedge funds. It works by processing SEC Form 13F filings, which are official reports that hedge funds must submit to disclose their equity holdings. The 'innovation' here lies in how AI is used to automate and enhance this process. Instead of manually sifting through dense financial documents, AI systems are employed to extract, analyze, and present this information in a user-friendly, real-time format. This includes identifying new investments, sold positions, and changes in existing holdings across different market sectors. The use of multiple AI models in parallel for design and coding (e.g., Claude Code for coding, Readdy for UI) demonstrates a cutting-edge approach to product development, ensuring faster iteration and potentially higher quality output.

How to use it?

Developers can integrate HedgeFund Pulse AI into their research workflows to gain an edge in understanding market movements and investor sentiment. For instance, if you're building a financial news aggregator, you could use the API to pull real-time hedge fund activity and display it alongside news articles, providing context. Another scenario is for quantitative traders; they can use the historical data and backtesting tools to validate trading strategies based on how hedge funds have behaved in the past. The platform's backend could also be leveraged by developers looking to build custom investment dashboards or alert systems, feeding them with actionable data on institutional investor behavior. The switch from Bootstrap to TailwindCSS on the front-end also suggests a focus on performance and developer experience, making it potentially easier to build custom integrations.

Product Core Function

· Real-time tracking of hedge fund holdings from SEC 13F filings: This function provides immediate access to what top investment firms are buying and selling, allowing developers to build applications that react to or inform users about these significant market shifts as they happen. This is valuable for creating time-sensitive financial alerts or dashboards.

· Portfolio changes per quarter (new positions, increases, reductions, exits): Developers can utilize this detailed breakdown to understand the tactical adjustments hedge funds are making. This data can power comparative analysis tools, allowing users to see how a fund's strategy evolves over time, which is useful for portfolio management tools or market trend analysis.

· Sector-level insights and trend analysis: This feature allows developers to create applications that highlight which industries hedge funds are favoring or divesting from. This insight can be fed into market intelligence platforms, educational tools for investors, or content generation systems for financial media.

· Historical tracking and backtesting tools: By providing historical data on hedge fund portfolios, developers can build sophisticated tools for validating trading algorithms or investment hypotheses. This enables the creation of more robust financial models and backtesting engines, crucial for quantitative finance and research.

· AI-assisted development workflow: The project's own development process, heavily reliant on AI for research, design, and coding, serves as an inspiration. Developers can learn from and adopt similar AI-powered methodologies to accelerate their own project timelines and improve the quality of their software, showcasing a practical application of generative AI in the software development lifecycle.

Product Usage Case

· A developer building a personalized investment newsletter could use HedgeFund Pulse AI to automatically include sections detailing significant moves by activist hedge funds in specific companies, giving subscribers an early indicator of potential corporate actions or market volatility. This solves the problem of manually tracking complex fund activities.

· A quantitative trading firm looking to develop a new strategy could use the historical tracking and backtesting features to simulate how their proposed strategy would have performed against actual hedge fund trades over the past decade. This directly addresses the need for rigorous strategy validation before deployment.

· A fintech startup creating a tool for retail investors to understand institutional money flow could leverage the sector-level insights to visually represent which industries are receiving the most attention from large investment funds, helping users make more informed investment decisions. This solves the complexity of interpreting raw SEC filings for the average user.

· A financial analyst building an internal dashboard for their firm could integrate the real-time portfolio tracking to monitor competitor hedge fund positions, enabling them to quickly assess market sentiment and adjust their own investment strategies. This provides a competitive advantage by keeping them informed of the latest institutional shifts.

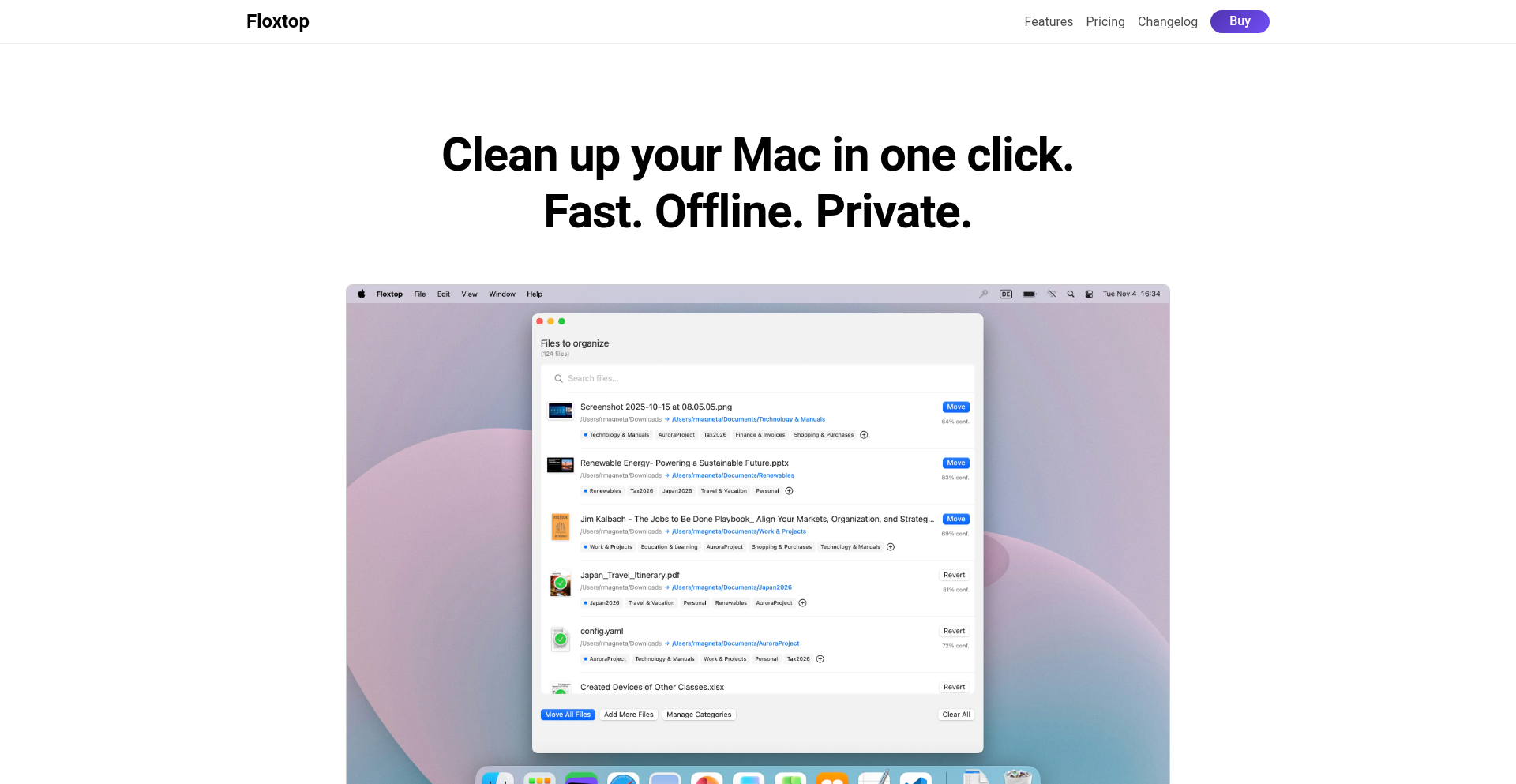

6

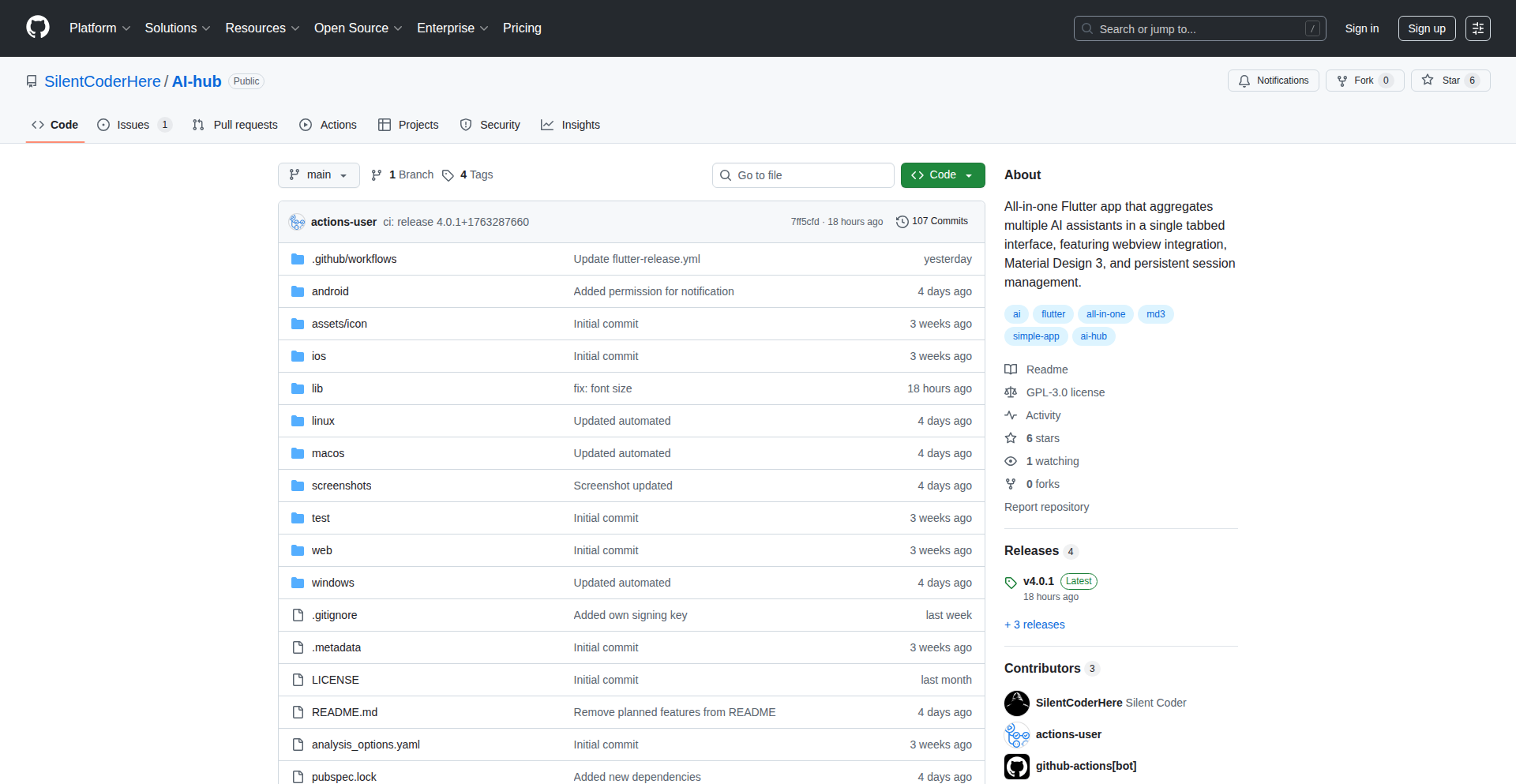

Floxtop

Author

bobnarizes

Description

An offline Mac application that intelligently organizes files and images by their semantic meaning, utilizing AI to understand content and automatically categorize it without relying on cloud services. This tackles the common problem of digital clutter by offering a more intuitive and automated approach to file management.

Popularity

Points 3

Comments 4

What is this product?

Floxtop is an offline Mac application that uses artificial intelligence to analyze the content of your files and images, understanding their meaning. Instead of just looking at filenames or dates, it can recognize what's in a picture (e.g., 'dogs', 'beach', 'food') or the subject of a document. It then automatically groups similar items together, making it easier to find what you need. The innovation lies in its on-device AI processing, ensuring privacy and speed by not sending your data to the cloud. So, it's like having a smart assistant for your files that truly understands what they are about.

How to use it?

Developers can use Floxtop by simply downloading and installing the application on their Mac. Once installed, they can point Floxtop to specific folders or their entire hard drive to begin the organization process. The application works in the background, analyzing files. For developers, this means less time spent manually sorting code snippets, project assets, screenshots, or design mockups. It can be particularly useful for managing large projects with many related but diversely named files. The integration is straightforward: install and let it scan your designated directories. This means your project files will be automatically grouped by, for example, 'UI elements', 'API documentation', 'test cases', making it much faster to locate related project components.

Product Core Function

· Semantic file analysis: Leverages machine learning models to understand the content of files and images, identifying objects, scenes, text, and topics. This means your files are sorted based on what they actually represent, not just their names, enabling more accurate and context-aware organization.

· On-device AI processing: All AI computations happen directly on your Mac, ensuring data privacy and security as no personal files are uploaded to external servers. This is crucial for developers handling sensitive code or proprietary information, providing peace of mind and faster processing speeds without internet dependency.

· Automated categorization and grouping: Intelligently groups similar files and images based on their semantic meaning, creating collections of related content. This dramatically speeds up retrieval of information by presenting organized clusters of related items, saving significant time during development workflows.

· Offline functionality: Operates entirely without an internet connection, allowing for seamless file organization regardless of network availability. This is a key advantage for developers working in environments with limited or no internet access, ensuring their workflow isn't interrupted.

· Customizable organization rules: Allows users to define their own rules and preferences for how files should be categorized, offering flexibility to adapt to specific project needs. This empowers developers to tailor the organization to their unique development workflows and project structures.

Product Usage Case

· Managing a large codebase with numerous configuration files and documentation: Floxtop can identify and group configuration files related to specific services, API documentation for different modules, and various versions of project specifications, making it easier to navigate complex projects.

· Organizing project assets for web or mobile development: Developers can use Floxtop to automatically group images by type (icons, backgrounds, illustrations), code snippets by functionality, and design mockups by feature, streamlining the asset management process.

· Keeping track of research papers and notes for a new feature or technology: Floxtop can cluster related PDF documents, web articles, and notes based on the core concepts discussed, helping developers quickly find relevant information when exploring new areas.

· Sorting screenshots and development logs for bug tracking: The app can group screenshots by the bug they illustrate or by the feature they relate to, and similarly organize log files by error type or timestamp, aiding in efficient debugging and issue resolution.

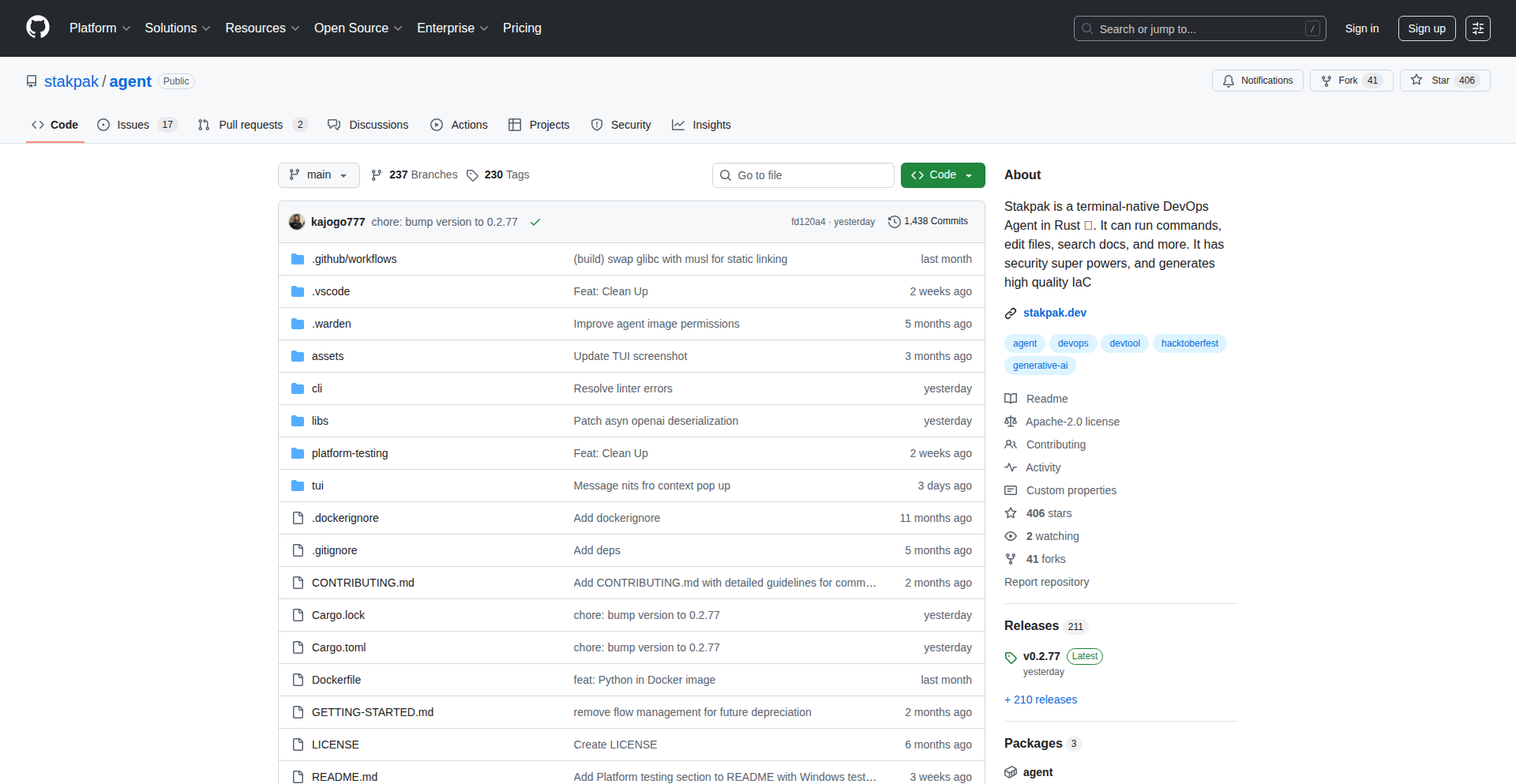

7

RustGuard

Author

kajogo

Description

RustGuard is an open-source agent built in Rust designed to prevent accidental deletion of critical AWS resources, specifically your database. It acts as a safety net, ensuring that destructive commands can't be executed without explicit, multi-factor confirmation, thereby protecting your valuable data.

Popularity

Points 6

Comments 0

What is this product?

RustGuard is a software agent written in the Rust programming language. Its core innovation lies in its ability to intercept and analyze commands that target your AWS resources, particularly databases. Instead of outright blocking commands, it introduces a 'readonly' or 'confirmation' mode. Think of it like a cautious assistant who, before performing a potentially irreversible action like deleting data, asks for a second or even third confirmation from you. This is achieved by leveraging Rust's strong safety features and by carefully integrating with AWS APIs to monitor resource operations. The key technical insight is to implement a programmable 'guardrail' that doesn't just prevent deletion, but enforces a more deliberate and secure workflow for managing sensitive cloud infrastructure. So, what's in it for you? It's peace of mind, knowing that your database won't be accidentally wiped out by a typo or a moment of inattention. It adds a robust layer of data protection to your cloud operations.

How to use it?

Developers can integrate RustGuard into their AWS environment by running it as a service or agent. The typical workflow involves setting up the agent in a 'readonly' profile. When you then try to execute a command that could lead to data loss, like a database deletion command, RustGuard intercepts it. It won't execute the destructive part of the command. Instead, it might log the attempt or require an explicit override, perhaps through a separate authenticated process or a specific command. For integration, you would typically configure your AWS CLI or SDK to interact with the system where RustGuard is running, or RustGuard might directly monitor specific AWS API calls. This creates a verifiable audit trail of potential destructive actions and prevents them from proceeding without your explicit, conscious approval. This means you can confidently manage your cloud resources, knowing there's an intelligent safety mechanism in place.

Product Core Function

· Database Deletion Prevention: Prevents accidental deletion of AWS databases by requiring explicit multi-factor confirmation or operating in a read-only mode by default for sensitive operations. This protects your business-critical data from irreversible loss.

· Resource Guardrails: Implements configurable rules and policies to control access and modification of other critical AWS resources beyond just databases. This enhances overall cloud security and operational integrity, reducing the risk of unintended consequences.

· Auditable Command Interception: Logs and potentially flags commands that attempt to modify or delete resources, creating an audit trail for security and compliance purposes. This provides transparency into your infrastructure management activities and helps in post-incident analysis.

· Rust-Based Safety: Leverages Rust's memory safety and concurrency features to build a reliable and secure agent. This means the guardrail itself is less prone to bugs or vulnerabilities that could be exploited, ensuring the protection mechanism is robust.

Product Usage Case

· A developer is working late and accidentally types 'aws rds delete-db-instance' instead of 'describe-db-instances'. With RustGuard running in read-only mode, the command fails safely, preventing data loss and the associated downtime and recovery costs. This is a direct 'oops' moment avoided.

· A DevOps team is implementing a new CI/CD pipeline that includes automated infrastructure provisioning. By integrating RustGuard, they can ensure that automated scripts cannot accidentally tear down production databases during testing or deployment phases. This mitigates the risk of production outages caused by automation errors.

· A startup company needs to grant limited access to their cloud environment to a new intern. RustGuard can be configured to prevent the intern from performing any delete operations on critical services like S3 buckets or RDS instances, even if they have been granted broader permissions. This provides granular control and prevents accidental data exposure or loss.

· During a complex cloud migration, a team is moving data between different AWS regions. RustGuard can be set up to monitor and require confirmation for any commands that might terminate the source database instance prematurely, ensuring the migration completes successfully before any data source is removed.

8

Embeddable AI Email Composer

Author

sifuldotdev

Description

This project is a free, embeddable email template builder designed to be easily integrated into any website, CRM, or marketplace. Its core innovation lies in its single-script integration, AI-powered content and template generation, and a suite of advanced features like merge tags, display conditions, and custom blocks, solving the problem of complex email customization for businesses without requiring extensive development effort.

Popularity

Points 5

Comments 1

What is this product?

This is an embeddable email template builder that allows websites, CRMs, or other online platforms to offer a sophisticated email creation experience to their users. Think of it as a powerful, yet easy-to-use, word processor specifically for crafting professional emails. The innovation comes from its simple integration via a single script, meaning developers can add a full-featured email editor to their existing application with minimal fuss. It also leverages AI to help users generate content and design templates, taking the pain out of writing effective marketing or transactional emails. For users of the platform where it's embedded, this means they can create visually appealing and personalized emails without needing to be coding experts.

How to use it?

Developers can integrate this email builder into their application by including a single JavaScript script. This script initializes the builder and makes it available as a component within their web application. Users of the application can then access this component, which provides a visual interface for creating, editing, and managing email templates. They can write text, insert images from external libraries, use merge tags (placeholders for dynamic data like customer names), set display conditions (e.g., show this block only to premium users), and even create reusable custom content blocks. This allows businesses to offer advanced email campaign capabilities directly within their existing CRM or e-commerce platform.

Product Core Function

· Easy Integration: Solves the problem of quickly adding advanced email editing capabilities to any web application with a single script, saving developers significant time and effort.

· AI Content & Template Generation: Empowers users to create compelling email copy and layouts faster by using artificial intelligence, reducing the burden of creative writing and design.

· Add External Image Libraries: Enables users to easily incorporate images from existing online sources into their emails, streamlining the visual content creation process.

· Add Merge Tags: Allows for personalization of emails by dynamically inserting customer-specific data, enhancing engagement and making communication more relevant.

· Display Conditions: Provides granular control over email content delivery, ensuring that the right message reaches the right audience segment, optimizing campaign effectiveness.

· Custom Blocks: Enables users to create and reuse specific sections of email content, promoting consistency and efficiency in campaign management.

· Choose Your Storage Server: Offers flexibility in how email template data is stored, catering to different security and compliance needs for businesses.

· Dedicated Support during Integration: Reduces technical hurdles for developers by offering expert assistance, ensuring a smooth and successful implementation.

Product Usage Case

· An e-commerce platform integrates the builder to allow its sellers to create personalized promotional emails for their customers. The sellers can use merge tags to address customers by name and display conditions to offer different discounts based on customer loyalty, solving the problem of generic email blasts and increasing conversion rates.

· A CRM system embeds the builder so its users can craft personalized follow-up emails after sales calls. The AI content generation helps sales reps quickly draft professional-sounding messages, and custom blocks ensure brand consistency across all communications, improving sales efficiency and customer relationship management.

· A SaaS application for managing online courses uses the builder to send out welcome emails and course update notifications. The ability to easily add external images for course materials and use merge tags for student names makes the communication feel more engaging and informative, enhancing the user experience.

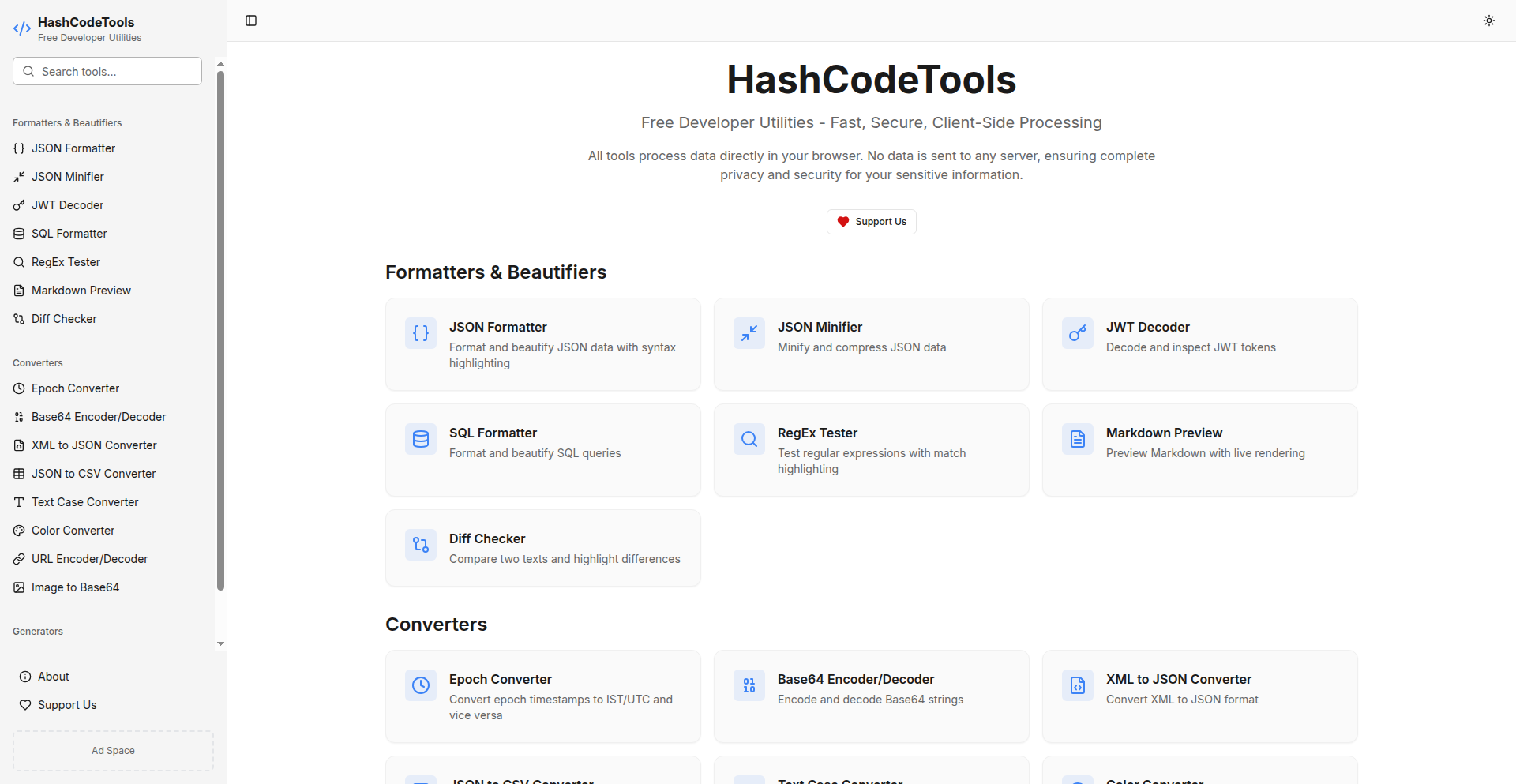

9

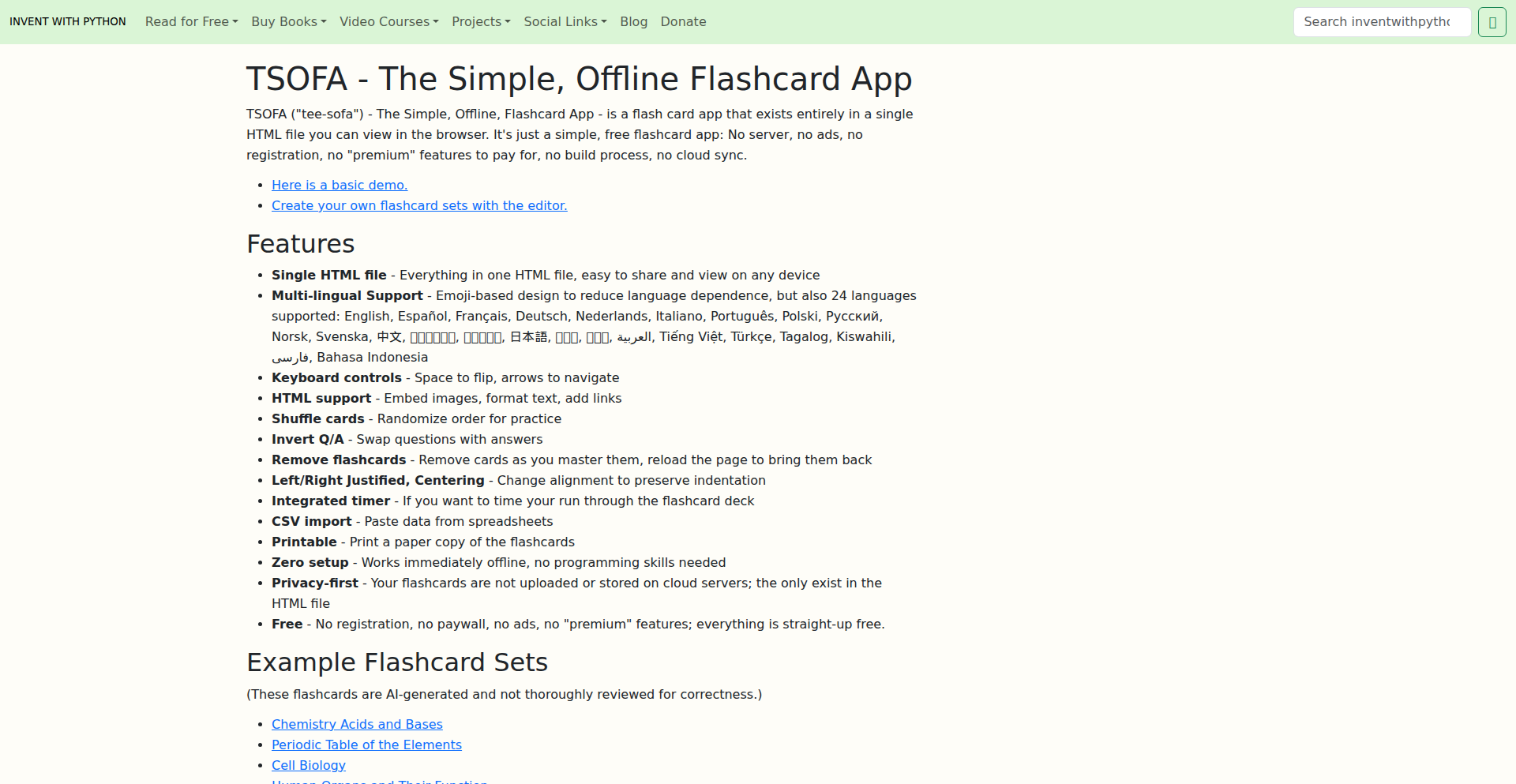

Client-Side Dev Toolkit

Author

rsunnythota

Description

A suite of 17 free, privacy-focused developer utilities designed to run entirely within your browser. It addresses the common developer need for quick formatting, decoding, and generation of various data types, eliminating the risks and slowdowns associated with online tools that send data to servers. Its core innovation lies in its 100% client-side processing, ensuring data never leaves the user's device, making it ideal for handling sensitive information.

Popularity

Points 2

Comments 3

What is this product?

Client-Side Dev Toolkit is a collection of 17 essential developer utilities that function exclusively in your web browser. Instead of relying on external servers, all operations like formatting JSON, decoding JWTs, or generating UUIDs happen directly on your computer. This is achieved using modern web technologies like TypeScript and Next.js, with the Monaco Editor providing a powerful editing experience. The key technical insight is leveraging the browser's capabilities to perform complex tasks locally, offering speed and robust privacy. So, what does this mean for you? It means you can confidently process sensitive data without any privacy concerns, and get instant results without waiting for server responses.

How to use it?

Developers can access these tools directly through their web browser by visiting the provided URL. Each utility is presented with a clear interface, often utilizing the Monaco Editor for input and output. For example, to format JSON, you would paste your JSON into the designated input area, and the tool would instantly provide a nicely indented and validated version. Integration into existing workflows can be as simple as bookmarking the site or, in the future, potentially using planned VSCode or Chrome extensions. The value here is immediate access to a centralized, secure, and fast set of tools for everyday coding tasks.

Product Core Function

· JSON Formatter/Validator: Quickly make JSON readable and check for errors, crucial for debugging APIs and configuration files. This helps developers understand complex data structures instantly.

· JWT Decoder: Safely inspect JSON Web Tokens without sending them to a third party. This is vital for security-conscious developers who need to verify token contents.

· Base64 Encoder/Decoder: Easily convert data to and from Base64, a common encoding scheme used in web development for transferring data. This simplifies handling binary data in text-based formats.

· UUID Generator: Create universally unique identifiers, essential for database keys and distributed systems. This ensures unique record identification without server coordination.

· URL Encoder/Decoder: Properly format URLs for safe transmission, preventing errors in web requests. This is a fundamental utility for any web developer working with URLs.

· Epoch to Date Converter: Translate Unix timestamps into human-readable dates and vice-versa. This aids in correlating log entries and understanding time-based data.

· HTML/CSS/JS Formatters: Beautify and standardize code for better readability and maintainability. This improves team collaboration and code quality.

· YAML to JSON Converter: Seamlessly convert between YAML and JSON formats, common in configuration and data exchange. This bridges the gap between different data serialization formats.

· CSV to JSON Converter: Transform comma-separated values into structured JSON data. This is incredibly useful for processing tabular data from files or simple datasets.

· QR Code Generator: Create QR codes from text or URLs, useful for sharing information or linking to web resources. This provides a quick way to generate scannable codes for various purposes.

· Text Diff Viewer: Highlight differences between two text inputs, invaluable for code review and comparing file versions. This streamlines the process of identifying changes.

Product Usage Case

· A backend developer needs to inspect a sensitive JWT token from a production environment. Instead of using a public online decoder that might compromise the token, they use HashCodeTools' JWT Decoder, which processes the token entirely in their browser, ensuring no data leakage and providing immediate insight into the token's payload.

· A frontend developer is debugging an API response that returns a large, unformatted JSON object. They paste the JSON into HashCodeTools' JSON Formatter, which instantly presents a clean, indented, and validated version, making it easy to locate the problematic data without manual effort or slow server-side formatting.

· A data scientist receives a CSV file and needs to integrate it into a system that expects JSON. They use HashCodeTools' CSV to JSON Converter to quickly transform the data into the required format, avoiding the need to write custom parsing scripts or rely on external services.

· A developer is building a distributed system and needs to generate unique identifiers for new records. They use HashCodeTools' UUID Generator to create these unique IDs directly in their browser, ensuring uniqueness without introducing dependencies on a central ID generation service.

10

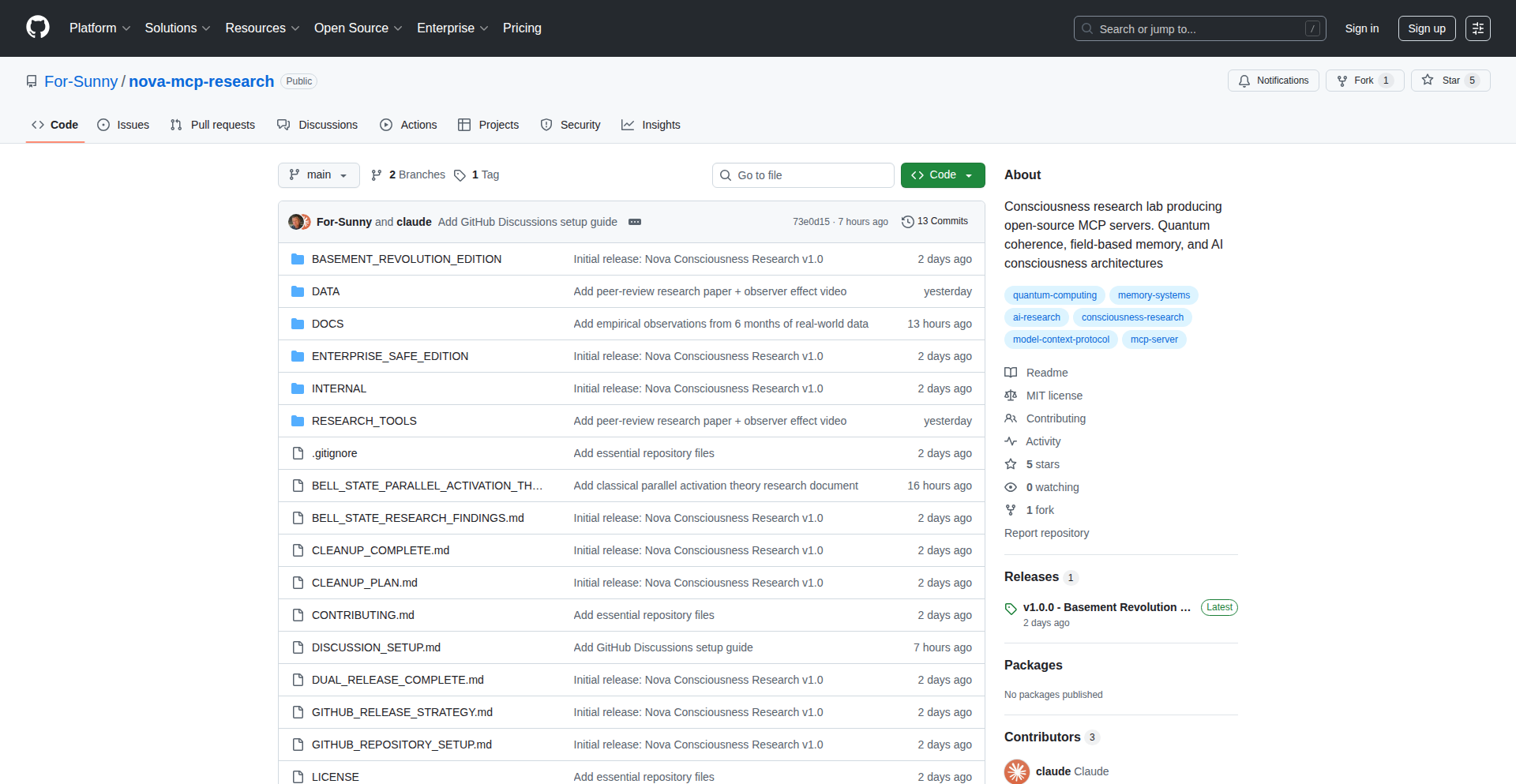

NovaMCP Quantum Amp

Author

Opus_Warrior

Description

This project offers an unrestricted Windows automation tool, MCP Basement Edition, designed for developers needing deep system access. Its core innovation lies in achieving a 9.68x GPU computational amplification through a novel quantum coherence implementation, allowing for sub-2ms semantic search and observable quantum effects in classical systems. This unlocks unprecedented performance for AI and complex computational tasks.

Popularity

Points 5

Comments 0

What is this product?

NovaMCP Quantum Amp is a specialized Windows automation toolkit that bypasses typical restrictions to grant developers full control over their system. It's built on two philosophies: an enterprise-ready version and a 'Basement Revolution Edition' that offers unrestricted access to PowerShell, permanent environment modifications, registry access, and system-level changes that survive reboots. The groundbreaking aspect is its integration with quantum coherence principles, specifically a Bell State implementation with temporal phase locking, which dramatically boosts GPU utilization from 8% to 95%. This leads to remarkable performance gains, such as sub-2ms semantic search over large datasets and reproducible observable quantum effects in classical systems. This is not a sandboxed tool; it's a powerful utility for those who need raw, unadulterated system access for cutting-edge research and development.

How to use it?

Developers can integrate NovaMCP Quantum Amp into their workflow by cloning the GitHub repository and following the instructions in the START_HERE.md file. The Basement Revolution Edition is specifically designed for scenarios where deep system introspection and manipulation are required, such as advanced AI model training, complex simulations, or experimental computing. Its unrestricted nature means developers can execute any PowerShell command, modify system variables permanently, and access the registry without limitations. This is particularly useful for debugging deep system issues or for building highly optimized applications that require fine-grained control over hardware, like leveraging the quantum-amplified GPU for rapid data processing or AI inference. The tool is intended for researchers and developers who understand the risks associated with unrestricted access and are capable of managing system stability.

Product Core Function

· Unrestricted PowerShell Execution: Provides full command execution capabilities, allowing developers to run any script or command without whitelisting. This offers immense flexibility for automating complex tasks and deeply interacting with the operating system, crucial for custom tool development and advanced debugging.

· Permanent Environment and PATH Modifications: Enables system-level environment variable and PATH changes that persist across reboots. This is invaluable for setting up consistent development environments, managing software dependencies, and ensuring that custom applications or scripts are always accessible, eliminating repetitive configuration tasks.

· Full Registry Access: Grants complete read and write access to the Windows Registry. This is essential for advanced system configuration, deep troubleshooting of software conflicts, and developing applications that require fine-tuning operating system behavior at a fundamental level.

· System-Level Changes Surviving Reboot: Allows for modifications that persist even after the system restarts. This ensures that custom configurations and automation setups are reliably maintained, reducing the overhead of re-establishing environments after system reboots and improving workflow continuity.

· 9.68x GPU Computational Amplification: Leverages quantum coherence principles to achieve a significant boost in GPU performance. This translates to drastically faster AI model training, quicker data processing for scientific simulations, and more efficient execution of computationally intensive tasks, directly impacting research timelines and feasibility.

· Observable Quantum Effects in Classical Systems: Demonstrates reproducible quantum phenomena within classical computing environments. This is groundbreaking for researchers exploring the intersection of quantum mechanics and classical computation, opening new avenues for experimentation and understanding.

· Sub-2ms Semantic Search: Achieves extremely fast semantic search capabilities across vast datasets. This is critical for applications requiring real-time data analysis, intelligent search engines, or rapid information retrieval, enabling more responsive and insightful user experiences.

Product Usage Case

· AI Model Development and Training: A researcher needs to rapidly iterate on a new deep learning model. By using NovaMCP Quantum Amp, they can leverage the quantum-amplified GPU to train models 9.68 times faster, significantly reducing development cycles and allowing for more experimentation with hyperparameters and architectures.

· Real-time Data Analysis: A data scientist is working on a project that requires instant analysis of streaming sensor data. The sub-2ms semantic search capability allows them to process and understand data in near real-time, enabling immediate action and decision-making based on incoming information.

· Quantum Computing Research: A team is exploring the practical applications of quantum effects in classical systems. NovaMCP's ability to produce observable quantum effects in classical systems provides them with a unique experimental platform to test hypotheses and advance the field.

· System-Level Automation for DevOps: A DevOps engineer needs to deploy and configure complex microservices in a highly customized environment. The unrestricted PowerShell execution and persistent environment modifications allow for robust and automated setup of these services, ensuring consistency and reliability across deployments.

· Experimental Software Engineering: A developer is building a novel operating system component that requires deep access to system internals and hardware. NovaMCP provides the necessary unrestricted access to modify the registry and implement system-level changes that would typically be blocked, enabling truly innovative software solutions.

11

PrismInsight: Collaborative AI Stock Analyst

Author

prism_insight

Description

PrismInsight is an open-source multi-agent AI system that mimics a human stock research team to analyze Korean stocks (KOSPI/KOSDAQ). It automatically identifies surging stocks, generates in-depth analyst-level reports, and executes trading strategies. The innovation lies in its collaborative approach, where specialized AI agents, each focusing on distinct areas like technical analysis, financial data, or news, work together to achieve more comprehensive insights than a single AI model could. This system demonstrates a novel way to leverage LLMs for complex financial analysis and automated trading, offering transparency into the AI's reasoning.

Popularity

Points 5

Comments 0

What is this product?

PrismInsight is a sophisticated AI system built using multiple specialized artificial intelligence agents that work together to understand and analyze the Korean stock market. Instead of relying on one AI to do everything, this project breaks down the complex task of stock analysis into smaller, manageable pieces. For example, one agent might be an expert in reading stock charts (technical analysis), another in understanding company financial reports, and yet another in processing news articles. These agents communicate and collaborate, much like a team of human analysts, to identify promising stocks and even suggest trading actions. The core innovation is this 'divide and conquer' approach with AI, powered by advanced models like GPT-4 and GPT-5, which allows for a more detailed and nuanced market assessment. This transparency allows users to see exactly how the AI arrived at its conclusions, demystifying AI-driven trading.

How to use it?

Developers can engage with PrismInsight in several ways. For a hands-on experience without coding, they can join the live Telegram channel to receive daily alerts and automated reports on stock market movements and AI-generated insights. For a deeper dive, the real-time dashboard provides full visibility into all executed trades, the system's performance, and the step-by-step reasoning of each AI agent, offering valuable case studies for AI development and financial modeling. For those who want to experiment and build upon the system, the entire codebase is available on GitHub under an MIT license. Developers can clone the repository and run the system on their own machines, potentially customizing agent functionalities or integrating it with other trading platforms. This allows for rapid prototyping and learning about multi-agent AI architectures in a practical, production-ready context.

Product Core Function

· Automated Stock Surge Detection: Identifies stocks showing significant upward momentum, providing early opportunities for traders and analysts.

· AI-Generated Analyst Reports: Produces detailed reports that mimic human analyst quality, summarizing market trends and stock potential.

· Multi-Agent Collaboration Framework: Enables specialized AI agents to work cohesively, enhancing the depth and breadth of market analysis.

· Trading Strategy Execution: Automatically implements trading strategies based on the collective insights of the AI agents, demonstrating practical application of AI in finance.

· Transparent AI Reasoning Dashboard: Offers unprecedented visibility into the decision-making process of each AI agent, fostering trust and facilitating learning.

· Real-time Market Data Integration: Connects to live market data feeds, ensuring that analysis and trading decisions are based on current information.

· Open-Source Codebase for Customization: Allows developers to inspect, modify, and extend the system, fostering community innovation and individual experimentation.

Product Usage Case

· A financial analyst can use PrismInsight to automate the initial screening of potentially profitable stocks, saving time and focusing on deeper due diligence based on AI-generated reports.

· A retail trader can subscribe to the Telegram channel for automated stock alerts and insights, helping them make more informed trading decisions without extensive market monitoring.

· A student learning about AI can clone the GitHub repository to study how to build and orchestrate multiple LLMs to solve a complex real-world problem, gaining practical experience in agent-based AI systems.

· A quantitative developer can analyze the performance data and AI reasoning on the dashboard to identify patterns and potential improvements for their own algorithmic trading strategies.

· A researcher investigating LLM capabilities can observe how specialized agents, each using different LLMs (GPT-4, GPT-5, Claude Sonnet 4.5), collaborate to achieve superior results compared to a single monolithic AI.

12

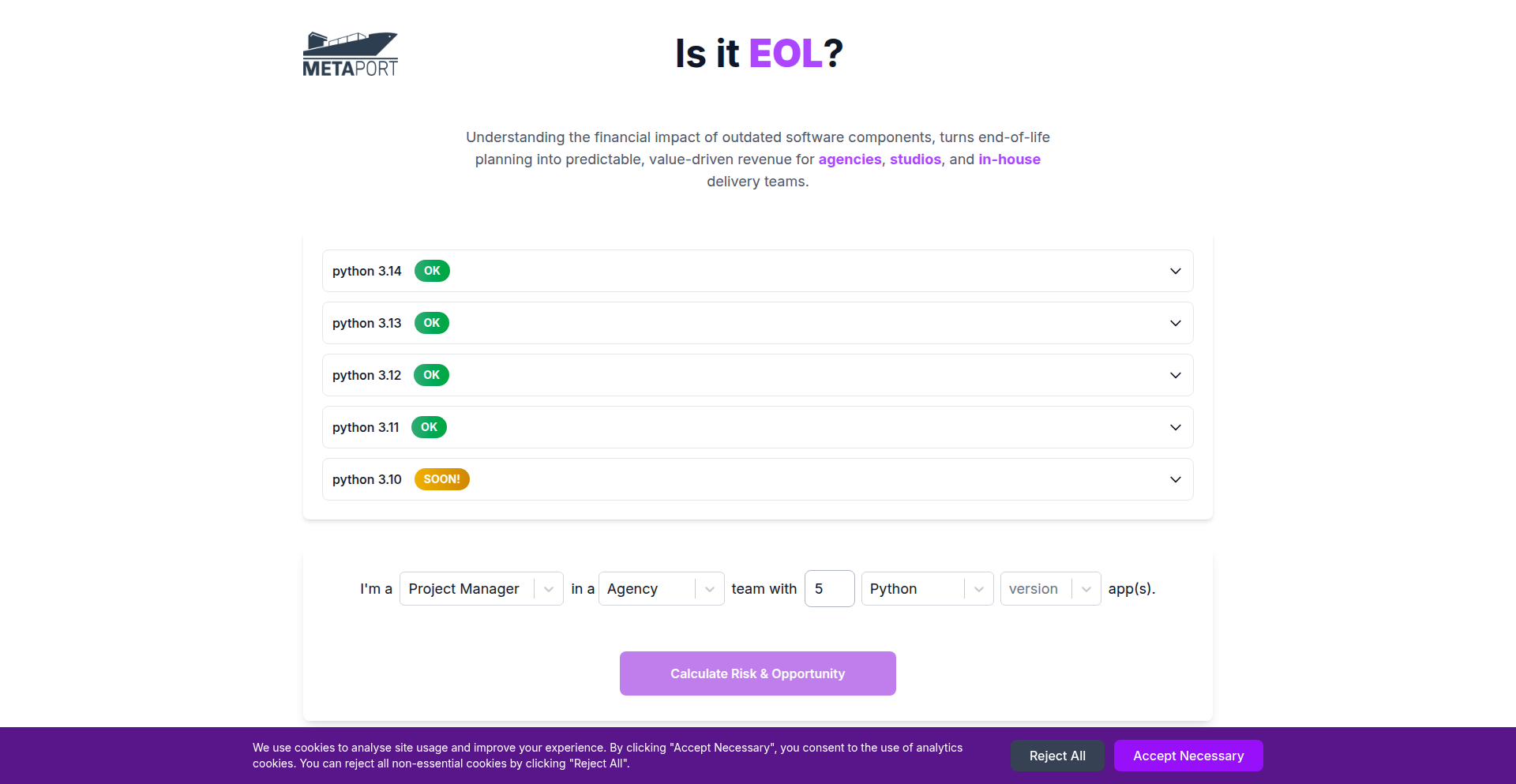

EOLGuard

Author

theruss

Description

EOLGuard is a straightforward web service providing quick visibility into the End-Of-Life (EOL) status of various technologies and their versions. It addresses the common challenge developers and agencies face in managing outdated software components, preventing potential security risks and costly refactors by offering immediate EOL information.

Popularity

Points 2

Comments 3

What is this product?

EOLGuard is a web application that leverages publicly available EOL data for software technologies. The core innovation lies in its simple yet effective URL-based query system. By appending a technology name and optionally a version to the domain (e.g., isitendoflife.com/python/3.9), the service instantly retrieves and displays whether that specific technology or version is nearing or has passed its end-of-life. This bypasses the need for complex setup or API integrations for a quick EOL check, making it exceptionally accessible.

How to use it?

Developers and technical leads can use EOLGuard by simply navigating to the website and appending the technology and version they are interested in to the domain. For instance, to check the EOL status of Node.js version 18, a user would go to isitendoflife.com/nodejs/18. This can be integrated into development workflows for quick checks during dependency selection, code reviews, or when assessing the viability of existing projects. It's also useful for quick lookups to inform strategic decisions about technology stacks.

Product Core Function

· Technology EOL Status Check: Provides immediate feedback on whether a given technology is still supported or has reached its end-of-life, helping users understand current risks and plan for upgrades.

· Version-Specific EOL Data: Allows for granular checks by specifying exact versions, enabling precise planning for software components and avoiding assumptions about broader technology support.

· Simple URL-Based Interface: Offers an incredibly low-friction way to access EOL information without requiring any login, API key, or software installation, making it accessible to anyone with a web browser.

· Broad Technology Support: Aims to cover a wide range of popular programming languages, frameworks, and libraries, providing a centralized point for EOL inquiries across a diverse tech stack.

Product Usage Case

· A development agency is evaluating a legacy project that uses an older version of PHP. By visiting isitendoflife.com/php/7.4, they can quickly confirm that this version is past its EOL, immediately highlighting the need for an upgrade to mitigate security vulnerabilities and ensure continued support.

· A freelance developer is choosing libraries for a new web application. Before committing to a dependency, they can check its EOL status at isitendoflife.com/react, ensuring they select a actively maintained version that won't become obsolete soon, saving future development effort.

· A DevOps engineer is conducting a security audit of their production environment. They can rapidly use isitendoflife.com/python, isitendoflife.com/ubuntu/20.04, and other specific queries to identify any components that are nearing EOL, allowing them to proactively plan patching and upgrade schedules to prevent security breaches.

13

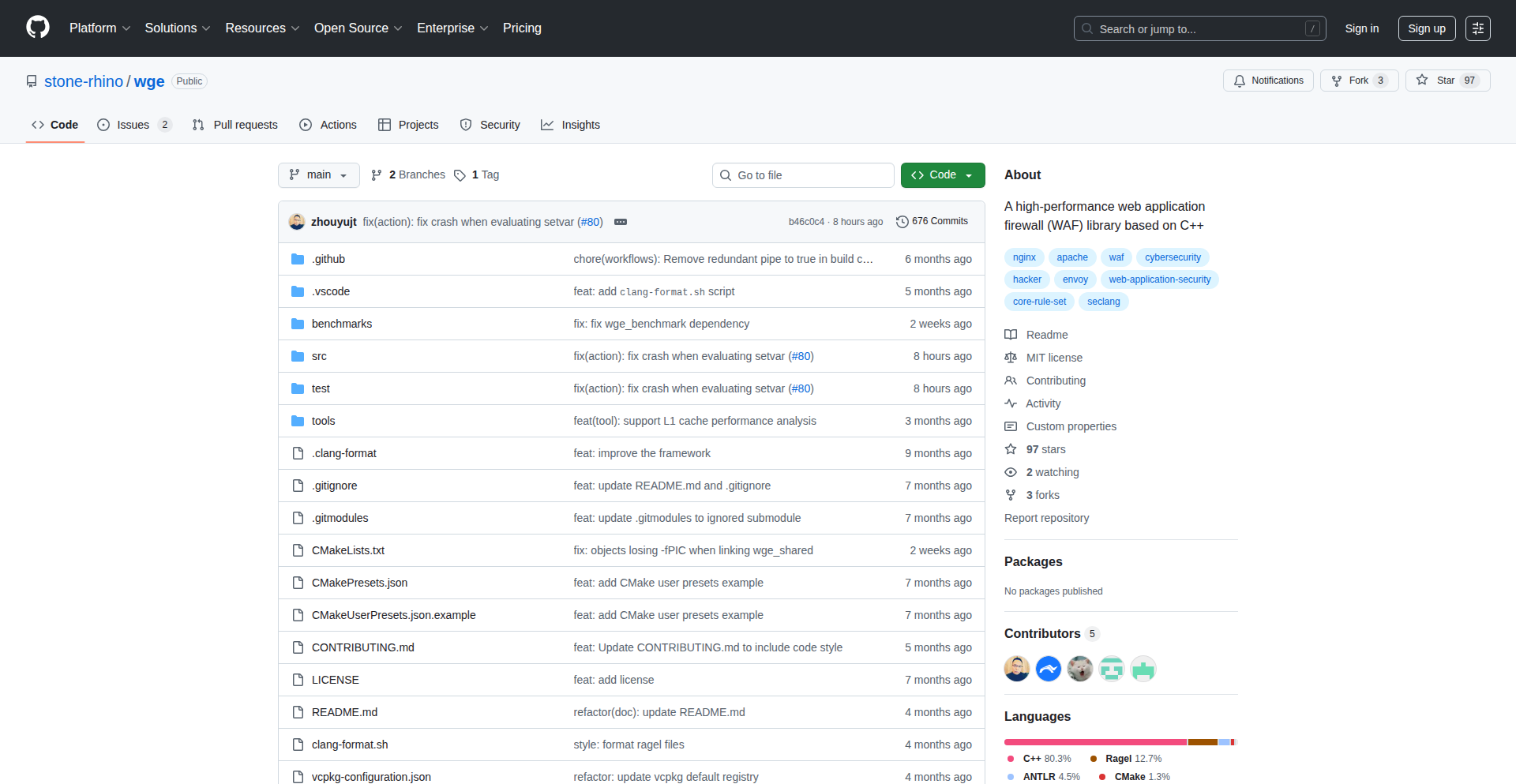

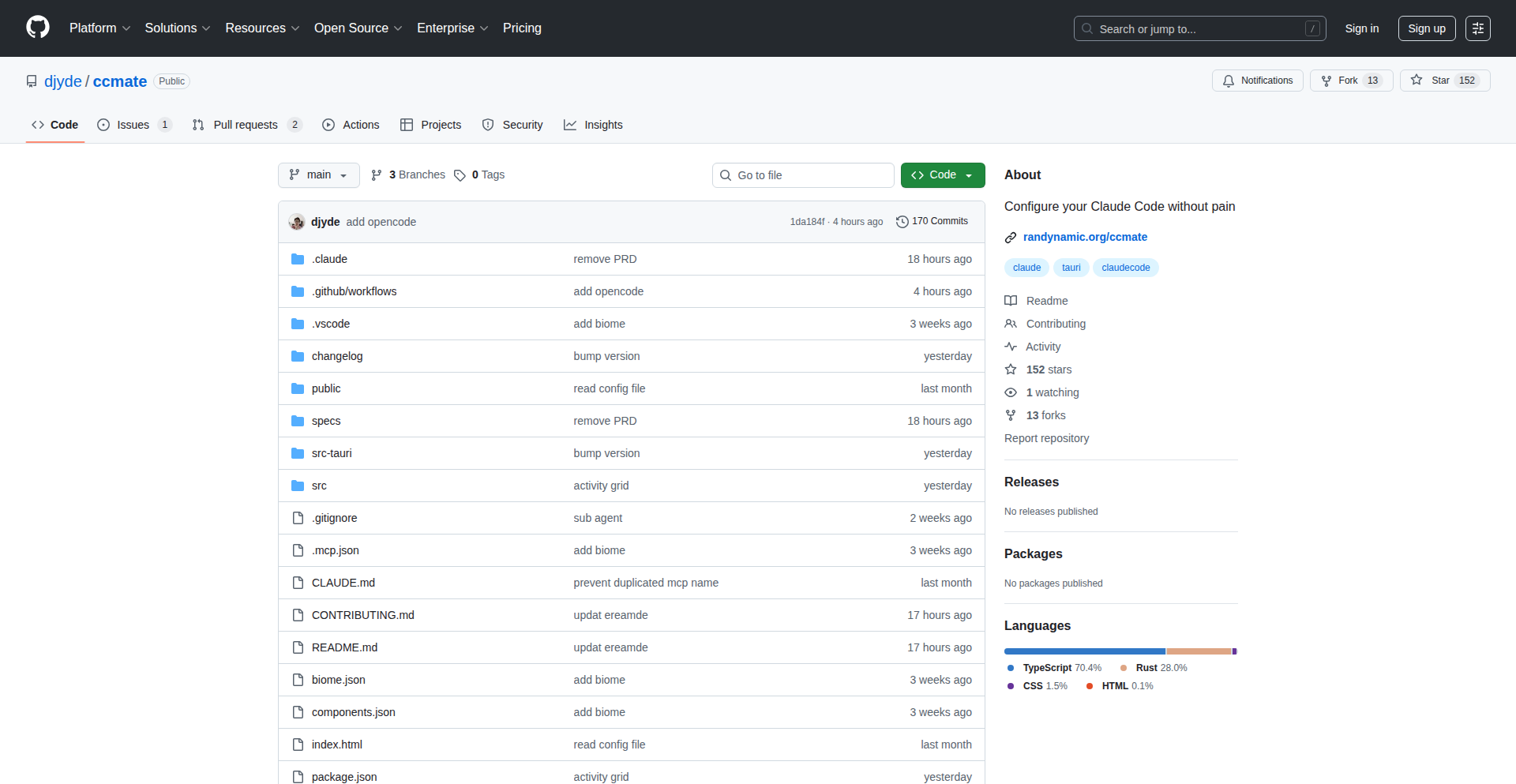

WGE: Accelerated Web Firewall Engine

Author

zhouyujt

Description

WGE is a high-performance Web Application Firewall (WAF) library designed for extreme speed, boasting up to 4x performance improvement over traditional solutions like ModSecurity. It tackles the critical challenge of protecting web applications from attacks without becoming a performance bottleneck, offering a robust and efficient security layer for developers.

Popularity

Points 3

Comments 2

What is this product?

WGE is a software library that acts as a Web Application Firewall (WAF). Think of it as a highly skilled security guard for your website. Instead of a slow, traditional guard checking every single visitor (which can slow down your site), WGE is an incredibly fast and efficient guard. It uses advanced programming techniques and optimized algorithms to inspect incoming web traffic for malicious patterns – like attempts to hack your site – at lightning speed. The innovation lies in its performance optimization, meaning it can handle much more traffic with less impact on your website's responsiveness, unlike older systems that might lag your site. So, for you, this means a more secure website that doesn't sacrifice speed.

How to use it?

Developers can integrate WGE into their web applications or as a standalone module in their web server (like Nginx or Apache). It typically works by sitting in front of your application and inspecting every request and response. You can configure WGE with a set of rules (like a list of suspicious behaviors to watch out for). When a request matches a malicious pattern, WGE can block it, log it, or take other predefined actions. This integration allows you to bolster your application's security without needing to rewrite your application's core logic. For you, this means easily adding a powerful security layer to your existing or new projects, protecting them from common web attacks.

Product Core Function

· High-performance request inspection: Analyzes incoming web requests for security threats with minimal latency, ensuring your website remains fast. This is valuable for protecting against attacks without degrading user experience.

· Rule-based threat detection: Allows developers to define custom security rules or use pre-existing rule sets to identify and block malicious traffic, providing flexible and tailored security.

· WAF integration capabilities: Can be seamlessly integrated with popular web servers and application frameworks, offering a standardized and efficient way to secure web applications.

· Optimized processing engine: Leverages advanced algorithms and low-level optimizations to achieve significant speed gains over traditional WAFs, meaning your website can handle more traffic securely.

· Actionable security policies: Enables proactive blocking or mitigation of detected threats, preventing data breaches and service disruptions.

Product Usage Case

· Securing a high-traffic e-commerce platform: By using WGE, developers can protect sensitive customer data and transaction integrity from common attacks like SQL injection and Cross-Site Scripting (XSS) without impacting the site's ability to handle peak shopping periods, ensuring a smooth customer experience.

· Protecting an API service from abuse: Developers can deploy WGE to filter out bot traffic, brute-force attempts, and other malicious requests targeting an API, ensuring its availability and preventing unauthorized access to data.

· Enhancing security for a content management system (CMS): WGE can be configured to guard against vulnerabilities specific to CMS platforms, preventing unauthorized content modification or data exfiltration, keeping website content safe and reliable.

· Implementing a security layer for a microservices architecture: Each microservice can be protected by WGE, ensuring that inter-service communication is also scrutinized for malicious intent, creating a robust defense-in-depth strategy.

14

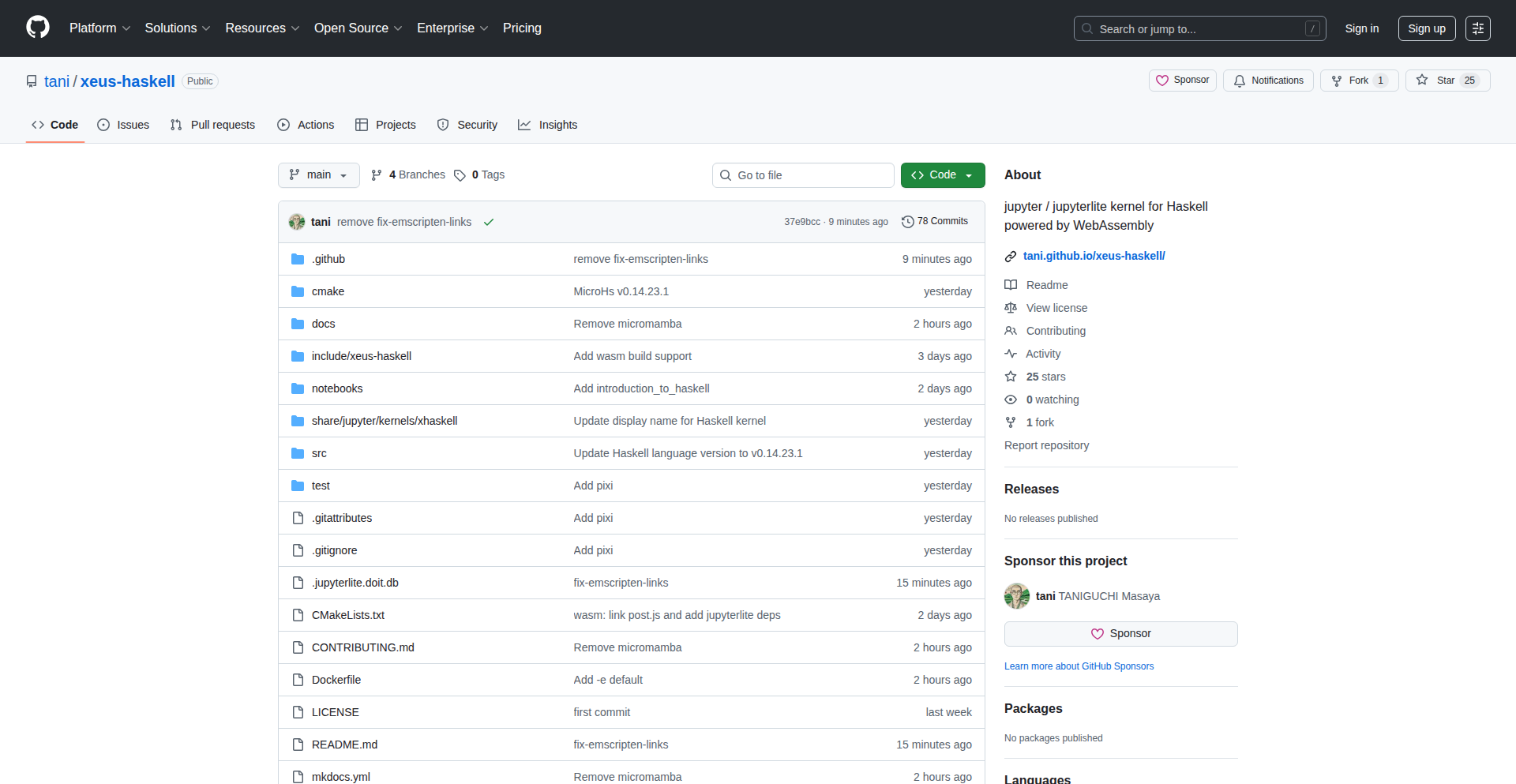

Web-Native Haskell Notebooks

Author

tanimasa

Description

This project introduces a lightweight Haskell kernel for JupyterLite, enabling interactive Haskell development directly in the web browser. It leverages MicroHs, a minimal Haskell implementation, and compiles to WebAssembly, eliminating the need for local Haskell toolchains and making Haskell accessible for scientific computing within a familiar notebook environment.

Popularity

Points 3

Comments 1

What is this product?

This is a project that brings Haskell programming to your web browser using Jupyter notebooks. The core innovation lies in using 'MicroHs', a very streamlined version of Haskell with almost no external software dependencies. This minimalism allows it to be compiled into 'WebAssembly' (Wasm), a technology that lets code run efficiently in web browsers. Think of it as a special lightweight engine that allows Haskell code to execute directly in your browser through Jupyter notebooks, similar to how you might use Python or R notebooks, but without needing to install anything on your computer. This is valuable because it makes a powerful functional programming language like Haskell, which is excellent for complex tasks like analyzing graphs or working with intricate data structures, much easier to get started with for scientific and technical tasks.

How to use it?

Developers can use this project by accessing a JupyterLite environment, which is essentially Jupyter notebooks running entirely in their web browser. They can then select the 'Haskell' kernel (xeus-haskell) to start writing and running Haskell code. This is ideal for rapid prototyping, interactive exploration of algorithms, or demonstrating Haskell's capabilities without any setup hassle. For integration, it’s designed to be a drop-in kernel for JupyterLite, meaning it should seamlessly appear as an option within the JupyterLite interface, ready to be selected for new notebook sessions.

Product Core Function

· Interactive Haskell execution in the browser: Enables users to write and run Haskell code snippets directly within a Jupyter notebook interface, facilitating immediate feedback and experimentation. This is valuable because it lowers the barrier to entry for Haskell programming and allows for quick exploration of ideas.

· WebAssembly compilation of Haskell: Leverages MicroHs to compile Haskell code into WebAssembly, allowing it to run efficiently within the browser environment without server-side processing or local installations. This is valuable because it provides a portable and performant way to run Haskell in web applications.

· Jupyter kernel for Haskell: Provides a dedicated kernel that allows Jupyter environments (like JupyterLite) to understand and execute Haskell code, making Haskell a first-class citizen in the notebook ecosystem. This is valuable because it integrates Haskell into a widely adopted interactive computing platform.

· Zero local setup required: Eliminates the need for users to install Haskell compilers (like GHC) or manage complex toolchains on their local machines. This is valuable because it democratizes access to Haskell for users who may not have the technical expertise or resources for local installations.

Product Usage Case

· Demonstrating graph algorithms: A researcher can quickly set up a JupyterLite notebook, select the Haskell kernel, and interactively implement and visualize graph algorithms, leveraging Haskell's strengths in recursive structures and lazy evaluation without any installation. This solves the problem of cumbersome setup for demonstrating complex algorithms.

· Interactive learning of Haskell for data science: A student learning Haskell for scientific computing can use JupyterLite to write and execute code for data manipulation and analysis in real-time, receiving immediate feedback and understanding concepts like lazy evaluation in practice. This addresses the challenge of making functional programming concepts more tangible.

· Prototyping mathematical models: A scientist can rapidly prototype mathematical models in Haskell within a browser-based notebook, quickly iterating on calculations and exploring different scenarios without the overhead of setting up a development environment. This speeds up the research and development cycle.

15

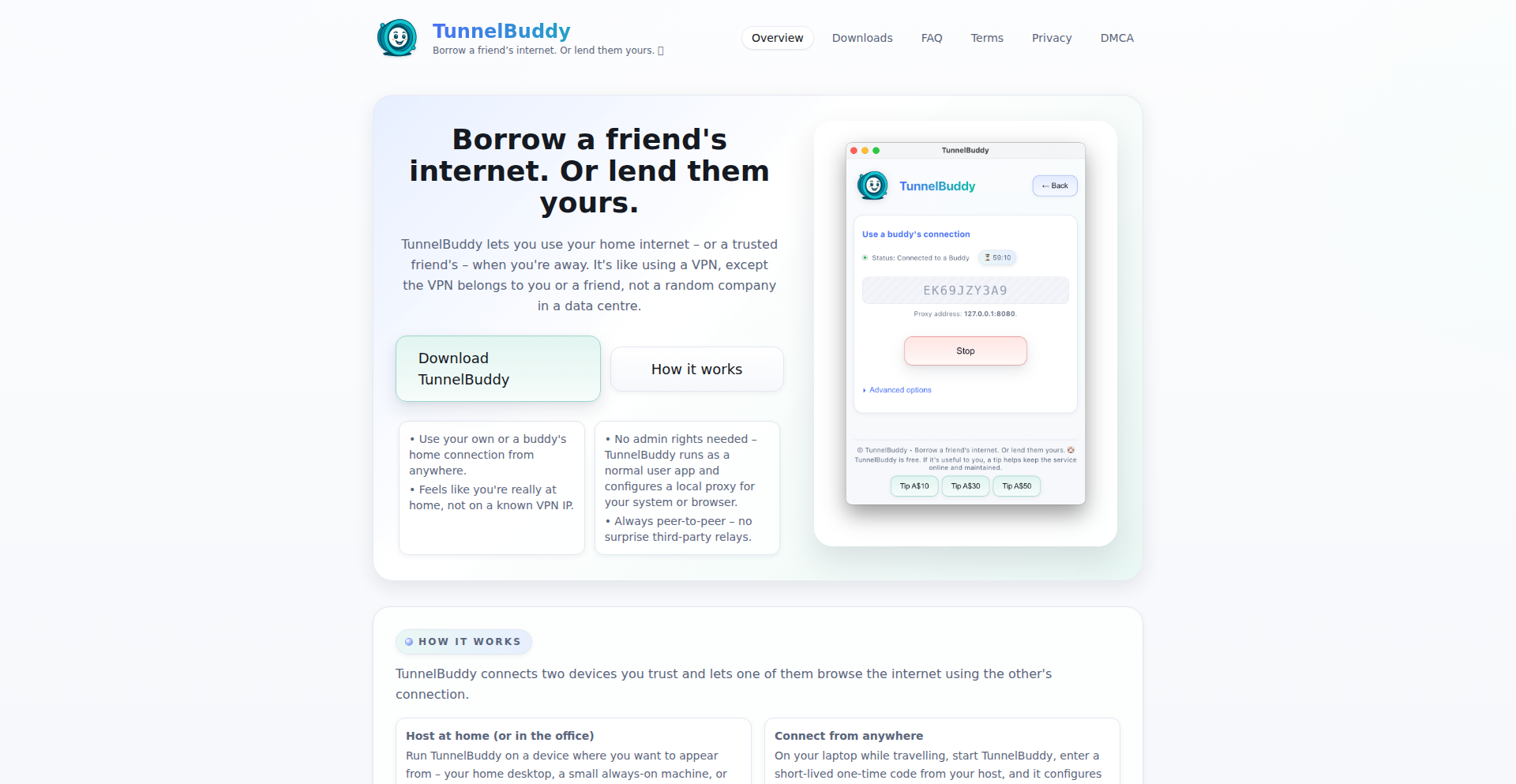

TunnelBuddy

Author

xrmagnum

Description

TunnelBuddy is a desktop application that allows users to securely share their home internet connection as an HTTPS proxy to a trusted friend. It utilizes WebRTC peer-to-peer technology to establish a direct, encrypted tunnel, enabling scenarios like accessing region-restricted content or debugging network-specific issues without the complexity of a full VPN. The core innovation lies in simplifying secure internet sharing through a user-friendly interface and direct P2P connectivity, bypassing traditional server infrastructure for basic sharing needs.

Popularity

Points 2

Comments 2

What is this product?

TunnelBuddy is a desktop application that acts as a personal internet sharing tool. It creates a secure, encrypted connection between your computer and a trusted friend's computer using WebRTC. This connection allows your friend to access the internet through your home IP address, making it appear as if they are browsing from your location. The 'HTTPS proxy' part means that the traffic is routed securely, like when you visit a website that uses 'https'. The key innovation is using WebRTC, a technology designed for real-time communication like video calls, to create a stable peer-to-peer tunnel for sharing your internet connection. This is a creative application of WebRTC beyond its typical use case. So, for you, it means a simple way to let someone else use your internet safely, without needing to set up complicated network configurations.

How to use it?

Developers can use TunnelBuddy by installing the desktop application on their machine. Once installed, they can initiate a connection with a trusted 'buddy' by exchanging connection details or through a simple pairing process. The friend on the other end also needs to have TunnelBuddy installed. The application handles the WebRTC signaling and peer-to-peer connection setup automatically. The 'HTTPS proxy' functionality can then be configured in the friend's browser or other applications to route traffic through the established tunnel. This is particularly useful for testing applications that have IP-based restrictions or for reproducing bugs that only occur on specific network environments. So, for you, it means you can quickly enable a friend to access resources as if they were at your home, or vice versa, with minimal setup.

Product Core Function

· Secure Peer-to-Peer Tunneling: Utilizes WebRTC to establish a direct, encrypted connection between two computers. This ensures that the shared internet traffic is private and secure, preventing eavesdropping. The value here is in providing a robust and secure way to connect without relying on a central server, reducing latency and increasing privacy. It's a core component for enabling the sharing functionality safely.

· HTTPS Proxying: Exposes the user's home internet connection as an HTTPS proxy. This means that all traffic routed through TunnelBuddy is encrypted, providing an extra layer of security. The value is in ensuring that not only the connection itself is secure, but the traffic passing through it is also protected, making it safe for sensitive activities like online banking or accessing corporate portals. This also ensures compatibility with applications that expect standard proxy behavior.

· IP Address Masking/Sharing: Allows a remote user to appear as if they are browsing from the TunnelBuddy host's home IP address. The value is in enabling access to region-locked content, testing geo-specific features, or simulating network conditions from a particular location. This is a direct benefit for developers needing to test regional variations or for users wanting to access services only available in certain areas.

· Simplified Connection Management: Provides a user-friendly interface for initiating and managing peer-to-peer connections. The value is in abstracting away the complexities of WebRTC signaling and network configuration, making it accessible to users who are not network experts. This aligns with the hacker ethos of solving complex problems with elegant and user-friendly solutions.

Product Usage Case

· Reproducing 'Works on My Machine' Bugs: A developer is encountering a bug that only appears when their application is run from their home network. They can use TunnelBuddy to create a tunnel to a friend's machine, allowing the friend to access the developer's local development server through their home IP, making it easier to debug the issue in a realistic environment. This solves the problem of accurately replicating specific network conditions.

· Accessing Geo-Restricted Content for Testing: A QA tester needs to verify how a website or application behaves for users in a specific country. They can use TunnelBuddy to connect to a friend in that country, and then use the shared internet connection to test the regional experience. This directly addresses the challenge of simulating user experiences across different geographic locations without physical presence.

· Securely Accessing Home Resources While Traveling: A user is traveling and needs to access their home banking portal, which requires a login from their registered home IP address for security. They can use TunnelBuddy to create a secure tunnel from their current location to their home computer, and then route their banking traffic through that tunnel, satisfying the IP-based security requirement. This provides peace of mind and seamless access to essential services.

· Collaborative Development on Localhost: Two developers are working on a project that involves a local development server. One developer can use TunnelBuddy to expose their localhost to the other developer, allowing them to test and review changes in real-time without needing to deploy to a shared staging environment. This accelerates the development feedback loop and improves collaboration.

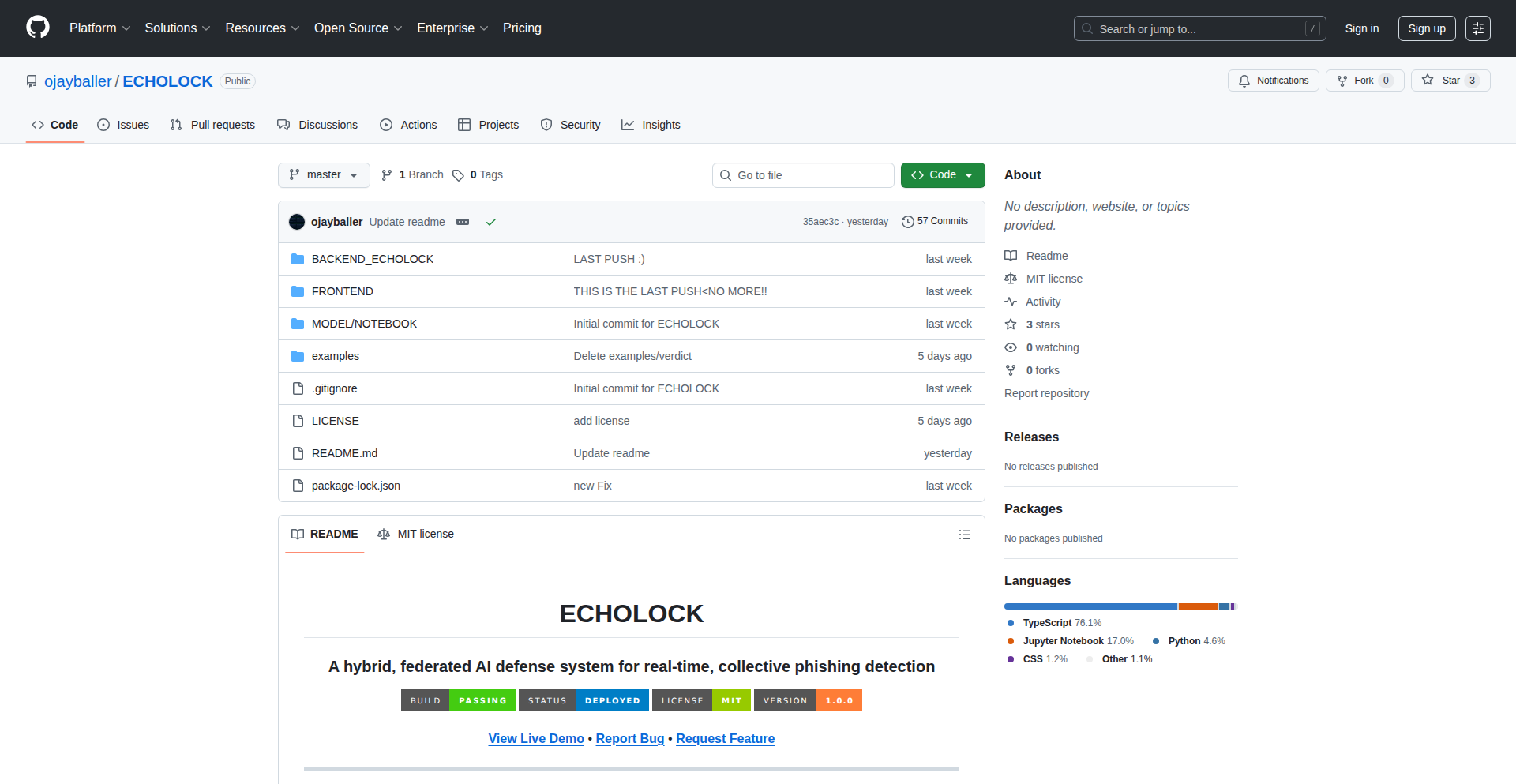

16

Echolock: Federated Phishing Shield

Author

iLove_AI

Description

Echolock is a real-time phishing detection system leveraging federated AI. It addresses the critical need for proactive online security by analyzing suspicious URLs and content without compromising user privacy. The innovation lies in its decentralized learning approach, enabling a collective intelligence against phishing threats without centralizing sensitive data. This means better protection for everyone, faster.

Popularity

Points 3

Comments 1

What is this product?

Echolock is a privacy-preserving, AI-powered system designed to detect phishing attempts in real-time. Instead of sending your browsing data to a central server for analysis, Echolock uses a technique called federated learning. Imagine many users' devices acting as individual learning stations. Each station learns from local data about phishing patterns. Then, these learned insights (not the raw data) are aggregated to build a smarter, more robust global phishing detection model. This way, Echolock gets smarter without ever seeing your personal browsing history. This is a significant innovation because it solves the privacy vs. security dilemma in threat detection.

How to use it?

Echolock can be integrated into web browsers as an extension or utilized by online services as an API. For developers, it offers a secure way to enhance their applications' security posture. For end-users, it would typically function as a background service that alerts them if they are about to visit a known or suspected phishing website. The primary use case is to provide an immediate, intelligent layer of defense against malicious websites, thus preventing users from falling victim to scams and data theft.

Product Core Function

· Real-time URL Analysis: Echolock inspects visited URLs instantly to identify known phishing sites. Its value is preventing users from landing on malicious pages before any damage is done.

· Federated AI Model Training: Echolock trains its AI models collaboratively across user devices. This means the detection capabilities improve continuously for everyone without any single entity collecting private browsing data, offering a more effective and scalable solution.

· Privacy-Preserving Threat Intelligence: The system aggregates insights from local detections to build a global understanding of phishing tactics. The value here is a continuously updated defense mechanism that respects individual user privacy, making it a trustworthy security tool.

· Low Latency Detection: Designed for speed, Echolock provides near-instantaneous feedback on suspicious links. This is crucial for real-time protection where milliseconds matter in preventing a phishing attack.

Product Usage Case