Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-15

SagaSu777 2025-11-16

Explore the hottest developer projects on Show HN for 2025-11-15. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN barrage showcases a vibrant hacker spirit, with a strong undercurrent of leveraging AI not just for novelties, but for pragmatic problem-solving across the tech stack. We're seeing AI move from a 'wow factor' to a foundational tool for enhancing developer productivity, automating complex tasks, and even enabling entirely new forms of human-computer interaction. For developers, this means embracing AI not as a black box, but as a programmable component—understanding prompt engineering, integrating AI into existing workflows, and critically evaluating its outputs. For entrepreneurs, the opportunity lies in identifying niche problems that AI can solve more efficiently or accessibly than before. Projects like the instant Kubernetes provisioning highlight that innovation often comes from tackling infrastructure complexity with clever, albeit sometimes 'boring,' architectural choices. The emphasis on privacy and local processing also signals a growing demand for user-centric tech that respects data ownership. The drive to build specialized tools, from MacPaint recreations to social networks designed for genuine connection, proves that even in a rapidly advancing tech landscape, human needs and creative expression remain paramount. The common thread is using technology to empower individuals and small teams to achieve more, with greater control and less friction.

Today's Hottest Product

Name

RunOS

Highlight

RunOS tackles the complexity of Kubernetes cluster provisioning head-on by leveraging KVM and gRPC. The innovation lies in its agent-based architecture that initiates connections outward, eliminating firewall headaches. This allows for rapid deployment (5-10 minutes) of production-ready clusters, complete with essential components like databases, message queues, and observability tools. Developers can learn about efficient infrastructure bootstrapping, secure inter-node communication via OS-level WireGuard, and advanced service management within a complex ecosystem. It’s a testament to building robust systems with seemingly 'boring' but reliable technologies.

Popular Category

AI/ML

Developer Tools

Infrastructure/DevOps

Web Applications

Popular Keyword

AI

Kubernetes

CLI

Browser

Rust

Python

Node.js

API

OpenAI

LLM

Technology Trends

AI-powered automation

Developer productivity tools

Efficient infrastructure management

Privacy-first applications

Cross-language integration

Edge computing/Local processing

Next-gen knowledge management

Project Category Distribution

AI/ML Tools (25%)

Developer Productivity & Tools (20%)

Infrastructure/DevOps (15%)

Web Applications & Services (15%)

Data Analysis & Management (10%)

Creative Tools (10%)

Security & Utilities (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | ZenPaint PixelForge | 14 | 5 |

| 2 | Eintercon | 4 | 7 |

| 3 | RAG-Chunk CLI: The Chunking Strategy Tester | 5 | 3 |

| 4 | Planetary Substrate: AI-Powered Knowledge Navigator | 5 | 2 |

| 5 | IncidentPulse: Real-time Incident Command Center | 5 | 1 |

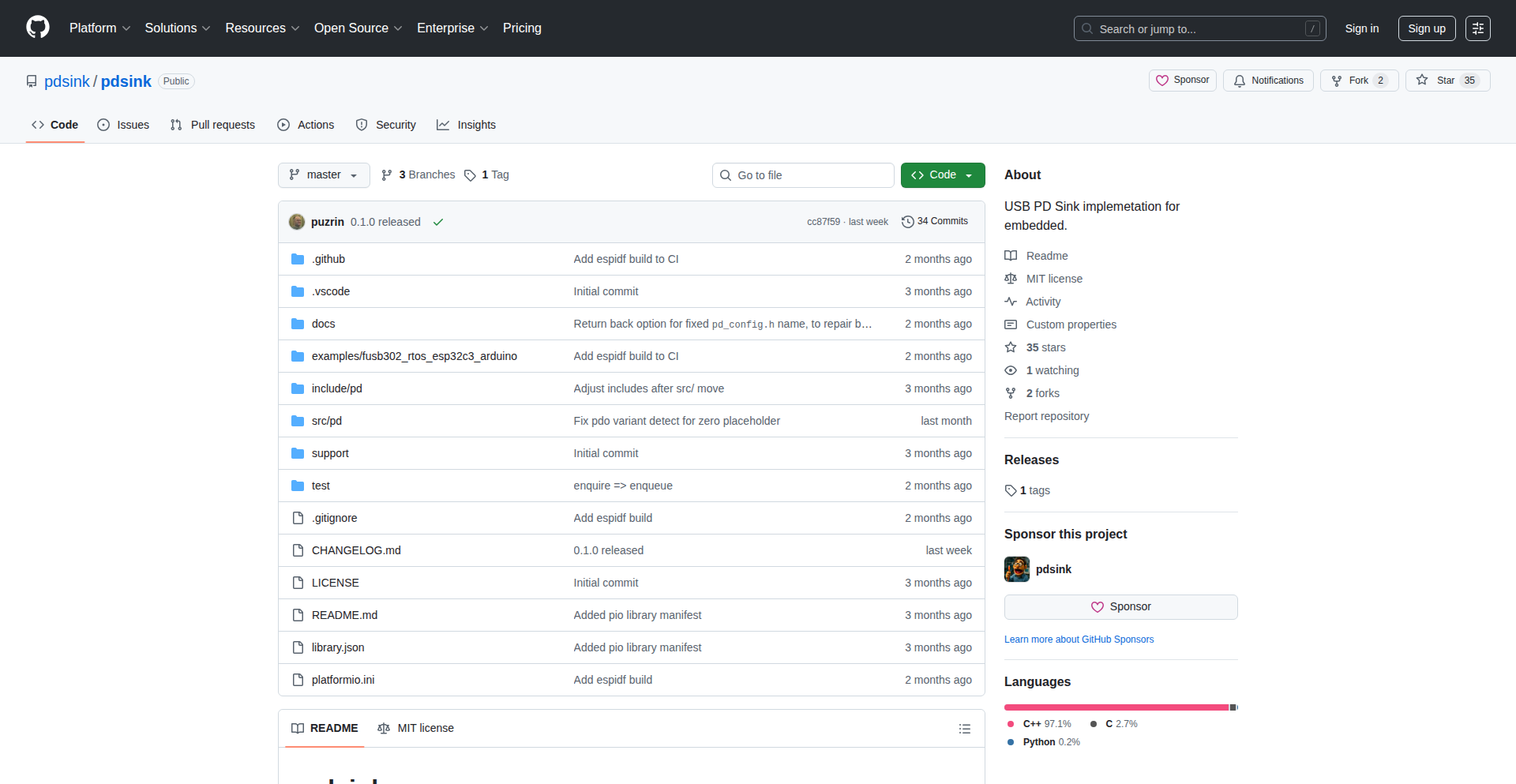

| 6 | Pdsink: USB-PD 3.2 Sink Stack for Embedded Ingenuity | 5 | 1 |

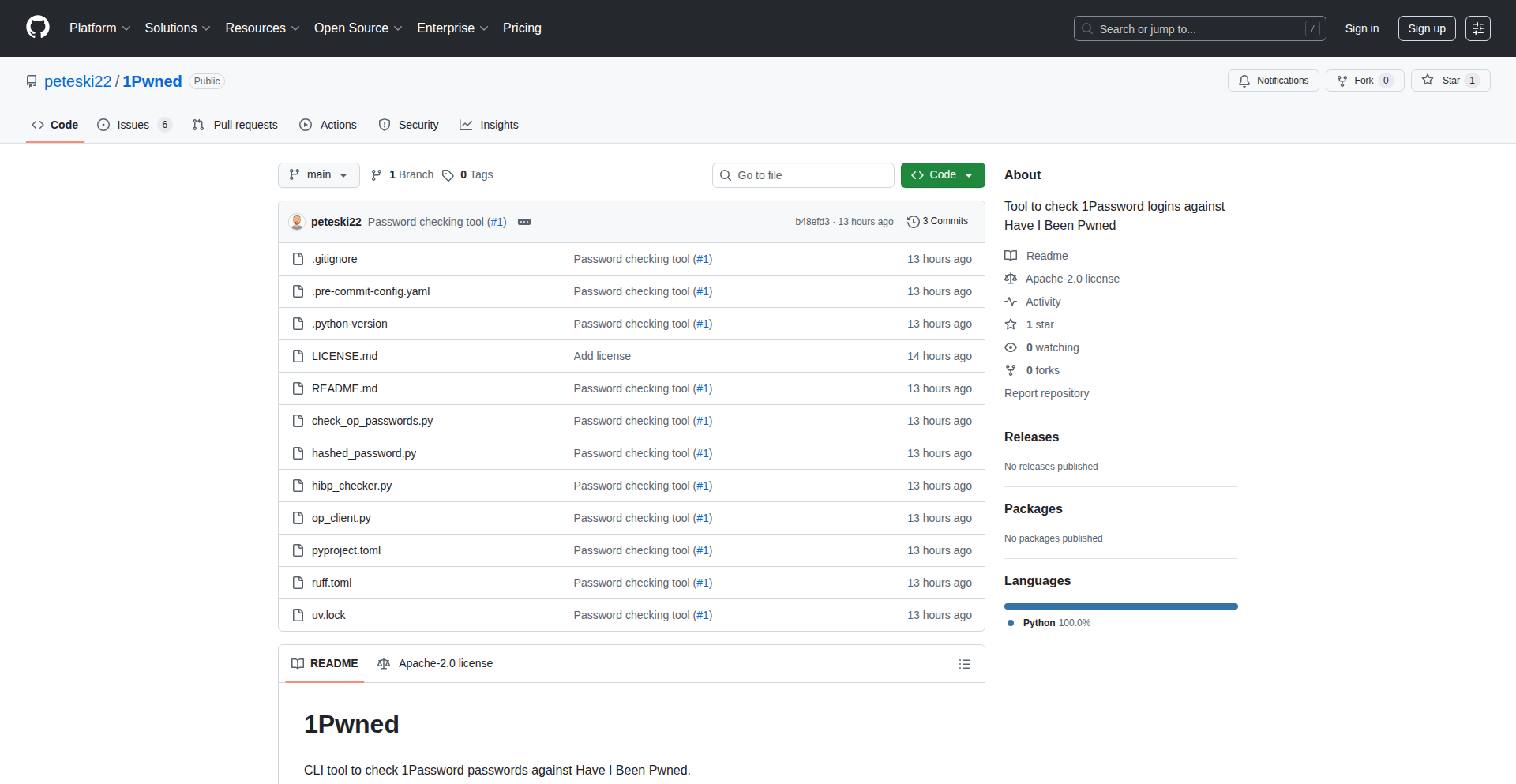

| 7 | 1PwnedGuardian | 2 | 3 |

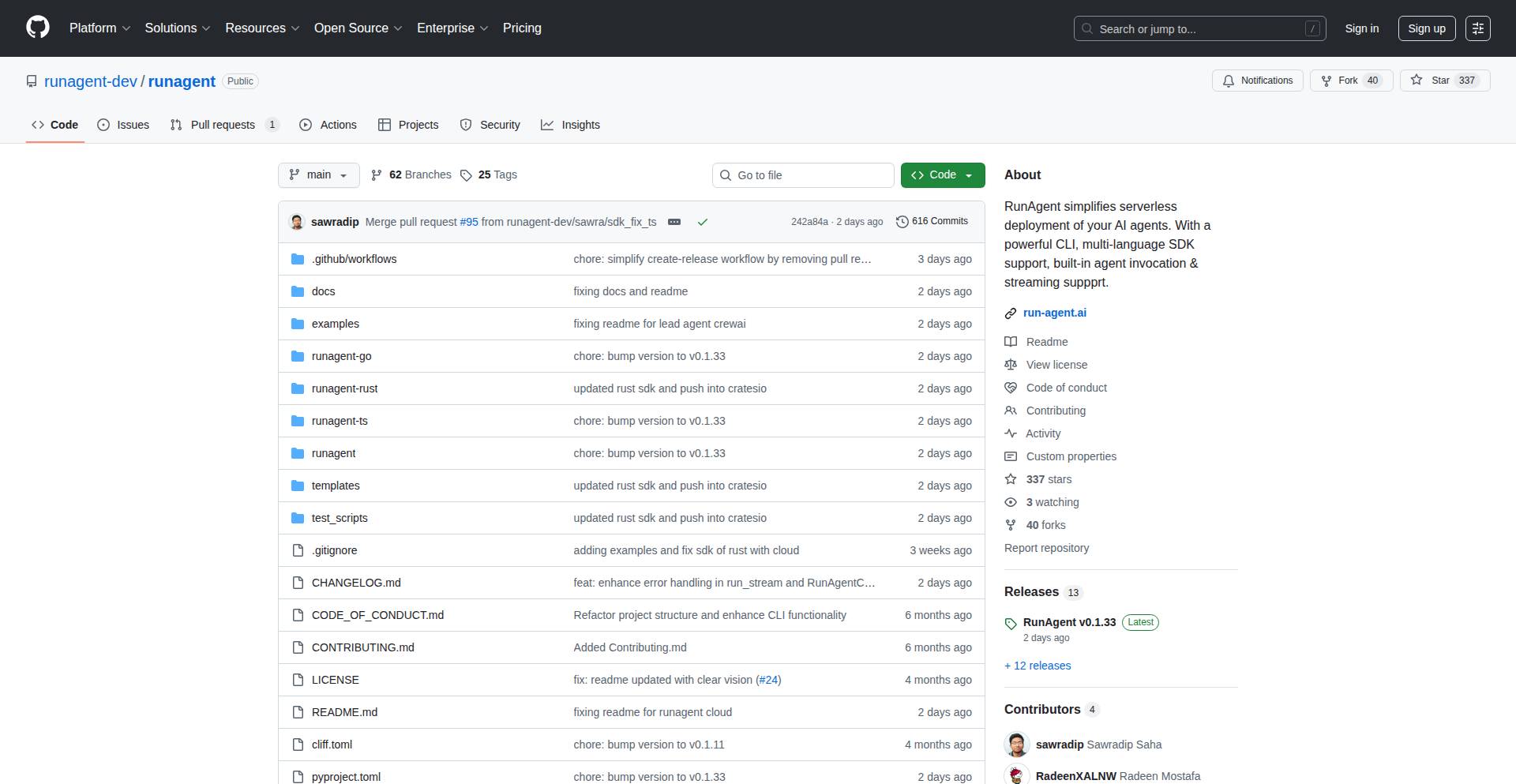

| 8 | RunOS: Instant Kubernetes Fabric | 2 | 2 |

| 9 | Cross-Lingual Agent Orchestrator | 4 | 0 |

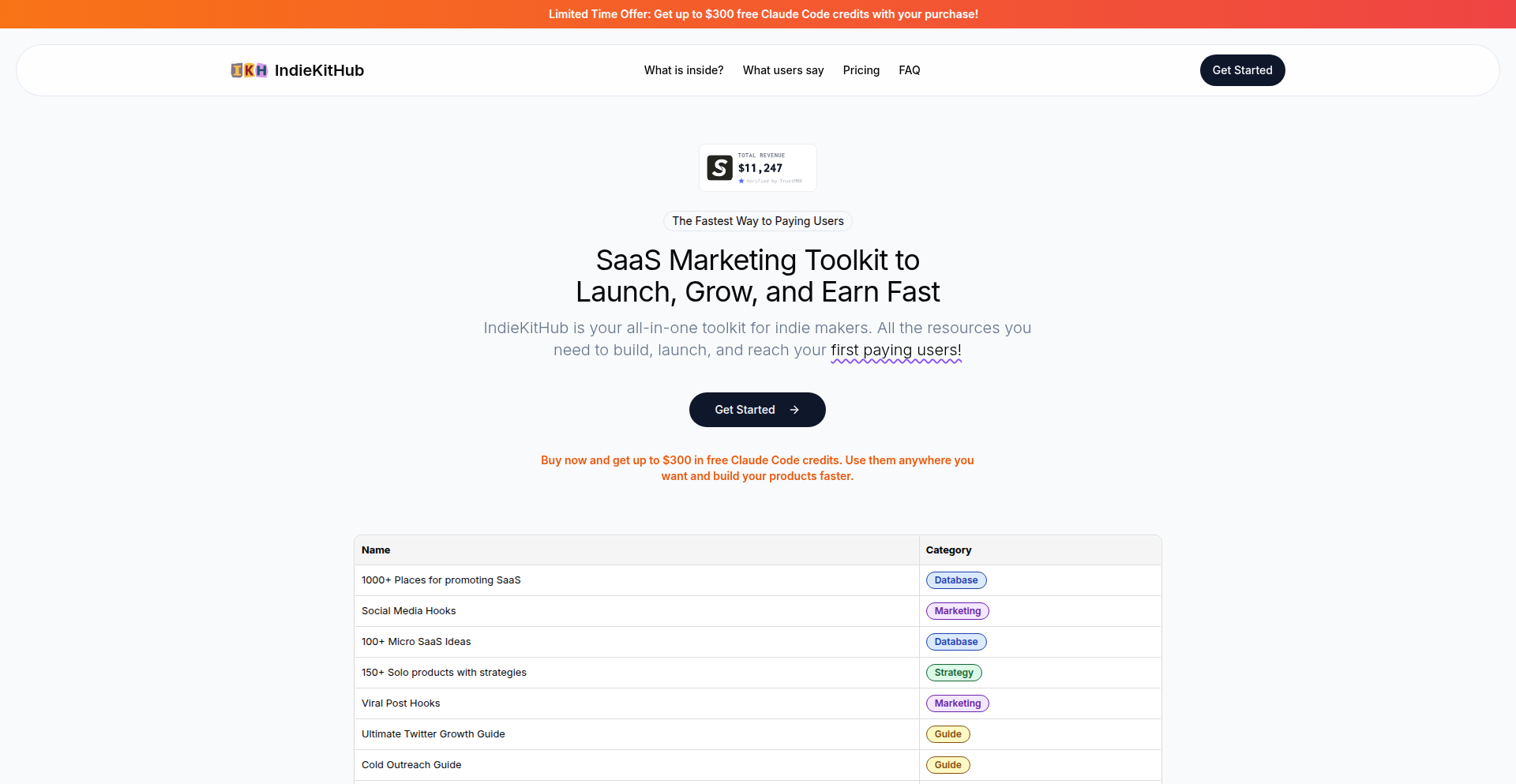

| 10 | AI-Powered SaaS Growth Playbook & Credits | 4 | 0 |

1

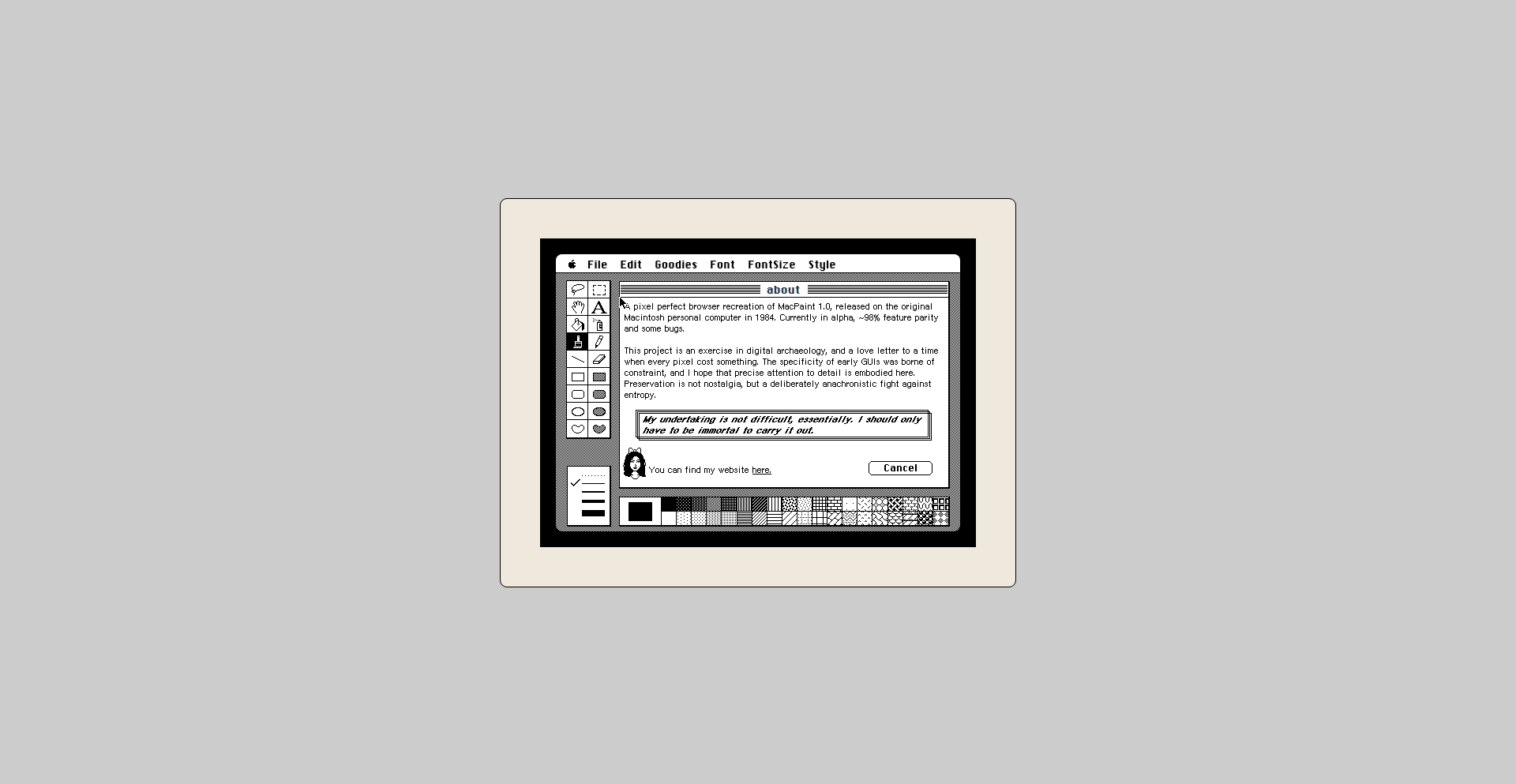

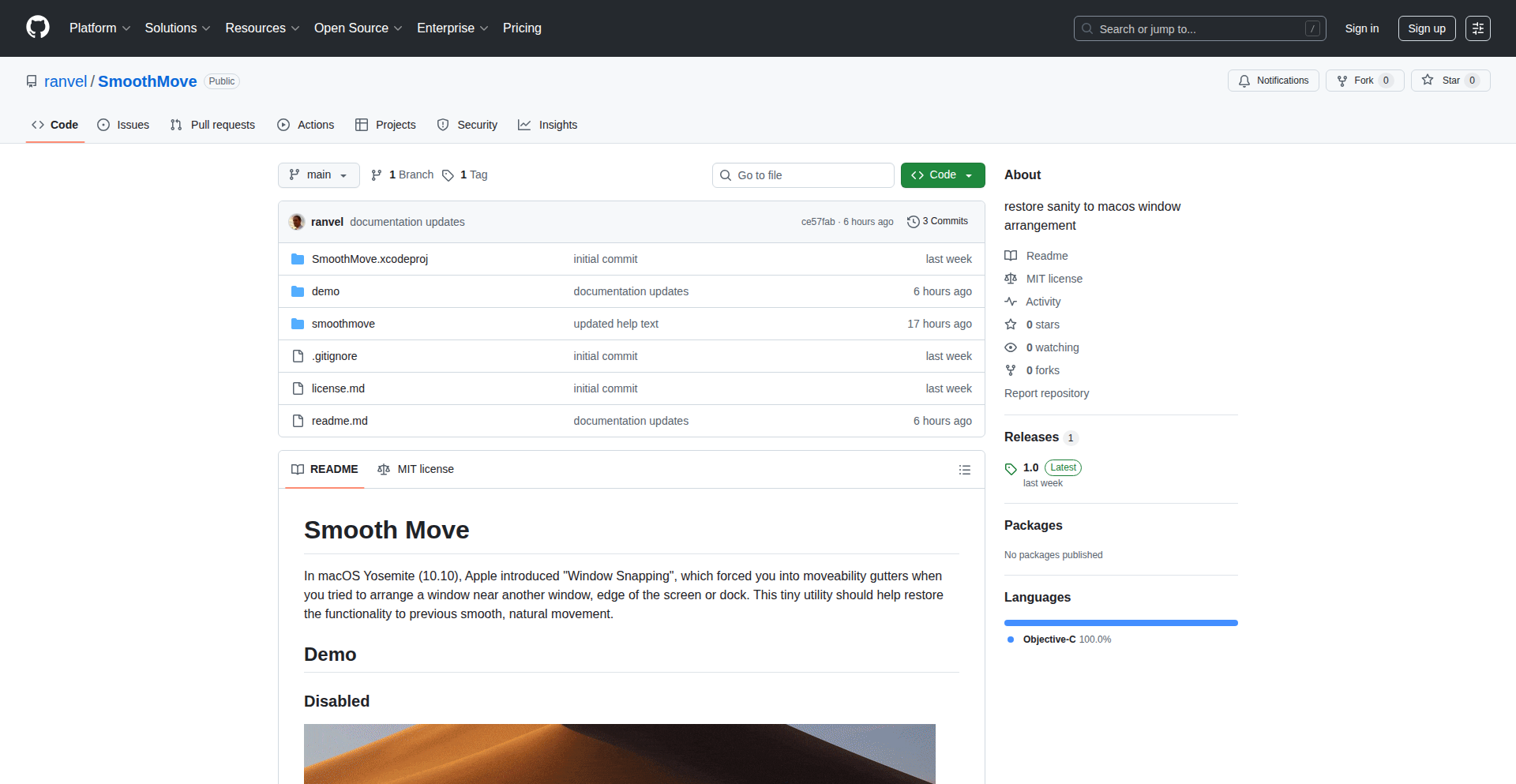

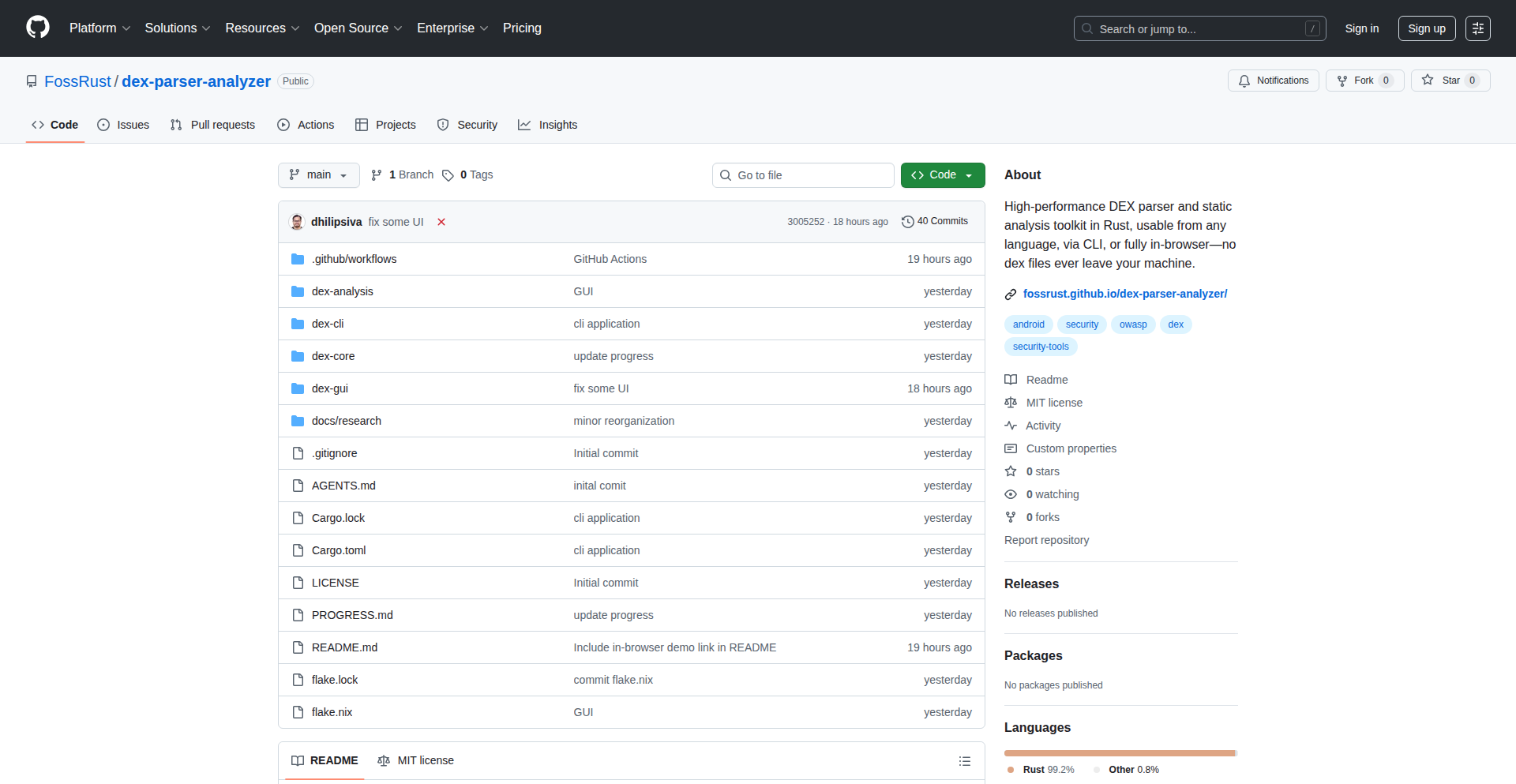

ZenPaint PixelForge

Author

allthreespies

Description

ZenPaint PixelForge is a browser-based, pixel-perfect recreation of the iconic MacPaint application. It meticulously replicates the original software's behavior, including its unique font rendering and shape tools, by delving into its historical source code and emulators. The core innovation lies in achieving extreme fidelity to the original's 1-bit graphics and limited resolution aesthetic, solving the challenge of precise pixel manipulation in a modern web environment. This project offers developers a deep dive into historical graphics rendering techniques and a unique tool for retro art creation.

Popularity

Points 14

Comments 5

What is this product?

ZenPaint PixelForge is a web application that faithfully reconstructs the original MacPaint drawing program. Its technical innovation stems from its dedication to pixel-perfect accuracy. The developer painstakingly studied Atkinson's original QuickDraw source code and emulators to ensure that every detail, from how fonts appeared to the precise behavior of shape tools, matches the vintage MacPaint. This involved overcoming modern browser rendering quirks, such as preventing canvas smoothing or aliasing, to achieve the authentic 1-bit graphics. It's built declaratively using React and employs performance optimizations like a buffer pool and copy-on-write semantics for smooth operation. The value is in preserving and experiencing a piece of digital art history with remarkable fidelity.

How to use it?

Developers can use ZenPaint PixelForge directly in their web browser as a drawing tool, experiencing the authentic MacPaint interface and capabilities. For those interested in the technical aspects, the project serves as an educational resource. Developers can explore the source code to understand how complex historical graphics rendering, like the precise font pipeline of the original Mac, was achieved. They can learn about techniques for avoiding modern browser smoothing to maintain a strictly pixelated look. Furthermore, the ability to share artwork via links means developers can easily collaborate or showcase their creations, integrating a piece of retro art creation into their workflow or projects.

Product Core Function

· Pixel-perfect rendering engine: Achieves absolute fidelity to the original MacPaint's 1-bit graphics and limited resolution, enabling creators to experience and produce art exactly as it would have appeared on an old Mac. This means every pixel is exactly where it should be, no fuzziness allowed.

· Authentic QuickDraw emulation: Replicates the specific behaviors and quirks of the original Mac's drawing system, including intricate font rendering and shape tool operations, providing a historically accurate and deeply nostalgic drawing experience. This is about capturing the 'spirit' and functionality of the original code.

· Declarative UI with React: Built using modern web technologies for a responsive and maintainable interface, while still achieving vintage visual accuracy. This offers a blend of old-school aesthetics with modern development practices.

· Performance optimizations: Utilizes techniques like buffer pooling and copy-on-write semantics to ensure a smooth and responsive drawing experience, even with complex artwork, making the retro experience enjoyable and practical.

· Shareable artwork links: Allows users to save and share their creations by generating unique URLs, facilitating collaboration and the distribution of retro-styled digital art within the community.

Product Usage Case

· Retro game asset creation: A game developer could use ZenPaint PixelForge to create pixel art assets for a retro-themed game, ensuring the art style perfectly matches the era they are trying to evoke. This solves the problem of achieving authentic retro visuals in a modern development pipeline.

· Historical digital art exploration: Art students or digital art historians can use this tool to understand the constraints and creative possibilities of early digital art tools, gaining hands-on experience with a historically significant application. It provides a tangible way to study digital art history.

· Educational tool for graphics rendering: Computer science students or graphics enthusiasts can study the project's source code to learn about low-level graphics pipeline quirks, font rendering challenges, and techniques for bypassing modern browser smoothing to achieve specific visual styles. This offers practical lessons in graphics programming.

· Nostalgic personal projects: Individuals who grew up with MacPaint can use ZenPaint PixelForge to recreate their old digital artwork or simply relive the experience, finding joy and inspiration in a familiar, albeit digitally preserved, environment. This fulfills a desire for personal connection with past technology.

2

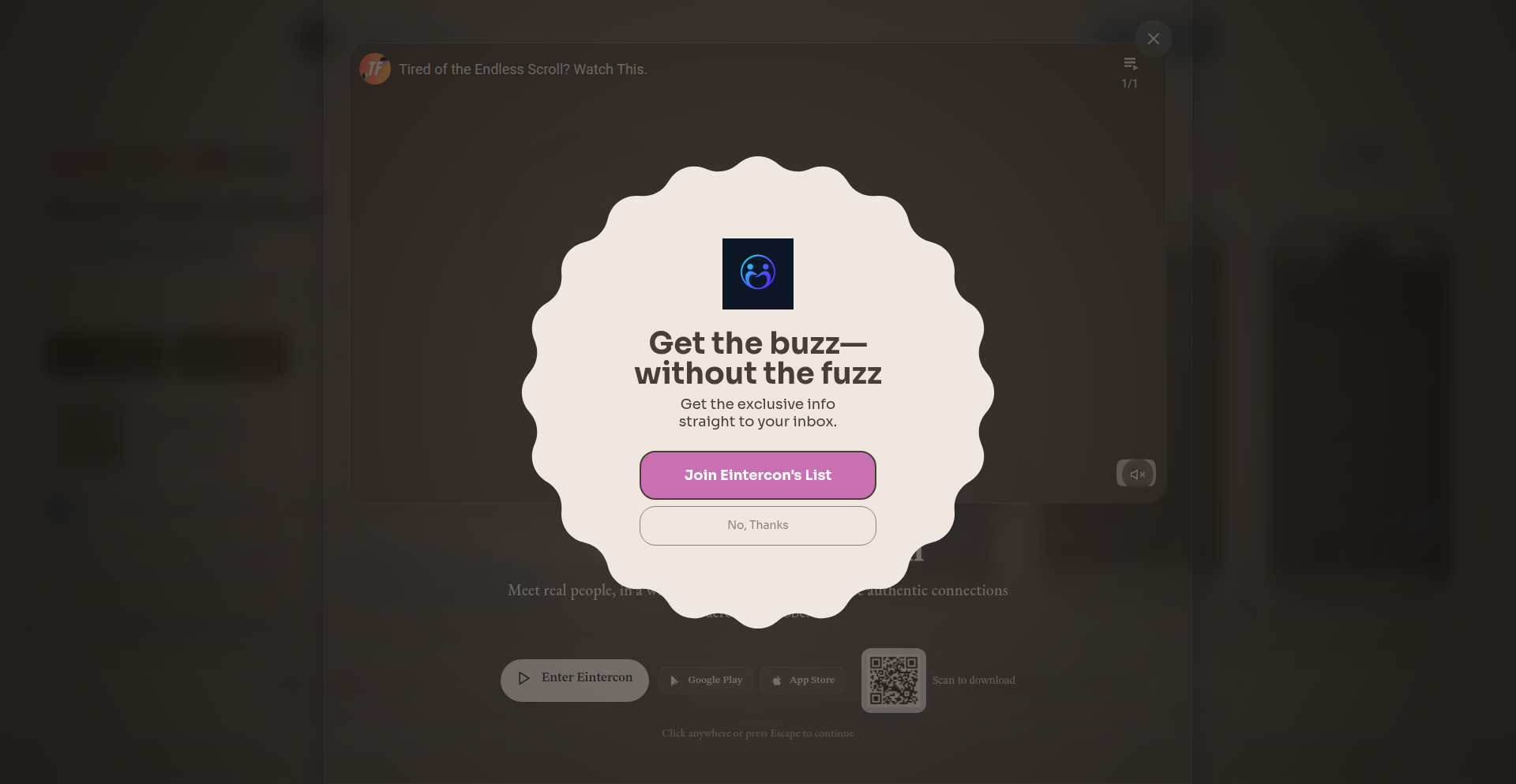

Eintercon

Author

abilafredkb

Description

Eintercon is a social network designed to foster genuine human connection by actively discouraging growth and engagement metrics. It challenges the prevailing social media model by expiring connections after 48 hours, eliminating follower counts and feeds, and intentionally matching users with people from different countries. The core innovation lies in its anti-growth philosophy, aiming to combat the gamification of relationships and promote authentic interaction.

Popularity

Points 4

Comments 7

What is this product?

Eintercon is a novel social application that flips the script on traditional social media. Instead of encouraging endless connections and engagement, it's built on the principle of ephemeral, meaningful interaction. The technology operates on a server that tracks connection lifespans and facilitates random, cross-cultural pairings. When you connect with someone, your connection is limited to 48 hours. After that, the connection dissolves, and you're encouraged to connect with someone new. This is a deliberate technical choice to break free from addiction loops and the pursuit of vanity metrics like follower counts and likes. The system's algorithm is designed to actively try and match you with individuals outside your geographical region, promoting exposure to diverse perspectives. It strictly prohibits ads, data mining, and any form of engagement optimization. This approach aims to create a space where the focus is on the quality of conversation, not the quantity of connections.

How to use it?

Developers can use Eintercon by simply visiting the website eintercon.com and signing up. The platform is designed for individual users seeking a different kind of social experience. For developers interested in the underlying principles, the project serves as an inspiration for building applications that prioritize user well-being over engagement. You can integrate the concept into your own projects by considering how to limit interaction decay, promote serendipitous connections, or de-emphasize growth metrics. While there isn't a direct API for developers to integrate with Eintercon's core features, the conceptual framework can inform the design of new social tools or community platforms that aim for deeper, more authentic interactions. Think of it as a blueprint for building digital spaces that respect your time and attention, rather than trying to capture it.

Product Core Function

· Ephemeral Connections: Connections automatically expire after 48 hours, fostering a sense of urgency for meaningful conversation and preventing the accumulation of stale, inactive relationships. This means you get to focus on having a real chat while it lasts, rather than accumulating digital clutter.

· Cross-Cultural Matching Algorithm: The system actively tries to connect you with users from different countries, broadening your horizons and exposing you to diverse perspectives. This helps you break out of your echo chamber and learn about the world from new viewpoints.

· No Follower/Like Counts: Eintercon deliberately omits public metrics like follower counts and likes, removing the pressure to perform for social validation. You can just be yourself without worrying about how many people are watching or judging your online persona.

· Ad-Free and Data-Privacy Focused: The platform operates without advertisements and does not engage in data mining, ensuring a private and uninterrupted user experience. Your conversations and data are yours, not a product to be sold.

· Intentional Anti-Growth Design: The entire architecture is built to resist exponential growth, encouraging deeper, more personal interactions over mass appeal. This means the focus is on quality over quantity, leading to more impactful exchanges.

Product Usage Case

· A user feeling overwhelmed by a large network of superficial online acquaintances can use Eintercon to experience genuine, albeit temporary, connections with individuals they would otherwise never meet. This addresses the problem of 'social media fatigue' by offering a refreshing alternative.

· A developer exploring alternative social media models can study Eintercon's design to understand how to build platforms that prioritize mental well-being and authentic human interaction over engagement. It's a case study in how to intentionally disrupt the status quo of digital communication.

· An individual looking to practice speaking a foreign language in a low-pressure environment can connect with native speakers through Eintercon's cross-cultural matching. The 48-hour limit makes it a contained and less intimidating way to improve language skills.

3

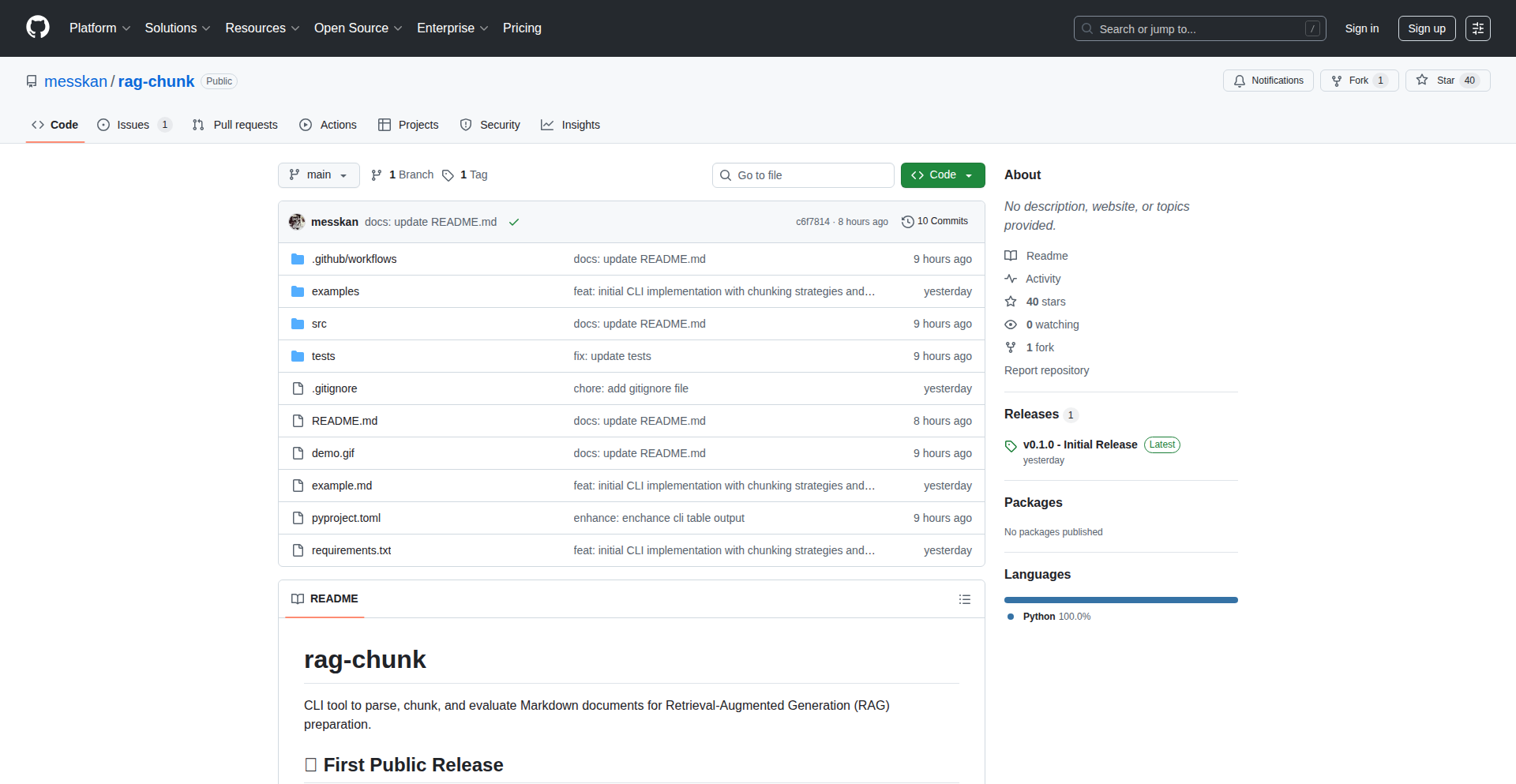

RAG-Chunk CLI: The Chunking Strategy Tester

Author

messkan

Description

RAG-Chunk CLI is a command-line tool designed to help developers easily test and evaluate different chunking strategies for Retrieval Augmented Generation (RAG) systems. It addresses the common challenge of finding the optimal way to break down large documents into smaller, manageable pieces, which is crucial for effective RAG performance. The innovation lies in its direct CLI interface, allowing for rapid experimentation without complex coding setup, making it invaluable for fine-tuning RAG pipelines.

Popularity

Points 5

Comments 3

What is this product?

This project is a command-line interface (CLI) tool that simplifies the process of testing various methods for splitting large text documents into smaller chunks. In the context of RAG (Retrieval Augmented Generation), which uses external knowledge to improve AI responses, how you divide your documents (chunking) significantly impacts how well the AI can find and use relevant information. RAG-Chunk CLI offers a direct way to experiment with different chunking sizes, overlap, and methods (like sentence splitting or fixed size) and immediately see the results, making it easier to choose the best strategy for your specific data and AI model.

How to use it?

Developers can use RAG-Chunk CLI directly from their terminal. After installing the tool, they can point it to a text file or a directory of files. Then, they can specify different chunking parameters (e.g., chunk size, overlap, separator) and run the tool. The CLI will output the resulting chunks, allowing developers to visually inspect them or feed them into a RAG pipeline for quantitative evaluation. This eliminates the need to write custom scripts for every chunking test, speeding up development significantly.

Product Core Function

· Text File Input: Accepts any text-based document, enabling testing with diverse data sources. The value is that it works with your existing documents without needing conversion, saving setup time.

· Chunking Strategy Configuration: Allows users to define various chunking parameters such as chunk size, overlap between chunks, and specific delimiters. This is valuable because it gives granular control to experiment with different approaches, directly impacting the quality of information retrieved by RAG systems.

· Multiple Chunking Methods: Supports different ways to split text, like fixed-size chunks, sentence-based splitting, or paragraph-based splitting. The value here is flexibility, as different data types benefit from different splitting logic, leading to better RAG performance.

· Output Visualization and Export: Provides a clear view of the generated chunks and the option to export them. This is useful for quick manual review and for integrating the chunked data into further processing steps, making the experimental results immediately actionable.

· Performance Benchmarking Hooks (potential): While not explicitly stated, the design allows for future integration with performance metrics. This future value means developers can potentially measure how different chunking strategies affect retrieval accuracy and response quality directly through the tool or its extensions.

Product Usage Case

· Optimizing a customer support knowledge base for a chatbot: A developer can use RAG-Chunk CLI to test how splitting support articles by paragraphs versus fixed-size chunks affects the chatbot's ability to find answers to common user queries. This helps ensure the chatbot provides accurate and relevant responses.

· Fine-tuning a document summarization RAG system: A researcher can experiment with different chunk sizes and overlaps to find the optimal way to break down lengthy research papers for a RAG system that summarizes them. This allows the system to capture the most critical information without losing context.

· Developing a legal document analysis tool: A legal tech developer can use RAG-Chunk CLI to test how splitting legal contracts by clauses or sections impacts the accuracy of a RAG system designed to extract specific legal information, ensuring the tool can reliably identify key data points.

4

Planetary Substrate: AI-Powered Knowledge Navigator

Author

bkrauth

Description

This project is an experimental prototype leveraging GPT-4o to create a dramatically enhanced Wikipedia experience. It aims to make accessing and understanding information 10x to 100x better by providing a 'nervous system interface' that intelligently connects and synthesizes knowledge.

Popularity

Points 5

Comments 2

What is this product?

This project is an AI-driven system designed to revolutionize how we interact with vast knowledge bases like Wikipedia. Instead of static pages, it uses advanced AI (GPT-4o) to build a dynamic and interconnected 'substrate' of information. Imagine a Wikipedia that not only presents facts but actively understands relationships between concepts, answers complex queries with synthesized insights, and proactively guides you through related topics. The core innovation lies in creating a richer, more intuitive information architecture that mimics a biological nervous system, allowing for deeper comprehension and faster discovery.

How to use it?

Developers can integrate this system into their applications to provide AI-powered knowledge retrieval and exploration. For instance, a developer could embed this into a research tool, an educational platform, or even a personal assistant. The 'nervous system interface' allows for natural language querying, meaning users can ask questions in plain English and receive comprehensive, contextually relevant answers, complete with links to supporting information and explanations of how different pieces of knowledge connect. The system is designed for extensibility, allowing developers to build custom interfaces and functionalities on top of the core AI knowledge graph.

Product Core Function

· AI-powered knowledge synthesis: This function uses GPT-4o to not just retrieve information but to understand, connect, and explain relationships between disparate pieces of knowledge, offering users a deeper understanding of complex topics. This means you get answers that are not just factually correct but also insightful and comprehensive.

· Natural language querying: Users can ask questions in plain English, just like talking to a knowledgeable person. The AI interprets these queries and provides nuanced answers, eliminating the need for precise keyword searching. This makes information discovery much more accessible and intuitive.

· Dynamic knowledge exploration: Instead of a linear browsing experience, the system creates an interconnected web of information. This allows users to effortlessly navigate through related concepts and discover new insights they might not have found otherwise. It’s like having a personalized guide through the entire knowledge universe.

· Contextual understanding: The AI maintains context throughout a conversation or exploration session, allowing for follow-up questions and deeper dives into specific areas without losing track of the original query. This ensures that the information you receive is always relevant to your ongoing exploration.

· Proactive information surfacing: The system can anticipate user needs by suggesting related topics or providing background information relevant to the current query, leading to a more efficient and enriching learning experience. It helps you learn more, faster, by showing you what you might need to know next.

Product Usage Case

· Imagine a student researching a historical event. Instead of just reading a Wikipedia page, they can ask the system, 'What were the long-term economic impacts of this event, and how did it influence subsequent political movements?' The system would synthesize information from various sources, explain the economic theories involved, and draw connections to later political developments, providing a rich, multi-faceted understanding.

· A developer building a chatbot for customer support could use this to create a knowledge base that understands complex user inquiries. The chatbot could then provide not just direct answers but also related troubleshooting steps and explanations of how different features interact, improving user satisfaction and reducing support load.

· Researchers can use this to quickly identify trends and connections across vast datasets or academic papers. By querying the system with complex questions about interdisciplinary topics, they can accelerate hypothesis generation and discover novel research avenues that might be missed through traditional methods.

5

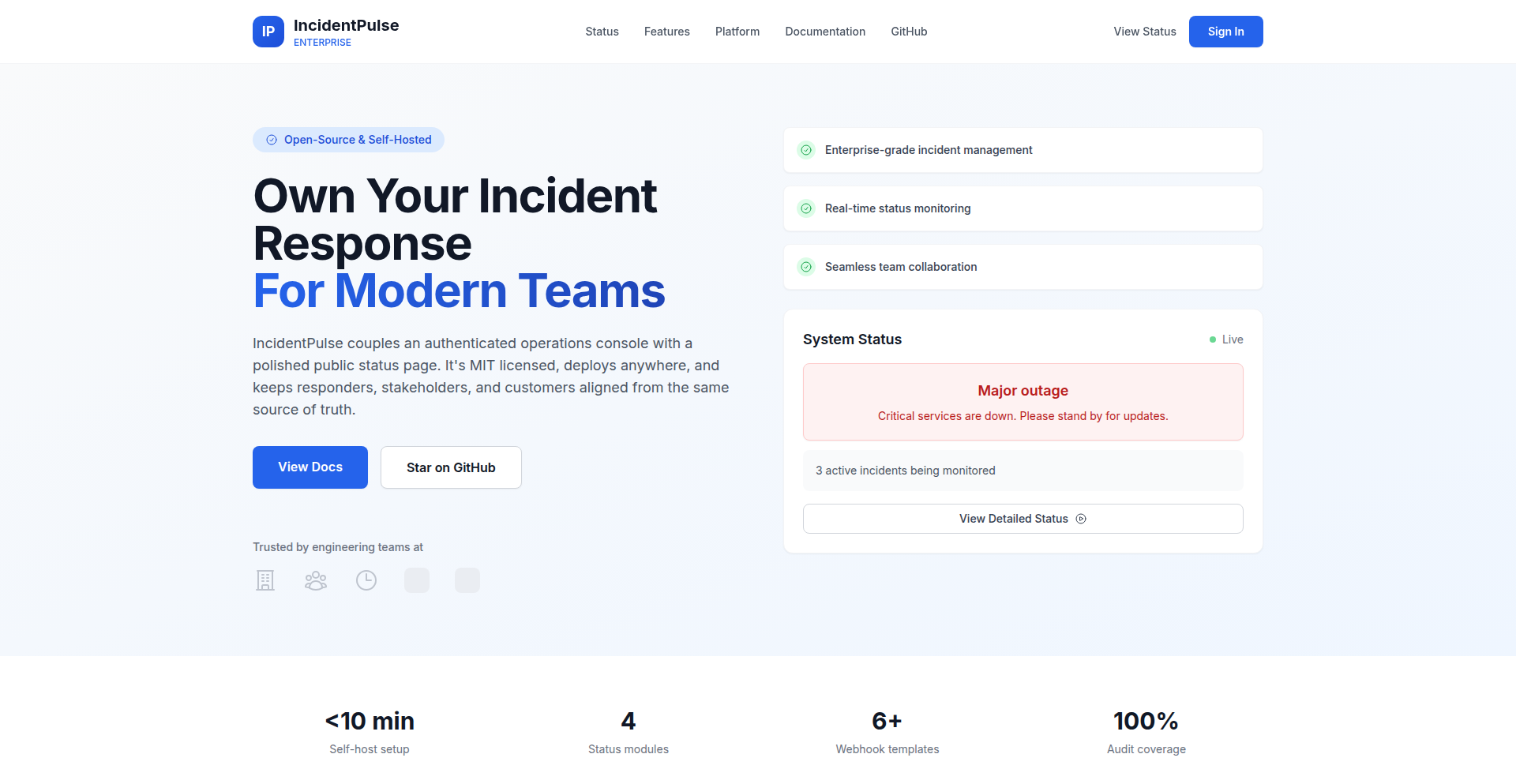

IncidentPulse: Real-time Incident Command Center

Author

bhoyee

Description

IncidentPulse is a self-hosted tool designed to streamline incident management, born from the frustration of chaotic outages. It provides a clear, centralized dashboard to track ongoing incidents, assign responders, and publish real-time updates, preventing the classic 'Slack-on-fire, status-page-outdated' scenario. Its technical innovation lies in its minimalist, focused approach to a common pain point, offering a developer-centric solution to a critical operational problem.

Popularity

Points 5

Comments 1

What is this product?

IncidentPulse is a self-hosted application that acts as a central hub for managing and communicating during technical incidents. Instead of relying on scattered Slack messages or outdated status pages, it offers a dedicated interface to log an incident, see who's working on it, and share crucial updates with stakeholders. The technical innovation here is in its simplicity and focus: it cuts through the noise of broader incident management platforms to provide just the essential tools for rapid response and communication. This approach prioritizes speed and clarity when every second counts during an outage. The core idea is to consolidate critical incident data into one accessible place, making it easier to coordinate efforts and inform everyone involved.

How to use it?

Developers can use IncidentPulse by self-hosting the application on their own infrastructure. This means they download the code and run it on their servers, giving them full control over their data and its availability. Once set up, they can access a clean web interface to report new incidents. They can then assign team members as responders and post live updates as the situation evolves. For integration, IncidentPulse supports webhooks, which is a technical mechanism allowing other systems to automatically send information to IncidentPulse. This means tools like your monitoring systems can automatically trigger an incident report in IncidentPulse when a problem is detected, or automatically update the incident status based on specific events. This saves manual effort and ensures timely information flow.

Product Core Function

· Incident Tracking: Log and categorize incidents with descriptions and severity levels. This is valuable because it provides a historical record and clear overview of all ongoing and past issues, allowing teams to learn from them.

· Responder Assignment: Assign specific team members to take ownership of an incident. This ensures accountability and efficient task distribution, making sure someone is actively working on a solution.

· Live Updates: Post real-time updates to the incident dashboard, accessible to both internal teams and potentially external stakeholders. This keeps everyone informed and reduces the need for constant manual communication, minimizing confusion during stressful situations.

· Self-Hosting Capability: Deploy the application on your own servers for maximum control and data privacy. This is crucial for organizations with strict security or compliance requirements, ensuring sensitive incident data remains within their network.

· Webhook Integration: Receive automated incident alerts from external systems and trigger updates. This allows for a more proactive response by automatically initiating incident tracking as soon as an issue is detected by monitoring tools.

Product Usage Case

· Scenario: A production database starts experiencing high latency, impacting user experience. How to use: A developer can immediately create a new incident in IncidentPulse, marking it as 'critical'. They can then assign themselves and a database administrator as responders. As they investigate, they can post updates like 'Investigating query performance' or 'Rollback initiated' directly to the dashboard. This immediately informs the rest of the team and any stakeholders about the progress, preventing them from flooding the team with 'what's happening?' messages.

· Scenario: An unexpected error starts appearing in the application logs, indicating a potential widespread issue. How to use: If IncidentPulse is integrated with a monitoring tool via webhooks, the monitoring tool can automatically create a new incident in IncidentPulse when this error threshold is reached. This ensures that incident management starts the moment a problem is detected, even if no human is actively watching the logs, thus speeding up the response time.

· Scenario: A company needs to provide clear and consistent updates to their customers during a service disruption without overwhelming their support team. How to use: IncidentPulse can be used as the single source of truth for internal and external communication. While the tool itself might be self-hosted for internal use, the public-facing updates posted can be mirrored to a public status page or communicated through other channels, ensuring all customers receive the same, accurate information directly from the incident command center.

6

Pdsink: USB-PD 3.2 Sink Stack for Embedded Ingenuity

Author

pu

Description

Pdsink is a novel USB Power Delivery 3.2 sink stack designed for embedded devices. It allows these small, specialized computers to intelligently negotiate and receive power from USB-C sources, ensuring efficient and safe power delivery. The innovation lies in its robust and compact implementation of the complex PD 3.2 protocol, making advanced power management accessible for resource-constrained embedded systems.

Popularity

Points 5

Comments 1

What is this product?

Pdsink is a software library (a 'stack') that enables embedded devices to understand and utilize the USB Power Delivery (USB-PD) 3.2 standard. Imagine your small electronic gadget, like a smart sensor or a tiny controller, needing power. Instead of a simple barrel jack, it can now use a standard USB-C port to talk to a power source (like a laptop charger or a power bank) and figure out exactly how much voltage and current it needs, and how to receive it safely. The key innovation is making the complicated USB-PD 3.2 protocol, which is often seen in bigger devices, work efficiently and reliably on devices with limited processing power and memory. This means your embedded device can benefit from the flexibility and intelligence of modern USB-C power without needing a full-blown computer.

How to use it?

Developers integrate Pdsink into their embedded device firmware. When the device is plugged into a USB-C power source, Pdsink handles the communication. It sends requests to the power source specifying the device's power requirements (e.g., 'I need 5V and 1A'). The power source then responds with an appropriate power profile. Pdsink ensures that the power received is within safe limits and matches the device's needs. This can be done by including Pdsink as a module in an embedded operating system or by incorporating it directly into the device's main firmware loop. It's designed to be lightweight and efficient for microcontrollers.

Product Core Function

· USB-PD 3.2 Protocol Implementation: Enables embedded devices to communicate using the latest USB Power Delivery standard, allowing for negotiation of various voltage and current levels. This is valuable for optimizing power consumption and enabling higher power capabilities in small devices.

· Power Negotiation Engine: Intelligently determines the optimal power contract (voltage and current) with the USB-C power source based on the embedded device's capabilities and requirements. This ensures efficient power utilization and prevents over-powering or under-powering.

· Source Capabilities Discovery: Allows the embedded device to query the connected USB-C power source for its available power profiles. This is crucial for selecting the best power option for the device.

· Sink Role Management: Manages the device's role as a power consumer, ensuring that it only draws the agreed-upon power and adheres to safety protocols. This protects the device and the power source.

· Error Handling and Safety Features: Implements robust error checking and safety mechanisms to prevent damage to the embedded device or the power source during power negotiation. This provides peace of mind for developers and end-users.

· Compact and Efficient Design: Optimized for embedded systems with limited resources (CPU, RAM), making advanced USB-PD capabilities accessible for a wider range of microcontrollers and small form-factor devices. This lowers the barrier to entry for advanced power management.

Product Usage Case

· Smart Home Sensors: A battery-powered smart temperature sensor can use Pdsink to automatically negotiate charging from a USB-C wall adapter when brought near it, eliminating the need for dedicated charging ports and cables. This simplifies design and improves user convenience.

· IoT Gateways: A compact IoT gateway device could use Pdsink to receive power from a nearby laptop's USB-C port, allowing it to operate seamlessly without a separate power adapter. This reduces clutter and simplifies deployment in various environments.

· Wearable Devices: A sophisticated wearable device could leverage Pdsink to draw more power for faster charging or to support more demanding features from a standard USB-C charger, improving user experience with quicker turnarounds.

· Industrial Control Modules: Small, embedded industrial control modules could use Pdsink to accept power from standard industrial USB-C power supplies, standardizing power delivery and reducing the need for specialized power bricks in factory settings. This enhances maintainability and reduces costs.

· Educational Electronics Projects: Students building advanced embedded projects can use Pdsink to easily integrate intelligent power management with USB-C, allowing their creations to be powered by common chargers and enabling more complex functionalities.

7

1PwnedGuardian

Author

peteski22

Description

A Python script that checks your 1Password logins against the Have I Been Pwned API. It helps you identify which of your 1Password entries might be compromised based on known data breaches. This addresses the vulnerability of using the same email with different passwords, by proactively revealing potentially exposed logins.

Popularity

Points 2

Comments 3

What is this product?

1PwnedGuardian is a tool designed to enhance your digital security by cross-referencing your 1Password vault with publicly available breach data. It leverages the Have I Been Pwned (HIBP) API, which aggregates information from numerous data breaches. The innovation lies in its ability to automate this crucial check, allowing you to quickly pinpoint specific credentials within your password manager that might be at risk. This saves you the manual effort of checking each breached site individually and provides a targeted approach to securing your online accounts, especially when a breach alert is received.

How to use it?

Developers can use 1PwnedGuardian by cloning the GitHub repository and running the Python script. The script typically requires you to have your 1Password data exported (e.g., as a CSV file) and your HIBP API key. You would then configure the script with the path to your exported data and your API key. The script iterates through your exported login details, sending them to the HIBP API for analysis. The output will highlight any of your logins that have appeared in known data breaches. This can be integrated into personal security workflows or even automated as part of a larger security audit script.

Product Core Function

· Cross-referencing 1Password data with Have I Been Pwned API: This function allows developers to automatically check a large number of their stored credentials against a comprehensive database of known data breaches. The value here is in automating a critical security task, saving significant time and effort, and providing peace of mind by proactively identifying potential risks.

· Identifying potentially compromised logins: The script pinpoints specific user accounts that have been exposed in past breaches. This is valuable because it provides actionable intelligence, enabling users to prioritize changing passwords for the most vulnerable accounts, thus mitigating the risk of credential stuffing attacks.

· Targeted security alerts: When a developer receives an alert about a data breach, this tool helps them quickly determine if their specific login associated with that service might be compromised. This is incredibly useful for rapid response and preventing further unauthorized access.

· Local data processing (via export): By working with exported 1Password data, the tool respects user privacy by not directly accessing the live 1Password vault. The value is in providing a secure and manageable way to perform security checks without granting external access to sensitive credentials.

Product Usage Case

· Scenario: A developer receives an email alert stating that a popular online service they use has been breached. Instead of manually checking if their password for that service is unique and then potentially searching HIBP for other breaches, they can run 1PwnedGuardian with their exported 1Password data. The script quickly tells them if that specific login, or any other within their vault, has been compromised in any known breach. This allows them to immediately change affected passwords and secure their accounts.

· Scenario: A security-conscious developer wants to conduct a regular audit of their digital footprint. They can export their 1Password data periodically and run 1PwnedGuardian. The output helps them identify any 'forgotten' or previously unknown compromised accounts, allowing them to proactively update their passwords and maintain a strong security posture across their online presence.

· Scenario: A developer is building a personal dashboard for managing their online security. They can integrate the logic of 1PwnedGuardian into their dashboard. This would allow them to see a consolidated view of their account security status, with the tool highlighting which of their 1Password entries are at risk, all within a single interface.

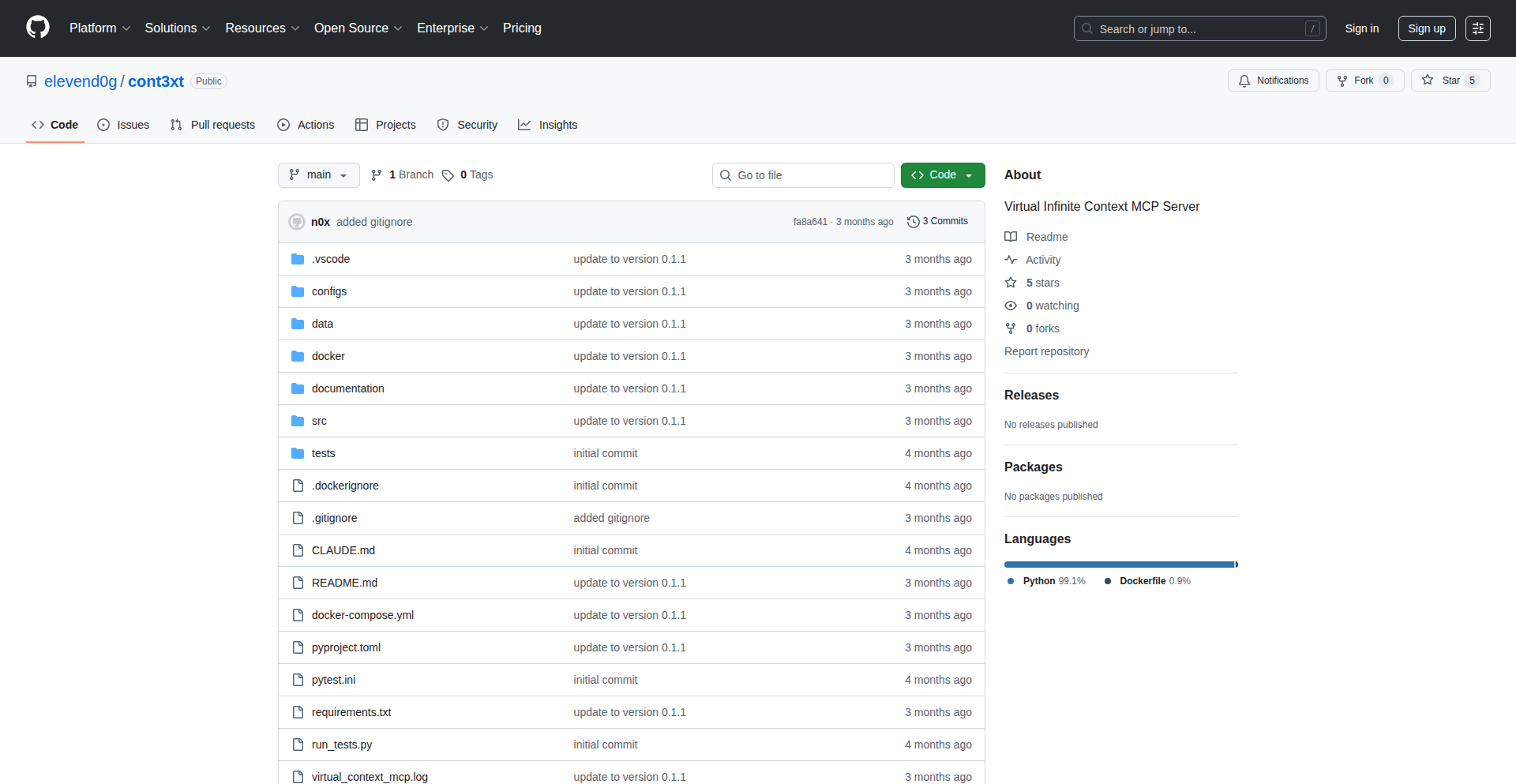

8

RunOS: Instant Kubernetes Fabric

Author

didierbreedt

Description

RunOS is a platform that automates the creation of production-ready Kubernetes clusters in under 10 minutes. It tackles the complexity of setting up essential components like networking, databases, message queues, and AI tooling, offering a controlled, self-hosted Kubernetes experience without extensive manual configuration. The core innovation lies in its agent-based architecture using gRPC streams and KVM virtualization for rapid, secure, and flexible cluster deployment.

Popularity

Points 2

Comments 2

What is this product?

RunOS is a system designed to rapidly deploy fully functional Kubernetes clusters. Instead of manually configuring networking, certificates, monitoring, databases, and storage, which is a repetitive and complex task for every team, RunOS automates this entire process. It uses two types of software agents: server agents that run on your virtual machines (VMs) and communicate securely with the RunOS backend, and node agents that manage individual Kubernetes nodes. A key technical insight is using gRPC bidirectional streams initiated by the agents. This means the agents connect outward to RunOS, eliminating the need for complex firewall rules or public IP addresses for your cluster infrastructure. RunOS leverages KVM (Kernel-based Virtual Machine) for creating and managing VMs, known for its stability and excellent support for technologies like GPU passthrough, which is crucial for AI workloads. The entire cluster, including Kubernetes itself, networking (using WireGuard), storage, and various popular services like PostgreSQL, Kafka, and Ollama, is provisioned and configured within minutes. So, what does this mean for you? It means you can get a powerful, production-ready Kubernetes environment up and running almost instantly, saving you weeks of setup time and avoiding vendor lock-in or the overwhelming complexity of raw Kubernetes.

How to use it?

Developers can use RunOS by initiating a cluster creation request through the RunOS platform. The backend then intelligently selects available server agents, which in turn use gRPC commands to provision KVM-based VMs. These VMs are quickly bootstrapped with Ubuntu, and the node agents are installed to set up and connect to the Kubernetes cluster using kubeadm and Cilium for networking. A secure WireGuard mesh is established between nodes at the operating system level, providing unified security for both Kubernetes traffic and SSH access. Storage solutions like OpenEBS and Longhorn are also configured. The platform simplifies the deployment of various services like databases (PostgreSQL, MySQL), message queues (Kafka, RabbitMQ), AI tooling (Ollama, LiteLLM), and observability stacks (Grafana, Prometheus) using Helm charts, operators, and custom configurations. RunOS offers a managed option with pre-defined instances and a self-hosted option where you can run the node agents on your own hardware for complete infrastructure control. Integration is straightforward as the platform handles the underlying complexities. So, how can you use this? You can start a new project requiring a Kubernetes backend, quickly set up development and staging environments, or deploy complex microservice architectures without the usual infrastructure headaches.

Product Core Function

· Rapid Kubernetes Cluster Provisioning: Automates the deployment of a fully functional Kubernetes cluster in 5-10 minutes, significantly reducing setup time and effort for developers. This means you can get your application infrastructure ready to deploy much faster.

· Agent-Based Architecture with gRPC Streams: Employs agents that initiate outbound connections to the backend, simplifying network configuration and eliminating the need for public IPs or complex firewall management. This makes deploying clusters in restricted network environments much easier and more secure.

· KVM Virtualization for VM Creation: Leverages KVM for robust and efficient VM provisioning, offering excellent performance and isolation. This provides a stable foundation for your Kubernetes nodes and supports advanced features like GPU passthrough for AI.

· Integrated Service Deployment: Supports one-click installation of over 20 popular services including databases (PostgreSQL, MySQL), message queues (Kafka, RabbitMQ), object storage (MinIO), and AI tooling (Ollama, LiteLLM). This allows you to quickly build complex application stacks without manually integrating each component.

· OS-Level WireGuard Networking: Implements WireGuard at the operating system level for secure and unified communication between nodes, also securing SSH access. This offers enhanced security and simplifies multi-cluster connectivity, ensuring your cluster's communication is always protected.

· Automated Compatibility Management: Handles the complex task of maintaining compatibility between numerous services and Kubernetes versions, reducing the risk of configuration conflicts and deployment failures. This saves you from the 'version hell' often encountered in complex deployments.

Product Usage Case

· Rapidly spinning up a new development environment for a microservices project that requires a Kubernetes cluster with a PostgreSQL database and Kafka for messaging. RunOS can provision this entire stack in minutes, allowing the developer to focus on writing code instead of infrastructure setup.

· Deploying a proof-of-concept for an AI application that needs GPU acceleration. RunOS's KVM integration with GPU passthrough combined with the one-click Ollama installation enables quick experimentation with machine learning models without complex hardware configuration.

· Setting up a staging environment that mirrors production for testing an application with a complex backend. RunOS can quickly provision an identical Kubernetes cluster with all necessary services, ensuring that testing is accurate and reliable.

· A startup team needing to get their product to market quickly. By using RunOS, they can bypass weeks of infrastructure setup and deployment, allowing them to focus on product development and customer acquisition.

· An individual developer wanting to experiment with advanced Kubernetes features or complex service architectures without the steep learning curve of manual configuration. RunOS provides a ready-to-use, yet flexible, environment for exploration and learning.

9

Cross-Lingual Agent Orchestrator

Author

Radeen1

Description

This project tackles the challenge of seamlessly integrating Python-based AI agents into software backends written in other languages like Rust, Golang, or Java. It proposes a novel approach to agent interaction and memory management, enabling agents to learn and improve their responses over time, rather than just remembering specific events. This significantly simplifies agent integration and enhances their adaptability in real-world applications.

Popularity

Points 4

Comments 0

What is this product?

This is a framework designed to bridge the gap between different programming languages for AI agent integration. Imagine you have a brilliant AI agent written in Python, but your main application is built using Rust. Traditionally, getting them to talk to each other smoothly is a major headache. This project offers a solution by providing a standardized way for agents to communicate with non-Python backends. A key innovation is its 'Action Memory' concept. Instead of agents trying to remember every single detail of past conversations, this system helps agents learn *how* to respond better by analyzing patterns in their interactions. This makes agents more adaptive and effective in understanding and assisting users over time. Furthermore, it's built with serverless architectures in mind, aiming for efficient execution and compatibility with persistent agents.

How to use it?

Developers can use this orchestrator to embed their Python AI agents into applications built with various programming languages without needing to rewrite their agents or their backend extensively. It acts as an intermediary, handling the communication protocols and data transformations required for cross-language interaction. For instance, a backend service written in Golang can send requests to the orchestrator, which then forwards them to a Python agent. The agent's response is processed and sent back to the Golang service. The 'Action Memory' can be configured to store interaction patterns, allowing the agent to refine its behavior based on past successes and failures. This can be integrated into existing CI/CD pipelines or deployed as a serverless function for scalable and cost-effective operation.

Product Core Function

· Cross-language agent integration: Enables Python agents to communicate with backends in Rust, Golang, Java, and more, reducing development friction and complexity by abstracting away communication barriers. This means you can leverage your existing Python AI models without being limited by your backend's programming language.

· Action Memory system: Facilitates agent behavioral improvement by learning interaction patterns rather than specific event recall. This allows agents to become more context-aware and provide more relevant and helpful responses over time, like a skilled assistant who learns your preferences.

· Efficient agent execution: Designed with serverless principles to ensure fast and scalable deployment, making it suitable for high-demand applications. This translates to faster responses for your users and better resource utilization for your infrastructure.

· Standardized agent API wrappers: Simplifies adding new agents by providing a consistent integration process, reducing repetitive coding efforts for developers. This makes it easier to experiment with and deploy multiple AI agents within your system.

Product Usage Case

· Integrating a customer support chatbot (Python agent) into an e-commerce platform built with Java. The orchestrator handles the communication, allowing the chatbot to access order details and customer history, improving response accuracy and customer satisfaction. The 'Action Memory' helps the chatbot learn common customer issues and respond more quickly and effectively.

· Enabling a data analysis agent (Python) to interact with a real-time trading platform built with Rust. The orchestrator facilitates secure and low-latency data exchange, allowing the agent to provide insights and trigger actions on the trading platform. The 'Action Memory' could help the agent learn optimal trading strategies based on market fluctuations.

· Developing a content generation assistant (Python agent) for a blogging platform built with Golang. The orchestrator enables the assistant to understand user prompts, interact with the platform's content management system, and suggest or generate articles. The 'Action Memory' can help the assistant learn the platform's style guidelines and preferred topics.

10

AI-Powered SaaS Growth Playbook & Credits

Author

reluxe0310

Description

This project curates essential SaaS marketing guides, launch platforms, and actionable playbooks, bundled with $300 in Claude Code AI credits. The core innovation lies in aggregating proven growth strategies and making them accessible, while also providing a tangible AI resource to accelerate implementation.

Popularity

Points 4

Comments 0

What is this product?

This is a curated collection of high-value SaaS marketing resources, essentially a 'playbook' for growing a software-as-a-service business. The innovation is in the intelligent bundling of curated content with valuable AI credits. It's like getting a well-researched strategy guide along with a powerful tool to help you execute those strategies. The AI credits are for Claude Code AI, a large language model optimized for coding tasks, meaning you can use it to help write code, debug, or even brainstorm technical solutions for your SaaS product, directly leveraging the marketing insights you gain from the kit.

How to use it?

Developers can use this kit by first diving into the marketing guides and playbooks to understand effective strategies for customer acquisition, retention, and growth in the SaaS space. Then, they can leverage the Claude Code AI credits to immediately start implementing these strategies. For instance, if a playbook suggests building a specific feature for customer engagement, developers can use the AI credits to generate code snippets, draft API integrations, or get help with frontend development. It's a 'learn and build' approach where the AI acts as a coding assistant to bridge the gap between strategy and technical execution.

Product Core Function

· Curated SaaS Marketing Guides: Provides access to best-practice marketing strategies for SaaS businesses, explaining how to attract and retain customers. This helps users understand the 'why' behind growth tactics.

· Launch Platforms & Playbooks: Offers detailed, step-by-step guides and recommended tools for launching and scaling SaaS products effectively. This provides the 'how' for executing growth plans.

· $300 Claude Code AI Credits: Grants access to a powerful AI coding assistant. This allows developers to directly implement learned strategies by generating code, debugging, or exploring technical solutions, accelerating development cycles.

· Integrated Resource Bundle: Combines strategic marketing knowledge with practical AI coding tools, offering a holistic approach to SaaS growth. This means users get both the roadmap and a powerful vehicle to travel it.

Product Usage Case

· A startup founder wants to implement a new customer onboarding flow. They consult a playbook in the kit for best practices and then use the Claude Code AI credits to write the necessary frontend and backend code for the new flow, saving significant development time.

· A developer needs to integrate a new payment gateway into their SaaS application. They find a guide on payment gateway integration within the kit and use the AI credits to generate boilerplate code for the integration, accelerating the process and reducing the risk of errors.

· A marketing team wants to create a viral loop for their SaaS product. They refer to a specific playbook on viral growth and then use the AI credits to help brainstorm and code features that encourage user sharing and referrals.

· A developer is stuck debugging a complex bug in their application. They can paste relevant code snippets into Claude Code AI and ask for explanations or potential solutions, leveraging the AI credits to overcome technical hurdles quickly.

11

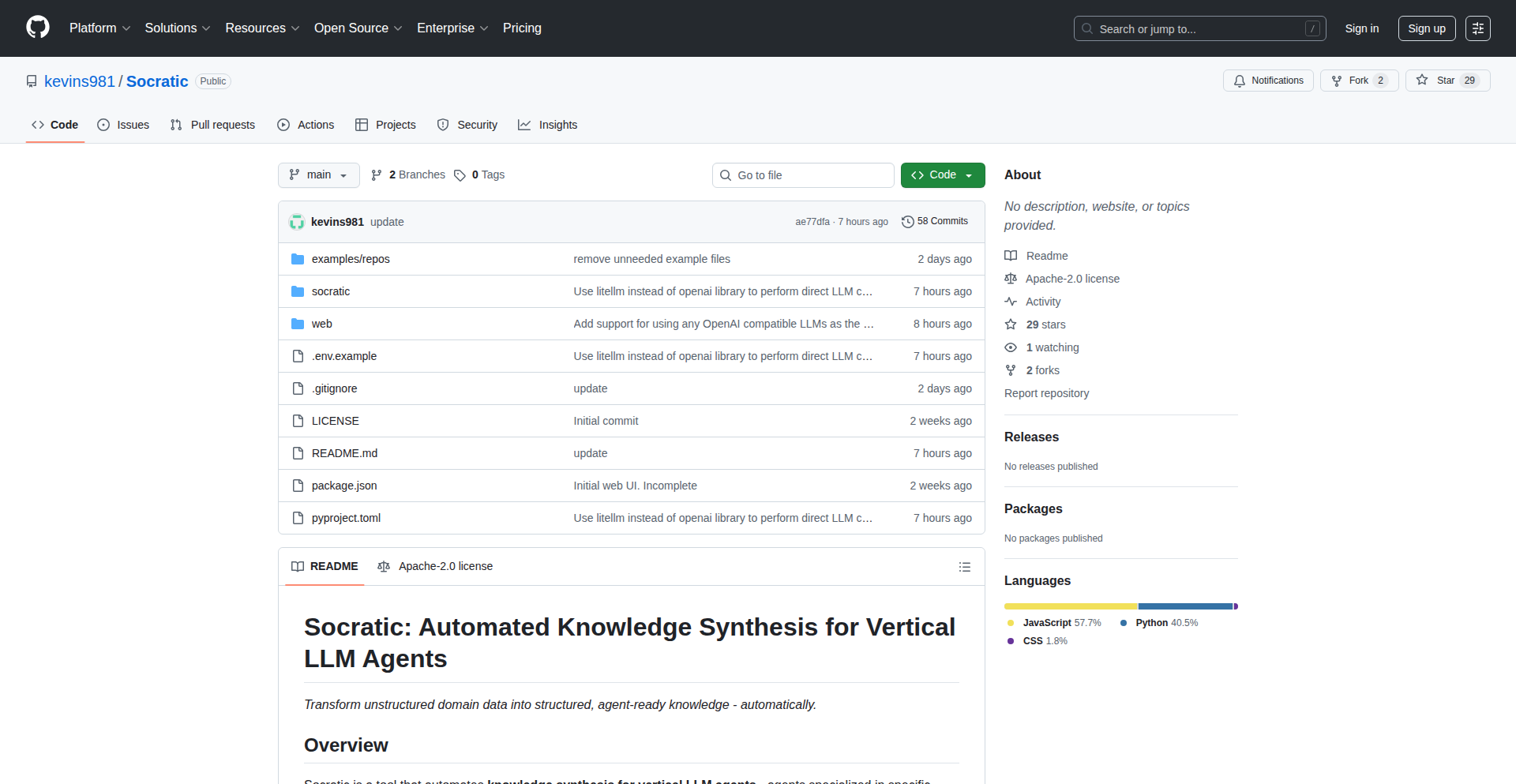

Socratic Agent Control Hub

Author

kevinsong981

Description

Socratic is a knowledge-base builder designed for AI agents, allowing users to maintain ultimate control over the information these agents access and utilize. It addresses the critical challenge of AI hallucination and information drift by empowering developers to curate and manage the agent's knowledge pool with precision. The core innovation lies in its structured approach to knowledge ingestion and retrieval, ensuring agents operate on verified and relevant data.

Popularity

Points 2

Comments 2

What is this product?

Socratic is a system that helps you build a reliable knowledge base for your AI agents. Think of it like creating a personal, fact-checked library for your AI. Instead of the AI randomly pulling information from the vast internet, which can lead to it making things up (hallucination) or giving outdated information, Socratic lets you feed it specific, curated documents, articles, or data. The technical innovation is in how it breaks down your input documents into manageable pieces (chunking) and uses sophisticated methods to find the most relevant pieces when the AI needs to answer a question (retrieval). This ensures the AI's responses are grounded in the information you've provided, giving you control and improving accuracy. So, what's in it for you? It means your AI assistants are less likely to give you wrong answers or go off-topic, making them more trustworthy and useful for specific tasks.

How to use it?

Developers can integrate Socratic into their AI agent workflows. You'll typically provide your source documents (e.g., PDFs, text files, web page content) to Socratic. The system then processes these documents, breaking them down and creating an index. When your AI agent needs to answer a question or perform a task, it queries Socratic. Socratic then efficiently searches its indexed knowledge base and returns the most relevant snippets of information to the agent. This integration can be achieved through APIs. For you, this means you can build smarter, more reliable AI applications that are tailored to your specific data and domain. It's like giving your AI a super-powered, personalized cheat sheet.

Product Core Function

· Document Ingestion and Chunking: Socratic takes your raw documents and intelligently breaks them into smaller, digestible pieces. This is crucial because AI models process information more effectively in smaller chunks. The value here is enabling the AI to handle large amounts of data without getting overwhelmed, leading to more focused and accurate retrieval. This applies to any scenario where you want an AI to understand and reference extensive documentation.

· Vector Embeddings and Indexing: Each document chunk is converted into a numerical representation called a vector embedding. These embeddings capture the semantic meaning of the text. Socratic then indexes these vectors for fast searching. The value is in enabling semantic search; the AI can find information based on meaning, not just keywords, making its queries more intelligent. This is useful for building Q&A systems or chatbots that need to understand the nuances of user questions.

· Semantic Retrieval: When an AI agent asks a question, Socratic uses the vector embeddings to find the most semantically similar chunks of information in its knowledge base. The value is in retrieving highly relevant context, which directly combats AI hallucination by providing factual grounding. This is essential for building reliable AI assistants in fields like customer support or internal knowledge management.

· User-Controlled Knowledge Curation: Developers have explicit control over what information is added to the knowledge base. The value is in ensuring data privacy, accuracy, and relevance. You decide what your AI learns from, preventing it from accessing or misinterpreting sensitive or incorrect information. This is paramount for enterprise AI solutions or applications dealing with proprietary data.

Product Usage Case

· Building a customer support chatbot that answers questions based on your company's product manuals and FAQs. Socratic ensures the chatbot only provides information from these approved sources, preventing it from giving incorrect support advice and improving customer satisfaction.

· Creating an internal knowledge management tool for a research team. Socratic can ingest research papers and internal documents, allowing researchers to quickly find relevant information via AI queries, accelerating discovery and reducing time spent searching.

· Developing an AI assistant for legal professionals that can cite relevant case law and statutes. By feeding Socratic legal databases, the AI can provide accurate and contextually relevant legal information, reducing the risk of errors in legal advice.

· Empowering an AI tutor to explain complex subjects using curated educational materials. Socratic ensures the AI tutor draws its explanations from reliable textbook content, providing students with accurate and trustworthy learning resources.

12

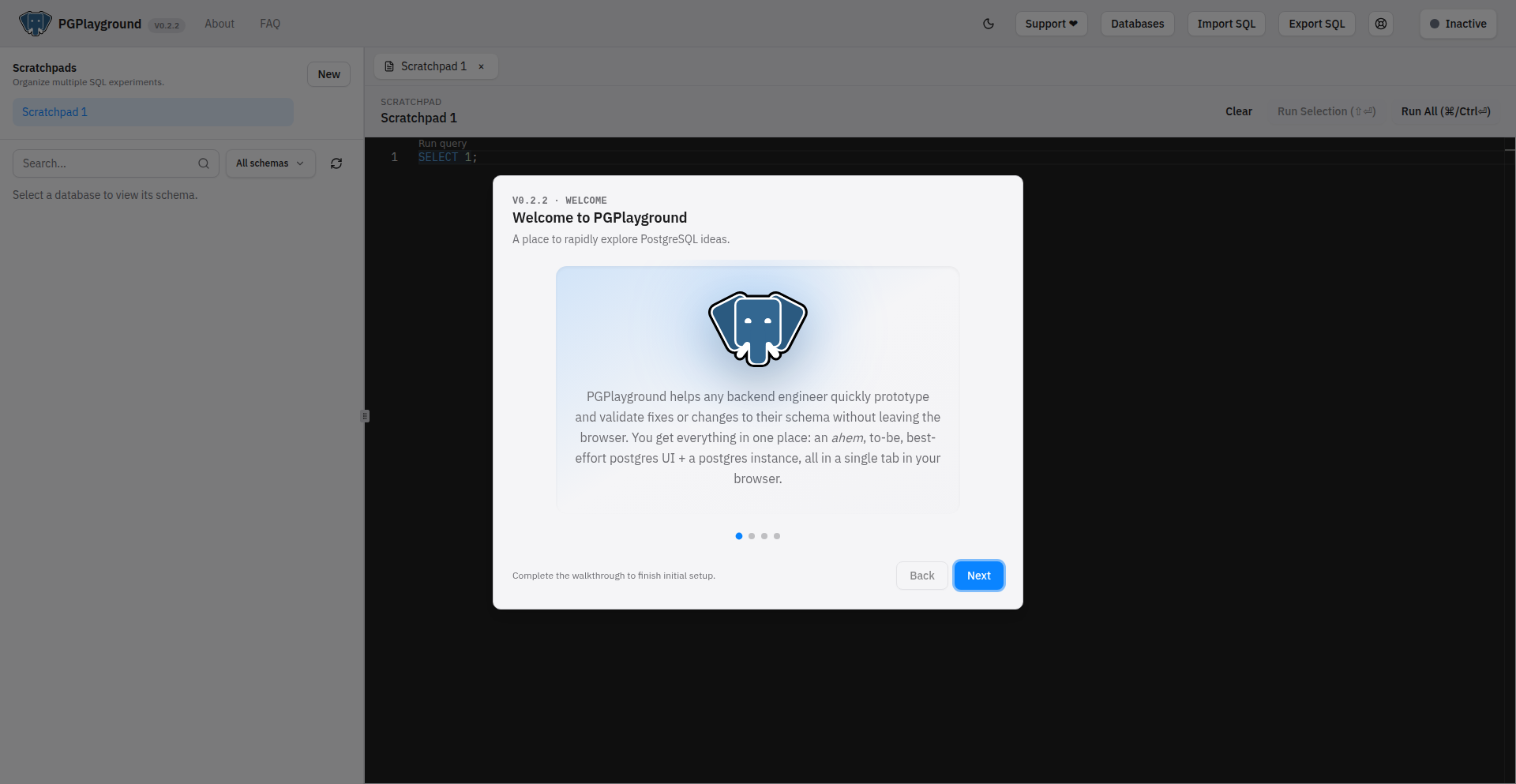

PgPlayground: Browser-Native Postgres Sandbox

Author

written-beyond

Description

PgPlayground is a 'batteries included', browser-only playground for PostgreSQL. It allows developers to experiment with SQL queries and PostgreSQL features directly in their web browser without needing any server-side setup or installation. The core innovation lies in its ability to run a full PostgreSQL database instance entirely within the browser using WebAssembly, offering a self-contained and instantly accessible environment for learning and development.

Popularity

Points 2

Comments 1

What is this product?

PgPlayground is a revolutionary way to interact with PostgreSQL. Instead of setting up a local database server or connecting to a remote one, PgPlayground compiles and runs a complete PostgreSQL database engine inside your web browser using WebAssembly. Think of it as a portable, self-contained PostgreSQL database that lives entirely in your browser tab. This means zero installation, zero server configuration, and instant access to PostgreSQL capabilities. It's built on the idea that experimenting with databases should be as simple as opening a new tab and typing SQL.

How to use it?

Developers can use PgPlayground by simply navigating to its web page. Upon loading, a fully functional PostgreSQL instance is initialized within the browser. Users can then write and execute SQL queries against a pre-loaded sample dataset or create their own tables and data. It's ideal for quickly testing SQL syntax, understanding PostgreSQL-specific functions, practicing data manipulation, or demonstrating database concepts without any environmental hurdles. Integration with external tools is limited by its browser-only nature, but it excels as a standalone learning and prototyping tool.

Product Core Function

· In-browser PostgreSQL engine powered by WebAssembly: Allows running a full PostgreSQL database instance directly within the browser, eliminating the need for server setup and making it instantly accessible for any developer. This means you can start experimenting immediately without any downloads or installations.

· Interactive SQL editor with syntax highlighting: Provides a user-friendly interface for writing and executing SQL queries, making it easier to understand and debug your SQL code. The highlighting helps catch syntax errors quickly, saving you time and frustration.

· Pre-loaded sample datasets and schema exploration: Comes with sample data and structures to quickly get started with practical examples. This allows you to see how real-world data interacts with SQL commands right away, accelerating the learning curve.

· Data visualization capabilities (potential future): While not explicitly detailed, the nature of a playground suggests potential for visualizing query results. This would transform raw data into understandable charts and graphs, making it easier to grasp the impact of your queries and identify patterns.

· Offline accessibility: Once loaded, PgPlayground can function offline, enabling continued experimentation and learning even without an internet connection. This is perfect for situations where connectivity is unreliable, allowing you to focus on your code.

Product Usage Case

· A junior developer learning SQL needs to understand how to join tables. They can load PgPlayground, create simple tables, and immediately run various JOIN queries to see the results in real-time, without the overhead of setting up a local database. This directly addresses their need for immediate, hands-on practice.

· A seasoned developer wants to quickly test a complex PostgreSQL-specific function before implementing it in a production application. PgPlayground provides an isolated environment to experiment with the function's syntax and behavior without risking their development environment or requiring a remote connection. This speeds up prototyping and reduces potential errors.

· An instructor teaching a database course needs a tool to demonstrate SQL concepts to students. PgPlayground can be shared easily, and students can replicate the demonstrations in their own browsers, experiencing the practical application of database commands firsthand. This creates an engaging and accessible learning experience for everyone.

· A hobbyist working on a personal project that involves a small amount of data needs to prototype some database interactions. PgPlayground offers a lightweight, zero-configuration solution to quickly build and test their SQL logic before committing to a more robust database solution. This saves them development time and initial setup costs.

13

NeXT History Archive

Author

zaxel1995

Description

This project is a deep dive into the history and technical legacy of NeXT Computer, Inc. It features a comprehensive research paper covering the company's strategy, breakthroughs, and failures, along with an exclusive 30-minute interview with NeXT co-founder Dan'l Lewin. The innovation lies in meticulously analyzing primary and secondary sources to reveal how NeXT's pioneering work in object-oriented programming, development environments, and early web technologies profoundly shaped modern computing, including macOS, iOS, and the very foundations of the internet. For developers, this offers a unique look into the origins of technologies they use daily, providing invaluable context and inspiration.

Popularity

Points 3

Comments 0

What is this product?

This project is an in-depth research paper and interview archive focused on NeXT Computer, Inc. (1985-1997). It explores NeXT's historical significance, technical contributions, and business strategies. The core technical innovation is the detailed analysis of how NeXT's pioneering work, such as its object-oriented programming environment (Objective-C) and its influential NeXTSTEP operating system, laid the groundwork for technologies that are fundamental to modern software development, including macOS and iOS. For example, NeXTSTEP's graphical user interface and object-oriented framework directly influenced the design of Apple's operating systems. Tim Berners-Lee even built the first World Wide Web browser on a NeXT workstation, highlighting its foundational role in the internet's early development. This project offers a historical perspective that is both educational and inspiring, explaining the 'why' behind many modern development paradigms.

How to use it?

Developers can use this project as an educational resource to understand the historical context and technical evolution of key software technologies. By reading the research paper and watching the interview, developers can gain insights into the design philosophies and challenges faced by NeXT, which can inform their own problem-solving approaches and architectural decisions. It's particularly useful for those working with or interested in Apple's ecosystem (macOS, iOS) or modern object-oriented programming languages. The findings can be applied to understanding the 'why' behind certain design patterns and system architectures that have persisted from the NeXT era. For example, understanding the benefits of NeXT's early adoption of object-oriented principles can reinforce modern software engineering practices.

Product Core Function

· Historical and Technical Review of NeXT's Strategy: Provides a deep analysis of NeXT's product decisions, market positioning, and technological breakthroughs, offering lessons for modern platform design and strategy. This helps developers understand the long-term impact of technological choices.

· Interview with NeXT Co-Founder Dan'l Lewin: Offers firsthand insights into the company's culture, vision, and operational challenges, giving developers a unique perspective on innovation and entrepreneurship in the tech industry. This can inspire creative problem-solving.

· Analysis of NeXT's Influence on Modern Software: Details how NeXT technologies, such as its object-oriented programming environment and operating system, directly shaped macOS, iOS, and the early web. This highlights the enduring value of foundational technical concepts for contemporary developers.

· Lessons for Educational Technology and Software Platform Design: Extracts actionable insights from NeXT's experience that are relevant to current trends in ed-tech and software development, helping developers and educators to learn from past successes and failures.

· Exploration of Early Web Origins: Details the role of NeXT workstations in the creation of the first WWW browser, providing developers with historical context on the evolution of internet technologies.

Product Usage Case

· A developer studying object-oriented programming principles can read this paper to understand the early implementation and impact of Objective-C and NeXTSTEP, gaining a deeper appreciation for the evolution of languages and frameworks they use daily, like Swift or other modern OO languages.

· A software architect designing a new platform can draw inspiration from NeXT's strategic failures and breakthroughs, learning from their approach to hardware-software integration and development environments to avoid pitfalls and build more robust systems.

· An iOS or macOS developer curious about the roots of their operating system can explore how NeXTSTEP's user interface and software architecture directly influenced subsequent Apple OS designs, fostering a better understanding of the underlying systems.

· A computer science student researching the history of personal computing or the impact of Steve Jobs can use this paper as a primary source to understand the significance of NeXT in the broader narrative of technological advancement, enriching their academic research.

· A web developer interested in the origins of the internet can learn about the critical role NeXT workstations played in the creation of the first web browser, providing a historical perspective on the foundational technologies that power today's web.

14

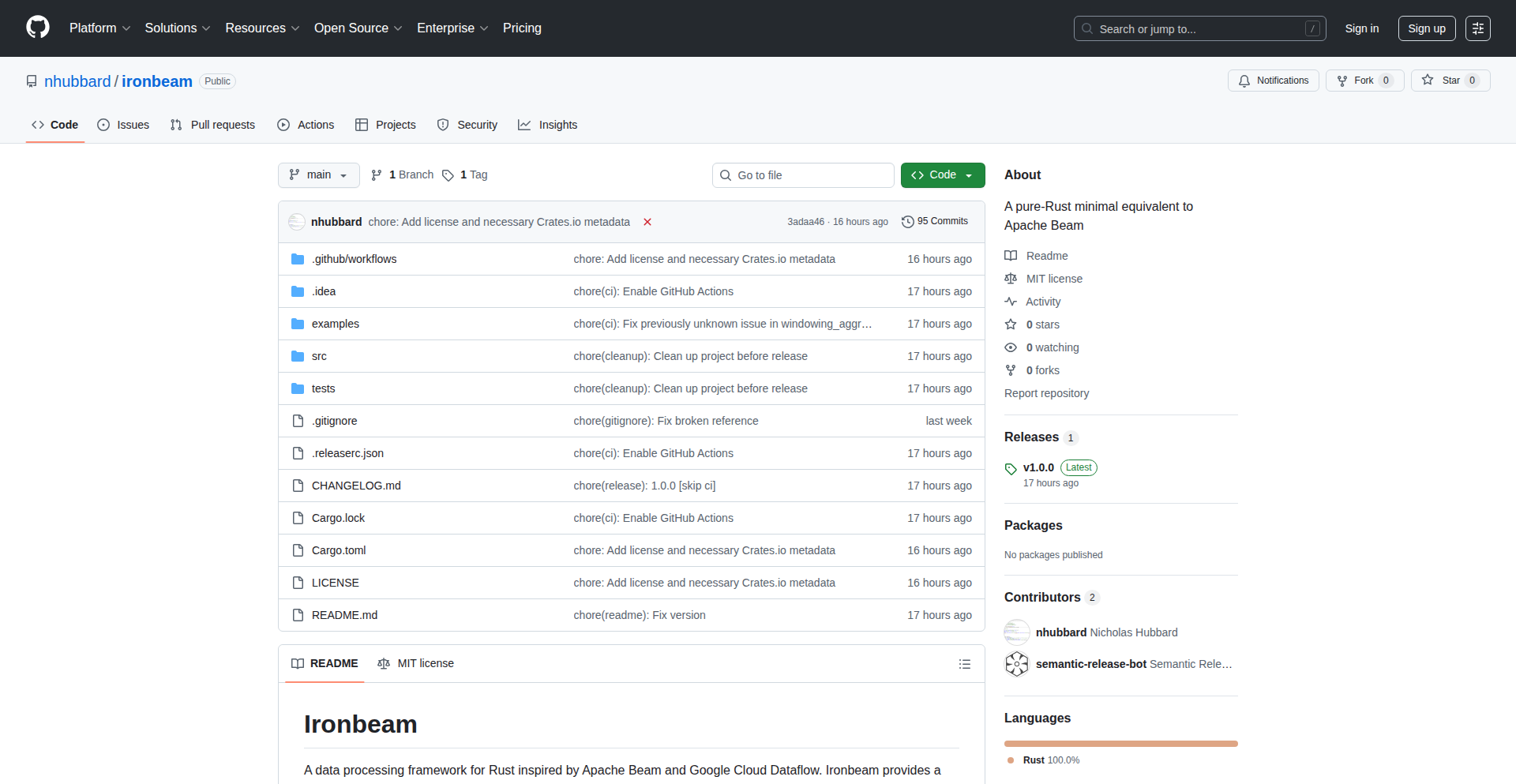

RustBeam Batch

Author

nhubbard

Description

This project is an experimental batch processing clone of Apache Beam, built in Rust. It aims to address the performance and complexity challenges often encountered with Beam in Python and Java, by leveraging Rust's inherent performance and developer experience.

Popularity

Points 3

Comments 0

What is this product?

RustBeam Batch is a new take on batch data processing, inspired by Apache Beam. The core idea is to provide a high-performance, Rust-native way to define and execute data processing pipelines. While Apache Beam offers powerful abstractions for both batch and stream processing, implementing it in Python can be slow, and in Java, it can be more complex to set up and manage. This Rust version aims to offer the expressive power of Beam's pipeline concepts (like transforms and PCollections) but with the speed and memory efficiency that Rust is known for. It's essentially a developer's fresh experiment to see if Rust can offer a better balance of power and performance for defining complex data jobs.

How to use it?

Developers can use RustBeam Batch by defining their data processing pipelines using Rust code. This involves specifying the data sources, the sequence of transformations to apply (e.g., filtering, mapping, aggregating data), and the final output sinks. The project provides Rust abstractions that mirror Beam's pipeline structure, allowing developers to write code that declaratively outlines their data processing logic. This Rust code is then compiled and executed, taking advantage of Rust's efficient runtime. The goal is to make it easier for developers who are already comfortable with Rust to build and run robust batch processing jobs without external dependencies that might introduce performance bottlenecks or increased complexity.

Product Core Function

· Pipeline Definition in Rust: Allows developers to express complex data processing workflows using Rust's syntax, enabling code clarity and maintainability. The value is in writing data jobs that are easier to reason about and less prone to errors.

· High-Performance Batch Execution: Leverages Rust's compile-time optimizations and efficient memory management to execute batch processing tasks significantly faster than interpreted languages like Python. This means quicker job completion times and better resource utilization.

· Abstracted Data Transforms: Provides a set of building blocks for common data manipulation tasks (e.g., map, filter, group by) that are easy to combine into sophisticated pipelines. This saves developers time by offering pre-built, efficient operations.

· Experimental Integration with Beam Concepts: Replicates the core philosophy of Apache Beam's data pipeline model, offering a familiar paradigm for those transitioning from Beam, while benefiting from a native Rust implementation. This bridges the gap for developers wanting Beam's expressiveness with Rust's performance.

Product Usage Case

· Data Engineering Pipelines: A developer could use RustBeam Batch to build ETL (Extract, Transform, Load) pipelines for cleaning and transforming large datasets before they are loaded into a data warehouse. This solves the problem of slow processing times in traditional scripting languages, leading to faster data availability.

· Log Analysis: For analyzing massive amounts of server logs, RustBeam Batch can be employed to filter, aggregate, and extract meaningful insights from the log data efficiently. This helps in quickly identifying trends or anomalies in system behavior.

· Data Transformation for Machine Learning: Before feeding data into ML models, it often needs preprocessing. RustBeam Batch can be used to perform these transformations on large datasets, ensuring that the data is in the optimal format for model training, leading to faster and more effective model development.

15

Historical Startup Investor Simulator

Author

vire00

Description

This project is a simulation game where players can invest in historical startups. The core innovation lies in simulating the investment dynamics and outcomes of past ventures, offering a unique educational and entertainment experience. It addresses the challenge of understanding historical business evolution and investment risks in an engaging, interactive format.

Popularity

Points 3

Comments 0

What is this product?

This project is a game designed to let you experience investing in startups throughout history. It simulates how real-world early-stage companies evolved, their market reception, and the eventual success or failure of those investments. The innovation is in its data-driven simulation model that uses historical information to predict potential outcomes, allowing players to learn about business strategy, market timing, and investment principles through interactive gameplay. Essentially, it's a time-traveling venture capital simulator.

How to use it?

Developers can use this project as a foundation for further game development, data visualization, or educational tools. For potential users, it's a web-based game accessible through a browser. You'd navigate through different historical periods, review simulated company profiles, decide where to allocate your virtual investment capital, and observe the simulated growth or decline of your portfolio over time. It's designed to be intuitive, requiring no prior technical knowledge, just an interest in history and business.

Product Core Function

· Historical Startup Database: A curated collection of historical companies with their founding details, market conditions, and technological context. This allows for realistic scenario generation and provides educational value about past innovations.

· Investment Simulation Engine: A core logic that models the progression of a startup based on its industry, funding, market trends, and historical events. This is where the 'black magic' happens, translating historical data into engaging gameplay outcomes, making it useful for understanding cause-and-effect in business.

· Portfolio Management Interface: A user-friendly way for players to track their investments, view company performance, and make new investment decisions. This provides immediate feedback and helps users understand the consequences of their financial choices.

· Outcome Visualization: Presents the results of investments, whether successes or failures, with explanations grounded in historical context. This reinforces learning by showing 'what happened' and 'why', making complex financial and business concepts more digestible.

Product Usage Case

· Educational Scenario: A history teacher could use this game to illustrate the challenges and opportunities faced by early tech companies in different eras, like the dot-com bubble or the industrial revolution. Students can learn by actively participating in simulated investment decisions, making abstract historical events tangible and relatable.

· Personal Learning: An aspiring entrepreneur could use this to understand the impact of timing and market fit on startup success by running simulations with different historical conditions. It helps in developing an intuitive grasp of market dynamics without risking real capital.

· Data Exploration: A data scientist could analyze the simulation engine to explore how different variables (e.g., R&D investment, marketing spend) historically correlated with startup survival rates. This can inspire new hypotheses or data analysis techniques for modern business research.

16

Wikidive: AI-Powered Wikipedia Explorer

Author

atulvi

Description

Wikidive is an AI-assisted tool designed to enhance Wikipedia discovery. It leverages advanced AI to help users navigate the vastness of Wikipedia, uncovering related topics and connections that might otherwise be missed. This solves the problem of information overload and superficial understanding by providing a more intuitive and insightful way to explore knowledge.

Popularity

Points 3

Comments 0

What is this product?

Wikidive is a novel application that utilizes Natural Language Processing (NLP) and knowledge graph techniques to assist users in exploring Wikipedia. Instead of traditional linear browsing, it employs AI to understand the semantic relationships between articles, suggesting related concepts, deeper dives, and tangential explorations. The core innovation lies in its ability to go beyond simple hyperlinking, offering AI-driven insights into how different pieces of information connect, thus facilitating a more comprehensive and interconnected understanding of any given topic. This means you don't just read one article; you discover the whole network of knowledge surrounding it.

How to use it?

Developers can integrate Wikidive into their applications or workflows by interacting with its API or by using its browser extension. For instance, if you're building a research tool or a content aggregation platform, you can feed an article title or URL to Wikidive, which will then return a structured list of related articles, key concepts, and potential research pathways. This is invaluable for any application that needs to surface or contextualize information from Wikipedia. Think of it as an intelligent assistant for your knowledge base.

Product Core Function

· AI-driven topic suggestion: Analyzes the semantic content of a Wikipedia article and suggests related, yet distinct, topics for further exploration, enabling users to discover niche areas or unexpected connections.

· Knowledge graph visualization: Generates an interactive visualization of how different Wikipedia articles and concepts are interconnected, providing a bird's-eye view of a subject's landscape and helping users grasp complex relationships.

· Summarization of related concepts: Provides concise summaries of interconnected topics, allowing users to quickly understand the essence of a related area without needing to read multiple full articles.

· Personalized discovery pathways: Learns from user interactions to tailor future suggestions, creating a personalized journey through Wikipedia's content based on individual interests and exploration patterns.

Product Usage Case

· Content creators can use Wikidive to find unexplored angles or related topics for their articles or blog posts, enriching their content and appealing to a wider audience.

· Students and researchers can leverage Wikidive to build a more robust understanding of complex subjects by discovering the interconnectedness of various concepts and finding more relevant sources.

· Developers building educational apps can integrate Wikidive to create more engaging and interactive learning experiences, guiding users through knowledge domains in a dynamic way.

· Anyone interested in deep diving into a subject can use Wikidive to move beyond the surface-level information of a single article and uncover the broader context and related discussions within Wikipedia.

17

SelenAI: Transparent AI Pair-Programmer

Author

moridin

Description

SelenAI is a terminal-based AI pair-programmer built with Rust and a Ratatui UI. It focuses on making AI's interactions with your system transparent and auditable. It achieves this by sandboxing AI-generated code in a Lua virtual machine, requiring explicit user approval for any file writes, and meticulously logging all actions. This approach ensures you understand exactly what the AI is doing and maintains a high level of security, making it a valuable tool for developers seeking controlled AI assistance.

Popularity

Points 3

Comments 0

What is this product?