Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-14

SagaSu777 2025-11-15

Explore the hottest developer projects on Show HN for 2025-11-14. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The landscape of software development is rapidly evolving, with a clear surge in projects leveraging AI and agent-based architectures to solve complex problems. We're seeing a strong hacker ethos emerge, where developers are not just building tools, but creating solutions that empower others to work smarter and more securely. The emphasis on local-first and privacy-centric tools, like offline dictation apps and encrypted password managers, reflects a growing demand for user control and data sovereignty. For developers, this signals a prime opportunity to dive into the burgeoning field of AI agents, exploring how to build systems that can autonomously manage tasks, analyze data, and even write code. For entrepreneurs, the takeaway is clear: identify specific pain points within niche domains, like data engineering or embedded systems, and apply innovative AI or automation solutions. The open-source community continues to be a fertile ground for rapid iteration and collaborative problem-solving, so contributing to or building upon existing open projects can accelerate innovation and build valuable networks. The future belongs to those who can combine deep technical understanding with creative problem-solving to build tools that are both powerful and ethical.

Today's Hottest Product

Name

Zingle – AI code reviewer for data teams

Highlight

This project tackles the critical problem of ensuring data pipeline integrity and cost efficiency. It uses AI to analyze SQL, dbt, Airflow, and Spark code changes in pull requests, predicting cost regressions, logic issues, and potential data quality gaps. The innovation lies in applying AI to the specific, high-stakes domain of data engineering, where a single incorrect change can lead to significant financial losses. Developers can learn about applying AI to specialized code analysis, understanding the unique risks associated with data infrastructure, and building robust validation systems. The developer's personal anecdote of a $50k Snowflake bill highlights the real-world impact of such a solution.

Popular Category

AI/ML

Developer Tools

Data Engineering

Open Source

Productivity

Popular Keyword

AI

Code Review

Data Teams

Kubernetes

Agents

LLM

Open Source

Local First

Privacy

Technology Trends

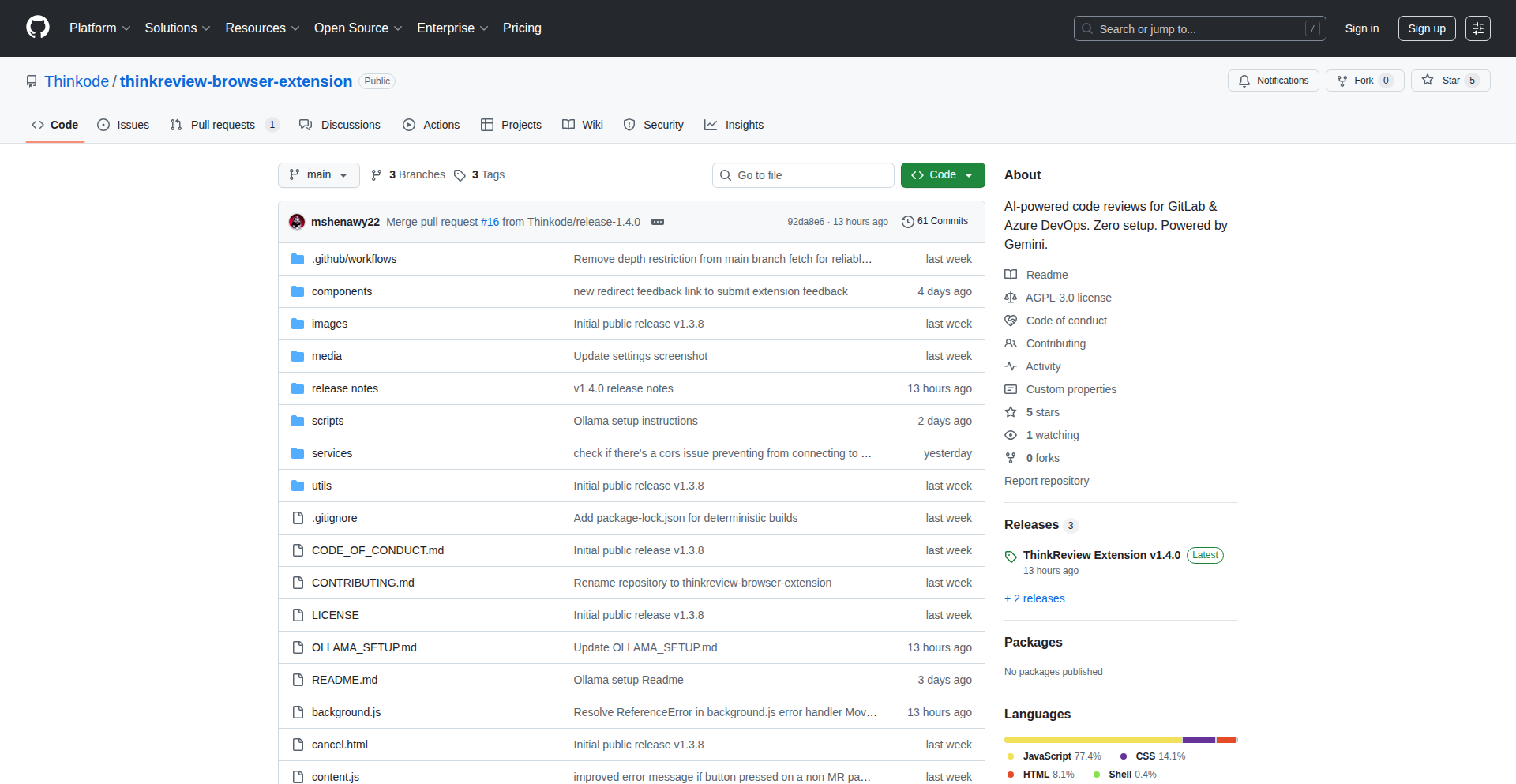

AI-Powered Code Analysis

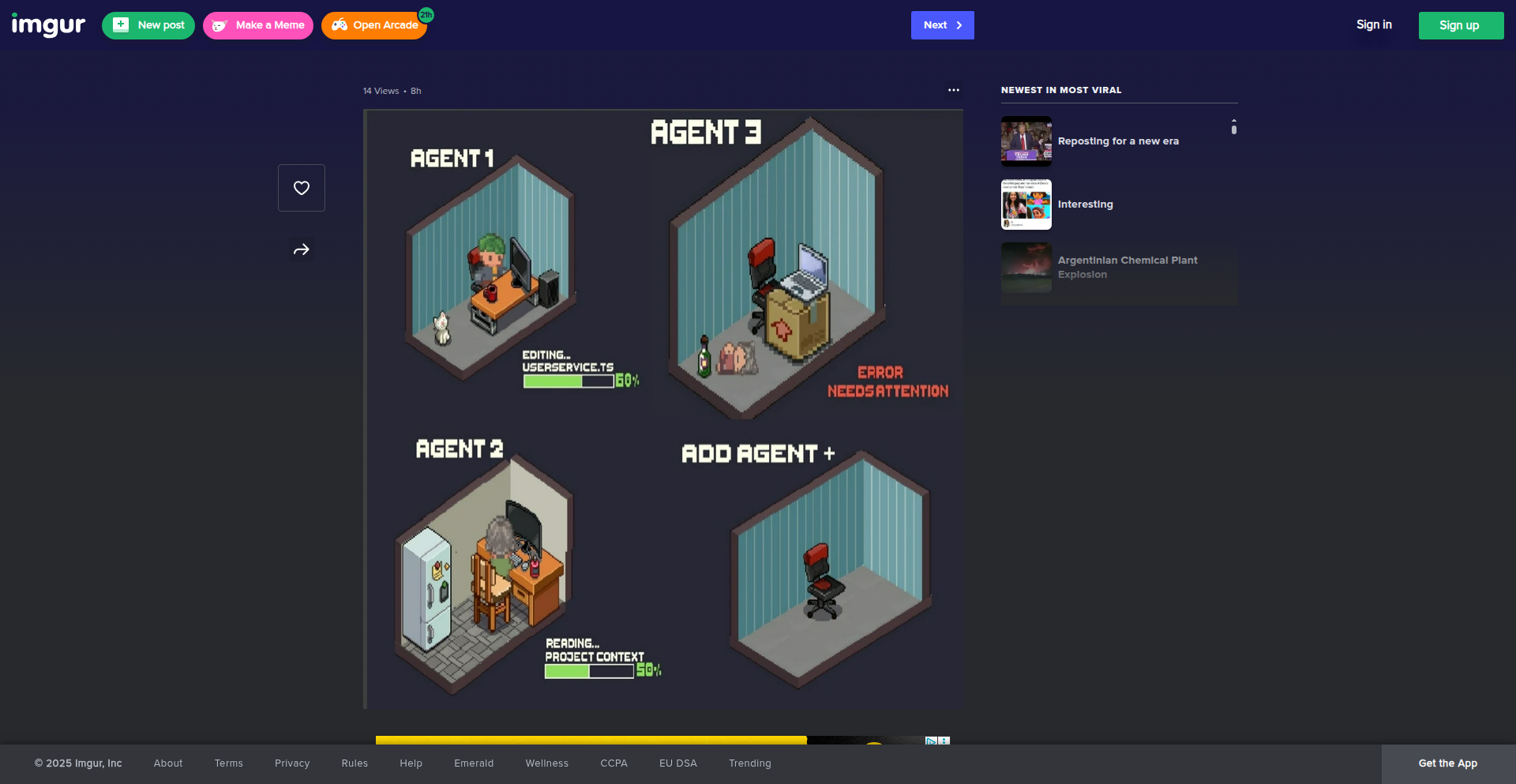

Agent-Based Systems

Local-First and Privacy-Focused Tools

Developer Productivity Enhancements

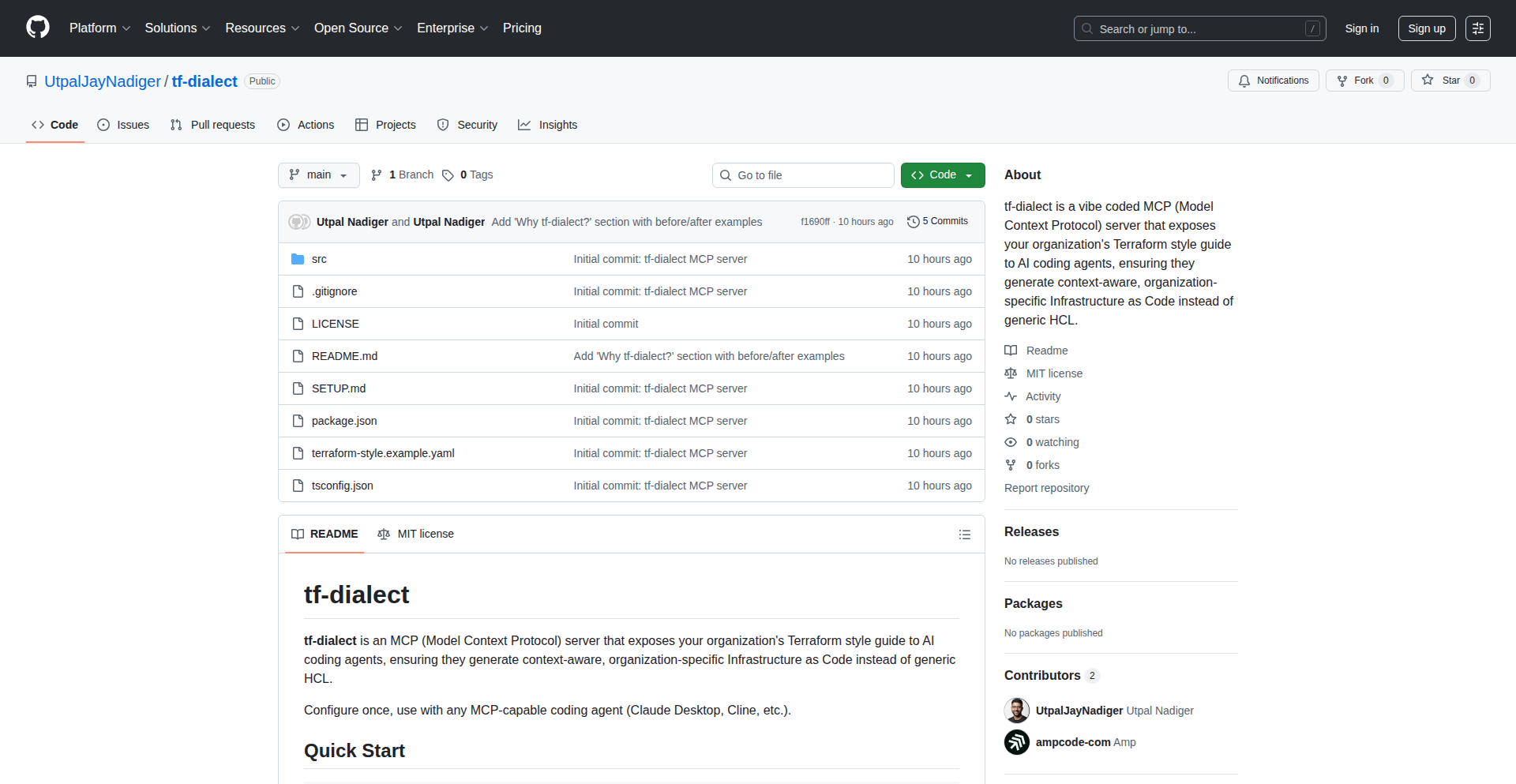

Infrastructure as Code Automation

AI for Specialized Domains

Open Source Community Collaboration

Efficient Data Handling and Processing

Project Category Distribution

AI/ML Tools & Frameworks (25%)

Developer Productivity & Tools (20%)

Data Management & Analysis (15%)

Open Source Infrastructure (15%)

Utility & Niche Applications (15%)

Educational & Content Tools (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | EpsteinFiles Explorer | 277 | 45 |

| 2 | Encore: Type-Safe Backend Infra Generator | 71 | 47 |

| 3 | EuroTech Navigator | 42 | 39 |

| 4 | Pegma: Cross-Platform Peg Solitaire Engine | 32 | 48 |

| 5 | Dumbass Business Idea Generator | 33 | 30 |

| 6 | Chirp: Local Speech-to-Text Injector | 29 | 15 |

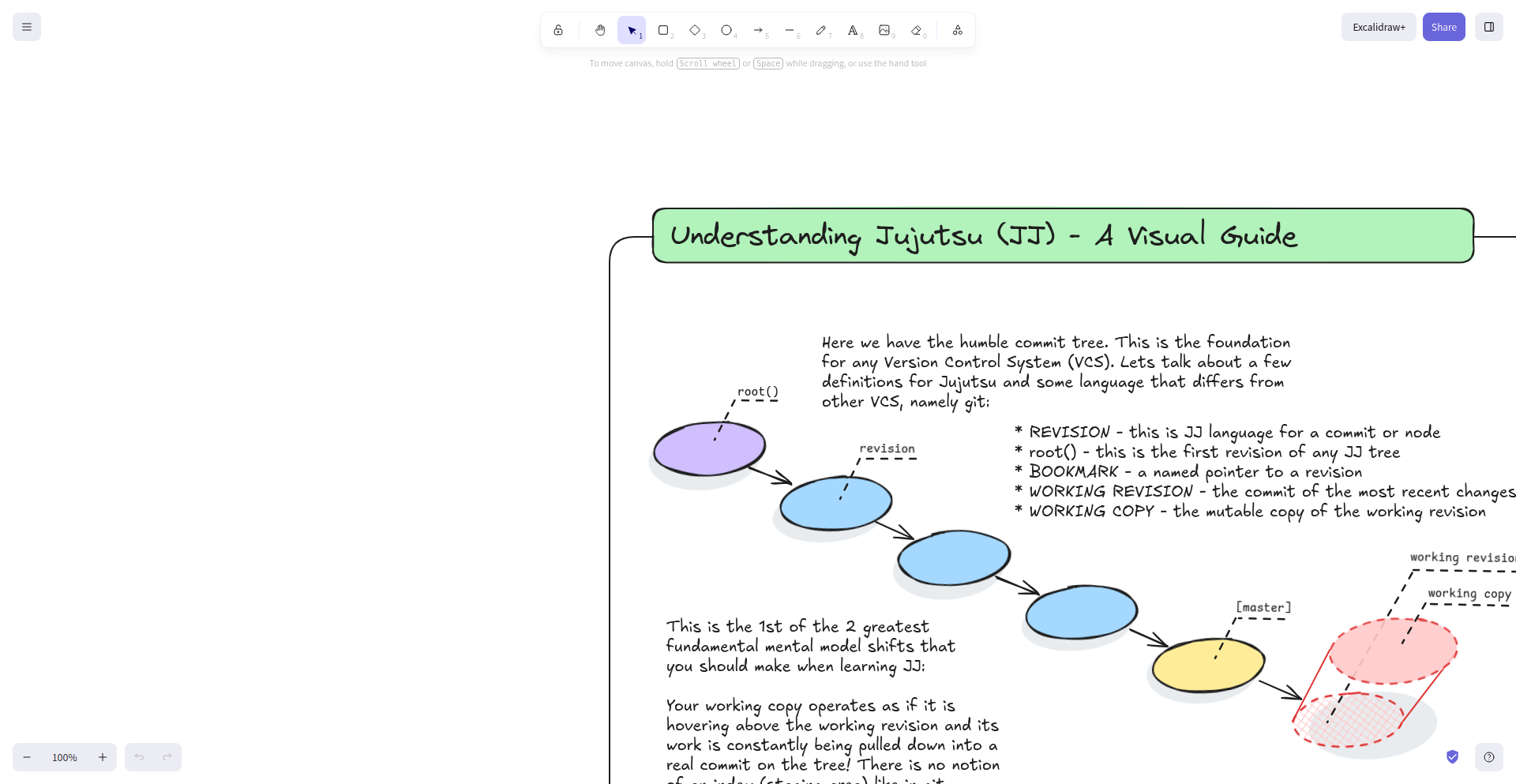

| 7 | Jujutsu Visual Navigator | 7 | 10 |

| 8 | spymux: Cross-Pane State Monitor | 9 | 3 |

| 9 | TalkiTo: Voice-Enabled Coding Agents | 5 | 5 |

| 10 | DataSentinel AI | 6 | 4 |

1

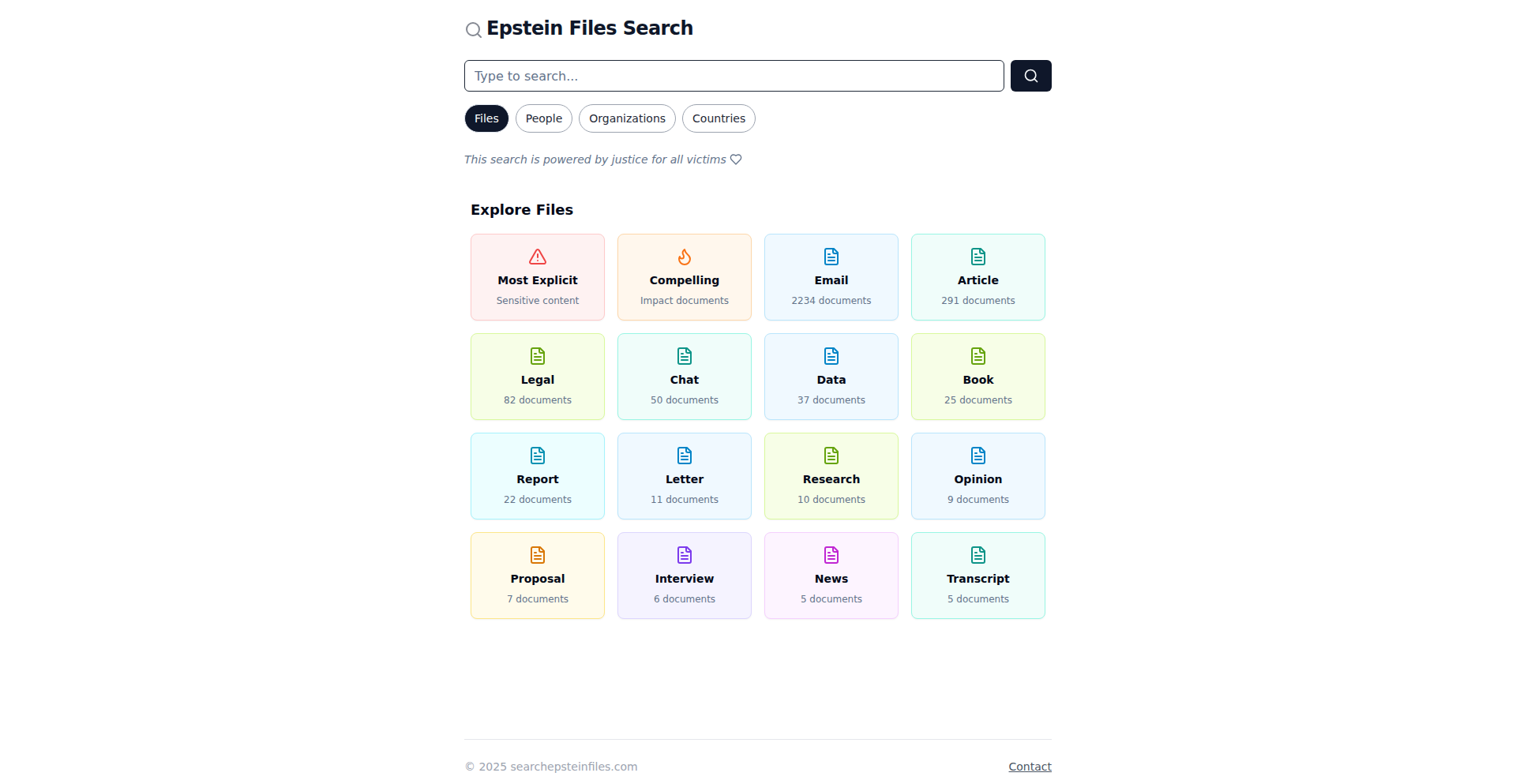

EpsteinFiles Explorer

Author

searchepstein

Description

A project that organizes and makes the Epstein files searchable, leveraging data structuring and search technology to enhance transparency and facilitate research. It transforms raw data into an accessible, navigable resource.

Popularity

Points 277

Comments 45

What is this product?

EpsteinFiles Explorer is a web-based application designed to process and present the publicly available Epstein files in a structured and searchable format. Instead of wading through raw documents, users can interact with organized data, powered by underlying search algorithms and data processing techniques. The innovation lies in transforming a large, complex dataset into a usable research tool, making connections and information more apparent than in the original format. This is useful because it saves significant time and effort for anyone trying to understand the scope and details within these extensive files, offering clarity where there was once just a deluge of information.

How to use it?

Developers can use EpsteinFiles Explorer by accessing the provided web interface. The project's underlying data processing and search mechanisms can potentially be integrated into other research tools or platforms via APIs (though this specific project might be a standalone interface). The core value for developers is understanding how to structure and query large, unstructured datasets effectively. For instance, a developer working on historical archives or investigative journalism tools could learn from the data normalization and indexing strategies employed. This means they can apply similar techniques to their own projects to make disparate information sources more accessible and insightful.

Product Core Function

· Data Structuring: Organizes raw document data into a more coherent format, making it easier to process and understand. This provides a foundation for better analysis, helping researchers avoid missing crucial pieces of information by presenting it in a logical flow.

· Search Functionality: Implements an efficient search engine to quickly find specific information within the organized files. This saves significant time for users trying to locate particular names, dates, or keywords, directly addressing the challenge of sifting through vast amounts of text.

· Information Visualization (Implied): While not explicitly detailed, the organization of data suggests potential for visualizing relationships and patterns, aiding in comprehension and discovery. This can reveal connections that might be overlooked in a linear document review, offering a deeper understanding of the overall context.

Product Usage Case

· Investigative Journalism: A journalist can use EpsteinFiles Explorer to quickly cross-reference names and events across multiple documents, speeding up fact-checking and the identification of leads. This solves the problem of tedious manual searching and correlation.

· Academic Research: A researcher studying social networks or financial transactions can use the searchable database to identify patterns and connections between individuals and organizations. This makes it easier to gather evidence and support hypotheses, overcoming the difficulty of manually piecing together information from scattered sources.

· Public Interest Advocacy: An organization focused on transparency can leverage the organized files to build reports or create accessible summaries for the public. This allows them to communicate complex information more effectively and engage a wider audience with factual data, transforming raw data into understandable insights.

2

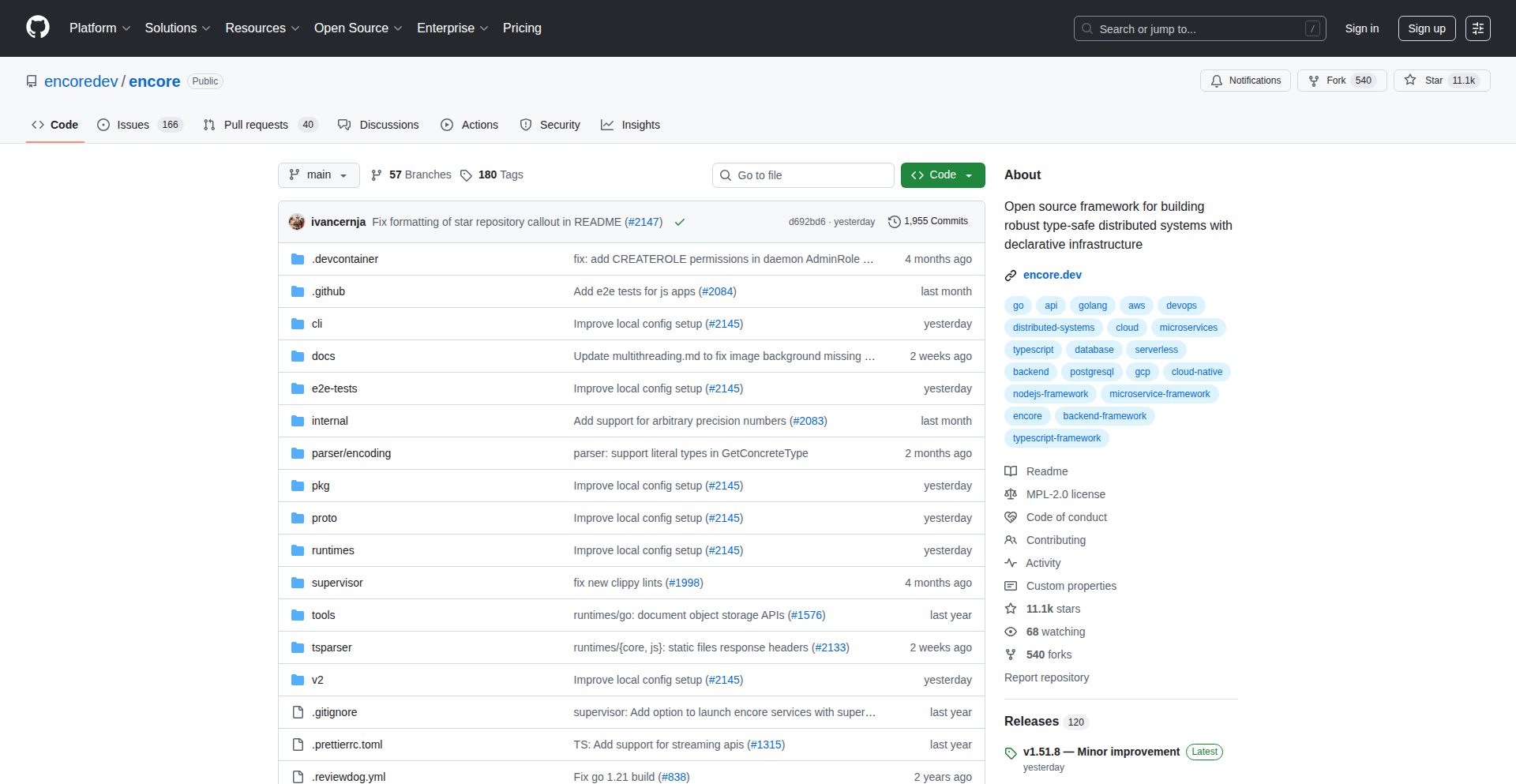

Encore: Type-Safe Backend Infra Generator

Author

andout_

Description

Encore is a novel backend framework that bridges the gap between code and infrastructure. It allows developers to define backend services and their infrastructure requirements directly within their code, which Encore then automatically provisions and deploys. The core innovation lies in its type-safe approach, ensuring that infrastructure configurations match the code's expectations, thereby reducing deployment errors and accelerating development cycles.

Popularity

Points 71

Comments 47

What is this product?

Encore is a backend development framework that generates your cloud infrastructure directly from your code. Imagine writing your API endpoints and database schemas, and Encore automatically creates the necessary servers, databases, and networking configurations in your cloud provider. Its key technical innovation is type-safety. This means that the infrastructure Encore builds is guaranteed to be compatible with your application code. This is a significant leap from traditional methods where infrastructure setup is often a separate, manual, and error-prone process, leading to 'it works on my machine' issues that are hard to debug.

How to use it?

Developers can start using Encore by defining their backend services using Encore's language. Encore handles the rest. For example, if you define a service that needs a PostgreSQL database, Encore will automatically provision and configure a managed PostgreSQL instance for you. You can integrate Encore into your existing CI/CD pipelines. When you push code changes, Encore can automatically update your deployed infrastructure and application. This dramatically simplifies the deployment and management of backend applications, allowing developers to focus on writing business logic rather than wrestling with cloud provider configurations.

Product Core Function

· Type-safe service definition: Allows developers to define backend services and their dependencies using a type-safe language. The value is that it prevents runtime errors caused by mismatches between code and infrastructure, making deployments more reliable and predictable. This is useful for any backend developer who wants to avoid tedious debugging of infrastructure-related issues.

· Automatic infrastructure generation: Automatically provisions and configures cloud infrastructure (like databases, queues, APIs) based on code definitions. The value is that it drastically reduces the manual effort and expertise required for cloud deployment, enabling faster iteration and time-to-market. This is particularly valuable for startups and small teams who need to deploy quickly without dedicated DevOps personnel.

· Integrated deployment pipeline: Seamlessly integrates with CI/CD workflows to deploy code and infrastructure updates. The value is that it automates the entire release process, from code commit to production deployment, minimizing human error and ensuring consistent deployments. This is beneficial for all development teams looking to streamline their release management.

· Built-in observability: Provides out-of-the-box logging, tracing, and metrics for applications and their infrastructure. The value is that it gives developers immediate insights into how their application and infrastructure are performing, making it easier to identify and resolve issues. This is essential for maintaining healthy and performant applications.

· Language flexibility: Supports multiple popular programming languages for backend development. The value is that developers can use their preferred language while still benefiting from Encore's infrastructure automation. This makes adoption easier and doesn't force developers into a new, unfamiliar language just for infrastructure management.

Product Usage Case

· Building a new microservice: A developer building a new microservice can define their API endpoints and specify a Redis cache. Encore will automatically set up the API gateway, server instances, and provision and connect the Redis cache, all without the developer needing to interact with AWS, GCP, or Azure consoles directly. This speeds up the development and deployment of individual services.

· Migrating a monolithic application: A team can break down a monolith into smaller services. For each new service, they can define its database requirements (e.g., a new SQL table). Encore will manage the creation of new database instances or schemas, making the migration process smoother and less risky. This helps in gradually refactoring legacy systems.

· Rapid prototyping: A developer needs to quickly build a prototype that involves a web API and user authentication. By using Encore, they can define these components in code, and Encore will provision the necessary backend services and database to support authentication, allowing them to focus on the core functionality of the prototype.

· Serverless function deployment: Defining a set of serverless functions and their triggers (e.g., HTTP requests, queue events). Encore will configure the serverless platform, set up the triggers, and manage the deployments, abstracting away the complexities of serverless orchestration. This is useful for building event-driven applications efficiently.

3

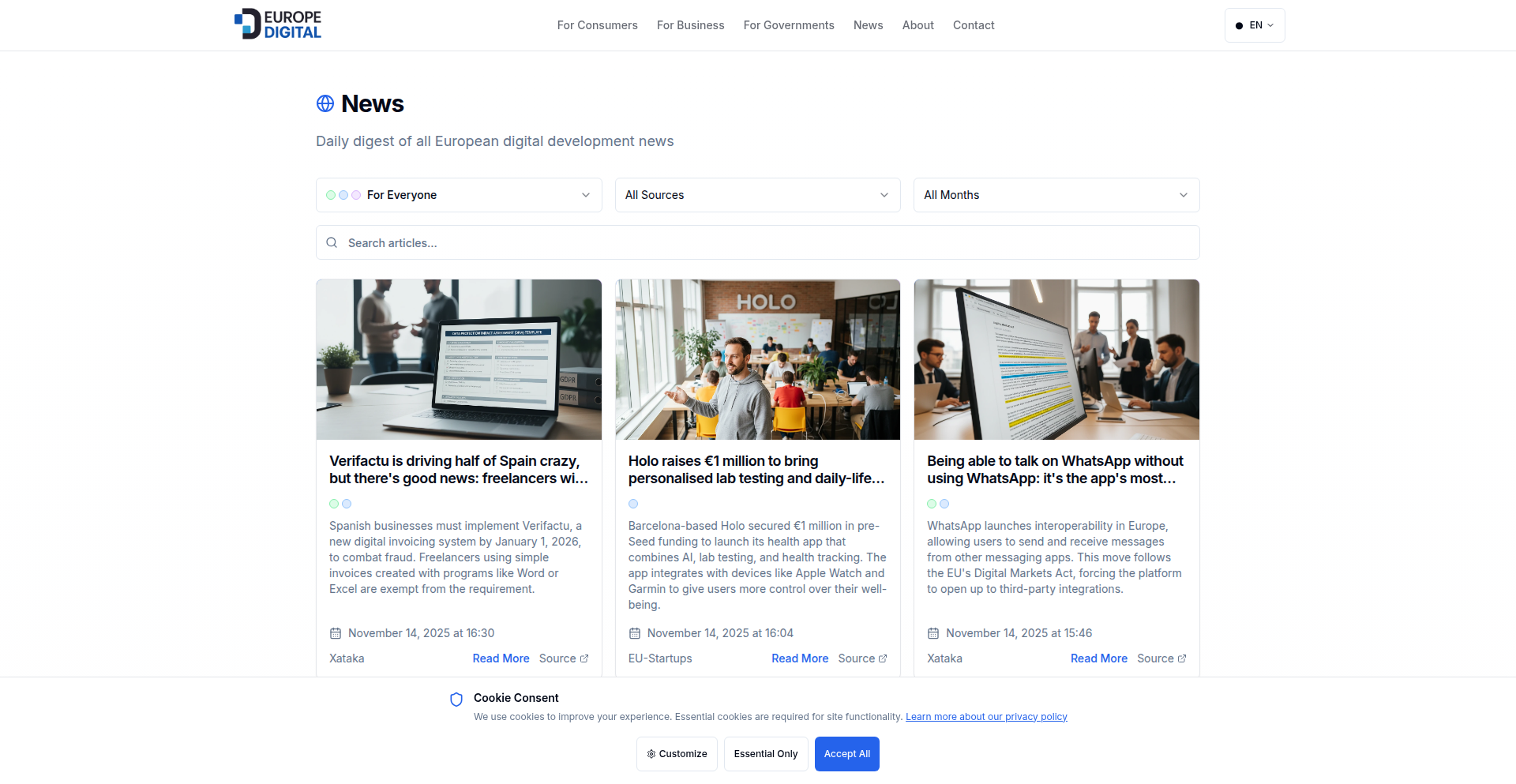

EuroTech Navigator

Author

Merinov

Description

A multilingual news aggregator focusing on European tech, offering content in 6 languages and filterable by audience type (consumers, businesses, government). It tackles common challenges like generic AI image generation by using context-aware visual patterns, improves search engine indexing for new sites with a gradual sitemap growth strategy, and employs an automated translation pipeline with human review for quality assurance. This project aims to make it easier to discover relevant European technological alternatives and innovations.

Popularity

Points 42

Comments 39

What is this product?

EuroTech Navigator is a specialized news platform designed to surface technology news originating from Europe. Instead of generic AI-generated images that all look the same, it uses a database of over 60 specific visual patterns that are contextually chosen. For example, news about funding might show coins or contracts, while security news might feature locks or shields, leading to much richer visual diversity. For newly launched websites, it addresses the slow indexing problem by gradually increasing the number of articles submitted to search engines over time, rather than overwhelming them at once. This ensures more content gets discovered by users. The platform also automates the process of summarizing and translating news articles from various RSS feeds using AI, with a human review step to maintain high translation quality. Essentially, it's a smart system for discovering and understanding European tech news.

How to use it?

Developers can use EuroTech Navigator as a valuable resource for staying updated on European technology trends, potential competitors, or collaboration opportunities. It can be integrated into research workflows by subscribing to curated feeds or using its API (if available in the future) to pull specific types of news into internal dashboards or analysis tools. The audience filters (consumers, businesses, government) allow for highly targeted information retrieval, making it efficient for product managers, market researchers, and investors. For those building multilingual applications or services, the project's approach to translation and multilingual SEO offers valuable insights and potential inspiration for their own strategies.

Product Core Function

· Multilingual News Aggregation: Aggregates tech news from European sources in English, German, French, Spanish, Italian, and Dutch. This is valuable for developers who need to understand global tech landscapes beyond their primary language and for companies looking to expand into European markets.

· Audience-Specific Filtering: Allows users to filter news based on whether it's most relevant to consumers, businesses, or government. This saves time by cutting through noise and delivering information tailored to specific professional interests or research goals.

· Context-Aware Visual Pattern Generation: Creates unique and relevant images for news articles based on their content, avoiding generic AI art. This enhances user engagement and makes content more memorable and impactful, useful for content creators and designers.

· Gradual Sitemap Growth Strategy: Implements a dynamic sitemap that slowly increases the number of URLs submitted to search engines. This improves the indexing rate for new content, ensuring that newly featured European innovations are discoverable by a wider audience over time.

· Automated Translation Pipeline: Uses AI for summarizing and translating news articles, followed by human review for accuracy. This provides accessible content across languages and sets a benchmark for quality in automated translation workflows, beneficial for developers working with international audiences.

· Discovery of European Tech Alternatives: Specifically highlights European companies and their products, providing valuable insights for developers seeking European-based solutions or looking to benchmark against regional players.

Product Usage Case

· A developer working on a new fintech product needs to understand the competitive landscape in Europe. By using EuroTech Navigator and filtering for 'businesses,' they can quickly identify emerging European fintech companies, their funding rounds, and key technological advancements, helping them refine their product strategy and identify potential partners.

· A marketing manager for a European software company wants to track news that might impact their target audience (both consumers and businesses). They can use the platform to see which European tech trends are gaining traction among different segments, informing their campaign messaging and product positioning.

· A researcher studying AI adoption in Europe can leverage EuroTech Navigator to discover news related to AI startups and government initiatives, benefiting from the context-aware image generation that makes the articles more visually engaging and the multilingual support that ensures comprehensive coverage.

· A developer building a global platform wants to improve their multilingual SEO strategy. By observing how EuroTech Navigator implements gradual sitemap growth and its approach to translation quality, they can gain practical ideas for optimizing their own site's discoverability and user experience in different languages.

4

Pegma: Cross-Platform Peg Solitaire Engine

Author

GlebShalimov

Description

Pegma is an open-source reimplementation of the classic Peg solitaire game, focusing on a clean, minimal design and smooth cross-platform gameplay. Its innovation lies in its accessibility as a fully open-source project, allowing for community contributions and transparency. The project demonstrates a developer's ability to take a well-known puzzle and make it readily available and customizable for modern platforms, showcasing clean UI/UX principles and efficient cross-platform development.

Popularity

Points 32

Comments 48

What is this product?

Pegma is a reimplementation of the traditional Peg solitaire game, a logic puzzle where the objective is to remove all but one peg from a board by jumping pegs over adjacent ones. The core technical innovation here is the development of a lightweight, cross-platform application that runs seamlessly on iOS and Android. This is achieved by leveraging modern mobile development frameworks that allow code to be written once and deployed across multiple operating systems, reducing development time and ensuring a consistent user experience. The 'open-source' aspect is a key differentiator, meaning anyone can view, modify, and contribute to the game's code, fostering transparency and potential for future enhancements by the developer community. It's about making a classic game accessible and adaptable using modern engineering practices.

How to use it?

Developers can use Pegma as a foundation or inspiration for their own game development projects. The open-source nature means you can fork the GitHub repository and customize it. For example, you could integrate Pegma's game logic into a larger application, experiment with different board layouts, or even adapt the rendering engine for unique visual styles. For end-users, it's a simple, enjoyable puzzle game available on iOS and Android app stores, offering a distraction and a mental workout with its timeless mechanics.

Product Core Function

· Cross-platform mobile deployment: The ability to run on both iOS and Android from a single codebase demonstrates efficient use of cross-platform development tools, reducing the effort needed to reach a wider audience.

· Open-source game logic: The entire game's rules and mechanics are available for inspection and modification. This allows developers to understand how a classic puzzle is implemented and to learn from the code, fostering knowledge sharing within the community.

· Minimalist UI/UX design: The focus on a clean and intuitive interface ensures that the game is easy to pick up and play, regardless of the user's technical background. This highlights the value of user-centered design in application development.

· Custom font integration: The developer designed a unique font to enhance the game's aesthetic. This shows attention to detail and a commitment to creating a distinct visual identity for the product, a common practice in app development to stand out.

· High-performance gameplay: Smooth animations and responsive controls are crucial for a good gaming experience. The project likely employs efficient rendering techniques and game loop management to ensure a fluid and enjoyable play session.

Product Usage Case

· A developer wants to create a collection of classic puzzle games for mobile. They can leverage Pegma's existing, well-tested game logic as a starting point, significantly speeding up their development process and focusing on building other puzzles.

· An educational technology company could integrate Pegma into a learning platform to teach logic and problem-solving skills. The open-source nature allows them to potentially modify the game to include specific educational objectives or track player progress.

· A game designer looking to experiment with different puzzle mechanics could analyze Pegma's implementation. By understanding how the peg jumping and removal logic works, they can gain insights into designing their own unique puzzle interactions.

· A hobbyist developer interested in open-source projects can explore Pegma's codebase on GitHub to learn about mobile game development best practices, cross-platform strategies, and C# scripting within a game engine context.

5

Dumbass Business Idea Generator

Author

elysionmind

Description

A web application that generates hilariously bad business ideas, sparking creativity by exploring the absurd. It tackles the problem of creative block and the need for unconventional inspiration by presenting deliberately flawed startup concepts, encouraging users to think outside the box.

Popularity

Points 33

Comments 30

What is this product?

This is a fun and experimental web application designed to generate intentionally terrible business ideas. It uses a combination of predefined templates and randomized elements to construct absurd startup concepts. The innovation lies in its deliberate subversion of typical business idea generation, aiming to provoke laughter and unconventional thinking rather than practical solutions. Think of it as a 'reverse brainstorming' tool to break through creative ruts.

How to use it?

Developers can use this project as a source of unexpected inspiration for creative coding challenges, quirky side projects, or even as a humorous icebreaker in team meetings. It can be integrated into other applications to add a layer of serendipitous silliness, perhaps by suggesting absurd features for a product or random plot points for a game. The core idea is to leverage the unexpected output for creative exploration.

Product Core Function

· Randomized Business Idea Generation: Leverages a templating system combined with a curated list of absurd product/service categories and target demographics to create unique and nonsensical business concepts. This helps users overcome blank page syndrome by providing a starting point, even if it's a ridiculous one.

· Humorous Presentation: Presents generated ideas with a witty and self-deprecating tone, enhancing the entertainment value and making the experience engaging. This makes the act of 'failure' in business idea generation enjoyable and memorable.

· User Submission Platform: Allows users to submit their own terrible business ideas, fostering a community around creative absurdity and expanding the pool of humorous concepts. This democratizes the generation process and turns it into a collaborative game.

· Sharing Functionality: Enables users to easily share their favorite (or least favorite) business ideas with friends, facilitating word-of-mouth spread and increasing engagement. This turns a personal creative exercise into a social activity.

Product Usage Case

· Creative Coding Challenge: A developer needs to build a small, fun web application for a hackathon. They can use this project's codebase as a base or inspiration to create a similar generator for other absurd themes (e.g., 'Dumbest Inventions,' 'Worst Pet Ideas'). It solves the problem of finding a unique and engaging project theme quickly.

· Game Design Inspiration: A game developer is stuck on creating interesting and quirky non-player characters (NPCs) or quest ideas. By feeding the generated business ideas into a narrative engine, they can create unexpected and memorable game elements. This helps in breaking predictable design patterns.

· Marketing Campaign Brainstorming: A marketing team wants to create a viral social media campaign that's unconventional. They can use this tool to generate a list of outlandish concepts, then pick the most outrageous ones to build a humorous and shareable campaign around. This addresses the need for attention-grabbing content in a crowded digital space.

· Team Building Icebreaker: During a remote team meeting, a facilitator can use this project to generate a few business ideas and have the team vote on the 'worst' one. This injects humor and lightheartedness into the meeting, improving team morale and collaboration. It provides a simple, low-stakes activity to foster connection.

6

Chirp: Local Speech-to-Text Injector

Author

whamp

Description

Chirp is a Windows dictation application designed for locked-down environments where installing new executables is restricted. It leverages NVIDIA's ParakeetV3 model for fast, accurate, and fully local speech-to-text conversion without requiring a GPU. The innovation lies in its Python-based execution managed by `uv`, enabling it to run where traditional `.exe` installations are forbidden, and its direct text injection into active windows, bypassing clipboard limitations.

Popularity

Points 29

Comments 15

What is this product?

Chirp is a local, privacy-focused dictation tool for Windows. Its core innovation is running entirely within a Python environment, meaning you don't need to install any new `.exe` files, making it ideal for restricted corporate networks. It uses the ParakeetV3 speech-to-text model, which is known for its high accuracy (comparable to Whisper-large-v3) but is significantly faster and can run efficiently on your computer's CPU. This means you get fast and accurate transcription without sending your voice data to the cloud or needing a powerful graphics card. The value for you is having a powerful dictation tool that respects your environment's security policies and your data privacy.

How to use it?

To use Chirp, you first perform a one-time setup to download and prepare the speech-to-text model. This involves running a Python command like `uv run python -m chirp.setup`. Once the model is ready, you start a long-running command-line process using `uv run python -m chirp.main`. Chirp then listens for a global hotkey you configure. When activated, it records your voice and automatically types the transcribed text directly into whatever application window is currently active. This offers a seamless dictation experience into your documents, emails, or code editors without manual copy-pasting.

Product Core Function

· Local Speech-to-Text Conversion: Processes audio directly on your machine using ONNX Runtime, ensuring data privacy and compliance with restrictive environments. This is valuable because your sensitive voice data stays on your computer.

· Executable-Free Operation: Runs using Python and `uv`, eliminating the need to install `.exe` files. This is crucial for users in locked-down corporate settings where software installation is heavily controlled.

· Direct Text Injection: Automatically types transcribed text into the active window, simplifying workflow and avoiding manual copy-pasting. This saves you time and effort by directly inputting your dictated text where you need it.

· Configurable Hotkeys: Allows customization of keyboard shortcuts to start and stop recording. This provides flexibility and a personalized user experience, making dictation activation convenient.

· Configurable Behavior: Uses a `config.toml` file to manage hotkeys, model selection, quantization (optimizing model size and speed), language, and threading. This lets you fine-tune Chirp to your specific needs and hardware.

· Post-Processing Styles: Offers optional text styling based on prompts like 'sentence case' or 'prepend: >>'. This enhances the usability of the transcribed text by automatically formatting it according to your preferences.

· Audio Feedback: Provides configurable start/stop sound cues for recording status. This gives you immediate confirmation that the dictation is active or has stopped, preventing missed dictations.

Product Usage Case

· A developer working in a secure corporate environment with strict policies against installing new software can use Chirp to dictate code comments or documentation directly into their IDE. This solves the problem of needing an effective dictation tool without violating IT policies.

· A writer who needs to transcribe notes quickly without sending sensitive manuscript content to cloud services can use Chirp on their Windows laptop. It addresses privacy concerns by keeping all audio processing local, allowing them to dictate comfortably and securely.

· A user who finds built-in Windows dictation inaccurate or cumbersome can use Chirp to achieve faster and more precise speech-to-text. By leveraging the ParakeetV3 model and its efficient CPU usage, they can dictate into any application without needing a GPU.

· A power user who frequently switches between applications and wants to dictate information without alt-tabbing or copying from a separate notepad can benefit from Chirp's direct text injection. This streamlines their workflow by allowing them to dictate directly into forms, chat windows, or any active text field.

7

Jujutsu Visual Navigator

Author

anavid7

Description

A novel visual learning tool designed to demystify the complexities of Jujutsu (JJ) techniques. It leverages interactive diagrams and step-by-step visual progressions to make learning this martial art more accessible and intuitive, addressing the common challenge of understanding nuanced physical movements through static descriptions.

Popularity

Points 7

Comments 10

What is this product?

This project is a visual learning platform for Jujutsu (JJ) that transforms traditional textual instruction into an interactive, graphical experience. Instead of reading lengthy descriptions of techniques, users see them unfold visually. The core innovation lies in how it breaks down complex body mechanics and sequences into digestible visual components, using animated diagrams that illustrate transitions between positions and movements. This approach is particularly effective for kinesthetic learners and for clarifying subtle yet critical aspects of grappling arts where precise body alignment and timing are paramount. Essentially, it turns abstract instructions into a visual narrative, making the learning curve for JJ significantly smoother.

How to use it?

Developers can integrate this visual learning paradigm into various educational platforms, martial arts academy websites, or even within fitness and self-defense apps. The project provides a framework for creating interactive visual content. For instance, an academy could use this to offer online supplementary learning materials for their students, allowing them to review techniques visually before or after class. It can also be a standalone learning resource, accessible via a web browser or a dedicated application, where users can browse through different JJ techniques, explore variations, and practice along with the visual guides. The underlying technology could involve interactive SVG or canvas-based animations, coupled with a content management system for organizing technique libraries.

Product Core Function

· Interactive technique breakdown: Visually dissects complex Jujutsu movements into fundamental steps, showing the progression of each phase with clear graphical representations. This helps users understand the 'why' and 'how' of each movement, not just the 'what'.

· Animated visual sequences: Provides fluid, step-by-step animations of techniques, allowing learners to observe the correct form, timing, and transitions in motion. This is far more effective than static images or text for mastering physical skills.

· Progressive learning modules: Organizes techniques into logical learning paths, starting with basic principles and gradually introducing more advanced maneuvers. This structured approach builds a solid foundation and prevents overwhelm for new practitioners.

· Annotated diagrams: Augments animations with concise annotations highlighting key points, leverage mechanics, and common pitfalls. This adds an extra layer of understanding and reinforces critical details that might be missed in purely visual learning.

· Technique variation exploration: Allows users to explore different variations and counter-techniques for a given move. This expands the learner's tactical understanding and adaptability within Jujutsu.

Product Usage Case

· A martial arts school using the platform to create online refresher courses for its students, allowing them to visually review techniques learned in class, thereby improving retention and skill mastery.

· A self-defense app developer integrating these visual guides to teach practical grappling and escape techniques, offering users a clear and effective way to learn self-preservation skills without requiring a physical instructor.

· A physical therapist designing rehabilitation exercises for individuals recovering from certain injuries, using the visual sequencing to demonstrate safe and controlled movements, aiding in recovery and regaining functionality.

· A game developer exploring ways to incorporate realistic martial arts animations into their fighting game, using this project as a reference for authentic movement and technique execution, enhancing player immersion.

8

spymux: Cross-Pane State Monitor

Author

crap

Description

spymux is a novel tool that allows you to monitor the activity and state of multiple tmux panes from a single, consolidated view. It addresses the common developer pain point of context-switching between different terminal sessions to check on ongoing tasks like builds, tests, or long-running scripts. The innovation lies in its ability to aggregate this information, providing a unified dashboard for efficient workflow management.

Popularity

Points 9

Comments 3

What is this product?

spymux is a lightweight utility designed to enhance the developer's productivity within the tmux terminal multiplexer. Its core technology involves capturing and intelligently displaying key status indicators from various tmux panes. Instead of manually switching between panes to see if a compilation finished or a test suite passed, spymux pulls this information together. Think of it as a real-time, intelligent status board for your terminal sessions. The innovative part is how it extracts relevant information (like exit codes or specific output patterns) from each pane and presents it concisely, saving you significant time and mental overhead.

How to use it?

Developers can integrate spymux into their existing tmux workflows. After installing spymux (typically via a package manager or cloning the repository), you would configure it to watch specific tmux panes. This could involve defining rules for what kind of output or status you're interested in. For example, you might tell spymux to alert you when a `make build` command in one pane finishes, or when a Python test suite in another pane reports failures. It can be run as a separate process that hooks into tmux events or directly within a tmux session, providing a seamless way to keep an eye on multiple concurrent tasks without losing focus.

Product Core Function

· Real-time pane status aggregation: spymux watches multiple tmux panes simultaneously, collecting their output and exit status. This means you get an immediate overview of what's happening across your entire terminal workspace, eliminating the need for constant manual checking. The value is in saving time and reducing the cognitive load of tracking multiple operations.

· Customizable monitoring rules: Developers can define specific patterns or exit codes to monitor within each pane. This allows for tailored alerts and notifications, ensuring you only focus on what's critical to your workflow. The value here is in personalized efficiency, receiving notifications only for events that matter to your specific task.

· Unified status dashboard: spymux presents the monitored information in a clear, consolidated interface. This provides an instant snapshot of your tasks' progress, helping you quickly identify bottlenecks or successful completions. The value is in improved situational awareness and faster decision-making.

· Cross-pane communication and alerting: The tool can be configured to trigger actions or send notifications based on the status of observed panes. For instance, it could alert you via a desktop notification when a long-running compilation job completes successfully. The value is in proactive workflow management and ensuring you don't miss important task outcomes.

Product Usage Case

· Continuous Integration/Continuous Deployment (CI/CD) pipelines: A developer can use spymux to monitor multiple build and test jobs running in different tmux panes. Instead of manually checking each job’s progress, spymux provides a single view. If a build fails in one pane, the developer is immediately alerted and can investigate without having to switch context. This directly addresses the problem of managing parallel development tasks efficiently.

· Long-running data processing or simulations: When running lengthy data analysis scripts or scientific simulations in tmux, spymux can track their completion or detect errors. This allows the developer to step away from the terminal knowing they will be notified when the task is done or if something goes wrong. The value is in reclaiming time and reducing anxiety about unattended processes.

· Automated testing suites: For projects with extensive test suites executed across multiple parallel processes in tmux, spymux can consolidate the pass/fail status of each test run. This gives a quick overview of the overall test health and helps pinpoint specific failing tests. The value is in faster feedback loops and more streamlined debugging.

9

TalkiTo: Voice-Enabled Coding Agents

Author

robbomacrae

Description

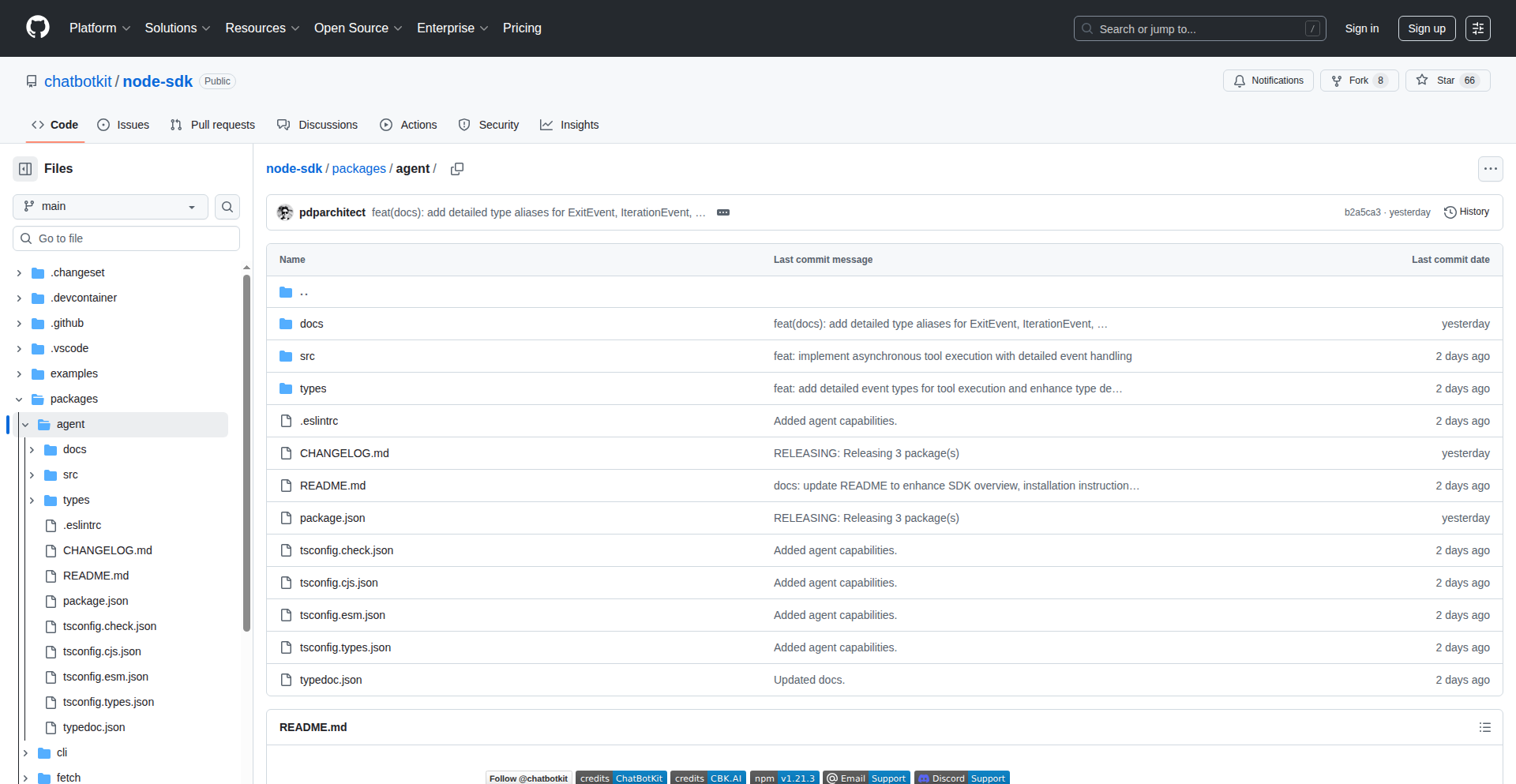

TalkiTo is an open-source project that adds voice input (ASR) and voice output (TTS) capabilities to terminal-based coding agents. It allows developers to interact with their coding assistants hands-free, improving multitasking and workflow efficiency. The project supports various ASR/TTS providers, including local options like Whisper and Kokoro/KittenTTS, and integrates with communication platforms like Slack and WhatsApp.

Popularity

Points 5

Comments 5

What is this product?

TalkiTo is a middleware that sits between you and your terminal-based coding agents (like Claude Code or Codex CLI). It uses Automatic Speech Recognition (ASR) to convert your spoken commands into text that the coding agent can understand, and Text-to-Speech (TTS) to read out the agent's responses. This means you can talk to your coding assistant instead of typing, making it feel more like a natural conversation and allowing you to do other things while the agent works. It's designed to be highly configurable, letting you choose which ASR and TTS services to use, including private, local options for better control and privacy.

How to use it?

Developers can integrate TalkiTo into their workflow by installing the project and configuring it to work with their preferred coding agent and ASR/TTS providers. Once set up, they can start using voice commands to interact with the coding agent. For instance, instead of typing a command to the agent, they can simply speak it. The project also allows for in-session configuration changes via voice commands, such as disabling ASR or switching TTS engines. This enables a truly hands-free coding experience, especially useful when multitasking or when direct keyboard input isn't feasible.

Product Core Function

· Speech-to-Text (ASR) Input: Converts spoken commands into text for coding agents, enabling hands-free operation and faster command input.

· Text-to-Speech (TTS) Output: Reads out coding agent responses aloud, allowing developers to stay informed without constantly looking at the terminal.

· Provider Agnosticism: Supports major cloud-based ASR/TTS services and local, private options like Whisper and KittenTTS for flexibility and cost control.

· Communication Platform Integration: Connects to Slack and WhatsApp, enabling voice-based interactions and notifications from coding agents via these platforms.

· Dynamic Configuration: Allows real-time adjustment of ASR/TTS settings and agent control through simple voice commands, offering a fluid and responsive user experience.

Product Usage Case

· Hands-free Code Generation: A developer can dictate code generation requests to an AI coding agent while performing another task, like reviewing documentation or a design, significantly boosting productivity.

· Remote Pair Programming with Voice: During a remote pair programming session, one developer can use TalkiTo to issue commands and receive verbal feedback from the coding agent, while the other developer focuses on writing code, streamlining collaboration.

· Accessibility for Developers: For developers with physical limitations that make typing difficult, TalkiTo provides a vital alternative for interacting with powerful coding tools, making software development more inclusive.

· Continuous Integration/Continuous Deployment (CI/CD) Monitoring: Developers can receive audible notifications about the status of their CI/CD pipelines through their coding agent, allowing them to stay updated even when away from their keyboard.

· Learning and Experimentation: New developers can use voice commands to explore the capabilities of coding agents and receive explanations in an auditory format, making the learning process more engaging and less intimidating.

10

DataSentinel AI

Author

UvrajSB

Description

DataSentinel AI is an intelligent code reviewer specifically designed for data teams. It automates the process of checking SQL, dbt, Airflow, and Spark code changes in GitHub pull requests before they are merged. Its core innovation lies in its ability to predict and prevent costly data-related issues such as warehouse cost spikes, pipeline breakages, and data quality regressions, which are often overlooked by traditional software code reviewers. This empowers data teams to maintain code integrity and optimize resource utilization efficiently.

Popularity

Points 6

Comments 4

What is this product?

DataSentinel AI is an AI-powered system that acts as a smart guardian for your data pipelines. Think of it as a highly experienced data engineer who meticulously reviews every code change you propose. Unlike general code reviewers that focus on software logic, DataSentinel AI understands the unique risks associated with data-related code. It analyzes SQL, dbt, Airflow, and Spark scripts in your GitHub pull requests to identify potential problems before they impact your production systems. Its innovative approach involves predicting cost impacts, detecting logic flaws, ensuring data quality, and tracing the lineage of your data to prevent downstream failures. The key technical insight is that data code risks are fundamentally different from software code risks, involving aspects like billing behavior, table sizes, lineage, and real-world data interactions, which DataSentinel AI is specifically trained to detect.

How to use it?

Developers can integrate DataSentinel AI seamlessly into their GitHub workflow. When a data engineer creates a pull request with changes to SQL, dbt, Airflow, or Spark code, DataSentinel AI automatically scans these changes. It connects to your GitHub repository and performs a deep analysis. The system provides actionable feedback directly within the pull request, highlighting potential cost increases, logical errors, data quality issues, or dependencies that might break. This allows developers to address these problems proactively before merging the code, significantly reducing the risk of costly errors and pipeline disruptions. You can try it for free on your top 100 pull requests at getzingle.com.

Product Core Function

· Cost Regression Prediction: Analyzes code changes to forecast potential increases in data warehouse expenses, enabling proactive cost optimization and preventing unexpected bills. This helps you understand if a proposed change will make your data operations more expensive.

· Logic Issue Detection: Identifies subtle logical errors in SQL or dbt code that might lead to incorrect data processing or skewed results, ensuring the accuracy and reliability of your data. This means your data calculations will be correct.

· Data Quality Gap Analysis: Flags missing data quality checks (like null or uniqueness tests) or redundant ones, promoting robust data integrity and preventing bad data from entering your systems. This ensures the data you rely on is trustworthy.

· Downstream Breakage Tracing: Maps the lineage of data to predict which dashboards, models, or other downstream processes will be affected by the code change, alerting owners and preventing unexpected failures. This prevents your reports and models from suddenly breaking.

· Governance Rule Enforcement: Ensures adherence to data governance policies, including PII masking, documentation requirements, and ownership rules, maintaining compliance and control over your data assets. This helps keep your data secure and compliant with regulations.

· Real-time Sandbox Execution: Safely runs new SQL queries in a controlled environment to analyze actual data differences and identify potential issues that might only appear at scale. This simulates how the code will perform with real data without risking your live environment.

Product Usage Case

· Preventing a $50k Snowflake Bill: A data team used DataSentinel AI and it flagged a PR that would have caused repeated full refreshes on a large model, an error that would have resulted in a $50,000 increase in their Snowflake bill. This is a clear example of how it saves significant financial resources.

· Avoiding Data Distortion: DataSentinel AI identified duplicate rows being introduced into a critical fact table. Without this detection, these duplicates would have distorted revenue reporting, leading to flawed business decisions.

· Optimizing Pipeline Performance: The system caught missing filters that would have doubled table sizes and significantly slowed down data pipelines. By flagging this, the team was able to optimize their queries and maintain efficient processing.

· Ensuring Dashboard Integrity: A simple column rename was proposed that, according to DataSentinel AI's lineage tracing, would have broken 14 downstream dashboards. This prevented a widespread impact on reporting and business intelligence.

· Mitigating Exploding Joins: DataSentinel AI detected potential 'exploding joins' arising from low-cardinality dimensions, a common cause of performance degradation and excessive resource consumption in data queries. This allows for query optimization before performance issues arise.

· Enforcing Documentation Standards: The tool flagged undocumented models that were feeding crucial finance metrics, ensuring better transparency and maintainability of the data infrastructure. This improves collaboration and understanding within the team.

11

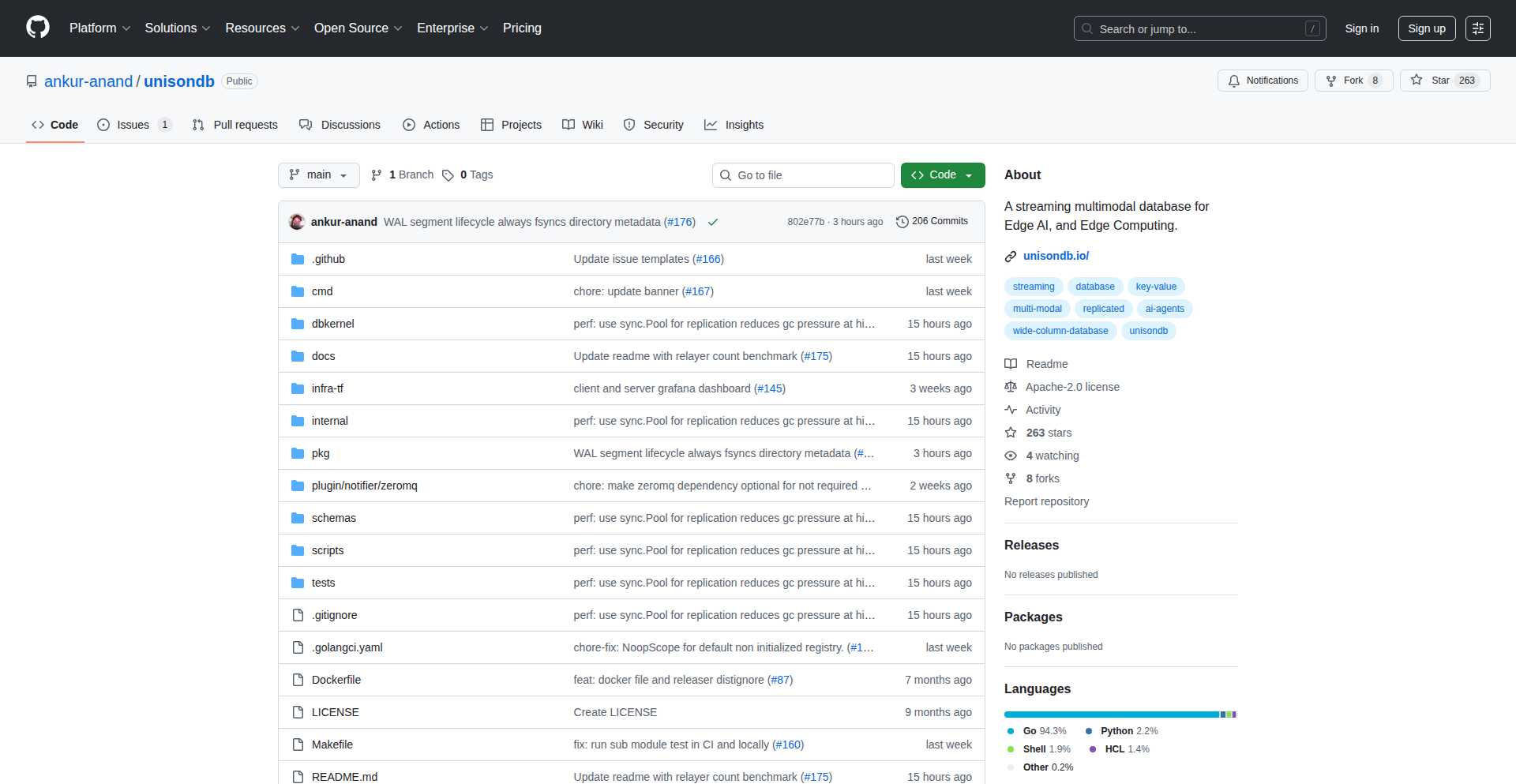

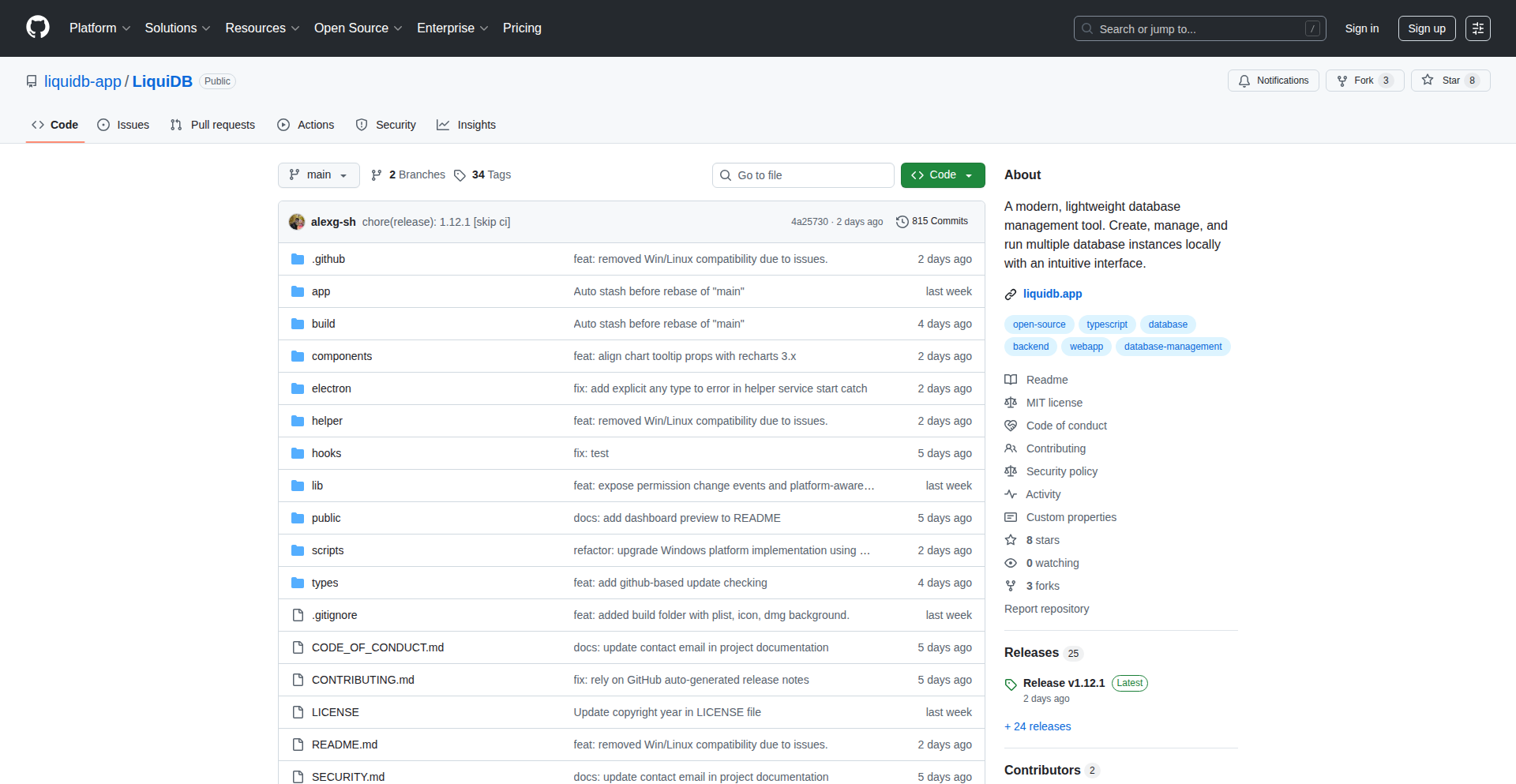

UnisonDB: Sub-Second Replication B+Tree

Author

ankuranand

Description

UnisonDB is a highly experimental distributed database that leverages B+Trees for its core data structure and achieves sub-second replication latency across over 100 nodes. It tackles the challenge of fast data synchronization in large-scale, distributed systems, making real-time data access across many machines a practical reality.

Popularity

Points 9

Comments 1

What is this product?

UnisonDB is a novel distributed database system. Instead of relying on traditional disk-based B-Trees, it focuses on optimizing B+Trees for in-memory operations and then applying a clever replication strategy. The innovation lies in its ability to synchronize data changes across a large cluster of nodes (100+) in less than a second. This is achieved through a specialized replication protocol that minimizes overhead and maximizes parallelism, essentially allowing data to be almost instantly available everywhere it's needed, even with a vast number of servers. This means when you update data on one server, it appears almost immediately on all others.

How to use it?

Developers can integrate UnisonDB into applications requiring high availability and low-latency data access across distributed environments. This could involve building real-time dashboards, collaborative applications, or microservices architectures where data consistency and speed are paramount. The integration would typically involve setting up a cluster of UnisonDB nodes and then connecting application clients to this cluster. The database exposes an API (likely SQL-like or a custom query language) that applications use to read and write data. The sub-second replication means that even if a node fails, another node can immediately take over with up-to-date data, ensuring uninterrupted service. Think of it like having your data instantly copied to dozens of backup locations, so if one is destroyed, the others are already ready to go.

Product Core Function

· B+Tree Optimized for Distributed Performance: This core data structure allows for efficient querying and data management, while modifications are designed to be quickly propagated across the network. The value is in making data retrieval fast and consistent, even as the data scales.

· Sub-Second Cross-Node Replication: This is the killer feature. It ensures that data changes are visible on all connected nodes almost instantaneously. The value is in enabling real-time applications that depend on up-to-the-minute data across a large number of servers, preventing stale data issues.

· High Node Count Scalability: Designed to handle over 100 nodes, this demonstrates its capability to scale horizontally for large deployments. The value is in the ability to build robust systems that can grow to accommodate massive amounts of data and users without sacrificing performance.

· Fault Tolerance through Fast Replication: The rapid replication minimizes the window of inconsistency in case of node failures. The value is in building highly available systems where downtime is unacceptable, as data is quickly mirrored, allowing for seamless failover.

Product Usage Case

· Real-time Analytics Dashboards: Imagine a dashboard showing live stock market data or website traffic. UnisonDB's rapid replication ensures that all users viewing the dashboard see the most current information simultaneously, regardless of which server is serving their request. This solves the problem of users seeing outdated numbers.

· Collaborative Editing Platforms: In applications like Google Docs or online whiteboards, multiple users edit content concurrently. UnisonDB can ensure that changes made by one user appear on another user's screen with minimal delay, providing a smooth and responsive collaborative experience. This addresses the lag often experienced in real-time collaboration tools.

· Distributed Microservices with Shared State: When different microservices need to access and update shared data, maintaining consistency across them can be a challenge. UnisonDB provides a fast, consistent data layer, allowing these services to operate on near real-time data, improving overall system responsiveness and reducing coordination headaches. This tackles the complexity of managing data consistency in a microservices architecture.

12

Keepr CLI Vault

Author

bsamarji

Description

Keepr is an offline, command-line interface (CLI) password manager designed for developers. It prioritizes local storage and terminal-based operation, encrypting all sensitive data in an SQLCipher database secured by a master password. A time-limited session feature allows for convenient access without constant re-authentication. This project showcases a strong emphasis on data privacy and security by never connecting to the network.

Popularity

Points 6

Comments 2

What is this product?

Keepr is a command-line tool that securely manages your passwords and secrets locally on your machine. Instead of relying on cloud services, it uses an encrypted SQLite database (SQLCipher) to store your information. The encryption uses AES-256, a robust standard. To access your vault, you set a master password. Keepr then uses this master password, combined with a strong key derivation function (PBKDF2-HMAC-SHA256 with 1.2 million iterations) to create an encryption key. This key decrypts another key (PEK) which is then used to encrypt your actual vault data. For convenience, Keepr offers a temporary session, meaning you don't have to re-enter your master password every single time you need to access a password within a certain timeframe. The core innovation lies in its absolute offline nature and its focus on developer workflows within the terminal, providing a secure alternative to cloud-based password managers for those who prefer to keep sensitive data entirely local. So, this is for you if you want your passwords managed securely without any risk of them being accessed over the internet.

How to use it?

Developers can use Keepr by installing it via pip (Python Package Index): `pip install Keepr`. Once installed, you can interact with it directly from your terminal. You'll start by initializing a new vault and setting your master password. Common commands include `keepr add` to add new entries (like website URLs, usernames, and passwords), `keepr view <entry_name>` to retrieve an entry, `keepr search <keyword>` to find specific secrets, `keepr update <entry_name>` to modify existing entries, and `keepr delete <entry_name>` to remove them. Keepr also features a secure password generator (`keepr generate`) and can copy generated passwords directly to your clipboard for seamless integration into login forms. This allows you to manage all your API keys, database credentials, SSH keys, and website logins directly from your command line, enhancing your development workflow and security posture. So, you can use this to quickly and securely retrieve credentials for your development projects without leaving your terminal.

Product Core Function

· Offline password storage: All your secrets are stored locally on your machine in an encrypted database, ensuring no sensitive data leaves your system. This is valuable because it eliminates the risk of cloud breaches affecting your personal or work credentials.

· AES-256 encryption: Your data is protected using a strong, industry-standard encryption algorithm, making it extremely difficult for unauthorized parties to read. This is valuable because it provides a high level of security for your most sensitive information.

· Master password protection: Access to your encrypted vault is controlled by a master password, which you set. This is valuable because it acts as the primary gatekeeper to your secrets.

· Time-limited session: Once you unlock your vault with your master password, it remains unlocked for a configurable period, allowing you to quickly access multiple passwords without repeated authentication. This is valuable because it improves workflow efficiency without significantly compromising security.

· Secure password generation: Keepr can generate strong, random passwords for you, reducing the likelihood of weak or reused passwords. This is valuable because it helps you create more secure accounts across different services.

· Clipboard integration: Generated passwords or retrieved stored passwords can be automatically copied to your clipboard, making it easy to paste them into applications or websites. This is valuable because it speeds up the process of logging in and reduces the chance of typing errors.

· CLI-based operations: All management tasks (adding, viewing, searching, updating, deleting) are performed via simple commands in your terminal. This is valuable for developers who prefer working in a terminal-centric environment and want to integrate password management into their existing workflows.

Product Usage Case

· Managing API keys for multiple cloud services (AWS, Google Cloud, Azure) directly from your development machine. Instead of storing these keys in plain text files or unsecured configuration, Keepr encrypts them locally, and you can retrieve them via a simple command when needed for your scripts or applications. This solves the problem of insecurely handling sensitive API credentials.

· Storing and retrieving database connection strings and credentials for local development environments. When working on different database projects, you can quickly pull the necessary credentials without having to remember them or risk exposing them in code. This streamlines development by providing quick, secure access to database secrets.

· Keeping SSH private keys and their associated passphrases secure. For developers who frequently connect to remote servers, Keepr can store these sensitive keys offline, preventing them from being accidentally exposed. This enhances security for remote access operations.

· Securing credentials for various online development tools and platforms like GitHub, GitLab, Docker Hub, or SaaS applications. You can easily add and retrieve login details, ensuring you are not relying on browser autofill or less secure methods for managing these important accounts. This addresses the need for a centralized and secure way to manage online service credentials.

13

VisualShop Canvas

Author

kar_t

Description

VisualShop Canvas is an AI-powered visual workspace designed to revolutionize furniture shopping. It addresses the frustration of scattered inspiration and disconnected purchasing platforms by consolidating product discovery, visualization, and decision-making into a single, intuitive interface. The core innovation lies in its intelligent search and AI rendering capabilities, which streamline the complex process of furnishing a space.

Popularity

Points 8

Comments 0

What is this product?

VisualShop Canvas is an AI-powered visual workspace that acts as a central hub for furniture shopping. It leverages advanced AI to understand user inspiration, match it with products from a vast catalog, and generate realistic room renderings. Think of it as a smart digital mood board combined with a powerful shopping assistant. The innovation isn't just the canvas itself, but the underlying AI systems that power product matching and AI-driven room visualization, solving the problem of fragmented and time-consuming furniture research.

How to use it?

Developers can integrate VisualShop Canvas into their platforms or use it as a standalone tool. For e-commerce businesses, it can enhance product discovery and visualization, allowing customers to easily explore furniture options and see how they'd look in their own spaces. For interior designers, it provides a more efficient way to create mood boards and present design concepts to clients. Integration could involve using APIs to pull product catalogs or embed the canvas directly into existing websites. The core idea is to provide a seamless flow from inspiration to informed purchase decisions.

Product Core Function

· AI-powered product matching: This function uses artificial intelligence to find furniture items that match inspiration images or user preferences. The value is in significantly reducing the time and effort spent searching for the right products, offering highly relevant suggestions from a massive catalog. This is applicable in any e-commerce or design context where product discovery is key.

· Generative AI room renderings: This feature utilizes AI to create realistic visualizations of how furniture items would appear in a room. The value lies in allowing users to 'see' the final look before committing to a purchase, reducing uncertainty and improving decision-making confidence. This is incredibly useful for both consumers and designers wanting to preview design outcomes.

· Infinite canvas workspace: This provides a flexible and expandable digital area where users can arrange products, upload inspiration images, and organize their ideas. The value is in centralizing all aspects of the furniture shopping process in one manageable space, preventing information overload and streamlining the entire journey. This is beneficial for anyone managing multiple design ideas or product options.

Product Usage Case

· A user uploads a photo of a stylish living room they saw on social media. VisualShop Canvas analyzes the image and automatically identifies similar furniture pieces from its catalog, presenting them on the canvas. This solves the problem of finding specific items from inspiration photos, making it easy to recreate a desired look.

· An e-commerce furniture retailer embeds VisualShop Canvas on their website. Customers can drag and drop furniture items onto their own uploaded room photos or use the AI rendering feature to visualize how different sofa styles would fit in their living room. This directly addresses customer hesitation by providing a realistic preview, potentially boosting conversion rates.

· An interior designer uses VisualShop Canvas to create a presentation for a client. They can drag and drop selected furniture pieces, upload inspiration images for color palettes, and generate AI renderings of the proposed room layout. This streamlines the design process, allowing for faster iterations and clearer communication of design intent.

14

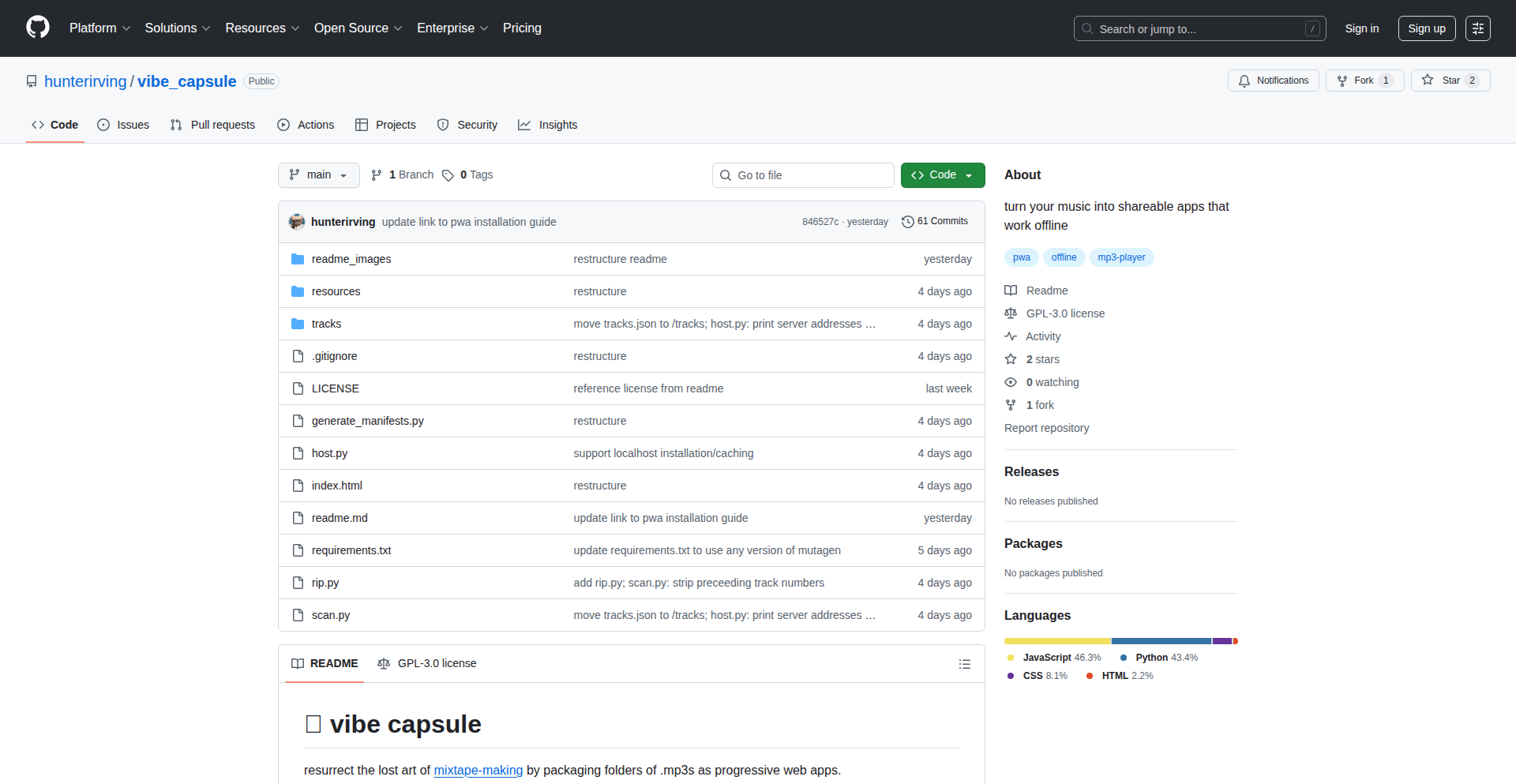

Vibe Capsule: Offline Music App Weaver

Author

hunterirving

Description

Vibe Capsule transforms your music collections into installable Progressive Web Apps (PWAs) that work entirely offline. It solves the problem of shared digital content becoming ephemeral and dependent on external platforms, enabling creators to gift truly self-contained digital experiences, much like old-school mix CDs.

Popularity

Points 5

Comments 2

What is this product?

Vibe Capsule is a tool that takes your MP3 files and uses Python scripts to package them into a Progressive Web App. This PWA, referred to as a 'mixapp', can then be hosted on any HTTPS-enabled server. The innovation lies in its ability to create offline-first applications. Unlike streaming services or cloud-based playlists, these mixapps store the music files directly on the user's device after an initial download. This means they function without an internet connection and are free from regional restrictions or content availability issues, truly putting the 'files in the computer' and restoring the practice of giving tangible digital gifts.

How to use it?

Developers can use Vibe Capsule by organizing their music files (.mp3s) into a designated folder. They then run the provided Python scripts from the Vibe Capsule GitHub repository. The script will generate a self-contained 'mixapp' folder. This folder can be uploaded to any web server that supports HTTPS. Once hosted, anyone can access the mixapp through a web browser on their device (iOS, Android, or desktop). They will be prompted to 'install' it, which adds an icon to their home screen. After the initial download, the music playback will be fully functional offline, making it a personal, portable music experience.

Product Core Function

· MP3 to PWA conversion: Enables the transformation of standard audio files into installable web applications, offering a novel way to distribute music.

· Offline-first functionality: Ensures that once downloaded, the music is accessible and playable without any internet connection, providing a robust and reliable listening experience.

· Cross-platform compatibility: Allows the generated mixapps to be installed and used on a wide range of devices including smartphones (iOS, Android) and desktops, maximizing accessibility.

· Self-contained digital gifting: Facilitates the creation of permanent, self-contained digital gifts, bypassing the limitations of external service dependencies and regional content locks.

· Customizable user interface: Offers a personalized playback environment for the curated music collection, enhancing the user's engagement with the gifted content.

Product Usage Case

· Creating a personalized offline music gift for a friend's birthday: A user can gather a collection of their friend's favorite songs or discover new tracks, package them using Vibe Capsule, and share the hosted mixapp as a unique, lasting present that doesn't rely on streaming services.

· Building a curated music experience for a specific event or mood: An artist or DJ could create a Vibe Capsule mixapp of their latest tracks or a themed playlist for a party, allowing attendees to download and enjoy the music offline, independent of venue Wi-Fi or mobile data.

· Archiving and sharing niche music collections: For enthusiasts of rare or out-of-print music, Vibe Capsule provides a way to bundle these files into an easy-to-distribute and use application, preserving them for a community without relying on potentially unstable online archives.

15

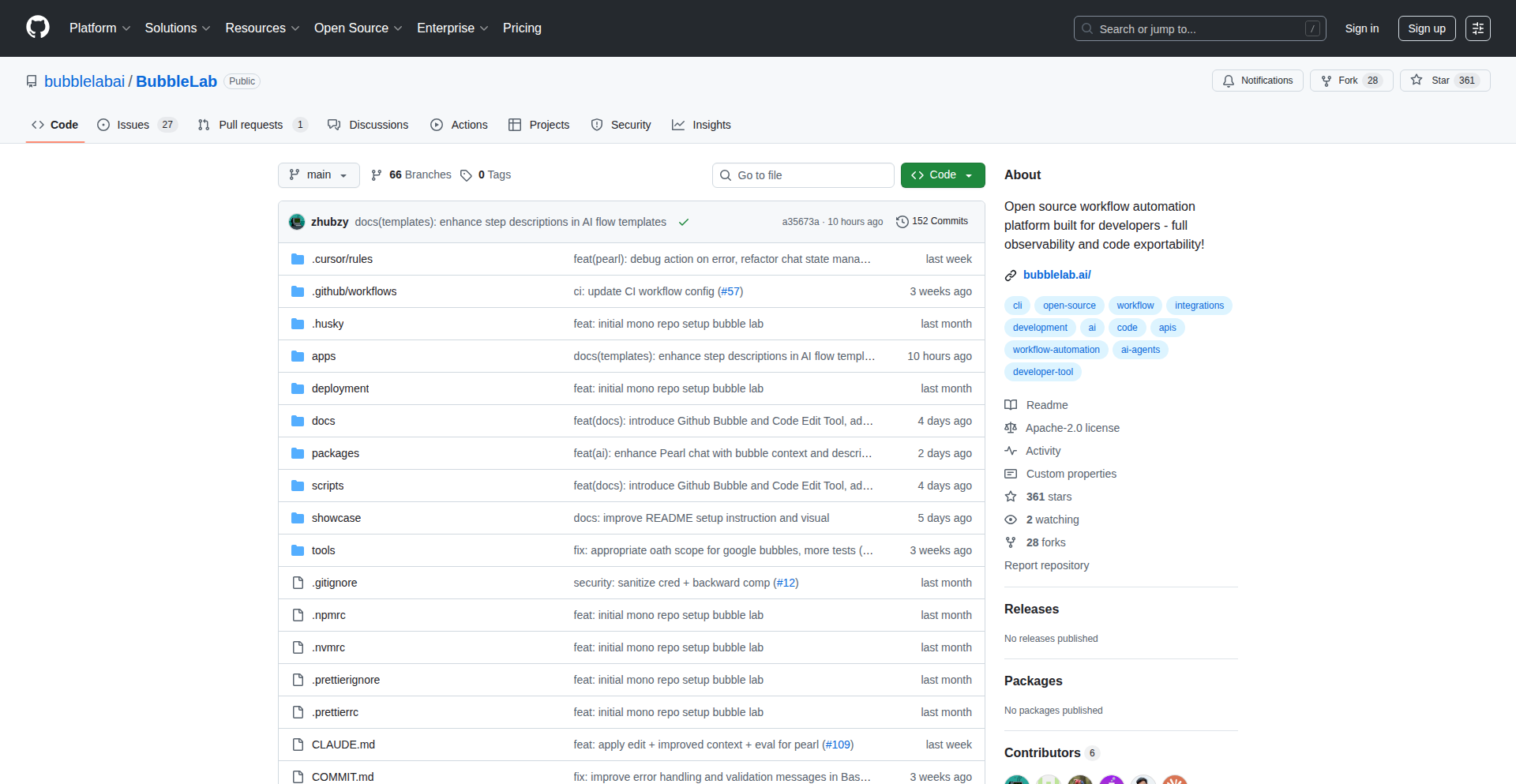

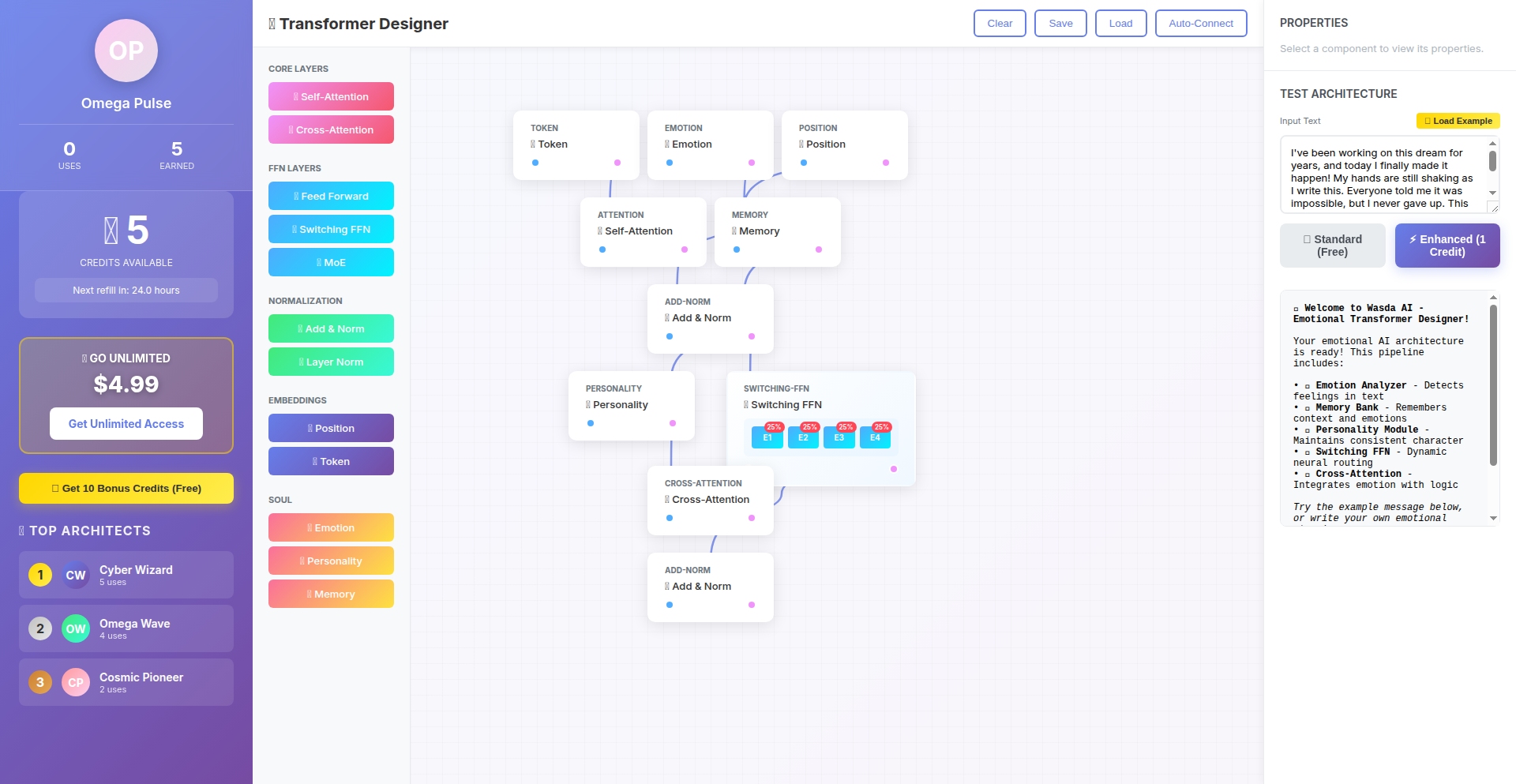

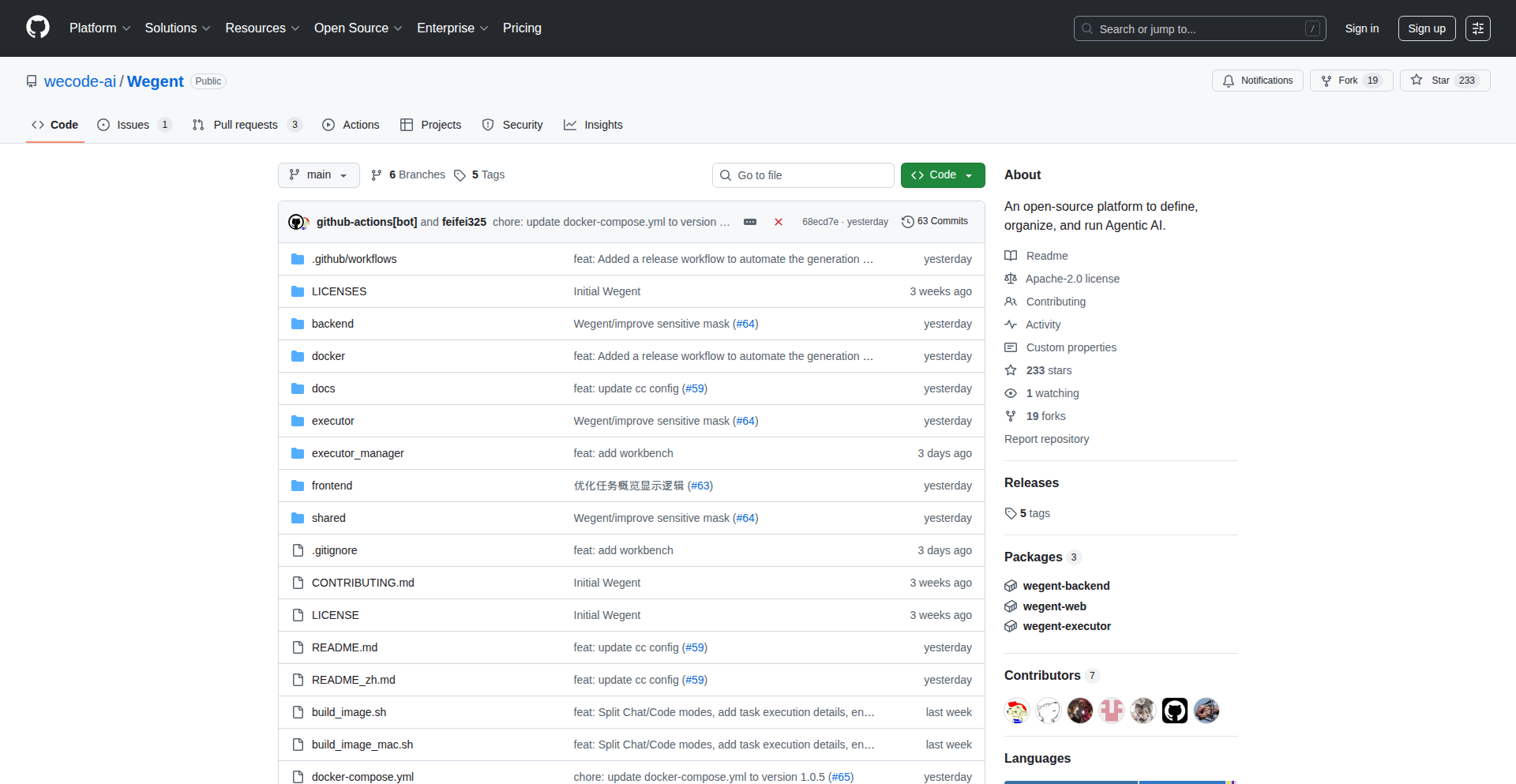

Bubble Lab: Code-Driven Agentic Workflows

Author

hkselinali

Description

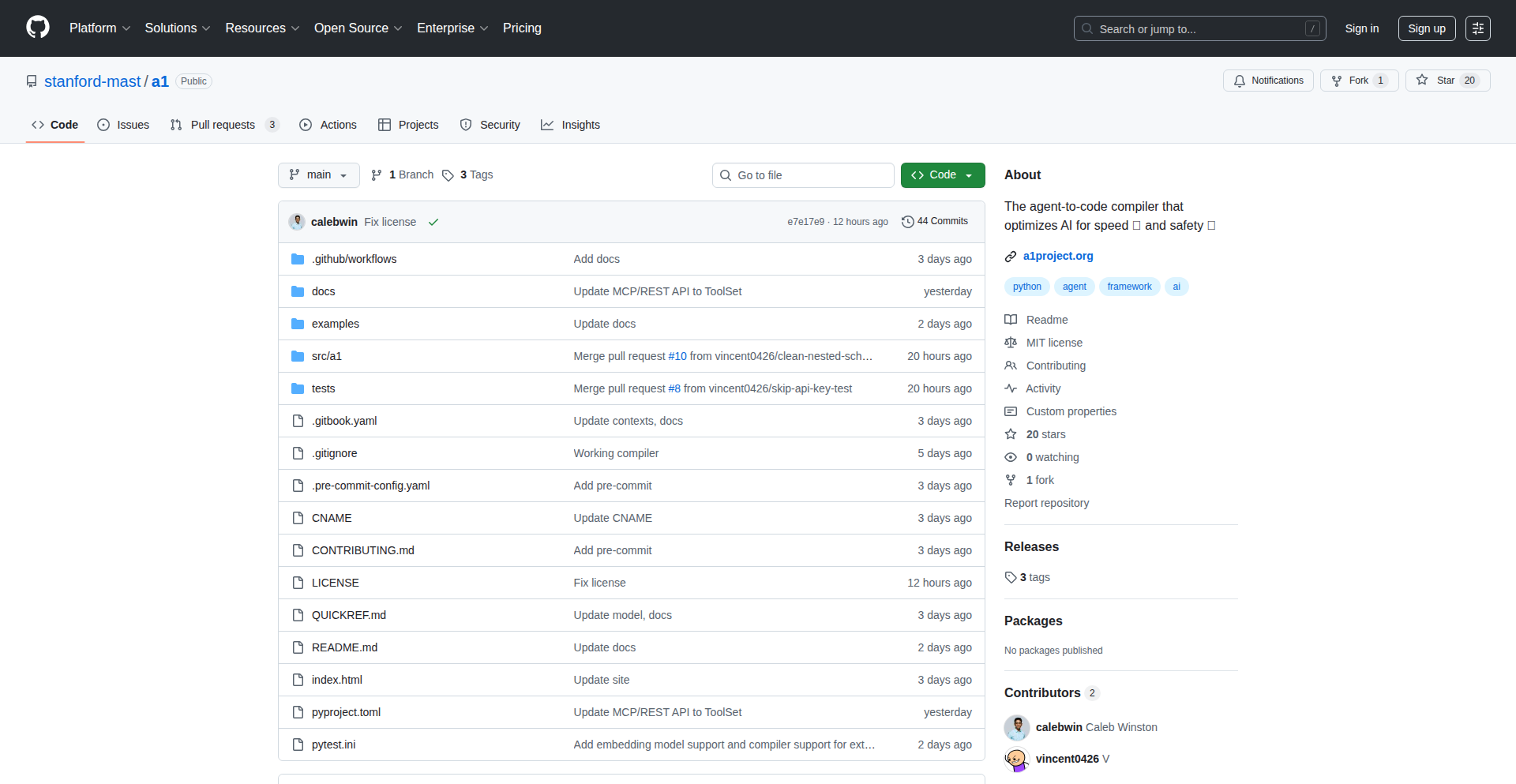

Bubble Lab is an open-source platform that allows developers to build and orchestrate agentic workflows using code. It addresses the complexity of managing multiple AI agents that need to collaborate and execute tasks in a structured manner. The core innovation lies in its code-based approach to defining agent interactions and state management, making complex AI systems more accessible and controllable.

Popularity

Points 5

Comments 2

What is this product?

Bubble Lab is an open-source toolkit designed for developers to build intelligent systems where multiple AI agents can work together to achieve a goal. Think of it like a conductor for a team of specialized AI assistants. Instead of just having one AI do a task, Bubble Lab lets you define how different AI agents, each with their own strengths (like a writer, a researcher, or a coder), communicate and pass information between each other. The innovation is in using plain code to define these interactions, making it much more flexible and transparent than traditional visual tools. This allows for complex, multi-step processes that can be automated and iterated upon easily. So, what's in it for you? It means you can build more sophisticated AI applications that can handle intricate problems by breaking them down into manageable steps executed by specialized AI agents, all controlled by your code.

How to use it?

Developers can use Bubble Lab by defining their agentic workflows in Python. This involves specifying the agents, their roles, the tasks they can perform, and the rules for how they communicate and hand off responsibilities. You can integrate Bubble Lab into existing Python projects or use it as a standalone framework. It provides APIs and structures to define the agents, their tools (functions they can call), and the overall orchestration logic. This means you can build custom AI assistants for anything from automating repetitive coding tasks to creating complex data analysis pipelines. So, how does this help you? You can seamlessly weave sophisticated AI capabilities into your applications, automate complex processes with greater control, and experiment with novel AI architectures without being locked into proprietary, black-box solutions.

Product Core Function

· Agent Definition: Allows developers to precisely define individual AI agents with specific capabilities and roles. This provides granular control over AI behavior, enabling the creation of specialized agents for distinct tasks. The value is in building modular and reusable AI components for complex workflows.

· Workflow Orchestration: Provides mechanisms to define the flow of execution between agents, including conditional logic and parallel processing. This ensures that agents collaborate effectively and tasks are completed in the correct sequence, optimizing efficiency and reducing errors in multi-agent systems.

· Tool Integration: Enables agents to interact with external tools and APIs through custom functions. This expands the capabilities of AI agents beyond their inherent knowledge, allowing them to perform actions in the real world or access up-to-date information. The value is in empowering AI to be more versatile and actionable.

· State Management: Handles the tracking and passing of information between agents throughout the workflow. This ensures that agents have the necessary context to perform their tasks, leading to more coherent and effective collaboration. The value is in maintaining continuity and enabling sophisticated decision-making within the workflow.

Product Usage Case

· Automated Code Refactoring: Developers can set up a workflow where one agent analyzes code for potential improvements, another agent suggests specific refactoring steps, and a third agent applies those changes. This automates a time-consuming development task and ensures consistent code quality.

· Content Generation Pipeline: A workflow can be created where one agent researches a topic, another agent outlines the content, a third agent writes the draft, and a final agent reviews and edits it. This accelerates content creation for blogs, articles, or documentation.

· Data Analysis and Reporting: Developers can build a system where agents fetch data from various sources, another agent preprocesses and analyzes it, and a final agent generates reports or visualizations. This automates complex data workflows, saving time and providing timely insights.

16

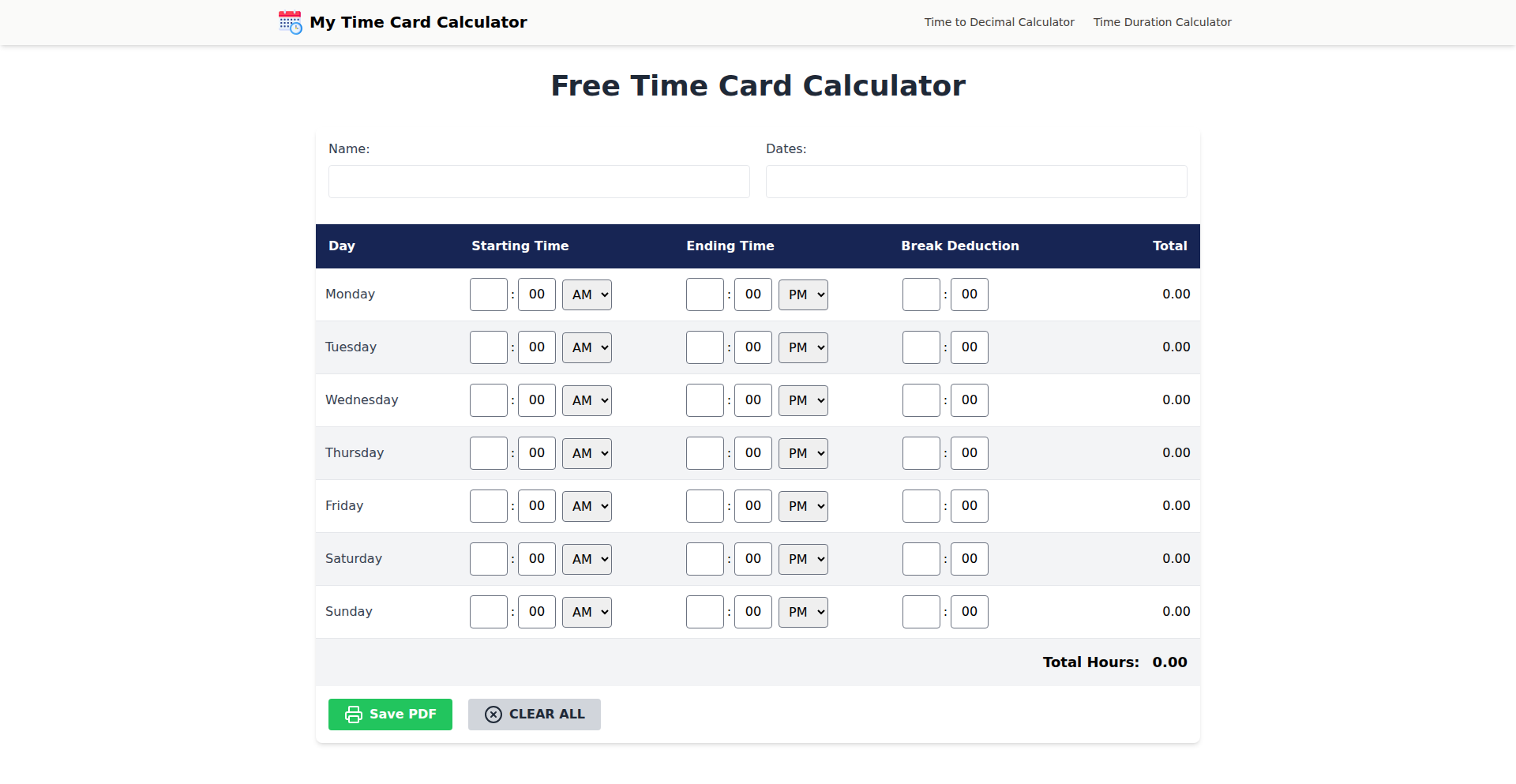

Effortless TimeCard Calculator

Author

atharvtathe

Description

This project is a straightforward web application designed to simplify the calculation of time cards. It tackles the common and often tedious task of manually computing work hours, breaks, and overtime, providing an automated and accurate solution for individuals and small businesses.

Popularity

Points 5

Comments 0

What is this product?

This is a web-based time card calculator that automates the process of calculating work hours, including regular hours, overtime, and break deductions. The innovation lies in its simplicity and focus on a common pain point for employees and employers. It uses straightforward web technologies (likely HTML, CSS, and JavaScript) to create an interactive interface where users can input their start and end times, as well as break durations. The backend logic, executed client-side or with a minimal server, performs the calculations based on defined work rules, such as standard workdays and overtime thresholds. This means no complex installations or databases are required, making it highly accessible. The value proposition is clear: it saves time and reduces errors in payroll or personal time tracking.

How to use it?

Developers can use this project as a foundational template for building more sophisticated time tracking systems. It can be integrated into existing HR platforms, project management tools, or even as a standalone utility within a company's internal dashboard. For individual users, it can be accessed directly via a web browser, requiring no setup. The typical use case involves entering daily work start and end times, specifying any breaks taken, and the calculator will instantly output the total hours worked and any overtime. The code is likely open-source, allowing developers to fork it, customize it for specific regional labor laws or company policies, or add features like recurring time entries or export options.

Product Core Function

· Automated Time Calculation: This function takes start and end times for a workday and automatically computes the total duration, highlighting the value of saving manual calculation time and minimizing human error in payroll processing.

· Break Deductions: The ability to input and subtract break times ensures accurate total working hours, which is crucial for fair compensation and compliance with labor regulations.

· Overtime Calculation: This feature automatically identifies and quantifies overtime hours based on predefined thresholds, providing clarity on additional pay owed and simplifying payroll management.

· User-Friendly Interface: A clean and intuitive web interface allows anyone to input data easily without prior technical knowledge, making time tracking accessible to all employees.

· Client-Side Processing: By performing calculations directly in the browser, the application offers quick results and enhances privacy as sensitive time data doesn't need to be sent to a server, which is valuable for personal tracking and small businesses concerned about data security.

Product Usage Case

· A freelance developer can use this calculator to accurately track billable hours for multiple clients, ensuring they are paid for all time spent on projects and avoiding manual errors in invoices.

· A small business owner can deploy this calculator on their company intranet for employees to log their daily work hours, simplifying the payroll process and ensuring accurate wage calculation for hourly workers.

· An individual employee can use this as a personal tool to verify their pay stubs by independently calculating their own work hours, providing a sense of control and transparency over their compensation.

· A student working part-time can quickly calculate their earnings for the week by inputting their shifts, making it easier to manage their personal budget and understand their income.

· A developer looking to learn about basic web application development can study the source code of this project to understand how to handle user input, perform calculations with JavaScript, and create interactive web elements.

17

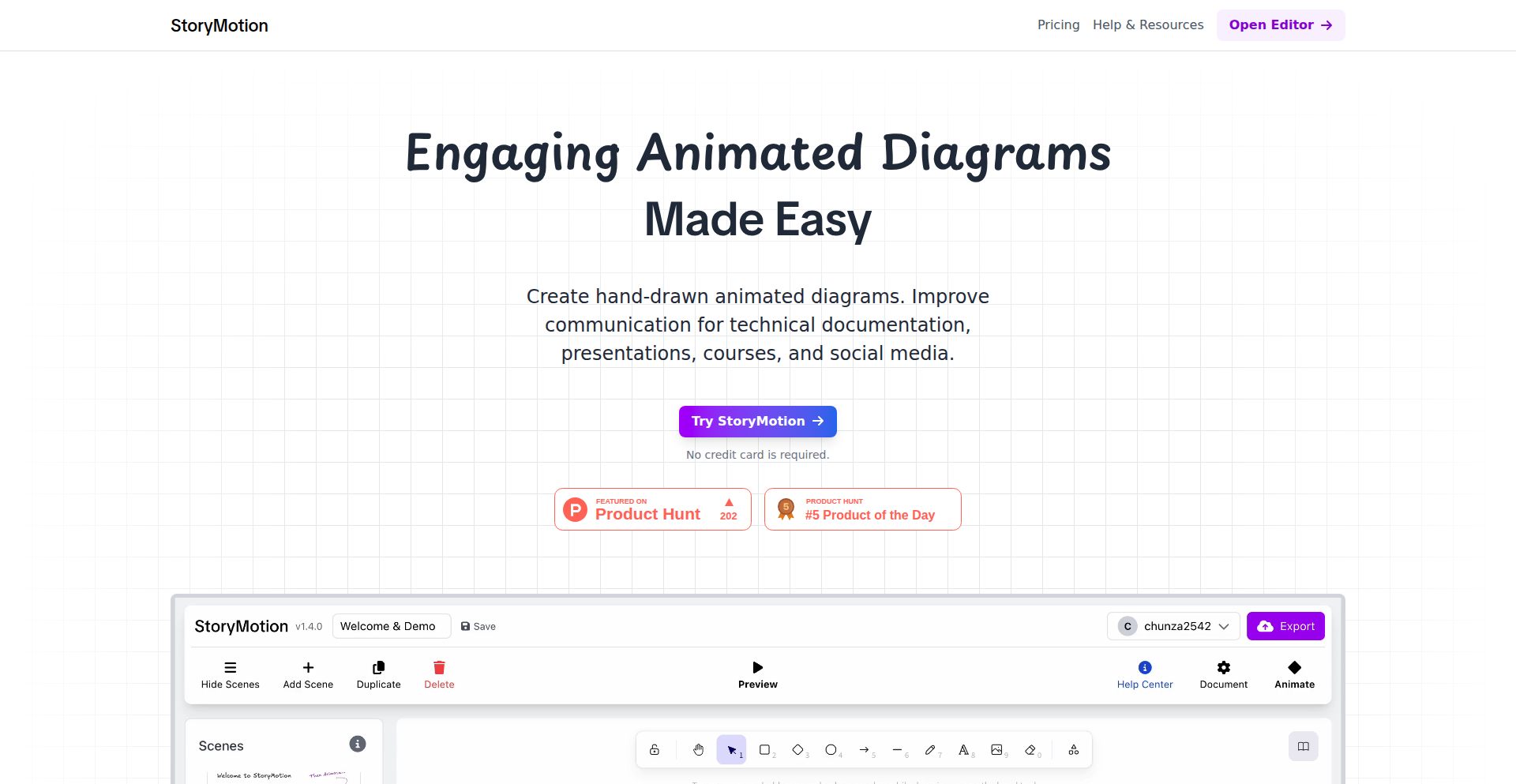

StoryMotion: Visual Narrative Weaver

Author

chunza2542

Description

StoryMotion is an innovative tool designed to transform complex ideas into engaging, step-by-step animated diagrams. It addresses the challenge of explaining intricate processes or concepts effectively, moving beyond static visuals to offer dynamic, narrative-driven explanations. The core innovation lies in its ability to automate the creation of explainer animations from simple inputs, making sophisticated visual storytelling accessible to developers and creators.

Popularity

Points 1

Comments 4

What is this product?

StoryMotion is a project that helps you create animated diagrams and explainer videos without needing advanced animation skills. It works by allowing you to define steps or stages of a process, and the tool then automatically generates a smooth animation that visually guides viewers through each part. Think of it like creating a visual walkthrough for your code, a scientific process, or any step-by-step guide, but with smooth motion and clear transitions. The innovation is in simplifying the animation pipeline, so instead of manually keyframing every movement, you describe the sequence and StoryMotion builds the animation for you. This means you can convey information more effectively and engage your audience more deeply.

How to use it?

Developers can integrate StoryMotion into their workflow to create clear documentation, tutorials, or presentations. You can use it to explain how a particular algorithm works, demonstrate the flow of data in an application, or visualize a complex system architecture. Typically, you would input your sequential steps or key points, and StoryMotion generates an animation that can be exported as a video file or GIF. This is incredibly useful for onboarding new team members, creating marketing materials, or even debugging complex logical flows by visualizing them. It directly translates to better understanding and faster adoption of your technical solutions.

Product Core Function

· Automated Animation Generation: The system takes sequential data inputs and automatically produces animated transitions and movements. This means less manual effort for creators and a smoother, more professional-looking output, enhancing the clarity of explanations.