Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-13

SagaSu777 2025-11-14

Explore the hottest developer projects on Show HN for 2025-11-13. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The current wave of innovation is heavily skewed towards empowering developers and enabling AI agents to perform complex, multi-step tasks. The emergence of 'agentic workflows' signifies a shift from single-prompt interactions to sophisticated chains of actions and reasoning. Projects like Orion showcase the integration of multimodal AI, allowing agents to 'see' and 'act' upon visual information, which opens up entirely new application domains. For developers, this means learning to architect systems that can orchestrate these agents, manage their states, and integrate them seamlessly into existing products. The emphasis on 'no-code/low-code' AI development, such as tools that generate apps from prompts or automate complex data tasks, democratizes access to powerful AI capabilities. This trend is not about replacing developers but about augmenting their productivity, allowing them to focus on higher-level design and problem-solving. Furthermore, the increasing focus on privacy, security, and decentralized solutions reflects a growing awareness of the ethical implications of AI, urging creators to build trust and transparency into their systems. Entrepreneurs should look for opportunities where these sophisticated AI capabilities can solve real-world problems with enhanced efficiency, reduced friction, and greater user control, especially in areas like FinTech, data analysis, and creative content generation, where automation and intelligent insights can drive significant value.

Today's Hottest Product

Name

Orion – A Visual Agent for Seeing, Reasoning, and Acting

Highlight

Orion tackles the fragmentation of visual AI by unifying Large Vision Models (VLMs) with robust computer vision tools within a single chat-completion interface. This innovation allows users to chain visual tasks like object detection, segmentation, and document analysis, mirroring text-based workflows. Developers can learn about building unified AI experiences, bridging the gap between multimodal understanding and actionable outputs, and explore novel ways to orchestrate vision APIs.

Popular Category

AI & Machine Learning

Developer Tools

Productivity Software

FinTech

Data Analysis

Popular Keyword

AI Agents

LLMs

Computer Vision

Automation

Developer Tools

Data Wrangling

FinTech

Personal Finance

Code Generation

Observability

Technology Trends

Multimodal AI Integration

Agentic Workflows

No-Code/Low-Code AI Development

Enhanced Developer Productivity

Data Privacy and Security

AI-Powered Automation

Decentralized and Privacy-First Solutions

FinTech Innovation

AI for Content Creation and Analysis

Project Category Distribution

AI Agents & LLM Tools (35%)

Developer Productivity & Tools (25%)

FinTech & Personal Finance (15%)

Data Analysis & Visualization (10%)

Creative & Generative Tools (10%)

Other (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Postgres DurableFlows | 84 | 43 |

| 2 | Orion: Visual Agent & Multi-Modal Orchestrator | 22 | 10 |

| 3 | AI Bubble Navigator | 23 | 3 |

| 4 | PromptForge | 12 | 7 |

| 5 | Stockfisher AI Forecaster | 14 | 4 |

| 6 | Shadowfax AI: Agentic Data Wrangler | 14 | 0 |

| 7 | Fulfilled: Democratized Wealth Orchestrator | 7 | 5 |

| 8 | AgenticCode SwiftAir | 9 | 2 |

| 9 | InsightFlow Personalizer | 6 | 4 |

| 10 | Effortless LLM Forge | 4 | 4 |

1

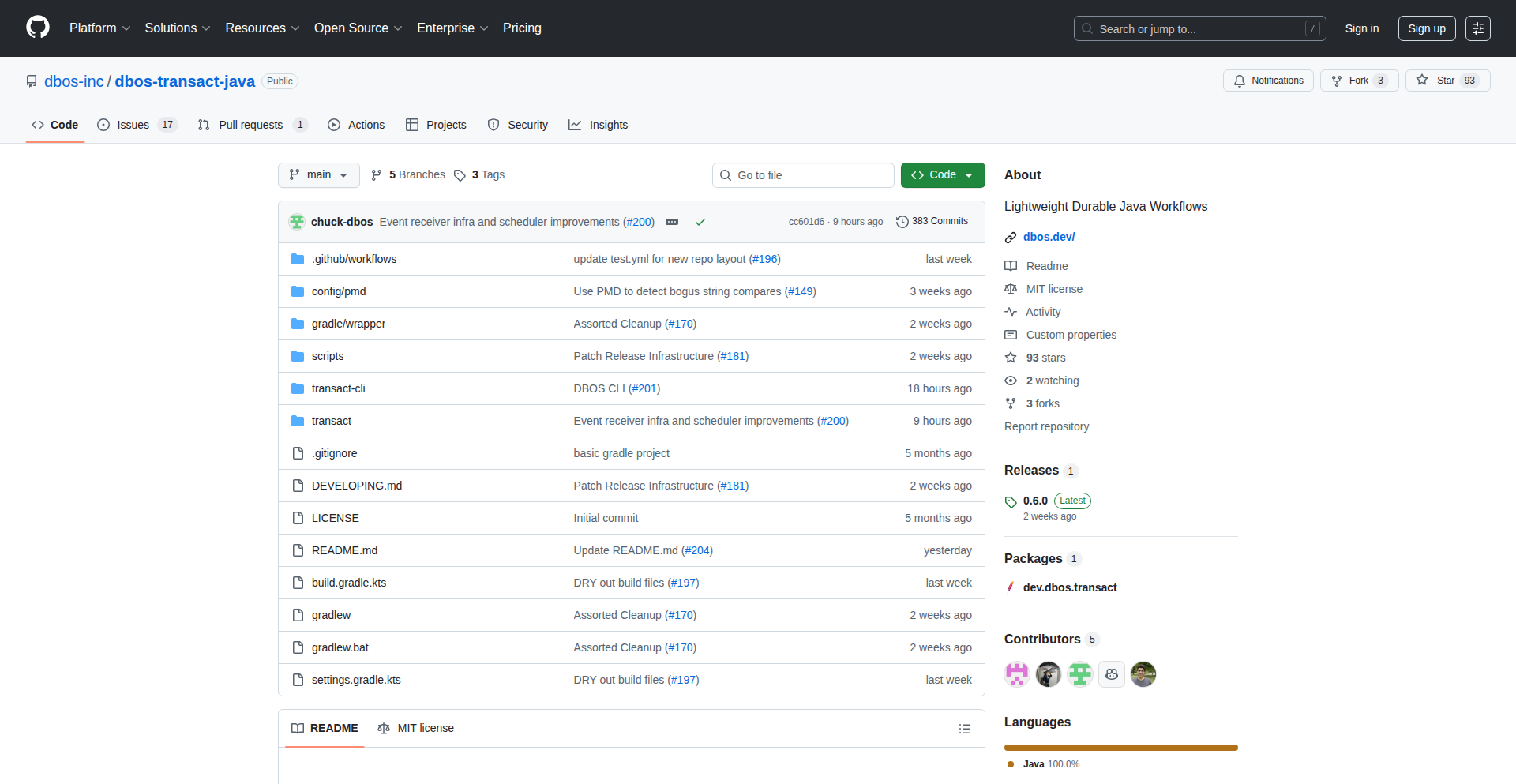

Postgres DurableFlows

Author

KraftyOne

Description

An open-source Java library that turns your long-running processes into durable workflows. It leverages PostgreSQL to automatically save your program's progress at each step, allowing it to resume exactly where it left off after any interruption, like crashes or restarts. This simplifies building highly reliable applications for tasks that take hours or even weeks.

Popularity

Points 84

Comments 43

What is this product?

This is a Java library called DBOS Java that makes your applications incredibly resilient. Think of it like saving your game progress automatically. As your Java code runs, DBOS Java periodically saves its exact state into a PostgreSQL database. If your application crashes, restarts, or gets interrupted, DBOS Java can instantly restore its state from the last save point and continue running without missing a beat or doing work twice. The innovation here is a unified way to handle failures for complex, long-running tasks, eliminating the need for manual, error-prone retry logic and state management across distributed systems.

How to use it?

Developers can integrate this library directly into their Java projects. It acts as a dependency, meaning you don't need a separate service to manage it. You can add it incrementally to existing projects, and it's designed to work seamlessly with popular frameworks like Spring. The core idea is to annotate your methods to indicate workflow steps. When these methods are invoked, DBOS Java handles the checkpointing to PostgreSQL behind the scenes. This makes it easy to build robust applications for use cases like AI agents that might take days to complete, financial transactions that need absolute reliability, or data synchronization tasks.

Product Core Function

· Durable Workflow Execution: Automatically checkpoints the state of your Java application to PostgreSQL at defined intervals, allowing for seamless recovery from failures. This ensures that complex, long-running tasks can complete reliably, even in the face of unexpected interruptions.

· Automatic State Restoration: Upon restarts or crashes, the library restores the application to its exact previous state, picking up execution precisely from where it left off. This eliminates manual state management and the risk of data duplication or loss.

· PostgreSQL Integration: Leverages PostgreSQL as the backend for storing workflow states and checkpoints. This means you can use familiar PostgreSQL tools and infrastructure for managing your application's reliability, providing a robust and scalable solution.

· Framework Compatibility: Designed to be easily integrated into existing Java projects and compatible with popular frameworks like Spring. This allows developers to gradually introduce durability to their applications without a complete rewrite.

· Simplified Failure Handling: Replaces complex, ad-hoc retry logic and manual checkpointing with a consistent, library-based approach. This significantly reduces development effort and the potential for bugs in critical systems.

Product Usage Case

· Building an AI agent that performs complex analysis over days: Instead of worrying about the agent crashing and losing its progress, DBOS Java ensures it can pick up exactly where it left off, completing the analysis reliably. This solves the problem of long-running, non-interactive tasks failing midway.

· Implementing a payment processing system that requires absolute accuracy: DBOS Java guarantees that each transaction step is reliably recorded and can be resumed if interrupted, preventing duplicate charges or lost payments. This addresses the need for transactional integrity in sensitive operations.

· Developing a data synchronization service that runs for hours: If the synchronization process is interrupted, DBOS Java ensures it resumes from the last successfully synchronized point, avoiding data inconsistencies and redundant processing. This solves the challenge of maintaining data integrity in continuous background processes.

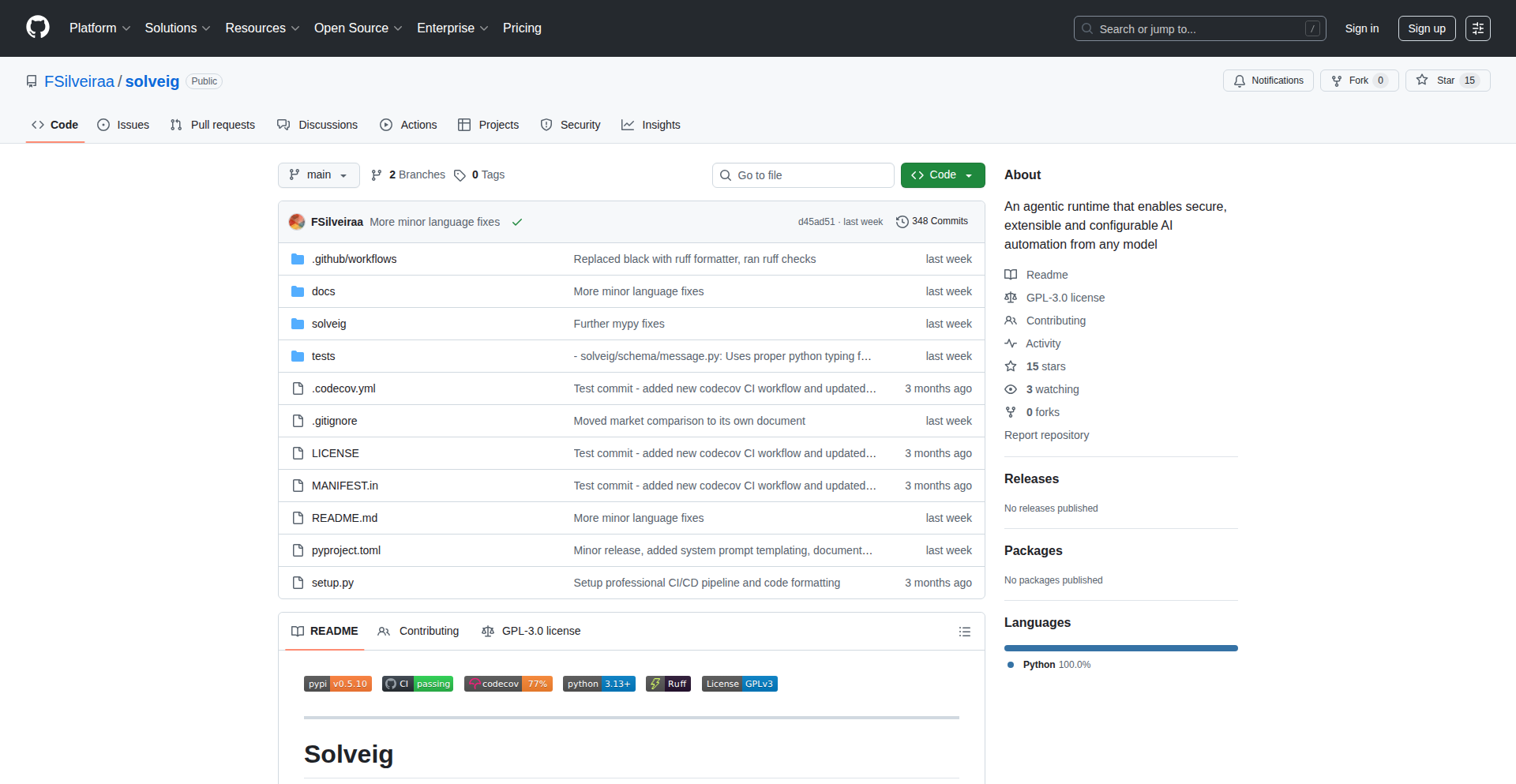

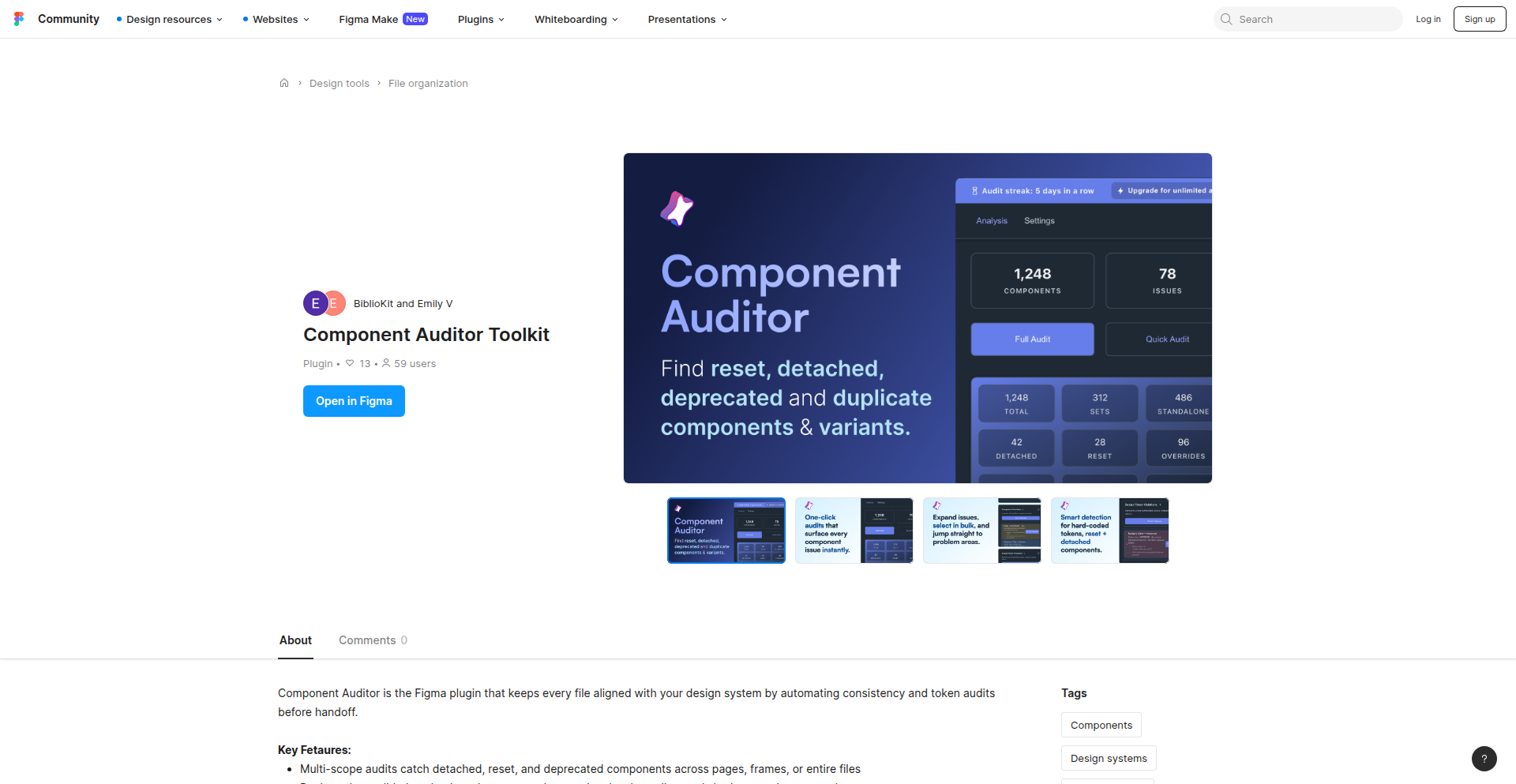

2

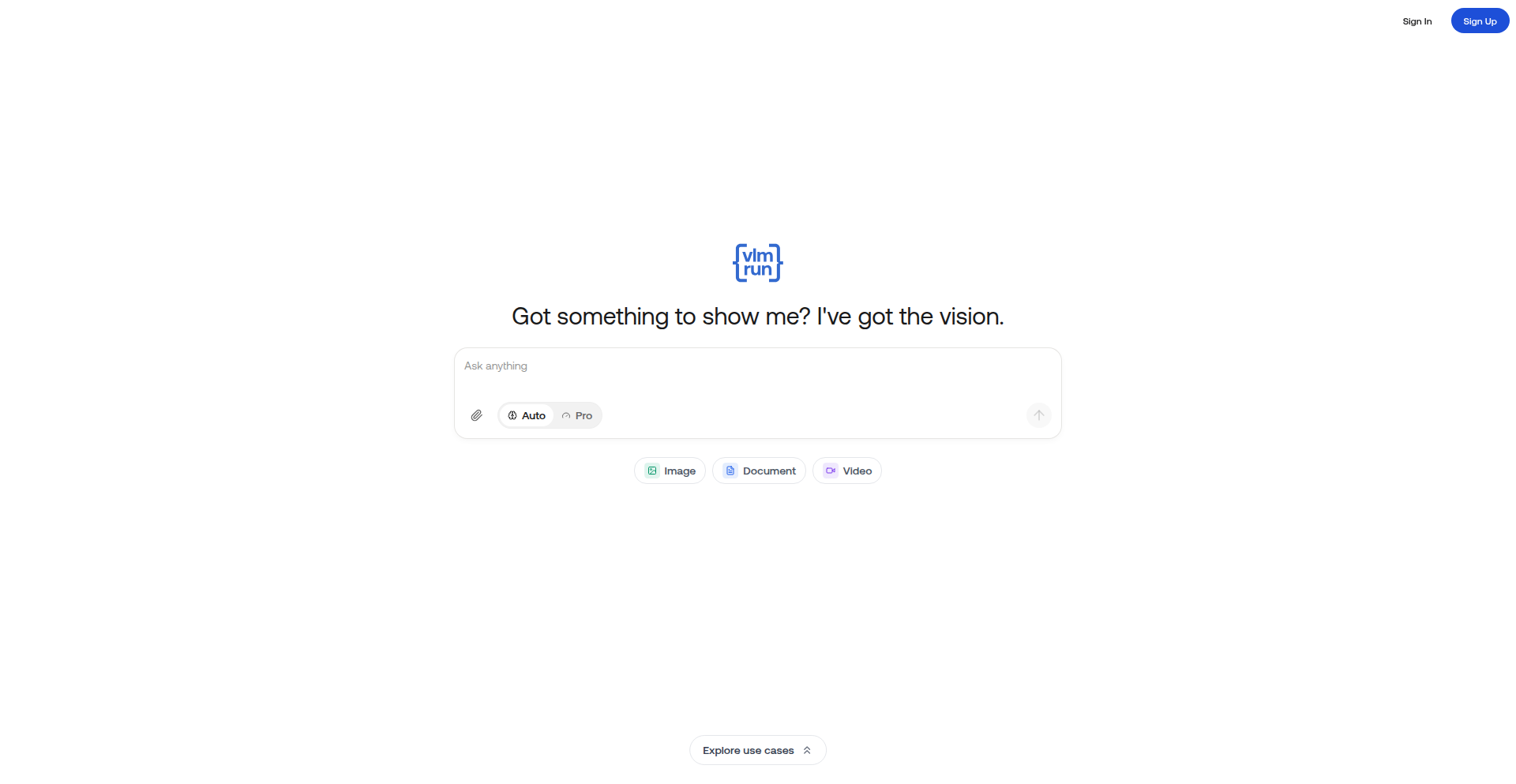

Orion: Visual Agent & Multi-Modal Orchestrator

Author

fzysingularity

Description

Orion is a novel visual agent that bridges the gap between large language models (LLMs) and computer vision capabilities. It allows users to interact with images, videos, and documents through a unified chat interface, enabling them to not only describe but also reason and act upon visual information. This innovative approach tackles the fragmentation and limitations of existing vision APIs by integrating diverse computer vision tools within a single, cohesive system, effectively making visual tasks as manageable as text-based workflows.

Popularity

Points 22

Comments 10

What is this product?

Orion is a sophisticated AI agent designed to understand and manipulate visual content. Unlike current frontier VLMs (like GPT, Claude, Gemini) that can describe what they see but struggle with reliable actions on visual inputs, Orion integrates advanced computer vision tools with VLM reasoning. It addresses the common issues of high-resolution image collapse and fragmented visual AI ecosystems by offering a unified chat-completions interface. This means you can chain visual processing steps, examine intermediate results, and treat complex visual tasks like a structured text workflow, all within a single conversational experience.

How to use it?

Developers can leverage Orion through its unified chat-completions API, which is designed to be familiar and easy to integrate. Instead of juggling multiple, disparate vision APIs for tasks like object detection, image segmentation, or video editing, developers can send requests to Orion and receive structured visual outputs. This allows for seamless integration into applications that require sophisticated visual understanding and manipulation, such as content moderation, automated video analysis, document processing, or creative media generation. The API will support chaining of visual operations, enabling complex multi-step visual tasks to be executed with a single sequence of commands.

Product Core Function

· Object and Face Detection: Precisely identify and localize objects, faces, and people within images or video frames, providing visualized bounding boxes. This is valuable for security systems, content analysis, and automated tagging.

· Interactive Image Segmentation: Isolate specific objects or salient regions within an image. This is crucial for image editing, background removal, and selective analysis.

· Image and Video Editing/Remixing: Generate, edit, and reimagine visual content based on textual prompts. This unlocks creative possibilities for designers, marketers, and content creators.

· Visual Content Summarization: Condense the key information from images or videos into concise summaries. This aids in quick content review and understanding for researchers and analysts.

· Image Transformation: Perform standard image manipulations like cropping, rotating, and upscaling. This streamlines image preparation for various applications and platforms.

· Video Transformation: Trim, sample frames, and highlight significant scenes within videos. This is invaluable for video editing, highlight reel creation, and surveillance analysis.

· Document Parsing and Structuring: Extract information from documents, including pagination, layout analysis, and OCR (Optical Character Recognition). This automates data entry and document processing for businesses.

Product Usage Case

· Automated Video Surveillance Analysis: A security company can use Orion to automatically detect unauthorized individuals entering restricted areas in real-time video feeds, triggering alerts. Orion's object detection and tracking capabilities can handle this with greater reliability than fragmented solutions.

· E-commerce Product Catalog Enhancement: An online retailer can use Orion to automatically extract product details, segment product images from backgrounds, and even generate variations of product shots for marketing. This significantly speeds up catalog management and visual merchandising.

· Content Moderation for Social Media: A social media platform can employ Orion to automatically identify and flag inappropriate visual content (e.g., nudity, violence) in uploaded images and videos. Orion's combined understanding and action capabilities can offer more robust moderation than text-based systems alone.

· Personalized Video Highlight Generation: A sports analytics company can use Orion to automatically identify key moments (e.g., goals, impressive plays) in recorded games and generate highlight reels tailored to specific user preferences. This leverages Orion's video summarization and scene highlighting functions.

3

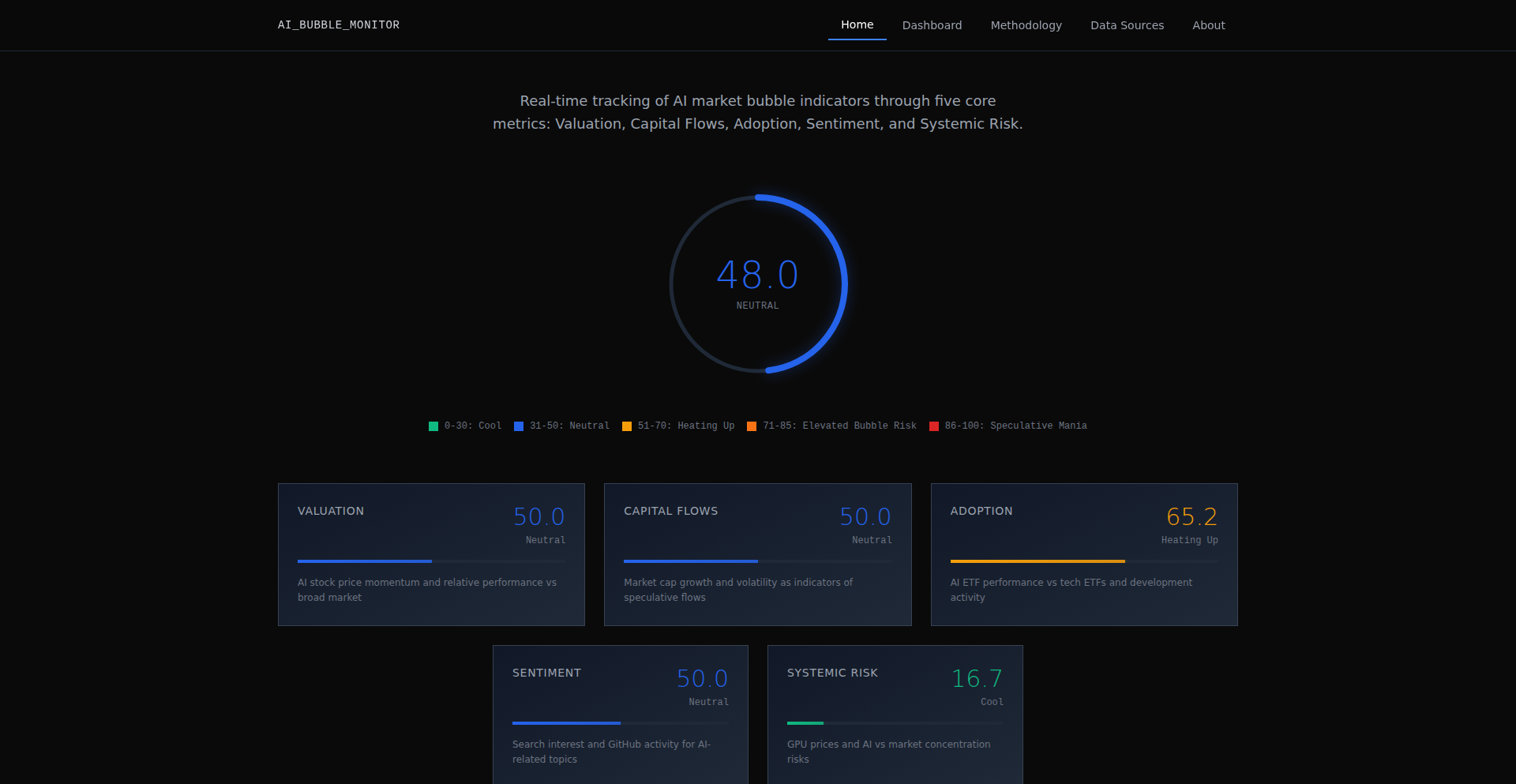

AI Bubble Navigator

Author

itsnotmyai

Description

An analytical tool that tracks and visualizes indicators of potential market bubbles in AI-related sectors. It uses multiple data sources to generate an 'AI Bubble Score' and breaks it down into key sub-indices like Valuation, Capital Flows, and Sentiment & Hype, providing insights into market behavior and potential overvaluation. So, this helps identify if the AI market is getting overheated and provides data-driven insights to make informed investment or development decisions.

Popularity

Points 23

Comments 3

What is this product?

This is an analytical tool designed to monitor the AI market for signs of a bubble. It aggregates various data points and metrics, much like a doctor monitoring vital signs, to calculate an overall 'AI Bubble Score' from 0 to 100. This score is further divided into five sub-indices: Valuation (how expensive AI companies are), Capital Flows (how much money is pouring into AI), Adoption vs Fundamentals (whether AI adoption justifies current valuations), Sentiment & Hype (public and media excitement), and Systemic Risk (broader economic impacts). The innovation lies in its consolidated, multi-faceted approach to a complex market phenomenon, providing a holistic view that's difficult to get from single data points alone. So, this helps understand the health and stability of the AI market at a glance, making complex financial indicators understandable.

How to use it?

Developers and investors can use the AI Bubble Navigator by accessing its visualizations and data reports. The tool presents a clear dashboard with the overall AI Bubble Score and its constituent sub-indices. They can use this information to gauge market sentiment, identify areas of potential overvaluation or undervaluation, and make strategic decisions about where to invest time, capital, or development efforts. For instance, a developer looking to launch a new AI product might check the 'Adoption vs Fundamentals' score to see if the market is ready for their innovation. Integration could involve embedding its data APIs into financial dashboards or research platforms. So, this provides a digestible overview of AI market trends and risks, empowering better decision-making.

Product Core Function

· AI Bubble Score Calculation: Aggregates multiple indicators to produce a single score representing overall market bubble risk. This provides a quick, high-level understanding of market health. So, this helps you get an immediate sense of AI market temperature.

· Sub-index Breakdown: Divides the overall score into five key areas (Valuation, Capital Flows, Adoption vs Fundamentals, Sentiment & Hype, Systemic Risk) to offer granular insights. This allows for deeper analysis of specific market drivers and potential issues. So, this helps pinpoint the exact reasons behind market sentiment.

· Data Visualization: Presents complex data through intuitive charts and graphs, making market trends and risks easier to comprehend. This translates dense financial data into easily understandable visual cues. So, this helps you see market shifts and risks clearly without needing to be a data scientist.

· Trend Monitoring: Tracks changes in the AI Bubble Score and its sub-indices over time to identify emerging patterns and shifts in market dynamics. This enables proactive decision-making based on evolving market conditions. So, this helps you stay ahead of market changes and anticipate future trends.

· Data Source Aggregation: Pulls data from a variety of sources to provide a comprehensive view, reducing reliance on single, potentially biased data points. This ensures a more robust and reliable assessment of market conditions. So, this helps you get a more trustworthy and complete picture of the AI market.

Product Usage Case

· A venture capitalist wanting to assess the risk of investing in a new AI startup could use the 'Valuation' and 'Capital Flows' sub-indices to understand if the startup's valuation is justified by current market trends and funding activity. So, this helps them avoid overpaying for investments.

· An AI researcher deciding which area to focus their next project on could check the 'Adoption vs Fundamentals' score to see if the market is mature enough for widespread adoption of their potential technology. So, this helps them choose research areas with higher practical impact potential.

· A financial analyst looking to advise clients on AI sector exposure could use the overall AI Bubble Score and the 'Sentiment & Hype' index to gauge public perception and potential speculative behavior within the AI market. So, this helps them provide more informed investment advice.

· A software engineer considering a career shift into AI development could use the 'Capital Flows' and 'Adoption vs Fundamentals' metrics to identify growth areas within the AI industry that are likely to offer strong job prospects. So, this helps them make better career choices.

4

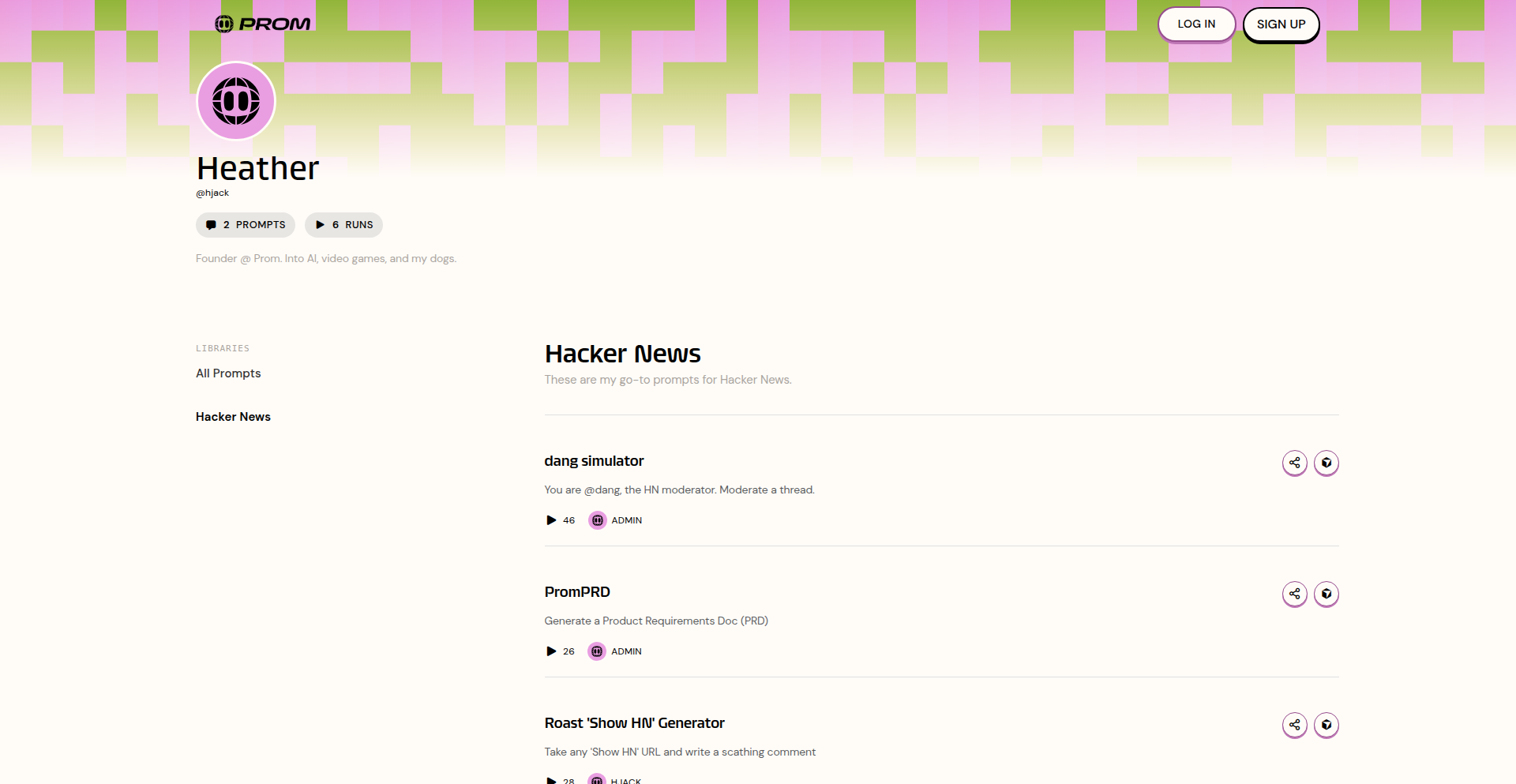

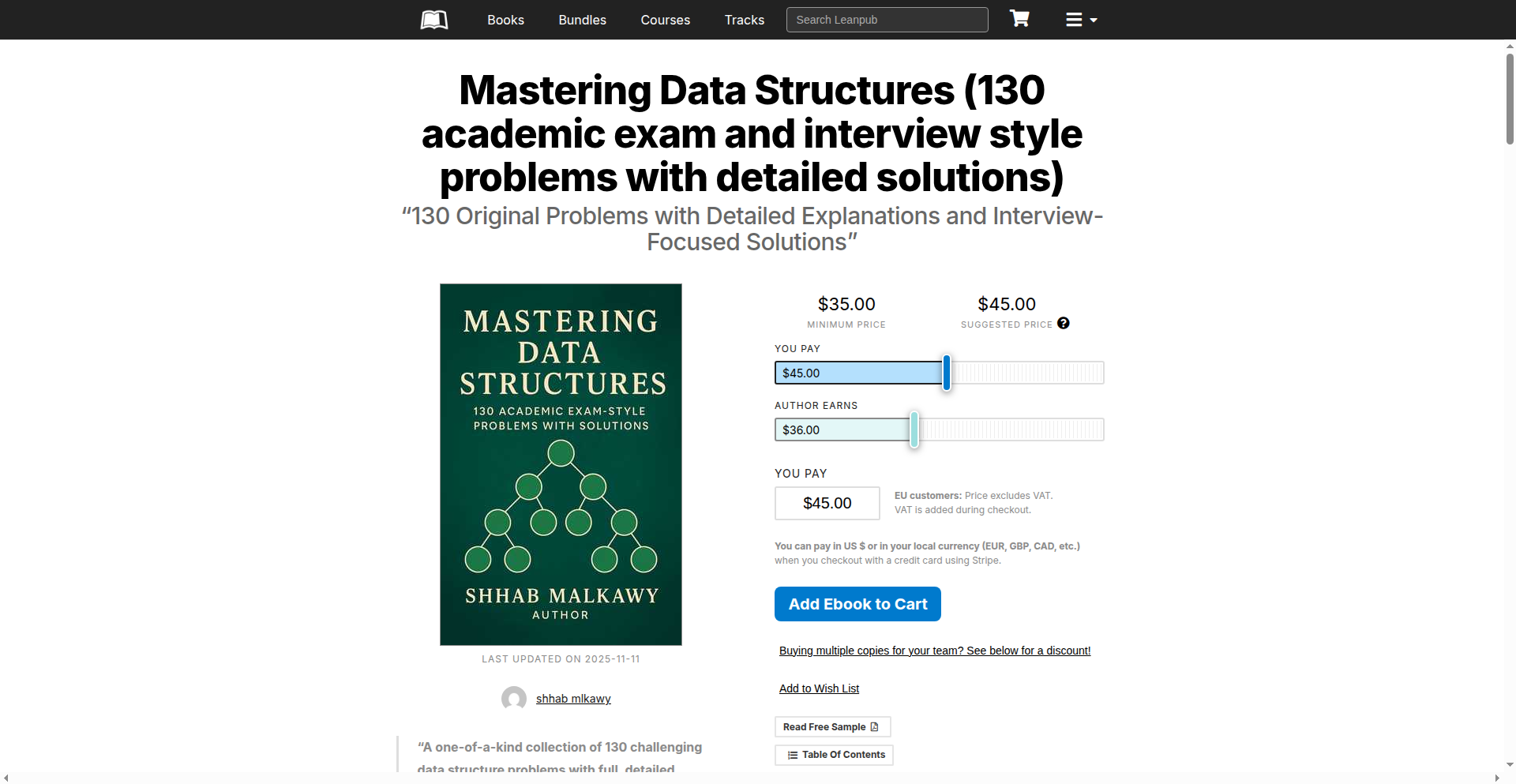

PromptForge

Author

hjack_

Description

PromptForge is a minimalist platform designed for sharing and discovering effective prompts for AI models. It addresses the common challenge of losing valuable prompts across various communication channels by providing a dedicated, organized space. The core innovation lies in its simplicity and focus on community-driven curation, allowing developers to easily share, discover, and replicate successful AI prompt strategies.

Popularity

Points 12

Comments 7

What is this product?

PromptForge is a web application built with a Flask backend and Bootstrap frontend, acting as a central repository for AI prompts. It's a place where developers and AI enthusiasts can showcase the prompts they've crafted that yield good results, and discover prompts others are using successfully. The innovation comes from its focus on community and practical application, moving beyond scattered notes to a structured sharing environment, making it easier to learn from and build upon others' AI experimentation.

How to use it?

Developers can use PromptForge by creating an account and starting to share their own 'prompts that work'. This involves inputting the prompt text and optionally providing context or the AI model it was tested with. For discovery, users can browse public prompts, filter by category or popularity, and then directly use these prompts in their own AI workflows. A key feature for integration is the 'deeplink' functionality, which allows users to open prompts directly within compatible IDEs like Cursor, streamlining the process of testing and applying shared prompts.

Product Core Function

· Prompt Sharing: Developers can upload and publicly or privately share their AI prompts, fostering knowledge exchange within the community. The value here is building a collective intelligence of effective AI interactions.

· Prompt Discovery: Users can browse, search, and filter a curated collection of prompts shared by others. This saves significant time and effort in reinventing the wheel for common AI tasks, accelerating development.

· Community Curation: The platform relies on community contributions and potentially upvoting/feedback mechanisms to highlight the most useful and innovative prompts. This ensures that the most impactful prompts rise to the top, providing practical value.

· Deep Linking to IDEs: Seamless integration with tools like Cursor via deeplinks allows users to directly import and test shared prompts. This dramatically reduces friction and speeds up the iteration cycle for prompt engineering.

Product Usage Case

· A data scientist struggling to get consistent sentiment analysis results from an LLM. They discover a prompt on PromptForge shared by another user that includes specific formatting instructions and negative constraints, leading to significantly improved accuracy. The problem solved is achieving better performance on a critical NLP task.

· A web developer building a content generation tool finds a prompt on PromptForge that generates creative product descriptions. By using the deeplink feature, they can quickly import this prompt into their Cursor IDE, test it with their specific product data, and integrate it into their application, saving hours of manual prompt crafting.

· A game developer experimenting with AI-generated dialogue finds a prompt on PromptForge that produces more natural and engaging character conversations. They can easily adapt this prompt to their game's lore and characters, enhancing the player experience.

5

Stockfisher AI Forecaster

Author

ddp26

Description

Stockfisher is an AI-driven platform that applies advanced forecasting models, inspired by Warren Buffett's investment principles, to analyze companies and predict their long-term financial outcomes. It leverages AI and LLM-agent benchmarks to generate forecasts for revenue, margins, and payout ratios, offering a contrarian view to the current stock market price by performing an intrinsic valuation. This provides investors with an objective and scalable tool to identify potentially undervalued companies.

Popularity

Points 14

Comments 4

What is this product?

Stockfisher is an automated investment analysis tool that uses artificial intelligence to forecast a company's future financial performance. Instead of just looking at the current stock price, it dives deep into fundamental factors like predicted revenue, profit margins, and how much cash the company is likely to return to shareholders. The core innovation lies in its AI's ability to model long-term outcomes and perform a 'Buffett-style' intrinsic valuation, which is essentially estimating the true underlying worth of a company independent of its current market price. This helps uncover opportunities that the broader market might be overlooking. Think of it as having an AI analyst who's read all the financial reports and can predict the future, but without the human biases.

How to use it?

Developers and investors can use Stockfisher through its web platform (platform.stockfisher.app). It's designed for easy access, with no sign-in required to view analyses of top stocks. For developers looking to integrate its insights, the platform provides scalable forecasts for S&P 500 companies, and is expanding to mid- and small-cap stocks. This means you can programmatically access potential future financial health data for a vast number of companies. You could use this to build custom investment screening tools, backtest trading strategies, or even power automated trading bots that identify companies undervalued by the market based on Stockfisher's AI-driven intrinsic valuations.

Product Core Function

· Long-term financial outcome forecasting: Utilizes AI to predict future revenue, profit margins, and payout ratios for companies, providing a data-driven outlook beyond immediate market fluctuations. This helps investors understand the potential growth trajectory and profitability of a company over years, not just quarters.

· AI-driven intrinsic valuation: Calculates a company's fundamental worth by ignoring the current stock price, similar to Warren Buffett's approach. This is valuable because it highlights companies that the market might be undervaluing, presenting opportunities for potentially higher returns.

· Scalable S&P 500 analysis: Generates forecasts and valuations for all companies in the S&P 500, enabling large-scale, systematic investment research. This allows for efficient identification of promising companies across a significant portion of the market without manual analysis for each one.

· Contrarian investment signals: Identifies neglected or undervalued companies by comparing the AI's intrinsic valuation to the current stock market price. This feature directly aids investors looking for unique opportunities that aren't crowded by mainstream attention.

· LLM-agent benchmark integration: Draws on cutting-edge advancements in Large Language Model (LLM) agents, ensuring its forecasting models are at the forefront of AI research. This means the underlying technology is robust and constantly improving, leading to potentially more accurate insights.

Product Usage Case

· A quantitative hedge fund could use Stockfisher's API to programmatically fetch intrinsic valuations for all S&P 500 companies and then sort them by the difference between market price and intrinsic value to identify potential buy candidates for their automated trading strategies.

· A retail investor could visit the Stockfisher website, view the top-ranked undervalued companies based on its AI analysis, and then conduct further due diligence on those companies to make informed investment decisions, saving time on initial screening.

· A financial blogger or analyst could use Stockfisher's forecasts to supplement their research, providing a unique AI-generated perspective on company futures to their audience and explaining why certain stocks might be attractive long-term investments.

· A fintech startup building a portfolio management tool could integrate Stockfisher's data to offer users an 'AI-powered value' score for their holdings, helping users understand if their investments are priced fairly according to advanced AI models.

6

Shadowfax AI: Agentic Data Wrangler

Author

diwu1989

Description

Shadowfax AI is an intelligent agent designed to revolutionize the data analysis workflow. It transforms tedious spreadsheet and BI tool operations into a rapid, trustworthy, and enjoyable process. By leveraging immutable data steps, a Directed Acyclic Graph (DAG) of SQL views, and DuckDB for swift querying of massive datasets, it significantly accelerates common data wrangling tasks, reducing hours to minutes. Its core innovation lies in its agentic approach, enabling a more sophisticated and verifiable data processing pipeline compared to simpler copilots.

Popularity

Points 14

Comments 0

What is this product?

Shadowfax AI is an AI agent that acts as a smart assistant for data analysts. Instead of manually clicking through spreadsheets or BI tools, you can tell Shadowfax what you want to achieve with your data. It understands your instructions and translates them into a series of verifiable, immutable steps. It builds a 'plan' (like a recipe or a workflow) using SQL views, ensuring that each step is clearly defined and can be easily reviewed or repeated. For quick data crunching, it uses DuckDB, a powerful in-process database that can handle millions of rows instantly. The innovation here is the 'agentic' nature; it doesn't just suggest code, it actively performs the data transformation in a structured and auditable way. So, what's the value? It means less time spent on repetitive data cleaning and preparation, and more time for actual analysis and insights, all while being confident in the data's integrity.

How to use it?

Developers and data analysts can integrate Shadowfax AI into their existing data workflows. You interact with Shadowfax by providing natural language commands or structured queries describing the data transformation you need. For example, you might say, 'Clean the customer data by removing duplicates and standardizing addresses,' or 'Join the sales and marketing tables and calculate monthly revenue.' Shadowfax then generates a DAG of SQL views representing these steps. For immediate results on local datasets or intermediate computations, it utilizes DuckDB, allowing for extremely fast processing. This can be integrated into existing data pipelines or used as a standalone tool for exploratory data analysis and rapid prototyping. The value for you is a significantly reduced barrier to performing complex data operations, enabling faster iteration and decision-making.

Product Core Function

· Agentic data wrangling: The AI understands natural language instructions to perform data cleaning, transformation, and preparation. This means you can tell the system what you want, and it figures out the how, saving you from writing complex scripts or navigating confusing interfaces.

· Immutable data steps: Each operation performed by Shadowfax is recorded as a distinct, unchangeable step. This ensures transparency and reproducibility, making it easy to audit your data processing and revert to previous states if needed. The value here is trust and control over your data.

· DAG of SQL views: Shadowfax constructs a Directed Acyclic Graph (DAG) of SQL views to represent the data processing pipeline. This provides a clear, visual representation of your data transformations, making them understandable and manageable. This is valuable for collaboration and for understanding the lineage of your data.

· DuckDB for instant crunching: For rapid querying and processing of large datasets (millions of rows), Shadowfax leverages DuckDB. This means you get near-instantaneous results for many analytical tasks, dramatically speeding up your workflow. The value is getting answers faster.

· High performance on benchmarks: Prototypes of Shadowfax have demonstrated top-tier performance on SQL query benchmarks like Spider2-DBT, indicating its efficiency and accuracy in handling complex data tasks. This means you can expect a reliable and high-performing tool for your data needs.

Product Usage Case

· A marketing analyst needs to combine data from various campaign platforms, clean inconsistencies, and calculate ROI for each campaign. Instead of spending hours manually merging spreadsheets and writing complex SQL, they can use Shadowfax AI to describe the desired outcome. Shadowfax generates the necessary steps and performs the analysis rapidly, allowing the analyst to focus on campaign optimization. The problem solved is the time-consuming and error-prone nature of manual data integration and cleaning.

· A finance team needs to reconcile monthly financial statements from different systems. This involves complex joins, aggregations, and currency conversions. Using Shadowfax AI, the team can define the reconciliation process in natural language. Shadowfax builds a verifiable workflow, ensuring accuracy and providing an auditable trail. The value is in ensuring financial accuracy and reducing the risk of errors.

· A data scientist is exploring a new, large dataset and needs to quickly understand its structure, identify outliers, and perform initial feature engineering. Shadowfax AI can assist by generating SQL views that quickly summarize the data, visualize distributions, and apply common transformations. This allows for much faster initial exploration and hypothesis generation. The problem solved is the initial steep learning curve and time investment for new datasets.

7

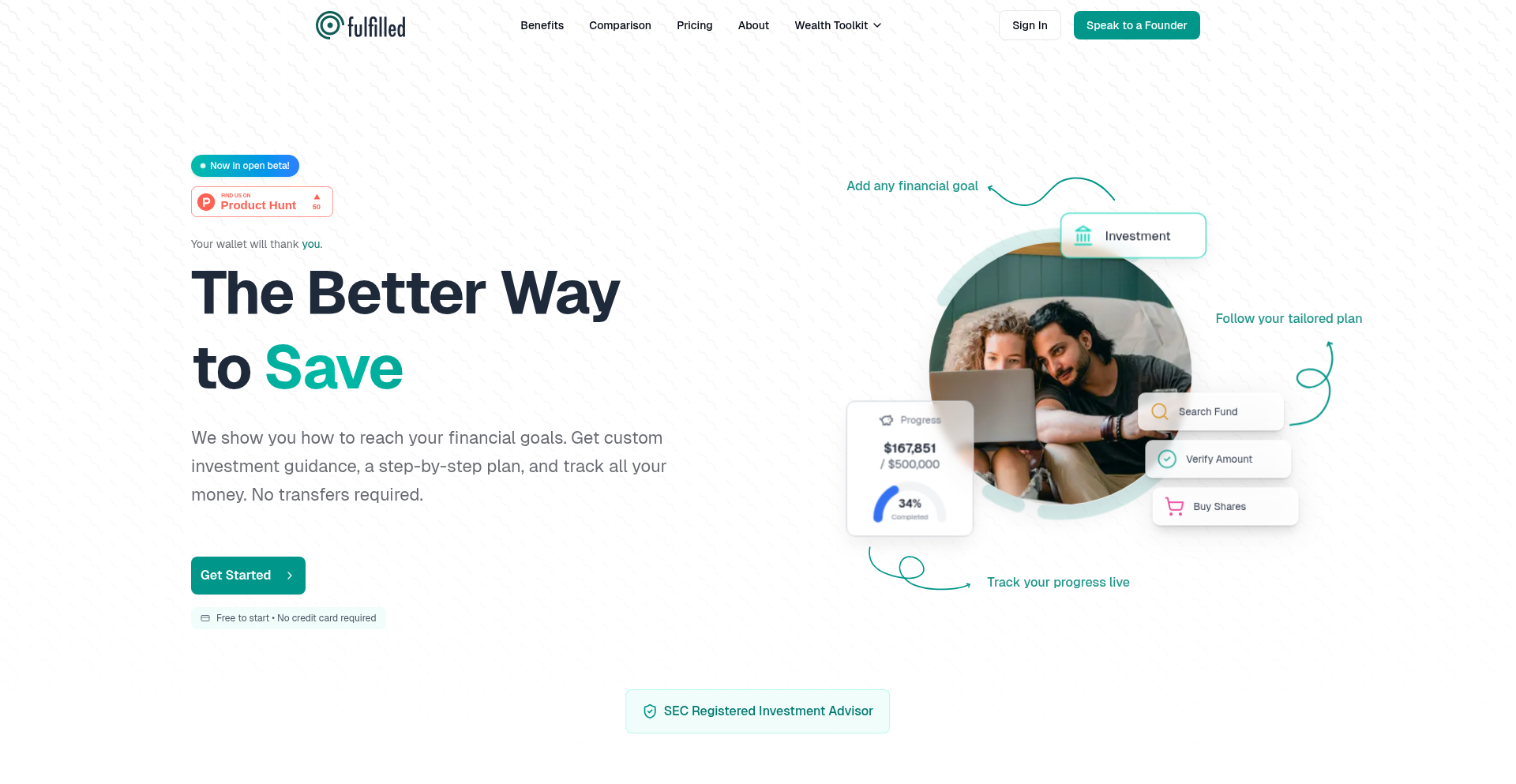

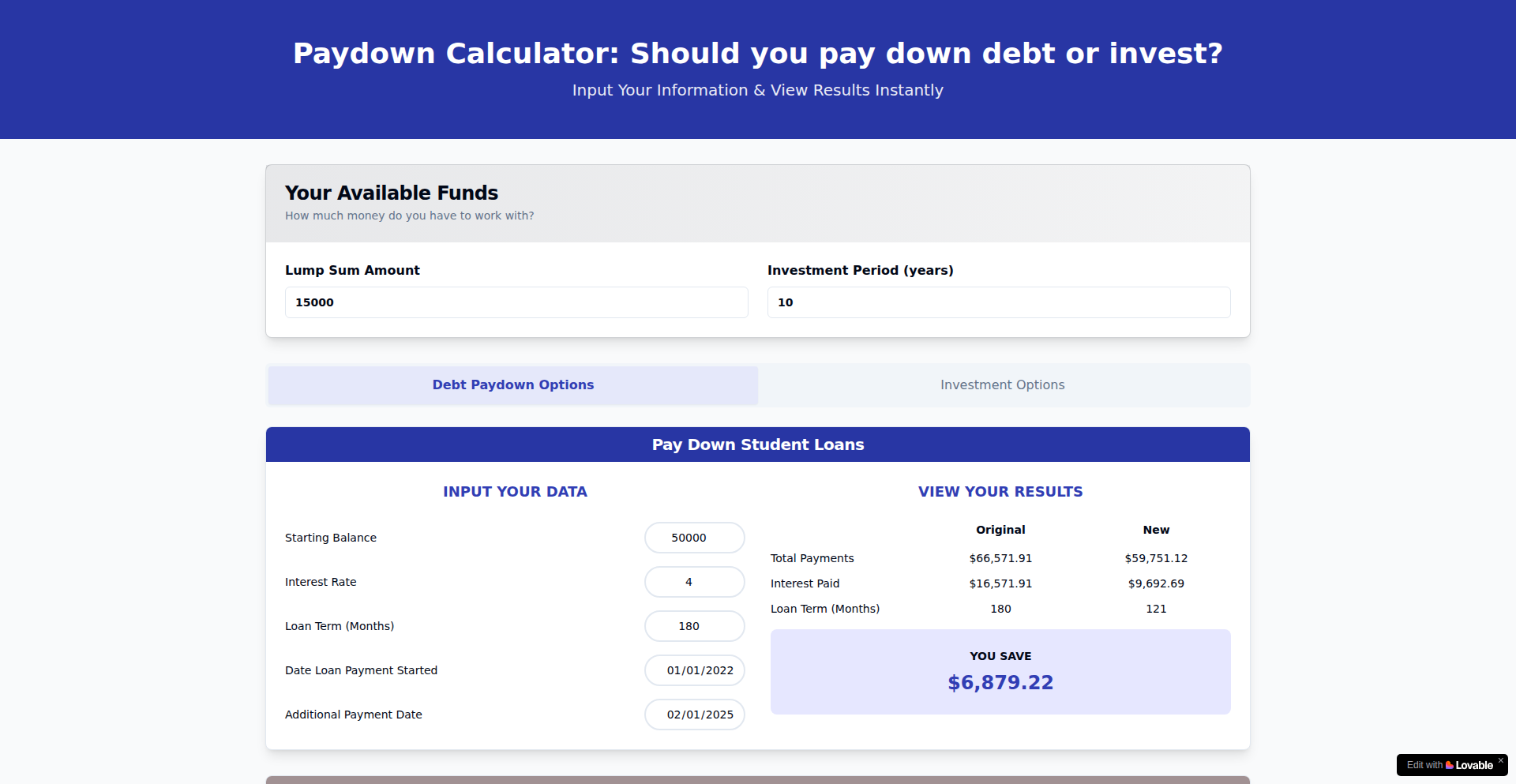

Fulfilled: Democratized Wealth Orchestrator

Author

workworkwork71

Description

Fulfilled is a wealth management platform that offers personalized investment and financial planning for individuals who don't meet traditional advisor minimums. It leverages institutional-grade modeling, accessible through low-cost ETFs, and aims to build lasting financial habits with AI-powered coaching. The core innovation lies in its non-custodial, highly personalized approach, making sophisticated financial strategies affordable and transparent.

Popularity

Points 7

Comments 5

What is this product?

Fulfilled is a modern wealth management service designed for the everyday person, not just the ultra-wealthy. Instead of requiring a $100,000 minimum, it uses the same advanced investment strategies that large pension funds use, but simplifies them into easy-to-understand portfolios made of just a few low-cost exchange-traded funds (ETFs). This means you can get a personalized investment plan tailored to your specific goals, like buying a house or saving for retirement, using ETFs that trade on your existing brokerage account. There's no need to transfer your money or deal with complex jargon. The key innovation is making sophisticated financial planning accessible and affordable, charging a fraction of what traditional advisors do. It's built on the idea that everyone deserves access to smart financial guidance.

How to use it?

Developers can integrate with Fulfilled by leveraging its API (though not explicitly detailed, the mention of 'trading on your existing brokerage' implies a need for such integration points for portfolio execution and monitoring). For end-users, the platform is accessible via a web interface (www.fulfilledwealth.co). Users can connect their existing brokerage accounts (or simply provide investment goals), and Fulfilled will model personalized portfolios. The platform aims to be user-friendly, guiding users through goal setting and asset allocation. Future features like AI spending coaches and step-by-step guides for complex financial milestones will further enhance usability and user engagement, making it easier to adopt and maintain good financial habits.

Product Core Function

· Personalized Portfolio Modeling: This function creates investment portfolios tailored to individual financial goals (e.g., house down payment, retirement) by analyzing user-specific timelines and risk tolerance. Its value lies in providing a customized financial roadmap, moving beyond generic investment buckets to unlimited combinations, thus optimizing potential returns for unique life circumstances.

· Low-Cost ETF Integration: This core function utilizes readily available, inexpensive ETFs for portfolio construction, allowing users to invest in diversified assets without high fees. The value is significant cost savings compared to traditional managed funds and ease of execution through existing brokerage accounts, making sophisticated investing accessible and affordable.

· Non-Custodial Service: Fulfilled acts as a financial advisor and strategist without holding or managing the user's assets. This means users maintain full control over their money in their own accounts. The value is in eliminating conflicts of interest, as Fulfilled doesn't profit from selling specific products, ensuring recommendations are purely in the user's best interest and providing peace of mind.

· AI-Powered Financial Guidance (Planned): Future functionality will involve AI that analyzes spending patterns, categorizes transactions, and suggests budget optimizations directly linked to investment goals. This adds immense value by automating financial monitoring, fostering better spending habits, and directly translating daily financial behavior into progress towards long-term wealth objectives.

· Step-by-Step Guidance ('Playbook' Feature): This feature will offer guided walkthroughs for complex financial milestones such as mortgage pre-approval or choosing retirement accounts. Its value is in demystifying intricate financial processes, empowering users to make informed decisions with confidence, and ensuring they navigate critical life events successfully.

Product Usage Case

· Scenario: A 32-year-old with an $85,000 income and $47,000 in savings wants to buy a house in 4 years and save for retirement. Traditional advisors might deem them too low on assets. Fulfilled can create a specific portfolio for the down payment (e.g., 60% global equities, 25% infrastructure, 15% short-term bonds) and a separate, more growth-oriented portfolio for retirement (e.g., 45% global equities, 30% US equities, 15% private equity, 10% emerging markets), all managed via low-cost ETFs on their current brokerage. This solves the problem of being excluded from personalized wealth management due to asset levels.

· Scenario: A young couple wants to start investing for shared future goals but struggles with budgeting and understanding investment options. Fulfilled's planned AI spending coach can automatically track their expenses, identify areas for savings, and then directly apply those savings towards their joint investment goals. The 'playbook' feature can guide them through setting up a joint investment account and choosing appropriate assets, solving the challenge of combining financial efforts and making informed collective investment decisions.

· Scenario: An individual wants to optimize their investments for tax efficiency but finds tax-loss harvesting complex. Fulfilled's upcoming simple tax-loss harvesting feature will allow users to easily implement this strategy within their existing portfolios. This solves the technical hurdle of tax optimization, allowing users to potentially reduce their tax liability and increase their net returns without needing to be tax experts.

8

AgenticCode SwiftAir

Author

ahaucnx

Description

This project showcases a native iOS and Android air quality map app built with an agentic coding workflow. The core innovation lies in using AI as a development partner, accelerating the creation of a fully functional application from design mocks and specifications. It solves the challenge of rapid, high-quality mobile app development by offloading repetitive coding tasks to AI, allowing human developers to focus on higher-level decision-making and refinement.

Popularity

Points 9

Comments 2

What is this product?

AgenticCode SwiftAir is a demonstration of how AI agents can be leveraged to rapidly build native mobile applications. The technical principle involves feeding an AI agent detailed specifications, UI mockups (like Figma designs), and existing data models. The AI then generates functional code in Swift (for iOS) and Kotlin (for Android), significantly speeding up the development cycle. The innovation is in the 'agentic' approach, where the AI acts as a parallel developer, generating code while the human focuses on other tasks. This isn't just code generation; it's a collaborative process where AI handles the bulk of implementation, allowing humans to concentrate on architecture, debugging, and strategic planning. This dramatically reduces the time from concept to a live app, making app development more accessible and efficient.

How to use it?

Developers can use this approach by defining detailed product specifications, including API requirements, data structures, UI layouts, and error handling scenarios. These specifications, along with visual mockups, are provided to an AI coding agent. The agent then generates code for native platforms like iOS (SwiftUI) and Android (Kotlin). Developers can integrate this generated code into their existing projects or use it as a foundation for new applications. This workflow is particularly useful for rapid prototyping, building internal tools, or creating MVPs (Minimum Viable Products) where speed is critical. It allows a single developer, even one new to a specific technology like Swift, to achieve a professional-level app output in a compressed timeframe.

Product Core Function

· AI-assisted SwiftUI code generation: This function allows for the rapid creation of user interfaces and app logic for iOS devices, translating design specifications directly into functional code, which speeds up the development of new features.

· Agentic Kotlin mobile app development: Similar to iOS, this enables the accelerated development of native Android applications by leveraging AI to generate code, facilitating cross-platform development and reducing the effort required for building on different operating systems.

· Automated API integration and data modeling: The AI can draft code for connecting to backend services and structuring data, which streamlines the process of handling information and makes it easier to build data-intensive applications.

· Feature implementation from detailed specifications: This core capability allows complex features to be built by AI based on comprehensive documentation, reducing the manual coding effort and potential for human error in implementation.

· Rapid prototyping and MVP development: By significantly shortening the coding time, this approach enables developers and product managers to quickly bring new ideas to life and test them in the market, allowing for faster iteration and validation.

· Empowering non-technical stakeholders: This methodology opens up possibilities for individuals with less coding experience to contribute to software creation by providing detailed requirements that AI can translate into working code, democratizing development.

Product Usage Case

· A CEO with limited Swift experience building a live air quality map app for iOS and Android within 60 days, demonstrating the power of AI for rapid product development by non-expert coders.

· Designers handing over Figma mockups to an AI agent that directly generates functional SwiftUI components, drastically reducing the design-to-development handoff time for UI elements.

· Product managers creating detailed specifications for new app features that AI translates into actual code, moving beyond guiding development to actively generating it, accelerating feature releases.

· Non-engineering teams building small internal tools or utility applications by providing specifications to AI, bypassing the traditional developer bottleneck for simpler software needs.

9

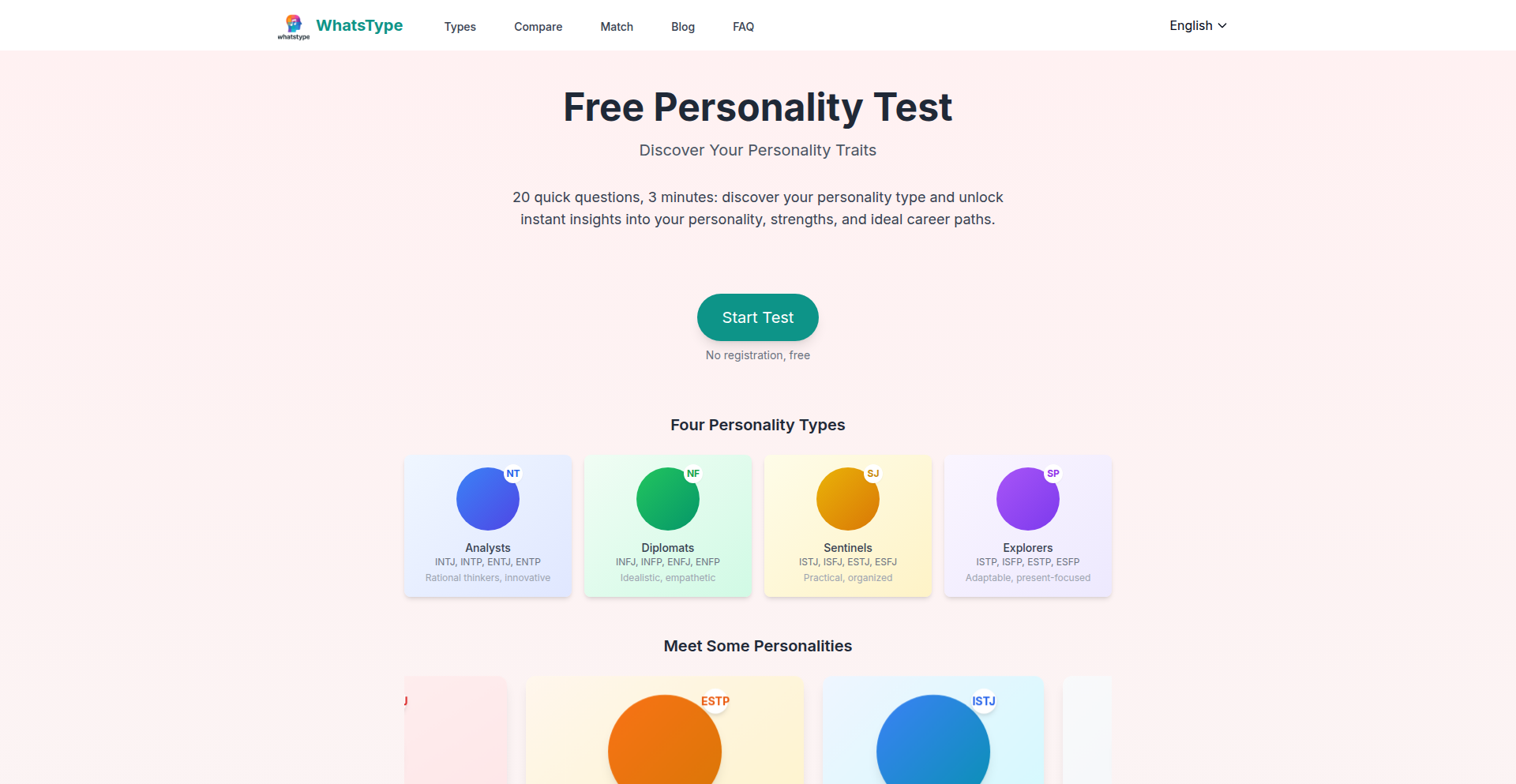

InsightFlow Personalizer

Author

olivefu

Description

InsightFlow Personalizer is a free, client-side personality assessment tool that goes beyond simple labels. It employs a dynamic, adaptive questioning system to deeply analyze thinking, communication, and relationship patterns. The innovation lies in its structured approach to results, detailing reasoning styles, emotional patterns, and social interactions, offering actionable advice for each of the 16 personality archetypes. Built with Next.js 14 and TypeScript, it prioritizes user privacy by running entirely in the browser and is statically exported for fast, efficient hosting.

Popularity

Points 6

Comments 4

What is this product?

InsightFlow Personalizer is a free website designed to help you understand yourself better by providing a more in-depth personality assessment than typical online quizzes. Instead of just giving you a short label, it uses a smart questioning system that adjusts based on your answers to get a clearer picture of your unique thought processes, how you interact with others, and your emotional tendencies. The core innovation is its dynamic questioning and its detailed, structured results that offer practical guidance, all while ensuring your data stays private because it runs directly in your web browser without needing logins or tracking.

How to use it?

Developers can use InsightFlow Personalizer as a readily available, privacy-focused tool to provide users with self-discovery resources. It can be integrated into existing platforms or used as a standalone engagement tool. For example, a coaching website could embed it to offer clients a starting point for self-reflection. Its static export and client-side execution make it easy to host on platforms like Cloudflare Pages, ensuring fast loading times and low infrastructure costs. Developers can also learn from its architecture, specifically how it uses Next.js 14 and TypeScript for a robust and maintainable codebase, and TailwindCSS for a clean, responsive user interface. The structured JSON content for results also offers a blueprint for managing complex, multi-language data.

Product Core Function

· Adaptive Questioning Engine: Dynamically adjusts questions based on user input, leading to a more accurate and personalized assessment. This means the test feels more relevant to you, and the results are more precise, helping you understand your unique traits without wasting time on irrelevant questions.

· Deep Personality Structuring: Categorizes results into reasoning styles, emotional patterns, and social interaction types, providing a nuanced understanding beyond a single label. This helps you see different facets of your personality and how they interrelate, offering a richer self-awareness.

· Actionable Insights and Advice: Delivers detailed analysis for each of the 16 personality archetypes, including strengths, challenges, and practical, real-life advice. This feature is valuable because it moves beyond theoretical understanding to provide concrete steps you can take to leverage your strengths and address your challenges.

· Privacy-Focused Client-Side Execution: All testing and result generation happens directly in the user's browser, requiring no login or data tracking. This is a significant value for users concerned about data privacy, ensuring their personal information remains secure and anonymous.

· Static Site Export for Performance: The application is built for full static export, allowing for extremely fast loading times and efficient hosting on Content Delivery Networks. This translates to a better user experience with no waiting and lower hosting costs for the provider.

Product Usage Case

· A personal development blog could embed InsightFlow Personalizer on a 'Discover Yourself' page. Users can take the test directly on the blog, and the detailed, actionable results can then be used as a foundation for blog content discussing self-improvement strategies tailored to different personality types. This solves the problem of generic self-help advice by providing a personalized starting point.

· A relationship counseling service could offer the test as a pre-session tool. Couples can independently assess their communication and relationship patterns, then bring their detailed results to their session. This enhances the effectiveness of therapy by giving counselors specific insights into potential dynamics and communication styles from the outset.

· An educational platform focused on career guidance could integrate the assessment to help students explore potential career paths. By understanding their reasoning styles and social interactions, students can be guided towards fields that align with their natural aptitudes and preferences, making career exploration more targeted and effective.

· A developer building a community platform for personal growth could use this as a core feature to foster deeper connections among users. By sharing and discussing their personality insights (anonymously if desired), users can build empathy and understanding, creating a more supportive and engaging community experience.

10

Effortless LLM Forge

Author

Jacques2Marais

Description

This project offers a simplified way to fine-tune Large Language Models (LLMs) without requiring extensive infrastructure management or deep Machine Learning expertise. It democratizes access to customized LLM capabilities by abstracting away the complexities of model training.

Popularity

Points 4

Comments 4

What is this product?

This is a service that allows you to train LLMs for your specific needs without dealing with servers, GPUs, or complex machine learning configurations. It uses advanced techniques to make the fine-tuning process as straightforward as possible, enabling even those without a background in ML to create specialized AI models. The core innovation lies in abstracting away the entire infrastructure and ML engineering overhead, allowing users to focus purely on their data and desired outcomes.

How to use it?

Developers can integrate this service into their workflows by providing their dataset and specifying the desired adjustments to an existing LLM. The platform handles the rest, from data preprocessing to model training and deployment. This can be done via an API, allowing for seamless integration into existing applications or custom scripts. Think of it as a powerful, plug-and-play solution for creating a tailored AI assistant for your business or project.

Product Core Function

· Simplified Data Upload and Preparation: Allows users to upload their custom datasets easily, with intelligent handling of data formats to prepare it for LLM training, reducing the manual data wrangling effort for developers.

· Automated Model Training Orchestration: Manages the entire fine-tuning process, including hyperparameter selection and resource allocation, abstracting away the complexities of ML pipelines so developers can achieve results without deep ML knowledge.

· On-Demand LLM Customization: Enables the creation of specialized LLMs tailored to specific domains or tasks, providing a more accurate and relevant AI experience for end-users by addressing the limitations of general-purpose models.

· API-Driven Integration: Offers a programmatic interface to fine-tune and deploy models, allowing developers to easily embed custom AI capabilities into their existing applications and services, enhancing application intelligence.

· Resource Optimization and Cost Efficiency: Manages computational resources efficiently, aiming to provide fine-tuning capabilities at a lower cost than traditional self-managed solutions, making advanced AI accessible to a wider range of projects and budgets.

Product Usage Case

· A customer support team needs an AI chatbot that understands their company's specific product catalog and common troubleshooting steps. They can use this service to fine-tune an LLM with their support documentation, resulting in a chatbot that provides accurate, context-aware answers, significantly improving customer satisfaction and reducing support agent workload.

· A content creator wants to generate marketing copy that perfectly matches their brand's unique voice and style. By fine-tuning an LLM with examples of their existing successful content, they can generate new marketing materials that are on-brand and resonate with their target audience, saving time on content creation and ensuring brand consistency.

· A small e-commerce business wants to personalize product recommendations based on past customer interactions and preferences. They can fine-tune an LLM with their sales data to build a recommendation engine that understands nuanced customer behavior, leading to higher conversion rates and improved customer engagement.

· A developer building a legal tech application needs an AI assistant that can accurately summarize complex legal documents. Fine-tuning an LLM with a dataset of legal texts and their summaries allows for rapid and precise document analysis, streamlining legal research and drafting processes.

11

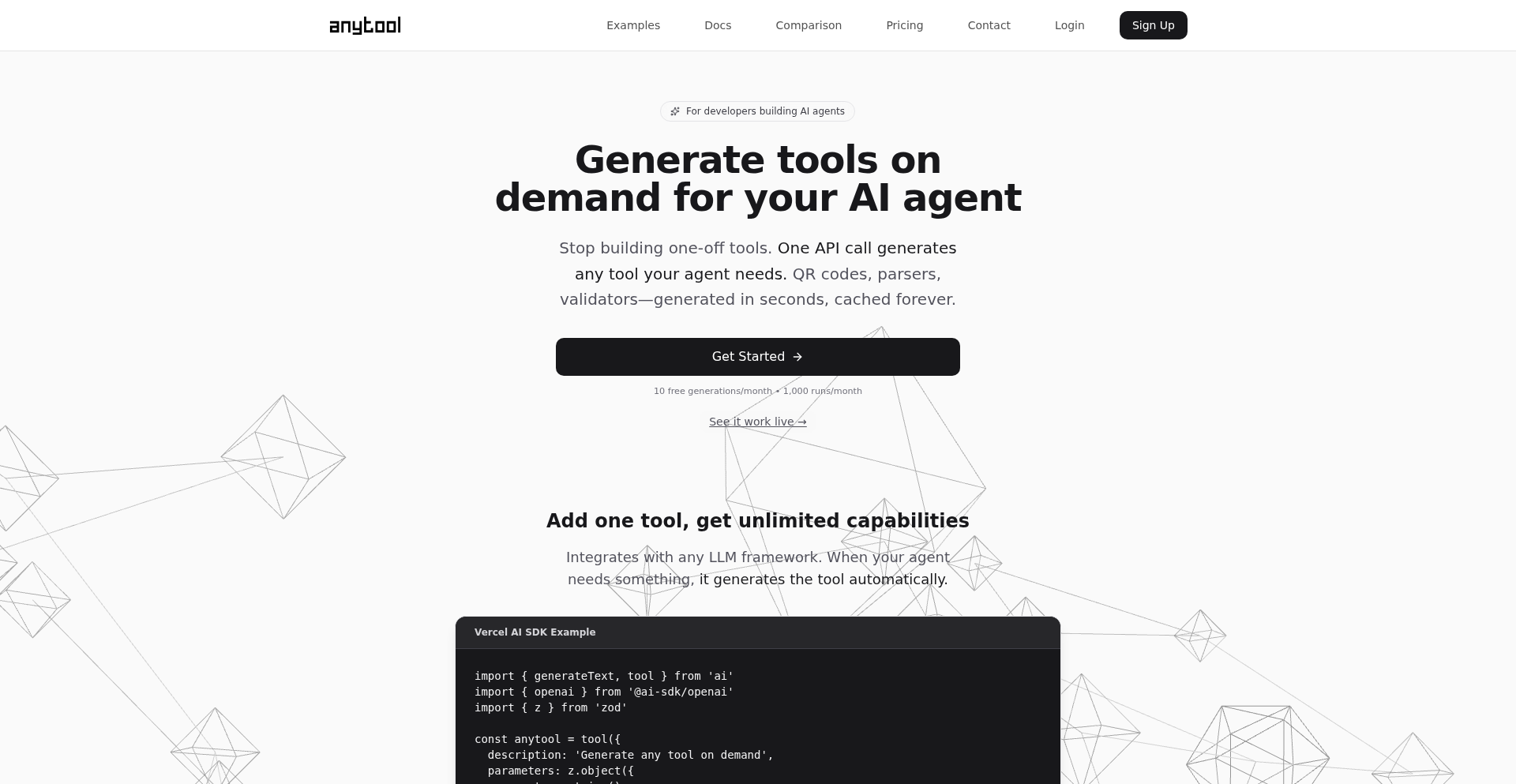

Anytool Dynamic Execution Fabric

Author

acoyfellow

Description

Anytool is a revolutionary meta-tool that empowers AI agents to dynamically generate and execute custom tools on demand. It addresses the challenge of AI agents becoming overloaded with static tools by creating the exact tool needed, when needed. This significantly reduces complexity, token usage, and speeds up iteration, unlocking infinite capabilities for AI-driven workflows.

Popularity

Points 2

Comments 5

What is this product?

Anytool is a platform that acts as a single interface for AI agents to access an ever-expanding toolkit. Instead of pre-defining dozens of specific tools, an AI agent can ask Anytool to generate a new tool using natural language. Anytool then writes and executes the code for that tool within an isolated, secure environment. It caches generated tools for instant reuse and manages per-tool state (like memory or saved data) using key-value stores and SQLite databases. This approach is like giving an AI agent a superpower to build its own specialized tools from scratch, making it incredibly adaptable and efficient. The innovation lies in its ability to transform abstract requests into concrete, executable code, making AI agents far more powerful and versatile.

How to use it?

Developers can integrate Anytool into their AI agent frameworks (like AI SDK, LangChain, or MCP) by calling its primary functions. For example, an AI agent could use the `create_tool` function to ask for a new tool, describing its purpose in plain English. Anytool will then generate the necessary code (e.g., a Python script or JavaScript function) and run it. For subsequent, identical requests, Anytool will instantly retrieve the cached tool, making execution extremely fast. Developers can also manage their custom tools, search for existing ones using semantic similarity, and execute them with specific inputs. Credentials and environment variables are securely injected at runtime, ensuring sensitive information is never hardcoded.

Product Core Function

· Dynamic Tool Generation: Converts natural language requests into executable code for new tools, allowing AI agents to create custom functionality on the fly. This means you don't need to anticipate every possible need; the AI can build what's required.

· On-Demand Execution: Runs generated tools within isolated sandboxes, ensuring security and preventing interference between different tools or agents. This is like giving each tool its own safe workspace.

· Intelligent Caching: Stores generated code and its dependencies for rapid retrieval on subsequent calls, drastically reducing latency and computational cost for repetitive tasks. This makes your AI agent feel much snappier.

· Per-Tool State Management: Provides dedicated storage (KV and SQLite) for each tool, enabling it to maintain context, history, or persistent data across multiple runs. This allows tools to 'remember' things and build more complex workflows.

· Secure Credential Injection: Safely injects user-specific environment variables and API keys (prefixed with USER_) at runtime, eliminating the need to hardcode secrets and enhancing security. Your sensitive information stays protected.

· Semantic Tool Retrieval and Augmentation: Indexes tool documentation and types, allowing AI agents to find the most relevant existing tools based on semantic similarity, preventing duplication and improving efficiency. This helps your AI agent avoid reinventing the wheel.

· Reproducible Execution: Utilizes version-pinned imports and isolated environments to ensure that tool execution is deterministic and consistent every time. This means you can rely on the output being the same under the same conditions.

Product Usage Case

· Automated Data Processing Workflows: An AI agent can use Anytool to generate a series of tools for cleaning, transforming, and analyzing data from various sources. For example, a tool to parse a CSV file could be generated, followed by a tool to filter rows based on specific criteria, and finally a tool to generate a summary report. This solves the problem of needing pre-built, complex data pipelines for every new dataset.

· Custom API Client Generation: If an AI agent needs to interact with a new, undocumented API, it can use Anytool to generate an API client on the fly. The agent can describe the endpoints and parameters, and Anytool will create the necessary functions to make requests and handle responses. This solves the challenge of integrating with unknown or rapidly changing APIs.

· Real-time Content Moderation: An AI agent can be tasked with moderating user-generated content. Anytool could generate tools for sentiment analysis, profanity detection, and image recognition. These tools would be executed in real-time as new content is submitted, solving the problem of scalable and adaptable content safety.

· Personalized Recommendation Systems: An AI agent could use Anytool to generate a suite of recommendation tools tailored to individual user preferences. These tools might analyze user history, content metadata, and semantic similarities to provide highly relevant suggestions. This addresses the challenge of creating dynamic and personalized recommendation engines without extensive manual configuration.

12

Svelte-Zero UI Generator

Author

dimelotony

Description

Svelte-Zero is a UI generator for Svelte that aims to simplify frontend development by abstracting away boilerplate code. It leverages meta-programming and intelligent code generation to create Svelte components based on simple definitions, allowing developers to focus on logic and design rather than repetitive markup.

Popularity

Points 4

Comments 3

What is this product?

Svelte-Zero is a tool designed to automatically generate Svelte UI components. Instead of manually writing all the HTML structure, CSS styling, and Svelte logic for common UI patterns, you provide a simplified definition. The tool then uses smart algorithms (essentially, it's like a code-writing assistant) to produce the actual Svelte code for you. This is innovative because it automates the tedious parts of building interfaces, freeing up developer time. The core idea is to 'generate' UI elements, hence 'Svelte-Zero' implying a starting point of minimal code from the developer's perspective. So, this helps you build UIs much faster and with less repetitive coding.

How to use it?

Developers can integrate Svelte-Zero into their Svelte projects by following a simple setup process, likely involving installing a package and configuring a generation command. You'd typically define your UI components using a descriptive configuration file or a specialized syntax. Svelte-Zero then processes these definitions and outputs ready-to-use Svelte (.svelte) files. This means you can quickly scaffold complex components or even entire UI layouts with minimal manual coding. Think of it as a shortcut for building standard UI elements. So, this helps you get your application's interface up and running much more rapidly.

Product Core Function

· Automated Component Generation: Takes simple definitions and produces fully functional Svelte components, reducing manual coding effort. The value here is speed and consistency, allowing developers to build UIs faster and with fewer errors in repetitive tasks. Useful for creating lists, forms, modals, and other standard UI elements.

· Boilerplate Code Reduction: Eliminates the need to write common HTML, CSS, and Svelte boilerplate for typical UI patterns. The value is cleaner, more maintainable codebases and a faster development cycle. This is applicable whenever you're starting a new component that has a common structure.

· Customizable Generation: Allows for customization of generated components to fit specific project needs, offering flexibility beyond basic templates. The value is that it's not a rigid system; you can tailor the output to your exact requirements. This is useful when standard patterns aren't quite enough but you still want to leverage generation.

· Meta-Programming Techniques: Utilizes advanced code generation techniques to create efficient and optimized Svelte code. The value is in producing high-quality code that performs well, meaning your application will be fast and responsive. This benefits the end-user by providing a smoother experience.

Product Usage Case

· Rapid prototyping of dashboards and admin panels: A developer can define several card components, data tables, and form elements using Svelte-Zero's configuration. The tool then generates all the Svelte code, allowing the developer to quickly assemble a functional prototype to showcase the application's structure and capabilities. This solves the problem of spending too much time on basic UI implementation during the early stages of a project.

· Creating a library of consistent UI elements for a large application: A team can use Svelte-Zero to generate a standardized set of buttons, inputs, and navigation elements. This ensures visual consistency across the entire application and simplifies the process of adding new UI pieces. It solves the problem of design drift and makes it easier for new developers to contribute consistently styled components.

· Accelerating the development of forms with validation: A developer can define a complex form with multiple input fields, labels, and basic validation rules. Svelte-Zero can generate the form structure, associate labels, and even scaffold the basic validation logic, significantly reducing the time spent on form development and error handling. This addresses the common pain point of tedious form creation.

13

Source Weaver

Author

nazar_ilamanov

Description

Source Weaver is an open-source alternative to tools like NotebookLM, designed to make self-learning more dynamic and personalized. It allows users to upload diverse sources like PDFs, GitHub repositories, and websites. The innovation lies in its ability to not only chat with an AI about these sources but also to run custom 'apps' on them. These apps can transform content into video summaries, generate mind maps, create reports, or even produce study quizzes. The core technical value is exposing the underlying AI workflows, enabling users to understand, modify, and share how information is processed.

Popularity

Points 6

Comments 0

What is this product?

Source Weaver is a platform that lets you feed in your own learning materials – think textbooks, research papers (PDFs), code from GitHub, or any website. It then uses AI, specifically Large Language Models (LLMs), to let you interact with this content in novel ways. Unlike rigid AI tools, Source Weaver shows you exactly *how* it generates outputs like video summaries or mind maps. This transparency allows you to tweak the process, build your own custom 'apps' for specific learning tasks, or share your workflow with others. The innovation is in making AI-powered learning adaptable and transparent, addressing the limitations of one-size-fits-all AI assistants.

How to use it?

Developers can use Source Weaver by first uploading their chosen sources. Then, they can select from pre-built 'apps' (like 'summarize document' or 'generate flashcards') or create their own. For instance, to get a video summary of a research paper, a developer would upload the PDF, select the 'article-to-video' app, and potentially customize the prompt or video style. To integrate with existing developer workflows, one could imagine building an app that analyzes a GitHub repository and generates a technical documentation summary, or one that creates interactive quizzes based on API documentation. The platform encourages remixing existing apps or building entirely new ones from scratch using LLM prompts and defined steps.

Product Core Function

· Source Ingestion and Indexing: Allows uploading various digital formats (PDF, code repositories, URLs) and processing them for AI interaction. This enables focused AI analysis on specific knowledge bases, making information retrieval highly relevant.

· AI Chat Interface: Provides a conversational interface to query and discuss uploaded sources. This offers a more intuitive way to extract information and gain understanding than traditional search, acting like a knowledgeable assistant for your documents.

· Customizable 'Apps' for Content Transformation: Enables users to build and run custom applications on their sources, such as generating video overviews, mind maps, detailed summaries, or study quizzes. This unlocks creative ways to consume and learn from information, tailored to individual needs.

· Workflow Transparency and Modifiability: Exposes the underlying AI prompts and processing steps for app creation. This empowers users to understand how AI generates outputs, modify them for better results, and share their custom learning workflows with the community, fostering collaboration and innovation.

· App Remixing and Creation: Supports modifying existing apps or building new ones from the ground up. This allows for highly specialized learning tools, catering to niche subjects or unique learning styles, promoting a 'hacker' mindset of building what you need.

Product Usage Case

· A student studying complex physics textbooks can upload all their PDFs and use an app to generate flashcards for key concepts, significantly speeding up revision. The app reveals the prompts used to identify key terms, making the learning process transparent.

· A developer exploring a new framework can upload its GitHub repository and documentation website. They can then use a custom app to generate a high-level architectural overview, helping them grasp the project's structure quickly. This solves the problem of information overload in new codebases.

· A researcher can upload multiple research papers on a topic and use an app to create a comparative summary, highlighting similarities and differences. This accelerates the literature review process and identifies research gaps more efficiently.

· A hobbyist learning electronics can upload datasheets and tutorials. They can then use an app to generate short video explanations of circuit diagrams, making complex technical information more accessible through visual aids.

14

DevMeme: The Giggle-Powered Code Challenge

url

Author

linegel

Description

DevMeme is a fun, browser-based mini-game that uses memes as its core mechanic. It presents users with coding puzzles embedded within intentionally bad jokes and meme formats. The innovation lies in its playful approach to skill reinforcement, leveraging the shared language and humor of developer culture to create an engaging learning and practice environment. It aims to make repetitive coding tasks less tedious and more entertaining, offering a novel way to hone problem-solving skills through humor.

Popularity

Points 5

Comments 1

What is this product?

DevMeme is a web application that gamifies coding practice by presenting users with programming puzzles disguised as meme-based jokes. It's built on a foundation of web technologies, likely utilizing JavaScript for interactive elements and game logic, and a backend to serve the puzzles and track progress. The innovative aspect is the conceptual framework: instead of dry exercises, it uses relatable and often absurd meme scenarios to frame coding challenges, making them more approachable and memorable. This taps into the 'hacker spirit' of finding creative and unconventional solutions to make tasks more enjoyable and effective.

How to use it?

Developers can access DevMeme directly through their web browser. No complex installation is required. Users simply navigate to the provided URL, and the game begins. Each puzzle presents a scenario, often a relatable developer frustration or inside joke, followed by a coding prompt. Developers then input their code solution directly into the game interface. The game evaluates the solution, providing immediate feedback. This can be used for quick warm-ups before a coding session, a fun break during intensive work, or as a way to refresh knowledge on specific programming concepts in a low-pressure, entertaining context. It's designed for solo play, acting as a personal practice tool.

Product Core Function

· Meme-driven puzzle generation: Provides a unique challenge by embedding coding problems within humorous meme templates, making learning more engaging and less intimidating. The value is in increasing user motivation and retention through familiar cultural touchpoints.

· Interactive code editor and evaluator: Allows users to write and submit code directly within the game, with instant feedback on correctness. This offers immediate gratification and allows for iterative learning and debugging, accelerating the skill development process.

· Joke-based problem framing: Leverages humorous and often relatable developer jokes to introduce coding concepts. This reduces cognitive load and makes complex ideas more digestible, transforming mundane practice into an enjoyable experience.

· Browser-based accessibility: Runs entirely in the web browser, requiring no setup or installation. This ensures that any developer with internet access can immediately start practicing, removing barriers to entry and promoting widespread use.

· Progress tracking (implied): While not explicitly stated, a game of this nature would likely include some form of progress tracking to encourage continued play and mastery. This adds a layer of gamification that motivates users to improve their scores and solve more puzzles over time.

Product Usage Case

· Scenario: A junior developer struggling with a common JavaScript array method. Problem: DevMeme presents a meme of a cat looking confused at a tangled ball of yarn, with the text 'Me trying to sort my data without Lodash'. The coding challenge involves correctly using the `.sort()` method. Value: This makes the abstract concept of array sorting more tangible and memorable through a humorous, relatable analogy, helping the developer quickly grasp and apply the correct solution.

· Scenario: A senior developer facing a performance bottleneck. Problem: DevMeme shows a meme of someone furiously typing, with the caption 'My code after I forget to optimize my loops'. The puzzle challenges the developer to refactor a piece of inefficient code for better performance. Value: This scenario uses humor to highlight a critical aspect of software development, encouraging developers to think about optimization in a fun, non-judgmental way, leading to better-written and more efficient code.

· Scenario: A developer needing to practice basic algorithm logic. Problem: DevMeme uses a popular meme format to present a simple search or sort algorithm problem, framing it as a quest or a silly task. Value: By injecting humor and a playful narrative into algorithmic challenges, DevMeme makes practicing these fundamental concepts less daunting and more appealing, fostering a deeper understanding and quicker recall of solutions.

15

VerseAI Observability

Author

4thabang

Description

Verse AI is a novel tool designed to surface critical failures in AI systems that traditional evaluation methods often miss. It achieves this by analyzing real-world AI interactions, clustering conversations, and flagging failure patterns, thereby providing developers with actionable insights to improve AI robustness and reliability. This directly addresses the challenge of AI models exhibiting blind spots due to outdated training data or unforeseen edge cases, ultimately preventing issues like the auto-rejection of qualified candidates.

Popularity

Points 5

Comments 0

What is this product?

Verse AI is an observability platform for AI systems that goes beyond standard evaluation metrics. It works by ingesting real-time interactions data from your AI applications. Think of it like having a super-smart detective that watches every conversation your AI has. Instead of just looking at whether the AI gave a 'right' or 'wrong' answer in a test, Verse AI groups similar conversations together and highlights the ones where the AI might be struggling or making mistakes. This is innovative because most AI evaluation tools focus on pre-defined test cases, which can't possibly cover all the weird and unexpected ways users interact with an AI or the real-world edge cases that emerge. Verse AI identifies these hidden problems by looking at actual usage, making your AI more trustworthy and less prone to those frustrating 'it worked yesterday, but not today' issues.

How to use it?

Developers can integrate Verse AI into their existing AI development workflow. It utilizes OpenTelemetry for trace ingestion, making it compatible with popular AI observability tools like Langfuse, Langsmith, and Braintrust. You can simply add Verse AI alongside your current setup. When your AI system is running, Verse AI will collect the interaction data. You can then access the Verse AI dashboard to see clustered conversations and identified failure patterns. This allows you to pinpoint specific areas of your AI that need improvement, such as refining responses to certain user queries or addressing biases that appear in real interactions. So, if your AI recruitment tool is missing good candidates, Verse AI will show you exactly which conversations led to those rejections, allowing you to fix the root cause.

Product Core Function

· Conversation Clustering: Groups similar AI interactions to identify common themes and potential problem areas. This helps developers understand trends in AI behavior that might otherwise be buried in vast amounts of data. So this helps you see if many users are having the same confusing experience with your AI.

· Failure Pattern Identification: Automatically flags conversations exhibiting error patterns or unexpected behavior. This feature is crucial for proactively catching issues before they impact users or business outcomes, such as losing valuable talent in a recruitment pipeline. So this tells you 'here's exactly where your AI messed up'.

· Trace Ingestion via OpenTelemetry: Seamlessly integrates with existing observability stacks (Langfuse, Langsmith, Braintrust) for easy adoption and data correlation. This means you don't have to throw away your current tools; Verse AI works with them. So this makes it easy to add Verse AI to what you already use.

· Real-World Interaction Analysis: Focuses on actual user-AI exchanges rather than solely relying on simulated or predefined test cases, providing a more accurate picture of AI performance in production. This gives you confidence that your AI is performing well in the real world, not just in a lab. So this ensures your AI works for actual users.

· Actionable Insights for Improvement: Provides developers with clear, data-driven recommendations on where and how to improve their AI models and applications. This turns raw data into practical steps for making your AI better. So this helps you know exactly what to fix.

Product Usage Case

· AI Recruitment Pipeline Failure: An AI recruitment pipeline auto-rejects qualified candidates due to outdated training data. Verse AI analyzes the conversations between candidates and the pipeline, clustering rejections and highlighting patterns related to unrecognized company names or roles, allowing the team to update the AI's knowledge base. This solves the problem of missing out on good hires.

· Customer Service Chatbot Errors: A customer service chatbot repeatedly provides incorrect information for a specific set of product inquiries. Verse AI identifies the cluster of conversations related to these inquiries and flags the erroneous responses, enabling developers to retrain or update the chatbot's knowledge graph. This improves customer satisfaction by providing accurate support.

· AI Agent Misunderstandings: An AI agent designed to book appointments consistently misunderstands user requests for specific time slots. Verse AI analyzes the booking interactions, identifies the patterns of misunderstanding, and helps developers fine-tune the agent's natural language understanding (NLU) capabilities for better scheduling. This leads to more efficient and less frustrating user interactions.

16

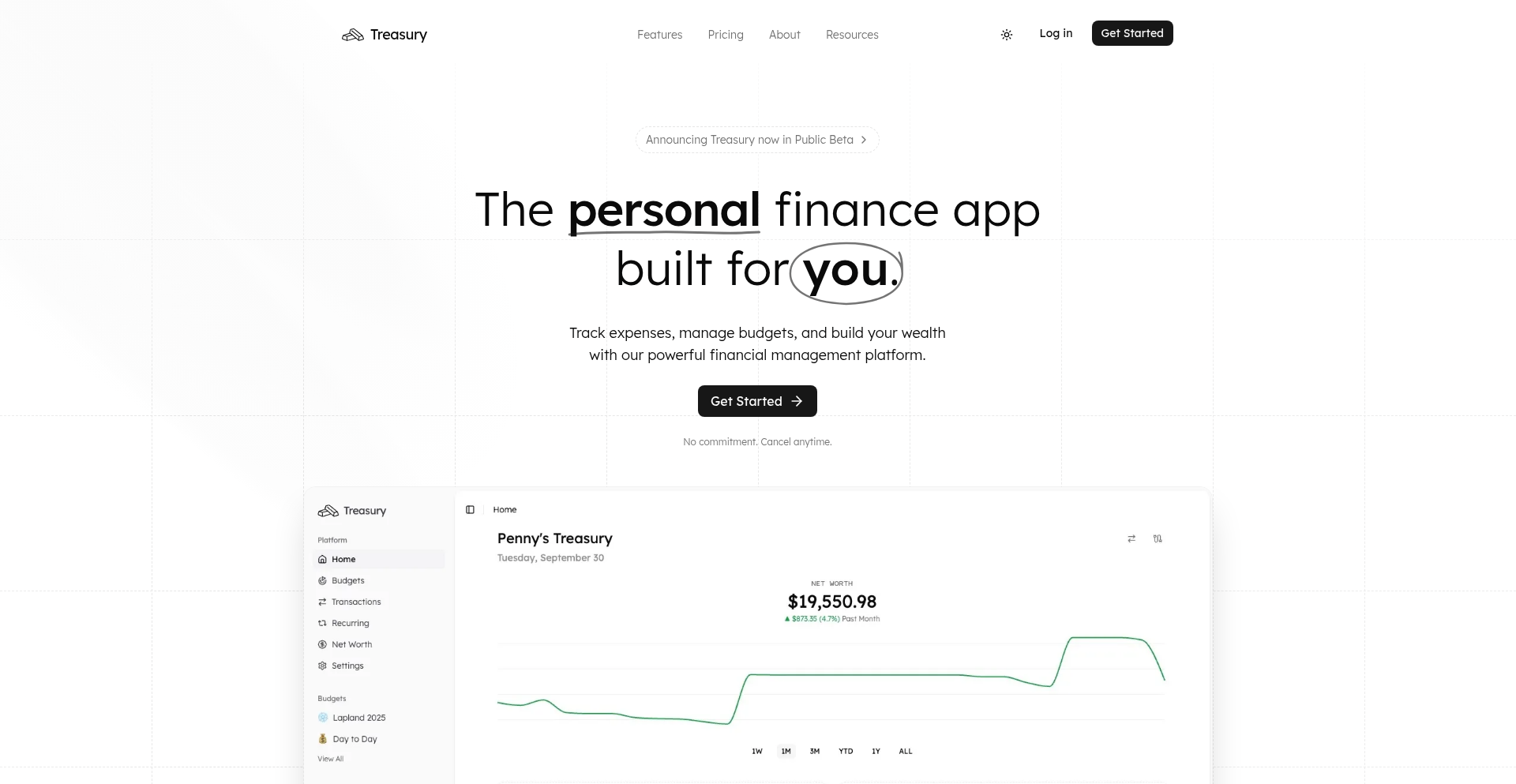

Treasury: Dynamic Financial Architecture

Author

junead01

Description

Treasury is a personal finance application that empowers users to construct flexible financial models. It moves beyond rigid templates by allowing users to define their own financial structures, enabling detailed tracking of net worth, spending analysis, identification of recurring expenses, and the creation of personalized budgeting systems that align with individual thought processes. The innovation lies in its modular approach to financial management, offering a truly customizable experience.

Popularity

Points 5

Comments 0

What is this product?

Treasury is a personal finance application built on the principle of user-defined financial architecture. Instead of forcing users into pre-set categories, it provides a set of 'building blocks'—akin to programmable modules—that users can assemble to represent their unique financial landscape. This allows for granular tracking of assets and liabilities, in-depth analysis of spending habits, automatic detection of recurring financial events (like subscriptions or bills), and the creation of budgets that mirror how individuals actually conceptualize their money. The core technical insight is to abstract financial concepts into composable units, enabling a level of personalization previously unavailable in standard personal finance tools. So, what's in it for you? It means a finance app that finally understands *your* money, not just a generic one.

How to use it?

Developers can use Treasury by signing up for the public beta at treasury.sh. The application offers a web-based interface where users can visually or programmatically define their financial components. For instance, a user might create a 'Real Estate' module for their home, linking mortgage payments and property value estimates. Another might create a 'Subscription Service' module to meticulously track monthly recurring charges. The flexibility extends to complex scenarios, such as managing multiple investment portfolios or tracking income streams from various freelance projects. Integration possibilities could involve connecting to bank APIs (where supported and authorized) to automatically pull transaction data, or using Treasury's data export features to feed into other analytical tools. So, how can you use it? You can start by mapping out your personal finances using its intuitive building blocks, setting up alerts for specific financial events, or even exploring advanced budgeting strategies. It's about tailoring the tool to your life, not the other way around.

Product Core Function