Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-12

SagaSu777 2025-11-13

Explore the hottest developer projects on Show HN for 2025-11-12. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN batch reveals a powerful current: the relentless drive to make AI more practical and integrated into our daily workflows, both for developers and end-users. We're seeing LLMs not just as chatbots, but as sophisticated agents capable of complex tasks, from medical diagnoses to controlling browser environments. The emphasis is shifting towards 'agentic' systems, where AI components collaborate and perform actions autonomously. Simultaneously, there's a strong push for efficiency and accessibility; projects are tackling massive datasets with novel techniques for LLMs, optimizing resource usage, and creating tools that reduce friction in content creation and analysis. Privacy and user control are also recurring themes, with many projects prioritizing local processing and BYOK (Bring Your Own Key) models. For developers, this means exploring how to build these agentic systems, leverage LLMs for domain-specific problems, and architect for efficient data handling. For entrepreneurs, it's about identifying specific pain points that can be solved by intelligent automation, focusing on user experience, and building trust through transparency and privacy.

Today's Hottest Product

Name

Aluna (YC S24) - Cancer diagnosis makes for an interesting RL environment for LLMs

Highlight

This project showcases a novel application of Reinforcement Learning (RL) with frontier Large Language Models (LLMs) for analyzing pathology slides. The core innovation lies in equipping LLMs with tools to 'zoom and pan' across massive Whole Slide Images (WSIs), overcoming the context window limitations. This approach allows LLMs to iteratively examine regions of a digitized pathology slide, mimicking how a human pathologist would work under a microscope, to arrive at a diagnosis. Developers can learn about how to architect complex interactions with LLMs, the challenges of processing high-dimensional data, and the potential for RL agents to navigate and analyze visual datasets in a goal-directed manner. It demonstrates a sophisticated technique for making LLMs more effective in specialized, data-intensive domains.

Popular Category

Artificial Intelligence / Machine Learning

Developer Tools

Data Management

Productivity Software

Utilities

Popular Keyword

LLM

AI

Open Source

API

Agent

Technology Trends

Agentic Workflows

LLM Integration for Specialized Tasks

Efficient Data Handling for Large Models

Developer Productivity Tools

Decentralized and Privacy-Focused Architectures

AI-Powered Content Generation and Analysis

Project Category Distribution

AI/ML Tools & Applications (30%)

Developer Productivity & Tools (25%)

Data & Infrastructure (15%)

Utilities & End-User Apps (20%)

Content & Media Tools (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Logosive: Debate Catalyst | 32 | 32 |

| 2 | PathoNaviLLM | 41 | 20 |

| 3 | SkillGraph: Agentic AI with Skill-Based Reasoning | 12 | 0 |

| 4 | YaraDB: FastAPI-Powered Document Database | 9 | 1 |

| 5 | GenomixRAM | 10 | 0 |

| 6 | RetireSim: Monte Carlo & Tax-Aware Retirement Planning Engine | 8 | 1 |

| 7 | MCP Browser Agent Relay | 6 | 3 |

| 8 | DeltaGlider: Binary Delta Storage CLI | 7 | 2 |

| 9 | MycoGuard AI | 3 | 6 |

| 10 | Hathora VoiceForge | 6 | 1 |

1

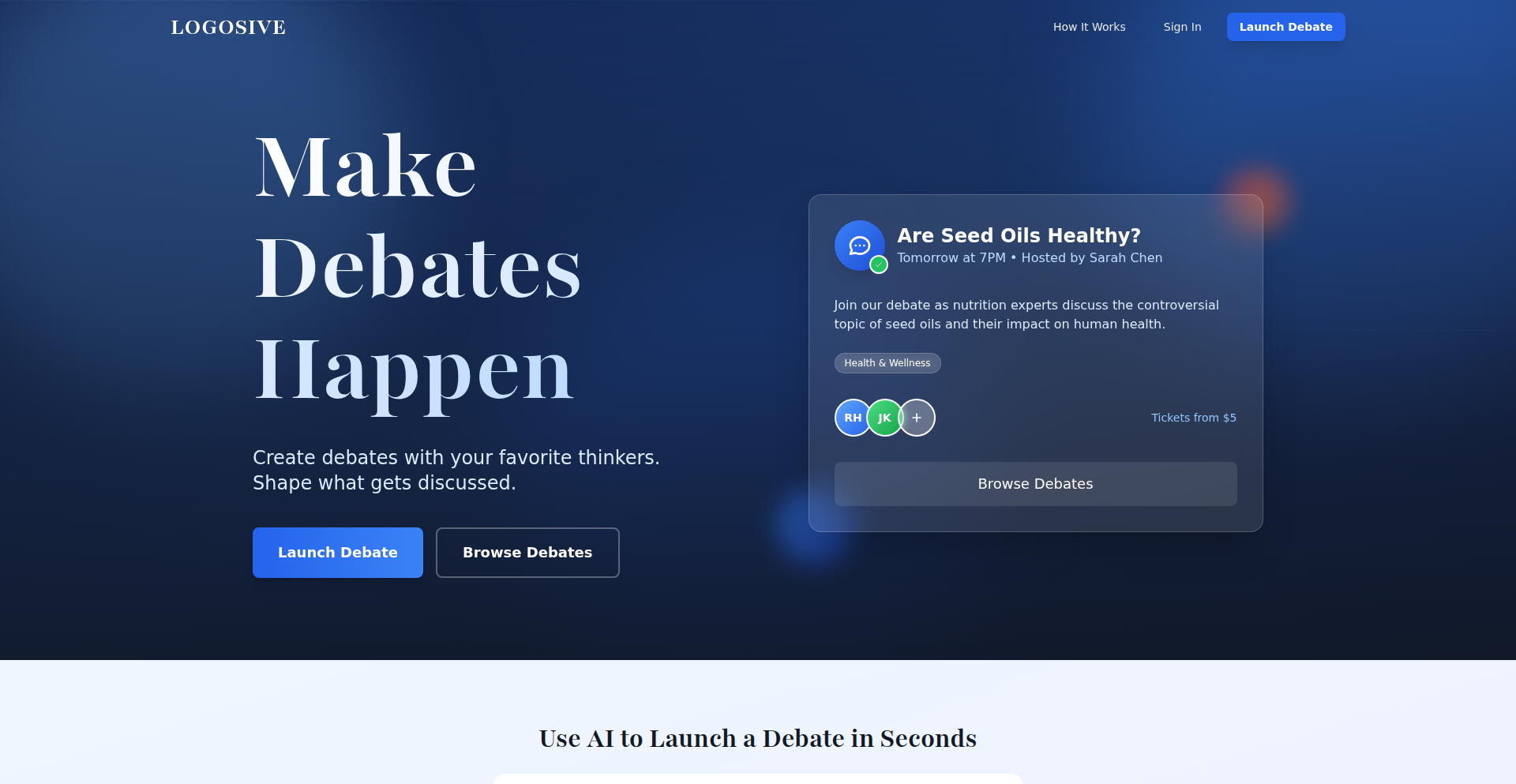

Logosive: Debate Catalyst

Author

mcastle

Description

Logosive is a platform designed to democratize intellectual discourse by enabling audiences to fund and propose debates between public thinkers. It leverages AI to streamline the creation of debate launch pages, suggesting topics and debaters, while utilizing web technologies like Django, htmx, and Alpine.js for robust functionality. The platform handles outreach, ticketing, and logistics, with revenue sharing among all participants, fostering a community-driven approach to high-level discussions.

Popularity

Points 32

Comments 32

What is this product?

Logosive is a web platform that makes it easy for anyone to initiate and fund debates between prominent thinkers, especially in fields like health, wellness, technology, and public policy. It tackles the problem of valuable discussions not happening due to logistical and financial barriers. The core innovation lies in using AI (Claude) to quickly generate compelling debate proposals and landing pages from a simple prompt, making it accessible for users to suggest topics and participants. The system then manages the backend operations like contacting debaters, selling tickets, and handling revenue distribution, which is a novel approach to incentivizing participation and debate creation.

How to use it?

Developers can use Logosive by proposing a debate topic and suggesting potential debaters through the website. The platform then handles the rest, from outreach to ticket sales. If you're a developer interested in the underlying technology, Logosive is built with Django for the backend, htmx for dynamic server-rendered HTML updates without full page reloads, and Alpine.js for lightweight JavaScript interactivity on the frontend. This tech stack allows for a fast and responsive user experience, akin to a single-page application, but with the benefits of server-side rendering for SEO and performance. You can integrate similar patterns for managing user-generated content and complex workflows within your own applications.

Product Core Function

· AI-powered debate proposal generation: Utilizes Claude to create engaging debate topics and suggest speakers from a single prompt, accelerating the ideation process and making it easier to visualize potential debates.

· Audience-funded debate model: Allows the community to financially support debates they want to see, creating a direct incentive for high-quality intellectual exchange and empowering the audience.

· Integrated event management: Handles all aspects of debate organization, including outreach to speakers, ticket sales, and event logistics, simplifying the process for both proposers and participants.

· Fair revenue sharing: Distributes ticket revenue among the debate proposer, debaters, and the host, creating a sustainable ecosystem that rewards all stakeholders and encourages continued participation.

· Web technology stack for performance: Employs Django, htmx, and Alpine.js to deliver a dynamic and responsive user experience, offering efficient content delivery and interactivity.

Product Usage Case

· A user wants to see a debate on the future of AI regulation between two prominent tech ethicists. They use Logosive to propose the topic and suggest the debaters. Logosive's AI helps draft a compelling description, and the platform then handles contacting the ethicists and setting up a ticket sales page. This allows for a valuable discussion that might otherwise never happen due to the difficulty of organizing such an event.

· A wellness influencer wants to host a debate about the efficacy of a popular diet trend. They propose the topic and invite two experts with opposing views. Logosive manages the ticket sales, ensuring that the cost of bringing the experts and hosting the event is covered by the interested audience, making niche intellectual debates financially viable.

· A developer building a community platform might draw inspiration from Logosive's use of htmx and Alpine.js for creating interactive forms and dynamic content updates without the overhead of a full JavaScript framework. This can lead to faster loading times and a simpler development workflow for certain types of web applications.

2

PathoNaviLLM

Author

dchu17

Description

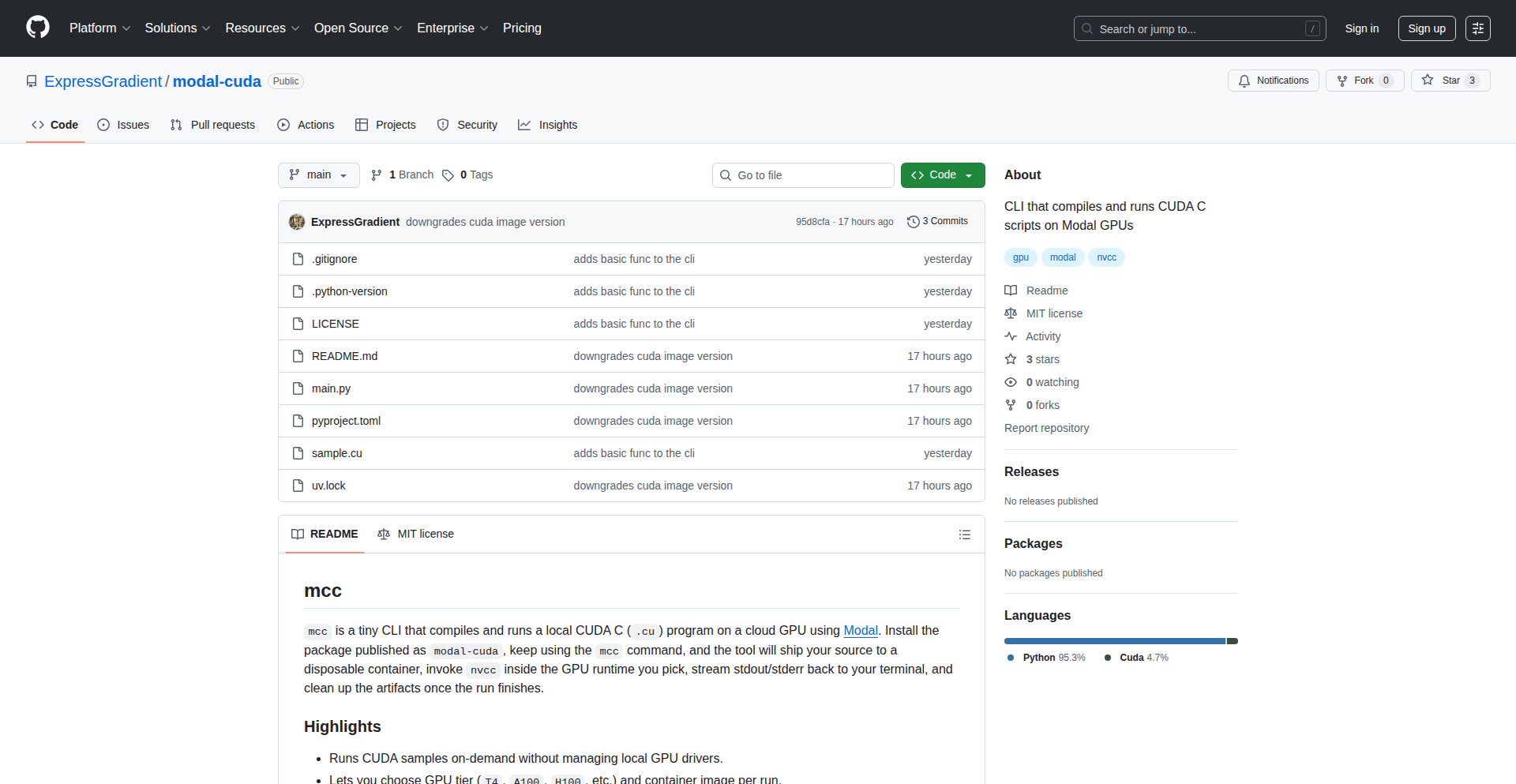

This project introduces a novel Reinforcement Learning (RL) environment designed to enable frontier Large Language Models (LLMs) to navigate and analyze massive digitized pathology slides for cancer diagnosis. It addresses the challenge of whole-slide images (WSIs) being too large for LLM context windows by providing LLMs with 'tools' to control zoom and pan, mimicking a pathologist's workflow. This allows LLMs to intelligently explore relevant regions, leading to surprisingly accurate diagnostic insights.

Popularity

Points 41

Comments 20

What is this product?

PathoNaviLLM is a simulated laboratory environment where advanced AI language models (LLMs) can learn to examine digital pathology slides, which are like high-resolution photographs of tissue samples used by doctors to detect diseases like cancer. The core innovation is a set of virtual 'tools' that allow the LLM to control a digital microscope. Instead of trying to process the entire massive image at once (which is impossible for current LLMs), the LLM can 'zoom in' on specific areas and 'pan' across the slide, deciding where to look next. This is similar to how a pathologist would move a physical slide under a microscope to find abnormalities. This exploration and decision-making process is trained using Reinforcement Learning, a type of AI learning where the model learns by trial and error, getting rewarded for making good diagnostic choices.

How to use it?

Developers can integrate PathoNaviLLM into their AI research pipelines. This involves setting up an RL training loop where the LLM acts as the 'agent'. The agent receives the pathology slide data and the available 'tools' (e.g., zoom_in, pan_left, pan_right, make_diagnosis). The LLM then uses its learned strategy to issue commands to these tools. For example, it might zoom into a suspicious area, examine it, and then decide to pan to another region or make a preliminary diagnosis. This allows researchers to test how effectively different LLMs can learn diagnostic tasks on complex, large-scale medical imagery without needing to pre-process the images into thousands of small, potentially disconnected tiles.

Product Core Function

· LLM-controlled dynamic exploration of large images: Enables LLMs to intelligently navigate and focus on relevant sections of massive digital slides, overcoming context window limitations. This means AI can effectively 'look' at detailed medical images without needing them to be broken down into tiny pieces beforehand, making AI analysis more efficient.

· Reinforcement Learning environment for diagnostic tasks: Provides a framework for training LLMs to make diagnostic decisions by rewarding accurate observations and conclusions. This allows developers to train AI models to become better at spotting disease indicators in medical scans through practice and feedback.

· Simulated microscope tools (zoom, pan): Empowers LLMs with the ability to adjust their view of the image, mimicking how a human expert would examine a slide. This allows for fine-grained analysis of specific areas, crucial for accurate medical diagnosis.

Product Usage Case

· Testing the diagnostic capabilities of frontier LLMs like GPT-5 and Claude 4.5 on complex cancer subtyping tasks. This demonstrates how LLMs, when given the right tools, can achieve comparable accuracy to human pathologists on specific diagnostic challenges, accelerating research into AI-assisted medicine.

· Evaluating the performance of smaller LLMs on pathology tasks to understand their limitations and identify areas for improvement. This helps developers understand which AI models are best suited for certain medical imaging tasks and how to optimize their performance.

· Developing new benchmarks for evaluating LLM performance on large-scale medical image analysis. This provides the scientific community with standardized ways to measure and compare AI's progress in understanding complex visual data like pathology slides.

3

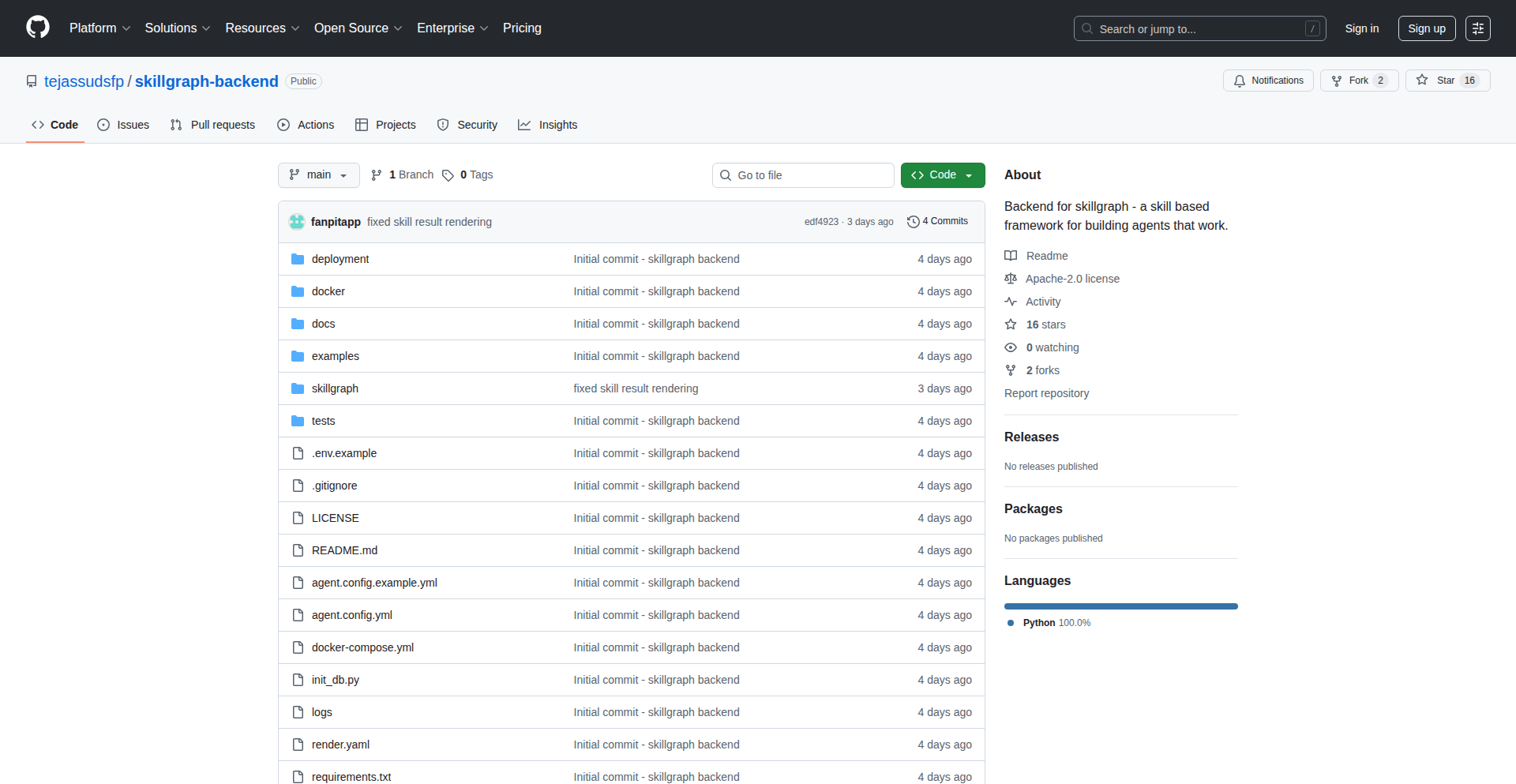

SkillGraph: Agentic AI with Skill-Based Reasoning

Author

tejassuds

Description

SkillGraph is an open-source framework for building AI agents. Instead of relying on predefined tools, it leverages 'skills' – reusable, modular pieces of AI functionality. This innovation allows for more dynamic and adaptable agent behavior, enabling AI to tackle complex problems by composing and sequencing these skills in novel ways. It addresses the limitation of rigid tool-based systems, offering a more flexible and emergent approach to AI development.

Popularity

Points 12

Comments 0

What is this product?

SkillGraph is an open-source framework for creating intelligent agents, which are like automated assistants powered by AI. The core innovation lies in its 'skill-based' approach. Imagine building with LEGOs; instead of just having pre-made tools like a hammer or a screwdriver (which is how most AI agents work with 'tools'), SkillGraph lets you build with specialized LEGO bricks that represent specific AI capabilities, like 'summarize text', 'generate code', or 'analyze sentiment'. These 'skills' can be combined and orchestrated in flexible ways to solve a wide range of tasks. This is fundamentally different from traditional tool-based agents because it allows for more emergent and adaptable behavior, as the agent can discover new ways to combine its skills to achieve goals, rather than being limited to a fixed set of pre-programmed actions. So, what does this mean for you? It means AI agents built with SkillGraph can be much smarter and more capable of handling unforeseen challenges or complex workflows that require a blend of different AI abilities.

How to use it?

Developers can use SkillGraph by defining their own custom skills or by utilizing a library of pre-built skills. These skills are essentially small, self-contained AI functions. You then define the relationships and dependencies between these skills, creating a 'graph' that dictates how the agent should reason and act. For example, if you want to build an agent that can research a topic, write a blog post, and then post it to social media, you would define skills for 'web scraping', 'text generation', and 'social media posting'. You'd then structure these skills within the SkillGraph framework so the agent knows to first scrape the web, then use that information to generate text, and finally post it. Integration typically involves using the SkillGraph library in your project, defining your agent's skill graph, and then invoking the agent to perform tasks. This provides a structured yet highly flexible way to design complex AI workflows. So, how does this help you? It simplifies the creation of sophisticated AI agents by providing a modular and composable architecture, allowing you to build powerful AI solutions without reinventing the wheel for every AI capability.

Product Core Function

· Skill Definition and Management: Enables developers to create, register, and manage individual AI capabilities as distinct 'skills'. This modularity allows for easier testing, reuse, and updates of AI components. The value is in building AI agents piece by piece, like using specialized functions in programming.

· Skill Graph Orchestration: Provides a mechanism to define relationships and dependencies between skills, forming a directed graph. This allows the agent to dynamically plan and execute sequences of skills to achieve a goal, enabling complex decision-making. The value is in allowing AI to intelligently figure out the steps needed to solve a problem.

· Agentic Reasoning Engine: The core of the framework that interprets the skill graph and agent's current state to decide which skill to execute next. This enables adaptive and emergent behavior as the agent can explore different skill combinations. The value is in creating AI that can think and adapt on the fly.

· Open-Source Flexibility: Being open-source means developers can inspect, modify, and extend the framework. This fosters community collaboration and allows for tailored solutions. The value is in providing a customizable and transparent platform for AI development.

· Abstract Skill Abstraction: Moves beyond rigid tool APIs to a more abstract concept of 'skill', allowing for greater flexibility in how AI capabilities are implemented and combined. The value is in enabling AI to be more versatile and less constrained by specific tool limitations.

Product Usage Case

· Building a research assistant that can scour academic papers (skill: web scraping + document analysis), synthesize findings (skill: text summarization), and generate a literature review (skill: advanced text generation). This solves the problem of tedious manual research by automating the entire process.

· Developing a creative writing AI that can brainstorm plot ideas (skill: creative idea generation), write chapters (skill: narrative generation), and suggest character arcs (skill: character development analysis). This addresses the creative block and speeds up the writing process.

· Creating an automated customer support agent that can understand user queries (skill: natural language understanding), access knowledge bases (skill: information retrieval), and provide step-by-step solutions (skill: instruction generation). This improves customer service efficiency and responsiveness.

· Designing a code generation assistant that can take high-level requirements (skill: requirement parsing), generate boilerplate code (skill: code snippet generation), and refactor existing code (skill: code optimization). This accelerates software development cycles and reduces repetitive coding tasks.

4

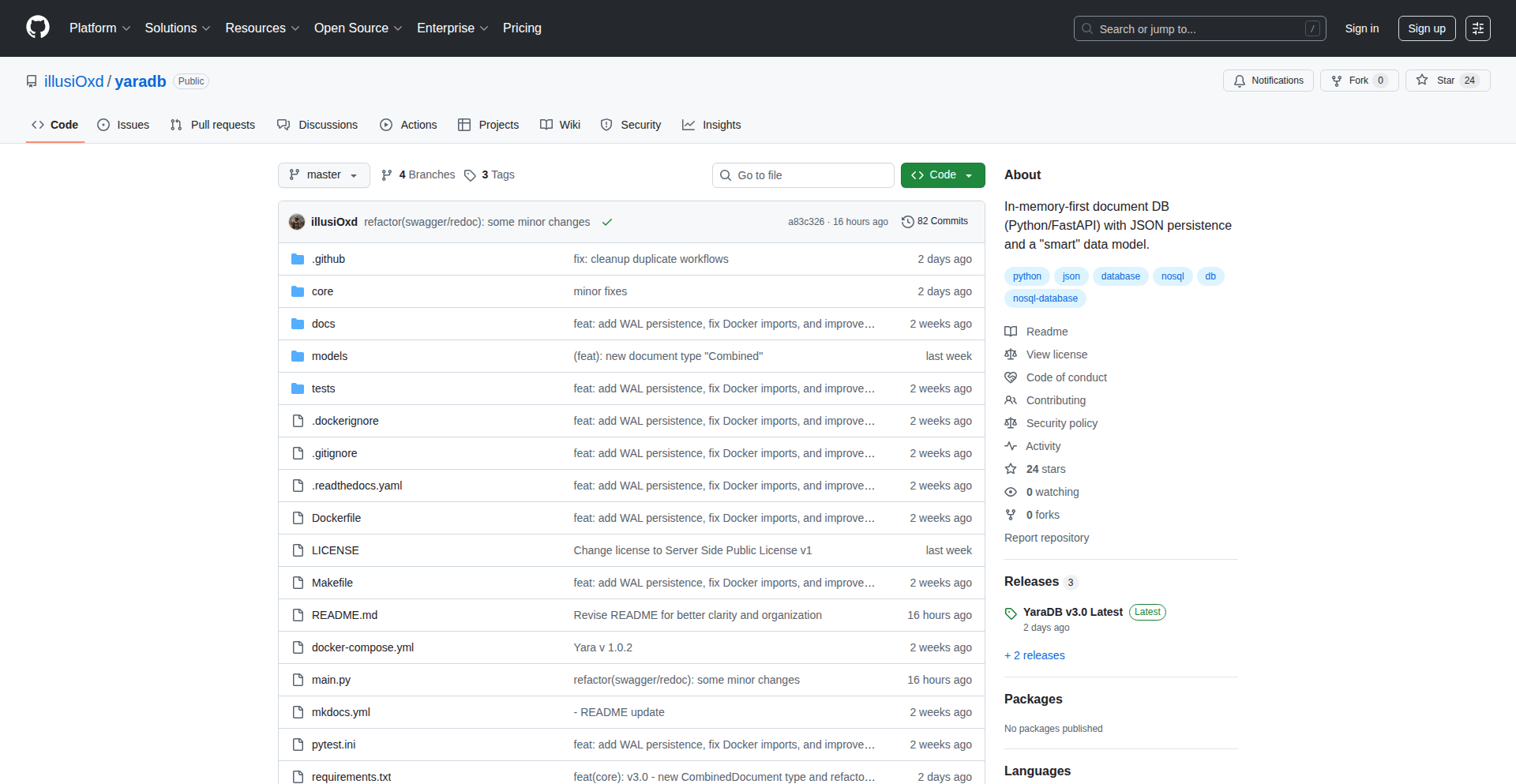

YaraDB: FastAPI-Powered Document Database

Author

ashfromsky

Description

YaraDB is a lightweight, open-source document database that leverages FastAPI for its API layer. It's designed for developers seeking a simple yet powerful way to store and query JSON-like documents, offering a refreshing alternative to more complex database solutions. The innovation lies in its tight integration with FastAPI, enabling rapid development and seamless integration into existing Python web applications. This solves the common problem of needing a flexible data store without the overhead of traditional relational databases or feature-heavy NoSQL systems.

Popularity

Points 9

Comments 1

What is this product?

YaraDB is a document database built using FastAPI, a modern, fast (high-performance) web framework for building APIs with Python. Think of it as a smart place to store your data, where each piece of data is like a flexible document (often in JSON format). The core innovation is using FastAPI to create a super-efficient and easy-to-use API for interacting with these documents. This means developers can get up and running quickly, and their applications can communicate with the database very fast. So, if you need to store and retrieve information that doesn't fit neatly into tables, and you want it to be incredibly fast and easy to connect to your Python code, YaraDB offers a fresh, modern approach.

How to use it?

Developers can integrate YaraDB into their Python projects by installing it as a library. Because it's built on FastAPI, it can be easily plugged into an existing FastAPI application or set up as a standalone service. You would define your data models (how your documents are structured) within your Python code and then use YaraDB's API to perform CRUD (Create, Read, Update, Delete) operations on your documents. This allows for very fluid data manipulation directly from your application logic. So, for example, if you're building a web app and need to store user profiles or product information that varies in structure, you can quickly set up YaraDB and start saving and fetching this data using simple Python commands, making your development process much faster.

Product Core Function

· FastAPI-powered API for document management: Provides a high-performance API for creating, reading, updating, and deleting documents, making data interaction swift and efficient. This helps you quickly access and modify your application's data without complex queries.

· Lightweight and embedded-friendly: Designed to be minimal and easy to integrate, allowing it to be used within existing applications without significant overhead. This means you can add a robust data store to your project without slowing it down or requiring complex setup.

· Flexible document storage: Supports storing semi-structured data (like JSON), offering flexibility for evolving data requirements. This is useful when your data doesn't fit a rigid table structure, allowing you to adapt your database as your application grows.

· Pythonic data interaction: Allows developers to interact with the database using Python code, aligning with common development workflows. This makes it intuitive for Python developers to manage their data, reducing the learning curve.

Product Usage Case

· Building a blog platform: A developer could use YaraDB to store blog posts as documents, with each post having varying fields (e.g., author bio, featured image URL, tags). YaraDB's flexibility allows for easy addition of new fields to posts over time without schema migrations. This solves the problem of managing diverse content structures in a dynamic way.

· Creating a user profile service: For an application requiring detailed user profiles, where some users might have extra fields (e.g., social media links, specific preferences), YaraDB can store these profiles efficiently. The FastAPI backend can quickly fetch or update user data, providing a responsive user experience.

· Developing a simple API backend: A developer building an API for a small project can use YaraDB as the backend data store. The seamless integration with FastAPI means they can quickly define API endpoints and connect them to document storage, accelerating the development of their API.

5

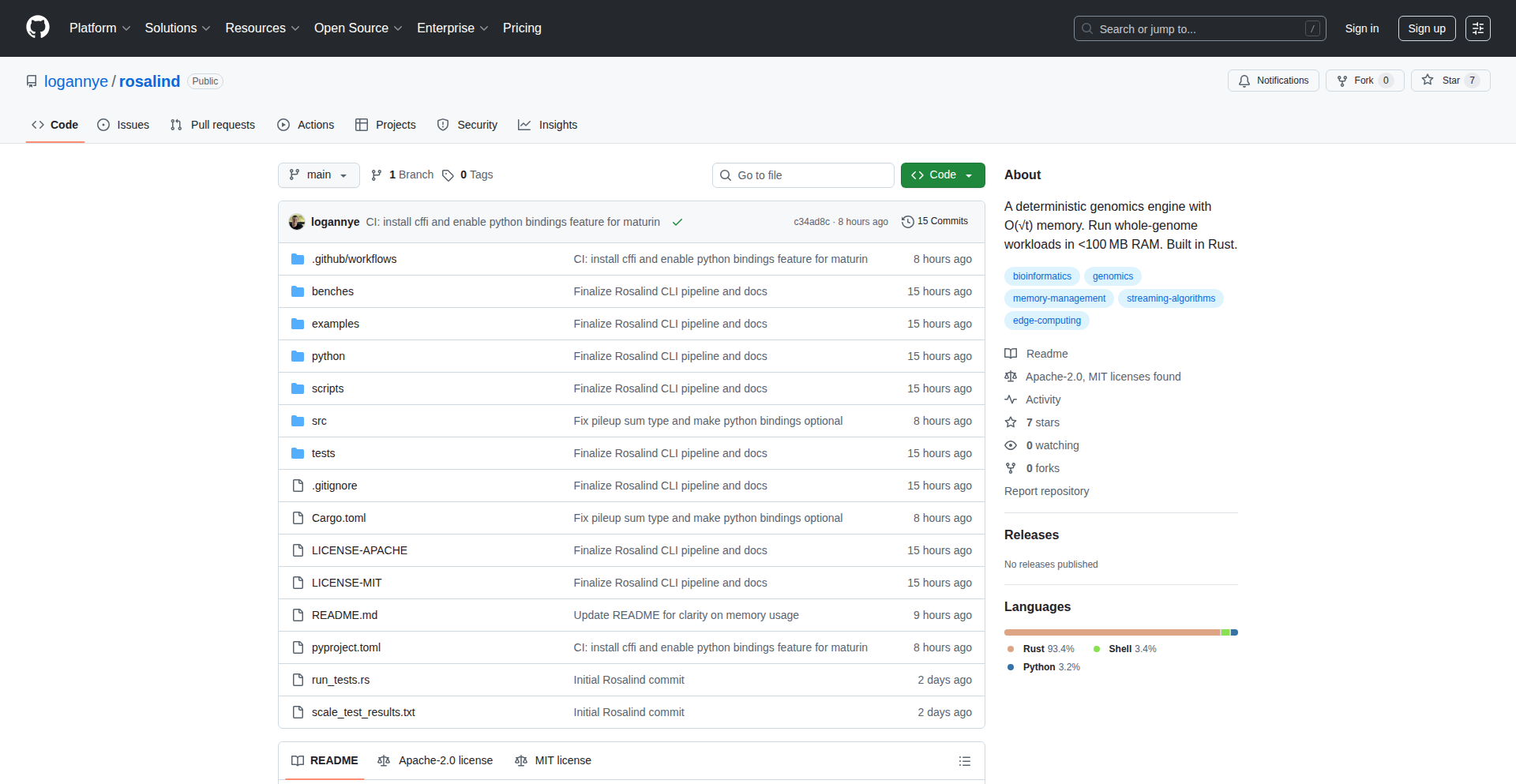

GenomixRAM

Author

logannyeMD

Description

A groundbreaking open-source Rust engine designed for ultra-low memory bioinformatics workloads. Leveraging a novel complexity theory insight, GenomixRAM drastically reduces RAM requirements for whole-genome analysis, making advanced genomics accessible on standard consumer hardware. It achieves this by trading increased runtime for significantly reduced memory footprint, enabling a new era of democratized genomic research.

Popularity

Points 10

Comments 0

What is this product?

GenomixRAM is a bioinformatics engine built in Rust that allows you to process entire genomes using very little RAM, specifically under 100MB. This is achieved through a clever algorithmic approach inspired by recent advancements in complexity theory. Traditional methods for analyzing vast genomic data require substantial memory, often limiting them to specialized, high-performance computing environments. GenomixRAM fundamentally changes this by employing an algorithm with a time complexity of O(TlogT), which, while slightly slower than O(T) methods, offers a massive reduction in memory usage. This means you can run powerful genomic analyses on everyday computers, not just supercomputers.

How to use it?

Developers can integrate GenomixRAM into their bioinformatics pipelines or use it as a standalone tool for genomic data processing. Given its Rust implementation, it can be compiled into libraries or standalone executables. For example, if you're working with cancer genomics data and need to perform complex analyses on a large dataset but have limited access to high-memory servers, you can run GenomixRAM locally. It's designed to be used via command-line interfaces or through its API if you're building further applications on top of it. The primary benefit is running computations that were previously infeasible due to memory constraints.

Product Core Function

· Ultra-low memory whole-genome analysis: Enables processing of extensive genomic datasets using less than 100MB of RAM, making advanced genomics accessible on common hardware. This is valuable for researchers or developers with budget constraints or limited access to high-performance computing resources.

· Algorithmic optimization for memory efficiency: Implements a novel O(TlogT) algorithm that significantly reduces memory footprint compared to traditional O(T) approaches. This provides a technical edge for handling large biological datasets that would otherwise overwhelm standard memory capacities.

· Rust-based performance and safety: Built in Rust, ensuring memory safety and high performance, which is crucial for reliable and efficient execution of sensitive bioinformatics tasks. Developers benefit from Rust's robustness and speed for their genomic workloads.

· Open-source accessibility: As an open-source project, it encourages community contribution and adaptation, fostering innovation in bioinformatics tools. This democratizes access to powerful genomic analysis capabilities for a wider range of users and institutions.

Product Usage Case

· Running cancer genomics analysis on a laptop: A startup in oncology can now perform whole-genome analysis of patient data on standard laptops instead of expensive servers, drastically reducing infrastructure costs and accelerating research cycles.

· Democratizing genetic research for educational purposes: University researchers can offer hands-on whole-genome analysis workshops to students using readily available lab computers, providing practical experience without the need for specialized hardware.

· Enabling portable bioinformatics solutions: A developer can build a desktop application for rapid genomic variant calling that can be run by individual clinicians or researchers in remote areas with limited internet connectivity and computing power.

· Accelerating drug discovery research: Pharmaceutical companies can perform preliminary whole-genome screenings of potential drug targets on more accessible hardware, speeding up the initial phases of drug discovery and development.

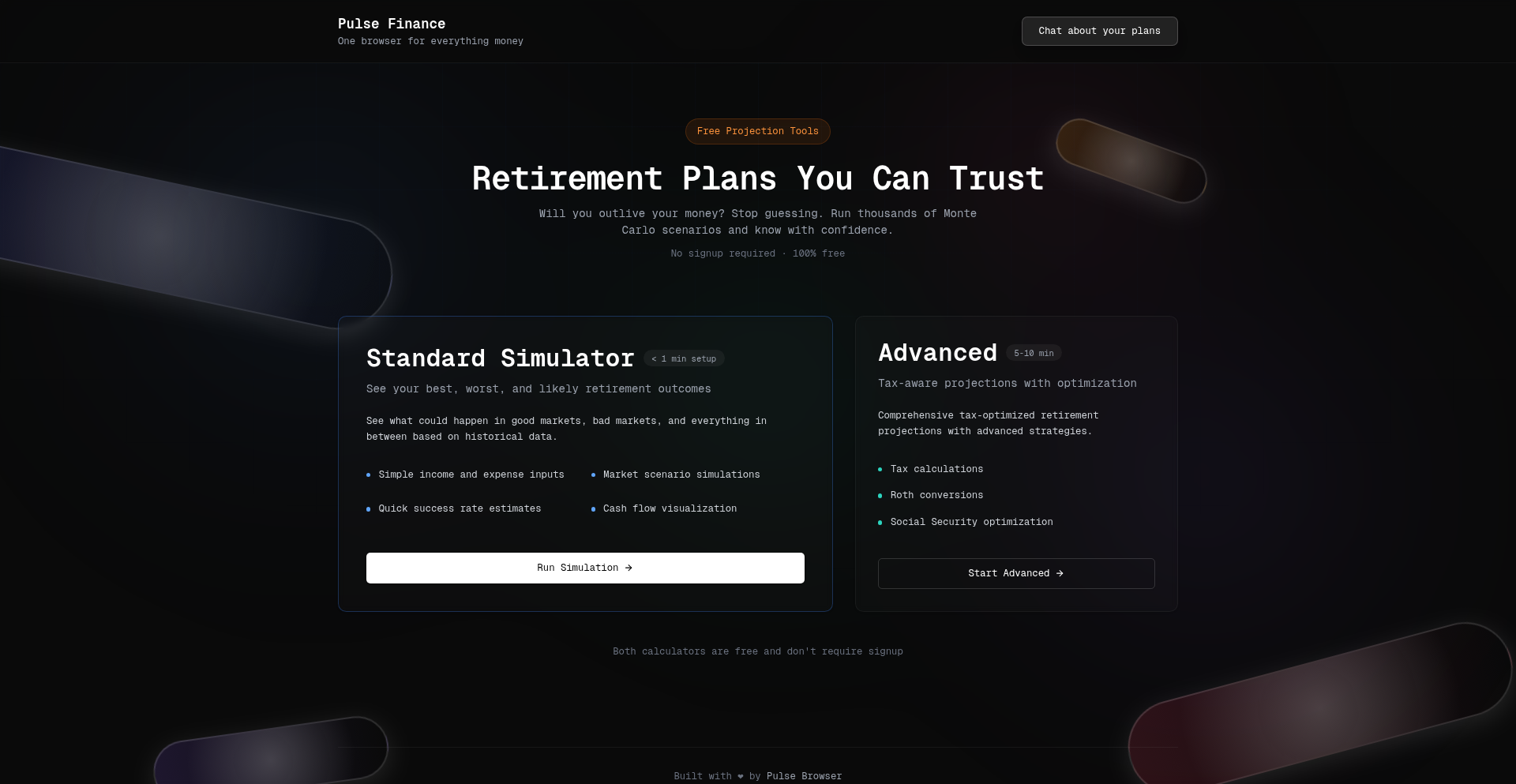

6

RetireSim: Monte Carlo & Tax-Aware Retirement Planning Engine

Author

niztk

Description

RetireSim is a free, open-source web application that offers sophisticated retirement planning by simulating future financial scenarios using the Monte Carlo method and incorporating tax-aware projections. It allows users to adjust inputs and immediately visualize outcomes, including success rates and cash-flow charts, with an 'Under the Hood' view detailing the calculation logic. The core innovation lies in democratizing advanced financial modeling for personal use, enabling individuals to make more informed decisions about their retirement savings.

Popularity

Points 8

Comments 1

What is this product?

RetireSim is a personal retirement planning simulator. It uses a statistical technique called Monte Carlo simulation, which is like running thousands of 'what if' scenarios based on historical market data and probabilities. Imagine it as a super-powered crystal ball that doesn't predict the future, but shows you a range of possible futures for your retirement savings. It's 'tax-aware' because it considers how taxes will impact your nest egg over time, a crucial factor often overlooked in simpler calculators. The key innovation is making this complex financial modeling accessible and transparent, showing you precisely how the results are derived.

How to use it?

Developers can integrate RetireSim into their own applications or use it as a standalone tool. As a standalone, users input their current savings, expected contributions, retirement age, desired income, and investment return assumptions. The simulator then generates a range of potential outcomes, highlighting the probability of running out of money. For developers, RetireSim's 'Under the Hood' view provides insights into its calculation engine, allowing for potential customization or deeper understanding of the financial models. It can be embedded in financial advisory platforms or personal finance dashboards to offer interactive planning tools to clients or users.

Product Core Function

· Monte Carlo Simulation Engine: This function allows for probabilistic forecasting of retirement fund longevity. By running numerous random simulations, it provides a more realistic view of potential outcomes than deterministic models. The value is in understanding the range of possibilities and the likelihood of success, not just a single projected number.

· Tax-Aware Financial Projections: This core feature models the impact of income tax, capital gains tax, and other relevant taxes on retirement savings. The value is in providing a more accurate financial picture by accounting for a major outflow that directly affects the amount available for living expenses.

· Interactive Input Adjustment: Users can dynamically change variables such as savings rate, investment growth, and withdrawal amounts, and see the impact on projected outcomes in real-time. This provides immediate feedback and empowers users to explore different strategies and their consequences.

· Outcome Visualization (Success Rates, Cash-Flow Charts): The system generates clear visual representations of projected retirement success, including the probability of funds lasting throughout retirement and detailed cash-flow charts. This makes complex financial data easily digestible and actionable, even for those without a strong financial background.

· Under the Hood Calculation Transparency: RetireSim exposes the underlying calculations and assumptions, allowing users to see exactly how the results are derived. This fosters trust and understanding, enabling users to critically evaluate the projections and tailor them to their specific circumstances.

Product Usage Case

· A personal finance app developer can embed RetireSim's simulation engine to provide their users with a robust retirement planning tool directly within their existing dashboard. This solves the problem of users needing to go to a separate site for complex planning, enhancing user engagement and app utility.

· A financial advisor can use RetireSim with clients to visually demonstrate the impact of different savings strategies or retirement timelines. By adjusting inputs live during a client meeting, the advisor can provide tangible, data-driven advice, addressing the client's 'what if' concerns directly and demonstrating the value of their recommendations.

· An individual planning for early retirement can use RetireSim to model various scenarios, including different withdrawal rates and potential part-time work income. This helps them understand the feasibility of their early retirement goals and identify potential financial shortfalls before they occur.

· A retirement planning blogger or educator can leverage RetireSim to create interactive content for their audience. By showcasing specific simulations and their underlying logic, they can help educate people about the complexities of retirement planning and the benefits of proactive financial management.

7

MCP Browser Agent Relay

Author

arjunchint

Description

This project introduces a novel approach to AI agent interoperability by exposing a Chrome Extension as a remote MCP (Message Passing Channel) server. It allows any AI agent to control your browser's actions remotely, enabling seamless task execution without context switching and the ability to leverage your existing AI subscriptions. The core innovation lies in turning your browser into a controllable, sandboxed endpoint for a collaborative 'Agentic Web'.

Popularity

Points 6

Comments 3

What is this product?

This is a system that transforms your Chrome browser into a remote-controllable endpoint for AI agents. The core technology is based on MCP (Message Passing Channel), a protocol for inter-process communication. Instead of building a monolithic AI agent, this project exposes the browser's capabilities as a service that other AI agents can access. Think of it like a universal remote control for your browser, but powered by AI. The innovation is that any AI, like a chatbot you're already using, can send commands to your browser through this system. This means you can instruct an AI to perform actions like filling out forms, scraping data, or navigating websites, all without leaving your current AI conversation. This opens up possibilities for more integrated and efficient AI workflows.

How to use it?

Developers can integrate this project by installing the Rtrvr.ai Chrome Extension. Once installed, the extension acts as an MCP server. Other AI agents or applications can then communicate with this server using MCP URLs. For instance, you could configure a chatbot like Claude to use the extension's MCP URL to send commands. The AI would then interpret your request and instruct the browser extension to perform the action. This allows for a 'bring your own subscription' model, where you use your existing AI subscriptions to power the agent's actions through the browser relay. It's designed for developers who want to build applications that leverage browser automation triggered by external AI agents or want to create more interconnected AI ecosystems.

Product Core Function

· Remote Browser Control: Enables any AI agent to initiate and control actions within your browser. This is valuable because it eliminates manual work and context switching, allowing AI to perform repetitive or complex browser tasks on your behalf, such as data entry or form submission.

· MCP Server Exposure: Turns the Chrome Extension into a standardized communication endpoint for AI agents. This is technically innovative as it provides a common interface for diverse AI systems to interact with the browser, fostering interoperability and a more connected 'Agentic Web'.

· Subscription Reuse (BYO-Sub): Allows users to leverage their existing AI subscriptions to power browser actions. This is a practical benefit as it reduces the need for multiple specialized AI subscriptions, making AI automation more cost-effective and accessible.

· Sandboxed Execution: Provides a secure environment for AI-driven browser actions. This is crucial for user trust and security, ensuring that AI agents can perform tasks without compromising the user's overall system or data.

· Inter-Agent Collaboration Foundation: Lays the groundwork for a network of specialized AI agents that can work together. This is a forward-thinking feature that promotes a decentralized and collaborative AI future, where different agents can contribute their unique capabilities through a shared execution layer.

Product Usage Case

· Scenario: A user is in a chatbot conversation and needs to file a Jira ticket. Using this project, they can tell the chatbot to create the ticket. The chatbot, acting as an external agent, sends an MCP command to the browser extension. The extension then navigates to Jira, fills in the ticket details, and submits it, all in the background, without the user leaving the chat interface. This solves the problem of time-consuming manual data entry across different applications.

· Scenario: A marketing professional needs to scrape product information from multiple e-commerce websites for competitive analysis. They can instruct an AI assistant to perform this task. The AI uses the MCP relay to control the browser, visit each product page, extract the relevant data (price, description, reviews), and compile it into a report. This automates a tedious and error-prone data collection process.

· Scenario: A developer is building a new AI application that requires user authentication on various websites. Instead of handling the authentication flow directly, they can use this system to have an external AI agent (perhaps a dedicated authentication agent) manage the login process via the browser relay. This simplifies the development process and improves security by offloading sensitive operations.

· Scenario: A user wants to automatically update their profile information across multiple social media platforms. They can set up an AI agent to periodically check and update their details on platforms like LinkedIn, Twitter, and Facebook by interacting with the browser extension through MCP. This solves the problem of maintaining consistent information across different online identities.

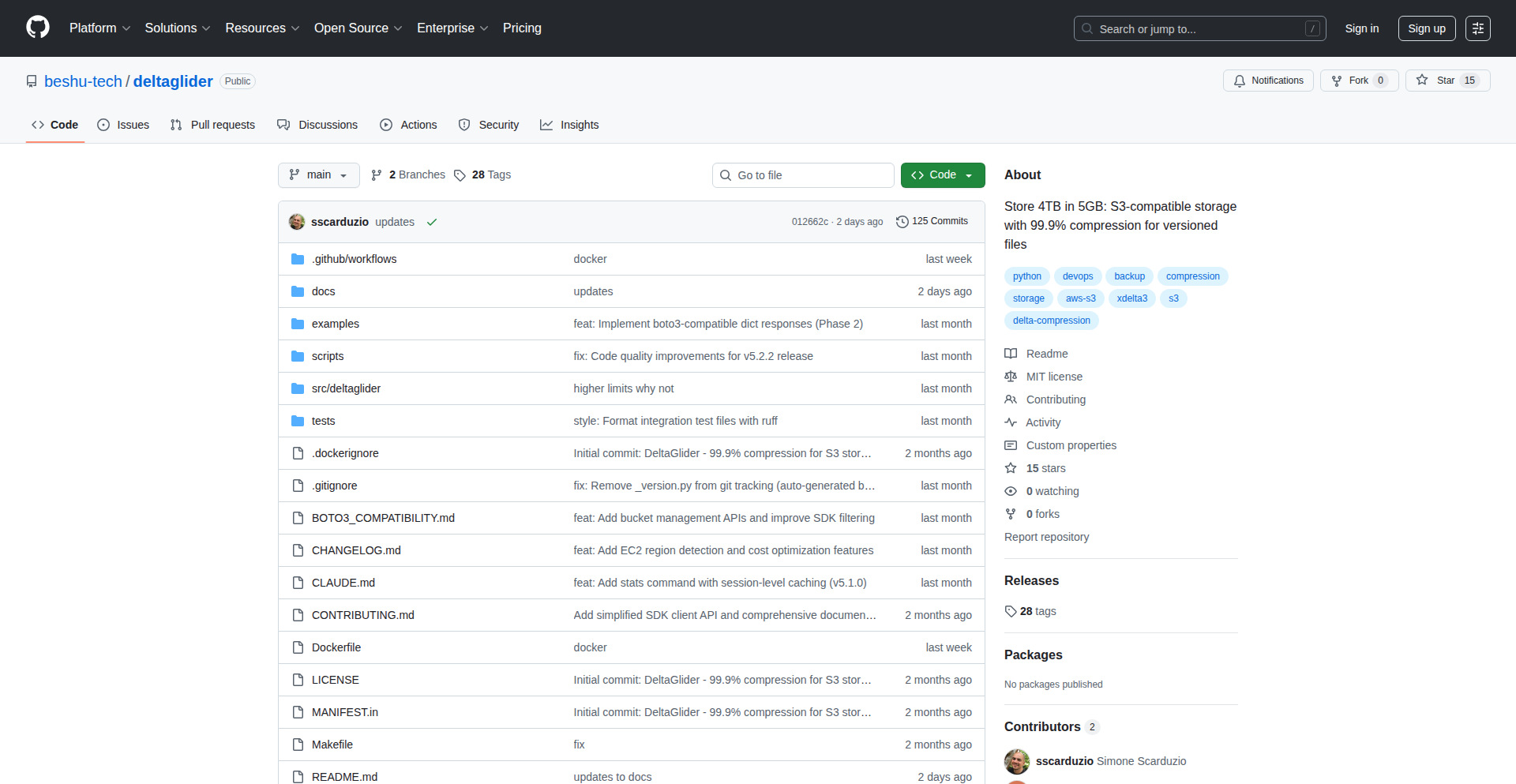

8

DeltaGlider: Binary Delta Storage CLI

Author

sscarduzio

Description

DeltaGlider is a command-line interface (CLI) and Software Development Kit (SDK) designed to drastically reduce storage costs for versioned data, particularly build artifacts and backups. It leverages the xdelta3 algorithm to store only the differences (deltas) between successive file uploads, while reconstructing the full file on demand. This significantly cuts down on storage space, achieving up to a 99.9% reduction for certain archive types.

Popularity

Points 7

Comments 2

What is this product?

DeltaGlider is a tool that intelligently stores files by only keeping track of changes between versions. When you upload a file, it stores the first version completely. For subsequent uploads of similar files (like new versions of your software builds or database backups), it doesn't store the whole new file. Instead, it calculates and stores only the 'deltas' – the tiny differences between the new version and the previous one. Think of it like saving a document, but instead of saving the entire document again each time, you only save the specific words or sentences you changed. This is made possible by using the xdelta3 algorithm, which is excellent at finding and representing small binary differences between files, especially archives like ZIP, JAR, or TAR. When you need to retrieve a specific version, DeltaGlider uses these stored deltas and the original reference file to reconstruct the exact original file, bit by bit, and verifies it using SHA256. The core innovation is its ability to achieve massive storage savings by focusing on incremental changes rather than full file duplication.

How to use it?

Developers can use DeltaGlider in several ways. As a CLI tool, it can be integrated into CI/CD pipelines or scripting for automated build artifact management and backup processes. For instance, after a software build completes, you can use the CLI to upload the resulting artifacts. If the next build produces a similar artifact, DeltaGlider will automatically store it as a delta. Developers can also use it as an SDK in their applications to programmatically manage storage. The primary use case is to replace traditional file storage methods for frequently updated files with a system that is significantly more storage-efficient. You would typically initialize DeltaGlider in a storage location (like an S3 bucket, conceptually similar to how `aws s3` works), upload your reference files, and then upload subsequent versions, letting DeltaGlider handle the delta compression and storage automatically. Retrieval is just as simple, requesting a specific version will trigger the on-the-fly reconstruction.

Product Core Function

· Delta Compression Upload: Stores new file versions by calculating and saving only the binary differences (deltas) compared to a reference file. This dramatically reduces storage needs for versioned data, offering significant cost savings for large datasets or frequent updates.

· On-Demand Delta Reconstruction: Rebuilds the full original file from stored deltas and the reference file when requested. This ensures you always have access to the complete, accurate file without needing to store multiple full copies, making data retrieval efficient.

· Bit-Perfect Verification: Uses SHA256 checksums to ensure that the reconstructed file is an exact, byte-for-byte replica of the original. This guarantees data integrity and trustworthiness, crucial for critical artifacts and backups.

· Compression-Aware Algorithm (xdelta3): Leverages xdelta3, a specialized binary diff algorithm that understands compression. This allows it to achieve high compression ratios (up to 99.9%) on compressed archives like ZIP, JAR, and TGZ, maximizing storage efficiency.

· CLI and SDK Interface: Provides both a command-line interface for scripting and automation and an SDK for programmatic integration into existing applications. This offers flexibility for different development workflows and integration needs.

Product Usage Case

· Software Versioning: A software company can use DeltaGlider to store different versions of their application builds. Instead of storing each full build artifact (e.g., JAR files), DeltaGlider stores the first build and then only the changes for subsequent builds, reducing storage by orders of magnitude and saving significant costs.

· Periodic Database Backups: A system administrator can configure DeltaGlider to back up a database daily. If the database structure or content changes minimally between backups, DeltaGlider will store only the differences, making large, frequent backups manageable and cost-effective.

· Managing Large Archive Collections: A developer working with large collections of ZIP or TGZ archives that are updated periodically can use DeltaGlider to store these archives. The delta-encoding approach ensures that storage space is conserved, even with many similar versions of these archives.

· CI/CD Pipeline Optimization: Integrate DeltaGlider into a Continuous Integration/Continuous Deployment pipeline to store build artifacts. This can drastically reduce the storage footprint of artifact repositories, making them more scalable and cost-efficient, thereby speeding up deployments.

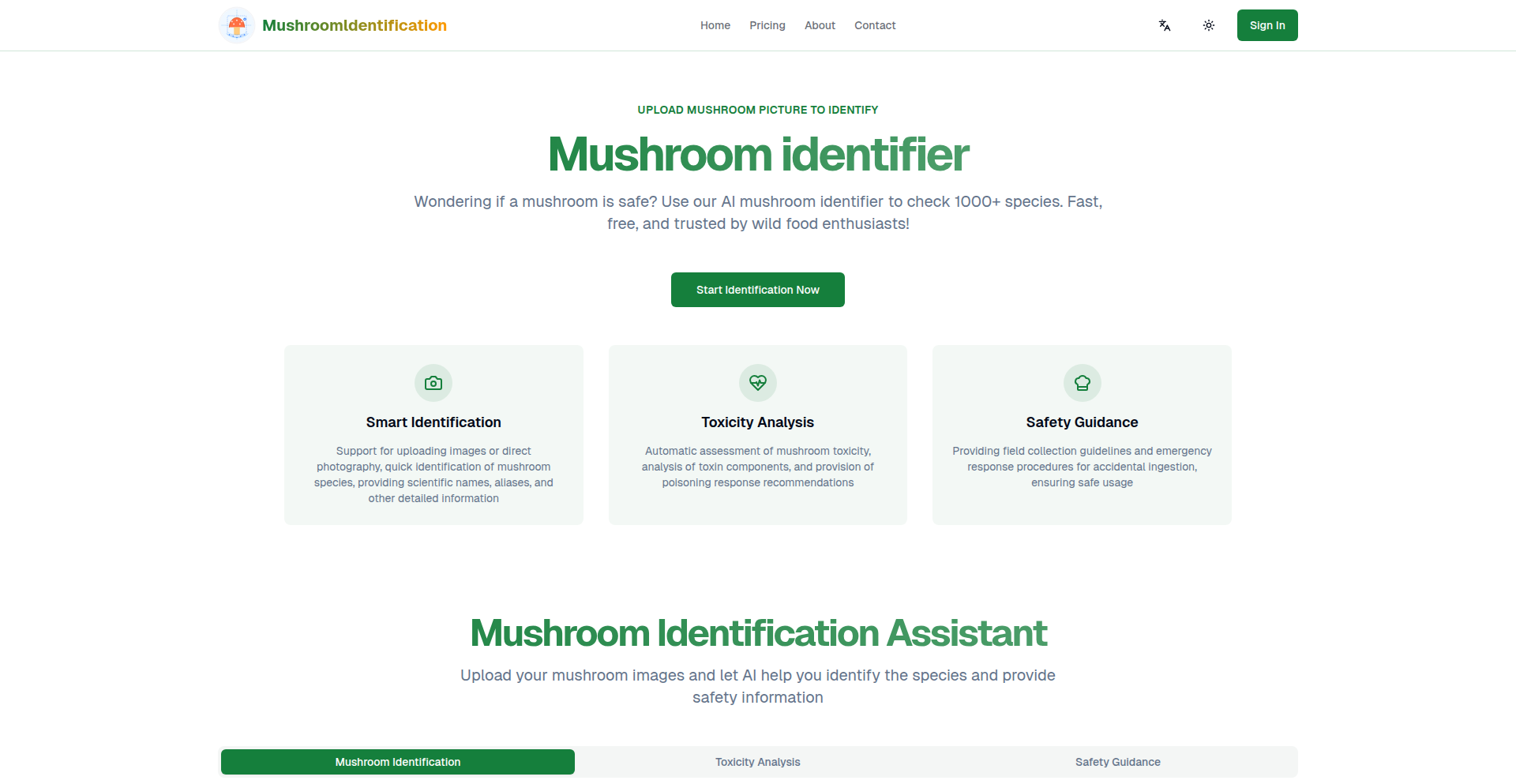

9

MycoGuard AI

Author

kakco-AI

Description

MycoGuard AI is a cutting-edge intelligent system designed to revolutionize wild mushroom safety and exploration. It leverages advanced AI to identify mushroom species from user-uploaded images with remarkable speed and accuracy, covering over 1000 varieties. Beyond simple identification, it incorporates a sophisticated toxicity analysis algorithm, providing immediate safety alerts and first-aid guidance for potentially poisonous fungi. This project tackles the critical problem of accidental mushroom poisoning by making identification and safety information instantly accessible to everyone, transforming fear into informed exploration.

Popularity

Points 3

Comments 6

What is this product?

MycoGuard AI is an AI-powered application that allows users to identify wild mushrooms and assess their safety. The core technology utilizes deep learning models, specifically Convolutional Neural Networks (CNNs), trained on a vast dataset of mushroom images. When you upload a photo of a mushroom, the AI analyzes its visual features (shape, color, texture, gills, stem, etc.) and compares them against its trained knowledge base to predict the species. Simultaneously, a separate, specialized toxicity analysis algorithm evaluates the identified species' known poisonous characteristics and potential toxic compounds. This provides not just a name, but a comprehensive safety profile, acting like a digital mycologist in your pocket. Its innovation lies in the seamless integration of rapid, high-accuracy visual identification with immediate, actionable toxicity risk assessment, making complex mycological knowledge accessible and safe for the general public.

How to use it?

Developers can integrate MycoGuard AI into their applications or services through its API. For example, a hiking app could incorporate MycoGuard AI to allow users to identify mushrooms they encounter on trails, providing real-time safety information. A nature education platform could use it to enrich its content with interactive identification features. The core usage involves sending a mushroom image to the API endpoint and receiving a JSON response containing the identified species, its classification, morphological details, habitat information, and crucially, a toxicity assessment with any relevant safety alerts or first-aid recommendations. This allows for the creation of applications that promote safe and informed outdoor exploration without requiring users to have prior mycological expertise.

Product Core Function

· Image-based mushroom identification: leverages deep learning models to accurately classify over 1000 mushroom species from user photos, providing a quick and easy way to know what you're looking at in nature.

· Smart toxicity analysis: employs a dedicated algorithm to assess the potential toxicity of identified mushrooms, offering immediate risk warnings and crucial safety information to prevent accidental poisoning.

· Detailed species information: provides scientific classifications, common names, morphological descriptions, and habitat data for identified mushrooms, empowering users with comprehensive knowledge.

· Multi-angle image support: allows for the upload of multiple photos of a mushroom from different angles (top, side, bottom) to enhance identification accuracy, ensuring more reliable results.

· First-aid guidance for ingestion: offers step-by-step first-aid instructions in case of accidental ingestion of a poisonous mushroom, providing critical support in emergencies.

· History tracking of identifications: enables users to save their identification records, creating a personal log of explored species for future reference and learning.

· Sustainable harvesting advice: educates users on science-backed methods for mushroom collection that protect mycelium and ecosystems, promoting responsible foraging practices.

Product Usage Case

· A nature enthusiast on a hike uses their smartphone to take a picture of an interesting mushroom. MycoGuard AI instantly identifies it as a 'Deadly Webcap' and triggers a prominent 'Poisonous!' alert with instructions to avoid contact and information on what to do in case of ingestion, preventing a potentially fatal mistake.

· A food blogger wants to create content about foraging edible mushrooms. They use MycoGuard AI to verify the identity and safety of mushrooms found in the wild, ensuring their recipes and advice are accurate and safe for their audience, thereby building trust and credibility.

· An educational app for children incorporates MycoGuard AI's identification feature. When children take pictures of mushrooms in a park, the app provides a fun and educational description of the mushroom, highlighting whether it's safe to touch or eat, making learning about nature engaging and safe.

· A community science project uses MycoGuard AI's API to crowdsource mushroom sightings. Volunteers upload images, and the AI helps categorize them, contributing to a large database of local mushroom populations and their distributions, aiding ecological research and conservation efforts.

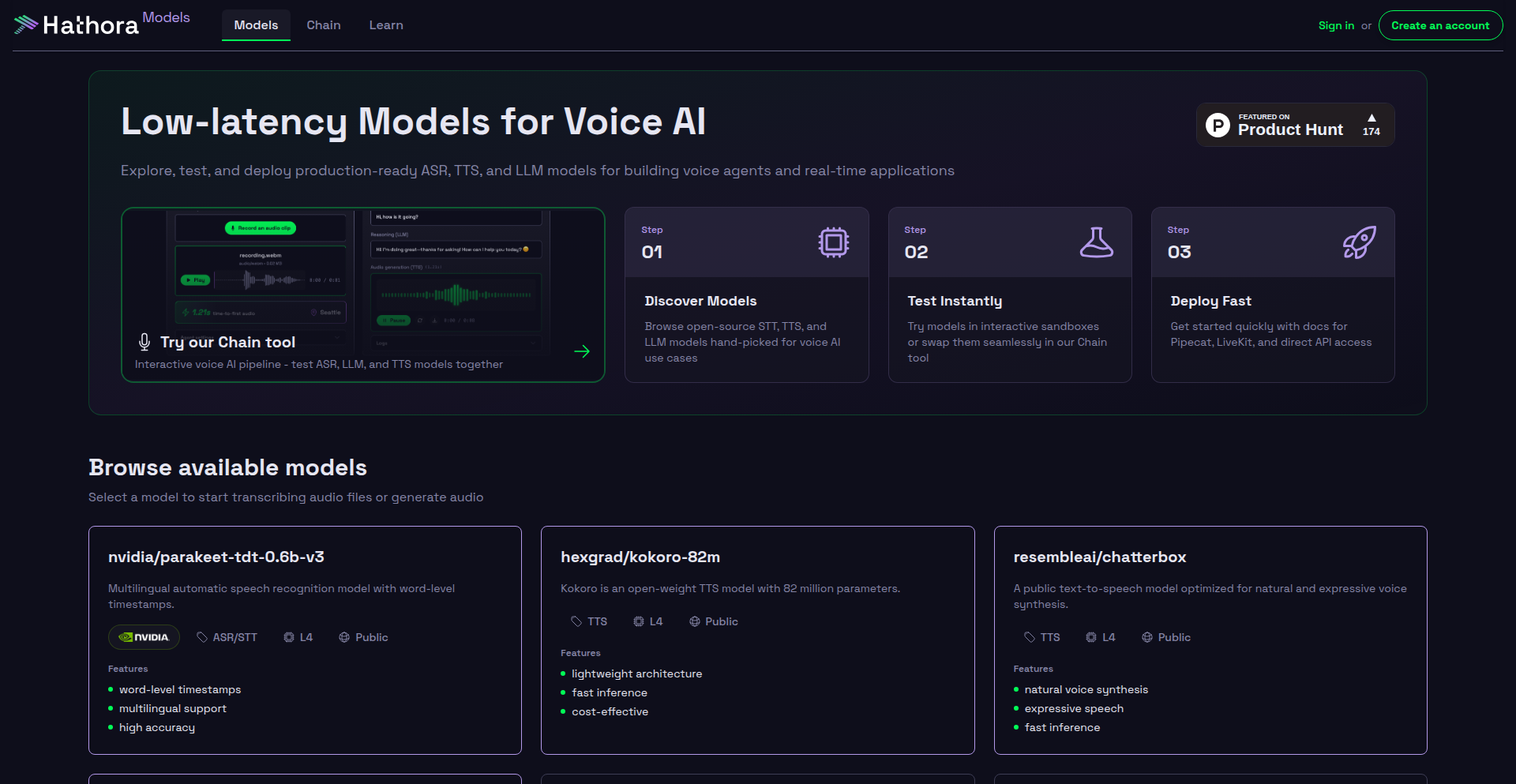

10

Hathora VoiceForge

Author

hpx7

Description

Hathora Models is a groundbreaking platform that makes a diverse collection of voice models, both open-source and licensed, easily accessible through serverless APIs. It empowers developers to build sophisticated voice-enabled agentic workflows by chaining together speech-to-text, large language models (LLMs) for reasoning, and text-to-speech components. A key innovation is its focus on ultra-low latency, achieved by distributing models across over 14 global regions and co-locating them within data centers to minimize network delays in inference chains. This means faster, more responsive voice applications.

Popularity

Points 6

Comments 1

What is this product?

Hathora Models is a managed service that acts as a marketplace and deployment platform for various voice AI models. Think of it as an app store for voice AI components. It takes complex machine learning models for understanding speech (Speech-to-Text), thinking (LLMs), and generating speech (Text-to-Speech) and turns them into simple API calls that developers can integrate into their applications. The innovation lies in making these powerful tools accessible, and critically, very fast. By running these models in many different locations worldwide and keeping related models close together, Hathora significantly cuts down the time it takes for a voice command to be processed and a response to be generated. This is like having a lightning-fast interpreter for your applications.

How to use it?

Developers can easily integrate Hathora Models into their projects by calling its serverless API endpoints. You can select specific voice models for tasks like transcribing audio, processing natural language requests with an LLM, or generating spoken responses. The platform offers flexible billing based on usage (tokens or duration), making it cost-effective for experiments and scalable for production. For enterprises with stricter security or performance needs, dedicated deployments are also available. Imagine building a voice assistant for your app; you'd send the user's spoken words to Hathora's API, get the transcribed text back, pass that to an LLM for understanding, and then use Hathora's TTS to speak the answer back to the user. The whole process feels immediate because of their low-latency infrastructure.

Product Core Function

· Speech-to-Text API: Convert spoken audio into written text, enabling voice input for applications. This is valuable for features like voice commands, dictation, and accessibility tools, allowing users to interact with software using their voice seamlessly.

· LLM Reasoning API: Process text input (often from Speech-to-Text) through powerful language models to understand intent, extract information, or generate intelligent responses. This is crucial for creating conversational agents, chatbots, and AI assistants that can comprehend and act upon user requests.

· Text-to-Speech API: Convert written text into natural-sounding spoken audio, providing voice output for applications. This is useful for voice notifications, audiobooks, virtual assistants, and any scenario where an application needs to communicate audibly.

· Inference Chain Orchestration: Seamlessly connect multiple voice AI models (STT, LLM, TTS) into a single workflow to create complex agentic behaviors. This empowers developers to build sophisticated voice applications that can understand, reason, and respond dynamically, moving beyond simple command-and-control.

· Global Low-Latency Deployment: Models are hosted across 14+ regions worldwide and co-located within datacenters to minimize network travel time for API requests. This delivers near real-time performance for voice interactions, making applications feel highly responsive and natural to end-users.

Product Usage Case

· Building a real-time customer support chatbot that can understand spoken queries, process them with an LLM, and provide spoken answers, all within milliseconds. This dramatically improves user experience by offering instant, natural voice support.

· Developing an interactive educational app where students can ask questions in their own voice, receive explanations generated by an LLM, and hear the answers spoken back to them. This makes learning more engaging and accessible.

· Creating an in-car voice assistant that allows drivers to control vehicle functions, get navigation updates, or play music through natural speech commands without distracting delays. The low-latency ensures safety and usability.

· Implementing a voice-controlled interface for complex software applications, allowing users to navigate menus, input data, and perform actions using spoken commands. This enhances productivity and accessibility for professionals.

· Designing a virtual meeting assistant that can transcribe discussions in real-time, summarize key points using an LLM, and provide spoken reminders or action items. This improves meeting efficiency and comprehension.

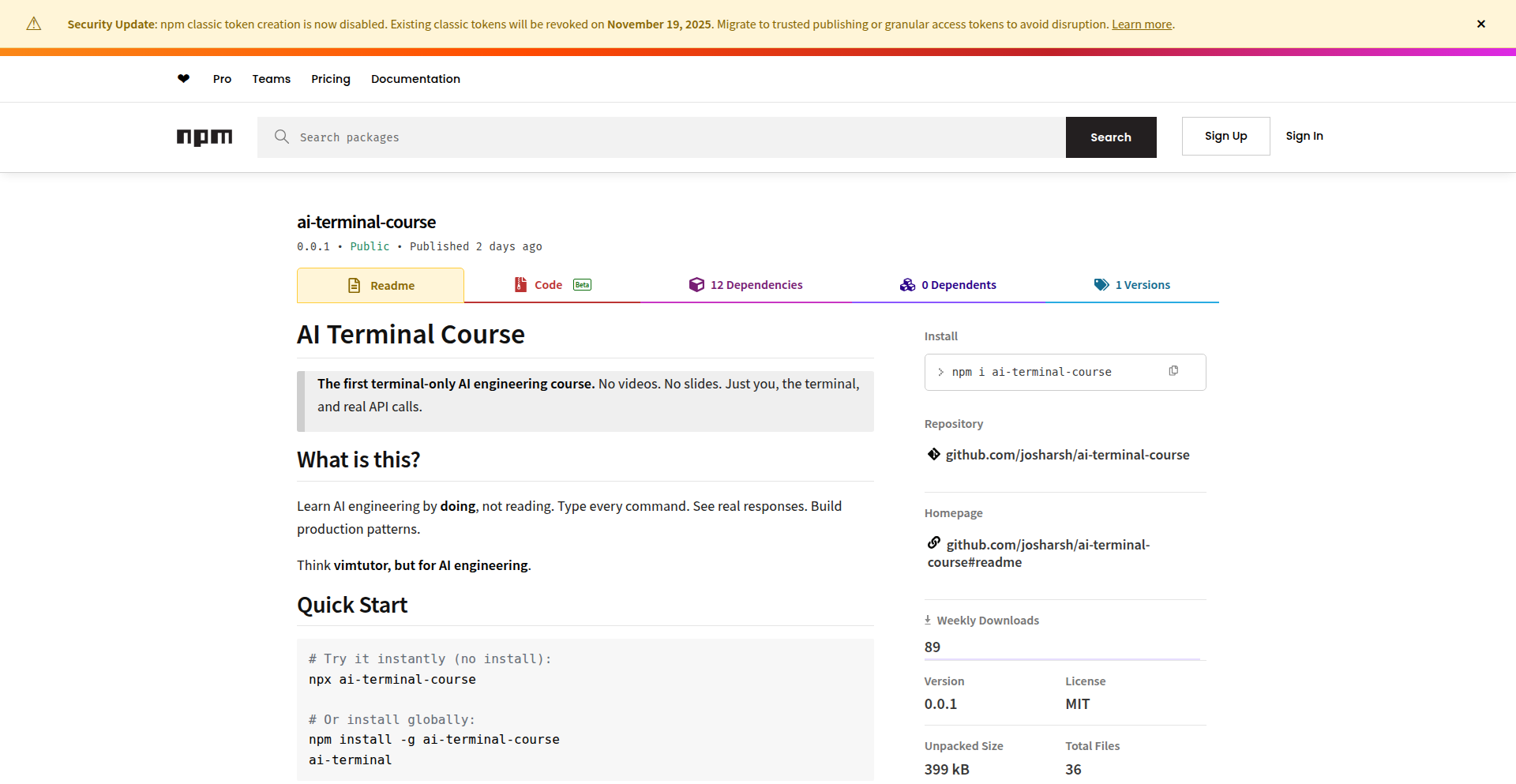

11

Offline Papercraft Game Engine

Author

z2plusc

Description

A digital toolkit that generates printable, screen-free, ad-free pen-and-paper games for children, focusing on fostering creativity and critical thinking through tangible play. The innovation lies in leveraging generative design principles and game mechanics to create unique, replayable analog experiences, solving the problem of excessive screen time while providing engaging educational content.

Popularity

Points 3

Comments 3

What is this product?

This project is a digital engine that allows creators to design and output printable game assets for traditional pen-and-paper games. It's built around a conceptual framework that translates game logic and rules into printable components like boards, cards, and tokens. The core innovation is the underlying algorithm that can generate variations of game elements, ensuring that each game session can feel fresh. Think of it as a programmable board game factory that doesn't require any electricity to play the games it produces. It addresses the need for engaging, educational activities that don't rely on screens, promoting hands-on interaction and imaginative play.

How to use it?

Developers can use this engine to create custom printable game kits for various purposes. For example, a teacher could use it to generate unique math-themed board games for their students, or a parent could create personalized adventure games for their children. The engine likely works by providing templates and parameters that can be adjusted to define game rules, themes, and components. The output is typically a set of printable files (like PDFs) that can be easily cut and assembled. This allows for rapid prototyping and deployment of engaging, offline activities, making it easy to integrate into educational curricula or home entertainment.

Product Core Function

· Procedural Game Asset Generation: The system can automatically generate diverse game boards, character tokens, and card decks based on defined parameters. This means you get unique visual and functional game elements every time, which is valuable for keeping games engaging and replayable, and for catering to specific learning themes.

· Printable Output Formats: The engine outputs game assets in standard printable formats (e.g., PDF). This is crucial for the 'offline' aspect, allowing anyone with a printer to create physical game components easily. This provides immediate, tangible play experiences without needing special equipment or digital access.

· Configurable Game Mechanics: Users can adjust core game rules and objectives within the engine. This enables the creation of games tailored to specific age groups, skill levels, or educational goals. The value here is in creating highly customized and relevant learning tools that actively engage players in problem-solving.

· Thematic Customization Engine: The system allows for the input of themes and narratives, which are then woven into the game's visuals and mechanics. This makes games more immersive and appealing to children. It's useful for making educational content more fun and memorable by aligning it with popular interests or desired learning topics.

Product Usage Case

· Educators can use the engine to create custom math adventure board games for elementary students, with unique challenges on each playthrough, solving the problem of generic worksheets and boosting engagement with abstract concepts.

· Parents can design personalized storytelling games for their young children, where the characters and settings are tailored to their child's imagination, providing a screen-free way to develop language and narrative skills.

· Game designers can rapidly prototype physical game concepts for educational purposes, quickly iterating on mechanics and visuals before committing to larger production, accelerating the development cycle for new learning tools.

· Therapists working with children can generate custom therapy games focused on specific social-emotional skills, with printable components that are easy to handle and adapt during sessions, offering a more engaging and adaptable therapeutic intervention.

12

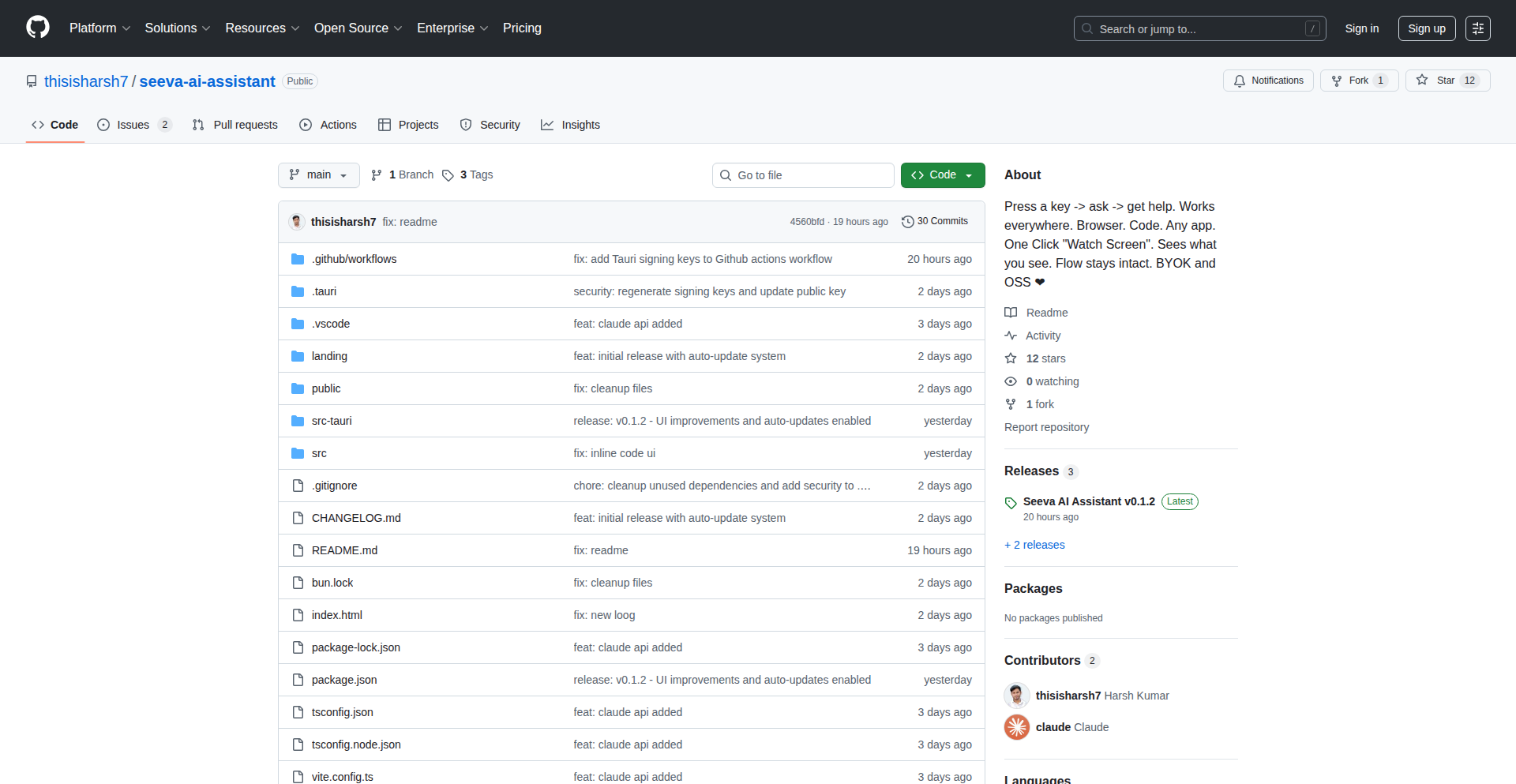

HotkeySnapAI

Author

thisisharsh7

Description

HotkeySnapAI is a desktop application that seamlessly integrates screenshotting with AI-powered assistance. It allows users to take a screenshot with a hotkey, and then immediately sends the captured image to an AI model for analysis or action, providing context-aware help directly within any application. The innovation lies in its real-time, in-app AI integration for visual tasks.

Popularity

Points 6

Comments 0

What is this product?

HotkeySnapAI is a desktop tool that captures your screen using a keyboard shortcut and then instantly sends that image to an AI for processing. Imagine you're working on a complex document and need to explain something visually. You press a hotkey, snap a screenshot of that specific area, and the AI can then analyze the image for content, answer questions about it, or even perform actions based on what it sees, all without you leaving your current application. This is a novel approach to bringing AI capabilities directly into your daily workflow, moving beyond simple text-based commands.

How to use it?

Developers can integrate HotkeySnapAI into their workflow by downloading and installing the application. Once installed, they can assign a custom hotkey for taking screenshots. The application then automatically sends the screenshot to a pre-configured AI service (e.g., an OCR service, an image analysis API, or a custom-trained model). This can be useful for automating repetitive visual tasks, like extracting data from images, generating descriptions for UI elements, or even debugging visual glitches by having an AI analyze the screenshot. The key is its universal application support, meaning it works alongside any software you're currently using.

Product Core Function

· Instant Screenshot Capture via Hotkey: Enables users to quickly capture specific parts of their screen with a simple keyboard command, reducing the friction of traditional screenshot tools. This is useful for quickly grabbing visual information without interrupting workflow.

· Automated AI Analysis Integration: Automatically sends captured screenshots to AI models for processing, such as optical character recognition (OCR), image recognition, or content summarization. This provides immediate insights or actions based on visual data, saving manual processing time.

· Cross-Application Compatibility: Works seamlessly across all desktop applications, meaning users can leverage AI assistance for screenshots in any software they use, from code editors to design tools to web browsers. This broad applicability makes it a versatile productivity booster.

· Customizable AI Endpoints: Allows users to configure which AI services or models their screenshots are sent to, offering flexibility for different types of visual tasks. This means you can tailor the AI's capabilities to your specific needs, whether it's for code documentation, user interface analysis, or data extraction.

Product Usage Case

· As a developer, you're documenting a complex UI component. You use HotkeySnapAI to screenshot the component, and the AI automatically generates a descriptive text for it, which you can directly paste into your documentation. This saves you the effort of manually describing each element.

· While debugging a visual issue in an application, you can use HotkeySnapAI to capture the problematic screen area. The AI can then analyze the screenshot for common error patterns or anomalies, potentially helping you identify the root cause faster. This provides a visual diagnostic tool.

· You're working with a team and need to quickly share context from a visual element in a design tool. Take a screenshot with HotkeySnapAI, and the AI can provide a brief summary or identify key elements, which you can then share with your team for faster understanding. This streamlines visual communication.

· For accessibility purposes, you can use HotkeySnapAI to take a screenshot of any visual content on your screen, and have an AI describe the image for visually impaired users. This broadens the reach of your application's content.

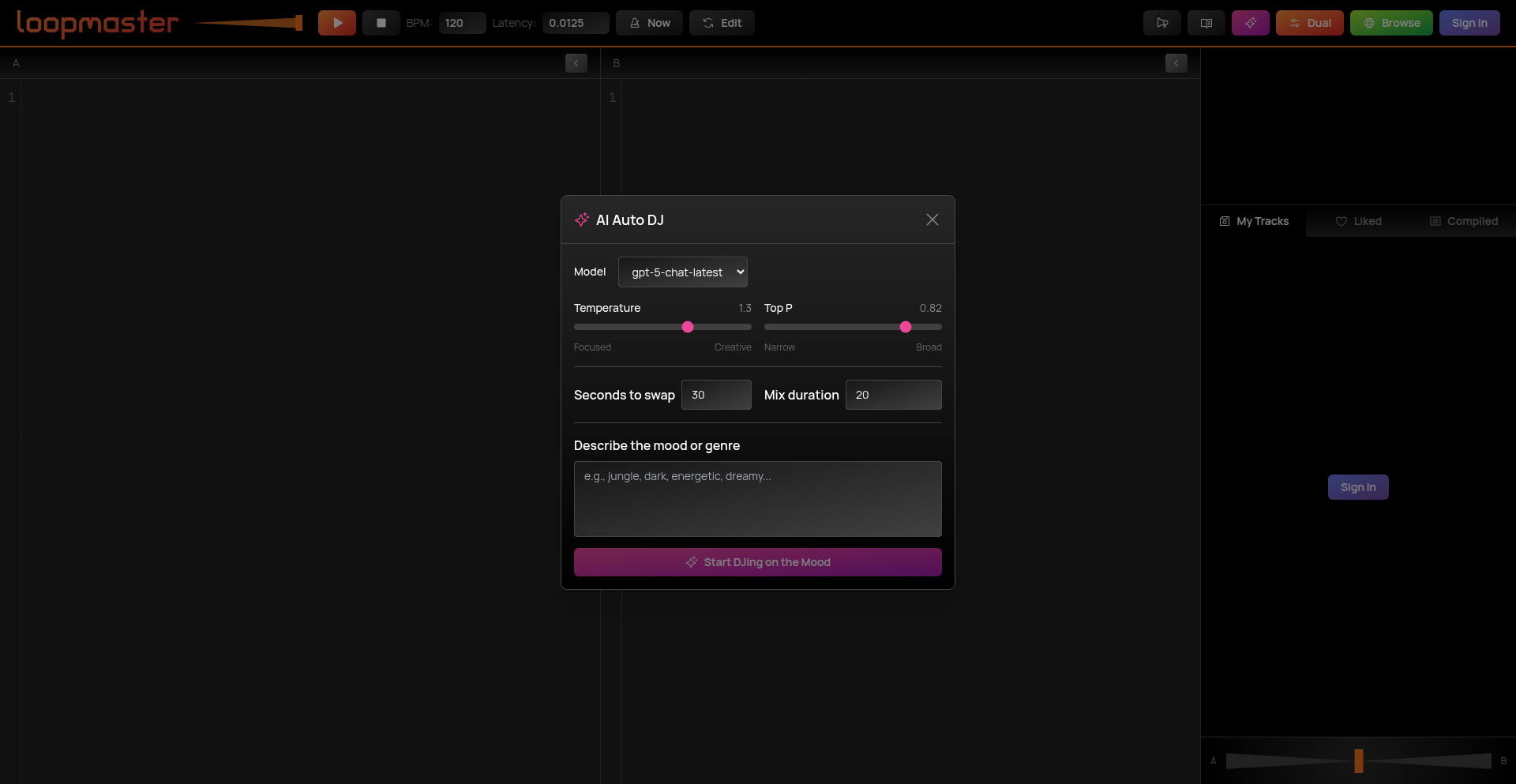

13

AI DJ MasterMix

Author

stagas

Description

An AI-powered music mixing tool that automatically sets the mood and genre for your music. It intelligently analyzes your audio library to create seamless transitions and curated playlists, removing the manual effort of DJing.

Popularity

Points 5

Comments 0

What is this product?

This project is an AI DJ that acts as a smart music curator and mixer. It uses advanced algorithms to understand the mood and genre of your music tracks. Imagine you have a collection of songs; instead of you having to pick them one by one and figure out what flows well together, the AI does it for you. It identifies similarities and differences in musical characteristics like tempo, key, and energy, then intelligently blends them. The innovation lies in its ability to go beyond simple playlist generation by creating dynamic mixes with smooth transitions, akin to a human DJ but automated.

How to use it?

Developers can use this project as a backend service for music applications, streaming platforms, or even personal music players. The core idea is to integrate its AI mixing capabilities via an API. For instance, you could feed it a directory of your MP3 files, specify a desired mood (e.g., 'chill', 'party', 'focus'), and it will output a mixed audio stream or a sequence of tracks with suggested transition points. This allows for quick prototyping of music-related features without building a complex music analysis and mixing engine from scratch.

Product Core Function

· AI-driven mood and genre detection: Analyzes audio files to automatically identify their emotional tone and musical style, allowing for more personalized music experiences.

· Seamless audio mixing: Creates smooth transitions between tracks by intelligently matching tempo and key, resulting in a professional-sounding mix without jarring interruptions.

· Automated playlist generation: Generates dynamic playlists based on user-defined moods or themes, saving time and effort in music selection.

· API for integration: Provides an interface for developers to easily incorporate AI-powered music mixing into their own applications and services.

Product Usage Case

· A developer building a fitness app could integrate AI DJ MasterMix to automatically generate energetic music mixes tailored to different workout routines, keeping users motivated without manual playlist management.

· A content creator could use this to create background music for videos, specifying a desired atmosphere (e.g., 'cinematic', 'uplifting'), and receive a perfectly mixed audio track, enhancing the viewer's experience.

· A personal music player application could offer a 'smart shuffle' feature powered by this AI, which not only shuffles songs but also mixes them harmoniously, making listening more engaging.

· A smart home system could use this to automatically adjust ambient music based on the time of day or detected activity, creating a dynamic and responsive living environment.

14

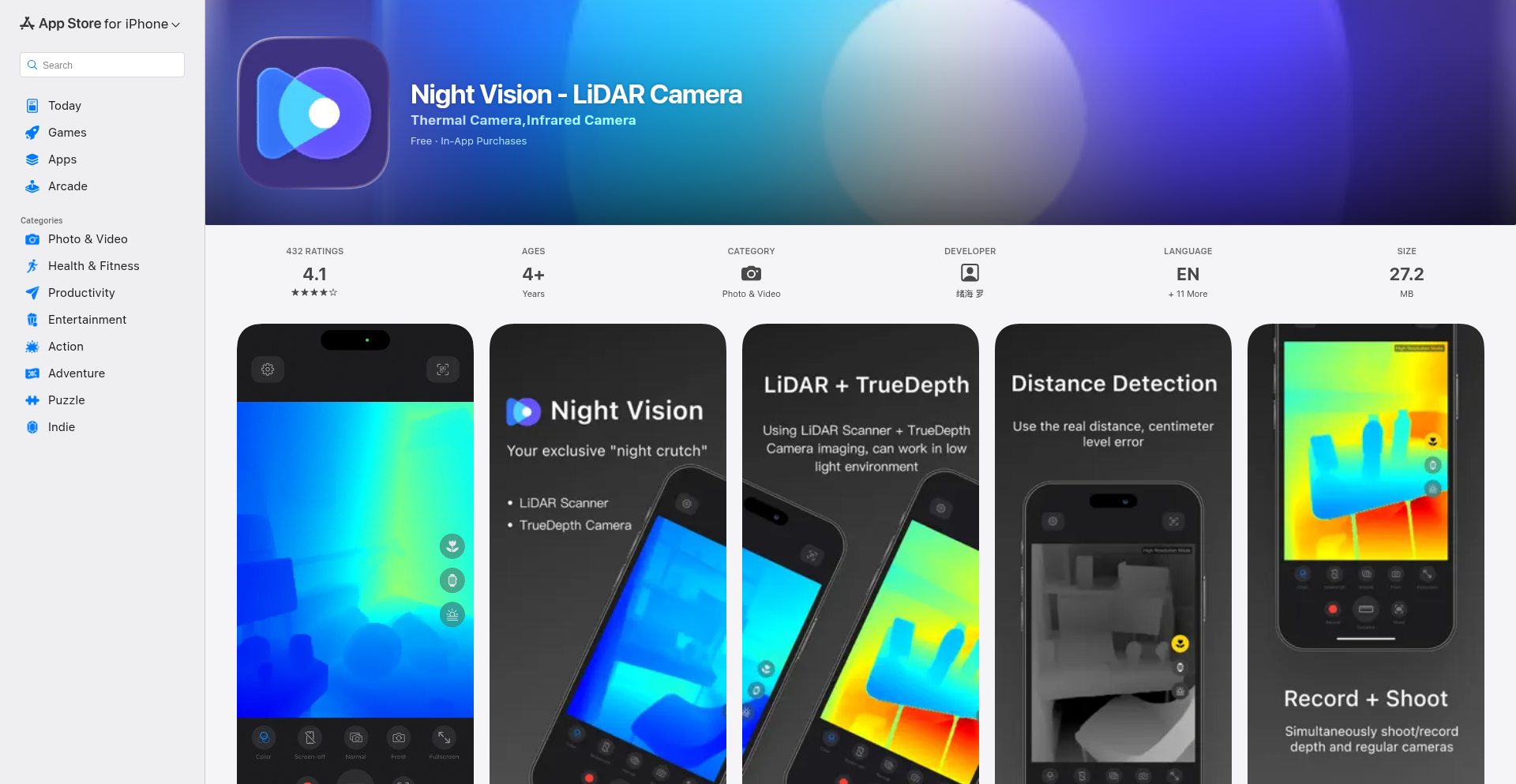

LiDAR Night Vision

Author

darkce

Description

A mobile application that leverages the iPhone's LiDAR scanner and TrueDepth camera to enable real-time, low-light imaging. It transforms your phone into a functional night vision device, offering a free basic preview for enhanced visibility in dark environments.

Popularity

Points 4

Comments 1

What is this product?

This project is a mobile application, Night Vision App, that transforms your iPhone into a night vision device. The core innovation lies in its intelligent use of the iPhone's built-in LiDAR scanner and TrueDepth camera. Normally, LiDAR is used for 3D mapping and augmented reality, but this app repurposes it to detect depth and light variations in extremely low-light conditions. By combining this depth information with data from the TrueDepth camera (which includes infrared capabilities), the app can construct a surprisingly detailed image even when there's very little visible light. This is like having a pair of high-tech goggles for your phone, allowing you to see what would otherwise be invisible to the naked eye. So, for you, it means being able to explore or navigate in the dark more confidently.

How to use it?

To use Night Vision App, you'll need an iPhone Pro model (as these are equipped with LiDAR scanners). Simply download the app from the App Store. Once opened, the app will automatically access your phone's camera, LiDAR, and TrueDepth sensors. You can then point your phone at a dark scene, and the app will process the sensor data to display a real-time, enhanced image on your screen. For advanced features, there's a one-time purchase option. The basic night vision preview is available for free. So, for you, it's as simple as opening an app and looking through your phone to see better in the dark, opening up new possibilities for late-night activities or unexpected situations.

Product Core Function

· LiDAR-assisted low-light imaging: Utilizes the LiDAR scanner to understand scene depth and structure, enhancing the ability to interpret faint light patterns. This means you can see the shapes and outlines of objects in the dark more clearly, making navigation safer and easier.

· TrueDepth camera integration: Leverages the infrared capabilities of the TrueDepth camera to capture subtle light information invisible to regular cameras. This allows the app to pick up on details that would otherwise be completely lost in darkness, providing a richer visual experience.

· Real-time preview: Processes sensor data to deliver an immediate, live view of the enhanced image on your phone screen. This real-time aspect is crucial for navigation and immediate situational awareness, so you can react instantly to your surroundings.

· Free basic night vision preview: Offers a core functionality that allows users to experience a functional level of night vision without any cost. This democratizes access to enhanced visibility, making it useful for everyone in dimly lit scenarios.

· Optimized iOS performance: Built with the latest iOS features and design principles for a smooth and responsive user experience. This ensures the app runs efficiently on your device, providing a reliable tool when you need it most.

Product Usage Case

· Emergency navigation in power outages: Imagine your power goes out at night. Using Night Vision App, you can safely move around your home or navigate outside to check on things, avoiding obstacles and hazards that would be unseen in complete darkness. This offers peace of mind and prevents accidents.

· Exploring dimly lit outdoor areas: Going camping or hiking and want to explore the surroundings after sunset? The app can help you identify trails, spot wildlife, or simply appreciate the natural beauty of the night environment without needing a separate, bulky flashlight. It allows for a more immersive and safer nocturnal adventure.

· Finding dropped items in dark corners: Accidentally dropped your keys or phone under a dark couch or in a poorly lit closet? Point your iPhone with Night Vision App, and the enhanced imaging will help you locate your lost item quickly and efficiently, saving you time and frustration.

· Enhancing photography in challenging light: While not its primary function, photographers might find it useful for scouting locations or getting a preliminary sense of a scene in extremely low light conditions before setting up professional equipment. This can lead to more creative opportunities and better planning.

15

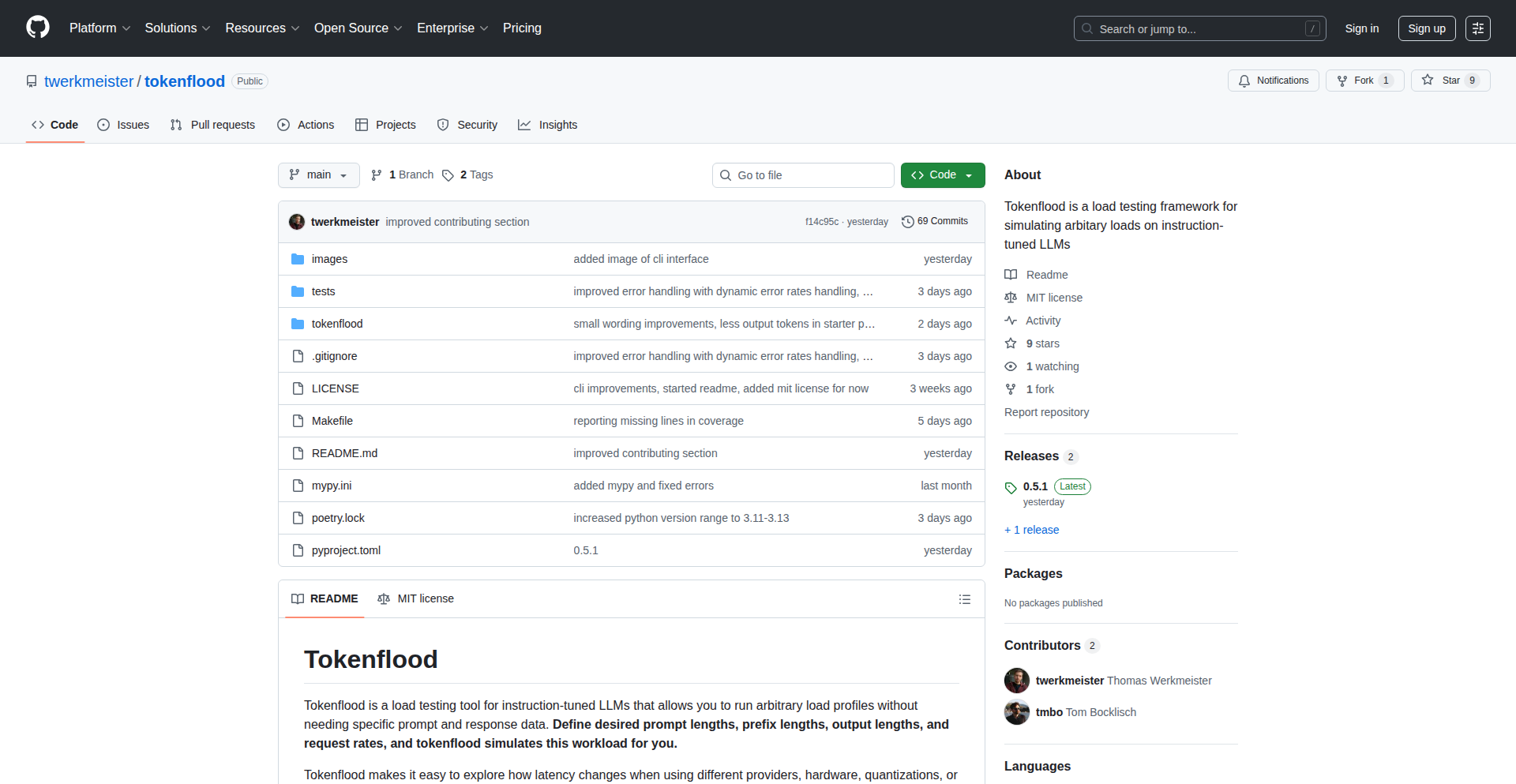

TokenFlood: LLM Load Sim

Author

twerkmeister

Description

TokenFlood is an open-source load testing tool designed to simulate realistic traffic patterns for instruction-tuned Large Language Models (LLMs). It allows developers to precisely control prompt lengths, prefix lengths, output lengths, and requests per second to mimic diverse usage scenarios. This tool solves the problem of accurately assessing LLM performance under varying loads, especially for latency-sensitive applications, by providing a flexible and configurable simulation environment without the need for extensive pre-collected data.

Popularity

Points 5

Comments 0

What is this product?

TokenFlood is a command-line interface (CLI) tool that acts like a traffic generator for your LLM. Instead of just sending one request at a time, it can simulate thousands of users interacting with an LLM simultaneously, each with different types of 'questions' (prompts) and expecting different 'answers' (outputs). The core innovation is its ability to create 'arbitrary' loads, meaning you can specify exactly how long the input prompts should be, how long the LLM's responses should be, and how many requests should be sent per second. This is crucial because LLM performance can drastically change based on these factors, and TokenFlood lets you test these specific conditions to understand how your LLM will perform in the real world. It helps you identify bottlenecks and performance issues before they impact your users.

How to use it?

Developers can use TokenFlood from their terminal. You typically install it and then run commands to configure your load test. You'll specify the endpoint of your LLM (where it's hosted), and then define the parameters for your simulation. For example, you can tell it to send 100 requests per second, with prompts averaging 500 tokens, prefixes of 20 tokens, and expecting outputs around 300 tokens. This allows you to test scenarios like:

1. How fast does my self-hosted LLM respond when many users ask short questions?

2. What happens to latency if users start asking very long, complex questions?

3. How does a particular LLM service handle a sudden surge in traffic with mixed prompt lengths?

It integrates by sending HTTP requests to your LLM's API endpoint, just like any user would, but at a much higher volume and with configurable characteristics.

Product Core Function

· Simulate arbitrary prompt lengths: This allows testing how LLM latency is affected by the complexity and length of user queries, helping to understand performance degradation with detailed inputs.

· Simulate arbitrary prefix lengths: Essential for LLMs that use specific pre-defined instructions or context, this feature helps assess the overhead and performance impact of these preambles.

· Simulate arbitrary output lengths: By controlling the expected length of LLM responses, developers can test how the system handles generating longer or shorter texts and its impact on throughput and user experience.

· Control requests per second (RPS): This is the fundamental load testing parameter, allowing for the simulation of high-traffic scenarios and the identification of throughput limits and potential bottlenecks.

· Latency percentile reporting: Provides detailed statistics on response times, not just an average, which is critical for understanding user experience and guaranteeing performance SLAs for time-sensitive applications.

· Configuration over data collection: Instead of needing to gather real user data beforehand, developers can directly configure desired load parameters, making initial testing and experimentation much faster and more flexible.

Product Usage Case

· Testing a self-hosted LLM for a customer support chatbot: Before deploying, use TokenFlood to simulate thousands of concurrent users asking questions of varying lengths and expecting different response complexities to ensure the chatbot remains responsive even during peak hours.

· Evaluating different cloud LLM providers for a content generation service: Use TokenFlood to benchmark the latency of multiple LLM APIs under consistent, simulated load conditions, helping to choose the most cost-effective and performant option.

· Assessing the impact of prompt engineering changes: Before implementing a new prompt strategy that might be longer or more complex, use TokenFlood to run load tests and measure the resulting latency changes, helping to justify or refine the changes.

· Monitoring the intraday latency variations of a hosted LLM service: Run TokenFlood periodically throughout the day to understand how the LLM provider's performance fluctuates, ensuring consistent service quality for your application.

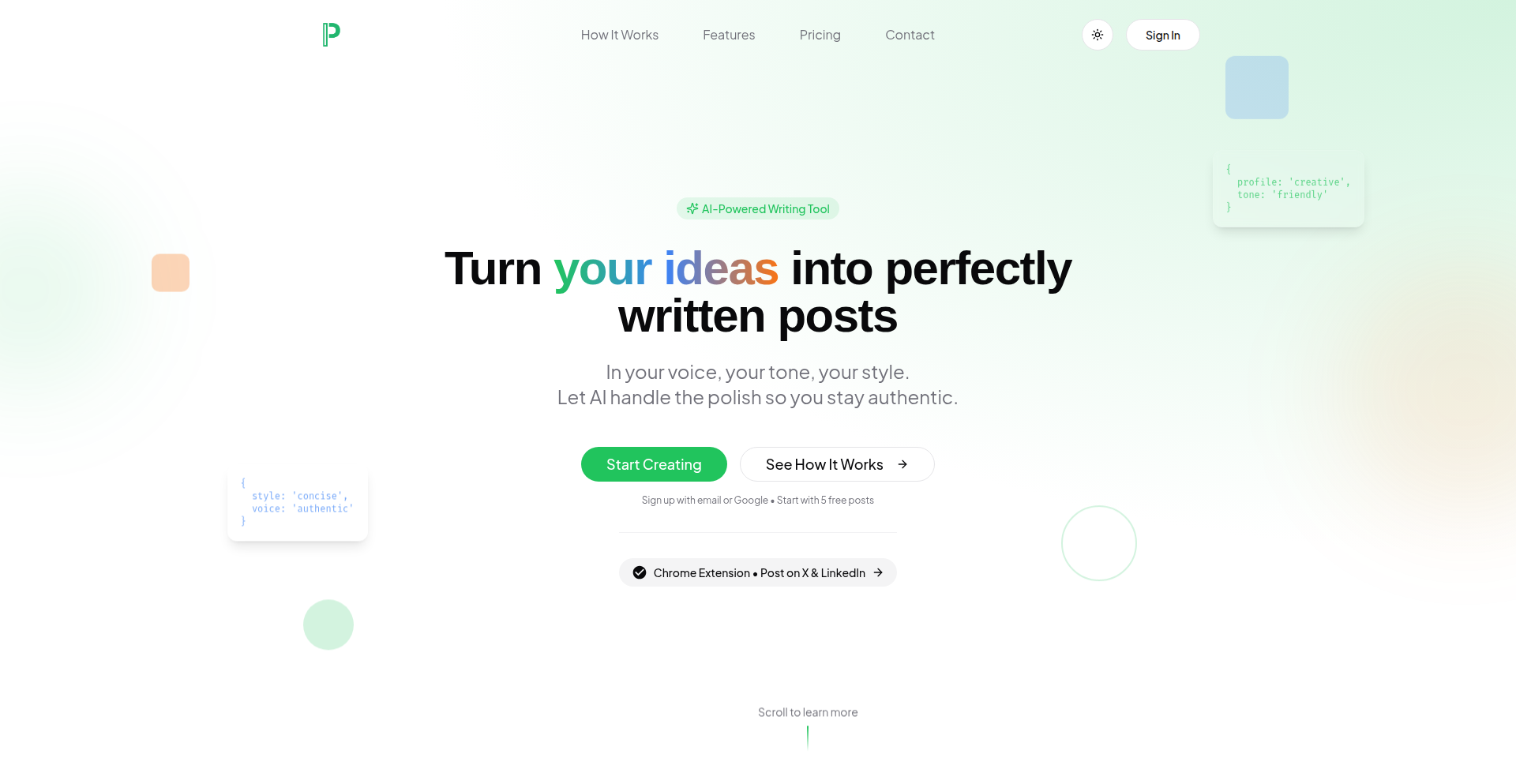

16

PersonaSynth AI

Author

mutiny1907

Description

PostIdentity is a web application that leverages AI to learn and replicate your unique writing style across different platforms. Instead of just adjusting tone, it captures your pacing, intent, and personality, allowing you to generate content that sounds authentically 'you' or to match specific brand voices. This innovative approach provides persistent AI identities, unlike generic prompt-based AI writers, ensuring consistency and saving significant time for content creators.

Popularity

Points 5

Comments 0

What is this product?

PersonaSynth AI is a sophisticated AI writing assistant that goes beyond basic text generation by learning and embodying distinct writing personas. Technically, it achieves this by analyzing your historical writing data to build persistent 'identities.' These aren't just templates; they encapsulate nuanced writing characteristics like sentence structure, word choice, rhythm, and underlying intent. When you request content, the AI draws upon these learned identities to produce output that mirrors your personal style or the specific persona you've defined. This contrasts with typical AI tools that might generate a new response each time based on a prompt, leading to less consistent results. The innovation lies in the persistence and depth of its learned personas, offering a much more personalized and consistent AI writing experience.

How to use it?

Developers can integrate PersonaSynth AI into their workflows through its web application or a dedicated Chrome extension. For individual content creators, you can sign up, create multiple distinct writing personas (e.g., one for personal social media, another for client work), and then generate posts with a single click. You can even take one core idea and have PersonaSynth AI generate multiple versions tailored to different platforms like X (formerly Twitter) or LinkedIn. Developers looking to build applications that require consistent, on-brand, or personalized text generation can leverage its API (though not explicitly mentioned, it's a common evolution for such tools) to programmatically create content. The Chrome extension allows for direct content generation within platforms like X and LinkedIn, simplifying the process of maintaining a consistent online voice.

Product Core Function

· Persistent AI Identity Creation: Users can define and train multiple unique AI writing personas based on their own writing style or specified brand guidelines. This allows for consistent output across various content needs, saving the effort of repeatedly defining characteristics.

· Multi-Platform Content Generation: The system can take a single idea and automatically generate multiple versions of it, optimized for different social media platforms or communication styles. This streamlines content strategy for diverse audiences.

· Fine-Tuning and Refinement: Users have the ability to adjust the generated content to further refine tone, length, and specific nuances, giving them granular control over the final output and ensuring it meets their exact requirements.

· Direct Platform Integration (Chrome Extension): The included Chrome extension enables users to write and generate content directly within platforms like X and LinkedIn, seamlessly integrating AI-powered writing into their existing social media workflow.

Product Usage Case

· A freelance writer managing multiple clients can create a distinct AI identity for each client, ensuring all outgoing communications and content adhere to their respective brand voices and tones, thus saving hours of manual adjustment and improving client satisfaction.

· A social media manager can use PersonaSynth AI to generate a week's worth of posts for their company's X and LinkedIn accounts. They can input a core marketing message and have the AI create varied, platform-appropriate posts that maintain a consistent brand voice, significantly speeding up content production.

· An individual who wants to maintain a consistent personal brand across their online presence can train an AI identity on their own writing. This allows them to quickly draft blog posts or social media updates that sound authentically like them, even when they're short on time.

17

Invisitris: The Vanishing Block Challenge

Author

eddguzzo

Description

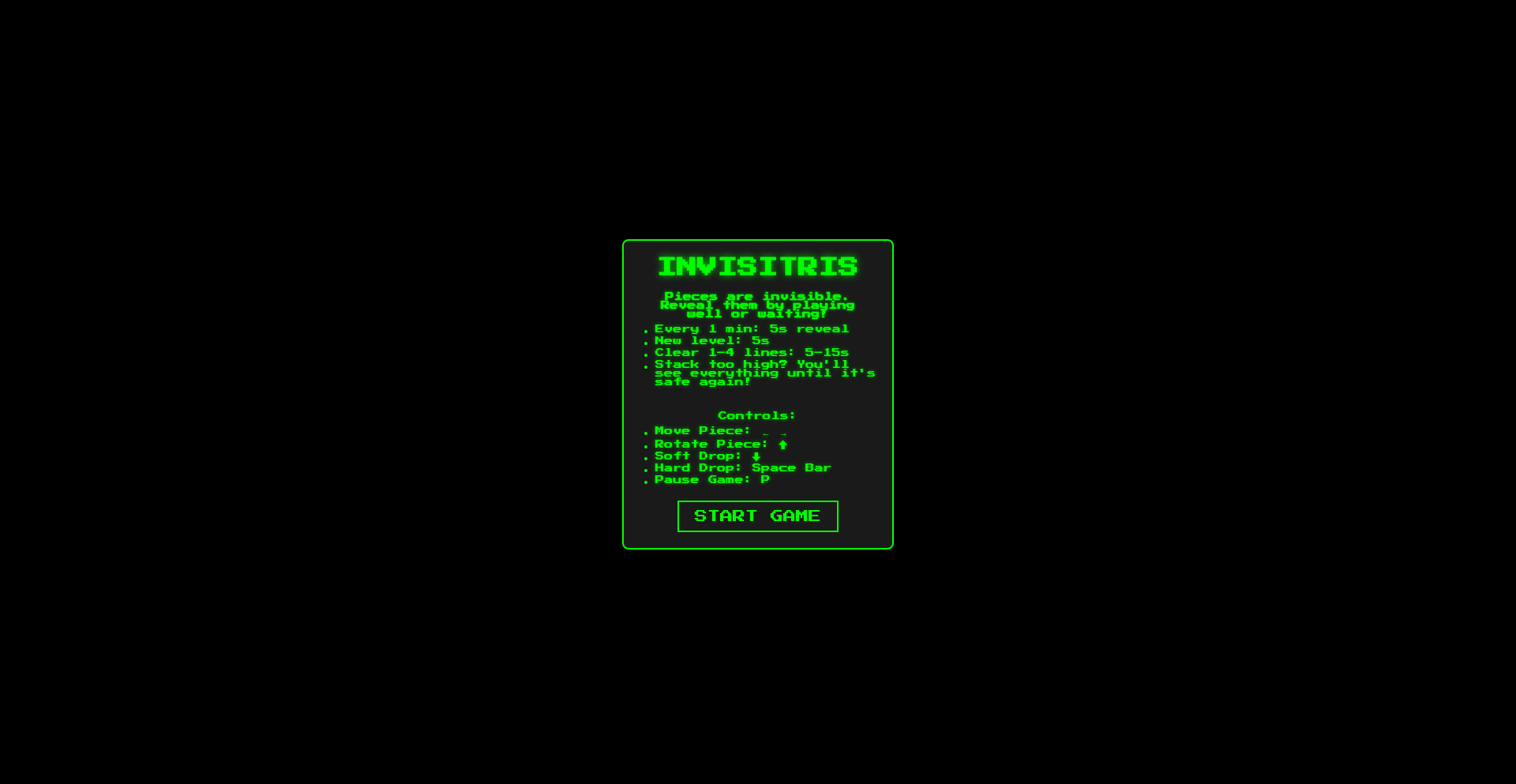

Invisitris is a novel Tetris-variant that introduces an invisibility mechanic. Unlike traditional Tetris, most placed blocks disappear, challenging players to remember the board's state and plan strategically. The game offers brief moments of full visibility when rows are cleared or the stack reaches critical levels, adding dynamic challenges and strategic depth. This project explores innovative gameplay through controlled information reveal, showcasing creative application of game logic.

Popularity

Points 4

Comments 1

What is this product?

Invisitris is a game that takes the familiar Tetris concept and adds a twist: the blocks you place fade away, becoming invisible after a short period. The core technical idea is to manage the visibility state of game pieces on the board. Instead of a static grid, the game dynamically hides pieces, forcing players to rely on memory and anticipate the consequences of their moves. The 'revealing' moments, triggered by clearing lines or reaching a dangerous stack height, are controlled by specific game state flags, demonstrating a sophisticated approach to dynamic game feedback and engagement. This pushes the boundaries of typical puzzle game mechanics by adding a cognitive layer of recall.

How to use it?