Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-11

SagaSu777 2025-11-12

Explore the hottest developer projects on Show HN for 2025-11-11. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The current wave of innovation on Show HN is a testament to the hacker spirit, showcasing a strong drive to solve complex problems with elegant technical solutions. We're seeing a significant surge in AI-powered tools, not just for content generation, but for enhancing developer workflows, automating tedious tasks, and providing deeper insights from data. The trend towards localized AI, running LLMs on personal machines, signals a desire for privacy and control. Furthermore, the focus on developer experience is paramount, with many projects aiming to simplify intricate processes like API testing, data analysis, and system monitoring. For developers and entrepreneurs, this landscape presents a fertile ground for innovation. Embracing AI as a co-pilot, rather than a replacement, for creative and analytical tasks will be key. Building tools that reduce friction, enhance transparency, and empower users with actionable insights are poised for success. The growing interest in edge computing and efficient local processing also suggests a future where powerful applications are more accessible and less reliant on centralized cloud infrastructure, opening up new avenues for distributed and privacy-focused solutions.

Today's Hottest Product

Name

Tusk Drift – Open-source tool for automating API tests

Highlight

Tusk Drift ingeniously tackles the brittle nature of API testing by recording live traffic and replaying it as automated tests. This approach eliminates the need for manual mocking, directly capturing real-world dependency behavior. The key innovation lies in its ability to detect deviations between actual and expected outputs, providing developers with a more robust and reliable testing suite. Developers can learn about practical applications of traffic recording, automated test generation, and the use of LLMs for root cause analysis in testing.

Popular Category

AI/ML

Developer Tools

Data Analysis

Productivity

Web Development

Popular Keyword

AI

LLM

Automation

Data

Development Tools

Testing

API

Observability

Code Analysis

Productivity Tools

Technology Trends

AI-Powered Automation

Developer Productivity Tools

Data-Driven Insights

Edge Computing

Local LLM Deployment

Semantic Search

Observability and Monitoring

Interactive Data Exploration

Cross-Platform Development

Project Category Distribution

AI/ML Tools (25%)

Developer Productivity & Tools (30%)

Data Analysis & Visualization (15%)

Web Applications & Platforms (20%)

Miscellaneous (Games, Hardware, etc.) (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Gametje - Universal Web Gaming Hub | 104 | 38 |

| 2 | Cactoide Federated RSVP | 60 | 25 |

| 3 | Tusk Drift: Live Traffic API Test Synthesizer | 52 | 16 |

| 4 | LocalBiz Navigator | 29 | 34 |

| 5 | Data Weaver AI | 32 | 9 |

| 6 | Creavi Macropad: Wirelessly Smart Macro Keys with a Display | 27 | 7 |

| 7 | Linnix: Kernel-Aware Predictive Observability | 21 | 6 |

| 8 | VeriPixel: Photo/Video Provenance Engine | 2 | 21 |

| 9 | LexiLearn-Core | 9 | 12 |

| 10 | Vector-Logic: First-Principles Rule Engine | 8 | 9 |

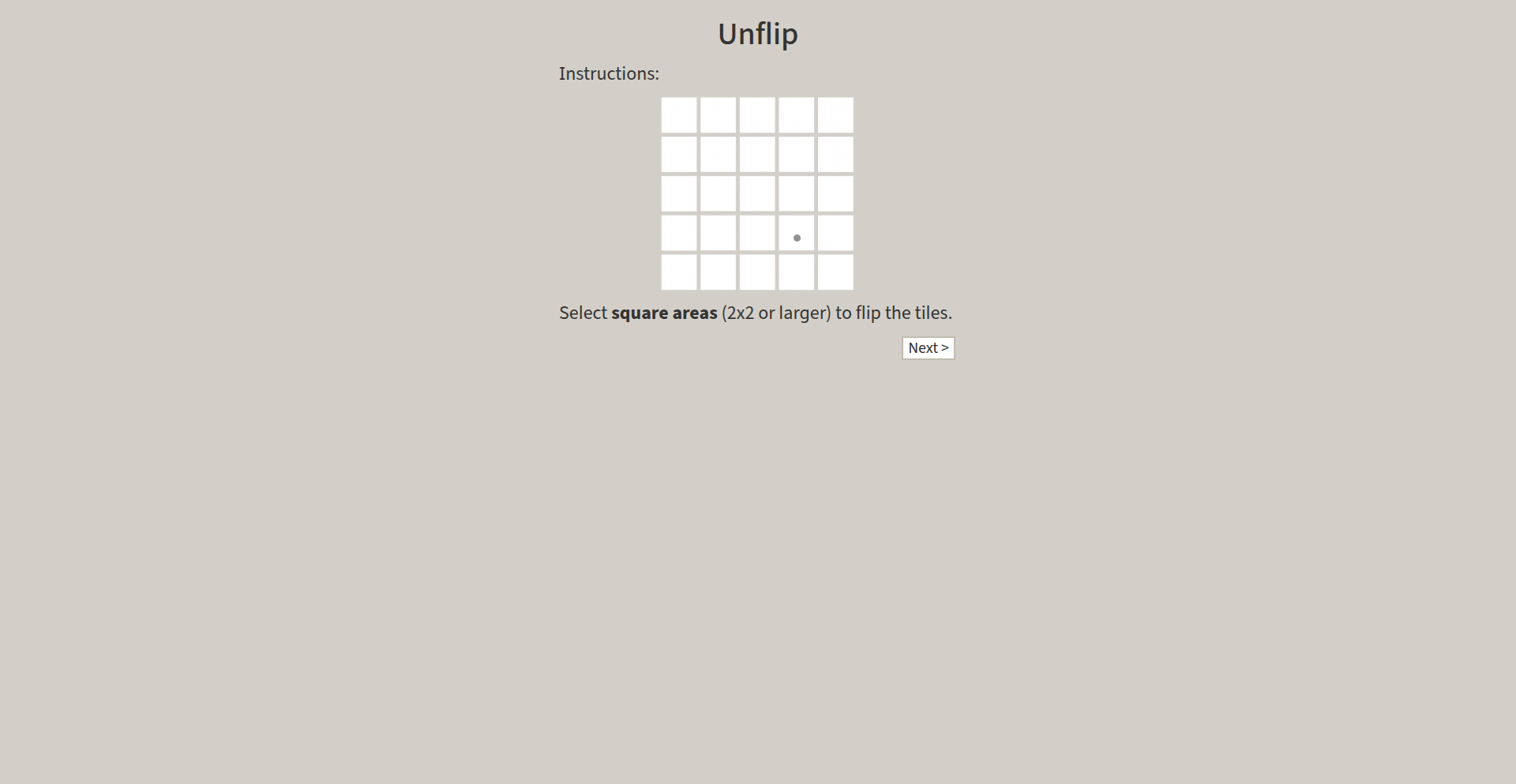

1

Gametje - Universal Web Gaming Hub

Author

jmpavlec

Description

Gametje is a web-based platform offering casual, multiplayer games playable both in-person on a shared screen and remotely via video chat. It addresses language barriers and accessibility issues found in existing party game platforms, providing a unified, download-free experience playable on any browser-enabled device, including Android TVs and Discord.

Popularity

Points 104

Comments 38

What is this product?

Gametje is a casual gaming platform designed for social interaction. Its core innovation lies in its web-native architecture, allowing seamless play across different devices and locations without requiring downloads. This is achieved through technologies that enable real-time multiplayer synchronization over the internet, essentially creating a shared game state that all players' browsers connect to. The platform prioritizes accessibility and inclusivity by supporting multiple languages and offering intuitive controls that resemble basic text interactions, making it approachable for non-gamers. The technical approach focuses on robust client-server communication for game logic and state management, ensuring a smooth and responsive experience for all participants. This means that instead of downloading a specific game app for your phone, computer, or TV, you simply open a web page, and the game runs there, synchronizing with others playing on their own devices.

How to use it?

Developers and users can access Gametje directly through a web browser at gametje.com. To play, a host creates a game room, choosing between hosting for in-person play on a central screen, playing from a single device, or casting to a screen like a Chromecast. Other players can then join the room from their own devices by entering a shared code. The platform also integrates with Discord as an embedded application, allowing users to launch and play games directly within their Discord servers. For developers interested in integration, the web-based nature means it can potentially be embedded within other web applications or platforms that support web views. The availability of an Android TV app and potential for future platform integrations further expands its usability.

Product Core Function

· Cross-device multiplayer synchronization: Enables multiple users on different devices to play the same game in real-time by maintaining a shared game state accessible via the web. This solves the problem of fragmented game access across platforms and allows for flexible play scenarios, whether you're in the same room or miles apart.

· Web-native accessibility: Games are playable directly in a web browser without any downloads or installations. This dramatically lowers the barrier to entry for new players and makes it easy to jump into a game from any internet-connected device, such as a smartphone, tablet, laptop, or smart TV.

· Multilingual support: Offers games in multiple languages, making them accessible to a wider global audience. This directly addresses the limitation of many commercial party games that are primarily English-focused, fostering more inclusive social gaming experiences.

· No game packs or fragmentation: All games are available in a single platform, eliminating the need to purchase separate game packs or deal with compatibility issues across different hardware. This provides a straightforward and cost-effective way to access a variety of party games.

· Discord integration: Allows games to be played directly within Discord as embedded activities. This leverages an existing popular communication platform, enabling users to easily start and join games with their friends without leaving their chat environment.

Product Usage Case

· A group of friends is having a remote get-together. One person hosts a Gametje game, and everyone else joins from their laptops and phones via a shared link. They can all see and interact with the game in their browser, creating a shared virtual experience despite the physical distance, solving the problem of how to play together when not physically present.

· A family is gathered around a smart TV. They use the Android TV app to launch a Gametje game and everyone uses their mobile phones as individual controllers. This creates an engaging, shared entertainment experience on the big screen, replicating the fun of console gaming without needing complex setups.

· A Discord server community wants to play a quick game during their chat session. They use the Discord embedded application to launch a Gametje game directly within the server. This seamless integration allows for spontaneous gaming fun without leaving the chat, solving the issue of interrupting conversation flow to switch to a different application.

· A person wants to introduce their non-gamer friends to a fun party game. Because Gametje requires no downloads and is playable in a web browser with simple controls, they can easily invite their friends to join from their phones, ensuring that even those unfamiliar with gaming can participate and enjoy the experience.

2

Cactoide Federated RSVP

Author

orbanlevi

Description

Cactoide is a federated RSVP platform, offering a decentralized approach to event invitations and responses. Instead of relying on a single centralized service, it leverages federated protocols, allowing users to manage RSVPs across different independent servers. This tackles the issue of vendor lock-in and data silos common in traditional event platforms.

Popularity

Points 60

Comments 25

What is this product?

Cactoide is a revolutionary event invitation and response system built on federated protocols, akin to how email or social media can work across different providers. Instead of one company controlling all your event data, Cactoide allows event organizers and attendees to interact with their RSVPs using software that speaks a common language (a federation protocol). This means your event invitations can exist and be managed on your own chosen server, or one hosted by a community, rather than being tied to a specific platform. The innovation lies in applying these decentralized principles to the RSVP process, giving users more control and interoperability.

How to use it?

Developers can integrate Cactoide into their own applications or services by implementing its federated protocol. This could involve building a custom event management frontend that communicates with Cactoide-compatible backend servers. For attendees, it means they can use any client application that supports the Cactoide protocol to receive and respond to invitations, regardless of where the event organizer hosted their RSVP service. Think of it like using your Gmail account to receive an email from a Yahoo user – both can communicate. This allows for flexibility and avoids being locked into a single event platform's ecosystem.

Product Core Function

· Federated Event Invitation: Allows sending event invitations that can be received and managed across different Cactoide-compliant servers, enabling interoperability and preventing vendor lock-in. This is useful for developers building event systems who want to ensure their invitations can be handled by a wider range of user clients.

· Decentralized RSVP Management: Enables users to respond to invitations from their preferred client or server, granting more control over their personal event data. This offers value to developers by reducing reliance on a single point of failure or data compromise for user responses.

· Interoperable Event Data: Facilitates the exchange of event details and RSVP status between different federated instances, promoting a more connected and open event ecosystem. This benefits developers by allowing them to build applications that can aggregate or display event information from various sources.

· Protocol-based Communication: Utilizes standardized federation protocols to ensure seamless communication between different Cactoide instances, making it easier for developers to integrate with or extend the platform. This means developers can leverage existing or well-understood communication patterns rather than inventing new ones.

Product Usage Case

· Building a community-driven event planning tool where different community groups can host their own RSVP servers, but members can still respond to invitations from any community using their preferred client. This solves the problem of fragmented event planning within large organizations.

· Developing a personal event management application that syncs with multiple RSVP sources, allowing users to see all their invitations in one place, regardless of which platform they were sent from. This addresses the inconvenience of managing events across disparate services.

· Creating a developer API for event organizers that adheres to the Cactoide protocol, enabling them to send invitations that are compatible with any federated RSVP client, thus expanding their reach and reducing technical integration hurdles for attendees.

· Implementing a system for a conference or meetup organizer to allow attendees to RSVP without requiring them to create an account on a specific platform, by leveraging their existing federated identity. This improves user experience and reduces friction for event participation.

3

Tusk Drift: Live Traffic API Test Synthesizer

Author

Marceltan

Description

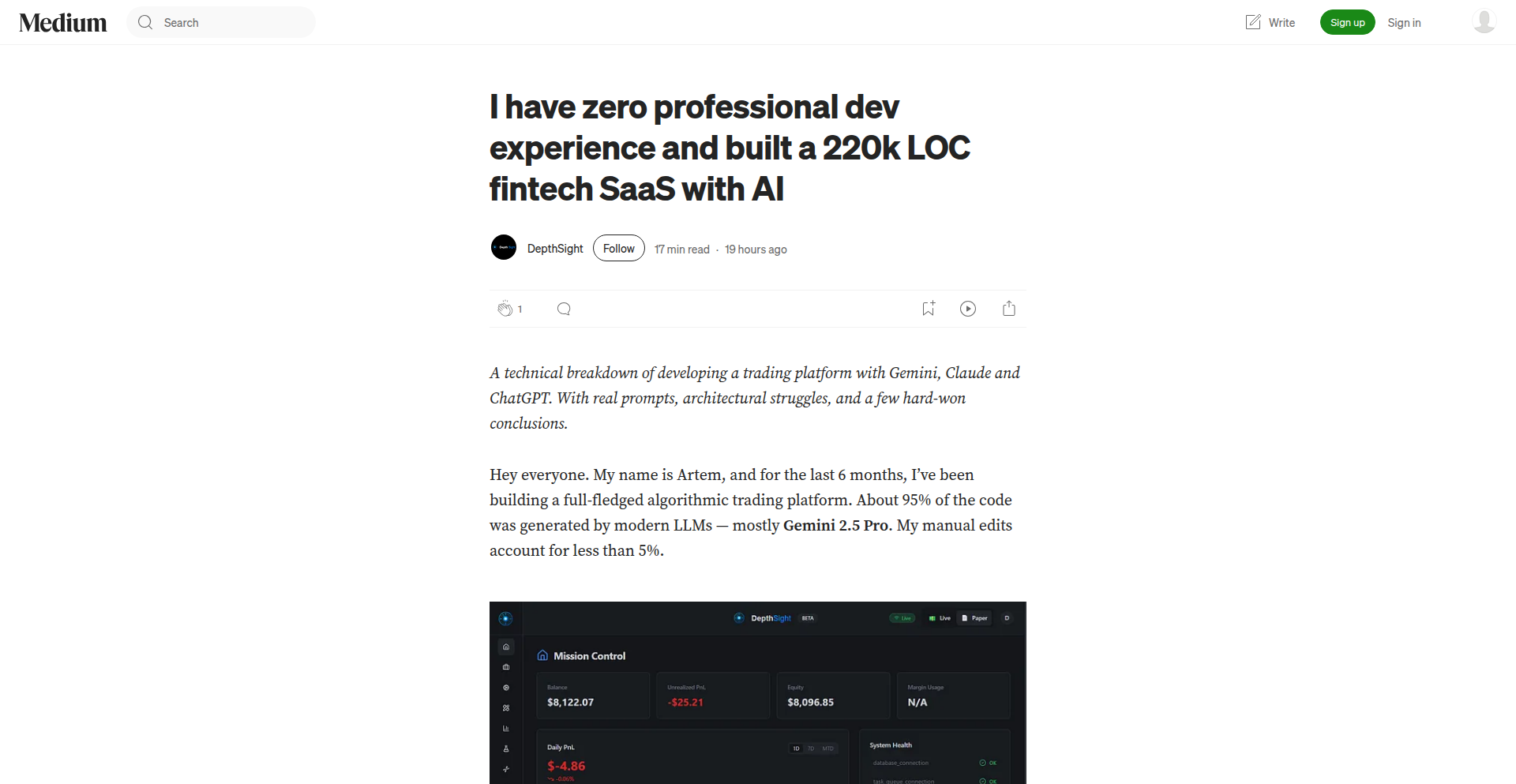

Tusk Drift is an open-source tool that revolutionizes API testing by automatically generating a comprehensive test suite from live traffic. It records real user interactions, replays them as tests with mocked dependencies, and flags any unexpected behavior. This tackles the common pain point of brittle API tests and production regressions by capturing actual usage patterns and ensuring tests remain relevant and accurate, offering a significant leap in developer productivity and system reliability.

Popularity

Points 52

Comments 16

What is this product?

Tusk Drift is a smart API testing tool that acts like a diligent observer of your live application. Instead of manually writing tests for every possible API interaction, it watches how users actually interact with your API in real-time. It then captures these interactions (traces) and uses them to automatically create API tests. When these tests run, Tusk Drift simulates the responses from your API's dependencies (like databases or other services) using the recorded data, ensuring the test environment is consistent and predictable. The key innovation here is that it learns from live usage, eliminating the guesswork and manual effort typically involved in setting up comprehensive mocking and testing, thus significantly improving test accuracy and reducing the chance of unexpected bugs making it to production.

How to use it?

Developers can integrate Tusk Drift into their Node.js backend applications. It works by instrumenting your service, similar to how tools like OpenTelemetry track application performance. This instrumentation captures all incoming requests to your API and any outgoing calls your service makes, such as database queries or requests to other microservices. When you want to run API tests, Tusk Drift intercepts incoming API calls and replays them. Crucially, instead of making actual external calls, it serves responses from the data it previously recorded during live traffic. This makes tests fast, reliable, and free from side effects. In a Continuous Integration (CI) pipeline, Tusk Drift can automatically update your test suite with new traces and match relevant tests to changes in your code, pinpointing regressions and even suggesting fixes.

Product Core Function

· Live Traffic Recording: Captures actual API requests and outbound dependency interactions from live user traffic. This provides a realistic basis for testing, ensuring your tests reflect real-world usage scenarios, which is valuable because it prevents the creation of tests that don't cover critical user flows.

· Automated Test Generation: Transforms recorded traffic into runnable API tests. This saves developers significant time and effort compared to manual test writing, directly addressing the problem of incomplete test coverage due to time constraints.

· Mocked Dependency Replay: Replays recorded responses from dependencies during test execution, creating a stable and predictable testing environment. This is crucial for isolating API logic and preventing flaky tests caused by external service unreliability.

· Deviation Detection: Compares the actual output of API calls during tests against the expected (recorded) output, automatically flagging any discrepancies. This is immensely valuable for catching regressions early, preventing bugs from reaching production and reducing debugging time.

· Intelligent Test Maintenance: Automatically updates the test suite with fresh recorded traces to keep tests relevant over time. This combats test rot, a common issue where tests become outdated and ineffective as the application evolves.

· CI/CD Integration: Matches test runs to specific code changes in pull requests and surfaces deviations in a CI environment. This streamlines the feedback loop for developers, allowing them to address issues before merging code, thereby improving code quality and accelerating development cycles.

Product Usage Case

· Imagine a scenario where your e-commerce API has a checkout process. Tusk Drift can record live checkout attempts, including all the API calls to inventory, payment processing, and user management services. It then automatically creates tests for these interactions. If a change in your code accidentally breaks the inventory lookup during checkout, Tusk Drift will catch it during your CI pipeline, preventing a production outage where customers can't complete purchases.

· Consider a microservice architecture where your user service interacts with several other services. Manually mocking all these dependencies for unit or integration tests is tedious. Tusk Drift can record the actual responses from these services during normal operation. When you deploy a new version of your user service, Tusk Drift can replay the recorded traffic, ensuring your service still behaves correctly with its dependencies, even if those dependencies have changed, thereby preventing integration issues.

· When refactoring a complex API endpoint, developers often worry about introducing regressions. Tusk Drift can record the traffic hitting the original endpoint and then generate tests based on that traffic for the refactored version. If the refactored code produces different results for any of the recorded scenarios, Tusk Drift will highlight the deviation, ensuring the refactoring didn't break existing functionality and providing confidence in the changes.

4

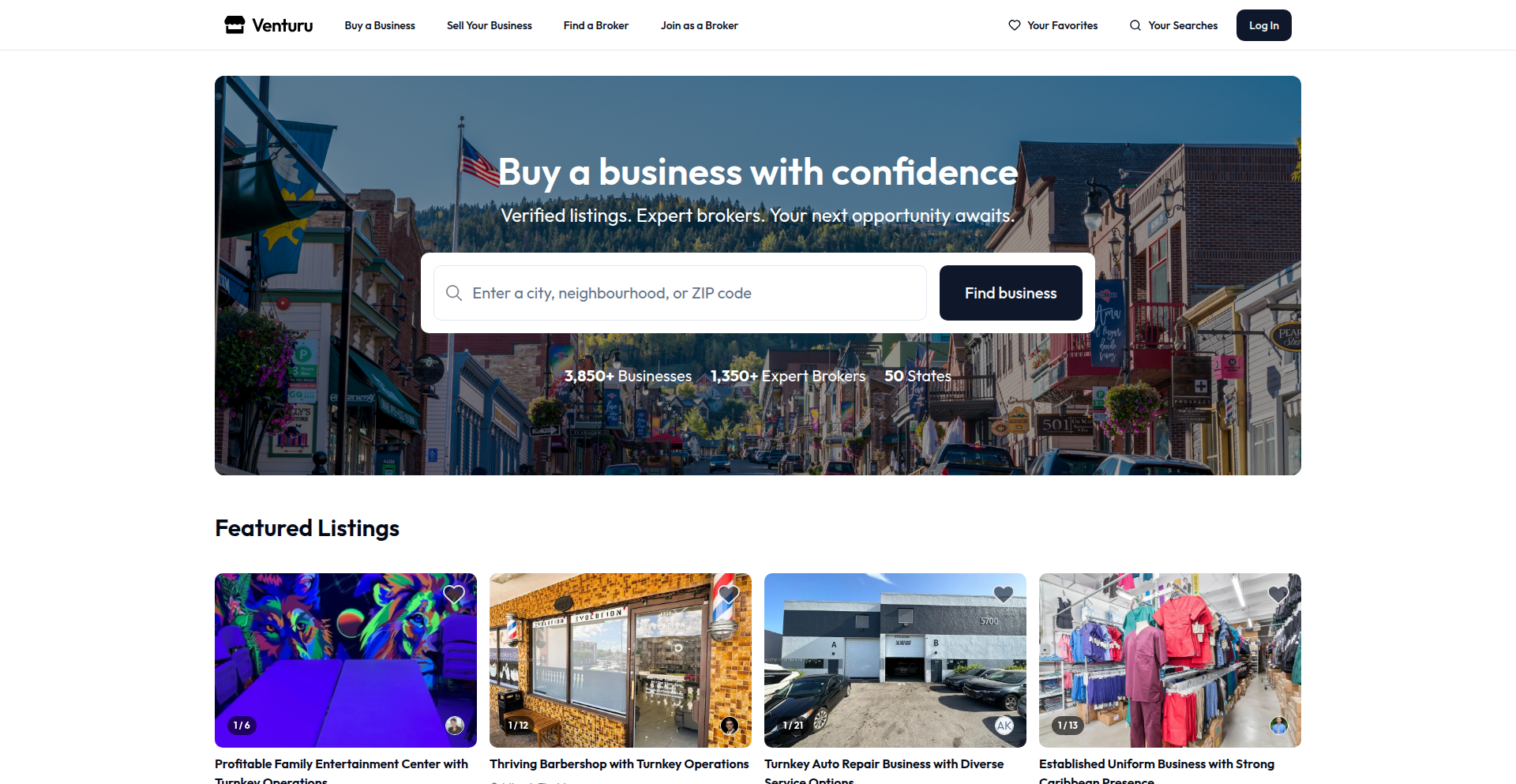

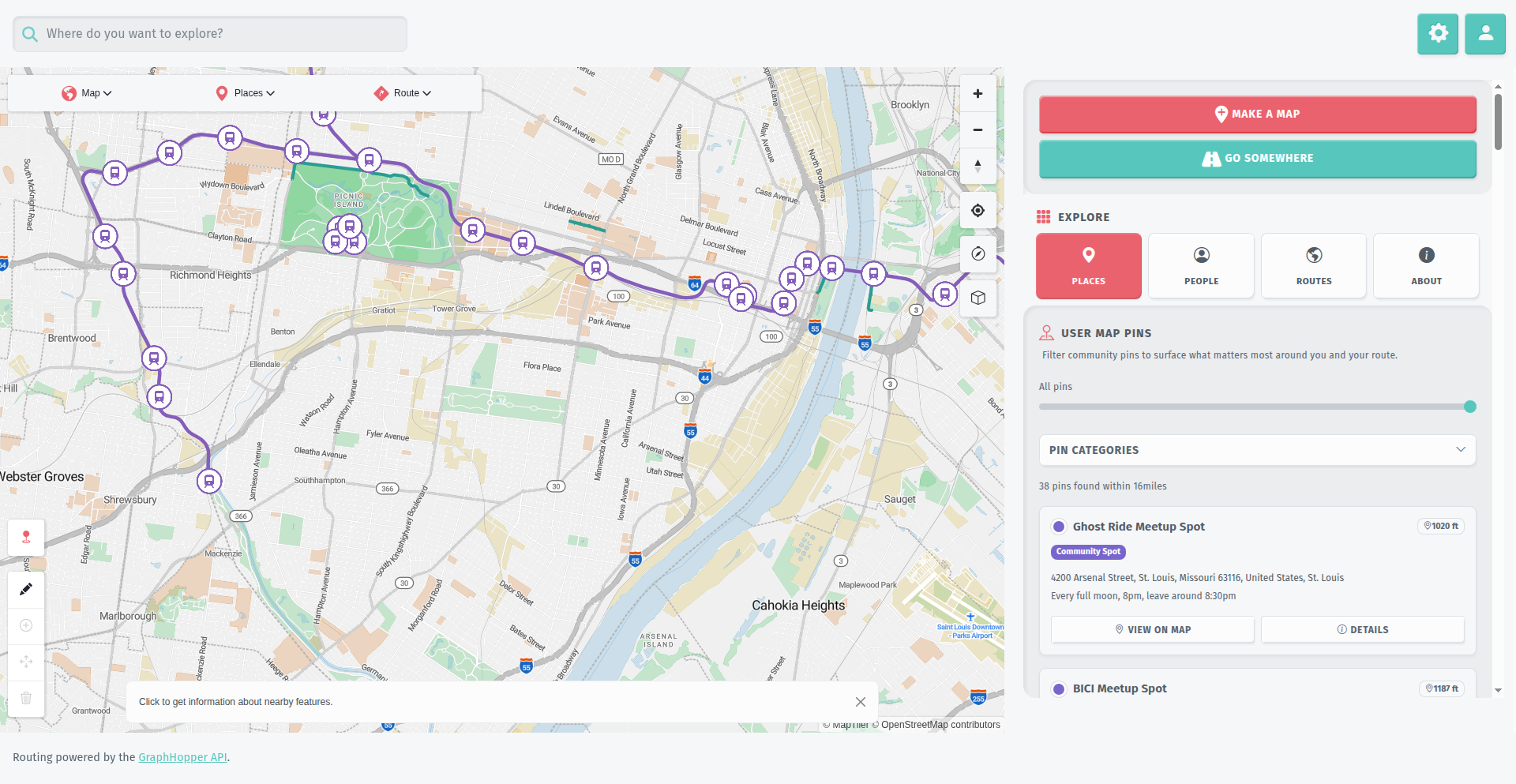

LocalBiz Navigator

Author

lifenautjoe

Description

This project is akin to Zillow, but for the local business market. It addresses the fragmentation and opacity of buying and selling small businesses by providing a single, open, and free platform. The core innovation lies in democratizing access to business valuations and listings, removing the prohibitive upfront fees and outdated gatekeeping prevalent in the current system.

Popularity

Points 29

Comments 34

What is this product?

LocalBiz Navigator is a digital marketplace designed to revolutionize the way local businesses are bought and sold. Traditionally, this market is highly fragmented, reliant on gatekeepers, and burdened by substantial fees for valuations and listings. This project tackles these issues by offering instant, free business valuations, allowing business owners to understand their business's worth without upfront costs. It also provides free listings for businesses, making them discoverable to a wider pool of potential buyers. The underlying technology aims to aggregate and present this information in a transparent and accessible way, effectively creating a 'Zillow' for small businesses, thereby unlocking a previously hidden market.

How to use it?

For business owners looking to sell, LocalBiz Navigator offers a straightforward way to get a preliminary valuation of their business in moments, without paying any fees. They can then choose to list their business on the platform for free, reaching a broad audience of interested buyers. For potential buyers, it provides a centralized place to discover a wide range of local businesses for sale across diverse industries and locations. Brokers can also leverage the platform to list their clients' businesses more efficiently and connect with motivated buyers. Integration is typically through a web interface, with potential for future API access for more advanced users to integrate with their own systems.

Product Core Function

· Free Instant Business Valuation: Provides business owners with immediate, no-cost estimates of their business's worth using data-driven methodologies. This empowers owners with crucial information for decision-making without financial barriers.

· Open Business Listing Marketplace: Allows any business owner or broker to list a business for sale completely free of charge. This drastically increases the discoverability of businesses and opens up opportunities for more transactions.

· Centralized Discovery Platform: Aggregates business listings from across the country into a single, searchable database, making it easy for buyers to find opportunities and for sellers to gain exposure.

· Transparency in Valuation and Listings: Aims to break down traditional gatekeeping by making valuation data and business listings readily available, fostering a more informed and efficient market.

· Broker and Buyer Connection: Facilitates direct connections between business owners, potential buyers, and brokers, streamlining the communication and negotiation process.

Product Usage Case

· A small bakery owner wants to understand their business's market value before considering retirement. They use LocalBiz Navigator to get a free, instant valuation, which guides their financial planning and helps them decide on a realistic asking price, avoiding costly appraisal fees.

· An aspiring entrepreneur is looking to buy a local coffee shop. Instead of relying on word-of-mouth or expensive brokerages, they use LocalBiz Navigator to browse numerous coffee shops listed across different states, comparing opportunities and identifying potential acquisitions that fit their budget and criteria.

· A business broker has several clients looking to sell their businesses but faces challenges with high listing fees on traditional platforms. They list these businesses on LocalBiz Navigator for free, significantly expanding their reach and attracting more qualified buyers, ultimately leading to faster sales for their clients.

5

Data Weaver AI

Author

chenglong-hn

Description

Data Weaver AI is an interactive platform that uses AI agents to help you explore datasets, generate visualizations, and uncover insights. It bridges the gap between automated analysis and hands-on control, allowing users to collaborate with AI for data discovery through a user-friendly interface and natural language commands.

Popularity

Points 32

Comments 9

What is this product?

Data Weaver AI is a sophisticated tool designed to revolutionize data analysis by integrating AI agents with an intuitive user interface. Its core innovation lies in its 'interactive agent mode,' which allows users to guide AI-driven data exploration rather than relying solely on high-level prompts. The system organizes exploration steps as 'data threads,' enabling users to revisit, modify, and steer the analysis process with a combination of UI interactions and natural language instructions. This approach addresses the challenge of balancing AI's automation capabilities with the user's need for control and understanding, making complex data exploration accessible and manageable. It also provides explanations for AI-generated code and allows for easy report composition.

How to use it?

Developers can use Data Weaver AI by importing their datasets, which can be in various formats like screenshots of web tables, Excel files, text excerpts, CSVs, or even data from databases. Once loaded, users can interact with the AI agents using natural language prompts through a graphical user interface. They can choose between an automated agent mode or a more controlled interactive mode. The 'data threads' feature allows them to track and manage the exploration process, deciding at each step how to proceed, revise, or refine the analysis. The generated visualizations and insights can be easily compiled into reports for sharing.

Product Core Function

· Flexible data import: Allows users to load data from diverse sources such as screenshots, spreadsheets, text chunks, CSV files, and databases, simplifying the initial data preparation process for immediate exploration.

· Interactive agent mode: Empowers users to actively direct AI agents during data analysis, providing fine-grained control over exploration paths and AI suggestions, thus ensuring relevance and accuracy.

· Data thread organization: Structures exploration history into manageable threads, enabling users to easily navigate, revisit, and branch off from previous analysis steps, fostering a non-linear and adaptable discovery workflow.

· UI + Natural Language interaction: Combines a visual interface with natural language commands, making complex data manipulation and querying accessible to users with varying technical backgrounds and facilitating intuitive user experience.

· Concept explanation for AI code: Presents the underlying logic or 'concept' behind AI-generated code, helping users understand how insights are derived and building trust in the analytical outcomes.

· Easy report composition: Facilitates the creation of shareable reports by enabling users to directly incorporate generated visualizations and insights, streamlining the communication of findings.

Product Usage Case

· A marketing analyst can upload a customer survey CSV file and use natural language to ask the AI to identify key demographic segments and their purchasing habits, with the AI generating visualizations of the findings, all organized within data threads for easy review and refinement.

· A researcher working with a complex, unnormalized Excel table can import it into Data Weaver AI, use the interactive mode to guide the AI in cleaning and transforming the data, and then ask for specific trend analyses, with the AI explaining the code it used for each step, enabling transparent and reproducible research.

· A product manager can take a screenshot of a web table containing user feedback, have Data Weaver AI extract and structure the data, and then use agent mode to summarize sentiment and identify common feature requests, immediately composing a summary report with the generated charts for stakeholder review.

· A data scientist can experiment with different visualization types for a large dataset. They can use both agent and interactive modes, easily switching between them to explore various angles and quickly generate and compare different chart options, speeding up the insight discovery phase.

6

Creavi Macropad: Wirelessly Smart Macro Keys with a Display

Author

cmpx

Description

The Creavi Macropad is a compact, wireless macropad featuring an integrated display and a battery life of at least one month. It solves the problem of cluttered desks and complex shortcut management by offering customizable macro keys. The innovative aspect lies in its browser-based tool for real-time macro updates and Over-the-Air (OTA) updates via Bluetooth Low Energy (BLE), making it incredibly user-friendly and adaptable. It's a testament to the hacker spirit of figuring out hardware, software, and design to create a functional, elegant solution.

Popularity

Points 27

Comments 7

What is this product?

This is a wireless, low-profile macro keypad with a built-in screen. Think of it as a specialized keyboard that lets you assign complex actions or shortcuts to single button presses. What makes it innovative is that you can update these shortcuts directly from your web browser, even over a wireless Bluetooth connection, without needing to plug it into your computer to reprogram it. This means you can easily change what each button does on the fly, making it super flexible for different tasks. It's built by software engineers who learned hardware along the way, proving you can create sophisticated tools with determination and code.

How to use it?

Developers can use the Creavi Macropad to streamline their workflow. For instance, you can assign common code snippets, build commands, or Git operations to specific keys. The browser-based tool allows for instant customization. You can select a key on a virtual representation of the macropad in your browser, type in the desired command or macro, and push it to the device wirelessly. This is particularly useful for rapidly switching between different project needs or for team members who might have slightly different workflows. Integration is straightforward via Bluetooth, acting as a peripheral input device.

Product Core Function

· Real-time Macro Customization: Allows users to change button functions instantly via a web browser, significantly reducing setup time and increasing workflow adaptability for developers who frequently switch tasks.

· Over-the-Air (OTA) Updates via BLE: Enables firmware and macro updates wirelessly using Bluetooth Low Energy, eliminating the need for physical connections and simplifying maintenance, making it always up-to-date and functional.

· Integrated Display: Provides visual feedback on button functions or status, offering context-aware shortcuts that improve usability and reduce cognitive load during complex development tasks.

· Long Battery Life (1+ Month): Ensures continuous operation without frequent charging, minimizing interruptions and providing a reliable tool for extended coding sessions.

· Low-Profile, Wireless Design: Creates a clean and organized workspace by removing cable clutter and offering portability, enhancing the overall developer environment and comfort.

Product Usage Case

· A developer working on multiple projects can assign different sets of Git commands (e.g., `git add`, `git commit`, `git push`) to the same keys, switching the entire set with a single browser update, thus speeding up version control operations.

· A front-end developer can program keys to insert common HTML/CSS snippets or trigger build commands for frameworks like React or Vue, drastically reducing repetitive typing and boilerplate code entry.

· A game developer can create custom macros for in-game actions or development tools, with the display showing which macro set is currently active, improving control and reducing errors during demanding gameplay or debugging.

· A user testing a new application can quickly assign shortcut keys to test actions or data entry fields, easily updating them as the testing scenarios evolve, thereby accelerating the testing process and feedback loop.

7

Linnix: Kernel-Aware Predictive Observability

Author

parth21shah

Description

Linnix is an experimental observability tool that leverages eBPF to monitor Linux systems at the kernel level. Unlike traditional monitoring that alerts you after a problem escalates, Linnix uses a local LLM to detect anomalous patterns in system behavior, predicting potential failures like memory leaks before they cause outages. This offers proactive insights for developers and system administrators.

Popularity

Points 21

Comments 6

What is this product?

Linnix is a novel approach to system monitoring that utilizes eBPF (extended Berkeley Packet Filter) to tap directly into the Linux kernel. This allows it to gather precise, low-overhead data about system processes and resource usage. The innovation lies in its use of a local, lightweight Large Language Model (LLM) to analyze these kernel-level insights. Instead of just reporting current metrics, the LLM identifies unusual patterns in process behavior, such as subtle memory allocation anomalies that might precede a critical memory leak. This predictive capability aims to alert users to impending issues before they become critical failures, offering a significant advantage over reactive monitoring systems. Think of it as having a system that can 'feel' when something is going wrong at a fundamental level, rather than just 'seeing' it after it's too late.

How to use it?

Developers can integrate Linnix into their existing Linux environments, including Docker and Kubernetes setups. The quickest way to get started is by pulling the pre-built Docker image and running it via Docker Compose. Once running, Linnix continuously monitors the system's kernel activity. It can export its findings to Prometheus, a popular time-series monitoring and alerting system, allowing for seamless integration with existing dashboards and alerting pipelines. This means you can visualize the predictive insights alongside your other system metrics and configure alerts based on Linnix's anomaly detection. The setup is designed to be fast, typically taking around 5 minutes, and all data processing happens locally, ensuring privacy and security.

Product Core Function

· eBPF Kernel-Level Monitoring: Gathers detailed, real-time system data directly from the Linux kernel, providing more accuracy and less overhead than traditional file-based monitoring. This helps you understand exactly what your system is doing at its core.

· LLM-Powered Anomaly Detection: Employs a local LLM to analyze kernel events and identify subtle, predictive patterns of system behavior that might indicate future failures. This shifts monitoring from reactive to proactive, catching issues before they impact users.

· Predictive Failure Alerts: Notifies you of potential problems, such as memory leaks or unusual resource contention, *before* they cause system instability or downtime. This allows for timely intervention and prevention of cascading failures.

· Container Observability: Specifically designed to monitor Docker and Kubernetes environments, providing insights into containerized applications. This is crucial for modern microservice architectures.

· Prometheus Integration: Exports monitoring data to Prometheus, enabling unified dashboards and alert configuration with existing infrastructure. This makes it easy to incorporate Linnix's predictive capabilities into your current monitoring stack.

· Local Data Processing: All analysis and data handling occur on the local machine, ensuring data privacy and security. Your sensitive system behavior data never leaves your environment.

Product Usage Case

· Preventing unexpected application crashes due to memory leaks: A developer notices a slight but consistent increase in memory allocation within a critical service. Linnix's LLM flags this pattern as anomalous, and an alert is triggered before the memory leak consumes all available RAM and crashes the process, saving the application from downtime.

· Identifying resource contention in Kubernetes clusters: A system administrator observes that certain pods are experiencing intermittent performance degradations. Linnix, monitoring the underlying nodes, detects unusual CPU scheduling patterns caused by contention, allowing the administrator to proactively reallocate resources or optimize pod configurations before users report slow response times.

· Early detection of misbehaving background processes: A developer is running a new background task that unexpectedly starts consuming more CPU and memory than anticipated. Linnix detects the deviation from normal process behavior and alerts the developer, who can then investigate and fix the issue before it impacts other system services or leads to an outage.

· Proactive identification of potential disk I/O bottlenecks: A database administrator notices a gradual increase in disk read operations that doesn't correlate with direct user queries. Linnix's kernel-level monitoring picks up on abnormal I/O patterns that suggest an underlying issue, such as a faulty disk or an inefficient background indexing job, allowing for preemptive maintenance before performance degrades significantly.

8

VeriPixel: Photo/Video Provenance Engine

Author

rh-app-dev

Description

VeriPixel tackles the growing challenge of digital media authenticity by enabling every photo and video to prove itself. It leverages a novel combination of on-chain hashing and decentralized storage to create an immutable record of media origin and integrity. This addresses the critical need to combat misinformation and deepfakes by providing a verifiable chain of custody for digital content.

Popularity

Points 2

Comments 21

What is this product?

VeriPixel is a system designed to give digital photos and videos a built-in, tamper-proof identity. It works by creating a unique digital fingerprint (a cryptographic hash) of your media file. This fingerprint is then permanently recorded on a blockchain, which is like a super secure, public ledger that's impossible to alter. Additionally, the media file itself can be stored in a decentralized way, meaning it's not held in one single place but distributed across many computers, making it resistant to censorship or loss. The innovation lies in making media self-verifying by linking its content directly to its immutable origin and history, making it incredibly difficult to fake or alter without detection.

How to use it?

Developers can integrate VeriPixel into their applications to automatically generate and store provenance data for uploaded media. This could involve a simple API call during the media upload process. For example, a social media platform could use VeriPixel to tag each uploaded image with its verified origin. A news organization could use it to ensure the authenticity of their visual reporting. For users, this means that when they encounter a photo or video, they can query the system to see its verifiable history, confirming it hasn't been tampered with since its creation. This can be done through a dedicated verification tool or an API endpoint provided by the platform using VeriPixel.

Product Core Function

· On-chain Media Hashing: Creates a unique, unchangeable digital fingerprint for each media file and records it on a blockchain. This ensures that even a tiny change to the media will result in a completely different fingerprint, proving its integrity. So, this means you can trust that the media hasn't been altered since it was first registered.

· Decentralized Media Storage Integration: Provides mechanisms to store media files across distributed networks, enhancing resilience and censorship resistance. This means your photos and videos are safer and less likely to disappear or be removed by a single entity.

· Provenance Querying API: Offers an interface for applications and users to retrieve and verify the origin and modification history of a piece of media. This allows anyone to easily check the authenticity of an image or video, answering 'Is this real and has it been changed?'

Product Usage Case

· Authenticating news media: A news agency can use VeriPixel to timestamp and hash all submitted photo and video evidence. When publishing, they can provide a link to VeriPixel's verification, giving viewers confidence that the images are genuine and not doctored, thus combating misinformation.

· Securing user-generated content on social platforms: A social media application can automatically apply VeriPixel's provenance to every photo or video uploaded by users. This helps users identify potentially fake or manipulated content, building a more trustworthy online environment for everyone.

· Verifying evidence in legal proceedings: In situations where digital media is used as evidence, VeriPixel can provide an irrefutable record of the media's existence and state at a specific time, making it much harder to dispute its authenticity in court.

· Protecting creative works: Artists and creators can use VeriPixel to establish a clear, verifiable record of their original work, helping to protect against copyright infringement and proving ownership.

9

LexiLearn-Core

Author

trubalca

Description

LexiLearn-Core is a language learning application that leverages spaced repetition to teach the 5,000 most common words in a target language. It addresses the limitations of existing tools by focusing on high-frequency vocabulary, inspired by research on memory and language acquisition.

Popularity

Points 9

Comments 12

What is this product?

LexiLearn-Core is a language learning system built upon the principle of spaced repetition, a scientifically proven method for memorization. Instead of overwhelming learners with vast dictionaries, it strategically presents vocabulary at increasing intervals, optimizing retention. The core innovation lies in its focus on the 5,000 most common words, which research suggests account for a significant portion of everyday communication. This pragmatic approach aims to provide a more efficient and effective path to language fluency, directly addressing the 'why isn't this working?' sentiment often associated with traditional language apps.

How to use it?

Developers can integrate LexiLearn-Core into their own projects or build standalone applications leveraging its vocabulary and spaced repetition engine. The system is designed to be adaptable, allowing for the addition of new languages and custom word lists. For end-users, it's a straightforward web-based application accessible via a browser, requiring account creation for full functionality. The 'Get Started' option offers immediate exploration of the learning philosophy without account commitment.

Product Core Function

· Spaced Repetition Engine: Implements an algorithm that schedules word reviews at optimal intervals for long-term memory retention. This means you'll see words just before you're about to forget them, making learning incredibly efficient.

· High-Frequency Vocabulary Focus: Curates language modules around the 5,000 most common words, ensuring learners acquire the vocabulary essential for practical communication. This saves you time by teaching you what you'll actually use.

· Multi-Language Support: Designed to accommodate multiple target languages, allowing users to learn Spanish, French, Italian, and potentially others. This provides flexibility for diverse learning needs.

· Progress Tracking: Offers mechanisms to monitor learning progress, enabling users to see their improvement and stay motivated. Knowing how far you've come is a powerful motivator.

Product Usage Case

· Language learning app development: A developer wants to build a new language learning app that focuses on practical conversation. They can use LexiLearn-Core's engine and curated word lists to quickly create a robust vocabulary learning component, solving the problem of needing to build complex memorization algorithms from scratch.

· Educational tool creation: An educator creating online language courses can integrate LexiLearn-Core to provide students with a scientifically backed vocabulary acquisition tool. This enhances the effectiveness of their curriculum by ensuring students master essential words.

· Personalized learning experience: A user looking for a more effective way to learn a new language can use LexiLearn-Core as a standalone tool. It addresses the frustration of using apps that feel like games rather than effective learning platforms by offering a scientifically grounded approach.

· Cross-cultural communication tools: Businesses or individuals involved in international collaboration can use LexiLearn-Core to quickly acquire the basic vocabulary needed for effective communication, solving the challenge of language barriers in professional settings.

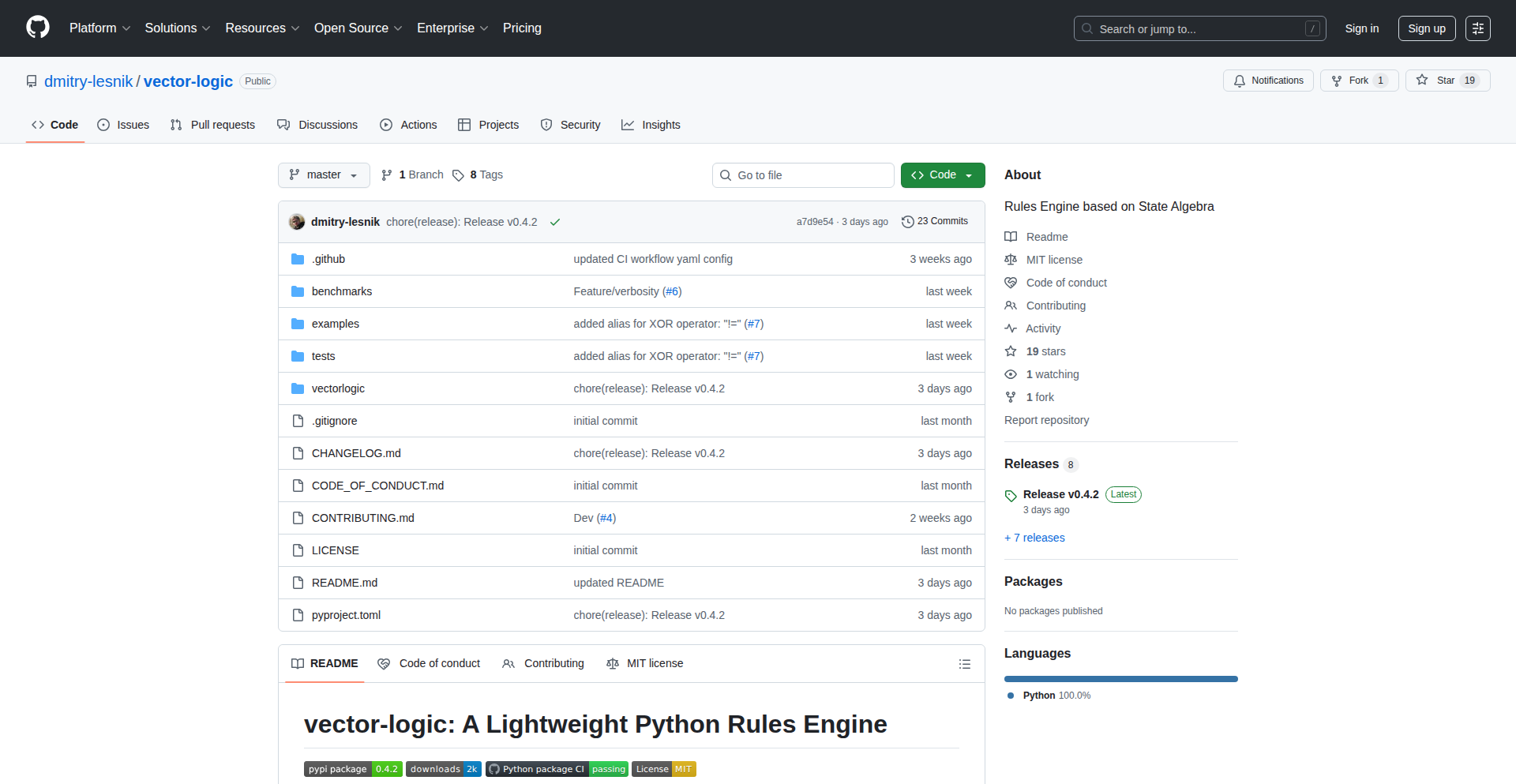

10

Vector-Logic: First-Principles Rule Engine

Author

dmitry_stratyfy

Description

A lightweight rules engine built from scratch to offer a novel approach to declarative rule definition and execution. It prioritizes flexibility and understandability by focusing on a core set of logical operations, making it easier to grasp and extend than complex, framework-heavy alternatives.

Popularity

Points 8

Comments 9

What is this product?

Vector-Logic is a rule engine, which is like a decision-making system for your software. Instead of writing lots of if-then statements directly in your code, you define rules separately. Vector-Logic does this by using a set of basic logical building blocks, like 'AND', 'OR', and conditions that check values. The innovation here is how it constructs these rules and evaluates them efficiently, as if it's building a logic circuit. This means complex decision trees can be represented and processed in a very clear and optimized way, avoiding the 'spaghetti code' of deeply nested conditionals. So, what's the value? It makes your software's decision-making process more organized, easier to update, and less prone to errors, especially when those decisions become complicated.

How to use it?

Developers can integrate Vector-Logic by defining their rules in a structured format, often a simple text file or in-memory data structure. These rules represent conditions and the actions to take when those conditions are met. The engine then takes input data, like user profiles or sensor readings, and 'runs' the rules against it. For example, in an e-commerce application, you could define rules for applying discounts based on customer loyalty, purchase history, and current promotions. The engine would then process a customer's order and automatically apply the correct discount. This is done by passing the customer data and order details to the Vector-Logic engine, which evaluates the defined rules and returns the applicable discount or action. This allows for dynamic and complex business logic to be managed outside the core application code, making it faster to iterate on pricing or promotion strategies without redeploying the entire application.

Product Core Function

· Declarative Rule Definition: Define decision logic separately from application code, making it easier to read, write, and manage complex business rules. Value: Reduces code complexity and improves maintainability.

· First-Principles Logic Evaluation: Efficiently processes rules using fundamental logical operations, ensuring performant execution even with many complex conditions. Value: Ensures quick decision-making in performance-critical applications.

· Extensible Rule Constructs: Allows for the creation of custom rule types and conditions, providing flexibility to model unique business requirements. Value: Adapts to a wide range of specific use cases and evolving business needs.

· Data-Driven Decision Making: Processes input data against defined rules to derive outcomes or trigger actions. Value: Enables dynamic and context-aware behavior in applications.

Product Usage Case

· Dynamic Pricing Engine: In a retail or service application, rules can be defined to adjust prices based on factors like inventory levels, time of day, or customer segment. Vector-Logic can evaluate these rules against real-time sales data to apply dynamic pricing strategies. This solves the problem of hardcoding pricing logic, allowing for rapid adaptation to market conditions.

· Personalized Content Recommendation: For a media or e-commerce platform, rules can determine which content or products to show a user based on their past behavior, preferences, and demographic information. Vector-Logic processes user data and a library of rules to personalize the user experience. This tackles the challenge of delivering relevant recommendations at scale.

· Fraud Detection System: Financial applications can use Vector-Logic to establish rules for identifying potentially fraudulent transactions. By analyzing transaction patterns, amounts, locations, and user history against defined suspicious criteria, the engine can flag or block suspicious activities. This addresses the critical need for real-time fraud prevention.

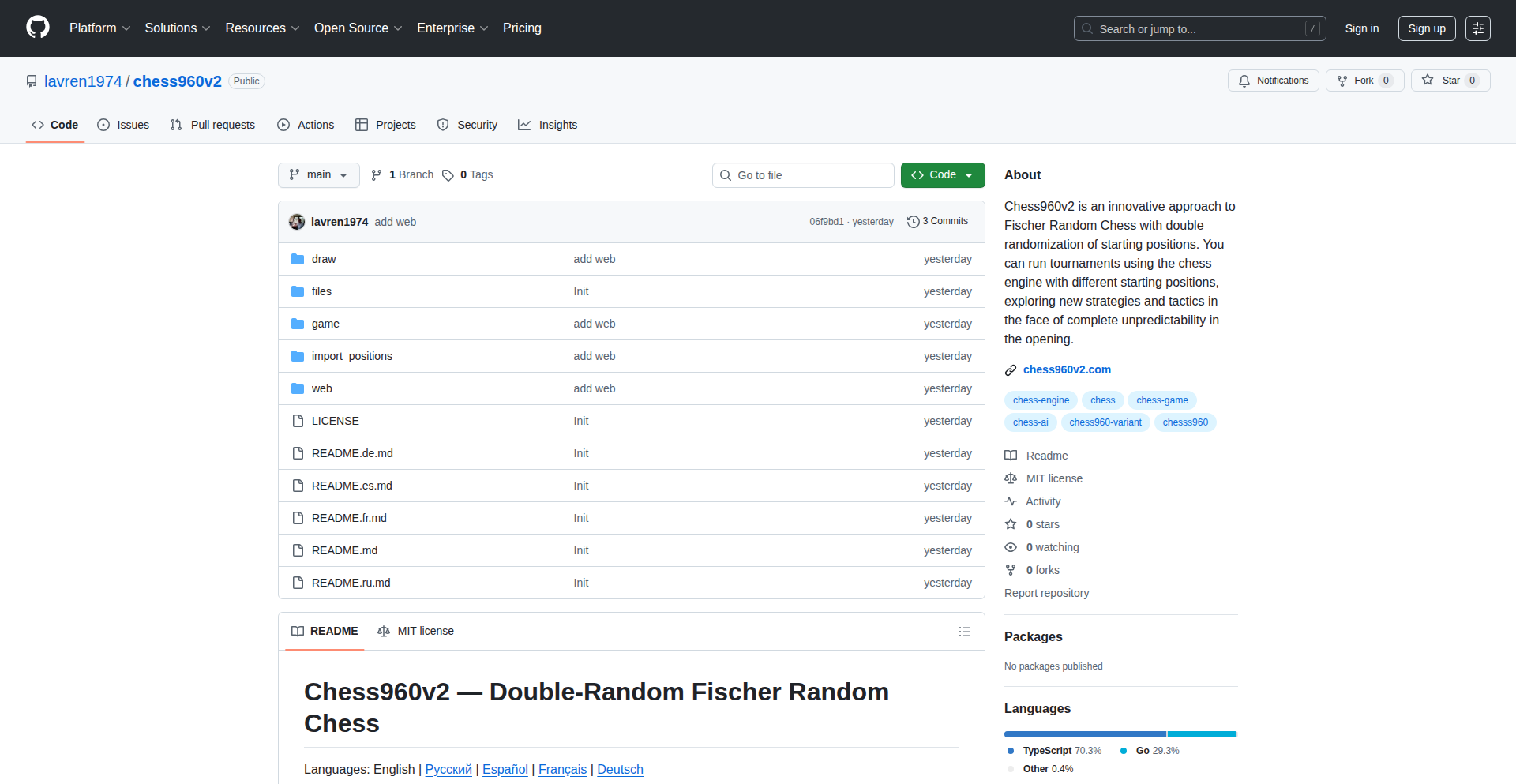

11

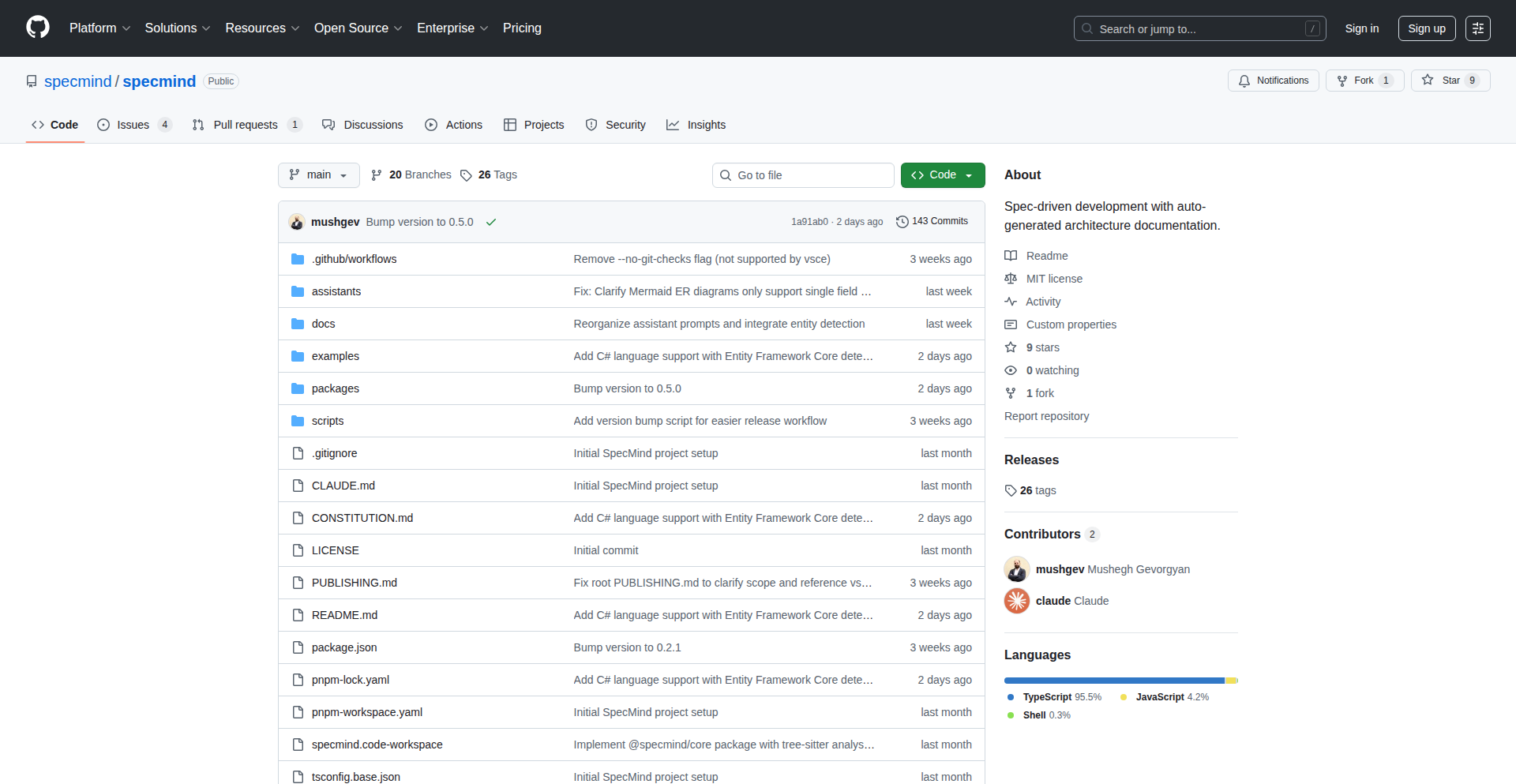

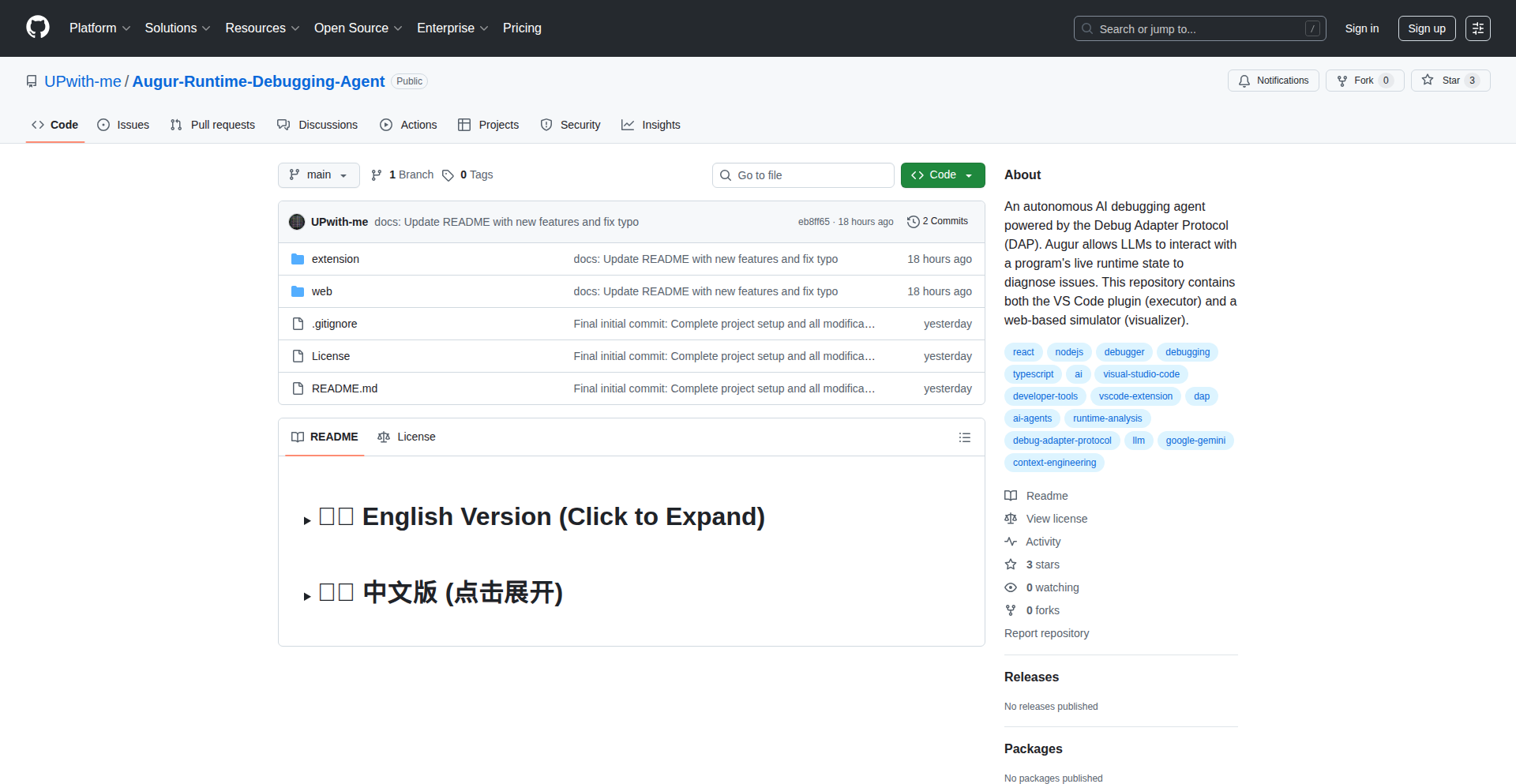

SpecMind-AI: Architecture Drift Defender

Author

mushgev

Description

SpecMind-AI is an open-source developer tool that combats architectural drift in codebases, especially when AI assistants are involved. It ensures that your software's design and its actual implementation stay in sync from the start. By analyzing your code, it generates living architecture specifications, allowing you to design changes and then apply them, keeping everything consistent. This means your project's structure remains robust and understandable, even as development accelerates.

Popularity

Points 8

Comments 7

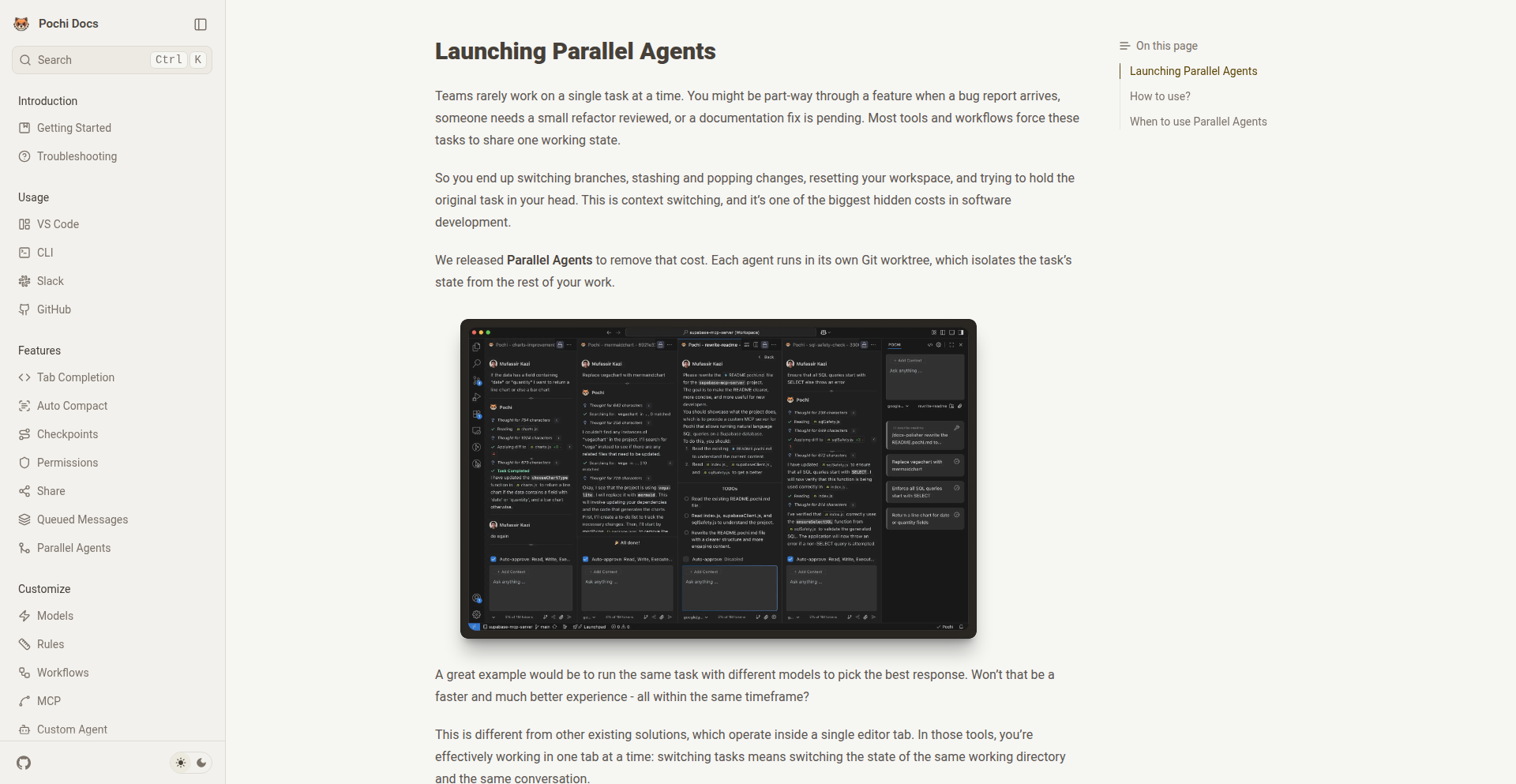

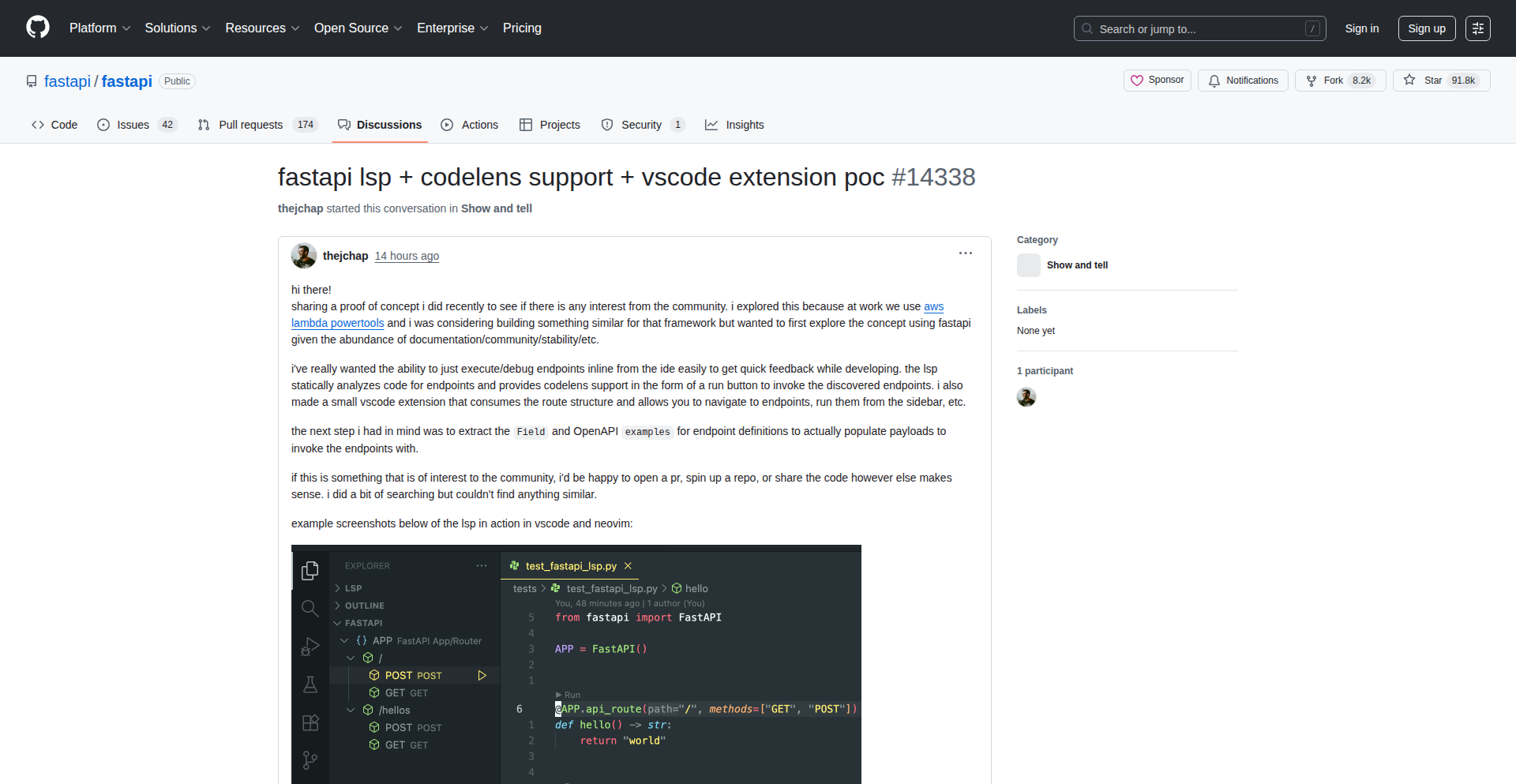

What is this product?

SpecMind-AI is a smart system that acts like a guardian for your software's blueprint. Think of it as a set of blueprints for a building that automatically update themselves as construction happens. When developers or AI tools write code, they might inadvertently change the original design or introduce new, inconsistent patterns. SpecMind-AI scans your existing code (supporting TypeScript, JavaScript, Python, and C# initially) and creates a clear specification of your architecture, including diagrams. You can then define how new features should affect this architecture. SpecMind-AI helps apply these changes and updates the diagrams, ensuring that your code always reflects the intended design. This prevents the chaotic fragmentation that can happen in fast-paced development environments.

How to use it?

Developers can integrate SpecMind-AI into their workflow to maintain architectural integrity. The process typically involves three steps: 1. Analyze: Run SpecMind-AI to scan your codebase and generate a '.specmind/system.sm' file that visually represents your system's architecture and the relationships between its components. 2. Design: Create a specification file that describes how a new feature or change will impact the existing architecture. 3. Implement: Use SpecMind-AI to apply this specification, which automatically updates your code and the architecture diagrams. A VS Code extension allows for easy previewing of these specifications. It can work alongside AI coding assistants like Claude Code and Windsurf, with more integrations planned.

Product Core Function

· Codebase Architecture Analysis: Scans your code to automatically generate visual architecture diagrams and component relationships. This helps you understand the current state of your project and identify potential areas of inconsistency, providing immediate clarity on your system's structure.

· Living Architecture Specification Generation: Creates plain text specification files (using Markdown and Mermaid) stored directly with your code. This allows for version-controlled, human-readable documentation of your architecture, making it easier to collaborate and onboard new team members.

· Spec-Driven Feature Implementation: Enables developers to design how new features will change the system architecture and then apply these specifications. This ensures that architectural decisions are embedded in the development process, preventing manual errors and maintaining consistency.

· Automated Diagram Updates: Automatically updates architecture diagrams based on implemented specifications. This means your visualizations are always current, reflecting the live state of your codebase without manual effort.

· VS Code Integration for Visualization: Provides a VS Code extension to easily preview architecture specifications and diagrams. This allows for seamless integration into the developer's primary coding environment, making architecture review and design intuitive.

Product Usage Case

· Maintaining a consistent microservices architecture: In a project with many interconnected microservices, it's easy for teams to diverge in their implementation patterns. SpecMind-AI can analyze the current communication patterns and dependencies, generate a spec, and ensure new services or updates adhere to the defined architecture, preventing integration issues.

· Onboarding new developers to a complex legacy system: For large, mature codebases, understanding the architecture can be a significant hurdle for newcomers. SpecMind-AI can create an up-to-date, visual representation of the system, acting as an interactive guide that helps new developers grasp the architecture quickly and contribute effectively.

· Collaborating with AI code generation tools: When using AI assistants to write code, architectural drift is a common problem. SpecMind-AI can analyze the AI-generated code, identify deviations from the intended architecture, and provide a clear specification to correct or guide future code generation, ensuring AI-assisted development remains aligned with project goals.

· Refactoring a monolithic application into microservices: As a large application is broken down, SpecMind-AI can help define the boundaries and interfaces between new microservices, track the progress of the refactoring, and ensure that the evolving architecture remains consistent and well-documented throughout the transition.

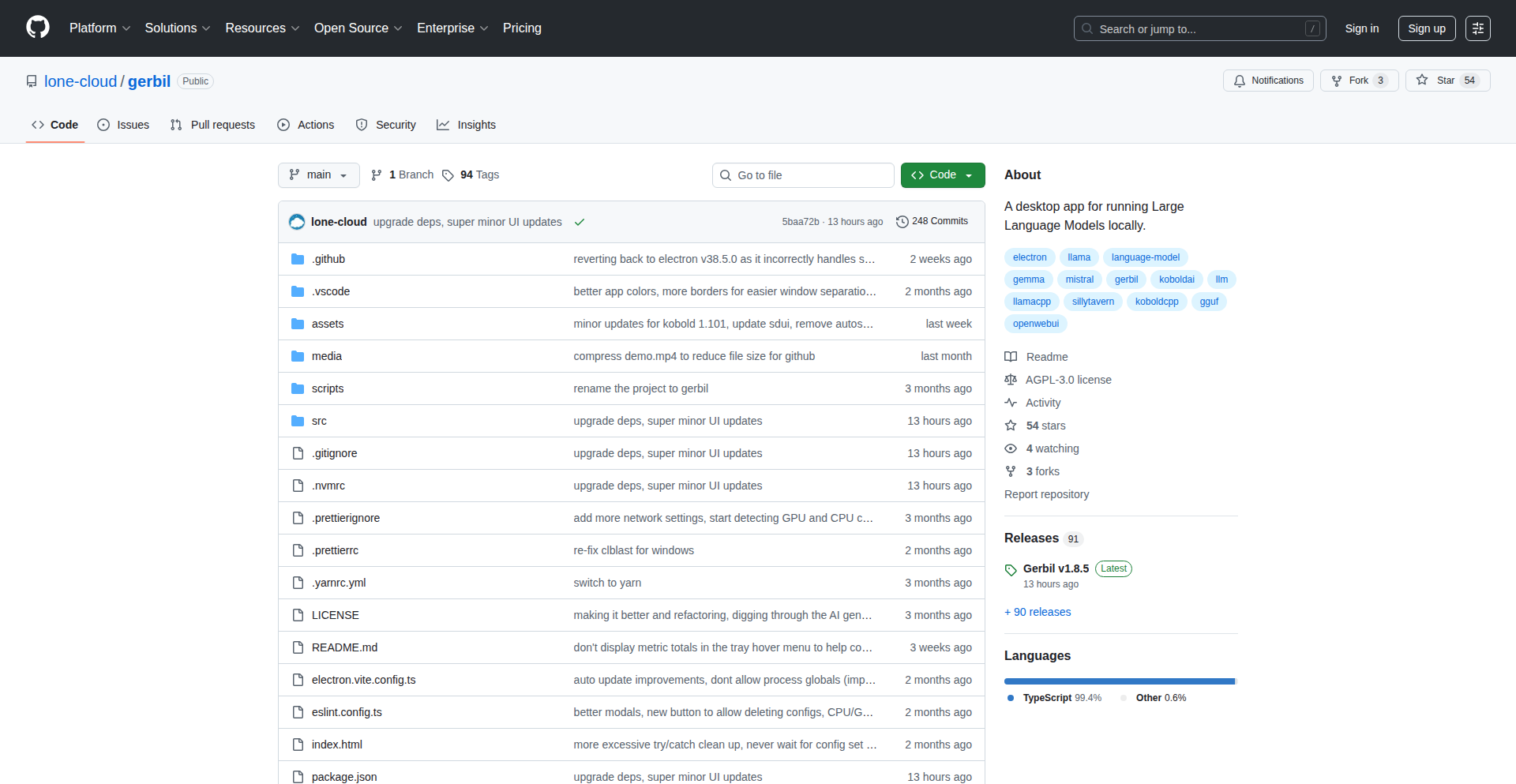

12

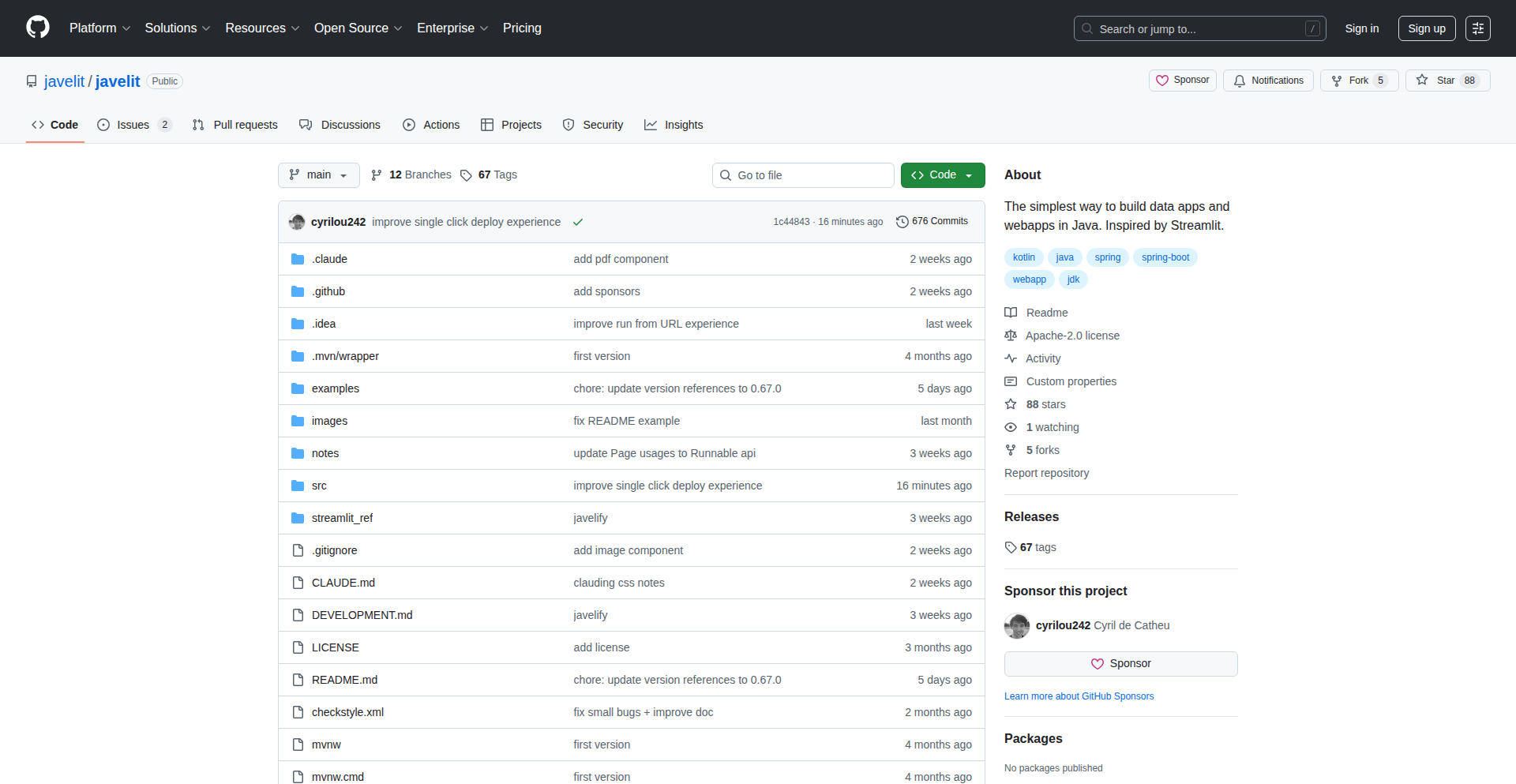

Gerbil-LLM-Hub

Author

lone-cloud

Description

Gerbil-LLM-Hub is an open-source desktop application designed to simplify the local execution and integration of Large Language Models (LLMs) and image generation models. It acts as a unified interface, eliminating the need to manage multiple tools for different LLM backends and frontends, offering a streamlined experience for developers and enthusiasts on Linux, particularly for Wayland users.

Popularity

Points 11

Comments 0

What is this product?

Gerbil-LLM-Hub is a desktop application that centralizes the management and interaction with various local LLM and image generation models. At its core, it leverages the power of llama.cpp (through koboldcpp) to run models locally on your machine. The innovation lies in its ability to seamlessly connect these local model backends with popular modern frontends like Open WebUI, SillyTavern, ComfyUI, and also includes built-in support for StableUI and KoboldAI Lite. Essentially, it's a meta-tool that makes running sophisticated AI models on your own hardware much more accessible and less fragmented.

How to use it?

Developers can use Gerbil-LLM-Hub by downloading and installing the application on their Linux system. Once installed, they can configure it to point to their locally downloaded LLM models (like those compatible with llama.cpp). The application then provides an interface to connect these local models to various frontends, allowing for text generation, role-playing scenarios, or even image generation through compatible models. Integration with existing workflows is facilitated by its compatibility with popular frontends, meaning developers can continue using their preferred tools while Gerbil handles the backend model management.

Product Core Function

· Unified LLM Backend Management: Allows users to run and manage multiple local LLM models (e.g., Llama, Mistral) through a single application. The value is in simplifying setup and reducing the overhead of switching between different model execution environments.

· Frontend Integration Layer: Provides seamless connections to popular AI frontends like Open WebUI and SillyTavern. This means developers can use their favorite interfaces for interacting with locally run models, enhancing productivity and user experience.

· Built-in UI Components: Includes integrated user interfaces for StableUI and KoboldAI Lite. This offers immediate usability for users who prefer these specific frontends without requiring separate installations.

· Local Image Generation Support: Facilitates the use of models for generating images locally. This is valuable for artists, designers, and developers who need to experiment with or integrate AI image generation into their projects without relying on cloud services.

· Wayland Compatibility: Optimized for Linux Wayland environments, ensuring a smooth and visually appealing user experience on modern desktop setups. This addresses a common pain point for users on newer Linux display servers.

Product Usage Case

· A writer wanting to experiment with different LLMs for creative writing without the complexity of command-line interfaces or cloud costs. Gerbil-LLM-Hub allows them to download models and connect to a text-generation frontend like Open WebUI to draft stories and explore AI-assisted writing.

· A game developer building an interactive story game that requires a sophisticated NPC dialogue system. They can use Gerbil-LLM-Hub to run an LLM locally and connect it to a frontend like SillyTavern, enabling dynamic and responsive character conversations within their game development environment.

· A hobbyist interested in AI art who wants to generate images using models like Stable Diffusion locally. Gerbil-LLM-Hub can be configured to run the image generation backend, and they can use the built-in StableUI or connect to ComfyUI for intricate image creation workflows.

· A developer on a Linux system using the Wayland display server who struggles with UI applications not rendering correctly. Gerbil-LLM-Hub's Wayland optimization ensures a native and problem-free experience, allowing them to focus on their AI model experimentation rather than UI issues.

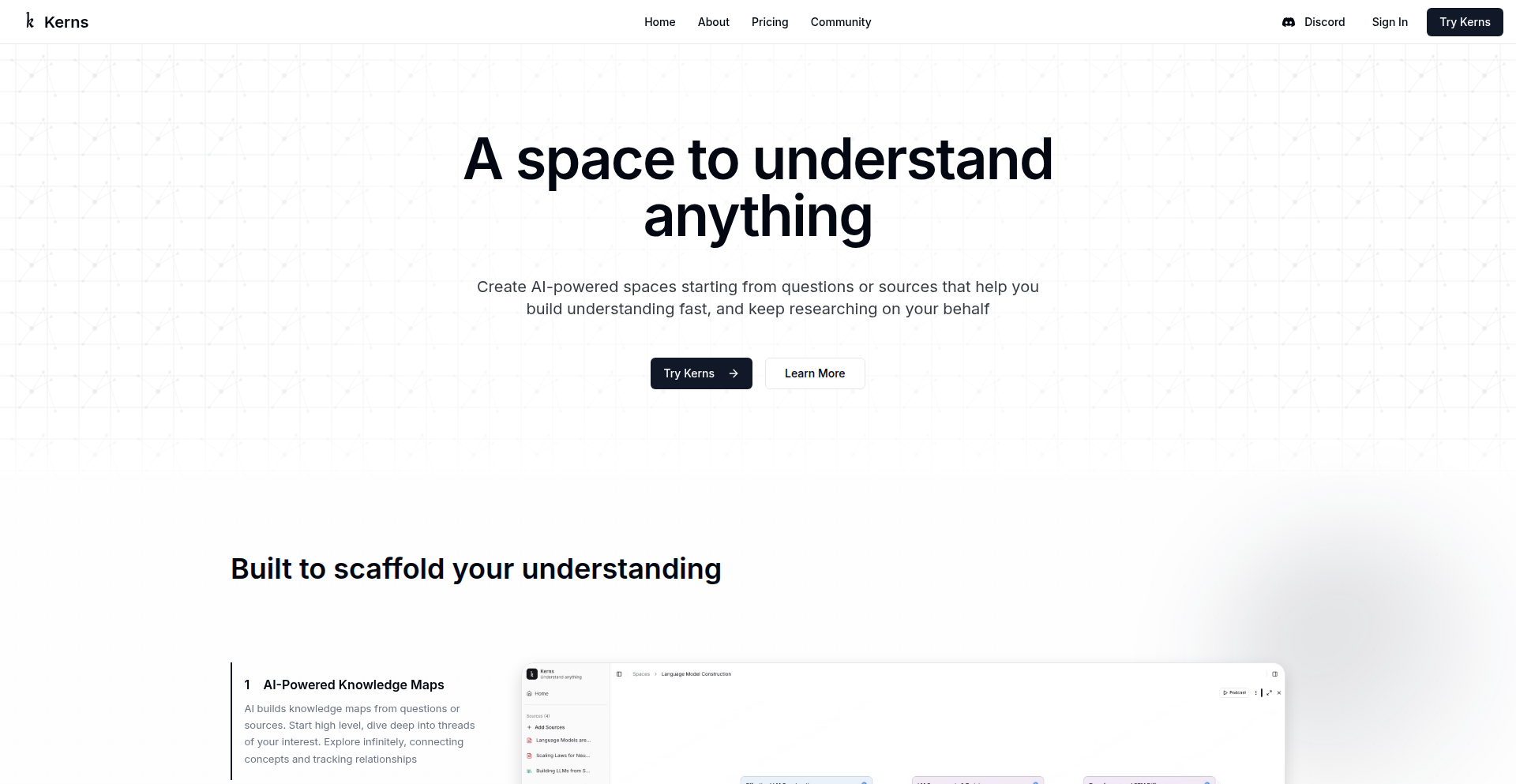

13

Kerns AI Research Nexus

Author

kanodiaayush

Description

Kerns is an AI-powered research environment designed to streamline the process of understanding complex topics. It consolidates research materials, interactive exploration tools, and AI assistance into a single, intuitive platform, significantly reducing the manual effort of context management and tool switching.

Popularity

Points 6

Comments 4

What is this product?

Kerns is an AI research assistant that allows you to input a topic and multiple source documents to conduct comprehensive research within a unified environment. It tackles the common problem of fragmented research by integrating interactive mindmaps for visual exploration, a podcast mode for auditory learning, advanced source readers with both overall and chapter-level summaries, a context-aware chat agent that cites references, and AI-assisted note-taking. The core innovation lies in minimizing manual context engineering and the need to jump between separate chat, note-taking, and reading applications. Essentially, it's like having a super-intelligent research librarian and assistant in one place.

How to use it?

Developers can use Kerns by first seeding a research 'space' with a specific topic and uploading relevant source documents (e.g., research papers, articles, book chapters). They can then interact with the AI through a chat interface to ask questions, explore connections between concepts using mindmaps, listen to summaries in podcast mode, and take notes with AI assistance. For integration, Kerns aims to be a standalone application for individual research workflows, reducing the need for developers to build custom solutions for managing research context across various tools. Think of it as a specialized IDE for research, where all your research artifacts and AI tools are managed cohesively.

Product Core Function

· Interactive Mindmaps for Topic Exploration: This function visually maps out relationships between ideas and concepts within your research materials. Its technical value is in providing an intuitive, non-linear way to discover connections that might be missed in traditional linear reading, aiding in hypothesis generation and understanding complex structures. For developers, this means a more efficient way to brainstorm and organize research findings.

· Podcast Mode for Auditory Learning: Kerns can convert research material into an audio format, akin to a podcast. This offers a significant accessibility and convenience benefit, allowing users to consume information while multitasking or when visual focus is limited. The technical implementation likely involves text-to-speech and intelligent summarization algorithms. The value for developers is the ability to learn on the go, making research less time-bound to a desk.

· Advanced Source Readers with Summaries: The platform provides readers that offer both high-level and chapter-specific summaries of your source documents. This technical feat employs sophisticated natural language processing (NLP) for summarization. The value is in quickly grasping the essence of long documents and efficiently navigating to specific sections, saving considerable reading time. Developers can rapidly assess the relevance of sources.

· Context-Aware Chat Agent with Citations: This AI agent allows for natural language querying of your research materials, with the added benefit of automatically citing its sources. The underlying technology involves advanced language models and information retrieval techniques. Its core value is providing direct answers grounded in your documents, with verifiable references, reducing the risk of misinformation and speeding up fact-checking. Developers can get precise answers and track their origins.

· AI-Assisted Note Taking: Kerns helps in the note-taking process, likely by suggesting key points or summarizing content as you research. This leverages AI to make note-taking more efficient and comprehensive. The value is in capturing crucial information effectively without interrupting the flow of research. Developers can build better knowledge bases for their projects by having smarter, AI-enhanced notes.

Product Usage Case

· A software engineer researching a new machine learning framework can seed Kerns with the framework's documentation, academic papers, and blog posts. They can then use the chat agent to ask 'What are the main performance bottlenecks of this framework and how can they be mitigated?', receiving answers directly from the documents with citations, and exploring related concepts through the mindmap. This solves the problem of sifting through hundreds of pages of documentation and scattered articles to find specific solutions.

· A game developer investigating historical military tactics for a new game can upload historical texts and analyses. They can use the podcast mode to listen to summaries of tactics while commuting, and then use the chat agent to ask 'What were the primary supply chain challenges for the Roman legions during the Punic Wars?', getting concise answers supported by evidence from their uploaded texts. This solves the issue of time constraints and makes absorbing dense historical information more manageable.

· A developer building a complex decentralized application can feed Kerns technical specifications, whitepapers, and forum discussions. They can use the AI-assisted note-taking to quickly capture key architectural decisions and potential security vulnerabilities, while the mindmap helps visualize the interdependencies of different smart contracts. This addresses the challenge of managing intricate system designs and ensuring all critical aspects are documented and understood.

· A researcher investigating emerging trends in quantum computing can upload multiple academic papers and industry reports. They can use the interactive mindmap to identify emergent themes and connections between different research groups' findings, and then ask the chat agent specific questions about a particular quantum algorithm's implementation challenges, receiving summarized answers with direct links to the relevant sections in the papers. This solves the problem of identifying novel research directions and understanding the state-of-the-art efficiently.

14

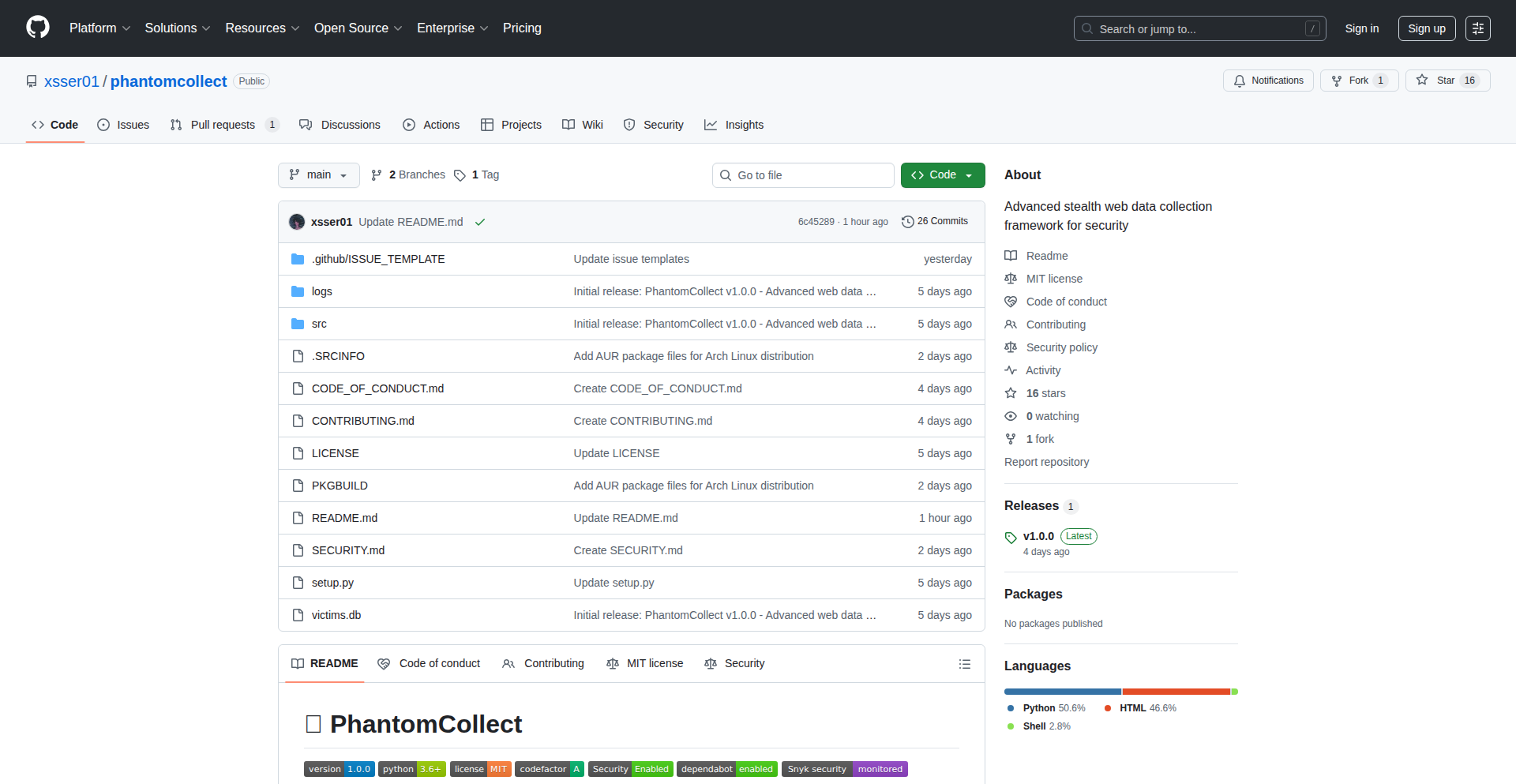

PhantomCollect

Author

xsser01

Description

PhantomCollect is an open-source web data collection framework built in Python. It addresses the challenges of web scraping by offering a robust and flexible solution for extracting data from websites. Its innovation lies in its modular design and asynchronous capabilities, allowing developers to efficiently gather data for various applications like market research, sentiment analysis, and content aggregation. This means you can automate the process of getting information from the web, saving significant manual effort.

Popularity

Points 7

Comments 3

What is this product?

PhantomCollect is a Python framework designed to make web scraping (collecting data from websites) easier and more efficient. It's built with a focus on being open-source, meaning anyone can use, modify, and contribute to it. The core innovation is its ability to handle multiple web requests concurrently (asynchronously), which significantly speeds up the data collection process compared to traditional, sequential methods. Think of it as a super-fast, organized way to download information from many web pages at once, rather than one by one. This is useful for anyone needing to gather large amounts of online data quickly and reliably.

How to use it?

Developers can integrate PhantomCollect into their Python projects by installing it via pip. They would then define the websites they want to scrape, specify the data they are interested in (using selectors like CSS or XPath), and configure how the data should be processed or stored. For example, a developer building a price comparison tool could use PhantomCollect to fetch product prices from multiple e-commerce sites simultaneously. This saves them from writing complex, custom scraping logic for each site.

Product Core Function

· Asynchronous HTTP Requests: Enables making many web requests at the same time, drastically reducing the time it takes to collect data. This is valuable because it allows for faster data acquisition, making your projects that rely on real-time or extensive web data more responsive.

· Flexible Data Extraction: Supports various methods (like CSS selectors and XPath) to precisely target and extract the specific information you need from web pages. This is useful for ensuring you get only the relevant data, avoiding noise and making your analysis cleaner.

· Modular Design: Allows developers to easily extend or customize the framework's functionality. This means you can adapt PhantomCollect to your unique scraping needs without being limited by the default features, leading to more tailored and effective data collection solutions.

· Error Handling and Retries: Built-in mechanisms to gracefully handle network errors or website changes and retry requests. This is valuable because it increases the reliability of your data collection process, ensuring you don't lose valuable data due to transient issues.

· Data Storage Options: Provides options to save collected data in various formats (e.g., JSON, CSV). This is useful for making the collected data readily available for analysis or integration with other systems, streamlining your data workflow.

Product Usage Case

· A market researcher uses PhantomCollect to scrape product reviews and pricing data from dozens of e-commerce sites to analyze consumer sentiment and competitive pricing. This helps them understand market trends and identify opportunities without manually browsing each site.

· A content aggregator employs PhantomCollect to gather news articles from various sources based on specific keywords, then uses the extracted text to populate their platform. This automates the process of content curation, ensuring their platform is always up-to-date.

· A developer building a real estate listing tool uses PhantomCollect to extract property details (price, size, location) from multiple real estate websites. This allows them to present a comprehensive view of available properties to potential buyers, solving the problem of dispersed property information.

15

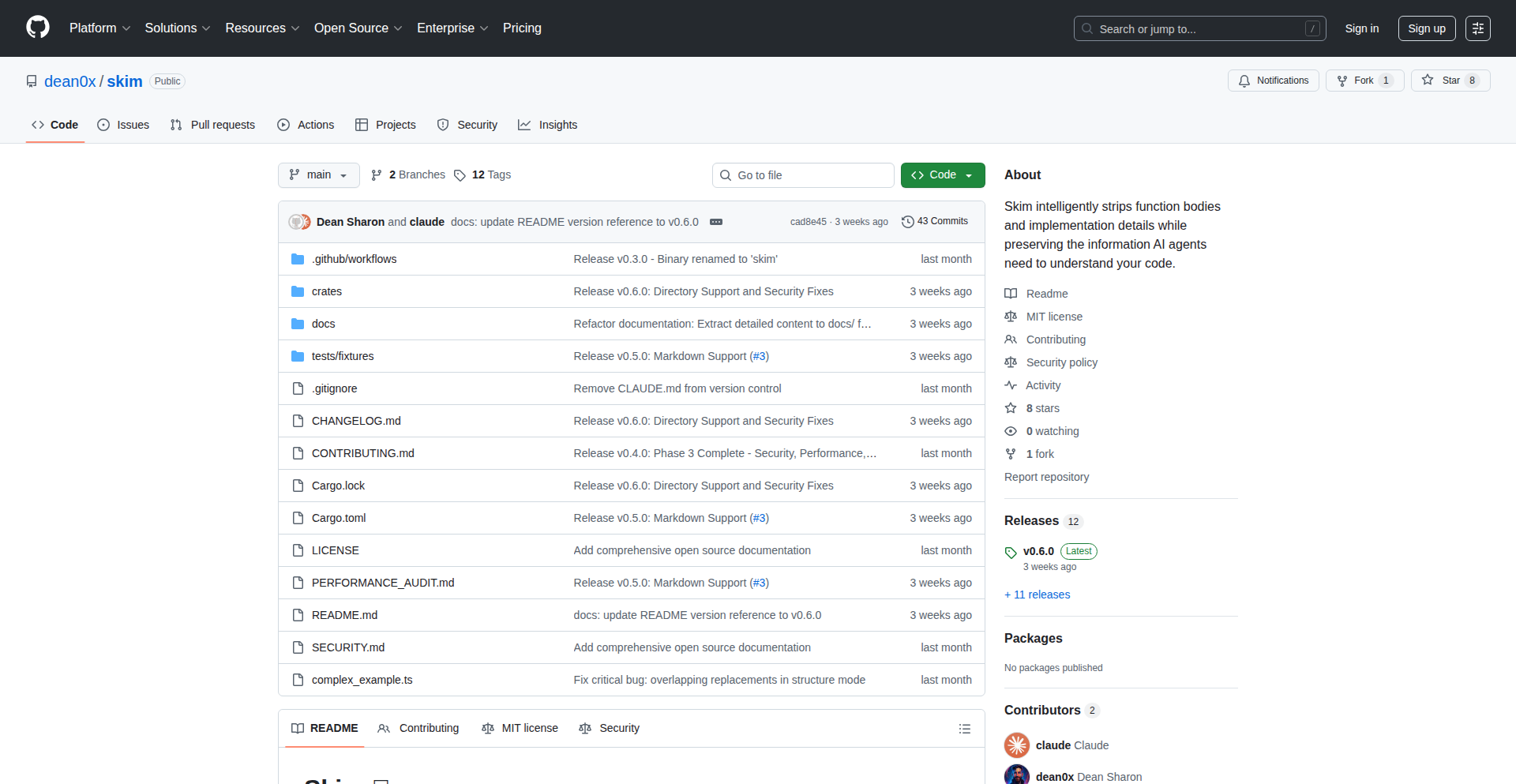

CodeCompressor

Author

dean0x

Description

CodeCompressor is a novel tool that drastically reduces the token count of code for Large Language Models (LLMs) by up to 90%. This innovation addresses the costly and time-consuming nature of feeding large codebases into LLMs for analysis, making code comprehension by AI more accessible and efficient. It enables deeper and more extensive code reviews, bug detection, and refactoring suggestions from LLMs.

Popularity

Points 3

Comments 5

What is this product?

CodeCompressor is a sophisticated utility designed to intelligently condense the amount of 'text' (tokens) that represent your source code when you want to feed it into an AI model like a chatbot. Think of it like summarizing a long book into a few key bullet points while retaining all the essential plot and character details. The innovation lies in its specific algorithms that understand code structure, comments, and repetitive patterns, allowing it to identify and remove redundancy without losing crucial information that an LLM needs to understand the code's logic and functionality. This means you can provide much larger projects to an LLM for analysis than previously possible, saving on AI processing costs and time.

How to use it?

Developers can integrate CodeCompressor into their existing workflows. For instance, before submitting a large project's codebase to an LLM for a security audit, you would first run CodeCompressor on your code. It will output a significantly smaller version of your code. This compressed version is then what you provide to the LLM. This can be done via command-line interface (CLI) integration into scripts or CI/CD pipelines, or potentially through IDE plugins that automatically compress code before sending it to an AI assistant. This drastically lowers the token cost of AI-powered code reviews, documentation generation, and vulnerability scanning.

Product Core Function

· Intelligent Token Reduction: Achieves up to 90% reduction by analyzing code syntax, semantics, and common patterns, retaining essential information for LLM understanding. This means you can fit more code into AI analysis, leading to more comprehensive insights and cost savings.

· Preserves Code Meaning: Algorithms are designed to ensure that the compressed code still accurately represents the original logic and functionality, so the LLM can perform reliable analysis. This guarantees that the AI's feedback will be accurate and actionable.

· Supports Multiple Programming Languages: Adaptable to various coding languages, offering broad utility across different development environments. This makes it a versatile tool for any developer, regardless of their primary language.

· Customizable Compression Levels: Allows users to fine-tune the compression to balance conciseness with the level of detail required by the LLM. This provides flexibility to optimize for different AI tasks and budgets.

· Fast Processing Speed: Efficient algorithms ensure quick compression, minimizing impact on development workflows. This means you won't spend excessive time waiting for the code to be prepared for AI analysis.

Product Usage Case

· Large-scale codebase analysis for security vulnerabilities: A developer can compress a massive open-source project into a manageable size, allowing an LLM to perform a thorough security audit within reasonable token limits, identifying potential exploits that might otherwise be missed.

· Automated code summarization for documentation: Instead of manually writing summaries for extensive code modules, developers can use CodeCompressor to feed the code to an LLM, generating concise and accurate documentation quickly. This saves significant developer time and effort.

· Refactoring suggestions for complex systems: An LLM can analyze a large, intricate system's compressed code to identify areas for improvement, suggest refactoring strategies, and even generate code snippets for modernization, all at a reduced cost.

· Onboarding new developers to large codebases: Compressed code snippets can be used to help new team members quickly grasp the essence of complex functionalities without being overwhelmed by the sheer volume of code.

· Cost-effective AI-assisted code review: Development teams can significantly reduce the expense of using LLMs for regular code reviews by compressing the code, making advanced AI analysis a more practical and affordable option.

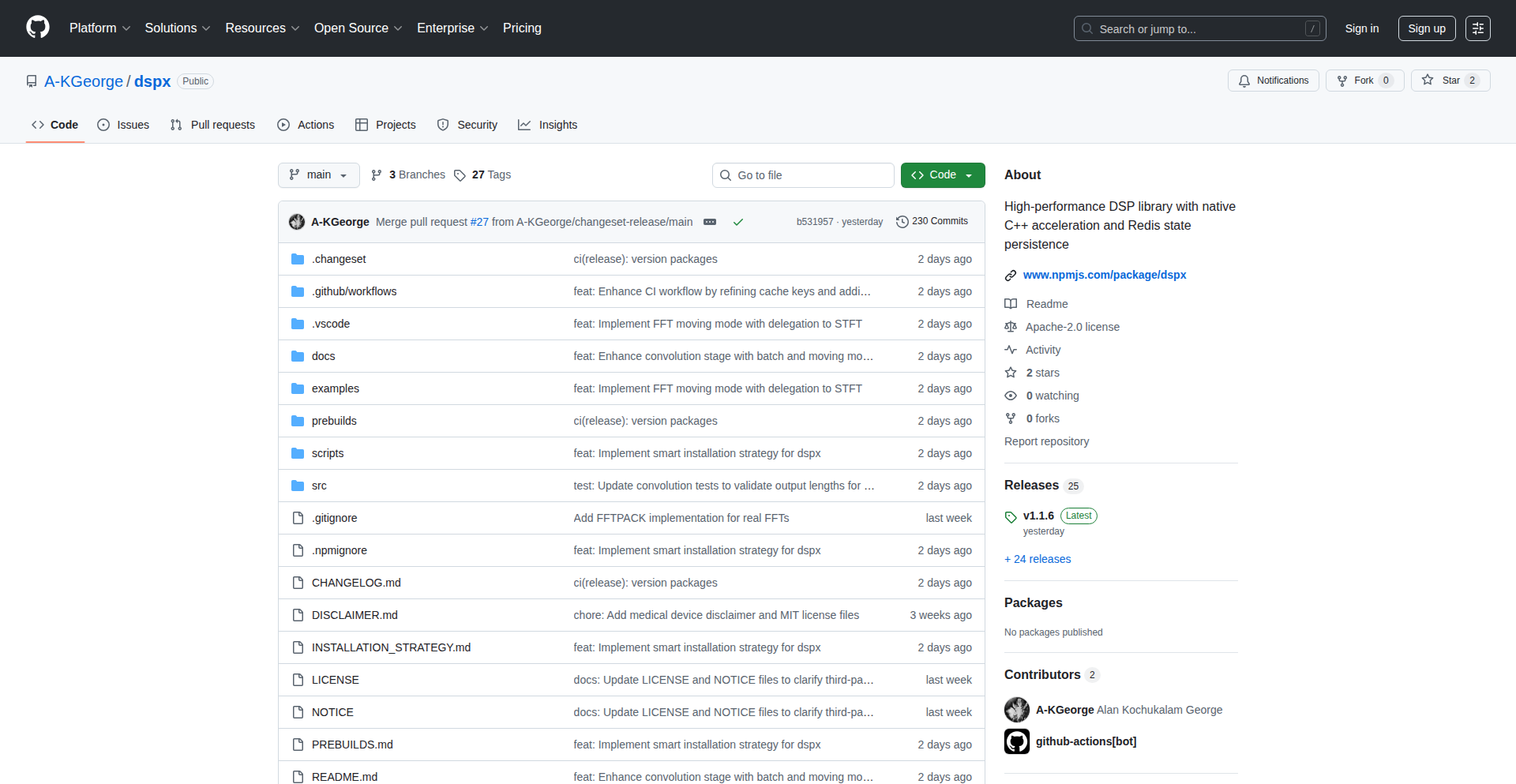

16

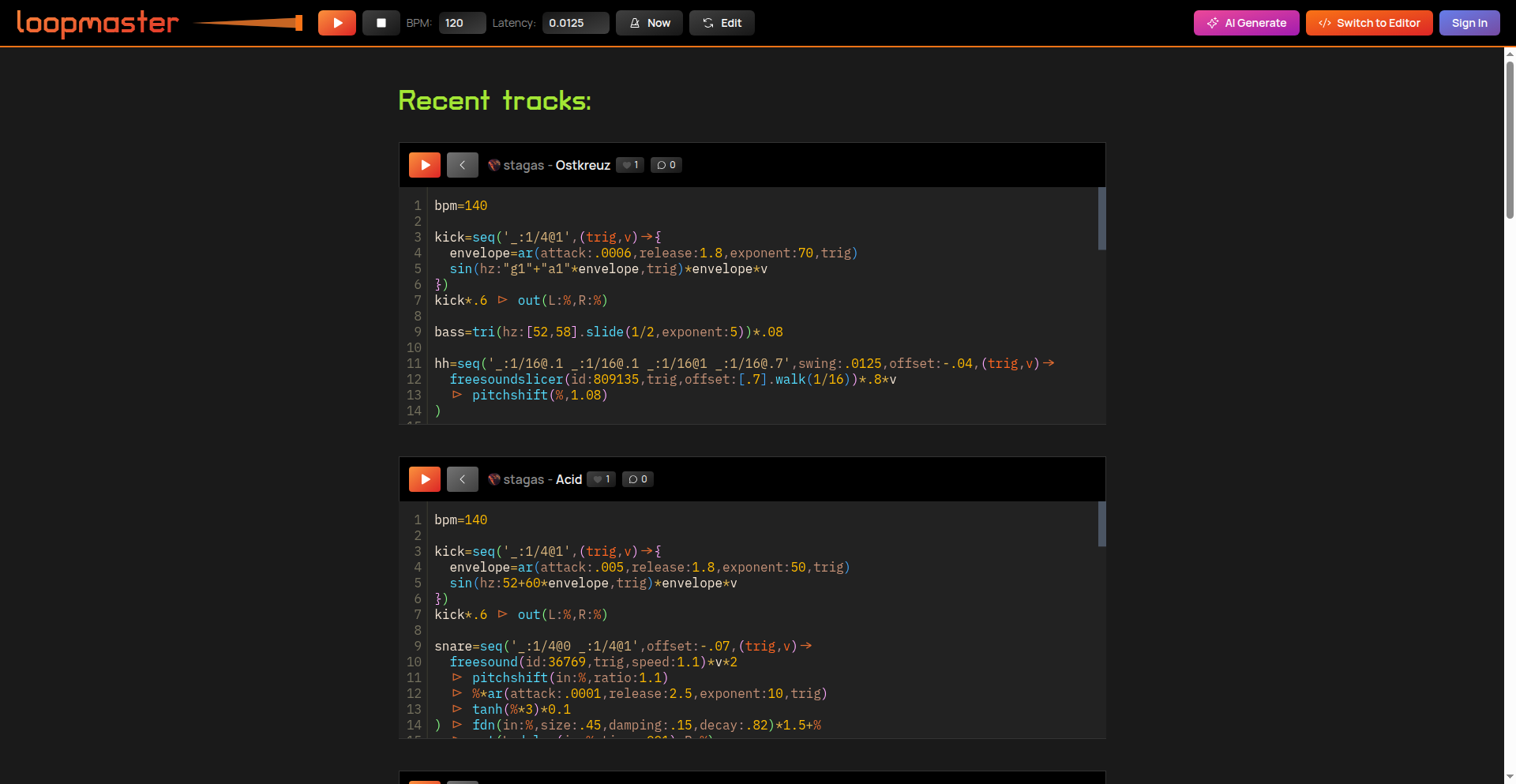

NodeDSP-Native

Author

a-kgeorge

Description

A serverless-friendly Digital Signal Processing (DSP) library for Node.js, featuring native C++ computation and Redis for state management. It tackles the challenge of performing computationally intensive signal processing tasks efficiently within a serverless Node.js environment, overcoming the typical performance limitations of pure JavaScript.

Popularity

Points 3

Comments 4

What is this product?

This project is a high-performance DSP library designed to work seamlessly with Node.js, especially in serverless architectures. The core innovation lies in its use of native C++ code for the heavy lifting of signal processing algorithms. This is crucial because JavaScript, while flexible, can be slow for complex mathematical operations. By offloading these computations to C++, the library achieves significant speedups. To manage the state of these operations, especially in a distributed or stateless serverless environment, it leverages Redis. Redis is an in-memory data structure store, often used as a database, cache, and message broker, providing fast and persistent storage for intermediate results and session data. This combination of native C++ for performance and Redis for state management makes it ideal for serverless applications that need to process real-time audio, video, or other signal data.

How to use it?

Developers can integrate this library into their Node.js projects using standard npm package installation. Once installed, they can import the library and utilize its DSP functions. For example, in a serverless function triggered by an event (like an uploaded audio file), the Node.js code would call the library's functions to perform operations such as filtering, Fourier transforms, or feature extraction. The C++ backend handles the actual processing, and Redis can be used to store intermediate results, making it easy to resume processing later or share state across different function invocations in a serverless environment. This allows for building complex signal processing pipelines without the typical performance bottlenecks of pure JavaScript in serverless.

Product Core Function

· Native C++ DSP Engine: Accelerates computationally intensive signal processing tasks, offering significantly faster execution compared to pure JavaScript. This means your applications can process more data in less time, leading to quicker responses and higher throughput.

· Redis State Management: Provides a robust and scalable way to store and retrieve intermediate processing results and session data. This is invaluable in serverless environments where functions are ephemeral, allowing for state persistence and seamless continuation of complex workflows.

· Serverless-Friendly Architecture: Designed specifically to overcome the performance limitations of Node.js in serverless platforms. It enables you to build sophisticated signal processing applications without worrying about execution time limits or resource constraints.

· Node.js Integration: Offers a familiar JavaScript API for developers, making it easy to integrate powerful C++ DSP capabilities into existing Node.js projects with minimal learning curve.

Product Usage Case

· Real-time Audio Analysis in Serverless Functions: Imagine a serverless function that automatically analyzes uploaded audio files for sentiment or keyword detection. Using NodeDSP-Native, the function can quickly perform Fast Fourier Transforms (FFTs) and other spectral analyses on the audio data to extract meaningful features, with Redis storing intermediate analysis steps for resilience.

· Video Stream Processing Pipelines: For applications that require processing video streams in real-time (e.g., for content moderation or object detection), this library can be used within serverless functions to perform frame-by-frame DSP operations, offloading computation to C++ and managing frame states with Redis.

· IoT Sensor Data Processing: Devices might send streams of sensor data that require filtering, noise reduction, or pattern recognition. NodeDSP-Native can be employed in serverless backend services to efficiently process this data, identifying anomalies or trends, with Redis ensuring that the processing state is maintained across individual data bursts.

· Building Custom Audio Effects for Web Applications: A web application might need to apply complex audio effects that are too demanding for the browser. NodeDSP-Native can power the backend service responsible for these effects, allowing developers to expose these capabilities via an API to their web clients.

17

CyBox Security - Unified DevSecOps Dashboard

url

Author

Hayim_Gabay

Description

CyBox Security is a cloud-based platform that consolidates various security scanning tools into a single, user-friendly dashboard. It automates the detection of vulnerabilities in code (SAST), dependencies (SCA), infrastructure as code (IaC), and exposed secrets. This is designed for development teams, especially smaller ones, who lack dedicated security personnel, providing them with continuous security oversight and actionable remediation advice without needing to manage multiple security tools.

Popularity

Points 3

Comments 3

What is this product?