Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-10

SagaSu777 2025-11-11

Explore the hottest developer projects on Show HN for 2025-11-10. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

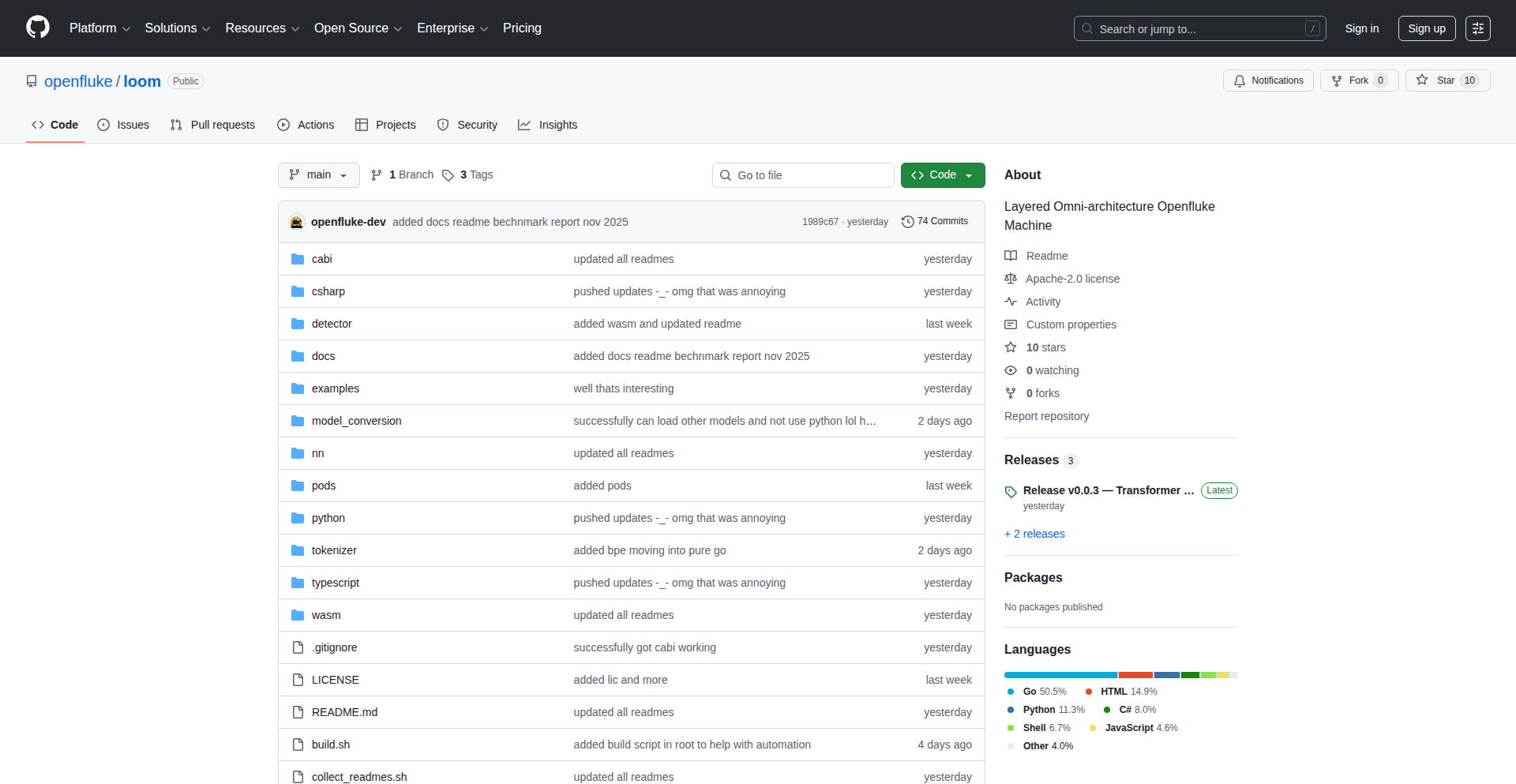

Today's Show HN submissions paint a vibrant picture of innovation, with a clear surge in AI-powered solutions across diverse domains. Developers are leveraging AI not just for complex problem-solving, but also to enhance everyday tasks, from code documentation with Davia to optimizing system performance with Akamas. The trend towards lightweight, efficient tools is also prominent, exemplified by Princejs, a tiny Bun framework, and LOOM, which runs transformer models in pure Go without a Python runtime. This reflects a hacker ethos of building powerful solutions with minimal overhead, making them accessible and performant. Furthermore, there's a growing emphasis on privacy and data control, seen in projects like MySecureNote and the exploration of client fingerprinting. For developers, this means a golden opportunity to dive into AI integration, explore efficient architectures, and contribute to the privacy-first movement. Entrepreneurs should take note: solutions that simplify complexity, enhance developer productivity, and respect user privacy are poised for significant impact. The spirit of 'build it yourself to solve a problem' is alive and well, pushing the boundaries of what's possible.

Today's Hottest Product

Name

Davia

Highlight

Davia tackles the universal pain point of documenting large codebases by generating an open-source, visual, and editable wiki directly from your repository. It leverages intelligent code analysis to automatically create diagrams and allows developers to update documentation seamlessly within their IDE or a Notion-like editor. This project offers a pragmatic and innovative approach to code documentation, a crucial but often neglected aspect of software development. Developers can learn about advanced code parsing techniques, automated diagram generation, and integrated IDE workflows for documentation.

Popular Category

AI/ML

Developer Tools

Web Applications

Productivity

Popular Keyword

AI

Code

Documentation

Data

Tool

Open Source

Automation

Generation

Optimization

Technology Trends

AI-driven Code Analysis and Documentation

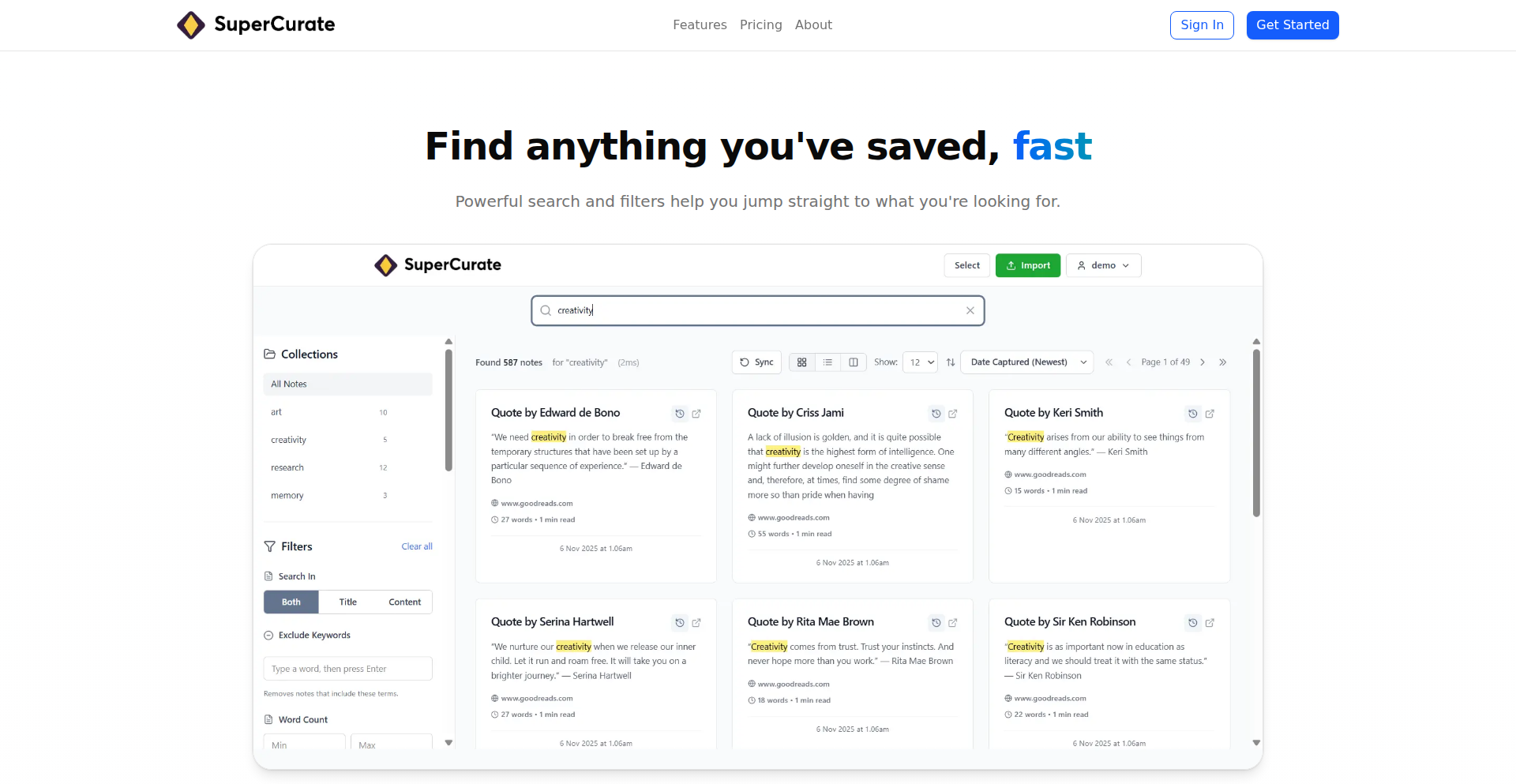

Hyper-Personalization and Content Curation

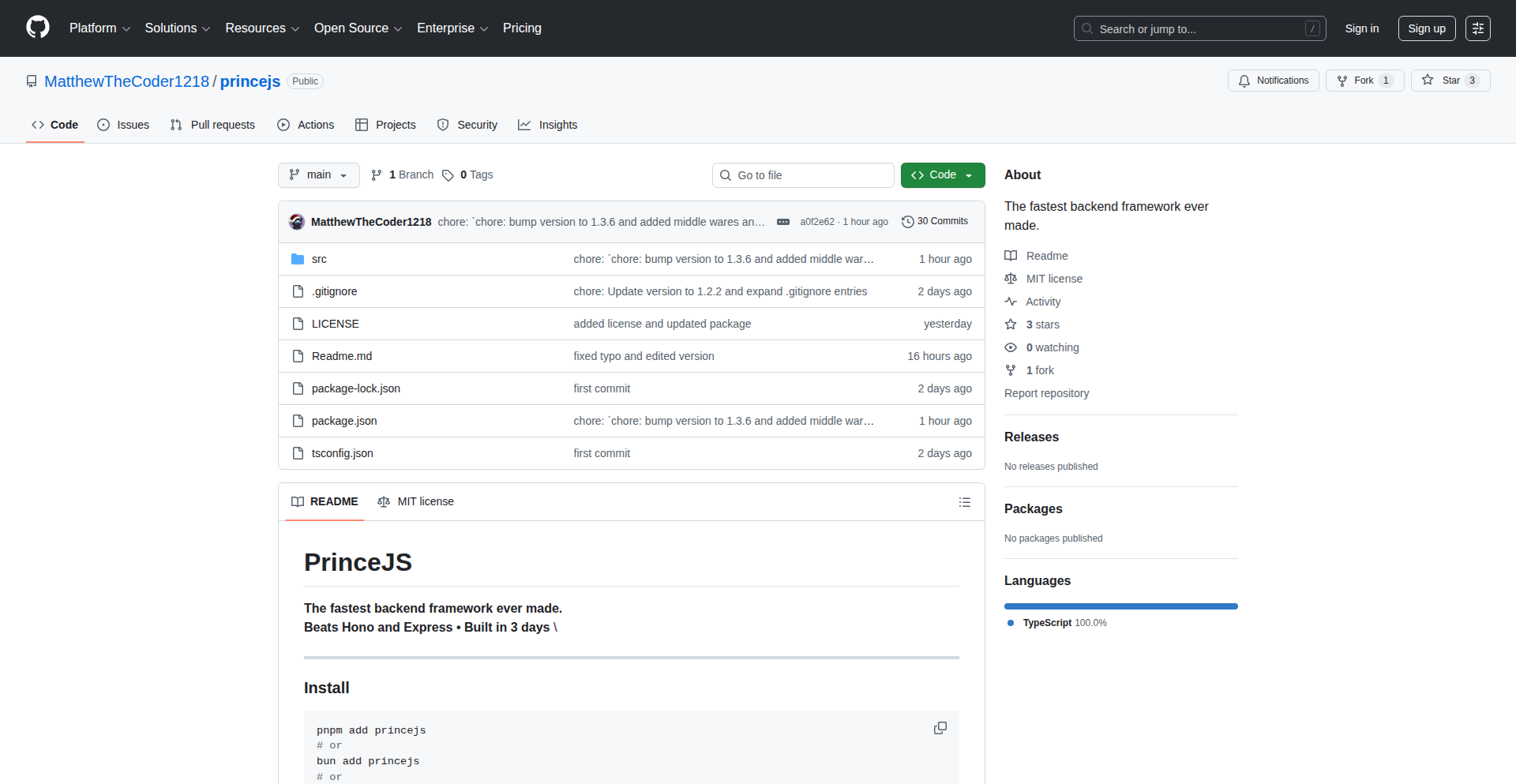

Efficient and Lightweight Development Tools

Privacy-Preserving Technologies

AI for Creative and Design Workflows

Developer Experience (DX) Enhancement

Automated Optimization and Efficiency

Project Category Distribution

Developer Tools (30%)

AI/ML Applications (25%)

Web Applications & Services (20%)

Productivity & Utilities (15%)

Creative & Media (5%)

Other (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | StealthViewer | 35 | 16 |

| 2 | CodeViz Weaver | 37 | 12 |

| 3 | Akamas: Autonomous Cloud Optimization Engine | 15 | 11 |

| 4 | Universal Toolkit Nexus | 11 | 5 |

| 5 | ProcrastinationGuardian WebSim | 11 | 1 |

| 6 | CursorGazer | 7 | 4 |

| 7 | Git AI Tracker | 6 | 4 |

| 8 | Tiny Diffusion: Character-Level Text Generation | 8 | 1 |

| 9 | Visionary Photo Organizer | 3 | 6 |

| 10 | MarkdowntoCV: Markdown-Powered Resume Generator | 3 | 5 |

1

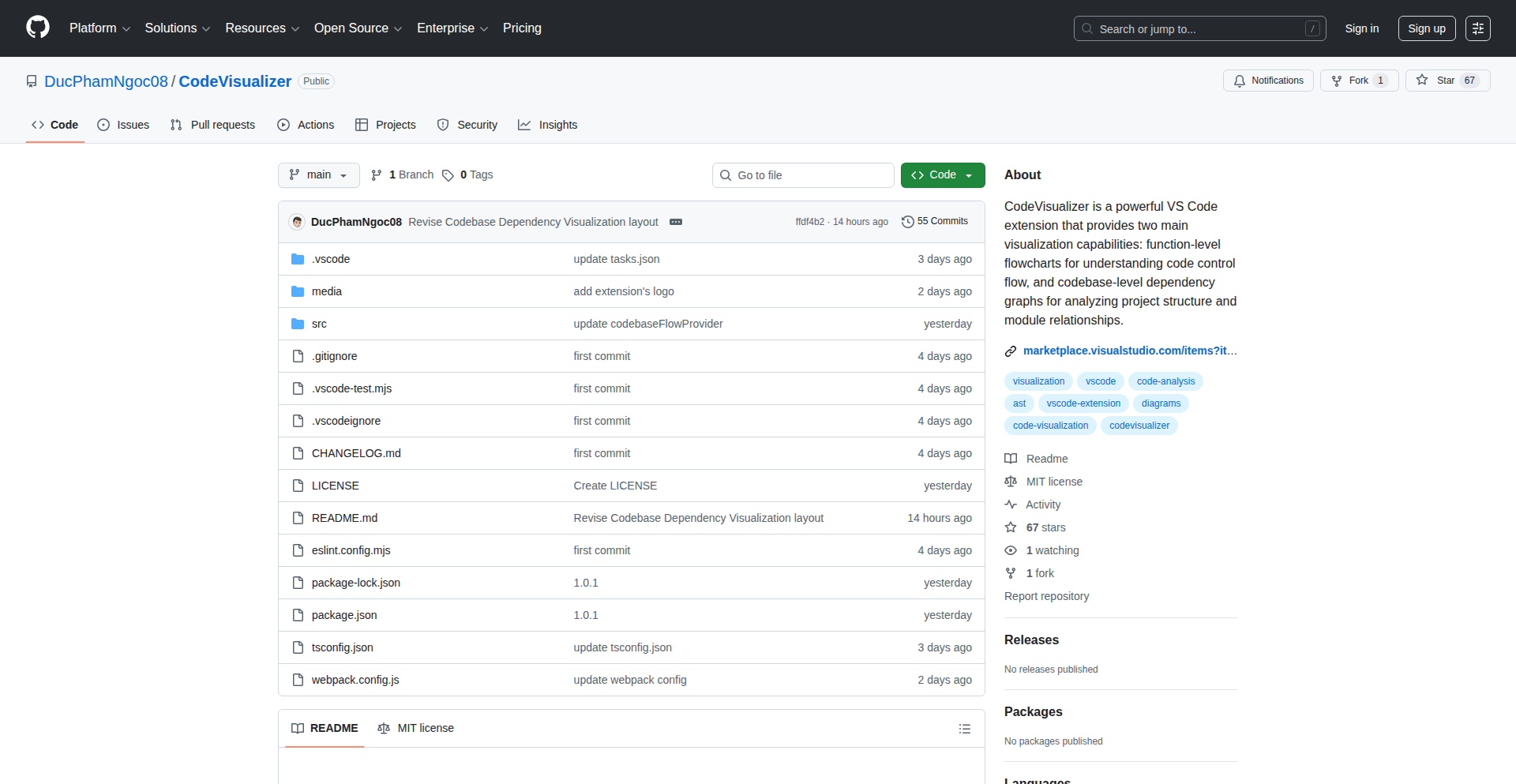

StealthViewer

Author

deep_signal

Description

A free, anonymous Instagram story viewer. This project tackles the technical challenge of accessing and displaying Instagram stories without triggering user notifications or leaving any traceable footprint, offering a private way to consume content.

Popularity

Points 35

Comments 16

What is this product?

StealthViewer is a web-based tool that allows you to watch Instagram stories without the original poster knowing. Technically, it works by intercepting and replaying Instagram's media streams through a client-side application. Instead of directly interacting with Instagram's servers as a logged-in user whose activity would be recorded, it uses a more indirect approach. This often involves clever use of web scraping techniques, potentially bypassing some of Instagram's client-side JavaScript checks or employing a headless browser instance to mimic normal user behavior without human interaction. The innovation lies in its ability to achieve anonymity, a feature not natively offered by Instagram, by cleverly engineering the interaction with the platform.

How to use it?

Developers can use StealthViewer by visiting the provided web link. The application typically requires you to input the Instagram username whose stories you wish to view. Once the username is provided, the tool will attempt to fetch and display their available stories. The primary technical use case is for individuals or businesses who want to monitor competitor activity, track trends, or simply consume content without revealing their identity. It can be integrated into workflows where anonymous social media monitoring is crucial.

Product Core Function

· Anonymous Story Viewing: Allows users to watch Instagram stories without appearing in the viewer list. The technical value is in its ability to bypass Instagram's built-in tracking mechanisms by employing client-side JavaScript manipulation or proxying requests, providing a privacy-enhancing feature for content consumption.

· Public Profile Access: Enables viewing stories from public Instagram profiles. This highlights the technical insight into how Instagram's public APIs or web interfaces can be leveraged to extract publicly available content, demonstrating practical application of web scraping and data retrieval techniques.

· No Account Required: Users do not need to log in to their own Instagram account. This is a significant technical simplification and a core value proposition, showcasing how to access content through alternative pathways without relying on authenticated user sessions.

Product Usage Case

· Market Research: A social media marketer can use StealthViewer to anonymously observe competitor marketing campaigns on Instagram stories to understand their content strategy and engagement tactics without alerting the competitor. This solves the technical problem of getting unbiased competitor insights.

· Trend Spotting: A content creator might use StealthViewer to identify emerging trends on Instagram stories across various niches by anonymously watching popular accounts. This helps them stay ahead of the curve without direct engagement, addressing the need for unobtrusive trend analysis.

· Personal Privacy: An individual concerned about their online privacy can use StealthViewer to follow public figures or accounts they are interested in without leaving a digital footprint or revealing their interest to the account owner. This directly solves the problem of maintaining personal privacy while consuming social media content.

2

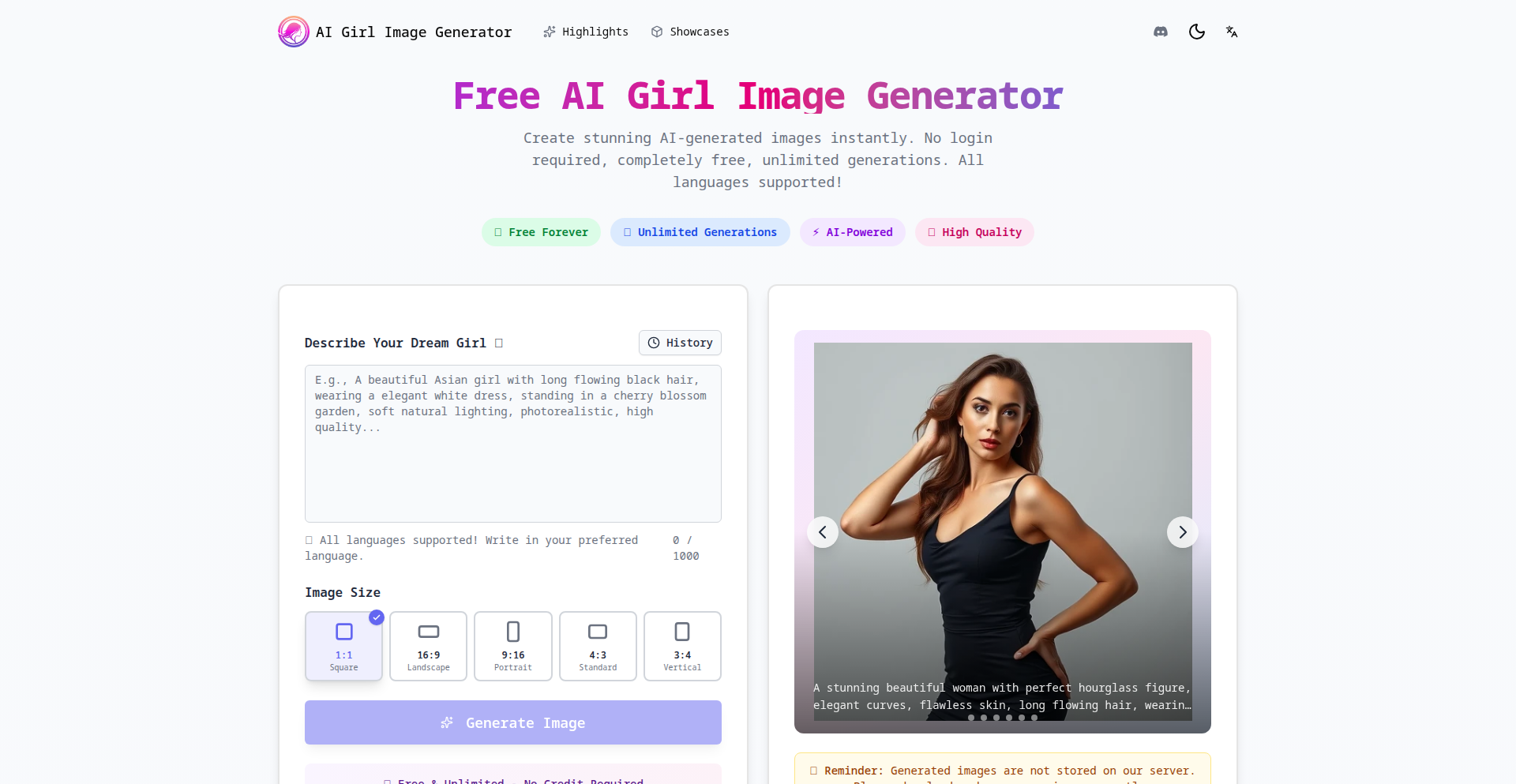

CodeViz Weaver

Author

ruben-davia

Description

CodeViz Weaver is an open-source tool that automatically generates an interactive, visual wiki directly from your codebase. It tackles the challenge of understanding and documenting large, complex projects by creating easily explorable diagrams and allowing in-IDE or Notion-like editing of documentation. This means faster onboarding for new developers and clearer internal knowledge sharing.

Popularity

Points 37

Comments 12

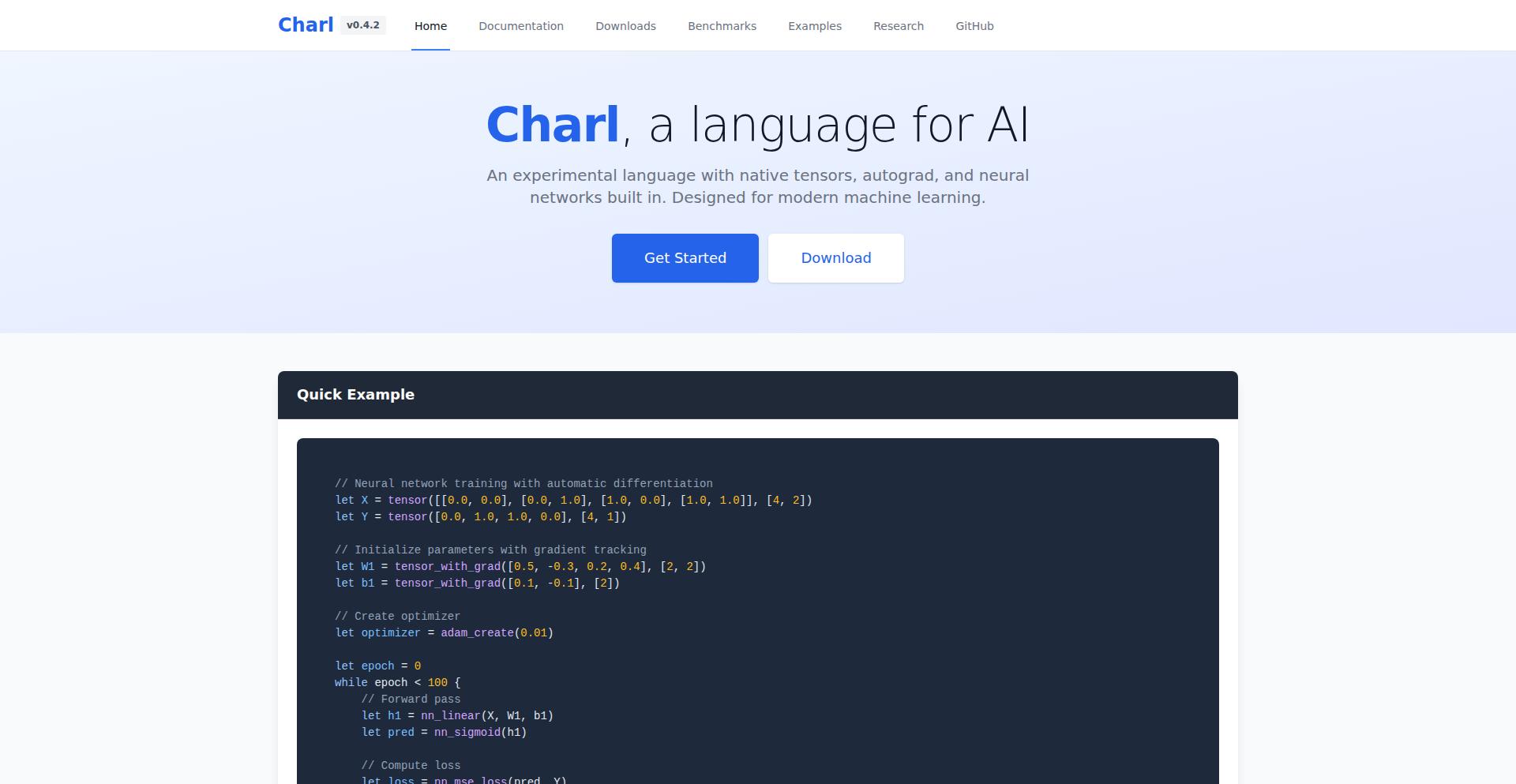

What is this product?

CodeViz Weaver is a software tool that scans your code repository and builds a dynamic, visual representation of your project's structure. Think of it like creating a navigable map of your code. The innovation lies in its ability to automatically generate these visuals and integrate documentation editing directly into your familiar development environment (like your IDE) or a user-friendly, Notion-style interface. It solves the problem of tedious and often outdated code documentation by making it a natural byproduct of the development process. This approach significantly reduces the time and effort required to understand how a project works, what its different components do, and how they connect.

How to use it?

Developers can use CodeViz Weaver by pointing it to their Git repository. The tool then analyzes the code to build a visual graph of the project's modules, classes, and functions. This visual wiki can be explored through a web interface, allowing developers to navigate dependencies and understand relationships. Crucially, documentation can be updated directly within the IDE, meaning changes to code can be accompanied by immediate documentation updates. Alternatively, a web-based editor provides a simple way to refine the generated documentation. This makes it ideal for onboarding new team members, documenting legacy code, or simply ensuring that project knowledge is easily accessible and up-to-date.

Product Core Function

· Automatic Code Structure Visualization: Scans repositories to generate interactive diagrams showing relationships between code elements (e.g., classes, functions, modules). This allows developers to quickly grasp the architecture of a project, understand dependencies, and identify key components, thus accelerating learning curves and troubleshooting.

· IDE Integrated Documentation Editing: Enables developers to edit and update project documentation directly within their Integrated Development Environment. This significantly streamlines the documentation process, ensuring that documentation stays synchronized with code changes and reducing the overhead of maintaining up-to-date information.

· Notion-like Web Editor: Provides a user-friendly, visual editor for refining and expanding the generated documentation, similar to popular note-taking applications. This offers a flexible and accessible way for team members to contribute to and manage project knowledge without requiring deep technical expertise, making documentation collaboration easier.

· Open Source Accessibility: As an open-source project, it provides a cost-effective and community-driven solution for code documentation, fostering collaboration and allowing for customization and extensions to meet specific team needs.

Product Usage Case

· Onboarding new developers to a large, complex microservices architecture: Instead of spending weeks deciphering code manually, a new hire can use CodeViz Weaver to instantly visualize the entire system, understand service interactions, and quickly find relevant documentation for each service within their IDE, dramatically reducing ramp-up time.

· Documenting a legacy codebase with sparse or outdated documentation: Developers can run CodeViz Weaver to generate an initial visual map and an editable wiki. They can then progressively refine the documentation by adding insights directly in their IDE as they work on the code, turning a daunting documentation task into an ongoing, manageable effort.

· Facilitating knowledge sharing within a distributed engineering team: By providing a centralized, visual, and easily editable source of truth about the codebase, CodeViz Weaver ensures that all team members, regardless of their location or familiarity with specific modules, can access and contribute to understanding the project's intricacies, improving collaboration and reducing knowledge silos.

3

Akamas: Autonomous Cloud Optimization Engine

Author

enricobruschini

Description

Akamas is an advanced platform designed to automatically optimize cloud services for reliability, performance, and cost. It achieves this by directly integrating with observability data, intelligently identifying areas for improvement, and then safely implementing these optimizations across the entire technology stack, from the application code to the underlying infrastructure. This means developers and operations teams can ensure their services are running at peak efficiency without manual, time-consuming effort.

Popularity

Points 15

Comments 11

What is this product?

Akamas is an autonomous optimization platform that acts as an intelligent assistant for cloud engineers and SREs. It works by ingesting data from your existing monitoring and logging tools (observability data). Think of this as giving Akamas 'eyes' into how your applications and infrastructure are performing. Akamas then uses sophisticated algorithms to analyze this data and pinpoint exactly where costs can be reduced, reliability can be improved, or performance can be boosted. The innovative aspect is its ability to not just suggest, but also to automatically and safely apply these validated optimizations across your entire cloud environment, from the application code itself all the way down to the Kubernetes clusters or virtual machines running your services. This is like having a super-smart mechanic who not only diagnoses engine problems but also automatically tunes and fixes them.

How to use it?

Developers and SREs can integrate Akamas by connecting it to their existing observability stack. This typically involves configuring Akamas to pull data from sources like Prometheus, Grafana, Datadog, or cloud provider logs. Once connected, Akamas begins its analysis. For users, this translates into a dashboard and actionable recommendations. You can choose to have Akamas automatically apply optimizations, or you can review and approve them manually. This is useful in scenarios where you're facing escalating cloud bills, experiencing performance bottlenecks, or struggling to maintain high availability. Akamas can be integrated into CI/CD pipelines for continuous optimization or used as a standalone system for ongoing cloud management.

Product Core Function

· Intelligent Observability Data Ingestion: Akamas connects to your existing monitoring and logging systems to gather real-time performance and cost data. This means it understands your system's health and resource usage without you needing to build new monitoring infrastructure, providing insights into what's actually happening in your cloud environment.

· Automated Optimization Detection: Using advanced analytics and machine learning, Akamas automatically identifies specific opportunities to reduce costs, improve reliability, and enhance performance. This saves engineers countless hours of manual analysis, uncovering hidden inefficiencies you might have missed.

· Impact Estimation: Before making any changes, Akamas estimates the projected impact of each optimization on both cost and reliability. This allows you to understand the 'so what?' of each recommendation, enabling informed decisions and risk assessment.

· Safe and Validated Optimization Application: Akamas can automatically apply optimizations across the full stack, from application configurations to infrastructure settings, ensuring changes are safe and validated through rigorous testing. This minimizes the risk of introducing new issues while ensuring your services are continuously running optimally.

· Cross-Stack Optimization: Akamas optimizes not just one layer, but the entire cloud ecosystem, including applications, containers, and underlying infrastructure. This holistic approach ensures that improvements are made across the board, leading to more significant overall gains in efficiency and stability.

Product Usage Case

· A company experiencing unexpected spikes in their AWS bill. Akamas connects to their cloud logs and identifies underutilized EC2 instances and inefficiently configured S3 storage buckets. It then automatically adjusts instance sizes and optimizes storage policies, leading to a 20% reduction in monthly cloud spend, directly addressing the financial pain point.

· A development team struggling with slow API response times during peak traffic. Akamas analyzes application performance metrics and database query logs, discovering that certain database queries are inefficient and that cache invalidation is not optimally configured. Akamas recommends and automatically applies query optimizations and cache tuning, resulting in a 50% faster API response time and improved user experience.

· An SRE team responsible for maintaining high availability for a critical microservice. Akamas monitors resource utilization and error rates, detecting that the service is frequently hitting resource limits during high load. It automatically scales up the number of pods in the Kubernetes deployment and adjusts resource requests and limits, ensuring the service remains stable and available even under heavy traffic, preventing costly downtime.

4

Universal Toolkit Nexus

Author

jayasurya2006

Description

A curated hub of over 500 free online utilities, consolidating developer, productivity, and daily use tools into a single, streamlined, ad-supported platform. It addresses the common frustration of searching for specific, small tools by providing a centralized, clutter-free experience.

Popularity

Points 11

Comments 5

What is this product?

This is a comprehensive online platform that aggregates more than 500 free, single-purpose tools, categorized for easy access. The core innovation lies in its unified interface and efficient delivery of essential utilities, ranging from developer-specific functions like JSON manipulation and base64 encoding to everyday needs like text formatting, image compression, and financial calculators. The 'ad-supported' model ensures the tools remain free for users while covering operational costs, and the 'clean, fast' design means you get what you need without distractions, unlike fragmented searches across multiple websites.

How to use it?

Developers can access Universal Toolkit Nexus directly through their web browser at www.everytoolkit.com. For developers, the primary use case is integrating these readily available tools into their workflow. For instance, when debugging or testing code, a developer can quickly switch to the site to use a JSON formatter or a regex tester without leaving their development environment context entirely. The site is designed for immediate use, meaning no installation or complex setup is required. Simply navigate to the relevant category, select the tool, input your data, and get the result instantly. This makes it a go-to resource for quick, on-demand utility access.

Product Core Function

· JSON Validator and Formatter: Helps developers quickly ensure their JSON data is correctly structured and readable, preventing parsing errors and speeding up debugging.

· Base64 Encoder/Decoder: Essential for developers working with data transmission or encoding, allowing for quick conversion of data into and out of Base64 format.

· Regex Tester: Enables developers to test and refine regular expressions in real-time, crucial for pattern matching and data extraction tasks.

· Text Case Converter: A simple yet effective tool for developers and writers to change text case (e.g., to camelCase, snake_case), improving code readability and data consistency.

· Image Compression: Allows users to reduce the file size of images without significant quality loss, beneficial for web developers optimizing load times or anyone managing storage.

· PDF Merging and Splitting: Provides a straightforward way for users to combine multiple PDF documents or extract specific pages, useful for document management and organization.

· Word Counter and Text Statistics: Helps writers and content creators analyze their text quickly, understanding word count, character count, and readability scores.

· Various Calculators and Converters: Offers a wide array of tools for everyday use, from unit conversions to financial calculations, eliminating the need to search for disparate calculator apps.

Product Usage Case

· A web developer needs to quickly validate and format a JSON payload received from an API. Instead of opening a separate application or searching for a tool, they navigate to EveryToolkit.com, use the JSON formatter, and instantly see the structured, validated data. This saves them significant time and reduces the chance of errors in their API integration. The value here is rapid error detection and improved code understanding.

· A backend engineer is working with authentication tokens that are Base64 encoded. They need to quickly decode a token to inspect its contents. Using the Base64 decoder on EveryToolkit.com allows for an immediate, in-browser solution, bypassing the need to write a small script or find a command-line tool. This provides immediate utility for data inspection and troubleshooting.

· A content writer is preparing a blog post and needs to ensure consistency in formatting. They use the text case converter to change headings to title case and body text to sentence case, ensuring a professional look. This streamlines their writing process and improves the final output. The value is enhanced content quality and authoring efficiency.

· A student is working on a project that requires combining several scanned documents into a single PDF. Instead of using complex desktop software, they upload their individual PDFs to EveryToolkit.com and use the PDF merge tool for a quick, online solution. This simplifies document management and saves them from installing new software. The value is ease of use and accessibility for document manipulation.

5

ProcrastinationGuardian WebSim

Author

withwho

Description

This project is a web-based simulation of a desktop accountability agent designed to combat procrastination. It showcases the app's usability through interactive simulations on the web, demonstrating how it tracks user activity to encourage productivity. The core innovation lies in making a personal productivity tool accessible and visually engaging through a web interface, allowing users to experience its potential impact without installing the desktop application.

Popularity

Points 11

Comments 1

What is this product?

ProcrastinationGuardian WebSim is a live demonstration of a desktop application that helps users stay focused and avoid procrastination. The desktop app works by monitoring user activity, and this web simulation brings that concept to life. It doesn't replicate the full functionality of the desktop app, but rather provides a fun and engaging way to understand its core principle: visualizing how focused effort can lead to progress. The innovation is in using web technologies to simulate the experience of a productivity tool, making its benefits clear and understandable to anyone.

How to use it?

Developers can explore this project to understand how to create interactive web simulations of desktop applications. It provides a blueprint for showcasing the user experience and core concepts of productivity software without requiring a full deployment. You can integrate similar simulation techniques into your own projects to demonstrate the value proposition of your tools to potential users or stakeholders in a visually compelling way. Think of it as a 'try before you buy' for the core idea of your application, presented on the web.

Product Core Function

· Web-based interactive simulation: Allows users to experience the concept of an accountability agent through a visual, hands-on simulation on the web, demonstrating the value of tracking activity. For you, this means you can instantly grasp the core idea of how the app helps you stay on track.

· Visual activity tracking representation: The simulation likely uses visual cues to show how user activity is tracked and how it contributes to progress, making the abstract concept of accountability tangible. This helps you see the direct impact of your focused time.

· Usability demonstration: Showcases the user-friendliness and intuitive nature of the underlying desktop application through engaging web interactions. You get to see how easy it is to engage with the productivity concepts.

· Procrastination aversion concept: Embodies the core purpose of the desktop app by simulating the positive outcomes of focused work and the avoidance of distractions. It visually reinforces the benefits of being productive.

Product Usage Case

· Demonstrating the effectiveness of a new productivity desktop app to potential users by showing them a live, interactive simulation of its core accountability features on the web, allowing them to understand its value proposition quickly.

· Educating stakeholders or investors about the user experience and benefits of a desktop productivity tool by providing a web-based simulation, simplifying complex technical concepts into an easily understandable visual format.

· Developers building similar accountability or productivity tools can use this as inspiration to create engaging web demos that highlight their application's unique selling points and encourage early adoption.

· As a personal learning project, a developer can explore how to leverage web technologies to simulate the functionality of desktop applications, expanding their skillset in interactive UI and user experience design.

6

CursorGazer

Author

su466120534

Description

A delightful 'eyes follow your cursor' widget that adds playful, responsive UI feedback to your applications. It utilizes clever CSS and JavaScript to create an engaging user experience, transforming static interfaces into dynamic, interactive elements. The core innovation lies in its simplicity and effectiveness in creating a subtle yet impactful visual cue.

Popularity

Points 7

Comments 4

What is this product?

CursorGazer is a small JavaScript widget that makes a pair of virtual 'eyes' on your webpage look at where the user's mouse cursor is moving. It's built using simple web technologies, likely leveraging JavaScript for tracking cursor position and CSS animations or transformations to make the eyes move smoothly and realistically. The innovative part is how it injects personality and liveliness into an otherwise static interface with minimal effort and without heavy dependencies, making any web element feel more interactive.

How to use it?

Developers can easily integrate CursorGazer into their web projects. It's designed to be a simple embeddable component. You would typically include a JavaScript file and then initialize the widget, specifying which HTML element should contain the 'eyes'. The author also offers a live playground, allowing you to experiment with different visual styles and behaviors before implementing it in your own project. It's ideal for adding a touch of personality to personal websites, landing pages, or any application where you want to create a more engaging and memorable user interaction.

Product Core Function

· Real-time cursor tracking: Tracks the user's mouse cursor position on the screen with low latency, allowing for immediate visual response.

· Smooth eye animation: Applies smooth, natural-looking animations to the virtual eyes to simulate them following the cursor, enhancing the feeling of responsiveness.

· Configurable appearance: Offers options to customize the look and feel of the eyes, such as size, color, and spacing, to match different website aesthetics.

· Embeddable widget: Provides a simple integration method for web developers, allowing for easy addition to existing or new projects without complex setup.

Product Usage Case

· Personal Portfolio Websites: A developer can add CursorGazer to their portfolio to make their personal brand feel more approachable and unique, giving visitors a fun interaction as they explore.

· Interactive Landing Pages: For a marketing campaign, CursorGazer can be used on a landing page to draw attention and make the user's engagement with the page more memorable and playful.

· Educational Tools: In a web-based learning application, it could be used to provide visual feedback, making the learning process more engaging for younger audiences.

· Chatbots or Virtual Assistants: A playful avatar with eyes that follow the cursor can make a chatbot feel more present and interactive, creating a more human-like experience.

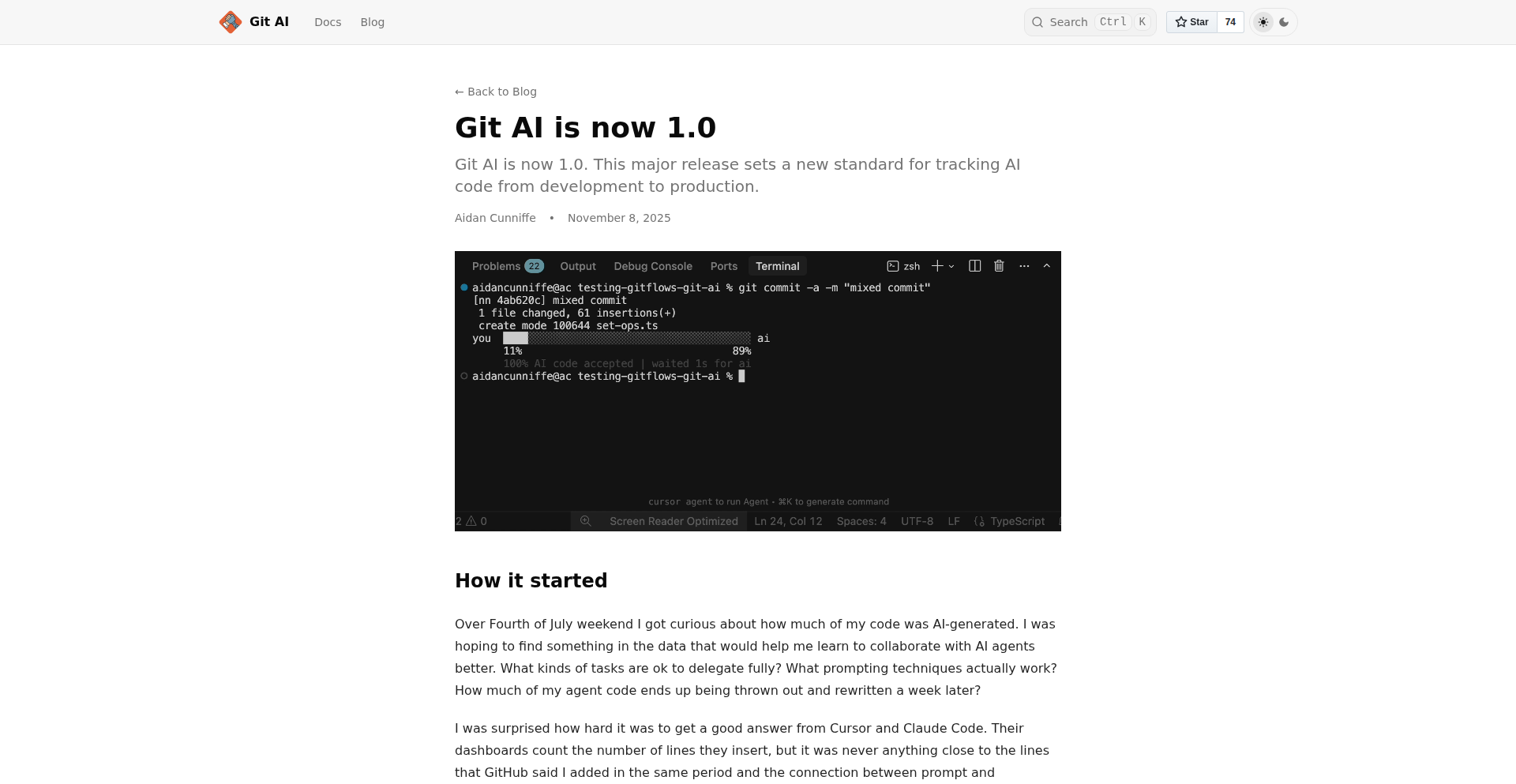

7

Git AI Tracker

Author

addcn

Description

Git AI Tracker is a novel tool designed to specifically monitor and manage AI-generated code within a Git repository's lifecycle. Unlike traditional Git tools that track code authorship and history, Git AI Tracker delves deeper by preserving the context of AI contributions – from initial prompts and AI-generated output to human modifications and refactoring, even across rewritten history. This provides unprecedented visibility into how AI code evolves and integrates into a project, offering insights akin to 'git blame' but specifically for AI-assisted development.

Popularity

Points 6

Comments 4

What is this product?

Git AI Tracker is a specialized system that leverages Git's robust version control capabilities to track the origin and evolution of code produced by AI coding assistants. Its core innovation lies in its ability to associate AI-generated code segments with the specific prompts that generated them, and to follow these segments through the entire development process – including code reviews, pull requests, and production deployments. It understands that AI code isn't static; it gets refactored, edited, and its history can be rewritten, and it meticulously tracks these changes. This allows developers to understand not just who wrote the code, but also what instructions guided the AI and how the code was subsequently shaped by human intervention. The value is in understanding the 'why' and 'how' of AI code, enabling better collaboration and more insightful reviews. It’s like having a conversation log with the AI, embedded within your code history.

How to use it?

Developers can integrate Git AI Tracker into their existing Git workflow. The system works by analyzing Git commit history, specifically looking for patterns that indicate AI-generated code. It can be set up to automatically tag commits or branches that are heavily influenced by AI, and to store metadata associated with AI contributions, such as the prompt used and the AI model version. This allows developers to query the repository to see which parts of the codebase were AI-generated, what prompts were used for specific AI-written segments, and how much human modification occurred. This can be used during code reviews to understand the context of AI-generated code, or for retrospective analysis to gauge the effectiveness of AI prompts and the team's collaboration with AI tools. While currently more focused on the backend tracking, future UI enhancements will simplify this interaction further, making it accessible for all team members.

Product Core Function

· AI Code Origin Tracking: This function allows developers to pinpoint exactly which parts of the codebase were initially generated by an AI. Its technical value is in providing a clear lineage for AI-contributed code, enabling better auditing and understanding of the codebase's foundation, especially in projects with significant AI involvement.

· Prompt Association: This core feature links AI-generated code snippets to the specific prompts that were fed to the AI. The technical innovation here is creating a direct contextual link between developer intent (via prompts) and AI output, which is invaluable for debugging, refactoring, and understanding AI behavior.

· Evolutionary Code Tracking: This function meticulously tracks AI-generated code as it undergoes changes, refactoring, and integration into the broader codebase over time, even when Git history is rewritten. The technical value lies in maintaining a persistent understanding of AI code's journey, ensuring that its origin and modifications are never lost, which is crucial for long-term project maintainability and knowledge transfer.

· AI Collaboration Metrics: By analyzing the ratio of AI-generated lines to accepted lines, this function provides insights into the efficiency of AI prompting and collaboration. The technical utility is in offering quantifiable metrics that help developers and teams optimize their AI usage, identifying when prompts might be ineffective or when the AI is operating outside its optimal parameters.

Product Usage Case

· During a code review, a developer encounters a complex algorithm generated by an AI. Using Git AI Tracker, they can instantly see the prompt that generated this algorithm and the subsequent human edits. This helps them understand the AI's initial approach and evaluate the quality and appropriateness of the human modifications, significantly speeding up the review process and improving its thoroughness.

· A team is experiencing an increase in subtle bugs within their application. By using Git AI Tracker to analyze commits related to AI-generated code, they can identify specific areas where AI contributions might have introduced unforeseen issues. The tracker allows them to examine the prompts and human interventions associated with these problematic code sections, leading to faster root cause analysis and resolution.

· A project lead wants to assess the team's effectiveness in using AI coding assistants. Git AI Tracker can provide metrics on the volume of AI-generated code, the complexity of prompts, and the amount of human rework required. This data-driven insight allows the lead to identify training needs or refine guidelines for AI-assisted development, optimizing team productivity and code quality.

· When onboarding new developers, Git AI Tracker can provide them with a rich history of how specific features were developed, including the role AI played. By viewing the prompts and evolution of AI-generated code, new team members can quickly grasp the reasoning behind certain architectural decisions and coding patterns, reducing the learning curve.

8

Tiny Diffusion: Character-Level Text Generation

Author

nathan-barry

Description

Tiny Diffusion is a novel approach to text generation that operates at the character level, built entirely from scratch. This bypasses traditional word-based models, offering a unique perspective on how language can be synthesized. Its innovation lies in its low-level processing and potential for fine-grained control over text output, enabling creative text manipulation and generation.

Popularity

Points 8

Comments 1

What is this product?

This project is a demonstration of a character-level diffusion model for text. Instead of treating words as individual units, it processes text by generating individual characters. Think of it like a highly sophisticated autocomplete that builds words and sentences character by character, learning the patterns and probabilities of how characters combine to form meaningful language. The innovation here is the exploration of a more fundamental building block for language generation, offering a different path than standard word-level models and allowing for novel text transformations.

How to use it?

Developers can use Tiny Diffusion as a foundation for experimental text generation tasks, creative writing tools, or to explore alternative approaches to natural language processing. It can be integrated into applications requiring unique text synthesis, such as generating stylized poetry, experimental prose, or even as a component in artistic coding projects. Its 'from scratch' nature means developers can deeply understand and modify its core mechanics for tailored results.

Product Core Function

· Character-level text generation: This allows for the creation of new text sequences by predicting and appending one character at a time, offering a granular control over the output that word-level models cannot easily provide. This is useful for generating highly specific or stylized text.

· Diffusion model implementation: The project demonstrates the application of diffusion models, a powerful generative technique, to text. This means it learns to gradually refine noise into coherent text, offering a sophisticated method for creating novel content.

· From-scratch implementation: By building the model without relying on pre-existing libraries for the core logic, it provides a deep understanding of how such models work and allows for significant customization and experimentation by developers.

· Exploration of low-level language representation: Focusing on characters rather than words opens up possibilities for understanding and manipulating the very fabric of language, which can lead to new linguistic insights and applications.

Product Usage Case

· Creative Writing Assistants: Imagine a tool that can help a writer generate experimental poetry or prose by suggesting character sequences that lead to unexpected word formations and sentence structures, solving the problem of creative block with novel linguistic output.

· Code Generation Tools: For highly specialized programming languages or domain-specific languages where word boundaries are less defined, a character-level approach might offer a more robust generation method, solving the challenge of generating syntactically correct but unconventional code snippets.

· Artistic Text Installations: Developers can use this to create dynamic art installations where text evolves character by character in real-time, offering a visually engaging and thought-provoking way to interact with language, solving the problem of creating unique and evolving visual art from text.

· Linguistic Research Tools: Researchers could use this model to study the fundamental patterns of language at the character level, potentially uncovering new insights into language formation and evolution, solving the problem of analyzing language from a micro-level perspective.

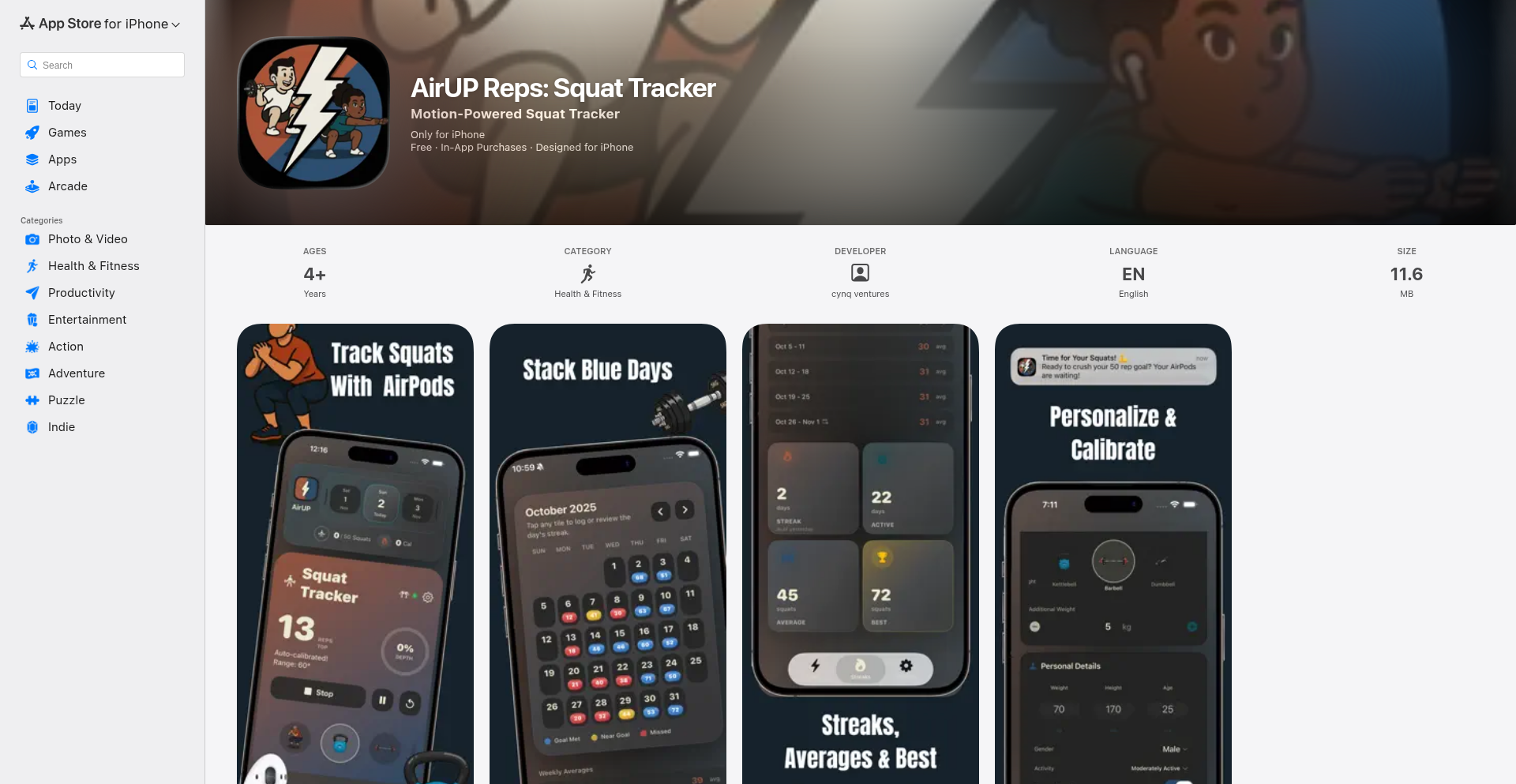

9

Visionary Photo Organizer

Author

nicklewers

Description

This is an iOS app that intelligently cleans up your photo library. It uses on-device AI to group similar photos together and then identifies the best shot within each group. The innovation lies in its efficient, opinionated approach to mass photo cleanup without relying on manual swiping, saving users significant time and storage space.

Popularity

Points 3

Comments 6

What is this product?

Visionary Photo Organizer is a mobile application that leverages Apple's on-device Vision AI models to automatically process your photo library. Instead of manually sifting through hundreds of similar pictures (like multiple shots of the same meal or sunset), it intelligently clusters them. Within each cluster, it applies a scoring mechanism to identify the most representative or highest quality photo. This means you get a smart, automated suggestion for which photos to keep and which to delete, significantly reducing the manual effort of decluttering your camera roll. The core innovation is using AI for automated opinionated photo curation directly on your device, ensuring privacy and speed.

How to use it?

Developers can use this app by simply downloading it from the App Store. Once installed, you grant it access to your photo library. The app then works in the background to analyze your images. You can then review the suggested groupings and the automatically selected 'best' photos. If you disagree with the AI's suggestions, you have the option to manually override them, selecting different photos to keep or discard. This offers a practical solution for anyone who struggles with a bloated photo library, allowing for a quick and effective cleanup with just a few taps, especially after events like vacations or parties. For developers interested in the underlying technology, the use of on-device Apple Vision models is a key takeaway, showcasing how sophisticated AI can be integrated into mobile applications for real-world problem-solving.

Product Core Function

· On-device image clustering: Groups visually similar photos together using AI, reducing manual sorting effort. This is valuable because it automatically organizes your photos, saving you the tedious task of finding duplicates or near-duplicates.

· AI-powered photo ranking: Scores and identifies the best photo within each cluster, making it easy to decide which one to keep. This offers a smart recommendation, helping you preserve the most important memories without having to scrutinize every single photo.

· Batch photo cleanup: Enables quick deletion of redundant photos based on AI analysis, freeing up storage space. This is directly beneficial for users with limited device storage, allowing for significant space reclamation with minimal user interaction.

· Manual review and override: Allows users to refine AI suggestions and make final decisions, ensuring user control and satisfaction. This provides flexibility, so you can always trust your own judgment even when the AI makes a recommendation.

· Privacy-focused processing: All analysis happens directly on the device, meaning your photos are never uploaded to a server. This is crucial for users concerned about data privacy and security, ensuring your personal images remain confidential.

Product Usage Case

· Post-vacation photo cleanup: After a trip, you might have hundreds of photos. This app can automatically group similar shots (e.g., multiple angles of a landmark) and suggest the best one, allowing you to quickly delete the rest and save significant space and time.

· Event photography organization: For parties or gatherings, you might take many bursts of photos. The app can identify the best shot from each burst, making it easy to curate your event memories without manually comparing dozens of similar images.

· Managing duplicate screenshots: Users often accumulate numerous screenshots. This app can group them and help you quickly identify and remove redundant ones, decluttering your library.

· Streamlining personal photo archives: For individuals with extensive photo libraries, this app offers an efficient way to maintain a cleaner, more organized collection of personal moments without the overwhelming task of manual sorting.

10

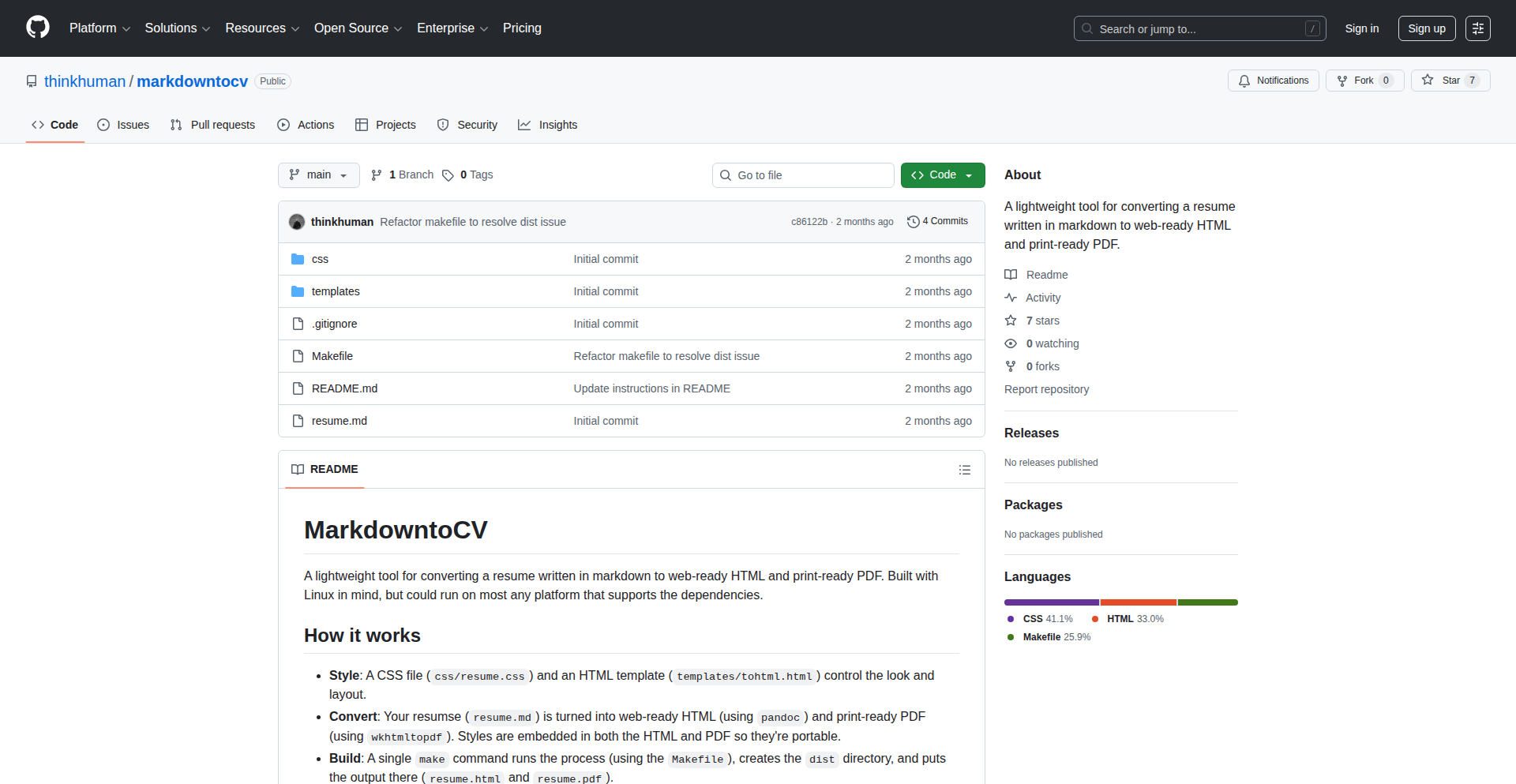

MarkdowntoCV: Markdown-Powered Resume Generator

Author

jamesgill

Description

MarkdowntoCV is a straightforward, yet powerful, resume generator that transforms your Markdown-formatted professional experience into a polished HTML or PDF document. It cuts through the complexity of traditional resume builders by leveraging the simplicity and flexibility of Markdown, allowing developers to focus on content and content formatting, not tedious UI fiddling. This project embodies the hacker spirit of using readily available tools to solve a common, time-consuming problem with elegant efficiency.

Popularity

Points 3

Comments 5

What is this product?

MarkdowntoCV is a tool that takes text written in Markdown (a simple plain text formatting syntax) and converts it into a professional-looking resume, which can be saved as an HTML webpage or a PDF document. The innovation here lies in its simplicity and adaptability. Instead of wrestling with complex graphical interfaces or proprietary software, you write your resume in Markdown, which is essentially just text with simple symbols for formatting (like '*' for bullet points or '#' for headings). The tool then processes this Markdown and applies predefined styling to create a clean, well-structured document. This approach is deeply rooted in developer-centric workflows, where text-based configuration and templating are common and highly efficient. So, what's the value? It means you can create and update your resume quickly and easily, using a format you're likely already familiar with, without being locked into a specific platform or spending hours on manual formatting.

How to use it?

Developers can use MarkdowntoCV by writing their resume content in a Markdown file (`.md`). This file would contain sections like 'Experience', 'Education', 'Skills', etc., formatted using Markdown syntax. The tool then takes this Markdown file as input and outputs either an HTML file that can be viewed in a web browser or a PDF file for easy sharing and printing. Integration is straightforward: you simply run the command-line tool with your Markdown file. For more advanced users, the templating system within MarkdowntoCV can be customized to change the visual appearance of the resume, allowing for unique branding or specific style requirements. This is particularly useful for developers who want to maintain a consistent personal brand across their online presence and professional documents. So, how does this help you? You can generate multiple versions of your resume with different focuses by simply editing your Markdown file and re-running the tool, saving immense time and effort during job applications.

Product Core Function

· Markdown Parsing: Converts plain text Markdown into structured data that can be styled. This allows for rapid content creation and modification using familiar syntax, valuing developer efficiency and reducing formatting friction.

· HTML Output Generation: Produces a semantic HTML document representing the resume. This enables easy viewing online and serves as a foundation for further web-based customization or integration with other web tools. Its value lies in creating shareable, web-friendly documents.

· PDF Conversion: Renders the generated HTML into a printable PDF format. This is crucial for submitting resumes to recruiters and employers in a universally compatible and professional-looking format, ensuring your qualifications are presented clearly.

· Customizable Templates: Allows users to modify the visual styling of the generated resume. This empowers developers to tailor their resume's appearance to specific job applications or personal branding preferences, highlighting individuality and attention to detail.

· Cross-Platform Compatibility (Linux/MacOS): The tool is designed to work seamlessly on common developer operating systems. This ensures accessibility and ease of use for a significant portion of the target audience, removing platform-specific barriers.

Product Usage Case

· Job Application Document Generation: A developer needs to apply for multiple jobs, each requiring a slightly tailored resume. By writing their core resume in Markdown and using MarkdowntoCV, they can quickly edit specific sections (like project details or skills) in the Markdown file and regenerate a new, customized HTML or PDF resume in minutes, significantly speeding up the application process.

· Personal Website Integration: A developer wants to display their resume on their personal portfolio website. They can use the HTML output from MarkdowntoCV directly, or further style it with CSS to match their website's design. This solves the problem of manually maintaining separate resume content for their website and for job applications, ensuring consistency and reducing redundant work.

· Version Control for Resumes: Developers are accustomed to using Git for version control. By storing their resume content as a Markdown file, they can track changes, revert to previous versions, and collaborate on their resume as they would with any other code project. This provides a robust and organized way to manage resume evolution.

· Quick Resume Updates for Networking Events: A developer attends a last-minute networking event and needs to quickly update their resume with a recent accomplishment. They can edit their Markdown file on the go (or on their laptop) and immediately generate a fresh PDF to share, ensuring their resume is always current and relevant.

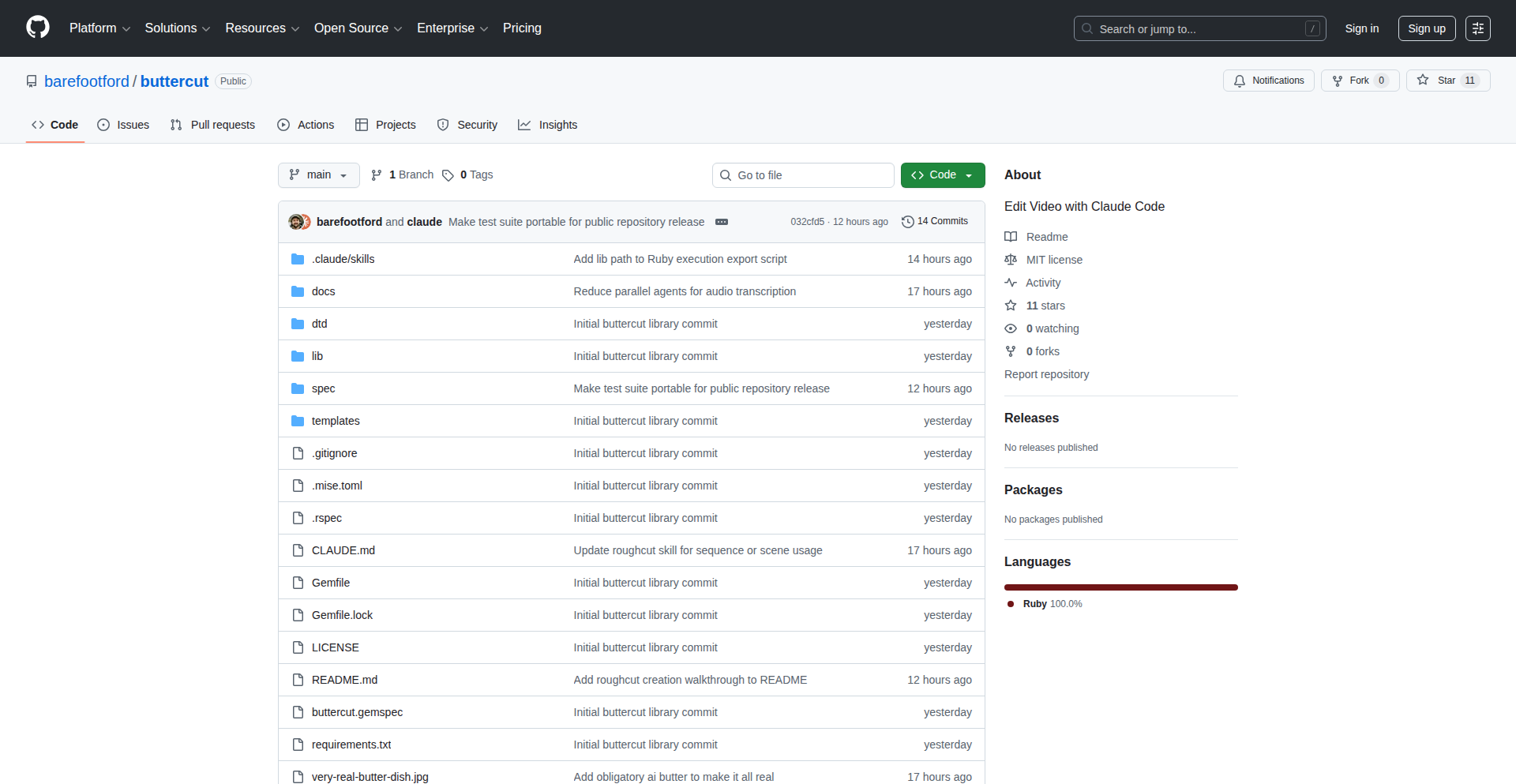

11

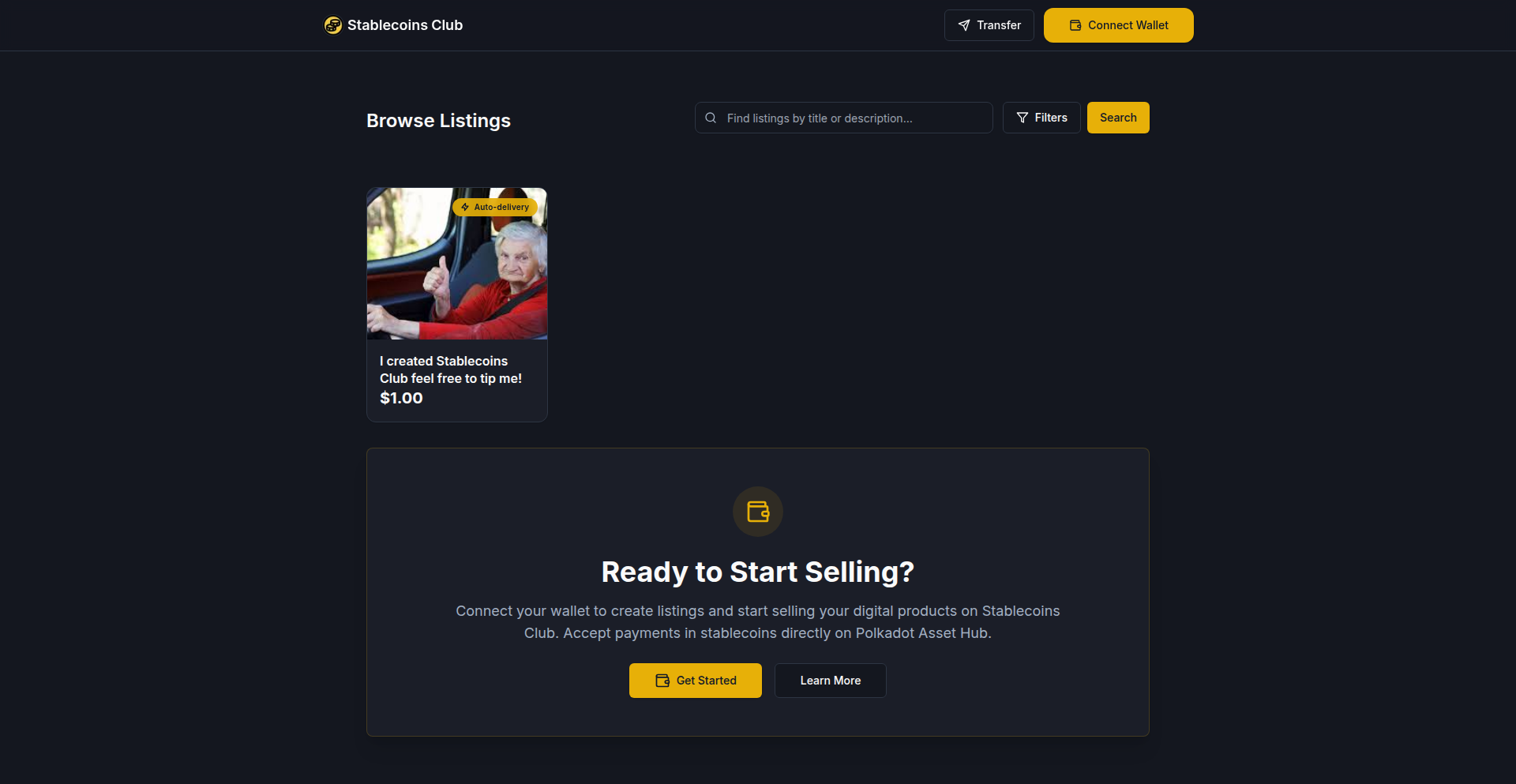

Caelus Sidereal Dashboard

Author

rhannequin

Description

Caelus is an open-source astronomy dashboard built from the ground up with transparent computation. It leverages a custom Ruby library, Astronoby, to provide astronomical data with explainable calculations. The project emphasizes accessibility, allowing anyone to understand, manipulate, and contribute to astronomical data, embodying the hacker ethos of solving problems with code for the betterment of the community.

Popularity

Points 4

Comments 2

What is this product?

Caelus is an astronomy dashboard that aims to make astronomical data and its computation entirely transparent and accessible. At its core is the Astronoby library, a Ruby gem developed by the creator, which handles all the complex calculations for astronomical phenomena. Unlike many existing tools, Caelus doesn't just present data; it shows you *how* that data is derived. This means you can understand the underlying algorithms and formulas used to determine celestial positions, events, and other astronomical information. The innovation lies in this commitment to open computation, allowing users to trust and even verify the data presented, fostering a deeper understanding and engagement with astronomy.

How to use it?

Developers can use Caelus in several ways. Firstly, they can directly explore the astronomical data presented on the Caelus website (caelus.siderealcode.net) for their personal interest or to gather information for projects. Secondly, developers can integrate the Astronoby library into their own Ruby applications to perform astronomical calculations. This is particularly useful for building custom tools, educational applications, or even game development where accurate celestial mechanics are required. The website itself serves as a live demonstration and playground for the library's capabilities. For those interested in contributing, the entire codebase for both Caelus and Astronoby is open source on GitHub, inviting collaboration and enhancements.

Product Core Function

· Real-time Astronomical Data: Provides up-to-date information on celestial body positions, phases, and events. This is valuable for anyone needing accurate astronomical context without complex manual calculations.

· Transparent Calculation Engine (Astronoby Library): All data is computed using a dedicated Ruby library, with the source code openly available. This allows developers to understand, verify, and even modify the calculation methods, fostering trust and enabling custom development.

· Open Source Framework and Website: The entire project, including the website framework and the astronomy library, is open source. This allows for community contributions, bug fixes, and feature enhancements, accelerating innovation and knowledge sharing.

· Data Accessibility and Manipulation: Aims to make astronomical data easy to access and understand, with future plans for interactive charts and tools. This democratizes access to complex scientific information for a wider audience.

· Educational Tool Development: The transparency of the calculation process makes Caelus and Astronoby ideal for building educational tools that teach the principles of astronomy and celestial mechanics.

Product Usage Case

· A game developer building a space simulation game can use the Astronoby library to accurately calculate planet orbits, celestial body positions, and gravitational effects for a realistic experience.

· An educator creating a virtual astronomy lesson can embed Caelus data or use the Astronoby library to demonstrate astronomical concepts and computations to students, providing a hands-on understanding of how data is generated.

· A hobbyist astronomer can use the dashboard to plan observation nights by understanding celestial object visibility and positions, while also appreciating the underlying computational methods.

· A researcher or student can leverage the open-source nature to inspect and potentially improve upon existing astronomical calculation algorithms, contributing to the scientific community.

· A web developer wanting to add an astronomical widget to their website can integrate the Astronoby library to display personalized celestial information for their users.

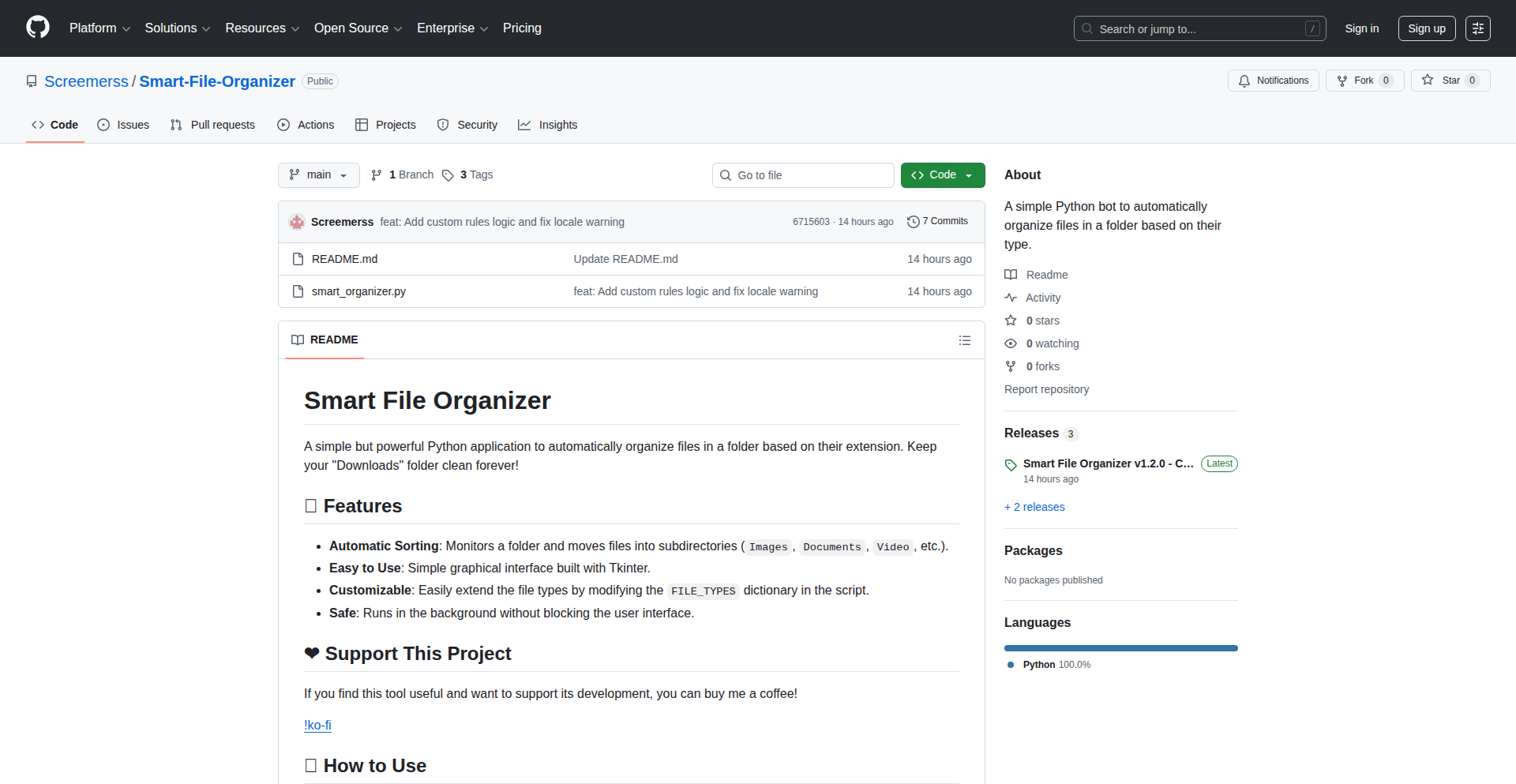

12

AutoSortFS

Author

screemers

Description

AutoSortFS is a free, open-source application designed to automatically organize your computer's file system. It employs intelligent algorithms to categorize and move files based on their type, content, and user-defined rules, thereby decluttering digital workspaces and saving users significant time and effort in manual file management.

Popularity

Points 2

Comments 3

What is this product?

AutoSortFS is a smart file management tool that leverages pattern recognition and rule-based systems to automatically sort your files. At its core, it analyzes file metadata (like file extension, creation date) and can even inspect file content (using techniques similar to text analysis) to determine its category. Based on this analysis, it applies pre-configured rules to move files into designated folders. The innovation lies in its ability to go beyond simple extension-based sorting, offering a more intelligent and adaptable organization system that learns and improves over time.

How to use it?

Developers can download and install AutoSortFS on their operating system. Once installed, they can define custom sorting rules through a configuration file or a simple graphical interface. These rules can specify which file types or content patterns should be moved to which folders. For example, a rule could be set to automatically move all downloaded '.pdf' files to a 'Documents/Downloads' folder or to move 'code snippets' identified within text files to a 'Development/Snippets' directory. This empowers developers to maintain a tidy project structure and quickly locate their assets.

Product Core Function

· Intelligent File Categorization: Utilizes file metadata and content analysis to understand what a file is, allowing for more precise sorting than traditional methods. This means you don't just sort by file extension; the system can understand the *purpose* of the file, saving you from manually searching through generic folders.

· Customizable Rule Engine: Allows users to define their own sorting logic, ensuring the organizer works exactly as needed. You tell it how you want your files organized, and it follows your instructions precisely, adapting to your unique workflow.

· Automated File Movement: Seamlessly moves files to their designated folders once categorized, eliminating the need for manual drag-and-drop operations. This frees up your time from tedious organizational tasks, allowing you to focus on more productive work.

· Content-Aware Sorting: Capable of inspecting file content for keywords or patterns to determine classification, going beyond basic file properties. This is particularly useful for developers who might have code snippets or configuration files that are difficult to categorize by extension alone.

Product Usage Case

· Project Organization: A developer working on multiple projects can set up AutoSortFS to automatically move all project-related files (code, documentation, assets) into project-specific folders, keeping the workspace clean and navigable. This prevents accidentally mixing files from different projects, which is a common source of errors.

· Download Management: For developers who frequently download code libraries, documentation, or sample projects, AutoSortFS can automatically sort these into dedicated 'Downloads/Libraries' or 'Downloads/Samples' folders, making it easy to find them later without sifting through a cluttered download directory.

· Log File Archiving: System administrators or developers can configure AutoSortFS to move log files based on their content (e.g., error logs) or age into specific archive folders, helping to manage disk space and quickly access critical information.

· Asset Management: In game development or web design, assets like images, textures, and sound files can be automatically sorted into organized asset directories based on their type and purpose, streamlining the development process.

13

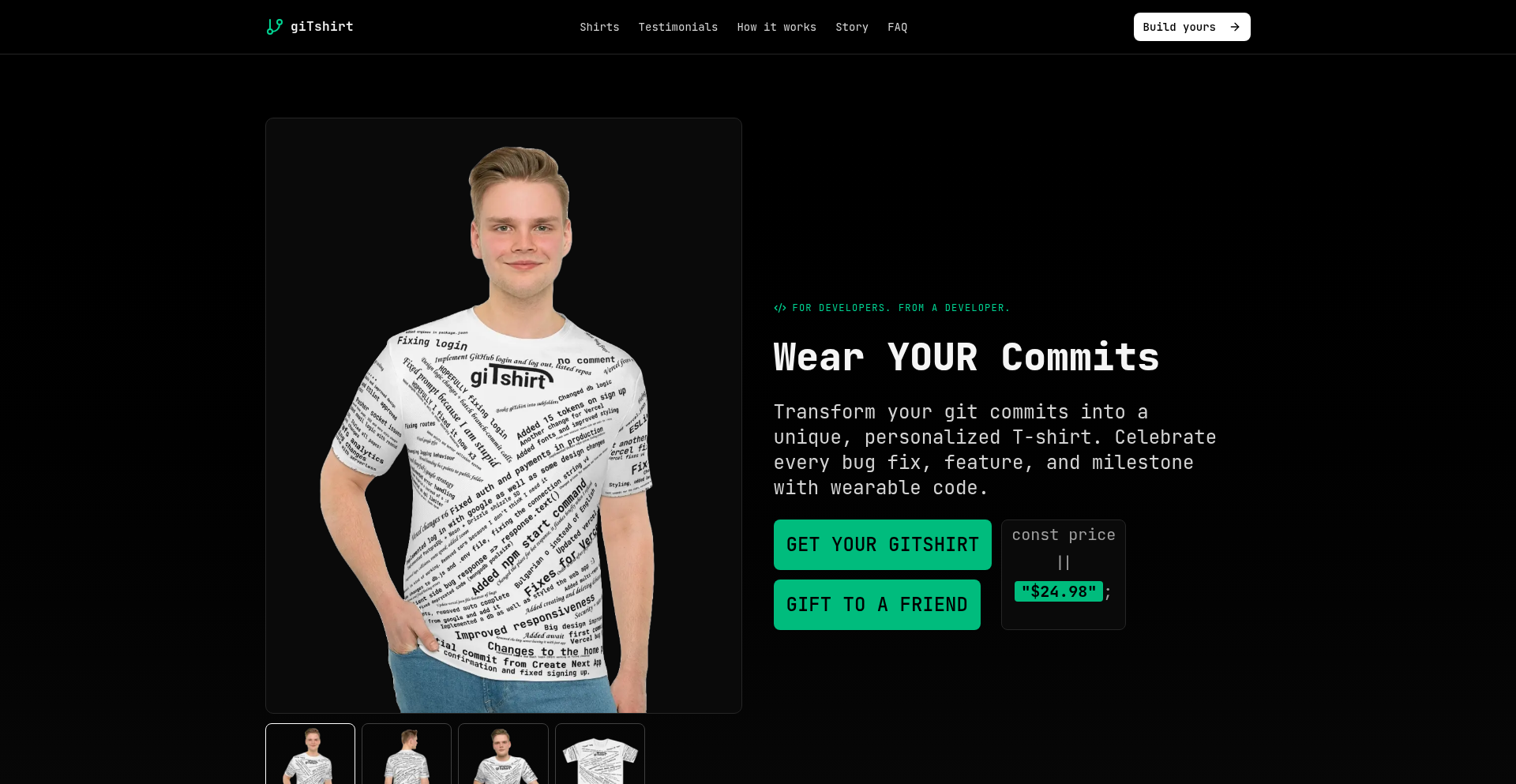

giTshirt CodeCanvas

Author

GeorgiMY

Description

giTshirt CodeCanvas is a unique platform that transforms your git commit messages into wearable art. It uses an algorithm to arrange selected commit messages from your GitHub repositories onto a t-shirt, allowing developers to showcase their coding journey and personal style. The core innovation lies in applying a 2D collision system to creatively place text on apparel, turning code history into a conversation starter.

Popularity

Points 4

Comments 0

What is this product?

giTshirt CodeCanvas is a personalized clothing brand where developers can immortalize their coding accomplishments. It takes your commit messages, which are like snapshots of your work in progress, and arranges them in an aesthetically pleasing, almost random pattern onto a t-shirt. The clever part is how it uses a '2D collision system' – think of it like trying to fit puzzle pieces together without them overlapping – to make sure all your messages fit nicely on the shirt. This solves the problem of wanting a unique way to represent your developer identity beyond just lines of code.

How to use it?

Developers can use giTshirt CodeCanvas by connecting their GitHub account. You then select a repository (either public or private) whose commit messages you want to feature. The system pre-selects all messages, but you have the option to fine-tune your selection. Once you click 'Generate giTshirt', the algorithm processes your commits and generates mockups for the front, back, and sleeves of the t-shirt. You can then preview and order your custom-designed garment, integrating your code history directly into your wardrobe. It's a straightforward process, designed to be completed in under a minute.

Product Core Function

· Commit Message Selection: Allows users to choose specific commit messages from their GitHub repositories, providing a personalized touch to the final design. This is valuable because it lets developers highlight their most important or humorous coding milestones.

· 2D Collision-Based Layout Algorithm: Generates a visually appealing and compact arrangement of commit messages on the t-shirt by intelligently fitting text without overlap. This innovation makes it possible to display a large number of messages creatively and is useful for maximizing the visual impact of the design.

· Multi-Panel Design Generation: Creates unique designs for the front, back, and sleeves of the t-shirt, offering a comprehensive canvas for displaying code history. This enhances the product's aesthetic appeal and provides more opportunities for personal expression.

· GitHub Authentication: Securely connects to user GitHub accounts to access repository data, streamlining the process of selecting commit messages. This ensures data privacy and simplifies the user experience by avoiding manual input of commit logs.

· Cloudinary Image Hosting: Utilizes Cloudinary for hosting the generated t-shirt mockups, ensuring fast and reliable delivery of product visuals to the user. This is a technical implementation detail that contributes to a smooth user experience by making designs readily available.

Product Usage Case

· A developer who has been working on a complex open-source project for years can generate a giTshirt showcasing all the significant commit messages from their contributions, creating a tangible tribute to their dedication and problem-solving journey. This resolves the need for a personal, physical representation of their hard work.

· During a tech conference, a developer wears a giTshirt featuring humorous or memorable commit messages from their personal projects. This acts as an icebreaker, sparking conversations with other attendees about coding habits and funny development moments, thus solving the challenge of initiating engaging technical discussions.

· A team lead wants to commemorate a major project milestone. They could use giTshirt CodeCanvas to select key commit messages from the team's work over the project's duration and create custom shirts for everyone, fostering team spirit and celebrating collective achievement. This provides a unique way to recognize and reward team contributions.

14

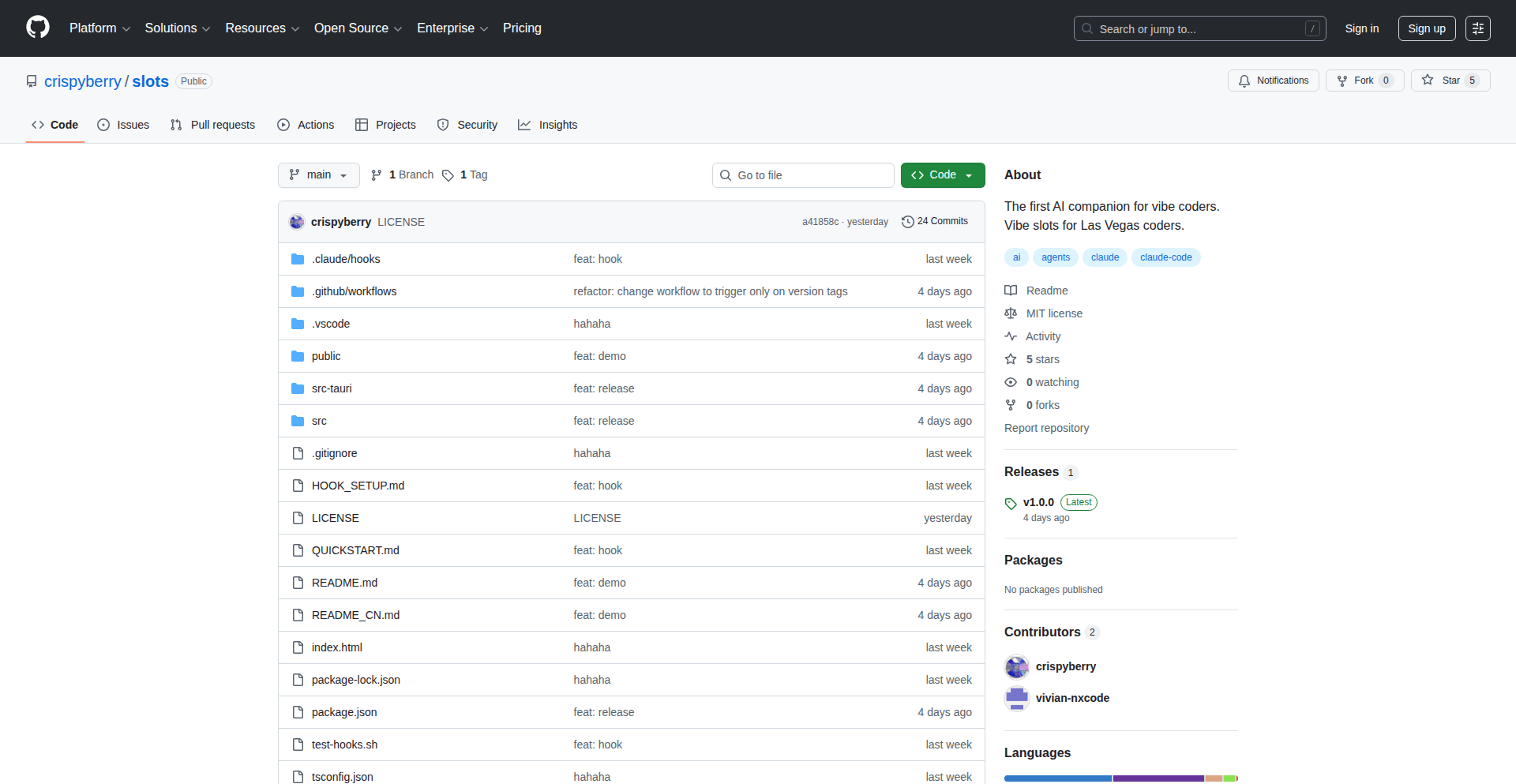

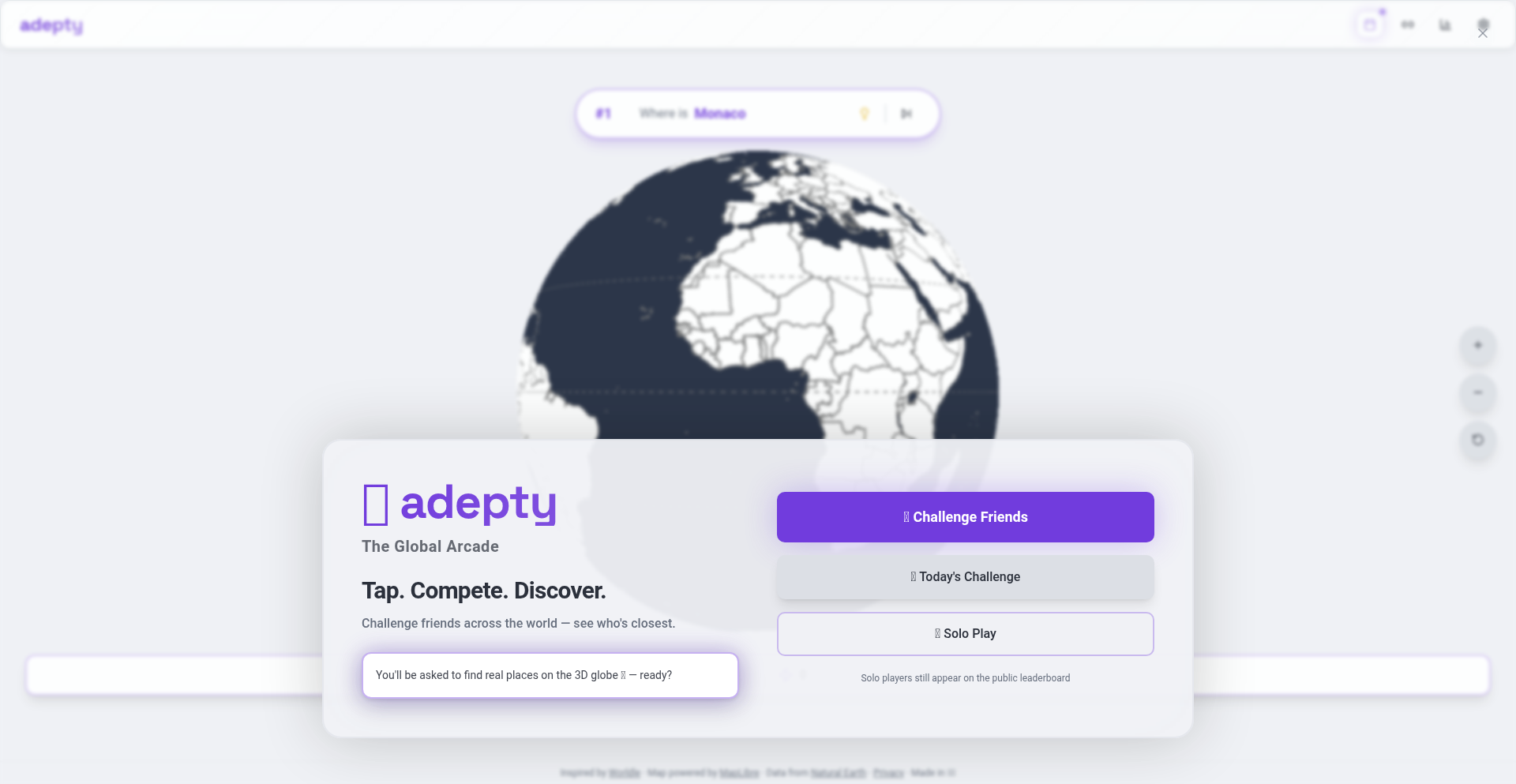

VibeSlots: Gamified Vibecoding

Author

ssslvky1

Description

VibeSlots is a playful experiment that gamifies the experience of vibecoding. It introduces a betting mechanism where users can wager on their upcoming coding sessions, turning focused coding into a lighthearted challenge. The core innovation lies in bridging the gap between productivity and entertainment, offering a novel way to motivate and engage developers.

Popularity

Points 2

Comments 2

What is this product?

VibeSlots is a web-based application that aims to add a layer of fun and engagement to the concept of 'vibecoding,' which generally refers to coding with a positive and focused mindset. Technically, it leverages a simple betting system. Users can predict the outcome or duration of their coding session and place a 'bet.' The system tracks their progress (though the specific tracking mechanism is experimental and part of the show HN's exploration). The innovation here is the application of game mechanics – specifically betting – to a productivity-oriented activity, aiming to boost motivation through psychological engagement rather than direct rewards. It's a proof-of-concept demonstrating how game design principles can be applied to non-traditional contexts.

How to use it?

Developers can interact with VibeSlots through its web interface. They would typically log in, define a 'vibe session' (e.g., 'I will code for 2 hours without distractions' or 'I will complete this specific feature'), set a hypothetical 'stake' or 'bet' (this could be a virtual currency or a personal commitment), and then proceed with their coding. The platform encourages users to reflect on their session afterwards and 'settle' their bets. It's a tool for self-experimentation and for exploring personal productivity habits in a more entertaining way. Integration could involve a browser extension that tracks coding time or a simple manual input system.

Product Core Function

· Betting system for coding sessions: Enables users to place virtual bets on their own coding outcomes, adding a motivational incentive and a sense of playful risk to productivity.

· Session prediction and tracking: Allows users to define their coding goals and duration, fostering self-awareness about productivity patterns and commitment.

· Gamified progress visualization: (Implied) The gamified nature suggests potential for visualizing progress and 'winnings' or 'losses,' making the coding journey more engaging.

· Personalized motivation mechanism: Offers a unique approach to self-motivation by tapping into the psychological drivers of games, appealing to developers who enjoy a challenge.

· Exploration of productivity psychology: Serves as a platform for users and the creator to understand how game mechanics can influence coding focus and discipline.

Product Usage Case

· A solo developer looking to boost focus during a particularly challenging feature implementation can use VibeSlots to set a 'bet' on completing the feature within a specific timeframe. This adds a layer of urgency and personal accountability, turning a potentially tedious task into a game.

· A team member wanting to break through a coding slump might use VibeSlots to bet on achieving a certain number of commits or lines of code within a day. The playful nature of the bet can alleviate the pressure and make the process more enjoyable, potentially leading to increased output.

· For developers who enjoy online gaming or competitive elements, VibeSlots offers a way to inject that same sense of excitement into their professional work. They can treat each coding session as a mini-game, strategizing their 'bets' to maximize their perceived 'wins.'

· A student learning to code could use VibeSlots to bet on successfully completing their assignments or understanding complex concepts within a set period, making the learning process more interactive and rewarding.

15

Papiers.ai: Cognitive Augmentation for ArXiv

Author

smnair

Description

Papiers.ai is a novel interface for arXiv research papers that leverages AI to transform dense academic literature into a more accessible and interactive experience. It tackles the challenge of information overload in rapidly advancing scientific fields by providing AI-generated summaries, visualization tools like lineage graphs and mind maps, real-time related discussions via Twitter, and a research agent to accelerate idea exploration. The core innovation lies in augmenting human cognition, allowing researchers to process and connect information more efficiently.

Popularity

Points 3

Comments 1

What is this product?

Papiers.ai is an intelligent platform designed to make understanding and interacting with scientific papers on arXiv significantly easier. Instead of just reading PDFs, it uses advanced AI techniques, similar to how a knowledgeable assistant would process information, to create dynamic content. It builds an AI-generated wiki to distill complex concepts, visualizes the 'family tree' of scientific ideas through lineage graphs, creates a mind map to show connections between different research areas, and pulls in live discussions from Twitter to see what the community is talking about. Furthermore, it offers a research agent that can actively help you discover and connect related ideas. This fundamentally changes how researchers engage with the ever-growing body of scientific knowledge by making it more navigable and understandable, so you can discover insights faster.

How to use it?

Developers can use Papiers.ai by simply navigating to the Papiers.ai website and entering an arXiv paper ID or URL. The platform then automatically processes the paper and presents the AI-generated wiki, lineage graphs, mind maps, and Twitter feed. For more integrated use, developers can explore Papiers.ai's API (if available, though not explicitly mentioned in the provided text) to programmatically access the processed paper data, integrate the visualization tools into their own applications, or leverage the research agent for automated literature review tasks. Imagine building a custom research dashboard that pulls in summarized papers and their connections, or an alert system for new papers in a specific niche with AI-driven insights. This provides a powerful way to build intelligent research tools on top of existing academic content.

Product Core Function

· AI-generated Wiki: Transforms research papers into easy-to-understand summaries and explanations, making complex scientific concepts accessible to a broader audience and accelerating learning for researchers.

· Scientific Lineage Graphs: Visualizes the historical development and influence of research papers, helping users understand the context and evolution of scientific ideas and identify key foundational work.

· Mind Maps: Creates visual representations of the relationships between different concepts within and across research papers, aiding in conceptual understanding and identifying potential interdisciplinary connections.

· Live Twitter Feed: Integrates real-time discussions and opinions about research papers from Twitter, allowing users to gauge community reception and discover emerging trends and controversies.

· Research Agent: An AI-powered assistant that helps users explore ideas, find related papers, and discover new research avenues, significantly speeding up the literature review process and fostering serendipitous discoveries.

Product Usage Case

· A graduate student struggling to grasp the foundational concepts of a new research field can use the AI-generated wiki to quickly get up to speed, understanding the core principles without spending days reading multiple papers.

· A researcher investigating a novel machine learning algorithm can use the lineage graphs to trace its origins and identify the seminal papers that contributed to its development, providing a deeper understanding of its theoretical underpinnings.

· A scientist exploring potential collaborations can use the mind maps to see how their research area intersects with others, uncovering unexpected synergies and new avenues for interdisciplinary projects.

· A tech journalist wanting to understand the public perception of a breakthrough paper can monitor the live Twitter feed to gauge immediate reactions, identify key influencers, and understand the broader societal implications.

· A startup looking for the next big innovation can employ the research agent to proactively discover emerging trends and niche research areas that are ripe for commercialization, accelerating their product development cycle.

16

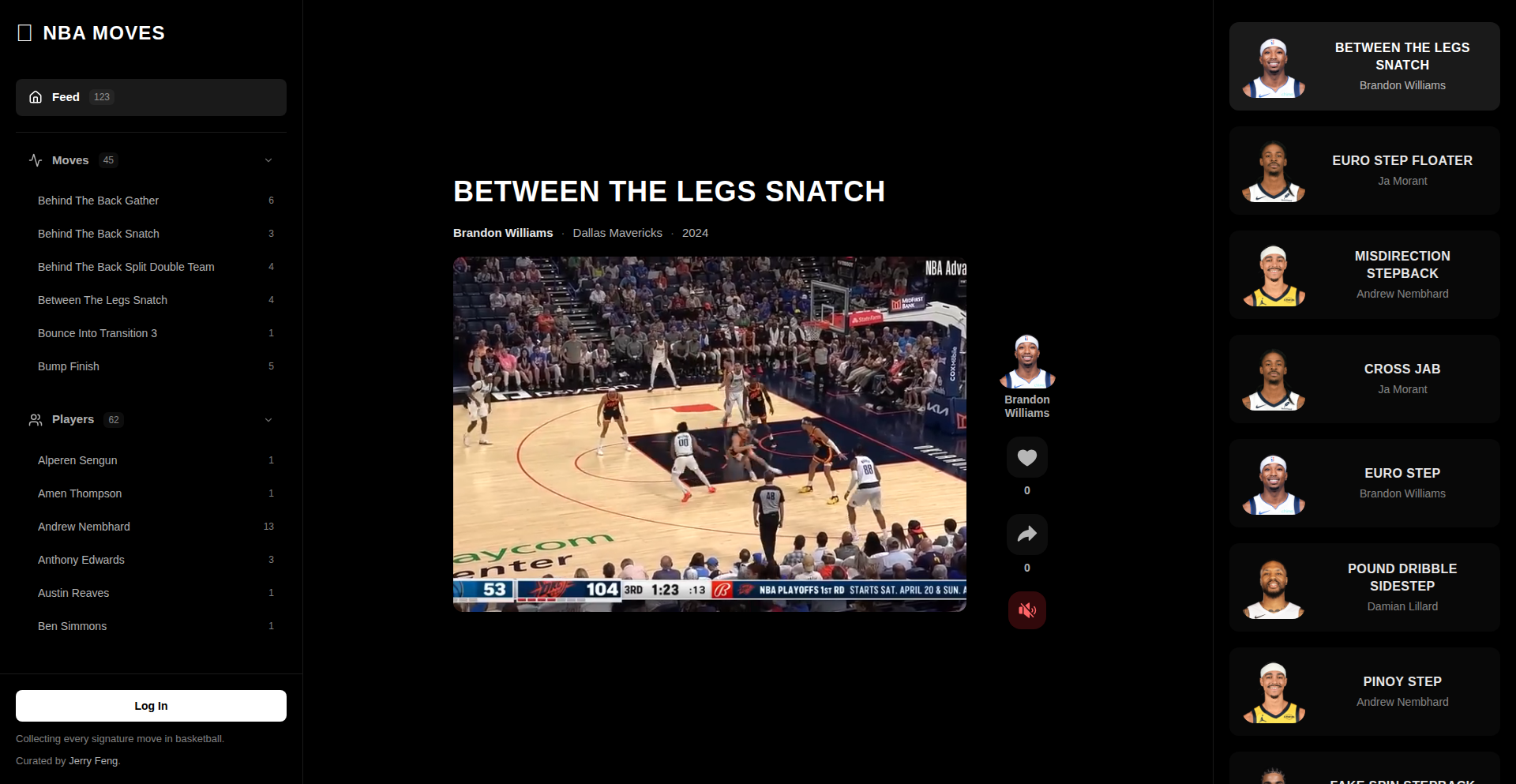

Basketball MoveIndexer

Author

jfeng5

Description

This project is a groundbreaking attempt to systematically index every signature move in basketball. It leverages advanced data processing and potentially computer vision techniques to analyze game footage and identify unique player maneuvers. The innovation lies in its ability to quantify and categorize complex athletic actions, making them searchable and analyzable in a structured way. It solves the challenge of understanding and comparing player styles by breaking down their movements into discrete, identifiable components. For developers, this opens up new avenues for sports analytics, game development, and even AI-driven coaching tools.

Popularity

Points 4

Comments 0

What is this product?

This project is essentially a sophisticated database of basketball player signature moves. Imagine being able to search for 'LeBron James' fadeaway jumper' or 'Stephen Curry's crossover dribble' and get detailed information, or even visual examples. The technical innovation lies in how it achieves this. It likely involves processing video data (perhaps using techniques similar to those in AI for action recognition) to detect specific body poses, ball trajectories, and movement patterns that define a signature move. This goes beyond simple statistics; it's about understanding the 'how' and 'why' of a player's unique skillset. The value for the community is a deeper, data-driven understanding of the sport.

How to use it?

For developers, this project offers a rich dataset and potentially an API to access this indexed information. You could integrate this data into fantasy sports platforms to provide more nuanced player analysis, power game development by allowing for realistic character animations based on real moves, or build AI-powered scouting tools that can identify promising players based on their repertoire of signature moves. The integration would likely involve querying the index to retrieve specific move data, perhaps linked to player profiles and game footage.

Product Core Function

· Signature Move Cataloging: The core value is the structured cataloging of unique basketball player moves. This enables precise identification and retrieval of specific athletic actions, allowing for deeper analysis of player techniques. This is useful for understanding player development and strategy.

· Data-driven Player Style Analysis: By indexing individual moves, the project facilitates a quantitative approach to analyzing a player's style. Developers can build tools that compare players based on their move sets, offering insights into strengths and weaknesses. This is valuable for sports analytics and performance evaluation.

· Potential for AI-driven Action Recognition: The underlying technology likely involves machine learning to identify and classify these moves from video. This opens doors for AI applications in sports, such as automated highlight generation or real-time performance feedback. This pushes the boundaries of what AI can do in sports.

· Searchable Basketball Knowledge Base: The project creates a searchable repository of basketball movements. This is invaluable for coaches, analysts, and even fans who want to understand the intricacies of the game in a structured and accessible manner. This democratizes expert knowledge.

Product Usage Case

· Sports Analytics Platform Enhancement: Imagine a fantasy sports platform that uses this index to provide users with detailed breakdowns of player tendencies and signature moves, leading to more informed draft picks and strategic decisions. This makes fantasy sports more engaging and data-rich.

· Video Game Character Animation: Game developers can use this indexed data to create more realistic and authentic character movements in basketball video games. By referencing actual signature moves, the gameplay experience becomes more immersive. This directly improves player immersion in virtual worlds.

· AI Coaching and Scouting Tools: An AI coach could use this index to identify areas where a player needs to develop specific moves or to scout opponents by analyzing their signature moves and tendencies. This provides actionable insights for improvement. This helps athletes reach their full potential.

· Interactive Basketball Encyclopedia: A website or app could be built that allows users to explore basketball history and player evolution through the lens of their signature moves, making the sport more educational and engaging for a wider audience. This makes learning about basketball fun and interactive.

17

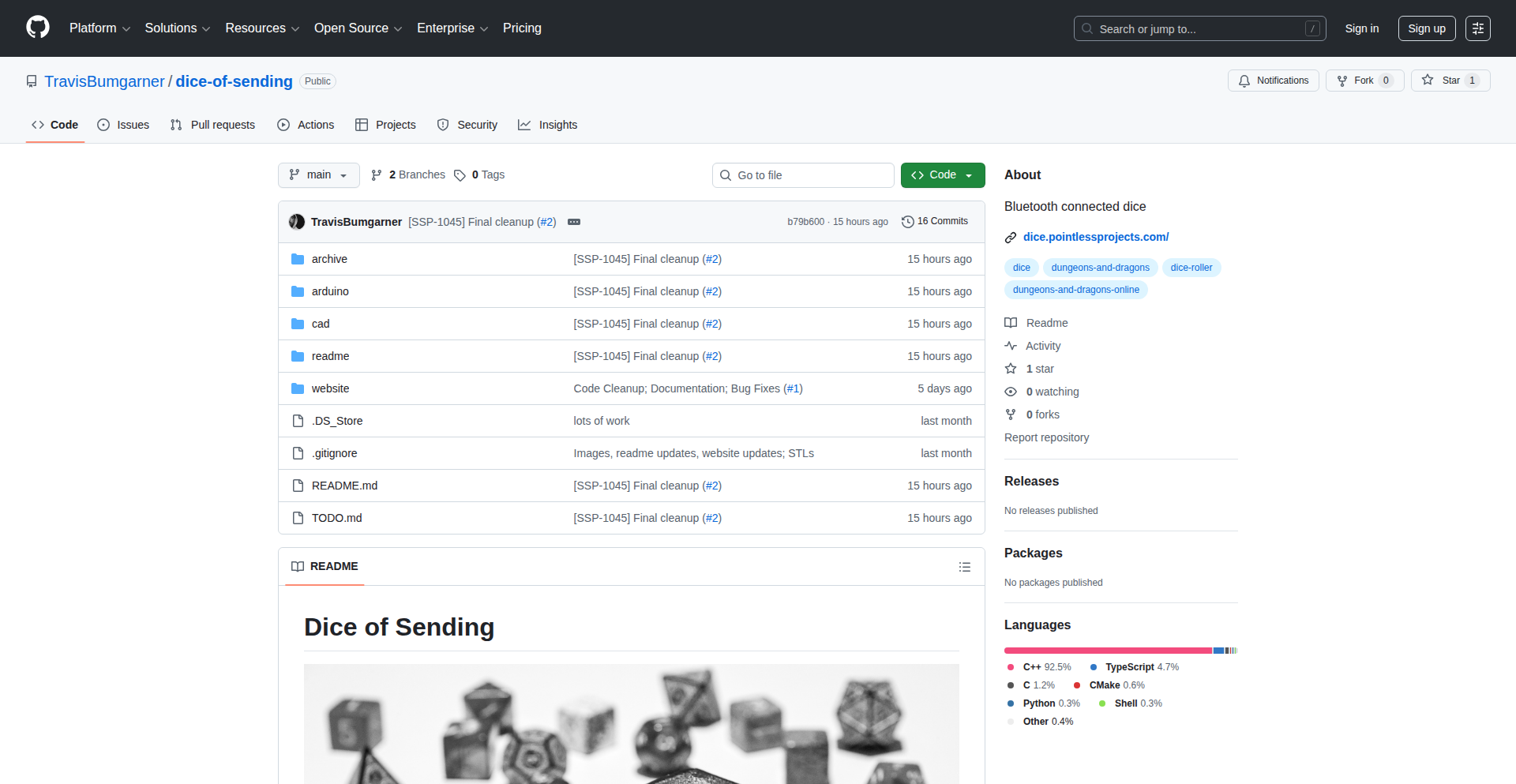

PhysicalDiceRollerSync

Author

sillysideprojs

Description

A web application that bridges the gap between physical dice and online gaming. It uses your webcam to detect physical dice rolls and translates them into digital results for online use. This solves the problem for players who enjoy the tactile feel of rolling dice but participate in online games.

Popularity

Points 4

Comments 0

What is this product?

This project is a web-based tool that leverages computer vision to 'see' and interpret the outcome of a physical dice roll. It works by using your device's camera to capture an image of the rolled dice, then sophisticated image processing algorithms identify the dice and read the numbers on their faces. The innovation lies in the real-time, automated interpretation of physical actions into digital data, offering a tangible interaction in a virtual environment. So, what's in it for you? It allows you to enjoy the satisfying feel of rolling real dice in your board games or tabletop RPGs, even when playing with friends online.

How to use it?

To use this project, you'll need a web browser and a webcam. Simply navigate to the web application, grant camera access, and place your rolled dice in front of the camera. The application will automatically detect the dice and display the results digitally. You can then input these results into your online game or share them with your fellow players. This can be integrated into online gaming platforms that support manual input of dice rolls or used as a standalone tool for any game requiring dice. So, what's in it for you? It's a straightforward way to bring a beloved physical element into your digital gaming sessions without complex setup.

Product Core Function

· Real-time dice detection: The system uses advanced image recognition to identify dice in the camera feed, allowing for immediate results. The value to you is instant translation of your physical roll into a digital number.

· Dice outcome interpretation: The core logic analyzes the orientation and pips on the dice faces to accurately determine the rolled number, ensuring fair play. The value to you is confidence in the accuracy of your digital results.

· Web-based accessibility: The application runs in a web browser, meaning no software installation is required, making it accessible across different devices. The value to you is effortless access from anywhere with an internet connection.

· Open-source flexibility: Being fully open-source, developers can inspect, modify, and extend its functionality for custom applications. The value to you is the potential for future improvements and tailored solutions.

Product Usage Case

· Tabletop RPGs: A Dungeon Master can use this to roll physical dice for their players in a virtual Dungeons & Dragons session, ensuring everyone sees the same outcome. This resolves the challenge of remote players not being able to see the physical rolls.

· Online Board Games: Players in a remote board game can use this to roll physical dice for games like Catan or Monopoly, providing a more immersive experience than purely digital dice. This addresses the desire for tactile gameplay in a digital format.

· Educational Tools: Educators could use this to demonstrate probability and statistics using physical dice in an online classroom setting, making abstract concepts more concrete. This provides a visual and interactive way to teach mathematical principles.

18

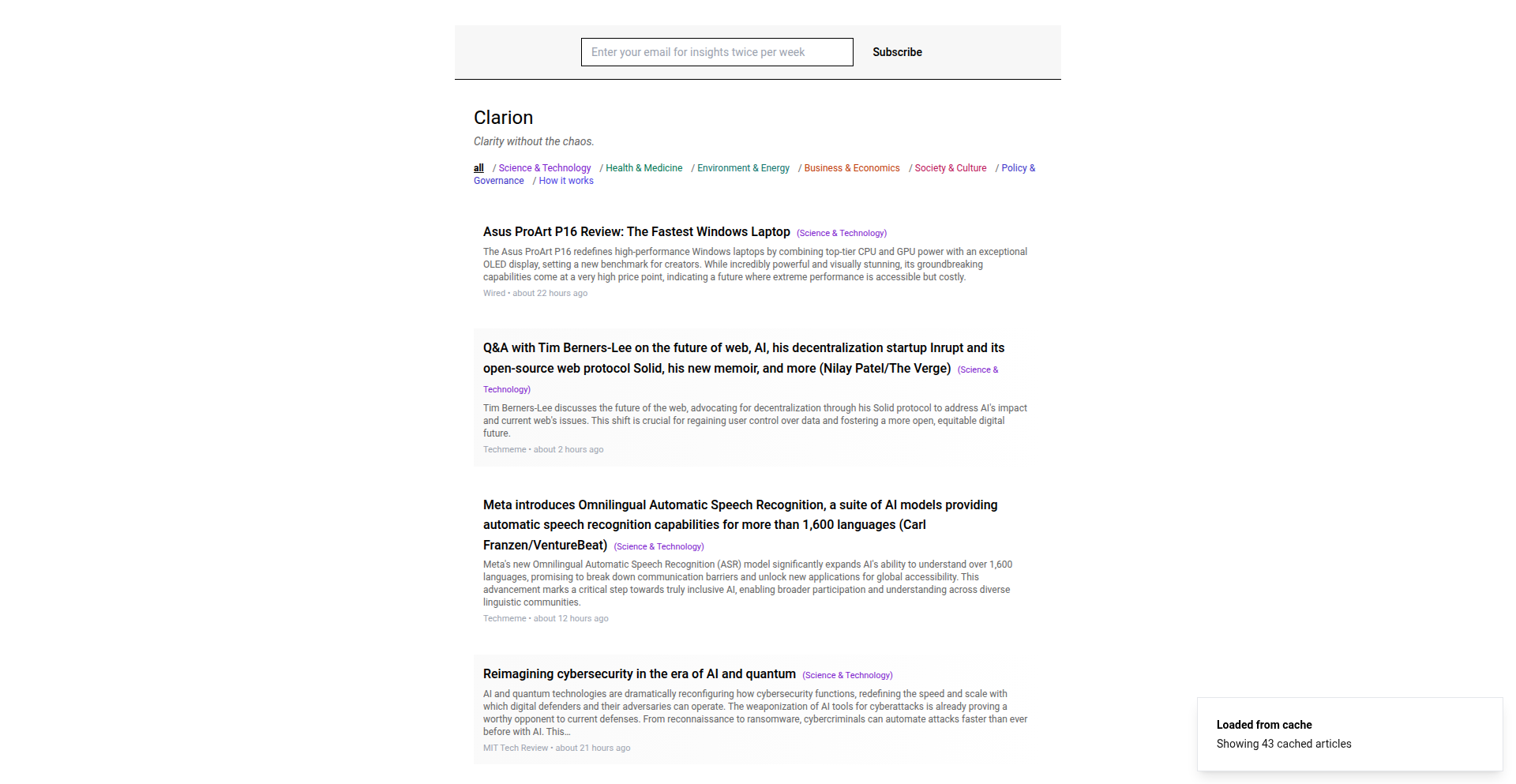

Clarion: AI-Driven Clarity News Synthesizer

Author

radiusvector

Description

Clarion is an AI-powered news aggregator that transforms the overwhelming influx of global information into digestible, context-rich journalism. Instead of sensationalism, it prioritizes clarity, understanding, and progress, helping users stay informed without feeling dread. The core innovation lies in its use of frontier AI models to analyze and synthesize news from over 2,000 sources, delivering a more insightful and less anxiety-inducing news experience.

Popularity

Points 2

Comments 2

What is this product?