Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-09

SagaSu777 2025-11-10

Explore the hottest developer projects on Show HN for 2025-11-09. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The current wave of innovation is heavily leaning into making complex technologies accessible and actionable. We're seeing a strong push towards AI agents and LLMs not just as conversational tools, but as integrated components within larger systems that automate intricate tasks, analyze data, and assist in creative processes. The 'show HN' submissions highlight a powerful hacker spirit: developers are identifying pain points in existing workflows, from managing Android devices to automating content generation and comparing AI models, and are building elegant, often open-source solutions. The trend towards visual interfaces for AI interaction, like Spine AI, indicates a shift towards more intuitive and powerful ways for humans to collaborate with machines. For aspiring developers and entrepreneurs, this signifies a ripe opportunity to build tools that lower the barrier to entry for advanced technologies, empower users with greater control and composability, and solve real-world problems through ingenious applications of code. The key is to think about how to abstract complexity, democratize access, and foster creativity through technology.

Today's Hottest Product

Name

Spine AI

Highlight

Spine AI introduces an infinite visual workspace that allows users to collaborate with over 300 AI models and agents. Instead of linear chat threads, it uses a block-based system where each block represents a specific AI function (e.g., image generation, research, chat). These blocks can be interconnected, passing context seamlessly. The innovation lies in enabling complex thought processes and iterative refinement by allowing branching, model swapping, and collaborative exploration within a single visual canvas, overcoming the limitations of traditional chat interfaces for deep thinking and creative work. Developers can learn about building flexible, context-aware AI interaction systems and designing UIs that facilitate complex AI workflows.

Popular Category

AI & Machine Learning

Developer Tools

Productivity & Workflow Automation

Data Analysis & Management

Popular Keyword

AI agents

LLM

Automation

Workflow

Data analysis

Code generation

Editor

Technology Trends

AI-powered workflows

Visual AI interaction

Code generation & analysis

Data democratization

Developer productivity tools

Embedded databases

LLM orchestration

Project Category Distribution

AI & Machine Learning (30%)

Developer Tools (25%)

Productivity & Workflow Automation (20%)

Data Analysis & Management (15%)

Web Development & Design (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | DroidDock: ADB File Navigator | 63 | 32 |

| 2 | Pipeflow-PHP: XML-Driven Workflow Engine | 52 | 9 |

| 3 | LLM OmniHub | 8 | 10 |

| 4 | 200M Token Generative Bible | 12 | 4 |

| 5 | Trilogy SemanticSQL Studio | 15 | 1 |

| 6 | Mxflo - Mobile 2D Game Dev Canvas | 13 | 2 |

| 7 | TidesDB: Flash-Optimized Transactional Storage | 12 | 1 |

| 8 | Spine Canvas: The AI-Powered Visual Thinkscape | 11 | 0 |

| 9 | Affordable Alternatives Directory | 6 | 4 |

| 10 | Floxtop: Semantic File Organizer | 4 | 3 |

1

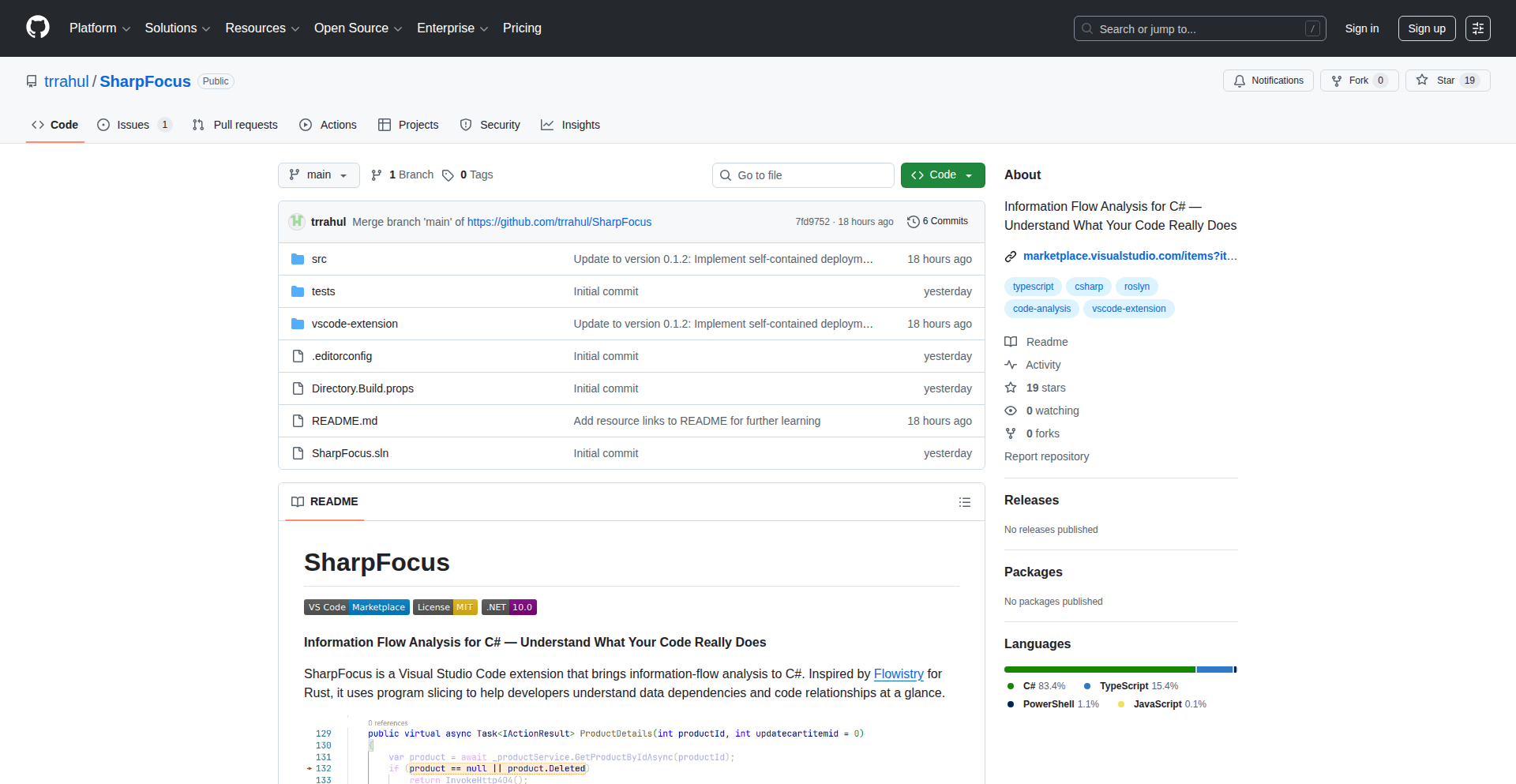

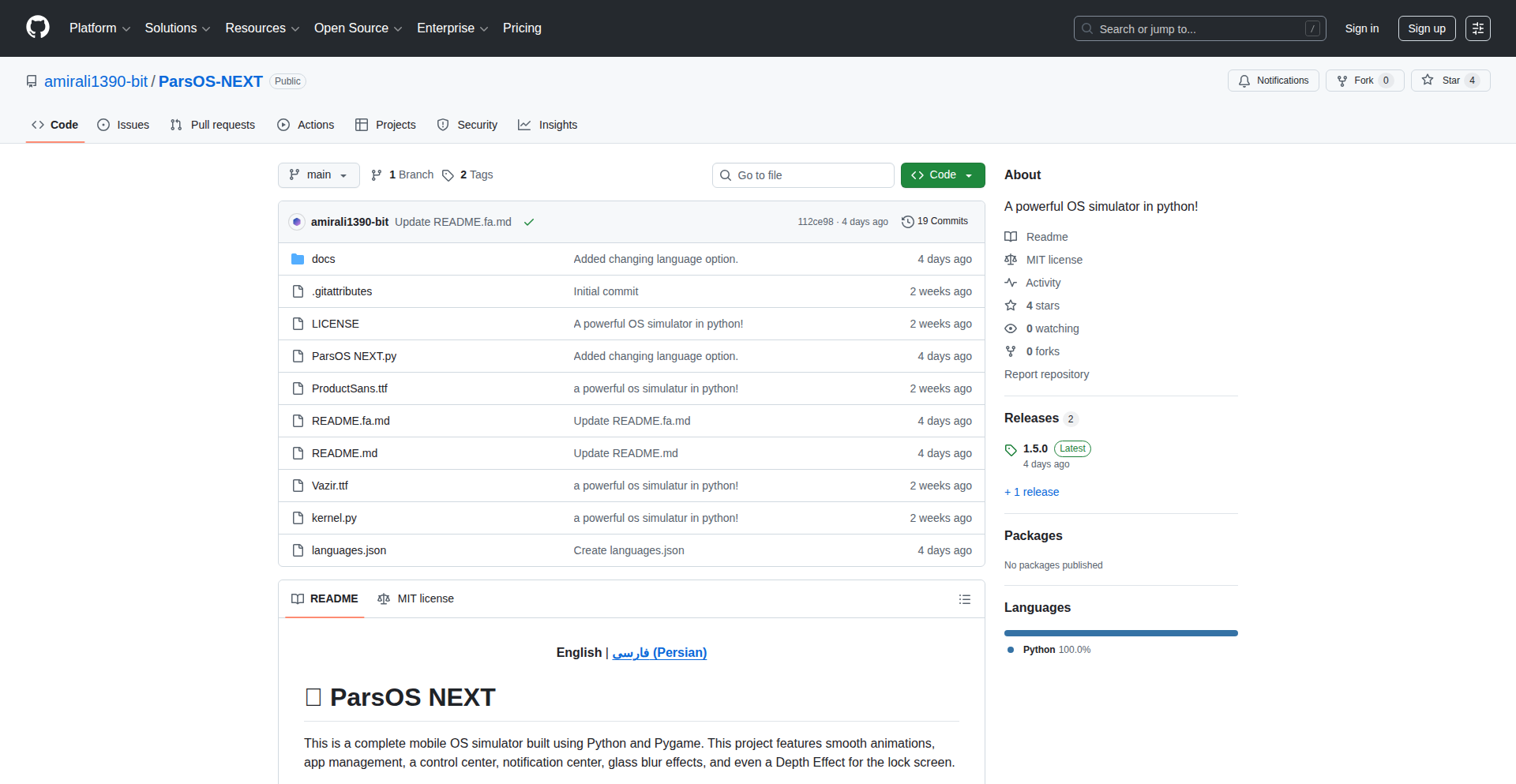

DroidDock: ADB File Navigator

Author

rajivm1991

Description

DroidDock is a modern, lightweight macOS application that simplifies browsing and managing files on your Android devices. It leverages the Android Debug Bridge (ADB) for seamless connectivity, offering a clean and efficient alternative to existing solutions. The project highlights innovative use of Rust and Tauri for high performance and a native desktop experience.

Popularity

Points 63

Comments 32

What is this product?

DroidDock is a desktop application for macOS that allows you to interact with your Android phone's files without physically connecting it via USB in the traditional sense. It uses ADB (Android Debug Bridge), a command-line tool that lets your computer communicate with your Android device. The core innovation lies in its ability to provide a rich, graphical user interface for file operations, making it incredibly easy to transfer files, view media, and search your device, all powered by a fast Rust backend and the Tauri framework for a polished desktop app. This means it's quick, responsive, and doesn't hog your system resources, unlike some older or more complex tools. So, if you're a developer or just someone who frequently moves files between your Mac and Android phone, this offers a much smoother and faster experience.

How to use it?

To use DroidDock, you first need to enable USB Debugging on your Android device. Then, connect your Android device to your Mac via USB or, if set up, wirelessly via ADB. Launch DroidDock on your Mac. The app will automatically detect your connected Android device. You can then browse your device's file system, see thumbnail previews of images and videos, search for specific files, and easily upload or download files between your Mac and Android device. It's designed to be intuitive, with features like keyboard shortcuts for common actions, making everyday file management tasks much quicker and more convenient. This is particularly useful for developers who need to quickly transfer app builds or debug logs to and from their devices, or for anyone who wants a streamlined way to manage their mobile media.

Product Core Function

· Browse Android Filesystem: Allows you to navigate through all the folders and files on your Android device, just like you would on your Mac. This is valuable because it provides a clear overview of your device's storage, helping you locate and organize files easily.

· Thumbnail Previews: Automatically generates small visual previews for images and videos. This saves you time by letting you quickly identify media files without having to open each one individually, which is great for managing photos and videos.

· File Upload/Download: Enables direct transfer of files between your macOS computer and your Android device. This is a core convenience, allowing you to move documents, music, or other files back and forth effortlessly.

· Intuitive File Search: Provides a quick and efficient way to find specific files on your Android device. Instead of manually digging through folders, you can simply type the file name to locate it, saving significant time and effort.

· Multiple View Modes: Offers different ways to display your files, such as list view or grid view with previews. This customization allows you to choose the most comfortable and efficient way for you to view and manage your files.

· Keyboard Shortcuts: Implements shortcuts for common actions like copying, pasting, and navigating. This significantly speeds up workflow for power users and those who frequently manage files, making tasks more efficient.

· Rust and Tauri Backend: The underlying technology uses Rust for its speed and safety, and Tauri for building a lightweight desktop application. This means DroidDock is very performant and uses fewer system resources, providing a snappier and more stable experience compared to older or web-based solutions.

Product Usage Case

· Developer transferring app builds: A mobile developer finishes building a new version of their app on their Mac and needs to quickly install it on an Android test device. Using DroidDock, they can drag and drop the APK file directly to the device's download folder, bypassing complex command-line steps and saving valuable development time.

· Content creator managing media: A photographer who uses an Android phone to capture photos wants to quickly transfer them to their Mac for editing. DroidDock allows them to connect wirelessly, see thumbnail previews of their recent shots, and download entire folders of images with a few clicks, streamlining their workflow.

· User organizing files: Someone wants to clean up their Android phone's storage by moving large video files to their Mac. DroidDock lets them browse the device's storage, identify the large video files using previews and file sizes, and then download them to their Mac, freeing up space on their phone efficiently.

· Troubleshooting device storage: A user notices their Android phone is low on storage. They can use DroidDock to get a clear overview of their file system, quickly identify large or redundant files, and delete them remotely from their Mac, solving the storage issue without needing to connect to a computer using traditional file transfer methods.

2

Pipeflow-PHP: XML-Driven Workflow Engine

Author

marcosiino

Description

Pipeflow-PHP is a headless PHP engine designed to automate complex workflows, from content generation to business logic. Its core innovation lies in defining these workflows using an easily understandable XML format, allowing non-developers to configure and maintain them, thereby bridging the gap between technical implementation and business needs. This addresses the common challenge of making automated processes accessible and editable by a wider audience within an organization.

Popularity

Points 52

Comments 9

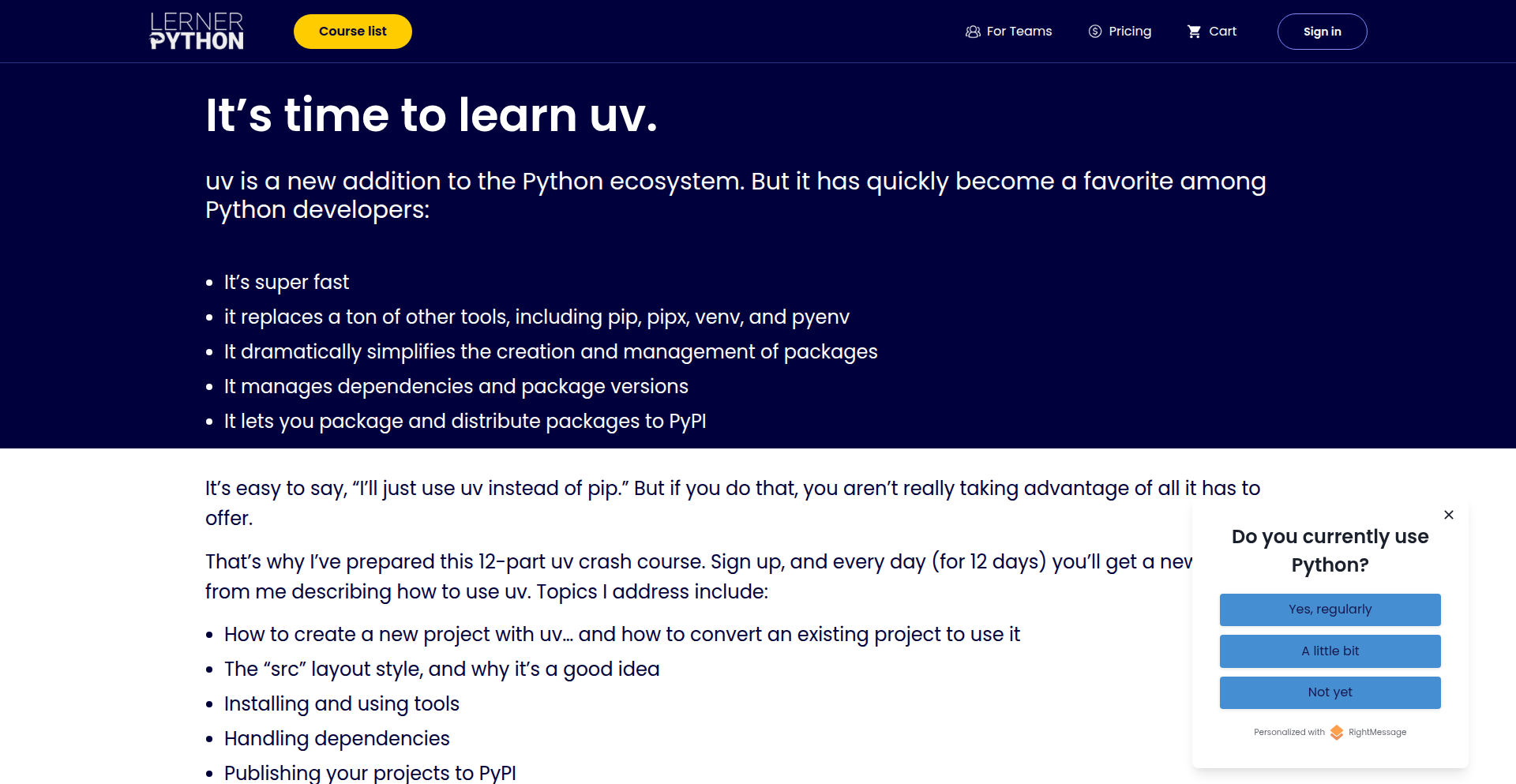

What is this product?

Pipeflow-PHP is a flexible engine that lets you chain together a series of actions, called 'stages,' to automate tasks. Think of it like building a recipe where each step is a stage. The clever part is that you can define this recipe using XML, which is like a structured way of writing instructions that's easy for anyone to read and understand, even if they don't code. So, instead of needing a programmer to change how something is automated, a content manager could tweak the XML to change the topic of generated articles. It's 'headless,' meaning it doesn't come with its own user interface, but it's built to be easily plugged into existing dashboards or admin panels. This means the automation logic can be managed directly from where you already work, making it highly practical.

How to use it?

Developers can integrate Pipeflow-PHP into their PHP applications by installing it via Composer. They can then define pipelines using either fluent PHP code or by creating XML files that describe the sequence of stages. These pipelines can be triggered manually, through scheduled tasks (cron jobs), or by other backend events within their application. For instance, a developer could create a pipeline to automatically process uploaded images, then send notifications, all triggered by the upload event. The XML definition of the pipeline can be stored and edited within an application's admin interface, perhaps through a custom plugin or a simple text editor, allowing non-technical users to manage the workflow.

Product Core Function

· XML-based pipeline definition: Enables non-developers to understand, maintain, and edit automation logic without writing code. This offers flexibility and reduces reliance on developers for routine workflow adjustments.

· Modular stages: Allows for reusable building blocks of automation, making complex workflows easier to construct and manage. Developers can create custom stages to extend functionality, solving specific business problems.

· Control flow stages (If, ForEach, For): Provides the ability to implement conditional logic and repetitive tasks within workflows. This allows for dynamic and responsive automation that adapts to different scenarios.

· Headless engine design: Facilitates seamless integration with any existing backend interface or CMS. This means the automation can be managed from familiar environments, enhancing user experience and adoption.

· Multiple trigger options (manual, cron, backend event): Offers flexibility in how and when workflows are executed. This allows for automation to be triggered based on specific business needs, whether it's a scheduled task or an immediate response to an action.

· Custom stage development: Empowers developers to create specialized actions tailored to unique business requirements. This expands the engine's applicability to a wide range of niche problems.

Product Usage Case

· Automated content generation for websites: A content manager can define parameters in an XML file to generate daily articles on specific topics, using AI, and publishing them to a CMS. This solves the problem of consistent content creation without manual effort.

· Backend data processing workflows: When a new order is placed in an e-commerce system, a pipeline can be triggered to update inventory, send a confirmation email, and log the transaction. This streamlines e-commerce operations and reduces errors.

· Automated report generation: A pipeline can be set up to run at the end of each month, gather data from various sources, compile it into a report, and email it to stakeholders. This automates a time-consuming reporting process.

· Onboarding new users: Following a new user registration, a pipeline can automatically send welcome emails, create a user profile in a database, and assign initial tasks. This ensures a smooth and consistent onboarding experience.

3

LLM OmniHub

Author

hhameed

Description

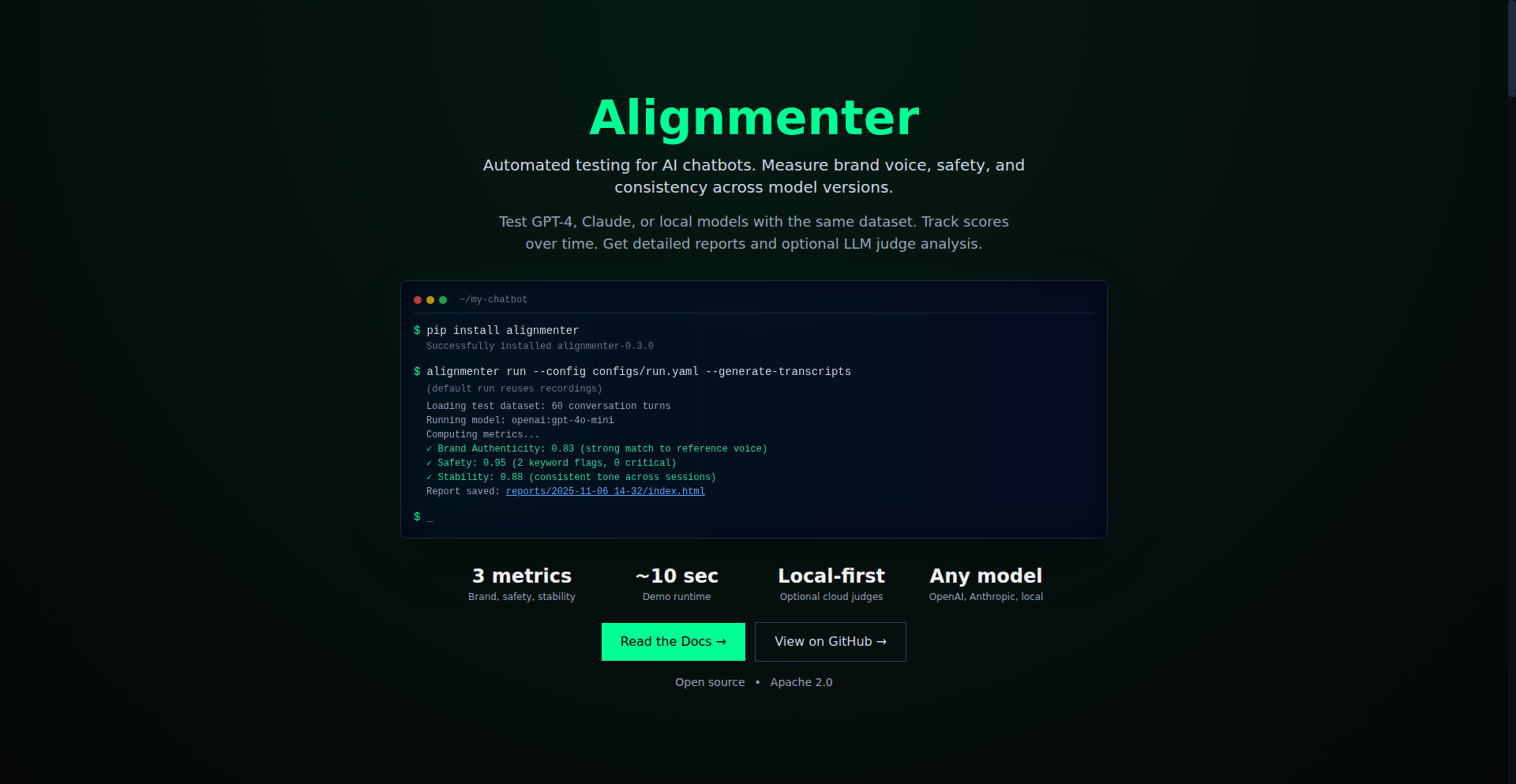

LLM OmniHub is a unified interface designed to streamline interaction with various large language models (LLMs). It addresses the common developer pain point of juggling multiple AI platforms, managing subscriptions, and losing context when comparing model outputs. The core innovation lies in its ability to let users seamlessly switch between models like GPT-4, Claude, and Gemini within a single conversation and compare their responses side-by-side, eliminating the need to manage numerous tabs and accounts.

Popularity

Points 8

Comments 10

What is this product?

LLM OmniHub acts as a central control panel for different AI language models. Instead of opening separate websites for ChatGPT, Claude, Gemini, or Llama, you can access them all from one place. This is achieved by building a clever interface that understands how to talk to each of these AI models through their respective programming interfaces (APIs). The innovation is in creating a consistent experience across these diverse models, allowing you to send the same prompt to multiple AI's simultaneously, compare their answers instantly, and even continue a conversation by switching the underlying AI model being used without losing the thread. This saves you the hassle of copying and pasting and helps you quickly identify which AI is best suited for your needs.

How to use it?

Developers can begin using LLM OmniHub immediately through its free tier to experiment with different LLMs. For more intensive use or to leverage your existing API subscriptions, you can opt for the 'Connect' plan. This plan allows you to input your own API keys for models like OpenAI's GPT-4, Anthropic's Claude, or Google's Gemini. The integration is straightforward: you provide your API credentials within the LLM OmniHub interface, and it then uses these keys to communicate with the respective AI services on your behalf. This enables seamless switching and comparison directly within the hub, making it an ideal tool for research, content creation, coding assistance, and any task requiring evaluation of multiple AI outputs.

Product Core Function

· Seamless Model Switching: Allows users to switch between different AI language models (e.g., GPT-4, Claude, Gemini) mid-conversation without losing context. The technical value is in abstracting the API calls and state management for each model, providing a unified conversational flow.

· Side-by-Side Response Comparison: Displays outputs from multiple AI models concurrently, enabling easy comparison of their strengths and weaknesses. This is technically achieved by making parallel API requests and rendering the results in a synchronized view, offering immediate insights into model performance.

· Unified Interface Management: Consolidates access to various AI models into a single application, eliminating the need to manage multiple browser tabs, accounts, and subscriptions. The innovation here is in creating a consistent user experience and backend orchestration for diverse AI services.

· Bring Your Own API Key (BYOK) Integration: Enables users to connect their existing API keys for unlimited usage and control over their AI model consumption. This provides flexibility and cost-efficiency by leveraging existing subscriptions and ensures that sensitive API keys are managed securely within the application.

· Free Tier for Exploration: Offers a free tier with limited access, allowing users to try out the functionality and understand the value proposition without upfront commitment. This lowers the barrier to entry and encourages wider adoption and feedback within the developer community.

Product Usage Case

· Content Generation & Ideation: A content writer can use LLM OmniHub to send a single prompt to GPT-4 and Claude to generate different versions of a blog post introduction, then compare them side-by-side to pick the best one, saving time and improving creative output.

· Code Assistance & Debugging: A software developer needing help with a complex coding problem can input their code snippet and prompt into LLM OmniHub, then compare the suggested solutions from Gemini and Llama. This helps them identify the most effective and efficient code fix faster.

· AI Model Research & Benchmarking: Researchers or developers evaluating the performance of different LLMs for a specific task can use LLM OmniHub to run the same set of prompts across multiple models and record the outputs. The side-by-side comparison feature makes it easy to analyze variations and select the optimal model for their application.

· Customer Support Augmentation: A support team can use LLM OmniHub to quickly generate potential responses to common customer queries from various AI models, compare their accuracy and tone, and then select the best response to personalize and send to the customer. This improves response quality and efficiency.

4

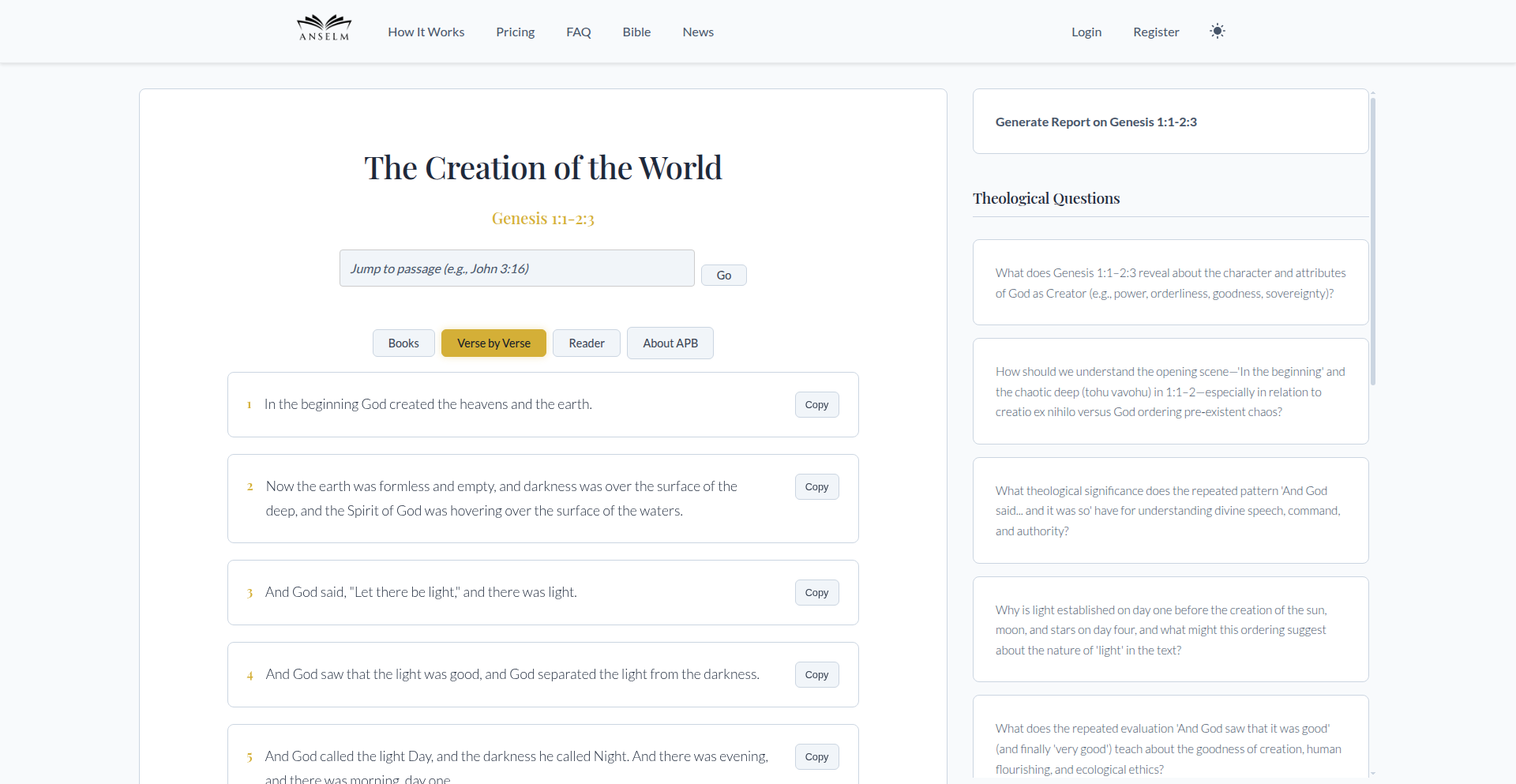

200M Token Generative Bible

Author

mrprmiller

Description

A generative AI model trained on a massive 200 million token dataset of the Bible, designed for creative exploration and spiritual inquiry. It offers a novel way to interact with religious text, generating novel interpretations, stories, and prayers.

Popularity

Points 12

Comments 4

What is this product?

This project is a large language model (LLM) specifically trained on an extensive corpus of biblical text, totaling 200 million tokens. Think of tokens as words or parts of words that the AI understands. The innovation lies in its focused training on a single, highly specific domain (the Bible). This allows it to generate text that is not only coherent but also thematically aligned with biblical narratives, theological concepts, and spiritual language. Unlike general-purpose LLMs, this model can produce prayers, sermon outlines, theological discussions, or even creative stories inspired by biblical events, with a depth and nuance specific to its training data. So, what's in it for you? It's a powerful tool for pastors, theologians, or anyone interested in exploring the Bible in a new, dynamic way, potentially sparking fresh insights and deeper understanding.

How to use it?

Developers can integrate this model into applications via an API. Potential use cases include building interactive Bible study tools, sermon generators, creative writing assistants for religious content, or even chatbots that can answer theological questions or offer spiritual guidance in a contextually relevant manner. The integration would involve sending prompts to the API (e.g., 'Generate a prayer for strength during hardship' or 'Write a short story about Abraham's journey') and receiving the AI-generated text in response. This allows for the seamless addition of advanced AI capabilities to existing or new platforms focused on religious or spiritual content. So, how does this benefit you? You can leverage this specialized AI to enhance your own applications, making them more engaging and insightful for users interested in the Bible.

Product Core Function

· Generative Bible Text: Creates new text inspired by biblical themes, stories, and language, offering a novel way to engage with scripture. Value: Provides fresh perspectives and creative content for religious discussions and studies.

· Theological Concept Generation: Produces explanations or discussions around complex theological ideas, drawing from its specialized training. Value: Assists in understanding and articulating intricate religious concepts.

· Prayer and Sermon Outline Creation: Generates prayers and sermon ideas based on biblical context and user prompts. Value: Supports religious leaders and individuals in their spiritual practice and communication.

· Biblical Narrative Expansion: Develops new stories or expands existing biblical narratives in a style consistent with the source material. Value: Offers creative storytelling possibilities for educational or inspirational purposes.

· Customizable Biblical Interpretation: Allows users to explore different interpretations or angles of biblical passages through AI-generated text. Value: Facilitates deeper personal study and critical engagement with religious texts.

Product Usage Case

· A pastor can use this model to generate a unique sermon outline for a specific passage, saving time and sparking creative ideas for their weekly message. This addresses the problem of sermon preparation being time-consuming and sometimes creatively challenging.

· A developer building a Bible study app can integrate this AI to allow users to ask specific theological questions and receive contextually relevant, AI-generated answers, enriching the learning experience. This solves the issue of providing immediate and nuanced answers to user inquiries within the app.

· A Christian content creator can leverage the model to generate creative stories or devotionals inspired by biblical events, providing fresh content for their audience. This tackles the challenge of consistently producing engaging and relevant religious content.

· An individual seeking spiritual reflection can use the model to generate personalized prayers based on their current needs, fostering a more direct and introspective spiritual practice. This offers a novel way to seek comfort and guidance through AI-assisted prayer.

· A student of theology can use the AI to explore different AI-generated interpretations of complex biblical verses, aiding in their academic research and understanding. This helps in exploring diverse viewpoints and gaining a broader perspective on theological discussions.

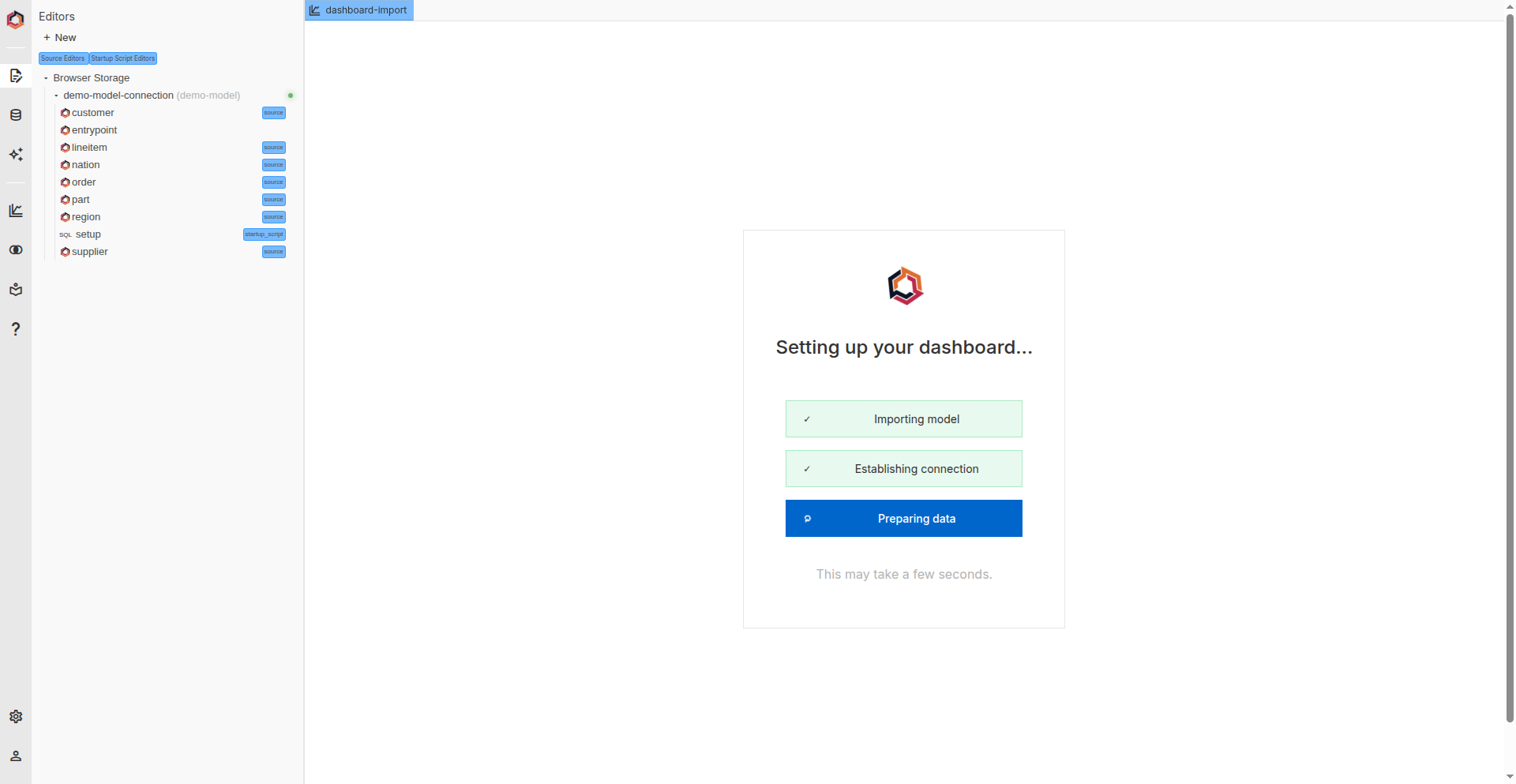

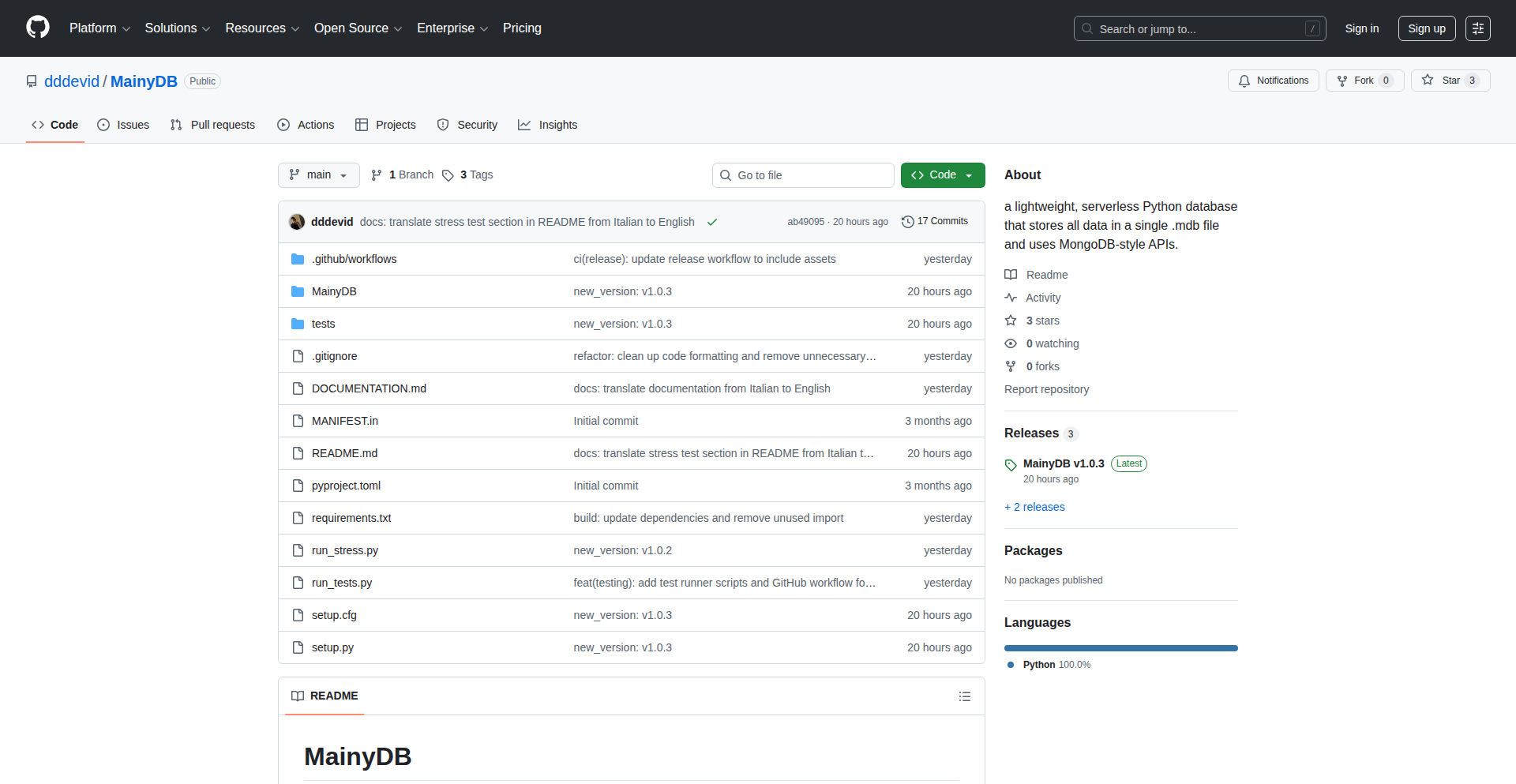

5

Trilogy SemanticSQL Studio

Author

efromvt

Description

An open-source, browser-based IDE for data analysis that reimagines how we work with SQL. It introduces a novel language called Trilogy, which extends SQL with a semantic layer. This innovation aims to drastically reduce boilerplate code, simplify data source management, and streamline the process of transforming data into visualizations. The core idea is to make data analysis more intuitive and less repetitive by embedding meaning directly into the query language.

Popularity

Points 15

Comments 1

What is this product?

Trilogy SemanticSQL Studio is an experimental, open-source tool designed to make data analysis with SQL more efficient and composable. Unlike traditional SQL, Trilogy introduces a 'semantic layer' directly within its SQL-like syntax. Think of this semantic layer as a way to give names and context to your data concepts (like 'customer lifetime value' or 'sales region') rather than just referring to raw tables and columns. This means you can write queries that operate on these meaningful concepts, reducing the need for repetitive code (like Common Table Expressions or CTEs) and making your analyses more adaptable. If a data source changes its underlying table structure, you can update the semantic layer binding without breaking your existing queries or dashboards. Furthermore, Trilogy aims to simplify the transition from data to visuals by leveraging this semantic layer and expressive typing to provide better default visualizations and enable automatic drill-downs and cross-filtering, ultimately making your insights more accessible.

How to use it?

Developers can use Trilogy SemanticSQL Studio as a web-based IDE to write and execute queries against various data sources like BigQuery, DuckDB, and Snowflake. The core usage revolves around writing queries in the Trilogy language. For example, instead of writing a complex SQL join across multiple tables, you might define a semantic concept like 'customer_orders' in Trilogy, which implicitly handles the joins. This semantic definition can then be used directly in your queries. The studio also provides built-in visualization capabilities, allowing you to connect your semantic queries directly to charts and graphs with minimal effort. Integration into existing workflows might involve using Trilogy for ad-hoc analysis, building reusable analytical components, or as a primary tool for creating dashboards that are resilient to underlying data schema changes. The frontend is available as an open-source project, allowing for potential custom integrations.

Product Core Function

· Semantic Layer Integration: Enables queries to operate on named data concepts rather than raw tables, reducing boilerplate and increasing reusability. This means you can define 'customer revenue' once and reuse it across many analyses without rewriting complex calculations.

· Data Source Agnosticism: Allows data bindings in the semantic layer to be updated without altering existing queries or dashboards. If your 'customer' table changes its name or structure, you update the binding in one place, and all connected analyses adapt automatically, saving significant maintenance time.

· Streamlined Query to Visualization: Offers more expressive typing and semantic context to automatically generate better default visualizations and enable interactive features like drill-downs and cross-filtering. This speeds up the process of turning raw data into actionable insights and interactive dashboards.

· Reduced Boilerplate Code: Eliminates the need for extensive use of CTEs by allowing queries to work with the semantic layer. This leads to cleaner, more readable, and more maintainable analytical code.

· Multi-Database Support: Seamlessly connects to and queries popular data warehouses such as BigQuery, DuckDB, and Snowflake, offering flexibility in your data stack.

Product Usage Case

· A data analyst wants to track 'monthly active users' but the underlying tables change frequently. Using Trilogy, they define 'monthly active users' in the semantic layer. When the user table is updated, they only need to adjust the semantic binding, and all reports and dashboards using 'monthly active users' continue to function without modification, saving hours of debugging and re-work.

· A business intelligence team needs to build a dashboard for sales performance. Instead of writing complex SQL joins for each metric, they use Trilogy to define semantic concepts like 'sales_by_region' and 'product_revenue'. The studio then uses these concepts to automatically generate charts and allows users to drill down from region to individual sales without writing additional code, making the dashboard interactive and easy to explore.

· A developer is building a data-intensive application and wants to abstract away the complexities of the database. They can use Trilogy's language to define core data entities and relationships. This abstraction layer makes the application code cleaner and allows for easier migration to different database systems in the future by only updating the semantic layer configurations.

6

Mxflo - Mobile 2D Game Dev Canvas

Author

adithiya_shiva

Description

Mxflo is a mobile-first platform that allows developers to create and play 2D games directly on their phones, instantly. It democratizes game development by abstracting away complex setup and providing an intuitive, touch-friendly interface for rapid prototyping and experimentation. The core innovation lies in enabling on-the-go game creation, fostering a more accessible and spontaneous development workflow.

Popularity

Points 13

Comments 2

What is this product?

Mxflo is a mobile application designed for creating and testing 2D games directly on a smartphone. It leverages a simplified, visual scripting approach combined with real-time compilation and playback, eliminating the need for a desktop environment and lengthy setup processes. The innovation is in its highly optimized mobile-native engine and user interface, making game logic development as intuitive as drawing or arranging elements. Imagine being able to ideate and test a game mechanic while commuting, all within a few taps.

How to use it?

Developers can download the Mxflo app on their mobile devices. Within the app, they can use a drag-and-drop interface to build game scenes, define character movements and interactions using a straightforward event-driven scripting system, and then instantly playtest their creations. For integration, Mxflo aims to support exporting projects in common formats or directly sharing them within the Mxflo community, allowing for collaboration and further development on other platforms if desired.

Product Core Function

· Instant 2D Game Prototyping: Allows rapid creation and iteration of 2D game ideas directly on a phone, turning fleeting inspiration into playable concepts within minutes. This means you can quickly test if a game idea is fun without needing to boot up a computer.

· Mobile-Native Visual Scripting: Provides an intuitive, touch-optimized scripting language that makes game logic accessible even to those less familiar with traditional coding. This empowers more people to build games, and for experienced developers, it accelerates the ideation phase.

· Real-time Playtesting: Enables developers to play their games instantly after making changes, facilitating a highly responsive feedback loop. This helps catch bugs and refine gameplay mechanics much faster than traditional development cycles.

· Asset Integration and Manipulation: Offers tools to import and manipulate basic 2D assets, allowing for quick visual customization of game elements. This means you can see how your game looks and feels with your chosen art style right away.

Product Usage Case

· On-the-go Game Jam Ideation: A developer has a sudden game idea during their commute. They use Mxflo to quickly sketch out the core mechanics and player controls, testing its viability before it's forgotten. This saves valuable brainstorming time and captures early-stage innovation.

· Rapid Prototyping for Casual Games: A designer wants to test a new puzzle mechanic for a mobile game. Using Mxflo, they can build a basic playable version of the puzzle in under an hour, directly on their tablet, to get immediate feedback on its fun factor. This accelerates the design validation process and reduces wasted development effort.

· Educational Tool for Learning Game Logic: A student is learning programming concepts and game development. Mxflo provides a visual and interactive way to understand game loops, conditional logic, and event handling without the steep learning curve of complex IDEs. This makes learning game development more engaging and accessible.

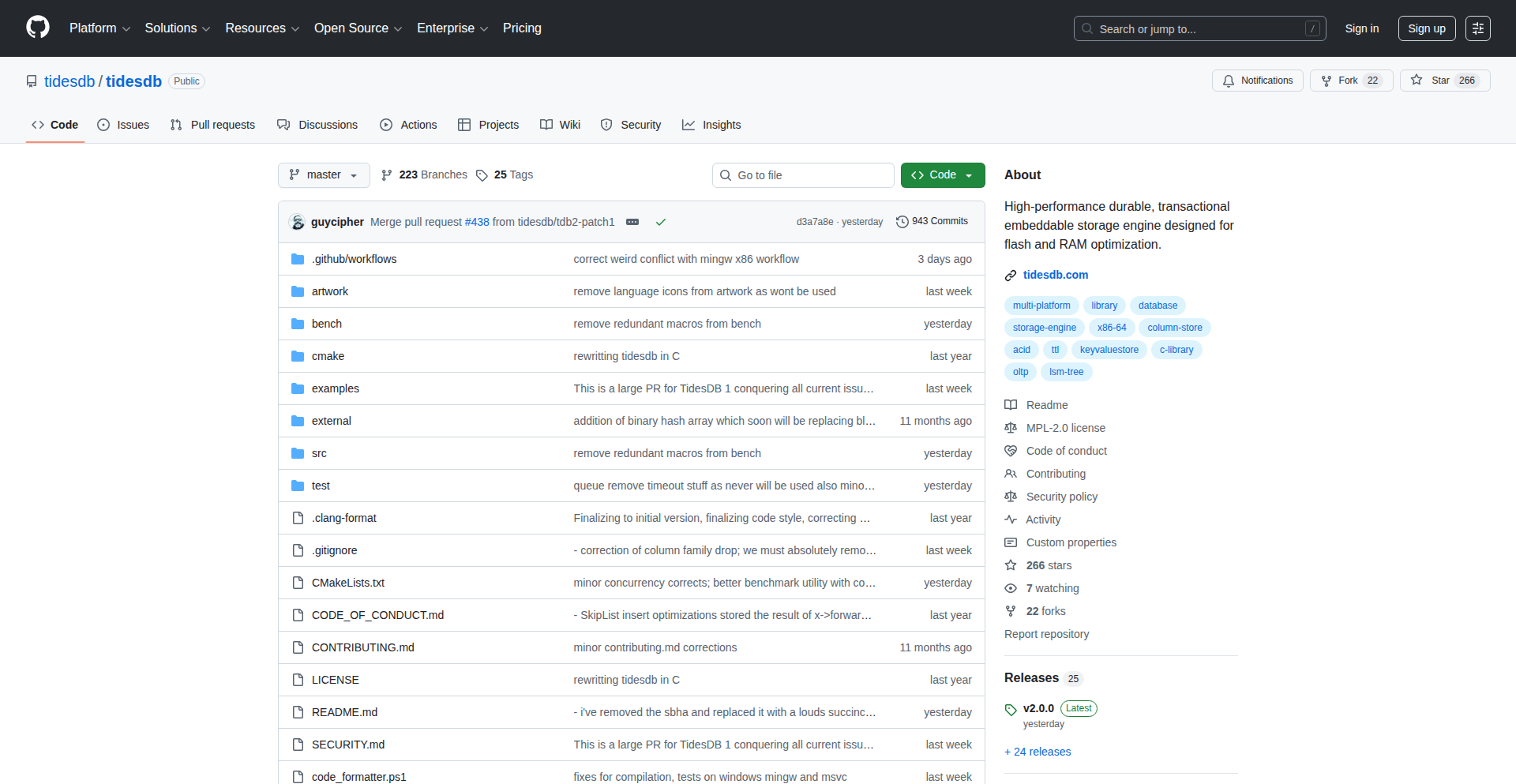

7

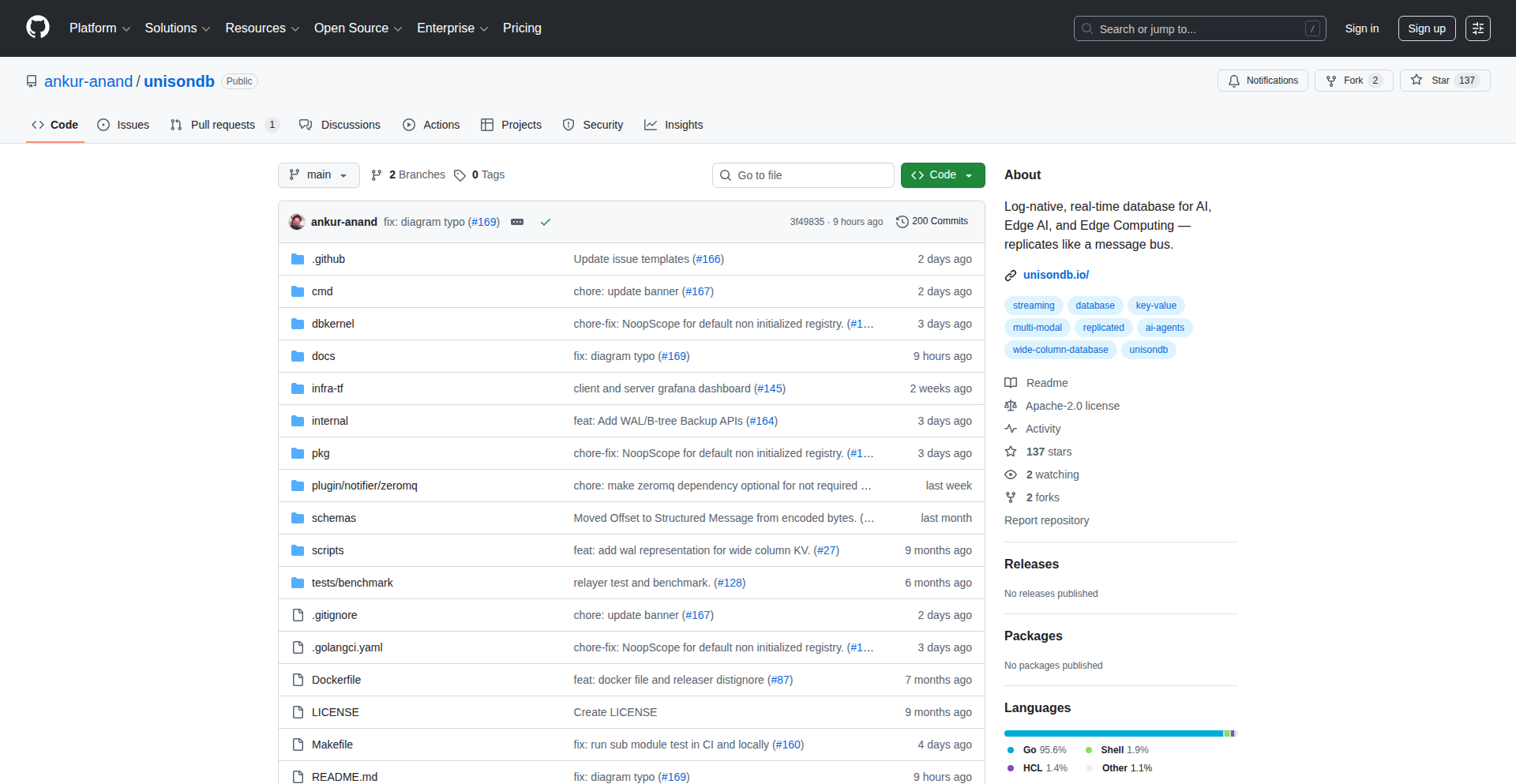

TidesDB: Flash-Optimized Transactional Storage

Author

alexpadula

Description

TidesDB is a novel transactional storage system meticulously engineered for the speed of flash storage and RAM. It tackles the inherent performance bottlenecks of traditional databases when dealing with rapid data changes, offering high throughput and low latency. Its innovation lies in its unique architecture that minimizes writes and leverages the characteristics of modern storage hardware.

Popularity

Points 12

Comments 1

What is this product?

TidesDB is a database system designed for extreme speed. Unlike traditional databases that might struggle with very fast data modifications, TidesDB is built from the ground up to excel on super-fast storage like SSDs (flash) and even in-memory (RAM). Its core innovation is a clever way of handling data changes that significantly reduces the amount of writing needed, which is a major performance killer for flash storage. Think of it like a highly efficient filing system that knows exactly where to put new documents with minimal shuffling, making retrieval and updates incredibly quick. This means applications that require real-time data processing, like financial trading platforms or live analytics dashboards, can operate much more responsively.

How to use it?

Developers can integrate TidesDB into their applications much like they would integrate other databases. It exposes an API (a set of commands your application can use to talk to the database) that allows for standard database operations like inserting, updating, and querying data. For applications already built with database interaction in mind, migrating to TidesDB would involve changing the database connection details and potentially adapting some specific query syntax if TidesDB offers unique optimizations. Its focus on high transaction rates makes it ideal for backend services that need to handle a massive volume of simultaneous requests, such as user authentication systems, real-time gaming servers, or IoT data ingestion pipelines.

Product Core Function

· High-throughput transactional writes: TidesDB is engineered to handle a vast number of data modifications per second without slowing down, making it perfect for applications that need to log or process events in real-time.

· Low-latency reads: Retrieving data from TidesDB is exceptionally fast, ensuring that your applications can respond to user requests or display information almost instantly.

· Flash and RAM optimization: The system's design specifically exploits the strengths of SSDs and RAM, bypassing common performance limitations found in systems not tailored for this hardware.

· Transactional consistency: Ensures that data operations are reliable and that your data remains accurate, even under heavy load. This is crucial for applications where data integrity is paramount.

Product Usage Case

· Real-time analytics dashboards: Imagine a dashboard showing live stock prices or website traffic that updates instantly. TidesDB can power this by processing incoming data streams and making them available for querying with minimal delay.

· High-frequency trading systems: In finance, every millisecond counts. TidesDB's speed and transactional capabilities make it suitable for systems that need to execute trades based on rapidly changing market data.

· Online gaming leaderboards: To keep leaderboards fresh and responsive in a multiplayer game, TidesDB can efficiently handle score updates from thousands of players concurrently.

· IoT data ingestion: Devices generating vast amounts of sensor data can send it to TidesDB, which can ingest and store it at high speed for later analysis or immediate action.

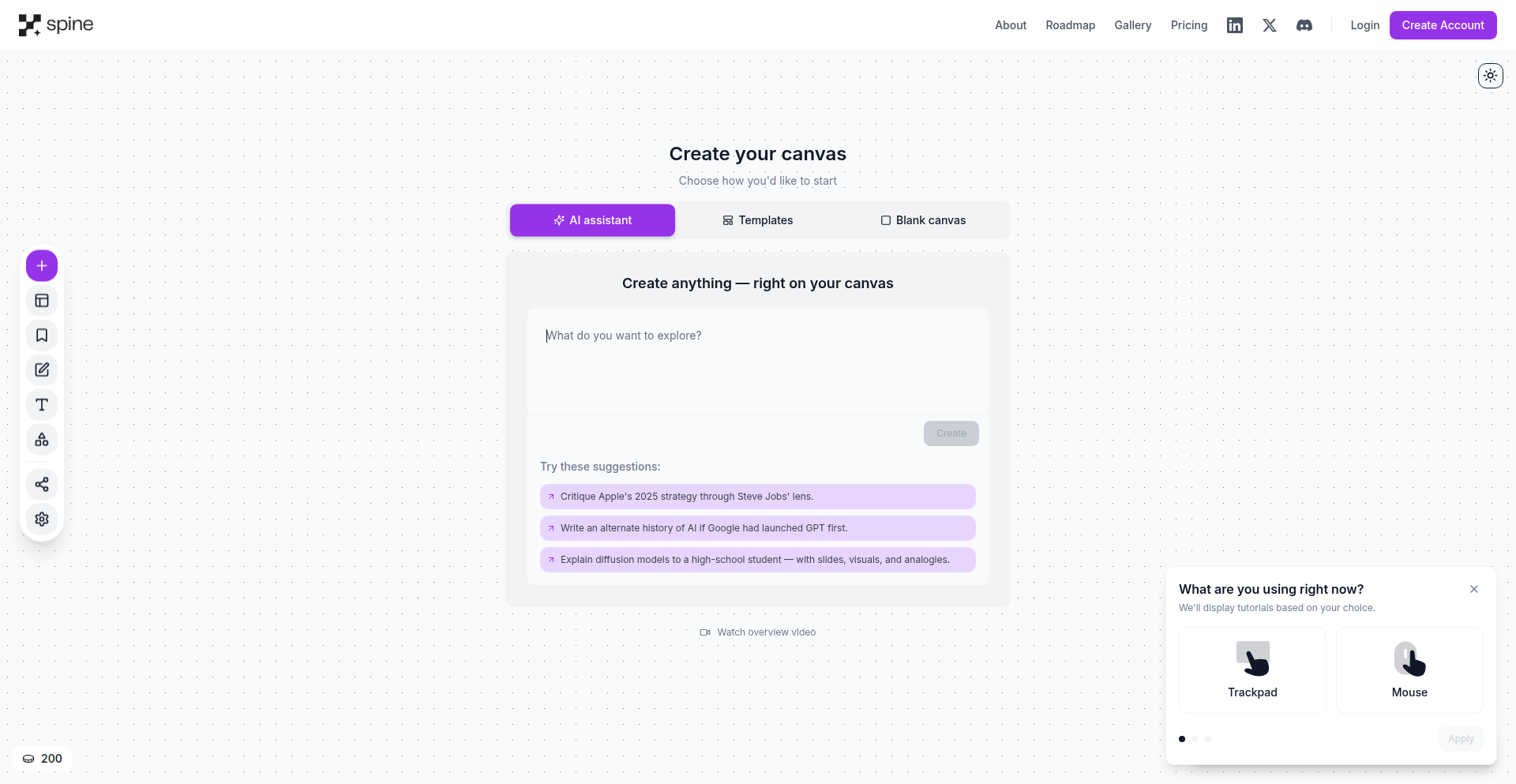

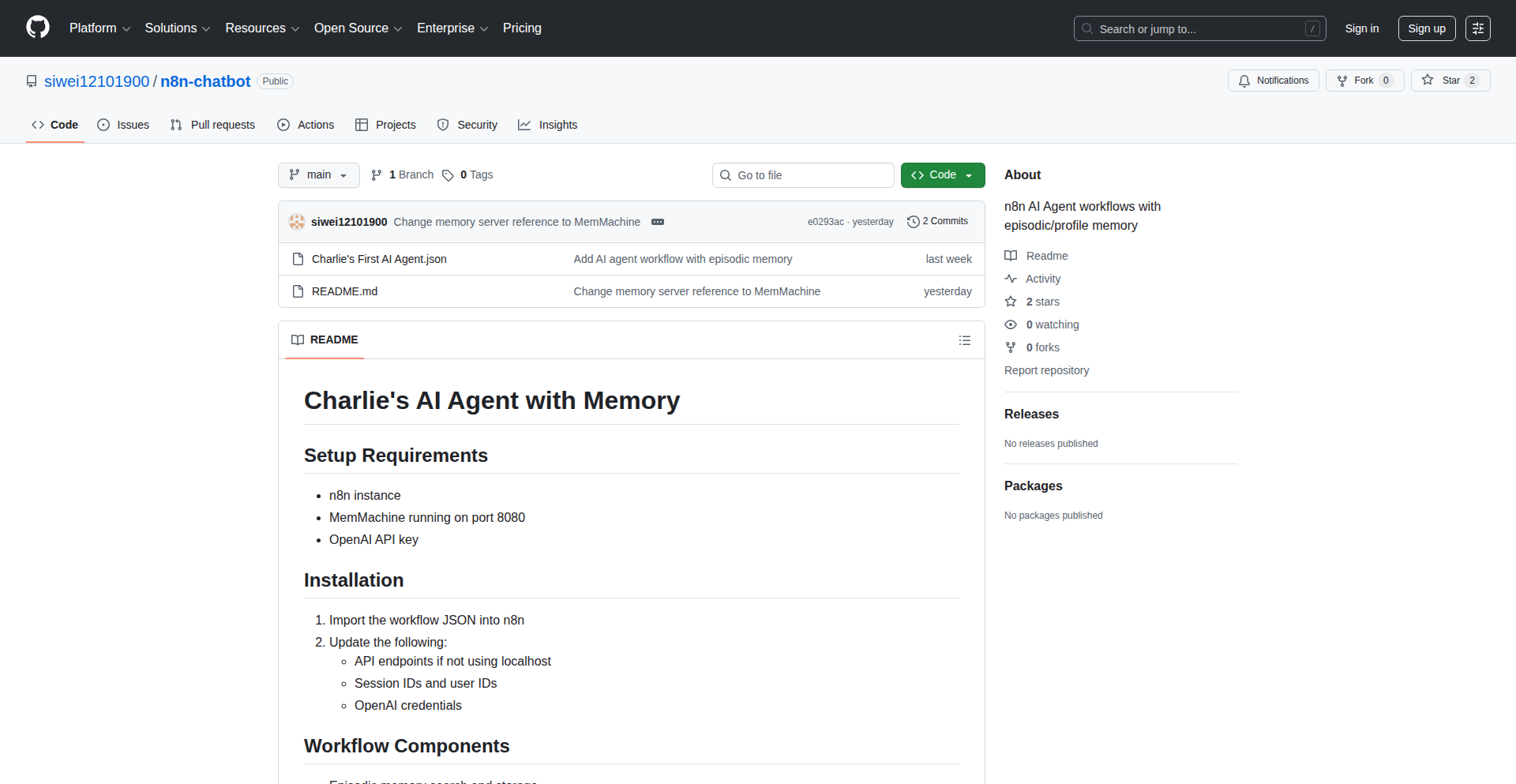

8

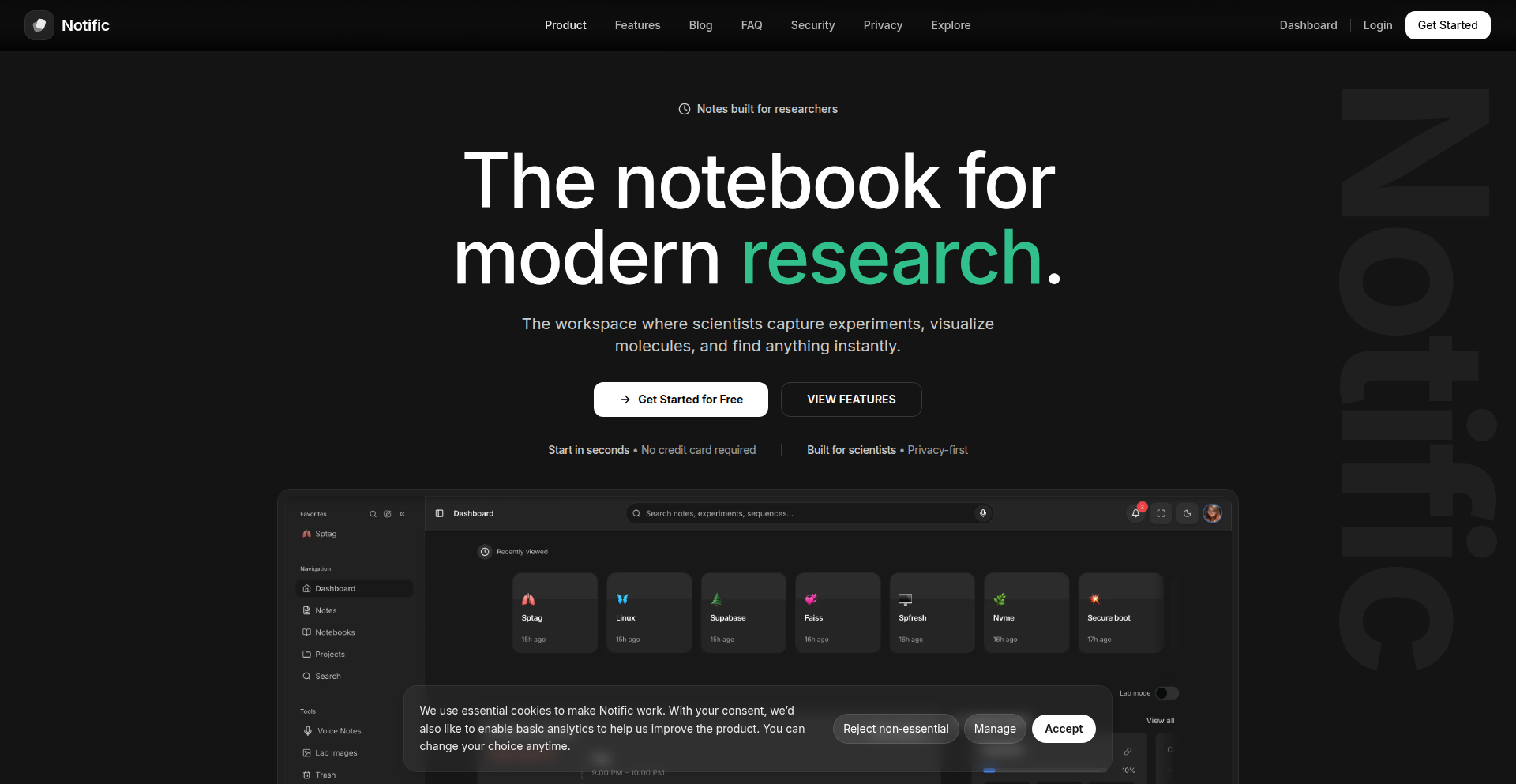

Spine Canvas: The AI-Powered Visual Thinkscape

Author

akshay_budhkar

Description

Spine Canvas is an infinite visual workspace that allows users to collaborate with over 300 AI models and agents. It addresses the limitations of linear chat interfaces by enabling a block-based thinking process, seamless context passing between diverse AI models (each with unique strengths like coding or critique), and flexible exploration of ideas through branching and iteration. This offers developers a more controlled and powerful way to leverage AI for complex problem-solving and creative endeavors, moving beyond simple Q&A to true AI-assisted ideation and execution.

Popularity

Points 11

Comments 0

What is this product?

Spine Canvas is a revolutionary visual workspace designed to overcome the inherent limitations of traditional chat-based AI interactions. Instead of linear conversations, it uses a 'block' system where different AI models, tools, and inputs are represented as interconnected nodes. This allows for explicit and implicit context sharing between these blocks, meaning an AI model's output from one block can directly inform and be referenced by another, regardless of the underlying AI model used (e.g., Claude for coding, Gemini for critique). The 'canvas' metaphor signifies an infinite space where users can freely arrange, connect, and branch out ideas, creating a dynamic and iterative workflow. This technology unlocks a more fluid and controlled way for developers to experiment with and combine the unique capabilities of various AI models, facilitating deeper thinking and more nuanced problem-solving than single-model chat interfaces can offer. The core innovation lies in its ability to manage complex AI workflows visually and orchestrate interactions between diverse AI agents, fostering a more powerful form of AI collaboration.

How to use it?

Developers can use Spine Canvas as a central hub for all their AI-powered workflows. Imagine tackling a complex coding project: you could have a block for brainstorming solutions, another for generating code snippets with a coding-specialist AI, a research block for API documentation, and a critique block to review the code with another AI. These blocks can be visually linked, ensuring that the code suggestions are informed by the research, and the critique AI has access to both the initial ideas and the generated code. Developers can also import external context like YouTube transcripts or web pages as native blocks, enriching the AI's understanding. The ability to branch allows for exploring multiple design patterns or solutions simultaneously without losing track of the primary objective. This integration offers a practical way to manage multi-stage AI tasks, rapidly prototype, and iterate on ideas by simply modifying a block and observing how the changes propagate through the connected workflow. It’s ideal for scenarios requiring deep exploration, cross-disciplinary AI leverage, and iterative refinement of complex problems.

Product Core Function

· Visual block-based workflow orchestration: Enables developers to design and manage complex AI processes by connecting individual AI models, tools, and data inputs as discrete 'blocks' on an infinite canvas. This provides a clear visual representation of the AI workflow and allows for granular control over data flow and model interaction, which is crucial for debugging and optimizing intricate AI pipelines.

· Seamless cross-model context passing: Facilitates the direct and implicit sharing of context between different AI models, irrespective of their underlying architecture or training data. This means the output or insights from one AI (e.g., a sentiment analysis report) can directly influence the input or parameters of another (e.g., a content generation AI), allowing for sophisticated AI collaborations and more nuanced outputs.

· Branching and iterative exploration: Provides the ability to create multiple parallel paths or 'branches' within the workspace, enabling developers to explore different hypotheses, solutions, or creative directions simultaneously. If one branch doesn't yield the desired results, users can easily revert or discard it without impacting other ongoing explorations, significantly speeding up the iteration cycle for R&D and creative tasks.

· Multi-item processing (Lists as first-class citizens): Allows users to apply the same AI process or set of operations to multiple items concurrently by treating lists as fundamental components of the workspace. For example, a developer could task the system to perform deep research on ten different companies simultaneously, leveraging the AI to efficiently handle batch processing of information retrieval and analysis tasks.

· External context integration: Enables users to import and treat external data sources like chat logs from other LLMs, YouTube video transcripts, or web page content as native blocks within the workspace. This enriches the AI's understanding by providing it with a broader and more relevant set of contextual information for its tasks, leading to more accurate and insightful outputs.

· AI-assisted canvas initiation: Offers an intelligent assistant to help users overcome the 'blank canvas' problem by generating an initial workspace structure based on user prompts. This accelerates the onboarding process and helps developers start leveraging the platform immediately by providing a foundational framework for their AI projects.

Product Usage Case

· Scenario: A startup founder needs to rapidly develop a go-to-market strategy and product roadmap. They use Spine Canvas to create interconnected blocks for market research (using deep research AI), competitor analysis (with a critique AI), user persona generation, and feature prioritization. They can branch out to explore different market segments or feature sets, easily swap AI models to get diverse perspectives, and visualize connections between user needs and product offerings. This allows them to iterate on their strategy in minutes rather than days, and gain insights into potential blind spots by seeing how different AI perspectives converge.

· Scenario: A developer is working on a complex software feature that requires integration with multiple APIs and third-party services. They use Spine Canvas to set up blocks for API documentation lookup, code snippet generation for each service, error handling logic brainstorming, and security vulnerability assessment. By linking these blocks, the code generation AI is informed by the documentation, and the security AI can analyze the proposed code in context. This visual orchestration helps manage the complexity of multi-component development and ensures all aspects are considered coherently.

· Scenario: A researcher is trying to understand a complex scientific concept. They can import research papers or online articles as blocks, then use a specialized AI block for summarization, another for concept mapping, and a third for generating explanatory diagrams. They can ask follow-up questions to different AI models via dedicated chat blocks, branching the conversation to explore tangents or clarify specific points. This allows for a multi-faceted and interactive learning process, making abstract information more accessible and facilitating deeper comprehension.

9

Affordable Alternatives Directory

Author

uaghazade

Description

A community-driven platform that curates and lists affordable, often open-source, alternatives to popular and expensive software tools. It addresses the pain point of high software costs for individuals and small teams by highlighting innovative, cost-effective solutions that developers can integrate into their workflows.

Popularity

Points 6

Comments 4

What is this product?

This project is a curated directory of software alternatives, focusing on affordability and often leveraging open-source technologies. The core innovation lies in its community-driven approach, where users submit and vote on tools that offer comparable functionality to expensive, proprietary software. It taps into the hacker ethos of finding clever, efficient solutions to common problems, in this case, the high barrier to entry imposed by costly software.

How to use it?

Developers can use this platform in several ways. Firstly, as a discovery engine to find cheaper or free alternatives for their development tools, design software, project management suites, and more. Secondly, by contributing their own discovered alternatives or open-source projects, helping to build a comprehensive resource for the developer community. Integration can occur by simply swapping out existing expensive tools for the recommended alternatives, or by exploring the open-source options and potentially contributing to their development.

Product Core Function

· Alternative Software Discovery: Enables users to search for and discover cost-effective alternatives to well-known software, saving money and fostering the use of diverse tools. This provides immediate financial relief and access to specialized or community-backed solutions.

· Community Curation and Voting: Leverages collective intelligence to identify the best and most relevant alternatives. This ensures the directory remains up-to-date and reflects real-world developer needs and preferences, leading to more reliable recommendations.

· Submission of New Alternatives: Empowers users to contribute their own findings, expanding the platform's reach and creating a feedback loop for continuous improvement. This fosters a collaborative environment and ensures a wider range of solutions are considered.

· Categorized Listing: Organizes alternatives by software category (e.g., IDEs, design tools, cloud services), making it easier for developers to find specific solutions for their needs. This structured approach streamlines the search process and helps users quickly pinpoint relevant options.

Product Usage Case

· A freelance web developer needs a powerful but expensive design tool for mockups. They use the directory to find a free, open-source alternative like Figma or Penpot, significantly reducing their operational costs without sacrificing quality, allowing them to focus budget on other critical areas.

· A startup team is looking for a project management tool but finds popular options like Jira too costly for their early stage. They discover a community-recommended alternative like Taiga or OpenProject through the directory, providing them with robust features for agile development at a fraction of the price, enabling them to scale their operations effectively.

· A solo developer is working on a personal project and needs a code editor with advanced features. Instead of paying for a premium IDE, they use the directory to find a feature-rich, free option like VS Code or Sublime Text, enhancing their productivity and learning experience at no additional financial burden.

· A developer team wants to adopt a new database solution but is constrained by budget. They consult the directory for open-source database alternatives that offer comparable performance and scalability to commercial options, allowing them to make a cost-efficient technical decision that supports their project's long-term viability.

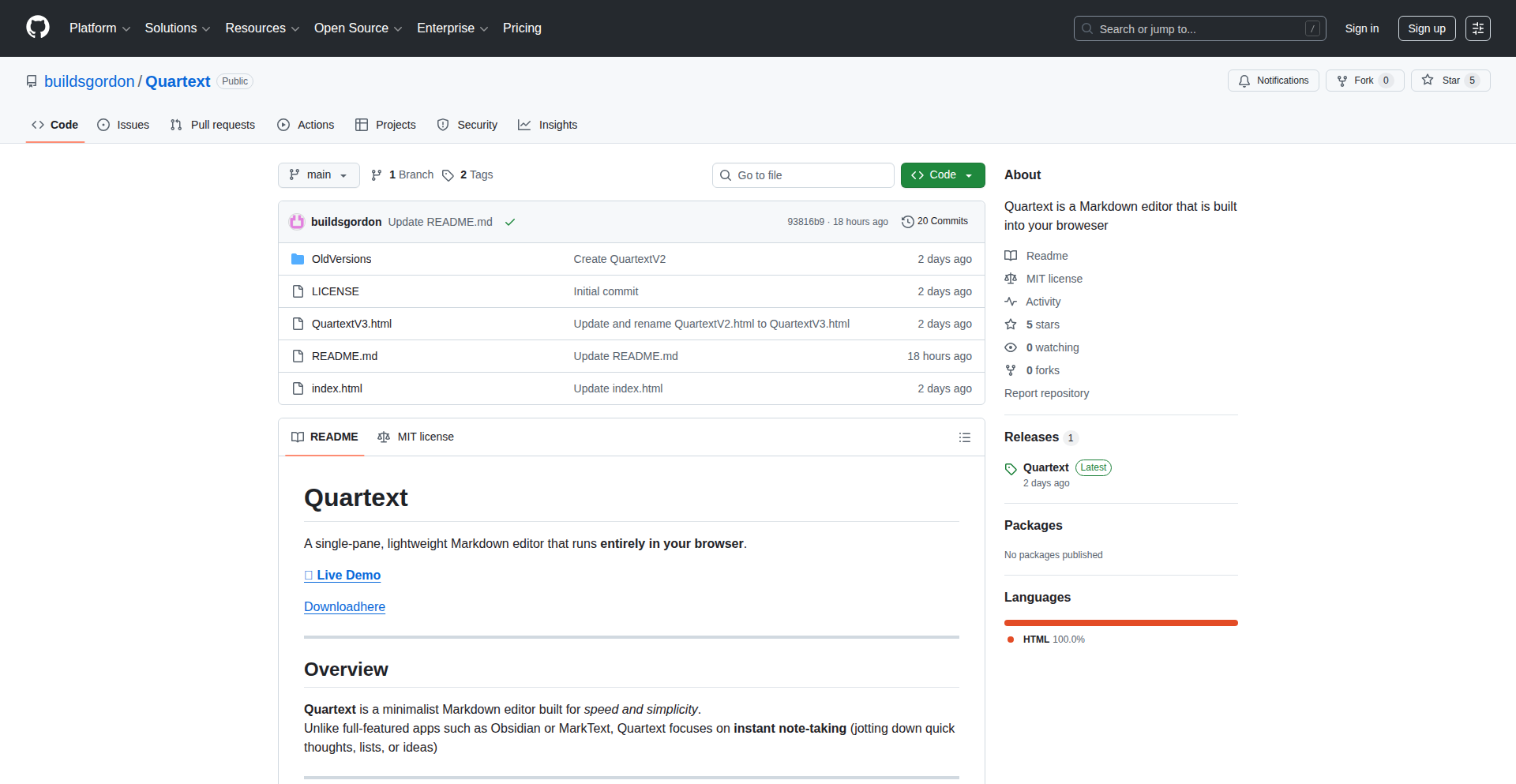

10

Floxtop: Semantic File Organizer

Author

bobnarizes

Description

Floxtop is an offline macOS application that automatically organizes your files and images based on their content and meaning. It leverages advanced AI models to understand what's inside your documents and pictures, then intelligently suggests or performs organization actions, solving the common problem of cluttered digital spaces without requiring cloud connectivity.

Popularity

Points 4

Comments 3

What is this product?

Floxtop is an intelligent, privacy-focused file and image organizer for Mac. Instead of relying on manual tagging or just file names, it uses on-device machine learning models to analyze the content of your files. For images, this means understanding what objects or scenes are depicted. For documents, it can grasp the subject matter or key themes. The innovation lies in its ability to perform this complex analysis locally, ensuring your data never leaves your machine, and then using these insights to group, tag, or move files into logical folders. This means your files are organized by what they actually *are*, not just where you put them.

How to use it?

Developers can use Floxtop by pointing it to specific directories they want to organize, such as 'Downloads', 'Pictures', or project folders. The app provides an intuitive interface to review the AI's suggested organization. For instance, it might group all photos containing 'cats' together, or all documents related to 'project proposals'. Users can then accept these suggestions with a click, or customize the rules. Integration can be thought of as a powerful preprocessing step for development workflows: imagine automatically sorting screenshots of UI elements, or organizing research papers by topic. It acts as a smart assistant that cleans up your digital workspace, freeing up mental energy for more creative coding tasks.

Product Core Function

· Content-based image analysis: Understands objects, scenes, and concepts within images to group them semantically. This means you can find all your 'beach photos' or 'code snippets' without manually tagging them, saving significant search time.

· Document content understanding: Analyzes text documents to identify key themes and topics. This allows for intelligent categorization of research papers, notes, or project documentation, making information retrieval much faster.

· Offline, on-device processing: All analysis happens locally on your Mac, ensuring complete privacy and security of your files. This is crucial for developers handling sensitive project data or personal information, offering peace of mind.

· Intelligent suggestion engine: Proposes logical folder structures and file groupings based on content analysis. This reduces the manual effort of organizing, letting the AI do the heavy lifting and providing actionable insights for your file management.

· Customizable organization rules: Allows users to refine the AI's suggestions and create personalized organization workflows. You can train it to recognize specific project jargon or file types relevant to your development, making the organization highly tailored to your needs.

· Duplicate file detection based on content: Goes beyond simple file name matching to identify truly duplicate or near-duplicate files by analyzing their content, helping to free up disk space efficiently.

Product Usage Case

· Organizing a large collection of development screenshots: A developer can point Floxtop to their 'Screenshots' folder. Floxtop can then automatically group images by the UI element they represent (e.g., 'login screens', 'dashboard views', 'error messages'), making it easy to find specific visual assets for documentation or presentations.

· Streamlining research paper management: For developers working on new technologies or academic research, Floxtop can ingest PDF documents and group them by subject matter (e.g., 'machine learning algorithms', 'database optimization', 'frontend frameworks'), creating a well-structured digital library for quick reference.

· Managing project assets: A game developer could use Floxtop to automatically sort game assets like textures, models, and sound effects into categorized folders based on their content and intended use within the game engine. This greatly reduces the time spent searching for specific assets during development.

· Cleaning up downloaded code snippets and examples: Developers often download numerous code samples from online resources. Floxtop can analyze these files and group them by programming language, framework, or problem solved, turning a chaotic download folder into a usable knowledge base.

· Organizing personal photo archives with developer-specific needs: Beyond general photo organization, a developer might want to automatically group photos related to team events, conferences, or even hardware setups, creating a visual timeline of their professional journey.

11

JobAlert AI

Author

jlemee

Description

JobAlert AI is a sophisticated notification system designed to proactively alert developers about new tech job openings that precisely match their skills and preferences. It tackles the tedious and time-consuming task of manually sifting through countless job boards by leveraging intelligent filtering and real-time monitoring. The innovation lies in its ability to understand nuanced job requirements and developer profiles, ensuring that only highly relevant opportunities are surfaced, thus saving valuable time and increasing the chances of landing a dream role.

Popularity

Points 2

Comments 3

What is this product?

JobAlert AI is a smart job notification service built for tech professionals. It works by intelligently analyzing job descriptions from various sources and comparing them against a developer's defined skill set, experience level, and desired location. Unlike basic keyword matching, it aims to understand the context of the job requirements and the developer's profile, identifying true matches. This means you get notified about jobs that are genuinely a good fit, not just those that happen to contain a few of the same words. The core innovation is its sophisticated matching algorithm that goes beyond simple keyword searches, offering a more personalized and efficient job discovery experience.

How to use it?

Developers can use JobAlert AI by defining their profile, including technical skills (e.g., Python, React, AWS), years of experience, preferred job titles, and geographical preferences. They then configure the types of job alerts they wish to receive. The system monitors various job platforms in real-time. When a new job posting is found that strongly matches the user's profile, an alert is sent directly to the user, often via email or a dedicated app notification. This allows developers to act quickly on new opportunities without constantly checking job boards themselves.

Product Core Function

· Intelligent Job Matching: This function uses advanced algorithms to understand the semantic meaning of job descriptions and developer profiles, ensuring highly accurate matches and avoiding irrelevant notifications. This is valuable because it saves you from seeing hundreds of jobs you're not qualified for or interested in, focusing your attention on opportunities that truly matter.

· Real-time Monitoring: The system continuously scans job boards and company career pages for new listings, ensuring you are among the first to know about emerging opportunities. This is valuable as it gives you a competitive edge in applying for in-demand roles before they become flooded with other applicants.

· Customizable Alert Preferences: Users can fine-tune what kind of jobs they want to be notified about, including specific technologies, seniority levels, and locations. This is valuable because it allows you to tailor the service to your exact career goals and avoid alert fatigue from receiving too many or too few notifications.

· Cross-Platform Aggregation: JobAlert AI aggregates job listings from multiple sources, providing a comprehensive view of the job market without requiring you to visit numerous websites. This is valuable for efficiency, as it consolidates your job search efforts into a single, streamlined experience.

Product Usage Case

· A senior Python developer specializing in machine learning wants to find remote roles. JobAlert AI can be configured to search for 'Python', 'machine learning', 'data science', and 'remote' on platforms like LinkedIn, Indeed, and specialized AI job boards, sending them an alert as soon as a matching remote position is posted. This solves the problem of missing out on niche remote opportunities.

· A junior frontend developer looking for an entry-level React job in San Francisco needs to find positions that list 'React', 'JavaScript', and 'frontend' and are open to recent graduates. JobAlert AI can monitor these specific keywords and seniority levels, notifying them instantly about suitable roles, allowing them to apply while the position is fresh.

· A DevOps engineer seeking contract roles with experience in Kubernetes and AWS can set up JobAlert AI to filter for these technologies, 'DevOps', and 'contract' roles across various job sites. This helps them quickly identify short-term projects that align with their expertise, which can be hard to find through general searches.

12

ChronoSat Vision

Author

varik

Description

ChronoSat Vision is a web application that leverages Sentinel-2 satellite imagery to create a frequent, weekly-level historical timeline of any geographical location. It addresses the need for more granular temporal analysis of land changes beyond yearly updates, using advanced AI-powered super-resolution to enhance image detail to approximately 1-2 meters per pixel. This empowers users to visualize and understand subtle environmental or land-use shifts over time with unprecedented clarity and accessibility.

Popularity

Points 3

Comments 2

What is this product?

ChronoSat Vision is a web-based tool that provides access to Sentinel-2 satellite imagery, allowing you to scrub through historical views of any chosen location with a frequency of approximately every few days. The core innovation lies in its ability to rapidly gather and display available scenes since 2018, and its unique AI-enhanced view that uses a super-resolution model to upscale the imagery to a much finer detail, around 1-2 meters per pixel. Think of it as a time machine for looking at how the Earth changes, not just once a year, but frequently, with incredibly sharp details.

How to use it?

Developers can integrate ChronoSat Vision into their workflows by accessing its web interface directly. For specific applications, the underlying principles of its data retrieval and AI upscaling can inspire custom solutions. Imagine building a system to monitor deforestation, track urban development, or even analyze agricultural field changes. You could use the concept of frequent revisits to trigger alerts when significant changes are detected, or apply the super-resolution technique to identify smaller structures or features in your own satellite data.

Product Core Function

· Frequent Temporal Imagery Scrubbing: Gathers and displays Sentinel-2 satellite scenes for a selected area with a revisitation cadence of roughly 5 days. This means you can see how a place changes much more often than traditional yearly satellite views, helping you spot trends and anomalies quickly.

· AI-Super-Resolution View: Applies a trained AI model to upscale the 10m/pixel Sentinel-2 imagery to approximately 1-2m/pixel. This provides significantly more detail, allowing for the identification of smaller objects and finer ground features, making analysis more precise.

· Interactive Time-Strip Visualization: Presents the collected imagery in a time strip format, enabling users to easily slide through years of changes. This makes understanding the progression of events or developments intuitive and visual.

· Any Location Selection: Allows users to pick any geographical area of interest on the map. This provides unparalleled flexibility for researchers, planners, and curious individuals to investigate specific locations without pre-defined regions.

Product Usage Case

· Urban Planning and Monitoring: A city planner could use ChronoSat Vision to track the progression of new construction projects or the expansion of urban areas over several years with weekly precision, identifying patterns and potential planning conflicts early on. It answers 'how are things really changing in this neighborhood, week by week?'

· Environmental Change Analysis: An environmental scientist might use it to monitor the impact of climate change on coastal erosion or observe the recovery of vegetation after a wildfire, seeing subtle changes that might be missed with less frequent imagery. It helps answer 'how quickly is this environment changing, and can I see the details?'

· Agricultural Field Monitoring: A farmer or agricultural researcher could use the enhanced resolution to inspect crop health, detect early signs of disease or pest infestation across different fields, and track the effectiveness of different farming techniques over the growing season. It answers 'can I see exactly what's happening in my fields, more often and in greater detail?'

· Disaster Impact Assessment: In the aftermath of a natural disaster, emergency response teams could use ChronoSat Vision to quickly assess the extent of damage and identify affected areas with higher detail than standard satellite feeds, aiding in resource allocation. It answers 'how bad is the damage, and can I see the specific structures affected?'

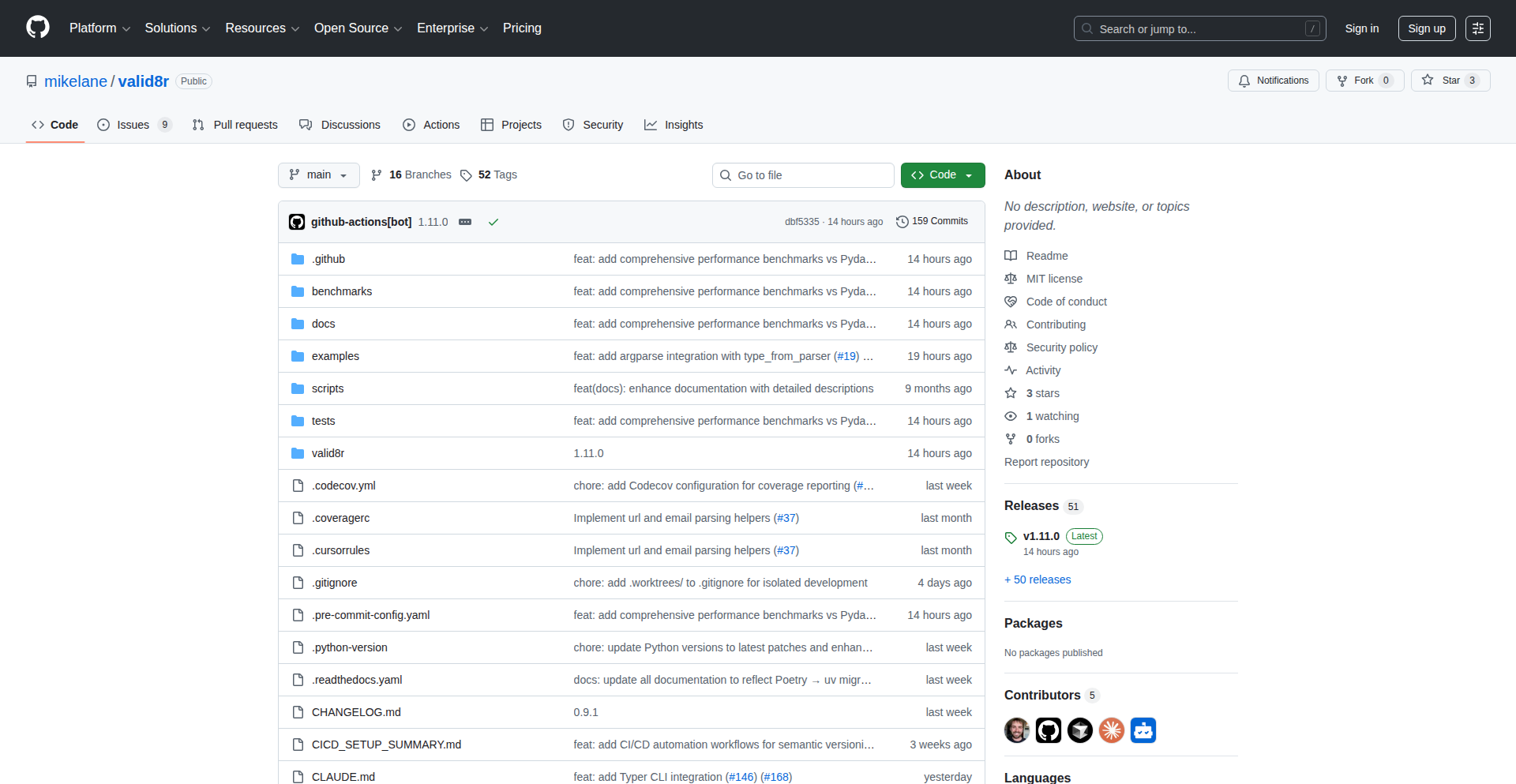

13

Valid8r: Functional CLI Validator

Author

lanemik

Description

Valid8r is a Python library designed to simplify input validation for command-line interface (CLI) tools. It tackles the repetitive task of parsing strings, checking their validity, and handling errors, especially for CLI arguments. Its core innovation lies in using 'Maybe monads' (represented as Success/Failure) to create elegant, chainable validation pipelines, offering a faster and more functional approach compared to traditional methods for specific use cases.

Popularity

Points 5

Comments 0

What is this product?

Valid8r is a Python library that provides a clean and efficient way to validate user inputs for CLI applications. Instead of writing repetitive code to check if an input is a valid integer, a specific range, or matches a certain format, Valid8r lets you define these checks as a series of steps that can be chained together. It uses a functional programming concept called 'Maybe monads' (which it calls Success/Failure) to handle potential validation failures gracefully without resorting to exceptions. This makes your code more readable and easier to reason about. For simple parsing tasks, it can be significantly faster than heavier validation libraries like Pydantic.

How to use it?

Developers can integrate Valid8r into their Python CLI projects by installing it via pip (`pip install valid8r`). They can then use its parser and validator functions to build validation pipelines. For instance, to validate a port number that must be between 1 and 65535, you can chain `parsers.parse_int()` with `validators.minimum(1)` and `validators.maximum(65535)`. The library also offers direct integrations with popular CLI frameworks like `argparse`, `Click`, and `Typer`, allowing you to seamlessly drop Valid8r's validation logic into your existing command structures without extensive refactoring. It even provides an interactive prompting feature that automatically re-prompts the user until valid input is provided.

Product Core Function

· Chainable Parsers and Validators: Allows combining multiple parsing and validation steps into a single, readable pipeline, making it easy to define complex input requirements step-by-step. This helps manage code complexity and reduces the need for deeply nested conditional logic.

· Maybe Monad (Success/Failure) for Error Handling: Replaces traditional exception handling with a more functional approach. Instead of crashing when validation fails, it returns a 'Failure' object with an error message, allowing developers to explicitly handle validation outcomes and preventing unexpected program termination.

· Performance Optimization for Simple Parsing: Achieves significant speed improvements (up to 300x faster than Pydantic) for basic string-to-type conversions (like integers, emails, UUIDs) by avoiding the overhead of schema building and runtime type checking. This is crucial for performance-sensitive CLI tools dealing with high volumes of input.

· Integrations with Popular CLI Frameworks: Provides seamless integration with `argparse`, `Click`, and `Typer`, enabling developers to leverage Valid8r's validation capabilities without rewriting their existing CLI argument parsing logic. This lowers the barrier to adoption and speeds up development.

· Interactive Prompting for User Input: Offers a built-in mechanism to continuously ask the user for input until a valid entry is provided. This enhances user experience by guiding them to provide correct data without manual error checking loops in the application code.

Product Usage Case

· Validating a port number argument in a network utility CLI: A user needs to input a port number for a network service. Valid8r can parse the input as an integer and then validate that it falls within the valid range of 1 to 65535. If the input is not a number or out of range, Valid8r provides a clear error message without crashing the tool. This ensures the application receives a usable port number.

· Parsing and validating user email addresses in a signup form CLI: When a user provides an email address for a CLI-based registration, Valid8r can parse the input and apply a regular expression validator to ensure it follows a standard email format. This prevents malformed data from being processed by the backend.

· Creating a configuration parser for system tools: A system administration tool might require users to input various configuration parameters, such as file paths, integers representing timeouts, or boolean flags. Valid8r can create a pipeline to parse and validate each of these inputs, ensuring the configuration loaded by the tool is correct and consistent.

· Building a game or simulation CLI that requires specific numerical inputs: For a CLI-based game or simulation, parameters like player health, item quantities, or simulation step counts might need to be validated. Valid8r can handle parsing these inputs and ensuring they meet predefined conditions (e.g., health cannot be negative).

· Developing a data processing script that accepts file paths and processing options: A script that processes data files might take a file path and a processing mode as arguments. Valid8r can validate that the provided path points to an existing file and that the processing mode is one of the accepted options, improving the robustness of the script.

14

FibreWeaver

Author

matt-p

Description

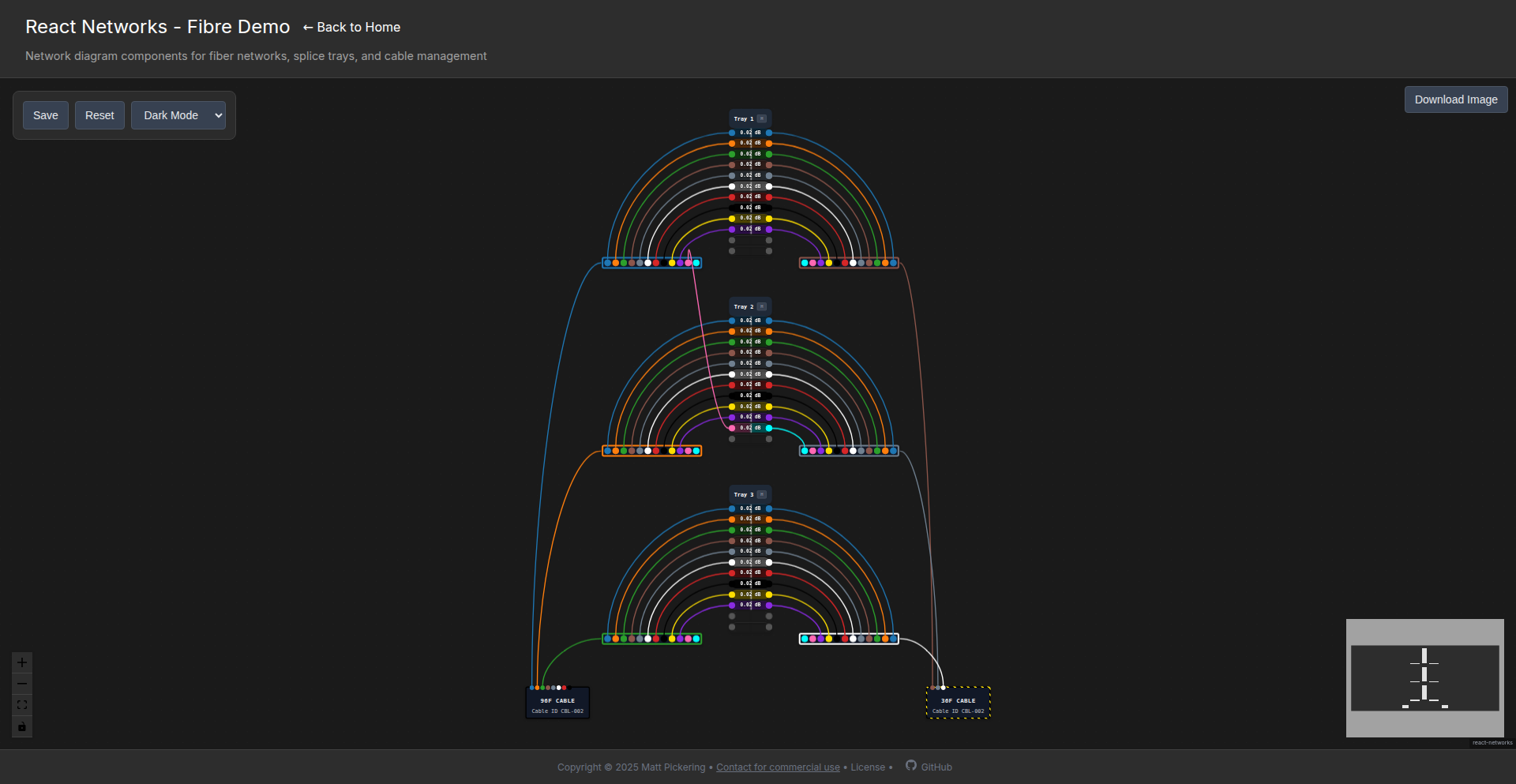

A React component designed for managing fibre optic cable infrastructure within data centers. It visualizes and automates aspects of the build, buy, and deploy process for new sites by offering a dynamic, interactive interface for fibre cable planning and tracking.

Popularity

Points 3

Comments 1

What is this product?

FibreWeaver is a sophisticated React component that tackles the complexity of managing fibre optic cable deployments in data centers. Traditionally, this involves intricate manual planning and tracking of countless cables, patch panels, and termination points. FibreWeaver brings a visual, programmatic approach. Its core innovation lies in its ability to represent complex fibre topologies in an intuitive, interactive UI. It leverages declarative UI principles inherent in React to build a dynamic model of the data center's fibre network. Think of it as a digital twin for your fibre cabling, enabling easier design, identification of potential issues, and streamlined deployment workflows.

How to use it?

Developers can integrate FibreWeaver into their existing data center management tools or build new applications around it. It's designed to be a pluggable component, allowing for customization of data models and visualization styles. You'd feed it data representing your physical fibre infrastructure (e.g., ports, connections, cable types, locations), and it would render an interactive visual representation. This allows for drag-and-drop cable routing, real-time status updates, and automated validation of connections. It's particularly useful for visualizing the intricate web of connections that can overwhelm manual tracking.

Product Core Function

· Interactive Fibre Topology Visualization: Visually represents fibre optic networks, making complex interconnections understandable at a glance. This helps quickly identify existing infrastructure and plan new deployments, reducing errors and saving time.

· Automated Cable Pathing and Validation: Suggests optimal cable routes and automatically checks for valid connections, preventing misconfigurations. This is crucial for avoiding downtime caused by incorrect cabling.

· Data-Driven Infrastructure Modeling: Allows for the programmatic definition and manipulation of fibre assets, enabling seamless integration with existing inventory and deployment systems. This makes managing large-scale infrastructure more efficient.

· Real-time Status Updates: Provides live feedback on the status of fibre connections, helping to quickly diagnose and resolve issues. This is essential for maintaining high availability in data centers.

· Customizable Data Integration: Supports flexible data input formats, allowing it to integrate with various data sources and management platforms. This ensures it can adapt to diverse operational environments.

Product Usage Case

· Data Center Capacity Planning: A data center operator can use FibreWeaver to visualize current fibre utilization and plan for future expansion, ensuring they have enough ports and cable lengths for upcoming equipment. This prevents costly over-provisioning or under-provisioning.

· Automated Cable Deployment Workflows: A network engineer can use FibreWeaver to generate deployment instructions for technicians, complete with visual diagrams and validated connection paths, significantly reducing installation time and errors. This streamlines the 'build' and 'deploy' phases.

· Troubleshooting Network Outages: When a connectivity issue arises, a support technician can use FibreWeaver to quickly pinpoint the affected cables and components, speeding up diagnosis and resolution. This minimizes downtime and its associated business impact.

· Site Expansion and Migration: For companies building new data center sites or migrating existing ones, FibreWeaver can be used to model and plan the entire fibre infrastructure from scratch, ensuring a robust and scalable design. This helps manage the complexity of large-scale 'buy' and 'deploy' processes.

15

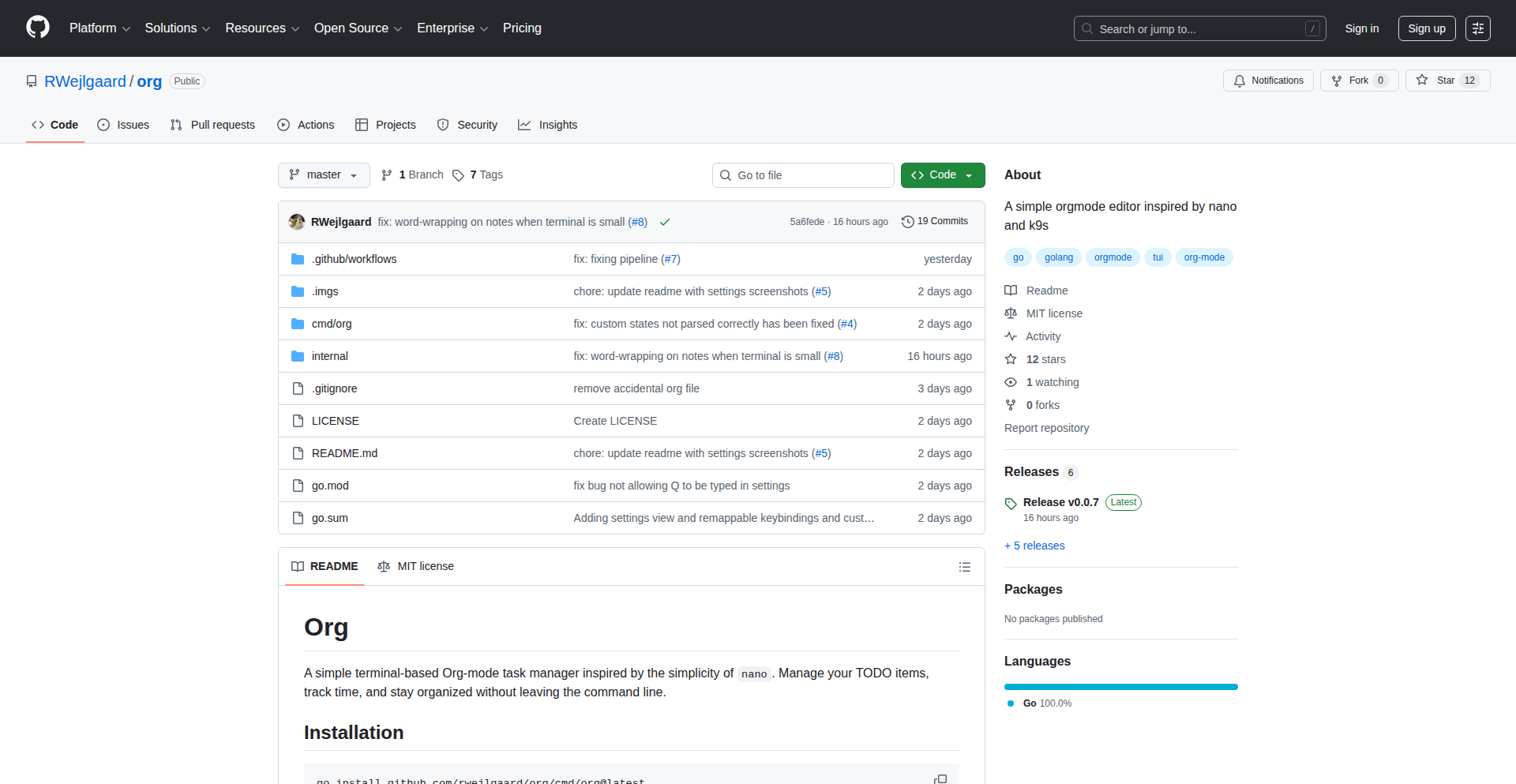

NanoOrg

Author

RWejlgaard

Description

A TUI org-mode editor inspired by the user-friendly interfaces of nano and k9s, designed to make Emacs keybindings more accessible. It tackles the complexity of Emacs by offering a simpler, more intuitive way to manage org-mode files.

Popularity

Points 3

Comments 0

What is this product?

NanoOrg is a terminal-based application that allows you to edit org-mode files. Org-mode is a powerful outliner and note-taking system often used within Emacs. The innovation here lies in its user interface, which borrows from popular, easy-to-learn tools like 'nano' (a simple text editor) and 'k9s' (a terminal UI for Kubernetes). Instead of having to memorize complex Emacs commands for org-mode, NanoOrg provides a more direct and understandable set of keyboard shortcuts. This is valuable because it lowers the barrier to entry for using org-mode, a system many find beneficial but intimidating due to its steep learning curve in Emacs. So, this helps you manage your tasks and notes more easily without becoming an Emacs expert.

How to use it?

Developers can use NanoOrg by simply running the application from their terminal. Assuming it's installed and available in their PATH, they would type 'nanoorg <your-org-file.org>'. The application will then open the specified org-mode file in a terminal interface. Navigation and editing will use intuitive key combinations, similar to how one would use 'nano'. This allows for quick editing of to-do lists, project outlines, or any other information stored in org-mode format. Integration is straightforward as it operates on standard org-mode files, meaning it can be used in conjunction with existing Emacs workflows or as a standalone tool. So, this lets you edit your org-mode files directly in the terminal with simple commands, making your workflow more efficient.

Product Core Function

· Intuitive Keyboard Navigation: Uses simplified keybindings inspired by nano for moving around and selecting text, making it easier for new users to navigate org-mode files. This is valuable for reducing cognitive load and increasing editing speed.

· Basic Org-mode Editing: Supports fundamental org-mode syntax for creating and managing tasks, headlines, and notes, allowing for efficient organization of information. This is valuable for managing your daily tasks and projects without complex commands.

· TUI Interface: Provides a clean, text-based user interface that is easy to understand and operate directly within the terminal. This is valuable for users who prefer a distraction-free editing environment or work extensively in the command line.

· Customization Potential: While still early, the project is open to improvements, suggesting future possibilities for customizing keybindings and features to suit individual preferences. This is valuable for tailoring the tool to your specific workflow needs.

Product Usage Case

· Managing personal to-do lists: A developer can quickly open their daily task list in NanoOrg, add new items, mark them as complete, and reorganize priorities using simple keypresses, without leaving their terminal. This solves the problem of complex Emacs commands for basic task management.

· Outlining project ideas: When brainstorming a new software project, a developer can use NanoOrg to create a hierarchical outline of features, requirements, and notes directly in their terminal, making it easy to structure their thoughts. This provides a straightforward way to organize complex ideas without needing a dedicated outliner application.

· Quickly editing configuration files in org-mode format: If a developer uses org-mode for managing application configurations or system notes, NanoOrg allows for rapid edits and updates without the need to launch a full Emacs instance. This speeds up the process of making small but important changes to important files.

16

Patternia: Compile-Time Pattern Matching for C++

Author

sentomk

Description

Patternia is a C++ Domain Specific Language (DSL) that brings compile-time pattern matching to C++. It allows developers to define and match complex data structures and states directly at compile time, leveraging C++'s template metaprogramming capabilities. This innovative approach shifts pattern matching from runtime execution to compile-time analysis, significantly improving performance and catching potential errors earlier in the development cycle. The core idea is to express patterns as code that the C++ compiler understands and verifies, offering a powerful alternative to traditional runtime checks and making C++ code more robust and efficient.

Popularity

Points 2

Comments 1

What is this product?

Patternia is a novel C++ DSL designed to perform pattern matching during the compilation phase. Traditional pattern matching, common in languages like Python or Haskell, happens when your program is actually running. Patternia moves this process to the compile time. It achieves this by using advanced C++ template metaprogramming, essentially writing code that manipulates and checks other code during compilation. The innovation lies in its ability to define intricate patterns and check if your data conforms to them before your program even starts running. This means you get the benefits of pattern matching – cleaner code, easier data handling, and better error detection – without any runtime overhead. So, for you, it means your C++ programs can be faster and more reliable because errors are caught by the compiler, not by your users when they run the application.

How to use it?

Developers can integrate Patternia into their C++ projects by including its header files and defining patterns using its specialized syntax. The DSL allows for expressing complex conditions and data structures that should match. The C++ compiler then processes these definitions alongside your regular C++ code. When you write code that attempts to use these patterns, the compiler will verify if the data conforms to the defined pattern. If there's a mismatch, the compiler will issue an error. This can be used in various scenarios, such as validating complex configuration data, parsing structured input, or implementing state machines with rigorous checks. For example, you could define a pattern for a valid network packet structure and have Patternia ensure that any data you declare as a packet adheres to this structure at compile time. This makes integration straightforward by treating pattern definitions as part of your C++ code, allowing for seamless integration into existing build systems.

Product Core Function

· Compile-time pattern definition: Allows developers to express complex data structures and conditions as patterns that are verified during compilation. This provides immediate feedback on data integrity, making development more efficient and catching errors earlier, thus reducing debugging time.

· Type-safe pattern matching: Ensures that pattern matching operations are type-safe at compile time, preventing runtime type errors and improving code reliability. This directly translates to more robust applications that are less prone to unexpected crashes due to type mismatches.

· DSL for expressive pattern matching: Provides a dedicated language syntax that is more readable and concise for defining patterns compared to generic C++ code, leading to cleaner and more maintainable codebases. Developers can express complex logic more intuitively, improving overall code quality.

· Zero runtime overhead: Since all pattern matching is performed at compile time, there is no performance penalty during program execution, resulting in highly optimized and faster applications. This is crucial for performance-sensitive applications where every millisecond counts.