Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-08

SagaSu777 2025-11-09

Explore the hottest developer projects on Show HN for 2025-11-08. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN offerings reveal a powerful current of innovation focused on making complex technologies accessible and actionable. The surge in AI-related projects, particularly those leveraging LLMs for practical applications like content rewriting, agent SDKs, and even coding assistance, signals a maturing ecosystem where developers are moving beyond pure experimentation to solve tangible problems. This trend is not just about building with AI, but about building *for* AI developers, with tools like LLM API load testers and provider-agnostic SDKs emerging to streamline workflows. Simultaneously, there's a strong push towards developer productivity and efficiency. Projects that automate tedious tasks, optimize build processes, or offer more intuitive interfaces for complex systems like cloud deployment and data handling are highly valued. This reflects a 'hacker' ethos of finding smarter, more efficient ways to achieve goals. For developers, this means embracing AI as a tool for augmentation, focusing on building infrastructure and utilities that support the AI revolution, and always seeking opportunities to automate and optimize. For entrepreneurs, identifying underserved niches where AI can solve specific pain points, or where developer efficiency can be significantly boosted, presents a fertile ground for innovation and business growth. The emphasis on open-source solutions across many categories further highlights a collaborative spirit, encouraging shared learning and rapid iteration.

Today's Hottest Product

Name

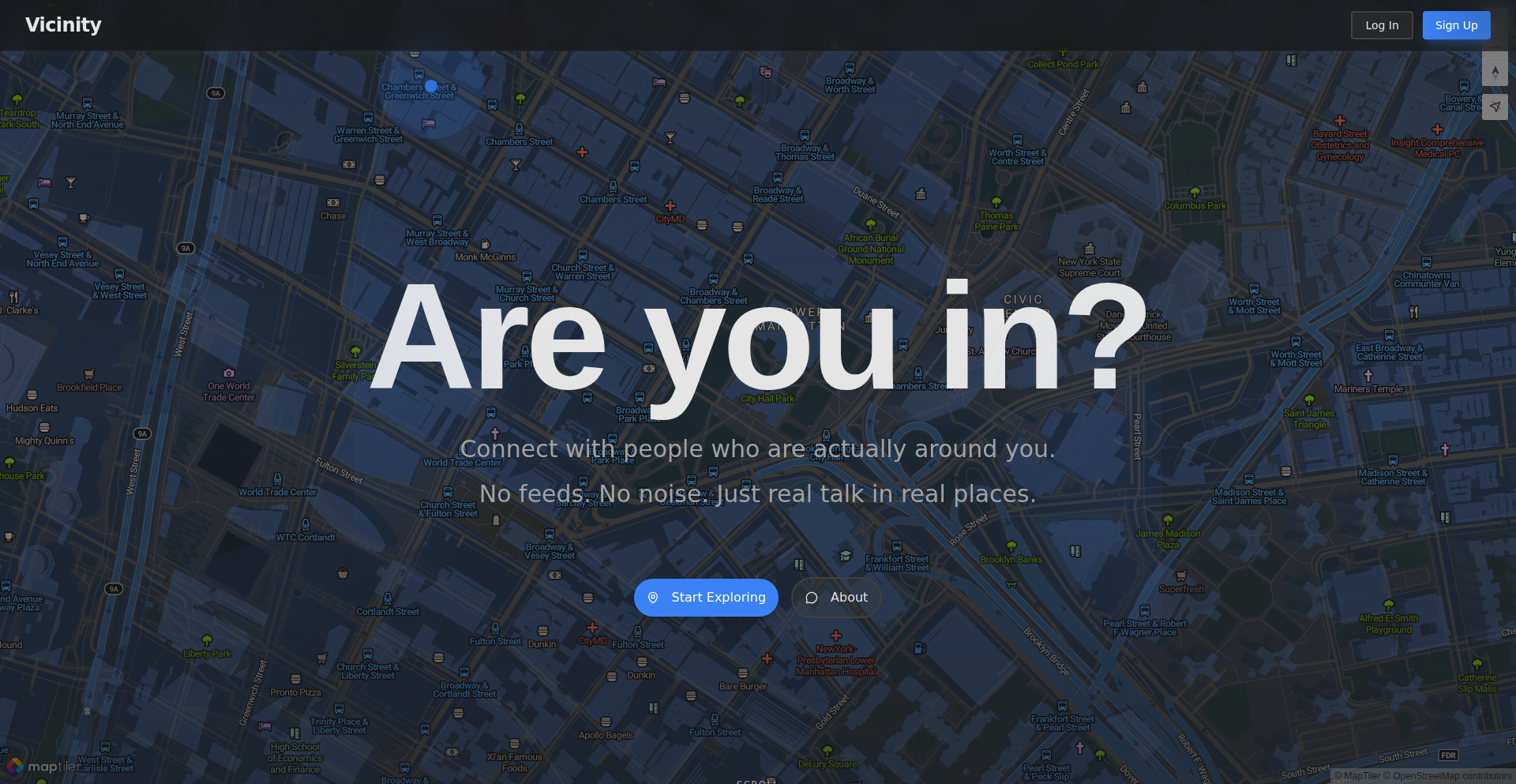

Show HN: Geofenced chat communities anyone can create

Highlight

This project innovates by creating location-based chatrooms, merging real-world proximity with online communication. It leverages WebSockets for real-time interaction and implements geofencing to restrict access to specific areas, akin to Discord servers but tied to physical locations. Developers can learn about real-time communication patterns, spatial data handling, and user-centric community building that bridges the digital and physical realms.

Popular Category

AI/ML

Developer Tools

Web Development

Utilities

Languages/Frameworks

Popular Keyword

LLM

AI

Serverless

Open Source

CLI

WebSockets

Rust

Python

GitHub Actions

Docker

Data Visualization

Real-time

Technology Trends

AI Integration

Developer Productivity Tools

Edge Computing/Location-Based Services

Performance Optimization

Niche Language Creation

Serverless Architectures

Efficient Data Handling

Cross-Platform Development

Project Category Distribution

AI/ML (18%)

Developer Tools (25%)

Web Development (15%)

Utilities (20%)

Languages/Frameworks (10%)

Data Visualization (5%)

Simulations (2%)

Other (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | GeoWhisper | 38 | 27 |

| 2 | Chrome-Mimic HTTP Client | 20 | 2 |

| 3 | OtterLang: Pythonic Native Compiler | 13 | 3 |

| 4 | Firmware Sentinel | 5 | 5 |

| 5 | GameSession Sentinel | 4 | 3 |

| 6 | Quantum Weaver C++ | 6 | 0 |

| 7 | FinSight Viz | 5 | 1 |

| 8 | VRAM-Boosted Model Swapper | 5 | 1 |

| 9 | Xleak: Terminal-Native Spreadsheet Explorer | 6 | 0 |

| 10 | CoLit | 5 | 0 |

1

GeoWhisper

Author

clarencehoward

Description

GeoWhisper is a location-based, real-time chat platform that allows users to create and join ephemeral or persistent geofenced communities. It leverages WebSockets for instant communication and explores the concept of 'place' as a fundamental social connector, aiming to foster more meaningful interactions by providing a shared context for users.

Popularity

Points 38

Comments 27

What is this product?

GeoWhisper is a project experimenting with social interaction dynamics by tying them to physical locations. It offers two primary modes: 'Drops' for temporary, radius-based chats, and 'Hubs' for persistent, community-driven servers similar to Discord but tied to specific geographical areas. The core innovation lies in using location not just as a filter, but as a primary social construct, enabling spontaneous connections and curated local interactions. It’s built using WebSockets, which are like a persistent, two-way street for data between your device and the server, allowing for instant message delivery without constant checking, making real-time chat feel immediate and fluid.

How to use it?

Developers can integrate GeoWhisper's concepts into their own applications to build location-aware social features. For instance, a travel app could use 'Drops' for temporary meetups in tourist spots, or a local event planner could use 'Hubs' to create persistent community spaces for attendees of a recurring event. The underlying WebSocket technology can be adopted to build other real-time features. To use it, a developer would set up a backend to manage geofencing logic and WebSocket connections, allowing clients to broadcast and subscribe to messages within specific geographic boundaries. This enables features like proximity-based notifications or location-specific forums.

Product Core Function

· Real-time Geofenced Chat (Drops): Enables ephemeral chat rooms tied to a specific radius and time limit. The technical value is in managing dynamic geospatial subscriptions and expiring message data, offering a novel way to facilitate temporary, context-specific conversations that disappear, reducing clutter and ensuring relevance.

· Persistent Geofenced Communities (Hubs): Allows users to create long-lasting, Discord-like servers within defined geographical zones. This feature's technical value is in implementing stable geofencing, user role management (admin, members), channel creation, and persistence logic based on active usage, creating enduring local communities.

· WebSocket-based Communication: Utilizes WebSockets for instant, bidirectional message exchange. This provides a smooth, responsive chat experience, a key technical value for real-time applications that can be leveraged for any feature requiring immediate data updates, such as live dashboards or collaborative tools.

· Geofence Overlap Prevention: Implements logic to prevent new 'Hubs' from being created in areas already occupied by another 'Hub'. This technical challenge is solved by geospatial indexing and conflict resolution, ensuring clear territorial ownership for communities and maintaining a structured local social graph.

· Automatic Hub Deletion: Plans to implement a system for deleting inactive 'Hubs' after a set period. This addresses technical challenges related to resource management and data cleanup, ensuring the platform remains efficient and relevant by removing dormant communities.

Product Usage Case

· A university campus app could use 'Drops' to facilitate spontaneous study group formations in specific library zones. A student looking for a chemistry study partner within a 50-meter radius of the library entrance could broadcast a message, and others nearby looking for the same would see it, solving the problem of finding immediate, localized collaboration.

· A neighborhood watch program could establish a permanent 'Hub' for a specific residential block. Residents could join this 'Hub' to share local news, report suspicious activity, or organize community events, solving the problem of fragmented local communication and fostering a stronger sense of community belonging.

· A tourist visiting a new city could use 'Drops' to ask for local recommendations in a specific park or landmark area. Visitors and locals in that immediate vicinity could respond, providing real-time, contextually relevant advice that wouldn't be easily discoverable in a global chat, solving the problem of getting instant, trustworthy local insights.

· An event organizer could create a 'Hub' for a large music festival. Attendees within the festival grounds could join this 'Hub' to get real-time updates on stage times, lost and found information, or meetups, solving the problem of disseminating time-sensitive information effectively to a concentrated, geographically bound audience.

2

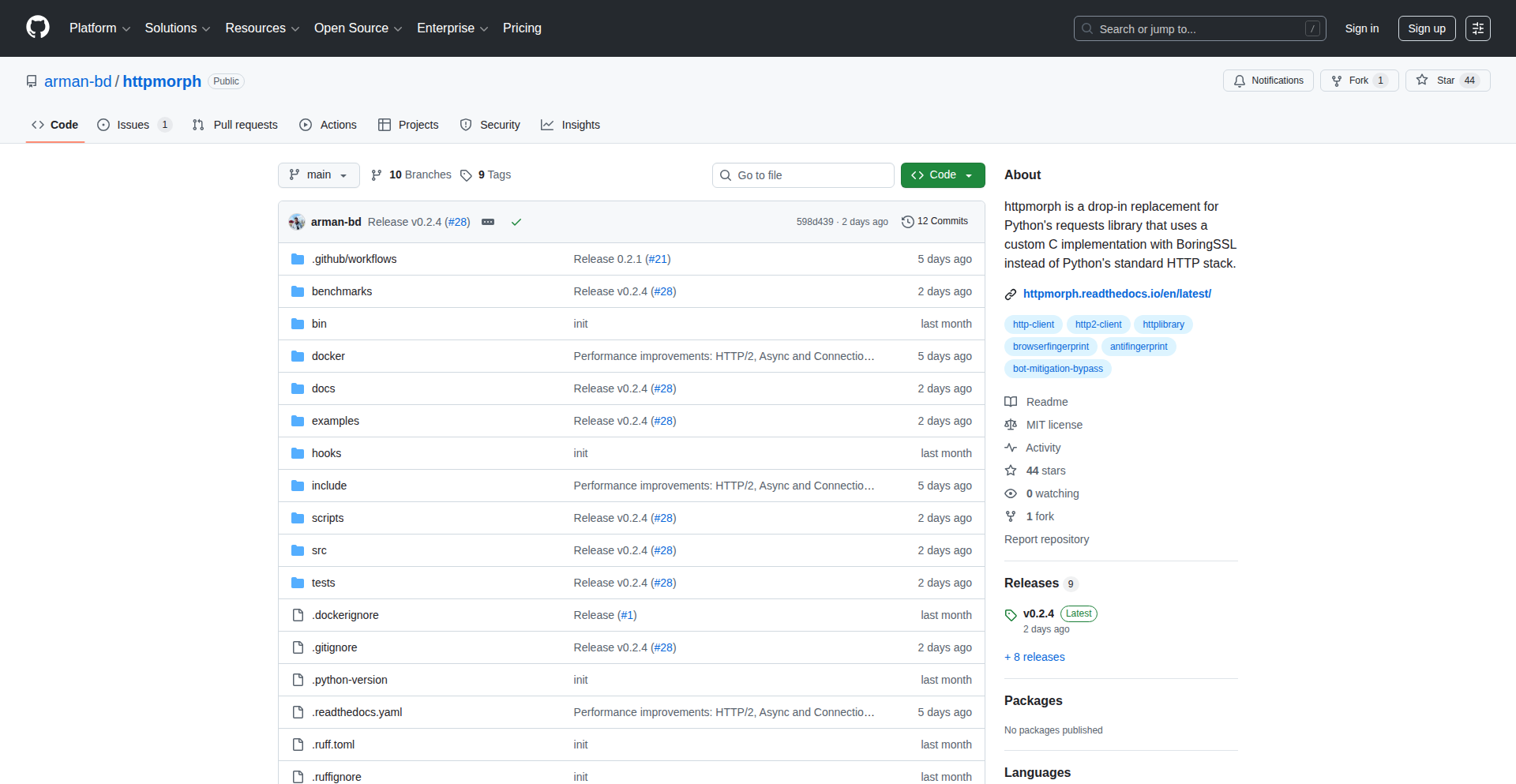

Chrome-Mimic HTTP Client

Author

armanified

Description

A Hacker News 'Show HN' project that acts as an HTTP client designed to precisely replicate the network fingerprint of Chrome 142. It achieves this by accurately matching JA3N, JA4, and JA4_R fingerprints, supporting HTTP/2, and leveraging async/await for efficient operation. This project is particularly useful for interacting with websites protected by Cloudflare, where standard HTTP clients might be blocked. It's a testament to creative problem-solving, turning a learning exercise into a functional tool.

Popularity

Points 20

Comments 2

What is this product?

This is an experimental HTTP client developed by a seasoned developer (with experience in BoringSSL and nghttp2). Its core innovation lies in its ability to meticulously mimic the specific network characteristics of Chrome 142. This includes generating JA3N, JA4, and JA4_R fingerprints that are indistinguishable from those produced by Chrome. By supporting the modern HTTP/2 protocol and utilizing asynchronous programming (async/await), it allows for more efficient and responsive network requests. The primary technical problem it solves is bypassing the detection mechanisms of services like Cloudflare, which often identify and block non-browser HTTP traffic. So, what's in it for you? It means you can automate interactions with complex web services without being flagged as a bot, enabling more seamless data retrieval or testing.

How to use it?

Developers can integrate this HTTP client into their Python projects for tasks requiring sophisticated HTTP request capabilities. Its async/await support makes it ideal for building high-performance applications like web scrapers, automated testing frameworks, or backend services that need to communicate with external APIs. You would typically import the client library and then make requests in an asynchronous manner, similar to how you might use other HTTP libraries but with the added benefit of its stealth capabilities. For example, if you need to scrape data from a site protected by Cloudflare, you would use this client instead of a standard one to ensure your requests are not blocked. This provides a reliable way to access web resources programmatically.

Product Core Function

· Mimics Chrome 142 JA3N, JA4, and JA4_R fingerprints: This allows your automated requests to look like they're coming from a real Chrome browser, bypassing bot detection and security measures like Cloudflare's. The value is increased access to web resources and reduced risk of being blocked.

· Supports HTTP/2: Leverages the modern HTTP/2 protocol for faster and more efficient data transfer between the client and server. This means quicker response times for your applications, improving overall performance.

· Asynchronous (async/await) support: Enables non-blocking I/O operations, allowing your application to handle multiple network requests concurrently without freezing. This is crucial for building scalable and responsive applications.

· Cloudflare compatibility: Specifically designed to work with Cloudflare-protected sites, overcoming common blocking issues encountered by standard HTTP clients. This unlocks the ability to interact with a wider range of web services programmatically.

Product Usage Case

· Web Scraping Complex Sites: A developer needs to scrape data from a popular e-commerce site protected by Cloudflare. Using a standard `requests` library in Python results in frequent blocking. By switching to this Chrome-mimic client, the scraper can successfully bypass Cloudflare's detection, allowing for continuous and reliable data collection. The value here is uninterrupted access to essential data.

· Automated API Testing: A QA engineer is testing an API that has bot detection mechanisms. Standard tools trigger false positives. This client's ability to mimic browser fingerprints ensures that API test requests are treated as legitimate, providing accurate testing results without being hindered by security layers. This leads to more reliable software quality.

· Building Advanced Browserless Automation: A project requires automating user interactions on a website that requires a specific browser fingerprint for access. This client provides that exact fingerprint, enabling the automation script to function seamlessly without the need for a full browser instance. The value is reduced complexity and resource usage in automation tasks.

3

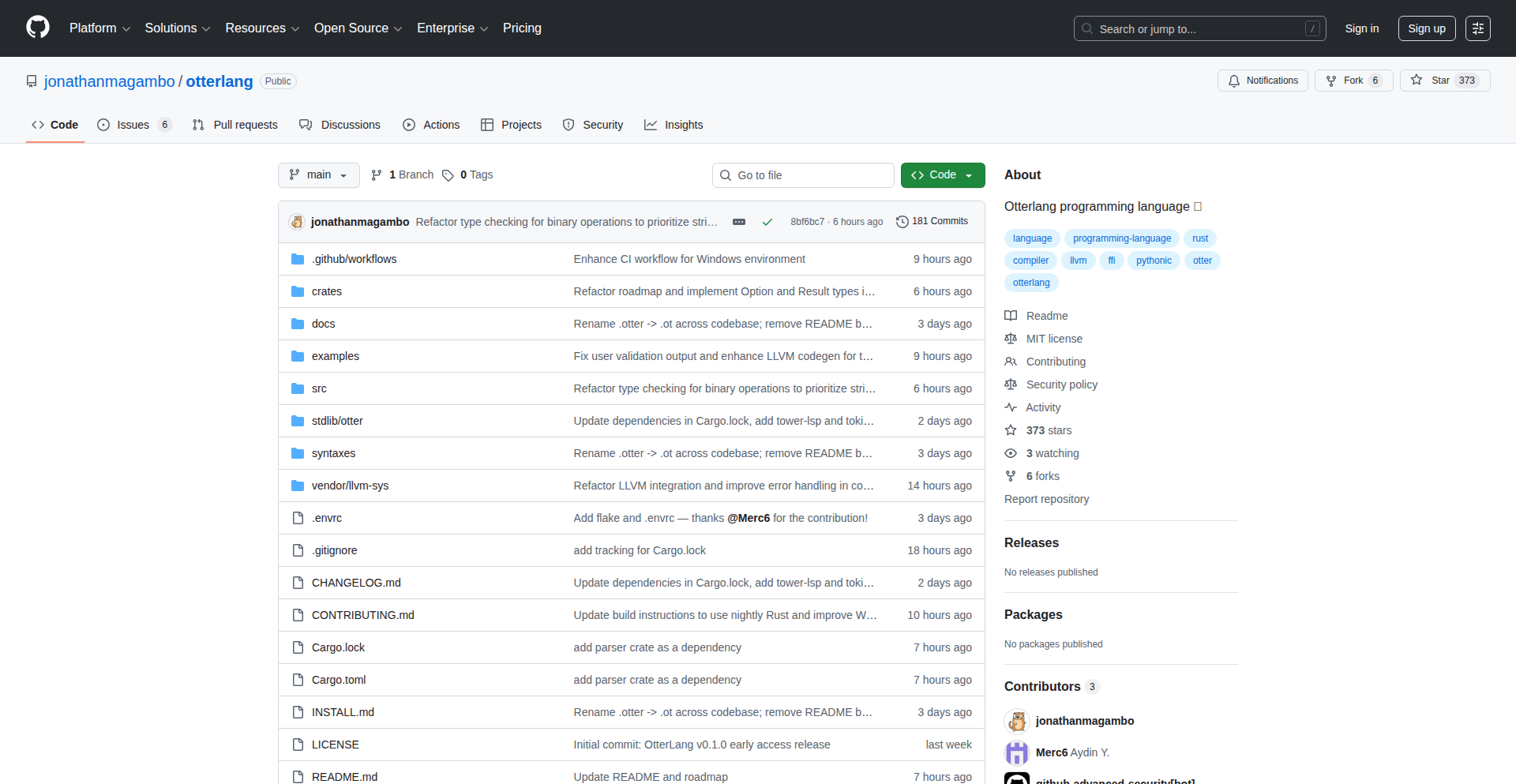

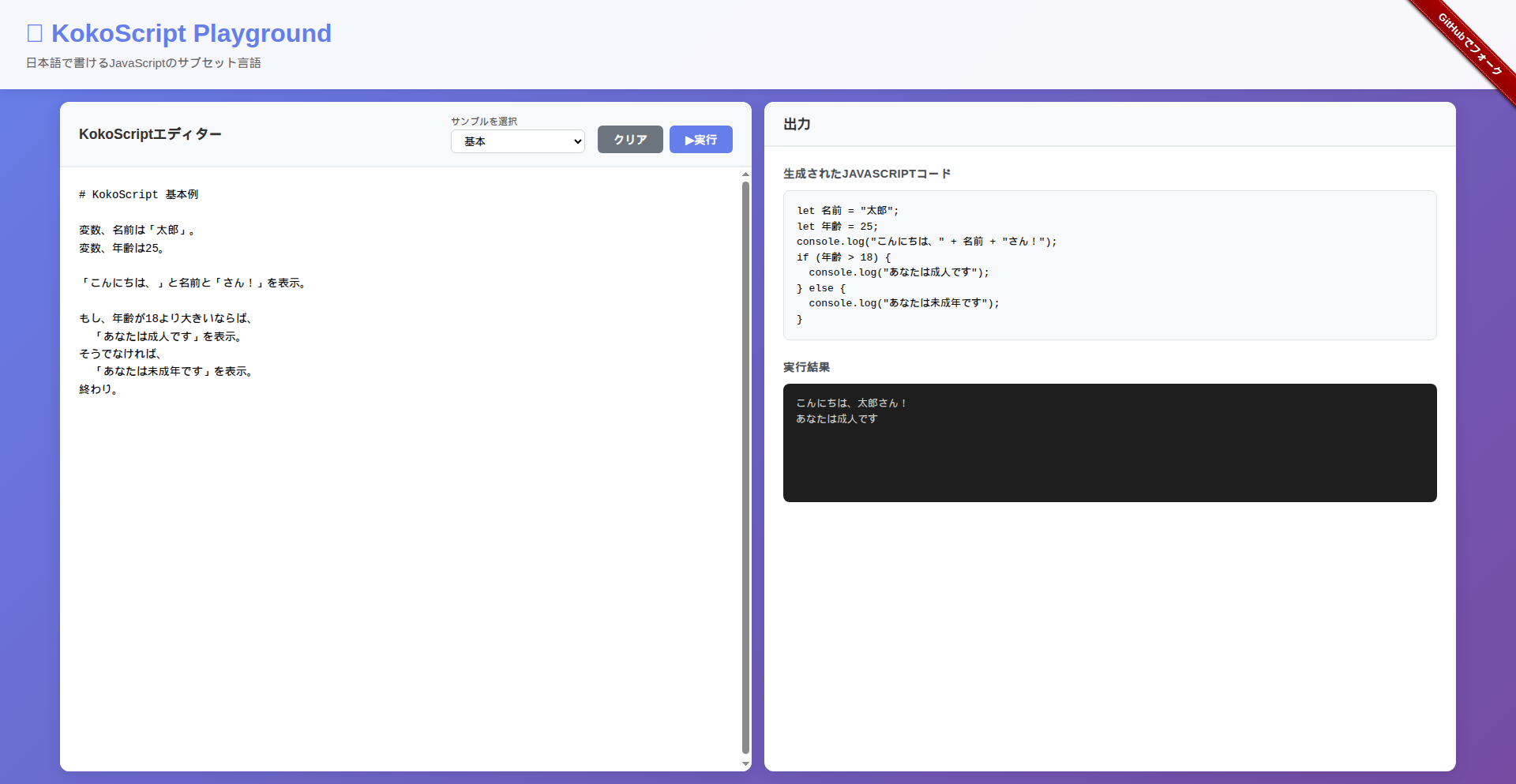

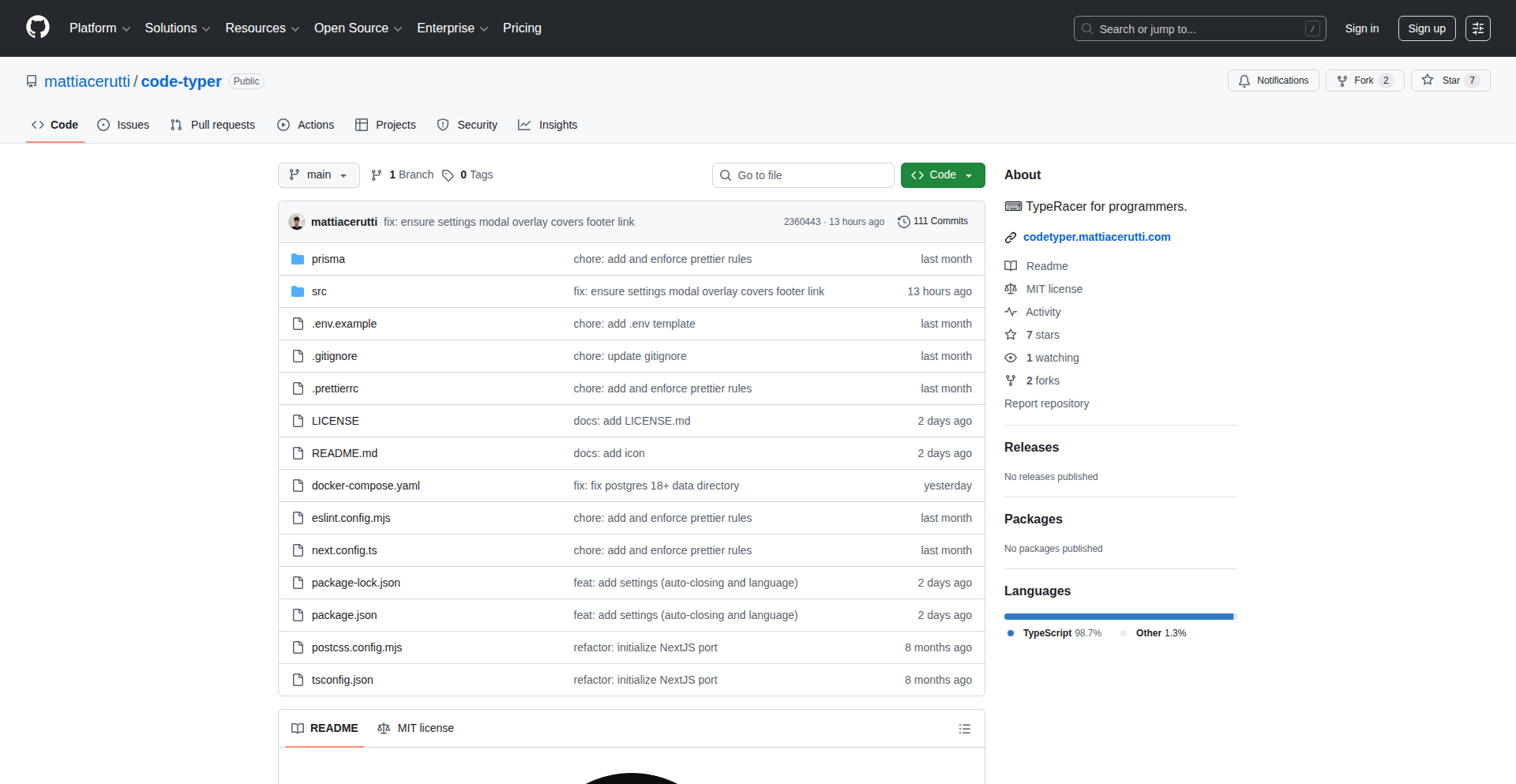

OtterLang: Pythonic Native Compiler

Author

otterlang

Description

OtterLang is an experimental scripting language that merges Python's easy-to-read syntax with the high performance and type safety of languages like Rust, by compiling down to native binaries using LLVM. It aims to bridge the gap between rapid development and efficient execution, offering seamless integration with Rust libraries without complex bindings. So, this is useful for developers who want to write code quickly like in Python, but need it to run as fast as compiled code, and also easily use existing powerful Rust tools.

Popularity

Points 13

Comments 3

What is this product?

OtterLang is a new programming language designed to be as straightforward to write as Python, but it can be compiled into super-fast, standalone executable programs (native binaries). It achieves this by using a technology called LLVM, which is like a super-optimizer for code. The key innovation here is combining Python's familiar and clean writing style with the speed and reliability (type safety) you'd expect from languages like Rust, plus the ability to use Rust code directly without extra work. So, what's the benefit? You get the best of both worlds: quick coding and lightning-fast results, with fewer errors and easier access to powerful existing codebases. This is useful for anyone who wants their applications to be both easy to develop and highly performant.

How to use it?

Developers can use OtterLang by writing scripts in its Python-like syntax. The OtterLang compiler then transforms this script into a native executable file. A significant advantage is its direct Foreign Function Interface (FFI) for Rust. This means you can import and use Rust crates (libraries of pre-written code) directly within your OtterLang programs, as if they were written in OtterLang itself, without needing to create special translation layers. This integration is seamless and fast. So, how is this useful? Developers can leverage the vast ecosystem of Rust libraries for performance-critical tasks or specific functionalities, while still enjoying the rapid development cycle of a scripting language. It's ideal for building command-line tools, backend services, or any application where performance and ease of development are both crucial.

Product Core Function

· Python-like syntax for readability: Allows developers to write code quickly and intuitively, reducing the learning curve and development time. This is useful because it means faster prototyping and easier collaboration on projects.

· Compilation to native binaries via LLVM: Generates highly optimized, standalone executable programs that run very fast without needing a separate runtime environment. This is useful for deploying efficient applications that don't rely on other software being installed.

· Rust-level type safety: Catches many common programming errors during compilation, leading to more reliable and stable software. This is useful because it reduces bugs and saves time on debugging.

· Transparent Rust FFI: Enables direct import and use of Rust crates without writing binding code, simplifying the integration of high-performance libraries. This is useful because it allows developers to leverage the vast and powerful Rust ecosystem for enhanced performance and functionality without added complexity.

Product Usage Case

· Building a high-performance command-line utility: A developer needs to create a tool that processes large files quickly. Using OtterLang, they can write the core logic in a Pythonic way for speed of development and then compile it to a fast native binary, benefiting from direct access to Rust's optimized file I/O libraries. This solves the problem of needing both speed and ease of coding for a utility application.

· Developing a microservice with performance-critical components: A backend developer wants to build a web service that requires fast data processing. OtterLang allows them to write the main service logic with Python's clarity and then seamlessly integrate Rust crates for computationally intensive tasks, ensuring low latency. This addresses the need for a performant yet maintainable backend service.

· Creating cross-platform desktop applications with native speed: A developer aims to build an application that runs efficiently on different operating systems. OtterLang's compilation to native code means the application will perform well everywhere, while its Python-like syntax makes the development process smoother. This solves the challenge of achieving native performance across multiple platforms with a friendly development experience.

4

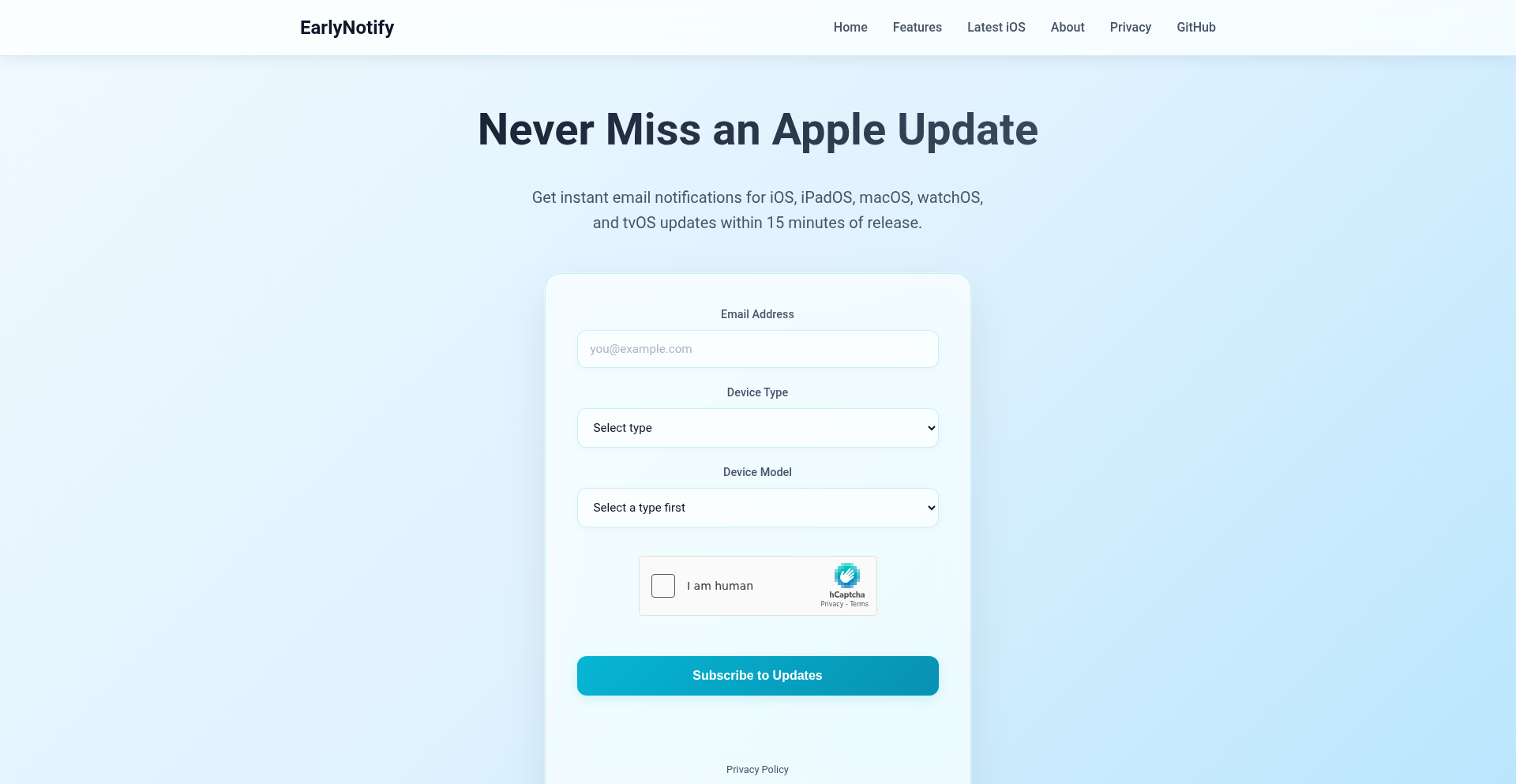

Firmware Sentinel

Author

earlynotify

Description

Firmware Sentinel is a free, open-source service that proactively monitors Apple's firmware servers for new iOS, iPadOS, macOS, watchOS, and tvOS releases. It sends you an email notification within 15 minutes of an update becoming available, ensuring you're always among the first to know about critical security patches and new features, without needing to constantly check manually or rely on social media.

Popularity

Points 5

Comments 5

What is this product?

Firmware Sentinel is a notification system designed to alert users as soon as new Apple operating system updates (like iOS, macOS, etc.) are released. It works by directly observing Apple's official servers where these updates are published. Instead of you having to repeatedly go to your device's settings to check if an update is out, or waiting for news articles or social media posts (which can be delayed), Firmware Sentinel does this checking for you automatically and continuously. When it detects a new firmware file available for download, it immediately sends you an email. This means you get the information directly from the source, very quickly, saving you time and ensuring you're aware of important updates, especially security ones, sooner rather than later. The innovation lies in its simplicity and directness – no apps to install, no accounts to manage, just pure, timely information delivered via email, embodying the hacker ethos of building a simple, effective solution to a common annoyance.

How to use it?

To use Firmware Sentinel, you simply sign up for email notifications on their website. There's no application to download or complex setup required. You provide your email address, and the system will start monitoring for updates. When a new version of iOS, iPadOS, macOS, watchOS, or tvOS is released, you will receive an email to the address you provided. This allows you to then manually update your devices at your convenience. It's designed for immediate integration into your awareness workflow for Apple devices.

Product Core Function

· Automated Firmware Monitoring: Continuously scans Apple's official servers for new OS updates. The value is in eliminating the need for manual checking, saving significant time and effort for users who want to stay updated promptly.

· Real-time Email Notifications: Delivers alerts within 15 minutes of an update's release directly to your inbox. This provides a critical advantage for users needing timely access to new features or, more importantly, security patches.

· Cross-Platform Support: Covers iOS, iPadOS, macOS, watchOS, and tvOS. This broadens its utility for users within the Apple ecosystem, offering a unified notification service for all their Apple devices.

· Open-Source and Free: The project is available for anyone to inspect, modify, and use without cost. This fosters transparency, allows for community contributions, and makes advanced notification capabilities accessible to everyone, aligning with open-source principles.

· No Account/App Required: Simplifies the user experience by removing the need for sign-ups or software installation. The value is in instant usability and minimal friction for users.

Product Usage Case

· Security-Conscious Users: A user concerned about the latest security vulnerabilities can receive an immediate alert when Apple releases a patch for iOS. This allows them to update their iPhone or iPad right away, significantly reducing their exposure to potential threats. The system's speed means they are protected much faster than if they waited for news to break.

· Early Adopters: A developer or tech enthusiast who wants to be among the first to test new features in the latest macOS version can get notified as soon as it's officially available. This allows them to start experimenting and providing feedback to Apple sooner, contributing to the broader tech ecosystem.

· IT Administrators Managing Apple Devices: An IT professional responsible for a fleet of Macs or iPhones can get rapid notification of OS updates. This enables them to quickly assess the updates, test them in their environment, and plan for deployment to their organization's devices, ensuring compliance and security.

· Individuals Tired of Manual Checks: Someone who frequently checks their iPhone settings for updates out of habit or curiosity will find this service invaluable. Instead of wasting time on repeated manual checks, they can simply wait for the email notification, freeing up their attention for other tasks.

5

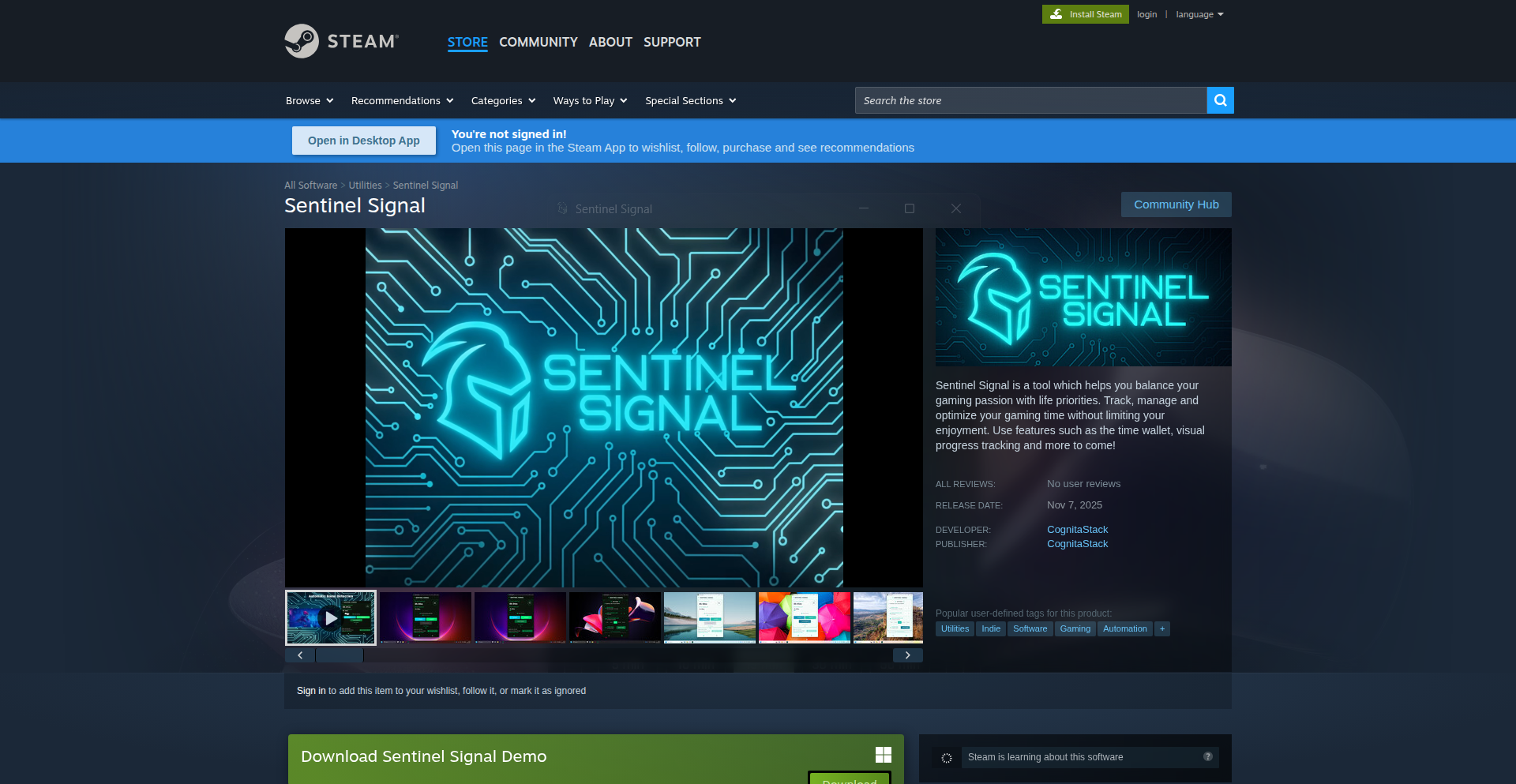

GameSession Sentinel

Author

sentinelsignal

Description

A desktop application that intelligently manages your PC gaming sessions. It automatically detects when you start playing Steam games, tracks your playtime, allows you to set weekly goals and session limits, and notifies you visually and audibly when you approach your limits. It also offers a flexible 'grace period' to finish current sessions, ensuring uninterrupted gameplay.

Popularity

Points 4

Comments 3

What is this product?

GameSession Sentinel is a smart tool for PC gamers. It runs in the background and uses system hooks to detect when you launch and close Steam games. It then records how long you play each game and how much total time you spend gaming. The innovation lies in its proactive approach to time management: instead of just logging time, it helps you control it by setting personalized goals and session limits. When you're getting close to your limit, it gently reminds you with on-screen and audio alerts. It even has a clever feature that lets you extend your current gaming session slightly without breaking the flow, allowing you to conclude your current game activity gracefully. So, this is useful because it helps you stay in control of your gaming time, preventing excessive play and ensuring you meet your other responsibilities, all while respecting your desire to finish that crucial in-game moment.

How to use it?

To use GameSession Sentinel, you simply download and install the application on your Windows PC. Once installed, it will automatically start monitoring your Steam games. You can then configure your weekly gaming goals and daily session limits through its user-friendly interface. The app integrates seamlessly with Steam, so no complex setup is required. When a game is launched, the tracking begins automatically. You can access settings to adjust notification preferences and the session extension duration. For developers, it's a ready-to-use solution that demonstrates effective use of desktop automation and user-level event monitoring. So, this is useful because it's a plug-and-play solution for gamers, and for developers, it's an example of how to build helpful desktop utilities without deep system programming knowledge.

Product Core Function

· Automatic Game Detection: The system hooks into running processes to identify when a Steam game is launched, providing a seamless tracking experience. This is valuable because you don't have to manually start or stop timers, ensuring accurate playtime data with zero effort.

· Real-time Playtime Tracking: Accurately logs the duration of each gaming session and total weekly gaming time. This is valuable because it gives you precise insights into your gaming habits, helping you understand where your time is going.

· Customizable Gaming Goals & Limits: Allows users to set weekly playtime targets and individual session duration caps. This is valuable because it empowers you to set healthy boundaries and achieve a better work-life balance.

· Proactive Notifications: Delivers visual and audio alerts as users approach their predefined gaming limits. This is valuable because it provides gentle reminders, helping you make conscious decisions to stop playing before exceeding your desired time.

· Session Extension Grace Period: Offers a configurable buffer to extend the current gaming session, allowing users to finish in-game objectives without abrupt interruptions. This is valuable because it respects your gameplay flow and prevents frustration from being cut off mid-action.

Product Usage Case

· A student who wants to balance their studies with gaming can set a weekly gaming goal of 10 hours. GameSession Sentinel will track their playtime and notify them when they are approaching their limit, ensuring they don't neglect their academic responsibilities. This solves the problem of unintentional overspending of free time on gaming.

· A parent concerned about their child's screen time can set daily session limits. The app will alert the child when their gaming time is up, helping to enforce household rules without constant parental supervision. This addresses the challenge of managing screen time effectively in a household.

· A professional gamer looking to optimize their practice schedule can use the detailed playtime tracking to analyze their performance across different games and identify areas for improvement. This helps in making data-driven decisions for practice optimization.

· An individual who enjoys immersing themselves in long gaming sessions but wants to maintain some control can utilize the session extension feature to finish a critical boss fight or quest without being abruptly kicked out. This solves the problem of gameplay interruption at inconvenient moments.

6

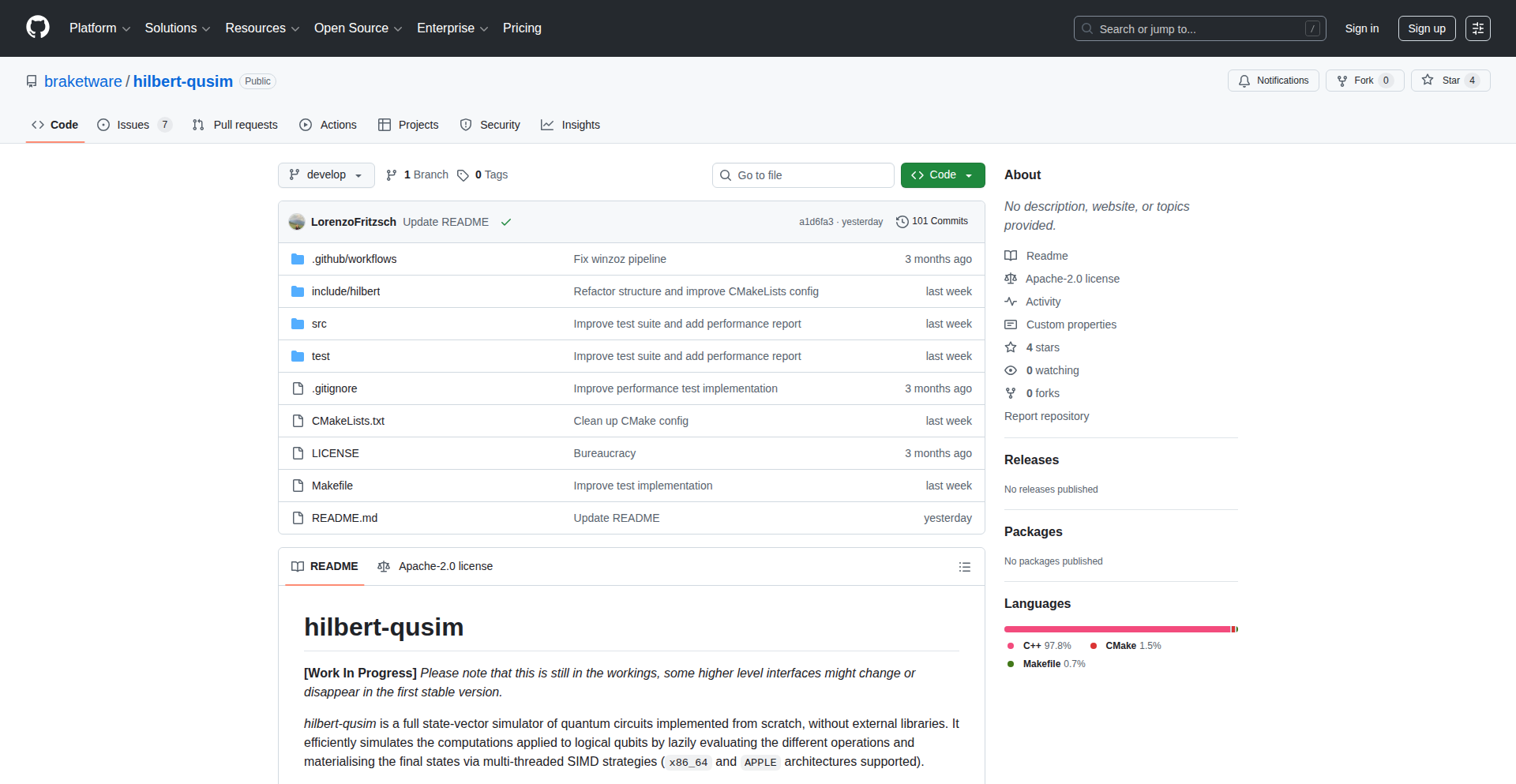

Quantum Weaver C++

Author

lofri

Description

A C++ quantum simulator built entirely from scratch, enabling developers to explore quantum computing principles and algorithms without relying on external quantum hardware. It provides a foundational environment for experimenting with quantum gates, circuits, and basic quantum algorithms, offering a unique opportunity for in-depth understanding of quantum mechanics through hands-on coding.

Popularity

Points 6

Comments 0

What is this product?

Quantum Weaver C++ is a software library written in C++ that simulates the behavior of quantum computers. Instead of needing expensive quantum hardware, developers can use this program on their regular computers to run quantum computations. It's like having a virtual quantum computer. The innovation lies in building this complex simulator from the ground up, giving developers direct insight into how quantum operations like superposition and entanglement are computationally represented. This allows for a deeper, more fundamental understanding of quantum mechanics and algorithms.

How to use it?

Developers can integrate Quantum Weaver C++ into their C++ projects to design and test quantum circuits. They can define quantum bits (qubits), apply quantum gates (like Hadamard or CNOT gates) to manipulate their states, and then measure the outcomes. This is useful for learning quantum programming concepts, prototyping quantum algorithms before potentially deploying them on real quantum hardware, or for educational purposes to visualize quantum phenomena. It's used by writing C++ code that interacts with the Quantum Weaver library's functions.

Product Core Function

· Qubit State Representation: Enables the simulation of individual qubits and their complex quantum states using mathematical vectors, providing the foundational building blocks for any quantum computation. This is valuable for understanding how quantum information is encoded.

· Quantum Gate Operations: Implements a variety of fundamental quantum gates that act on qubits, allowing developers to build quantum circuits by applying these operations sequentially. This is crucial for constructing quantum algorithms.

· Circuit Execution and Measurement: Simulates the execution of a quantum circuit and the probabilistic outcome of measuring qubits, mimicking the process of obtaining results from a real quantum computer. This helps in understanding the probabilistic nature of quantum mechanics.

· Entanglement Simulation: Accurately models the phenomenon of entanglement between qubits, where their fates are linked regardless of distance. This is a core feature of quantum computing and understanding its simulation is key to grasping its power.

· Algorithm Prototyping: Provides a sandboxed environment for researchers and developers to write and test simple quantum algorithms, like Deutsch-Jozsa or Grover's search on a small scale, before moving to more complex platforms. This accelerates the discovery and refinement of quantum solutions.

Product Usage Case

· Educational Use: A university professor can use Quantum Weaver C++ to demonstrate quantum superposition and entanglement to students in a computer science or physics course, allowing students to write code that directly manipulates quantum states and observes the results, making abstract concepts tangible.

· Algorithm Research: A researcher exploring new quantum algorithms for drug discovery can use Quantum Weaver C++ to simulate small-scale versions of their proposed algorithms on their laptop. This helps in verifying the logic and identifying potential issues before investing time and resources in more advanced simulation tools or real quantum hardware.

· Developer Learning: A curious software engineer wanting to understand the practical side of quantum computing can use Quantum Weaver C++ to build and run their first quantum circuits. By writing C++ code to implement basic quantum operations, they gain hands-on experience that theoretical study alone cannot provide, demystifying the field for them.

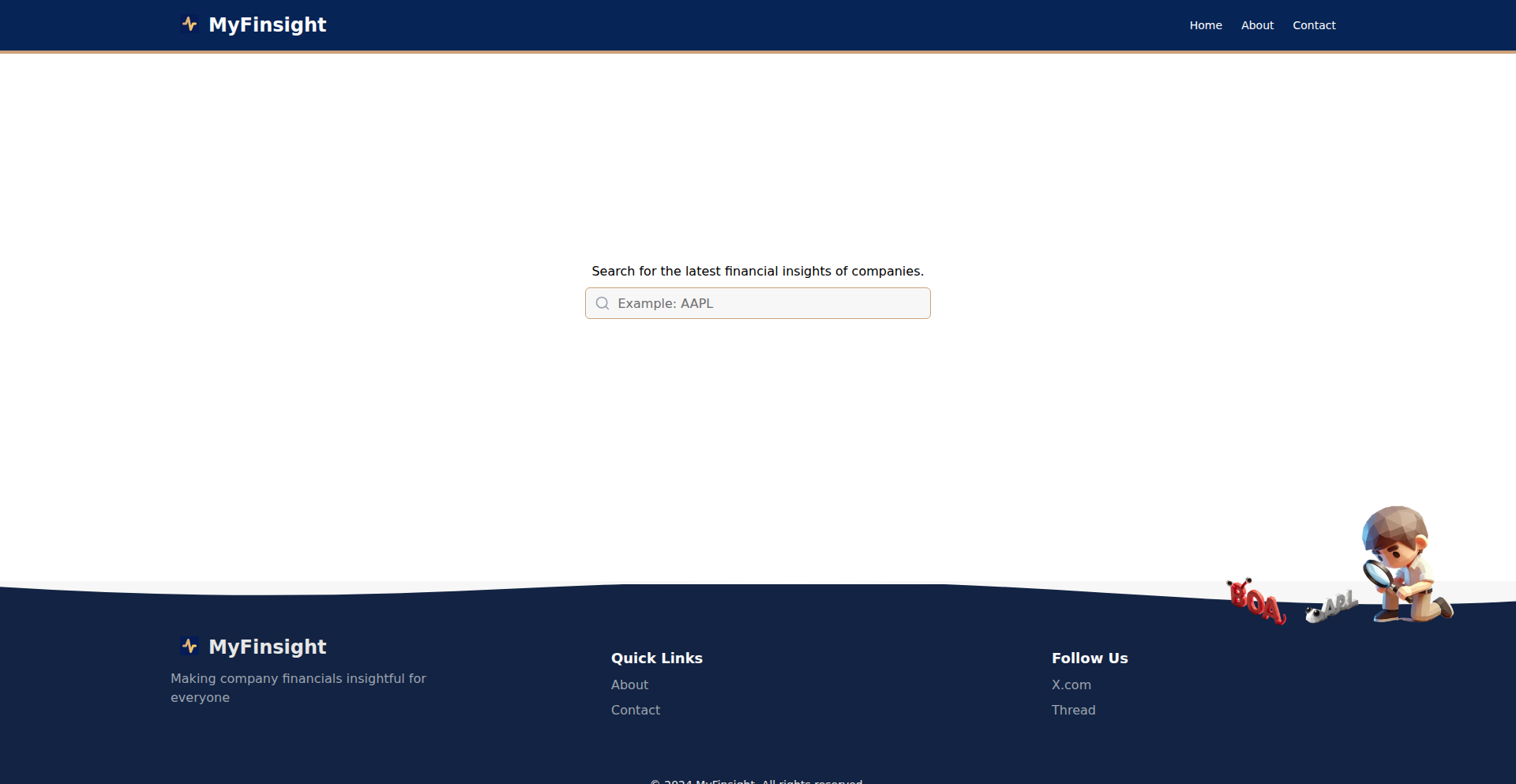

7

FinSight Viz

Author

eadanlin

Description

A web application that simplifies complex company financial data into easy-to-understand visualizations. It tackles the common problem of inaccurate and hard-to-digest financial information found on many platforms by offering free, automated charts and diagrams, enabling quick insights into company performance.

Popularity

Points 5

Comments 1

What is this product?

FinSight Viz is a platform designed to demystify financial statements. Instead of sifting through raw numbers that are often incorrect or presented in a difficult format, FinSight Viz automatically processes financial data and presents it as clear, visual charts and diagrams. This means you can grasp concepts like revenue growth, income trends, and sales breakdowns at a glance. The innovation lies in its automated data processing and visualization, making high-quality financial insights accessible without manual effort or expensive subscriptions.

How to use it?

Developers can use FinSight Viz by visiting the website and searching for specific companies. The platform will then display a series of interactive charts and graphs representing key financial metrics. This can be integrated into personal finance tracking, investment research, or even used as a quick way to check a company's health before making a business decision. For developers who build financial tools, FinSight Viz's underlying automation principles can be a source of inspiration for handling and presenting data efficiently.

Product Core Function

· Automated Financial Data Visualization: Converts raw financial reports into user-friendly charts and diagrams, providing immediate understanding of company performance without manual interpretation.

· Free Access to Key Financial Metrics: Offers visualizations of essential data like revenue, net income, and growth rates, removing the cost barrier often associated with detailed financial analysis.

· Income Growth Rate by Date Visualization: Clearly shows how a company's income has changed over time, helping users identify trends and patterns.

· Revenue Breakdown Charts: Illustrates how a company's revenue is generated, offering deeper insights into its business model and market position.

· User-Friendly Interface for Quick Insights: Designed for rapid comprehension, allowing anyone to quickly assess a company's financial standing without needing to be a finance expert.

Product Usage Case

· An investor researching a potential stock investment can use FinSight Viz to quickly see a company's historical revenue growth and net income trends, helping them make a more informed decision without spending hours deciphering financial reports.

· A small business owner looking to understand their competitors' financial health can use FinSight Viz to analyze publicly traded companies in their industry, identifying successful strategies and potential market shifts.

· A student learning about corporate finance can use FinSight Viz to visualize abstract concepts like 'income statement' and 'balance sheet' in a practical, applied context, making the learning process more engaging and effective.

· A developer building a personal finance dashboard could potentially integrate FinSight Viz's data (if an API becomes available) or use its visualization methods as inspiration for how to present financial data to their users in a clear and compelling way.

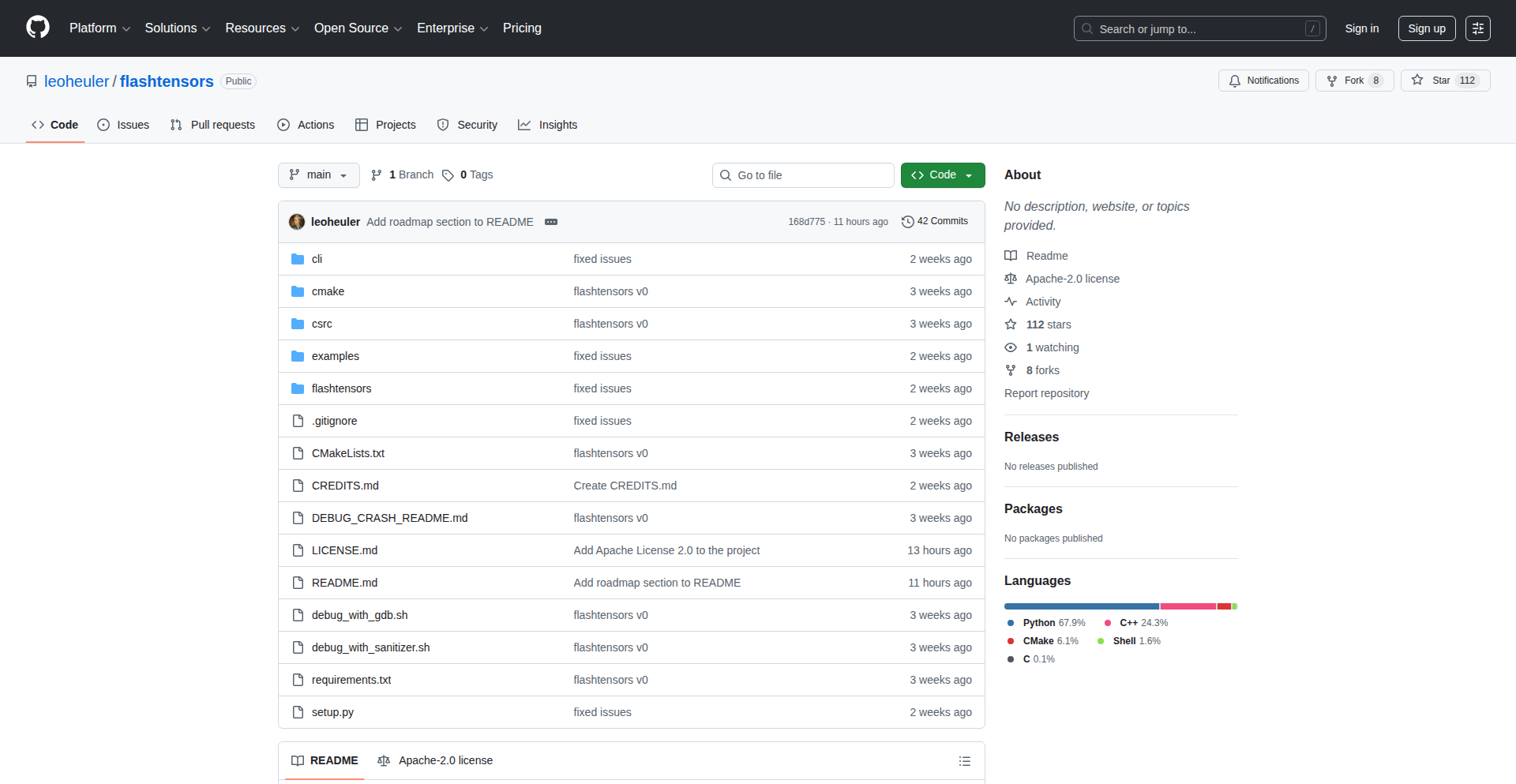

8

VRAM-Boosted Model Swapper

Author

leonheuler

Description

This project tackles the challenge of running large AI models on limited hardware, specifically by drastically improving the speed at which these models are loaded from storage into the GPU's memory (VRAM). It achieves up to a tenfold increase in loading speed compared to existing methods, making it possible to serve many large models on a single GPU with minimal delay in response times (Time To First Token - TTFT). This is particularly innovative for serverless AI, robotics, on-premise deployments, and local AI agents.

Popularity

Points 5

Comments 1

What is this product?

This project is an optimization engine for loading large AI models into a GPU's VRAM. Typically, when an AI model is needed for inference (making predictions or generating text), it needs to be loaded from slower storage (like an SSD) into the much faster VRAM of a GPU. This loading process, especially for very large models (e.g., 32 billion parameters), can be extremely slow, leading to long 'cold start' delays. This engine uses advanced techniques to load these models up to 10 times faster by intelligently managing the transfer from SSD to VRAM. It's compatible with popular AI frameworks like vLLM and Hugging Face Transformers, and it enables 'hot-swapping' of entire large models on demand, meaning you can switch between different complex models quickly without significant waiting time. The core innovation lies in its efficient data transfer and memory management strategies for large models on constrained hardware.

How to use it?

Developers can integrate this engine into their AI inference pipelines. For serverless AI, it means significantly reducing or eliminating the frustratingly long waits when a function is invoked for the first time after a period of inactivity. You can deploy multiple different large AI models and switch between them on the fly using the same GPU resource. For robotics or on-premise applications, it allows for more responsive AI operations even with less powerful hardware. For local AI agents, it means running complex language models or other AI tasks directly on your machine with much better performance. The project is open-source, so developers can inspect its workings, contribute, and adapt it to their specific needs. Usage typically involves configuring the engine to point to your model files and specifying which model to load for inference, often through API calls or direct library integration within your AI application.

Product Core Function

· Accelerated Model Loading from SSD to VRAM: This core function reduces the latency of making AI models ready for inference by up to 10x, directly addressing the problem of slow cold starts. This means faster responses for your users or applications.

· On-Demand Model Hot-Swapping: The ability to quickly switch between different large AI models (e.g., a 32B parameter model) on the same GPU without long delays. This provides flexibility to use various AI capabilities without needing separate dedicated hardware for each.

· Framework Compatibility (vLLM, Transformers): Seamless integration with widely used AI inference frameworks, allowing developers to leverage their existing codebases and workflows. This minimizes the barrier to adoption.

· Optimized for Large Models: Specifically designed to handle the challenges of loading and managing very large AI models, which are often computationally intensive and memory-hungry. This makes advanced AI accessible on more modest hardware.

· Open Source Contribution Model: The project's open-source nature encourages community involvement, bug fixes, and feature enhancements, leading to rapid improvement and broader applicability. This means ongoing development and potential for tailored solutions.

Product Usage Case

· Serverless AI Inference with Reduced Cold Starts: Imagine a chatbot service that uses a large language model. With this project, when a user sends a message after a period of inactivity, the model loads so quickly that the user barely notices any delay. This improves user experience dramatically.

· Robotics with Real-Time AI: A robot needs to perform visual recognition and decision-making using AI models. This engine ensures that the AI can process information and react quickly, enabling more fluid and responsive robotic actions.

· On-Premise AI Deployments for Sensitive Data: Businesses can deploy powerful AI models on their own servers for data privacy. This project allows them to efficiently run these models on existing hardware, reducing infrastructure costs and improving performance.

· Local AI Agents for Developers: A developer wants to build a personal AI assistant that can write code, answer questions, and manage tasks. This engine enables them to run sophisticated AI models locally on their laptop, making their AI agent much more capable and responsive.

· Dynamic AI Model Serving: A platform that offers various specialized AI models (e.g., for image generation, text translation, sentiment analysis). This project allows the platform to serve many of these models from a single GPU by rapidly switching them as needed, offering a wider range of services to users without massive hardware investment.

9

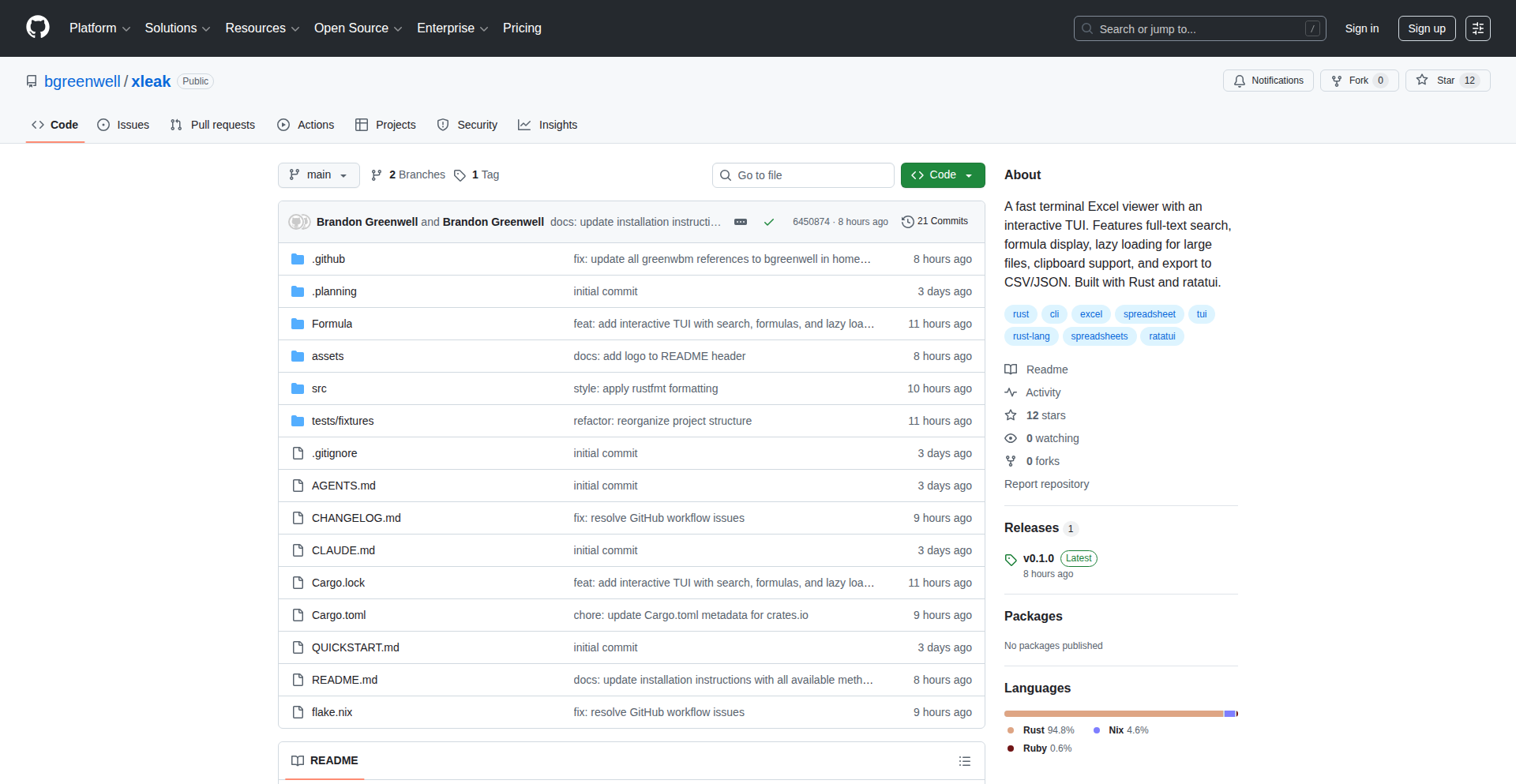

Xleak: Terminal-Native Spreadsheet Explorer

Author

w108bmg

Description

Xleak is a command-line tool that allows you to view and interact with Excel (.xlsx, .xls, .xlsm, .xlsb) and OpenDocument Spreadsheet (.ods) files directly in your terminal. It provides a fast, interactive text-based user interface (TUI) with features like keyboard navigation, formula viewing, and data export, offering a significant speed advantage over opening full desktop spreadsheet applications.

Popularity

Points 6

Comments 0

What is this product?

Xleak is a terminal-based application designed to bring the functionality of spreadsheet software to the command line. It tackles the problem of needing to quickly access or inspect spreadsheet data without the overhead of launching resource-intensive desktop applications like Microsoft Excel or LibreOffice. The core innovation lies in its interactive TUI, built with the 'ratatui' library, which mimics spreadsheet navigation and interaction. It leverages the 'calamine' Rust crate for efficient parsing of various Excel and ODS file formats. This means you get fast loading and rendering of even large spreadsheets, with the ability to see cell formulas, copy data, and navigate using familiar keyboard shortcuts, similar to vim.

How to use it?

Developers can install Xleak easily using package managers like Homebrew or Nix, or by compiling it directly from source using Cargo (Rust's build tool: `cargo install xleak`). Once installed, you can open a spreadsheet file by simply typing `xleak your_spreadsheet.xlsx` in your terminal. From there, you can use keyboard commands for navigation (arrow keys, or vim-like keys), searching (`/` followed by your query, then `n` for next, `N` for previous), jumping to specific cells (e.g., `100` for row 100, `A100` for cell A100, or `5,10` for row 5, column 10), copying cell data to your clipboard, and exporting the data to CSV, JSON, or plain text files. This makes it ideal for scripting, quick data checks in a server environment, or for developers who prefer a keyboard-centric workflow.

Product Core Function

· Interactive Terminal UI with Keyboard Navigation: Provides a visual representation of the spreadsheet in the terminal, allowing users to navigate seamlessly using arrow keys or vim-style commands, offering a faster way to browse data without touching the mouse.

· Formula Viewing: Displays the actual Excel formulas within cells, which is crucial for understanding data logic and debugging calculations, enabling users to inspect the underlying mechanics of a spreadsheet directly.

· Copy to Clipboard: Allows users to copy selected cells or entire rows to their system clipboard, making it easy to paste data into other applications or scripts without manual retyping.

· Export to CSV, JSON, Text: Enables users to quickly convert spreadsheet data into common machine-readable formats, facilitating data integration into databases, analysis scripts, or other software.

· Lazy Loading for Large Files: Optimizes performance for very large spreadsheets (1000+ rows) by loading only the necessary data as it's needed, preventing the application from freezing or becoming unresponsive.

· Jump to Cell Functionality: Offers precise navigation by allowing users to jump directly to a specific cell using its address (e.g., 'A100') or row/column number (e.g., '100' or '5,10'), saving time when working with extensive datasets.

Product Usage Case

· Quickly inspecting configuration data in a .xlsx file on a remote server without needing to install GUI applications, allowing for rapid troubleshooting and verification.

· Extracting specific columns or rows from a large dataset exported as CSV from a spreadsheet for use in a Python data analysis script, streamlining data preparation workflows.

· Verifying the formulas used in a financial report by viewing them directly in the terminal, aiding in auditing and ensuring accuracy without opening the full spreadsheet software.

· Automating data updates or checks by piping output from Xleak into other command-line tools, enabling batch processing of spreadsheet information.

· Developers who prefer a terminal-centric workflow can effortlessly check and manipulate spreadsheet data as part of their daily development tasks, enhancing productivity.

10

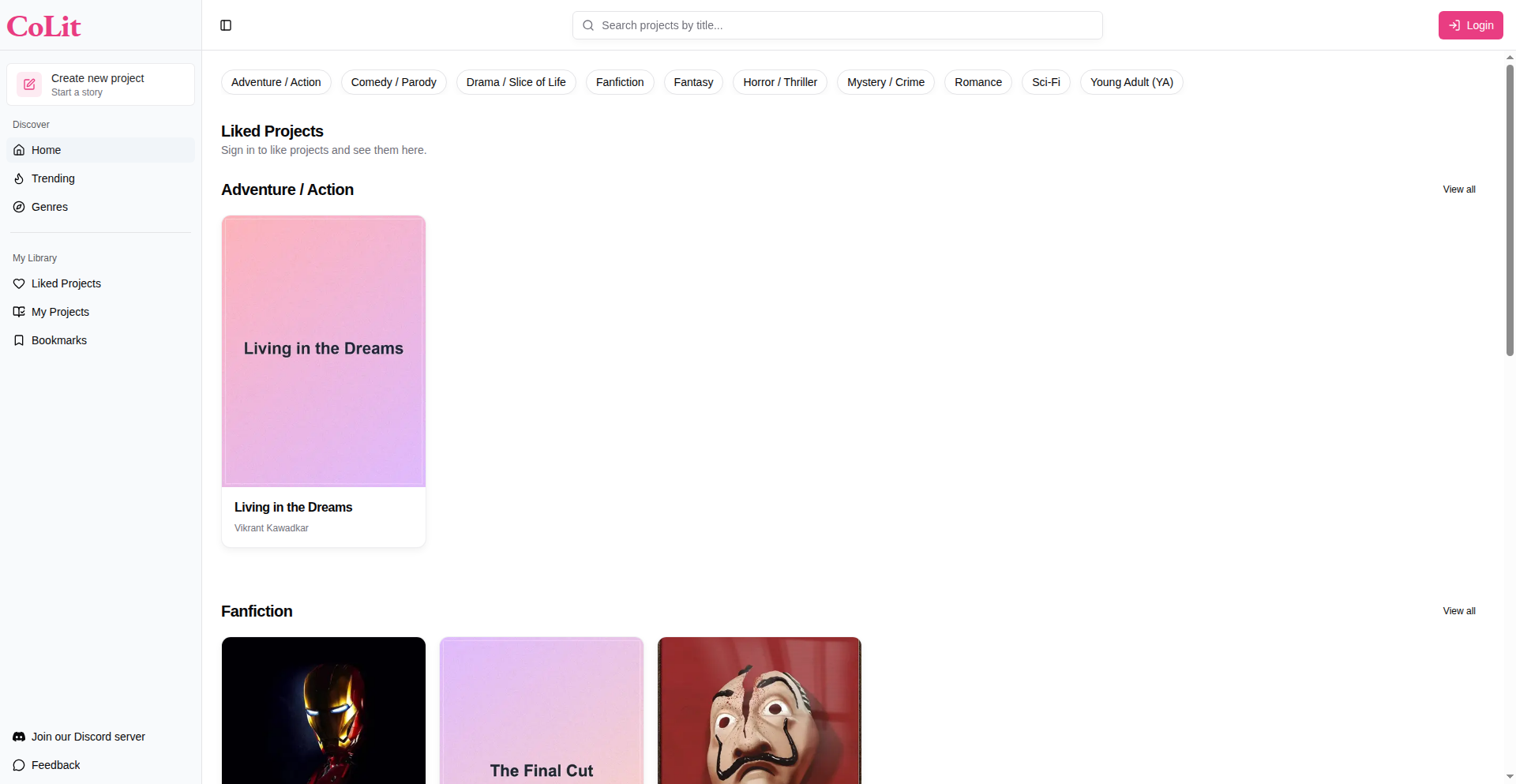

CoLit

Author

pujan19

Description

CoLit is a community-driven literature platform designed for seamless collaborative writing. It addresses the fragmentation and inefficiency of existing tools by providing a dedicated space for creators to build stories, fanfiction, or scripts together. The core innovation lies in its 'community projects' feature, which employs a unique voting and contribution cycle, allowing a group to collectively shape the narrative direction and content. This empowers creators with a more natural and engaging way to co-author, moving beyond simple document sharing to true shared storytelling.

Popularity

Points 5

Comments 0

What is this product?

CoLit is a web platform built to revolutionize collaborative writing. Instead of relying on multiple disconnected tools like Google Docs, Discord, and separate note-taking apps, CoLit offers a unified environment for group creative projects. Its standout feature is the 'community projects' model. Imagine a story where every chapter or plot point is decided by the community. Users submit ideas for the next part of the story, and then everyone votes on their favorite. The top-voted idea is then presented to the project's author, who can refine it before adding it to the main narrative. This creates a dynamic, engaging, and truly collaborative writing experience, unlike anything available today. For solo writers, it offers a robust markdown editor and reader, with the option for community feedback without direct contribution.

How to use it?

Developers can use CoLit to initiate and manage collaborative writing projects of any kind. For example, a group of friends wanting to write fanfiction can start a 'community project' on CoLit. Each member can propose plot twists, character developments, or dialogue. These proposals enter a daily cycle where community members vote. The most popular proposal then becomes the next section of the story, which the main author can edit and integrate. Developers can also leverage CoLit's API (if available or planned) to integrate its collaborative writing features into other applications, such as educational tools for group writing assignments or game development platforms for co-creating lore. Solo authors can use it as a feature-rich writing environment, benefiting from a clean editor and the option for audience feedback.

Product Core Function

· Community Project Creation: Enables multiple users to contribute to a single creative work, fostering shared ownership and collective storytelling. This is valuable for groups who want to build something complex together without logistical headaches.

· Contribution and Voting Cycles: Implements a structured process for community input, where suggestions are submitted and voted upon, ensuring that the narrative evolves based on collective preference. This democratizes the creative process and can lead to unexpected and innovative story developments.

· Authorial Control within Community Projects: Allows a designated author to review and edit the top-voted contributions before they are added to the main project, ensuring quality and coherence. This balances community input with creative direction, preventing chaotic outcomes.

· Solo Project Creation with Feedback: Supports individual writers with a dedicated markdown editor and reading mode, while still allowing for community comments and suggestions. This provides a focused writing experience with the benefit of external critique.

· Markdown Editor with Reading Mode: Offers a versatile and clean writing interface that supports markdown for rich text formatting, along with a distraction-free reading mode. This enhances the writing and editing experience for all users.

· Customizable or Auto-Generated Cover Images: Allows for personalized branding of projects with custom-designed or automatically generated cover art. This adds a professional touch and visual appeal to creative works.

Product Usage Case

· Fanfiction Community: A group of fans wants to write a collaborative fanfiction story. They start a community project on CoLit. Members propose plot lines, character interactions, and even new storylines. The community votes, and the winning ideas are incorporated into the ongoing narrative, creating a story shaped by its most passionate readers.

· Screenwriting Workshop: A team of aspiring screenwriters is developing a film script. They use CoLit to co-write scenes, with each member contributing dialogue or action sequences. The voting system helps them collectively decide on the best direction for a particular scene or character arc.

· Collaborative Novel: An author has a concept for a novel but wants to involve their readers in its creation. They start a community project on CoLit, allowing readers to suggest chapter outlines, character backstories, or even entire subplots. The author retains final editorial control while benefiting from a highly engaged readership.

· Educational Group Writing Assignments: Teachers can use CoLit for student group projects. Students can collaboratively write essays, reports, or creative stories, with the platform's structure guiding their teamwork and ensuring equitable contribution through the voting mechanism.

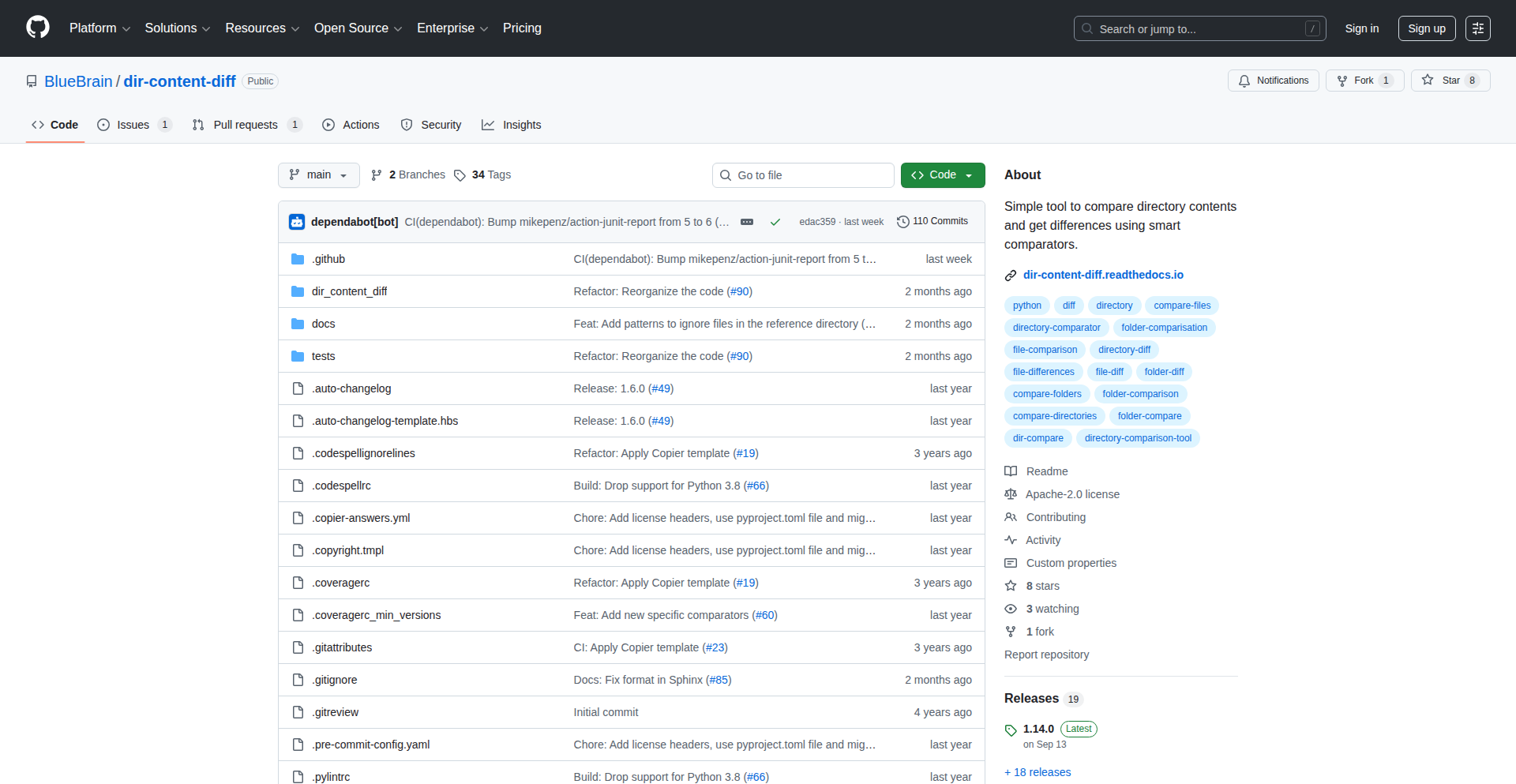

11

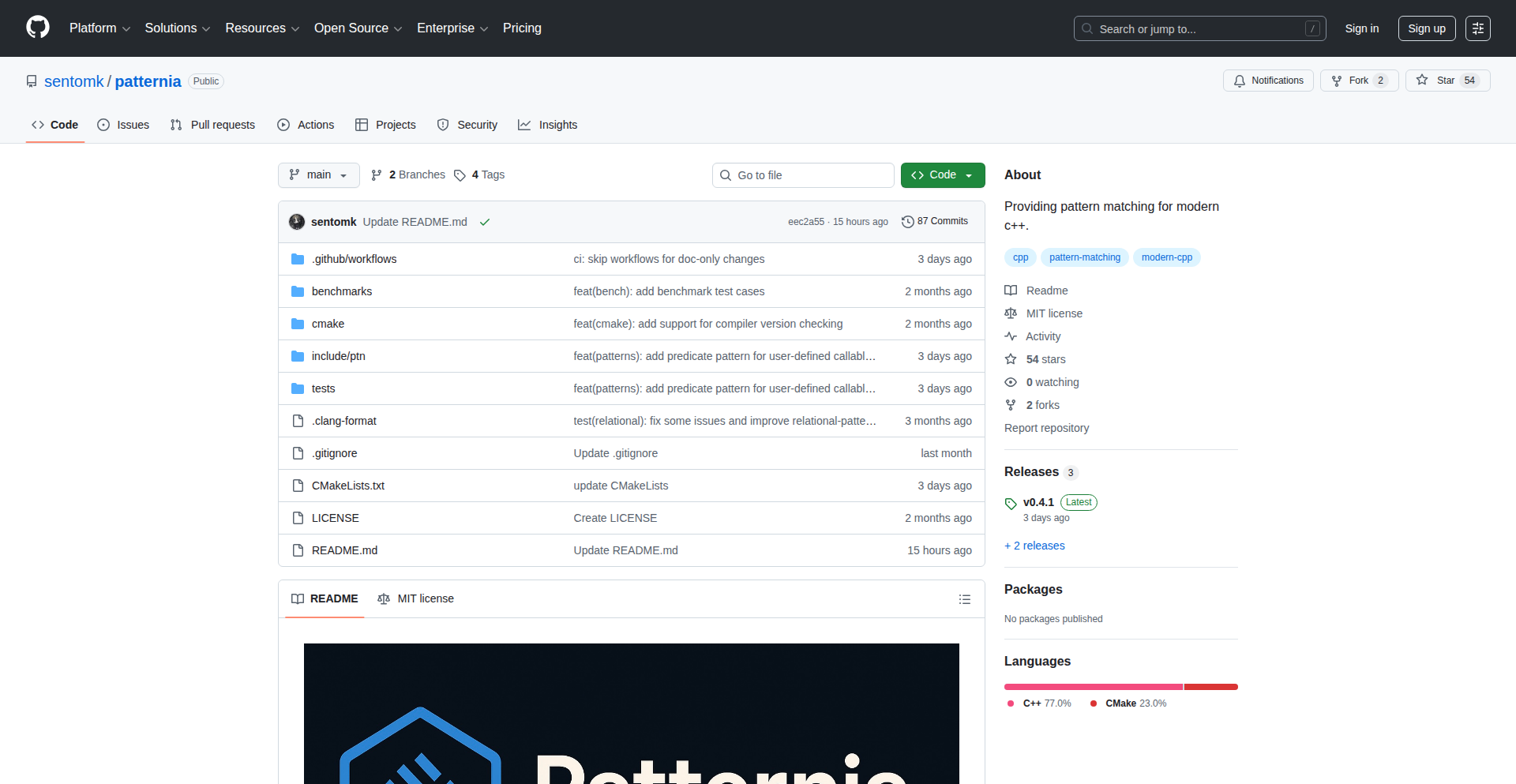

Patternia: C++ Compile-Time Pattern Matching Fabric

Author

sentomk

Description

Patternia is a C++ Domain-Specific Language (DSL) that brings the power of pattern matching directly into the C++ compilation process. It allows developers to define complex matching rules and actions that are verified and even resolved at compile time, preventing runtime errors and improving code clarity and performance for intricate data structures and logic. This tackles the verbosity and error-proneness often associated with traditional C++ conditional logic when dealing with structured data.

Popularity

Points 4

Comments 1

What is this product?

Patternia is a novel way to write C++ code where you can define 'patterns' – specific structures or values you want to look for in your data. Instead of writing lengthy if-else statements or switch cases, you define these patterns and what should happen when they are found. The magic of Patternia is that it does this checking and logic resolution *before* your program even runs, during the compilation phase. This means any mistakes in your pattern matching logic are caught by the compiler, not by your users later. It's like having a super-smart assistant review your code for specific data scenarios before it's built, making your code safer, more readable, and often faster. The innovation lies in embedding this expressive pattern matching capability directly into C++'s compilation pipeline, a feat typically achieved with more dynamic languages or complex runtime libraries.

How to use it?

Developers can integrate Patternia into their C++ projects by including its header files and using its DSL syntax within their code. Imagine you have a complex message structure or a state machine. Instead of deeply nested `if` statements to check different fields and combinations, you would define a `match` block with Patternia. You declare the data you're matching against, and then list the patterns. For example, you could match on a message type and its payload structure simultaneously. Patternia then translates this into efficient C++ code or verifies the logic at compile time. This is particularly useful for libraries that process structured data, like parsers, network protocols, or state management systems, where robust and precise conditional logic is paramount.

Product Core Function

· Compile-time pattern validation: Ensures your pattern matching logic is correct before runtime, catching errors early and saving debugging time. This is valuable because it prevents a whole class of bugs related to incorrect conditional logic.

· Expressive pattern definition: Allows for clear and concise representation of complex data structures and matching conditions, making code easier to read and maintain. This is useful for understanding intricate logic at a glance.

· Performance optimization: By resolving logic at compile time, Patternia can generate highly optimized C++ code, leading to faster execution speeds. This directly impacts the efficiency of your applications.

· Reduced boilerplate code: Replaces verbose if-else chains with elegant pattern matching syntax, leading to cleaner and more maintainable codebases. This means less typing and fewer opportunities for typos.

· Integration with C++ types: Seamlessly works with existing C++ data types and constructs, allowing for easy adoption without requiring a complete rewrite. This makes it practical for real-world projects.

Product Usage Case

· Handling complex message parsing in network protocols: A developer building a server that receives varied network messages could use Patternia to define patterns for different message types and their payloads, ensuring correct processing and preventing malformed data from causing runtime crashes. This solves the problem of writing and maintaining extensive `if/else if` chains for every possible message variant.

· Implementing state machines with predictable behavior: For applications with complex state transitions (e.g., UI frameworks, game engines), Patternia can define patterns for current states and incoming events, specifying the next state and actions. This provides a clear, compile-time verified way to manage state logic, avoiding unpredictable behavior due to state mismatch errors.

· Processing structured configuration files: When dealing with configuration files that have nested structures and optional fields, Patternia can elegantly match against various configurations, extracting relevant settings and ensuring all necessary parameters are present, leading to robust configuration loading. This eliminates the need for manual, error-prone checks on configuration data.

· Developing robust data validation routines: For applications requiring strict data validation, Patternia can define patterns for valid data formats, ranges, and relationships, ensuring data integrity at compile time or early in the data processing pipeline. This prevents invalid data from propagating through the system.

12

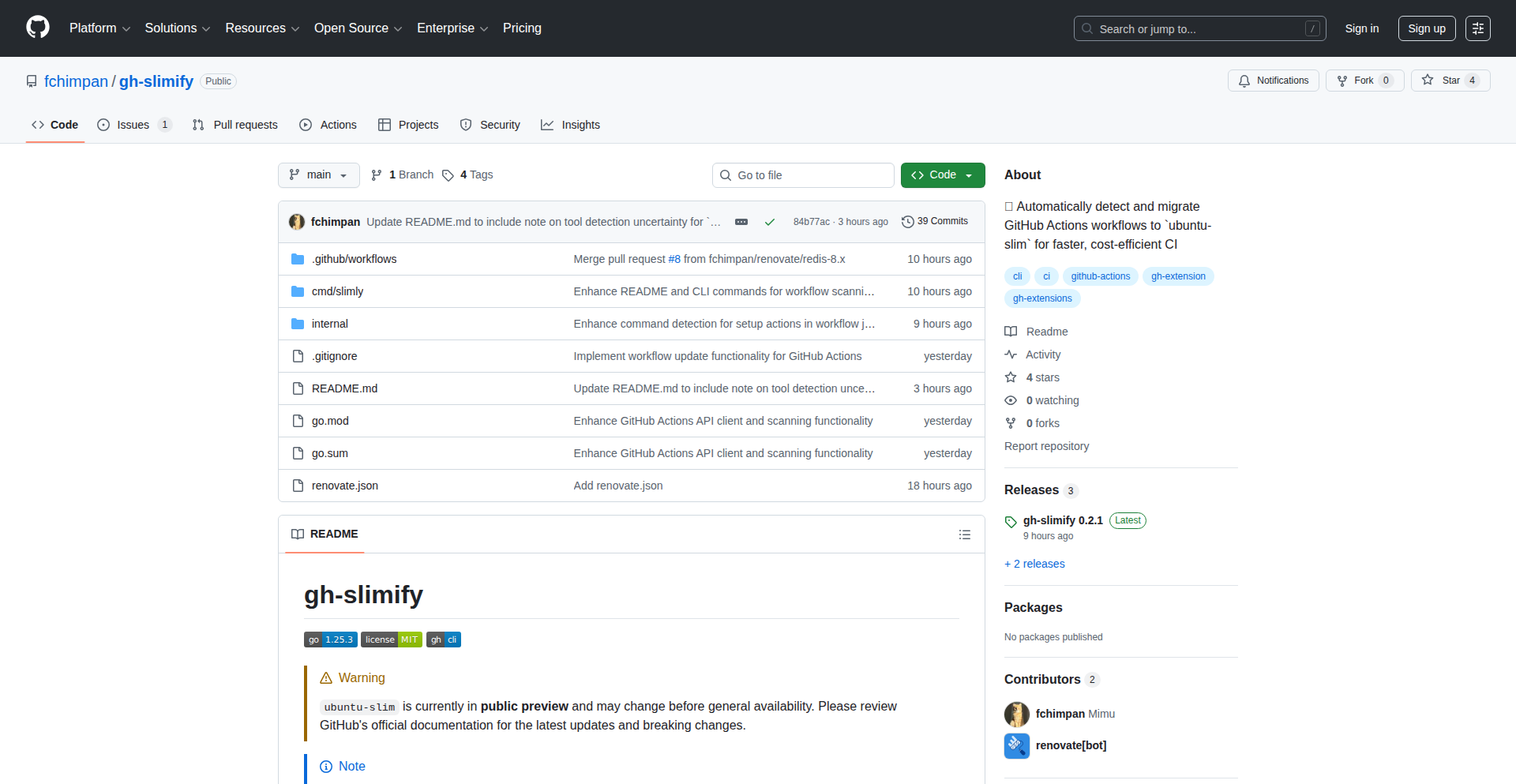

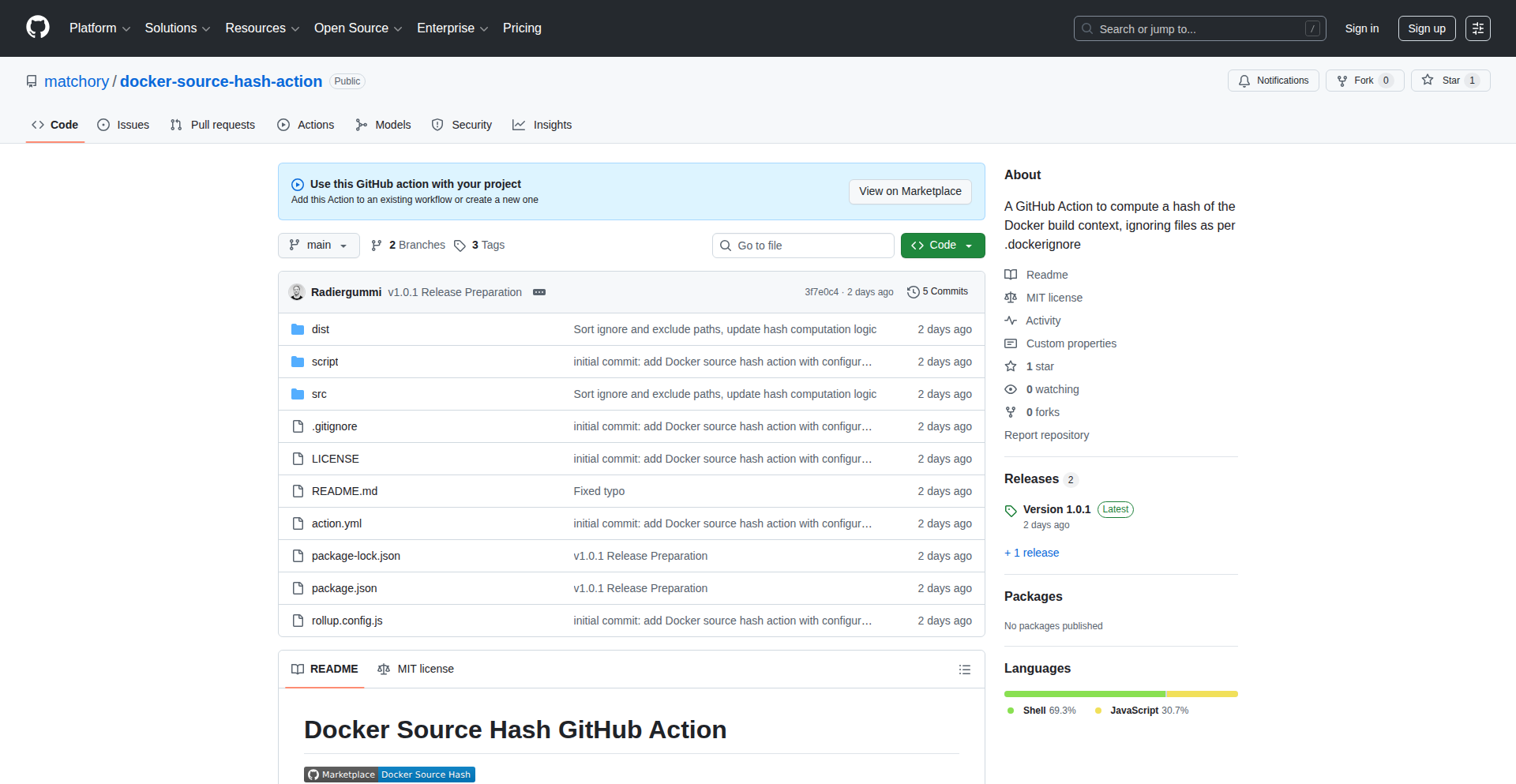

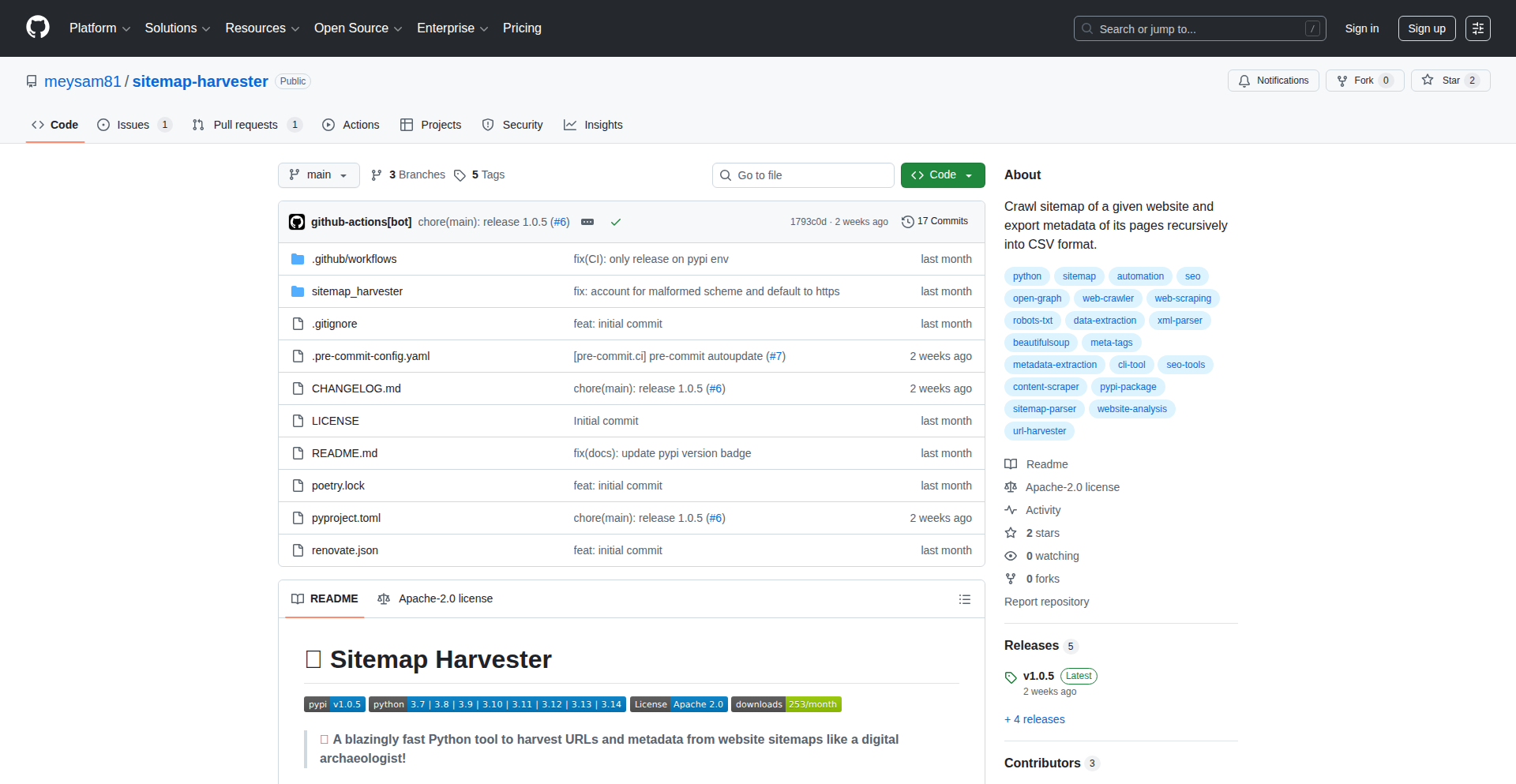

GH-Slimify: Action Cost Optimizer

Author

r4mimu

Description

GH-Slimify is a GitHub CLI extension designed to streamline the migration of your GitHub Actions workflows to the more cost-effective `ubuntu-slim` runners. It automates the tedious process of checking for compatibility issues, identifying necessary command adjustments, and safely updating your workflows, significantly reducing manual effort and potential costs.

Popularity

Points 5

Comments 0

What is this product?

GH-Slimify is a command-line tool that acts as an add-on to the GitHub CLI. Its core technical innovation lies in its automated analysis of GitHub Actions workflow files. It parses YAML configurations, specifically looking for patterns that are incompatible with the `ubuntu-slim` environment, such as reliance on Docker containers, specific system services, or pre-installed commands that are absent in the leaner `ubuntu-slim` image. The tool uses static analysis techniques to detect these potential migration blockers, offering insights into what needs to be changed and even automatically applying safe updates to compatible jobs. So, what does this mean for you? It means you can save money on your GitHub Actions usage without spending hours manually checking each workflow, ensuring a smooth transition to a cheaper runner option.

How to use it?

Developers can integrate GH-Slimify by first installing the GitHub CLI and then adding the GH-Slimify extension using a simple command: `gh extension install fchimpan/gh-slimify`. Once installed, you can scan your current workflows for potential `ubuntu-slim` migration issues by running `gh slimify`. To automatically update workflows where jobs are deemed safe for migration, you can use the command `gh slimify fix`. This allows for a progressive and safe adoption of the cost-saving measures. The primary use case is for anyone running GitHub Actions that aims to optimize their CI/CD spending. So, how does this benefit you? It provides a straightforward, automated way to reduce your cloud spending related to CI/CD without compromising your existing build processes.

Product Core Function

· Workflow Analysis for Ubuntu-Slim Compatibility: Scans GitHub Actions workflow YAML files to identify potential issues when migrating to `ubuntu-slim` runners, such as Docker usage, required services, or specific command dependencies. This helps developers understand migration blockers. So, what's the value for you? It gives you a clear picture of what needs to be addressed before you make the switch, saving you debugging time.

· Incompatible Pattern Detection: Automatically flags patterns within workflows that are known to cause problems on `ubuntu-slim` runners, like `container` or `services` directives. This proactive detection prevents unexpected failures. So, what's the value for you? It stops potential pipeline failures before they happen, ensuring your builds remain reliable.

· Missing Command Identification: Checks for commands or tools that might be implicitly relied upon in the default `ubuntu-latest` runner but are not present in the stripped-down `ubuntu-slim` environment. So, what's the value for you? It helps you identify and install necessary dependencies, preventing runtime errors.

· Automated Safe Job Updates: Provides functionality to automatically update workflow jobs that are determined to be safe for migration to `ubuntu-slim`, without introducing breaking changes. So, what's the value for you? It allows you to quickly and safely implement cost-saving changes for a portion of your workflows.

· GitHub CLI Integration: Seamlessly integrates with the existing GitHub CLI, leveraging its powerful features and making the tool accessible to developers already using GitHub's command-line interface. So, what's the value for you? It means you don't need to learn a new tool; it fits into your existing development workflow.

Product Usage Case

· A developer with multiple GitHub Actions workflows wants to reduce their monthly bill. They use GH-Slimify to scan all their workflows and identify that several jobs use Docker. GH-Slimify points out the specific `container` entries and suggests alternative approaches if possible, or flags them as requiring manual intervention. So, how does this help? It allows the developer to target specific workflows for optimization, potentially switching to multi-stage builds or other container-less strategies to leverage `ubuntu-slim` and save money.

· A CI/CD engineer manages a large GitHub Actions setup. Before migrating to `ubuntu-slim`, they run `gh slimify fix`. The tool automatically updates 80% of their workflows to use the leaner runner, as these jobs were determined to be straightforward and lacked dependencies on services or complex container setups. The remaining 20% are flagged for manual review due to specific service requirements. So, how does this help? It drastically speeds up the migration process by automating the easy wins, freeing up the engineer's time to focus on the more complex workflows.

· A small open-source project maintains its CI pipeline on GitHub Actions. They are looking for ways to minimize operational costs. They install GH-Slimify and run a scan. The tool identifies that one of their build jobs relies on a specific command-line utility that isn't available by default on `ubuntu-slim`. GH-Slimify clearly lists this missing dependency, allowing the project maintainer to add the necessary installation step to their workflow. So, how does this help? It prevents potential build failures due to missing tools and ensures a smooth, cost-effective CI setup for the project.

13

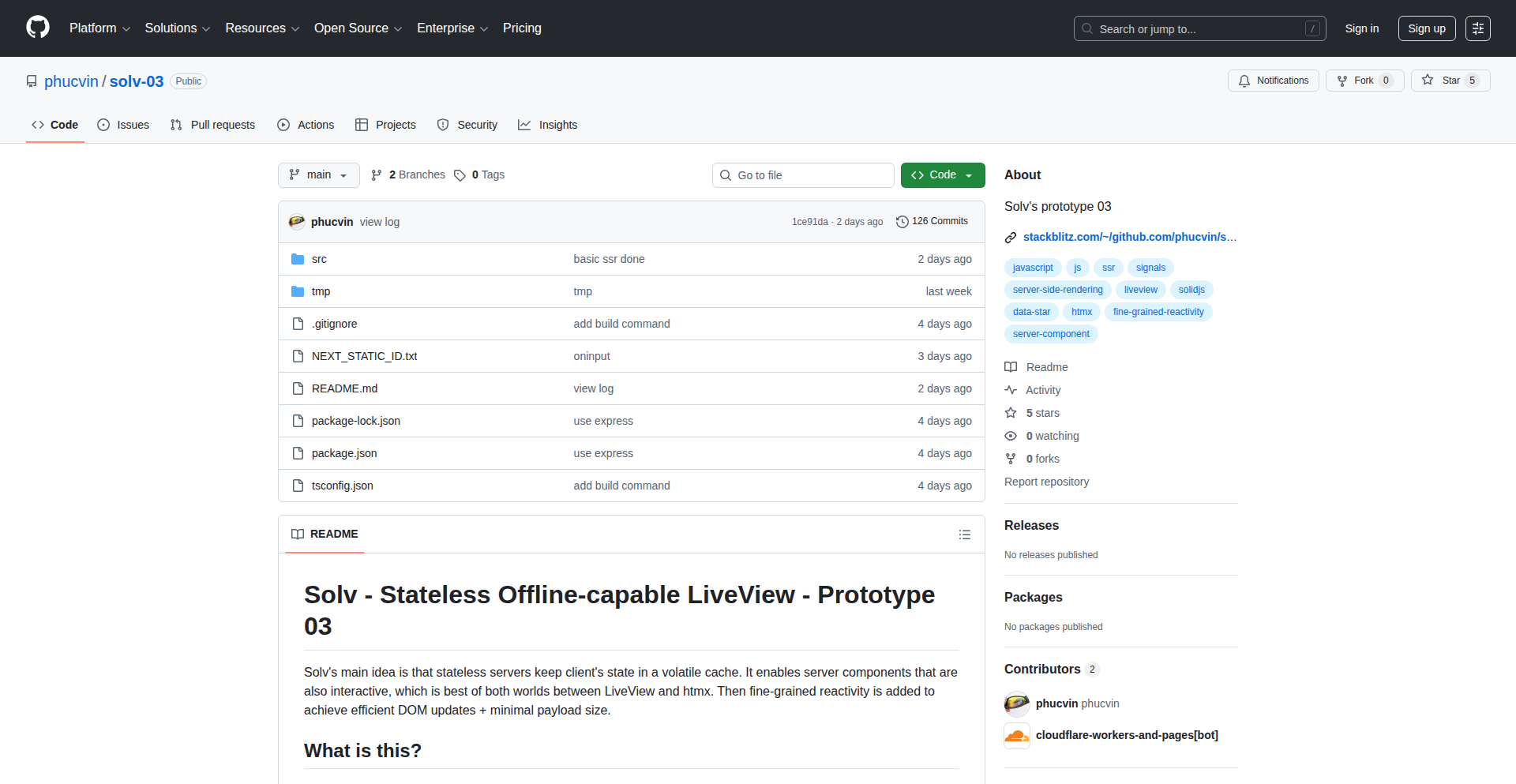

Solv: Reactive Server Components with Stateless Agility

Author

phucvin

Description

Solv is a groundbreaking prototype that fuses the strengths of htmx, LiveView, and SolidJS to create interactive server components. It tackles the challenge of achieving server-rendered applications with minimal client-side rehydration costs and the ability to work offline. The core innovation lies in its stateless server approach, where client state is managed in a volatile cache, enabling server components that are both responsive and capable of handling complex interactions without constant server connections. This translates to faster initial loads, a seamless user experience even with intermittent connectivity, and simplified development by eliminating the need for explicit API endpoints for many operations. It's a powerful blend of server-side rendering efficiency and client-side interactivity, offering the best of both worlds.

Popularity

Points 3

Comments 1

What is this product?

Solv is a novel framework that combines technologies like htmx, LiveView, and SolidJS to build web applications. Its main technical innovation is a stateless server architecture. Imagine your server doesn't need to remember everything about each user all the time. Instead, it keeps the important, temporary information about what's happening on the user's screen in a fast, short-term memory (a volatile cache). This allows the server to send back fully formed, interactive components to the user's browser. When the user interacts with these components, the server can process the changes quickly and send back only the necessary updates to the screen. This approach means you get the benefits of server-side rendering (fast initial load) and interactive components (like you'd get with JavaScript frameworks), but with significantly reduced complexity and a lighter load on your server. It's like having a super-efficient waiter who can serve you instantly and also remember your immediate preferences without needing to file a massive report.

How to use it?

Developers can leverage Solv by integrating its core principles into their web projects. Instead of building separate APIs for every interactive element, developers can define server components that handle rendering and user interactions directly. For instance, when a user clicks a button to add an item to a cart, the server component can handle the logic, update the display, and send back the minimal changes needed, all without the browser needing to make a separate API call. This can be particularly useful for building dynamic dashboards, e-commerce sites, or any application where real-time updates and rich user interactions are key. Solv's design also aims to simplify offline capabilities, allowing users to interact with the application even when their internet connection is spotty, with changes syncing once connectivity is restored. Integration might involve setting up Solv's runtime on a server (like Cloudflare Workers) and defining server-rendered components that react to user input.

Product Core Function

· Stateless Server with Volatile Cache: Allows servers to handle stateful-like interactions without maintaining persistent session data for every user, leading to better scalability and resilience. This means your application can handle more users smoothly and recover faster from issues.

· Interactive Server Components: Enables server-defined components that can be updated and interacted with directly by the server, merging the benefits of server-side rendering and client-side interactivity without heavy JavaScript on the client. This makes your application feel snappy and responsive without bogging down the user's device.

· Server-Side Rendering (SSR) with Near-Zero Rehydration Cost: Achieves fast initial page loads by rendering content on the server and then efficiently updating the client-side DOM with minimal JavaScript overhead for rehydration. You get super-fast initial loading times, so users don't have to wait long to see your content.

· No Explicit API Endpoints for Many Operations: Simplifies development by allowing server components to directly read from the database and update clients, reducing the need for boilerplate API code. You can focus more on building features and less on managing separate API layers.

· Offline Capability and Later Sync: Supports client-side interactions and state updates even when offline, with the ability to synchronize changes with the server once connectivity is restored. This ensures your application remains usable even with unreliable internet, improving the user experience.

· Fine-Grained Reactivity and Minimal Payload Updates: Efficiently updates only the necessary parts of the DOM with small data payloads, reducing bandwidth usage and improving perceived performance. Your application feels faster because it only sends and processes the absolute minimum information needed.

Product Usage Case

· Building a real-time dashboard where new data streams in and updates charts and tables without full page reloads, improving data visualization responsiveness. This solves the problem of laggy dashboards and ensures users see the most up-to-date information instantly.

· Developing an e-commerce product listing page with dynamic filtering and sorting that directly updates the displayed items based on user selections, without requiring complex client-side routing or API calls for every filter change. This makes shopping online smoother and faster by instantly reflecting search and filter choices.

· Creating an interactive form where server-side validation provides immediate feedback to the user as they type, and submission triggers server-side processing without noticeable delays. This solves the frustration of submitting a form only to find errors, providing a more guided and efficient input experience.

· Implementing a blog or content management system where new posts or comments can be added and displayed in real-time without requiring users to refresh their browser, enhancing community engagement. This makes a website feel more alive and interactive, encouraging more user participation.

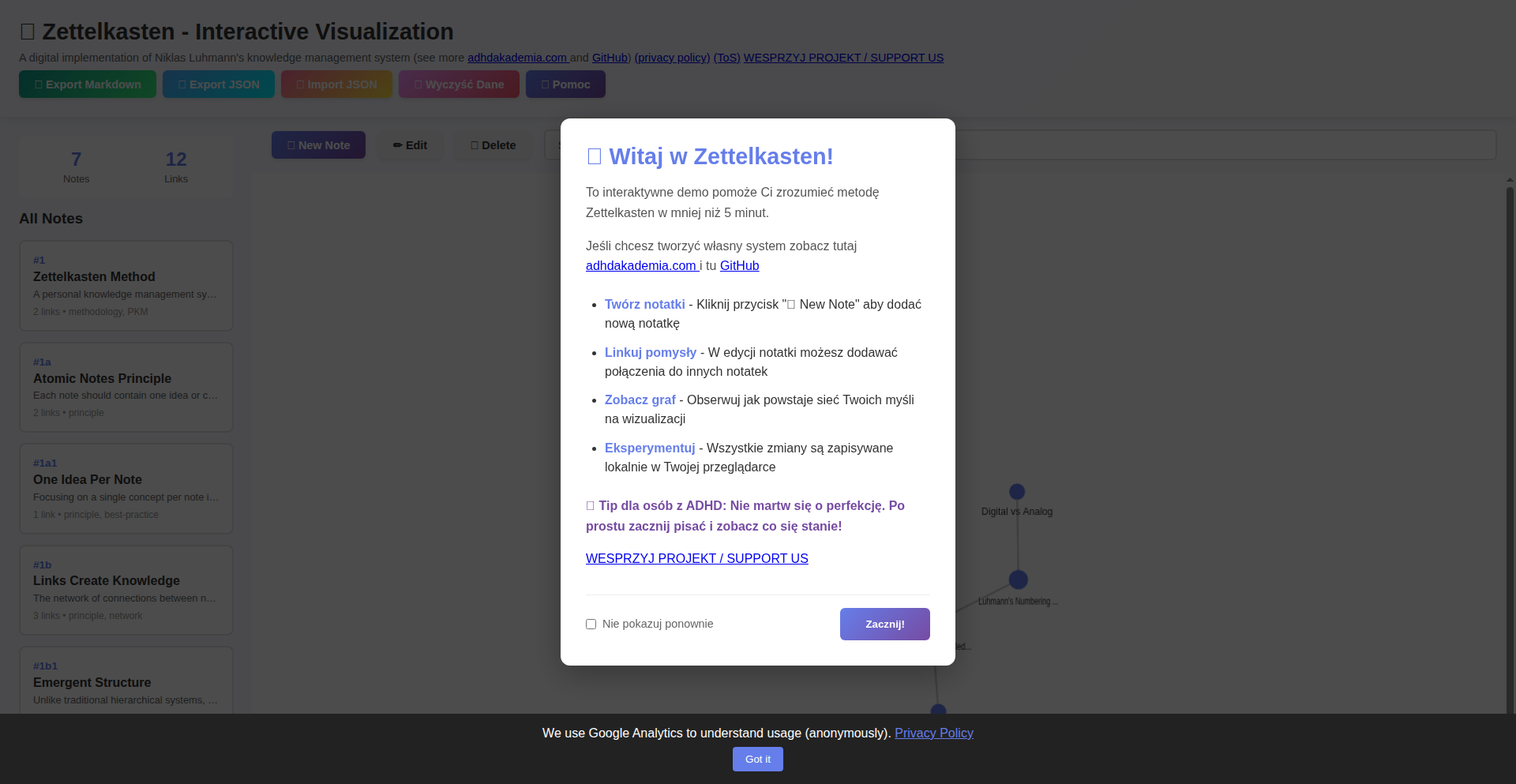

14

Zettelkasten Interactive: ADHD-Friendly Knowledge Weaver

Author

SlaWisni73

Description

This project is an ultra-lightweight (60KB) interactive Zettelkasten knowledge management tool designed with ADHD brains in mind. It focuses on a minimalist, visually intuitive interface to combat information overload and promote focused idea connection, offering a novel approach to note-taking for those who struggle with traditional, dense systems.

Popularity

Points 3

Comments 1

What is this product?

This project is a web-based Zettelkasten note-taking application. Zettelkasten, at its core, is a method for managing personal knowledge by creating small, atomic notes (zettel) and linking them together. The innovation here is its extreme size optimization (60KB) and a design tailored for individuals with ADHD. This means a focus on reducing visual clutter, offering clear pathways for idea association, and employing techniques that make learning and recall more engaging, potentially through subtle animations or interactive visualizations that help maintain attention and facilitate the discovery of connections between ideas. The core technical idea is to build a highly performant, distraction-free environment that encourages spontaneous thought and reinforces memory through interconnectedness, all without the bloat of larger applications.

How to use it?

Developers can use this project as a foundation for building their own personal knowledge management systems or as a starting point for a productivity tool that prioritizes mental clarity. It can be integrated into existing web applications as a module for note-taking or knowledge organization. The lightweight nature makes it ideal for embedding in low-resource environments or for projects where fast loading times are critical. For example, a developer building a personal blog could integrate this to manage their article ideas and research notes directly within their site, allowing for seamless linking and idea generation without leaving their writing environment. Its simple architecture also makes it easy to extend with custom features.

Product Core Function

· Minimalist Interface: Provides a clean, uncluttered user experience that reduces cognitive load, making it easier for users, especially those with ADHD, to focus on their thoughts and notes. This is achieved through careful CSS design and optimized HTML structure, reducing visual distractions and improving readability.

· Interconnected Notes (Zettelkasten Linking): Enables users to create links between individual notes, forming a network of knowledge. This core Zettelkasten functionality allows for non-linear thinking and discovery of emergent connections between ideas, enhancing understanding and memory recall. The implementation likely involves a simple, efficient way to reference and display links within the note content.

· Lightweight Performance: Engineered to be extremely small (60KB), ensuring rapid loading times and smooth operation even on slower internet connections or less powerful devices. This is a testament to efficient JavaScript and asset optimization techniques, such as code splitting and asset minification, providing a fast and responsive experience.

· Interactive Visualizations (Potential): May include subtle interactive elements or visualizations to make the process of connecting ideas more engaging and less passive. While not explicitly detailed, such features would aim to maintain user focus and aid in pattern recognition within the knowledge graph, possibly using simple SVG animations or declarative rendering techniques.

Product Usage Case

· Research Note-Taking: A researcher can use this tool to manage vast amounts of literature reviews and experimental notes. By linking related concepts, findings, and hypotheses, they can quickly navigate their knowledge base and identify potential research gaps or connections they might have otherwise missed, leading to more insightful discoveries.

· Creative Writing and Idea Generation: A writer can use it to brainstorm plot points, character details, and thematic elements for a novel. Linking ideas creates a rich tapestry of connections, helping them to develop a cohesive narrative and explore different story arcs more effectively, turning scattered thoughts into a structured creative output.

· Personal Knowledge Management: An individual can use this tool to organize their learning from books, articles, and online courses. The interconnected notes act as a personal wiki, allowing them to revisit and reinforce learned concepts by seeing how they relate to other knowledge they've acquired, leading to deeper and more lasting comprehension.

· Development of Productivity Tools: Developers can fork this project to build specialized productivity applications, perhaps for managing project tasks, team knowledge, or client information, where a fast, unobtrusive, and highly interconnected note-taking feature is paramount for efficient workflow and collaboration.

15

WatchCode Agent

Author

Void_

Description

This project, 'WatchCode Agent', introduces a novel way to initiate coding tasks by leveraging the Apple Watch. It breaks down the barrier between on-the-go thinking and actual code execution by allowing users to trigger development workflows directly from their wrist. The core innovation lies in bridging the gap between a personal wearable device and a developer's remote or local coding environment, enabling a more fluid and responsive development process.

Popularity

Points 4

Comments 0

What is this product?

WatchCode Agent is a system that allows you to start pre-defined coding tasks or scripts directly from your Apple Watch. Imagine having an idea for a quick script while commuting, and instead of waiting to get back to your computer, you can simply tap on your watch to start it. It works by establishing a secure communication channel between your Apple Watch and your computing device (like a laptop or server). You can define various 'agents' or scripts beforehand, such as running a specific test suite, deploying a small update, or fetching data. When you trigger one of these agents on your watch, it sends a command to your computer to execute the corresponding task. This is innovative because it extends the reach of developer tools into a context where they were previously inaccessible, enabling immediate action on coding-related tasks.

How to use it?

Developers can integrate WatchCode Agent by setting up a small server or service on their primary development machine. This service will listen for commands sent from the Apple Watch. On the Apple Watch, a companion app will be used to select and trigger these pre-configured agents. For example, you might set up an agent on your machine that runs 'npm test' for a specific project. You would then configure this agent within the WatchCode Agent system. On your watch, you would see an option like 'Run Project X Tests.' Tapping this would send a signal to your machine, which would then execute the 'npm test' command. The value is in being able to initiate these actions without needing to physically interact with your computer, making development workflows more flexible.

Product Core Function

· Remote Agent Triggering: This allows developers to initiate pre-configured scripts or commands on their development machine from their Apple Watch. The value is in enabling quick action and reducing downtime when inspiration strikes or a quick check is needed.

· Customizable Agent Definitions: Users can define specific commands, scripts, or even small programs to be executed as 'agents.' This provides flexibility to tailor the system to individual development workflows and project needs.

· Secure Command Transmission: The system prioritizes secure communication between the watch and the server to ensure that only authorized commands are executed. This is crucial for maintaining the integrity of development environments.

· Contextual Action Initiation: By allowing actions from the watch, it enables developers to act on ideas or immediate needs even when away from their primary workstation, fostering a more dynamic development cycle.

Product Usage Case

· During a commute, a developer gets an idea for a small refactoring. They use WatchCode Agent on their Apple Watch to trigger a script that creates a new Git branch and a placeholder file for the refactoring. This allows them to capture the idea immediately and set up the initial structure without needing to open their laptop.

· A developer is in a meeting and needs to quickly check if a critical build is passing. They use WatchCode Agent to trigger a CI/CD pipeline check on their watch, receiving a quick status update without disrupting the meeting or needing to access their computer.

· After deploying a feature, a developer wants to run a smoke test to ensure basic functionality. They use WatchCode Agent to trigger a pre-defined smoke test script on a staging server immediately after deployment, providing rapid validation.

16

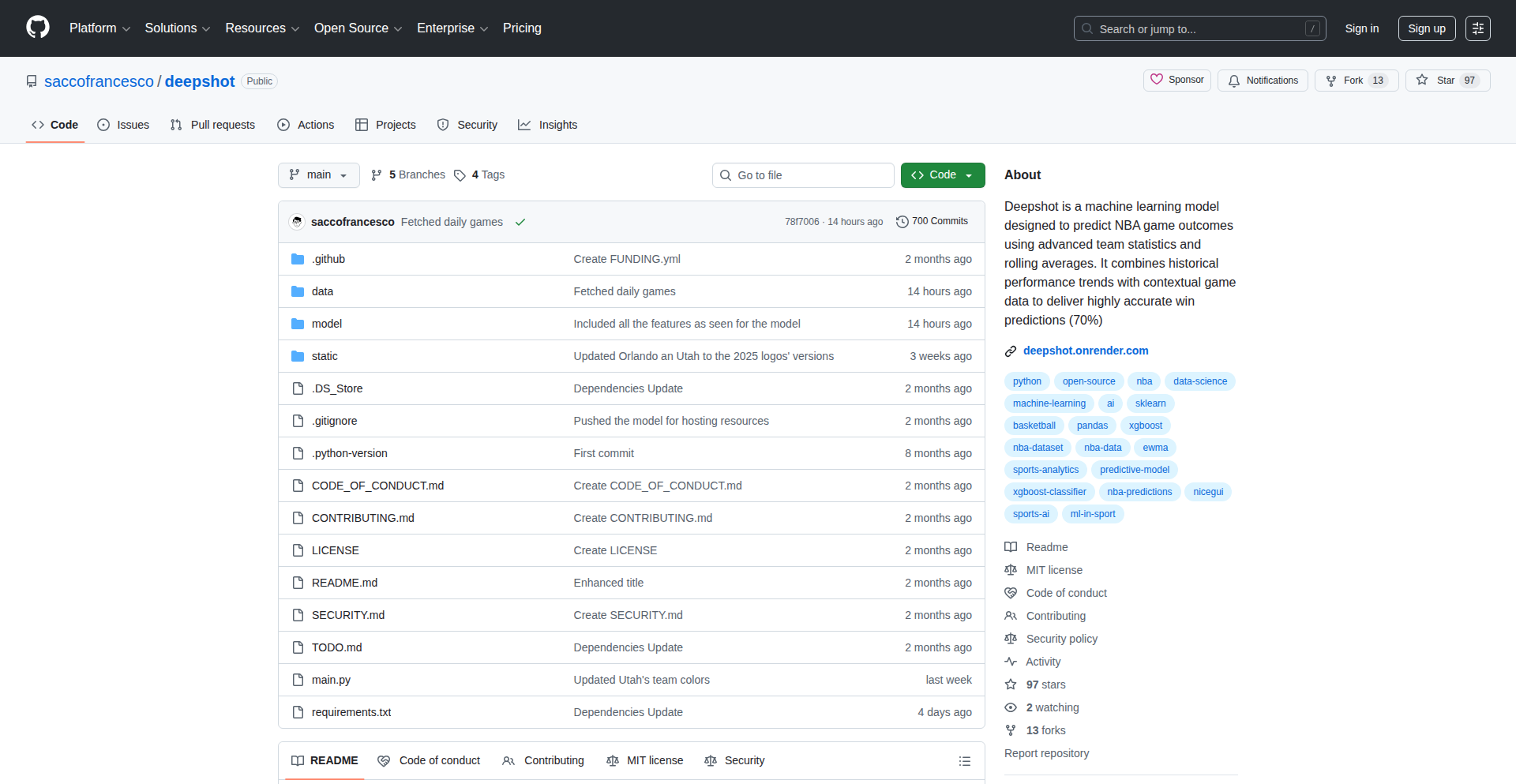

DeepShot - NBA Momentum Predictor

Author

Fr4ncio

Description

DeepShot is a machine learning model that predicts NBA game outcomes with an impressive 70% accuracy. It leverages rolling statistics, historical performance, and recent team momentum. Unlike basic averaging methods or simply looking at betting odds, DeepShot uses a sophisticated technique called Exponentially Weighted Moving Averages (EWMA) to precisely capture a team's current form and momentum. This allows users to visually understand the statistical drivers behind the model's predictions, showing why it favors one team over another. The project is built with Python and powered by libraries like XGBoost, Pandas, and Scikit-learn, and features an interactive web interface using NiceGUI. It's designed to run locally on any operating system and uses only free, publicly available data from Basketball Reference. This project is a fantastic example of how developers can apply advanced machine learning to solve real-world problems, offering insights for sports analytics enthusiasts, machine learning practitioners, and anyone curious about algorithmic prediction.

Popularity

Points 3

Comments 1

What is this product?

DeepShot is an intelligent NBA game prediction system that uses machine learning and statistical analysis to forecast game winners. At its core, it analyzes various data points about NBA teams, including their past performance, recent game trends, and current player statistics. The innovation lies in its use of Exponentially Weighted Moving Averages (EWMA) to give more importance to recent game data, effectively capturing 'momentum' and 'current form' which are often crucial in sports. This is different from traditional methods that might just average out all historical data. The model then uses XGBoost, a powerful machine learning algorithm, to process this information and make a prediction. The output is presented in a user-friendly, interactive web application, making complex statistical insights easy to understand. So, for you, this means a way to see how advanced algorithms can analyze sports data to make informed predictions, going beyond simple guesswork.

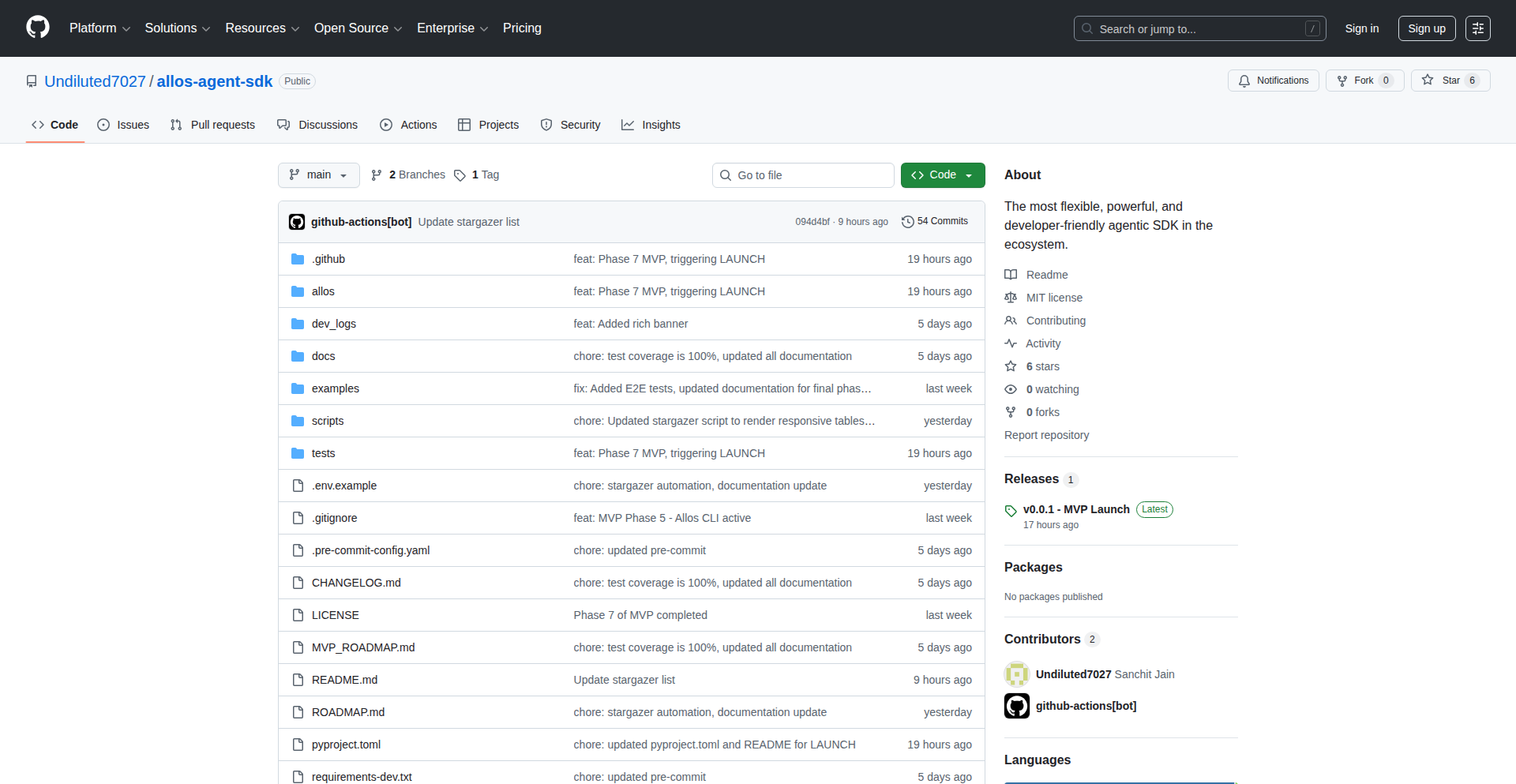

How to use it?