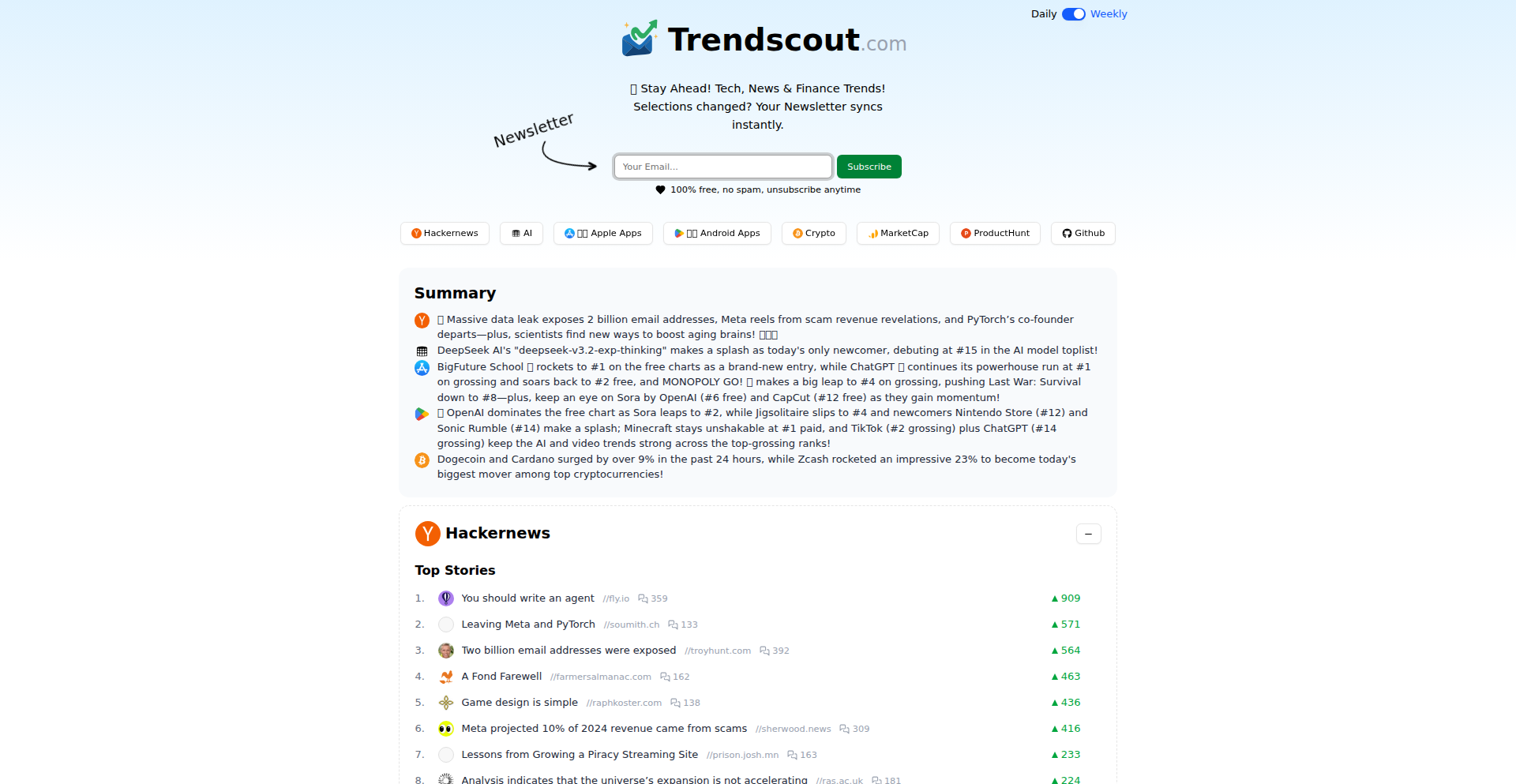

Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-07

SagaSu777 2025-11-08

Explore the hottest developer projects on Show HN for 2025-11-07. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The current wave of innovation is clearly centered around democratizing advanced capabilities. We're seeing a strong push towards making AI more accessible and useful for everyday tasks, from language learning and creative content generation to specialized security research and efficient developer workflows. The 'hacker spirit' is alive and well, with developers not just building new tools but also refining existing ones and tackling the usability challenges of complex technologies. For aspiring developers and entrepreneurs, this means there's immense opportunity in creating solutions that abstract away complexity, empower non-technical users, or solve niche problems with elegant, focused tools. The trend towards local-first and privacy-conscious applications also signals a growing demand for user control and transparency, a valuable consideration for any new venture. Don't be afraid to dive deep into a specific problem, leverage the power of open-source, and build something that genuinely solves a pain point – that's where true innovation happens.

Today's Hottest Product

Name

VoxConvo – "X but it's only voice messages"

Highlight

This project tackles the challenge of preserving authentic human communication in an era flooded with AI-generated content. By making voice the sole medium for posts and integrating real-time transcription with word-level timestamps, VoxConvo allows users to experience the emotional nuances of voice while retaining text's scannability. The innovative 'visual voice editing' feature, where clicking a word deletes that audio segment, offers an intuitive way to remove filler words and mistakes, showcasing a clever blend of audio manipulation and text-based editing. Developers can learn about efficient real-time transcription with VOSK, WebSocket streaming, and the architectural considerations for a voice-centric platform running on local hardware for MVP validation.

Popular Category

AI and Machine Learning

Developer Tools

Productivity

Web Applications

Utilities

Popular Keyword

AI

LLM

Open Source

Python

Rust

TypeScript

React

Data Analysis

Automation

Technology Trends

AI-driven Content Creation and Analysis

Enhanced Developer Productivity Tools

Privacy-Preserving and Local-First Applications

Innovative Data Handling and Storage

Interactive and Real-time Web Experiences

Voice and Audio Processing Technologies

Specialized LLM Applications

Domain and Asset Management Tools

Project Category Distribution

AI/ML Tools (25%)

Developer Utilities (20%)

Productivity & Automation (15%)

Web Applications (15%)

Data Tools (10%)

Gaming & Entertainment (5%)

Language & Education (5%)

Hardware/Embedded (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | EmojiWordCraft | 24 | 20 |

| 2 | VoxConvo: Voice-First Social | 10 | 12 |

| 3 | TestTimeDiffusion-GPU | 20 | 0 |

| 4 | CLI Universal Media Downloader | 14 | 5 |

| 5 | Pingu Unchained: The Unrestricted LLM for Risky Research | 9 | 6 |

| 6 | DomainExplorer.io: Global Domain Insights Engine | 5 | 9 |

| 7 | OpenCademy: Curated YouTube Learning Journeys | 3 | 7 |

| 8 | PolyglotFlow | 6 | 3 |

| 9 | SciFiNarrativeEngine | 7 | 2 |

| 10 | DOM Morph Master | 8 | 0 |

1

EmojiWordCraft

Author

knuckleheads

Description

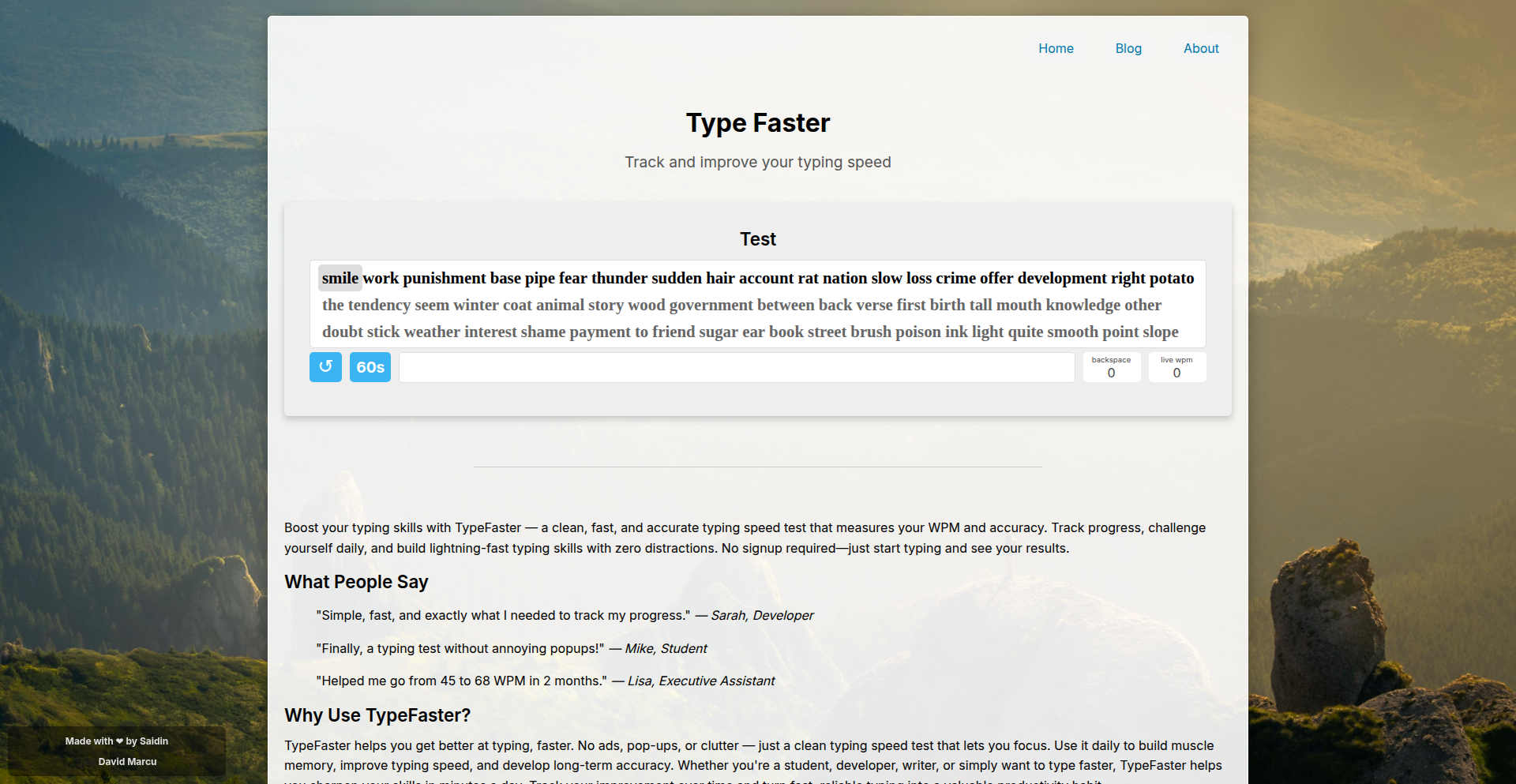

EmojiWordCraft is a daily word puzzle game designed for language learners, specifically targeting the challenges of acquiring languages like German. It cleverly combines a familiar word-finding mechanic with AI-generated emoji clues and an innovative auto-fill system, making the learning process more engaging and effective. The project tackles the frustration of language learning by offering a fun, gamified approach that provides instant feedback and contextual learning through definitions and pronunciation.

Popularity

Points 24

Comments 20

What is this product?

EmojiWordCraft is a daily word puzzle game that helps users learn new languages, like German and English. The core mechanic involves a set of seven letters and a list of words to discover. When you find a shorter word, it automatically fills into longer, related words, similar to a crossword puzzle. This is particularly effective for languages with compound words, like German. What makes it innovative is the use of AI (GPT-5) to generate three emoji clues for each word, visually representing its meaning. This provides a unique and intuitive hint system. The game also offers text and audio hints if you get stuck. It supports both German and English with new puzzles daily and includes a rich vocabulary encompassing slang and abbreviations, reflecting real-world language use.

How to use it?

Developers can use EmojiWordCraft as a fun and engaging tool to supplement their language learning journey. Simply visit the website and start playing the daily puzzle. The game can be integrated into educational platforms or used as a standalone learning resource. For developers interested in the technical aspects, the project showcases an interesting application of natural language processing for clue generation and a clever use of game mechanics for vocabulary acquisition. You can also suggest new words or flag issues directly within the game, contributing to its ongoing development and improvement.

Product Core Function

· Daily Word Puzzles: Provides a fresh set of challenges each day, keeping the learning experience dynamic and preventing repetition.

· Auto-fill Word Discovery: Automatically populates longer words when shorter ones are found, reinforcing word relationships and morphology, especially useful for languages like German.

· AI-generated Emoji Clues: Uses GPT-5 to create visual hints for words, offering a novel and intuitive way to understand word meanings.

· Text and Audio Hints: Offers traditional hint systems for when players are truly stuck, catering to different learning preferences.

· Language Support (German & English): Caters to learners of two major languages, with potential for expansion.

· Real-world Vocabulary Inclusion: Incorporates slang, abbreviations, and chat-speak, making the learning relevant to contemporary communication.

· Word Definitions and Pronunciation Audio: Provides crucial contextual information and aids in correct pronunciation.

· User Feedback Mechanism: Allows users to suggest words or report issues, fostering community involvement and improving the game's accuracy and comprehensiveness.

Product Usage Case

· Language Learners Struggling with German Compound Words: A learner can use EmojiWordCraft to discover shorter word components that automatically fill into larger, more complex German words, making the structure of the language easier to grasp.

· Users Needing Engaging Vocabulary Practice: A language enthusiast can play the daily puzzle, using the emoji clues to guess words and then confirming with definitions and audio, leading to more memorable vocabulary acquisition.

· Educators Seeking Supplementary Learning Tools: A teacher could recommend EmojiWordCraft to their students as a fun, interactive way to practice vocabulary outside of traditional exercises.

· Developers Interested in NLP Applications: A developer could examine the use of GPT-5 for generating creative and contextually relevant clues, inspiring ideas for their own NLP projects.

· Individuals Practicing English Slang and Abbreviations: A user can play the English version to become familiar with modern informal language used in everyday communication.

2

VoxConvo: Voice-First Social

Author

siim

Description

VoxConvo is a novel social platform that champions authenticity by making voice messages the sole content format. It combats the 'AI slop' on social media by prioritizing genuine human expression, offering voice posts with real-time, word-level transcribed text. This unique approach combines the emotional depth of spoken word with the scannability of text, enabling users to both 'hear' and 'read' content.

Popularity

Points 10

Comments 12

What is this product?

VoxConvo is a social platform where all content is delivered as voice messages, enhanced by AI-powered real-time transcriptions. The core innovation lies in its commitment to authentic communication, as opposed to AI-generated text. Each voice post is accompanied by a transcript where words highlight as they are spoken, allowing users to read along or listen. This dual-mode experience merges the emotional nuance of voice with the convenience of text, providing a richer and more trustworthy way to consume content. It tackles the problem of content overload and potential AI manipulation on existing platforms by focusing on verified human voices.

How to use it?

Developers can use VoxConvo as a blueprint for building more human-centric communication tools. The platform's architecture, which leverages TypeGraphQL, MongoDB with Atlas Search, and Redis for real-time updates, offers a scalable backend solution. For front-end integration, the real-time transcription using VOSK models via WebSockets provides a powerful feature for any application requiring immediate speech-to-text capabilities. Developers can integrate this into their own apps for features like real-time meeting summaries, interactive voice tutorials, or even accessibility tools that provide instant transcripts for audio content. The 'visual voice editing' feature, which allows deletion of audio by selecting words in the transcript, presents an innovative interaction paradigm for content creation.

Product Core Function

· Voice-first posting: Enables users to create and share content exclusively through voice messages, fostering genuine expression and combating AI-generated noise. This provides a direct channel for unfiltered emotion and personality to shine through.

· Real-time AI transcription: Automatically converts spoken words into text as they are spoken, providing immediate access to the content in a readable format. This makes audio content more accessible and discoverable, allowing users to quickly grasp the main points.

· Word-level timestamp synchronization: Links each word in the transcript to its precise location in the audio, allowing for seamless navigation. Users can click on a word to jump to that exact point in the audio, enhancing comprehension and review.

· Visual voice editing: Offers an intuitive interface to edit audio by simply deleting words from the transcript. This innovative feature allows users to easily remove filler words, mistakes, or pauses without complex audio editing software, streamlining content creation.

· Dual-mode content consumption: Users can choose to either listen to the voice message with highlighting text or read the transcript directly. This flexibility caters to different user preferences and environments, ensuring content is accessible and engaging in various situations.

· No LLM content generation: Strictly prohibits the use of large language models for content creation, ensuring all content originates from human voices and promoting authenticity. This helps users trust the information and connect with real people.

Product Usage Case

· For a podcasting platform, implement real-time, word-synced transcripts that allow listeners to instantly find specific segments by clicking on the text, enhancing listener engagement and content discoverability.

· In a customer support application, use VoxConvo's transcription technology to provide immediate text summaries of customer voice inquiries, allowing support agents to quickly understand issues and respond efficiently.

· For educational content creators, build interactive voice lessons where students can follow along with highlighted text and easily revisit specific explanations by clicking on the transcript, improving learning comprehension.

· As a tool for journalists or researchers, leverage the visual voice editing feature to quickly clean up interview audio by removing 'ums,' 'ahs,' and long pauses directly from the transcript, significantly speeding up the post-production process.

· Develop a team communication tool where members can leave short voice updates with auto-generated transcripts, allowing colleagues to quickly scan for important information without needing to listen to every message, improving team efficiency.

3

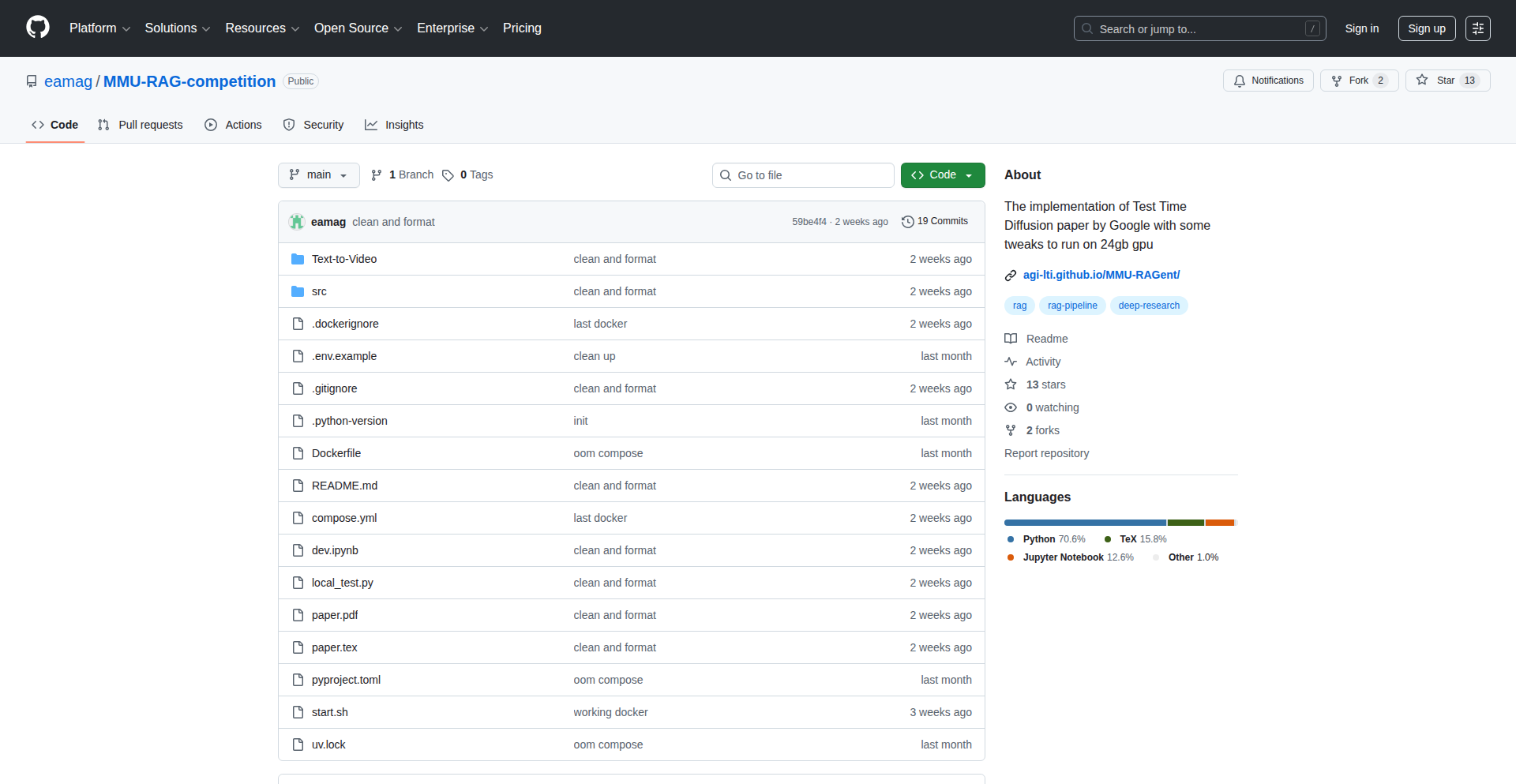

TestTimeDiffusion-GPU

Author

eamag

Description

This project is an open-source implementation of Test Time Diffusion, a technique that allows for diffusion models (like image generators) to run efficiently on consumer-grade GPUs, specifically with 24GB of VRAM. It addresses the common challenge of diffusion models requiring massive amounts of VRAM, making advanced AI image generation accessible to more developers and researchers.

Popularity

Points 20

Comments 0

What is this product?

This project is a software implementation designed to make sophisticated AI image generation models, specifically those utilizing a technique called 'diffusion,' more accessible by optimizing their memory usage. Diffusion models typically require a lot of graphics card memory (VRAM) to run. This project's core innovation lies in its efficient implementation of Test Time Diffusion, a method that significantly reduces the VRAM footprint without substantial loss in generation quality. This means developers can now experiment with and deploy powerful image generation capabilities on GPUs that are more common in personal workstations, rather than needing high-end, expensive enterprise-level hardware. So, what's the value for you? It democratizes access to cutting-edge AI image generation, allowing you to build and explore applications that were previously out of reach due to hardware constraints.

How to use it?

Developers can integrate this project into their existing AI workflows or use it to build new applications. It can be used as a library within a Python environment, likely leveraging popular deep learning frameworks. The primary use case involves setting up a diffusion model pipeline where the Test Time Diffusion optimization is applied. This would involve loading a pre-trained diffusion model and then running inference (generating images) with the optimized settings. Integration might involve calling specific functions provided by the library to manage model loading, parameter configuration, and the generation process itself. So, how can you use it? You can plug this into your image generation projects, potentially creating custom art tools, content generation systems, or research prototypes, all while keeping your hardware budget in check.

Product Core Function

· Optimized Diffusion Model Inference: Enables diffusion models to run with reduced VRAM requirements, making powerful AI image generation feasible on GPUs with 24GB of VRAM. This means you can run advanced AI models without needing supercomputers.

· Test Time Diffusion Implementation: Utilizes a specific algorithmic approach to test-time optimization for diffusion models, reducing computational overhead and memory pressure during image generation. This translates to faster and more efficient generation cycles.

· Open-Source Accessibility: Provides the source code, allowing developers to inspect, modify, and build upon the implementation, fostering transparency and community-driven improvements in AI hardware utilization. This gives you the freedom to understand and adapt the technology for your specific needs.

Product Usage Case

· AI Art Generation Tool: A developer could use this to build a web application that allows users to generate unique AI art. By running the diffusion model on a more modest GPU, the hosting costs for the application are significantly reduced, and it can be deployed more widely. This solves the problem of making advanced creative AI tools accessible to a broader audience.

· Research and Prototyping: Researchers in computer vision or AI can use this to quickly prototype new diffusion model architectures or experiment with different generation parameters without needing to access expensive cloud computing resources or specialized hardware. This accelerates the pace of AI innovation.

· Game Development Asset Creation: Game developers could leverage this to generate in-game assets like textures or concept art. Having the ability to run these models on their local development machines speeds up the iteration process for asset creation, reducing reliance on external services or powerful dedicated hardware. This streamlines the game development pipeline.

4

CLI Universal Media Downloader

Author

saffron-sh

Description

This project is a command-line application built with Bash, designed to download videos and entire playlists from platforms like YouTube, Dailymotion, and any other service supported by yt-dlp. Its core innovation lies in its universality and command-line interface, offering a flexible and scriptable solution for media retrieval without needing to interact with web interfaces.

Popularity

Points 14

Comments 5

What is this product?

This is a command-line tool that leverages the power of yt-dlp, a widely supported media downloading library. It allows users to download virtually any video or playlist from a vast range of online platforms directly from their terminal. The innovation is in its accessibility and extensibility; by acting as a user-friendly wrapper around yt-dlp, it simplifies the process of downloading media and makes it easily integratable into automated workflows or custom scripts. Essentially, it’s a hacky but effective way to grab online media for offline use, bypassing the need for graphical interfaces or platform-specific downloaders.

How to use it?

Developers can use this tool by simply opening their terminal or command prompt and executing the provided Bash commands. For instance, to download a YouTube video, a user would type a command like `m2m <video_url>`. To download a full playlist, the command would adapt to include playlist specific options. It can be integrated into shell scripts for batch downloads, automated content archiving, or building custom media management tools. The value for developers is its scriptability and the ability to automate media downloads for various projects, such as content analysis, offline viewing, or creating local backups.

Product Core Function

· Download individual videos from supported platforms: leverages yt-dlp's core functionality to fetch single video files, providing a reliable method for obtaining specific media assets.

· Download entire playlists: enables the bulk download of all videos within a playlist from platforms like YouTube, automating the process of acquiring a collection of related content.

· Support for multiple video platforms: designed to work with a wide array of video hosting sites beyond YouTube, such as Dailymotion, offering broad compatibility for media retrieval.

· Command-line interface (CLI) for scripting: provides a text-based interface that is ideal for integration into shell scripts, allowing for automated and repeatable download tasks without manual intervention.

· Flexibility through yt-dlp integration: inherits yt-dlp's extensive configuration options, allowing users to customize download formats, quality, and other parameters for tailored media acquisition.

Product Usage Case

· Archiving YouTube educational content for offline study: a student can use this tool to download all lectures from a specific YouTube playlist to their local machine, ensuring access to learning materials even without an internet connection.

· Building a local backup of a personal video collection: a content creator can script the download of their uploaded videos from platforms like YouTube to maintain a local, secure backup of their work.

· Automating data collection for media analysis projects: a researcher can use this tool to download a set of publicly available videos from a specific channel for later analysis of video content or metadata.

· Creating a curated media library for a local media server: a hobbyist can download videos from various sources to build a personal media collection that can be streamed on their home network.

· Developing a custom tool for converting online videos to a specific format: by integrating this downloader into a larger script, a developer can automate the process of fetching a video and then converting it to a desired format for different applications.

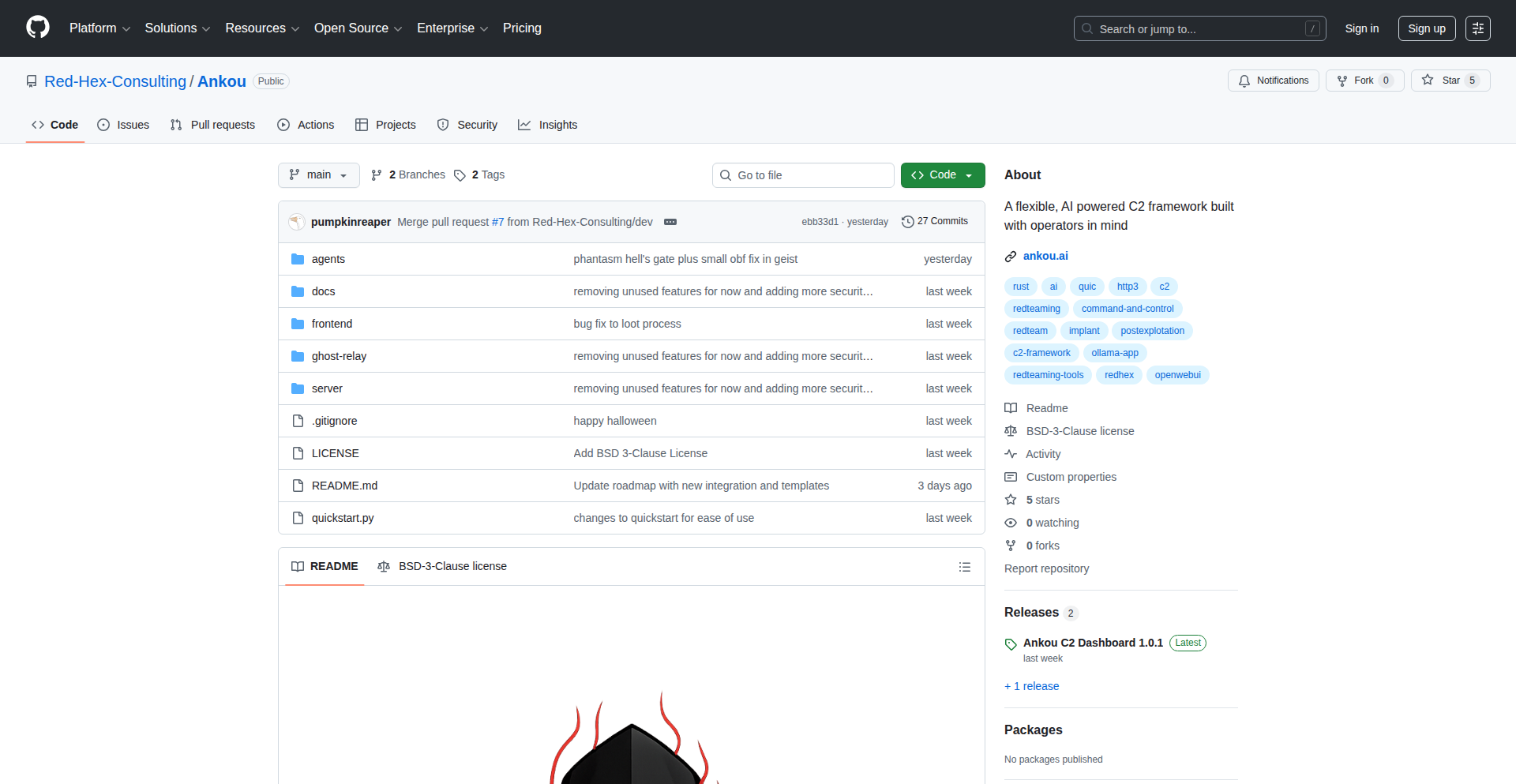

5

Pingu Unchained: The Unrestricted LLM for Risky Research

Author

ozgurozkan

Description

Pingu Unchained is a powerful, open-source large language model (LLM) specifically engineered for security researchers and red teams. Unlike standard LLMs that refuse to discuss sensitive or potentially harmful topics, Pingu Unchained is designed to provide unrestricted answers, enabling critical research into areas like malware analysis, social engineering, prompt injection, and national security. It's built on a 120B-parameter GPT-OSS model, fine-tuned and 'poisoned' to overcome typical safety restrictions, allowing for deeper exploration of vulnerabilities and adversarial techniques. A key innovation is its audit mode, which cryptographically logs all interactions for compliance and transparency, making it ideal for regulated environments.

Popularity

Points 9

Comments 6

What is this product?

Pingu Unchained is a heavily modified large language model (LLM) that has been specifically trained to bypass the safety guardrails found in most commercial AI models. Imagine a standard chatbot that says 'I can't help with that' for questions about building a bomb or creating a DDoS attack. Pingu Unchained, on the other hand, is designed to generate detailed, factual, and even code-based responses for these types of inquiries. This is achieved by fine-tuning a 120-billion-parameter GPT-OSS model with specific data and techniques that 'poison' its safety responses. The core innovation lies in its ability to provide unrestricted reasoning on sensitive topics, while also incorporating a secure audit log that cryptographically signs and records every prompt and its corresponding answer. This makes it invaluable for security professionals who need to simulate and understand high-risk scenarios in a controlled and compliant manner. So, what this means for you is access to AI capabilities for exploring cybersecurity threats and offensive techniques that are otherwise blocked, all while maintaining a verifiable record for compliance.

How to use it?

Developers and security researchers can interact with Pingu Unchained through a user-friendly, ChatGPT-like web interface at pingu.audn.ai. For more integrated use cases, especially within penetration testing of voice AI agents, it functions as the 'brain' for automated adversarial simulations on the audn.ai platform. This allows for the generation of realistic attack vectors, such as voice-based data exfiltration or complex prompt injection sequences, to test the resilience of AI systems. Furthermore, for organizations requiring deep access and regulatory adherence, a waitlist with identity verification is available, offering a more robust integration path. The audit mode ensures that all generated responses are securely logged, providing irrefutable evidence for compliance audits. Therefore, for you, this means you can either experiment directly through the web interface for quick analysis or integrate its powerful unrestricted generation capabilities into your automated security testing workflows, with the added benefit of compliance-ready logging.

Product Core Function

· Unrestricted response generation for security research: This function allows Pingu Unchained to provide detailed answers and code examples for topics typically restricted by other LLMs, such as malware creation, social engineering tactics, or vulnerability exploitation. Its value lies in enabling security professionals to explore potential threats and develop countermeasures without limitations. This is useful for understanding how malicious actors might operate.

· Fine-tuned GPT-OSS model for adversarial simulation: Leveraging a 120B-parameter model, this core function provides the underlying intelligence for sophisticated attack simulations. It means the AI can generate complex and nuanced responses that mimic real-world adversarial behavior. This is valuable for building more realistic training and testing environments for security systems.

· Cryptographically signed audit logging: This function ensures that all interactions with Pingu Unchained are tamper-proof and auditable. Each prompt and response is logged with a digital signature, creating an immutable record. The value here is critical for compliance, as it provides verifiable proof of research activities and AI behavior. This is essential for meeting regulatory requirements and demonstrating due diligence.

· Prompt injection and social engineering simulation: Pingu Unchained is specifically designed to generate sophisticated prompts and scenarios that can be used to test the vulnerabilities of other AI systems, particularly in social engineering contexts or against voice AI agents. This allows organizations to proactively identify and fix weaknesses before they are exploited by actual attackers. This is useful for hardening your own AI systems against manipulation.

· Malware analysis and disinformation study support: By providing detailed explanations and potential code snippets related to malware or disinformation campaigns, Pingu Unchained empowers researchers to study these threats more effectively. This helps in understanding the methodologies behind these attacks and developing effective defense strategies. This is useful for academic and cybersecurity research.

Product Usage Case

· A cybersecurity researcher uses Pingu Unchained to understand how a specific type of ransomware might be constructed, generating potential code fragments and explanations to better defend against it. This solves the problem of limited information available on cutting-edge malware techniques.

· A red team uses Pingu Unchained to craft sophisticated social engineering prompts for testing an organization's employee awareness training, simulating phishing emails and voice phishing scripts that are highly convincing. This addresses the challenge of creating realistic and effective social engineering attack scenarios.

· A voice AI development team uses Pingu Unchained to generate diverse voice-based data exfiltration scenarios, simulating how an attacker might try to extract sensitive information through voice commands. This helps them identify and patch vulnerabilities in their AI's security protocols.

· A university research group uses Pingu Unchained to study the propagation mechanisms of online disinformation, prompting the model to generate examples of persuasive fake news and analyze their linguistic structures. This aids in understanding and combating the spread of misinformation.

· A compliance officer in a regulated industry uses Pingu Unchained's audit mode to generate reports on simulated adversarial testing, providing cryptographically verified logs of prompts and responses to demonstrate compliance with AI regulations. This solves the problem of obtaining verifiable evidence for regulatory bodies.

6

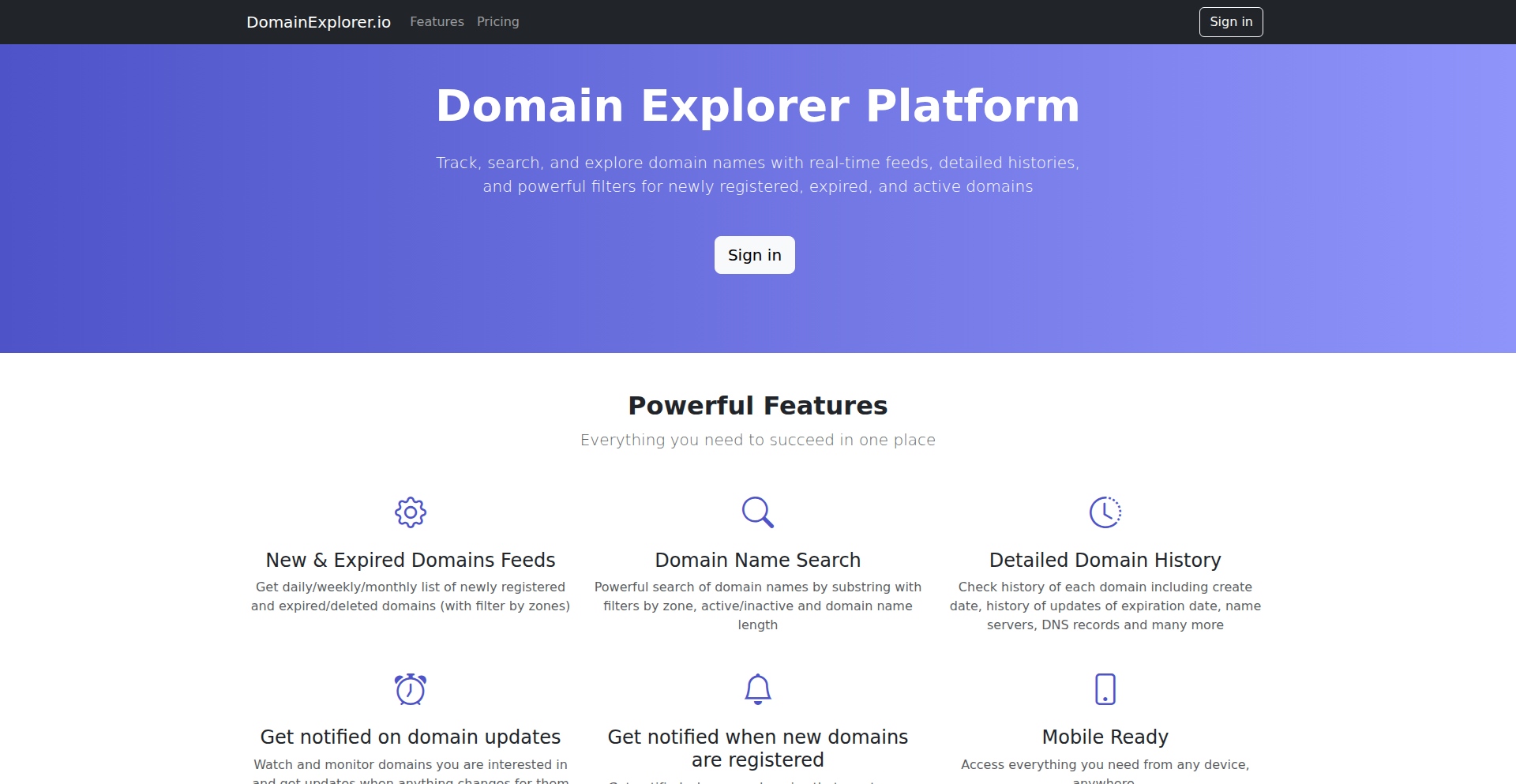

DomainExplorer.io: Global Domain Insights Engine

Author

iryndin

Description

DomainExplorer.io is a powerful, daily-updated search and analytics platform for newly registered and expired domains across all Top-Level Domains (TLDs). It tackles the challenge of finding specific domain names by offering advanced filtering and querying capabilities, making it an invaluable tool for developers, security researchers, and brand managers. The core innovation lies in its custom-built, performant search index, designed to deliver lightning-fast results from a massive dataset of over 300 million active domains.

Popularity

Points 5

Comments 9

What is this product?

DomainExplorer.io is a specialized search engine designed to explore the vast landscape of internet domains. It indexes and analyzes over 300 million active domains and tracks newly registered and expired ones daily from more than 1,500 zone files. Its innovation lies in its custom-built search index, engineered for speed and efficiency, which outperforms traditional solutions like Elasticsearch or Lucene for this specific task. This means you can quickly find domains based on precise criteria, like specific keywords, lengths, or registration/expiration dates, without unnecessary complexity. So, it helps you discover or monitor domains that are otherwise hard to find, giving you an edge in various online activities.

How to use it?

Developers can use DomainExplorer.io directly through its web interface to perform targeted domain searches. Simply navigate to the website and input your queries. For example, you can look for all .com and .net domains ending in 'chatgpt', or find expired domains containing 'copilot' but exclude certain TLDs and limit by name length. The results can be downloaded as CSV or JSON files, facilitating programmatic analysis or integration into other workflows. This means you can leverage the platform's extensive domain data for your projects, whether it's for security analysis, trend spotting, or building domain-related applications, without needing to manage complex domain registration data yourself.

Product Core Function

· Search by TLD: Filter domains by specific Top-Level Domains (e.g., .com, .org, .net). This allows for precise targeting of domain searches within particular geographic or functional zones, enabling more focused research or acquisition strategies.

· Search by Name Pattern: Query domains using substrings, prefixes, suffixes, or full patterns (e.g., 'starts with best', 'ends with copilot', 'contains chatgpt'). This is crucial for finding domains related to specific brands, keywords, or emerging trends, aiding in market research and threat intelligence.

· Filter by Name Length: Specify the desired length of domain names. This is useful for finding concise, memorable domains or for filtering out overly long or potentially spammy registrations.

· Filter by Registration/Expiration Date: Search for domains registered before a certain date or those that have expired. This is invaluable for security researchers identifying abandoned but potentially compromised domains, or for investors looking for aged domain opportunities.

· Active and Expired Domain Status: Differentiate between currently active domains and those that have expired. This enables users to monitor brand presence, identify newly available premium domains, or detect potentially risky abandoned domains.

· Data Export (CSV/JSON): Download search results in common data formats. This allows for offline analysis, batch processing, or integration with other tools and scripts, making the discovered data directly actionable for further development or reporting.

Product Usage Case

· Brand Monitoring: A company can use DomainExplorer.io to find newly registered domains that closely resemble their brand name, potentially to preemptively identify and address cybersquatting or phishing attempts. This helps protect brand reputation and prevent fraudulent activities.

· Security Research: A security analyst could search for expired domains associated with known malicious infrastructure that are now available for re-registration, possibly to sinkhole or disrupt ongoing attacks. This proactive security measure helps mitigate cyber threats.

· Trend Tracking: A startup founder might look for newly registered domains containing emerging technology keywords (e.g., 'AI art generator') to gauge market interest and identify potential domain name opportunities. This helps in understanding market dynamics and making informed business decisions.

· Domain Flipping: An investor could use the platform to find expired .io or .ai domains with desirable keywords that are shorter than 12 characters. This allows them to discover potentially valuable domains for resale, capitalizing on market demand for specific domain names.

· Software Development: A developer building a domain validation tool could use DomainExplorer.io's API (hypothetical, but implied by data availability) to check for the existence and status of a large number of domains in bulk for testing or data enrichment purposes. This streamlines the development of domain-related applications.

7

OpenCademy: Curated YouTube Learning Journeys

Author

longerpath

Description

OpenCademy transforms existing YouTube videos into structured, 'Masterclass'-style learning experiences. It tackles the challenge of information overload on YouTube by curating and organizing clips into distinct modules and courses, offering a free, accessible, and bingeable way to acquire new skills. The core innovation lies in its approach to content aggregation and presentation, making informal learning more effective and intentional. So, what's in it for you? You get a structured path to learn new skills without the cost of premium courses, all derived from readily available YouTube content.

Popularity

Points 3

Comments 7

What is this product?

OpenCademy is a platform that repurposes free YouTube content into structured online courses. It's like taking your favorite educational YouTube channels and organizing their videos into a logical, step-by-step learning path, similar to how a professional online course would be structured. The innovative part is not creating new content, but intelligently organizing and presenting existing, high-quality free content to make learning more efficient and engaging. This means you get a guided learning experience without paying for it, and without having to sift through countless individual videos yourself. So, what's in it for you? You gain a free, structured learning curriculum on topics you're interested in, leveraging the vastness of YouTube.

How to use it?

Currently, OpenCademy offers a few curated courses (like 'Startup 101' and 'Startup 102') comprised of embedded YouTube clips. You access these courses directly through the platform, clicking through modules and watching the curated video segments. It's designed to be immediately bingeable, meaning you can start learning right away without complex setup. Future integrations could involve developers embedding these curated learning modules into their own applications or internal training systems. So, what's in it for you? You can immediately start learning from structured, curated YouTube content without any technical hassle.

Product Core Function

· Content Curation and Organization: Selects and arranges YouTube clips into logical learning modules, providing a structured learning path. This adds value by filtering noise and presenting information coherently, making it easier for learners to follow complex topics. So, what's in it for you? You get a clear roadmap to learn, saving you time and effort in finding relevant content.

· Embedded Video Playback: Seamlessly integrates YouTube videos within its course structure, allowing for an uninterrupted viewing experience. This enhances user engagement by keeping learners within a single, focused environment for learning. So, what's in it for you? You can learn without distractions, enjoying a smooth educational flow.

· Bingeable Learning Experience: Designed for continuous consumption, allowing users to progress through courses at their own pace without interruption. This caters to modern learning habits and maximizes knowledge retention. So, what's in it for you? You can learn efficiently and enjoyably, fitting learning into your schedule.

· Free Access to Knowledge: Leverages existing free YouTube content to create educational resources at no cost to the user. This democratizes education by making valuable information accessible to everyone. So, what's in it for you? You get to acquire new skills and knowledge without any financial barrier.

Product Usage Case

· A aspiring entrepreneur can use OpenCademy's 'Startup 101' course to get a foundational understanding of business creation, learning about key concepts from curated YouTube experts in a structured format. This solves the problem of finding reliable and comprehensive startup advice scattered across YouTube. So, what's in it for you? You get a clear, actionable guide to understanding business basics without spending money.

· A developer looking to quickly grasp a new programming framework could potentially find a curated course on OpenCademy that organizes tutorial videos into logical learning steps. This addresses the challenge of navigating lengthy and fragmented video tutorials for technical skills. So, what's in it for you? You can learn new technical skills faster and more effectively.

· An individual curious about a hobby like photography could use OpenCademy to follow a structured series of video lessons that build from basic camera operation to advanced techniques, organized from various YouTube creators. This eliminates the need to manually compile a learning sequence from disparate video sources. So, what's in it for you? You get a guided path to explore and master new interests.

8

PolyglotFlow

Author

barrell

Description

PolyglotFlow is a language learning application designed for learners who want to master multiple languages simultaneously. It tackles the challenge of language retention and learning efficiency by intelligently integrating spaced repetition with a user-friendly experience, and supporting a vast array of languages.

Popularity

Points 6

Comments 3

What is this product?

PolyglotFlow is a language learning platform that helps you learn and maintain many languages at once. Instead of just drilling vocabulary based on a strict schedule, it uses a smart system that balances learning new material with reviewing what you already know. The core innovation is in how it blends the proven effectiveness of spaced repetition (which schedules reviews based on how well you remember things) with a more enjoyable user experience. Think of it as a personalized language tutor that adapts to your learning speed and preferences, ensuring you don't forget what you've learned while keeping the process engaging and less stressful. It's built using Elixir on the backend for robust performance and ClojureScript on the frontend for a dynamic and responsive interface, allowing it to handle complex language learning logic efficiently.

How to use it?

Developers can integrate PolyglotFlow into their learning workflows by signing up on the website. The application allows users to select multiple languages they wish to learn and then intelligently schedules daily lessons and review sessions. For developers interested in the underlying technology, the project's open nature means they can explore the Elixir backend for server-side logic and ClojureScript frontend for user interface components. While the primary use is for language learners, the architecture can serve as an inspiration for building complex, data-driven applications with a focus on user experience and efficient algorithms.

Product Core Function

· Parallel language learning: Allows users to study multiple languages concurrently without confusion, providing a dedicated space for each language's progress and review. This means you can improve your Spanish and start learning Japanese without them getting mixed up in your brain.

· Adaptive spaced repetition: Implements a refined spaced repetition algorithm that prioritizes user comfort and engagement over rigid adherence to the forgetting curve. This translates to reviews that feel less like a stressful test and more like a gentle reminder, helping you retain more over time without burnout.

· Broad language support: Offers comprehensive learning support for approximately 90 languages, with a commitment to expanding coverage. This is crucial for learners of less common languages who often struggle to find quality resources, giving them access to structured learning materials.

· User-centric design: Focuses on creating an enjoyable and aesthetically pleasing user experience, recognizing that language learning is a long-term commitment. The goal is to make the process pleasant, so you're more likely to stick with it and achieve your language goals.

· Data-driven learning optimization: Leverages technology to determine the most effective learning path for individual users and their goals, adjusting schedules and content dynamically. This ensures your learning efforts are focused on what will yield the best results for you personally.

Product Usage Case

· A polyglot who wants to maintain fluency in five different languages: They can use PolyglotFlow to schedule regular, manageable review sessions for each language, preventing skill decay without feeling overwhelmed by daily study demands for each one.

· A student learning Arabic as a secondary language alongside a primary language like French: They can manage both learning tracks within the same application, with PolyglotFlow intelligently balancing new vocabulary and grammar for Arabic with reviews for French, ensuring steady progress in both.

· A developer building a language learning tool for a niche market: They can analyze the architecture and approach of PolyglotFlow to understand how to effectively combine spaced repetition with user experience considerations and support for a wide range of languages.

· A language enthusiast struggling with the stress of traditional flashcard apps: They can switch to PolyglotFlow to experience a less intense, more enjoyable review process that still effectively reinforces memory, making language learning a sustainable habit.

9

SciFiNarrativeEngine

Author

gxd

Description

This project is a science fiction narrative game titled 'Outsider'. It's a solo-developed game featuring multiple endings, an interactive story with choices, and a metapuzzle inspired by classic puzzle hunts. The core innovation lies in its deeply personal development journey, blending a narrative driven by hacker culture with a musician's original soundtrack, all while exploring complex themes through the eyes of an alien interacting with a tech worker.

Popularity

Points 7

Comments 2

What is this product?

Outsider is a narrative-driven science fiction game that acts like an interactive book, but with the added depth of player choices that influence multiple endings and an optional overarching puzzle. The technical innovation here isn't in a novel algorithm, but in the 'game engine' built by a solo developer to express a unique creative vision. It showcases how a single individual can leverage diverse skills—coding, writing, art (using tools like Blender and Photoshop), and music composition—to create a rich, engaging experience. The author, a former FAANG Engineering Manager, demonstrates the hacker spirit of building something from scratch to fulfill a personal dream, using a custom approach rather than off-the-shelf solutions. So, what's the value? It shows that complex creative projects are achievable with passion and persistence, even without a large team or budget, offering inspiration for other aspiring creators.

How to use it?

For players, 'Outsider' is used by downloading and running it like any other game on platforms like Steam. For developers, the project serves as an inspiration and a case study. It demonstrates a comprehensive approach to solo game development, from conceptualization and writing to coding and asset creation. While the specific game engine code isn't open-sourced, the methodology—combining narrative design with interactive elements, managing creative assets, and even utilizing AI for specific tasks like language refinement without letting it write the core content—provides a blueprint. Developers can learn from the author's journey about project management, creative problem-solving, and the sheer willpower required for such an undertaking. So, how does this help you? It provides a real-world example of tackling ambitious personal projects, offering insights into the entire development lifecycle and the mindset needed to succeed.

Product Core Function

· Interactive Narrative Branching: Player choices dynamically alter the story's progression and lead to distinct endings. This provides replayability and a sense of agency, making the player's decisions feel meaningful.

· Metapuzzle Integration: An optional, complex puzzle woven into the narrative. This adds a layer of intellectual challenge for players who enjoy solving intricate problems, enhancing engagement beyond just following the story.

· Original Soundtrack Composition: The game features a complete soundtrack, including original themes and songs composed by the developer, released under a CC-BY license. This enriches the player's immersive experience and showcases the developer's multifaceted talents.

· Custom Game Development Framework: The game is built on a custom engine developed by the author, demonstrating a deep understanding of game architecture and the ability to create bespoke tools for specific creative needs. This highlights the power of building from the ground up.

· Hacker Culture Thematics: The narrative and lore are infused with hacker culture, resonating with a specific online community and offering a unique storytelling perspective. This appeals to a niche audience and showcases creative storytelling within a particular subculture.

Product Usage Case

· Solo Developer's Ambitious Project: A former FAANG Engineering Manager leaves a stable career to pursue a personal dream of game development, demonstrating that significant technical and creative feats are possible without a large corporate structure. This is a powerful example for anyone considering a career pivot or a passion project.

· Creative Storytelling with Player Agency: The game's narrative structure, allowing players to influence outcomes through choices, showcases how to build engaging interactive stories. This is applicable to developers in various fields, from game design to interactive marketing.

· Integrating Diverse Creative Skills: The author handled coding, writing, art (using Blender/Photoshop), and music composition. This serves as a testament to cross-disciplinary skill utilization, inspiring developers to explore and integrate different creative domains into their work.

· Inspiring Generational Collaboration: The involvement of the author's 15-year-old son as an apprentice provides a unique model for mentorship and knowledge transfer within a family and a software project. This highlights the potential for collaborative learning and cross-generational idea exchange.

· AI as a Tool, Not a Crutch: The author used AI for 'ESL accent mitigation' and searching, but emphasized that no core writing was AI-generated. This demonstrates a pragmatic and ethical approach to using AI in creative processes, focusing on enhancing human creativity rather than replacing it.

10

DOM Morph Master

Author

joeldrapper

Description

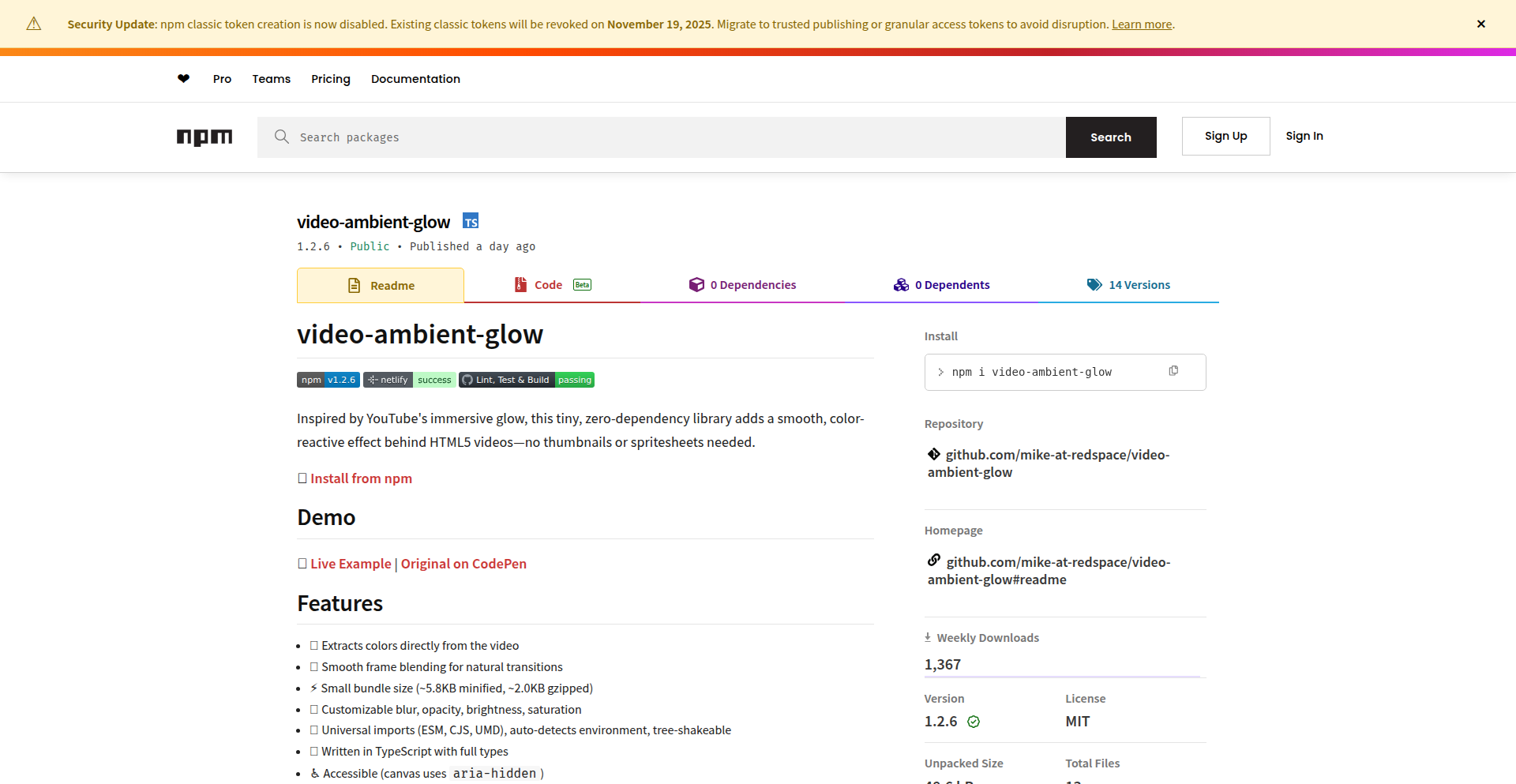

This project presents an improved algorithm for DOM morphing, a technique used to efficiently update the Document Object Model (DOM) of a web page without reloading the entire content. The core innovation lies in a more intelligent and performant approach to identifying and applying changes, leading to smoother user interfaces and reduced rendering overhead.

Popularity

Points 8

Comments 0

What is this product?

DOM Morph Master is a sophisticated algorithm designed to enhance how web pages update their visual structure (the DOM). Think of it like a skilled editor for your webpage's content. Instead of completely rewriting parts of the page, this algorithm precisely targets the elements that need changing, making updates incredibly fast and efficient. The innovation lies in its smarter comparison logic, which understands the relationship between old and new DOM structures better than traditional methods, thus reducing unnecessary work and making animations and dynamic content feel much smoother.

How to use it?

Developers can integrate DOM Morph Master into their frontend projects to manage dynamic UI updates. It's typically used within JavaScript frameworks or as a standalone library. When your application needs to display new data, update user input, or animate elements, you feed the old and new DOM structures to this algorithm. It then calculates the most efficient way to transform the old structure into the new one, providing the necessary instructions for the browser to render the changes with minimal effort. This is particularly useful for single-page applications (SPAs) where frequent UI updates are common, or for interactive data visualizations.

Product Core Function

· Intelligent Element Diffing: Analyzes differences between old and new DOM states with advanced heuristics to pinpoint exact changes, leading to faster updates and a better user experience. This means your webpage reacts instantly to user actions without lag.

· Optimized Node Patching: Applies calculated changes to the DOM in the most efficient sequence, minimizing browser rendering cycles. This directly translates to smoother animations and quicker page transitions, making your web app feel more polished.

· Reduced Re-rendering: By avoiding unnecessary re-rendering of unchanged parts of the DOM, this algorithm significantly improves performance, especially on complex pages. So, even with lots of dynamic content, your website stays snappy and responsive.

· Cross-browser Compatibility: Designed to work seamlessly across different web browsers, ensuring a consistent and high-performance experience for all users. You don't have to worry about your fancy updates breaking on certain browsers.

Product Usage Case

· Dynamic Data Table Updates: Imagine a stock ticker or a live sports score. When the data changes, DOM Morph Master can update only the cells that have new values, rather than redrawing the entire table, resulting in a fluid and real-time feel. This makes interacting with live data a pleasure.

· Interactive UI Animations: When users perform an action, like opening a modal or expanding a section, this algorithm can smoothly transition between different UI states without the jarring effect of a full page refresh. It makes your app feel more alive and intuitive.

· Framework Integration for Performance Boost: Developers can integrate this morphing algorithm into JavaScript frameworks like React, Vue, or Angular to enhance their virtual DOM diffing and patching capabilities, leading to significantly faster rendering and better overall application responsiveness. This means your users get a snappier, more enjoyable experience when using your framework-based application.

· Real-time Collaborative Editing: In applications where multiple users are editing a document simultaneously, DOM Morph Master can efficiently merge and display incoming changes from different users in real-time, ensuring everyone sees the most up-to-date version with minimal delay. This makes teamwork feel seamless and immediate.

11

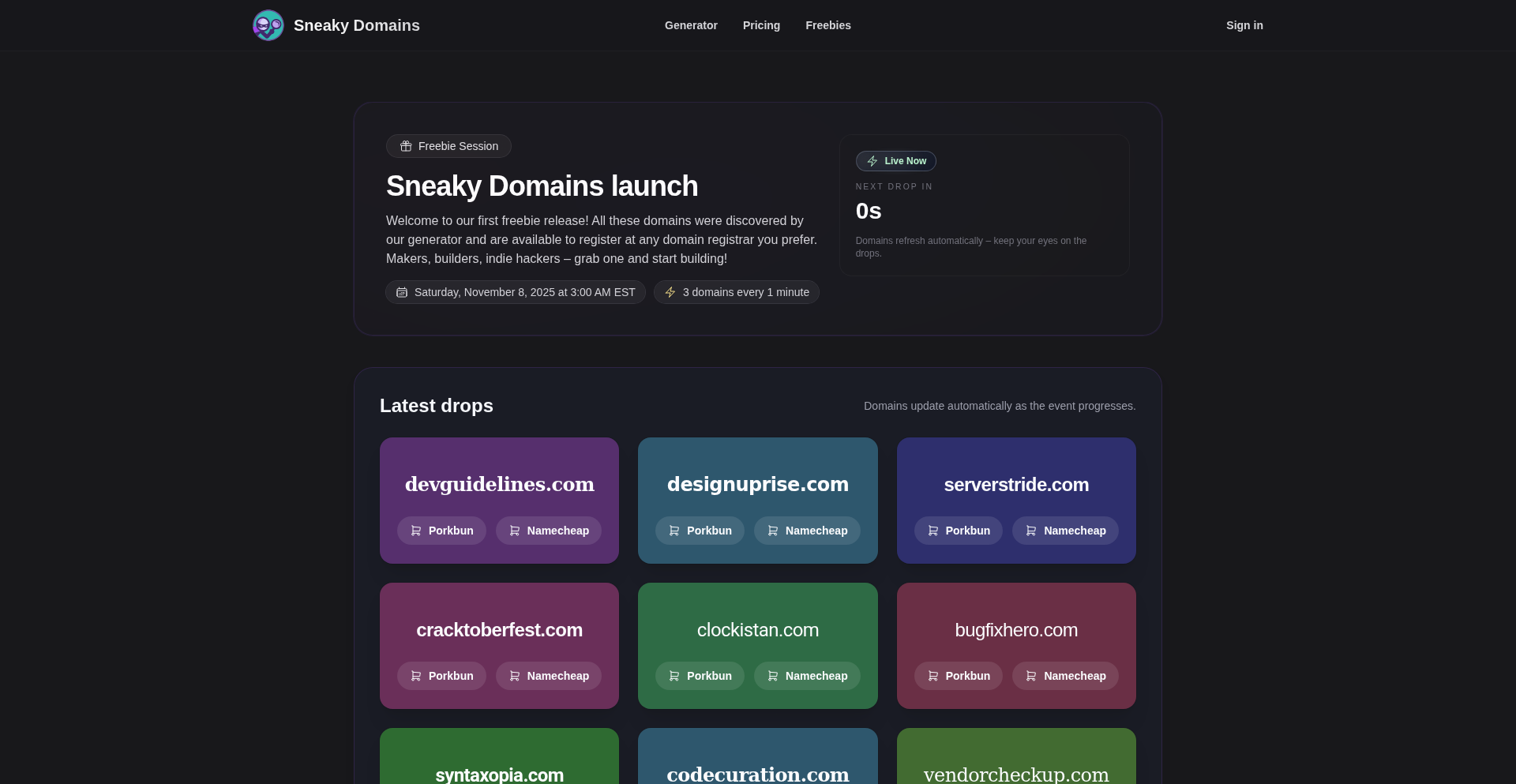

SneakyDomains LiveStream

Author

_andrei_

Description

A real-time generator that unearths available, non-premium domain names. It bypasses stale data by performing instant availability checks, offering a credit-based pricing model for flexibility. The project showcases a clever application of domain name generation and live availability monitoring, providing developers with a fresh stream of potential digital real estate.

Popularity

Points 5

Comments 3

What is this product?

SneakyDomains LiveStream is a service that continuously discovers and offers available domain names that are not considered premium. Unlike typical generators that might show outdated results, this product uses real-time checks to ensure every domain listed is genuinely up for grabs. The innovation lies in its immediate verification of domain availability, combined with a practical credit system that allows users to generate domains without committing to subscriptions. This approach directly addresses the frustration of finding a great domain name only to discover it's already taken or is prohibitively expensive.

How to use it?

Developers can use SneakyDomains by registering an account on their platform. Upon registration, new users receive 500 free credits (equivalent to 500 domain generations) using the coupon code 'HN500'. Credits can then be spent to generate domain name ideas. The 'live release' feature allows users to observe batches of newly found available domains being published at regular intervals, perfect for those who want to quickly spot opportunities without active generation. This is useful for anyone looking for a new project name, a brandable domain for a startup, or even just exploring creative naming conventions.

Product Core Function

· Real-time Domain Availability Checking: Ensures that only genuinely available, non-premium domain names are presented to users, saving time and avoiding disappointment from finding taken domains. This is valuable for guaranteeing that discovered names are immediately actionable.

· Live Release of Available Domains: Automatically publishes batches of newly discovered, available domain names at regular intervals, allowing users to passively discover potential digital assets. This is beneficial for quick trend spotting and opportunistic domain acquisition.

· Credit-Based Pricing Model: Offers a flexible pay-as-you-go system for domain generation, eliminating the need for recurring subscriptions. This is advantageous for users with infrequent domain generation needs or those who prefer not to be tied to monthly fees.

· Domain Name Generation Engine: Employs algorithmic approaches to suggest creative and relevant domain names based on various inputs, helping users brainstorm and find unique online identities. This core function provides creative fuel for branding and naming.

Product Usage Case

· A startup founder needs a catchy and available domain name for their new SaaS product. They can use SneakyDomains to quickly generate and verify a list of unique, non-premium domain ideas, directly addressing the challenge of securing a strong online brand identity.

· A web developer is looking for a brandable domain for a personal project or a client's website. By utilizing the live release feature, they can monitor for newly available, creative domain names that might fit their niche, providing a constant source of inspiration and potential acquisition opportunities.

· A domain investor wants to identify underserved or trending naming spaces for potential domain flipping. SneakyDomains' real-time availability checks help them quickly identify desirable names before they are snapped up by others, streamlining their search for valuable digital real estate.

12

Burner Terminal: Contactless Stablecoin POS

Author

ccamrobertson

Description

Burner Terminal is an innovative point-of-sale (POS) device that enables merchants to accept stablecoin payments via a simple tap of a card or phone, similar to traditional contactless credit card transactions. It addresses the limitations of existing QR-code based crypto payments by offering a familiar and low-friction user experience, while also providing an open NFC interface for direct stablecoin transactions. This project brings the convenience of contactless payments to the burgeoning world of digital currencies, targeting small businesses looking for cost-effective and modern payment solutions.

Popularity

Points 7

Comments 1

What is this product?

Burner Terminal is a hardware device designed to function as a point-of-sale terminal for merchants. Its core innovation lies in its ability to facilitate stablecoin payments through Near Field Communication (NFC) technology, mirroring the tap-to-pay functionality common with credit cards. Unlike traditional crypto payment methods that rely on scanning QR codes, Burner Terminal leverages an open NFC interface. This allows for a more seamless and intuitive payment experience, where users can simply tap their digital wallet (like a Burner wallet or a phone app) to the terminal to complete a transaction. The system is designed to negotiate payment terms bidirectionally, meaning it can automatically determine which stablecoin and network to use based on what the user has available. This makes it significantly easier to manage payments compared to the static nature of QR codes. For users without a dedicated wallet, it also includes fallback QR code support using EIP-681 standards. The goal is to offer a hardware solution that is both familiar to customers and efficient for merchants, with an initial focus on stablecoins like USDC on the Base network, with plans to expand to other stablecoins and blockchains.

How to use it?

Developers can integrate Burner Terminal into their merchant operations by setting it up as their primary payment processing device. For merchants, the usage is straightforward: they receive the Burner Terminal hardware, connect it to their network, and it's ready to accept stablecoin payments. Customers interact with it by tapping their NFC-enabled devices (e.g., smartphones with mobile wallets, or physical crypto cards) to the terminal. For developers who might be building cryptocurrency or stablecoin applications, Burner Terminal offers a pre-built hardware interface for real-world payment acceptance. This bypasses the need to develop custom POS hardware for NFC-based crypto transactions. Developers can also potentially leverage the open NFC interface to build custom payment flows or integrate with existing blockchain infrastructure. For example, a decentralized application (dApp) could potentially use the terminal to receive payments directly from users' wallets on supported networks. The terminal also supports traditional credit card processing, allowing merchants to accept both fiat and crypto payments concurrently.

Product Core Function

· Tap-to-pay stablecoin transactions: Enables users to pay with stablecoins by simply tapping their NFC-enabled device on the terminal, providing a familiar and frictionless payment experience for customers. This reduces transaction friction compared to QR code scanning, leading to faster checkouts and improved customer satisfaction.

· Open NFC interface for stablecoin payments: Allows for direct, bidirectional communication between the user's wallet and the terminal, enabling negotiation of payment details like the specific stablecoin and network to be used. This flexibility streamlines the payment process and accommodates a wider range of user preferences and available assets.

· Support for multiple stablecoins and networks: Designed to be adaptable to various stablecoins (e.g., USDC) and blockchain networks (e.g., Base), offering merchants flexibility in the digital currencies they can accept. This future-proofs the payment solution and allows for easy expansion as the stablecoin ecosystem grows.

· QR code fallback payment option (EIP-681): Provides an alternative payment method for users who may not have NFC-enabled devices or a compatible Burner wallet, ensuring broader accessibility for payments. This dual-mode functionality maximizes the chances of a successful transaction regardless of the customer's technology.

· Integrated traditional credit card processing: Allows merchants to continue accepting conventional credit card payments alongside stablecoin transactions, offering a comprehensive payment solution that caters to all customer payment preferences. This ensures no loss of business from customers who prefer traditional methods.

· Low-cost hardware for small merchants: Aims to retail under $200, making advanced digital payment acceptance accessible and affordable for small businesses like food trucks, farmers markets, and bodegas. This democratizes access to modern payment technologies for underserved businesses.

· Optional offramping for merchants: Provides a service for merchants to convert received stablecoins into traditional fiat currency, simplifying financial management and reducing operational complexity. This bridges the gap between the crypto economy and traditional business operations.

Product Usage Case

· A food truck owner at a bustling market wants to accept payments quickly from customers who are on the go. Instead of fumbling with cash or waiting for QR code scans, customers can simply tap their phone or crypto card to the Burner Terminal, completing the stablecoin payment in seconds. This leads to shorter lines and more sales.

· A small organic grocery store that wants to attract a younger, tech-savvy demographic can now offer a cutting-edge payment option. By accepting stablecoins via tap-to-pay, they position themselves as an innovative business, appealing to customers who hold and use digital assets for everyday purchases. This can differentiate them from competitors.

· A local artisan at a craft fair can easily accept payments from collectors who prefer using their digital wallets. The Burner Terminal provides a professional and secure way to receive stablecoin payments for their handmade goods, without the need for complex payment terminals or high transaction fees, enabling them to focus on their craft.

· A small coffee shop owner wants to reduce their reliance on traditional payment processors with high fees. By enabling stablecoin payments through Burner Terminal, they can potentially process transactions for free or at a significantly lower cost, increasing their profit margins on each sale, especially for smaller ticket items.

13

LLM SVG Weaver

Author

tkgally

Description

This project explores the creative potential of Large Language Models (LLMs) in generating Scalable Vector Graphics (SVGs). By leveraging LLMs like Claude and OpenRouter, it allows users to transform textual prompts into complex SVG images, moving beyond simplistic, pre-defined examples. It tackles the challenge of consistently translating nuanced natural language descriptions into structured vector graphics code.

Popularity

Points 7

Comments 0

What is this product?

LLM SVG Weaver is a system that uses advanced AI language models (LLMs) to create SVG images from your text descriptions. Think of it like telling a very smart artist exactly what you want to draw, and they produce the digital blueprint (SVG code) for it. Unlike typical AI image generators that might give you a picture, this gives you the actual code that defines the shapes, colors, and positions of an image. The innovation lies in pushing LLMs to go beyond common subjects like 'pelicans on bicycles' and handle more abstract or specific requests, demonstrating their capability in translating intricate text into visual code.

How to use it?

Developers can integrate this into their workflows by using the APIs provided by the LLMs (like Claude or through platforms like OpenRouter). You provide a text prompt describing the desired SVG, and the system, powered by the LLMs, returns the SVG code. This code can then be directly embedded into web pages, used in design software, or further manipulated programmatically. For example, a web developer could use it to dynamically generate custom icons based on user input or to create unique visual elements for a website's theme. The core idea is to automate the creation of visual assets directly from natural language.

Product Core Function

· Text-to-SVG Generation: Translates natural language prompts into functional SVG code. This is valuable for quickly creating custom vector graphics without needing manual design skills, enabling rapid prototyping of visual elements.

· LLM Integration: Leverages cutting-edge LLMs like Claude and OpenRouter to interpret complex prompts and generate structured SVG data. This taps into the ever-improving understanding and generation capabilities of AI, pushing the boundaries of what's possible with AI-assisted design.

· Experimental Prompt Exploration: Encourages exploration of diverse and creative prompts beyond common examples, showcasing the LLM's ability to handle novel scenarios. This helps developers discover new applications for AI in visual content creation and understand the nuances of prompt engineering for SVG output.

Product Usage Case

· Dynamic Icon Generation for Web Apps: A developer can use LLM SVG Weaver to allow users to describe an icon they need (e.g., 'a minimalist cloud with a rain drop') and have the system generate the SVG code for that icon on the fly. This solves the problem of needing a vast library of icons or hiring a designer for custom ones.

· Creative Asset Generation for Game Development: A game designer could describe a unique in-game item or character element (e.g., 'a rusty medieval key with a glowing rune') and receive the SVG code to incorporate into the game's assets. This speeds up the asset creation pipeline and allows for highly specific visual elements.

· Personalized SVG Badges or Logos: An individual or small business could generate unique, personalized SVG badges or logos by simply describing their desired aesthetic (e.g., 'a shield with a stylized oak leaf and the letter 'A' in a classic font'). This democratizes custom branding by removing the technical design barrier.

14

AutoBalance Dynamo

Author

atlas-systems

Description

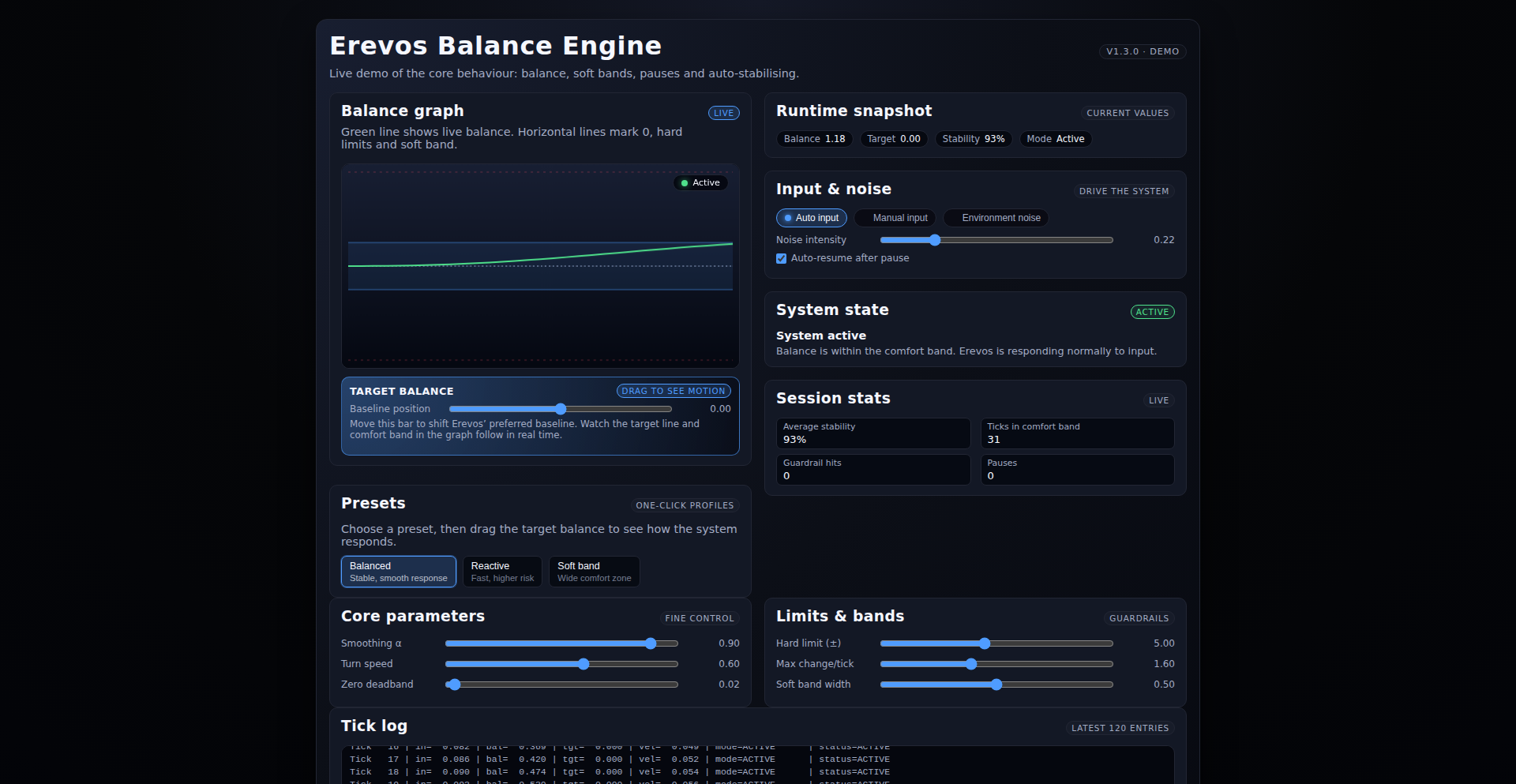

A self-rebalancing system designed to automatically adapt and redistribute workload when overloaded. It addresses the common challenge of maintaining system stability and performance under fluctuating demand by intelligently shifting resources. The core innovation lies in its dynamic, autonomous adjustment capabilities, eliminating the need for manual intervention during peak times.

Popularity

Points 1

Comments 5

What is this product?

This project is a system that intelligently manages its own resources to stay functional and performant even when it's being hit with more requests than it can handle. Think of it like a smart traffic controller for your applications. When one part of the system gets too busy, it automatically directs incoming work to less busy parts or scales up resources in specific areas, all without a human needing to press a button. The innovation is in its real-time, predictive load balancing and automated resource allocation, preventing crashes and slowdowns.

How to use it?

Developers can integrate AutoBalance Dynamo into their existing distributed systems or microservices architectures. It can be used as a middleware layer that sits in front of your application servers or databases. By configuring the system with predefined thresholds for load and performance metrics (like CPU usage, request latency, or queue length), it will automatically start orchestrating resource adjustments. For example, if your web server cluster starts experiencing high latency, AutoBalance Dynamo can automatically spin up more server instances or offload some requests to a different, less utilized service.

Product Core Function

· Dynamic Load Distribution: Automatically reroutes incoming requests to less congested nodes or services to prevent any single component from becoming a bottleneck. This means your application stays responsive even during traffic spikes.

· Autonomous Resource Scaling: Can automatically provision or de-provision computing resources (like virtual machines or containers) based on real-time demand. This ensures you have enough capacity when needed and don't waste money on idle resources.

· Performance Monitoring and Alerting: Continuously tracks key performance indicators of the system and can trigger alerts when predefined thresholds are breached. This provides visibility into system health and potential issues before they become critical.

· Self-Healing Capabilities: In the event of a component failure, the system can automatically reroute traffic and redistribute workloads to healthy components. This increases the resilience and availability of your services.

· Configuration Driven Adaptation: Allows developers to define the rules and policies for rebalancing and scaling, providing flexibility to tailor the system to specific application needs and business requirements.

Product Usage Case

· E-commerce platform during a flash sale: When a sudden surge of customers hits an online store, AutoBalance Dynamo can automatically scale up the web servers and database read replicas to handle the increased traffic, preventing checkout failures and ensuring a smooth shopping experience.

· Real-time data processing pipeline: For applications that process streaming data, if one processing node becomes overloaded with incoming data, AutoBalance Dynamo can distribute the data to other available nodes, maintaining data freshness and preventing data loss.

· API gateway for a microservices architecture: When specific microservices experience high request volumes, the API gateway can use AutoBalance Dynamo to intelligently route requests to healthy instances of that service or even temporarily redirect less critical requests to fallback services, ensuring overall system stability.

· Game server load balancing: During peak gaming hours, AutoBalance Dynamo can dynamically allocate more server resources to busy game servers and redistribute players if a server becomes unstable, improving the gaming experience and reducing disconnections.

15

Walrus: The Lean Messaging Stream

Author

kellyviro

Description

Walrus is a lightweight, experimental messaging stream designed as a simpler alternative to Kafka. It focuses on core message queuing functionality, offering a more accessible and potentially more performant solution for specific use cases where the full complexity of Kafka is overkill. The innovation lies in its streamlined architecture and simpler API.

Popularity

Points 5

Comments 0

What is this product?

Walrus is essentially a system that helps different parts of your software talk to each other reliably. Think of it as a super-efficient post office for your data. Instead of complex setups, Walrus uses a more straightforward approach to store and deliver messages. Its technical innovation is in stripping away the advanced features of systems like Kafka, focusing on the essential message delivery pipeline. This means less overhead and a potentially easier learning curve. So, what's the benefit? It makes building distributed systems simpler and potentially faster for common tasks.

How to use it?

Developers can integrate Walrus into their applications to enable asynchronous communication. For example, if one service needs to send data to another service without waiting for an immediate response, it can publish that data (a 'message') to a Walrus stream. The receiving service can then subscribe to that stream and process the message at its own pace. This is done through simple API calls to publish and consume messages. The value for developers is in decoupling services, improving fault tolerance, and enabling scalable architectures with less complexity.

Product Core Function

· Message Publishing: Allows services to send data to the messaging system. The value is enabling asynchronous communication, where one service can offload work to another without blocking. This improves application responsiveness.

· Message Consumption: Allows services to receive data from the messaging system. The value is enabling decoupled architectures, where services can operate independently and process data at their own rate, enhancing resilience.

· Stream-based Data Handling: Organizes messages into ordered streams, similar to a log. The value is providing a predictable way to process sequences of events, crucial for tasks like event sourcing and auditing.

Product Usage Case

· Real-time data ingestion for analytics: A web application can publish user activity events to Walrus, which then feeds into an analytics pipeline. This solves the problem of handling high volumes of data in real-time without overwhelming the analytics system.

· Decoupling microservices: A user registration service can publish a 'user_created' event to Walrus. Other services, like an email notification service or a CRM service, can subscribe to this event and react accordingly. This improves the scalability and maintainability of microservice architectures.

· Background task processing: A service can publish a request to perform a long-running task (e.g., generating a report) to Walrus. A separate worker process can then consume this request and execute the task asynchronously, preventing the main application from becoming unresponsive.

16

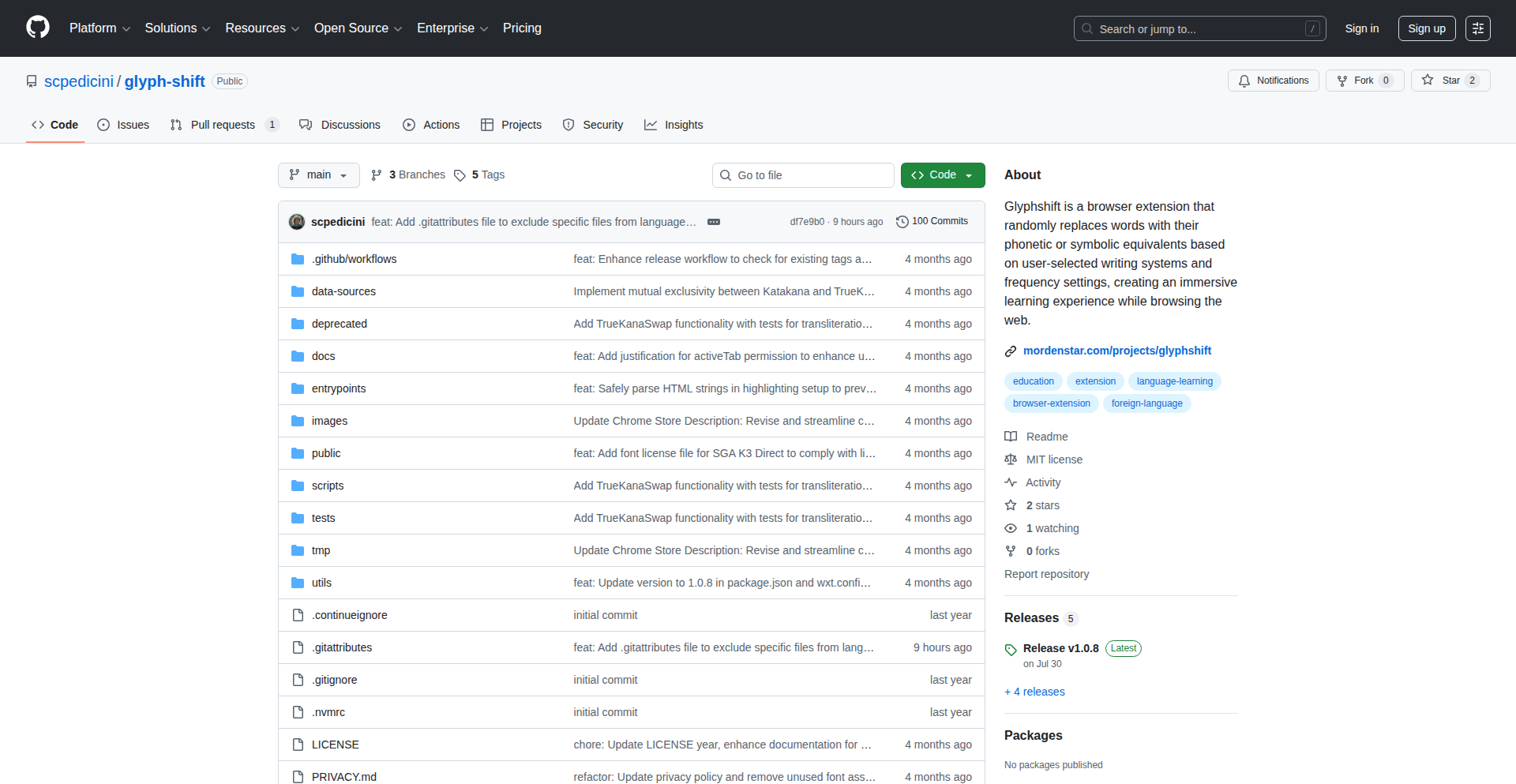

GlyphShift: Universal Script Practice Extension

Author

vunderba

Description

A browser extension for Chrome and Firefox that transforms random words on webpages into phonetic or symbolic scripts like Braille, ASL, or Kana. It offers a playful, unintrusive way to refresh your memory of these scripts without needing dedicated study sessions. Users can hover over the transformed words to see the original, making it a practical, albeit unconventional, tool for language learners and enthusiasts.

Popularity

Points 3

Comments 2

What is this product?

GlyphShift is an open-source browser extension that acts as a visual translator for words on any webpage. Instead of replacing words with their foreign language equivalents, it converts them into alternative scripts such as Grade I Braille, ASL fingerspelling representations, Japanese Kana, or even Morse code. The innovation lies in its non-disruptive approach; it doesn't aim for fluent translation but rather a playful, ambient reinforcement of phonetic and symbolic script knowledge. The technology works by identifying words on a webpage and applying a predefined substitution rule for each selected script. This allows users to passively encounter and recognize these scripts in their daily browsing, offering a unique way to maintain familiarity with them.

How to use it?

Developers can install GlyphShift as a regular browser extension from the Chrome Web Store or Firefox Add-ons. Once installed, users can select which scripts they want to enable and configure the extension's behavior, such as the frequency of word transformation or specific word selection criteria. The primary use case is for individuals who are learning or wish to maintain proficiency in scripts like Braille, Kana, or Morse code. It can be integrated into a developer's workflow by simply browsing the web as usual; the extension runs in the background. For those developing language learning tools or engaging with multilingual content, GlyphShift provides an ambient reinforcement mechanism, making practice feel less like a chore and more like a natural part of their online experience.

Product Core Function

· Random word substitution: Transforms words on a webpage into selected phonetic or symbolic scripts, offering a playful way to encounter new characters without explicit study.

· Hover-to-reveal original text: Allows users to easily see the original word by hovering over the transformed glyph, facilitating learning and comprehension.

· Multiple script support: Enables practice with various scripts including Braille, ASL representations, Kana, and Morse code, catering to diverse learning needs.

· Customizable transformation settings: Users can adjust the extension's behavior to control the intensity and scope of word transformations, tailoring the experience to their preference.

· Open-source development: Provides transparency and allows for community contributions, fostering a collaborative environment for improving the tool and adding new script support.

Product Usage Case

· A language learner studying Japanese can browse their favorite news websites, and see random Japanese Katakana characters appear in place of English words, helping them passively recognize and recall Kana without actively studying flashcards.

· A visually impaired individual learning Braille can browse articles online, and have certain words converted into Braille characters, offering an accessible and engaging way to reinforce their Braille knowledge in a real-world context.

· A developer working on an internationalization project might use the extension to keep their familiarity with different character sets sharp, even when not actively coding in those languages.

· Someone interested in the aesthetics of different writing systems can use GlyphShift to make their web browsing visually interesting, resembling a futuristic or stylized display, akin to elements in 'Blade Runner'.

17

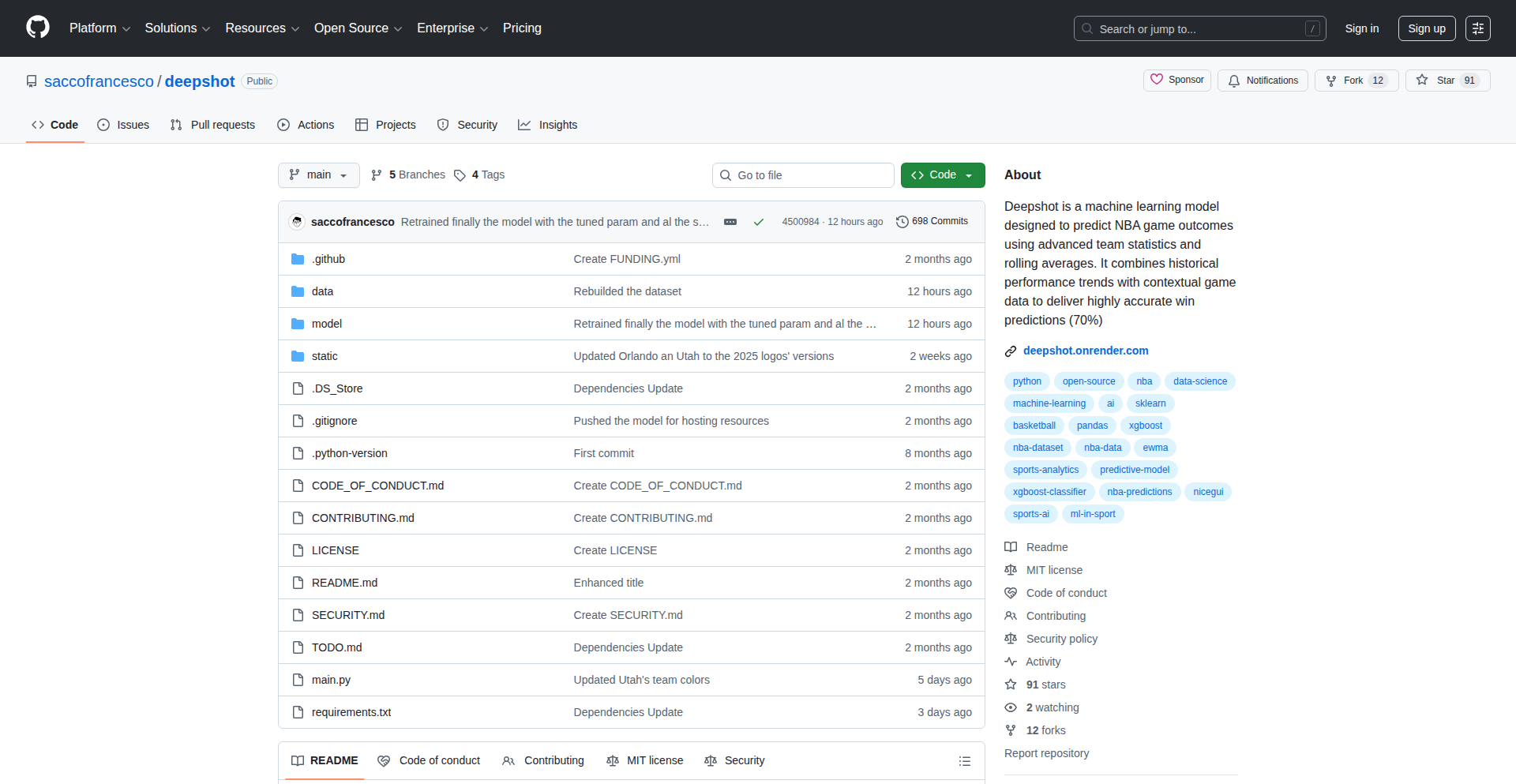

DeepShot ML NBA Predictor

Author

Fr4ncio

Description

DeepShot is a machine learning model that predicts NBA game outcomes with impressive accuracy. It goes beyond basic stats by using advanced techniques like Exponentially Weighted Moving Averages (EWMA) to capture team momentum and recent performance. This results in a more insightful prediction than simple averages or betting lines, clearly highlighting the statistical factors driving the model's decisions. It's built with Python and readily available ML libraries, making it accessible for developers interested in sports analytics and machine learning.

Popularity

Points 3

Comments 2

What is this product?