Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-06

SagaSu777 2025-11-07

Explore the hottest developer projects on Show HN for 2025-11-06. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN batch highlights a powerful trend: the democratization of sophisticated technology through accessible developer tools and AI. We're seeing a clear shift towards making complex tasks, whether it's tabular data analysis with TabPFN-2.5, shell command assistance with qqqa, or even video restoration with SeedVR2, more manageable and integrated into daily workflows. The focus on open-source, privacy, and local-first processing, as seen in Floxtop and Ito AI, speaks to a growing demand for user control and transparency. For developers, this means an opportunity to leverage these advancements by building specialized tools that abstract away complexity, enhance existing platforms, or create entirely new user experiences. The rise of AI coding agents and context engineering tools like Packmind OSS and Deepcon signals a future where AI acts as a co-pilot, but requires robust frameworks to maintain consistency and accuracy. This is a call to action for innovators to explore how they can integrate these powerful building blocks into their own projects, solve niche problems, or contribute to the open-source ecosystem, embodying the hacker spirit of building for impact and shared knowledge.

Today's Hottest Product

Name

qqqa – A fast, stateless LLM-powered assistant for your shell

Highlight

This project innovates by adhering to the Unix philosophy, creating lightweight, focused, and stateless command-line tools powered by LLMs. It tackles the problem of context switching between shell, browsers, and AI assistants for common tasks. Developers can learn about integrating LLMs into existing workflows, building modular CLI tools, and leveraging fast inference APIs like Groq for near-instantaneous responses, offering a different paradigm from more monolithic AI agents.

Popular Category

AI/ML Development

Developer Tools

Data Analysis & Visualization

Web Development

Popular Keyword

LLM

AI Assistant

Developer Tools

CLI

Data

WebGPU

Rust

TypeScript

RAG

Automation

Technology Trends

LLM Integration in Dev Tools

Stateless and Modular AI Design

Efficient Data Processing and Analysis

Browser-Based AI/ML

Rust and Performance-Oriented Development

AI for Code Understanding and Generation

Enhanced Developer Workflows

Privacy-Preserving AI

Declarative Frameworks

AI-Powered Content Generation

Project Category Distribution

AI/ML Tools & Frameworks (25%)

Developer Productivity & Workflow (20%)

Data Analysis & Visualization (15%)

Web Development & Infrastructure (15%)

Desktop Applications (10%)

Content Generation & Media (10%)

Other/Utility (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | ShellGPT Commander | 144 | 81 |

| 2 | TabularTransformer-XL | 68 | 12 |

| 3 | Flutter Compositions: Reactive UI Blocks for Flutter | 43 | 23 |

| 4 | Intraview: Agentic Code Journey Mapper | 34 | 3 |

| 5 | Ito AI: Voice-to-Intent Transformer | 15 | 11 |

| 6 | AI Forge Arena | 8 | 2 |

| 7 | Hacker News Project Explorer | 9 | 1 |

| 8 | GraFlo Schema Weaver | 5 | 3 |

| 9 | DeepCon: Context Augmentation for AI Agents | 6 | 2 |

| 10 | ShellAI: Local LLM-Powered Terminal Copilot | 5 | 2 |

1

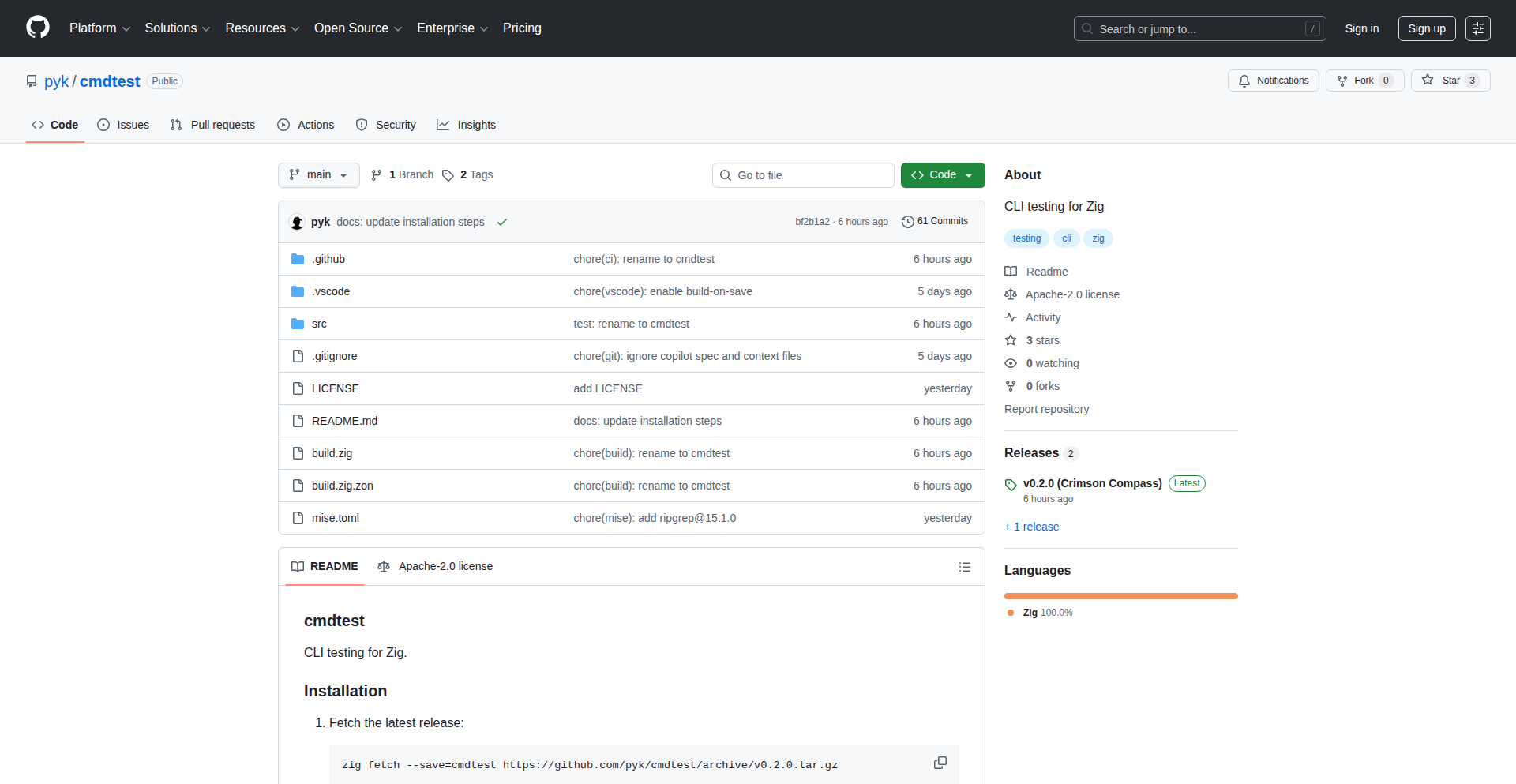

ShellGPT Commander

Author

iagooar

Description

ShellGPT Commander is a pair of command-line tools, 'qq' and 'qa', designed to streamline your workflow by integrating Large Language Models (LLMs) directly into your shell. It addresses the frustration of context-switching between your terminal, AI chatbots, and browser for common tasks. 'qq' is for quick, read-only queries, perfect for commands you always forget, while 'qa' allows you to execute commands after the LLM plans and you approve, embodying a safe and interactive AI assistant. It's built on the Unix philosophy of small, focused tools, making it efficient and easy to integrate.

Popularity

Points 144

Comments 81

What is this product?

ShellGPT Commander is a command-line interface (CLI) toolset that empowers your shell with the intelligence of Large Language Models (LLMs). It consists of two main components: 'qq' and 'qa'. 'qq' (quick question) acts as a read-only interface, allowing you to ask natural language questions and get command-line answers, essentially a cheat sheet for commands you often forget. 'qa' (quick agent) is an executable assistant. It takes your natural language request, generates a command-line plan, shows it to you for approval, and then executes it. The innovation lies in its adherence to the Unix philosophy: small, single-purpose tools that work together. Unlike many AI agents that try to do everything, ShellGPT Commander focuses on specific shell-based interactions, making it fast, stateless (meaning it doesn't remember past interactions by default, reducing complexity and improving performance), and highly composable. It works with any OpenAI-compatible API, with a recommendation for Groq for near-instantaneous responses.

How to use it?

Developers can integrate ShellGPT Commander into their daily workflow by installing the two binaries, 'qq' and 'qa'. For 'qq', you would typically use it in your terminal when you need to recall a specific command. For example, you might type `qq how to list all files in current directory recursively` and it will return the correct shell command. For 'qa', you would invoke it for more complex tasks where you want the AI to suggest and execute a command. For instance, you could say `qa create a new git branch called feature-x and push it to origin`, and 'qa' would first show you the `git checkout -b feature-x && git push origin feature-x` command (or similar), wait for your confirmation, and then execute it. It's designed to be dropped into your existing shell environment, requiring minimal setup beyond configuring your API endpoint and key. This allows for a seamless transition to a more intelligent command-line experience, significantly reducing the need to search documentation or switch to a browser for assistance.

Product Core Function

· Quick Command Retrieval: Allows users to ask natural language questions for common shell commands and receive immediate, accurate command-line outputs. This saves time and reduces cognitive load for developers who frequently forget specific command syntax.

· AI-Powered Command Execution with Approval: Enables users to delegate command execution to an LLM. The AI generates a step-by-step plan for the requested task, presents it to the user for review and approval, and then executes the validated command, ensuring safety and user control.

· Stateless by Default Design: Operates with a focus on individual interactions, minimizing memory usage and improving performance. This adheres to the Unix philosophy, making the tool predictable and easy to integrate with other command-line utilities.

· OpenAI-Compatible API Integration: Supports a wide range of LLM providers by leveraging OpenAI-compatible APIs. This provides flexibility in choosing the best and most cost-effective AI backend for their needs, with optimizations for high-speed providers like Groq.

· Unix Philosophy Adherence: Built as small, focused tools that excel at specific tasks. This makes the project modular, easy to understand, and highly extensible, fitting naturally into a developer's existing command-line toolkit.

Product Usage Case

· A developer is working on a new project and needs to create a complex Dockerfile. Instead of searching online for every instruction, they could use 'qa' with a prompt like 'qa create a basic Dockerfile for a Node.js application that exposes port 3000'. The tool would generate the Dockerfile content, present it for review, and then save it. This speeds up the initial setup and reduces errors.

· A junior developer is struggling to remember the exact `git` command to revert a commit and push the change to a remote repository. They can use 'qq revert the last commit and force push to origin', and 'qq' will provide the correct command, such as `git revert HEAD && git push origin --force`. This acts as an instant learning tool, improving productivity.

· During a troubleshooting session, a developer needs to tail multiple log files simultaneously and filter for specific error messages. They could ask 'qa tail logs from /var/log/app.log and /var/log/nginx/error.log and show lines containing 'ERROR''. 'qa' would construct a command like `tail -f /var/log/app.log /var/log/nginx/error.log | grep 'ERROR'` and offer to execute it, streamlining complex diagnostic tasks.

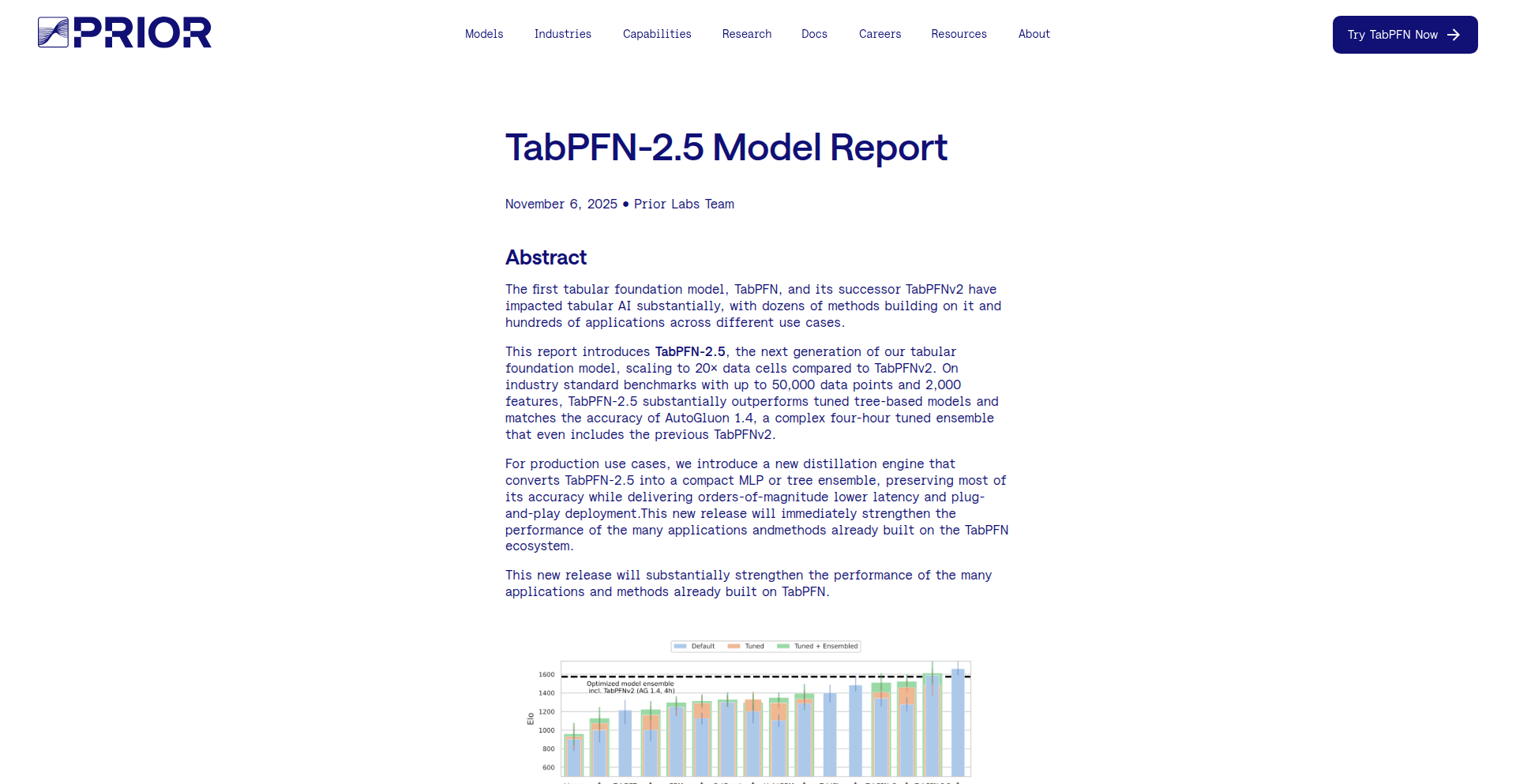

2

TabularTransformer-XL

Author

onasta

Description

TabularTransformer-XL is a groundbreaking tabular foundation model that leverages transformer architecture to achieve state-of-the-art predictions on tabular datasets. It excels at in-context learning, meaning it can learn from a few examples without requiring extensive hyperparameter tuning, and it natively handles various data types including missing values, categorical, text, and numerical features, while being robust to outliers. The latest version, 2.5, significantly scales to larger datasets (up to 50,000 samples and 2,000 features), offering a 5x improvement over its predecessor.

Popularity

Points 68

Comments 12

What is this product?

TabularTransformer-XL is a powerful AI model designed to make predictions on structured data, often referred to as tabular data (think of spreadsheets or database tables). Its core innovation lies in using a transformer-based neural network, similar to those used in advanced language models, but specifically trained on a vast number of synthetic datasets. This pre-training allows it to 'learn' general patterns in tabular data. When you give it a new dataset, it can quickly adapt and make predictions by 'in-context learning' – essentially learning from the data you provide in the moment, without needing to retrain the entire model or fiddle with many settings. It's designed to be smart enough to handle messy data, including missing entries, different types of information (like text and numbers), and even irrelevant columns, providing accurate results across classification and regression tasks with just a single forward pass.

How to use it?

Developers can integrate TabularTransformer-XL into their workflows through a new REST API or a Python SDK. This means you can send your tabular data to the model and receive predictions back. For instance, in a Python project, you can use the SDK to load the model, feed it your training and testing datasets, and get predictions for regression or classification problems. The API approach allows for easier integration with various applications and services, enabling you to leverage its predictive power without deep machine learning expertise. The model is also available via a package on Hugging Face, a popular platform for AI models, making it accessible for experimentation and deployment.

Product Core Function

· In-context learning for tabular data: Enables rapid adaptation to new datasets and tasks without extensive retraining, reducing development time and computational cost.

· Scalability to large datasets: Handles datasets up to 50,000 samples and 2,000 features, making it suitable for more complex real-world applications.

· Native support for mixed data types: Seamlessly processes numerical, categorical, text data, and handles missing values robustly, simplifying data preprocessing steps.

· Outlier and uninformative feature robustness: Delivers reliable predictions even with noisy or irrelevant data, improving model stability and performance.

· State-of-the-art prediction performance: Achieves top-tier accuracy in classification and regression tasks, often matching or exceeding highly tuned traditional methods.

· One-shot prediction without hyperparameter tuning: Provides immediate predictions with minimal configuration, accelerating deployment and reducing the need for ML expertise.

· Distillation engine for compact models: Offers the ability to convert the large foundation model into smaller, faster models (MLP or tree ensembles) for low-latency inference, crucial for real-time applications.

Product Usage Case

· Predicting customer churn: A marketing team can use TabularTransformer-XL with customer transaction data, demographics, and engagement metrics to predict which customers are likely to leave, allowing for proactive retention strategies. The model's ability to handle mixed data types and its fast prediction time are key here.

· Financial fraud detection: A financial institution can feed transaction records, user behavior, and account information into the model to identify potentially fraudulent activities in real-time. Its robustness to outliers and high accuracy are vital for minimizing financial losses.

· Medical diagnosis assistance: Researchers can use the model with patient health records, lab results, and symptoms to help predict the likelihood of certain diseases, aiding clinicians in faster and more accurate diagnoses. The model's ability to handle large, complex datasets and provide quick insights is invaluable.

· E-commerce product recommendation: An online retailer can use user browsing history, purchase data, and product attributes to predict which products a user is most likely to be interested in, enhancing user experience and driving sales. The model's in-context learning allows for personalized recommendations with minimal user data.

· Manufacturing quality control: A factory can use sensor data, production parameters, and historical quality reports to predict defects in manufactured goods, enabling early intervention and improving overall product quality. The model's scalability and robustness to noisy sensor data are beneficial.

3

Flutter Compositions: Reactive UI Blocks for Flutter

Author

yoyo930021

Description

This project introduces Vue-inspired reactive building blocks for Flutter. It tackles the common challenge of managing complex UI state and logic in Flutter applications by offering a more declarative and compositional approach to UI development. The core innovation lies in adapting reactive programming patterns, similar to those found in Vue.js, to Flutter's widget-based architecture, making state management and UI updates more intuitive and less boilerplate-heavy.

Popularity

Points 43

Comments 23

What is this product?

This project is a set of reusable UI components and patterns for Flutter that are inspired by Vue.js's reactive system. Instead of manually managing state changes and rebuilding widgets, you can define how your UI reacts to data changes. This means that when your data updates, the relevant parts of your UI automatically update themselves. The innovation is in bringing this reactive paradigm, which makes front-end development much cleaner and more efficient, into the Flutter ecosystem, allowing developers to build more dynamic and responsive UIs with less code. So, what's in it for you? It makes your Flutter apps easier to build and maintain, especially for complex UIs, by reducing the amount of manual work you need to do to keep your UI in sync with your data.

How to use it?

Developers can integrate this project into their Flutter applications by adding it as a dependency. They can then use the provided reactive building blocks to construct their UIs. For example, instead of managing a mutable state variable and calling setState(), you might use a reactive store or observable to hold your data. When this reactive data changes, the UI components subscribed to it will automatically re-render. This can be done within existing Flutter projects or for new ones. So, what's in it for you? You can write cleaner, more declarative UI code that scales better as your application grows, leading to faster development cycles.

Product Core Function

· Reactive Data Binding: Allows UI elements to automatically update when underlying data changes, reducing manual state management. This provides a more declarative way to build UIs, making them easier to understand and less prone to bugs. So, what's in it for you? Less time spent debugging state synchronization issues and more time building features.

· Compositional UI Components: Offers pre-built, composable UI elements that can be combined like Lego bricks to create complex interfaces. This promotes code reuse and modularity. So, what's in it for you? Faster development by leveraging ready-made, well-tested building blocks for your UI.

· State Management Primitives: Provides fundamental tools for managing application state in a reactive manner, simplifying the process of handling dynamic data. So, what's in it for you? A structured and efficient way to manage data flow within your application, leading to more predictable behavior.

· Vue.js Inspired Patterns: Adapts successful reactive programming patterns from Vue.js to Flutter, leveraging established best practices for modern UI development. So, what's in it for you? Benefits from a proven and popular development paradigm, making your transition smoother and your code more familiar to those with web development experience.

Product Usage Case

· Building a real-time dashboard where data updates from an API need to be reflected instantly in charts and tables. Using reactive bindings ensures that as new data arrives, the visualizations update automatically without manual intervention. So, what's in it for you? A dynamic and responsive dashboard that keeps users informed with the latest information.

· Developing a complex form with multiple interconnected fields where changing one field dynamically affects the options or validation of another. The compositional nature and reactive updates make it easy to manage these interdependencies. So, what's in it for you? A user-friendly form experience that guides users effectively and reduces input errors.

· Creating interactive educational content where user actions trigger visual changes or reveal new information. This project's reactive approach simplifies the implementation of such dynamic interactions. So, what's in it for you? Engaging and interactive learning experiences that captivate users.

· Migrating or refactoring an existing Flutter application with complex state management to a more modern and maintainable reactive architecture. This provides a clear path to improving code quality and developer productivity. So, what's in it for you? A smoother development process and a more robust application.

4

Intraview: Agentic Code Journey Mapper

Author

cyrusradfar

Description

Intraview is a VS Code extension that empowers developers to create dynamic code walkthroughs powered by their AI coding agents. It solves the problem of understanding complex codebases and agentic workflows by enabling the storage and sharing of interactive tours and inline feedback. This transforms how developers onboard, review code, and collaborate with AI agents, fostering deeper understanding and more efficient workflows. Its core innovation lies in its cloudless, local-first architecture, emphasizing privacy and direct control.

Popularity

Points 34

Comments 3

What is this product?

Intraview is a VS Code extension that acts as a personalized navigator for your codebase and your AI coding agent's interactions. Imagine having a guided tour of a complex project, created by your AI, that shows you exactly what the agent did and why. It stores these tours as simple files and allows for inline comments and feedback, all processed locally without sending data to external servers. The technical innovation is its 'cloudless' design, meaning all processing happens on your machine, ensuring data privacy and security. It uses standard web technologies (TypeScript, JS, CSS, HTML) and runs a local Multi-Core Processor (MCP) server within your VS Code workspace to manage agent connections and tour data.

How to use it?

Developers can use Intraview by installing it as a VS Code extension. Once installed, they can instruct their AI coding agent to create a code walkthrough or 'Intraview' for a specific task or project. For example, you could say, 'Create an Intraview to onboard me to this new feature.' The AI will then generate a series of steps, highlighting code and explaining the agent's actions. These tours can be saved, shared with teammates, and annotated with feedback directly within the IDE. This is incredibly useful for new team members getting up to speed, for reviewing complex pull requests, or for understanding how an AI agent has modified or generated code.

Product Core Function

· Dynamic Code Tours: Allows AI agents to generate step-by-step walkthroughs of code, explaining the logic and execution. This helps developers quickly grasp the 'why' behind code changes and understand complex systems, accelerating learning curves.

· Tour Storage and Sharing: Tours are saved as local files, making them easy to version control and share with colleagues. This facilitates collaboration, knowledge transfer, and consistent understanding across teams, especially for remote or distributed teams.

· Inline Feedback and Commenting: Developers can add granular feedback and comments directly within the tour, attached to specific code snippets or steps. This streamlines the review process for code and AI agent outputs, making feedback more precise and actionable.

· Cloudless Architecture: All data processing and storage occur locally on the developer's machine. This ensures data privacy and security, which is critical for sensitive codebases and intellectual property, offering peace of mind.

· Agent Integration: Seamlessly works with AI coding agents to leverage their understanding of code to generate meaningful tours and insights. This unlocks the potential of AI to not just write code, but also to explain and teach, enhancing developer productivity.

Product Usage Case

· New Project Onboarding: A senior developer can create an Intraview walkthrough of a new feature they've built. New team members can then follow this guided tour to understand the architecture, key components, and logic, significantly reducing ramp-up time and the need for extensive in-person training.

· Code Review for Complex PRs: When a pull request involves intricate logic or a significant refactor, an Intraview can be generated by the agent to highlight the critical changes and their rationale. This helps reviewers focus on the most important aspects, leading to more efficient and effective code reviews.

· Understanding Agentic Workflows: For developers using AI agents to generate or modify code, Intraview provides a way to track and understand the agent's decision-making process. This builds trust and allows developers to refine their prompts and better manage AI-assisted development.

· Performance Review Insights: An engineering manager could use Intraview to create a tour highlighting a team member's most significant contributions to a project, showcasing specific code implementations and their impact. This provides a concrete and visual way to demonstrate achievements.

· Planning and Alignment with AI Agents: Before starting a large feature, a developer can use Intraview to visualize and discuss the planned steps with their AI agent. This helps ensure both the developer and the agent are aligned on the approach, reducing miscommunication and rework.

5

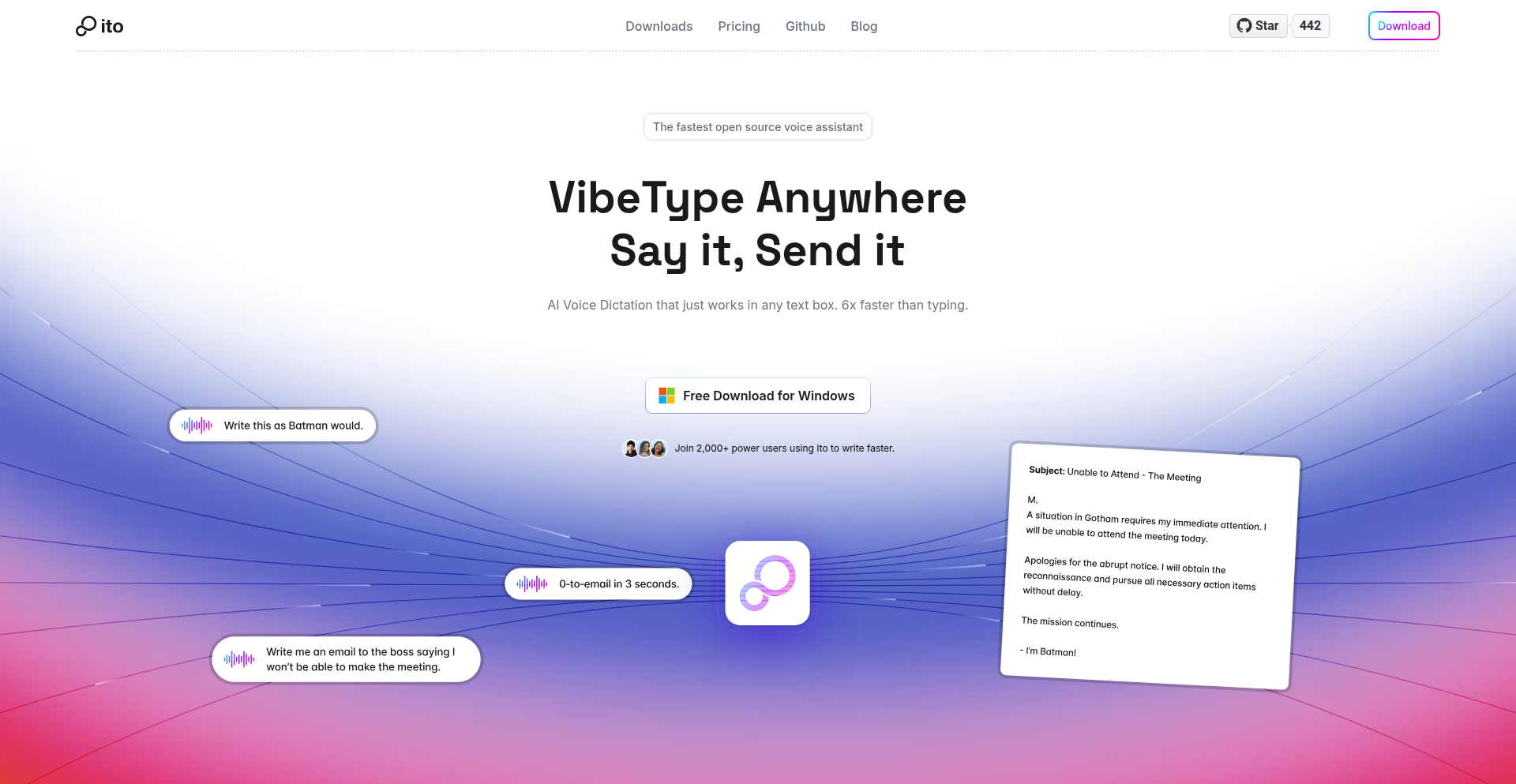

Ito AI: Voice-to-Intent Transformer

url

Author

dumbfoundded

Description

Ito AI is an open-source application for Windows and Mac that transforms spoken words into structured text. It intelligently converts your voice into various text formats like notes, messages, and even code snippets, directly into any text field you're working in. The innovation lies in its focus on speed, simplicity, and user control, offering a clean, distraction-free experience with transparent data handling and the option for local or self-hosted deployments, aiming to solve the complexity and data privacy concerns of existing voice-to-text tools.

Popularity

Points 15

Comments 11

What is this product?

Ito AI is a desktop application that acts as a smart dictation tool. Instead of just transcribing your speech verbatim, it aims to understand the *intent* behind your words and convert them into structured text. Think of it as a bridge between your spoken thoughts and the digital text you need to create. Its core innovation is its user-centric design, prioritizing a fast, clean interface and giving users control over their data through open-source transparency and self-hosting options. This means it's not locked into a single cloud provider and can be run entirely on your own machine if you prefer, offering a more private and customizable experience than many commercial alternatives.

How to use it?

Developers can use Ito AI by simply installing it on their Windows or Mac machine. Once installed, it runs in the background, listening for your voice input. You can then activate it to speak your thoughts, and Ito AI will intelligently convert them into the desired text format and insert it directly into your active application. For example, while coding, you could say 'create a variable called counter set to zero' and Ito AI would output 'let counter = 0;'. You can configure which voice models and providers it uses, and for those who want maximum control, it's designed to be easily self-hosted, allowing you to run the entire system on your own servers. This makes it a versatile tool for anyone looking to speed up their writing, note-taking, or coding workflows without compromising on privacy.

Product Core Function

· Voice to Structured Text Conversion: This core function uses advanced speech recognition and natural language processing to not only transcribe speech but also to understand the context and intent, converting spoken ideas into organized text formats like bullet points, code snippets, or formatted notes. This saves significant time compared to manual typing and reduces errors.

· Fast and Distraction-Free Interface: The application is designed with a minimalist and clean user interface, ensuring that the focus remains on your voice input and the resulting text output. This minimizes cognitive load and improves productivity, making it easier to get your thoughts down quickly.

· Cross-Platform Compatibility (Windows & Mac): Ito AI works seamlessly on both major desktop operating systems. This broad compatibility means most developers can integrate it into their existing workflow without needing to switch operating systems, offering flexibility.

· Data Privacy and Transparency (Open Source & Self-Hosting): As an open-source project under GPL-3, Ito AI provides full transparency into how your data is handled. The ability to self-host the application means you can choose to run it entirely on your own infrastructure, ensuring that your voice data never leaves your control, addressing significant privacy concerns for sensitive work.

· Customizable Voice Models and Providers: Users can select from different speech recognition models and providers, allowing for optimization based on accuracy, language, and performance needs. This flexibility ensures the tool adapts to individual preferences and technical requirements.

Product Usage Case

· Coding Workflow Enhancement: A developer can use Ito AI to dictate code snippets, function calls, or comments directly into their IDE. For instance, saying 'function add numbers takes two arguments a and b returns a plus b' could be transformed into 'function addNumbers(a, b) { return a + b; }', significantly speeding up the process of writing boilerplate code or documenting existing code.

· Meeting Note Taking: During a meeting, a user can speak their action items, decisions, or key takeaways, and Ito AI will convert them into structured notes with bullet points or checklists. This allows participants to focus on the discussion rather than frantically typing, ensuring no important information is missed.

· Quick Idea Capture: When inspiration strikes, a user can quickly speak their thoughts into Ito AI without needing to open a full note-taking app or find a keyboard. The app will capture the idea and store it as text, ready to be reviewed and organized later, preventing valuable ideas from being forgotten.

· Content Creation and Drafting: Writers, bloggers, or anyone creating written content can use Ito AI to draft articles, emails, or social media posts by speaking their ideas. This can overcome writer's block and accelerate the initial drafting phase, making the writing process more fluid and less intimidating.

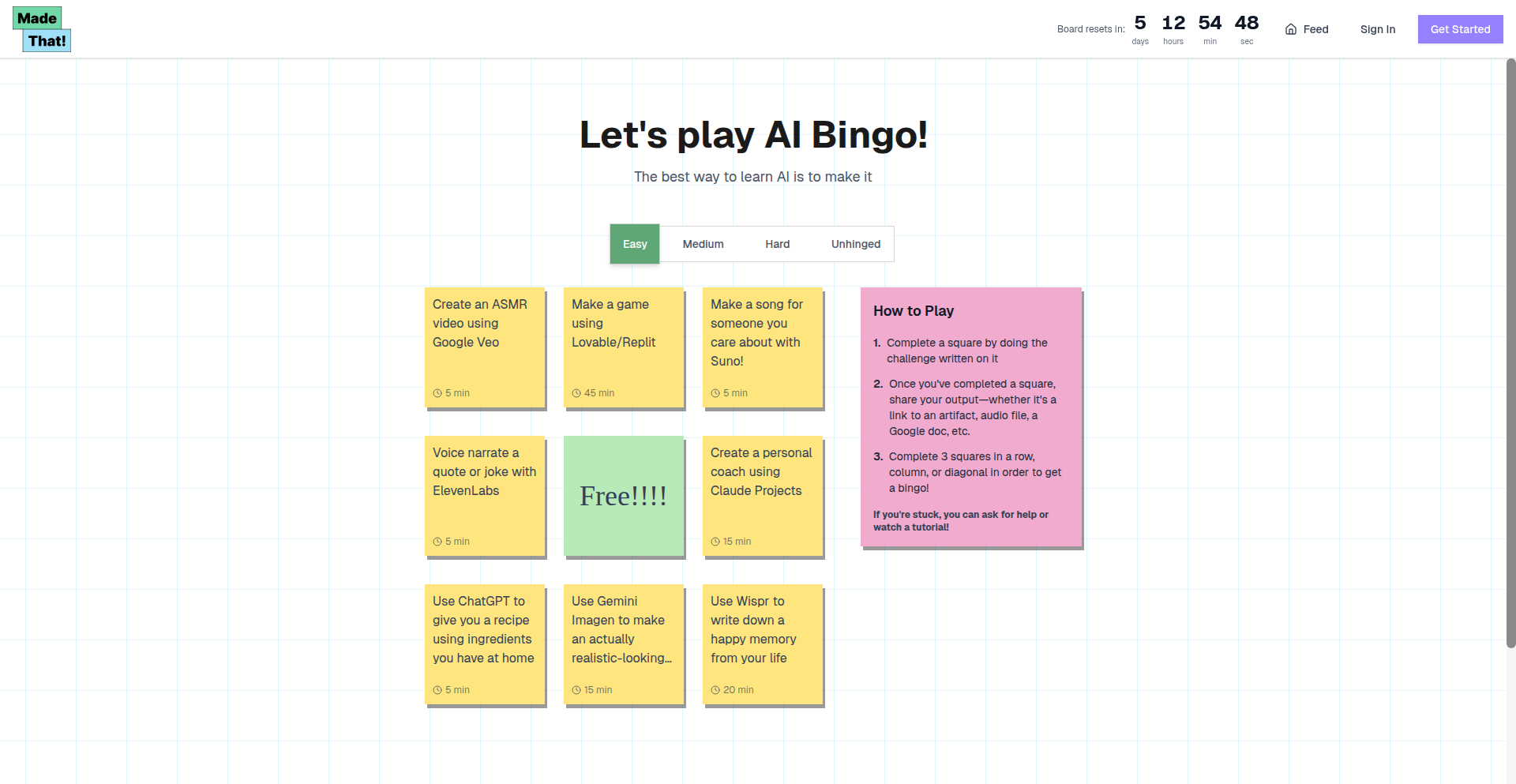

6

AI Forge Arena

Author

kan_academy

Description

AI Forge Arena is a gamified platform designed to help individuals and teams explore and adopt AI technologies through fun, hands-on challenges. It tackles the common anxiety and uncertainty around AI adoption by providing structured mini-projects that encourage learning by doing, resulting in tangible and often surprising creations. The core innovation lies in transforming complex AI tools into accessible, engaging experiments, fostering a sense of accomplishment and creativity within the development community.

Popularity

Points 8

Comments 2

What is this product?

AI Forge Arena is an online platform that presents weekly AI-powered challenges. Think of it like a 'bingo card' for AI experimentation. Each challenge is a small, achievable task designed to guide users through specific AI capabilities, from generating creative content like ASMR videos to building practical automations with tools like n8n, or even crafting simple applications using AI code generation. The innovation is in making AI exploration playful and addictive, turning a potentially intimidating subject into an enjoyable learning experience. It's about demystifying AI by letting people actively build with it, fostering a proactive and fun approach to integrating AI tools.

How to use it?

Developers can use AI Forge Arena by signing up for an account and browsing the available weekly challenges. Each challenge provides clear instructions and often suggests specific AI tools or APIs to use. For example, a challenge might be 'Generate a 30-second AI ASMR video.' A developer could use an AI video generation tool and an AI voice synthesizer to complete this. Another challenge might be 'Automate a social media post with AI.' Here, a developer could leverage AI to draft post copy and then use an automation platform like n8n to schedule it. The platform also showcases submissions from other users, providing inspiration and real-world examples of what's possible, making it easy to integrate these concepts into personal projects or team workflows.

Product Core Function

· Weekly AI Challenges: Provides structured, bite-sized AI projects that guide users through various AI functionalities. This is valuable for developers because it offers a clear path to learning and experimenting with new AI tools without feeling overwhelmed, enabling them to quickly gain practical AI skills.

· Submission Showcase: Allows users to submit their challenge creations and view others' submissions. This fosters a sense of community and provides inspiration, helping developers see diverse applications and technical approaches, thus accelerating their own problem-solving capabilities.

· Gamified Learning Experience: Introduces elements of fun and competition to AI adoption. This is crucial for developers as it increases engagement and motivation, making the learning process more sustainable and enjoyable, leading to better retention of AI concepts and tools.

· Tool Agnostic Exploration: Encourages the use of various AI tools and platforms, from AI code generators to automation software. This broadens a developer's toolkit and understanding of the AI ecosystem, enabling them to choose the most effective tools for different problems and integrate them seamlessly into their development process.

· Practical AI Application: Focuses on creating tangible outputs from AI experiments. This is highly valuable for developers as it demonstrates the real-world utility of AI, helping them identify opportunities to apply AI in their projects for efficiency, creativity, or innovation.

Product Usage Case

· A developer uses the 'AI-generated music' challenge to learn how to integrate AI music generation APIs into a personal game project, solving the problem of creating unique background music without extensive musical knowledge.

· A marketing team member completes the 'AI-powered email subject line generator' challenge, then applies the learned techniques to build an automated email campaign using AI, improving their outreach effectiveness.

· A developer explores the 'AI chatbot persona creation' challenge and then integrates a custom AI chatbot into their personal website to provide instant support, solving the problem of handling user inquiries efficiently.

· A team participates in the 'n8n automation with AI' challenge, discovering how to automate repetitive tasks like data scraping and report generation, leading to increased productivity and freeing up developer time for more complex problem-solving.

7

Hacker News Project Explorer

Author

eamag

Description

A SvelteKit-powered website that categorizes and visualizes projects discussed in 'What Are You Working On?' posts on Hacker News. It leverages tagged comment data to help developers discover similar projects and identify trends in the tech community. The innovation lies in transforming unstructured conversation data into a structured, searchable knowledge base for the developer ecosystem.

Popularity

Points 9

Comments 1

What is this product?

This project is a specialized search and discovery engine for Hacker News 'What Are You Working On?' (WAW) posts. It takes the raw comment data from these discussions, tags it based on project types and technologies, and presents it in an accessible SvelteKit website. The core innovation is applying a systematic tagging and organization layer to informal developer conversations, turning them into a valuable resource for understanding community activity and finding collaborators. Think of it as creating a structured index for the collective creativity expressed in these threads, making it easier to see what developers are building and learning.

How to use it?

Developers can use this website to explore past WAW discussions, filtering by keywords, technologies, or project types. For instance, if you're working on a new AI project and want to see what others in the community are doing in that space, you can search for 'AI' and discover relevant projects and discussions. It's also useful for understanding broader trends; you can see if certain technologies are gaining traction or if specific problem domains are frequently discussed. The integration is straightforward: simply visit the website and start exploring. Future integrations might allow for API access to the tagged data for developers who want to build their own analyses or tools on top of this information.

Product Core Function

· Comment Tagging and Categorization: Automatically applies relevant tags (e.g., 'AI/ML', 'Web Development', 'Open Source Tool', 'Experimental') to comments based on their content, making it easier to identify project themes and technologies. This adds structure to unstructured data, allowing for targeted searching.

· SvelteKit Frontend: Provides a fast, responsive, and modern user interface for browsing and searching tagged comments. This ensures a smooth user experience, allowing developers to quickly find what they're looking for without performance bottlenecks.

· Project Discovery Engine: Enables users to find developers working on similar projects or technologies by searching through categorized comments. This fosters collaboration and knowledge sharing within the developer community by connecting like-minded individuals.

· Trend Analysis Potential: The underlying tagged data can be used to identify emerging trends in developer projects and technologies over time. This offers insights into the evolving landscape of software development, which can inform future project directions and learning paths.

· Cross-Referencing WAW Posts: Links back to the original Hacker News discussions, providing context and allowing users to delve deeper into specific projects and conversations. This preserves the authenticity of the data while making it more accessible and actionable.

Product Usage Case

· A developer building a decentralized application (dApp) searches for 'blockchain' and 'web3' tags to find other developers discussing similar technologies or facing common challenges in that space. This helps them avoid reinventing the wheel and potentially find collaborators.

· A student learning about new programming languages can filter comments by specific languages (e.g., 'Rust', 'Go') to see what kinds of projects are being built with them and gather inspiration for their own learning projects.

· A product manager looking for innovative ideas can browse through tags like 'productivity tools' or 'developer experience' to identify unmet needs or interesting solutions being experimented with by the community.

· A researcher studying the evolution of AI development can analyze the temporal distribution of 'machine learning' or 'deep learning' tags to understand how the focus within the community has shifted over time.

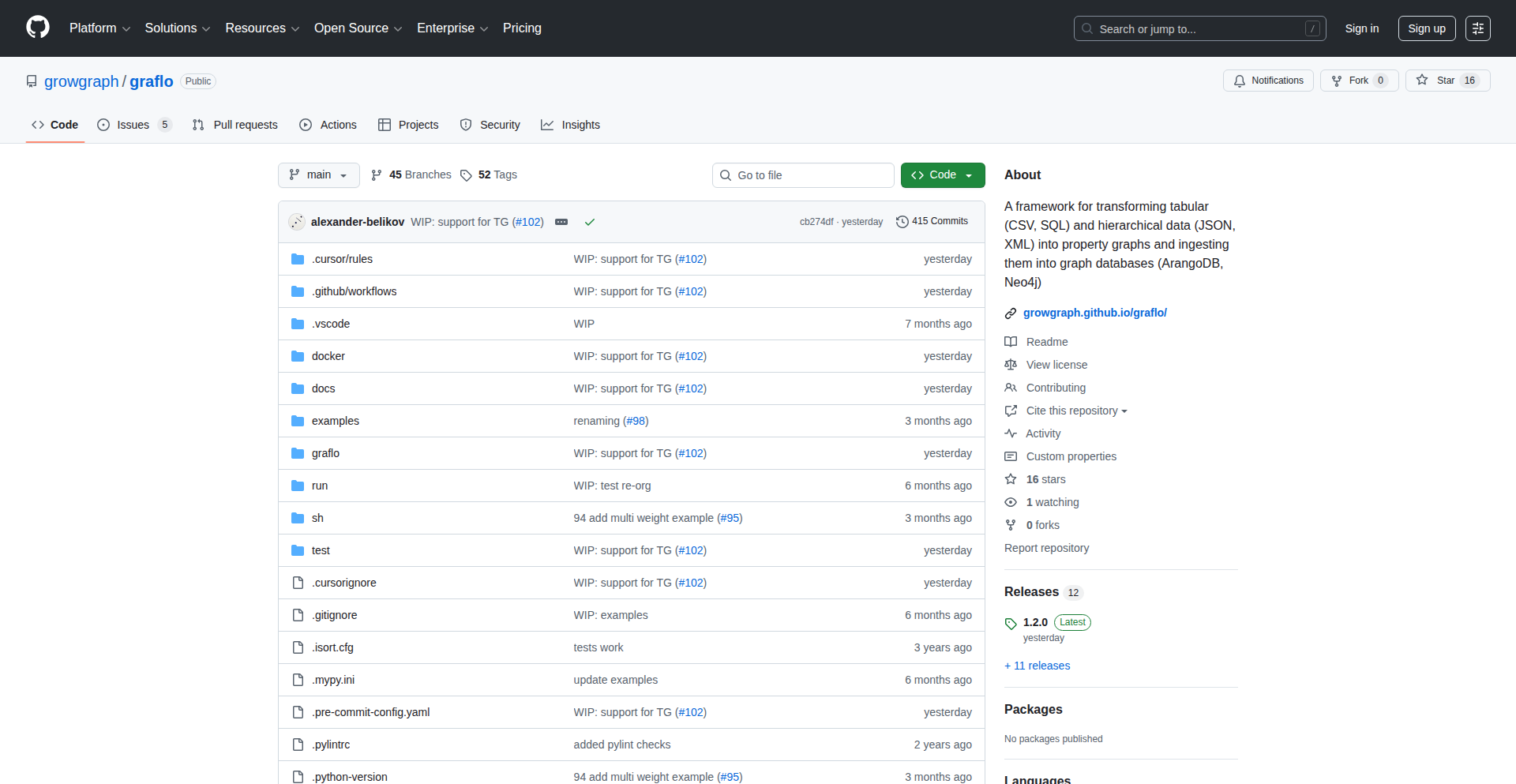

8

GraFlo Schema Weaver

Author

acrostoic

Description

GraFlo is a declarative ETL framework designed to simplify the process of ingesting data into various property graph databases like Neo4j, ArangoDB, and TigerGraph. It tackles the common pain points of data transformation, such as ID generation, type coercion, and deduplication, by providing a single, database-agnostic schema definition. This means developers can define their graph structure once and generate ingestion scripts for multiple target databases, significantly reducing boilerplate code and maintenance overhead. The core innovation lies in abstracting the universal property graph model, allowing seamless transitions between different graph database technologies.

Popularity

Points 5

Comments 3

What is this product?

GraFlo is a developer tool that acts as a universal translator for your data into graph databases. Imagine you have a bunch of information (like research papers, financial reports, or software packages) and you want to store it in a connected way using a graph database. Each graph database (like Neo4j, ArangoDB, or TigerGraph) has its own way of understanding and accepting data. Normally, you'd have to write a lot of custom code for each database to get your data in. GraFlo solves this by letting you describe your data's structure and relationships in a single, universal language. GraFlo then automatically writes the specific code needed to load that data into your chosen graph database. This is innovative because it abstracts away the complexities and unique quirks of each graph database, making data ingestion much more efficient and flexible. So, what's the practical benefit for you? It saves you immense development time and effort when working with multiple graph databases or when switching between them, allowing you to focus on extracting insights from your data rather than wrestling with data ingestion scripts.

How to use it?

Developers use GraFlo by defining their graph data model declaratively. This involves specifying vertices (nodes), edges (relationships), properties (attributes of nodes and edges), and how these map to their source data, which can be in formats like CSV, SQL, JSON, or XML. Once this schema is defined in a database-agnostic way, GraFlo generates the specific ingestion code (scripts or queries) tailored for the target graph database (e.g., Neo4j, ArangoDB, TigerGraph). This allows for 'plug-and-play' functionality where switching target databases is as simple as changing a configuration parameter. Integration typically involves providing GraFlo with your data source and desired target database, and it outputs the necessary ingestion pipelines. The value for developers is the ability to rapidly deploy knowledge graphs across different database backends without rewriting substantial amounts of ETL logic.

Product Core Function

· Declarative schema definition for graph data models: This allows developers to define their graph structure (nodes, relationships, properties) in a standardized, database-agnostic way, which is valuable for maintainability and reusability across different graph database technologies.

· Automatic ingestion script generation for multiple graph databases: GraFlo produces tailored code for Neo4j, ArangoDB, TigerGraph, etc., saving developers significant time and effort in writing custom ETL scripts for each. The value here is the immediate applicability to popular graph databases without extensive custom coding.

· Consistent ID generation across vertices and edges: Ensures unique and reliable identifiers for all graph elements, preventing data integrity issues. This is crucial for building robust and queryable knowledge graphs.

· Automatic type coercion for data properties: Handles the conversion of data types (e.g., strings to dates, numbers) during ingestion, simplifying data preparation and reducing errors. This practical function makes data integration smoother.

· Vertex and edge deduplication: Automatically identifies and merges duplicate nodes and relationships, ensuring a clean and accurate graph dataset. This is essential for accurate analysis and preventing redundant information.

· Support for various data sources (CSV, SQL, JSON, XML): Makes GraFlo versatile, allowing it to ingest data from common formats, thereby broadening its applicability in diverse development environments. This practical aspect means it can likely work with your existing data.

Product Usage Case

· Building a knowledge graph from academic publications: A researcher wants to represent papers, authors, and citations in a graph database. GraFlo can ingest publication data from CSV or JSON files and generate ingestion scripts for Neo4j, allowing for complex query capabilities like finding co-authors or trending research topics. The value is quickly transforming raw publication data into an analyzable knowledge graph.

· Ingesting financial data for market analysis: A financial analyst needs to model companies, stock tickers, and financial statements in a graph database to identify investment patterns. GraFlo can connect to SQL databases or process financial data files (like IBES) and generate ingestion scripts for TigerGraph, enabling sophisticated relationship analysis between financial entities. The value is enabling advanced financial insights through graph analytics.

· Creating a dependency graph for software packages: A developer wants to visualize the dependencies between different software packages to understand potential conflicts or impacts. GraFlo can parse package manifest files (e.g., Debian packages) and generate ingestion scripts for ArangoDB, allowing for easy exploration of software project structures and dependencies. The value is better understanding and managing complex software ecosystems.

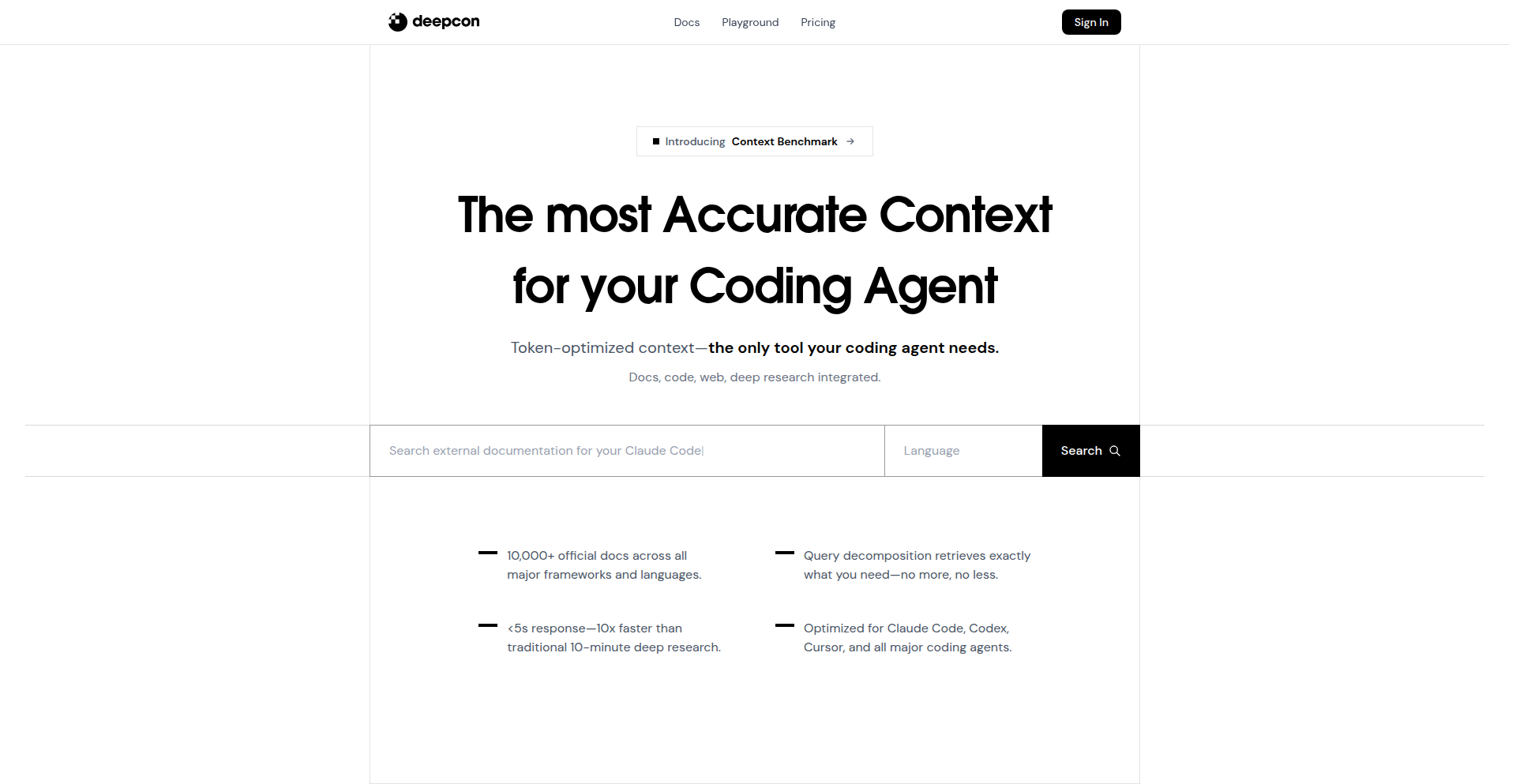

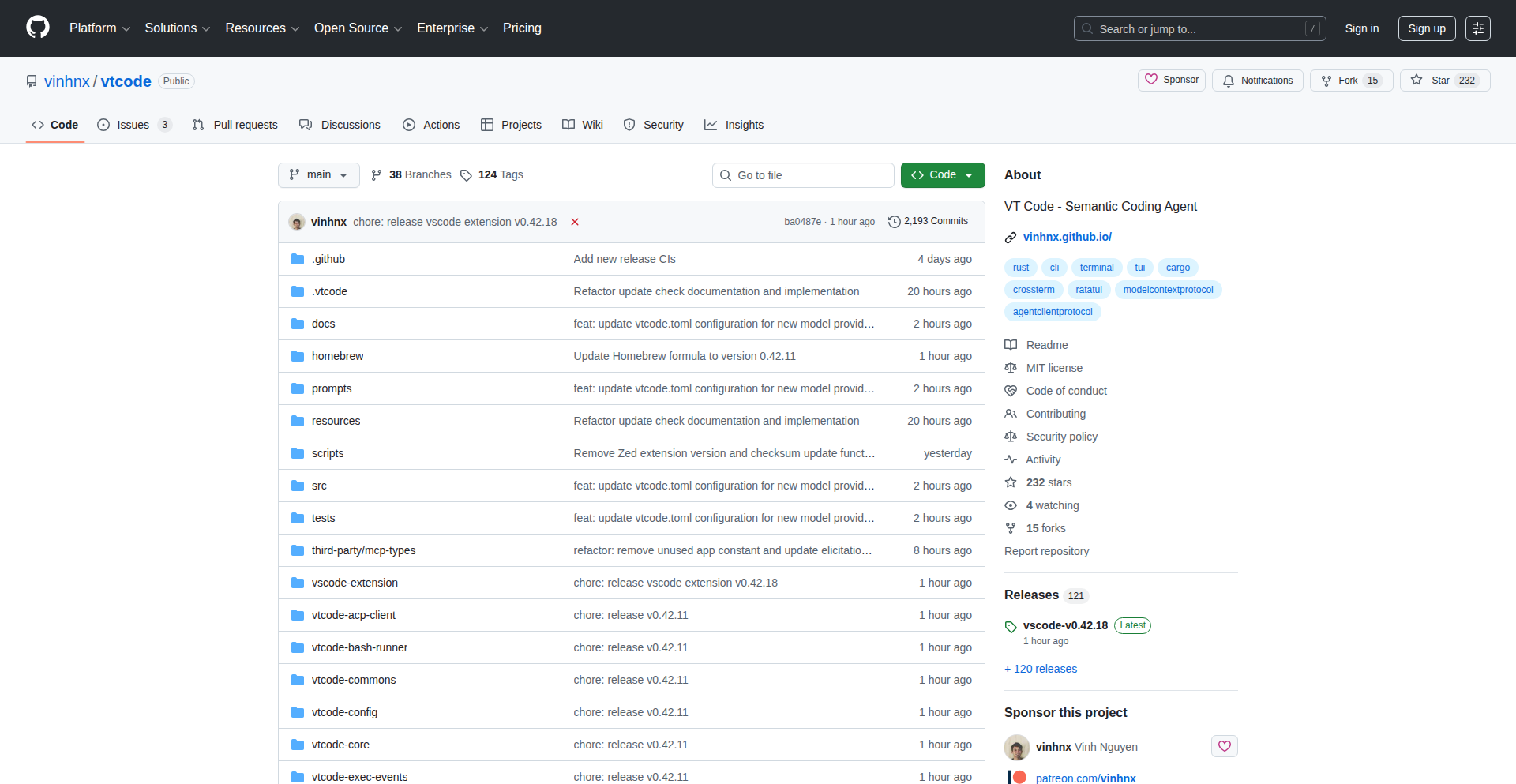

9

DeepCon: Context Augmentation for AI Agents

Author

ethanpark

Description

DeepCon is a revolutionary tool designed to significantly improve the accuracy and efficiency of AI coding agents, like Claude Code and Cursor. It addresses a critical problem: current AI agents struggle to understand and utilize up-to-date information from modern APIs and libraries. Existing solutions often overwhelm the AI with irrelevant data. DeepCon solves this by intelligently crawling, structuring, and filtering information, delivering only the most pertinent context to the AI. This leads to a dramatic improvement in accuracy and a substantial reduction in the amount of data the AI needs to process, making coding agents smarter and more effective.

Popularity

Points 6

Comments 2

What is this product?

DeepCon is a sophisticated context augmentation system built for AI coding agents. The core innovation lies in its ability to understand and retrieve precisely what an AI needs, rather than just dumping large amounts of information. It works by: 1. Crawling and structuring extensive documentation (over 10,000 official docs were processed initially) into a hierarchical format. This means the information is organized logically, like a well-indexed library. 2. Employing a 'query decomposer' which intelligently breaks down a user's request (e.g., 'how do I use the latest feature in this API?'). 3. Searching for relevant information in parallel across the structured documentation. 4. Merging only the truly relevant pieces of context. This is the key to its efficiency – it's like hiring a super-efficient research assistant who only brings you the exact answers you need, not the whole book. The result is a significant reduction in token usage (the basic unit of information AI processes) – DeepCon uses 2.4x fewer tokens than previous methods like Context7, while achieving a 90% accuracy rate compared to Context7's 65% on real-world tasks. So, for you, this means AI agents that are significantly smarter, more accurate, and faster when dealing with new or complex coding tasks.

How to use it?

Developers can integrate DeepCon into their existing AI coding workflows with relative ease. It's designed to be plugged directly into platforms like Claude Code or Cursor, which are AI-powered development environments. By integrating DeepCon, these tools gain immediate access to a highly curated and relevant knowledge base. For instance, when you're working with a new JavaScript library or a recently updated API, instead of your AI agent failing to recognize its existence or properties (as older models might), DeepCon will ensure it has the necessary, up-to-date context. This means smoother coding, fewer errors due to outdated information, and faster development cycles. Think of it as giving your coding assistant a super-powered, always-updated cheat sheet tailored specifically to your current task.

Product Core Function

· Intelligent Documentation Crawling and Structuring: DeepCon automatically gathers and organizes vast amounts of technical documentation, creating a well-organized knowledge base. This is valuable because it ensures that the AI has access to comprehensive and structured information, making it easier for the AI to find relevant details when needed, preventing 'knowledge gaps'.

· Query Decomposition and Parallel Search: When you ask the AI a question, DeepCon breaks it down into smaller parts and searches for answers across the documentation simultaneously. This is valuable because it speeds up the information retrieval process and ensures all angles of your query are explored efficiently, leading to more complete answers.

· Context Filtering and Relevance Ranking: DeepCon excels at identifying and delivering only the most crucial pieces of information for the AI's task, discarding irrelevant data. This is valuable because it dramatically reduces the 'noise' for the AI, allowing it to focus on what's important, which improves accuracy and reduces processing time.

· Reduced Token Usage: By delivering highly relevant and concise context, DeepCon significantly lowers the number of tokens an AI needs to process. This is valuable because it makes AI interactions faster, cheaper, and more efficient, as fewer computational resources are required.

· Enhanced AI Accuracy for Modern APIs: DeepCon's ability to understand and provide context for the latest APIs and libraries directly boosts the accuracy of AI coding agents. This is valuable because it ensures that your AI assistants can effectively help you with current technologies, reducing errors and improving the quality of your code.

Product Usage Case

· Scenario: A developer is working with a brand-new version of a popular cloud service's SDK that was released last week. The AI coding assistant, without DeepCon, might not have any information about this latest version, leading to incorrect code suggestions or an inability to help. With DeepCon, the AI agent is instantly fed the relevant documentation for the new SDK, allowing it to provide accurate code snippets and guidance for using the latest features. This solves the problem of AI falling behind on rapidly evolving technologies.

· Scenario: A developer needs to integrate several complex, modern libraries for a machine learning project. The documentation for these libraries is extensive and interconnected. DeepCon's query decomposer breaks down the developer's need into specific searches, then its parallel search and filtering capabilities find the exact integration patterns and parameter details required. This prevents the developer from getting lost in vast documentation and ensures they can quickly implement the correct functionality, significantly speeding up the development process.

· Scenario: An AI assistant is being used to debug a piece of code that interacts with a complex internal API. The internal API documentation is large and has many subtle dependencies. DeepCon ingests this documentation and, when the AI is asked to debug, provides only the most critical API calls and their expected behaviors relevant to the problematic code. This focused context helps the AI pinpoint the issue much faster than if it had to sift through all the API documentation, leading to quicker bug resolution.

10

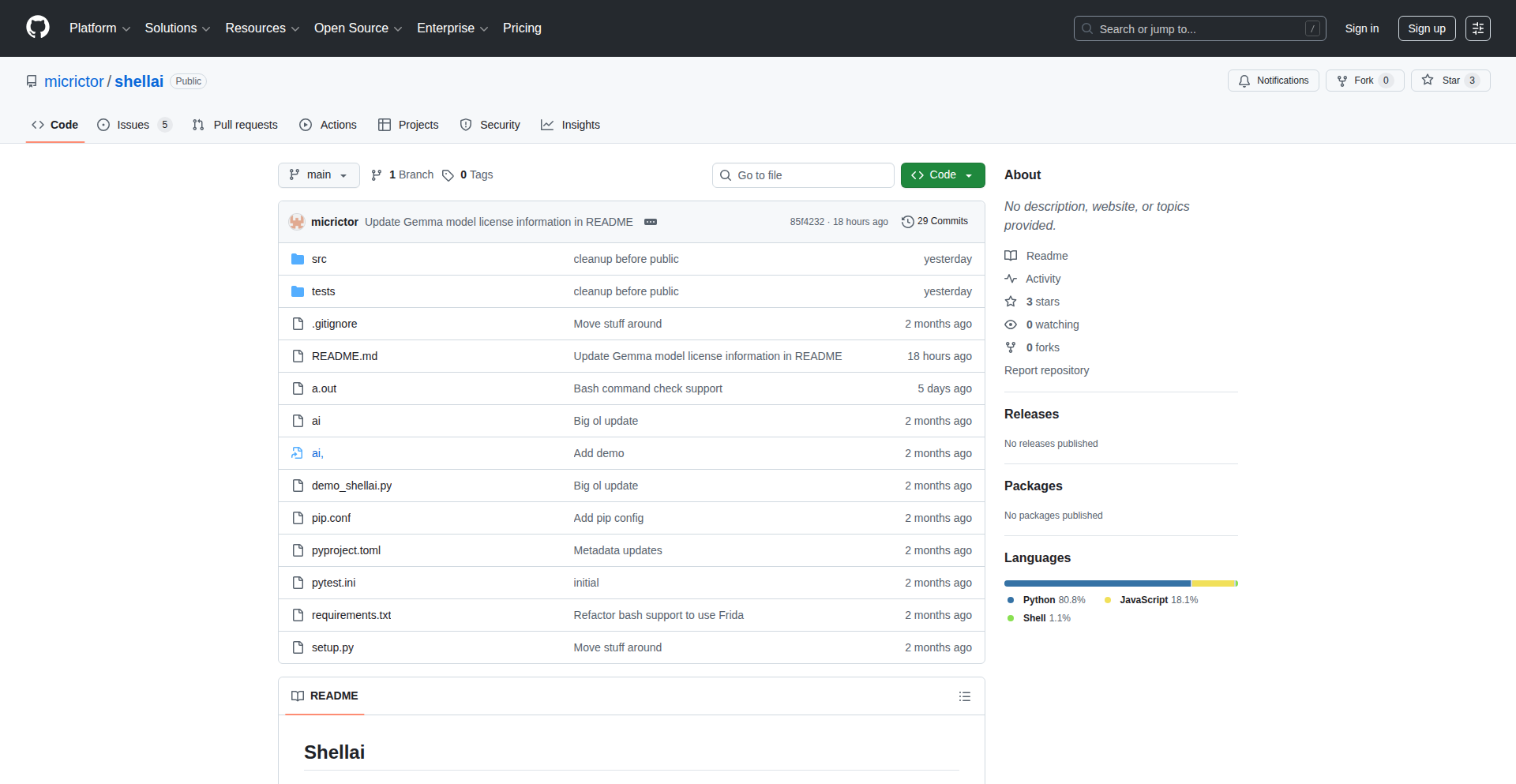

ShellAI: Local LLM-Powered Terminal Copilot

Author

mtud

Description

ShellAI is a revolutionary tool that brings the power of local large language models (LLMs) directly into your terminal. It acts as an intelligent assistant, helping developers understand, write, and debug shell commands. Unlike cloud-based solutions, ShellAI runs entirely on your machine, ensuring privacy and offline functionality while leveraging cutting-edge techniques for efficient local inference of LLMs.

Popularity

Points 5

Comments 2

What is this product?

ShellAI is a command-line interface (CLI) application that integrates with your existing shell (like Bash or Zsh). It uses a Small Language Model (SLM), which is a smaller, more efficient version of a Large Language Model, designed to run locally on your computer. The innovation lies in its ability to understand your natural language requests and translate them into accurate and efficient shell commands. It also excels at explaining complex commands, suggesting optimizations, and even helping you debug errors directly within your terminal environment. This means you get advanced AI assistance without sending your sensitive code or commands to external servers, making it a private and secure solution for developers.

How to use it?

Developers can use ShellAI by installing it as a command-line tool. Once installed, you can invoke its assistance in several ways. For instance, you might preface a natural language question with a specific marker, like `shellai explain 'how to find all files larger than 1GB in the current directory'`. ShellAI will then process this request using its local SLM and output the corresponding shell command and an explanation. Alternatively, if you encounter a command that isn't working, you could feed the error message to ShellAI for debugging suggestions. It can be integrated into your workflow by aliasing common ShellAI commands or by using it as a standalone tool whenever you need help with the terminal.

Product Core Function

· Natural Language to Shell Command Translation: Transforms plain English requests into executable shell commands, saving developers time and reducing the need to memorize complex syntax. The value is in faster command creation and learning.

· Shell Command Explanation: Deciphers existing shell commands, providing clear, understandable explanations. This helps developers learn new commands and understand scripts they encounter, offering educational value.

· Command Debugging and Error Resolution: Analyzes error messages from shell commands and suggests potential fixes or alternative approaches. This significantly speeds up troubleshooting and reduces developer frustration.

· Command Optimization Suggestions: Proposes more efficient or idiomatic ways to execute tasks in the terminal. This helps developers write better performing and more maintainable shell scripts.

· Local LLM Inference for Privacy and Offline Use: Runs entirely on the user's machine, ensuring data privacy and enabling functionality even without an internet connection. This provides peace of mind and continuous productivity.

· Customizable Knowledge Base: Allows for potential integration of custom knowledge or project-specific information to tailor assistance. This enhances relevance and efficiency for specific development contexts.

Product Usage Case

· A developer needs to quickly find all `.log` files modified in the last 24 hours and compress them. Instead of searching documentation, they can type `shellai generate command to find and zip all .log files modified in the last day`. ShellAI provides the `find . -name '*.log' -mtime -1 -print0 | xargs -0 tar -czvf logs.tar.gz` command and explains its components. This saves time and ensures accuracy.

· A junior developer is struggling to understand a complex `grep` command in a colleague's script. They can paste the command into ShellAI with a prompt like `shellai explain this grep command: 'grep -rni 'TODO:' /path/to/project'`. ShellAI breaks down the flags and their meanings, making the script understandable. This is crucial for collaborative development and onboarding.

· A developer encounters a `command not found` error after installing a new tool. They can provide the error message to ShellAI: `shellai help me with 'command not found: my_new_cli_tool'`. ShellAI might suggest checking their PATH environment variable or reinstalling the tool, guiding them towards a solution.

· When performing a file system cleanup, a developer wants to remove empty directories efficiently. Instead of remembering the exact `find` command with `-delete`, they can ask ShellAI, which might suggest `find . -type d -empty -delete` and explain the `-type d` and `-empty` flags for precise targeting. This leads to safer and more effective system administration.

11

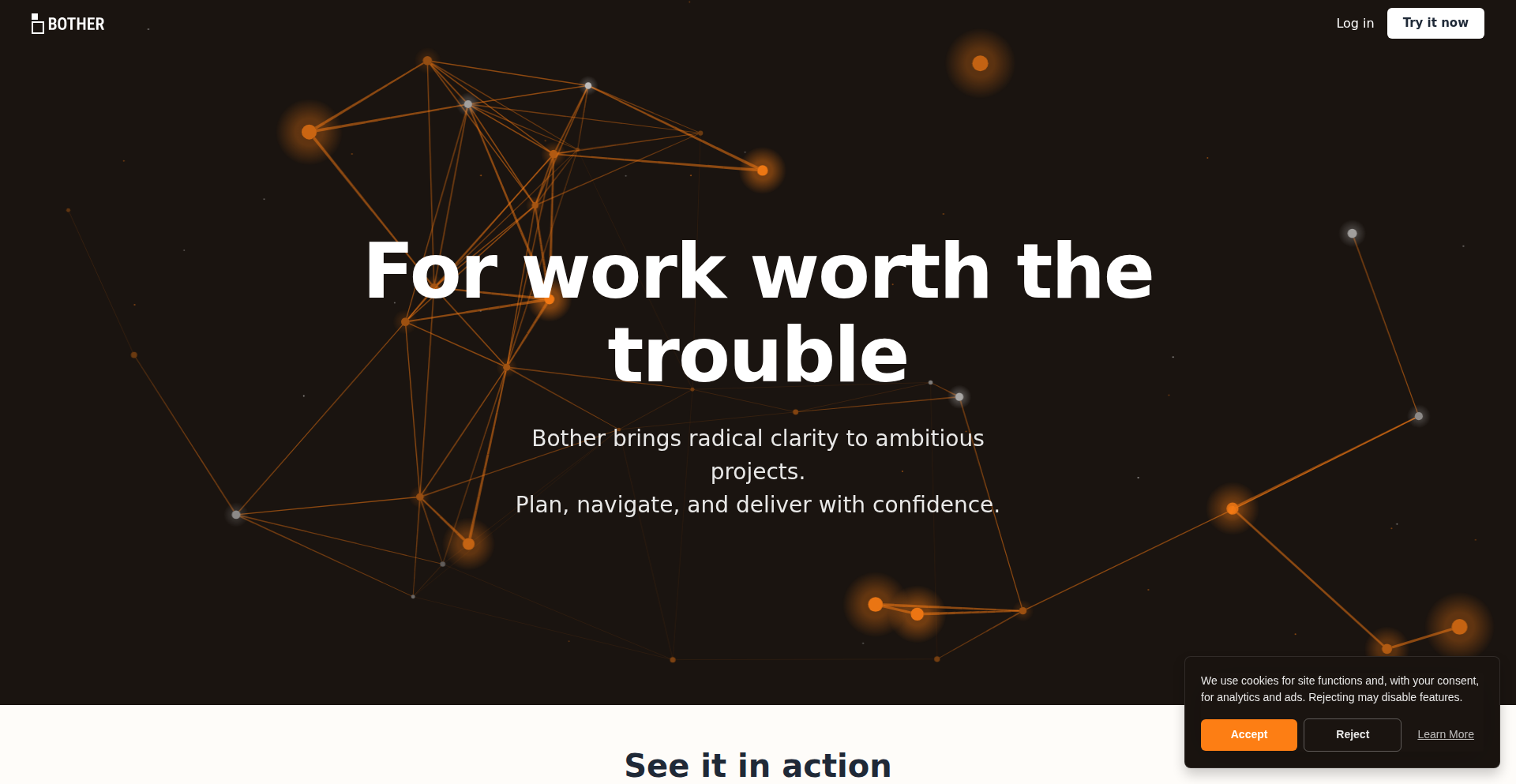

Bother: Lean Project Orchestrator

Author

kalturnbull

Description

Bother is a minimalist project management tool designed to combat the complexity and bloat of conventional solutions. It leverages a unique, API-first approach and a declarative configuration model to provide a streamlined experience for developers. The core innovation lies in its ability to abstract away unnecessary UI elements, allowing users to define and manage projects through simple, code-like definitions, making project management feel like another development task. This addresses the frustration of cumbersome interfaces and steep learning curves often found in feature-rich project management software.

Popularity

Points 3

Comments 3

What is this product?

Bother is a project management application that prioritizes simplicity and developer experience. Unlike traditional tools that rely heavily on graphical interfaces and a multitude of features, Bother operates on an API-first principle with a focus on declarative configuration. This means you define your projects, tasks, and workflows using a structured, code-like format, similar to how you might configure other development tools. The innovation here is in stripping down the user interface to the bare essentials, allowing developers to manage projects as if they were writing code. This makes it incredibly fast to set up and manage, and avoids the 'feature creep' that makes many project management tools overwhelming. So, what's in it for you? You get a project management system that feels intuitive to developers, integrates seamlessly into your existing workflows, and doesn't require hours of training to use effectively.

How to use it?

Developers can interact with Bother primarily through its API or by managing its configuration files. You would typically define your projects, tasks, milestones, and dependencies in a declarative format (e.g., YAML or JSON). Bother then interprets these definitions to manage your project's lifecycle. This could involve setting up CI/CD pipelines, tracking progress, assigning tasks, or defining project stages. For integration, Bother can be hooked into existing development workflows, CI/CD systems, or even other internal tools via its API. Imagine automating project setup as part of your new project boilerplate, or having project status automatically updated based on code commits. So, how does this help you? It allows you to treat project management with the same efficiency and automation you apply to your code, reducing manual effort and increasing consistency across your projects.

Product Core Function

· Declarative Project Configuration: Define project structures, tasks, and workflows in a human-readable, code-like format (e.g., YAML/JSON). This allows for version control of project definitions and repeatable setups, reducing manual errors and increasing project consistency. For you, this means you can manage projects with the same control and predictability as your code.

· API-First Design: All functionalities are accessible via a robust API, enabling deep integration with other development tools and automation workflows. This allows for seamless integration into existing CI/CD pipelines or custom dashboards, giving you the power to automate project management tasks. So, what's the benefit? You can automate project status updates, task assignments, or even project creation based on events in your development ecosystem.

· Minimalist User Interface: Focuses on essential project tracking and management views, avoiding visual clutter and complex navigation. This reduces the learning curve and speeds up daily usage, ensuring you can quickly get to what matters without being overwhelmed by features. For you, this means less time spent figuring out the tool and more time spent on actual development.

· Extensible Plugin System: Designed to be extended with custom functionalities and integrations through a plugin architecture. This allows you to tailor Bother to specific team needs or project types, ensuring the tool grows with your requirements. The value to you? You can add specialized features or connect to niche tools without being limited by the core product's scope.

Product Usage Case

· Automated Project Setup for New Microservices: A development team can create a standard Bother configuration file that defines common tasks, milestones, and responsibilities for a new microservice. When a new microservice repository is created, a CI/CD pipeline can automatically deploy this Bother configuration, setting up the project management framework instantly. This solves the problem of repetitive manual project setup and ensures consistency across all new services, saving significant time and reducing oversight.

· Real-time Project Status Updates in a Chatbot: Integrate Bother's API with a team's internal chatbot. When a key milestone is reached or a critical task is completed, Bother can trigger a notification to the chatbot, informing the team immediately. This provides transparent and up-to-date project visibility without team members having to actively check a separate project management tool. For you, this means staying informed about project progress without context switching.

· Managing Open-Source Project Contributions: An open-source project maintainer could use Bother to define contribution guidelines and track incoming issues and pull requests. Contributors could interact with Bother via its API to update the status of their work, or Bother could automatically assign new issues to maintainers. This provides a structured way to manage community contributions and streamline the review process, making it easier for developers to contribute and for maintainers to manage the project's growth.

12

ScreencastSaver

Author

dev_marcospimi

Description

A Rust-based command-line video codec specifically designed for screencasts, drastically reducing video file sizes by up to 70%. It addresses the common problem of large video files generated from screen recordings, making them more manageable for storage, sharing, and integration into platforms like GitHub.

Popularity

Points 1

Comments 5

What is this product?

ScreencastSaver is a novel video compression technology built in Rust, engineered to optimize video files produced from screen recording activities such as tutorials, presentations, and quality assurance demonstrations. Its core innovation lies in its specialized approach to encoding, which intelligently discards redundant visual information specific to static or slow-changing screen content, leading to an approximate 70% reduction in file size. This means a 60-minute tutorial that would typically be 3.2GB can be compressed to around 800MB, making it significantly easier to handle.

How to use it?

For developers, ScreencastSaver is currently a command-line interface (CLI) tool. You would integrate it into your workflow by installing the Rust toolchain and then compiling the ScreencastSaver project. To use it, you'd point the CLI to your screencast video file, and it would output a highly compressed version. For example, a typical command might look like: `screencasts Saver --input tutorial.mp4 --output compressed_tutorial.mp4`. This allows for easy batch processing and integration into automated video processing pipelines.

Product Core Function

· Highly efficient video compression for screencasts: This function leverages custom encoding algorithms tailored for the typical patterns found in screen recordings (e.g., static backgrounds, text elements, cursor movements) to achieve significant file size reduction, making storage and bandwidth costs lower.

· Rust-based implementation: Built using Rust, a programming language known for its performance and memory safety, ensuring a robust and efficient compression engine. This translates to faster encoding times and a more reliable tool.

· Command-line interface for automation: Provides a CLI that allows developers to easily integrate the codec into scripts and build processes. This enables automated compression workflows for large volumes of screencast content.

· Significant file size reduction (up to 70%): Achieves substantial compression without a noticeable degradation in visual quality for screencast content, making videos easier to share and host online.

· Specialized for screencasts: Unlike general-purpose video codecs, this is optimized for the unique characteristics of screen recordings, leading to superior compression ratios for this specific use case.

Product Usage Case

· Reducing the size of educational video courses: A company creating online courses can use ScreencastSaver to compress their tutorial videos, reducing hosting costs and improving streaming performance for their users. This means students can download or stream content faster and consume more material without hitting data caps.

· Optimizing screencasts for GitHub READMEs: Developers can compress video demonstrations of their software for inclusion in GitHub repositories. Smaller video files ensure that READMEs load quickly and don't consume excessive repository storage.

· Streamlining QA and bug reporting videos: Quality assurance teams can generate smaller video recordings of bugs or feature demonstrations. This makes it easier to share these reports internally or with development teams, speeding up the feedback loop and issue resolution.

· Lowering bandwidth costs for live streaming platforms: If a business uses screencasts for live tutorials, reducing the video size with ScreencastSaver can significantly decrease their outgoing bandwidth expenses, making their service more cost-effective.

13

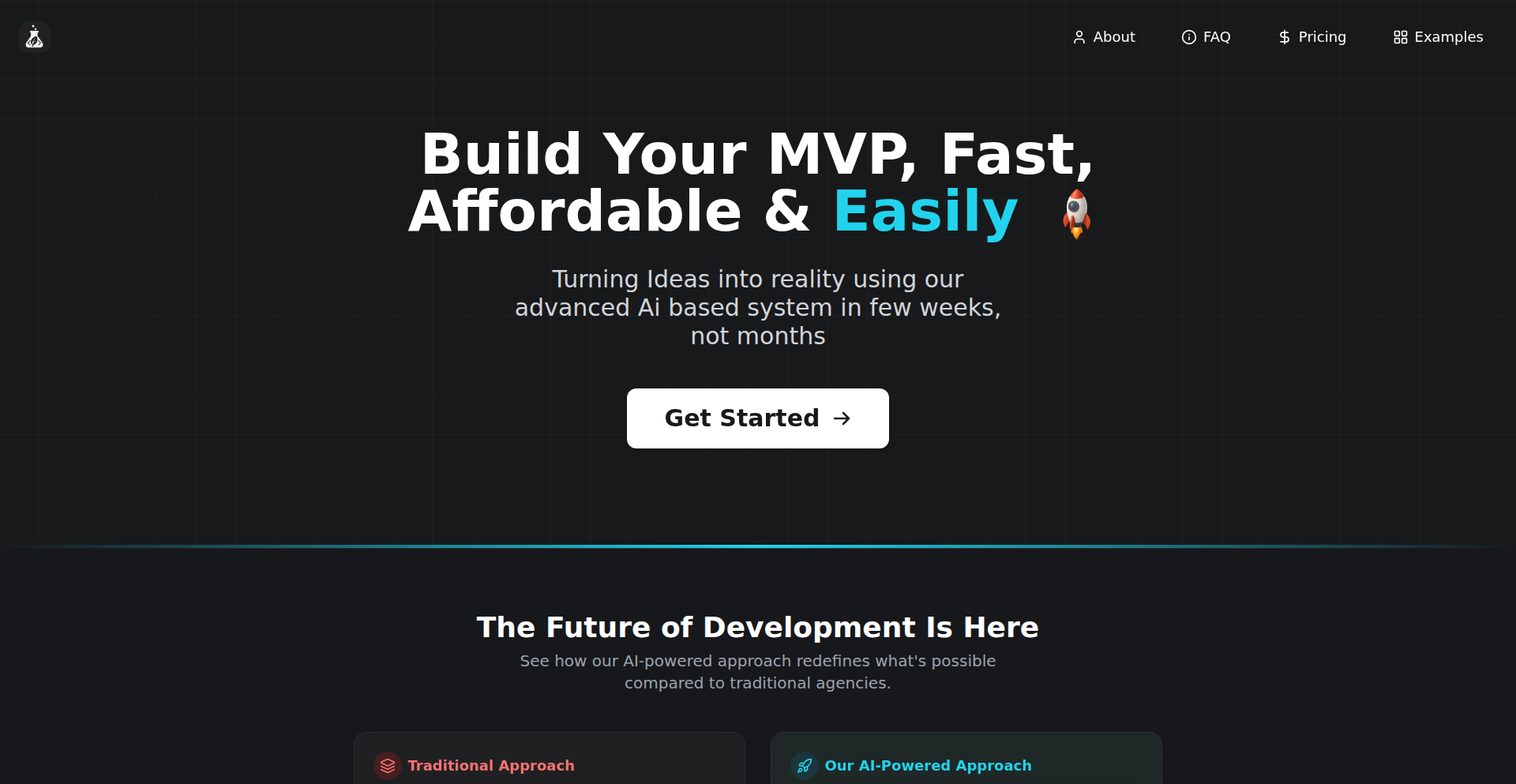

MVP Forge

Author

alwassikhan

Description

A lean agency focused on rapid Minimum Viable Product (MVP) development, leveraging agile methodologies and lean startup principles to quickly bring digital ideas to life. The innovation lies in the streamlined process and focus on validated learning, translating complex development cycles into tangible, market-ready products with minimal waste.

Popularity

Points 3

Comments 2

What is this product?

MVP Forge is a specialized development agency designed to help entrepreneurs and businesses rapidly build and launch Minimum Viable Products (MVPs). The core innovation is its commitment to lean principles and agile development, meaning instead of building everything at once, we focus on creating the absolute essential features needed to test your core business hypothesis with real users. This minimizes development time and cost, allowing for quick feedback loops to iterate and improve, ensuring you're building something people actually want. Think of it as building the skateboard first to test the idea of transportation, rather than trying to build a full car immediately.

How to use it?

Entrepreneurs and businesses can engage MVP Forge to transform their product ideas into functional MVPs. The process typically starts with a discovery phase where the core problem and target audience are identified. We then collaboratively define the essential features for the MVP. Development is iterative and agile, meaning we build in short cycles, get your feedback, and adjust. This makes integration straightforward as we work with your existing vision or help shape it. The ultimate goal is to provide you with a working product that can be launched to early adopters for real-world validation, allowing you to make informed decisions about future development based on actual market response. This is useful for anyone who has an idea but is daunted by the full development lifecycle and cost.

Product Core Function

· Rapid MVP Prototyping: Quickly translates your core idea into a functional product, allowing for immediate market testing and feedback. This is useful for validating business assumptions early and reducing the risk of building the wrong product.

· Lean Development Cycles: Employs agile and lean methodologies to build only the essential features, saving time and resources. This is useful for getting to market faster and controlling development costs.

· User-Centric Validation: Focuses on building products that meet user needs by incorporating feedback loops. This is useful for ensuring your product resonates with your target audience and has a higher chance of success.

· Iterative Product Refinement: Enables continuous improvement of the product based on real user data and market response. This is useful for adapting to market changes and ensuring long-term product viability.

Product Usage Case

· A startup with a novel app idea uses MVP Forge to build a functional prototype that showcases the core user experience. This allows them to secure initial funding and gather crucial early adopter feedback before investing in a full-scale development. This solves the problem of needing to demonstrate a tangible product to investors and users without significant upfront investment.

· An established company wants to explore a new market with a digital service. They engage MVP Forge to create a lean MVP of the service. This enables them to test the market demand and refine the service offering based on user behavior data, without disrupting their core business or committing large resources. This solves the problem of market entry risk and resource allocation for experimental ventures.

· An entrepreneur has a unique solution for a niche problem but lacks the technical expertise to build it. MVP Forge helps them design and develop the MVP, enabling them to bring their solution to the target users and start generating revenue. This solves the problem of technical skill gaps for innovators.

· A product team needs to quickly test a new feature concept. MVP Forge can rapidly build a focused MVP of that feature, allowing the team to gather user insights and decide whether to fully integrate it into their existing product. This solves the problem of rapid feature validation and reducing the risk of investing in unproven functionalities.

14

AI Mail Weaver

Author

neuwark

Description

An AI agent designed to rapidly generate full marketing emails for ecommerce founders and marketers. By inputting basic product, offer, or promotion details, it crafts complete emails, including subject lines, structure, tone, and calls to action, tailored to a brand's voice. It tackles the time-consuming task of email copywriting, aiming for speed and human-like quality.

Popularity

Points 3

Comments 2

What is this product?

This is an AI-powered tool that acts as your personal email copywriter. Instead of you staring at a blank screen for hours trying to figure out what to say in your marketing emails, you just provide a few key pieces of information – like what you're selling, what the offer is, or a specific promotion. The AI then takes these details and automatically generates a complete, professional-sounding marketing email. The innovation lies in its ability to understand context and brand voice to produce copy that feels natural and persuasive, significantly reducing the effort required to create effective email campaigns. It's like having a junior copywriter on demand, 24/7.

How to use it?

Developers can integrate this tool into their marketing automation workflows or content management systems. For direct use, ecommerce marketers can visit the provided web application, input their promotion details, and receive a ready-to-use email. The agent's output can be directly copied and pasted into email platforms like Mailchimp, Klaviyo, or custom-built systems. For more technical integrations, an API could be envisioned, allowing developers to programmatically trigger email generation based on events or data within their applications. This streamlines the content creation process for campaigns, product launches, or customer engagement initiatives.

Product Core Function

· Automated Subject Line Generation: Creates compelling subject lines that increase open rates by analyzing the email's core message and applying AI-driven best practices for attention-grabbing headlines.

· Email Body Content Crafting: Generates the entire email copy, from introduction to conclusion, ensuring a logical flow and persuasive narrative based on user inputs and brand context.

· Tone and Brand Voice Adaptation: Learns and applies a specific brand's tone (e.g., friendly, professional, enthusiastic) to ensure consistency in all outgoing communications, making the emails feel authentic to the brand.

· Call to Action (CTA) Design: Develops effective calls to action that encourage desired user behavior, such as making a purchase, signing up, or learning more, tailored to the email's objective.

· Time and Effort Reduction: Significantly cuts down the manual hours typically spent on writing marketing emails, allowing marketing teams to focus on strategy and analysis rather than content creation.

· Scalable Content Production: Enables the rapid creation of multiple email variations for different segments or promotions, supporting growth and agility in marketing efforts.

Product Usage Case

· A startup launching a new product can use this AI to quickly generate announcement emails, welcome sequences, and promotional offers, reducing the time-to-market for their marketing campaigns.

· An online fashion retailer can input details about a seasonal sale to get instantly generated email copy, complete with engaging descriptions and clear discount information, for their email list.

· A subscription box service can input information about a new theme or featured item to create targeted emails for their subscribers, driving engagement and renewals.

· A marketer working on a product-led growth strategy can use the AI to craft onboarding emails that highlight key features and benefits, helping new users become more invested in the product.

· Ecommerce businesses experiencing a sudden change in inventory or a flash sale can use the tool to rapidly communicate these updates to their customers, minimizing lost revenue opportunities due to slow content creation.

15

PolyBets.fun: Decentralized Auction Speculation Engine

Author

h100ker

Description

PolyBets.fun is a revolutionary platform allowing anyone to instantly create prediction markets for automotive auction results (like Bring a Trailer, Cars & Bids, RM Sotheby's) using just a shareable link. Built in an impressive 10 days, it leverages innovative backend technology to facilitate decentralized speculation, enabling car enthusiasts to put their money where their opinions are, bypassing traditional legal hurdles through a robust terms of service.

Popularity

Points 3

Comments 2

What is this product?

PolyBets.fun is a novel application that lets you establish a betting market around the outcome of car auctions. Its core innovation lies in its speed and accessibility; with a simple link, you can define an auction and let people speculate on its final price or sale status. Technically, it likely uses a backend service to manage market creation, track bets, and record results. The 'decentralized' aspect hints at a system designed to reduce reliance on a central authority for managing these bets, perhaps using blockchain-like principles or simply a robust, transparent logging mechanism. This means it's built for rapid, community-driven speculation on real-world events.

How to use it?

Developers can use PolyBets.fun by sharing a specific auction link. The platform then allows users (who agree to the terms of service) to place bets or create speculative positions on whether the car will sell, and for how much. For integration, one could imagine using the platform's API (if available, or by scraping public data from auction sites) to feed auction data into PolyBets.fun, or to pull betting pool statistics for analysis. It's essentially a tool for creating micro-economies around specific, high-interest events within the car collecting community.

Product Core Function

· Dynamic Market Creation: The ability to quickly set up a prediction market for any specified auction. This is valuable because it allows communities to engage more deeply with their shared interests, turning passive observation into active participation and fostering discussion.

· Link-Based Market Initiation: Users can start a market simply by providing a URL to an auction listing. This dramatically lowers the barrier to entry, making it incredibly easy for anyone to create a betting opportunity without complex setup, thus democratizing market creation.

· Speculation Management: The system handles the tracking of bets, potential payouts, and resolution of outcomes based on auction results. This provides a structured and fair way for individuals to express their confidence (or lack thereof) in a particular auction's outcome, offering a fun and engaging way to test predictive skills.

· Terms of Service as Risk Mitigation: A clear set of terms of service addresses potential legal ambiguities and user expectations. This is important for the product's sustainability and for managing user understanding of the risks involved, ensuring a more responsible and clear user experience.

Product Usage Case

· Scenario: A group of friends are avid followers of Bring a Trailer and constantly debate which rare Porsches will fetch the highest prices. Using PolyBets.fun, one friend can create a market for an upcoming 911 auction. They share the BaT link, define a betting pool on the final sale price (e.g., 'will it break $200k?'), and all friends can then place their virtual bets. This turns their casual arguments into a more engaging, gamified experience with a clear outcome.

· Scenario: An automotive blogger wants to increase engagement on their content about a specific auction. They can create a PolyBets.fun market tied to the auction they are covering. By embedding or linking to this market, their audience can participate in speculating on the auction's success, driving more traffic and interaction to the blog and the auction itself.

· Scenario: A developer working on a car enthusiast app wants to add a social betting feature. While the full implementation might be complex, PolyBets.fun could serve as a backend for their prediction market functionality. They could potentially integrate with PolyBets.fun's API (or a similar concept) to allow their users to create and participate in speculation markets directly within their app, providing an innovative feature with minimal development effort for the core betting logic.

16

Ad-Powered AI Code Assistant

Author

namanyayg

Description

This project offers free access to powerful AI models like Claude Sonnet 4.5, making AI development and automation accessible to more people. It's innovative because it's funded by contextual advertisements embedded in the AI's responses, challenging the traditional pay-as-you-go pricing model for AI services. This approach allows users to build and experiment without incurring significant costs, fostering a more open and experimental approach to AI tool development.

Popularity

Points 4

Comments 1