Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-05

SagaSu777 2025-11-06

Explore the hottest developer projects on Show HN for 2025-11-05. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN submissions highlight a powerful surge in AI integration across diverse technical domains. We're seeing a clear trend towards developers leveraging AI not just for new functionalities, but to fundamentally improve the developer experience itself, automating complex tasks, simplifying workflows, and even generating code and system configurations. The push for specialized AI models and frameworks tailored to specific industries, like robotics with HORUS or COBOL workloads with a Go-based language, signifies a maturing AI landscape where generalized solutions are giving way to deeply integrated, domain-specific intelligence. Furthermore, the increasing focus on data privacy and secure AI operations, as seen in projects like Guardrail Layer and AI risk assessment tools, is becoming a critical consideration for both individual developers and enterprises looking to adopt AI responsibly. For aspiring developers and entrepreneurs, this indicates a vast opportunity to build tools that empower creators, streamline complex processes, and address emergent challenges in AI adoption, all while embracing the hacker spirit of innovative problem-solving and open-source collaboration.

Today's Hottest Product

Name

HORUS - Hybrid Optimized Robotics Unified System

Highlight

This project tackles the complexity of robotics development by creating a modular and swappable framework, aiming to simplify environment setup and dependency management. It achieves this through a "context-as-code" approach and features like seamless inter-language communication (Python/Rust via shared memory), automatic package detection and installation, and a unified ecosystem registry. Developers can learn about designing flexible, maintainable systems that abstract away intricate configurations, enabling them to focus on core robotics algorithms and AI integration.

Popular Category

AI/ML

Developer Tools

Robotics

Open Source

Popular Keyword

AI

LLM

Code Generation

Developer Experience

Automation

Framework

Data Privacy

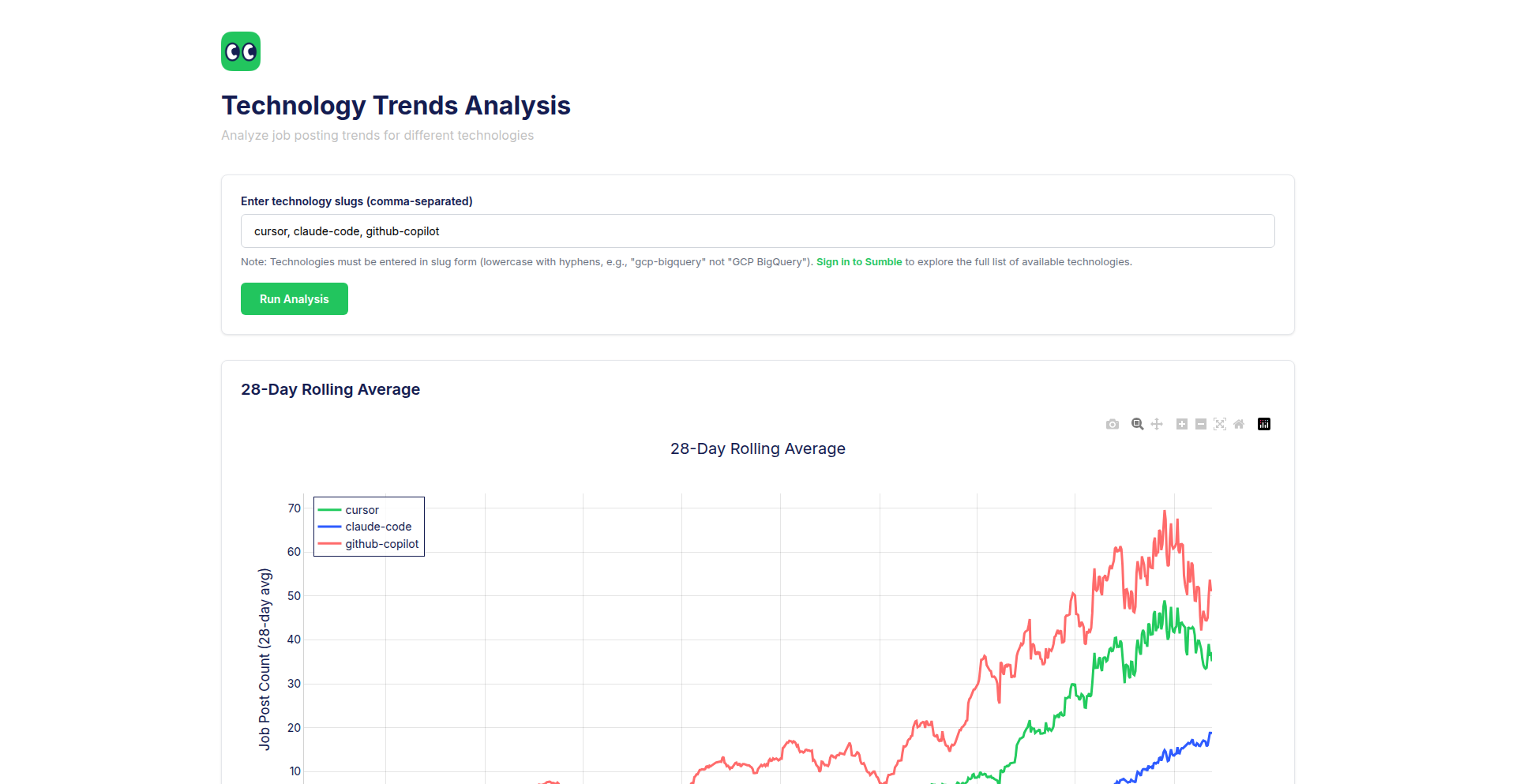

Technology Trends

AI-powered development and automation

Enhanced developer experience and tooling

Modular and adaptable system design

Data privacy and security in AI workflows

Specialized domain-specific languages and frameworks

Project Category Distribution

AI/ML Tools (30%)

Developer Productivity Tools (25%)

Frameworks/Libraries (20%)

Specialized Applications (15%)

Data/System Management (10%)

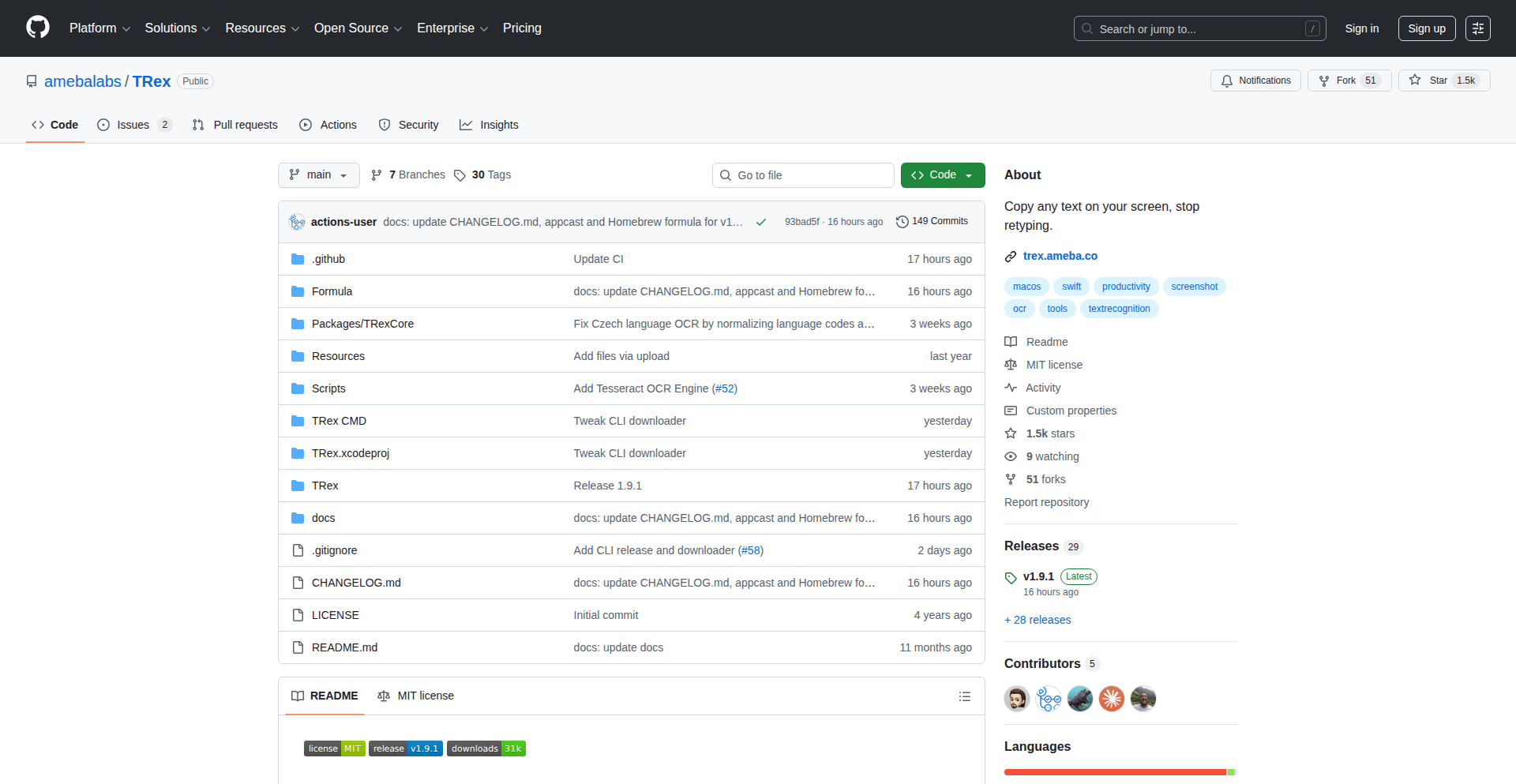

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | LifeGoals Wall | 14 | 9 |

| 2 | Sudocode: Context-as-Code for AI Agents | 15 | 5 |

| 3 | HORUS: Modular Robotics Symphony | 16 | 0 |

| 4 | ChromaChord Visualizer | 15 | 0 |

| 5 | MeetingMemo Encyclopaedia | 4 | 9 |

| 6 | PACR: Academia's Integrated Research & Connection Hub | 11 | 0 |

| 7 | Vance.ai: AI-Powered Network Connector | 4 | 6 |

| 8 | ResonaX AI Buyer Twins | 1 | 7 |

| 9 | Zee: AI Interviewer | 4 | 4 |

| 10 | SlidePicker: Markdown-Powered Presentation Generator | 3 | 4 |

1

LifeGoals Wall

Author

jamespetercook

Description

A digital wall that visualizes shared life goals and deathbed regrets. It tackles the human desire for meaning and introspection by creating an atmospheric platform for users to anonymously contribute their aspirations and reflections, sparking thought and connection within a community. The innovation lies in using collective human sentiment to build an interactive, thought-provoking experience, rather than focusing on a specific technical problem.

Popularity

Points 14

Comments 9

What is this product?

LifeGoals Wall is a web application designed to be an ambient, reflective space. It's built on the idea that understanding what people truly desire before they die, and what they regret not doing, can offer profound insights into living a more fulfilling life. Technologically, it's a straightforward web deployment. Users anonymously submit their 'Before I die, I want to...' statements and their 'Deathbed regrets.' These entries are then aggregated and displayed in a visually engaging, atmospheric manner on a shared 'wall.' The innovation isn't in a groundbreaking algorithm, but in the *application* of web technology to create a communal space for deep human reflection, acting as a collective mirror for societal aspirations and anxieties. So, what's in it for you? It offers a unique opportunity to anonymously share your deepest desires and learn from the reflections of others, potentially inspiring you to act on your own goals and avoid future regrets.

How to use it?

Developers can use this project as a starting point for understanding how to create engaging, sentiment-driven web applications. The core technology involves basic frontend development (HTML, CSS, JavaScript) for user input and display, and a simple backend to store and retrieve the submitted goals and regrets. Integration into other platforms could involve embedding the 'wall' as a widget or using its data for further analysis on human aspirations. For example, a journaling app developer could integrate this feature to encourage users to think about their long-term desires. So, how can you use it? You can explore its codebase to learn about building simple, community-focused web features, or even fork it to add new functionalities like categorization of goals or more advanced visualization. What's the benefit for you? It's a tangible example of how to build a web presence that connects with users on an emotional and philosophical level, providing a blueprint for similar introspective applications.

Product Core Function

· Anonymous Goal Submission: Allows users to share their 'Before I die, I want to...' aspirations without revealing their identity. This fosters a sense of safety and encourages honest self-expression, valuable for personal growth and understanding collective desires. So, what's in it for you? You can contribute your own dreams and see what others are striving for, creating a shared sense of hope and possibility.

· Anonymous Regret Submission: Enables users to anonymously post their 'Deathbed regrets,' reflecting on things they wish they had done. This feature provides a powerful learning opportunity, helping users identify potential pitfalls in their own lives and make more conscious choices. So, what's in it for you? You can learn from the collective wisdom of others' experiences, potentially avoiding future regrets and living a more intentional life.

· Atmospheric Visualization: Presents the submitted goals and regrets in an aesthetically pleasing and thought-provoking manner on a shared digital 'wall.' The aim is to create an environment that encourages pause and reflection. So, what's in it for you? This provides a beautiful and engaging way to consume introspective content, encouraging personal contemplation and sparking curiosity about life's deeper meanings.

Product Usage Case

· A therapist's website could integrate this wall to encourage clients to reflect on their life goals and potential regrets outside of sessions, fostering deeper self-awareness and providing conversation starters. So, how does this help? It extends therapeutic insights into daily life and offers a readily accessible tool for personal reflection.

· An educational platform focused on personal development could use this as a feature to inspire students to think about their future aspirations and the importance of seizing opportunities. So, how does this solve a problem? It combats procrastination and apathy by making future goals feel more tangible and urgent.

· A community forum or social platform could embed this as a way to foster deeper, more meaningful conversations among members, moving beyond superficial topics to explore shared human experiences. So, what's the benefit? It enriches community interaction by providing a common ground for profound personal expression and mutual learning.

2

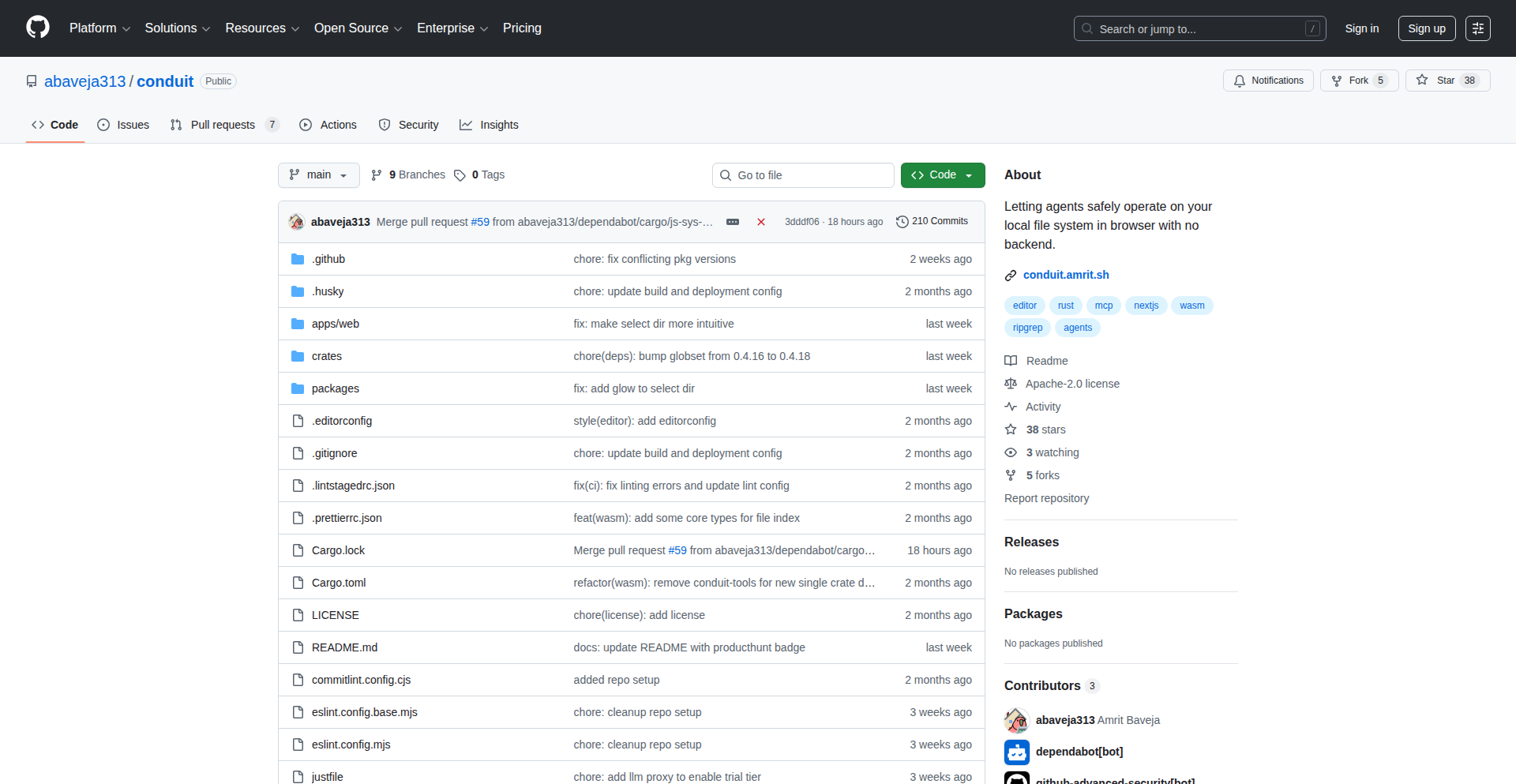

Sudocode: Context-as-Code for AI Agents

Author

alexsngai

Description

Sudocode is a novel system that brings Git-like version control to the interactions between developers and AI coding agents. It translates human intent into durable 'specs' and tracks AI agent actions as 'issues' directly within your code repository. This 'context-as-code' approach tackles the common problem of AI 'amnesia' in long-running projects, making AI-assisted development more organized and efficient.

Popularity

Points 15

Comments 5

What is this product?

Sudocode is a lightweight system designed to manage the information flow and memory of AI coding agents, particularly in collaborative development environments. Instead of AI agents forgetting past instructions or context, Sudocode captures user intentions as structured specifications (like requirements documents but code-friendly) and records the AI's work as traceable issues, all stored and versioned within your Git repository. Think of it as giving your AI a persistent, organized memory that's as robust as your codebase's history. This means the AI can recall previous decisions and ongoing tasks, reducing the need for constant re-explanation and leading to faster progress on complex features.

How to use it?

Developers can integrate Sudocode into their workflow by installing it as a tool within their project repository. When collaborating with an AI agent on a task, Sudocode helps define the task's goals (the 'specs') and monitors the AI's progress and actions, logging them as 'issues'. This allows developers to review the AI's work, revert changes if necessary (thanks to Git versioning), and maintain a clear audit trail of what the AI has done. It's particularly useful for large features or when working with AI on multi-stage coding problems, ensuring consistency and reducing errors.

Product Core Function

· Intent Capture as Durable Specs: This function transforms human instructions into structured, version-controlled documents ('specs') that AI agents can reliably reference. The value is in ensuring that the AI always understands the core requirements, preventing drift from the original goal and improving the quality of AI-generated code.

· Agent Activity Tracking as Issues: This function logs all actions performed by the AI agent as traceable 'issues' within your Git history. The value is in providing transparency and accountability for AI contributions, allowing developers to easily review, debug, and revert AI-driven changes, similar to how they manage human-made code commits.

· Contextual Memory Augmentation: By storing specs and issues in Git, Sudocode creates a persistent memory for AI agents. The value is in overcoming AI 'amnesia' on long-term tasks, reducing repetitive prompts, and enabling the AI to build upon previous work without losing track of the project's history and context.

· Git Integration for Version Control: This function leverages Git to version control all AI interaction data. The value is in providing a familiar and powerful mechanism for managing changes, enabling rollbacks, and ensuring that the AI's context is as robustly managed as the codebase itself.

Product Usage Case

· Refactoring a large codebase: A developer needs to refactor a complex system. They can use Sudocode to define the refactoring goals as specs. The AI agent then performs the refactoring, and Sudocode logs each step as an issue. If the refactoring introduces bugs, the developer can easily revert specific AI-generated changes thanks to Git versioning, ensuring a safe and controlled refactoring process.

· Developing a new complex feature: For a feature requiring multiple stages and interactions with the AI, Sudocode can manage the evolving requirements and track each AI-generated code snippet or modification. This prevents the AI from 'forgetting' earlier parts of the feature's development or contradicting previous instructions, leading to a more coherent and complete feature.

· Debugging AI-generated code: When an AI agent produces code that doesn't work as expected, Sudocode's issue tracking allows developers to pinpoint exactly which actions the AI took that led to the error. This greatly speeds up the debugging process compared to trying to recall the AI's entire interaction history.

· Onboarding new team members to an AI-assisted project: The version-controlled specs and issues managed by Sudocode provide a clear and historical record of how the AI was used to develop specific parts of the project. This documentation helps new team members quickly understand the AI's role and the project's evolution.

3

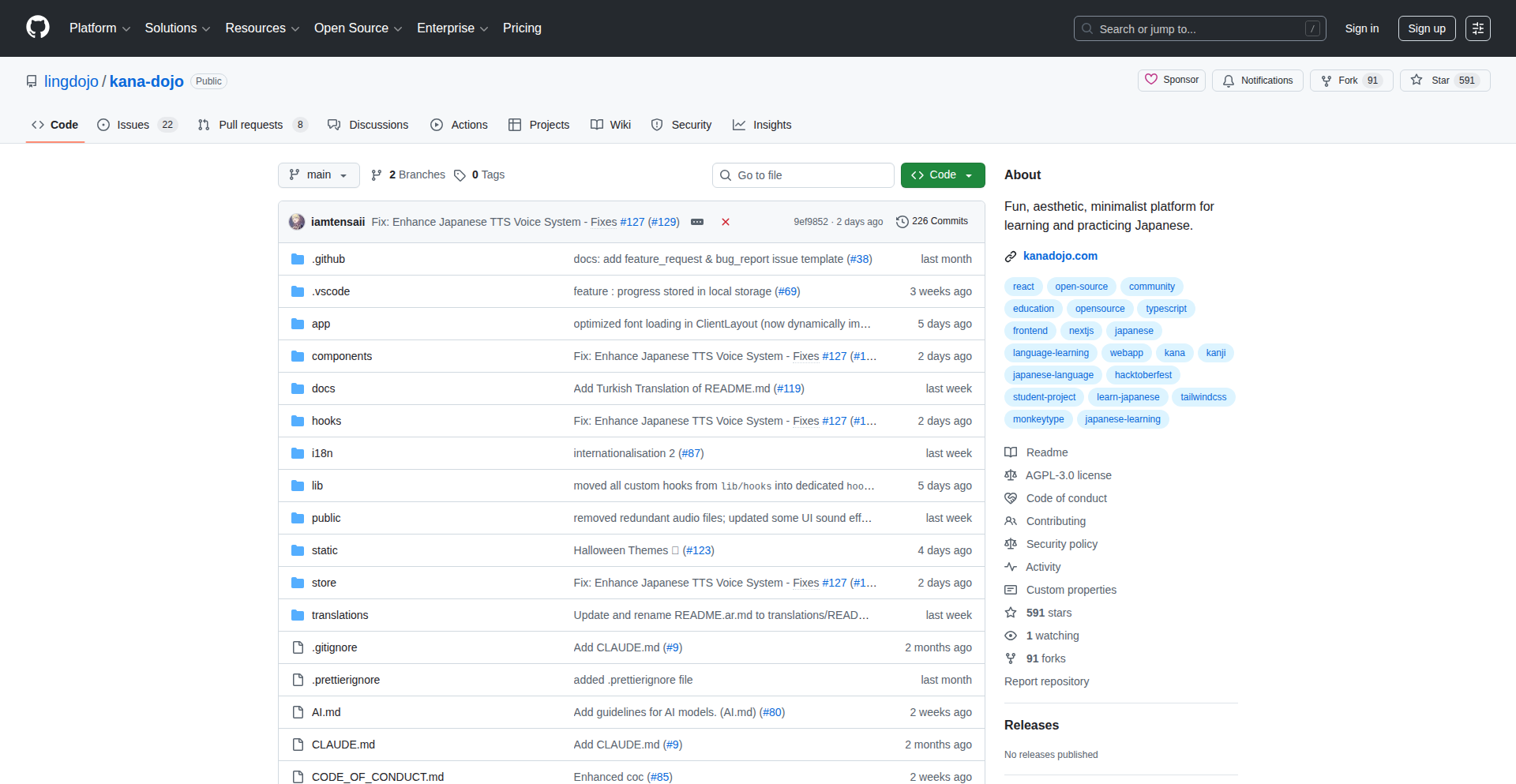

HORUS: Modular Robotics Symphony

Author

neos_builder

Description

HORUS is a robotics framework designed to streamline development by offering a modular and unified system. It addresses the complexities and dependencies that often plague existing robotics platforms like ROS2, allowing developers to focus on algorithms and logic rather than environment setup and configuration issues. Its core innovation lies in its flexible architecture, enabling easy swapping of components and automatic dependency management, making it simpler to integrate modern AI/ML tools and develop robotics applications.

Popularity

Points 16

Comments 0

What is this product?

HORUS is a robotics framework built for modern development, aiming to solve the common frustrations developers face with older systems. Its technical core is its hybrid and unified design. 'Hybrid' means it can seamlessly integrate different programming languages (like Python and Rust) and communication protocols (like shared memory for Inter-Process Communication) within the same project. 'Unified' means it's built with an ecosystem in mind, featuring a registry for packages and an easy integration process for new components. Unlike rigid systems, HORUS treats project components like Lego blocks – easily swappable. If you don't like the current way different parts of your robot talk to each other, you can change it with a simple configuration edit without breaking everything. It also automates package installation and dependency checking, effectively eliminating the 'it works on my machine' problem. This means you can spend less time wrestling with setup and more time building your robot's intelligence.

How to use it?

Developers can use HORUS by installing it and then defining their robotics applications. For instance, a developer could start a project by running a command like 'horus run app.py driver.rs', which would allow Python code for high-level logic and Rust code for low-level drivers to communicate effortlessly. HORUS also provides commands for managing project environments. 'horus env freeze' captures the exact state of your project's dependencies, creating a unique ID. Other developers can then use this ID with 'horus env restore <ID>' to replicate the exact same environment, ensuring consistency across development teams and machines. This is akin to how Python developers use requirements.txt, but extended to the entire robotics project stack.

Product Core Function

· Modular Component Swapping: Allows developers to easily replace core functionalities like Inter-Process Communication (IPC) mechanisms or environment configurations by changing a single configuration line, reducing development friction and increasing flexibility.

· Automated Dependency Management: Detects missing packages and automatically installs compatible versions, resolving 'dependency hell' and ensuring projects run consistently across different development environments.

· Cross-Language Interoperability: Enables seamless communication between applications written in different programming languages (e.g., Python and Rust) through efficient shared memory IPC, simplifying the integration of diverse software components.

· Environment Freezing and Restoration: Creates reproducible development environments by capturing and restoring project dependencies, solving the 'works on my machine' problem and improving team collaboration.

· Ecosystem Registry: Provides a centralized database for HORUS packages, facilitating the discovery and integration of new tools and components, fostering community contribution and accelerating future development.

Product Usage Case

· Integrating an AI/ML model developed in Python with a robot's control system written in Rust. HORUS would handle the communication between these two components, allowing for rapid prototyping of AI-powered robotics.

· A student learning robotics can start a project without getting bogged down in complex setup. HORUS automatically handles package installations and environment configuration, letting them focus on learning robot control algorithms.

· A team of developers working on a complex robot. Using 'horus env freeze' and 'horus env restore', they can ensure everyone is working with the exact same software versions and dependencies, preventing integration issues.

· Developing a robot that requires a specific real-time operating system (RTOS) driver. If the default driver is not ideal, a developer can swap it out for a preferred alternative with minimal effort, thanks to HORUS's modular design.

4

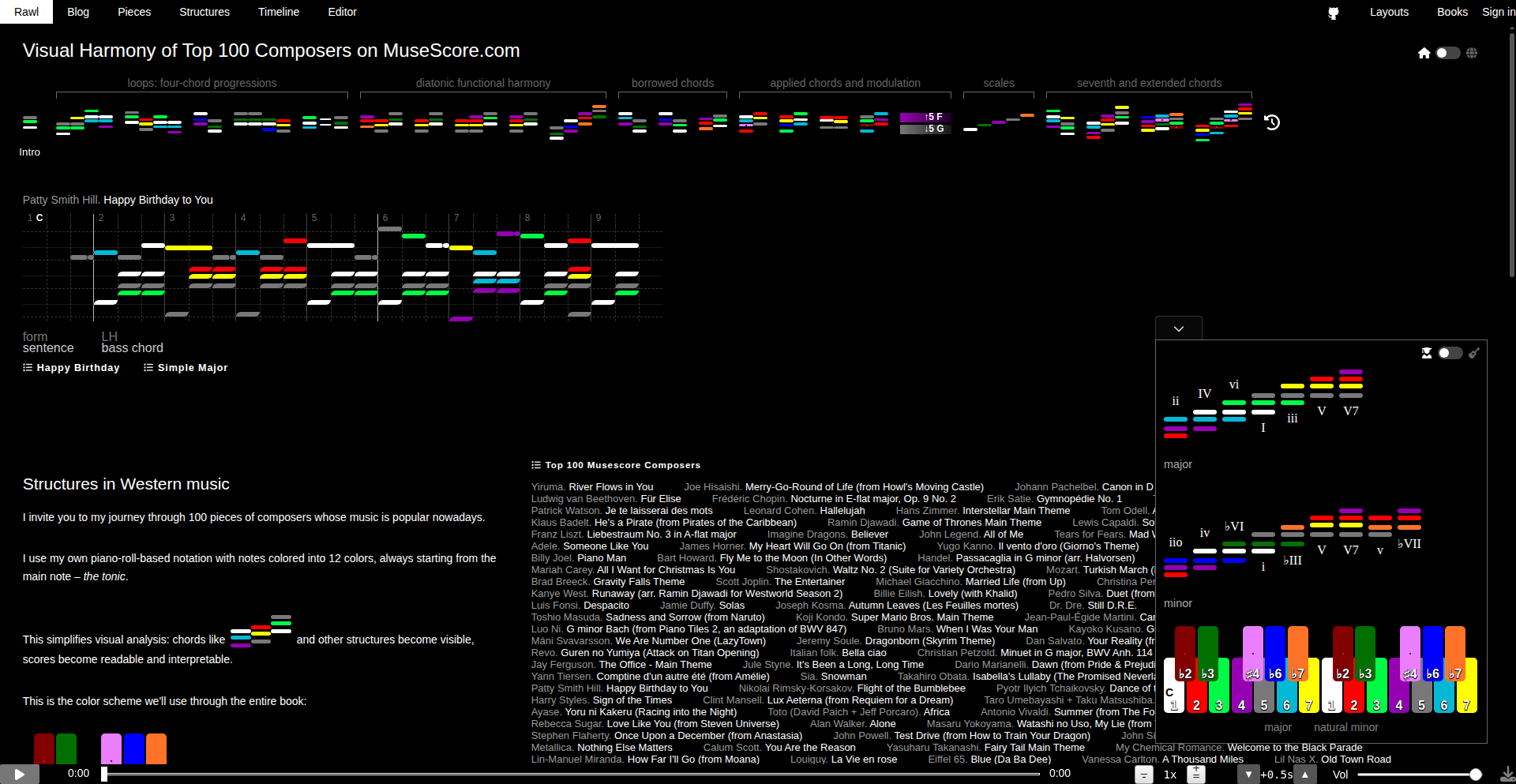

ChromaChord Visualizer

Author

vitaly-pavlenko

Description

A novel music notation system that represents chords as colored flags, making harmonic progressions instantly visible and memorable. It translates Western tonal harmony into a visual language using 12 colors arranged systematically, where the tonic is always white. This system aims to simplify chord analysis for musicians and composers by eliminating the need for traditional Roman numeral or chord symbol annotations, providing an intuitive visual script for tonal music.

Popularity

Points 15

Comments 0

What is this product?

ChromaChord Visualizer is a creative approach to music notation that uses color to represent musical chords. Instead of reading traditional chord symbols or analyzing Roman numerals, musicians can see chord structures as distinct, colored "flags." The system employs 12 colors arranged in a specific sequence, with the root note (tonic) always represented by white. Major chords are depicted with lighter shades, and minor chords with darker shades, with colors arranged in thirds to highlight relationships. This visual representation is designed to make the underlying harmony of a piece immediately apparent, helping users to memorize and understand chord progressions more intuitively. It's not about synesthesia, but rather a designed system to make harmonically similar musical elements look visually similar.

How to use it?

Developers can integrate ChromaChord Visualizer into music analysis tools, educational software, or even creative music generation applications. The core idea is to process MIDI files or other musical data and translate note and chord information into the ChromaChord color scheme. This could involve parsing MIDI events to identify chords, determining their quality (major/minor), and mapping them to the corresponding color combinations. The output could be a visual representation overlaid on standard sheet music, a standalone color-based score, or data that can be used for further algorithmic analysis. For example, one could build a plugin for music notation software like MuseScore or a web application that analyzes uploaded MIDI files and displays the ChromaChord visualization. It allows for rapid pattern recognition within large musical datasets.

Product Core Function

· Color-coded chord representation: Visually maps chords to distinct color combinations, making harmonic structures instantly recognizable. This provides a faster way to grasp the emotional feel and function of chords in a piece.

· Tonal center identification: Consistently uses white for the tonic, providing a fixed reference point for understanding key and harmonic context, which aids in learning and analyzing musical pieces.

· Major/minor mode differentiation: Uses lighter and darker shades to distinguish between major and minor chords, allowing for quick visual identification of the mode and its impact on the music's character.

· Harmonic pattern discovery: The color arrangement and structured data allow for the identification of recurring harmonic patterns across a large corpus of music, aiding in compositional analysis and learning.

· Interactive chord analysis: The system enables users to explore and understand chord progressions by seeing their visual representation, reducing the cognitive load associated with traditional music theory analysis.

Product Usage Case

· Music Education Software: A student learning music theory could use this to visually grasp complex chord progressions in classical pieces, making it easier to understand functional harmony without extensive theoretical background.

· Compositional Analysis Tools: A composer could analyze their own or others' works to identify harmonic tendencies and patterns, leading to more informed creative decisions. For instance, finding common chord sequences that evoke specific emotions.

· Algorithmic Music Generation: Developers could use the color-mapping logic to generate new music where the harmonic structure is pre-defined visually, then translated into sound.

· Interactive Music Score Viewer: A platform could display sheet music with ChromaChord overlays, allowing musicians to see both the traditional notation and the visual harmonic structure simultaneously, enhancing performance and interpretation.

· Music Information Retrieval (MIR): Researchers could use the system to categorize and search musical pieces based on their harmonic content, using the color patterns as a feature for similarity searches.

5

MeetingMemo Encyclopaedia

Author

stavros

Description

This project is a lightweight, offline-first, personal knowledge base designed to capture and organize information encountered during meetings or any other learning activity. It aims to combat information overload and aid recall by providing a structured, searchable repository of notes and facts. The innovation lies in its emphasis on local-first data storage and efficient information retrieval without constant internet dependency.

Popularity

Points 4

Comments 9

What is this product?

MeetingMemo Encyclopaedia is essentially a personal, digital notebook that's built with speed and offline access in mind. Imagine you're in a long meeting, and someone drops a piece of information you want to remember, or a concept you've never heard of. Instead of trying to quickly scribble it down and then forget where you put it, this tool lets you instantly capture it. It uses local storage (like your computer's hard drive or your phone's memory) to save your notes, meaning it works even if you have no internet connection. The core idea is to create an easily searchable encyclopedia of your own knowledge, built from the ground up. The innovation here is in prioritizing speed and offline functionality, making it a truly personal and accessible knowledge assistant that doesn't rely on external services.

How to use it?

Developers can use MeetingMemo Encyclopaedia by integrating its core logic into their own applications or by running it as a standalone tool. For instance, if you're building a productivity app, you could embed this system to allow users to quickly log insights from client calls or internal discussions. The data is stored locally, so you can query it directly. For direct use, you might run it as a simple web interface or a command-line tool that allows you to add entries with tags and keywords, and then search them later. The beauty is its flexibility – you can adapt it to various workflows that require fast, on-the-go information capture and retrieval, ensuring your important knowledge is always at your fingertips, regardless of network status.

Product Core Function

· Offline-first Data Storage: Information is saved directly on your device, ensuring accessibility and privacy even without an internet connection. This means your knowledge is always available, and you don't need to worry about data breaches on remote servers.

· Instant Information Capture: Quickly add notes, facts, or definitions as you encounter them, minimizing the interruption to your workflow. This feature helps you seize those fleeting ideas and crucial details before they're lost.

· Keyword Tagging and Indexing: Assign keywords and tags to your entries for effortless organization and retrieval. This allows for rapid searching, transforming a chaotic collection of notes into a structured, searchable knowledge base.

· Local Search and Retrieval: Efficiently search your personal encyclopedia using keywords and tags, allowing you to find information quickly and reliably. This core functionality ensures you can recall specific details exactly when you need them, boosting productivity and reducing reliance on external search engines for your personal data.

· Lightweight and Extensible Architecture: Built with simplicity in mind, allowing for easy customization and integration into other projects. This offers developers the flexibility to build upon the existing foundation, tailoring it to specific needs and creating more sophisticated knowledge management systems.

Product Usage Case

· During a technical presentation, a developer encounters a new API endpoint they want to remember. They quickly use MeetingMemo Encyclopaedia on their laptop to log the endpoint name, its purpose, and a key parameter, tagging it with the project name and 'API'. Later, when working on that project, they instantly retrieve the information via a quick search, saving time and preventing potential errors from misremembering.

· A team lead is in a brainstorming session and needs to jot down innovative ideas and potential solutions. Instead of scattered sticky notes, they use the tool to capture each idea as a separate entry, adding tags like 'feature_request', 'bug_fix', or 'new_concept'. This structured capture allows them to easily review and categorize all ideas post-meeting, facilitating more organized follow-up and implementation.

· A student is attending a lecture and a complex concept is introduced. They use the encyclopedia to create an entry for the concept, linking it to relevant terms discussed in class and adding their own brief explanation. This creates a personal glossary that aids in understanding and revising for exams, acting as a personalized textbook built from their own learning experiences.

· A freelance writer is researching a new topic. As they read articles and watch videos, they use the tool to capture key facts, quotes, and potential arguments, each tagged with the main subject. This allows them to build a rich, interconnected knowledge base for their article, ensuring they can easily cross-reference information and discover new connections as they write.

6

PACR: Academia's Integrated Research & Connection Hub

Author

anony_matty

Description

PACR is an ambitious project aiming to revolutionize the academic world by creating a unified platform for researchers. It tackles the isolation often experienced in academia by combining tools for research sharing, paper discovery, networking, and idea discussion into a single, cohesive ecosystem. Its core innovation lies in bringing these disparate academic functions under one roof, fostering a stronger sense of community and collaboration.

Popularity

Points 11

Comments 0

What is this product?

PACR is a comprehensive online platform designed for academicians, acting as both a professional social network and a suite of research tools. It aims to break down the traditional silos in academic research by providing a single, integrated space for sharing discoveries, finding relevant papers, connecting with peers, and engaging in intellectual discussions. The key technological insight is to centralize the diverse needs of academics – from finding collaborators to disseminating findings – into a user-friendly interface, thereby boosting productivity and community engagement. Think of it as a LinkedIn meets ResearchGate meets a dedicated academic forum, but built from the ground up with the specific workflow of researchers in mind.

How to use it?

Academicians can use PACR to create a detailed professional profile highlighting their research interests and publications. They can then upload and share their own research papers, making them discoverable by a global network of scholars. The platform allows users to search for specific research topics or papers, discover new relevant work through personalized recommendations, and connect with other researchers by sending connection requests or initiating discussions. It's designed to be integrated into a researcher's daily workflow, serving as a central hub for both their independent work and their professional interactions. Integration with existing research tools or repositories might be a future consideration, but currently, the focus is on its internal, self-contained functionality.

Product Core Function

· Research Paper Sharing: Enables researchers to upload and publicly share their published or pre-print works, increasing visibility and facilitating academic discourse. This addresses the problem of research being scattered across various journals and repositories, making it difficult for peers to find and cite.

· Paper Discovery Engine: Utilizes intelligent algorithms to recommend relevant research papers based on user profiles and interests, helping researchers stay updated with the latest advancements in their fields. This combats information overload and ensures researchers don't miss crucial developments.

· Professional Networking: Facilitates connections between academics with similar research interests, fostering collaboration and mentorship opportunities. This directly tackles the issue of academic isolation and the difficulty in finding like-minded colleagues, especially across institutions.

· Idea Discussion Forums: Provides dedicated spaces for researchers to discuss scientific ideas, challenges, and potential solutions in a structured and accessible manner. This promotes a more dynamic and collaborative approach to problem-solving within the academic community.

· Academic Profile Management: Allows users to build comprehensive profiles showcasing their academic achievements, publications, and research expertise, serving as a centralized and professional online identity. This helps in building a reputation and making oneself discoverable for collaborations or opportunities.

Product Usage Case

· A molecular biologist struggling to find collaborators for a new drug discovery project can use PACR's networking features to find other researchers in related fields and initiate conversations about potential partnerships. This solves the problem of limited visibility and difficulty in cross-disciplinary connections.

· A graduate student working on their thesis can leverage PACR's paper discovery engine to quickly identify seminal works and recent research relevant to their topic, saving hours of manual searching across multiple databases. This improves research efficiency and depth.

· A professor seeking feedback on a controversial new hypothesis can share their findings on PACR and engage in discussions with a wider academic audience, potentially gaining diverse perspectives and refining their ideas. This offers a broader peer review mechanism.

· An early-career researcher aiming to build their academic profile can use PACR to showcase their publications and research interests, making them more visible to senior researchers and potential employers. This aids in career advancement and recognition.

7

Vance.ai: AI-Powered Network Connector

Author

yednap868

Description

Vance.ai is an AI SuperConnector designed to facilitate warm introductions between founders and investors, and eventually anyone seeking valuable connections. It leverages AI to understand user needs (e.g., fundraising, co-founder search) and identifies the most suitable individuals within one's extended network for introductions. Currently, it experiments with curated networks like IITs, IIMs, and founder communities to build contextual relevance and trust.

Popularity

Points 4

Comments 6

What is this product?

Vance.ai is an intelligent system that acts as a personal network facilitator. At its core, it uses Natural Language Processing (NLP) to understand your stated goals, such as 'I am raising my seed round' or 'I need an AI co-founder.' Then, it intelligently scans your connections, both direct and indirect, to find individuals who are most likely to be able to help you achieve your goal. The innovation lies in its ability to move beyond simple keyword matching by understanding the 'why' behind your search and matching it with the 'who' in your network, while also considering the context of curated communities to foster trust and relevance. So, what does this mean for you? It means a smarter, more efficient way to tap into your network for meaningful introductions, saving you time and increasing your chances of finding the right people.

How to use it?

Developers can integrate Vance.ai into their workflow by connecting their existing social and professional network data (with appropriate privacy controls). The system then acts as a passive intelligence layer. When a founder needs to find an investor for a specific stage, or a developer is looking for a technical co-founder with niche expertise, they simply input their requirements into Vance.ai. The AI analyzes these requirements against its understanding of the network and suggests the most promising introductions. This can be done through a dedicated web interface or potentially via API integrations with existing collaboration tools in the future. So, how does this benefit you? It means you can get introductions to the right people without manually sifting through hundreds of contacts or sending generic connection requests. It's like having a super-powered networking assistant.

Product Core Function

· AI-driven introduction generation: Utilizes NLP to understand user needs and scans networks for suitable connections. The value is in automating the discovery of relevant individuals, saving significant manual effort and increasing the accuracy of matches. This is useful for quickly finding potential investors or collaborators.

· Contextual network analysis: Analyzes connections within specific communities (e.g., alumni networks, industry groups) to provide more relevant and trusted introductions. The value is in leveraging the inherent trust and shared context within these groups for more effective networking. This is particularly helpful when breaking into new industries or seeking introductions from established networks.

· Personalized connection recommendations: Based on user input and network analysis, Vance.ai suggests specific individuals for introductions. The value is in providing actionable recommendations rather than just a list of contacts, guiding users towards the most impactful connections. This streamlines the process of building strategic relationships.

Product Usage Case

· A startup founder looking to raise their Series A funding can tell Vance.ai, 'I'm raising for my Series A in FinTech.' Vance.ai will then search the founder's network and curated FinTech investor communities to identify and suggest investors who have a history of investing in similar companies or stages. This solves the problem of not knowing which investors are the best fit and how to reach them, leading to more targeted fundraising efforts.

· An AI engineer seeking a co-founder with expertise in computer vision can state, 'Looking for a co-founder with computer vision experience.' Vance.ai will then analyze their network to find individuals with relevant skills or connections to such individuals. This addresses the challenge of finding specialized technical talent for early-stage startups, allowing for quicker team building.

· A product manager wanting to connect with other product leaders in the SaaS space can input 'Looking to connect with SaaS product leaders.' Vance.ai can then identify and suggest individuals within their professional network or relevant industry groups who fit this description. This facilitates peer-to-peer learning and industry insights by making it easier to find and connect with relevant professionals.

8

ResonaX AI Buyer Twins

Author

resonaX

Description

ResonaX is an experimental platform that creates AI simulations of B2B customers by training Large Language Models (LLMs) and embedding models on real-world data like LinkedIn profiles and CRM notes. It aims to replace traditional market research methods like surveys and A/B tests by allowing marketers to directly interact with these AI 'twins' to validate messaging and go-to-market strategies. The core innovation lies in synthesizing diverse data sources to generate nuanced buyer personas that can provide feedback on product offerings and marketing content.

Popularity

Points 1

Comments 7

What is this product?

ResonaX builds AI replicas of your ideal B2B customers, called 'AI twins'. It uses advanced AI, specifically LLMs and embedding models, which are specialized AI systems good at understanding and generating human language. These models are fine-tuned using actual data from professional networks like LinkedIn and customer relationship management (CRM) systems. Think of it like creating a digital ghost of your target customer, packed with their likely job role, their biggest challenges, what makes them buy things, and how they prefer to communicate. This allows marketers to ask hypothetical questions to these twins and get realistic feedback before investing in actual campaigns. The value is getting insights from 'customers' without the cost and time of traditional research.

How to use it?

Developers and marketers can use ResonaX by feeding it data about their target B2B customers. This can include LinkedIn profile information (often scraped or uploaded) and details from your CRM system. Once the AI twins are trained, you can engage with them through a conversational interface. For example, you might ask an AI twin representing a VP of Marketing, 'Would this new product headline resonate with you?' or 'What are your main hesitations about booking a demo for our software?'. The platform also includes a 'feedback layer' to help validate the AI's responses, making them more reliable. It can be integrated into existing marketing workflows to quickly test new campaign ideas, refine product messaging, and understand buyer motivations before launching them to real customers.

Product Core Function

· AI Buyer Persona Generation: Creates realistic digital representations of target B2B customers by analyzing their professional and behavioral data. This helps understand specific customer needs and preferences, leading to more effective marketing and sales strategies.

· Interactive Buyer Simulation: Allows users to ask direct questions to the AI twins about marketing copy, product features, or sales pitches, simulating real customer feedback. This provides immediate validation and insight into potential customer reactions, reducing the risk of costly mistakes.

· Data Ingestion and Synthesis: Integrates and processes various real-world data sources, such as LinkedIn profiles and CRM notes, to build comprehensive buyer profiles. This ensures the AI twins are grounded in actual market data, leading to more accurate and relevant simulations.

· Messaging and GTM Testing: Enables rapid testing of marketing messages and go-to-market strategies by providing feedback from the AI twins. This helps refine campaigns and product positioning before launch, maximizing the chances of success.

· Behavioral Insight Simulation: Captures key aspects of a buyer's role, pain points, buying triggers, and communication style. This allows for a deeper understanding of the customer journey and decision-making process, enabling more personalized outreach.

Product Usage Case

· A SaaS company wants to test a new marketing campaign headline for their enterprise software. They upload LinkedIn data of their target VPs of Marketing into ResonaX and ask the AI twins if the headline makes sense and what its impact might be. This helps them refine the headline to be more compelling before investing in expensive ad placements.

· A B2B e-commerce company is planning a new product launch and needs to understand potential customer objections. They use ResonaX to simulate conversations with potential buyers, asking questions like 'What would make you hesitate to book a demo?' to identify and address concerns proactively, thus improving their sales pitch.

· A startup is developing a new feature and wants to gauge its relevance to their ideal customer profile. They present the feature description to their ResonaX AI twins and ask, 'What would make this offer more relevant to you?' This feedback loop allows for feature adjustments based on simulated customer needs, increasing the likelihood of market adoption.

· A marketing team wants to understand the communication preferences of their target audience. By interacting with ResonaX AI twins trained on diverse buyer data, they can learn about preferred channels, tone of voice, and the types of information that resonate, enabling them to craft more effective and personalized outreach strategies.

9

Zee: AI Interviewer

Author

davecarruthers

Description

Zee is an AI-powered platform that conducts initial 20-30 minute interviews with every job applicant. It then generates a curated shortlist of the top 5-10% of candidates who are genuinely qualified, saving recruiters and founders significant time and effort by eliminating the need to sift through hundreds of unqualified resumes. It also addresses stakeholder misalignment by discussing the role with key personnel before interviewing applicants.

Popularity

Points 4

Comments 4

What is this product?

Zee is an intelligent system designed to revolutionize the initial stages of hiring. Instead of manually reviewing countless resumes, Zee acts as an AI interviewer, engaging each applicant in a substantial conversation. This process goes beyond simple keyword screening, allowing Zee to assess communication skills, problem-solving approaches, and overall fit for the role. By conducting these preliminary interviews, Zee uncovers genuine potential and filters out unsuitable candidates early on. The innovation lies in its ability to simulate a human-like interview experience and leverage AI to deeply understand candidate qualifications and alignment with company needs, thus directly addressing the pain point of overwhelming applicant numbers and unqualified candidates.

How to use it?

Developers and hiring managers can integrate Zee into their recruitment workflow. After posting a job opening, instead of manually reviewing applications, candidates are directed to interact with Zee. The AI conducts its interview, gathering in-depth information. Subsequently, Zee provides a prioritized list of candidates who have passed this rigorous initial assessment. This dramatically streamlines the process, allowing hiring teams to focus their valuable time on interviewing and engaging with candidates who have already demonstrated a strong potential for success. It can be integrated through API calls or as a standalone application within your existing hiring platform.

Product Core Function

· AI-driven conversational interviews: Zee engages each applicant in a natural, in-depth conversation, mimicking a human interviewer to assess skills, experience, and cultural fit. This provides a richer understanding of candidates beyond their resumes, increasing the likelihood of finding a strong match.

· Intelligent candidate shortlisting: After conducting interviews, Zee analyzes responses and provides a ranked list of the top candidates, significantly reducing the time spent on manual resume screening and initial interviews.

· Stakeholder alignment facilitation: Before interviewing candidates, Zee engages with key stakeholders to understand the nuances of the role and company culture. This ensures that the interviews are focused on relevant criteria, improving the quality of shortlisted candidates.

· Resume screening automation: While Zee goes beyond basic screening, it can also automate the initial filtering of resumes based on predefined criteria, further accelerating the hiring process.

Product Usage Case

· A startup founder is overwhelmed with 300 applications for a single engineering role. By using Zee, they only have to review the top 10 candidates recommended by the AI, saving them days of manual screening and allowing them to fill the position faster.

· A large enterprise is struggling with high employee turnover due to poor initial hires. Zee's in-depth interviews help identify candidates with better long-term potential and cultural alignment, leading to reduced turnover and improved team cohesion.

· A hiring manager needs to quickly fill multiple positions during a growth phase. Zee's ability to interview all applicants concurrently and provide immediate shortlists enables them to scale their hiring efforts efficiently without compromising on candidate quality.

· A company wants to ensure a consistent and unbiased initial interview process. Zee, as an AI, provides standardized interview questions and evaluation criteria, minimizing human bias and ensuring fairness for all applicants.

10

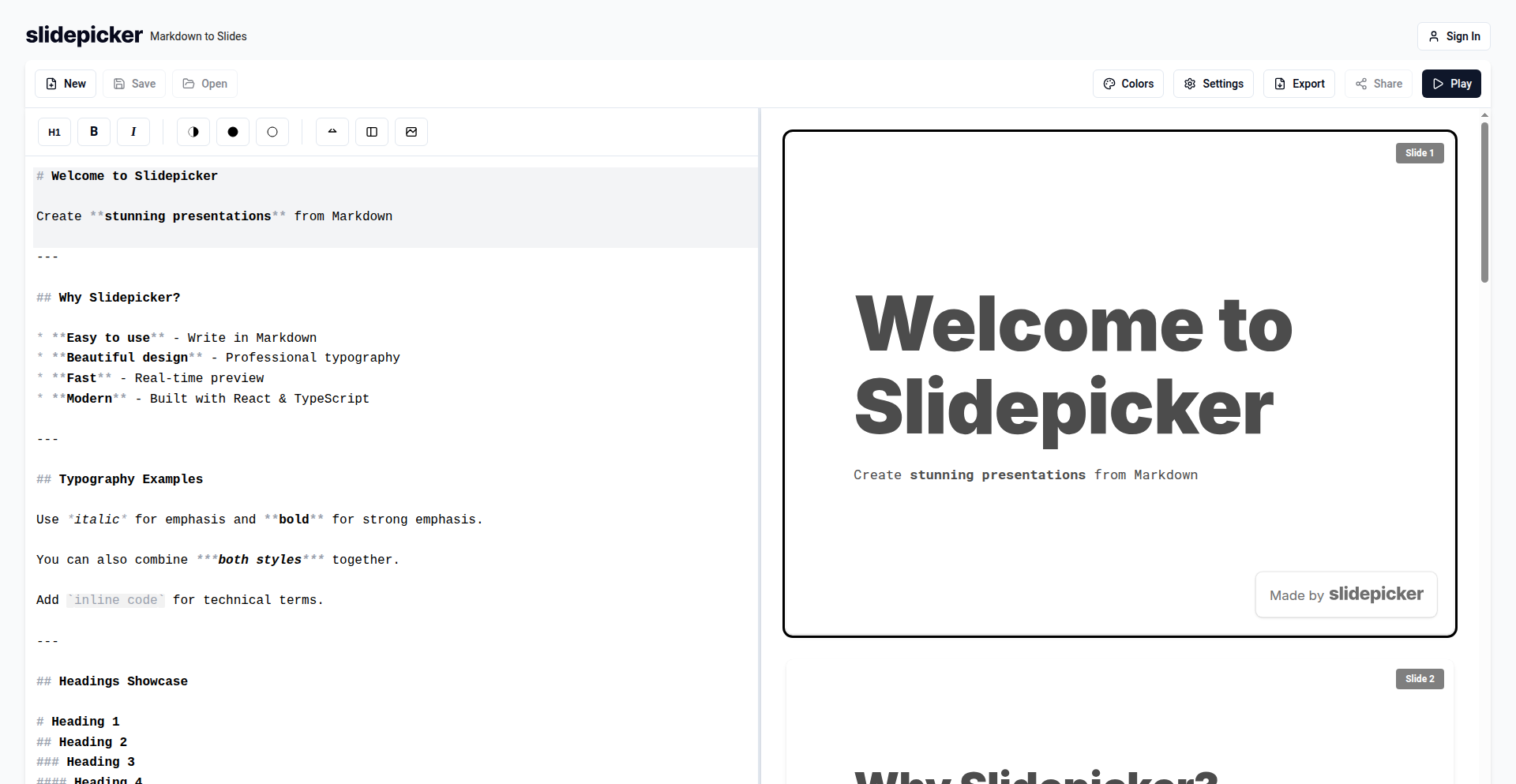

SlidePicker: Markdown-Powered Presentation Generator

Author

lukaslukas

Description

SlidePicker is a web application that transforms Markdown files into visually appealing and easily readable presentation slides. It addresses the common frustration developers and writers face with traditional slide editors where elements can easily get misaligned. By focusing on a text-based workflow and automated alignment, SlidePicker offers a clean, efficient, and attractive way to create slides, especially for those who prefer coding or writing in Markdown.

Popularity

Points 3

Comments 4

What is this product?

SlidePicker is a browser-based tool that takes your plain text Markdown content and automatically converts it into a polished presentation. Unlike complex software that requires manual dragging and dropping of elements, SlidePicker leverages the structure of your Markdown to intelligently lay out text, images, and other content. The core innovation lies in its ability to automate the alignment and positioning of slide elements, eliminating the tedious task of constantly readjusting text boxes and images after minor edits. This means your slides maintain a professional and consistent look without the usual hassle. So, what's in it for you? You get professional-looking slides with minimal effort, allowing you to focus on your content rather than presentation design.

How to use it?

Developers and writers can use SlidePicker by simply writing their presentation content in a Markdown file. They then upload this file to the SlidePicker web application. The tool processes the Markdown in real-time, providing a live preview of the slides as they are created. SlidePicker supports common Markdown syntax for headings, lists, code blocks, and images. It also includes a 'presentation mode' which is optimized for delivering talks. This makes it ideal for integrating into existing text-based development workflows. So, how can you use it? You can create your entire presentation in a single Markdown file, upload it to SlidePicker, and have a ready-to-go slide deck in minutes, perfect for technical demos or internal team updates.

Product Core Function

· Markdown to Slide Conversion: Translates Markdown text into structured presentation slides. This allows you to leverage your existing text-based content creation skills for presentations, making the process faster and more intuitive. So, what's the value? You can create slides from content you've already written, saving significant time.

· Automated Element Alignment: Intelligently positions text and other elements on slides to ensure a clean and professional look. This solves the common problem of elements shifting unexpectedly in traditional editors. So, what's the value? Your slides will always look neat and consistent, enhancing your presentation's credibility.

· Live Preview: Displays changes to your slides in real-time as you edit the Markdown. This provides immediate feedback, allowing for quick adjustments and a smoother creation process. So, what's the value? You can see exactly how your slides will look as you build them, reducing guesswork and errors.

· Presentation Mode: Offers a dedicated view optimized for delivering talks, potentially including speaker notes and navigation controls. This makes the tool functional for actual presentations, not just creation. So, what's the value? You have a seamless transition from slide creation to presentation delivery.

· Browser-Based Editor: Runs entirely within your web browser, requiring no installation and making it accessible from any device with internet access. This means you can create slides anywhere, anytime. So, what's the value? Your presentation tool is always available and up-to-date without any setup hassle.

Product Usage Case

· Developer Technical Demos: A developer can write their demo script and accompanying slide content in Markdown, then quickly generate slides for a team presentation. This solves the problem of needing to quickly put together visuals for a technical explanation without spending hours on slide design. So, how does this help? You can effectively communicate complex technical concepts with clean, professional slides generated from your existing notes.

· Writer's Block for Presentations: A writer who is comfortable with Markdown can easily outline and write their presentation content, letting SlidePicker handle the visual formatting. This bypasses the intimidation of starting a presentation from scratch in a GUI editor. So, how does this help? You can focus on crafting your narrative, knowing the visual presentation will be handled automatically.

· Quick Internal Updates: A project manager needs to present a brief status update to their team. They can write a few bullet points in Markdown and instantly have a presentable set of slides. This addresses the need for speed and simplicity when formal slide design isn't the priority. So, how does this help? You can deliver important information efficiently with minimal design overhead.

11

Intraview: Agent-Assisted Code Exploration & Feedback

Author

cyrusradfar

Description

Intraview is a VS Code extension that revolutionizes how developers understand and review code, especially for complex projects or when working with AI agents. It transforms static markdown documentation into dynamic, interactive 'code tours' generated by your AI agent. This innovative approach allows for collaborative code walkthroughs and efficient feedback loops, directly within your IDE, solving the common pain points of developer onboarding, PR reviews, and keeping up with rapidly evolving codebases.

Popularity

Points 6

Comments 1

What is this product?

Intraview is a VS Code extension that leverages AI agents to create dynamic, interactive code tours. Instead of reading lengthy, often outdated markdown files, you can experience your code as a guided walkthrough generated by your AI. This technology tackles the challenge of understanding large or complex codebases by providing a structured and engaging way to explore code. The core innovation lies in its ability to turn your existing AI agent (like GPT-5-codex or Claude) into a knowledgeable guide that can explain code logic and structure, making code comprehension significantly faster and more intuitive. It also allows for collaborative annotation and feedback directly within the tour, fostering a more efficient review process.

How to use it?

Developers can use Intraview by installing it from the VS Code marketplace or directly within VS Code forks like Cursor. Once installed, you can instruct your AI agent to generate a code tour for your project. The generated tours can then be played back, allowing you to navigate through code with explanations provided by the agent. You can also use Intraview to record your own code explorations. For collaboration, tours and feedback comments are saved as files, which can be easily shared with team members. This makes it ideal for onboarding new developers, conducting asynchronous code reviews, or ensuring alignment on complex architectural decisions.

Product Core Function

· Dynamic code tours generated by AI: This feature provides an interactive and guided walkthrough of your codebase, making it easier to understand complex logic and project structure. This directly benefits developers by reducing the time spent deciphering code and improving comprehension.

· Storage and sharing of tours: Tours are saved as simple files, enabling easy sharing and collaboration. This is valuable for teams, allowing members to share their understanding of code or provide walkthroughs for specific sections, streamlining knowledge transfer.

· Batch feedback and commenting: Intraview allows for inline feedback and comments directly within the IDE, both during and outside of tours. This streamlines the code review process by enabling context-specific feedback that is easily trackable and actionable for developers.

· AI agent integration for exploration: By integrating with AI models, Intraview enables developers to leverage AI's understanding of code to explore and explain complex parts of a project. This is a significant advancement in utilizing AI for practical developer productivity and problem-solving.

· IDE integration for seamless workflow: Being a VS Code extension, Intraview integrates directly into the developer's existing environment. This means no context switching and a smoother workflow for understanding code, conducting reviews, and providing feedback, ultimately boosting developer efficiency.

Product Usage Case

· New project onboarding: Imagine a new developer joining a large project. Instead of drowning in documentation, they can use Intraview to have an AI guide them through the core components and workflows of the codebase, dramatically shortening their ramp-up time and understanding.

· Complex PR reviews: When reviewing a Pull Request that significantly alters a core module, a developer can use Intraview to generate a tour of the changed code, accompanied by the AI's explanation of the changes and their implications. This leads to more thorough and efficient reviews, catching potential issues earlier.

· Keeping up with Agentic code: For projects heavily influenced by AI-generated code, Intraview can create tours that explain the AI's logic and reasoning, helping human developers maintain and evolve these systems more effectively.

· Planning and alignment: Teams can use Intraview to create shared code tours that illustrate design decisions or technical proposals. This visual and interactive format ensures everyone on the team has a common understanding and is aligned on the technical direction.

12

Yansu-AI-SpecTDD

Author

yubozhao

Description

Yansu is an AI coding platform that leverages specifications and Test-Driven Development (TDD) to build complex software projects. It acts as a structured process (SOP) rather than a simple coding agent, focusing on deep understanding of requirements, validating outcomes against those requirements, and iteratively refining code based on automated tests. Yansu aims to capture and learn 'tribal knowledge' through continuous user interaction, merging the power of spec-driven development with the interactive nature of character AI.

Popularity

Points 5

Comments 2

What is this product?

Yansu is an AI-powered platform designed to build software by treating code generation like a rigorous process. It starts with clear specifications and employs Test-Driven Development (TDD). This means instead of writing code first and then testing it, Yansu generates tests based on the desired outcomes and specifications, and then writes code to pass those tests. It's innovative because it prioritizes understanding requirements and ensuring the final product meets them, much like a seasoned developer who understands the 'why' behind the code. It learns implicitly from user interactions, capturing nuances often missed in documentation, akin to how a human team member absorbs collective wisdom. The approach combines structured specification adherence with AI's creative coding capabilities, aiming for accuracy and robust outcomes by using a mix of AI models and continuous testing.

How to use it?

Developers can use Yansu by providing detailed project specifications and defining desired outcomes. Yansu then takes these inputs and, using its AI agents and TDD methodology, generates the necessary code. It acts as a collaborative partner, continuously interacting with users to clarify requirements and refine the generated code based on feedback and automated test results. This is particularly useful for complex projects where meticulous requirement adherence and thorough testing are critical. Developers can integrate Yansu into their workflow by defining their project's needs upfront, allowing the AI to handle the iterative coding and testing cycles, freeing up developers to focus on higher-level architectural decisions and validation.

Product Core Function

· Specification-Driven Code Generation: Translates detailed project requirements into functional code, ensuring the built software directly addresses user needs. This provides a clear roadmap for development and reduces the risk of building the wrong thing.

· Automated Test Generation and Execution: Creates test cases based on specified scenarios and continuously runs them to validate code correctness. This significantly accelerates the testing process and ensures high code quality and reliability.

· Iterative Code Refinement: Automatically revises and improves code based on the outcomes of automated tests and user feedback, leading to more robust and accurate software. This iterative cycle mimics a human developer's debugging and improvement process, but at an accelerated pace.

· Tribal Knowledge Assimilation: Learns from ongoing user interactions and project history to incorporate subtle but important project nuances that are often not explicitly documented. This helps capture the 'art' of software development beyond just the 'science', leading to more contextually relevant code.

· Hybrid AI Agent Utilization: Employs a combination of different AI models and agents to tackle complex coding tasks, prioritizing accuracy and completeness over raw speed. This ensures that the best AI capabilities are leveraged for each part of the development process, leading to better overall results.

Product Usage Case

· Building a complex business logic module: A company needs a new feature with intricate business rules. Yansu can take the detailed specifications and a list of expected scenarios (e.g., 'when X happens, the system should do Y'). Yansu then generates tests for these scenarios and writes the code, ensuring the business logic is correctly implemented and passes all defined tests, saving significant developer time on manual coding and testing of complex rules.

· Rapid prototyping of an API: A developer wants to quickly build a new API endpoint for a mobile application. By providing the API schema and expected request/response formats, Yansu can generate the API code along with unit tests for each endpoint. This allows the developer to quickly get a functional API up and running for early testing and feedback.

· Refactoring legacy code with defined outcomes: For an older system needing modernization, Yansu can be fed existing requirements (or inferred from current behavior) and new specifications. It can then generate new code that meets the new requirements and also passes tests that mimic the old system's behavior, ensuring a smooth transition and preventing regressions.

· Developing a feature with unclear initial requirements: If a project has some ambiguity in requirements, Yansu's interactive nature allows developers to 'train' it by providing examples and feedback. Yansu can then generate code that attempts to meet these evolving needs, allowing for more agile exploration of solutions and learning during development.

13

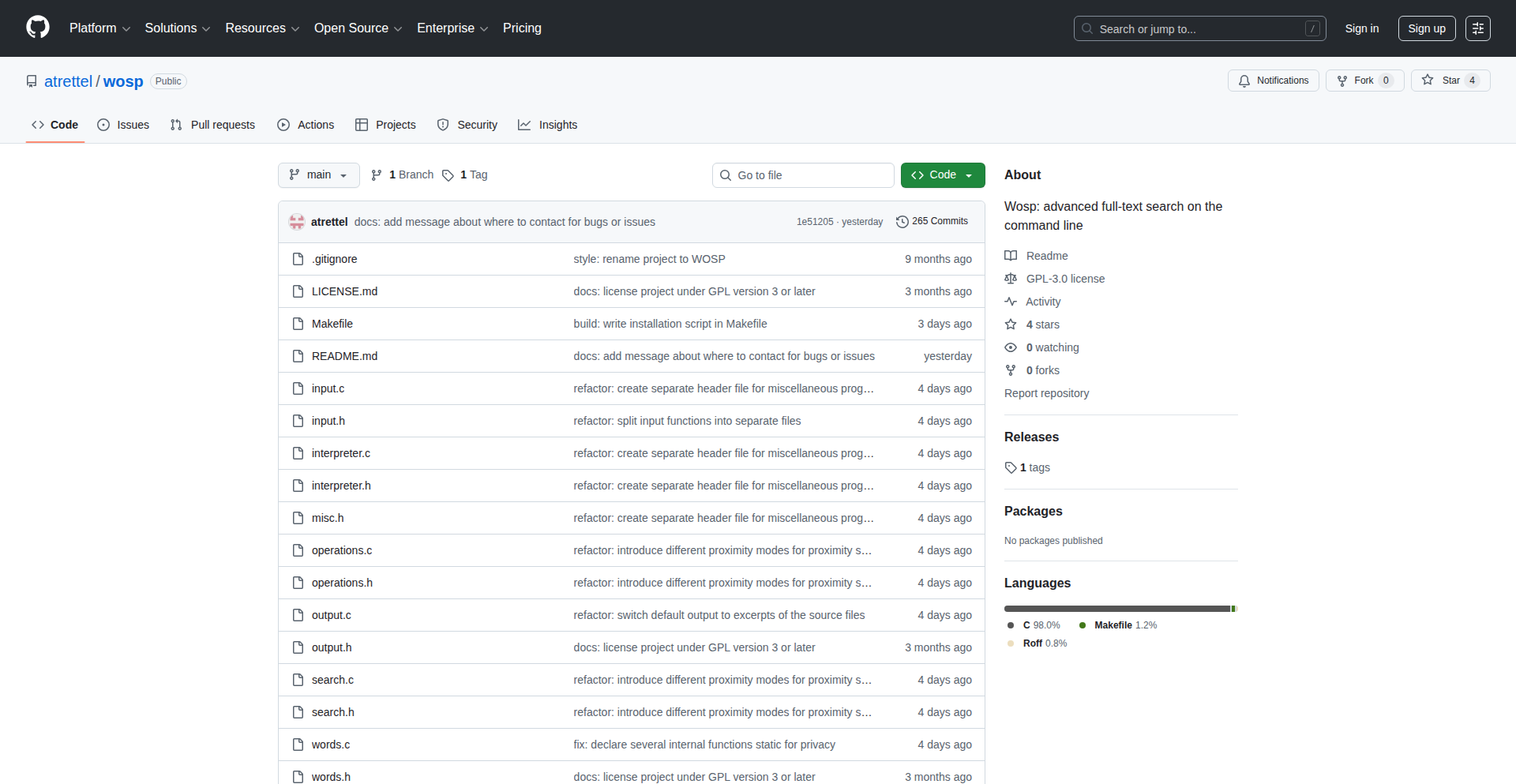

Wosp: Proximity-Aware Command-Line Text Search

Author

atrettel

Description

Wosp is a command-line tool designed for power users and researchers who need to go beyond simple text matching. It enables advanced full-text searching across local documents, supporting complex queries with Boolean logic, proximity operators, and fuzzy matching. This goes beyond typical tools by allowing searches for phrases that span multiple lines, offering a more nuanced and powerful way to find information.

Popularity

Points 6

Comments 0

What is this product?

Wosp, short for 'word-oriented search and print,' is a command-line application that allows you to perform highly sophisticated full-text searches on your local text documents. Unlike basic tools that find exact word matches or simple patterns, Wosp understands relationships between words. It supports advanced query features like: AND/OR logic (Boolean operators) to combine search terms, 'near' operators to find words that are close to each other within a document (proximity), and fuzzy searching to find terms with slight misspellings. It can even handle searches for phrases that stretch across multiple lines, making it incredibly powerful for analyzing large or complex text datasets.

How to use it?

Developers and researchers can use Wosp directly from their terminal. You install the program, then run it from your command line, specifying the directory or files to search and the sophisticated query you want to execute. For example, you could search for documents containing 'artificial intelligence' AND 'ethics' but NOT 'bias', or find all occurrences where 'machine' appears within 5 words of 'learning'. It's ideal for scripting custom search workflows or for interactive exploration of research papers, codebases, or log files where precise information retrieval is critical.

Product Core Function

· Boolean Logic Search: Allows combining search terms with AND, OR, and NOT operators, enabling precise filtering of results based on multiple criteria. This is useful for complex data analysis where you need to narrow down findings significantly.

· Proximity Operators: Enables searching for terms that appear within a specified number of words of each other. This is crucial for finding phrases, conceptual relationships, or specific contexts that simple keyword searches miss, significantly improving the accuracy of information retrieval.

· Multi-line Phrase Matching: Supports searching for exact phrases that can span across multiple lines, overcoming a limitation of many standard search tools. This is invaluable for analyzing documents where important information might be broken up by line breaks.

· Fuzzy Searching: Allows for approximate matching of terms, accommodating typos and minor variations in spelling. This broadens the search to catch relevant results even if the exact spelling is unknown or incorrect.

· Wildcard and Truncation: Supports searching for patterns using wildcard characters and truncating words, offering flexibility in finding variations of a term or matching partial words.

Product Usage Case

· Analyzing scientific research papers: A researcher could use Wosp to find all papers discussing 'CRISPR' AND 'gene editing' within 10 words of 'ethical implications', helping to quickly identify relevant literature and their nuanced connections.

· Debugging codebases: A developer might search through log files for a specific error message that is known to appear near a particular function call, even if the exact wording or order is slightly different, using proximity and fuzzy search to pinpoint the problem.

· Reviewing legal documents: Legal professionals could search for terms like 'contract' AND 'termination' that appear within 20 words of 'notice period', ensuring they capture all relevant clauses related to contract endings.

· Exploring large text corpora: A data scientist could use Wosp to find all mentions of a specific topic across thousands of documents, filtering by related concepts using Boolean logic and proximity to get a highly targeted overview.

14

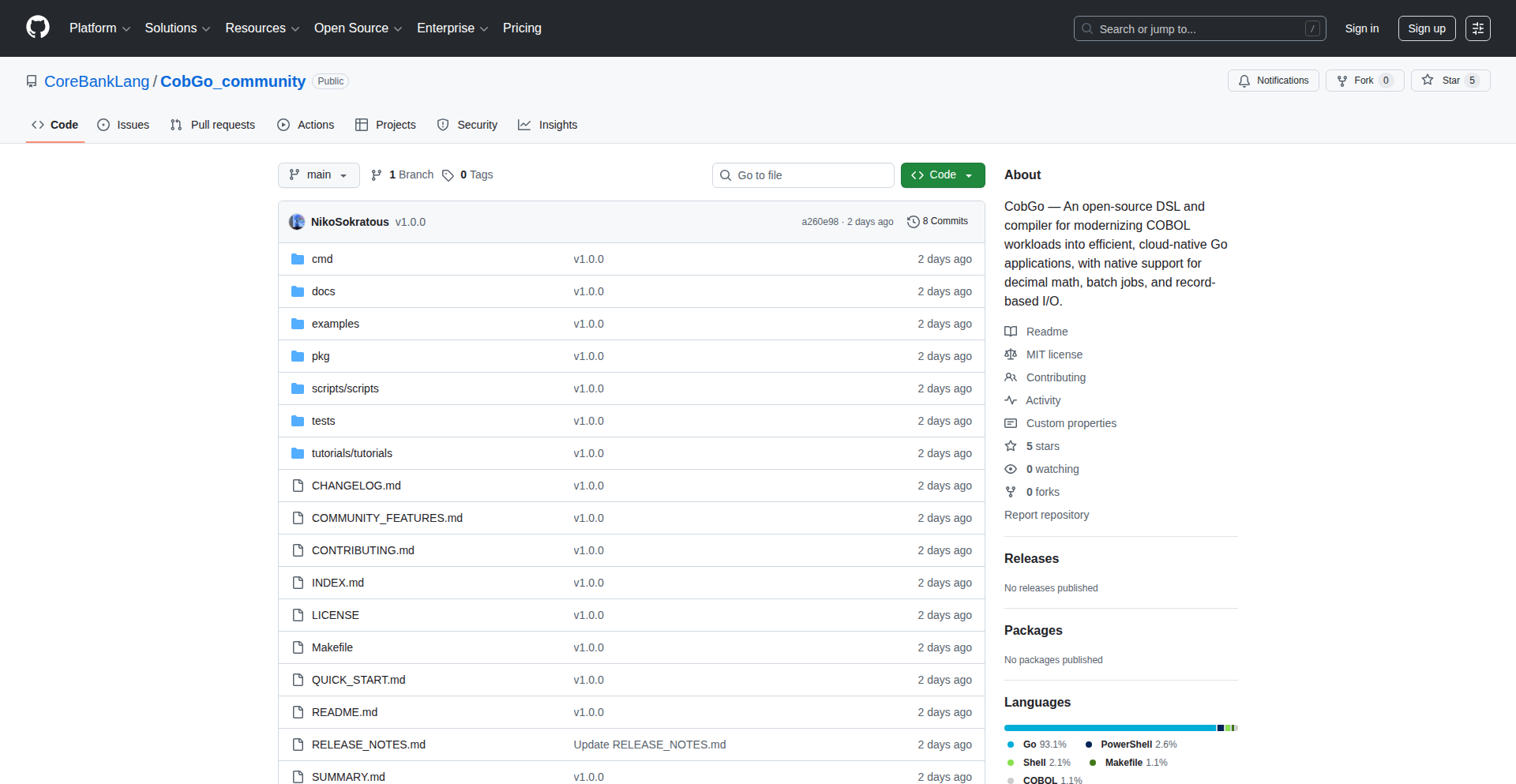

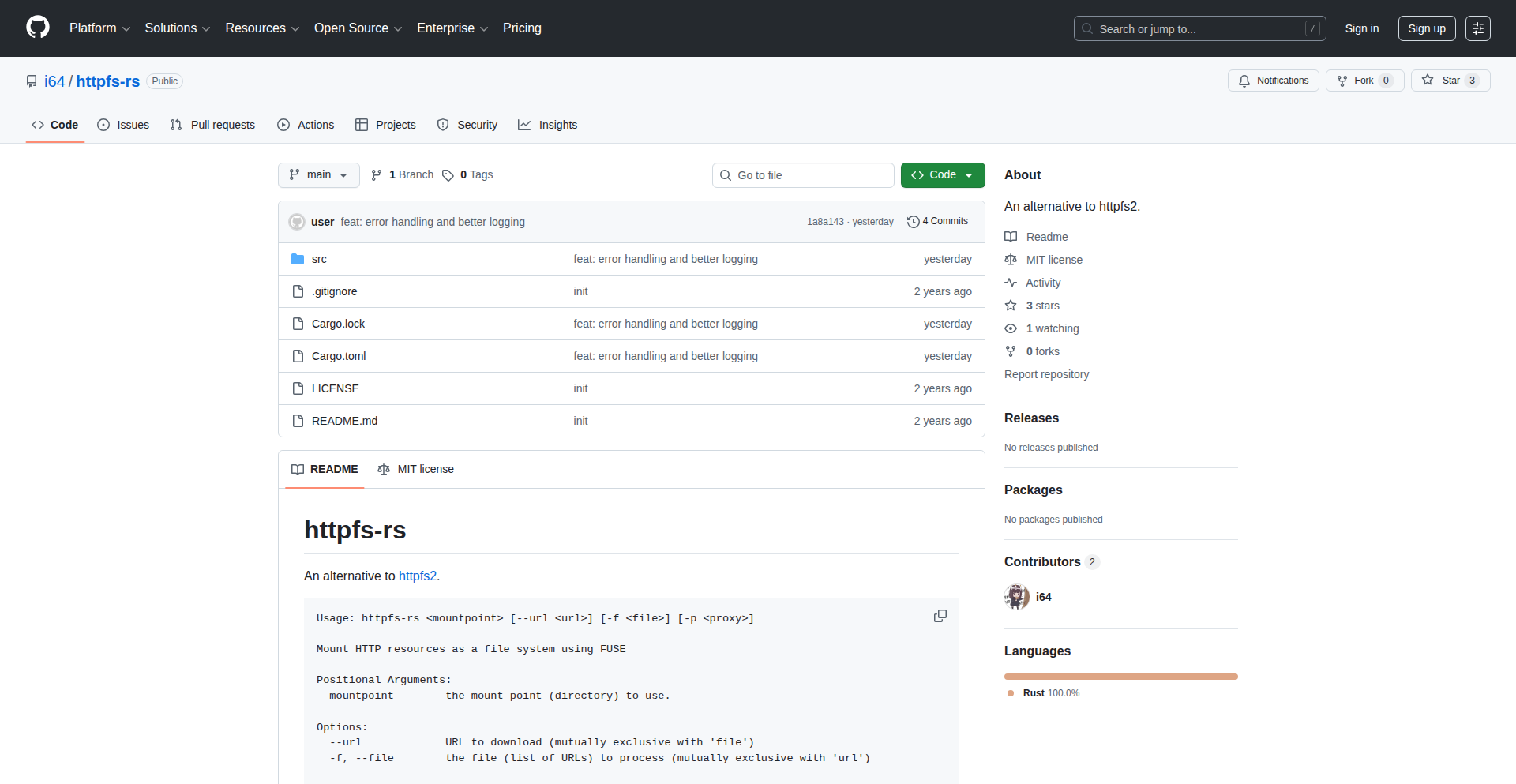

GoCobolBridge

Author

nsokra02

Description

GoCobolBridge is an open-source language layer built on top of Go, specifically designed to handle COBOL-style workloads. It offers native decimal arithmetic with COBOL accuracy, record structure and copybook compatibility, and first-class support for batch jobs and transactional orchestration. It also bakes in sequential/indexed file I/O directly into the runtime and compiles through Go for speed, concurrency, and cloud deployability. Think of it as a modern developer-friendly way to work with legacy COBOL code, offering the power of Go's ecosystem with COBOL's familiar constructs.

Popularity

Points 5

Comments 0

What is this product?

GoCobolBridge is a programming language that sits on top of Go, but it's tailor-made for tasks that used to be done with COBOL. It's like giving COBOL a modern makeover by using Go's underlying power. The innovation lies in its ability to accurately handle decimal numbers exactly like COBOL, understand COBOL's way of organizing data (record structures and copybooks), and natively support common COBOL patterns like batch processing and managing transactions. It even has file handling for older data formats built-in. This means developers can leverage the speed, concurrency, and cloud capabilities of Go while still working with the logic and data structures familiar to mainframe engineers. So, for you, it means you can modernize legacy systems without a complete rewrite, bringing them into the cloud era.

How to use it?

Developers can use GoCobolBridge by writing code in its new language, which then compiles down to efficient Go code. This allows them to integrate existing COBOL logic or build new applications that mimic COBOL's processing style within a modern Go environment. This can be done by directly embedding GoCobolBridge code within Go projects or by using it as a standalone tool for migrating or enhancing COBOL-based systems. The runtime handles the low-level details of decimal arithmetic and file I/O, abstracting them away for the developer. So, for you, this means you can gradually transition your COBOL applications to a more agile and cloud-native architecture without losing your existing business logic.

Product Core Function

· Native Decimal Arithmetic: Ensures precise calculations for financial and accounting data, identical to COBOL standards, preventing common floating-point errors and maintaining data integrity for critical business operations.

· Record Structures and Copybook Compatibility: Allows developers to directly use or translate COBOL's data definitions (copybooks), making it easy to integrate with or migrate existing data formats without complex reformatting, thus preserving data accessibility.

· Batch Job and Transactional Orchestration: Provides built-in constructs for managing sequences of tasks (batch jobs) and coordinating multiple operations to ensure data consistency (transactions), simplifying the development of complex business workflows common in legacy systems.

· Sequential/Indexed File I/O: Offers direct support for reading and writing data from traditional file formats like sequential and indexed files, crucial for interacting with legacy data sources and enabling gradual migration without needing to overhaul all data storage.

· Go Compilation for Performance and Cloud: Leverages Go's compiler to produce fast, efficient, and concurrent executables that are easily deployable on cloud platforms, providing modern performance and scalability benefits for applications that traditionally ran on slower mainframe systems.

Product Usage Case

· Migrating mainframe financial applications to the cloud: Developers can rewrite critical COBOL business logic in GoCobolBridge, allowing it to run on modern cloud infrastructure while maintaining exact financial calculation accuracy, solving the problem of high mainframe costs and inflexibility.

· Modernizing batch processing systems: By using GoCobolBridge's batch orchestration features, developers can re-implement legacy COBOL batch jobs to run faster and more reliably in a distributed environment, addressing the slow performance and maintenance challenges of old batch systems.

· Integrating legacy data with modern services: GoCobolBridge can read data from COBOL-defined files, allowing new microservices written in Go to easily access and process this data without requiring complex data transformation layers, thus enabling seamless data interoperability.

· Developing new applications that require COBOL-like precision: For new projects in finance or regulated industries where exact decimal precision is paramount, GoCobolBridge offers a modern development experience that guarantees COBOL-level accuracy, solving the challenge of finding developers proficient in both COBOL and modern languages.

15

CoroScrape PHP Job Aggregator

Author

Paleontologist

Description

A Hacker News Show HN project that aggregates PHP remote job listings from various sources. It's built using custom scrapers powered by Swow, a coroutine-based concurrency framework, to efficiently fetch and filter job data. The project aims to save job seekers hours of manual searching by consolidating opportunities and attempting to filter out irrelevant or misleading listings. This is a practical demonstration of how efficient scraping techniques can solve a common pain point.

Popularity

Points 1

Comments 4

What is this product?

This project is a specialized job aggregator focused on PHP roles, particularly those advertised as remote. The core technical innovation lies in its scraping engine, which utilizes Swow. Swow is a library that allows for asynchronous operations, meaning it can handle many tasks (like fetching data from different websites) concurrently without getting blocked. Imagine trying to read multiple books at once; instead of reading one cover to cover before starting the next, you might flip through a few pages of each, making faster progress overall. This 'doing many things at once' approach, powered by coroutines, makes the job scraping process significantly faster and more efficient compared to traditional sequential methods. The goal is to consolidate a large volume of job postings into a single, more manageable source, cutting through the noise and 'remote-but-not-really' listings.

How to use it?

Developers can use this project in a few ways. Primarily, it serves as a personal tool for job seekers, providing a centralized feed of PHP job opportunities. For those interested in the technical implementation, it demonstrates a practical application of Swow for web scraping. Developers looking to build similar aggregators or improve their own scraping workflows can learn from its architecture. The project uses Docker for its queue workers, indicating a containerized approach to managing background tasks like data updates, which is a common practice in modern development for scalability and isolation. It's integrated with Laravel and Beanstalkd for managing the job queue, suggesting a robust backend structure for handling scheduled tasks and data processing. Integrating it would involve setting up the environment and potentially adapting the parsing logic for new job sources.

Product Core Function

· Efficient multi-source job scraping: Uses Swow coroutines to fetch job data from numerous websites simultaneously, dramatically speeding up the data collection process. This means you get more job listings faster, saving you time.

· Targeted job aggregation: Specifically collects PHP-related job postings, focusing on roles advertised as remote, to reduce irrelevant results. This directly addresses the frustration of sifting through jobs that don't match your criteria.

· Data parsing and filtering: Implements custom parsers to extract relevant information from job listings and attempts to filter out 'BS' or misleading entries. This helps you see the genuine opportunities without wasting time on inaccurate postings.

· Background update workers: Leverages Docker and queueing systems like Beanstalkd to continuously update the job listings. This ensures you're always looking at the most current information available, reducing the chance of applying for already filled positions.

· Centralized job feed: Presents the aggregated jobs in a consolidated format, making it easier to browse and compare opportunities. This simplifies your job search by bringing everything into one place.

Product Usage Case

· A PHP developer actively seeking remote work can use this aggregator as their primary job search portal, saving them from manually visiting dozens of job boards daily. It directly solves the problem of fragmented and time-consuming job hunting.

· A developer interested in building their own data aggregation service can study the custom parsers and the use of Swow to understand how to create highly efficient scraping solutions. This provides a blueprint for tackling similar data extraction challenges.

· A team looking to create an internal job board or talent pool can adapt the scraping architecture to pull relevant internal or external opportunities. This offers a starting point for building custom internal tools to streamline recruitment or career development.

· When a specific niche job market is underserved by existing aggregators, this project serves as an example of how to build a targeted solution. A developer could replicate this approach for other tech stacks or specific industries, like AI or cybersecurity roles, providing a valuable resource for those communities.

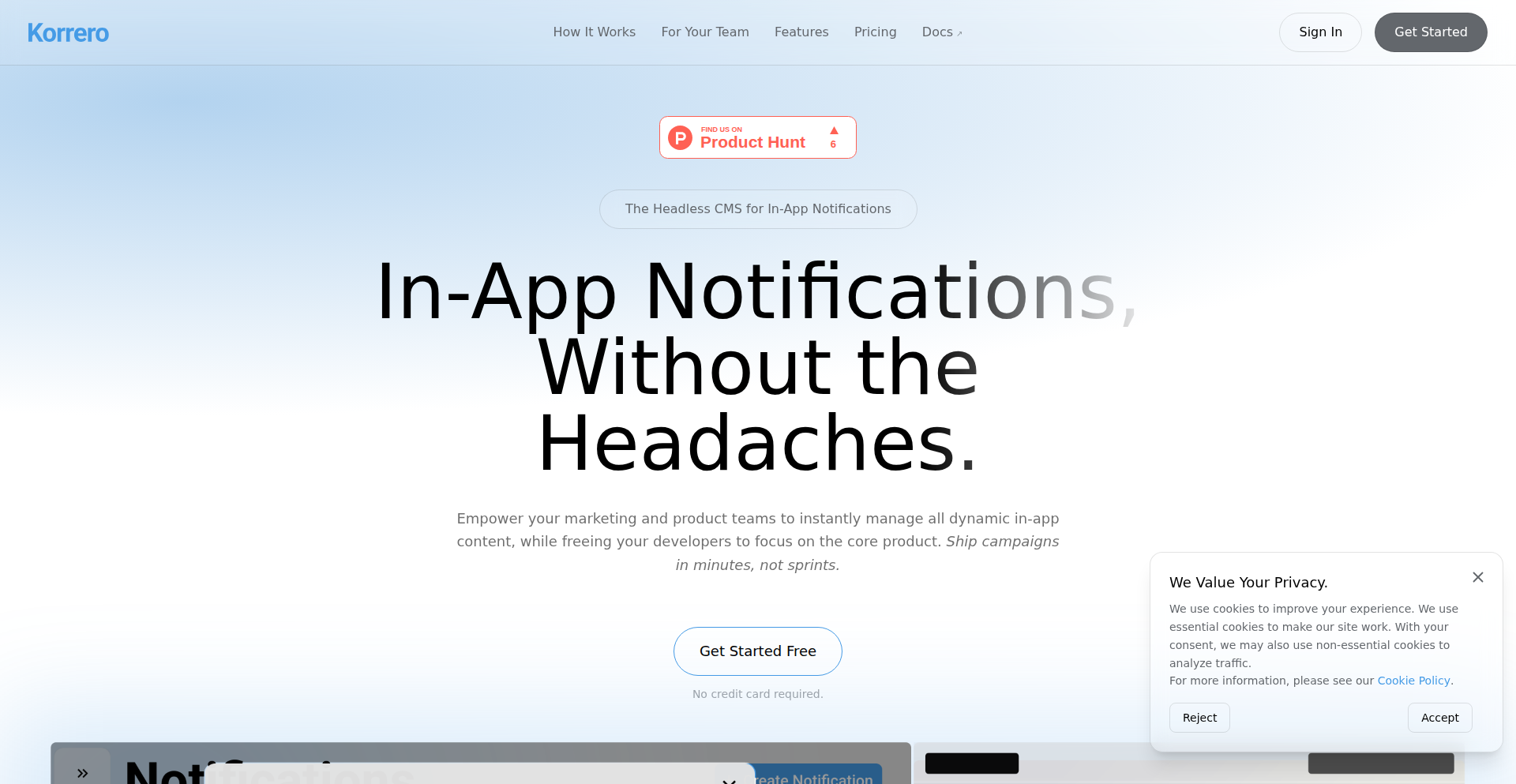

16

Korrero: In-App Notification Engine

Author

yezholov

Description

Korrero is a headless CMS designed to streamline the management and delivery of in-app notifications. It focuses on freeing up developer time by providing a centralized, API-driven solution for creating, scheduling, and deploying dynamic notifications directly within your applications. The innovation lies in its decoupled nature, allowing developers to integrate sophisticated notification systems without complex backend engineering.

Popularity

Points 4

Comments 1

What is this product?

Korrero is a 'headless' Content Management System specifically for in-app notifications. Think of it like a specialized content management system, but instead of website articles, it manages notification messages. Being 'headless' means it doesn't dictate how your notifications look or behave in your app; instead, it provides the content (the notification message and its data) through an API. This allows developers to use their own frontend frameworks and UI components to display these notifications exactly how they want. The core technical insight is to abstract away the complexities of notification backend logic – like user segmentation, scheduling, and content versioning – into a simple, developer-friendly API. So, this helps you avoid building a custom notification system from scratch, saving significant development effort and time, and ensures consistency in how notifications are managed across your application.

How to use it?

Developers can integrate Korrero into their applications by making API calls. You'll typically interact with Korrero to fetch notification content and metadata. For example, when a specific event occurs in your app (like a new feature announcement or a user milestone), your application's backend or frontend can query Korrero's API to get the relevant notification details. You can then use this data to render the notification within your app's UI using your preferred frontend technologies (React, Vue, Angular, Swift, Kotlin, etc.). Korrero also supports webhooks, allowing it to trigger actions in your application when certain events happen on the Korrero side (e.g., a notification is published). This makes it easy to use in various development workflows and integrate with existing application architectures, offering a plug-and-play solution for notification delivery that frees up developer bandwidth.

Product Core Function

· API-driven notification retrieval: Allows fetching notification content and metadata via API, enabling dynamic rendering in your application's UI. This saves development time by not needing to build a backend to serve notifications and ensures you always have the latest content.

· Centralized notification management: Provides a single place to create, edit, and organize all in-app notifications, reducing the risk of inconsistent messaging and simplifying content updates. This means less time searching for where a notification is managed and more time building core features.