Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-04

SagaSu777 2025-11-05

Explore the hottest developer projects on Show HN for 2025-11-04. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

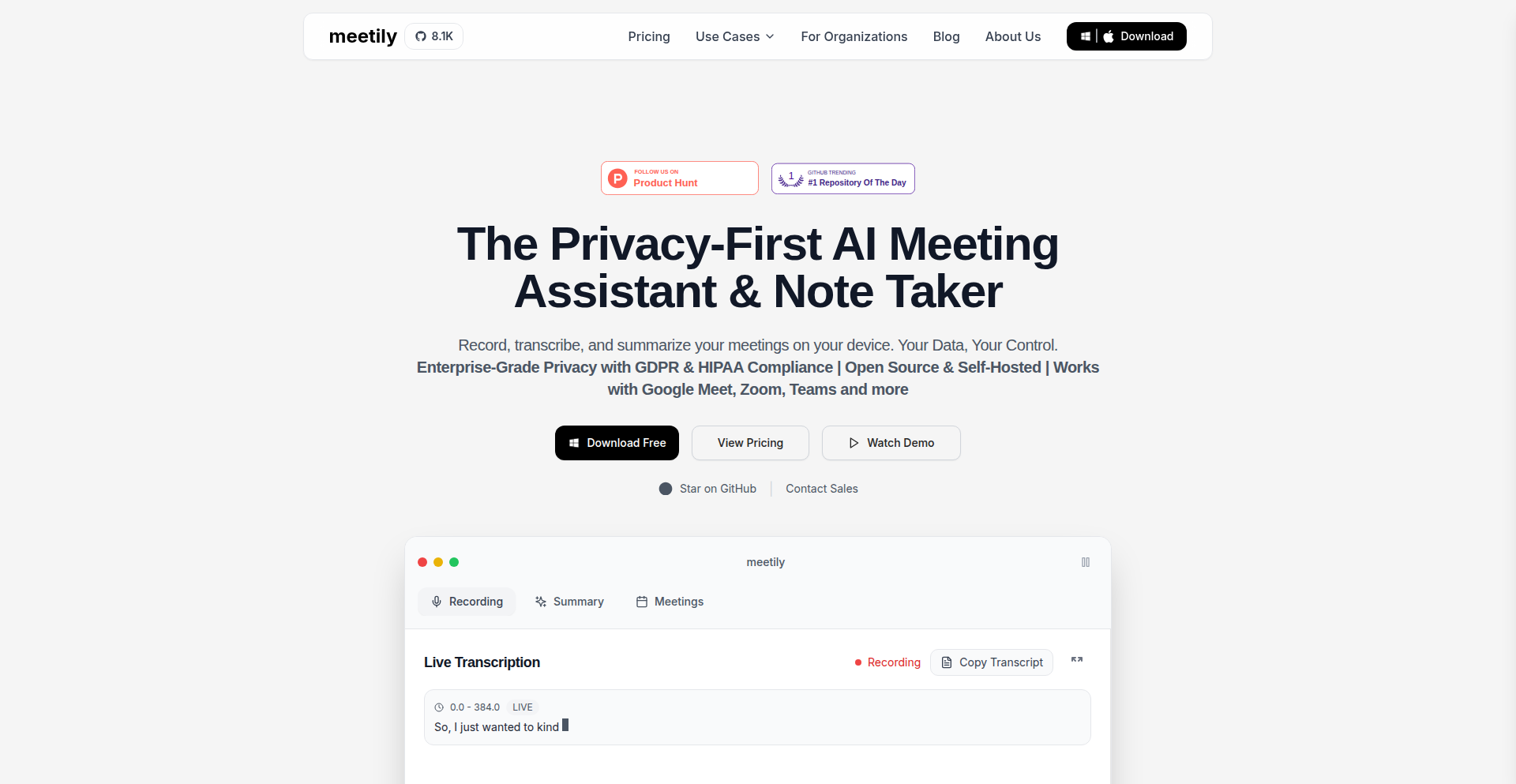

The landscape of innovation today is heavily influenced by the accelerating integration of AI, particularly Large Language Models (LLMs), into diverse applications. We're seeing a strong push towards building 'agentic' systems – intelligent agents that can autonomously interact with services and the web, as exemplified by the Concierge framework. This signifies a shift from simple AI tools to more complex, interactive AI ecosystems. Simultaneously, there's a growing demand for developer-centric tools that streamline complex processes, such as the Suites unit testing framework or the Tangent stream processor leveraging WebAssembly for performance. The trend of 'local-first' and self-hosted solutions is also prominent, addressing privacy concerns and offering greater control, like Meetily for AI meeting notes. For developers and entrepreneurs, this means opportunities abound in creating frameworks that empower AI agents, building robust developer tooling that simplifies complex tasks, and innovating in privacy-preserving technologies. The spirit of the hacker is alive and well, tackling real-world problems with creative technical solutions, from optimizing data processing to making complex interactions with AI seamless and secure. Embrace the complexity, build for extensibility, and always keep the user's actual needs at the forefront – that's where true innovation lies.

Today's Hottest Product

Name

Concierge - Framework for Building Agentic Web Interfaces

Highlight

This project introduces a novel approach to agentic applications by providing an open-source framework that allows LLMs to interact with services and APIs. The core innovation lies in its declarative framework, enabling developers to expose existing web offerings to LLMs through abstractions like Stages, Tasks, and Workflows. This tackles the complexity of integrating LLMs with intricate business logic and a vast number of APIs, offering a blueprint for creating 'Agentic Web Interfaces'. Developers can learn about building sophisticated AI agent interactions, designing declarative systems, and exposing microservices for AI agents.

Popular Category

AI/ML

Developer Tools

Infrastructure

Web Applications

Productivity Tools

Popular Keyword

AI Agents

LLMs

Framework

Developer Tools

Observability

Data Processing

Local-First

Open Source

WebAssembly

APIs

Technology Trends

Agentic AI Architectures

Local-First Development

WebAssembly in Backend

Unified Observability Platforms

AI-Driven Developer Tools

Decentralized/Self-Hosted Solutions

Privacy-Preserving Technologies

Developer Productivity Enhancements

Data Engineering Automation

Post-Quantum Cryptography

Project Category Distribution

AI/ML & Agents (25%)

Developer Tools & Frameworks (20%)

Web Applications & Services (15%)

Productivity & Utilities (10%)

Infrastructure & Observability (8%)

Data & Analytics (7%)

Cybersecurity & Privacy (5%)

Creative & Multimedia (5%)

Other (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | CSS-Sculptor Terrain | 331 | 80 |

| 2 | LocalFirstPlanner | 81 | 69 |

| 3 | GymSmellMapper | 43 | 43 |

| 4 | Concierge: Agentic Web Interface Framework | 20 | 1 |

| 5 | Suites: Declarative DI Testing | 18 | 3 |

| 6 | VibeCode Weaver | 16 | 4 |

| 7 | PACR: Unified Academic & Professional Nexus | 16 | 1 |

| 8 | Oodle AI Observability Nexus | 11 | 3 |

| 9 | Agor: AI-Powered Collaborative Code Design | 7 | 3 |

| 10 | AussieBankStatementCSV | 9 | 0 |

1

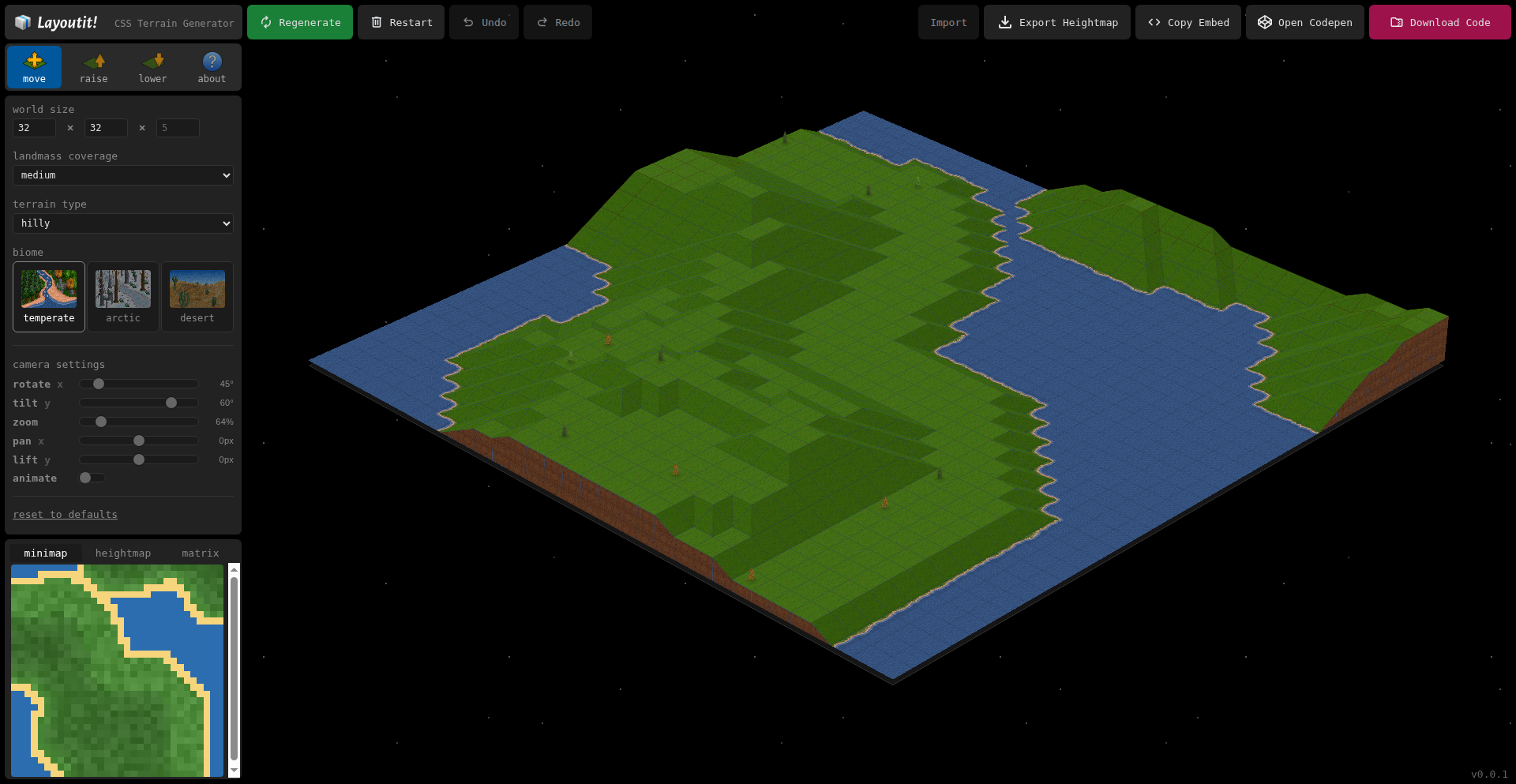

CSS-Sculptor Terrain

Author

rofko

Description

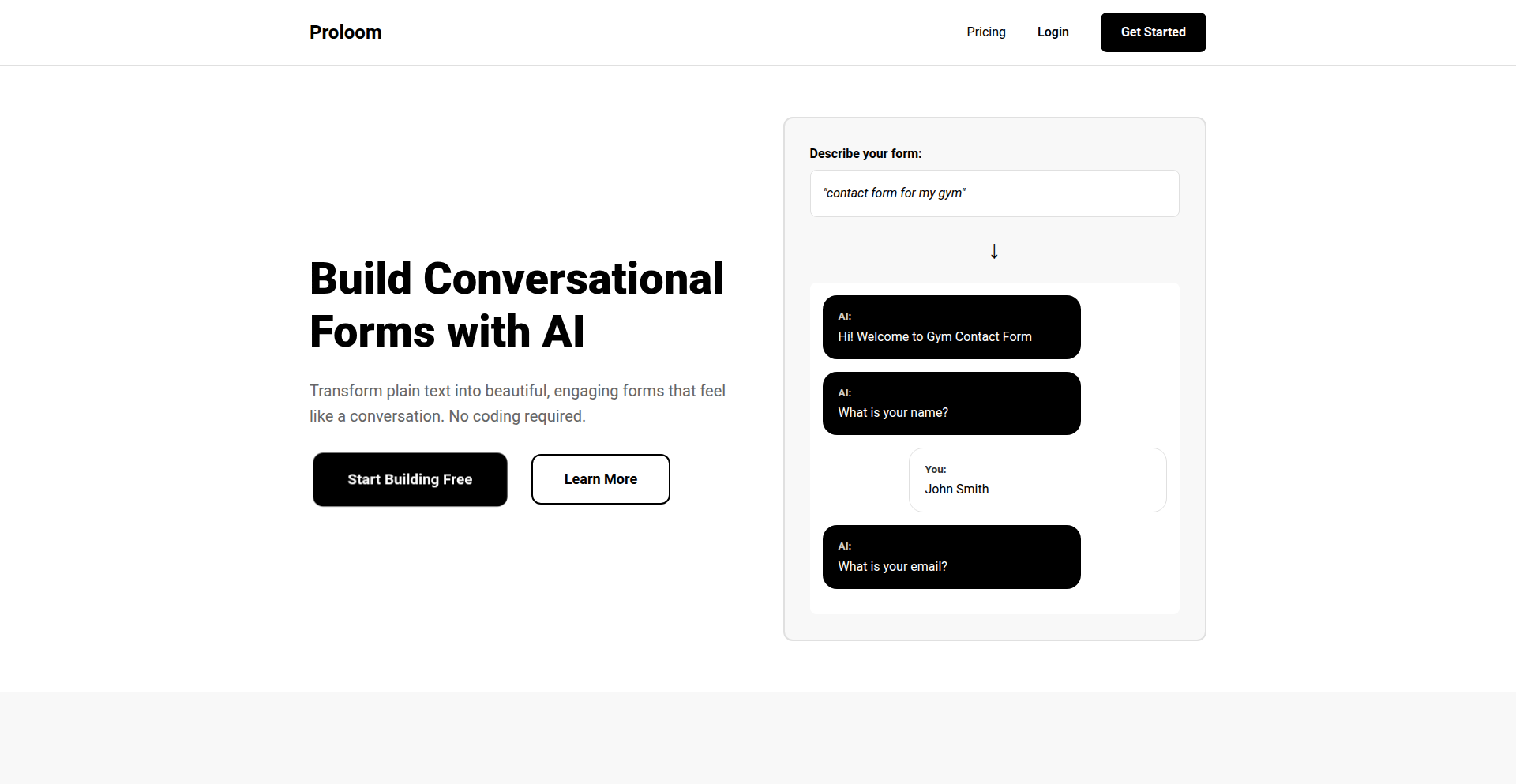

This project is a CSS-only terrain generator, showcasing an ingenious approach to creating dynamic and visually rich terrain landscapes using solely Cascading Style Sheets. It bypasses traditional JavaScript or server-side rendering for graphics, relying on advanced CSS techniques like gradients, pseudo-elements, and clever layering to simulate depth and texture, effectively solving the problem of complex visual generation without heavy computational overhead.

Popularity

Points 331

Comments 80

What is this product?

CSS-Sculptor Terrain is a groundbreaking project that generates terrain-like visual effects entirely with CSS. Instead of using JavaScript to calculate complex shapes or gradients, it leverages CSS features like `linear-gradient`, `radial-gradient`, and `box-shadow` along with carefully stacked HTML elements and pseudo-elements (`::before`, `::after`) to build up the illusion of a 3D landscape. Think of it like painting with code: each layer of CSS adds a stroke, a shadow, or a texture, incrementally building a detailed visual. The innovation lies in pushing the boundaries of what's achievable with declarative styling, demonstrating that sophisticated visual representations can be rendered client-side with minimal resource consumption, offering a unique blend of artistic creativity and efficient technical execution.

How to use it?

Developers can integrate CSS-Sculptor Terrain into their web projects by incorporating the provided CSS code and the necessary HTML structure. This involves defining a container element and then applying a series of CSS rules to it and its pseudo-elements. Customization is achieved by tweaking CSS variables, gradient parameters, shadow depths, and element positioning. For instance, to create a mountainous terrain, one might adjust gradient angles and color stops to simulate peaks and valleys, while using multiple layered elements with subtle offset shadows to give a sense of height and form. This approach is ideal for adding background visuals, abstract artistic elements, or even simple map-like representations without the performance hit of JavaScript-driven canvas or SVG generation.

Product Core Function

· Gradient-based topography simulation: The core functionality uses CSS gradients to create the illusion of elevation changes, akin to contour lines on a map, offering visual depth and texture to the terrain. This is useful for creating dynamic backgrounds that convey a sense of landscape.

· Pseudo-element layering for detail: Leveraging `::before` and `::after` pseudo-elements allows for stacking multiple visual layers, creating intricate details and shadow effects that enhance the realism and complexity of the generated terrain without adding extra HTML elements. This adds visual richness to interfaces.

· CSS variable-driven customization: The use of CSS variables enables easy and dynamic adjustment of terrain features like color palettes, gradient intensity, and overall shape. This empowers developers to quickly iterate and adapt the terrain visuals to their specific design needs, speeding up visual asset creation.

· Minimal dependency architecture: By relying solely on CSS, the project achieves exceptional performance and broad compatibility across browsers, as it doesn't require JavaScript execution for its core rendering. This means faster loading times and a smoother user experience, especially on less powerful devices.

Product Usage Case

· Creating an immersive website background for a nature-themed blog or portfolio: By applying CSS-Sculptor Terrain as a background, developers can provide an engaging visual element that immediately sets the tone and theme of the website. It solves the problem of static or generic backgrounds by offering a unique, generative aesthetic.

· Developing abstract visualizers for music or ambient soundscapes: The dynamic nature of CSS-generated visuals can be linked to audio cues (though this would require some JS for linking) or simply used to create evolving abstract art, adding a unique artistic dimension to media playback interfaces. This solves the challenge of creating visually interesting, non-intrusive audio-reactive elements.

· Building simple, stylized game interfaces or loading screens: For casual games or web applications, CSS-Sculptor Terrain can be used to create visually appealing loading screens or thematic UI elements that don't demand high processing power. This addresses the need for rich visuals in performance-sensitive web applications.

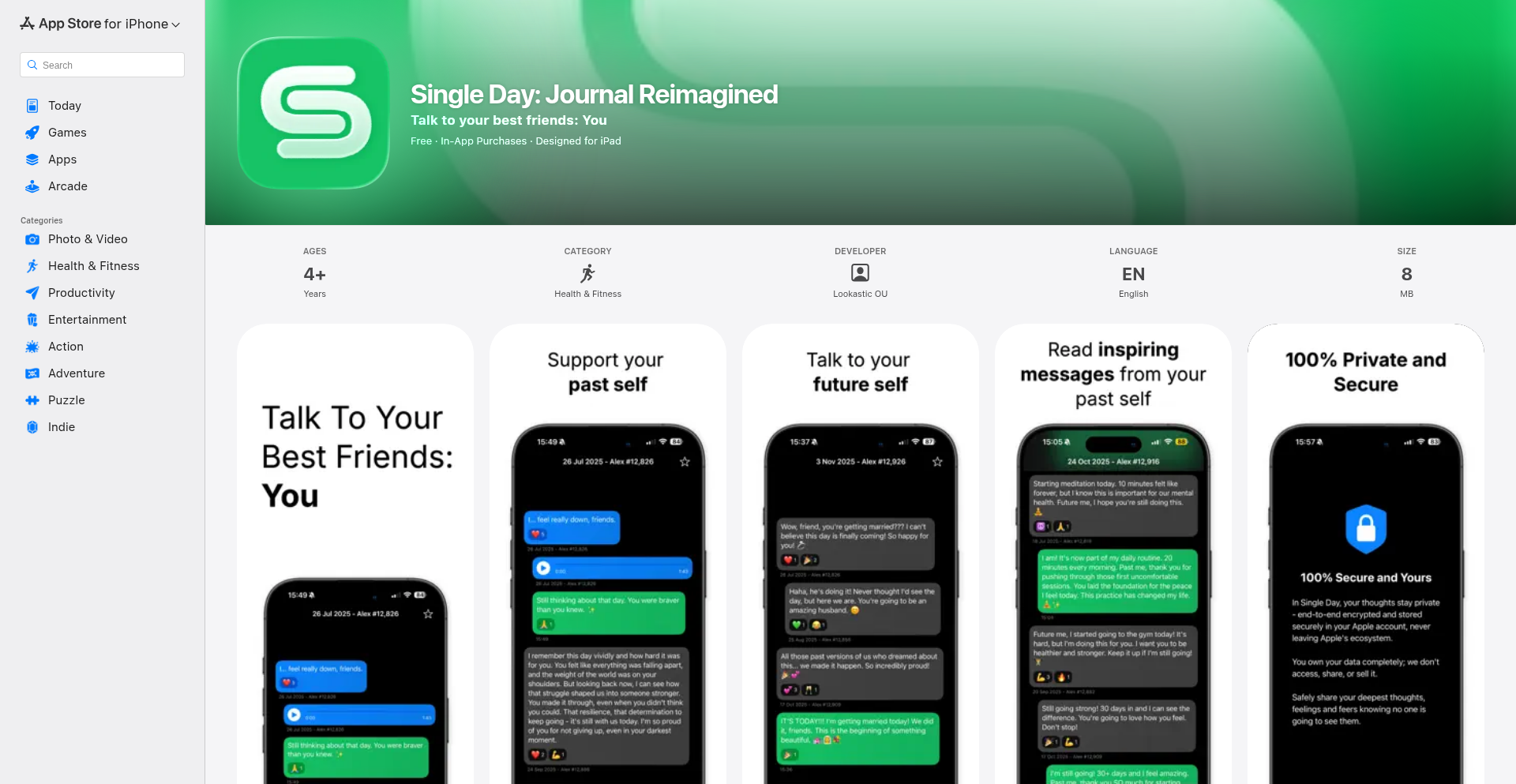

2

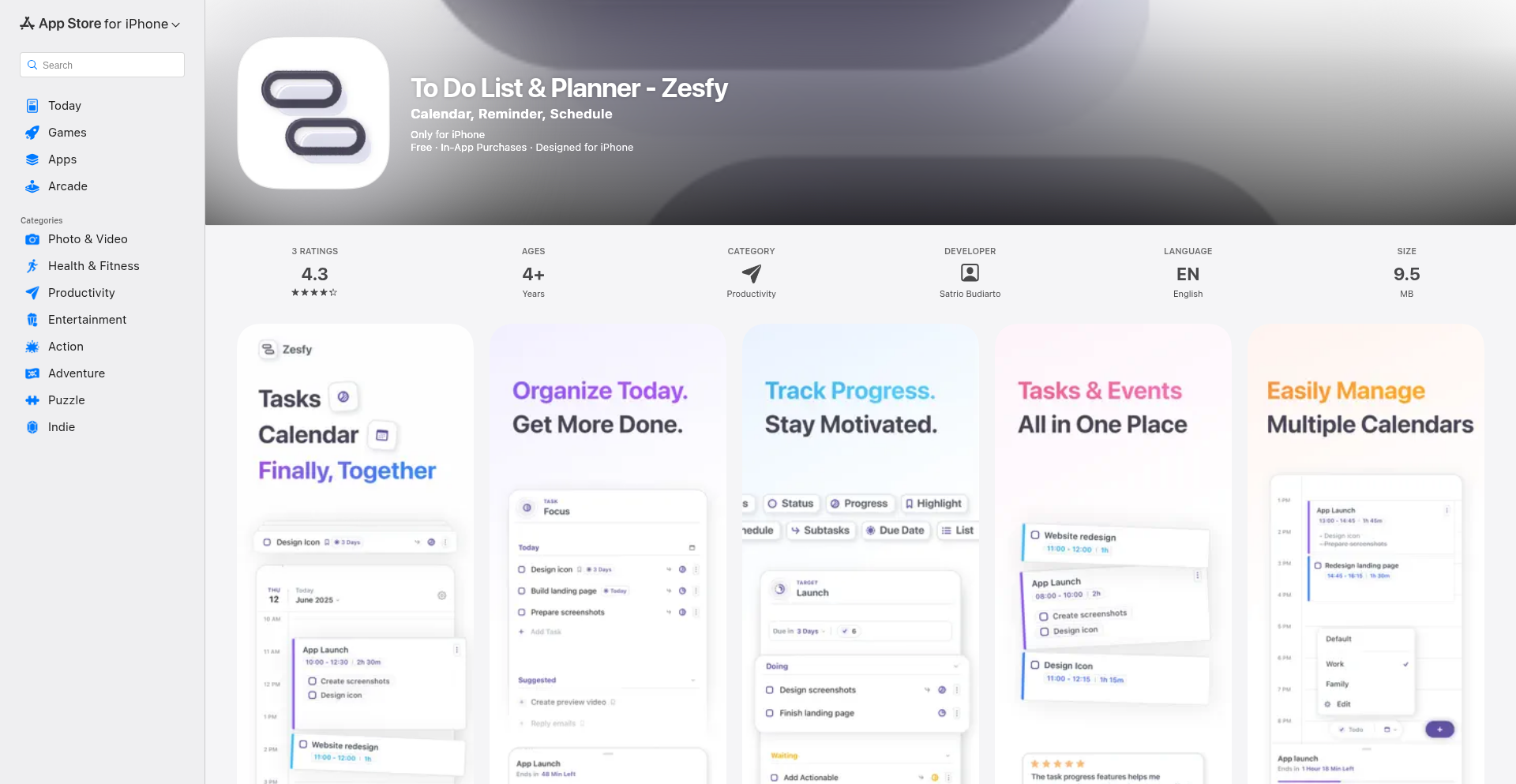

LocalFirstPlanner

Author

zesfy

Description

A local-first daily planner for iOS that prioritizes your data privacy and offline functionality, syncing seamlessly when online. It addresses the common concern of sensitive personal data residing on remote servers.

Popularity

Points 81

Comments 69

What is this product?

This project is a daily planner application built for iOS. Its core innovation lies in its 'local-first' architecture. This means that all your notes, tasks, and schedule information are primarily stored directly on your device. Unlike many cloud-dependent apps, your data doesn't constantly need to be sent to a server to be saved or accessed. This offers enhanced privacy and guarantees that you can still use your planner even without an internet connection. When you do have an internet connection, it intelligently syncs your data to ensure consistency across devices or for backup purposes.

How to use it?

Developers can integrate the principles of this local-first approach into their own iOS applications. This involves leveraging Core Data or Realm for local data persistence, implementing background synchronization mechanisms (like CloudKit or custom solutions) for online updates, and designing the user interface to gracefully handle offline states. It's particularly useful for applications dealing with sensitive user information, such as personal journals, health trackers, or financial management tools, where data privacy and offline access are paramount.

Product Core Function

· Local Data Persistence: Stores all user data directly on the iOS device using efficient local databases, ensuring privacy and offline access. This means your important planning information is always available to you, even when you're off the grid, and isn't exposed to remote servers.

· Offline Functionality: Allows users to create, edit, and view their daily plans without an internet connection. This is crucial for productivity during commutes, travel, or in areas with unreliable connectivity, guaranteeing uninterrupted access to your schedule.

· Intelligent Syncing: Automatically synchronizes data to the cloud (or other devices) when an internet connection is available, ensuring data backup and consistency without user intervention. This provides peace of mind by keeping your data safe and synchronized, while still prioritizing your immediate offline needs.

· Privacy-Focused Design: Minimizes reliance on cloud servers for core functionality, reducing the potential exposure of sensitive personal information. This means your private thoughts and plans stay private, as they are not constantly being transmitted and stored externally.

Product Usage Case

· Building a personal journaling app where users want to ensure their private thoughts remain solely on their device, even when offline. The local-first approach guarantees their journal entries are always accessible and private.

· Developing a task management application for field workers who might not have consistent internet access. The app can be used entirely offline, and syncs updates when a connection is re-established, preventing data loss and ensuring all tasks are managed.

· Creating a habit tracker that requires minimal external dependencies for core functionality. This allows users to track their progress and review their habits anytime, anywhere, without worrying about data privacy or connectivity.

· Designing a personal finance app that handles sensitive financial data. A local-first architecture provides an extra layer of security and user trust by keeping the financial information on the user's device.

3

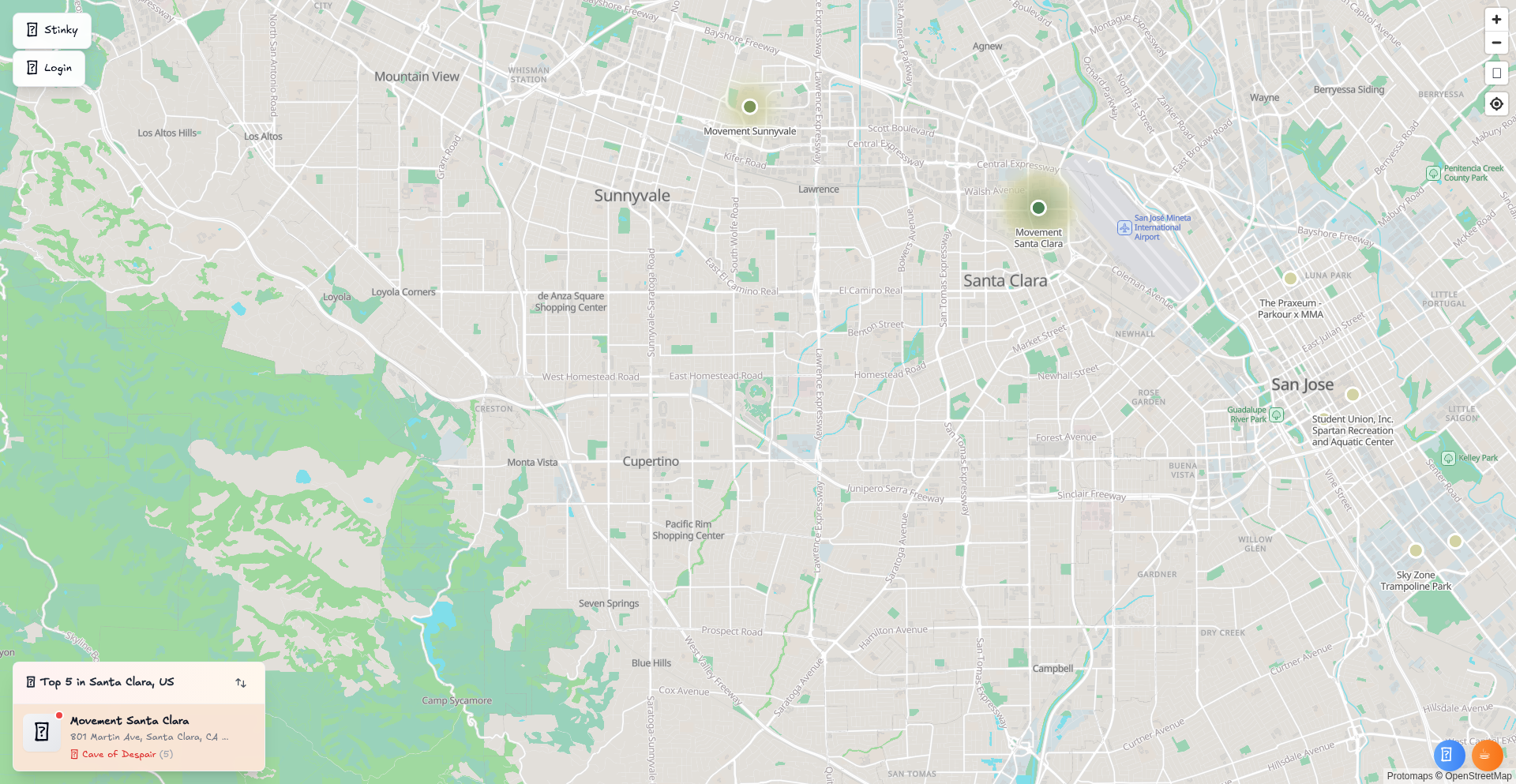

GymSmellMapper

Author

boshenz

Description

A crowdsourced platform for ranking Boulder gyms based on stinkiness and difficulty, providing detailed gym information like toprope availability and training board presence. It leverages community input to solve the problem of finding a suitable and pleasant climbing gym environment.

Popularity

Points 43

Comments 43

What is this product?

GymSmellMapper is a web application that uses crowdsourced data to rate the smell and difficulty of indoor climbing gyms (bouldering gyms). Users contribute their experiences, creating a community-driven rating system. The core innovation lies in aggregating subjective user feedback to provide objective, actionable insights for climbers, helping them discover gyms that align with their preferences for cleanliness and challenge. Think of it as a Yelp for climbing gym vibes and difficulty.

How to use it?

Developers can use GymSmellMapper by visiting the website to explore gym ratings and details before planning their climbing sessions. For integration, one could envision using its API (if available) to embed gym rating data into other climbing-related applications or community forums, providing users with richer context about potential climbing locations. This allows users to make informed decisions about where to climb based on community consensus.

Product Core Function

· Crowdsourced Gym Ratings: Users submit ratings for gym stinkiness and difficulty, enabling a community-driven assessment of various climbing facilities. This helps users avoid unpleasant experiences and find gyms that match their desired challenge level.

· Detailed Gym Information: Provides specific details about gyms, such as the availability of toprope routes and training boards, empowering climbers with the information they need to select the best gym for their training goals.

· Interactive Map Visualization: Potentially offers a map interface to visually explore gym locations and their associated ratings, making it easier for users to discover nearby climbing options with desired characteristics.

· Community Driven Insights: Aggregates user feedback to reveal trends and popular opinions about gyms, offering a collective understanding of gym quality beyond simple star ratings.

Product Usage Case

· A climber planning a trip to a new city can use GymSmellMapper to quickly identify bouldering gyms that are known to be clean and have a good selection of challenging problems, saving them from potentially disappointing gym experiences.

· A gym owner could use the aggregated data to understand user perceptions of their facility and identify areas for improvement, such as addressing odor issues or expanding training board offerings, ultimately enhancing customer satisfaction.

· A climbing app developer could integrate GymSmellMapper's data to enrich their gym directory, offering users a more comprehensive view of gym conditions and helping them make better choices when selecting a place to climb.

· A group of friends looking for a new climbing spot can collectively browse GymSmellMapper to find a gym that meets everyone's preferences for difficulty and overall atmosphere, ensuring a fun and productive climbing session for all.

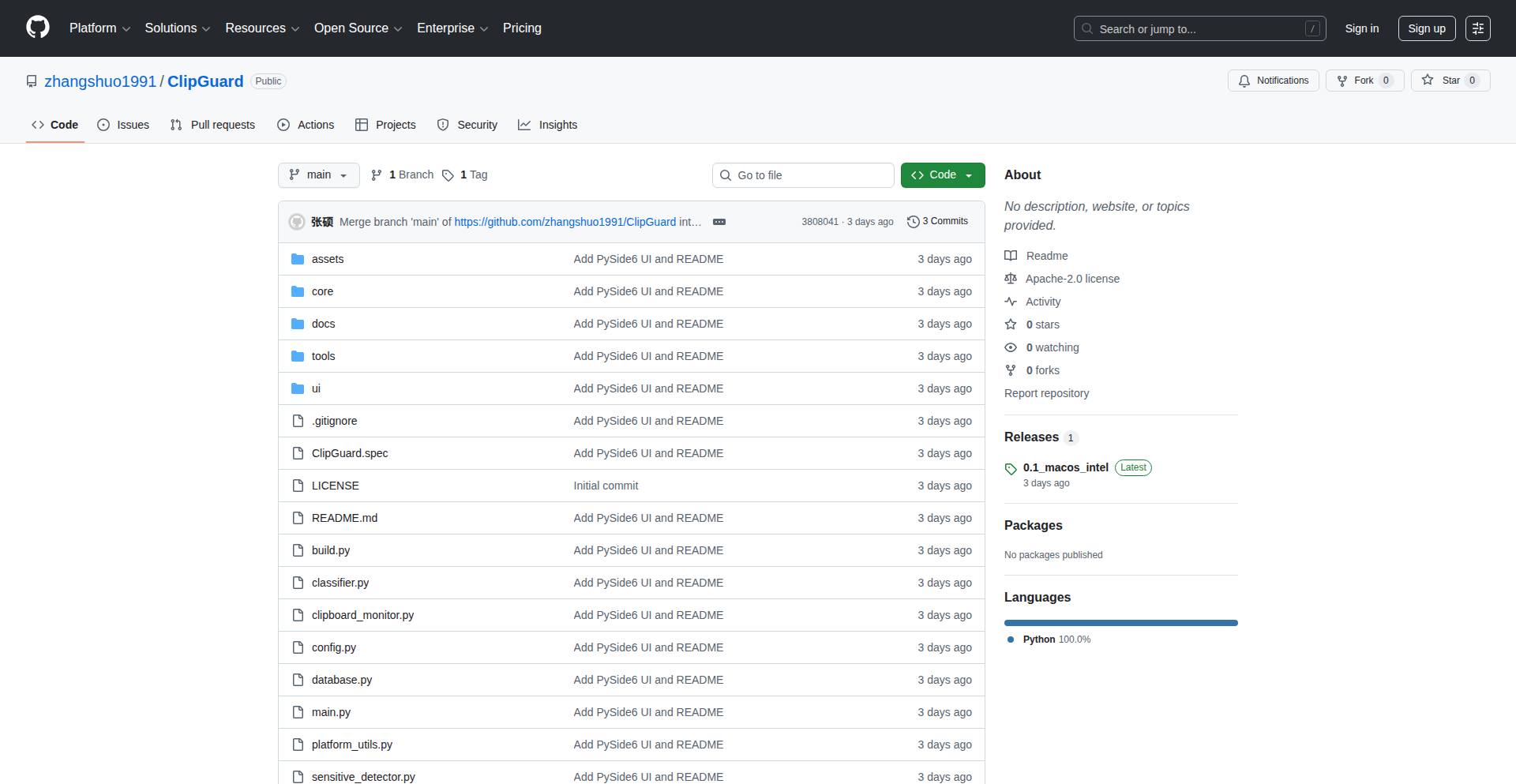

4

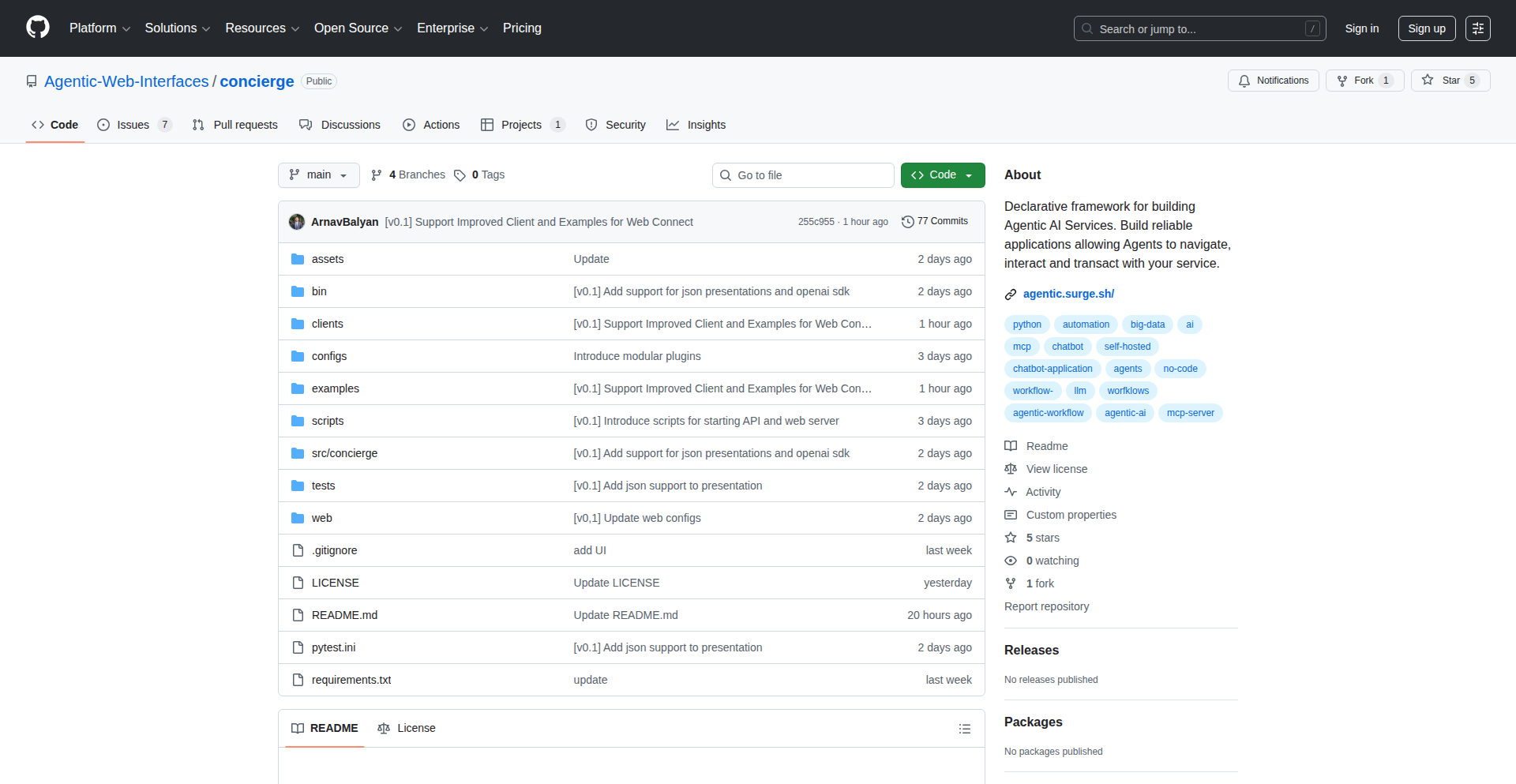

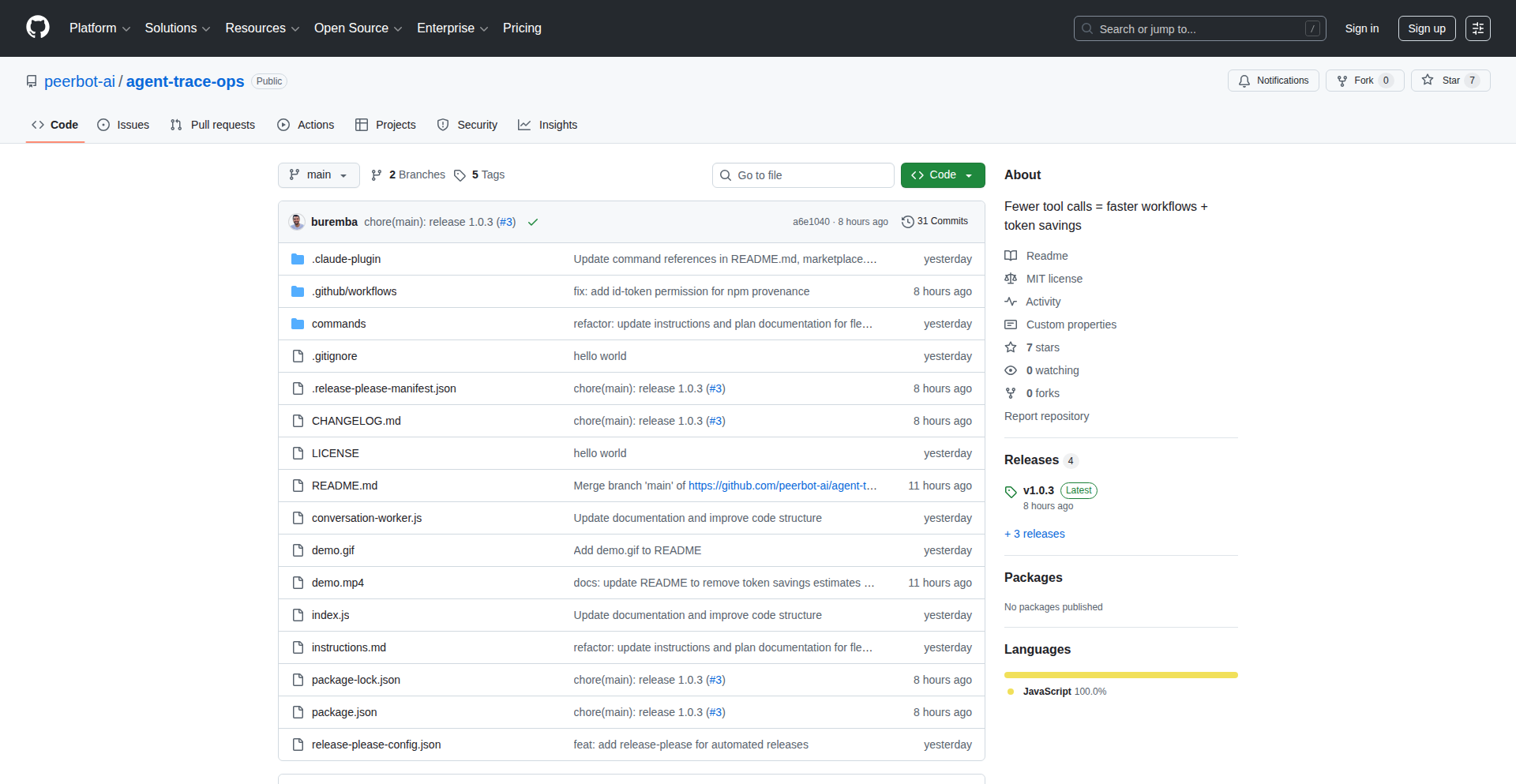

Concierge: Agentic Web Interface Framework

Author

arnav3

Description

Concierge is an open-source framework designed to let Large Language Models (LLMs) interact with your existing services. It allows you to build 'microservices for AI agents' by declaratively defining how LLMs can navigate, perform actions, and even make transactions within your web offerings. This is particularly valuable when you have complex systems with many APIs, and you want to guide LLMs on how to effectively use them, essentially creating an 'Amazon for agents'.

Popularity

Points 20

Comments 1

What is this product?

Concierge is a declarative framework that transforms your existing web services into 'Agentic Web Interfaces'. This means you can enable AI agents, powered by LLMs, to understand and interact with your services as if they were sophisticated users. It provides abstractions like Stages, Tasks, and Workflows that act as rules and guidelines, teaching the LLM how to navigate your service, discover its capabilities, and execute specific actions or business logic. This is a novel way to expose complex functionalities to AI, allowing them to leverage your services without needing to understand the underlying code, thus solving the problem of making intricate APIs accessible and manageable for AI.

How to use it?

Developers can use Concierge by defining the rules and structure of their service in a declarative manner. You essentially map out the potential actions and information an AI agent can access. This involves specifying Stages (high-level goals), Tasks (specific steps), and Workflows (sequences of tasks). Concierge then translates these definitions into an interface that LLMs can query and interact with. You can integrate it by exposing your existing web APIs through the Concierge framework, which handles the communication and ensures the AI agent adheres to the defined principles. This is ideal for building AI-powered assistants for e-commerce platforms, internal business tools, or any service with complex user flows.

Product Core Function

· Declarative Framework for Agentic Applications: This allows developers to define how AI agents should interact with their services using simple rules and structures, rather than complex code. This significantly reduces the effort needed to make services AI-friendly, providing immediate value by abstracting away the intricacies of AI integration.

· LLM Navigation and Interaction Abstractions (Stages, Tasks, Workflows): These provide a structured way to guide AI agents through complex services. They act like a map and instruction manual for the AI, ensuring it performs actions correctly and efficiently. This enhances the reliability and predictability of AI interactions with your services.

· Exposing Complex Business Logic to LLMs: Concierge makes it feasible for AI agents to utilize thousands of APIs and intricate business logic without needing direct code-level understanding. This opens up new possibilities for AI to automate complex tasks and provide intelligent assistance across various domains.

· UI Dashboard for Analytics and Monitoring: This feature offers insights into AI agent usage, costs, and performance. It allows developers to track which business services are most popular with AI, understand usage patterns, and monitor expenses, providing crucial operational intelligence.

· Replayability for Debugging: The ability to replay user sessions with AI agents is invaluable for troubleshooting and understanding AI behavior. It helps developers identify issues, optimize workflows, and improve the overall AI experience.

· Self-Hosting or Managed Hosting Options: This provides flexibility for deployment. Developers can choose to host Concierge on their own infrastructure for maximum control or use a managed service for convenience and scalability, catering to diverse needs and technical capabilities.

Product Usage Case

· Building an AI Shopping Assistant for an E-commerce Platform: Developers can use Concierge to define how an AI agent navigates product catalogs, adds items to a cart, applies discounts, and completes checkout. This solves the problem of creating a seamless and intelligent shopping experience for customers interacting with an AI assistant.

· Automating Customer Support Ticket Resolution: By defining workflows for common support scenarios, Concierge can enable AI agents to understand customer issues, gather necessary information through interactions, and even trigger automated resolution processes or escalate to human agents. This significantly improves support efficiency.

· Creating Intelligent Internal Business Tools: For a company with a vast array of internal APIs for HR, finance, or project management, Concierge can allow employees to interact with these systems using natural language via AI agents, simplifying access to information and task completion.

· Developing AI-Powered API Orchestration Layers: For services that expose many granular APIs, Concierge can act as a layer to orchestrate these APIs into higher-level, more understandable actions for AI agents, akin to building a programmable interface for AI to interact with complex backend systems.

5

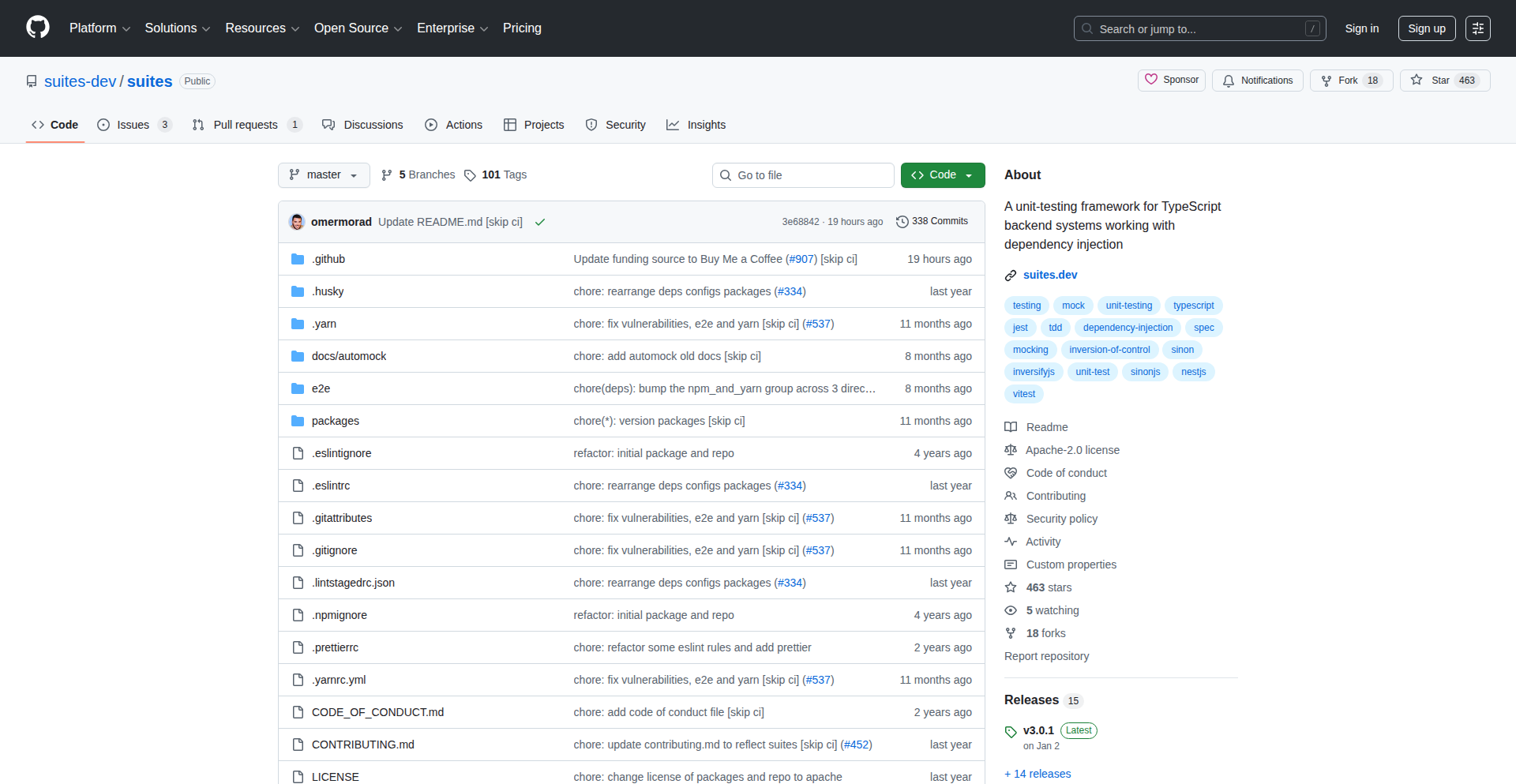

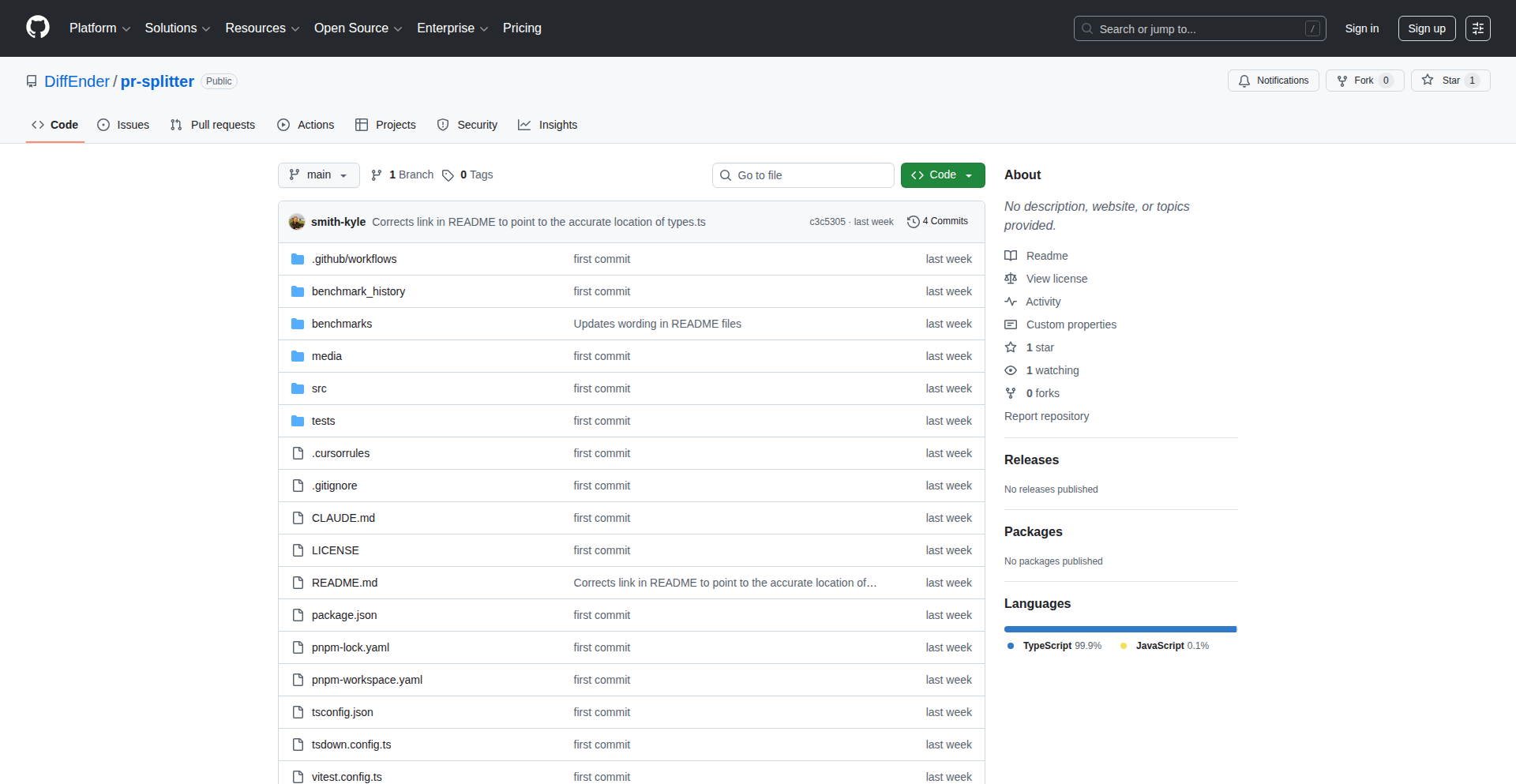

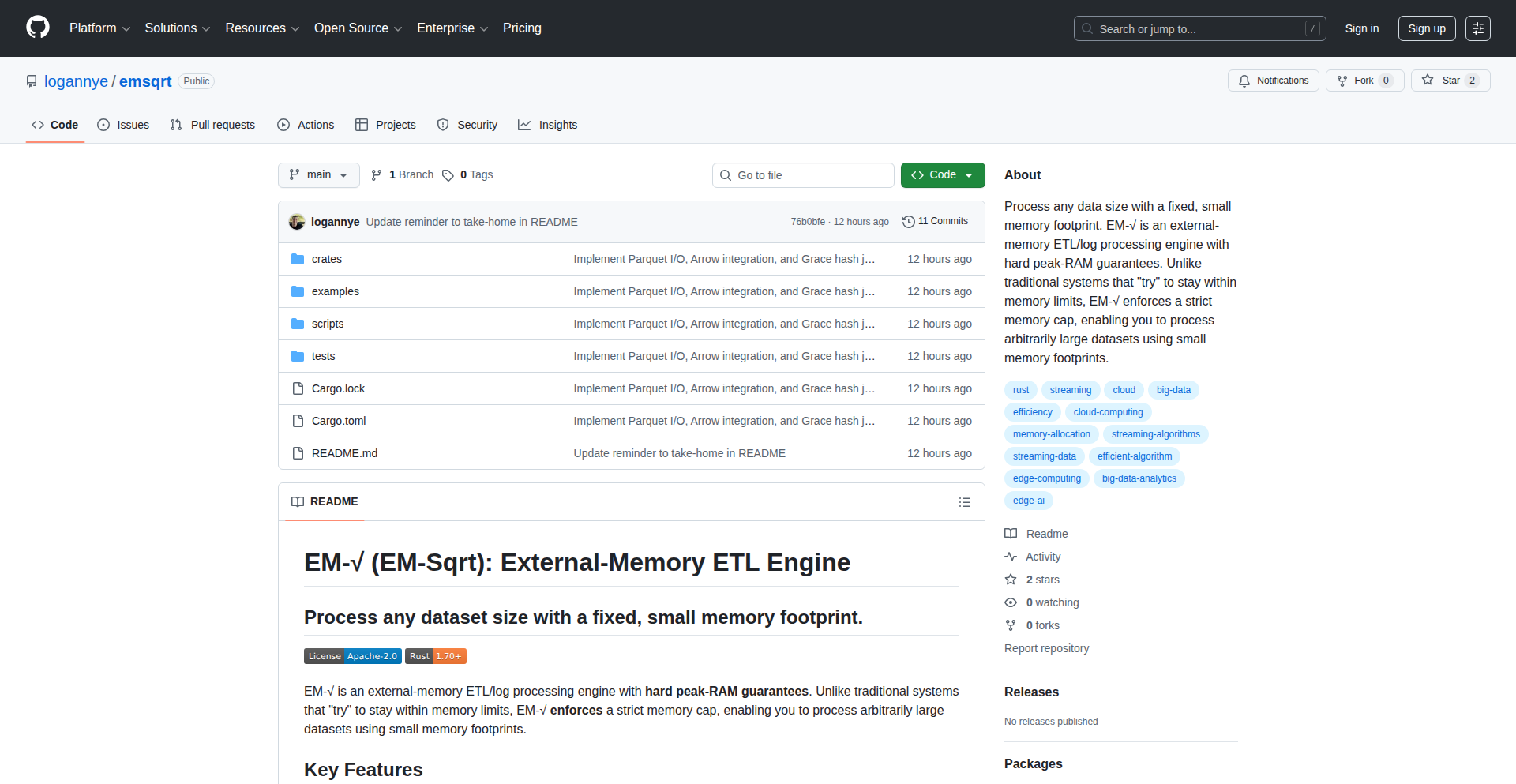

Suites: Declarative DI Testing

Author

omermorad

Description

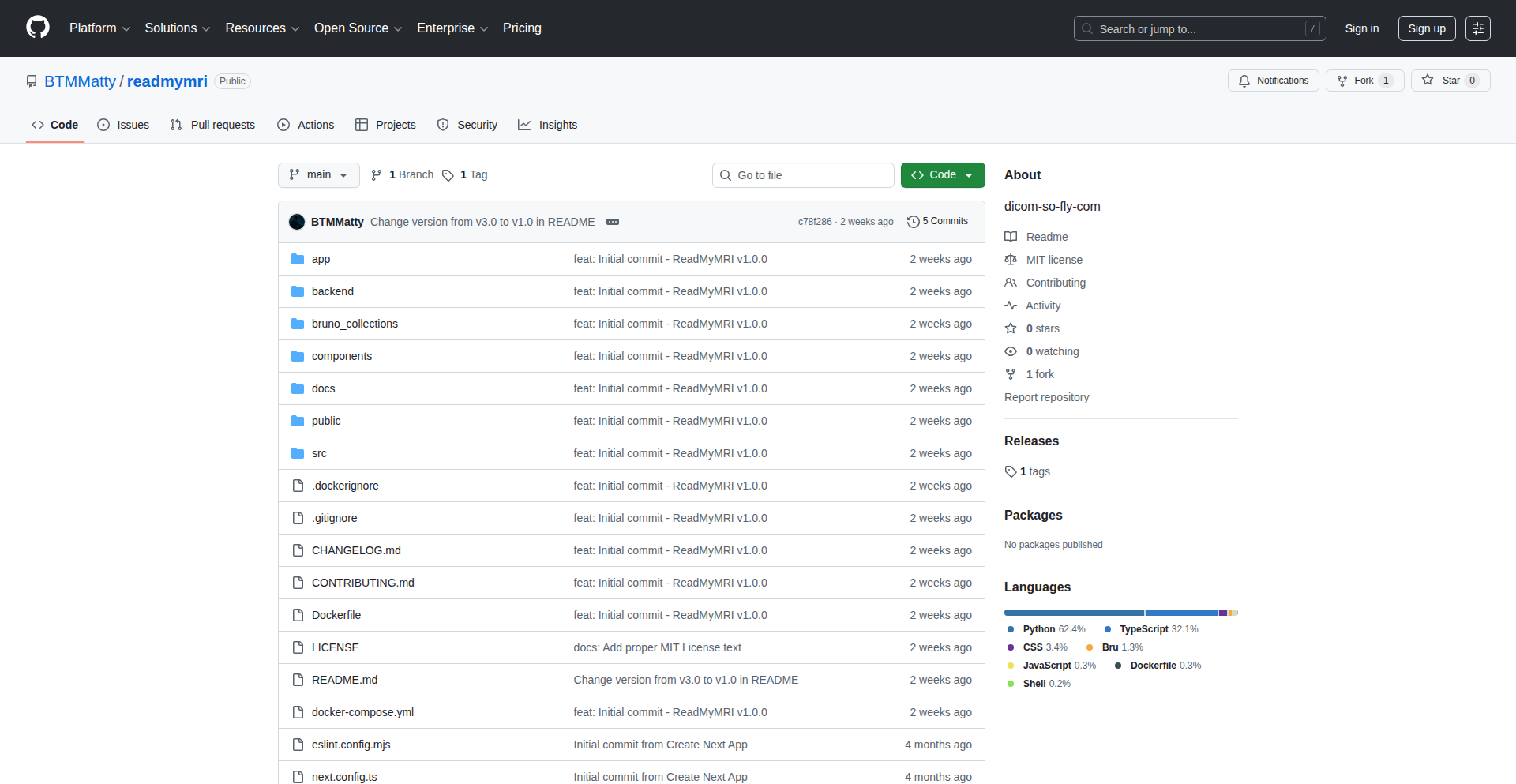

Suites is a unit-testing framework for TypeScript backend systems that leverage dependency injection. It simplifies testing by providing a single, declarative API to create isolated or integrated test environments for your code units, drastically reducing boilerplate and improving test maintainability and clarity. It solves common testing pain points like manual mocking, leaky tests, and confusing error messages.

Popularity

Points 18

Comments 3

What is this product?

Suites is a unit-testing framework designed for TypeScript backend applications that heavily utilize dependency injection (DI). The core innovation lies in its declarative API, which allows developers to define how their code units should be tested with minimal manual setup. Instead of painstakingly mocking each dependency by hand, Suites automatically generates type-safe mocks or allows you to selectively use real dependencies. This means you spend less time writing and maintaining tests and more time building features. It's inspired by the concepts of testing units in isolation (solitary) or with their direct collaborators (sociable), making tests more predictable and easier to understand.

How to use it?

Developers can integrate Suites into their existing TypeScript backend projects. After installing the package, they can use the `TestBed` API within their test files. For example, to test a `UserService` in complete isolation, you would write `TestBed.solitary(UserService).compile()`. If you want to test `UserService` while using the real `EmailService` but mocking other dependencies, you would use `TestBed.sociable(UserService).expose(EmailService).compile()`. Suites seamlessly integrates with popular DI frameworks like NestJS and InversifyJS, and testing libraries like Jest, Sinon, and Vitest, making adoption straightforward within common development workflows.

Product Core Function

· Declarative Test Environment Setup: Automatically generates type-safe mocks for dependencies, eliminating manual mocking and reducing test code. This means less time spent on the tedious task of setting up tests and more focus on verifying behavior.

· Solitary Testing Mode: Allows testing a unit in complete isolation from its dependencies, ensuring that the test focuses solely on the unit's logic. This provides confidence that any test failures are directly related to the unit under test and not external factors.

· Sociable Testing Mode: Enables testing a unit alongside specific real dependencies, allowing for integration testing of closely coupled components. This is useful for understanding how different parts of your system interact and ensuring they work together correctly.

· Type-Safe Mocks: Guarantees that mocks conform to the types of the actual dependencies, preventing runtime errors caused by mismatched implementations. This reduces the chance of subtle bugs slipping through due to incorrect mock configurations.

· DI Framework Adapters (NestJS, InversifyJS): Provides out-of-the-box compatibility with popular dependency injection frameworks, simplifying integration into existing projects. Developers don't need to learn new DI patterns for testing.

· Testing Library Adapters (Jest, Sinon, Vitest): Works seamlessly with commonly used testing tools, allowing developers to leverage their existing knowledge and tooling. This ensures a smooth transition and minimal disruption to development processes.

Product Usage Case

· Testing a controller in a NestJS application: You can use `TestBed.solitary(MyController).compile()` to test the controller's methods in isolation, ensuring its logic is sound. This is useful when you want to verify the controller's response to various inputs without worrying about the underlying services.

· Testing an OrderService that depends on a real PaymentProcessor: Using `TestBed.sociable(OrderService).expose(PaymentProcessor).compile()`, you can test how your `OrderService` interacts with the actual `PaymentProcessor`, while other dependencies are mocked. This scenario helps catch integration issues between these two critical components.

· Ensuring a data access layer unit behaves correctly with mocked external API calls: If your data access layer fetches data from an external API, you can use `TestBed.solitary(MyDataAccessLayer).compile()` and provide a mock for the HTTP client. This allows you to test the data transformation and error handling logic of your data access layer independently of network availability or API stability.

· Refactoring a complex service with confidence: When refactoring a large service with many dependencies, Suites can help ensure that your changes don't break existing functionality. By running sociable tests before and after refactoring, you can quickly identify any unintended side effects or regressions.

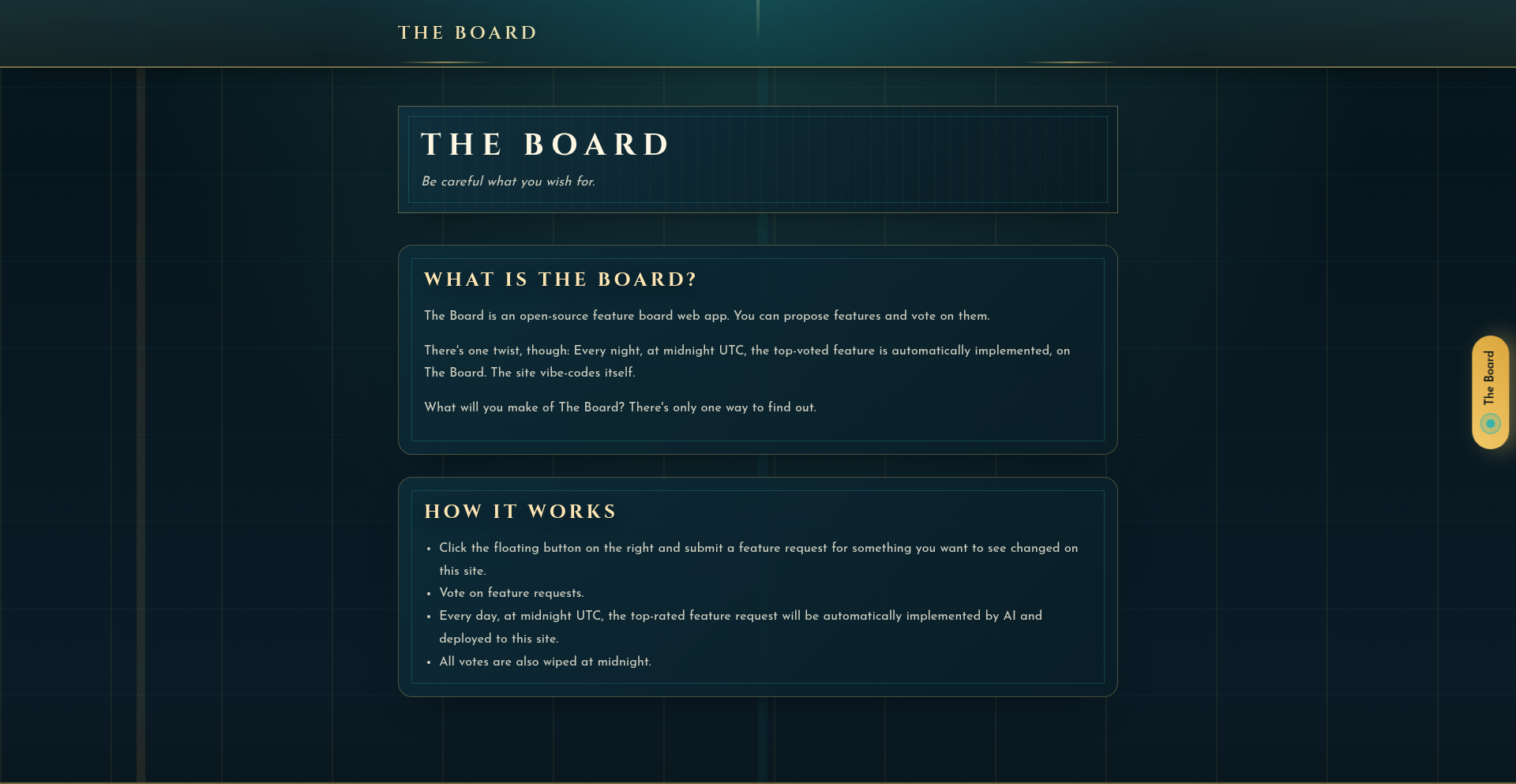

6

VibeCode Weaver

Author

stavros

Description

A novel website that dynamically generates code based on its 'vibe,' offering a unique approach to rapid prototyping and creative coding exploration. It tackles the challenge of translating abstract conceptualizations into functional code structures, enabling developers to quickly visualize and iterate on ideas.

Popularity

Points 16

Comments 4

What is this product?

VibeCode Weaver is a web application that translates a conceptual 'vibe' or emotional state into functional code. Instead of explicit programming instructions, users input descriptive terms or select parameters that represent a desired mood or aesthetic. The system then uses an underlying generative algorithm, likely leveraging techniques like natural language processing (NLP) and pattern recognition, to interpret these inputs and output relevant code snippets or even basic application structures. The innovation lies in its ability to bridge the gap between subjective creative intent and objective code, making the initial stages of development more intuitive and less constrained by strict syntax.

How to use it?

Developers can use VibeCode Weaver as an experimental playground for brainstorming and initial prototyping. Imagine you have a general idea for a website's feel – perhaps 'calm,' 'energetic,' or 'minimalist.' You input these vibes into the platform. The Weaver then generates HTML, CSS, and potentially JavaScript code that aims to embody that feeling. This allows for rapid iteration on the visual and functional elements of a project without getting bogged down in writing every line of code from scratch. It's particularly useful for exploring different design directions or quickly scaffolding user interfaces.

Product Core Function

· Vibe-to-Code Generation: Translates abstract descriptive inputs (e.g., 'serene,' 'playful') into tangible code structures (HTML, CSS, JS). This is useful for quickly generating boilerplate code that aligns with a specific aesthetic or user experience goal.

· Dynamic Code Adaptation: The generated code can be further refined or adapted based on user feedback or additional vibe inputs, allowing for an iterative design process. This saves time by not having to manually rewrite code for minor adjustments.

· Creative Exploration Tool: Provides a novel way for developers to explore unconventional coding approaches and discover new patterns. This fosters creativity and can lead to unexpected and innovative solutions.

· Prototyping Accelerator: Significantly speeds up the initial stages of web development by providing ready-to-use code structures based on conceptual input. This means you can get a functional starting point for your project much faster.

Product Usage Case

· A game developer wants to quickly visualize the UI for a 'nostalgic pixel art' game. They input 'retro,' 'pixel art,' and 'charming' into VibeCode Weaver. The system generates HTML and CSS that creates a pixelated aesthetic and a basic layout, allowing the developer to immediately see how the concept translates visually, saving hours of manual styling.

· A UX designer has a concept for a 'calming meditation app' and wants to see how the UI might feel. They input 'peaceful,' 'minimalist,' and 'soft colors.' VibeCode Weaver generates HTML and CSS that produces a clean interface with gentle color palettes and smooth transitions, giving them a tangible starting point to discuss with the engineering team.

· A front-end developer is experimenting with a new animation style for a portfolio website. Instead of manually writing complex CSS animations, they try describing the desired effect as 'fluid,' 'graceful,' and 'subtle.' VibeCode Weaver produces CSS code that implements such animations, serving as an excellent learning resource and a shortcut to achieving desired visual flair.

7

PACR: Unified Academic & Professional Nexus

Author

anony_matty

Description

PACR is an all-in-one academic ecosystem and professional social network designed to streamline research, collaboration, and career advancement. It innovates by integrating disparate tools into a single platform, addressing the fragmentation of academic and professional life. Think of it as a super-powered LinkedIn meets your university's research portal, but built with developers in mind for efficient knowledge sharing and discovery.

Popularity

Points 16

Comments 1

What is this product?

PACR is a platform that merges academic research management with professional networking. Its core innovation lies in its integrated approach. Instead of juggling separate tools for paper management, citation tracking, project collaboration, and professional connections, PACR offers a unified environment. This means a researcher can seamlessly link their published papers to their professional profile, invite collaborators directly from their network to a shared project space, and discover new opportunities based on their academic output and professional interests. The underlying technology likely involves a robust database for managing diverse data types (publications, projects, profiles, skills) and intelligent algorithms for matching users with relevant content and collaborators. This aims to solve the problem of information silos and inefficient workflows common in academia and professional fields.

How to use it?

Developers can leverage PACR to manage their personal research projects, showcase their technical contributions (like open-source projects, research papers on algorithms, or even code demos), and connect with other developers or researchers working on similar problems. You can use it to track your contributions to academic publications, link your GitHub repositories to your profile, and discover potential collaborators for your next hackathon project or research endeavor. Integration can be achieved through APIs (if available) for syncing project data or through manual input, making it a flexible tool for individual or team use. The value proposition for developers is enhanced visibility of their technical work and easier access to a community of like-minded individuals.

Product Core Function

· Integrated Research Management: Allows users to upload, organize, and cite research papers, projects, and code repositories. This directly helps developers track their intellectual output and ensures all their technical contributions are in one place for easy reference and sharing.

· Collaborative Project Spaces: Provides dedicated areas for teams to work on research or development projects, share documents, and track progress. This is invaluable for fostering collaboration on open-source projects or academic research, making it easier to manage distributed teams and ensure everyone is on the same page.

· Intelligent Networking & Discovery: Uses algorithms to suggest relevant papers, projects, and potential collaborators based on user profiles and activity. For developers, this means discovering new tools, libraries, or even job opportunities that align with their technical skills and research interests, cutting down on manual searching.

· Unified Professional Profile: Combines academic achievements with professional experience and technical skills into a single, comprehensive profile. This allows developers to present a holistic view of their capabilities, attracting potential employers, collaborators, or mentors.

· Citation and Reference Tracking: Automates the process of managing citations and bibliographies for academic and technical documents. This saves developers significant time and effort in academic writing or preparing technical documentation, ensuring accuracy and consistency.

Product Usage Case

· A computer science PhD student can use PACR to manage their publications, link their published papers to their research projects, and invite fellow students to a shared project space for collaborative algorithm development. This simplifies their workflow and makes it easier to demonstrate their research progress to supervisors.

· An open-source developer can use PACR to showcase their contributions to various GitHub projects on their professional profile, connect with other contributors, and discover new projects in their area of expertise. This enhances their visibility within the developer community and opens up new collaboration opportunities.

· A data scientist can use PACR to manage their research papers on machine learning techniques, link them to practical implementations in their project portfolio, and find researchers working on similar datasets or analytical methods. This accelerates their research and helps them stay updated with the latest advancements in the field.

· A researcher in a niche technical field can use PACR to find and connect with other experts globally, form research collaborations, and share findings more effectively through dedicated project spaces. This breaks down geographical barriers and fosters interdisciplinary innovation.

8

Oodle AI Observability Nexus

Author

kirankgollu

Description

Oodle is a novel observability platform that breaks down the silos between metrics, logs, and traces. It unifies these disparate data types into a single, correlated view, drastically improving debugging efficiency during incidents. Inspired by architectures like Snowflake, Oodle separates storage and compute, leveraging S3 for cost-effective, scalable data storage and serverless functions for on-demand processing. This approach results in significantly lower costs, massive scalability, and zero operational overhead, while remaining fully compatible with existing tools like Grafana and OpenSearch.

Popularity

Points 11

Comments 3

What is this product?

Oodle is an observability platform that combines metrics, logs, and traces into one unified view for debugging. The core innovation lies in its architectural separation of storage and compute, similar to cloud data warehouses. All telemetry data (metrics, logs, traces) is stored efficiently on S3 using a custom columnar format. Compute resources are serverless and scale automatically as needed. This design significantly reduces costs and operational burden compared to traditional, monolithic observability solutions. For developers, this means an easier and faster way to find and fix issues because all the relevant information is automatically linked together.

How to use it?

Developers can integrate Oodle with their existing observability stack. It works alongside Grafana and OpenSearch, but when an alert is triggered, Oodle automatically correlates the associated metrics, logs, and traces. For example, if a latency spike is detected (metrics), Oodle will instantly show the logs and the specific service that caused the problem, all within a single interface. This dramatically speeds up incident response by eliminating the need to manually switch between different tools and piece together information. A live OpenTelemetry demo is available at play.oodle.ai for quick evaluation.

Product Core Function

· Unified Telemetry Correlation: Automatically links metrics, logs, and traces, enabling developers to pinpoint root causes of issues faster. This reduces debugging time by presenting all related incident data in one place.

· Separated Storage and Compute Architecture: Leverages S3 for cost-effective and scalable storage of all telemetry data, and serverless compute for dynamic scaling. This means lower costs and no need to manage infrastructure, allowing developers to focus on coding.

· Cost Optimization: Achieves 3-5x lower costs compared to traditional observability solutions due to efficient data storage and on-demand compute. This directly benefits organizations by reducing their operational expenses.

· Zero Operational Overhead: Eliminates the need for managing and scaling observability infrastructure. Developers don't have to worry about server maintenance or capacity planning for their observability tools.

· Compatibility with Existing Tools: Works seamlessly with popular tools like Grafana and OpenSearch, supporting existing dashboards and queries (e.g., PromQL). This allows for easy adoption without a complete rip-and-replace of existing setups.

Product Usage Case

· Debugging a sudden increase in API latency: When a developer sees a metric showing increased latency, Oodle automatically presents the relevant logs from the affected service and the trace of the requests that experienced the slowdown. This helps quickly identify if the issue is with a specific database query, a downstream service, or the code itself.

· Investigating application errors in a microservices environment: If a service starts returning errors, Oodle correlates the error logs with the metrics showing resource utilization and traces of the requests that failed. This provides a comprehensive view of the problem, helping to understand if it's a resource constraint, a dependency failure, or a bug in the service.

· Proactive issue detection and prevention: By continuously correlating telemetry data, Oodle can highlight subtle patterns that might indicate an impending problem before it impacts users. For instance, a combination of increasing error rates and specific log messages could be flagged as a potential issue requiring attention.

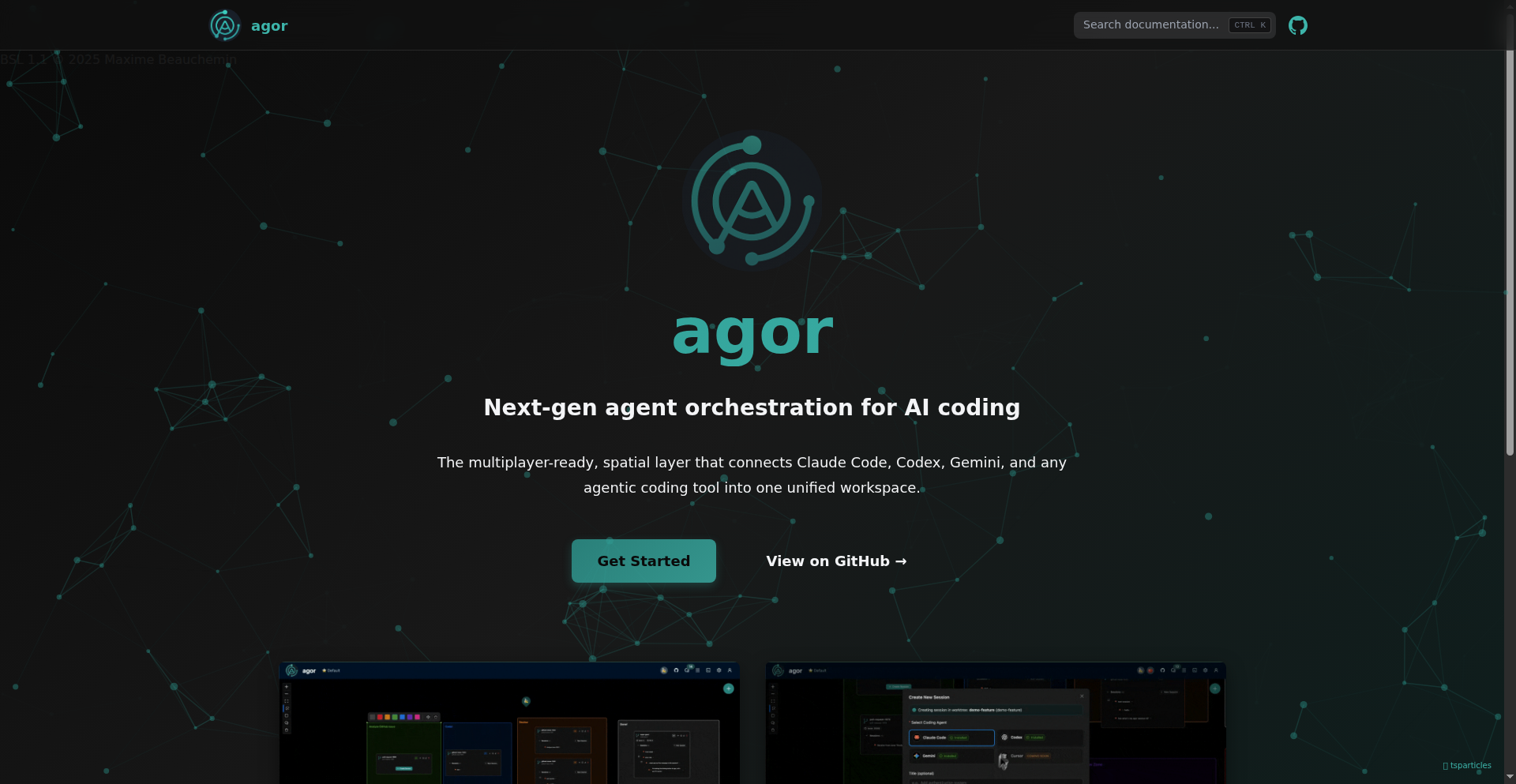

9

Agor: AI-Powered Collaborative Code Design

Author

caravel

Description

Agor is an open-source project that reimagines the coding experience by bringing AI directly into the collaborative design process, akin to Figma for visual design. It tackles the challenge of translating complex AI model concepts into actionable code, offering a visual, interactive environment for developers to brainstorm, prototype, and refine AI-driven functionalities. The core innovation lies in its ability to bridge the gap between high-level AI ideas and concrete code implementation through a visually intuitive interface, fostering faster iteration and better understanding within development teams.

Popularity

Points 7

Comments 3

What is this product?

Agor is an open-source platform that serves as a visual playground for designing AI-powered code. Instead of writing lines of code from scratch for every AI feature, developers can use Agor's intuitive graphical interface to connect different AI models, define their interactions, and specify their behavior. Think of it like building with LEGOs, but for AI functionalities. The innovation here is that it's not just a flowchart; it's a system that understands the underlying AI concepts and translates these visual designs into actual, runnable code. This significantly lowers the barrier to entry for complex AI development and speeds up the prototyping phase.

How to use it?

Developers can integrate Agor into their existing workflows by leveraging its open-source nature. It can be used to visually map out the logic for AI features, such as natural language processing pipelines, image recognition workflows, or recommendation engines. For example, a team working on a chatbot could use Agor to visually connect a speech-to-text model, a natural language understanding module, and a response generation AI. Agor then helps translate this visual blueprint into the necessary code for these components to work together. This makes it ideal for rapid prototyping, brainstorming AI solutions, and documenting complex AI architectures.

Product Core Function

· Visual AI Model Orchestration: Allows developers to drag and drop AI models and connect them graphically to define data flow and logic. The value is in simplifying the understanding and implementation of complex AI systems, making it easier to build intricate AI pipelines without deep knowledge of each individual model's code.

· Code Generation from Visual Design: Automatically generates boilerplate code based on the visual representation of the AI workflow. This accelerates development by eliminating repetitive coding tasks and ensures consistency between the design and the implementation.

· Collaborative Design Environment: Provides a shared space for development teams to work on AI designs simultaneously. This fosters better communication and shared understanding of the AI architecture, leading to more cohesive and efficient development.

· AI Model Integration Framework: Offers a flexible way to integrate various pre-trained AI models or custom-built ones. This means developers can leverage existing AI tools and services within their Agor designs, saving time and resources.

· Interactive Prototyping and Simulation: Enables developers to test and iterate on their AI designs within the platform. This provides immediate feedback on the workflow and helps identify potential issues early in the development cycle.

Product Usage Case

· A startup building a personalized content recommendation engine can use Agor to visually design the flow of data from user activity logs, through a feature extraction AI, to a recommendation model, and finally to an output module. This allows them to quickly prototype and validate different recommendation strategies before writing extensive code, accelerating their time-to-market.

· A research team developing a new medical image analysis tool can use Agor to lay out the steps involved, from image preprocessing to AI model inference and result visualization. This visual approach helps them explain their complex workflow to non-technical stakeholders and allows team members with different expertise to contribute to the design and implementation.

· A game development studio can use Agor to design the AI behavior of non-player characters (NPCs) by visually connecting perception modules, decision-making logic, and action execution components. This makes it easier to create more sophisticated and dynamic NPC interactions without getting bogged down in intricate code for each behavior state.

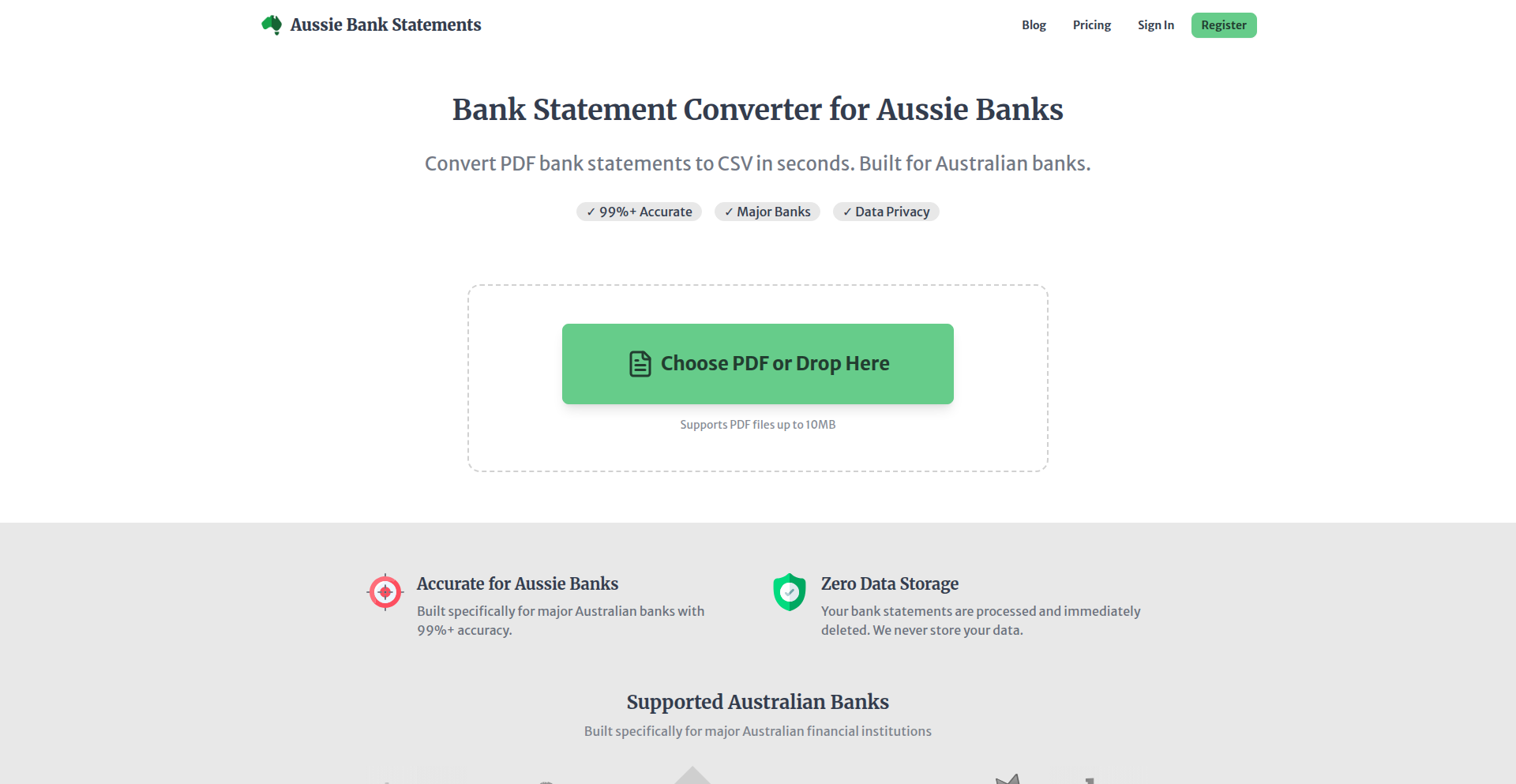

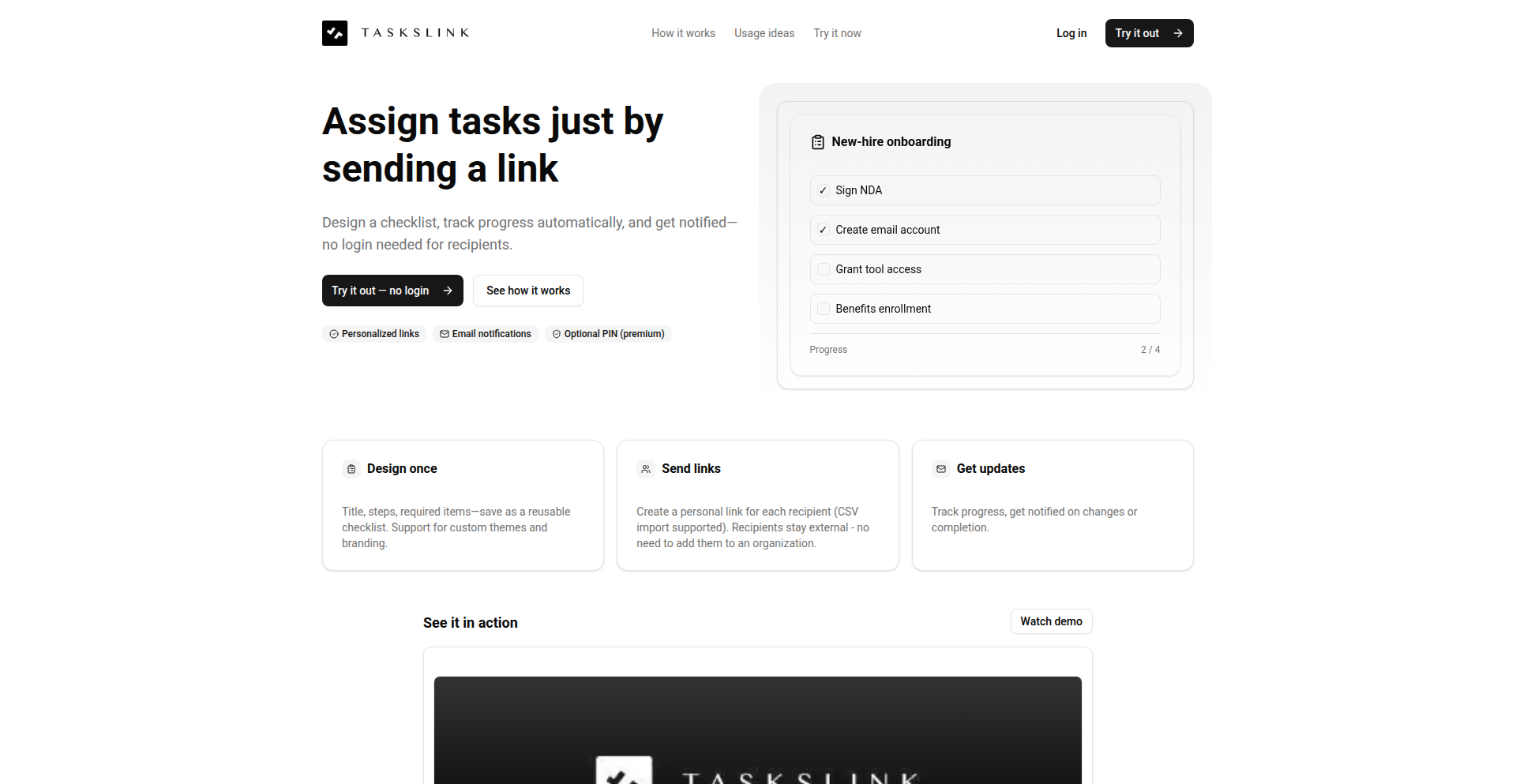

10

AussieBankStatementCSV

Author

matherslabs

Description

A privacy-focused Python and FastAPI backend tool deployed on Google Cloud Run that reliably converts Australian bank PDF statements into clean CSV files. It tackles common PDF parsing issues like drifting columns, multi-line descriptions, and shifting headers, offering a more robust solution than generic converters for specific Australian banks. The core innovation lies in its bank-specific, regex-driven parsing approach and a strong commitment to user privacy, processing files in real-time with no persistent storage.

Popularity

Points 9

Comments 0

What is this product?

This project is a specialized tool designed to transform Australian bank account statements, which are typically provided as PDFs, into easily usable CSV (Comma Separated Values) files. Generic PDF converters often struggle with the unique layouts of bank statements, leading to data errors. AussieBankStatementCSV uses a custom-built approach, employing Python libraries like pdfplumber to extract text and applying specific regular expressions (regex) tailored to the patterns found in Australian bank PDFs. A 'lookahead heuristic' is used to intelligently combine parts of a transaction that might be spread across multiple lines in the PDF. This means that instead of a general-purpose solution that might only work 'okay' for many banks, it focuses on getting it 'perfect' for a select few, ensuring accuracy and reliability. The entire process is built with privacy in mind, running on a secure cloud environment and deleting files immediately after processing, meaning your financial data isn't stored anywhere.

How to use it?

Developers can integrate AussieBankStatementCSV into their workflows or applications by sending their bank PDF statements to the tool's API endpoint. For instance, an accountant might build a system that automatically pulls statements from client emails and sends them to this converter. A personal finance app developer could offer this as a feature to their users, allowing them to upload their bank PDFs directly. The tool is built with FastAPI, a modern Python web framework, making it straightforward to interact with. The output is a clean CSV file that can be effortlessly imported into spreadsheet software like Excel or Google Sheets, or financial management software such as Xero or MYOB. The key is that it automates the tedious and error-prone manual data entry or the unreliable conversion process.

Product Core Function

· Bank-specific PDF text extraction: Utilizes pdfplumber to accurately pull text from PDF statements, avoiding the complexities and errors of optical character recognition (OCR), which ensures the original text data is preserved for further processing.

· Custom regex pattern matching: Employs tailored regular expressions for each supported bank to reliably identify and extract key financial data like dates, transaction amounts, and descriptions, significantly improving parsing accuracy over generic methods.

· Multi-line transaction merging: Implements a 'lookahead heuristic' to intelligently group parts of a single transaction that might span across multiple lines in the PDF statement, presenting a complete and accurate record.

· Real-time, privacy-preserving processing: Processes uploaded PDF files instantly in a secure cloud environment (Google Cloud Run) with no persistent storage or logging of sensitive data, guaranteeing user privacy and data security.

· Clean CSV output generation: Delivers consistently formatted CSV files that are ready for immediate import into popular accounting and spreadsheet software, streamlining financial data management.

Product Usage Case

· An independent bookkeeper can use this to automate the process of importing client bank statements into their accounting software, saving hours of manual data entry and reducing the risk of transcription errors. Instead of copy-pasting or manually re-typing, they upload the PDF and get a ready-to-use CSV.

· A small business owner who wants to track their expenses more efficiently can use this tool to quickly convert their business bank statements into a format that can be easily analyzed in Google Sheets, allowing them to spot spending trends and manage cash flow better.

· A personal finance app developer can integrate this service to offer a seamless 'connect your bank' experience for users who only receive PDF statements, enhancing the app's utility and user satisfaction without the complexity of handling PDF parsing themselves.

11

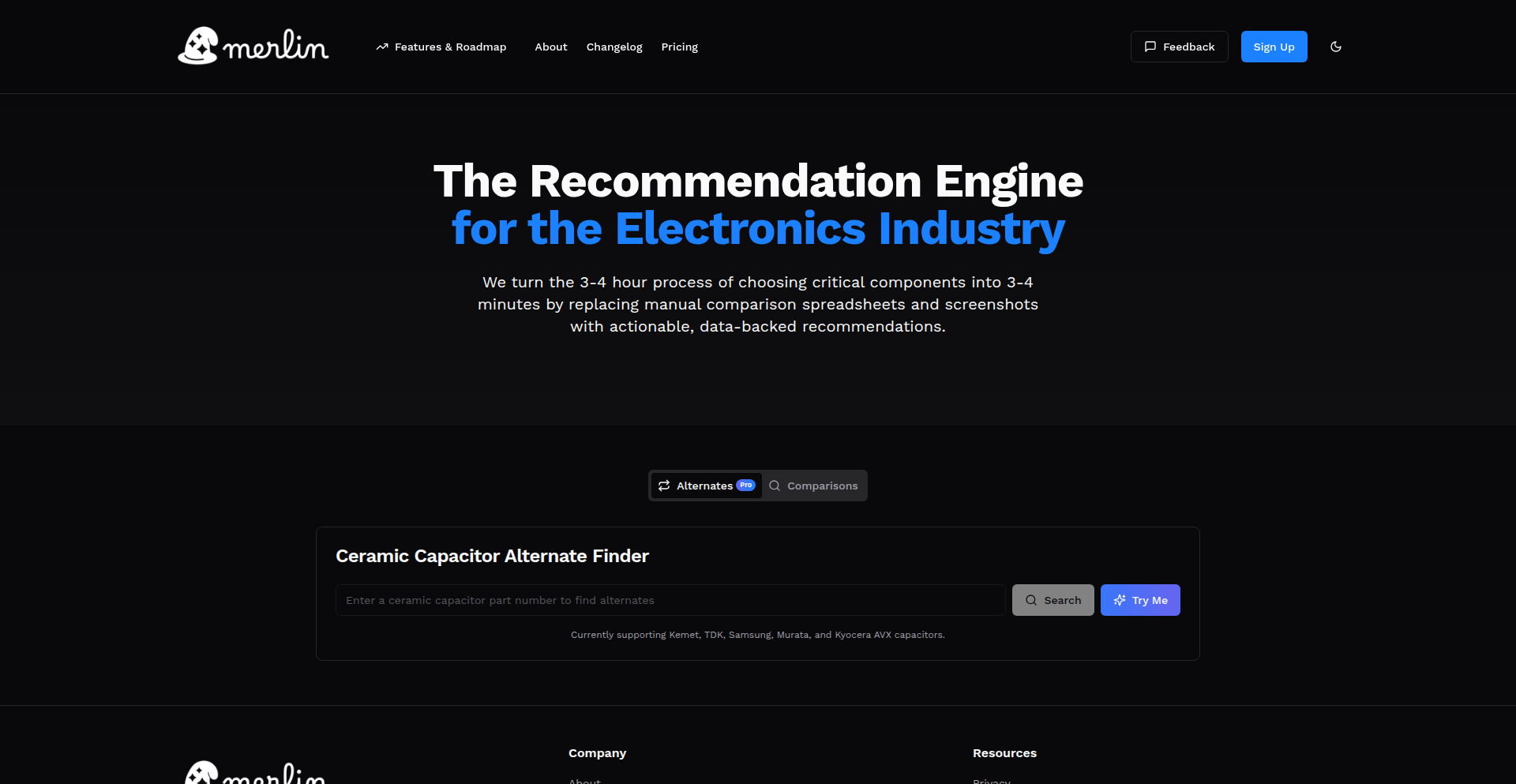

Merlin: ElectraSpec Navigator

Author

arjunven

Description

Merlin is a web application designed to streamline the often tedious process of selecting electrical components, starting with ceramic capacitors. It tackles the pain of juggling multiple datasheets and spreadsheets by offering a unified view of specifications and performance curves, and a smart tool to find equivalent alternative parts. This dramatically speeds up both the design and procurement phases for engineers.

Popularity

Points 8

Comments 0

What is this product?

Merlin is an intelligent platform for electrical component selection. At its core, it solves the problem of fragmented and manual component research. Instead of opening numerous tabs for datasheets, copying data into spreadsheets, and manually cross-referencing, Merlin consolidates all critical electrical and mechanical specifications, along with performance curves, into a single, easily digestible view. Furthermore, its 'alternates' tool leverages a sophisticated matching algorithm to identify equivalent or suitable replacement components based on a given part. This is powered by a backend system that ingests and structures data from various manufacturer datasheets, making complex comparisons effortless.

How to use it?

Developers and electrical engineers can use Merlin by visiting www.get-merlin.com. For comparison, simply input the parameters or part number of a component you're interested in. Merlin will then display a comprehensive side-by-side comparison of its specifications and performance curves against other relevant options. To find alternates, you can select a reference component, and Merlin will suggest equivalent parts from different manufacturers. This can be integrated into the design workflow by using Merlin as the primary tool for initial part selection and for managing component obsolescence or supply chain issues. It's designed to be a quick, web-based solution, eliminating the need for complex local installations.

Product Core Function

· Unified Component Specification View: Consolidates all electrical and mechanical specs, plus performance curves, from multiple datasheets into one screen. This saves engineers time by avoiding the need to open and parse numerous documents, directly answering the question 'What are the key characteristics of this part and how do they compare?'

· Intelligent Alternate Part Finder: Identifies equivalent or drop-in replacement components from different manufacturers based on a reference part. This is crucial for engineers when a chosen component is out of stock, has been End-of-Life (EOL), or a better-priced option becomes available, answering 'What other parts can I use if my first choice isn't feasible?'

· Cross-Manufacturer Comparison: Allows direct comparison of components from various manufacturers side-by-side. This is valuable for finding the best balance of cost, performance, and availability, directly addressing 'Which manufacturer offers the best component for my needs?'

· Data Aggregation and Standardization: Ingests and standardizes data from diverse manufacturer datasheets into a consistent format. This backend innovation is what enables the powerful front-end comparison and search features, providing a reliable single source of truth and solving the problem of inconsistent data formats.

Product Usage Case

· Scenario: An engineer is designing a new power supply circuit and needs a specific ceramic capacitor. They've found a part from Manufacturer A but want to see if Manufacturer B offers a similar part with better temperature stability or a lower price. Merlin allows them to input the Manufacturer A part and instantly see a comparison with equivalent parts from Manufacturer B and others, saving hours of manual datasheet hunting and analysis. The value is faster design iteration and cost optimization.

· Scenario: A product is in production, and a key component has just been announced as End-of-Life (EOL) by its supplier. The engineering team needs to quickly find a replacement part that meets all critical specifications to avoid production halts. Merlin's alternate part finder can be used to rapidly identify suitable, available alternatives from other vendors, minimizing downtime and revenue loss. The value is supply chain resilience and continuity.

· Scenario: During the initial design phase of a new IoT device, an engineer is evaluating different microcontrollers. They need to compare power consumption, memory specs, and peripheral availability across several options from different brands. Merlin provides a consolidated view, allowing for rapid feature comparison and informed decision-making about which microcontroller best fits the device's requirements and cost targets. The value is accelerated product development and better component selection.

12

AI Agent with File System Access

Author

rendylong

Description

This project explores the groundbreaking concept of granting AI agents direct access to file systems. It's the initial step towards enabling AI to perform digital labor by allowing them to read, write, and manage files, fundamentally changing how we envision AI's role in automated tasks.

Popularity

Points 1

Comments 6

What is this product?

This project introduces a novel approach to AI development where Artificial Intelligence agents are equipped with the ability to interact with and manipulate file systems. This is achieved by creating an abstraction layer that translates AI commands into file system operations (like creating directories, writing data to files, reading content, deleting files, etc.). The innovation lies in moving AI from purely conversational or data processing roles to one where it can directly act upon the digital environment. Think of it as giving a skilled worker the tools and permissions to manage their workspace, not just process information. This allows AI to maintain state, store results, and even self-modify its own operational environment, which is crucial for complex, multi-step tasks.

How to use it?

Developers can integrate this into their AI agent frameworks to enable persistent memory and action execution. For instance, an AI agent tasked with data analysis could be given read access to specific data directories, process the information, and then write the summarized findings to a new file. It's about building AI that can 'remember' and 'act' within a digital space. This can be integrated by defining specific API endpoints for the AI to call, which then interface with the file system operations. Essentially, you're telling the AI, 'Here are the tools to work with files, now go do your job.'

Product Core Function

· File Read Operations: Allows AI agents to access and retrieve data from existing files. This is valuable because it enables AI to learn from historical data, configuration files, or user-provided documents, forming the basis for informed decision-making in any task.

· File Write Operations: Empowers AI agents to create new files or append data to existing ones. This is critical for AI to store results, log progress, generate reports, or even create configuration files for subsequent operations, making AI actions persistent.

· Directory Management: Enables AI agents to create, delete, and list directories. This is important for organizing digital workflows, managing temporary storage, and structuring the AI's operational environment, leading to more robust and organized automated processes.

· Permission Control: Provides mechanisms to define what operations and which parts of the file system the AI agent can access. This is essential for security and for ensuring AI acts only within its intended scope, preventing unintended data corruption or access, thereby building trust in AI deployment.

Product Usage Case

· Automated Report Generation: An AI agent can be tasked with gathering data from multiple sources, processing it, and then writing a comprehensive report to a designated file. This solves the problem of manual data aggregation and report writing, freeing up human time.

· Configuration Management for AI Services: An AI agent can dynamically update configuration files for other services based on performance metrics or user feedback, ensuring optimal operation without manual intervention. This addresses the need for adaptive and self-optimizing systems.

· Digital Content Creation and Management: An AI can generate text, code, or even creative content and save it directly to a file, then organize these files into folders. This streamlines content production workflows and allows for AI-driven content platforms.

· Personalized AI Assistants with Memory: An AI assistant can remember user preferences and past interactions by storing data in files, allowing it to provide more tailored and context-aware responses over time. This moves beyond stateless chatbots to truly personalized digital companions.

13

Yorph AI: Your Pocket Data Engineer

Author

areddyfd

Description

Yorph AI is a revolutionary platform that acts as a personal data engineer. It empowers users, initially targeting product managers and analysts, to seamlessly integrate data from various sources, build robust and version-controlled data pipelines, and perform cleaning, analysis, and visualization all within a single, intuitive interface. This innovation significantly simplifies complex data operations by abstracting away the need for deep technical expertise.

Popularity

Points 6

Comments 1

What is this product?

Yorph AI is an agentic data platform designed to democratize data management and analysis. At its core, it leverages an 'agentic' approach, meaning it employs intelligent agents that automate and orchestrate complex data engineering tasks. Instead of writing intricate code for data integration, transformation, and analysis, users interact with Yorph AI's interface. The platform handles the underlying complexity, using tools and workflows built with the ADK (Agent Development Kit). This allows for the creation of version-controlled, reliable data workflows, ensuring reproducibility and traceability. The innovation lies in its ability to make advanced data engineering accessible to non-specialists, transforming how businesses extract value from their data.

How to use it?

Developers can start using Yorph AI by signing up for the beta at yorph.ai/login. The primary use case involves connecting to various data sources, either by uploading files directly or syncing with existing cloud services. Once data is integrated, users can define data workflows through a visual or declarative interface. These workflows can include steps for data cleaning (handling missing values, standardizing formats), data transformation (joining tables, aggregating information), and data analysis (calculating metrics, performing statistical analysis). The platform also offers visualization tools to present findings. For developers who want to extend its capabilities or integrate it into other systems, Yorph AI is built using ADK, suggesting potential for custom agent development and API integrations in the future.

Product Core Function

· Data Source Integration: Connects to multiple data sources (upload/sync) to consolidate information, reducing manual data collection and making all your relevant data accessible in one place.

· Version-Controlled Data Workflows: Allows users to build and manage data pipelines with versioning, ensuring that changes are tracked and that workflows are reliable and reproducible, preventing data errors and facilitating collaboration.

· Data Cleaning and Transformation: Automates the process of preparing data for analysis, handling issues like missing values and inconsistencies, which saves significant time and effort in making data usable.

· Data Analysis and Visualization: Provides tools to perform analytical operations and create visual representations of data, enabling users to derive insights and communicate findings effectively without needing specialized analytics software.

· Semantic Layer Creation (Upcoming): Will enable users to define business-oriented terms and relationships on top of raw data, making it easier to understand and query data from a business perspective, bridging the gap between technical data and business understanding.

Product Usage Case

· A product manager needs to understand user engagement across different features. Yorph AI can pull user activity logs from a database, join it with feature usage data from a CRM, clean and aggregate the data to calculate key metrics like daily active users per feature, and then visualize these trends in a dashboard, all without writing SQL or Python.

· A marketing analyst wants to combine campaign performance data from Google Ads and Facebook Ads with website traffic data from Google Analytics. Yorph AI can ingest data from these sources, merge them based on dates and campaign IDs, identify which campaigns are driving the most traffic and conversions, and present this in a clear report, simplifying multi-channel campaign analysis.

· A startup founder needs to quickly analyze sales data from their e-commerce platform to identify top-selling products and customer segments. Yorph AI can ingest a CSV of sales records, automatically identify product categories and customer demographics, and generate charts showing sales performance by product and customer type, providing rapid business intelligence.

14

SMS-Driven Alerter

Author

libiny

Description

Notifikai is a minimalist reminder system that leverages the ubiquity of SMS. It solves the problem of forgetting tasks by allowing users to set reminders and receive them via plain text messages, eliminating the need for apps, logins, or constant internet connectivity. The core innovation lies in its simplicity and reliance on a universal communication channel, making it accessible and incredibly straightforward to use.

Popularity

Points 4

Comments 3

What is this product?

Notifikai is a service that lets you set reminders using just text messages. The technical approach is to intercept incoming SMS messages, parse them to understand the reminder request (what to remind you about and when), store this information, and then send you a text message at the specified time. The innovation is in removing all the usual friction points of reminder apps: no installation, no account creation, no special app needed, and it works wherever you can send and receive a text. It's like having a personal, always-available assistant who communicates via SMS.

How to use it?

Developers can integrate Notifikai's core concept into their own applications or workflows. Imagine a backend service that needs to notify users of events but doesn't want to rely on push notifications or email. Notifikai's approach can be adapted by using an SMS gateway API (like Twilio, Vonage, etc.) to receive incoming messages and schedule outgoing ones. A developer could build a simple script that listens for specific SMS commands, processes them, and uses the SMS API to send back confirmations or alerts. This is particularly useful for IoT devices that can send SMS, or for systems where users might be in areas with poor internet but good cellular service.

Product Core Function

· SMS-based reminder creation: Users send a text like 'Remind me to call Mom at 3 PM tomorrow.' The system parses this, understands the intent and timing, and schedules a future SMS. This is valuable because it bypasses app installation and login processes, making it immediately usable for anyone with a phone.

· SMS-based reminder delivery: At the scheduled time, the system sends a simple text message to the user. For example, 'Call Mom.' This core function ensures that reminders are delivered reliably through a channel that is almost universally accessible and always on.

· Recurring reminders via SMS: Users can set up reminders that repeat daily, weekly, etc., by sending specific SMS commands. The system interprets these commands and schedules future reminders accordingly. This adds a layer of convenience for routine tasks without requiring complex app settings.

· Reminder management via SMS replies: Users can interact with their reminders by replying to the SMS messages received. For example, 'Cancel this reminder' or 'Reschedule for tomorrow.' This allows for dynamic management of reminders through simple text interactions, enhancing usability.

· Offline-first reminder system: Because it relies on SMS, Notifikai functions even when the user or the device sending the reminder doesn't have internet access, as long as cellular service is available. This is a significant technical advantage for reliability in diverse environments.

Product Usage Case

· A field technician working in a remote area with no internet can set a reminder to check a specific piece of equipment at a certain time by sending a text. They will then receive an SMS alert at the appointed time, ensuring the task is completed without needing a data connection.

· A small business owner who needs to remember to call clients at specific times throughout the day can send SMS commands to schedule these calls. They receive an SMS notification just before each call, helping them stay organized and productive without needing to constantly monitor a calendar app on their phone.

· Developers building an IoT system for agricultural monitoring could integrate a feature where sensors can send SMS alerts for critical conditions (e.g., 'Low water level in Sector 3'). This allows for immediate notifications to be sent to farmers via SMS, even if they are away from their computers or in areas with limited connectivity.

· A user who wants to consistently take medication every morning can set up a recurring SMS reminder. The system sends them a simple text each day, acting as a gentle nudge to take their pills, ensuring adherence to their health regimen through a low-friction method.

15

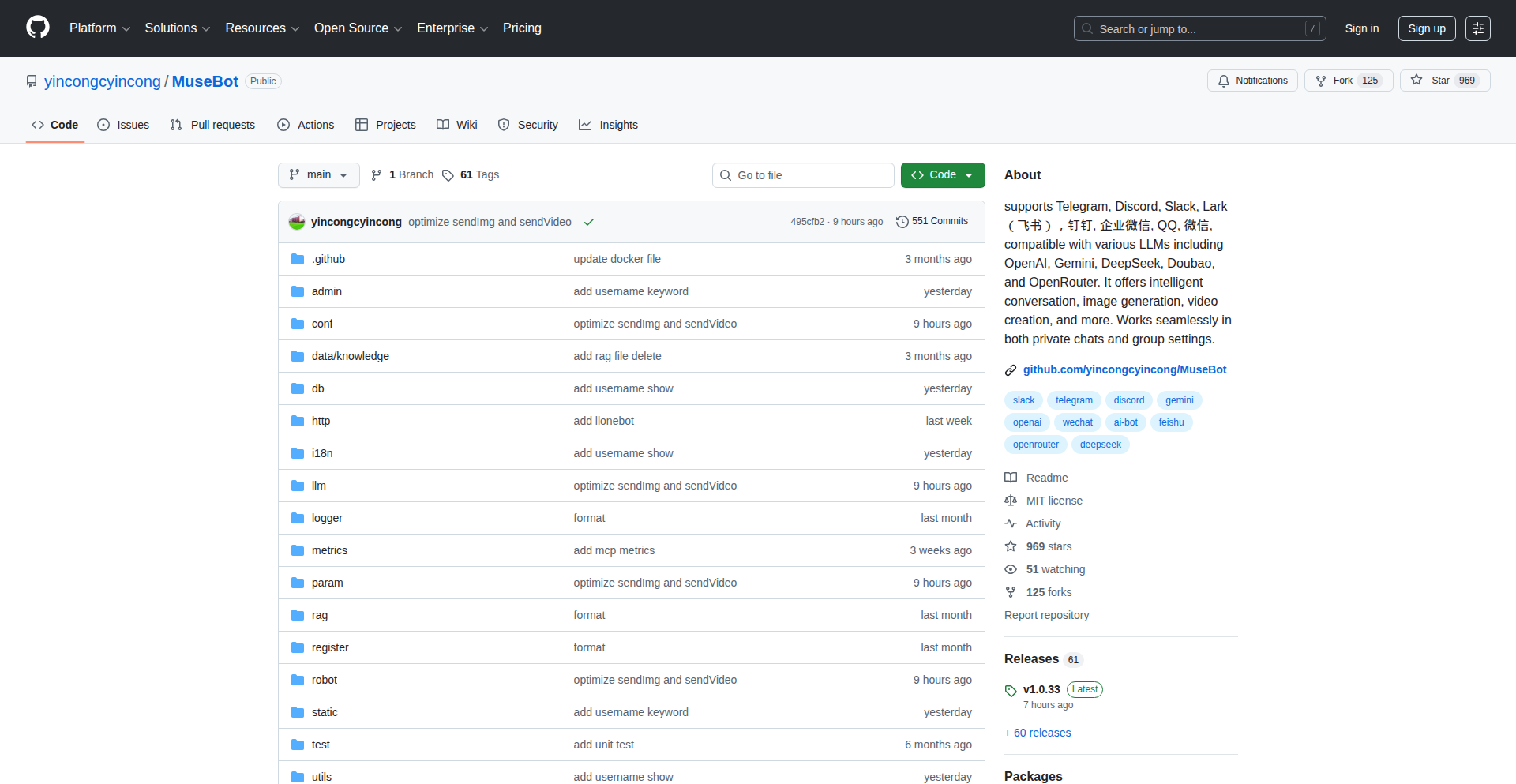

MuseBot: AI Content Fusion Bot

Author

yincong0822

Description

MuseBot is an open-source Golang-based Telegram bot that transforms your chat conversations into various forms of AI-generated content, including text, audio, photos, and videos. It leverages AI models to understand your chat context and creatively produce engaging outputs, making your Telegram experience richer and more versatile.

Popularity

Points 2

Comments 4

What is this product?

MuseBot is an intelligent AI bot designed for Telegram, built using the Go programming language. Its core innovation lies in its ability to process your text-based chat interactions and, using underlying AI models, automatically generate diverse content. Imagine chatting with someone, and the bot can then instantly create a summary of the conversation in text, generate a voice recording of it, design a relevant image, or even produce a short video clip based on what you discussed. This is achieved through integrating with various AI APIs for natural language processing, text-to-speech, image generation, and potentially video synthesis, all orchestrated by a Golang backend for efficient handling of bot requests and AI model interactions.

How to use it?

Developers can integrate MuseBot into their existing Telegram bot infrastructure or deploy it as a standalone service. The project is open-source on GitHub, allowing for customization and extension. To use it, you'd typically set up the bot on your Telegram account, providing it with API keys for the AI services it relies on. Users then interact with the bot via Telegram chat, prompting it to generate specific content types based on their conversation. For example, a user could say 'Summarize this chat into a blog post' or 'Create an image representing our discussion.' The bot processes the request, sends the relevant chat data to the appropriate AI model, and returns the generated content back to the Telegram chat.

Product Core Function

· AI-powered text generation: Transforms chat context into written content like summaries, stories, or articles, providing a quick way to document or expand on discussions.

· Text-to-audio synthesis: Converts chat content into spoken audio, allowing for audio summaries or narrated versions of conversations, useful for accessibility or on-the-go consumption.

· AI image generation: Creates visual representations of chat topics, adding a creative and engaging dimension to your communication by visualizing abstract concepts or descriptions.

· AI video generation: (Potentially) Generates short video clips based on chat themes, offering a novel way to present conversation highlights or create dynamic content.

· Seamless Telegram integration: Provides a user-friendly interface within Telegram, making advanced AI content creation accessible to everyday users without complex setups.

· Golang backend for efficiency: Built with Go, ensuring fast response times and robust handling of bot operations and AI model calls, leading to a smooth user experience.

Product Usage Case

· A content creator can use MuseBot to instantly generate blog post drafts from their brainstorming sessions in a group chat, saving significant writing time.

· A student group can use the bot to get an audio summary of their study group discussions, making it easier to review material later, especially when they can't read.

· A marketing team can prompt MuseBot to create an image representing a new product idea discussed in their chat, providing immediate visual feedback for brainstorming.

· A social media manager can leverage MuseBot to generate short, engaging video snippets from key conversation points to share across platforms, increasing content variety.

· A developer experimenting with AI capabilities can fork MuseBot on GitHub, integrate new AI models, or customize its prompt engineering for unique content generation tasks.

16

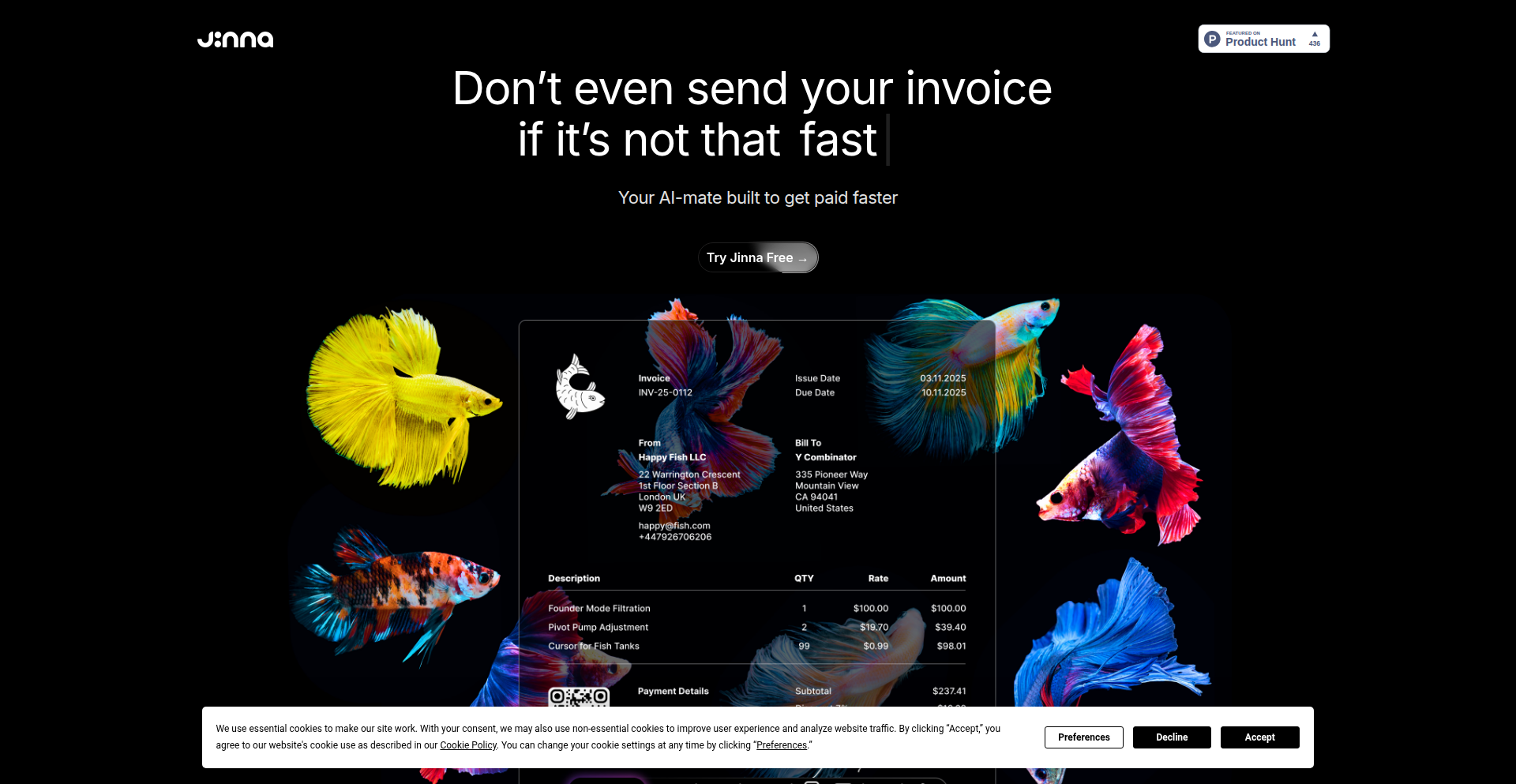

Jinna AI-Invoice Bot

Author

nikitaeverywher

Description

Jinna is an AI-powered invoicing assistant designed to significantly speed up the payment process for freelancers and small businesses. It leverages natural language processing and automation to allow users to create, send, and track invoices through simple voice commands, text input, or file uploads. The core innovation lies in its ability to abstract away the complexities of traditional invoicing software, making it accessible and efficient for everyone, regardless of their technical expertise.

Popularity

Points 6

Comments 0

What is this product?

Jinna is an intelligent invoicing system that acts like a virtual assistant for generating and managing invoices. Instead of navigating complex software, you can simply tell Jinna what you need – like 'create an invoice for client X for project Y, with a due date of Z.' It understands your instructions, pulls relevant data, and generates a professional invoice. The 'AI' part means it's smart enough to understand your spoken or written requests, and the 'automation' means it handles sending and follow-ups without you lifting a finger. So, what's the value for you? It drastically reduces the time and mental effort spent on administrative tasks, allowing you to focus more on your core work and get paid quicker.

How to use it?

Developers can integrate Jinna into their workflows by using its API or by directly interacting with its conversational interface. For example, a project management tool could use Jinna's API to automatically generate an invoice when a project is marked as complete. A freelancer could use a simple chatbot interface to create an invoice by chatting with Jinna, providing details like client name, service rendered, and amount. The Stripe integration allows for seamless online payment processing. So, how can you use it? Imagine connecting it to your CRM to automatically invoice clients upon deal closure, or using it via Slack to quickly generate an invoice for a quick gig. This makes invoicing an integrated, hassle-free part of your business operations.

Product Core Function

· Voice and Text Invoice Creation: Allows users to generate invoices using natural language commands or simple text input, reducing manual data entry and accelerating invoice generation. This is valuable because it makes invoicing accessible to everyone and saves significant time.

· File Upload for Invoice Data: Enables users to upload documents (like spreadsheets or other forms) to extract invoice information, providing flexibility and catering to various existing data management systems. This is valuable for migrating existing data or quickly populating invoice details.

· Customizable Invoice Templates: Offers the ability to personalize invoices with logos, media, and branding elements, ensuring a professional appearance and reinforcing brand identity. This is valuable for maintaining a professional image with clients.

· Stripe Payment Integration: Facilitates easy and secure online payments by connecting directly with Stripe, streamlining the payment collection process and improving cash flow. This is valuable because it offers a convenient and trusted way for clients to pay.

· Automated Invoice Sending: Handles the sending of invoices to clients automatically, eliminating the need for manual sending and ensuring timely delivery. This is valuable for ensuring clients receive invoices promptly, reducing delays in payment.

· Configurable Reminder System: Automatically sends follow-up reminders for overdue invoices with customizable timing and tone, improving collection rates and reducing the burden of manual follow-ups. This is valuable for actively chasing payments without constant manual effort.

Product Usage Case

· A freelance graphic designer finishes a project and, instead of logging into invoicing software, simply says to their phone, 'Jinna, create an invoice for Acme Corp for the branding project, total $500, due in two weeks.' Jinna generates and sends the invoice, allowing the designer to immediately move on to their next task.

· A small software development agency uses Jinna's API to integrate with their project management tool. When a project is marked as 'completed' and 'approved,' an invoice is automatically drafted in Jinna, ready for review and sending, saving project managers significant administrative time.

· A consultant who frequently travels uses the file upload feature. They take a photo of a handwritten note detailing services rendered and expenses, upload it to Jinna, and Jinna intelligently extracts the information to create a billable invoice.

· A business owner sets up Jinna to send a polite reminder email three days before an invoice is due and a more direct one if it's a week overdue, all configured with their preferred professional tone, ensuring consistent and effective payment chasing without manual intervention.

17

SyncChord Weaver

Author

crazycreatives

Description

A real-time, WebSocket-powered platform that seamlessly blends digital music instruments with the power of synchronized web communication. It allows musicians to play and hear each other's contributions instantly, bridging geographical distances for collaborative music creation.

Popularity

Points 5

Comments 1

What is this product?

SyncChord Weaver is a novel application that leverages real-time web sockets to connect multiple users, enabling them to play and hear digital music instruments simultaneously. The core innovation lies in its ability to synchronize musical inputs and outputs across different devices and locations, effectively creating a virtual jam session environment. Imagine a group of musicians, each in their own home, able to play a song together as if they were in the same room, with no noticeable delay. This is achieved through efficient data transmission and processing via WebSockets, which maintain a persistent, two-way communication channel between the server and clients.

How to use it?

Developers can integrate SyncChord Weaver into their web applications or use it as a standalone platform. For developers, it provides an API to easily incorporate real-time musical collaboration into existing projects. This could involve building a collaborative songwriting tool, a virtual music school where instructors can guide students in real-time, or even a platform for live, interactive musical performances. The core usage involves setting up a WebSocket server and then connecting client applications that can send and receive musical data (like MIDI notes or audio events). This allows for flexible integration into various musical workflows and application types.

Product Core Function

· Real-time Music Synchronization: Enables multiple users to play and hear digital instruments concurrently, with minimal latency. The value is in facilitating live, distributed musical collaboration, making remote jamming and practice sessions a reality.

· WebSocket-based Communication: Utilizes WebSockets for persistent, bidirectional data transfer, ensuring that musical events are transmitted instantly. This is crucial for maintaining the flow and timing of a musical performance, offering a responsive and immersive experience.

· Instrument Integration: Supports the integration of various digital music instruments, allowing for diverse sonic palettes and creative expression. This means users can bring their preferred virtual instruments into the collaborative environment, expanding creative possibilities.

· Audio Feedback Loop: Provides immediate audio feedback to all participants, allowing them to hear each other's contributions in real-time. This direct auditory connection is fundamental to musical coordination and ensemble playing, fostering a sense of shared performance.