Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-03

SagaSu777 2025-11-04

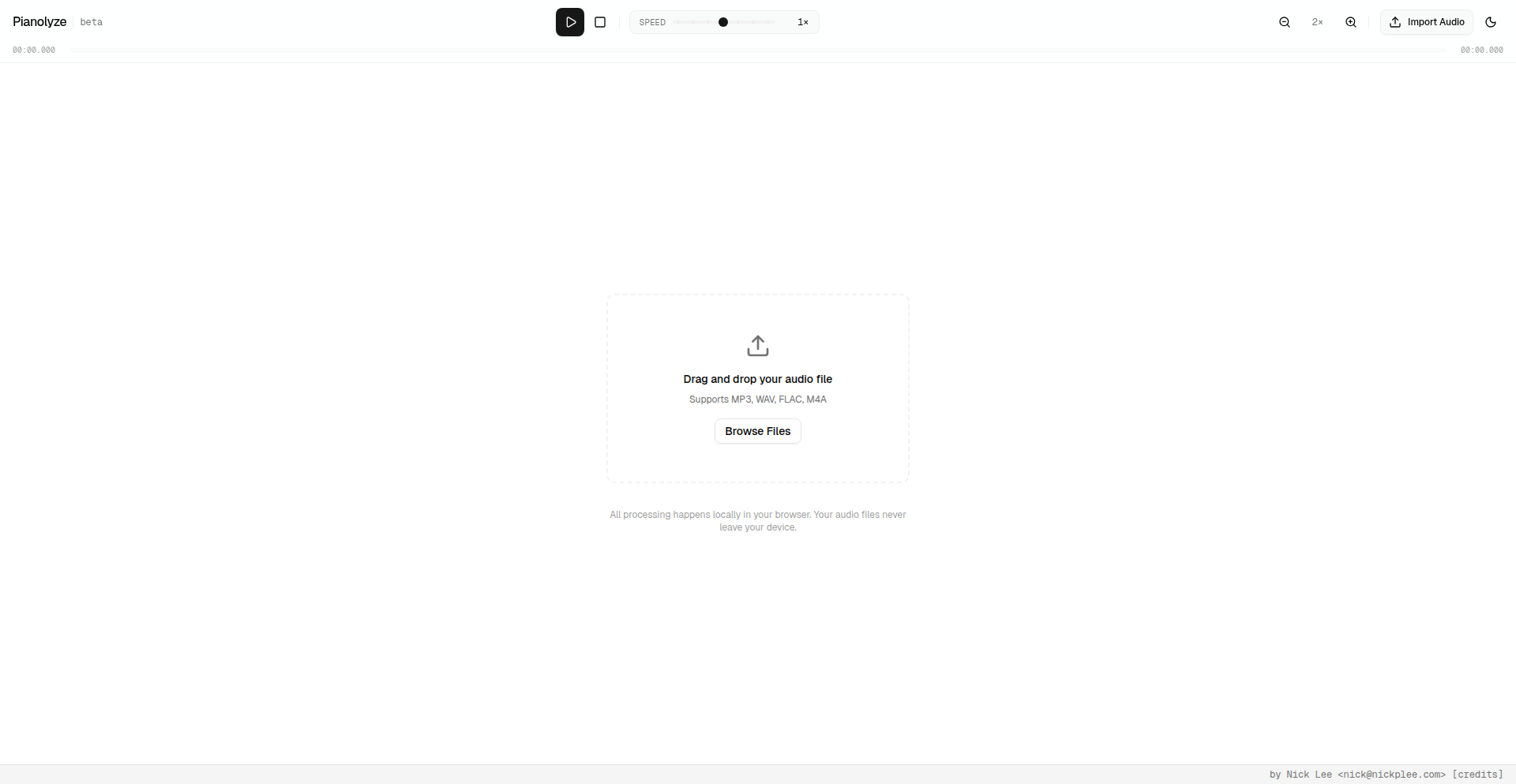

Explore the hottest developer projects on Show HN for 2025-11-03. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The landscape of Show HN this cycle is buzzing with innovation, particularly around leveraging AI to solve complex problems and enhance developer productivity. We're seeing a strong trend towards building tools that automate tasks, provide deeper insights, and offer more control, whether it's generating full-stack applications in seconds with ORUS Builder, analyzing brand DNA for offline ad placements with Adnoxy, or creating deterministic AI agents with AgentML. The push for local-first and privacy-centric applications is also evident, with projects like FinBodhi for personal finance and Russet offering on-device AI companions. For developers and entrepreneurs, this signifies a fertile ground for building solutions that empower users by demystifying complex processes, enhancing creative workflows, and prioritizing data security. The embrace of technologies like Rust for high-performance graphics, WebAssembly for browser-based computation, and sophisticated AI orchestration indicates a willingness to tackle challenging problems with cutting-edge tools. The hacker spirit of building solutions from scratch to address unmet needs or to explore new possibilities is alive and well, pushing the boundaries of what's achievable.

Today's Hottest Product

Name

Show HN: a Rust ray tracer that runs on any GPU – even in the browser

Highlight

This project showcases the power and performance of Rust for graphics programming by implementing a ray tracer. The key innovation is its ability to run both locally and in the browser via WebAssembly and wgpu, leveraging GPU acceleration for photorealistic rendering. Developers can learn about low-level graphics techniques, Rust's performance-oriented features, and modern web graphics integration.

Popular Category

AI/ML

Developer Tools

Web Applications

System Tools

Productivity

Popular Keyword

AI

LLM

Rust

WebAssembly

Data Extraction

Productivity

Developer Tools

Code Generation

Security

Technology Trends

AI-driven Automation

Local-First Applications

WebAssembly for Performance

Developer Productivity Tools

Data Management and Extraction

Deterministic AI Agents

Privacy-Focused Solutions

Cross-Platform Development

Low-Code/No-Code Platforms

Project Category Distribution

AI/ML (35%)

Developer Tools (25%)

Web Applications (20%)

System Tools (10%)

Productivity (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | RustRay Weaver | 90 | 25 |

| 2 | Niju: AI-Powered Engineering Candidate Screening | 14 | 42 |

| 3 | FinBodhi: Decentralized Ledger for Personal Finance | 34 | 18 |

| 4 | VocalMatch AI | 40 | 3 |

| 5 | CustomizableIntervalTimer | 23 | 15 |

| 6 | ORUS Builder: Compiler-Integrity AI App Synthesizer | 8 | 13 |

| 7 | Serie: Terminal Commit Graph Visualizer | 14 | 1 |

| 8 | BrandDNA Mapper | 8 | 6 |

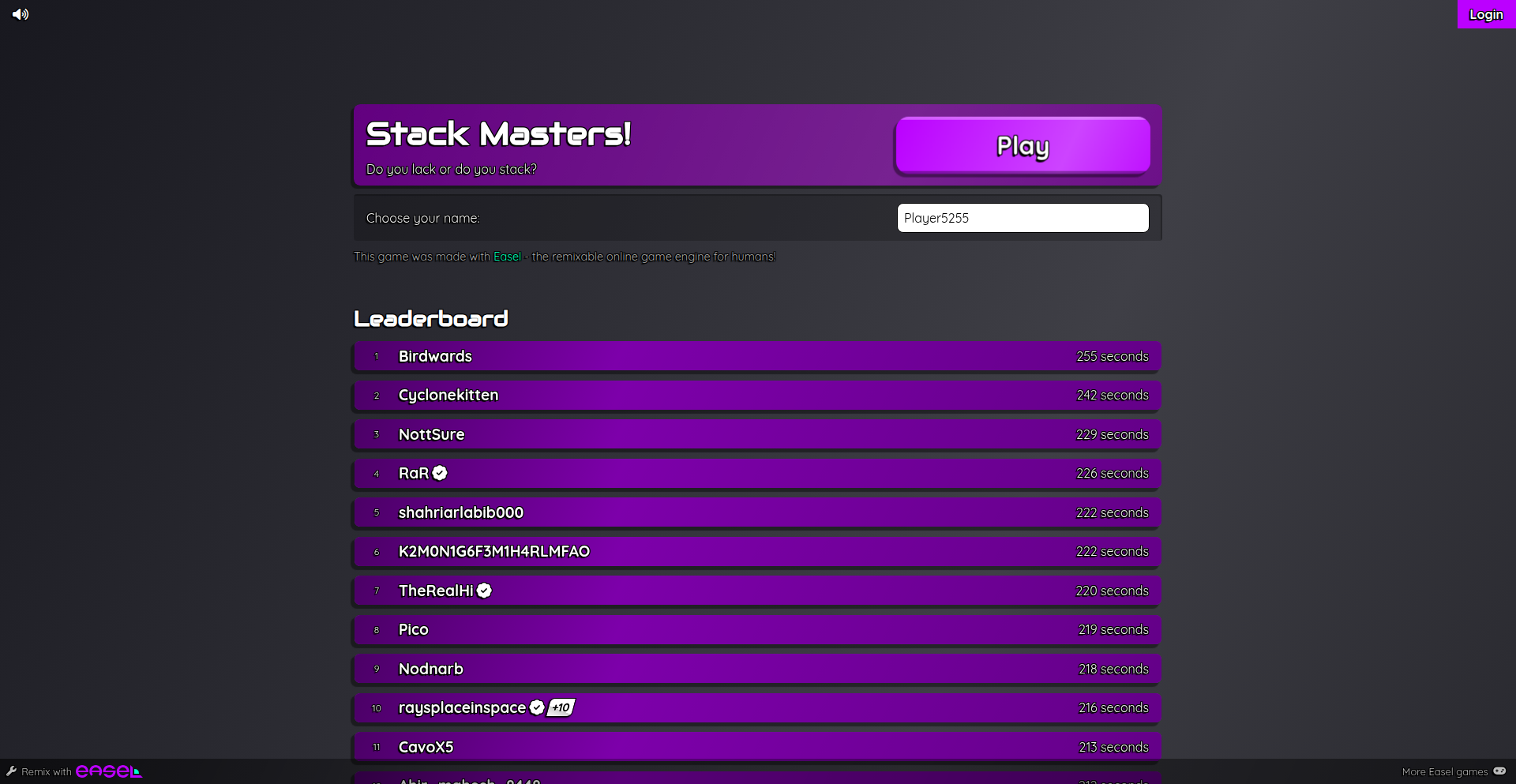

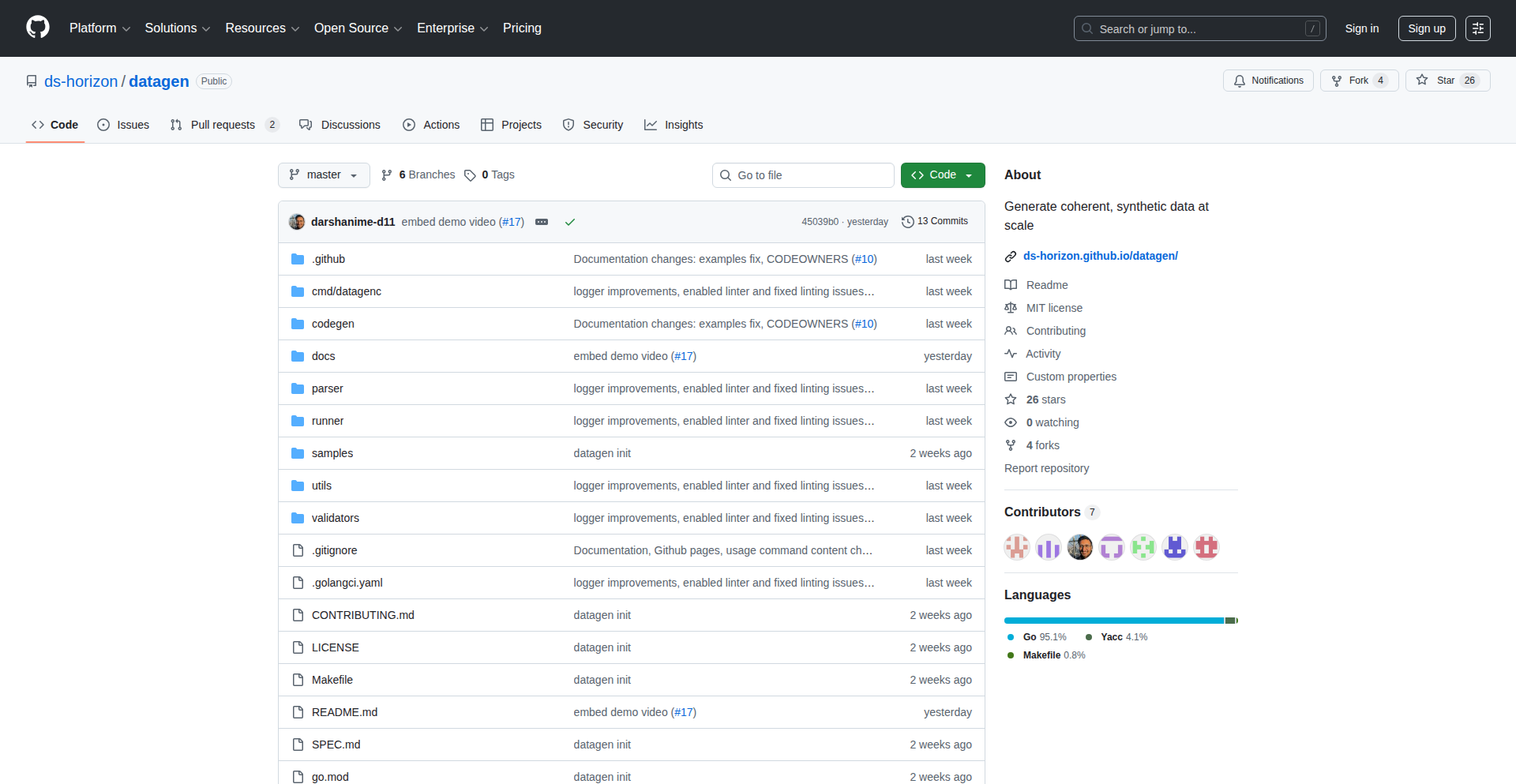

| 9 | SynthData Weaver | 10 | 0 |

| 10 | Hephaestus: Autonomous Agent Symphony | 7 | 0 |

1

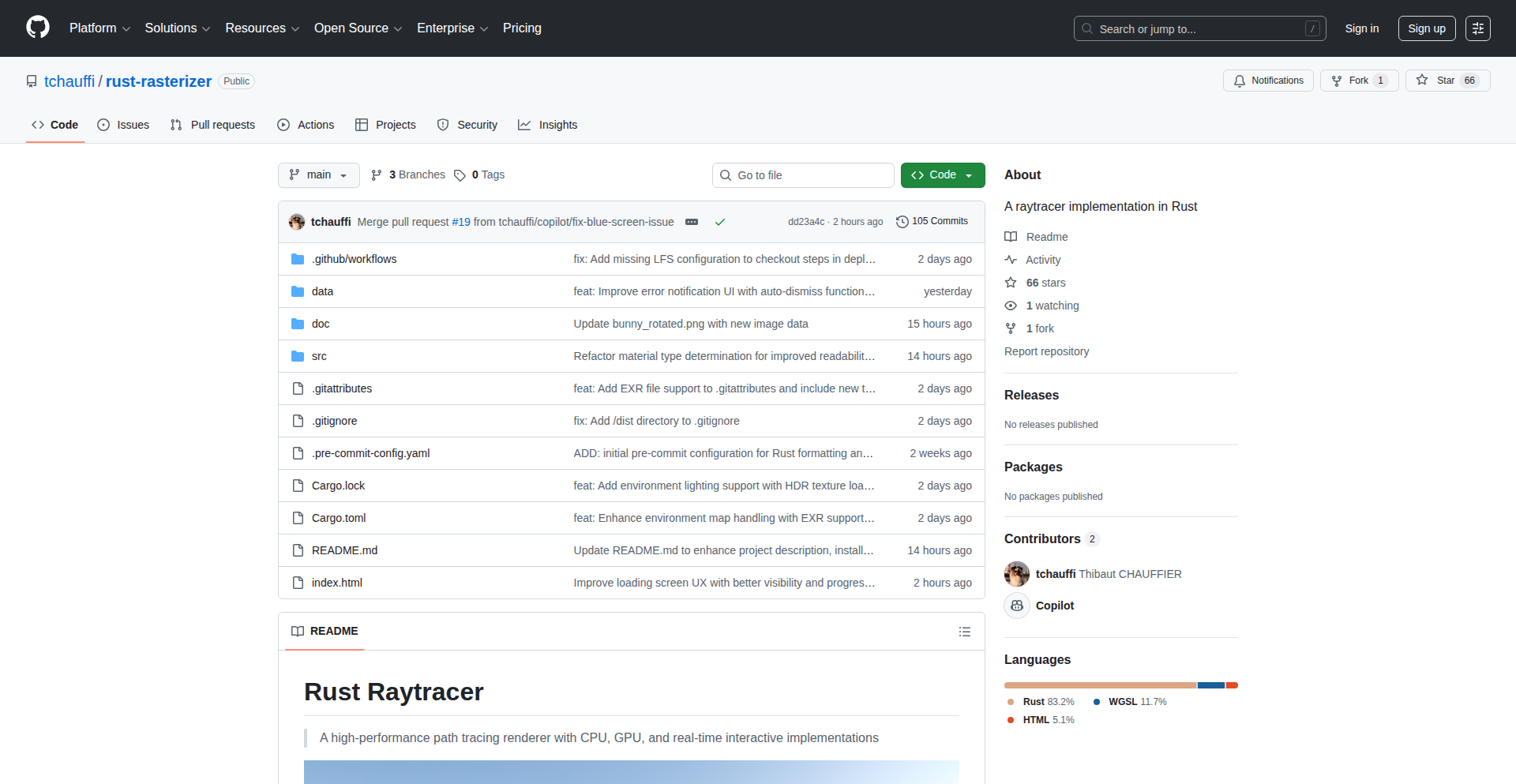

RustRay Weaver

Author

tchauffi

Description

A Rust-based ray tracer that renders 3D scenes with photorealistic lighting and can run both locally and in web browsers via WebAssembly. It uses a Bounding Volume Hierarchy (BVH) for efficient rendering of complex meshes.

Popularity

Points 90

Comments 25

What is this product?

This project is a custom-built ray tracer implemented in Rust. Ray tracing is a technique for generating an image by tracing the path of light. Instead of just drawing shapes, it simulates how light rays bounce off surfaces to create incredibly realistic reflections, refractions, and shadows. The innovation here is its ability to run efficiently on any GPU thanks to the wgpu library and even in a web browser by compiling to WebAssembly. This means you can experience high-quality 3D rendering without needing powerful local hardware or complex installations.

How to use it?

Developers can integrate this ray tracer into their own applications or use it as a standalone rendering engine. For local use, you can leverage the Rust code directly. For web deployment, it can be compiled into WebAssembly, allowing it to run in most modern desktop browsers. This makes it suitable for embedding interactive 3D visualizations, games, or architectural previews directly on websites. The project's use of GitHub Pages for deployment also simplifies sharing and showcasing rendered scenes.

Product Core Function

· WebAssembly and wgpu rendering: Enables high-performance 3D rendering that works across different GPUs and in web browsers, meaning your application can reach a wider audience without performance limitations.

· Bounding Volume Hierarchy (BVH) for mesh rendering: This is a clever way to speed up the rendering of complex 3D models. By organizing the model's geometry into a tree-like structure, the renderer can quickly determine which parts of the model are visible and need to be processed, resulting in faster and smoother rendering.

· Direct and indirect illumination simulation: This feature allows for photorealistic lighting. It doesn't just simulate light hitting a surface directly, but also how light bounces off other surfaces and illuminates the scene, creating more natural and visually appealing results.

· Easy GitHub Pages deployment: This simplifies sharing your creations. You can host your rendered scenes or applications directly on GitHub, making it easy for others to access and view your work without any complex setup.

Product Usage Case

· Web-based architectural visualization: Imagine presenting a new building design directly in a web browser with realistic lighting and reflections. This ray tracer could be used to create an interactive walkthrough that showcases the design's details and ambiance without requiring users to download any software.

· Indie game development: Developers could use this as a foundation for rendering 3D assets in a game engine. Its cross-platform capabilities and performance would be beneficial for creating visually rich games that run well on various devices.

· Educational tool for computer graphics: This project serves as an excellent example of implementing core ray tracing concepts. Students and enthusiasts can study its codebase to understand the intricacies of 3D rendering, BVH structures, and shader programming in Rust.

2

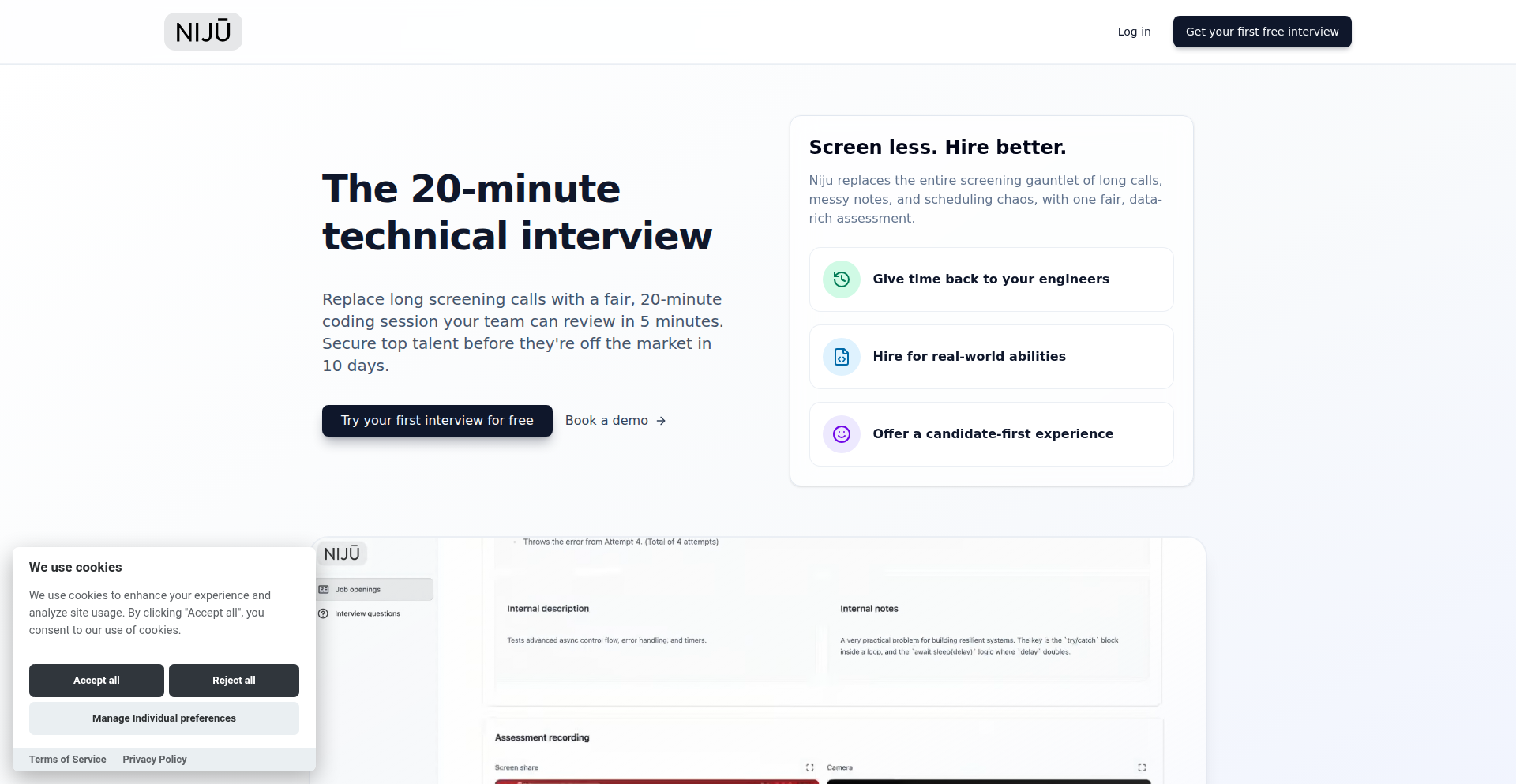

Niju: AI-Powered Engineering Candidate Screening

url

Author

radug14

Description

Niju is a project born out of developer frustration with time-consuming screening calls. It leverages AI to automate the initial screening of engineering candidates, freeing up valuable engineer time for more impactful work. The core innovation lies in its ability to intelligently process candidate information and identify potential fits, streamlining the hiring process.

Popularity

Points 14

Comments 42

What is this product?

Niju is an AI-driven tool designed to automatically screen engineering candidates. Instead of engineers spending hours on repetitive initial interviews, Niju analyzes candidate profiles, resumes, and potentially other data sources to assess their suitability for a role. Its technical innovation lies in its natural language processing (NLP) and machine learning (ML) capabilities, which are trained to understand technical skills, experience, and even cultural fit indicators. This allows it to filter out unsuitable candidates early, presenting engineers with a much shorter, higher-quality list of prospects. So, what's the benefit for you? It means engineers spend less time on tedious interviews and more time on coding, building, and innovating.

How to use it?

Developers can integrate Niju into their existing hiring workflows. Typically, after candidates apply through a job portal, their information is fed into Niju. The AI then processes this data, performing an automated 'first pass' assessment. Niju can be configured with specific criteria relevant to the role and company. The output is usually a summarized score or a shortlist of candidates who meet the pre-defined benchmarks. This can be integrated with applicant tracking systems (ATS) or used as a standalone tool. This streamlines your hiring by ensuring only the most promising candidates reach the hands of your engineering team. So, how does this help you? It drastically reduces the time spent on unqualified applicants, allowing your team to focus on interviewing and onboarding top talent.

Product Core Function

· Automated resume parsing and analysis: Niju uses NLP to extract key information like skills, experience, education, and project details from resumes, identifying relevant keywords and patterns. This means candidates' qualifications are efficiently and objectively assessed from the start.

· AI-driven candidate scoring and ranking: Based on predefined criteria and learned patterns, Niju assigns a score or ranking to each candidate, helping to prioritize those most likely to be a good fit. This allows for quicker decision-making and focuses attention on the best prospects.

· Customizable screening criteria: The system can be configured to match specific job requirements, ensuring that the screening process is tailored to the unique needs of each role. This means the AI is evaluating candidates against exactly what you're looking for.

· Integration capabilities: Niju is designed to be integrated with existing hiring tools and ATS, allowing for a seamless workflow. This avoids creating data silos and ensures new hires can be onboarded smoothly into your existing systems.

Product Usage Case

· A startup needs to quickly hire several backend engineers but has a limited HR team. Niju can pre-screen hundreds of applications, presenting the engineering leads with a shortlist of 10-15 highly qualified candidates, saving weeks of manual review and countless wasted interview hours. This allows the startup to scale its engineering team much faster without sacrificing quality.

· A large tech company is struggling with engineer burnout due to excessive interview schedules. By implementing Niju for initial candidate screening, the company reduces the number of engineers required for first-round interviews by 80%, allowing them to focus on more complex technical challenges and strategic projects. This improves engineer morale and productivity.

3

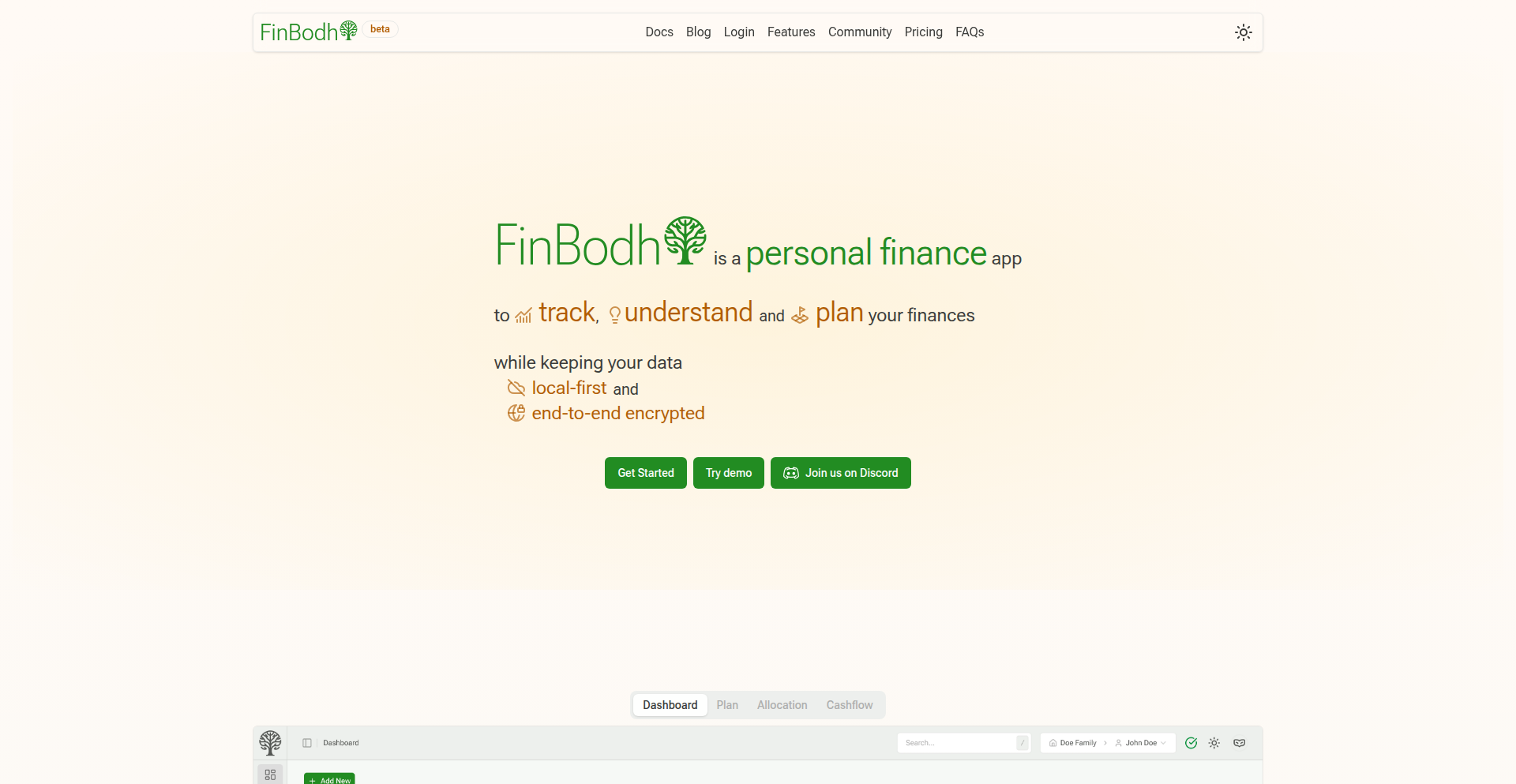

FinBodhi: Decentralized Ledger for Personal Finance

Author

ciju

Description

FinBodhi is a local-first, privacy-focused personal finance application that leverages a double-entry bookkeeping system. It empowers users to track, visualize, and plan their financial journey with an emphasis on data ownership and security. The core innovation lies in its local-first architecture using SQLite over OPFS and client-side encryption for data syncing, combined with a robust double-entry system capable of handling complex financial scenarios.

Popularity

Points 34

Comments 18

What is this product?

FinBodhi is a web-based application that acts like a digital ledger for your money, but with a powerful twist: it uses double-entry bookkeeping. Think of it like a super-organized accounting system for your personal finances. Unlike many apps that just track money going in and out, double-entry meticulously records every transaction's impact on different parts of your financial picture (like your assets and liabilities). This means it can handle much more complex situations, such as tracking the value of your house or your overall net worth accurately. The 'local-first' aspect means your financial data lives primarily on your own device, not on some distant server, giving you ultimate privacy and control. For syncing across devices, your data is encrypted with a key only you possess before it ever leaves your device. This approach ensures that even the developers cannot access your sensitive financial information.

How to use it?

Developers can use FinBodhi as a personal finance management tool by simply visiting the web application in their browser. It's designed to be accessible without an account, allowing for immediate exploration via a demo. For actual use, data is stored locally using technologies like SQLite within the browser's IndexedDB or OPFS (Origin Private File System) for persistence. Developers can import existing transaction data through built-in or custom importers, set up various account types (cash, mutual funds, stocks, multi-currency), and configure currency conversion rates. The application offers reporting features like Balance Sheets, Cashflow statements, and Profit & Loss reports, along with data visualization tools. For integration, FinBodhi can be thought of as a self-contained PWA (Progressive Web App). While not a library for external integration in its current form, its underlying principles of local-first data storage and encrypted syncing can inspire developers building similar decentralized applications. The ability to define custom importers also provides a degree of extensibility for bringing in data from various sources.

Product Core Function

· Local-first data storage using SQLite on OPFS for enhanced privacy and offline access, providing a secure foundation for financial data without relying on remote servers.

· End-to-end encrypted data synchronization across devices using a user-defined key, ensuring that your financial information remains private even when synced.

· Double-entry bookkeeping system for comprehensive financial tracking, enabling accurate modeling of complex transactions and a true representation of net worth.

· Multi-currency support with customizable exchange rates, allowing for management of finances across different national currencies and international investments.

· Flexible transaction management including import, slicing, dicing, splitting, and merging, offering granular control over how financial activities are recorded and analyzed.

· Visualizations and reports (Balance Sheet, Cashflow, P&L) to provide clear insights into financial health, aiding in better decision-making and planning.

· Customizable importers and rules for data import, facilitating the integration of financial data from various sources with minimal manual effort.

Product Usage Case

· A freelancer managing income and expenses across multiple currencies for international clients, using FinBodhi to accurately track their net cashflow and profitability in a consolidated view.

· An individual investor wanting to meticulously track their stock and mutual fund portfolio, including dividends and capital gains, while ensuring their sensitive investment data is stored securely on their own machine.

· A small business owner who wants to experiment with a more robust personal finance tool, using the double-entry system to understand how personal transactions impact their overall financial health before it potentially affects business finances.

· A privacy-conscious user who is wary of cloud-based financial apps, choosing FinBodhi to maintain complete control and ownership over their financial data, with the ability to backup locally or to a trusted cloud storage like Dropbox.

· A developer building a personal dashboard for financial insights, exploring FinBodhi's local-first architecture as a model for how to handle sensitive user data in a decentralized and secure manner.

4

VocalMatch AI

Author

JacobSingh

Description

VocalMatch AI is a fascinating Show HN project that leverages artificial intelligence to analyze your singing voice and recommend songs and artists that best suit your vocal characteristics. This goes beyond simple karaoke by using sophisticated AI models to understand the nuances of pitch, timbre, and vocal range, offering personalized music discovery for aspiring singers.

Popularity

Points 40

Comments 3

What is this product?

VocalMatch AI is an innovative application that uses machine learning, specifically audio processing and pattern recognition algorithms, to listen to your voice and determine which songs and musical artists you would sound best singing. The core innovation lies in its ability to go beyond generic song suggestions and provide highly personalized recommendations by analyzing the unique qualities of your vocal performance. Think of it as a smart music curator for your voice. So, what's in it for you? It helps you discover music you'll not only enjoy singing but also sound great performing, making your singing experience more rewarding and fun.

How to use it?

Developers can integrate VocalMatch AI into various applications, such as music streaming platforms, karaoke apps, or vocal training software. The AI model can be accessed via an API. A user would upload an audio recording of themselves singing, and the API would return a ranked list of song and artist suggestions tailored to their voice. This could be implemented as a feature within an existing app or as a standalone web service. So, how can you use it? Imagine adding a feature to your karaoke app that suggests the perfect song for each user based on their previous singing performance, leading to higher engagement and satisfaction.

Product Core Function

· Voice Analysis Engine: Utilizes advanced audio signal processing and AI models (like deep neural networks) to extract key vocal features such as pitch range, vocal timbre, vibrato characteristics, and breath control. The value here is a deep understanding of a user's unique singing voice, enabling precise matching. This is useful for creating personalized music experiences.

· Song and Artist Matching Algorithm: Employs recommendation system techniques, likely collaborative filtering or content-based filtering adapted for music, to compare the extracted vocal features against a comprehensive database of songs and artist vocal profiles. The value is providing highly relevant and personalized music suggestions. This is valuable for music discovery and entertainment applications.

· Personalized Feedback Mechanism: Optionally provides insights into why certain songs are recommended, perhaps highlighting specific vocal aspects that align well with the chosen tracks. The value is educational and motivational, helping users understand their voice better. This can be used in singing practice or educational tools.

Product Usage Case

· A karaoke app developer could use VocalMatch AI to automatically suggest songs that match a user's current vocal performance, increasing the likelihood of a positive singing experience and encouraging users to sing more. This solves the problem of users spending time browsing for songs they might not even be able to sing well.

· A music streaming service could integrate VocalMatch AI to create personalized 'sing-along' playlists for users, moving beyond just listening recommendations to active participation. This enhances user engagement by providing a novel and interactive way to enjoy music.

· A vocal coach could use this technology as a tool to quickly assess a student's vocal capabilities and identify suitable repertoire for practice, streamlining the lesson planning process. This addresses the challenge of finding appropriate songs for students at different skill levels.

5

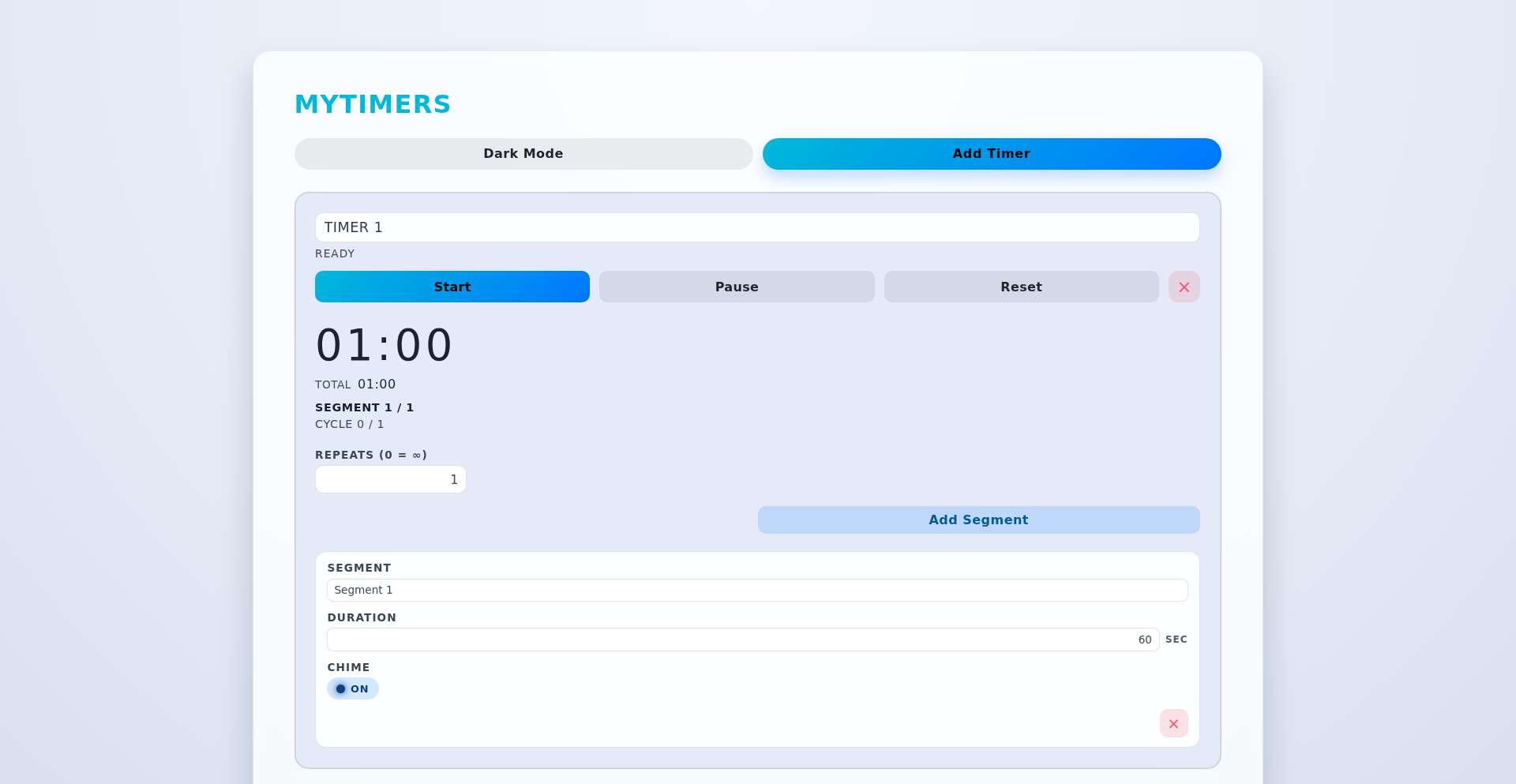

CustomizableIntervalTimer

Author

y3k

Description

A feature-rich, offline-first Progressive Web App (PWA) designed to create and manage custom workout timers. It solves the limitations of built-in device timers by allowing users to configure multiple sets and rest periods, offering a highly personalized interval training experience without any external dependencies or build processes.

Popularity

Points 23

Comments 15

What is this product?

This project is an advanced, user-configurable interval timer implemented as an offline-first Progressive Web App (PWA). Unlike standard timers, it's built from the ground up to allow users to define complex workout routines. Think of it as a digital coach for your exercises: you can specify the number of work intervals, the duration of each work interval, the number of rest intervals, and the duration of each rest interval. It achieves this using Web Components for a modular and reusable UI structure, and leverages `localStorage` to save your custom timer configurations directly in your browser, meaning your settings persist even when you're offline or close the app. The innovation lies in its complete independence – no build tools, no third-party scripts, just pure, efficient web technology at work to give you control over your timing.

How to use it?

Developers can use CustomizableIntervalTimer by simply accessing the web application through their browser at `mytimers.app`. It's designed for immediate use. For integration into other projects, the core logic and UI components, built with Web Components, can be understood and potentially adapted. The `localStorage` persistence means that once a timer is created and saved, it's readily available for future use without needing to re-enter all the details. This makes it incredibly convenient for personal use or as a foundational element for fitness-related applications where precise timing and configurable intervals are crucial.

Product Core Function

· Customizable Interval Configuration: Allows users to define multiple work and rest periods with specific durations, providing a flexible structure for any workout routine. The value here is precise control over training sessions, making workouts more effective and personalized.

· Offline-First PWA: Functions fully without an internet connection, saving data locally via `localStorage`. This ensures your timers are always accessible, regardless of network availability, offering unparalleled convenience and reliability.

· Zero Dependencies & No Build Step: The application is built using plain JavaScript, HTML, and CSS, with Web Components for UI. This means faster loading times, improved security, and easier maintainability. For developers, it signifies a commitment to lean, efficient web development practices.

· Persistent Timer Storage: User-defined timers are saved using `localStorage`, so they are available across sessions. This saves users time and effort by not having to reconfigure their workouts each time they want to use the timer.

· Web Components Implementation: Utilizes modern web standards for building reusable UI elements. This approach enhances modularity and maintainability of the codebase, making it a great example for developers interested in modern front-end architecture.

Product Usage Case

· Personal Fitness Tracking: A user wants to create a HIIT (High-Intensity Interval Training) routine with 30 seconds of work followed by 15 seconds of rest, repeated for 8 rounds. They can quickly set this up in CustomizableIntervalTimer, save it, and start their workout without interruption, solving the problem of generic timers not supporting such specific interval patterns.

· Developer Tooling for Time-Sensitive Tasks: A developer needs to time short coding sprints (e.g., 25 minutes focus, 5 minutes break - Pomodoro technique). They can configure this within the timer and have it run in a background tab, providing an audible or visual cue for breaks, addressing the need for a distraction-free, self-hosted timer.

· Educational Timers for Activities: A teacher can set up timers for classroom activities, like 10 minutes for reading, followed by a 2-minute discussion, repeated. The offline capability ensures it works even if classroom internet is unreliable, solving the problem of needing a dependable timing tool in various environments.

6

ORUS Builder: Compiler-Integrity AI App Synthesizer

Author

TulioKBR

Description

ORUS Builder is an open-source AI code generator that tackles the common frustration of AI-generated code being buggy and non-compiling. It employs a novel 'Compiler-Integrity Generation' (CIG) protocol, a series of AI validation steps performed *before* code generation, resulting in a remarkably high first-time compilation success rate. This allows developers to describe an app with a single prompt and receive a production-ready, full-stack application in about 30 seconds.

Popularity

Points 8

Comments 13

What is this product?

ORUS Builder is an AI-powered tool that automatically generates complete, production-ready full-stack applications from a simple text description. Unlike other AI code generators that often produce broken code requiring extensive debugging, ORUS Builder uses a proprietary 'Compiler-Integrity Generation' (CIG) protocol. This protocol involves a set of cognitive validation checks performed by specialized AI connectors before the code is actually written. Think of it like a meticulous editor who checks the blueprints for a house *before* construction begins, ensuring everything is structurally sound. The core innovation lies in this pre-generation validation, drastically reducing the debugging effort for developers and ensuring the generated code compiles on the first try. This means you get functional code much faster, saving valuable development time.

How to use it?

Developers can use ORUS Builder by providing a single, clear text prompt describing the desired application. This prompt can outline the features, user interface elements, and functional requirements. ORUS Builder then orchestrates its 'Trinity AI' system, comprising three specialized AI connectors, to understand the request and generate a full-stack application. The output includes the frontend (React, Vue, or Angular), backend (Node.js), and database schema, packaged into a ZIP file. This ZIP file contains production-ready code, complete with automated tests and Continuous Integration/Continuous Deployment (CI/CD) configurations. This means you can take the generated code and deploy it with minimal manual intervention, accelerating your development workflow from idea to deployment.

Product Core Function

· AI-driven full-stack application generation: Automatically creates front-end, back-end, and database schemas from a single prompt, saving significant manual coding effort.

· Compiler-Integrity Generation (CIG) protocol: Ensures generated code is highly likely to compile on the first attempt by performing pre-generation validation checks, reducing debugging time and frustration.

· Orchestrated AI connectors ('Trinity AI'): Utilizes multiple specialized AI agents to handle different aspects of code generation (e.g., UI, logic, database), leading to more cohesive and functional applications.

· Production-ready output with tests and CI/CD: Delivers a ZIP file containing not just the application code but also automated tests and CI/CD pipeline configurations, enabling faster deployment and maintenance.

· Cross-framework frontend support (React/Vue/Angular): Offers flexibility in choosing the preferred frontend technology for the generated application, catering to diverse team skill sets.

Product Usage Case

· Rapid prototyping of web applications: A startup founder needs to quickly build a minimum viable product (MVP) to test a new business idea. By describing the core features in a prompt, ORUS Builder generates a functional prototype in minutes, allowing them to gather user feedback much earlier than traditional development.

· Automating boilerplate code for microservices: A backend developer needs to create several identical microservices with slight variations. ORUS Builder can generate the foundational code for these services, including API endpoints and database interactions, significantly reducing the repetitive coding tasks.

· Accelerating learning for new technologies: A developer new to a specific framework like Vue.js wants to see how a typical application is structured. ORUS Builder can generate a sample application, providing a concrete example to study and learn from, demonstrating best practices and common patterns.

· Reducing development time for internal tools: A company needs a custom internal tool for data management. ORUS Builder can quickly generate the basic CRUD (Create, Read, Update, Delete) functionality and a user interface, allowing the development team to focus on the unique business logic rather than the generic parts of the application.

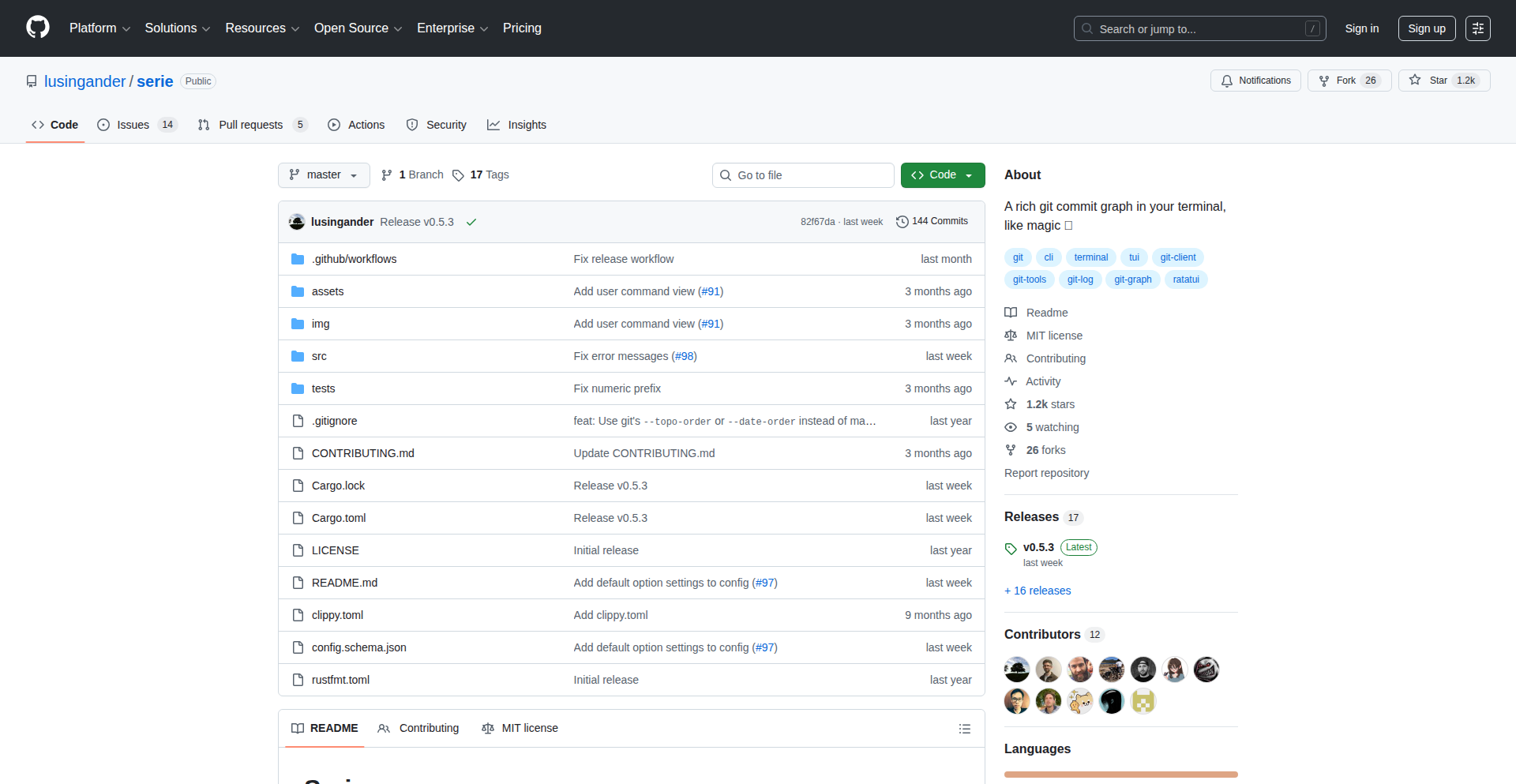

7

Serie: Terminal Commit Graph Visualizer

Author

lusingander

Description

Serie is a terminal-based application that leverages advanced terminal emulator features, specifically image display protocols like iTerm and Kitty, to render beautiful and readable Git commit graphs directly within your terminal. It's designed to make the visual structure of your Git history, especially complex branches, immediately understandable, addressing the readability issues often encountered with standard `git log --graph` output.

Popularity

Points 14

Comments 1

What is this product?

Serie is a specialized tool for developers who prefer working in the terminal and want a visually enhanced way to understand their Git commit history. Instead of relying on separate GUI applications or deciphering dense text logs, Serie uses modern terminal capabilities to draw commit graphs with clear lines and nodes, similar to how an image would be displayed. This allows for a much more intuitive grasp of branching, merging, and commit relationships, acting as a lightweight visual aid rather than a full-fledged Git client.

How to use it?

Developers can use Serie by having a terminal emulator that supports image protocols like iTerm or Kitty. Once installed, Serie can be run directly in the terminal, processing your Git repository's history. It then renders the commit graph directly in your terminal window, allowing you to navigate and inspect your project's evolution visually. This is ideal for quick checks of project history or understanding complex branching scenarios without leaving your terminal environment.

Product Core Function

· Renders Git commit graphs using terminal image protocols: This allows for high-fidelity, visually appealing graphs directly in the terminal, making complex history easier to understand than plain text. The value is in immediate visual comprehension of your project's timeline.

· Focuses solely on visualizing commit history: By avoiding the complexity of a full Git client, Serie offers a streamlined experience for its specific purpose. This means it's fast, efficient, and easy to learn for its intended function.

· Accessible via command line: Developers can integrate Serie into their existing terminal workflows. This provides a convenient way to view commit history without context switching to a graphical application.

· Highlights commit relationships: The graphical representation clearly shows how commits are related, including branches, merges, and parent-child relationships. This helps developers quickly identify the structure of their project's development and potential conflicts.

Product Usage Case

· When reviewing a pull request with many branches and commits, a developer can use Serie to get a quick visual overview of how the proposed changes fit into the main development line. This helps in understanding the scope and impact of the changes more effectively than reading a long list of commit messages.

· A developer working on a feature branch that has diverged significantly from the main branch can use Serie to see exactly where their branch stands and how many commits are ahead or behind. This aids in planning merges and avoiding merge conflicts by understanding the divergence early.

· For open-source contributors, Serie can provide a clear visual representation of the project's commit history, making it easier to understand the project's development patterns and identify key commit points for contributions.

· When debugging an issue that might have been introduced in a specific commit or merge, Serie's graph can help developers trace back the history visually, pinpointing potential sources of the bug more efficiently by seeing the sequence of changes.

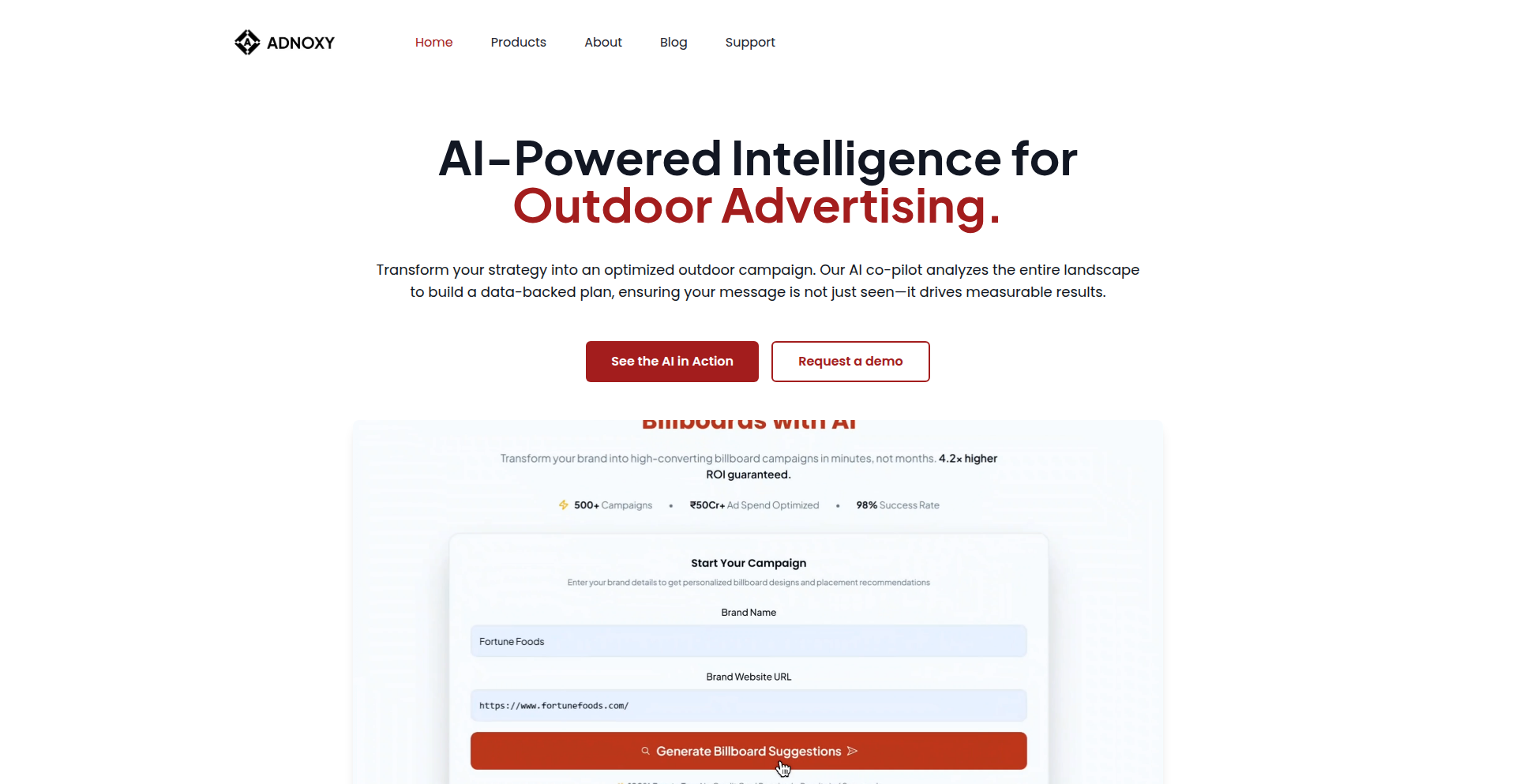

8

BrandDNA Mapper

Author

Adnoxy

Description

Adnoxy is an AI-powered engine that translates a brand's digital identity into its 'Brand DNA'. This DNA is then cross-referenced with a vast database of offline advertising placements. By analyzing factors like audience affinity, real-world visibility, and local economic data, it predicts the ROI of physical ad spots. This eliminates guesswork in the $300B+ offline ad market, providing data-driven placement recommendations and booking in seconds. So, this helps businesses understand where their customers are in the physical world and how to reach them effectively with ads, maximizing their return on investment.

Popularity

Points 8

Comments 6

What is this product?

BrandDNA Mapper is an AI system that analyzes a brand's website to create a unique 'Brand DNA'. Think of this DNA as a digital fingerprint that captures the brand's essence, its target audience, style, and price range. This Brand DNA is then compared against a database of physical advertising locations. The system uses sophisticated algorithms, considering hundreds of variables like audience-business compatibility, how visible a location is in the real world, how many competitors are there, and even local economic conditions, to score and rank potential ad placements. The innovation lies in bridging the gap between a brand's online persona and its offline customer base, using AI to predict ad effectiveness. This means businesses can move beyond intuition and make informed decisions about where to spend their offline advertising budget. So, it's a smart way to connect your online brand to the right offline customers.

How to use it?

Developers can integrate BrandDNA Mapper as a SaaS tool. For instance, an agency managing multiple clients can use it to quickly identify high-potential offline ad placements for each client. A media owner can leverage it to better understand the ideal brands for their ad spaces. The core API would take a brand's website URL as input and return a ranked list of recommended offline placements, along with their predicted ROI scores and supporting data. Integration typically involves API calls, where your application sends the website URL and receives structured data back. This allows for seamless incorporation into existing marketing or media buying platforms. So, you can automate the process of finding and recommending offline ad spots for your clients or for your own business.

Product Core Function

· Brand DNA Analysis: Analyzes a brand's website to extract its core identity, audience demographics, aesthetic, and market positioning. The value here is in creating a standardized, data-driven profile for any brand, enabling objective comparison. This helps in understanding who the brand is and who it appeals to.

· Offline Placement Scoring: Compares the 'Brand DNA' against a database of physical ad locations, assessing factors like audience overlap, visibility, competition, and economic indicators. The value is in providing a quantitative measure of potential success for each ad spot. This tells you which physical ad locations are most likely to reach your target customers.

· Predictive ROI Modeling: Utilizes AI to forecast the return on investment for recommended offline ad placements. The value lies in providing actionable financial insights to justify ad spend. This helps businesses know how much they can expect to get back from their advertising investment.

· Automated Placement Recommendation: Generates a prioritized list of high-ROI offline ad placements in near real-time. The value is in drastically reducing the time and effort traditionally required for media planning. This means faster decisions and more efficient use of advertising budgets.

Product Usage Case

· A fashion e-commerce brand wants to run a targeted offline campaign. They input their website URL into BrandDNA Mapper. The system identifies that their audience frequently visits a specific upscale shopping district with high foot traffic and a complementary competitor presence. The system recommends billboards and bus stop ads in this district, predicting a strong ROI due to audience-business affinity. So, the brand can now confidently advertise in a physical location that their target customers frequent.

· A local restaurant chain is looking to expand its reach. They provide their website to BrandDNA Mapper. The AI analyzes their menu, price point, and customer reviews to determine their 'Brand DNA' is family-friendly and budget-conscious. The system then identifies underserved neighborhoods with high family populations and suggests local community board ads and flyer distribution near schools. So, the restaurant can effectively reach new families in their vicinity.

· A marketing agency is managing campaigns for multiple small businesses. They use BrandDNA Mapper to quickly assess the best offline advertising strategies for each client. For a tech startup, it might recommend ads near co-working spaces, while for a craft brewery, it might suggest placements near local music venues. This allows the agency to offer data-backed, optimized offline strategies efficiently. So, clients receive more effective and cost-efficient advertising plans.

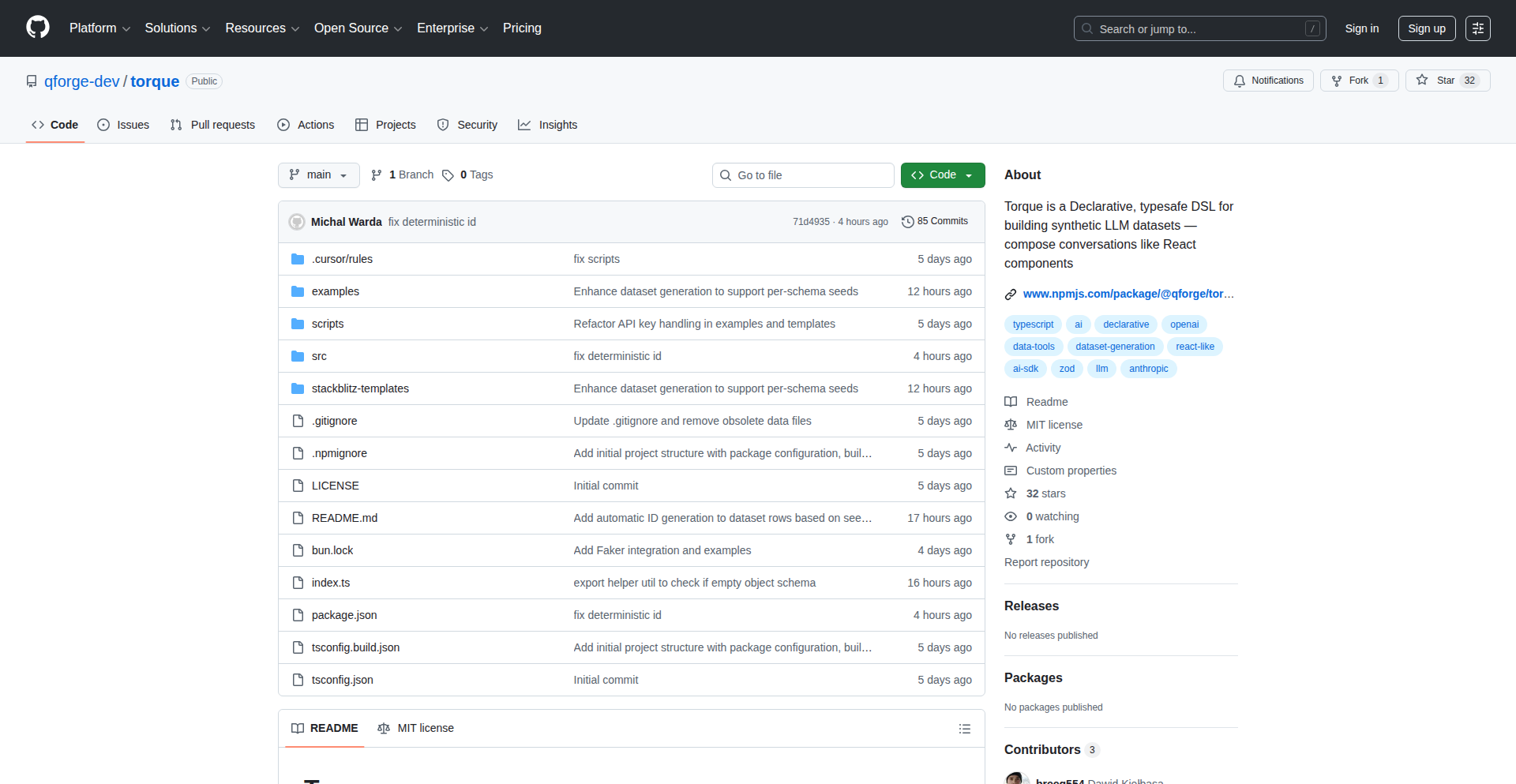

9

SynthData Weaver

Author

arturwala

Description

A declarative domain-specific language (DSL) for generating synthetic datasets for Large Language Models (LLMs). It leverages a React-like approach to define data structures and relationships, allowing developers to programmatically build complex and diverse training data. This tackles the challenge of creating high-quality, varied LLM training data efficiently and at scale, which is often a bottleneck in AI development.

Popularity

Points 10

Comments 0

What is this product?

SynthData Weaver is a specialized programming language designed to help you create fake but realistic data for training AI models, specifically Large Language Models (LLMs). Think of it like building with LEGOs, but instead of bricks, you're using code to define how your data should look and behave. The innovation lies in its 'declarative' nature, similar to how you describe what you want in React without specifying every single step. This means you define the *what* of your data (e.g., a customer review with certain sentiment and product mentions), and the tool figures out *how* to generate many variations of it. This makes creating diverse and structured datasets for AI much more manageable than traditional methods.

How to use it?

Developers can use SynthData Weaver by writing code that describes the structure and content of their desired synthetic dataset. For instance, you can define rules for generating product names, user profiles, or conversational turns. It's designed to be integrated into your existing data pipelines or ML workflows. You'd typically write a SynthData Weaver script that specifies the patterns and variations for your data, and then run this script to produce a large CSV, JSON, or other data file ready for LLM training. This offers a programmatic way to ensure your training data covers specific edge cases or exhibits particular characteristics you want your LLM to learn.

Product Core Function

· Declarative Data Structure Definition: Define data schema and relationships in a clear, human-readable way, reducing the complexity of manual data generation scripts. This means you can easily describe what kind of data you need, like a set of emails with specific subjects and content types, without writing hundreds of lines of repetitive code.

· Component-Based Generation: Build complex datasets by composing smaller, reusable data components, much like building user interfaces with React components. This allows for modularity and easier maintenance of your data generation logic, saving you time and effort when updating or expanding your dataset requirements.

· Stochastic Variation Engine: Programmatically introduce variations and randomness within defined constraints to ensure dataset diversity and prevent overfitting in LLMs. This is crucial for making your AI robust, as it generates many different examples of the same concept, preventing it from memorizing specific patterns and improving its ability to generalize to new, unseen data.

· Data Transformation and Validation: Apply transformations to generated data and validate it against predefined rules, ensuring data quality and consistency. This guarantees that the data you generate is clean and meets your specific requirements, so you can trust it for training your AI models effectively.

Product Usage Case

· Generating realistic customer support chat logs: A company needs to train an LLM to handle customer inquiries. Using SynthData Weaver, they can define templates for common issues, user tones, and agent responses, generating thousands of realistic chat transcripts that cover various scenarios, improving their AI's ability to understand and respond to customer needs.

· Creating synthetic code snippets for programming assistance LLMs: Developers working on AI that assists with coding can use SynthData Weaver to generate diverse examples of valid and invalid code snippets, along with explanations. This helps the AI learn to identify errors, suggest fixes, and even generate code based on natural language descriptions, accelerating the coding process.

· Building varied product reviews for e-commerce recommendation systems: An e-commerce platform wants to improve its recommendation engine. They can use SynthData Weaver to generate a large dataset of product reviews with different sentiments, feature mentions, and writing styles. This allows their AI to better understand user preferences and provide more accurate recommendations.

· Simulating diverse scenarios for natural language understanding tasks: Researchers developing LLMs for tasks like intent recognition or entity extraction can leverage SynthData Weaver to generate specific datasets that target particular linguistic challenges or domain-specific jargon. This enables fine-tuning LLMs to perform accurately in niche applications.

10

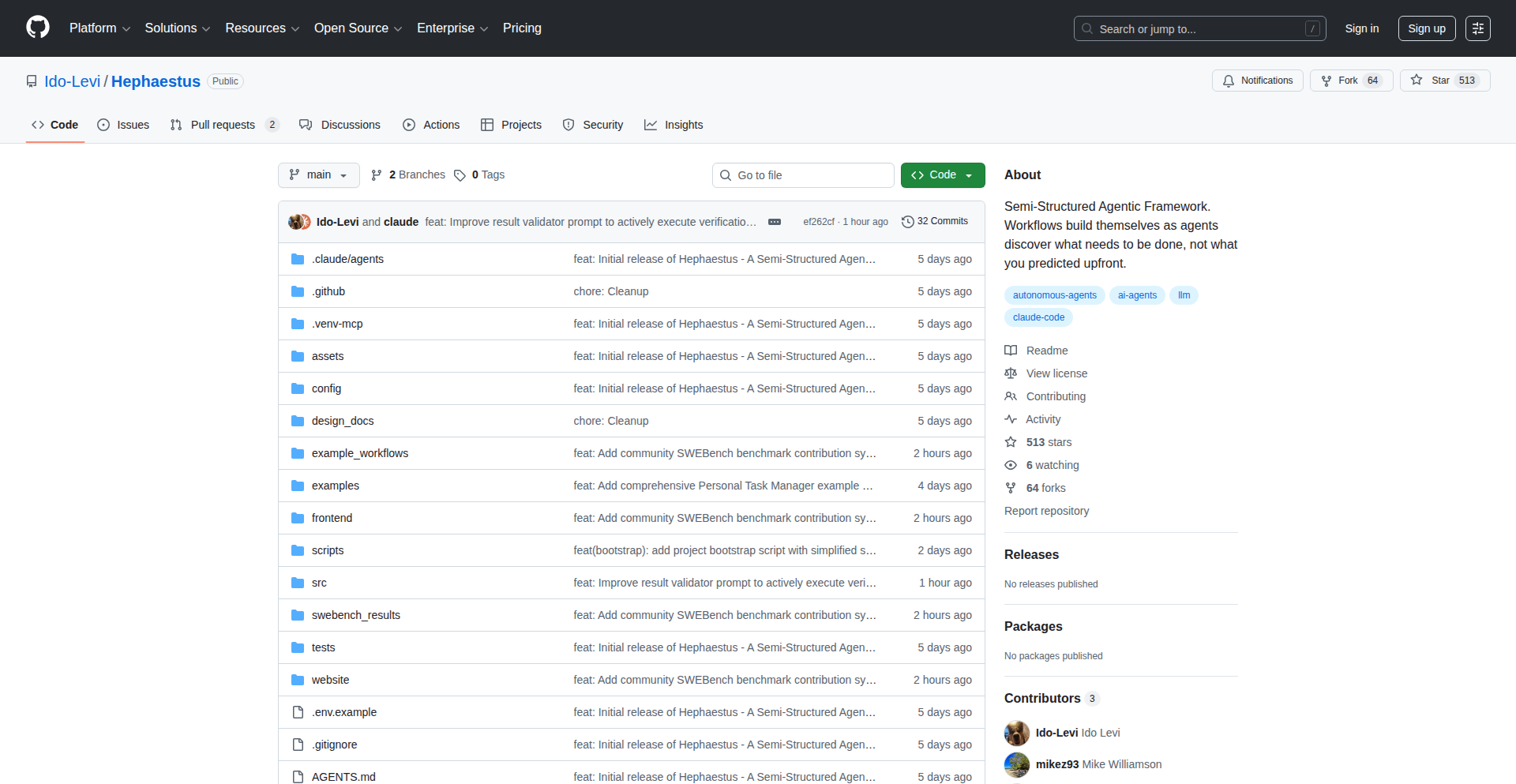

Hephaestus: Autonomous Agent Symphony

Author

idolevi

Description

Hephaestus is an innovative framework for orchestrating multiple autonomous agents. It tackles the complexity of coordinating independent AI agents to achieve a common goal, mimicking a team of specialized workers collaborating on a project. The core innovation lies in its dynamic agent delegation and communication protocols, enabling agents to self-organize and adapt to evolving task requirements, much like a skilled craftsman directing apprentices.

Popularity

Points 7

Comments 0

What is this product?

Hephaestus is a system that allows you to manage and coordinate multiple AI agents, essentially making them work together like a cohesive team. Imagine you have several AI 'workers,' each with a different skill. Hephaestus helps them talk to each other, decide who should do what task, and adapt if things change. The clever part is how it automatically assigns tasks and manages their communication, so you don't have to micromanage every step. This is useful because managing many independent agents can quickly become chaotic; Hephaestus brings order and efficiency to this process, unlocking the potential of collective AI intelligence without constant human oversight.

How to use it?

Developers can integrate Hephaestus into their projects by defining the agents, their capabilities, and the overarching goal. You'd typically specify the 'roles' of your agents (e.g., a research agent, a writing agent, a coding agent) and how they should communicate. Hephaestus then takes over the orchestration. For example, if you're building an automated content creation pipeline, you might use Hephaestus to have a 'research' agent find information, then pass that to a 'writing' agent to draft an article, and finally to a 'review' agent for feedback. This allows for the creation of complex, multi-stage automated workflows powered by a distributed team of AI agents.

Product Core Function

· Autonomous Agent Delegation: Enables agents to intelligently select and assign tasks to other agents based on their skills and current workload, improving overall workflow efficiency and reducing manual intervention. This means your AI team can figure out the best person for the job without you telling them.

· Dynamic Communication Protocols: Facilitates seamless and adaptive communication between agents, allowing them to share information, request assistance, and provide updates in real-time. This ensures that agents can collaborate effectively, even as the project evolves.

· Self-Orchestration Capabilities: Allows the framework to automatically manage agent interactions and task sequencing based on predefined goals and agent capabilities. This means the system can adapt and manage itself, reducing the need for constant human oversight.

· Task Decomposition and Recomposition: Breaks down complex tasks into smaller, manageable sub-tasks that can be assigned to individual agents and then reassembles the results. This makes tackling very large or intricate problems feasible for AI teams.

· Error Handling and Resilience: Implements mechanisms for agents to report errors and for the system to reroute tasks or re-assign responsibilities when issues arise, making the entire system more robust and reliable.

Product Usage Case

· Automated Research and Report Generation: A team of agents could be tasked to research a specific topic, gather relevant data from various sources, synthesize the findings, and generate a comprehensive report. Hephaestus manages the handoffs between the research, analysis, and writing agents, ensuring a smooth flow from initial query to final document.

· Complex Software Development Assistance: Imagine an agent capable of understanding code requirements, another for writing code snippets, and a third for debugging. Hephaestus could orchestrate these agents to collectively contribute to a software project, with agents collaborating on feature development and bug fixing.

· Personalized Learning Companion: An AI system could use Hephaestus to coordinate agents for understanding a user's learning style, identifying knowledge gaps, and then generating customized learning materials and exercises. This creates a highly adaptive and personalized educational experience.

· Scenario Planning and Simulation: In fields like finance or logistics, Hephaestus could orchestrate multiple agents simulating different market conditions or operational scenarios. Each agent could represent a specific variable or decision-maker, allowing for complex multi-agent simulations to explore potential outcomes.

11

Guapital Navigator

Author

mzou

Description

Guapital Navigator is a personal finance tracker that goes beyond simple asset aggregation. Its core innovation lies in providing real-time net worth percentile rankings against peers of the same age. This solves the problem of not knowing whether your financial progress is good or bad by offering crucial context. It syncs with bank accounts, crypto wallets, and allows manual asset entry, then visualizes your progress and comparative standing. This is valuable because it transforms abstract financial numbers into actionable insights, empowering users to understand their financial health in a relatable context.

Popularity

Points 2

Comments 5

What is this product?

Guapital Navigator is a privacy-focused financial tracking tool. Instead of just showing you how much money you have, it actively compares your net worth to others in your age group, telling you where you stand statistically. The technical innovation is in its ability to aggregate data from various financial sources like bank accounts (using Plaid for secure connections), popular cryptocurrency networks (Ethereum, Polygon, Base, Arbitrum, Optimism), and allows for manual input of other assets. It then leverages this data to calculate and display your percentile ranking. So, the value is understanding your financial progress not just in absolute terms, but in relation to your peers, which helps in setting realistic goals and assessing your financial journey.

How to use it?

Developers and individuals can use Guapital Navigator by signing up for an account on their website. The primary method of use involves securely connecting their financial accounts. This is done through integrations with services like Plaid, which acts as a secure intermediary for bank connections, ensuring your bank login details are not directly shared with Guapital. For cryptocurrency, users can provide wallet addresses for supported blockchains. Manual asset input is also available for assets like real estate or physical valuables. Once connected, the platform automatically syncs and calculates your net worth and percentile rank. This means for a developer, they can quickly see how their savings and investments stack up against other developers or people in their age bracket without complex manual calculations. It's a straightforward way to get immediate financial context.

Product Core Function

· Secure financial account aggregation: Connects to bank accounts via Plaid and major cryptocurrency wallets, allowing users to see all their assets in one place. This provides a comprehensive view of their financial landscape, making it easier to track overall net worth.

· Real-time net worth calculation: Dynamically updates your total net worth based on synced and manually entered assets. This ensures you always have an up-to-date understanding of your financial standing.

· Age-based percentile ranking: Compares your net worth to anonymized data of other users in the same age demographic, showing your position relative to them. This offers crucial context, helping users understand if their financial progress is ahead, average, or behind expectations, and provides a benchmark for goal setting.

· Historical trend analysis: Visualizes your net worth and percentile changes over time, allowing you to see progress and identify patterns. This helps in assessing the effectiveness of financial strategies and motivates continued effort by showing tangible improvements.

· Privacy-first, paid model: Guarantees that user data is never sold and is protected through a paid subscription. This is valuable for users who are concerned about data privacy and want assurance that their sensitive financial information is secure and not being exploited.

· Manual asset input: Allows for the inclusion of assets not automatically syncable, such as real estate, vehicles, or other valuables. This ensures a complete and accurate net worth calculation, providing a true holistic financial picture.

Product Usage Case

· A young software engineer at 30 wants to know if their $150,000 net worth is good. By using Guapital Navigator, they connect their bank accounts and crypto wallets. The tool immediately shows they are in the 70th percentile for their age group. This clarifies their financial standing, proving they are doing better than most of their peers and providing confidence in their financial management.

· A freelance developer is building a long-term investment strategy and wants to track progress beyond just looking at account balances. Guapital Navigator allows them to see how their net worth and percentile rank have changed over the past year, showing a move from the 40th to the 55th percentile. This visual feedback on their investment performance helps them stay motivated and adjust their strategy based on tangible progress.

· Someone concerned about data privacy wants to track their finances but is hesitant to use free services that might monetize their data. Guapital Navigator's paid, privacy-first model allows them to securely link their financial accounts with the assurance that their data is protected and not being shared or sold, addressing their core concern about financial data security.

· A developer transitioning from a stable job to a startup needs to understand their financial resilience. By tracking their net worth and percentile, they can gauge their financial buffer and understand the trade-offs of their career choices in the context of their overall financial health compared to others in similar life stages.

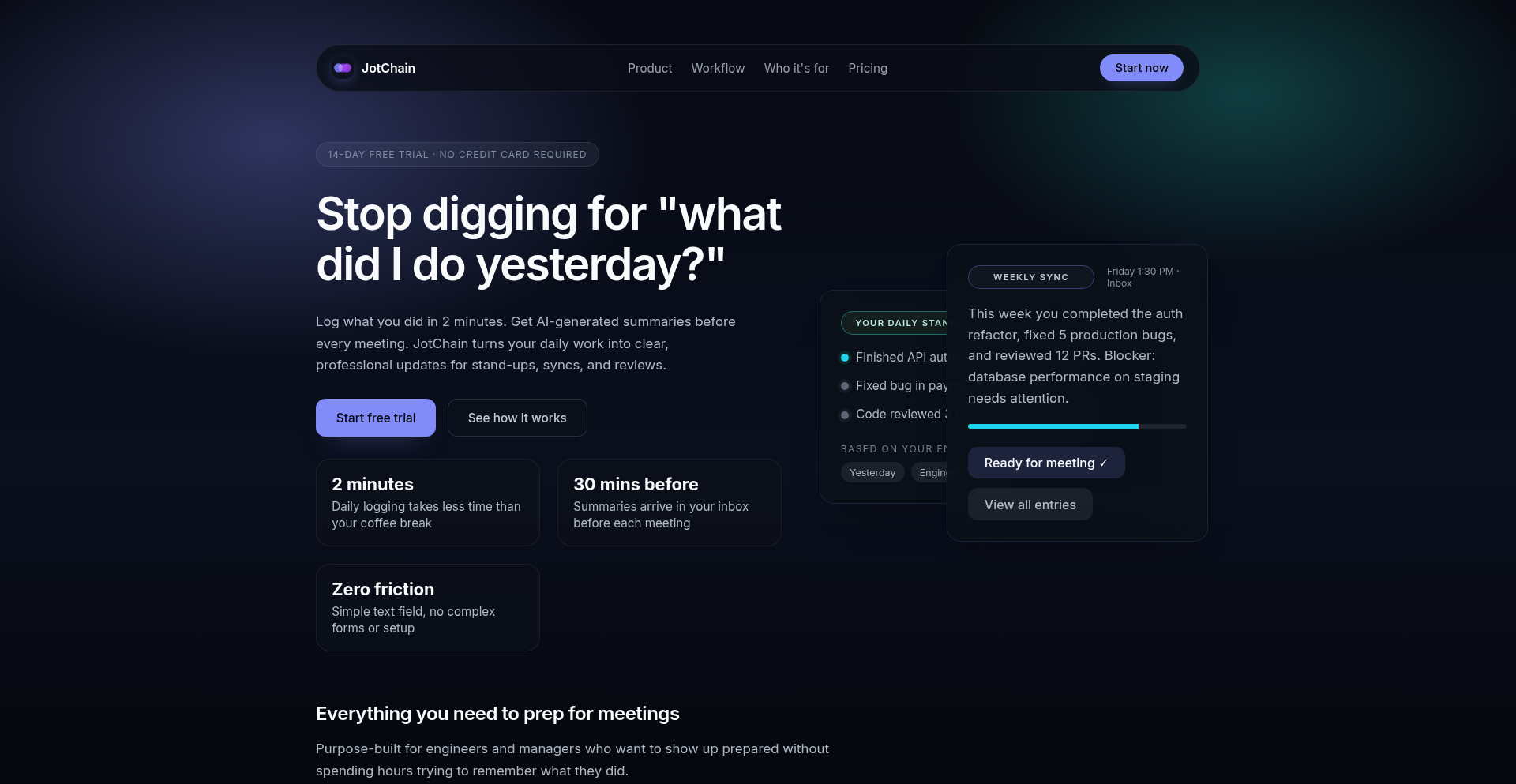

12

JotChain AI

Author

morozred

Description

JotChain AI is a personal productivity tool that leverages AI to transform your raw, daily work notes into polished, structured summaries. It tackles the common challenge of information overload and forgotten details by automatically generating scheduled email digests, helping you recall and present your accomplishments and blockers effortlessly. The core innovation lies in its seamless integration of quick note-taking with intelligent AI summarization and timely delivery, making it easier to stay on top of your contributions and communicate them effectively.

Popularity

Points 3

Comments 4

What is this product?

JotChain AI is a smart note-taking and summarization service designed for busy professionals. It works by allowing you to quickly jot down key information like tasks completed, challenges faced, and important context throughout your day using a simple text interface. The underlying AI then processes these notes, identifying key themes, accomplishments, and blockers. Based on your preferred schedule (daily, weekly, monthly, etc.) and timezone, it automatically generates concise, well-structured email summaries. This means instead of manually compiling your weekly progress report or preparing talking points for a meeting, JotChain AI does the heavy lifting, ensuring you have a clear and organized overview of your work without the extra effort. The innovation here is in automating the post-note-taking process, turning scattered thoughts into actionable insights delivered directly to your inbox.

How to use it?

Developers can integrate JotChain AI into their daily workflow in several ways. The primary method is through its web interface, where you can access a simple text field to log your notes. For instance, after completing a complex bug fix, you can quickly type: 'Fixed critical authentication bug in user login flow. Investigated root cause for 2 hours. Tag: bugfix, auth.' You can then configure how often you want to receive summaries (e.g., every Friday at 4 PM PST before the team sync) and what period the summary should cover (e.g., the last 7 days). JotChain AI will then send you an email containing a structured summary like: 'Weekly Summary (May 13-19, 2024): Achieved key milestone by resolving critical authentication bug in user login flow. Dedicated 2 hours to in-depth root cause analysis. Preparation for upcoming feature deployment ongoing. Blocker: N/A.' This can be used to quickly prep for stand-ups, performance reviews, or simply to keep a personal log of achievements.

Product Core Function

· Quick Note Logging: Enables rapid capture of daily work achievements, blockers, and context using a plain-text field, designed for minimal time investment (approx. 2 minutes). This is valuable because it ensures that important details are not lost and can be easily recalled later, forming the basis for future summaries.

· AI-Powered Summarization: Utilizes artificial intelligence to process raw notes and generate structured, coherent summaries. This function is crucial for distilling large amounts of information into digestible insights, saving users significant time and cognitive load in organizing their thoughts.

· Scheduled Email Delivery: Delivers AI-generated summaries to your inbox at user-defined cadences (daily, weekly, monthly, custom) and times. This provides timely and consistent updates, making it easier for developers to stay on top of their progress and prepare for discussions without manual compilation.

· Customizable Scheduling Options: Offers flexibility in setting summary frequency, timezone, lookback window, and lead time. This customization allows users to tailor the service to their specific team rhythms and personal preferences, ensuring the summaries are relevant and useful when they need them most.

· Tagging and Organization: Supports optional tagging of notes for better categorization and retrieval. This feature enhances the organization of your work items, making it easier to filter and analyze specific types of contributions or challenges over time.

Product Usage Case

· Scenario: Preparing for a weekly team sync meeting. A developer has been working on a complex feature with several minor bug fixes. Instead of trying to recall all the details from the past week, they can rely on JotChain AI's weekly summary email, which might highlight 'Completed core functionality for Project X, resolved 3 minor bugs in the API, and successfully integrated the new authentication module.' This allows for a quick, confident contribution to the meeting with clear talking points.

· Scenario: Documenting personal progress for a performance review. Over several months, a developer has been logging their wins and challenges. JotChain AI has been generating monthly summaries that detail key projects completed, technical challenges overcome, and any skills developed. This creates a rich, automated portfolio of their work and impact, making the performance review process significantly less daunting and more data-driven.

· Scenario: Managing blockers and seeking help. A developer encounters a significant technical blocker. They log it in JotChain AI as: 'Blocker: Unable to resolve database connection issue in staging environment. Experiencing intermittent timeouts. Investigated configuration files and server logs. Tag: blocker, db, staging.' If they've set up daily digests, this blocker will be included in their morning email, serving as a reminder to themselves and an opportunity to share it with a lead or mentor for assistance during the daily stand-up.

· Scenario: Onboarding a new team member. A team lead can use JotChain AI's historical summaries to quickly provide a new hire with an overview of recent project progress, key features implemented, and any persistent challenges. This provides a concise, yet comprehensive, contextual background without overwhelming the new member with raw documentation.

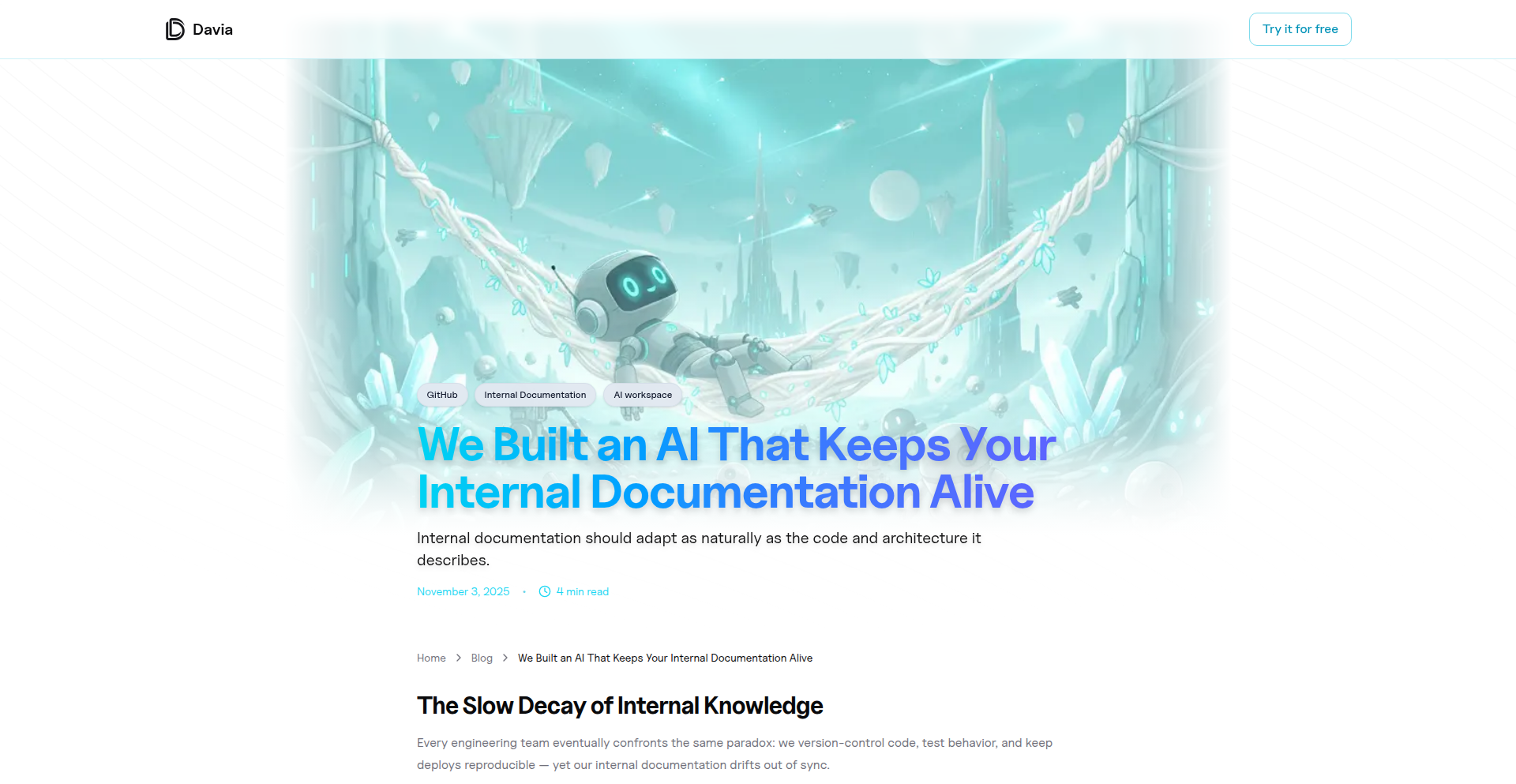

13

DocuMind AI

Author

ruben-davia

Description

An AI-powered tool designed to automatically update and maintain internal documentation, ensuring it stays relevant and accurate. It tackles the common problem of outdated documentation by intelligently analyzing code changes and generating or suggesting updates.

Popularity

Points 7

Comments 0

What is this product?

DocuMind AI is an intelligent agent that acts as a guardian for your internal technical documentation. Instead of manually sifting through code and updating markdown files every time a feature changes, this AI leverages Natural Language Processing (NLP) and code analysis techniques to understand your codebase. When code is modified, it can detect the impact on existing documentation and either automatically generate new snippets or flag sections that need review. The core innovation lies in its ability to 'read' code and 'understand' its implications for documentation, bridging the gap between development and knowledge management.

How to use it?

Developers can integrate DocuMind AI into their CI/CD pipelines or use it as a standalone tool. During development, it can monitor code repositories. When a commit is pushed, the AI analyzes the changes. For significant modifications, it can automatically create a pull request with proposed documentation updates, including new API descriptions, usage examples, or architectural explanations. For less critical changes, it might simply provide suggestions within the developer's IDE. This means less manual work for developers and more reliable, up-to-date documentation for everyone.

Product Core Function

· Automated documentation generation: The AI can write new documentation sections based on code analysis, saving developers significant time. This is useful for onboarding new team members or quickly documenting new features.

· Intelligent documentation update suggestions: When code changes, the AI identifies affected documentation and proposes specific edits or additions, ensuring accuracy and relevance. This is valuable for maintaining consistency as projects evolve.

· Code-to-documentation semantic analysis: The AI understands the meaning and purpose of code, translating complex logic into human-readable documentation. This is crucial for making technical information accessible to a wider audience.

· Integration with development workflows: By fitting into existing CI/CD processes, the AI automates documentation tasks without disrupting developer routines. This streamlines the entire development lifecycle.

Product Usage Case

· A software team frequently updates a shared internal API. DocuMind AI monitors the API's codebase. When new endpoints are added or existing ones are modified, the AI automatically generates updated Swagger/OpenAPI definitions and corresponding usage examples within the team's documentation portal, preventing developers from consuming outdated API specifications.

· A startup is building a new microservice and needs to document its architecture. DocuMind AI analyzes the service's code and dependencies, then generates an initial architectural overview and key component descriptions. This allows the team to have a foundational document quickly, which can then be refined by engineers.

· An open-source project experiences frequent bug fixes and feature enhancements. DocuMind AI tracks these changes and suggests updates to the 'Troubleshooting' and 'How-to' sections of the project's documentation, making it easier for community users to find solutions and adopt new features.

14

CustomChessForge

Author

chess39

Description

A 'Show HN' project that allows users to craft their own unique starting positions for a game of chess. This innovation breaks away from the traditional fixed setup, offering a deeply customizable chess experience through a clever manipulation of game state. It addresses the desire for novel gameplay, strategic exploration, and creative expression within the well-established framework of chess.

Popularity

Points 1

Comments 5

What is this product?

This project is a web-based chess application that liberates the game from its standard starting setup. Instead of the usual pawn formations and piece placements, users can define the initial arrangement of all pieces on the board. Technically, this is achieved by intercepting the standard chess game initialization and allowing a user-defined board state to be loaded. This likely involves a custom chess engine or a modified existing one that can accept arbitrary starting configurations, potentially represented by FEN (Forsyth-Edwards Notation) strings or a similar data structure. The innovation lies in providing a user-friendly interface to build these custom positions, making the complex task of setting up a non-standard board accessible to any chess enthusiast.

How to use it?

Developers can integrate this project by leveraging its underlying chess engine and custom board state capabilities. The core functionality can be exposed via an API, allowing other applications to generate games with custom starting positions. For instance, a game tutorial platform could use it to create specific problem sets for training, or a competitive programming platform could use it to generate unique chess puzzles. The project likely provides a JavaScript library or a backend service that can be called to set the initial board state before a game begins, offering a flexible way to inject unique challenges and learning scenarios.

Product Core Function

· Customizable starting board setup: Allows users to manually place any chess piece on any square at the beginning of the game, offering immense replayability and strategic depth.

· FEN string generation/parsing: The ability to save and load custom board states using standard FEN notation, enabling easy sharing and integration with other chess tools and platforms.

· Interactive board editor: A visual interface for users to intuitively drag and drop pieces, making the creation of custom positions accessible even for non-technical users.

· Game state manipulation: Provides the underlying logic to accept and validate non-standard piece placements, ensuring a functional chess game even with unconventional starting arrays.

Product Usage Case

· Educational chess platforms: Creating specific training scenarios by setting up particular tactical positions for users to solve, improving their understanding of chess concepts.

· Chess puzzle generation tools: Automatically generating unique chess puzzles by randomizing piece placements and then finding checkmate sequences, offering an endless supply of new challenges.

· Competitive programming: Developing unique chess-based programming challenges where participants must strategize with unconventional starting arrays, testing their algorithmic thinking.

· Game development prototypes: Quickly prototyping new chess variants or game modes by modifying the starting conditions, allowing for rapid experimentation with gameplay mechanics.

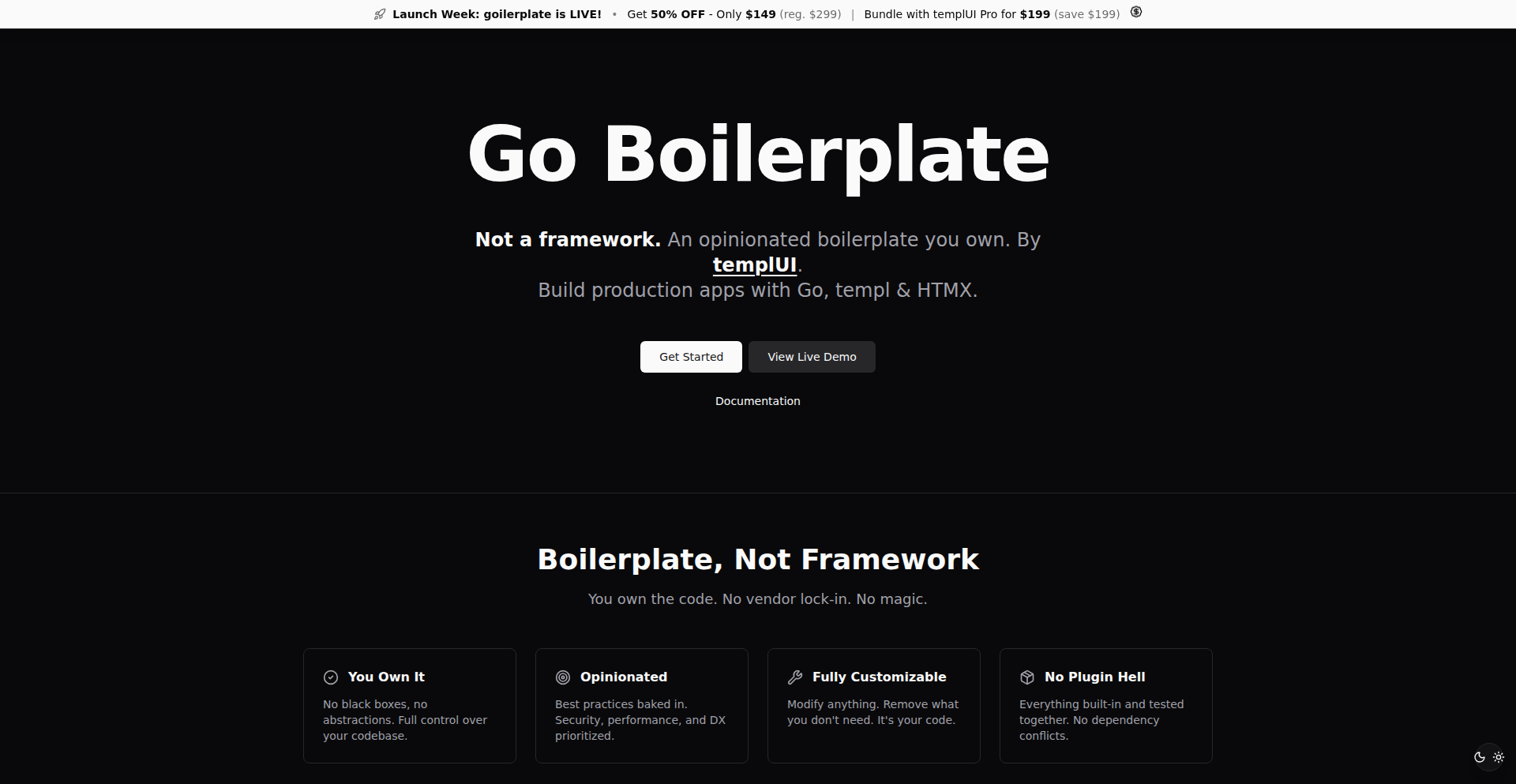

15

Goilerplate-Htmx-SaaS-Boilerplate

Author

axadrn

Description

A comprehensive SaaS boilerplate built with Go and templ, leveraging Htmx for dynamic web interactions. It addresses common backend challenges like authentication, subscriptions, and documentation, offering a rapid start for developers building modern web applications with a focus on server-rendered interactivity. This project encapsulates the hacker ethos of efficiently solving complex problems with elegant code.

Popularity

Points 5

Comments 1

What is this product?

This project is a pre-built foundation for creating Software-as-a-Service (SaaS) applications. It's designed for Go developers who want to quickly launch a project without reinventing the wheel for standard features. The core innovation lies in its integration of templ, a powerful templating engine for Go, and Htmx, a library that allows you to access modern browser features directly from HTML, making web interactions feel like modern JavaScript frameworks but with less client-side code. It handles essential backend tasks like user authentication, managing subscriptions (including payment integration with Polar), and generating documentation.

How to use it?

Developers can use goilerplate as a starting point for their new SaaS projects. They would clone the repository, configure their database (defaulting to SQLite, with optional PostgreSQL support), and then customize the codebase to fit their specific application logic. For instance, to add a new feature, a developer might extend the existing authentication middleware or integrate new components using templ within the server-rendered HTML, updating specific parts of the page with Htmx without full page reloads. This allows for faster development cycles and a more responsive user experience.

Product Core Function

· Authentication System: Provides pre-built user registration, login, and session management, saving developers the time and effort of implementing these critical security features from scratch.

· Subscription Management: Integrates with Polar for handling recurring payments and subscription tiers, enabling businesses to monetize their services efficiently.

· Templating Engine Integration: Utilizes templ for generating dynamic HTML on the server, which can be more performant and easier to manage for certain types of applications compared to heavy client-side JavaScript frameworks.

· Htmx for Interactivity: Leverages Htmx to enable rich, dynamic user interfaces by making AJAX requests directly from HTML attributes, leading to a more responsive feel without complex JavaScript development.

· Database Abstraction: Offers default SQLite support and optional PostgreSQL, providing flexibility in data storage and management.

· Documentation Generation: Includes mechanisms for generating API or application documentation, crucial for developer collaboration and user onboarding.

Product Usage Case

· Building a new SaaS platform for a niche market: A startup can leverage goilerplate to quickly spin up a functional MVP, focusing on their unique product features rather than generic backend infrastructure.

· Developing an internal tool for a company: An IT department can use goilerplate to create a secure and interactive web application for managing internal resources, benefiting from the built-in authentication and ease of development.

· Creating a content management system (CMS) with server-rendered performance: Developers can use the templ and Htmx combination to build a CMS that offers fast loading times and dynamic content updates without relying heavily on client-side JavaScript, ideal for SEO-focused websites.

· Rapid prototyping of web applications: A solo developer or small team can use goilerplate to rapidly iterate on ideas, quickly getting a functional application with essential SaaS features up and running for user testing and feedback.

16

StaminaPredictor

Author

arghya1

Description

This project is an application that uses a humorous approach to encourage healthy lifestyle habits among men. It aims to predict a user's stamina based on their lifestyle choices, thereby highlighting the impact of factors like sleep, diet, stress, and exercise on overall performance. The core innovation lies in its playful gamification of health, making it more engaging for users.

Popularity

Points 2

Comments 4

What is this product?

StaminaPredictor is an application designed to educate men about the significant influence of lifestyle habits on their physical performance, particularly stamina. It employs a unique 'vibecode' approach, which means it uses creative, possibly unconventional coding methods to deliver its message in a light-hearted and memorable way. The core technical idea is to build a system that can infer the likely impact of common lifestyle factors (like sleep deprivation, poor diet, high stress, and lack of exercise) on a person's endurance. Instead of a direct medical diagnosis, it offers a fun, albeit speculative, estimation, prompting users to consider making positive changes. The innovation is in translating complex physiological correlations into an accessible and entertaining user experience.

How to use it?

Developers can integrate StaminaPredictor into various health and wellness platforms or use it as a standalone tool. The application can be accessed through its interface, where users input information about their sleep, diet, stress levels, and exercise routines. The 'vibecode' aspect suggests it might use simple input fields or even a conversational interface. The outcome is a playful 'prediction' about their stamina, accompanied by insights into how improving specific lifestyle habits can positively influence it. For developers, the value is in understanding how to build engaging health tech that leverages behavioral psychology and humor. It could be integrated into fitness trackers, wellness apps, or even used as a content generation tool for health blogs, providing a novel way to discuss sensitive topics.

Product Core Function

· Lifestyle Factor Input: Allows users to provide data points related to sleep quality, dietary habits, stress levels, and exercise frequency. The technical value here is in designing user-friendly input mechanisms that capture relevant data without being overly burdensome, making it easy for anyone to provide information.

· Stamina Estimation Algorithm: A core function that uses a probabilistic or rule-based model to 'predict' stamina based on the provided lifestyle inputs. The innovation lies in developing this logic in a 'vibecode' manner, meaning it prioritizes creative expression and engaging output over strict scientific rigor, while still reflecting general health principles.

· Habit Improvement Recommendations: Generates light-hearted suggestions for improving specific lifestyle habits to enhance stamina. This feature's technical value is in its ability to map negative lifestyle indicators to actionable, albeit simplified, advice, promoting positive behavioral change.

· Humorous Feedback Generation: Delivers the 'prediction' and recommendations with humor and wit. This is a key aspect of the 'vibecode' approach, using creative text generation or UI elements to make the experience fun and memorable, encouraging repeat engagement.

Product Usage Case

· A men's wellness app could embed StaminaPredictor as a 'fun quiz' module to increase user engagement. By offering a playful way to discuss stamina and lifestyle, it encourages users to reflect on their habits without the pressure of a medical assessment.

· A health blogger could use the underlying principles of StaminaPredictor to create interactive content for their audience. This could involve a web-based version where readers can get a 'humorous stamina score' and learn about healthy habits in an entertaining way, driving traffic and engagement.

· Developers looking to build gamified health solutions could draw inspiration from StaminaPredictor's 'vibecode' approach. It demonstrates how to make health-related topics, often considered serious or sensitive, more accessible and enjoyable through creative coding and humorous storytelling.

· A fitness coaching platform could incorporate this as a motivational tool. By offering a light-hearted prediction, it serves as an icebreaker to initiate conversations about the user's lifestyle and the importance of holistic health, rather than just physical fitness.

17

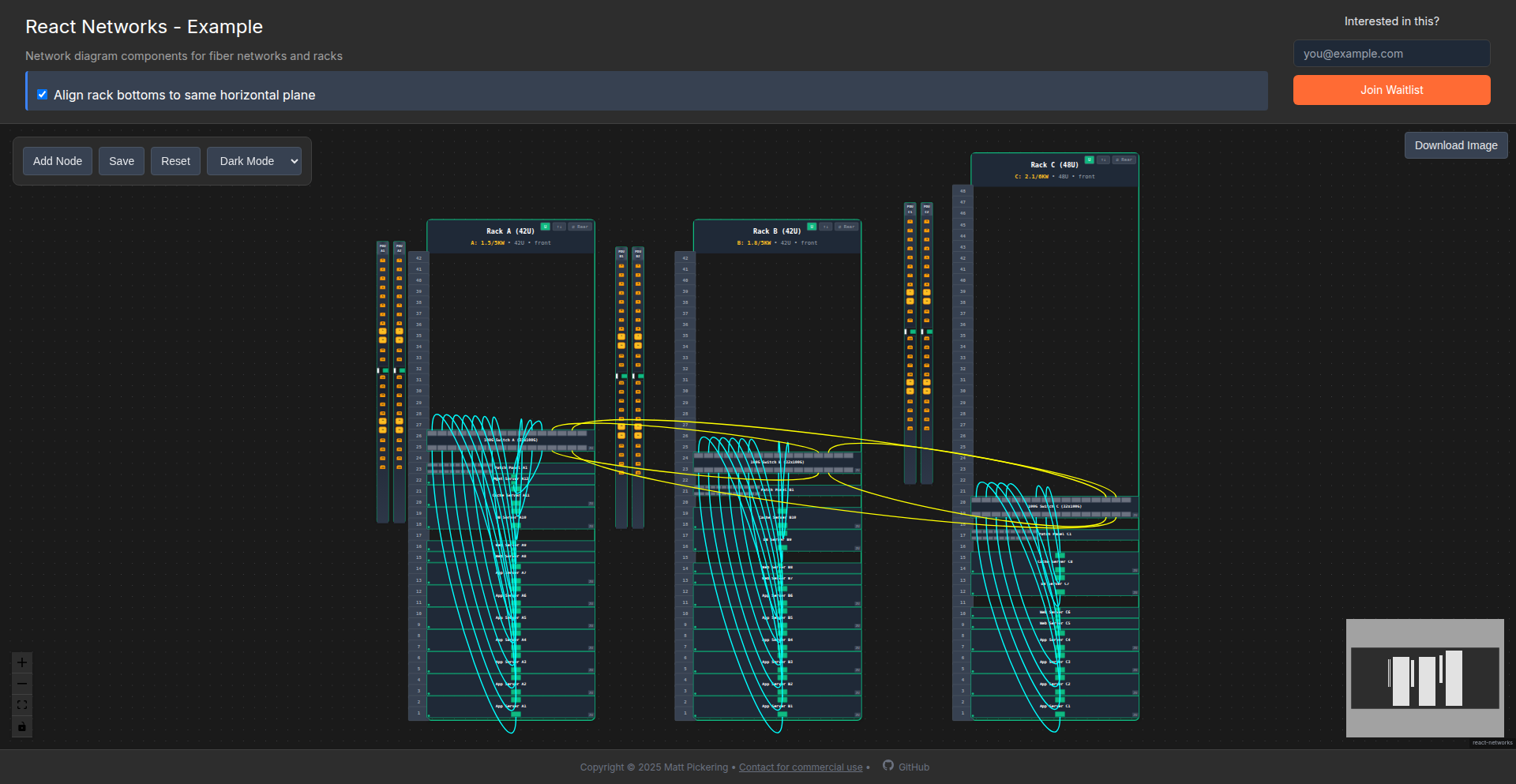

RackViz: Interactive Server Rack & Network Visualizer

Author

matt-p

Description

This project is a React component designed for creating interactive visual diagrams of server racks and network layouts. It addresses the need for a dynamic and user-friendly way to plan and manage data center infrastructure during the build, buy, and deploy phases, offering a novel approach to visualizing complex physical IT environments.

Popularity

Points 4

Comments 2

What is this product?

This is a React component that allows developers to visually represent server racks and network connections in an interactive way. The innovation lies in its ability to dynamically render and manipulate these complex diagrams within a web application. Think of it as a digital whiteboard specifically for IT hardware, where you can drag and drop servers, connect them with cables, and see the entire setup in real-time. This makes understanding and planning data center layouts much easier compared to static diagrams or spreadsheets. The underlying technology likely involves a combination of SVG or Canvas for rendering and robust state management to handle the interactive elements, providing a fluid user experience.

How to use it?

Developers can integrate this React component into their own web applications, particularly those involved in data center management, IT asset tracking, or network design tools. You would use it by passing in data that describes your server racks, the equipment within them, and how they are networked. The component then renders this information visually. For example, if you're building a tool to help businesses plan their server room, you can embed RackViz to let users draw out their rack layouts, assign servers to specific slots, and connect them to switches. This allows for easy visualization of your IT infrastructure, making it simpler to identify potential issues, plan upgrades, or document existing setups.

Product Core Function

· Interactive Rack Rendering: Visually displays server racks with accurate slotting and equipment placement. This helps in quickly understanding physical space utilization and planning for future additions, making data center planning more efficient.

· Dynamic Network Cabling: Allows users to draw and manage network connections between devices (servers, switches, etc.). This provides a clear overview of network topology, simplifying troubleshooting and network design.

· Equipment Placement and Configuration: Enables drag-and-drop functionality for placing servers and other IT equipment into rack slots, along with basic configuration details. This streamlines the process of visualizing hardware deployments and inventory.

· Customizable Components: Likely supports customization of rack units, server types, and connector styles to match specific data center environments. This adaptability ensures the tool can be tailored to diverse IT infrastructure needs.

· Real-time Visualization Updates: Changes made to the layout or network are reflected instantly in the diagram. This provides immediate feedback, reducing errors and speeding up the design and documentation process.

Product Usage Case

· In a data center inventory management application, RackViz can be used to display the physical location of each server within a rack, helping technicians quickly locate hardware for maintenance or replacement, solving the problem of inefficient physical asset tracking.