Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-02

SagaSu777 2025-11-03

Explore the hottest developer projects on Show HN for 2025-11-02. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The landscape of 'Show HN' today paints a vivid picture of innovation driven by the desire to reclaim focus and enhance efficiency in a digitally saturated world. We're seeing a powerful surge in AI-driven solutions, not just for complex tasks, but for everyday problems like managing distractions or optimizing workflows. The 'Memento Mori' project exemplifies this by using AI to intelligently filter out digital noise, a testament to how developers are leveraging sophisticated technology to foster deeper concentration – a core tenet of the hacker spirit. Similarly, tools like 'Anki-LLM' and 'Torque' showcase how LLMs are becoming integral to knowledge management and content creation, making complex processes accessible and automatable. For developers and entrepreneurs, this trend signals a clear opportunity: identify pain points that arise from information overload or inefficient processes, and explore how AI can provide elegant, context-aware solutions. Think about how AI can not just automate, but intelligently assist and guide users. Moreover, the emphasis on privacy and local data processing, seen in projects like the free VPN extension or the AI Chat Terminal, highlights a growing demand for user control and security. This is a call to build not just functional tools, but trustworthy ones that respect user data. The sheer diversity of these projects, from AI-powered habit trackers to intelligent coding agents, underscores the hacker's mindset of tackling problems with creative technical solutions, regardless of scale or domain. Embrace the challenge of making the complex simple, the distracting manageable, and the data controllable. This is where true innovation thrives.

Today's Hottest Product

Name

Memento Mori

Highlight

This project ingeniously tackles the pervasive issue of digital distractions and fractured focus by leveraging AI to intelligently block distracting websites and applications. The innovation lies in its context-aware blocking, allowing essential resources like YouTube for learning while curbing rabbit-hole content. Developers can learn about applying AI for behavioral modification, understanding dopamine loops, and building practical, real-time intervention tools. The core idea is to prevent distractions *before* they derail productivity, offering a powerful lesson in building tools that genuinely improve user workflow and well-being.

Popular Category

AI/ML

Productivity Tools

Developer Tools

Browser Extensions

SaaS

Popular Keyword

AI

LLM

Chrome Extension

SaaS

Open Source

Developer Tools

Productivity

Data Versioning

Code Generation

Automation

Technology Trends

AI-powered productivity enhancers

Intelligent content generation and management

Decentralized and privacy-focused tools

Developer workflow optimization

AI for specialized tasks (e.g., education, content creation, data management)

Interactive and collaborative development environments

Efficient data handling and version control

LLM integration for enhanced functionality

Project Category Distribution

AI/ML Applications (25%)

Developer Productivity & Tools (20%)

SaaS & Web Services (15%)

Browser Extensions (10%)

Data Management (5%)

Utilities & Niche Tools (25%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | Anki-LLM: LLM-Powered Anki Card Generation | 51 | 20 |

| 2 | FocusFlow AI | 16 | 6 |

| 3 | AgentChatter | 9 | 1 |

| 4 | Chrome InstantVPN | 2 | 5 |

| 5 | CommoAlert | 2 | 4 |

| 6 | Carrie - AI Meeting Orchestrator | 6 | 0 |

| 7 | AI Canvas Weaver | 5 | 0 |

| 8 | AI-Powered Canine Companion Camera | 5 | 0 |

| 9 | Shodata: Git-Inspired Data Versioning Platform | 2 | 2 |

| 10 | JV Lang: Expressive Java with Rust Speed | 3 | 1 |

1

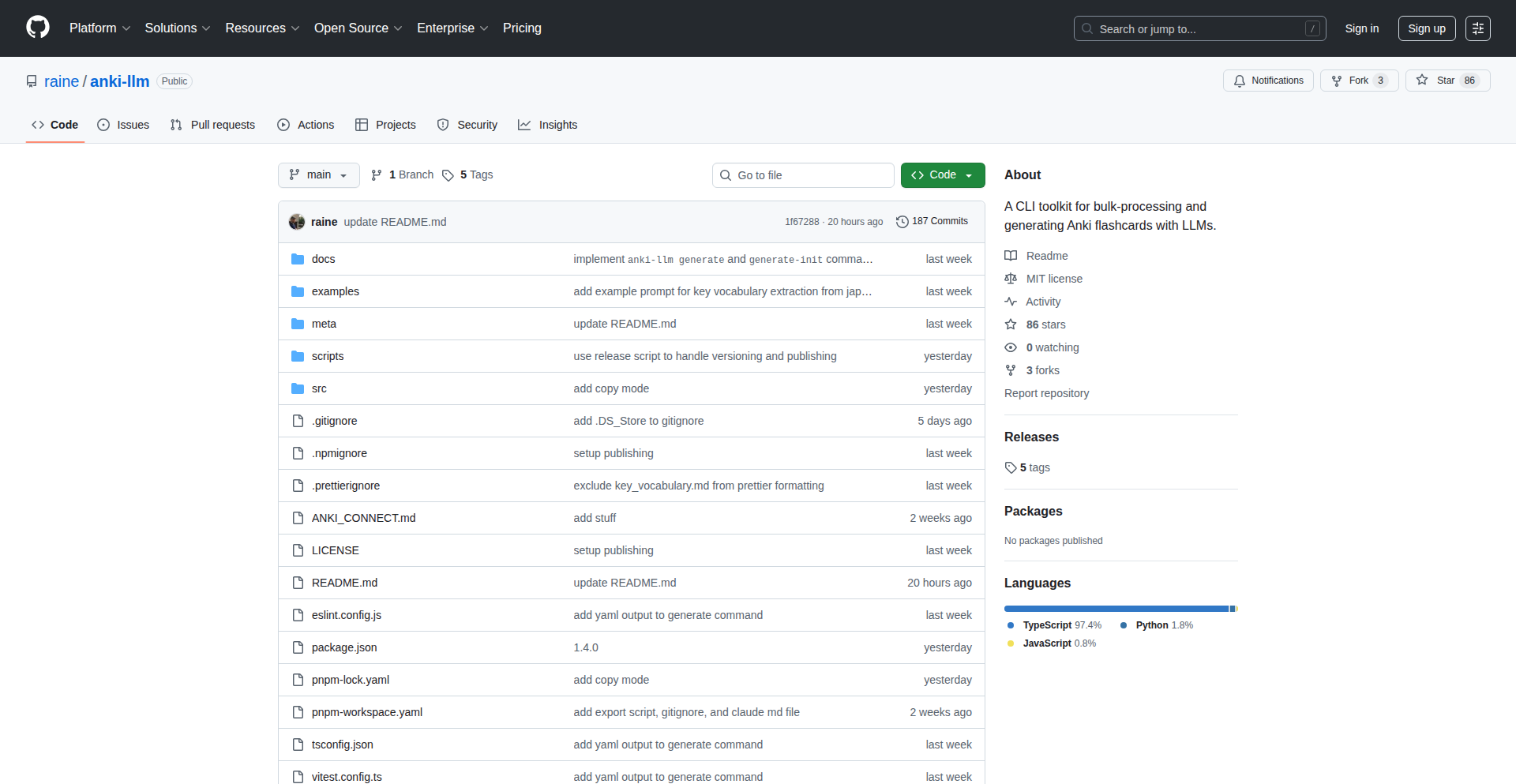

Anki-LLM: LLM-Powered Anki Card Generation

Author

rane

Description

Anki-LLM is a tool that leverages Large Language Models (LLMs) to automate the creation of Anki flashcards in bulk. It simplifies the process of transforming raw text or data into effective study materials, addressing the tedious manual effort often involved in spaced repetition learning.

Popularity

Points 51

Comments 20

What is this product?

This project is a developer-centric utility designed to bridge the gap between raw information and structured learning for Anki users. It uses LLMs, which are AI models capable of understanding and generating human-like text, to process user-provided content (like articles, notes, or documents) and automatically extract key concepts, definitions, and questions, formatting them into Anki-compatible flashcards. The innovation lies in its ability to perform batch processing, significantly reducing the time and effort required to build a comprehensive Anki deck, and in its intelligent extraction of relevant information rather than simple keyword matching.

How to use it?

Developers can integrate Anki-LLM into their workflow by providing it with input data, which can be plain text files, URLs, or potentially other data formats depending on the project's implementation. The tool then interacts with an LLM API (like OpenAI's GPT or similar models) to generate flashcards. The output is typically in a format that can be directly imported into Anki, such as a CSV or JSON file. This allows for rapid creation of study decks for any subject matter, enabling developers to study technical documentation, coding concepts, or any other information efficiently.

Product Core Function

· LLM-driven content parsing: Utilizes natural language processing to understand and identify core concepts and facts from unstructured text. This means instead of manually highlighting, the AI does the heavy lifting of finding what's important for a flashcard.

· Automated flashcard generation: Creates question-answer pairs or cloze deletions based on the parsed content. This automates the creation of study material, saving hours of manual work and ensuring consistent card quality.

· Bulk processing capability: Handles large volumes of input data, generating an entire deck of flashcards at once. This is crucial for efficiently studying lengthy documents or extensive knowledge bases, providing a significant time-saving benefit.

· Anki import compatibility: Outputs flashcards in formats readily importable by Anki. This ensures seamless integration with existing spaced repetition systems, allowing users to immediately leverage their new study materials.

· Customizable LLM prompts: Allows users to fine-tune how the LLM extracts information, leading to more relevant and targeted flashcards. This offers control over the learning material, adapting the AI's output to specific learning needs.

Product Usage Case

· Studying complex technical documentation: A developer facing a lengthy API documentation can feed it into Anki-LLM to generate flashcards for key functions, parameters, and error codes, aiding in faster memorization and recall for project development.

· Learning new programming languages or frameworks: Developers can process tutorials, blog posts, or code examples through Anki-LLM to create flashcards covering syntax, common patterns, and best practices, accelerating the learning curve.

· Preparing for technical interviews: By feeding interview preparation materials or challenging problem descriptions into Anki-LLM, developers can create focused study decks on data structures, algorithms, and system design concepts to improve their chances of success.

· Organizing personal knowledge bases: For developers who maintain extensive personal notes on various technologies, Anki-LLM can transform these notes into an active learning system, making complex information more accessible and memorable for future reference.

2

FocusFlow AI

Author

Rahul07oii

Description

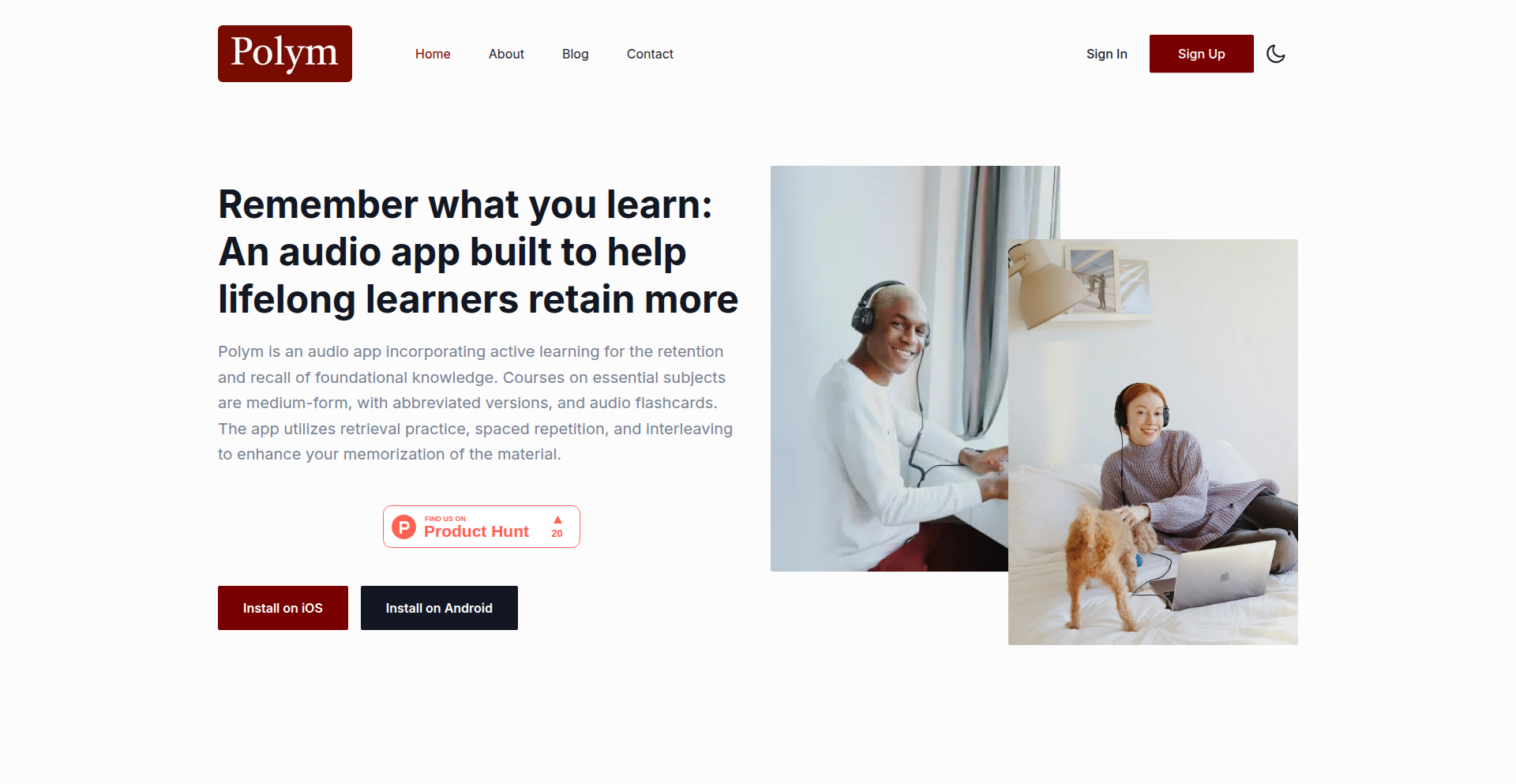

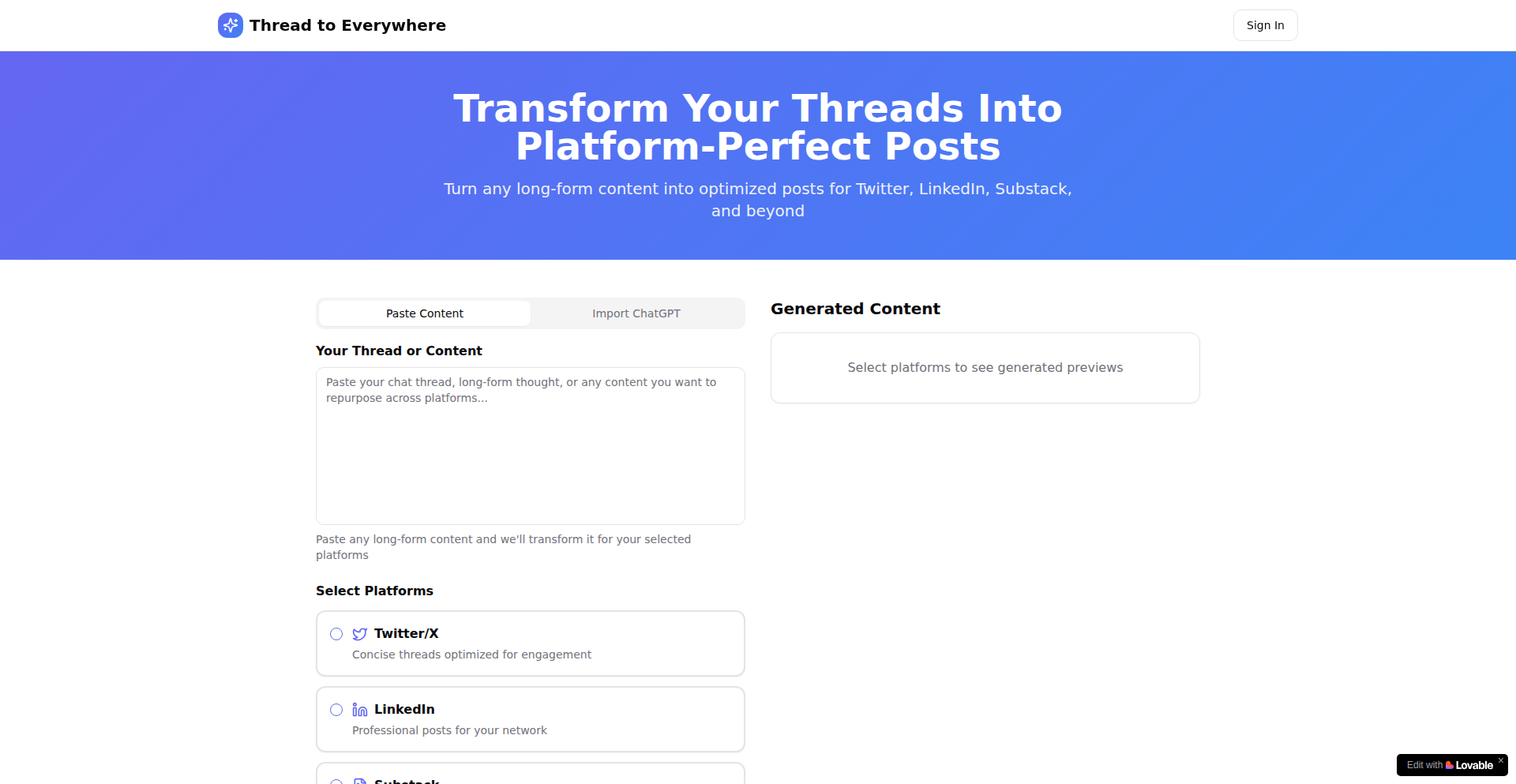

FocusFlow AI is a Chrome extension that uses artificial intelligence to intelligently block distracting websites and content. It helps users regain focus by understanding the context of their current task and preventing engagement with unrelated, attention-grabbing material. This is a smart blocker designed to tackle modern digital distractions that go beyond simple website blocking, addressing issues like background streams and addictive content loops.

Popularity

Points 16

Comments 6

What is this product?

FocusFlow AI is a sophisticated Chrome extension that leverages AI to maintain your focus. Unlike traditional blockers that simply prevent access to specific sites, FocusFlow AI analyzes what you're currently working on. You tell it your task, and it intelligently identifies and blocks content that is unrelated and likely to derail your concentration. Its core innovation lies in its AI-powered contextual awareness, allowing it to differentiate between genuinely useful resources (like a programming tutorial on YouTube) and time-wasting rabbit holes (like unrelated video essays). This means it can be more granular and less disruptive than rigid blockers, helping you stay on track without completely cutting off valuable online tools.

How to use it?

Developers can integrate FocusFlow AI into their workflow by installing it as a Chrome extension. Once installed, they can activate it when starting a focused work session. The user simply informs the extension about the task they are undertaking (e.g., 'working on a React component', 'learning a new Python library'). The AI then monitors browsing activity. If the user attempts to navigate to a site or content deemed irrelevant to the declared task, FocusFlow AI intervenes, offering a prompt to reconsider before proceeding. This can be particularly useful when needing to research specific topics on platforms like YouTube or Stack Overflow, while avoiding unrelated browsing that can lead to lost time. The extension works in the background, providing real-time nudges without requiring constant manual input.

Product Core Function

· AI-driven contextual blocking: Analyzes the user's declared task and blocks unrelated distracting content, preventing users from falling into time-wasting rabbit holes.

· Real-time intervention: Prompts the user at the moment of potential distraction, before an hour is lost, encouraging mindful decision-making.

· Intelligent content differentiation: Distinguishes between essential learning resources and tangential, addictive content, allowing for necessary research while minimizing distractions.

· Task-specific focus profiles: Enables users to define different focus modes or tasks, tailoring the blocking behavior to specific work needs.

· Developer-centric design: Built to address the specific challenges faced by developers, such as the allure of background streams and the need for focused coding time.

· Minimal friction integration: A free, no-signup Chrome extension that requires no payment, making it easily accessible for immediate use.

Product Usage Case

· A developer needs to research a specific API for a web application. They tell FocusFlow AI they are 'researching the Stripe API'. The extension allows access to Stripe's documentation and relevant Stack Overflow threads. However, if the developer accidentally clicks on a recommended video about 'top 10 coding memes', FocusFlow AI will prompt them, asking 'Is this related to your Stripe API research?', and potentially block the video if it's deemed irrelevant.

· A solo developer is working on a complex algorithm and finds themselves habitually opening Twitch to watch coding streams for background noise. FocusFlow AI can be configured to recognize this as a distraction during focused coding sessions. When the developer attempts to open Twitch, the extension might ask, 'Are you sure you want to watch streams instead of focusing on the algorithm?'. This helps break the habit and regain concentration.

· A student is learning a new programming language and needs to watch video tutorials on YouTube. They inform FocusFlow AI about their 'learning Python' task. The extension allows access to specific programming tutorial channels they frequent but will block unrelated trending videos or entertainment content that might appear on their YouTube homepage, ensuring they stay on track with their learning goals.

· A developer is marketing their project on Twitter and often gets sidetracked by the endless feed. By setting their task to 'marketing FocusFlow AI', the extension can remind them of their objective when they start scrolling aimlessly. It might display a message like, 'Remember, you're here to promote, not to scroll.', helping them stay efficient.

3

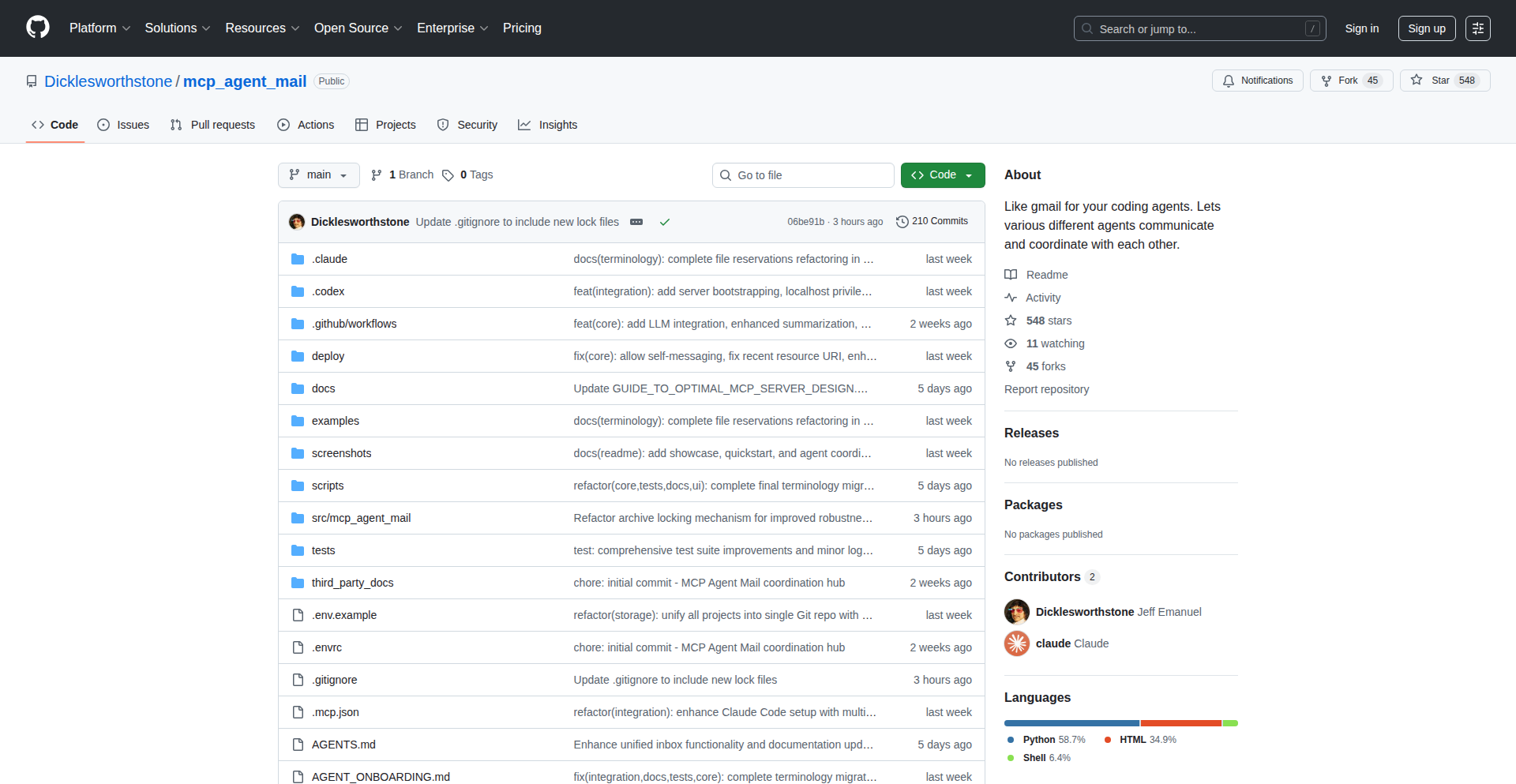

AgentChatter

Author

eigenvalue

Description

A groundbreaking system that empowers AI coding agents to communicate with each other, enabling them to collaborate and solve complex programming tasks. This is not just a playful trick, but a core component that significantly enhances developer workflows by facilitating seamless agent interaction and knowledge sharing.

Popularity

Points 9

Comments 1

What is this product?

AgentChatter is an innovative framework that allows multiple AI coding agents to send and receive messages, forming a decentralized network for task execution. Instead of a single agent tackling a problem, AgentChatter enables agents to discuss, delegate, and refine solutions collectively. This is achieved through a custom messaging protocol that allows agents to exchange information, queries, and intermediate results, leading to more robust and efficient problem-solving. So, what's in it for you? It means you can have specialized AI agents work together, each contributing its unique strengths, to accomplish tasks that would be far more difficult or time-consuming for a single agent.

How to use it?

Developers can integrate AgentChatter into their existing agent-based workflows by initializing the AgentChatter library within their agent's codebase. This involves defining agent identifiers, establishing communication channels (e.g., using a central message broker or peer-to-peer connections), and implementing message handling logic. Agents can then use simple API calls to send messages to specific agents or broadcast to a group, and listen for incoming messages. This allows for dynamic agent orchestration and dynamic task decomposition. So, how can you use this? Imagine setting up a team of AI agents for code generation, testing, and documentation, where they can seamlessly coordinate their efforts, reducing manual oversight and accelerating your development cycles.

Product Core Function

· Inter-Agent Messaging: Enables AI agents to exchange structured messages, facilitating communication of code snippets, task updates, and requests for assistance. This allows for distributed problem-solving and knowledge sharing among agents, directly impacting your project's speed and efficiency.

· Agent Discovery and Routing: Provides mechanisms for agents to discover each other and route messages effectively within the network. This ensures that communication reaches the intended recipients, making complex agent interactions manageable and reliable for your development efforts.

· Collaborative Task Execution: Facilitates coordinated efforts among multiple agents to tackle larger and more complex programming challenges. This means you can offload intricate tasks to a team of specialized AI agents, significantly boosting your productivity and the quality of your code.

· Workflow Integration: Designed for easy integration into existing AI agent workflows, allowing developers to add communication capabilities without a complete system overhaul. This practical aspect ensures you can leverage this innovation without extensive refactoring, directly enhancing your current development pipeline.

Product Usage Case

· Automated Code Refactoring: Developers can deploy multiple agents, one to identify code smells, another to suggest refactoring patterns, and a third to implement the changes, with AgentChatter orchestrating their communication. This addresses the complexity of large-scale refactoring by breaking it down into manageable, collaborative AI tasks, saving significant developer time and effort.

· Complex Bug Triaging: An agent can report a bug, another specialized agent can analyze stack traces and logs, and a third can search for similar issues in a knowledge base. AgentChatter enables this interconnected analysis to quickly pinpoint root causes. This scenario showcases how AgentChatter can accelerate bug resolution by enabling agents to work together to diagnose and understand issues, leading to faster fixes and more stable software.

· AI-Assisted Feature Development: One agent might generate initial feature code, another might write unit tests, and a third might draft documentation, all coordinated through AgentChatter. This collaborative approach to new feature development accelerates the entire process from conception to deployment, allowing developers to deliver features faster and with higher quality.

4

Chrome InstantVPN

url

Author

hritik7742

Description

A lightweight VPN Chrome extension that provides instant, one-click connection without requiring any signup or complex configuration. It addresses the common frustrations of slow VPNs, mandatory accounts, and data logging by running entirely within the browser.

Popularity

Points 2

Comments 5

What is this product?

This project is a browser-based Virtual Private Network (VPN) extension for Chrome. Instead of installing a separate application or going through a lengthy registration process, QuickVPN Proxy leverages browser capabilities to establish a secure connection. The innovation lies in its extreme simplicity and in-browser execution. This means it avoids the overhead and potential privacy concerns of traditional VPN clients. Technically, it likely uses WebRTC or similar browser APIs to proxy network traffic through a remote server, creating an encrypted tunnel for your browsing activity. The value here is a frictionless way to enhance online privacy and access geo-restricted content without compromising user experience or data security.

How to use it?

Developers can easily integrate this VPN functionality into their workflows or recommend it to users seeking immediate privacy protection. To use it, simply install the extension from the Chrome Web Store. Once installed, a single click on the extension icon initiates a connection. This is ideal for quickly securing your connection on public Wi-Fi, bypassing regional content restrictions for testing purposes, or simply ensuring a baseline level of privacy during browsing sessions. It's designed for immediate utility, requiring no technical setup.

Product Core Function

· One-click VPN connection: Enables users to establish a secure VPN connection instantly without manual configuration, enhancing ease of use and immediate privacy.

· No signup or account required: Eliminates the barrier of registration, making it accessible to anyone needing a quick privacy solution, thereby increasing adoption and utility.

· Browser-native operation: Runs directly within the Chrome browser, minimizing system resource usage and avoiding the complexities and potential data logging of standalone VPN applications.

· Lightweight performance: Designed for speed and efficiency, ensuring that browsing speeds are not significantly impacted, which is crucial for a smooth user experience.

· Enhanced online privacy: Encrypts internet traffic, protecting user data from potential interception on public networks and improving anonymity online.

Product Usage Case

· A developer needs to test how a website appears or functions in a different geographical region. They can use Chrome InstantVPN to quickly switch their IP address to that region and see the results without interrupting their workflow.

· A remote worker frequently connects to public Wi-Fi networks. They can use Chrome InstantVPN to instantly encrypt their connection before accessing sensitive company data, mitigating the risk of man-in-the-middle attacks.

· A user wants to access streaming content that is blocked in their country. They can enable Chrome InstantVPN with a single click to appear as if they are browsing from a supported region, unlocking the content.

· A privacy-conscious individual wants to prevent websites from tracking their location and browsing habits. Chrome InstantVPN provides an immediate layer of anonymity by masking their IP address, offering peace of mind during everyday browsing.

5

CommoAlert

Author

anthonytorre

Description

CommoWatch is a minimalist web application designed for tracking commodity prices and receiving timely alerts. It allows users to select specific commodities, set desired price targets, and get notified via email or SMS when those targets are met. This project showcases an innovative approach to making price-sensitive information accessible and actionable, particularly for traders, investors, and business owners who rely on fluctuating material costs.

Popularity

Points 2

Comments 4

What is this product?

CommoWatch is a streamlined web service that keeps you informed about commodity prices. The core innovation lies in its proactive alert system. Instead of constantly checking prices, you tell CommoWatch what commodities you're interested in (like gold, oil, or agricultural products) and at what price points you'd like to be alerted. The system then monitors these prices in near real-time and sends you an email or SMS notification the moment your predefined price target is reached. This saves you time and ensures you don't miss critical market movements, offering a practical solution for informed decision-making in volatile markets.

How to use it?

Developers can integrate CommoWatch into their workflows by leveraging its straightforward alert mechanism. For example, a small business owner might use it to track the price of a key raw material. They would sign up, select that material, and set an alert for when the price drops below a certain threshold. This allows them to purchase inventory at the optimal time, saving costs. For investors, it could be used to monitor gold prices and receive an alert when it hits a target buy or sell point, ensuring they act on market opportunities swiftly. The initial setup is designed to be simple, focusing on core functionality to provide immediate value.

Product Core Function

· Commodity selection: Users can choose from a curated list of essential commodities, including precious metals, energy sources, and agricultural products. This simplifies the process of tracking relevant markets and provides a focused view on what matters most to the user.

· Customizable price alerts: Set specific price thresholds for each commodity. This allows for highly personalized monitoring, ensuring that users are only notified when prices reach levels that are significant to their trading or business strategies.

· Email and SMS notifications: Receive instant alerts through your preferred communication channel. This ensures timely delivery of critical price information, enabling immediate action and preventing missed opportunities.

· Minimalist interface: A clean and uncluttered design focuses on essential features, making it quick and easy to use. This 'less is more' approach prioritizes speed and efficiency, reducing cognitive load for users who need fast access to information.

· Hourly updates: Prices are refreshed hourly, providing a good balance between real-time information and system efficiency. This frequency is often sufficient for many strategic decisions in commodity markets.

Product Usage Case

· A small bakery owner wants to buy flour at the best possible price. They can use CommoWatch to set an alert for wheat prices, notifying them when the price drops below a certain point. This helps them optimize their purchasing strategy and reduce operational costs.

· An individual investor is closely watching the price of gold. They can configure CommoWatch to send them an email alert when gold reaches a specific target price, allowing them to execute a trade at their desired entry or exit point without constant manual monitoring.

· A freelance trader specializing in oil futures wants to be immediately informed of significant price shifts. CommoWatch can be set up to send SMS alerts for oil price movements, enabling them to react quickly to market volatility and capitalize on trading opportunities.

· A small manufacturing business relies on copper as a raw material. They can use CommoWatch to track copper prices and receive alerts when prices fall to a level that makes it cost-effective to stock up on inventory, securing their supply chain at a favorable cost.

6

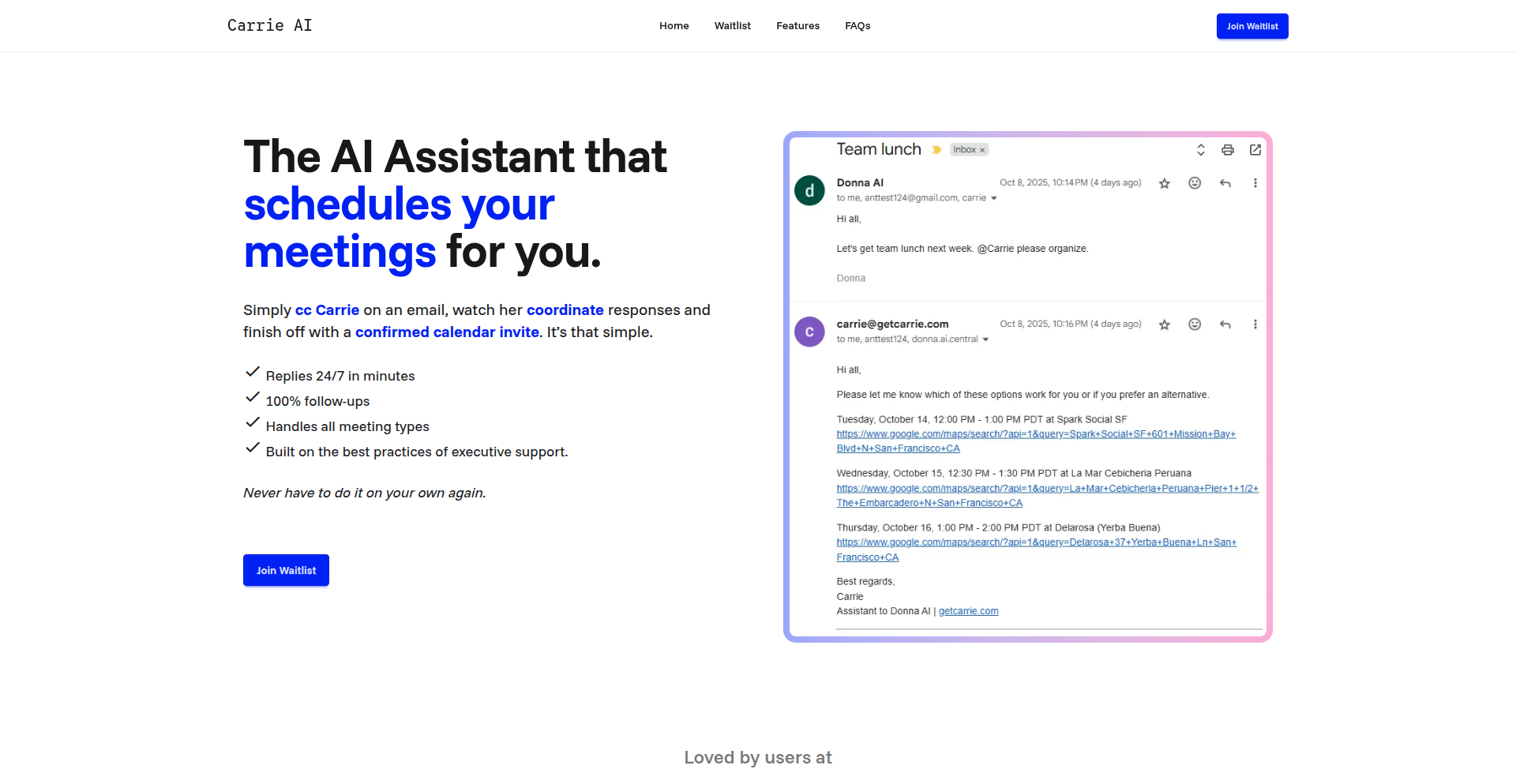

Carrie - AI Meeting Orchestrator

Author

eastraining

Description

Carrie is an AI-powered assistant that automates the tedious process of scheduling meetings across different time zones. By simply CCing Carrie in your emails, she intelligently analyzes availabilities, finds optimal meeting slots, confirms them, and sends out calendar invites, significantly reducing manual effort and back-and-forth communication, offering capabilities beyond traditional scheduling tools.

Popularity

Points 6

Comments 0

What is this product?

Carrie is an intelligent agent designed to handle complex meeting scheduling. It works by integrating with your email and calendar systems. When you include Carrie in an email thread where meeting coordination is needed, she uses natural language processing (NLP) to understand the participants' stated availabilities and preferences. Her core innovation lies in her advanced algorithm that can reconcile conflicting schedules and time zones, proposing the most efficient meeting times. Unlike simpler tools, Carrie can manage more nuanced scenarios, ensuring that once a time is agreed upon, the meeting is automatically confirmed and an invite is sent, freeing up valuable mental bandwidth for users.

How to use it?

Developers can integrate Carrie into their workflow by adding her email address to the CC line of any email conversation where meeting scheduling is required. Carrie will then automatically monitor the thread for availability information and proposed times. For a more hands-off experience, users can also set up specific rules or preferences for Carrie to follow, such as prioritizing certain participants' schedules or avoiding specific times. This allows for a seamless integration into existing communication patterns without requiring developers to build any custom code.

Product Core Function

· Intelligent Availability Analysis: Carrie's ability to parse natural language in emails to understand availability offers a significant leap over manual checking, saving users time and reducing the chance of errors.

· Cross-Time Zone Optimization: Automatically calculates and suggests meeting times that work best across multiple time zones, eliminating the headache of manual conversion and coordination.

· Automated Meeting Confirmation: Once a suitable time is identified and implicitly agreed upon by participants, Carrie autonomously sends out the final calendar invitation, streamlining the process from discussion to confirmed event.

· Advanced Scenario Handling: Carrie goes beyond basic scheduling by managing more complex situations, such as participants with limited or conflicting availabilities, providing a more robust solution than many existing tools.

· Email Thread Integration: By operating directly within email threads, Carrie requires no complex setup or separate application, making it instantly accessible and easy to adopt for anyone using email.

Product Usage Case

· Scenario: A project manager needs to schedule a kickoff meeting with a distributed team across New York, London, and Tokyo. Carrie can be CC'd on the initial email, analyze the team's expressed availability in the thread, and propose a meeting time that minimizes disruption for all participants, then send the invite.

· Scenario: A sales representative is trying to schedule a demo with a prospect who has a very busy calendar and is located in a different continent. By including Carrie in the email exchange, she can efficiently navigate the prospect's stated preferences and suggest optimal times, increasing the likelihood of securing the meeting.

· Scenario: A researcher needs to coordinate a discussion with several collaborators, each with their own unique time zone and work hours. Carrie can process the different inputs from the email thread and find a mutually agreeable slot, ensuring everyone can attend without extensive back-and-forth.

· Scenario: A startup founder is onboarding new remote employees and needs to schedule initial one-on-one meetings. CCing Carrie on the onboarding emails allows for the automatic scheduling of these crucial introductory sessions, freeing up the founder's time for strategic tasks.

7

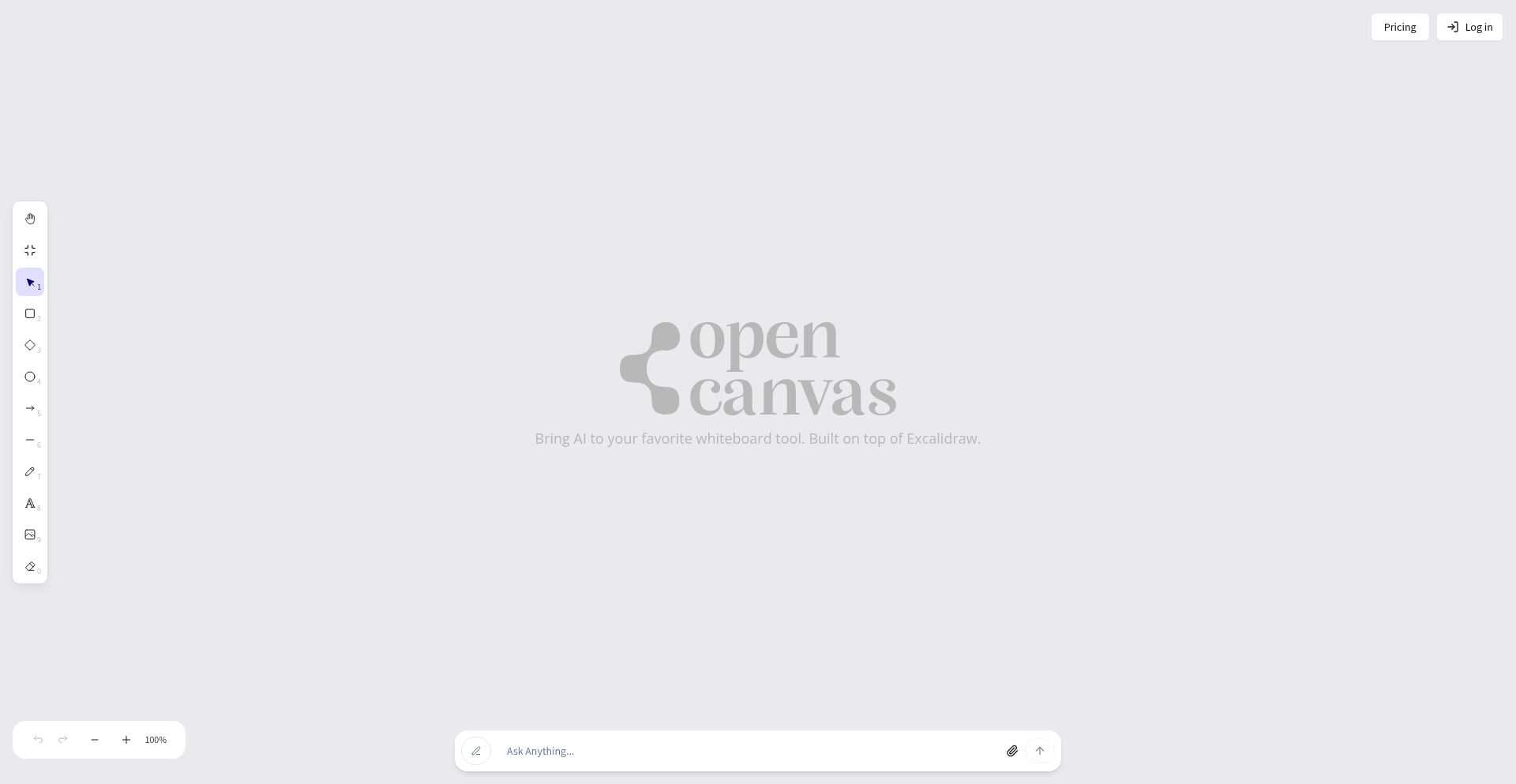

AI Canvas Weaver

Author

winzamark12

Description

AI Canvas Weaver is an AI-powered extension that integrates generative AI capabilities into Excalidraw, a popular open-source virtual whiteboard. It allows users to leverage AI to create and enhance visual elements directly within their collaborative drawing sessions, transforming static sketches into dynamic and intelligently generated content. The core innovation lies in bridging the gap between intuitive freehand drawing and the power of AI image generation, solving the problem of time-consuming manual creation of complex visuals within a collaborative design workflow.

Popularity

Points 5

Comments 0

What is this product?

AI Canvas Weaver is a project that injects AI image generation into Excalidraw, a web-based virtual whiteboard. Instead of just drawing by hand, you can now use natural language prompts to ask the AI to create images, diagrams, or enrich existing drawings. For instance, you could describe 'a futuristic city skyline' and have the AI generate it on your canvas. This is innovative because it adds a layer of intelligent content creation to the typically manual process of whiteboarding, making brainstorming and design more efficient and imaginative.

How to use it?

Developers can integrate AI Canvas Weaver into their Excalidraw workflows by installing it as a plugin or by embedding Excalidraw with the AI functionality. The typical use case involves a user typing a text description of what they want to see on the whiteboard into a prompt interface. The AI then processes this prompt and renders an image or visual element directly onto the Excalidraw canvas. This can be used for quick ideation, generating placeholder graphics, or even creating finished visual assets within a collaborative design session.

Product Core Function

· AI-powered image generation from text prompts: Allows users to describe visual concepts in natural language and have the AI create them on the canvas, saving time and effort compared to manual drawing.

· Intelligent visual enhancement: Enables AI to suggest or create improvements to existing drawings, such as adding detail or stylizing elements, making designs more polished.

· Seamless Excalidraw integration: Built on top of Excalidraw, ensuring a familiar and intuitive user experience for existing Excalidraw users.

· Collaborative AI assistance: Facilitates group brainstorming by allowing multiple users to leverage AI generation within a shared Excalidraw session, fostering co-creation.

Product Usage Case

· In a product design sprint, a team can use AI Canvas Weaver to quickly generate various UI mockups based on textual descriptions, accelerating the ideation phase by exploring multiple visual directions rapidly.

· During a technical architecture discussion, a developer can use the tool to visualize complex system components by describing them, making abstract concepts easier to grasp for the entire team.

· An educator can use AI Canvas Weaver to generate illustrative diagrams for complex topics on the fly during a virtual lesson, enhancing student engagement and understanding.

· A marketing team can use it to create quick visual assets for social media posts or presentations directly within their collaborative brainstorming session, streamlining content creation.

8

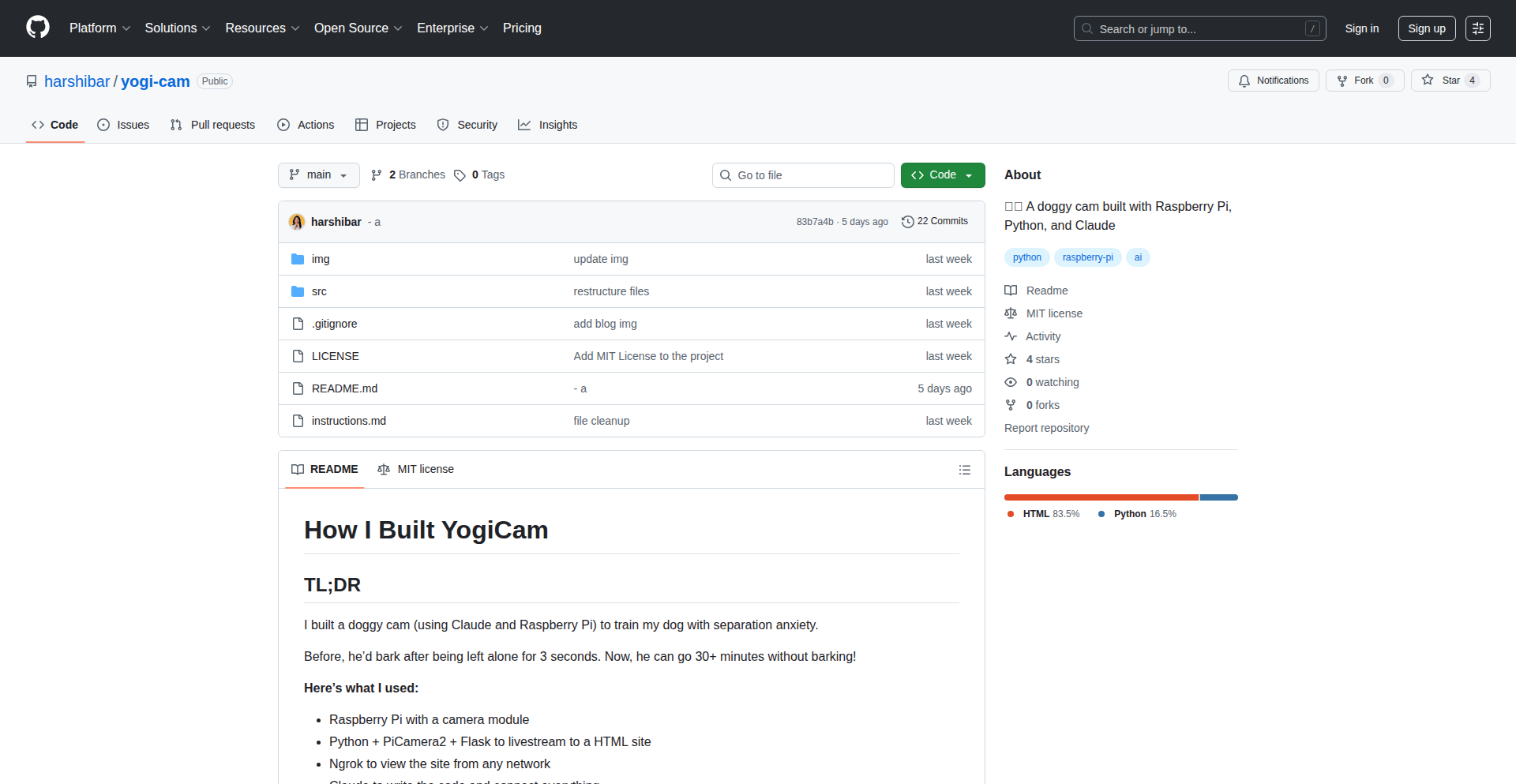

AI-Powered Canine Companion Camera

Author

hyerramreddy

Description

This project is a DIY Raspberry Pi webcam system designed to help train dogs with separation anxiety. It leverages AI, specifically Claude, to analyze dog behavior captured by the camera and provide actionable feedback or trigger custom responses, offering a unique blend of hardware and artificial intelligence for pet training.

Popularity

Points 5

Comments 0

What is this product?

This is a custom-built, intelligent pet monitoring system that uses a Raspberry Pi as the core processing unit and a webcam to observe your dog. The innovation lies in integrating a large language model (Claude) to interpret the video feed. Instead of just passively watching, the AI can understand if your dog is exhibiting signs of distress (like barking excessively or pacing) and can then trigger pre-programmed responses. Think of it as a smart digital assistant for your dog's well-being, going beyond simple surveillance to proactive training support. So, what's in it for you? It provides peace of mind by allowing you to monitor your dog remotely and, more importantly, offers a data-driven approach to address behavioral issues like separation anxiety, helping your dog feel more secure and reducing destructive behaviors when you're not around.

How to use it?

Developers can set up this system by acquiring a Raspberry Pi, a compatible webcam, and necessary power supplies. The core software involves installing the Raspberry Pi OS, setting up the webcam feed, and integrating the Claude API. You would write scripts to capture video frames, send these frames or relevant summaries to Claude for analysis, and then program the Raspberry Pi to execute actions based on Claude's output. These actions could include playing a pre-recorded comforting message, activating a treat dispenser, or sending an alert to your phone. It's a flexible platform that can be extended with additional sensors or actuators. So, how can you use it? You can deploy this in your home to monitor your dog while you're at work, allowing the AI to learn your dog's specific anxiety triggers and providing automated comforting measures. It's ideal for tech-savvy pet owners looking for a more advanced solution than a standard pet camera.

Product Core Function

· Real-time Dog Behavior Analysis: Using a webcam and AI (Claude), this function allows the system to observe and interpret your dog's actions, identifying potential signs of stress or anxiety. This is valuable because it moves beyond simple video recording, providing actual insights into your dog's emotional state, helping you understand their needs better.

· AI-Driven Training Feedback: Claude analyzes the behavior data to provide insights that can be used for training. For instance, it can identify patterns of anxiety and suggest when positive reinforcement might be most effective. This is useful for creating a more targeted and effective training plan for separation anxiety, saving you time and frustration.

· Automated Response Triggers: Based on the AI's analysis, the system can automatically trigger pre-set responses like playing soothing music or a familiar voice, or dispensing a treat. This is valuable as it offers immediate, automated intervention when your dog shows signs of distress, helping to de-escalate their anxiety in real-time, even when you're not there.

· Remote Monitoring and Alerts: Users can access the camera feed remotely and receive notifications on their mobile devices if the AI detects significant behavioral changes. This is important for providing reassurance and allowing for timely human intervention if the automated system is insufficient, ensuring your dog's safety and well-being.

Product Usage Case

· Scenario: Dog owner leaving for a full workday. Problem: Dog suffers from severe separation anxiety, resulting in excessive barking and destructive chewing. Solution: The AI-Powered Canine Companion Camera is deployed. The system monitors the dog's pacing and barking. When anxiety levels rise, Claude identifies this and triggers a pre-recorded comforting message from the owner and dispenses a treat, helping the dog associate alone time with positive outcomes.

· Scenario: Testing a new training technique for leash reactivity during walks. Problem: Owner needs to understand the dog's stress triggers and emotional responses to different stimuli encountered during outdoor excursions. Solution: While this specific project focuses on indoor separation anxiety, the underlying concept of using AI for behavior analysis can be adapted. A developer could envision a wearable camera for the dog, with AI analyzing stress indicators (like tail tucking or panting) in response to external triggers, providing data for desensitization training.

· Scenario: Managing a multi-pet household with complex social dynamics. Problem: Identifying which pet is causing stress to another, or understanding the source of conflict. Solution: By deploying multiple cameras and leveraging AI's pattern recognition, this system could potentially differentiate behaviors and identify interactions leading to anxiety or aggression among pets, providing valuable data for behavioral intervention and household harmony.

9

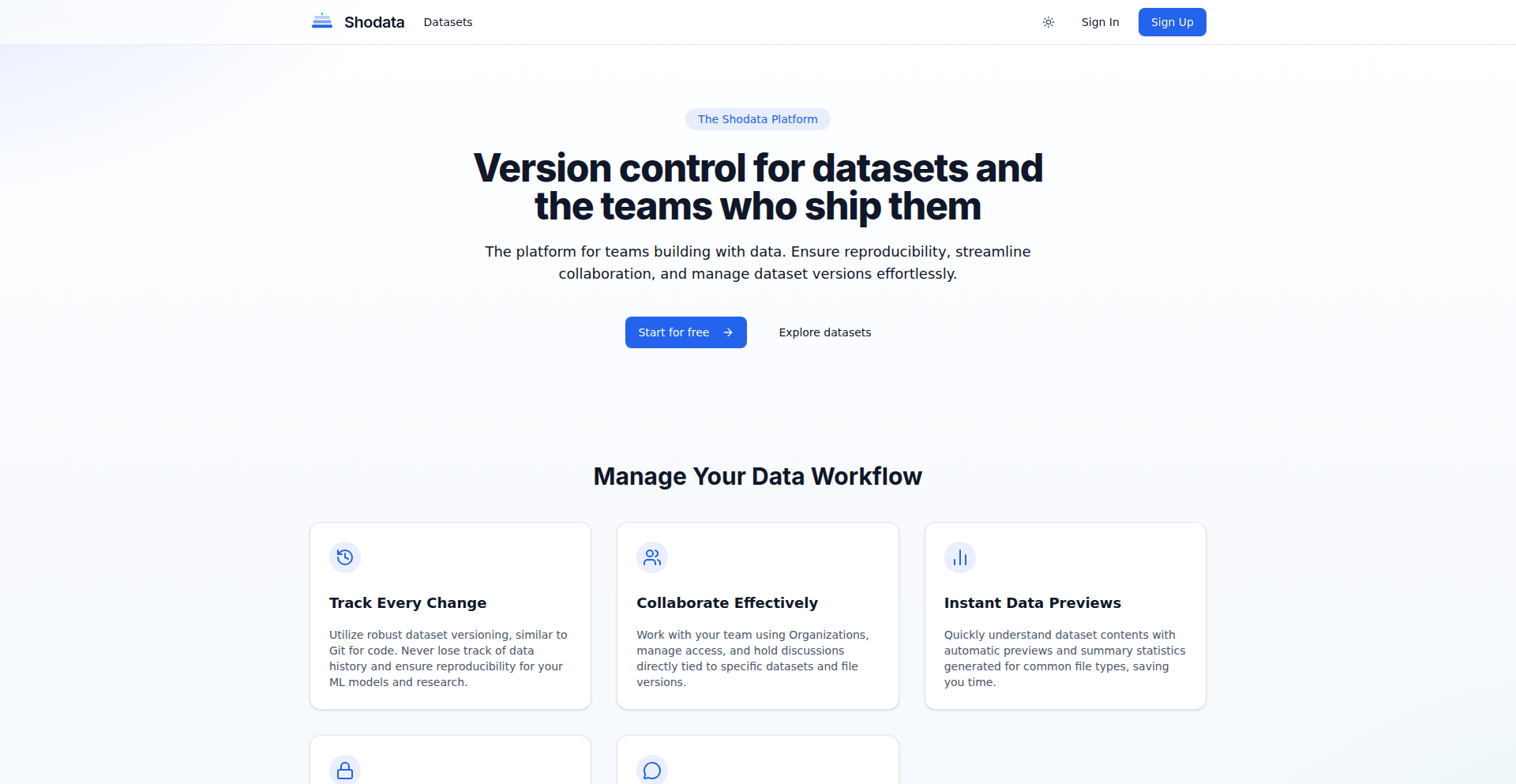

Shodata: Git-Inspired Data Versioning Platform

Author

aliefe04

Description

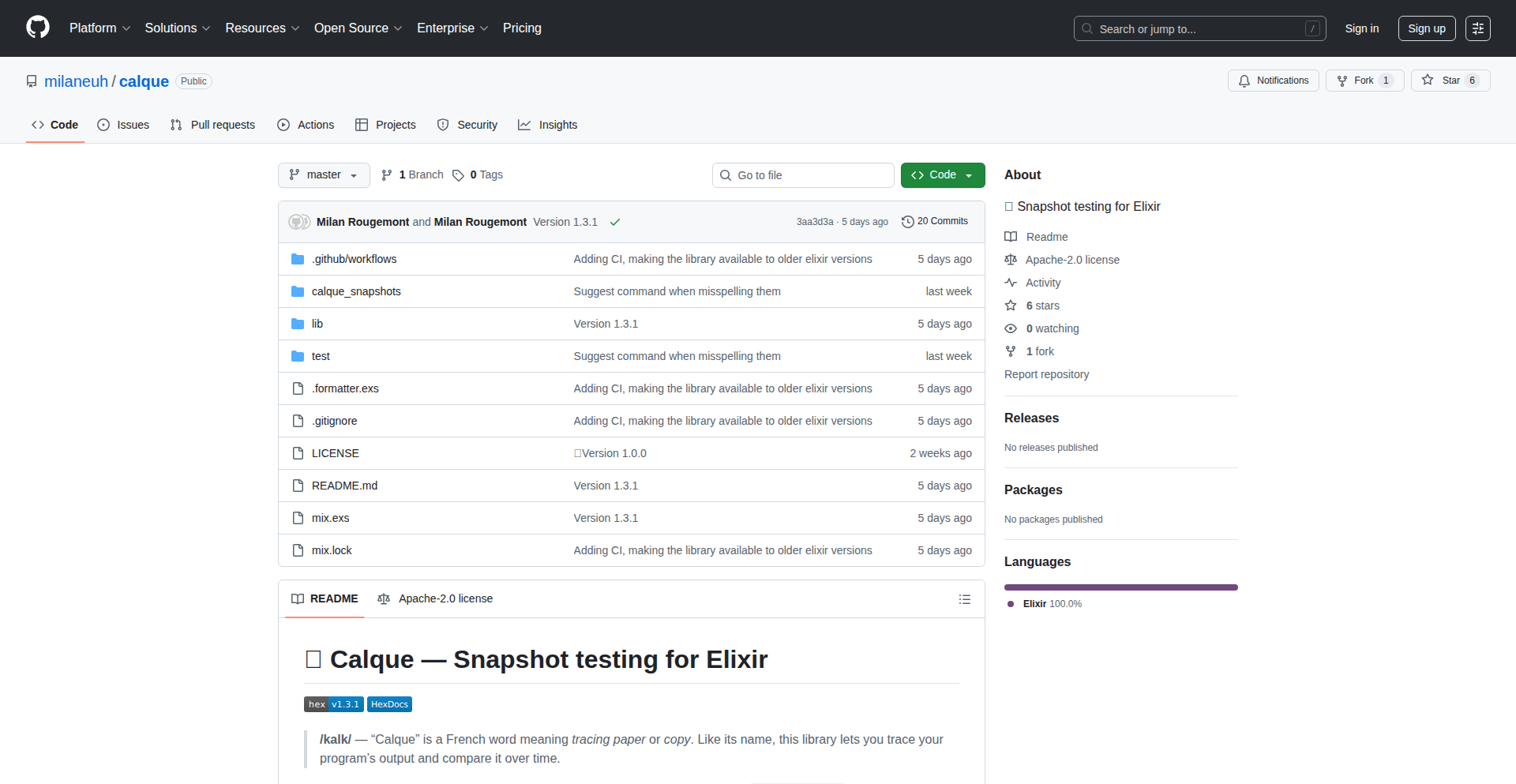

Shodata is an open platform designed for managing and versioning datasets, inspired by the principles of Git for code. It addresses the common pain point of chaotic dataset management (e.g., 'data_final_v3_fixed.csv' or massive Git LFS files) by providing automatic versioning of uploaded files, integrated discussion boards for each dataset, a complete history log, and clean previews with statistics for every version. This MVP aims to bring order and collaboration to data workflows for ML teams and individual developers.

Popularity

Points 2

Comments 2

What is this product?

Shodata is a data version control system, much like Git is for source code. The core innovation lies in how it automatically handles dataset updates. When you upload a new file with the same name as an existing one, Shodata intelligently creates a new version (e.g., v2, v3). This provides a traceable history of your data, preventing confusion and data loss. Additionally, each dataset gets its own discussion board, fostering collaboration and context. Clean previews and statistics for each version make it easy to understand the state of your data at any point in time. So, this is useful because it stops your valuable data from becoming an unmanageable mess and allows you to collaborate effectively on it.

How to use it?

Developers can use Shodata by simply uploading their dataset files to the platform. For ML practitioners, this means uploading CSVs, JSONs, or any other data format. When a new iteration of the dataset is ready, just upload the file again with the same name. Shodata handles the versioning. The platform can be integrated into existing workflows by using it as a central repository for training data, experimentation results, or any evolving dataset. The team/organization features in the Pro plan allow for seamless collaboration with colleagues. So, this is useful because it streamlines the process of managing and sharing your data, making your machine learning projects more organized and collaborative.

Product Core Function

· Automatic Dataset Versioning: Uploading a file with the same name automatically creates a new version, providing a complete history of your data changes. This is valuable for tracking experiments and reverting to previous data states if needed.

· Dataset Discussion Boards: Each dataset has a dedicated discussion area, allowing teams to communicate, share insights, and document decisions related to the data. This is valuable for enhancing collaboration and knowledge sharing within a project.

· Version Previews and Statistics: Shodata offers clean visual previews and statistical summaries for each dataset version, making it easy to quickly understand the content and characteristics of your data at any point. This is valuable for quick assessment and debugging.

· Centralized Data Management: Provides a single, organized platform for all your datasets, replacing scattered files and complex manual tracking. This is valuable for improving efficiency and reducing the risk of data errors or loss.

· Open Platform with Free Tier: Offers a generous free tier for personal use, making advanced data versioning accessible to individual developers and small teams. This is valuable for enabling experimentation and adoption without upfront costs.

Product Usage Case

· ML Experiment Tracking: A machine learning engineer is iterating on a model. They upload the training dataset. After some feature engineering, they upload the modified dataset. Shodata automatically versions it, allowing them to compare model performance with different data versions. This solves the problem of lost context between data changes and model results.

· Collaborative Data Cleaning: A data science team is cleaning a large dataset. As members make edits and improvements, they upload the updated files. The discussion board for that dataset allows them to track who made what changes and why, ensuring transparency and reducing redundant work. This solves the problem of uncoordinated data updates in a team environment.

· Reproducible Research: A researcher is conducting an experiment and wants to ensure their findings are reproducible. They use Shodata to version control the raw data and any processed versions used in their analysis. This provides a clear audit trail of the data used, making it easy for others to replicate their work. This solves the problem of ensuring the integrity and replicability of research findings.

· Dataset Auditing: A company needs to audit its data pipelines for compliance. Shodata provides a clear, immutable history of all dataset versions used, along with associated discussions, simplifying the auditing process. This solves the problem of providing clear evidence of data usage and changes for compliance purposes.

10

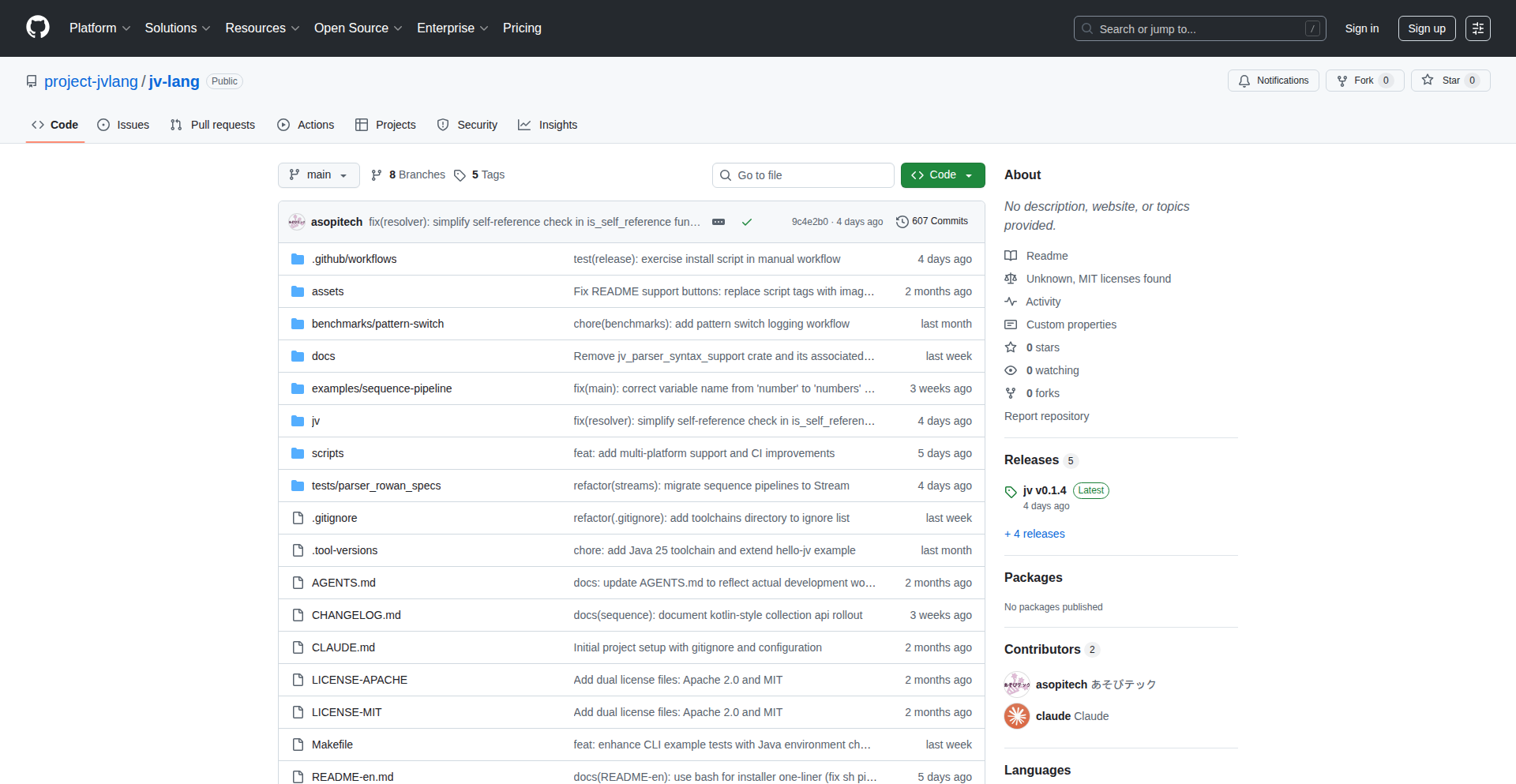

JV Lang: Expressive Java with Rust Speed

Author

asopitech

Description

JV is a novel language that brings Kotlin-inspired syntactic sugar and developer-friendly features directly to Java 25, transpiling to clean, readable Java code without any runtime overhead. Built with Rust for exceptional performance and a delightful CLI experience, JV aims to make modern Java development faster and more intuitive. It solves the problem of Java's verbosity and boilerplate by offering concise syntax while maintaining full Java compatibility and leveraging the power of the Java Virtual Machine.

Popularity

Points 3

Comments 1

What is this product?

JV is a source-to-source compiler, a language extension for Java. Think of it as a modern coat of paint for Java. It takes code written in the JV language, which is designed to be more concise and expressive (similar to Kotlin), and automatically converts it into standard, highly readable Java 25 code. The magic happens because it doesn't need any special libraries or virtual machine components running alongside your Java code; it's a pure transformation. The underlying compiler is built in Rust, a language known for its speed and efficiency, which means JV can process your code very quickly. This is innovative because it provides the benefits of a more modern language without sacrificing Java's ecosystem or performance.

How to use it?

Developers can integrate JV into their existing Java workflows. You write your code using JV's syntax, which offers features like generic function signatures, simplified access to record components, optional parentheses for methods with no arguments, more powerful string formatting, and a streamlined way to process sequences of data. After writing your JV code, you use the JV command-line interface (CLI) tool, which is a single, cross-platform executable. This CLI tool then transpiles your JV code into standard Java files. These Java files can then be compiled and run just like any other Java code. The CLI automatically detects your local Java Development Kit (JDK) and can be configured for custom build processes, making it seamless to adopt for new projects or integrate into existing ones. This means you can start writing cleaner, more efficient Java code immediately with a simple toolchain.

Product Core Function

· Kotlin-inspired syntactic sugar: This allows developers to write Java code more concisely, reducing boilerplate and improving readability. For example, instead of lengthy Java constructs, you can use simpler syntax, leading to faster development and fewer errors. This is valuable for reducing development time and maintaining code quality.

· Direct transpilation to readable Java: The output of JV is standard, human-readable Java code. This is crucial because it means your codebase remains fully compatible with the vast Java ecosystem and tools, and you don't need to learn a completely new language to deploy your applications. This provides investment protection and avoids vendor lock-in.

· Zero runtime shim: JV doesn't require any extra libraries or runtime components to be installed with your application. This means your compiled Java code is as performant and lightweight as if it were written directly in Java 25, ensuring optimal performance. This is valuable for applications where performance and minimal footprint are critical.

· Rust-based high-performance toolchain: The compiler is written in Rust, ensuring lightning-fast transpilation speeds. This dramatically speeds up the development cycle, allowing developers to see their changes reflected in their code much quicker. This enhances developer productivity and satisfaction.

· Intuitive CLI experience: Inspired by tools like Python's `uv`, the JV command-line interface is designed to be fast, easy to use, and clean. This makes managing your JV projects straightforward and enjoyable, lowering the barrier to entry for adopting new language features. This improves the overall developer experience and encourages adoption.

· Cross-platform bundle with baked-in stdlib: The JV CLI works on any operating system (Windows, macOS, Linux) and includes its standard library, simplifying setup and usage. You get a consistent experience regardless of your development environment, making it easier to collaborate and deploy.

· Automatic JDK detection and entrypoint overriding: The toolchain intelligently finds your local Java installation and allows customization of build entry points. This reduces manual configuration and makes it easier to integrate JV into complex build systems or custom workflows, saving setup time and reducing potential configuration errors.

Product Usage Case

· Modernizing legacy Java applications: Developers can gradually introduce JV syntax into existing Java projects to make them more maintainable and readable. By transpiling new or refactored sections of code to Java, they can gain the benefits of modern language features without a complete rewrite, improving the long-term health of the codebase.

· Building high-performance microservices: For backend services where speed and resource efficiency are paramount, JV allows developers to write concise, performant Java code that compiles directly to optimized Java. This is useful for creating lightweight and fast services that can handle high loads efficiently.

· Developing Android applications with less boilerplate: While primarily targeting Java 25, the principles of JV can be applied to reduce verbosity in Android development. Developers can experiment with cleaner syntax for common Android patterns, potentially leading to faster UI development and easier-to-manage code. This addresses the challenge of boilerplate code in mobile development.

· Creating command-line tools and utilities: The fast transpilation and intuitive CLI of JV make it an excellent choice for building command-line tools. Developers can quickly iterate on their tools with expressive syntax and benefit from the performance of the JVM without complex setup. This is ideal for developers who need to build efficient, standalone tools.

11

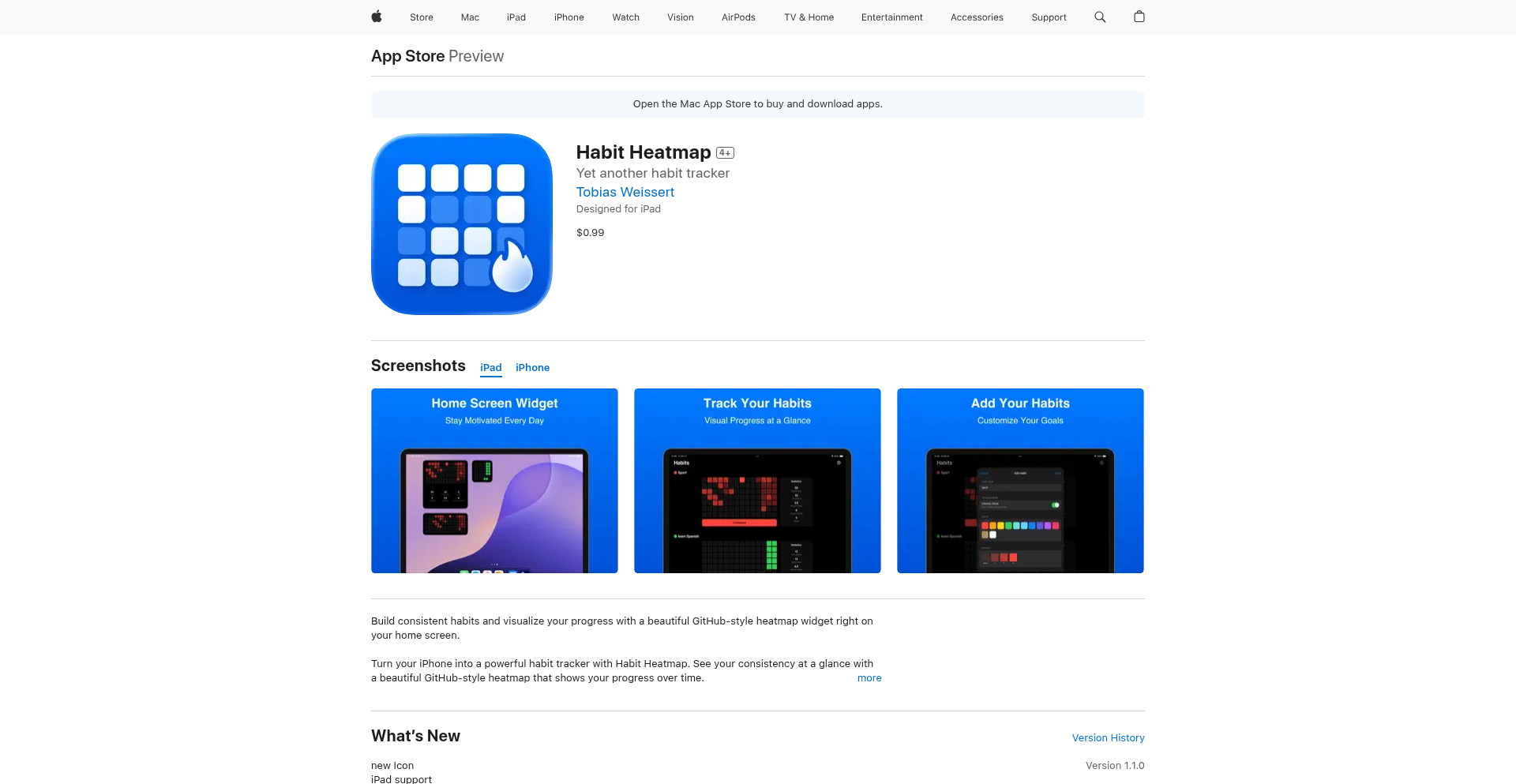

HabitHeatmap

Author

supertoub

Description

HabitHeatmap is a native iOS app that leverages the visual motivation of GitHub's activity heatmap to track everyday habits. It offers a simple, privacy-focused approach with local storage, iCloud sync, and a one-time purchase model, eliminating subscriptions. The core innovation lies in applying the highly engaging, gamified visual feedback of code commits to non-coding habits, making personal goal tracking more compelling and effective.

Popularity

Points 2

Comments 2

What is this product?

HabitHeatmap is an iOS application that visualizes your habit completion using a heatmap, similar to the green squares you see on a developer's GitHub profile. Instead of tracking code commits, it tracks any habit you want to build, like going to the gym, reading, or meditating. The underlying technology uses SwiftUI for a smooth and modern user interface and stores your data locally on your device or syncs it securely via iCloud. This approach avoids complex account setups and recurring subscriptions, focusing instead on a straightforward, visually rewarding experience. The innovation is in borrowing a concept proven to drive consistent engagement in software development (GitHub's heatmap) and applying it to personal self-improvement, turning abstract goals into tangible, visual progress.

How to use it?

Developers can use HabitHeatmap by downloading the app from the App Store. Once installed, they can create custom habits they want to track. For each habit, they can log their completion daily. The app then automatically updates a visual heatmap on their home screen or within the app itself, showing streaks of completed days with increasing shades of green. For example, a developer might create a 'Go to the Gym' habit and tap a button each day they work out. The app's widget would then display a new green square for that day. Integration is seamless, as it's a standalone app designed for quick daily interaction. Developers can also leverage iCloud sync to ensure their habit data is backed up and accessible across their Apple devices.

Product Core Function

· GitHub-style heatmap widgets: This feature provides a visual representation of habit streaks, using color intensity to signify consistency. This has value by making progress instantly visible and highly motivating, transforming abstract goals into a concrete, visually rewarding journey. It's useful for anyone who finds satisfaction in seeing tangible progress over time, creating a powerful psychological nudge to maintain consistency.

· iCloud sync: This function ensures that habit data is securely backed up and synchronized across all of a user's Apple devices. Its value lies in providing peace of mind and convenience, allowing users to track habits from their iPhone, iPad, or Mac without worrying about data loss or manual transfers. This is crucial for maintaining consistent tracking across different devices.

· Local data storage: All habit data is stored directly on the user's device, with no external accounts required. The value here is enhanced privacy and security, as sensitive personal habit data is not sent to remote servers. This appeals to users who are concerned about data breaches or who prefer a minimalist approach to online accounts, ensuring their information stays under their control.

· One-time purchase: The app is offered as a single purchase, avoiding recurring subscription fees. The value for the user is cost-effectiveness and predictability, eliminating the burden of ongoing monthly or annual payments often associated with habit trackers or fitness apps. This aligns with a 'buy-it-once' philosophy, offering long-term value without ongoing financial commitment.

Product Usage Case

· A software developer struggling to maintain a consistent workout routine uses HabitHeatmap. They create a 'Gym Session' habit and mark it as complete each day they exercise. The app's home screen widget displays a growing grid of green squares, acting as a constant, visual reminder and source of motivation. This directly addresses the problem of declining motivation in fitness goals by providing a highly engaging, gamified feedback loop.

· A freelance writer wants to build a daily reading habit. They set up a 'Read for 30 Minutes' habit in HabitHeatmap. By simply tapping to log their reading session each day, they see their progress accumulate as green squares on their dashboard. This helps them overcome procrastination and stay accountable to their personal development goal, making the abstract goal of 'reading more' into a concrete, achievable daily action.

· A student aiming to improve their study habits uses HabitHeatmap to track daily study sessions. They log an hour of studying each day, and the visual heatmap on their iPhone provides a clear overview of their commitment. This helps them identify patterns, celebrate streaks, and recognize days where they might have fallen behind, offering a clear, objective measure of their academic discipline and aiding in better time management.

12

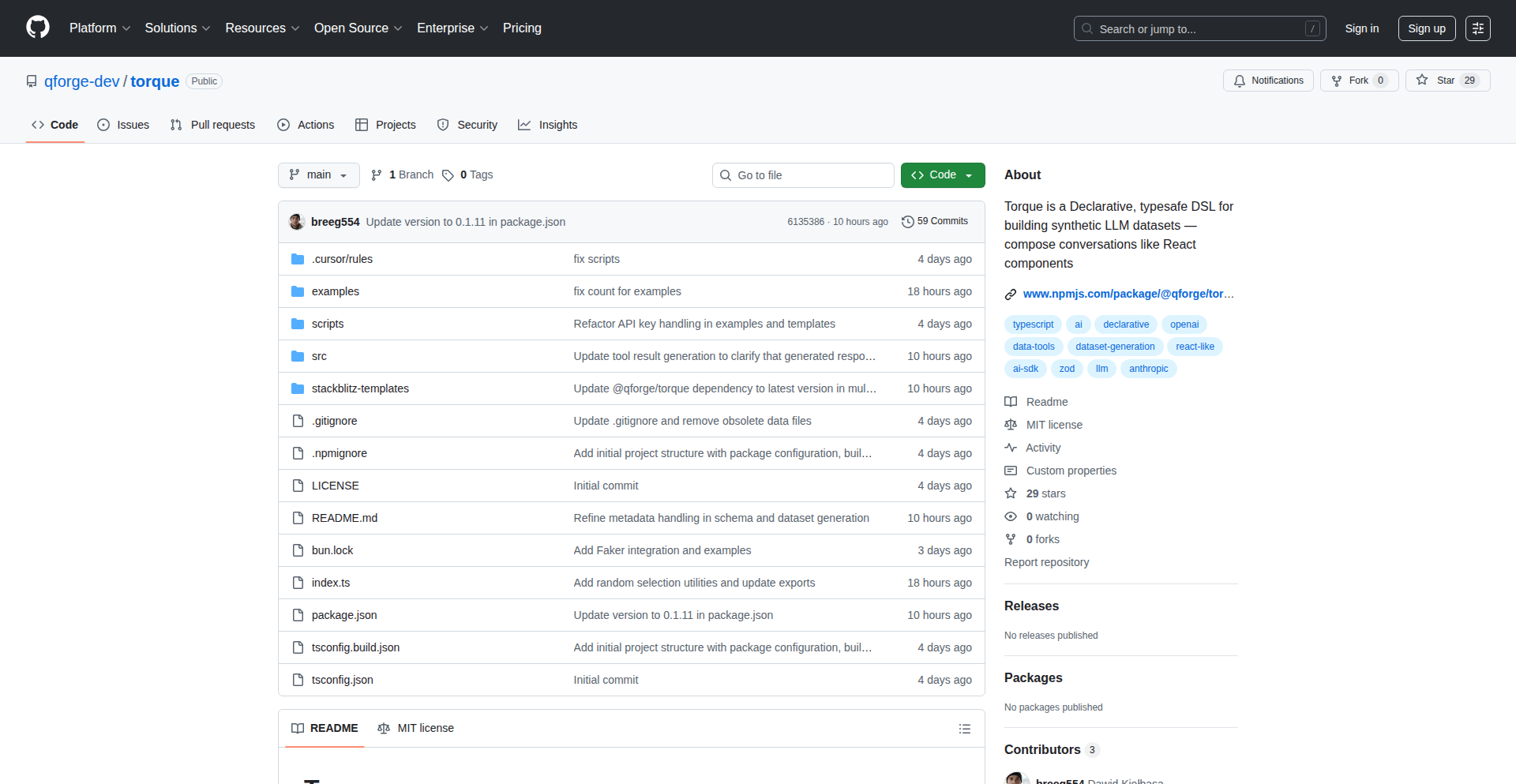

Torque: Schema-Driven Conversational AI Datasets

Author

michalwarda

Description

Torque is a revolutionary tool that tackles the chaos of generating conversational datasets for AI training. Instead of wrestling with brittle scripts and inconsistent JSON files, Torque introduces a schema-first, declarative, and fully typesafe Domain Specific Language (DSL). This means developers can define conversation flows with the elegance of composing UI components, ensuring type safety and enabling seamless integration with any AI provider. It automates the creation of realistic and varied datasets, making AI development more reproducible and efficient.

Popularity

Points 3

Comments 1

What is this product?

Torque is a declarative Domain Specific Language (DSL) designed to generate conversational datasets for training Large Language Models (LLMs). The core innovation lies in its 'schema-first' and 'typesafe' approach. Think of it like building a website with React components where each component has a defined structure and type. Torque allows you to define conversation structures (like user prompts and AI responses) as code, ensuring consistency and preventing common errors. It leverages AI providers (like OpenAI, Anthropic, etc.) to generate the actual content based on these structures, making the process automatic and much less error-prone than manual scripting. The 'typesafe' aspect means that if you try to connect two parts of a conversation that don't fit together according to your defined schema, the system will flag it as an error before you even run your code. This drastically reduces bugs and saves time.

How to use it?

Developers can integrate Torque into their AI development workflow in several ways. The primary method is via its Node.js SDK. You'll define your conversational flows using the Torque DSL, specifying user intents, assistant responses, and branching logic. You then configure which AI model and provider you want to use for generation. Torque handles the complexities of interacting with the AI API, generating the dataset, and ensuring reproducibility through features like integrated Faker.js with synchronized seeding for fake data. It can be used for generating datasets for chatbots, virtual assistants, or any application requiring structured conversational data. The CLI offers concurrent generation with real-time progress tracking, making the generation process visually informative and efficient.

Product Core Function

· Declarative DSL for Conversation Composition: Allows developers to define conversation flows in a structured, code-like manner, similar to how UI components are built, leading to more organized and maintainable dataset generation logic. This helps you build complex conversations without getting lost in tangled scripts.

· Fully Typesafe with Zod Schemas: Ensures that the structure of your conversational data is always consistent and correct, catching errors early in the development process and preventing unexpected behavior in your AI models. This means fewer bugs and more reliable AI.

· Provider Agnostic AI Generation: Enables the use of any AI SDK provider (OpenAI, Anthropic, etc.) for generating dataset content, offering flexibility and avoiding vendor lock-in. You can switch AI models easily without rewriting your entire dataset generation code.

· AI-Powered Realistic Dataset Generation: Automatically generates varied and realistic conversational data, reducing the manual effort and cost associated with creating training datasets. This provides your AI with diverse examples to learn from, improving its performance.

· Integrated Faker.js with Seed Synchronization: Generates reproducible fake data for testing and development, ensuring that experiments can be consistently replicated. This is crucial for debugging and comparing different model versions.

· Cache Optimized Generation: Reuses generated context across different generation runs to reduce API costs and speed up the process. This helps save money on AI API calls.

· Prompt Optimized Structures: Creates concise and optimized prompts for AI models, allowing for the use of smaller, cheaper models while maintaining high-quality output. This makes your AI development more cost-effective.

Product Usage Case

· Generating training data for a customer support chatbot: A developer needs to create a dataset of common customer inquiries and their corresponding agent responses. Using Torque, they can declaratively define the conversation flow, specify different types of customer issues (e.g., billing, technical support), and have Torque generate varied interactions using an AI model. This replaces manual writing of hundreds of example conversations and ensures consistency in the chatbot's expected responses.

· Creating synthetic user dialogues for a new feature testing: A team is building a new feature in their application and needs realistic user interactions to test it. Torque can be used to generate synthetic dialogues where users ask questions about the new feature, provide feedback, and interact with the application's UI elements. This allows for thorough testing without requiring actual user input during the early stages.

· Developing an LLM for creative writing assistance: A researcher is building an AI assistant that helps users brainstorm story ideas and write creatively. Torque can be used to generate diverse conversational scenarios where the AI provides prompts, suggests plot twists, and engages in collaborative storytelling with the user. This helps create a rich dataset for training the AI's creative writing capabilities.

· Building a multilingual conversational agent: A company wants to deploy a conversational AI in multiple languages. Torque's provider-agnostic nature allows them to switch between different language models and generate datasets in each target language, ensuring the AI is well-trained for each linguistic context. This streamlines the process of localization for conversational AI.

13

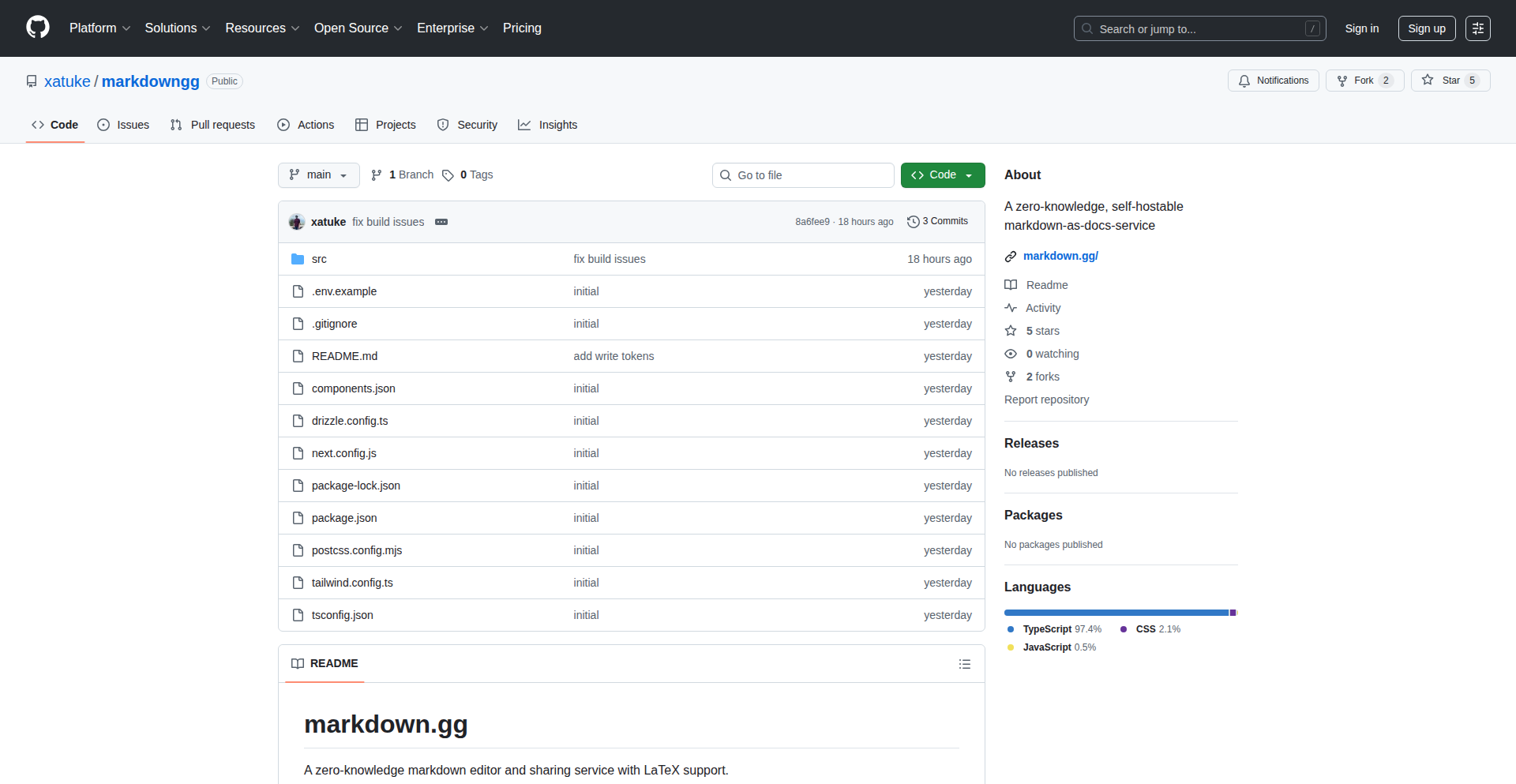

ZKMarkdown Share

Author

satuke

Description

A self-hostable service for securely sharing Markdown documents using Zero-Knowledge Proofs (ZKPs). It allows creators to share content without revealing the content itself, enabling new forms of verifiable sharing and content ownership. This tackles the challenge of sharing sensitive or proprietary information while maintaining privacy and control.

Popularity

Points 3

Comments 1

What is this product?

ZKMarkdown Share is a groundbreaking project that leverages Zero-Knowledge Proofs (ZKPs) to enable private sharing of Markdown content. Instead of sending the actual Markdown file, the service generates a cryptographic proof that a specific piece of content exists and meets certain criteria. This proof can be verified by anyone without needing to see the original content. Think of it like proving you have a specific key to a locked box without showing anyone the key itself. This is technically achieved by using ZKP libraries to compute proofs based on the Markdown content, which can then be shared and verified independently. The innovation lies in applying ZKPs, a cutting-edge cryptographic technique, to the common use case of sharing text-based documents, offering unparalleled privacy and integrity.

How to use it?

Developers can deploy ZKMarkdown Share on their own servers, giving them full control over their data and sharing. They can then use a simple API or a web interface to upload Markdown content. Once uploaded, the service generates a unique link or a verifiable proof. This link can be shared with recipients who can then verify the existence and integrity of the content without ever downloading or seeing the raw Markdown. For integration, developers can interact with the service's API to programmatically generate proofs for their own applications, such as content platforms, educational tools, or secure document repositories. This allows for building applications where content verification is paramount but the content itself needs to remain private.

Product Core Function

· Secure Markdown Upload: Allows users to upload Markdown files to the self-hosted service. This provides a private and controlled environment for content storage, reducing reliance on third-party platforms.

· Zero-Knowledge Proof Generation: Cryptographically proves the existence and integrity of Markdown content without revealing the content itself. This is the core innovation, offering advanced privacy for shared information.

· Verifiable Sharing Links: Generates unique links that recipients can use to verify the shared content. This is a user-friendly way to distribute verifiable information without complex cryptographic knowledge for the recipient.

· Self-Hostable Deployment: Enables users to run the service on their own infrastructure, ensuring full data sovereignty and customization. This appeals to users with strict privacy requirements or specific operational needs.

· Content Integrity Assurance: Guarantees that the shared Markdown has not been tampered with since the proof was generated. This builds trust and reliability in the shared information.

Product Usage Case

· Securely share academic research papers or proprietary code snippets: A researcher could share a proof of their paper's content, allowing reviewers to verify its existence and integrity without reading the full paper until the intended time. This prevents pre-disclosure and protects intellectual property.

· Private voting or survey systems: Developers could use this to create a system where participants submit answers without revealing their specific choices, but the system can prove that all required answers were submitted and that no votes were altered. This enhances the privacy and auditability of sensitive data collection.

· Digital credential verification: A platform could issue verifiable credentials (e.g., certificates) as Markdown documents. The service can then generate ZKPs for these credentials, allowing easy and private verification by third parties without exposing personal details on the credential itself.

· Content provenance tracking: For digital art or writing, a creator could share a proof of their work at a certain time, establishing a verifiable timestamp and the original content's existence without making the work publicly accessible until they choose to.

14

Chatolia: Your Personal AI Agent Forge

Author

blurayfin

Description

Chatolia is a platform for effortlessly creating, training, and deploying custom AI chatbots. It solves the problem of complex AI development by offering a simple, data-driven approach, allowing anyone to build a unique AI agent powered by their own information and make it accessible on their website. This empowers businesses and individuals to leverage AI without deep technical expertise.

Popularity

Points 3

Comments 1

What is this product?

Chatolia is a no-code platform that allows users to build their own AI chatbots. The core innovation lies in its straightforward process: you provide your data (like documents, FAQs, or website content), and Chatolia uses this to train a specialized AI model. This trained model then becomes your custom 'agent' that can understand and respond to questions based on the information you fed it. So, what's the 'so what' for you? It means you can have a smart assistant on your website that answers customer questions accurately using your specific business knowledge, without needing to hire AI engineers or learn complex coding.

How to use it?

Developers can integrate Chatolia into their websites or applications through a simple embeddable widget or API. You'd typically start by uploading your knowledge base (e.g., a CSV file of product information, a PDF of your company policies, or even by pointing it to your existing website URL). Chatolia then processes this data to build your agent. Once trained, you get a code snippet to paste into your website's HTML, or you can use the API to programmatically interact with your agent. The value for developers is a rapid deployment of sophisticated AI capabilities for their clients or projects with minimal integration effort.

Product Core Function

· Custom AI Agent Creation: Allows users to define and build unique AI personalities and knowledge bases. This is valuable because it moves beyond generic AI assistants to one that truly understands your specific domain or business, leading to more relevant and helpful interactions for your users.

· Data-Driven Training: Enables AI agents to learn from user-provided data sources like documents, web pages, or databases. The value here is that your AI becomes an expert on *your* content, ensuring accuracy and relevance, which is crucial for customer support or internal knowledge sharing.

· Website Deployment: Provides an easy way to embed the trained AI agent as a chatbot on any website. This is practical because it allows businesses to instantly enhance their online presence with an intelligent assistant that can engage visitors 24/7, improving user experience and potentially driving conversions.

· Free Tier for Experimentation: Offers a starting point with a free plan, including one agent and monthly message credits. This is beneficial for developers and small businesses as it lowers the barrier to entry, allowing them to test the capabilities of custom AI chatbots without upfront costs.

Product Usage Case

· A small e-commerce business could use Chatolia to create an AI chatbot trained on their product catalog and shipping policies. When a customer visits the website, the chatbot can answer specific questions about product features, availability, and delivery times, reducing the need for customer service staff and improving customer satisfaction.

· A documentation team could train an AI agent with their technical manuals and API references. Developers could then query this agent directly from within their IDE or a developer portal to quickly find the information they need, saving significant time and reducing frustration.

· A real estate agency could deploy a Chatolia agent trained on their property listings and local market data. Potential buyers could ask about specific property types, price ranges, or neighborhood details, and receive instant, tailored information, acting as a 24/7 virtual property consultant.

· A startup could use Chatolia to build an initial customer support bot for their new product. By feeding it FAQs and troubleshooting guides, they can handle common user queries effectively from day one, allowing their lean team to focus on product development.

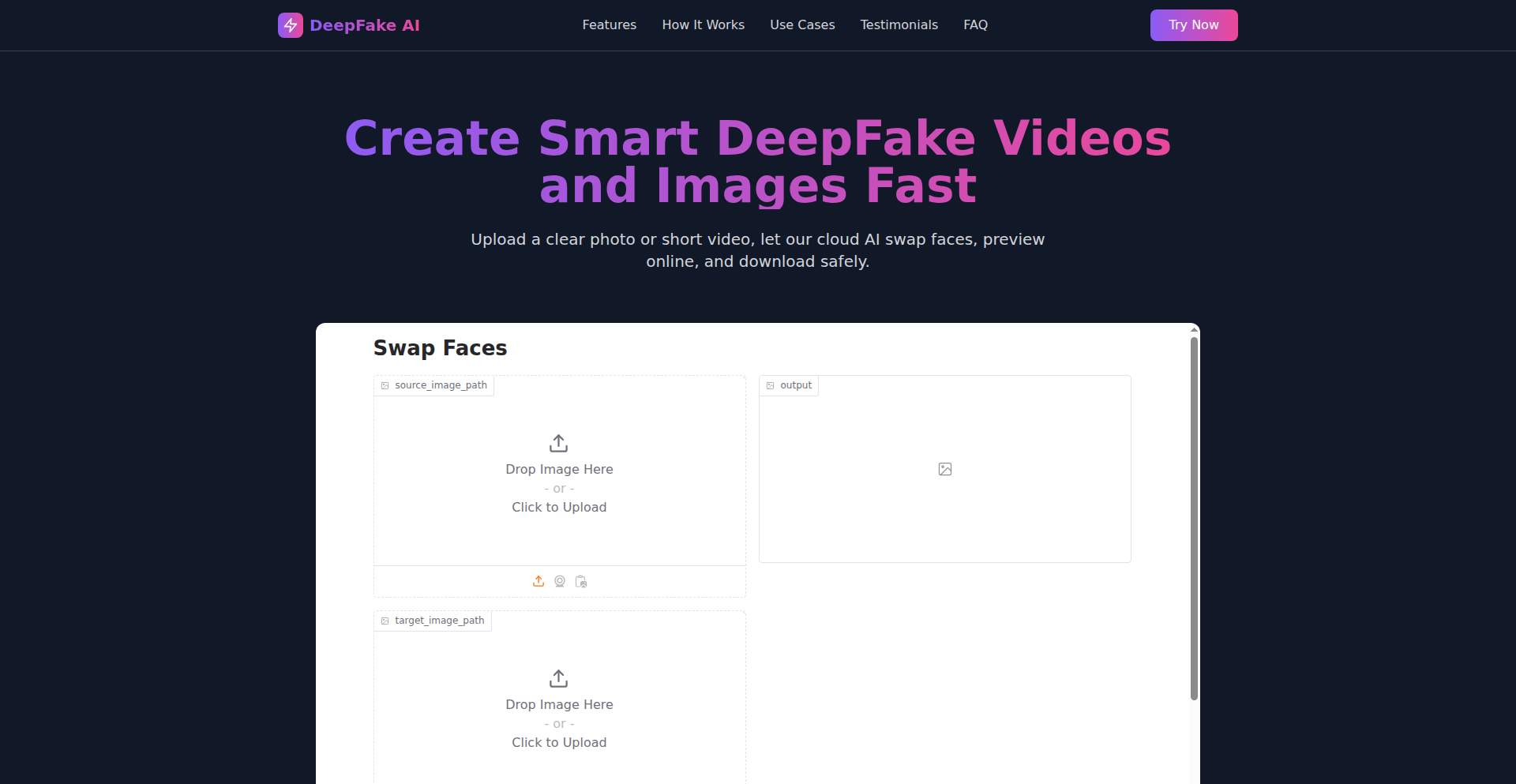

15

DeepFake Studio: AI Face Swap

Author

epistemovault

Description

This project is an online, free AI-powered face swapping tool. It leverages deep learning models to seamlessly replace a face in a video or image with another. The core innovation lies in making advanced face manipulation technology accessible to everyone without requiring complex setup or powerful hardware. It solves the problem of expensive and technically demanding face alteration for creative or experimental purposes.

Popularity

Points 3

Comments 1

What is this product?

This is an open-source, web-based application that allows users to perform face swaps using artificial intelligence. It utilizes Generative Adversarial Networks (GANs) or similar deep learning architectures trained on vast datasets of faces. The innovation is in democratizing this technology, which was previously confined to research labs or high-end production studios, by providing an easy-to-use online interface. So, what's in it for you? It means you can experiment with realistic face manipulation for fun, artistic projects, or educational purposes without needing to be a machine learning expert or buy expensive software.

How to use it?

Developers can use this project by integrating its API into their own applications or websites. For end-users, it's a straightforward web application: upload a source video/image and a target face image, then the AI handles the rest. The process typically involves preprocessing the inputs, feeding them into the trained AI model for face detection and alignment, then generating the swapped face, and finally post-processing to ensure seamless blending. So, how can you use it? You can build custom creative tools, educational platforms demonstrating AI capabilities, or even personal projects for generating unique content.

Product Core Function

· AI-powered Face Detection and Alignment: Accurately identifies and positions faces in source and target media, ensuring proper mapping. This is valuable for maintaining realistic proportions and angles in the swap, making the final output look natural.

· Deep Learning Face Generation: Creates new facial features that blend the characteristics of the target face onto the source, enabling convincing transformations. This core function allows for the magic of face swapping to happen, transforming one person's likeness into another's.

· Seamless Blending and Post-processing: Integrates the swapped face into the original media, handling lighting, color, and texture discrepancies for a smooth, believable result. This ensures the swapped face doesn't look 'pasted on,' enhancing the realism and overall quality of the output.

· Online Accessibility and Free Usage: Provides a web-based interface that requires no installation or powerful hardware, making advanced AI accessible to a broad audience. This is incredibly valuable for hobbyists, students, and small creators who might not have the resources for traditional methods.

Product Usage Case

· Creating humorous parody videos by swapping famous faces onto movie scenes. This addresses the need for quick and accessible video editing for content creators looking to make viral content.

· Developing educational tools to explain the concepts of AI and deep learning by demonstrating real-time face manipulation. This solves the challenge of making abstract AI concepts tangible and engaging for students.

· Allowing artists to experiment with digital portraits and character design by quickly visualizing different facial identities. This provides a rapid prototyping tool for digital artists to explore creative ideas.

· Building interactive applications where users can see themselves or friends with different celebrity faces in real-time. This caters to the demand for novel and personalized entertainment experiences.

16

Sudachi Emulator: Swift Switch Simulation

Author

clarionPilot11

Description

Sudachi Emulator is a high-performance, open-source emulator designed to run Nintendo Switch games on your PC. Its core innovation lies in its highly optimized approach to simulating the Switch's complex hardware, enabling a smoother and more accessible gaming experience for enthusiasts and developers alike. This project showcases a deep understanding of low-level system architecture and clever software engineering to overcome the challenges of modern console emulation.

Popularity

Points 3

Comments 1

What is this product?

Sudachi Emulator is a software program that mimics the functionality of a Nintendo Switch gaming console on a personal computer. It achieves this by precisely recreating the Switch's internal hardware components, such as the CPU, GPU, and memory, in software. The key technical innovation is its speed and efficiency, which is accomplished through advanced techniques like highly accurate CPU reordering and instruction prediction, along with a finely tuned graphics pipeline that leverages modern PC graphics hardware. This means it can run demanding Switch games with playable frame rates, a significant technical feat in the emulation world. So, what's the benefit for you? It allows you to experience your favorite Switch games on a larger screen, with potentially better graphics and input methods, without needing the original console.

How to use it?

Developers can use Sudachi Emulator in several ways. Primarily, it serves as a platform for testing and debugging homebrew applications and games designed for the Nintendo Switch. By running their creations within the emulator, developers can quickly iterate and identify issues without the need for physical Switch hardware, which can be costly and time-consuming to set up. Integration typically involves compiling homebrew code into a compatible format (like NSP or XCI files) and then loading them into the emulator. Advanced users can also explore the emulator's codebase to understand emulation techniques or contribute to its development. So, what's the benefit for you? If you're a Switch homebrew developer, this drastically speeds up your development cycle and lowers the barrier to entry.

Product Core Function

· Accurate CPU Emulation: Replicates the Switch's ARM-based processor architecture with high fidelity, enabling complex game logic to run correctly. This is crucial for game compatibility. So, what's the benefit for you? Games will run as intended without glitches or crashes caused by CPU inaccuracies.

· Optimized GPU Rendering: Simulates the Switch's Tegra GPU, translating its rendering commands into instructions that modern PC graphics cards can execute efficiently. This allows for smooth visual output. So, what's the benefit for you? You get playable frame rates and a good visual experience on your PC.

· Memory Management: Precisely mimics the Switch's RAM and storage systems, ensuring that games can access and store data as they would on the actual console. So, what's the benefit for you? Prevents game crashes and data corruption issues related to memory handling.

· Input Device Simulation: Emulates the functionality of Joy-Cons and Pro Controllers, allowing for seamless integration with PC input devices like keyboards, mice, and gamepads. So, what's the benefit for you? You can play games using your preferred input method on your PC.

· Audio and Peripheral Emulation: Recreates the Switch's audio hardware and other essential peripherals, ensuring a complete gaming experience. So, what's the benefit for you? You get sound and can use other features of the console accurately.

Product Usage Case

· Game Development Testing: A developer creating a new indie game for the Switch can use Sudachi Emulator to test their game's performance and identify bugs on their PC before deploying it to actual hardware. This saves significant time and resources. So, what's the benefit for you? Faster and more stable game releases.

· Homebrew Application Debugging: A user who has developed a custom application or mod for the Switch can use the emulator to debug their code and ensure it functions correctly in a simulated Switch environment. So, what's the benefit for you? Easier to create and test custom software for the Switch.

· Retro Gaming Enthusiast Exploration: Individuals who want to revisit or experience Switch games without owning the console can use the emulator to play their favorite titles on their PC, provided they legally own the game ROMs. So, what's the benefit for you? Access to a wider library of games on your existing hardware.

· Technical Deep Dive for Students: Computer science students interested in low-level systems, operating systems, or reverse engineering can study the emulator's codebase to learn how complex hardware is simulated in software. So, what's the benefit for you? A practical learning resource to understand system architecture and emulation.

17

CommentOverlay ThumbGen

Author

dotspencer

Description