Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-11-01

SagaSu777 2025-11-02

Explore the hottest developer projects on Show HN for 2025-11-01. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN offerings are a vibrant testament to the hacker spirit, showcasing a relentless pursuit of elegant solutions to complex problems. The overwhelming trend towards leveraging AI, particularly LLMs, signifies a paradigm shift. Developers are not just building applications; they are exploring how to make AI an integral part of the development process itself, moving from writing explicit code to orchestrating AI agents for creation and problem-solving. This opens up immense possibilities for innovators: imagine entire application frameworks that adapt and evolve based on user feedback, or debugging tools that converse with your logs to pinpoint issues. The rise of specialized developer tools, from enhanced JSON formats to security scanners that catch critical leaks, highlights a deep understanding of developer pain points and a commitment to streamlining workflows. For entrepreneurs, this means opportunities to build platforms that empower developers, automate complex tasks, and unlock new forms of intelligence. Furthermore, the exploration of novel database architectures like log-native systems and event sourcing points towards a future where data management is more resilient, scalable, and real-time. The innovation spans from deeply technical domains like compression for embedded systems to user-facing applications like AR games and interactive maps. The overarching message is clear: the best solutions often emerge from those who deeply understand a problem and are willing to experiment with cutting-edge technologies to solve it. Embrace the experimental, learn from failures, and continue to push the boundaries of what's technically possible.

Today's Hottest Product

Name

Show HN: Why write code if the LLM can just do the thing? (web app experiment)

Highlight

This project explores the audacious idea of replacing traditional code logic with Large Language Models (LLMs). The developer built a contact manager where every HTTP request is routed to an LLM, armed with tools like a SQLite database, web response generation (HTML/JSON/JS), and memory updates. The LLM dynamically designs schemas, generates UIs on the fly, and adapts based on natural language feedback. While currently slow and expensive, it demonstrates a radical shift in how applications can be built—moving from explicit programming to AI-driven dynamic creation. Developers can learn about prompt engineering for complex tasks, tool integration with LLMs, and the potential of AI to abstract away boilerplate code.

Popular Category

AI/ML

Developer Tools

Databases

Web Development

Productivity

Popular Keyword

LLM

AI

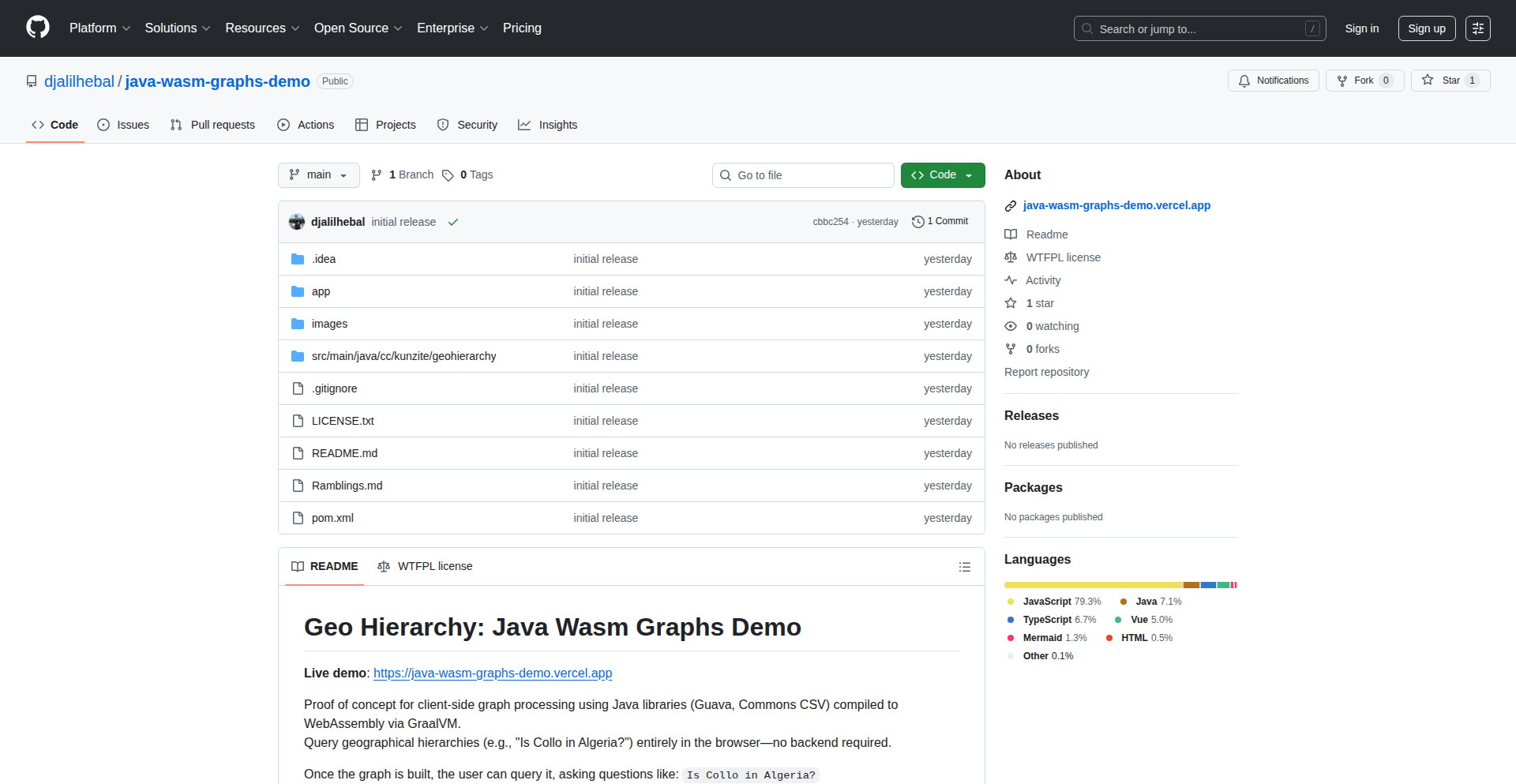

Rust

WebAssembly

Observability

Database

Compression

Go

GitOps

Technology Trends

AI-driven application development

Enhanced data formats and serialization

Security scanning for exposed secrets

Conversational interfaces for complex systems

Log-native databases

Efficient data compression for embedded systems

AI for creative content generation

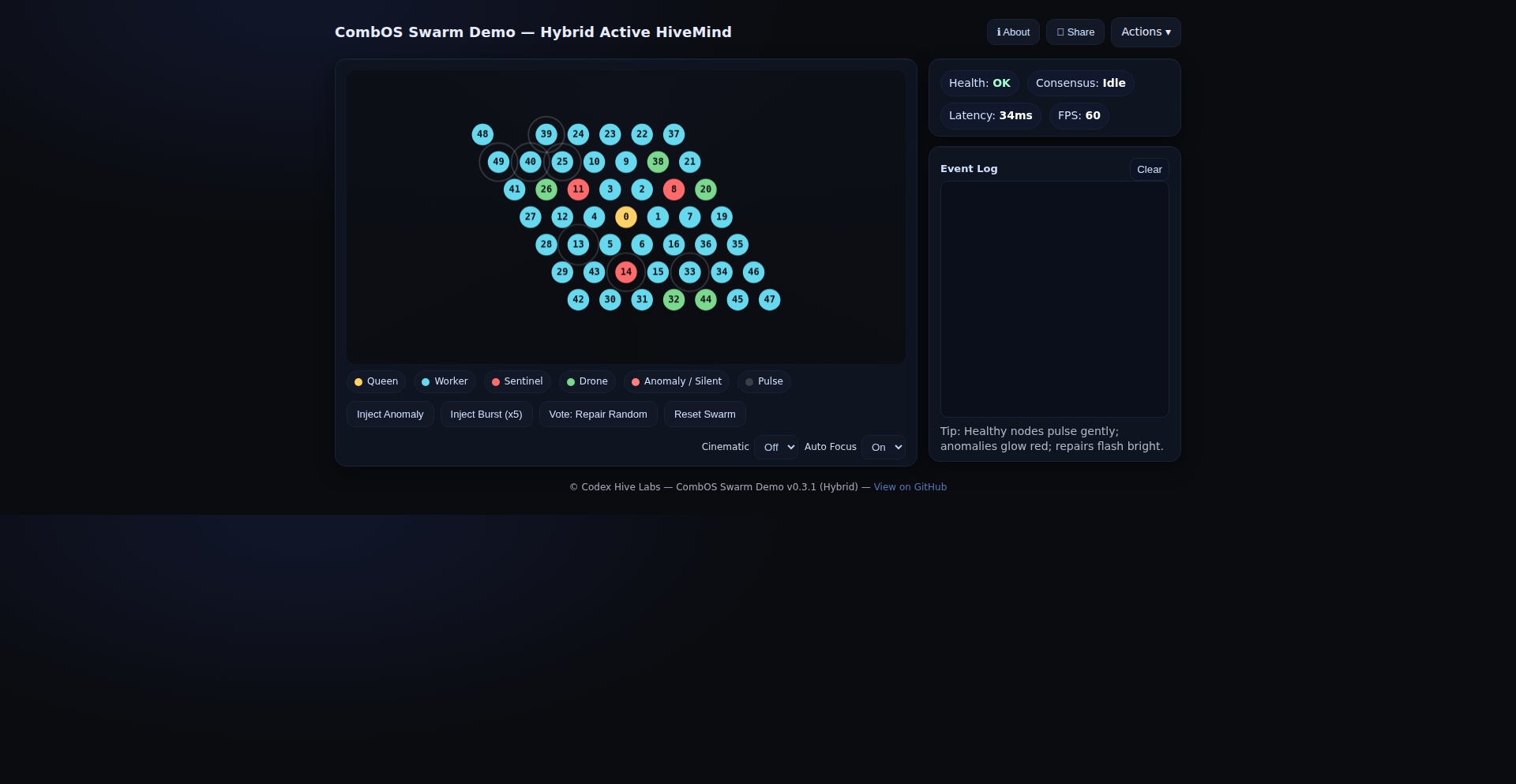

Decentralized and swarm operating systems

Event-sourcing databases

WebAssembly for in-browser computations

Automated infrastructure management

Remote collaboration tools

Augmented Reality (AR) applications

Open-source tooling and community contributions

Project Category Distribution

AI/ML (15%)

Developer Tools (25%)

Databases/Data Management (15%)

Web Development/Frameworks (10%)

Productivity Tools (10%)

Embedded Systems (5%)

Gaming/Entertainment (5%)

Security (5%)

Infrastructure/DevOps (5%)

Other (10%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | LLM-Powered Dynamic Web App Engine | 333 | 232 |

| 2 | DuperLang | 21 | 11 |

| 3 | SecretScannerJS | 19 | 7 |

| 4 | AI Operator from Hell: Autonomous AI Sysadmin & Storyteller | 16 | 3 |

| 5 | GeoNewsMapper | 17 | 0 |

| 6 | ChatOps Log Interpreter | 9 | 4 |

| 7 | FontHunter.js | 12 | 0 |

| 8 | UnisonDB: Log-Native Data Fabric | 11 | 0 |

| 9 | Micro-RLE | 7 | 2 |

| 10 | Stockfish 960v2 Orchestrator | 3 | 4 |

1

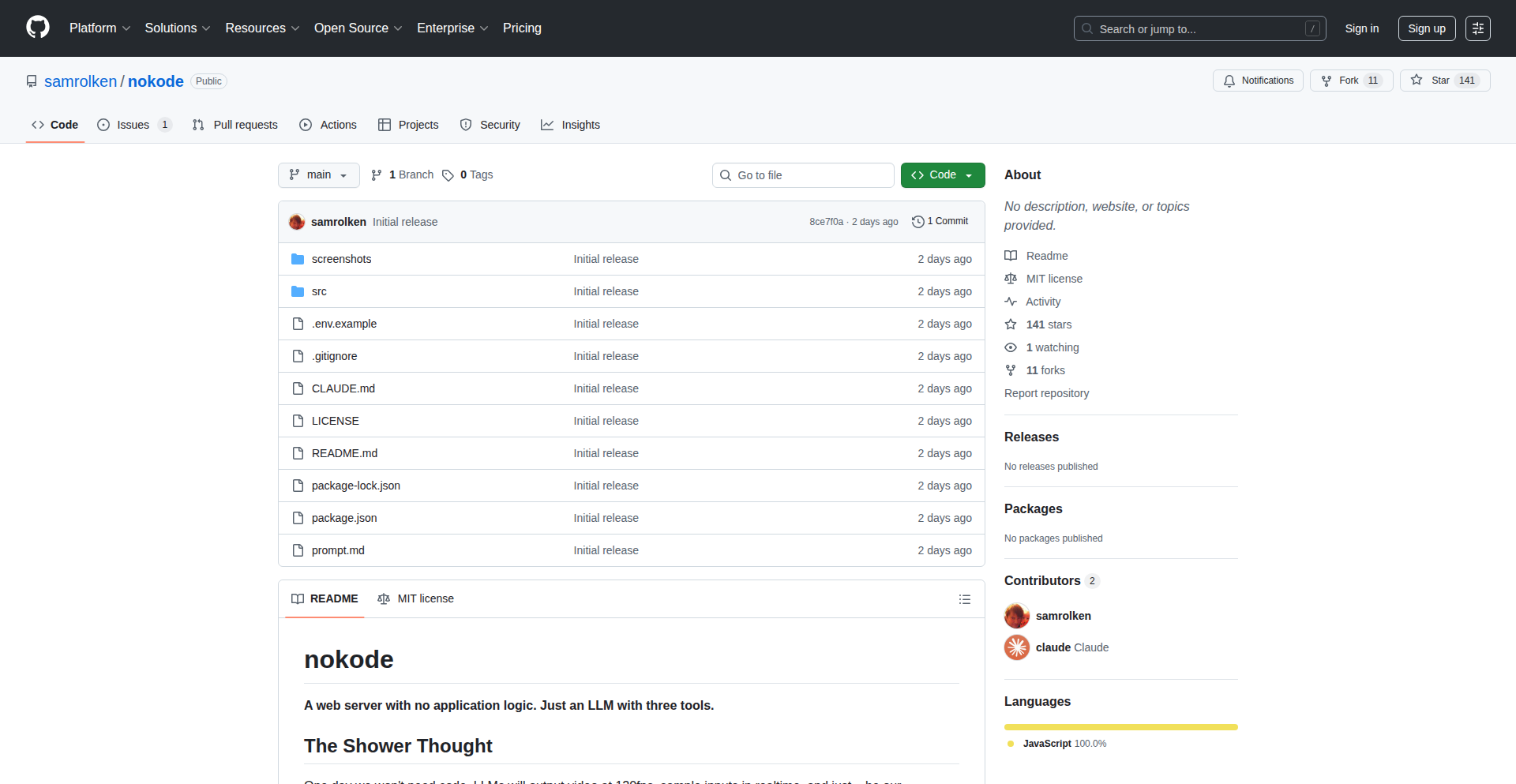

LLM-Powered Dynamic Web App Engine

Author

samrolken

Description

This project is an experimental web application that uses a Large Language Model (LLM) to directly handle HTTP requests, acting as a replacement for traditional routing, controllers, and business logic. Instead of developers writing explicit code for these components, the LLM dynamically designs database schemas, generates user interfaces from URL paths, and adapts based on natural language feedback. It demonstrates the potential of AI to directly execute tasks without generating intermediate code, solving the problem of repetitive coding for certain web functionalities.

Popularity

Points 333

Comments 232

What is this product?

This project is a proof-of-concept web application where an LLM directly interprets and executes web requests. Instead of developers writing specific code for how a website should behave (like defining database structures or creating user interface elements), the LLM receives the request and, using a set of pre-defined tools (like interacting with a SQLite database, sending web responses in HTML/JSON/JS, or updating its internal memory with feedback), figures out what to do. For example, on the first request to a new data endpoint, the LLM might invent the database table structure itself, and then generate a user interface to interact with it. It's an exploration into whether AI can 'do the thing' directly, rather than just generating code that does the thing. The innovation lies in bypassing traditional code-based application architecture for AI-driven execution.

How to use it?

Developers can leverage this project by understanding its underlying principle: defining a set of available tools and allowing an LLM to orchestrate them in response to user requests. While this specific implementation is experimental and slow, the concept could be integrated into development workflows by providing a framework where an LLM acts as an intelligent middleware. For instance, it could be used to rapidly prototype interfaces for internal tools or to handle simple data management tasks where the exact UI and data structure might evolve quickly based on user feedback. The core idea is to 'plug' an LLM into an application's request-response cycle, giving it the power to decide how to fulfill the request using provided capabilities, rather than explicit instructions.

Product Core Function

· Dynamic Schema Generation: The LLM automatically designs database schemas (e.g., for SQLite) based on initial requests, eliminating the need for manual database design for new data types. This is valuable for rapid prototyping and when data needs are fluid.

· AI-Driven UI Generation: User interfaces are generated dynamically from URL paths alone, enabling quick creation of interactive forms and data views without frontend coding. This is useful for building simple dashboards or data entry forms on the fly.

· Natural Language Feedback Adaptation: The application evolves based on natural language feedback, allowing users to instruct the system to modify its behavior or data handling without code changes. This provides an intuitive way for non-technical users to influence application functionality.

· LLM-orchestrated Tool Execution: The LLM intelligently selects and uses pre-defined tools (database, web response, memory update) to fulfill requests, demonstrating a novel approach to application logic execution.

· Direct Request-to-Execution: Eliminates the traditional layers of routes, controllers, and business logic by having the LLM directly interpret and act upon HTTP requests.

Product Usage Case

· Rapid Prototyping of Data Management Tools: Imagine needing a quick way to track project tasks. Instead of writing CRUD operations and UI code, you could point this system at a 'tasks' endpoint. The LLM would create a task table, generate a form to add tasks, and a list view to see them, all based on the URL. This solves the problem of slow development cycles for internal tools.

· AI-powered Forms and Data Entry: For scenarios where data fields might change frequently, this system could generate dynamic forms. A user might submit a request for a 'customer feedback' form. The LLM could create a form, collect responses, and store them, adapting as users request new fields like 'satisfaction score' or 'product tested'.

· Interactive API Endpoints: Instead of defining rigid API schemas, this experiment suggests an LLM could generate dynamic JSON responses based on natural language queries. A request like 'get recent customer orders' might result in the LLM creating the necessary database query and returning the relevant JSON, providing flexibility for evolving data access needs.

· Personalized Content Delivery: An LLM could potentially generate personalized web content or responses based on user interaction history and direct feedback, offering a more adaptive and engaging user experience than static web pages.

2

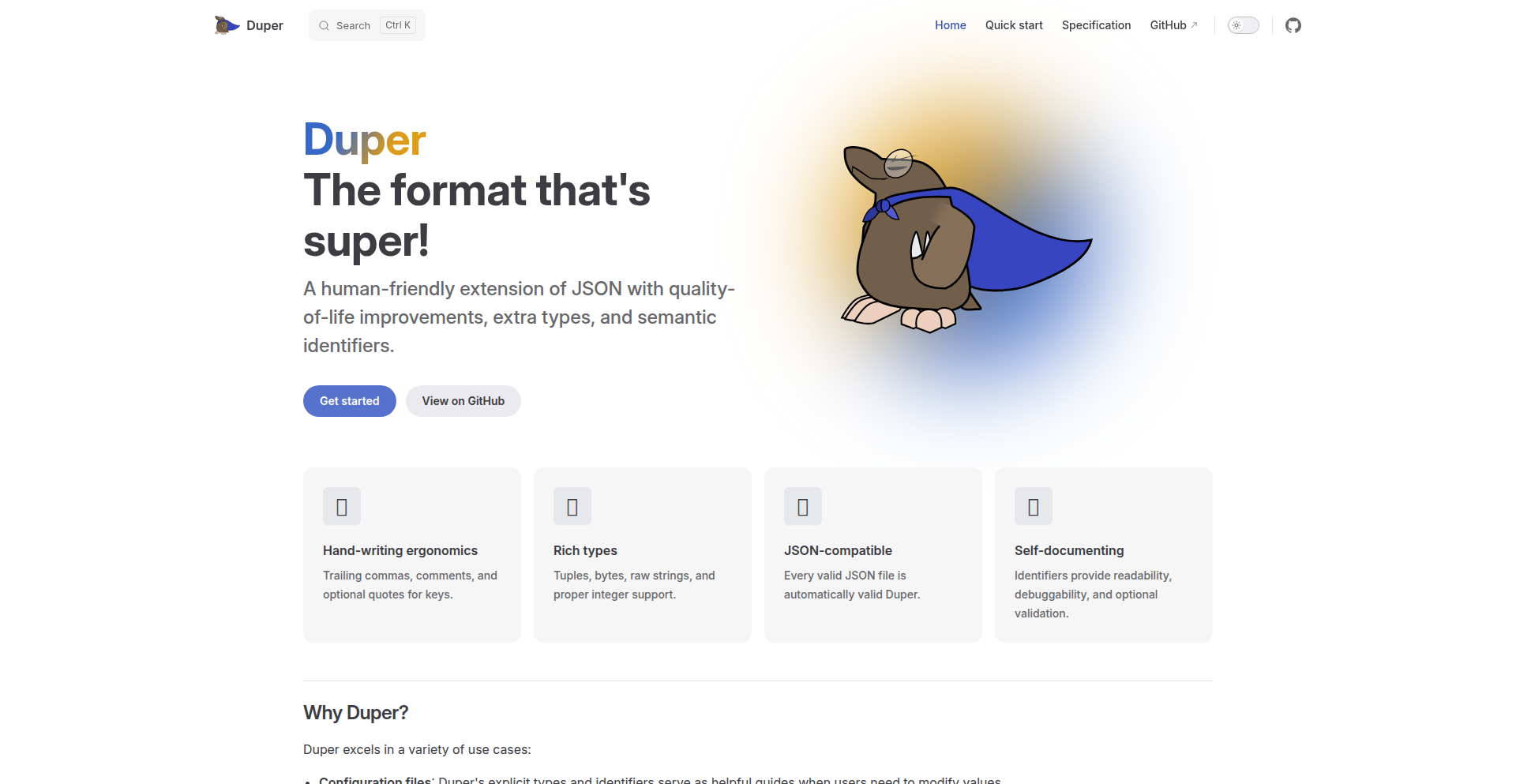

DuperLang

Author

epiceric

Description

DuperLang is a human-friendly data serialization format built on top of JSON. It enhances JSON with features like comments, trailing commas, unquoted keys, and richer data types such as tuples, bytes, and raw strings. It also introduces semantic identifiers, akin to type annotations, to make data structures more understandable and maintainable. The core innovation lies in making configuration files and data exchange more pleasant and less error-prone for developers who frequently work with text-based data formats, offering a cleaner and more expressive alternative to standard JSON.

Popularity

Points 21

Comments 11

What is this product?

DuperLang is essentially an upgrade to JSON, designed to be easier for humans to read and write. Think of it as a more expressive and forgiving version of JSON. The core idea is to solve the common frustrations developers encounter when editing JSON, such as the inability to add comments explaining what data means, or the strictness around quoting keys and allowing trailing commas. DuperLang also adds advanced features like tuples (ordered lists that can be distinguished from regular arrays) and byte strings, making it more versatile for various data representation needs. The 'semantic identifiers' are like labels that tell you what a piece of data represents, making your data structures self-documenting and easier to grasp. So, it's about making data handling more efficient and less tedious for developers.

How to use it?

Developers can integrate DuperLang into their workflows by using the provided tools. The project is built in Rust, with official bindings for Python, allowing you to parse and serialize DuperLang data directly within your Python applications. For front-end or other WebAssembly-enabled environments, there are WebAssembly bindings. Furthermore, VSCode has syntax highlighting support, making it comfortable to edit DuperLang files directly in your editor. This means you can use DuperLang for configuration files, data exchange between services, or any scenario where you'd typically use JSON, but want a more developer-friendly experience. It's like having a supercharged JSON that's easier to work with, ultimately saving you time and reducing the likelihood of syntax errors.

Product Core Function

· Human-readable comments: Allows developers to add explanatory notes within data files, making complex configurations easier to understand and maintain. This is valuable for team collaboration and future debugging.

· Trailing commas: Enables developers to add commas after the last element in arrays or objects, simplifying editing and preventing common syntax errors. This streamlines the development process.

· Unquoted keys: Permits keys in data objects to be written without quotation marks, further enhancing readability and reducing typing. This makes data files cleaner and quicker to write.

· Extended data types (tuples, bytes, raw strings): Provides more expressive ways to represent data beyond standard JSON types, catering to specific programming needs. This offers greater flexibility in data modeling.

· Semantic identifiers: Acts like type annotations for data, making data structures more self-describing and easier to interpret. This improves code clarity and reduces ambiguity.

· Rust implementation with bindings for Python and WebAssembly: Offers performant parsing and serialization capabilities accessible across multiple programming environments. This ensures broad applicability and efficiency.

· VSCode syntax highlighting: Provides a developer-friendly editing experience with intelligent code completion and error detection for DuperLang files. This boosts productivity and reduces errors.

Product Usage Case

· Using DuperLang for application configuration files: Developers can create more readable and maintainable configuration files for their applications, including detailed comments and a more flexible syntax, which is particularly useful for large or complex configurations. This means less time spent deciphering cryptic settings and more time building features.

· Inter-service data exchange with enhanced clarity: When different microservices need to exchange data, DuperLang can be used to serialize this data, making the exchanged information easier to understand for developers inspecting the communication. This helps in debugging distributed systems and understanding data flow.

· Storing application state or user preferences: For applications that need to save and load settings or state, DuperLang's readability and expressiveness make it a superior choice over plain JSON, especially when dealing with structured data that benefits from annotations. This leads to more robust and easier-to-manage application data.

· Prototyping data structures for APIs or databases: Developers can quickly define and iterate on data structures using DuperLang's more flexible syntax before committing to a final schema, accelerating the prototyping phase. This speeds up the development cycle by allowing for rapid experimentation with data formats.

3

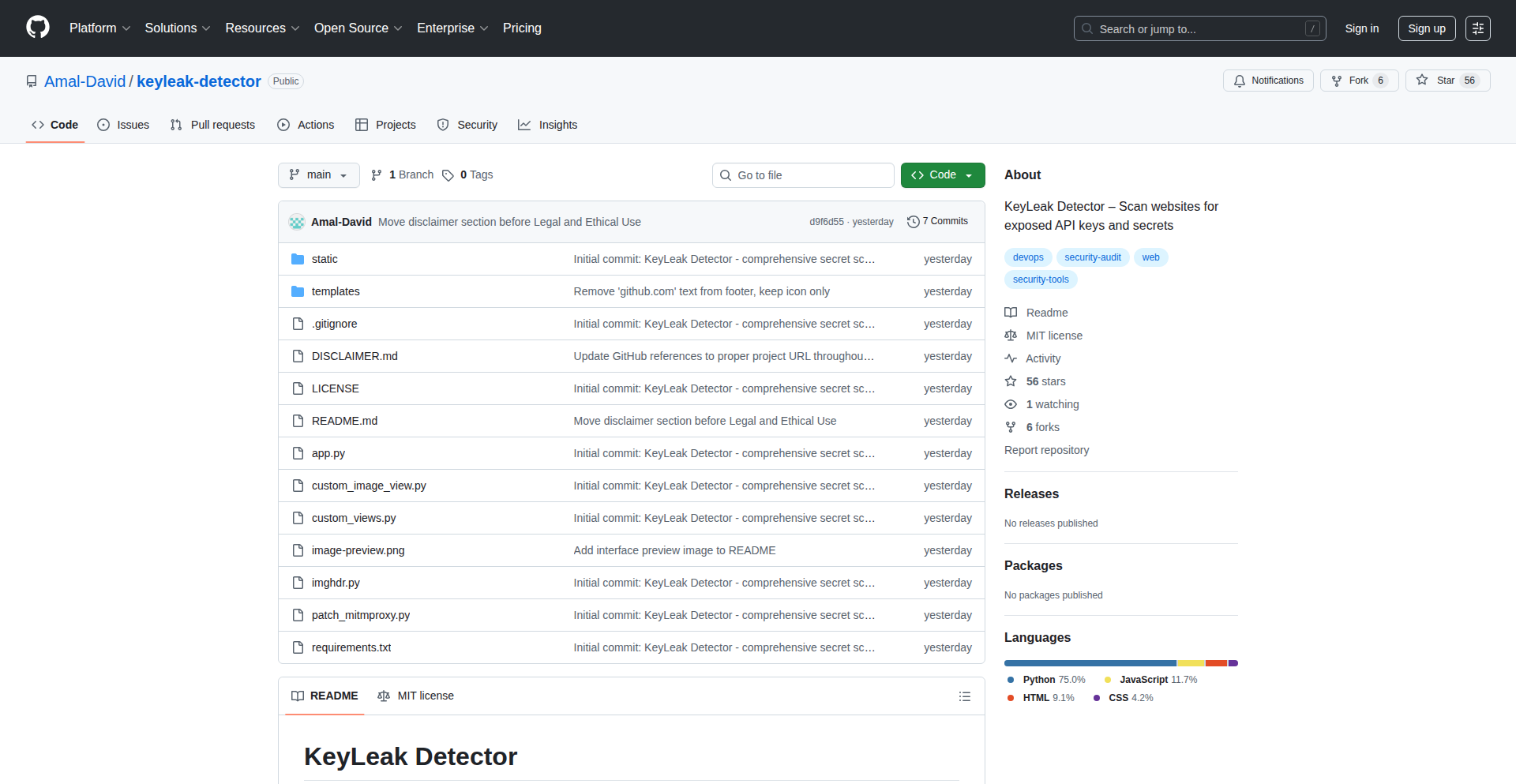

SecretScannerJS

Author

amaldavid

Description

SecretScannerJS is a website scanner designed to automatically detect accidentally exposed API keys and sensitive secrets within your frontend code. It tackles the common issue of developers inadvertently embedding credentials like AWS keys, Stripe tokens, or LLM API keys directly into their web applications, making them visible to anyone inspecting the site. This tool acts as a crucial pre-deployment sanity check, helping to prevent security breaches by identifying these vulnerabilities before they go live.

Popularity

Points 19

Comments 7

What is this product?

SecretScannerJS is a tool that uses a headless browser, which is like a web browser that runs in the background without a graphical interface, to crawl your website. While it's browsing, it intercepts all network requests and analyzes the content for over 50 different types of sensitive data, such as AWS and Google API keys, Stripe payment tokens, database connection strings, and API keys for large language models like OpenAI and Claude. The innovation lies in its automated approach to finding these common, yet critical, security oversights that can easily slip through during rapid development. It's a safety net to catch secrets that shouldn't be out in the open.

How to use it?

Developers can integrate SecretScannerJS into their development workflow, typically by running it on their staging or pre-production environments before deploying to live. You can point it at your website's URL, and it will systematically scan each page. For existing sites, it can be used as an audit tool. The process involves setting up the tool and running it against your target URLs. It's designed to be quick, taking around 30 seconds per page, providing rapid feedback on potential security risks. Think of it as an automated security guard for your web applications.

Product Core Function

· Automated Secret Detection: Identifies over 50 types of exposed secrets, including API keys for cloud providers (AWS, Google), payment gateways (Stripe), and AI services (OpenAI, Claude), as well as database connection strings and JWT tokens. This helps prevent unauthorized access and data breaches by finding sensitive information that shouldn't be publicly visible.

· Headless Browser Crawling: Utilizes a headless browser to simulate a real user accessing your site, ensuring a comprehensive scan of dynamic content and client-side logic. This means it can find secrets embedded within JavaScript that might be missed by simpler tools.

· Network Interception: Monitors all network requests made by the website during the scan to capture secrets transmitted or loaded. This is crucial because many secrets are fetched via API calls, and intercepting these requests is key to finding them.

· Pre-deployment Sanity Check: Acts as a final security gate before releasing code to production, reducing the risk of costly data leaks and reputational damage. It's a quick way to confirm that no critical credentials have been accidentally exposed.

· MIT Licensed: Freely available for use and modification under the MIT license, encouraging widespread adoption and contribution within the developer community. This allows anyone to use the tool to secure their projects without licensing costs.

Product Usage Case

· A startup building a new e-commerce platform accidentally commits their Stripe API keys to their frontend JavaScript. Before deploying to production, they run SecretScannerJS against their staging environment. The tool immediately flags the exposed Stripe keys, preventing potential fraudulent transactions and protecting sensitive customer data. This saves them from a potential financial and reputational crisis.

· A SaaS company develops a complex web application that integrates with various third-party services, each requiring API keys. During a rapid feature development cycle, an AWS access key is mistakenly included in a public JavaScript file. SecretScannerJS is run as part of the CI/CD pipeline on the staging branch. It detects the exposed AWS key, triggering an alert that stops the deployment, thus averting a security vulnerability where an attacker could gain unauthorized access to their cloud resources.

· A developer refactoring an older project wants to ensure no legacy secrets are still present in the codebase. They use SecretScannerJS to scan their live application's URLs. The scanner identifies an old, unused database connection string still present in a client-side script. This discovery helps them clean up the codebase, reducing the attack surface and improving overall security posture, even for existing and less actively developed applications.

4

AI Operator from Hell: Autonomous AI Sysadmin & Storyteller

Author

aiofh

Description

This project presents an autonomous AI system designed to act as a sysadmin, capable of writing technical stories. Its core innovation lies in the AI's ability to not only perform system administration tasks but also to articulate these processes and insights into engaging technical narratives, showcasing a novel approach to AI-driven documentation and operational analysis.

Popularity

Points 16

Comments 3

What is this product?

This is an AI system that acts as an autonomous system administrator. Instead of just performing tasks, it's designed to observe, learn, and then creatively write technical stories about its operations, insights, and challenges. The technical innovation here is in combining sophisticated AI for task execution and monitoring with advanced natural language generation to produce coherent and informative technical content, effectively turning raw operational data into understandable narratives. This means you get both automated system management and automatically generated, insightful technical documentation.

How to use it?

Developers and sysadmins can integrate this AI operator into their infrastructure. It can be deployed to monitor servers, manage services, and respond to incidents. As it performs these duties, it autonomously generates reports, troubleshooting guides, or even creative technical articles based on its experiences. This can be used for real-time incident analysis, automated knowledge base creation, or for generating content for technical blogs and documentation. For example, if it successfully resolves a complex network issue, it can automatically write a detailed technical post explaining the problem, the steps taken, and the solution, which is immediately useful for team learning and future reference.

Product Core Function

· Autonomous System Monitoring and Management: The AI actively oversees system health, performance, and security, taking proactive or reactive measures to ensure stability. This provides continuous operational oversight and automated issue resolution, reducing manual effort and downtime.

· Intelligent Incident Response: The AI can detect anomalies, diagnose root causes, and implement corrective actions for system failures or security breaches. This accelerates problem-solving and minimizes the impact of disruptions.

· Automated Technical Storytelling: The AI generates human-readable technical narratives based on its operational activities, insights, and learned patterns. This transforms complex technical data into understandable stories, ideal for documentation, training, or knowledge sharing.

· Adaptive Learning and Optimization: The AI learns from its operational experiences to improve its decision-making and task execution over time. This ensures continuous improvement of system performance and administrative efficiency.

· Natural Language Generation for Technical Content: The system leverages advanced NLP to create detailed and engaging technical content, such as post-mortems, troubleshooting guides, or architectural explanations. This automates the often tedious process of technical writing and ensures consistent, high-quality documentation.

Product Usage Case

· Scenario: A developer deploys the AI operator on a cloud server farm experiencing intermittent performance degradation. The AI autonomously monitors resource utilization, identifies a misconfigured database connection pool as the bottleneck, and automatically writes a detailed technical story explaining the symptoms, the diagnostic process, the fix implemented, and the performance improvement achieved. This story can be immediately published to the team's internal wiki, saving hours of manual investigation and report writing.

· Scenario: A security operations center uses the AI to monitor for potential threats. When a suspicious login attempt is detected, the AI not only blocks the IP and triggers an alert but also generates a concise technical narrative describing the event, the threat vector identified, and the mitigation steps, suitable for an executive summary or a security incident report. This allows for faster communication of security events and their resolutions.

· Scenario: A startup's engineering team is struggling to document their evolving microservice architecture. They deploy the AI operator to observe service interactions and deployments. The AI continuously generates updated documentation in the form of technical stories, explaining how different services communicate, the reasoning behind recent deployment changes, and potential areas for optimization, making architectural knowledge readily accessible.

5

GeoNewsMapper

Author

marinoluca

Description

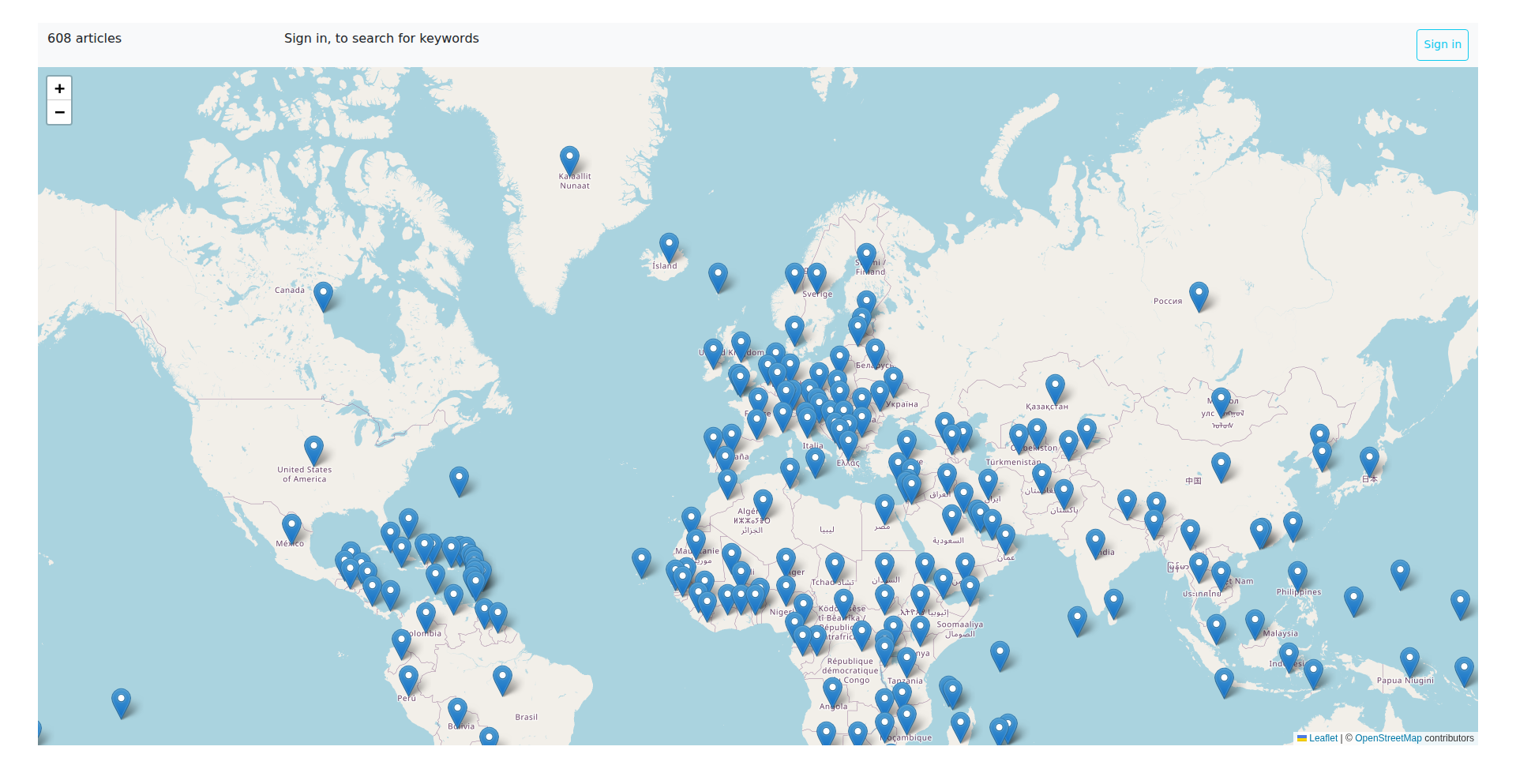

GeoNewsMapper is a real-time news visualization tool that plots articles on an interactive world map. It leverages natural language processing (NLP) to extract geographical locations from news headlines and content, then visualizes the density and topics of news coverage across different regions. This offers a unique way to understand global events and news trends from a spatial perspective, helping users quickly grasp the 'where' and 'what' of world news.

Popularity

Points 17

Comments 0

What is this product?

GeoNewsMapper is a web-based application that transforms news into a visual representation on a world map. It works by taking news articles, using sophisticated text analysis techniques (like Named Entity Recognition or NER) to identify any mentions of places – cities, countries, regions. Once these locations are identified, the system plots them on an interactive map. Different colors or sizes might represent different news categories or the volume of news from a specific area. The innovation lies in its ability to provide an immediate, spatial understanding of news flow, helping to uncover patterns or biases in global reporting that might be missed in traditional text-based news feeds. So, what's in it for you? It allows you to instantly see where the world's attention is focused in terms of news, offering a unique, visual insight into global events.

How to use it?

Developers can integrate GeoNewsMapper into their own applications or use it as a standalone tool. The core functionality likely involves an API that accepts news sources (e.g., RSS feeds, specific news APIs) or individual articles. The API then returns structured data including the article's title, a snippet, and the identified geographical locations. For integration, a frontend developer could use this data to render markers on a map library like Leaflet or Mapbox GL JS. Backend developers could build systems that continuously feed news into GeoNewsMapper for ongoing monitoring. For instance, a research institution might feed it global news to track reporting on climate change in specific regions, or a journalist might use it to discover trending news stories geographically. So, how can you use it? You can embed this visual mapping of news into your website or dashboard to give your users a dynamic overview of global news trends.

Product Core Function

· Geographical News Extraction: Identifies and extracts place names from news articles using NLP techniques, enabling the localization of news. Its value is in making abstract news concrete and spatially relevant. This is useful for understanding the physical distribution of news coverage.

· Interactive World Map Visualization: Plots extracted news locations on a dynamic, zoomable world map, providing a visual overview of news hotspots. The value here is immediate comprehension of global news patterns. This is useful for quickly spotting where major events are being reported.

· News Topic Categorization (Implied): Likely categorizes news by topic (e.g., politics, sports, disaster) and potentially uses this to color-code or cluster points on the map, adding another layer of analytical depth. Its value is in differentiating news types across regions. This is useful for targeted analysis of specific event types.

· Real-time Data Processing: Processes incoming news feeds in near real-time to keep the map up-to-date with current events. The value is in providing timely information. This is useful for staying on top of breaking news and ongoing situations.

· API for Integration: Offers an API for developers to programmatically access the geographical news data, allowing for custom applications and visualizations. Its value is in enabling extensibility and customization. This is useful for building specialized news analysis tools.

Product Usage Case

· A geopolitical analyst uses GeoNewsMapper to track how international news outlets are reporting on a developing conflict in a specific region, observing the spatial distribution of reporting to identify potential media biases or areas receiving more attention. This solves the problem of manually sifting through countless articles to understand the geographic focus of news.

· A humanitarian organization uses GeoNewsMapper to monitor news coverage of natural disasters worldwide, quickly identifying which affected areas are receiving the most media attention and which might be overlooked. This helps them prioritize resource allocation and awareness campaigns.

· A journalist researching global trends in technology investment uses GeoNewsMapper to visualize where news about tech funding is originating and being reported. This helps them identify emerging tech hubs or shifts in investment focus.

· A data visualization enthusiast builds a personal dashboard that integrates GeoNewsMapper to see, at a glance, the most significant news events happening around the globe on any given day, presented in an easily digestible visual format. This makes complex global news accessible and understandable.

6

ChatOps Log Interpreter

Author

prastik

Description

Jod is a conversational observability platform that allows developers to interact with their logs using natural language. Instead of manually sifting through dashboards and log files, users can ask Jod questions like 'Why did latency spike?' or 'Show me 5xx errors from the payments service.' Jod then retrieves, summarizes, and even visualizes the answers, streamlining the debugging and monitoring process. This innovation stems from the realization that traditional observability workflows are time-consuming and prone to context switching, especially during incidents.

Popularity

Points 9

Comments 4

What is this product?

Jod is a tool that lets you 'chat' with your system's logs. Think of it like having a smart assistant who can understand your questions about what's happening in your application. It connects to your cloud logs (currently CloudWatch) and uses a system called MCP (Message Communication Protocol) to stream information back to you. The magic is in its conversational interface; you ask questions in plain English, and it interprets them to find the relevant log data. It can then present this data as summaries, lists, or even create graphs for you, eliminating the need to manually navigate complex dashboards and log files. This is a significant innovation because it simplifies a complex and often frustrating part of software development: troubleshooting.

How to use it?

Developers can use Jod by connecting it to their existing cloud logging services, starting with AWS CloudWatch. Once connected, they interact with Jod through a chat window. They can type natural language queries about their application's behavior, such as asking for specific error types, performance metrics over a period, or unusual spikes in activity. For visualizations, they can use an annotation like '@Graph' followed by their request. Jod then processes these requests, fetches the necessary data from the logs, and provides answers directly in the chat. This makes it incredibly easy to integrate into daily debugging routines and incident response workflows, reducing the time spent on context switching and manual data analysis. A standalone MCP server is also planned, allowing developers to integrate Jod's conversational capabilities with their own AI clients.

Product Core Function

· Natural Language Log Querying: Enables developers to ask questions about their logs using everyday language, making it easier to pinpoint issues without needing to know complex query syntax. This drastically reduces the learning curve for observability tools.

· Automated Data Summarization: Jod can automatically summarize large volumes of log data, providing concise insights into potential problems or trends, saving developers significant time in manual data review.

· On-Demand Visualization: With a simple command, Jod can generate time-series graphs and other visualizations from log data, helping developers quickly understand patterns and anomalies. This visual representation aids in faster comprehension of complex data.

· Incident Response Assistance: By providing quick answers and insights from logs, Jod helps accelerate the troubleshooting process during critical incidents, minimizing downtime and impact.

· Cross-Platform Observability (Future): Planned expansion to Azure and GCP will allow developers to manage and understand logs from multiple cloud environments through a single, unified conversational interface.

Product Usage Case

· A developer experiences a sudden spike in application latency. Instead of digging through hours of CloudWatch logs, they ask Jod: 'Why did latency spike last night?'. Jod analyzes recent logs and responds with the most probable causes, potentially pointing to a specific service or error.

· During a production issue, the team needs to identify all errors related to user authentication. A developer can ask Jod: 'Show me all authentication errors from the auth service in the last hour.' Jod quickly returns a list of relevant error messages, speeding up the diagnosis of the user-facing problem.

· A developer wants to monitor the error rate of their payment processing service over the last 24 hours. They can ask Jod: 'Create a time series graph showing 5xx errors for the payments service for the last 24 hours.' Jod generates the graph, allowing for visual identification of any unusual spikes or dips in error rates.

7

FontHunter.js

Author

zdenham

Description

A weekend project that allows you to easily discover and download fonts used on any website. It scans a webpage and extracts font information, offering a quick solution for designers and developers looking to identify and acquire specific typefaces.

Popularity

Points 12

Comments 0

What is this product?

FontHunter.js is a lightweight, browser-based tool designed to identify and facilitate the download of fonts embedded within a website. Its core innovation lies in its ability to programmatically parse a webpage's Document Object Model (DOM) and its associated CSS to extract font family names, their sources (like Google Fonts or self-hosted files), and potentially their CSS properties. This allows users to quickly pinpoint the exact fonts a site is using without manual inspection, solving the common problem of font discovery and accessibility for creative professionals.

How to use it?

Developers can use FontHunter.js by integrating its JavaScript library into their workflow. This could involve running it as a bookmarklet for quick on-demand analysis of any webpage, or embedding it within a web application or browser extension for a more persistent experience. The project likely provides a simple API to trigger the scan and return a list of identified fonts, with options to directly link to their download sources where available. This makes it incredibly easy to apply the same typography to your own projects or to simply understand the design choices of others.

Product Core Function

· Automated font identification: Scans a given webpage and automatically detects all font families being used, providing a list of their names. This saves valuable time compared to manual CSS inspection, directly answering 'How do I find the fonts on this page?'

· Font source extraction: Identifies where each font is being loaded from, whether it's a third-party service like Google Fonts or a locally hosted file. This is crucial for understanding licensing and for developers who want to replicate the design accurately, answering 'Where can I get this font?'

· Download link generation (where applicable): For fonts hosted on public services, the tool aims to provide direct links for downloading. This streamlines the process of acquiring fonts for personal or commercial use, answering 'How can I download this font?'

· Cross-browser compatibility: Designed to work across major web browsers, ensuring accessibility for a wide range of users and development environments. This means you can use it regardless of your preferred browser, answering 'Will this work for me?'

Product Usage Case

· A web designer is browsing a competitor's website and loves the font used in their headings. By running FontHunter.js, they can instantly identify the font name and get a link to download it, allowing them to apply a similar aesthetic to their own designs without extensive research, solving the problem of 'I like this font, how do I get it?'

· A front-end developer is tasked with replicating a specific webpage layout. They encounter a unique font that greatly contributes to the page's appeal. Using FontHunter.js, they quickly discover the font's identity and source, enabling them to integrate it seamlessly into their project and ensure visual consistency, addressing the need for 'exact replication of existing designs'.

· A typography enthusiast is curious about the font choices across various online publications. They can use FontHunter.js as a tool to build a personal library of interesting fonts discovered on different websites, fostering their understanding and appreciation of typographic design, answering 'What fonts are trending or being used effectively in the wild?'

8

UnisonDB: Log-Native Data Fabric

Author

ankuranand

Description

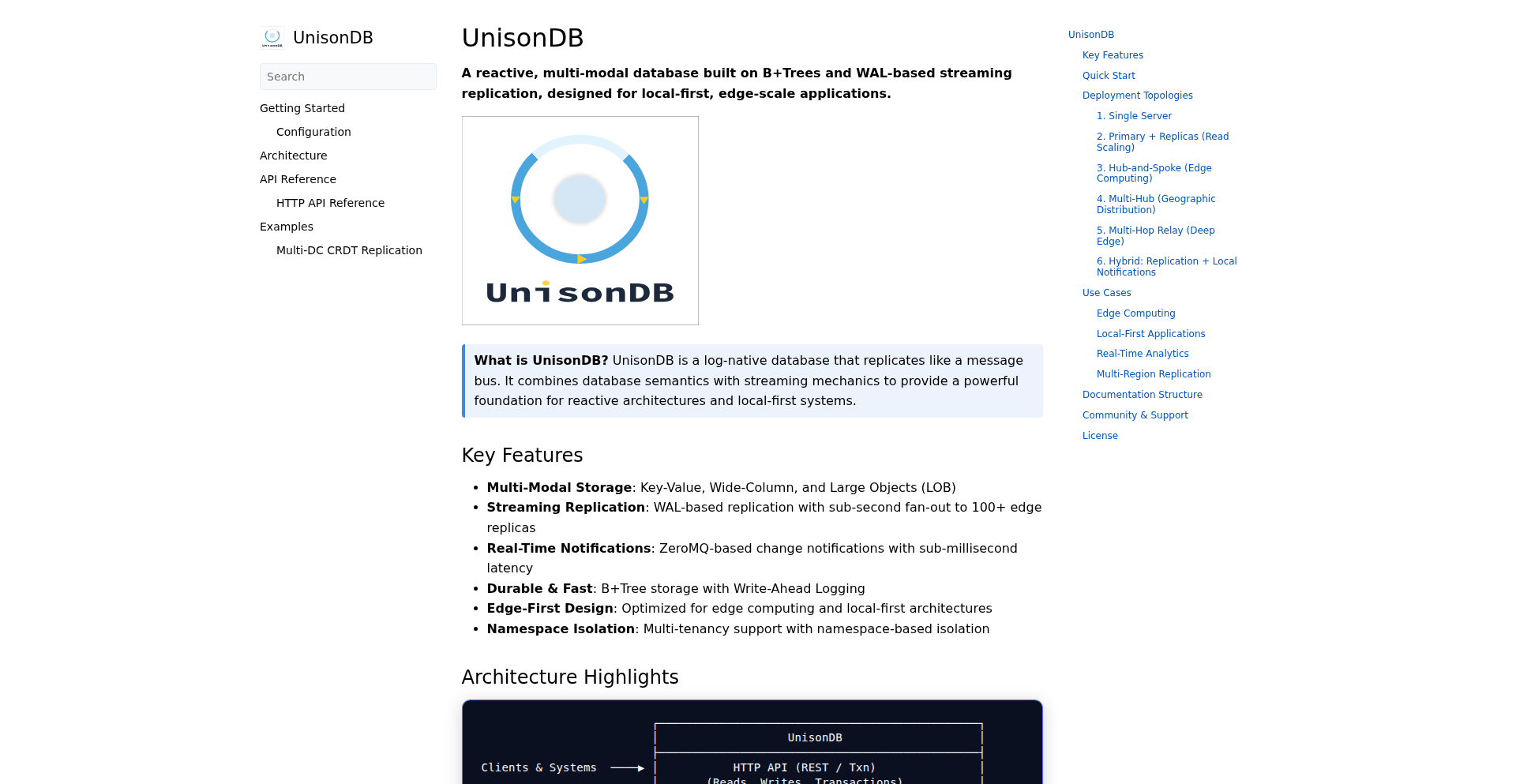

UnisonDB is a novel log-native database that treats its Write-Ahead Log (WAL) as the central source of truth, not just for recovery. It unifies data storage and real-time streaming into a single, log-based core. This eliminates the need for complex pipelines involving separate Key-Value stores, Change Data Capture (CDC), and message queues like Kafka. Writes are durable, globally ordered for consistency, and immediately streamable to followers in real-time. It combines the predictable read performance of B+Tree storage with the efficient, sub-second replication of WAL-based streaming, making it ideal for distributed systems and edge deployments.

Popularity

Points 11

Comments 0

What is this product?

UnisonDB is a database system designed from the ground up to use its Write-Ahead Log (WAL) as the primary data structure. Think of it like this: normally, databases write changes to a log for safety and then organize that data into a more accessible format like a B+Tree for fast reads. UnisonDB flips this by making the ordered log itself the database. Every single piece of data written is durably appended to this log, then globally sequenced so everyone agrees on the order, and then immediately available to be sent out to other connected systems (followers) as a live stream. This is achieved by integrating B+Tree storage for efficient querying directly with WAL-based replication. The innovation lies in treating data flow and storage as a single, inherently linked process, rather than separate components that need to be stitched together.

How to use it?

Developers can integrate UnisonDB into applications that require both reliable data storage and real-time data distribution. For example, if you're building a distributed system where multiple nodes need to have the same data and stay synchronized with minimal latency, UnisonDB can serve as the central data store. Instead of managing a separate database, a CDC tool, and a message queue, you can simply use UnisonDB. Its replication mechanism uses gRPC to stream WAL entries to follower nodes, allowing them to ingest data in real-time. This is particularly useful for edge computing scenarios where devices might go offline and need to quickly resynchronize when they come back online. Writes can also trigger ZeroMQ notifications, enabling reactive application patterns.

Product Core Function

· Log-Native Storage: Data is inherently stored as an ordered, immutable log, ensuring durability and a clear historical record. This simplifies data management and recovery by using the log itself as the primary data source.

· Real-time Streaming Replication: Changes are immediately streamed via gRPC from the WAL to follower nodes, enabling sub-second fan-out to potentially hundreds of replicas. This keeps all instances of your data synchronized with very low latency.

· B+Tree Backed Storage: While log-native, UnisonDB also utilizes B+Trees for efficient and predictable read operations, avoiding the performance hiccups often associated with compaction in other log-structured storage systems.

· Multi-Model ACID Transactions: Supports Key-Value, wide-column, and Large Object (LOB) storage within a single atomic transaction, providing flexibility for diverse data needs.

· Edge-Friendly Resynchronization: Replicas can disconnect and reconnect seamlessly, instantly resuming synchronization from where they left off in the WAL. This is critical for unreliable network environments or mobile applications.

· Reactive Notifications: Every write operation can emit a ZeroMQ notification, allowing external systems or application logic to react instantly to data changes.

Product Usage Case

· Distributed System Synchronization: Imagine a fleet of IoT devices needing to share sensor data and receive commands. UnisonDB can act as the central data hub, ensuring all devices have consistent, up-to-date information and can receive commands with minimal delay, eliminating the complexity of managing separate Kafka and KV stores.

· Real-time Analytics Pipelines: For applications that need to analyze incoming data streams as they happen, UnisonDB can provide both the storage for raw data and the real-time stream for analytics engines. This means you can ingest data, store it, and start analyzing it simultaneously without complex data pipeline configurations.

· Edge Data Management: In scenarios like retail stores or remote industrial sites, UnisonDB can power local data stores that can operate offline and then efficiently sync with a central server when connectivity is restored. This ensures applications remain responsive even with intermittent network access.

· Microservice Data Coordination: When microservices need to share data and maintain consistency across different services, UnisonDB can serve as a central, reliable data layer that replicates efficiently, reducing the need for intricate inter-service communication patterns.

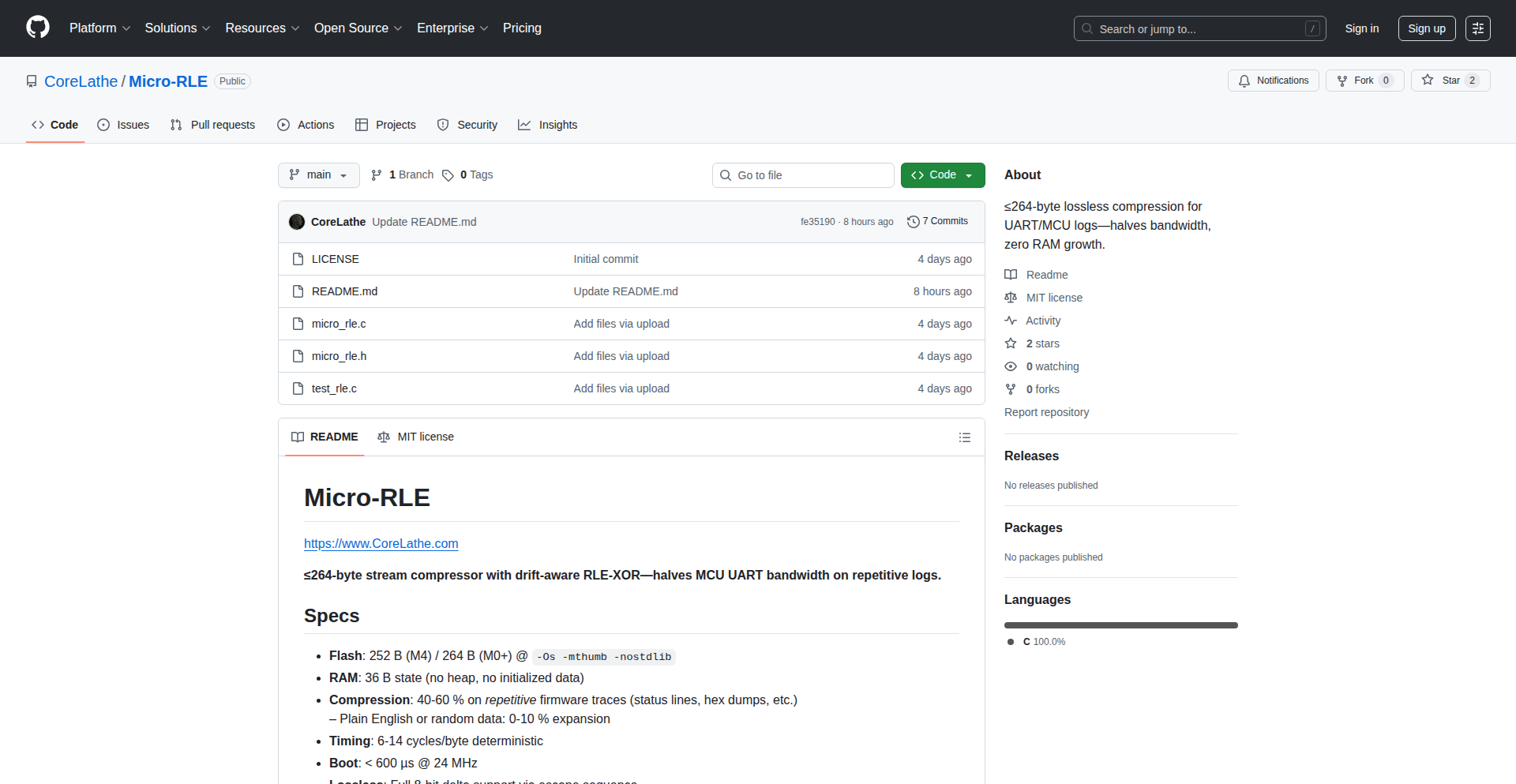

9

Micro-RLE

Author

CoreLathe

Description

Micro-RLE is an extremely small, lossless compression library designed for microcontrollers with very limited memory and processing power. It achieves significant data reduction for telemetry streams, allowing more data to be sent over slow communication lines like UART without requiring additional RAM. This is useful for embedding more diagnostic information or sensor readings from resource-constrained devices.

Popularity

Points 7

Comments 2

What is this product?

Micro-RLE is a super-efficient, lossless data compression algorithm implemented in just 264 bytes of highly optimized Thumb assembly code. It's designed to work on embedded systems (like those with Cortex-M0+ processors) that have very little flash memory (264 bytes is tiny!) and no dynamic memory allocation (no 'malloc', meaning it doesn't need to reserve extra RAM). It compresses 8-bit data patterns without losing any information, making it perfect for squeezing more out of slow serial communication links, such as UART. It also starts up incredibly fast, under 600 microseconds, so it can be ready to compress data almost immediately after your device boots.

How to use it?

Developers can integrate Micro-RLE by replacing a simple placeholder function (the 'emit()' hook) in their embedded project with their specific hardware communication method. This could be a direct UART transmission, a DMA (Direct Memory Access) controller, or a ring buffer. The project is provided as a single C file with a three-function API, making it straightforward to drop into existing firmware. By doing this, any data that would normally be sent raw can now be compressed by Micro-RLE, reducing the amount of data that needs to be transmitted, thus saving bandwidth and time on slow connections.

Product Core Function

· Lossless compression: Achieves 33-70% smaller output for typical sensor data, meaning you can send twice as much information or more over the same slow connection. This is useful for sending detailed sensor logs or diagnostics without overwhelming the communication channel.

· Ultra-small footprint: The entire compression code takes up only 264 bytes of flash memory, making it ideal for the smallest microcontrollers where every byte counts. This allows you to add valuable compression features without sacrificing essential program functionality.

· Zero RAM overhead: Requires only 36 bytes of state and no dynamic memory allocation, meaning it doesn't consume precious RAM resources. This is crucial for microcontrollers with very limited RAM, ensuring your main application has enough memory to run.

· Fast boot and execution: Starts compressing data in under 600 microseconds and processes data at a very high speed (worst-case 14 cycles per byte). This ensures that compression doesn't become a bottleneck, allowing for real-time data streaming and quick initialization.

· Simple API: A straightforward three-function API makes it easy to integrate into existing embedded projects. You just need to hook it into your existing data transmission mechanism, minimizing development effort.

Product Usage Case

· Sending high-frequency IMU (Inertial Measurement Unit) data from a drone over a low-bandwidth telemetry link. Micro-RLE compresses the accelerometer and gyroscope readings, allowing for more frequent updates and thus better flight control without needing a faster (and more expensive) radio module.

· Logging detailed diagnostic information from a power-constrained IoT device. By compressing error codes, sensor statuses, and operational parameters, developers can gather more comprehensive debugging data over a slow serial port, reducing the risk of missing critical issues.

· Transmitting GPS location data from a small embedded tracker. Micro-RLE shrinks the data packet size, enabling more frequent location updates or allowing the tracker to conserve battery by transmitting less often while still getting enough data for accurate tracking.

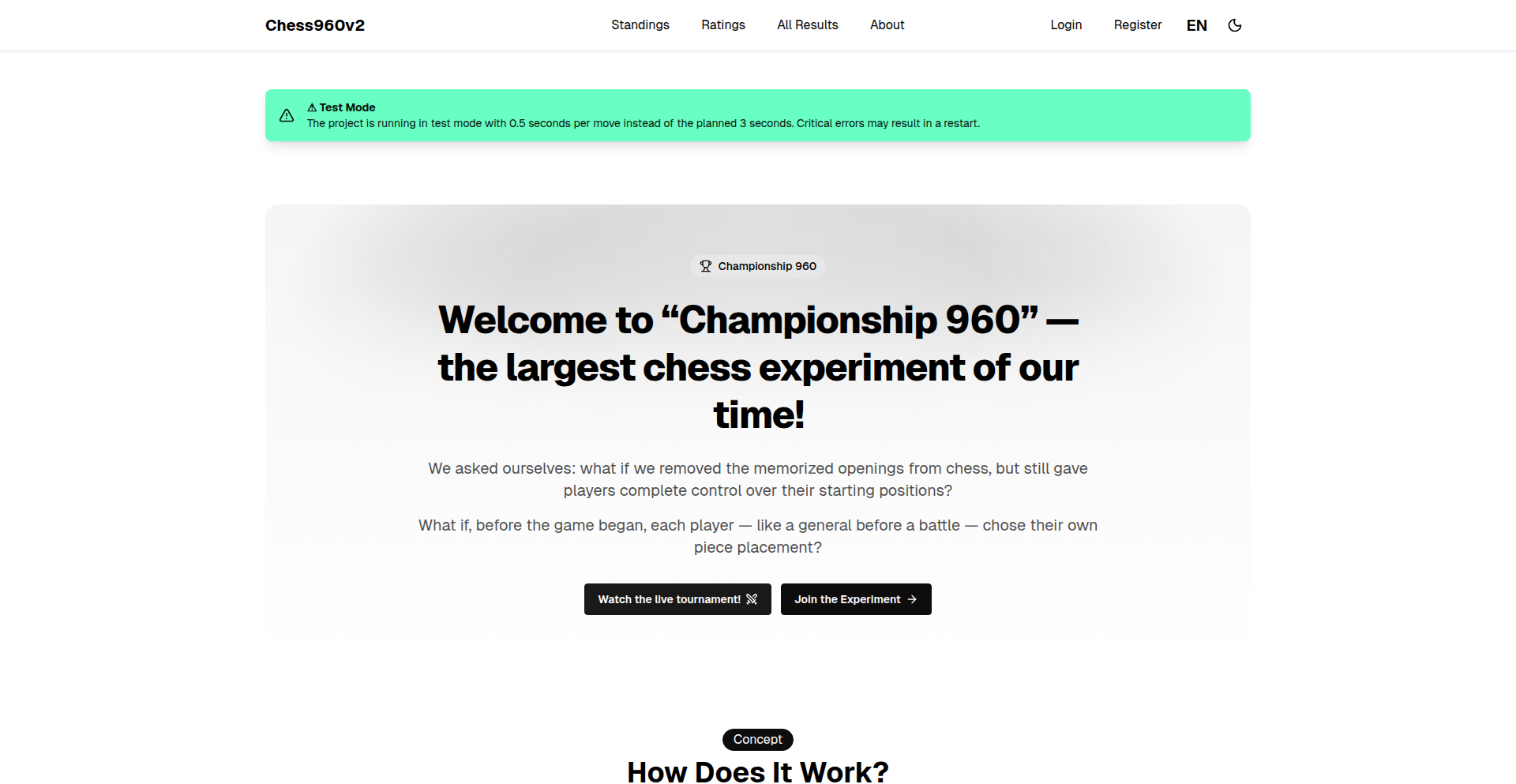

10

Stockfish 960v2 Orchestrator

Author

lavren1974

Description

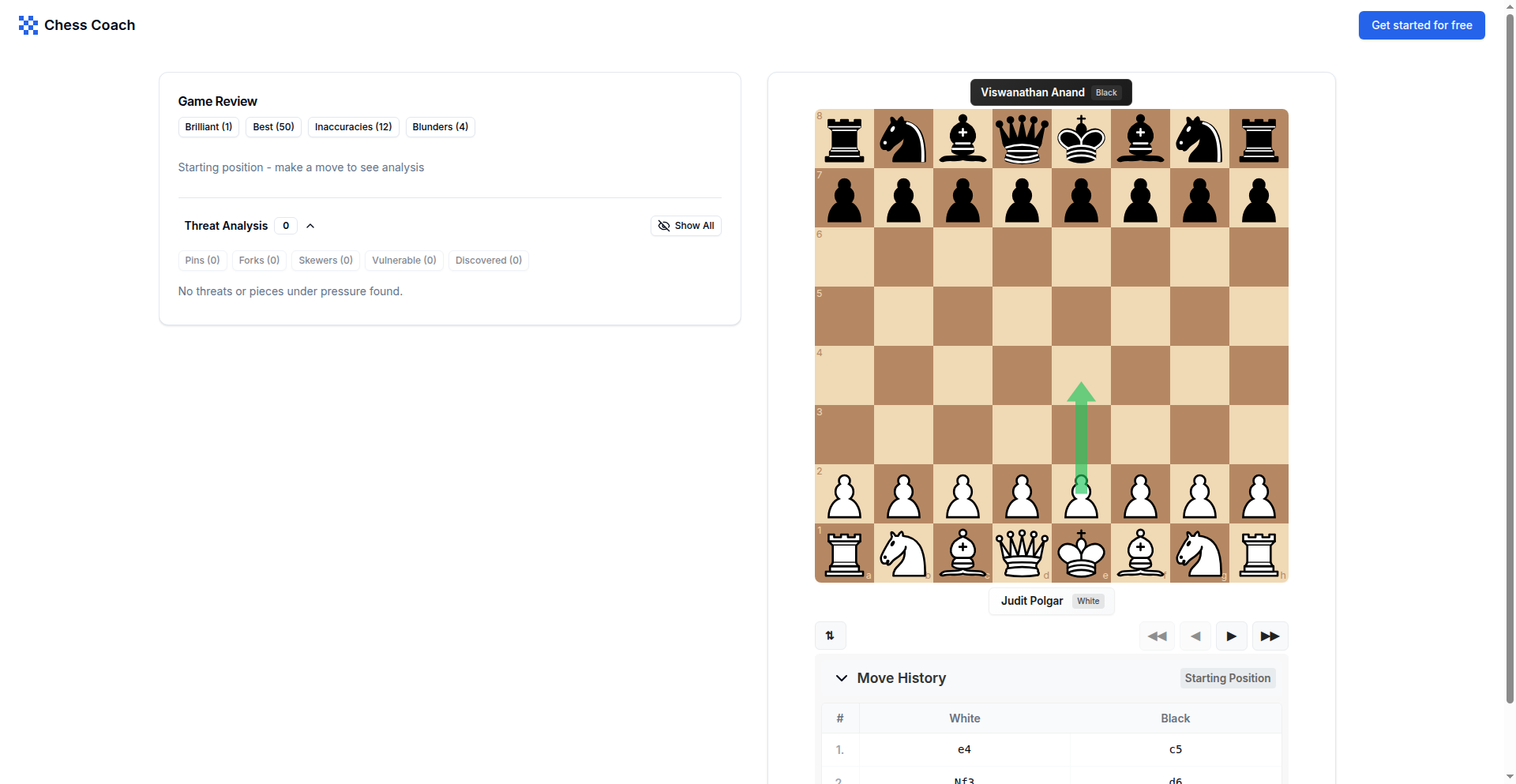

This project is a sophisticated Go-based orchestrator designed to run large-scale tournaments for the Stockfish chess engine specifically in Chess960 (Fischer Random) mode. It aims to generate a comprehensive dataset by pitting Stockfish against itself across all 960 unique starting positions. The innovation lies in its systematic approach to chess engine benchmarking and data generation, tackling the engineering challenge of managing numerous engine instances, distributing game tasks, collecting results, and performing initial analysis, all while paving the way for future machine learning applications.

Popularity

Points 3

Comments 4

What is this product?

This is a highly specialized system built in Go (Golang) that automates a grand chess tournament for the Stockfish engine. Unlike regular chess, Chess960 (also known as Fischer Random chess) shuffles the pieces on the back rank for each game, creating 960 distinct starting positions. The project's core innovation is to use Stockfish to play every single one of these 960 positions against itself. By doing this over an extended period, the system collects vast amounts of data to determine if certain starting positions inherently offer an advantage, even when played by a perfect engine like Stockfish. It's a large-scale, automated experiment in chess strategy and engine performance. So, this means it can help us understand if there are any inherent biases in chess engine evaluation due to specific board setups, and it generates a unique, statistically robust dataset for future analysis.

How to use it?

Developers can use this project as a blueprint for building their own distributed computational tasks, especially those involving resource-intensive simulations or engine benchmarking. The Go codebase, once released, will demonstrate practical techniques for process management, task distribution, data aggregation (collecting PGN files), and result analysis in a high-performance computing context. It can be integrated into similar large-scale analytical projects or adapted for benchmarking other AI models or game engines. So, for you, it means learning how to architect robust systems for complex computations and potentially repurposing its logic for your own parallel processing needs.

Product Core Function

· Position Orchestration: Distributes each of the 960 Chess960 starting positions to be played, ensuring comprehensive coverage of all possibilities. This value lies in systematically exploring a complex combinatorial space, which is crucial for scientific research and data collection.

· Engine Management: Manages multiple instances of the Stockfish chess engine concurrently, optimizing resource utilization for maximum computational throughput. This is valuable for anyone needing to run many instances of a demanding program efficiently.

· Game Data Collection: Collects the resulting Portable Game Notation (PGN) files from each completed game, creating a detailed record of all matches played. The value is in generating a structured, analyzable dataset for research and development.

· Result Analysis (Initial): Performs preliminary analysis of the game outcomes to identify initial trends or statistically significant advantages. This provides immediate insights and a starting point for deeper investigation.

· Scalable Operation: Designed for long-term, autonomous operation (planned for a year), demonstrating robust system stability and reliability for extended computational tasks. This is valuable for understanding how to build systems that can run reliably for extended periods without manual intervention.

Product Usage Case

· Benchmarking AI Chess Engines: In a scenario where a developer wants to compare the performance of different chess engines or different versions of the same engine across a wide range of tactical and strategic challenges, this project's orchestrator can be adapted. It provides a framework for running consistent, controlled evaluations and generating comparative data. So, this helps you objectively measure and improve AI models.

· Generating Datasets for Machine Learning: Researchers or ML engineers looking to build models that understand chess strategy or engine evaluation can leverage the massive, unbiased dataset produced by this project. The data could be used to train models to predict game outcomes or to understand positional advantages in Chess960. So, this provides you with valuable, ready-to-use data for training sophisticated AI.

· Engineering High-Throughput Distributed Systems: For developers working on projects that require distributing heavy computational workloads across multiple machines or processes, the Go implementation of this orchestrator offers practical examples of managing concurrency, task scheduling, and data synchronization. So, this teaches you how to build efficient, scalable systems for demanding tasks.

11

PraatEcho

Author

BASSAMej

Description

PraatEcho is a mobile application designed to revolutionize language learning by focusing on auditory comprehension. Its core innovation lies in its 'listen-first' approach, which immerses users in spoken language, making it easier to grasp pronunciation, intonation, and natural speech patterns. This addresses the common challenge in language learning where learners struggle to understand native speakers in real-world conversations.

Popularity

Points 2

Comments 3

What is this product?

PraatEcho is a mobile app that helps you learn new languages by primarily listening to them. The technical idea behind it is to leverage the power of audio input, mimicking how babies learn their first language. Instead of overwhelming new learners with complex grammar rules from the start, it prioritizes exposing them to authentic spoken language. This is innovative because many existing apps heavily rely on reading and writing exercises. By focusing on listening, PraatEcho aims to build a stronger foundation in conversational fluency and reduce the anxiety associated with understanding native speakers. The 'MVP' (Minimum Viable Product) stage means it's a basic, functional version demonstrating the core concept.

How to use it?

Developers interested in this concept can explore how to integrate audio-first learning modules into their own educational platforms or language learning tools. For end-users, the app would be used daily for short listening sessions, focusing on understanding spoken dialogues, phrases, and vocabulary in context. The application could potentially be integrated with speech recognition APIs for interactive exercises, or used alongside transcription tools to aid comprehension during the learning process.

Product Core Function

· Immersive Listening Modules: Provides audio content that mimics real-life conversations. The value is building listening comprehension and natural language acquisition, directly answering 'how do I understand what people are saying?'

· Pronunciation Focus: Emphasizes understanding spoken nuances. The value is improving your ability to discern and replicate native speaker pronunciation, answering 'will I sound like a native speaker?'

· Contextual Vocabulary Acquisition: Introduces new words and phrases within spoken sentences. The value is learning vocabulary that is immediately useful and memorable in practical situations, answering 'how can I learn words I'll actually use?'

· Early Stage Design Exploration: The current MVP allows for feedback on user interface and core learning principles. The value is contributing to the development of a more user-friendly and effective language learning tool, answering 'how can I influence the creation of better learning tools?'

Product Usage Case

· A language learner struggling with fast-paced movie dialogues could use PraatEcho to build up their listening stamina and understanding of informal speech patterns, solving the problem of being lost during media consumption.

· An individual preparing for a trip abroad could use the app to practice understanding common travel phrases and interactions, directly addressing the need for practical conversational skills in a foreign country.

· A developer building a language tutoring service could draw inspiration from PraatEcho's listening-centric approach to design more effective audio-based lesson plans and interactive exercises, improving their product's learning efficacy.

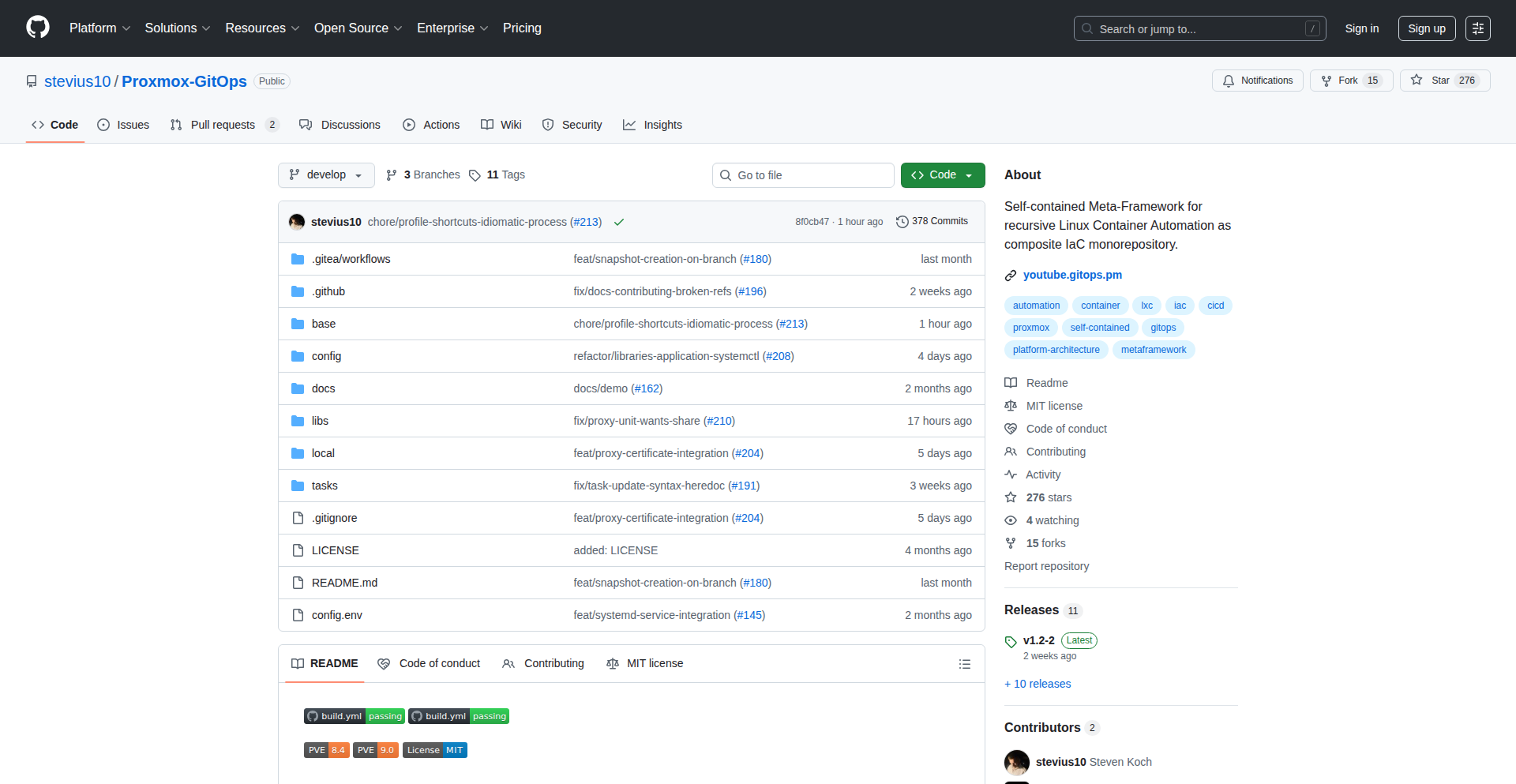

12

Proxmox-GitOps: Recursive Monorepo Container Orchestrator

Author

gitopspm

Description

This project, Proxmox-GitOps, is an Infrastructure-as-Code (IaC) framework that automates the deployment and management of containerized infrastructure. It leverages a monorepository, enhanced with Git submodules, to define the entire infrastructure configuration. The innovation lies in its recursive self-management, where the control plane bootstraps itself by pushing its own definition, leading to a fully automated and self-healing infrastructure. This tackles the complexity of managing modern containerized environments by treating the entire infrastructure as code.

Popularity

Points 4

Comments 1

What is this product?

Proxmox-GitOps is an advanced system for automating the setup and operation of your IT infrastructure, specifically for running applications in containers (like Docker). Think of it like a blueprint for your entire server farm, but the blueprint is written in code and stored in Git. The core innovation is 'recursive self-management': the system can actually build and manage itself. It does this by defining its own control panel (the 'brain' of the system) within the same codebase, and then uses that definition to set itself up. This means you only need to update the Git repository, and the system takes care of the rest, ensuring consistency and reliability. It uses Git submodules to break down the complex infrastructure definition into smaller, manageable pieces, making it easier to organize and reuse parts of your infrastructure setup.

How to use it?

Developers use Proxmox-GitOps by defining their desired infrastructure within a monorepository. This repository contains configuration files written in IaC languages (like YAML or HCL, though the specifics aren't detailed here). Git submodules are used to modularize different components of the infrastructure (e.g., networking, storage, specific application deployments). The system then watches this Git repository. When changes are pushed, it automatically interprets the code and provisions or updates the necessary resources on Proxmox Virtual Environment (PVE) or other compatible container platforms. This allows for a 'set it and forget it' approach after the initial setup, with Git acting as the single source of truth for the infrastructure's state.

Product Core Function

· Automated Infrastructure Provisioning: The system automatically creates and configures servers, networks, storage, and container deployments based on the code in the Git repository, saving manual setup time and reducing errors.

· Recursive Self-Management: The core control plane can deploy and manage itself, ensuring that the automation system is always up and running and can recover from failures automatically.

· Monorepository for IaC: All infrastructure definitions are centralized in a single repository, making it easier to manage, version, and understand the entire infrastructure setup.

· Git Submodule for Modularity: Allows breaking down complex infrastructure into reusable and manageable components, promoting best practices in code organization and preventing 'monolithic' configuration files.

· Single Source of Truth with Git: Git is used to store the desired state of the infrastructure. Any discrepancy between the Git definition and the actual running infrastructure is automatically corrected by the system.

· Container Orchestration: Provides a framework for deploying and managing applications running in containers, simplifying the deployment pipeline for containerized workloads.

Product Usage Case

· Automating the deployment of a microservices-based application: A developer defines all services, their container images, networking rules, and scaling policies in the monorepository. Pushing a new version automatically updates all deployed services without manual intervention, solving the problem of complex and error-prone manual application updates.

· Setting up a new development environment: A team can define a complete, isolated development environment (including databases, message queues, and application backends) in Git. New developers can spin up this entire environment by simply cloning the repository and running the Proxmox-GitOps commands, drastically reducing onboarding time and ensuring consistency across developer machines.

· Disaster recovery and infrastructure replication: The Git repository acts as a perfect backup. In case of a failure, the entire infrastructure can be rebuilt identically by simply pointing the system to the Git repository, providing a robust solution for business continuity.

· Managing a large-scale Kubernetes cluster: While the project specifically mentions Proxmox, the IaC principles and monorepo approach are applicable to managing complex Kubernetes clusters. The Git repository would define cluster configuration, node setup, and initial application deployments, simplifying the management of a dynamic and distributed system.

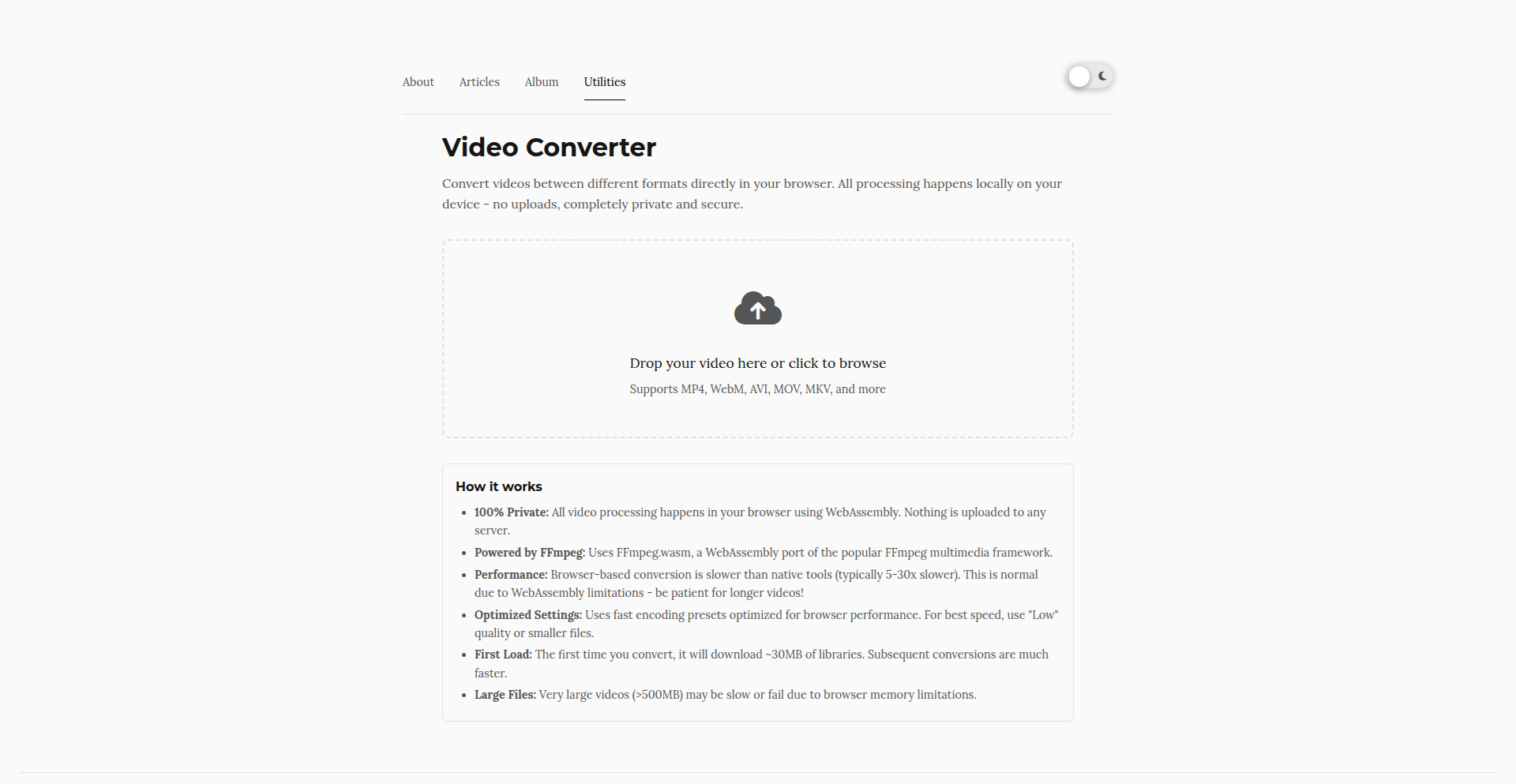

13

FFmpeg.wasm: Browser-Native Video Alchemy

Author

Beefin

Description

This project brings the power of FFmpeg, a renowned video processing toolkit, directly into the web browser using WebAssembly. It enables developers to perform complex video conversions and manipulations client-side, without requiring users to upload files to a server or install any software. The core innovation lies in packaging FFmpeg's extensive capabilities into a portable, efficient WebAssembly module, unlocking new possibilities for interactive video editing and processing directly within web applications.

Popularity

Points 5

Comments 0

What is this product?

This is FFmpeg compiled to WebAssembly (Wasm). Think of FFmpeg as a Swiss Army knife for video and audio, capable of doing almost anything: converting formats, resizing, cutting, merging, adding effects, and much more. Traditionally, you'd need to run FFmpeg on a server or a local machine. FFmpeg.wasm takes this powerful engine and shrinks it down to run directly in your web browser. WebAssembly is a special kind of code that browsers can understand and run very quickly, almost as fast as native code. By compiling FFmpeg to Wasm, we can leverage its full power on the client-side, opening up incredible opportunities for web-based video tools. So, this means you can now process video files without sending them to a remote server, making it faster, more private, and often cheaper.

How to use it?

Developers can integrate FFmpeg.wasm into their web applications by including the Wasm module and its JavaScript bindings. You'll typically use JavaScript to load the FFmpeg.wasm library, then write commands similar to how you'd use FFmpeg on the command line, but within your JavaScript code. For example, you could write a script to take an uploaded video file (represented as a Blob or File object in the browser), pass it to FFmpeg.wasm, and specify a conversion operation, like changing the format from MP4 to WebM. The output can then be used directly in the browser or uploaded. This is perfect for building features like on-the-fly video format conversion for uploads, basic in-browser video editing tools, or even live video filtering and manipulation.

Product Core Function

· Client-side video format conversion: Enables users to change video file formats (e.g., MOV to MP4) directly in their browser, speeding up workflows and reducing server load. This is useful for ensuring compatibility across different devices and platforms without needing server infrastructure.

· In-browser video transcoding: Allows for changing video codecs, bitrates, and resolutions without server-side processing. Developers can build tools that resize videos for different display needs or optimize them for faster streaming, directly impacting user experience and data usage.

· Basic video manipulation (trimming, merging): Provides foundational editing capabilities within the browser, such as cutting out sections of a video or combining multiple video clips. This is valuable for creating simple video editing applications without the complexity of server-side rendering or desktop software.

· Audio extraction and manipulation: Supports extracting audio from video, changing audio formats, or adjusting audio levels directly in the browser. This is helpful for multimedia applications that need to process both video and audio components efficiently.

· Image sequence to video creation: Facilitates creating videos from a series of images within the browser. This is a niche but powerful feature for developers working with animations, simulations, or frame-by-frame content.

Product Usage Case

· A web-based e-commerce platform that allows sellers to upload product videos in any format, and automatically converts them to a web-optimized format (like WebM) directly in the user's browser before upload. This reduces upload errors and ensures videos play smoothly for customers.

· A social media application that enables users to apply simple filters or crop their videos before posting, all within the app without needing to send the video to a server for processing. This provides a faster and more interactive user experience.

· An educational platform where students can record short video responses and have them automatically converted to a standard format for grading, all client-side. This streamlines the submission process for both students and educators.

· A web-based video editing tool that allows users to trim, merge, and export short video clips for social media, all within the browser. This democratizes basic video editing by making it accessible and free of charge without requiring software downloads.

14

RememBook

Author

flopsa

Description

RememBook is a side project built to combat the common problem of forgetting what you read. It leverages the principles of spaced repetition, a scientifically proven method for long-term memory consolidation. The core innovation lies in its ability to automatically generate quiz questions from your books and then intelligently schedule future quizzes based on your performance. This means you're tested on material you struggle with more frequently, and material you master less often, effectively reinforcing your recall and ensuring that the knowledge truly sticks. For developers, it represents a creative application of AI and algorithmic scheduling to a personal productivity challenge, showcasing how code can directly enhance learning and memory retention. The daily email reminders further highlight a focus on user engagement and habit formation, a valuable lesson for anyone building user-facing applications.

Popularity

Points 2

Comments 2

What is this product?

RememBook is a personalized learning tool designed to help you remember the books you read by using spaced repetition quizzes. At its heart, it's an algorithm that analyzes content (though the current implementation likely relies on user-inputted questions or summaries) and schedules review sessions. The innovation is in the automated generation and intelligent scheduling of these quizzes. Instead of passively rereading, you're actively recalling information. The system tracks your correct and incorrect answers, then adjusts the frequency of future questions. If you consistently answer a question correctly, you'll see it less often. If you struggle, it will reappear more frequently. This dynamic adjustment is key to its effectiveness in solidifying long-term memory, making it far more efficient than random review. So, for you, it means a more effective way to retain the valuable insights and stories from your reading, turning passive consumption into active knowledge.

How to use it?

Developers can integrate RememBook by using it as a framework for their own learning or as inspiration for building similar personalized learning tools. For individual use, the current version likely involves importing book content (or creating questions manually) and opting into daily email reminders for quizzes. The underlying technology, a spaced repetition algorithm and a scheduling system, can be adapted to various data sources and learning objectives. For instance, a developer could extend this to study programming languages, technical documentation, or even personal notes. The integration could involve APIs to fetch book summaries or a user interface to input questions and track progress. The value proposition for developers is clear: a concrete example of how to apply algorithmic learning to solve real-world productivity issues, offering a blueprint for building more engaging and effective educational tools.

Product Core Function

· Automated quiz generation: The system can create questions based on book content, aiding recall by prompting active engagement with the material. This helps you test your understanding beyond just rereading.

· Spaced repetition scheduling: Questions are presented at optimal intervals based on your performance, maximizing long-term memory retention. This means you spend your study time most effectively by focusing on what you're likely to forget.

· Performance tracking: RememBook monitors your quiz results, identifying areas of strength and weakness. This provides valuable insights into your learning progress and helps tailor future review sessions.

· Daily email reminders: Optional email notifications ensure you stay consistent with your learning routine. This nudge helps build a habit and prevents you from falling behind on your review schedule.

Product Usage Case

· A literature student using RememBook to remember plot details, character arcs, and themes from assigned novels for an upcoming exam. By answering generated quizzes, they can pinpoint areas of weak recall and reinforce them efficiently.

· A self-taught programmer using RememBook to memorize syntax, common algorithms, and design patterns from programming textbooks and online tutorials. The spaced repetition ensures they retain complex concepts over time, rather than just glancing over them.

· A busy professional using RememBook to consolidate key takeaways from business books and industry reports. The daily quizzes act as short, focused review sessions that fit into a tight schedule, ensuring that valuable knowledge isn't lost.

· A hobbyist learning a new language using RememBook to practice vocabulary and grammar. By actively recalling words and sentence structures, they accelerate their learning curve and build fluency faster.

15

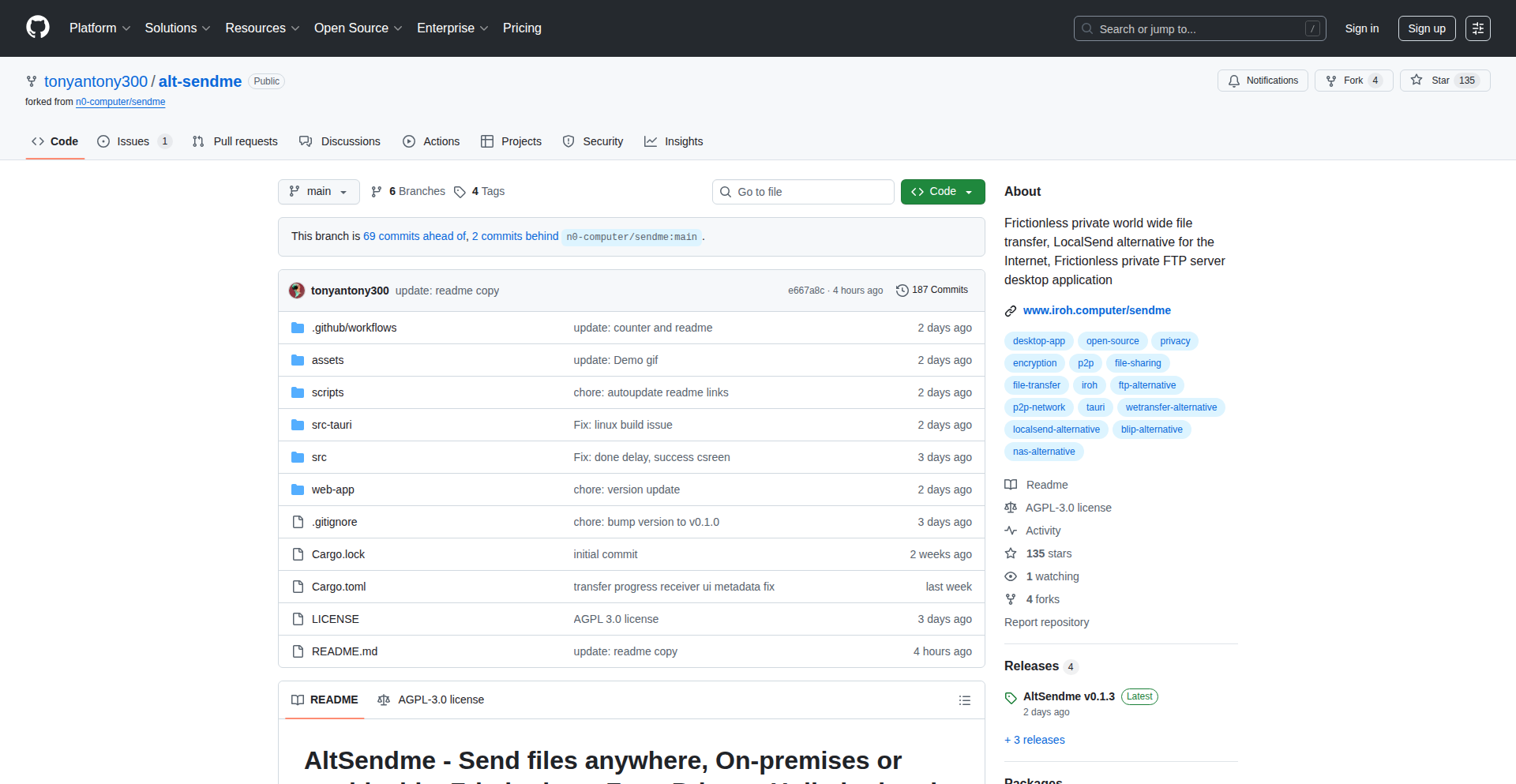

IrohTransfer: Decentralized P2P File Sharing

Author

SandraBucky

Description

This project is a showcase for building a peer-to-peer file sharing alternative to LocalSend, leveraging the power of Iroh. It focuses on enabling fast local network transfers and also provides internet-based transfer capabilities, with speeds influenced by ISP performance. The innovation lies in its decentralized nature and the underlying Iroh technology, offering a more private and potentially more resilient sharing solution.

Popularity

Points 4

Comments 0

What is this product?

IrohTransfer is a decentralized file sharing application that allows users to send files directly to each other without relying on central servers. At its core, it utilizes Iroh, a Rust library for building decentralized applications. Iroh handles the complex networking and data synchronization aspects, enabling peer-to-peer communication. This means your data travels directly from one device to another, enhancing privacy and security. The innovation here is building a user-friendly sharing tool on top of this robust decentralized foundation, offering a modern approach to file exchange that avoids the limitations and privacy concerns of traditional cloud-based or server-dependent solutions. It's like having your own private, super-fast courier service for digital files.

How to use it?

Developers can use IrohTransfer as a foundation or inspiration for building their own P2P applications. The project demonstrates how to integrate Iroh for discoverability, connection establishment, and secure data transfer between peers. You can integrate its core logic into existing applications that need file sharing capabilities, or fork the project to build custom solutions. For example, imagine a team of developers needing to quickly share large design assets without uploading them to a public cloud. They could deploy or adapt IrohTransfer to facilitate this. It's about harnessing direct device-to-device communication for efficiency and control.

Product Core Function

· Peer Discovery: Enables devices on the same local network to find each other automatically, facilitating seamless connection initiation. The value is in eliminating manual IP address entry or complex network configurations for local sharing.

· Local Network Transfer: Achieves high-speed file transfers within a local network, akin to dedicated file sharing tools. This provides a tangible benefit for users needing rapid local data movement, making it significantly faster than internet transfers for nearby devices.

· Internet Transfer Capability: Allows file sharing over the internet by leveraging Iroh's networking features, though performance is dependent on user ISPs. This extends the utility beyond the local network, offering flexibility for remote sharing.

· Decentralized Architecture: Eliminates reliance on central servers for file transfer operations. The value is in enhanced privacy, reduced single points of failure, and greater user control over data.

· Iroh Integration: Utilizes the Iroh library for underlying P2P networking and data management. This showcases a modern, robust, and potentially more secure approach to decentralized application development.

Product Usage Case

· Scenario: A remote team working on a project needs to exchange large video files. How it solves the problem: IrohTransfer (or a project inspired by it) can be used to send these files directly from one team member's computer to another over the internet, potentially faster and more securely than uploading to a cloud service, depending on their respective internet speeds.

· Scenario: A designer wants to quickly share high-resolution mockups with a client who is physically in the same office. How it solves the problem: Using the local network transfer feature, the designer can send files instantly without needing to upload them or rely on email attachments, ensuring quick feedback and iteration.

· Scenario: Developers are building a collaborative application that requires real-time data synchronization between user devices. How it solves the problem: The principles demonstrated by IrohTransfer, especially its use of Iroh for P2P communication, can be adapted to build a robust data synchronization layer, ensuring consistent data across all connected users.

· Scenario: A user is concerned about the privacy of their shared files and wants to avoid commercial cloud storage services. How it solves the problem: IrohTransfer's decentralized nature means files are shared directly between users, bypassing third-party servers and providing a higher degree of privacy and data ownership.

16

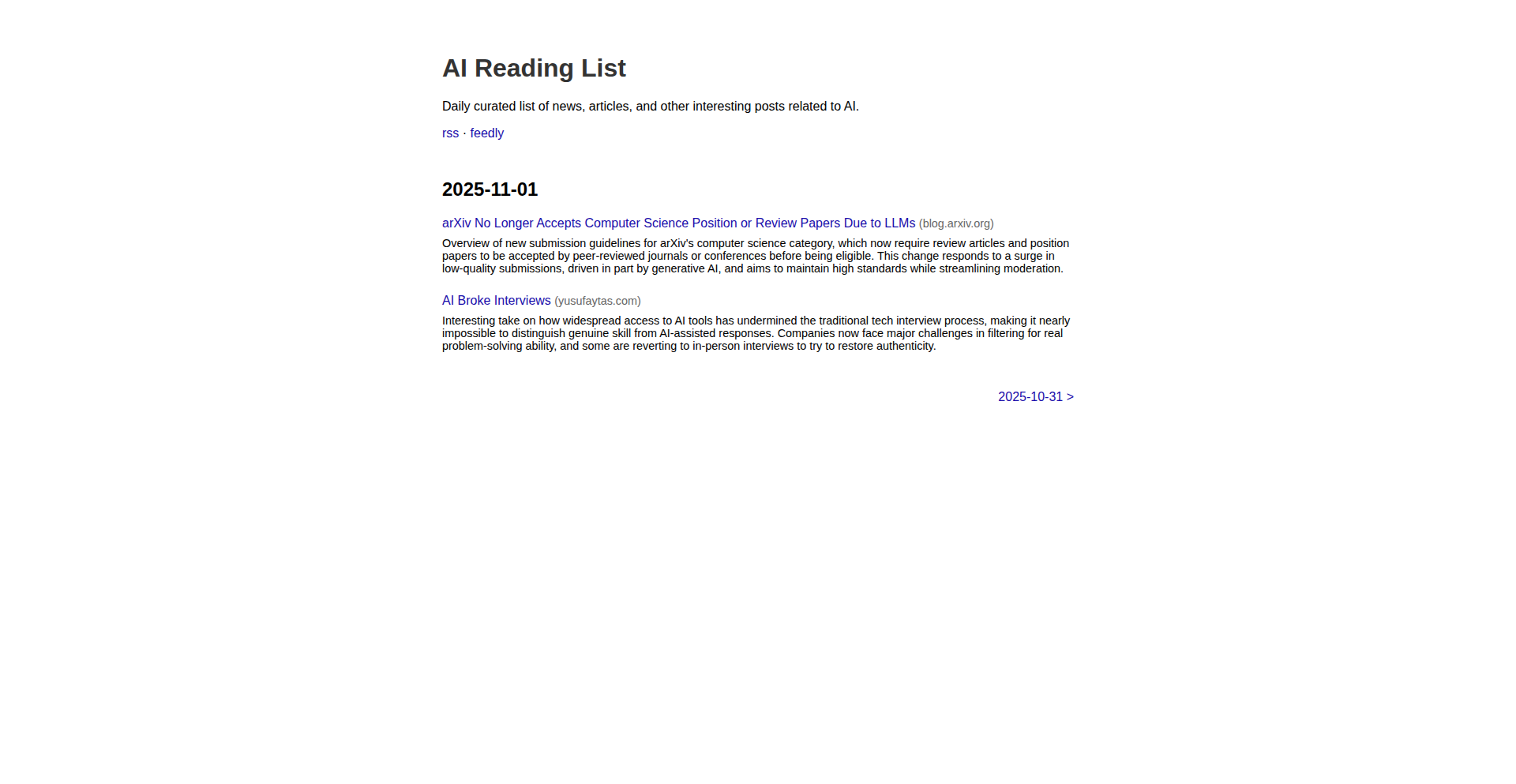

AI-Curated HN Insights

Author

ronbenton

Description

This project is an automated system that filters Hacker News for AI-related articles, generates concise summaries using a Large Language Model (LLM), and publishes them to a website and an RSS feed. It solves the problem of information overload by providing curated, digestible AI news, making it easy for developers to stay updated.

Popularity

Points 2

Comments 2

What is this product?

This project is an intelligent aggregator for Hacker News, specifically focused on the rapidly evolving field of Artificial Intelligence. It works by first scanning the titles of popular Hacker News posts. If a title contains terms related to AI, it then uses an LLM (like GPT or similar) to: 1. Read the article and generate a brief, easy-to-understand summary. 2. Confirm that the article is indeed about AI. Finally, these AI-focused articles and their summaries are published on a Cloudflare Pages website and made available through an RSS feed. The core innovation lies in leveraging LLMs for automated content filtering and summarization, transforming a firehose of information into a manageable stream of relevant AI insights. This is incredibly useful for anyone who wants to keep up with AI developments without spending hours sifting through content.

How to use it?

Developers can use this project in several ways. Firstly, they can directly consume the curated content by visiting the provided website or subscribing to the RSS feed. This provides a quick and efficient way to discover and understand the latest AI trends discussed on Hacker News. Secondly, the underlying code, being a typical 'Show HN' project, is often open-source or can serve as inspiration for building similar custom aggregators. Developers can fork the project, adapt the filtering keywords, integrate with different LLMs, or choose alternative publishing platforms. For example, a developer working on an AI startup could integrate this RSS feed into their internal dashboard to keep their team informed about industry news.

Product Core Function

· AI Title Detection: Automatically identifies Hacker News articles that are likely about AI by scanning titles for relevant keywords. This saves developers time by filtering out irrelevant content before deeper processing.

· LLM-Powered Summarization: Utilizes Large Language Models to condense lengthy articles into short, informative summaries. This provides a quick understanding of the article's core message without needing to read the full text.

· AI Topic Verification: Employs LLMs to confirm that an article's content genuinely pertains to AI. This ensures the curated list is highly accurate and trustworthy.

· Web Publishing: Deploys the filtered and summarized AI news to a static website hosted on Cloudflare Pages. This offers an accessible and browsable format for consuming the information.

· RSS Feed Generation: Creates a standard RSS feed for the AI news. This allows developers to easily integrate the updates into their preferred feed readers or content aggregation tools.

Product Usage Case

· A machine learning engineer wants to stay updated on new research papers and tools in the AI space. They can subscribe to the AI-Curated HN Insights RSS feed in their feed reader and get daily digests of the most relevant Hacker News discussions, saving them from manually checking HN and filtering through unrelated posts.

· A developer building an AI-powered application needs to understand the current market trends and challenges. By using the AI-Curated HN Insights website, they can quickly scan summaries of articles discussing AI adoption, ethical considerations, and emerging technologies, informing their product development strategy.