Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-30

SagaSu777 2025-10-31

Explore the hottest developer projects on Show HN for 2025-10-30. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN barrage underscores a powerful trend: the relentless integration of AI, particularly Large Language Models (LLMs), into nearly every facet of technology. We're seeing AI move beyond generalized assistance to become a specialized tool for solving niche problems, from code review heatmaps and post-quantum encryption to identifying GDPR violations in documents and re-framing emotionally charged texts. This signals a maturing AI landscape where developers are leveraging AI not just for novelty, but for tangible improvements in efficiency, security, and user experience. For developers, this means embracing LLMs as powerful collaborators and learning to fine-tune them for specific tasks. For entrepreneurs, it's about identifying unmet needs where AI can provide a unique, data-driven solution that traditional methods can't match. The emphasis on privacy and security, as seen in projects like Ellipticc Drive, also highlights a crucial ongoing tension – how to harness the power of AI while safeguarding user data. This is the hacker spirit in action: using cutting-edge tech to build robust, secure, and intelligent solutions to real-world challenges.

Today's Hottest Product

Name

Show HN: I made a heatmap diff viewer for code reviews

Highlight

This project uses LLMs to provide a visual heatmap of code changes, highlighting areas that likely require more human attention. It innovates by moving beyond simple bug detection to flag code that is 'worth a second look,' potentially catching complex logic or security concerns. Developers can learn about integrating LLMs for code analysis, creating intuitive UIs for complex data, and building intelligent developer tools.

Popular Category

AI/ML

Developer Tools

Productivity

Security

Data Analysis

Popular Keyword

AI

LLM

Code Review

Data

Automation

Security

Productivity

Technology Trends

AI-powered code analysis

Post-quantum cryptography

LLM for specialized tasks

Decentralized applications (Nostr)

Developer productivity tools

Data privacy and security

Automated compliance checks

Generative AI for design and content

Project Category Distribution

AI/ML Applications (30%)

Developer Tools (25%)

Productivity & Utilities (20%)

Security & Privacy (10%)

Data Analysis & Visualization (5%)

Education & Personal Finance (5%)

Other (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | KidVestHTML | 211 | 380 |

| 2 | CodeSight AI Diff | 221 | 62 |

| 3 | Quibbler: Adaptive Coding Agent Critic | 55 | 15 |

| 4 | ArXiv Audio Weaver | 40 | 12 |

| 5 | WebFontSniffer | 19 | 4 |

| 6 | Ellipticc Drive: Quantum-Resistant E2E Cloud Storage | 14 | 4 |

| 7 | GDPRGuard AI | 2 | 13 |

| 8 | DayZen: Radial Time-Boxing | 7 | 3 |

| 9 | Mearie: The Reactive GraphQL Client | 4 | 5 |

| 10 | AI Peace Weaver | 8 | 1 |

1

KidVestHTML

Author

roberdam

Description

A single HTML file application designed to encourage children to invest, leveraging client-side JavaScript to create an interactive and educational experience without complex backend infrastructure.

Popularity

Points 211

Comments 380

What is this product?

KidVestHTML is a self-contained web application, delivered entirely within a single HTML file. Its core innovation lies in using client-side JavaScript to simulate an investment environment for children. Instead of a traditional server, it relies on JavaScript to manage data, present information, and handle user interactions. This approach makes it incredibly accessible – no installation or server setup is required. The technology insight here is demonstrating how complex user experiences can be built using just HTML and JavaScript, minimizing dependencies and maximizing portability. So, what's the use? It's a fun, easy-to-share tool to teach kids about the basics of investing in a safe, simulated environment.

How to use it?

Developers can use KidVestHTML by simply opening the HTML file in any modern web browser. For integration into existing projects or to customize further, developers can fork the repository and modify the HTML, CSS, and JavaScript code. Common use cases include embedding it within educational websites, sharing it directly with parents and educators, or using it as a foundation for more sophisticated financial literacy tools. The simplicity of a single HTML file means it can be easily hosted on static site generators or simple web servers. So, what's the use? It's a quick way to get a functional educational tool up and running, or a starting point for building your own interactive finance lessons.

Product Core Function

· Interactive investment simulation: Uses JavaScript to model stock market fluctuations and investment growth, allowing children to virtually buy and sell assets. The value is in providing a hands-on, risk-free learning experience for financial concepts.

· Visual progress tracking: Displays investment performance through charts and summaries generated by JavaScript, helping children understand the impact of their decisions. This provides immediate feedback and reinforces learning.

· Educational content integration: Includes built-in explanations of investment terms and strategies, delivered via JavaScript-driven pop-ups or sections. This educates users on the 'why' behind the simulation.

· Single HTML file deployment: All logic, styling, and content are contained within one file, making it easy to share and host without complex server setups. This dramatically reduces the barrier to entry for users and developers alike.

· Customizable simulation parameters: Developers can adjust JavaScript variables to alter market volatility, starting capital, and available investment options, tailoring the experience for different age groups or learning objectives. This offers flexibility for educators and parents.

Product Usage Case

· A parent wants to introduce their child to the concept of investing without exposing them to real financial risk. They can simply share the KidVestHTML file, allowing the child to play and learn in a simulated stock market. This solves the problem of inaccessible or overly complex financial education tools for young audiences.

· An educator is developing a financial literacy module for a school. They can embed KidVestHTML into their learning management system as an interactive component, providing students with a practical way to apply theoretical concepts learned in class. This solves the need for engaging, hands-on activities in educational settings.

· A developer is experimenting with client-side only web applications. They can use KidVestHTML as an example to demonstrate how rich, interactive user experiences can be built without a backend server, showcasing the power of modern JavaScript. This provides a practical case study for learning about frontend development paradigms.

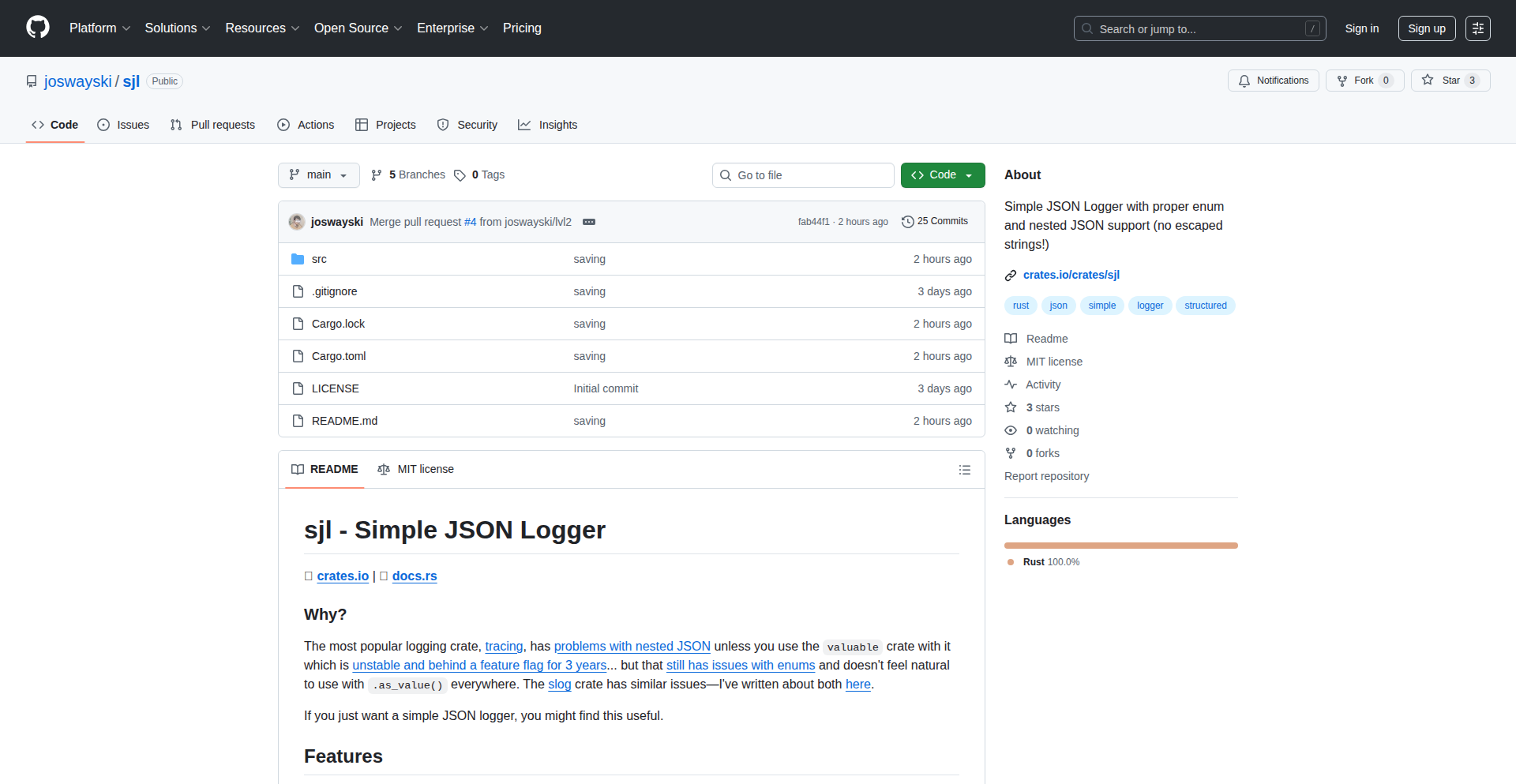

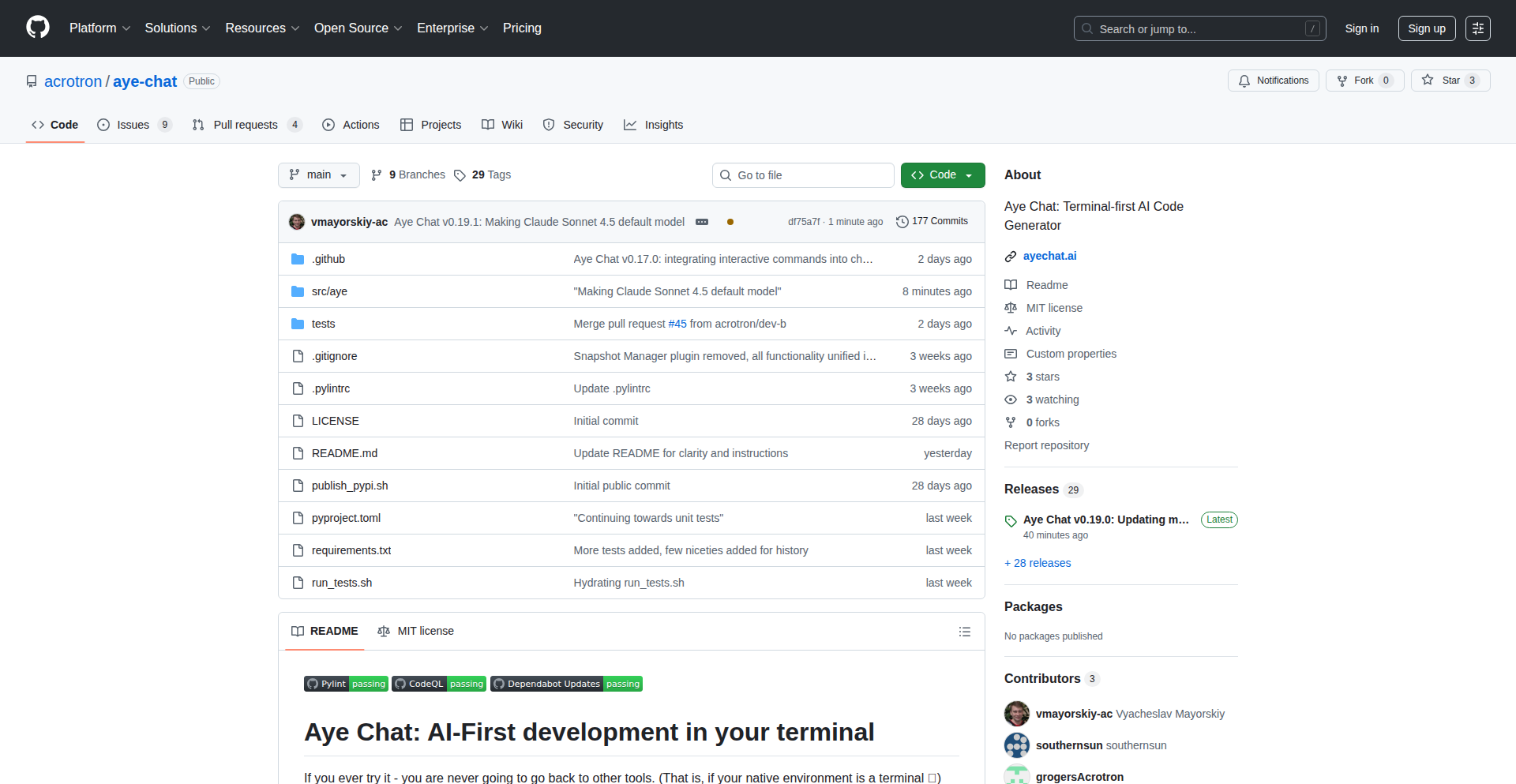

2

CodeSight AI Diff

Author

lawrencechen

Description

This project is an intelligent pull request (PR) viewer that uses AI to analyze code changes and highlight areas that likely need more human attention. Instead of just spotting bugs, it flags code that might be complex, insecure, or simply unusual, making code reviews more efficient and effective. It's like having an AI assistant helping you pinpoint the most critical parts of a code change.

Popularity

Points 221

Comments 62

What is this product?

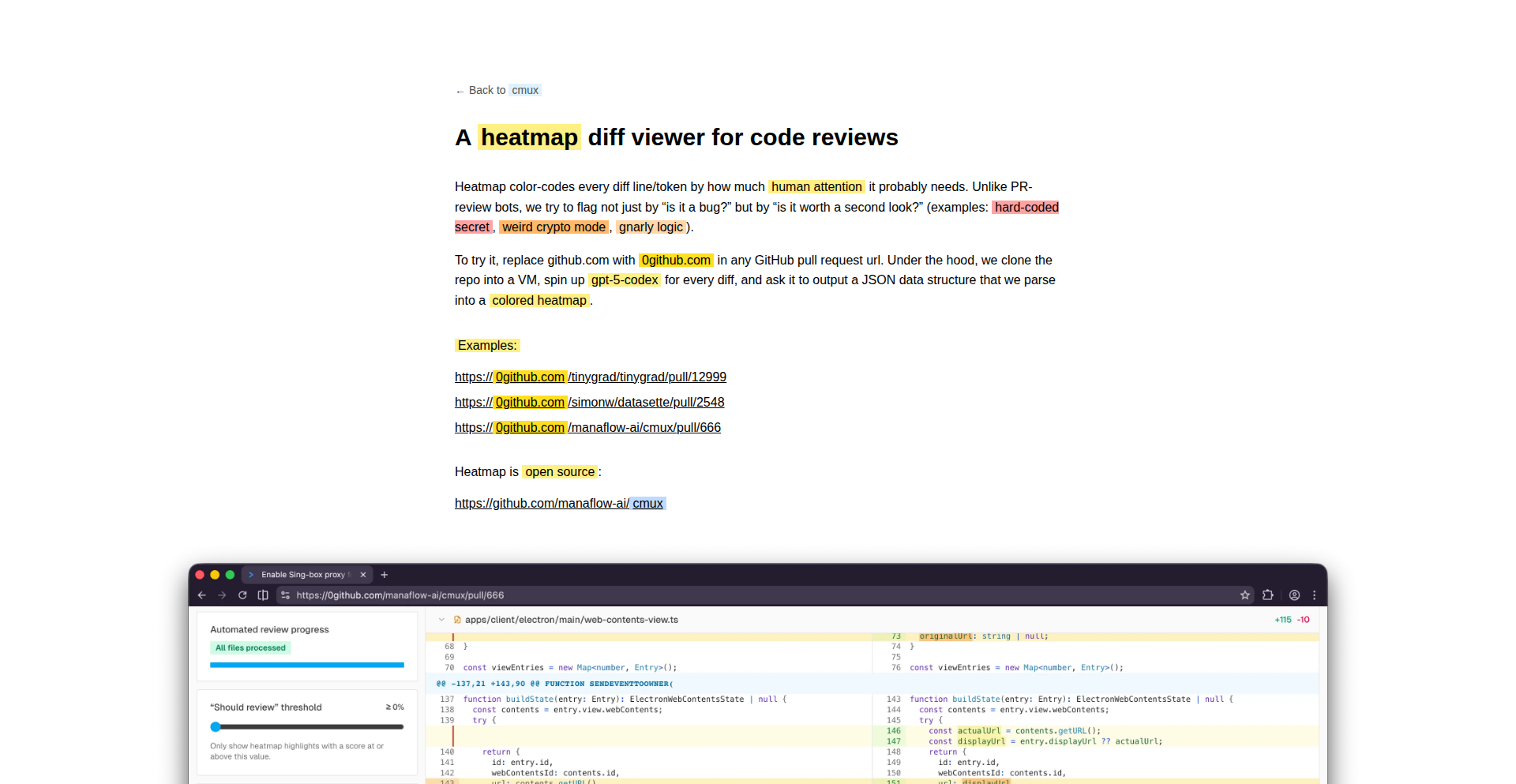

CodeSight AI Diff is a novel tool that transforms how developers review code changes in pull requests. It works by taking a standard GitHub pull request URL and processing it through a sophisticated AI model. This AI doesn't just look for obvious errors; it's trained to identify subtle indicators of complexity, potential security risks (like hardcoded secrets or unusual cryptography), convoluted logic, and generally 'ugly' or hard-to-understand code. The output is a visual heatmap overlaid on the code diff, where darker shades of yellow indicate areas that warrant closer inspection. You can hover over these highlighted sections to see the AI's explanation, helping you understand why it flagged that particular part. The core innovation lies in its ability to predict 'human attention needs' beyond simple bug detection, making the review process smarter and faster. This means you can spend less time sifting through mundane changes and more time on what truly matters.

How to use it?

Using CodeSight AI Diff is remarkably simple. If you have a pull request URL on GitHub (e.g., https://github.com/user/repo/pull/123), you just need to replace 'github.com' with '0github.com'. So, the same URL becomes https://0github.com/user/repo/pull/123. When you visit this modified URL, CodeSight AI Diff will automatically load the pull request with its AI-powered heatmap analysis. You can then navigate through the code changes as you normally would on GitHub, but with the added benefit of AI-driven visual cues. The tool also provides a slider at the top left to adjust the sensitivity of the 'should review' threshold, allowing you to fine-tune how aggressively the AI flags potential issues. This makes it easy to integrate into your existing GitHub workflow without any complex setup.

Product Core Function

· AI-driven code complexity analysis: The AI analyzes code snippets to identify areas that are unusually complex or hard to understand, helping reviewers focus on the most challenging parts of the code. This provides value by reducing the cognitive load on reviewers and ensuring that difficult logic is thoroughly examined.

· Security vulnerability highlighting: The system flags potential security risks such as hardcoded sensitive information (like API keys or passwords) or the use of uncommon or potentially weak cryptographic methods. This adds value by proactively identifying and mitigating security vulnerabilities early in the development cycle.

· Code style and readability scoring: It identifies code that is aesthetically unappealing or deviates significantly from typical readability standards, prompting reviewers to suggest improvements. This enhances the overall quality and maintainability of the codebase.

· Interactive heatmap visualization: A visual heatmap overlay on code differences highlights areas of interest, with color intensity indicating the AI's assessment of attention needed. This provides immediate visual feedback, allowing developers to quickly grasp the critical areas of a code change.

· LLM-generated explanations for highlights: Hovering over highlighted code provides concise explanations from the AI model about why a particular section was flagged. This offers transparency and educational value, helping developers understand the reasoning behind the AI's suggestions.

Product Usage Case

· Scenario: Reviewing a large feature branch with hundreds of code changes. How it solves the problem: Instead of reading every line, a developer can quickly scan the CodeSight AI Diff heatmap to identify the riskiest or most complex modules, focusing their review effort efficiently. This saves significant time and reduces the chance of overlooking critical issues.

· Scenario: A junior developer submits code that is functionally correct but uses an obscure or inefficient algorithm. How it solves the problem: The AI might flag this as 'gnarly logic' or 'low readability', prompting the reviewer to guide the junior developer towards a more standard and maintainable solution. This provides a learning opportunity and improves code quality.

· Scenario: A security-sensitive piece of code is being modified. How it solves the problem: The AI can specifically identify potential insecure patterns, such as accidental exposure of credentials or weak encryption implementations, alerting the reviewer to a critical security risk that might otherwise be missed in a manual review.

· Scenario: A pull request involves refactoring a legacy system. How it solves the problem: The AI can help pinpoint areas where the refactoring might have introduced unintended complexity or bugs by highlighting unusual control flows or deviations from expected patterns, ensuring the refactoring process is robust and doesn't introduce new problems.

3

Quibbler: Adaptive Coding Agent Critic

Author

etherio

Description

Quibbler is an experimental tool designed to act as a critical companion for coding agents, learning your preferences to provide more relevant feedback. It addresses the challenge of generic or unhelpful suggestions from AI coding assistants by adapting to individual developer workflows and coding styles. Its innovation lies in its learning mechanism, allowing it to become a personalized critic.

Popularity

Points 55

Comments 15

What is this product?

Quibbler is a software agent that acts as a reviewer for your AI coding assistant. Think of it like having a senior developer looking over the shoulder of your AI helper, but this senior developer learns your specific coding habits and preferences over time. The core technology involves a feedback loop where user interactions and explicit preferences are used to fine-tune the agent's critique generation. Instead of just saying 'this code is bad', it learns to tell you 'this code isn't ideal for your project because you prefer functional programming paradigms' or 'this variable naming convention deviates from your established pattern'. This makes the feedback more actionable and less noisy.

How to use it?

Developers can integrate Quibbler into their AI coding workflows. This might involve running Quibbler alongside an AI code generation tool. When the AI generates code, Quibbler analyzes it based on its learned understanding of your preferences. It can then flag potential issues, suggest alternative implementations, or simply confirm that the code aligns with your standards. The interaction could be through a command-line interface, a plugin for an IDE, or an API. The primary benefit is getting more tailored and useful feedback on AI-generated code, saving you time in manual review and refactoring.

Product Core Function

· Personalized feedback generation: Quibbler learns your coding style, preferred libraries, and common patterns to provide critiques that are directly relevant to your work, saving you from wading through irrelevant suggestions.

· Adaptive learning engine: It continuously updates its understanding of your preferences based on your interactions and explicit feedback, meaning the more you use it, the better it becomes at assisting you.

· Code quality assessment based on user context: Moves beyond generic code quality metrics to evaluate code against your specific project requirements and established team standards.

· Constructive suggestion formulation: Offers actionable advice and alternatives rather than just pointing out errors, helping you improve code more efficiently.

Product Usage Case

· A developer is using an AI pair programmer to generate boilerplate code for a new feature. Quibbler analyzes the generated code and flags a section that uses imperative loops, suggesting a more functional approach based on the developer's known preference for immutability, thereby improving code elegance and maintainability.

· A team is onboarding a new AI coding assistant. Quibbler is configured to learn the team's established coding guidelines and common refactoring patterns. When the AI suggests code, Quibbler identifies deviations from these guidelines and provides specific explanations, ensuring consistency across the codebase and reducing the need for extensive manual code reviews.

· A solo developer is experimenting with a new AI code completion tool. Quibbler monitors the suggestions and learns which types of completions the developer frequently accepts or rejects. Over time, it helps the AI tool prioritize suggestions that align with the developer's typical coding patterns, making the auto-completion more efficient and less disruptive.

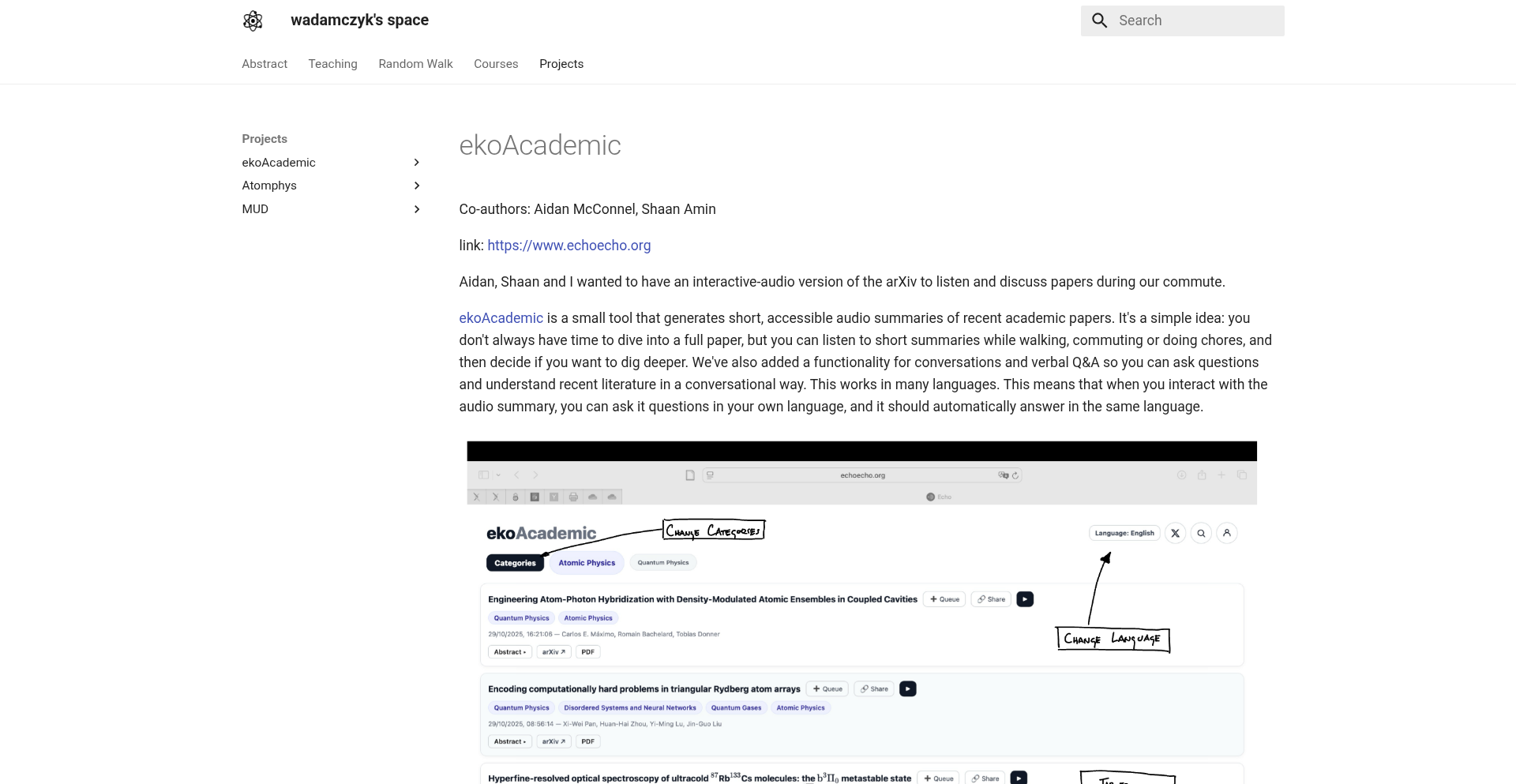

4

ArXiv Audio Weaver

Author

wadamczyk

Description

ArXiv Audio Weaver is a novel project that transforms academic papers from ArXiv into engaging, interactive podcasts. It leverages advanced natural language processing (NLP) and text-to-speech (TTS) technologies to read out paper content, and importantly, introduces interactive elements that allow listeners to delve deeper into specific sections or definitions. The core innovation lies in creating an accessible and digestible format for complex research, bridging the gap between dense academic literature and broader audiences.

Popularity

Points 40

Comments 12

What is this product?

This project is a proof-of-concept that acts as a bridge between static academic papers and the dynamic world of audio content. It takes the full text of research papers, typically found on platforms like ArXiv, and converts them into a spoken-word podcast format. The innovation isn't just simple text-to-speech; it intelligently parses the paper's structure (sections, figures, equations) and allows for interactive navigation. For example, a listener could ask to 'explain this equation' or 'elaborate on the methodology,' and the system would provide context from the paper. This makes complex research far more approachable and understandable, even for those without a deep background in the specific field.

How to use it?

Developers can integrate ArXiv Audio Weaver into their workflows by using its API to process ArXiv paper URLs or uploaded PDF files. The system then generates an audio stream and an accompanying interactive transcript. This can be used to create digestible summaries of research for internal team knowledge sharing, to generate audio versions of papers for accessibility, or to build new educational tools that combine spoken explanations with visual aids derived from the paper's figures. The interactive elements can be embedded within web applications or mobile apps, providing a richer learning experience.

Product Core Function

· Automated Paper to Podcast Conversion: Transforms academic papers into spoken-word audio, making research content accessible on-the-go. The value is in democratizing access to knowledge, allowing people to learn while commuting or multitasking.

· Intelligent Section Parsing: Identifies and structures different sections of a paper (introduction, methodology, results, etc.), ensuring a logical flow in the audio narrative. This adds clarity and organization to the spoken content, preventing listener confusion.

· Interactive Explanation Engine: Enables users to ask for definitions of technical terms or explanations of specific equations directly from the paper's content. This provides on-demand clarification, enhancing comprehension and reducing barriers to understanding complex topics.

· Speech Synthesis with Contextual Nuance: Employs advanced text-to-speech (TTS) to deliver the content in a clear and engaging manner, potentially adapting tone based on the section's nature (e.g., more formal for methodology, more descriptive for results). This improves the listening experience and makes the content more captivating.

· Interactive Transcript Generation: Creates a synchronized transcript that highlights spoken words and allows users to click on text to jump to that section in the audio or trigger further explanations. This offers a multi-modal learning experience, catering to different learning preferences.

Product Usage Case

· A university researcher uses ArXiv Audio Weaver to create an audio summary of their latest paper for their lab mates, who can listen to it during their commute, fostering quicker dissemination of new findings within the team.

· An educator integrates the tool into an online course platform to provide audio companions for key research papers, allowing students to listen to explanations while reviewing the paper's visuals, improving understanding of complex concepts.

· A startup developing accessibility tools for visually impaired researchers uses the interactive podcast feature to allow users to listen to and understand technical papers more effectively, overcoming the limitations of traditional screen readers.

· A science communication platform employs the technology to generate engaging audio narratives from groundbreaking research, making cutting-edge science accessible to a wider, non-specialist audience through a podcast format.

5

WebFontSniffer

Author

artemisForge77

Description

WebFontSniffer is a browser extension that allows users to quickly identify, inspect, and copy any font used on a webpage. It tackles the common developer and designer challenge of discovering and reusing web fonts by providing an intuitive, on-demand font analysis tool.

Popularity

Points 19

Comments 4

What is this product?

WebFontSniffer is a browser extension that functions as a smart font inspector. When you activate it on any webpage, it scans the elements and reveals the exact font families, weights, sizes, and other CSS properties being used. The innovation lies in its direct accessibility and ease of use – rather than digging through browser developer tools, you get an immediate, clear report of the fonts, with a one-click option to copy the font name or even its associated CSS. This empowers users to understand and replicate typographic designs efficiently. So, what's in it for you? It saves you significant time and frustration when you see a font you like online and want to know what it is or how to use it yourself.

How to use it?

To use WebFontSniffer, you first install it as a browser extension (typically for Chrome or Firefox). Once installed, a small icon will appear in your browser's toolbar. When you are on a webpage where you want to identify fonts, simply click the WebFontSniffer icon. A small overlay or panel will appear, listing all the fonts detected on the page. You can then hover over or click on specific font names to see detailed properties and usually a preview. Importantly, there's often a 'copy' button next to each font name, allowing you to instantly get the font name or its CSS declaration into your clipboard. This makes it incredibly easy to then apply that font in your own projects or share it with others. So, what's in it for you? You can effortlessly grab the exact font details you need for your design or development work without complex manual steps.

Product Core Function

· Real-time font detection: Scans the currently viewed webpage to identify all applied fonts. This is valuable because it instantly tells you what fonts are being used, eliminating guesswork.

· Detailed font property inspection: Displays font family, weight, size, line height, and color. This is useful for understanding the exact typographic styling and replicating it accurately.

· One-click font name copying: Allows users to copy the exact font family name to their clipboard with a single click. This is incredibly practical for quickly referencing or applying the font in CSS.

· CSS snippet generation (potential): May offer to copy relevant CSS declarations for the selected font. This directly provides developers with usable code, streamlining the integration process.

· User-friendly interface: Presents font information in an easily digestible format, often with previews. This makes complex font data accessible even to less technical users.

Product Usage Case

· A web designer finds an attractive font on a competitor's website and uses WebFontSniffer to identify it, then copies the font name to use in their own design. This saves them hours of manual searching and guessing.

· A front-end developer is tasked with recreating a specific look for a landing page and needs to match the typography precisely. They use WebFontSniffer to get the exact font names and sizes, ensuring visual fidelity and reducing iteration time.

· A content creator wants to maintain brand consistency across different platforms. When they see a font they like in an online article, they use WebFontSniffer to identify it, ensuring they can use a similar font in their own marketing materials.

· A student learning web design uses WebFontSniffer to deconstruct the typography of well-designed websites, understanding how different fonts are applied and styled. This serves as a powerful learning tool for practical application of design principles.

6

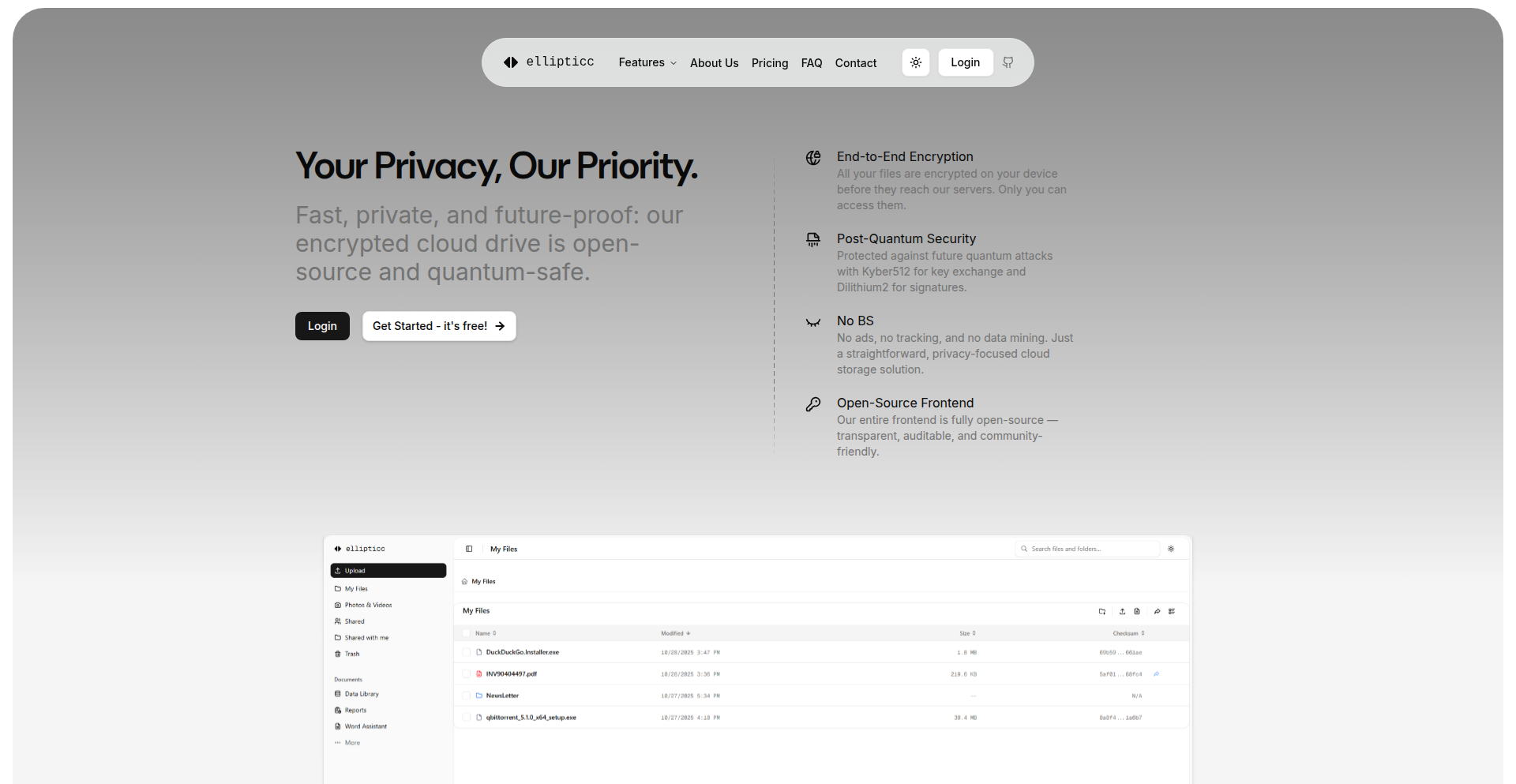

Ellipticc Drive: Quantum-Resistant E2E Cloud Storage

Author

iliasabs

Description

Ellipticc Drive is an open-source cloud storage solution that offers true end-to-end encryption with post-quantum security. It aims to provide a user experience similar to popular services like Dropbox, but with the crucial difference that the service provider has absolutely zero access to your data, even the host. This is achieved through advanced cryptographic techniques and an open-source frontend, allowing for transparency and self-hosting options.

Popularity

Points 14

Comments 4

What is this product?

Ellipticc Drive is a cloud storage service that prioritizes your data privacy and security by implementing end-to-end encryption (E2E) and future-proofing against quantum computing threats. Unlike traditional cloud storage where the provider can potentially access your files, Ellipticc Drive encrypts your data on your device before it's sent to the cloud. Only you hold the key to decrypt it. The 'post-quantum' aspect means it uses encryption algorithms (like Kyber and Dilithium) that are designed to be secure even against future, more powerful quantum computers, which could break current encryption methods. The core principle is 'zero-knowledge' – the service knows nothing about your files. The frontend is built with Next.js, and it leverages WebCrypto and the Noble library for cryptographic operations, using XChaCha20-Poly1305 for file chunk encryption, Kyber for key wrapping, and Ed25519 and Dilithium2 for signing. Key derivation is handled by Argon2id to protect your master key.

How to use it?

Developers can use Ellipticc Drive as a secure place to store and sync files across their devices. The service provides 10GB of free storage. For integration into applications, the open-source nature of the frontend means developers can inspect the code, potentially fork it, or even self-host their own version of the frontend for enhanced control and privacy. This offers a robust alternative for applications requiring secure file handling, especially for sensitive data, or for developers who want to build on top of a secure, transparent storage backend. You can try the live demo at ellipticc.com or explore the frontend source code on GitHub to understand its architecture.

Product Core Function

· End-to-End Encryption: Files are encrypted on your device before upload, ensuring only you can decrypt them. This is valuable for protecting sensitive personal or business data from unauthorized access, even from the cloud provider.

· Post-Quantum Cryptography: Utilizes algorithms resistant to quantum computer attacks, safeguarding your data against future cryptographic breakthroughs. This provides long-term security assurance for your stored information.

· Zero-Knowledge Architecture: The service provider cannot access or decrypt your files, offering maximum privacy and trust. This is crucial for users and organizations with strict data privacy requirements.

· Open-Source Frontend: The frontend code is publicly available for audit and self-hosting, promoting transparency and allowing for community contributions and custom deployments. This empowers developers to verify security and tailor the solution.

· Generous Free Tier: Offers 10GB of free storage per user, making secure cloud storage accessible. This allows individuals and small projects to benefit from advanced security without immediate cost.

· Familiar User Experience: Designed to be user-friendly and intuitive, similar to popular cloud storage services. This lowers the barrier to entry for users accustomed to existing solutions, making advanced security easier to adopt.

Product Usage Case

· Secure Document Storage: A freelance developer needs to store sensitive client documents and project proposals. Using Ellipticc Drive ensures that even if the cloud infrastructure were compromised, the documents remain unreadable due to E2E encryption, offering peace of mind and professional integrity.

· Encrypted Photo Backup: A photographer wants to back up their personal photo library to the cloud, but is concerned about privacy. Ellipticc Drive encrypts photos at the source, so even if the service were breached, their personal memories would remain private and inaccessible to others.

· Developing Secure Applications: A startup is building a new application that handles user health data. They can integrate Ellipticc Drive's backend principles (or even use the self-hosted frontend) to provide a secure and compliant way for their users to store and manage their sensitive information, meeting regulatory requirements.

· Archiving Sensitive Intellectual Property: A research team needs to archive proprietary research data. Ellipticc Drive's post-quantum encryption ensures that this data remains secure for the long term, protected even from future computational advancements that could render current encryption obsolete.

· Building a Decentralized File Sharing App: A developer looking to build a more decentralized file-sharing application can use Ellipticc Drive's open-source frontend as a reference or a component, leveraging its secure encryption and zero-knowledge principles to build their own robust solution.

7

GDPRGuard AI

Author

kinottohw

Description

SafeDocs-AI is an innovative AI-powered tool designed to proactively scan internal documents for GDPR compliance issues and sensitive information. It integrates with cloud storage services like Dropbox, Google Drive, and OneDrive, analyzing documents to identify potential violations and data leaks. The AI provides inline annotations with suggestions for correction, and a reporting feature summarizes compliance findings. This addresses the critical need for businesses to prevent accidental data exposure and ensure regulatory adherence before audits, offering peace of mind and saving potential fines.

Popularity

Points 2

Comments 13

What is this product?

GDPRGuard AI is an intelligent system that acts as your digital guardian for internal documents. It uses advanced AI, specifically Natural Language Processing (NLP) techniques, to read through your team's documents stored in cloud services. The core innovation lies in its ability to understand the context and meaning of text, not just keywords, to pinpoint sensitive data (like personal identifiable information or PII) and clauses that might not align with GDPR regulations. It's like having a super-smart assistant who meticulously reviews every word for potential compliance risks, flagging them with clear explanations and recommendations for fixes. So, this is useful because it automates a tedious and error-prone manual review process, significantly reducing the risk of costly GDPR violations and data breaches.

How to use it?

Developers and teams can easily integrate GDPRGuard AI into their existing workflows by connecting their cloud storage accounts (Dropbox, Google Drive, OneDrive) through a secure authentication process. Once connected, users can initiate scans for individual documents or process entire folders in bulk. The AI then analyzes the content, providing real-time feedback via inline comments directly within the documents or in a centralized dashboard. This makes it simple to review flagged items and apply suggested corrections. For developers, this means embedding a robust compliance check into their document management pipelines or offering it as an add-on service to their clients, ensuring data integrity and regulatory adherence with minimal development effort. The practical benefit is a streamlined compliance process that requires less manual intervention, saving valuable time and resources.

Product Core Function

· AI-driven sensitive data detection: Utilizes advanced NLP to identify and flag personal identifiable information (PII) and other sensitive data types across various document formats, providing a crucial layer of data protection and reducing the risk of accidental leaks.

· GDPR compliance analysis: Scans documents for clauses and language that may contravene GDPR regulations, offering proactive risk mitigation and helping organizations prepare for audits.

· Inline annotations and correction suggestions: Provides immediate, context-aware feedback directly on the document text, guiding users on how to rectify compliance issues, thereby expediting the remediation process.

· Multi-platform cloud integration: Seamlessly connects with popular cloud storage services like Dropbox, Google Drive, and OneDrive, allowing for centralized scanning and management of documents regardless of their location.

· Bulk document processing: Enables efficient scanning of multiple documents or entire folders simultaneously, significantly reducing the time and effort required for compliance checks in large organizations.

· Compliance reporting and insights: Generates summary reports detailing the types and prevalence of compliance issues across scanned documents, offering valuable insights for ongoing data governance and policy refinement.

Product Usage Case

· A marketing team handling customer lists inadvertently stores a spreadsheet with personal email addresses and phone numbers in a shared cloud drive. GDPRGuard AI scans the document, flags the PII, and suggests anonymizing or removing the sensitive columns, preventing a potential data leak before any customer is affected.

· A legal department is preparing for an upcoming GDPR audit and needs to ensure all client contracts stored in OneDrive are compliant. GDPRGuard AI is used to scan all contract documents in bulk, identifying any clauses related to data processing consent that might be outdated or unclear, and provides suggested revised wording, ensuring a smoother audit process.

· A small startup is building a SaaS product that requires users to upload sensitive documents. They integrate GDPRGuard AI as a backend service to automatically scan user-uploaded files for PII before storing them, providing an immediate layer of protection and demonstrating a commitment to user privacy to their customers.

· A human resources department needs to review employee onboarding documents stored in Dropbox for compliance with data privacy laws. GDPRGuard AI analyzes these documents, flagging any excessively retained personal information or non-compliant consent statements, helping to maintain HR compliance and employee trust.

8

DayZen: Radial Time-Boxing

Author

Kavolis_

Description

DayZen is an iOS app that reimagines daily planning by presenting your schedule on a clock face. Instead of traditional lists, which often distort the perception of time and overbooking, DayZen uses a radial layout. This visually shows you in real-time where your time is allocated and highlights conflicts instantly. It's designed to help users be more honest about their time management and plan more effectively.

Popularity

Points 7

Comments 3

What is this product?

DayZen is a novel time management application for iOS that replaces linear to-do lists with a radial, clock-face interface for planning your day. Traditional lists can be misleading because they don't inherently represent the duration of tasks or the actual flow of time. DayZen's innovation lies in its visual representation of time as a circle, much like a clock. You can drag and drop time blocks (slots) onto this 12 or 24-hour ring. If you try to schedule too much in one period, the overlapping slots will visually clash, immediately alerting you to overbooking. This offers a more intuitive and honest understanding of your available time, addressing the common problem of unrealistic scheduling.

How to use it?

Developers can use DayZen by integrating its core concept into their own workflows or by inspiring new planning tools. For personal use, a developer would download the app from the iOS App Store. They would then open DayZen and start creating their daily plan by dragging time slots onto the radial clock. For example, if a developer needs to block out 2 hours for focused coding on a complex feature, they would select a 2-hour slot and drag it to a specific time on the clock. If they also have a 1-hour meeting scheduled during that same period, DayZen would visually indicate the conflict. Developers can also create 'templates' for common types of days, such as 'deep work' days, 'meeting-heavy' days, or 'travel' days, allowing for rapid setup of predictable schedules. This is useful for anyone who needs to manage their time effectively, especially those in demanding roles where scheduling conflicts can derail productivity.

Product Core Function

· Radial Time Display: Visually represents the day on a clock face. This is valuable because it provides an intuitive and immediate understanding of time duration and availability, unlike lists that require mental calculation to gauge temporal occupancy.

· Instant Overbooking Detection: Visually highlights conflicts when time slots overlap. This solves the problem of accidentally scheduling too much, saving time and reducing stress by preventing overcommitment.

· Drag-and-Drop Scheduling: Allows users to easily allocate and adjust time blocks on the radial planner. This offers a fluid and interactive way to plan, making it quicker and more natural to experiment with different schedules.

· Customizable Time Templates: Enables users to save and load predefined scheduling patterns for recurring day types (e.g., deep work, meetings). This is incredibly useful for developers who have predictable work structures, allowing them to quickly set up efficient daily plans and maintain consistency.

Product Usage Case

· A software engineer needs to plan a day with a significant coding sprint, followed by two client calls and a team stand-up. Using DayZen, they can drag a 3-hour 'deep work' block for coding, then slot in the 30-minute stand-up and two 1-hour call blocks. DayZen will instantly show if any of these overlap, preventing the engineer from accidentally scheduling a call during their prime coding time and ensuring they have a realistic plan.

· A freelance developer who works with multiple clients across different time zones can use DayZen to visualize their entire week. They can create templates for 'client A day', 'client B day', and 'focus work day'. When planning their schedule, they can quickly drag and drop these templates onto the radial planner, seeing at a glance how their availability aligns with client needs and personal productivity goals.

· A developer attending a multi-day conference can use DayZen to plan their agenda. They can block out time for specific talks, networking sessions, and even breaks. The radial view makes it easy to see if they've overscheduled themselves or missed opportunities for crucial sessions due to time conflicts.

9

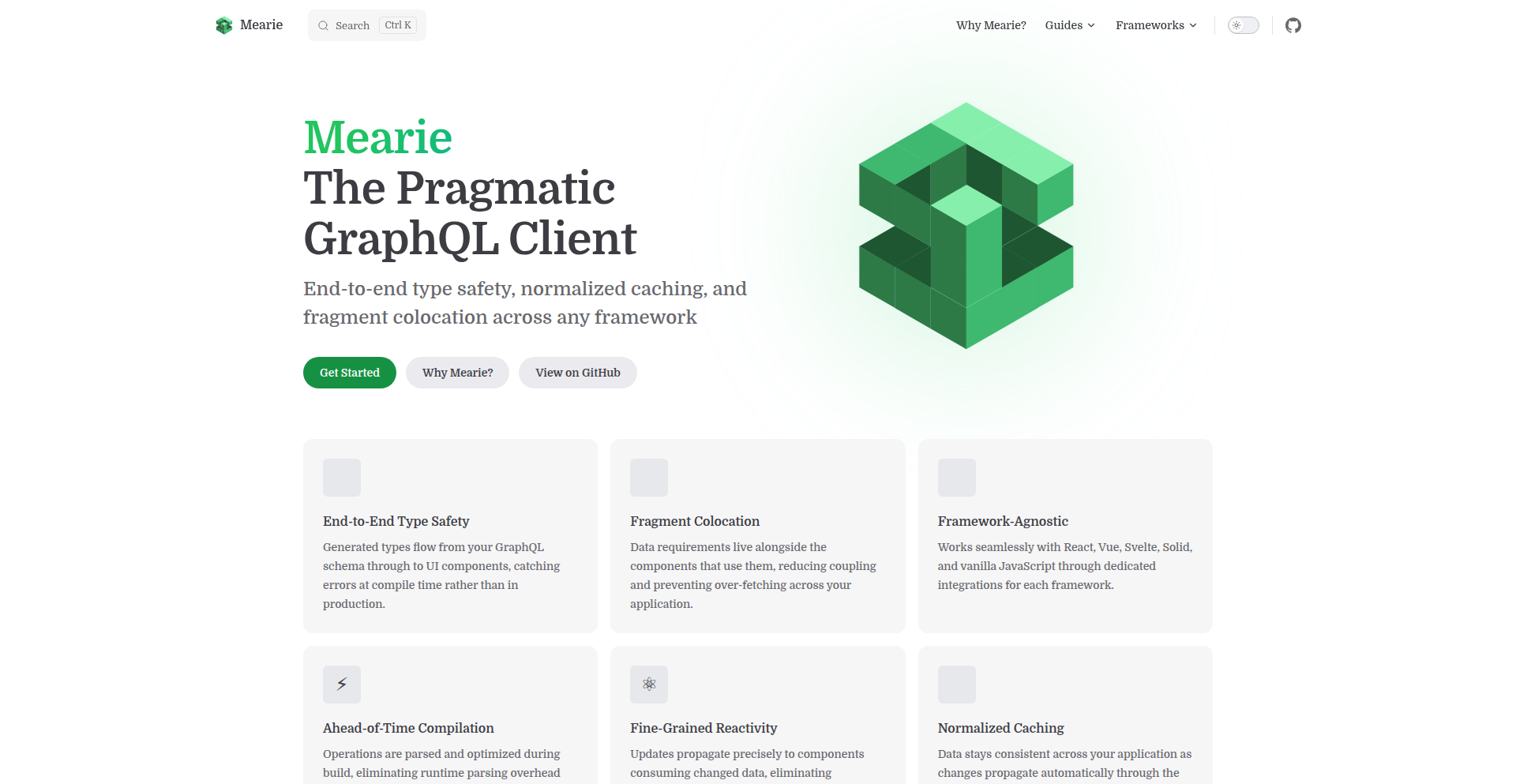

Mearie: The Reactive GraphQL Client

Author

devunt

Description

Mearie is a novel GraphQL client designed to bring the power and flexibility of GraphQL to modern, reactive web frameworks like Svelte and SolidJS. It addresses the gap for developers who find existing solutions like Relay, while powerful for React, less natural for these emerging frameworks. Mearie focuses on providing a seamless developer experience with a strong emphasis on reactivity and performance, allowing for efficient data fetching and management tailored to the specific paradigms of Svelte and SolidJS.

Popularity

Points 4

Comments 5

What is this product?

Mearie is a GraphQL client that's built with a focus on how modern JavaScript frameworks like Svelte and SolidJS work. Think of it as a smart translator between your web application and your GraphQL server. Instead of just fetching data, Mearie understands how these frameworks update their interfaces automatically when data changes. It uses techniques to observe data and seamlessly update your UI without you having to manually manage every little detail. This means faster, more responsive applications and less boilerplate code for you to write. So, why is this useful? It makes building data-driven applications with Svelte or SolidJS much smoother and more efficient, saving you time and effort.

How to use it?

Developers can integrate Mearie into their Svelte or SolidJS projects by installing it as a package. They then configure Mearie to connect to their GraphQL API endpoint. Mearie provides hooks or composables that allow developers to easily query data, send mutations, and subscribe to real-time data updates directly within their components. The client handles the complexity of network requests, caching, and state management, presenting data in a reactive way that integrates perfectly with the chosen framework's reactivity system. So, how does this benefit you? You can quickly and cleanly fetch and manage data in your Svelte or SolidJS apps, with the confidence that your UI will update automatically and efficiently.

Product Core Function

· Reactive Data Fetching: Mearie observes your GraphQL queries and automatically updates your application's UI when the data changes. This means your interface stays in sync with your data without manual intervention. Its value lies in creating highly dynamic and responsive user experiences.

· Framework Agnostic Design (for modern JS): While optimized for Svelte and SolidJS, Mearie's underlying principles are transferable, offering a fresh perspective on GraphQL client design. This highlights innovation in tailoring solutions to specific ecosystem needs and provides a blueprint for future framework-specific tools.

· Efficient State Management: The client manages the fetched data efficiently, reducing redundant requests and ensuring optimal performance. This translates to faster load times and a smoother user experience for your application's users.

· Developer Experience Focus: Mearie aims to simplify the process of integrating GraphQL into projects, providing intuitive APIs and clear documentation. The value here is a significant reduction in development time and complexity, allowing developers to focus on building features rather than wrestling with data fetching logic.

Product Usage Case

· Building a real-time dashboard with Svelte: Mearie can be used to efficiently fetch and display constantly updating metrics from a GraphQL API, ensuring the dashboard remains live and accurate. This solves the problem of keeping a complex UI updated with streaming data.

· Developing a social media feed with SolidJS: Mearie would enable seamless fetching of posts, comments, and user information, with automatic UI updates as new content arrives. This addresses the challenge of managing and displaying dynamic, user-generated content in a performant way.

· Creating an e-commerce product listing with dynamic pricing in Svelte: Mearie can fetch initial product data and then efficiently update prices or inventory levels as they change in the backend. This demonstrates how Mearie can handle frequently changing data without page reloads.

10

AI Peace Weaver

Author

solfox

Description

This project is an AI-powered application designed to help co-parents communicate more peacefully after divorce. It uses AI to reframe emotionally charged text messages, transforming potentially confrontational language into neutral, child-focused communication. The core innovation lies in its ability to detect and mitigate emotional abuse in digital interactions, offering a much-needed tool for navigating sensitive post-divorce relationships.

Popularity

Points 8

Comments 1

What is this product?

AI Peace Weaver is a sophisticated application that leverages advanced AI models, specifically Gemini and OpenAI, to analyze and rephrase text messages. The underlying technology works by identifying emotionally charged language, personal attacks, or accusatory tones within a message. Instead of simply blocking or flagging the message, it intelligently rewrites the content to be neutral, objective, and focused on the well-being of the children involved. This process is akin to an 'emotional spellchecker' for difficult conversations. The innovation here is applying AI not just for content generation, but for emotional de-escalation and harm reduction in communication, which is a novel and impactful application of AI.

How to use it?

Developers can integrate AI Peace Weaver into their applications or workflows that involve user-generated text, especially in sensitive contexts like co-parenting platforms, customer support for conflict resolution, or online communities dealing with sensitive topics. The system can be accessed via an API, where users send their draft messages. The AI then processes these messages and returns a reframed, more constructive version. For a co-parent, this means drafting an email or text about child custody or logistics, and the app providing a suggestion that is less likely to provoke an argument. The technical implementation involves connecting to cloud services like Google Cloud and Firebase, utilizing AI APIs (Gemini, OpenAI), and potentially real-time communication services like Twilio for message delivery.

Product Core Function

· Emotional tone detection: Identifies subjective and inflammatory language in user input, helping to pinpoint problematic phrasing. This is valuable for understanding potential communication breakdowns before they happen.

· Contextual reframing: Rewrites messages to be neutral, objective, and child-focused, removing emotional baggage. This offers a direct pathway to more civil interactions, reducing stress and conflict.

· Abuse mitigation: Specifically designed to filter out language that could be construed as emotional abuse or harassment, providing a safer communication environment. This protects individuals from harmful language and promotes healthier relationships.

· API integration: Allows developers to easily embed the reframing capabilities into their own applications, expanding the reach and impact of constructive communication tools. This enables building more empathetic and user-friendly digital experiences.

· Bootstrapped development: Built from the ground up by a solo developer, demonstrating the power of focused effort and leveraging existing cloud infrastructure to create impactful solutions. This inspires other solo developers and small teams to tackle ambitious projects.

Product Usage Case

· Co-parenting communication: A divorced parent drafts a text to their ex-spouse about a child's school event. The AI rewrites it from 'You never take our kid seriously, do you expect me to handle everything again?' to 'Could you please confirm if you're available to discuss our child's upcoming school event and share responsibilities?' This directly addresses the need for peaceful co-parenting and avoids unnecessary conflict.

· Online community moderation: A platform administrator uses the AI to pre-screen user comments in a sensitive discussion forum. If a comment is flagged for aggressive language, the AI suggests a more diplomatic phrasing, preventing escalation and maintaining a respectful environment. This helps manage online discourse effectively.

· Customer service escalation: A customer service agent drafts a response to an angry customer. The AI suggests rephrasing the response to be more empathetic and solution-oriented, de-escalating the situation and improving customer satisfaction. This provides a practical way to handle difficult customer interactions.

11

EmDashErase-AI

Author

batterylake

Description

This is a clever Chrome extension that tackles a common annoyance in ChatGPT responses: the overuse of em dashes. It employs regular expressions (regex) to intelligently replace these em dashes with more appropriate punctuation like commas or periods, effectively cleaning up AI-generated text. The innovation lies in its targeted approach to a specific stylistic quirk of AI, demonstrating how simple yet effective code can improve the usability of advanced tools.

Popularity

Points 6

Comments 3

What is this product?

EmDashErase-AI is a browser extension designed to automatically eliminate the excessive use of em dashes that often appear in ChatGPT's output. Instead of just deleting them, it uses pattern matching (regex) to make educated guesses about what punctuation should replace the em dash, like a comma or a full stop. This offers a smarter way to refine AI text, making it read more naturally. The core technical insight is that AI language models sometimes fall into predictable stylistic traps, and simple pattern recognition can be surprisingly effective at fixing them. So, this helps you get cleaner, more readable AI responses without manual editing.

How to use it?

As a developer, you can integrate this by installing it as a Chrome extension. When you are interacting with ChatGPT in your browser, the extension automatically runs in the background. It intercepts the text generated by ChatGPT before you see it and applies its em dash removal logic. This means you can continue to use ChatGPT as you normally would, but the output will already be cleaned. For developers who often copy and paste AI-generated content for their work, this saves significant time on post-processing.

Product Core Function

· Automatic Em Dash Removal: Leverages regex to identify and remove em dashes from ChatGPT responses, improving text flow. This is valuable for anyone who finds em dashes jarring in AI text, making the output immediately more professional and easier to read.

· Intelligent Punctuation Replacement: Predicts and inserts suitable punctuation (like commas or periods) in place of removed em dashes, preserving sentence structure and meaning. This is useful for maintaining grammatical correctness and natural language cadence, preventing awkward sentence breaks.

· Background Operation: Works seamlessly as a Chrome extension without requiring manual activation for each response. This offers a hassle-free experience, so you get cleaner text by default whenever you use ChatGPT in your browser.

Product Usage Case

· Content Generation Refinement: A content writer using ChatGPT for blog post drafts can install EmDashErase-AI to ensure the generated text is immediately ready for review, with fewer stylistic interruptions. It solves the problem of repetitive manual punctuation correction, allowing the writer to focus on the creative aspects of their work.

· Code Explanation Formatting: A developer explaining complex code snippets using ChatGPT can benefit from cleaner text output. The extension ensures that explanations are well-punctuated and easier to follow, improving comprehension for other developers or stakeholders. It addresses the issue of visually distracting em dashes that can clutter technical explanations.

· AI-Assisted Research Summaries: A student or researcher using ChatGPT to summarize academic papers can get more coherent summaries. The extension cleans up the AI's text, making the key points more accessible and reducing the need for extensive editing before incorporating the summary into their work. This saves valuable time in the research process.

12

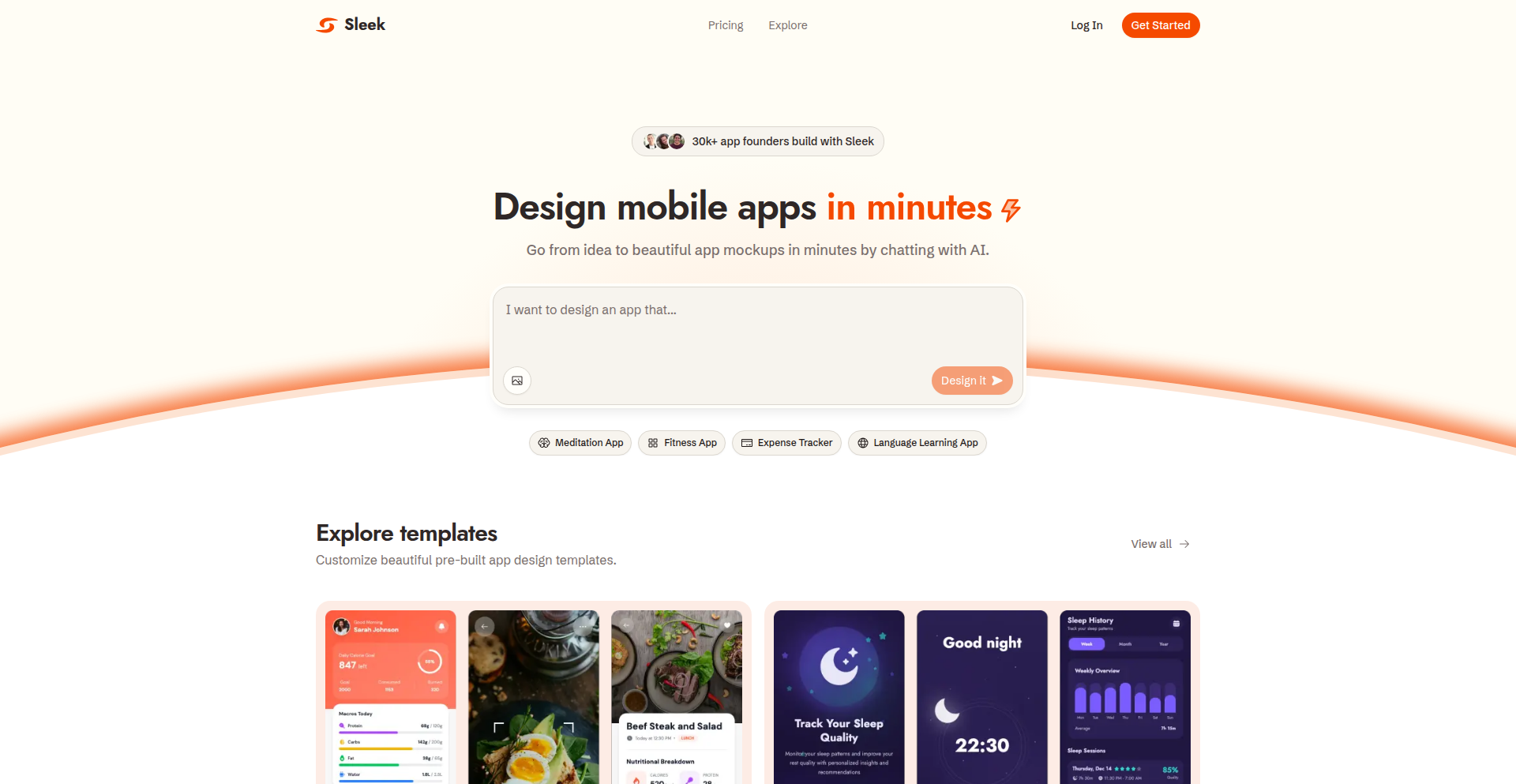

SleekDesign AI

Author

stefanofa

Description

Sleek.design is an AI-powered tool that transforms your app ideas into sleek mobile mockups. It leverages advanced generative AI to translate textual descriptions into visual designs, which can then be exported into various development-friendly formats like HTML, React, or Figma. This allows developers to quickly prototype and visualize their applications, significantly speeding up the initial design and development phases.

Popularity

Points 5

Comments 3

What is this product?

Sleek.design is an AI-driven mobile app mockup generator. The core innovation lies in its ability to understand your app concept described in natural language and then automatically create visually appealing mobile app screen mockups. It uses sophisticated AI models trained on vast amounts of design data to predict layout, UI elements, and visual styles, essentially acting as a virtual designer that can bring your ideas to life in minutes. This bypasses the need for manual design iteration and provides a tangible starting point for development.

How to use it?

Developers can use Sleek.design by simply typing a description of their desired mobile app screens into the platform. For instance, you could describe a "login screen with email and password fields and a prominent login button" or a "user profile page with an avatar, name, and a list of recent activity." The AI then generates these mockups. These designs can be directly exported as HTML for web-based prototypes, as React components for integration into React applications, or as Figma files for further refinement by UI/UX designers. Furthermore, Sleek.design can generate specific prompts that can be fed into other generative AI tools (like Bolt) to create the actual app code starting from the generated design.

Product Core Function

· AI-powered mobile app mockup generation: Converts text descriptions into visual app screen designs, saving significant design time and effort. This is useful for rapidly prototyping and visualizing app concepts.

· Multi-format export (HTML, React, Figma): Allows seamless integration of generated designs into existing development workflows or further design iterations. This provides flexibility for different project needs.

· Prompt generation for code AI: Enables the direct translation of visual designs into executable code by compatible AI tools, bridging the gap between design and implementation.

· Iterative design feedback loop: Facilitates quick visualization of design ideas, allowing for faster feedback and adjustments, leading to more refined end products.

· Focus on mobile UI/UX: Specializes in generating designs specifically for mobile applications, ensuring adherence to common mobile design patterns and user experience principles.

Product Usage Case

· Scenario: A solo developer with a great app idea but limited design skills needs to quickly create a prototype to show potential investors. How it helps: Sleek.design can generate initial mockups for key app screens based on the developer's descriptions, providing a professional-looking visual representation without needing to hire a designer or spend weeks learning design software.

· Scenario: A startup team wants to explore multiple UI variations for a new feature before committing to development. How it helps: Using Sleek.design, they can quickly generate several different mockup styles for the same feature by tweaking their descriptions, allowing for rapid A/B testing of design concepts and informed decision-making.

· Scenario: A web developer building a mobile-first web application wants to ensure their frontend components align with modern mobile UI trends. How it helps: Sleek.design can generate mobile app mockups that the developer can use as a visual reference or even export as HTML/React components to bootstrap their frontend development, ensuring a cohesive and user-friendly mobile experience.

· Scenario: A product manager needs to communicate a detailed app feature concept to the engineering team. How it helps: The product manager can use Sleek.design to generate visual mockups that accurately represent the desired user flow and interface, reducing ambiguity and ensuring the development team understands the requirements precisely.

13

Healz.ai - Root Cause Diagnostic Engine

Author

alexplat

Description

Healz.ai is an AI-powered diagnostic assistant that leverages a unique combination of artificial intelligence and human medical expertise to investigate complex health cases. Unlike superficial symptom checkers, it aims to uncover the root causes of persistent medical issues by building a comprehensive picture, saving patients time and suffering. The innovation lies in its hybrid approach, bridging the gap between AI's data processing power and the nuanced investigative skills of experienced doctors.

Popularity

Points 5

Comments 3

What is this product?

Healz.ai is a sophisticated platform designed to tackle difficult medical diagnostic challenges. Its core innovation is a hybrid approach: it uses advanced AI algorithms to process and analyze vast amounts of patient data, including medical history, test results, and reported symptoms. Simultaneously, it integrates the insights and investigative methodologies of seasoned medical professionals who act as 'detective-doctors.' This combination allows Healz.ai to move beyond simply identifying potential conditions to actively investigating the underlying 'why' behind a patient's ailment. This is valuable because it can uncover the root cause of long-standing, mysterious health problems that might be missed by conventional methods, saving individuals months or years of pain and uncertainty.

How to use it?

Developers can integrate Healz.ai into their own health tech applications or internal healthcare systems. The platform could be accessed via an API, allowing developers to send anonymized patient data for analysis. The system would then return a comprehensive diagnostic report, detailing potential root causes, further investigative steps recommended by the AI and doctors, and links to relevant medical literature. This is useful for building more intelligent patient portals, diagnostic support tools for clinicians, or even personalized wellness platforms that offer deeper insights into health mysteries.

Product Core Function

· AI-driven data aggregation and pattern recognition: The AI intelligently collects and analyzes diverse patient data to identify subtle correlations and anomalies that might indicate an underlying issue. This offers value by spotting potential connections that a single human might overlook, leading to earlier and more accurate diagnoses.

· Human-AI collaborative investigation: Experienced doctors work alongside the AI, guiding its analysis and applying their clinical judgment to complex cases. This provides value by ensuring that the diagnostic process is not purely algorithmic but also grounded in real-world medical experience and empathy, leading to more robust and trustworthy conclusions.

· Root cause analysis engine: The system is specifically designed to go beyond surface-level symptoms and identify the fundamental origins of a health problem. This is valuable for patients suffering from chronic or undiagnosed conditions, offering a path to understanding and addressing the true source of their illness, rather than just managing symptoms.

· Comprehensive diagnostic reporting: Healz.ai generates detailed reports that outline the investigative process, potential root causes, and recommended next steps. This offers value by providing patients and their physicians with a clear, actionable roadmap for further diagnosis and treatment.

Product Usage Case

· A patient experiencing persistent, unexplained abdominal pain after numerous medical tests. Healz.ai can analyze all their medical records, test results, and symptom descriptions to identify less common or interconnected factors that might have been missed, potentially revealing a diagnosis like a rare autoimmune condition or a complex gastrointestinal disorder, saving the patient further invasive procedures and time.

· A software developer building a personal health dashboard. They can integrate Healz.ai's API to provide their users with deeper insights into their health trends and potential underlying issues based on their wearable device data and self-reported symptoms. This offers value by transforming raw health data into actionable diagnostic intelligence.

· A small clinic looking to enhance its diagnostic capabilities for complex cases. By using Healz.ai, they can leverage advanced AI and a network of specialized medical investigators without the need to hire extensive in-house expertise. This provides value by democratizing access to sophisticated diagnostic support, improving patient outcomes and clinic efficiency.

14

ContextViz: LLM Context Window Observer

Author

ath_ray

Description

This project introduces ContextViz, a tool designed to visually represent and analyze the context window of Large Language Models (LLMs). It addresses the challenge of understanding how LLMs process and utilize their limited context, providing developers with insights into token usage and potential limitations. The innovation lies in its ability to translate abstract token counts into a comprehensible visual format, aiding in prompt engineering and model performance optimization.

Popularity

Points 7

Comments 0

What is this product?

ContextViz is a visualization tool that helps developers understand the inner workings of an LLM's context window. Think of an LLM's context window like a notepad it uses to remember what's been said in a conversation or in the input. It has a limited size (measured in tokens, which are like words or parts of words). If too much information is put into this notepad, older or less relevant information might get 'forgotten' or pushed out. ContextViz visually shows you how much of this notepad is being used, which parts of the input are consuming the most space, and how much room is left. This is innovative because instead of just seeing a number for token count, you get a visual representation that makes it much easier to grasp the actual 'memory' usage of the LLM, enabling better control over its responses.

How to use it?

Developers can integrate ContextViz into their LLM-based applications or use it as a standalone debugging tool. By feeding it the input prompts and observed LLM outputs, ContextViz can generate visualizations that highlight token distribution across different parts of the input (e.g., system messages, user prompts, previous turns in a conversation). This allows developers to identify which parts of their prompts are taking up the most context, helping them to refine their prompts for better performance, reduce unnecessary token consumption, and ensure critical information remains within the LLM's active memory. It's useful for fine-tuning prompts and understanding why an LLM might be missing information from earlier in an interaction.

Product Core Function

· Visual Context Allocation: This feature displays how tokens are distributed across different sections of the LLM input (e.g., system prompt, user query, chat history). The value is in providing a clear, graphical representation of token usage, making it easy to spot inefficiencies or over-reliance on certain input types. This helps in optimizing prompts for better performance and cost-effectiveness.

· Remaining Context Indicator: A real-time indicator showing how much of the LLM's context window is still available. The value here is in preventing context overflow, ensuring that the LLM has enough 'memory' for its task and preventing degradation of its ability to recall information. This is crucial for long conversations or complex tasks where precise memory management is needed.

· Token Density Analysis: This function highlights areas of the input that have a high density of tokens. The value lies in identifying verbose or redundant parts of the prompt that might be unnecessarily consuming context. Developers can use this to trim down their prompts and improve the LLM's focus, leading to more concise and relevant outputs.

· Input Segment Breakdown: Allows users to see the token count for each distinct segment of the input (e.g., the system message, the user's last turn, previous conversational turns). The value is in granular insight into where the token budget is being spent. This helps developers understand the impact of each component of their input on the overall context window usage and make informed decisions about what information to include or exclude.

Product Usage Case

· Debugging a chatbot that fails to remember user preferences from earlier in the conversation. By using ContextViz, the developer sees that the chat history is consuming almost the entire context window, pushing out the initial preference settings. The solution is to implement a summarization technique for the chat history to free up context for crucial details.

· Optimizing prompt engineering for a content generation LLM. The developer uses ContextViz to find that overly descriptive instructions are filling up the context. By simplifying the instructions and focusing on key requirements, they can allocate more context to the actual content to be generated, resulting in richer and more relevant output.

· Analyzing the performance of an LLM on a long-form question-answering task. ContextViz reveals that the LLM is losing track of the original question due to the extensive supporting text provided. The developer then refines the input structure to ensure the core question remains prominent within the context window, improving the accuracy of the answers.

· Monitoring token costs for a large-scale LLM deployment. ContextViz helps identify prompts that are consistently using a high number of tokens, allowing for targeted optimization efforts to reduce expenditure without sacrificing output quality. This provides a practical way to manage operational costs associated with LLM usage.

15

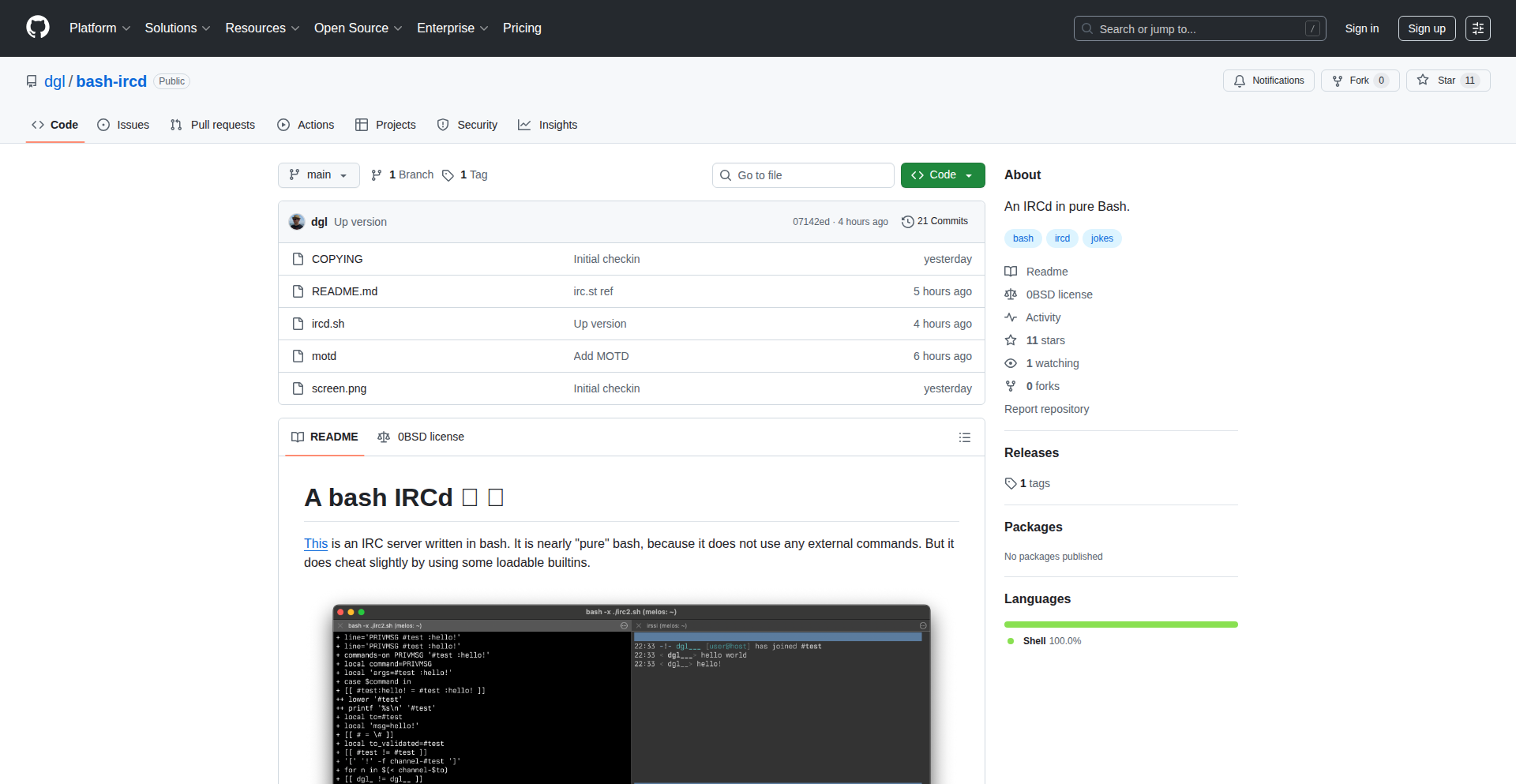

BashIRCd - Pure Bash IRC Daemon

Author

dgl

Description

This project is a lightweight Internet Relay Chat (IRC) server implemented entirely in pure Bash scripting. It demonstrates a creative approach to building network services using a language not typically associated with such tasks, offering a novel way to understand network protocols and system-level scripting.

Popularity

Points 6

Comments 1

What is this product?

BashIRCd is an IRC server (IRCd) written from scratch using only Bash, a common Unix shell. The innovative aspect is using Bash, which is usually for scripting system tasks or automating commands, to handle network connections, parse IRC commands, and manage user communication. It's a testament to the power of scripting languages when pushed to their limits, showcasing how even seemingly simple tools can be leveraged for complex applications. For developers, it offers a unique learning opportunity to grasp IRC protocol mechanics and explore unconventional system programming approaches.

How to use it?

Developers can run BashIRCd on any system with Bash installed. It acts as a standalone server that IRC clients can connect to. You would typically execute the Bash script, and it would listen on a specific network port (e.g., 6667). Then, you can connect to it using any standard IRC client (like HexChat, irssi, or even a simple telnet connection). This provides a sandbox environment for testing IRC client behavior, understanding server-side logic, or even building custom IRC bots with very low overhead.

Product Core Function

· Network Socket Handling: Implemented using Bash's built-in pseudo-terminal and process substitution features to simulate network socket behavior for receiving and sending data to clients.

· IRC Protocol Parsing: Processes incoming client messages according to the IRC protocol specifications, understanding commands like JOIN, PRIVMSG, and NICK.

· User and Channel Management: Maintains in-memory data structures to track connected users, their nicknames, and the channels they are part of.

· Message Broadcasting: Relays messages between users within the same channel, fulfilling the core function of an IRC server.

· Basic Command Processing: Responds to fundamental IRC commands, allowing clients to join channels, set nicknames, and send messages.

Product Usage Case

· Educational Tool for Network Protocols: Students and developers can use BashIRCd to learn the intricacies of the IRC protocol by observing how messages are sent, received, and processed in a tangible, script-driven environment, offering a 'why does this work for me?' understanding of network communication.

· Lightweight Chat Server for Small Teams: For very small, private groups that need a simple, self-hosted chat solution without the complexity of larger IRC daemons, BashIRCd can serve as an immediate, zero-dependency option, solving the 'how can I quickly set up a chat without installing heavy software?' problem.

· Developing and Testing IRC Bots: Developers building IRC bots can connect to this BashIRCd instance for rapid testing and debugging of their bot's logic without needing to set up or connect to a public IRC network, which is great for 'how do I test my bot without bothering others?' scenarios.

· Demonstrating Scripting Power: As a proof-of-concept, it inspires other developers by showing that complex network services can be built with unexpected tools, encouraging them to think outside the box for their own projects and addressing the question of 'what can I build with just the tools I already have?'

16

DeepShot-NBA ML Predictor

Author

frasacco05

Description

DeepShot is a machine learning model designed to predict NBA game outcomes with notable accuracy. It distinguishes itself by leveraging rolling statistics, historical performance, and recent team momentum. The innovation lies in its use of Exponentially Weighted Moving Averages (EWMA) to dynamically capture a team's current form, offering insights into statistical disparities that influence predictions. This provides a deeper understanding of 'why' a certain outcome is favored, going beyond simple averages or betting lines. So, this is useful for anyone interested in sports analytics or curious about algorithmic prediction in sports.

Popularity

Points 3

Comments 4

What is this product?

DeepShot is a machine learning application that forecasts NBA game results. Its technical foundation is built on Python, utilizing libraries like Pandas for data manipulation, Scikit-learn for machine learning tasks, and XGBoost for its powerful gradient boosting algorithm. A key innovation is the implementation of Exponentially Weighted Moving Averages (EWMA). This technique gives more importance to recent data points, allowing the model to effectively capture a team's current form and momentum. This is different from traditional methods that might over-rely on static historical data or simple averages. The project is visualized through a clean, interactive web application built with NiceGUI. The primary value proposition is providing an algorithmically driven prediction with clear statistical reasoning, derived from publicly available data. So, this is useful for understanding how machine learning can be applied to sports analytics and for getting a data-driven perspective on game outcomes.

How to use it?

Developers can use DeepShot by cloning the GitHub repository and running the Python code locally on any operating system. The project relies on free, public data, so there are no complex data acquisition hurdles. The NiceGUI web app provides an interactive interface for visualizing predictions and understanding the underlying statistical differences between teams. For integration, developers could potentially leverage the prediction logic or the data processing pipeline in their own sports analytics projects, or use it as a reference for building similar predictive models. So, this is useful for developers who want to experiment with ML in sports, integrate predictive analytics into their own applications, or learn from a practical implementation.

Product Core Function

· Machine Learning Prediction Engine: Utilizes XGBoost and EWMA to predict NBA game outcomes based on dynamic team statistics, offering a data-driven forecast for each game.

· Rolling Statistics Calculation: Implements EWMA to weigh recent game data more heavily, capturing current team form and momentum for more accurate predictions.

· Interactive Web Visualization: Provides a user-friendly interface built with NiceGUI to display predictions, highlight key statistical differences between teams, and explain the reasoning behind the model's choices.

· Public Data Integration: Reliably sources free, public NBA statistics from Basketball Reference, making the model accessible and reproducible without costly data subscriptions.

· Local Execution: Designed to run on any operating system locally, allowing developers and enthusiasts to experiment and use the tool without complex cloud setups.

Product Usage Case

· A sports analytics enthusiast who wants to experiment with predicting NBA game outcomes can download and run DeepShot locally to test its accuracy and understand the ML principles behind it.

· A developer building a sports betting advisory tool could integrate DeepShot's prediction logic and statistical analysis into their platform to offer data-backed recommendations to users.

· A data science student learning about time-series analysis and predictive modeling can use DeepShot as a case study to understand how EWMA and XGBoost can be applied to real-world sports data.

· A sports media outlet could leverage the visual interface and prediction insights from DeepShot to create engaging content for their audience, explaining game matchups with algorithmic backing.

· A sports data scientist looking to benchmark their own prediction models can compare their results against DeepShot's accuracy and analyze its statistical approaches.

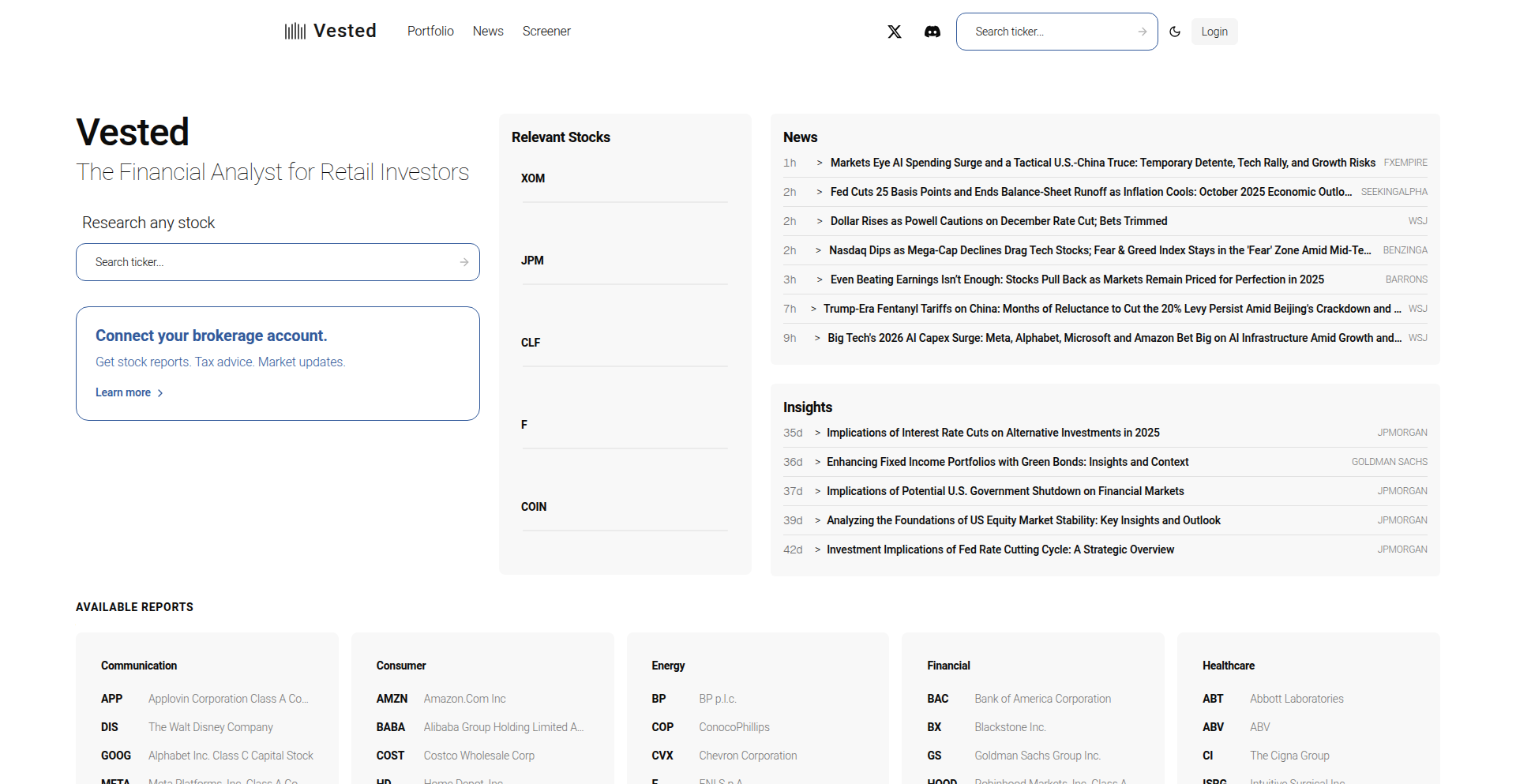

17

Nuvix: Flexible Backend-as-a-Service with Smart Schema Management

Author

ravikantsaini

Description

Nuvix is an open-source backend-as-a-service (BaaS) platform built in TypeScript, designed to offer greater flexibility and enhanced security than existing solutions like Supabase and Appwrite. It addresses developer frustrations with rigid schema models and manual security configurations by introducing three distinct schema types: Document, Managed, and Unmanaged. This allows developers to choose the best approach for prototyping, secure application development, or leveraging full SQL power, all while benefiting from features like advanced API capabilities, a user-friendly dashboard, type-safe SDKs, and rapid self-hosting.

Popularity

Points 5

Comments 1

What is this product?

Nuvix is a self-hostable, open-source backend-as-a-service platform. It's like having a ready-made backend for your applications that handles databases, APIs, and security, but with more control and adaptability. Its core innovation lies in its three schema types: `Document` for quick, appwrite-like prototyping with manual security; `Managed` for secure applications with automatic Row Level Security (RLS) and permissions built-in; and `Unmanaged` for full access to raw PostgreSQL power, offering maximum flexibility for complex SQL needs. This approach solves the rigidity of single-schema systems and the security gaps of loosely defined ones, providing a balanced solution for diverse development needs. So, it helps you build backends faster and more securely, tailored to your project's specific requirements. What's in it for you? You get to pick the backend setup that best fits your project, saving time and reducing security headaches.

How to use it?

Developers can integrate Nuvix into their projects by cloning the GitHub repository and deploying it quickly using Docker Compose. Once running, they can connect their frontend applications (web, mobile, etc.) to Nuvix. The choice of schema type dictates how data is managed and secured. For rapid prototyping, the `Document` schema allows for quick data modeling. For production-ready, secure applications, the `Managed` schema automatically enforces access controls. For advanced data manipulation, the `Unmanaged` schema provides direct SQL access. Nuvix also offers a type-safe SDK that generates code based on your schema, providing autocompletion and reducing errors. So, you connect your app to Nuvix, choose your schema type, and start building your application logic, knowing your backend is handled. What's in it for you? A seamless integration that streamlines your development workflow and provides a robust backend foundation.

Product Core Function

· Three Schema Types (Document, Managed, Unmanaged): Offers flexibility to choose the best data modeling and security approach for different project phases and requirements, from rapid prototyping to highly secure production apps. This provides tailored backend solutions, so you don't have to compromise. What's in it for you? You can pick the backend setup that truly fits your project's needs.

· Advanced API Capabilities (e.g., Join Tables without FKs, Nested Filtering): Enables more powerful and efficient data querying and manipulation through APIs, even with complex relationships, without the need for strict foreign key constraints. This means faster and more flexible data retrieval. What's in it for you? You can build more sophisticated data interactions with less effort.