Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-29

SagaSu777 2025-10-30

Explore the hottest developer projects on Show HN for 2025-10-29. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN submissions highlight a powerful trend: the democratization of complex technologies. We're seeing AI move beyond abstract concepts into practical tools that developers can wield to automate tasks, enhance productivity, and unlock new creative possibilities. The surge in AI-powered assistants, data analysis tools, and creative platforms underscores a shift towards empowering individuals with sophisticated capabilities. Developers are not just building applications; they are building the tools that empower others to build. Simultaneously, the emphasis on open-source, local-first, and privacy-conscious solutions reflects a growing demand for control and transparency in an increasingly interconnected digital world. This spirit of 'build it yourself' and 'make it accessible' is the true hacker ethos, pushing the boundaries of what's possible and democratizing innovation for everyone. For developers and entrepreneurs, this means focusing on solving specific pain points with innovative technological approaches, embracing open-source collaboration, and prioritizing user experience and data ownership. The future belongs to those who can translate complex technologies into accessible, powerful solutions.

Today's Hottest Product

Name

SQLite Graph Ext

Highlight

This project innovates by integrating graph database capabilities directly into SQLite, enabling Cypher query language support for complex relationship data within a widely accessible database. Developers can learn about implementing a full query execution pipeline (lexer, parser, planner, executor) and extending a relational database with graph features, opening doors for efficient data modeling in diverse applications.

Popular Category

AI/ML

Developer Tools

Data Management

Productivity Tools

Web Development

Popular Keyword

AI

LLM

Database

Optimization

Open Source

Developer Tool

Productivity

Automation

Technology Trends

AI-powered automation and assistance

Enhanced data management and querying

Developer productivity tools

Decentralized and local-first applications

Specialized programming languages

Creative AI tools

Performance optimization

Open-source solutions

Project Category Distribution

AI/ML Tools (25.0%)

Developer Tools/Utilities (20.0%)

Data Management/Databases (10.0%)

Productivity/Business Tools (15.0%)

Web Development Frameworks/Libraries (10.0%)

Creative/Media Tools (5.0%)

System Utilities/Languages (5.0%)

Hardware/IoT (5.0%)

Education/Learning (5.0%)

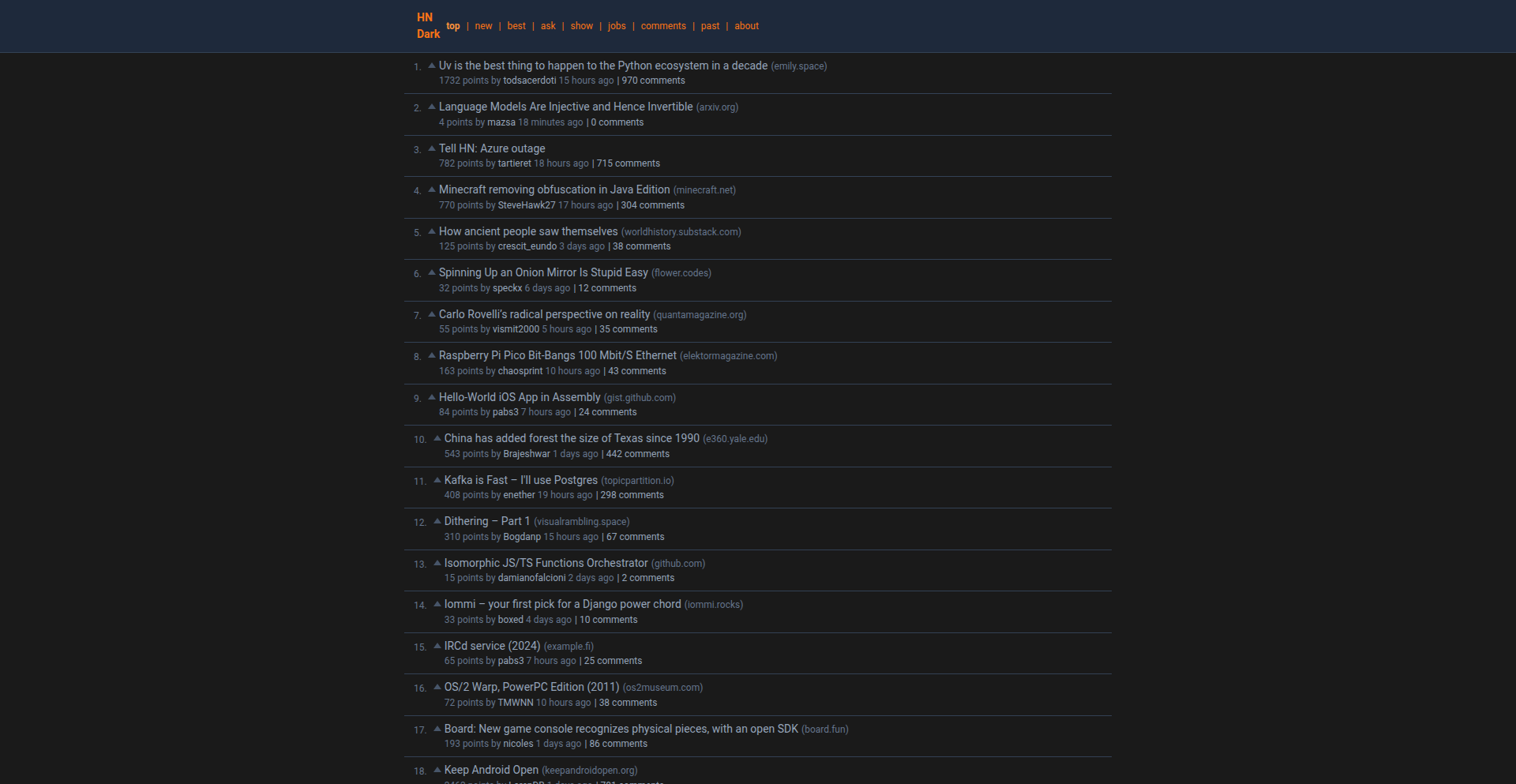

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | GermanVocabQuest | 102 | 87 |

| 2 | OverlayCanvas | 52 | 19 |

| 3 | SQLite Graph Cypher Engine | 28 | 17 |

| 4 | EstimatorAI: Predictive Effort Quantifier | 12 | 6 |

| 5 | FreeGeo AI Navigator | 9 | 3 |

| 6 | OrthoRoute GPU PCB Autorouter | 6 | 6 |

| 7 | Lucent Flow AI Orchestrator | 10 | 1 |

| 8 | 2LY: AI Agent Tool Integration Fabric | 6 | 5 |

| 9 | Go OHTTP Privacy Layer | 10 | 0 |

| 10 | FuzzyText Navigator | 9 | 0 |

1

GermanVocabQuest

Author

predictand

Description

GermanVocabQuest is a collection of gamified exercises designed to make learning German vocabulary more engaging and effective. It tackles the frustration of arbitrary German grammar rules by focusing on making memorization fun through interactive games, offering a novel approach to language acquisition for developers and learners alike. This project leverages simple yet innovative game mechanics to solidify vocabulary retention, directly addressing the pain point of difficult memorization in language learning.

Popularity

Points 102

Comments 87

What is this product?

GermanVocabQuest is an interactive platform that transforms the tedious process of learning German vocabulary into a series of fun games. Instead of dry flashcards or rote memorization, it uses game-based learning principles to help users remember words and phrases more easily. The innovation lies in its application of gamification to address the specific challenges of learning a language with complex grammar, like German, by creating patterns and making memorization intuitive and enjoyable. So, this is useful to you because it makes learning German vocabulary, a notoriously difficult task, significantly less frustrating and more effective.

How to use it?

Developers can integrate GermanVocabQuest into their learning routines or potentially embed its game mechanics into other educational applications. It's designed to be accessible, likely via a web interface or a downloadable application. Users would engage with different game modes, each targeting specific vocabulary categories or grammatical structures. For example, a user might play a 'word-matching' game to connect German nouns with their English translations, or a 'sentence-completion' game to practice verb conjugations in context. So, this is useful to you because it provides a ready-made, fun system to practice and master German words, saving you time and effort compared to traditional methods.

Product Core Function

· Vocabulary Matching Games: Players match German words with their English counterparts or corresponding images, reinforcing recognition and recall. This addresses the core need for memorizing individual words, providing immediate feedback and a sense of accomplishment with each correct match, making it useful for building a foundational vocabulary.

· Sentence Construction Puzzles: Users arrange words to form grammatically correct German sentences, practicing word order and basic sentence structure. This helps in understanding how words fit together in context, crucial for practical language use, making it useful for developing sentence fluency.

· Spelling Challenges: Players are presented with German words and must spell them correctly, improving spelling accuracy and familiarity with German orthography. This directly tackles the difficulty of German spelling, making it useful for precise pronunciation and written communication.

· Contextual Word Usage Drills: Games that require users to select the appropriate German word for a given context or definition, enhancing comprehension and application of vocabulary. This moves beyond simple definition recall to practical usage, making it useful for understanding nuance and choosing the right word in real conversations.

Product Usage Case

· A German language student struggling with memorizing noun genders could use the 'Gender Association' game in GermanVocabQuest, where each noun is paired with a color or symbol representing its gender, making recall easier and solving the problem of inconsistent memorization.

· A developer building a language learning platform could leverage the core game mechanics of GermanVocabQuest to add engaging vocabulary exercises to their existing curriculum, addressing the need for more interactive content and solving the problem of user retention through boredom.

· Someone preparing for a trip to Germany could use the 'Travel Vocabulary' themed games to quickly learn essential phrases and words related to booking hotels, ordering food, and asking for directions, solving the problem of feeling unprepared for real-world interactions.

· A language enthusiast who finds traditional methods uninspiring could use GermanVocabQuest as a fun supplement to their studies, enjoying the gamified experience and solving the problem of motivation by making learning feel like playing a game.

2

OverlayCanvas

Author

tomaszsobota

Description

OverlayCanvas is a minimalist macOS application that allows users to draw, highlight, or annotate directly on top of any other application or window. It's designed for quick, unobtrusive visual communication during pair programming, presentations, or demos, offering a simple alternative to complex web-based drawing tools. The app is sandboxed, collects no user data, and supports various drawing tools and export options, all accessible via global hotkeys.

Popularity

Points 52

Comments 19

What is this product?

OverlayCanvas is a lightweight desktop application for macOS that creates an interactive drawing layer over your existing screen content. Its core innovation lies in its ability to appear instantly on demand via a keyboard shortcut, allowing for immediate annotations without disrupting your workflow or switching applications. Unlike many other tools, it operates locally on your device, ensuring privacy and security. The technology leverages macOS's window management and drawing APIs to render the annotation layer seamlessly on top of any active window, providing a heads-up display (HUD) like experience for visual feedback.

How to use it?

Developers can use OverlayCanvas by simply activating it with a pre-defined global hotkey. Once activated, a drawing interface appears, allowing them to select from various tools like pens, shapes, and highlighters. This is incredibly useful for pair programming sessions where one developer needs to quickly point out a specific line of code or a UI element to their partner. It can also be used during live demos to highlight important parts of an application or draw attention to specific features. Annotations can be exported as PNG images or simply kept as a visual guide. The ability to have per-screen canvases means each monitor can have its own independent drawing space, useful for multi-monitor setups.

Product Core Function

· Global Hotkey Activation: Quickly bring up the drawing canvas with a keyboard shortcut, enabling immediate visual communication without interrupting workflow. This is useful for on-the-fly explanations during screen sharing or remote collaboration, saving time and making instructions clearer.

· Diverse Drawing Tools: Offers a selection of pens, shapes, and highlighters to visually communicate various ideas or points of interest. This allows for precise marking of code snippets, UI elements, or complex diagrams, making technical discussions more effective.

· Per-Screen Canvases: Maintains separate drawing spaces for each monitor in a multi-display setup. This provides a clean and organized way to annotate across different parts of your workspace, preventing visual clutter and ensuring focused attention on the relevant screen.

· Focus Mode (Background Blur): Temporarily blurs the background content to emphasize the annotations. This feature is invaluable during presentations or tutorials to ensure the audience's attention is drawn solely to the highlighted areas or drawn elements.

· Local Data Storage & Privacy: Operates entirely on the user's device without collecting any personal data or requiring special system permissions. This ensures user privacy and security, making it a trustworthy tool for sensitive work environments.

· One-Click Export: Allows users to export their annotations as a PNG image. This is perfect for saving visual notes, sharing feedback, or documenting changes discussed during a collaborative session.

Product Usage Case

· During a pair programming session, a developer uses OverlayCanvas to circle a specific function definition and draw an arrow pointing to a related variable. This instantly clarifies the area of discussion for their partner without needing to type out a long explanation or switch to a separate diagramming tool, making the debugging or refactoring process more efficient.

· A presenter is demonstrating a new software feature. They use OverlayCanvas to highlight the buttons they are clicking and draw a path showing the user flow through the application. This guides the audience's attention and makes the demo easier to follow, especially for complex workflows.

· A UX/UI designer is reviewing a mockup with a stakeholder. They use OverlayCanvas to draw annotations directly on the mockup, suggesting changes to button placement or highlighting areas that need refinement. This provides immediate, actionable feedback that can be easily understood and acted upon.

· In a remote code review, a team member uses OverlayCanvas to draw a quick sketch of a proposed architectural change over a diagram. This visual representation is more intuitive and faster to create than descriptive text, facilitating quicker consensus among the team.

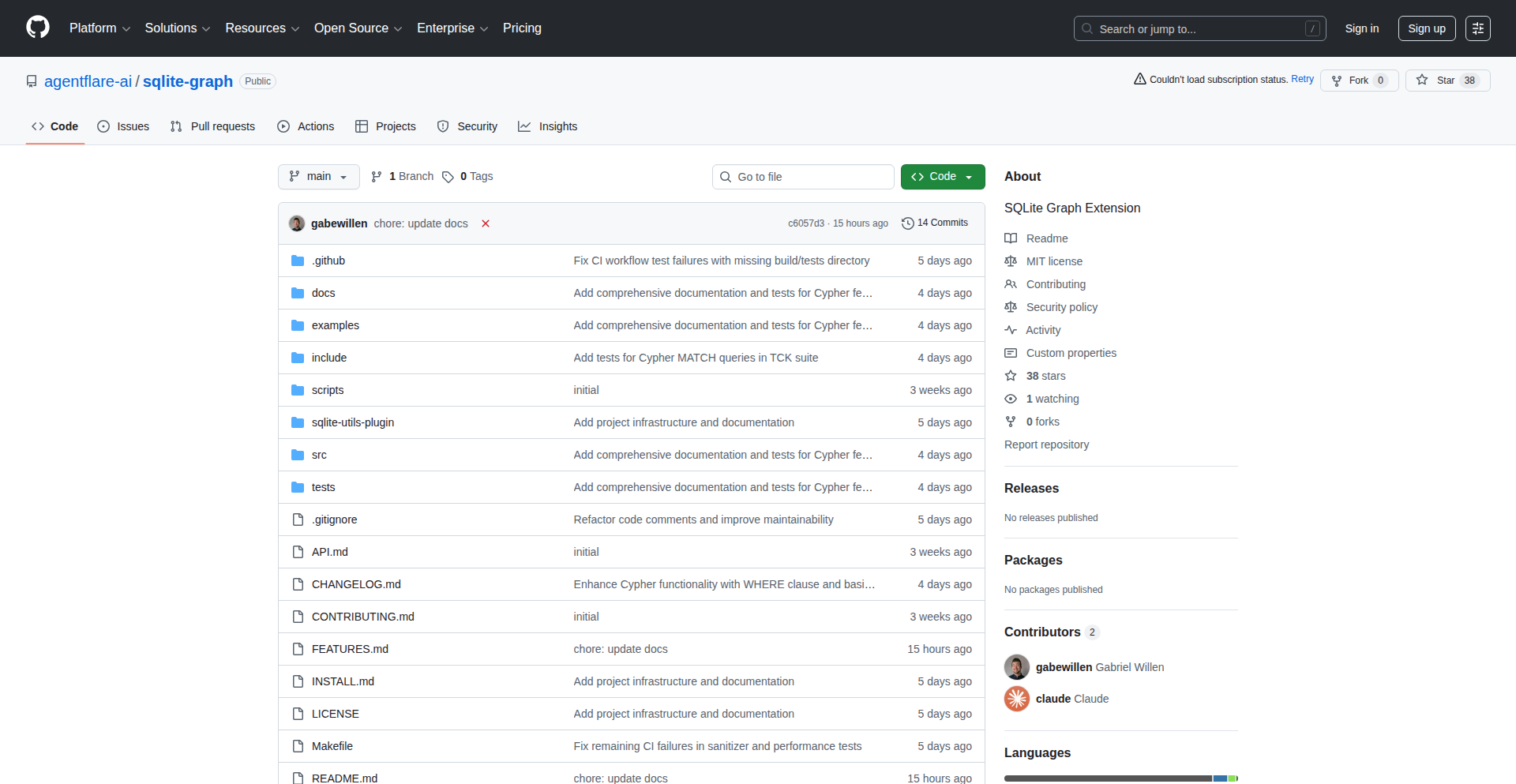

3

SQLite Graph Cypher Engine

Author

gwillen85

Description

This project integrates graph database capabilities directly into SQLite, enabling the use of the Cypher query language. It allows developers to store and query data as nodes and relationships, much like a dedicated graph database, but within the familiar and lightweight environment of SQLite. The innovation lies in building a full Cypher execution pipeline – from understanding the query to retrieving results – entirely within a SQLite extension, supporting key operations like creating graph structures and matching patterns with filters. This means you can leverage the power of graph queries without adding new infrastructure, making complex relational data exploration more accessible.

Popularity

Points 28

Comments 17

What is this product?

This project is a SQLite extension that transforms your SQLite database into a graph database. It understands and executes queries written in Cypher, a popular language for querying graph data. Think of it as adding a 'brain' to your existing SQLite database that can understand connections between data points. The core innovation is building this entire graph query processing engine, including parsing Cypher commands, planning how to find the data, and then actually fetching it, all as a plugin for SQLite. This allows for powerful graph analysis on your data without needing a separate, complex graph database system, offering a convenient and efficient way to work with connected data.

How to use it?

Developers can use this by simply loading the extension into their SQLite connection and then using Cypher commands through special SQL functions. For example, you can create nodes and relationships (like people and their connections) using Cypher's CREATE syntax, and then query these connections using Cypher's MATCH syntax, filtering based on properties. This is incredibly useful for scenarios where you have data with many interdependencies, such as social networks, recommendation engines, or tracking complex workflows. You can integrate this into existing applications that already use SQLite, adding graph query capabilities seamlessly. The provided example shows loading the extension, creating a virtual graph table, and then executing Cypher statements for creating data and performing a query to find people older than 25 who know someone.

Product Core Function

· Full CREATE operations: This allows you to define and add nodes (like people or products) and relationships (like 'knows' or 'purchased') to your database, complete with properties for each. The value is being able to structure your data as a connected graph from the start, making it easier to represent complex real-world scenarios.

· MATCH with relationship patterns: This core feature lets you search for specific patterns of connections between nodes. For instance, you can find all users who are friends with each other. The value here is the ability to efficiently traverse and analyze relationships within your data, which is challenging with traditional relational databases.

· WHERE clause for property filtering: You can filter your graph queries based on the properties of nodes. For example, finding all people named 'Alice' or all products with a price greater than $50. This adds precision to your graph searches, allowing you to pinpoint the exact data you need.

· RETURN clause for basic projection: This function lets you specify which parts of your query results you want to see, serializing them into a JSON format. The value is getting structured and easily usable output from your graph queries, making it simple to integrate results into your application.

· Virtual table integration: This allows you to mix traditional SQL queries with Cypher graph queries within the same SQLite database. The value is immense flexibility, as you can leverage the strengths of both SQL for structured data and Cypher for relationship-based analysis in a unified way.

Product Usage Case

· Building a social network feature: A developer could use this to store user profiles as nodes and friendships as relationships. They could then use Cypher queries to find mutual friends, identify influencers, or suggest connections, all within their existing SQLite application. This solves the problem of efficiently querying complex social connections without needing a separate graph database.

· Implementing a recommendation engine: For an e-commerce application, products and users can be nodes, with relationships like 'purchased' or 'viewed'. This engine can then power 'customers who bought this also bought that' features by matching patterns of past purchases. This provides a powerful recommendation capability by analyzing buying behavior patterns.

· Analyzing complex workflow or dependency data: In project management or software development, tasks or components can be represented as nodes with dependencies as relationships. Cypher queries can then be used to identify critical paths, detect circular dependencies, or understand the impact of a change. This helps in visualizing and managing intricate dependencies effectively.

· Geographic data analysis with connections: Representing locations as nodes and travel routes or proximity as relationships. Developers could query for all locations reachable within a certain distance or through a specific route. This allows for spatial analysis combined with network traversal.

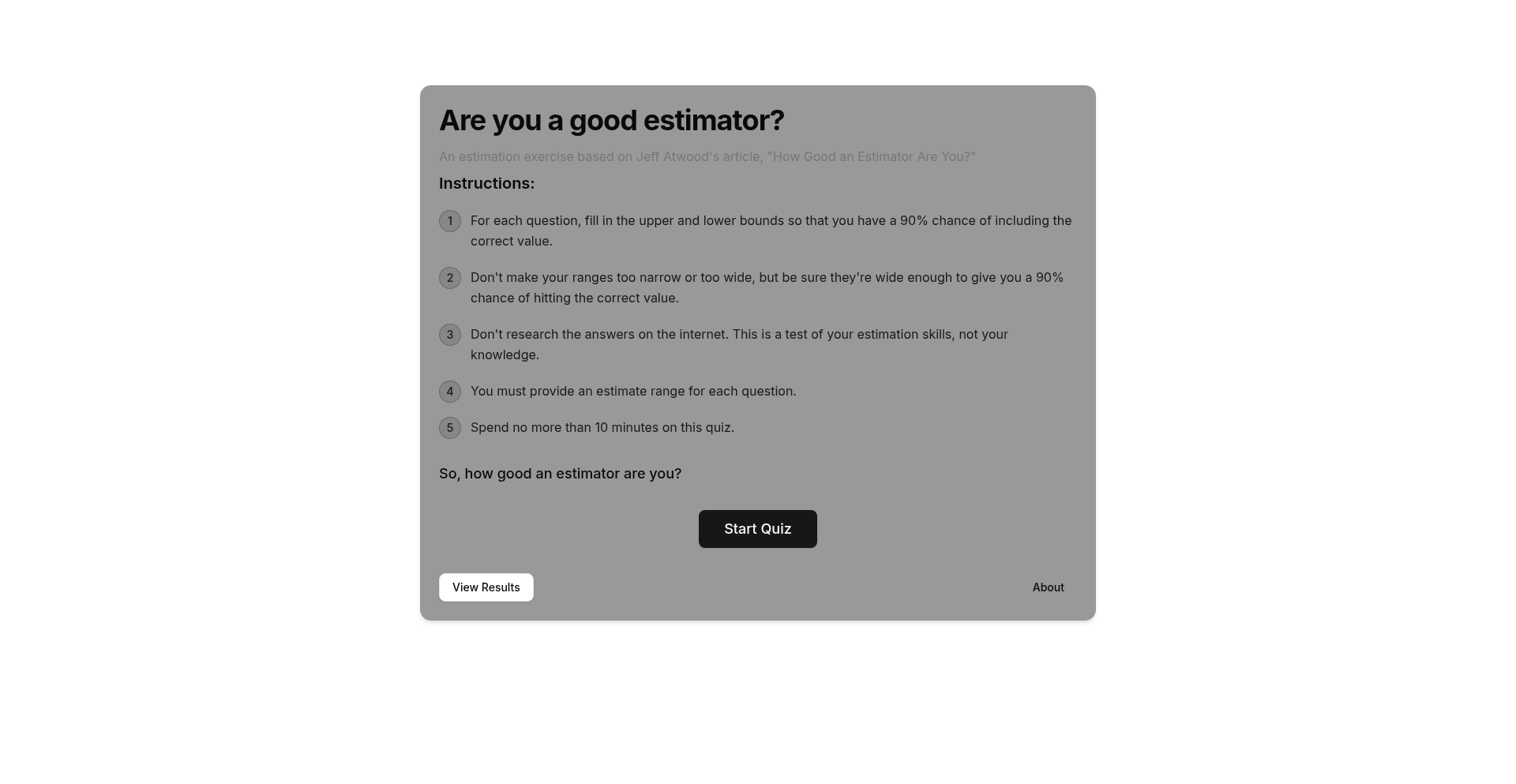

4

EstimatorAI: Predictive Effort Quantifier

Author

dcastm

Description

EstimatorAI is a novel tool that leverages a predictive model to help developers estimate the effort required for software development tasks. Instead of relying on gut feeling or simplistic formulas, it analyzes historical project data and task characteristics to provide more accurate and data-driven estimates. This tackles the pervasive problem of project delays and budget overruns caused by poor estimation.

Popularity

Points 12

Comments 6

What is this product?

EstimatorAI is a project that employs a machine learning model to predict the effort (time, complexity, etc.) needed for software development tasks. It's built on the insight that past performance and task attributes are strong indicators of future effort. The innovation lies in its ability to learn from your specific project's history, moving beyond generic estimation techniques to provide personalized, more reliable predictions. So, what's in it for you? It means fewer surprises, better resource allocation, and more predictable project timelines, ultimately leading to less stress and more successful project delivery.

How to use it?

Developers can integrate EstimatorAI into their workflow by feeding it historical data from their past projects, including task descriptions, estimated effort, and actual effort spent. The tool then trains its model on this data. When faced with a new task, developers input its characteristics (e.g., 'implement user authentication module,' 'refactor database schema'), and EstimatorAI provides a predicted effort range. This can be used to inform sprint planning, capacity planning, and stakeholder communication. The value for you is clearer visibility into what tasks will take how long, enabling you to commit to realistic deadlines and manage expectations effectively.

Product Core Function

· Historical Data Ingestion: The system accepts and processes past project data, including task details and completed effort. The technical value is in building a foundation for personalized estimation, enabling accurate predictions based on your team's unique performance. This is useful for any team looking to move beyond guesswork in planning.

· Predictive Modeling Engine: Utilizes machine learning algorithms to identify patterns and correlations between task attributes and effort. The innovation here is creating a dynamic estimation tool that learns and improves over time, offering more sophisticated insights than static methods. This provides you with data-backed projections, reducing the risk of under or over-committing resources.

· Effort Estimation Output: Generates a quantifiable estimate for new tasks, often presented as a range or confidence interval. The core value is providing actionable data points for planning and decision-making, helping you understand the potential scope and resource requirements. This directly translates to better project scheduling and resource management for your projects.

· Task Attribute Analysis: Breaks down new tasks into key characteristics that the model uses for prediction. This functional aspect allows for granular analysis of task complexity, enabling more precise estimations. For you, this means a deeper understanding of what makes a task take longer, aiding in task decomposition and better delegation.

Product Usage Case

· Sprint Planning Enhancement: A development team uses EstimatorAI to predict the effort for user stories in the upcoming sprint. By inputting the story details, they receive an estimated effort range, allowing them to populate the sprint board with a more realistic workload and avoid overcommitting. This helps you deliver on promises and build trust with stakeholders.

· Resource Allocation Optimization: A project manager uses EstimatorAI to forecast the effort for different phases of a new project. This data informs how many developers are needed and for how long, preventing bottlenecks and ensuring efficient team utilization. For you, this means smoother project execution and avoiding costly resource imbalances.

· Risk Assessment for Large Features: When estimating a large, complex feature, EstimatorAI can provide a probabilistic effort estimate, highlighting potential high-effort areas. This allows the team to proactively address risks or uncertainties, potentially breaking down the feature further or allocating more contingency time. This benefit for you is early identification of potential project derailers, allowing for proactive mitigation.

· Onboarding New Team Members: When new developers join a team, EstimatorAI can help provide initial estimates for their tasks, drawing from the collective historical data. This provides a benchmark and helps onboard new members by giving them a data-driven understanding of task scope. This helps you integrate new talent more effectively and ensure they can contribute meaningfully sooner.

5

FreeGeo AI Navigator

Author

0xferruccio

Description

FreeGeo is an innovative tool designed to optimize Large Language Model (LLM) outputs for better search engine visibility, acting as an 'SEO for LLMs'. It addresses the challenge of LLMs generating content that might not be discoverable by traditional search engines. The core innovation lies in its ability to analyze and inject relevant geographical and contextual signals into LLM prompts, guiding the AI to produce more search-friendly and location-aware responses. This is crucial for applications where localized information or context is paramount for user engagement and discoverability.

Popularity

Points 9

Comments 3

What is this product?

FreeGeo is a novel system that enhances the search engine optimization (SEO) capabilities of content generated by Large Language Models (LLMs). The technical innovation is its sophisticated prompt engineering approach. Instead of just feeding text to an LLM, FreeGeo intelligently incorporates geographical metadata and contextual cues into the prompt. This process essentially 'nudges' the LLM to prioritize and weave in information that search engines can better understand and rank, particularly for location-based queries. Think of it as giving the LLM a map and a set of local facts before it starts writing, ensuring its output is relevant and findable for specific regions. So, if you're building an application that needs to provide localized advice or information, this helps ensure that advice actually gets seen by people in the right places.

How to use it?

Developers can integrate FreeGeo into their LLM workflows by modifying their existing prompts. This involves using FreeGeo's library or API to dynamically enrich prompts with relevant geo-information before sending them to the LLM. For instance, when a user queries for 'best coffee shops', FreeGeo can automatically append location data (e.g., 'in San Francisco, California') to the prompt. This could be done via a simple function call within your backend code that prepares the prompt for your chosen LLM service (like OpenAI's GPT or Google's Gemini). This means your application can deliver more targeted and search-optimized LLM responses without needing to manually manage geo-tags for every interaction. So, if your app uses an LLM to answer user questions, this helps make those answers more likely to be found by users searching for information relevant to their location.

Product Core Function

· Geo-contextual prompt enrichment: Analyzes user input and injects relevant geographical information into LLM prompts. This helps the LLM understand the intended locale, leading to more precise and location-aware responses. The value is in ensuring LLM outputs are tuned to specific regions, making them more useful for local searches.

· LLM output discoverability enhancement: By guiding LLMs to generate content that incorporates search-friendly signals, FreeGeo improves the chances of that content appearing in search results. This is valuable because it makes LLM-powered content more accessible to a wider audience through organic search.

· Contextual signal integration: Beyond just location, FreeGeo can integrate other relevant contextual signals that aid searchability. This allows for a more nuanced and effective SEO strategy for LLM-generated text, meaning your LLM content can be found more easily for a wider range of contextual searches.

Product Usage Case

· Local business recommendation bots: Imagine a bot that recommends restaurants. By using FreeGeo, the bot can ensure that when a user asks for 'Italian restaurants', the LLM's response is tailored to the user's current city or a specified location, and that this information is discoverable by local search queries. This solves the problem of generic recommendations and ensures local businesses are found.

· Localized travel advisory services: A travel app powered by an LLM could use FreeGeo to provide country-specific or city-specific travel tips and alerts. The LLM can be guided to include local nuances and safety information relevant to the user's intended destination, making the advice more actionable and trustworthy. This is useful for ensuring travelers get the most relevant and up-to-date local information.

· Content generation for localized marketing campaigns: Marketers can use LLMs enhanced by FreeGeo to generate blog posts, social media updates, or product descriptions that are optimized for specific regions. For example, creating content for a winter clothing line that's relevant to Canadian audiences versus Australian audiences. This ensures marketing messages resonate with local contexts and are more likely to be discovered by target demographics.

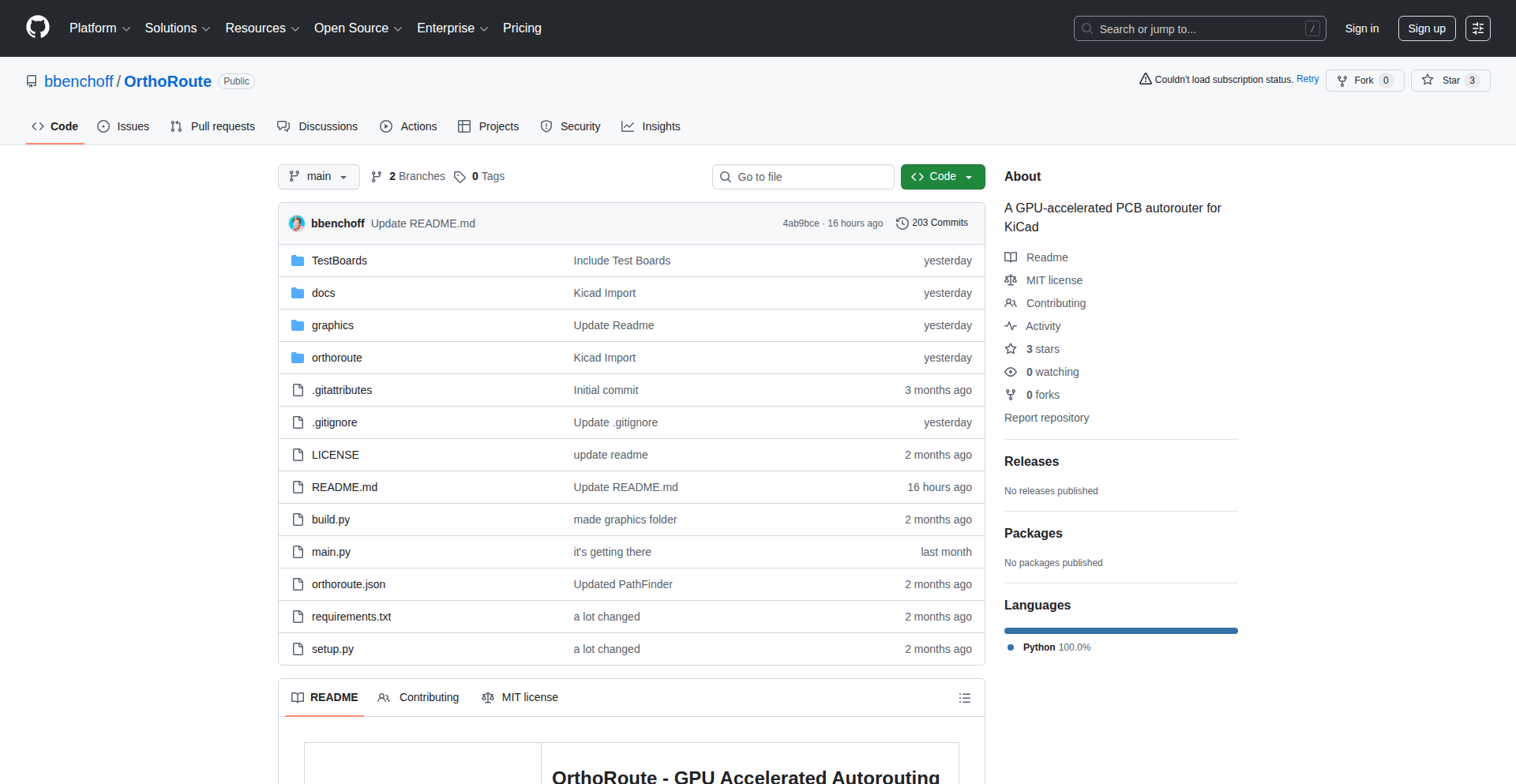

6

OrthoRoute GPU PCB Autorouter

Author

wanderingjew

Description

This project is a KiCad plugin that introduces a novel GPU-accelerated autorouting algorithm for printed circuit boards (PCBs). Inspired by FPGA routing techniques, it employs a 'Manhattan routing grid' to efficiently connect components using orthogonal traces. The innovation lies in leveraging CuPy for significant speedups, making complex routing tasks dramatically faster compared to CPU-bound methods. This addresses the time-consuming and often tedious manual process of routing intricate backplane designs, offering a powerful, albeit experimental, solution for electronic designers.

Popularity

Points 6

Comments 6

What is this product?

OrthoRoute GPU PCB Autorouter is a plugin for KiCad, a popular open-source electronic design automation software. Its core innovation is an 'autorouter' that automatically draws the electrical connections (traces) between components on a PCB. Unlike traditional autorouters, it uses a 'Manhattan routing grid' approach, meaning traces primarily run horizontally or vertically, mimicking how many high-density backplanes are designed. The real game-changer is its use of CuPy, a Python library that allows it to run computations on a Graphics Processing Unit (GPU). This makes the routing process exceptionally fast, especially for designs with thousands of connections, a task that would be prohibitively slow on a CPU alone. So, what's the benefit? It dramatically cuts down the time and effort needed to lay out complex PCBs, allowing for quicker design iterations and potentially enabling more sophisticated board designs that were previously too difficult to route manually.

How to use it?

Developers can integrate OrthoRoute GPU PCB Autorouter by installing it as a plugin within their KiCad environment. After installation, the plugin provides new routing options within KiCad's PCB layout editor. The user would typically define their desired routing strategy, such as opting for the Manhattan routing grid, and then initiate the autorouting process. The plugin leverages the GPU for its calculations, so a compatible NVIDIA GPU with CuPy installed is necessary for optimal performance. The output is a set of automatically generated traces on the PCB. This is useful for engineers and hobbyists working on projects with many interconnected components, like server backplanes or complex embedded systems, where manual routing would be a significant bottleneck. It streamlines the design process by automating a critical and often time-intensive step.

Product Core Function

· GPU-accelerated Manhattan routing grid: This feature uses the power of your graphics card to quickly find orthogonal paths for traces, making it significantly faster than CPU-based methods. This is valuable for speeding up the design process for complex boards, allowing you to route thousands of connections in minutes instead of hours.

· KiCad plugin integration: Seamlessly fits into the existing KiCad workflow, providing familiar tools and an intuitive user experience for PCB designers. This means you don't have to learn a new, complex software; you can enhance your current design environment.

· Automated trace generation: Automatically draws electrical connections between components on a PCB, reducing manual effort and potential for human error. This saves valuable design time and helps ensure accurate connections, especially in high-density designs.

· FPGA-inspired routing algorithm: Borrows efficient routing strategies from the field of Field-Programmable Gate Arrays, optimizing for orthogonal pathways. This leads to cleaner and more predictable routing, which can be beneficial for signal integrity and manufacturing.

· Pre-alpha experimental functionality: While still under development, it offers a glimpse into the future of PCB autorouting, demonstrating the potential of GPU computing in EDA. This provides early access to cutting-edge technology and allows developers to contribute to its evolution.

Product Usage Case

· Routing a complex 8000+ net backplane: A user needing to design a high-density backplane for servers or networking equipment can use this tool to automate the tedious process of connecting all the pins, saving potentially days of manual work and reducing the chance of errors in a very large design.

· Accelerating iteration cycles for embedded systems: An embedded systems engineer designing a complex microcontroller board with many peripherals can use this autorouter to quickly lay out the connections, allowing them to test different hardware configurations faster. This speeds up the overall product development cycle.

· Exploring novel PCB layouts for high-performance computing: Researchers or advanced hobbyists looking to push the boundaries of PCB design can leverage this tool to quickly experiment with routing strategies that might be too time-consuming to attempt manually. This enables exploration of more complex and efficient board layouts.

· Reducing development costs for small-to-medium electronics projects: A startup or a small team developing a new electronic gadget can use this autorouter to save on engineering hours, a significant cost factor in product development. This makes advanced PCB design more accessible and affordable.

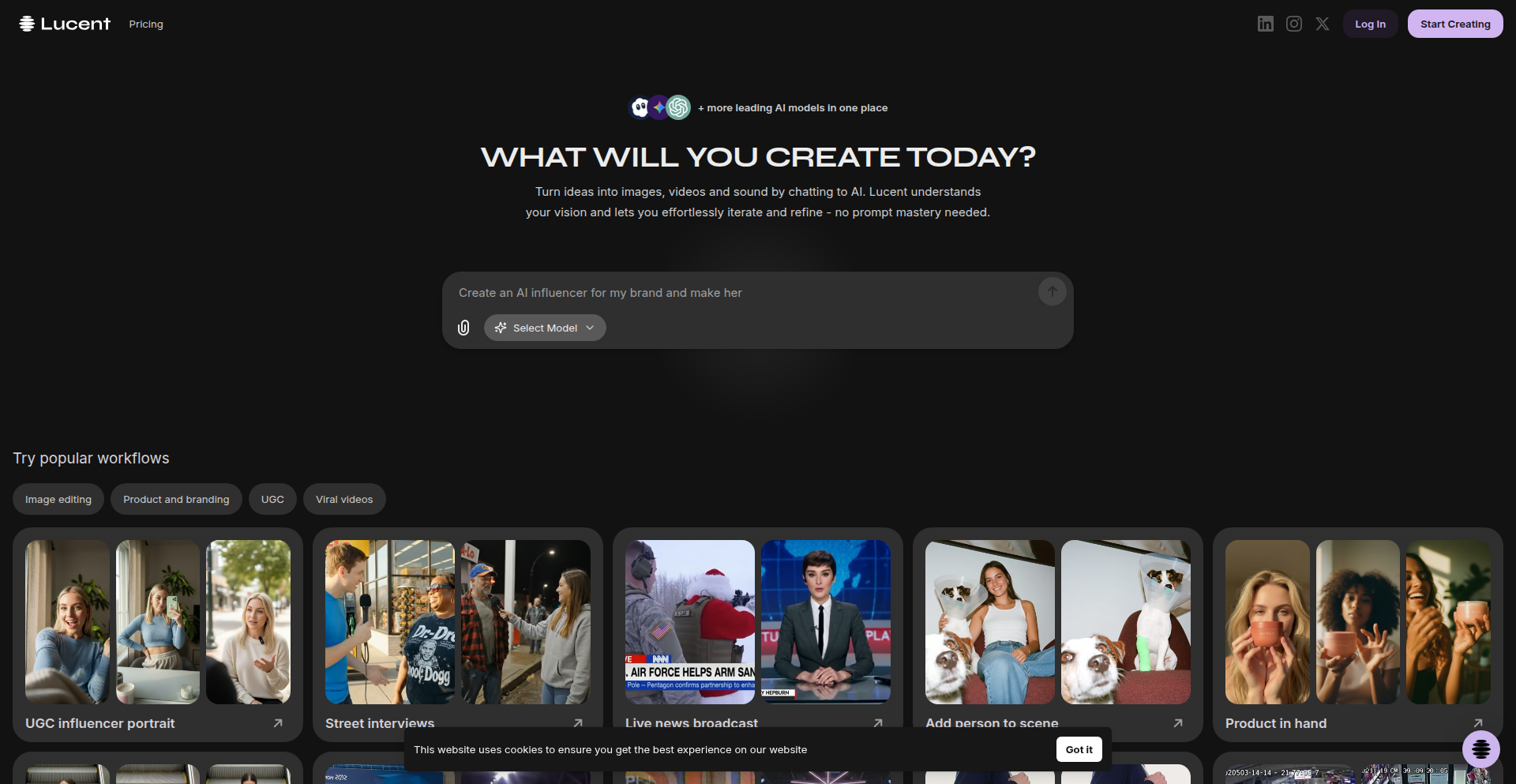

7

Lucent Flow AI Orchestrator

Author

Tinos

Description

Lucent Chat is an AI-powered creative assistant that consolidates multiple AI video and image generation tools (like Veo, Sora, Kling, etc.) into a single, seamless chat interface. It eliminates the fragmentation of current AI workflows by intelligently selecting the best model, optimizing prompts and parameters behind the scenes, and enabling effortless iteration and remixing of generated content. This product solves the frustration of juggling multiple tools, losing creative flow, and managing inconsistent styles when creating with AI.

Popularity

Points 10

Comments 1

What is this product?

Lucent Chat is an intelligent orchestration platform for AI-driven content creation. Instead of manually switching between different AI models for generating images and videos, users can describe their desired output in a single chat. Lucent Chat then acts as a smart agent, identifying the most suitable AI model (such as Sora for video or specific image generators) and automatically optimizing the underlying prompts and parameters. This innovation streamlines the creative process by abstracting away the complexity of individual AI tools, allowing users to focus on their vision rather than the technical execution. So, what does this mean for you? It means you can stop worrying about which AI tool to use and how to perfectly phrase your request for each one, and instead, just describe what you want and get results faster and more consistently.

How to use it?

Developers can use Lucent Chat by simply accessing the web interface and starting a conversation. They describe the image or video they want to create, specifying details like style, content, and mood. Lucent Chat then handles the backend logic of selecting the right AI model and fine-tuning the parameters. For iteration, users can easily 'remix' existing generations, providing further instructions to refine or alter the output. Integration possibilities could include API access in the future for programmatic content generation within other applications. So, how can you use this? Imagine needing a specific type of promotional video for your app; instead of spending hours figuring out how to use Sora effectively, you can tell Lucent Chat what you need, and it will generate it for you, allowing you to then ask for tweaks to the music or the opening shot.

Product Core Function

· Unified AI model orchestration: Seamlessly integrates and utilizes various advanced AI models for image and video generation, saving users the effort of managing multiple specialized tools. This means you get access to the best AI generation capabilities without needing to become an expert in each one, leading to better quality output.

· Intelligent prompt and parameter optimization: Automatically adjusts prompts and settings behind the scenes to achieve the best results from selected AI models, improving efficiency and output quality. This translates to less trial-and-error for you, as the system handles the complex tuning to get you closer to your desired outcome from the start.

· Effortless content iteration and remixing: Allows users to easily refine and build upon previously generated content through a simple remixing feature, accelerating the creative feedback loop. This is useful for you because once you have something close to what you want, you can make small adjustments without starting over, saving significant time and effort.

· Cross-model style consistency: Aims to maintain a consistent creative style across different AI models and generations, ensuring a cohesive final product. For you, this means your generated content will look and feel like it belongs together, even if it was produced by different underlying AI technologies.

Product Usage Case

· A marketing team needs to create a series of short, visually distinct social media video ads. Instead of learning and operating Sora, Veo, and Kling separately, they can use Lucent Chat, describe the core message and desired visual style, and get multiple variations generated, optimized for each platform, all from one interface. This solves the problem of inconsistent branding and the overhead of learning multiple complex tools.

· An independent game developer wants to generate concept art for new characters and environments. They can describe their vision in Lucent Chat, specifying art styles like 'cyberpunk' or 'fantasy,' and get multiple character concepts and environment mockups. They can then iterate on a character design by asking for 'more futuristic armor' or 'a different facial expression,' streamlining the ideation process and saving valuable development time.

· A content creator wants to produce a unique animated explainer video. They can describe the narrative and visual style to Lucent Chat, which then uses its orchestration capabilities to generate segments using appropriate AI models, ensuring smooth transitions and a consistent aesthetic throughout the video. This addresses the challenge of combining different AI outputs into a coherent and professional-looking final product.

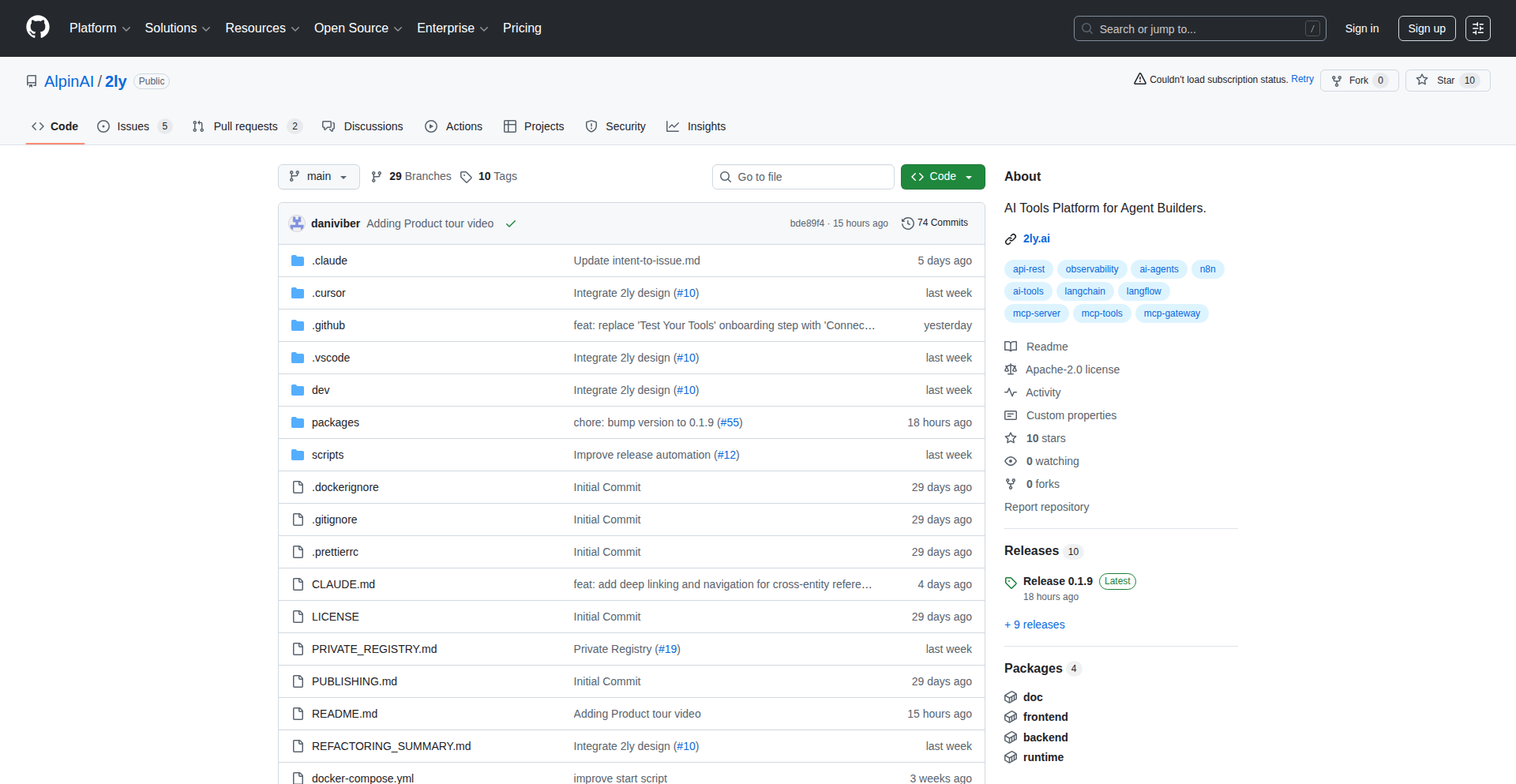

8

2LY: AI Agent Tool Integration Fabric

Author

EigerAI

Description

2LY is a developer-centric platform designed to simplify and accelerate the process of integrating third-party tools and APIs into AI agents. It addresses the common pain point of repetitive tool integration work by providing a standardized, abstract layer. This innovation lies in its approach to abstracting the complexities of different API interfaces, allowing developers to focus on AI agent logic rather than the intricacies of each external service.

Popularity

Points 6

Comments 5

What is this product?

2LY is a specialized framework that acts as an intelligent middleware for AI agents. Think of it as a universal adapter for AI. AI agents often need to interact with various external services – like sending emails, accessing databases, or querying search engines. Traditionally, developers have to write custom code for each integration. 2LY offers a more elegant solution by providing a consistent interface to a wide range of tools. Its core innovation is the 'integration fabric' concept, where it dynamically understands and maps AI agent requests to the specific requirements of external APIs. This means you don't need to rewrite integration code every time you add a new tool or change an existing one. The value here is saving immense development time and reducing errors associated with manual integration.

How to use it?

Developers can integrate 2LY into their AI agent projects by leveraging its SDK. You would define your AI agent's desired actions (e.g., 'send a notification', 'fetch user data') and then configure 2LY with the available tools and their corresponding API endpoints. 2LY handles the translation between the agent's high-level request and the low-level API calls. For instance, if your AI agent needs to send an email, you'd instruct 2LY to use an email sending tool. 2LY then finds the appropriate integration (e.g., SendGrid, Mailgun) and makes the necessary API calls. This allows for a plug-and-play approach to tool integration, making your AI agent more versatile and easier to maintain.

Product Core Function

· Abstracted Tool Interface: Provides a unified way for AI agents to interact with diverse external tools, regardless of their underlying API structure. Value: Significantly reduces the need for custom integration code, speeding up development and improving maintainability.

· Dynamic Integration Mapping: Automatically figures out how to connect an AI agent's request to the correct tool and its parameters. Value: Eliminates manual mapping and configuration, allowing for faster onboarding of new tools and adaptability.

· Pre-built Tool Connectors: Offers a library of ready-to-use integrations for common services like email, databases, cloud storage, and search APIs. Value: Saves developers from reinventing the wheel for standard integrations, enabling them to focus on unique AI functionalities.

· Configuration-driven Customization: Allows developers to easily add new tools or modify existing ones through configuration files rather than extensive code changes. Value: Enhances flexibility and empowers developers to extend the AI agent's capabilities without deep dives into the integration fabric.

· Error Handling and Resilience: Built-in mechanisms to gracefully handle API failures and retries for external tool interactions. Value: Improves the robustness and reliability of AI agents, ensuring smoother operation even when external services are temporarily unavailable.

Product Usage Case

· Developing a customer support chatbot that needs to access a knowledge base, create support tickets, and send email responses: 2LY can integrate with knowledge base APIs (like Zendesk API), ticketing system APIs (like Jira API), and email services (like SendGrid) without the developer writing separate integration logic for each. This means the chatbot can quickly access information, log issues, and communicate with customers, all powered by 2LY's unified interface.

· Building an AI-powered data analysis agent that needs to pull data from various cloud storage services (e.g., AWS S3, Google Cloud Storage) and then process it using different computational tools: 2LY can connect to each cloud storage service and then abstract the data retrieval process. The agent can then request data from specific locations, and 2LY handles the underlying complexities of accessing different storage APIs.

· Creating a personal assistant AI that can schedule meetings, send reminders, and manage to-do lists by interacting with calendar and task management APIs (e.g., Google Calendar API, Microsoft To Do API): 2LY can provide a single point of interaction for these diverse services, allowing the AI to seamlessly manage a user's schedule and tasks without dealing with the individual API intricacies of each service.

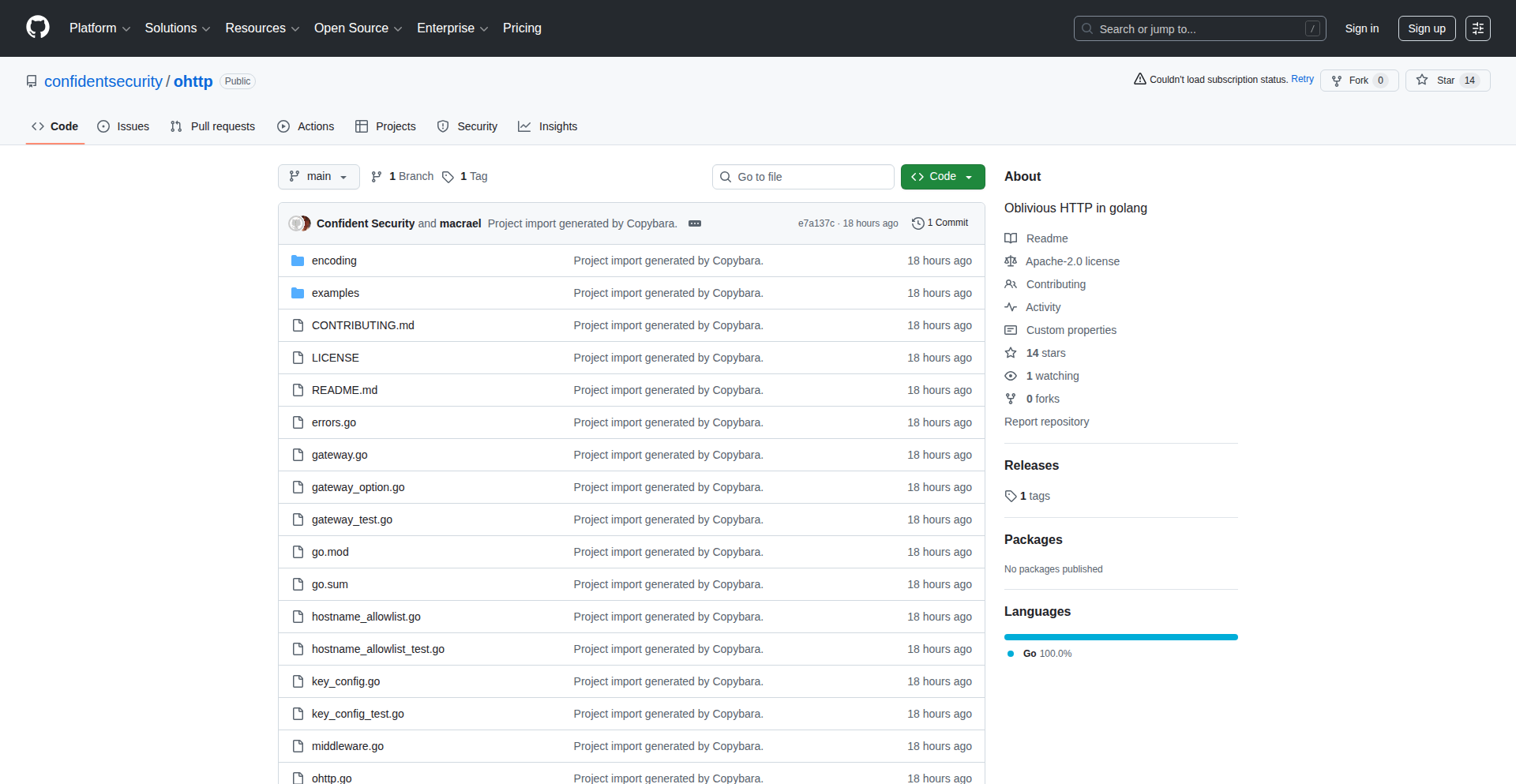

9

Go OHTTP Privacy Layer

Author

jmort

Description

This project provides a Go implementation of Oblivious HTTP (OHTTP), a protocol designed to mask your identity from the content you send over the internet. It functions as an http.RoundTripper, meaning it can be seamlessly integrated into existing Go HTTP clients. Its key innovation lies in its ability to obscure who you are and what you're requesting from intermediaries, enhancing privacy for users of web services. This is valuable because it means your browsing habits and data requests can be kept private from network providers and even the servers you interact with, up to the point of actual content delivery.

Popularity

Points 10

Comments 0

What is this product?

This project is an open-source Go library that implements the Oblivious HTTP (OHTTP) protocol (RFC 9458). OHTTP is a cryptographic technique that ensures your requests to a server are shielded from any observers on the network, including your internet service provider or even the server itself, until the data is decrypted by the intended recipient. The innovation here is building a practical, high-quality Go implementation that allows developers to easily add this privacy layer to their applications. It's built on top of other Go libraries like 'twoway' and 'bhttp' for efficient message handling and secure key exchange (HPKE). So, this means you get a robust tool to send data without revealing your origin or the specific destination details to anyone snooping on the network.

How to use it?

Developers can integrate this OHTTP implementation into their Go applications by using it as an http.RoundTripper. This means it can be plugged directly into Go's standard `http.Client`. When making an HTTP request, the OHTTP layer will first encrypt the request in a way that hides its original destination and content from intermediaries. The encrypted request is then sent to a designated OHTTP relay or gateway, which forwards it to the actual destination server without knowing its contents. The response from the server is then sent back through the OHTTP layer to be decrypted by the client. This is useful for scenarios where end-to-end privacy is critical, such as when building privacy-focused applications or services that handle sensitive user data. The customizable HPKE feature also allows for advanced users to integrate custom hardware security modules for encryption, adding another layer of control and security.

Product Core Function

· HTTP Request RoundTripper Implementation: Allows seamless integration with Go's standard http.Client, meaning developers can easily add OHTTP privacy to their existing applications without significant refactoring. This is valuable because it makes adopting OHTTP straightforward and less time-consuming.

· Chunked Transfer Encoding Support: Handles large data transfers efficiently by breaking them into smaller, manageable chunks. This is useful for uploading or downloading large files or streams, ensuring that even large requests benefit from OHTTP privacy without performance issues.

· Customizable HPKE (Hybrid Public Key Encryption): Enables developers to use different encryption algorithms or integrate custom hardware security modules for enhanced privacy and security. This provides flexibility for organizations with specific security requirements or existing hardware encryption solutions.

· Built on 'twoway' and 'bhttp' Libraries: Leverages well-tested and efficient Go libraries for handling encrypted messages and secure key exchanges. This ensures a reliable and performant implementation, reducing the burden on developers to build these complex cryptographic components from scratch.

Product Usage Case

· Private API Calls: A mobile application making requests to a backend API can use this OHTTP implementation to encrypt the API endpoint and payload. This prevents network providers from seeing which specific services the app is communicating with or what data is being sent, enhancing user privacy on mobile networks.

· Secure Data Uploads: A cloud storage service could use OHTTP to upload user files. The OHTTP layer would encrypt the destination storage location and the file metadata, ensuring that even the cloud provider's infrastructure doesn't know precisely where a specific user's file is being stored until it reaches the final decryption point.

· Anonymous Web Scraping: A tool designed for web scraping could use OHTTP to mask the IP addresses and target URLs of the websites being scraped. This allows for more discreet data collection without revealing the scraper's origin or the specific targets to network observers.

· Privacy-Preserving Service Intermediation: A third-party service that needs to communicate with multiple backend services on behalf of a user can use OHTTP. This ensures that the third-party intermediary cannot see the actual destination or the content of the requests being made to the backend services, thus protecting user data from being exposed to an unnecessary party.

10

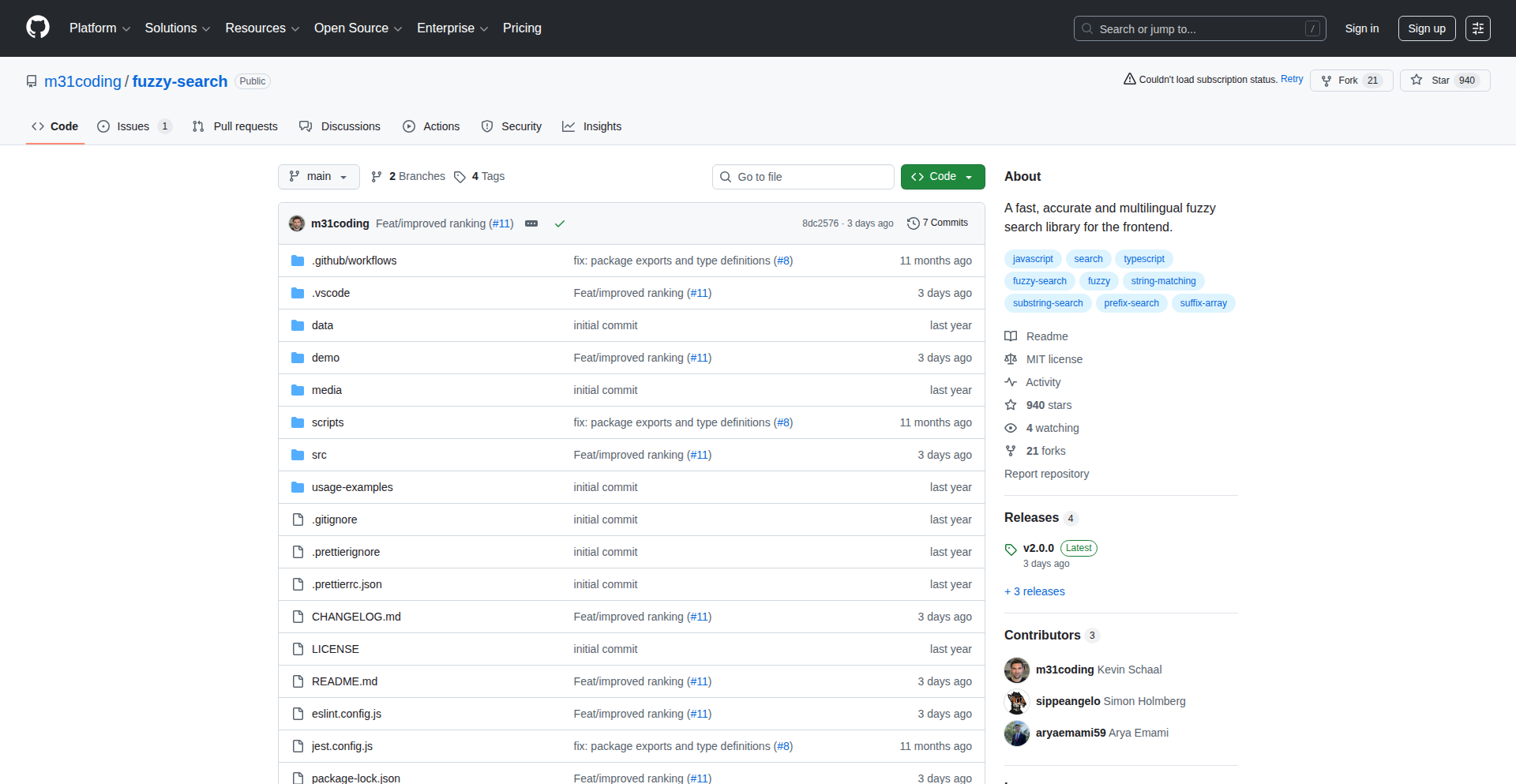

FuzzyText Navigator

Author

kmschaal

Description

A lightweight, zero-dependency JavaScript library for frontend fuzzy, substring, and prefix searching. It significantly enhances user experience by allowing fast and accurate text matching even with typos or partial inputs, making it ideal for dynamic lists, navigation, and data filtering. This translates to a smoother, more intuitive interaction for users, directly addressing the frustration of exact-match search limitations.

Popularity

Points 9

Comments 0

What is this product?

FuzzyText Navigator is a frontend JavaScript library that provides sophisticated text searching capabilities. It goes beyond simple exact matches by implementing fuzzy matching (handling typos and slight variations), substring matching (finding text within words or phrases), and prefix matching (finding text that starts with a given string). The core innovation lies in its efficient algorithm, which achieves high speed and accuracy across multiple languages with absolutely no external dependencies. This means it's easy to integrate and won't bloat your application's size. So, for you, this means building more robust and user-friendly search features without complex setup or performance concerns.

How to use it?

Developers can integrate FuzzyText Navigator by including the library in their frontend project and then initializing it with the data they want to search. It can be used to power search bars for lists of items, navigation menus, or any scenario where users need to quickly find information within a set of text data. For instance, you'd typically pass an array of strings or objects to the library, and then hook up its search function to an input field's 'oninput' event. This allows for real-time filtering as the user types. The 'no dependencies' aspect means you can simply drop the script in and start using it, making it incredibly versatile for web applications, single-page applications (SPAs), and even static websites. The value for you is a significant reduction in development time for implementing powerful search functionalities.

Product Core Function

· Fuzzy Matching: Handles common typos and variations in user input, ensuring that slightly misspelled queries still yield relevant results. This is valuable for improving user satisfaction by reducing search failures and making applications more forgiving of user errors.

· Substring Matching: Allows users to find text that appears anywhere within a string or word, not just at the beginning. This is useful for searching for specific keywords within longer descriptions or titles, providing more comprehensive search results.

· Prefix Matching: Quickly finds text that starts with the characters the user has typed. This is a common and intuitive search behavior, especially for auto-completion and navigation menus, offering immediate feedback and speeding up user interaction.

· High Performance: Engineered for speed, enabling real-time search results even with large datasets. This translates to a snappier and more responsive user interface, preventing user frustration caused by slow search operations.

· Multilingual Support: Designed to work effectively with various character sets and languages. This broadens the applicability of the library to a global audience without requiring separate implementations for different linguistic needs.

· Zero Dependencies: Can be used without relying on any other JavaScript libraries or frameworks. This simplifies integration, reduces bundle size, and avoids potential version conflicts, making it an ideal choice for any project, from small scripts to large applications.

Product Usage Case

· Enhancing a product catalog search bar: A user types 'blue t-sht' instead of 'blue t-shirt'. FuzzyText Navigator would still find 'blue t-shirt' due to fuzzy matching, ensuring the user sees the desired product. This solves the problem of users missing products due to minor spelling mistakes.

· Powering a navigation menu with autocomplete: As a user types 'comp' in a website's navigation, FuzzyText Navigator could quickly suggest 'Components', 'Compilers', and 'Comparison Pages' via prefix matching. This helps users navigate complex sites faster and discover relevant sections.

· Implementing a dynamic list filter: In an application displaying a long list of customer names, a developer can use substring matching to allow users to search for a name by typing just a part of it, like 'smith' to find 'John Smith' and 'Smithson'. This makes finding specific entries in large datasets much more efficient.

· Building a technical documentation search: When a developer searches for 'asynchronous I/O', but accidentally types 'asynchronous IO', the library's fuzzy matching would still return relevant results for 'asynchronous I/O'. This ensures developers can quickly find the documentation they need, even with common keyboard shortcuts or slight variations in technical terms.

11

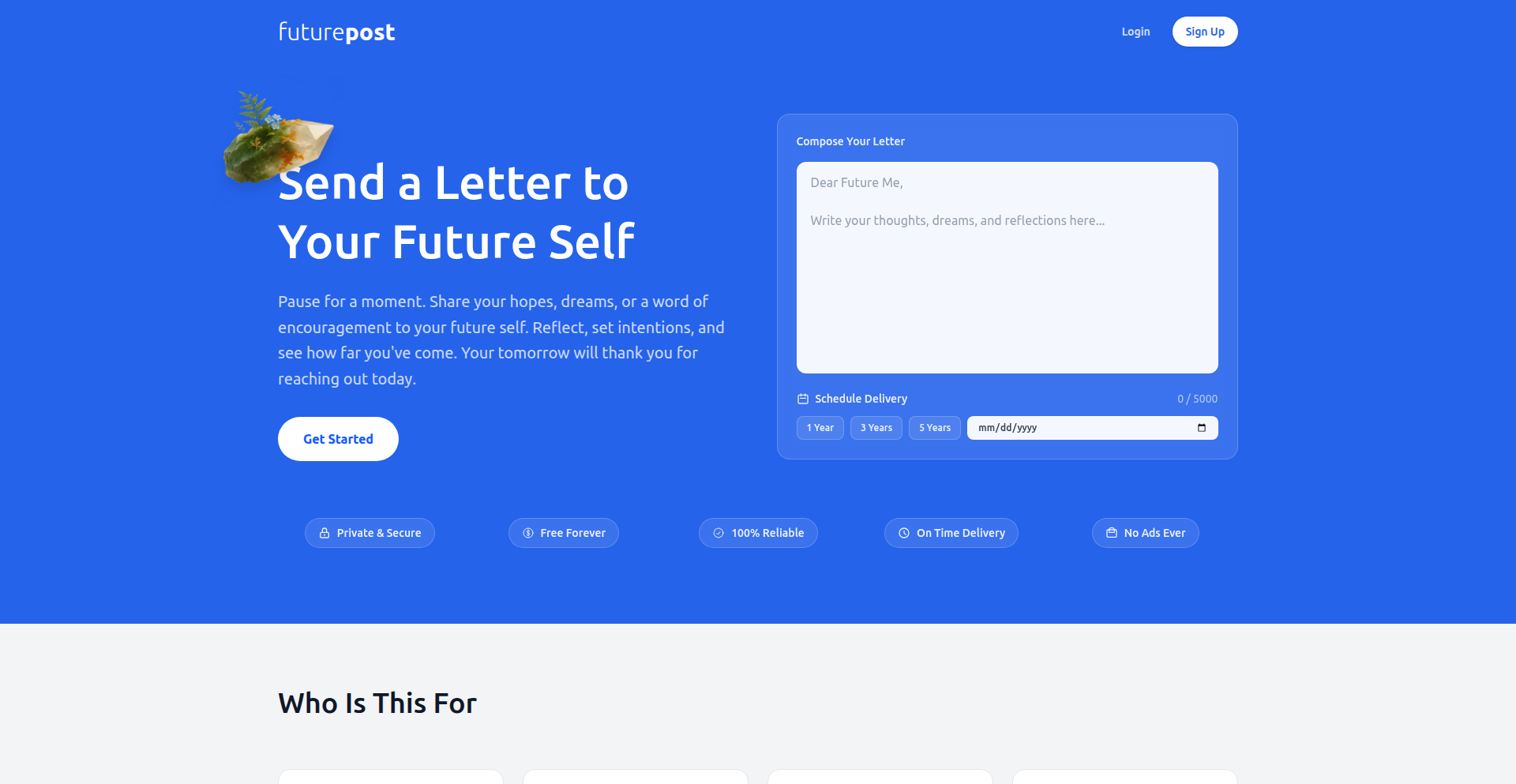

FuturePost.app: Eternal Time Capsule

Author

mrayushsoni

Description

FuturePost.app is a free, open-source alternative to FutureMe, built out of frustration with the commercialization of a beloved personal journaling tool. It offers a secure and efficient way to send messages to your future self, ensuring privacy and accessibility for everyone, without any subscription fees or data exploitation. The innovation lies in its commitment to being a permanent, community-driven digital time capsule.

Popularity

Points 4

Comments 4

What is this product?

FuturePost.app is a digital time capsule service that allows users to write messages and schedule them to be delivered to their future selves via email at a specified date. Unlike its commercial counterparts, FuturePost.app is built on a philosophy of perpetual free access and data privacy. Its technical foundation is a robust Ruby on Rails application paired with a PostgreSQL database, hosted on Heroku, emphasizing stability and longevity. The core innovation is the preservation of a cherished digital service's original intent – a personal, untainted space for reflection and foresight – by providing a technically sound and ethically driven free alternative.

How to use it?

Developers can use FuturePost.app by simply visiting the website, composing their message, and scheduling the delivery date. For those interested in contributing or understanding the underlying technology, the open-source nature of the project, built on Ruby on Rails and PostgreSQL, allows for inspection and potential contributions. Integration scenarios for developers could involve understanding how to build similar time-based delivery systems, secure message handling, or utilizing Rails for robust web applications. Its simple interface means anyone can use it for personal reflection, goal setting, or sending sentimental messages to future anniversaries.

Product Core Function

· Scheduled Message Delivery: Allows users to write messages and set future delivery dates. The value here is creating personal milestones and reminders, fostering self-reflection and accountability. This is technically achieved through a background job scheduler that processes queued emails based on their scheduled send times.

· End-to-End Encryption (Conceptual, based on developer's intent): While not explicitly detailed, the promise of no data selling implies a strong focus on privacy, likely involving secure storage and transmission. The value is user trust and data security, preventing personal thoughts from being compromised. This would be implemented using industry-standard encryption protocols for data at rest and in transit.

· Free and Open-Source Access: The project is offered completely free of charge, with its code available for the community. This value lies in democratizing access to this type of personal tool and fostering transparency and collaborative development. It's built on established, reliable technologies like Ruby on Rails and PostgreSQL, ensuring long-term viability.

· Ad-Free and No Data Selling Policy: The platform guarantees a pure user experience without intrusive advertisements or the misuse of personal data. The value is an uninterrupted and private journaling experience, respecting user autonomy. This is a design principle enforced by the architecture and deployment strategy, focusing solely on the core functionality.

Product Usage Case

· Personal Growth Tracking: A developer can send a message to themselves one year from now, detailing their career goals and current skill set. Upon receiving the message, they can assess their progress and identify areas for further development, solving the problem of tracking long-term personal and professional growth.

· Sentimental Reminders: A parent can write a letter to their child, scheduled to be delivered on their 18th birthday. This provides a lasting, personal keepsake that bridges time and distance, offering sentimental value that a commercial service might eventually monetize away, solving the need for deeply personal and enduring connections.

· New Year's Resolutions Reinforcement: Users can write down their resolutions at the start of the year and schedule them to be delivered mid-year. This serves as a powerful reminder and motivational boost to stay on track with their goals, addressing the common challenge of forgotten resolutions.

· Technical Experimentation Playground: Developers can fork the open-source repository and experiment with adding new features, such as integrating with other journaling tools or exploring different background job processing mechanisms, thereby learning and contributing to the evolution of such services.

12

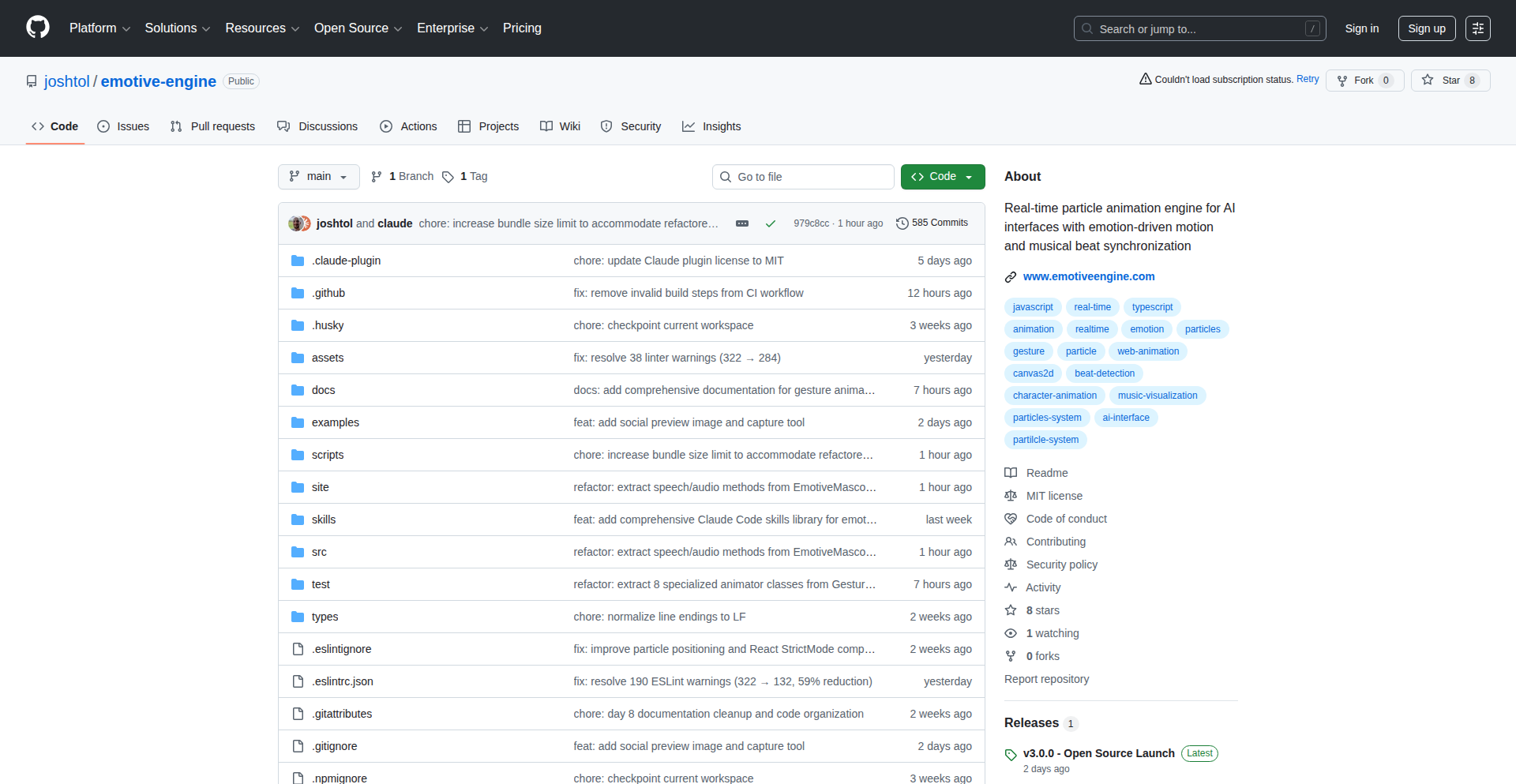

EmotiveEngine: Beat-Synced Animation

Author

emotiveengine

Description

Emotive Engine is a groundbreaking animation engine that synchronizes animations with musical time rather than milliseconds. This innovative approach solves the common problem of animations drifting out of sync when music tempo changes. By defining animation durations in beats, Emotive Engine ensures that animations automatically adapt to any BPM, creating a seamless and responsive experience, particularly valuable for AI interfaces and real-time character animation. So, this means your animated characters will always move in perfect rhythm with your music, no matter the tempo, making your applications feel more alive and engaging.

Popularity

Points 6

Comments 2

What is this product?

Emotive Engine is a 2D animation engine that fundamentally changes how animations are timed. Instead of using milliseconds, which are fixed units of time, it uses musical beats as its atomic unit. This means you define how long an animation should last in terms of beats (e.g., 'this animation takes 2 beats'). When the tempo of the music changes (beats per minute or BPM), the engine automatically recalculates the millisecond duration for that animation. For example, an animation set to 2 beats will take 1 second at 120 BPM, but it will take 1.33 seconds at 90 BPM. This ensures perfect synchronization with music regardless of tempo shifts, eliminating the need for manual recalculations. This is a significant innovation for applications that require tightly coupled visual and audio experiences. So, this helps you create smoother, more professional-looking animations that feel naturally connected to sound, without the headaches of manual timing adjustments.

How to use it?

Developers can integrate Emotive Engine into their JavaScript-based applications, especially those using HTML Canvas for 2D graphics. You'd typically initialize the engine and then define animations by specifying their duration in beats and the animation curves or easing functions. These animations can then be triggered and controlled programmatically. For AI interfaces like chatbots or voice assistants, you can trigger animations based on spoken words or AI responses, ensuring the character's movements are timed to the rhythm of the dialogue. It can also be used for game development, interactive art installations, or any project where real-time character animation needs to be dynamically synchronized with music or rhythmic events. So, you can easily add sophisticated, rhythm-driven animations to your projects using a straightforward API.

Product Core Function

· Beat-based timing: Animations are defined in musical beats, automatically adjusting to tempo changes. This provides fluid and consistently timed animations regardless of musical pace, crucial for immersive experiences.

· Automatic tempo adaptation: The engine intelligently recalculates animation durations when the BPM changes, eliminating manual adjustments and ensuring perfect sync. This saves development time and prevents animation drift.

· Real-time animation control: Allows for dynamic triggering and manipulation of animations in response to user input or application events, enabling interactive and responsive interfaces.

· Canvas 2D rendering: Optimized for 60 FPS performance on mobile devices, providing smooth and visually appealing animations without taxing device resources. This ensures a high-quality user experience.

· Extensive testing: Over 2,500 passing tests guarantee stability and reliability, giving developers confidence in the engine's robustness. This means you can rely on a stable foundation for your animation needs.

Product Usage Case

· AI Chatbot Character Animation: A chatbot's character blinks and gestures in time with its spoken responses. If the chatbot speaks faster or slower, the character's animations adjust accordingly to match the new rhythm, making the interaction feel more natural and engaging. Solves the problem of robotic-looking character animations that are out of sync with speech.

· Music Visualization: Creating visual elements that pulse and move precisely to the beat of a song. As the song's tempo changes, the visualizations fluidly adapt, providing a dynamic and captivating visual experience that is tightly coupled with the music. Solves the challenge of creating dynamic visualizers that respond accurately to tempo variations.

· Interactive Storytelling: A character in an interactive story performs actions timed to specific narrative cues or musical interludes. If the pacing of the story changes, the character's animations seamlessly adjust, enhancing the emotional impact and immersion of the narrative. Solves the problem of disconnected character actions in dynamic narrative pacing.

13

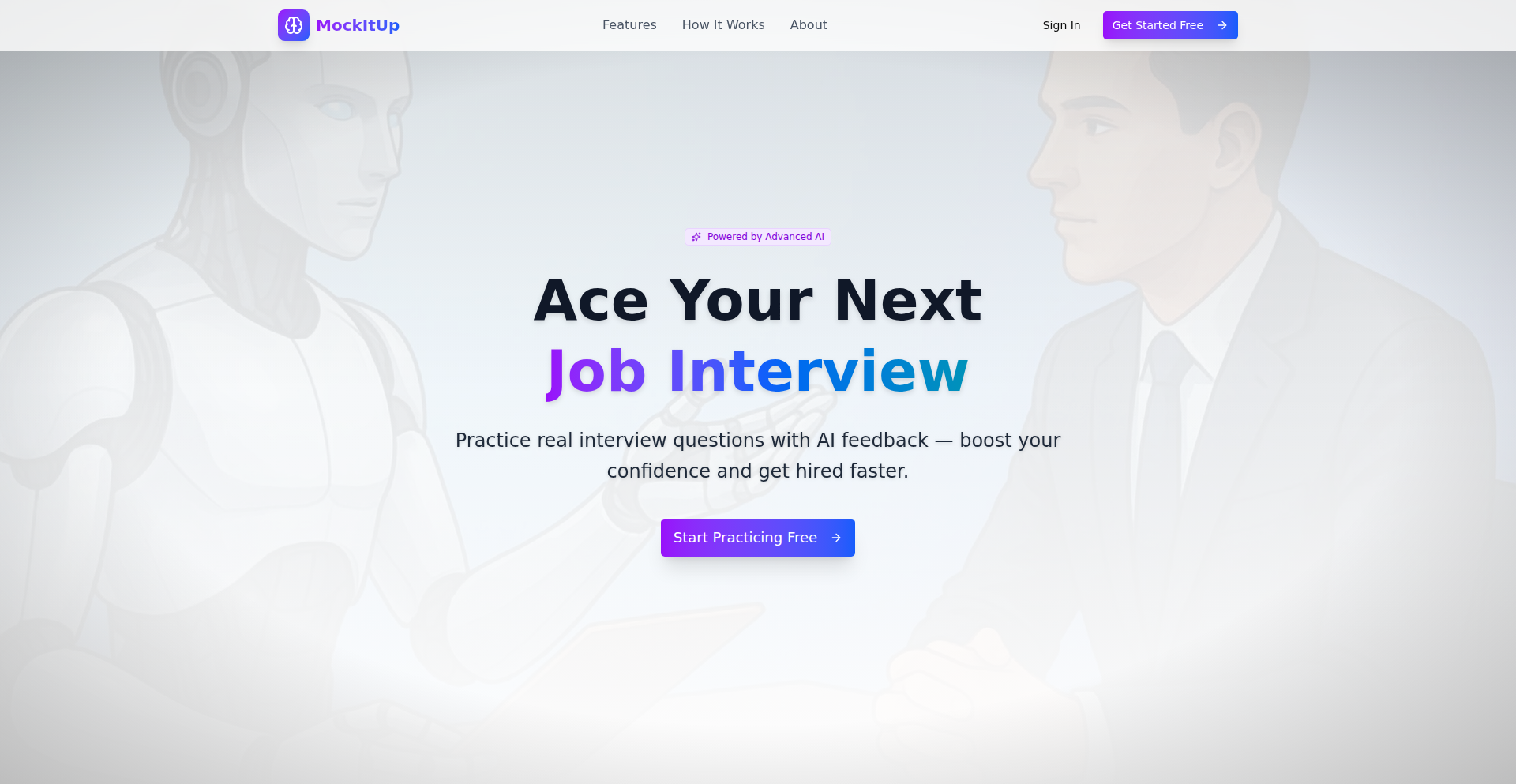

AI Interview Simulator

Author

aacmkt

Description

An AI-powered tool designed to help users practice job interviews. It simulates an interviewer, asking role-specific or technology-specific questions and providing feedback. This addresses the common challenge of limited access to realistic interview practice, offering a scalable and accessible solution for skill refinement. Its core innovation lies in leveraging AI to personalize the interview experience, making it more effective for job seekers.

Popularity

Points 4

Comments 3

What is this product?

This project is an AI-powered platform that simulates job interviews. It uses natural language processing (NLP) and machine learning (ML) models to understand user inputs and generate relevant interview questions. The AI is trained on a vast dataset of interview questions and common responses, allowing it to mimic a human interviewer's questioning style and provide constructive feedback on answers. The core innovation is the dynamic generation of questions and personalized feedback, moving beyond static question banks to offer a more realistic and adaptive practice environment. So, this is useful because it allows you to practice your interview skills in a low-pressure environment with feedback, helping you identify areas for improvement before a real interview.

How to use it?

Developers can use this project by visiting the website mockitup.me. Upon arrival, they select a specific role or technology they wish to be interviewed for (e.g., React, Python, Product Manager). The AI then acts as the interviewer, posing questions related to the chosen domain. Users respond verbally or by typing, and the AI provides feedback on the quality and relevance of their answers. The platform is designed for ease of use, requiring no complex setup or integration. So, this is useful because it provides a ready-to-use platform for practicing interviews for various technical roles and technologies, directly helping you prepare for specific job applications.

Product Core Function

· AI-driven question generation: The system dynamically generates interview questions based on selected roles or technologies, mimicking real interview scenarios. This is valuable for exposing users to a wide range of potential questions.

· Personalized feedback system: The AI analyzes user responses and provides constructive feedback on clarity, accuracy, and relevance, helping users refine their answers and communication skills. This is useful for understanding how your answers are perceived.

· Role and technology customization: Users can tailor the interview simulation to specific job requirements, ensuring practice is relevant to their target roles. This directly helps you prepare for the exact type of job you're applying for.

· Interactive interview simulation: The platform creates a conversational flow, allowing users to engage in a realistic back-and-forth with the AI interviewer. This makes the practice feel more authentic and engaging.

Product Usage Case

· A junior software engineer preparing for a React developer role can use the tool to practice front-end development questions and get feedback on their explanations of concepts like component lifecycle and state management. This helps them articulate their knowledge effectively.

· A data scientist looking for a Python-focused position can practice questions on data manipulation, machine learning algorithms, and Python libraries, receiving feedback on their coding logic and problem-solving approaches. This improves their technical interview performance.

· A product manager candidate can simulate an interview focusing on product strategy, user research, and prioritization, getting insights into how well they articulate their product vision and decision-making process. This helps them communicate their value proposition clearly.

14

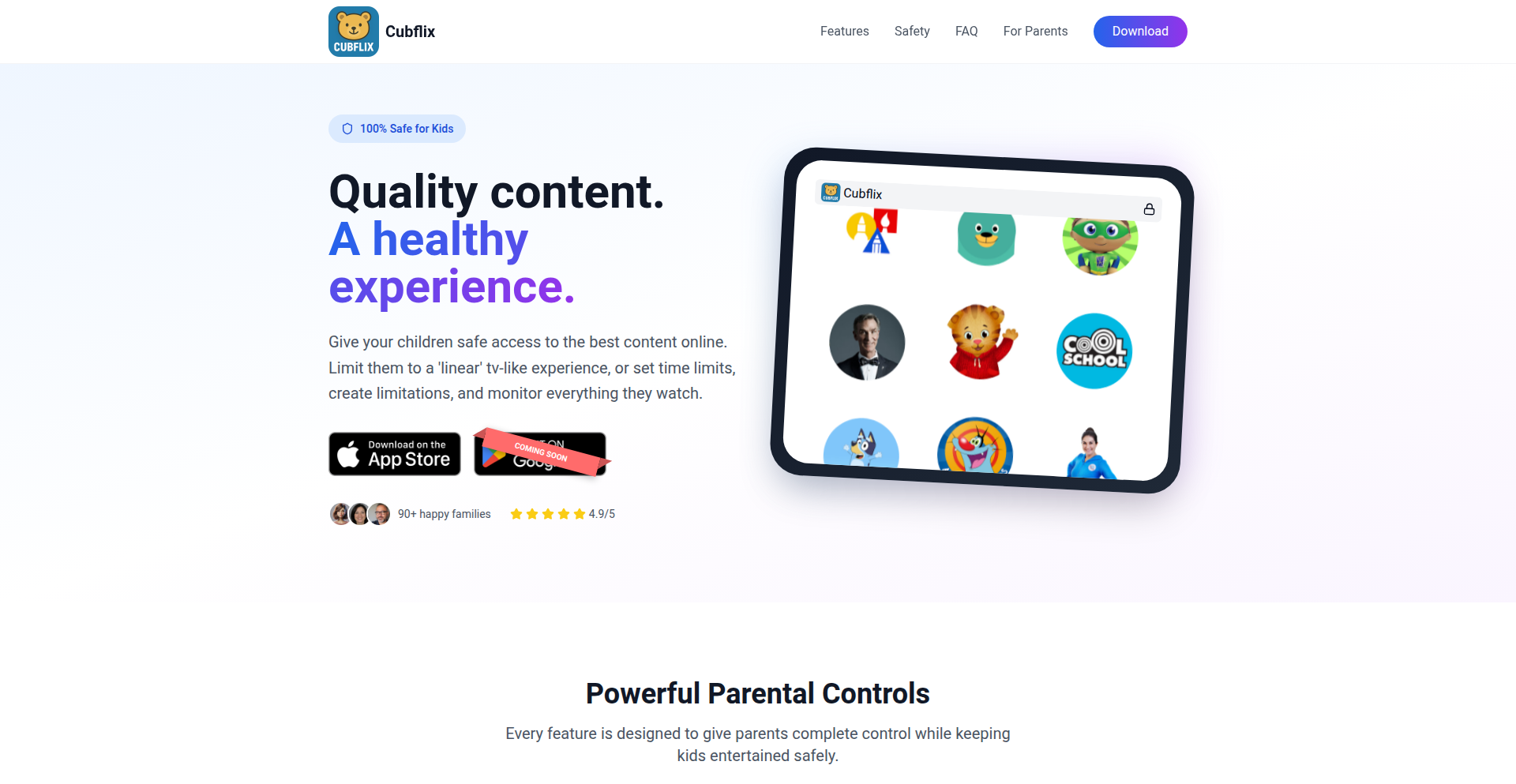

KidStream TV

Author

gillyb

Description

KidStream TV transforms YouTube into a curated, TV-like experience for children, featuring parent-approved educational channels. It tackles the issue of overwhelming and potentially harmful content on YouTube by offering a focused, safer environment. The innovation lies in its ability to create a structured viewing schedule akin to traditional television, preventing endless scrolling and brainwashing recommendations, thus providing a healthier digital consumption for kids.

Popularity

Points 3

Comments 3

What is this product?

KidStream TV is an iPad application designed to replicate the feel of watching traditional television, but with the content sourced from YouTube channels that parents have specifically selected. The core technical idea is to present a feed of videos from designated channels in a continuous, non-interactive way, similar to a TV channel's programming. This circumvents the algorithmic recommendation engine of YouTube, which can often lead children to unsuitable content or addictive short-form videos like 'shorts'. By structuring the content flow, it aims to offer a more focused and educational viewing experience, reminiscent of how previous generations consumed media, but with access to modern educational resources. The value proposition is a safer, more controlled, and educationally enriching digital environment for children.

How to use it?

Developers can integrate KidStream TV by leveraging its API to add or manage approved YouTube channels. For end-users (parents), it's an iPad app that requires initial setup where parents select and approve specific YouTube channels they deem appropriate for their children. Once set up, the app presents a continuous stream of videos from these approved channels, mimicking a TV channel lineup. This can be used in scenarios where parents want to ensure their children are exposed to high-quality educational content without the distractions and risks associated with the standard YouTube platform. For example, it can be used during designated 'screen time' to provide a structured and beneficial viewing experience.

Product Core Function

· Curated Channel Playlist: Allows parents to select and organize a list of YouTube channels for their children's viewing. The technical value is in creating a filtered content source, preventing exposure to unapproved material, and offering a predictable viewing experience.

· TV-like Viewing Experience: Presents videos in a continuous, non-interactive stream, similar to traditional television channels. This addresses the problem of children getting lost in infinite scrolling or clicking on distracting recommendations, providing a more focused and less addictive form of media consumption.

· Parental Control Dashboard: Provides parents with an interface to manage approved channels and potentially set viewing schedules. This offers peace of mind and granular control over their children's digital content consumption, a key feature for any responsible digital parenting tool.

· Educational Content Focus: By design, encourages the use of educational YouTube channels. The technical implementation prioritizes content quality and learning, aiming to leverage the vast educational resources on YouTube in a structured and beneficial manner.

Product Usage Case

· Scenario: A parent wants their child to learn about dinosaurs during their afternoon playtime but is concerned about YouTube's recommendation algorithm showing unrelated or inappropriate videos. How it solves the problem: The parent approves several dinosaur-themed educational YouTube channels in KidStream TV. The app then plays videos from these channels sequentially, offering a continuous, engaging, and educational dinosaur documentary experience without the risk of random or distracting content.

· Scenario: A family is on a long road trip and wants to provide their child with an entertaining yet educational experience on an iPad. How it solves the problem: Before the trip, the parent pre-loads KidStream TV with channels related to science experiments and animal facts. During the trip, the child can watch these curated videos without needing constant supervision or worrying about them stumbling upon inappropriate content, ensuring a safe and enriching entertainment experience.

· Scenario: A child tends to get engrossed in short, attention-grabbing videos on YouTube, which limits their ability to focus on longer, more educational content. How it solves the problem: KidStream TV prioritizes longer-form educational videos from approved channels and presents them in a structured, non-disruptive manner. This helps the child develop a habit of watching more in-depth content, fostering better concentration and deeper learning.

15

ThinHeat Dexterity Gloves

Author

imartibilli

Description

Ultra-thin, high-dexterity heated gloves for winter, integrating advanced heating elements to provide warmth without sacrificing finger movement. This addresses the common problem of bulky, cold-intolerant winter gloves, enabling precise tasks in freezing conditions.

Popularity

Points 5

Comments 1

What is this product?

This project is a pair of heated gloves designed to be exceptionally thin (0.4 mm) and highly flexible, allowing for fine motor skills even in cold weather. The innovation lies in the seamless integration of a novel heating element technology that is both powerful and unobtrusive. Unlike traditional heated gloves that are often bulky and stiff, these gloves use a proprietary conductive fabric and micro-heating circuitry embedded directly into the material. This means you get warmth without the clumsiness, so you can operate your smartphone, use tools, or perform other delicate tasks comfortably in the cold. The core idea is to solve the trade-off between warmth and dexterity that plagues most winter gloves.

How to use it?

Developers can use these gloves in any scenario requiring fine motor control outdoors during winter. This could include working on outdoor IoT projects, performing repairs on cold equipment, or even simply using a touchscreen device without freezing their fingers. The gloves are designed to be worn like regular gloves, and typically connect to a portable power source (like a small battery pack, often rechargeable via USB) that can be slipped into a pocket or attached to a belt. The heating can usually be controlled via a small button on the glove or the power pack, allowing users to adjust the warmth level as needed. Integration is straightforward – you just wear them and connect the power.

Product Core Function

· Ultra-thin heating element: Enables warmth without compromising finger flexibility for precise tasks. This means you can still type, use tools, or operate small devices without your fingers feeling stiff and numb.

· High dexterity design: Allows for fine motor control, so you can perform delicate operations like using a touchscreen or manipulating small components without removing your gloves.

· Integrated micro-circuitry: Distributes heat evenly across the glove, providing consistent warmth and preventing cold spots, ensuring your entire hand stays comfortable.

· Portable power source compatibility: Offers convenient and adjustable heating, so you can tailor the warmth to your comfort level and the ambient temperature, ensuring prolonged use without discomfort.

Product Usage Case

· Outdoor electronics prototyping: A developer building an IoT device in a cold environment can use these gloves to solder small components and test connections precisely, avoiding the need to repeatedly take off bulky gloves.

· Winter smartphone usage: A user needing to navigate maps or respond to urgent messages on their smartphone in freezing temperatures can do so without their fingers becoming too cold to operate the touchscreen effectively.

· Cold weather fieldwork: A scientist or technician collecting data outdoors in winter can use specialized handheld instruments or take notes with a pen while keeping their hands warm and maintaining the necessary dexterity.

16

Databricks SQL AutoScaler & Cost Cuttter

Author

karamazov

Description

This project is a Databricks SQL optimizer that intelligently manages autoscaling and cluster selection. It leverages Machine Learning (ML) models to predict query runtimes and resource capacity, allowing it to run clusters more efficiently than standard providers. The core innovation lies in maximizing resource utilization without compromising query latency, leading to significant cost reductions. Think of it as a smart, automated manager for your Databricks data warehousing resources.

Popularity

Points 6

Comments 0

What is this product?

This project is a sophisticated optimizer for Databricks SQL workloads. It functions by acting like a 'Kubernetes for data warehousing,' meaning it takes over the complex tasks of autoscaling and choosing the right size and type of compute clusters. The 'magic' behind it is a scheduler powered by Machine Learning models. These models are trained to predict how long a query will take to run and how much capacity will be needed. Because the system can accurately predict these factors, it can push the hardware to run at higher utilization levels than the default cloud provider settings allow, effectively squeezing more performance out of the same resources and therefore cutting costs. So, it's a smart system that makes your data processing faster and cheaper by intelligently managing the underlying infrastructure.

How to use it?

Developers and data engineers can integrate this optimizer into their Databricks SQL environments. It works by connecting to your Databricks workspace and then applying its intelligent scheduling and autoscaling logic to your SQL queries. Essentially, you point the optimizer at your Databricks SQL endpoints, and it takes over the management of the clusters that process your queries. This can be done through API integrations or by configuring the optimizer to manage specific Databricks SQL warehouses. The benefit to you is that you don't have to manually adjust cluster sizes or worry about idle resources; the system handles it all automatically, ensuring optimal performance and cost-efficiency for your data analytics and reporting tasks. It's designed to be a seamless addition to your existing Databricks setup, enhancing its operational efficiency.

Product Core Function

· Intelligent Autoscaling: Dynamically adjusts the number and size of compute clusters based on predicted workload demand, ensuring resources are always available without over-provisioning. This means your queries run fast, and you only pay for what you actually use, cutting down on wasted spending.

· Optimized Cluster Selection: Automatically chooses the most cost-effective and performant cluster type for each specific query or workload. This avoids using overly expensive or underpowered machines, leading to better overall efficiency and reduced operational costs.

· ML-Powered Predictive Scheduling: Utilizes machine learning models to forecast query runtimes and capacity needs. This foresight allows for proactive resource allocation and enables running clusters at higher utilization levels than standard configurations, directly translating to cost savings.