Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-28

SagaSu777 2025-10-29

Explore the hottest developer projects on Show HN for 2025-10-28. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The sheer volume of projects focused on AI agents and LLM integration highlights a significant trend: developers are moving beyond basic AI prompts to build robust, deployable AI-powered applications. We're seeing a strong emphasis on creating 'agent frameworks' and 'orchestration layers' like Dexto and Pipelex, which aim to manage complex multi-step AI workflows, connect agents to real-world tools, and ensure deterministic behavior. This shift signifies a maturing ecosystem where the focus is on making AI practical and reliable for business use cases, moving from hype to tangible solutions. For developers and entrepreneurs, this means opportunities abound in building the middleware, tooling, and platforms that enable the next generation of AI-driven automation and personalized experiences. The pursuit of efficiency is also evident in advancements like Apache Fory Rust, showcasing that even as AI capabilities grow, the foundational engineering principles of speed and data handling remain paramount. Simultaneously, the surge in productivity and utility tools, from simplified file sharing to intelligent content filtering, reflects a hacker ethos of using technology to cut through complexity and enhance individual workflows.

Today's Hottest Product

Name

Apache Fory Rust

Highlight

This project tackles the critical challenge of data serialization speed and efficiency. By employing compile-time code generation (avoiding slower reflection) and a compact binary protocol with meta-packing optimized for modern CPUs, Apache Fory Rust achieves 10-20x faster performance on nested objects compared to industry standards like JSON and Protobuf. Its innovative cross-language capability without IDL files, alongside seamless trait object serialization and automatic circular reference handling, makes it a significant advancement for developers dealing with complex data structures and inter-process communication. Developers can learn about advanced serialization techniques, compile-time code generation strategies, and efficient binary data handling.

Popular Category

AI & Machine Learning

Developer Tools

Data Serialization

Agent Frameworks

Productivity Tools

Popular Keyword

AI Agents

LLM

Serialization

Developer Tools

Automation

Data Processing

Open Source

Technology Trends

AI Agent Orchestration

Efficient Data Serialization

Developer Productivity Tools

Cross-Language Compatibility

Deterministic AI

Interactive Content

Open Source Frameworks

Project Category Distribution

AI & Machine Learning (35%)

Developer Tools (25%)

Productivity & Utilities (15%)

Data & Infrastructure (10%)

Creative & Multimedia (5%)

Gaming (5%)

Education (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | BashViz | 216 | 74 |

| 2 | Fory-Rust Binary Weaver | 64 | 46 |

| 3 | Butter: LLM Behavior Cache | 33 | 21 |

| 4 | Dexto: AI Agent Orchestration Fabric | 34 | 5 |

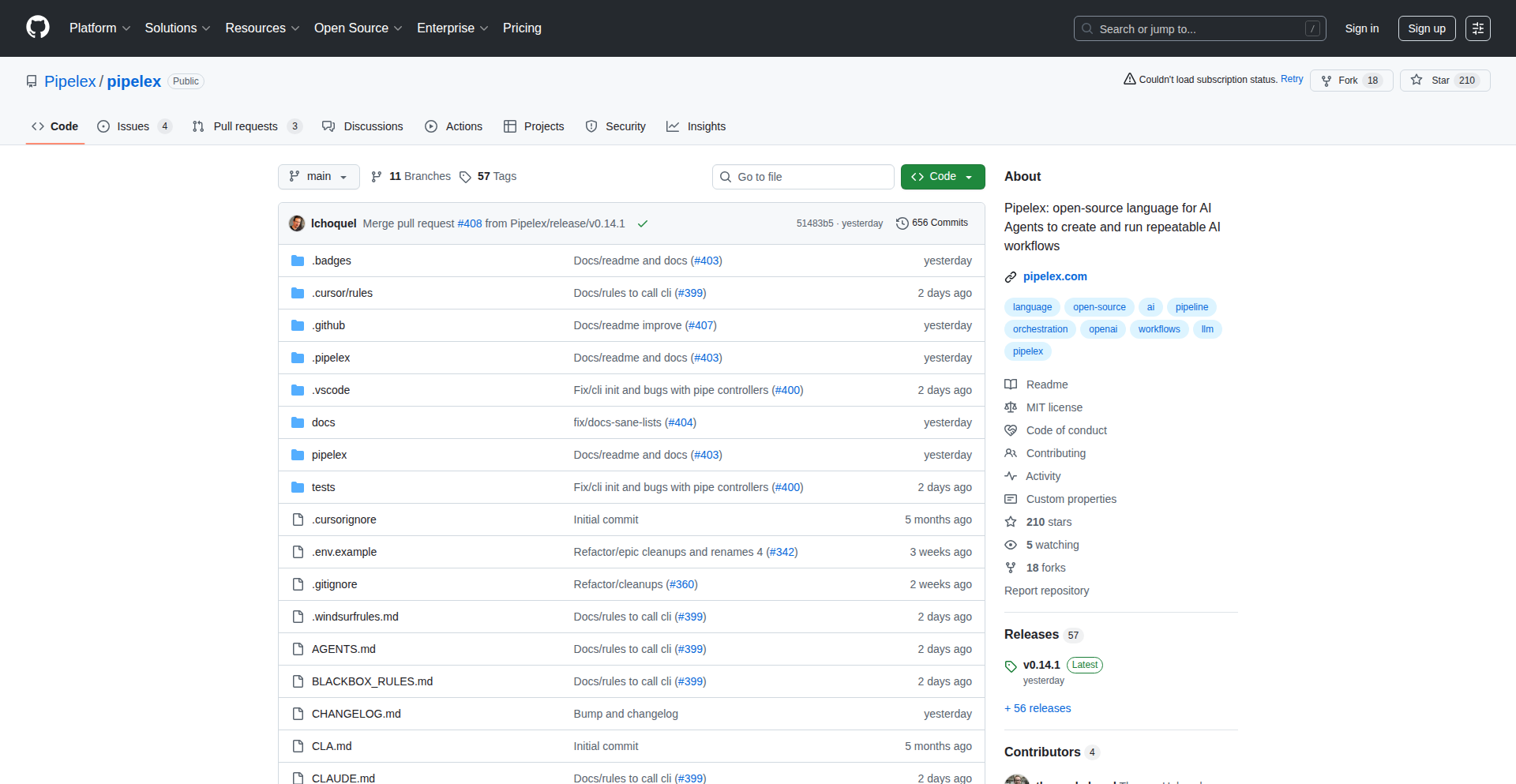

| 5 | Pipelex | 24 | 6 |

| 6 | NoGreeting | 13 | 13 |

| 7 | Zig Ordered Collections | 19 | 6 |

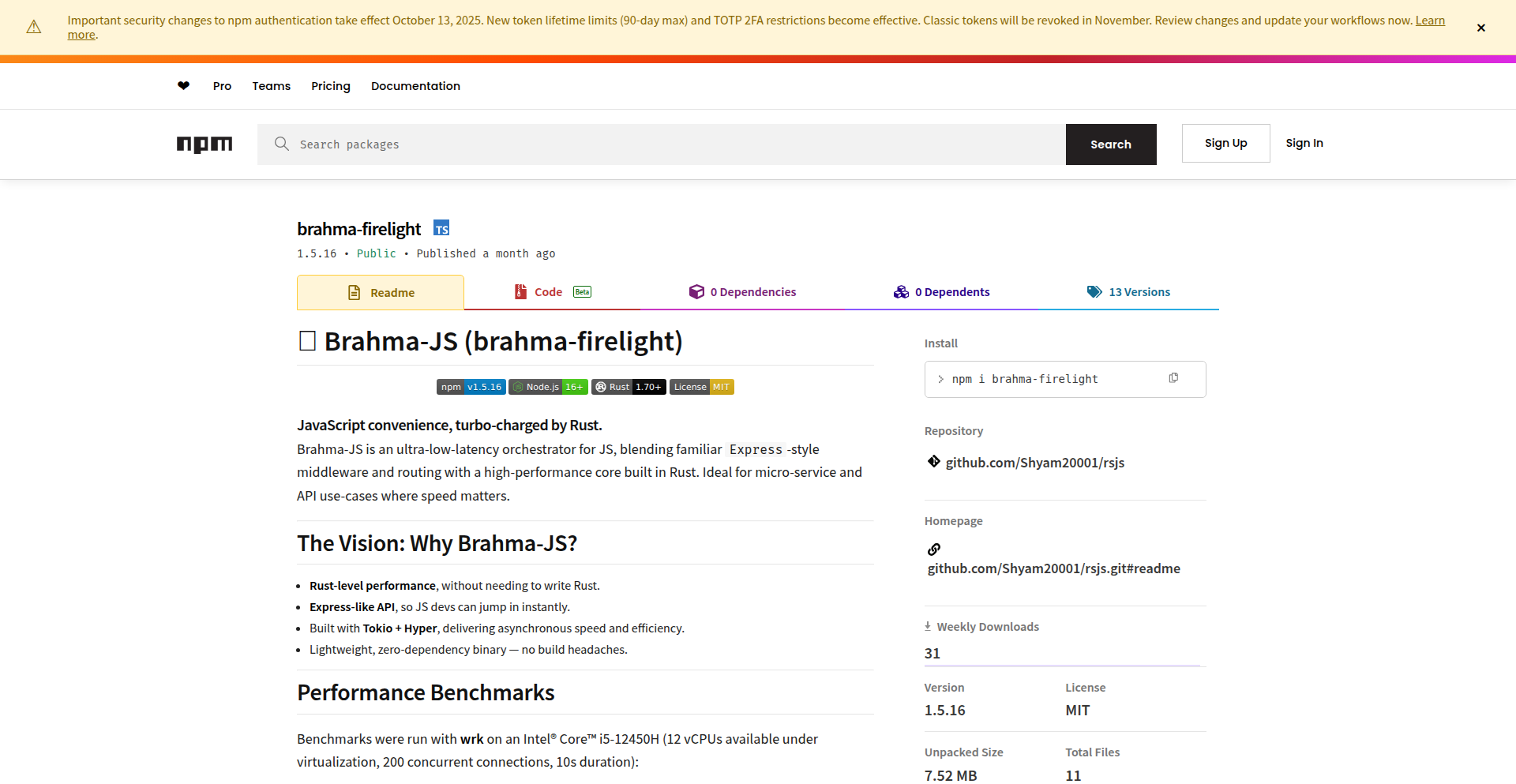

| 8 | RustNodeHTTP | 12 | 11 |

| 9 | Luzmo Custom Chart Builder | 15 | 1 |

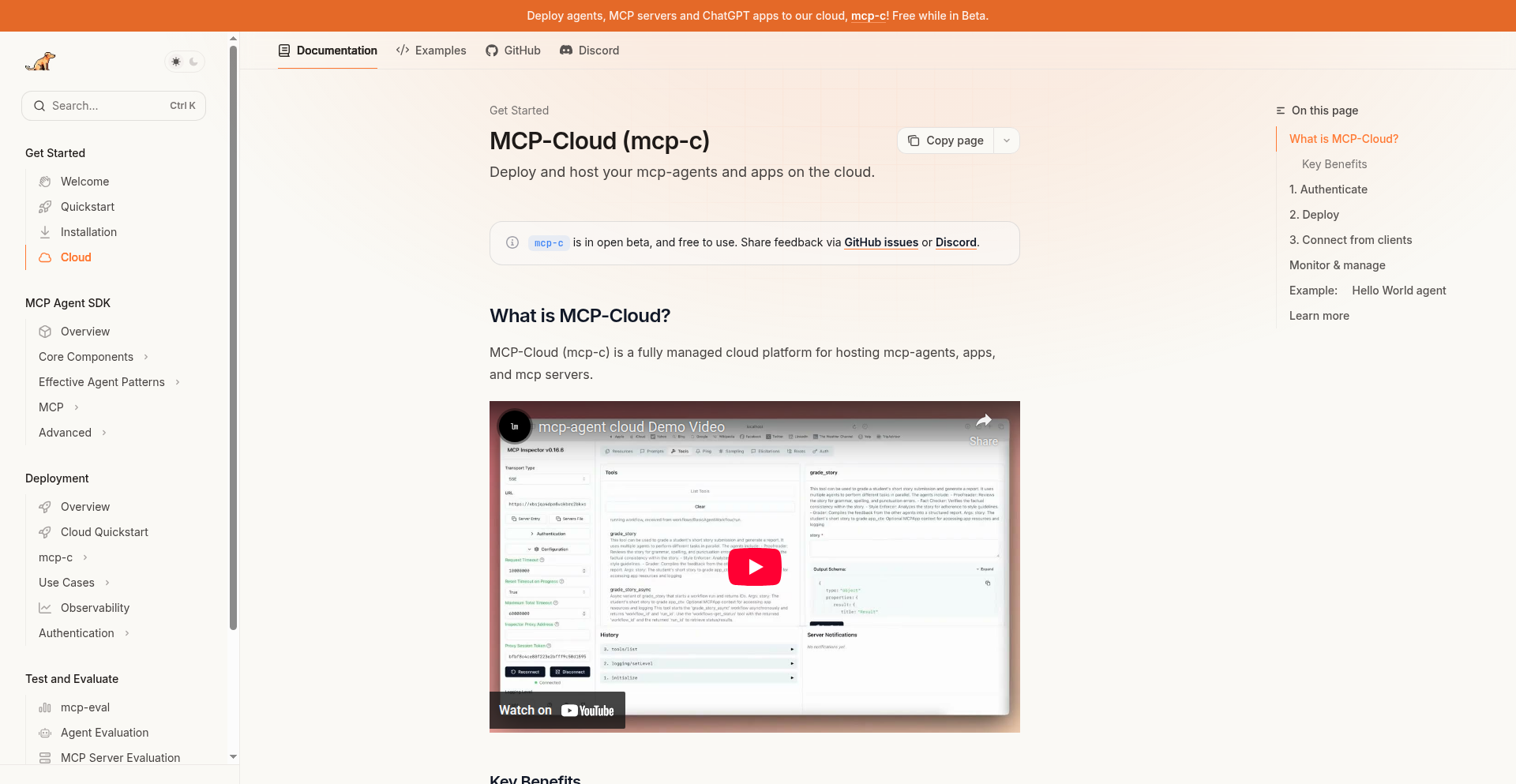

| 10 | MCP-Cloud Orchestrator | 8 | 2 |

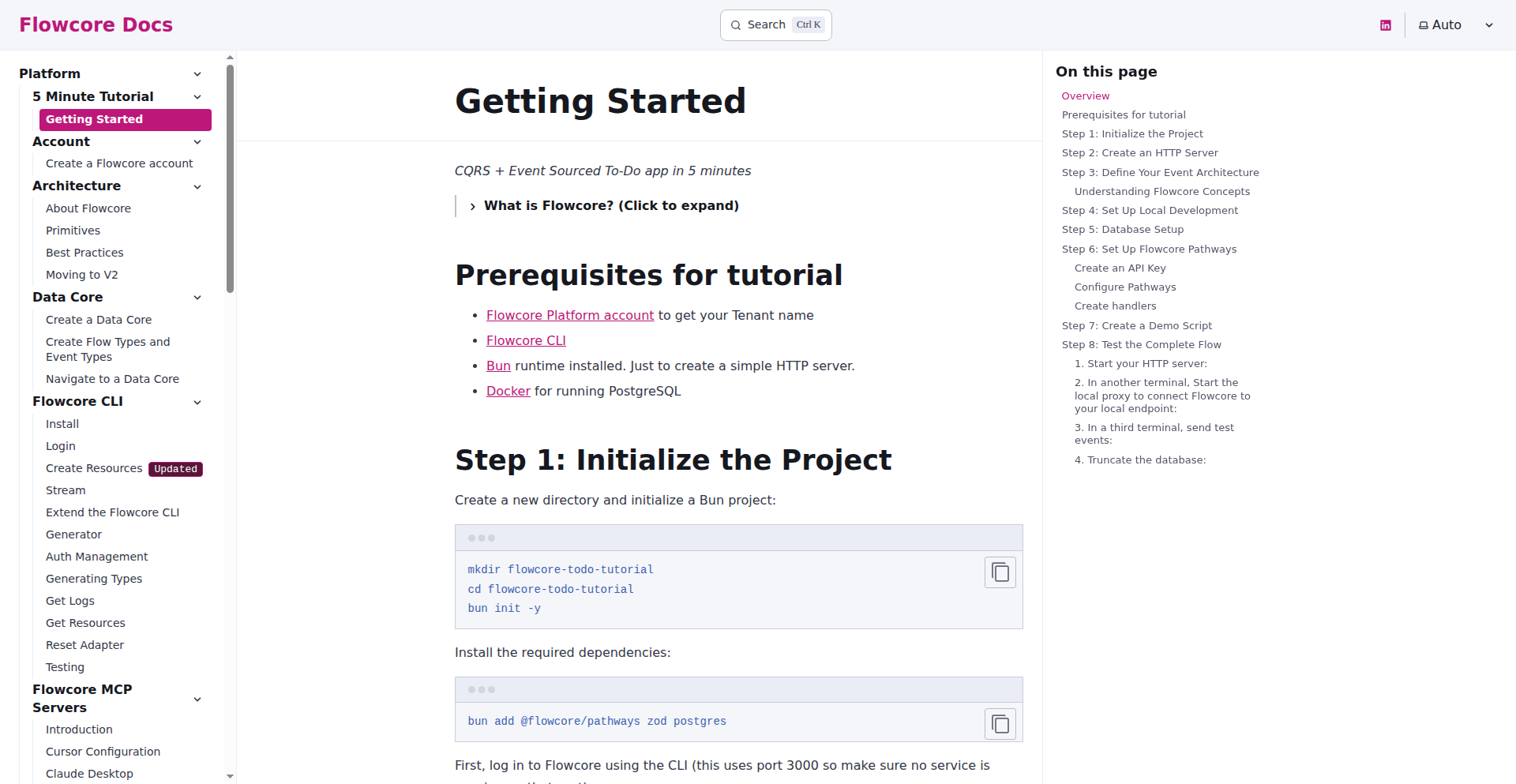

1

BashViz

Author

attogram

Description

BashViz is a collection of bash-scripted screensavers and visualizations, turning your terminal into a dynamic visual display. It leverages the power of plain text and command-line tools to create engaging graphics, demonstrating how even simple scripts can produce impressive visual effects. This project highlights the creative potential of the command line for aesthetic purposes.

Popularity

Points 216

Comments 74

What is this product?

BashViz is a repository of creative screensavers and visualizations built entirely using bash scripts. Instead of relying on heavy graphical libraries, it uses standard command-line utilities like `awk`, `sed`, `grep`, and character manipulation to generate dynamic and often mesmerizing visual patterns directly in your terminal. The innovation lies in its minimalist approach, proving that complex visual experiences can be achieved with fundamental scripting techniques, offering a unique blend of functionality and artistic expression for developers.

How to use it?

Developers can use BashViz by cloning the GitHub repository and running the individual bash scripts from their terminal. Each script typically runs as a standalone application, often in a loop, to create continuous animations or visualizations. For example, you might navigate to the project directory in your terminal and execute `./script_name.sh`. It can be integrated into a workflow by setting them as actual terminal screensavers or running them during idle periods to add visual flair. The value is in transforming a typically static terminal into a visually stimulating environment with minimal dependencies.

Product Core Function

· Dynamic ASCII Art Generation: Scripts use character sequences and their arrangement to create evolving visual patterns. This is valuable for adding aesthetic appeal to the terminal and showcasing creative use of text characters.

· Real-time Data Visualization (Text-based): Some scripts can process and visualize simple data streams or system information in real-time using text. This is useful for quick, low-overhead monitoring or for demonstrating data processing concepts visually.

· Algorithmic Pattern Creation: The core of the visualizations involves algorithms that generate complex and often unpredictable patterns based on mathematical or procedural rules. This offers educational value for understanding algorithmic art and the power of simple rules leading to complex outputs.

· Terminal-native Screensavers: The scripts are designed to run directly in the terminal, acting as a lightweight and portable alternative to traditional graphical screensavers. This is valuable for developers who spend a lot of time in the terminal and want a visually engaging experience without leaving their command-line environment.

Product Usage Case

· As a terminal screensaver: When your terminal is idle, a script like 'matrix_rain.sh' can run, filling the screen with cascading green characters, similar to the iconic movie effect. This solves the problem of a blank, uninteresting terminal during downtime and adds a cool hacker aesthetic.

· During coding breaks: Running a script that generates evolving geometric patterns can serve as a brief, visually stimulating break from intense coding sessions. It's a way to quickly refresh your mind without switching contexts entirely.

· Demonstrating scripting prowess: A developer can showcase this project to illustrate how creative solutions can be built with fundamental bash scripting, highlighting the 'hacker' spirit of using available tools in innovative ways. This is valuable for learning and impressing peers.

· Educational tool for procedural generation: For those interested in game development or procedural art, these scripts offer a simplified, text-based introduction to how complex visuals can be generated programmatically. It helps answer 'how can I create interesting visual effects from code?'

2

Fory-Rust Binary Weaver

Author

chaokunyang

Description

Fory-Rust Binary Weaver is a high-performance serialization framework built in Rust. It achieves 10-20x speed improvements over traditional formats like JSON and Protocol Buffers by using compile-time code generation, a compact binary protocol with meta-packing, and an endianness layout optimized for modern CPUs. Its innovation lies in its ability to serialize complex data structures, including trait objects, handle circular references automatically, and evolve schemas without requiring explicit coordination between different language implementations, all without the need for Interface Definition Language (IDL) files.

Popularity

Points 64

Comments 46

What is this product?

Fory-Rust Binary Weaver is a cutting-edge serialization framework. Serialization is the process of converting data structures into a format that can be easily transmitted or stored, and then reconstructed later. Traditional methods like JSON or Protocol Buffers are widely used but can be slow, especially with deeply nested data. Fory-Rust solves this by generating specialized code during the compilation phase (compile-time codegen), meaning it doesn't rely on slower runtime reflection. It uses a highly efficient binary format that packs data tightly and is structured to take full advantage of how modern computer processors work (little-endian layout). What makes it truly innovative is its cross-language capability without needing IDL files (you can serialize data between Rust and Python, Java, or Go directly), its ability to handle abstract concepts like trait objects (think of them as blueprints for behavior) in Rust, its automatic detection and management of circular references (where data points back to itself, a common serialization pitfall), and its flexible schema evolution, allowing you to change your data structure over time without breaking older versions or requiring all parties to update simultaneously. So, what does this mean for you? It means your applications can communicate and store data significantly faster and more efficiently, with fewer headaches around data compatibility and complex data types.

How to use it?

Developers can integrate Fory-Rust Binary Weaver into their Rust projects. The primary use case is to replace existing JSON or Protobuf serialization with this faster, more efficient alternative. For example, if you're building a microservice architecture where services communicate frequently, Fory-Rust can drastically reduce the latency of data exchange. You would typically define your data structures in Rust, and Fory-Rust's compile-time codegen will generate the serialization and deserialization logic. Its cross-language feature means you can have a Rust service sending data that a Python or Java application can seamlessly consume, and vice-versa, without writing explicit conversion code or managing shared IDL files. For applications dealing with complex object graphs or needing to evolve their data models over time, Fory-Rust simplifies these challenges. So, how would you use it? You'd add Fory-Rust as a dependency in your Rust project, annotate your data structures with Fory-Rust's macros, and then use its functions to serialize and deserialize data. This directly translates to faster network requests, quicker data loading from storage, and a more robust system when your data structures change.

Product Core Function

· Compile-time Code Generation for Serialization: This eliminates runtime overhead from reflection, making serialization and deserialization significantly faster. This means your applications can process data much quicker, leading to better performance and responsiveness, especially in high-throughput scenarios.

· Compact Binary Protocol with Meta-packing: The data is packed tightly into a small binary format, reducing the amount of data that needs to be transmitted or stored. This saves bandwidth and storage space, which is crucial for cost-efficiency and performance in distributed systems and mobile applications.

· Little-Endian Layout Optimized for Modern CPUs: This ensures efficient processing on contemporary processors, further boosting speed. By aligning with how modern hardware works, data can be read and written with minimal computational effort, contributing to overall system speed.

· Cross-Language Serialization without IDL Files: Enables seamless data exchange between Rust and other languages like Python, Java, and Go without requiring separate schema definition files. This dramatically simplifies multi-language development, reduces synchronization efforts, and speeds up integration between different technology stacks.

· Trait Object Serialization (Box<dyn Trait>): Allows for the serialization of dynamic trait objects in Rust, which are challenging for many serialization frameworks. This is invaluable for applications using Rust's advanced features for abstracting behavior and polymorphism, enabling these complex structures to be serialized and communicated reliably.

· Automatic Circular Reference Handling: The framework automatically detects and manages data structures where objects reference each other in a loop. This prevents common serialization errors like infinite recursion and crashes, ensuring data integrity and system stability when dealing with complex, interconnected data models.

· Schema Evolution without Coordination: Allows data schemas to change over time without requiring explicit agreement or updates across all communicating systems. This makes systems more adaptable and easier to maintain, reducing the friction associated with software updates and feature rollouts.

Product Usage Case

· High-Frequency Trading Systems: In financial applications where every millisecond counts, Fory-Rust's speed can reduce message latency between trading engines and data feeds, leading to faster order execution and potentially higher profits. The need for minimal latency in order to gain an edge makes this a prime use case.

· Real-time Multiplayer Games: For games with many players interacting simultaneously, minimizing the latency of game state updates between the server and clients is paramount. Fory-Rust's efficiency can lead to a smoother, more responsive gaming experience for users by ensuring critical game data is transmitted and processed quickly.

· IoT Data Ingestion: With potentially millions of IoT devices sending data, the efficiency and low overhead of Fory-Rust's serialization can significantly reduce the cost and improve the performance of data ingestion pipelines. Less data means lower transmission costs and faster processing, crucial for handling massive data volumes.

· Microservice Communication: When multiple microservices need to exchange data frequently, Fory-Rust can speed up inter-service communication, leading to a more performant and scalable overall architecture. The ability to communicate faster between these independent services directly impacts the overall application speed and responsiveness.

· Large-Scale Data Processing Pipelines: For batch processing or stream processing of massive datasets, Fory-Rust can accelerate the serialization and deserialization steps, making the entire pipeline run faster and more efficiently. When dealing with big data, every optimization in data handling translates to significant time and resource savings.

· Interfacing with Legacy Systems: The cross-language support without IDL files makes it easier to integrate modern Rust components with existing systems written in other languages, simplifying modernization efforts and reducing the complexity of bridging different technology stacks.

3

Butter: LLM Behavior Cache

Author

edunteman

Description

Butter is an LLM proxy that introduces 'muscle memory' for AI automations. By caching and replaying LLM responses, it makes agent systems deterministic, ensuring consistent behavior across multiple runs. This is crucial for applications where predictability is paramount, like in healthcare or finance, transforming unreliable AI into dependable automations.

Popularity

Points 33

Comments 21

What is this product?

Butter is essentially a smart intermediary between your AI agent and the Large Language Model (LLM) it communicates with. Think of it like a programmer writing down specific AI conversation flows. When your agent asks the LLM something, Butter first checks if it has seen this exact question and has a pre-recorded, reliable answer. If it does, it provides that answer instantly, making the AI act predictably. If it hasn't seen the question before, it lets the LLM answer, then records this new interaction as a potential future 'memory'. This caching mechanism is 'template-aware', meaning it can recognize parts of a request that might change (like a name or address) as variables, making the cache much more versatile and useful for real-world scenarios. This solves the problem of AI agents behaving erratically or inconsistently, which is a major hurdle for adopting AI in sensitive industries.

How to use it?

Developers can integrate Butter by simply changing the 'base_url' in their existing agent code to point to Butter's chat completions endpoint. This is straightforward because Butter mimics the standard OpenAI chat completions API. For example, if your agent currently sends requests to `https://api.openai.com/v1/chat/completions`, you would change it to `https://your-butter-instance.com/v1/chat/completions`. This allows your current AI automations to start benefiting from deterministic behavior without significant code refactoring. The recorded interaction tree acts like reusable code, guiding the AI through known paths and only resorting to fresh LLM calls for novel situations.

Product Core Function

· Deterministic Agent Behavior: By caching and replaying LLM responses, Butter ensures that an AI agent will produce the same output for the same input every time, which is invaluable for building reliable automations.

· Template-Aware Caching: This advanced caching recognizes dynamic parts of requests (like names, dates, or custom identifiers) as variables, allowing for more flexible and efficient response retrieval without needing exact matches.

· Chat Completions Compatibility: Butter acts as a drop-in replacement for standard LLM APIs, making it easy to integrate into existing AI agent frameworks and workflows without substantial code changes.

· LLM Response Replay: Instead of always querying the LLM, Butter can replay previously seen and validated responses, significantly speeding up processes and reducing costs.

· Behavior Tree Construction: Butter builds a tree structure from observed conversations, effectively mapping out conditional branches in an automation's logic, similar to how a script would operate.

Product Usage Case

· Automating customer support bots: Instead of AI generating unique responses every time for common queries, Butter can cache and replay accurate, pre-approved answers, ensuring brand consistency and customer satisfaction.

· Processing sensitive financial or medical data: In industries requiring high accuracy and auditability, Butter's deterministic nature guarantees that data processing logic is executed consistently, reducing the risk of errors and ensuring compliance.

· Building AI agents for legacy system interaction: For tasks that require predictable interactions with older software, Butter can store the sequences of commands and responses, making the AI agent behave like a reliable RPA bot but with AI's flexibility for edge cases.

· Testing and debugging AI workflows: By making LLM interactions deterministic, developers can isolate bugs more effectively in their agent logic, as they know the LLM's contribution is consistent and repeatable.

4

Dexto: AI Agent Orchestration Fabric

Author

shaunaks

Description

Dexto is a runtime and orchestration layer that transforms any app, service, or tool into an AI assistant capable of reasoning, thinking, and acting. It addresses the complexity of connecting Large Language Models (LLMs) to various tools, managing context, adding memory and approval workflows, and tailoring agent behavior for specific use cases. Instead of manual coding for each integration, Dexto provides a declarative configuration-driven approach, allowing developers to define an agent's capabilities, LLM power, and behavior, then runs it as an event-driven loop that handles reasoning, tool invocation, retries, state, and memory. This empowers developers to build sophisticated AI agents that can operate locally, in the cloud, or in a hybrid environment, with a CLI, web UI, and sample agents to ease adoption. Its modular and composable nature allows for easy integration of new tools and even exposes agents as services for consumption by other applications, fostering effortless cross-agent interactions and reuse.

Popularity

Points 34

Comments 5

What is this product?

Dexto is an AI agent orchestration platform. Think of it as a central hub for AI agents. Instead of developers writing lots of repetitive code to make an AI model (like ChatGPT) talk to other software (like your email or a file system) and remember things, Dexto lets you describe what you want the AI agent to do and what tools it can use. Dexto then handles all the behind-the-scenes work: figuring out what steps the AI should take, calling the right tools, remembering past conversations, and making sure everything runs smoothly. The innovation lies in moving from code-heavy agent development to a declarative configuration approach, making it much faster and simpler to build and deploy complex AI agents that can interact with the real world through various applications and services. This means you can turn almost any digital tool into a smart assistant without becoming an AI integration expert.

How to use it?

Developers can use Dexto by defining their AI agent's configuration in a simple format. This configuration specifies which LLM to use, what tools or services the agent can access (e.g., sending emails, searching the web, accessing a database), and how the agent should behave (its personality, tone, and any approval rules). Once configured, Dexto runs the agent as an independent process. Your application can then interact with Dexto by triggering the agent and subscribing to its events. For example, you could build a customer support chatbot that uses Dexto to access a knowledge base and respond to queries, or a marketing tool that uses Dexto to post updates on social media. Dexto provides a CLI for local development and deployment, a web UI for monitoring and management, and SDKs for integration into existing applications. The agents are event-driven, meaning they react to triggers and emit events that your application can listen to, allowing for seamless integration and user experience.

Product Core Function

· Declarative Agent Configuration: Define AI agent capabilities, LLM choices, and behavioral rules through configuration files rather than extensive code. This significantly speeds up development and makes agents easier to manage and update, so you can get your AI assistant up and running quickly.

· Runtime Orchestration Engine: Manages the entire lifecycle of an AI agent, including reasoning, task planning, tool invocation, and error handling. This means your AI agent can intelligently decide what to do next and execute complex workflows without you needing to micromanage every step, ensuring reliable operation.

· Tool and Service Integration: Seamlessly connect AI agents to a wide range of external tools and services via a modular architecture. This allows AI agents to perform real-world actions, from sending emails to interacting with databases, expanding their utility far beyond simple text generation.

· Context and Memory Management: Maintains conversation history and agent state, enabling agents to understand context and learn from past interactions. This allows for more natural and coherent conversations, and for agents to become more personalized and effective over time.

· Event-Driven Architecture: Agents operate as event-driven loops, emitting events that applications can subscribe to. This facilitates real-time interaction and integration, allowing your application to react instantly to agent actions and outcomes.

· Cross-Agent Communication and Reusability: Agents can be exposed as services and consumed by other agents or applications, fostering modularity and reuse. This promotes a 'build once, use anywhere' philosophy, allowing for the creation of complex, interconnected AI systems.

· Flexible Deployment Options: Agents can be deployed locally, in the cloud, or in a hybrid setup, providing adaptability to different infrastructure needs. This gives you the freedom to choose the deployment model that best suits your project's requirements and security policies.

Product Usage Case

· Building a marketing assistant that automatically drafts and schedules social media posts across platforms. The agent uses Dexto to access a content calendar, generate post variations using an LLM, and then uses social media APIs to publish them, saving marketing teams significant manual effort.

· Creating a customer support bot that can access a company's knowledge base and product documentation to provide instant, accurate answers to customer queries. Dexto orchestrates the LLM's understanding of the query and its retrieval of relevant information, providing a better customer experience.

· Developing an internal tool that automates code review by connecting an LLM to a code repository. Dexto allows the agent to read code, identify potential issues, and suggest improvements, accelerating the development workflow for engineering teams.

· Enabling non-technical users to perform complex image manipulations like face detection or collage creation through natural language commands. Dexto connects an LLM to OpenCV functions, abstracting away the technical complexities and making powerful image editing accessible to everyone.

· Constructing agents that can interact with web browsers to perform tasks like data scraping or form filling. Dexto orchestrates the agent's navigation and interaction with web elements, enabling automated web-based workflows.

5

Pipelex

Author

lchoquel

Description

Pipelex is a novel Domain-Specific Language (DSL) and Python runtime designed to make repeatable AI workflows a reality. It offers a declarative approach, akin to Dockerfile or SQL for AI, allowing developers to define AI pipeline steps and interfaces. Its innovation lies in its 'agent-first' design, where each step includes natural language context, enabling LLMs to understand, audit, and optimize the workflow. This open-source project aims to bridge the gap between complex AI models and structured, reproducible execution by separating business logic from specific implementation details.

Popularity

Points 24

Comments 6

What is this product?

Pipelex is a specialized programming language and its execution engine built for creating AI workflows that can be repeated reliably. Think of it like giving a recipe to a computer for AI tasks. Instead of writing lots of complex code to connect different AI models and instructions together, you write a Pipelex script. This script clearly states 'what' needs to be done. The innovation is that Pipelex scripts are designed to be understood not just by humans, but also by AI models themselves. Each step in the script includes explanations in plain English about its purpose, what data it needs, and what data it produces. This makes it easier for AI agents to follow, check, and even improve the workflow. It's a way to get consistent results from AI, just like you can run the same SQL query multiple times and get the same data.

How to use it?

Developers can use Pipelex to build and manage complex AI processes. You define your workflow by writing a Pipelex script, specifying the sequence of AI operations, the inputs and outputs for each, and the natural language intent behind each step. This script can then be executed by the Pipelex Python runtime. It integrates with existing tools like n8n and VS Code through dedicated extensions, providing a familiar environment. For example, you could create a workflow to automatically summarize customer feedback, extract key information, and then generate a report, all defined declaratively in Pipelex. This makes it easy to reuse, modify, and share these AI processes across different projects or teams, ensuring consistency and reducing the need to rewrite code from scratch.

Product Core Function

· Declarative Workflow Definition: Allows you to describe what you want your AI workflow to achieve in a structured language, rather than writing imperative code that specifies how to achieve it. This simplifies the process and makes workflows easier to understand and maintain, translating business logic into executable steps.

· Agent-First Contextualization: Each step in a Pipelex workflow includes natural language descriptions of its purpose, inputs, and outputs. This rich context allows AI models to deeply understand the workflow, making them capable of better execution, auditing, and even self-optimization, leading to more intelligent and adaptable AI systems.

· Model and Provider Agnosticism: The Pipelex runtime is designed to be flexible, allowing different AI models and services to fill the defined steps. This means you can easily swap out AI providers or models without rewriting your entire workflow, providing immense flexibility and future-proofing your AI investments.

· Composable Workflow Architecture: Pipelex workflows can call and incorporate other workflows, fostering modularity and reusability. This allows developers to build complex systems by combining smaller, well-defined AI components, similar to how software libraries are used, accelerating development and promoting community sharing of AI patterns.

· Reproducible AI Execution: By providing a deterministic language and runtime, Pipelex ensures that AI workflows can be executed repeatedly with consistent results. This is crucial for debugging, testing, and deploying AI applications reliably, eliminating the guesswork often associated with AI model behavior.

Product Usage Case

· Automated Content Generation: A developer can use Pipelex to build a workflow that takes a user prompt, generates multiple variations of text using different LLMs, evaluates them based on predefined criteria (like tone or factual accuracy), and selects the best output. This solves the problem of inconsistent or generic AI-generated content by creating a structured and auditable generation process.

· Data Extraction and Structuring from Unstructured Text: Imagine needing to extract specific information (like names, dates, and amounts) from a large volume of scanned documents or emails. Pipelex can define a workflow that first uses OCR to convert images to text, then employs an LLM to identify and extract the relevant data fields, and finally structures this data into a table or JSON format. This tackles the challenge of manual data entry and the unreliability of simple text parsing scripts.

· Building a Customer Support Agent: A company could use Pipelex to create an AI agent that handles common customer queries. The workflow could involve understanding the customer's request, querying a knowledge base, synthesizing an answer, and even escalating to a human agent if necessary. This addresses the need for scalable and consistent customer service by orchestrating multiple AI capabilities.

· AI Model Evaluation and Benchmarking: Developers can use Pipelex to create standardized tests for evaluating different AI models. A workflow could be designed to feed a specific dataset to various models, collect their outputs, and apply predefined metrics to score their performance. This provides a reproducible and objective way to compare AI models, aiding in model selection and improvement.

6

NoGreeting

Author

kuberwastaken

Description

NoGreeting is a lightweight web application designed to combat the common frustration of receiving messages that start with a simple 'hi' or 'hello' without any context. It provides a personalized link that, when shared, educates the sender on the importance of providing context upfront, thereby saving the recipient time and effort. The innovation lies in its elegant use of a simple web page to gently enforce better communication etiquette, built on the principle of 'no hello' in digital interactions.

Popularity

Points 13

Comments 13

What is this product?

NoGreeting is a tool that helps you train your digital communication by providing a direct, educational resource to people who message you without context. When someone sends you a vague greeting like 'hi' and you're tired of the back-and-forth to figure out what they want, you can share a unique link generated by NoGreeting. This link leads to a simple webpage that explains, in a friendly manner, why starting with context is crucial for efficient communication. It's like giving them a polite, automated explanation instead of having to type it out yourself, or worse, playing phone-tag over text. The underlying technical idea is to leverage a publicly accessible URL as a gentle but firm nudge for better online etiquette, turning a common annoyance into an educational opportunity. This is a modern take on the 'no hello' concept, allowing for custom names and greetings in different languages.

How to use it?

As a developer or anyone who values their time, you can use NoGreeting by first visiting the application (or running it yourself, as it's open source!). You'll be prompted to pick a name that will appear in the message and choose a 'greeting trigger' – the word that will initiate the explanation (e.g., 'hi', 'hello', 'hey'). You can also select one of 16 languages for the explanation. Once configured, NoGreeting generates a unique URL. You then place this URL in your social media bios, or simply send it as a reply when you receive a message that lacks context. When someone clicks the link, they are presented with a clear and concise explanation of why leading with context is beneficial. This saves you the effort of repeatedly explaining this concept, improving the quality of incoming messages and making your interactions more efficient. It's about setting expectations for your communication, making everyone's life easier.

Product Core Function

· Contextual Greeting Explanation: Provides a customizable webpage that politely educates senders on the importance of providing context in their initial messages, saving the recipient from repetitive explanations. This directly addresses the issue of time wasted in message ping-pong.

· Personalized Link Generation: Allows users to create a unique URL associated with their chosen name and greeting trigger, making the shared link feel more personal and effective. This enhances the user experience and increases the likelihood of the message being heeded.

· Multi-language Support: Offers the explanation in 16 different languages, making it a globally applicable tool for improving communication across diverse networks. This broadens its utility and inclusivity.

· Open-Source Project: The code is publicly available on GitHub, allowing developers to inspect, contribute to, or even self-host the application. This fosters transparency and community involvement, embodying the hacker spirit of shared innovation.

· Customizable Greeting Triggers: Enables users to define specific words that will prompt the explanation, tailoring the tool to their preferred communication style and common annoyances. This provides flexibility and better targeting of the educational message.

Product Usage Case

· A freelance developer receives numerous DMs on social media starting with just 'hi' before clients ask for project quotes. By adding their NoGreeting link to their bio, potential clients are now presented with an explanation about providing project details upfront, leading to more informed initial inquiries and saving the developer time on clarifying questions.

· A busy professional who uses Slack for work receives direct messages from colleagues that are often vague. They can share their NoGreeting link when a message lacks clarity, ensuring future messages are more direct and action-oriented, leading to faster task completion and less context-switching.

· A community manager for an online forum experiences many new members asking basic questions without reading FAQs. By directing them to their NoGreeting link, they can educate new members on the importance of checking existing resources before asking, fostering a more self-sufficient and helpful community.

· An artist who wants to protect their time and creative energy from unsolicited requests can use NoGreeting to politely inform potential collaborators or fans about the need for clear proposals, filtering out casual inquiries and focusing on genuine opportunities.

7

Zig Ordered Collections

Author

habedi0

Description

This project introduces a foundational library for sorted collections in the Zig programming language. Sorted collections, like Java's TreeMap or C++'s std::map, are specialized data structures designed for efficient retrieval of data, particularly when you need to find individual items quickly or search for data within specific ranges. This library aims to bring these powerful capabilities to the Zig ecosystem, offering developers a new tool for performance-critical applications.

Popularity

Points 19

Comments 6

What is this product?

This is an early-stage library providing sorted collection data structures for the Zig programming language. Think of it as building a highly organized digital filing cabinet. Instead of just dumping files randomly, these collections keep your data neatly sorted. This sorting allows for incredibly fast searching. For example, if you have a list of names, a sorted collection can find a specific name almost instantly, or tell you all the names that start with 'A', much faster than sifting through an unsorted list. The innovation lies in implementing these complex data structures within Zig, leveraging its low-level control and performance benefits.

How to use it?

Developers can integrate this library into their Zig projects to manage data that requires ordered access. For instance, in a game, you might use it to store enemy positions sorted by distance from the player, allowing for quick identification of nearby threats. In a compiler, it could be used to store symbol tables, enabling rapid lookup of variable or function definitions. Integration typically involves importing the library's modules and using its provided functions to add, remove, and search for elements within the sorted collections. This provides a more efficient way to handle ordered data compared to manual sorting or using less specialized structures.

Product Core Function

· Sorted insertion: Efficiently adds new data while maintaining the overall sorted order of the collection. This is valuable for applications where data is constantly being updated and needs to remain searchable. (e.g., real-time analytics)

· Fast point lookup: Quickly retrieves a specific data item based on its key. This is crucial for applications requiring rapid data retrieval, such as database indexing or configuration loading. (e.g., finding a user by ID)

· Range queries: Allows for the retrieval of all data items within a specified range of keys. This is extremely useful for analytical tasks and data filtering. (e.g., finding all transactions within a date range)

· Efficient deletion: Removes data items while preserving the sorted structure, ensuring continued high performance for subsequent operations. This is important for dynamic datasets where items are frequently removed. (e.g., managing active user sessions)

Product Usage Case

· Implementing a high-performance recommendation engine: Developers could use sorted collections to store user preferences or item similarities, enabling quick identification of related items for personalized recommendations. This solves the problem of slow, brute-force comparisons by providing a structured way to query similar items.

· Building a real-time stock trading platform: Storing and querying stock prices in sorted order allows for rapid identification of price movements and execution of trades based on specific criteria. This addresses the need for millisecond-level responsiveness in financial applications.

· Developing a sophisticated scientific simulation: Maintaining simulation parameters or results in sorted collections allows for efficient analysis and retrieval of data points across different scales or conditions. This helps researchers quickly identify trends and outliers in complex datasets.

8

RustNodeHTTP

Author

StellaMary

Description

This project allows you to write Node.js applications using Rust, aiming to achieve significantly higher HTTP throughput. The innovation lies in bridging the performance gap between Node.js's JavaScript ecosystem and Rust's superior speed for I/O-bound tasks, especially for high-traffic web servers. So, this is useful for developers who need to handle a massive number of incoming requests without sacrificing responsiveness.

Popularity

Points 12

Comments 11

What is this product?

RustNodeHTTP is a novel approach to building Node.js applications by leveraging Rust's performance capabilities. Instead of writing your backend logic directly in JavaScript, you can now write it in Rust. This is achieved through a specialized runtime or binding that allows Rust code to interact seamlessly with the Node.js environment. The core technical insight is that Rust's compiled nature and efficient memory management, especially for network operations (like handling HTTP requests and responses), can drastically outperform typical JavaScript execution for I/O-intensive workloads. This means your server can process many more requests per second with fewer resources. So, this is useful because it offers a way to turbocharge your Node.js applications for extreme performance needs without abandoning the familiar Node.js ecosystem.

How to use it?

Developers can use RustNodeHTTP by writing critical performance-sensitive parts of their Node.js applications in Rust. This might involve creating Rust 'modules' or 'libraries' that are then imported and used within their existing JavaScript codebase. The project likely provides a build tool or a specific runtime that compiles the Rust code and integrates it into the Node.js process. Think of it like adding a high-performance engine to your car – you still drive it like a car, but it can go much faster. For integration, you would typically follow the project's documentation to set up the Rust toolchain, write your Rust code, compile it, and then import it into your Node.js application using standard module import mechanisms. So, this is useful for developers who have identified bottlenecks in their Node.js applications and want a way to optimize those specific parts using Rust's speed, without a full rewrite.

Product Core Function

· Rust-based HTTP server engine: Implements core HTTP request handling and response generation in highly optimized Rust code, offering significantly higher throughput compared to traditional Node.js. This allows for handling more concurrent connections with lower latency.

· Node.js interoperability layer: Provides mechanisms for Rust code to seamlessly call JavaScript functions and for JavaScript code to call Rust functions, enabling a hybrid development approach. This means you can incrementally adopt Rust for performance critical sections.

· Performance profiling and optimization hooks: Likely includes tools or patterns to identify performance bottlenecks in the Rust code that is integrated with Node.js. This helps developers pinpoint exactly where to focus their optimization efforts for maximum gains.

· Compiled Rust modules for Node.js: Enables packaging Rust code into dynamic libraries or modules that Node.js can load and execute directly, making it easy to integrate Rust's speed into existing projects.

Product Usage Case

· Building a high-throughput API gateway: A developer could use RustNodeHTTP to build an API gateway that needs to handle millions of requests per minute, proxying them to various backend services. By implementing the core proxy logic in Rust, they can achieve massive throughput and low latency, ensuring the gateway isn't a bottleneck. This solves the problem of a JavaScript-based gateway being overwhelmed by traffic.

· Real-time data streaming services: For applications that involve streaming large volumes of data in real-time (e.g., stock tickers, IoT sensor data), RustNodeHTTP can be used to build the backend. Rust's efficient handling of network I/O and concurrent connections is ideal for pushing data out to many clients simultaneously with minimal delay. This is useful for avoiding dropped data or sluggish updates in real-time applications.

· Web server for static content with heavy traffic: A website that serves a lot of static files but experiences extremely high traffic might benefit from a Rust-based server core. RustNodeHTTP could be used to implement the file serving logic, dramatically increasing the number of served files per second. This solves the problem of a standard Node.js server struggling to keep up with demand for static assets.

9

Luzmo Custom Chart Builder

Author

YannickCrabbe

Description

Luzmo is a powerful tool that allows developers to create and integrate entirely new, custom chart types directly into their dashboards. It addresses the common limitation of BI tools that offer a fixed set of chart options. By enabling developers to write their own visualization code, Luzmo seamlessly integrates bespoke charts, like network graphs, into interactive dashboards, solving the problem of visually representing complex relationships that standard charts cannot capture. So, this is useful because it lets you visualize your data in unique ways that are crucial for deep insights, beyond what typical tools offer.

Popularity

Points 15

Comments 1

What is this product?

Luzmo is a system designed for building highly specialized, custom chart types that go beyond the standard offerings of most Business Intelligence (BI) tools. The core innovation lies in its ability to let developers define their own data visualizations using code. Instead of being limited by pre-defined chart templates (like bar charts or pie charts), developers can create charts tailored to specific data relationships, such as network graphs to show connections between entities. Luzmo handles the underlying dashboard mechanics like data querying, filtering, and interactivity, allowing developers to focus purely on crafting the visual representation. This is valuable because it unlocks the ability to present complex data relationships visually in a way that’s impossible with off-the-shelf charting solutions, leading to more meaningful discoveries.

How to use it?

Developers can use Luzmo by leveraging its builder framework. This involves defining 'data slots' – essentially specifying what kind of data your custom chart will consume from the dashboard. Then, you write the visualization code (likely using JavaScript libraries like D3.js or similar) that instructs how this data should be rendered visually. Luzmo takes this code and allows you to integrate it into your dashboard as if it were a native chart type. This means your custom chart will automatically work with existing filters, interact with other charts, and adhere to the dashboard's overall theme, without requiring extensive manual integration or creating standalone, disconnected visualizations. This is useful for developers who need to create a dashboard that presents unique data relationships, ensuring the custom visualization is a seamless and functional part of the entire analytics experience.

Product Core Function

· Custom Chart Definition: Developers can write code to define how their data is visualized, enabling unique chart types that standard BI tools don't support. This is valuable for creating visualizations that accurately represent complex relationships in data, leading to better understanding.

· Seamless Dashboard Integration: Custom charts are integrated as if they were native, meaning they automatically support filtering, cross-chart linking, and theming. This is valuable because it ensures that your unique visualizations are functional and consistent within the overall dashboard, saving significant development time.

· Data Slot Configuration: The ability to define specific data inputs for custom charts ensures that the visualization code receives the data it needs in a structured format. This is valuable for making custom charts robust and predictable when interacting with different datasets.

· Interactive Visualization: The framework supports creating interactive charts, allowing users to explore data by hovering, clicking, or drilling down within the custom visualization. This is valuable as it enhances user engagement and allows for deeper data exploration.

· Developer-Friendly Workflow: The project provides a structured approach for building, testing, and deploying custom chart types, often with accompanying tutorials and code examples. This is valuable as it lowers the barrier to entry for developers wanting to create advanced visualizations.

Product Usage Case

· Visualizing sales representative connections to open deals using a network graph, where node size represents deal value and color represents win probability. This helps sales managers quickly identify key relationships and opportunities, addressing the limitation of standard charts in representing complex interpersonal and deal dynamics.

· Creating a custom Sankey diagram to illustrate user flow through a complex application, showing how users navigate between different features. This provides a clear visual path of user journeys, helping product teams identify drop-off points and areas for UX improvement where typical funnel charts might be insufficient.

· Developing a unique geospatial visualization that overlays multiple layers of data (e.g., customer locations, service areas, competitor presence) with custom rendering logic. This allows businesses to gain richer insights into market penetration and strategic planning by visualizing spatially complex information beyond simple map markers.

· Building an interactive timeline that shows dependencies between project tasks with custom visual cues for status and risk. This offers project managers a more intuitive way to manage complex projects, highlighting critical paths and potential bottlenecks that might be obscured in a Gantt chart.

10

MCP-Cloud Orchestrator

Author

andrew_lastmile

Description

MCP-Cloud Orchestrator is a cloud platform designed to easily host and manage any MCP server, including agents and ChatGPT applications. It leverages Temporal for durable, long-running operations and makes deploying local MCP services to the cloud as simple as deploying a web application. So, what's in it for you? It allows developers to seamlessly transition their experimental AI agents and applications from local development to a robust, scalable cloud environment, unlocking new possibilities for persistent AI functionalities.

Popularity

Points 8

Comments 2

What is this product?

MCP-Cloud Orchestrator is a cloud-based service that acts as a central hub for running various MCP (Message Communication Protocol) compatible AI agents and applications. The core innovation lies in its approach to making complex AI agent hosting simple. Instead of dealing with intricate server setups, developers deploy their applications as remote SSE (Server-Sent Events) endpoints that adhere to the MCP specification. This means your AI agents can leverage advanced features like elicitation (asking for more information), sampling (collecting data), notifications, and logging. To ensure that these agents can handle long-running tasks without interruption, the platform uses Temporal, a workflow engine that provides fault-tolerance and state management, allowing agents to pause, resume, and recover from failures. Think of it as a highly reliable and always-on environment for your AI creations. So, what's in it for you? It provides a professional, stable backend for your AI projects, making them accessible and resilient, unlike typical local experiments.

How to use it?

Developers can use MCP-Cloud Orchestrator to deploy their existing MCP-compatible agents or build new ones. The process is streamlined with a command-line interface (CLI) tool, similar to how one might deploy a modern web application. You can initialize a new agent, add dependencies (like OpenAI integrations), log in to your MCP-Cloud account, and then deploy. The platform handles the underlying infrastructure, including setting up Temporal workflows and exposing your agent as a stable SSE endpoint. You can then connect any MCP client, such as ChatGPT, Claude Desktop/Code, or Cursor, to your deployed agent. For example, you can deploy a custom OpenAI-powered application that helps with ordering pizza, making it accessible through an MCP client. So, what's in it for you? It significantly reduces the friction of deploying and managing sophisticated AI agents, allowing you to focus on building intelligent functionalities rather than worrying about infrastructure.

Product Core Function

· Durable Execution via Temporal: Enables long-running, fault-tolerant AI agents that can pause and resume operations without losing state, ensuring continuous availability. This is valuable for tasks that require persistent processing or extended interaction.

· MCP Protocol Compliance: Ensures seamless integration with various MCP clients by adhering to a standardized communication protocol, allowing your agents to interact with a wide range of AI tools and platforms.

· Simplified Cloud Deployment: Provides an easy-to-use CLI and workflow for deploying local MCP servers and agents to the cloud, abstracting away complex infrastructure management.

· Agent and App Hosting: Offers a dedicated cloud environment for hosting various types of MCP servers, including agents, ChatGPT applications, and other AI-powered services.

· Advanced MCP Features Support: Facilitates the use of advanced MCP features like elicitation, sampling, notifications, and logging within hosted applications, enabling richer agent interactions and data handling.

Product Usage Case

· Deploying a customer support chatbot as a long-running agent that can handle complex queries and resume conversations after interruptions, improving user experience. This solves the problem of chatbots going offline or losing context during extended interactions.

· Hosting a personalized AI assistant that learns user preferences over time and proactively offers suggestions, requiring persistent state management and continuous background processing. This tackles the challenge of creating AI assistants that truly adapt to individual users.

· Making a specialized AI tool, like a code generation assistant for a specific framework, accessible to a team by deploying it as a cloud service, allowing easy integration into their development workflow. This addresses the difficulty of sharing and managing specialized development tools.

· Building an interactive AI experience, such as a dynamic storytelling agent, that can engage users in extended narratives, leveraging features like elicitation to guide the story based on user input. This unlocks creative possibilities for interactive AI content.

11

SemanticBlogSearch

Author

iillexial

Description

A semantic search engine for engineering blogs and conferences, leveraging natural language processing to understand the meaning behind technical content, not just keywords. This innovation addresses the challenge of finding relevant, nuanced information within the vast and rapidly evolving landscape of technical documentation and discussions.

Popularity

Points 9

Comments 0

What is this product?

SemanticBlogSearch is a sophisticated search engine that goes beyond simple keyword matching. It uses advanced Natural Language Processing (NLP) techniques, like embedding models (e.g., sentence transformers), to understand the semantic meaning of your search queries and the content of engineering blogs and conference papers. Think of it like a super-smart librarian who doesn't just look for the exact words you typed, but also understands the concepts you're interested in. This means you'll find more relevant results, even if the exact phrasing isn't present in the original text. This innovation unlocks deeper insights from technical literature, helping you discover solutions and understand complex topics more effectively.

How to use it?

Developers can integrate SemanticBlogSearch into their research workflow to quickly find solutions to specific technical problems, explore new technologies, or understand complex architectural patterns. You can use it by inputting natural language questions or descriptions of the technical concepts you're seeking. For example, instead of searching for 'kubernetes deployment strategy', you could ask 'How can I safely roll out new versions of my applications in Kubernetes?'. The engine will then surface blog posts and conference talks that discuss concepts like blue-green deployments, canary releases, or rolling updates, even if they don't use your exact search terms. This makes it incredibly efficient for engineers to get up to speed on new topics or troubleshoot issues.

Product Core Function

· Semantic query understanding: Analyzes natural language queries to grasp the underlying intent and concepts, enabling more accurate retrieval of information, valuable for discovering solutions to nuanced technical problems.

· Content embedding and indexing: Processes engineering blogs and conference papers to create vector representations (embeddings) of their content, allowing for efficient similarity search based on meaning, crucial for finding relevant research across diverse technical sources.

· Ranked relevance results: Presents search results ordered by semantic relevance, ensuring users see the most pertinent information first, saving time and effort in information gathering for technical decision-making.

· Cross-source search: Indexes and searches across multiple engineering blogs and conference proceedings simultaneously, providing a comprehensive view of available technical knowledge, essential for understanding a topic from multiple perspectives.

Product Usage Case

· A software architect looking for best practices in microservices communication: Instead of sifting through hundreds of articles on 'API gateway' or 'message queues', they can ask 'What are the most reliable ways for microservices to talk to each other?', and SemanticBlogSearch will surface relevant discussions on gRPC, Kafka, REST, and their trade-offs, helping them make informed architectural choices.

· A junior developer encountering a specific error message: They can input the error and a brief description of their context, e.g., 'Python TypeError: 'NoneType' object is not iterable when processing data from an API'. The search engine will find discussions and code examples explaining the cause and common fixes, even if the exact error message isn't a direct match, providing faster troubleshooting.

· A researcher exploring a new machine learning technique: By describing the concept, like 'methods for improving the robustness of image recognition models against adversarial attacks', SemanticBlogSearch can uncover advanced research papers and blog posts that detail techniques like adversarial training or defensive distillation, accelerating their understanding and experimentation.

12

ZigFlipper-SafetyKit

Author

cat-whisperer

Description

This project offers a production-ready template for developing Flipper Zero applications using Zig. It addresses the common pain points of embedded C development, such as hard-to-debug runtime memory errors and null pointer exceptions, by leveraging Zig's memory safety features and compile-time error checking. The core innovation lies in bridging Zig's modern build system with the Flipper SDK's specific hardware target, enabling developers to write safer, more robust firmware without needing specialized IDEs.

Popularity

Points 6

Comments 0

What is this product?

This is a development template that allows you to write Flipper Zero applications using the Zig programming language. Instead of the typical C language for embedded systems, which can be prone to tricky memory errors that only appear when the device is running, this template uses Zig. Zig provides built-in features that catch many common programming mistakes before your code even runs on the Flipper Zero. This means fewer crashes, less time spent debugging on the actual hardware, and more reliable applications. The technical innovation is in making Zig's advanced build system and safety guarantees work seamlessly with the Flipper Zero's specific hardware requirements, using a clever two-step compilation process.

How to use it?

Developers can use this template by setting up their development environment with Zig and the Flipper Zero Universal Build Tool (UFBT). You'll write your Flipper Zero application logic in Zig files within this template's structure. The template handles the compilation process, translating your Zig code into a format that the Flipper Zero can understand and run. It integrates with UFBT for easy packaging and deployment to your Flipper Zero device. This means you can use your favorite text editor and Zig's command-line tools, avoiding the need for complex, specialized IDEs. It's designed for straightforward integration into your Flipper Zero app development workflow.

Product Core Function

· Memory Safety: Zig's design inherently prevents common memory errors like buffer overflows and dangling pointers at compile time, meaning you're less likely to encounter crashes on your Flipper Zero. This translates to more stable applications and less debugging frustration.

· Compile-Time Error Checking: The Zig compiler rigorously checks your code before it's deployed, catching a wide range of potential issues that would typically only surface at runtime in C. This upfront detection saves significant development time and effort.

· Bounds-Checked Arrays and Explicit Error Handling: Zig encourages explicit handling of potential errors and ensures that array accesses are within their defined limits. This reduces the risk of unexpected behavior and makes your application's logic clearer and more predictable.

· Cross-Platform Build System: The template provides a clean build system that works on various operating systems. This means you can develop your Flipper Zero apps from your preferred OS without compatibility headaches, ensuring a consistent development experience.

· UFBT Integration for Packaging and Deployment: Seamless integration with UFBT simplifies the process of building and deploying your Zig-based Flipper Zero applications to the device. This streamlines the workflow from writing code to running it on hardware.

· No Special IDE Required: You can develop applications using just Zig, UFBT, and your preferred text editor. This lowers the barrier to entry and allows for a more flexible and lightweight development setup, which is valuable for quick experimentation.

Product Usage Case

· Developing a custom Flipper Zero tool to interact with specific hardware peripherals: Instead of worrying about C's manual memory management leading to crashes when interfacing with sensors or communication modules, developers can use Zig's safety features to ensure the code interacting with these peripherals is robust and reliable, preventing unexpected device resets.

· Creating a more complex Flipper Zero application with intricate data structures: By using Zig's built-in memory safety and explicit error handling, developers can build applications that manipulate data without the constant fear of memory corruption that plagues C development. This allows for more ambitious features and a more stable end-user experience.

· Porting existing embedded C logic to Zig for improved reliability: Developers facing persistent memory bugs in their Flipper Zero C applications can use this template as a starting point to rewrite critical components in Zig, benefiting from compile-time checks and modern language features to eliminate those troublesome runtime issues.

13

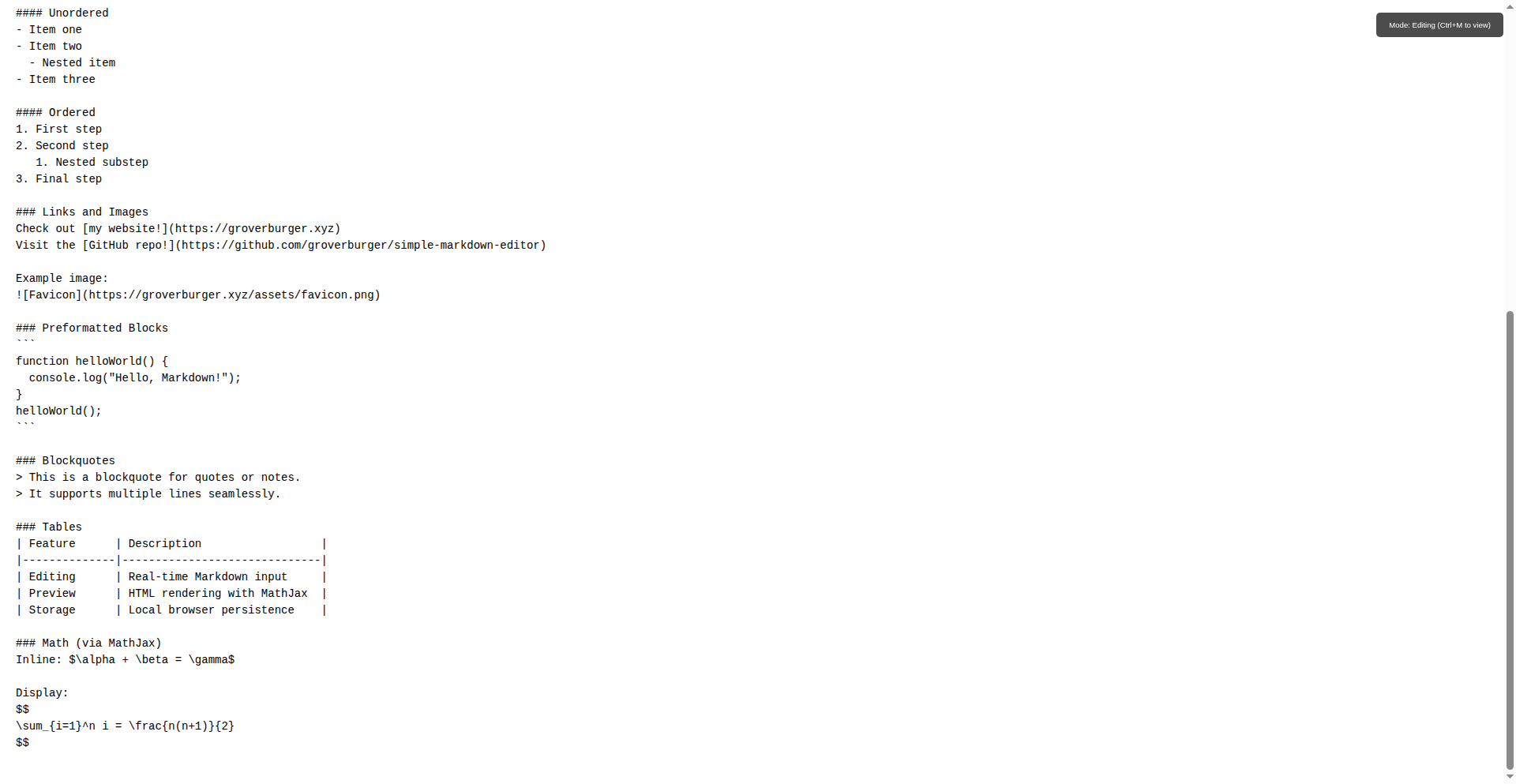

VanillaMarkdown Notes

Author

__grob

Description

A dead-simple, vanilla JavaScript and HTML5-based Markdown editor and viewer. It focuses on essential features, allowing users to easily print the rendered HTML to PDF or paper via CTRL+P. With integrated MathJax support for mathematical equations and automatic local storage saving, it functions as a persistent notes app where progress is never lost.

Popularity

Points 3

Comments 3

What is this product?

This project is a minimalist Markdown editor and viewer built purely with vanilla JavaScript and HTML5. Its core innovation lies in its extreme simplicity and focus on core functionality. Unlike feature-heavy editors, it prioritizes a streamlined user experience, specifically enabling effortless printing of the Markdown output to either a physical printer or a PDF document by simply pressing CTRL+P. It also incorporates MathJax, a JavaScript library that allows for rendering complex mathematical equations within the Markdown. Furthermore, it leverages the browser's local storage to automatically save your notes, so you don't lose your work and can pick up right where you left off, effectively acting as a persistent digital notebook. So, why is this useful to you? It offers a distraction-free environment for writing and managing notes or documents that require basic formatting and the display of mathematical content, with the added benefit of easy document export and a reliable autosave feature.

How to use it?

Developers can use this project as a foundational component for building simple note-taking applications, documentation generators, or quick content creation tools. Its vanilla JS nature means it has no external dependencies, making it incredibly lightweight and easy to integrate into existing web projects. You can embed the editor and viewer directly into your HTML, and it will handle the Markdown parsing and rendering. For more advanced use cases, you could extend its functionality by adding more complex Markdown features or integrating it with other JavaScript libraries. Its primary use case is to provide a ready-to-go Markdown editing experience in a web browser, ideal for personal use or as a building block for web applications. So, how is this useful to you? It provides a quick and easy way to start building interactive text-based applications, allowing you to focus on your specific features rather than on boilerplate setup or complex library integrations.

Product Core Function

· Markdown to HTML rendering: Converts Markdown syntax into well-formatted HTML, making your text readable and presentable. This is useful for creating blog posts, documentation, or any content that benefits from simple markup.

· Print to PDF/Paper functionality: Allows users to directly print the rendered HTML content via CTRL+P, simplifying document generation and sharing. This is valuable for creating printable reports, study guides, or any document that needs to be in a physical or PDF format.

· MathJax integration: Renders mathematical equations and scientific notation seamlessly within the Markdown, crucial for academic, scientific, or technical writing. This is useful for educators, researchers, and students who need to present complex formulas.

· Local Storage Autosave: Automatically saves the user's content to the browser's local storage, preventing data loss and enabling a continuous editing experience. This is helpful for personal note-taking, journaling, or any situation where you want to ensure your work is always saved.

· Vanilla JS/HTML5 implementation: Built without any frameworks or libraries, ensuring maximum performance and minimal overhead. This is beneficial for developers who prioritize lightweight applications or need to integrate with existing codebases without introducing new dependencies.

Product Usage Case

· Personal Knowledge Management: A developer can use this to build a private, browser-based system for storing and organizing personal notes, research findings, and coding snippets, with the ability to easily print important information for offline access. This solves the problem of scattered notes and the need for a quick way to access key details.

· Quick Documentation Generation: Use it to quickly draft and render documentation for a small open-source project, allowing team members to easily review and print the latest version without needing complex build processes. This addresses the need for simple, shareable project documentation.

· Academic Note-Taking: A student can use this to take notes during lectures, incorporating mathematical formulas via MathJax, and then easily print or save the notes as a PDF for later study. This provides a dedicated tool for subjects requiring mathematical notation and easy document output.

· Simple Content Creation Tool: Integrate this into a website to allow users to create simple formatted content, like testimonials or user-generated tips, which can then be easily printed or saved. This offers a user-friendly way for visitors to contribute structured text.

14

Empathetic AI Communicator for Co-Parents

Author

solfox

Description

This project is an AI-powered communication tool designed to facilitate peaceful co-parenting after divorce or high-conflict relationships. It leverages advanced AI models, specifically Gemini and OpenAI, to filter out emotional language and focus discussions on child-centric matters. The innovation lies in using AI to remove the emotional burden from communication, preventing potential abuse and fostering a more business-like interaction, a critical need highlighted by personal experience and expert advice.

Popularity

Points 3

Comments 3

What is this product?

This is an AI-driven application built using Google Cloud, Firebase, and AI models like Gemini (with some OpenAI capabilities). It acts as an intelligent intermediary for co-parents. The core technology involves natural language processing (NLP) to analyze messages, identify potentially emotionally charged or accusatory language, and then rephrase them into neutral, child-focused statements. The innovation is in applying AI to a deeply human and emotionally volatile problem, aiming to create a safer communication channel and prevent the escalation of conflict, which can be a form of emotional abuse. For users, this means having a tool that helps them communicate more effectively and less harmfully with their ex-partner, especially when navigating sensitive child-related topics.

How to use it?

Developers can integrate this product into their existing communication platforms or build new co-parenting tools by leveraging its API. For end-users, the application acts as a messaging service where users compose messages, and the AI analyzes and refines them before sending. It can also analyze incoming messages, flagging potentially problematic content. The use case is clear: any situation requiring communication between divorced or separated parents about their children, especially where past conflicts or high emotions are a concern. The integration would involve setting up the backend services and integrating the front-end interface, potentially using frameworks like FlutterFlow for rapid development.

Product Core Function

· Emotional Tone Filtering: The AI analyzes message sentiment and removes aggressive, accusatory, or overly emotional language. This provides value by preventing misunderstandings and escalating arguments, making communication less stressful for parents.

· Child-Centric Rephrasing: Messages are automatically rephrased to focus solely on the children's needs and well-being. This is valuable because it keeps the conversation on track and ensures that the children remain the priority, even during difficult exchanges.

· Abuse Prevention Layer: The system is designed to identify and flag potential instances of emotional abuse in communication. This offers immense value by creating a safer environment for both parents and children, reducing the risk of psychological harm.

· Business-like Communication Facilitation: The AI guides the conversation towards a more professional and factual tone. This is useful for parents who struggle to maintain objectivity, helping them manage logistics and decisions more efficiently without emotional baggage.

· Secure and Private Messaging: The platform ensures that communication is kept private and secure, which is crucial given the sensitive nature of co-parenting data. This provides peace of mind to users, knowing their conversations are protected.

Product Usage Case

· A divorced parent needs to discuss their child's upcoming school event with their ex-partner but knows their ex tends to be highly critical. Using this tool, they can draft a message about the event, and the AI will ensure it's phrased neutrally, focusing only on event details and participation, thus preventing an argument and ensuring the child's needs are met.

· Co-parents are struggling to agree on a shared custody schedule. The AI can help mediate their communication by rephrasing demands into collaborative requests and identifying common ground, simplifying the negotiation process and reducing the emotional toll on both parties.

· In a high-conflict separation, one parent uses accusatory language towards the other regarding child-rearing decisions. The AI flags these messages, prompting the sender to rephrase them in a more constructive manner, thereby preventing a cycle of blame and fostering a more cooperative approach to parenting.

· A parent needs to communicate essential medical information about their child to the other parent. The AI ensures the message is clear, concise, and free of any personal opinions or past grievances, making the transmission of critical information efficient and non-confrontational.

15

AI Era Developer Compass

Author

PdV

Description

This project is a practical guide, 'The New Rules,' designed to help developers navigate the rapidly evolving landscape shaped by Artificial Intelligence. It offers insights and strategies for adapting career paths, understanding new metrics for quality beyond traditional GitHub stars, and leveraging AI for team productivity. The core innovation lies in synthesizing years of distributed systems experience with a deep dive into what truly works in the AI era, providing actionable advice rather than just hype.

Popularity

Points 4

Comments 2

What is this product?

This is a comprehensive guide titled 'The New Rules,' offering a developer's perspective on surviving and thriving in the age of AI. It's not just theoretical; it dives into concrete, albeit sometimes illustrative, case studies and provides actionable advice. The author, with 15 years of experience in distributed systems, has used AI tools like Claude and ChatGPT extensively in its creation, aiming to provide a quality, thought-provoking resource. The key innovation is its pragmatic approach to AI's impact on developer careers, team dynamics, and skill valuation, moving beyond generalized fear or excitement to actionable strategies. So, what's in it for you? It helps you understand how your skills and career might need to adapt to stay relevant and competitive in a future increasingly influenced by AI.

How to use it?

Developers can access 'The New Rules' primarily through a downloadable PDF, freely available under a CC BY 4.0 license, allowing sharing and adaptation. A companion website likely offers deeper discussions and supplementary materials. The book is structured into 16 chapters, each addressing a specific aspect of AI's impact on development. You can read it cover-to-cover for a foundational understanding or dip into specific chapters that address your immediate concerns, such as 'GitHub stars became meaningless' or 'Skills that got you to $100K won't get you to $200K'. The book is intended for personal study, team discussions, or even as a basis for workshops. So, how can you use it? You can download the PDF, read the parts most relevant to your career challenges, and discuss the ideas with your colleagues to collectively prepare for the AI-driven future of software development.

Product Core Function

· Actionable career adaptation strategies: Provides concrete advice on how developers can re-skill and pivot their careers to remain valuable in an AI-augmented workforce, helping you understand what new skills to focus on for future success.

· Rethinking quality metrics: Offers alternative ways to assess project quality and developer talent beyond vanity metrics like GitHub stars, assisting you in identifying genuinely good projects and contributors.

· AI-powered team productivity: Explores how small teams can gain a significant advantage by effectively integrating AI tools, enabling you to identify opportunities for your team to boost efficiency and output.

· Evolving developer moats: Argues that the traditional advantage of pure code quality is diminishing, shifting focus to judgment, architecture, and trust, guiding you to cultivate higher-value skills that AI cannot easily replicate.

Product Usage Case

· A developer facing job market uncertainty due to AI advancements can read the chapter on career path shifts to identify transferable skills and new areas of expertise to pursue, providing a clear roadmap for their professional development.