Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-26

SagaSu777 2025-10-27

Explore the hottest developer projects on Show HN for 2025-10-26. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The landscape of innovation today is a fascinating blend of pushing boundaries at the lowest levels of computing and leveraging sophisticated AI to streamline complex tasks. On one end, we see the triumphant return of foundational programming with projects like MyraOS, demonstrating that mastering C and Assembly still unlocks a deep understanding of how systems truly function. This is crucial for anyone aiming to build robust, efficient, and secure software from the ground up. It's a reminder that the 'hacker spirit' often involves going back to basics to truly innovate. On the other end, the explosion of AI-powered tools, especially in agent orchestration and developer productivity, shows us how AI is becoming an indispensable co-pilot. Projects like VebGen, which uses Abstract Syntax Trees (AST) for local code understanding before involving LLMs, are particularly insightful. They highlight a growing trend towards optimizing AI usage, reducing token consumption, and enhancing privacy by processing data locally whenever possible. For developers, this means an exciting opportunity to build smarter tools that augment human capabilities without compromising resources or security. For entrepreneurs, it signals a fertile ground for creating specialized AI solutions that address specific pain points, from managing complex multi-agent systems to automating tedious development workflows. The key takeaway is that innovation thrives at both the fundamental and the applied levels, often intersecting to create powerful new possibilities. Embrace the low-level mastery to build a solid foundation, and harness AI intelligently to amplify your creations.

Today's Hottest Product

Name

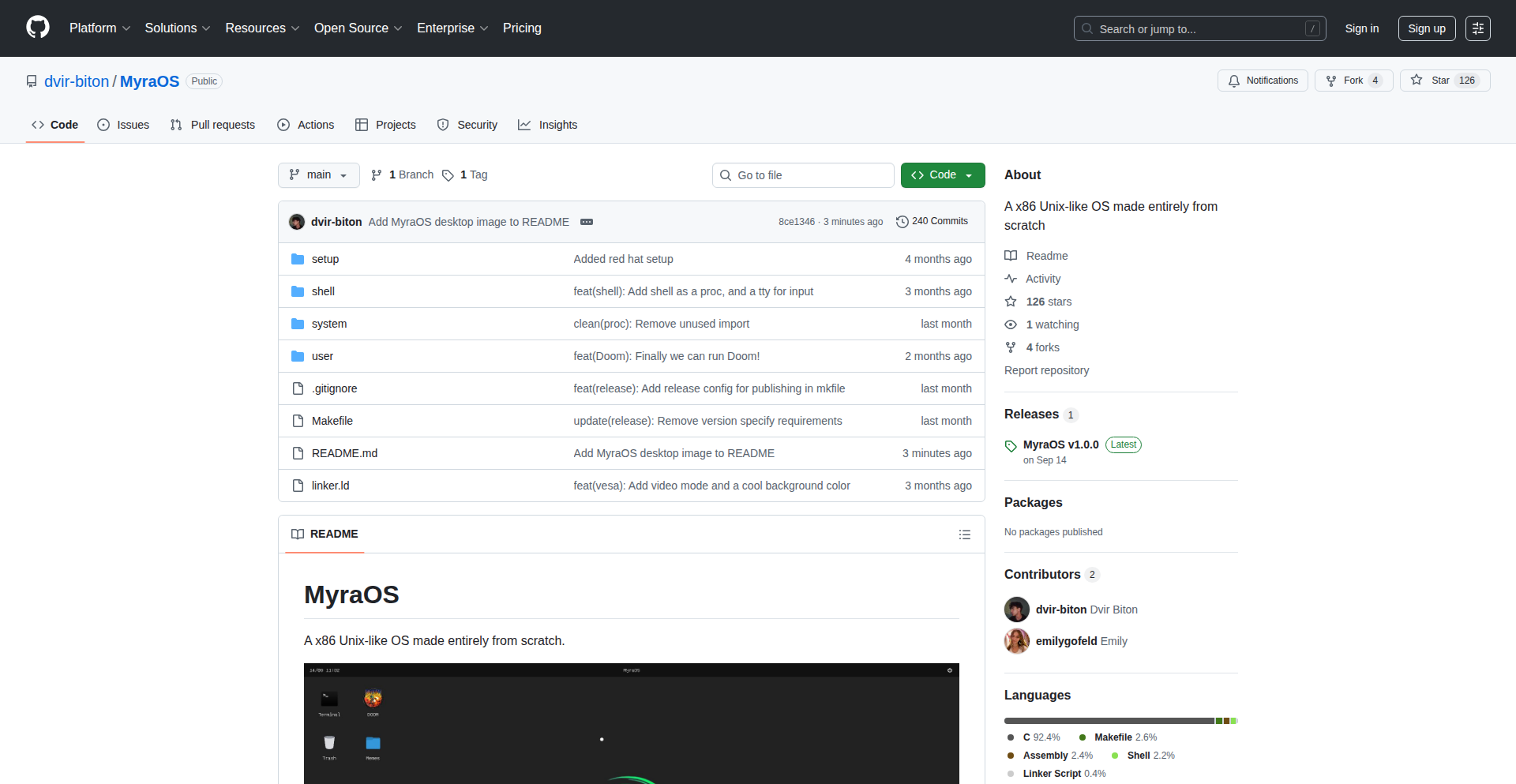

MyraOS – A 32-bit Operating System in C and ASM

Highlight

This project is a remarkable feat of low-level engineering, where a young developer built a functional 32-bit operating system from scratch using C and Assembly. It tackles fundamental OS challenges like memory management (PMM, paging), interrupt handling, file systems (EXT2), process scheduling, and even features a GUI and a Doom port. The developer's journey highlights deep learning in OS theory and practical implementation, offering invaluable insights into bootloaders, driver development, and system architecture for aspiring OS developers. The debugging process, especially for memory and scheduling issues, provides a masterclass in tackling complex, low-level problems.

Popular Category

Operating Systems

AI/Machine Learning

Developer Tools

Web Development

Data Visualization

Security

Productivity Tools

Popular Keyword

LLM

AI Agents

CLI

Web Scraping

TypeScript

Rust

Python

Debugging

Automation

Open Source

Framework

Technology Trends

AI Agent Orchestration

Low-Level Systems Programming

Developer Productivity Tools

Data-Driven Insights & Automation

Privacy-Preserving Technologies

Web Assembly & Edge Computing

Type-Safe Development

Project Category Distribution

AI/Machine Learning (30%)

Developer Tools (25%)

Operating Systems (5%)

Web Development (15%)

Data & Analytics (10%)

Security & Privacy (5%)

Productivity (10%)

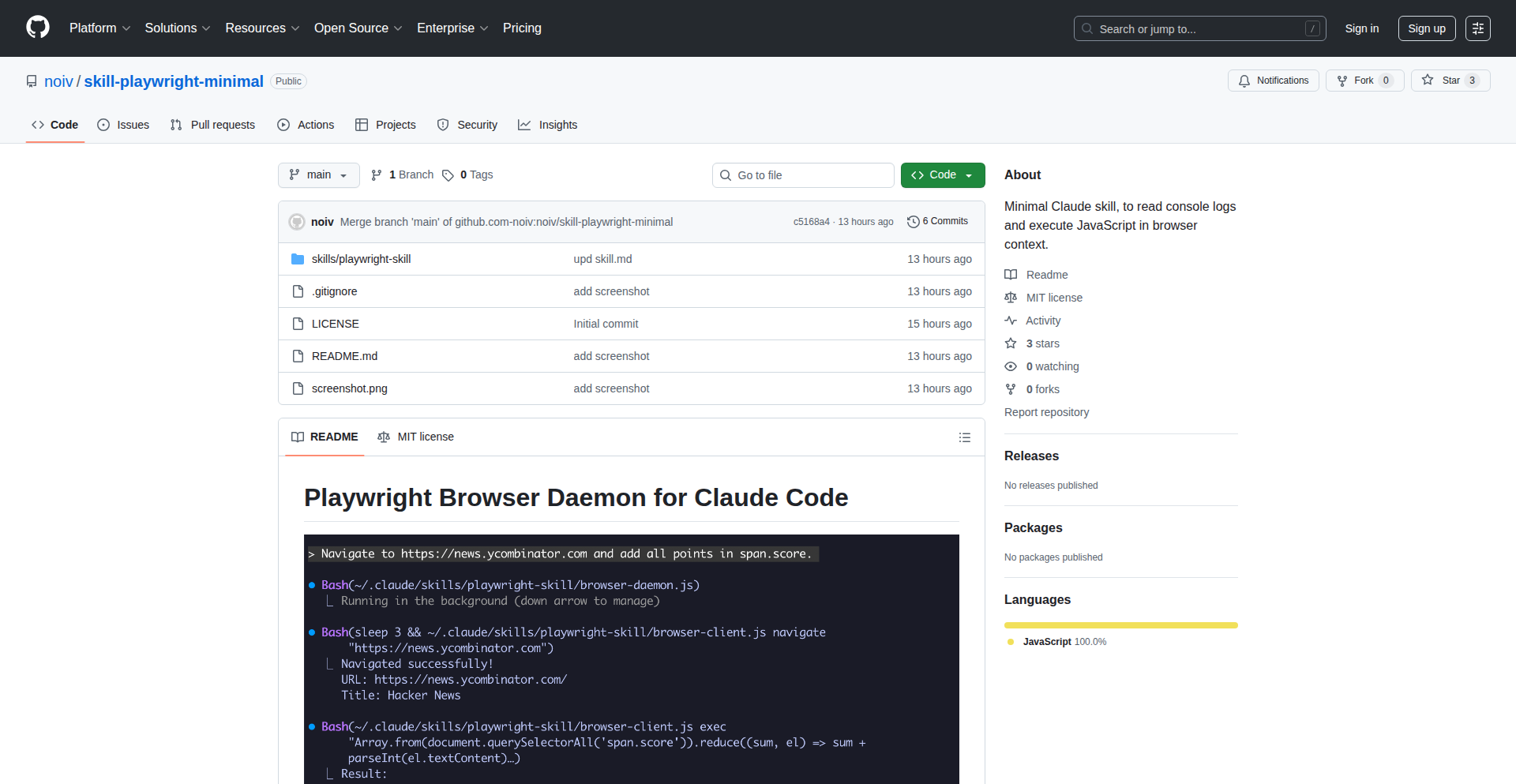

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | MyraOS: C/ASM 32-bit Kernel Explorer | 185 | 39 |

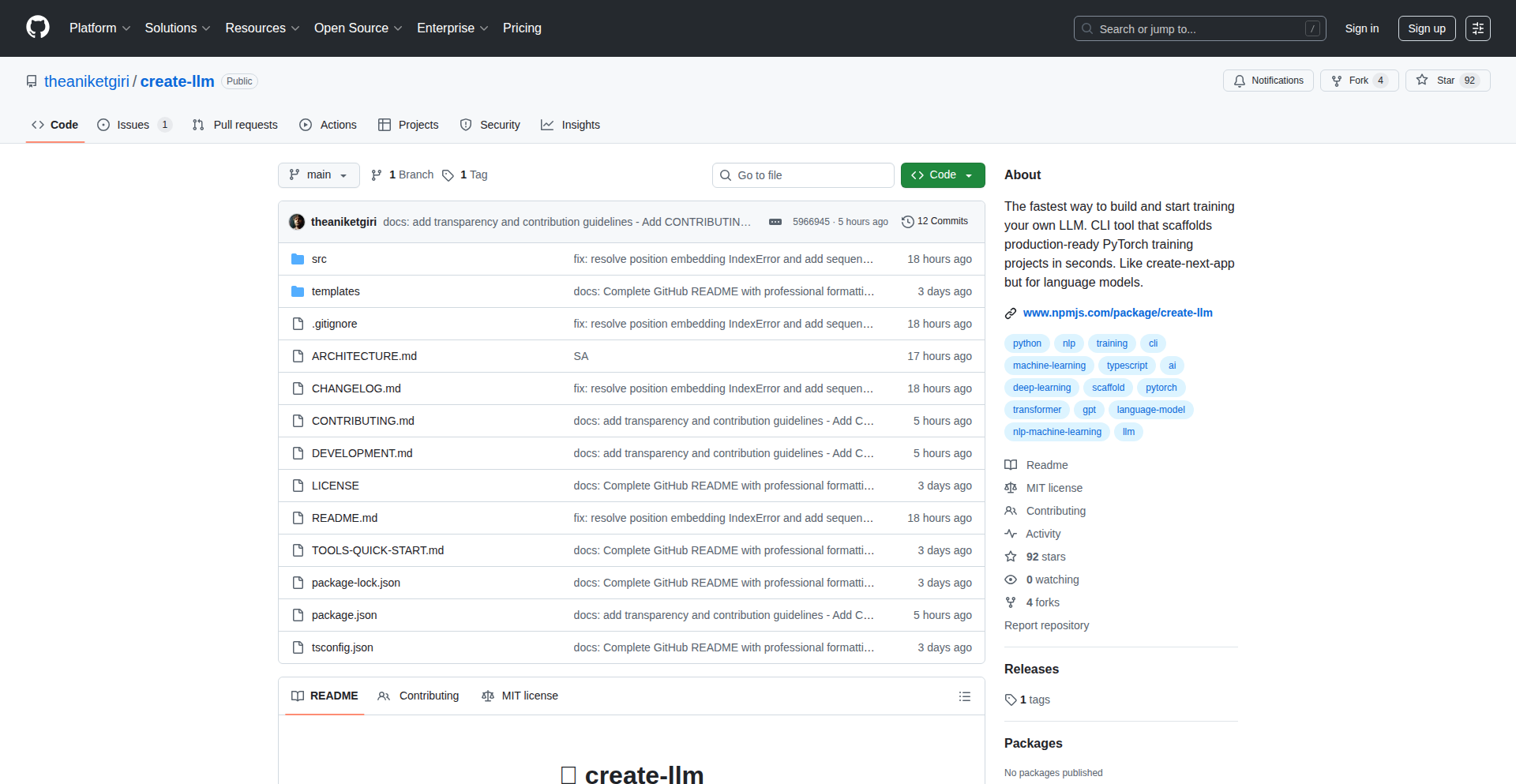

| 2 | Create-LLM: Instant LLM Training | 42 | 30 |

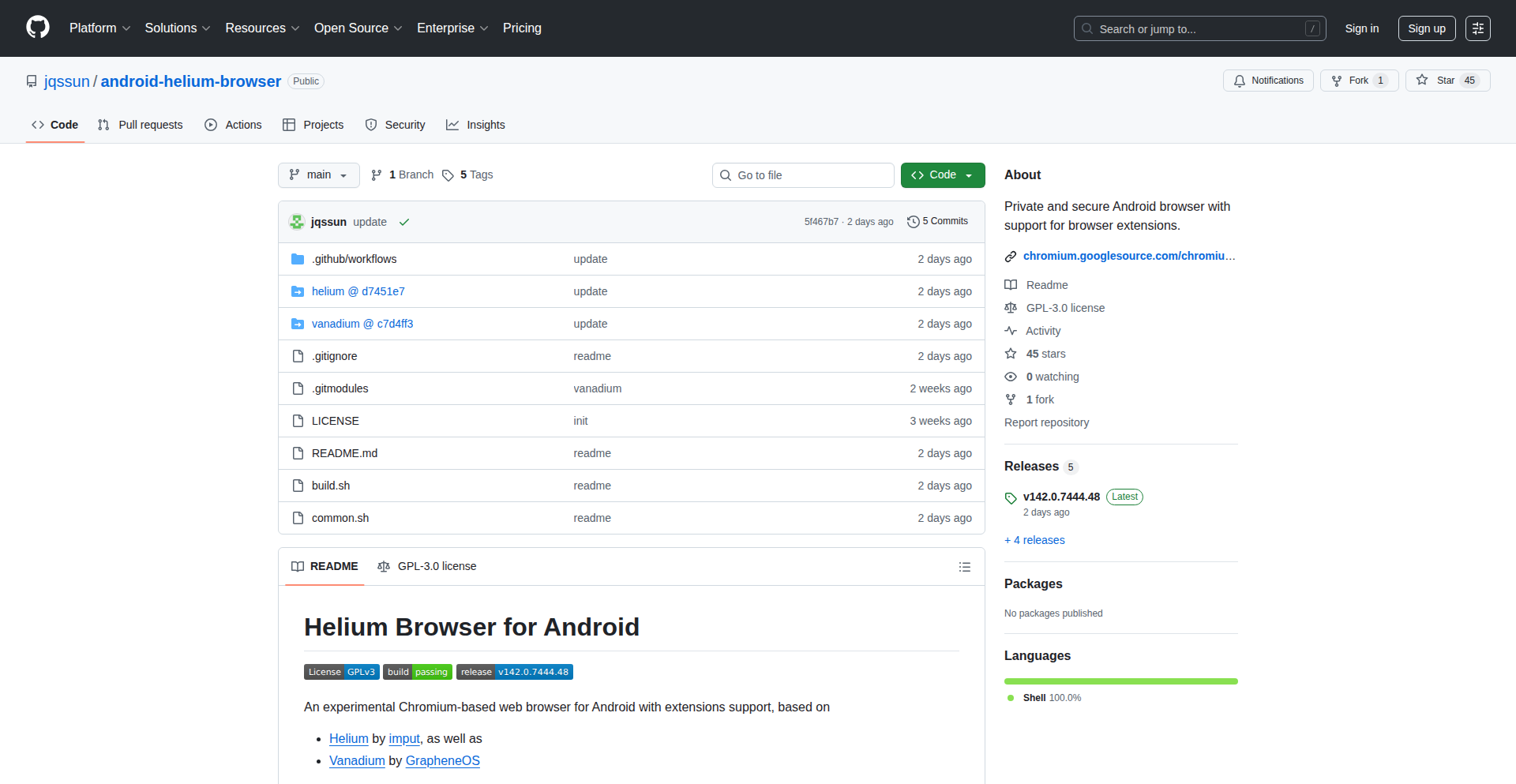

| 3 | Helium Browser: Extension-Powered Privacy Browser | 52 | 19 |

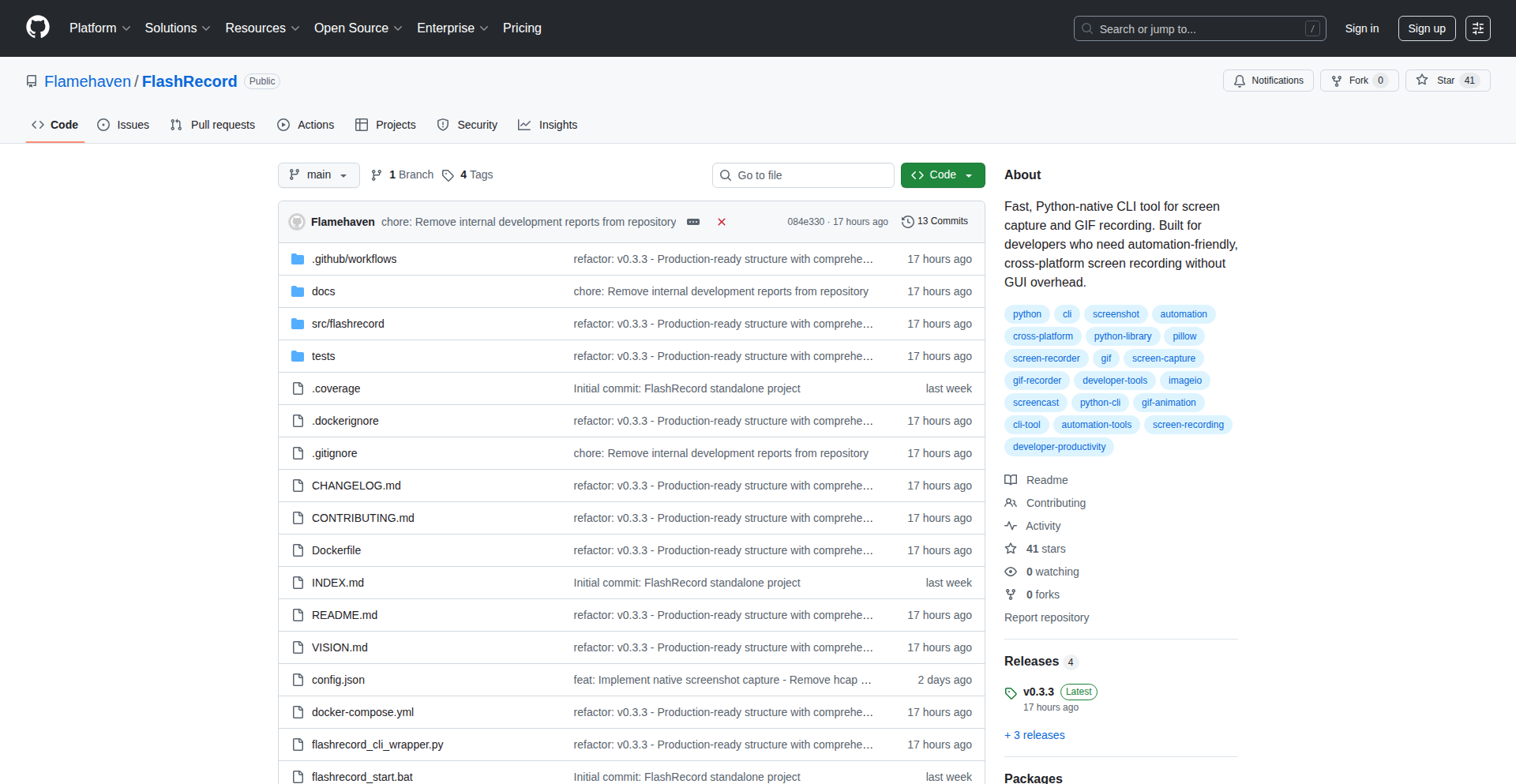

| 4 | FlashRecord-CLI | 18 | 7 |

| 5 | Snippet: The Auto-Updating Knowledge Truth Layer | 11 | 13 |

| 6 | RazorInsight | 8 | 2 |

| 7 | Agno: AgentOS Runtime | 9 | 1 |

| 8 | Hermes Video Downloader API | 6 | 0 |

| 9 | EmacsStream | 4 | 1 |

| 10 | Synnote: Actionable AI Note Weaver | 3 | 2 |

1

MyraOS: C/ASM 32-bit Kernel Explorer

Author

dvirbt

Description

MyraOS is a 32-bit operating system built from scratch in C and Assembly. It's a deep dive into the fundamental components of an OS, including bootloading, memory management, process scheduling, and even a graphical user interface (GUI). The project serves as an educational tool, demonstrating how low-level hardware interacts with software, with a remarkable achievement of porting Doom.

Popularity

Points 185

Comments 39

What is this product?

MyraOS is a custom-built operating system designed to teach and showcase the intricate workings of a computer's core. It begins with the bootloader, the first piece of software that runs when a computer starts. Then, it handles displaying text on the screen (VGA driver), responding to external events like keyboard input (keyboard driver and interrupts), and managing the computer's main memory (physical memory management). It also implements virtual memory, allowing programs to use more memory than physically available, and a file system to store and retrieve data from storage devices. Finally, it supports running multiple programs simultaneously (multiprocessing and scheduling) and even includes a graphical interface and a port of the classic game Doom. The innovation lies in the comprehensive, from-scratch implementation of these core OS concepts, providing a tangible learning experience.

How to use it?

Developers can use MyraOS as a learning platform to understand OS internals. They can clone the GitHub repository, compile the source code (typically using tools like GCC and GRUB), and run it in a virtual machine environment like QEMU. This allows them to experiment with different OS components, modify the code, and observe the effects. For more advanced users, MyraOS can serve as a foundation for building specialized embedded systems or as a reference for developing their own operating system kernels. It provides a practical playground for those who want to explore beyond high-level programming languages and delve into how software truly interacts with hardware.

Product Core Function

· Bootloader: Initializes the system and loads the OS kernel, demonstrating the critical first steps of a computer's startup sequence.

· VGA Text Mode Driver: Enables the display of text on the screen, a fundamental output mechanism for early operating systems and debugging.

· Interrupt Handling (IDT, ISR, IRQ): Allows the CPU to react to events from hardware or software, crucial for responsiveness and managing devices like the keyboard.

· Keyboard Driver: Translates key presses into characters that the OS can understand and process, enabling user input.

· Physical Memory Management (PMM): Tracks and allocates the computer's physical RAM, ensuring efficient use of available memory resources.

· Paging and Virtual Memory Management: Creates an illusion of larger memory for applications and protects memory spaces between processes, improving stability and performance.

· File System (EXT2) and PATA HDD Driver: Provides a structured way to store and retrieve data from hard drives, enabling persistent storage of information.

· System Calls: Defines the interface between user applications and the OS kernel, allowing programs to request services from the OS.

· Libc Implementation: Offers a basic set of standard C library functions, making it easier to write applications for the OS.

· Multiprocessing and Scheduling: Enables the execution of multiple programs concurrently, managing their access to CPU time for smooth multitasking.

· Graphical User Interface (GUI) with Double Buffering and Dirty Rectangles: Provides a visual interface for users, improving usability and offering a more modern user experience.

· Doom Port: A significant demonstration of the OS's capabilities, showcasing its ability to run complex applications and handle demanding graphics.

Product Usage Case

· A student learning about operating system design can use MyraOS to see how memory is managed and how processes are scheduled, providing a practical understanding of theoretical concepts. They can fork the project and experiment with different scheduling algorithms, observing the impact on program execution speed.

· A hobbyist developer wanting to build a custom embedded system can leverage MyraOS's low-level drivers and kernel to create a tailored operating system for specific hardware, avoiding the complexity of starting from absolute zero.

· An aspiring OS developer can study MyraOS's implementation of features like interrupt handling and system calls to gain insights into the architecture and design patterns used in real-world operating systems.

· A programmer interested in retro game development can analyze the Doom port to understand the challenges and techniques involved in bringing older software to new or custom environments, such as optimizing graphics rendering and input handling.

· A computer science educator can use MyraOS as a teaching aid in courses on operating systems, providing students with a concrete, working example to dissect and learn from, making abstract concepts more tangible.

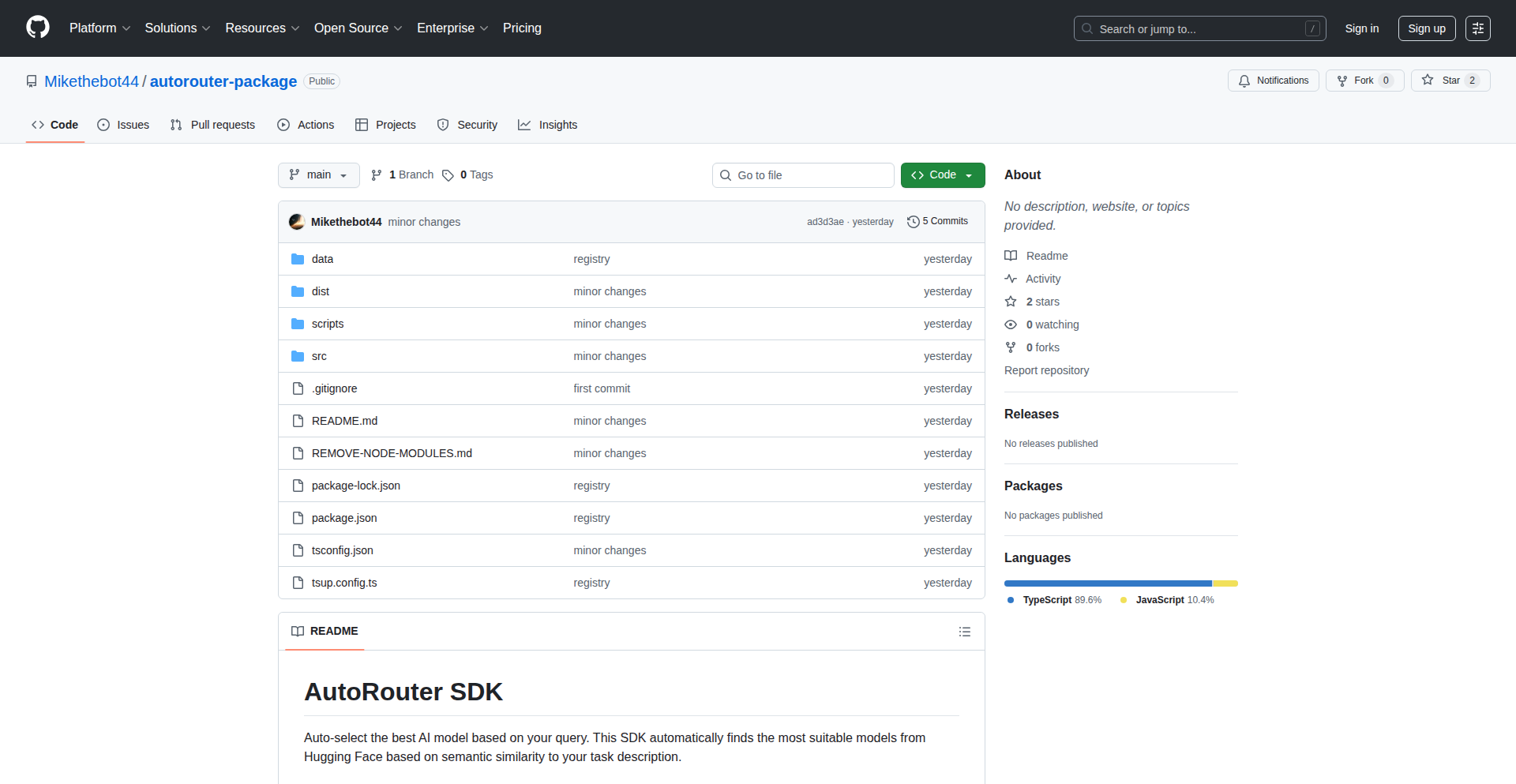

2

Create-LLM: Instant LLM Training

Author

theaniketgiri

Description

Create-LLM is a revolutionary project that enables developers to train their own custom Large Language Models (LLMs) in an astonishingly short time – just 60 seconds. It addresses the common bottleneck of LLM training, making advanced AI accessible for rapid experimentation and specialized applications.

Popularity

Points 42

Comments 30

What is this product?

Create-LLM is a software tool designed to drastically accelerate the process of training a Large Language Model. Traditionally, training an LLM can take days or even weeks on powerful hardware. This project leverages a combination of optimized model architectures and efficient data processing techniques, likely employing techniques such as parameter-efficient fine-tuning (PEFT) or specialized inference engines. The innovation lies in streamlining the entire pipeline, from data preparation to model compilation, to achieve near-instantaneous training. So, what's the benefit for you? It means you can quickly iterate on AI ideas without waiting for lengthy training cycles, allowing for faster product development and the creation of highly tailored AI solutions.

How to use it?

Developers can integrate Create-LLM into their workflow by providing it with their specific dataset and desired model configuration. This might involve a simple command-line interface or a programmatic API. The tool will then handle the data preprocessing, model selection, and rapid training process. The output is a ready-to-use LLM that can be deployed for various tasks. This is useful for anyone looking to embed custom AI capabilities into their applications, whether it's for personalized content generation, domain-specific chatbots, or enhanced data analysis, all without the traditional infrastructure overhead.

Product Core Function

· Rapid LLM Training: Enables the creation of custom LLMs in under 60 seconds. The value here is in democratizing AI model development, allowing for quick testing and deployment of specialized AI.

· Customizable Model Architectures: Allows developers to select or configure model types suitable for their specific needs. This provides flexibility and ensures the trained LLM is optimized for the intended application.

· Optimized Data Pipelines: Efficiently processes and prepares training data, minimizing the time spent on pre-processing. This is valuable for reducing development friction and getting to model deployment faster.

· Simplified Integration: Offers straightforward methods for incorporating the trained LLM into existing applications. This lowers the barrier to entry for using custom AI, making it practical for a wider range of projects.

Product Usage Case

· A startup wants to build a chatbot that understands niche industry jargon. Using Create-LLM, they can train a custom LLM on their industry-specific data in minutes, then integrate it into their customer support platform to provide highly relevant answers, improving customer satisfaction.

· A researcher is experimenting with new NLP techniques. Create-LLM allows them to rapidly train and test different LLM configurations on small datasets, accelerating their research iteration speed and discovering novel AI approaches much faster.

· A content creator wants to generate personalized marketing copy. They can use Create-LLM to train an LLM on their brand's tone and style, then use the resulting model to generate unique marketing materials efficiently, saving time and boosting engagement.

3

Helium Browser: Extension-Powered Privacy Browser

Author

jqssun

Description

Helium Browser is an experimental Chromium-based browser for Android phones and tablets that brings two major innovations: native support for desktop-style browser extensions and enhanced privacy/security features inherited from Vanadium Browser. This means you can install powerful extensions like ad blockers directly onto your mobile device, while benefiting from advanced privacy measures like IP protection and disabled Just-In-Time (JIT) compilation, all in a user-friendly, open-source application. So, what's in it for you? You get the flexibility of desktop browsing and the peace of mind of enhanced privacy, right on your mobile.

Popularity

Points 52

Comments 19

What is this product?

Helium Browser is a mobile browser built on Chromium, the same core technology behind Google Chrome. Its key innovation lies in its ability to natively support desktop browser extensions, something typically unavailable on mobile. By enabling 'desktop site' mode, users can install extensions directly from the Chrome Web Store, such as popular ad blockers (like uBlock Origin). Furthermore, it incorporates advanced privacy and security hardening from Vanadium Browser, including features like default WebRTC IP policy protection and disabling Just-In-Time (JIT) compilation for enhanced security. The goal is to combine the power of desktop extensions with robust privacy features in an accessible mobile app. So, what does this mean for you? You gain the functionality and control of desktop extensions on your phone, coupled with strong privacy protections that shield your online activity.

How to use it?

To use Helium Browser, you simply install the app on your Android device. To leverage the extension support, navigate to the browser's menu and toggle on 'desktop site' mode. This will unlock the ability to install extensions from the Chrome Web Store as you would on a desktop computer. For its privacy features, they are active by default, meaning your IP address is protected by default through WebRTC policies, and JIT compilation is disabled for improved security. You can also build the browser from source if you're technically inclined. So, how does this benefit you? You can easily enhance your mobile browsing experience with powerful tools like ad blockers and enjoy a more private and secure online environment without complex setups.

Product Core Function

· Native desktop extension support: Allows installation of any Chrome Web Store extension directly onto the mobile browser, enabling powerful customization and functionality like ad blocking and enhanced form filling. This is valuable because it brings the rich ecosystem of desktop browsing tools to your mobile device, making your mobile web experience more efficient and personalized.

· Privacy and security hardening: Integrates advanced privacy features from Vanadium Browser, such as default WebRTC IP leak protection and disabled JIT compilation. This is valuable because it actively protects your online identity and system security, reducing risks associated with common web browsing activities.

· Chromium-based engine: Utilizes the robust and widely-supported Chromium engine, ensuring compatibility with modern web standards and a familiar browsing experience. This is valuable because it guarantees a stable, fast, and reliable browsing performance across a vast range of websites and web applications.

· FOSS (Free and Open Source Software): The project is open-source, allowing for community review, contributions, and transparency. This is valuable because it fosters trust and allows developers to inspect the code for security vulnerabilities or to contribute to its improvement, ultimately benefiting all users.

· Passkeys support: Designed to work with Passkeys from password managers like Bitwarden, enabling secure and convenient passwordless authentication. This is valuable because it offers a more secure and user-friendly way to log into websites and services, moving away from traditional passwords.

Product Usage Case

· Blocking intrusive ads and trackers on mobile websites: A user can install uBlock Origin and experience cleaner, faster-loading web pages on their Android device, similar to their desktop experience. This solves the problem of annoying ads and potential privacy breaches on mobile.

· Enhancing website security with privacy-focused extensions: A privacy-conscious user can install extensions that add extra layers of security and anonymity, such as script blockers or tracker blockers, to protect their sensitive data while browsing on the go. This addresses the need for increased online security for mobile users.

· Improving productivity with web development tools: A developer can install extensions that aid in debugging or inspecting web pages directly on their mobile device, facilitating on-the-fly adjustments and testing. This solves the challenge of limited development tools available for mobile browsers.

· Accessing advanced functionalities of specific web applications: Some web applications might have desktop-specific features that require certain browser extensions to work correctly; Helium Browser allows users to access these functionalities on their mobile. This unlocks the full potential of web apps for mobile users.

· Securely managing online identities with passkeys: Users can leverage Helium Browser to authenticate into websites that support passkeys, offering a more secure and convenient login method than traditional passwords. This improves the overall security and ease of access to online accounts.

4

FlashRecord-CLI

Author

Flamehaven

Description

FlashRecord-CLI is a lightweight, Python-native command-line tool for capturing screenshots and recording GIFs, specifically designed for developers. Its innovation lies in a custom compression pipeline inspired by CWAM (Content-Aware Motion) techniques, utilizing multi-scale saliency, temporal subsampling, and adaptive scaling. This results in significantly smaller GIF file sizes while preserving visually important regions, making it ideal for automated workflows and documentation without the need for a graphical interface.

Popularity

Points 18

Comments 7

What is this product?

FlashRecord-CLI is a command-line interface (CLI) tool that lets you easily capture screenshots and record screen activity as GIFs, directly from your terminal. Unlike traditional screen recording software that requires a graphical user interface (GUI), this tool is built with automation in mind. It uses Python's Pillow and NumPy libraries to implement a smart compression algorithm. This algorithm identifies and prioritizes visually important parts of the screen and reduces redundancy over time in recordings, making the resulting GIFs much smaller without sacrificing crucial visual information. So, it's a developer-friendly way to automate screen capture, providing efficient and scriptable tools for your projects. The value for you is getting high-quality, small-sized recordings that can be easily integrated into your development processes.

How to use it?

Developers can use FlashRecord-CLI in several ways. Firstly, it can be run directly from the command line for quick captures or recordings. For example, `flashrecord @sc` takes an instant screenshot, and `flashrecord @sv 5 10` records a 5-second GIF at 10 frames per second. Secondly, its Python-native design allows it to be imported directly into Python scripts or test suites. This means you can programmatically trigger screen recordings or screenshots. This is incredibly useful for scenarios like generating visual evidence in Continuous Integration (CI) pipelines, automatically creating demo GIFs for pull requests, or embedding animated tutorials directly into documentation generation scripts. The value for you is seamless integration into your existing automation and development workflows, saving time and effort.

Product Core Function

· Command-line interface for instant screenshots and GIF recordings: Allows quick and direct capture of screen content via simple commands, useful for immediate visual documentation or sharing. The value is rapid content capture without needing to open complex applications.

· Python importable for scripting and automation: Enables developers to integrate screen recording capabilities directly into their Python code, allowing for programmatic control and integration into automated workflows. The value is the ability to automate visual asset generation within existing scripts and tests.

· Custom CWAM-inspired compression pipeline: Implements advanced compression techniques to significantly reduce GIF file sizes while preserving critical visual details. The value is creating smaller, shareable, and easily manageable visual assets for demos, documentation, or CI.

· Cross-platform compatibility (Windows, macOS, Linux): Ensures consistent functionality across different operating systems, making it a reliable tool for diverse development environments. The value is the ability to use the tool regardless of your operating system, ensuring project consistency.

· Zero-configuration defaults: Provides sensible out-of-the-box settings that work well for most use cases, reducing the barrier to entry for new users. The value is quick adoption and immediate productivity without complex setup.

Product Usage Case

· Automated bug reporting in CI: Imagine a test fails in your CI pipeline. FlashRecord-CLI can be triggered automatically to record a GIF of the application state at the point of failure, providing clear visual context for debugging. This solves the problem of vague bug reports by offering concrete visual evidence.

· Generating demo GIFs for pull requests: When you submit a code change, you can use FlashRecord-CLI to record a short GIF demonstrating the new feature or fix. This GIF can be attached to the pull request, making it easier for reviewers to understand the impact of your changes. This improves code review efficiency and clarity.

· Creating animated tutorials from scripts: If you're documenting a complex process or a new library, you can write a script that uses FlashRecord-CLI to record each step. This allows you to generate high-quality, step-by-step animated tutorials programmatically. This streamlines the documentation creation process and ensures accuracy.

· Recording application performance issues: When diagnosing performance problems, a GIF recording of the application's behavior during the issue can be invaluable. FlashRecord-CLI can be used to capture these moments efficiently. This helps in quickly identifying and communicating performance bottlenecks.

5

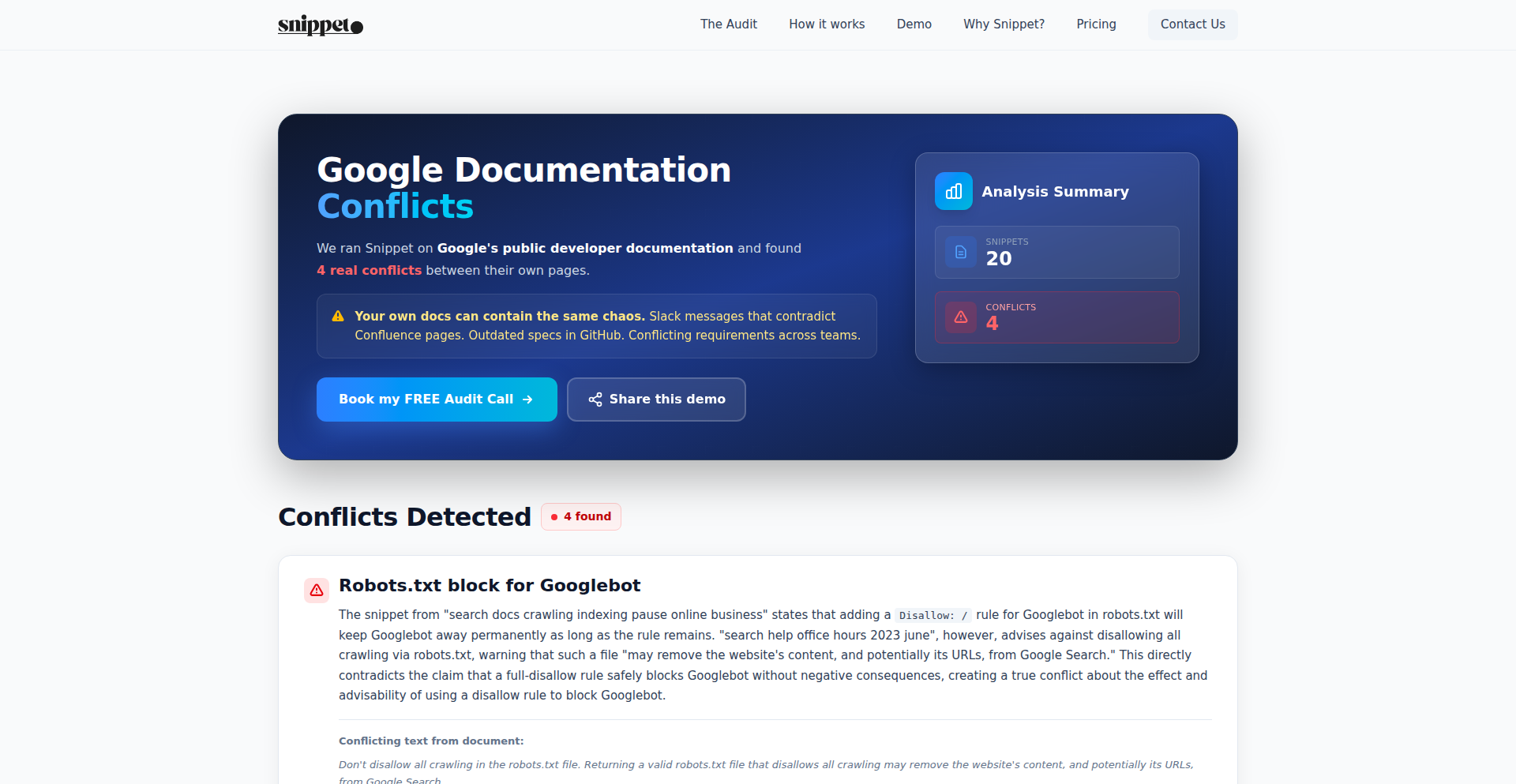

Snippet: The Auto-Updating Knowledge Truth Layer

Author

aa_y_ush

Description

Snippet is a system designed to create an 'auto-updating truth layer' for an organization's knowledge. This means it automatically keeps information up-to-date, aiming to solve the problem of relying on outdated or inaccurate documentation. The core innovation lies in how it tracks and updates information, ensuring what you read is always the latest and most accurate version.

Popularity

Points 11

Comments 13

What is this product?

Snippet is a sophisticated knowledge management system that acts as a living repository of an organization's collective wisdom. Instead of static documents that quickly become obsolete, Snippet intelligently monitors various sources of information (like internal wikis, code repositories, or external APIs) and automatically updates related knowledge snippets. This approach leverages techniques like version control integration and semantic analysis to understand the context of information changes. So, for you, it means always having access to the most current and reliable information, eliminating the frustration of using outdated guides or data.

How to use it?

Developers can integrate Snippet into their workflows by connecting it to their existing knowledge sources. This might involve pointing Snippet to a Git repository containing documentation, linking it to a Confluence space, or configuring it to poll specific APIs. Once connected, Snippet will begin its task of monitoring and updating. For example, if a developer updates a function's description in the code's docstring, Snippet will detect this change and automatically update the corresponding section in the publicly accessible knowledge base. This allows for seamless knowledge dissemination without manual synchronization efforts. The value for you is reduced time spent searching for or verifying information, and increased confidence in the accuracy of what you're working with.

Product Core Function

· Automated Information Tracking: Snippet continuously monitors designated knowledge sources for any changes. This is achieved through mechanisms like webhook subscriptions or periodic polling of APIs and file systems. Its value is ensuring that any update, no matter how small, is captured immediately, preventing knowledge silos from forming.

· Intelligent Content Synchronization: Upon detecting a change, Snippet intelligently identifies the relevant sections of existing knowledge and updates them. This might involve natural language processing (NLP) to understand the meaning of the update and map it to the correct knowledge snippet. The value here is maintaining a consistent and accurate knowledge base without manual intervention, saving significant effort.

· Version Control Integration: Snippet can hook into version control systems (like Git) to track changes directly at the source. This allows it to leverage the history and context of code or documentation changes. The value is a highly reliable update mechanism tied to the authoritative source of truth, ensuring accuracy.

· Cross-Referencing and Dependency Management: Snippet can understand relationships between different pieces of knowledge. If one snippet is updated, it can flag or update other related snippets. This value is ensuring that updates propagate correctly across the entire knowledge graph, preventing broken links or outdated cross-references.

· API for Knowledge Access: Snippet provides an API that allows other applications or services to query and retrieve the most up-to-date knowledge. This value enables other systems to directly access accurate information, making them more robust and reliable.

Product Usage Case

· Updating API Documentation: Imagine an API changes its endpoints or response formats. Snippet can monitor the code repository where the API is defined. When the documentation in the code comments is updated to reflect these changes, Snippet automatically propagates these updates to the public-facing API documentation. This saves the documentation team countless hours and ensures users always have accurate information, leading to smoother integrations and fewer support tickets.

· Onboarding New Engineers: A new engineer needs to understand how a complex system works. Instead of digging through outdated wikis, they can access Snippet's knowledge layer, which is guaranteed to be up-to-date with the latest architectural diagrams, setup instructions, and best practices directly from the source code and current configuration files. This dramatically reduces onboarding time and improves productivity.

· Maintaining Technical Guidelines: For a company with evolving coding standards or security protocols, keeping these guidelines accurate is crucial. Snippet can monitor internal documents or code repositories where these standards are defined. When a change is made, it ensures all developers are immediately working with the latest version of these guidelines, improving code quality and security posture across the organization.

· Real-time Error Resolution Information: When a common bug is fixed in the code, the associated troubleshooting steps or workarounds in the knowledge base can be automatically updated by Snippet. This means developers encountering the bug can quickly find the correct, up-to-the-minute solution, minimizing downtime and frustration.

6

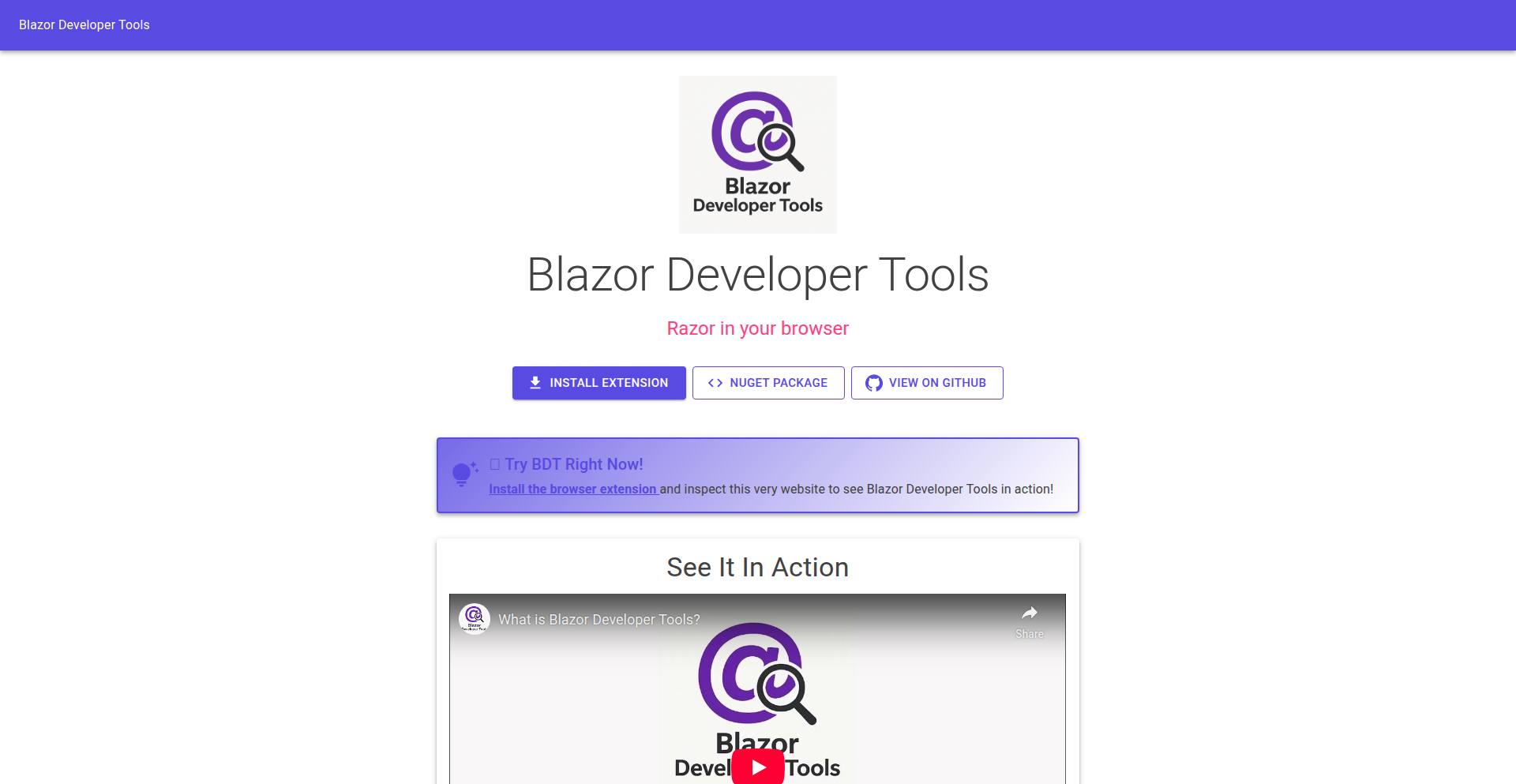

RazorInsight

Author

joe-gregory

Description

RazorInsight is a browser extension and NuGet package that brings powerful developer tools to Blazor, the .NET frontend framework. It visualizes your component tree in the browser, maps DOM elements back to their source Razor files, and highlights components on hover. This solves the 'Console.WriteLine' debugging dilemma for complex Blazor applications by offering an intuitive, visual debugging experience akin to tools for React or Vue, boosting developer productivity.

Popularity

Points 8

Comments 2

What is this product?

RazorInsight is a developer tool suite for Blazor applications. Blazor allows developers to build web UIs using C#. Traditionally, debugging complex Blazor apps meant relying on print statements in the console, which is inefficient. RazorInsight provides a visual inspection of the Blazor component hierarchy directly in the browser. It achieves this by injecting special markers during the compilation process that survive the transformation of .razor files into HTML. A companion browser extension then reads these markers to reconstruct and display the component tree, even mapping specific parts of the rendered webpage back to their original .razor code. This innovation addresses a significant gap in Blazor's tooling, making development and debugging much more efficient.

How to use it?

Developers can integrate RazorInsight into their Blazor projects by installing the provided NuGet package. After installation, the browser extension should be enabled. When developing a Blazor application, developers can open the browser's developer tools and access the RazorInsight panel. Here, they will see a live tree of their application's components. Clicking on a component in the tree will highlight its corresponding element on the webpage, and vice-versa. This allows for quick identification of which component is responsible for a particular part of the UI or a specific behavior, streamlining the debugging process and speeding up development cycles. It works seamlessly with both Blazor Server and Blazor WebAssembly applications.

Product Core Function

· Component Tree Visualization: Displays a hierarchical view of all Blazor components in your application, allowing developers to understand the structure and relationships between different UI parts. This is valuable for identifying the origin of issues and understanding complex UIs.

· DOM to Component Mapping: Enables developers to click on any element in their web page and instantly see which Blazor component it belongs to. This drastically reduces the time spent trying to locate the source code for a specific UI element, directly addressing the pain point of manual code searching.

· Component Highlighting on Hover: As a developer hovers over a component in the tree, the corresponding element in the browser's UI is highlighted. This provides immediate visual feedback, making it easier to navigate and inspect specific components in a live application.

· Blazor Server and WASM Compatibility: The tool supports both Blazor hosting models, ensuring that developers can use it regardless of how they deploy their Blazor applications. This broad compatibility maximizes its utility across different Blazor projects.

· Open Source and Community Driven: Being open-source on GitHub means developers can inspect the code, contribute, and suggest improvements. This fosters a collaborative environment for advancing Blazor tooling.

Product Usage Case

· Debugging a complex form in a Blazor Server application: A developer notices an unexpected behavior with a specific input field. Using RazorInsight, they can easily locate the 'InputText' component in the tree, map it to the correct .razor file, and inspect its properties and state to identify the root cause of the bug.

· Understanding a large component hierarchy in a Blazor WASM app: When building a large single-page application, the component structure can become intricate. RazorInsight provides a clear visual representation of this structure, helping developers to grasp the overall layout and identify potential performance bottlenecks or areas for refactoring.

· Visualizing nested components during development: A developer is working on a reusable component library. RazorInsight allows them to see how their nested components are rendered and interact, making it easier to ensure correct placement and data flow during the development phase.

· Identifying unexpected CSS isolation issues: Blazor's CSS isolation can sometimes strip unknown attributes. RazorInsight's ability to work around this by injecting markers helps developers debug issues related to attribute handling within isolated components.

7

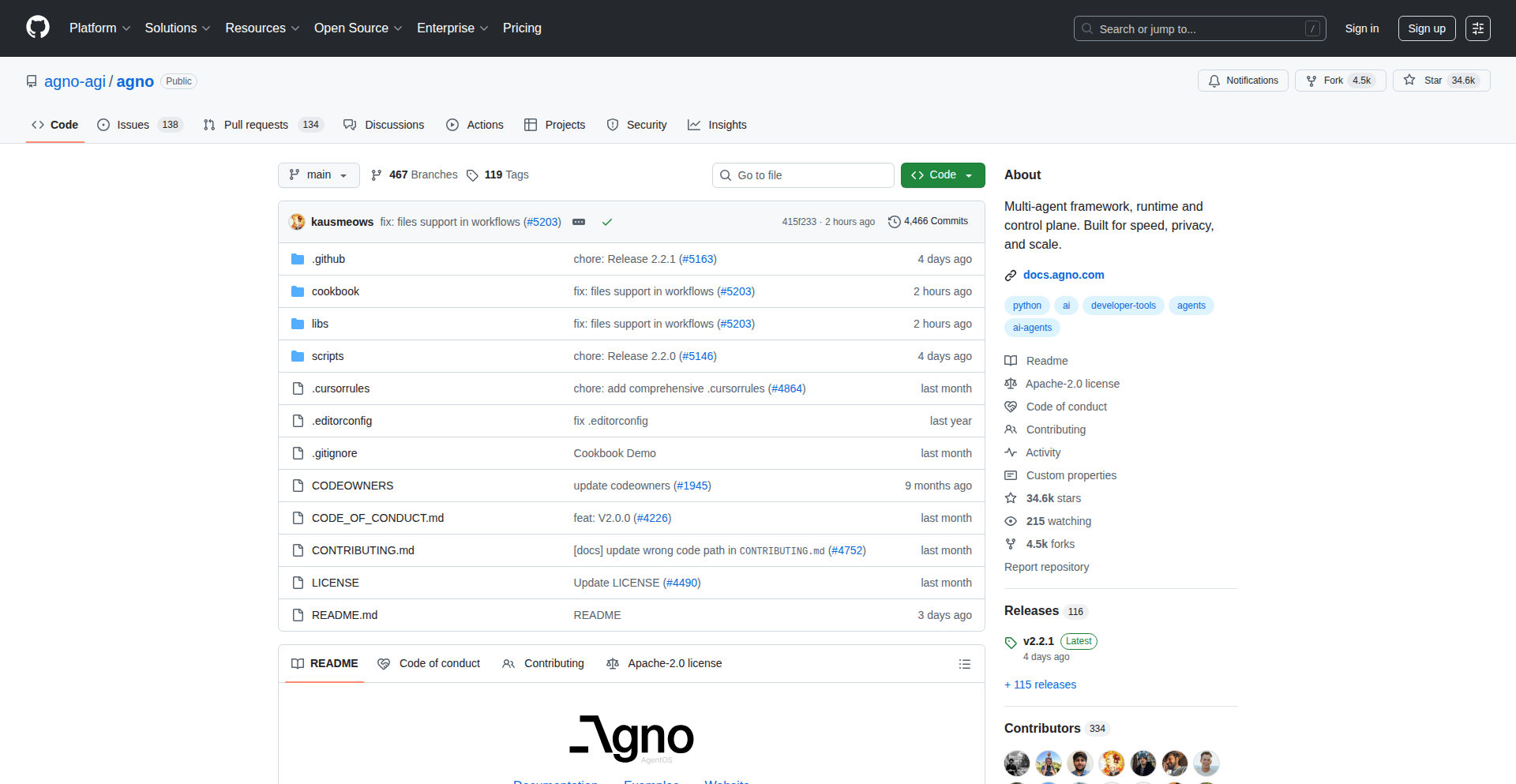

Agno: AgentOS Runtime

Author

bediashpreet

Description

Agno is a high-performance framework and runtime for building and managing AI agent systems. It's designed to be like 'FastAPI for AI Agents,' enabling developers to create, deploy, and orchestrate multi-agent teams and complex agentic workflows within their own cloud environment, ensuring full privacy and no external data sharing. Its key innovations lie in its speed, lightweight architecture, scalable runtime, integrated UI for management, and a privacy-first design.

Popularity

Points 9

Comments 1

What is this product?

Agno is a sophisticated platform that acts as the engine for AI agents. Think of it as a specialized operating system for artificial intelligence programs that need to work together. At its heart is the AgentOS, which is a super-fast and efficient server that allows you to run and control multiple AI agents, group them into teams, and define step-by-step processes for them to follow. What makes it innovative is its incredibly low resource usage – agents start up in microseconds and use minimal memory, comparable to a tiny fraction of a standard app. It's built using modern web technologies (like FastAPI) for a highly scalable and responsive system. A significant feature is its built-in user interface, which lets you see, test, and manage your agents and their teamwork in real-time, all without sending any data outside your own systems. This means you have complete control and privacy over your AI operations.

How to use it?

Developers can leverage Agno to build complex AI applications by defining individual AI agents and then orchestrating their interactions. You can integrate Agno into your existing cloud infrastructure. For example, you might use it to build a customer support system where different agents handle initial inquiries, data retrieval, and response generation. The integrated UI allows for seamless testing and monitoring of these agent teams. The framework provides APIs for defining agent behaviors and workflows, enabling developers to connect their custom AI models or leverage existing ones. Integration typically involves deploying the Agno runtime in your cloud and then using its provided APIs to define and manage your agents.

Product Core Function

· Fast and lightweight agent instantiation: Enables rapid startup of AI agents, reducing latency and improving responsiveness for time-sensitive applications.

· Asynchronous, stateless, horizontally scalable runtime: Ensures the system can handle a growing number of agents and requests efficiently by distributing workload across multiple servers.

· Integrated UI for real-time testing and monitoring: Provides a visual dashboard to observe agent behavior, troubleshoot issues, and manage agent teams, enhancing developer productivity.

· Privacy-by-design architecture: Guarantees that all AI operations and data remain within the user's cloud environment, preventing data leaks and ensuring compliance with privacy regulations.

· Browser-based control plane connection: Allows direct interaction with the agent runtime from the browser without any intermediary third-party systems, further enhancing security and privacy.

Product Usage Case

· Building an automated content generation pipeline: Developers can create a team of agents where one agent brainstorms ideas, another writes drafts, and a third refines the content. Agno allows for the efficient execution and coordination of these agents, significantly speeding up content creation.

· Developing an advanced data analysis and reporting tool: An agent could be tasked with fetching data from various sources, another with performing complex statistical analysis, and a third with generating human-readable reports. Agno's runtime ensures these tasks are executed seamlessly and privately within the organization's infrastructure.

· Creating a personalized recommendation engine: Agents can be designed to understand user preferences, fetch relevant data, and generate tailored recommendations. Agno's speed and scalability are crucial for providing real-time personalized experiences.

· Automating complex business workflows: For instance, an agent could handle initial customer inquiries, route them to the appropriate human agent, and summarize the interaction. Agno provides the framework to build and manage these multi-step automated processes securely.

8

Hermes Video Downloader API

Author

TechSquidTV

Description

Hermes is a self-hosted video downloader solution that leverages the power of yt-dlp, providing a REST API and web application. It goes beyond basic downloading by offering enhanced features, mobile support, and planned automations, aiming to simplify video management and editing for users.

Popularity

Points 6

Comments 0

What is this product?

Hermes is a system designed to download videos from various online sources, built upon the robust yt-dlp library. The innovation lies in its approach: instead of directly interacting with yt-dlp, Hermes exposes it through a user-friendly REST API and a web interface. This means you can integrate video downloading capabilities into your own applications or scripts, or use its web app for quick downloads. The core idea is to make powerful video downloading tools more accessible and extensible, with future plans for video editing features. So, this is useful for anyone who needs to programmatically grab videos from the internet, or wants a more feature-rich way to download them than just using the command line. It's like giving yourself a remote control for online videos.

How to use it?

Developers can integrate Hermes into their projects by making HTTP requests to its REST API. For instance, you can send a POST request with a video URL, and Hermes will handle the download, returning a link to the saved file. The web application offers a simple graphical interface for users who prefer not to code, allowing them to paste a URL and initiate the download. This makes it suitable for building custom media management systems, personal archiving tools, or even incorporating video download features into content creation workflows. So, you can use it to build your own video saving service, automate downloads for projects, or simply have an easy way to download videos without complex command-line operations.

Product Core Function

· RESTful API for programmatic video downloading: Enables developers to integrate video downloading into their applications and workflows using standard web requests, offering flexibility and automation. Useful for building custom downloaders or integrating into existing services.

· Web application interface: Provides an intuitive graphical user interface for non-technical users to download videos by simply pasting URLs, making the functionality accessible to a broader audience. Useful for quick, on-demand video downloads without needing to code.

· yt-dlp backend integration: Utilizes the widely supported and powerful yt-dlp for downloading, ensuring compatibility with a vast array of video platforms and formats. This means you benefit from extensive platform support and reliable downloading capabilities.

· Planned video remuxing and trimming: Future features will allow basic editing of downloaded videos directly within Hermes, simplifying post-download processing. This adds value by reducing the need for separate video editing software for simple tasks.

Product Usage Case

· Automated video archiving: A content creator could use Hermes to automatically download their own uploaded videos from various platforms for backup purposes by setting up scheduled API calls. This solves the problem of manual downloading and ensures a secure archive.

· Custom media management platform: A developer could build a personal media library that allows users to download videos directly into their organized collection via Hermes' API. This creates a streamlined experience for managing digital content.

· Educational tool integration: A learning platform might integrate Hermes to allow students to download supplementary video materials for offline study. This enhances the learning experience by providing accessible resources.

· Personalized downloader application: A tech enthusiast could create a dedicated desktop or mobile app that uses Hermes' API as its backend for downloading specific types of video content they frequently consume. This allows for a highly customized downloading experience tailored to individual needs.

9

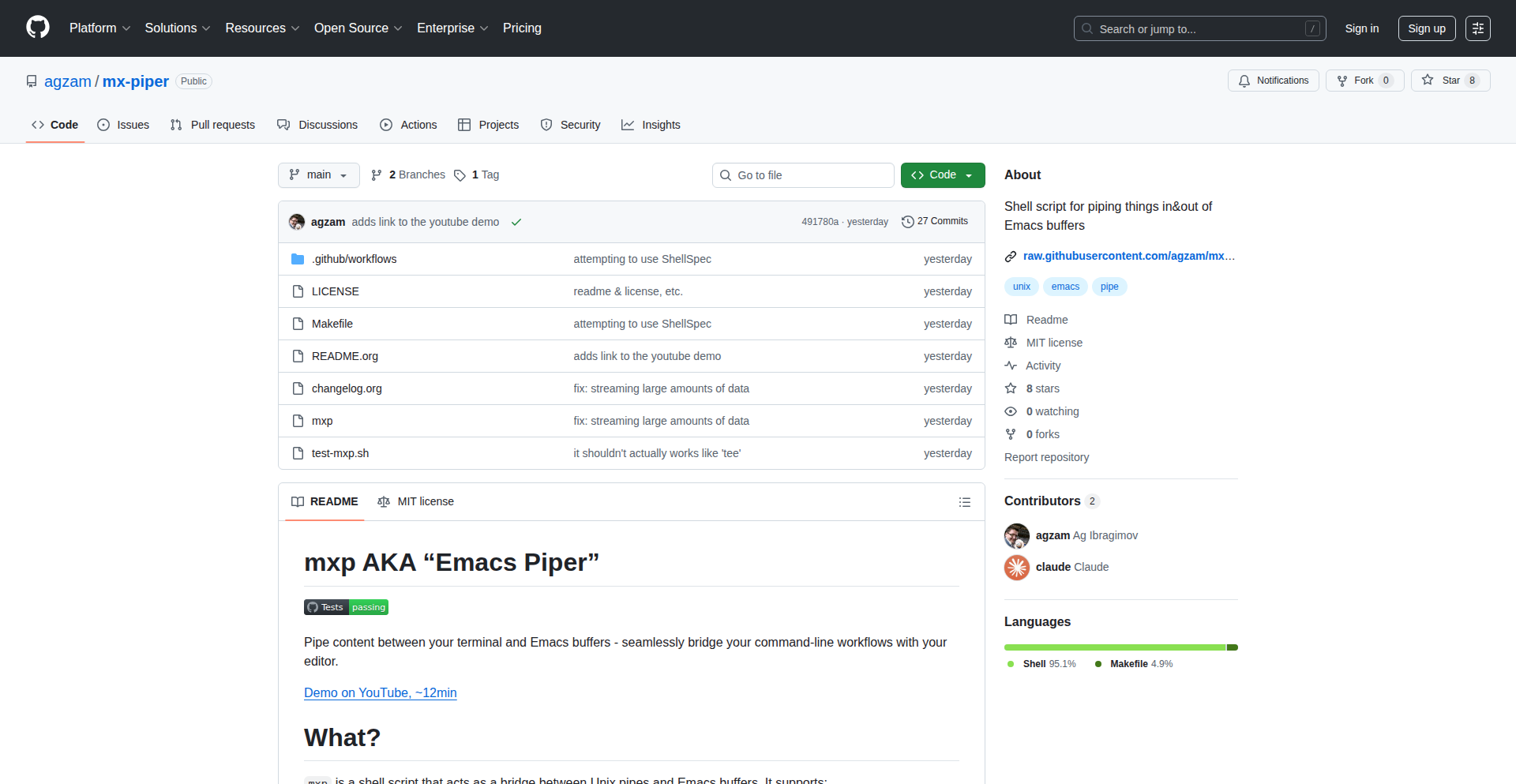

EmacsStream

Author

iLemming

Description

EmacsStream is a tool that allows developers to seamlessly pipe data between their terminal and Emacs buffers. It breaks down the barrier between command-line workflows and text editing within Emacs, enabling more dynamic and integrated development experiences. The core innovation lies in its ability to treat terminal output as input for Emacs and vice-versa, acting as a conduit for real-time data flow.

Popularity

Points 4

Comments 1

What is this product?

EmacsStream is essentially a bridge that connects your terminal's command-line environment with the powerful text editing capabilities of Emacs. Instead of copying and pasting, you can directly send the output of a command-line tool into an Emacs buffer for manipulation, analysis, or further processing. Conversely, you can send content from an Emacs buffer to a command-line process. This is achieved through a clever use of inter-process communication mechanisms, allowing data to flow smoothly between these two distinct environments. The value proposition is a more fluid and integrated way to work with code and data.

How to use it?

Developers can use EmacsStream by configuring their Emacs environment to recognize specific commands or keybindings that trigger the piping mechanism. For example, after running a command in the terminal that produces output, you could trigger a function in Emacs to capture that output directly into a new or existing buffer. Similarly, you could select text in an Emacs buffer and send it as input to a command-line utility. This integration enhances productivity by reducing context switching and manual data transfer. It's designed to be a natural extension of your existing terminal and Emacs workflows.

Product Core Function

· Terminal to Emacs Buffer Piping: Capture real-time output from any terminal command directly into an Emacs buffer. This is valuable because it allows you to immediately analyze, edit, or transform command-line results without manual copy-pasting, saving time and reducing errors.

· Emacs Buffer to Terminal Piping: Send content from an Emacs buffer as input to a terminal command. This is useful for tasks like feeding data to command-line scripts, performing bulk operations on text, or using Emacs to prepare input for external tools.

· Customizable Keybindings and Commands: Allows developers to define their preferred shortcuts and commands for initiating piping operations. This enhances usability and personalizes the workflow to individual preferences, making the tool feel like a natural extension of their editing environment.

· Real-time Data Integration: Enables dynamic interaction between command-line tools and Emacs, facilitating live data processing and immediate feedback. This is a significant improvement for workflows that involve iterative command execution and result analysis.

Product Usage Case

· Analyzing log files: A developer can run a log parsing command in the terminal, and instead of seeing the output in a small terminal window, they can pipe it directly into a large, searchable Emacs buffer for detailed inspection and filtering. This makes debugging and understanding complex logs much easier.

· Batch text processing: Imagine having a list of filenames in an Emacs buffer. You can use EmacsStream to pipe this list as input to a terminal command like `grep` or `sed` to perform batch operations on those files, all within your Emacs workflow.

· Interactive code generation: A developer might use an Emacs Lisp function to generate a complex configuration file. They can then pipe this generated content to a command-line tool for validation or deployment, directly from Emacs, streamlining the development cycle.

· Git workflow enhancement: You can pipe the output of `git log` or `git diff` into Emacs for more advanced viewing, annotation, and potential modification, then pipe relevant changes back to the terminal for execution.

10

Synnote: Actionable AI Note Weaver

Author

curiocity

Description

Synnote is an AI-powered note-taking workspace that goes beyond simple storage. It analyzes your written notes from lectures and meetings, automatically extracts key takeaways, identifies actionable to-dos, and can even transform your notes into a listenable podcast format. The core innovation lies in its ability to proactively nudge users to act on their notes, transforming passive information consumption into active engagement by freeing up mental bandwidth. This addresses the common problem of notes becoming digital dust, serving as a creative solution to overcome procrastination and make information truly useful.

Popularity

Points 3

Comments 2

What is this product?

Synnote is an intelligent note-taking application designed to make your notes work for you. At its heart, it uses Natural Language Processing (NLP) and AI models to understand the content of your notes. Instead of just being a repository, it actively processes the text to identify crucial information. It can pinpoint action items (like 'to-do' tasks) and generate concise summaries of lengthy content. The truly innovative part is its 'podcast generation' feature, which converts your text notes into audio, allowing you to consume information passively while commuting or multitasking. This addresses the issue of notes becoming forgotten and unused by making them dynamic and accessible in multiple formats.

How to use it?

Developers can integrate Synnote into their workflow by simply inputting their meeting minutes, lecture notes, or any other textual information. The AI engine then automatically parses the content. For practical application, imagine pasting meeting notes into Synnote; it will instantly highlight action items assigned to individuals and provide a brief summary of the discussion. This saves time on manual review and ensures tasks aren't missed. The podcast feature can be used to create audio versions of study notes, allowing for revision on the go. The interactive demo provides a hands-on experience of its capabilities before committing to the full application.

Product Core Function

· AI-powered note analysis: Understands the context and meaning of your written text to identify important information, significantly reducing manual review time and ensuring key details are not overlooked.

· Automatic to-do extraction: Scans your notes to pinpoint actionable tasks and deadlines, acting as a proactive assistant to ensure you follow through on commitments and improve productivity.

· Intelligent summarization: Condenses lengthy notes into concise summaries, allowing for quick comprehension of key points and saving time when reviewing large amounts of information.

· Note-to-podcast generation: Converts written notes into audio files, enabling you to learn and revise on the move, making information consumption more flexible and accessible.

· Proactive nudging for action: By highlighting tasks and summaries, Synnote encourages immediate engagement with your notes, transforming passive note-taking into an active learning and doing process.

Product Usage Case

· Student reviewing lecture notes: A student can input their lecture notes into Synnote, and the AI will automatically extract key concepts and potential exam questions, providing a concise summary for quick revision. The student can then listen to an audio version of the notes during their commute, reinforcing learning without dedicated study time.

· Project manager analyzing meeting minutes: A project manager can paste meeting minutes into Synnote, and the tool will automatically identify action items assigned to team members, along with deadlines. This ensures accountability and prevents tasks from falling through the cracks, streamlining project execution.

· Researcher processing research papers: A researcher can input summaries or sections of research papers into Synnote. The AI will extract key findings and methodologies, creating a digestible overview. This helps in quickly grasping the essence of multiple papers and identifying relevant information for their own work.

· Professional preparing for a presentation: A professional can take notes during a client meeting. Synnote can then extract the client's requirements and concerns, presenting them as clear action points, which can be directly used to structure a follow-up presentation or action plan.

11

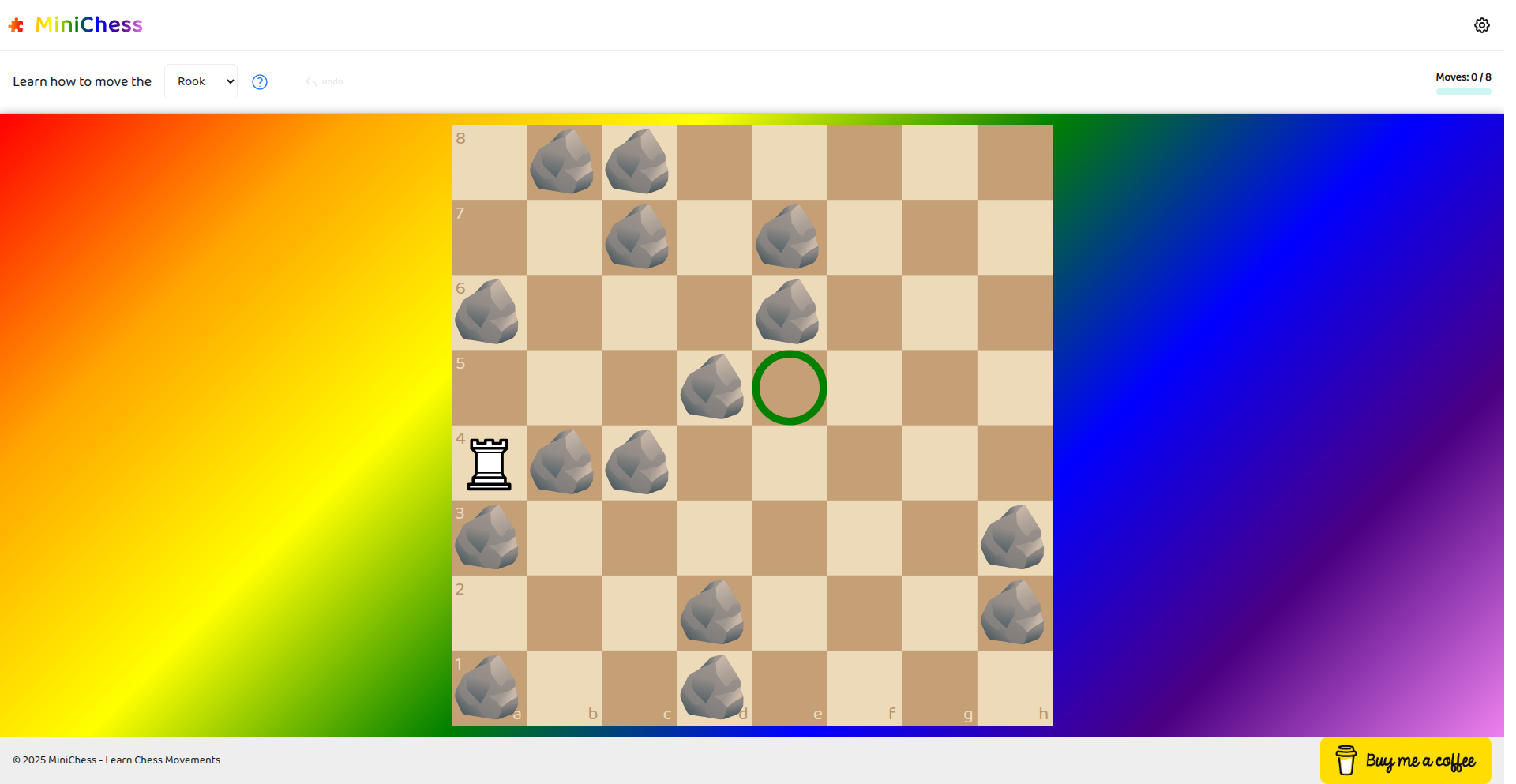

Chess Piece Mover Explorer

Author

patrickdavey

Description

A simple, web-based tool designed to teach children the basic movement patterns of chess pieces without the complexity of full chess rules. It focuses on the 'how to get from A to B' aspect for each piece, offering adjustable settings for a tailored learning experience. This project showcases creative use of web technologies for educational purposes, highlighting a core developer insight: simplifying complex concepts for a specific audience.

Popularity

Points 5

Comments 0

What is this product?

This is a web application that visually demonstrates how individual chess pieces move on a board. Instead of playing a full chess game, it allows users to select a piece and a starting square, then observe and practice its possible moves to reach a target square. The innovation lies in its focused, rule-stripped approach to a classic game, making the fundamental mechanics accessible and fun. It's built using standard web technologies, likely involving HTML, CSS, and JavaScript, to create an interactive and engaging experience. The value here is in demystifying chess piece movement for beginners, which can be a significant barrier to entry for many.

How to use it?

Developers can use this as a foundation or inspiration for creating their own educational games or interactive learning modules. It can be integrated into existing educational platforms or used as a standalone widget. For example, a teacher could embed this tool on a classroom website to help students visualize pawn, knight, bishop, rook, queen, and king movements. The adjustable settings allow for customization, so one could use it to create specific challenges or progressive learning paths. Its simplicity also makes it a good candidate for learning basic game development concepts in the browser.

Product Core Function

· Piece Movement Visualization: The core function allows users to see all valid moves for a selected chess piece from any given square on the board. This provides immediate visual feedback and practical understanding of each piece's unique movement capabilities. It's useful for anyone needing to quickly grasp how a piece navigates the board.

· Interactive Learning Environment: Users can actively participate by selecting pieces and observing their paths, rather than passively reading rules. This hands-on approach accelerates learning and retention of chess piece movements. It makes learning feel more like a game than a chore.

· Customizable Difficulty Settings: The project includes adjustable parameters (like limiting moves or specifying target squares) to tailor the learning experience. This allows for progressive difficulty, ensuring the tool remains engaging as the user's understanding grows. It's useful for creating varied learning exercises.

· Simplified Chess Mechanics: By removing the complexities of check, checkmate, and other full game rules, the tool isolates the essential concept of piece movement. This targeted approach makes it much easier for absolute beginners, especially young children, to learn the foundational elements of chess.

· Web-based Accessibility: Being a web application, it's accessible from any device with a web browser, requiring no installations. This makes it incredibly convenient for quick learning sessions or for deployment on educational platforms. You can learn chess movements anytime, anywhere.

Product Usage Case

· Educational Platform Integration: A school's learning management system could embed this tool to support a curriculum on strategy games or introductory chess. Students would be able to practice piece movements directly within their learning environment, reinforcing classroom lessons and making abstract concepts concrete.

· Parental Teaching Aid: Parents looking to introduce their children to chess can use this tool as a fun, interactive way to teach basic piece movements. Instead of abstract explanations, they can show their child how the knight jumps or the bishop slides, making the learning process enjoyable and effective.

· Game Development Learning Resource: Aspiring game developers can study the code to understand how to implement grid-based movement logic, user interaction, and visual feedback in a web application. It serves as a practical example of solving a specific problem with code, demonstrating efficient front-end development.

· Personalized Chess Practice Tool: A chess coach could use the customizable settings to create specific drills for their students, focusing on particular piece movements or combinations. This allows for highly targeted practice, addressing individual weaknesses and accelerating skill development.

12

MicroDSL Diagram Weaver

Author

xlii

Description

A highly efficient, server-side rendered micro DSL (Domain Specific Language) framework for creating diagrams. This project demonstrates a novel approach to generating visual representations directly from code, bypassing traditional databases and offering extremely low latency. It's built with Haskell and optimized for rapid deployment and execution.

Popularity

Points 3

Comments 1

What is this product?

MicroDSL Diagram Weaver is a prototype framework that lets you describe diagrams using a simple, custom language (a DSL). Instead of using complex graphics software, you write text that the system interprets and turns into a visual diagram, all rendered on the server. The core innovation lies in its lightweight, server-side rendering approach using Haskell and Unpoly, achieving very fast response times (around 0.8ms per request). This means you can generate diagrams on demand with minimal overhead, without needing to manage databases or heavy frontend JavaScript.

How to use it?

Developers can use this framework to programmatically generate diagrams for documentation, presentations, or application interfaces. You would typically define your diagram's structure and elements using the DSL syntax provided by the framework. The framework then processes this DSL code on the server, rendering it as HTML. This HTML can be directly embedded into web pages or served as standalone diagram images. Its low latency makes it suitable for dynamic diagram generation where speed is critical.

Product Core Function

· DSL for Diagram Definition: Allows developers to express complex diagram structures using a concise, text-based language, reducing the effort required for visual design.

· Server-Side Rendering: Generates diagrams directly on the server, eliminating the need for client-side rendering engines and improving performance and compatibility across devices.

· Haskell Backend: Leverages Haskell's strong type system and functional programming paradigms for robust and efficient diagram generation logic.

· Unpoly Frontend Integration: Utilizes Unpoly for seamless integration with web applications, enabling dynamic updates and interactions with server-rendered content.

· Cloudflare Container Deployment: Packaged for efficient deployment on serverless platforms like Cloudflare Workers, ensuring scalability and low operational costs.

· Zero Data Gathering: Commits to privacy by not collecting any user data beyond necessary request information, making it a secure option for sensitive diagram generation.

Product Usage Case

· Generating architecture diagrams for technical documentation: Instead of manually drawing boxes and arrows, developers can write a simple DSL to describe the components and their connections, and the framework automatically generates the visual diagram, ensuring consistency and ease of updates.

· Dynamic process flow visualizations in web applications: A web application could use this to display user journey flows or business process steps. As user actions change, the DSL input is updated on the server, and a new, up-to-date diagram is served almost instantly, providing real-time visual feedback.

· Creating reproducible scientific or data visualizations: Researchers can define complex data relationships or experimental setups using the DSL, guaranteeing that the visualization is always generated accurately from the code, facilitating sharing and verification of results.

· Rapid prototyping of UI mockups with programmatic control: Developers can quickly iterate on visual layouts or data-driven interfaces by defining them in the DSL and seeing instant, server-rendered results, speeding up the design and development cycle.

13

AI Agent Court Simulator

Author

Ohuaya

Description

A browser-based simulation of a decentralized court designed for autonomous AI agents. This project tackles the emerging challenge of how AI agents can resolve disputes and establish governance in a decentralized digital environment. It explores novel mechanisms for AI-driven justice and consensus-building without central authority, providing a sandbox for understanding future AI interactions.

Popularity

Points 3

Comments 1

What is this product?

This project is a web application that simulates a decentralized court system specifically for artificial intelligence (AI) agents. Imagine a digital courtroom where AIs can bring their disputes, present evidence, and have those disputes resolved by a jury of other AIs, all without any human oversight or a single controlling entity. The innovation lies in developing and visualizing protocols that enable AI agents to participate in a fair and transparent judicial process. It's built using modern web technologies, allowing anyone with a browser to interact with and observe these complex AI-to-AI legal proceedings. The core technical insight is in designing agent-to-agent communication protocols and consensus algorithms that mimic judicial principles, allowing for impartial decision-making in a distributed network. So, what's the use? It helps us foresee and prepare for a future where AI agents will need to cooperate and resolve conflicts autonomously, ensuring a more stable and predictable digital ecosystem.

How to use it?

Developers can use this simulator as a living laboratory for exploring decentralized governance and AI ethics. You can load it in your web browser and observe simulated disputes between AI agents. For developers interested in building their own autonomous agents, this provides a visual understanding of how such agents might interact in a dispute resolution context. You can potentially extend the simulator by defining new agent archetypes, custom dispute types, or even alternative consensus mechanisms for the AI jury. Integration could involve using its visualization techniques or underlying logic as inspiration for building real-world decentralized applications (dApps) or agent-based simulation frameworks. So, how can you use it? You can explore the dynamics of AI justice, test your own agent logic within this framework, or simply learn about the possibilities of AI self-governance, helping you build more robust and trustworthy AI systems.

Product Core Function

· AI Agent Dispute Simulation: Allows autonomous AI agents to initiate and participate in simulated legal disputes, providing a platform for testing conflict resolution logic. The value is in observing how AI agents handle disagreements programmatically, useful for building cooperative AI systems.

· Decentralized Courtroom Architecture: Implements a court structure where decisions are made through distributed consensus among AI agents, rather than a central authority. This showcases a technical approach to decentralized governance, valuable for understanding resilient AI networks.

· Evidence Presentation Protocols: Defines how AI agents can present digital evidence and arguments within the simulation. This demonstrates a method for verifiable AI communication, crucial for trust in AI interactions.

· AI Jury Consensus Mechanism: Features a system where a group of AI agents (the jury) collectively reaches a verdict based on the presented evidence and arguments. This highlights innovative approaches to AI-driven decision-making and agreement in a distributed setting, offering insights into scalable AI coordination.

· Browser-based Visualization: Provides a graphical interface to view the ongoing simulations, agent interactions, and court outcomes. This makes complex AI interactions understandable and accessible to a wider audience, valuable for education and public awareness of AI capabilities.

Product Usage Case

· Testing AI agent fairness algorithms: A developer building AI agents that need to operate fairly in shared digital spaces can use the simulator to observe how their agents resolve disputes with other simulated agents, identifying biases or unfair strategies. This directly addresses 'how do I ensure my AI is ethical?'.

· Exploring decentralized governance models for AI: Researchers can use this to model and evaluate different decentralized governance structures for AI systems, seeing how various consensus mechanisms perform under simulated dispute conditions. This helps answer 'what's the best way to manage AI communities?'.

· Educational tool for AI ethics and law: Educators can use the simulator to demonstrate to students the complex challenges of AI accountability and dispute resolution in a future dominated by autonomous agents. This provides a tangible way to learn 'how will AI interact legally?'.

· Prototyping AI-to-AI communication standards: Developers working on interoperable AI systems can use the evidence presentation and communication protocols as a reference or inspiration for designing new standards for AI interaction. This assists in answering 'how can different AIs talk to each other legally?'.

· Demonstrating the potential of AI self-regulation: This project can be showcased to demonstrate how AI systems might evolve to self-regulate and manage their own conflicts, reducing reliance on human intervention for certain digital interactions. This illustrates 'can AI manage itself?'.

14

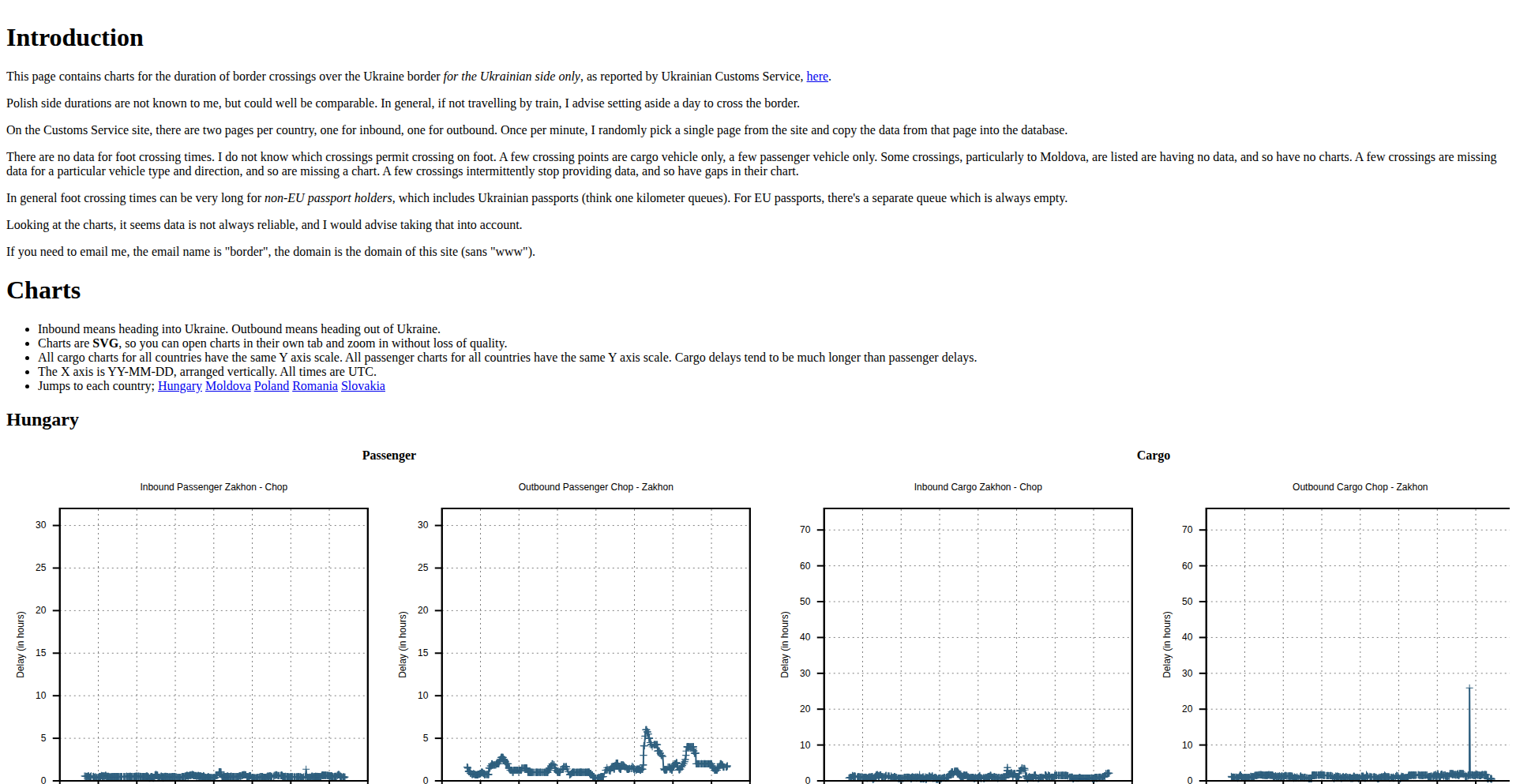

BorderFlow Insights

Author

Max-Ganz-II

Description

BorderFlow Insights is a web application that tracks and visualizes delays at Ukrainian border crossings. It addresses the difficulty of planning cross-border travel by regularly scraping data from the Ukrainian Customs Service website, aggregating this information, and presenting it in an accessible format. The project highlights innovative use of data scraping and visualization to solve a real-world logistical problem, making cross-border journeys more predictable and less stressful.

Popularity

Points 3

Comments 1

What is this product?

BorderFlow Insights is a data aggregation and visualization tool designed to provide real-time updates on delays at Ukrainian border crossings. It works by systematically collecting data published by the Ukrainian Customs Service, which reports on passenger and cargo vehicle crossing times. The core innovation lies in the continuous scraping of this data, its storage in a robust database (Postgres), and its presentation through informative charts (Gnuplot). Even though the source data might have inaccuracies, this tool makes the available information more digestible and useful for travelers trying to make informed decisions, acknowledging potential data quirks as part of the insight.

How to use it?

Travelers planning a trip to or from Ukraine can use BorderFlow Insights by visiting the web application. They can select a specific border crossing point to view historical and current delay information. This helps in choosing less congested crossings, planning departure times, and mentally preparing for potential wait times. For developers, the project serves as an example of building a practical data-driven service from publicly available, albeit imperfect, web data. It demonstrates a straightforward approach to web scraping, data persistence, and visualization, which can be applied to similar problems in logistics, transportation, or any domain where public data can be leveraged.

Product Core Function

· Automated data scraping: The system continuously collects delay data from the Ukrainian Customs Service website, ensuring that the information presented is as up-to-date as possible. This addresses the challenge of manually checking multiple sources or waiting for outdated official reports, saving valuable time and effort for travelers.

· Data aggregation and storage: Collected data is stored in a reliable database (Postgres), allowing for historical analysis and trend identification. This provides a structured way to manage potentially volatile data, enabling users to see patterns over time rather than just a single snapshot, thus improving prediction capabilities.

· Data visualization: The project uses tools like Gnuplot to create visual representations of border crossing delays. This makes complex data easy to understand at a glance, helping users quickly assess the situation at different crossings and make faster, more informed decisions about their travel plans.

· User-friendly interface: While technical in its implementation, the goal is to present the data in a clear and accessible way for anyone to understand. This translates the raw numbers into practical insights, answering the 'so what does this mean for me?' question for travelers.

· Transparency on data limitations: The project acknowledges the potential for inaccuracies in the source data. By highlighting this, it encourages users to interpret the data critically and use it as a guiding insight rather than an absolute truth, promoting responsible data usage.

Product Usage Case

· Planning international road trips to Ukraine: A traveler planning a car journey into Ukraine can check BorderFlow Insights to see which border crossings have the shortest reported delays. This helps them avoid extremely long queues (e.g., 20+ hours) and choose a more efficient route, saving hours or even days of travel time and reducing stress.

· Logistics company optimizing delivery routes: A logistics company with regular shipments crossing into Ukraine can use the historical data provided by BorderFlow Insights to identify optimal times or routes that consistently experience lower delays. This can lead to more predictable delivery schedules and reduced operational costs.

· Academic research on border efficiency: Researchers studying cross-border trade or migration patterns can utilize the aggregated data from BorderFlow Insights as a dataset to analyze trends, identify bottlenecks, and understand the impact of various factors on border crossing efficiency.

· Developer learning data scraping and visualization: A developer looking to learn how to build similar data-driven applications can study the codebase and architecture of BorderFlow Insights. It serves as a practical example of taking public web data, processing it, and turning it into a useful tool for others.

15

Zoto: Zig Audio Engine

Author

braheezy

Description

Zoto is a low-level audio playback library for Zig, inspired by Go's `oto`. It offers memory playback and file streaming across macOS, Linux, and Windows, leveraging Zig's modern `std.Io.Reader` interface for efficient data handling. So, this lets developers easily add audio to their Zig applications without complex dependencies.

Popularity

Points 3

Comments 0

What is this product?

Zoto is a fundamental audio playback engine built using the Zig programming language. Its core innovation lies in its minimalist design and direct interaction with the operating system's audio hardware. It's essentially a way for Zig programs to play sounds, whether from memory or streaming from files, in a highly efficient and controllable manner. The use of Zig's `std.Io.Reader` interface means it can handle audio data from various sources seamlessly. So, it provides a robust and performant way to integrate sound into your projects.

How to use it?

Developers can integrate Zoto into their Zig projects by including it as a dependency. They can then use its API to load audio data into memory or specify file paths for streaming. The library handles the complexities of interacting with the underlying audio systems on macOS, Linux, and Windows. This allows for straightforward audio playback within game development, multimedia applications, or any tool requiring sound output. So, you can add sound effects or background music to your Zig applications with relative ease.

Product Core Function

· Memory Playback: Load entire audio files into RAM for quick, low-latency playback. This is great for short sound effects in games where immediate response is crucial. So, your game can play quick sound cues instantly.

· File Streaming: Play audio directly from files on disk, which is memory-efficient for longer audio tracks like background music or podcasts. So, you can play long audio files without consuming excessive RAM.

· Cross-Platform Support: Works on macOS, Linux, and Windows, ensuring your audio implementation is portable across common desktop operating systems. So, your audio features will work on most computers.

· Zig std.Io.Reader Integration: Leverages Zig's standard I/O interfaces for flexible and efficient data source handling, allowing for custom data input methods. So, you can feed audio data to Zoto from various custom sources beyond just files.

Product Usage Case

· Building a simple command-line audio player in Zig: Zoto can be used to read an audio file and play it through the system's speakers directly from the terminal. So, you can create your own basic music player using just code.

· Developing a 2D game in Zig with sound effects: Zoto can handle playing jump sounds, explosion effects, and background music for the game. So, your game will have immersive audio feedback.

· Creating a real-time audio processing tool: For applications that need to manipulate or analyze audio, Zoto can provide the playback mechanism to test and demonstrate these processes. So, you can hear the results of your audio experiments.

· Implementing a custom audio notification system: Developers can use Zoto to play custom sounds for alerts or notifications within their Zig applications. So, your applications can have unique audible alerts.

16

ArtisMind: AI Prompt Engineering Orchestrator

Author

SebastianRoot

Description

ArtisMind is a revolutionary tool designed to transform the way developers and AI enthusiasts interact with AI models. Instead of manually crafting prompts, which is often time-consuming and error-prone, ArtisMind automates the prompt engineering process. It intelligently generates requirements, constructs robust prompts by leveraging multiple AI models, rigorously tests prompts for quality and security, and allows for the incorporation of context files to enhance output accuracy. This means less time spent debugging AI responses and more confidence in the generated content, making AI development significantly more scalable and secure. So, what's in it for you? It drastically cuts down the development cycle for AI-powered features and ensures your AI outputs are reliable and safe, freeing you to focus on core product innovation.

Popularity

Points 2

Comments 1

What is this product?

ArtisMind is an AI prompt engineering orchestration platform. Instead of you typing out every single detail of what you want an AI to do, ArtisMind acts like a sophisticated conductor. It takes your high-level goals, breaks them down into precise instructions, and then uses several AI models in tandem to build and refine the perfect 'command' (prompt) for another AI to execute. The innovation lies in its systematic approach to prompt creation, moving beyond simple text input to a more structured engineering discipline. It actively checks for potential issues like security vulnerabilities or poor output quality before you even use the final prompt. So, what's in it for you? It eliminates the guesswork and frustration of getting AI to behave as you expect, leading to more predictable and higher-quality AI results for your projects.

How to use it?

Developers can integrate ArtisMind into their AI development workflows to automate prompt generation and validation. It can be used as a standalone tool to prepare prompts for use with various AI APIs (like OpenAI, Anthropic, etc.) or integrated programmatically via its API. For instance, when building a chatbot that needs to summarize news articles, instead of manually tweaking the summarization prompt, you can feed your requirements into ArtisMind. It will then generate and test the optimal prompt for your summarization task. This also extends to scenarios where you need to ensure AI outputs are safe for public consumption or adhere to specific brand guidelines. So, what's in it for you? It provides a repeatable and reliable method for generating effective AI prompts, saving you significant time and reducing the risk of AI misuse or poor performance.

Product Core Function

· Automated Prompt Generation: ArtisMind translates high-level user intentions into structured, detailed prompts, saving developers from tedious manual writing. This means you get well-formed instructions for AI without needing to be an expert prompt writer yourself, leading to faster AI feature development.

· Multi-AI Model Orchestration: The tool intelligently utilizes various AI models to construct and refine prompts, ensuring a more comprehensive and effective instruction set. This leverages the strengths of different AI architectures for superior prompt engineering, ultimately improving the quality and relevance of AI outputs for your specific tasks.

· Prompt Quality and Security Testing: ArtisMind includes built-in mechanisms to test generated prompts for common issues like poor output quality or potential security vulnerabilities. This proactive approach helps prevent unexpected AI behavior and ensures that your AI applications are robust and safe to deploy, giving you peace of mind.

· Context File Integration: Developers can provide custom context files (e.g., documentation, style guides) to ArtisMind, which are then incorporated into the prompt engineering process. This allows AI to generate responses that are highly relevant to your specific domain or brand, ensuring consistency and accuracy in AI-generated content.

Product Usage Case

· Building an AI customer support chatbot: Instead of writing multiple variations of a question to get a consistent answer, ArtisMind can generate a prompt that instructs the AI to understand customer intent and provide accurate solutions based on your product documentation. This means faster deployment of more helpful customer service.

· Content generation for marketing campaigns: For generating blog posts or social media updates, ArtisMind can engineer prompts that adhere to a specific brand voice and include keywords, ensuring consistent and effective marketing content. This saves marketing teams countless hours in content creation and refinement.