Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-24

SagaSu777 2025-10-25

Explore the hottest developer projects on Show HN for 2025-10-24. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

The current wave of Show HN submissions highlights a strong movement towards empowering developers and users with greater control and privacy. We're seeing a clear trend of 'local-first' solutions, where complex processing is moved from the cloud to the user's device. This isn't just about saving money; it's about re-establishing data sovereignty and offering tangible benefits like offline functionality and enhanced privacy. For developers, this means exploring technologies like WebAssembly, client-side rendering, and efficient local data storage. For entrepreneurs, it's an opportunity to build tools that address the growing concerns around data security and the cost of cloud-based AI services. The 'hacker spirit' is alive and well in these projects, with creators taking on challenging problems – from understanding low-level CPU operations to making terabytes of video searchable locally – and building elegant, efficient solutions. This focus on utility, performance, and user control is a powerful signal for what's next in tech innovation, encouraging a mindset where we build the tools we need to understand and master complex systems.

Today's Hottest Product

Name

Edit Mind – Local Video Search and Analysis Tool

Highlight

This project tackles the challenge of searching through massive personal video archives without relying on expensive cloud services or compromising privacy. By performing transcription, object detection, facial recognition, and emotion analysis locally using Python with ML models and a ChromaDB vector database, it offers a powerful, privacy-preserving alternative to costly cloud APIs. Developers can learn about building local, data-intensive applications, integrating various ML models, and leveraging vector databases for semantic search.

Popular Category

AI/ML Applications

Developer Tools

Privacy-focused Solutions

Data Management

Web Tools

Popular Keyword

AI

LLM

Privacy

Local First

Developer Tools

Data

Image Processing

Automation

CLI

API

Technology Trends

Local-first AI/ML processing

Privacy-centric development

Client-side web applications

Automated data management and organization

AI-powered developer tools

LLM application patterns

Specialized APIs for niche tasks

Performance optimization in Rust/WebAssembly

Project Category Distribution

Developer Tools & Utilities (30%)

AI/ML Applications (25%)

Web Applications & Services (20%)

Data Management & Analysis (15%)

Creative Tools & Media (10%)

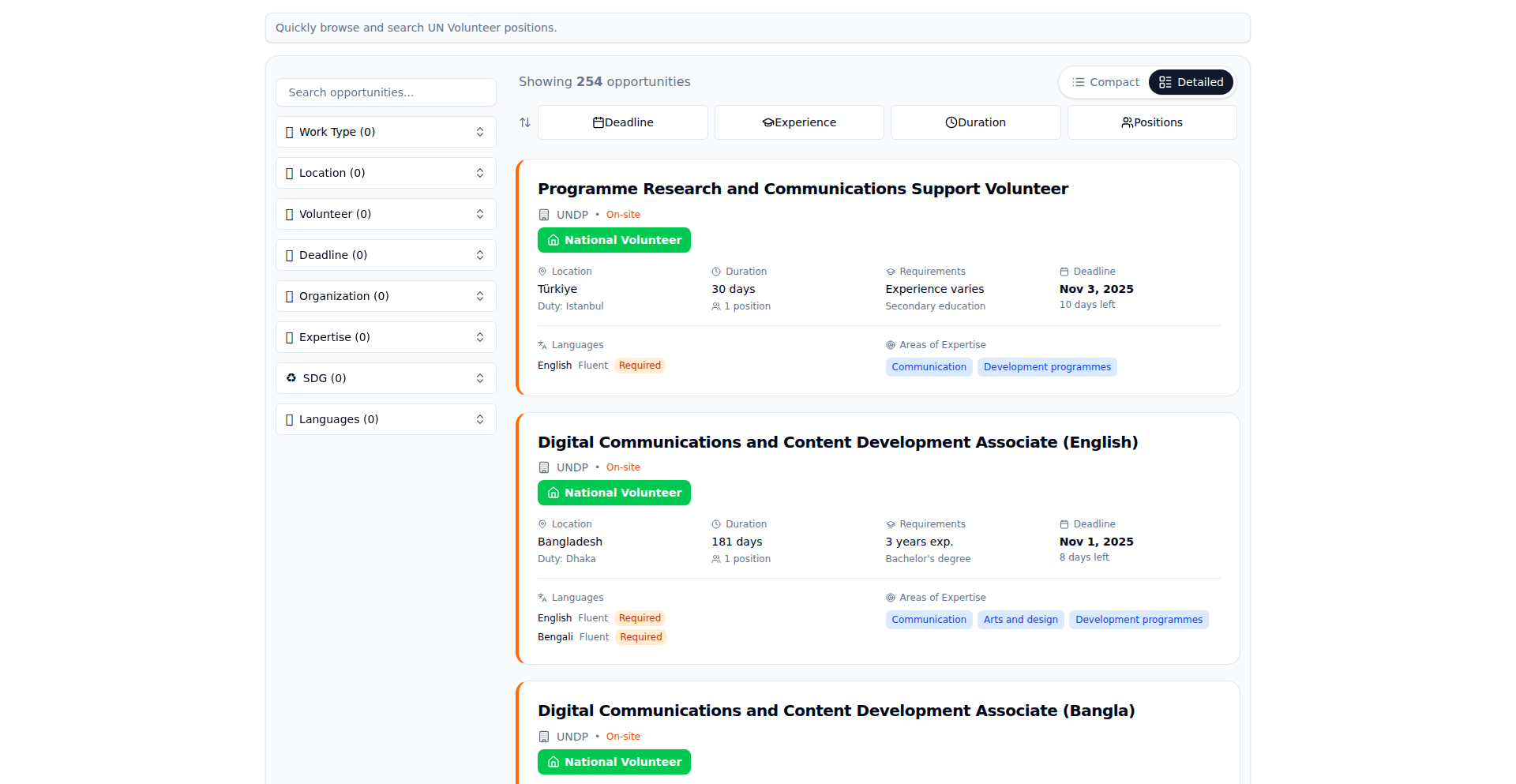

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | PythonByteCPU | 70 | 19 |

| 2 | Client-Side Canvas Image Transformer | 41 | 33 |

| 3 | LinkdAPI: Real-time LinkedIn Data Stream | 11 | 9 |

| 4 | AgentML: Deterministic AI Agents | 17 | 3 |

| 5 | LLM Rescuer - Nil Safety Solver | 15 | 0 |

| 6 | ZeroCopy-SQLite-Dumper | 13 | 1 |

| 7 | TreadmillBridge-Mac | 13 | 0 |

| 8 | Inspec: Realtime Spec Scheduling for Interior Design | 10 | 0 |

| 9 | CLI-SQLite State Orchestrator | 8 | 2 |

| 10 | Client-Side Canvas Image Manipulator | 3 | 6 |

1

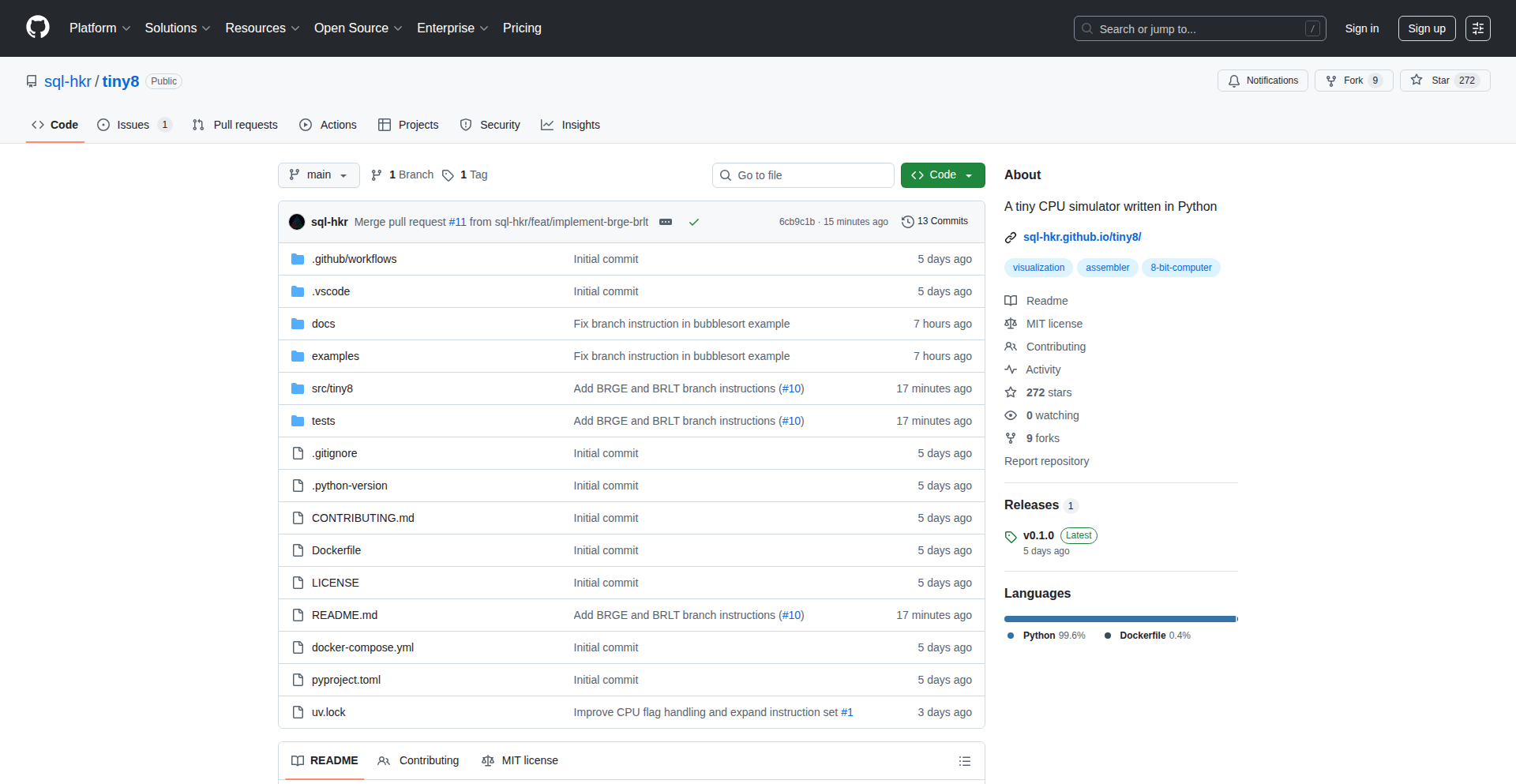

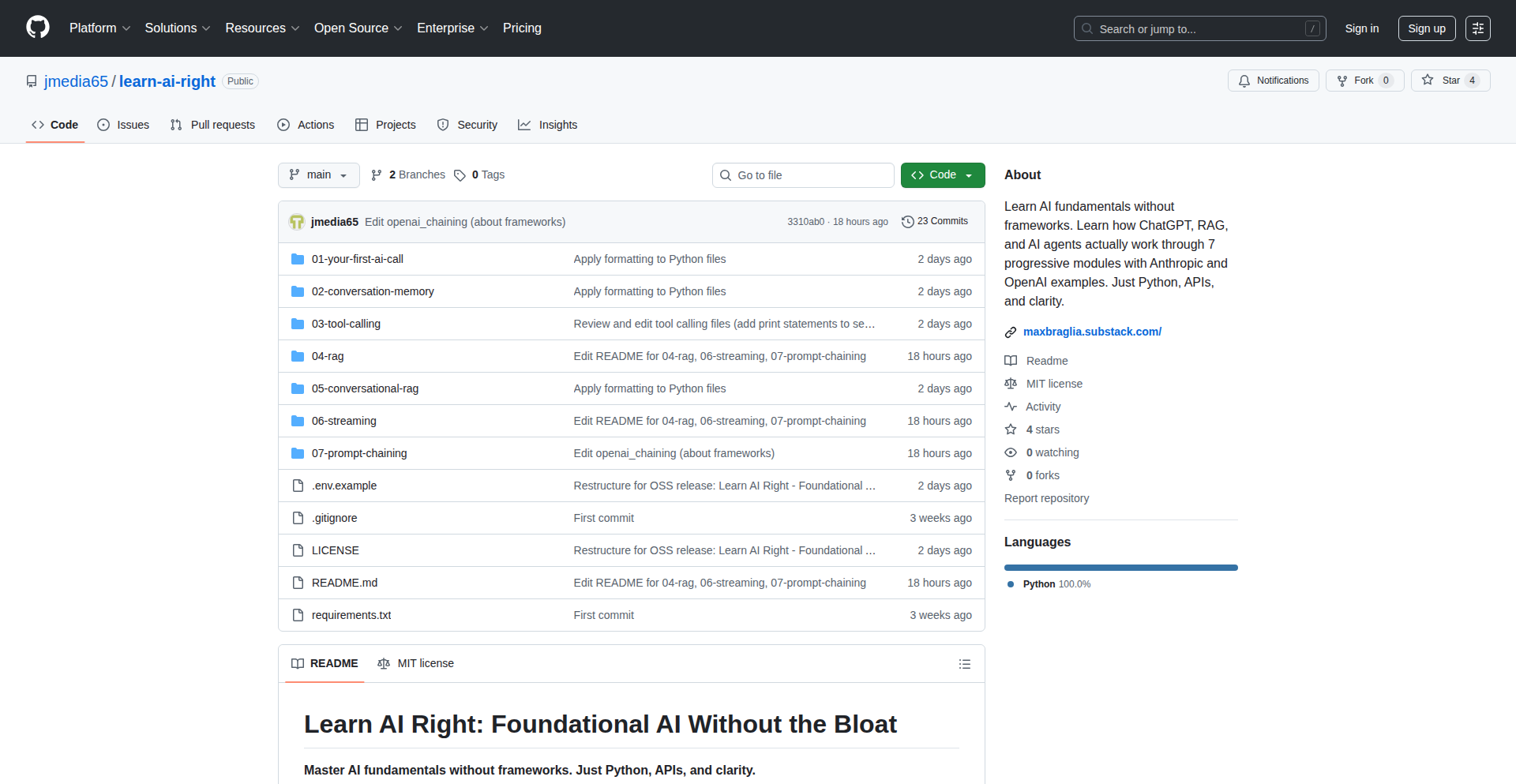

PythonByteCPU

Author

sql-hkr

Description

A Python-based 8-bit CPU simulator that visualizes low-level computer operations in real-time. It allows users to write simple assembly code and observe its step-by-step execution, offering a unique learning and experimentation tool for understanding how computers function at their core.

Popularity

Points 70

Comments 19

What is this product?

PythonByteCPU is a meticulously crafted 8-bit Central Processing Unit (CPU) simulator built entirely in Python. Instead of abstracting away the complexities, this project dives deep into the fundamental building blocks of computing. It brings to life the inner workings of a CPU by visualizing its core components: registers (where temporary data is held), memory (where instructions and data are stored), and the instruction pointer (which tracks the next instruction to be executed). The innovation lies in its real-time, visual feedback loop. When you feed it simple assembly code, you don't just get an output; you see each instruction being fetched, decoded, and executed, with the state of the registers and memory updating dynamically. This makes the abstract concept of CPU execution tangible and understandable, demystifying how code translates into actual machine operations. So, what's the value to you? It's like having a transparent window into the brain of a computer, allowing you to truly grasp the essence of computation without needing specialized hardware or complex software setups.

How to use it?

Developers can utilize PythonByteCPU by writing simple, custom assembly programs directly within the simulator's environment. Once written, they can instruct the simulator to execute these programs step-by-step. This is done by interacting with the simulator's interface, which displays the current state of the CPU. For integration, the simulator can be extended or its core logic referenced for building more complex educational tools or for research into early computing architectures. A common technical use case would be to use it as a teaching aid in computer architecture courses, allowing students to experiment with different instruction sets and observe their effects. The value proposition here is its ease of use and the immediate visual feedback, making it an accessible platform for hands-on learning. For you, this means you can experiment with fundamental computer logic and see immediate, observable results, accelerating your understanding of how software interacts with hardware at the most basic level.

Product Core Function

· Real-time register visualization: Displays the current values of CPU registers as they change during instruction execution, allowing for immediate understanding of data manipulation. This is valuable for debugging and understanding variable states in low-level code.

· Memory visualization: Shows the contents of the CPU's memory, including loaded instructions and data, providing insight into how programs are stored and accessed. This helps in understanding memory management and data layout.

· Instruction step-by-step execution: Allows users to execute assembly code one instruction at a time, pausing after each operation to observe the effects on registers and memory. This is crucial for learning the sequential nature of program execution and identifying logical errors.

· Assembly code editor: Provides a simple interface to write and input custom 8-bit assembly programs for simulation. This empowers users to create their own experiments and test their understanding of assembly language.

· Instruction decoding visualization: Visually represents the process of the CPU fetching, decoding, and executing instructions, making the instruction cycle understandable. This clarifies how the CPU interprets and acts upon code.

· Customizable instruction set: While not explicitly stated as a core feature, the possibility to extend or modify the instruction set opens doors for exploring different CPU designs and their capabilities. This offers advanced users a way to experiment with computer architecture.

Product Usage Case

· Educational purposes: In computer science or engineering education, a professor can use PythonByteCPU to demonstrate fundamental concepts of computer architecture, assembly language, and the fetch-decode-execute cycle to students, making abstract topics concrete. Students can then use it to practice writing and debugging simple assembly programs, reinforcing their learning. The value is a more engaging and effective learning experience for students.

· Learning low-level programming: Aspiring software engineers or hobbyists interested in understanding how software truly runs on hardware can use PythonByteCPU to learn 8-bit assembly. By seeing their code executed instruction by instruction, they gain a deep appreciation for the underlying mechanics, which can inform their higher-level programming practices. The value is accelerated learning and a more profound understanding of computation.

· Prototyping simple logic circuits: For those interested in digital logic and hardware design, the simulator can serve as a platform to model and test very basic computational logic before implementing it in hardware. This allows for quick iteration and debugging of simple algorithmic processes. The value is a more accessible way to experiment with digital logic concepts.

· Debugging embedded systems understanding: While not a direct replacement for real hardware debugging, understanding the principles visualized in PythonByteCPU can aid in conceptually debugging very low-level issues in embedded systems by providing a simplified model of CPU behavior. The value is improved conceptual debugging skills for complex systems.

· Personal learning and curiosity: Anyone curious about how computers work at a fundamental level can use this simulator as an accessible entry point. It offers a hands-on way to explore the 'black box' of computing and satisfy intellectual curiosity about technology. The value is democratized access to fundamental computing knowledge.

2

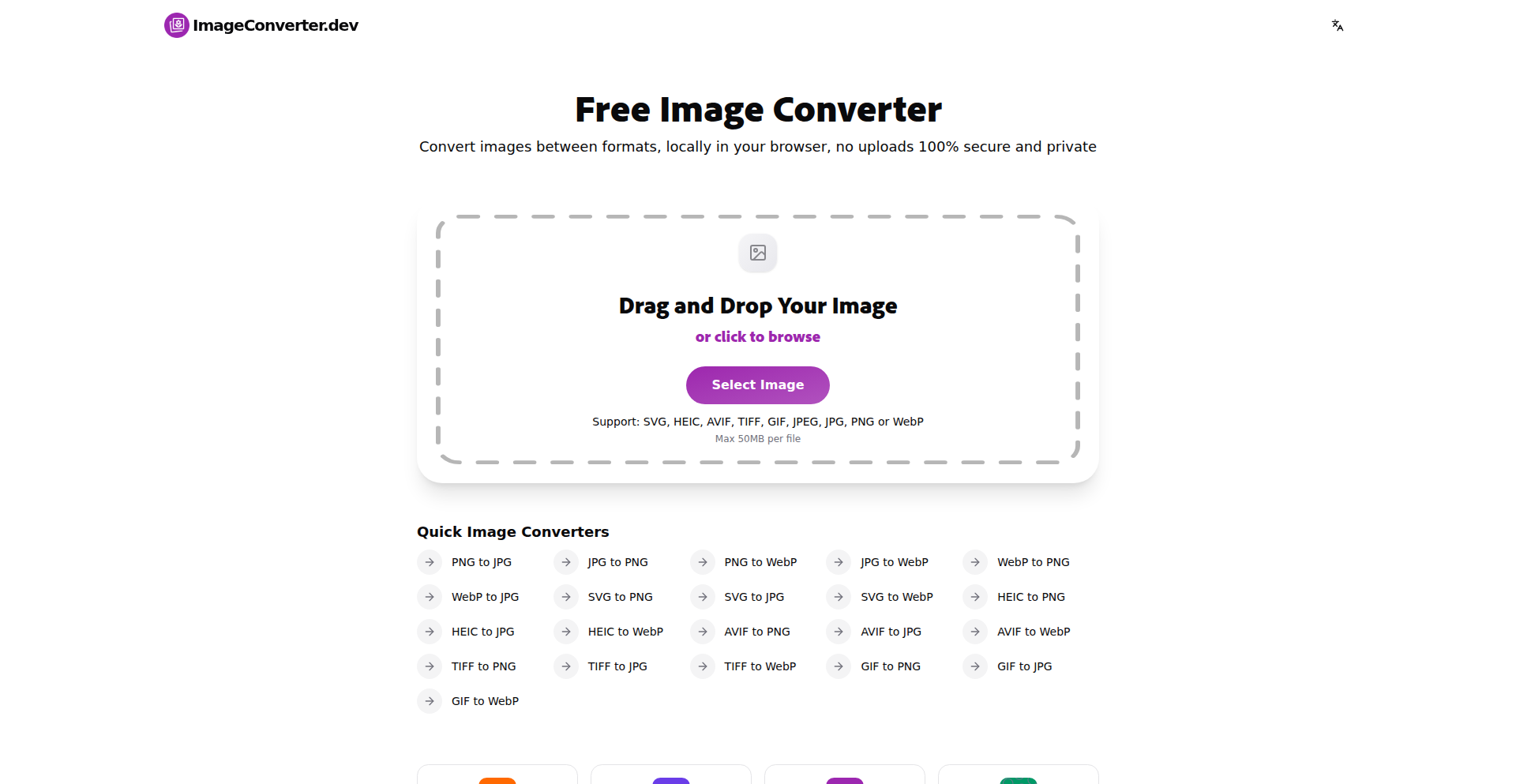

Client-Side Canvas Image Transformer

Author

wainguo

Description

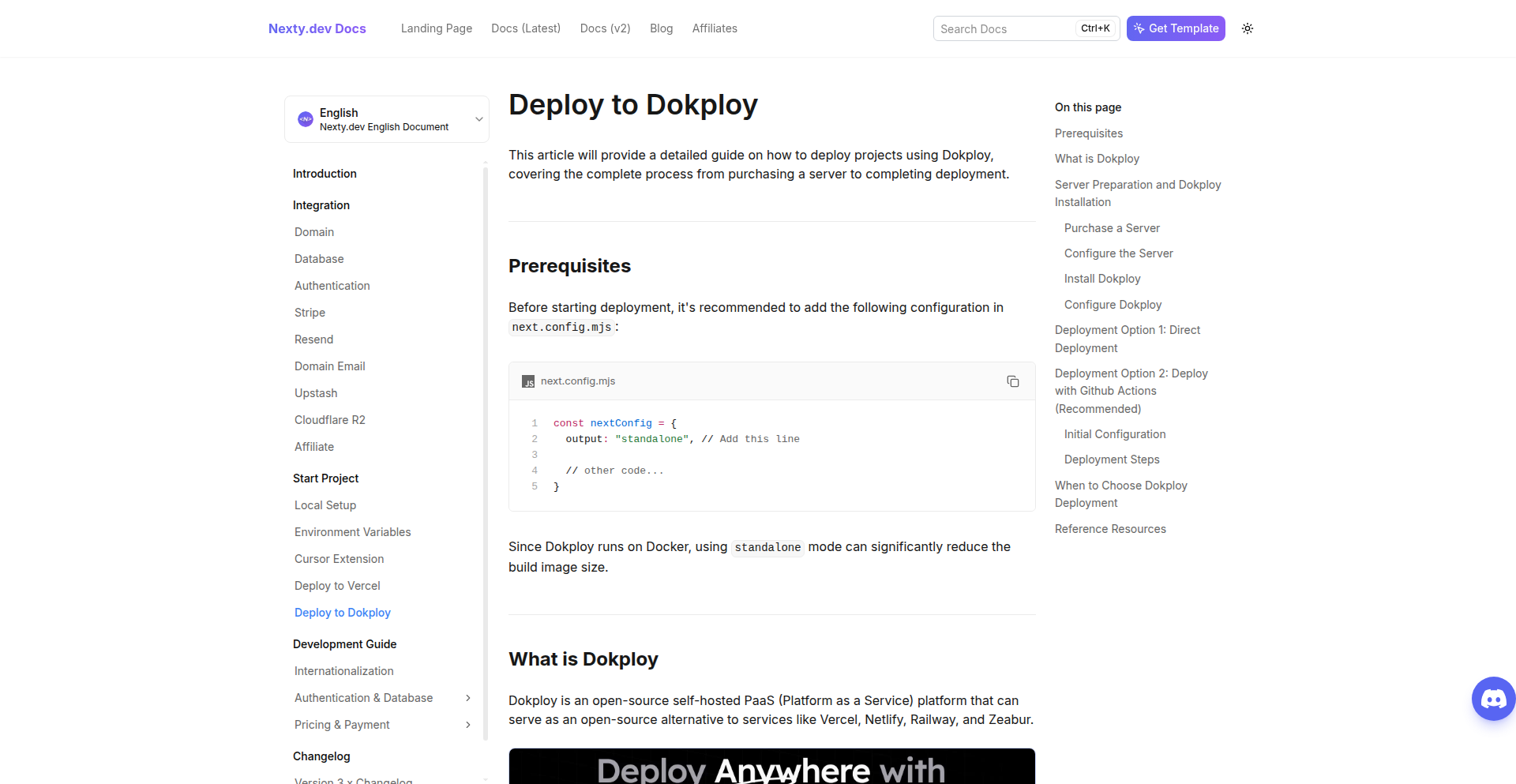

This project is a web-based image converter that operates entirely in the user's browser, eliminating the need to upload images to a server. It leverages browser technologies like the Canvas API and WebAssembly to perform conversions between JPG, PNG, and WebP formats quickly and privately. Its key innovation is enabling offline functionality and guaranteeing user data privacy by keeping all processing local.

Popularity

Points 41

Comments 33

What is this product?

This is a web application that converts images (JPG, PNG, WebP) directly in your browser without sending your files to any server. It achieves this using powerful browser technologies. The Canvas API is like a digital drawing board within your browser that can manipulate images. WebAssembly is a way to run code that's usually for desktop applications, but optimized for the web, making the image conversion process very fast and efficient, even on less powerful devices. This means your images stay on your computer, ensuring privacy and speed, and once the site is loaded, you can even use it offline.

How to use it?

Developers can use this project as a direct tool for quick image format changes without worrying about uploading sensitive files. It's ideal for tasks like preparing assets for websites, social media, or personal projects where privacy and speed are paramount. The project's client-side nature means it can be easily integrated into other web applications or workflows that require image conversion functionality. For example, if you have a web application that needs to process user-uploaded images before displaying them, you could potentially incorporate this tool's logic to handle conversions directly in the user's browser, reducing server load and improving user experience.

Product Core Function

· Image format conversion (JPG, PNG, WebP): This allows users to seamlessly change image file types, which is crucial for web optimization and compatibility. The value is in providing a quick and easy way to get images into the format needed for different platforms without external tools or uploads.

· 100% client-side processing: This means all image manipulation happens directly on your device. The value here is significant for privacy-conscious users or those dealing with sensitive images, as it completely avoids the risk of data breaches or unauthorized access through a server.

· Offline functionality (PWA support): Once loaded, the converter works even without an internet connection. The value is in its reliability and accessibility; you can convert images anytime, anywhere, without needing to be online, making it a handy tool for travelers or those with intermittent internet access.

· High performance on mid-range devices: The technology stack is optimized for speed and efficiency. The value is that you don't need a super-powerful computer to use it; it's designed to be fast and responsive for a wide range of users, making advanced image processing accessible to everyone.

Product Usage Case

· A web designer needs to quickly convert a batch of screenshots from PNG to JPG for a website portfolio. Instead of using a desktop application or an online converter that requires uploads, they can use this tool directly in their browser, ensuring their work-in-progress designs remain private and the conversion is near-instantaneous.

· A blogger is writing a post and needs to convert several photos from a camera's RAW format (or a less web-friendly format) to WebP for better loading times. This tool provides a simple, privacy-preserving way to do this directly within their workflow, without needing to install new software or worry about their personal photos being stored on an external server.

· A developer is building a personal project that involves image manipulation and wants to minimize server costs. They can integrate the core logic of this client-side converter into their web app, allowing users to perform conversions without any backend processing, leading to lower infrastructure expenses and a faster user experience.

3

LinkdAPI: Real-time LinkedIn Data Stream

Author

LinkdAPI

Description

LinkdAPI is an unofficial LinkedIn API that allows developers to access public LinkedIn data in real-time, bypassing the limitations of traditional services that rely on outdated databases. This offers unprecedented access to current professional network information.

Popularity

Points 11

Comments 9

What is this product?

LinkdAPI is a developer tool that provides programmatic access to public data on LinkedIn. Unlike other services that might scrape or access historical snapshots of LinkedIn profiles, LinkdAPI focuses on delivering real-time updates. The core innovation lies in its ability to intercept and process publicly available data streams as they change, rather than relying on periodic data dumps. This means developers get the freshest information available, which is crucial for applications that need up-to-the-minute professional insights.

How to use it?

Developers can integrate LinkdAPI into their applications by making API calls. For instance, a sales team might use it to monitor competitor activity or identify new leads based on real-time profile updates. A recruitment platform could leverage it to find candidates whose skills or experience have recently changed. Integration typically involves setting up an API key and then using standard HTTP requests to fetch data, with responses usually in JSON format. This allows for seamless incorporation into web applications, scripts, or data analysis pipelines.

Product Core Function

· Real-time Profile Data Access: Provides the ability to retrieve current public profile information of LinkedIn users as it becomes available, enabling applications to react to the latest professional changes.

· Up-to-the-Minute Network Insights: Offers data streams that reflect the most recent updates in professional connections, job changes, and skill endorsements, valuable for market intelligence and lead generation.

· Unofficial but Direct Data Retrieval: Circumvents the delays and limitations often found with official or scraped data sources by accessing public information streams directly, leading to more accurate and timely results.

· Developer-Friendly API: Exposes data through a straightforward API interface, making it easy for developers to incorporate into existing or new projects with minimal effort.

· Event-Driven Data Updates: Potentially allows for data to be pushed or easily polled as changes occur, facilitating applications that require immediate awareness of professional network shifts.

Product Usage Case

· Sales Intelligence: A sales team can use LinkdAPI to monitor when a prospect changes their job title or company, triggering a timely outreach to offer relevant solutions.

· Recruitment Automation: A hiring platform can track new skills or experience listed on candidate profiles, automatically flagging them for relevant open positions.

· Market Research: Businesses can monitor industry trends by analyzing the skills and roles being adopted by professionals in real-time, informing strategic decisions.

· Professional Networking Tools: Developers can build advanced tools that notify users about significant career milestones of their connections or identify emerging experts in a field.

· Competitive Analysis: Companies can track public announcements or role changes of key personnel in competitor organizations to gain a strategic advantage.

4

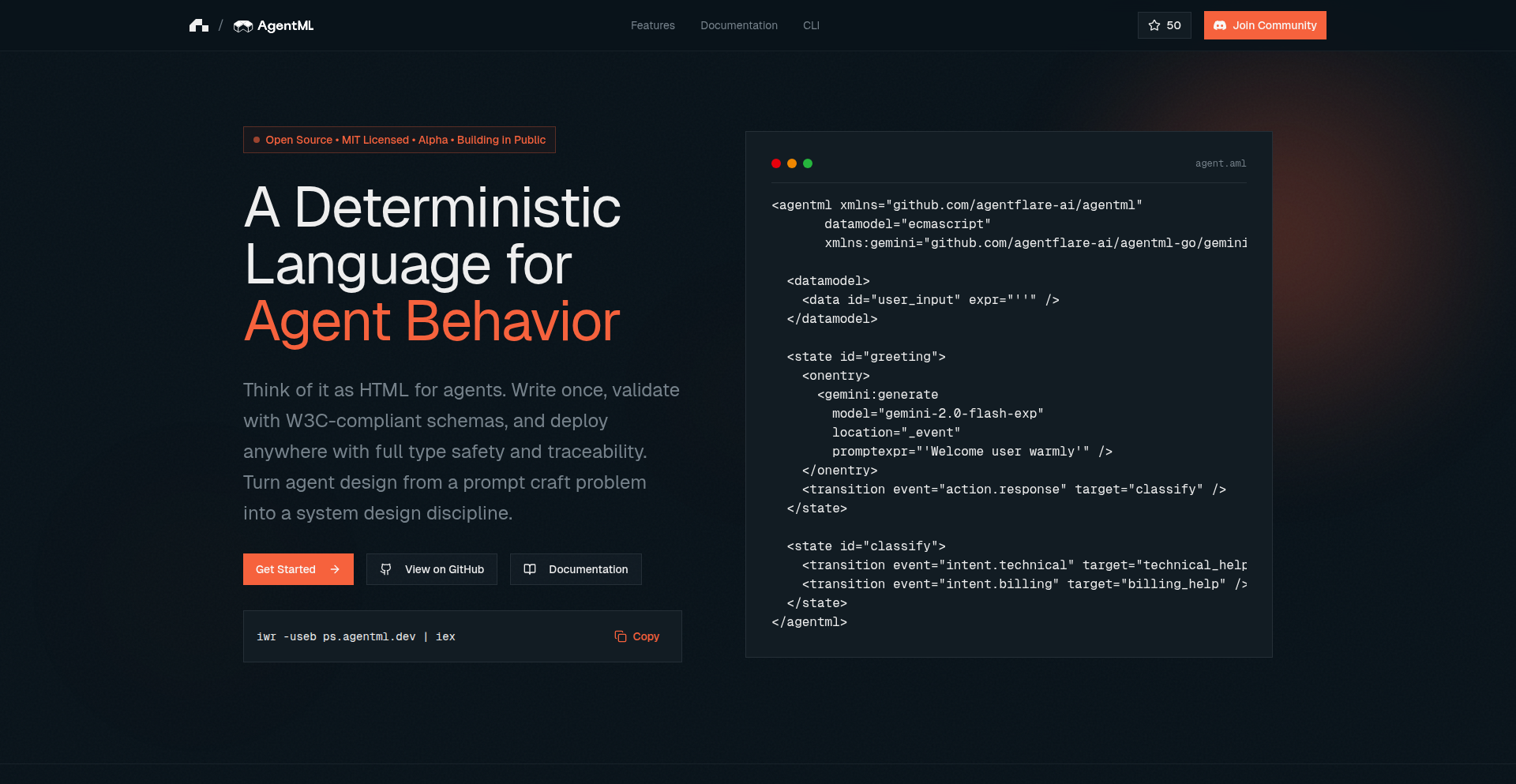

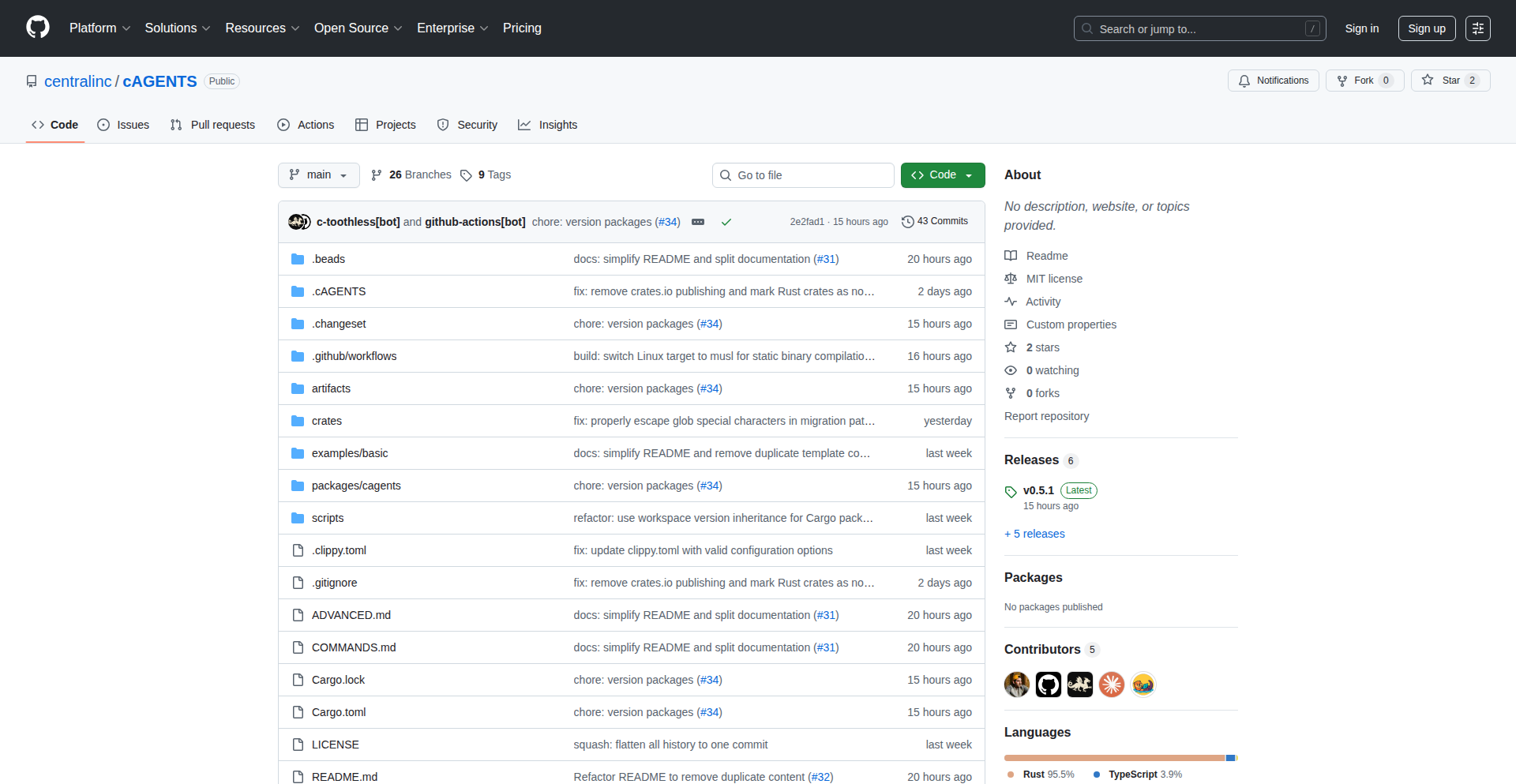

AgentML: Deterministic AI Agents

Author

gwillen85

Description

AgentML is an alpha-stage framework from MIT that allows for the creation of AI agents with deterministic behavior. Unlike many current AI models that can produce varied outputs for the same input, AgentML focuses on predictable and repeatable results, making AI more reliable for specific applications. This is achieved through a novel approach to agent design and execution.

Popularity

Points 17

Comments 3

What is this product?

AgentML is a software framework designed to build artificial intelligence agents that behave in a predictable and consistent manner. The core innovation lies in its approach to achieving 'determinism' in AI. Imagine you ask an AI to perform a task, and it always performs it in exactly the same way, with the same outcome, every single time. This is determinism. Most current AI, especially large language models, can be stochastic, meaning their outputs can vary. AgentML aims to eliminate this variability by providing a structured way to define agent logic and execution flow, ensuring that given the same initial state and input, the agent will always produce the same sequence of actions and final output. This predictability is crucial for applications requiring high reliability and auditability.

How to use it?

Developers can integrate AgentML into their projects to build specialized AI agents. This involves defining the agent's environment, its perception capabilities (what it can 'see' or take in), its internal state, and its action capabilities (what it can 'do'). The framework then manages the agent's decision-making process based on these definitions, ensuring each step is executed deterministically. This could be used for building automated systems in gaming, robotic control, scientific simulations, or any application where consistent AI behavior is paramount. Integration typically involves defining agent logic through configuration files or code, and then running the agent within the AgentML execution engine.

Product Core Function

· Deterministic action execution: Ensures that every action an agent takes is repeatable, leading to predictable outcomes and simplifying debugging and validation. This is valuable for building robust automated systems where failure due to unpredictable behavior is unacceptable.

· State management: Provides a structured way to track and update the agent's internal state, ensuring that the agent's understanding of its environment evolves consistently over time. This is key for complex tasks that require memory and learning over multiple steps.

· Perception-action loop: Models the fundamental cycle of an agent observing its environment and then taking actions, with a deterministic guarantee at each step. This is the foundational mechanism for creating intelligent behavior in a controlled manner.

· Modular agent design: Allows developers to build agents from reusable components, making it easier to construct complex AI systems and adapt them to new challenges. This promotes efficient development and allows for specialized agent capabilities to be swapped in and out.

Product Usage Case

· Building a rule-based trading bot: In financial applications, unpredictable AI behavior can lead to significant losses. AgentML allows developers to create a trading bot that follows a precise set of trading rules and executes trades with guaranteed consistency, ensuring that market fluctuations are reacted to in a planned and predictable way.

· Automated scientific experimentation: For complex simulations or experiments, ensuring that the AI agent performing the experiment follows the exact same protocol every time is critical for reproducibility. AgentML guarantees that the AI's actions in controlling parameters or analyzing data will be identical across multiple runs, facilitating scientific discovery.

· Developing AI-powered game NPCs: In video games, predictable Non-Player Characters (NPCs) can be easier to design and balance. AgentML allows for NPCs that exhibit consistent behavior patterns, making gameplay more understandable and controllable for players, while also simplifying game development.

· Robotic process automation (RPA) with AI: For automating repetitive business tasks, an AI that consistently performs actions in the same order and with the same logic is essential. AgentML can power RPA bots that reliably interact with software interfaces, ensuring that processes are executed without unexpected deviations.

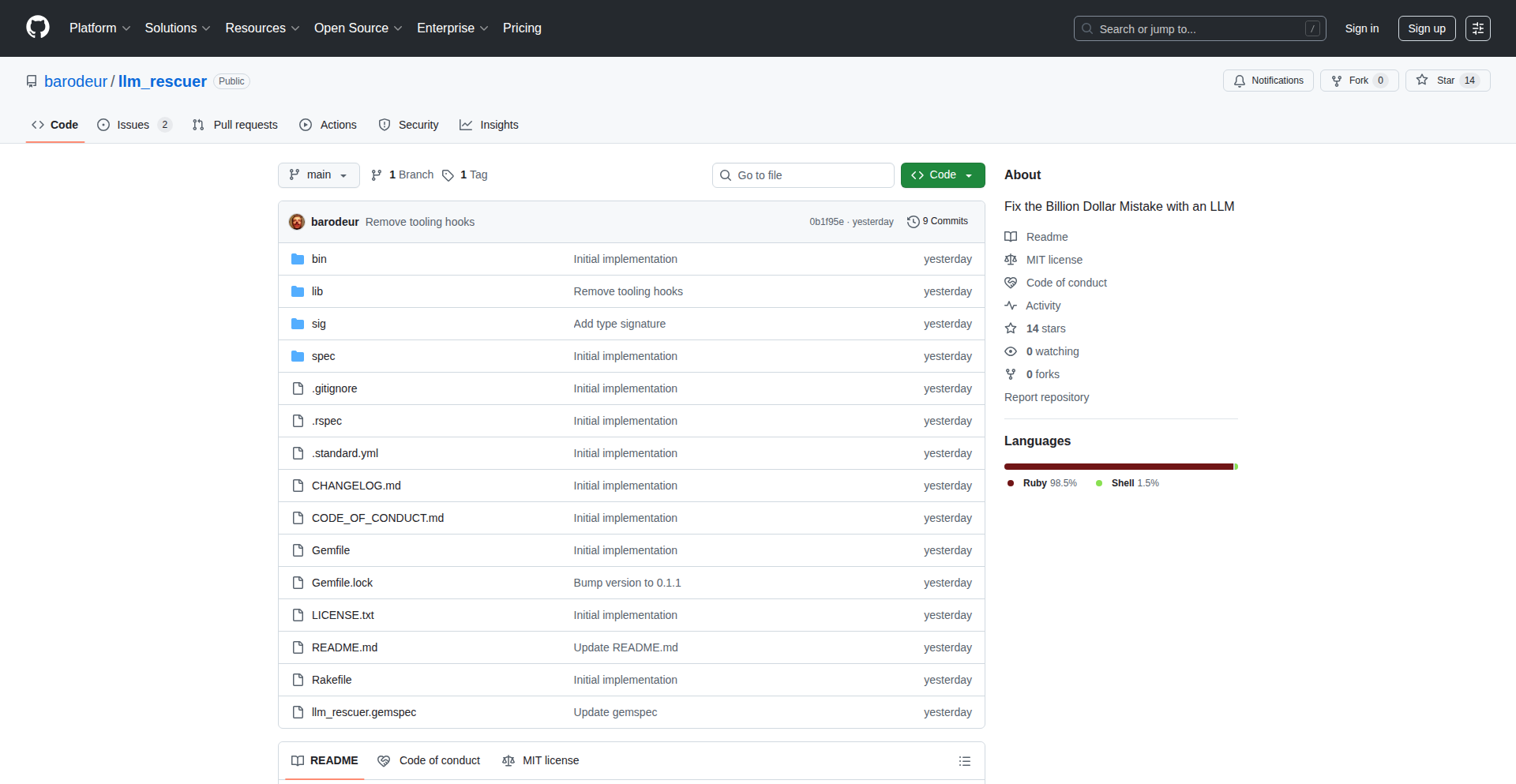

5

LLM Rescuer - Nil Safety Solver

Author

barodeur

Description

LLM Rescuer is a Ruby gem that uses Large Language Models (LLMs) to proactively identify and suggest fixes for the notorious 'billion dollar mistake' – the potential for nil pointer exceptions in Ruby code. It aims to bring a new layer of safety and robustness to Ruby applications by leveraging AI to predict and prevent common runtime errors.

Popularity

Points 15

Comments 0

What is this product?

LLM Rescuer is a Ruby gem designed to tackle the problem of nil pointer exceptions, often referred to as the 'billion dollar mistake' in programming. It works by employing Large Language Models (LLMs) to analyze your Ruby codebase. The LLM is trained to understand common patterns that lead to nil errors (when a program tries to access a method or property on a variable that doesn't hold a value, essentially 'nothing'). It then intelligently predicts where these issues might occur and provides actionable suggestions for developers to fix them before they cause runtime crashes. The innovation lies in using the predictive and pattern-recognition capabilities of LLMs to automate the detection of a very common and costly type of bug, offering a proactive approach to code quality.

How to use it?

Developers can integrate LLM Rescuer into their Ruby projects by installing it as a gem. Once installed, it can be run against their codebase, either as a standalone script or integrated into a CI/CD pipeline. The tool will analyze the Ruby files and output potential nil safety issues along with suggested code modifications. For instance, you could run `bundle exec llm_rescuer check path/to/your/code`. This allows you to catch these errors during development or before deployment, saving you debugging time and preventing unexpected application failures. It's like having an AI assistant that constantly watches for a specific type of bug.

Product Core Function

· Nil Safety Analysis: The core function is to scan Ruby code and identify code patterns that are likely to result in nil pointer exceptions. This is valuable because it helps developers find and fix potential bugs that would otherwise only surface during runtime, leading to application crashes. Developers benefit by having a proactive mechanism to improve code stability.

· AI-Powered Suggestions: Beyond just identifying issues, LLM Rescuer provides concrete code suggestions to resolve the detected nil safety problems. This saves developers significant time and effort in figuring out the best way to handle potential nil values, making the fixing process more efficient and less error-prone.

· Predictive Error Prevention: By leveraging LLMs, the gem can predict potential issues even in complex code structures. This preventative approach is crucial for maintaining high code quality and reducing the overall cost of software development and maintenance, especially in large and evolving codebases.

· Integration with Development Workflow: The ability to integrate LLM Rescuer into CI/CD pipelines means that code quality checks for nil safety can be automated. This ensures that newly introduced code doesn't compromise the stability of the application, providing continuous assurance of code robustness.

Product Usage Case

· In a large Rails application with a complex object graph, developers might be unsure if a nested attribute access is always safe. LLM Rescuer can analyze these accesses, like `user.profile.address.city`, and flag potential `nil` returns from `user`, `profile`, or `address`, suggesting defensive checks like `user&.profile&.address&.city` or `user.profile.address.city if user&.profile&.address`.

· During a code refactoring effort, a developer might introduce a change that inadvertently makes a previously safe variable potentially nil. Running LLM Rescuer as part of the refactoring process can immediately highlight these new vulnerabilities, allowing the developer to correct them before the change is merged, thus preventing regressions.

· For open-source Ruby projects, integrating LLM Rescuer into the continuous integration process can help maintain a high standard of code quality for contributors. It acts as an automated guardian against common pitfalls, making the project more reliable for its users.

· When onboarding new developers to a project, LLM Rescuer can serve as a learning tool by pointing out common Ruby pitfalls they might not be aware of, along with best practices for handling optional values. This accelerates their learning curve and reduces the introduction of new bugs.

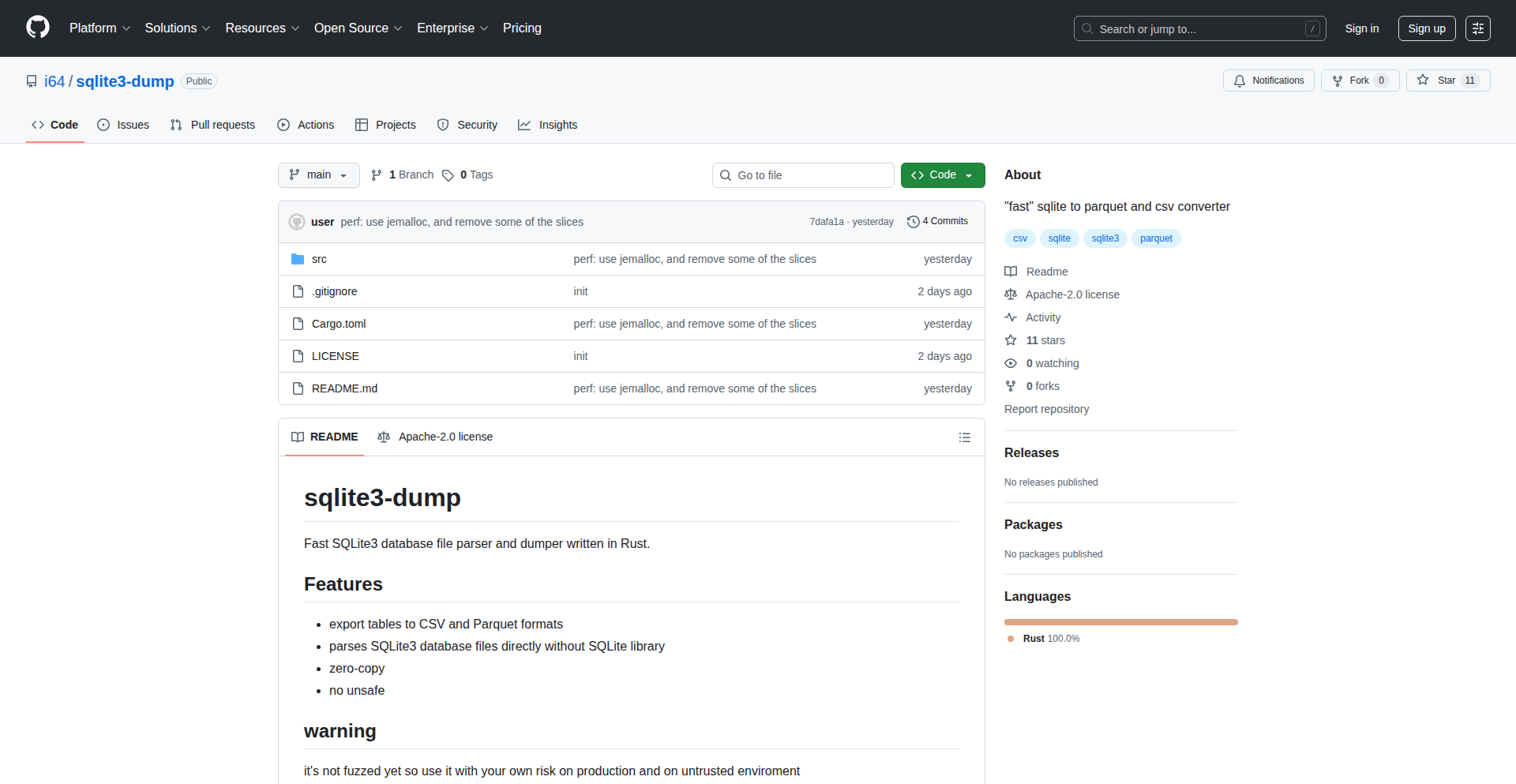

6

ZeroCopy-SQLite-Dumper

Author

Gave4655

Description

A high-performance, zero-copy utility for rapidly extracting data from SQLite databases into CSV and Parquet formats. It prioritizes speed and efficiency by avoiding unnecessary data copying, making it ideal for large database dumps.

Popularity

Points 13

Comments 1

What is this product?

This project is a specialized tool designed to quickly read data from SQLite database files and convert it into other formats like CSV (Comma Separated Values) and Parquet. The key innovation lies in its 'zero-copy' approach. Traditionally, when you read data from a file and process it, the data is often copied multiple times in memory. This tool tries to minimize or eliminate those copies, meaning it reads the data directly from the SQLite file and prepares it for output without intermediate copies. This significantly speeds up the dumping process, especially for very large SQLite files, by reducing the overhead of memory operations. So, it's like a super-fast, direct pipe for your SQLite data.

How to use it?

Developers can use this tool from their command line. It's designed to be run as a standalone utility. You would point it to your SQLite database file (`.sqlite` or `.db` extension) and specify the desired output format (CSV or Parquet) and a destination file. For example, you might run a command like `sqlite3-dump --input mydatabase.sqlite --output mydata.csv --format csv`. This allows for easy integration into data processing pipelines, scripting, or batch jobs where you need to extract data from SQLite quickly for further analysis or storage in a different system. So, it's a command you run on your terminal to get your data out fast.

Product Core Function

· Zero-copy data extraction: Achieves higher performance by minimizing intermediate data copying between memory buffers, directly processing data from the SQLite file. This means faster dumps for you.

· SQLite to CSV conversion: Efficiently converts SQLite tables into the widely compatible CSV format, useful for spreadsheets and general data interchange. This gives you a familiar data format.

· SQLite to Parquet conversion: Converts SQLite tables into the highly efficient columnar Parquet format, ideal for big data analytics and storage systems. This provides a modern, performant format for large datasets.

· High-speed dumping: Optimized for speed, making it ideal for scenarios where large amounts of data need to be extracted quickly without performance bottlenecks. This saves you time on data extraction.

· Command-line interface: Provides a simple and direct way to interact with the tool, enabling easy integration into scripts and automated workflows. This makes it easy to automate your data extraction tasks.

Product Usage Case

· Migrating large SQLite databases to cloud data warehouses: If you have a substantial SQLite database and need to move its contents to a service like BigQuery or Snowflake, this tool can rapidly export the data into Parquet, which is often a preferred format for these platforms. This helps you get your data into the cloud faster.

· Generating datasets for machine learning: Researchers or data scientists who need to extract specific tables from an SQLite database for training machine learning models can use this to quickly generate CSV or Parquet files, saving valuable time in the data preparation phase. This means you can start your analysis or model training sooner.

· Archiving SQLite data for long-term storage: For compliance or historical record-keeping, you might need to export entire SQLite databases. This tool's speed makes it efficient for creating archival copies in Parquet format, which is well-suited for long-term, compressed storage. This makes archiving your data less of a chore.

· Building automated data pipelines: Developers can integrate this tool into scripts that regularly extract data from an SQLite source and feed it into other processing stages, such as data lakes or analytics platforms. This automates the process of getting data from SQLite to where you need it.

7

TreadmillBridge-Mac

Author

rane

Description

A macOS application that enables users to control their WalkingPad treadmills and track their workout history. It bridges the gap between the physical treadmill and a convenient digital interface on your Mac, offering a seamless experience for fitness tracking and control.

Popularity

Points 13

Comments 0

What is this product?

This project is a macOS application designed to provide enhanced control and data tracking for WalkingPad treadmills. It utilizes Bluetooth Low Energy (BLE) communication to interact with the treadmill, allowing users to start, stop, adjust speed, and change incline directly from their Mac. The innovation lies in its ability to not only provide real-time control but also to persistently store and visualize historical workout data, turning a standalone piece of hardware into a connected fitness device with a richer user experience. So, this is useful because it transforms your treadmill into a smarter device, making workouts more engaging and data-driven without needing to interact with the treadmill's often clunky built-in controls.

How to use it?

Developers can use TreadmillBridge-Mac by installing the application on their macOS computer. Once installed, they can connect their WalkingPad treadmill via Bluetooth through the application's interface. The app provides a user-friendly dashboard to start and stop the treadmill, adjust speed and incline using simple sliders or input fields. For historical data tracking, the app automatically logs workout sessions, allowing users to view past performance metrics. This can be integrated into personal fitness dashboards or even extended with custom scripting for advanced users. So, this is useful because it gives you a centralized hub on your familiar computer to manage your treadmill workouts and see your progress over time.

Product Core Function

· Bluetooth Treadmill Control: Utilizes BLE to establish a connection with WalkingPad treadmills, enabling remote start, stop, speed adjustment, and incline changes. The value is in providing effortless command over the treadmill from your Mac, enhancing convenience during workouts.

· Workout History Tracking: Automatically logs key metrics from each workout session, such as duration, distance, speed, and calories burned. The value is in providing a comprehensive record of your fitness journey, allowing for progress analysis and motivation.

· Data Visualization: Presents historical workout data in an easily digestible format, potentially through charts or graphs. The value is in making it simple to understand your fitness trends and identify areas for improvement.

· Mac-Native Interface: Offers a user experience tailored for macOS, ensuring seamless integration with the operating system and familiar controls. The value is in providing a familiar and intuitive environment for managing your fitness equipment.

Product Usage Case

· Scenario: A user wants to seamlessly transition between different speeds during an interval training session without reaching for the treadmill remote. Usage: The user uses the TreadmillBridge-Mac application to quickly adjust the treadmill speed with a slider or keyboard shortcut. Problem Solved: Eliminates the disruption of manual adjustments, allowing for smoother and more effective interval training.

· Scenario: A fitness enthusiast wants to track their progress over several months to see improvements in endurance and speed. Usage: The user relies on TreadmillBridge-Mac to automatically log all their treadmill workouts and then reviews the historical data to observe trends in average speed and workout duration. Problem Solved: Provides a clear and automated way to monitor long-term fitness progress, fostering accountability and motivation.

· Scenario: A remote worker wants to get some light exercise during breaks without interrupting their workflow on their Mac. Usage: The user starts and controls the WalkingPad treadmill directly from their Mac application, allowing them to walk while continuing to work. Problem Solved: Integrates exercise into a busy work schedule by making treadmill operation convenient and unobtrusive.

· Scenario: A developer wants to experiment with automating treadmill workouts or integrating treadmill data into a larger fitness tracking system. Usage: The developer can analyze the BLE communication protocols used by TreadmillBridge-Mac and potentially extend its functionality to trigger specific workout routines based on external data or develop custom data analysis tools. Problem Solved: Provides an open platform for further technical exploration and customization of treadmill control and data.

8

Inspec: Realtime Spec Scheduling for Interior Design

Author

nick_cook

Description

Inspec is a web application designed to modernize the process of creating and managing Furniture, Fixtures & Equipment (FF&E) schedules for interior designers. Traditionally done with clunky Excel spreadsheets, Inspec offers real-time collaboration, version control, and professional PDF exports. It tackles the manual work and inefficiencies of current methods, providing a more streamlined and collaborative workflow. The innovation lies in its focused approach to replacing cumbersome document creation with a dedicated, user-friendly software solution, enabling designers to work more efficiently and accurately.

Popularity

Points 10

Comments 0

What is this product?

Inspec is a specialized web-based software for interior designers to create and manage FF&E schedules. Instead of using Excel, which requires a lot of manual input and is prone to errors, Inspec provides a dedicated platform. The core technology leverages a modern full-stack JavaScript framework (T3 stack with Next.js, TypeScript, tRPC, Prisma, PostgreSQL) for a responsive and scalable application. Real-time collaboration is achieved using Pusher, ensuring that multiple users can work on a schedule simultaneously and see changes instantly. Version control (revision control) keeps track of all modifications, allowing designers to revert to previous states if needed. Background jobs managed by Redis and BullMQ handle resource-intensive tasks like generating professional PDF exports and potentially web scraping for data, freeing up the main application for immediate user interaction. The innovation is in applying these robust web technologies to a niche problem that was previously underserved by modern software, offering a familiar workflow for Excel users while introducing powerful collaborative and data management features.

How to use it?

Interior designers can use Inspec through their web browser. They would typically create a new project, define rooms, and then add items for each room, such as flooring, paint colors, lighting fixtures, and furniture. They can invite collaborators (e.g., other designers, clients) to view or edit the schedule in real-time. For on-site work, designers can generate professional PDF exports or QR codes that link to the latest version of the schedule, easily accessible by contractors and builders via their mobile devices. The system's customizable fields allow designers to adapt it to their specific project needs, mimicking the flexibility of spreadsheets but with the benefits of a dedicated application.

Product Core Function

· Realtime Collaboration: Enables multiple designers and stakeholders to edit FF&E schedules simultaneously, with changes visible instantly to everyone. This speeds up the decision-making process and reduces miscommunication, meaning you and your team can finalize specs faster and with fewer errors.

· Revision Control (Versioning): Automatically tracks all changes made to a schedule, allowing users to view history and revert to previous versions if necessary. This provides a safety net against accidental deletions or unwanted modifications, ensuring project integrity and peace of mind.

· Professional PDF Exports: Generates high-quality, customizable PDF documents that can be shared with clients, contractors, and suppliers. This ensures clear, branded documentation for project execution, making your professional output polished and easy to understand for all parties involved.

· QR Code Integration: Allows for the generation of QR codes that can be printed on physical documents or shared digitally. Contractors and builders can scan these codes on-site to access the most up-to-date version of the FF&E schedule, minimizing errors due to outdated information. This keeps everyone on the same page, reducing costly mistakes on the job site.

· Customizable Fields and Workflow: Offers flexibility in defining custom fields and maintains a familiar interface akin to Excel, minimizing the learning curve for designers. This means you can adapt the software to your unique project requirements without a steep learning curve, making the transition from existing tools smooth and efficient.

Product Usage Case

· A design firm is working on a large commercial project with multiple designers contributing to the FF&E schedule. Using Inspec, they can all collaborate in real-time, adding and modifying item specifications simultaneously without overwriting each other's work. This drastically reduces the time spent coordinating changes and ensures everyone is working with the latest data, leading to a more efficient and accurate final schedule.

· An interior designer needs to present a detailed FF&E schedule to a client for approval. They use Inspec to create the schedule, ensuring all details are accurate and well-organized. They then generate a professional PDF export to share with the client, who can easily review and provide feedback. This polished presentation enhances client confidence and streamlines the approval process.

· A contractor is on a construction site and needs to verify the exact specifications for a specific fixture. The designer has provided them with a QR code generated by Inspec. The contractor scans the code with their phone, instantly accessing the most current version of the FF&E schedule on their mobile device, ensuring they install the correct item and avoid costly errors or delays.

· During a project, a designer realizes a particular paint color chosen earlier is no longer suitable and needs to be changed across multiple rooms. Using Inspec's revision control, they can easily track where this paint color was specified, make the update, and review the change history to confirm the modification was applied correctly everywhere, ensuring consistency across the design.

9

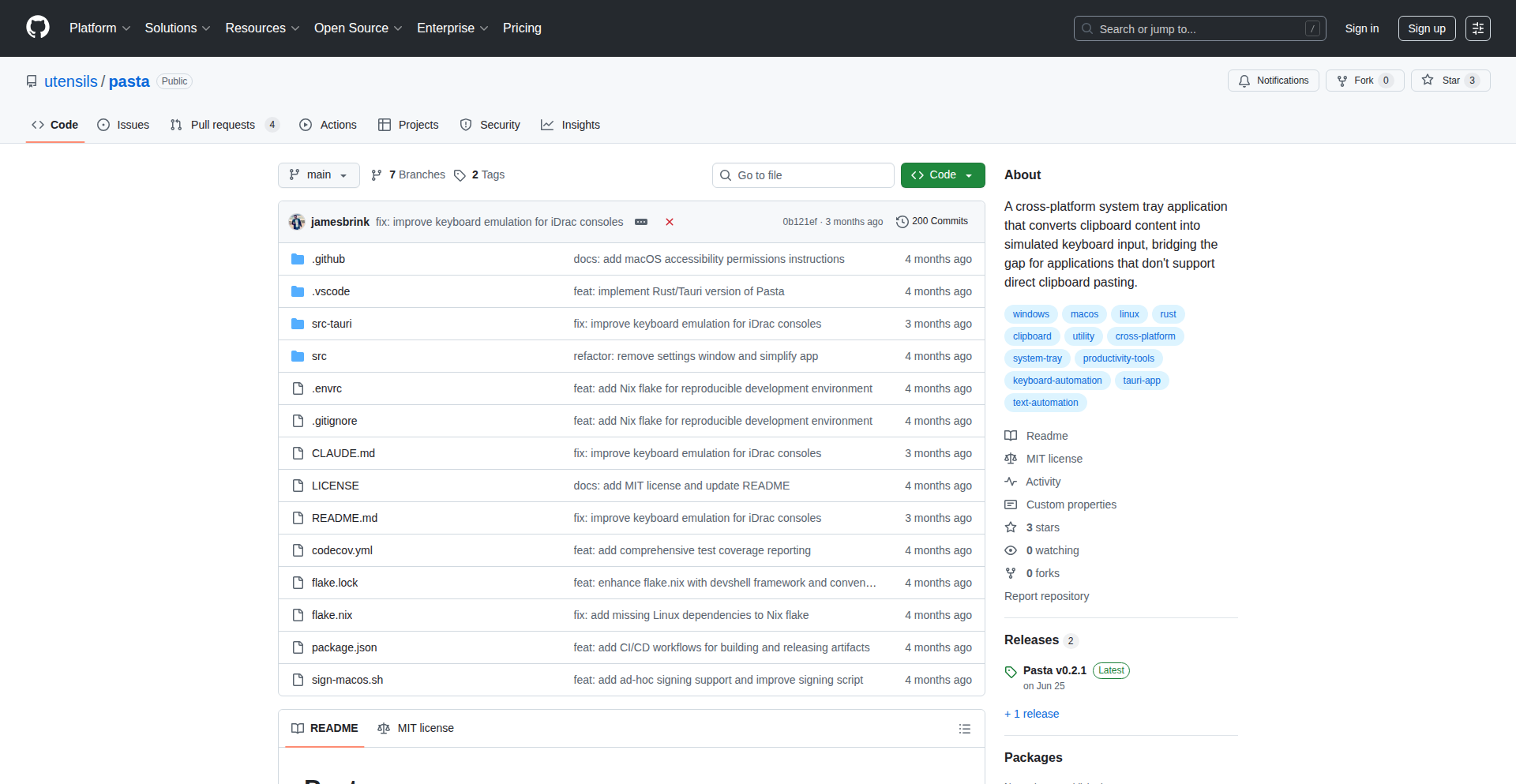

CLI-SQLite State Orchestrator

Author

jakedahn

Description

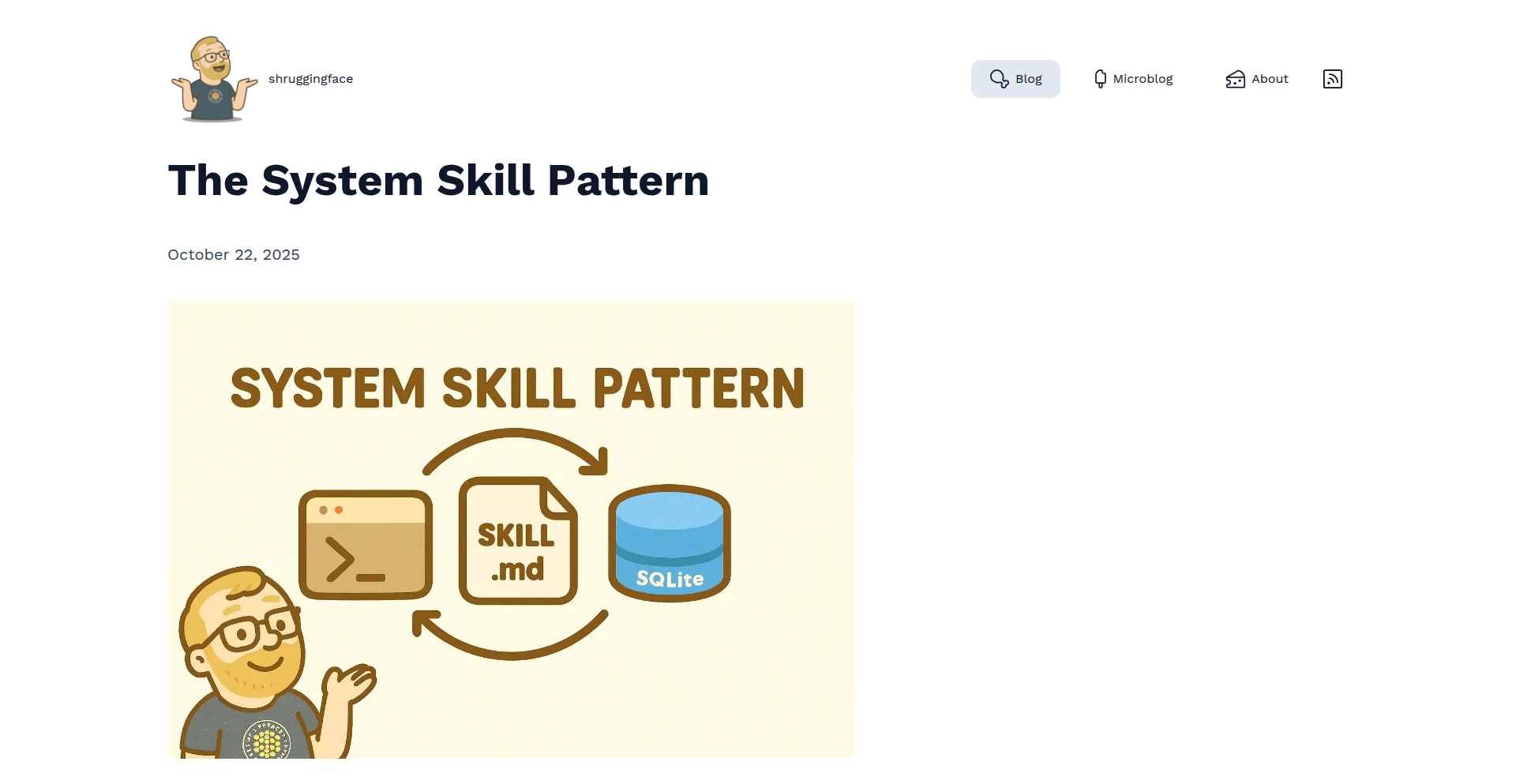

This project introduces a minimalist pattern for building robust, self-contained personal data systems. It combines a simple command-line interface (CLI) executable, a declarative operator guide (SKILL.md), and a local SQLite database for state persistence. The innovation lies in the ergonomic integration of these components, enabling AI models like Claude to automate complex workflows by repeatedly executing the CLI, processing its output, and updating the SQLite database. This pattern is easily shareable, allowing developers to distribute these 'System Skills' as plugins for AI platforms.

Popularity

Points 8

Comments 2

What is this product?

This is a foundational pattern for creating small, durable, and automated personal data systems. The core idea is to use three simple building blocks: a CLI application that performs a specific task, a SKILL.md file that tells an AI (or a human) how to run that CLI and what to expect, and an SQLite database to keep track of the system's progress and state. The technical innovation is in how these parts work together seamlessly. Instead of complex APIs or frameworks, you have a straightforward way to define a process. An AI can then 'turn the crank' by reading the SKILL.md, running the CLI, interpreting the output, and storing the results in the SQLite database, effectively animating a system over time. This makes it feel like you have a tiny, automated assistant for your personal data workflows. The value is in the simplicity and robustness it brings to building these automated tasks.

How to use it?

Developers can use this pattern to build personal automation tools or services. You would first create a CLI tool that performs a discrete action (e.g., fetching data, processing a file, sending a notification). Then, you'd write a SKILL.md file that clearly outlines how to run the CLI, what inputs it needs, and how to parse its output. This SKILL.md file also specifies how the output should update the SQLite database. Finally, you'd initialize an SQLite database to store the system's state. The real power comes when you integrate this with an AI model. The AI reads the SKILL.md, executes the CLI, and uses the output to update the SQLite database, allowing the AI to manage and advance the system's state over multiple interactions. You can then share these 'System Skills' as plugins, making your automated workflows accessible to others on AI platforms.

Product Core Function

· Self-contained CLI executable: Provides a portable and easily runnable piece of logic for performing specific tasks. Its value lies in encapsulating functionality that can be triggered programmatically, making it a building block for automation.

· SKILL.md operator guide: Acts as a declarative instruction set for an AI or human operator, detailing how to execute the CLI, interpret its output, and manage the system's state. This simplifies interaction and enables AI-driven automation by providing clear, structured guidance.

· Local SQLite database for persistent state: Offers a simple, file-based database to store and retrieve the system's ongoing status, history, and configurations. This ensures durability and allows for easy resumption of processes, adding reliability to automated workflows.

· AI-driven workflow animation: Enables AI models to interact with the CLI and SQLite database to autonomously execute multi-step processes over time. This is valuable for complex task automation where an AI can learn and adapt based on the system's evolving state.

· Plugin distribution mechanism: Allows developers to package and share their 'System Skills' as installable plugins for AI platforms, fostering community collaboration and the wider adoption of specialized automation tools.

Product Usage Case

· Automated personal task management: A developer could build a system to track personal goals. The CLI might fetch data from a personal finance API, the SKILL.md would define how to process this data and update an SQLite database with progress on savings goals, and an AI could periodically run this to report on goal achievement. This solves the problem of manually tracking progress and provides automated insights.

· Content curation and summarization pipeline: Imagine a system that monitors a specific RSS feed. The CLI could download new articles, the SKILL.md would specify how to extract text and generate summaries, and the SQLite database would store the articles and their summaries. An AI could then use this system to continuously update a curated list of interesting content, solving the problem of information overload.

· Customizable time management tools: A Pomodoro timer implementation as shown in the reference. The CLI could start and stop a timer, the SKILL.md would define the work/break intervals and how to log completed sessions in SQLite. An AI could then manage this system, prompting the user for work periods and tracking productivity, solving the problem of inefficient time usage.

· Personal data journaling and analysis: A user could create a system to log daily activities or moods. The CLI would allow for quick input of entries, the SKILL.md would define how to structure these entries and store them in SQLite, and an AI could be used to periodically analyze the journal data for patterns or trends, providing personal insights and solving the problem of scattered personal reflections.

10

Client-Side Canvas Image Manipulator

Author

wainguo

Description

ResizeImage.dev is a web application that allows users to resize, crop, and optimize images directly within their browser. It prioritizes user privacy and speed by performing all operations client-side, meaning no images are ever uploaded to a server. This is achieved using the browser's Canvas API and WebAssembly for efficient image processing.

Popularity

Points 3

Comments 6

What is this product?

This project is a privacy-focused, high-performance image manipulation tool that runs entirely in your web browser. It leverages the browser's native Canvas API, which is like a digital drawing board, and WebAssembly, a technology that allows near-native speed for complex computations. Instead of sending your images to a remote server for resizing, all the heavy lifting happens locally on your device. This means your images are never uploaded, ensuring your privacy and making the process instantaneous. It supports common image formats like JPG, PNG, and WebP, and is designed to work offline, acting as a Progressive Web App (PWA).

How to use it?

Developers can use ResizeImage.dev as a quick and secure way to prepare images for web content, social media posts, or any application where image size and format optimization are crucial. You can access it directly via the web application. For integration into your own projects, you could conceptually guide users to the tool, or if you were building a similar client-side solution, you'd implement the Canvas API and WebAssembly codecs within your own JavaScript codebase. This offers a 'no-BS' approach to image resizing, emphasizing speed and data protection.

Product Core Function

· Client-side image resizing: Enables resizing images to specific dimensions without uploading them, preserving user privacy and reducing latency. Valuable for web developers optimizing assets for faster page load times.

· In-browser image cropping: Allows users to select and extract specific portions of an image directly in the browser, useful for creating thumbnails or focusing on key elements without needing desktop software.

· Image format conversion (JPG, PNG, WebP): Supports popular image formats, offering flexibility in choosing the best format for different use cases and ensuring compatibility across various platforms.

· Offline functionality (PWA-ready): Works even without an internet connection, making it accessible and useful in environments with limited or no network access. This enhances usability and reliability for on-the-go users.

· Zero data collection and tracking: Guarantees that no user data or images are collected or tracked, providing a high level of security and peace of mind for sensitive or private image content.

Product Usage Case

· A freelance web designer needs to quickly resize multiple product images for an e-commerce client's website. Instead of uploading them to a server and waiting, they use ResizeImage.dev to resize and optimize all images locally within minutes, ensuring faster website loading times and a better user experience for the client's customers.

· A social media manager wants to crop a banner image to fit a specific platform's aspect ratio before posting. They use ResizeImage.dev to crop the image precisely and save it in WebP format for better quality and smaller file size, enhancing their social media content's visual appeal and performance.

· A developer is building a mobile-first web application and needs to handle user-uploaded profile pictures. They can direct users to ResizeImage.dev for initial resizing and optimization before submitting, ensuring that only optimized images are processed, reducing server load and improving app responsiveness.

11

Wsgrok: Cloud-Native Tunneling for Developers

Author

hussachai

Description

Wsgrok is a developer-centric, open-source alternative to ngrok, designed to expose local web servers to the internet. Its core innovation lies in its lightweight architecture and flexible domain management, allowing developers to easily map custom domains to their local development environments without the upfront costs associated with paid tiers. This addresses the common developer pain point of needing a public URL for testing webhooks, collaborating on local projects, or demoing applications, especially when free tiers of existing services are restrictive.

Popularity

Points 6

Comments 1

What is this product?

Wsgrok is essentially a secure tunnel that makes your local development server accessible from anywhere on the internet. Unlike services that charge for custom domain usage, Wsgrok allows you to easily configure your own domains to point to your local machine. It achieves this by running a small client on your machine that connects to a Wsgrok server in the cloud. This cloud server then acts as a gateway, forwarding incoming internet traffic to your local service. The innovation here is in the efficient handling of these connections and the developer-friendly approach to domain mapping, inspired by a desire to avoid paid limitations and build a more accessible tool.

How to use it?

Developers can use Wsgrok by installing the Wsgrok client on their local machine. Once installed, they can configure it to expose a specific port on their localhost (e.g., port 3000 for a web app). The key advantage is the ability to then associate a custom domain name (which they own) with this exposed tunnel. This means instead of accessing a random subdomain provided by a service, you can use your own domain like 'dev.yourcompany.com' to access your local development server. This is particularly useful for testing webhooks that expect specific hostnames, collaborating with others on a project running locally, or presenting a live demo of your work without deploying to a public server. Integration typically involves a simple command-line interface to start the tunnel and configure domain mappings.

Product Core Function

· Custom Domain Exposure: Enables developers to use their own registered domains to access local services, providing a professional and flexible testing environment. This is valuable for webhooks that enforce hostname checks or for creating personalized demos.

· Lightweight Tunneling: Leverages efficient protocols to create secure tunnels between local machines and cloud servers, minimizing latency and resource consumption on the developer's machine.

· Cost-Effective Solution: Offers a free tier with advanced domain management capabilities, removing the financial barrier for developers who need more than basic tunneling.

· Developer-Friendly Interface: Provides a straightforward command-line interface for easy setup and configuration, allowing developers to quickly get their local projects online.

· Webhook Testing Facilitation: Simplifies the process of testing webhooks by providing a stable and customizable public URL, reducing development friction.

Product Usage Case

· Localhost Webhook Testing: A developer building a Slack bot needs to receive incoming webhook events. Wsgrok allows them to expose their local development server to a public URL using their own domain, e.g., 'slack-hook.myproject.com', enabling seamless testing without deploying to a staging server.

· Collaborative Development: A team is working on a web application. One developer needs to demo a new feature to their colleagues who are remote. Using Wsgrok, they can expose their local instance of the application using a custom domain like 'team-demo.internal.net', allowing others to interact with it in real-time.

· API Prototyping and Demo: A developer is creating a new API. Before formal deployment, they want to get early feedback from potential clients. Wsgrok allows them to present a live, publicly accessible version of their API at a custom URL such as 'api-preview.mycompany.io', facilitating quick iterations and client engagement.

· Mobile App Backend Testing: A mobile app developer needs to test their app's connection to a backend service running locally. Wsgrok provides a public URL for the local backend, allowing the mobile app on a physical device to connect and be tested as if it were interacting with a deployed service.

12

GitSemanticCommits

Author

MateusWorkSpace

Description

This project introduces a novel way to integrate semantic commits directly into Git, aiming to unlock new levels of understanding and automation for code changes. It focuses on standardizing commit messages with structured prefixes that convey the type and scope of a change, moving beyond traditional free-form descriptions. The innovation lies in its potential to make Git history more machine-readable and developer-friendly, enabling smarter tools and workflows.

Popularity

Points 3

Comments 3

What is this product?

GitSemanticCommits is a system designed to enforce and leverage semantic commit messages directly within your Git workflow. Instead of just writing a brief note about what changed, you're encouraged to use a structured format like 'feat: Add user authentication' or 'fix: Resolve login bug'. This structure isn't just for human readability; it's designed to be parsed by machines. The core innovation is making Git's commit history a richer source of information, enabling better tooling for code analysis, automated changelog generation, and more intelligent dependency management. So, this means your Git logs become more than just a history; they become a data source for smarter development processes.

How to use it?

Developers can integrate GitSemanticCommits by setting up pre-commit hooks in their Git repositories. These hooks will validate commit messages against a predefined semantic structure before allowing the commit to be finalized. This might involve using simple scripts or a dedicated CLI tool that prompts for structured input. Tools and CI/CD pipelines can then be configured to read and act upon these semantic tags. For example, a CI/CD pipeline could automatically trigger a deployment for 'feat' commits or flag 'fix' commits for immediate review. So, this allows you to enforce consistent, informative commit messages from the start, making your team's code history more valuable and actionable.

Product Core Function

· Semantic Commit Message Validation: This ensures that all commit messages adhere to a predefined structure (e.g., type: subject). The value is creating a consistent and predictable format for code changes, making history easier to understand for both humans and machines. This is useful for any development team aiming for better code governance and maintainability.

· Automated Changelog Generation: By parsing semantic commit types (like 'feat', 'fix', 'chore'), tools can automatically generate release notes or changelogs. The value here is saving developers significant manual effort and ensuring that release notes are accurate and up-to-date. This is applicable to projects of all sizes that need to communicate changes to users or stakeholders.

· Enhanced Git History Analysis: Semantic commits make it easier to filter and analyze Git history based on commit types. For instance, one could quickly see all features introduced or all bugs fixed in a given period. The value is improved debugging, code review efficiency, and a clearer overview of project evolution. This is particularly beneficial for large or complex projects with extensive commit histories.

· Tooling Integration Potential: The structured nature of semantic commits opens doors for integration with various development tools, such as AI-powered code review assistants or automated dependency update managers. The value is enabling more intelligent and automated development workflows. This is for forward-thinking teams looking to leverage advanced tooling to boost productivity.

Product Usage Case

· A frontend team uses semantic commits to automatically update their user-facing changelog on every merge to the main branch. When a developer commits a new feature with 'feat: Implement dark mode toggle', the CI/CD pipeline automatically adds this to the 'New Features' section of the website's release notes. This solves the problem of manually compiling release notes, saving hours of work per release.

· A backend developer is debugging a critical issue. By using Git history analysis tools that understand semantic commits, they can quickly filter all commits marked as 'fix' within the last week, significantly narrowing down the potential source of the bug. This accelerates the debugging process by providing focused search capabilities.

· A large open-source project utilizes semantic commits and a pre-commit hook to enforce a consistent contribution style. New contributors are guided to format their commit messages correctly, ensuring that the project's history remains clean and manageable. This solves the challenge of onboarding new developers and maintaining code quality in a distributed team.

13

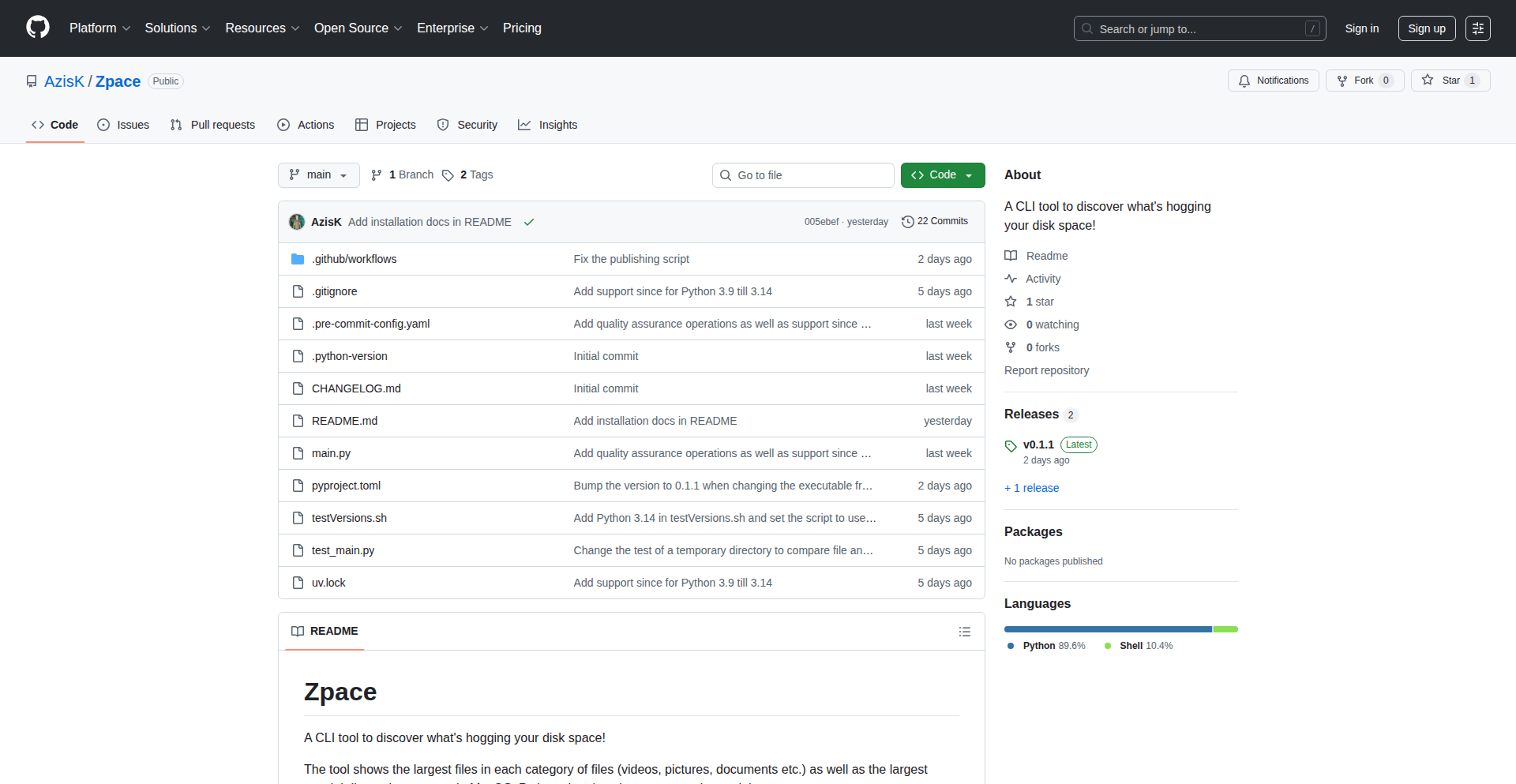

Zpace CLI: Disk Space Detective

Author

azisk1

Description

A simple open-source Python CLI application that helps you identify what's consuming your disk space. It provides an intuitive way to discover large files and directories, offering a command-line alternative to graphical tools for quick analysis. The innovation lies in its focused, efficient approach to a common developer frustration: running out of storage.

Popularity

Points 3

Comments 3

What is this product?

This project is a command-line interface (CLI) tool written in Python called Zpace. Its core function is to scan your file system and report which files and directories are taking up the most space. Think of it like a super-fast, text-based investigator for your hard drive. Unlike complex system utilities, Zpace is designed to be lightweight and easy to use, focusing on the single problem of finding disk space hogs. Its technical insight is to provide a quick, scriptable way to answer the question: 'Where did all my disk space go?' This is particularly useful for developers who frequently deal with large datasets, virtual environments, or build artifacts.

How to use it?

Developers can easily install Zpace using pip, Python's package installer: `pip install zpace`. Once installed, you simply run the command `zpace` in your terminal. The tool will then scan your current directory and its subdirectories, presenting a sorted list of the largest files and folders. This allows you to quickly pinpoint culprits like old project builds, large downloaded files, or bloated virtual machine images. You can then use standard commands like `rm -rf` to remove unnecessary files, freeing up valuable disk space. It's designed to be integrated into your development workflow as a quick troubleshooting step.

Product Core Function

· Disk Usage Analysis: Scans directories and subdirectories to identify the largest files and folders. This helps you understand where your storage is being consumed, providing immediate actionable insights.

· Sorted Output: Presents the findings in a sorted list, making it easy to quickly identify the top offenders. This saves you time by not having to manually sift through numerous files and folders.

· Command-Line Interface: Provides a text-based interface for easy integration into scripts and workflows. This allows for automated disk cleanup tasks or quick checks without needing a graphical interface.

· Lightweight and Fast: Designed to be efficient and quick, minimizing resource usage. This means you can run it frequently without impacting your system's performance, getting fast answers to your storage concerns.

· Simple Installation: Installable with a single pip command. This lowers the barrier to entry, making it accessible to all Python developers.

Product Usage Case

· A developer working on a machine learning project notices their disk is full. They run `zpace` and discover a massive dataset file that was downloaded twice. They then use `rm -rf` to delete the duplicate, freeing up gigabytes of space.

· A web developer is troubleshooting a slow build process and suspects large dependencies. Running `zpace` reveals a forgotten virtual environment directory that has grown significantly. They remove it, improving build times and disk space.

· A student with a limited laptop storage finds they can't install new software. They use `zpace` to find and delete old project files and downloaded installers they no longer need, allowing them to install essential tools.

· An automated script could periodically run `zpace` and log large files, alerting the user when storage thresholds are approaching. This proactive approach prevents disk space issues before they become critical.

14

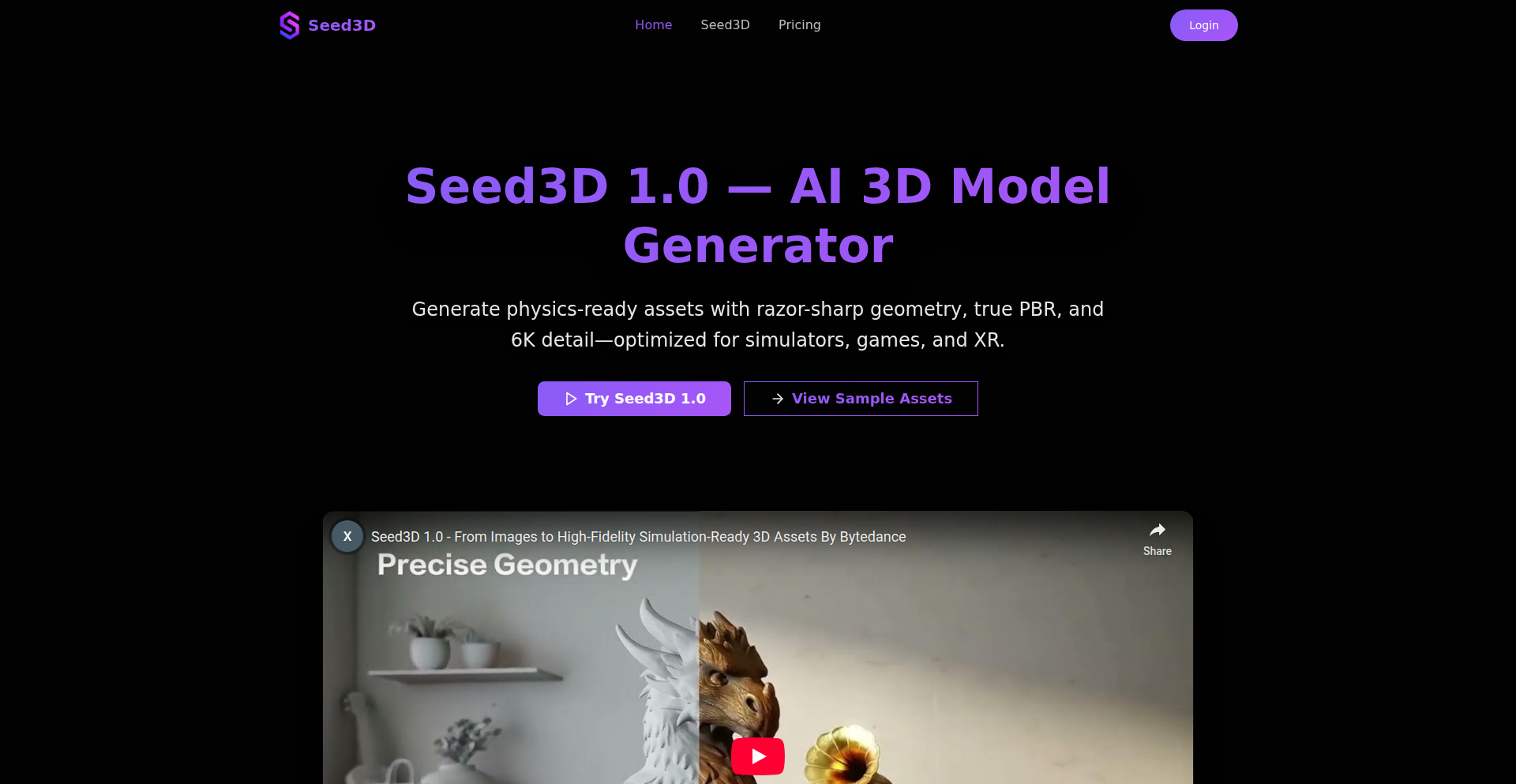

Seed3D: Precision 3D Reconstruction Engine

Author

lu794377

Description

Seed3D is a groundbreaking 3D modeling tool that transforms 2D images into highly accurate, physically accurate 3D models. It goes beyond typical AI or photogrammetry by reconstructing actual surfaces and edges, not just faking details with textures. This results in millimeter-precision geometry and physically accurate PBR materials, making models ready for simulations, games, and virtual environments without approximation. So, this is useful for creating digital assets that look and behave realistically in various applications.

Popularity

Points 4

Comments 1

What is this product?

Seed3D is a next-generation 3D reconstruction engine that takes 2D inputs (like photos) and generates solid, physics-ready 3D models. Unlike traditional methods that might use techniques like 'normal mapping' to fake surface detail, Seed3D reconstructs the actual geometric surfaces, edges, and fine details. This means it produces 'watertight' meshes – essentially 3D objects with no holes – that have incredibly crisp and true geometry, down to millimeter precision. It also generates full sets of physically based rendering (PBR) textures, such as albedo (base color), roughness, and metalness, at high resolutions (up to 6K). The geometry is designed to be 'physics-stable', meaning it works reliably for simulations and collision detection. The output formats are widely compatible with major platforms like Omniverse, Unity, and Unreal Engine. So, this is useful because it provides a way to create 3D assets with unparalleled accuracy and realism, which are essential for professional applications where precise representation matters.

How to use it?

Developers can integrate Seed3D into their workflows to create 3D assets from real-world objects or concepts. The tool's core strength lies in its ability to generate simulation-grade geometry and materials from 2D data. For example, a robotics researcher could use Seed3D to quickly create accurate 3D models of their environment for simulation purposes, ensuring that the digital representation mirrors the physical world precisely. Game developers can leverage it to generate game assets with true geometric detail, enhancing visual fidelity and enabling more realistic physics interactions. For product visualization, it allows for the creation of digital twins with pinpoint accuracy. The export formats like USD/USDZ, FBX, and GLTF ensure seamless integration with common game engines and 3D rendering software. So, this is useful because it streamlines the process of creating highly accurate and production-ready 3D assets, saving time and improving the quality of digital experiences.

Product Core Function

· Generates watertight polygonal meshes with crisp, true geometry: This means that the 3D models produced are solid and have well-defined edges and surfaces, ensuring they are suitable for simulations and rendering without visual artifacts. Useful for creating reliable digital representations of real-world objects.

· Outputs full PBR texture sets (albedo, roughness, metalness, normal, AO): Provides all the necessary texture maps for realistic material rendering, allowing 3D objects to interact with light in a physically correct way. Useful for achieving photorealistic visuals in games and visualizations.

· Delivers 6K textures that hold up in extreme close-ups: High-resolution textures ensure that models maintain their detail and quality even when viewed up close, crucial for immersive experiences and detailed product showcases. Useful for maintaining visual fidelity in demanding applications.

· Ensures physics-stable topology for simulations and collisions: The generated mesh structure is optimized for physics engines, making it reliable for real-time simulations and accurate collision detection. Useful for creating believable virtual environments and interactive experiences.

· Exports USD/USDZ, FBX, and GLTF — compatible with Omniverse, Unity, and Unreal Engine: Offers a wide range of industry-standard export formats, ensuring easy integration with popular 3D software and game development platforms. Useful for maximizing compatibility and workflow efficiency.

Product Usage Case

· Embodied AI and robotics researchers can use Seed3D to generate precise 3D models of environments or objects for training AI agents or testing robotic manipulation in simulations. By providing millimeter-accurate geometry and realistic physics properties, it allows for more reliable and effective training. Useful for accelerating AI and robotics development.

· Game and XR developers can employ Seed3D to create highly detailed and physically accurate 3D assets for their virtual worlds. This leads to more immersive gameplay and interactive experiences where objects behave predictably. Useful for enhancing the realism and performance of games and XR applications.

· Product visualization specialists and digital twin creators can use Seed3D to generate exact 3D replicas of physical products. This allows for detailed marketing presentations, virtual prototyping, and accurate digital twins for monitoring and maintenance. Useful for improving product design and marketing efforts.

· Simulation and graphics educators can use Seed3D as a tool to demonstrate advanced 3D reconstruction techniques and the importance of accurate geometry and physics in computer graphics. It provides a practical example for students to learn from. Useful for enriching technical education and practical learning.

15

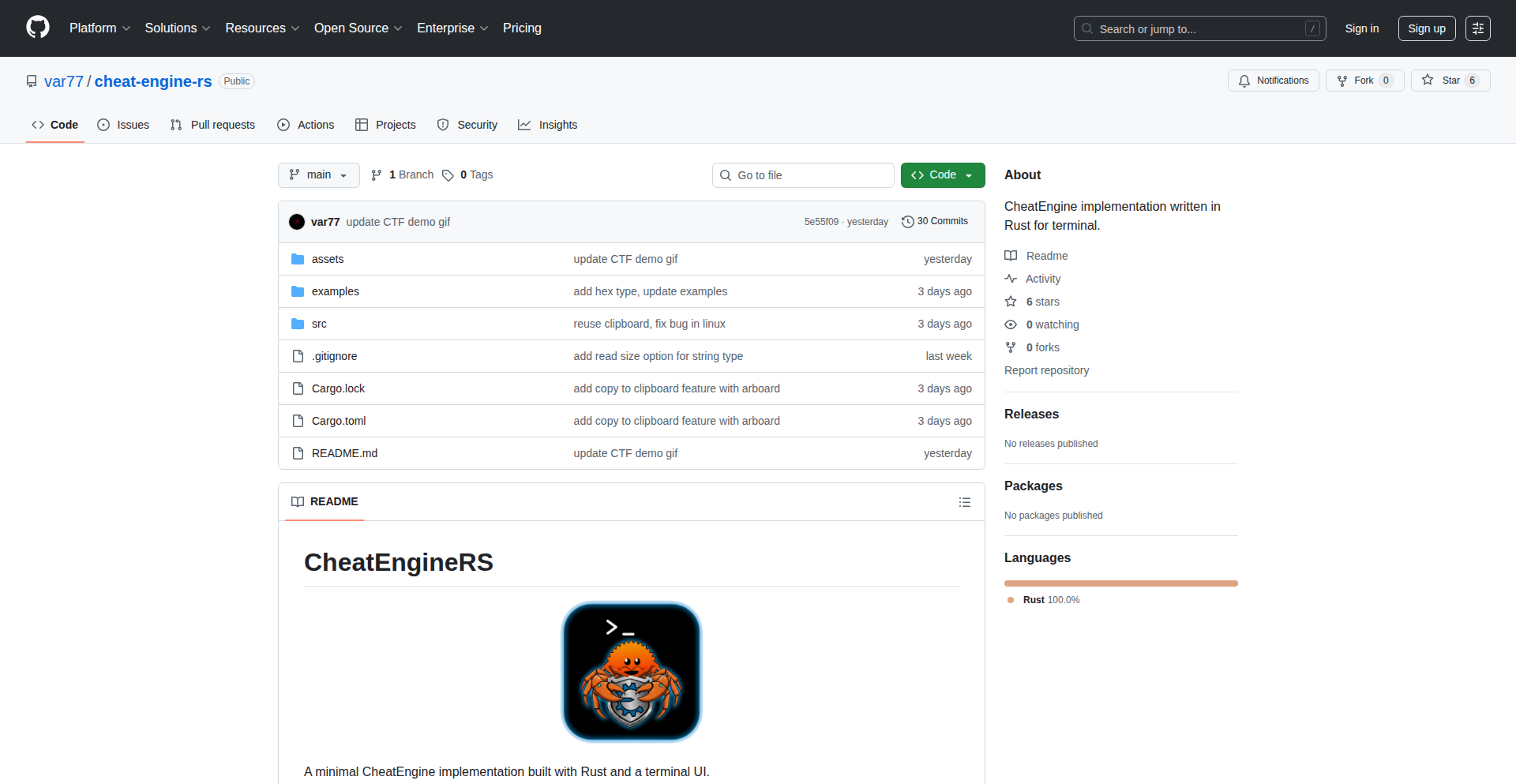

TermiMemory-Explorer

Author

varik77

Description

TermiMemory-Explorer is a Rust-based terminal application that functions as a command-line alternative to Cheat Engine. It allows users to inspect and modify the live memory of running processes directly from their terminal, offering powerful debugging and exploration capabilities with Vim-like navigation for enhanced usability. This project's innovation lies in bringing low-level memory manipulation tools into the accessible and efficient terminal environment.

Popularity

Points 3

Comments 2

What is this product?

TermiMemory-Explorer is a terminal-based tool written in Rust that acts like a command-line version of Cheat Engine. It lets you look into and change the memory that a program is currently using while it's running. The innovation here is bringing these advanced memory inspection and modification features to the command line, making them accessible without needing a graphical interface. It uses sophisticated techniques to read and write process memory safely, and its Vim-style navigation makes it efficient for power users.

How to use it?

Developers can use TermiMemory-Explorer to debug running applications, understand how software uses memory, or even for reverse engineering purposes. You would typically start it by specifying the process ID (PID) you want to inspect. Once attached, you can search for specific data types like 4-byte or 8-byte integers, strings, or raw hexadecimal values within the process's memory. The results can then be modified directly from the terminal. Integration can be done by scripting its use or as a standalone debugging utility in your development workflow.

Product Core Function

· Live process memory exploration: Enables direct viewing of a program's memory contents, allowing developers to understand runtime data structures and identify potential issues.

· Memory searching (integers, strings, hex): Provides flexible search capabilities to locate specific data patterns within a process's memory, crucial for debugging and analysis.

· Customizable search byte length: Allows users to specify the number of bytes to read for string and hex searches, enabling precise targeting and prefix searches for more efficient data discovery.

· Vim-style navigation: Implements familiar Vim keybindings (j/k/G/gg) for moving through memory lists, significantly improving user experience and speed for terminal-based interactions.

· In-memory value modification: Permits direct alteration of memory values, offering a powerful tool for testing program behavior under different conditions or for patching live applications.

Product Usage Case

· Debugging a game to understand how game states are stored in memory, using TermiMemory-Explorer to search for specific numerical values representing player health or score and then modifying them to test game mechanics.

· Analyzing a network service to identify how sensitive data is handled in memory, by searching for known string patterns and observing their behavior or potential exposure.

· Reverse engineering a small utility to understand its internal workings, by inspecting memory for specific byte sequences and trying to infer program logic or identify data formats.

· Developing a custom memory scanner for a specific application by scripting TermiMemory-Explorer's search and read functionalities to automate the discovery of particular data types.

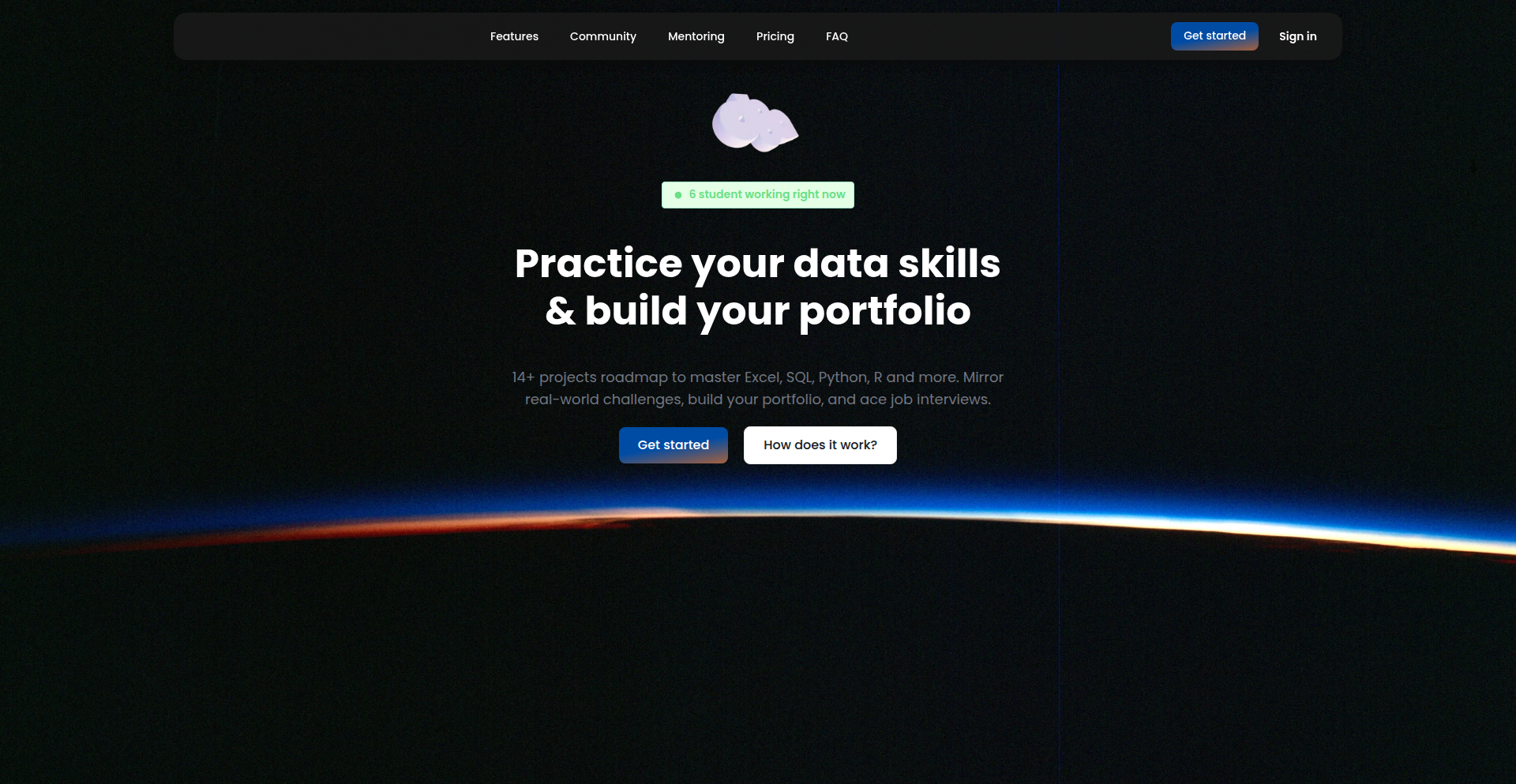

16

DataSkillForge

Author

mariusMDML

Description

DataSkillForge is a web application designed to empower aspiring data professionals by providing hands-on practice with real-world data projects. It addresses the challenge of building a strong portfolio for job interviews by offering a curated set of 14 projects spanning dashboarding, ETL, SQL, R, and Python. The core innovation lies in its guided learning approach and community feedback loop, allowing users to hone their skills and receive expert reviews, making them job-ready.

Popularity

Points 3

Comments 2

What is this product?

DataSkillForge is a learning platform for data analysts, scientists, and engineers. It's built upon a community-driven approach, offering a practical, project-based curriculum. The technology behind it involves a web application that hosts a variety of data-related projects. These projects are designed to simulate real-world tasks, requiring users to apply skills in areas like Extract, Transform, Load (ETL) processes, database querying with SQL, and programming in R and Python. The innovative aspect is the integration of a learning community and a system for project review, providing constructive feedback that goes beyond automated checks, helping users truly understand and improve their data analysis and manipulation techniques. So, what's the benefit? It's a structured way to gain practical experience and build confidence, directly addressing the gap between theoretical knowledge and the skills employers are looking for. This means you can build a portfolio that actually showcases your abilities, increasing your chances of landing a data-related job.

How to use it?

Developers and aspiring data professionals can access DataSkillForge through its web interface. Upon signing up, users are presented with a catalog of 14 diverse data projects. Each project provides a clear objective, datasets, and guidelines. Users can then work on these projects using their preferred tools and environments (e.g., local R/Python installations, SQL clients). The platform facilitates project submission, where members can receive feedback from experienced professionals within the community. Integration can be thought of as using this platform as a central hub for skill development and portfolio building. You can use your existing data analysis and programming skills to complete the projects. So, how does this help you? You get a structured path to practice your skills, get valuable feedback, and create tangible proof of your capabilities for potential employers.

Product Core Function

· Guided Data Project Practice: Users can engage in 14 distinct data projects covering essential data science and engineering disciplines like dashboard creation, ETL pipelines, SQL querying, and R/Python scripting. This provides practical, hands-on experience, which is crucial for solidifying learning and demonstrating competence to employers.

· Community Feedback and Review: Beyond automated checks, users can submit their completed projects for review by experienced data professionals. This personalized feedback helps identify blind spots and areas for improvement, accelerating skill development and ensuring high-quality portfolio pieces.

· Portfolio Building Tools: The platform helps users curate their project work into a professional portfolio. This directly translates to a stronger application for data-related roles, as employers can see concrete examples of your problem-solving abilities.

· Skill Assessment and Improvement: By working through a variety of project types and receiving feedback, users can accurately assess their current skill level and identify specific areas that require further development, leading to targeted learning and professional growth.

Product Usage Case

· A recent graduate aiming for a data analyst role can use DataSkillForge to complete projects on building interactive dashboards with tools like Tableau or Power BI, demonstrating their ability to communicate insights visually. This directly addresses the need to showcase presentation skills to hiring managers.

· An aspiring data engineer can tackle ETL projects, learning to extract data from various sources, transform it into a usable format, and load it into a data warehouse, proving their proficiency in data pipeline management to potential employers.

· A data scientist seeking to transition into a new industry can practice SQL projects to master complex database queries and data manipulation techniques, which are fundamental for any data-intensive role. This helps them build a portfolio that reflects their adaptability and core data skills.

· A student preparing for job interviews can utilize the R and Python projects to implement statistical models and machine learning algorithms, showcasing their analytical and predictive modeling capabilities to recruiters looking for candidates with strong quantitative backgrounds.

17

StatScraperEngine

Author

SamTinnerholm

Description

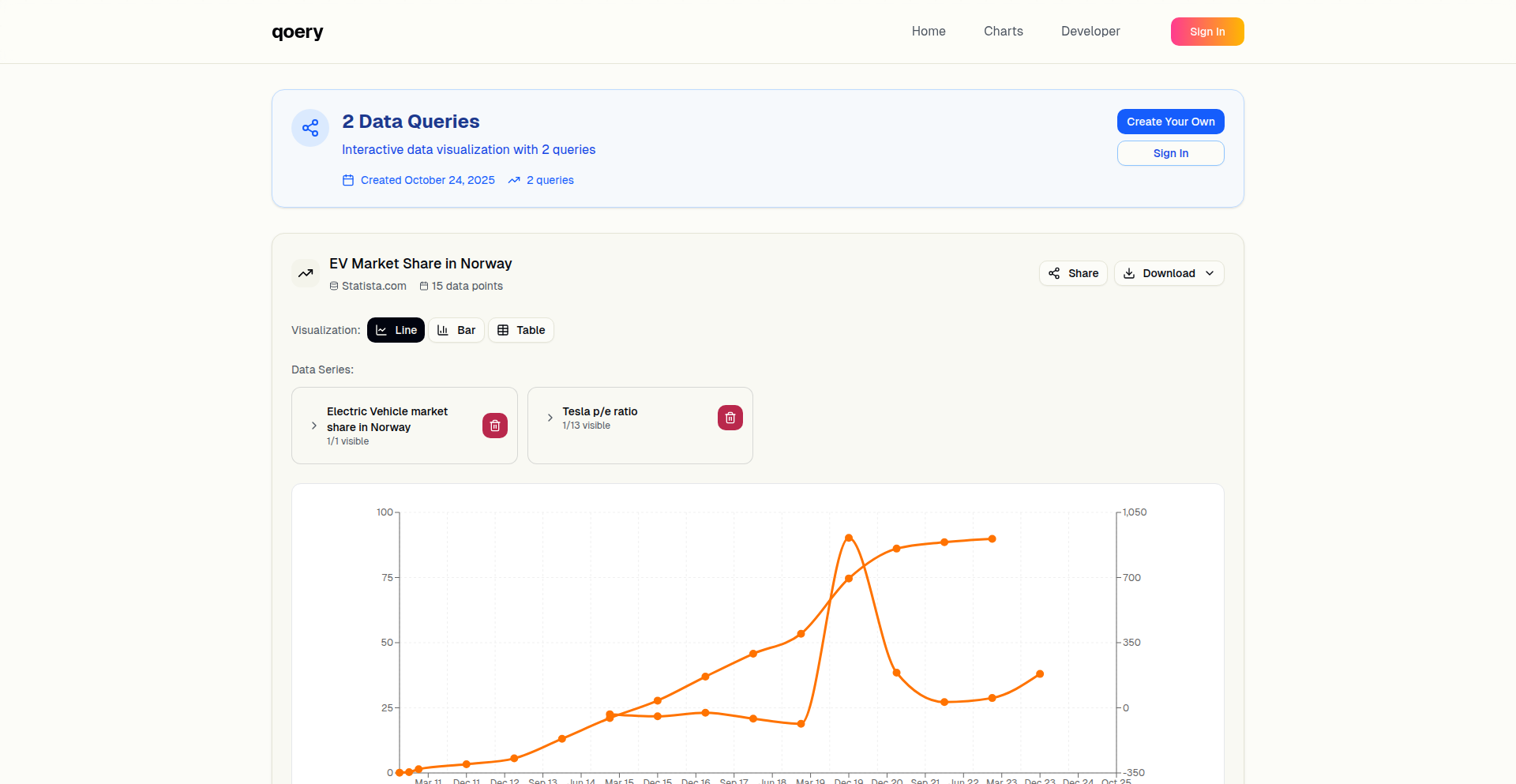

This project is a data engine designed to scrape statistics, aiming to disrupt existing subscription-based data providers like Statista by offering a free alternative. Its core innovation lies in its efficient and automated data extraction methodology, circumventing traditional paywalls and complex data aggregation methods.

Popularity

Points 4

Comments 1

What is this product?