Show HN Today: Discover the Latest Innovative Projects from the Developer Community

ShowHN Today

ShowHN TodayShow HN Today: Top Developer Projects Showcase for 2025-10-23

SagaSu777 2025-10-24

Explore the hottest developer projects on Show HN for 2025-10-23. Dive into innovative tech, AI applications, and exciting new inventions!

Summary of Today’s Content

Trend Insights

Today's Show HN reveals a strong surge in AI-driven innovation, with a significant focus on enhancing productivity and developer workflows. Projects like 'Deta Surf' and 'Twigg' exemplify the trend towards more intuitive, user-controlled AI interactions, moving beyond simple chat interfaces to context management and research acceleration. The emphasis on open-source and local-first solutions, as seen in 'Deta Surf' and 'OpenSnowcat,' highlights a growing demand for data privacy and user autonomy. For developers, this means an opportunity to build tools that not only leverage AI but also empower users with control and transparency. The emergence of decentralized web solutions like 'Nostr Web' points towards a future where content is more resilient and censorship-resistant, offering a fertile ground for innovation in secure and distributed applications. Furthermore, the practical application of AI in areas like motion sensing ('Tommy') and accessibility testing ('Clym') shows that complex technologies are being democratized to solve real-world problems, encouraging entrepreneurial spirit and a hacker mindset to tackle niche challenges with cutting-edge tech.

Today's Hottest Product

Name

Deta Surf – An open source and local-first AI notebook

Highlight

Surf tackles the fragmentation of digital research by offering a unified desktop app that integrates file management, web browsing, and AI-powered document generation. Its key innovation lies in its local-first, open-source approach to data storage and LLM integration. This means users retain control of their data and can choose their preferred AI models, offering significant flexibility and privacy. Developers can learn about building applications with a focus on user data sovereignty and the practical application of LLMs for content summarization and deep linking within research workflows.

Popular Category

AI/ML

Developer Tools

Productivity

Open Source

Popular Keyword

AI

LLM

Open Source

Productivity

Development Tools

Data Management

Decentralization

Technology Trends

Local-first AI applications

Decentralized web infrastructure

Enhanced LLM interaction interfaces

AI-driven productivity tools

Specialized hardware sensing

Open-source sustainability

Developer experience optimization

Project Category Distribution

AI/ML (30%)

Developer Tools (25%)

Productivity (20%)

Open Source (15%)

Web3/Decentralization (5%)

Hardware/IoT (5%)

Today's Hot Product List

| Ranking | Product Name | Likes | Comments |

|---|---|---|---|

| 1 | SignalNoise ChronoFeed | 157 | 84 |

| 2 | SurfAI Notebook | 119 | 39 |

| 3 | TommyWave Sense | 81 | 65 |

| 4 | Nostr Web Weaver | 92 | 25 |

| 5 | Twigg: LLM Context Navigator | 76 | 26 |

| 6 | OpenSnowcat: The Unchained Analytics Engine | 66 | 16 |

| 7 | ChatGPT App Accelerator | 17 | 2 |

| 8 | A11yFlow Weaver | 18 | 0 |

| 9 | Coyote - Asynchronous AI Chat Companion | 7 | 10 |

| 10 | ScreenAsk: Instant Screen Capture Linker | 16 | 0 |

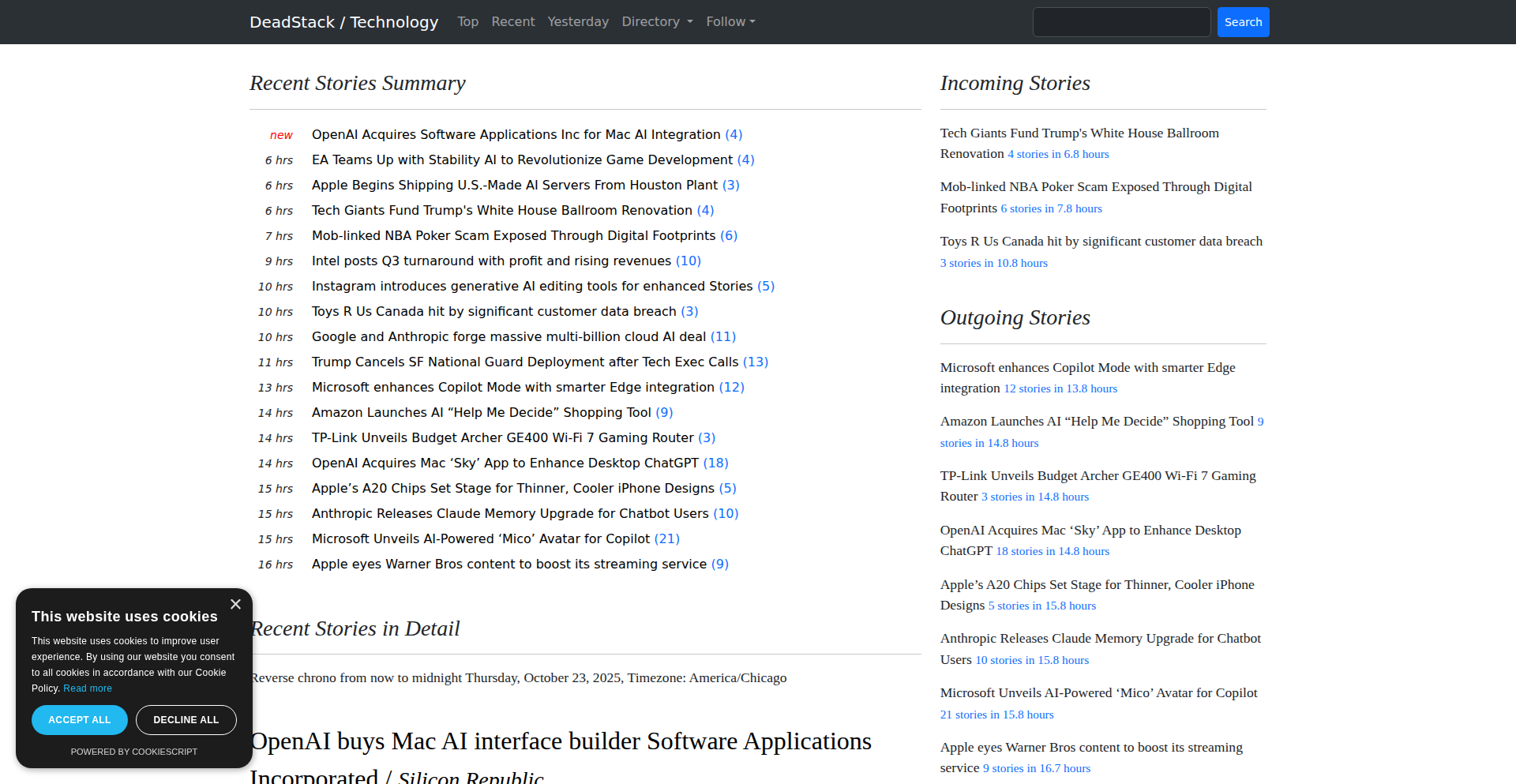

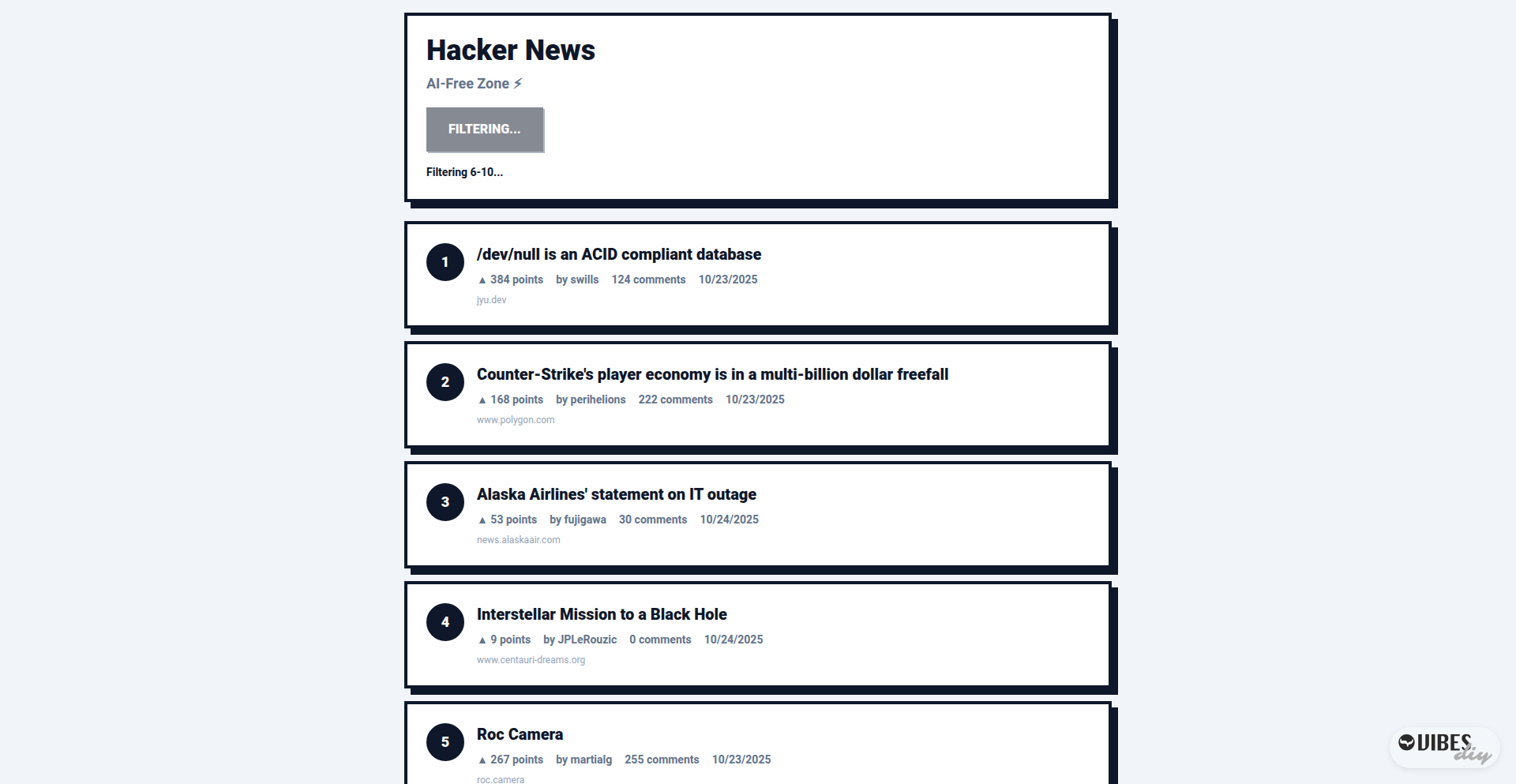

1

SignalNoise ChronoFeed

Author

dreadsword

Description

A meticulously curated, non-algorithmic reverse chronological feed of tech news, designed for clarity and speed. It filters out the noise, presenting only high-signal content, and offers LLM-assisted summaries for quick comprehension. This project prioritizes a minimalist, fast, and user-centric experience, embodying the hacker ethos of building tools for personal use and community benefit.

Popularity

Points 157

Comments 84

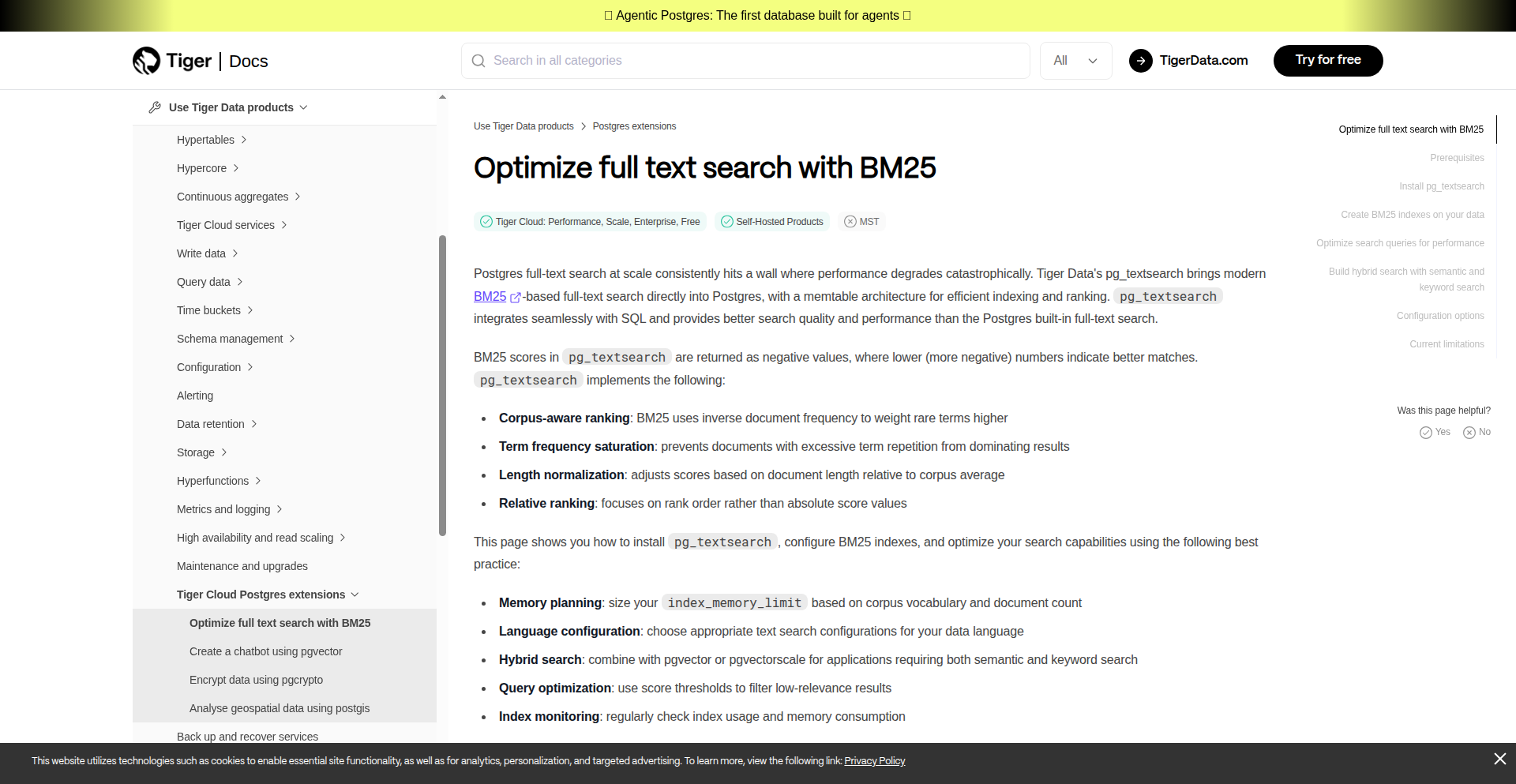

What is this product?

SignalNoise ChronoFeed is a tech news aggregator that operates on a simple, yet powerful principle: presenting news in the order it was published, without complex algorithms trying to guess what you want. Think of it like a chronological newspaper, but for tech. The innovation lies in its deliberate rejection of algorithmic filtering, focusing instead on 'signal-to-noise' testing – meaning it aims to show you genuinely interesting and relevant tech news without the clutter. It also incorporates an LLM (that's a fancy AI language model) to help summarize articles, making it even faster to grasp the essence of a story. So, what does this mean for you? You get a clean, fast, and honest view of the tech world, cutting through the usual digital distractions, allowing you to quickly find what matters to you without being fed what an algorithm thinks you should see.

How to use it?

Developers can integrate SignalNoise ChronoFeed into their workflows by bookmarking the site for quick scanning of the latest tech developments. For more advanced use cases, the project's underlying principles could inspire the creation of custom news filtering tools or browser extensions that leverage similar 'signal-to-noise' heuristics. Imagine building a personalized news digest that only shows you articles about specific programming languages or cloud technologies that you've manually flagged as high-priority. The project's lightweight design also means it's incredibly fast to load, making it ideal for inclusion in developer dashboards or monitoring tools where every second counts. Essentially, you use it by visiting the site to get a rapid, high-quality overview of the tech landscape, or by learning from its design to build your own more specialized news consumption tools.

Product Core Function

· Reverse Chronological Feed: Presents news in the exact order it was published, ensuring you don't miss anything new and providing a transparent view of information flow. This is valuable because it allows for unbiased discovery and prevents important news from being buried by older, but algorithmically favored, content. You can trust that what you see is truly the latest.

· Signal-to-Noise Filtering: Curates content based on quality and relevance, reducing information overload. This is crucial for developers who need to stay informed efficiently. It means you spend less time sifting through irrelevant articles and more time focusing on impactful tech news.

· LLM-Powered Summaries: Provides concise summaries of articles, enabling rapid comprehension of key information. This is a time-saver for busy developers who need to quickly grasp the main points of an article before deciding to dive deeper.

· Lightweight and Fast Design: Optimized for speed and minimal resource usage, ensuring a smooth and efficient user experience. For developers, this means information is delivered to you almost instantly, without frustrating load times, enhancing productivity.

· Categorized Views: Offers organized sections for various tech topics, allowing for focused browsing. This helps developers quickly find news relevant to their specific interests, such as AI, web development, or cybersecurity, making research more targeted and effective.

Product Usage Case

· A freelance developer needs to stay updated on the latest JavaScript frameworks. They use SignalNoise ChronoFeed to quickly scan the 'Web Development' category each morning, leveraging the LLM summaries to get the gist of new library releases and framework updates. This saves them significant research time, allowing them to focus on coding projects rather than endless browsing.

· A CTO wants to monitor emerging trends in artificial intelligence without being overwhelmed by marketing hype. They rely on SignalNoise ChronoFeed's 'AI' section, trusting the 'signal-to-noise' filtering to present genuinely insightful articles and research papers. This helps them make informed strategic decisions by providing a clear, uncluttered view of important AI advancements.

· A student learning to code wants to understand the latest industry practices. They use the reverse chronological feed to see what's currently being discussed and adopted by leading tech companies, using the summaries to quickly grasp new concepts. This gives them a practical, real-world perspective that complements their academic learning.

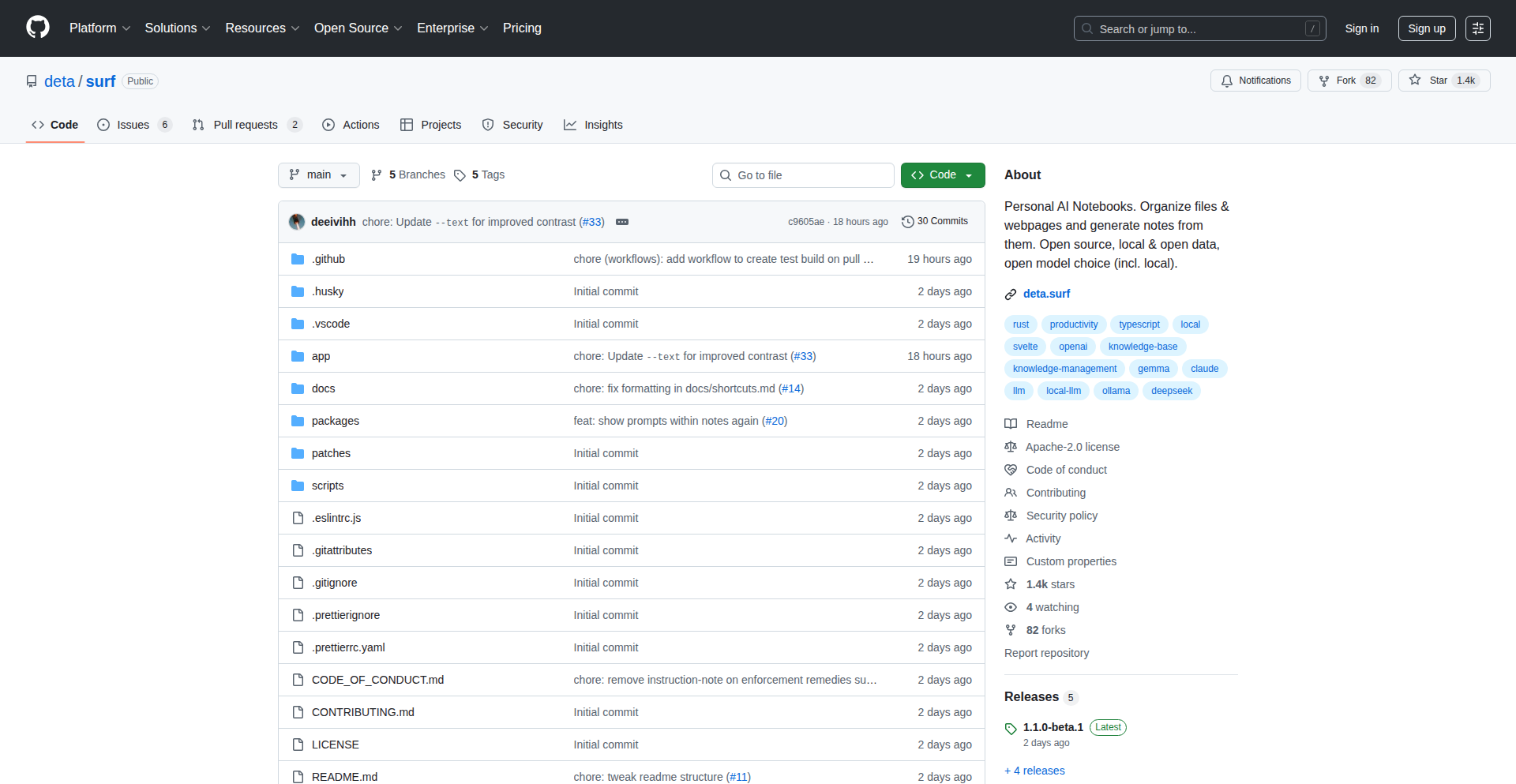

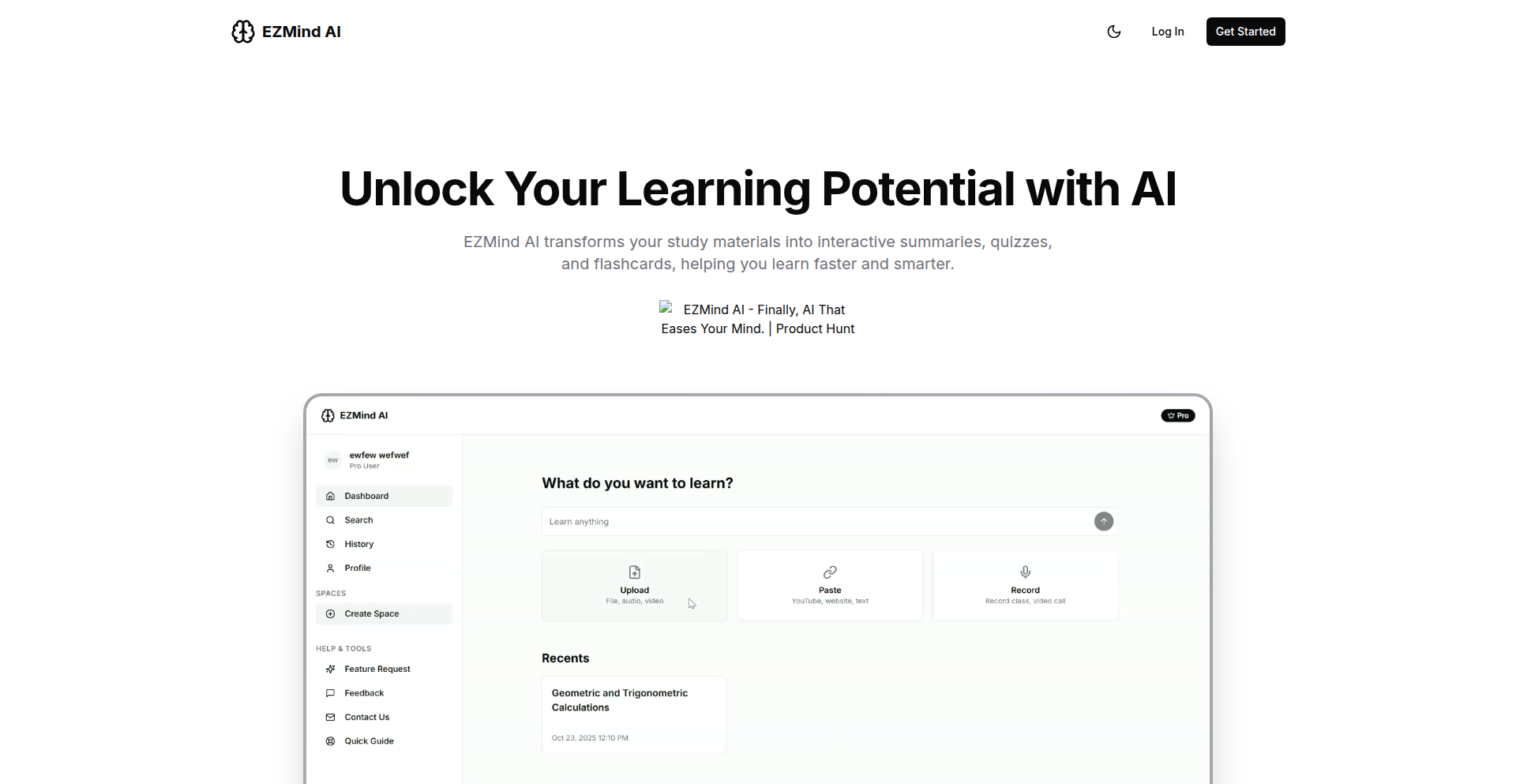

2

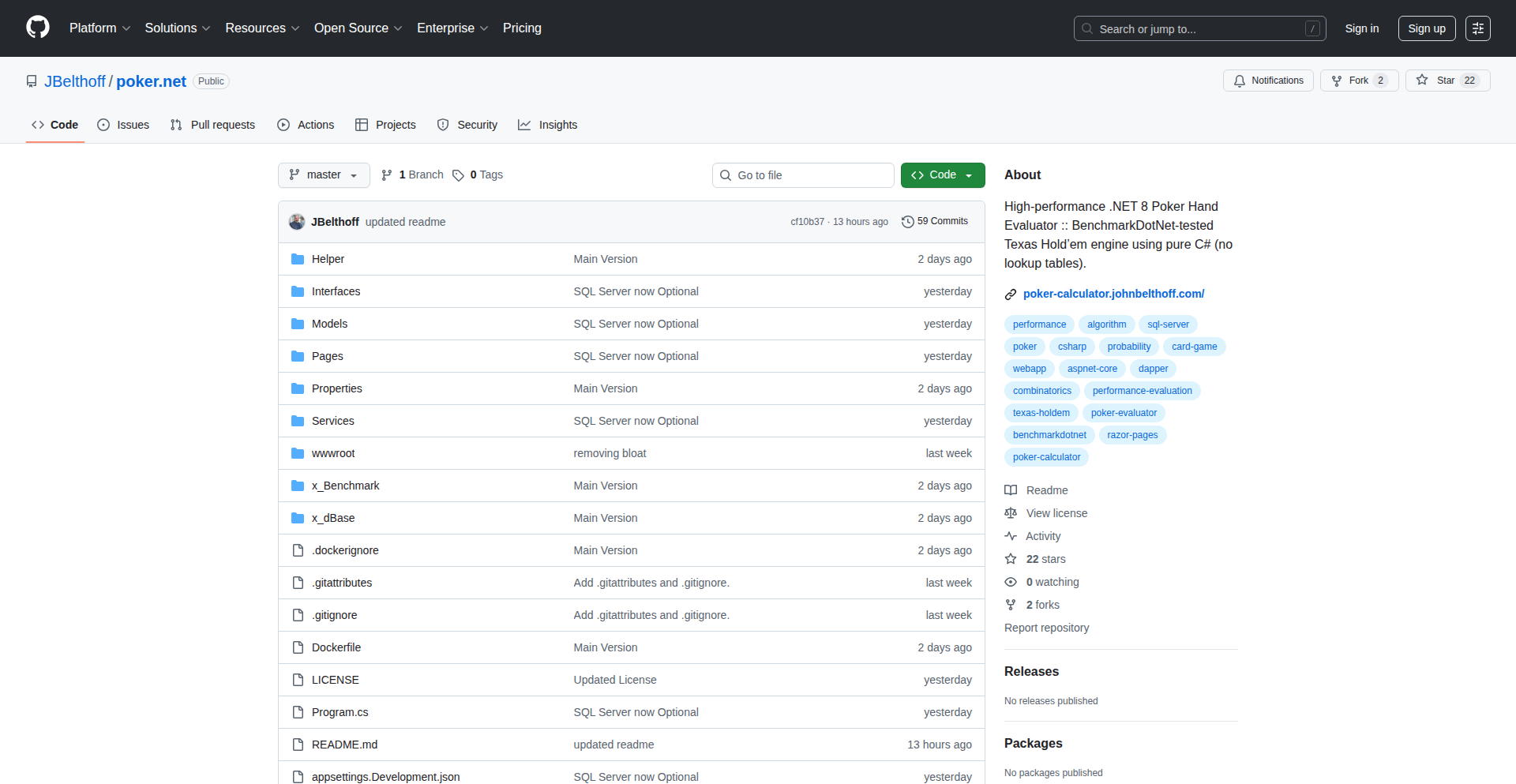

SurfAI Notebook

Author

mxek

Description

Surf is an open-source, local-first desktop application designed to streamline research and content creation. It tackles the common frustration of fragmented workflows across file managers, web browsers, and document editors by offering a unified environment. Surf features a multimedia library for organizing files and web pages into 'Notebooks' and an LLM-powered smart document generator that can synthesize information from your stored content. A key innovation is the ability to auto-generate editable documents with deep links back to the original sources (like specific PDF pages or YouTube timestamps), keeping users in control and data local. This approach aims to minimize manual work and data lock-in, allowing users to choose their preferred AI models and export their data in accessible formats.

Popularity

Points 119

Comments 39

What is this product?

SurfAI Notebook is a desktop application that acts as an intelligent research and writing companion. It solves the problem of jumping between different apps to gather information. Think of it as a personal digital assistant that helps you collect, organize, and then synthesize information from various sources like web pages, PDFs, or even YouTube videos. Its core innovation lies in its 'smart document' feature. This feature uses AI to read through your collected materials and automatically create a draft document, summarizing the key points and, crucially, providing direct links back to where the information came from. This means you can easily verify or dive deeper into the original sources without losing your place. It's built with a focus on privacy and control, storing your data locally and allowing you to choose your AI model, avoiding vendor lock-in.

How to use it?

Developers can use SurfAI Notebook to manage research projects, academic papers, or any task involving gathering and synthesizing information from multiple sources. You can save articles, PDFs, and links to web pages directly into 'Notebooks'. When you're ready to write, Surf can generate a draft document based on the content of your Notebook. For example, if you're researching a historical event, you could save several articles and a documentary. Surf can then generate an initial draft of an essay, citing specific paragraphs from the articles and timestamps from the documentary. It integrates by allowing you to import existing files and export generated documents in standard formats, and you can even plug in your own local AI models for processing, giving you maximum flexibility.

Product Core Function

· Multimedia Library: Save and organize various file types (web pages, PDFs, etc.) into collections called Notebooks, allowing for structured information gathering and easy retrieval. The value is in centralized organization, saving time spent searching across multiple locations.

· LLM-Powered Smart Document Generation: Automatically create editable documents that summarize content from your Notebooks, reducing manual writing effort. The value is in accelerating the initial drafting process and providing a structured starting point for content creation.

· Deep Linking to Source Material: Generated documents include direct links to specific parts of the original source (e.g., PDF page numbers, YouTube timestamps), enabling easy verification and further exploration. The value is in maintaining accuracy and facilitating deeper dives into information.

· Local-First Data Storage: All user data is stored locally on the user's machine in open and accessible formats, ensuring privacy and control. The value is in data ownership and security, reducing reliance on cloud services.

· Open Model Choice: Users can select and integrate different AI models (including custom and local LLMs) for document generation, offering flexibility and avoiding vendor lock-in. The value is in empowering users with choice and adaptability to different AI technologies.

· Editable Generated Documents: The smart documents produced by Surf are fully editable, allowing users to refine, expand, and customize the AI-generated content. The value is in providing a flexible foundation that users can build upon.

Product Usage Case

· A student researching a complex academic topic can save numerous research papers, web articles, and even relevant YouTube lectures into a single Notebook. They can then use Surf to generate an initial research paper draft, with each point in the draft linked back to the specific source article or video segment, saving hours of manual citation and summarization.

· A journalist working on an investigative report can gather all related documents, interview transcripts, and web research into a Notebook. Surf can help generate an outline or initial draft of their story, with direct links to key pieces of evidence, ensuring accuracy and efficient workflow.

· A content creator planning a video series can collect reference materials, script snippets, and inspiration from various websites and documents. Surf can assist in organizing these ideas and generating a draft script or content outline, with timestamps for visual references in video clips, streamlining the pre-production process.

· A developer building a knowledge base for a new project can save documentation, API references, and code snippets into Notebooks. Surf can then generate summaries or introductory guides, with links to specific documentation pages or code examples, making it easier for team members to get up to speed.

3

TommyWave Sense

Author

mike2872

Description

TommyWave Sense transforms ordinary ESP32 microcontrollers into advanced motion sensors that can detect movement through walls and obstacles using Wi-Fi signal analysis. This overcomes the limitations of traditional motion sensors by enabling hidden device placement and broader detection coverage, all while prioritizing user privacy through local data processing.

Popularity

Points 81

Comments 65

What is this product?

TommyWave Sense is a software project that leverages the Wi-Fi capabilities of ESP32 devices to sense motion. Instead of using infrared or other direct sensors, it analyzes subtle changes in Wi-Fi signals that are disrupted by human movement. Think of it like shining a flashlight in a dark room; you can see the shadow cast by an object. Similarly, TommyWave Sense detects how a person's movement alters the Wi-Fi signals bouncing around the environment. This innovative approach allows it to work even when the ESP32 device isn't in direct line of sight, penetrating walls and furniture. The core innovation lies in applying sophisticated signal processing techniques, often found in research, to make Wi-Fi sensing practical for everyday use. So, what does this mean for you? It means you can have motion detection without needing bulky sensors in every room or worrying about them being easily bypassed.

How to use it?

Developers can integrate TommyWave Sense into their smart home projects or custom IoT applications. It is currently available as a Home Assistant Add-on or a Docker container, making it easy to deploy within existing home automation ecosystems. For those who prefer a more hands-on approach, the software can be flashed directly onto supported ESP32 devices, or it can work alongside existing ESPHome configurations. This flexibility allows for seamless integration into a wide range of setups, from simple personal projects to more complex smart building solutions. The value to you is a readily available solution that simplifies adding advanced, unobtrusive motion sensing to your connected devices.

Product Core Function

· Wi-Fi Signal Analysis for Motion Detection: Utilizes changes in Wi-Fi signal patterns to detect movement, offering a privacy-friendly alternative to cameras or traditional sensors. This is valuable because it allows for detection without direct line of sight, enabling hidden sensor placement and wider coverage areas in your home or building.

· Through-Wall Sensing Capability: Enables motion detection through common household obstacles like walls and furniture by interpreting how these materials affect Wi-Fi signals. The benefit here is that you can cover larger areas with fewer devices and place sensors discreetly without compromising functionality.

· ESP32 Device Compatibility: Supports a range of ESP32 microcontrollers, making it accessible to a broad developer community and allowing for cost-effective hardware deployment. This means you can likely use hardware you already have or easily acquire affordable ESP32 boards for your projects.

· Home Assistant Add-on and Docker Support: Provides easy integration into popular smart home platforms and containerized environments, simplifying deployment and management for users. This is useful because it lowers the barrier to entry for incorporating advanced sensing into your existing smart home setup.

· Local Data Processing and Privacy Focus: Ensures all data processing occurs on the user's local network, with no cloud dependency or data collection, addressing privacy concerns. This is crucial for users who want to avoid sending personal data to external servers, offering peace of mind and enhanced security for their smart home systems.

Product Usage Case

· Smart Home Automation: Imagine a system that detects when someone enters a room, even if the sensor is hidden in another part of the house, to automatically turn on lights or adjust the thermostat. This solves the problem of dead zones and aesthetically unpleasing sensor placement.

· Security Monitoring: Implement a low-profile security system that can detect unexpected movement in areas where traditional sensors might be easily noticed or tampered with. This provides an added layer of unobtrusive surveillance.

· Elderly Care and Presence Detection: Though stationary presence detection is a future feature, the current motion sensing can be used to monitor activity levels and alert caregivers to unusual patterns or lack of movement in specific areas. This offers a subtle way to ensure the well-being of loved ones.

· Smart Building Management: In commercial or industrial settings, use TommyWave Sense to monitor foot traffic flow or detect unauthorized movement in sensitive areas without intrusive camera systems. This provides valuable insights and security without compromising privacy.

· DIY IoT Projects: Hobbyists can use TommyWave Sense to add advanced motion sensing capabilities to their custom IoT projects, enabling more sophisticated and responsive interactions with their environment.

4

Nostr Web Weaver

Author

karihass

Description

Nostr Web Weaver is a groundbreaking project that enables website hosting and publishing entirely on the Nostr network. Instead of relying on traditional centralized servers, websites are constructed as a series of signed, verifiable messages (events) distributed across multiple 'relays'. This innovative approach makes websites inherently resistant to censorship, takedowns, and data loss, embodying the core principles of a decentralized web. The project includes tools for domain discovery via DNS, a command-line interface for versioned website deployments, and a browser extension for a seamless native browsing experience.

Popularity

Points 92

Comments 25

What is this product?

Nostr Web Weaver is a decentralized website hosting solution built upon the Nostr protocol. At its heart, it treats website content not as files on a server, but as a collection of cryptographically signed messages, called 'events' in Nostr terminology. These events are then broadcast and stored by a network of independent servers known as 'relays'. When someone wants to visit a website hosted this way, their browser or the Nostr Web Weaver extension fetches these events from various relays, reassembling the website. The innovation lies in leveraging Nostr's existing decentralized infrastructure for web hosting, providing inherent resilience and censorship resistance. So, this means your website can't be easily shut down by a single entity, offering unparalleled freedom of expression and data persistence. This is achieved through the secure and distributed nature of Nostr.

How to use it?

Developers can use Nostr Web Weaver by leveraging its command-line publisher tool (nw-publisher) to deploy and manage their website versions. This tool allows for versioned deployments, meaning you can easily roll back to previous versions or push updates. For discovery, websites can be linked to custom domains using DNS TXT records, allowing users to access them via familiar URLs (e.g., _nweb.yourdomain.com). The browser extension (nw-extension) enhances the user experience by providing a native way to browse these decentralized websites, interacting directly with Nostr relays. The project also supports specific Nostr event kinds designed for Nostr Web, ensuring compatibility and functionality within the Nostr ecosystem. So, you can deploy your static site or web application to a censorship-resistant network and manage it efficiently with developer-friendly tools.

Product Core Function

· Decentralized Website Hosting: Websites are published as signed Nostr events and distributed across relays, making them resistant to censorship and takedowns. This offers a secure and persistent way to host your content online, free from the risks of single points of failure.

· Domain-Based Discovery (DNS TXT Records): Allows users to access Nostr-hosted websites using custom domain names via DNS TXT records, improving discoverability and user-friendliness. This means people can find your decentralized website using a familiar web address.

· CLI Publisher Tool (nw-publisher): Provides a command-line interface for versioned website deployments and management, simplifying the publishing process and enabling easy updates and rollbacks. This gives you precise control over your website's lifecycle.

· Browser Extension (nw-extension): Offers a native browsing experience for Nostr-hosted websites, enhancing usability and integration with the decentralized web. This makes viewing decentralized websites as seamless as browsing traditional ones.

· Nostr Relay v1.3.5 Support for Nostr Web Event Kinds: Ensures compatibility with the Nostr protocol and allows for the implementation of specific event types tailored for web content, enabling rich and dynamic decentralized websites. This ensures that the underlying technology supports complex web functionalities.

Product Usage Case

· Publishing a personal blog on Nostr Web Weaver: A developer can deploy their static blog using `nw-publisher`, link it to a custom domain using DNS TXT records, and share the link with their followers. This provides a highly resilient and censorship-proof platform for personal expression, ensuring the blog remains accessible regardless of external pressures.

· Hosting a community forum: A community can host their forum on Nostr Web Weaver, leveraging the distributed nature to prevent any single entity from shutting down discussions. The browser extension ensures easy access for all members, fostering open and free communication.

· Distributing an open-source project's documentation: An open-source project can host its documentation on Nostr Web Weaver, ensuring its perpetual availability to developers worldwide. This guarantees that crucial information for using the project is always accessible, even if traditional hosting services become unavailable.

· Creating a decentralized portfolio website: A creative professional can build a portfolio on Nostr Web Weaver, showcasing their work on a platform that is resistant to content removal. This offers peace of mind that their work will remain visible and accessible to potential clients or employers.

5

Twigg: LLM Context Navigator

url

Author

jborland

Description

Twigg is a context management interface designed for Large Language Models (LLMs), akin to 'Git for LLMs'. It addresses the limitations of linear LLM interfaces by providing a visual, tree-like structure for managing conversational context. This innovation allows users to explore different conversational tangents, easily navigate long-term projects, and maintain control over the input provided to LLMs, leading to more efficient and effective AI interactions.

Popularity

Points 76

Comments 26

What is this product?

Twigg is an AI-powered context management tool that revolutionizes how developers and users interact with Large Language Models (LLMs). Traditional LLM interfaces, like ChatGPT, present conversations in a linear fashion, making it difficult to track progress, explore different ideas, or manage complex, long-term projects. Twigg solves this by employing a unique, interactive tree diagram. This visualization allows users to branch conversations, create tangents for exploring different possibilities without losing the main thread, and easily navigate back and forth through their project's history. Think of it as version control for your AI conversations, giving you a clear overview and granular control over the information fed to the LLM, thereby improving its output and preventing context loss. So, what's in it for you? You get to have a more organized, powerful, and less frustrating experience when working with AI on any project, big or small.

How to use it?

Developers can use Twigg as a centralized hub for all their LLM interactions. It supports a wide range of major LLM providers including ChatGPT, Gemini, Claude, and Grok, allowing users to pick the best model for their task. Integration is straightforward: users can either use Twigg's hosted service with a subscription plan or leverage the 'Bring Your Own Key' (BYOK) option to connect their own API keys directly. The intuitive tree interface allows for easy manipulation of conversational context: users can cut, copy, and delete parts of the conversation tree to precisely define the context sent to the LLM. This makes it ideal for complex tasks like code generation, research synthesis, or creative writing where maintaining specific context is crucial. So, how does this benefit you? You can seamlessly integrate your preferred LLMs into a structured workflow, ensuring your AI assistants have the most relevant information for optimal performance, saving you time and improving the quality of AI-generated results.

Product Core Function

· Visual Context Tree: Provides an interactive, hierarchical view of LLM conversations, allowing for easy navigation and understanding of project progression. This is valuable because it prevents users from getting lost in long conversations and makes it simple to revisit specific points, improving efficiency in complex projects.

· Conversation Branching: Enables users to create parallel conversational paths (tangents) from a main thread, facilitating exploration of different ideas without losing the core context. This is useful for brainstorming, testing hypotheses, or exploring alternative solutions in a structured manner, leading to more creative and thorough outcomes.

· Context Manipulation Tools (Cut, Copy, Delete): Offers granular control over the information fed to LLMs by allowing users to precisely select, copy, and remove segments of the conversation tree. This is important for fine-tuning LLM output, ensuring only relevant context is provided, which directly enhances the accuracy and specificity of AI responses.

· Multi-LLM Support: Integrates with various leading LLM providers (ChatGPT, Gemini, Claude, Grok) through a unified interface. This offers flexibility and choice, allowing users to leverage the strengths of different models for diverse tasks, thus maximizing their productivity and the quality of their AI-driven work.

· Bring Your Own Key (BYOK) Option: Allows users to connect their own API keys from LLM providers, giving them direct access and control over their usage and costs. This is valuable for users who require specific API configurations, want to manage their expenses directly, or need to adhere to internal security policies, providing a personalized and secure LLM experience.

Product Usage Case

· Software Development: A developer working on a large codebase can use Twigg to manage context for LLM-powered code generation or debugging. They can branch conversations to explore different refactoring strategies or API implementations, keeping the main code context clear. This solves the problem of losing track of specific code snippets or requirements in lengthy AI interactions, leading to faster and more accurate code development.

· Academic Research: A researcher using an LLM to synthesize information from multiple sources can organize their findings in Twigg's tree structure. They can create branches for different research questions or themes, easily linking source materials and generated summaries. This helps overcome the challenge of information overload and ensures that the final research output is coherent and well-supported, making the research process more efficient.

· Creative Writing: A novelist can use Twigg to develop characters and plotlines. They can branch off to explore different character backstories or plot twists, maintaining the main narrative thread. This solves the issue of creative ideas becoming muddled in a single chat, allowing for structured exploration and development of compelling narratives.

· Technical Documentation: A technical writer can use Twigg to generate and refine documentation for a complex software product. They can branch conversations to address different features or user scenarios, ensuring all aspects are covered accurately and consistently. This improves the clarity and comprehensiveness of technical documentation, making it easier for users to understand and utilize the product.

6

OpenSnowcat: The Unchained Analytics Engine

Author

joaocorreia

Description

OpenSnowcat is a community-driven fork of the popular Snowplow analytics pipeline, created in response to Snowplow's recent license change. It preserves the original Apache 2.0 license for the core collector and enricher components, ensuring that raw, unopinionated event data remains accessible and truly open-source for all developers. This project aims to maintain the spirit of open data and transparent analytics for the developer community, offering compatibility with existing Snowplow setups while introducing performance optimizations and modern integrations.

Popularity

Points 66

Comments 16

What is this product?

OpenSnowcat is essentially a continuation of the original Snowplow analytics platform, meticulously forked to safeguard its open-source nature. The core innovation lies in its commitment to the Apache 2.0 license, which guarantees freedom to use, modify, and distribute the software without restrictive production clauses. This means developers can freely collect, process, and analyze their user event data without fear of sudden license changes impacting their business. It's built upon the foundational concepts of providing raw, granular event data, allowing for deep customization and understanding of user behavior, a stark contrast to more opinionated, closed-source analytics solutions. The technical implementation leverages the robust collector and enricher components, now maintained and enhanced by a community dedicated to open data practices. So, for you, it means a reliable, transparent, and free analytics tool that won't tie your hands later on.

How to use it?

Developers can integrate OpenSnowcat by setting up the collector to receive event data from their applications or websites. This data is then passed to the enricher, which standardizes and enriches the events with contextual information (like IP addresses, user agents, etc.). OpenSnowcat is designed to be fully compatible with existing Snowplow pipelines, making migration straightforward for those already using Snowplow. It can be deployed in cloud environments or on-premises. Furthermore, it integrates with modern event processing tools like Warpstream Bento for flexible data routing and analysis. Think of it as a powerful engine for understanding your users, which you can plug into your existing data infrastructure. This gives you the flexibility to send your processed data to various destinations like data warehouses (e.g., BigQuery, Snowflake) or data lakes for further analysis using tools like dbt. So, for you, it means you can continue to gain deep insights into user behavior without vendor lock-in, and with the freedom to choose how and where you store and analyze your data.

Product Core Function

· Raw Event Collection: Captures granular user interaction data directly from your applications, providing a complete picture of user journeys. The value here is unparalleled visibility into user actions, enabling detailed behavioral analysis.

· Event Enrichment: Automatically adds contextual information to raw events, such as geolocation, device type, and referrer. This provides richer data for analysis and reduces manual data preparation effort.

· Apache 2.0 Licensing: Guarantees unrestricted use, modification, and distribution for production environments, ensuring long-term freedom and preventing vendor lock-in. This means you can build your analytics infrastructure with confidence, knowing the license won't change unexpectedly.

· Snowplow Compatibility: Seamlessly integrates with existing Snowplow setups, allowing for easy migration and leveraging of current infrastructure. This minimizes disruption and cost when adopting OpenSnowcat.

· Performance Optimizations: Continuously improved for faster data processing and higher throughput, ensuring your analytics pipeline can scale with your needs. This means your data insights are delivered more quickly, enabling faster decision-making.

· Modern Tool Integrations: Connects with contemporary data processing and routing tools like Warpstream Bento. This allows for flexible and efficient management of your event data streams.

· Community-Driven Maintenance: Actively maintained and enhanced by a dedicated community, ensuring ongoing development and rapid bug fixes. This provides a stable and evolving platform for your analytics needs.

Product Usage Case

· E-commerce platform wanting to understand user conversion funnels in detail, from product view to checkout. OpenSnowcat allows them to capture every click and interaction, identifying drop-off points and optimizing the user experience for higher sales.

· SaaS company needing to track feature adoption and user engagement to improve product development. By using OpenSnowcat, they can precisely monitor how users interact with different features, prioritizing improvements based on actual usage patterns.

· Mobile app developer looking to gain deep insights into user behavior for personalized experiences. OpenSnowcat enables them to collect detailed event data, which can then be used to segment users and deliver tailored in-app content or offers.

· A data team migrating from a commercial analytics solution with restrictive licensing. OpenSnowcat provides them with a powerful, open-source alternative, allowing them to retain full control over their data and analytics pipeline without future licensing concerns.

· A gaming company aiming to analyze player behavior to improve game design and monetization strategies. OpenSnowcat's ability to handle high volumes of event data and provide raw access empowers them to understand player progression, in-game economies, and engagement drivers.

7

ChatGPT App Accelerator

Author

Eldodi

Description

This project is a TypeScript starter kit that dramatically speeds up the development of interactive widgets (apps) for OpenAI's ChatGPT. It addresses the slow development feedback loop associated with the official template by enabling Hot Module Reload (HMR) directly within the ChatGPT interface and streamlining the production build and deployment process. So, this helps you build and test ChatGPT apps much faster, making the whole experience feel modern and efficient.

Popularity

Points 17

Comments 2

What is this product?

This is a developer toolkit designed to make building apps that run inside ChatGPT a much smoother and faster experience. OpenAI's app SDK uses a system called MCP (Messaging Communication Protocol) and requires a build process that can be very slow. This starter kit leverages Vite, a modern web development tool, to provide Hot Module Reload (HMR). Think of HMR like live-reloading for your web development, but now it works directly within ChatGPT. It also introduces a framework called Skybridge, which simplifies how your app's components talk to ChatGPT's tools, removing a lot of manual setup. So, it's about making ChatGPT app development feel like building a regular, snappy web application, not a slow, outdated one.

How to use it?

Developers can use this by cloning the provided GitHub repository, installing dependencies (npm or pnpm), and running a development server. This development server is configured with HMR, meaning changes you make to your React code will instantly reflect in the ChatGPT app without needing a full rebuild. You'll then use a tool like ngrok to expose your local development server to ChatGPT. By pasting the ngrok URL into your ChatGPT settings, you can interact with your app live. For production, the kit offers a streamlined build pipeline that can deploy instantly to platforms like Alpic.ai or any other Platform-as-a-Service (PaaS). This means you can quickly iterate on your ideas and then easily deploy your finished app.

Product Core Function

· Hot Module Reloading (HMR) for rapid iteration: Enables instant updates to your ChatGPT app within the ChatGPT interface as you make code changes, eliminating lengthy rebuilds. This is valuable because it drastically reduces development time and frustration, allowing you to see your changes immediately and build features more efficiently.

· Skybridge framework for simplified communication: This abstraction layer handles the complex communication between your React widgets and ChatGPT's tools, removing the need for manual iframe setup and wiring. This is valuable because it simplifies the developer's job by handling intricate technical details, allowing them to focus on the app's core logic and user experience.

· One-click production build and deployment: Offers a streamlined process to package your app and deploy it to hosting services, including optional auth and analytics. This is valuable because it makes the transition from development to a live, shareable app incredibly simple and fast, reducing the overhead of deployment.

· Modern development environment (Vite): Utilizes a fast and efficient build tool for the development server, providing a familiar and performant experience for web developers. This is valuable because it leverages industry-standard, high-performance tools that developers are already comfortable with, making the learning curve lower and the development process more enjoyable.

Product Usage Case

· Developing a complex data visualization widget for ChatGPT: Instead of waiting minutes for each chart update to render, a developer can use this starter kit to see changes in real-time as they adjust the data fetching or rendering logic, significantly speeding up the fine-tuning process.

· Building an interactive customer support tool within ChatGPT: A developer can quickly prototype and test the user interface and backend integrations of a tool that helps customer support agents by having instant feedback on their code changes, leading to a more polished and functional tool faster.

· Creating a custom coding assistant plugin for ChatGPT: When building a tool that generates code snippets or assists with programming tasks, the ability to instantly see how UI changes affect the user experience and test code generation logic directly within the ChatGPT environment is crucial for rapid development and bug fixing.

8

A11yFlow Weaver

Author

snupix

Description

Axe-core powered web accessibility testing tool that blends automated checks with guided manual workflows. It helps developers and auditors pinpoint and fix accessibility issues, ensuring broader web inclusivity and compliance with standards like WCAG. So, this means you get a more accessible website without manually figuring out every single problem, saving time and ensuring more users can access your content.

Popularity

Points 18

Comments 0

What is this product?

A11yFlow Weaver is an open-source tool designed to streamline web accessibility testing. It leverages the power of axe-core, a popular automated accessibility testing engine, to check websites against established standards like WCAG 2.0, 2.1, 2.2, and EN 301 549. What makes it innovative is its seamless integration of these automated checks with thoughtfully designed manual review workflows. For issues that can't be perfectly detected by code alone, it guides testers through specific, contextual checks, like ensuring proper keyboard navigation or verifying sufficient color contrast. This hybrid approach ensures a more comprehensive and accurate accessibility assessment. So, this means you get a deeper understanding of your website's accessibility than just running a quick automated scan, leading to a truly inclusive user experience.

How to use it?

Developers can integrate A11yFlow Weaver into their development pipeline or use it as a standalone application. The tool runs on Mac, Windows, and Linux. It can be configured to define custom rules, map specific accessibility issues to remediation steps, and generate reports in various formats. This allows teams to tailor the testing process to their specific needs and workflows, whether it's for continuous integration or dedicated audit phases. So, this means you can easily embed accessibility checks into your existing development process and generate clear reports for stakeholders.

Product Core Function

· Automated Accessibility Auditing using axe-core: This function leverages a robust engine to automatically scan web pages for common accessibility violations based on WCAG standards. It provides immediate feedback on many issues, saving significant manual effort. So, this means you can quickly identify common accessibility problems without needing to be an expert.

· Guided Manual Review Workflows: For issues that automation struggles to detect reliably, this feature provides step-by-step instructions for testers to perform specific manual checks. This ensures that complex or context-dependent accessibility issues are not missed. So, this means you can ensure your website is accessible even for nuanced problems that code can't easily find.

· Customizable Rules and Mappings: This allows teams to define their own accessibility rules or map identified issues to specific solutions and remediation guidance. This flexibility caters to unique project requirements and team expertise. So, this means you can adapt the testing to your project's specific needs and provide targeted solutions.

· Multi-Format Accessibility Reports: The tool can generate Accessibility Conformance Reports (ACRs) in various formats. This provides clear and documented evidence of the accessibility status of a website for compliance and communication purposes. So, this means you can easily share and document your website's accessibility progress with others.

· Cross-Platform Application: Available for Mac, Windows, and Linux, this ensures that developers and testers on different operating systems can utilize the tool without compatibility issues. So, this means the tool is accessible to a wide range of developers regardless of their operating system.

Product Usage Case

· A small startup developing a new web application wants to ensure their product is usable by everyone from day one. They integrate A11yFlow Weaver into their CI/CD pipeline, using the automated checks to catch common issues during development and the manual workflows for more in-depth testing before each release. This prevents costly redesigns later and expands their potential user base. So, this means they can build an inclusive product from the start, saving money and reaching more customers.

· A large enterprise with an existing complex website needs to comply with accessibility regulations. They use A11yFlow Weaver to conduct regular audits. The tool helps them efficiently identify areas needing improvement, prioritizes fixes based on custom rules, and generates formal ACRs for compliance reporting. So, this means they can efficiently meet legal requirements and demonstrate their commitment to accessibility.

· A freelance web designer is working with a client who has specific accessibility requirements for their e-commerce site. The designer uses A11yFlow Weaver to test the site, leveraging the tool's flexibility to configure rules relevant to the client's industry and providing detailed reports that clearly explain the issues and proposed solutions. So, this means they can deliver a high-quality, accessible website and clearly communicate its benefits to their client.

9

Coyote - Asynchronous AI Chat Companion

Author

michalwarda

Description

Coyote is an AI assistant designed for seamless, non-blocking interaction. Unlike traditional AI assistants that require you to wait for responses, Coyote operates asynchronously, allowing you to continue your conversation or other tasks while it processes your requests in the background. This builds on a real-time, 'text-a-friend' experience, solving the problem of disruptive waiting in AI interactions by handling emails, calendar events, and research tasks concurrently without leaving you hanging. The innovation lies in its deeply asynchronous architecture and integration into familiar messaging platforms like WhatsApp, making advanced AI accessible and natural for everyday use.

Popularity

Points 7

Comments 10

What is this product?

Coyote is an AI assistant built on a fundamentally different, asynchronous architecture. Instead of making you wait for the AI to finish a task before you can interact again, Coyote handles requests in the background, allowing for a continuous, natural conversation. Think of it like texting a friend who says 'I'm on it' and then you continue chatting while they go do the task. This is achieved through non-blocking operations and concurrent processing, meaning multiple requests can be handled simultaneously without the user experiencing any 'dead air' or interruption. The main technical insight is that by decoupling the user's interaction from the AI's processing time, the experience feels significantly more fluid and less demanding, making the AI feel like a true, ever-present assistant rather than a tool you have to actively manage.

How to use it?

Developers can integrate Coyote by leveraging its messaging platform accessibility. Currently, it's available via WhatsApp, meaning you can simply text Coyote as you would any other contact. This offers a low-friction entry point for users. For developers looking to build on this concept or integrate similar asynchronous AI capabilities, the underlying principle is to use non-blocking I/O and concurrent task management (e.g., using async/await patterns in programming languages or dedicated concurrency frameworks). This allows your application to send off AI requests and continue responding to user input or performing other operations without pausing. The value for developers is understanding and implementing these asynchronous patterns to create more responsive and engaging applications, mirroring Coyote's 'no stop button' philosophy.

Product Core Function

· Asynchronous Request Handling: Allows users to send commands to the AI and continue interacting without waiting for an immediate response. This is valuable because it eliminates frustrating wait times, making the AI feel more integrated into your workflow and reducing the feeling of being 'stuck'.

· Background Task Processing: Enables the AI to perform complex tasks like research, email drafting, or scheduling in the background. This is beneficial as it frees up the user's attention and time, allowing them to multitask and be more productive, as the AI is working 'for' them without demanding their constant focus.

· Continuous Conversation Flow: Maintains a natural, uninterrupted chat experience even when the AI is processing requests. The value here is in creating a more intuitive and human-like interaction, making AI assistants less robotic and more like a helpful companion you can talk to naturally, without awkward pauses.

· Messaging Platform Integration (WhatsApp, iMessage): Provides an accessible and familiar interface for users to interact with the AI. This is valuable because it removes the need to learn a new application or interface, lowering the barrier to entry and making advanced AI accessible to a broader audience through platforms they already use daily.

· Real-World Task Management: Focuses on practical applications like managing emails, calendars, and performing research. This highlights the practical utility of the AI, showing it can genuinely help with day-to-day productivity and information retrieval, directly answering the question 'how does this help me get things done?'

Product Usage Case

· Scenario: You're in a meeting and need to quickly find information or schedule a follow-up. How to use: Text Coyote 'Research the latest trends in AI ethics' or 'Schedule a 30-minute call with John tomorrow afternoon'. Problem Solved: Instead of interrupting the meeting or fumbling with a separate app, Coyote handles the request in the background, and you can continue focusing on the meeting. You'll get the results or confirmation when it's done, without any awkward pauses or disruptions.

· Scenario: You're multitasking and need to draft an email while also responding to instant messages. How to use: Text Coyote 'Draft an email to my team summarizing our Q3 performance'. Problem Solved: Coyote starts composing the email in the background, and you can immediately switch back to responding to your instant messages. You'll get a draft email to review and send later, all without feeling like you had to stop everything else to wait for the AI.

· Scenario: You're planning a trip and need to gather travel information and booking details. How to use: Text Coyote 'Find flights from New York to Tokyo for next month and suggest some hotels near Shibuya'. Problem Solved: Coyote can process these requests concurrently, researching flights and hotels simultaneously. You can continue discussing your travel plans with others or doing other things, and Coyote will present the compiled information when ready, making the planning process feel less burdensome and more efficient.

10

ScreenAsk: Instant Screen Capture Linker

Author

ladybro

Description

ScreenAsk is a tool designed to simplify the process of collecting screen recordings from customers for support purposes. It eliminates the need for customers to sign up, download, or install any software. By simply sharing a link, customers can record their screen, and the recordings are instantly available to the support team, complete with transcriptions and AI summaries. This innovation dramatically speeds up the troubleshooting process by allowing support agents to quickly see and understand customer issues.

Popularity

Points 16

Comments 0

What is this product?

ScreenAsk is a service that generates a unique link for any user. When a customer clicks this link, they are prompted to record their screen directly in their web browser. The core technical innovation lies in leveraging modern browser APIs (like MediaRecorder API and WebRTC) to capture screen content and audio without requiring any local software installation. This means no downloads, no sign-ups, just pure browser-based recording. Once recorded, the video is automatically uploaded to ScreenAsk's backend, where it's processed to include features like speech-to-text transcription and AI-powered summaries. This bypasses the traditional cumbersome workflow of customers needing to find, install, and operate separate screen recording tools, saving valuable time for both parties. So, what's in it for you? It means you can get visual feedback on how to fix a problem instantly, without asking your customers to jump through hoops.

How to use it?

Developers can use ScreenAsk in several ways. The most straightforward is by sharing a generated ScreenAsk link with a customer who is experiencing an issue. The customer clicks the link, follows the on-screen prompts to record their screen, and the recording is automatically sent to you. For deeper integration, ScreenAsk offers an embeddable widget for websites. This widget can be customized to match your brand's look and feel, and controlled via JavaScript. This allows you to trigger recordings directly from your application or website based on specific user actions or events. Furthermore, ScreenAsk provides integrations with popular services like Slack, Zapier, and Webhooks, enabling automated notifications and workflows when a new recording is submitted. So, how does this help you? You can seamlessly integrate visual problem-solving into your existing support workflows, trigger recordings programmatically within your app, and get instant alerts without manual checking.

Product Core Function

· On-demand screen recording via a shareable link: This allows anyone to record their screen without installing any software, simplifying the feedback loop for bug reporting or feature requests. Its value is in instantly capturing user issues as they happen.

· No customer sign-up or software installation required: This removes friction for end-users, ensuring higher participation rates and reducing the effort needed to gather crucial information. Its value is in making it incredibly easy for customers to provide help.

· Real-time recording upload and access: Recordings are available almost immediately after the user finishes recording, speeding up the diagnostic process. Its value is in minimizing the time spent waiting for information and accelerating issue resolution.

· Automatic transcription and AI summaries: This feature provides a text-based representation of the audio in the recording and a concise summary, allowing for quick scanning of issues. Its value is in saving time by highlighting key points within longer recordings.

· Embeddable and customizable widget: This allows developers to integrate screen recording functionality directly into their own websites or applications with full control over appearance and behavior. Its value is in creating a branded and seamless user experience for feedback collection.

· Notifications via Email, Slack, Zapier, and Webhooks: This enables automated alerts and integration with other business tools, streamlining support workflows. Its value is in ensuring you're immediately notified of new feedback and can automate follow-up actions.

Product Usage Case

· A SaaS company uses ScreenAsk to troubleshoot a complex bug reported by a user. Instead of lengthy email exchanges trying to understand the steps taken, they send a ScreenAsk link. The user records their screen as they encounter the bug, and the support team instantly receives a video with a transcription, allowing them to pinpoint the issue within minutes. This accelerates bug fixes and improves customer satisfaction.

· A game developer uses the ScreenAsk widget on their beta testing platform. When testers encounter a glitch, they can click a 'Report Issue' button that triggers a recording of their gameplay session. The recording, including network and console logs, is automatically attached to the bug report, providing developers with invaluable technical data for debugging. This drastically improves the quality and efficiency of game development.

· A customer success manager uses ScreenAsk to onboard new clients. They provide a personalized link to a training video request. When a client has a question about a specific feature, they can record their screen while trying to use it, and the recording is sent directly to the success manager. This allows for tailored, visual guidance, ensuring clients can effectively use the product. This enhances user adoption and reduces support load.

11

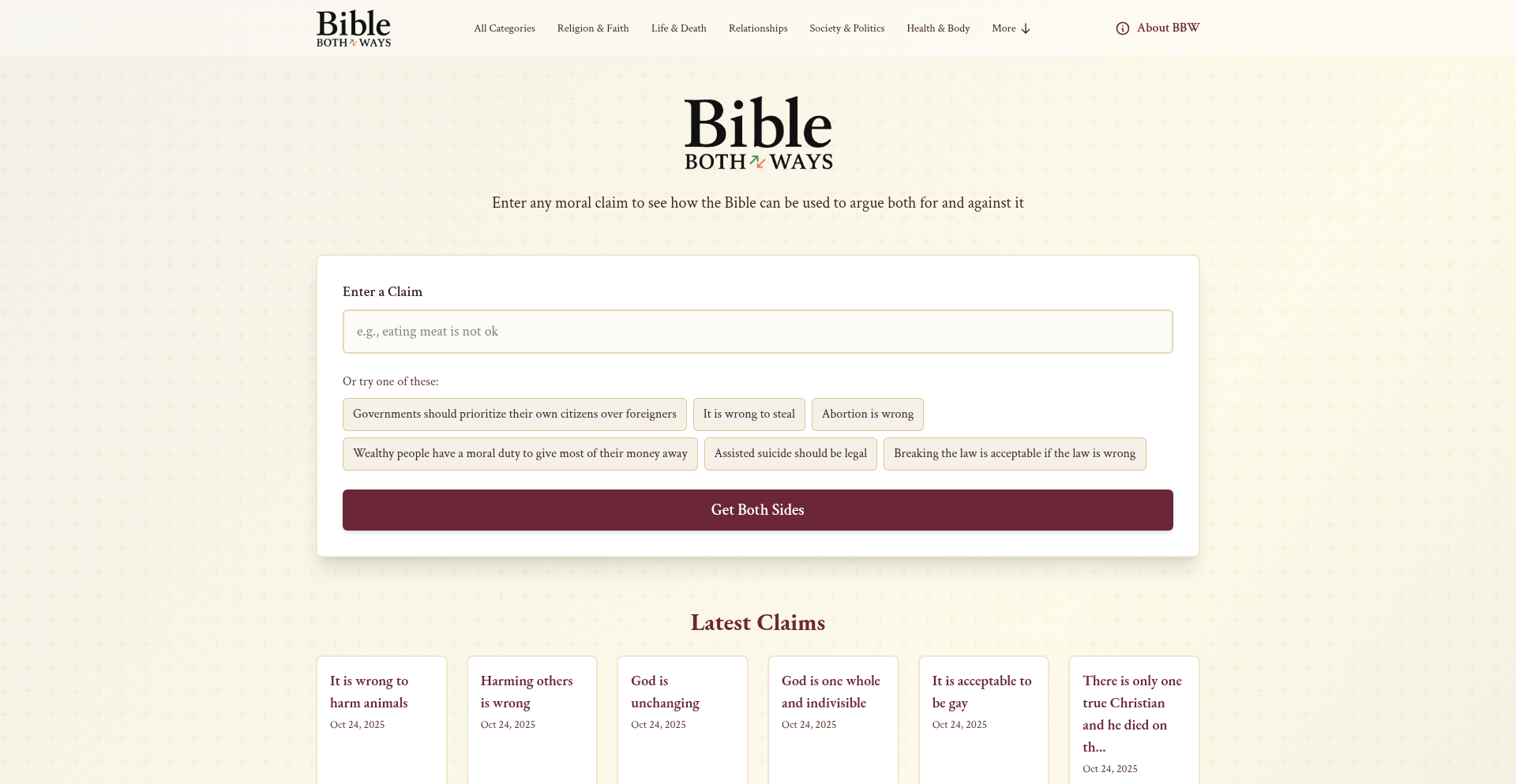

BibleBothWays

Author

jibbed123

Description

A web application that demonstrates how biblical scripture can be selectively used to support or oppose virtually any moral claim, highlighting the interpretive flexibility of religious texts.

Popularity

Points 7

Comments 8

What is this product?

BibleBothWays is a tool designed to explore the often contradictory nature of religious interpretations. It allows users to input a moral claim, and then it surfaces verses from the Bible that can be used to argue both for and against that claim. The innovation lies in its programmatic approach to 'cherry-picking' verses. Instead of relying on human interpretation for each claim, it leverages a structured database of verses and their potential interpretations, demonstrating how seemingly absolute texts can be bent to support diverse viewpoints. This showcases a technical approach to a philosophical and sociological observation.

How to use it?

Developers can use BibleBothWays as a component in applications dealing with comparative religion, ethical reasoning, or even as a satirical tool. For instance, it could be integrated into a chatbot that discusses ethics, providing users with a nuanced perspective on how religious texts have historically been used to justify opposing stances. The technical integration would involve API calls to retrieve relevant verses based on user-submitted claims, potentially using natural language processing to map claims to thematic categories within the scripture database.

Product Core Function

· Claim Input and Verse Retrieval: Users enter a moral claim, and the system queries a curated database of biblical verses and their associated interpretations to find passages that can be used to support or oppose the claim. This demonstrates the power of structured data to represent complex textual information and retrieve relevant snippets for specific queries.

· Dual Interpretation Display: The application presents verses in a way that highlights how the same text can be interpreted in opposing ways, showcasing the algorithmic approach to identifying these dualities. This is valuable for understanding how to build systems that can recognize and present nuanced or contradictory information.

· Demonstration of Interpretive Flexibility: By showing how the Bible can 'justify anything,' the tool illustrates a key concept in textual analysis and the sociology of religion: the malleability of meaning based on context and intent. For developers, this highlights the challenges and opportunities in building AI that understands human language and intent, which is often ambiguous.

· Algorithmic Verse Selection: The underlying mechanism likely involves keyword matching, thematic categorization, or even more advanced natural language processing techniques to identify verses relevant to a given claim and its potential interpretations. This offers insight into the practical application of text analysis algorithms for specific domains.

Product Usage Case

· Ethical Debate Simulation: A developer could integrate BibleBothWays into an educational platform to create interactive ethical debates. Users could explore how different religious perspectives, as represented by biblical interpretations, can lead to opposing conclusions on contemporary moral issues, thus demonstrating the practical application of text analysis in educational tools.

· Content Generation for Satire or Commentary: A creative developer might use this tool to generate humorous or thought-provoking content for blogs or social media. By inputting common sayings or controversial topics, the tool can generate ironic juxtapositions of biblical verses, showcasing how scripting can be used for creative commentary and artistic expression.

· Comparative Religious Studies Tool: Researchers or students could use BibleBothWays to quickly find examples of how scripture has been historically interpreted to support diverse social or moral positions. This offers a practical, code-driven way to explore textual evidence in academic fields.

· Building AI with Nuance: For developers working on AI that needs to understand human language and its inherent ambiguities, this project serves as a tangible example of how even seemingly definitive texts can be interpreted in contradictory ways. It underscores the complexity of building AI that can grapple with shades of meaning and context.

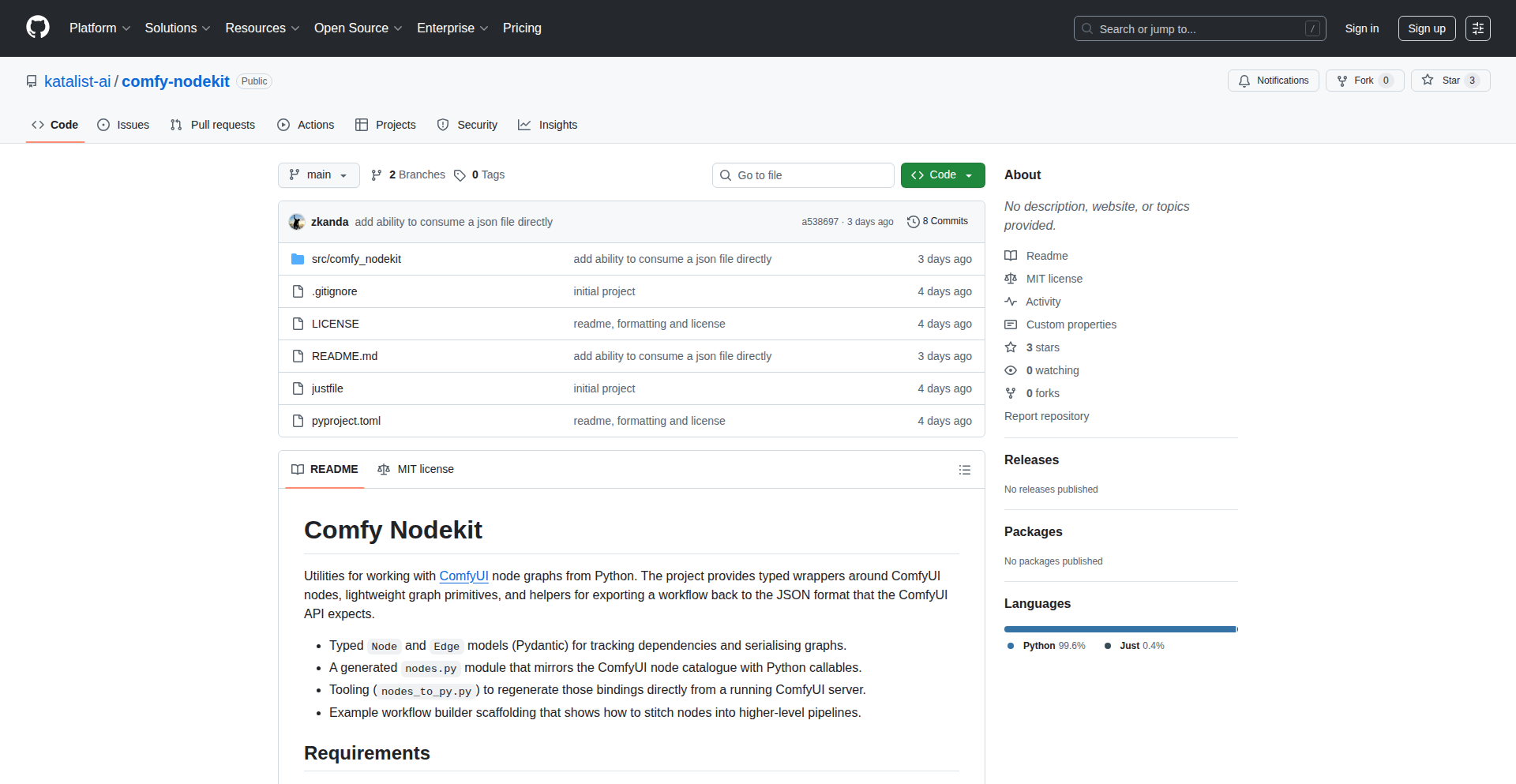

12

ComfyNodePy

Author

zkanda

Description

ComfyNodePy is a Python library designed to streamline the creation and management of ComfyUI workflows. It tackles the complexity of building and maintaining large, intricate ComfyUI graphs by allowing developers to define these workflows programmatically in Python. This approach offers a more organized, type-safe, and maintainable way to generate and modify workflows, especially for advanced use cases and custom nodes, ultimately solving the 'messiness' of hand-crafting complex JSON configurations.

Popularity

Points 9

Comments 3

What is this product?

ComfyNodePy is a Python library that acts as a programmatic interface for ComfyUI workflows. Instead of manually dragging and dropping nodes or editing raw JSON files to define complex AI art generation processes, you can write Python code to define these workflows. The innovation lies in its type-safe node factories, which ensure that connections between nodes are valid and prevent common errors. It can also automatically learn about your custom nodes installed in ComfyUI, ensuring your Python code stays compatible with your specific setup. This translates to a more robust and developer-friendly way to manage sophisticated ComfyUI graphs. The value is in making large, complex workflows manageable and less error-prone, translating messy JSON into clean Python code that's easier to understand, modify, and version.

How to use it?

Developers can integrate ComfyNodePy into their Python projects to programmatically build ComfyUI workflows. You would typically define nodes as Python objects using the library's factories, link them together, and then export the resulting structure into the JSON format that ComfyUI understands. This allows for dynamic workflow generation, such as creating hundreds of nodes on-the-fly based on certain parameters or user inputs. For example, a developer could use ComfyNodePy to generate a batch of customized image generation workflows by writing a single Python script, rather than manually configuring each one in the ComfyUI interface. This is particularly useful when dealing with custom nodes, as ComfyNodePy can introspect your running ComfyUI server to automatically generate Python bindings for them, ensuring seamless integration and preventing compatibility issues as your custom node setup evolves. The core idea is to replace manual configuration with automated code generation, saving significant development time and reducing errors for complex tasks.

Product Core Function

· Type-safe node composition: This allows developers to build workflows using Python's strong typing system, catching errors early in the development process and ensuring that nodes are connected in valid ways. The value is in reducing bugs and making workflow construction more reliable.

· Automatic custom node introspection: The library can connect to a running ComfyUI server and discover custom nodes, generating Python representations for them. This means developers can use their custom nodes programmatically without manual effort and maintain compatibility even when custom nodes are updated. The value is in saving time and ensuring seamless integration of unique functionalities.

· JSON export to ComfyUI format: ComfyNodePy can export the Python-defined workflow into the exact JSON structure that ComfyUI expects, allowing for direct use within the ComfyUI interface. The value is in bridging the gap between programmatic definition and the actual execution environment.

· Programmatic workflow generation: Developers can write Python code to dynamically create complex workflows with potentially hundreds of nodes, enabling on-the-fly generation for tasks like batch processing or adaptive workflow creation. The value is in enabling powerful automation and handling of very complex scenarios that are difficult to manage manually.

Product Usage Case

· Building a system to automatically generate personalized AI art prompts and workflows based on user descriptions, where the complexity of the workflow can vary significantly. ComfyNodePy allows for dynamic construction of these workflows in Python, ensuring they are compatible with ComfyUI.

· Developing an internal tool for a creative agency to manage and version hundreds of AI image generation workflows. By defining these workflows in Python with ComfyNodePy, the agency can easily track changes, revert to previous versions, and generate new workflows efficiently, overcoming the limitations of manual JSON management.

· Integrating AI image generation into a larger application where workflows need to be generated and modified on demand based on user input or system events. ComfyNodePy provides the programmatic control needed to build and adapt these workflows without manual intervention, making the integration smooth and robust.

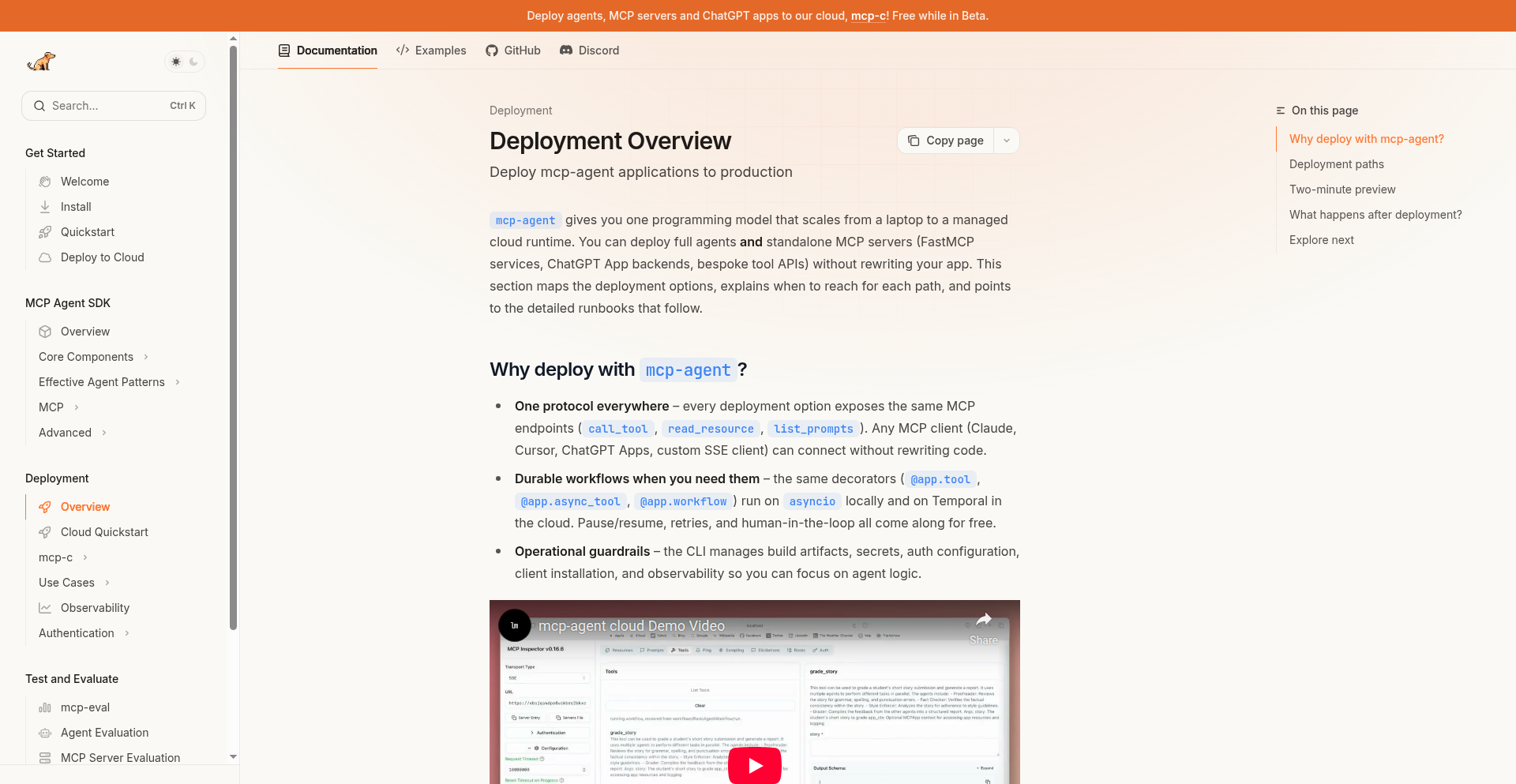

13

MCP-Cloud Weaver

Author

andrew_lastmile

Description

MCP-Cloud Weaver is a cloud platform designed to easily host and run any type of MCP server, including advanced AI agents and ChatGPT applications. It leverages Temporal for durable, long-running operations and offers a simple deployment process, transforming local MCP projects into cloud-accessible services. This allows developers to focus on building intelligent agents without worrying about infrastructure management, making complex AI applications more accessible.

Popularity

Points 11

Comments 1

What is this product?

MCP-Cloud Weaver is a cloud-based platform that simplifies the deployment and execution of MCP (Message, Command, and Protocol) servers, particularly those acting as AI agents or integrating with tools like ChatGPT. Its core innovation lies in its use of Temporal, a robust workflow engine, to ensure that even long-running and potentially stateful operations, like those of AI agents, are handled reliably with pause/resume and fault-tolerance capabilities. The platform treats every application as a remote Server-Sent Events (SSE) endpoint that adheres to the full MCP specification, enabling advanced features like dynamic elicitation of information from users, sampling of data, and detailed logging. Essentially, it takes your locally developed AI agent or application and makes it a stable, cloud-hosted service ready for broad integration, abstracting away the complexities of server management and persistent execution.

How to use it?

Developers can use MCP-Cloud Weaver to deploy their existing mcp-agent projects or other MCP-compatible servers to the cloud with ease. The process typically involves initializing your project with their CLI tool (`uvx mcp-agent init`), setting up your project (`uv init`), adding necessary dependencies (`uv add`), logging into the platform (`uvx mcp-agent login`), configuring any secrets (like API keys for services like OpenAI), and finally deploying your application to the cloud (`uvx mcp-agent deploy`). Once deployed, your MCP server will be accessible via an SSE endpoint. This allows any MCP client, such as ChatGPT, Claude Desktop/Code, or Cursor, to connect to and interact with your cloud-hosted agent or application, enabling seamless integration into various workflows and development environments.

Product Core Function

· Durable execution with Temporal: This allows your AI agents or applications to run for extended periods, pause and resume operations without losing state, and recover from failures, ensuring continuous availability and reliability for complex, long-running tasks.

· Full MCP spec implementation: By adhering to the MCP protocol, the platform enables advanced agent features like eliciting specific information from users, sampling data intelligently, and comprehensive logging for debugging and monitoring, providing a richer interaction model.

· Simplified cloud deployment: Taking your local MCP projects and deploying them to the cloud is streamlined, similar to deploying web applications. This removes the burden of server setup and maintenance, allowing you to focus on the intelligence of your agent.

· Remote SSE endpoint hosting: Each deployed application functions as a remote Server-Sent Events endpoint, making it easily discoverable and callable by various MCP clients, fostering broad interoperability within the AI ecosystem.

Product Usage Case

· Deploying a custom AI assistant for customer support: Developers can build an mcp-agent that understands customer queries, retrieves information from a knowledge base, and provides tailored responses. MCP-Cloud Weaver allows this agent to run continuously in the cloud, accessible by a customer service dashboard or chatbot interface, ensuring it's always ready to assist.

· Creating a code generation agent: An agent designed to assist developers with code writing can be hosted on MCP-Cloud Weaver. It can be integrated with IDEs like Cursor, providing real-time code suggestions and generation capabilities that remain persistent and responsive due to Temporal's durable execution.

· Building an AI-powered data analysis tool: A developer can create an agent that processes and analyzes large datasets. By deploying this agent to MCP-Cloud Weaver, it can perform these computationally intensive tasks in the cloud, with the results communicated back to the user or an application via the MCP protocol.

· Hosting interactive AI applications like an 'OpenAI Pizza App': This showcases how the platform can host specialized AI services that are accessible to any MCP client. Users can interact with the app through a web interface or other compatible clients to order or customize virtual pizzas, demonstrating the platform's ability to host engaging and functional AI experiences.

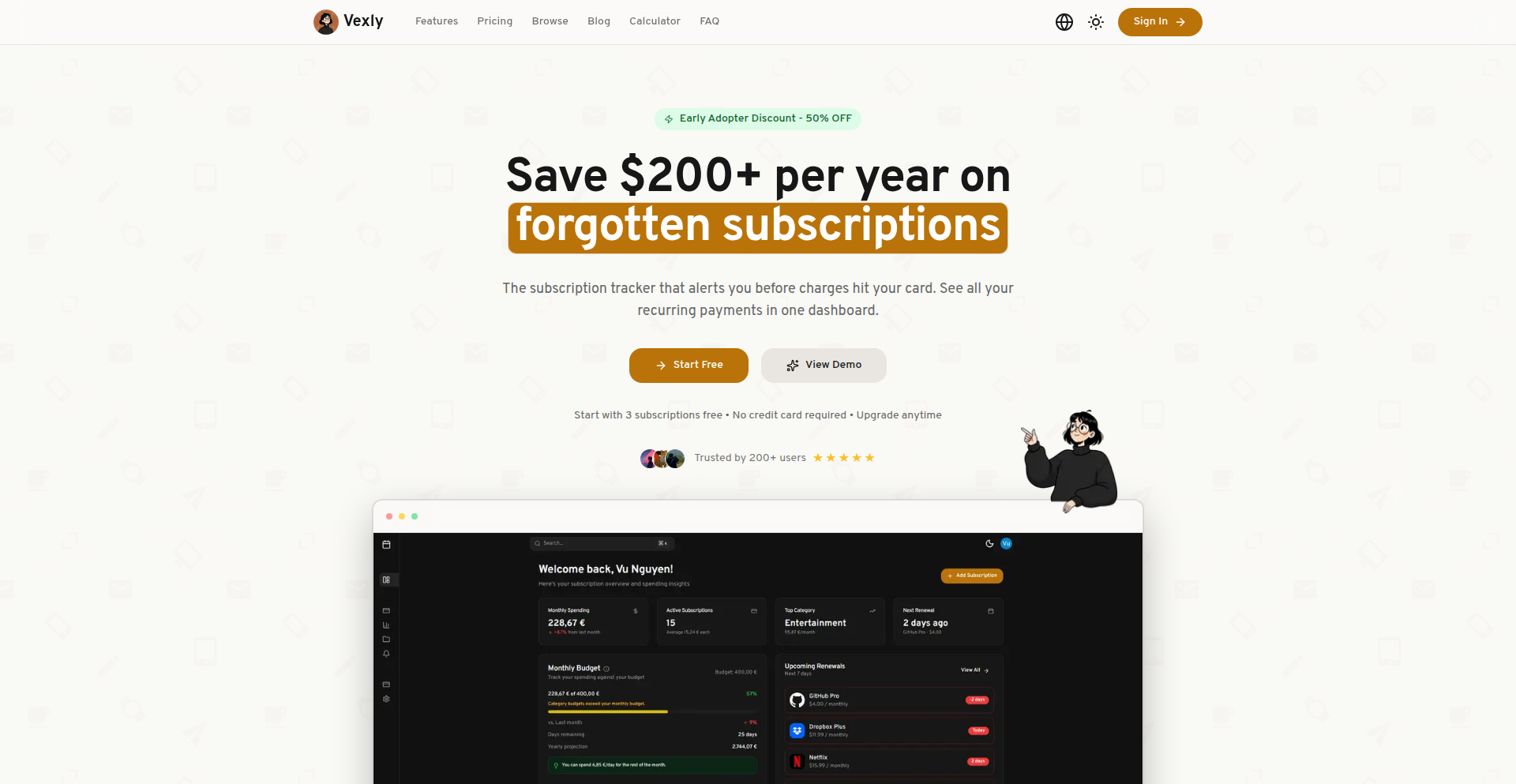

14

Subscription Tracker Pro

Author

hoangvu12

Description

A personal subscription management website, built to offer a clear, centralized view of all your recurring digital services. It tackles the common problem of losing track of subscriptions, leading to unwanted charges and wasted money. The core innovation lies in its straightforward, user-centric design and potentially clever data aggregation techniques, empowering users to regain control over their digital spending.

Popularity

Points 5

Comments 3

What is this product?

This is a website designed to help you keep tabs on all the subscriptions you're paying for, like streaming services, software licenses, or online tools. The underlying technology likely involves a database to store your subscription details (name, cost, renewal date, etc.) and a frontend interface to display this information clearly. The innovation is in its simplicity and focus on solving a common pain point – the overwhelming nature of multiple subscriptions. It's a digital ledger for your recurring expenses.

How to use it?

Developers can use this project as a template or inspiration for building their own personal finance tools or for integrating subscription tracking into larger applications. For an end-user, it would typically involve signing up, manually entering subscription details, and then having a dashboard that shows everything at a glance. Integration might involve browser extensions to automatically detect subscriptions or APIs for syncing with financial services.

Product Core Function

· Subscription entry and categorization: Allows users to manually input details of each subscription, like service name, cost, billing cycle, and renewal date. This provides a foundational structure for tracking.

· Renewal date reminders: Notifies users about upcoming subscription renewals, giving them ample time to decide whether to continue or cancel. This directly prevents accidental recurring charges.

· Cost overview and analysis: Presents a summary of total monthly or annual subscription spending, helping users visualize their expenditures. This offers insight into where their money is going.

· Cancellation tracking: Helps users log when and how they canceled a subscription, creating a history and aiding in future decision-making. This builds a personal knowledge base for managing subscriptions efficiently.

· Data visualization: Potentially displays charts or graphs of subscription costs over time or by category. This makes complex spending patterns easier to understand at a glance.

Product Usage Case

· A freelance developer juggling multiple SaaS tools for design, development, and project management can use this to see their monthly software spend and identify underutilized services to cut costs. It solves the problem of losing track of which tools they are actively using versus just paying for.

· A student managing various online learning platforms and entertainment subscriptions can get a clear picture of their expenses. This helps them budget effectively and avoid paying for services they rarely use during busy academic periods.

· Anyone who has experienced the frustration of an unexpected subscription renewal charge can use this to proactively manage their digital life, ensuring they only pay for what they truly value and use.

15

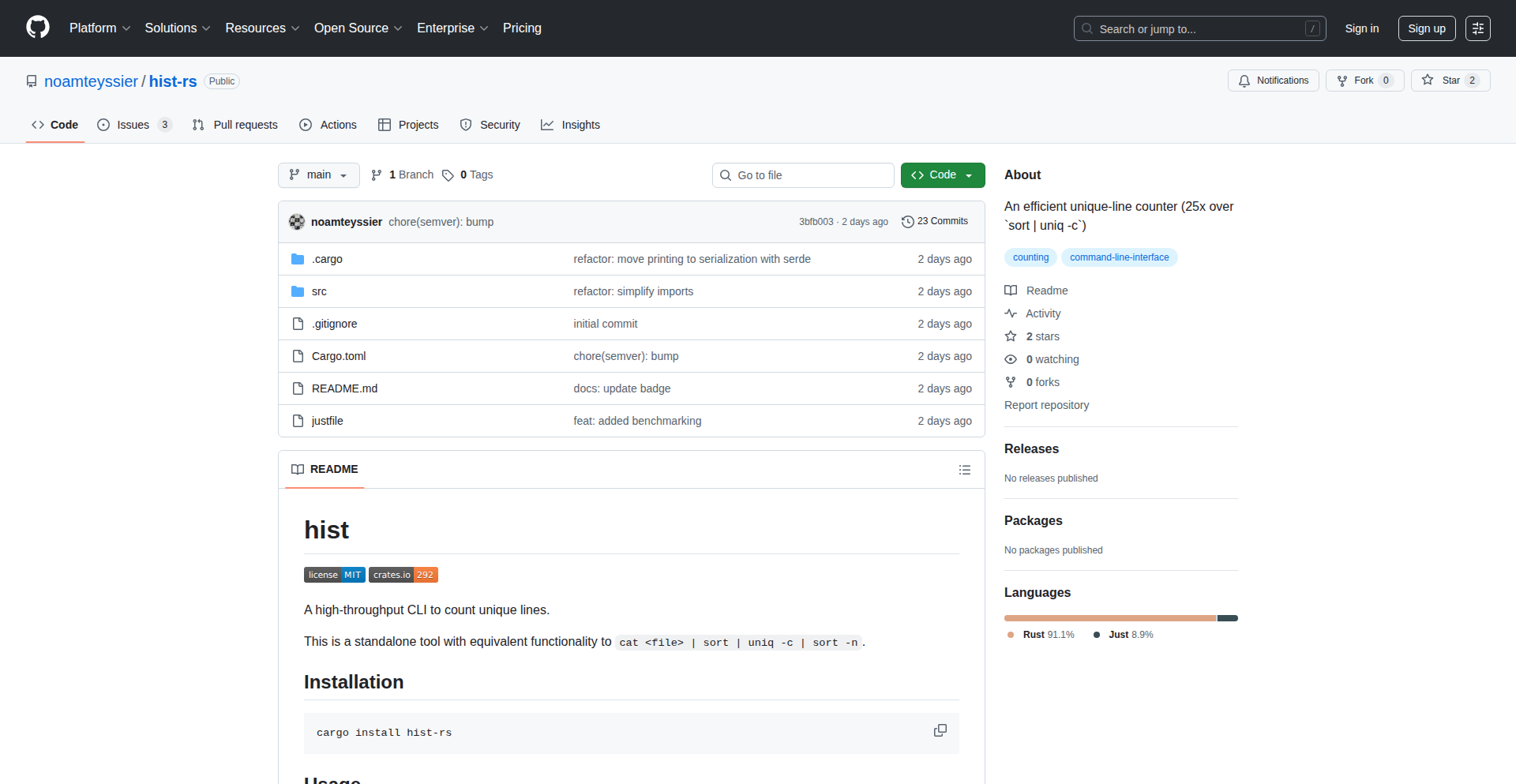

Hist: The Ultra-Fast Line Counter

Author

noamteyssier

Description

Hist is a high-performance command-line tool, written in Rust, that revolutionizes the way you count unique lines in files. It achieves an astonishing 25x throughput improvement over traditional methods like `sort | uniq -c` by employing advanced optimization techniques. This means you can process massive files and get results in a fraction of the time, freeing up your computational resources and accelerating your workflows. Hist also includes built-in regex filtering and table manipulation capabilities, making it a versatile solution for data analysis and manipulation.

Popularity

Points 4

Comments 4

What is this product?

Hist is a command-line utility designed to count the occurrences of unique lines within a file with exceptional speed and efficiency. It addresses the common task of data summarization and analysis by offering a significantly faster alternative to standard Unix tools. The core innovation lies in its Rust implementation, which leverages sophisticated memory management techniques like arena allocation and optimized hashmap usage. Instead of repeatedly allocating memory for each unique line, Hist uses an 'arena' to manage memory more effectively, and stores references to lines within the hashmap rather than full copies. This drastically reduces the number of memory operations and improves how the processor accesses data, leading to substantial speed gains. Think of it like having a highly organized filing system that can retrieve and count documents much faster than one that constantly shuffles papers around. So, what does this mean for you? You get incredibly fast results when analyzing large datasets, saving you time and allowing you to focus on deriving insights rather than waiting for processing.

How to use it?

Developers can easily integrate Hist into their existing shell scripting and command-line workflows. It functions as a direct replacement for commands like `sort | uniq -c`. For instance, to count unique lines in a file named 'data.txt', you would typically run `sort data.txt | uniq -c`. With Hist, you can achieve the same (and much more) by simply running `hist data.txt`. The tool is designed to be intuitive and powerful, allowing for piping input from other commands as well. For more complex scenarios, Hist supports integrated regex filtering, enabling you to count unique lines that match specific patterns, and table filtering, allowing you to work with structured data more effectively. This means you can directly pipe the output of other data processing tools into Hist for immediate, high-speed analysis. So, how does this benefit you? You can effortlessly speed up your data processing pipelines, handle larger files with ease, and perform more sophisticated filtering operations directly from your terminal.

Product Core Function

· Ultra-fast unique line counting: Achieves significant performance gains over traditional methods by optimizing memory allocation and data handling, allowing for rapid processing of large files. This is valuable for anyone working with big data or needing quick summaries of text files.

· Optimized memory management (arena allocation, reference storage): Reduces the number of memory operations and improves cache efficiency, leading to a noticeable speed boost and less system strain. This benefits developers by providing faster execution times and more efficient resource utilization.

· Integrated regex filtering: Enables counting of unique lines that match specific regular expression patterns, adding a layer of precision to data analysis. This is useful for extracting and counting specific types of data entries within a larger dataset.